SOFTWARE TESTING Definition The process of finding errors

- Slides: 33

SOFTWARE TESTING � Definition - The process of finding errors in s/w so that it works according to the end users requirements. � It is not way of ensuring quality � To detect defects in s/w product � Other methods are employed to achieve same functions 1

VERIFICATION AND VALIDATION Ideas of catching defects before testing phase. � Verification – Are we build the product right? Procedures carried out to prevent the defects before they take the shape. (Proactive) � Validation – Are we build the right product? Procedures carried out to validate whether the product is built as per specification (Reactive) Includes Unit testing, Integration testing and system testing 2

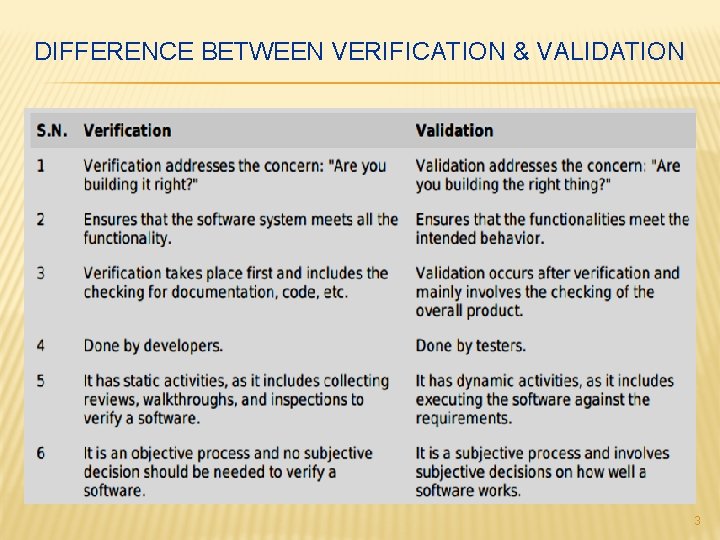

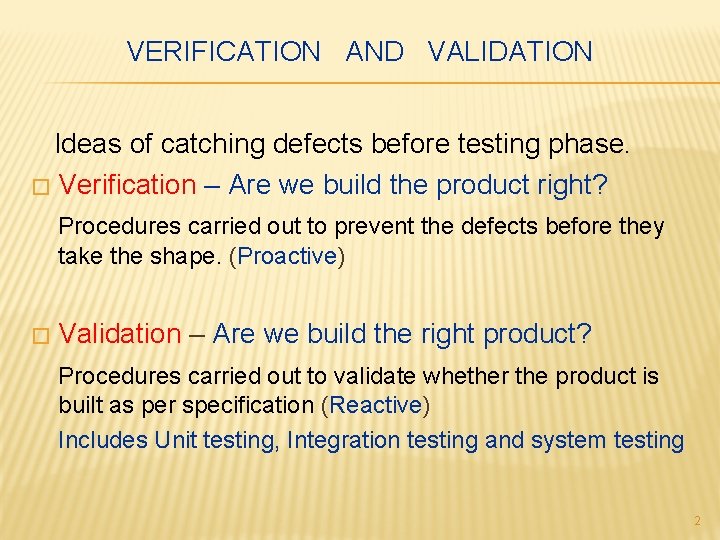

DIFFERENCE BETWEEN VERIFICATION & VALIDATION 3

TESTER’S ROLE � � Ø Ø Reveals defects in s/w Finds weak points in s/w Detects Inconsistent behavior of s/w Checks whether s/w work as expected Needs a very good skill of s/w development and testing Should work with the developers Should have correct ratio with industry and project personnel Work with requirement Engineer to meet requirements as testable 4

TESTER’S SHOULD HAVE KNOWLEDGE OF ü ü ü Programming Test principles Process Measurement’s Plans Tools and methods and how to apply to testing tasks 5

ROLE OF PROCESS IN S/W QUALITY The s/w development is similar to Engineering Activity, as it; Ø Designed Ø Implemented Ø Evaluated Ø And maintained § § S/w development must be in consistent and predictable manner. Testing is a quality testing process. It follows Engg principles. 6

BASIC DEFINITIONS 1. Errors – Mistake / misunderstanding of the s/w by developer 2. Defects – Irregularity in the S/w leads to behave incorrectly 3. Failures – Inability of s/w or component to perform required functions. 4. Test cases – Involve: 1) A set of inputs 2) Execution conditions, 3} Expected outputs 5. Test – A group of related test cases and test procedures 7

BASIC DEFINITIONS CONT. . 7. Test bed – An environment containing H/W and S/W needed to test s/w component or system. 8. S/W quality– Degree to which a system, component or process meets specified requirements. 9. Metric (Quantitative measure) - The degree to which a system , component or process has a given attribute. 10. Quality metric (Qualitative measure) - The degree to which an item posses a given quality attribute. These are correctness, reliability, usability, Integrity, portability, Maintainability and Inter-operability 8

WHO DOES TESTING? Depends on the process and associated stakeholders of the projects. Following professionals does testing with their capacities: Ø Software Tester Ø Software Developer Ø Project Lead/Manager Ø End User 9

WHEN TO START TESTING? An early start reduces the cost and time to rework and produce error-free software. In SDLC, testing can be started from the Requirements gathering and continued till the deployment of the s/w. It also depends on the SDLC model that is being used. i. e. In Waterfall model, formal testing is conducted in the testing phase; In incremental model, testing is performed at the end of every iteration and whole application is tested at the end. Testing is done in different forms at every phase of SDLC. 10

WHEN TO STOP TESTING? Difficult to determine when to stop testing, as testing is a never-ending process and no one can claim that a S/W is 100% tested. Aspects for stopping the testing process: Ø Testing Deadlines Ø Completion of test case execution Ø Completion of functional and code coverage to a certain point Ø Bug rate falls below a certain level or no high-priority bugs are identified 11

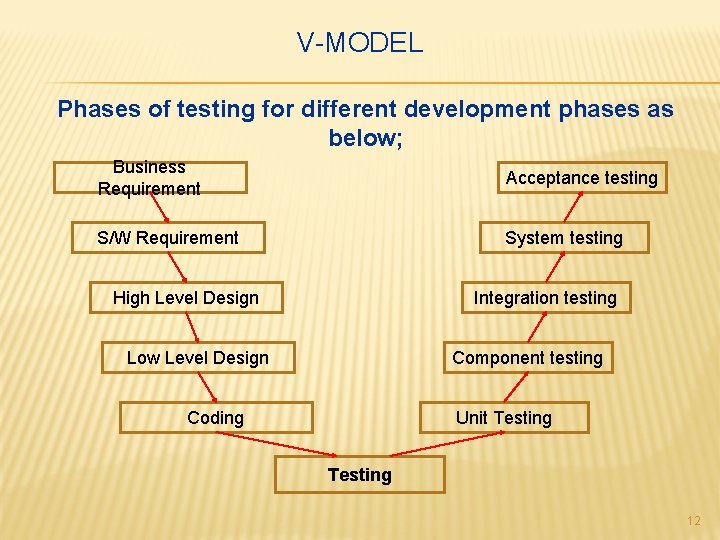

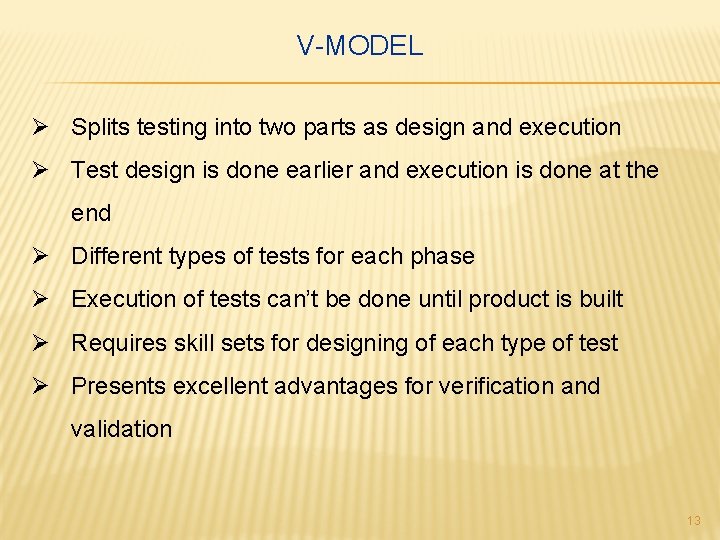

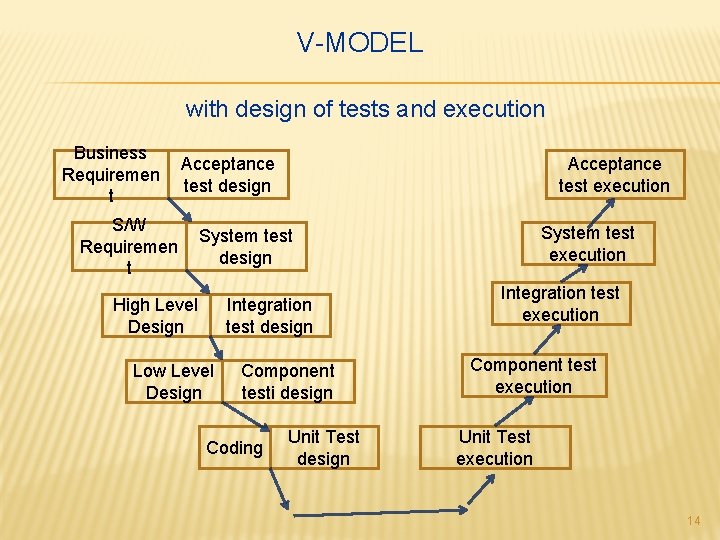

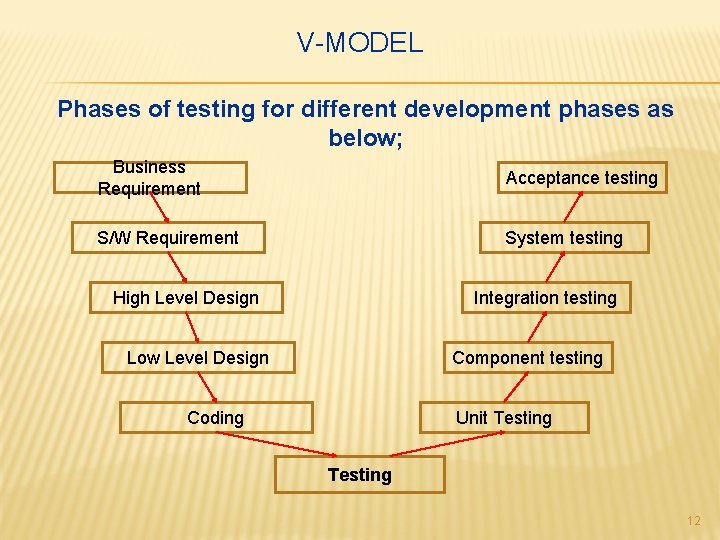

V-MODEL Phases of testing for different development phases as below; Business Requirement Acceptance testing S/W Requirement System testing High Level Design Integration testing Low Level Design Component testing Coding Unit Testing 12

V-MODEL Ø Splits testing into two parts as design and execution Ø Test design is done earlier and execution is done at the end Ø Different types of tests for each phase Ø Execution of tests can’t be done until product is built Ø Requires skill sets for designing of each type of test Ø Presents excellent advantages for verification and validation 13

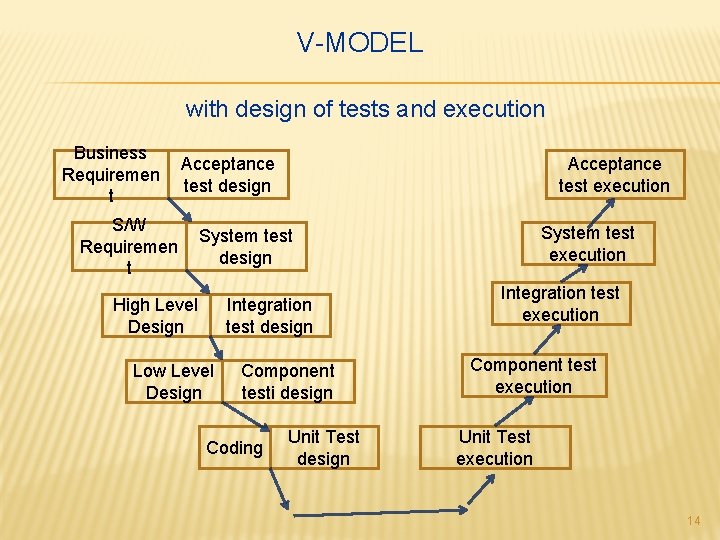

V-MODEL with design of tests and execution Business Requiremen t S/W Requiremen t Acceptance test design Acceptance test execution System test design High Level Design Integration test design Low Level Design Component testi design Coding Unit Test design Integration test execution Component test execution Unit Test execution 14

SOFTWARE TESTING PRINCIPLES Ø Exhausting testing is not possible Ø Defect clustering Ø Pesticide paradox Ø Testing shows presence of defects Ø Absences of errors Ø Early testing Ø Testing is context dependent 15

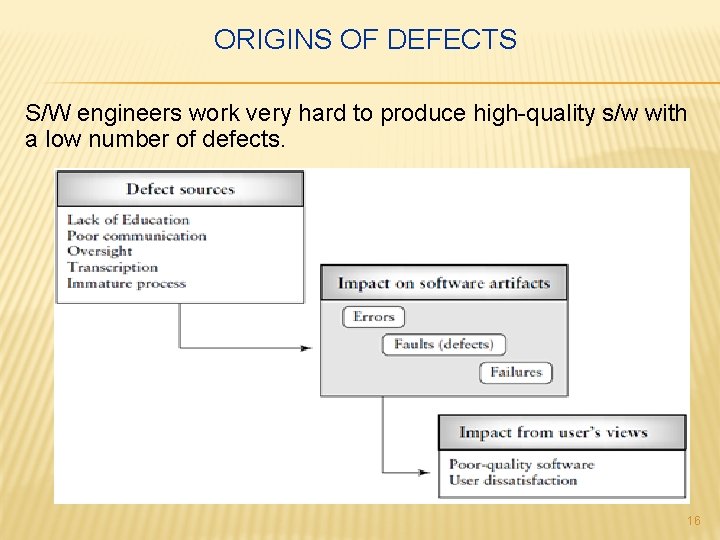

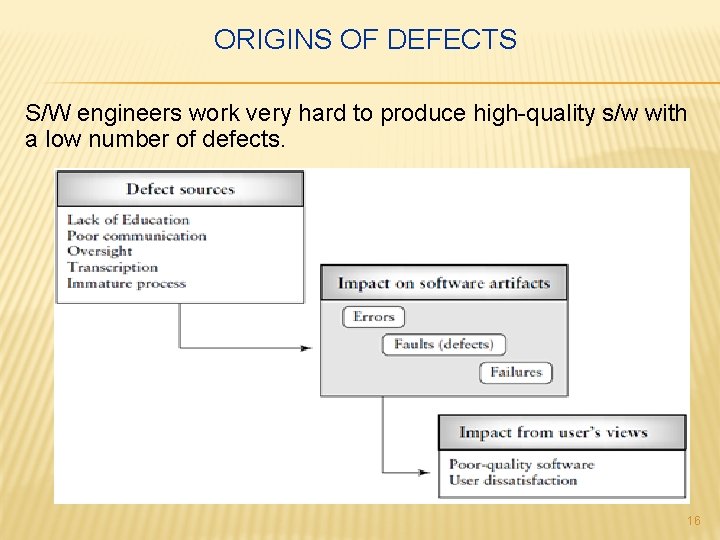

ORIGINS OF DEFECTS S/W engineers work very hard to produce high-quality s/w with a low number of defects. 16

Ø Ø Ø Education: The s/w engineer did not have the proper educational background to prepare the software artifact. Communication: The s/w engineer was not informed about something by a colleague. Oversight: The s/w engineer omitted to do something. Transcription: The s/w engineer knows what to do, but makes a mistake in doing it. Process: The process used misdirect the actions. 17

HYPOTHESES A tester develops hypotheses and then design Test cases. The hypotheses are: Ø Design test cases. Ø Design test procedures. Ø Assemble test sets. Ø Select the testing levels suitable for the tests. Ø Evaluate the results of the tests. 18

DEFECT REPOSITORY S/W orgs need to create a defect database. It supports storage and retrieval of defect data from all projects in a centrally accessible location. Ø Defects can be classified in many ways. . Ø Some defects will fit into more than one class or category. Ø The defect types and frequency of occurrence should be used in test planning, and test design. The four classes of defects are as follows, 1. Requirements and specifications defects, 2. Design defects, 3. Code defects, 4. Testing defects 19

1. REQUIREMENTS AND SPECIFICATIONS DEFECTS Defects are injected in early phases can be very difficult to remove in later phases. Many requirement documents are written using a natural language, they may become � Ambiguous, � Contradictory, � Unclear, � Redundant, � Imprecise 20

1. Functional Description Defects Description of what the product does, and how it should behave (inputs/outputs), is incorrect, ambiguous, and/or incomplete. 2. Feature Defects Due to feature descriptions are missing, incorrect, incomplete, or unnecessary. 3. Feature Interaction Defects Due to incorrect description of how the features should interact with each other. 4. Interface Description Defects Occur in the description of how the target software is to interface with external software, hardware, and users. 21

2. DESIGN DEFECTS Occurs when the following are incorrectly designs of: Ø System components, Ø Interactions between system components, Ø Interactions between the components and outside software/hardware, or users Ø Algorithms, control, logic, data elements, module interface descriptions, and external software/hardware/user interface descriptions. 22

1. Algorithmic and Processing Defects Processing steps in the algorithm as described by the pseudo code are incorrect. 2. Control, Logic, and Sequence Defects Logic flow in the pseudo code is not correct. 3. Data Defects Associated with incorrect design of data structures. 4. Module Interface Description Defects Incorrect or inconsistent usage of parameter types, incorrect number of parameters or incorrect ordering of parameters. 5. Functional Description Defects Include incorrect, missing, or unclear design elements. 6. External Interface Description Defects Derived from incorrect design descriptions for interfaces components, external software systems, databases, and hardware devices. 23

3. CODING DEFECTS Derived from errors in implementing the code. Some coding defects come from a failure to understand programming language constructs, and miscommunication with the designers. 1. Algorithmic and Processing Defects Unchecked overflow and underflow conditions, Comparing inappropriate data types, Converting one data type to another, Incorrect ordering of arithmetic operators, Misuse or omission of parentheses, Precision loss, Incorrect use of signs. 2. Control, Logic and Sequence Defects Include incorrect expression of case statements, incorrect iteration of loops, and missing paths. 3. Typographical Defects Syntax errors, incorrect spelling of a variable name that are usually detected by a compiler or self-reviews, or peer reviews. 4. Initialization Defects Initialization statements are omitted or are incorrect. Because of misunderstandings or lack of communication between programmers, and designer`s, carelessness, or misunderstanding of the programming environment. 5. Data-Flow Defects Data-Flow defects occur when the code does not follow the necessary data-flow conditions. 24

6. Data Defects Indicated by incorrect implementation of data structures. 7. Module Interface Defects Occurs because of using incorrect or inconsistent parameter types, an incorrect number of parameters, or improper ordering of the parameters. 8. Code Documentation Defects Code documentation does not describe what the program actually does, or is incomplete or ambiguous, it is called a code documentation defect. 9. External Hardware, Software Interfaces Defects Occurs because of problems related to: System calls, links to databases, Input/output sequences, Memory usage, Resource usage, Interrupts and exception handling, Data exchanges with hardware, Protocols, Formats, Interfaces with build files, Timing sequences. 25

4. TESTING DEFECTS Test plans, test cases, test harnesses, and test procedures can also contain defects. Defects in test plans are best detected using review techniques. 1. Test Harness Defects At the unit and integration levels, auxiliary code must be developed. This is called the test harness or scaffolding code. The test harness code should be carefully designed, implemented, and tested since it is a work product and this code can be reused when new releases of the software developed. 2. Test Case Design and Test Procedure Defects Consists of incorrect, incomplete, missing, inappropriate test cases, and test procedures. 26

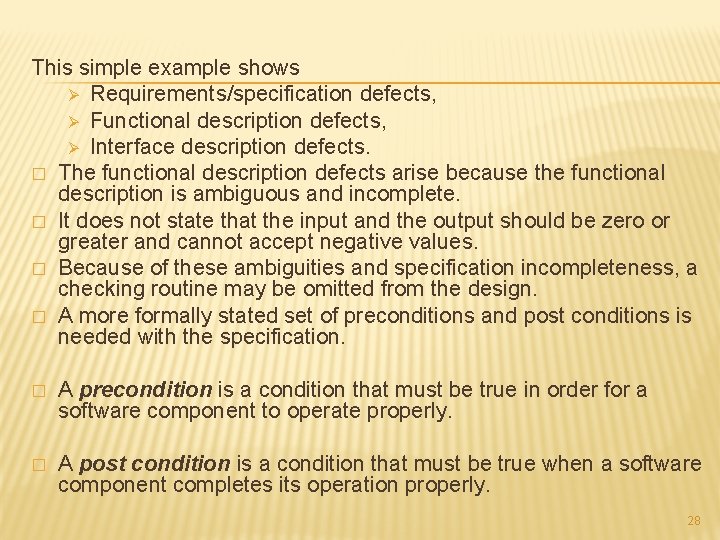

DEFECT EXAMPLE: THE COIN PROBLEM � � � Specification for the program calculate_coin_value This program calculates the total rupees value for a set of coins. The user inputs the amount of 25 p, 50 p and 1 rs coins. There are size different denominations of coins. The program outputs the total rupees and paise value of the coins to the user Input : number_of_coins is an integer Output : number_of_rupees is an integer number_of_paise is an integer 27

This simple example shows Ø Requirements/specification defects, Ø Functional description defects, Ø Interface description defects. � The functional description defects arise because the functional description is ambiguous and incomplete. � It does not state that the input and the output should be zero or greater and cannot accept negative values. � Because of these ambiguities and specification incompleteness, a checking routine may be omitted from the design. � A more formally stated set of preconditions and post conditions is needed with the specification. � A precondition is a condition that must be true in order for a software component to operate properly. � A post condition is a condition that must be true when a software component completes its operation properly. 28

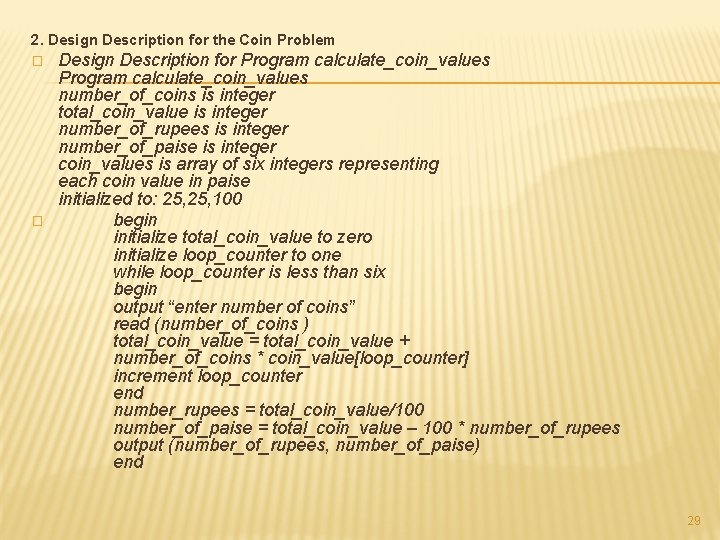

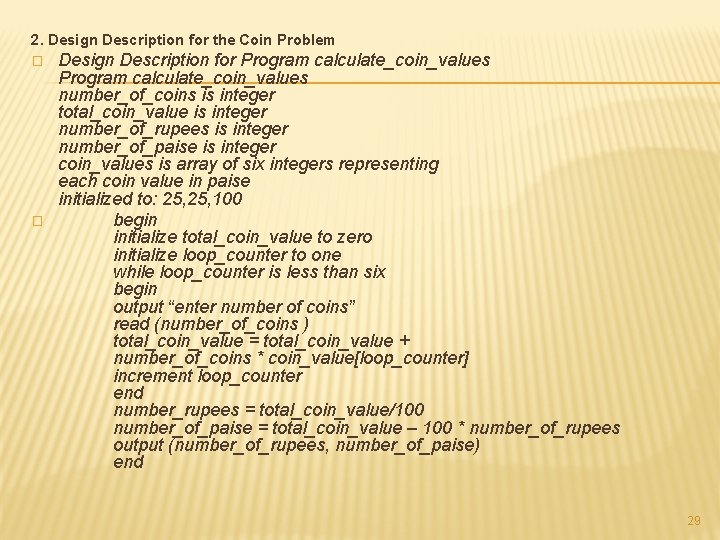

2. Design Description for the Coin Problem � � Design Description for Program calculate_coin_values number_of_coins is integer total_coin_value is integer number_of_rupees is integer number_of_paise is integer coin_values is array of six integers representing each coin value in paise initialized to: 25, 100 begin initialize total_coin_value to zero initialize loop_counter to one while loop_counter is less than six begin output “enter number of coins” read (number_of_coins ) total_coin_value = total_coin_value + number_of_coins * coin_value[loop_counter] increment loop_counter end number_rupees = total_coin_value/100 number_of_paise = total_coin_value – 100 * number_of_rupees output (number_of_rupees, number_of_paise) end 29

DESIGN DEFECTS IN THE COIN PROBLEM Control, logic, and sequencing defects. The defect in this subclass arises from an incorrect “while” loop condition (should be less than or equal to six) Algorithmic, and processing defects. These arise from the lack of error checks for incorrect and/or invalid inputs, lack of a path where users can correct erroneous inputs, lack of a path for recovery from input errors. Data defects. This defect relates to an incorrect value for one of the elements of the integer array, coin_values, which should be 25, 50, 100. External interface description defects. These are defects arising from the absence of input messages or prompts that introduce the program to the user and request inputs. 3. Coding Defects in the Coin Problem Control, logic, and sequence defects. These include the loop variable increment step which is out of the scope of the loop. Note that incorrect loop condition (i<6) is carried over from design and should be counted as a design defect. Algorithmic and processing defects. The division operator may cause problems if negative values are divided, although this problem could be eliminated with an input check. 30

DEVELOPER/TESTER SUPPORT FOR DEVELOPING A DEFECT REPOSITORY � � � Software engineers and test specialists should follow the examples of engineers in other disciplines who make use of defect data. A requirement for repository development should be a part of testing and/or debugging policy statements. Forms and templates should be designed to collect the data. Each defect and frequency of occurrence must be recorded after testing. Defect monitoring should be done for each on-going project. The distribution of defects will change when changes are made to the process 31

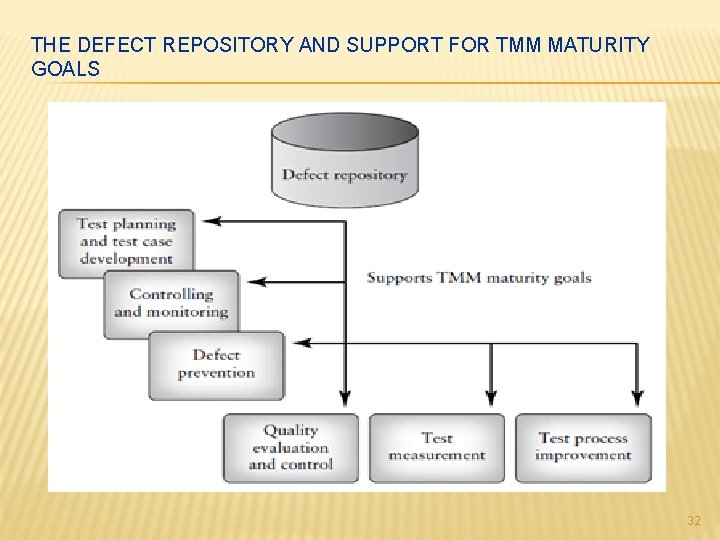

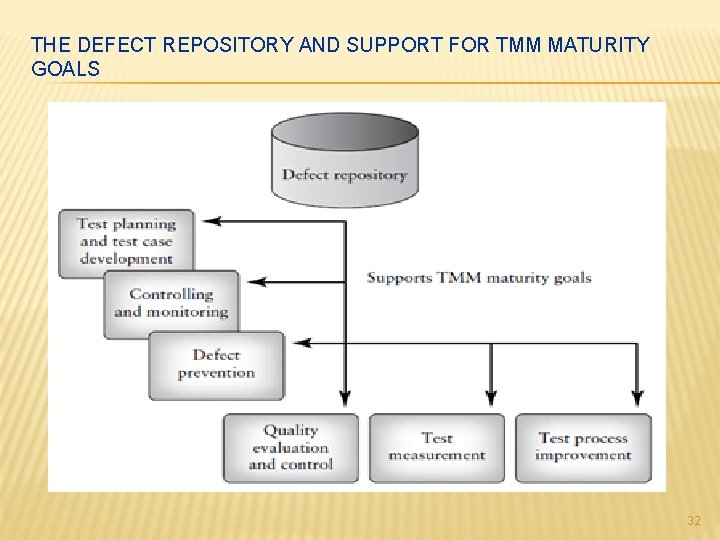

THE DEFECT REPOSITORY AND SUPPORT FOR TMM MATURITY GOALS 32

The defect data is useful for test planning. . It helps a tester to select applicable testing techniques, design the test cases, and allocate the amount of resources needed to detect and remove defects. This allows tester to estimate testing schedules and costs. The defect data can support debugging activities also. A defect repository can help in implementing several TMM maturity goals including : Ø Controlling and monitoring of test, Ø Software quality evaluation and control, Ø Test measurement, Ø Test process improvement. 33