UNIT5 CHAPTER12 EFFICIENT TEST SUITE MANAGEMENT 12 1

- Slides: 82

UNIT-5 CHAPTER-12 EFFICIENT TEST SUITE MANAGEMENT Ø 12. 1 Why does a test suite grow: - §Test engineers measure the extent to which a criterion is satisfied in terms of coverage, a test set achieves 100% coverage if it completely satisfies the criterion. § Coverage is measured in terms of the requirements that are imposed. § Partial coverage is defined as the percentage of requirements that are satisfied. § Test requirements are specific things that much be satisfied or covered ; e. g for statement coverage , each statement is requirement ; in mutation , each mutant is a requirement ; and in data flow testing, each DU pair is a requirement. 1

§ Coverage criteria are used as a stopping point to decide when a program is sufficiently tested. § In this case, additional tests are added until the test suite has achieved a specified coverage level according to a specific adequacy criterion. § Test suites can also be reused later as the software evolves. § Such test suite reuse, in the form of regression testing. § Running all the test cases in a test suite, however, can require a lot of effort. § Due to obsolete and redundant test cases , the size of a test suite continues to grow unnecessarily. 2

12. 2 Minimizing the test suite and its Benefits: § A test suite can something grow to an extent that it is nearly impossible to execute. § Following are the reasons why minimization is important: • Release date of the product is near. • Limited staff to execute all the test cases. • Limited test equipments or unavailability of testing tools. § Minimizing a test suite has the following benefits: § The test suite grows , it can become prohibitively expensive to execute on new versions of the program. 3

§ This test suites will no longer to satisfy the coverage criteria , because they are now obsolete or redundant. § Through minimization , these redundant test cases will be eliminated. § Test suite minimization techniques lower costs by reducing a test suite to a minimal sub set. § Reducing the size of a test suite decreases both the over head of maintaining the test suite and the number of test cases. Ø 12. 4 Test suite Prioritization: § The purpose of prioritization is to reduce the set of test cases based on some rational , non - arbitrary criteria. § The following priority can be determined for the test cases: 4

§ Priority 1: The test cases must be executed , otherwise there may be worse consequences after the release product. For example if the test cases for this category are not executed , then critical bugs may appear. § Priority 2: The test cases may be executed, if time permits. § Priority 3: The test case is not important prior to the current release. § Priority 4: The test case is never important, as its impact is nearly negligible. These goals can become the basis for prioritizing the test cases. Some of them § Testers or customers may want to get some critical features tested and presented in the first version of the software. 5

§ Prioritization can be on the basis of the functionality advertised in the market. § The rate of fault detection of a test suite can reveal the likelihood of faults earlier. § Increase the coverage of coverable code in the system under test at a faster rate. § Increase the rate at which high-risk faults are detected by a test suite. § Increase the likelihood of revealing faults related to specific code changes. Ø 12. 5 Types of test case prioritization: § We prioritize the test cases that will be useful over a succession of subsequent modified versions of P, without any knowledge of the modified versions. 6

§ A general test case prioritization can be performed following the release of a program version during offpeak hours and the cost of performing the prioritization is amortized over the subsequent releases. Version-specific test case prioritization: § We prioritize the test cases such that they will be useful on a specific version P' of P. Ø 12. 6 Prioritization Techniques: § Various prioritization techniques may be applied to a test suite with the aim of meeting that goal. § For example in an attempt to increase the rate of fault detection of test suites. § We might prioritize the test cases in terms of their increasing cost per coverage of code components. 7

Prioritization for regression test suite: § This category prioritizes the test suite of regression testing. § Since regression testing is performed whenever there is a change in the software. Prioritization for system test suite: § This category prioritizes the test suite of system testing. § The test cases of system testing are prioritized based on several criteria: risk analysis , user feedback , faultdetection rate , etc. , Ø 12. 6. 1 Coverage-based test case prioritization: § This type of prioritization is based on the coverage of codes , such as statement coverage , branch coverage etc. , and the fault exposing potential of the test cases. 8

Total statement coverage prioritization: § This prioritization orders the test cases based on the total number of statements covered. § It counts the number of statements covered by the test cases and orders them in a descending order. § If multiple test cases cover the same number of statements, then a random order may be used. § For example , if T 1 covers 5 statements, T 2 covers 3 statements and T 3 covers 12 statements; then according to this prioritization, the order will be T 3, T 1, T 2. Additional statement coverage prioritization: § Total statement coverage prioritization schedules the test cases based on the total statements covered. 9

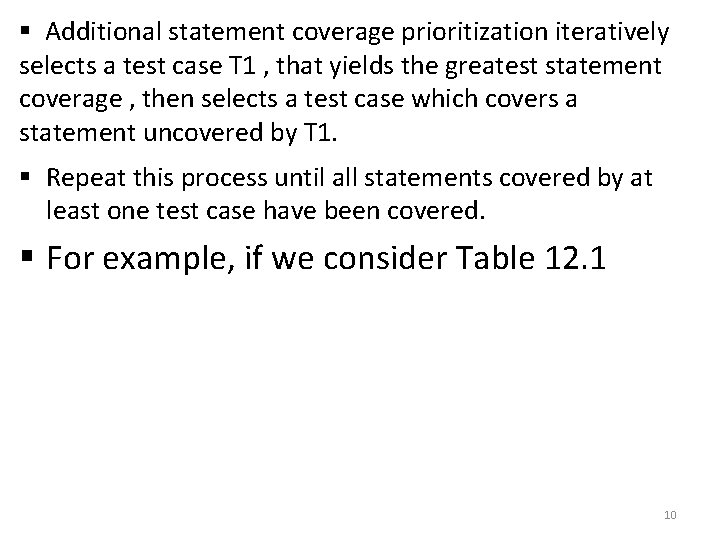

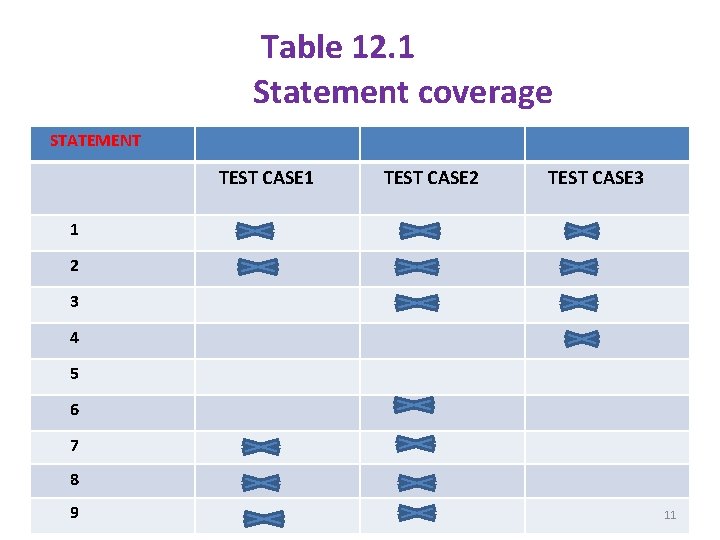

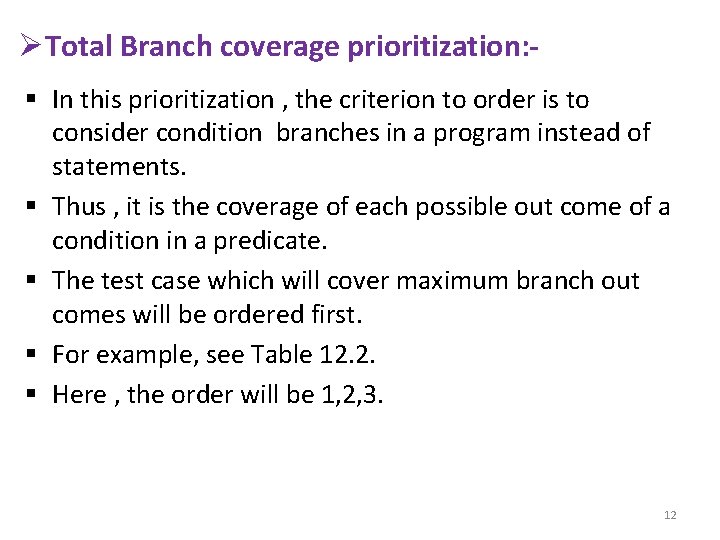

§ Additional statement coverage prioritization iteratively selects a test case T 1 , that yields the greatest statement coverage , then selects a test case which covers a statement uncovered by T 1. § Repeat this process until all statements covered by at least one test case have been covered. § For example, if we consider Table 12. 1 10

Table 12. 1 Statement coverage STATEMENT TEST CASE 1 TEST CASE 2 TEST CASE 3 1 2 3 4 5 6 7 8 9 11

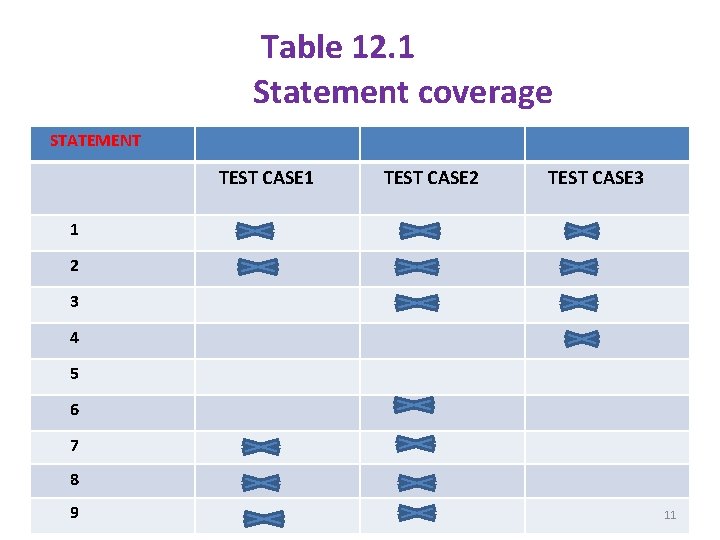

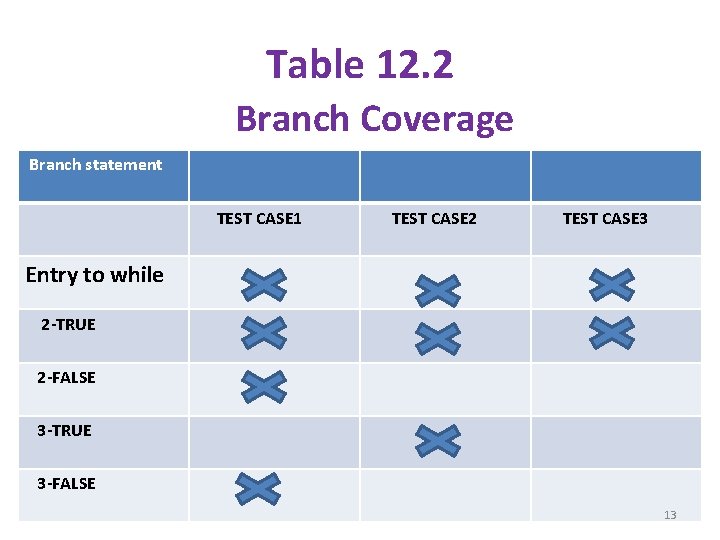

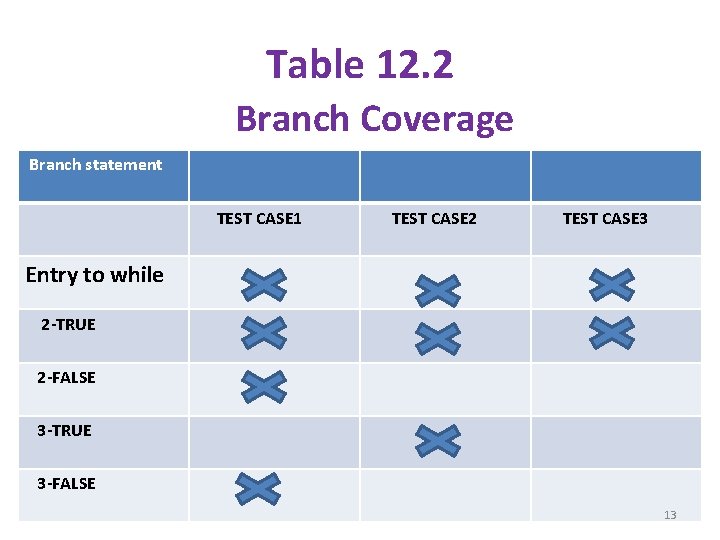

ØTotal Branch coverage prioritization: § In this prioritization , the criterion to order is to consider condition branches in a program instead of statements. § Thus , it is the coverage of each possible out come of a condition in a predicate. § The test case which will cover maximum branch out comes will be ordered first. § For example, see Table 12. 2. § Here , the order will be 1, 2, 3. 12

Table 12. 2 Branch Coverage Branch statement TEST CASE 1 TEST CASE 2 TEST CASE 3 Entry to while 2 -TRUE 2 -FALSE 3 -TRUE 3 -FALSE 13

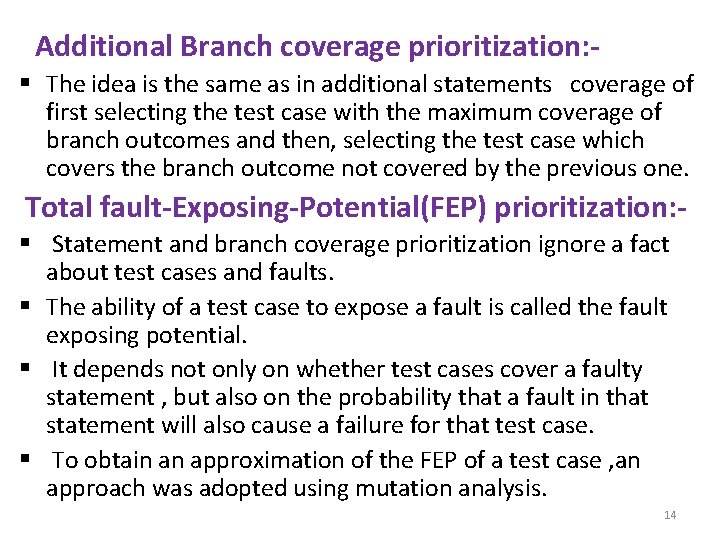

Additional Branch coverage prioritization: § The idea is the same as in additional statements coverage of first selecting the test case with the maximum coverage of branch outcomes and then, selecting the test case which covers the branch outcome not covered by the previous one. Total fault-Exposing-Potential(FEP) prioritization: § Statement and branch coverage prioritization ignore a fact about test cases and faults. § The ability of a test case to expose a fault is called the fault exposing potential. § It depends not only on whether test cases cover a faulty statement , but also on the probability that a fault in that statement will also cause a failure for that test case. § To obtain an approximation of the FEP of a test case , an approach was adopted using mutation analysis. 14

§ This approach is discussed below. § Given a program p and a test suite T, § First , create a set of mutants N: {n 1, n 2, . . nm} for p , nothing which statement Sj in p contains each mutant. § Next , for each test case ti belongs to T , execute mutant version nk of p on ti , nothing whether ti kills that mutant. § Having collected this information for every test case and mutant , consider each test case ti , and each statement Sj in p , and calculate FEP(s , t) of ti on Sj as the ratio: 15

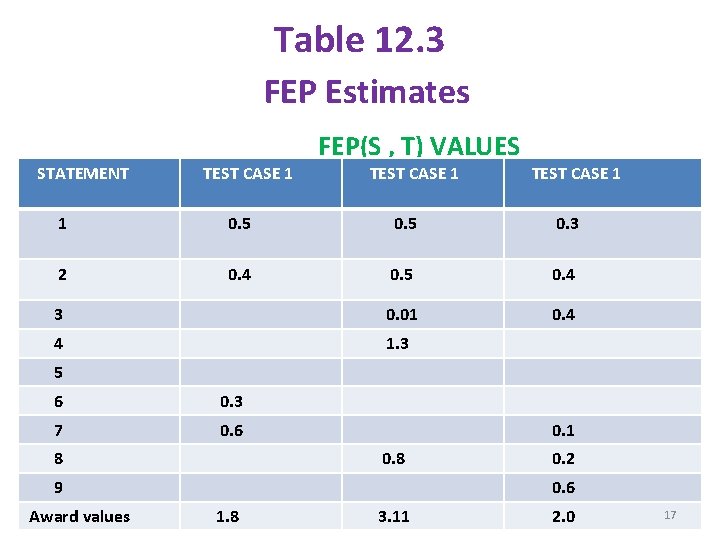

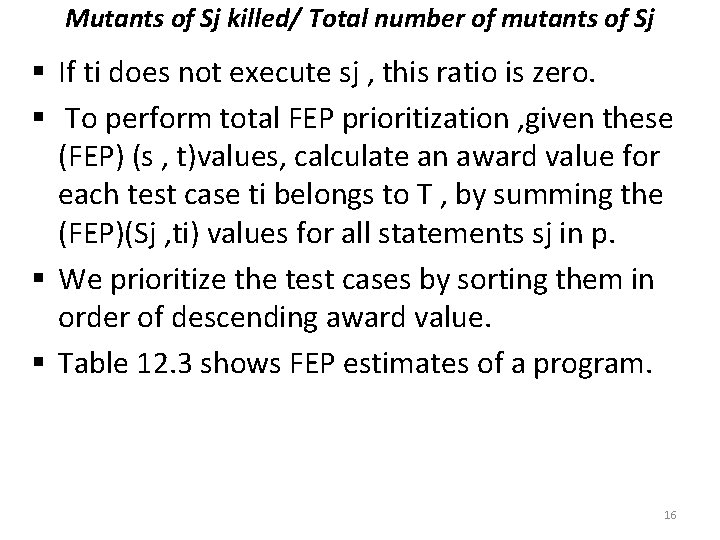

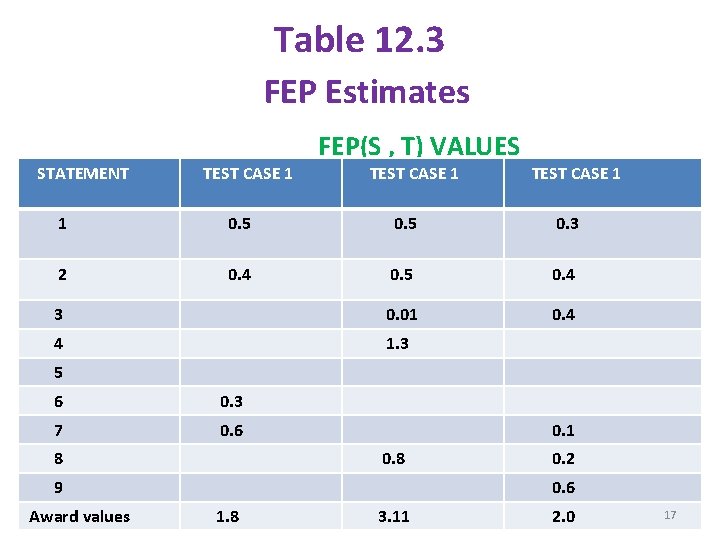

Mutants of Sj killed/ Total number of mutants of Sj § If ti does not execute sj , this ratio is zero. § To perform total FEP prioritization , given these (FEP) (s , t)values, calculate an award value for each test case ti belongs to T , by summing the (FEP)(Sj , ti) values for all statements sj in p. § We prioritize the test cases by sorting them in order of descending award value. § Table 12. 3 shows FEP estimates of a program. 16

Table 12. 3 FEP Estimates STATEMENT TEST CASE 1 FEP(S , T) VALUES TEST CASE 1 1 0. 5 0. 3 2 0. 4 0. 5 0. 4 3 0. 01 0. 4 4 1. 3 5 6 0. 3 7 0. 6 8 0. 1 0. 8 9 Award values 0. 2 0. 6 1. 8 3. 11 2. 0 17

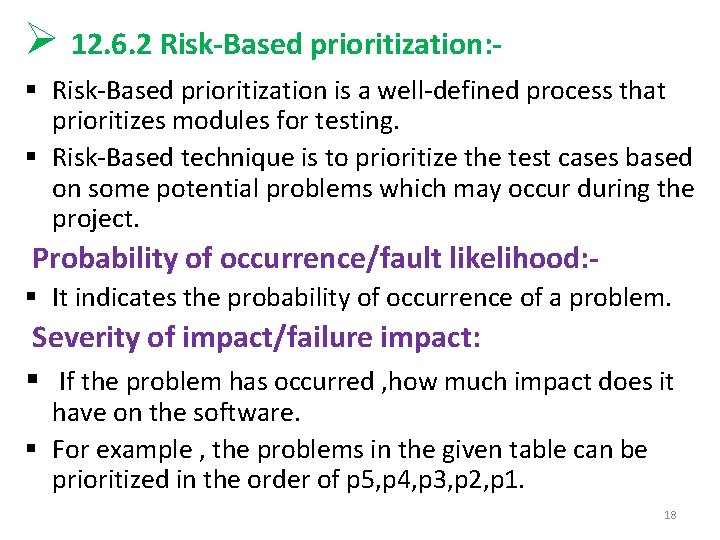

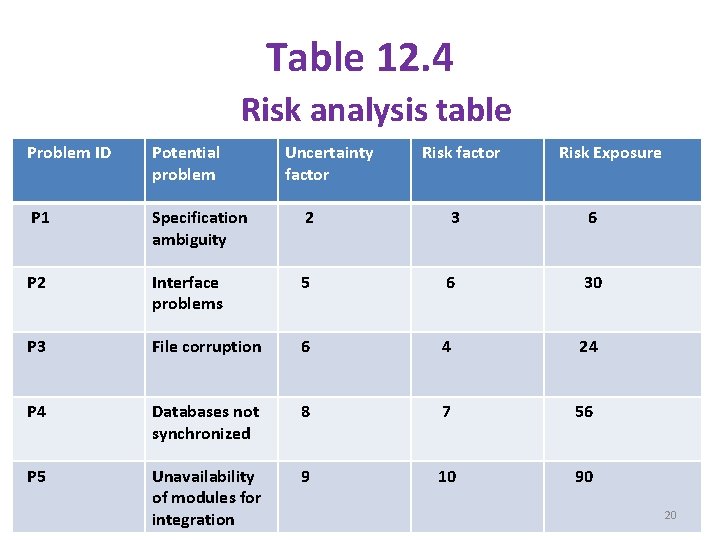

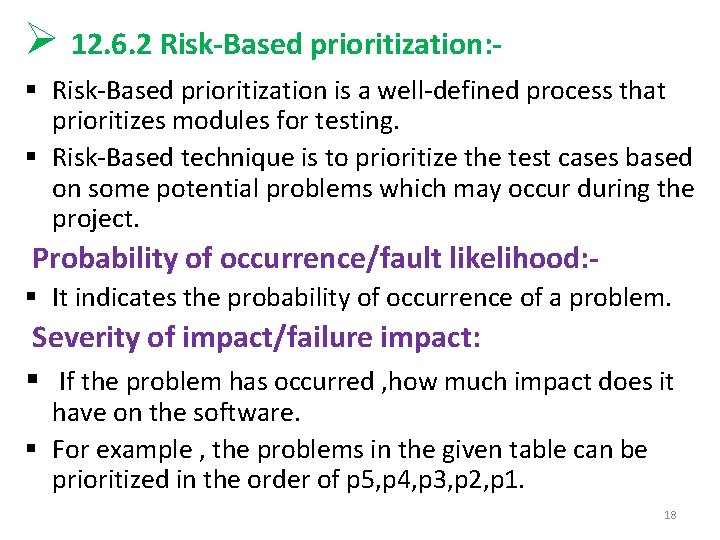

Ø 12. 6. 2 Risk-Based prioritization: § Risk-Based prioritization is a well-defined process that prioritizes modules for testing. § Risk-Based technique is to prioritize the test cases based on some potential problems which may occur during the project. Probability of occurrence/fault likelihood: § It indicates the probability of occurrence of a problem. Severity of impact/failure impact: § If the problem has occurred , how much impact does it have on the software. § For example , the problems in the given table can be prioritized in the order of p 5, p 4, p 3, p 2, p 1. 18

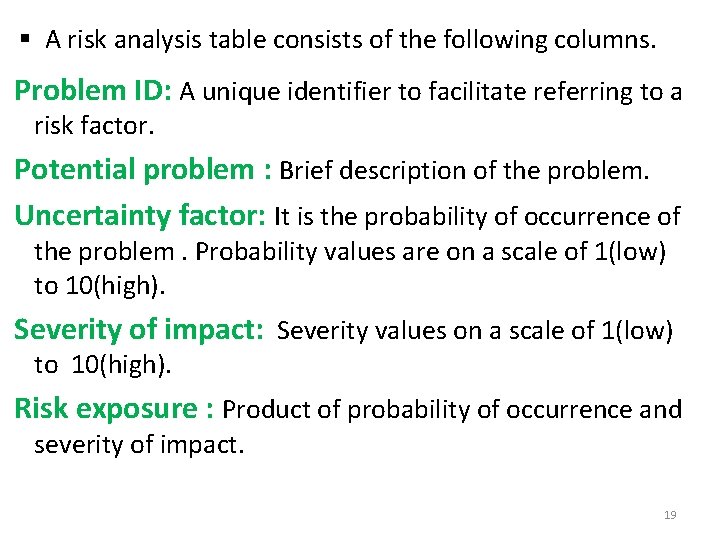

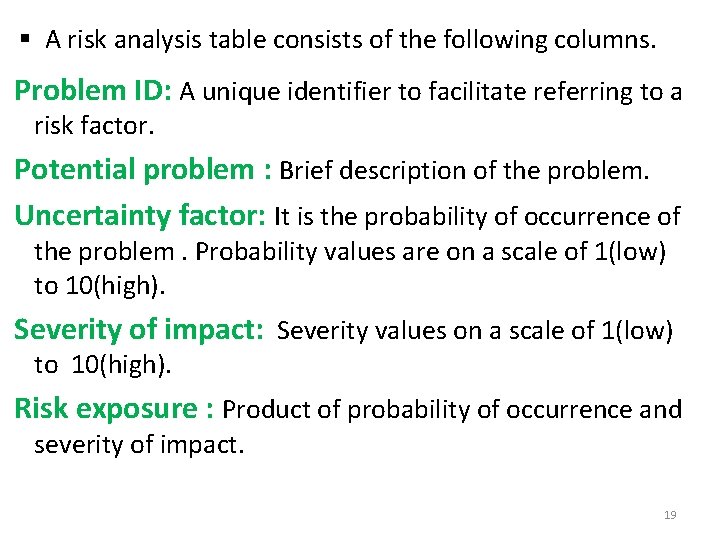

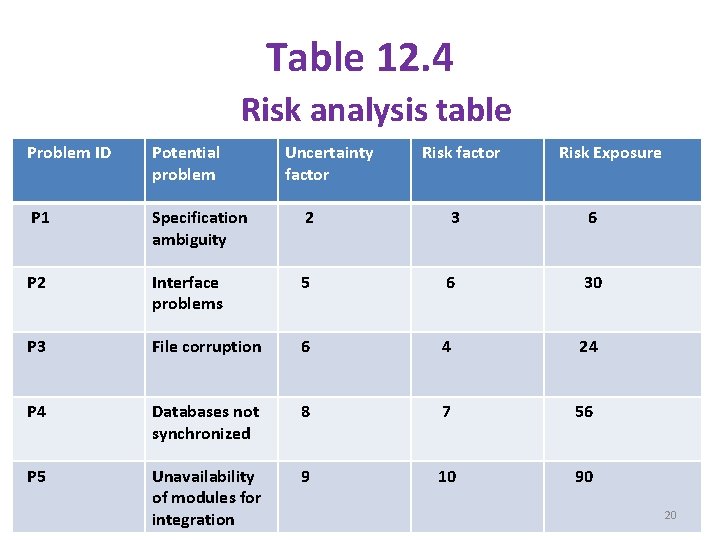

§ A risk analysis table consists of the following columns. Problem ID: A unique identifier to facilitate referring to a risk factor. Potential problem : Brief description of the problem. Uncertainty factor: It is the probability of occurrence of the problem. Probability values are on a scale of 1(low) to 10(high). Severity of impact: Severity values on a scale of 1(low) to 10(high). Risk exposure : Product of probability of occurrence and severity of impact. 19

Table 12. 4 Risk analysis table Problem ID Potential problem Uncertainty factor Risk Exposure P 1 Specification ambiguity 2 3 6 P 2 Interface problems 5 6 30 P 3 File corruption 6 4 24 P 4 Databases not synchronized 8 7 56 P 5 Unavailability of modules for integration 9 10 90 20

Ø 12. 6. 3 Prioritization Based on operational profiles: § In this approach , the test planning is done based on the operation profiles of the important functions which are of use to the customers. § An operational profile is a set of tasks performed by the system and their probabilities of occurrence. Ø 12. 6. 4 Prioritization using relevant slices: - § During regression testing , the modified program is executed on all existing regression test cases to check that it still works the same way as the original program , but re-running the test suite for every change in the software makes regression testing a time-consuming process. § If we can find the portion of the software which has been affected with the change in software, then we can prioritize the test cases based on this information. This is called the slicing technique. 21

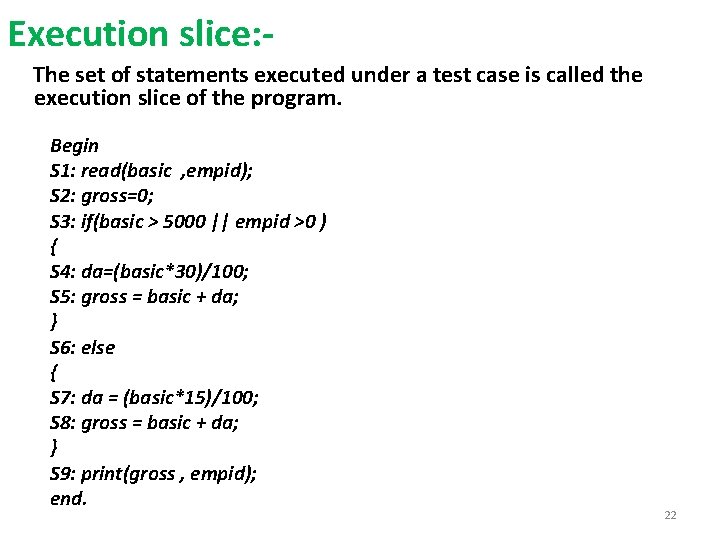

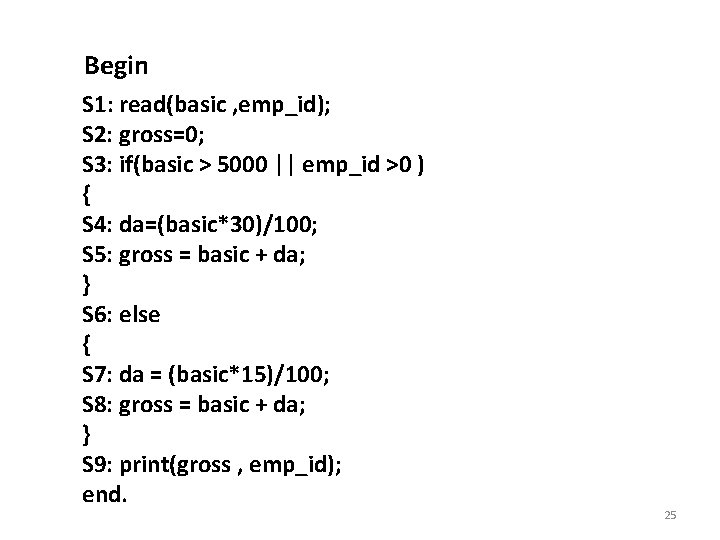

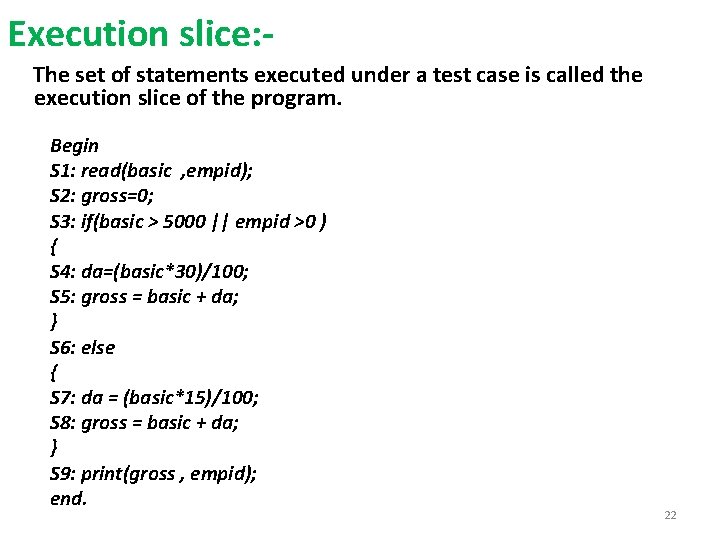

Execution slice: The set of statements executed under a test case is called the execution slice of the program. Begin S 1: read(basic , empid); S 2: gross=0; S 3: if(basic > 5000 || empid >0 ) { S 4: da=(basic*30)/100; S 5: gross = basic + da; } S 6: else { S 7: da = (basic*15)/100; S 8: gross = basic + da; } S 9: print(gross , empid); end. 22

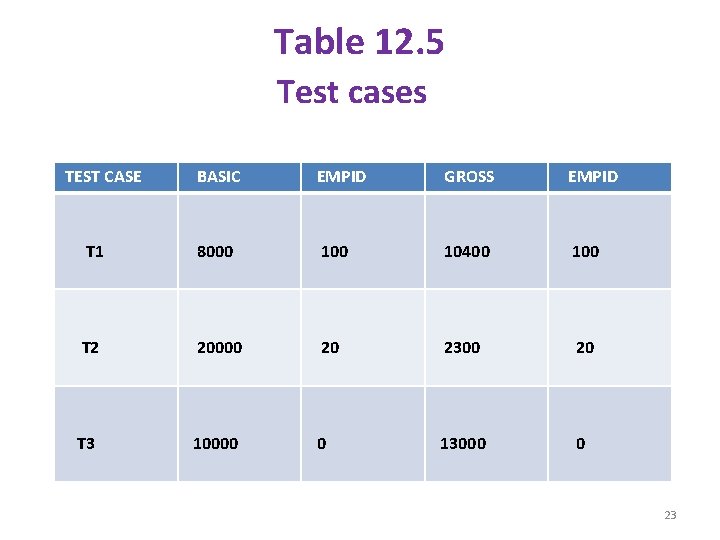

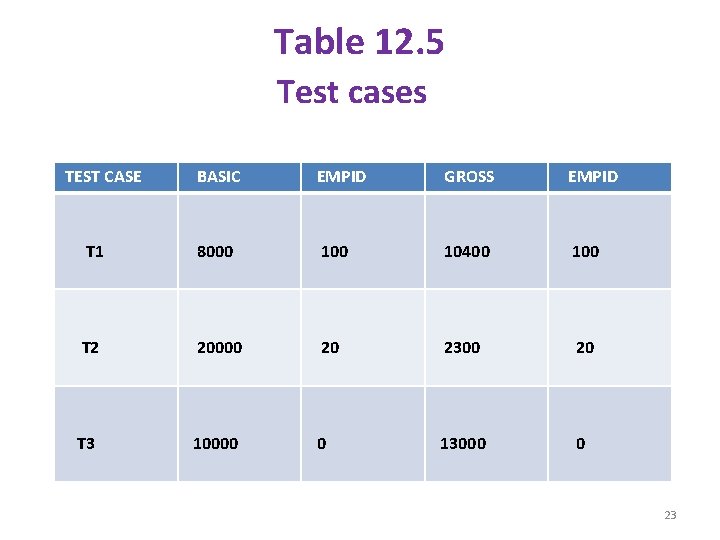

Table 12. 5 Test cases TEST CASE BASIC EMPID GROSS EMPID T 1 8000 10400 100 T 2 20000 20 2300 20 T 3 10000 0 13000 0 23

§ T 1 and T 2 produce correct values on the other hand, T 3 produces an incorrect result. § Syntactically , it is correct , but an employee with the emp_id'0' will not get any salary , even if his basic salary is read as input. So it has to be modified. § Suppose S 3 is modified as [if(basic > 5000 && empid >0 )] § So in the execution slice , we will have less number of statements. The execution slice is highlighted in the given code segment. 24

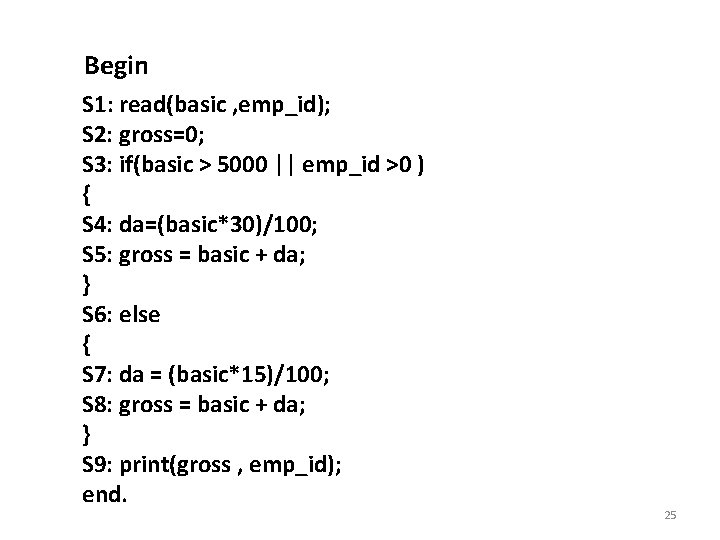

Begin S 1: read(basic , emp_id); S 2: gross=0; S 3: if(basic > 5000 || emp_id >0 ) { S 4: da=(basic*30)/100; S 5: gross = basic + da; } S 6: else { S 7: da = (basic*15)/100; S 8: gross = basic + da; } S 9: print(gross , emp_id); end. 25

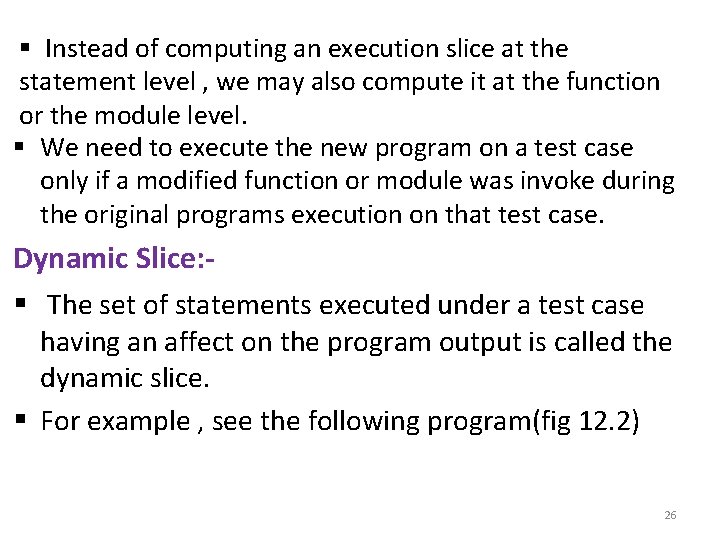

§ Instead of computing an execution slice at the statement level , we may also compute it at the function or the module level. § We need to execute the new program on a test case only if a modified function or module was invoke during the original programs execution on that test case. Dynamic Slice: § The set of statements executed under a test case having an affect on the program output is called the dynamic slice. § For example , see the following program(fig 12. 2) 26

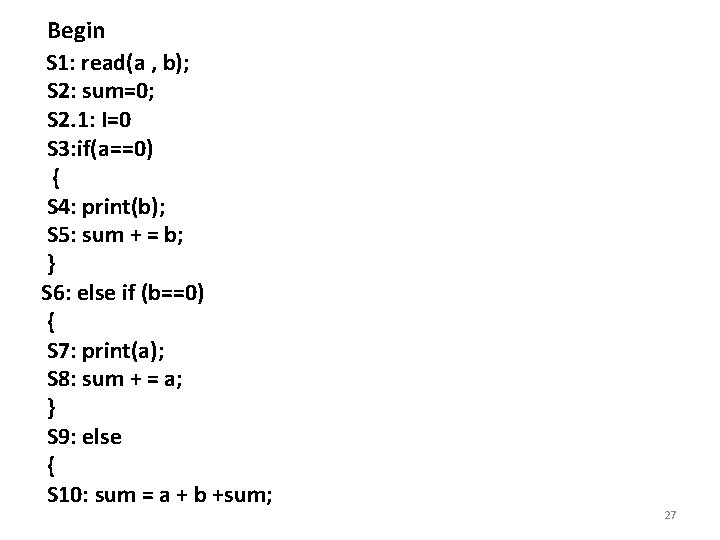

Begin S 1: read(a , b); S 2: sum=0; S 2. 1: I=0 S 3: if(a==0) { S 4: print(b); S 5: sum + = b; } S 6: else if (b==0) { S 7: print(a); S 8: sum + = a; } S 9: else { S 10: sum = a + b +sum; 27

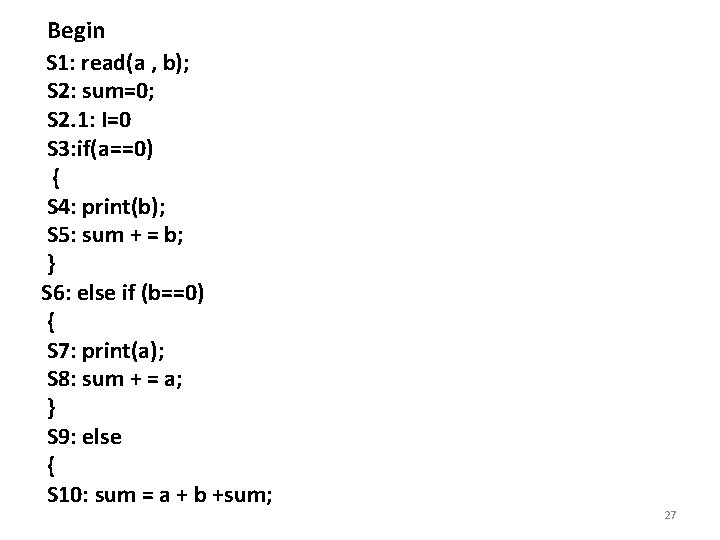

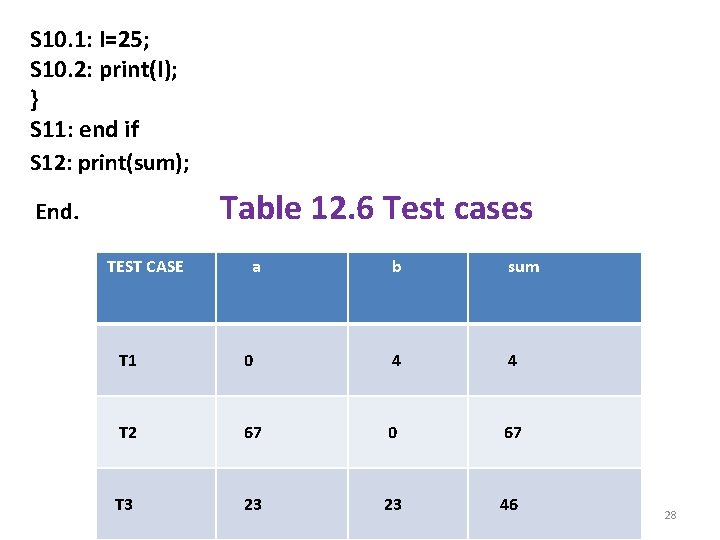

S 10. 1: I=25; S 10. 2: print(I); } S 11: end if S 12: print(sum); Table 12. 6 Test cases End. TEST CASE a b sum T 1 0 4 4 T 2 67 0 67 T 3 23 23 46 28

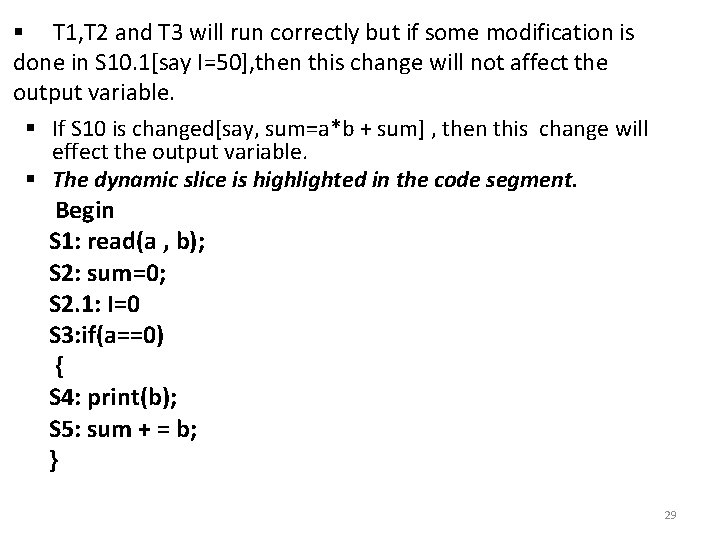

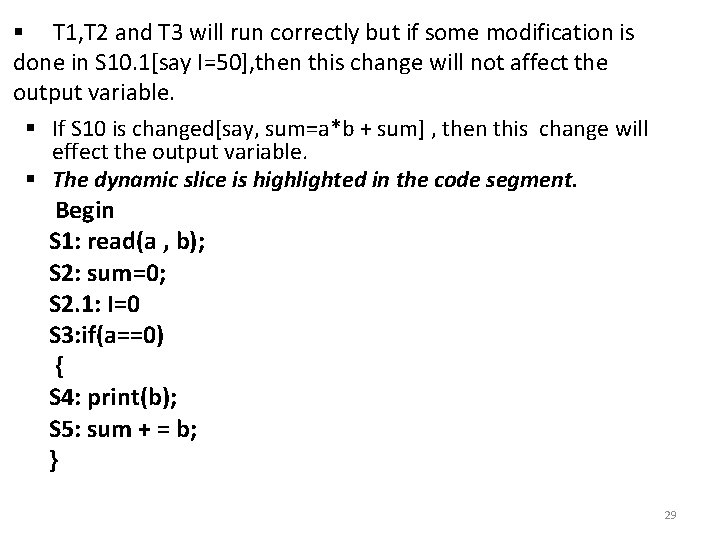

§ T 1, T 2 and T 3 will run correctly but if some modification is done in S 10. 1[say I=50], then this change will not affect the output variable. § If S 10 is changed[say, sum=a*b + sum] , then this change will effect the output variable. § The dynamic slice is highlighted in the code segment. Begin S 1: read(a , b); S 2: sum=0; S 2. 1: I=0 S 3: if(a==0) { S 4: print(b); S 5: sum + = b; } 29

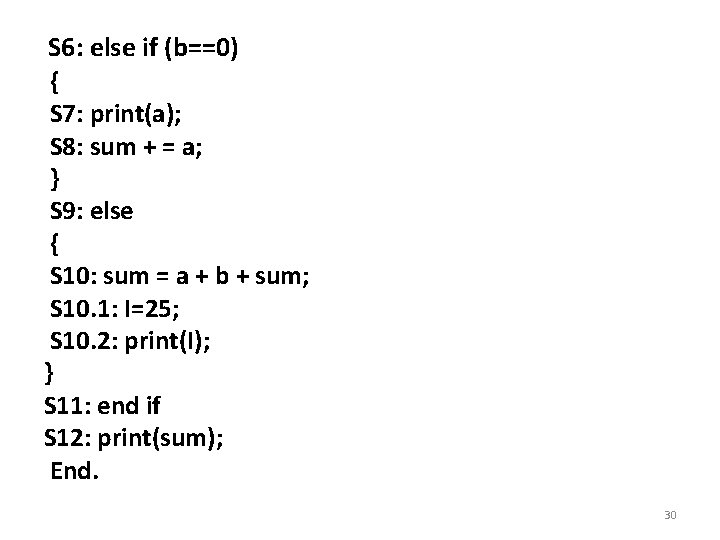

S 6: else if (b==0) { S 7: print(a); S 8: sum + = a; } S 9: else { S 10: sum = a + b + sum; S 10. 1: I=25; S 10. 2: print(I); } S 11: end if S 12: print(sum); End. 30

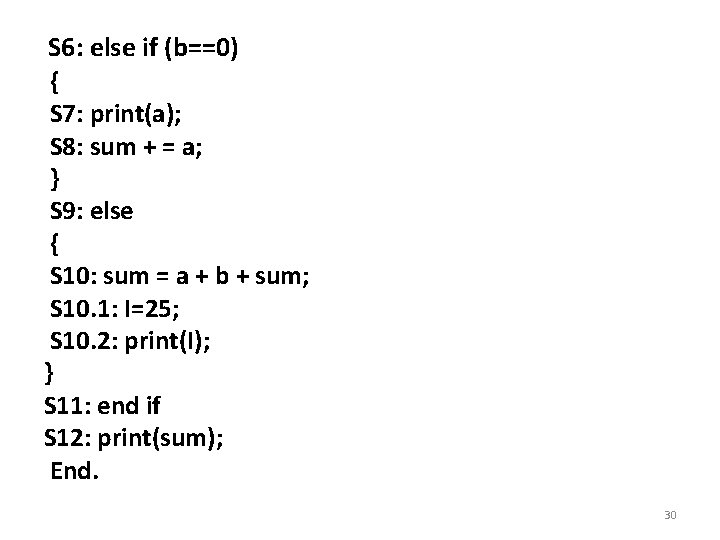

Relevant slice: - § The set of statements that were executed under a test case and did not affect the output , but have the potential to affect the output produced by a test case , is known as the relevant slice of the program. § It contains the dynamic slice and in addition , includes those statements which, if corrected may modify the variables at which the program failure. § For example , consider the example of fig 12. 2, statements S 3 and S 6 have the potential to affect the output , If modified. § This technique is helpful for prioritizing the regression test suite which saves time and effort for regression testing. 31

§ Jeffer and Gupta stated: 'If a modification in the program has to affect the output of a test case in the regression test suite , it must affect some computation in the relevant slice of the output for that test case. ‘ Ø 12. 6. 5 Prioritization based on requirement: § This technique is used for prioritizing the system test cases. § System test cases are largely dependent on the requirements. § Some requirements are more important as compared to others. Thus the test cases corresponding to important and critical requirements are given more weight as compared to others and these test cases having more weight are executed earlier. 32

§ Hema srikanth et al. have applied requirement engineering approach for prioritizing the system test cases. § It is known as PORT(prioritization of requirements for test) § They have considered the following four factors for analyzing and measuring the criticality of requirements. Customer-assigned priority of requirements: - Based on priority , the customer assigns a weight (on a scale of 1 to 10)to each requirement. Requirement volatility: - This is a rating based on the frequency of change of a requirement. 33

Developer-perceived implementation complexity: The developer gives more weight to a requirement which he thinks is more difficult to implement. Fault proneness of requirements: § This factor is identified based on the previous versions of system. § Based on this 4 factors values , a prioritization factor value(PFV) is computed as given below. PFV i = sigma (FV ij * FW j) where FV ij = Factor value is the value of factor j corresponding to requirement i FW j = Factor weight is the weight given to factor j. 34

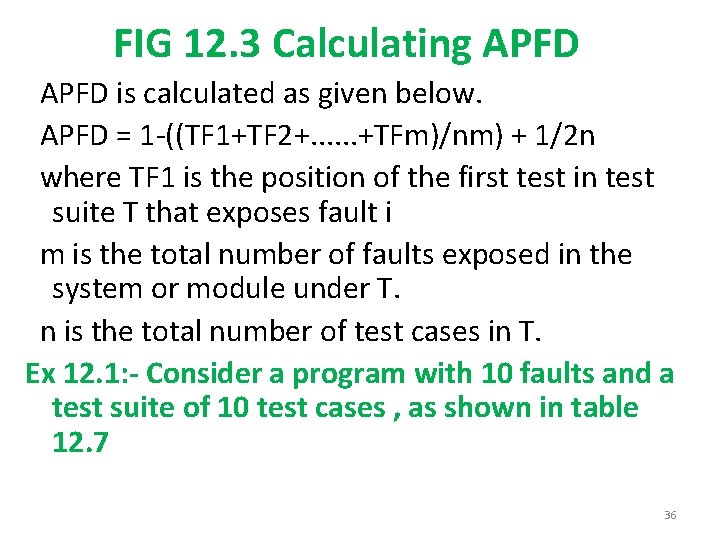

12. 7 Measuring the effectiveness of a prioritized test suite: - § When a prioritized test suite is prepares , how will we check its effectiveness? § For this purpose , the rate of fault-detection criterion can be taken. § Elbaum et al developed APFD(average percentage of faults detected) metric that measures the weighted average of the percentage of faults detected during the execution of a test suite. § Its value ranges from 0 to 100. § If we plot percentage of test suite run on the x-axis and percentage of faults detected on the y-axis , then the area under the curve is the APFD value , as shown in fig 12. 335

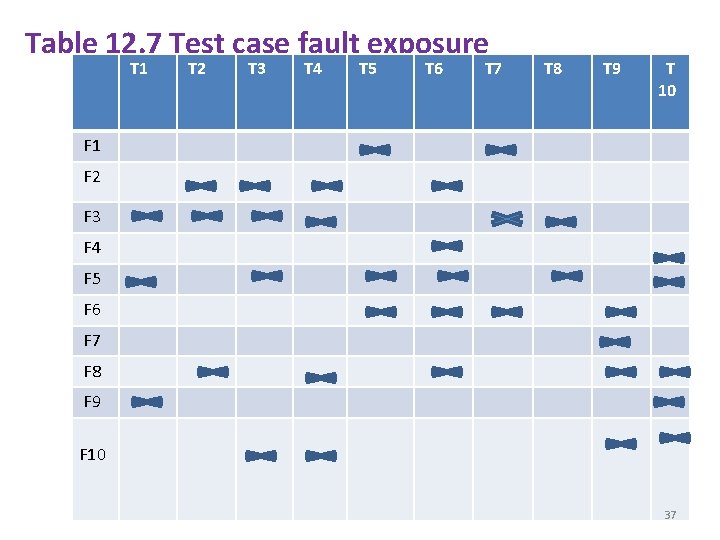

FIG 12. 3 Calculating APFD is calculated as given below. APFD = 1 -((TF 1+TF 2+. . . +TFm)/nm) + 1/2 n where TF 1 is the position of the first test in test suite T that exposes fault i m is the total number of faults exposed in the system or module under T. n is the total number of test cases in T. Ex 12. 1: - Consider a program with 10 faults and a test suite of 10 test cases , as shown in table 12. 7 36

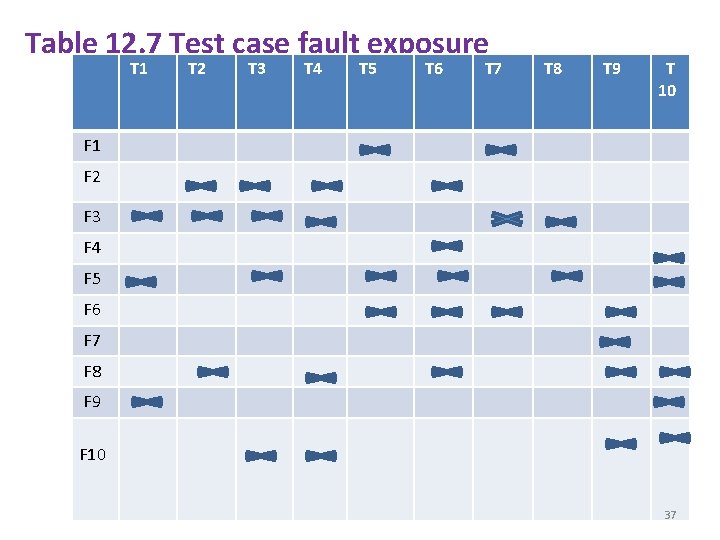

Table 12. 7 Test case fault exposure T 1 T 2 T 3 T 4 T 5 T 6 T 7 Representation T 8 T 9 T 10 F 1 F 2 F 3 F 4 F 5 F 6 F 7 F 8 F 9 F 10 37

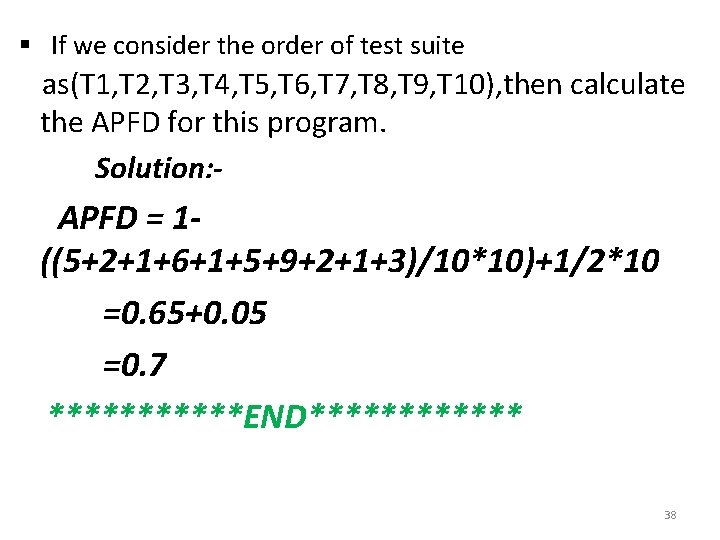

§ If we consider the order of test suite as(T 1, T 2, T 3, T 4, T 5, T 6, T 7, T 8, T 9, T 10), then calculate the APFD for this program. Solution: - APFD = 1((5+2+1+6+1+5+9+2+1+3)/10*10)+1/2*10 =0. 65+0. 05 =0. 7 ******END****** 38

UNIT-5 CHAPTER 13 Software Quality Management: Software Quality Metrics: - § Software quality metrics are a subset of software metrics that focus on the quality aspects of the product , process and project. § Software quality metrics can be grouped into the following three categories in accordance with the software life cycle 1) Product quality metrics 2) In-process quality metrics 3)Metrics for software maintenance 39

1) Product quality metrics: In product quality , the following metrics are considered. Mean-time to failure(MTTF): § MTTF metric is an estimate of the average or mean time until a product's first failure occurs. Ex: airline traffic control system. § It is calculated by dividing the total operating time of the units tested by the total number o failures encountered. Defect density metrics: § It measures the defects relative to the software size. Defect density = Number of defects / Size of product § Defect rate metrics generally used for commercial software systems. 40

Customer problem metrics: § This metrics measures the problems which customers face while using the product. § The problem metrics is usually expressed in terms of problems per user month(PUM). PUM = Total problems reported by the customer for a time period / Total number of licensed months of the software during the period § where number of licensed months : number of installed license of the software *number of months in the calculation period. § Improving the development process and reducing the product defects. § Increasing the number of installed license of the product. 41

Customer satisfaction metrics: § Customer satisfaction is actually measured through various methods of customer survey via the five point scale. 1. Very satisfied 2. Satisfied 3. Neutral 4. Dissatisfied 5. Very dissatisfied 2) In-process quality metrics: Following are some metrics. 42

Defect-density during testing: § This metric is a good indicator of quality while the software is still being tested. § It is especially useful to monitor subsequent release of a product in the same development organization. Defect-arrival pattern during testing: § Different patterns of defect arrivals indicate different quality levels in the field. § Defect-removal efficiency: DRE = Defects removed during the month ----------------------* 100 Number of problem arrivals during the month 43

3) Metrics for software maintenance: § The following metrics are important in software maintenance. Fix backlog and backlog management index: § Fix backlog metrics is the count of reported problems that remain open at the end of a month or a week. § Backlog management index(BMI) is also the metric to manage the backlog of open unresolved problems. BMI = Number of problems closed during the month ----------------------*100 Number of problem arrivals during the month § If BMI is larger than 100, it means the backlog reduced ; If BMI is less than 100, the backlog is increased. 44

Fix response time and fix responsiveness: § The fix response time metric is calculated as mean-time of high severity defects from open to closed. § This metric can be enhanced from the customer's view point. § The customers sets a time-limit to fix the defects. § This agreed to fix time is known as fix responsiveness metric. Percent delinquent fixes: - § For each fix , if the turn around time greatly exceeds the required response time , then it is classified as delinquent. § Percent delinquent fixes = Number of fixes exceeded the response time criteria by severity level *100 -----------------------Number of fixes delivered in a specified time 45

Fix quality: § It is the metric to measure the number of fixes that turn out to be defective. § Fix quality is measured as a percent defective fixes. § Percent defective fixes is the percentage of all fixes in a time interval that are defective. SQA Models: --> Organizations must adopt a model for standardizing their san activities. --> Some of them are discussed below. 46

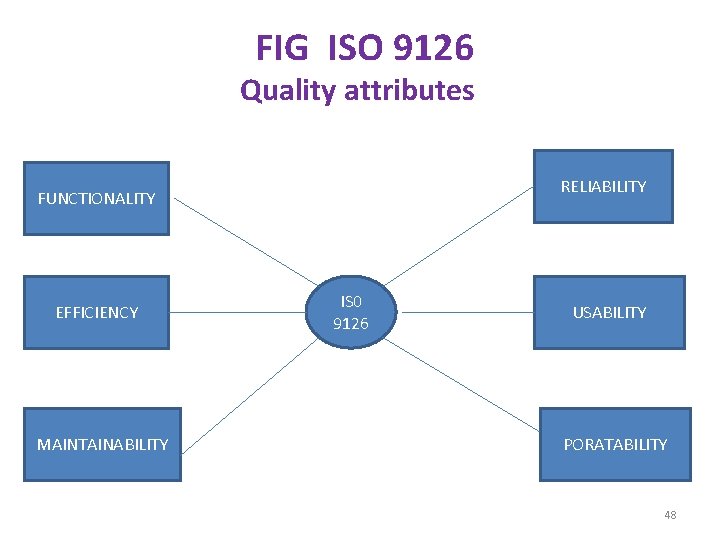

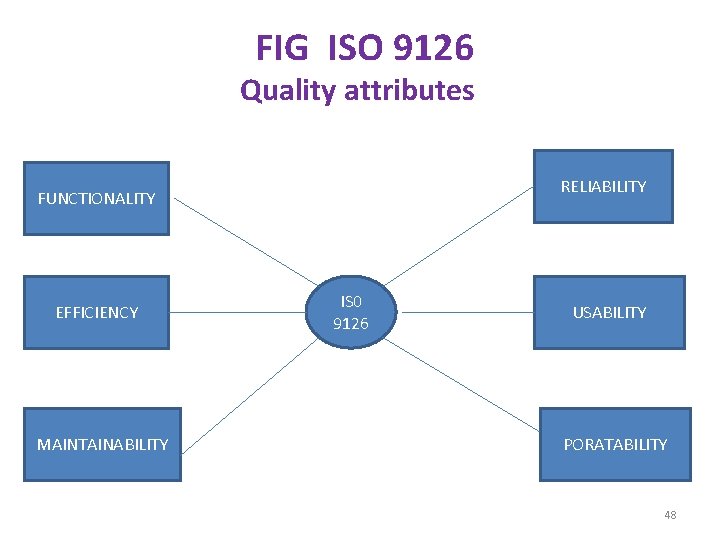

ISO 9126 : § This is an international software quality standard § § § from ISO. It aims to eliminate any misunderstanding between customers and developers. There may be problems with both customers and developers which may affect the delivery and quality of the software projects. ISO 9126 targets the common understanding between customers and developers. ISO 9126 provides a set of six quality characteristics. The quality characteristics are shown in fig. 47

FIG ISO 9126 Quality attributes RELIABILITY FUNCTIONALITY EFFICIENCY MAINTAINABILITY IS 0 9126 USABILITY PORATABILITY 48

ISO 9126 defines the following guidelines for implementation of this model: Quality requirement definition: § A quality requirement specification is prepared with implied needs , technical documentation of the project and the ISO standard. Evaluation preparation: - § This stage involves the selection of appropriate metrics , a rating-level definition and the definition of assessment criteria. § Metrics are quantifiable measures mapped onto scales. § The rating levels definition determines what ranges of values on these scales count as satisfaction or unsatisfactory. § The assessment criteria definition takes input a management criterion specific to the project and prepares a procedure for summarizing the results of evaluation. 49

Evaluation procedure: § The selected metrics are applied to the software product and values are taken on the scales. § For each measured value, the rating level is determined. § A set of rated levels are summarized. § This summary is then compared with other aspects as time and cost. § The final managerial decision is taken based on managerial criteria. Capability maturity model(CMM): § It is a frame work meant for software development process. § This model is aimed to measure the quality of a process by recognizing it on a maturity scale. § The development of CMM started in 1986 by the software engineering institute with MITRE corporation. 50

§ They developed a process maturity framework for improving software processes in organization. § After working for four years on this framework , the SEI developed the CMM for software. CMM Structure: § The structure of CMM consists of five maturity levels. § These levels consist of key process areas(KPA) which are organized by common features. § KPA's depending on these common features , as shown in fig 13. 7(a) CMM Structure Maturity levels contain Key process areas Common features organized by Key practices contain 51

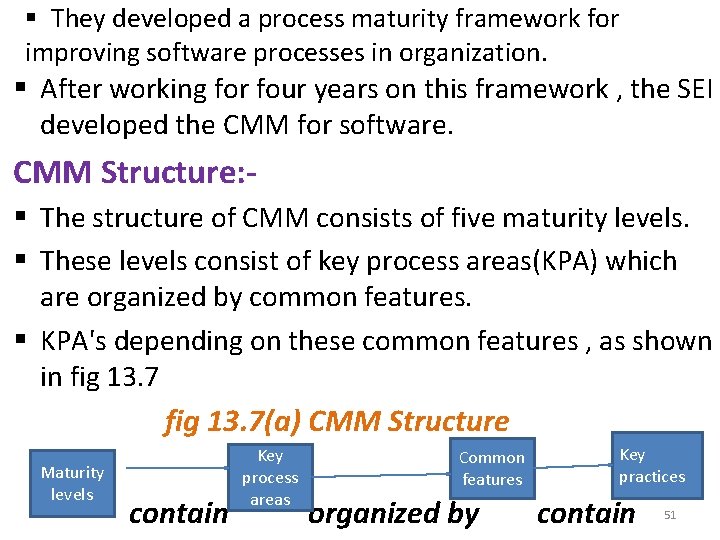

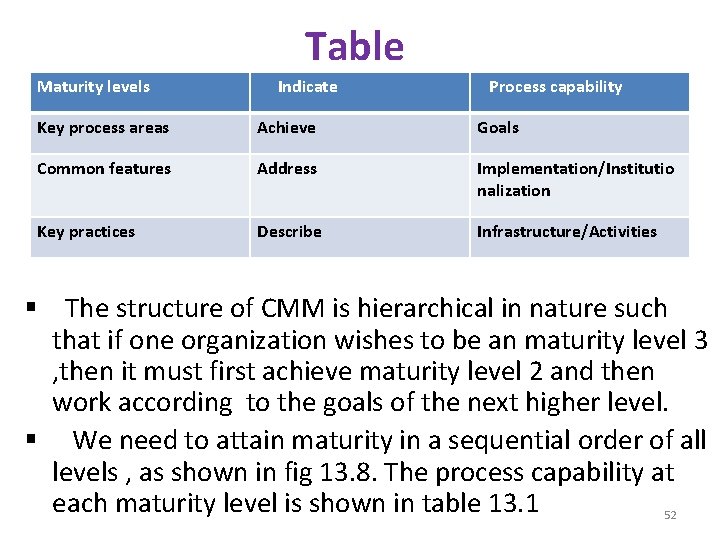

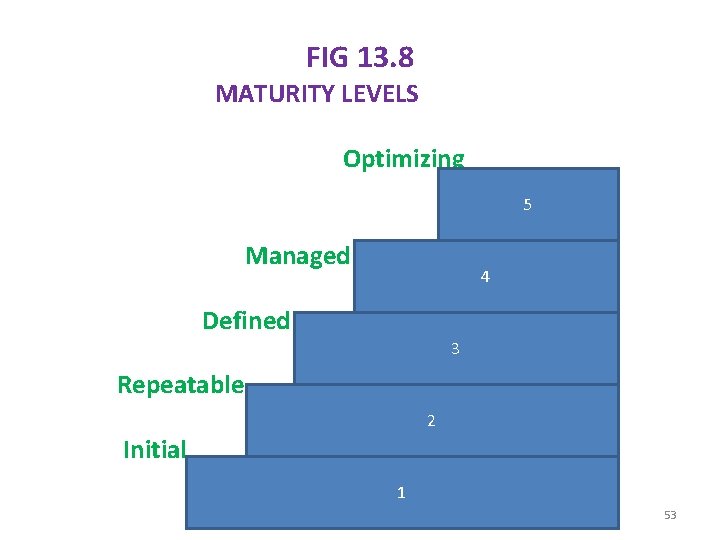

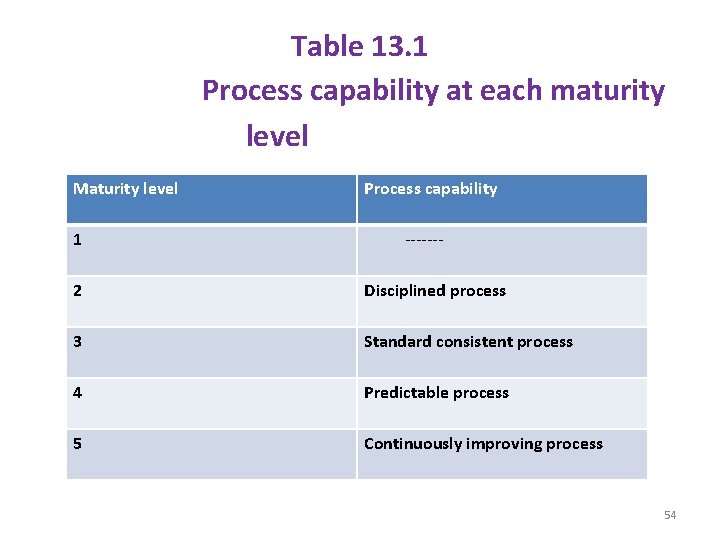

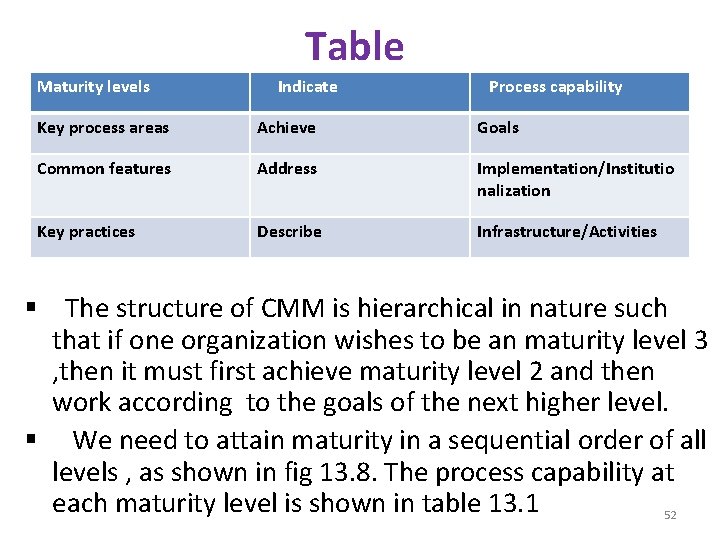

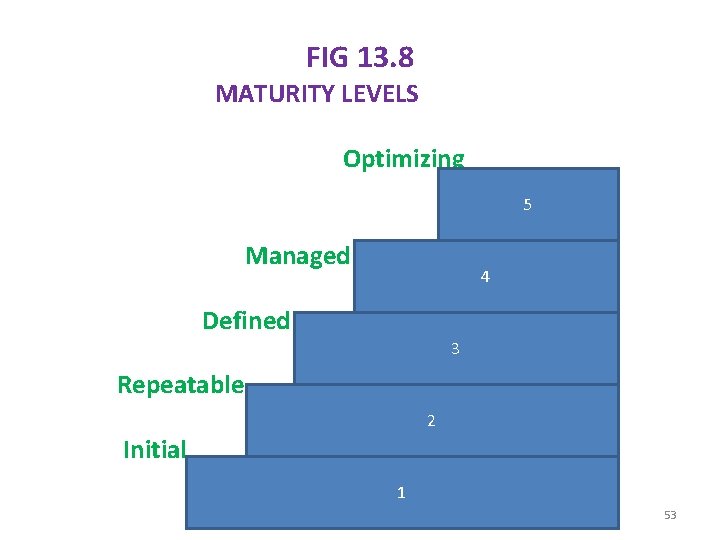

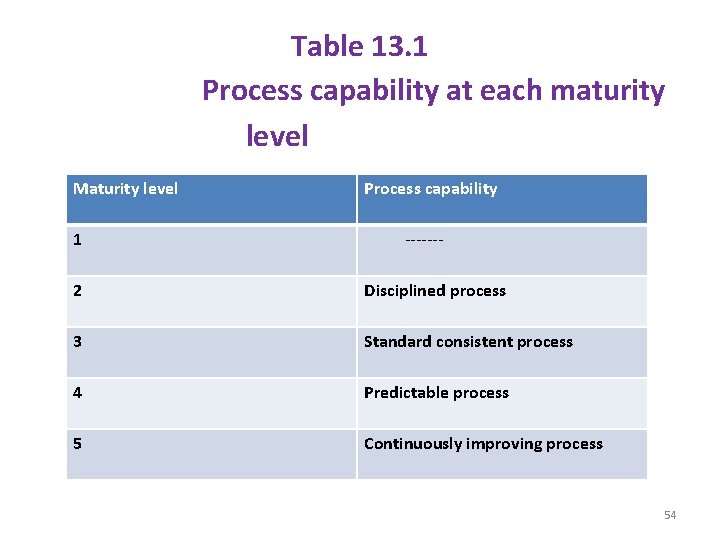

Table Maturity levels Indicate FORMAT Process capability Key process areas Achieve Goals Common features Address Implementation/Institutio nalization Key practices Describe Infrastructure/Activities § The structure of CMM is hierarchical in nature such that if one organization wishes to be an maturity level 3 , then it must first achieve maturity level 2 and then work according to the goals of the next higher level. § We need to attain maturity in a sequential order of all levels , as shown in fig 13. 8. The process capability at each maturity level is shown in table 13. 1 52

FIG 13. 8 MATURITY LEVELS Optimizing 5 Managed 4 Defined 3 Repeatable 2 Initial 1 53

Table 13. 1 Process capability at each maturity level Maturity level 1 Process capability ------- 2 Disciplined process 3 Standard consistent process 4 Predictable process 5 Continuously improving process 54

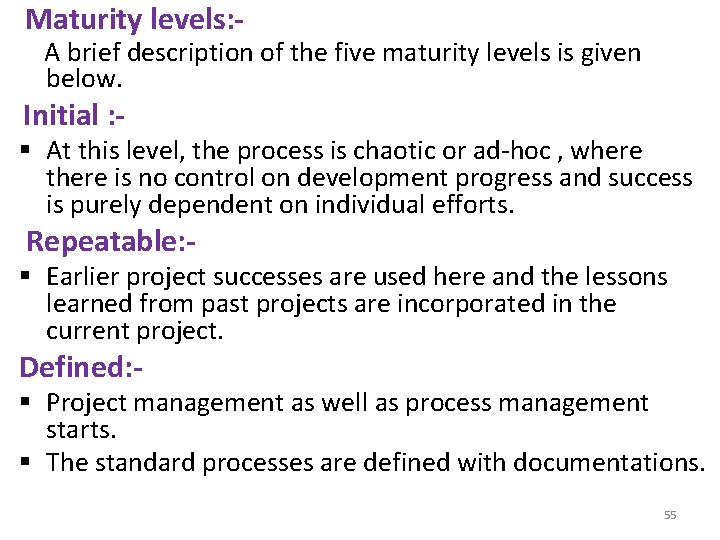

Maturity levels: - A brief description of the five maturity levels is given below. Initial : - § At this level, the process is chaotic or ad-hoc , where there is no control on development progress and success is purely dependent on individual efforts. Repeatable: - § Earlier project successes are used here and the lessons learned from past projects are incorporated in the current project. Defined: - § Project management as well as process management starts. § The standard processes are defined with documentations. 55

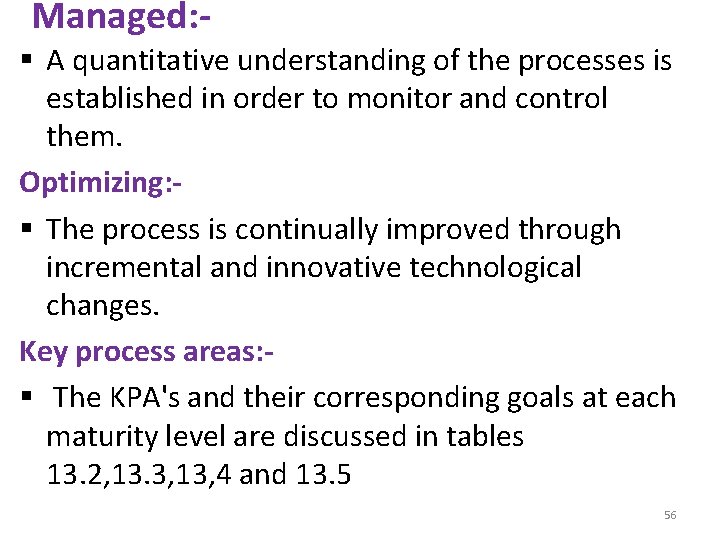

Managed: - § A quantitative understanding of the processes is established in order to monitor and control them. Optimizing: § The process is continually improved through incremental and innovative technological changes. Key process areas: § The KPA's and their corresponding goals at each maturity level are discussed in tables 13. 2, 13. 3, 13, 4 and 13. 5 56

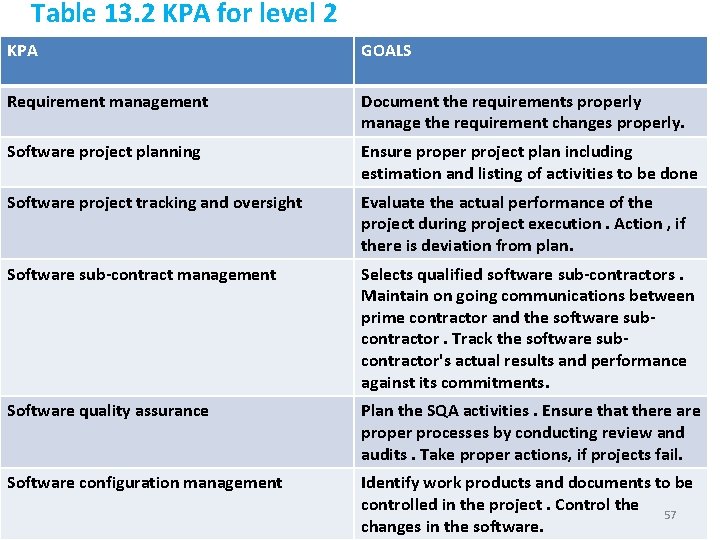

Table 13. 2 KPA for level 2 KPA GOALS Requirement management Document the requirements properly manage the requirement changes properly. Software project planning Ensure proper project plan including estimation and listing of activities to be done Software project tracking and oversight Evaluate the actual performance of the project during project execution. Action , if there is deviation from plan. Software sub-contract management Selects qualified software sub-contractors. Maintain on going communications between prime contractor and the software subcontractor. Track the software subcontractor's actual results and performance against its commitments. Software quality assurance Plan the SQA activities. Ensure that there are proper processes by conducting review and audits. Take proper actions, if projects fail. Software configuration management Identify work products and documents to be controlled in the project. Control the 57 changes in the software.

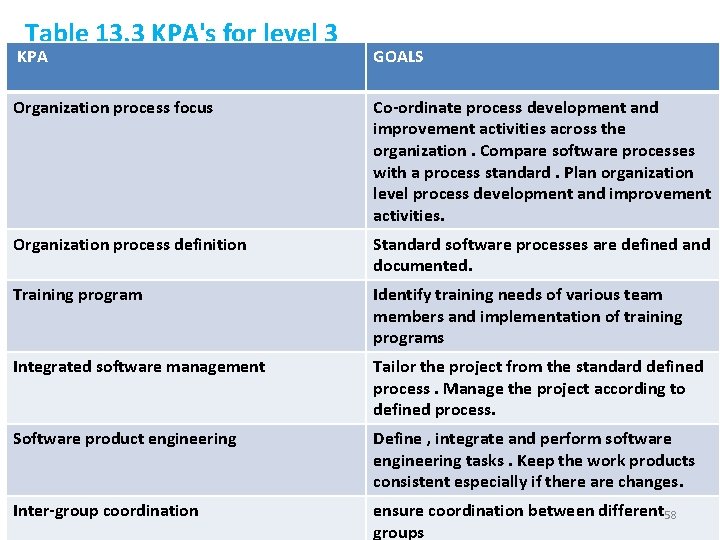

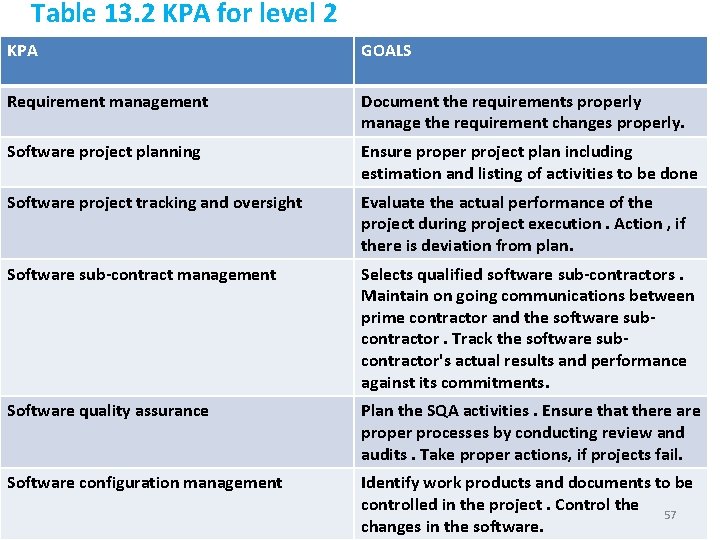

Table 13. 3 KPA's for level 3 KPA GOALS Organization process focus Co-ordinate process development and improvement activities across the organization. Compare software processes with a process standard. Plan organization level process development and improvement activities. Organization process definition Standard software processes are defined and documented. Training program Identify training needs of various team members and implementation of training programs Integrated software management Tailor the project from the standard defined process. Manage the project according to defined process. Software product engineering Define , integrate and perform software engineering tasks. Keep the work products consistent especially if there are changes. Inter-group coordination ensure coordination between different 58 groups Address about project and organizational issues.

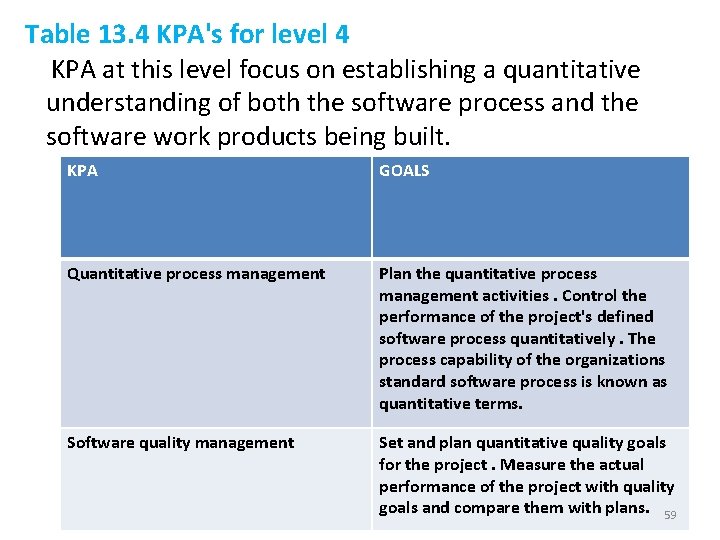

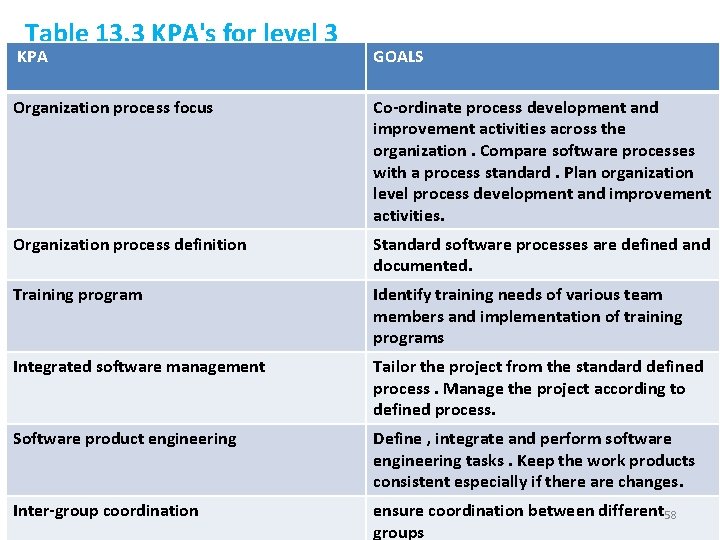

Table 13. 4 KPA's for level 4 KPA at this level focus on establishing a quantitative understanding of both the software process and the software work products being built. KPA GOALS Quantitative process management Plan the quantitative process management activities. Control the performance of the project's defined software process quantitatively. The process capability of the organizations standard software process is known as quantitative terms. Software quality management Set and plan quantitative quality goals for the project. Measure the actual performance of the project with quality goals and compare them with plans. 59

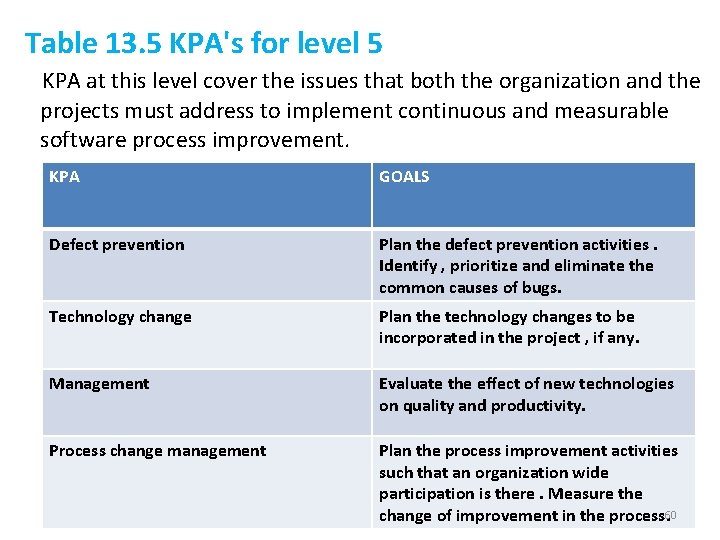

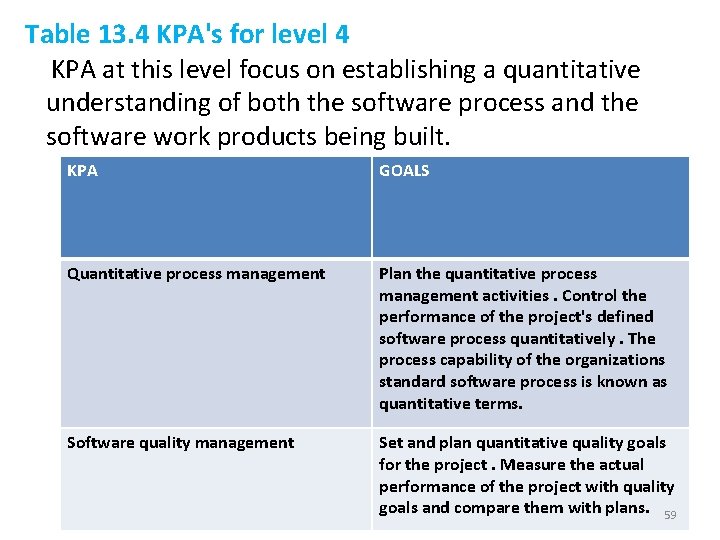

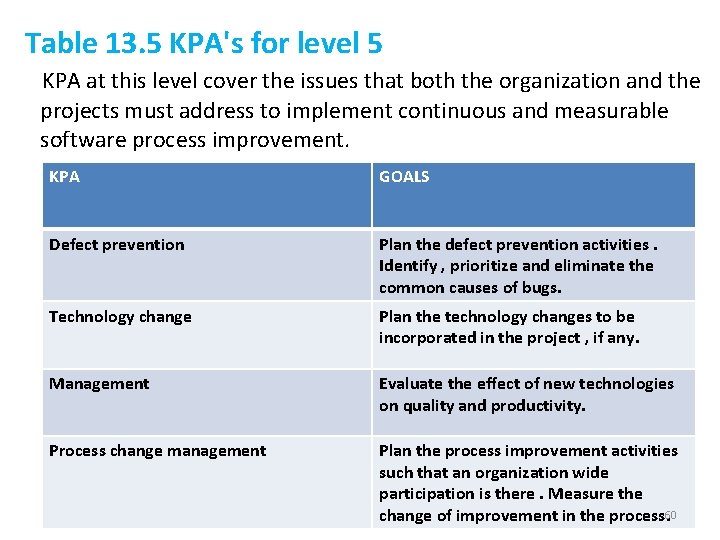

Table 13. 5 KPA's for level 5 KPA at this level cover the issues that both the organization and the projects must address to implement continuous and measurable software process improvement. KPA GOALS Defect prevention Plan the defect prevention activities. Identify , prioritize and eliminate the common causes of bugs. Technology change Plan the technology changes to be incorporated in the project , if any. Management Evaluate the effect of new technologies on quality and productivity. Process change management Plan the process improvement activities such that an organization wide participation is there. Measure the change of improvement in the process. 60

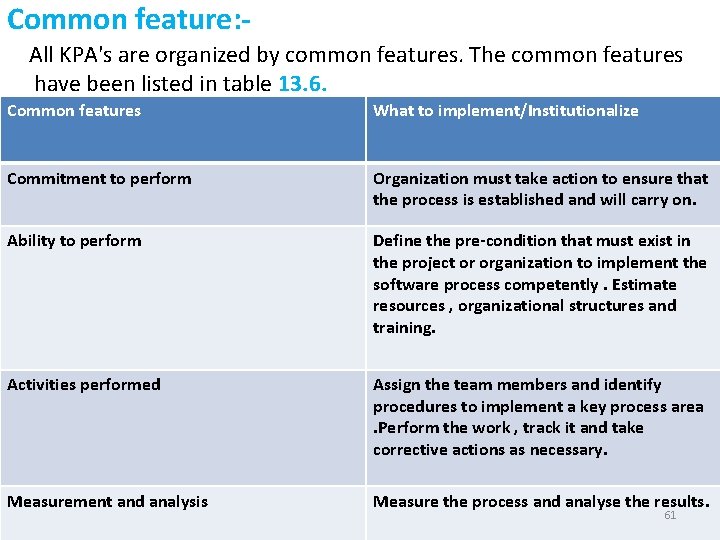

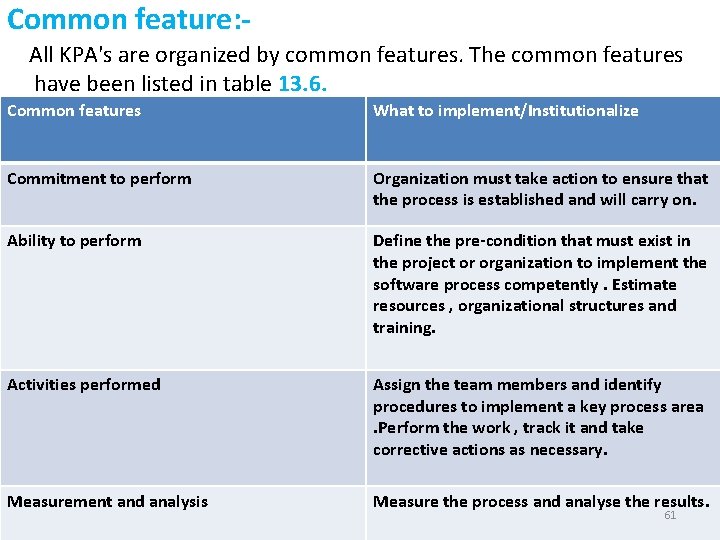

Common feature: All KPA's are organized by common features. The common features have been listed in table 13. 6. Common features What to implement/Institutionalize Commitment to perform Organization must take action to ensure that the process is established and will carry on. Ability to perform Define the pre-condition that must exist in the project or organization to implement the software process competently. Estimate resources , organizational structures and training. Activities performed Assign the team members and identify procedures to implement a key process area. Perform the work , track it and take corrective actions as necessary. Measurement and analysis Measure the process and analyse the results. 61

Assessment of process maturity: § The assessment is done by an assessment team consisting of some experienced team members. § These members start the process of assessment by collecting information about the process being assessed. § The information about the process can be collected in the form of maturity questionnaires , documentation or interviews. Software total quality management(STQM): Total quality management is a term that was originally coined in 1985 by the naval air systems command to describe its Japanese-style management approach to quality improvement. 62

§ TQM is defined as a quality-centered , customer-focused , fact-based , team-driven , senior management-led process to achieve an organization's strategic imperative through continuous process improvement. T = Total = everyone in the organization Q = Quality = Customer satisfaction M = Management = People and process § The elements of TQM system can be summarized as follows: 63

1) Customer focus: - The goal is to attain total customer satisfaction. 2) Process: The goal is to achieve continuous process improvement. 3) Human-side of quality: The goal is to create a company wide quality culture. 4) Measurement and analysis: § The goal is to drive continuous improvement in all quality parameters by the goal-oriented measurement system. § Long term benefits that may be expected from TDM are : higher productivity , increased morale , reduced costs and greater customer commitment. 64

Customer focus in software development: Requirement bugs constitute a large portion of the software bugs. Process , technology and development quality: The central question is how to develop the software effectively so that it can meet the criterion of conformance to customers requirements. Human-side of software quality: Quality , process and schedule management must be totally integrated for software development to be effective. Measurement and analysis: Software development must be controlled in order to have product delivery on time and within budget. Software metrics help in measuring various factors during development and monitoring and controlling them. 65

SIX Sigma: - § Six-sigma is a quality model originally developed for manufacturing processes. § It was developed by Motorola. § Six sigma derives its meaning from the field of statistics. § Sigma is the standard deviation for a statistical population. § The goal of this model is to have a process quality of that level. § You can apply six sigma to many fields like services , medical and insurance procedures , call centers including software. 66

§ Six sigma processes will produce less than 3. 4 defects per million opportunities. § To achieve this target , it uses a methodology known as DMAIC with the following steps: 1. Define opportunities 2. Measure performance 3. Analyse opportunity 4. Improve performance 5. Control performance ******END******* 67

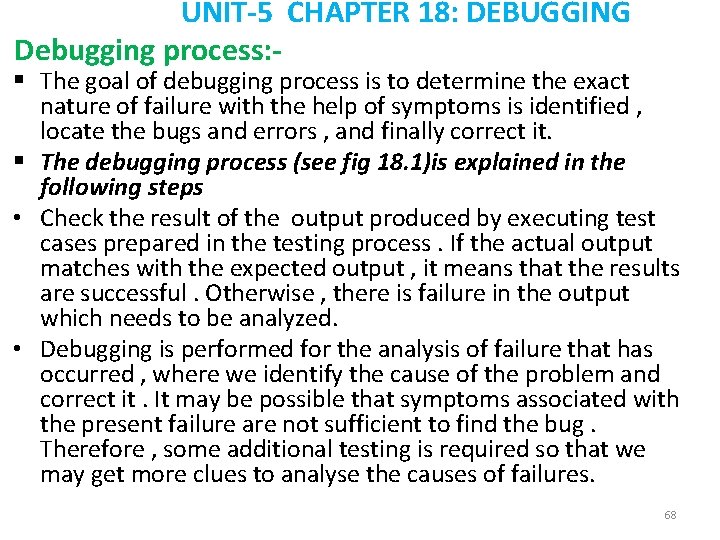

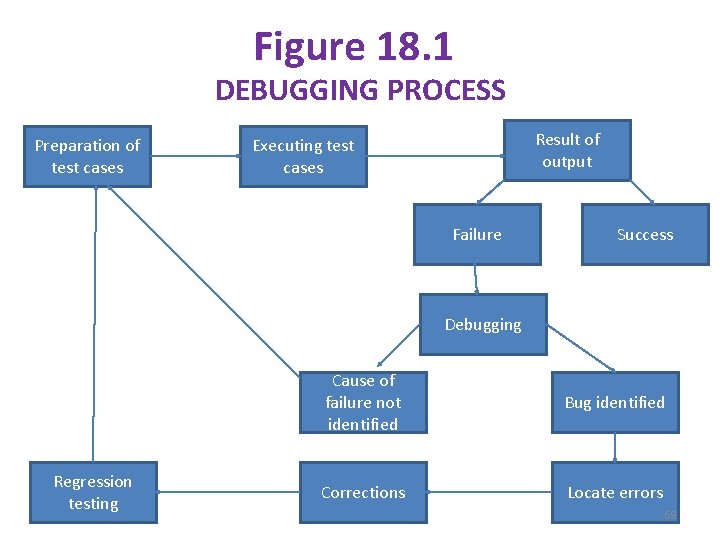

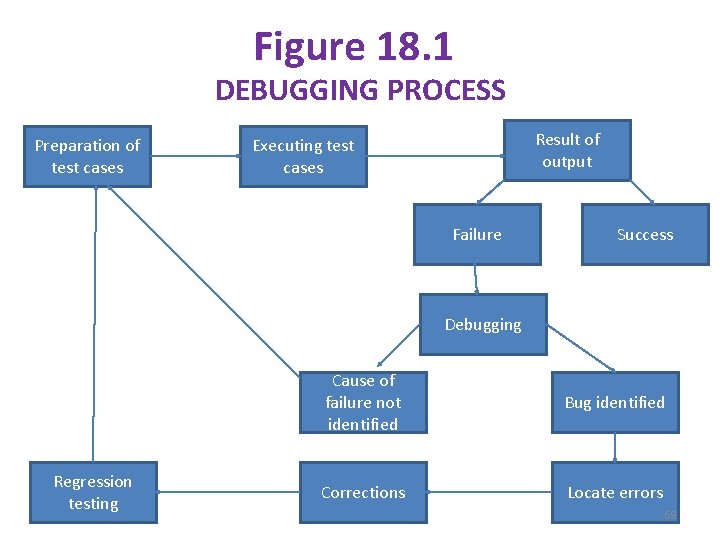

UNIT-5 CHAPTER 18: DEBUGGING Debugging process: - § The goal of debugging process is to determine the exact nature of failure with the help of symptoms is identified , locate the bugs and errors , and finally correct it. § The debugging process (see fig 18. 1)is explained in the following steps • Check the result of the output produced by executing test cases prepared in the testing process. If the actual output matches with the expected output , it means that the results are successful. Otherwise , there is failure in the output which needs to be analyzed. • Debugging is performed for the analysis of failure that has occurred , where we identify the cause of the problem and correct it. It may be possible that symptoms associated with the present failure are not sufficient to find the bug. Therefore , some additional testing is required so that we may get more clues to analyse the causes of failures. 68

Figure 18. 1 DEBUGGING PROCESS Preparation of test cases Result of output Executing test cases Failure Success Debugging Regression testing Cause of failure not identified Bug identified Corrections Locate errors 69

Debugging techniques: Debugging with memory dump: § The relevant data of the program is observed through these memory locations and registers for any bug in the program. § This method is inefficient. § Following are some drawbacks of this method. 1. There is difficulty of establishing the correspondence between storage locations and the variables in one's source program. 2. The massive amount of data with which one is faced , is irrelevant. 3. It is limited to static state of the program as it shows the state of the program at only one instant of time. 70

Debugging with watch points: § The program's final output failure may not give sufficient clues about the bug. § At a particular point of execution in the program , value of variables or other actions can be verified. § Theses particular points of execution are known as watch points. § Debugging with watch points can be implemented with the following methods. Output statements: § In this method , output statements can be used to check the state of a condition of a variable at some watch point in the program. § Execution of output statements may give some clues to find the bug. 71

§ This method has the following drawbacks: 1. It may require many changes in the code. These changes may mask an error or introduce new errors in the program. 2. After analysing the bug, we may forget to remove these added statements which may cause other failures or minister pretations in the result. Break point execution: - § This is the advanced form of the watch point used with an automated debugger program. § Breakpoint is actually a watch point interested at various places in the program. § The other difference in this method is that program is executed up to the breakpoint inserted. § At that point , you can examine whatever is desired. 72

§ Afterwards , the program will resume and will be executed further for the next break point. § Break points have an obvious advantage over output statements. Some are discussed here: a)There is no need to compile the program after inserting breakpoint , while this is necessary after inserting output statements. b)Removing the breakpoints after their requirement is easy as compared to removing all inserted output statements in the program. c)The status of program variable or a particular condition can be seen after the execution of a breakpoint as the execution temporarily stops after the 73 break point.

§ Break points can be categorized as follows: 1)Unconditional break point: - It is a simple break point without any condition to be evaluated. 2)Conditional break point: - The activation of this break point , one expression is evaluated for its boolean value. If true , the break point will cause a stop ; Otherwise , execution will continue. 3)Temporary break point: - This break point is used only once in the program. When it is set , the program starts running and once it stops , the temporary break point is removed. 4)Internal break point: - These are invisible to the user but are key to the debugger's correct handling of its algorithms. 74

Single stepping: § The idea of single stepping is that the users should be able to watch the execution of the program after every executable instruction. § Single stepping is implemented with the help of internal break points. Step-into: - § It means execution proceeds into any function in the current source statement and stops at the first executable source line in that function. Step-over: - § It is also called skip. § It treats a call to a function as an atomic operation and proceeds past any function calls to the textually succeeding source line in the current scope. 75

Back tracking: § This is a logical approach for debugging a program. The following are the steps for backtracking process. (see fig 18. 2) FIG 18. 2 BACK TRACKING Source code of various modules Site where symptoms are uncovered MODULAR DESIGN 76

• If symptoms are sufficient to provide clues about the bug , then the cause of failure is identified. The bug is trashed to find the actual location of the error. • Once we find the actual location of the error , the bug is removed with corrections. • Regression testing is performed as bug has been removed with corrections in the software. Thus to validate the corrections , regression testing is necessary after every modification. 77

Explanation: a) Observe the symptom of the failure at the output side and reach the site where the symptom can be uncovered. For example , suppose you are not getting display of a value on the output. After examining the symptom of this problem , you uncover that one module is not sending the proper message to another module. b) Once you have reached the site where symptom has been uncovered , trace the source code starting backwards and move to the highest level of abstraction of design. c) Slowly isolate the module through logical back tracking using data flow diagrams(DFD) of the modules where in the bug resides. d) Logical back tracking in the isolated module will lead to the actual bug and error can thus be removed. § This method is very effective as compared to the other methods in pinpointing the error location quickly. 78

18. 5 Correcting the bugs: § The second phase of the debugging process is to correct the error when it has been uncovered. § Before correcting the errors , we should concentrate on the following points; a) Evaluate the coupling of the logic and data structure where corrections are to be made. b) After recognizing the influence of corrections on other modules or parts , plan the regression test cases to perform regression testing. c) Perform regression testing with every corrections in the software to ensure that the corrections have not introduced bugs in other parts of the software. 18. 5. 1 Debugging Guidelines: 79

Fresh thinking leads to good debugging: § Don't spend continuous hours and hours on debugging a problem. § So rest in between and start with a fresh mind to concentrate on the problem. Don't isolate the bug from your colleagues: § It has been observed that bugs cannot be solved in isolation. § Show the problem to other colleagues , explain to then what is happening and discuss the solution. § It has also been observed that br just discussing the problem with others may suddenly lead you to come up with some solution. 80

Don't attempt code modification in the first attempt: § Debugging always starts with the analysis of clues. § If you don't analyse the failure and clues and simply change the code randomly with the view junk code to the software. Additional test case are a must if you don't get the symptom or clues to solve the problem: - § Design test cases with the view to execute those parts of the program which are causing the problem. Regression testing is a must after debugging: - § The process of debugging does not end with fixing the error. § After fixing the error , you need to realize the effects of this change on other parts. § That is why regression testing is necessary after fixing the errors. 81

Design should be referred before fixing the error: - § Any change in the code to fix the bug should be according to pre-specified design of the software. ******* 82