Synchronizing Processes Clocks External clock synchronization Cristian Internal

- Slides: 46

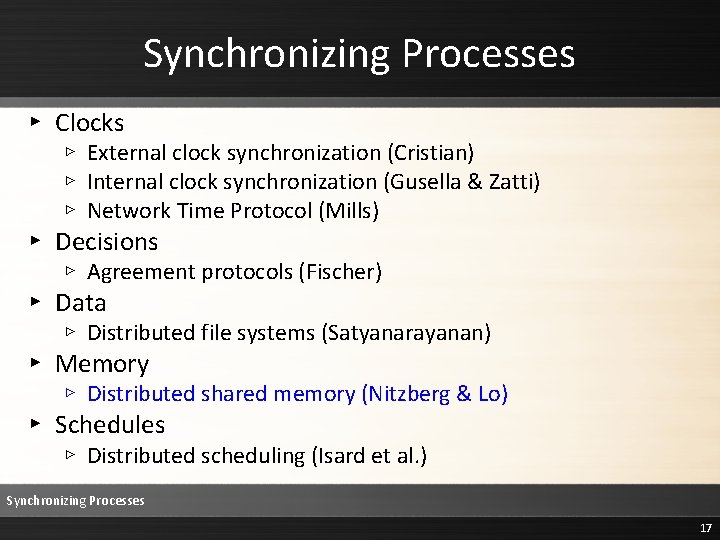

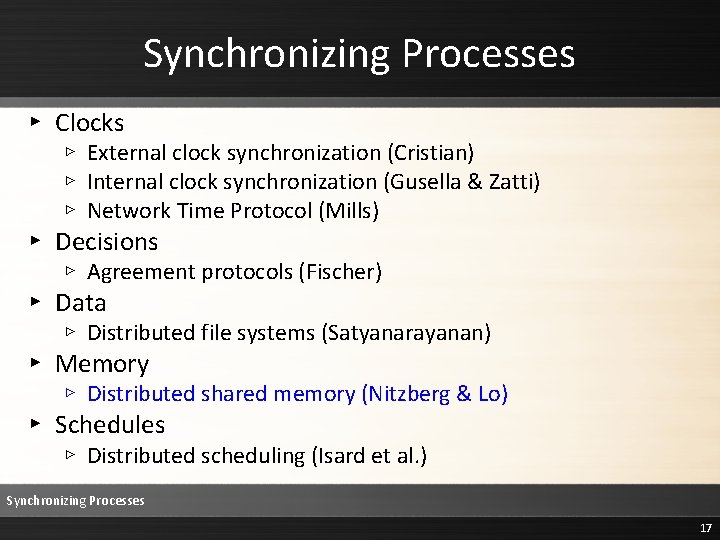

Synchronizing Processes ▸ Clocks ▹ External clock synchronization (Cristian) ▹ Internal clock synchronization (Gusella & Zatti) ▹ Network Time Protocol (Mills) ▸ Decisions ▹ Agreement protocols (Fischer) ▸ Data ▹ Distributed file systems (Satyanarayanan) ▸ Memory ▹ Distributed shared memory (Nitzberg & Lo) ▸ Schedules ▹ Distributed scheduling (Isard et al. ) Synchronizing Processes 1

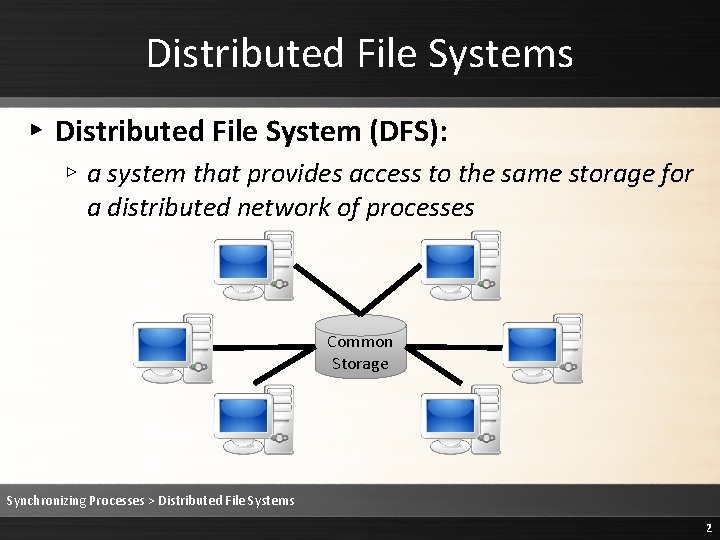

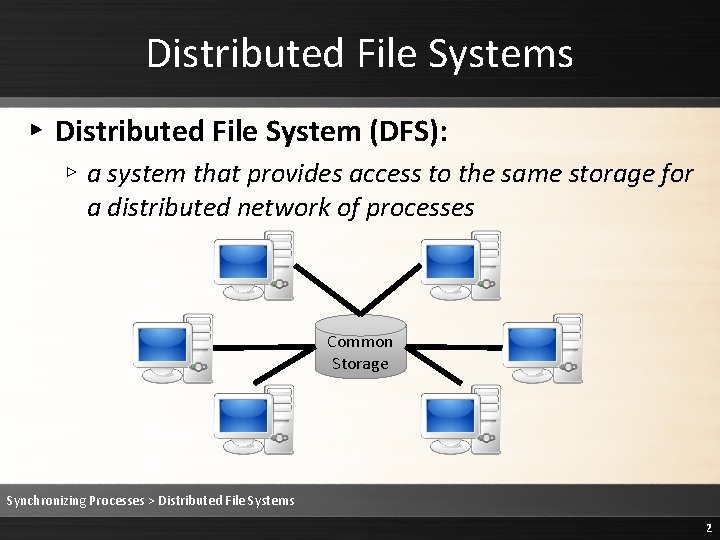

Distributed File Systems ▸ Distributed File System (DFS): ▹ a system that provides access to the same storage for a distributed network of processes Common Storage Synchronizing Processes > Distributed File Systems 2

Benefits of DFSs ▸ Data sharing is simplified ▹ Files appear to be local ▹ Users are not required to specify remote servers to access ▸ User mobility is supported ▹ Any workstation in the system can access the storage ▸ System administration is easier ▹ Operations staff can focus on a small number of servers instead of a large number of workstations ▸ Better security is possible ▹ Servers can be physically secured ▹ No user programs are executed on the servers ▸ Site autonomy is improved ▹ Workstations can be turned off without disrupting the storage Synchronizing Processes > Distributed File Systems 3

Design Principles for DFSs ▸ Utilize workstations when possible ▹ Opt to perform operations on workstations rather than servers to improve scalability ▸ Cache whenever possible ▹ This reduces contention on centralized resources and transparently makes data available whenever used ▸ Exploit file usage characteristics ▹ Knowledge of how files are accessed can be used to make better choices ▹ Ex: Temporary files are rarely shared, hence can be kept locally ▸ Minimize system-wide knowledge and change ▹ Scalability is enhanced if global information is rarely monitored or updated ▸ Trust the fewest possible entities ▹ Security is improved by trusting a smaller number of processes ▸ Batch if possible ▹ Transferring files in large chunks improve overall throughput Synchronizing Processes > Distributed File Systems 4

Mechanisms for Building DFSs ▸ Mount points ▹ Enables filename spaces to be “glued” together to provide a single, seamless, hierarchical namespace ▸ Client caching ▹ Contributes the most to better performance in DFSs ▸ Hints ▹ Pieces of information that can substantially improve performance if correct but no negative consequence if erroneous ▹ Ex: Caching mappings of pathname prefixes ▸ Bulk data transfer ▹ Reduces network communication overhead by transferring in bulk ▸ Encryption ▹ Used for remote authentication, either with private or public keys ▸ Replication ▹ Storing the same data on multiple servers increases availability Synchronizing Processes > Distributed File Systems 5

DFS Case Studies ▸ Two case studies: ▹ Andrew (AFS) ▹ Coda ▸ Both were: ▹ Developed at Carnegie Mellon University (CMU) ▹ A Unix-based DFS ▹ Focused on scalability, security, and availability Synchronizing Processes > Distributed File Systems 6

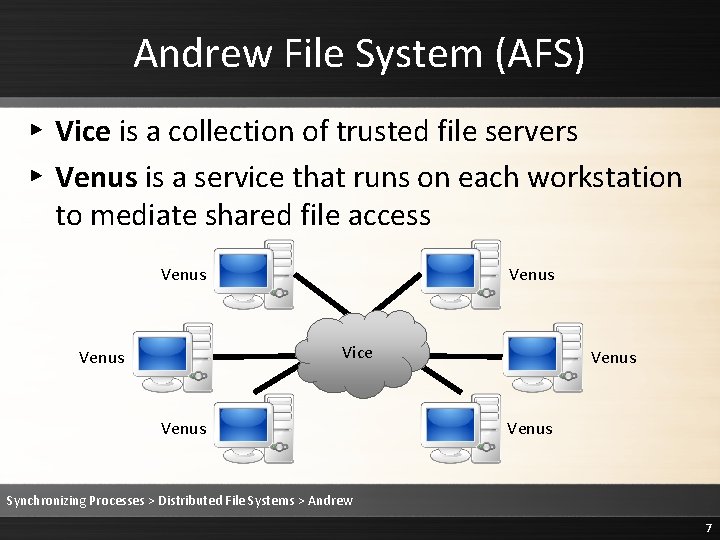

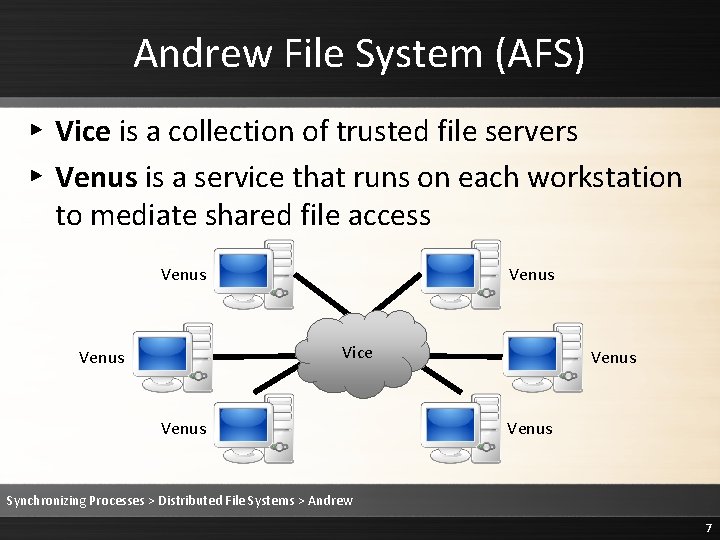

Andrew File System (AFS) ▸ Vice is a collection of trusted file servers ▸ Venus is a service that runs on each workstation to mediate shared file access Venus Vice Venus Synchronizing Processes > Distributed File Systems > Andrew 7

AFS-2 ▸ Used from 1985 through 1989 ▸ Venus now used an optimistic approach to maintaining cache coherence ▹ All cached files were considered valid ▹ Callbacks were used ▸ When files are cached on a workstation, the server promises to notify the workstation if the file is to be modified by another machine ▸ A remote procedure call (RPC) mechanism was used to optimize bulk file transfers ▸ Mount points and volumes were used instead of stub directories to easily move files around among the servers ▹ Each user was normally assigned a volume and a disk quota ▹ Read-only replication of volumes increased availability Synchronizing Processes > Distributed File Systems > Andrew 8

Security in Andrew ▸ Protection domains ▹ Each is composed of users and groups ▹ Each group is associated with a unique owner (user) ▹ A protection server is used to immediately reflect changes in domains ▸ Authentication ▹ Upon login, a user’s password is used to obtain tokens from an authentication server ▹ Venus uses these tokens to establish connections to the RPC ▸ File system protection ▹ Access lists are used to determine access to directories instead of files, including negative rights ▸ Resource usage ▹ Andrew’s protection and authentication mechanisms protect against denials of service and resources Synchronizing Processes > Distributed File Systems > Andrew 9

Coda Overview ▸ Clients cache entire files on their local disks ▸ Cache coherence is maintained by the use of callbacks ▸ Clients dynamically find files on servers and cache location information ▸ Token-based authentication and end-to-end encryption are used for security ▸ Provides failure resiliency through two mechanisms: ▹ Server replication: storing copies of files on multiple servers ▹ Disconnected operation: mode of optimistic execution in which the client relies solely on cached data Synchronizing Processes > Distributed File Systems > Coda 10

Server Replication ▸ Replicated Volume: ▹ consists of several physical volumes or replicas that are managed as one logical volume by the system ▸ Volume Storage Group (VSG): ▹ a set of servers maintaining a replicated volume ▸ Accessible VSG (AVSG): ▹ the set of servers currently accessible ▹ Venus performs periodic probes to detect AVSGs ▹ One member is designated as the preferred server Synchronizing Processes > Distributed File Systems > Coda > Server Replication 11

Server Replication ▸ Venus employs a Read-One, Write-All strategy ▸ For a read request, ▹ If a local cache exists, ▸ Venus will read the cache instead of contacting the VSG ▹ If a local cache does not exist, ▸ Venus will contact the preferred server for its copy ▸ Venus will also contact the other AVSG for their version numbers ▸ If the preferred version is stale, a new, up-to-date preferred server is selected from the AVSG and the fetch is repeated Synchronizing Processes > Distributed File Systems > Coda > Server Replication 12

Server Replication ▸ Venus employs a Read-One, Write-All strategy ▸ For a write, ▹ When a file is closed, it is transferred to all members of the AVSG ▹ If the server’s copy does not conflict with the client’s copy, an update operation handles transferring file contents, making directory entries, and changing access lists ▹ A data structure called the update set, which summarizes the client’s knowledge of which servers did not have conflicts, is distributed to the servers Synchronizing Processes > Distributed File Systems > Coda > Server Replication 13

Disconnected Operation ▸ Begins at a client when no member of a VSG is accessible ▸ Clients are allowed to rely solely on local caches ▸ If a cache does not exist, the system call that triggered the file access is aborted ▸ Disconnected operation ends when Venus established a connection with the VSG ▸ Venus executes a series of update processes to reintegrate the client with the VSG Synchronizing Processes > Distributed File Systems > Coda > Disconnected Operation 14

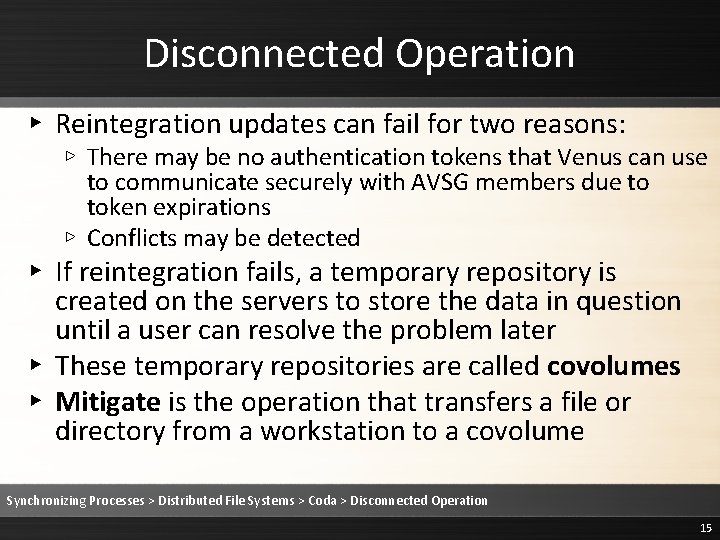

Disconnected Operation ▸ Reintegration updates can fail for two reasons: ▹ There may be no authentication tokens that Venus can use to communicate securely with AVSG members due to token expirations ▹ Conflicts may be detected ▸ If reintegration fails, a temporary repository is created on the servers to store the data in question until a user can resolve the problem later ▸ These temporary repositories are called covolumes ▸ Mitigate is the operation that transfers a file or directory from a workstation to a covolume Synchronizing Processes > Distributed File Systems > Coda > Disconnected Operation 15

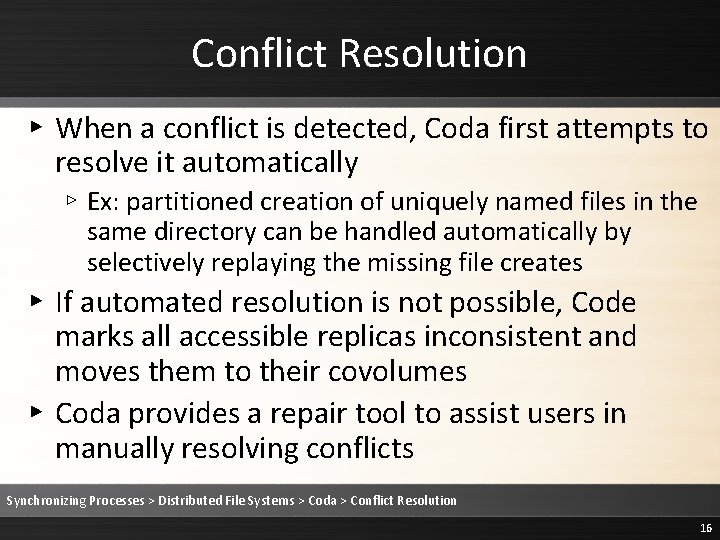

Conflict Resolution ▸ When a conflict is detected, Coda first attempts to resolve it automatically ▹ Ex: partitioned creation of uniquely named files in the same directory can be handled automatically by selectively replaying the missing file creates ▸ If automated resolution is not possible, Code marks all accessible replicas inconsistent and moves them to their covolumes ▸ Coda provides a repair tool to assist users in manually resolving conflicts Synchronizing Processes > Distributed File Systems > Coda > Conflict Resolution 16

Synchronizing Processes ▸ Clocks ▹ External clock synchronization (Cristian) ▹ Internal clock synchronization (Gusella & Zatti) ▹ Network Time Protocol (Mills) ▸ Decisions ▹ Agreement protocols (Fischer) ▸ Data ▹ Distributed file systems (Satyanarayanan) ▸ Memory ▹ Distributed shared memory (Nitzberg & Lo) ▸ Schedules ▹ Distributed scheduling (Isard et al. ) Synchronizing Processes 17

Synchronizing Processes: Distributed Shared Memory CS/CE/TE 6378 Advanced Operating Systems

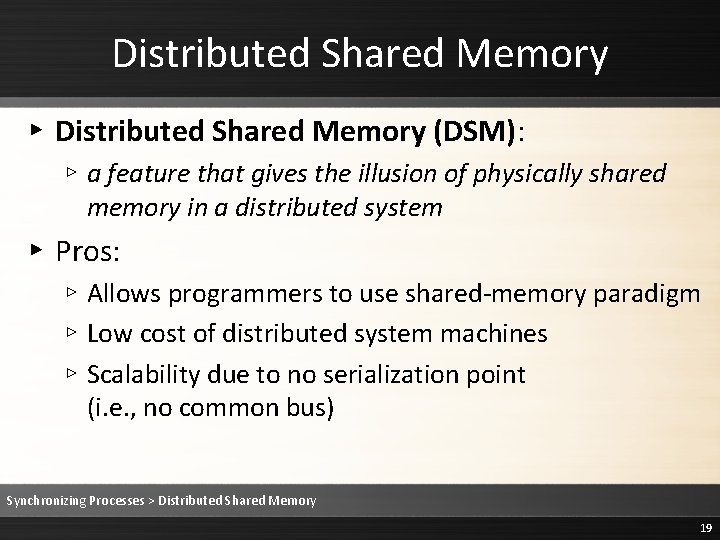

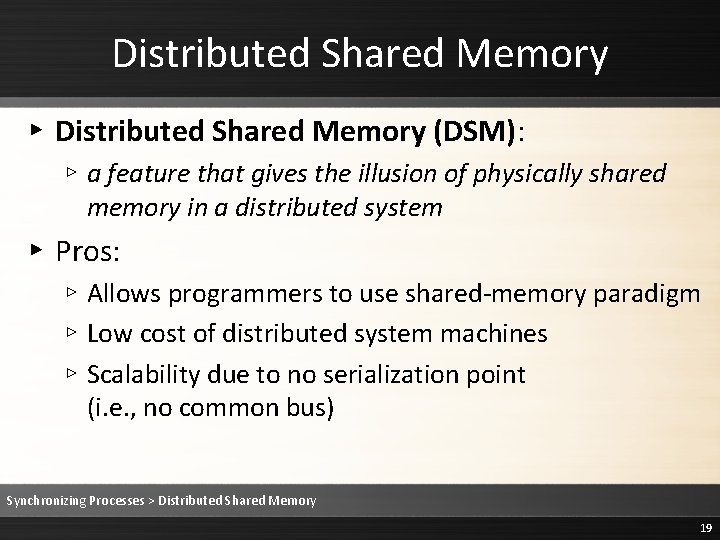

Distributed Shared Memory ▸ Distributed Shared Memory (DSM): ▹ a feature that gives the illusion of physically shared memory in a distributed system ▸ Pros: ▹ Allows programmers to use shared-memory paradigm ▹ Low cost of distributed system machines ▹ Scalability due to no serialization point (i. e. , no common bus) Synchronizing Processes > Distributed Shared Memory 19

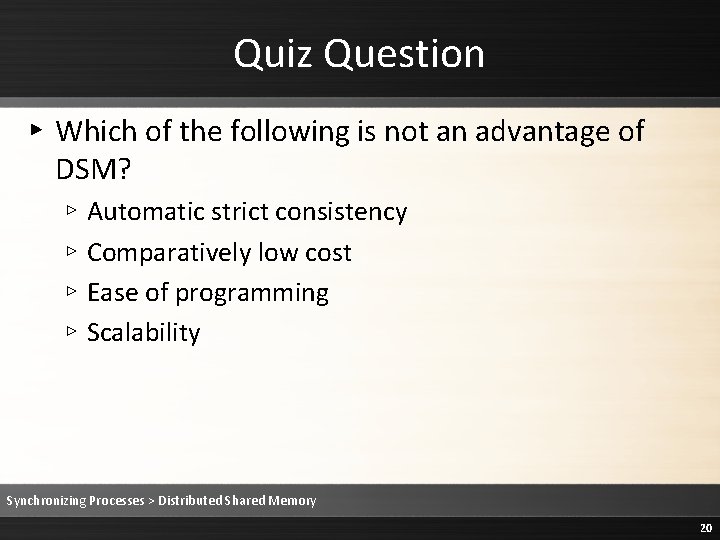

Quiz Question ▸ Which of the following is not an advantage of DSM? ▹ Automatic strict consistency ▹ Comparatively low cost ▹ Ease of programming ▹ Scalability Synchronizing Processes > Distributed Shared Memory 20

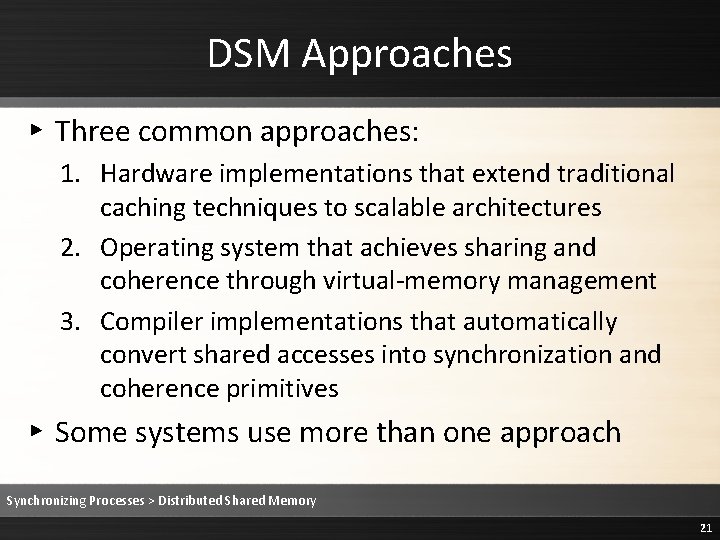

DSM Approaches ▸ Three common approaches: 1. Hardware implementations that extend traditional caching techniques to scalable architectures 2. Operating system that achieves sharing and coherence through virtual-memory management 3. Compiler implementations that automatically convert shared accesses into synchronization and coherence primitives ▸ Some systems use more than one approach Synchronizing Processes > Distributed Shared Memory 21

Quiz Question ▸ Which of the following is not an approach to implementing a DSM system? ▹ Compiler ▹ Hardware architecture ▹ Network topology ▹ Operating system Synchronizing Processes > Distributed Shared Memory 22

DSM Design Choices ▸ Four key aspects: ▹ Structure and granularity ▹ Coherence semantics ▹ Scalability ▹ Heterogeneity Synchronizing Processes > Distributed Shared Memory > Design Choices 23

Structure and Granularity ▸ Structure: ▹ refers to the layout of the shared data in memory ▹ Most DSMs do not structure memory (linear array of words) ▹ But some structure data as objects, language types, or associative memory structures (i. e. , tuple space) ▸ Granularity: ▹ refers to the size of the unit of sharing ▹ Examples include byte, word, page, or complex data structure Synchronizing Processes > Distributed Shared Memory > Design Choices 24

Quiz Question ▸ Which of the following is not an example of granularity? ▹ Byte ▹ Object ▹ Page ▹ Word Synchronizing Processes > Distributed Shared Memory > Design Choices 25

Granularity ▸ A process is likely to access a large region of its shared address in a small amount of time ▸ Hence, larger page sizes reduce paging overhead ▸ However, larger page sizes increase the likelihood that more than one process will require access to a page (i. e. , contention) ▸ Larger page sizes also increase false sharing ▸ False Sharing: ▹ occurs when two unrelated variables (each used by different processes) are placed in the same page Synchronizing Processes > Distributed Shared Memory > Design Choices 26

Quiz Question ▸ Which of the following is not an advantage of using larger page sizes for DSMs? ▹ Decreased probability of false sharing ▹ Reduction of paging overhead ▹ Smaller paging directory ▹ All of the above are advantages Synchronizing Processes > Distributed Shared Memory > Design Choices 27

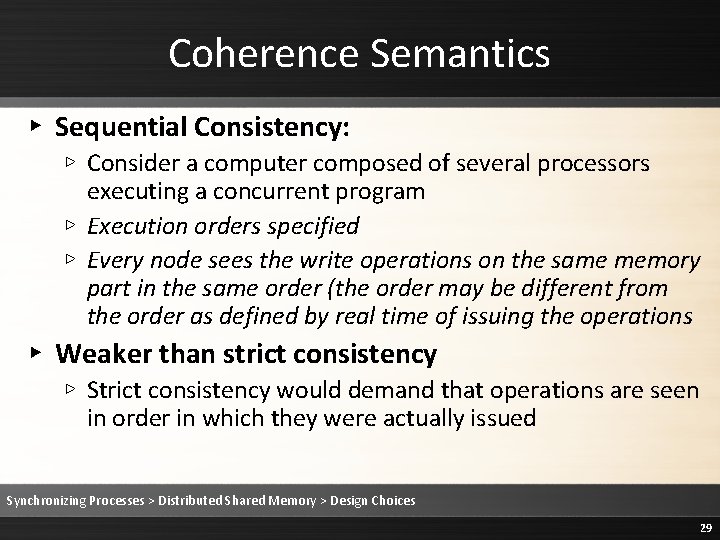

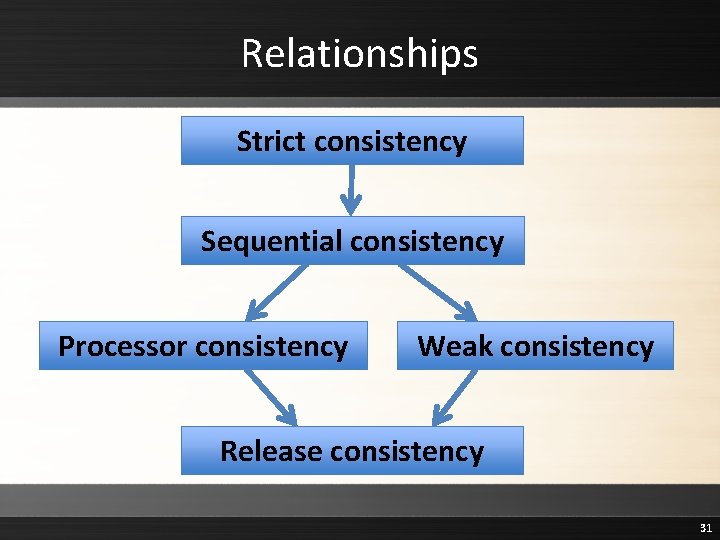

Coherence Semantics ▸ Coherence Semantics: ▹ how parallel memory updates are propagated throughout a distributed system ▸ Strict Consistency: ▹ a read returns the most recently written value ▸ Sequential Consistency: ▹ the result of any execution appears as some interleaving of the operations of the individual nodes when executed on a multithreaded sequential machine Synchronizing Processes > Distributed Shared Memory > Design Choices 28

Coherence Semantics ▸ Sequential Consistency: ▹ Consider a computer composed of several processors executing a concurrent program ▹ Execution orders specified ▹ Every node sees the write operations on the same memory part in the same order (the order may be different from the order as defined by real time of issuing the operations ▸ Weaker than strict consistency ▹ Strict consistency would demand that operations are seen in order in which they were actually issued Synchronizing Processes > Distributed Shared Memory > Design Choices 29

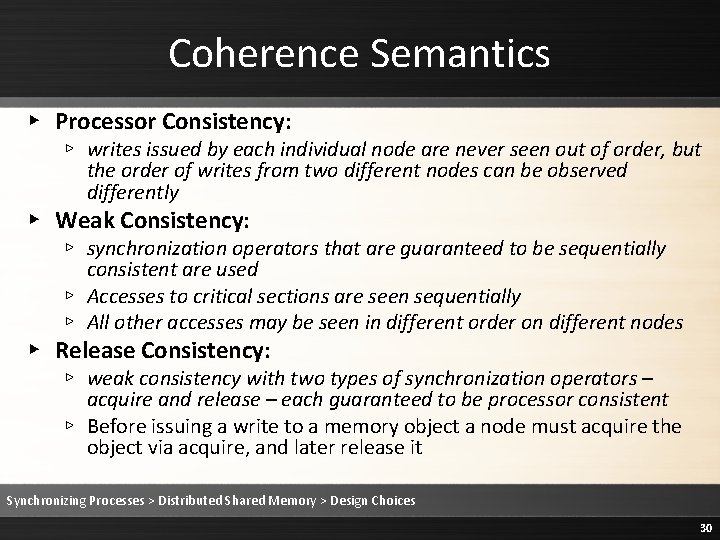

Coherence Semantics ▸ Processor Consistency: ▹ writes issued by each individual node are never seen out of order, but the order of writes from two different nodes can be observed differently ▸ Weak Consistency: ▹ synchronization operators that are guaranteed to be sequentially consistent are used ▹ Accesses to critical sections are seen sequentially ▹ All other accesses may be seen in different order on different nodes ▸ Release Consistency: ▹ weak consistency with two types of synchronization operators – acquire and release – each guaranteed to be processor consistent ▹ Before issuing a write to a memory object a node must acquire the object via acquire, and later release it Synchronizing Processes > Distributed Shared Memory > Design Choices 30

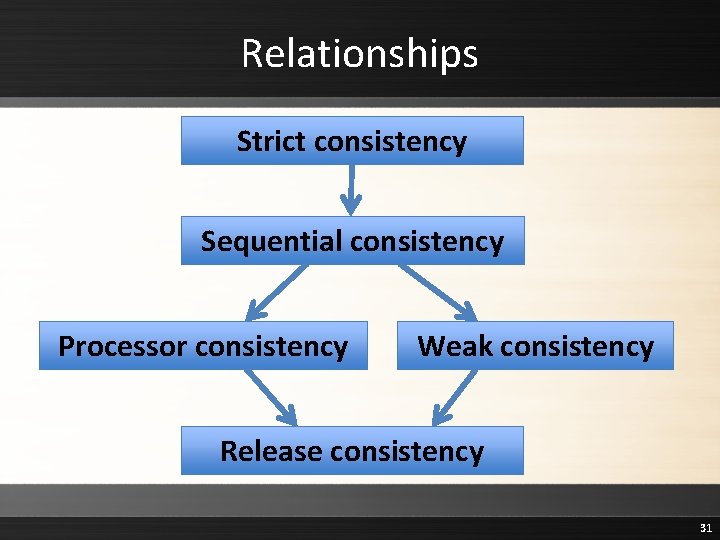

Relationships Strict consistency Sequential consistency Processor consistency Weak consistency Release consistency 31

Quiz Question ▸ Which of the following memory coherences returns the most recently written value for a read? ▹ Processor consistency ▹ Release consistency ▹ Strict consistency ▹ Weak consistency Synchronizing Processes > Distributed Shared Memory > Design Choices 32

Scalability ▸ Scalability is reduced by two factors: ▹ Central bottlenecks ▸ Ex: the bus of a tightly coupled shared memory multiprocessor ▹ Global knowledge operations and storage ▸ Ex: broadcasted messages Synchronizing Processes > Distributed Shared Memory > Design Choices 33

Heterogeneity ▸ If the DSM is structured as variables or objects in the source language, memory can be shared between two machines with different architectures ▸ The DSM compiler can add conversion routines to all accesses to shared memory for any representation of basic data types ▸ Heterogeneous DSM allows more machines to participate in a computation ▸ But the overhead of conversion usually outweighs the benefits Synchronizing Processes > Distributed Shared Memory > Design Choices 34

DSM Implementation ▸ A DSM system must automatically transform shared-memory access into inter-process communication ▸ Hence, the DSM algorithms are required to locate and access shared data, maintain coherence, and replace data ▸ Additionally, operating system algorithms must support process synchronization and memory management Synchronizing Processes > Distributed Shared Memory > Implementation 35

DSM Implementation ▸ Five key aspects: ▹ Data location and access ▹ Coherence protocol ▹ Replacement strategy ▹ Thrashing ▹ Related algorithms Synchronizing Processes > Distributed Shared Memory > Implementation 36

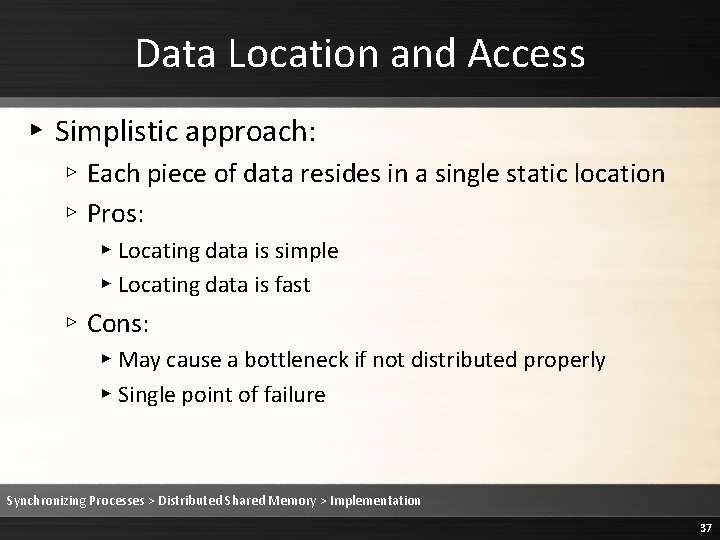

Data Location and Access ▸ Simplistic approach: ▹ Each piece of data resides in a single static location ▹ Pros: ▸ Locating data is simple ▸ Locating data is fast ▹ Cons: ▸ May cause a bottleneck if not distributed properly ▸ Single point of failure Synchronizing Processes > Distributed Shared Memory > Implementation 37

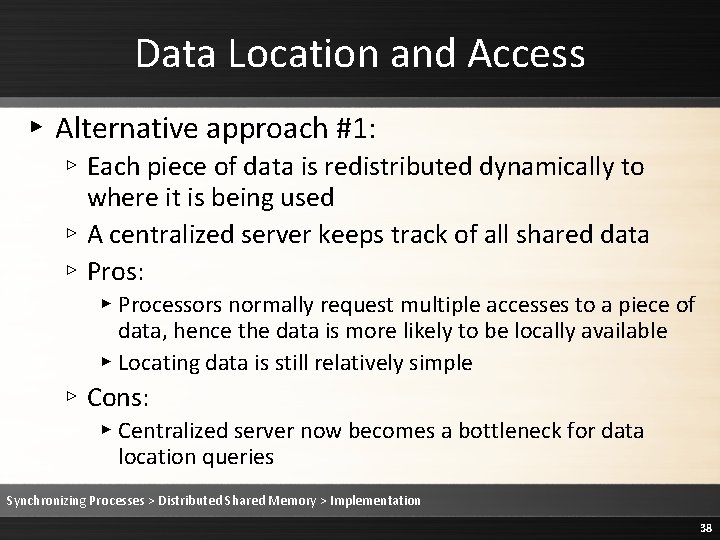

Data Location and Access ▸ Alternative approach #1: ▹ Each piece of data is redistributed dynamically to where it is being used ▹ A centralized server keeps track of all shared data ▹ Pros: ▸ Processors normally request multiple accesses to a piece of data, hence the data is more likely to be locally available ▸ Locating data is still relatively simple ▹ Cons: ▸ Centralized server now becomes a bottleneck for data location queries Synchronizing Processes > Distributed Shared Memory > Implementation 38

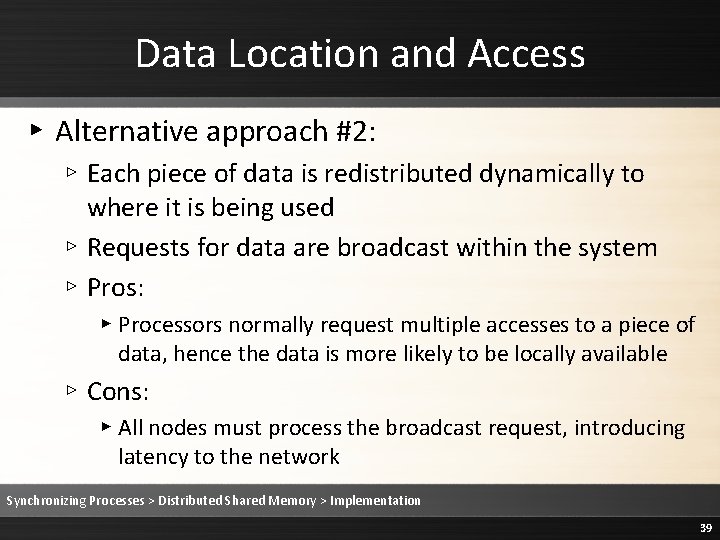

Data Location and Access ▸ Alternative approach #2: ▹ Each piece of data is redistributed dynamically to where it is being used ▹ Requests for data are broadcast within the system ▹ Pros: ▸ Processors normally request multiple accesses to a piece of data, hence the data is more likely to be locally available ▹ Cons: ▸ All nodes must process the broadcast request, introducing latency to the network Synchronizing Processes > Distributed Shared Memory > Implementation 39

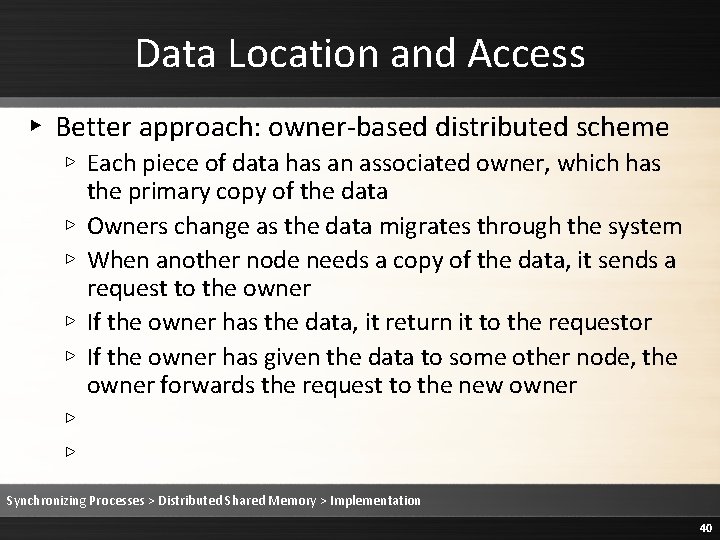

Data Location and Access ▸ Better approach: owner-based distributed scheme ▹ Each piece of data has an associated owner, which has the primary copy of the data ▹ Owners change as the data migrates through the system ▹ When another node needs a copy of the data, it sends a request to the owner ▹ If the owner has the data, it return it to the requestor ▹ If the owner has given the data to some other node, the owner forwards the request to the new owner ▹ ▹ Synchronizing Processes > Distributed Shared Memory > Implementation 40

Data Location and Access ▸ Better approach: owner-based distributed scheme ▹ Each piece of data has an associated owner, which has the primary copy of the data ▹ Owners change as the data migrates through the system ▹ When another node needs a copy of the data, it sends a request to the owner ▹ If the owner has the data, it return it to the requestor ▹ If the owner has given the data to some other node, the owner forwards the request to the new owner ▸ Drawback: ▹ A request may be forwarded many times Synchronizing Processes > Distributed Shared Memory > Implementation 41

Coherence Protocol ▸ If shared data is not replicated, enforcing memory coherence is trivial ▹ The underlying network automatically serializes requests in the order they are received ▹ This ensures strict memory consistency ▹ But also creates a bottleneck Synchronizing Processes > Distributed Shared Memory > Implementation 42

Coherence Protocol ▸ If shared data is replicated, two types of protocols handle replication and coherence ▸ Write-Invalidate Protocol: ▹ Many copies of a read-only piece of data, but only one copy of a writable piece of data ▹ invalidates all copies of a piece of data except one before a write can proceed ▸ Write-Update Protocol: ▹ a write updates all copies of a piece of data Synchronizing Processes > Distributed Shared Memory > Implementation 43

Replacement Strategy ▸ A strategy for freeing space when shared memory fills up ▹ Two issues: which data should be replaced and where should it go? ▸ Most DSM systems differentiate the status of data items and prioritize them ▹ E. g. , shared items got higher priorities than exclusively owned items ▸ Deleting read-only shared copies of data are preferable because no data is lost ▸ But the system must ensure data is not lost when replaced by free space ▹ E. g. , in a caching system of a multiprocessor, the item would simply be placed in main memory Synchronizing Processes > Distributed Shared Memory > Implementation 44

Thrashing ▸ When a computer’s virtual memory is in a constant stage of paging (i. e. , rapidly exchanging data in memory for data on disk) ▸ DSM systems are particularly prone to thrashing ▹ Ex: two nodes competing for write access to a single data item may cause it to be transferred at such a high rate that no real work can get done ▸ Easy solution: ▹ Add a changeable parameter to the coherence protocol ▹ This parameter determines the minimum amount of time a page will be available at a node ▹ Solving the problem of having a page stolen away after only a single request on a node can be satisfied Synchronizing Processes > Distributed Shared Memory > Implementation 45

Related Algorithms ▸ Synchronization operations and memory management must be specifically tuned ▸ Synchronization of semaphores should avoid thrashing ▹ One possible solution is to use specialized synchronization primitives along with DSM ▹ E. g. , grouping semaphores into centrally managed segments ▸ Memory management should be restructured ▹ Linear search of all shared memory is expensive ▹ One possible solution is partitioning available memory into private buffers on each node ▹ Allocate memory from the global buffer space only when the private buffer is empty Synchronizing Processes > Distributed Shared Memory > Implementation 46