Time Clocks and the Ordering of Events in

- Slides: 27

Time, Clocks, and the Ordering of Events in a Distributed System Leslie Lamport Massachusetts Computer Associates, Inc. Presented by Lokendra 1

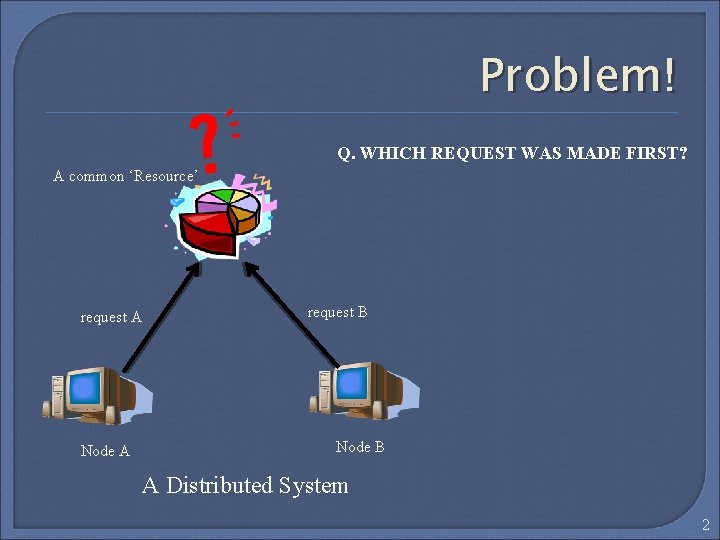

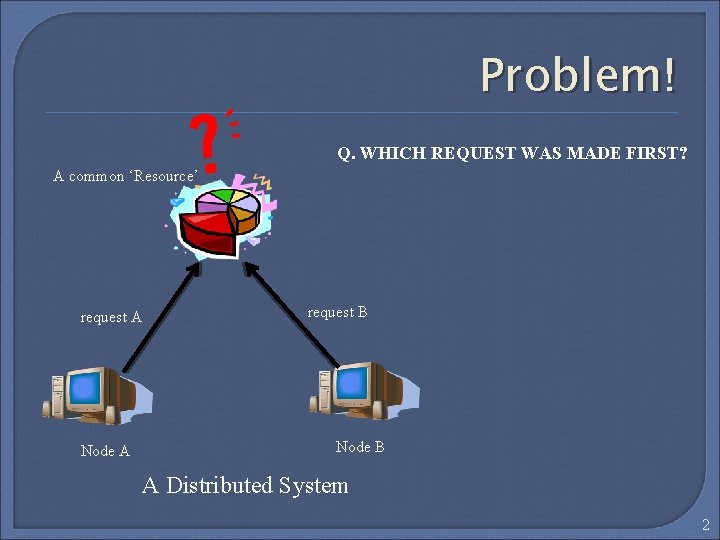

Problem! Q. WHICH REQUEST WAS MADE FIRST? A common ‘Resource’ request A Node A request B Node B A Distributed System 2

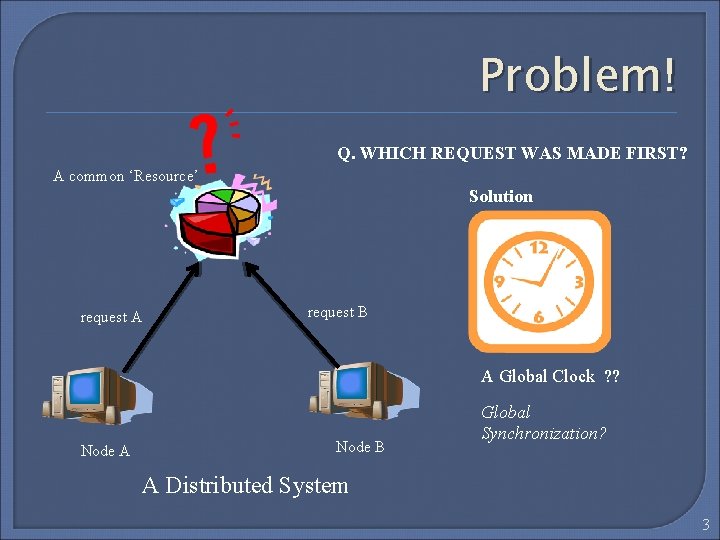

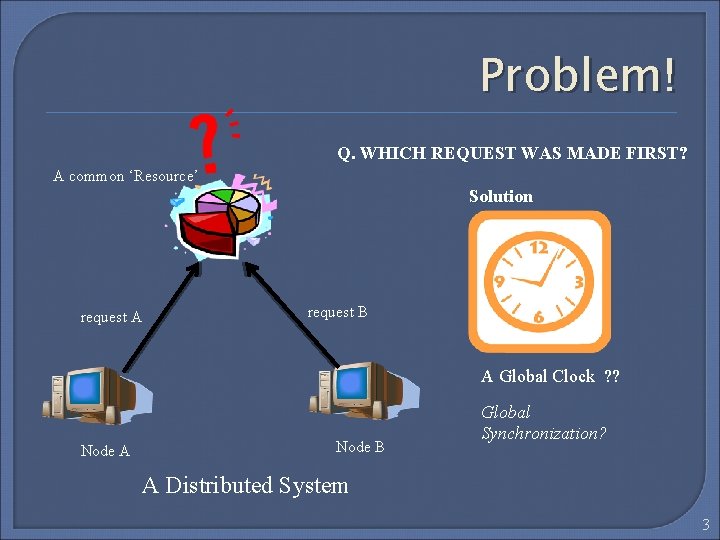

Problem! Q. WHICH REQUEST WAS MADE FIRST? A common ‘Resource’ Solution request A request B A Global Clock ? ? Node A Node B Global Synchronization? A Distributed System 3

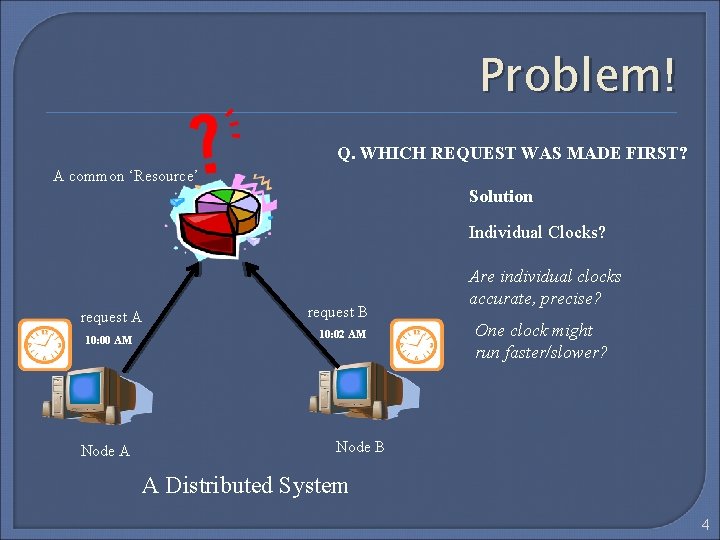

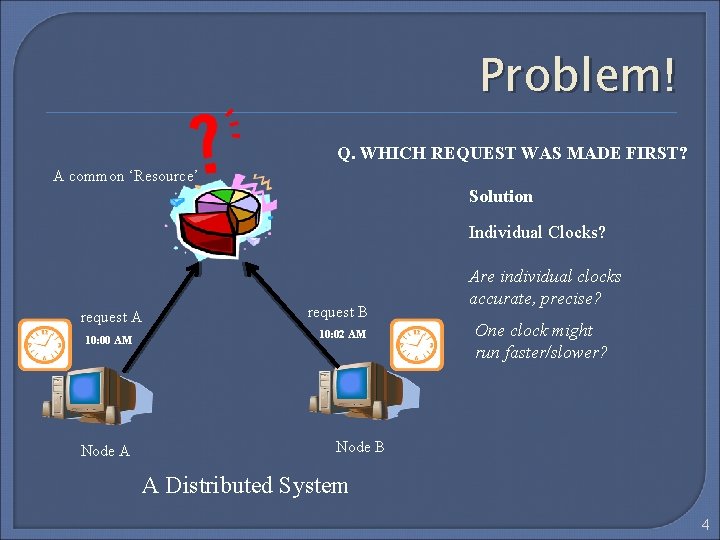

Problem! Q. WHICH REQUEST WAS MADE FIRST? A common ‘Resource’ Solution Individual Clocks? request A 10: 00 AM Node A request B 10: 02 AM Are individual clocks accurate, precise? One clock might run faster/slower? Node B A Distributed System 4

Problem! Synchronization in a Distributed System! • Event Ordering : Which event occurred first? • How to sync the clocks across the nodes? Can we define notion of ‘happened-before’ without using physical clocks? 5

Approach towards solution Develop a logical model of event ordering (without the use of physical clocks) • Might result into Anomalous Behavior? Bring in ‘Physical’ Clocks to integrate with the logical model • Fix anomalous behavior Make sure that the ‘Physical’ Clocks are synced. 6

Partial Ordering The system is composed of a collection of processes Each process consists of a sequence of events (instructions/subprogram) Process P : instr 1 instr 2 instr 3 … (Total Order) ‘Sending’ and ‘Receiving’ messages among processes • ‘Send’ : an event • ‘Receive’ : an event 7

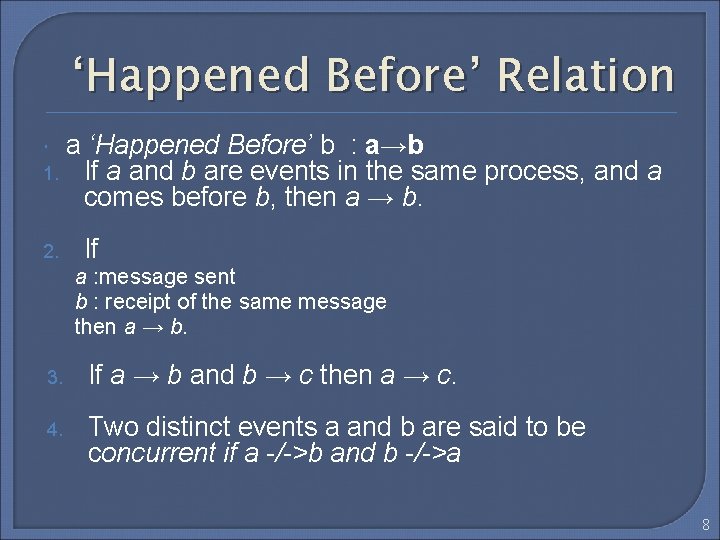

‘Happened Before’ Relation 1. 2. a ‘Happened Before’ b : a→b If a and b are events in the same process, and a comes before b, then a → b. If a : message sent b : receipt of the same message then a → b. 3. If a → b and b → c then a → c. 4. Two distinct events a and b are said to be concurrent if a -/->b and b -/->a 8

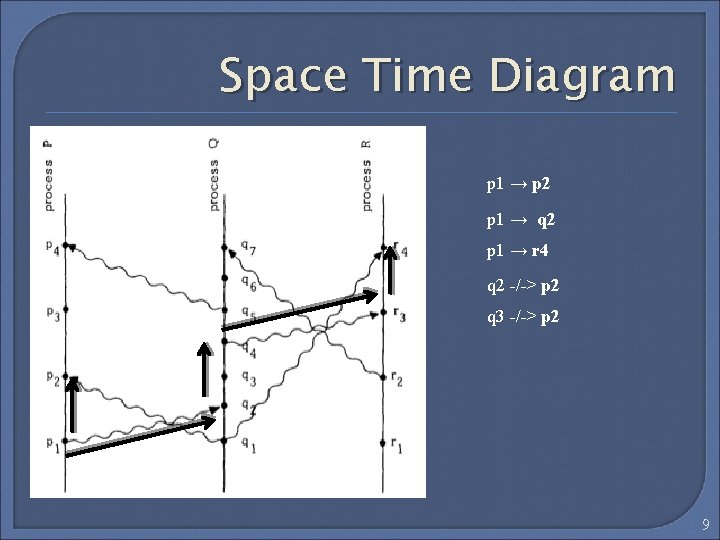

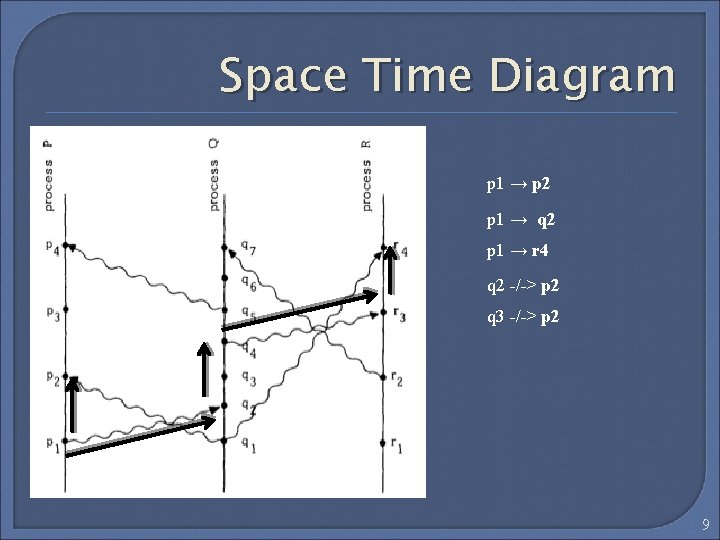

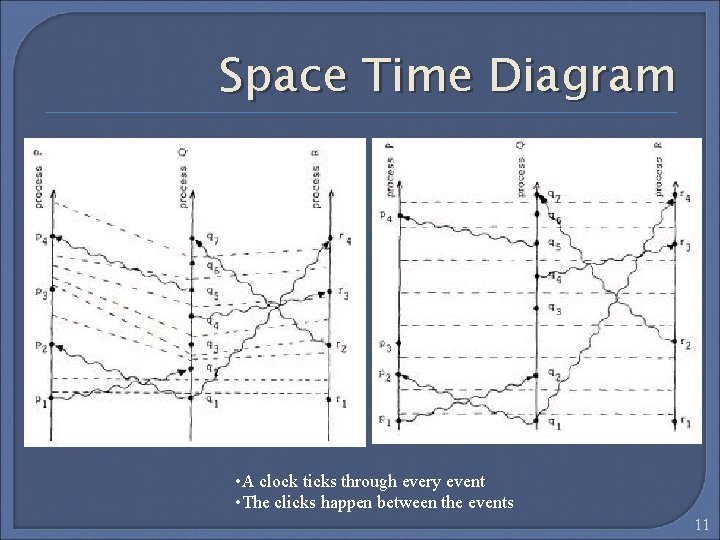

Space Time Diagram p 1 → p 2 p 1 → q 2 p 1 → r 4 q 2 -/-> p 2 q 3 -/-> p 2 9

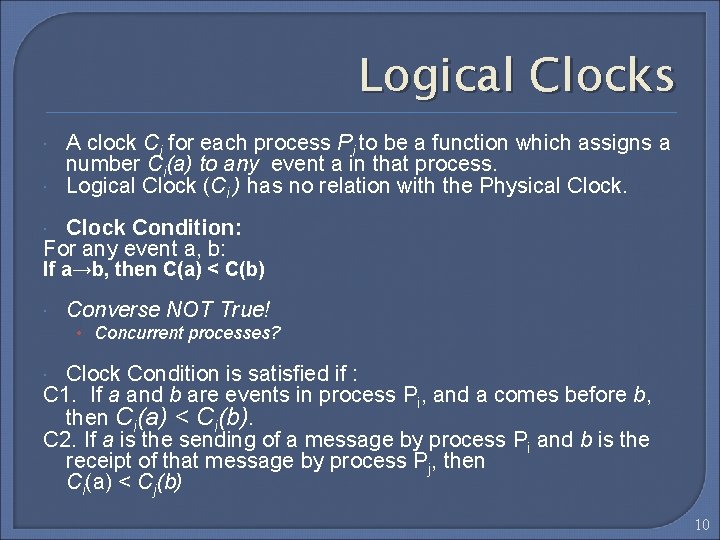

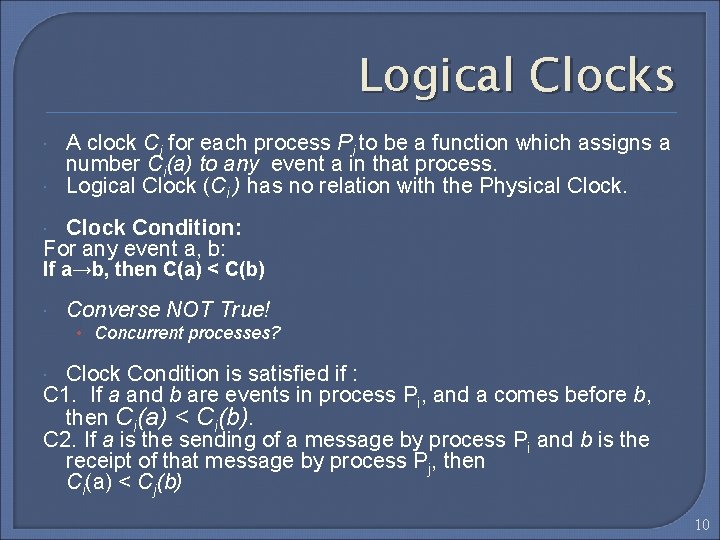

Logical Clocks A clock Ci for each process Pi to be a function which assigns a number Ci(a) to any event a in that process. Logical Clock (Ci ) has no relation with the Physical Clock Condition: For any event a, b: If a→b, then C(a) < C(b) Converse NOT True! • Concurrent processes? Clock Condition is satisfied if : C 1. If a and b are events in process Pi, and a comes before b, then Ci(a) < Ci(b). C 2. If a is the sending of a message by process Pi and b is the receipt of that message by process Pj, then Ci(a) < Cj(b) 10

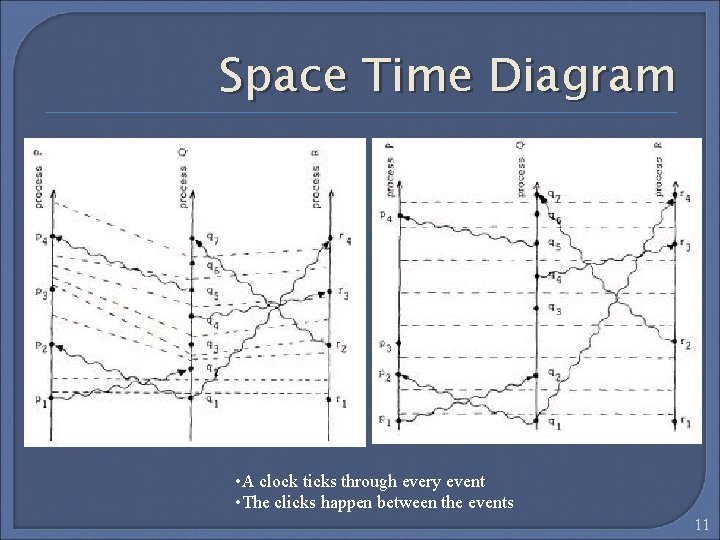

Space Time Diagram • A clock ticks through every event • The clicks happen between the events 11

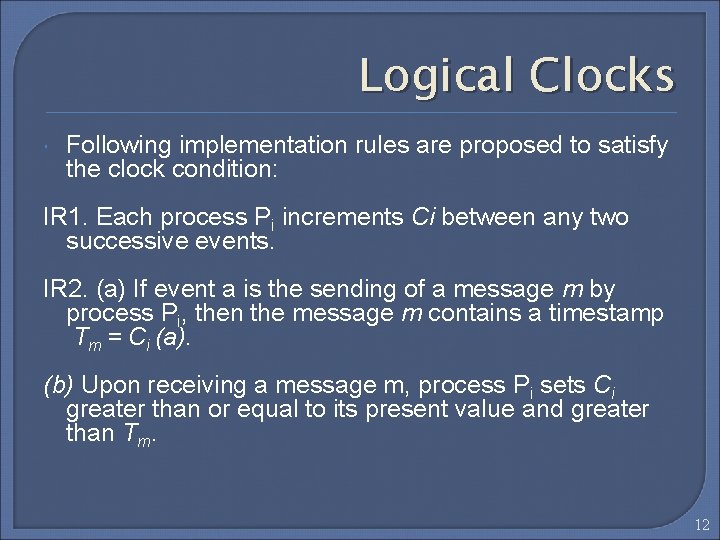

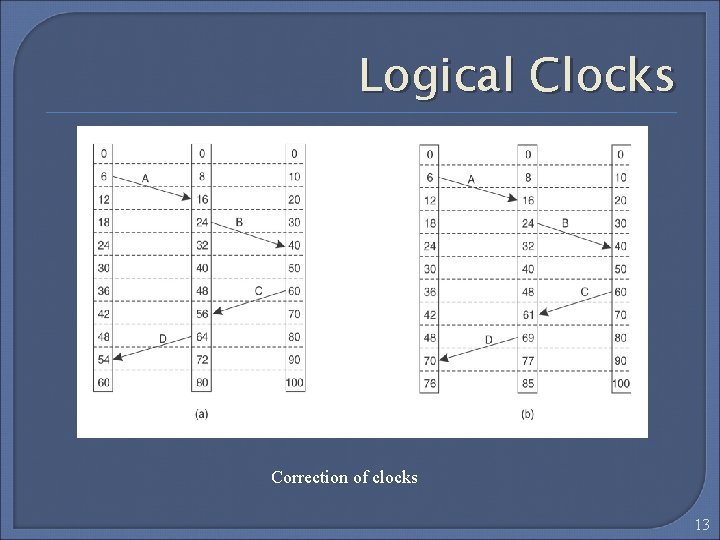

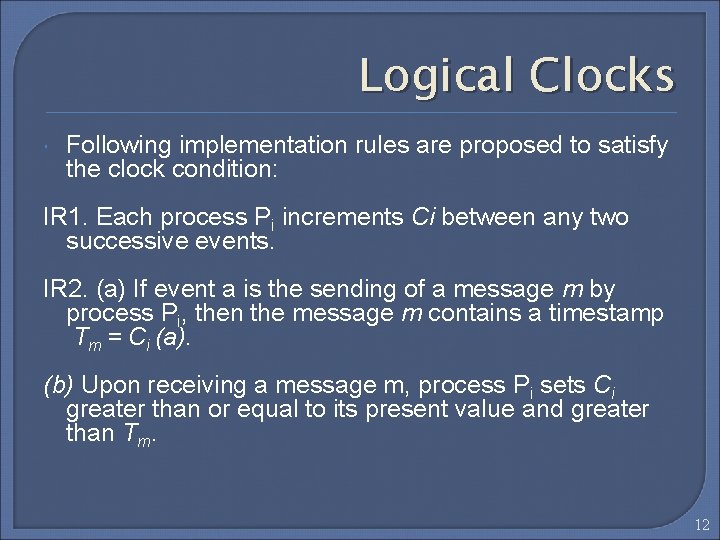

Logical Clocks Following implementation rules are proposed to satisfy the clock condition: IR 1. Each process Pi increments Ci between any two successive events. IR 2. (a) If event a is the sending of a message m by process Pi, then the message m contains a timestamp Tm = Ci (a). (b) Upon receiving a message m, process Pi sets Ci greater than or equal to its present value and greater than Tm. 12

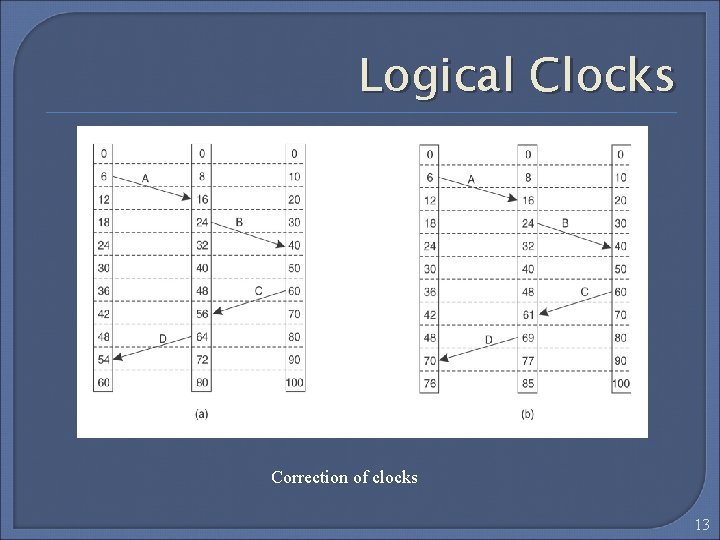

Logical Clocks Correction of clocks 13

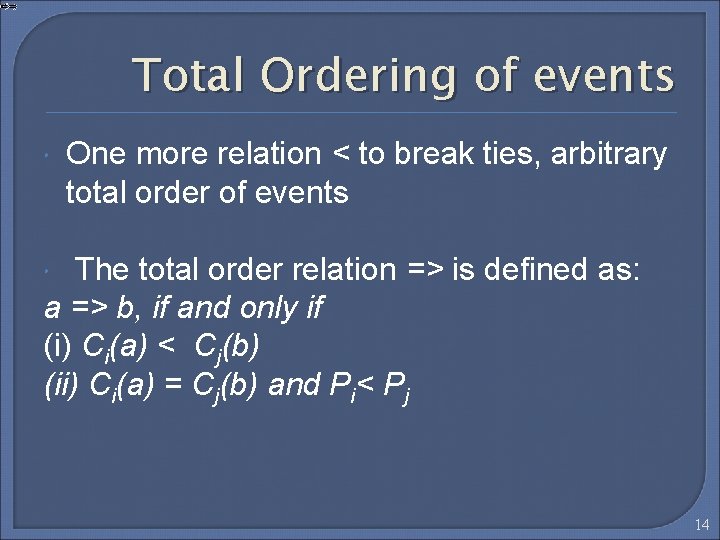

Total Ordering of events One more relation < to break ties, arbitrary total order of events The total order relation => is defined as: a => b, if and only if (i) Ci(a) < Cj(b) (ii) Ci(a) = Cj(b) and Pi< Pj 14

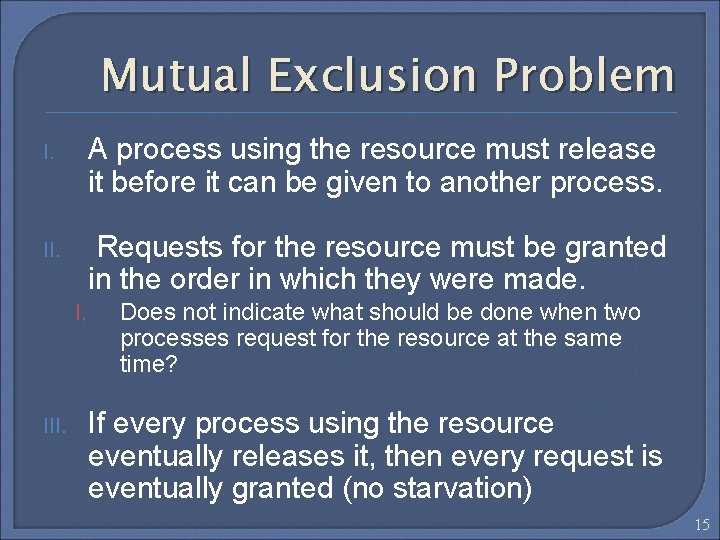

Mutual Exclusion Problem I. A process using the resource must release it before it can be given to another process. II. Requests for the resource must be granted in the order in which they were made. I. III. Does not indicate what should be done when two processes request for the resource at the same time? If every process using the resource eventually releases it, then every request is eventually granted (no starvation) 15

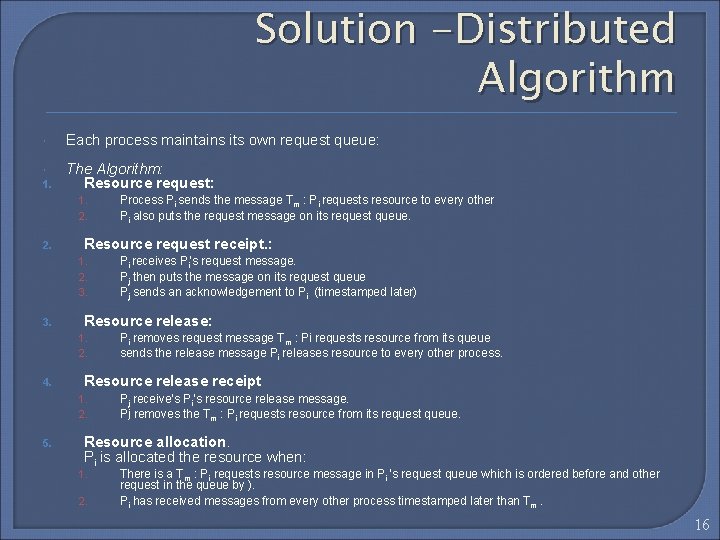

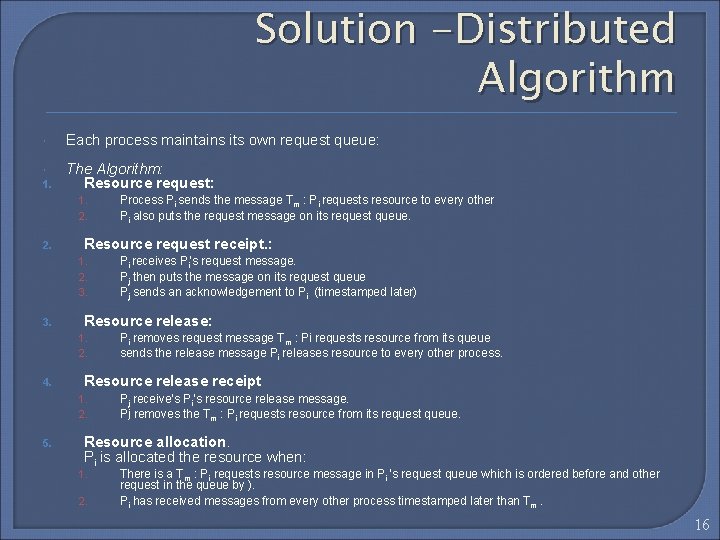

Solution -Distributed Algorithm Each process maintains its own request queue: 1. The Algorithm: Resource request: 1. 2. Resource request receipt. : 1. 2. 3. Pi removes request message Tm : Pi requests resource from its queue sends the release message Pi releases resource to every other process. Resource release receipt 1. 2. 5. Pi receives Pi’s request message. Pj then puts the message on its request queue Pj sends an acknowledgement to Pi (timestamped later) Resource release: 1. 2. 4. Process Pi sends the message Tm : Pi requests resource to every other Pi also puts the request message on its request queue. Pj receive’s Pi’s resource release message. Pj removes the Tm : Pi requests resource from its request queue. Resource allocation. Pi is allocated the resource when: 1. 2. There is a Tm : Pi requests resource message in Pi ’s request queue which is ordered before and other request in the queue by ). Pi has received messages from every other process timestamped later than Tm. 16

Solution -Distributed Algorithm NOTE: • Each process independently follows these rules • There is no central synchronizing process or central storage. • Can be viewed as State Machine C x S→ S C : set of commands for eg resource request S : Machine’s state 17

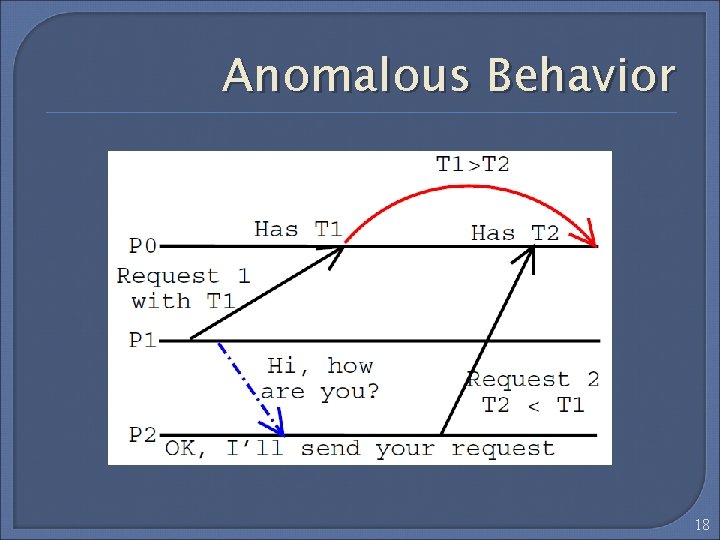

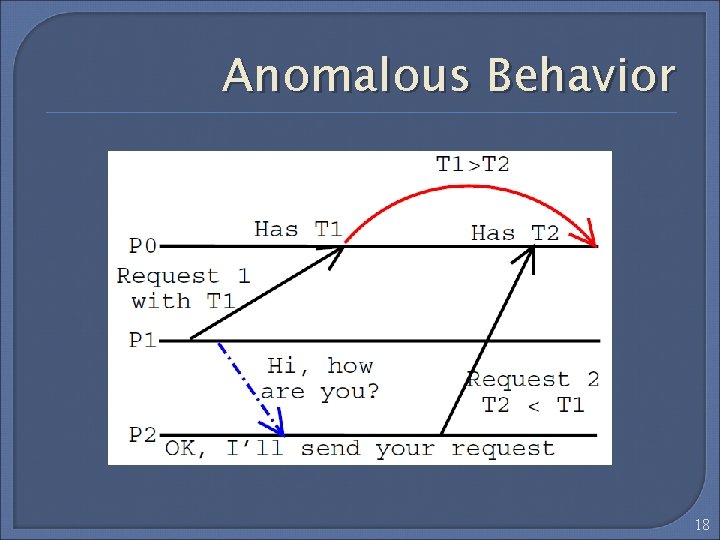

Anomalous Behavior 18

Anomalous Behavior (contd) Two possible ways to avoid such anomalous behavior: Give the user the responsibility for avoiding anomalous behavior. 1. • 2. When the call is made, b be given a later timestamp than a. Let S : set of all system events Š : Set containing S + relevant external events Let → denote the “happened before” relation for Š. Strong Clock Condition: For any events a; b in Š : if a → b then C(a) < C(b) One can construct physical clocks, running quite independently, and having the Strong Clock Condition, therefore eliminating anomalous behavior. 19

Physical Clocks PC 1: | d. Ci(t)/dt - 1 | < ĸ ĸ <<1 PC 2: For all i, j : |Ci(t) – Cj(t)| < ԑ 2 clocks don’t run at the same rate: • They may drift further Need of an algorithm that PC 2 always holds 20

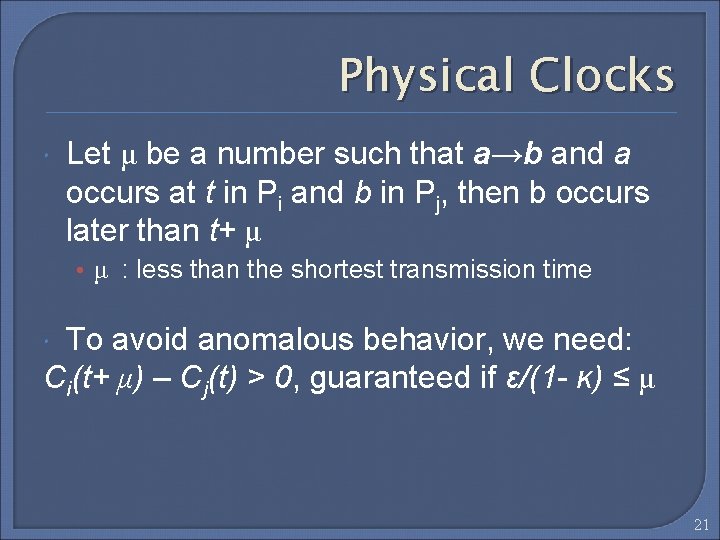

Physical Clocks Let μ be a number such that a→b and a occurs at t in Pi and b in Pj, then b occurs later than t+ μ • μ : less than the shortest transmission time To avoid anomalous behavior, we need: Ci(t+ μ) – Cj(t) > 0, guaranteed if ԑ/(1 - ĸ) ≤ μ 21

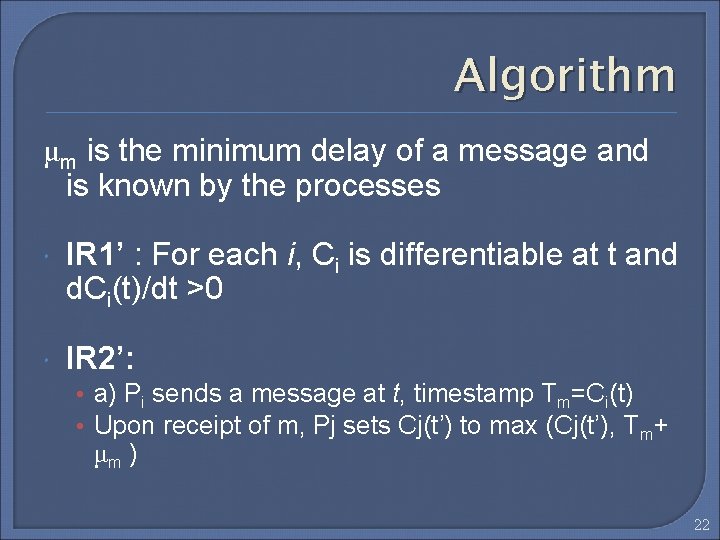

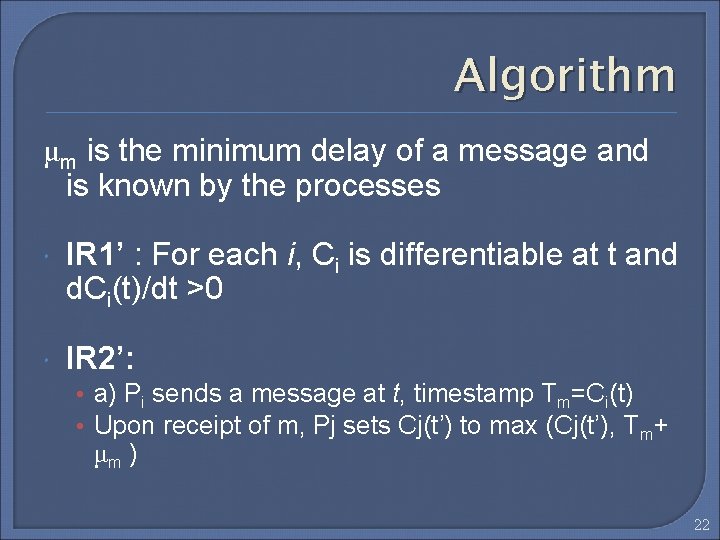

Algorithm μm is the minimum delay of a message and is known by the processes IR 1’ : For each i, Ci is differentiable at t and d. Ci(t)/dt >0 IR 2’: • a) Pi sends a message at t, timestamp Tm=Ci(t) • Upon receipt of m, Pj sets Cj(t’) to max (Cj(t’), Tm+ μm ) 22

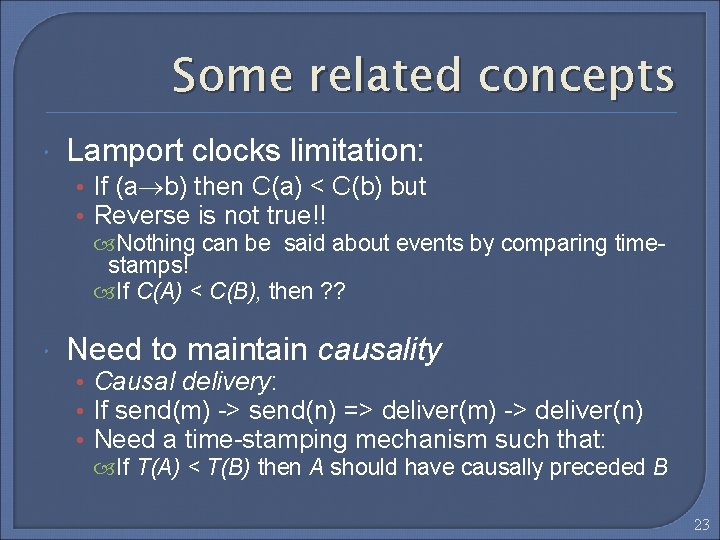

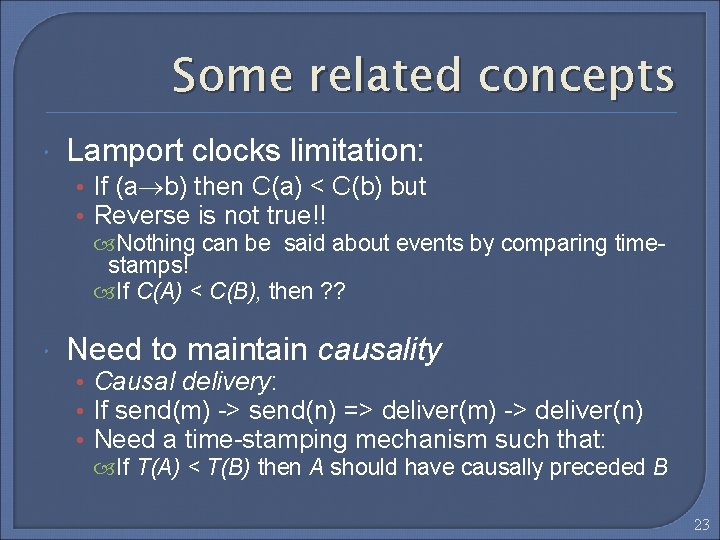

Some related concepts Lamport clocks limitation: • If (a b) then C(a) < C(b) but • Reverse is not true!! Nothing can be said about events by comparing timestamps! If C(A) < C(B), then ? ? Need to maintain causality • Causal delivery: • If send(m) -> send(n) => deliver(m) -> deliver(n) • Need a time-stamping mechanism such that: If T(A) < T(B) then A should have causally preceded B 23

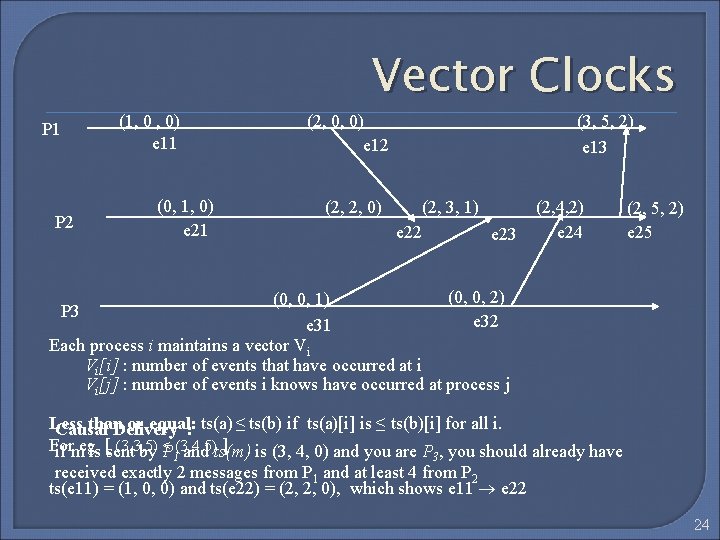

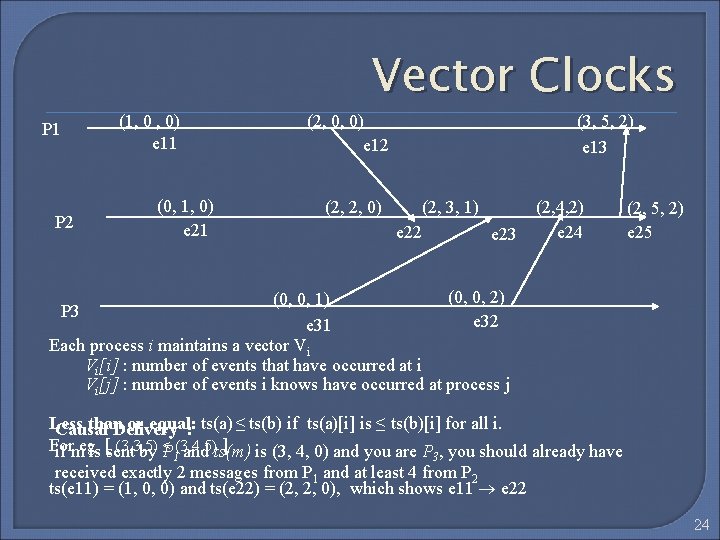

P 1 P 2 (1, 0 , 0) e 11 (0, 1, 0) e 21 Vector Clocks (2, 0, 0) e 12 (2, 2, 0) (3, 5, 2) e 13 (2, 3, 1) e 22 e 23 (2, 4, 2) e 24 (2, 5, 2) e 25 (0, 0, 2) (0, 0, 1) P 3 e 32 e 31 Each process i maintains a vector Vi Vi[i] : number of events that have occurred at i Vi[j] : number of events i knows have occurred at process j Less than or equal: Causal Delivery : ts(a) ≤ ts(b) if ts(a)[i] is ≤ ts(b)[i] for all i. For (3, 3, 5) ≤ (3, 4, 5) ] if megis [sent by P and ts(m) is (3, 4, 0) and you are P , you should already have 1 3 received exactly 2 messages from P 1 and at least 4 from P 2 ts(e 11) = (1, 0, 0) and ts(e 22) = (2, 2, 0), which shows e 11 e 22 24

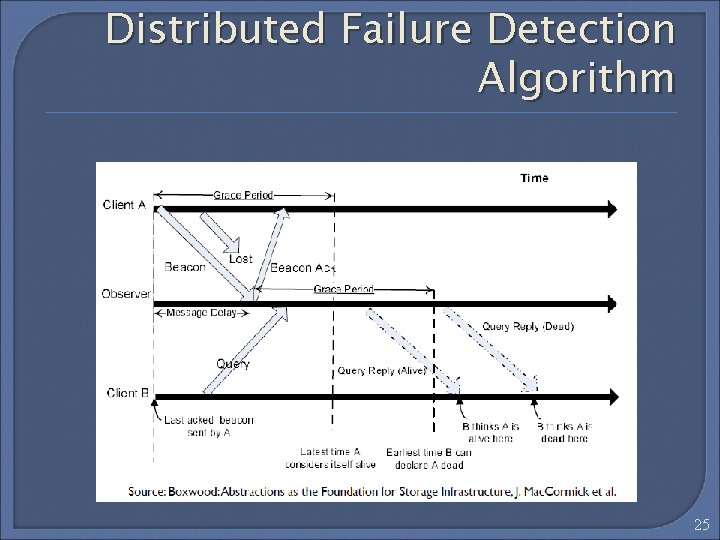

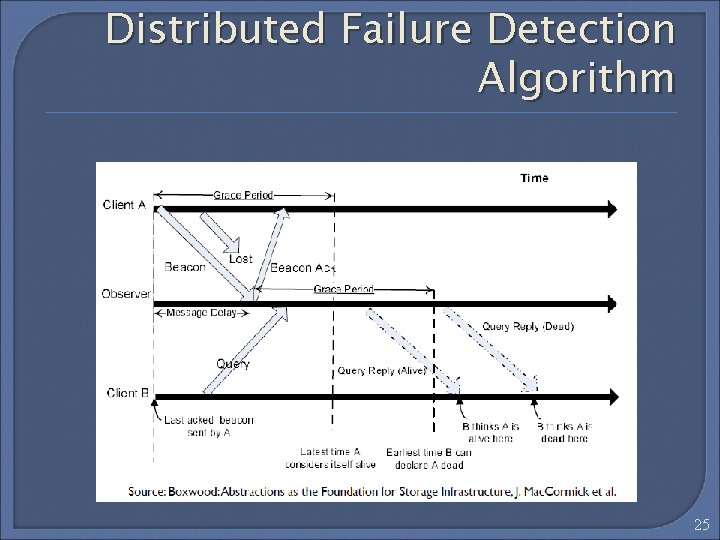

Distributed Failure Detection Algorithm 25

Conclusion ‘Happens Before’ is just partial ordering To make it total, arbitrary ties method can be used • But can lead to Anomalous behavior But we can use synchronized clocks to resolve 26

QUESTIONS ? 27