Synchronizing Processes Clocks External clock synchronization Cristian Internal

- Slides: 60

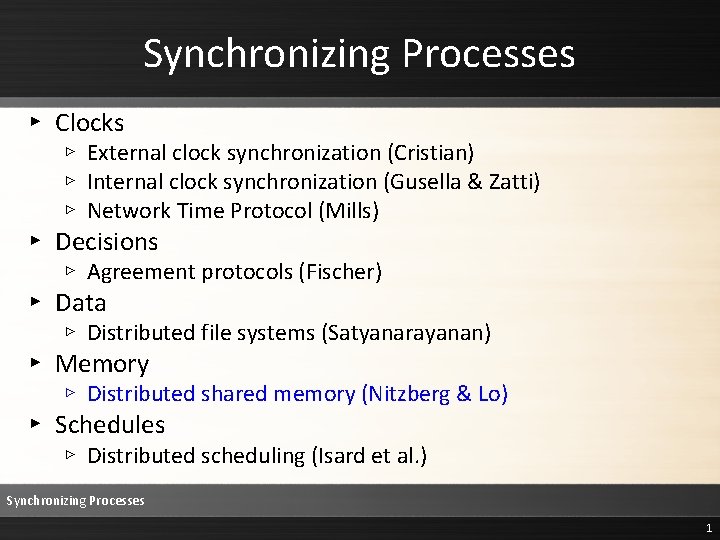

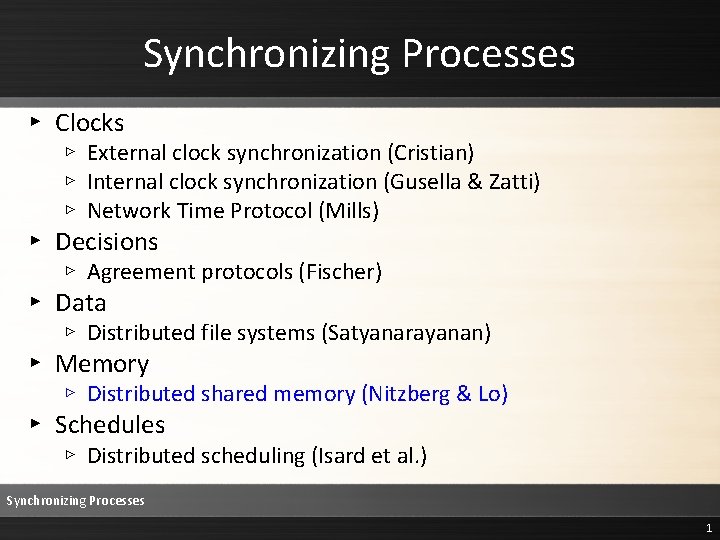

Synchronizing Processes ▸ Clocks ▹ External clock synchronization (Cristian) ▹ Internal clock synchronization (Gusella & Zatti) ▹ Network Time Protocol (Mills) ▸ Decisions ▹ Agreement protocols (Fischer) ▸ Data ▹ Distributed file systems (Satyanarayanan) ▸ Memory ▹ Distributed shared memory (Nitzberg & Lo) ▸ Schedules ▹ Distributed scheduling (Isard et al. ) Synchronizing Processes 1

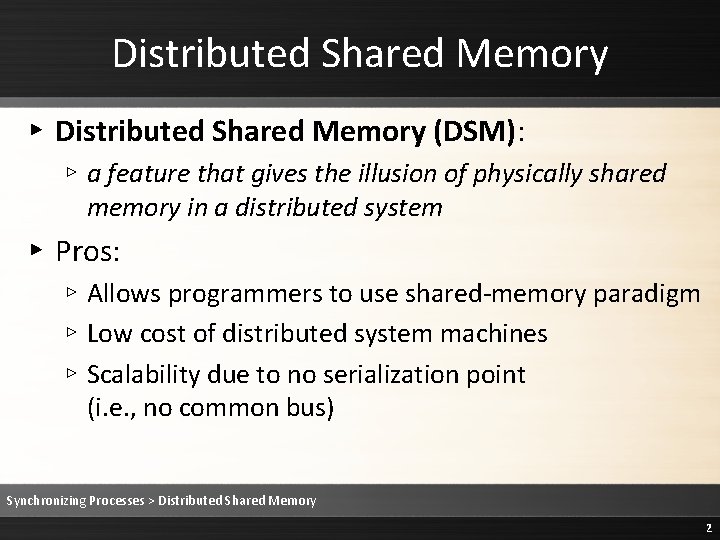

Distributed Shared Memory ▸ Distributed Shared Memory (DSM): ▹ a feature that gives the illusion of physically shared memory in a distributed system ▸ Pros: ▹ Allows programmers to use shared-memory paradigm ▹ Low cost of distributed system machines ▹ Scalability due to no serialization point (i. e. , no common bus) Synchronizing Processes > Distributed Shared Memory 2

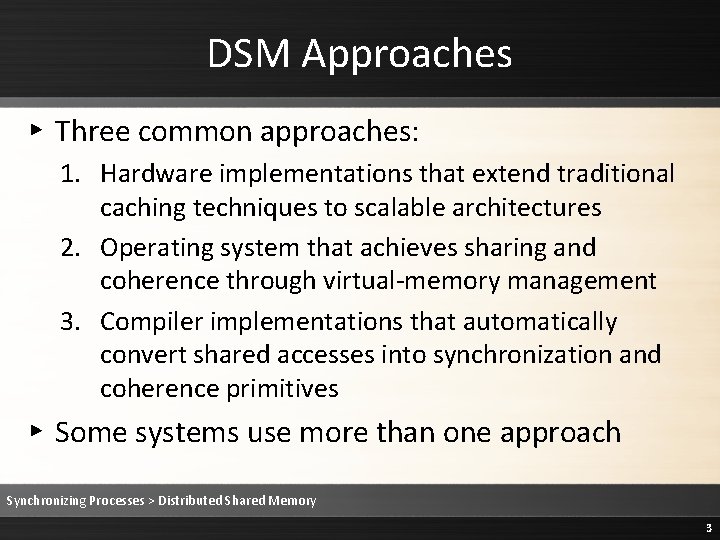

DSM Approaches ▸ Three common approaches: 1. Hardware implementations that extend traditional caching techniques to scalable architectures 2. Operating system that achieves sharing and coherence through virtual-memory management 3. Compiler implementations that automatically convert shared accesses into synchronization and coherence primitives ▸ Some systems use more than one approach Synchronizing Processes > Distributed Shared Memory 3

DSM Design Choices ▸ Four key aspects: ▹ Structure and granularity ▹ Coherence semantics ▹ Scalability ▹ Heterogeneity Synchronizing Processes > Distributed Shared Memory > Design Choices 4

Granularity ▸ A process is likely to access a large region of its shared address in a small amount of time ▸ Hence, larger page sizes reduce paging overhead ▸ However, larger page sizes increase the likelihood that more than one process will require access to a page (i. e. , contention) ▸ Larger page sizes also increase false sharing ▸ False Sharing: ▹ occurs when two unrelated variables (each used by different processes) are placed in the same page Synchronizing Processes > Distributed Shared Memory > Design Choices 5

DSM Implementation ▸ Five key aspects: ▹ Data location and access ▹ Coherence protocol ▹ Replacement strategy ▹ Thrashing ▹ Related algorithms Synchronizing Processes > Distributed Shared Memory > Implementation 6

Coherence Protocol ▸ If shared data is replicated, two types of protocols handle replication and coherence ▸ Write-Invalidate Protocol: ▹ invalidates all copies of a piece of data except one before a write can proceed ▸ Write-Update Protocol: ▹ a write updates all copies of a piece of data Synchronizing Processes > Distributed Shared Memory > Implementation 7

Synchronizing Processes ▸ Clocks ▹ External clock synchronization (Cristian) ▹ Internal clock synchronization (Gusella & Zatti) ▹ Network Time Protocol (Mills) ▸ Decisions ▹ Agreement protocols (Fischer) ▸ Data ▹ Distributed file systems (Satyanarayanan) ▸ Memory ▹ Distributed shared memory (Nitzberg & Lo) ▸ Schedules ▹ Distributed scheduling (Isard et al. ) Synchronizing Processes 8

Synchronizing Processes: Data-Intensive Scheduling CS/CE/TE 6378 Advanced Operating Systems

Data-Intensive Computing ▸ Increasingly important for applications such as: ▹ Web-scale data mining, Machine learning, traffic analysis ▹ Fairness ▸ More than 50% are small jobs ( less than 30 minutes) ▸ Large job should not monopolize the cluster ▸ If Job X takes t seconds when it runs exclusively on a cluster, X should take no more than Jt seconds when cluster has J concurrent jobs. (For N computers and J jobs, each job should get at-least N/J computers) ▹ Data locality ▸ Large disks directly attached to the computers ▸ Network bandwidth is expensive Synchronizing Processes > Data-Intensive Scheduling > Data-Intensive Computing 10

Distinguishing Feature ▸ Every computer in the cluster has a large disk directly attached to it ▸ Allows application data to be stored on the same computers on which it will be processed ▸ But maintaining high bandwidth between arbitrary pairs of computers becomes increasingly expensive as the size of a cluster grows ▸ If computations are not placed close to their input data, the network can therefore become bottlenecked ▸ Hence, optimizing the placement of computation to minimize network traffic is a primary goal Synchronizing Processes > Data-Intensive Scheduling > Data-Intensive Computing 11

Data-Intensive Scheduling ▸ Primary challenge is to balance the requirements of data locality and fairness ▸ Fairness: ▹ all applications will be affected equally ▸ A strategy that achieves optimal data locality will typically delay a job until its ideal resources are available ▸ A strategy that achieves fairness by allocating available resources to a job as soon as possible will typically be forced to choose resources not closest to the computation’s data Synchronizing Processes > Data-Intensive Scheduling 12

Fair Scheduling ▸ Most users desire fair sharing of cluster resources ▸ The most common request is that one user’s large job should not monopolize the whole cluster, delaying the completion of everyone else’s small jobs ▸ It is also important that ensuring low latency for short jobs does not come at the expense of the overall throughput of the system Synchronizing Processes > Data-Intensive Scheduling 13

Throughput ▸ Throughput: ▹ the amount of data transferred from one node to another or processed in a specified amount of time ▸ Data transfer rates for disk drives and networks are measured in terms of throughput ▸ Throughput is normally measured in kbps, Mbps, and Gbps Synchronizing Processes > Data-Intensive Scheduling 14

Computational Model ▸ Each job is managed by a root task, which is a process running on one of the cluster computers that contains a state machine managing the workflow of that job ▸ The computation is executed by worker tasks, which are individual processes ▸ A job’s workflow is represented by a directed acyclic graph of workers where edges represent dependencies Synchronizing Processes > Data-Intensive Scheduling > Computational Model 15

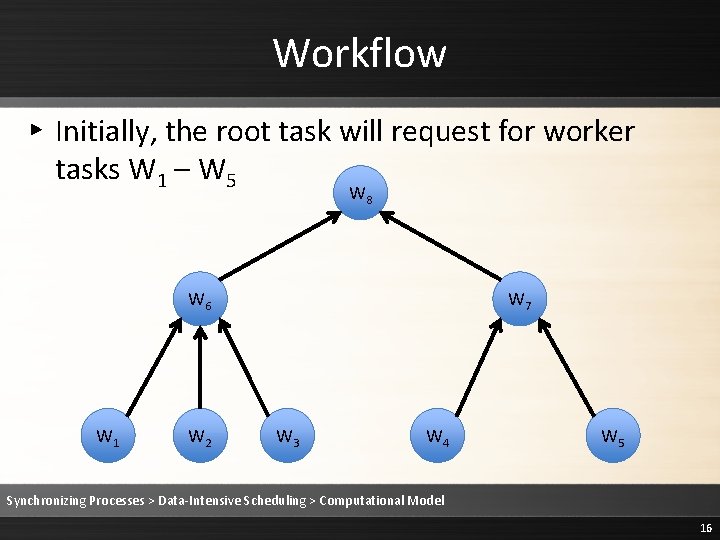

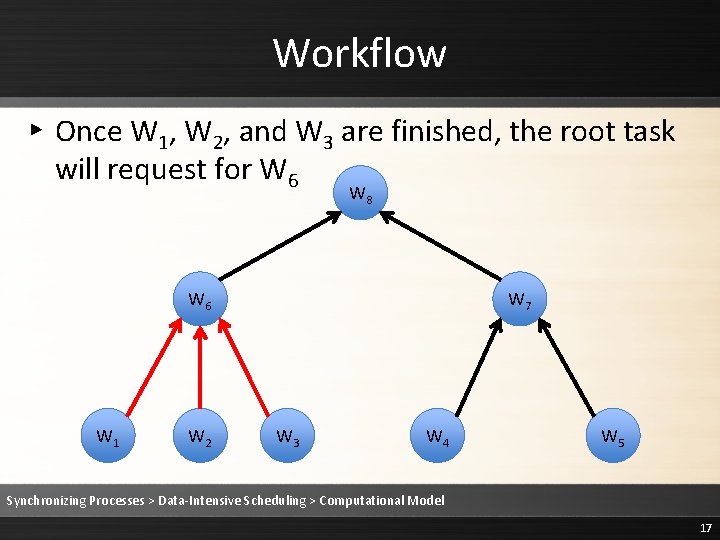

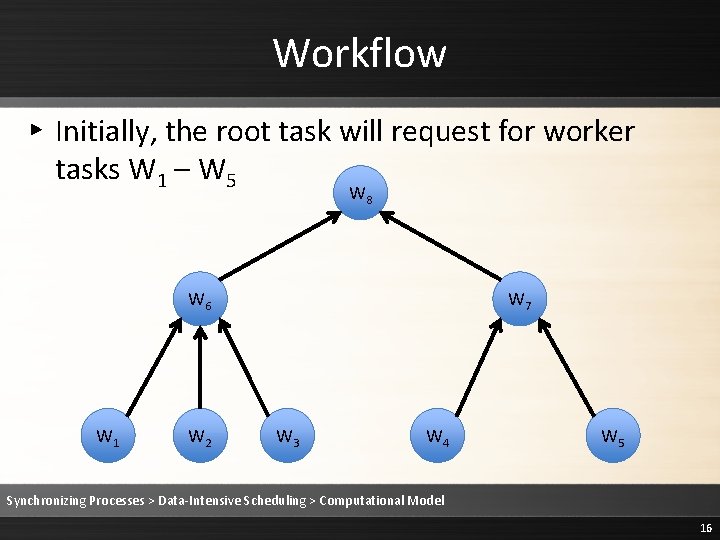

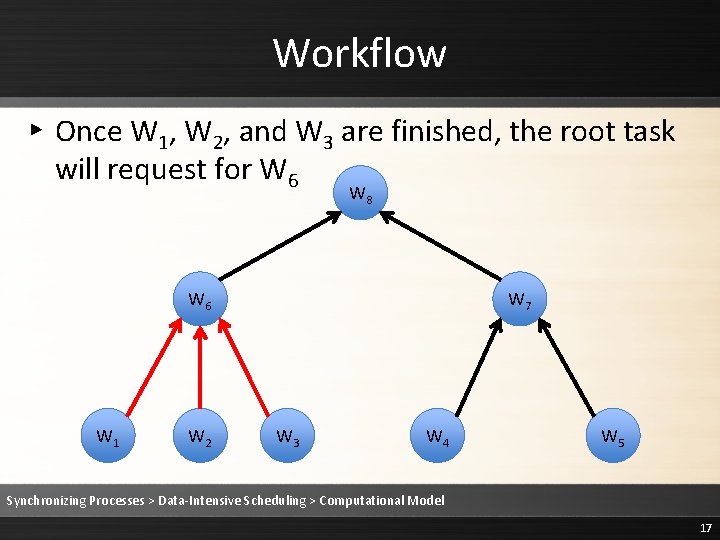

Workflow ▸ Initially, the root task will request for worker tasks W 1 – W 5 W 8 W 6 W 1 W 2 W 7 W 3 W 4 W 5 Synchronizing Processes > Data-Intensive Scheduling > Computational Model 16

Workflow ▸ Once W 1, W 2, and W 3 are finished, the root task will request for W 6 W 8 W 6 W 1 W 2 W 7 W 3 W 4 W 5 Synchronizing Processes > Data-Intensive Scheduling > Computational Model 17

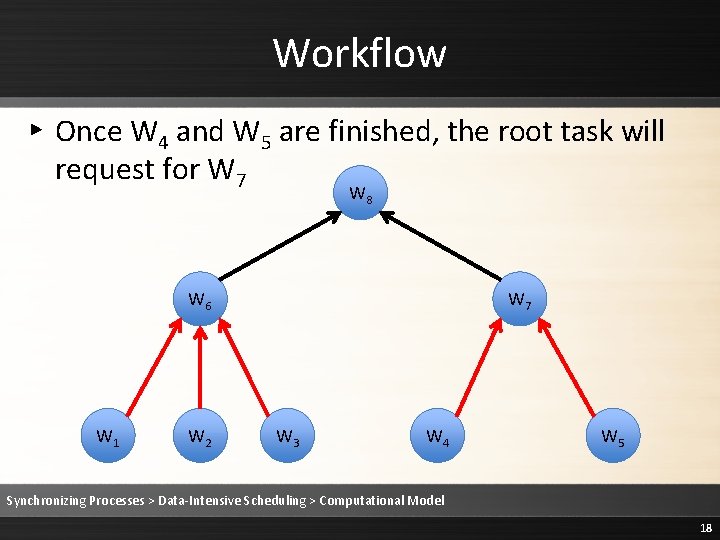

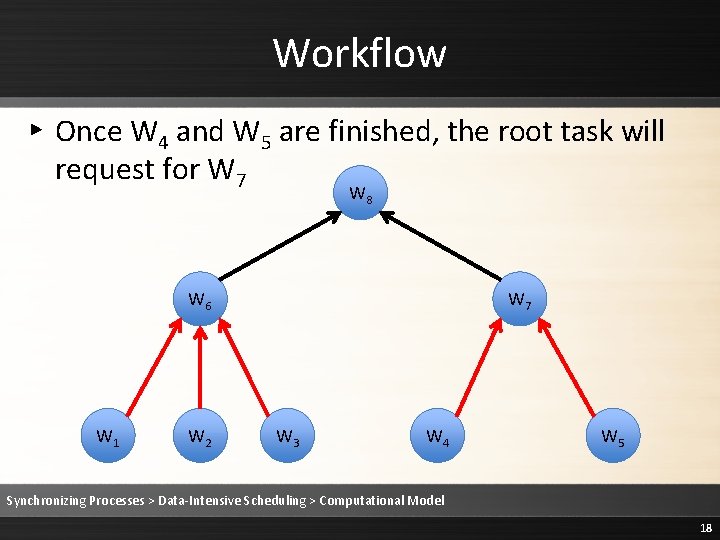

Workflow ▸ Once W 4 and W 5 are finished, the root task will request for W 7 W 8 W 6 W 1 W 2 W 7 W 3 W 4 W 5 Synchronizing Processes > Data-Intensive Scheduling > Computational Model 18

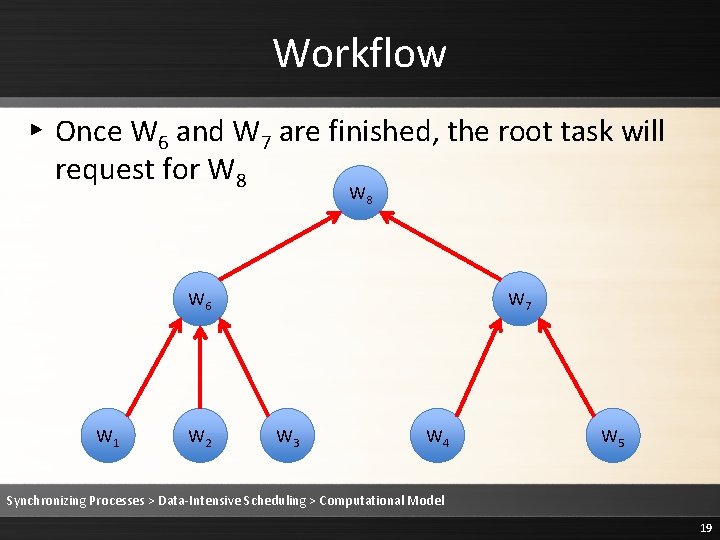

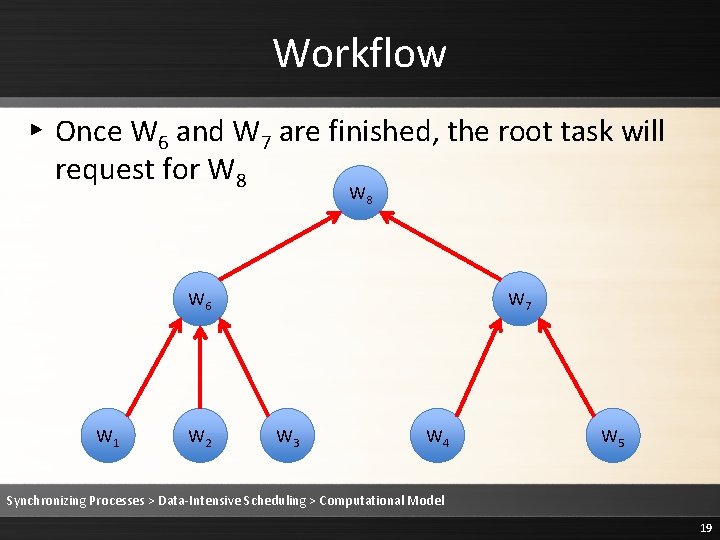

Workflow ▸ Once W 6 and W 7 are finished, the root task will request for W 8 W 6 W 1 W 2 W 7 W 3 W 4 W 5 Synchronizing Processes > Data-Intensive Scheduling > Computational Model 19

Computational Model ▸ The root process monitors which tasks have completed and which are ready for execution ▸ While running, tasks are independent of each other so killing one task will not impact another ▸ A worker task may also be executed multiple times, for example to recreate data lost as a result of a failed computer ▸ But a worker task will always generate the same result Synchronizing Processes > Data-Intensive Scheduling > Computational Model 20

Cluster Architecture ▸ A single centralized scheduling service maintains a batch queue of jobs ▸ Several concurrent jobs can be running and sharing resources within the cluster ▸ Each computer is restricted to run only one task at a time ▸ Other jobs can be queued and waiting for admission ▸ When a job is started the scheduler allocates a computer for its root task ▹ If that computer fails, the job will be re-executed from its beginning ▸ Each running job’s root task submits list of ready workers and their input data summaries to the scheduler Synchronizing Processes > Data-Intensive Scheduling > Cluster Architecture 21

Input Data Locality ▸ A worker task may read data from storage attached to computers in the cluster ▸ Data is either ▹ Inputs stored as partitioned files in a DFS, or ▹ Intermediate output files generated by other workers ▸ Consequently, the scheduler can be made aware of detailed information about the data transfer costs that would result from executing a worker on a given computer Synchronizing Processes > Data-Intensive Scheduling > Cluster Architecture 22

Input Data Summaries ▸ When the nth worker in job j (denoted wjn) is ready, its root rj computes for each computer m the amount of data that wjn would have to read across the network if executed on m ▸ The root rj then constructs two lists for wjn: ▹ A list of preferred computers, and ▹ A list of preferred racks Synchronizing Processes > Data-Intensive Scheduling > Cluster Architecture 23

Preferred Localities ▸ Any computer that stores more than a fraction δc of wjn’s total input data is added to the list of preferred computers ▸ Any rack whose computers in sum store more than a fraction δr of wjn’s total input data is added to the second ▸ In practice, the authors recommend a value of 0. 1 for δc and δr Synchronizing Processes > Data-Intensive Scheduling > Cluster Architecture 24

Cluster Architecture ▸ Each running job’s root task submits list of ready workers and their input data summaries to the scheduler ▸ The scheduler then matches tasks to computers and instructs the appropriate root task to set them running ▸ When a worker completes, its root task is informed and this may trigger a new set of ready tasks to be sent to the scheduler Synchronizing Processes > Data-Intensive Scheduling > Cluster Architecture 25

Monitoring ▸ The root task also monitors the execution time of worker tasks ▸ It can submit a duplicate of a task that is taking longer than expected to complete ▸ When a worker task fails because of unreliable cluster resources, its root task is responsible for back-tracking through the dependency graph and re-submitting tasks as necessary to regenerate any intermediate data that has been lost Synchronizing Processes > Data-Intensive Scheduling > Cluster Architecture 26

Termination ▸ The scheduler may decide to kill a worker task before it completes in order to allow other jobs to have access to its resources or to move the worker to a more suitable location ▸ In this case, the scheduler will automatically instruct the worker’s root so the task can be restarted on a different computer at a later time Synchronizing Processes > Data-Intensive Scheduling > Cluster Architecture 27

Fairness Goals ▸ A job which runs for t seconds given exclusive access to a cluster should take no more than Jt seconds when there are J jobs concurrently executing on that cluster ▸ Authors attempt to achieve this through admission control Synchronizing Processes > Data-Intensive Scheduling > Fairness 28

Admission Control ▸ Admission control is implemented to ensure that at most K jobs are executing at any time ▸ When the limit of K jobs is reached, new jobs are queued and started in order of submission time as other jobs complete ▸ A large K increases the likelihood that several jobs will be competing for access to data stored on the same computer ▸ A small K may leave some cluster computers idle if the jobs do not submit enough tasks Synchronizing Processes > Data-Intensive Scheduling > Fairness 29

Queue-Based Scheduling ▸ Queue-based architecture: ▹ One queue for each computer m in the cluster (Cm) ▹ One queue for each rack of computers n (Rn) ▹ One cluster-wide queue (X) ▸ When a worker task is submitted to the scheduler it is added to multiple queues: ▹ Cm for each computer m on its preferred list ▹ Rn for each rack n on its preferred rack list ▹ X for the entire cluster ▸ When a task starts, it is removed from all queues Synchronizing Processes > Data-Intensive Scheduling > Queue-Based Scheduling 30

Queue-Based Scheduling ▸ When a new job is started, its root task is assigned a computer at random from among the computers that are not currently executing root tasks ▸ Any worker task currently running on that computer is killed and re-entered into the scheduler queues as though it had just been submitted ▸ K must be small enough that there at least K + 1 working computer in the cluster, in order that at least one computer is available to execute worker tasks Synchronizing Processes > Data-Intensive Scheduling > Queue-Based Scheduling 31

Queue-Based Scheduling ▸ Four types of queue-based algorithms ▹ Greedy without fairness ▹ Fair greedy ▹ Fairness with preemption ▹ Sticky slots Synchronizing Processes > Data-Intensive Scheduling > Queue-Based Scheduling 32

Greedy Without Fairness ▸ Whenever a computer m becomes free, the first ready task on Cm is dispatched to m ▸ If Cm does not have any ready tasks, then the first ready task on Rn is dispatched to m, where n is m’s rack ▸ If neither Cm nor Rn contain a ready task, then the first ready task on X is dispatched to m Synchronizing Processes > Data-Intensive Scheduling > Queue-Based Scheduling 33

Greedy Without Fairness ▸ Cons: 1. If a job submits a large number of tasks on every computer’s queue then other jobs will not execute any workers until the first job’s tasks have been run 2. In a loaded cluster, a task that has no preferred computers or racks may wait for a long time before being executed anywhere since there will always be at least one preferred task ready for every computer or rack Synchronizing Processes > Data-Intensive Scheduling > Queue-Based Scheduling 34

Fair Greedy ▸ Blocked: ▹ when a job is blocked, its waiting tasks will not be matched to any computers, thus allowing unblocked jobs to take precedence when starting new tasks ▸ Simple Fairness: ▹ the first “ready” task from a queue is pulled, where a task is ready only if its job is not blocked ▸ A job j is blocked when it is running Aj tasks or more, where Aj = min(M/K, Nj) and Nj is the number of workers that j currently has running or queued Synchronizing Processes > Data-Intensive Scheduling > Queue-Based Scheduling 35

Fairness With Preemption ▸ When a job j is running more than Aj workers, the scheduler will kill its over-quota tasks, starting with the most-recently scheduled task first to try to minimize wasted work Synchronizing Processes > Data-Intensive Scheduling > Queue-Based Scheduling 36

Sticky Slots ▸ Consider the steady state in which each job is occupying exactly its allocated quota of computers ▸ Whenever a task from job j completes on computer m, j becomes unblocked but all of the other jobs remain blocked ▸ Consequently, m is reassigned to one of j’s tasks again ▸ This is the “sticky slot” problem Synchronizing Processes > Data-Intensive Scheduling > Queue-Based Scheduling 37

Sticky Slots Solution ▸ A job j is not unblocked immediately if its number of running tasks falls below Aj ▸ Instead the scheduler waits to unblock j until either the number of j’s running tasks falls below Aj – H or H seconds have passed, where H is a hysteresis margin ▸ In many cases, this delay is sufficient enough to allow another job’s worker, with better locality, to steal computer m Synchronizing Processes > Data-Intensive Scheduling > Queue-Based Scheduling 38

Flow-Based Scheduling ▸ The primary data structure used by this approach is a graph that encodes both the structure of the cluster’s network and the set of waiting tasks along with their locality metadata ▸ By assigning appropriate weights and capacities to the edges in this graph, a standard graph solver can be used to convert the graph into an instantaneous set of scheduling assignments ▸ There is a quantifiable cost to every scheduling decision ▹ Transfer cost for running a task on a particular computer ▹ Time cost for killing a task that has begun execution Synchronizing Processes > Data-Intensive Scheduling > Flow-Based Scheduling 39

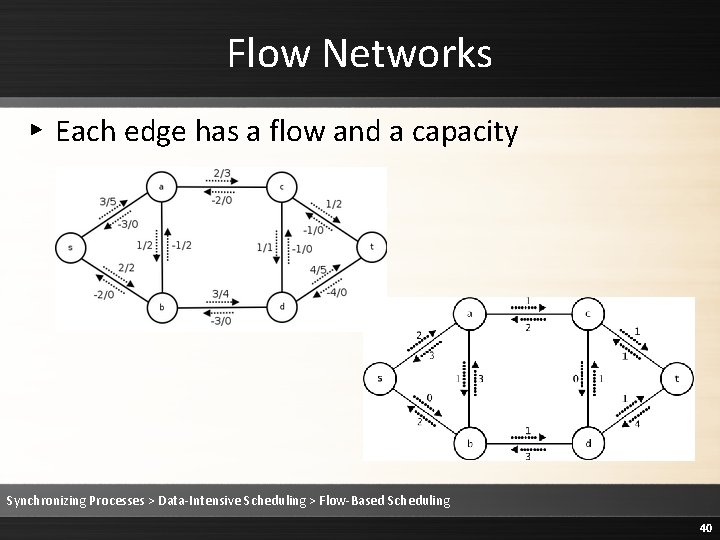

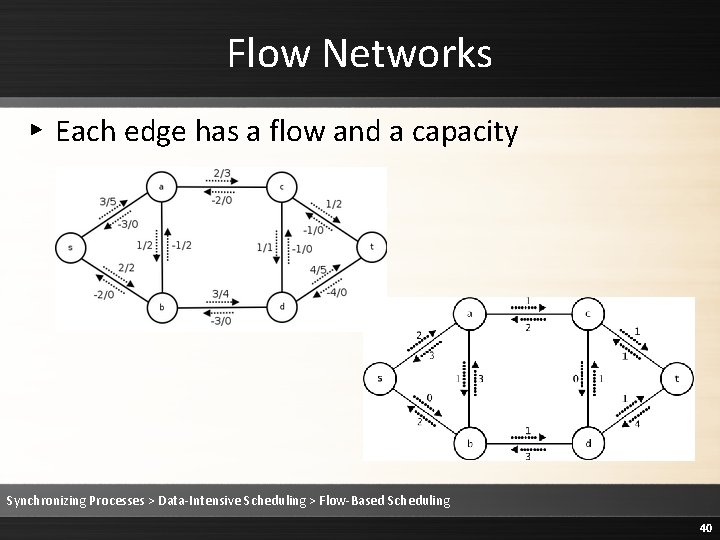

Flow Networks ▸ Each edge has a flow and a capacity Synchronizing Processes > Data-Intensive Scheduling > Flow-Based Scheduling 40

Minimum-cost flow problem ▸ To find the cheapest possible way of sending a certain amount of flow trhough a flow network ▸ The MCF solution represents the job scheduling decisions that yield the minimum global cost. 41

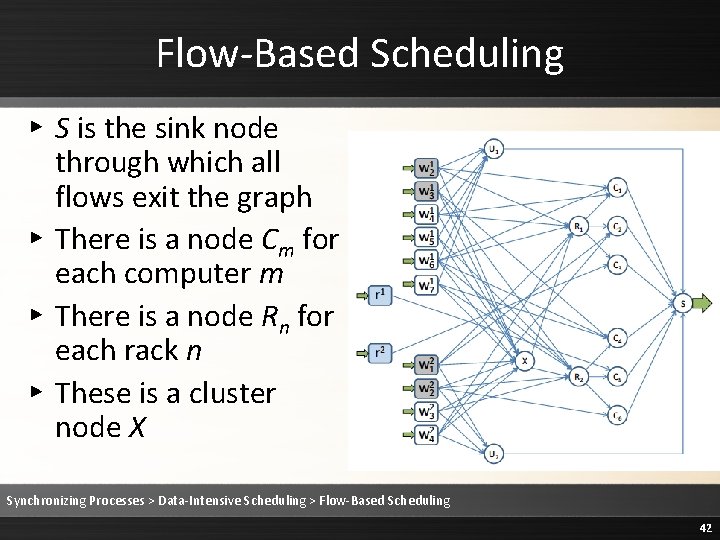

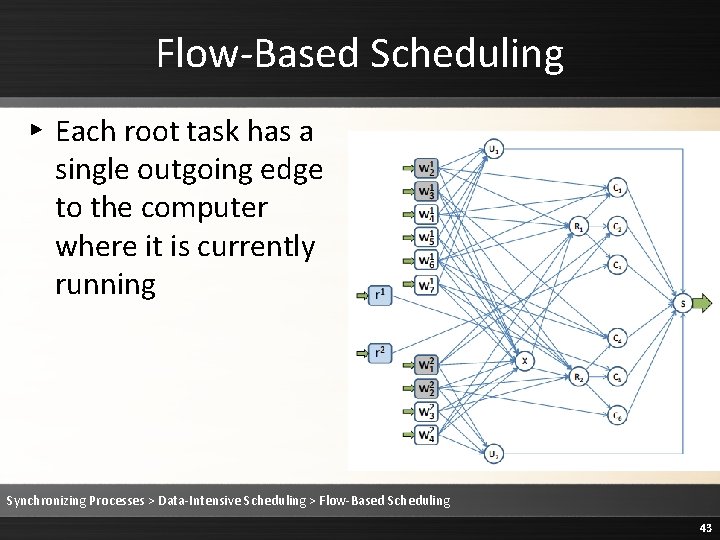

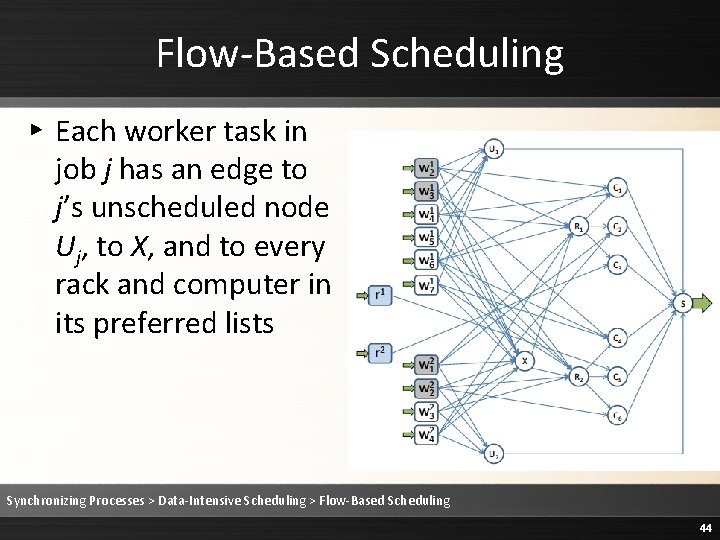

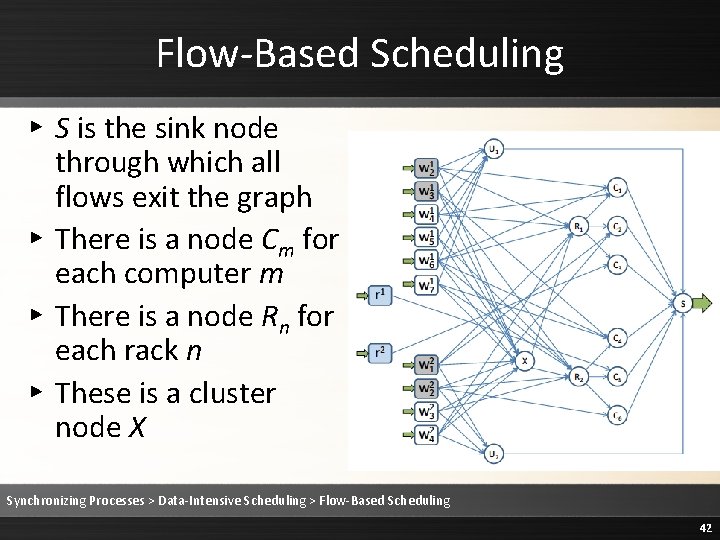

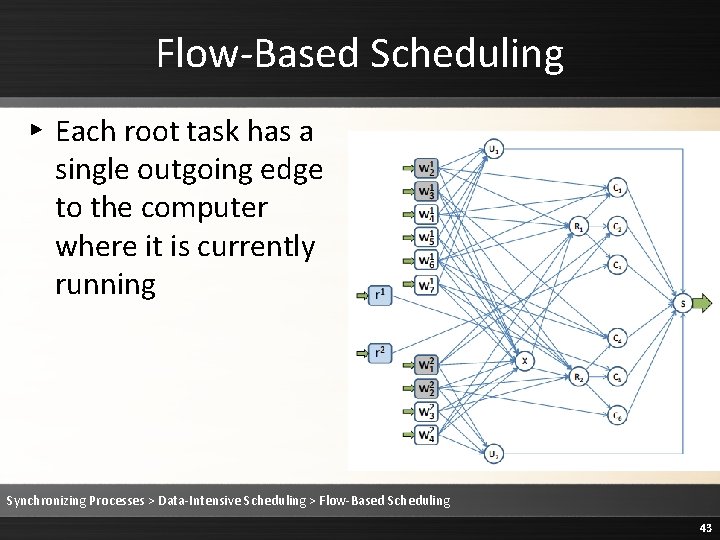

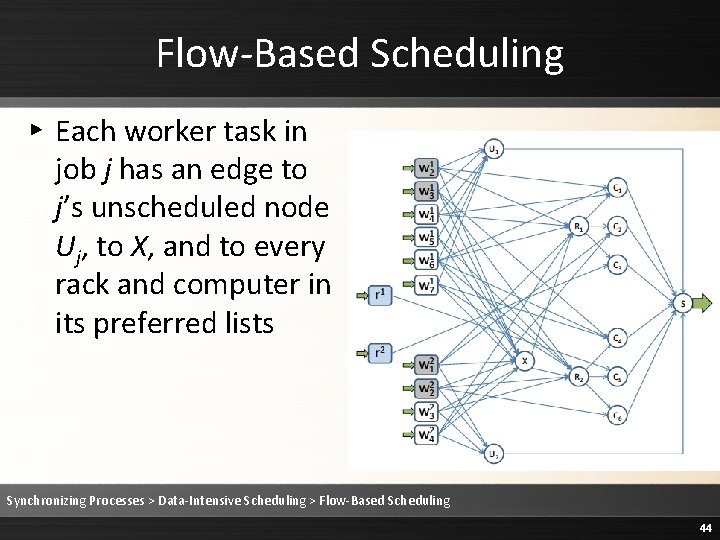

Flow-Based Scheduling ▸ S is the sink node through which all flows exit the graph ▸ There is a node Cm for each computer m ▸ There is a node Rn for each rack n ▸ These is a cluster node X Synchronizing Processes > Data-Intensive Scheduling > Flow-Based Scheduling 42

Flow-Based Scheduling ▸ Each root task has a single outgoing edge to the computer where it is currently running Synchronizing Processes > Data-Intensive Scheduling > Flow-Based Scheduling 43

Flow-Based Scheduling ▸ Each worker task in job j has an edge to j’s unscheduled node Uj, to X, and to every rack and computer in its preferred lists Synchronizing Processes > Data-Intensive Scheduling > Flow-Based Scheduling 44

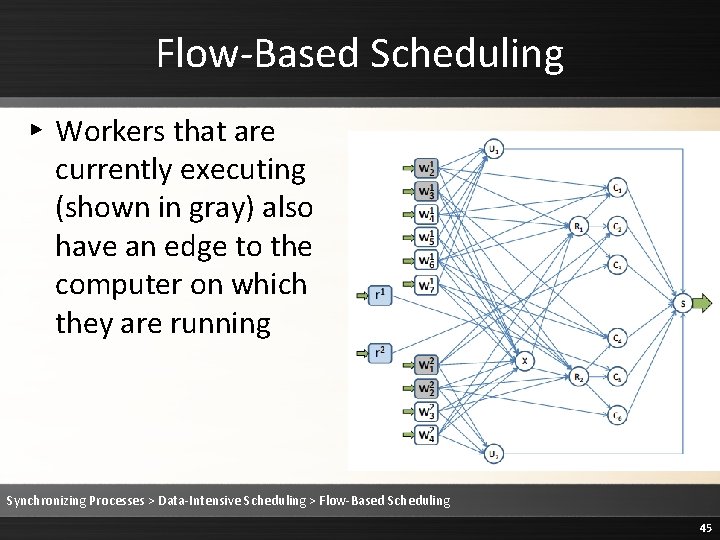

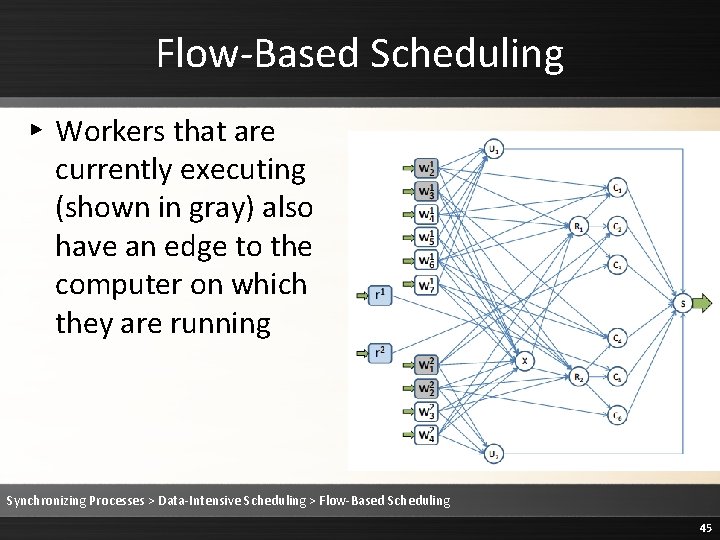

Flow-Based Scheduling ▸ Workers that are currently executing (shown in gray) also have an edge to the computer on which they are running Synchronizing Processes > Data-Intensive Scheduling > Flow-Based Scheduling 45

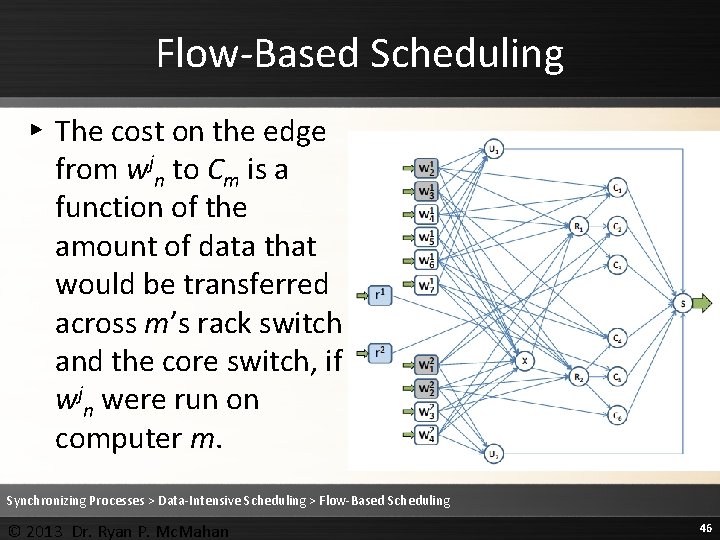

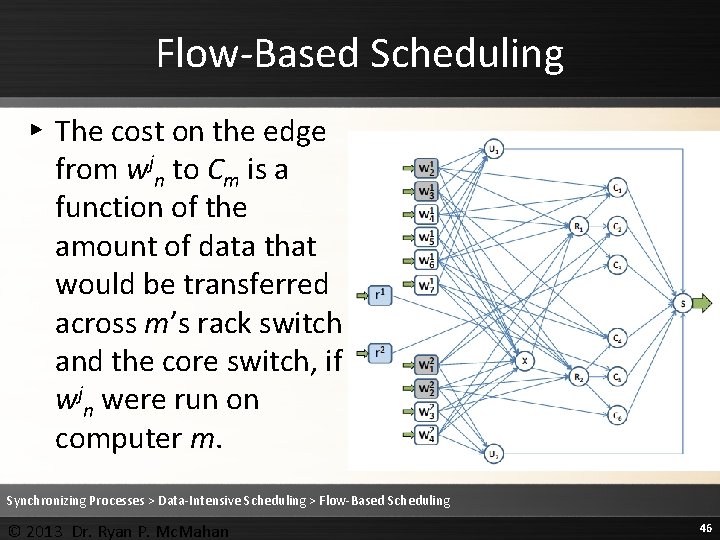

Flow-Based Scheduling ▸ The cost on the edge from wjn to Cm is a function of the amount of data that would be transferred across m’s rack switch and the core switch, if wjn were run on computer m. Synchronizing Processes > Data-Intensive Scheduling > Flow-Based Scheduling © 2013 Dr. Ryan P. Mc. Mahan 46

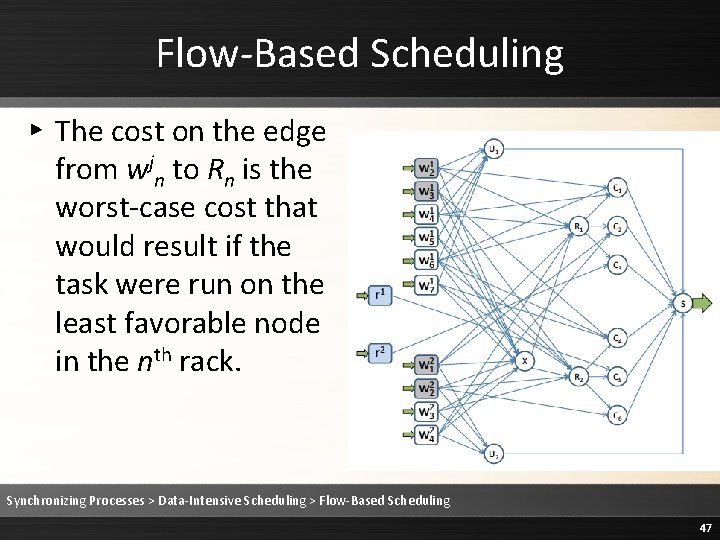

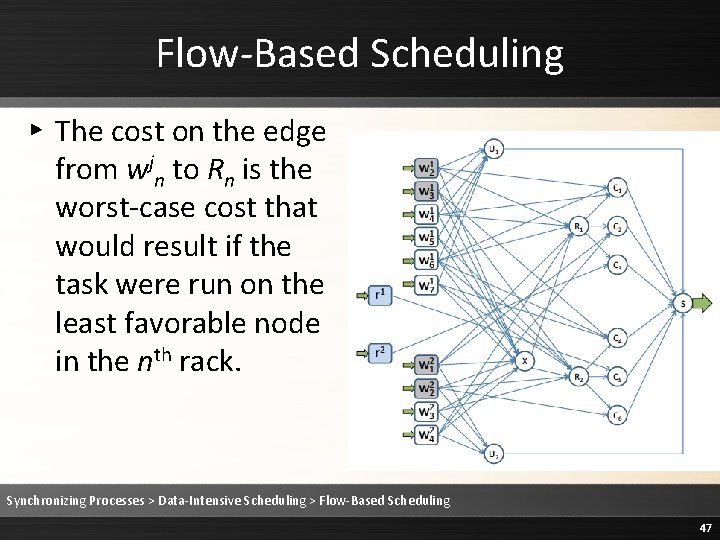

Flow-Based Scheduling ▸ The cost on the edge from wjn to Rn is the worst-case cost that would result if the task were run on the least favorable node in the nth rack. Synchronizing Processes > Data-Intensive Scheduling > Flow-Based Scheduling 47

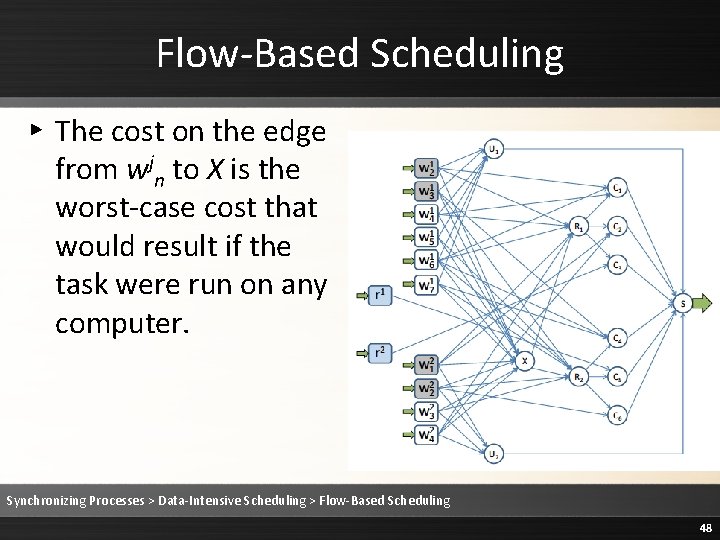

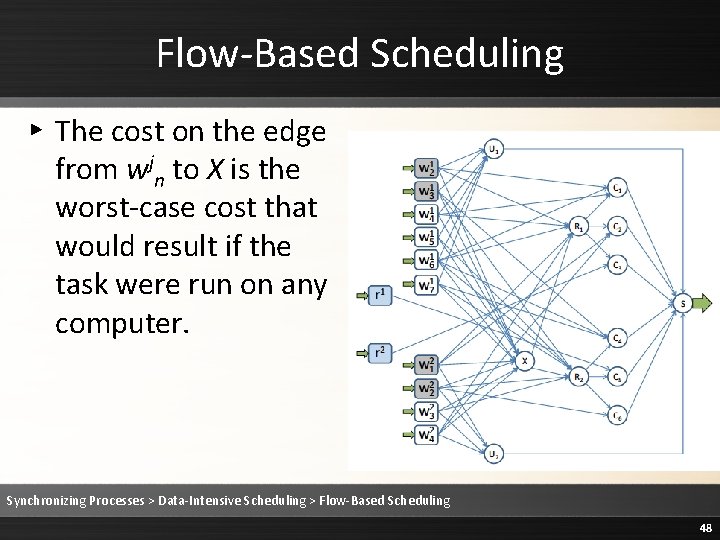

Flow-Based Scheduling ▸ The cost on the edge from wjn to X is the worst-case cost that would result if the task were run on any computer. Synchronizing Processes > Data-Intensive Scheduling > Flow-Based Scheduling 48

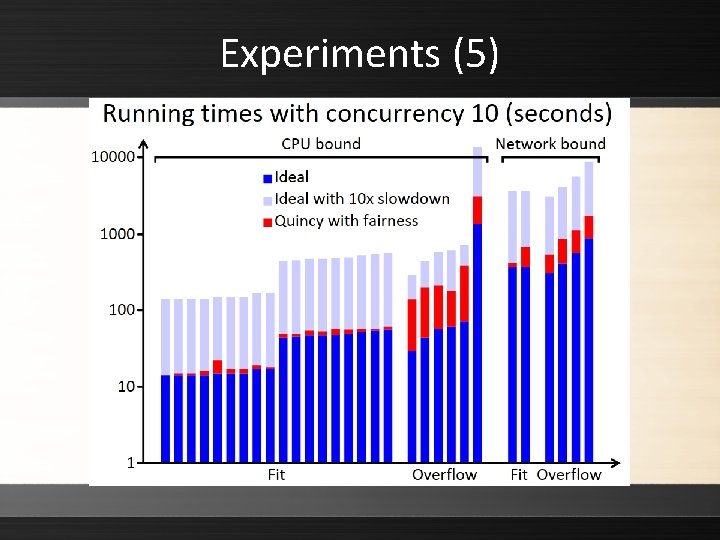

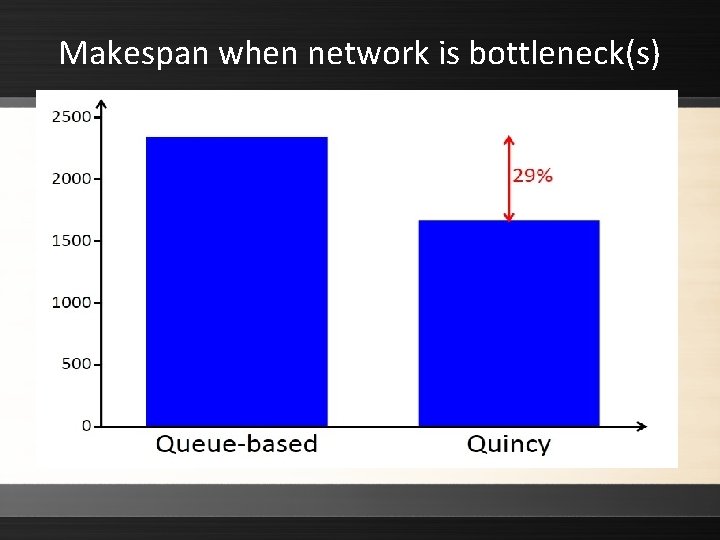

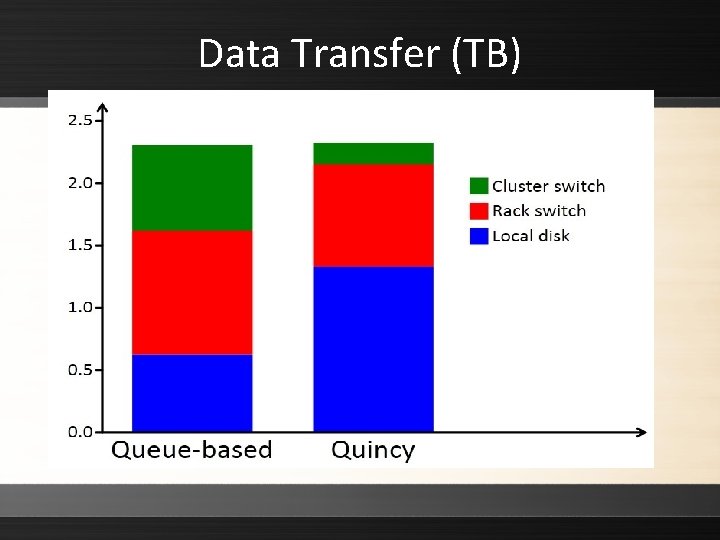

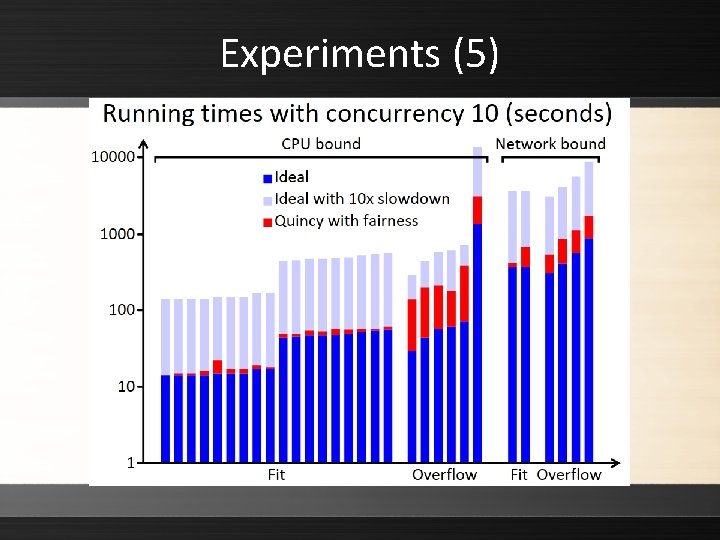

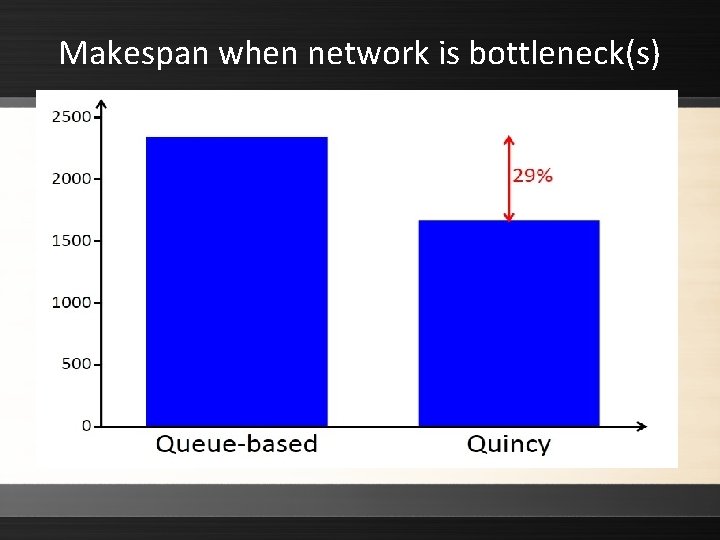

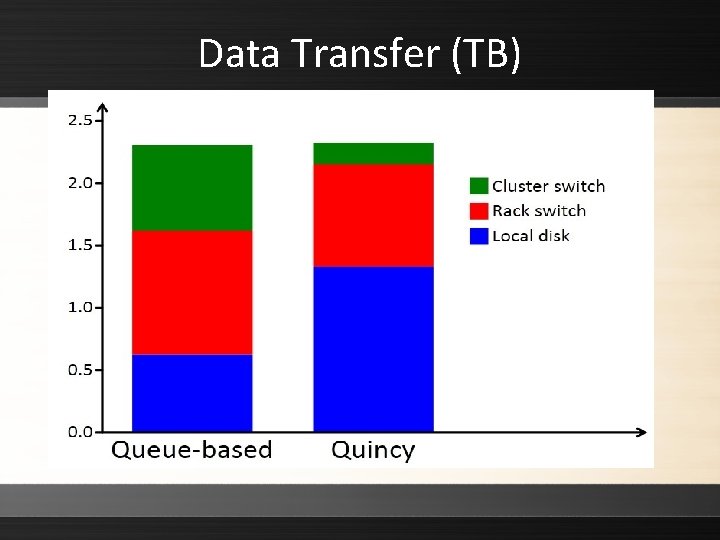

Experiment ▸ Authors conducted experiments to compare the queue-based and flow-based (Quincy) algorithms ▸ Quincy with preemption performed the best in unconstrained and constrained networks ▸ Overall, flow-based scheduling was found to reduce traffic through the core switch of a hierarchical network by a factor of three, while at the same time increasing the throughput of the cluster Synchronizing Processes > Data-Intensive Scheduling > Flow-Based Scheduling 49

Credit ▸ Modified version of: ▹ www. cse. unl. edu/~ylu/csce 990/notes/Quincy_Weiy ue. ppt 50

Evaluation ▸ Typical Dryad jobs (Sort, Join, Page. Rank, Word. Count, Prime) ▸ Prime used as a worst-case job that hogs the cluster if started first ▸ 240 computers in cluster. 8 racks, 29 -31 computers per rack ▸ More than one metric used for evaluation

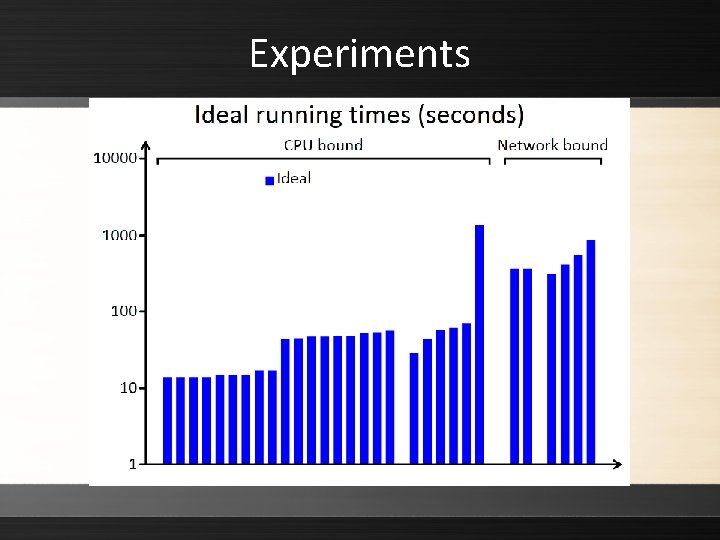

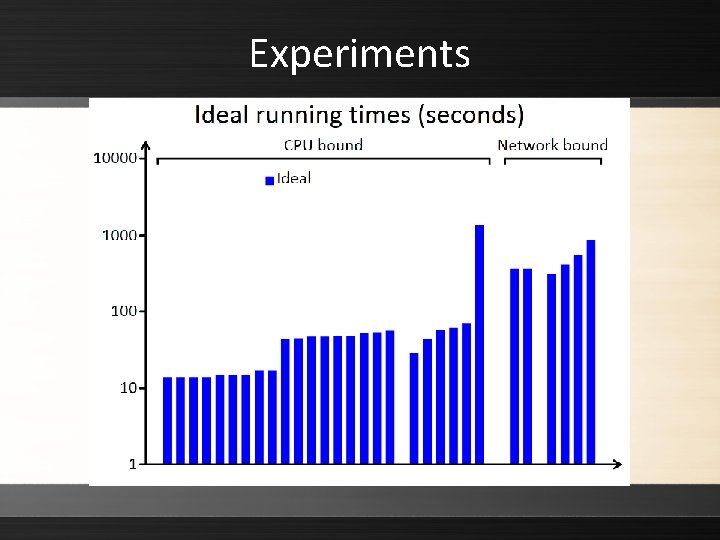

Experiments

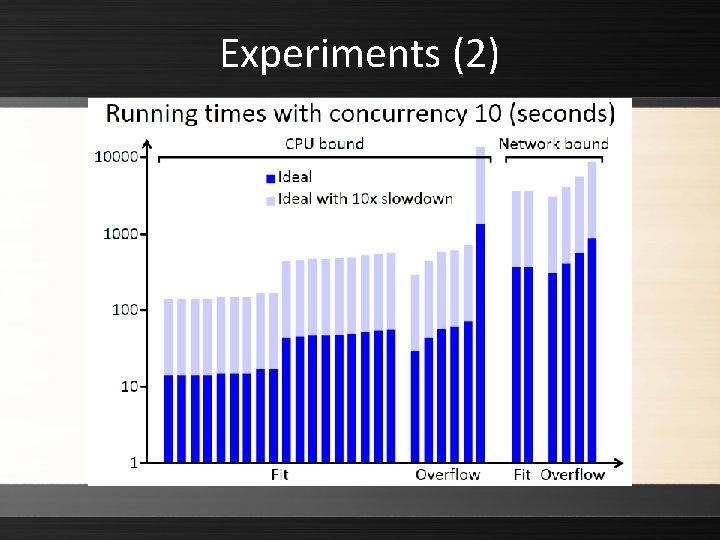

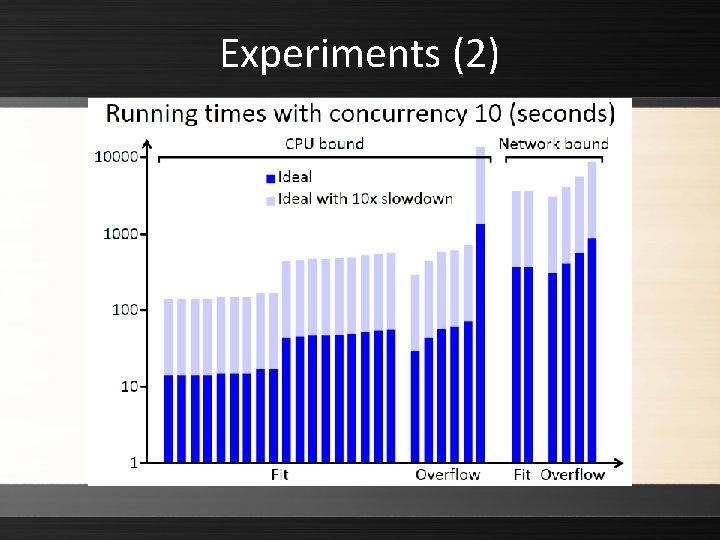

Experiments (2)

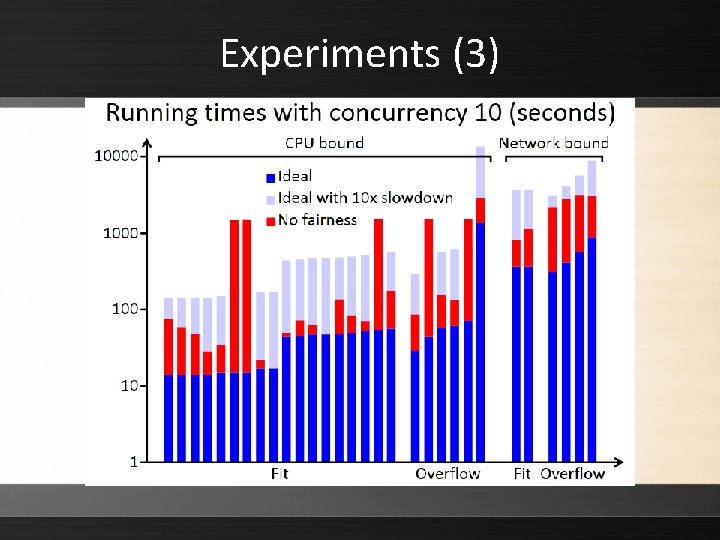

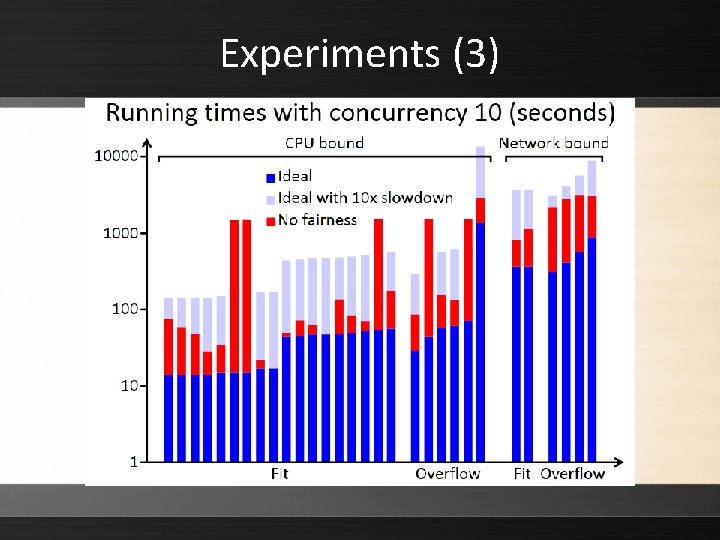

Experiments (3)

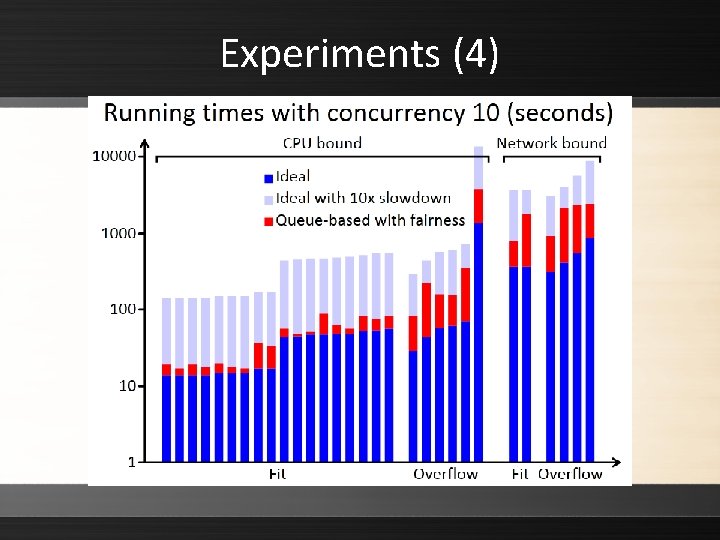

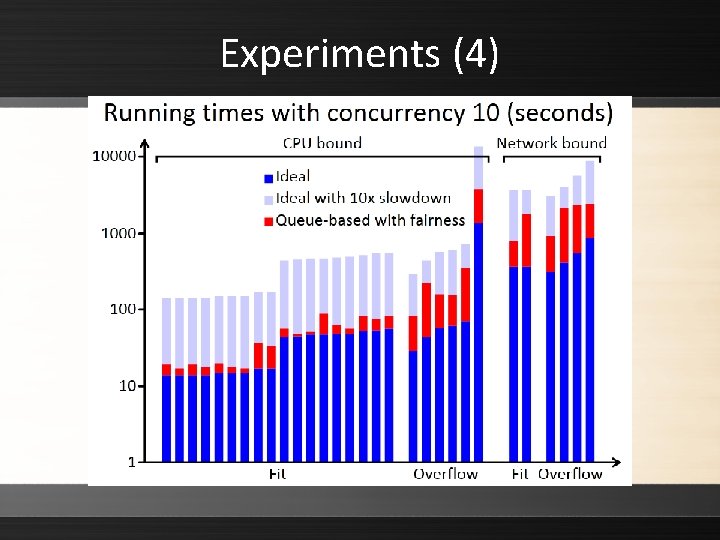

Experiments (4)

Experiments (5)

Makespan when network is bottleneck(s)

Data Transfer (TB)

Conclusion ▸ New computational model for data intensive computing ▸ Elegant mapping of scheduling to min-cost flow/matching problem

Discussion ▸ Homogenous environment ▸ Centralized Quincy controller: single point of failure. ▸ No theoretical stability guarantee. ▸ Cost measure: fairness, cost of kill