SOFT COMPUTING TECHNIQUES R ANIL KUMAR Assistant Professor

- Slides: 29

SOFT COMPUTING TECHNIQUES R. ANIL KUMAR (Assistant Professor) GRIET

Importance of Soft Computing Techniques • New innovations are developed with many applications among which Soft Computing Methods occupies major role. • These techniques are embedded/implemented in present Industries (Either IT or Non. IT). • For any Industry, Controlling the complex parameters is the major task which enhances the importance of SCT. • Traditional controllers (which are complex) were designed on the basis of Mathematical Modelling equations framed under stable condition of electrical systems. • Better performance has been judged while using these Soft Computing Techniques in combination with conventional methods. • SCT crop to resolve the issues by learning from the mechanism provided to Train themselves through expert experience. Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 2

Unit – 1: Neural Networks (NN) - I Objectives: At the end of this Unit, Students must be able to • Tell what the types of activation functions are used in Artificial Neural Networks • List the types of Associative memories. • Classify the Learning Methods available for training a Neural Network • Summarize the applications of NN Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 3

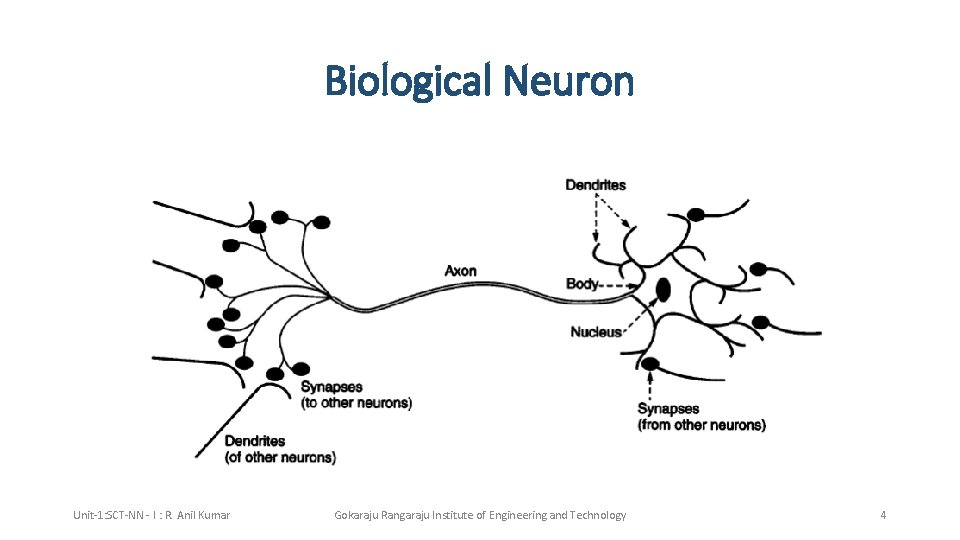

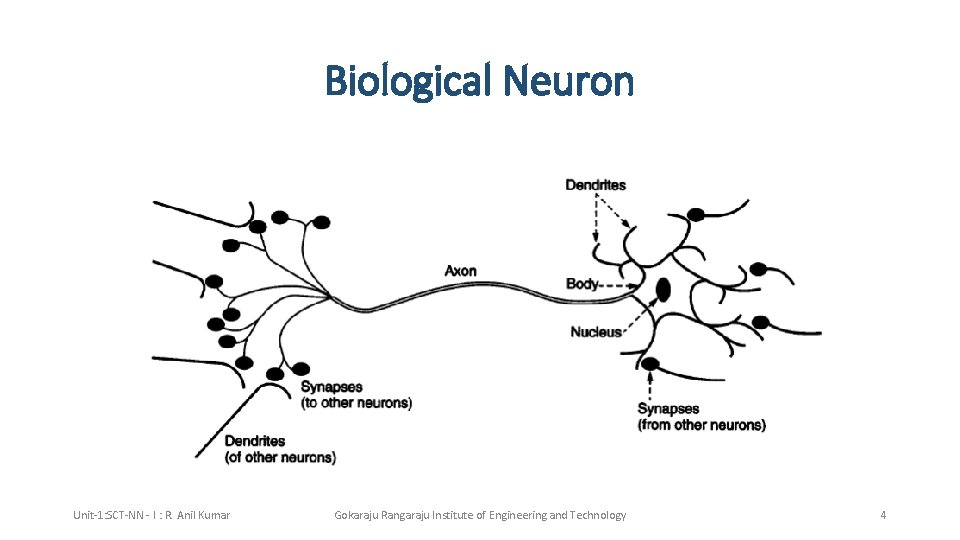

Biological Neuron Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 4

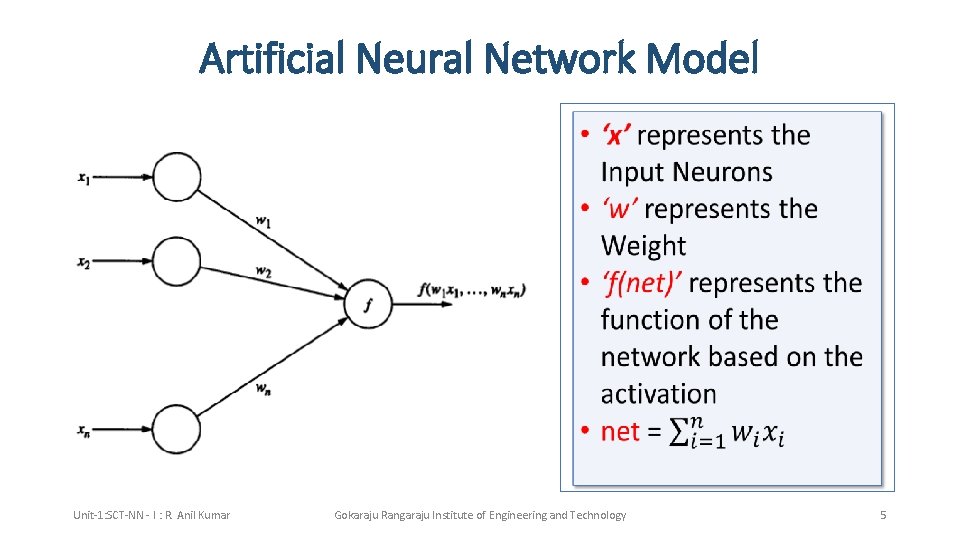

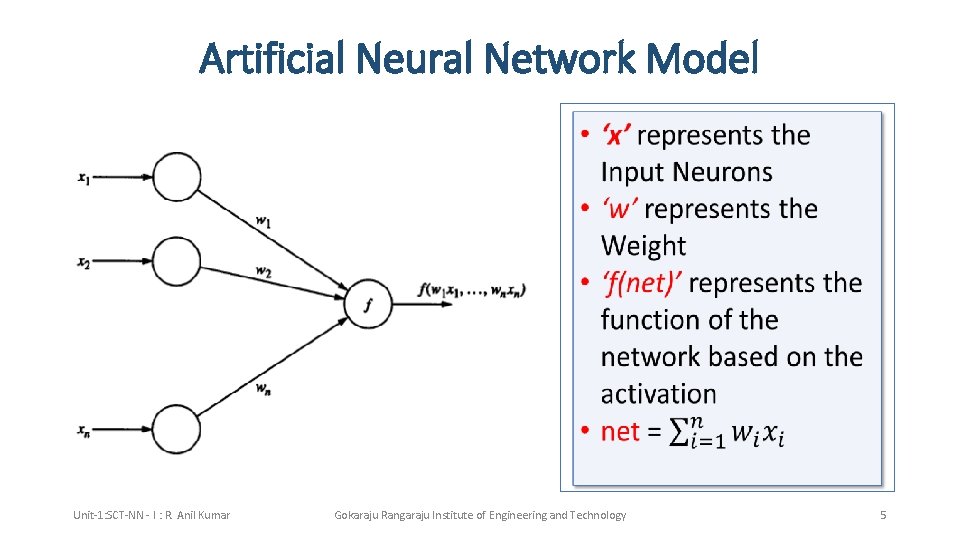

Artificial Neural Network Model Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 5

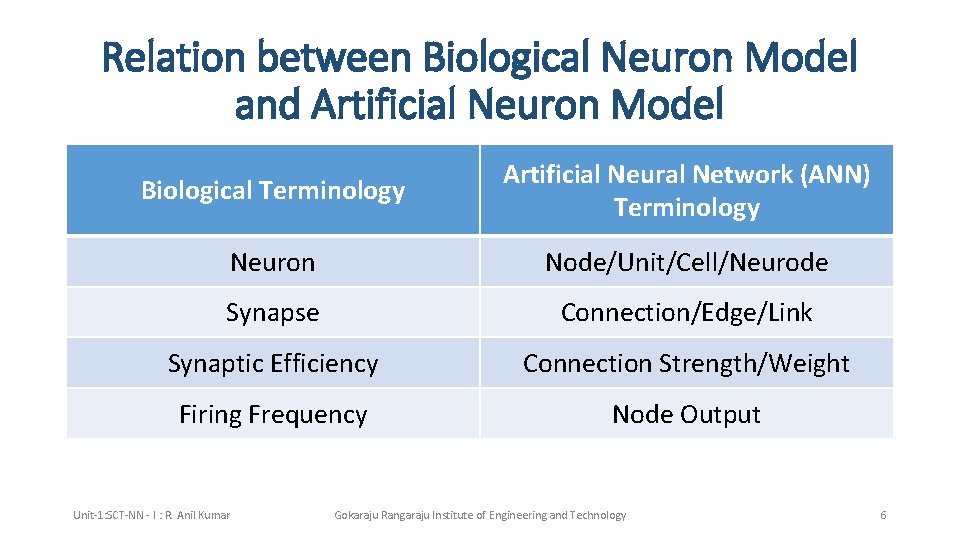

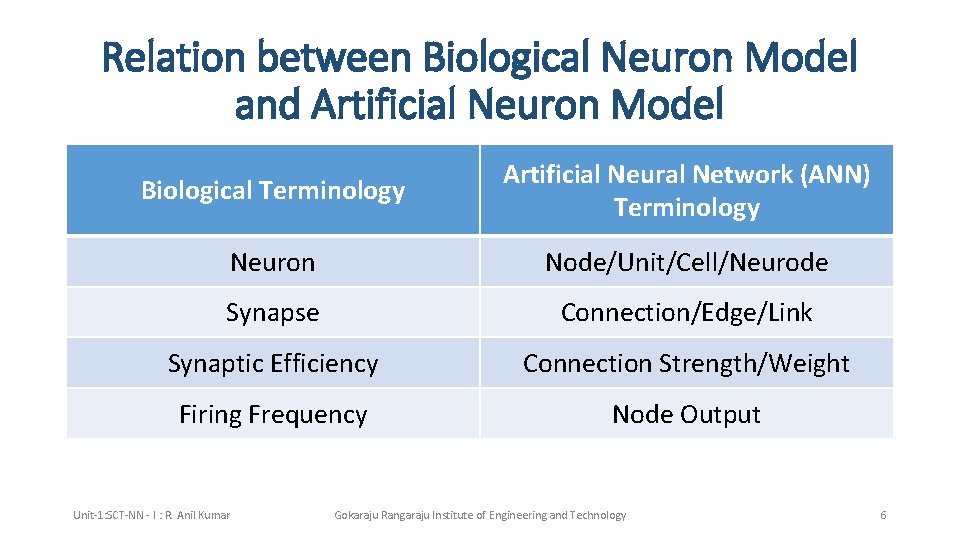

Relation between Biological Neuron Model and Artificial Neuron Model Biological Terminology Artificial Neural Network (ANN) Terminology Neuron Node/Unit/Cell/Neurode Synapse Connection/Edge/Link Synaptic Efficiency Connection Strength/Weight Firing Frequency Node Output Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 6

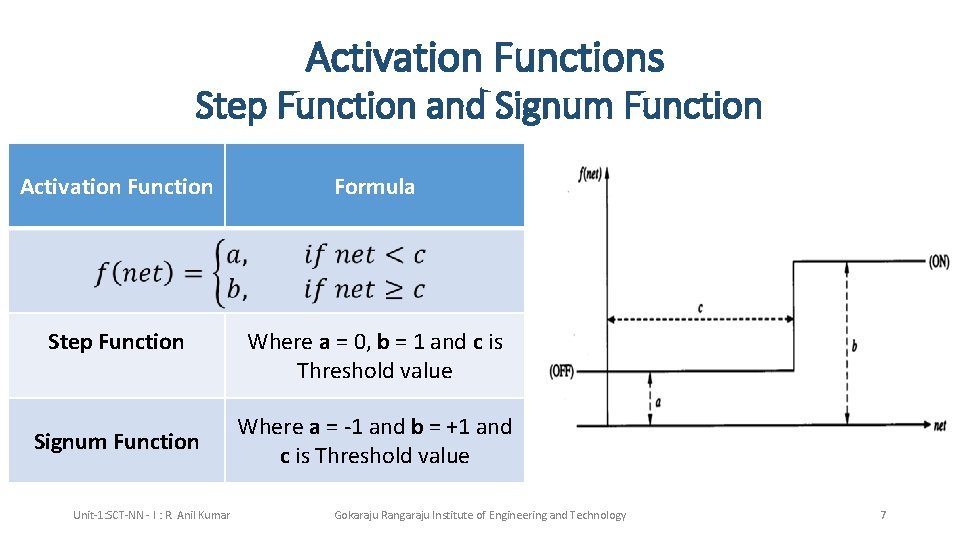

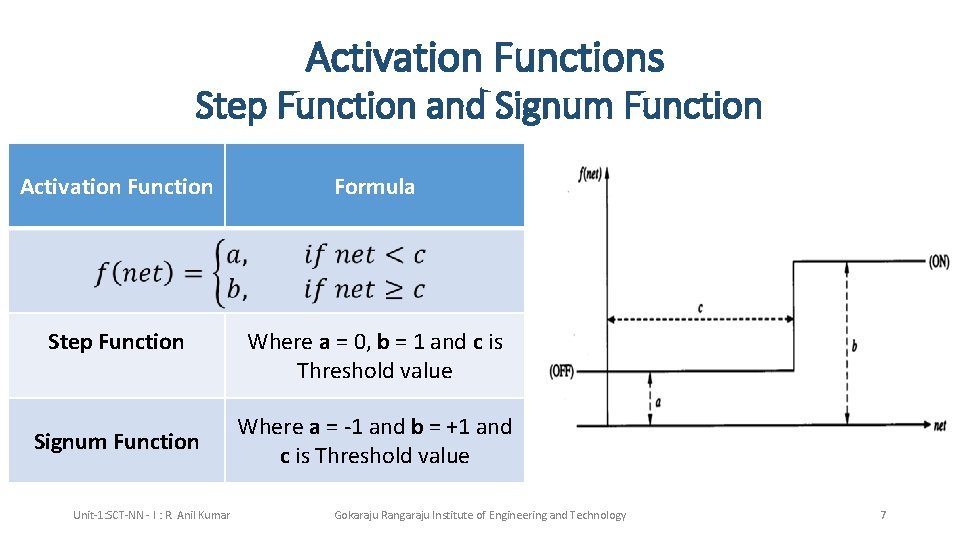

Activation Functions Step Function and Signum Function Activation Function Formula Step Function Where a = 0, b = 1 and c is Threshold value Signum Function Unit-1: SCT-NN - I : R. Anil Kumar Where a = -1 and b = +1 and c is Threshold value Gokaraju Rangaraju Institute of Engineering and Technology 7

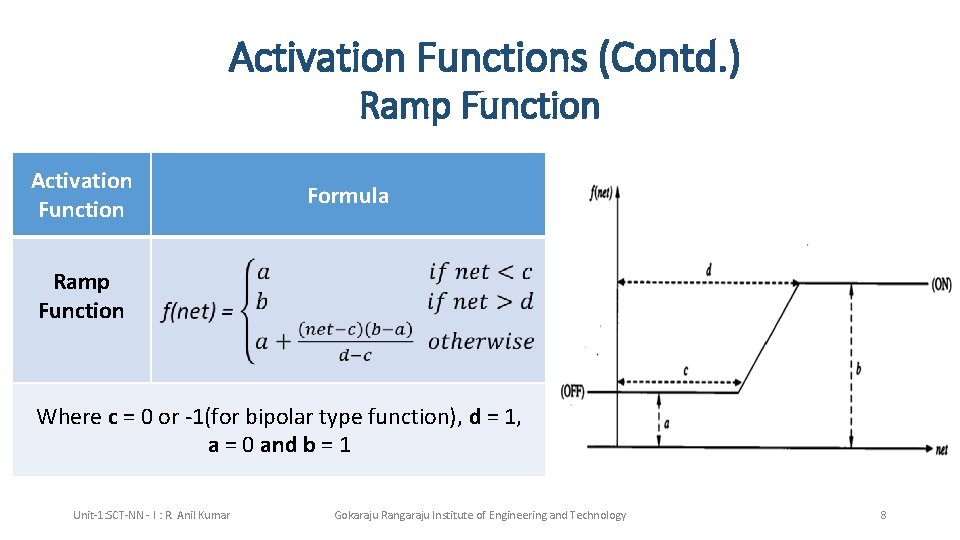

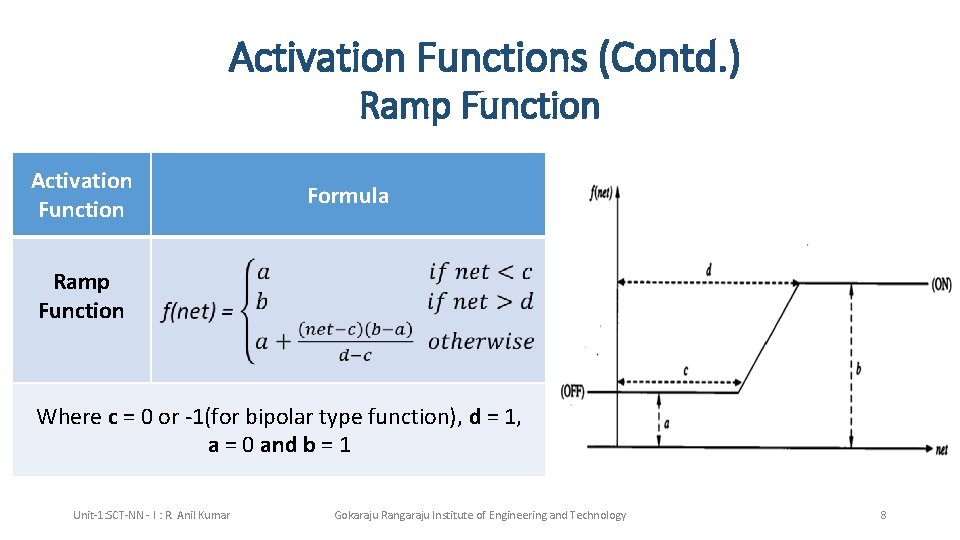

Activation Functions (Contd. ) Ramp Function Activation Function Formula Ramp Function Where c = 0 or -1(for bipolar type function), d = 1, a = 0 and b = 1 Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 8

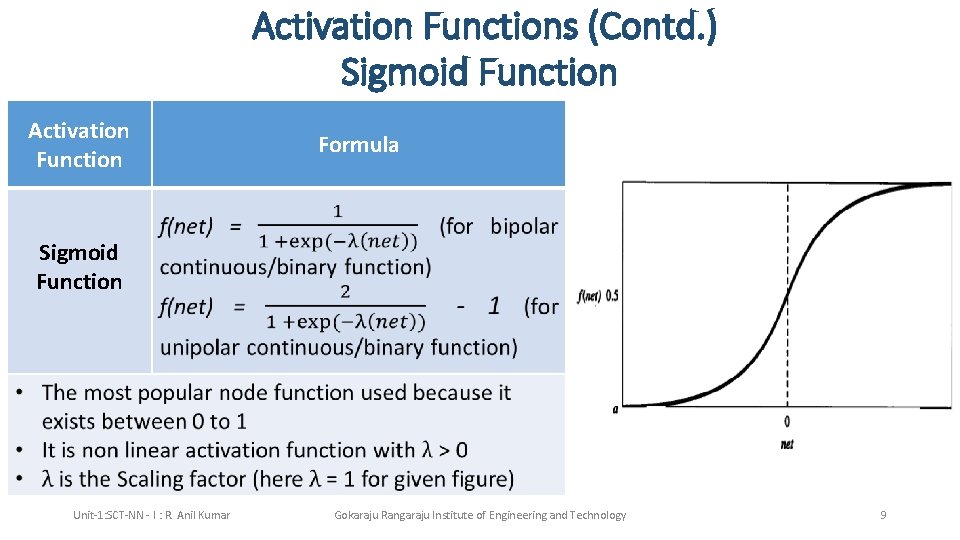

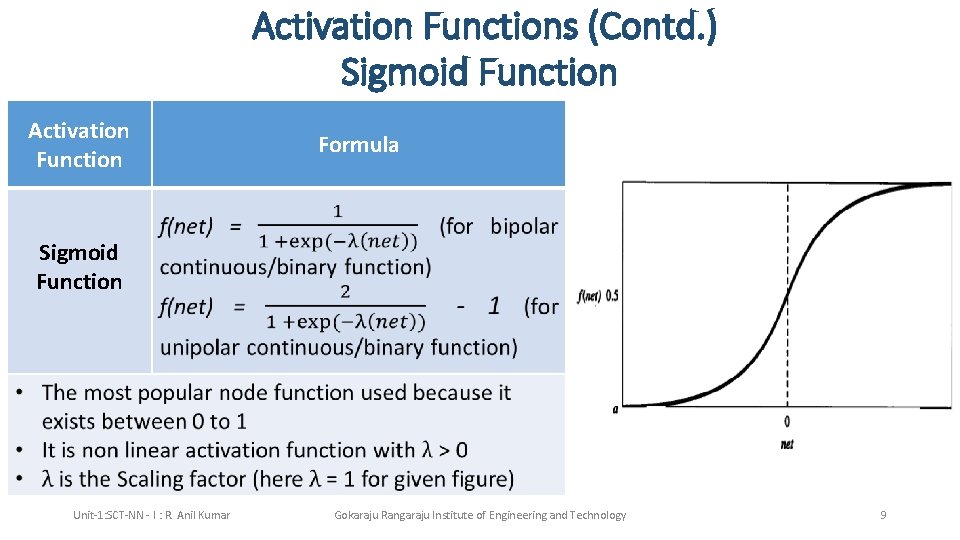

Activation Functions (Contd. ) Sigmoid Function Activation Function Formula Sigmoid Function Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 9

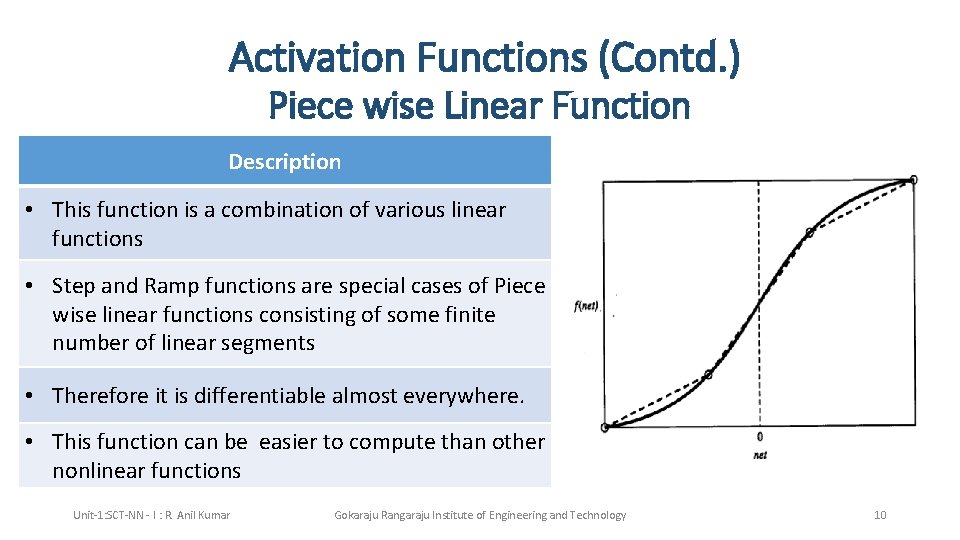

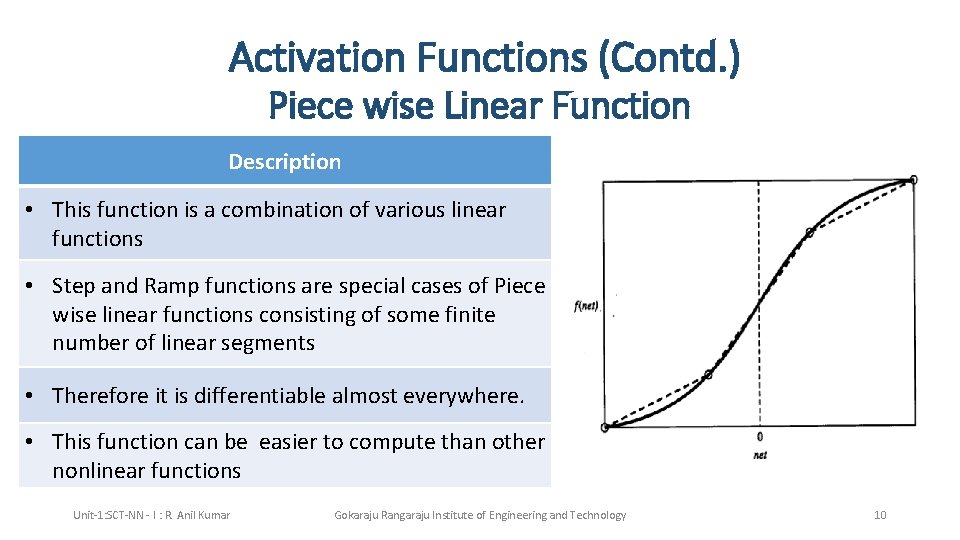

Activation Functions (Contd. ) Piece wise Linear Function Description • This function is a combination of various linear functions • Step and Ramp functions are special cases of Piece wise linear functions consisting of some finite number of linear segments • Therefore it is differentiable almost everywhere. • This function can be easier to compute than other nonlinear functions Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 10

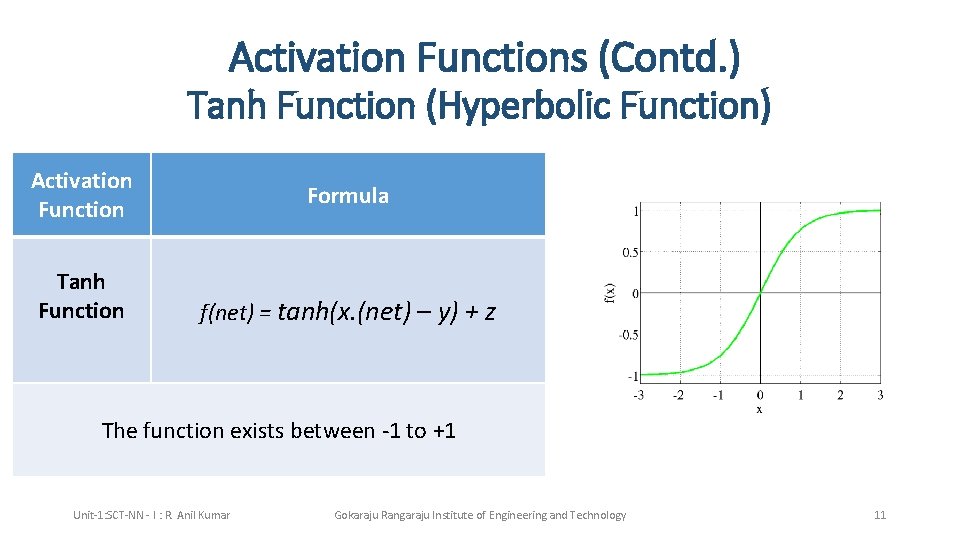

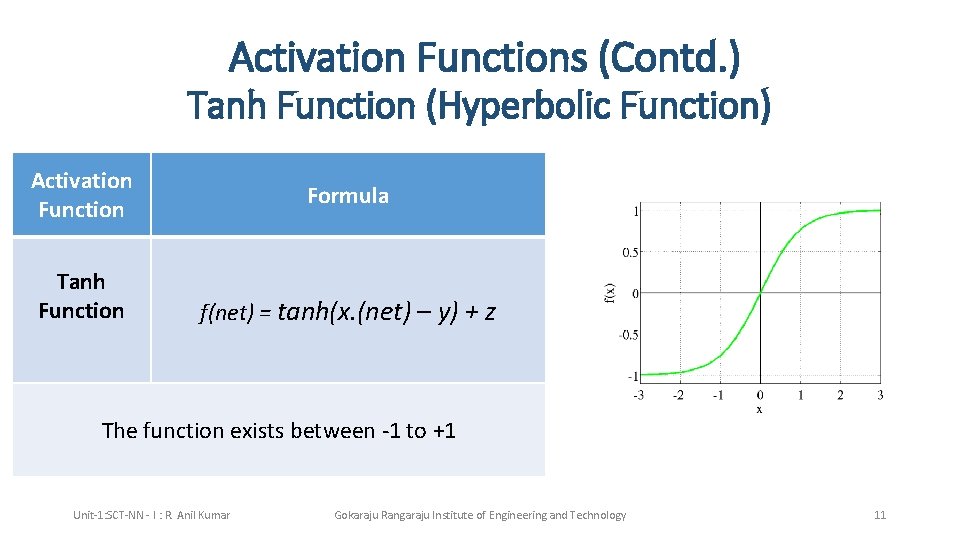

Activation Functions (Contd. ) Tanh Function (Hyperbolic Function) Activation Function Tanh Function Formula f(net) = tanh(x. (net) – y) + z The function exists between -1 to +1 Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 11

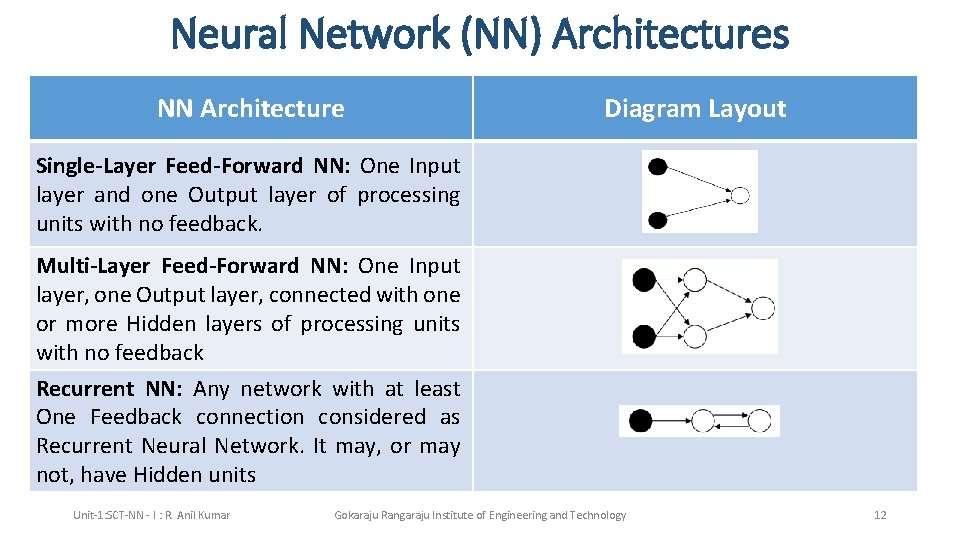

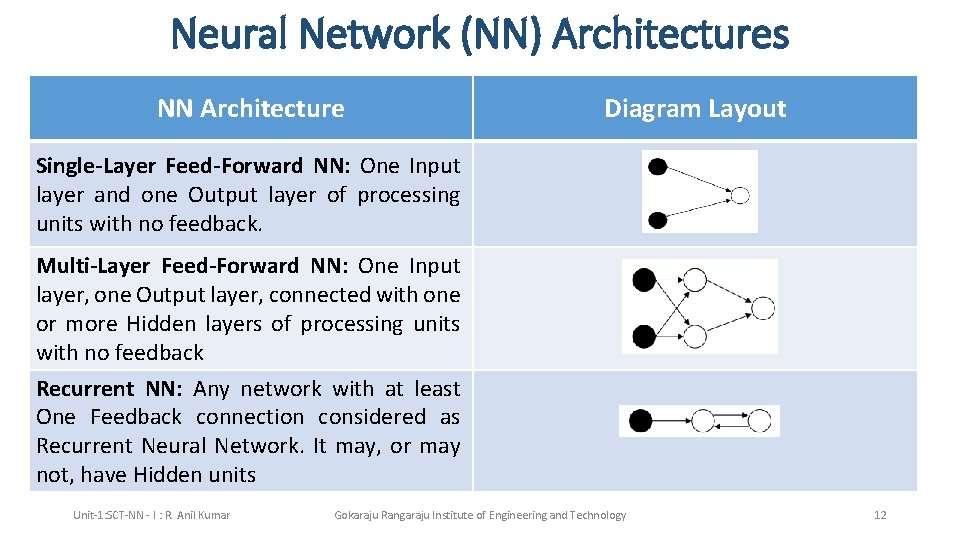

Neural Network (NN) Architectures NN Architecture Diagram Layout Single-Layer Feed-Forward NN: One Input layer and one Output layer of processing units with no feedback. Multi-Layer Feed-Forward NN: One Input layer, one Output layer, connected with one or more Hidden layers of processing units with no feedback Recurrent NN: Any network with at least One Feedback connection considered as Recurrent Neural Network. It may, or may not, have Hidden units Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 12

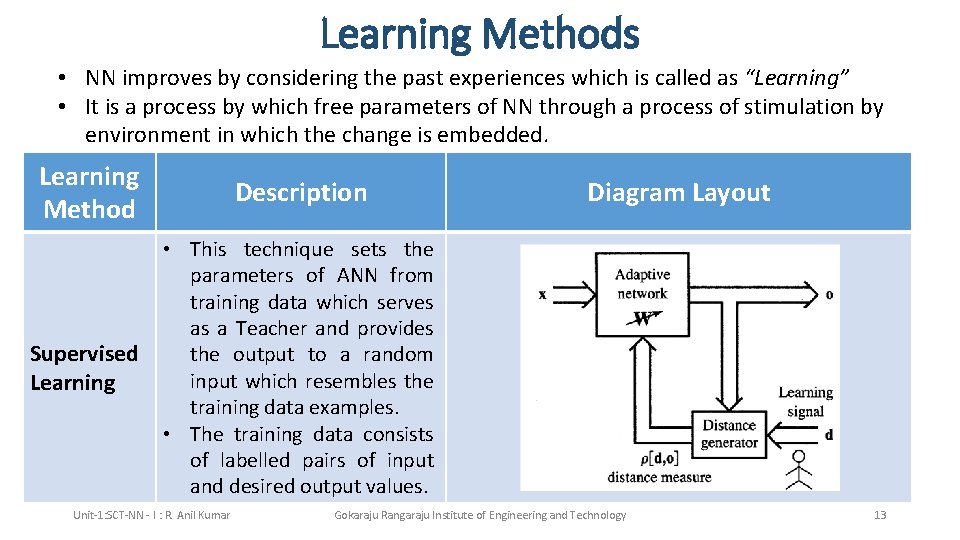

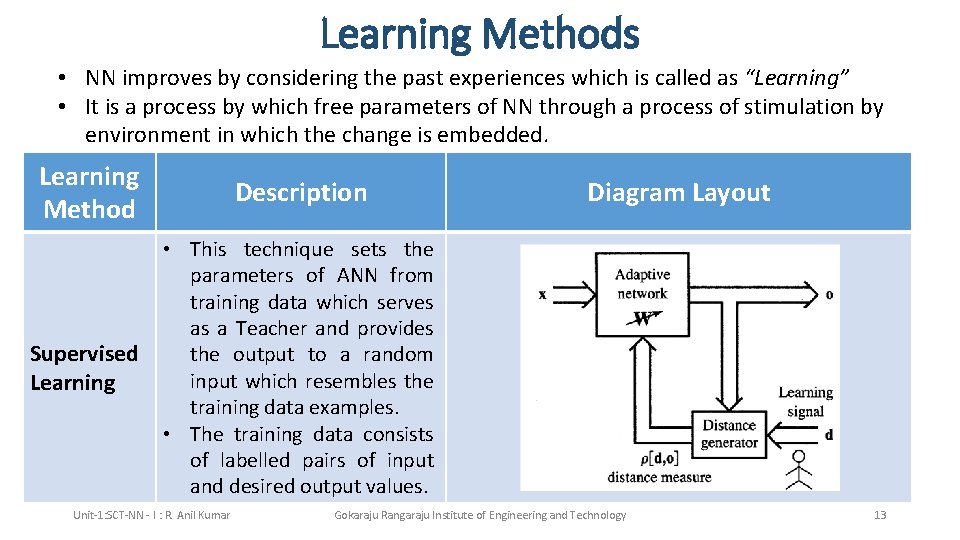

Learning Methods • NN improves by considering the past experiences which is called as “Learning” • It is a process by which free parameters of NN through a process of stimulation by environment in which the change is embedded. Learning Method Description Supervised Learning • This technique sets the parameters of ANN from training data which serves as a Teacher and provides the output to a random input which resembles the training data examples. • The training data consists of labelled pairs of input and desired output values. Unit-1: SCT-NN - I : R. Anil Kumar Diagram Layout Gokaraju Rangaraju Institute of Engineering and Technology 13

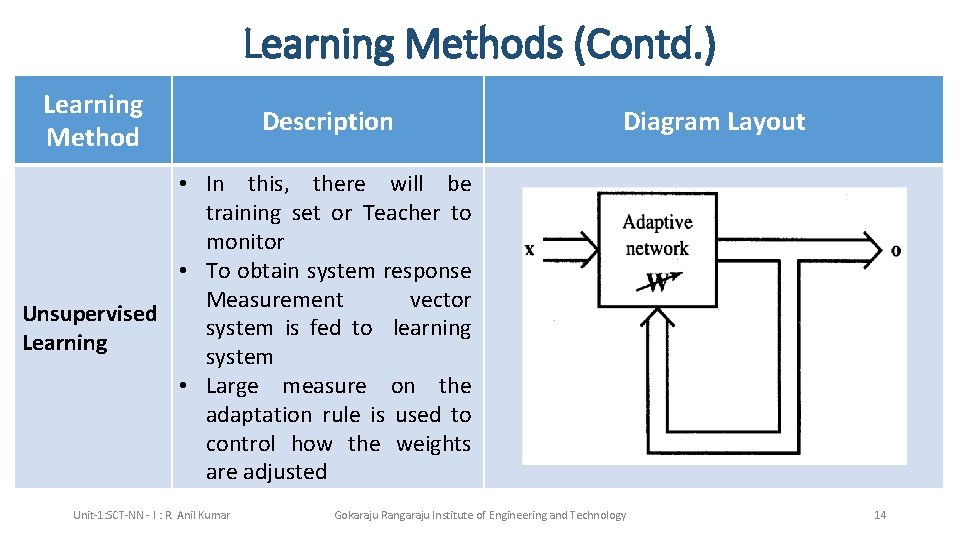

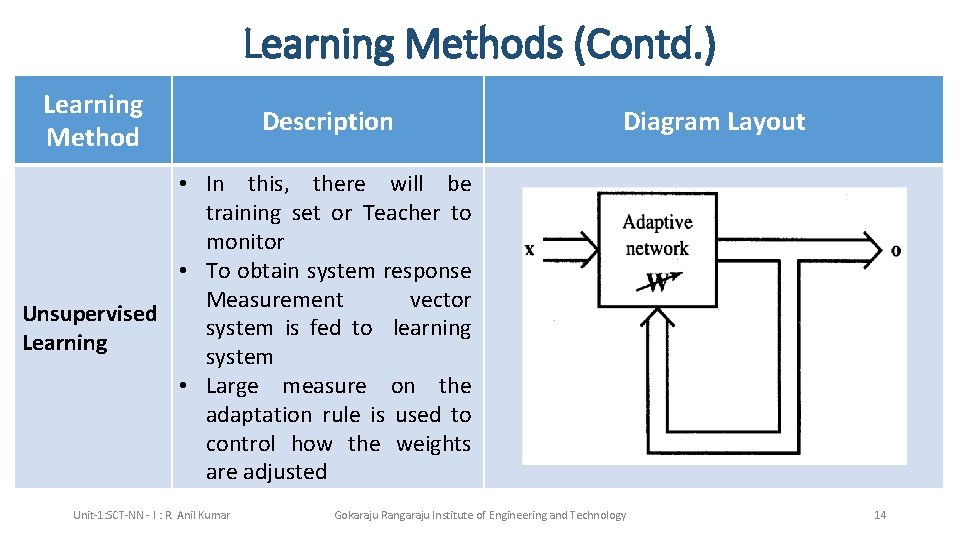

Learning Methods (Contd. ) Learning Method Description Diagram Layout • In this, there will be training set or Teacher to monitor • To obtain system response Measurement vector Unsupervised system is fed to learning Learning system • Large measure on the adaptation rule is used to control how the weights are adjusted Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 14

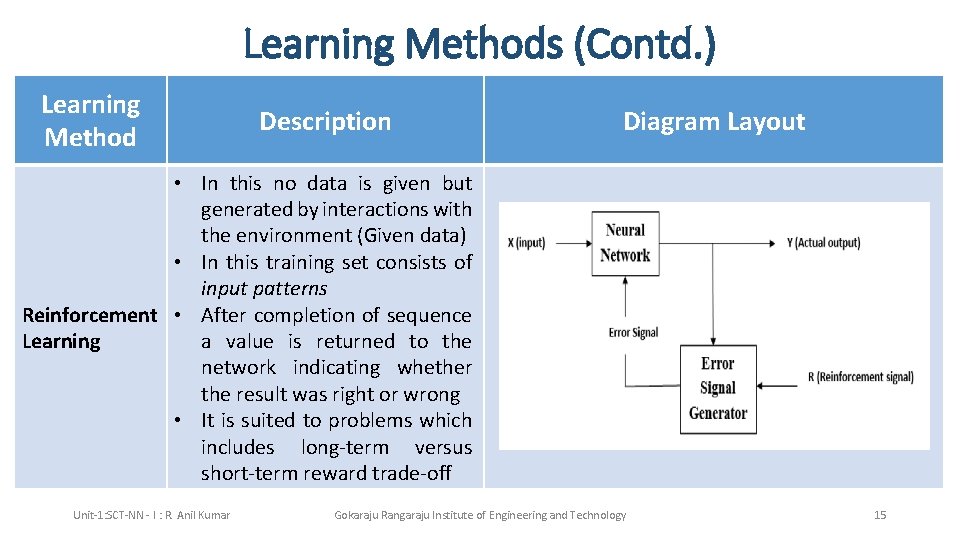

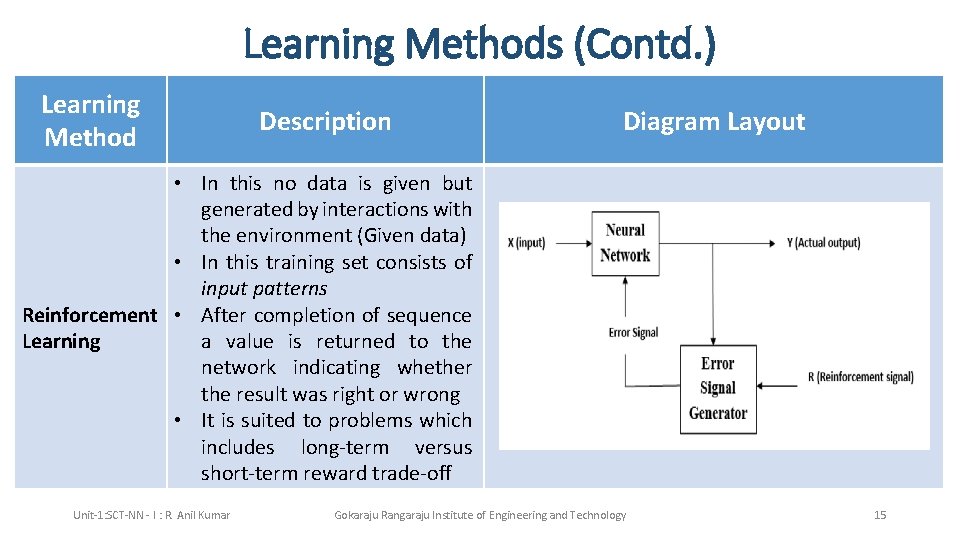

Learning Methods (Contd. ) Learning Method Description Diagram Layout • In this no data is given but generated by interactions with the environment (Given data) • In this training set consists of input patterns Reinforcement • After completion of sequence a value is returned to the Learning network indicating whether the result was right or wrong • It is suited to problems which includes long-term versus short-term reward trade-off Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 15

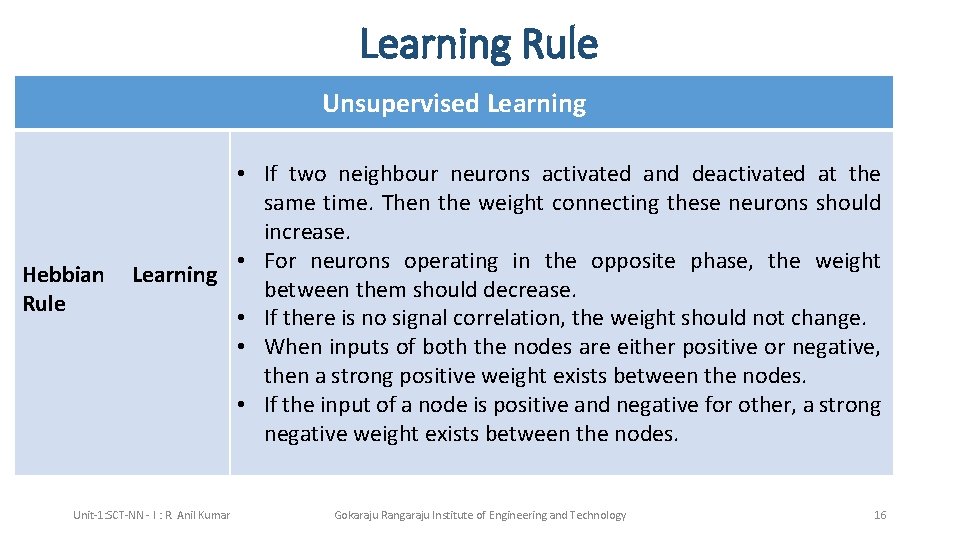

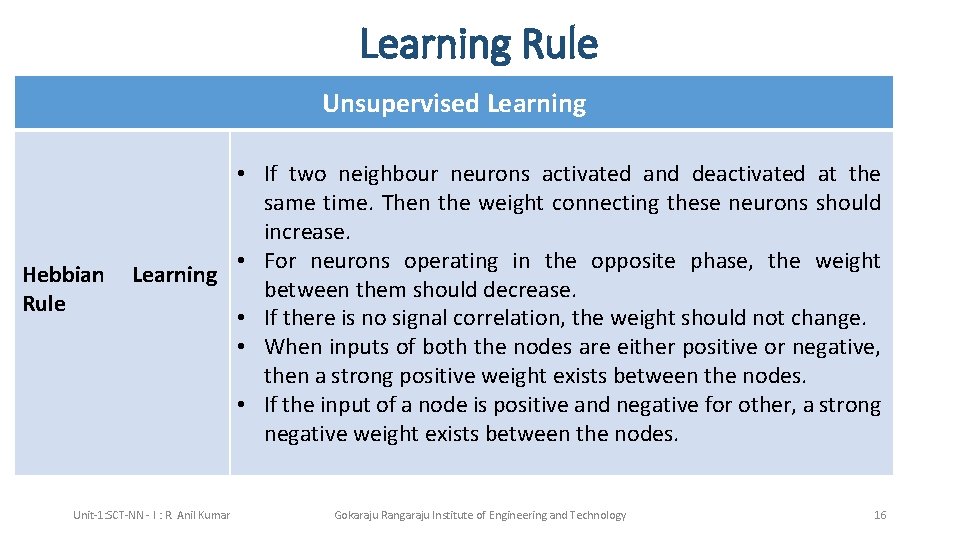

Learning Rule Unsupervised Learning Hebbian Rule • If two neighbour neurons activated and deactivated at the same time. Then the weight connecting these neurons should increase. • For neurons operating in the opposite phase, the weight Learning between them should decrease. • If there is no signal correlation, the weight should not change. • When inputs of both the nodes are either positive or negative, then a strong positive weight exists between the nodes. • If the input of a node is positive and negative for other, a strong negative weight exists between the nodes. Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 16

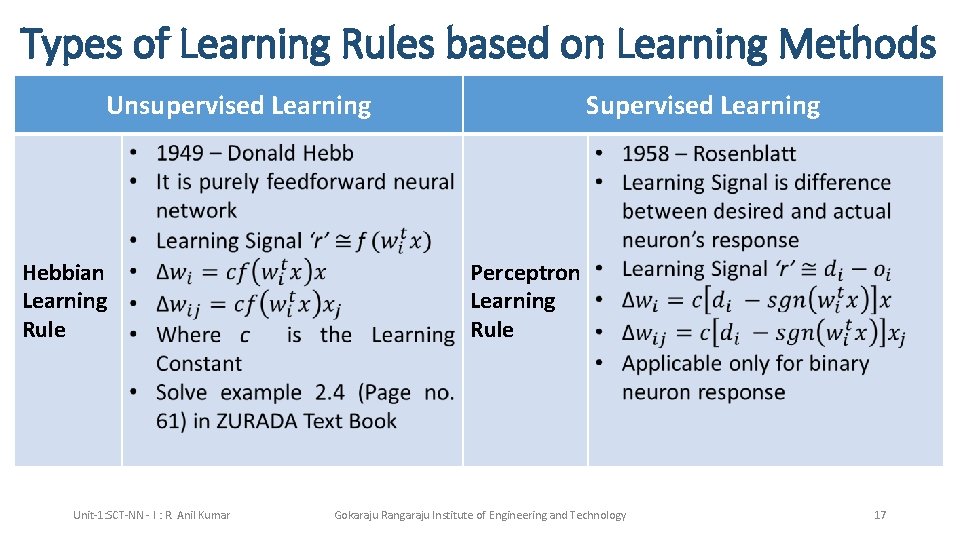

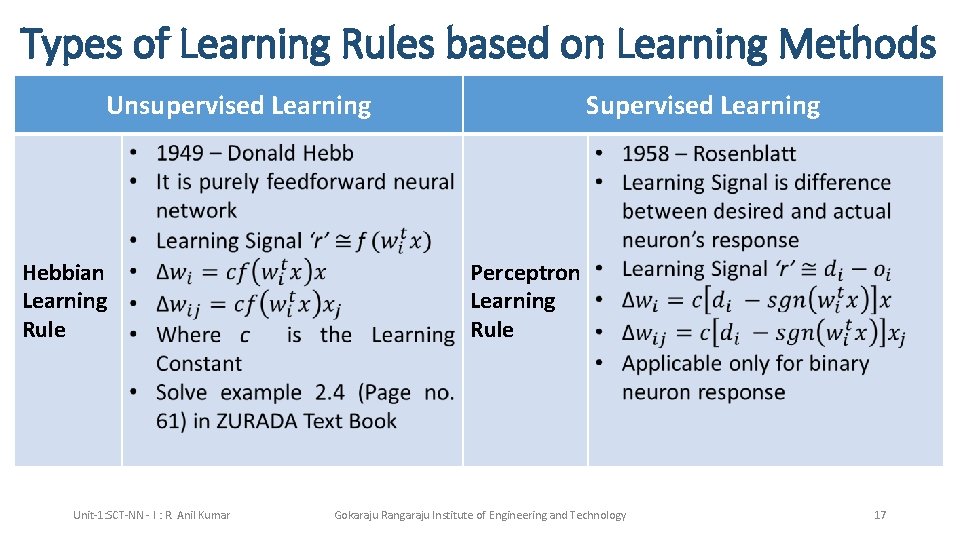

Types of Learning Rules based on Learning Methods Unsupervised Learning Hebbian Learning Rule Unit-1: SCT-NN - I : R. Anil Kumar Supervised Learning Perceptron Learning Rule Gokaraju Rangaraju Institute of Engineering and Technology 17

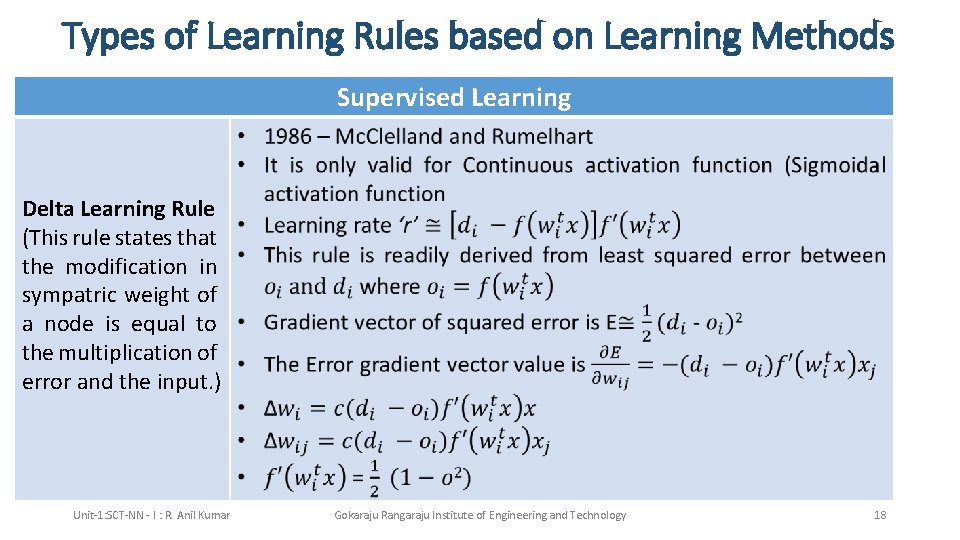

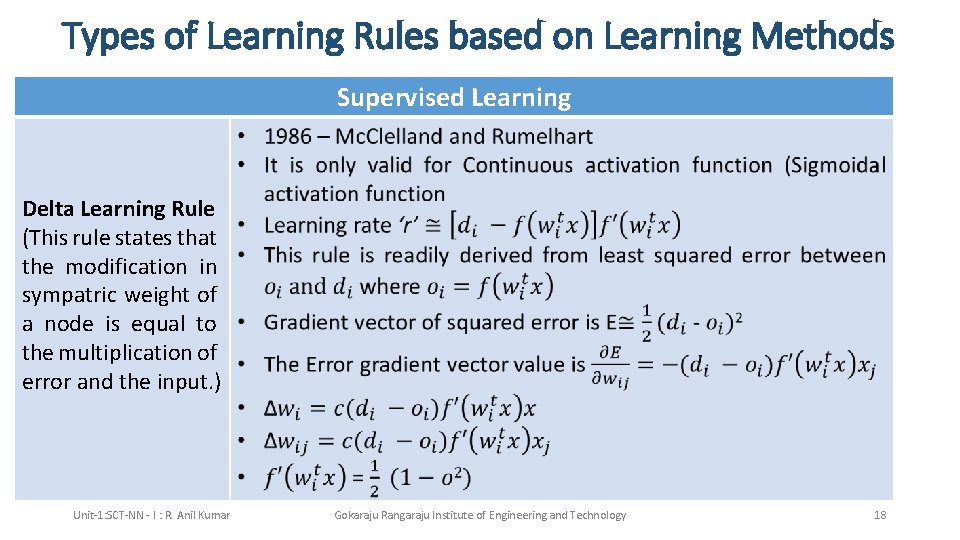

Types of Learning Rules based on Learning Methods Supervised Learning Delta Learning Rule (This rule states that the modification in sympatric weight of a node is equal to the multiplication of error and the input. ) Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 18

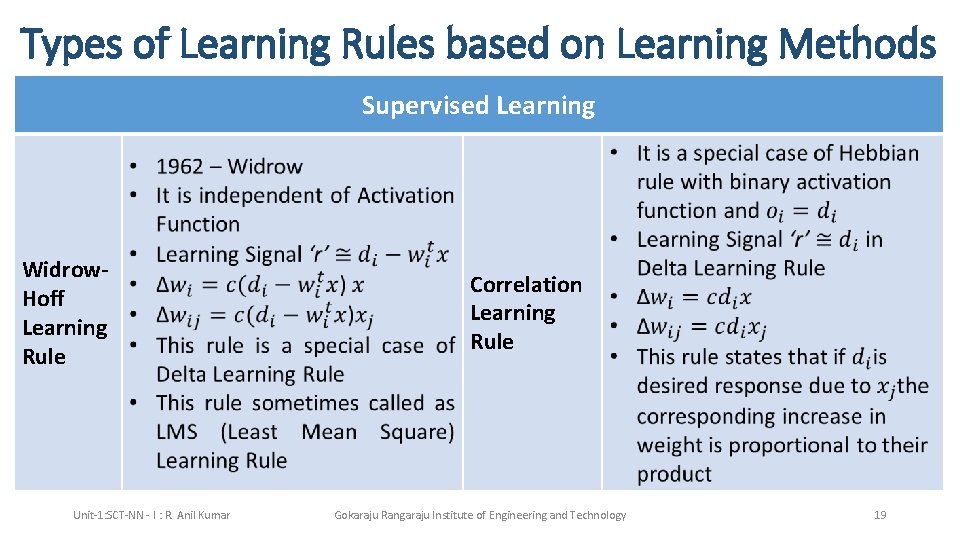

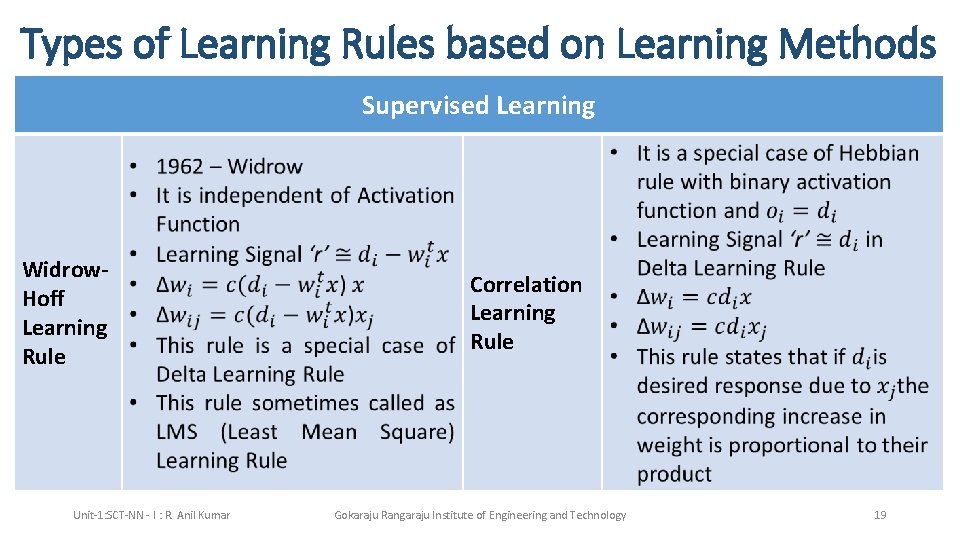

Types of Learning Rules based on Learning Methods Supervised Learning Widrow. Hoff Learning Rule Unit-1: SCT-NN - I : R. Anil Kumar Correlation Learning Rule Gokaraju Rangaraju Institute of Engineering and Technology 19

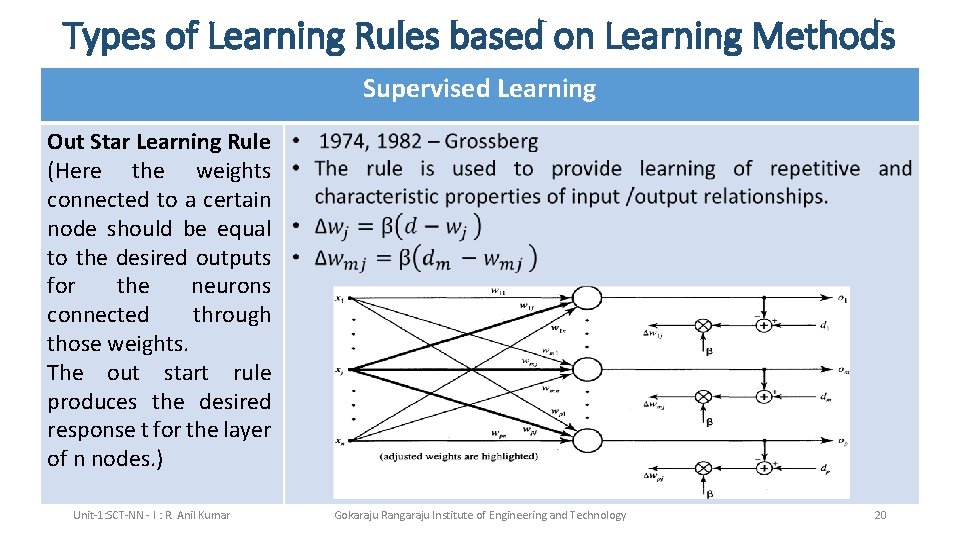

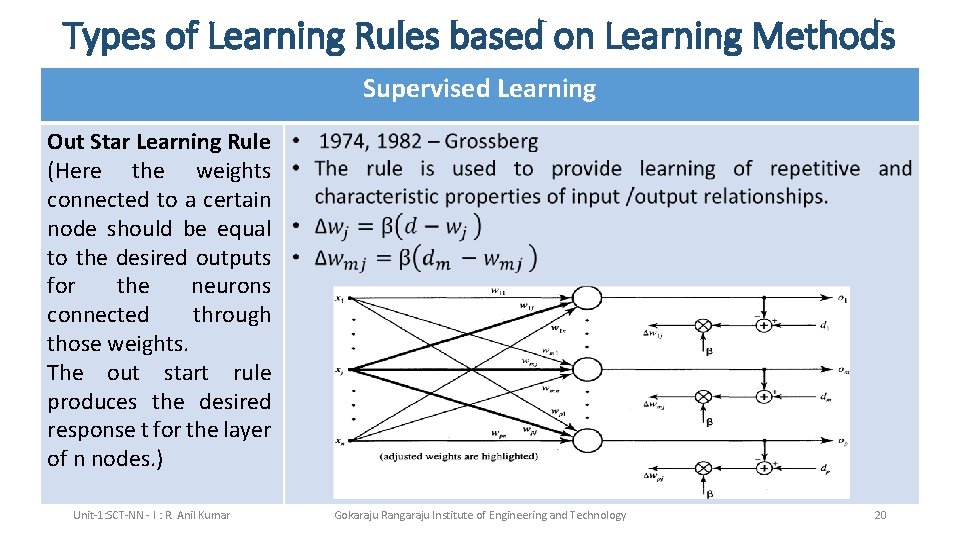

Types of Learning Rules based on Learning Methods Supervised Learning Out Star Learning Rule (Here the weights connected to a certain node should be equal to the desired outputs for the neurons connected through those weights. The out start rule produces the desired response t for the layer of n nodes. ) Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 20

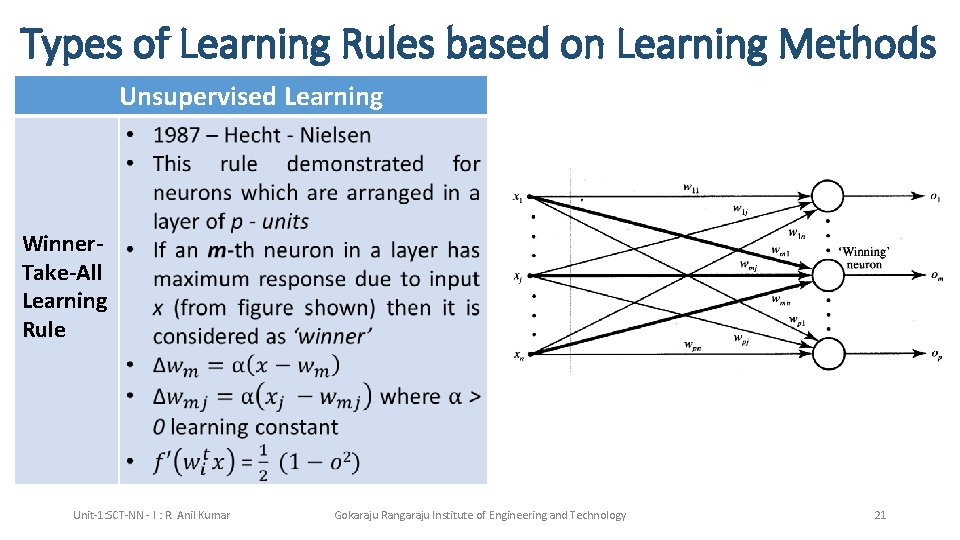

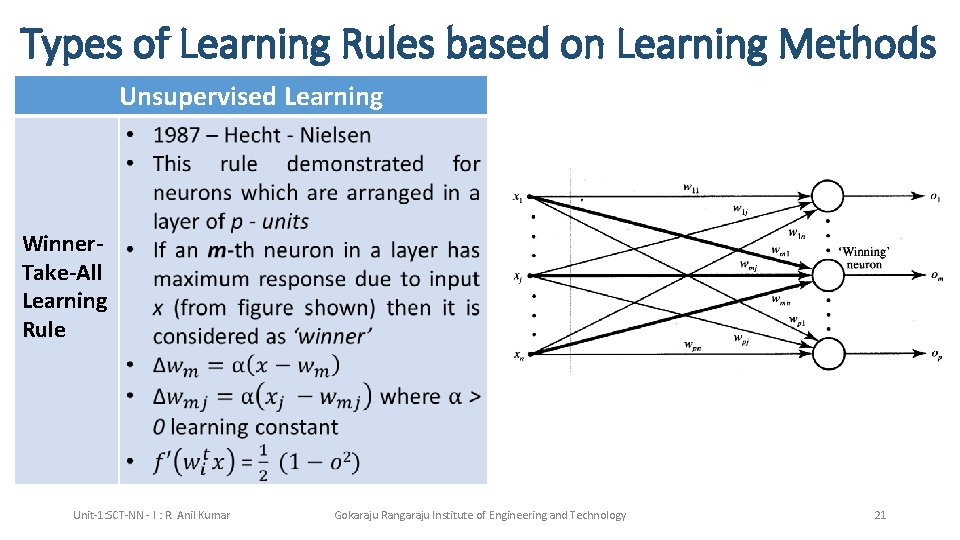

Types of Learning Rules based on Learning Methods Unsupervised Learning Winner. Take-All Learning Rule Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 21

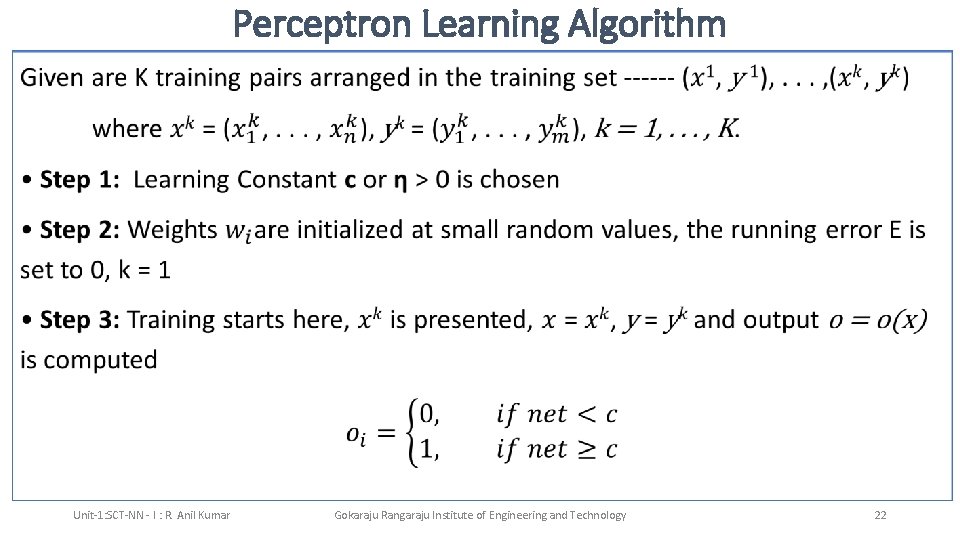

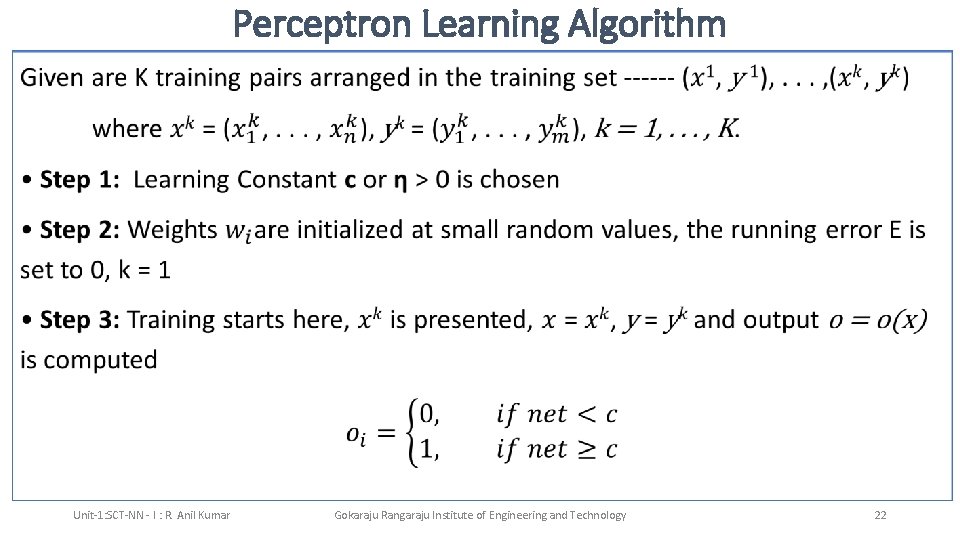

Perceptron Learning Algorithm • Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 22

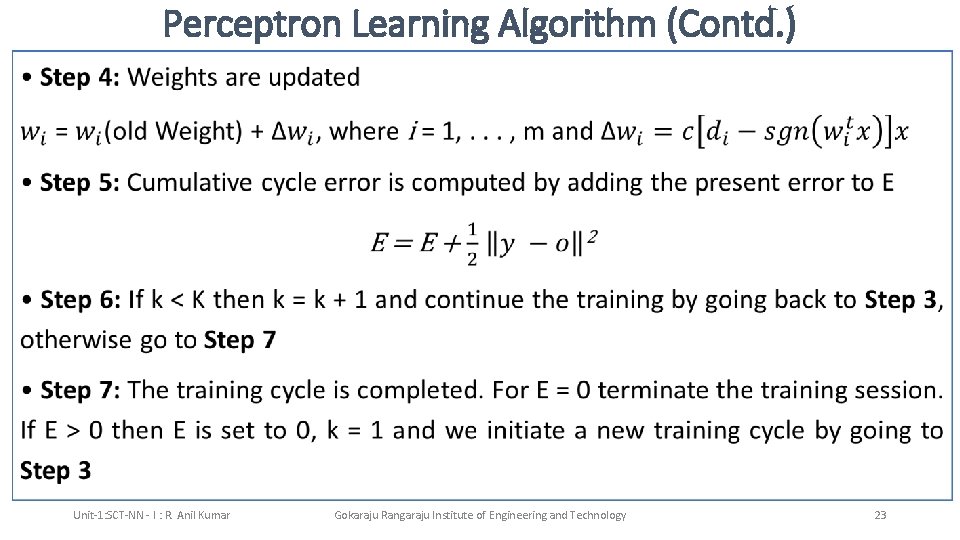

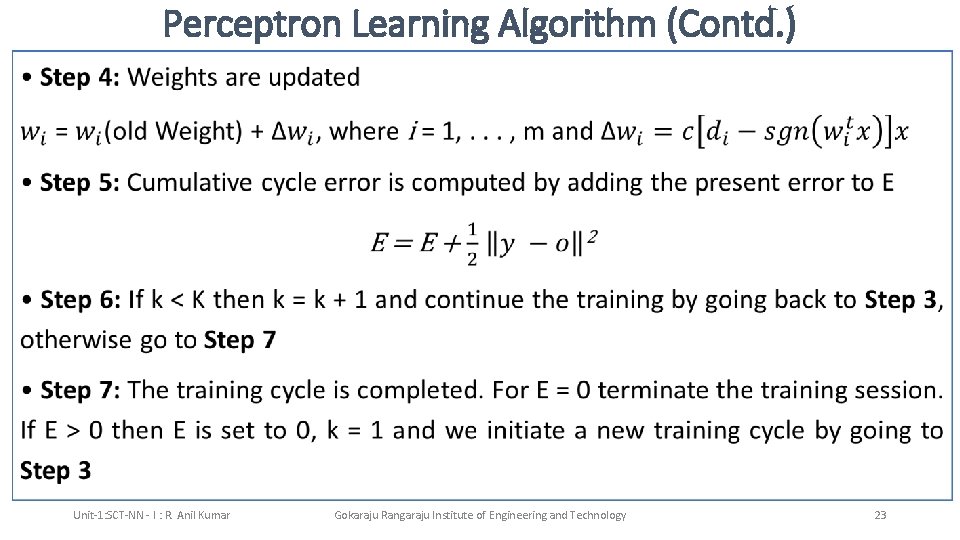

Perceptron Learning Algorithm (Contd. ) • Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 23

Perceptron Convergence Theorem • It states that for any data set which is linearly separable, the Perceptron learning rule is guaranteed to find a solution in a finite number of steps • In other words, the Perceptron learning rule is guaranteed to converge to a weight vector that correctly classifies the examples provided through the training examples are linearly separable. • A function is said to be linearly separable when its outputs can be discriminated by a function which is a linear combination of features, i. e. , we can discriminate its outputs by a line or a hyperplane. Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 24

Associative Memory • An Associative Memory is one in which the stimulus of an incomplete or noised or corrupted pattern leads to the response of a stored pattern that corresponds in some manner to the input pattern. For example: An appropriate word and its meaning can be interpreted by human brain though the spelling of that word is wrong, that is possible due to the heavy interconnections of the neurons and stored data that can be mapped/associated inter relatedly. Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 25

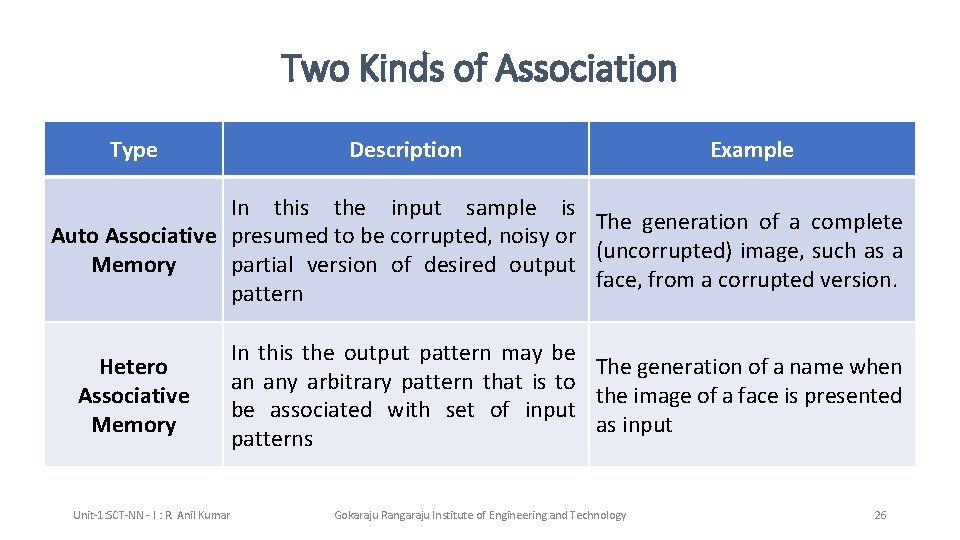

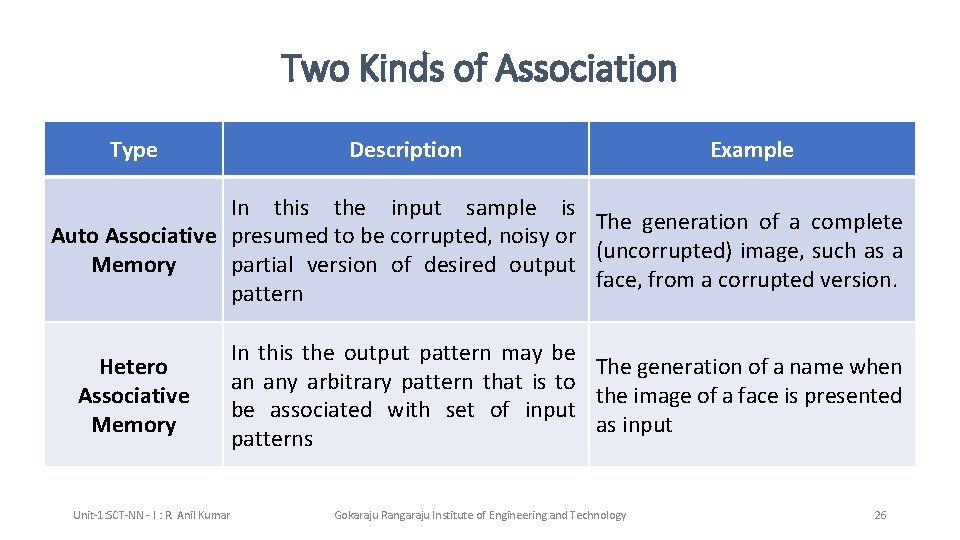

Two Kinds of Association Type Description Example In this the input sample is The generation of a complete Auto Associative presumed to be corrupted, noisy or (uncorrupted) image, such as a Memory partial version of desired output face, from a corrupted version. pattern Hetero Associative Memory Unit-1: SCT-NN - I : R. Anil Kumar In this the output pattern may be The generation of a name when an any arbitrary pattern that is to the image of a face is presented be associated with set of input as input patterns Gokaraju Rangaraju Institute of Engineering and Technology 26

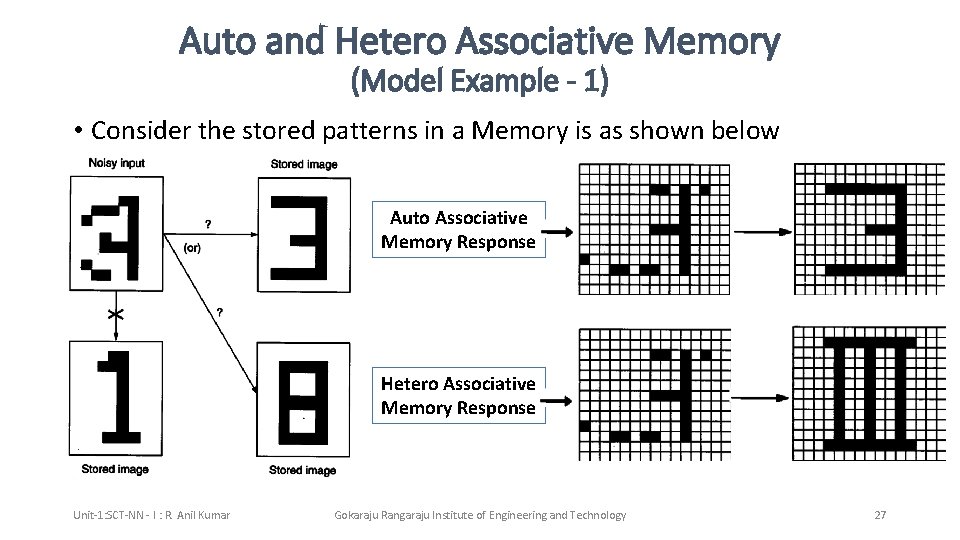

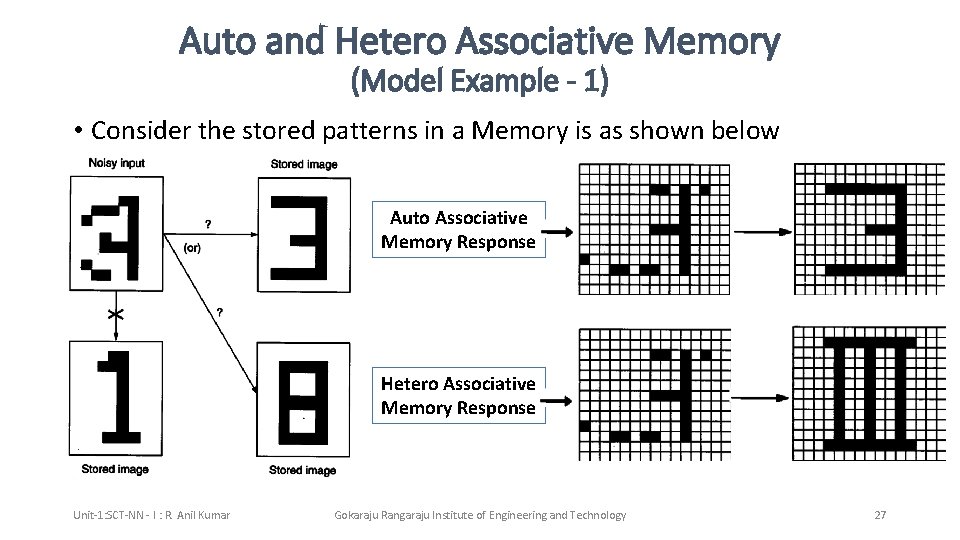

Auto and Hetero Associative Memory (Model Example - 1) • Consider the stored patterns in a Memory is as shown below Auto Associative Memory Response Hetero Associative Memory Response Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 27

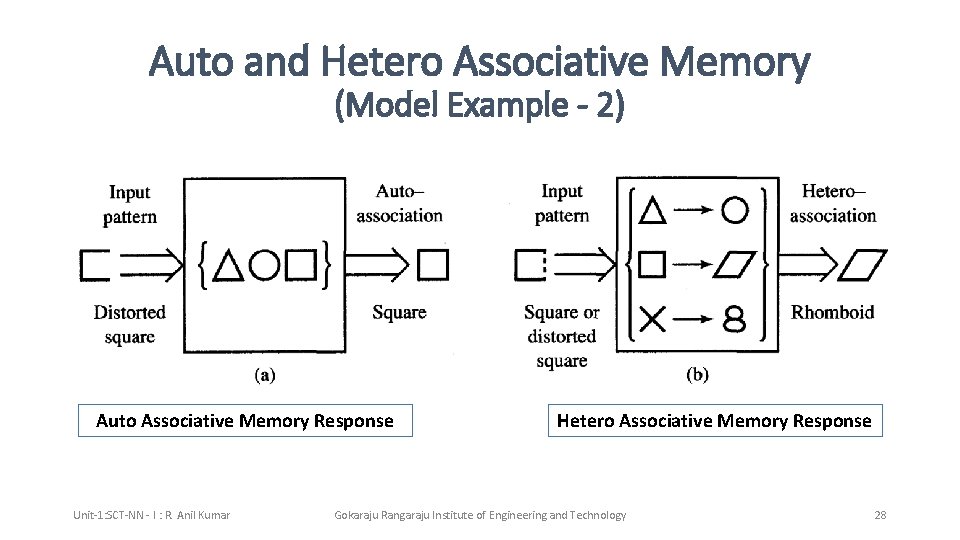

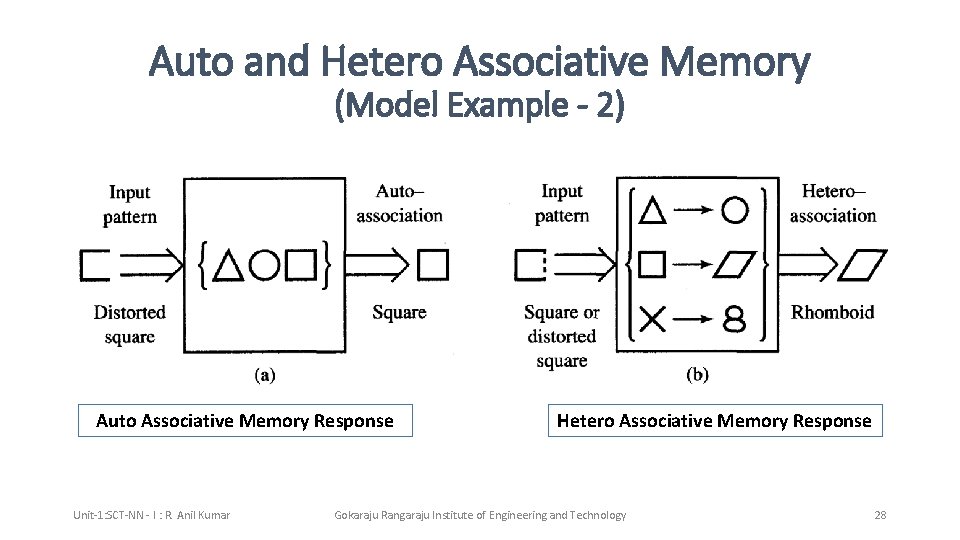

Auto and Hetero Associative Memory (Model Example - 2) Auto Associative Memory Response Unit-1: SCT-NN - I : R. Anil Kumar Hetero Associative Memory Response Gokaraju Rangaraju Institute of Engineering and Technology 28

Unit – 1: Neural Networks-I Outcomes: After completing this Unit, Students will be able to • Choose the type of activation function for a selected Artificial Neuron Network Model. • Illustrate the learning rules and working single and multi-layer Perceptron Model. • Tell the importance of Auto and Hetero Associative Memories. • Summarize the importance of NN in various fields. Unit-1: SCT-NN - I : R. Anil Kumar Gokaraju Rangaraju Institute of Engineering and Technology 29