Semisupervised Support Vector Machine SVM Algorithms And Their

- Slides: 36

Semi-supervised Support Vector Machine (SVM) Algorithms And Their Applications in Brain Computer Interfaces (BCIs) 李远清 华南理 大学自动化科学与 程学院 脑机接口与脑信息处理研究中心 2022/1/9 1

Outline 1. My research topics in recent years 2. Semi-supervised Support Vector Machine (SVM) l A self training semi-supervised SVM algorithm and its convergence l Feature extraction based on Rayleigh coefficient maximization l An extended semi-supervised SVM algorithm and its effectiveness 3. Applications in Brain Computer Interfaces (BCIs)

1. My research topics in recent years Motivation: To extract interesting components from complex data using some principles Complex data: highly dimensional, highly noisy, illconditioned, highly dynamical, insufficient, etc. , E. g. EEG, f. MRI data. Principles: independence, sparseness, ML, MAP, information maximization, entropy, etc.

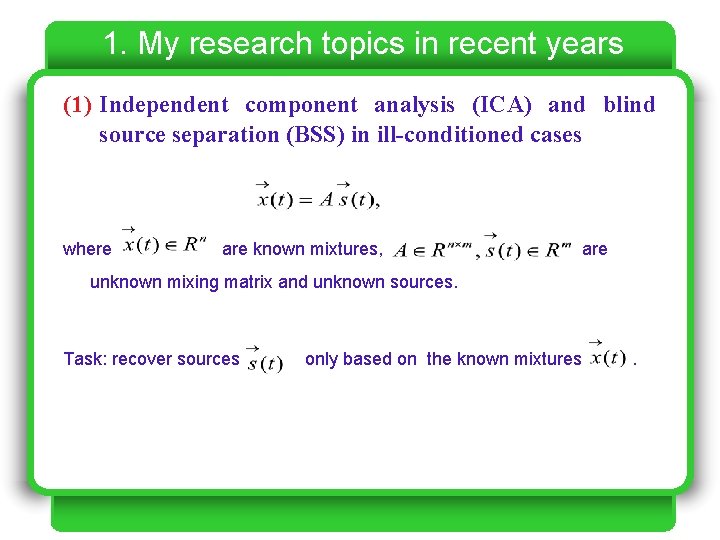

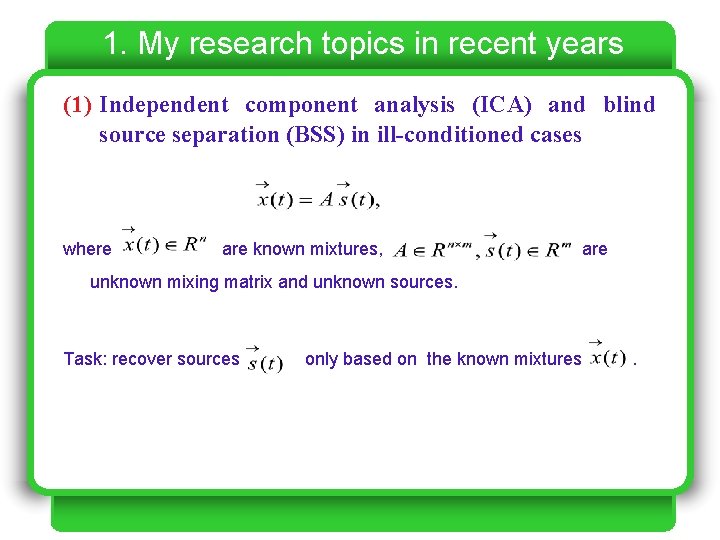

1. My research topics in recent years (1) Independent component analysis (ICA) and blind source separation (BSS) in ill-conditioned cases where are known mixtures, are unknown mixing matrix and unknown sources. Task: recover sources only based on the known mixtures .

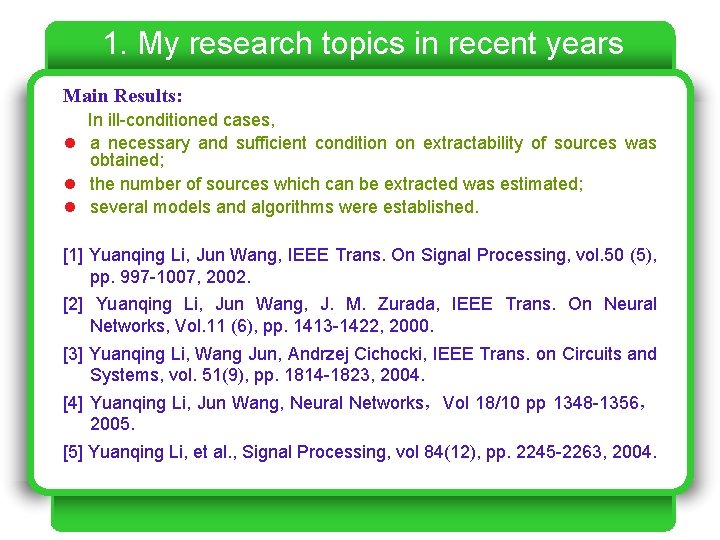

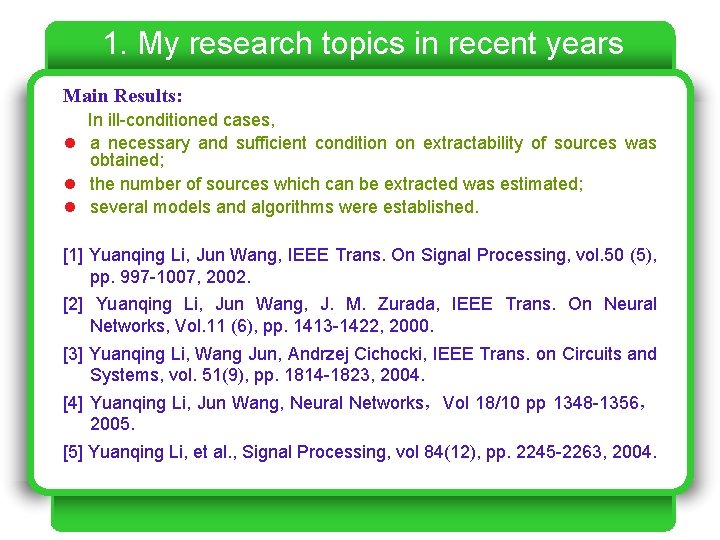

1. My research topics in recent years Main Results: In ill-conditioned cases, l a necessary and sufficient condition on extractability of sources was obtained; l the number of sources which can be extracted was estimated; l several models and algorithms were established. [1] Yuanqing Li, Jun Wang, IEEE Trans. On Signal Processing, vol. 50 (5), pp. 997 -1007, 2002. [2] Yuanqing Li, Jun Wang, J. M. Zurada, IEEE Trans. On Neural Networks, Vol. 11 (6), pp. 1413 -1422, 2000. [3] Yuanqing Li, Wang Jun, Andrzej Cichocki, IEEE Trans. on Circuits and Systems, vol. 51(9), pp. 1814 -1823, 2004. [4] Yuanqing Li, Jun Wang, Neural Networks,Vol 18/10 pp 1348 -1356, 2005. [5] Yuanqing Li, et al. , Signal Processing, vol 84(12), pp. 2245 -2263, 2004.

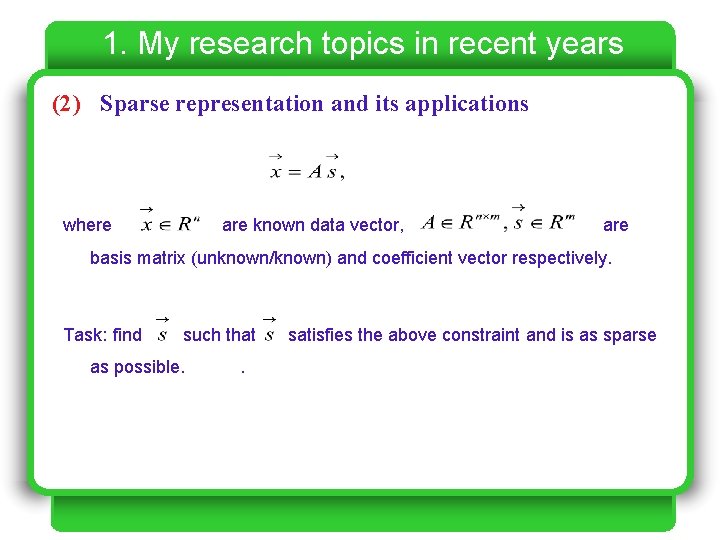

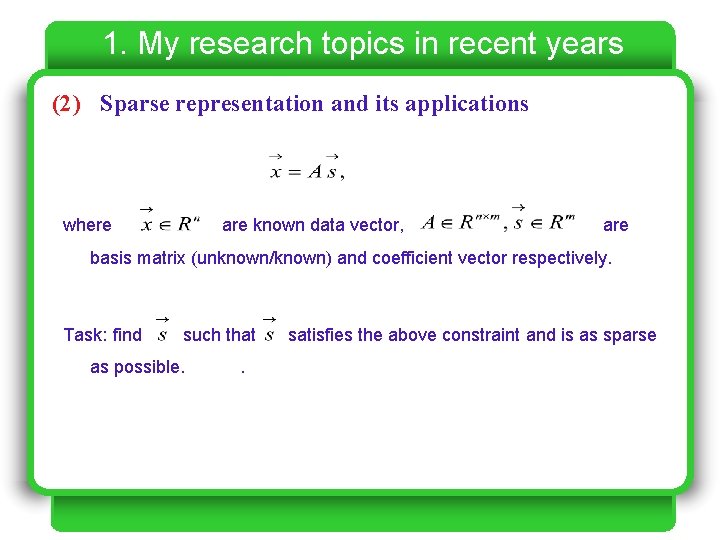

1. My research topics in recent years (2) Sparse representation and its applications where are known data vector, are basis matrix (unknown/known) and coefficient vector respectively. Task: find such that as possible. . satisfies the above constraint and is as sparse

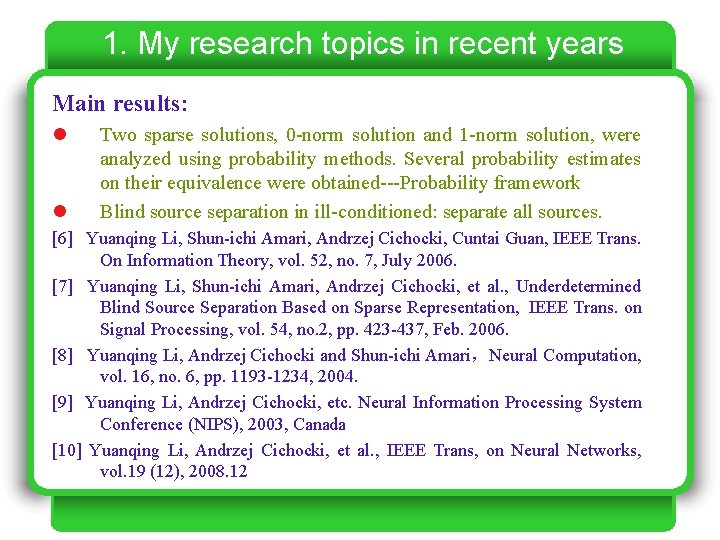

1. My research topics in recent years Main results: l l Two sparse solutions, 0 -norm solution and 1 -norm solution, were analyzed using probability methods. Several probability estimates on their equivalence were obtained---Probability framework Blind source separation in ill-conditioned: separate all sources. [6] Yuanqing Li, Shun-ichi Amari, Andrzej Cichocki, Cuntai Guan, IEEE Trans. On Information Theory, vol. 52, no. 7, July 2006. [7] Yuanqing Li, Shun-ichi Amari, Andrzej Cichocki, et al. , Underdetermined Blind Source Separation Based on Sparse Representation, IEEE Trans. on Signal Processing, vol. 54, no. 2, pp. 423 -437, Feb. 2006. [8] Yuanqing Li, Andrzej Cichocki and Shun-ichi Amari,Neural Computation, vol. 16, no. 6, pp. 1193 -1234, 2004. [9] Yuanqing Li, Andrzej Cichocki, etc. Neural Information Processing System Conference (NIPS), 2003, Canada [10] Yuanqing Li, Andrzej Cichocki, et al. , IEEE Trans, on Neural Networks, vol. 19 (12), 2008. 12

1. My research topics in recent years (3) EEG, f. MRI data analysis l Event related Synchronization and de-synchronization of EEG components obtained by sparse representation were analyzed; l Pre-processing of EEG signals based on sparse representation; l Voxel selection in f. MRI data analysis. [11] Yuanqing Li, Andrzej Cichocki, and Shun-ichi Amari, IEEE Trans. on Neural Networks, Vol. 17, No. 2, pp. 419 -431, Mar. 2006. [12] Yuanqing Li and et al. , Voxel selection in f. MRI data analysis: A sparse representation method, IEEE Trans. on Biomedical Engineering (accepted).

1. My research topics in recent years (4) Semi-supervised learning and its applications in BCIs l E. g. two semi-supervised learning algorithms which are based on SVM and EM respectively were developed for joint feature extraction and classification for small training data set. l These algorithms can be used to reduce the training effort and improve the adaptability in BCIs [13] Yuanqing Li, Cuntai Guan, Neural Computation,18, pp. 2730 -2761, 2006. [14] Yuanqing Li, Cuntai Guan, Machine Learning, vol. 71, no. 1. [15] Yuanqing Li, Huiqi Li, Cuntai Guan, Pattern Recognition Letters, vol. 29(9), pp. 1285 -1294, 2008. 7. [16] A. Cichocki, …, Yuanqing Li, Noninvasive BCIs: Multiway Signal Processing and Array Decomposition, IEEE Computer Magazine, Vol. 41(10), pp. 34 -42, 2008.

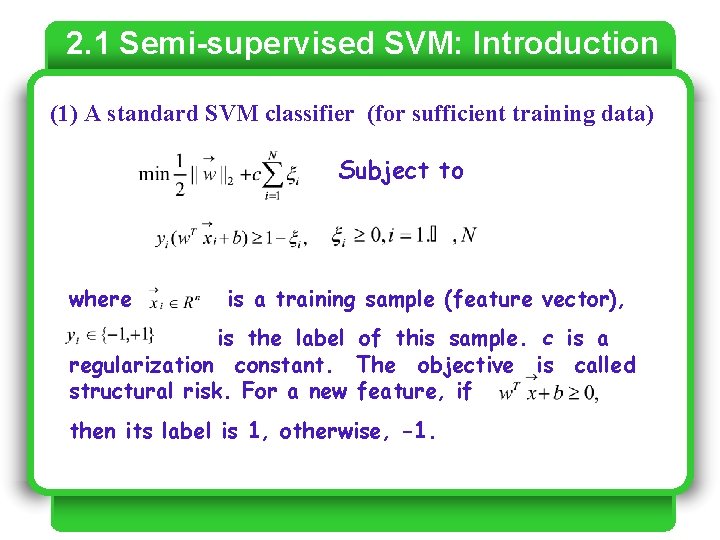

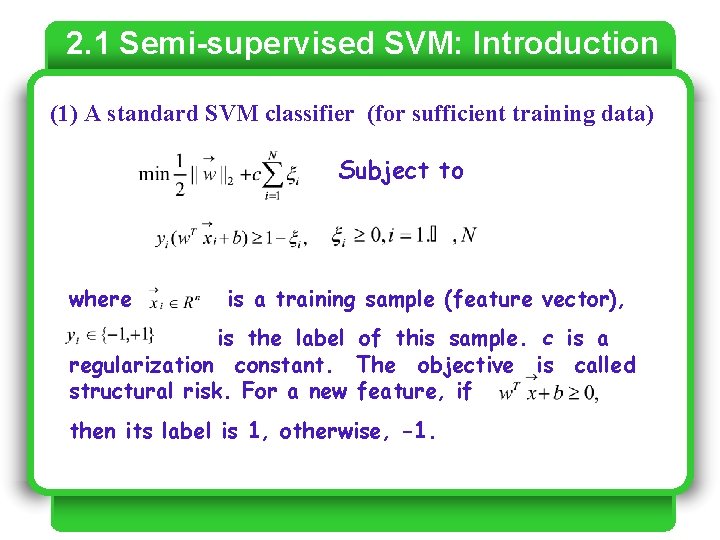

2. 1 Semi-supervised SVM: Introduction (1) A standard SVM classifier (for sufficient training data) Subject to where is a training sample (feature vector), is the label of this sample. c is a regularization constant. The objective is called structural risk. For a new feature, if then its label is 1, otherwise, -1.

2. 1 Semi-supervised SVM: Introduction (2) Why semi-supervised learning with feature reextraction is important? l In many real applications, labelling data is time consuming and expensive (e. g. , BCI training, disease diagnosis, etc). l When only a small amount of labeled data and a large amount of unlabeled data are available, semi-supervised learning, which resorts to labeled and unlabeled data simultaneously, can often provide us a satisfactory classifier.

2. 1 Semi-supervised SVM: Introduction l In recent years, semi-supervised learning has received a great deal of attention due to its potential for reducing the effort of labeling data l Until now, existing semi-supervised learning methods have been developed only for classification. However, many features are extracted also based on training data with labels (e. g. , LDA). How to extract reliable features when training data set is small? This problem has not been discussed.

2. 1 Semi-supervised SVM: Introduction (3) The main contributions in this work 2. Semi-supervised SVM l Propose a self-training SVM algorithm and prove its convergence; l How to extract reliable (or consistent) features and perform classification in small training data case? This problem is first discussed. An extended semisupervised learning algorithm is proposed for joint feature extraction and classification. Convergence and effectiveness of are analyzed. l Applications in EEG based BCIs

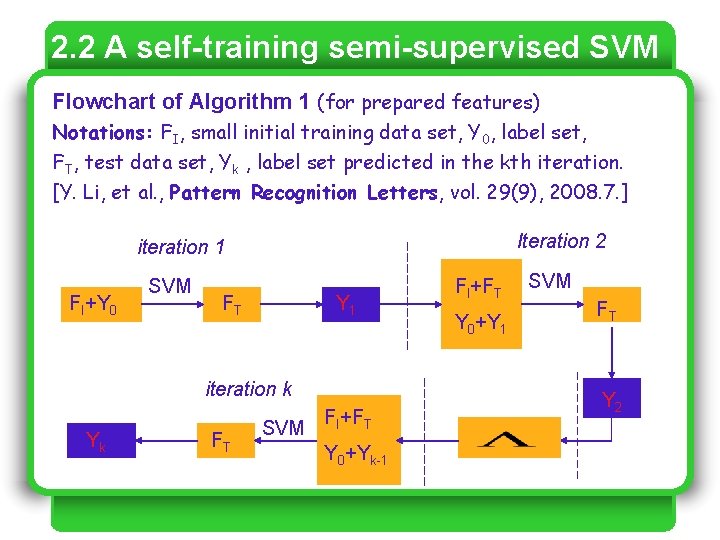

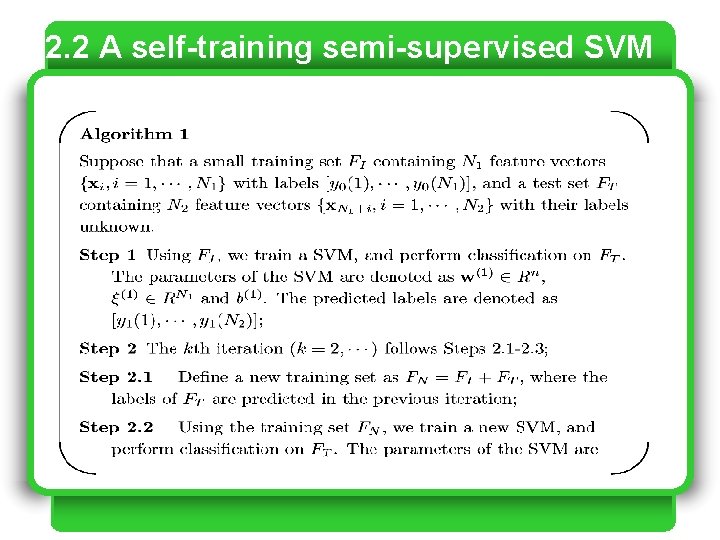

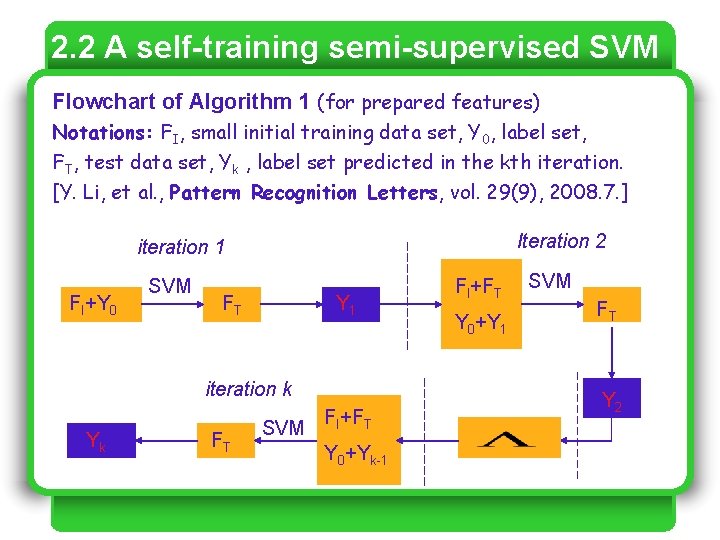

2. 2 A self-training semi-supervised SVM Flowchart of Algorithm 1 (for prepared features) Notations: FI, small initial training data set, Y 0, label set, FT, test data set, Yk , label set predicted in the kth iteration. [Y. Li, et al. , Pattern Recognition Letters, vol. 29(9), 2008. 7. ] Iteration 2 iteration 1 FI+Y 0 SVM FT Y 1 iteration k Yk FT SVM FI+FT Y 0+Yk-1 FI+FT Y 0+Y 1 SVM FT Y 2

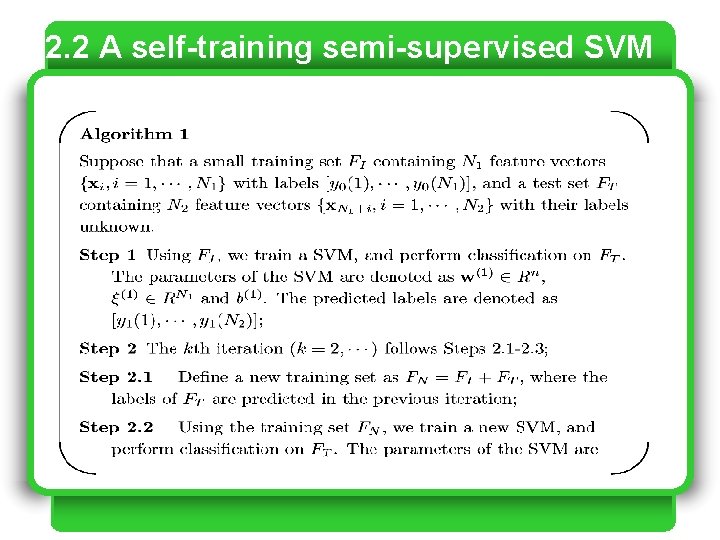

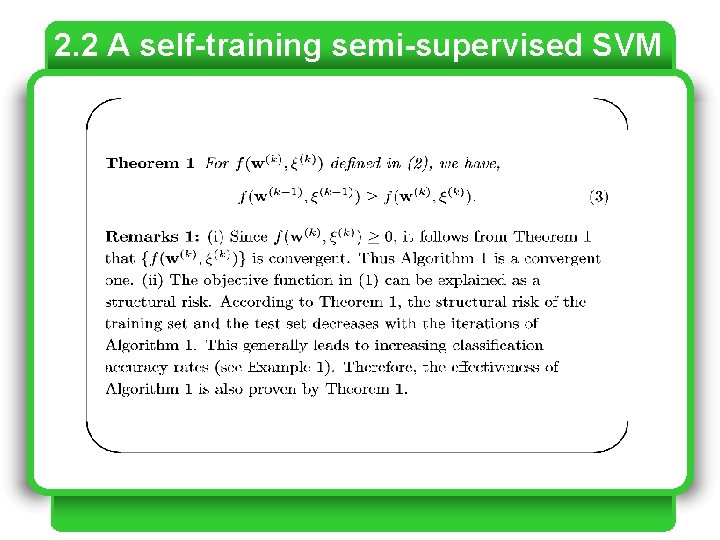

2. 2 A self-training semi-supervised SVM

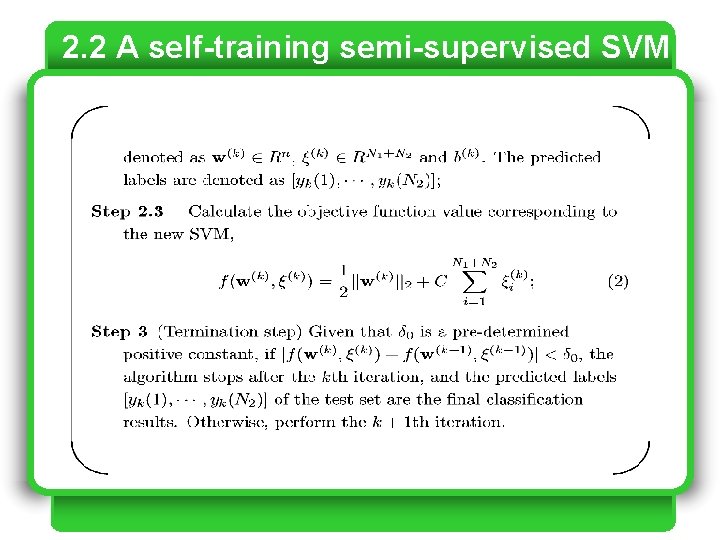

2. 2 A self-training semi-supervised SVM

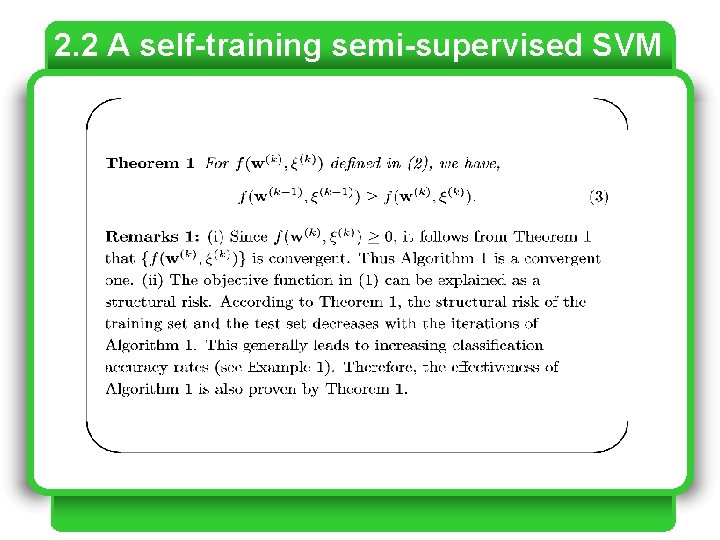

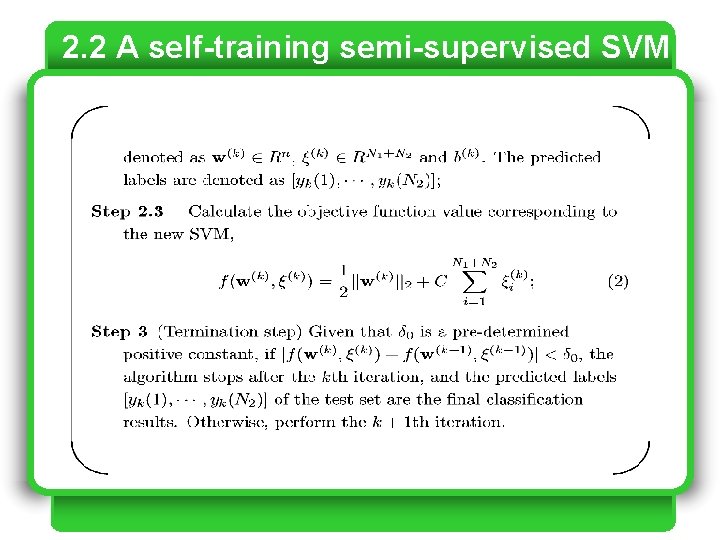

2. 2 A self-training semi-supervised SVM

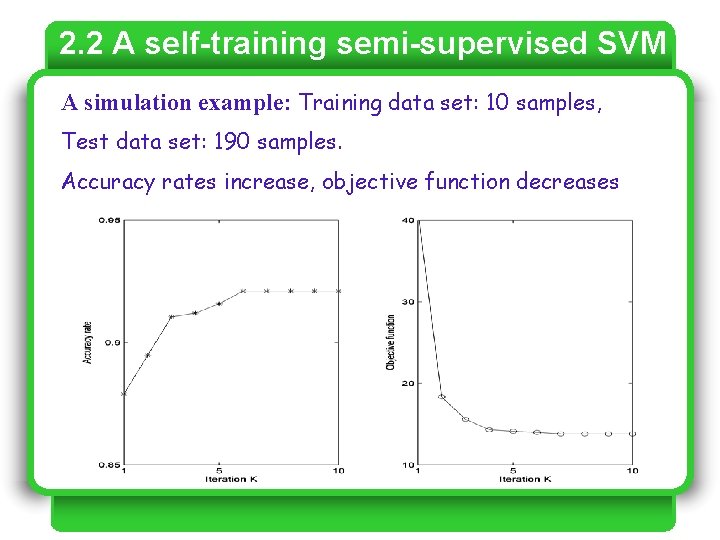

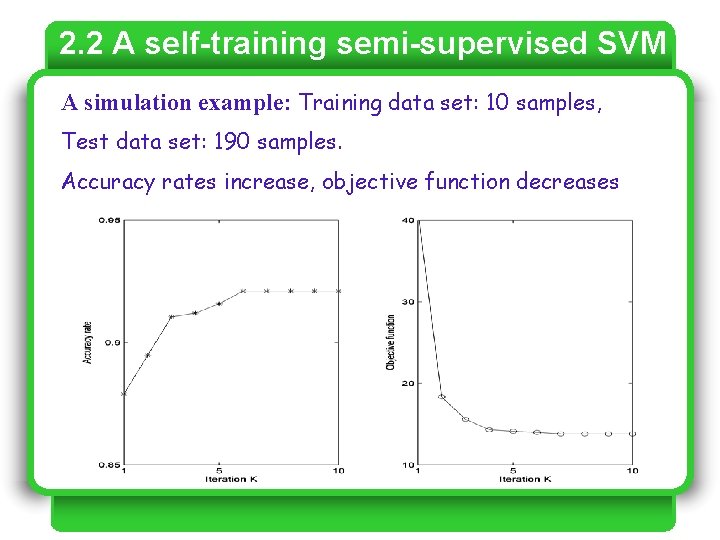

2. 2 A self-training semi-supervised SVM A simulation example: Training data set: 10 samples, Test data set: 190 samples. Accuracy rates increase, objective function decreases

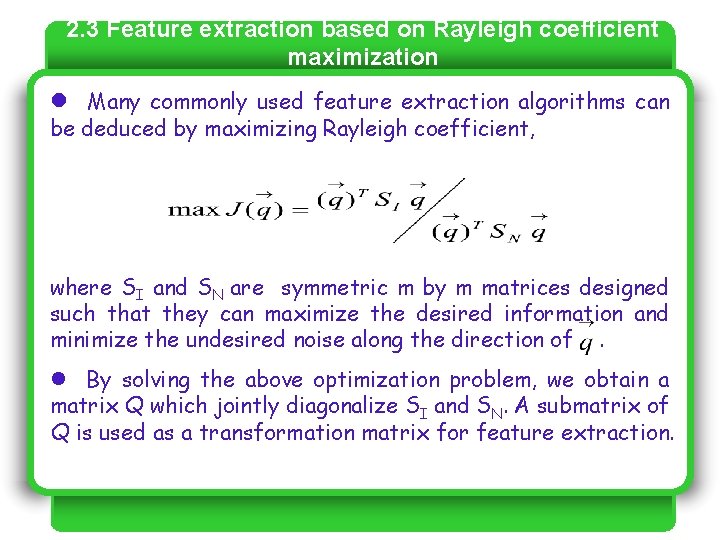

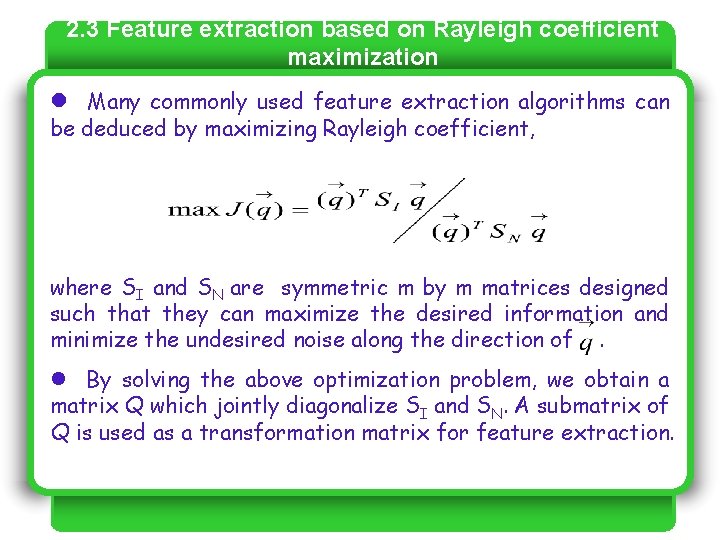

2. 3 Feature extraction based on Rayleigh coefficient maximization l Many commonly used feature extraction algorithms can be deduced by maximizing Rayleigh coefficient, where SI and SN are symmetric m by m matrices designed such that they can maximize the desired information and minimize the undesired noise along the direction of. l By solving the above optimization problem, we obtain a matrix Q which jointly diagonalize SI and SN. A submatrix of Q is used as a transformation matrix for feature extraction.

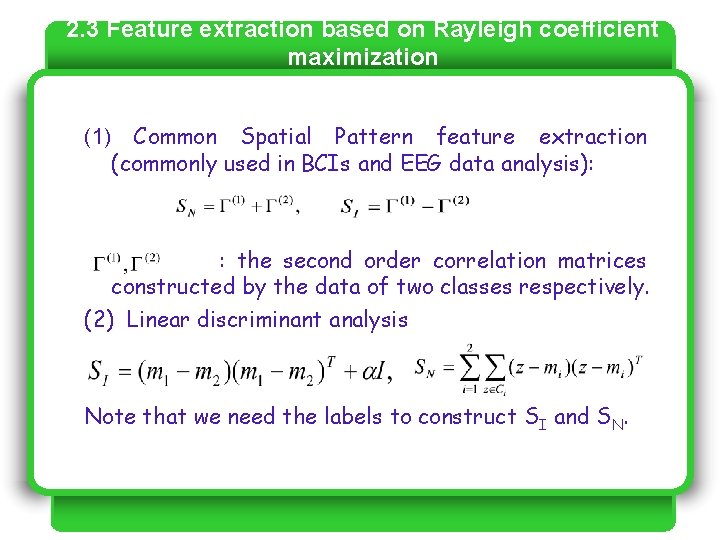

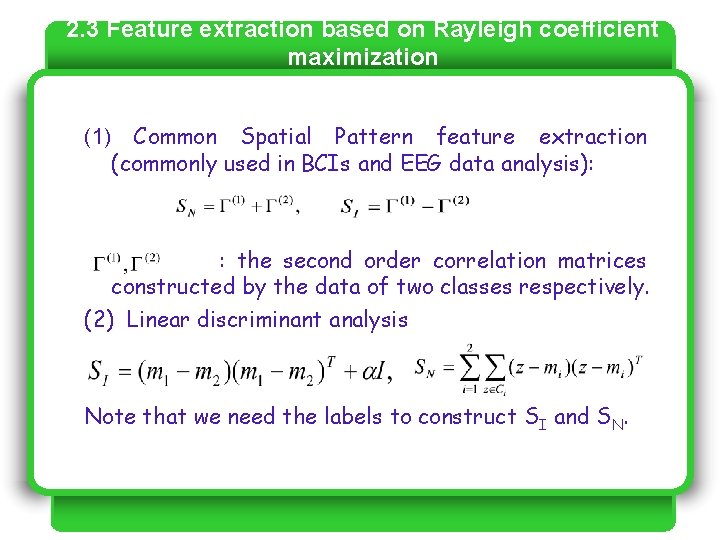

2. 3 Feature extraction based on Rayleigh coefficient maximization (1) Common Spatial Pattern feature extraction (commonly used in BCIs and EEG data analysis): : the second order correlation matrices constructed by the data of two classes respectively. (2) Linear discriminant analysis Note that we need the labels to construct SI and SN.

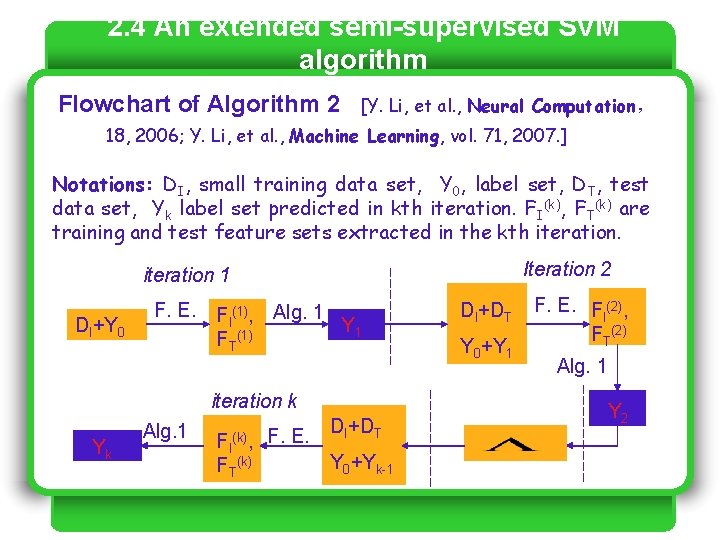

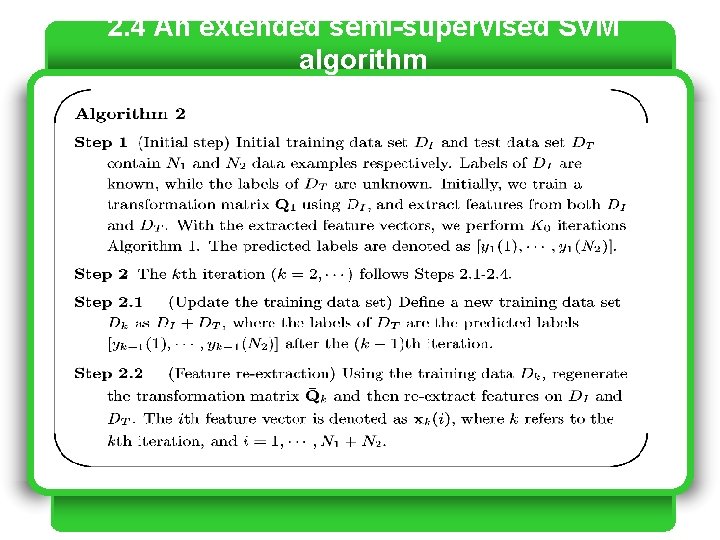

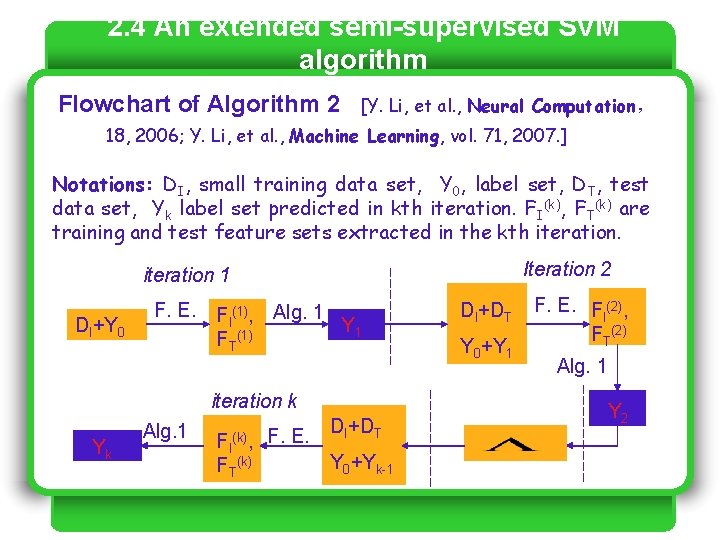

2. 4 An extended semi-supervised SVM algorithm Flowchart of Algorithm 2 [Y. Li, et al. , Neural Computation, 18, 2006; Y. Li, et al. , Machine Learning, vol. 71, 2007. ] Notations: DI, small training data set, Y 0, label set, DT, test data set, Yk label set predicted in kth iteration. FI(k), FT(k) are training and test feature sets extracted in the kth iteration. Iteration 2 iteration 1 DI+Y 0 F. E. FI(1), Alg. 1 Y 1 FT(1) iteration k Yk Alg. 1 FI(k), FT(k) F. E. DI+DT Y 0+Yk-1 DI+DT Y 0+Y 1 F. E. F (2), I FT(2) Alg. 1 Y 2

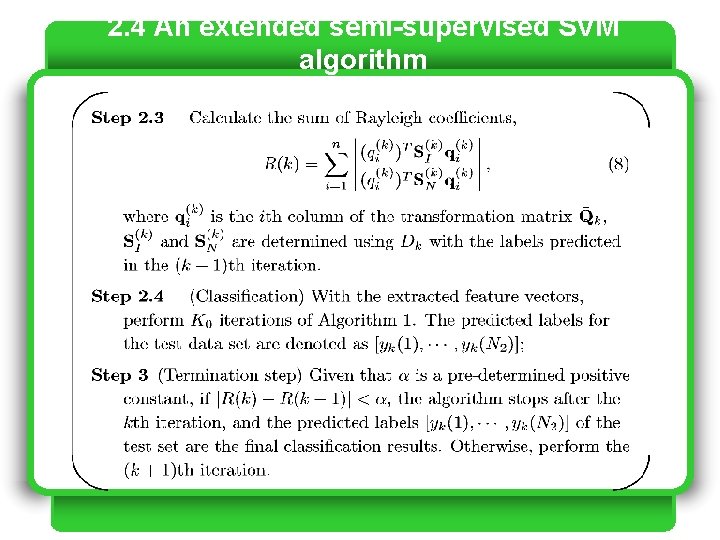

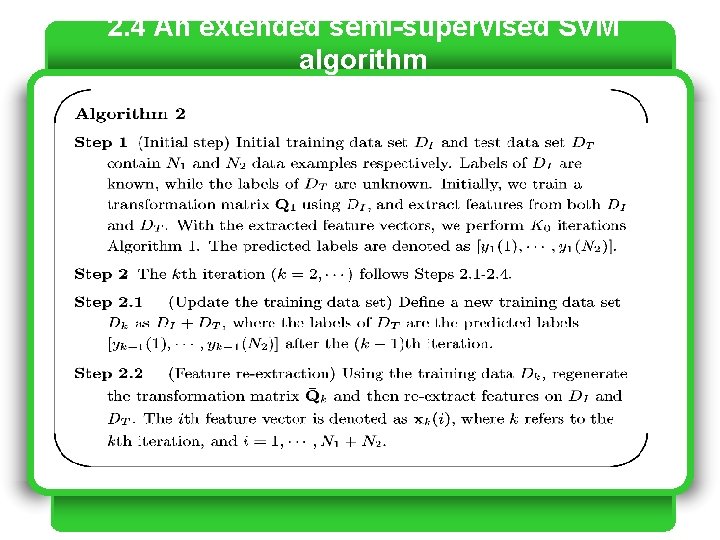

2. 4 An extended semi-supervised SVM algorithm

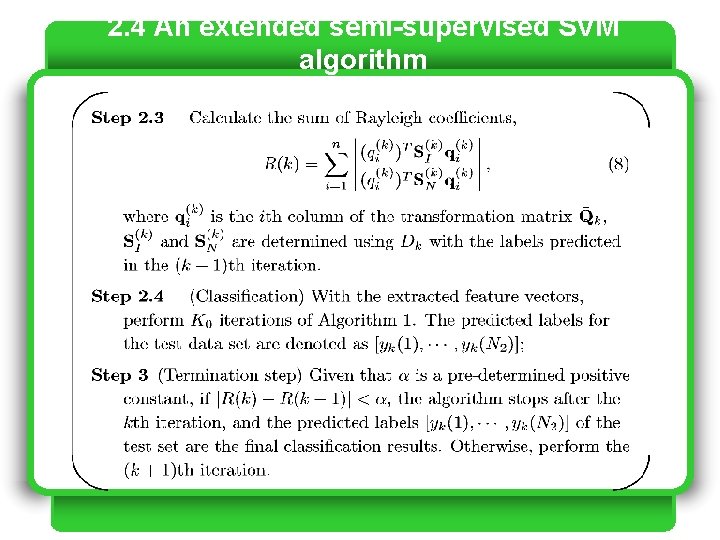

2. 4 An extended semi-supervised SVM algorithm

2. 4 An extended semi-supervised SVM algorithm Convergence: We have proved that the sum of Rayleigh coefficients generally increases in the iterations of Algorithm 2 and is bounded. That is, Algorithm 2 is convergent. This will be demonstrated in our experimental data analysis.

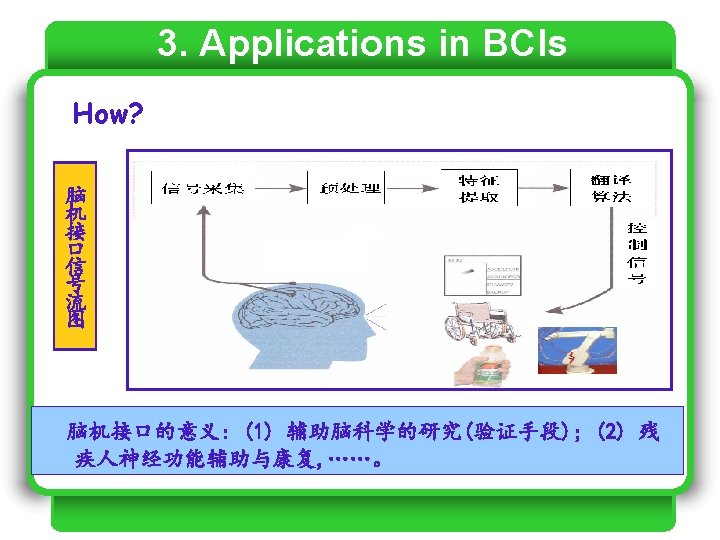

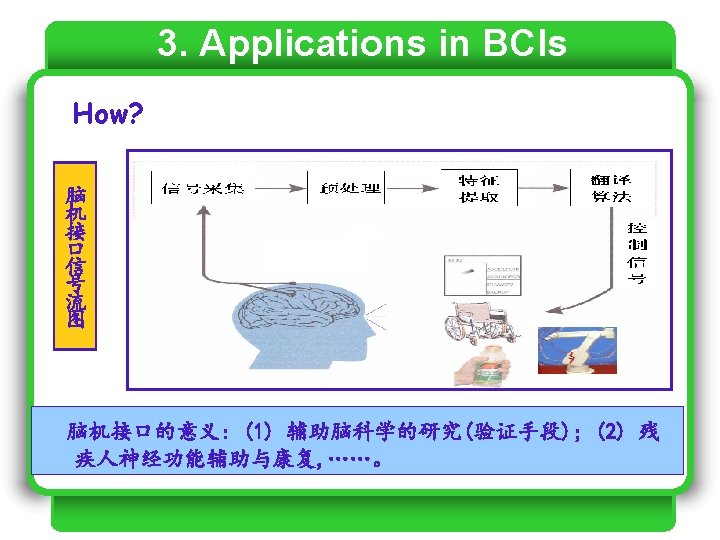

3. Applications in BCIs Introduction to BCIs What? An interface between brain and computer Why? Brain-Computer Interface (BCI) provides an alternative communications and control method for those people with severe motor disabilities Neural rehabilitation

3. Applications in BCIs How many? There are two classes of BCIs. Invasive BCIs use neurons’ signal as input, which is collected by implanting micro-electrodes in the brain[2], while noninvasive BCIs use EEG, MEG, FMRI etc. as inputs (collected from the outside of brain) [1] Birbaumer, N. , Ghanayim, N. , etc. A spelling device for the paralysed, Nature, 398, 297 -298, 1999. [2] Leigh R. Hochberg, etc. , Neuronal ensemble control of prosthetic devices by a human with tetraplegia, Nature, Vol. 442, 2006|

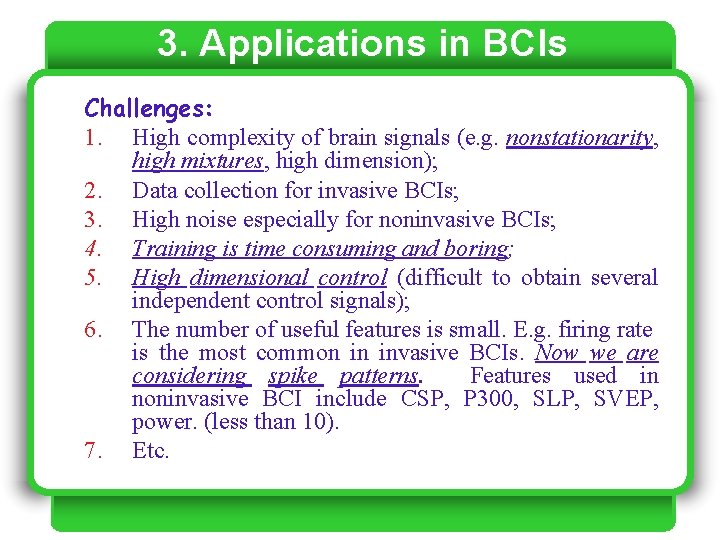

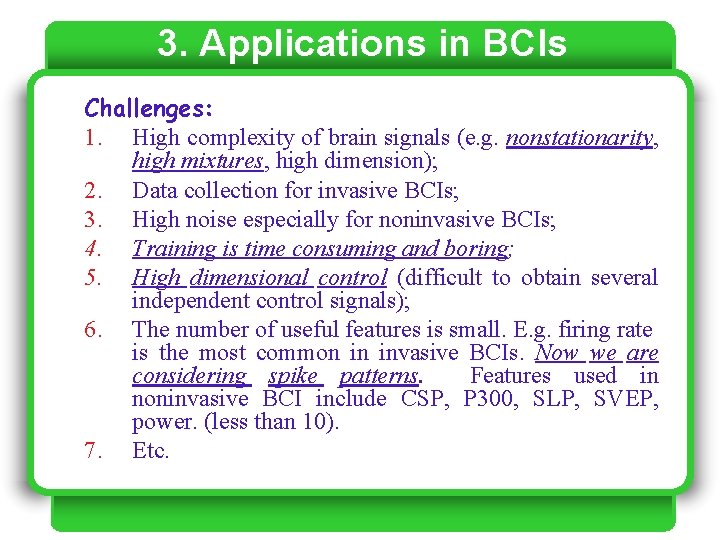

3. Applications in BCIs Challenges: 1. High complexity of brain signals (e. g. nonstationarity, high mixtures, high dimension); 2. Data collection for invasive BCIs; 3. High noise especially for noninvasive BCIs; 4. Training is time consuming and boring; 5. High dimensional control (difficult to obtain several independent control signals); 6. The number of useful features is small. E. g. firing rate is the most common in invasive BCIs. Now we are considering spike patterns. Features used in noninvasive BCI include CSP, P 300, SLP, SVEP, power. (less than 10). 7. Etc.

3. Applications in BCIs Demos: l Demo 1 (Track hand movement of a monkey using neurons’ signal [John P. Donoghue etc. ]) l Demo 2 (EEG based BCI speller using P 300) l Demo 3 (BCI soccer game using motor imaginaries) l Demo 4 (2 D cursor control) l Demo 5 (Rehabilitation)

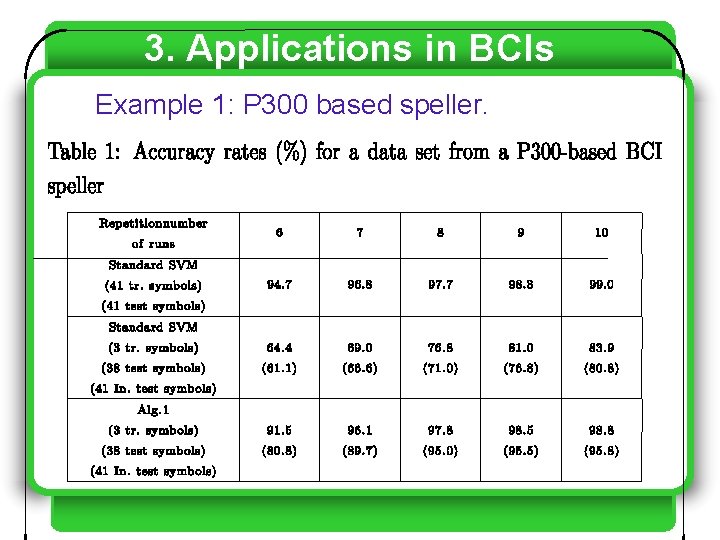

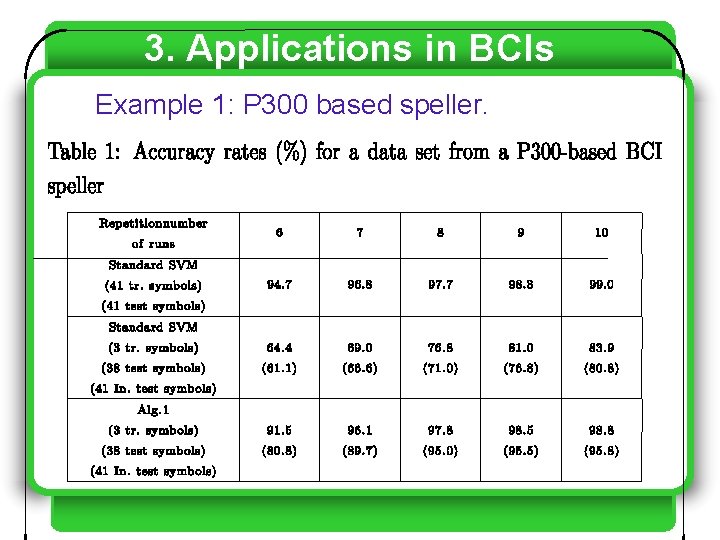

3. Applications in BCIs Example 1: P 300 based speller.

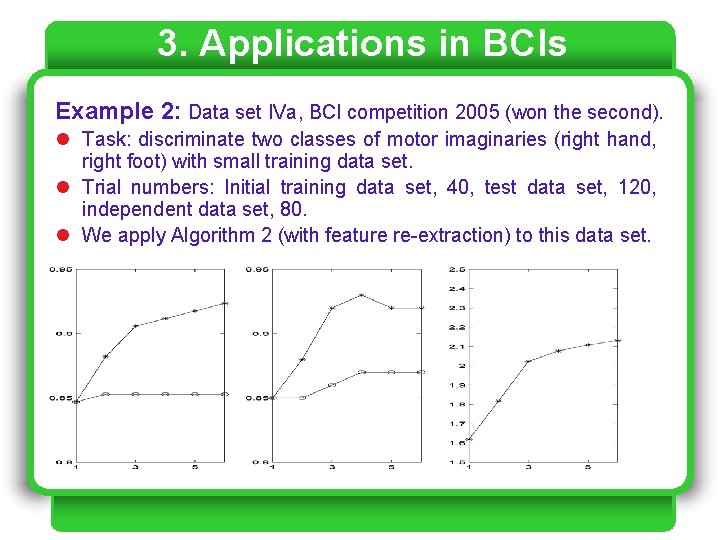

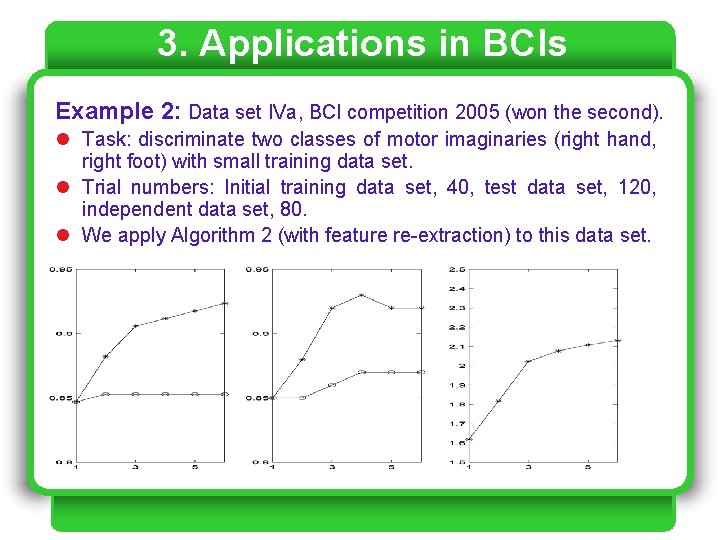

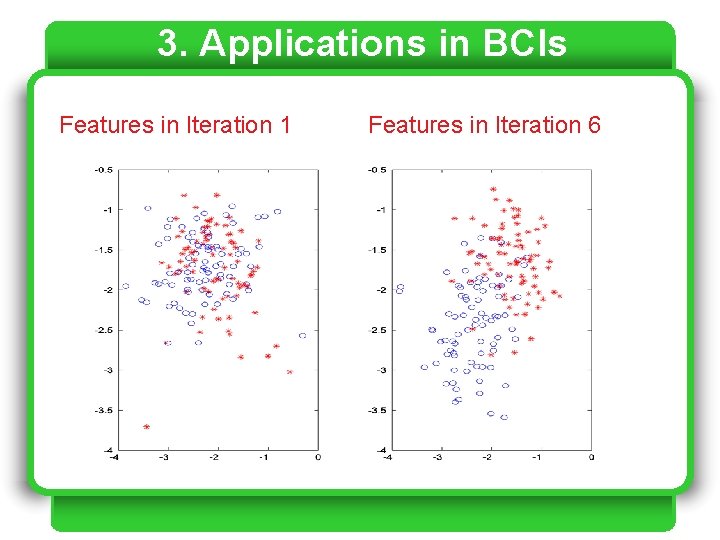

3. Applications in BCIs Example 2: Data set IVa, BCI competition 2005 (won the second). l Task: discriminate two classes of motor imaginaries (right hand, right foot) with small training data set. l Trial numbers: Initial training data set, 40, test data set, 120, independent data set, 80. l We apply Algorithm 2 (with feature re-extraction) to this data set.

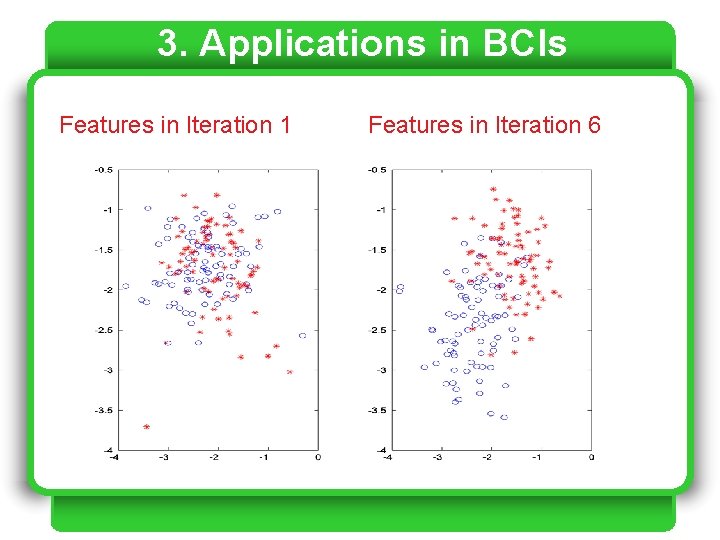

3. Applications in BCIs Features in Iteration 1 Features in Iteration 6

Conclusions l We proposed two semi-supervised SVM algorithms and proved their convergence. l Algorithm 1 is a self-training algorithm. By embedding feature re-extraction into Algorithm 1, we obtain Algorithm 2. l The two algorithms can be used for reducing training effort. Especially, Algorithm 2 can perform joint feature extraction and classification in small training data cases. l Applications of our algorithms in EEG based BCIs.

References 1. 2. 3. 4. 5. Yuanqing Li, Cuntai Guan, An Extended EM Algorithm for Joint Feature Extraction and Classification in Brain Computer Interfaces, Neural Computation,18, 2730 -2761, 2006. Yuanqing Li, Cuntai Guan, Joint Feature Re-extraction and Classification Using An Iterative Semi-supervised Support Vector Machine Algorithm, Machine Learning, vol. 71, no. 1, 2007. Yuanqing Li, et al. , A Self-training Semi-supervised SVM Algorithm and Its Application in An EEG-based Brain Computer Interface Speller System, Pattern Recognition Letters, vol. 29(9), 1285 -1294, 2008. 7. Yuanqing Li, Cuntai Guan, "A Semi-supervised SVM Learning Algorithm for Joint Feature Extraction and Classification in Brain Computer Interfaces", 28 th International Conference of the IEEE EMBS, Aug 30 - Sept 3, 2006, New York City, USA. Jianzhao Qin, Yuanqing Li, An Improved Semi-Supervised Support Vector Machines Based Translation Algorithm for BCI Systems, International Conference on Pattern Recognition (ICPR), 2006, Hong Kong.

Thanks a lot!