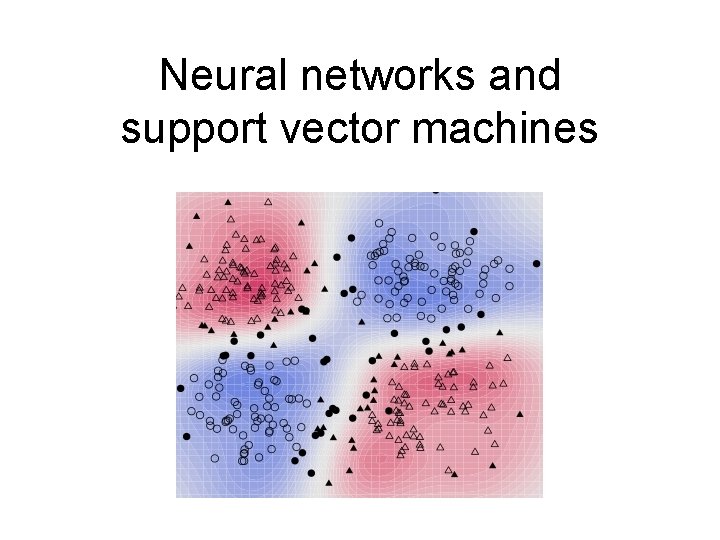

Neural networks and support vector machines Outline Neural

- Slides: 55

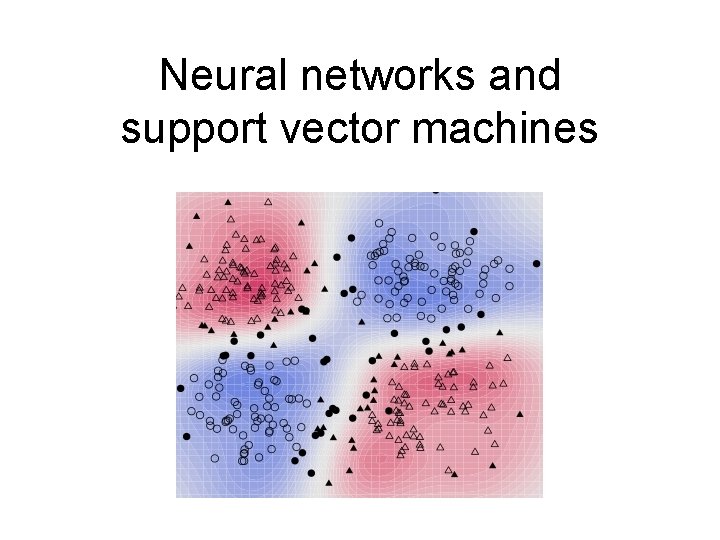

Neural networks and support vector machines

Outline • Neural Networks – Perceptron review – Differentiable perceptron – Multilayer perceptron – Support vector machine (SVM) • Other Machine Learning Tasks – Structured prediction – Clustering, quantization, density estimation – Semi-supervised and active learning Perceptron·MLP·SVM·Structure·Supervision

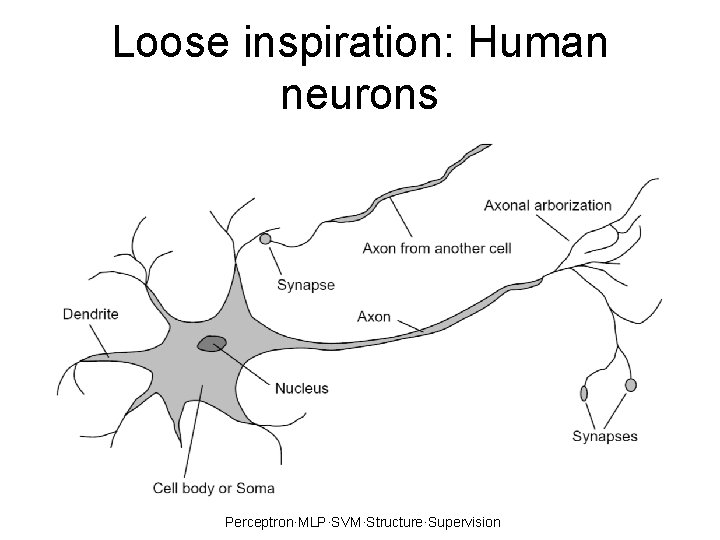

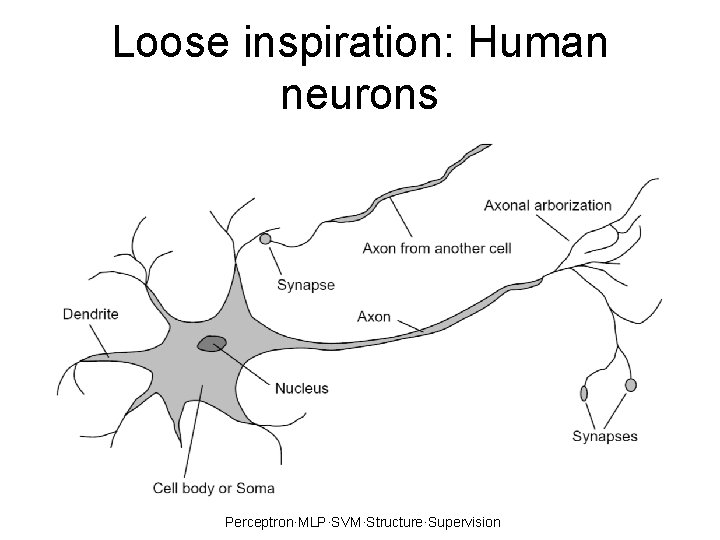

Loose inspiration: Human neurons Perceptron·MLP·SVM·Structure·Supervision

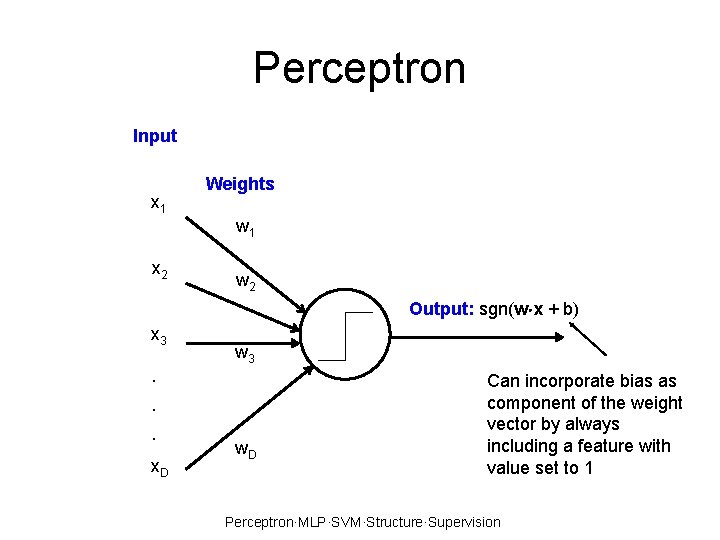

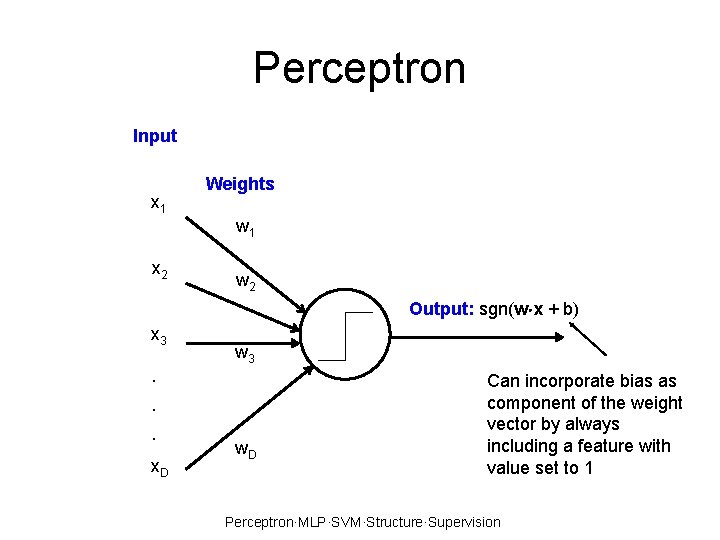

Perceptron Input x 1 Weights w 1 x 2 w 2 Output: sgn(w x + b) x 3 . . . x. D w 3 w. D Can incorporate bias as component of the weight vector by always including a feature with value set to 1 Perceptron·MLP·SVM·Structure·Supervision

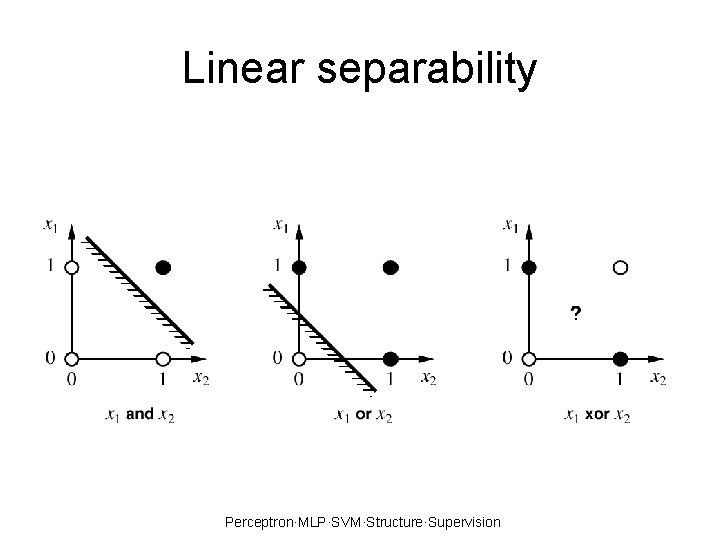

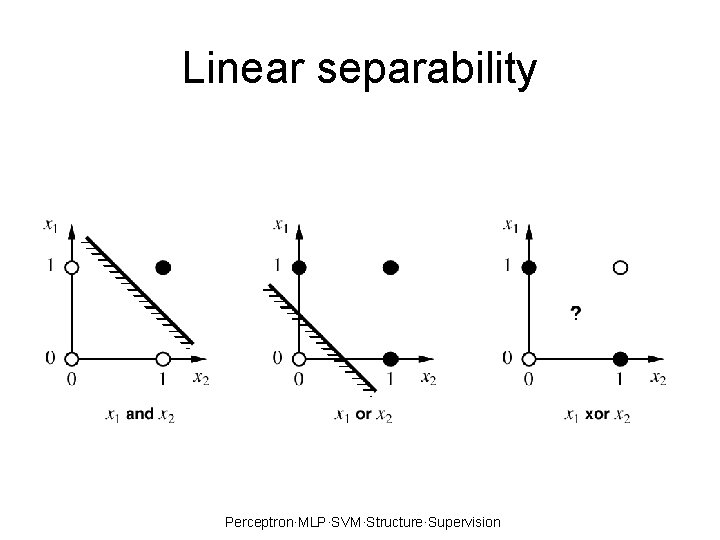

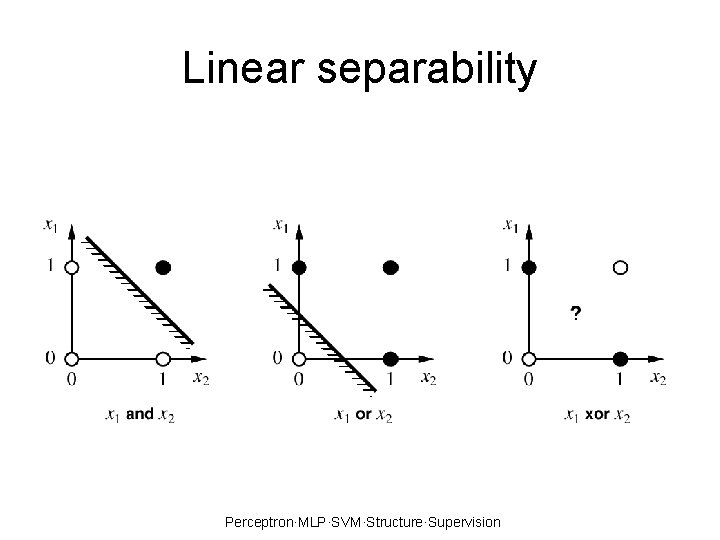

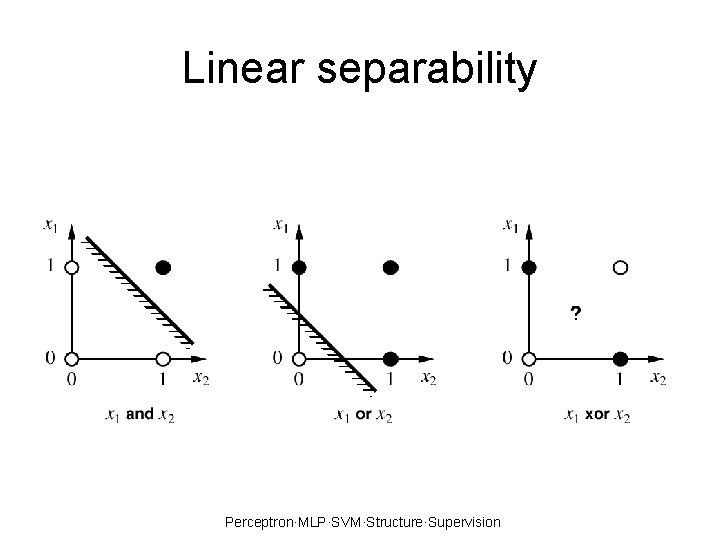

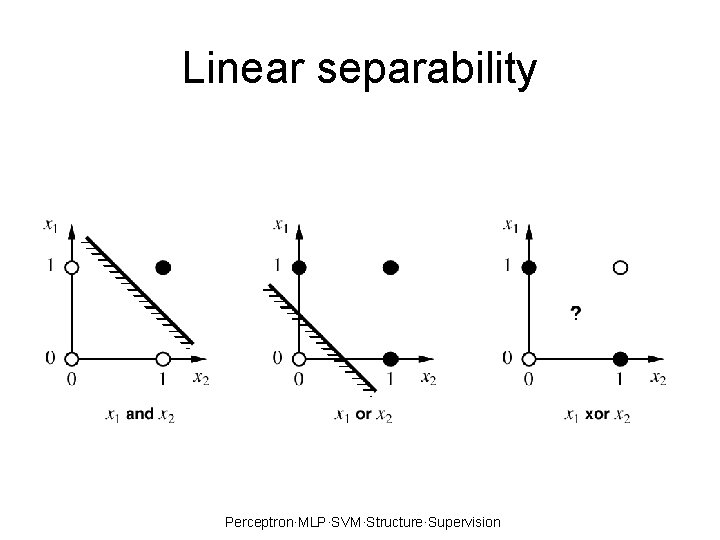

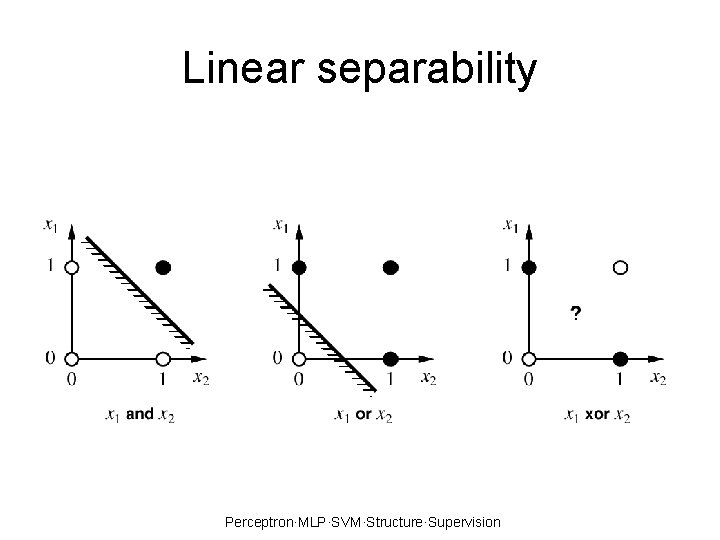

Linear separability Perceptron·MLP·SVM·Structure·Supervision

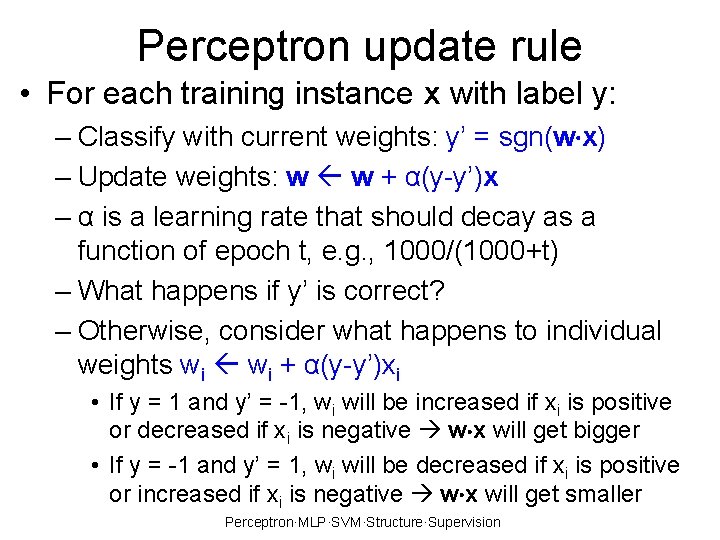

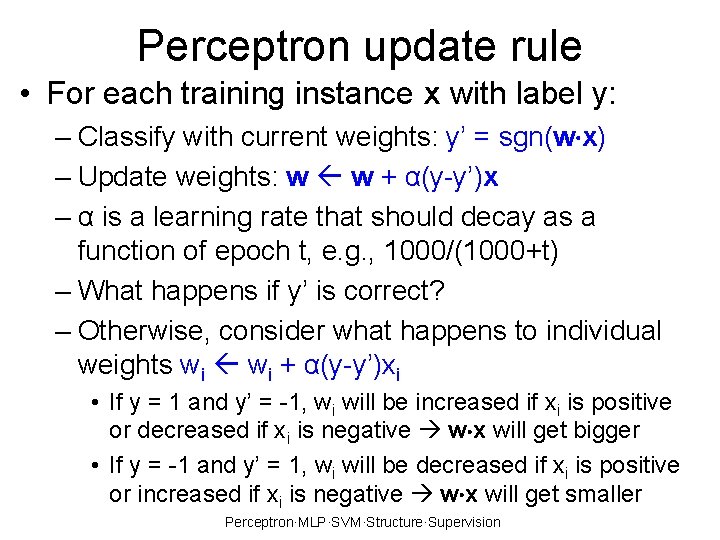

Perceptron update rule • For each training instance x with label y: – Classify with current weights: y’ = sgn(w x) – Update weights: w w + α(y-y’)x – α is a learning rate that should decay as a function of epoch t, e. g. , 1000/(1000+t) – What happens if y’ is correct? – Otherwise, consider what happens to individual weights wi + α(y-y’)xi • If y = 1 and y’ = -1, wi will be increased if xi is positive or decreased if xi is negative w x will get bigger • If y = -1 and y’ = 1, wi will be decreased if xi is positive or increased if xi is negative w x will get smaller Perceptron·MLP·SVM·Structure·Supervision

Outline • Neural Networks – Perceptron review – Differentiable perceptron – Multilayer perceptron – Support vector machine (SVM) • Other Machine Learning Tasks – Structured prediction – Clustering, quantization, density estimation – Semi-supervised and active learning Perceptron·MLP·SVM·Structure·Supervision

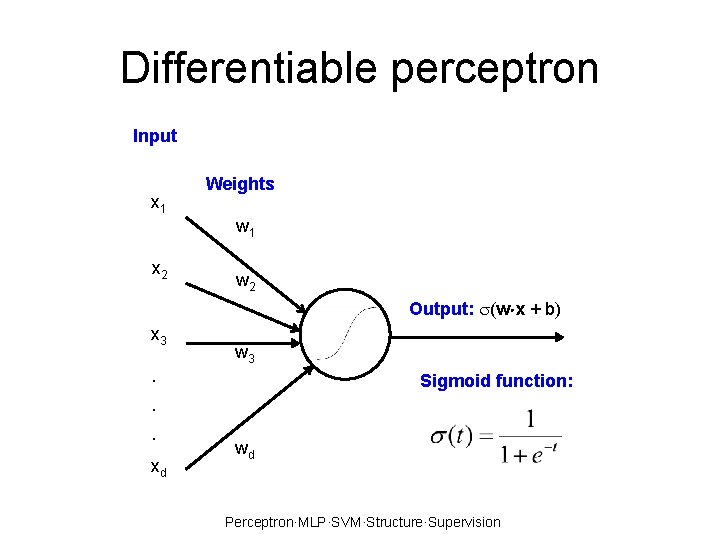

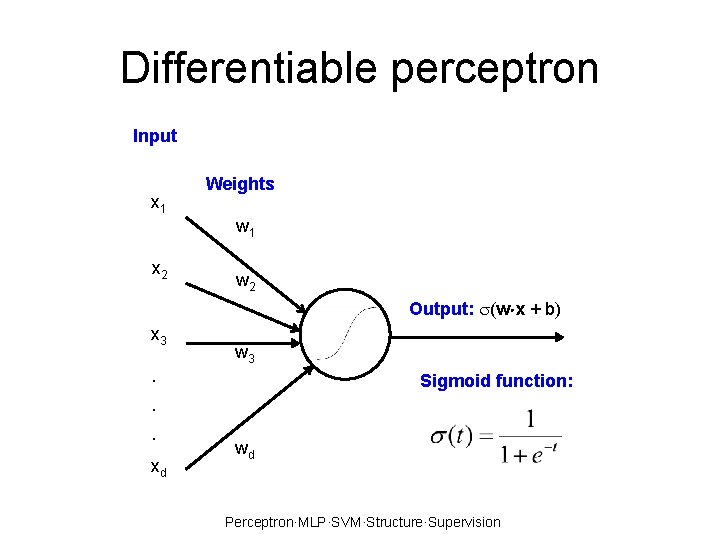

Differentiable perceptron Input x 1 Weights w 1 x 2 w 2 Output: (w x + b) x 3 . . . xd w 3 Sigmoid function: wd Perceptron·MLP·SVM·Structure·Supervision

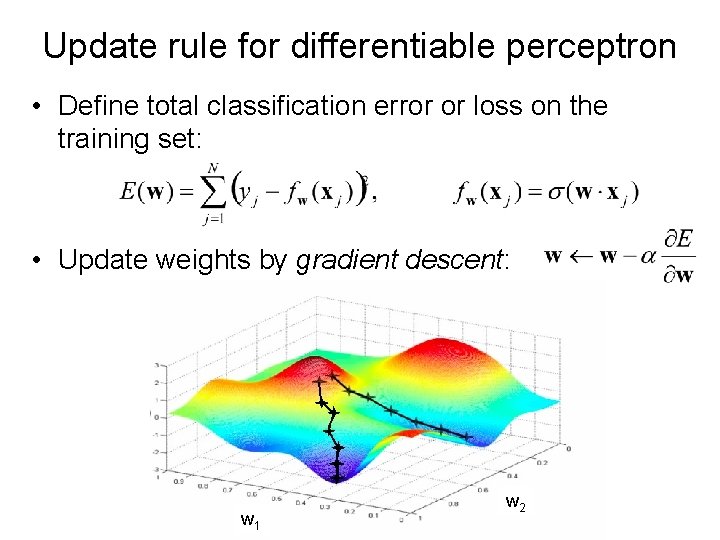

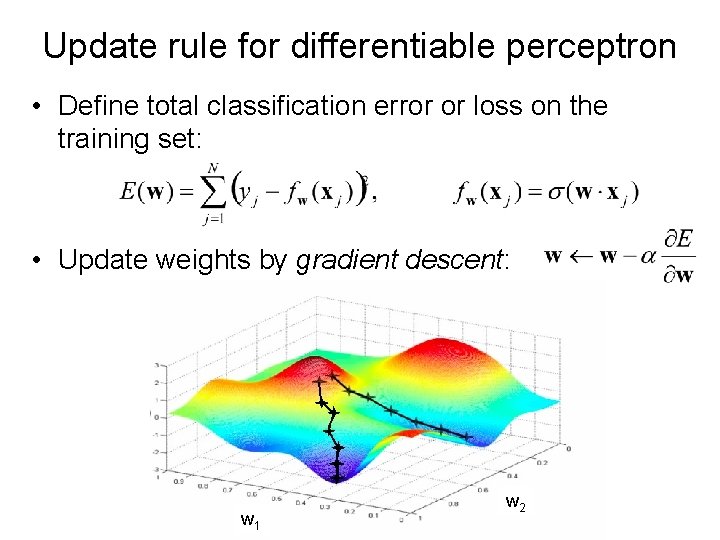

Update rule for differentiable perceptron • Define total classification error or loss on the training set: • Update weights by gradient descent: w 1 Perceptron·MLP·SVM·Structure·Supervision w 2

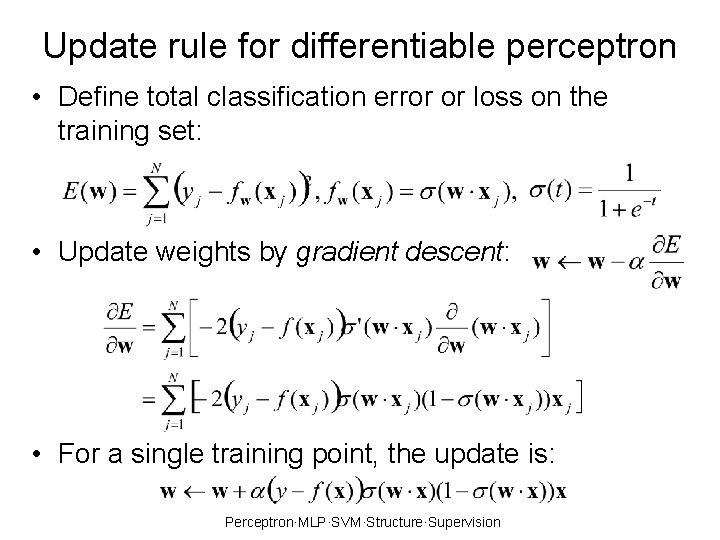

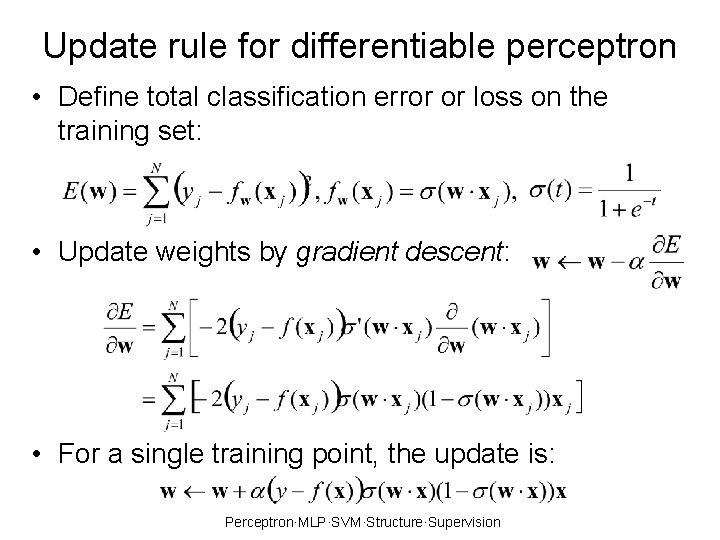

Update rule for differentiable perceptron • Define total classification error or loss on the training set: • Update weights by gradient descent: • For a single training point, the update is: Perceptron·MLP·SVM·Structure·Supervision

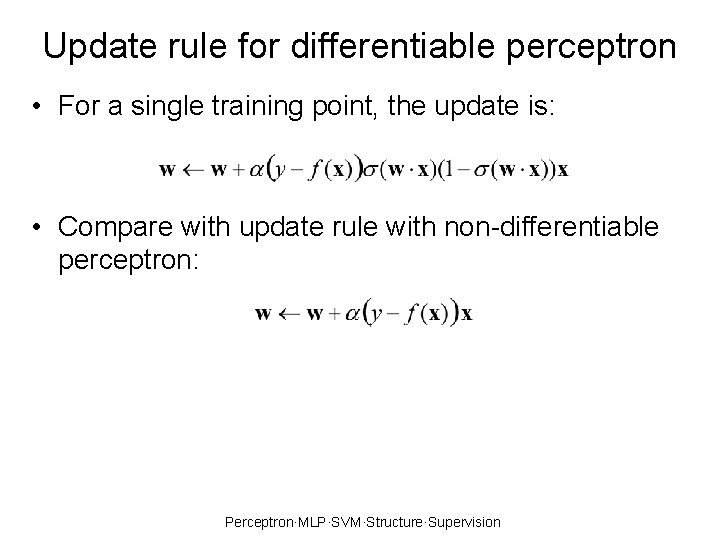

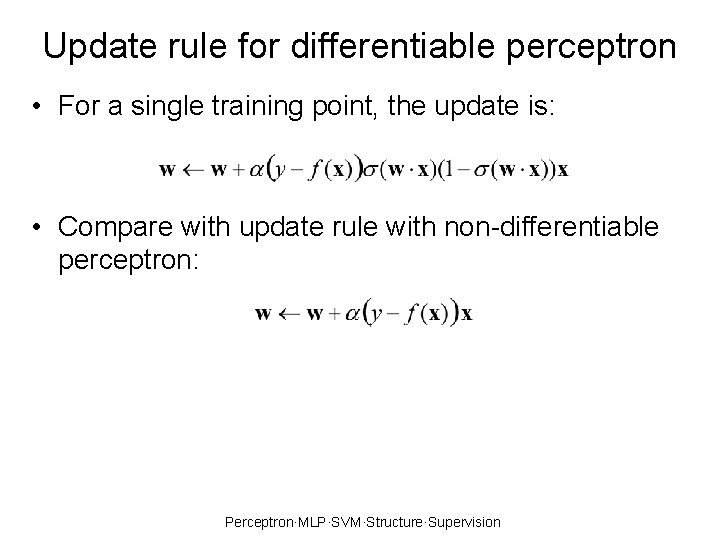

Update rule for differentiable perceptron • For a single training point, the update is: • Compare with update rule with non-differentiable perceptron: Perceptron·MLP·SVM·Structure·Supervision

Outline • Neural Networks – Perceptron review – Differentiable perceptron – Multilayer perceptron – Support vector machine (SVM) • Other Machine Learning Tasks – Structured prediction – Clustering, quantization, density estimation – Semi-supervised and active learning Perceptron·MLP·SVM·Structure·Supervision

Linear separability Perceptron·MLP·SVM·Structure·Supervision

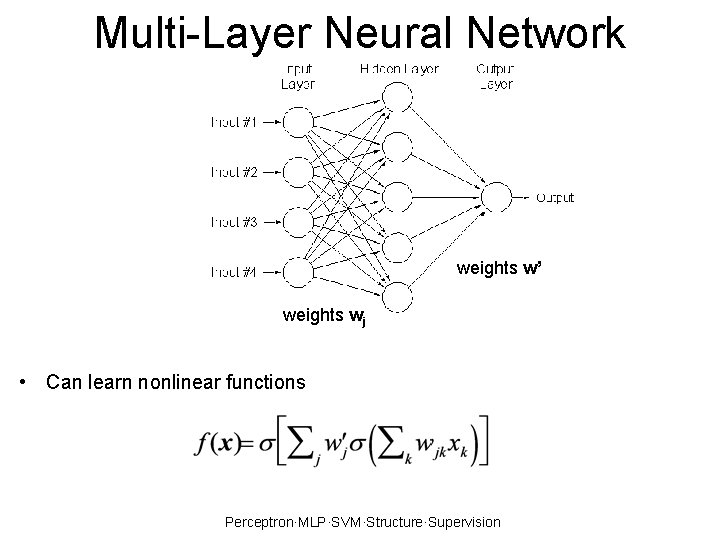

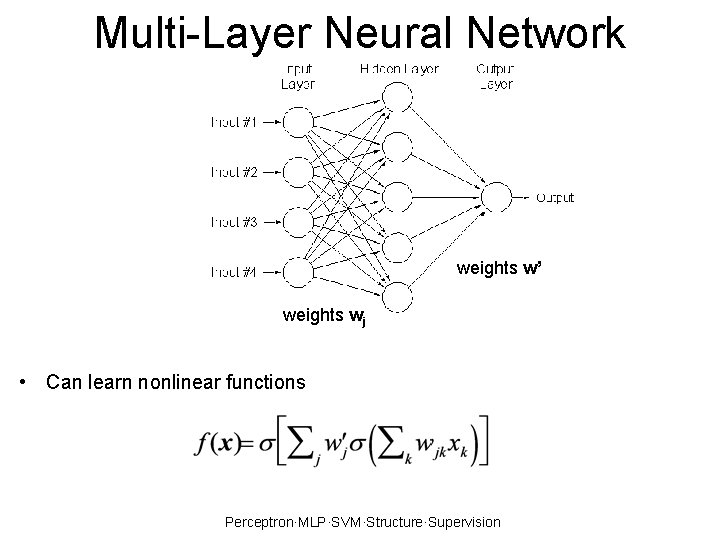

Multi-Layer Neural Network weights w’ weights wj • Can learn nonlinear functions Perceptron·MLP·SVM·Structure·Supervision

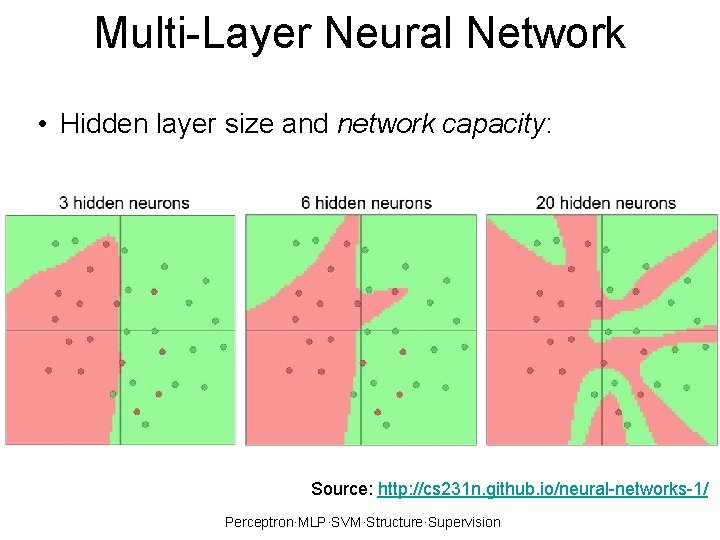

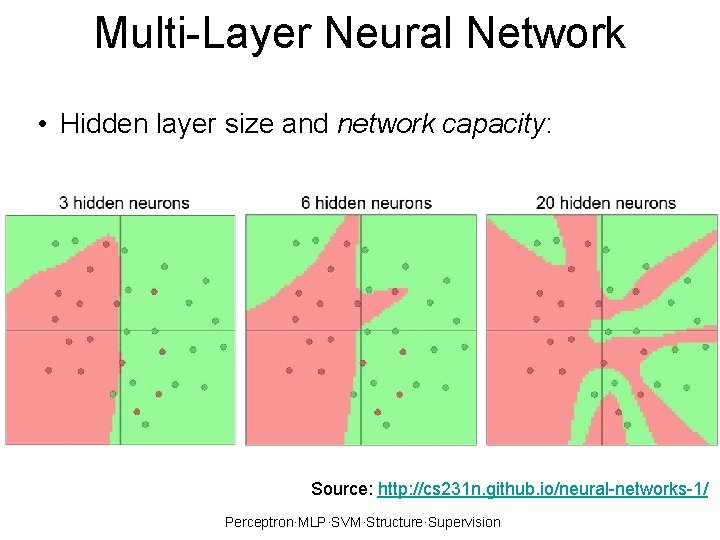

Multi-Layer Neural Network • Hidden layer size and network capacity: Source: http: //cs 231 n. github. io/neural-networks-1/ Perceptron·MLP·SVM·Structure·Supervision

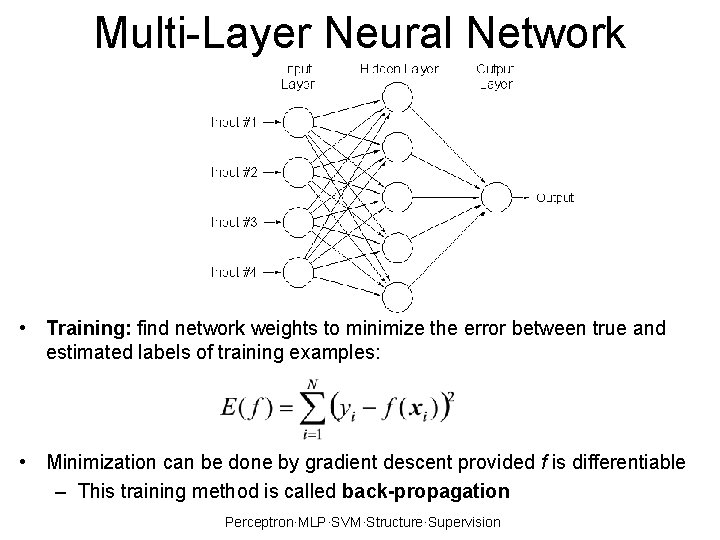

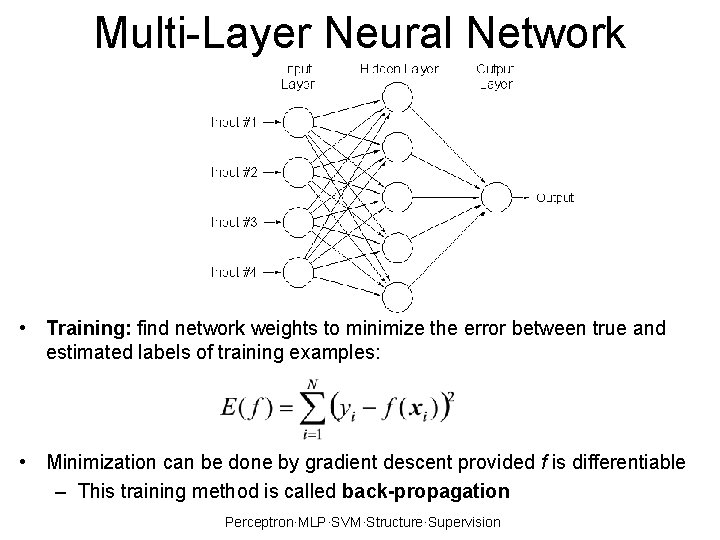

Multi-Layer Neural Network • Training: find network weights to minimize the error between true and estimated labels of training examples: • Minimization can be done by gradient descent provided f is differentiable – This training method is called back-propagation Perceptron·MLP·SVM·Structure·Supervision

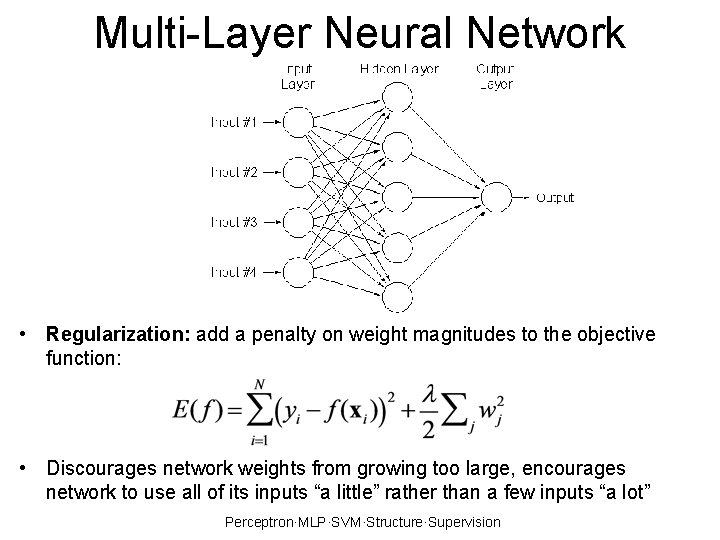

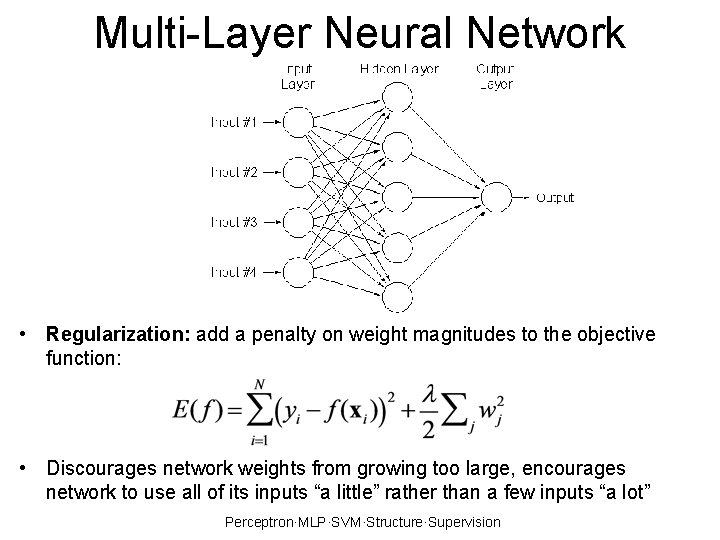

Multi-Layer Neural Network • Regularization: add a penalty on weight magnitudes to the objective function: • Discourages network weights from growing too large, encourages network to use all of its inputs “a little” rather than a few inputs “a lot” Perceptron·MLP·SVM·Structure·Supervision

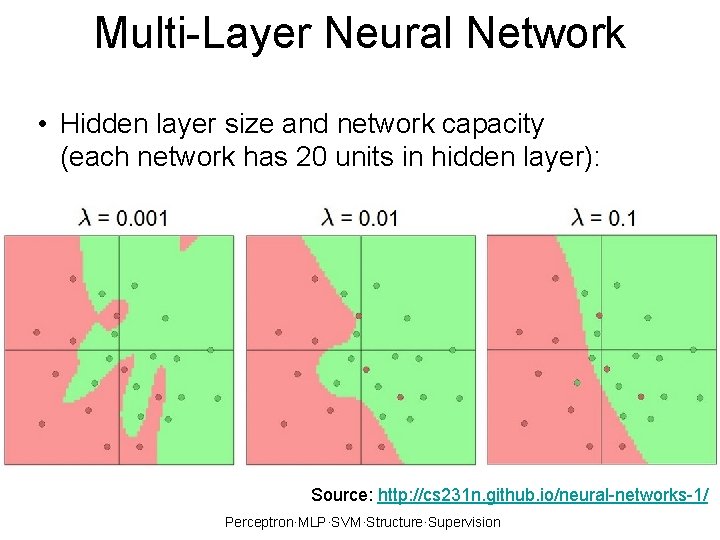

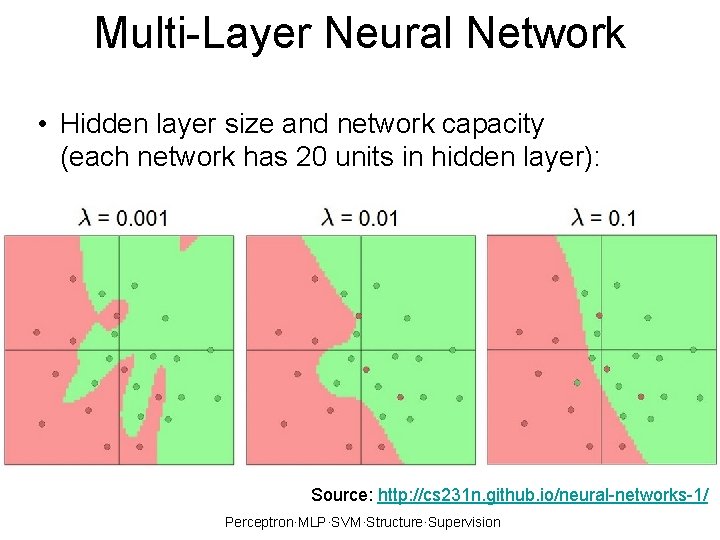

Multi-Layer Neural Network • Hidden layer size and network capacity (each network has 20 units in hidden layer): Source: http: //cs 231 n. github. io/neural-networks-1/ Perceptron·MLP·SVM·Structure·Supervision

Neural networks: Pros and cons • Pros – Flexible and general function approximation framework – Can build extremely powerful models by adding more layers • Cons – Hard to analyze theoretically (e. g. , training is prone to local optima) – Huge amount of training data, computing power required to get good performance – The space of implementation choices is huge (network architectures, parameters) Perceptron·MLP·SVM·Structure·Supervision

Outline • Neural Networks – Perceptron review – Differentiable perceptron – Multilayer perceptron – Support vector machine (SVM) • Other Machine Learning Tasks – Structured prediction – Clustering, quantization, density estimation – Semi-supervised and active learning Perceptron·MLP·SVM·Structure·Supervision

Linear separability Perceptron·MLP·SVM·Structure·Supervision

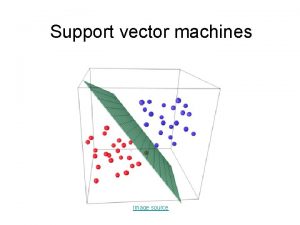

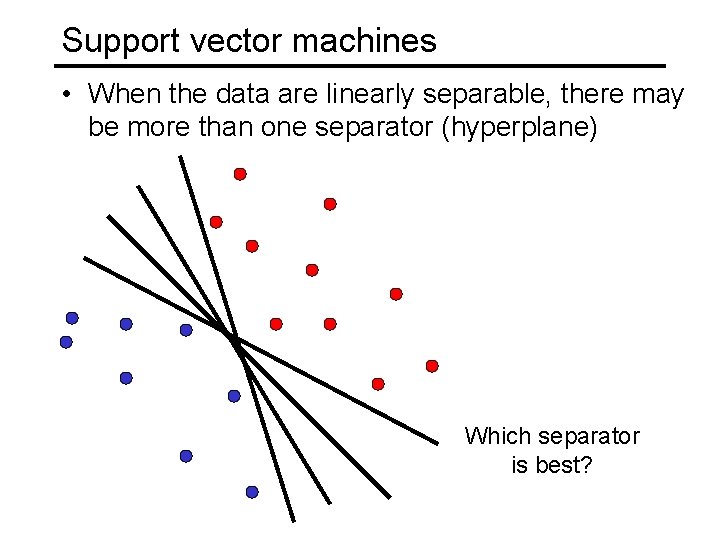

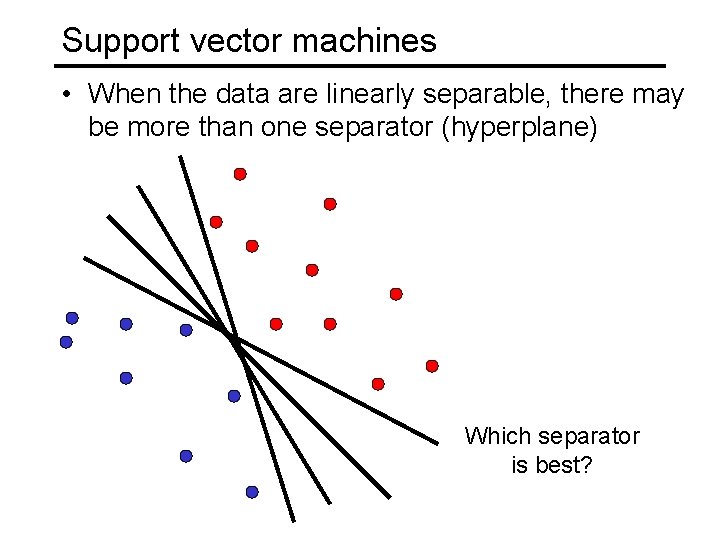

Support vector machines • When the data are linearly separable, there may be more than one separator (hyperplane) Which separator is best?

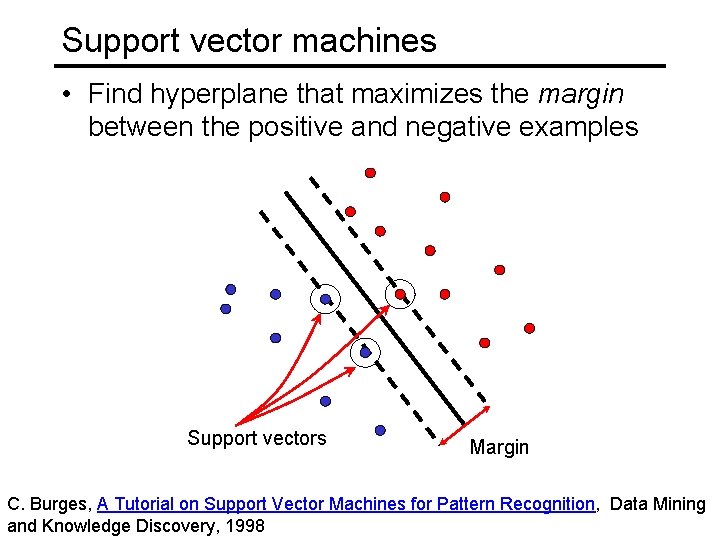

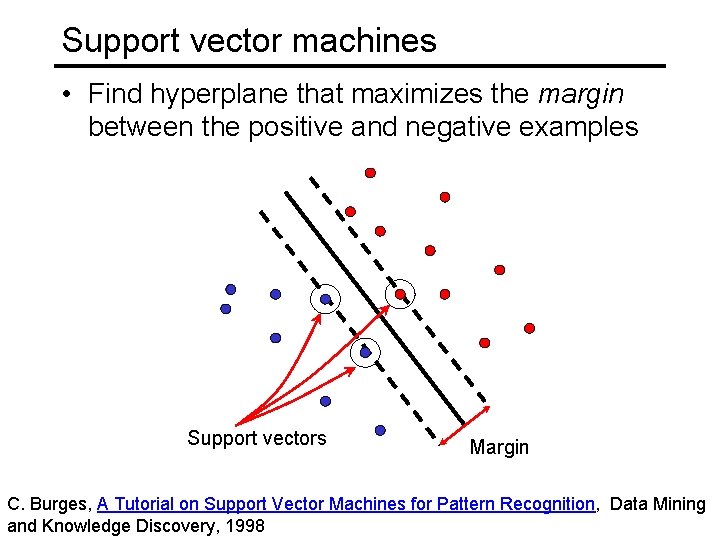

Support vector machines • Find hyperplane that maximizes the margin between the positive and negative examples Support vectors Margin C. Burges, A Tutorial on Support Vector Machines for Pattern Recognition, Data Mining and Knowledge Discovery, 1998

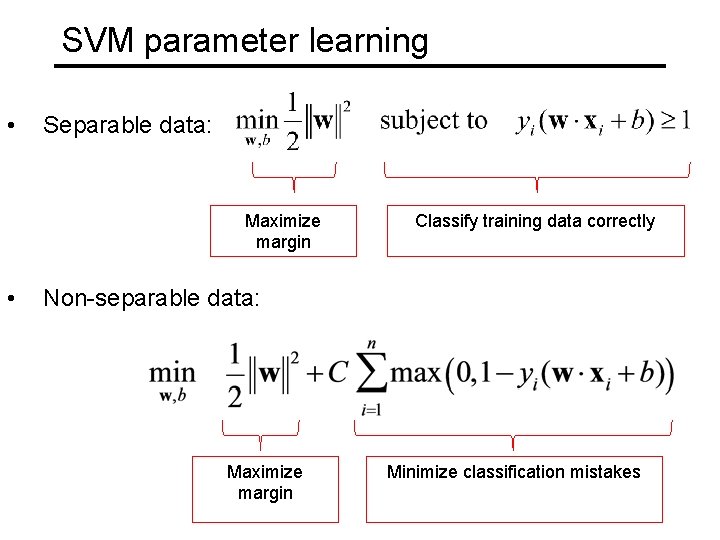

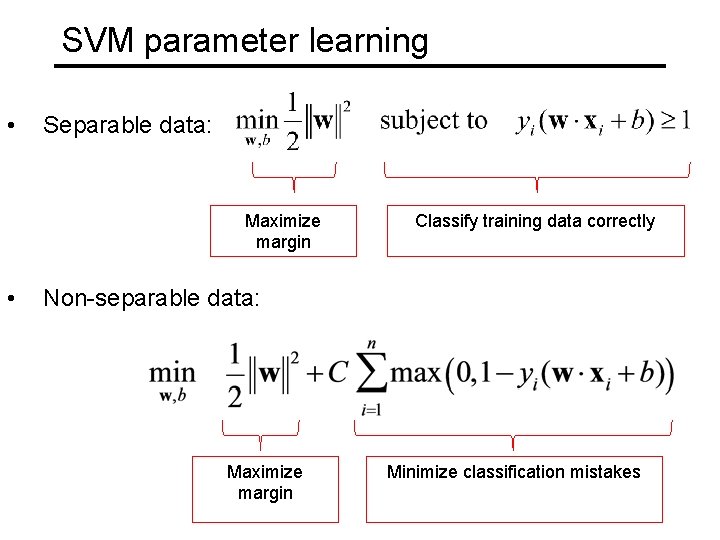

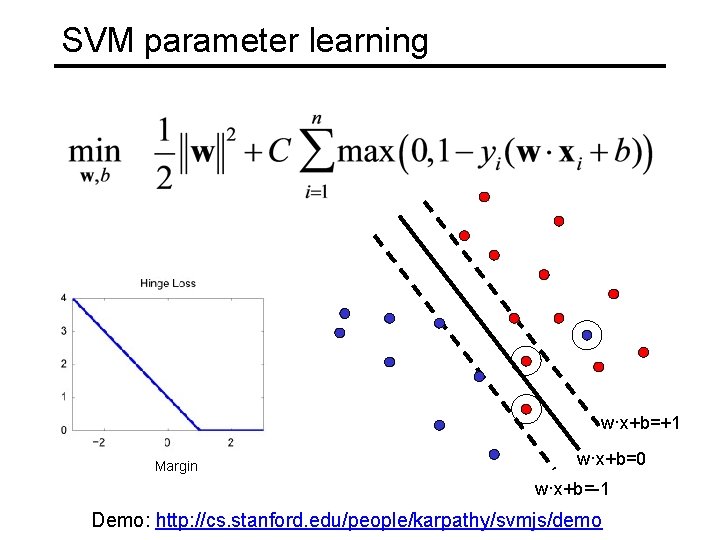

SVM parameter learning • Separable data: Maximize margin • Classify training data correctly Non-separable data: Maximize margin Minimize classification mistakes

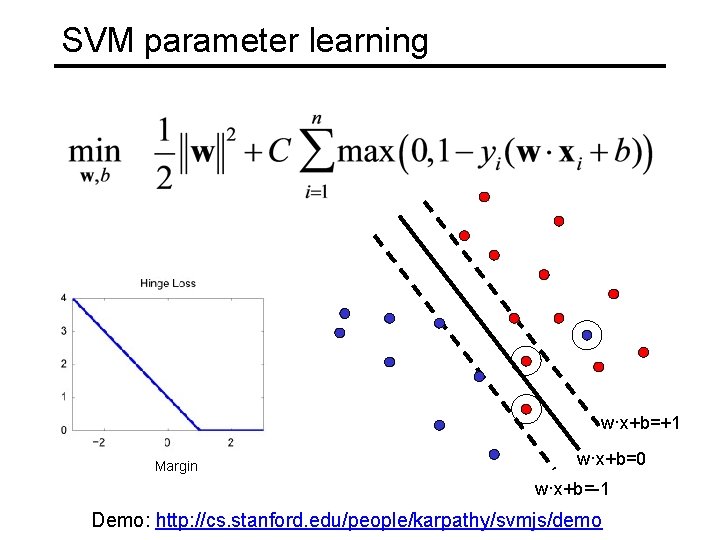

SVM parameter learning w·x+b=+1 Margin w·x+b=0 w·x+b=-1 Demo: http: //cs. stanford. edu/people/karpathy/svmjs/demo

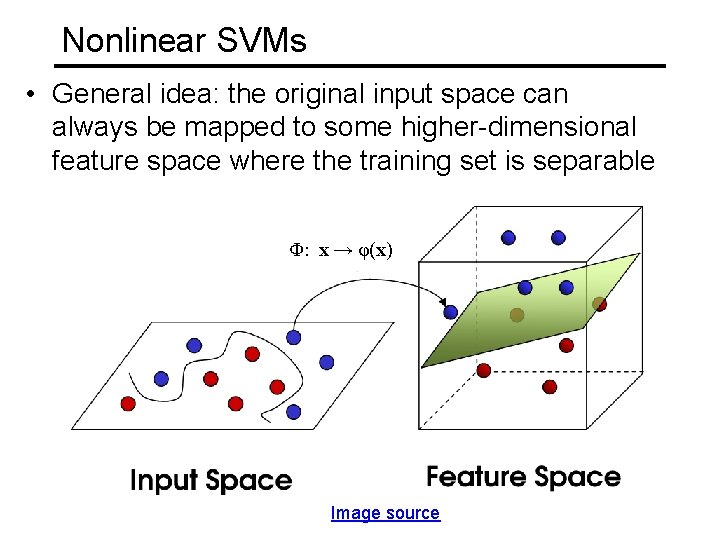

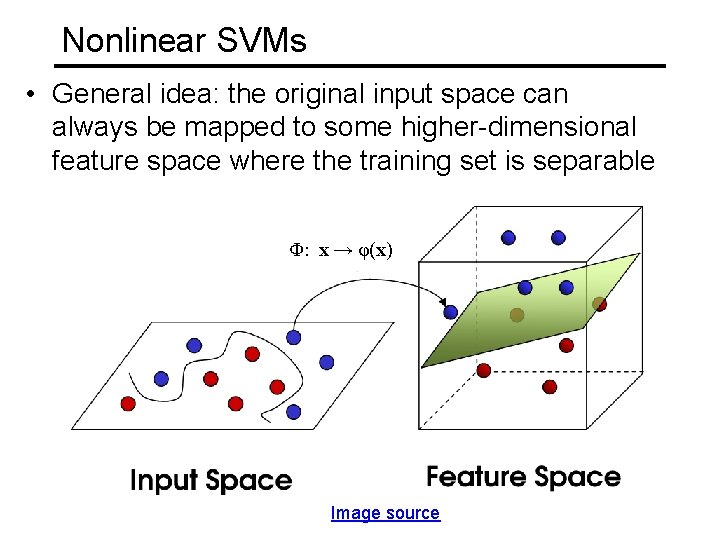

Nonlinear SVMs • General idea: the original input space can always be mapped to some higher-dimensional feature space where the training set is separable Φ: x → φ(x) Image source

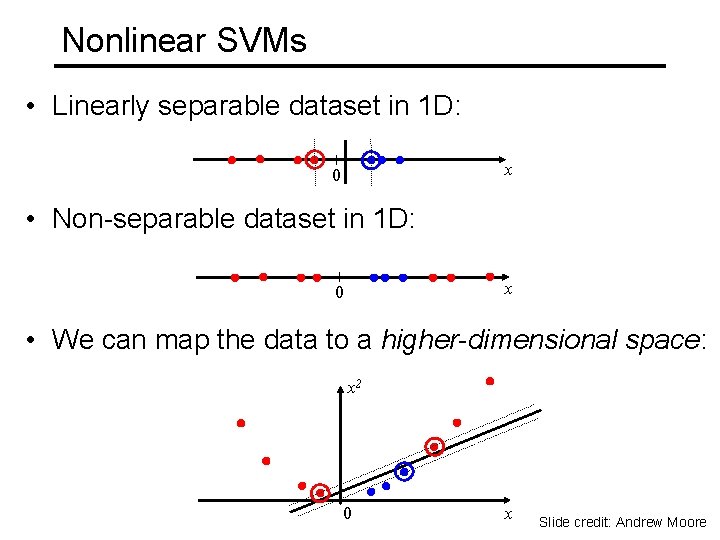

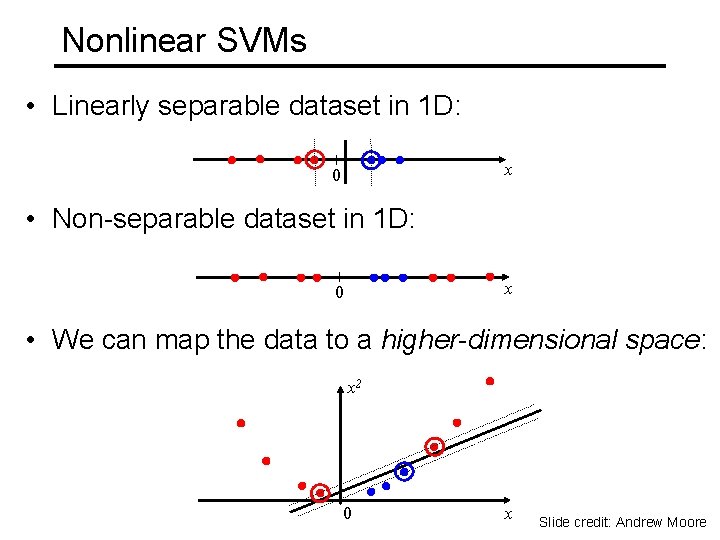

Nonlinear SVMs • Linearly separable dataset in 1 D: x 0 • Non-separable dataset in 1 D: x 0 • We can map the data to a higher-dimensional space: x 2 0 x Slide credit: Andrew Moore

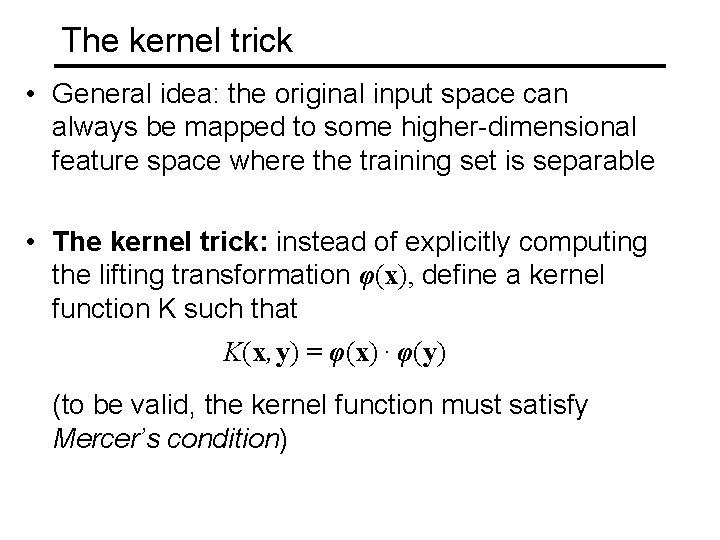

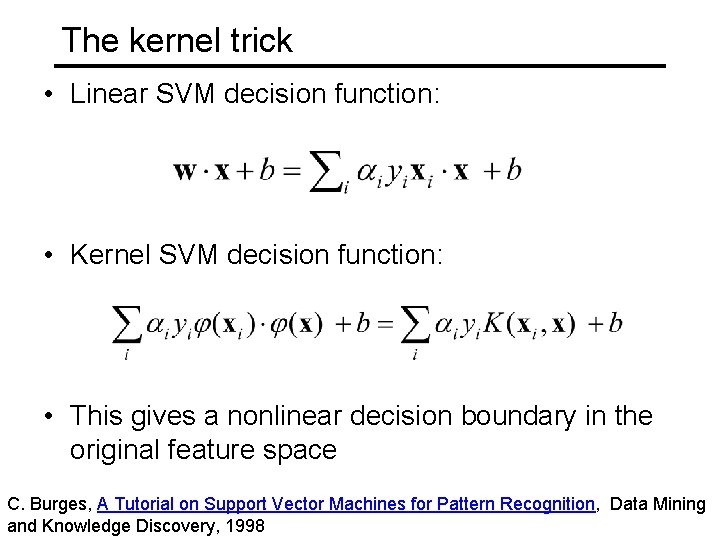

The kernel trick • General idea: the original input space can always be mapped to some higher-dimensional feature space where the training set is separable • The kernel trick: instead of explicitly computing the lifting transformation φ(x), define a kernel function K such that K(x , y) = φ(x) · φ(y) (to be valid, the kernel function must satisfy Mercer’s condition)

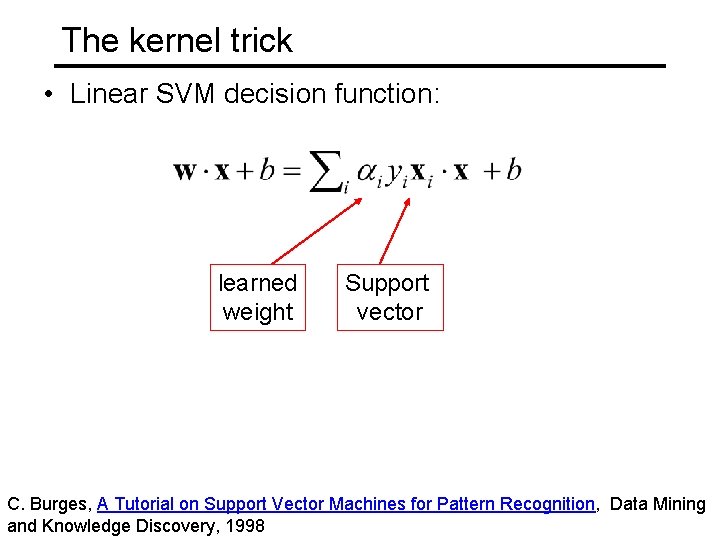

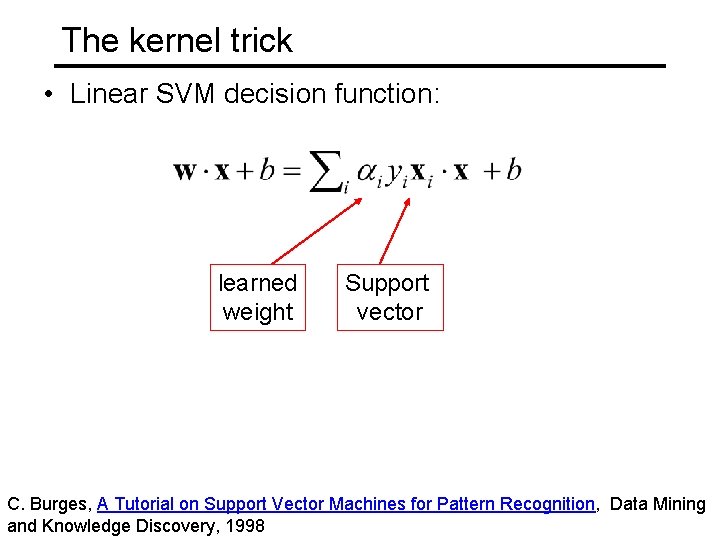

The kernel trick • Linear SVM decision function: learned weight Support vector C. Burges, A Tutorial on Support Vector Machines for Pattern Recognition, Data Mining and Knowledge Discovery, 1998

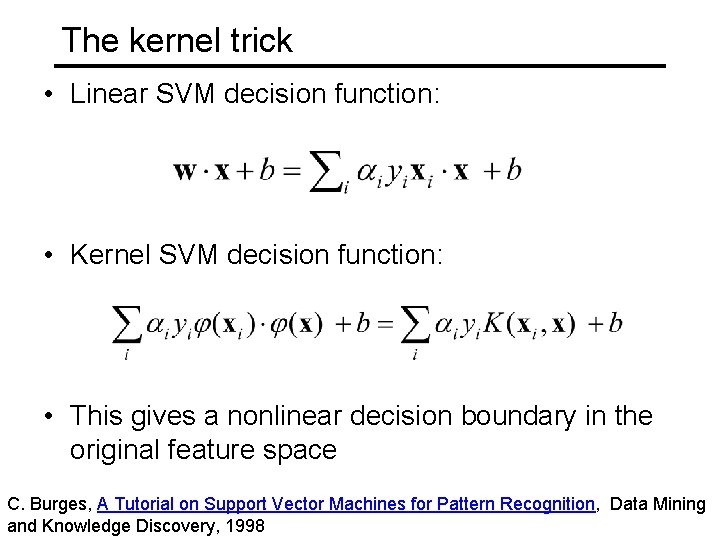

The kernel trick • Linear SVM decision function: • Kernel SVM decision function: • This gives a nonlinear decision boundary in the original feature space C. Burges, A Tutorial on Support Vector Machines for Pattern Recognition, Data Mining and Knowledge Discovery, 1998

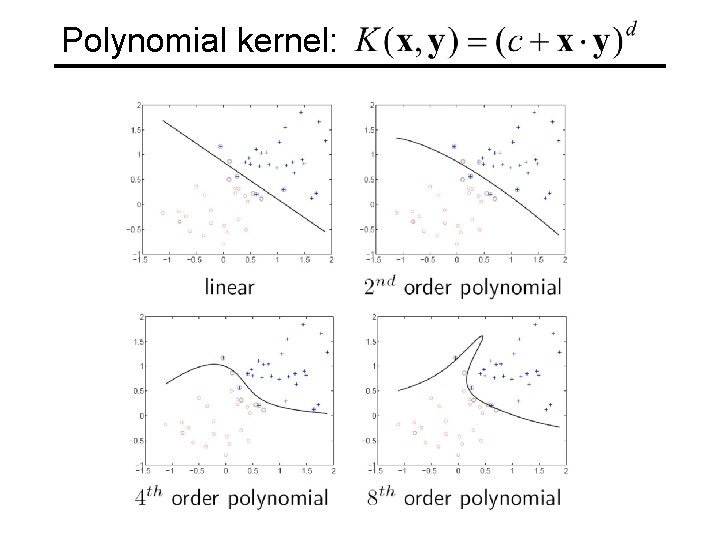

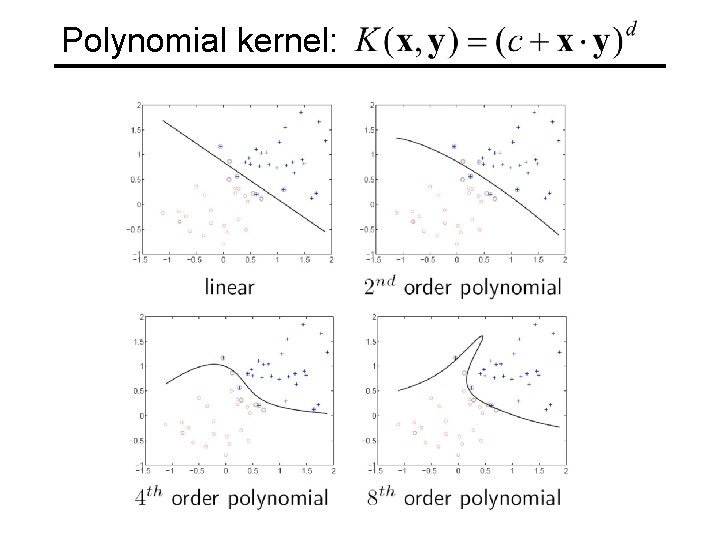

Polynomial kernel:

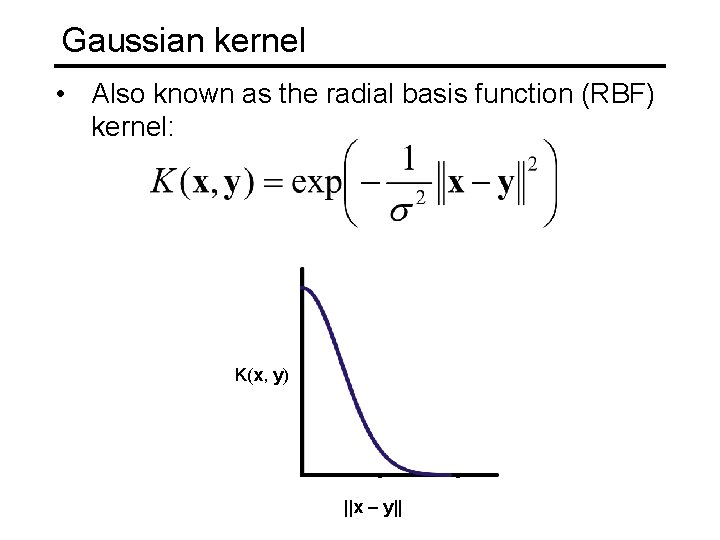

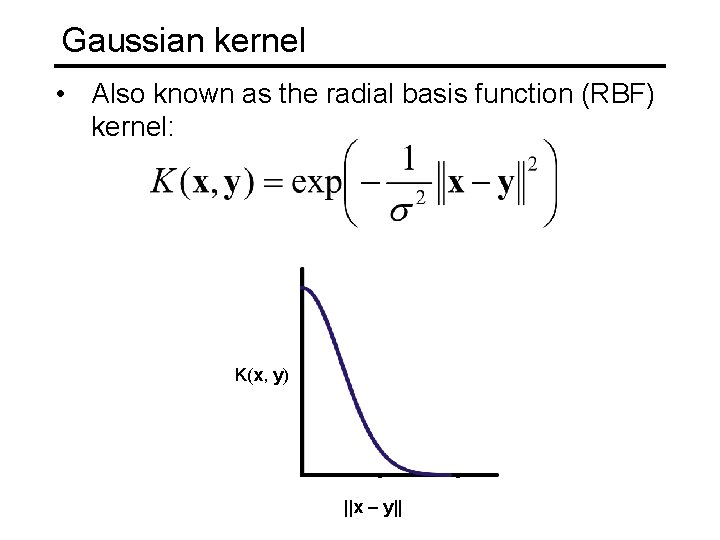

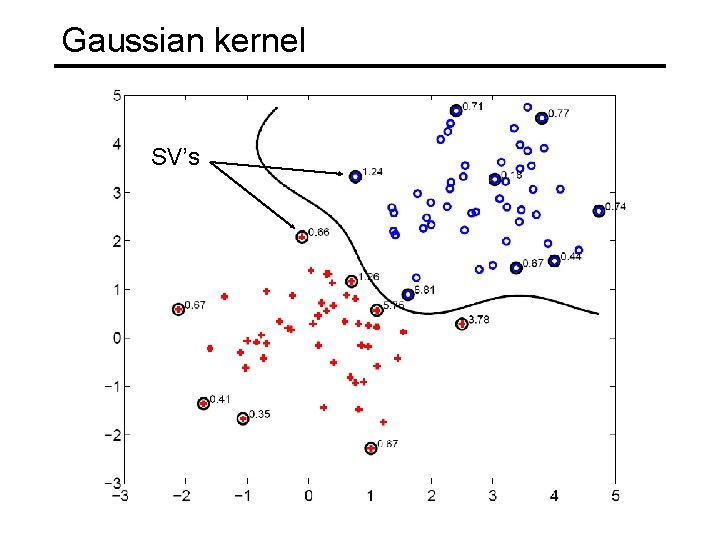

Gaussian kernel • Also known as the radial basis function (RBF) kernel: K(x, y) ||x – y||

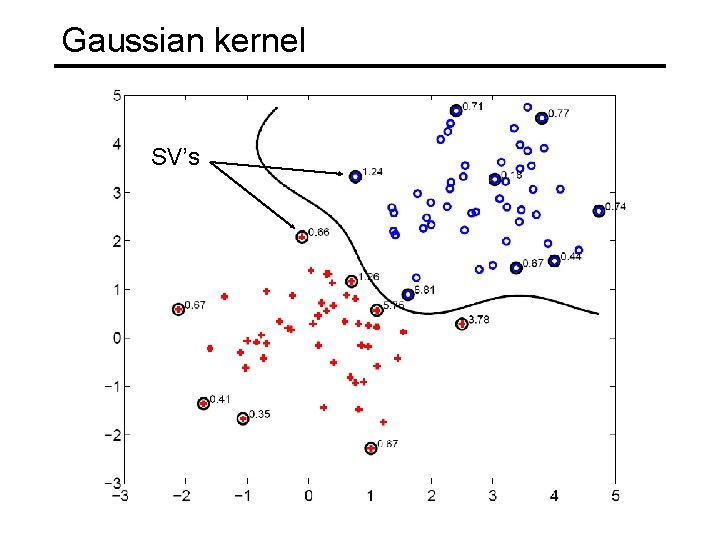

Gaussian kernel SV’s

SVMs: Pros and cons • Pros • Kernel-based framework is very powerful, flexible • Training is convex optimization, globally optimal solution can be found • Amenable to theoretical analysis • SVMs work very well in practice, even with very small training sample sizes • Cons • No “direct” multi-class SVM, must combine two-class SVMs (e. g. , with one-vs-others) • Computation, memory (esp. for nonlinear SVMs)

Outline Neural Networks • • Perceptron review Differentiable perceptron Multilayer perceptron Support vector machine (SVM) Other Machine Learning Tasks • Structured prediction • Clustering, quantization, density estimation • Semi-supervised and active learning

Other machine learning scenarios Other prediction scenarios • Regression • Structured prediction Other supervision scenarios • • Unsupervised learning Semi-supervised learning Active learning Lifelong learning

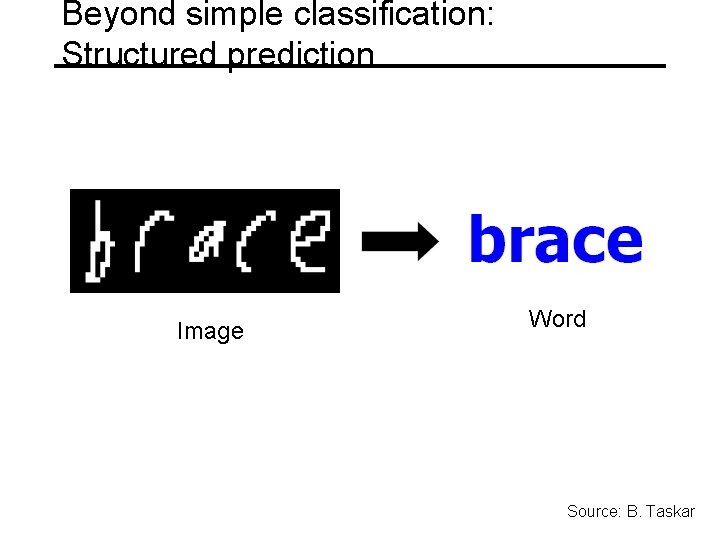

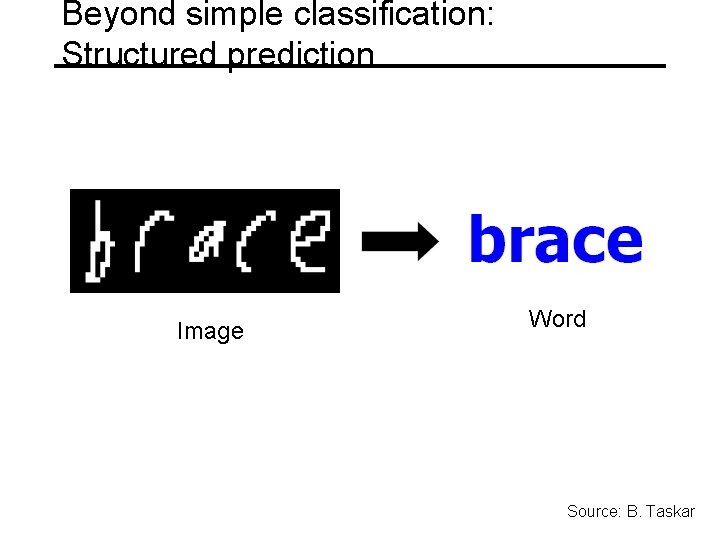

Beyond simple classification: Structured prediction Image Word Source: B. Taskar

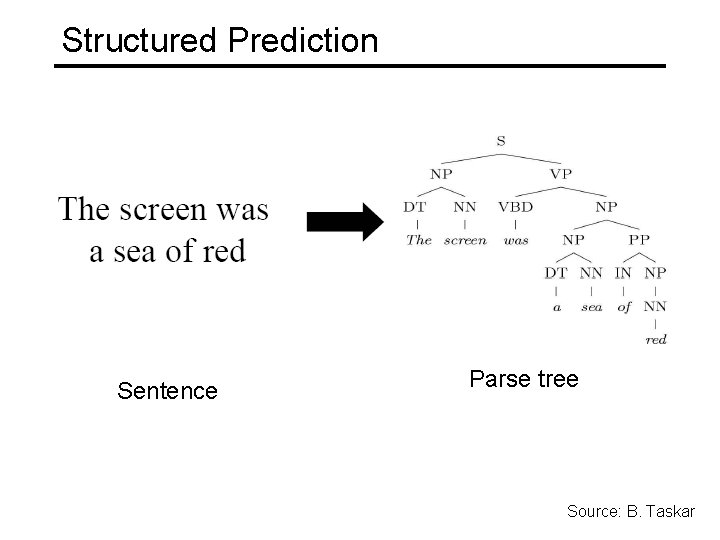

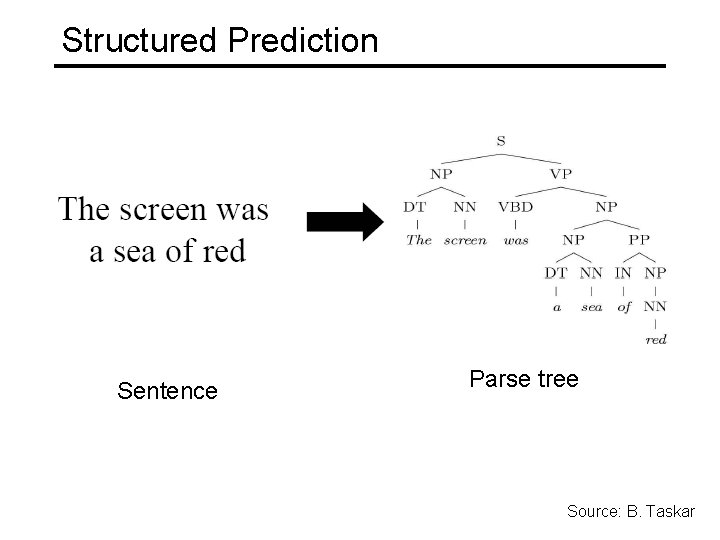

Structured Prediction Sentence Parse tree Source: B. Taskar

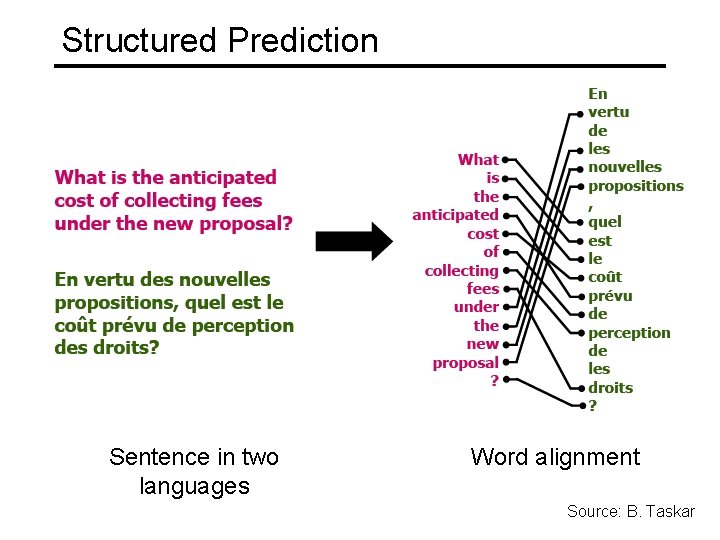

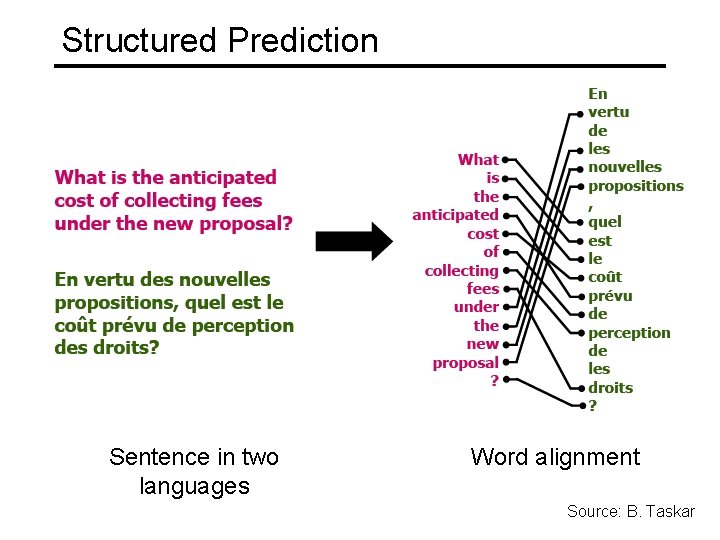

Structured Prediction Sentence in two languages Word alignment Source: B. Taskar

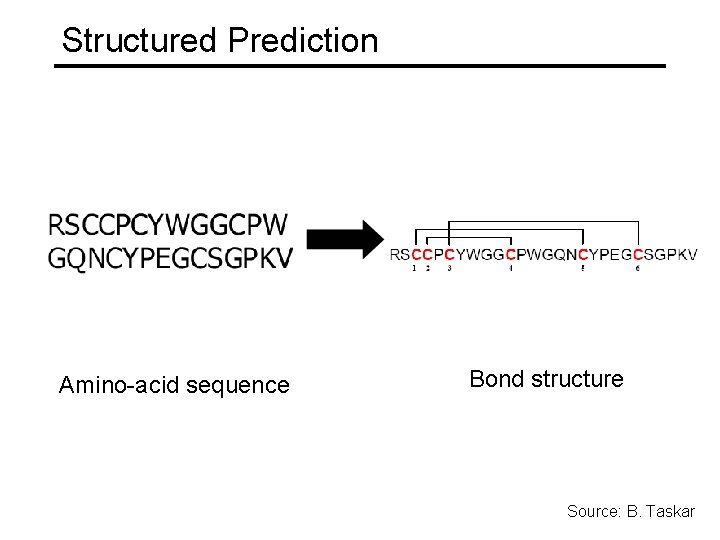

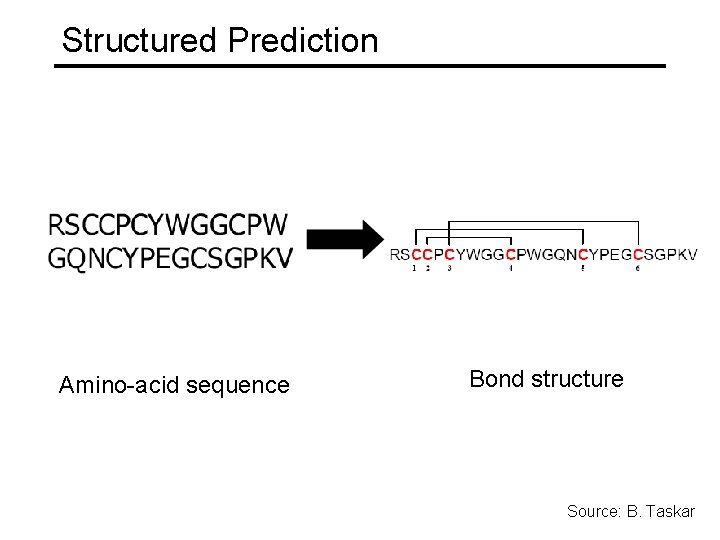

Structured Prediction Amino-acid sequence Bond structure Source: B. Taskar

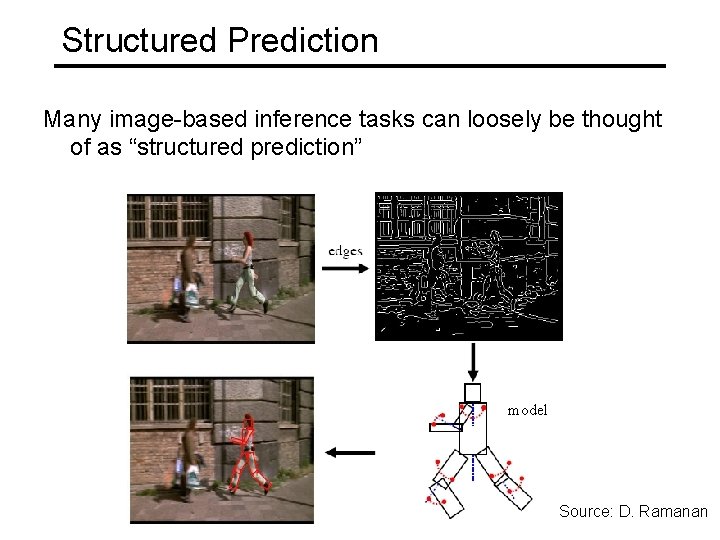

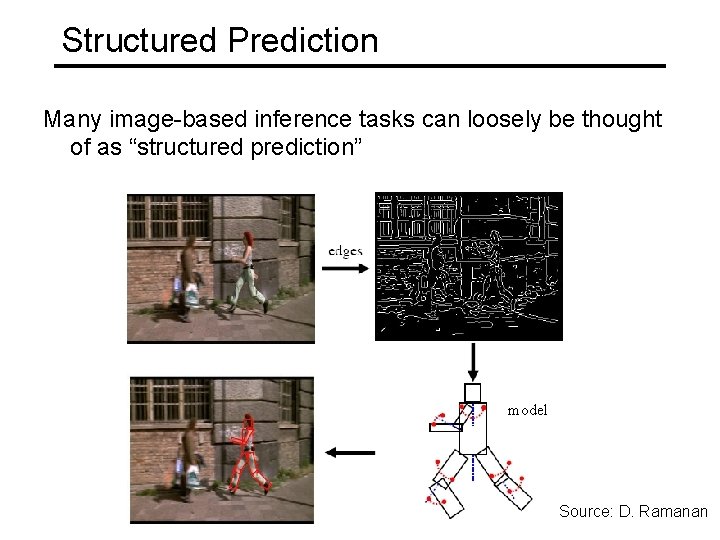

Structured Prediction Many image-based inference tasks can loosely be thought of as “structured prediction” model Source: D. Ramanan

Outline Neural Networks • • Perceptron review Differentiable perceptron Multilayer perceptron Support vector machine (SVM) Other Machine Learning Tasks • Structured prediction • Clustering, quantization, density estimation • Semi-supervised and active learning

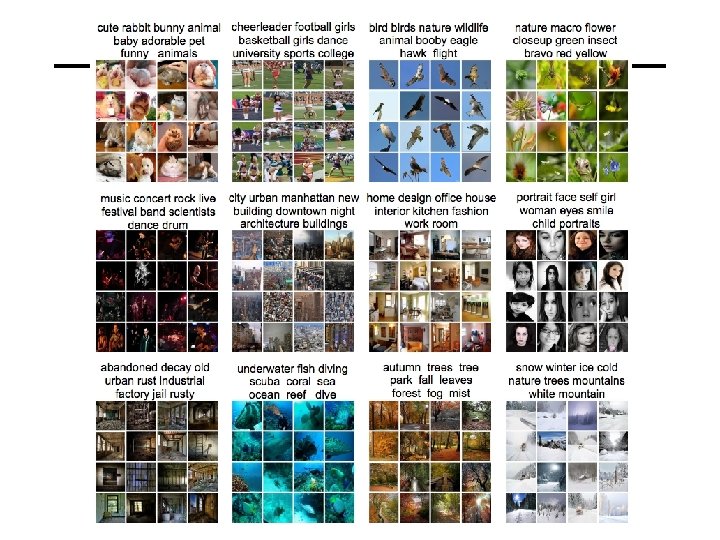

Unsupervised Learning Idea: Given only unlabeled data as input, learn some sort of structure The objective is often more vague or subjective than in supervised learning This is more of an exploratory/descriptive data analysis

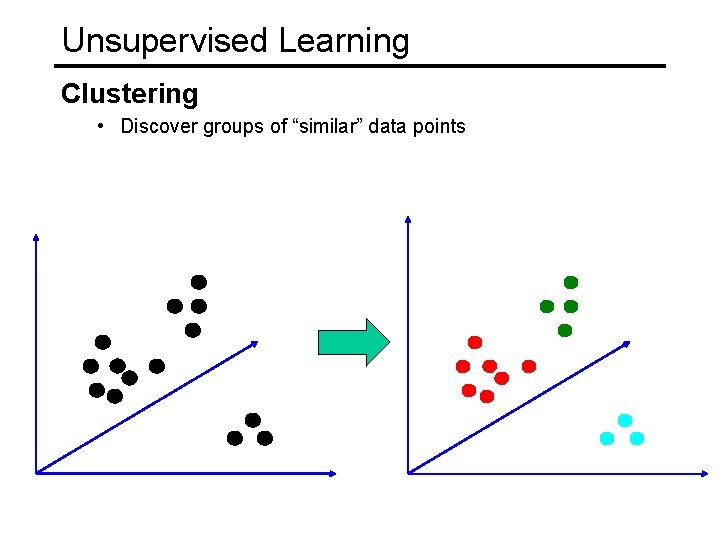

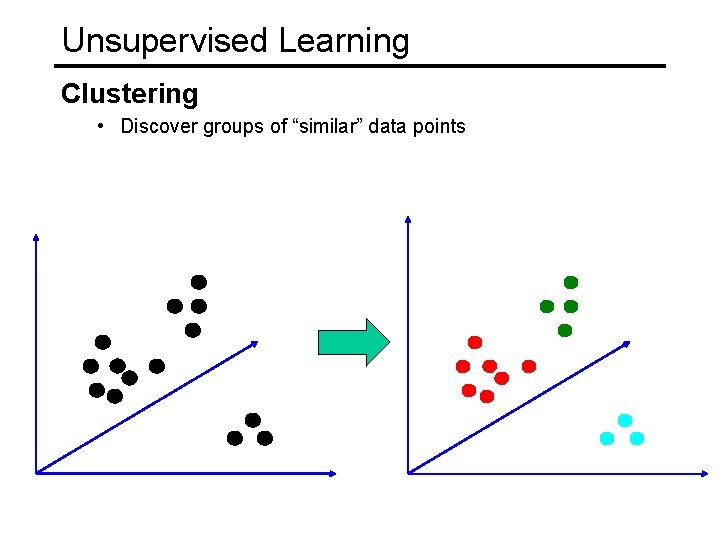

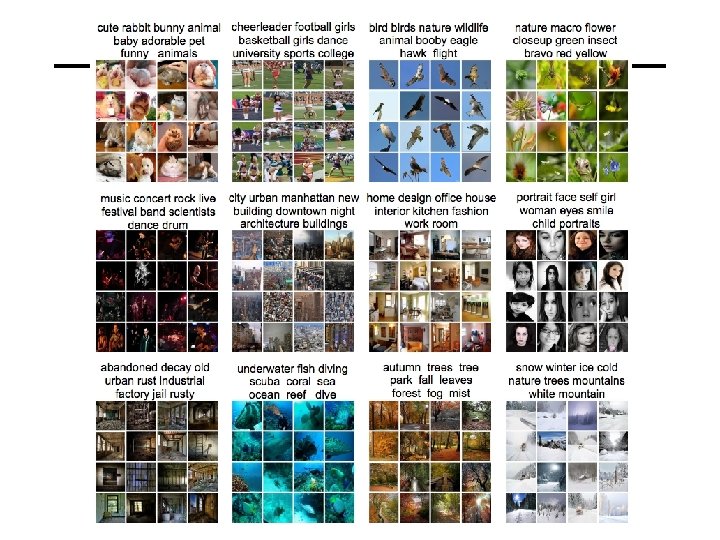

Unsupervised Learning Clustering • Discover groups of “similar” data points

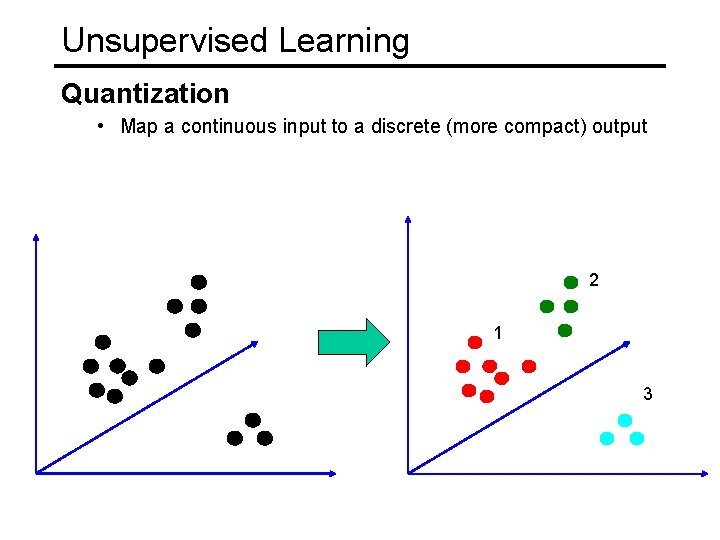

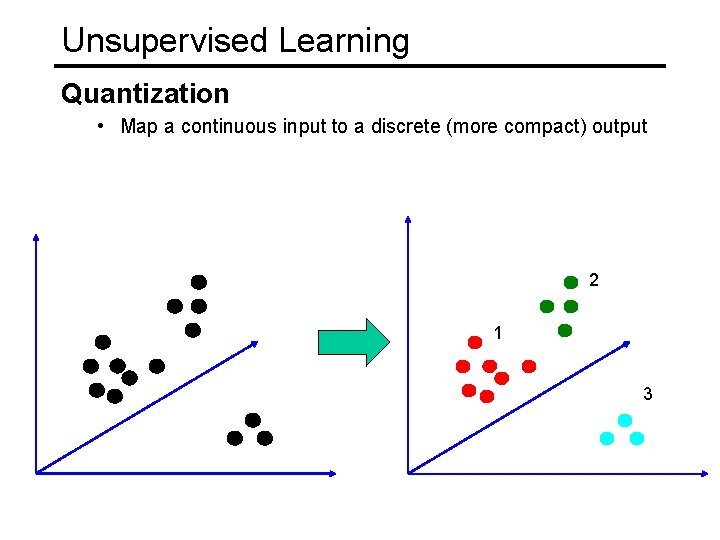

Unsupervised Learning Quantization • Map a continuous input to a discrete (more compact) output 2 1 3

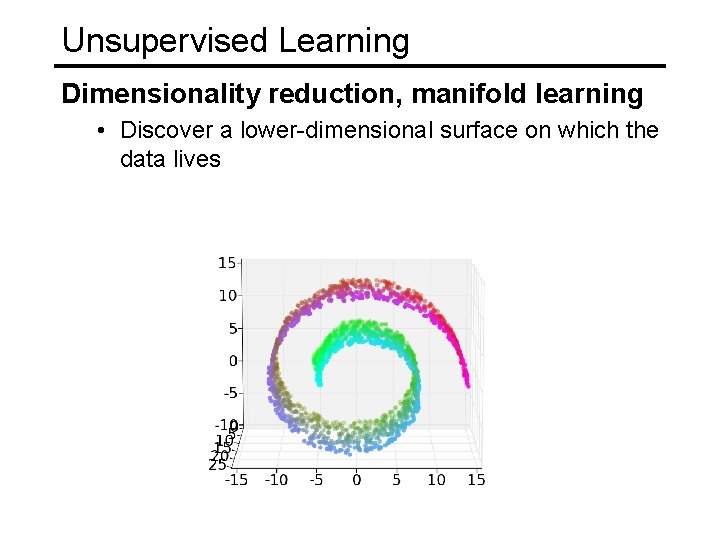

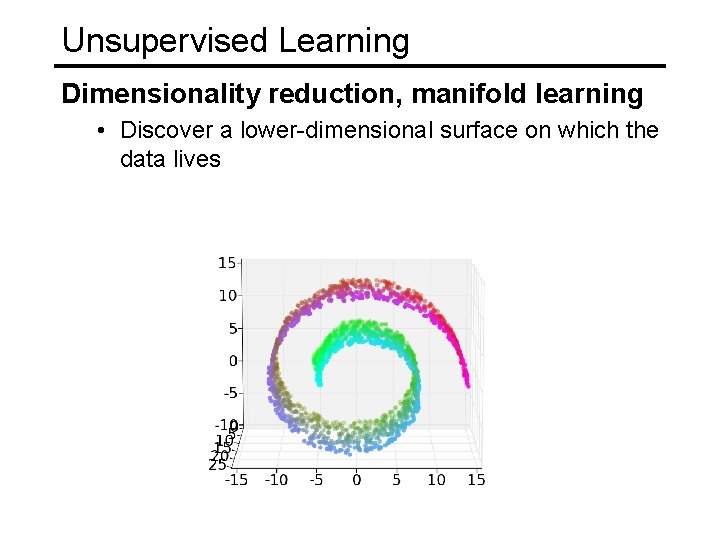

Unsupervised Learning Dimensionality reduction, manifold learning • Discover a lower-dimensional surface on which the data lives

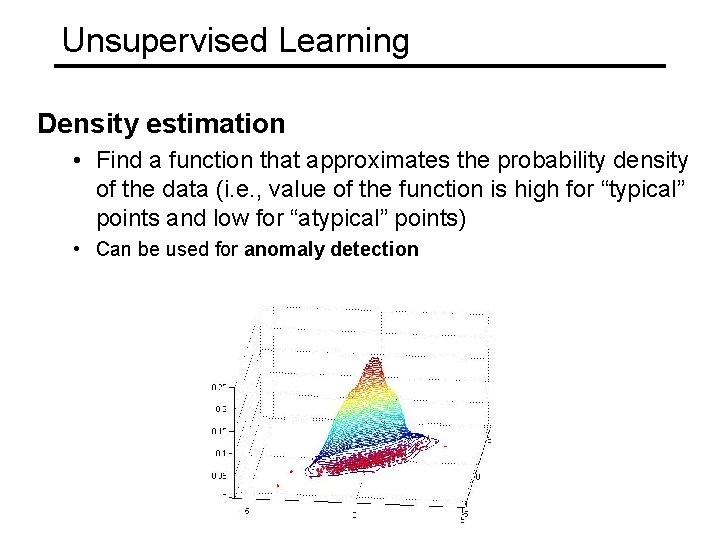

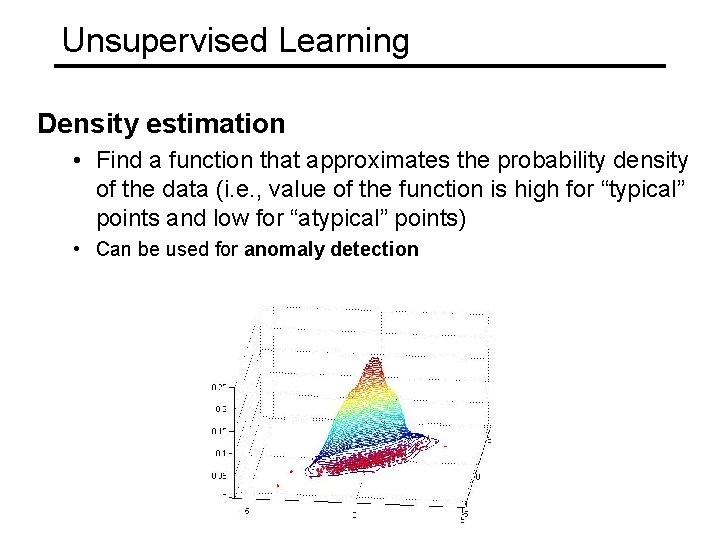

Unsupervised Learning Density estimation • Find a function that approximates the probability density of the data (i. e. , value of the function is high for “typical” points and low for “atypical” points) • Can be used for anomaly detection

Outline Neural Networks • • Perceptron review Differentiable perceptron Multilayer perceptron Support vector machine (SVM) Other Machine Learning Tasks • Structured prediction • Clustering, quantization, density estimation • Semi-supervised and active learning

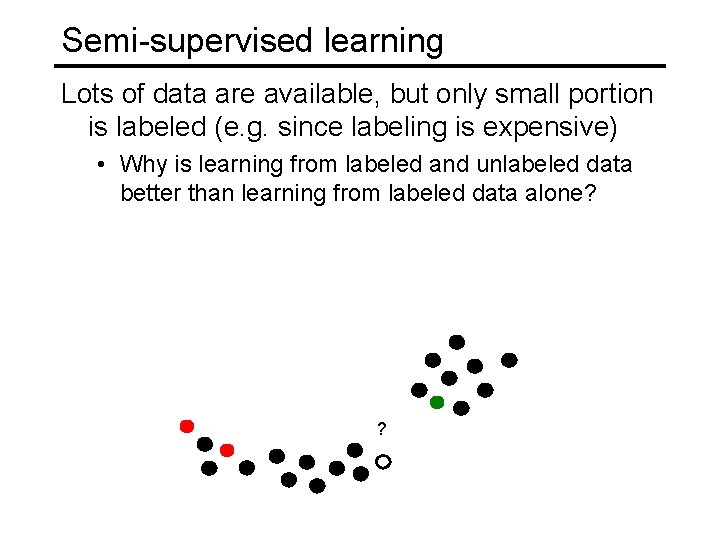

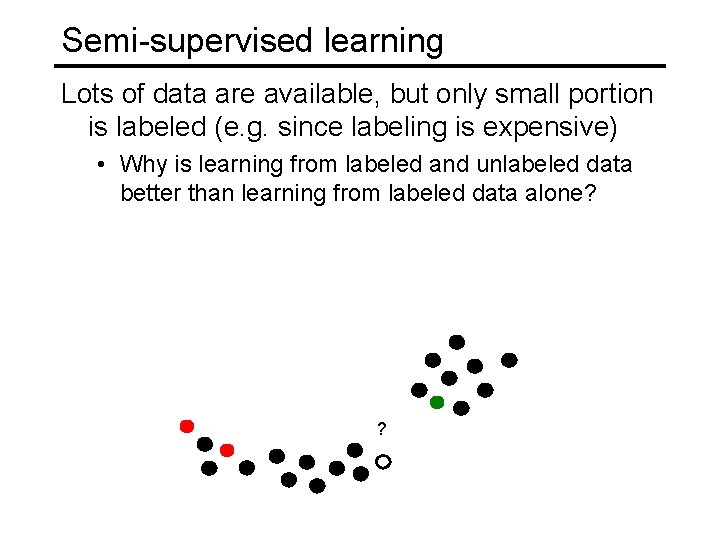

Semi-supervised learning Lots of data are available, but only small portion is labeled (e. g. since labeling is expensive) • Why is learning from labeled and unlabeled data better than learning from labeled data alone? ?

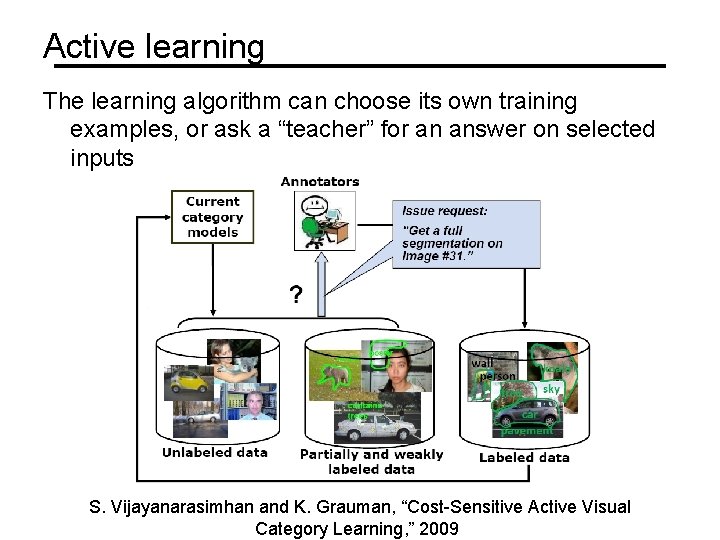

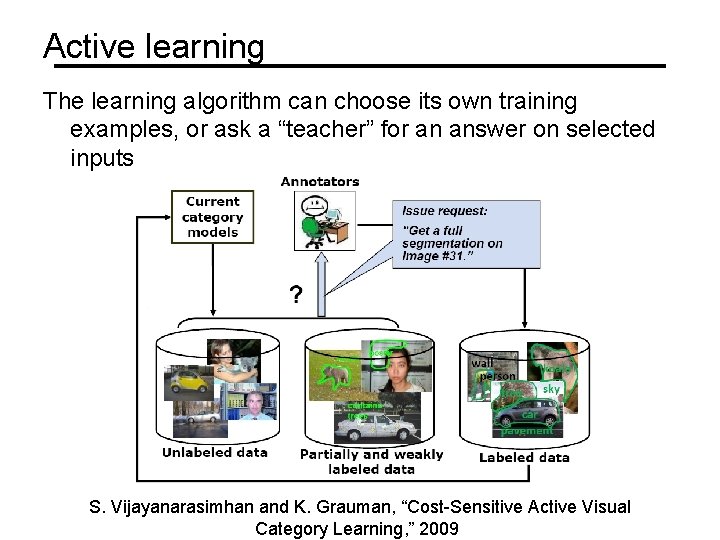

Active learning The learning algorithm can choose its own training examples, or ask a “teacher” for an answer on selected inputs S. Vijayanarasimhan and K. Grauman, “Cost-Sensitive Active Visual Category Learning, ” 2009

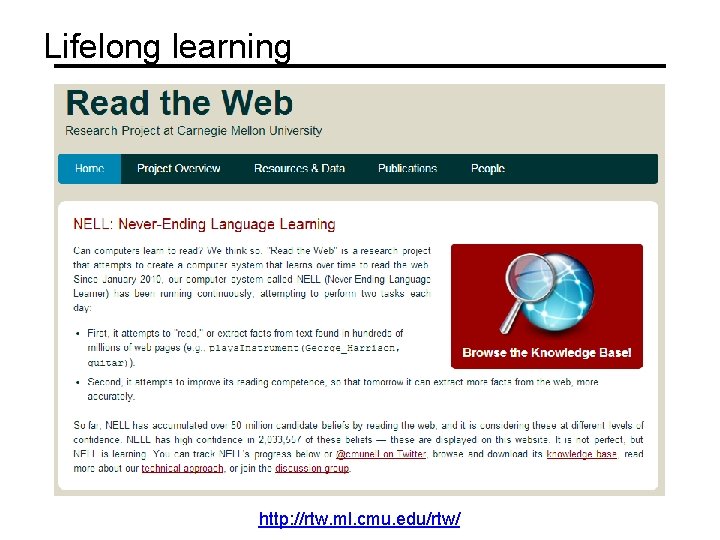

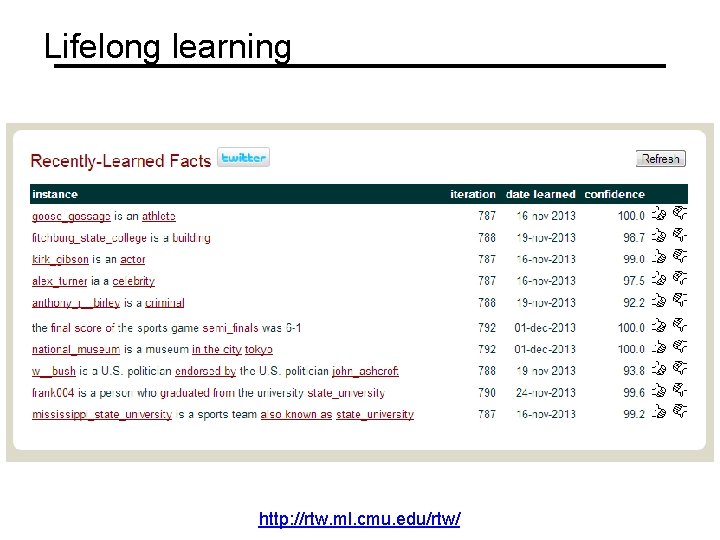

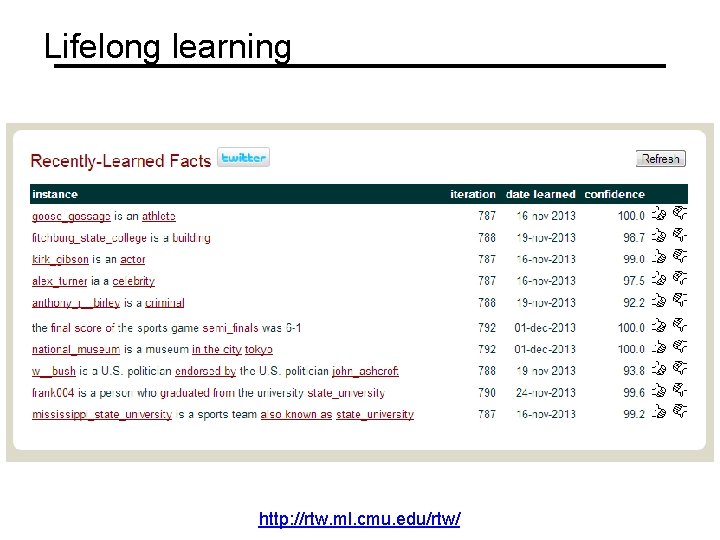

Lifelong learning http: //rtw. ml. cmu. edu/rtw/

Lifelong learning http: //rtw. ml. cmu. edu/rtw/

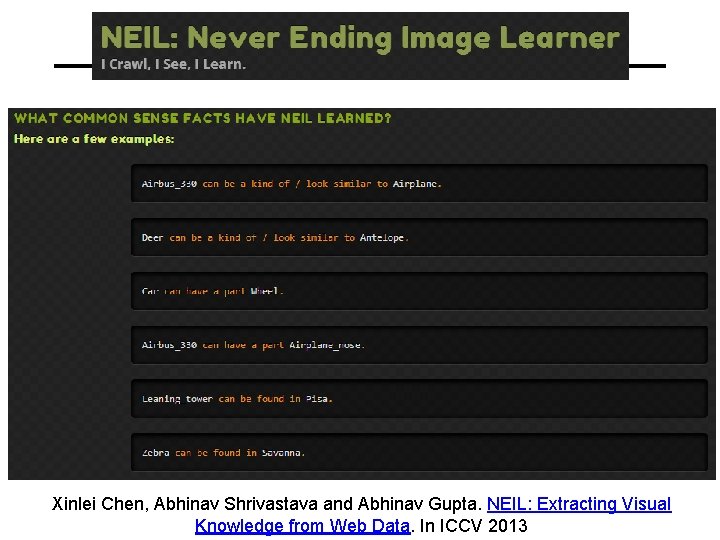

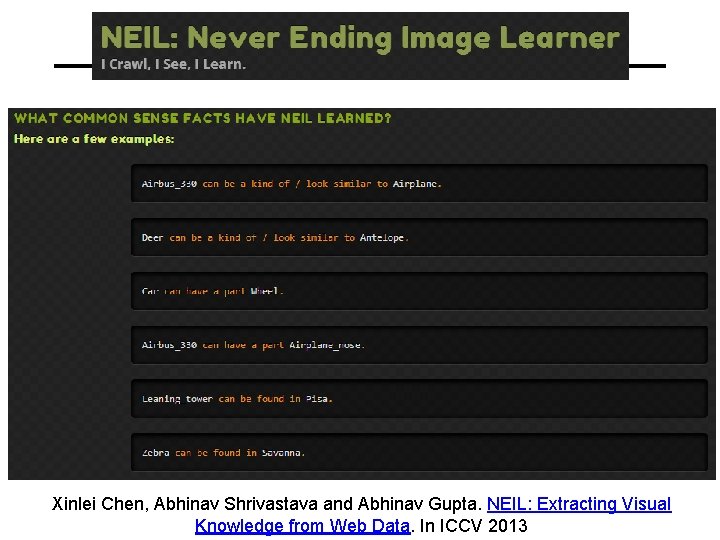

Xinlei Chen, Abhinav Shrivastava and Abhinav Gupta. NEIL: Extracting Visual Knowledge from Web Data. In ICCV 2013

Outline Neural Networks • • Perceptron review Differentiable perceptron Multilayer perceptron Support vector machine (SVM) Other Machine Learning Tasks • Structured prediction • Unsupervised learning – Clustering, quantization, density estimation • Semi-supervised and active learning – Example: the Never-Ending Language Learner

Neural networks and learning machines 3rd edition

Neural networks and learning machines 3rd edition Neural networks and learning machines

Neural networks and learning machines Transductive support vector machines

Transductive support vector machines Visualizing and understanding convolutional neural networks

Visualizing and understanding convolutional neural networks Deep neural networks and mixed integer linear optimization

Deep neural networks and mixed integer linear optimization Neural networks for rf and microwave design

Neural networks for rf and microwave design Fuzzy logic lecture

Fuzzy logic lecture Datagram switching vs virtual circuit

Datagram switching vs virtual circuit Vc bound

Vc bound Neural pruning ib psychology

Neural pruning ib psychology Audio super resolution using neural networks

Audio super resolution using neural networks Convolutional neural networks for visual recognition

Convolutional neural networks for visual recognition Leon gatys

Leon gatys Efficient processing of deep neural networks

Efficient processing of deep neural networks Introduction to convolutional neural networks ppt

Introduction to convolutional neural networks ppt Pixelrnn

Pixelrnn Neural network matlab toolbox

Neural network matlab toolbox 11-747 neural networks for nlp

11-747 neural networks for nlp Perceptron xor

Perceptron xor Csrmm

Csrmm On the computational efficiency of training neural networks

On the computational efficiency of training neural networks Threshold logic unit

Threshold logic unit Xooutput

Xooutput Lmu cis

Lmu cis Few shot learning with graph neural networks

Few shot learning with graph neural networks Deep forest towards an alternative to deep neural networks

Deep forest towards an alternative to deep neural networks Convolutional neural networks

Convolutional neural networks Neuraltools neural networks

Neuraltools neural networks Lstm andrew ng

Lstm andrew ng Predicting nba games using neural networks

Predicting nba games using neural networks The wake-sleep algorithm for unsupervised neural networks

The wake-sleep algorithm for unsupervised neural networks Bharath subramanyam

Bharath subramanyam Convolutional neural network alternatives

Convolutional neural network alternatives Backbone networks in computer networks

Backbone networks in computer networks Sandwich paragraph example

Sandwich paragraph example Support control and movement lesson outline

Support control and movement lesson outline Signal words

Signal words Vector directed line segment

Vector directed line segment Cosenos directores de un vector

Cosenos directores de un vector How is vector resolution the opposite of vector addition

How is vector resolution the opposite of vector addition Position vector defination

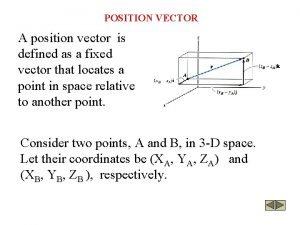

Position vector defination Support vector machine icon

Support vector machine icon Support vector machine regression

Support vector machine regression Father of support vector machine

Father of support vector machine Svm exercises

Svm exercises Support vector machine pdf

Support vector machine pdf Support vector regression

Support vector regression Support vector regression

Support vector regression Andrew ng support vector machine

Andrew ng support vector machine Structured support vector machine

Structured support vector machine Support vector machine intuition

Support vector machine intuition Chapter 4 work and energy section 1 work and machines

Chapter 4 work and energy section 1 work and machines Networks and graphs circuits paths and graph structures

Networks and graphs circuits paths and graph structures Wired data transfer

Wired data transfer Section 1 work and machines answer key

Section 1 work and machines answer key Section 1 work and machines

Section 1 work and machines