Support Vector Machine 2 SVM Lecture 15 Courtesy

- Slides: 28

Support Vector Machine _ 2 (SVM) Lecture 15 Courtesy to Dr. David Mease for many slides in section 4

Nonlinear SVM 1. The problem that cannot solve by linear SVM 2. Nonlinear transformation of data 3. Characteristics of Nonlinear SVM 4. Ensemble Method

1. Data that can be separated by a line

Maximize the margin by Lagrange Multiplier Method

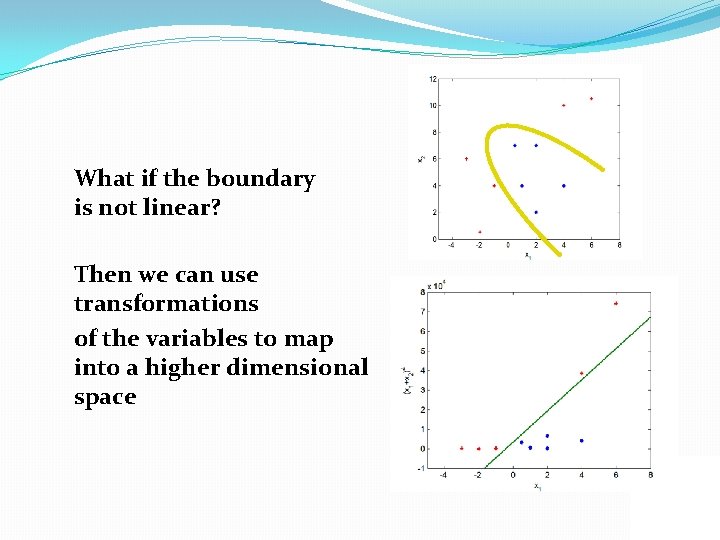

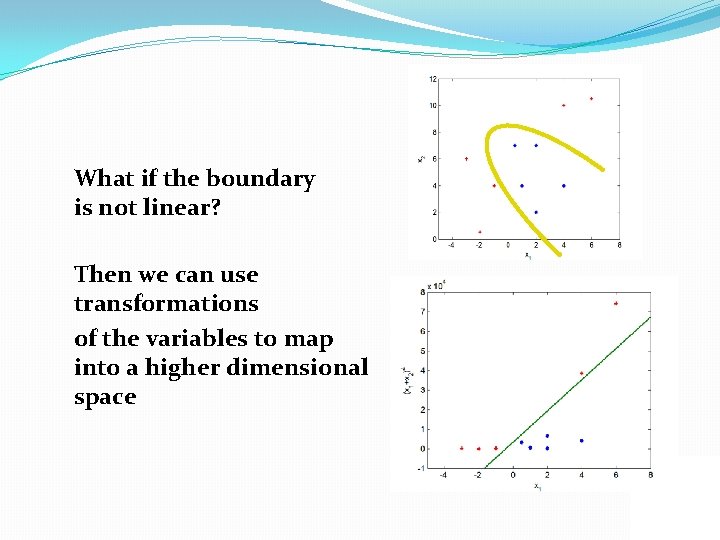

What if the boundary is not linear? Then we can use transformations of the variables to map into a higher dimensional space

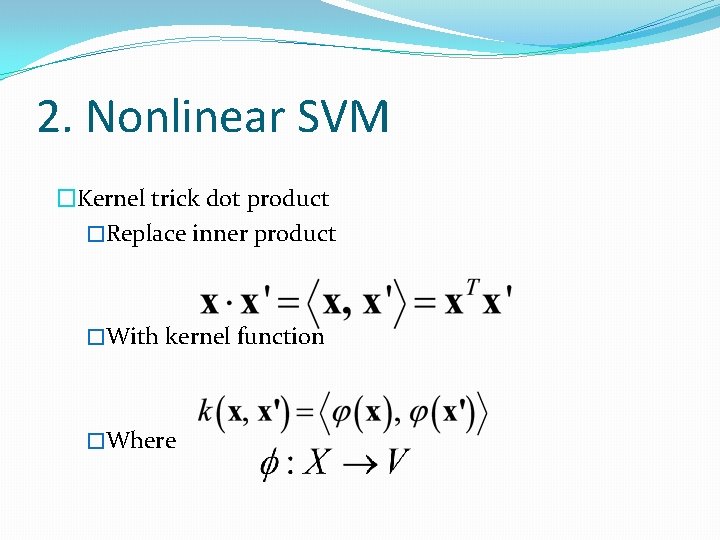

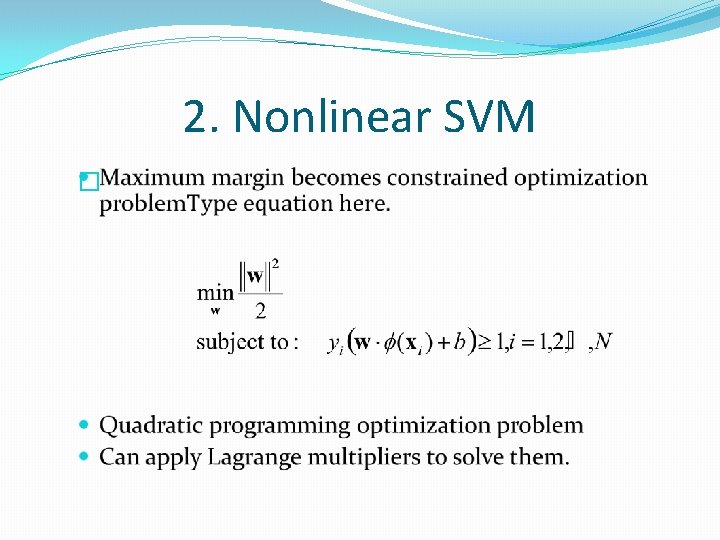

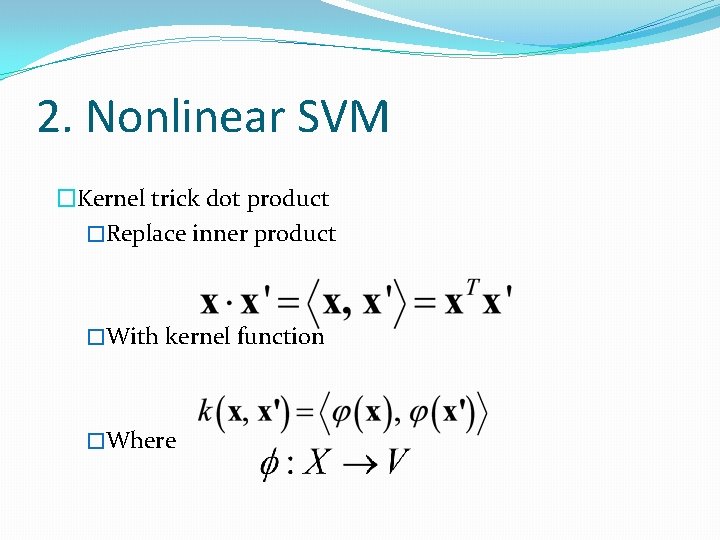

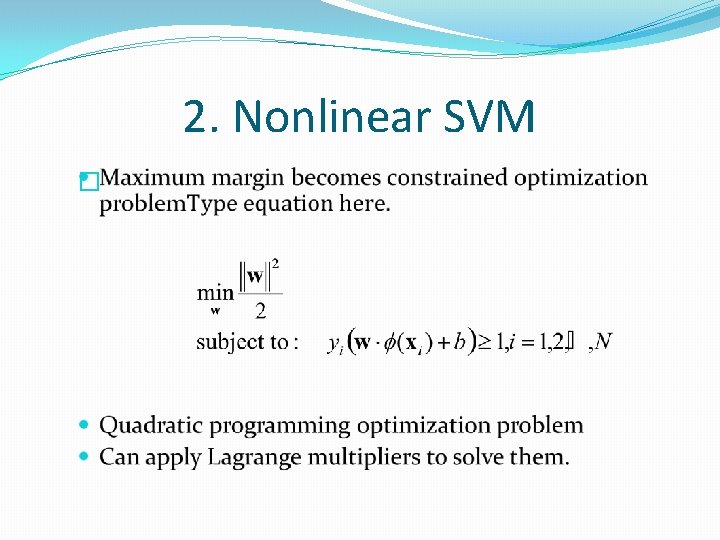

2. Nonlinear SVM �Kernel trick dot product �Replace inner product �With kernel function �Where

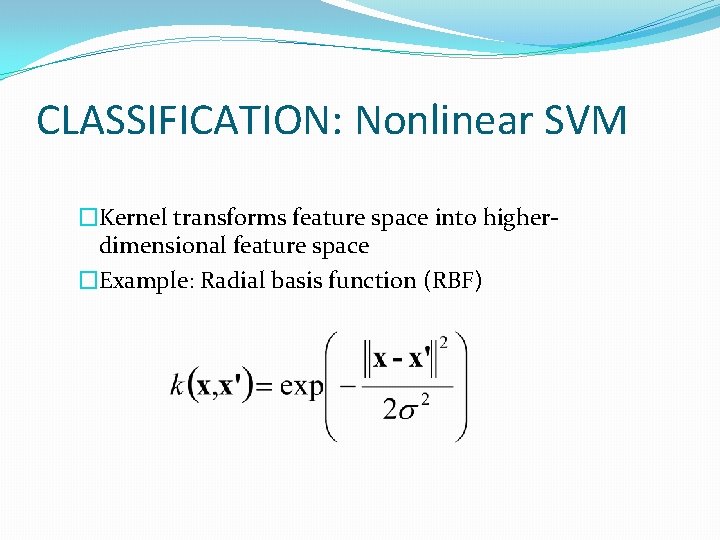

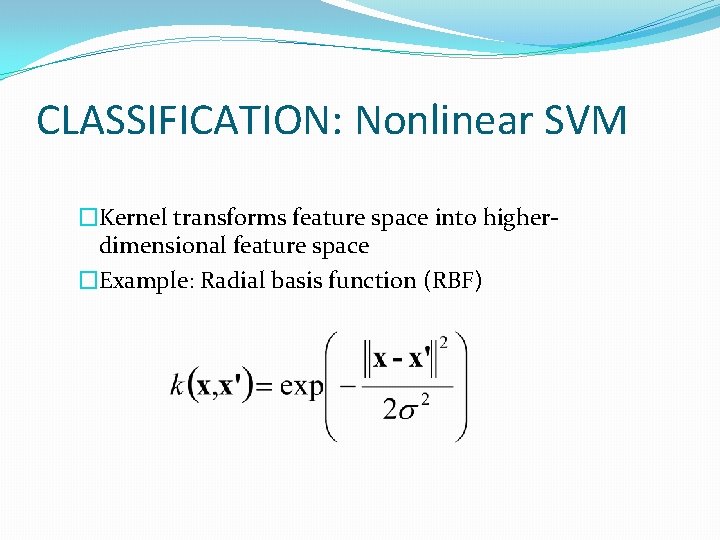

CLASSIFICATION: Nonlinear SVM �Kernel transforms feature space into higherdimensional feature space �Example: Radial basis function (RBF)

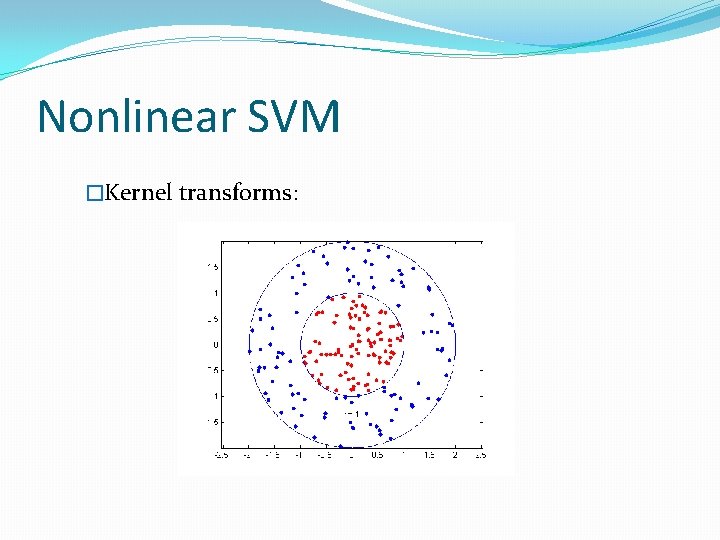

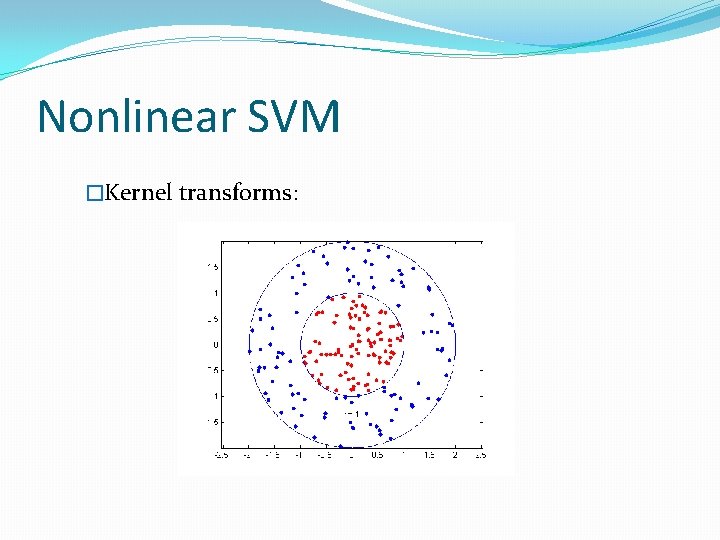

Nonlinear SVM �Kernel transforms:

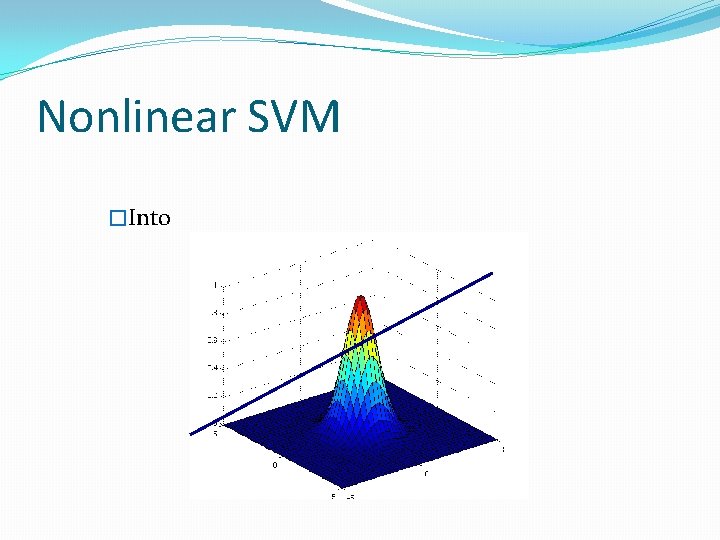

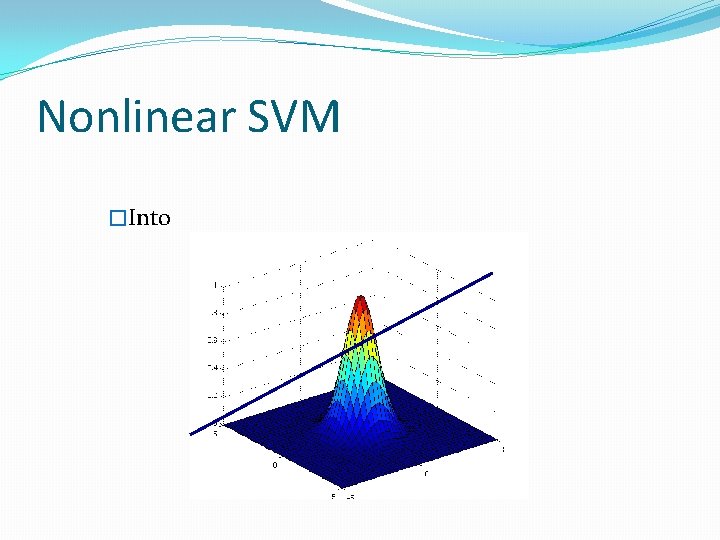

Nonlinear SVM �Into

2. Nonlinear SVM �

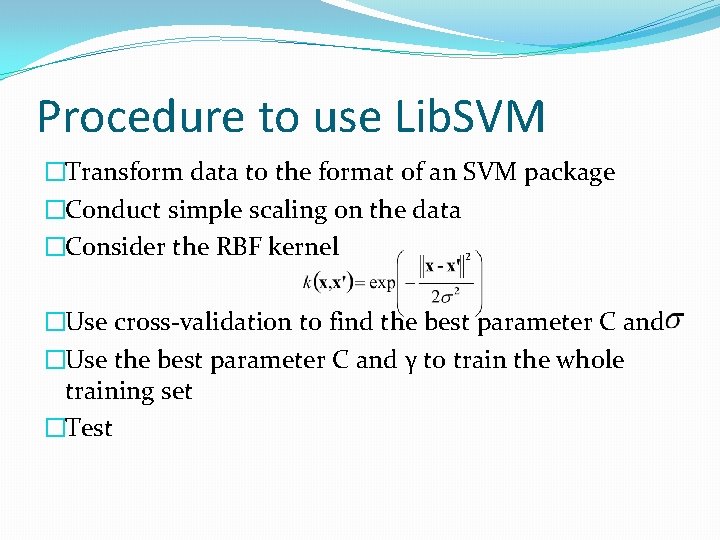

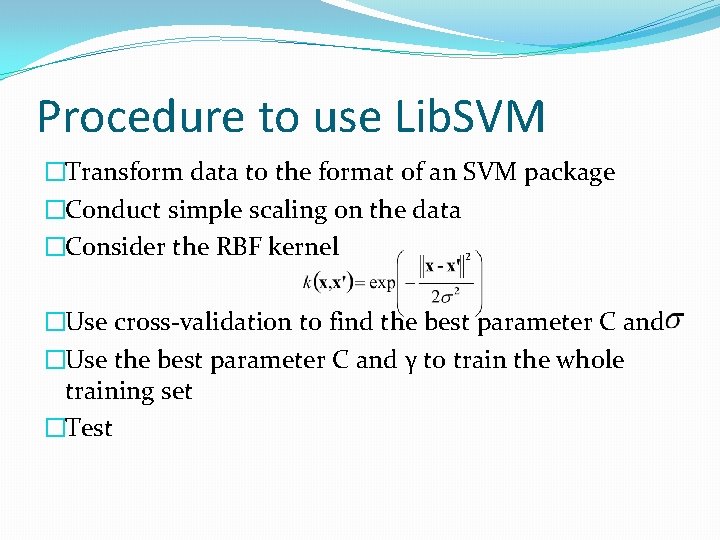

Procedure to use Lib. SVM �Transform data to the format of an SVM package �Conduct simple scaling on the data �Consider the RBF kernel �Use cross-validation to find the best parameter C and �Use the best parameter C and γ to train the whole training set �Test

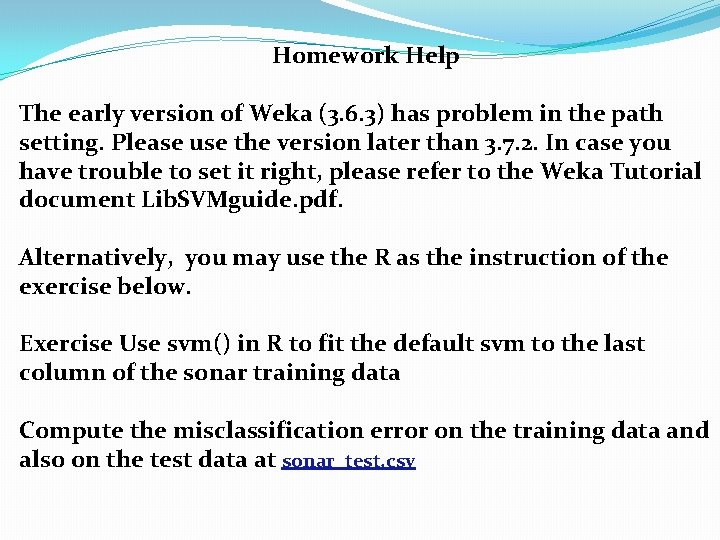

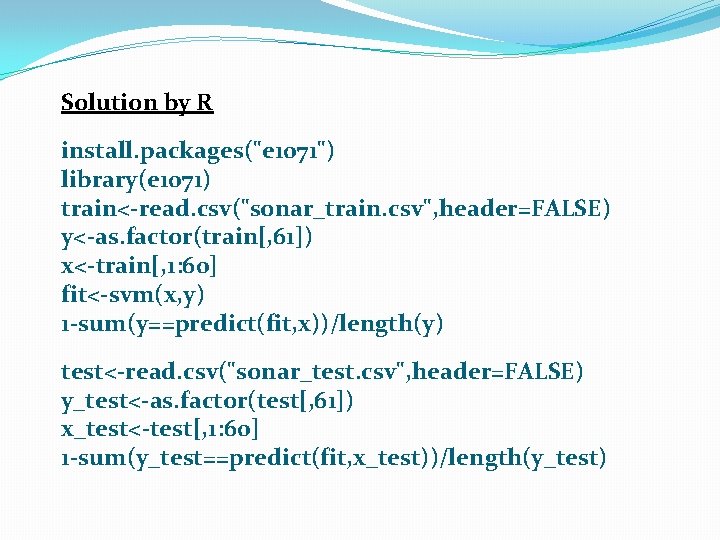

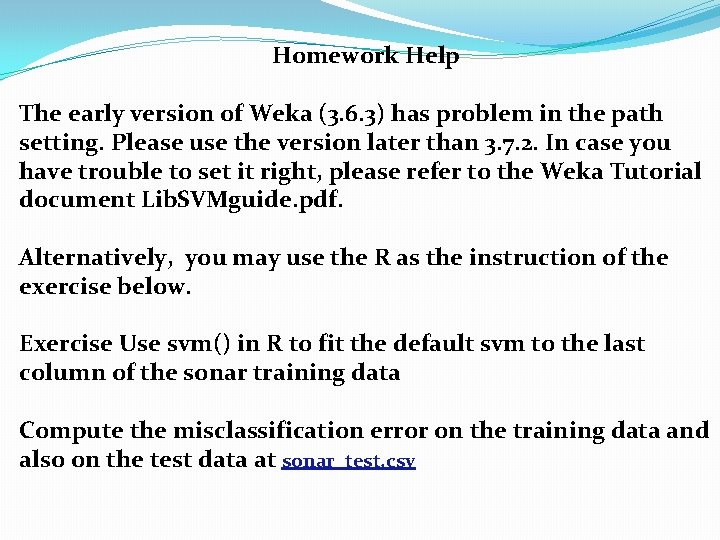

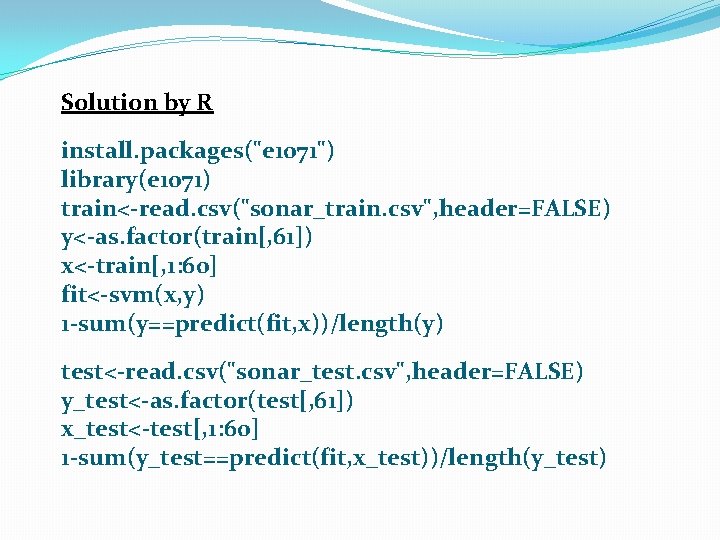

Homework Help The early version of Weka (3. 6. 3) has problem in the path setting. Please use the version later than 3. 7. 2. In case you have trouble to set it right, please refer to the Weka Tutorial document Lib. SVMguide. pdf. Alternatively, you may use the R as the instruction of the exercise below. Exercise Use svm() in R to fit the default svm to the last column of the sonar training data Compute the misclassification error on the training data and also on the test data at sonar_test. csv

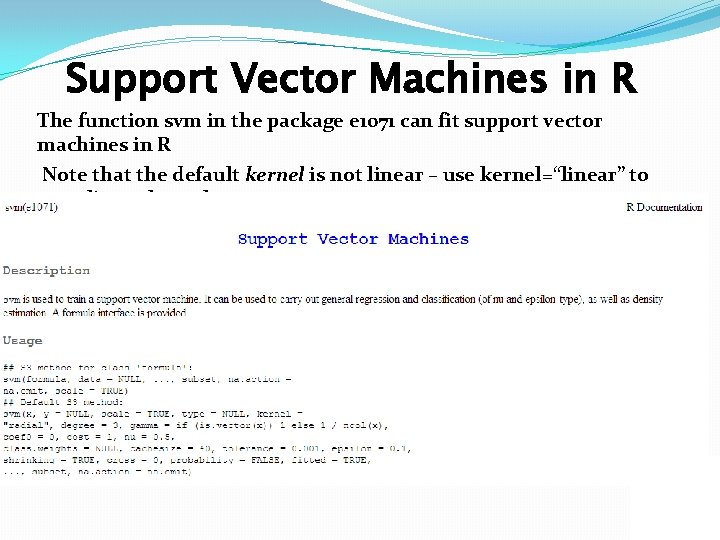

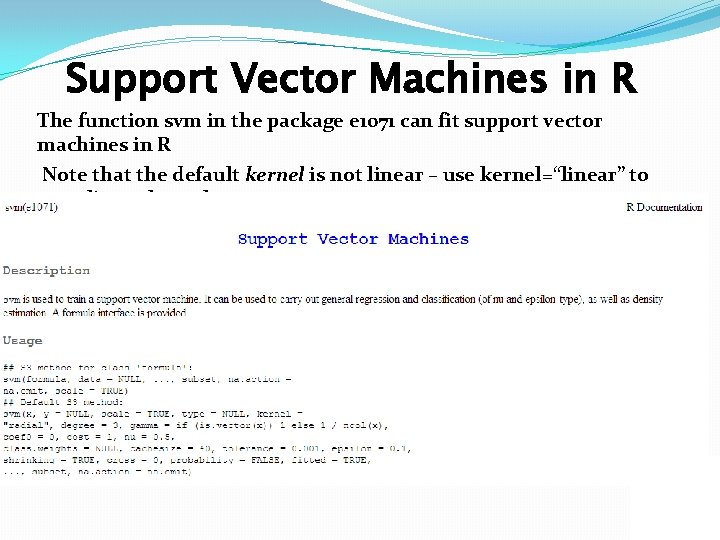

Support Vector Machines in R The function svm in the package e 1071 can fit support vector machines in R Note that the default kernel is not linear – use kernel=“linear” to get a linear kernel

Solution by R install. packages("e 1071") library(e 1071) train<-read. csv("sonar_train. csv", header=FALSE) y<-as. factor(train[, 61]) x<-train[, 1: 60] fit<-svm(x, y) 1 -sum(y==predict(fit, x))/length(y) test<-read. csv("sonar_test. csv", header=FALSE) y_test<-as. factor(test[, 61]) x_test<-test[, 1: 60] 1 -sum(y_test==predict(fit, x_test))/length(y_test)

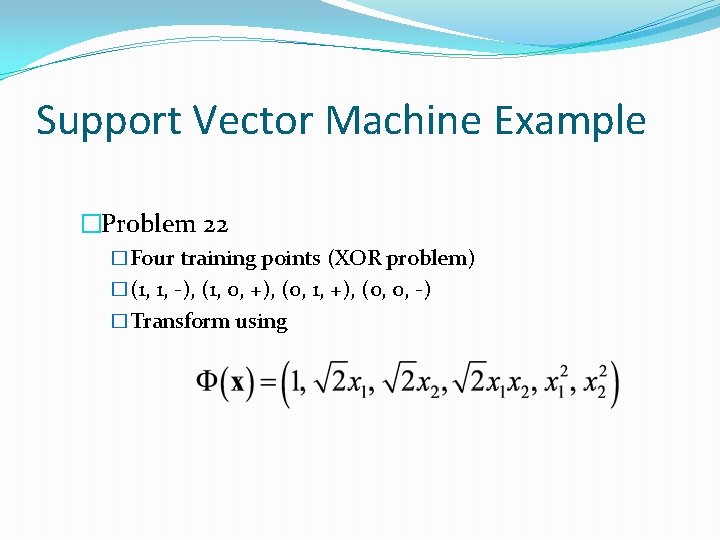

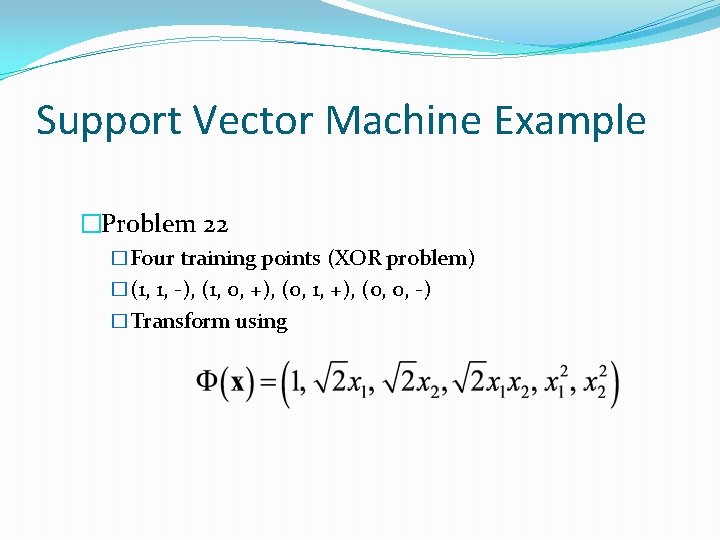

Support Vector Machine Example �Problem 22 �Four training points (XOR problem) �(1, 1, -), (1, 0, +), (0, 1, +), (0, 0, -) �Transform using

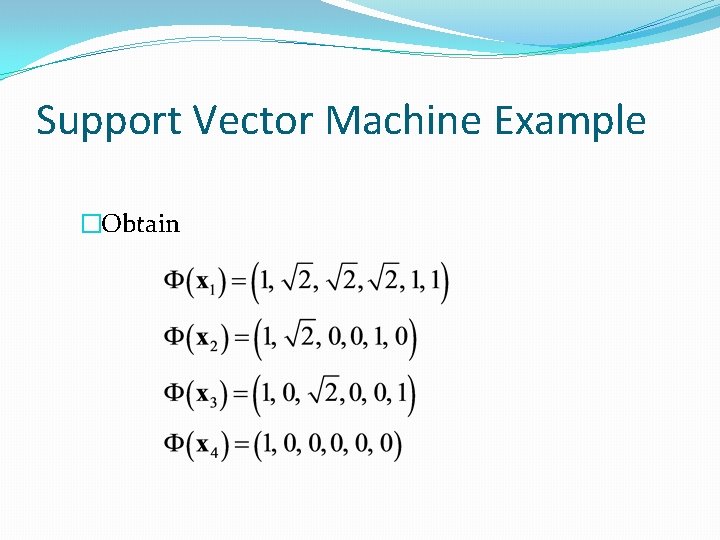

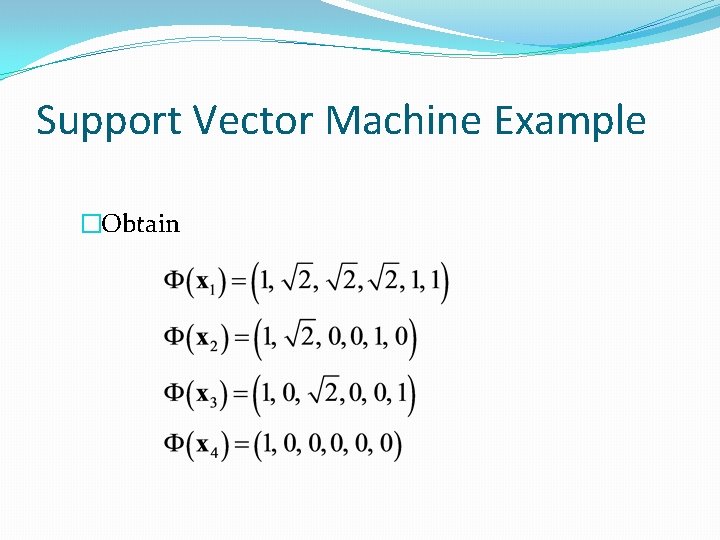

Support Vector Machine Example �Obtain

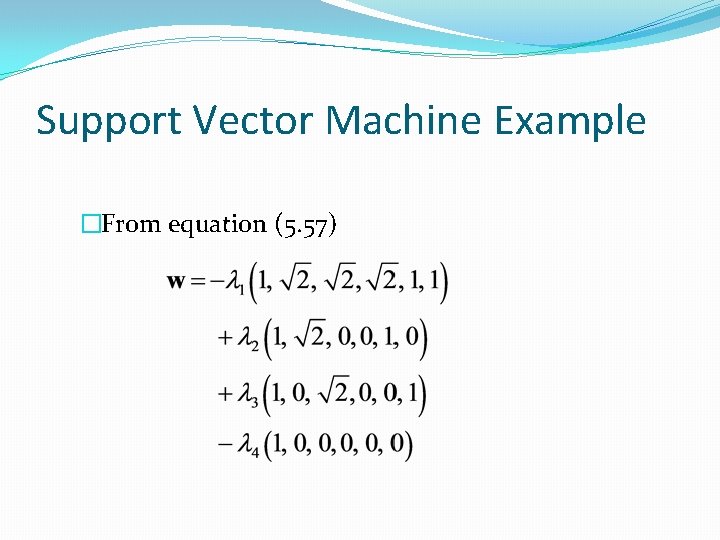

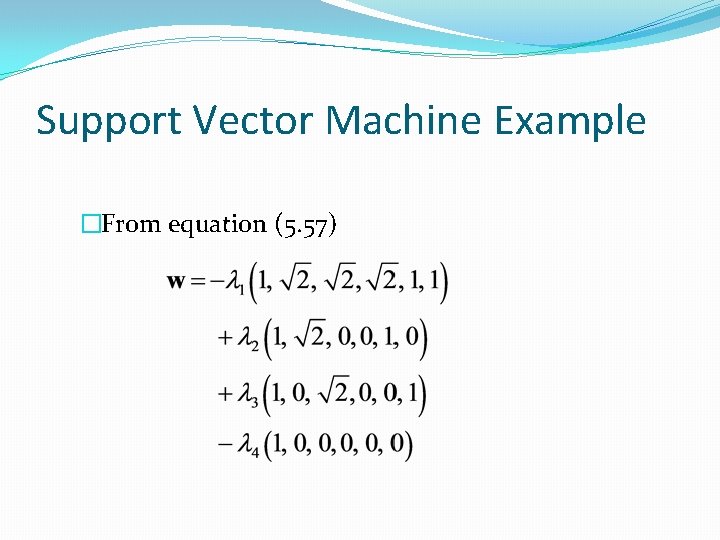

Support Vector Machine Example �From equation (5. 57)

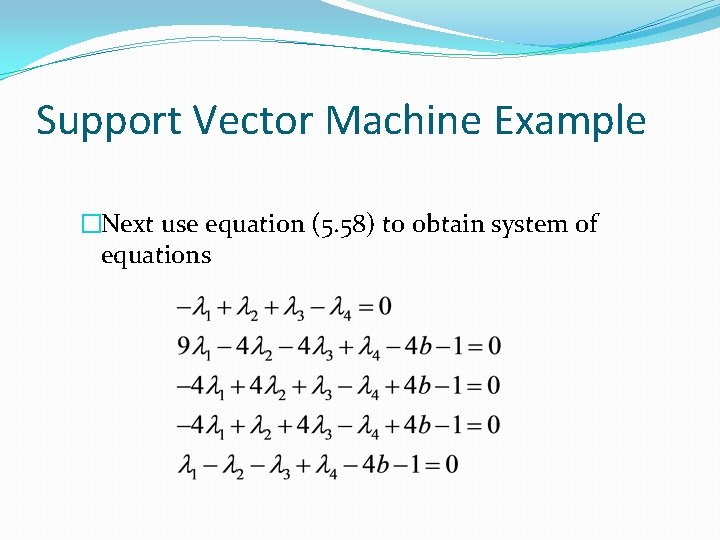

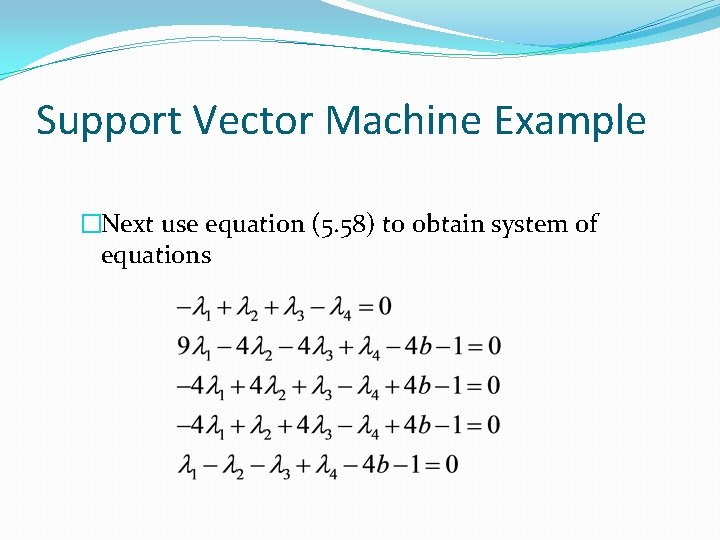

Support Vector Machine Example �Next use equation (5. 58) to obtain system of equations

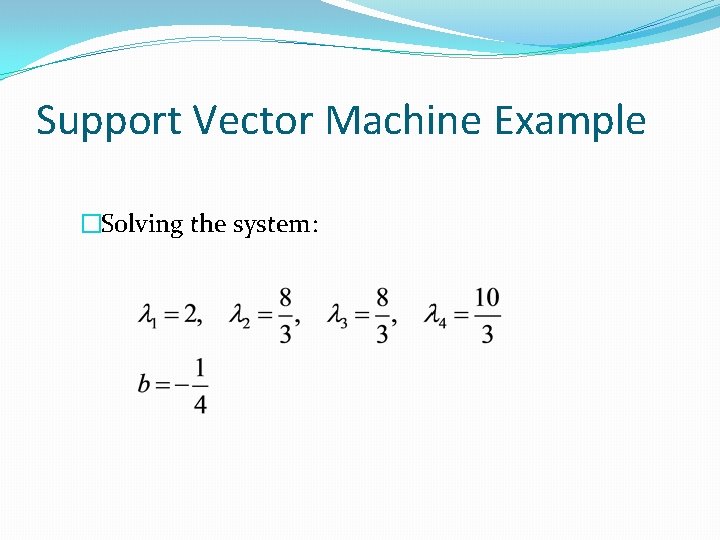

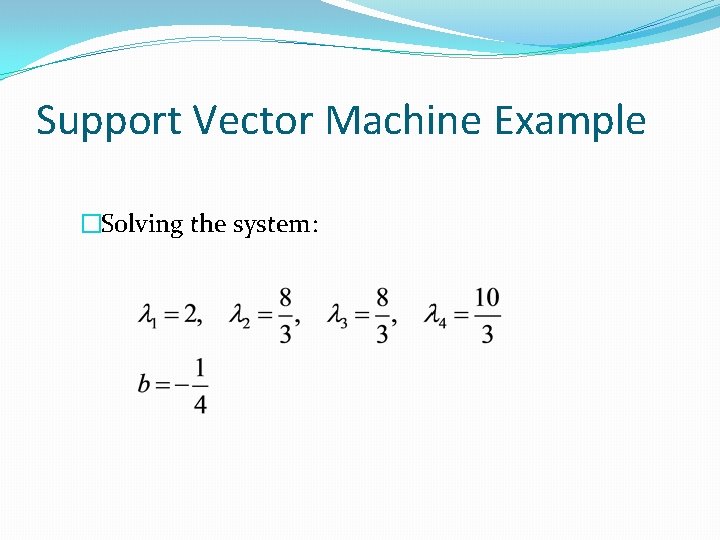

Support Vector Machine Example �Solving the system:

Support Vector Machine Example �Finally use equation (5. 59)

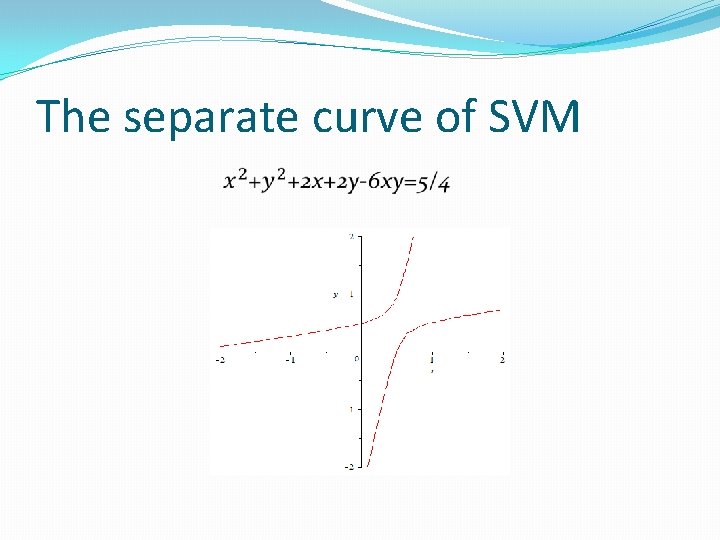

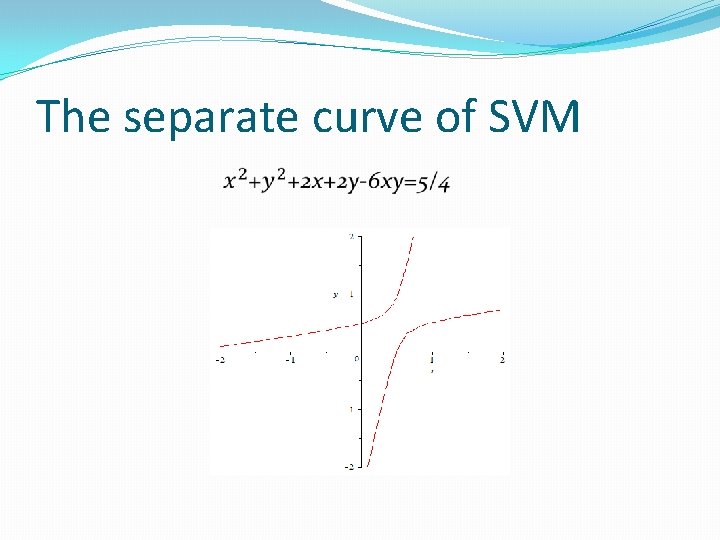

The separate curve of SVM

3. Characteristics of Nonlinear SVM �Different kernel functions lead to different general shapes � Linear kernel � Polynomial kernel (Textbook Page 273 -274) � Gaussian (RBF) kernel (shown above) � Exponential kernel � Sigmoid (hyperbolic tangent) kernel � Circular kernel

Nonlinear SVM �Even more kernels � Spherical kernel � Wave kernel � Power kernel � Logarithm kernel � Spline/B-spline kernel � Wavelet kernel � ……

4. Ensemble Methods Ensemble methods aim at “improving classification accuracy by aggregating the predictions from multiple classifiers (page 276) One of the most obvious ways of doing this is simply by averaging classifiers which make errors somewhat independently of each other Suppose I have 5 classifiers which each classify a point correctly 70% of the time. If these 5 classifiers are completely independent and I take the majority vote, how often is the majority vote correct for that point?

4. Ensemble Methods • Ensemble methods include -Bagging (page 283) -Random Forests (page 290) -Boosting (page 285) • Bagging builds many classifiers by training on repeated samples (with replacement) from the data • Random Forests averages many trees which are constructed with some amount of randomness • Boosting combines simple base classifiers by upweighting data points which are classified incorrectly

4. Boosting • Boosting has been called the “best off-the-shelf classifier in the world” See an old reference • There a number of explanations for boosting, but it is not completely understood why it works so well • The original popular algorithm is Ada. Boost from

4. Boosting can use any classifier as its weak learner (base classifier) but classification trees are by far the most popular Boosting usually gives zero training error, but rarely overfits which is very curious

4. References 1. Mathematical Modeling and Simulation, Module 2, lesson 2 – 6. https: //modelsim. wordpress. com/modules/optimization/ 2. Lib. SVM Guide, posted on the Shared Google Driver under the Weka Tutorial 3. Y Freund and R. E. Schapire, Experiments with a new boost algorithm, In Machine Learning, Proceeding s of the Thirteen International Conference, Pages 148 -156, 1996.