Relation Extraction Slides from Dan Jurafsky Rion Snow

![A complex hand-built extraction rule [NYU Proteus] A complex hand-built extraction rule [NYU Proteus]](https://slidetodoc.com/presentation_image_h2/0d8e954fc2154cba3592c5c7317ee4bf/image-60.jpg)

![Supervised Learning l Supervised machine learning (e. g. [Zhou et al. 2005], [Bunescu & Supervised Learning l Supervised machine learning (e. g. [Zhou et al. 2005], [Bunescu &](https://slidetodoc.com/presentation_image_h2/0d8e954fc2154cba3592c5c7317ee4bf/image-70.jpg)

![Snowball [Agichtein & Gravano 2000] l Exploit duality between patterns and tuples - find Snowball [Agichtein & Gravano 2000] l Exploit duality between patterns and tuples - find](https://slidetodoc.com/presentation_image_h2/0d8e954fc2154cba3592c5c7317ee4bf/image-85.jpg)

- Slides: 107

Relation Extraction Slides from Dan Jurafsky, Rion Snow, Jim Martin, Chris Manning and William Cohen

Background: Information Extraction l Extract information from text § Sometimes called text analytics commercially § Extract entities (the people, organizations, locations, times, dates, genes, diseases, medicines, etc. in a text) § Extract the relations between entities § Figure out the larger events that are taking place

Information Extraction Creating knowledge bases and ontologies l Implications for cognitive modeling l Digital Libaries l § Google scholar, Citeseer need to extract the title, author and references l l l Bioinformatics Patent analysis Specific market segments for stock analysis SEC filings Intelligence analysis

Paradigms: Data Mining vs Text Mining l l Data Mining is a set of techniques that aims to extract knowledge by big data analysis, e. g. from databases, using simple patterns and statistical methods In contrast, the Text Mining's goal (or Machine Reading), is to extract relationship between entities that just existing in examinated text, semantically deeper than data mining does The difference is in the source information: § relationship in text just exist in our source, § we don't need to try to guess it but only discover it!

Outline Reminder: Named Entity Tagging l Relation Extraction l § § Hand-built patterns Seed (bootstrap) methods Supervised classification Distant supervision

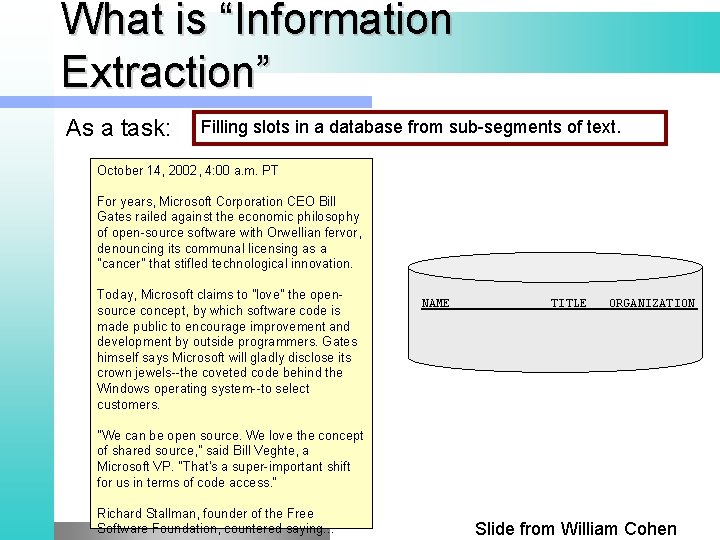

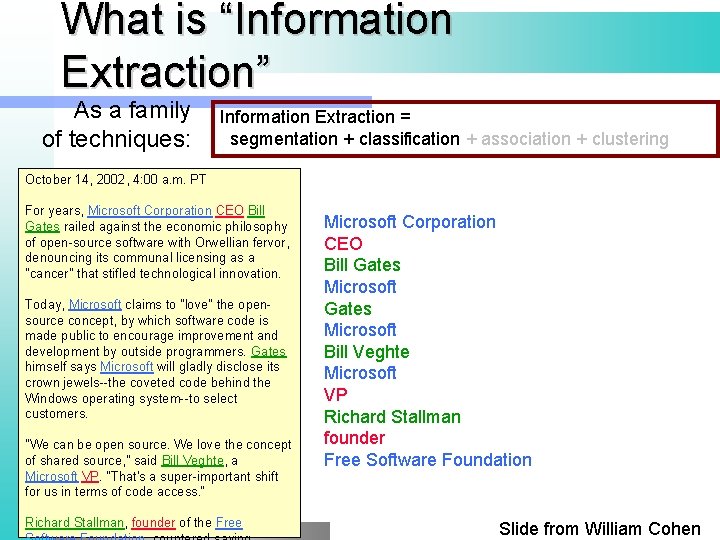

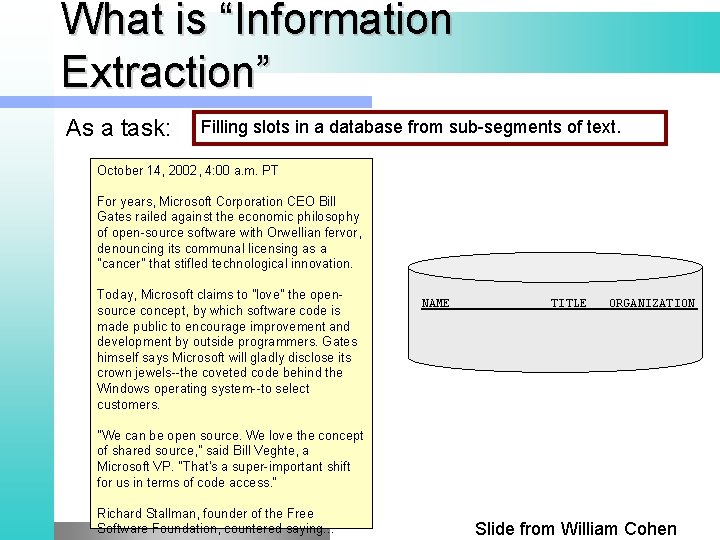

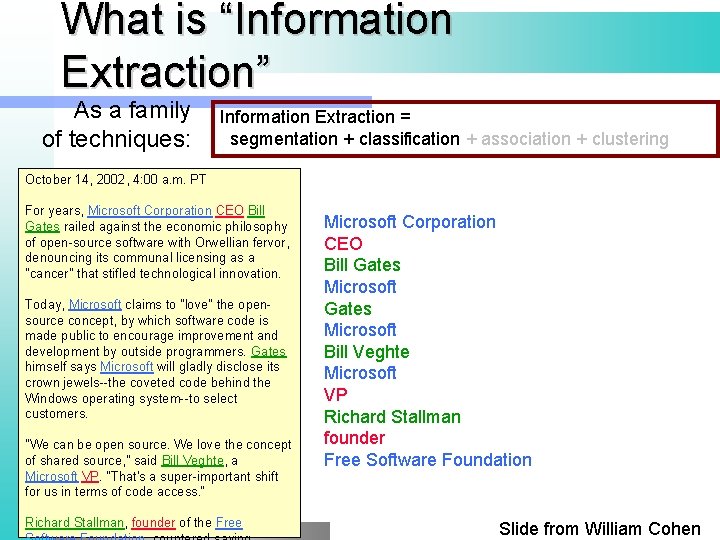

What is “Information Extraction” As a task: Filling slots in a database from sub-segments of text. October 14, 2002, 4: 00 a. m. PT For years, Microsoft Corporation CEO Bill Gates railed against the economic philosophy of open-source software with Orwellian fervor, denouncing its communal licensing as a "cancer" that stifled technological innovation. Today, Microsoft claims to "love" the opensource concept, by which software code is made public to encourage improvement and development by outside programmers. Gates himself says Microsoft will gladly disclose its crown jewels--the coveted code behind the Windows operating system--to select customers. NAME TITLE ORGANIZATION "We can be open source. We love the concept of shared source, " said Bill Veghte, a Microsoft VP. "That's a super-important shift for us in terms of code access. “ Richard Stallman, founder of the Free Software Foundation, countered saying… Slide from William Cohen

What is “Information Extraction” As a task: Filling slots in a database from sub-segments of text. October 14, 2002, 4: 00 a. m. PT For years, Microsoft Corporation CEO Bill Gates railed against the economic philosophy of open-source software with Orwellian fervor, denouncing its communal licensing as a "cancer" that stifled technological innovation. Today, Microsoft claims to "love" the opensource concept, by which software code is made public to encourage improvement and development by outside programmers. Gates himself says Microsoft will gladly disclose its crown jewels--the coveted code behind the Windows operating system--to select customers. IE NAME Bill Gates Bill Veghte Richard Stallman TITLE ORGANIZATION CEO Microsoft VP Microsoft founder Free Soft. . "We can be open source. We love the concept of shared source, " said Bill Veghte, a Microsoft VP. "That's a super-important shift for us in terms of code access. “ Richard Stallman, founder of the Free Slide from William Cohen

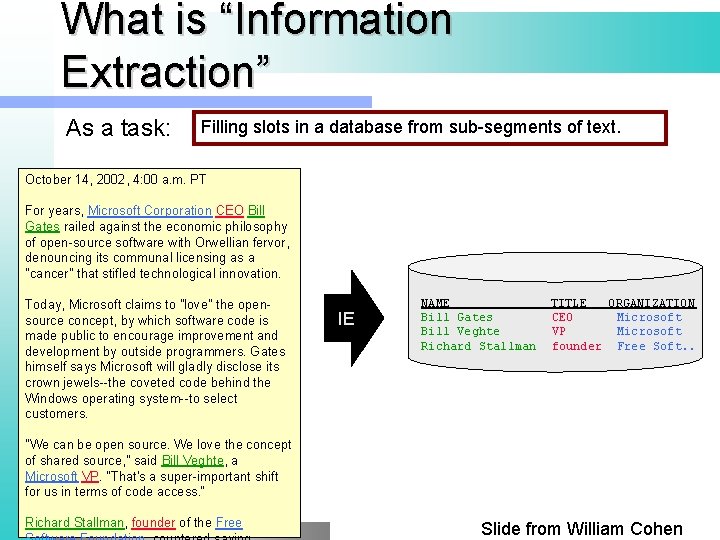

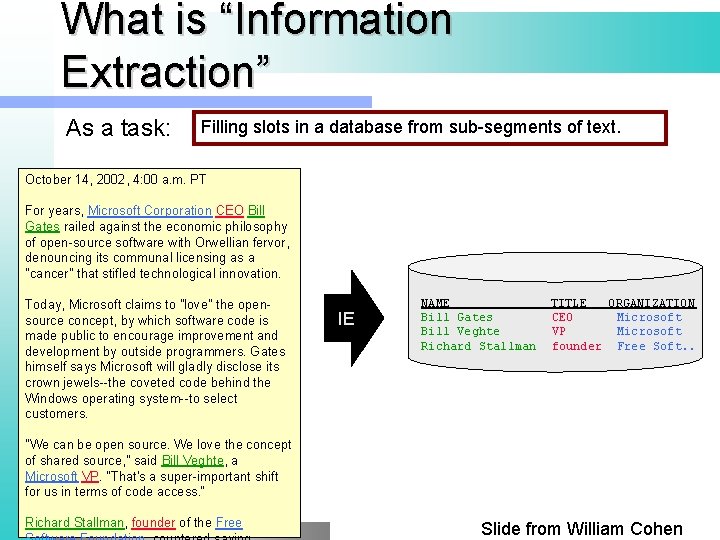

What is “Information Extraction” As a family of techniques: Information Extraction = segmentation + classification + clustering + association October 14, 2002, 4: 00 a. m. PT For years, Microsoft Corporation CEO Bill Gates railed against the economic philosophy of open-source software with Orwellian fervor, denouncing its communal licensing as a "cancer" that stifled technological innovation. Today, Microsoft claims to "love" the opensource concept, by which software code is made public to encourage improvement and development by outside programmers. Gates himself says Microsoft will gladly disclose its crown jewels--the coveted code behind the Windows operating system--to select customers. "We can be open source. We love the concept of shared source, " said Bill Veghte, a Microsoft VP. "That's a super-important shift for us in terms of code access. “ Richard Stallman, founder of the Free Microsoft Corporation CEO Bill Gates Microsoft “named entity extraction” Gates Microsoft Bill Veghte Microsoft VP Richard Stallman founder Free Software Foundation Slide from William Cohen

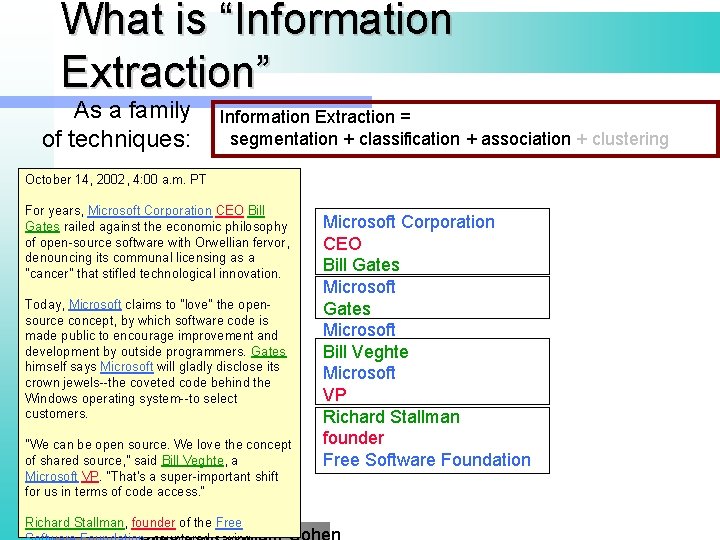

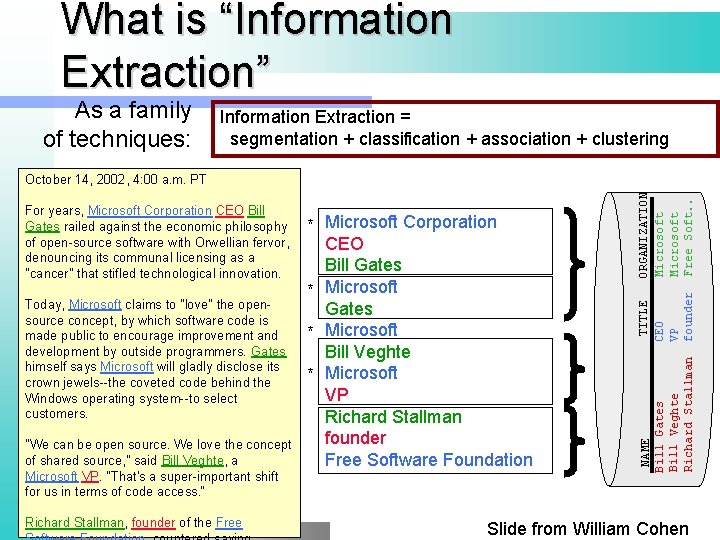

What is “Information Extraction” As a family of techniques: Information Extraction = segmentation + classification + association + clustering October 14, 2002, 4: 00 a. m. PT For years, Microsoft Corporation CEO Bill Gates railed against the economic philosophy of open-source software with Orwellian fervor, denouncing its communal licensing as a "cancer" that stifled technological innovation. Today, Microsoft claims to "love" the opensource concept, by which software code is made public to encourage improvement and development by outside programmers. Gates himself says Microsoft will gladly disclose its crown jewels--the coveted code behind the Windows operating system--to select customers. "We can be open source. We love the concept of shared source, " said Bill Veghte, a Microsoft VP. "That's a super-important shift for us in terms of code access. “ Richard Stallman, founder of the Free Microsoft Corporation CEO Bill Gates Microsoft Bill Veghte Microsoft VP Richard Stallman founder Free Software Foundation Slide from William Cohen

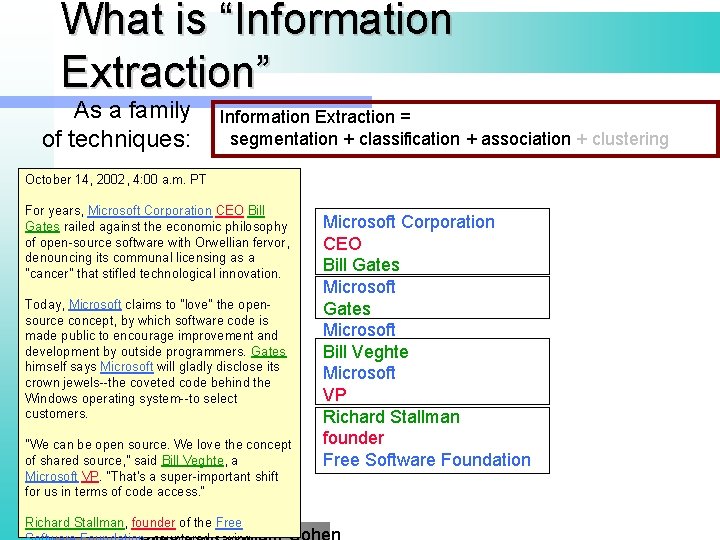

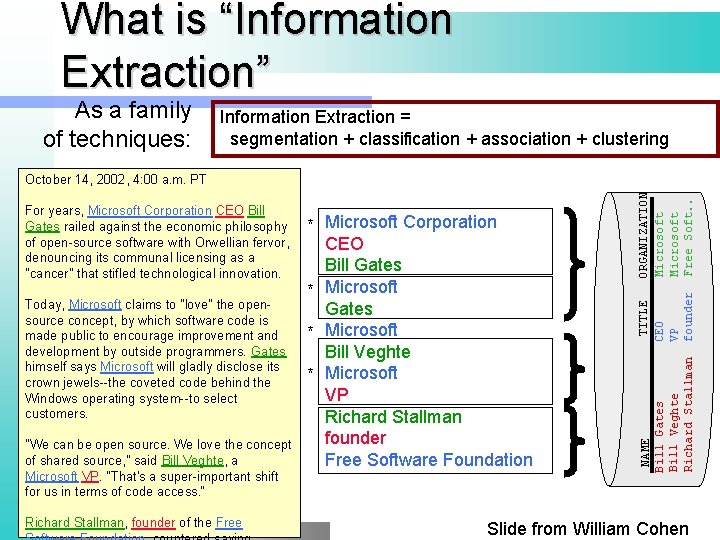

What is “Information Extraction” As a family of techniques: Information Extraction = segmentation + classification + association + clustering October 14, 2002, 4: 00 a. m. PT For years, Microsoft Corporation CEO Bill Gates railed against the economic philosophy of open-source software with Orwellian fervor, denouncing its communal licensing as a "cancer" that stifled technological innovation. Today, Microsoft claims to "love" the opensource concept, by which software code is made public to encourage improvement and development by outside programmers. Gates himself says Microsoft will gladly disclose its crown jewels--the coveted code behind the Windows operating system--to select customers. "We can be open source. We love the concept of shared source, " said Bill Veghte, a Microsoft VP. "That's a super-important shift for us in terms of code access. “ Richard Stallman, founder of the Free Microsoft Corporation CEO Bill Gates Microsoft Bill Veghte Microsoft VP Richard Stallman founder Free Software Foundation

What is “Information Extraction” As a family of techniques: Information Extraction = segmentation + classification + association + clustering "We can be open source. We love the concept of shared source, " said Bill Veghte, a Microsoft VP. "That's a super-important shift for us in terms of code access. “ Richard Stallman, founder of the Free TITLE CEO VP founder Today, Microsoft claims to "love" the opensource concept, by which software code is made public to encourage improvement and development by outside programmers. Gates himself says Microsoft will gladly disclose its crown jewels--the coveted code behind the Windows operating system--to select customers. * Microsoft Corporation CEO Bill Gates * Microsoft Bill Veghte * Microsoft VP Richard Stallman founder Free Software Foundation NAME Bill Gates Bill Veghte Richard Stallman For years, Microsoft Corporation CEO Bill Gates railed against the economic philosophy of open-source software with Orwellian fervor, denouncing its communal licensing as a "cancer" that stifled technological innovation. ORGANIZATION Microsoft Free Soft. . October 14, 2002, 4: 00 a. m. PT Slide from William Cohen

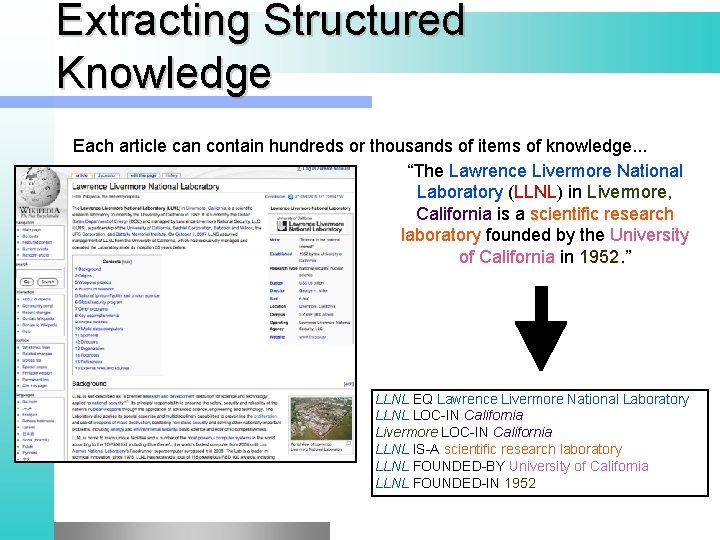

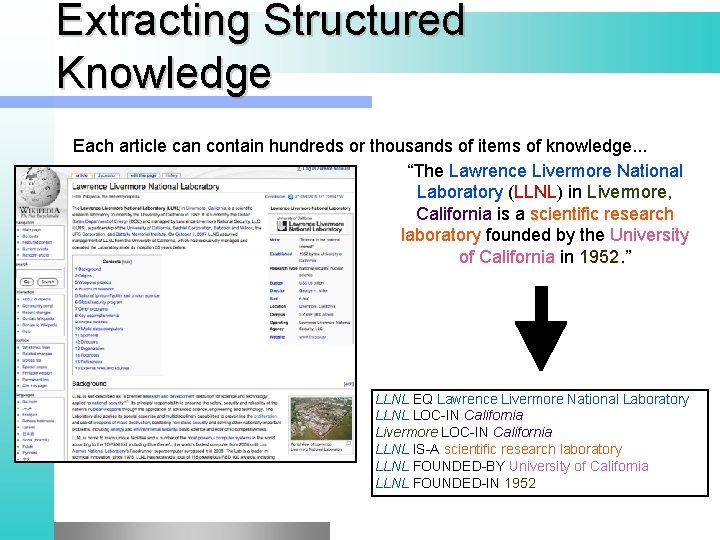

Extracting Structured Knowledge Each article can contain hundreds or thousands of items of knowledge. . . “The Lawrence Livermore National Laboratory (LLNL) in Livermore, California is a scientific research laboratory founded by the University of California in 1952. ” LLNL EQ Lawrence Livermore National Laboratory LLNL LOC-IN California Livermore LOC-IN California LLNL IS-A scientific research laboratory LLNL FOUNDED-BY University of California LLNL FOUNDED-IN 1952

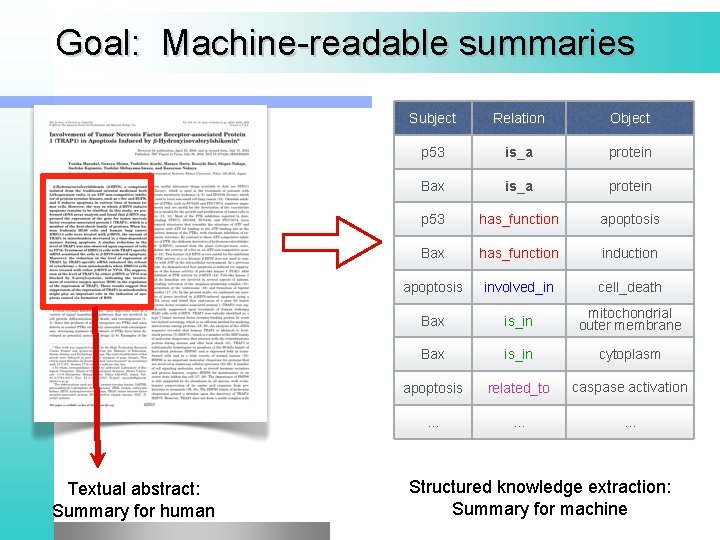

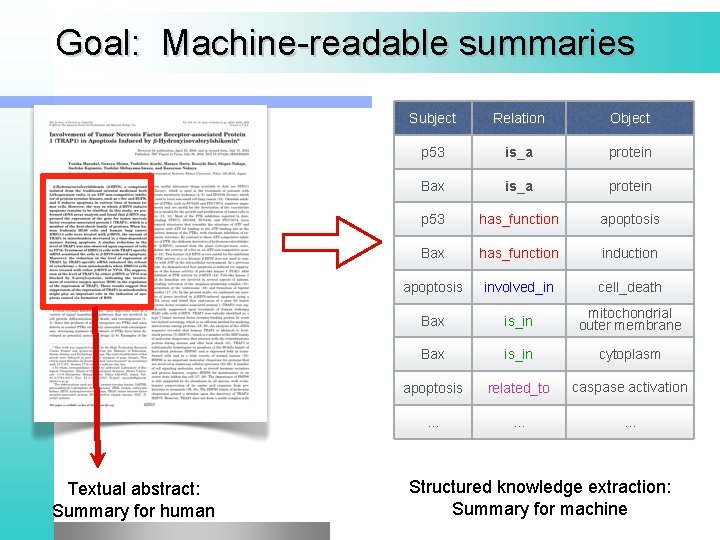

Goal: Machine-readable summaries Textual abstract: Summary for human Subject Relation Object p 53 is_a protein Bax is_a protein p 53 has_function apoptosis Bax has_function induction apoptosis involved_in cell_death Bax is_in mitochondrial outer membrane Bax is_in cytoplasm apoptosis related_to caspase activation . . Structured knowledge extraction: Summary for machine

From Unstructured Text to Structured Knowledge Unstructured Text slide from Rion Snow News articles. . .

From Unstructured Text to Structured Knowledge Unstructured Text Blog posts. . slide from Rion Snow

From Unstructured Text to Structured Knowledge Unstructured Text Scientific journal articles. . . slide from Rion Snow

From Unstructured Text to Structured Knowledge Unstructured Text Tweets, instant messages, chat logs. . . slide from Rion Snow

From Unstructured Text to Structured Knowledge Unstructured Text slide from Rion Snow

From Unstructured Text to Structured Knowledge Unstructured Text slide from Rion Snow Structured Knowledge

From Unstructured Text to Structured Knowledge Unstructured Text slide from Rion Snow Structured Knowledge

From Unstructured Text to Structured Knowledge Unstructured Text slide from Rion Snow Structured Knowledge

From Unstructured Text to Structured Knowledge Unstructured Text slide from Rion Snow Structured Knowledge

From Unstructured Text to Structured Knowledge Unstructured Text slide from Rion Snow Structured Knowledge

From Unstructured Text to Structured Knowledge Unstructured Text slide from Rion Snow Structured Knowledge

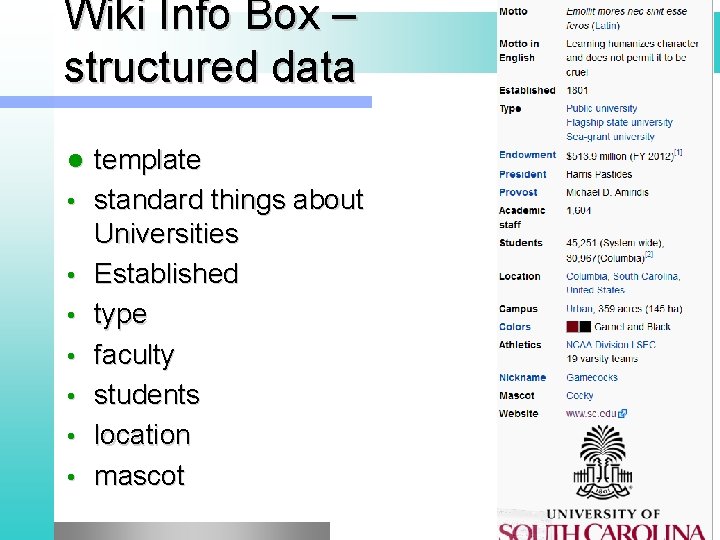

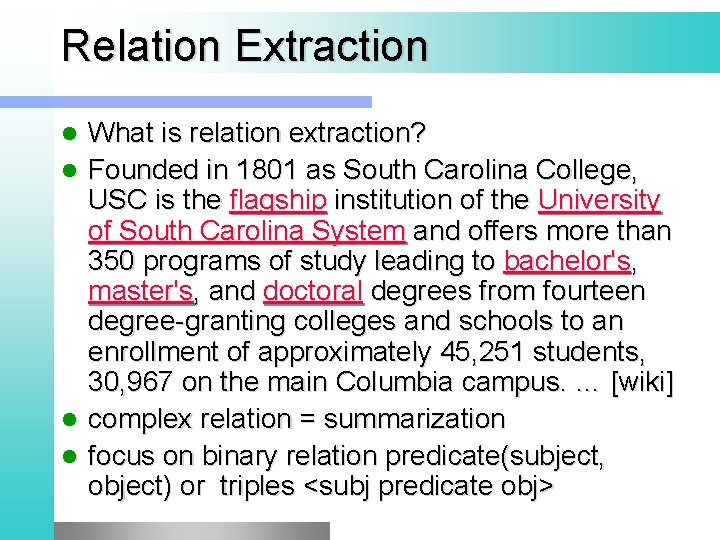

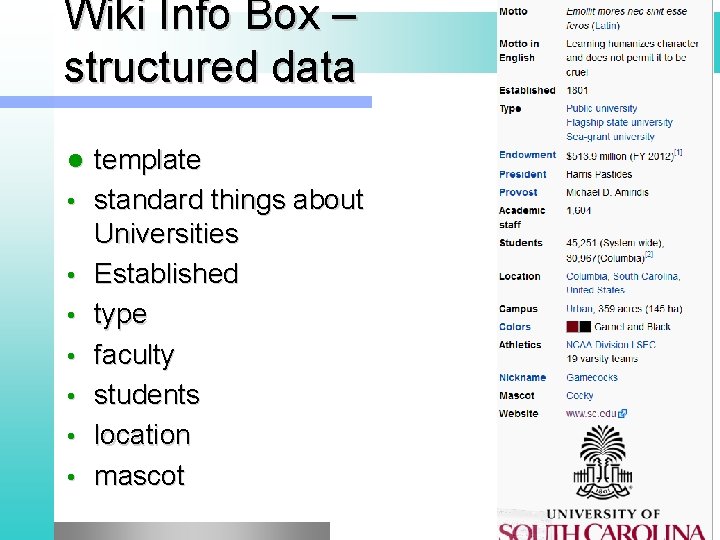

Relation Extraction l l What is relation extraction? Founded in 1801 as South Carolina College, USC is the flagship institution of the University of South Carolina System and offers more than 350 programs of study leading to bachelor's, master's, and doctoral degrees from fourteen degree-granting colleges and schools to an enrollment of approximately 45, 251 students, 30, 967 on the main Columbia campus. … [wiki] complex relation = summarization focus on binary relation predicate(subject, object) or triples <subj predicate obj>

Wiki Info Box – structured data l • • template standard things about Universities Established type faculty students location mascot

Focus on extracting binary relations • predicate(subject, object) from predicate logic • triples <subj relation object> • Directed graphs

Why relation extraction? l l create new structured KB Augmenting existing: words -> wordnet, facts -> Free. Base or DBPedia Support question answering: Jeopardy Which relations Automated Content Extraction (ACE) http: //www. itl. nist. gov/iad/mig//tests/ace/ l 17 relations l l ACE examples

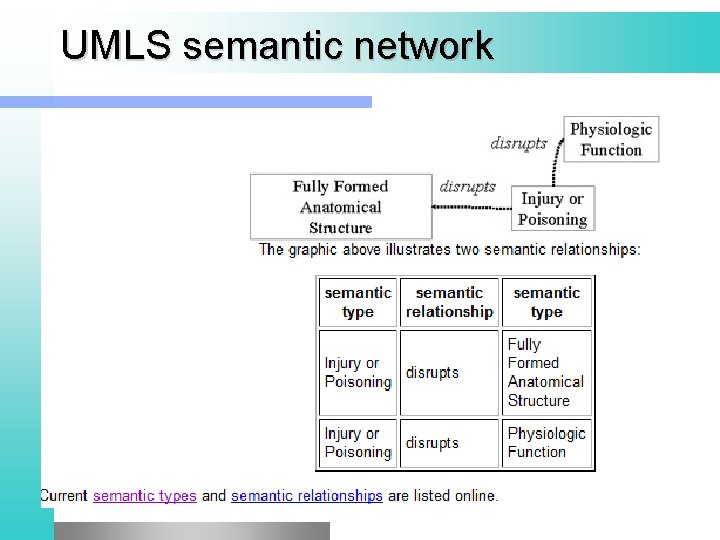

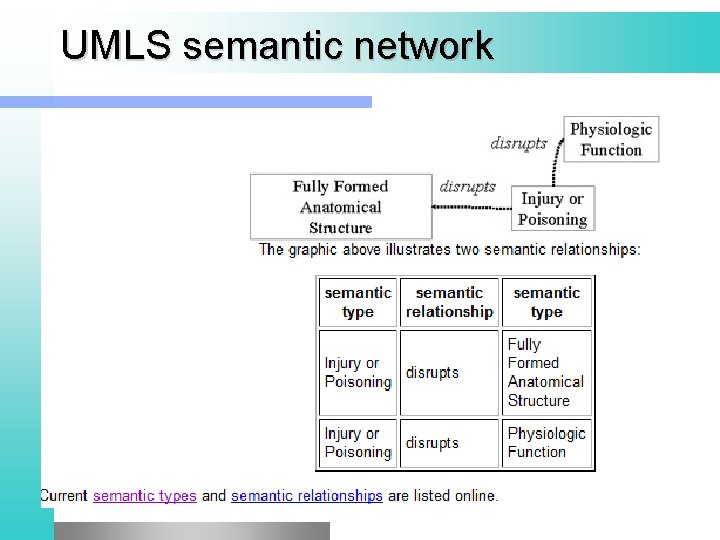

Unified Medical Language System (UMLS) l UMLS: Unified Medical 134 entities, 54 relations http: //www. nlm. nih. gov/research/umls/

UMLS semantic network

Current Relations in the UMLS Semantic Network l isa associated_with physically_related_to part_of consists_of contains connected_to interconnects branch_of tributary_of ingredient_of spatially_related_to location_of adjacent_to surrounds traverses functionally_related_to affects … l… ltemporally_related_to co-occurs_with precedes lconceptually_related_to levaluation_of degree_of analyzes assesses_effect_of measurement_of measures diagnoses property_of derivative_of developmental_form_of method_of …

Databases of Wikipedia Relations • • DBpedia is a crowd-sourced community effort to extract structured information from Wikipedia and to make this information readily available DBpedia allows you to make sophisticated queries http: //dbpedia. org/About

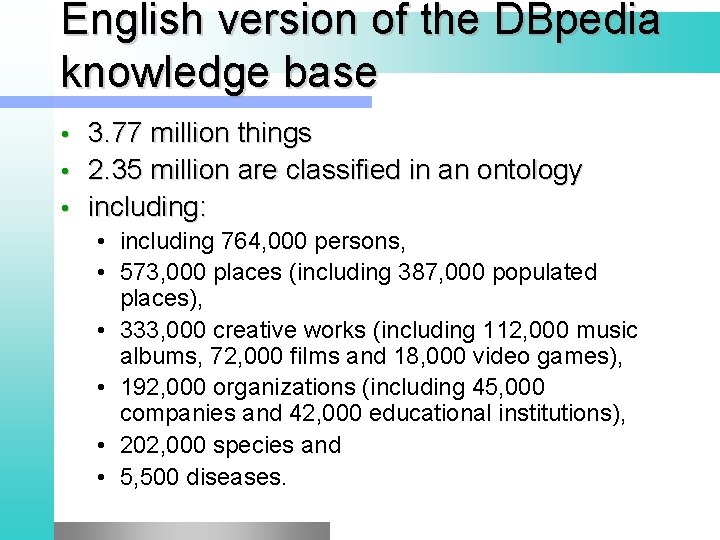

English version of the DBpedia knowledge base 3. 77 million things • 2. 35 million are classified in an ontology • including: • • including 764, 000 persons, • 573, 000 places (including 387, 000 populated places), • 333, 000 creative works (including 112, 000 music albums, 72, 000 films and 18, 000 video games), • 192, 000 organizations (including 45, 000 companies and 42, 000 educational institutions), • 202, 000 species and • 5, 500 diseases.

freebase l google (freebase wiki) http: //wiki. freebase. com/wiki/Main_Page

Ontological relations l • • Ontological relations IS-A hypernym Instance-of has-Part hyponym (opposite of hypernym)

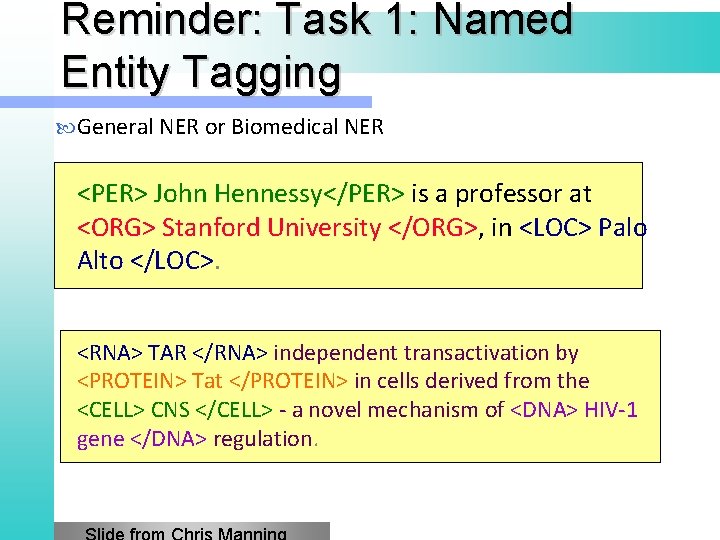

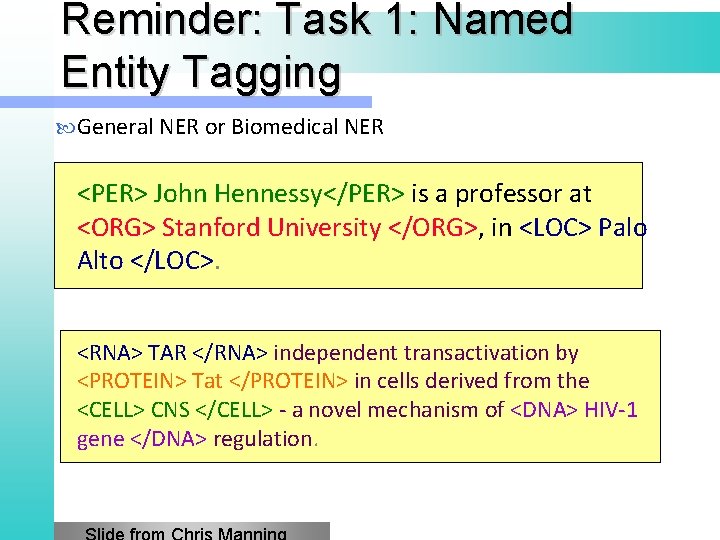

Reminder: Task 1: Named Entity Tagging General NER or Biomedical NER <PER> John Hennessy</PER> is a professor at <ORG> Stanford University </ORG>, in <LOC> Palo Alto </LOC>. <RNA> TAR </RNA> independent transactivation by <PROTEIN> Tat </PROTEIN> in cells derived from the <CELL> CNS </CELL> - a novel mechanism of <DNA> HIV-1 gene </DNA> regulation.

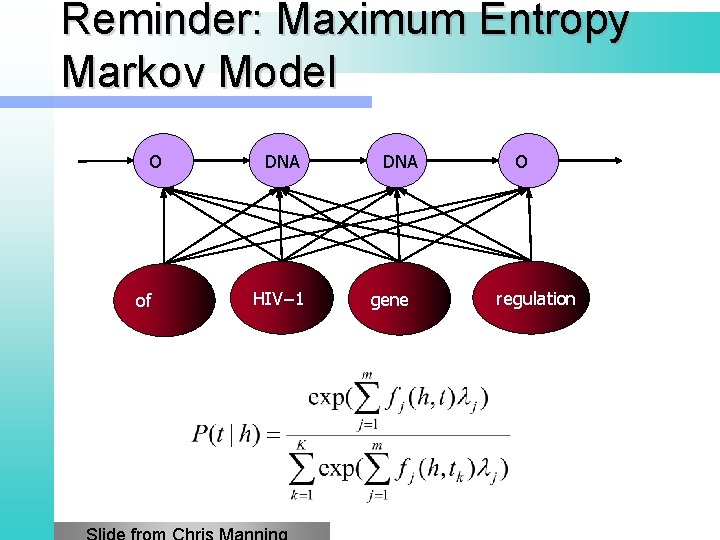

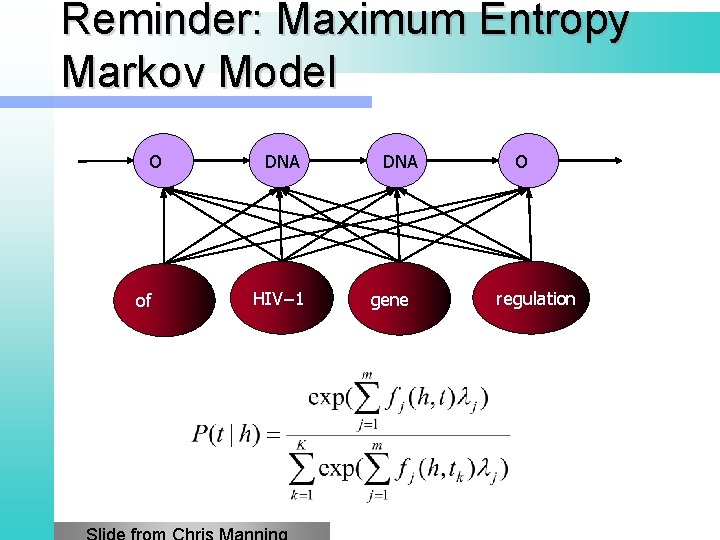

Reminder: Maximum Entropy Markov Model O of DNA HIV− 1 DNA gene O regulation

Task II: Relation Extraction

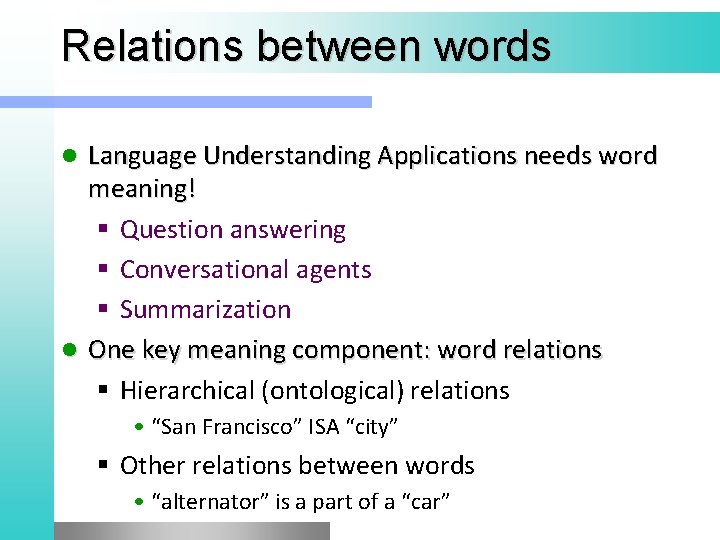

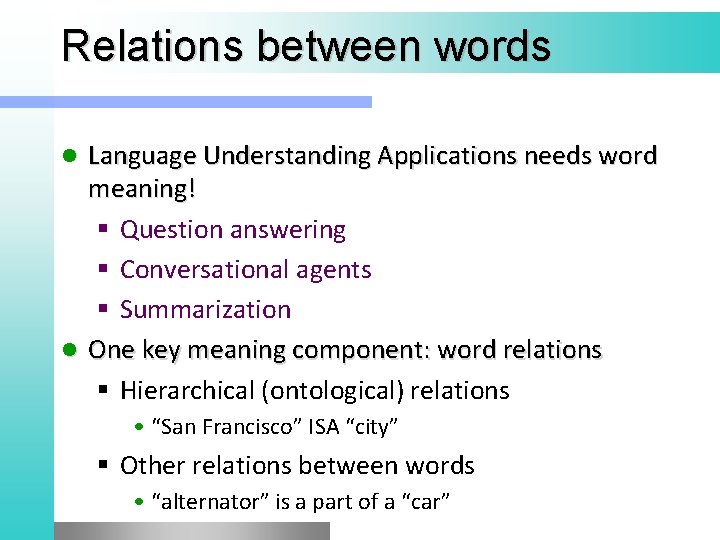

Relations between words Language Understanding Applications needs word meaning! § Question answering § Conversational agents § Summarization l One key meaning component: word relations § Hierarchical (ontological) relations l • “San Francisco” ISA “city” § Other relations between words • “alternator” is a part of a “car”

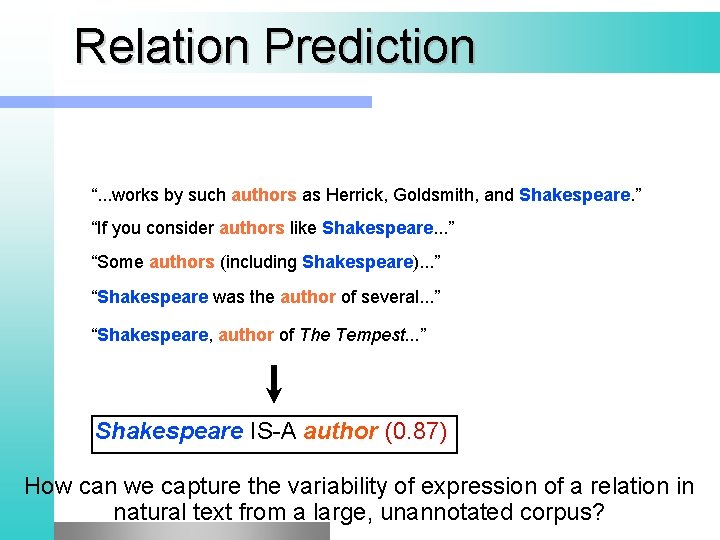

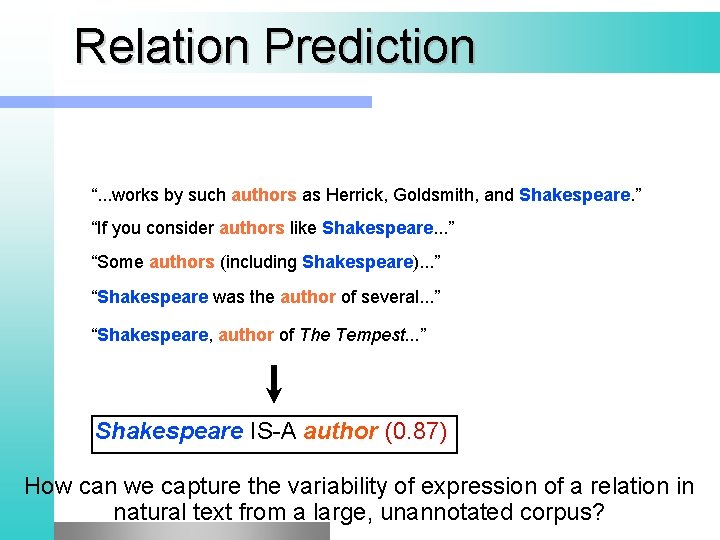

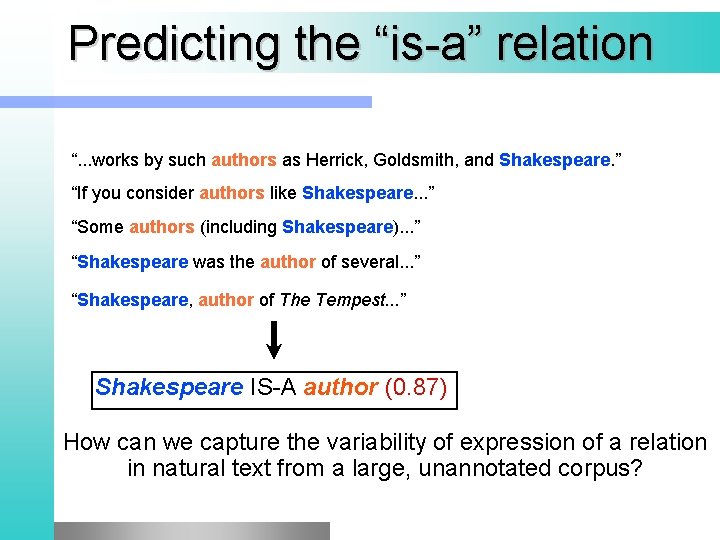

Relation Prediction “. . . works by such authors as Herrick, Goldsmith, and Shakespeare. ” “If you consider authors like Shakespeare. . . ” “Some authors (including Shakespeare). . . ” “Shakespeare was the author of several. . . ” “Shakespeare, author of The Tempest. . . ” Shakespeare IS-A author (0. 87) How can we capture the variability of expression of a relation in natural text from a large, unannotated corpus?

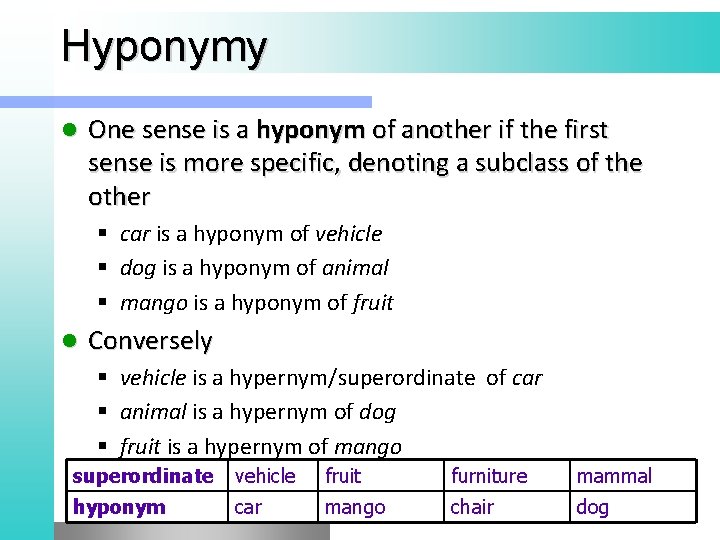

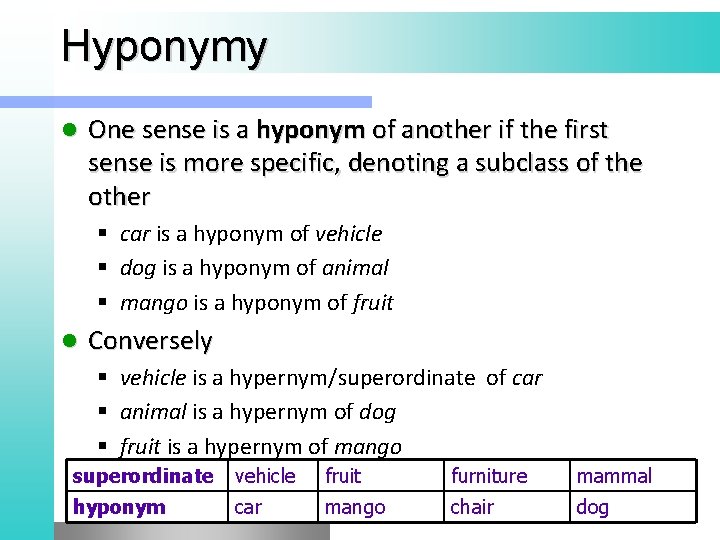

Hyponymy l One sense is a hyponym of another if the first sense is more specific, denoting a subclass of the other § car is a hyponym of vehicle § dog is a hyponym of animal § mango is a hyponym of fruit l Conversely § vehicle is a hypernym/superordinate of car § animal is a hypernym of dog § fruit is a hypernym of mango superordinate vehicle fruit furniture mammal hyponym car mango chair dog

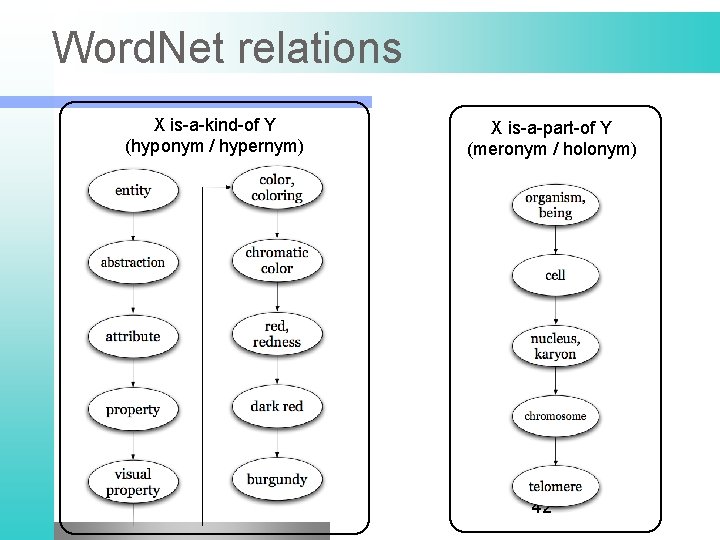

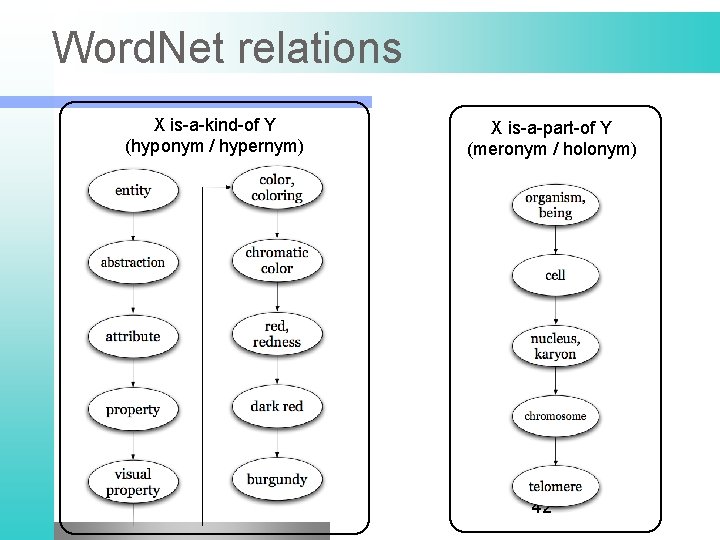

Word. Net relations X is-a-kind-of Y (hyponym / hypernym) X is-a-part-of Y (meronym / holonym) 42

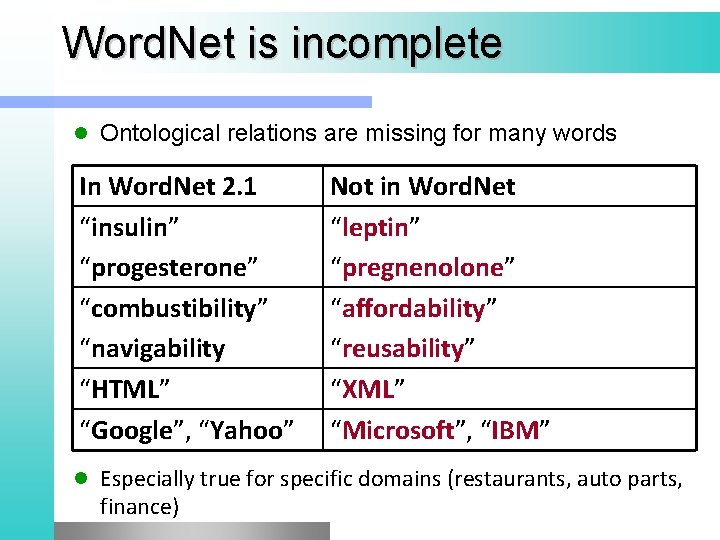

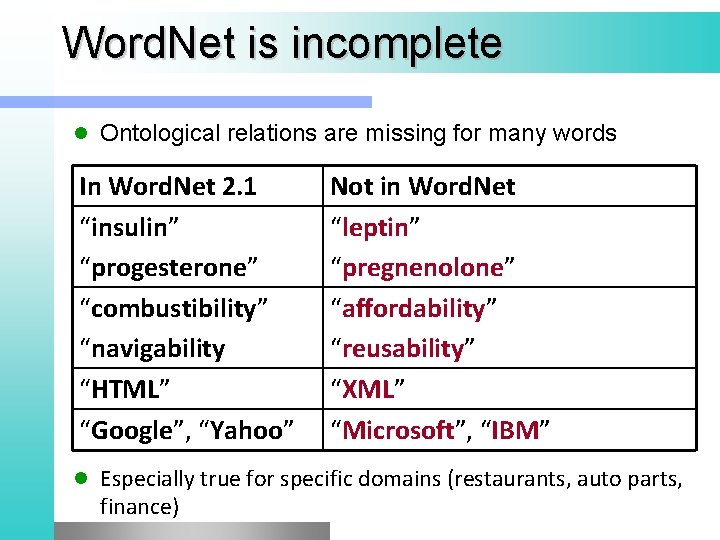

Word. Net is incomplete l Ontological relations are missing for many words In Word. Net 2. 1 “insulin” “progesterone” “combustibility” “navigability “HTML” “Google”, “Yahoo” Not in Word. Net “leptin” “pregnenolone” “affordability” “reusability” “XML” “Microsoft”, “IBM” l Especially true for specific domains (restaurants, auto parts, finance)

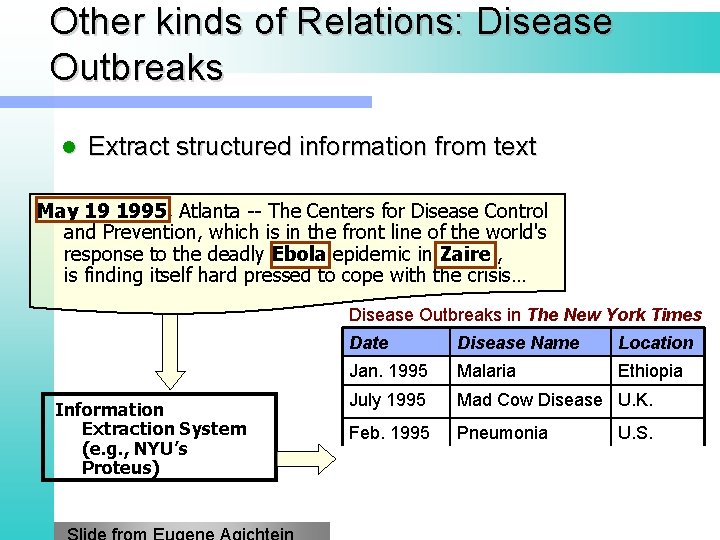

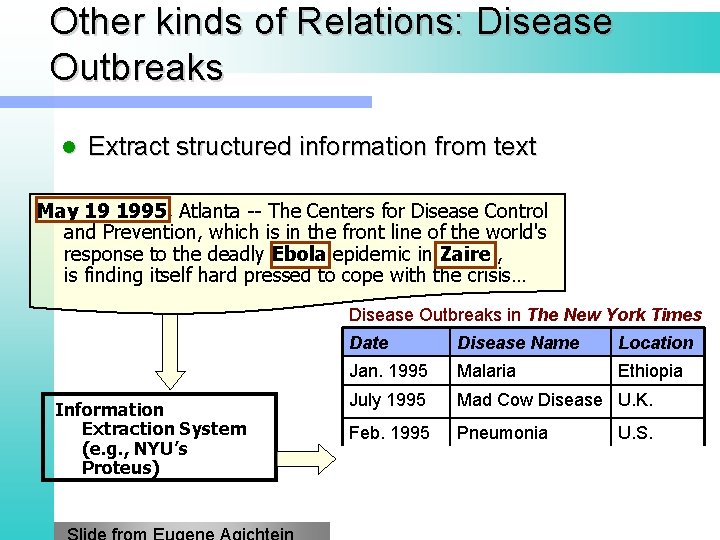

Other kinds of Relations: Disease Outbreaks l Extract structured information from text May 19 1995, Atlanta -- The Centers for Disease Control and Prevention, which is in the front line of the world's response to the deadly Ebola epidemic in Zaire , is finding itself hard pressed to cope with the crisis… Disease Outbreaks in The New York Times Information Extraction System (e. g. , NYU’s Proteus) Date Disease Name Location Jan. 1995 Malaria Ethiopia July 1995 Mad Cow Disease U. K. Feb. 1995 Pneumonia U. S. May 1995 Ebola Zaire

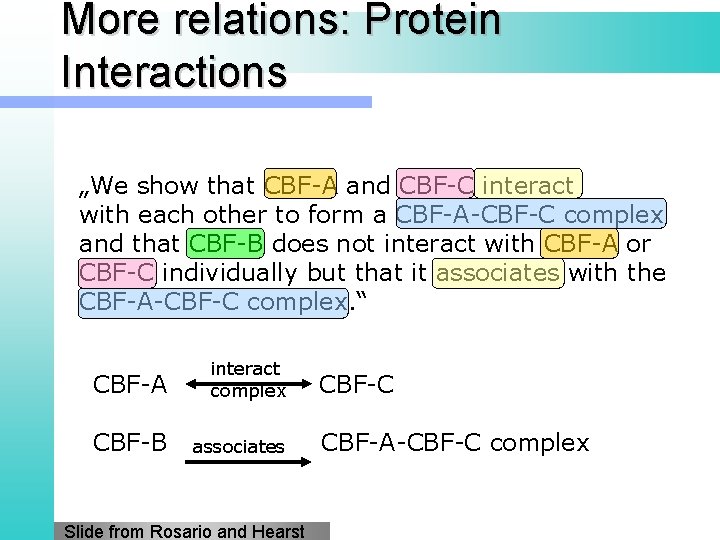

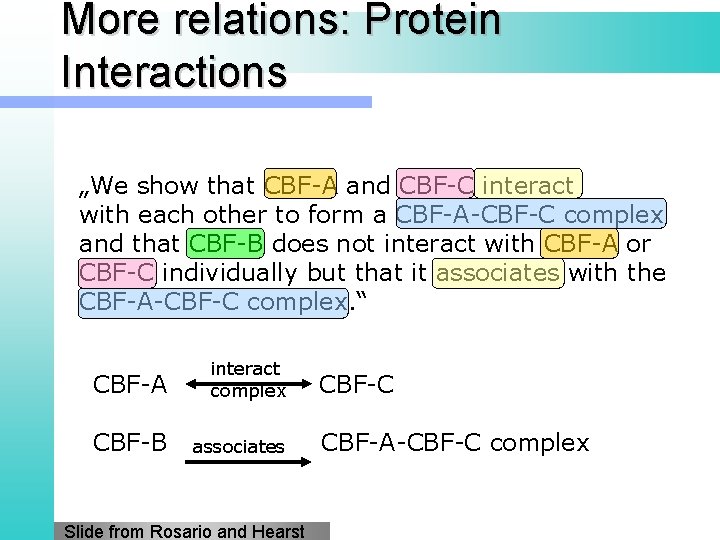

More relations: Protein Interactions „We show that CBF-A and CBF-C interact with each other to form a CBF-A-CBF-C complex and that CBF-B does not interact with CBF-A or CBF-C individually but that it associates with the CBF-A-CBF-C complex. “ CBF-A interact complex CBF-B associates Slide from Rosario and Hearst CBF-C CBF-A-CBF-C complex

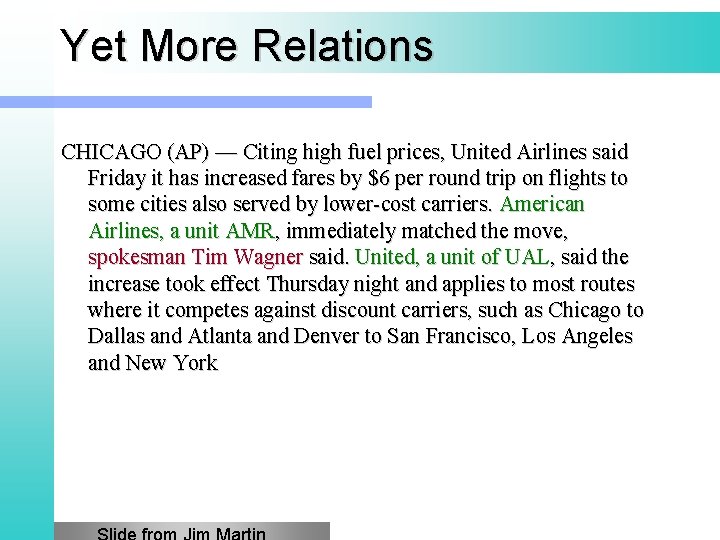

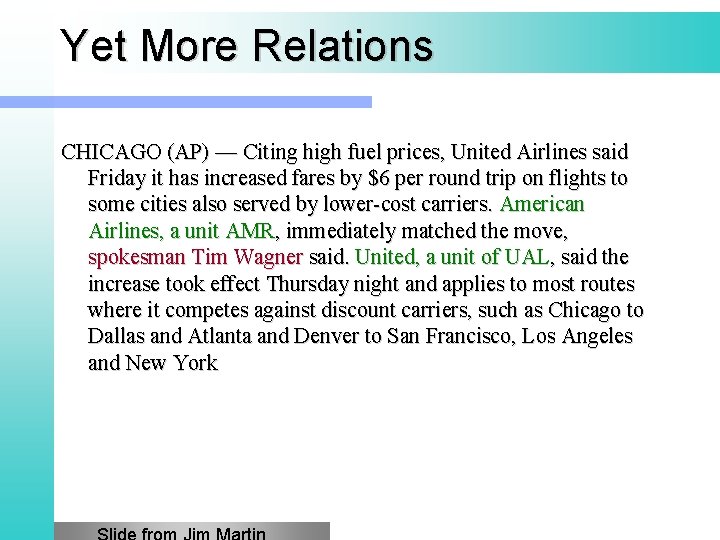

Yet More Relations CHICAGO (AP) — Citing high fuel prices, United Airlines said Friday it has increased fares by $6 per round trip on flights to some cities also served by lower-cost carriers. American Airlines, a unit AMR, immediately matched the move, spokesman Tim Wagner said. United, a unit of UAL, said the increase took effect Thursday night and applies to most routes where it competes against discount carriers, such as Chicago to Dallas and Atlanta and Denver to San Francisco, Los Angeles and New York

Relation Types l For generic news texts. . . Slide from Jim Martin

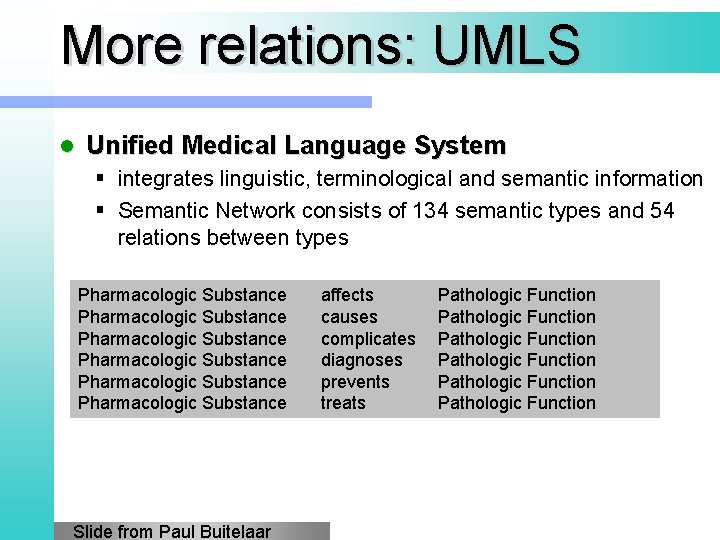

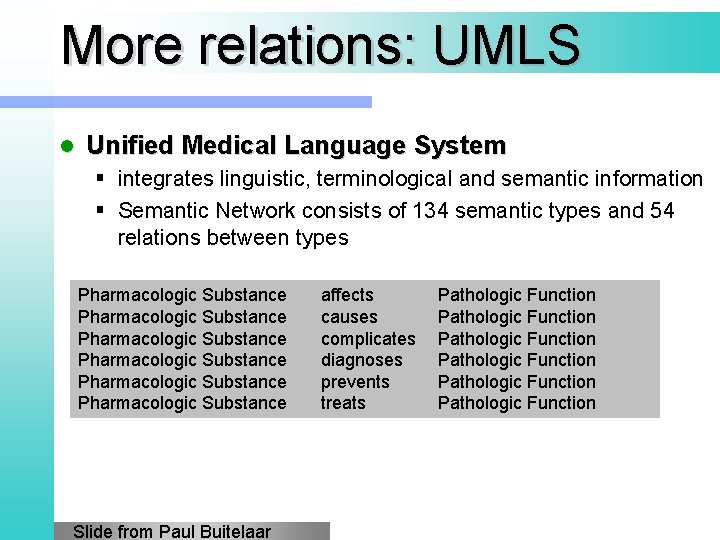

More relations: UMLS l Unified Medical Language System § integrates linguistic, terminological and semantic information § Semantic Network consists of 134 semantic types and 54 relations between types Pharmacologic Substance Pharmacologic Substance Slide from Paul Buitelaar affects causes complicates diagnoses prevents treats Pathologic Function Pathologic Function

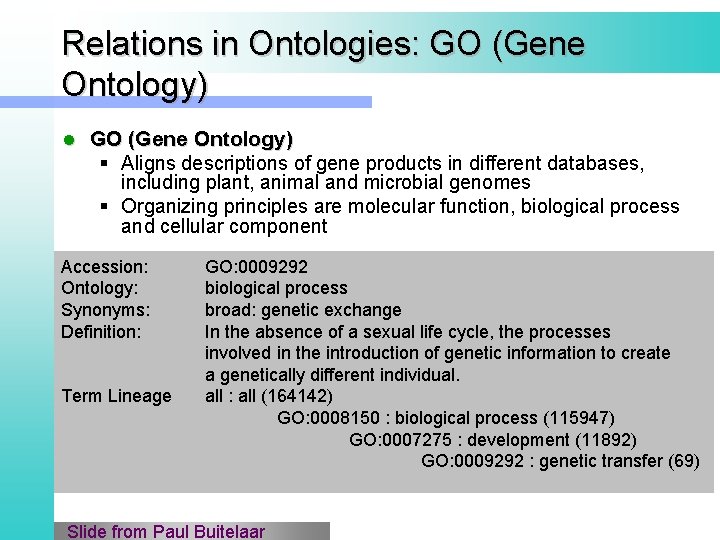

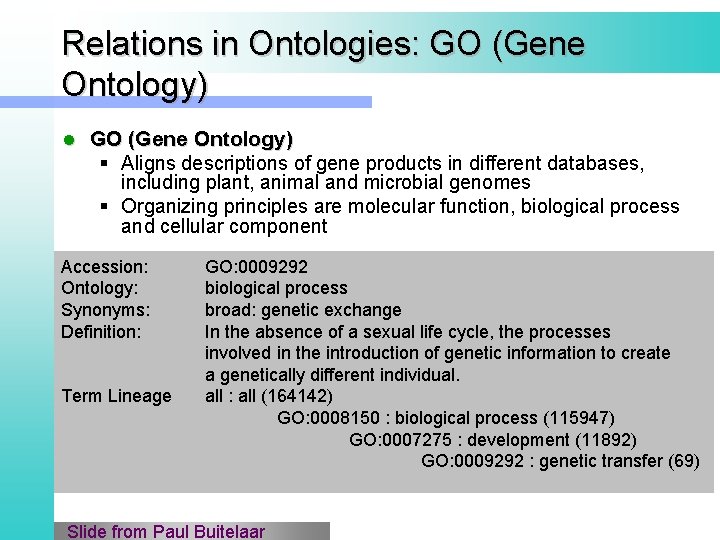

Relations in Ontologies: GO (Gene Ontology) l GO (Gene Ontology) § Aligns descriptions of gene products in different databases, including plant, animal and microbial genomes § Organizing principles are molecular function, biological process and cellular component Accession: Ontology: Synonyms: Definition: Term Lineage GO: 0009292 biological process broad: genetic exchange In the absence of a sexual life cycle, the processes involved in the introduction of genetic information to create a genetically different individual. all : all (164142) GO: 0008150 : biological process (115947) GO: 0007275 : development (11892) GO: 0009292 : genetic transfer (69) Slide from Paul Buitelaar

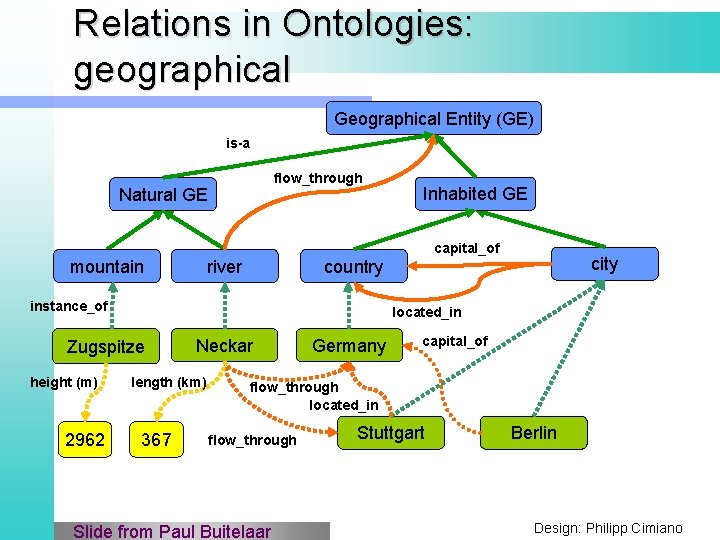

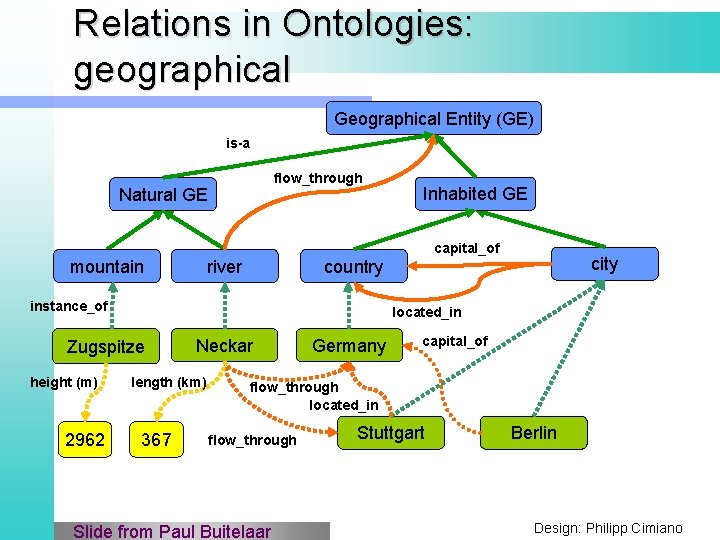

Relations in Ontologies: geographical Geographical Entity (GE) is-a flow_through Natural GE Inhabited GE capital_of mountain river instance_of located_in Zugspitze height (m) 2962 city country Neckar length (km) 367 F-Logic Ontology capital_of Germany flow_through located_in flow_through Slide from Paul Buitelaar Stuttgart similar Berlin Design: Philipp Cimiano

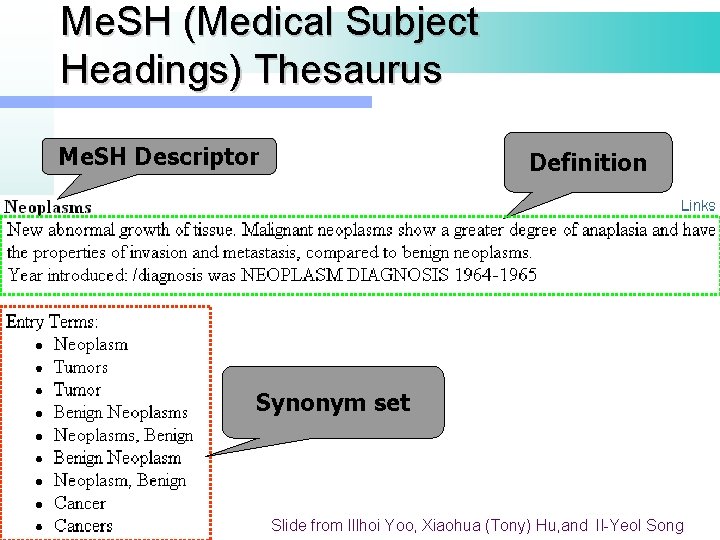

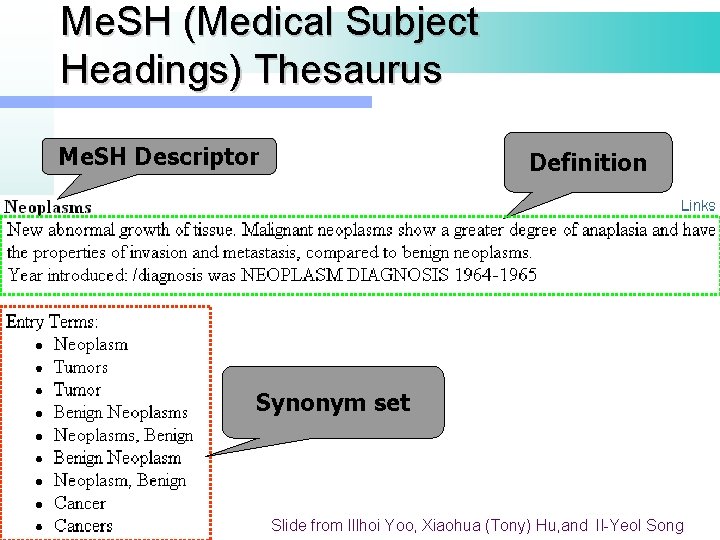

Me. SH (Medical Subject Headings) Thesaurus Me. SH Descriptor Definition Synonym set 5 1 Slide from Illhoi Yoo, Xiaohua (Tony) Hu, and Il-Yeol Song

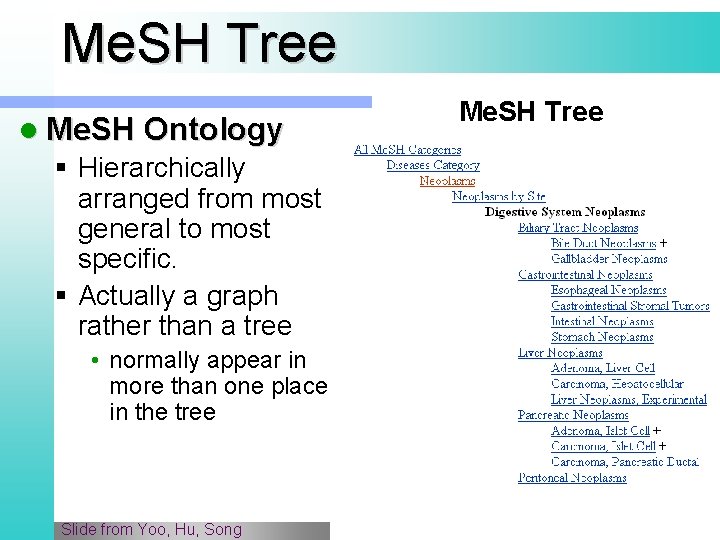

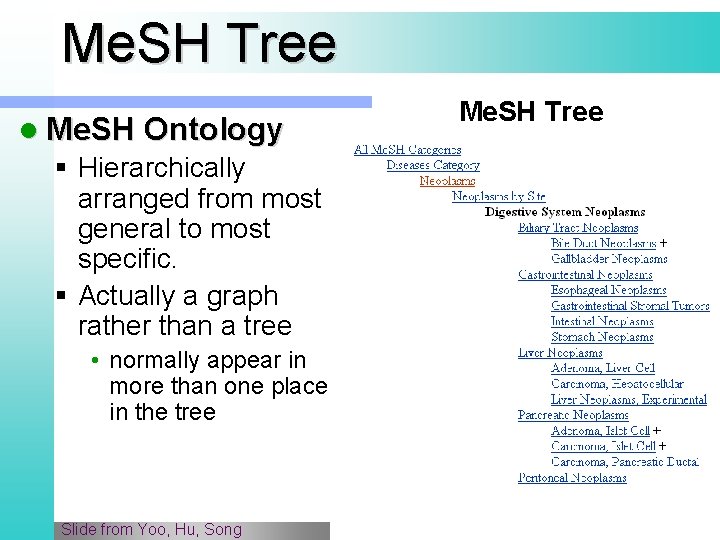

Me. SH Tree l Me. SH Ontology § Hierarchically arranged from most general to most specific. § Actually a graph rather than a tree • normally appear in more than one place in the tree Slide from Yoo, Hu, Song Me. SH Tree

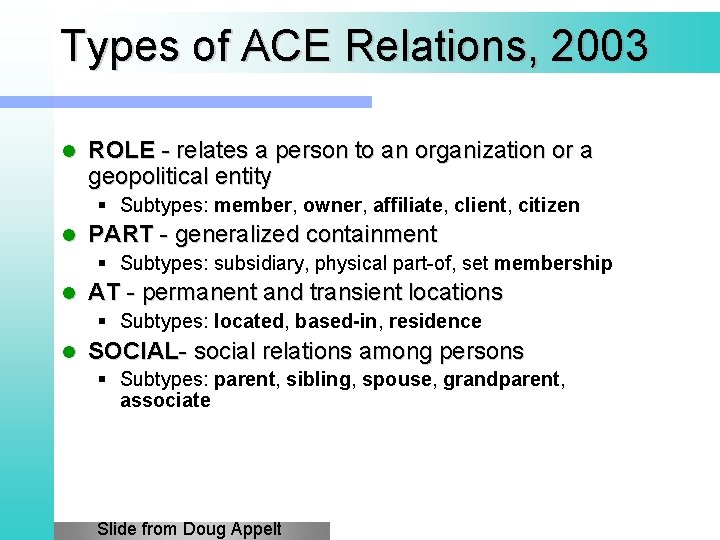

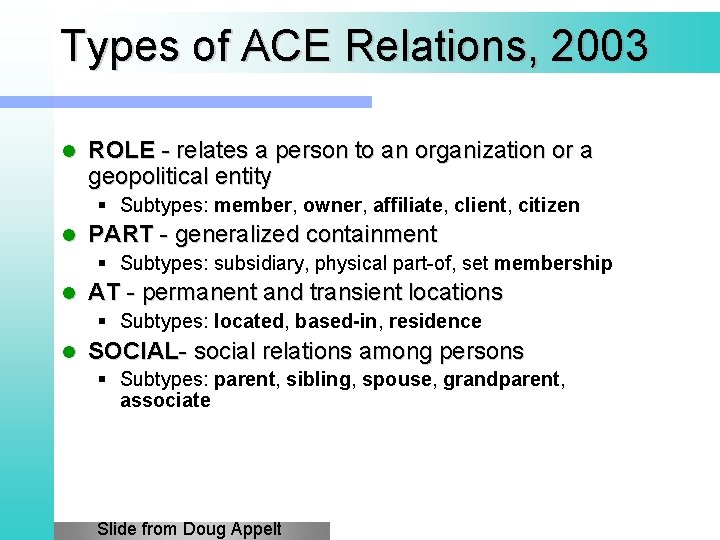

Types of ACE Relations, 2003 l ROLE - relates a person to an organization or a geopolitical entity § Subtypes: member, owner, affiliate, client, citizen l PART - generalized containment § Subtypes: subsidiary, physical part-of, set membership l AT - permanent and transient locations § Subtypes: located, based-in, residence l SOCIAL- social relations among persons § Subtypes: parent, sibling, spouse, grandparent, associate Slide from Doug Appelt

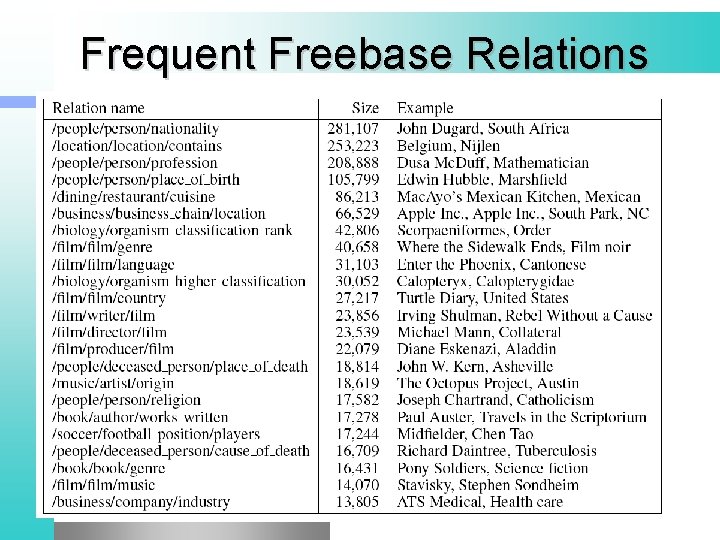

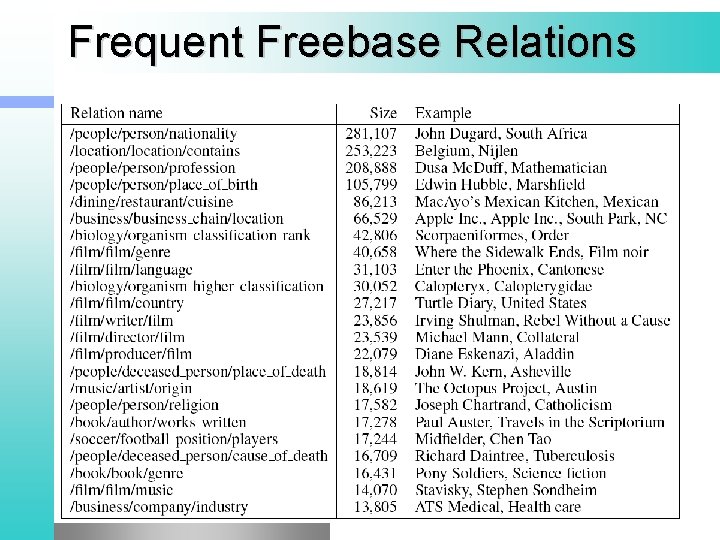

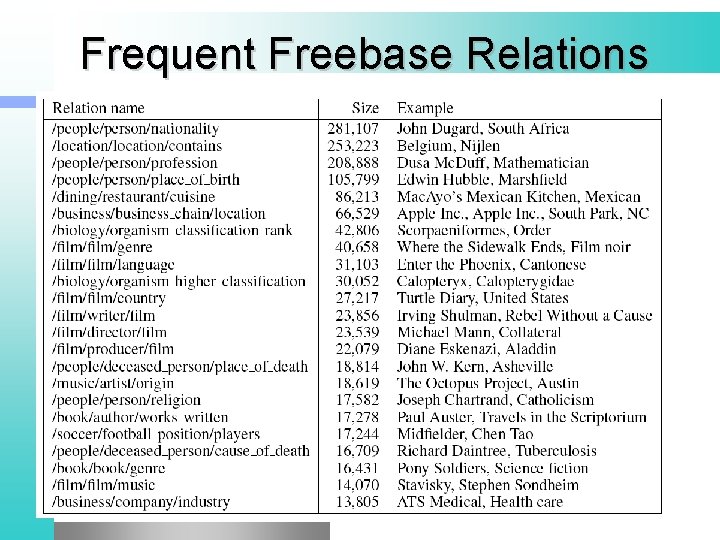

Frequent Freebase Relations l a

Predicting the “is-a” relation “. . . works by such authors as Herrick, Goldsmith, and Shakespeare. ” “If you consider authors like Shakespeare. . . ” “Some authors (including Shakespeare). . . ” “Shakespeare was the author of several. . . ” “Shakespeare, author of The Tempest. . . ” Shakespeare IS-A author (0. 87) How can we capture the variability of expression of a relation in natural text from a large, unannotated corpus?

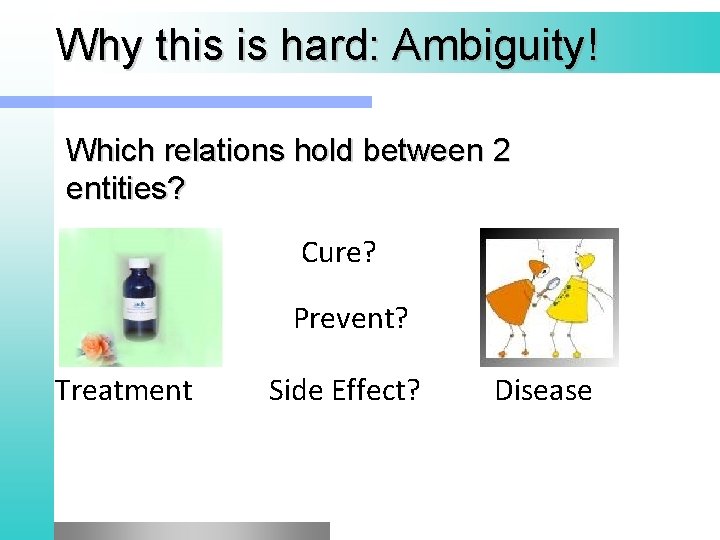

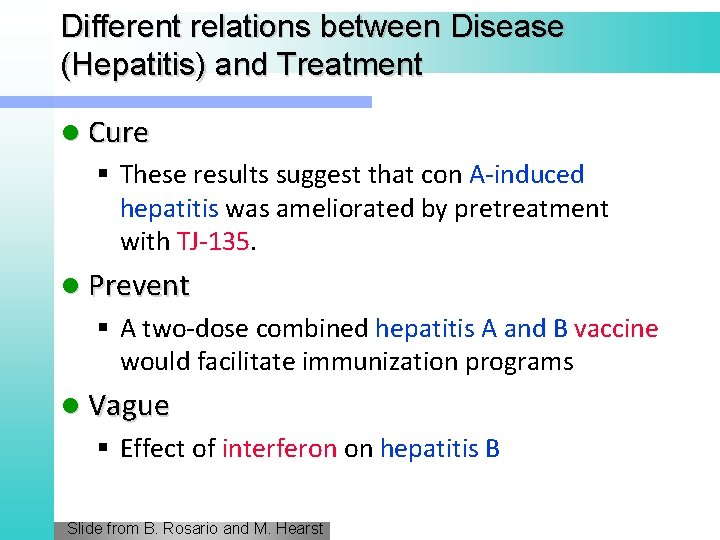

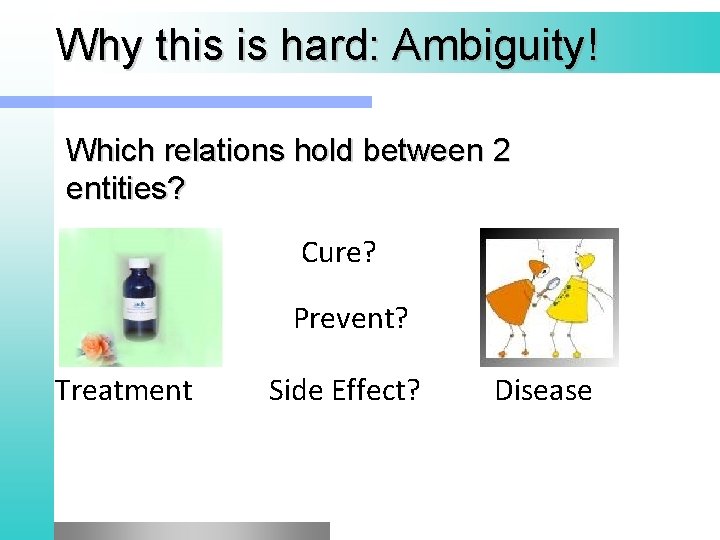

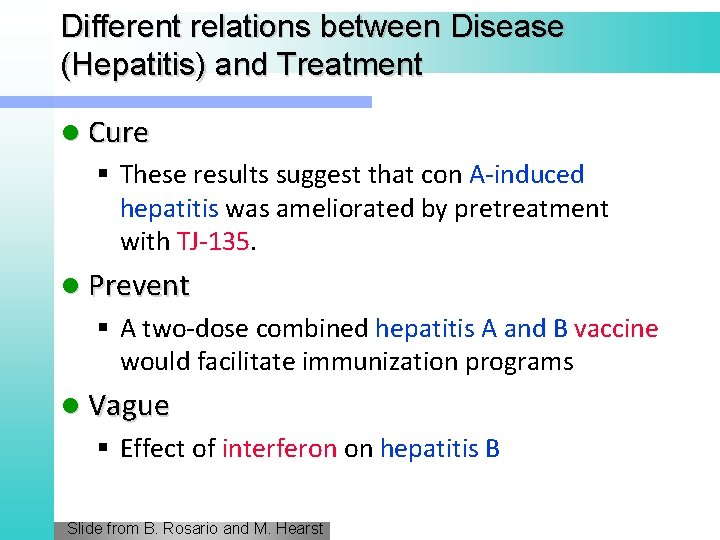

Why this is hard: Ambiguity! Which relations hold between 2 entities? Cure? Prevent? Treatment Side Effect? Disease

Different relations between Disease (Hepatitis) and Treatment l Cure § These results suggest that con A-induced hepatitis was ameliorated by pretreatment with TJ-135. l Prevent § A two-dose combined hepatitis A and B vaccine would facilitate immunization programs l Vague § Effect of interferon on hepatitis B Slide from B. Rosario and M. Hearst

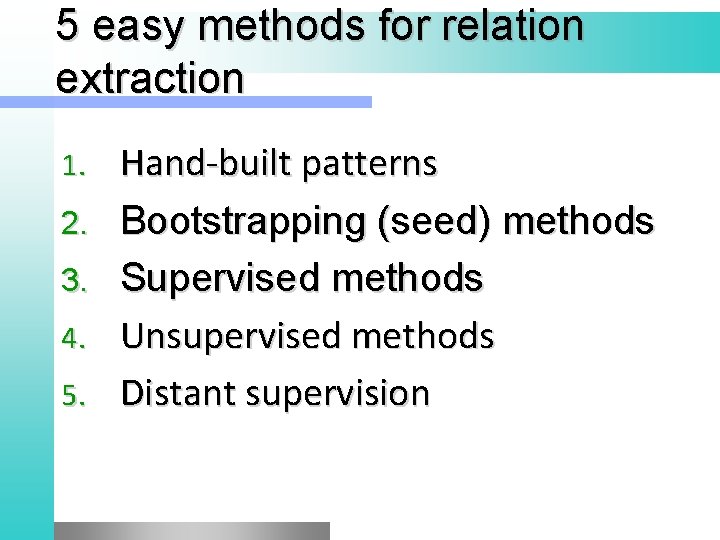

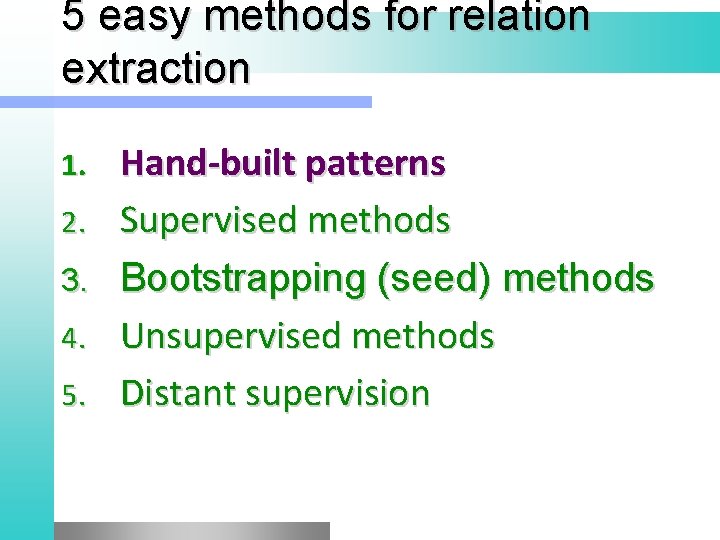

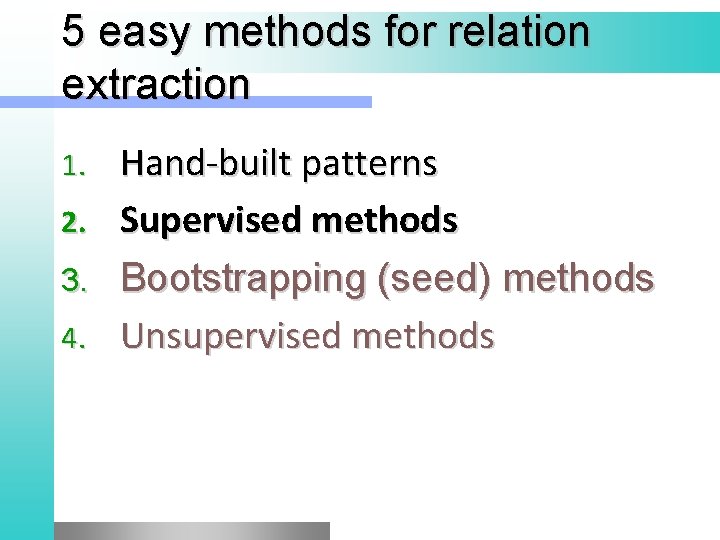

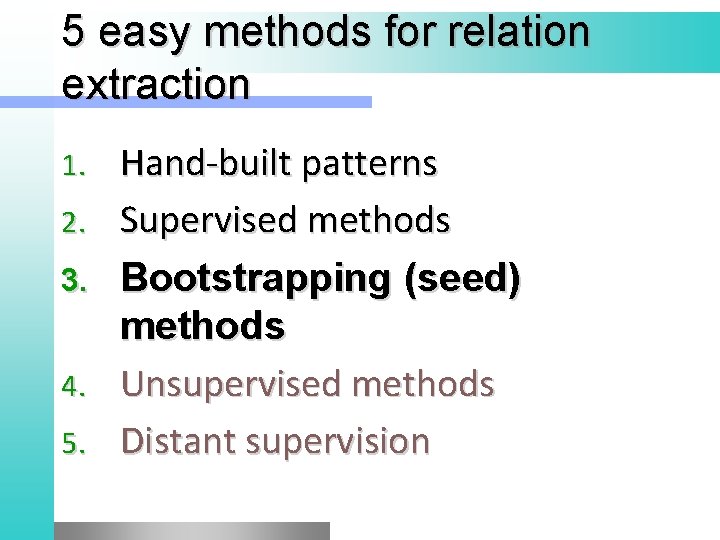

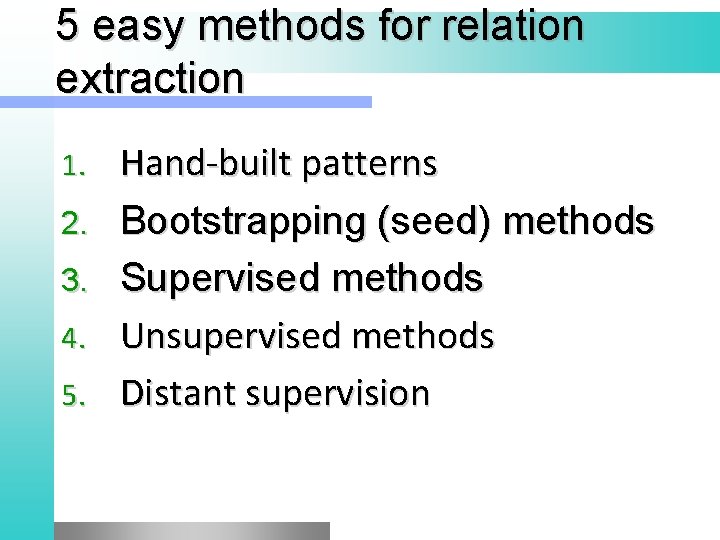

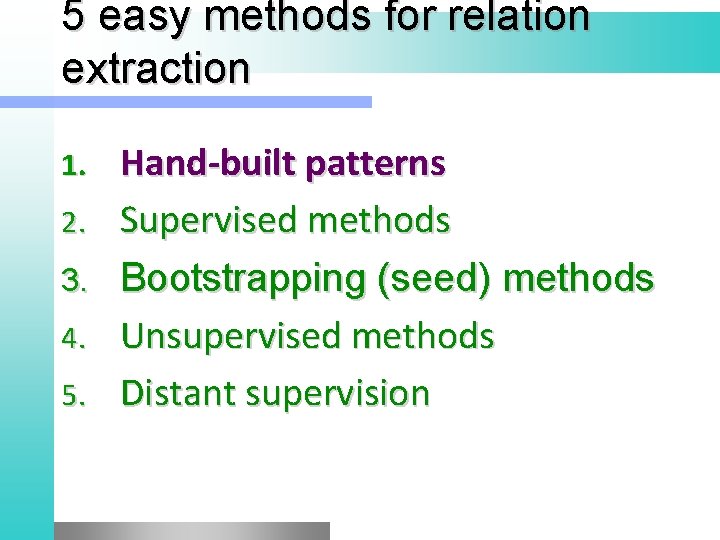

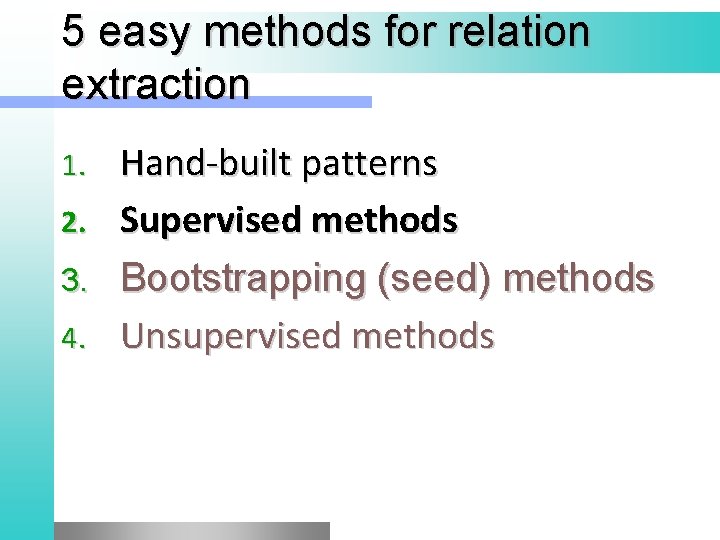

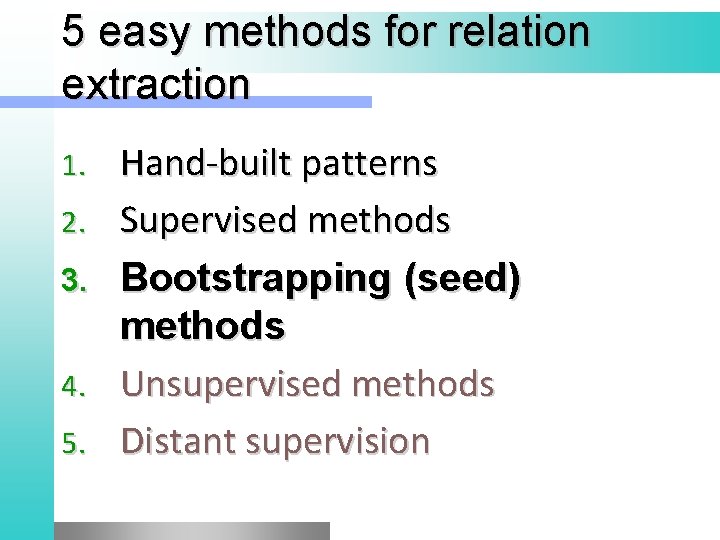

5 easy methods for relation extraction 1. 2. 3. 4. 5. Hand-built patterns Bootstrapping (seed) methods Supervised methods Unsupervised methods Distant supervision

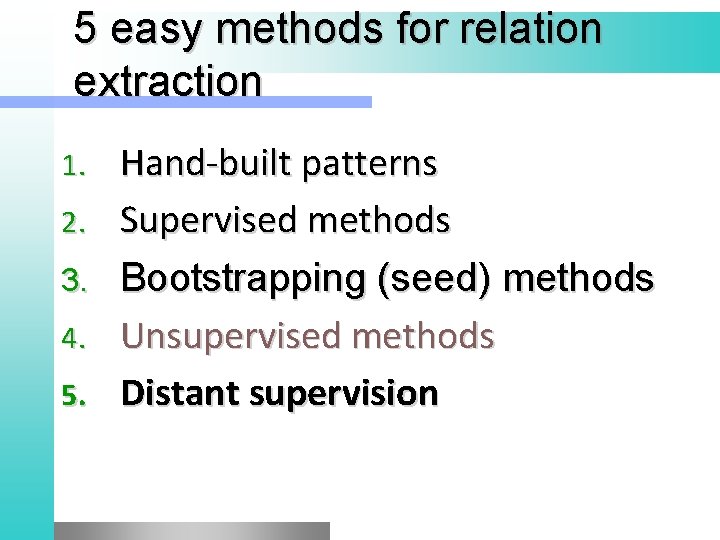

5 easy methods for relation extraction 1. 2. 3. 4. 5. Hand-built patterns Supervised methods Bootstrapping (seed) methods Unsupervised methods Distant supervision

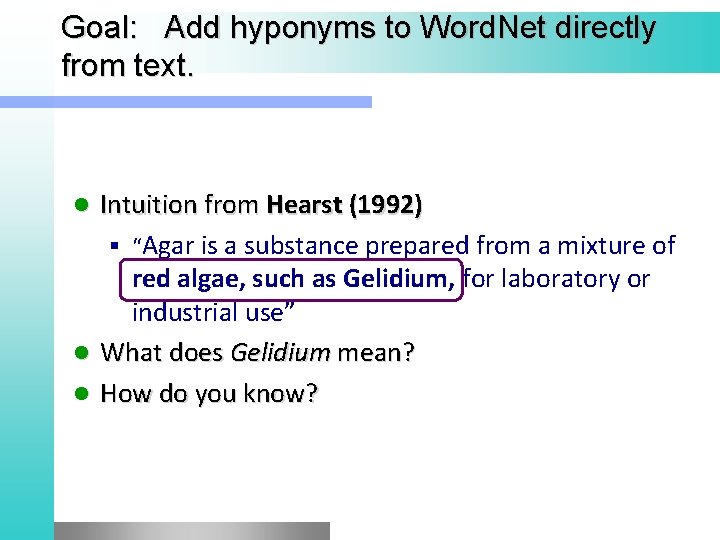

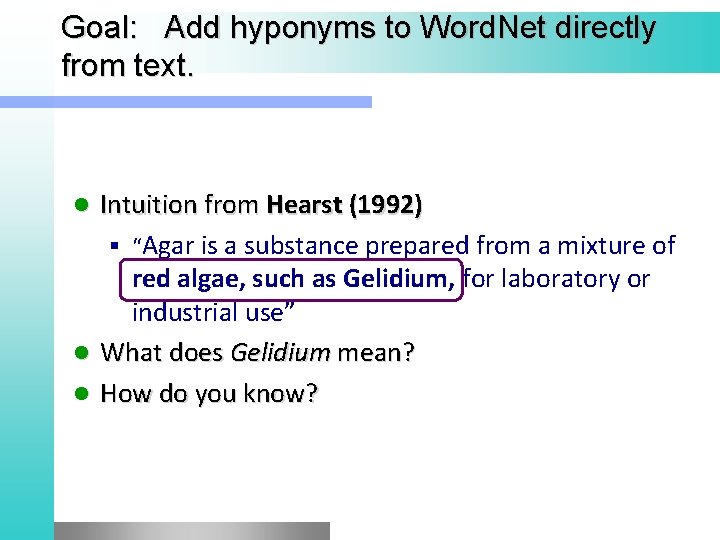

![A complex handbuilt extraction rule NYU Proteus A complex hand-built extraction rule [NYU Proteus]](https://slidetodoc.com/presentation_image_h2/0d8e954fc2154cba3592c5c7317ee4bf/image-60.jpg)

A complex hand-built extraction rule [NYU Proteus]

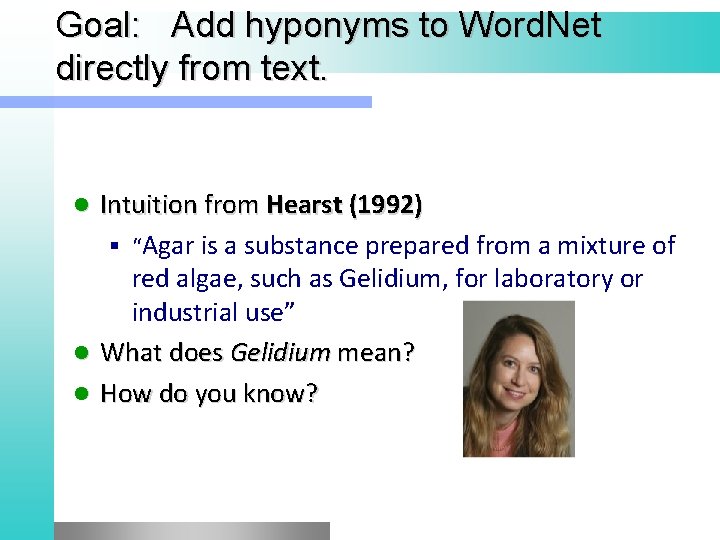

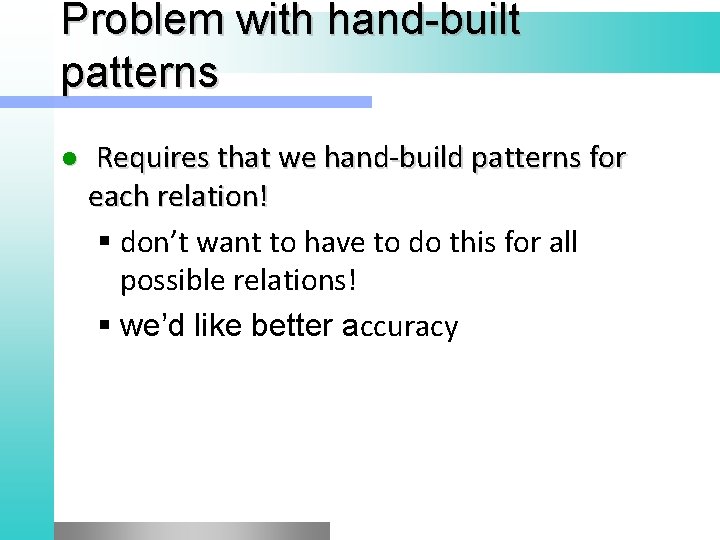

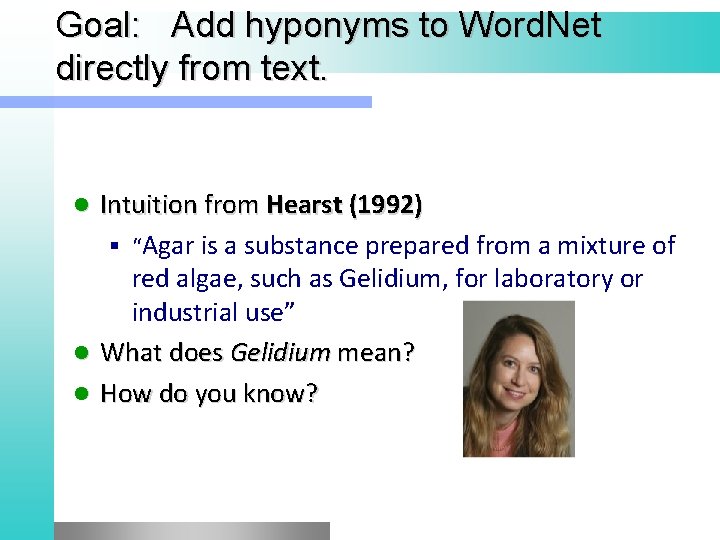

Goal: Add hyponyms to Word. Net directly from text. Intuition from Hearst (1992) § “Agar is a substance prepared from a mixture of red algae, such as Gelidium, for laboratory or industrial use” l What does Gelidium mean? l How do you know? l

Goal: Add hyponyms to Word. Net directly from text. Intuition from Hearst (1992) § “Agar is a substance prepared from a mixture of red algae, such as Gelidium, for laboratory or industrial use” l What does Gelidium mean? l How do you know? l

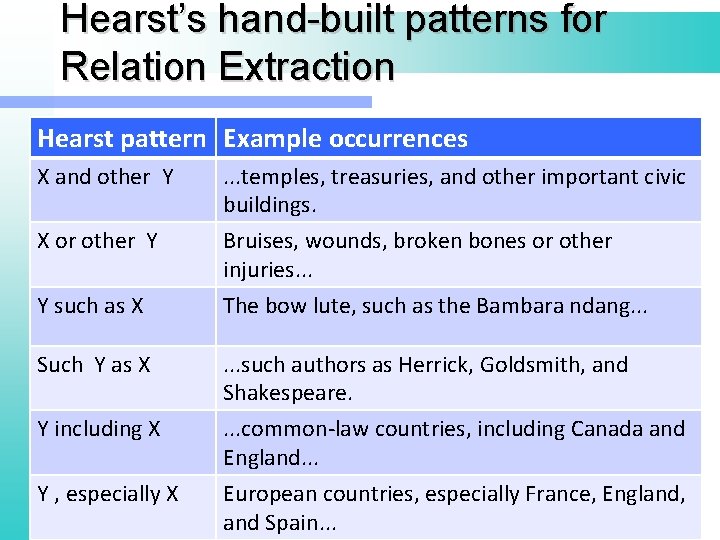

Hearst’s Hand-Designed Lexico. Syntactic Patterns (Hearst, 1992): Automatic Acquisition of Hyponyms “Y such as X ((, X)* (, and/or) X)” “such Y as X…” “X… or other Y” “X… and other Y” “Y including X…” “Y, especially X…”

Hearst’s hand-built patterns for Relation Extraction Hearst pattern Example occurrences X and other Y . . . temples, treasuries, and other important civic buildings. X or other Y Bruises, wounds, broken bones or other injuries. . . Y such as X The bow lute, such as the Bambara ndang. . . Such Y as X . . . such authors as Herrick, Goldsmith, and Shakespeare. Y including X . . . common-law countries, including Canada and England. . . Y , especially X European countries, especially France, England, and Spain. . .

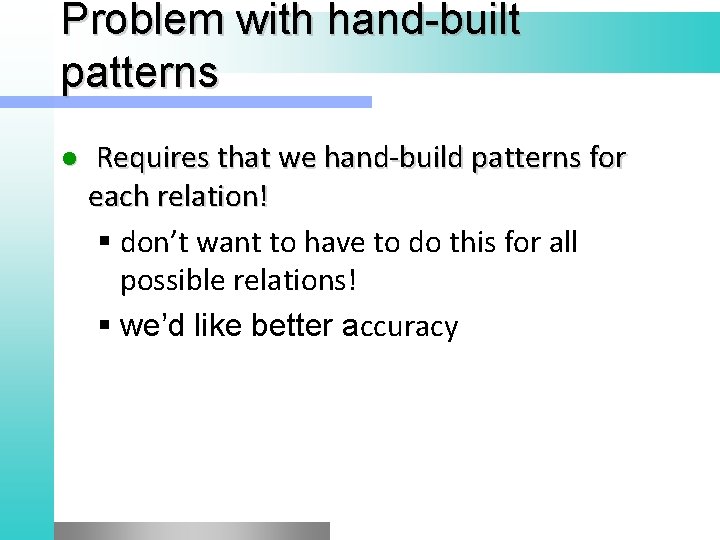

Problem with hand-built patterns l Requires that we hand-build patterns for each relation! § don’t want to have to do this for all possible relations! § we’d like better accuracy

5 easy methods for relation extraction 1. 2. 3. 4. Hand-built patterns Supervised methods Bootstrapping (seed) methods Unsupervised methods

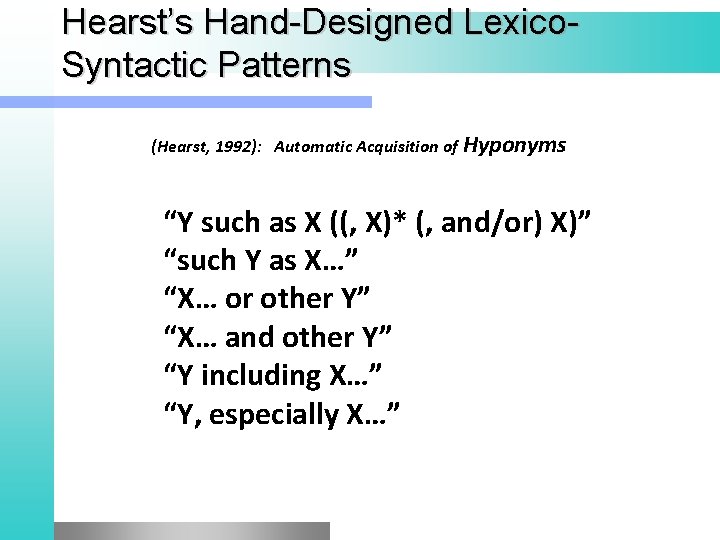

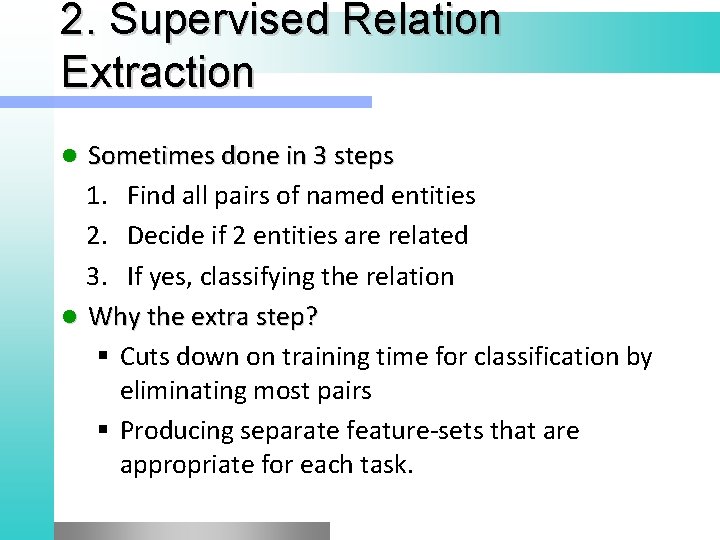

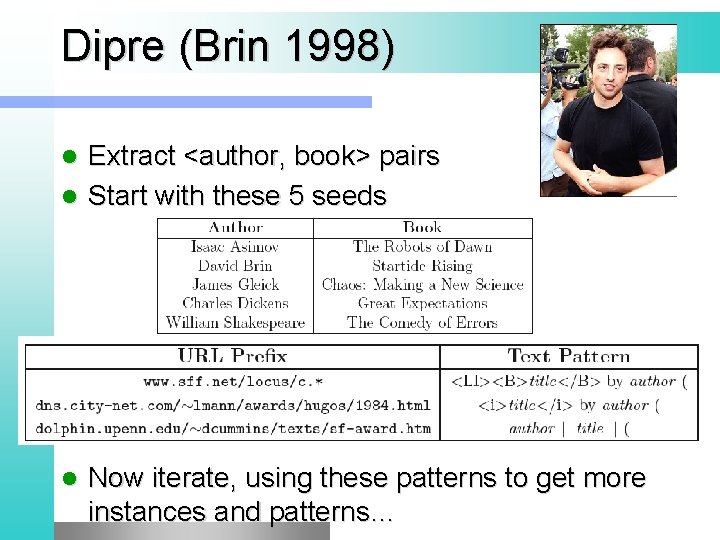

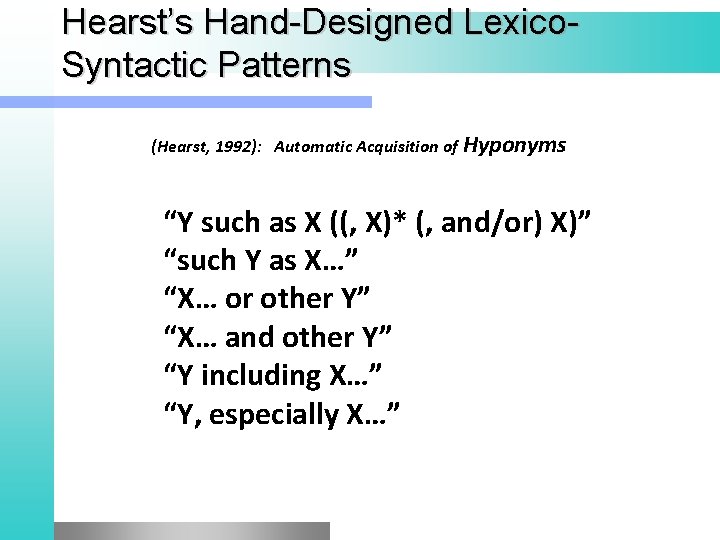

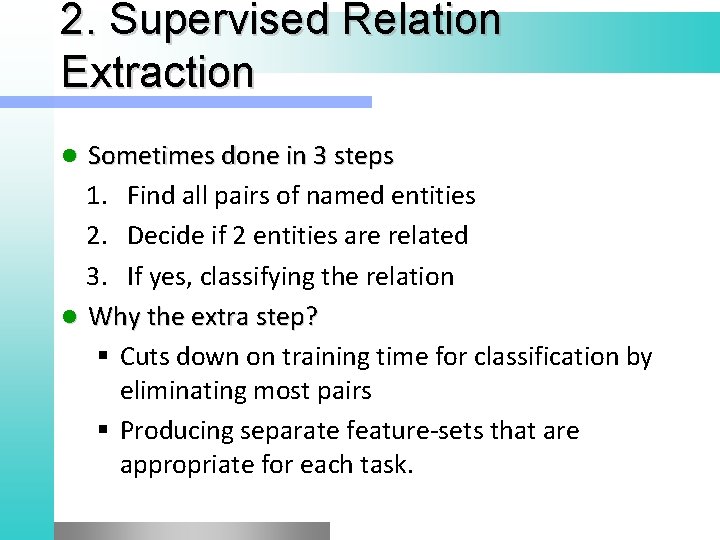

2. Supervised Relation Extraction Sometimes done in 3 steps 1. Find all pairs of named entities 2. Decide if 2 entities are related 3. If yes, classifying the relation l Why the extra step? § Cuts down on training time for classification by eliminating most pairs § Producing separate feature-sets that are appropriate for each task. l

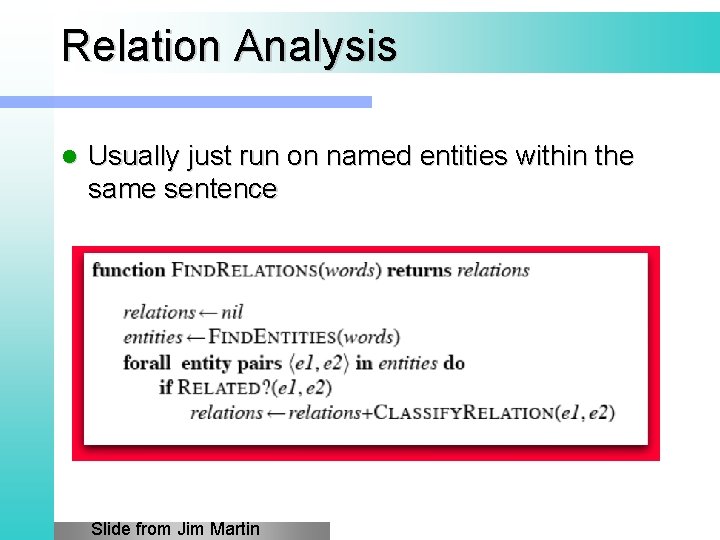

Relation Analysis l Usually just run on named entities within the same sentence Slide from Jim Martin

Relation Extraction l Task definition: to label the semantic relation between a pair of entities in a sentence (fragment) …[leader arg-1] of a minority [government arg-2]… PHYS PER-SOC EMP-ORG NIL PHYS: Physical PER-SOC: Personal / Social EMP-ORG: Employment / Membership / Subsidiary Slide from Jing Jiang

![Supervised Learning l Supervised machine learning e g Zhou et al 2005 Bunescu Supervised Learning l Supervised machine learning (e. g. [Zhou et al. 2005], [Bunescu &](https://slidetodoc.com/presentation_image_h2/0d8e954fc2154cba3592c5c7317ee4bf/image-70.jpg)

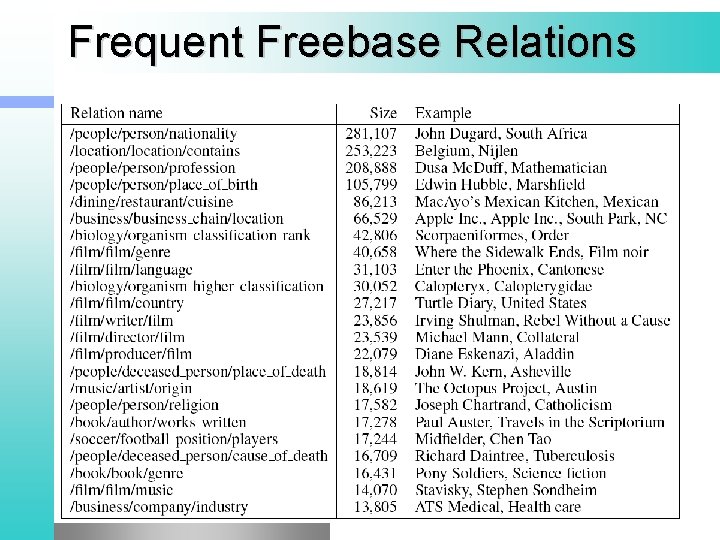

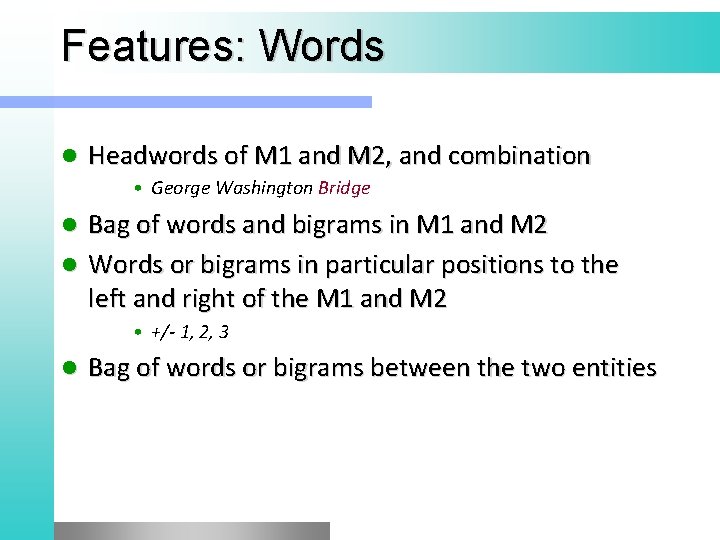

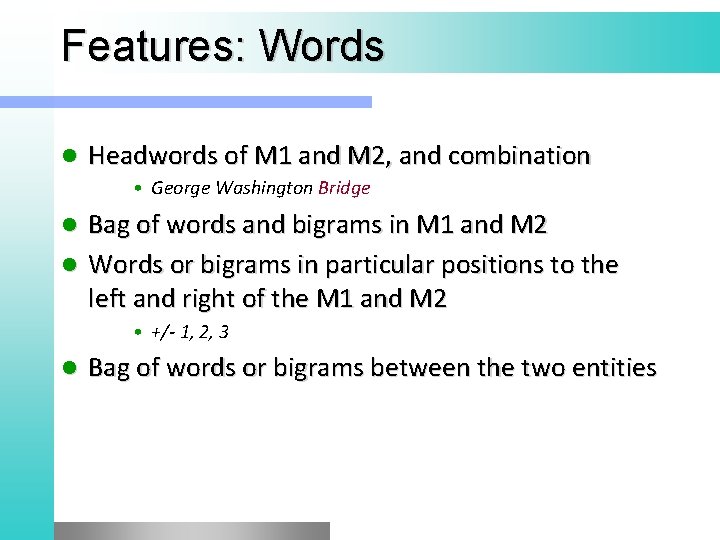

Supervised Learning l Supervised machine learning (e. g. [Zhou et al. 2005], [Bunescu & Mooney 2005], [Zhang et al. 2006], [Surdeanu & Ciaramita 2007]) …[leader arg-1] of a minority [government arg-2]… arg-1 word: leader PHYS l arg-2 type: ORG dependency: PER-SOC EMP-ORG arg-1 of arg-2 NIL Training data is needed for each relation type Slide from Jing Jiang

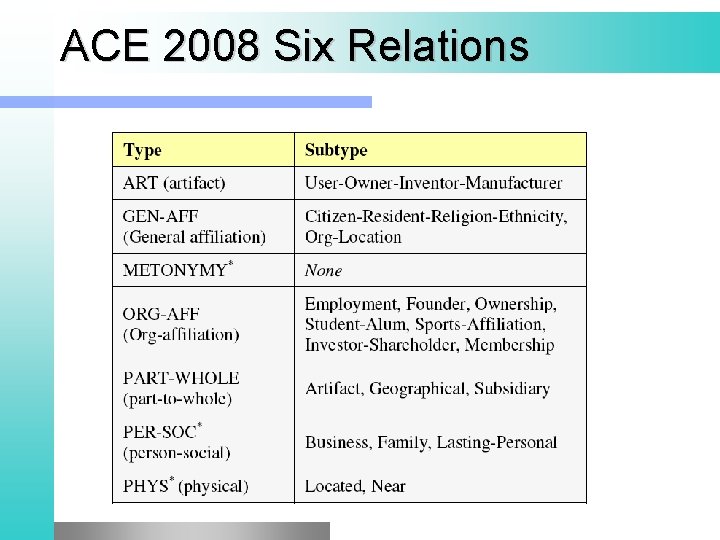

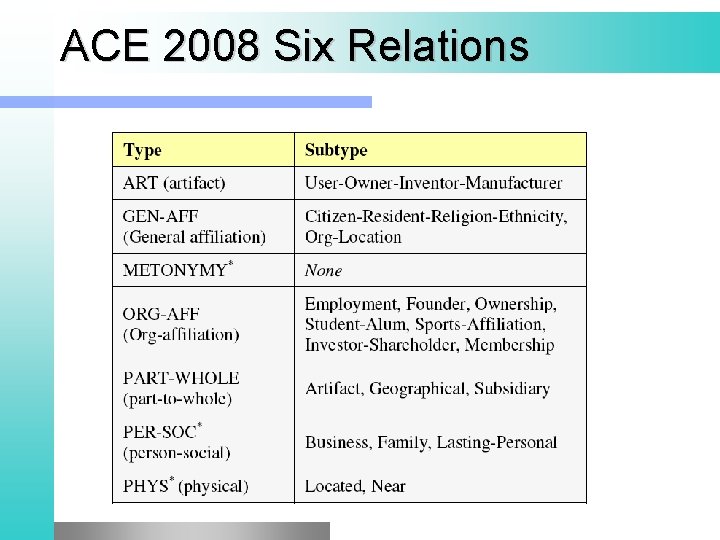

ACE 2008 Six Relations

Features: Words l Headwords of M 1 and M 2, and combination • George Washington Bridge Bag of words and bigrams in M 1 and M 2 l Words or bigrams in particular positions to the left and right of the M 1 and M 2 l • +/- 1, 2, 3 l Bag of words or bigrams between the two entities

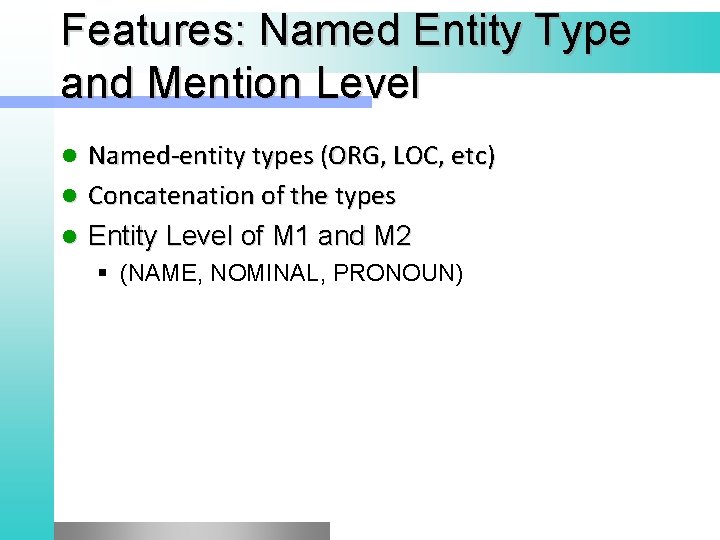

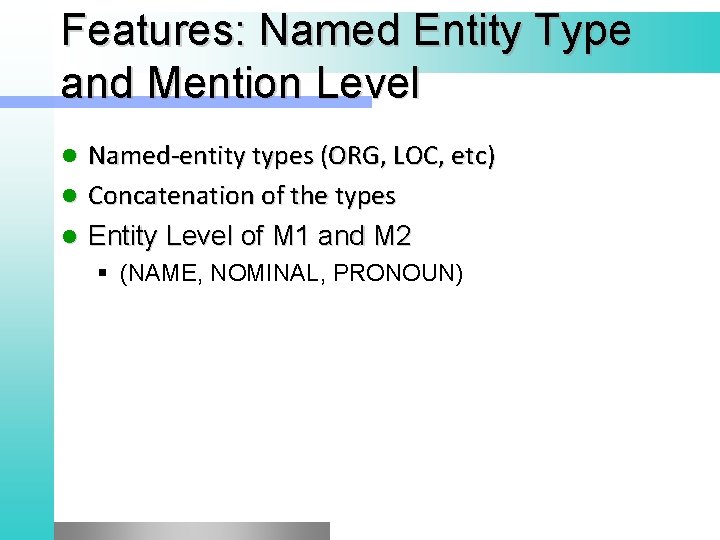

Features: Named Entity Type and Mention Level Named-entity types (ORG, LOC, etc) l Concatenation of the types l Entity Level of M 1 and M 2 l § (NAME, NOMINAL, PRONOUN)

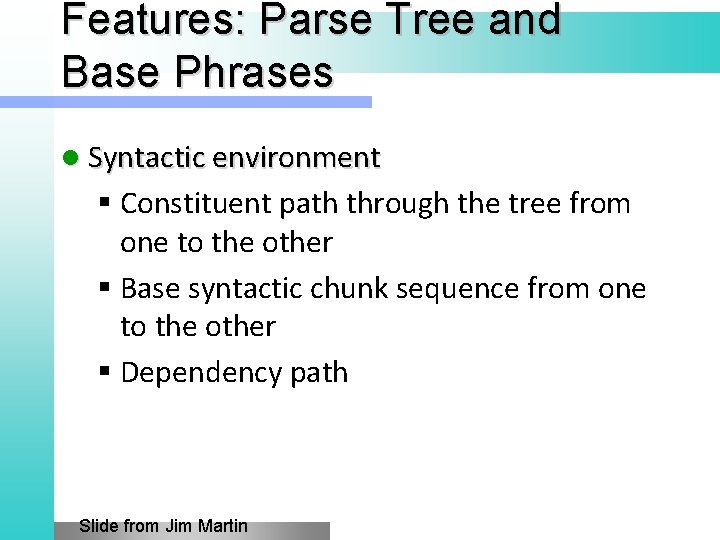

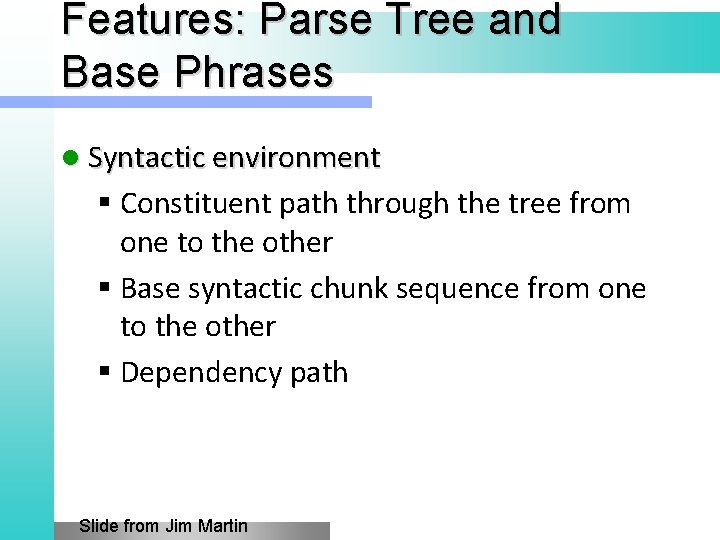

Features: Parse Tree and Base Phrases l Syntactic environment § Constituent path through the tree from one to the other § Base syntactic chunk sequence from one to the other § Dependency path Slide from Jim Martin

Features: Gazeteers and trigger words l Personal relative trigger list § from wordnet: parent, wife, husabnd, grandparent, etc l Country name list

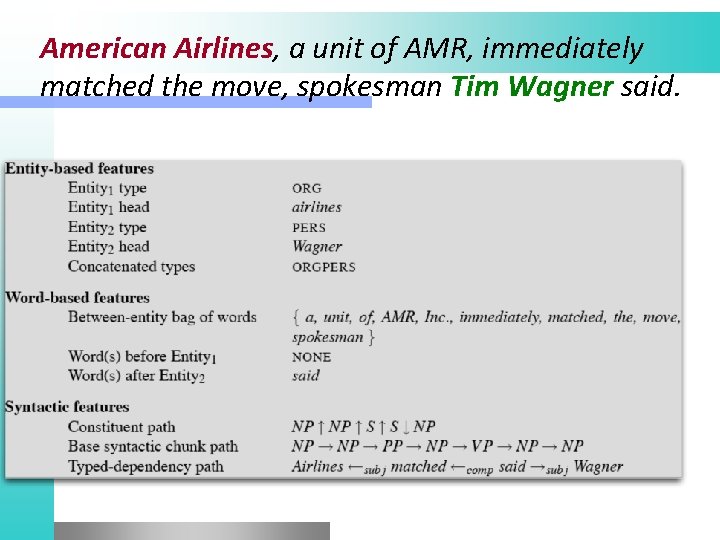

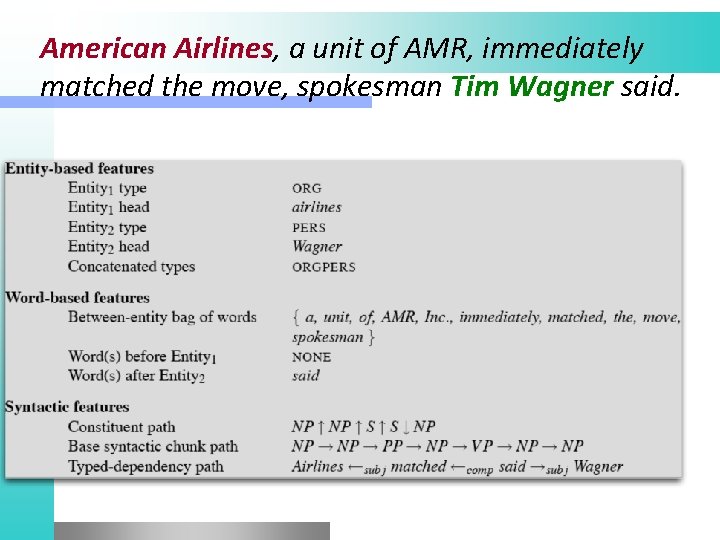

American Airlines, a unit of AMR, immediately matched the move, spokesman Tim Wagner said.

Classifiers for supervised methods l Now you can use any classifier you like § SVM § Logistic regression § Naïve Bayes § etc

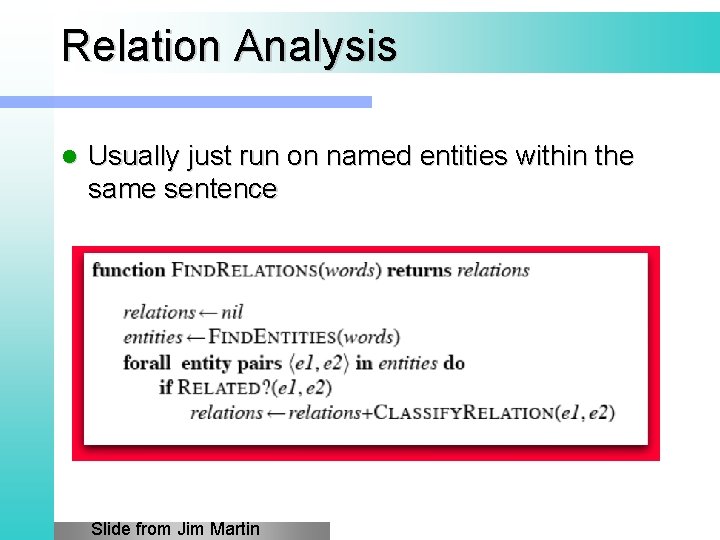

Summary Can get high accuracies with enough handlabeled training data l If test data looks exactly like the training data l But § labeling 5000 relations (and named entities) is expensive § the approach doesn’t generalize to different genres l

5 easy methods for relation extraction 1. 2. 3. 4. 5. Hand-built patterns Supervised methods Bootstrapping (seed) methods Unsupervised methods Distant supervision

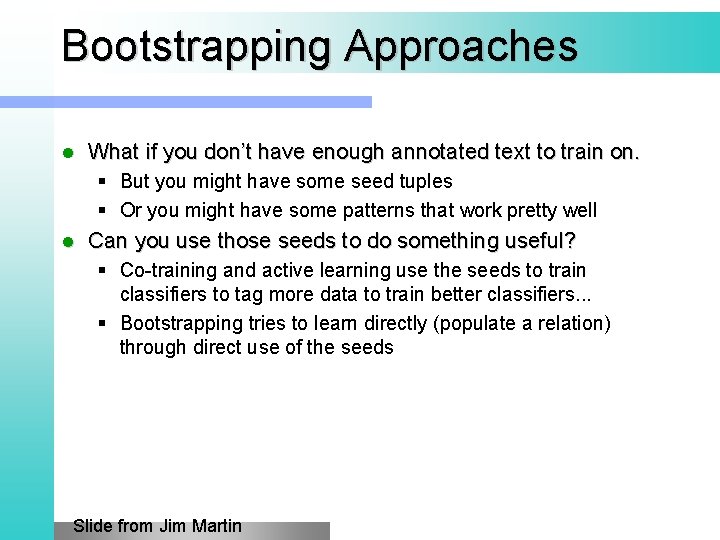

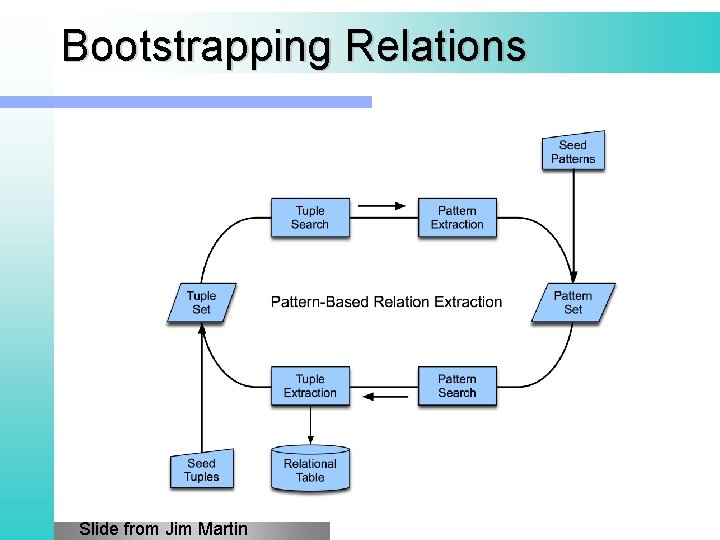

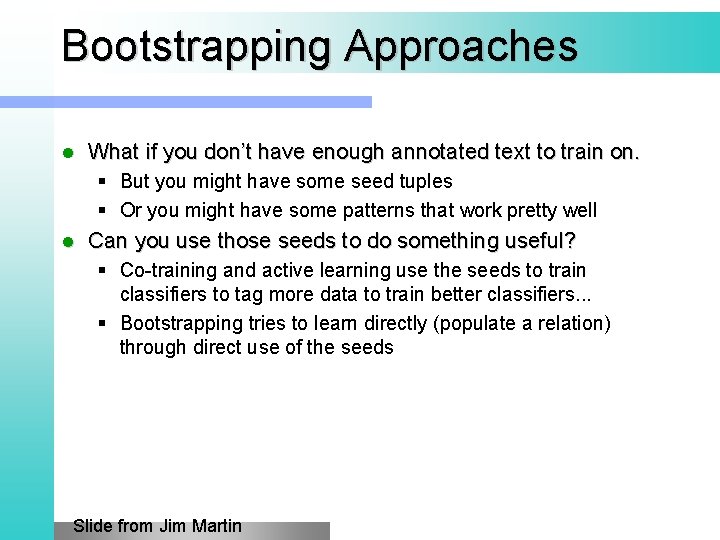

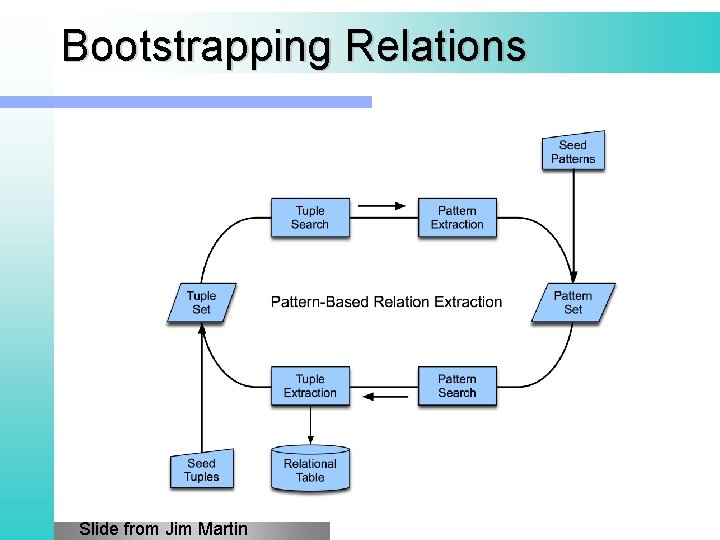

Bootstrapping Approaches l What if you don’t have enough annotated text to train on. § But you might have some seed tuples § Or you might have some patterns that work pretty well l Can you use those seeds to do something useful? § Co-training and active learning use the seeds to train classifiers to tag more data to train better classifiers. . . § Bootstrapping tries to learn directly (populate a relation) through direct use of the seeds Slide from Jim Martin

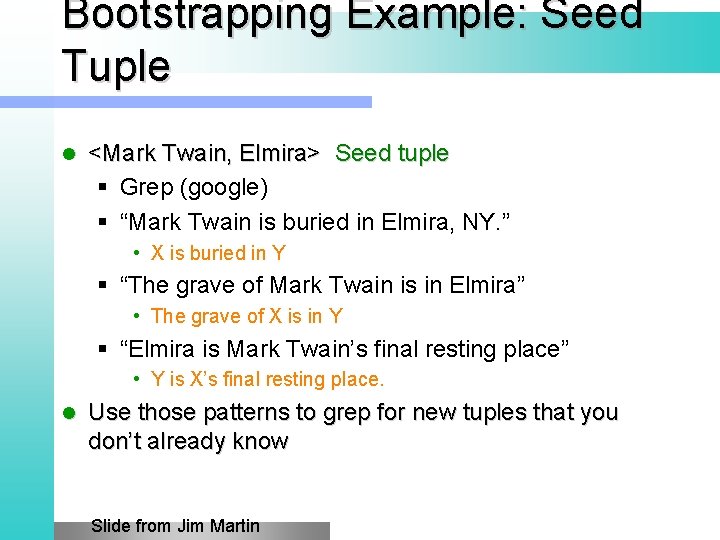

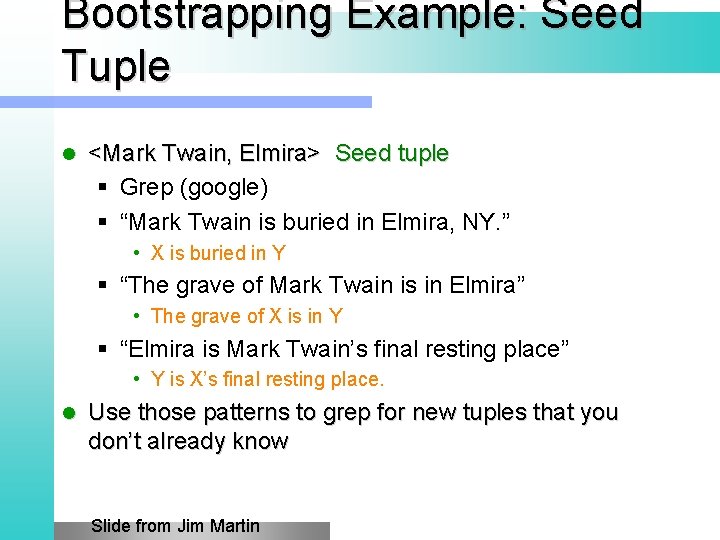

Bootstrapping Example: Seed Tuple l <Mark Twain, Elmira> Seed tuple § Grep (google) § “Mark Twain is buried in Elmira, NY. ” • X is buried in Y § “The grave of Mark Twain is in Elmira” • The grave of X is in Y § “Elmira is Mark Twain’s final resting place” • Y is X’s final resting place. l Use those patterns to grep for new tuples that you don’t already know Slide from Jim Martin

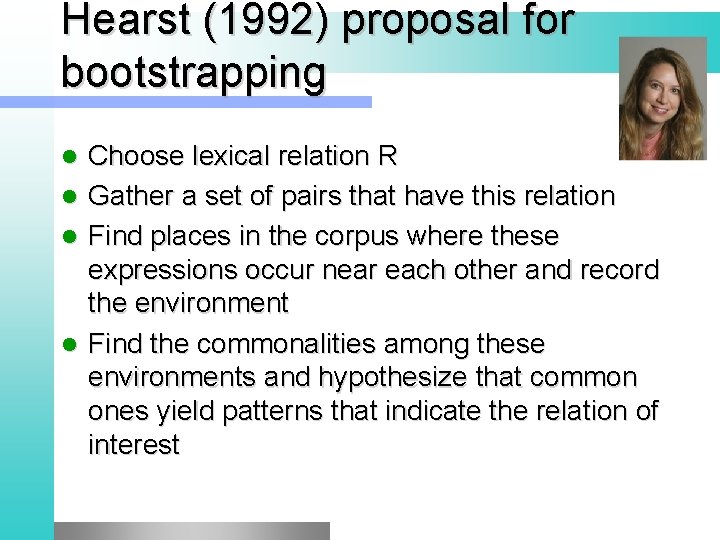

Hearst (1992) proposal for bootstrapping Choose lexical relation R l Gather a set of pairs that have this relation l Find places in the corpus where these expressions occur near each other and record the environment l Find the commonalities among these environments and hypothesize that common ones yield patterns that indicate the relation of interest l

Bootstrapping Relations Slide from Jim Martin

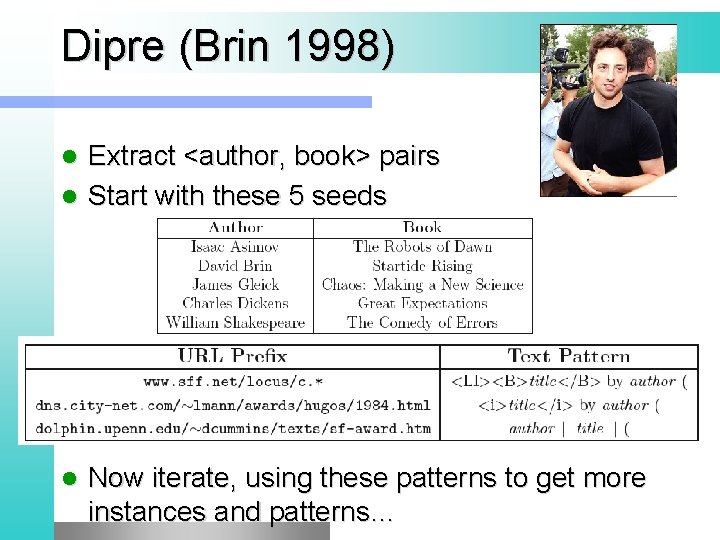

Dipre (Brin 1998) Extract <author, book> pairs l Start with these 5 seeds l l Learn these patterns: l Now iterate, using these patterns to get more instances and patterns…

![Snowball Agichtein Gravano 2000 l Exploit duality between patterns and tuples find Snowball [Agichtein & Gravano 2000] l Exploit duality between patterns and tuples - find](https://slidetodoc.com/presentation_image_h2/0d8e954fc2154cba3592c5c7317ee4bf/image-85.jpg)

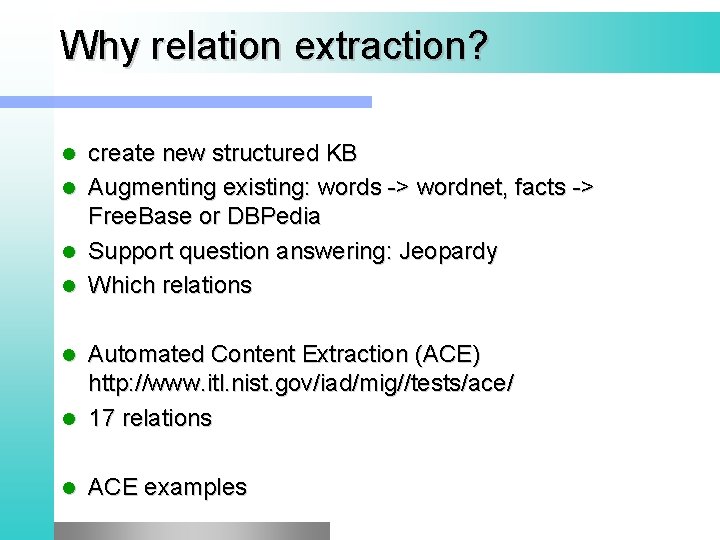

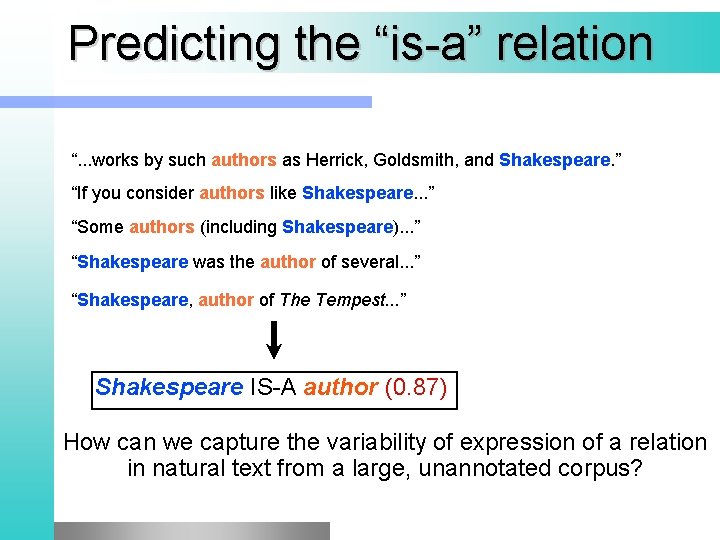

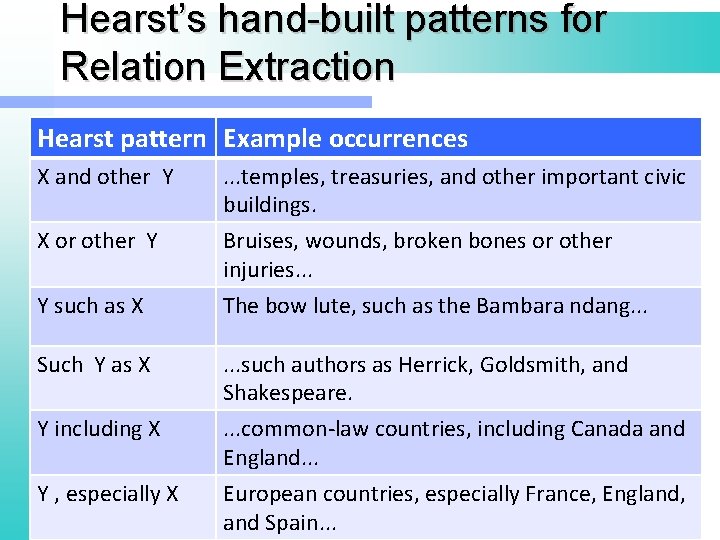

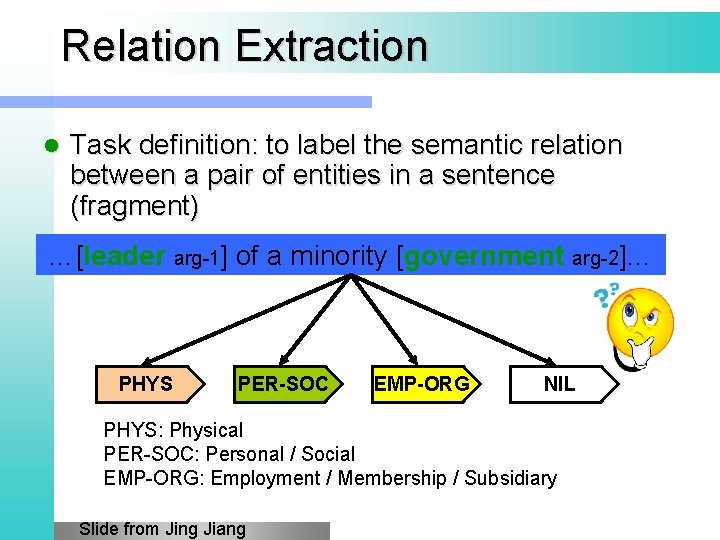

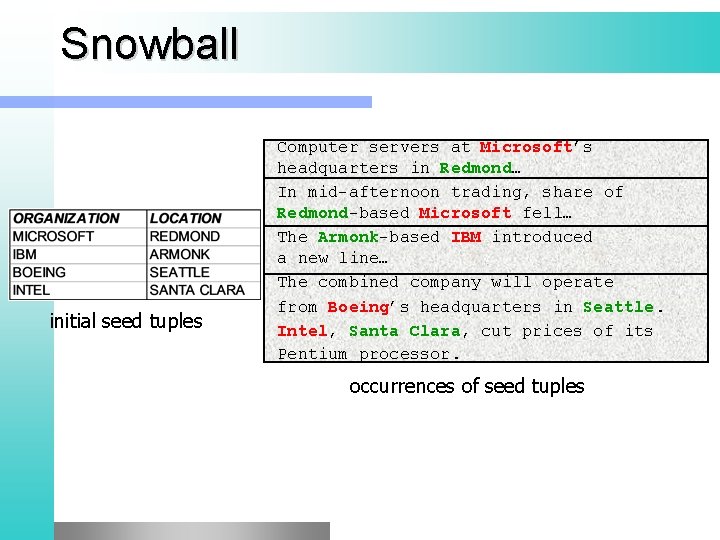

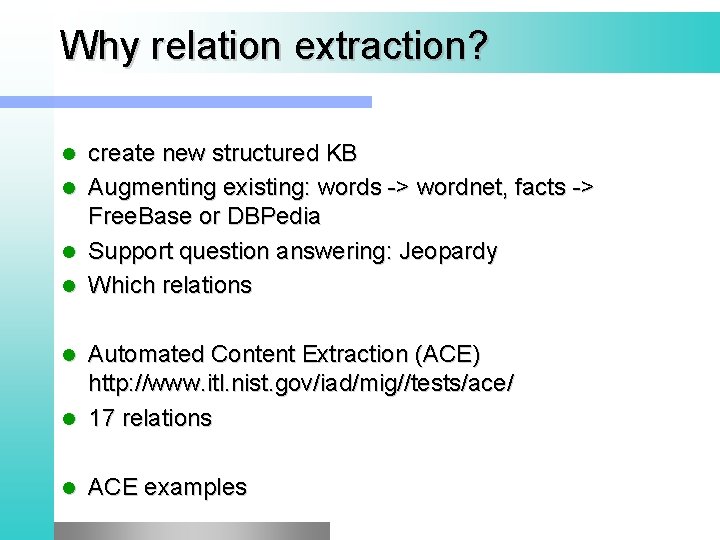

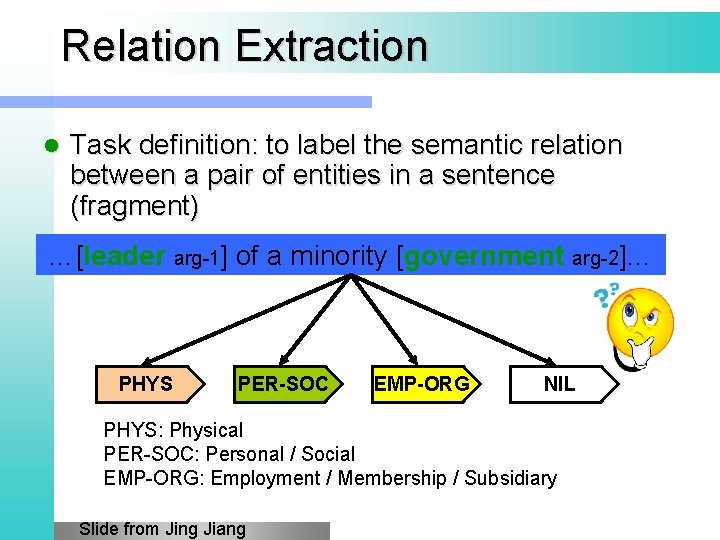

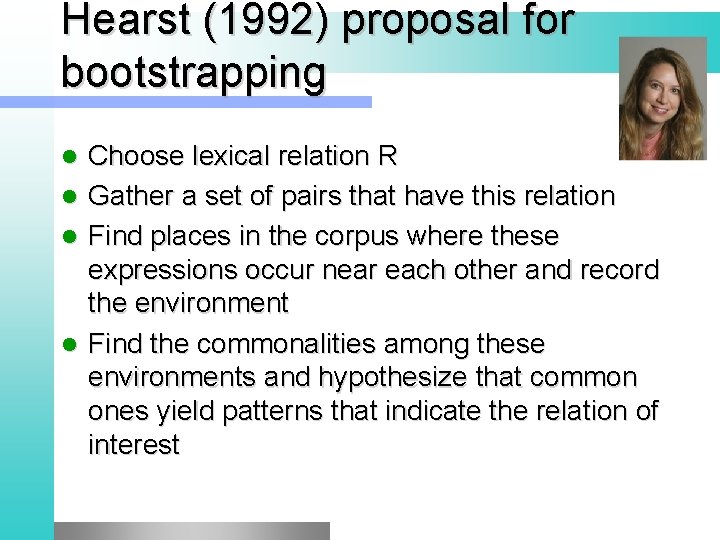

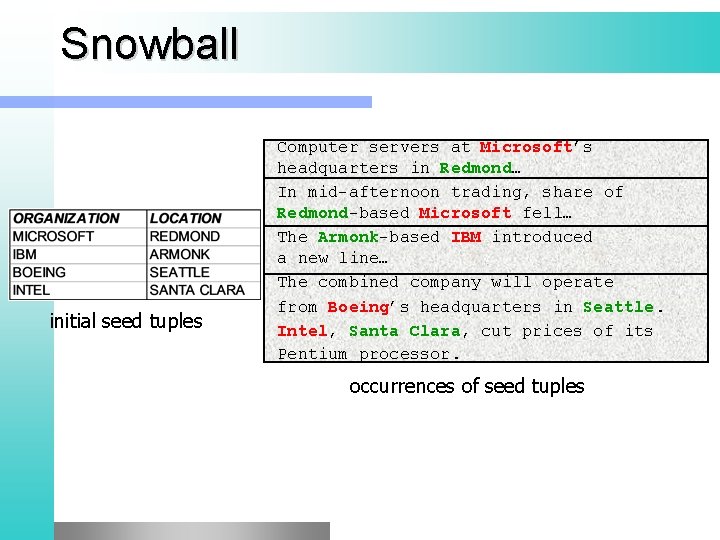

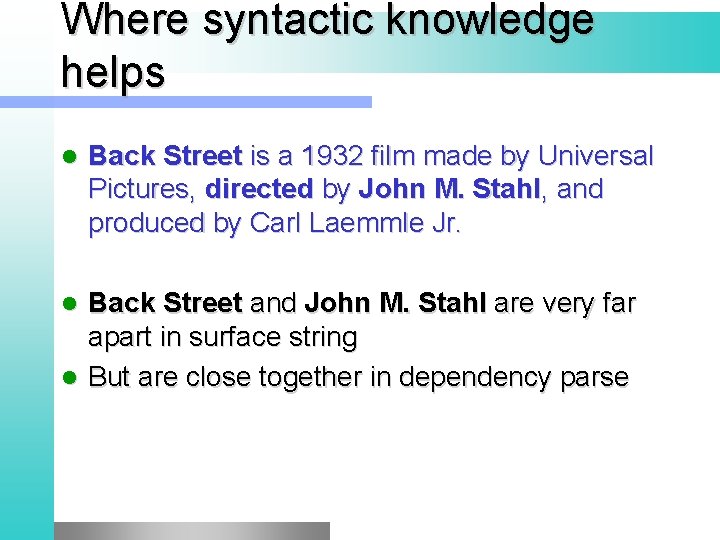

Snowball [Agichtein & Gravano 2000] l Exploit duality between patterns and tuples - find tuples that match a set of patterns - find patterns that match a lot of tuples bootstrapping approach Initial Seed Tuples Occurrences of Seed Tuples Generate New Seed Tuples Augment Table Tag Entities Generate Extraction Patterns

Snowball initial seed tuples Computer servers at Microsoft’s headquarters in Redmond… In mid-afternoon trading, share of Redmond-based Microsoft fell… The Armonk-based IBM introduced a new line… The combined company will operate from Boeing’s headquarters in Seattle. Intel, Santa Clara, cut prices of its Pentium processor. occurrences of seed tuples

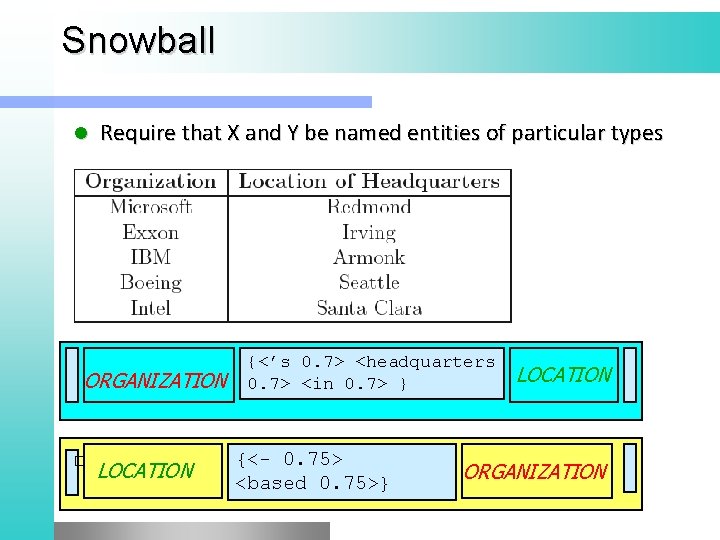

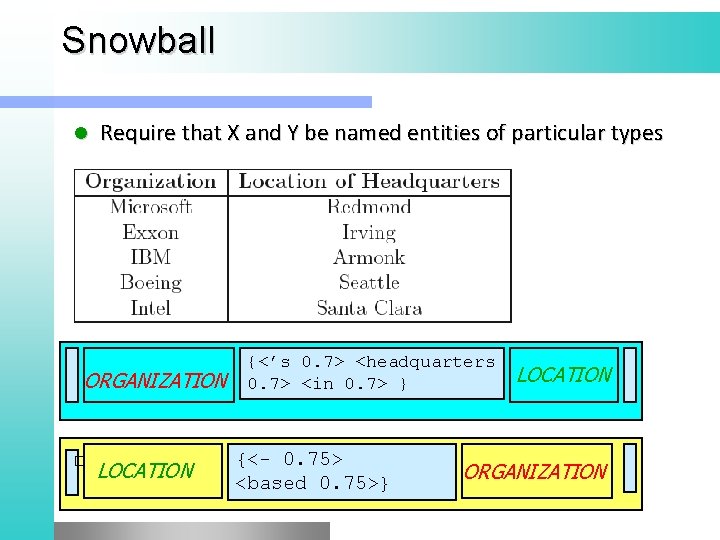

Snowball l Require that X and Y be named entities of particular types ORGANIZATION � LOCATION {<’s 0. 7> <headquarters 0. 7> <in 0. 7> } {<- 0. 75> <based 0. 75>} LOCATION ORGANIZATION

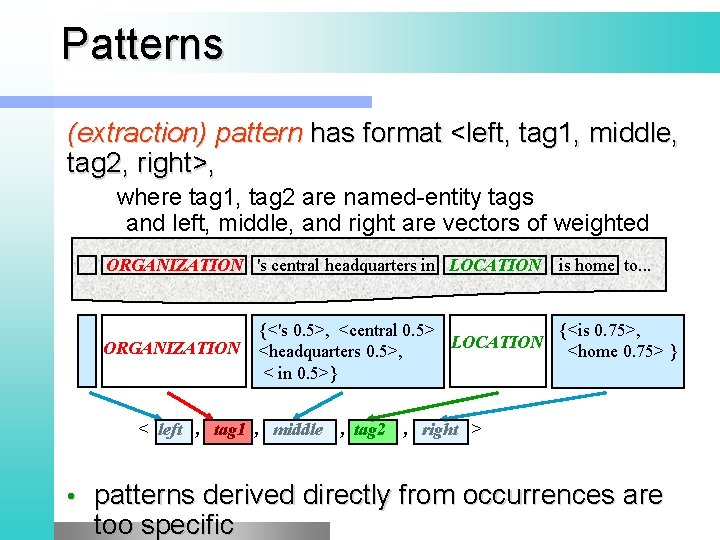

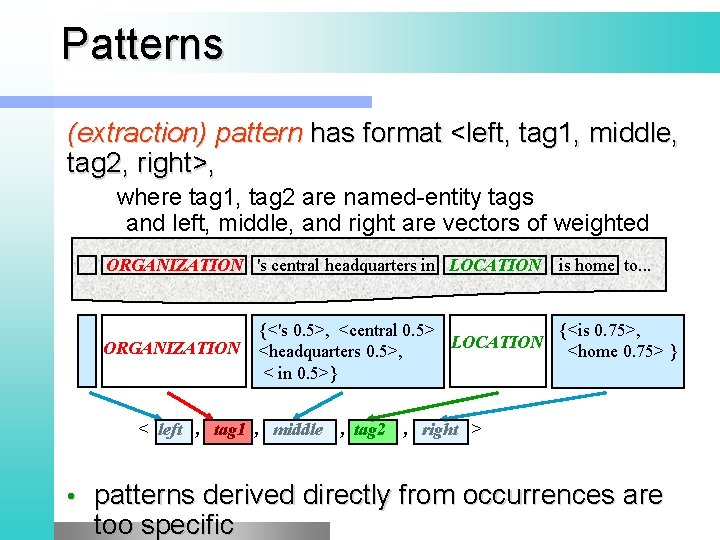

Patterns (extraction) pattern has format <left, tag 1, middle, tag 2, right>, where tag 1, tag 2 are named-entity tags and left, middle, and right are vectors of weighted terms ORGANIZATION 's central headquarters in LOCATION ORGANIZATION {<'s 0. 5>, <central 0. 5> {<is 0. 75>, LOCATION <headquarters 0. 5>, <home 0. 75> } < in 0. 5>} < left , tag 1 , middle • is home to. . . , tag 2 , right > patterns derived directly from occurrences are too specific

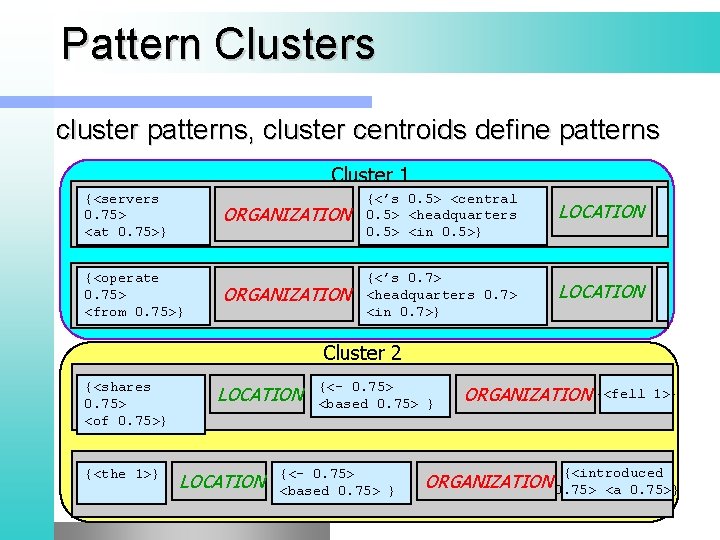

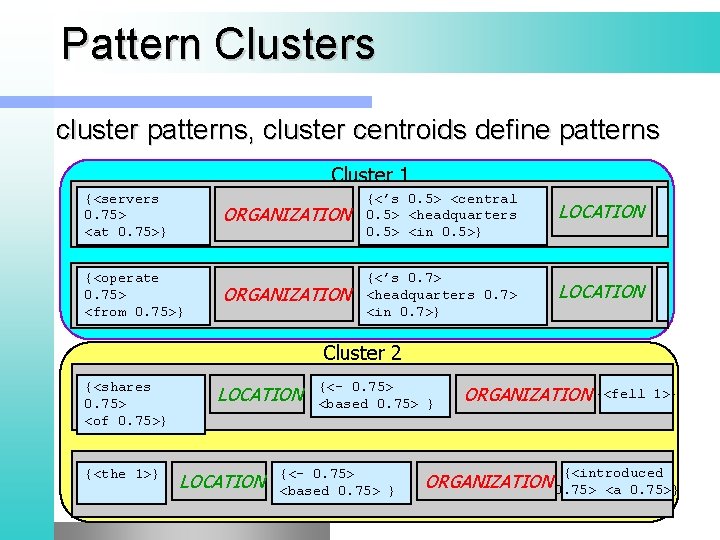

Pattern Clusters cluster patterns, cluster centroids define patterns Cluster 1 {<servers 0. 75> <at 0. 75>} ORGANIZATION {<’s 0. 5> <central 0. 5> <headquarters 0. 5> <in 0. 5>} LOCATION ORGANIZATION 0. 75> ORGANIZATION {<’s 0. 7> <headquarters 0. 7> <in 0. 7>} LOCATION {<operate <from 0. 75>} Cluster 2 {<shares 0. 75> <of 0. 75>} {<the 1>} LOCATION {<- 0. 75> <based 0. 75> } ORGANIZATION {<fell 1>} {<introduced <a 0. 75>} ORGANIZATION 0. 75>

5 easy methods for relation extraction 1. 2. 3. 4. 5. Hand-built patterns Supervised methods Bootstrapping (seed) methods Unsupervised methods Distant supervision

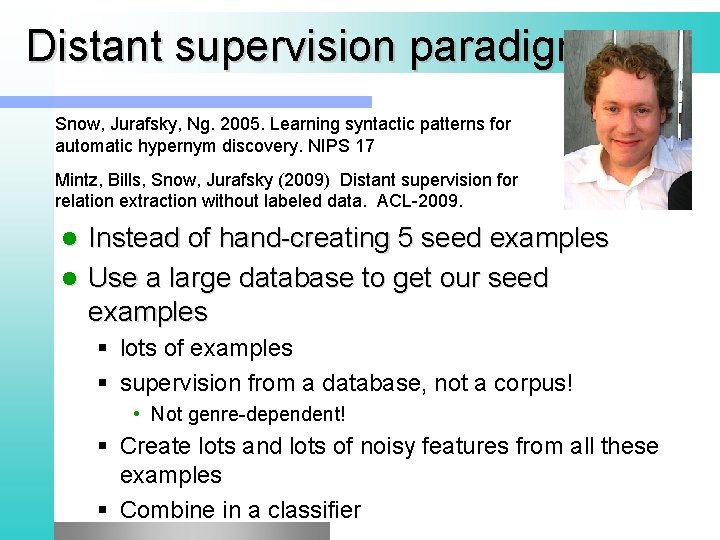

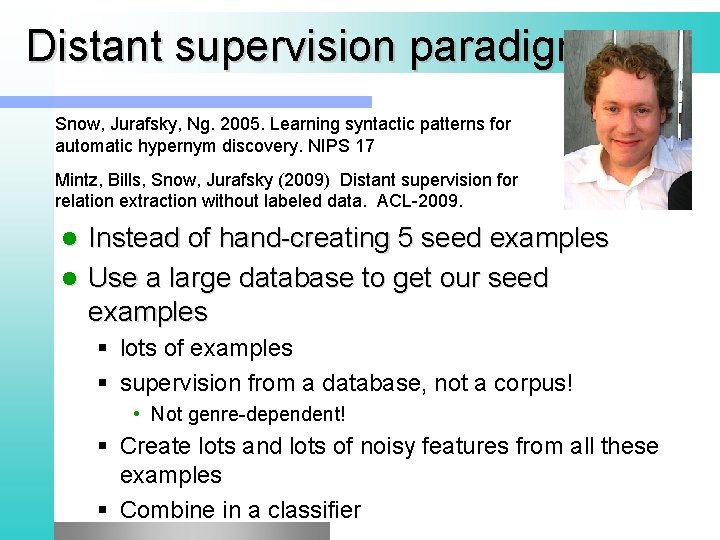

Distant supervision paradigm Snow, Jurafsky, Ng. 2005. Learning syntactic patterns for automatic hypernym discovery. NIPS 17 Mintz, Bills, Snow, Jurafsky (2009) Distant supervision for relation extraction without labeled data. ACL-2009. Instead of hand-creating 5 seed examples l Use a large database to get our seed examples l § lots of examples § supervision from a database, not a corpus! • Not genre-dependent! § Create lots and lots of noisy features from all these examples § Combine in a classifier

Distant supervision paradigm Has advantages of supervised classification: § use of rich of hand-created knowledge l Has advantages of unsupervised classification: § infinite amounts of data § allows for very large number of weak features § not sensitive to training corpus l

Relation Classification with “Distant Supervision” We construct a noisy training set consisting of occurrences from our corpus that contain an IS-A pair according to Word. Net. Suppose scientists could erase memories with a single substance in the brain. Slide from Rion Snow

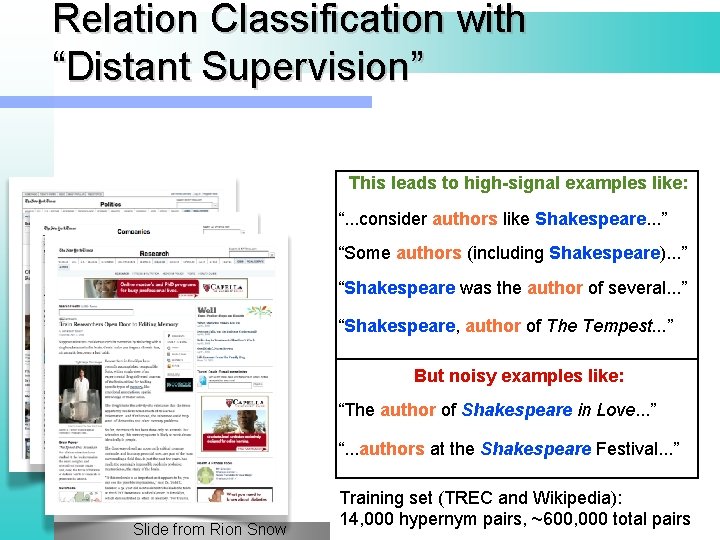

Relation Classification with “Distant Supervision” Construct a noisy training set consisting of occurrences from our corpus that contain an IS-A pair according to Word. Net. This leads to high-signal examples like: “. . . consider authors like Shakespeare. . . ” “Some authors (including Shakespeare). . . ” “Shakespeare was the author of several. . . ” “Shakespeare, author of The Tempest. . . ” Slide from Rion Snow

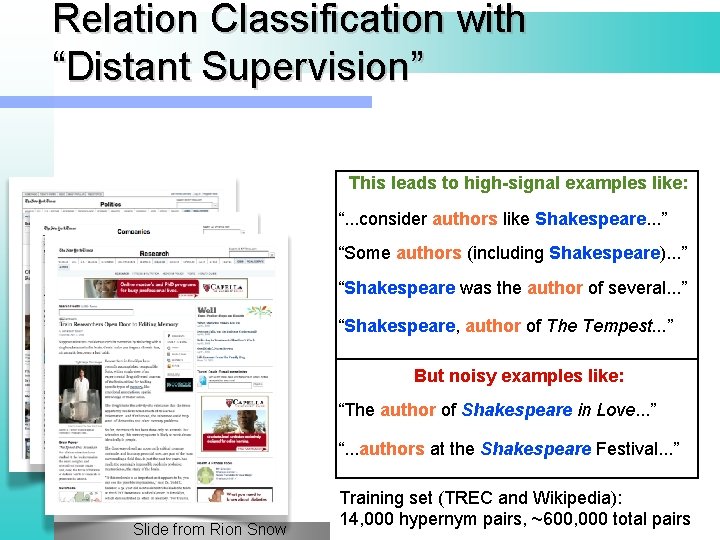

Relation Classification with “Distant Supervision” This leads to high-signal examples like: “. . . consider authors like Shakespeare. . . ” “Some authors (including Shakespeare). . . ” “Shakespeare was the author of several. . . ” “Shakespeare, author of The Tempest. . . ” But noisy examples like: “The author of Shakespeare in Love. . . ” “. . . authors at the Shakespeare Festival. . . ” Slide from Rion Snow Training set (TREC and Wikipedia): 14, 000 hypernym pairs, ~600, 000 total pairs

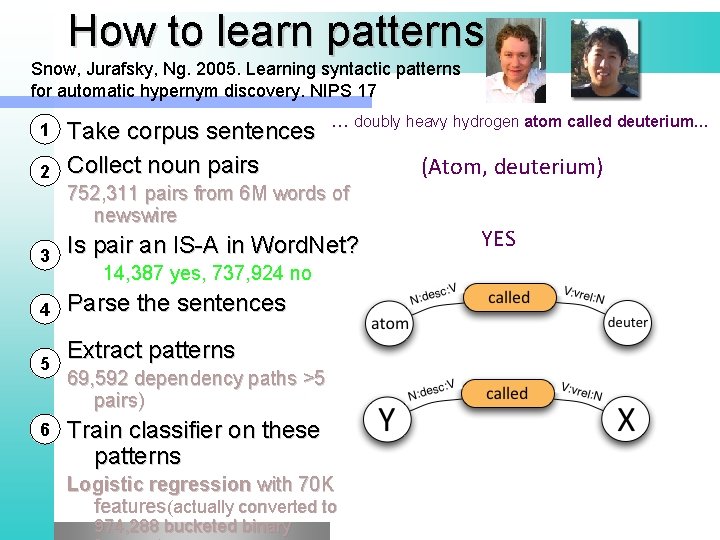

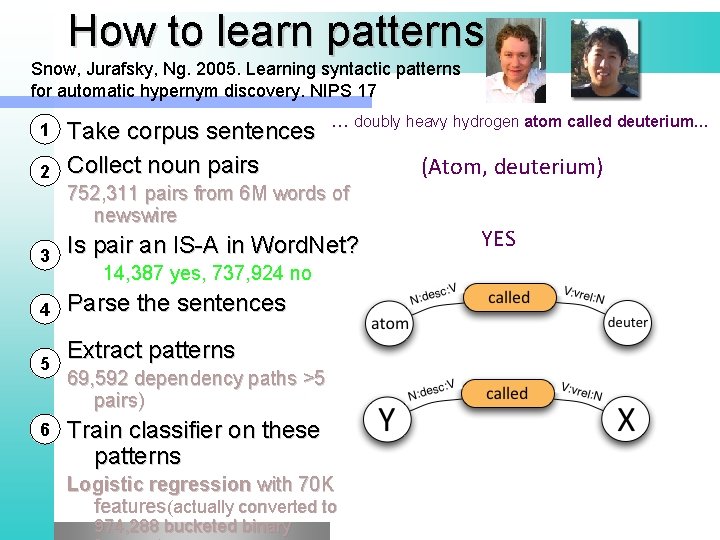

How to learn patterns Snow, Jurafsky, Ng. 2005. Learning syntactic patterns for automatic hypernym discovery. NIPS 17 1 2 Take corpus sentences Collect noun pairs … doubly heavy hydrogen atom called deuterium… 752, 311 pairs from 6 M words of newswire 3 Is pair an IS-A in Word. Net? 4 Parse the sentences 5 6 14, 387 yes, 737, 924 no Extract patterns 69, 592 dependency paths >5 pairs) Train classifier on these patterns Logistic regression with 70 K features(actually converted to 974, 288 bucketed binary (Atom, deuterium) YES

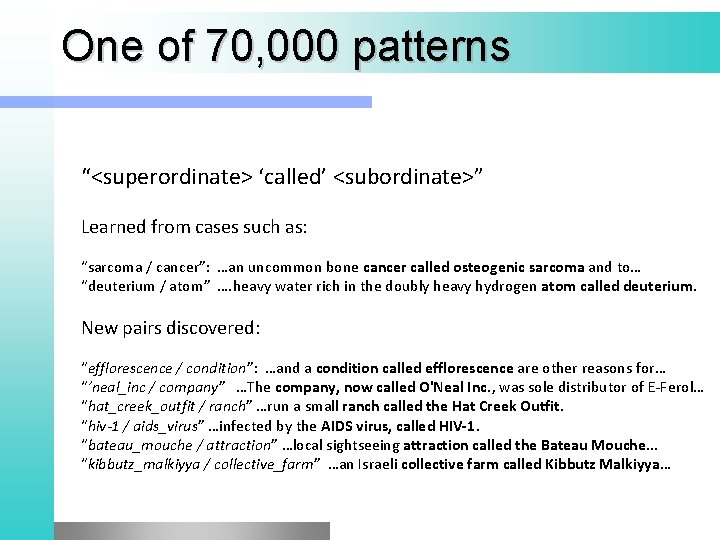

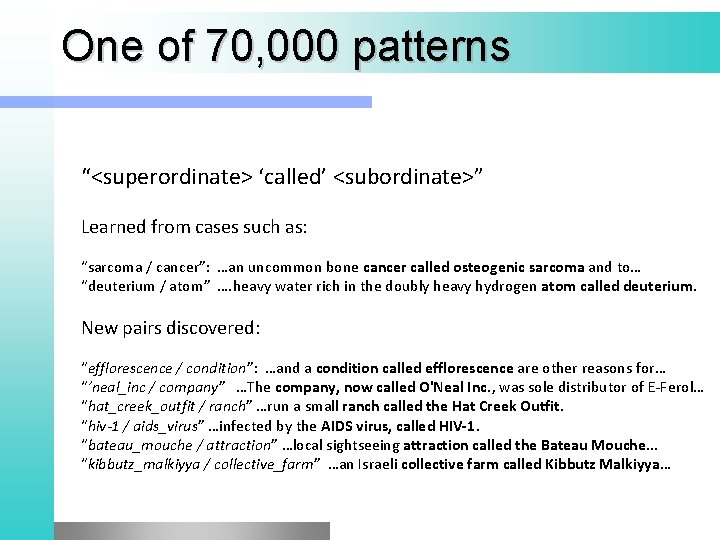

One of 70, 000 patterns “<superordinate> ‘called’ <subordinate>” Learned from cases such as: “sarcoma / cancer”: …an uncommon bone cancer called osteogenic sarcoma and to… “deuterium / atom” …. heavy water rich in the doubly heavy hydrogen atom called deuterium. New pairs discovered: “efflorescence / condition”: …and a condition called efflorescence are other reasons for… “’neal_inc / company” …The company, now called O'Neal Inc. , was sole distributor of E-Ferol… “hat_creek_outfit / ranch” …run a small ranch called the Hat Creek Outfit. “hiv-1 / aids_virus” …infected by the AIDS virus, called HIV-1. “bateau_mouche / attraction” …local sightseeing attraction called the Bateau Mouche. . . “kibbutz_malkiyya / collective_farm” …an Israeli collective farm called Kibbutz Malkiyya…

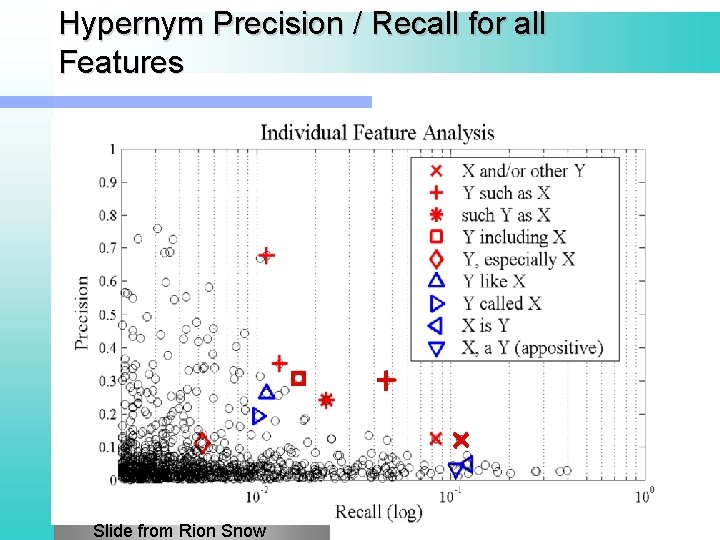

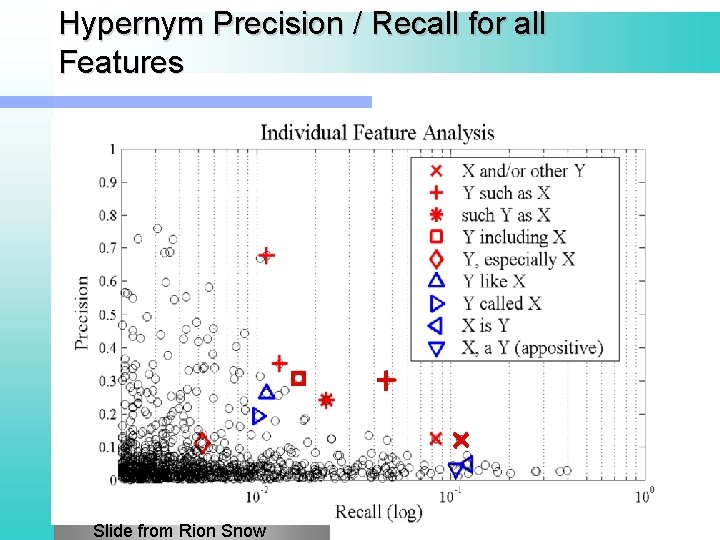

Hypernym Precision / Recall for all Features Slide from Rion Snow

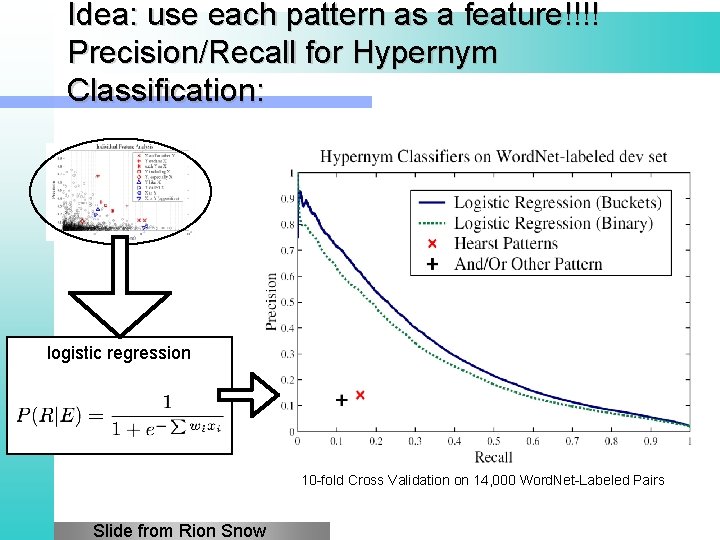

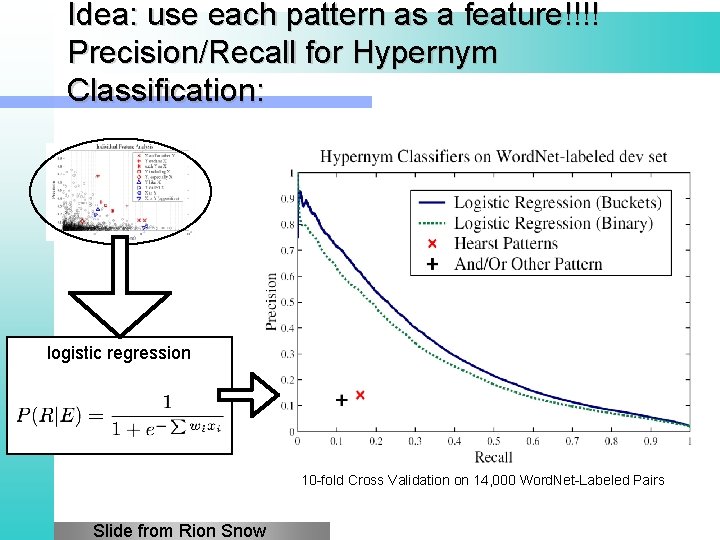

Idea: use each pattern as a feature!!!! Precision/Recall for Hypernym Classification: logistic regression 10 -fold Cross Validation on 14, 000 Word. Net-Labeled Pairs Slide from Rion Snow

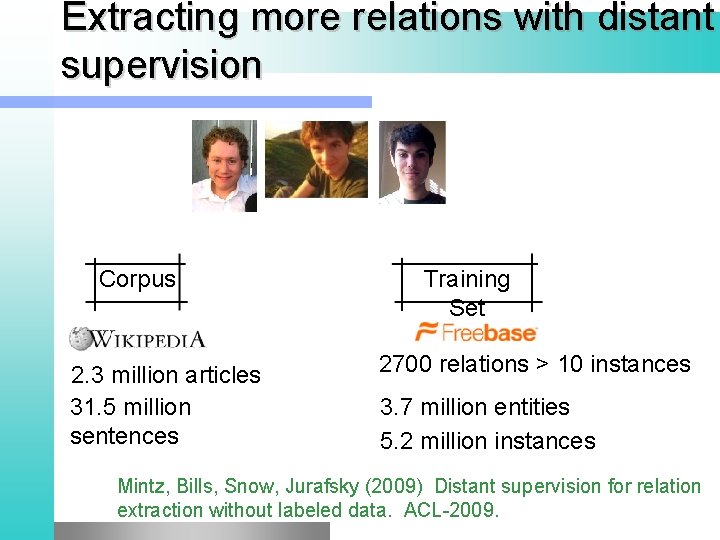

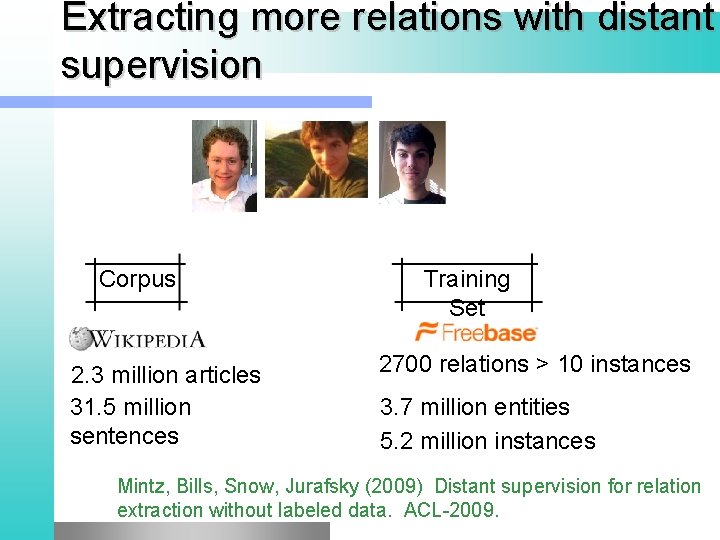

Extracting more relations with distant supervision Corpus 2. 3 million articles 31. 5 million sentences Training Set 2700 relations > 10 instances 3. 7 million entities 5. 2 million instances Mintz, Bills, Snow, Jurafsky (2009) Distant supervision for relation extraction without labeled data. ACL-2009.

Frequent Freebase Relations l a

Algorithm: Distant Supervision l A kind of weakly supervised learning § Use a large database to get seed examples § Create lots and lots of noisy pattern features from all these examples § Combine in a classifier

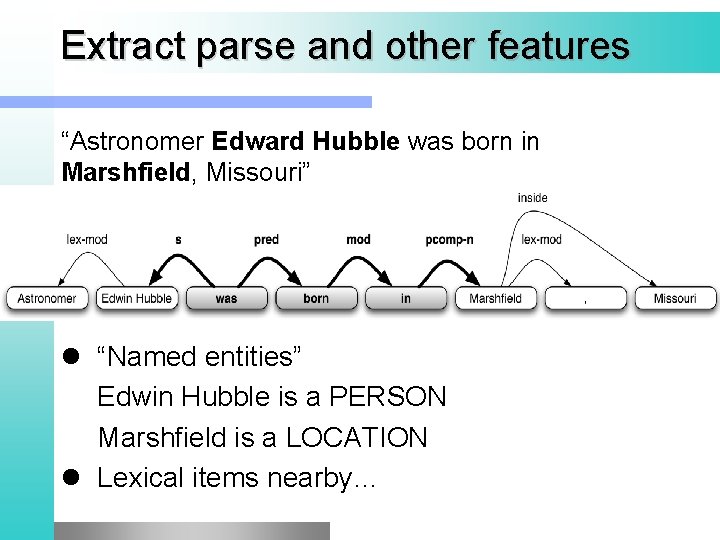

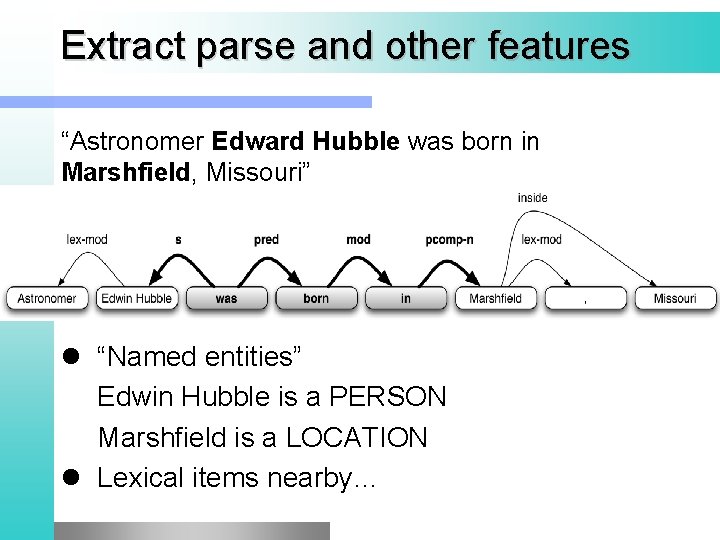

Extract parse and other features “Astronomer Edward Hubble was born in Marshfield, Missouri” l “Named entities” Edwin Hubble is a PERSON Marshfield is a LOCATION l Lexical items nearby…

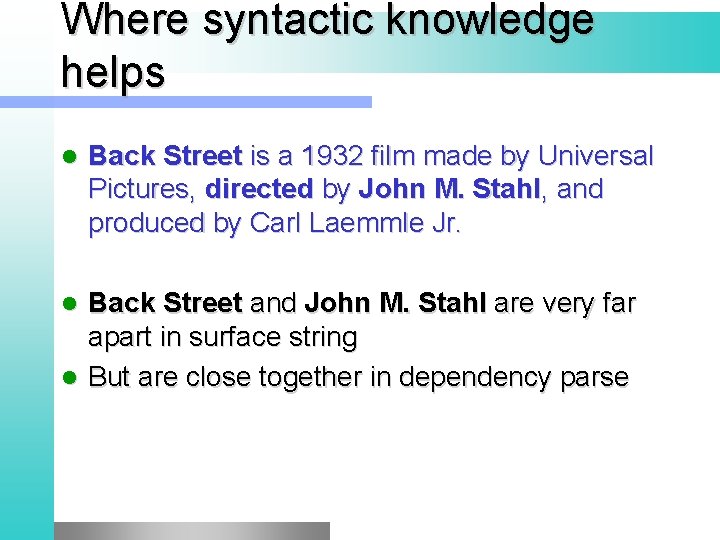

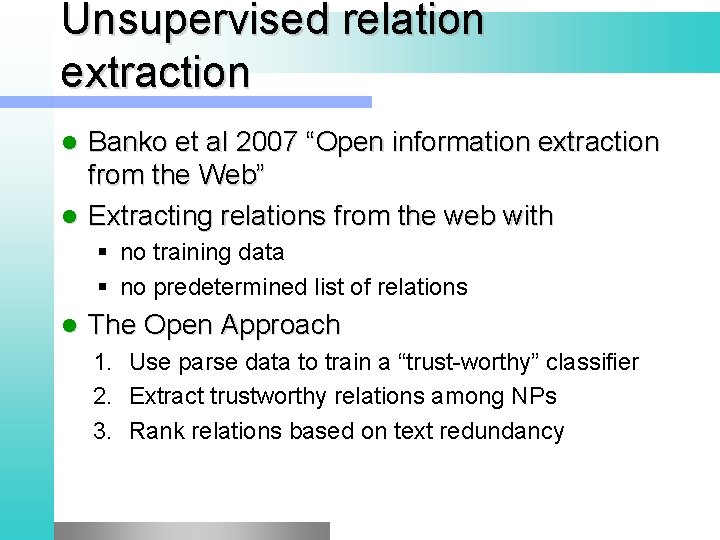

New relations learned l l l Montmartre IS-IN Paris Fort Erie IS-IN Ontario Fyoder Kamesnky DIED-IN Clearwater Utpon Sinclair WROTE Lanny Budd Vince Mc. Mahon FOUNDED WWE Thomas Mellon HAS-PROFESSION Judge

Human evaluation: precision using Mechanical Turk labelers Feature Syntactic Lexical Both Precision. 67. 66. 69

Where syntactic knowledge helps l Back Street is a 1932 film made by Universal Pictures, directed by John M. Stahl, and produced by Carl Laemmle Jr. Back Street and John M. Stahl are very far apart in surface string l But are close together in dependency parse l

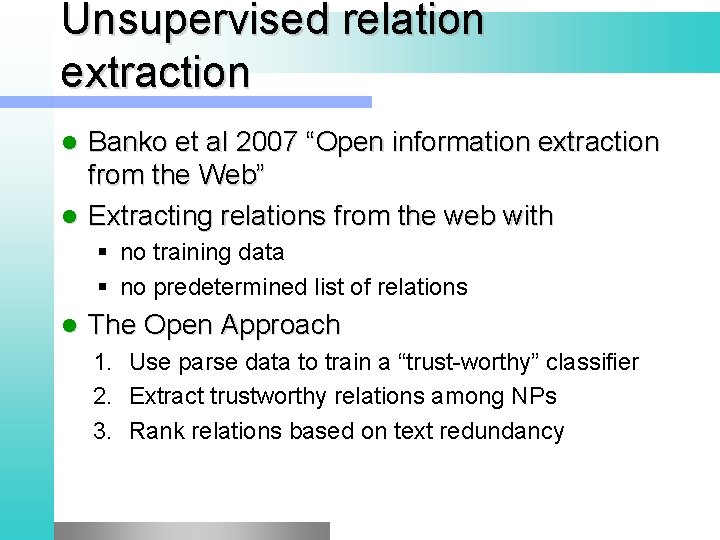

Unsupervised relation extraction Banko et al 2007 “Open information extraction from the Web” l Extracting relations from the web with l § no training data § no predetermined list of relations l The Open Approach 1. Use parse data to train a “trust-worthy” classifier 2. Extract trustworthy relations among NPs 3. Rank relations based on text redundancy