Parallel Computing Project OPENMP using LINUX for Parallel

![References [1] Michael Resch, Edgar Gabriel, Alfred Geiger (1999). An Approach for MPI Based References [1] Michael Resch, Edgar Gabriel, Alfred Geiger (1999). An Approach for MPI Based](https://slidetodoc.com/presentation_image/c4ae671c0b13dfbcfaaa15b8f34c0b97/image-29.jpg)

- Slides: 30

Parallel Computing Project (OPENMP using LINUX for Parallel application) Summer 2008 Group Project Instructor: Prof. Nagi Mekhiel August 12 th, , 2008 Ravi Illapani Kyunghee Ko Lixiang Zhang

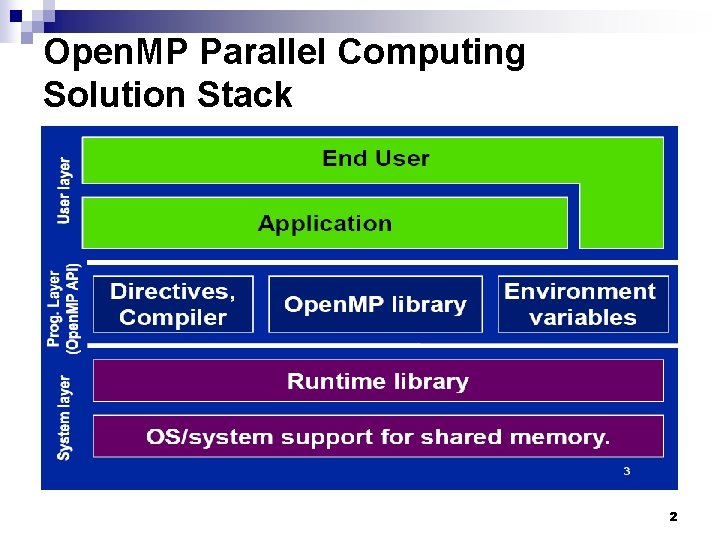

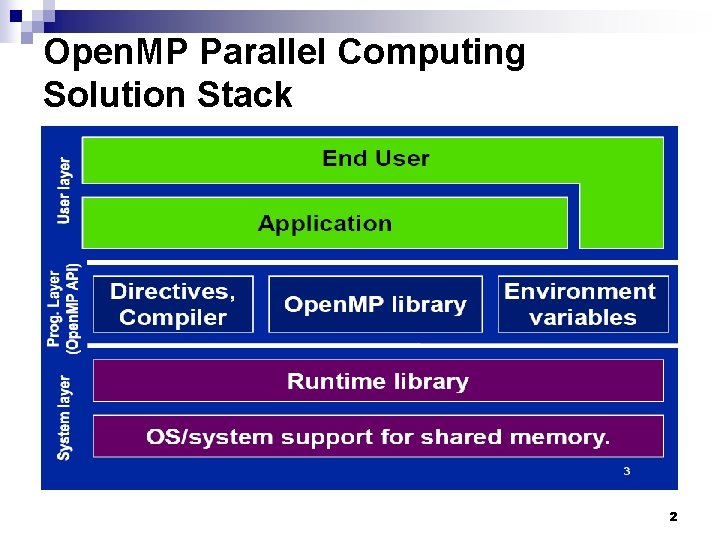

Open. MP Parallel Computing Solution Stack 2

Recall Basic Idea of Open. MP n The program generated by the compiler is executed by multiple threads ¨ One thread per processor or core n Each thread performs part of the work ¨ Parallel parts executed by multiple threads ¨ Sequential parts executed by single thread n Dependences in parallel parts require synchronization between threads 3

Recall Basic Idea: How Open. MP Works n User must decide what is parallel in program ¨ Makes any changes needed to original source code ¨ E. g. to remove any dependences in parts that should run in parallel n User inserts directives telling compiler how statements are to be executed ¨ What parts of the program are parallel ¨ How to assign code in parallel regions to threads ¨ Specifies data sharing attributes: shared, private, threadprivate… 4

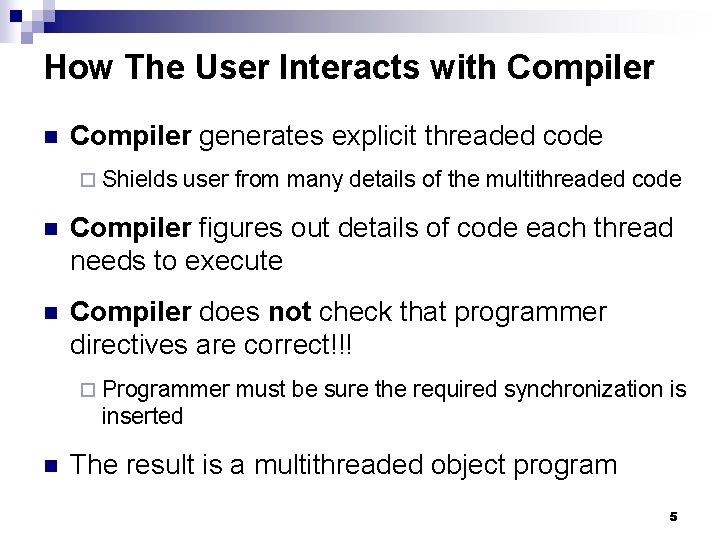

How The User Interacts with Compiler n Compiler generates explicit threaded code ¨ Shields user from many details of the multithreaded code n Compiler figures out details of code each thread needs to execute n Compiler does not check that programmer directives are correct!!! ¨ Programmer must be sure the required synchronization is inserted n The result is a multithreaded object program 5

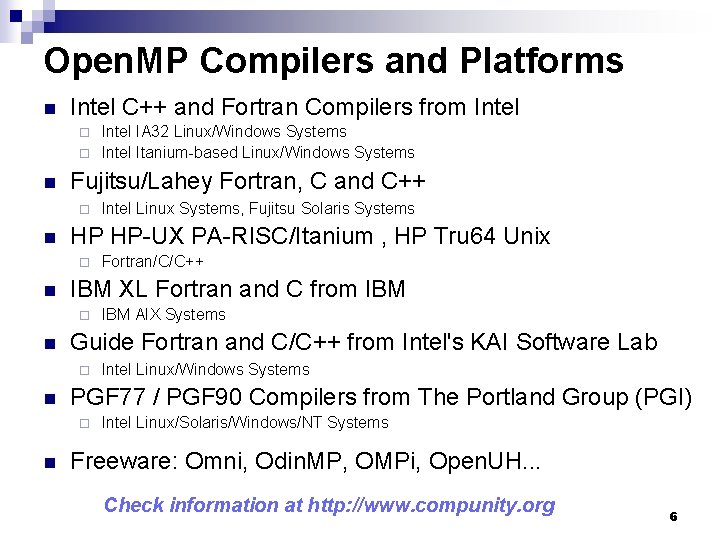

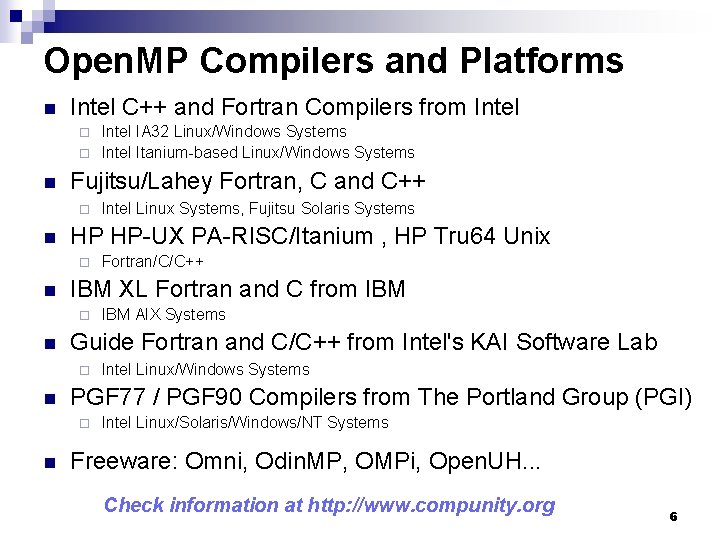

Open. MP Compilers and Platforms n Intel C++ and Fortran Compilers from Intel IA 32 Linux/Windows Systems ¨ Intel Itanium-based Linux/Windows Systems ¨ n Fujitsu/Lahey Fortran, C and C++ ¨ n HP HP-UX PA-RISC/Itanium , HP Tru 64 Unix ¨ n Intel Linux/Windows Systems PGF 77 / PGF 90 Compilers from The Portland Group (PGI) ¨ n IBM AIX Systems Guide Fortran and C/C++ from Intel's KAI Software Lab ¨ n Fortran/C/C++ IBM XL Fortran and C from IBM ¨ n Intel Linux Systems, Fujitsu Solaris Systems Intel Linux/Solaris/Windows/NT Systems Freeware: Omni, Odin. MP, OMPi, Open. UH. . . Check information at http: //www. compunity. org 6

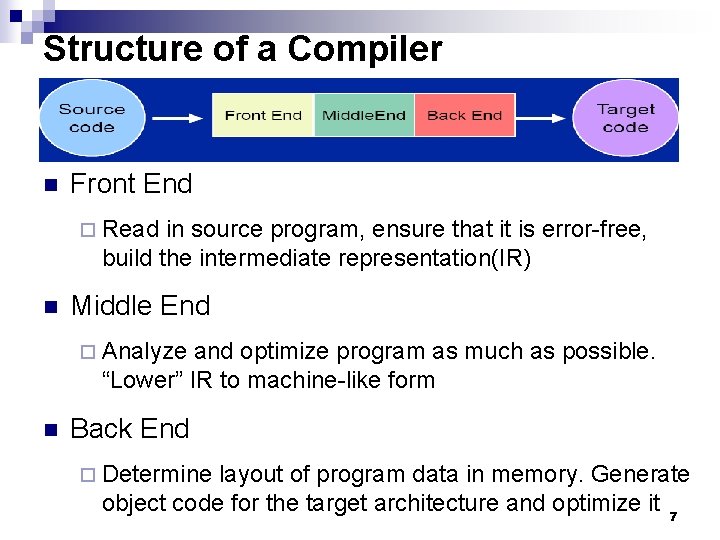

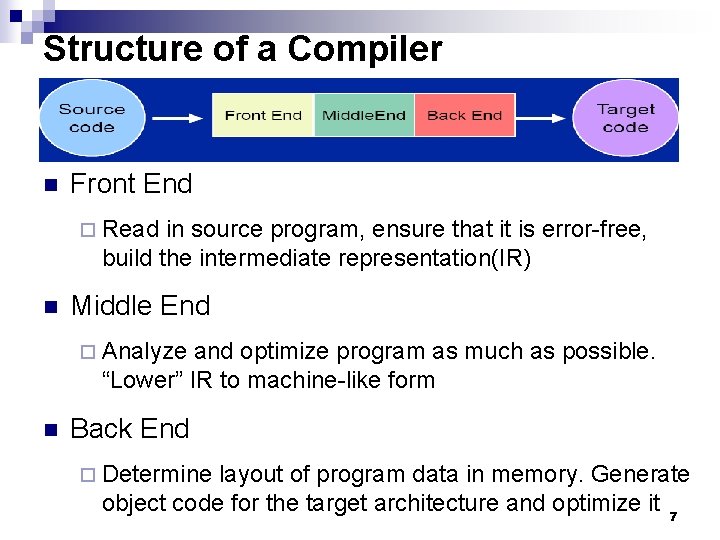

Structure of a Compiler n Front End ¨ Read in source program, ensure that it is error-free, build the intermediate representation(IR) n Middle End ¨ Analyze and optimize program as much as possible. “Lower” IR to machine-like form n Back End ¨ Determine layout of program data in memory. Generate object code for the target architecture and optimize it 7

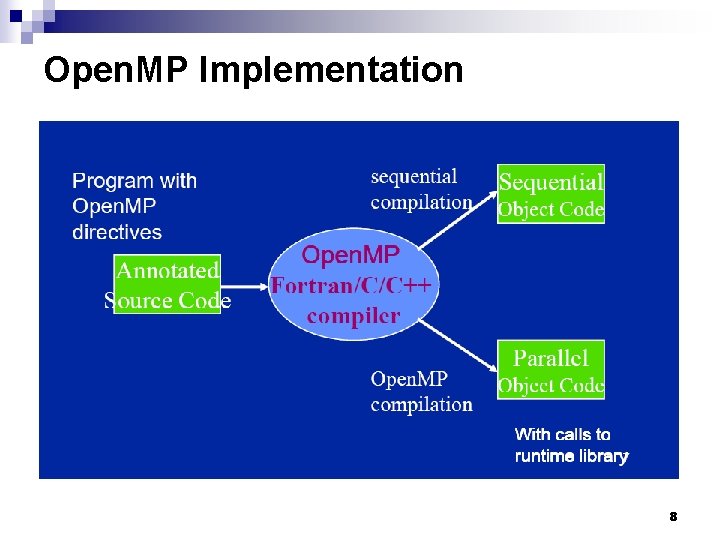

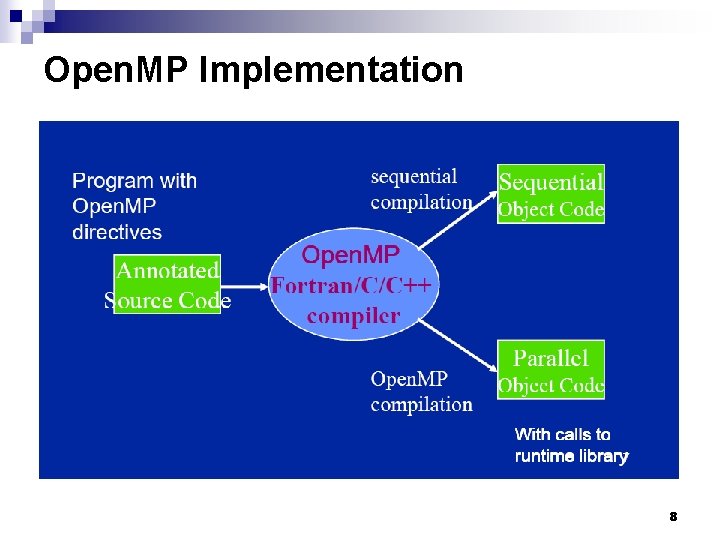

Open. MP Implementation 8

Open. MP Implementation (con’t) n If program is compiled sequentially ¨ Open. MP comments and pragmas are ignored n If code is compiled for parallel execution ¨ Comments and/or pragmas are read, and ¨ Drive translation into parallel program n Ideally, one source for both sequential and parallel program (big maintenance plus) n Usually this is accomplished by choosing a specific compiler option 9

Open. MP Implementation (con’t) n Transforms Open. MP programs into multi-threaded code n Figures out the details of the work to be performed by each thread n Arranges storage for different data and performs their initializations: shared, private. . . n Manages threads: creates, suspends, wakes up, terminates threads n Implements thread synchronization 10

Implementation-Defined Issues n Open. MP leaves some issues to the implement ¨ Default number of threads ¨ Default schedule and default for schedule (runtime) ¨ Number of threads to execute nested parallel regions ¨ Behaviour in case of thread exhaustion ¨ And many others. . Despite many similarities, each implementation is a little different from all others 11

Butterfly effect n The butterfly effect is a phrase that encapsulates the more technical notion of sensitive dependence on initial conditions in chaos theory. Small variations of the initial condition of a dynamical system may produce large variations in the long term behavior of the system n As butterfly describes, we gave parameters a little change and we got the totally different results.

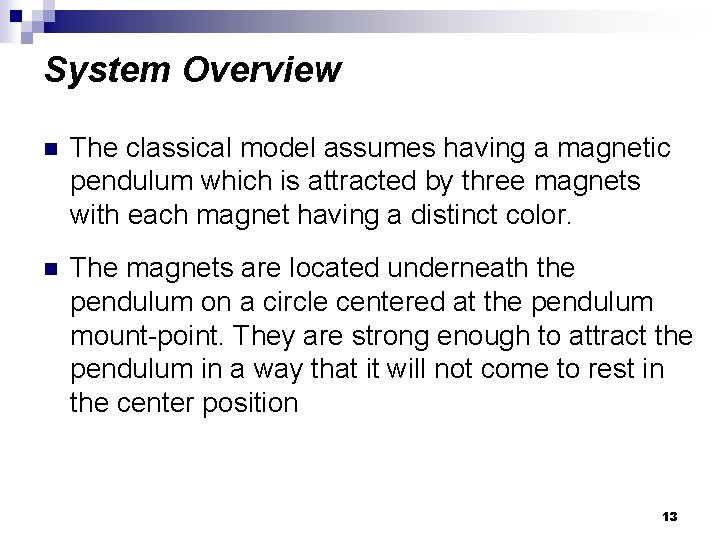

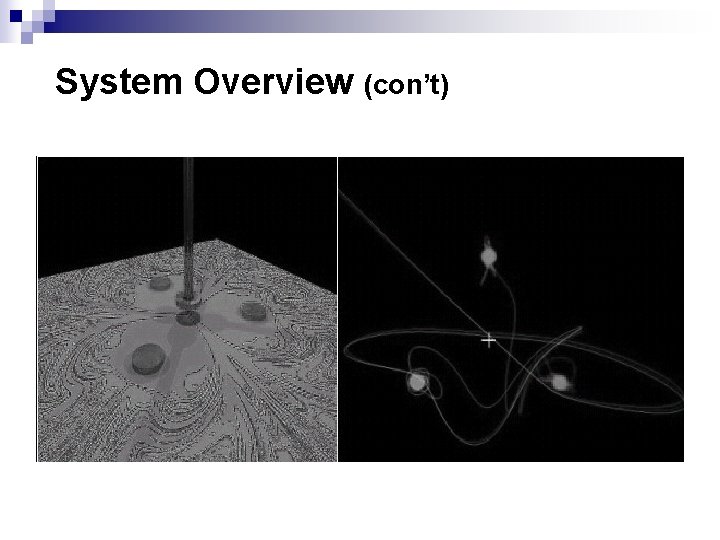

System Overview n The classical model assumes having a magnetic pendulum which is attracted by three magnets with each magnet having a distinct color. n The magnets are located underneath the pendulum on a circle centered at the pendulum mount-point. They are strong enough to attract the pendulum in a way that it will not come to rest in the center position 13

System Overview (con’t)

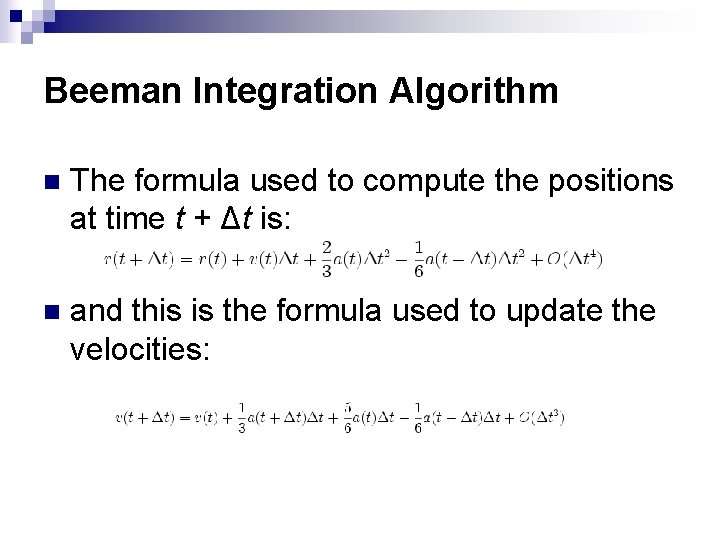

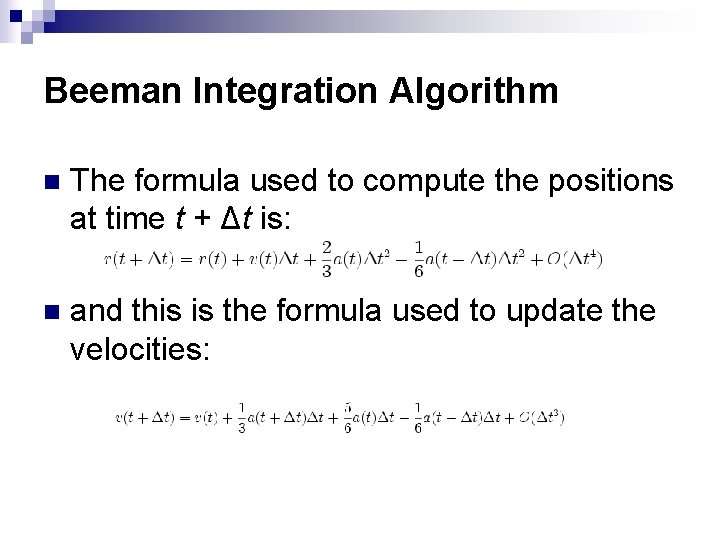

Beeman Integration Algorithm n The formula used to compute the positions at time t + Δt is: n and this is the formula used to update the velocities:

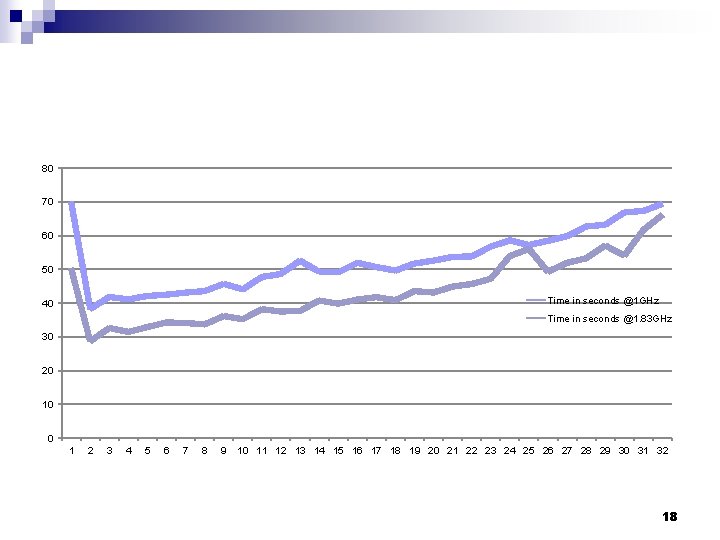

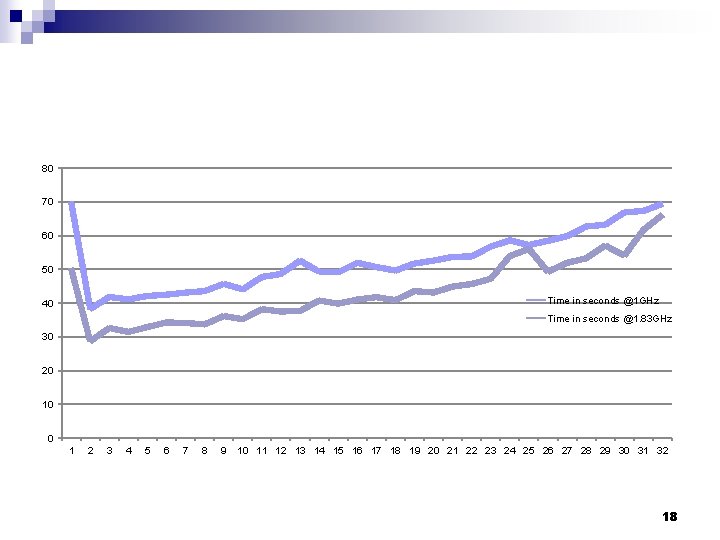

Simulation results Exp 1: Single core vs dual core…. Performance w. r. t number of threads…. . Serial vs parallel…. . 32 tests were conducted… n 17

80 70 60 50 Time in seconds @1 GHz 40 Time in seconds @1. 83 GHz 30 20 10 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 18

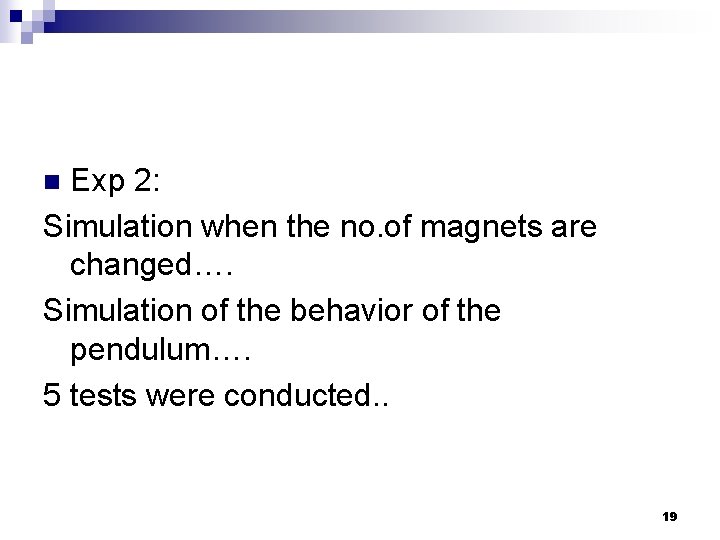

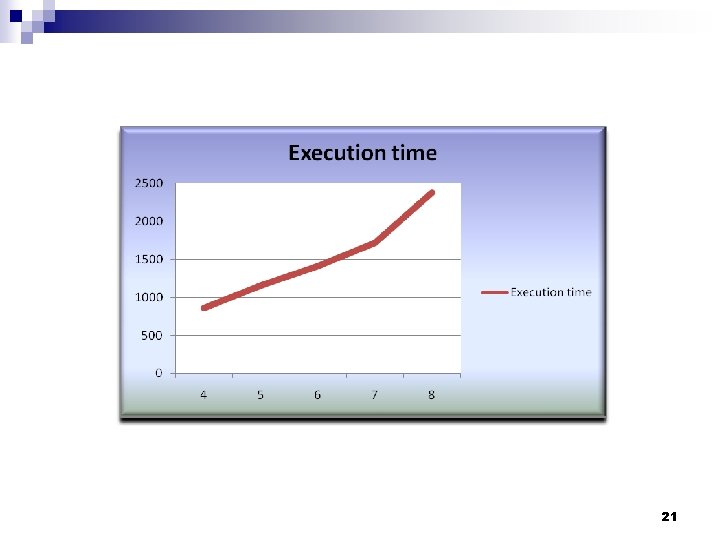

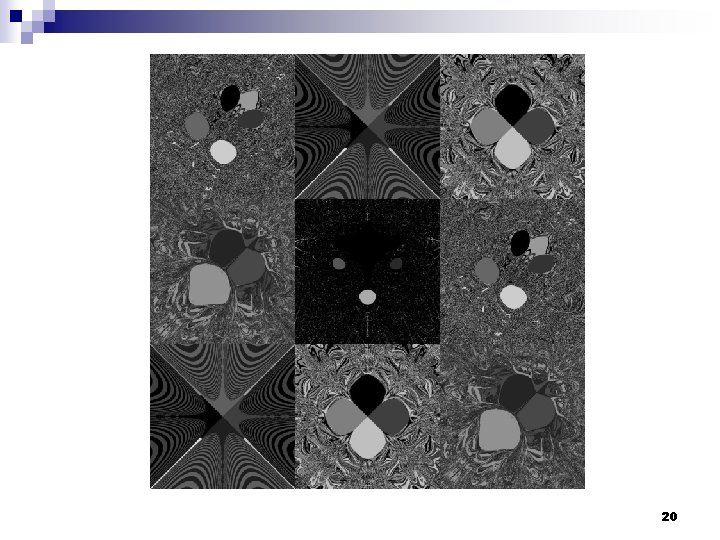

Exp 2: Simulation when the no. of magnets are changed…. Simulation of the behavior of the pendulum…. 5 tests were conducted. . n 19

20

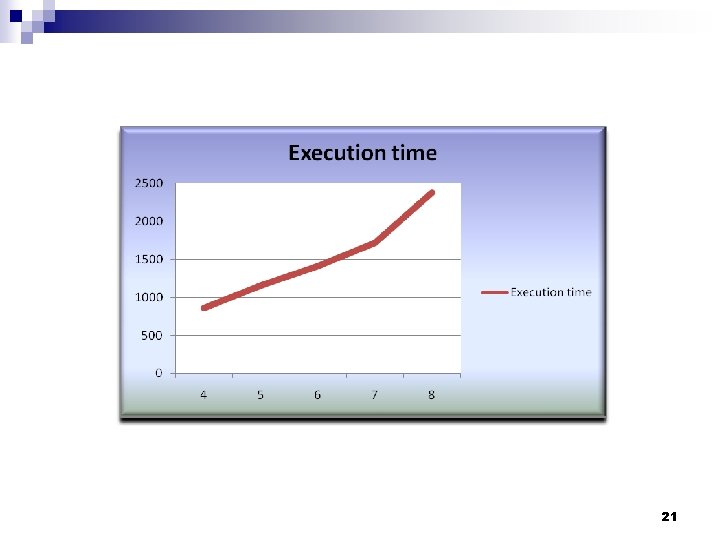

21

Exp 3 n In this experiment, we simulate the pendulum in a field of 2 magnets with varying values of friction and gravitation forces. n A total number of 63 simulations were run: n 22

23

Exp 4 n In this experiment, we simulate the pendulum in a field of 3 magnets with varying values of friction and gravitation forces. n A total number of 63 simulations were run: n 24

25

Exp 5 n In this experiment, we simulate the pendulum in a field of 8 magnets with varying values of friction and gravitation forces. n A total number of 26 simulations were run: n 26

27

Conclusion n n Even though the hardware is available, effective programming is required to maximize code efficiency. Complex simulations can be performed faster using parallel architecture. Openmp helps!! Simple: everybody can learn it in 11 weeks Not so simple: Don’t stop learning! keep learning it for better performance 28

![References 1 Michael Resch Edgar Gabriel Alfred Geiger 1999 An Approach for MPI Based References [1] Michael Resch, Edgar Gabriel, Alfred Geiger (1999). An Approach for MPI Based](https://slidetodoc.com/presentation_image/c4ae671c0b13dfbcfaaa15b8f34c0b97/image-29.jpg)

References [1] Michael Resch, Edgar Gabriel, Alfred Geiger (1999). An Approach for MPI Based Metacomputing, High Performance Distributed Computing Proceedings of the 8 th IEEE International Symposium on High Performance Distributed Computing, 17, retrieved from ACM website August, 2008 http: //portal. acm. org/citation. cfm? id=823264&coll=ACM&dl=ACM&CFID=12436242&CFTOKEN=36621280 n [2] William Gropp, Ewing Lusk, Rajeev Thakur (1998), A case for using OPENMP's derived datatypes to improve I/O performance, Conference on High Performance Networking and Computing Proceedings of the 1998 ACM/IEEE conference on Supercomputing, 1 -10, retrieved from ACM website August, 2008 http: //portal. acm. org/citation. cfm? id=509059&coll=ACM&dl=ACM&CFID=12436242&CFTOKEN=36621280 n [3] Michael Kagan (2006), Application acceleration through OPENMP overlap, Proceedings of the 2006 ACM/IEEE conference on Supercomputing, , retrieved from ACM website August, 2008 http: //portal. acm. org/citation. cfm? id=1188736&coll=ACM&dl=ACM&CFID=12436242&CFTOKEN=36621280 n [4] Kai Shen, Hong Tang, Tao Yang (1999), Compile/run-time support for threaded OPENMP execution on multiprogrammed shared memory machines, ACM SIGPLAN Notices Volume 34, Issue 8, 107 -118, , retrieved from ACM website August, 2008 http: //portal. acm. org/citation. cfm? id=301114&coll=ACM&dl=ACM&CFID=12436242&CFTOKEN=36621280 n [5] Wikipedia Reference, retrieved from Wikipedia. org website August, 2008 http: //en. wikipedia. org/wiki/Beeman's_algorithm http: //www. bugman 123. com/Fractals. html http: //www. inf. ethz. ch/personal/muellren/pendulum/index. html#simulation http: //en. wikipedia. org/wiki/Chaos_theory http: //en. wikipedia. org/wiki/Butterfly_effect n [6] Software install, compiler, code Reference, retrieved website August, 2008 http: //www. openmp. org/wp/ http: //www. intel. com/cd/software/products/asmo-na/eng/compilers/277618. htm http: //www. codeproject. com/KB/recipes/Magnetic. Pendulum. aspx n 29

30