MPIizing Your Program CSCI 317 Mike Heroux 1

- Slides: 53

MPI-izing Your Program CSCI 317 Mike Heroux 1

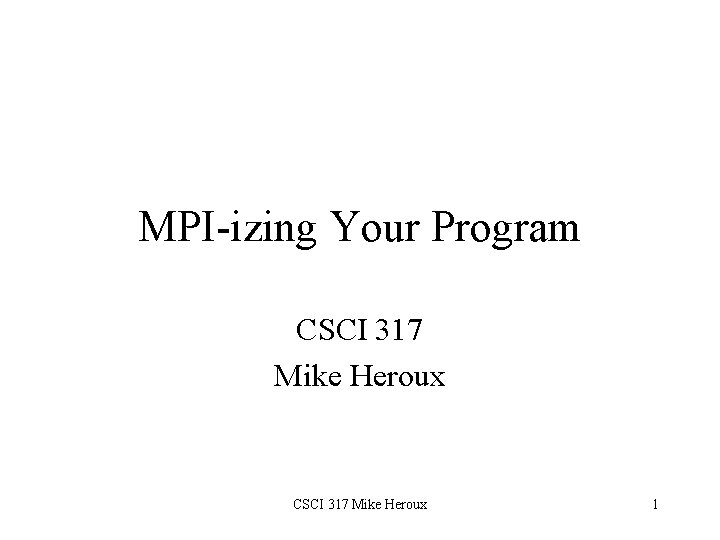

Simple Example • Example: Find the max of n positive numbers. – Way 1: Single processor ( SISD - for comparison). – Way 2: Multiple processor, single memory space (SPMD/SMP). – Way 3: Multiple processor, multiple memory spaces. (SPMD/DMP).

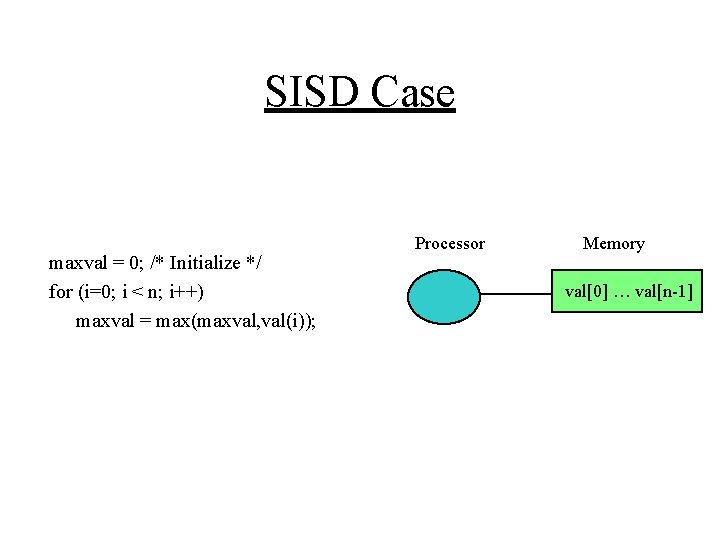

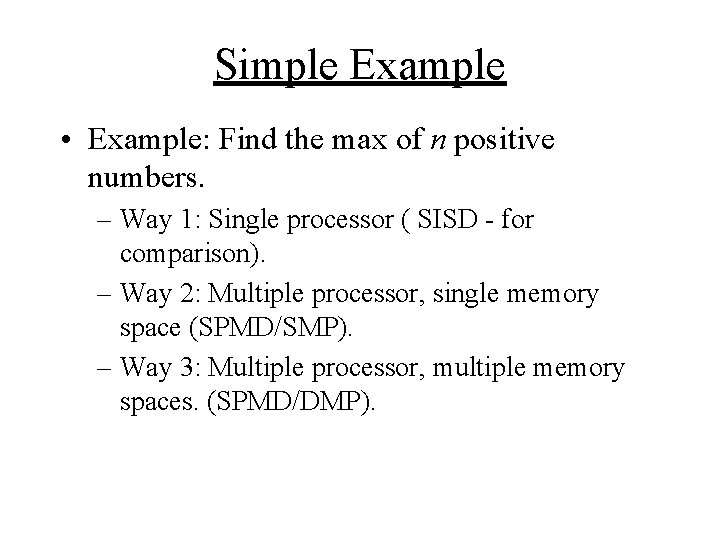

SISD Case maxval = 0; /* Initialize */ for (i=0; i < n; i++) maxval = max(maxval, val(i)); Processor Memory val[0] … val[n-1]

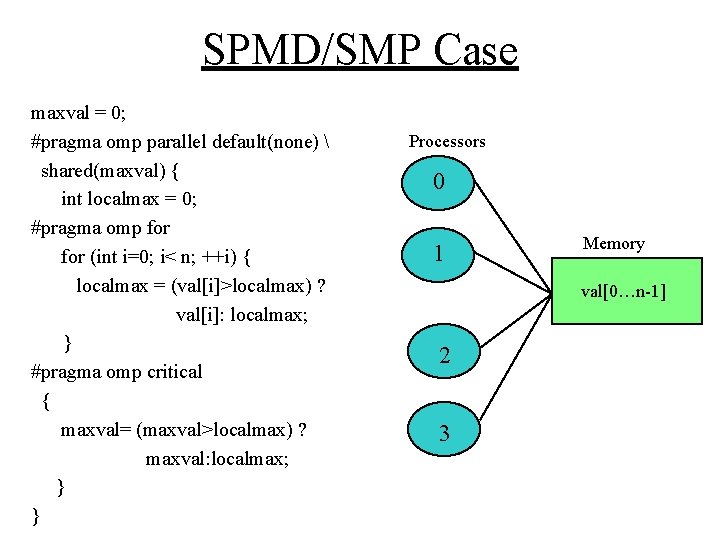

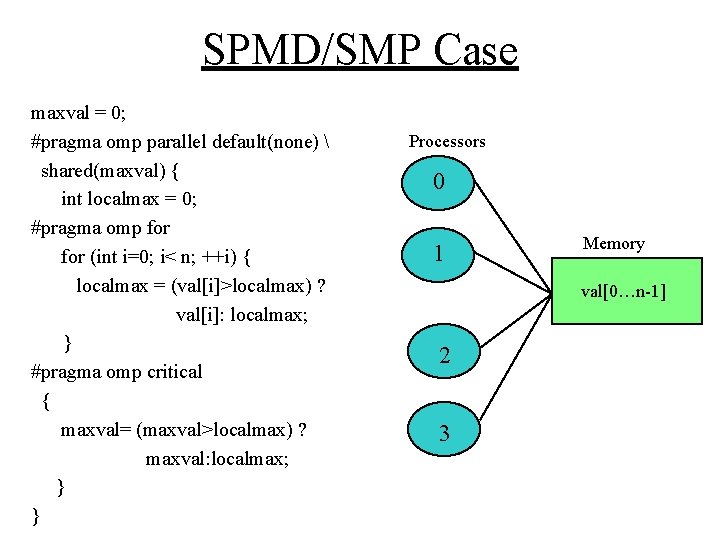

SPMD/SMP Case maxval = 0; #pragma omp parallel default(none) shared(maxval) { int localmax = 0; #pragma omp for (int i=0; i< n; ++i) { localmax = (val[i]>localmax) ? val[i]: localmax; } #pragma omp critical { maxval= (maxval>localmax) ? maxval: localmax; } } Processors 0 1 Memory val[0…n-1] 2 3

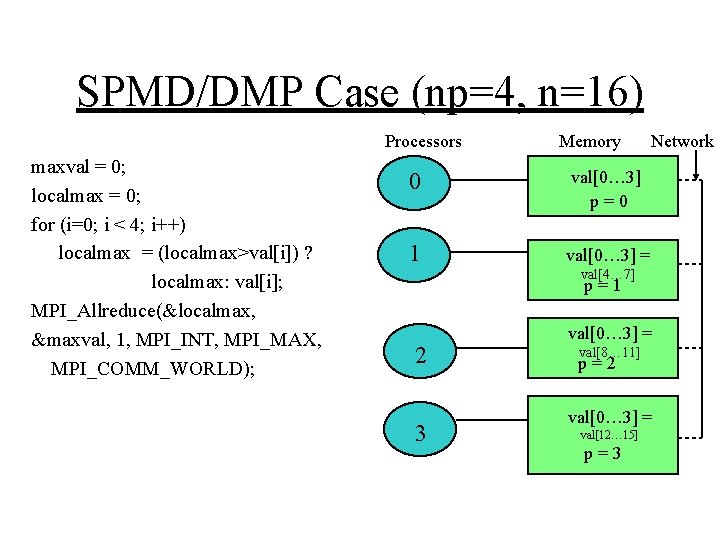

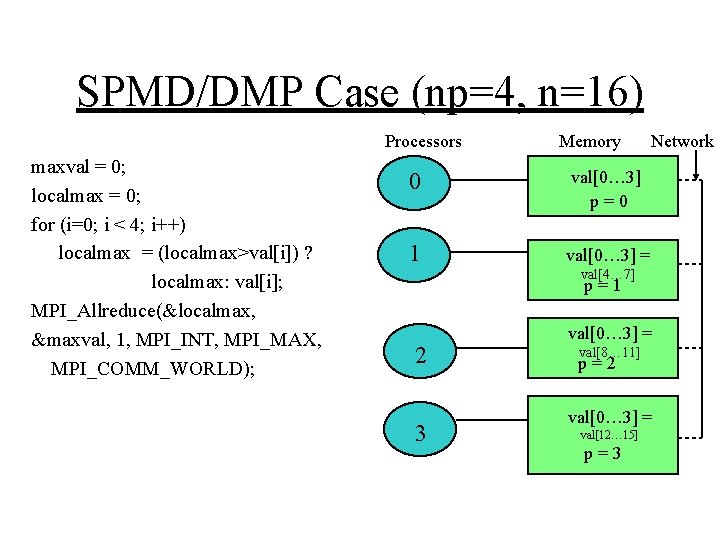

SPMD/DMP Case (np=4, n=16) Processors maxval = 0; localmax = 0; for (i=0; i < 4; i++) localmax = (localmax>val[i]) ? localmax: val[i]; MPI_Allreduce(&localmax, &maxval, 1, MPI_INT, MPI_MAX, MPI_COMM_WORLD); Memory 0 val[0… 3] p=0 1 val[0… 3] = Network val[4… 7] p=1 2 3 val[0… 3] = val[8… 11] p=2 val[0… 3] = val[12… 15] p=3

Shared Memory Model Overview • All Processes share the same memory image. • Parallelism often achieved by having processors take iterations of a for-loop that can be executed in parallel. • Open. MP, Intel TBB.

Message Passing Overview • SPMD/DMP programming requires “message passing”. • Traditional Two-sided Message Passing – Node p sends a message. – Node q receives it. – p and q are both involved in transfer of data. – Data sent/received by calling library routines. • One-sided Message Passing (mentioned only here) – Node p puts data into the memory of node q. or – Node p gets data from the memory of node q. – Node q is not involved in transfer. – Put’ing and Get’ing done by library calls.

MPI - Message Passing Interface • The most commonly used message passing standard. • The focus of intense optimization by computer system vendors. • MPI-2 includes I/O support and one-sided message passing. • The vast majority of today’s scalable applications run on top of MPI. • Supports derived data types and communicators.

Hybrid DMP/SMP Models • Many applications exhibit a coarse grain parallel structure and a simultaneous fine grain parallel structure nested within the coarse. • Many parallel computers are essentially clusters of SMP nodes. – SMP parallelism is possible within a node. – DMP is required across nodes. • Compels us to consider programming models where, for example, MPI runs across nodes and Open. MP runs within nodes.

First MPI Program • Simple program to measure: – Asymptotic bandwidth (send big messages). – Latency (send zero-length messages). • Works with exactly two processors. CSCI 317 Mike Heroux 10

Simple. Comm. Test. cpp • Go to Simple. Comm. Test. cpp • Download on Linux system. • Setup: – module avail (locate MPI environment, GCC or Intel). – module load … • Compile/run: – – mpicxx Simple. Comm. Test. cpp mpirun -np 2 a. out Try: mpirun -np 4 a. out Why does it fail? How? CSCI 317 Mike Heroux 11

Going from Serial to MPI • One of the most difficult aspects of DMP is: There is no incremental way to parallelize your existing full-featured code. • Either a code run in DMP mode or it doesn’t. • One way to address this problem is to: – Start with a stripped down version of your code. – Parallelize it and incrementally introduce features into the code. • We will take this approach.

Parallelizing CG • To have a parallel CG solver we need to: – Introduce MPI_Init/MPI_Finalize into main. cc – Provide parallel implementations of: • waxpby. cpp, compute_residual. cpp, ddot. cpp (easy) • HPCCG. cpp (also easy) • HPC_sparsemv. cpp (hard). • Approach: – Do the easy stuff. – Replace (temporarily) the hard stuff with easy.

Parallelizing waxpby • How do we parallelize waxpby? • Easy: You are already done!!

Parallelizing ddot • Parallelizing ddot is very straight-forward given MPI: // Reduce what you own on a processor. ddot(my_nrow, x, y, &my_result); //Use MPI's reduce function to collect all partial sums MPI_Allreduce(&my_result, &result, 1, MPI_DOUBLE, MPI_SUM, MPI_COMM_WORLD); • Note: – Similar works for compute_residual. • Replace MPI_SUM with MPI_MAX. • Note: There is a bug in the current version!

Distributed Memory Sparse Matrix-vector Multiplication

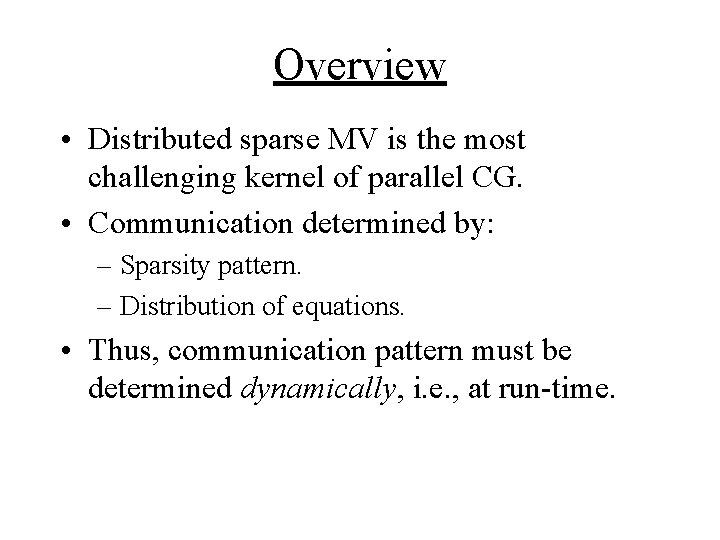

Overview • Distributed sparse MV is the most challenging kernel of parallel CG. • Communication determined by: – Sparsity pattern. – Distribution of equations. • Thus, communication pattern must be determined dynamically, i. e. , at run-time.

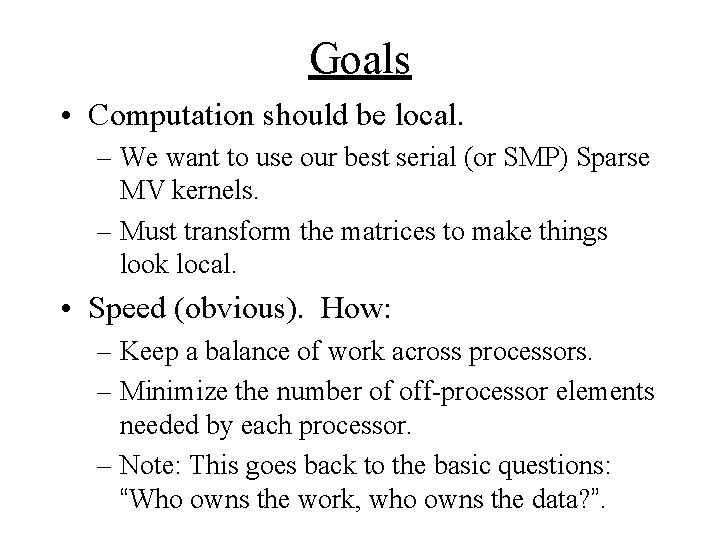

Goals • Computation should be local. – We want to use our best serial (or SMP) Sparse MV kernels. – Must transform the matrices to make things look local. • Speed (obvious). How: – Keep a balance of work across processors. – Minimize the number of off-processor elements needed by each processor. – Note: This goes back to the basic questions: “Who owns the work, who owns the data? ”.

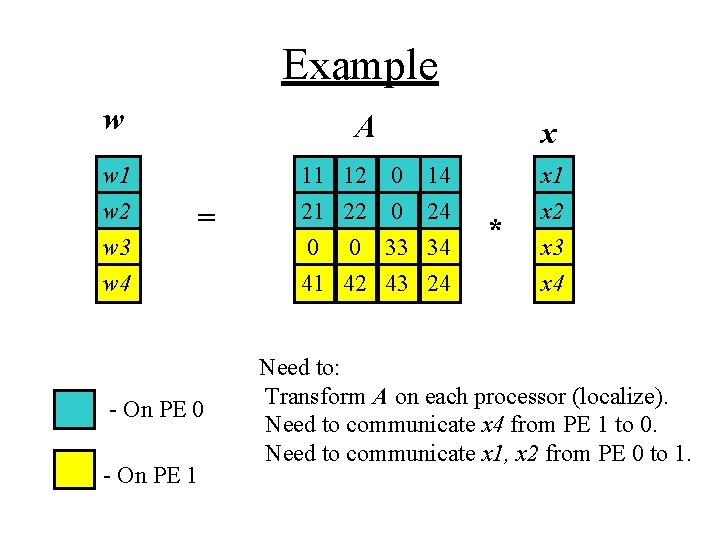

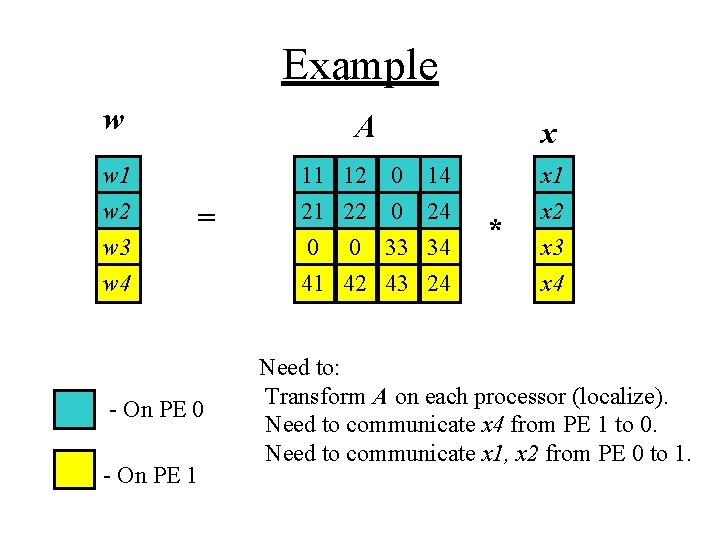

Example w w 1 w 2 w 3 w 4 A = - On PE 0 - On PE 1 11 12 0 14 21 22 0 24 0 0 33 34 41 42 43 24 x * x 1 x 2 x 3 x 4 Need to: Transform A on each processor (localize). Need to communicate x 4 from PE 1 to 0. Need to communicate x 1, x 2 from PE 0 to 1.

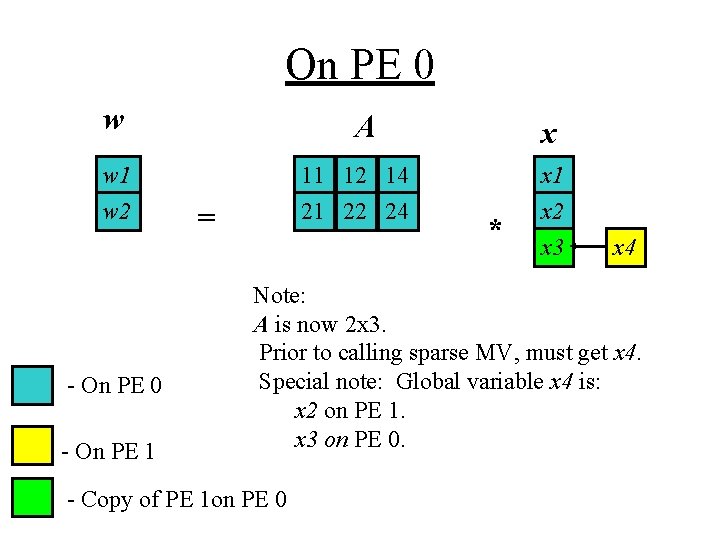

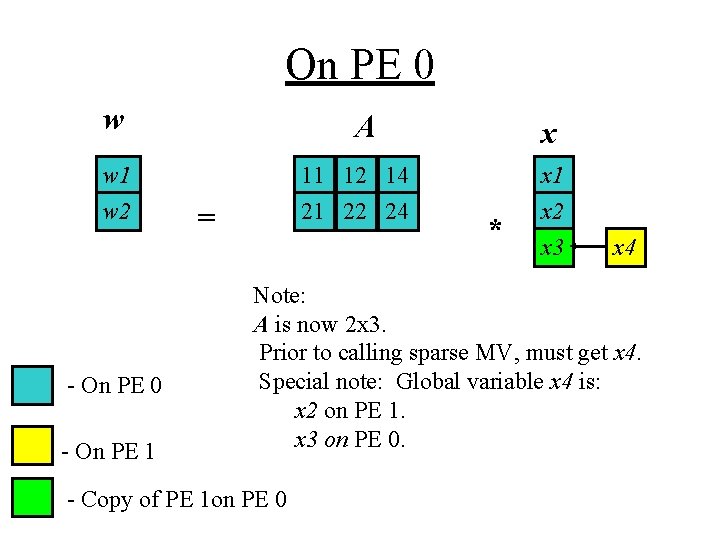

On PE 0 w w 1 w 2 - On PE 0 - On PE 1 A 11 12 14 21 22 24 = x * x 1 x 2 x 3 x 4 Note: A is now 2 x 3. Prior to calling sparse MV, must get x 4. Special note: Global variable x 4 is: x 2 on PE 1. x 3 on PE 0. - Copy of PE 1 on PE 0

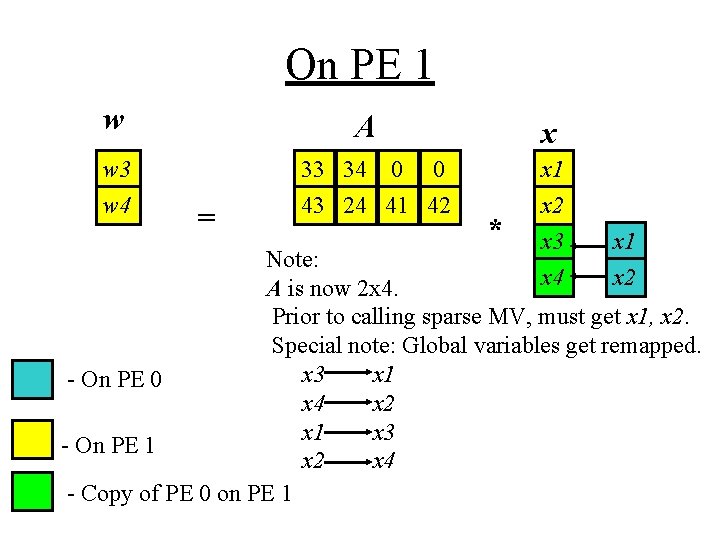

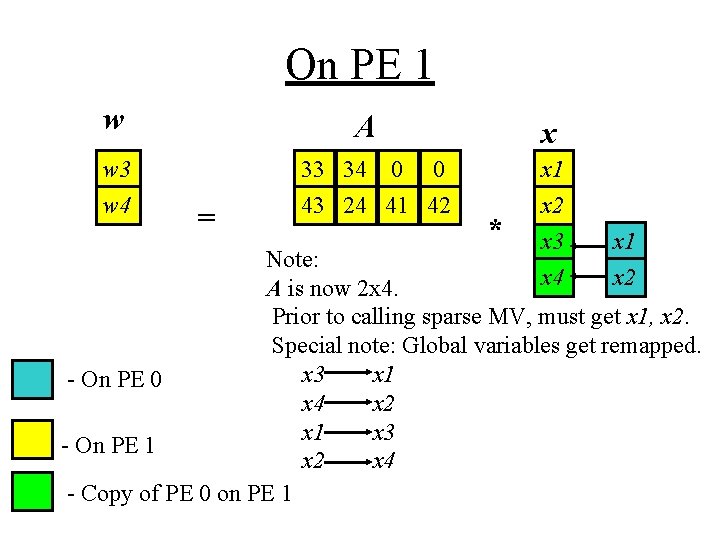

On PE 1 w w 3 w 4 A = 33 34 0 0 43 24 41 42 x * x 1 x 2 x 3 x 4 x 1 x 2 Note: A is now 2 x 4. Prior to calling sparse MV, must get x 1, x 2. Special note: Global variables get remapped. x 3 x 1 - On PE 0 x 4 x 2 x 1 x 3 - On PE 1 x 2 x 4 - Copy of PE 0 on PE 1

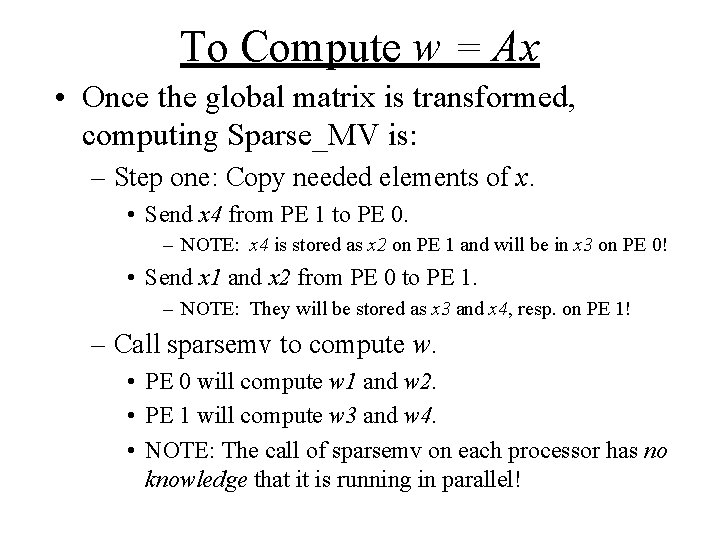

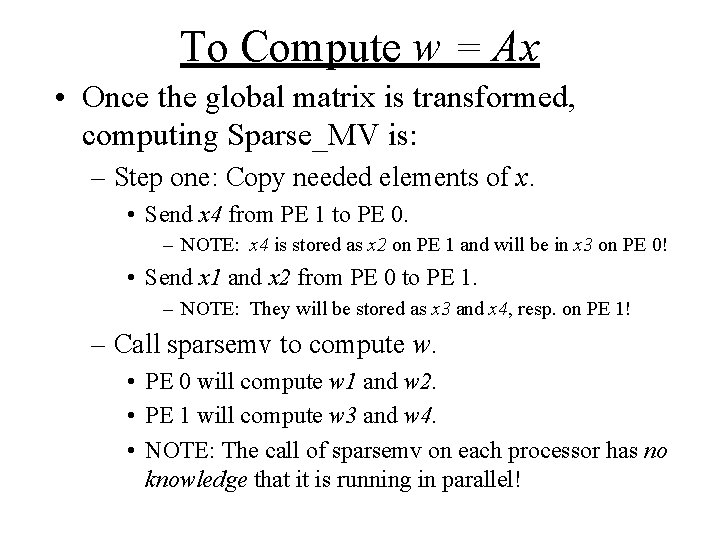

To Compute w = Ax • Once the global matrix is transformed, computing Sparse_MV is: – Step one: Copy needed elements of x. • Send x 4 from PE 1 to PE 0. – NOTE: x 4 is stored as x 2 on PE 1 and will be in x 3 on PE 0! • Send x 1 and x 2 from PE 0 to PE 1. – NOTE: They will be stored as x 3 and x 4, resp. on PE 1! – Call sparsemv to compute w. • PE 0 will compute w 1 and w 2. • PE 1 will compute w 3 and w 4. • NOTE: The call of sparsemv on each processor has no knowledge that it is running in parallel!

Observations • This approach to computing sparse MV keeps all computation local. – Achieves first goal. • Still need to look at: – Balancing work. – Minimizing communication (minimize # of transfers of x entries).

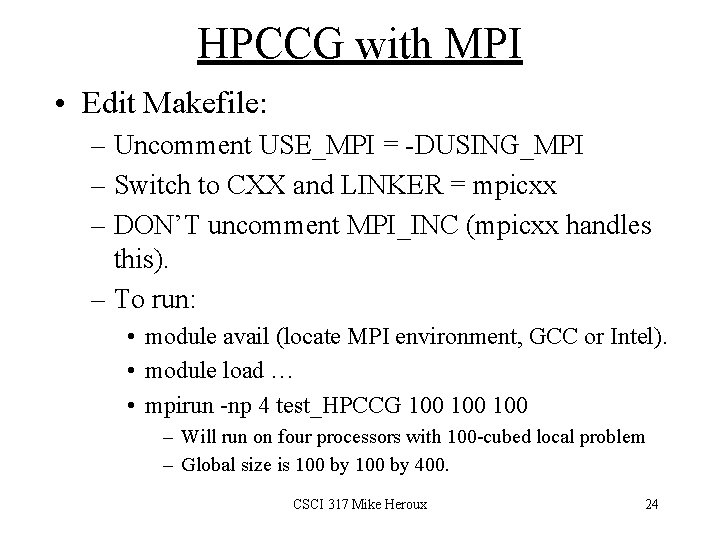

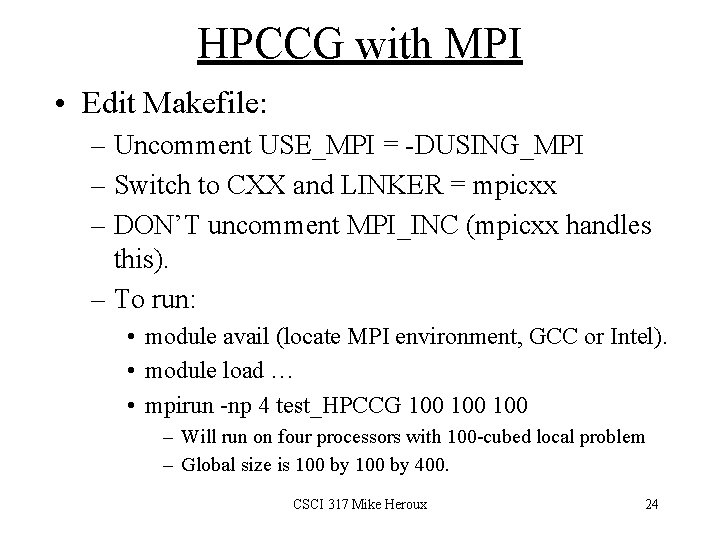

HPCCG with MPI • Edit Makefile: – Uncomment USE_MPI = -DUSING_MPI – Switch to CXX and LINKER = mpicxx – DON’T uncomment MPI_INC (mpicxx handles this). – To run: • module avail (locate MPI environment, GCC or Intel). • module load … • mpirun -np 4 test_HPCCG 100 100 – Will run on four processors with 100 -cubed local problem – Global size is 100 by 400. CSCI 317 Mike Heroux 24

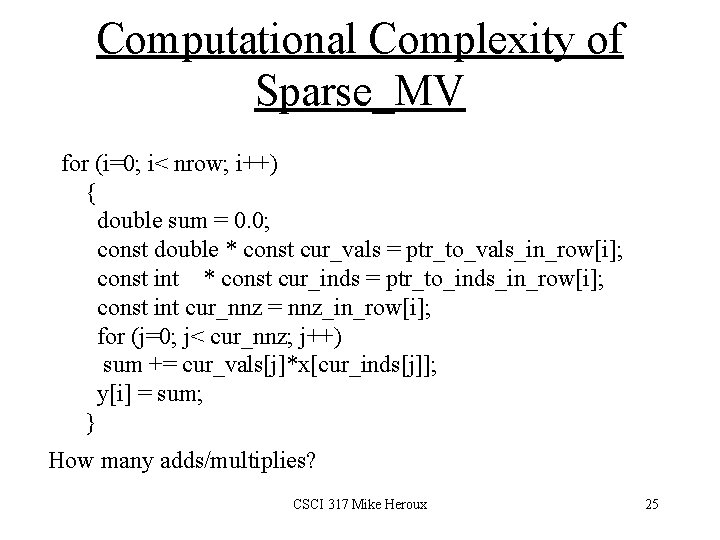

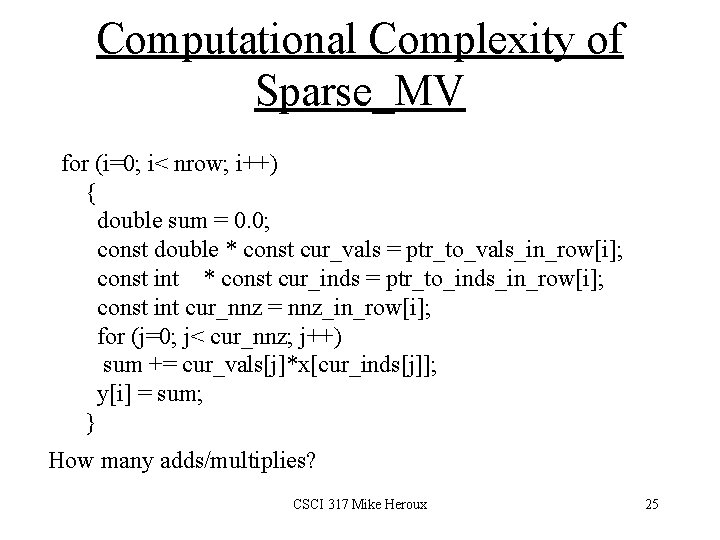

Computational Complexity of Sparse_MV for (i=0; i< nrow; i++) { double sum = 0. 0; const double * const cur_vals = ptr_to_vals_in_row[i]; const int * const cur_inds = ptr_to_inds_in_row[i]; const int cur_nnz = nnz_in_row[i]; for (j=0; j< cur_nnz; j++) sum += cur_vals[j]*x[cur_inds[j]]; y[i] = sum; } How many adds/multiplies? CSCI 317 Mike Heroux 25

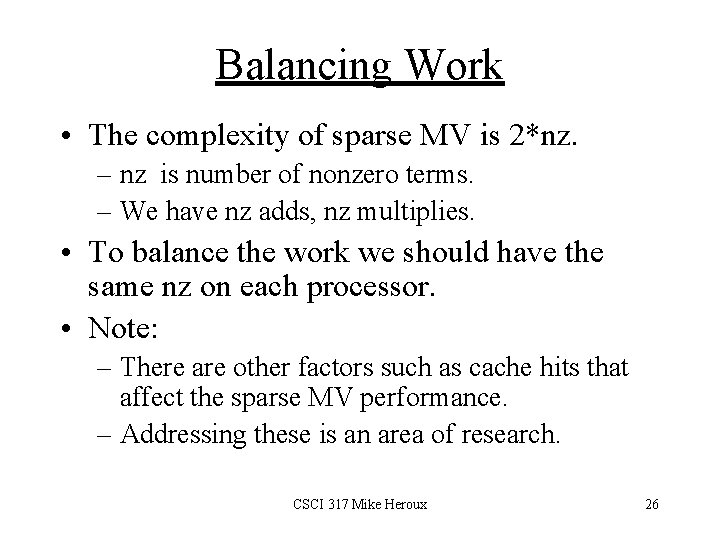

Balancing Work • The complexity of sparse MV is 2*nz. – nz is number of nonzero terms. – We have nz adds, nz multiplies. • To balance the work we should have the same nz on each processor. • Note: – There are other factors such as cache hits that affect the sparse MV performance. – Addressing these is an area of research. CSCI 317 Mike Heroux 26

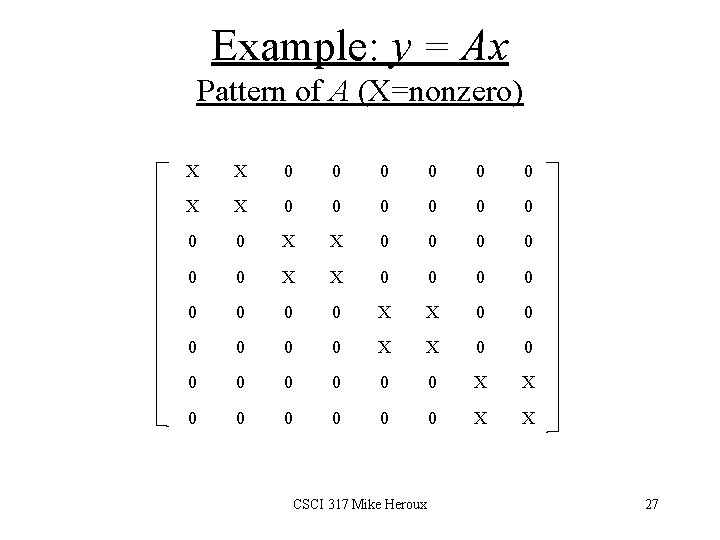

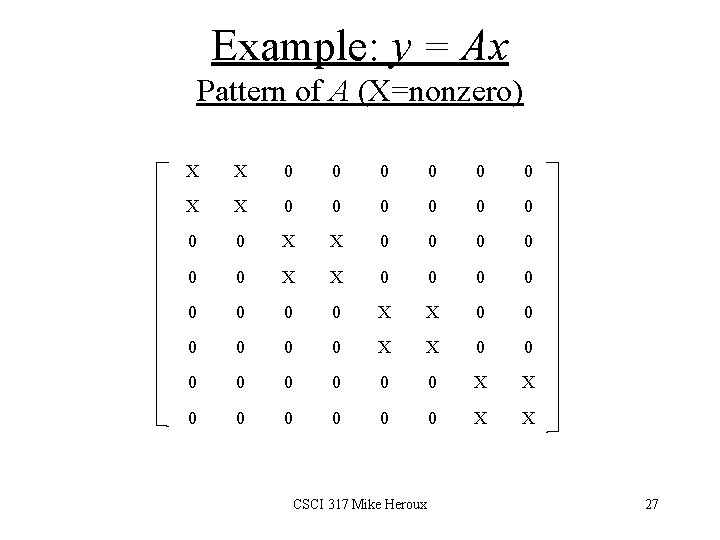

Example: y = Ax Pattern of A (X=nonzero) X X 0 0 0 0 0 0 0 0 X X 0 0 0 0 X X 0 0 0 X X CSCI 317 Mike Heroux 27

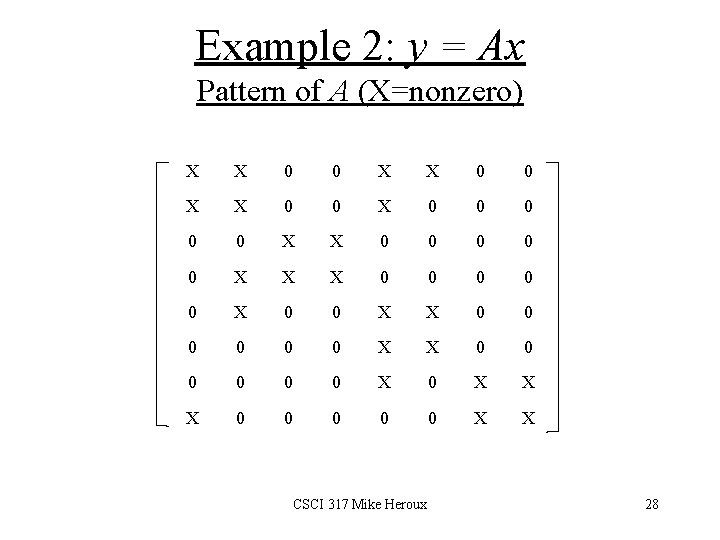

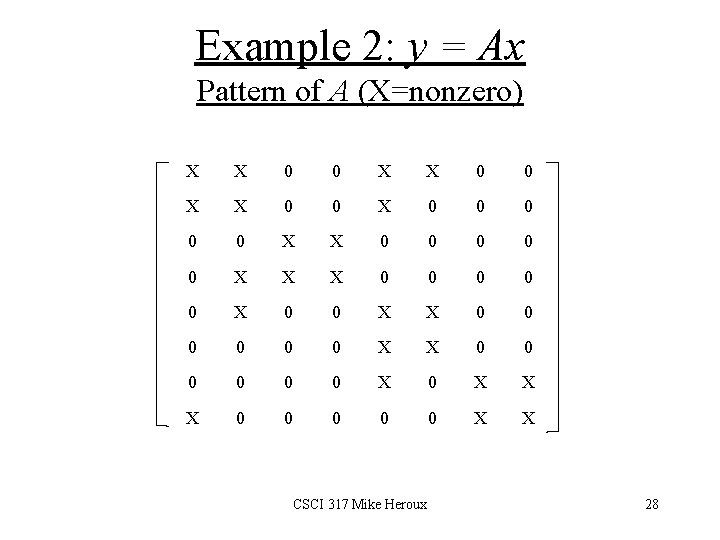

Example 2: y = Ax Pattern of A (X=nonzero) X X 0 0 0 0 0 X X 0 0 0 0 0 X X 0 0 0 X X X 0 0 0 X X CSCI 317 Mike Heroux 28

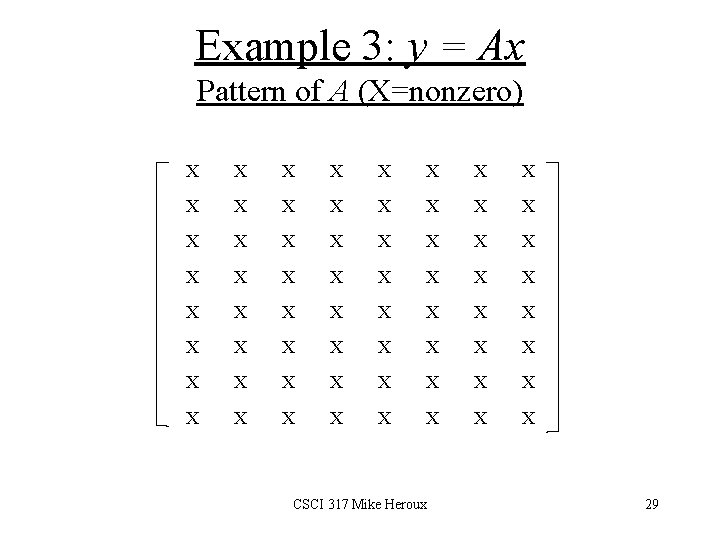

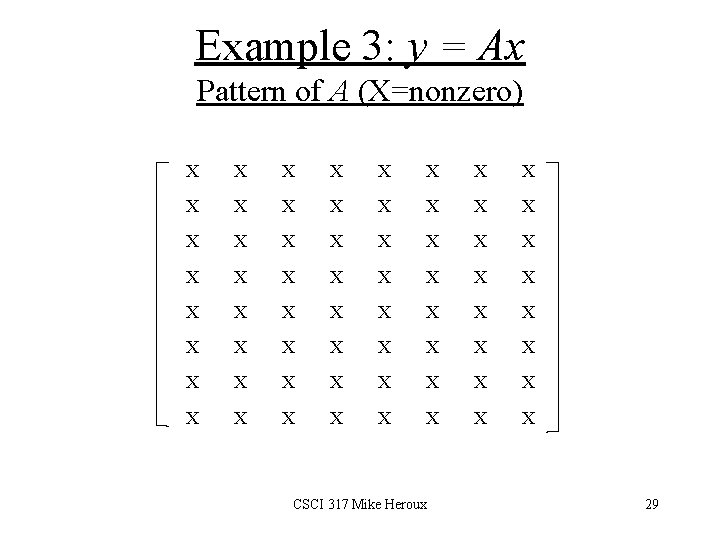

Example 3: y = Ax Pattern of A (X=nonzero) X X X X X X X X X X X X X X X X CSCI 317 Mike Heroux 29

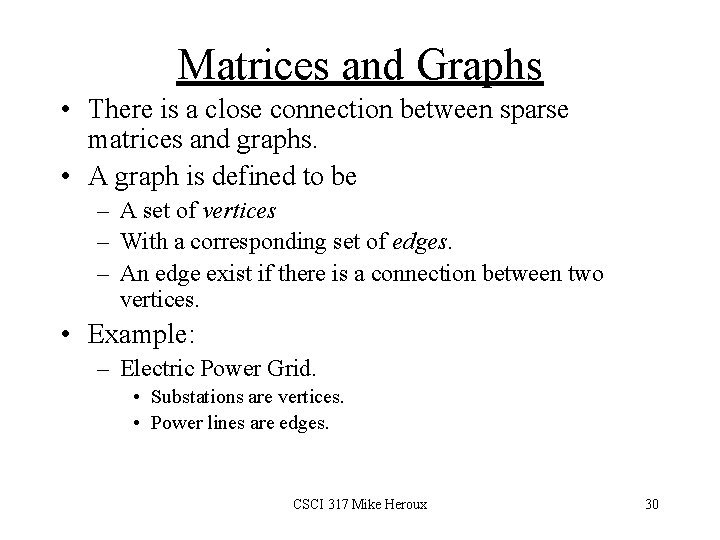

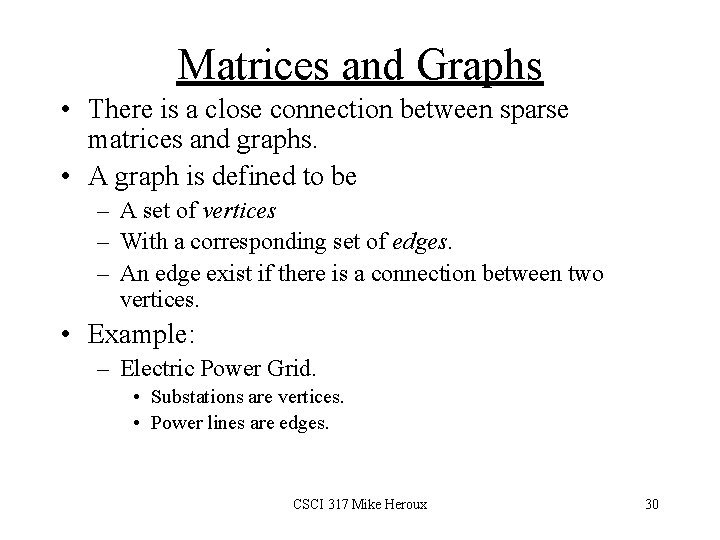

Matrices and Graphs • There is a close connection between sparse matrices and graphs. • A graph is defined to be – A set of vertices – With a corresponding set of edges. – An edge exist if there is a connection between two vertices. • Example: – Electric Power Grid. • Substations are vertices. • Power lines are edges. CSCI 317 Mike Heroux 30

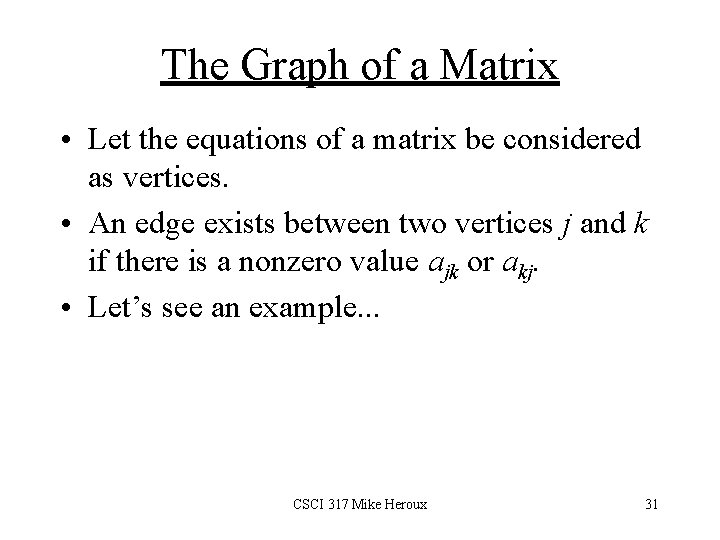

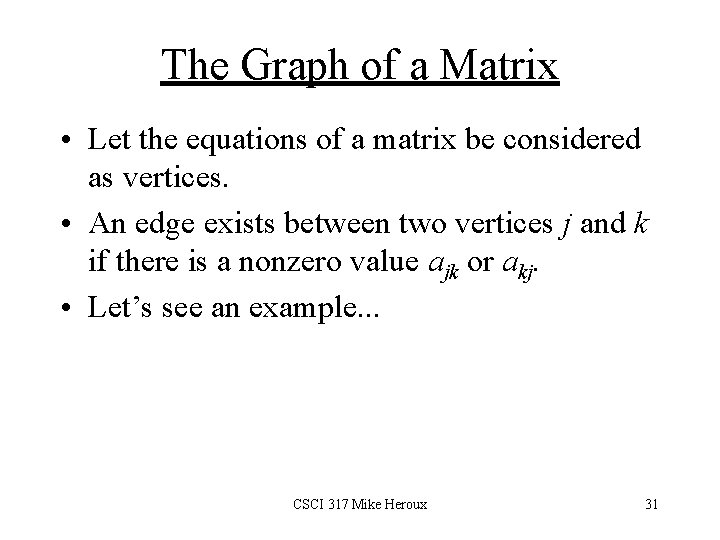

The Graph of a Matrix • Let the equations of a matrix be considered as vertices. • An edge exists between two vertices j and k if there is a nonzero value ajk or akj. • Let’s see an example. . . CSCI 317 Mike Heroux 31

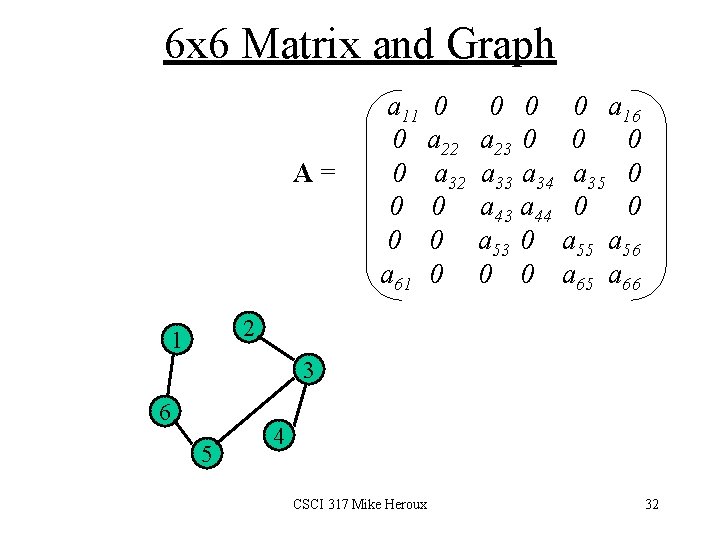

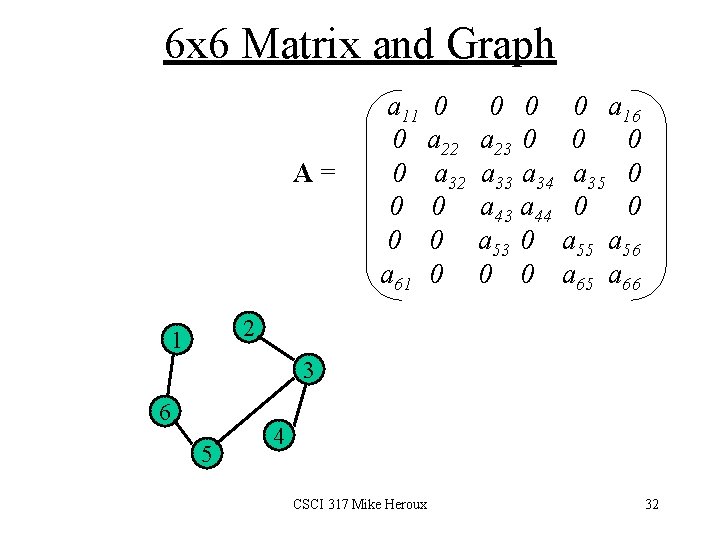

6 x 6 Matrix and Graph A= a 11 0 0 a 22 0 a 32 0 0 a 61 0 0 a 16 a 23 0 0 0 a 33 a 34 a 35 0 a 43 a 44 0 0 a 53 0 a 55 a 56 0 0 a 65 a 66 2 1 3 6 5 4 CSCI 317 Mike Heroux 32

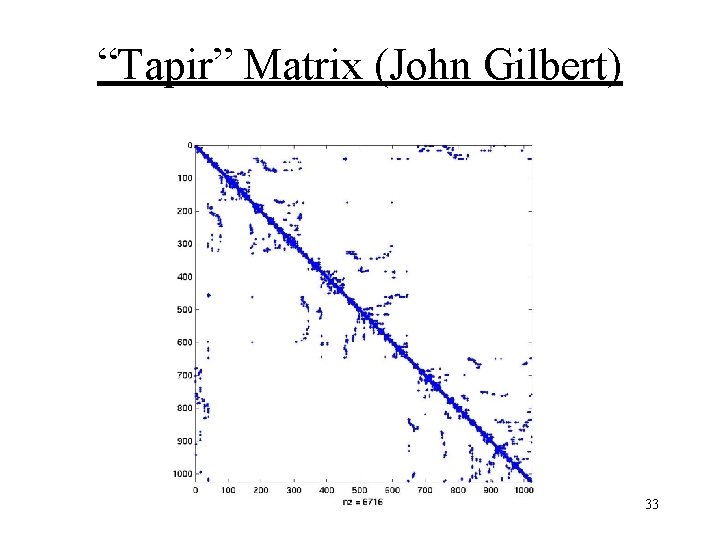

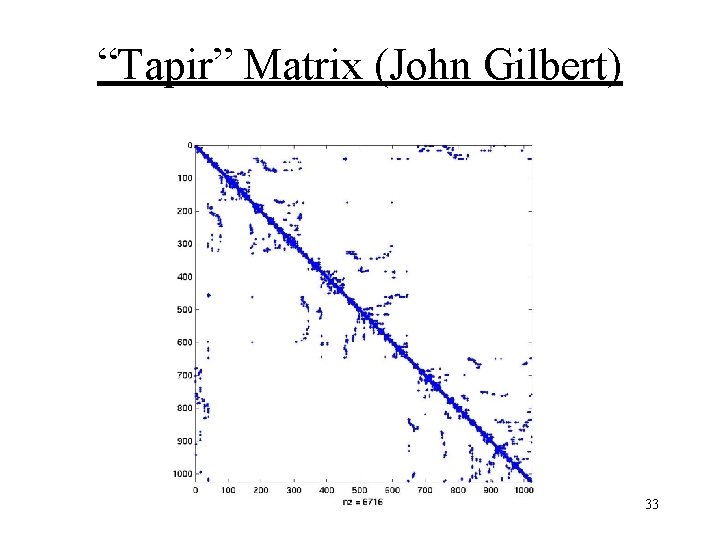

“Tapir” Matrix (John Gilbert) CSCI 317 Mike Heroux 33

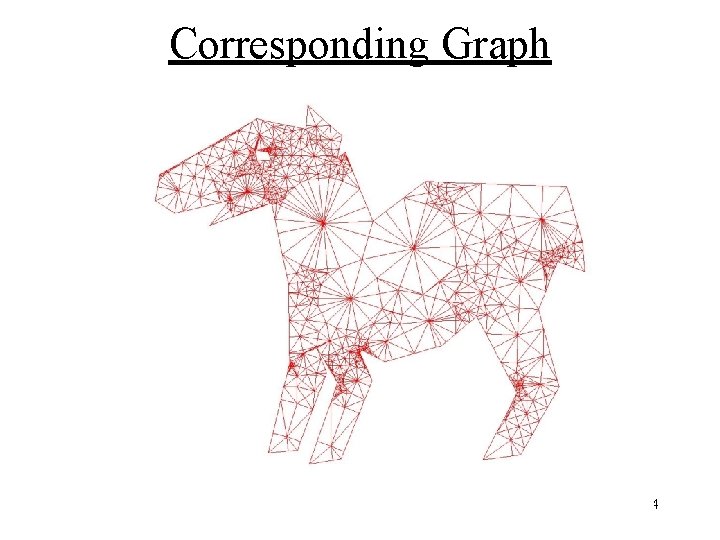

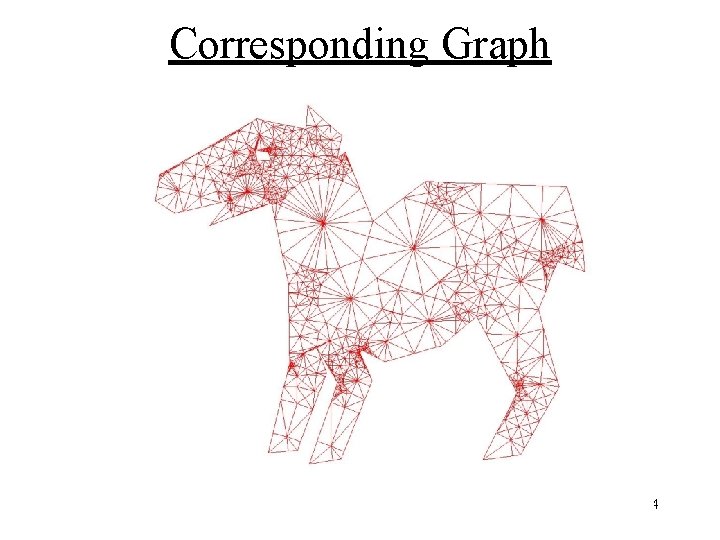

Corresponding Graph CSCI 317 Mike Heroux 34

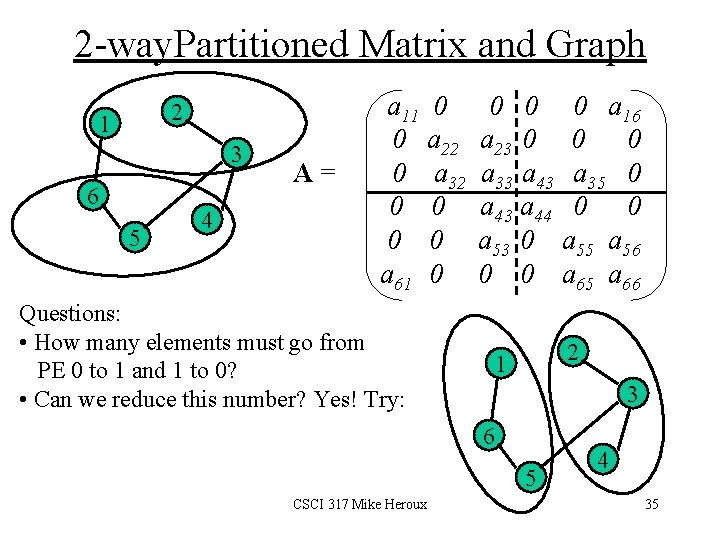

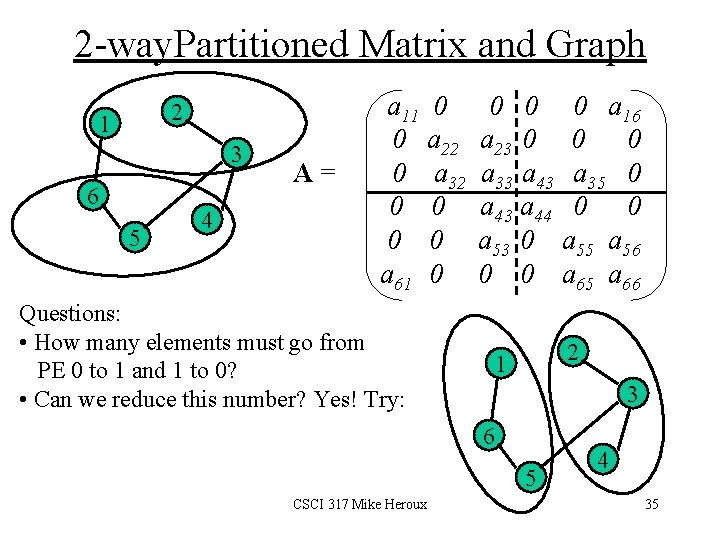

2 -way. Partitioned Matrix and Graph 2 1 3 6 5 4 A= a 11 0 0 a 22 0 a 32 0 0 a 61 0 0 a 16 a 23 0 0 0 a 33 a 43 a 35 0 a 43 a 44 0 0 a 53 0 a 55 a 56 0 0 a 65 a 66 Questions: • How many elements must go from PE 0 to 1 and 1 to 0? • Can we reduce this number? Yes! Try: 2 1 3 6 5 CSCI 317 Mike Heroux 4 35

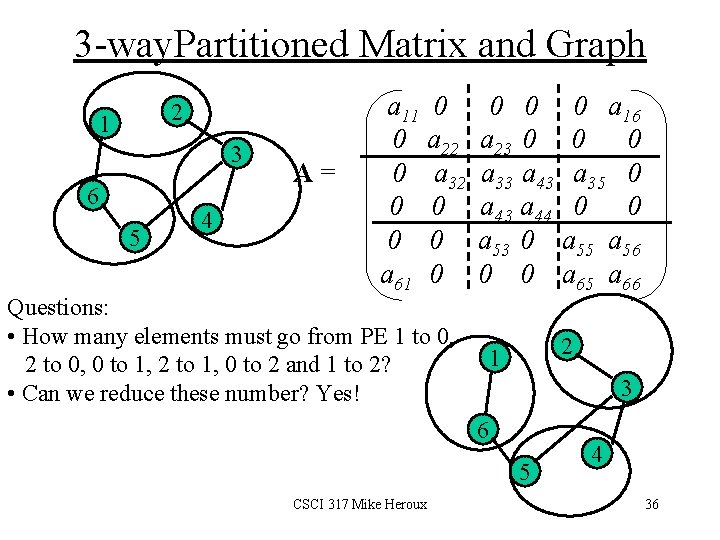

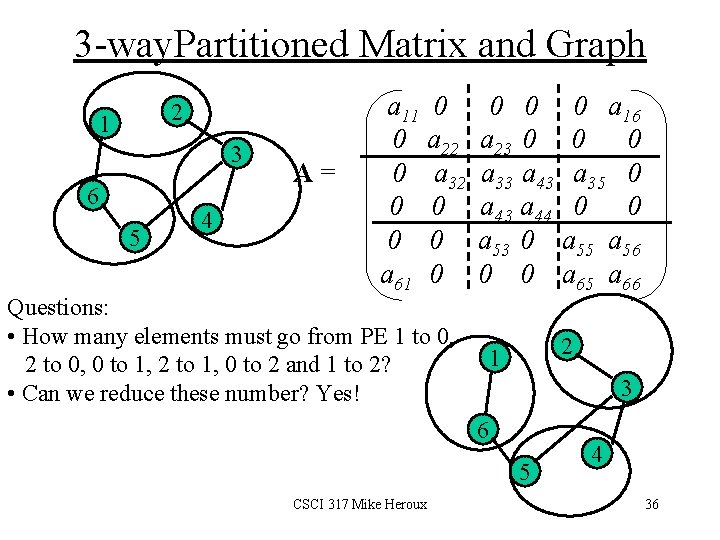

3 -way. Partitioned Matrix and Graph 2 1 3 6 5 4 A= a 11 0 0 a 22 0 a 32 0 0 a 61 0 Questions: • How many elements must go from PE 1 to 0, 2 to 0, 0 to 1, 2 to 1, 0 to 2 and 1 to 2? • Can we reduce these number? Yes! 0 0 0 a 16 a 23 0 0 0 a 33 a 43 a 35 0 a 43 a 44 0 0 a 53 0 a 55 a 56 0 0 a 65 a 66 2 1 3 6 5 CSCI 317 Mike Heroux 4 36

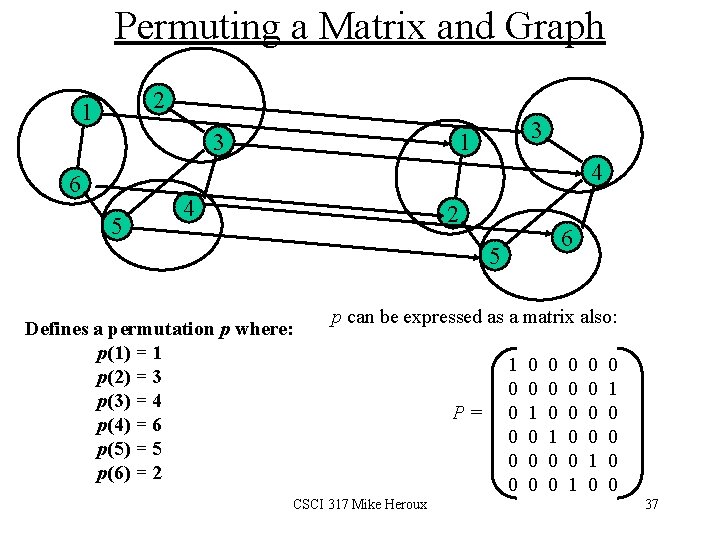

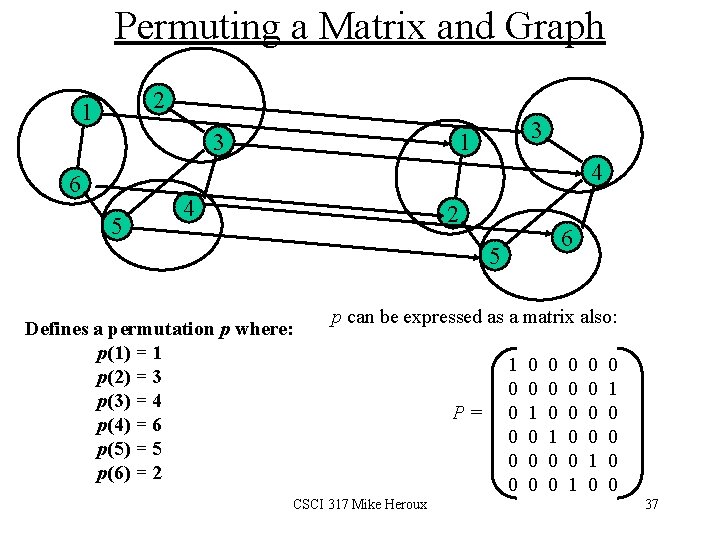

Permuting a Matrix and Graph 2 1 3 3 1 4 6 5 4 2 6 5 Defines a permutation p where: p(1) = 1 p(2) = 3 p(3) = 4 p(4) = 6 p(5) = 5 p(6) = 2 p can be expressed as a matrix also: CSCI 317 Mike Heroux P= 1 0 0 0 0 0 0 0 1 0 0 37

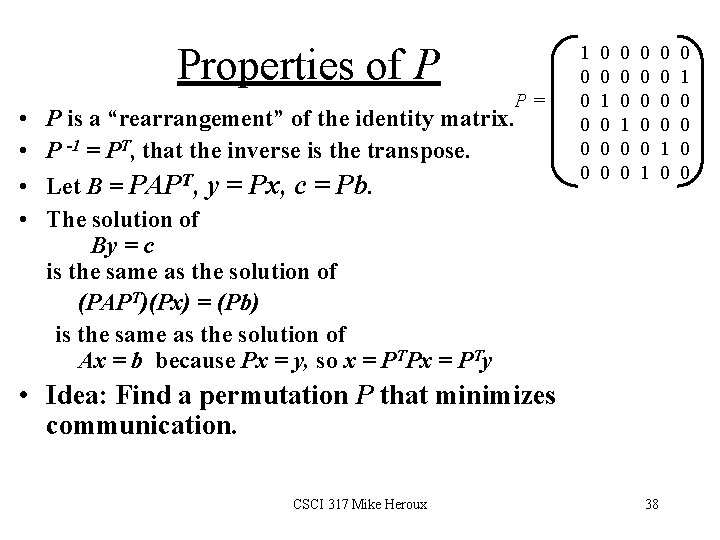

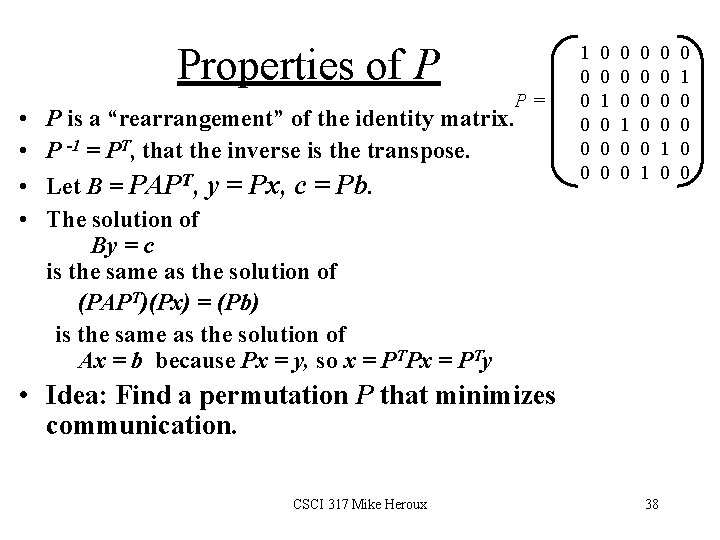

Properties of P • • P= P is a “rearrangement” of the identity matrix. P -1 = PT, that the inverse is the transpose. Let B = PAPT, y = Px, c = Pb. The solution of By = c is the same as the solution of (PAPT)(Px) = (Pb) is the same as the solution of Ax = b because Px = y, so x = PTPx = PTy 1 0 0 0 0 0 0 0 1 • Idea: Find a permutation P that minimizes communication. CSCI 317 Mike Heroux 38 0 0 1 0 0 0 0

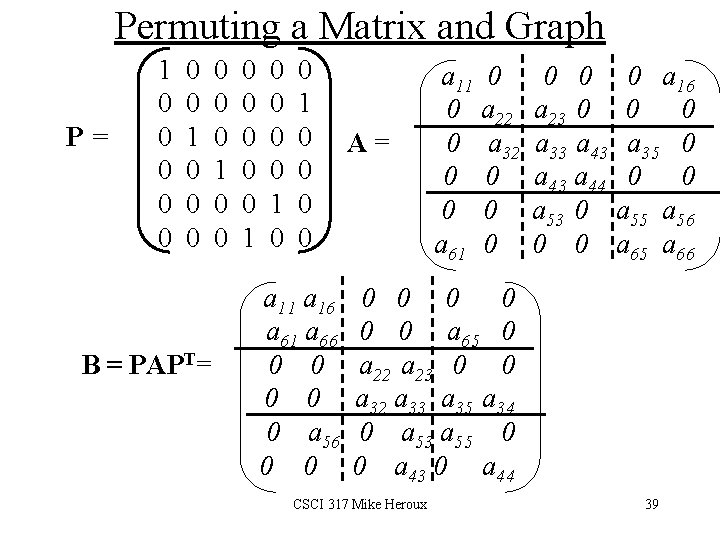

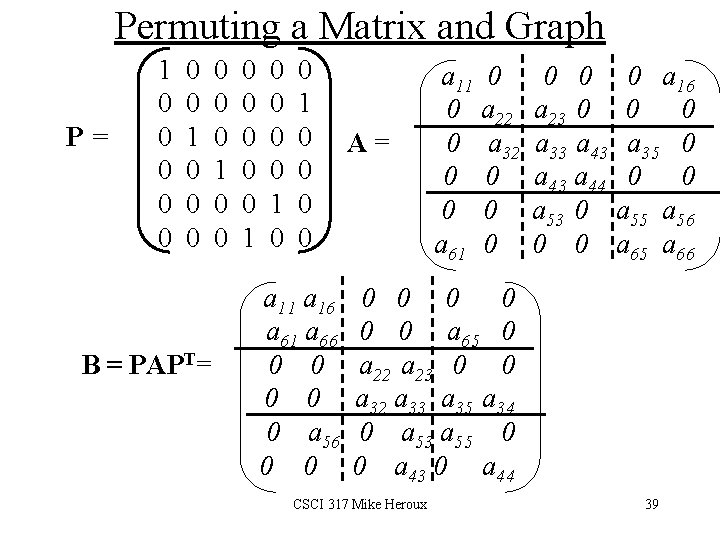

Permuting a Matrix and Graph P= 1 0 0 0 0 1 0 0 0 B = PAPT= 0 0 0 1 0 0 0 0 a 11 a 16 a 61 a 66 0 0 0 a 56 0 0 A= a 11 0 0 a 22 0 a 32 0 0 a 61 0 0 a 16 a 23 0 0 0 a 33 a 43 a 35 0 a 43 a 44 0 0 a 53 0 a 55 a 56 0 0 a 65 a 66 0 0 0 a 65 0 a 22 a 23 0 0 a 32 a 33 a 35 a 34 0 a 53 a 55 0 0 a 43 0 a 44 CSCI 317 Mike Heroux 39

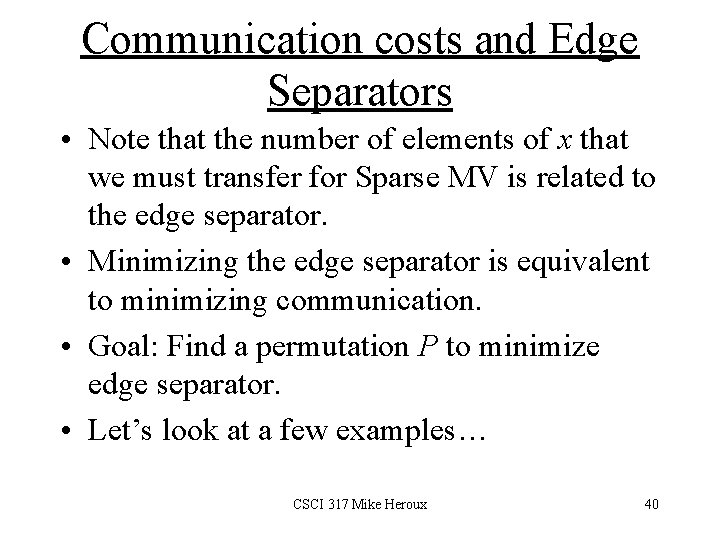

Communication costs and Edge Separators • Note that the number of elements of x that we must transfer for Sparse MV is related to the edge separator. • Minimizing the edge separator is equivalent to minimizing communication. • Goal: Find a permutation P to minimize edge separator. • Let’s look at a few examples… CSCI 317 Mike Heroux 40

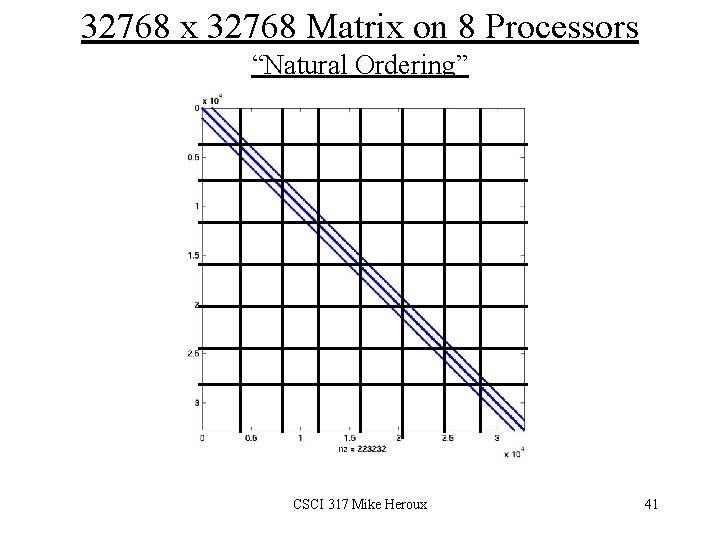

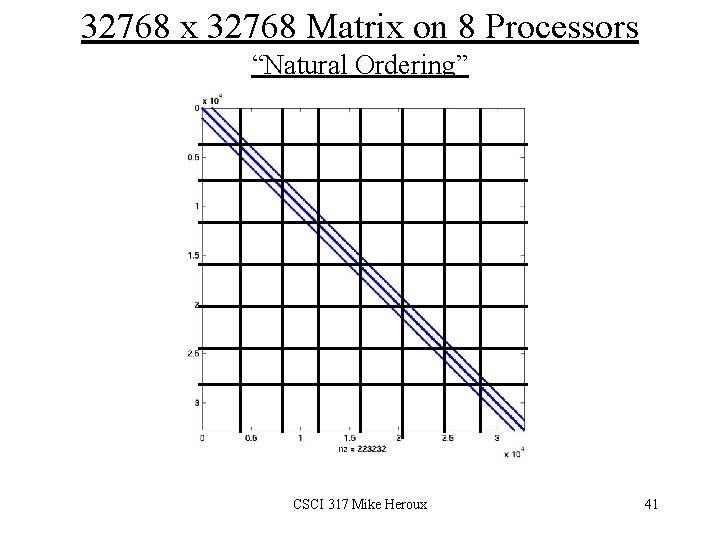

32768 x 32768 Matrix on 8 Processors “Natural Ordering” CSCI 317 Mike Heroux 41

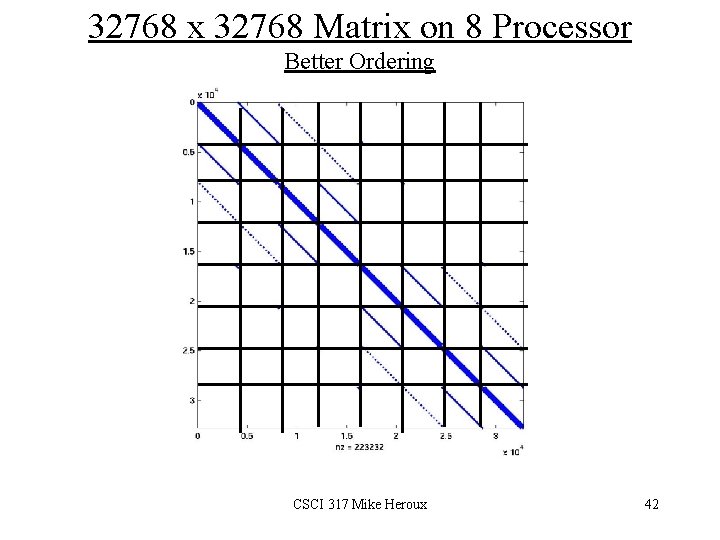

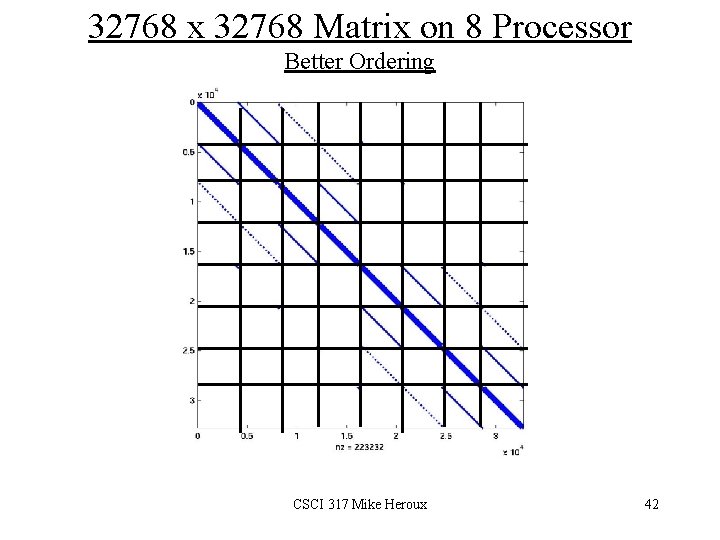

32768 x 32768 Matrix on 8 Processor Better Ordering CSCI 317 Mike Heroux 42

MFLOP Results CSCI 317 Mike Heroux 43

Edge Cuts CSCI 317 Mike Heroux 44

Message Passing Flexibility • Message Passing (specifically MPI): – Each process runs independently in separate memory. – Can run across multiple machine. – Portable across any processor configuration. • Shared memory parallel: – Parallelism restricted by what? • Number of shared memory procs. • Amount of memory. • Contention for shared resources. Which ones? – Memory and channels, I/O speed, disks, …

MPI-capable Machines • Which machines are MPI-capable? – Beefy. How many processors, how much memory? • 8, 48 GB – Beast? • 48, 64 GB. – PE 212 machines. How many processors? • 24 machines X 4 cores = 96 !!!, X 4 GB = 96 GB !!! CSCI 317 Mike Heroux 46

pe 212 hostfile • List of machines. • Requirements: passwordless ssh access. % cat pe 212 hostfile lin 2 lin 3 … lin 24 lin 1 CSCI 317 Mike Heroux 47

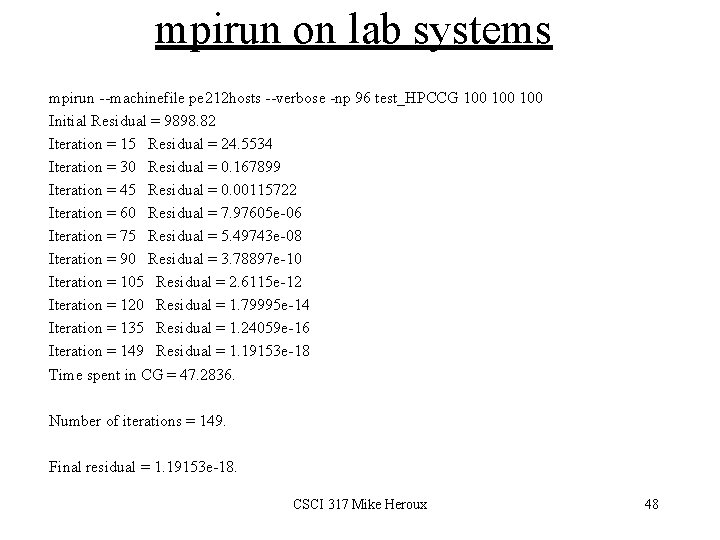

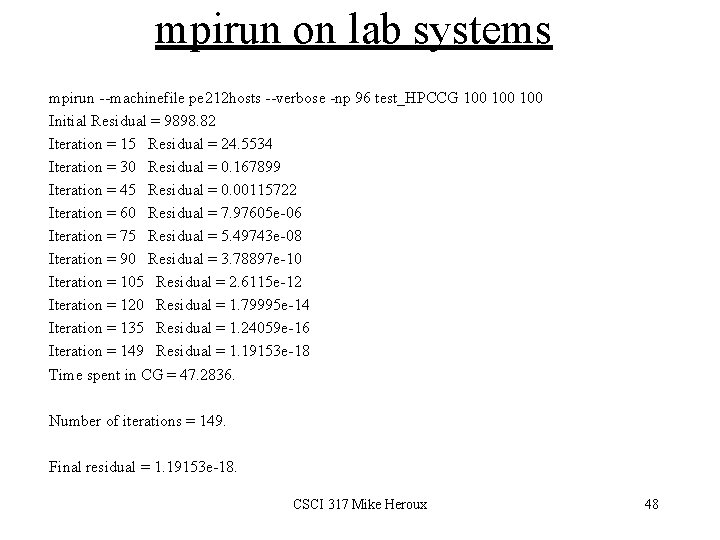

mpirun on lab systems mpirun --machinefile pe 212 hosts --verbose -np 96 test_HPCCG 100 100 Initial Residual = 9898. 82 Iteration = 15 Residual = 24. 5534 Iteration = 30 Residual = 0. 167899 Iteration = 45 Residual = 0. 00115722 Iteration = 60 Residual = 7. 97605 e-06 Iteration = 75 Residual = 5. 49743 e-08 Iteration = 90 Residual = 3. 78897 e-10 Iteration = 105 Residual = 2. 6115 e-12 Iteration = 120 Residual = 1. 79995 e-14 Iteration = 135 Residual = 1. 24059 e-16 Iteration = 149 Residual = 1. 19153 e-18 Time spent in CG = 47. 2836. Number of iterations = 149. Final residual = 1. 19153 e-18. CSCI 317 Mike Heroux 48

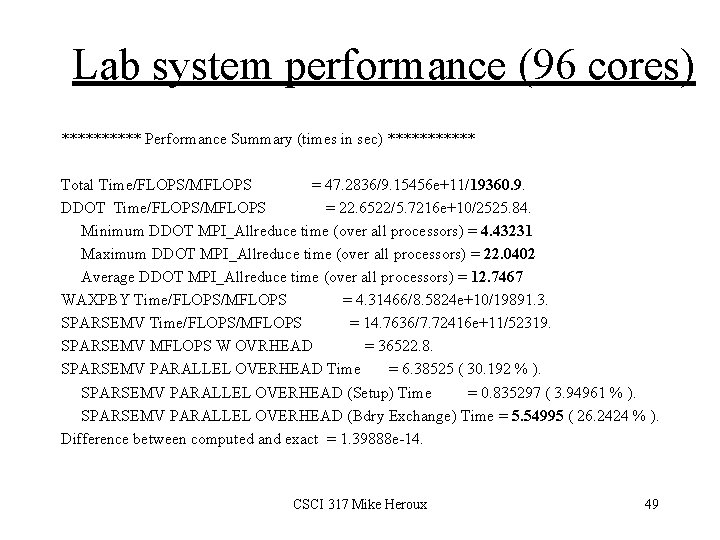

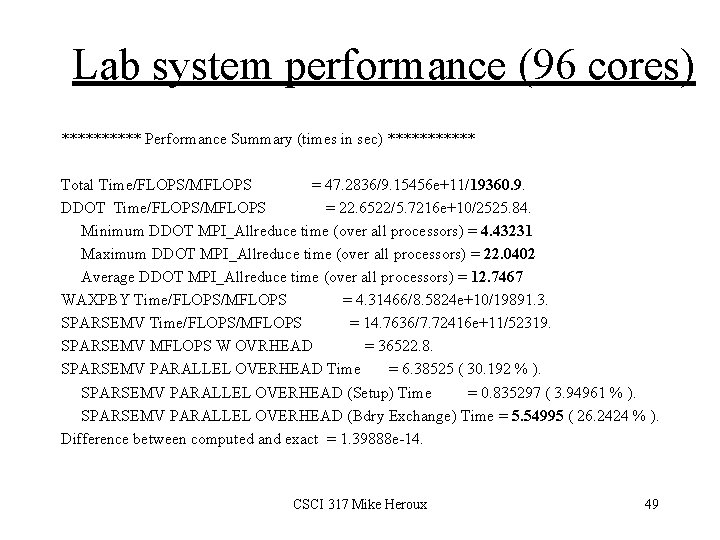

Lab system performance (96 cores) ***** Performance Summary (times in sec) ****** Total Time/FLOPS/MFLOPS = 47. 2836/9. 15456 e+11/19360. 9. DDOT Time/FLOPS/MFLOPS = 22. 6522/5. 7216 e+10/2525. 84. Minimum DDOT MPI_Allreduce time (over all processors) = 4. 43231 Maximum DDOT MPI_Allreduce time (over all processors) = 22. 0402 Average DDOT MPI_Allreduce time (over all processors) = 12. 7467 WAXPBY Time/FLOPS/MFLOPS = 4. 31466/8. 5824 e+10/19891. 3. SPARSEMV Time/FLOPS/MFLOPS = 14. 7636/7. 72416 e+11/52319. SPARSEMV MFLOPS W OVRHEAD = 36522. 8. SPARSEMV PARALLEL OVERHEAD Time = 6. 38525 ( 30. 192 % ). SPARSEMV PARALLEL OVERHEAD (Setup) Time = 0. 835297 ( 3. 94961 % ). SPARSEMV PARALLEL OVERHEAD (Bdry Exchange) Time = 5. 54995 ( 26. 2424 % ). Difference between computed and exact = 1. 39888 e-14. CSCI 317 Mike Heroux 49

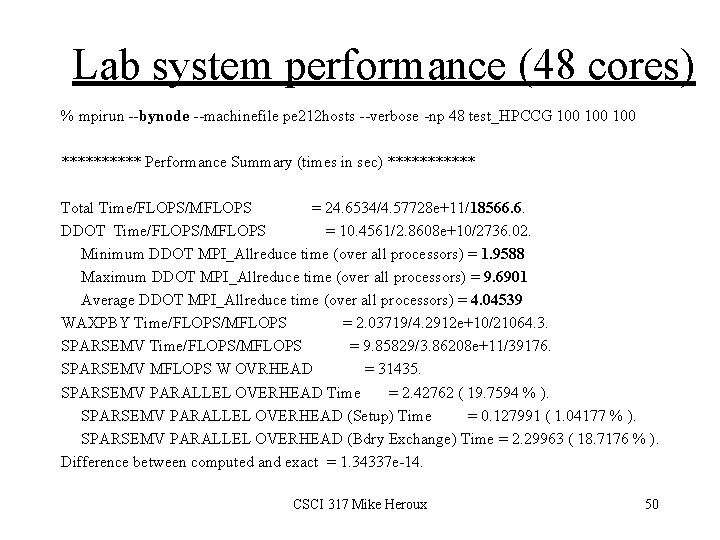

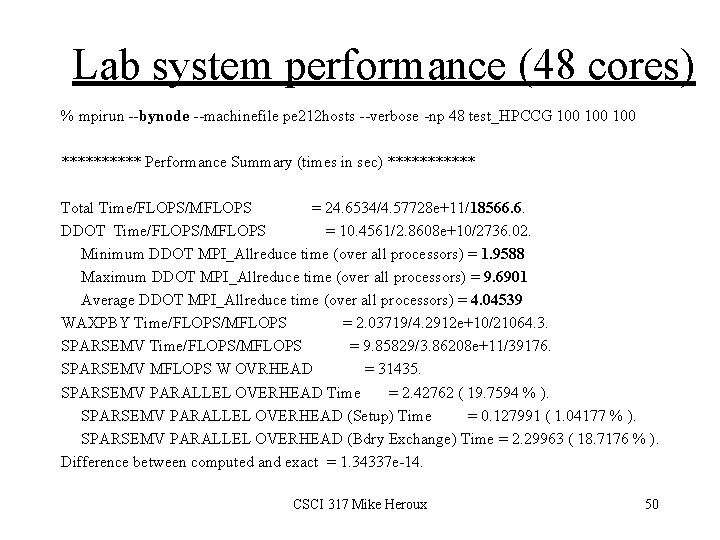

Lab system performance (48 cores) % mpirun --bynode --machinefile pe 212 hosts --verbose -np 48 test_HPCCG 100 100 ***** Performance Summary (times in sec) ****** Total Time/FLOPS/MFLOPS = 24. 6534/4. 57728 e+11/18566. 6. DDOT Time/FLOPS/MFLOPS = 10. 4561/2. 8608 e+10/2736. 02. Minimum DDOT MPI_Allreduce time (over all processors) = 1. 9588 Maximum DDOT MPI_Allreduce time (over all processors) = 9. 6901 Average DDOT MPI_Allreduce time (over all processors) = 4. 04539 WAXPBY Time/FLOPS/MFLOPS = 2. 03719/4. 2912 e+10/21064. 3. SPARSEMV Time/FLOPS/MFLOPS = 9. 85829/3. 86208 e+11/39176. SPARSEMV MFLOPS W OVRHEAD = 31435. SPARSEMV PARALLEL OVERHEAD Time = 2. 42762 ( 19. 7594 % ). SPARSEMV PARALLEL OVERHEAD (Setup) Time = 0. 127991 ( 1. 04177 % ). SPARSEMV PARALLEL OVERHEAD (Bdry Exchange) Time = 2. 29963 ( 18. 7176 % ). Difference between computed and exact = 1. 34337 e-14. CSCI 317 Mike Heroux 50

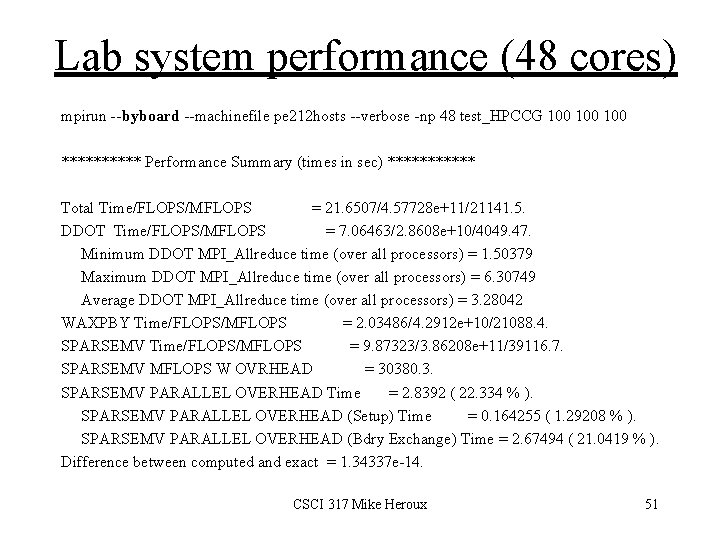

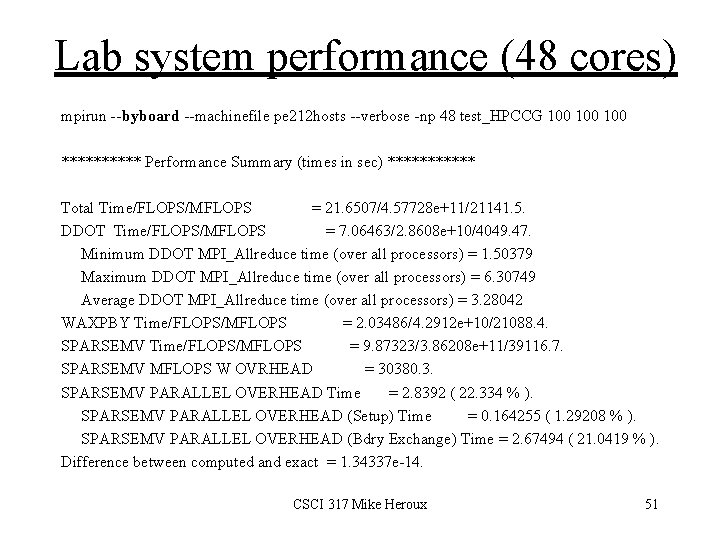

Lab system performance (48 cores) mpirun --byboard --machinefile pe 212 hosts --verbose -np 48 test_HPCCG 100 100 ***** Performance Summary (times in sec) ****** Total Time/FLOPS/MFLOPS = 21. 6507/4. 57728 e+11/21141. 5. DDOT Time/FLOPS/MFLOPS = 7. 06463/2. 8608 e+10/4049. 47. Minimum DDOT MPI_Allreduce time (over all processors) = 1. 50379 Maximum DDOT MPI_Allreduce time (over all processors) = 6. 30749 Average DDOT MPI_Allreduce time (over all processors) = 3. 28042 WAXPBY Time/FLOPS/MFLOPS = 2. 03486/4. 2912 e+10/21088. 4. SPARSEMV Time/FLOPS/MFLOPS = 9. 87323/3. 86208 e+11/39116. 7. SPARSEMV MFLOPS W OVRHEAD = 30380. 3. SPARSEMV PARALLEL OVERHEAD Time = 2. 8392 ( 22. 334 % ). SPARSEMV PARALLEL OVERHEAD (Setup) Time = 0. 164255 ( 1. 29208 % ). SPARSEMV PARALLEL OVERHEAD (Bdry Exchange) Time = 2. 67494 ( 21. 0419 % ). Difference between computed and exact = 1. 34337 e-14. CSCI 317 Mike Heroux 51

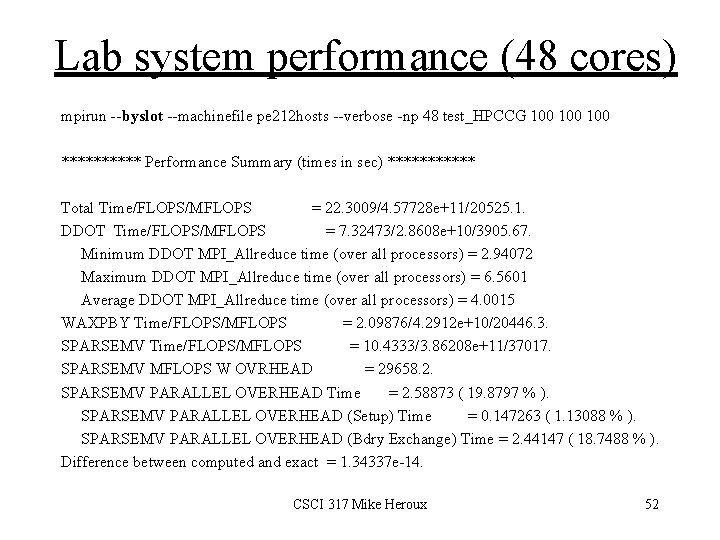

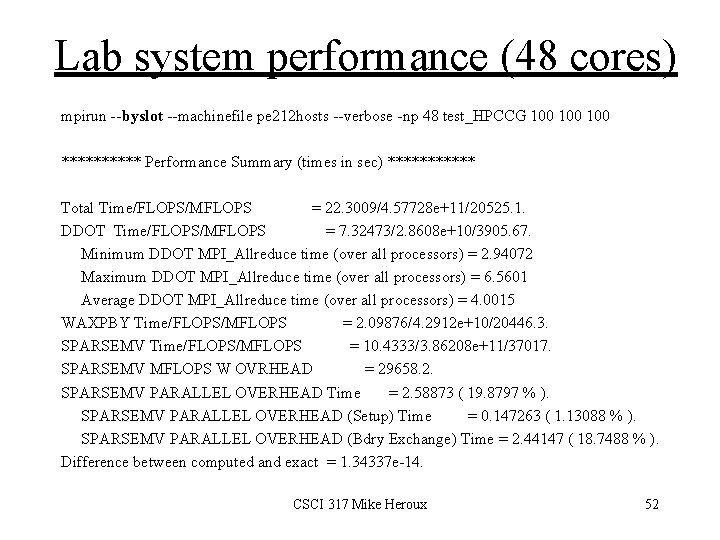

Lab system performance (48 cores) mpirun --byslot --machinefile pe 212 hosts --verbose -np 48 test_HPCCG 100 100 ***** Performance Summary (times in sec) ****** Total Time/FLOPS/MFLOPS = 22. 3009/4. 57728 e+11/20525. 1. DDOT Time/FLOPS/MFLOPS = 7. 32473/2. 8608 e+10/3905. 67. Minimum DDOT MPI_Allreduce time (over all processors) = 2. 94072 Maximum DDOT MPI_Allreduce time (over all processors) = 6. 5601 Average DDOT MPI_Allreduce time (over all processors) = 4. 0015 WAXPBY Time/FLOPS/MFLOPS = 2. 09876/4. 2912 e+10/20446. 3. SPARSEMV Time/FLOPS/MFLOPS = 10. 4333/3. 86208 e+11/37017. SPARSEMV MFLOPS W OVRHEAD = 29658. 2. SPARSEMV PARALLEL OVERHEAD Time = 2. 58873 ( 19. 8797 % ). SPARSEMV PARALLEL OVERHEAD (Setup) Time = 0. 147263 ( 1. 13088 % ). SPARSEMV PARALLEL OVERHEAD (Bdry Exchange) Time = 2. 44147 ( 18. 7488 % ). Difference between computed and exact = 1. 34337 e-14. CSCI 317 Mike Heroux 52

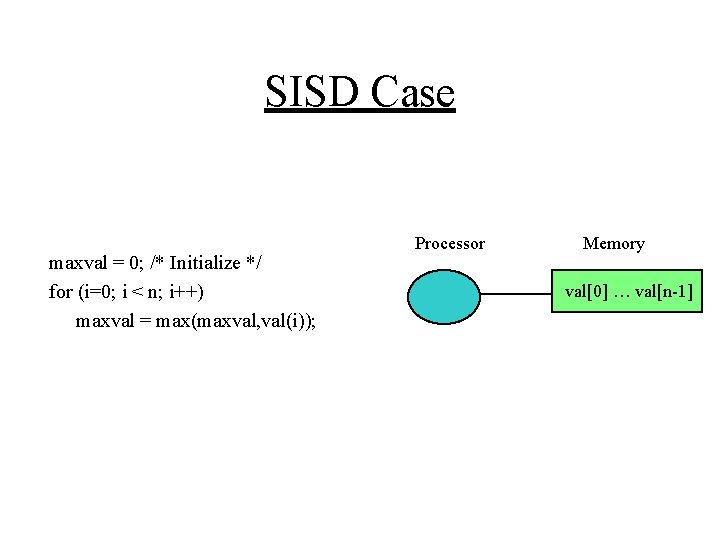

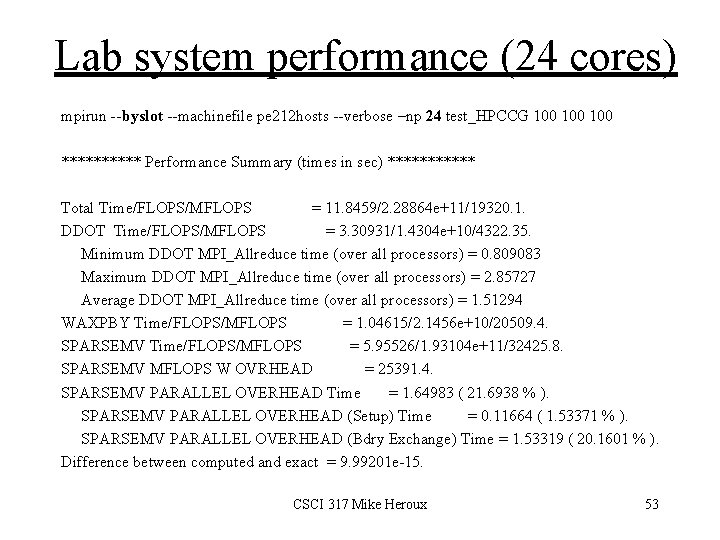

Lab system performance (24 cores) mpirun --byslot --machinefile pe 212 hosts --verbose –np 24 test_HPCCG 100 100 ***** Performance Summary (times in sec) ****** Total Time/FLOPS/MFLOPS = 11. 8459/2. 28864 e+11/19320. 1. DDOT Time/FLOPS/MFLOPS = 3. 30931/1. 4304 e+10/4322. 35. Minimum DDOT MPI_Allreduce time (over all processors) = 0. 809083 Maximum DDOT MPI_Allreduce time (over all processors) = 2. 85727 Average DDOT MPI_Allreduce time (over all processors) = 1. 51294 WAXPBY Time/FLOPS/MFLOPS = 1. 04615/2. 1456 e+10/20509. 4. SPARSEMV Time/FLOPS/MFLOPS = 5. 95526/1. 93104 e+11/32425. 8. SPARSEMV MFLOPS W OVRHEAD = 25391. 4. SPARSEMV PARALLEL OVERHEAD Time = 1. 64983 ( 21. 6938 % ). SPARSEMV PARALLEL OVERHEAD (Setup) Time = 0. 11664 ( 1. 53371 % ). SPARSEMV PARALLEL OVERHEAD (Bdry Exchange) Time = 1. 53319 ( 20. 1601 % ). Difference between computed and exact = 9. 99201 e-15. CSCI 317 Mike Heroux 53