Maximum Entropy Language Modeling with Syntactic Semantic and

- Slides: 43

Maximum Entropy Language Modeling with Syntactic, Semantic and Collocational Dependencies Jun Wu Advisor: Sanjeev Khudanpur Department of Computer Science Johns Hopkins University Baltimore, MD 21218 April, 2001 NSF STIMULATE Grant No. IRI-9618874 Center for Language and Speech Processing, The Johns Hopkins University. April 2001 1

Outline z Language modeling in speech recognition z The maximum entropy (ME) principle z Semantic (Topic) dependencies in natural language z Syntactic dependencies in natural language z ME models with topic and syntactic dependencies z Conclusion and future work z Topic assignment during test (15 min) z Role of syntactic head (15 min) z Training ME models in an efficient way (1 hour) Center for Language and Speech Processing, The Johns Hopkins University. April 2001 2

Outline z Language modeling in speech recognition z The maximum entropy (ME) principle z Semantic (Topic) dependencies in natural language z Syntactic dependencies in natural language z ME models with topic and syntactic dependencies z Conclusion and future work z Topic assignment during test (15 min) z Role of syntactic head (15 min) z Training ME models in an efficient way (1 hour) Center for Language and Speech Processing, The Johns Hopkins University. April 2001 3

Motivation Example: z A research team led by two Johns Hopkins scientists ___ found the strongest evidence yet that a virus may …. . . y have y has y his Center for Language and Speech Processing, The Johns Hopkins University. April 2001 4

Motivation Example: z A research team led by two Johns Hopkins scientists ___ found the strongest evidence yet that a virus may …. . . y have y has y his Center for Language and Speech Processing, The Johns Hopkins University. April 2001 5

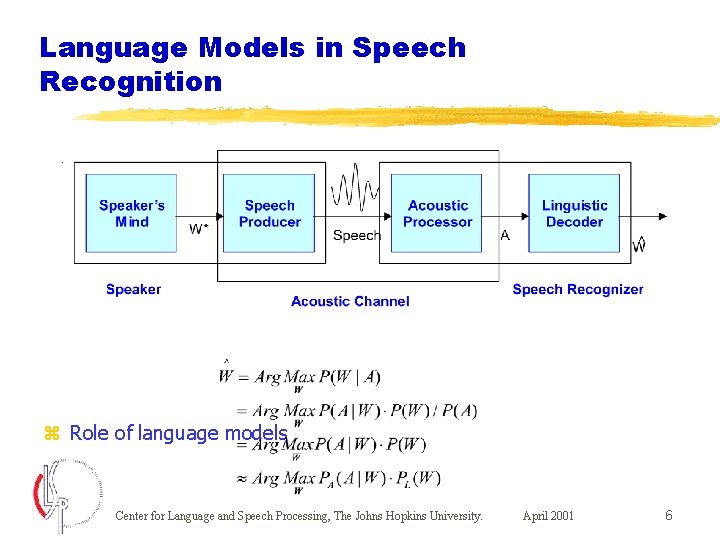

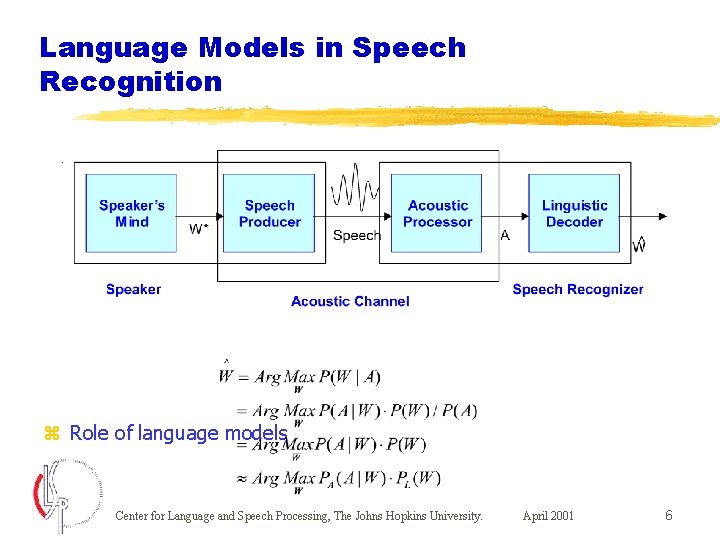

Language Models in Speech Recognition z Role of language models Center for Language and Speech Processing, The Johns Hopkins University. April 2001 6

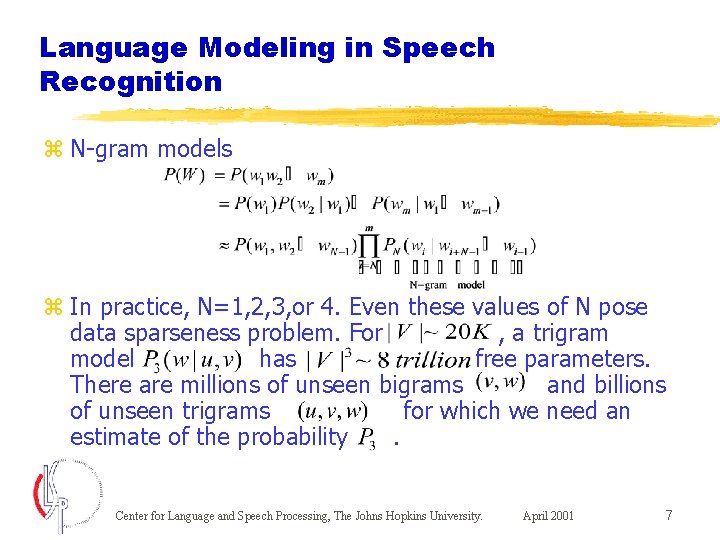

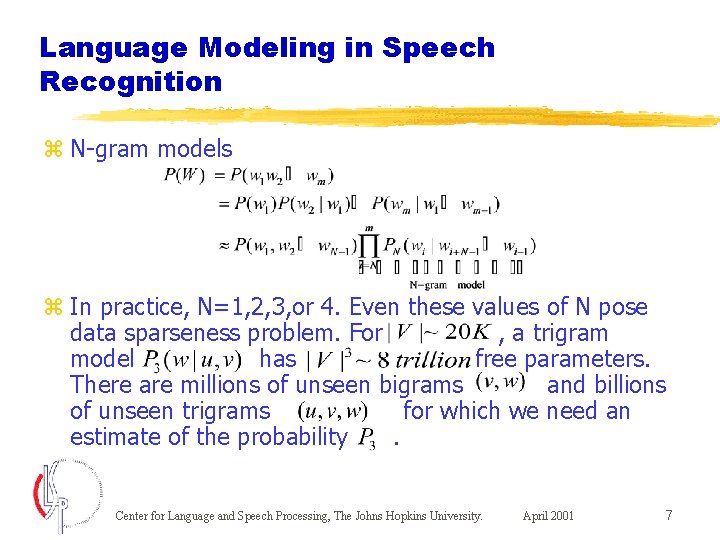

Language Modeling in Speech Recognition z N-gram models z In practice, N=1, 2, 3, or 4. Even these values of N pose data sparseness problem. For , a trigram model has free parameters. There are millions of unseen bigrams and billions of unseen trigrams for which we need an estimate of the probability. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 7

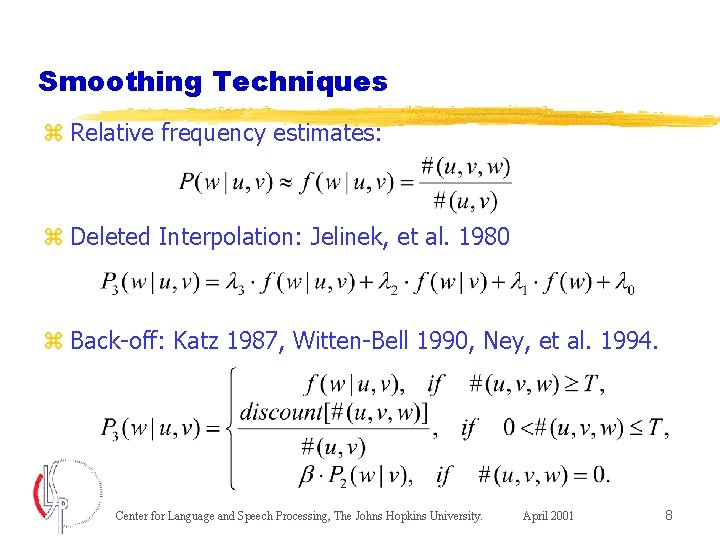

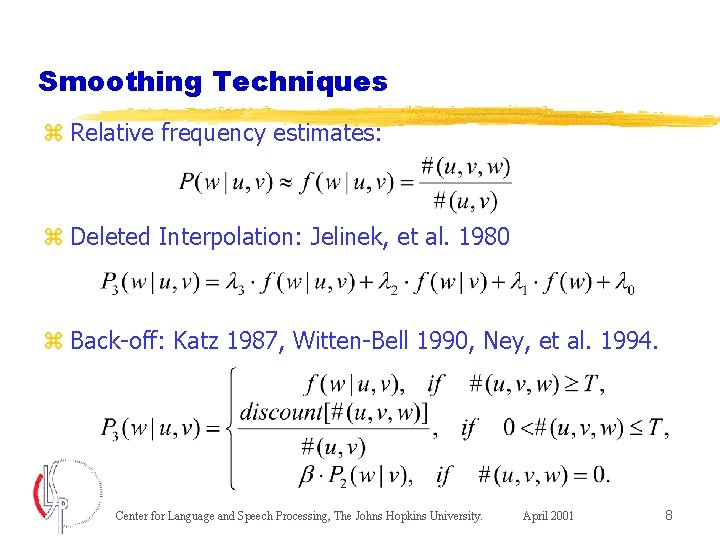

Smoothing Techniques z Relative frequency estimates: z Deleted Interpolation: Jelinek, et al. 1980 z Back-off: Katz 1987, Witten-Bell 1990, Ney, et al. 1994. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 8

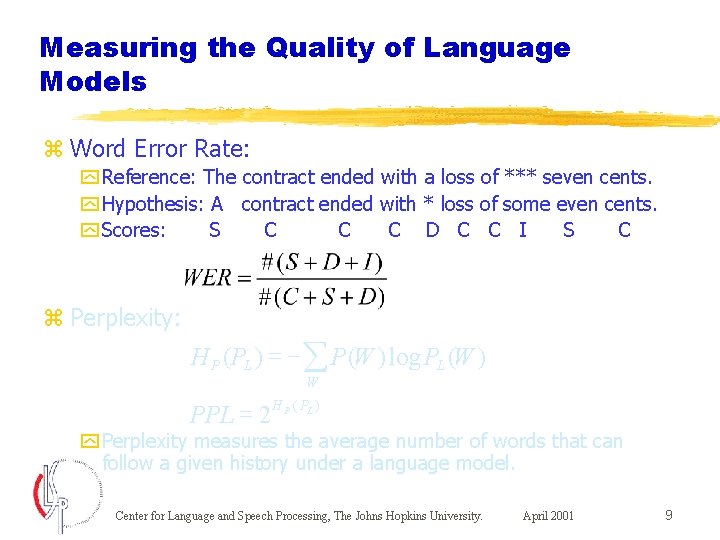

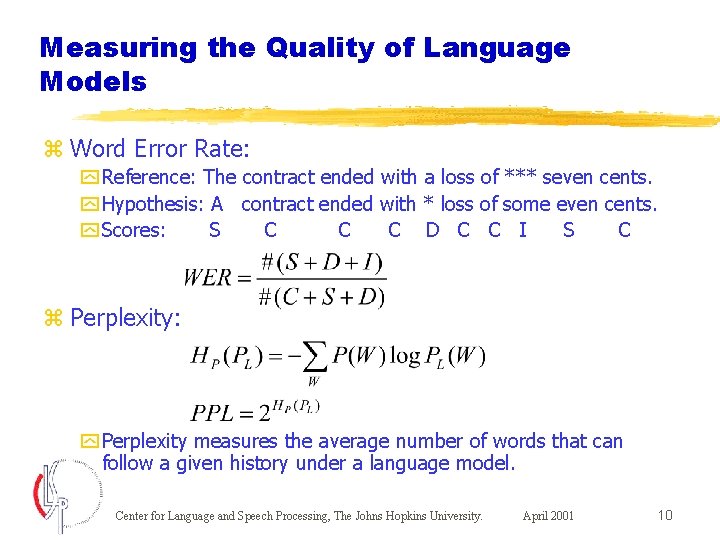

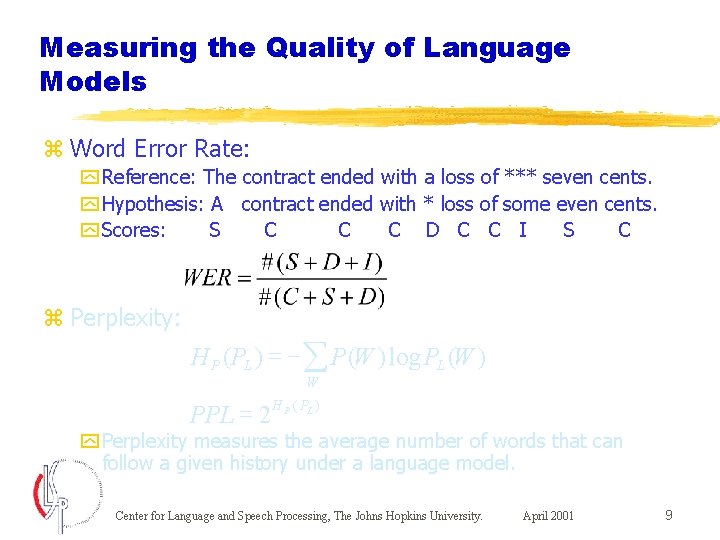

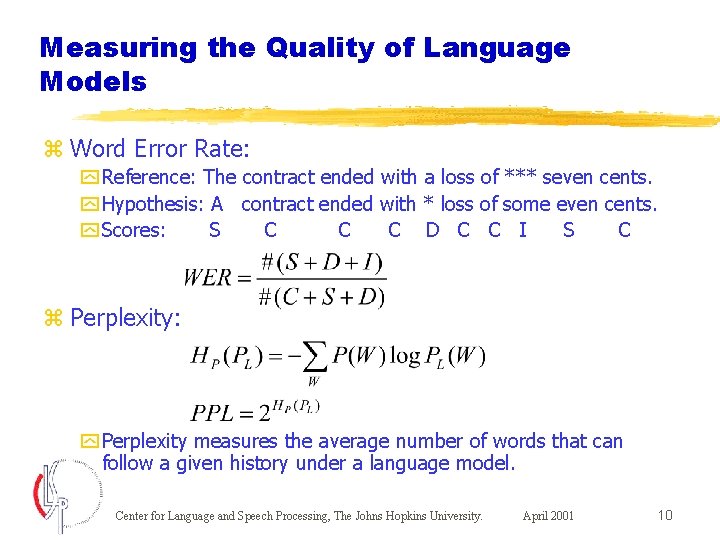

Measuring the Quality of Language Models z Word Error Rate: y Reference: The contract ended with a loss of *** seven cents. y Hypothesis: A contract ended with * loss of some even cents. y Scores: S C C C D C C I S C z Perplexity: H P ( PL ) = -å P(W ) log PL (W ) W PPL = 2 H P ( PL ) y Perplexity measures the average number of words that can follow a given history under a language model. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 9

Measuring the Quality of Language Models z Word Error Rate: y Reference: The contract ended with a loss of *** seven cents. y Hypothesis: A contract ended with * loss of some even cents. y Scores: S C C C D C C I S C z Perplexity: y Perplexity measures the average number of words that can follow a given history under a language model. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 10

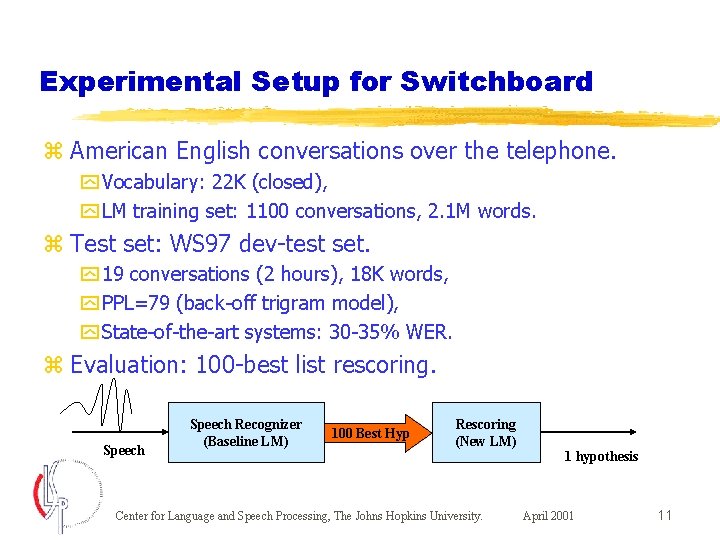

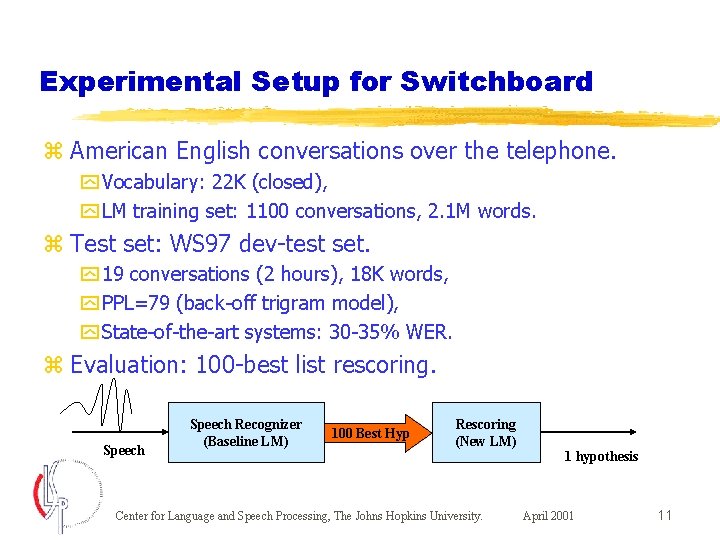

Experimental Setup for Switchboard z American English conversations over the telephone. y Vocabulary: 22 K (closed), y LM training set: 1100 conversations, 2. 1 M words. z Test set: WS 97 dev-test set. y 19 conversations (2 hours), 18 K words, y PPL=79 (back-off trigram model), y State-of-the-art systems: 30 -35% WER. z Evaluation: 100 -best list rescoring. Speech Recognizer (Baseline LM) 100 Best Hyp Rescoring (New LM) Center for Language and Speech Processing, The Johns Hopkins University. 1 hypothesis April 2001 11

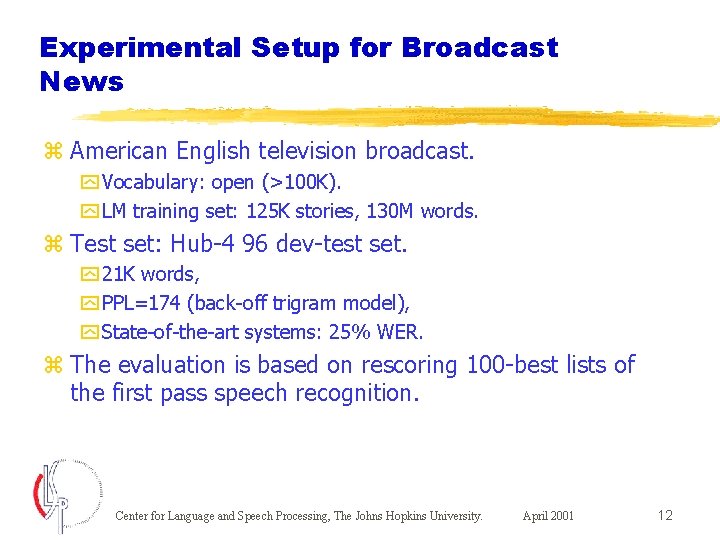

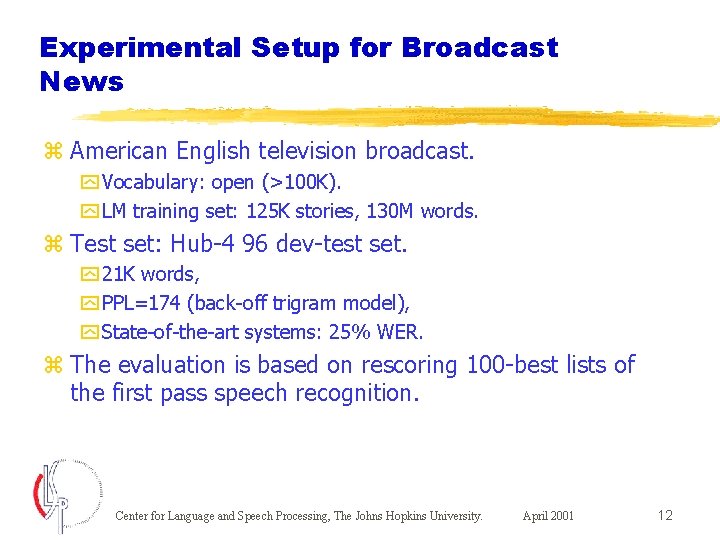

Experimental Setup for Broadcast News z American English television broadcast. y Vocabulary: open (>100 K). y LM training set: 125 K stories, 130 M words. z Test set: Hub-4 96 dev-test set. y 21 K words, y PPL=174 (back-off trigram model), y State-of-the-art systems: 25% WER. z The evaluation is based on rescoring 100 -best lists of the first pass speech recognition. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 12

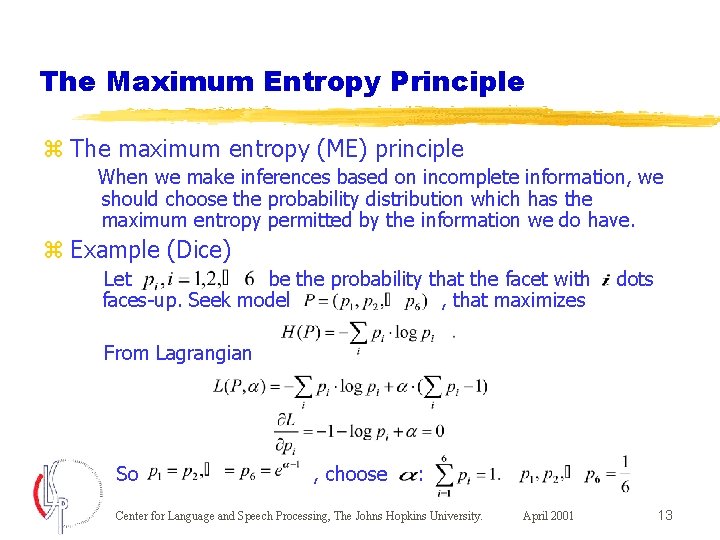

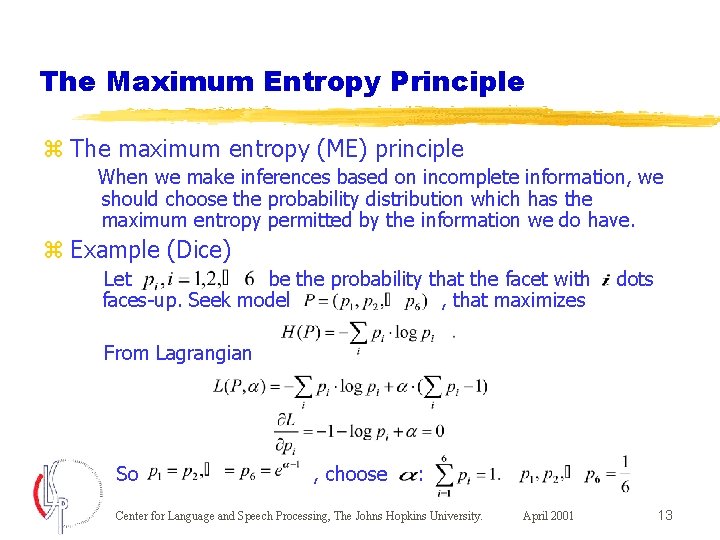

The Maximum Entropy Principle z The maximum entropy (ME) principle When we make inferences based on incomplete information, we should choose the probability distribution which has the maximum entropy permitted by the information we do have. z Example (Dice) Let be the probability that the facet with faces-up. Seek model , that maximizes dots From Lagrangian So , choose : Center for Language and Speech Processing, The Johns Hopkins University. April 2001 13

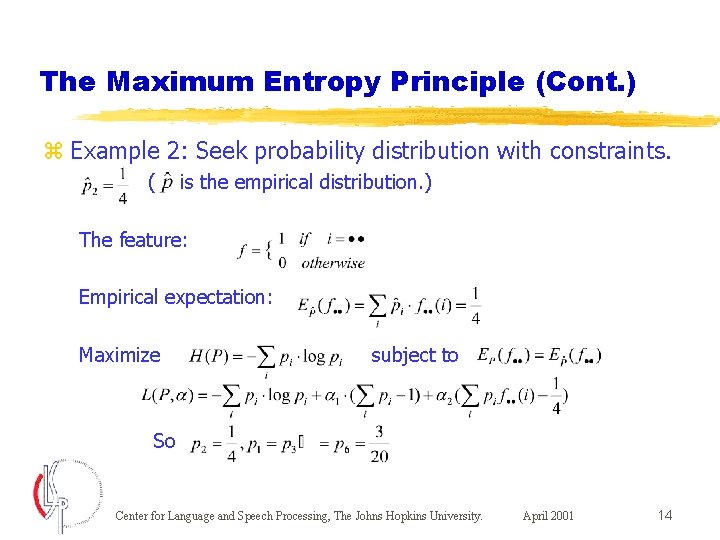

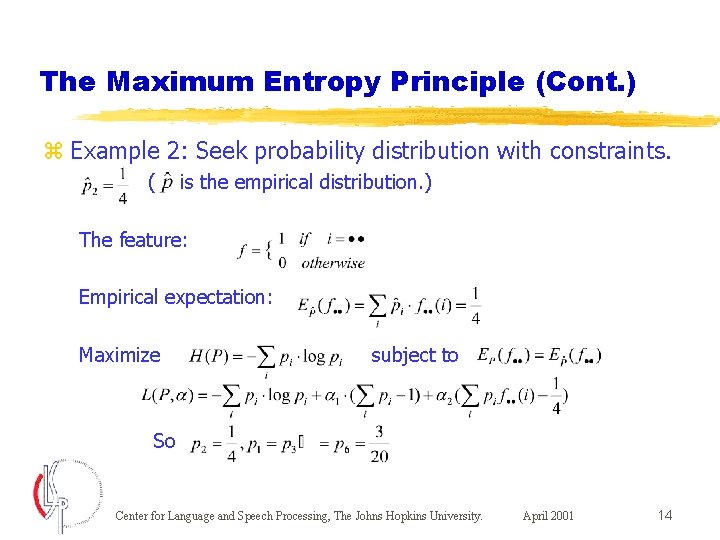

The Maximum Entropy Principle (Cont. ) z Example 2: Seek probability distribution with constraints. ( is the empirical distribution. ) The feature: Empirical expectation: Maximize subject to So Center for Language and Speech Processing, The Johns Hopkins University. April 2001 14

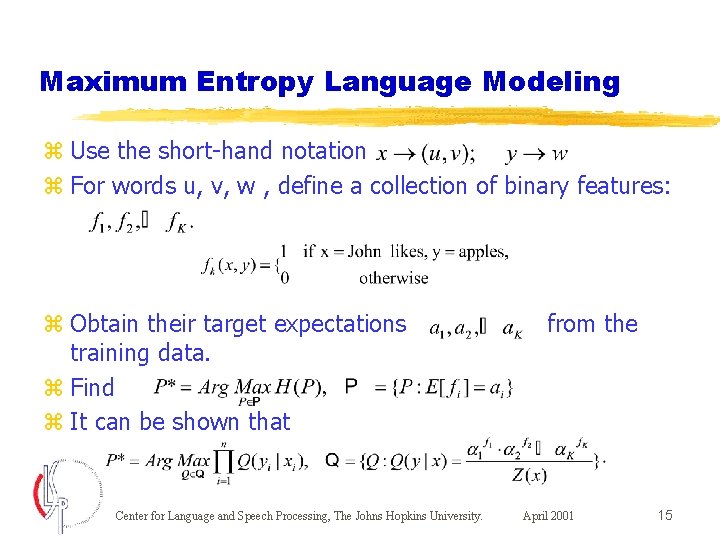

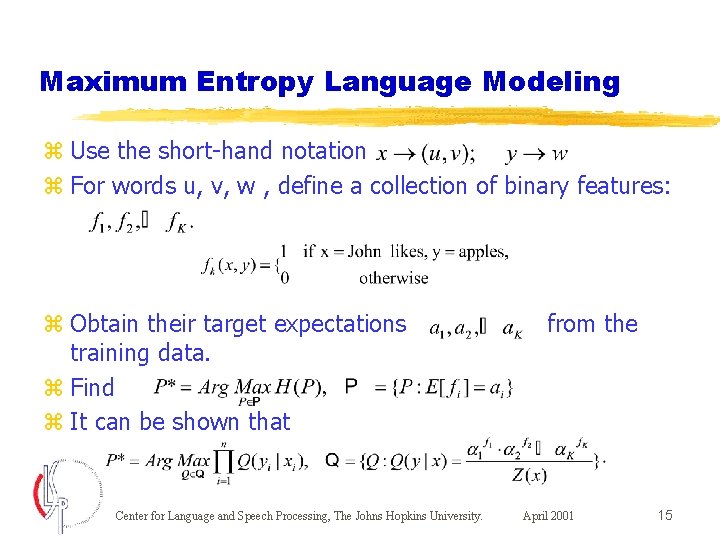

Maximum Entropy Language Modeling z Use the short-hand notation z For words u, v, w , define a collection of binary features: z Obtain their target expectations training data. z Find z It can be shown that Center for Language and Speech Processing, The Johns Hopkins University. from the April 2001 15

Advantages and Disadvantage of Maximum Entropy Language Modeling z Advantages: y Creating a “smooth” model that satisfies all empirical constraints. y Incorporating various sources of information in a unified language model. z Disadvantage: y Computation complexity of model parameter estimation procedure. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 16

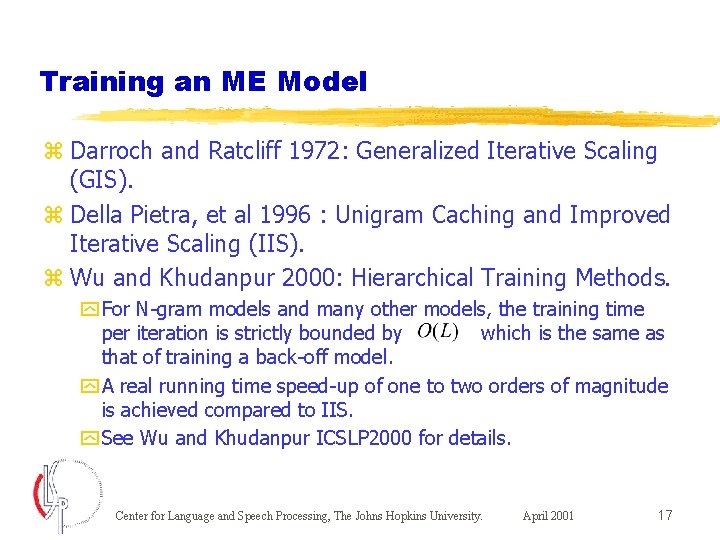

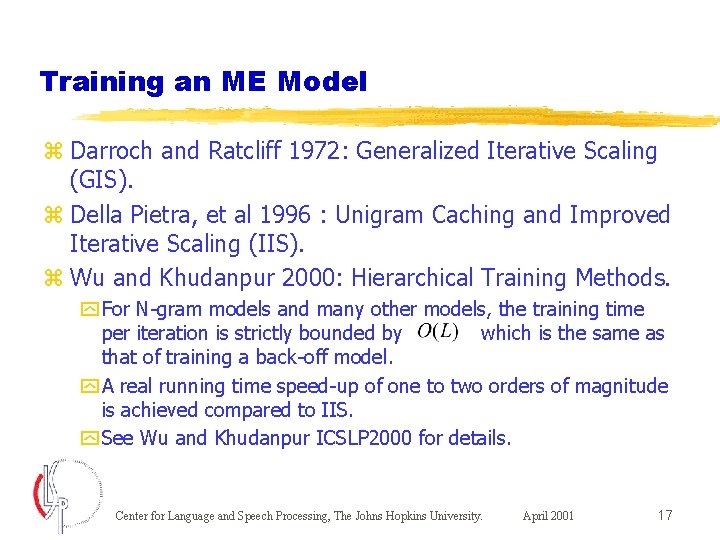

Training an ME Model z Darroch and Ratcliff 1972: Generalized Iterative Scaling (GIS). z Della Pietra, et al 1996 : Unigram Caching and Improved Iterative Scaling (IIS). z Wu and Khudanpur 2000: Hierarchical Training Methods. y For N-gram models and many other models, the training time per iteration is strictly bounded by which is the same as that of training a back-off model. y A real running time speed-up of one to two orders of magnitude is achieved compared to IIS. y See Wu and Khudanpur ICSLP 2000 for details. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 17

Motivation for Exploiting Semantic and Syntactic Dependencies Analysts and financial officials in the former British colony consider the contract essential to the revival of the Hong Kong futures exchange. z N-gram models only take local correlation between words into account. z Several dependencies in natural language with longer and sentence-structure dependent spans may compensate for this deficiency. z Need a model that exploits topic and syntax. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 18

Motivation for Exploiting Semantic and Syntactic Dependencies Analysts and financial officials in the former British colony consider the contract essential to the revival of the Hong Kong futures exchange. z N-gram models only take local correlation between words into account. z Several dependencies in natural language with longer and sentence-structure dependent spans may compensate for this deficiency. z Need a model that exploits topic and syntax. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 19

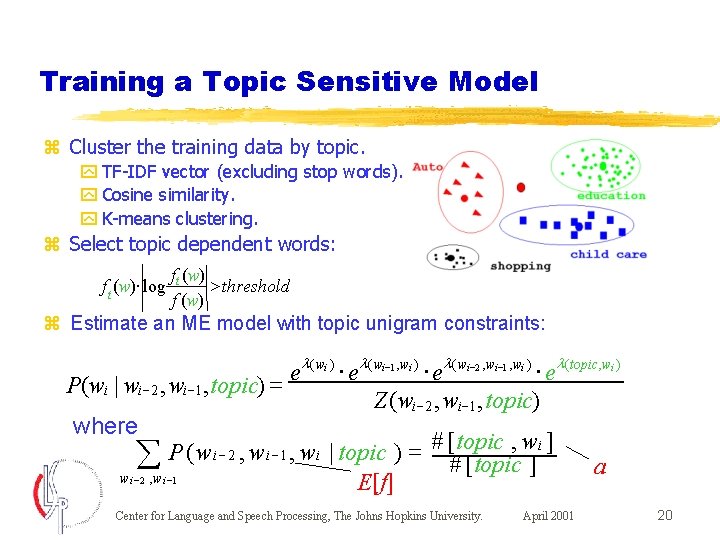

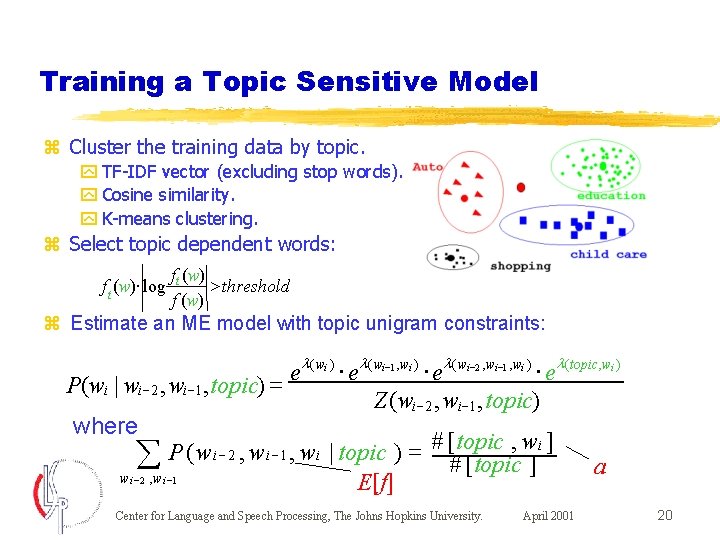

Training a Topic Sensitive Model z Cluster the training data by topic. y TF-IDF vector (excluding stop words). y Cosine similarity. y K-means clustering. z Select topic dependent words: f t (w) × log ft (w) > threshold f (w) z Estimate an ME model with topic unigram constraints: P(wi | wi -2 , wi -1 , topic) = where e l ( wi ) × el ( wi-1 , wi ) × el ( wi-2 , wi-1 , wi ) × el (topic, wi ) Z (wi -2 , wi -1 , topic) # [ topic , w i ] = P ( w , w | topic ) i 2 i 1 i å # [ topic ] w i - 2 , w i -1 E[f] Center for Language and Speech Processing, The Johns Hopkins University. April 2001 a 20

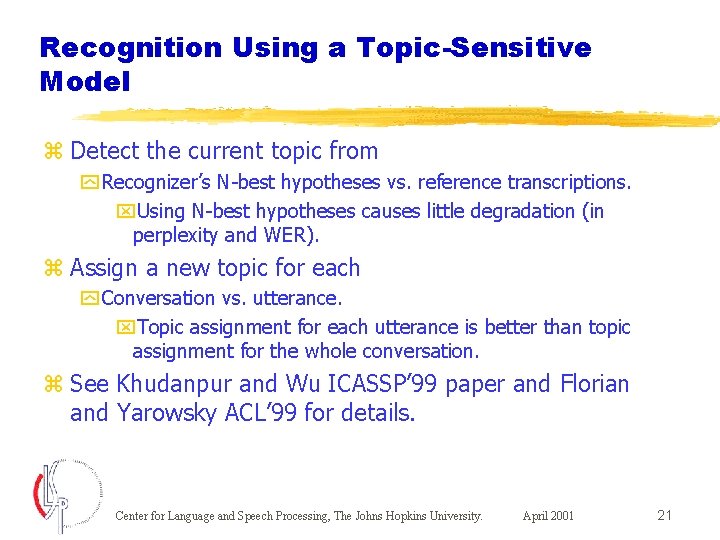

Recognition Using a Topic-Sensitive Model z Detect the current topic from y Recognizer’s N-best hypotheses vs. reference transcriptions. x. Using N-best hypotheses causes little degradation (in perplexity and WER). z Assign a new topic for each y Conversation vs. utterance. x. Topic assignment for each utterance is better than topic assignment for the whole conversation. z See Khudanpur and Wu ICASSP’ 99 paper and Florian and Yarowsky ACL’ 99 for details. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 21

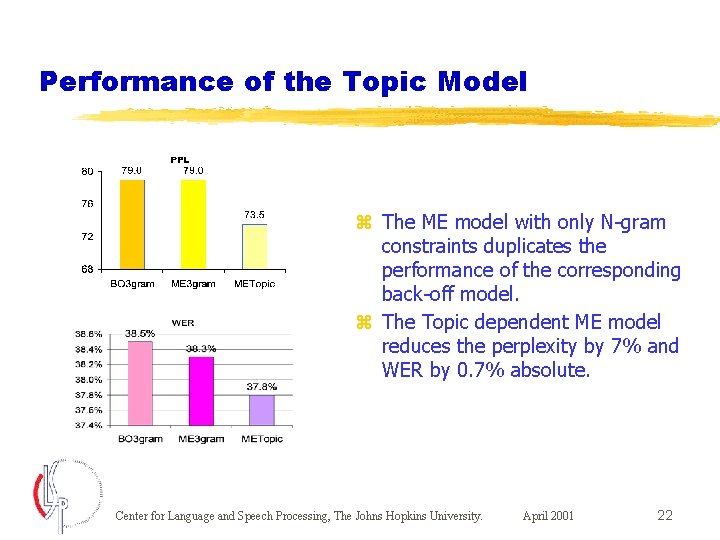

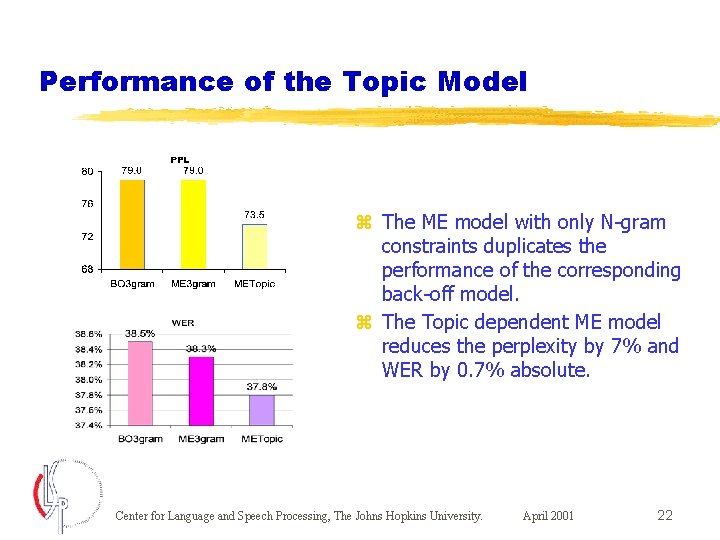

Performance of the Topic Model z The ME model with only N-gram constraints duplicates the performance of the corresponding back-off model. z The Topic dependent ME model reduces the perplexity by 7% and WER by 0. 7% absolute. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 22

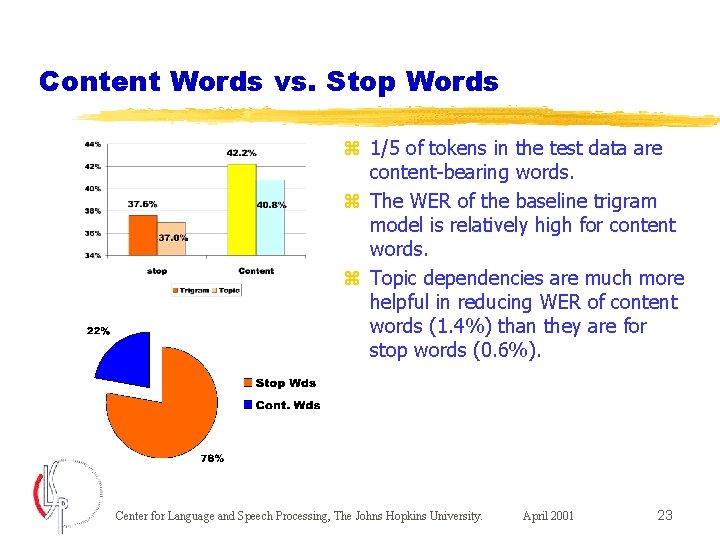

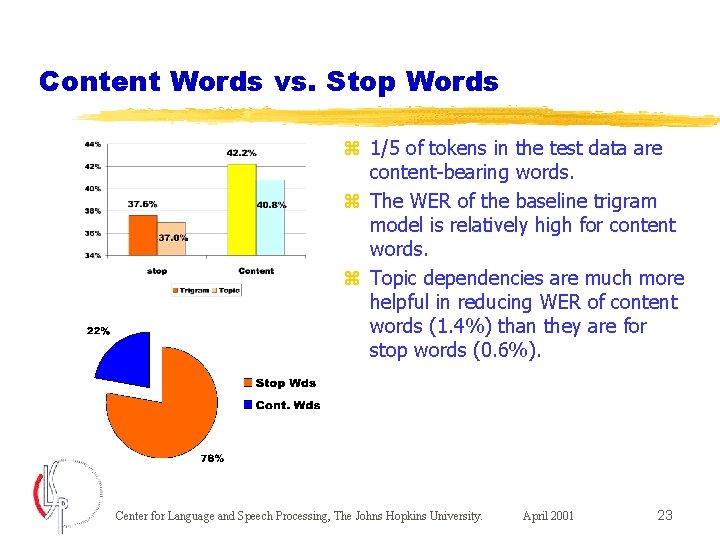

Content Words vs. Stop Words z 1/5 of tokens in the test data are content-bearing words. z The WER of the baseline trigram model is relatively high for content words. z Topic dependencies are much more helpful in reducing WER of content words (1. 4%) than they are for stop words (0. 6%). Center for Language and Speech Processing, The Johns Hopkins University. April 2001 23

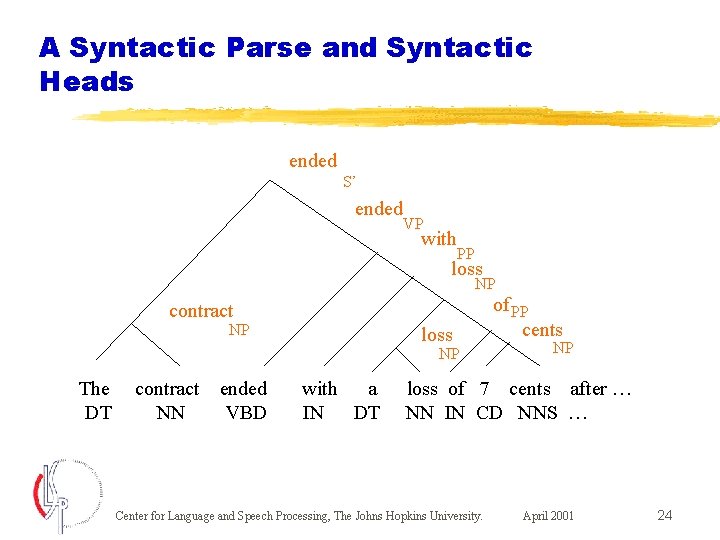

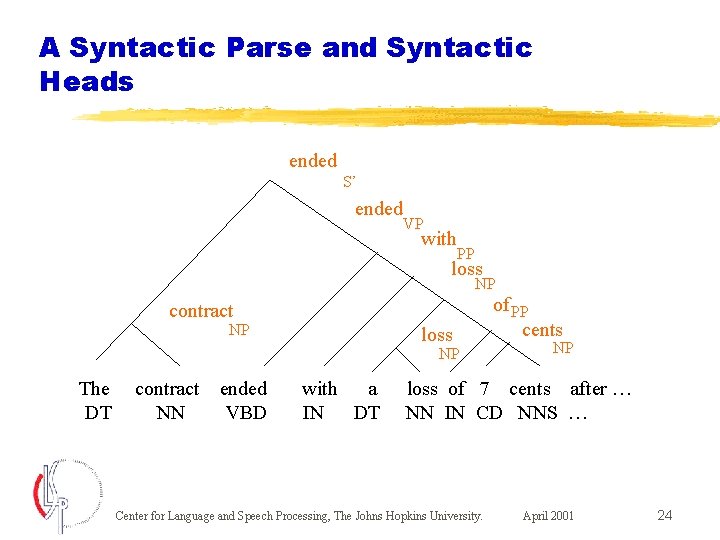

A Syntactic Parse and Syntactic Heads ended S’ ended VP with PP loss NP contract NP loss NP The DT contract NN ended VBD with a IN DT of PP cents NP loss of 7 cents after … NN IN CD NNS … Center for Language and Speech Processing, The Johns Hopkins University. April 2001 24

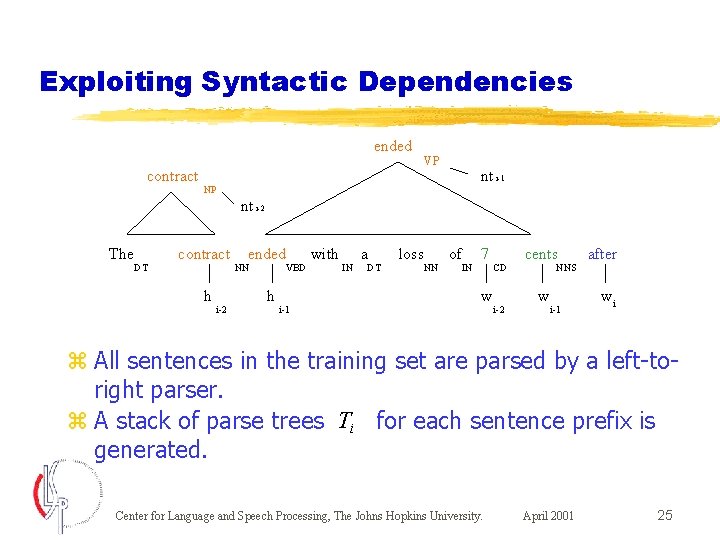

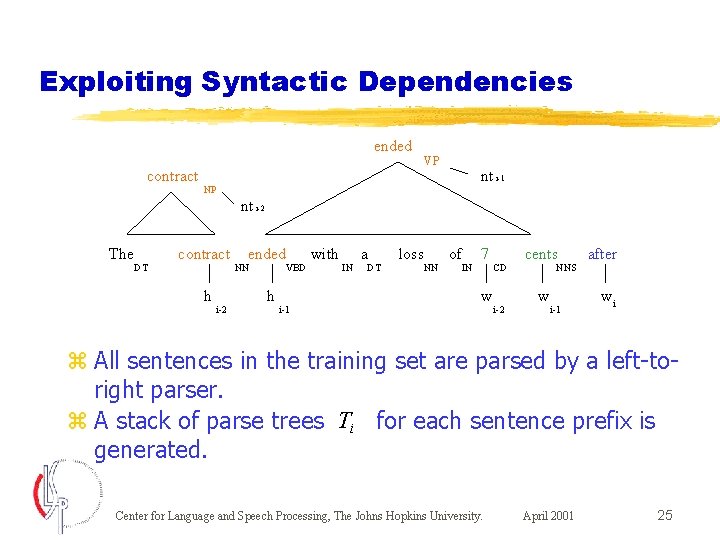

Exploiting Syntactic Dependencies ended contract VP nt i-1 NP nt i-2 The DT contract h i-2 ended NN VBD h i-1 with IN a DT loss NN of IN 7 w CD i-2 cents NNS w i-1 after wi z All sentences in the training set are parsed by a left-toright parser. z A stack of parse trees Ti for each sentence prefix is generated. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 25

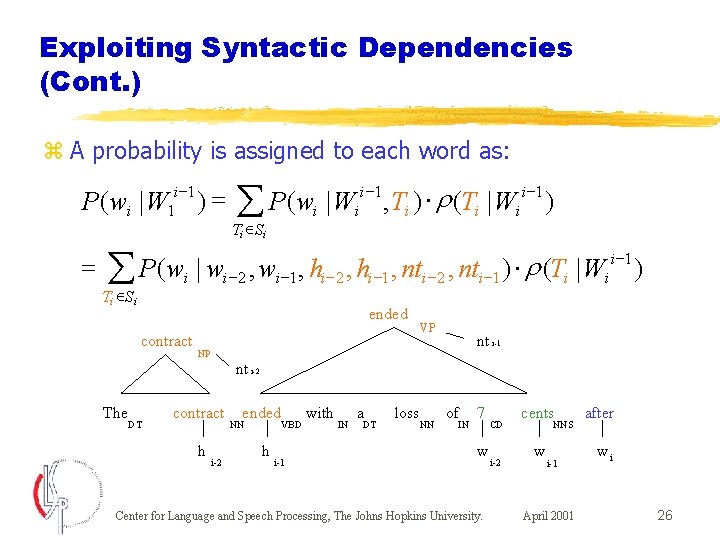

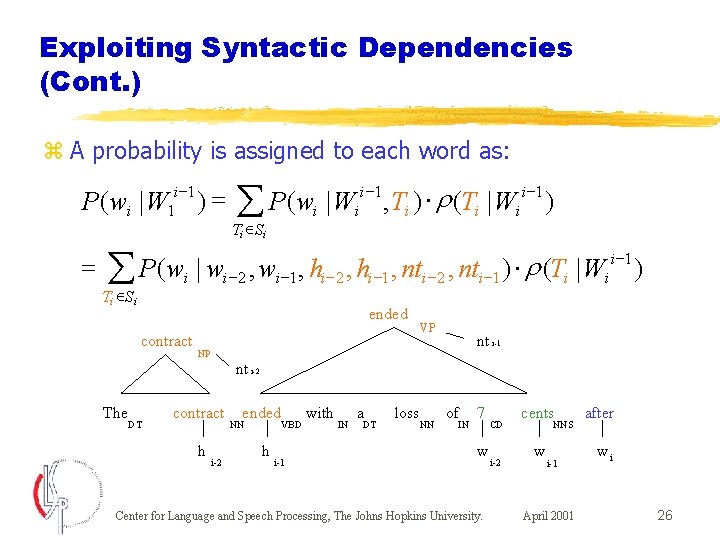

Exploiting Syntactic Dependencies (Cont. ) z A probability is assigned to each word as: i -1 1 P( wi | W = )= å P(w | W i Ti ÎSi i -1 × r , Ti ) (Ti | Wi ) i -1 × r å P(wi | wi-2 , wi-1 , hi-2 , hi-1 , nti-2 , nti-1 ) (Ti | Wi ) Ti ÎSi ended contract VP nt i-1 NP nt i-2 The DT contract h i-2 ended NN VBD h i-1 with IN a DT loss NN of IN 7 w Center for Language and Speech Processing, The Johns Hopkins University. CD i-2 cents NNS w i-1 April 2001 after wi 26

Exploiting Syntactic Dependencies (Cont. ) z A probability is assigned to each word as: i -1 1 P( wi | W = )= å P(w | W Ti ÎSi i -1 × r , Ti ) (Ti | Wi ) i -1 × r å P(wi | wi-2 , wi-1 , hi-2 , hi-1 , nti-2 , nti-1 ) (Ti | Wi ) Ti ÎSi z It is assumed that most of the useful information is embedded in the 2 preceding words and 2 preceding heads. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 27

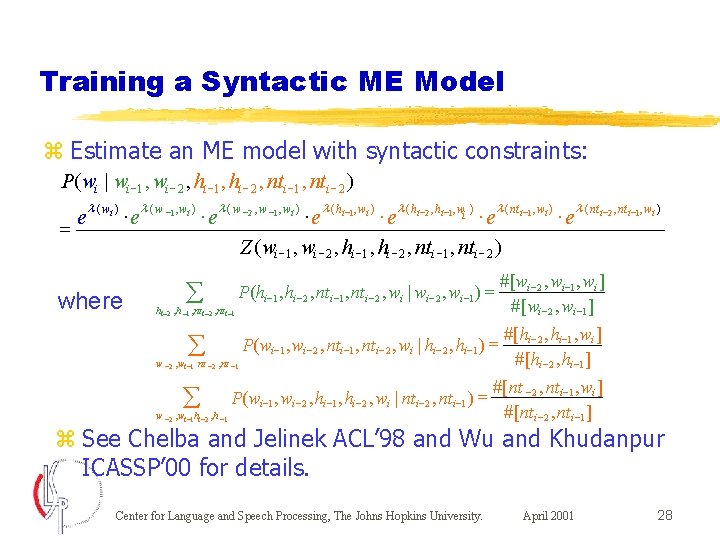

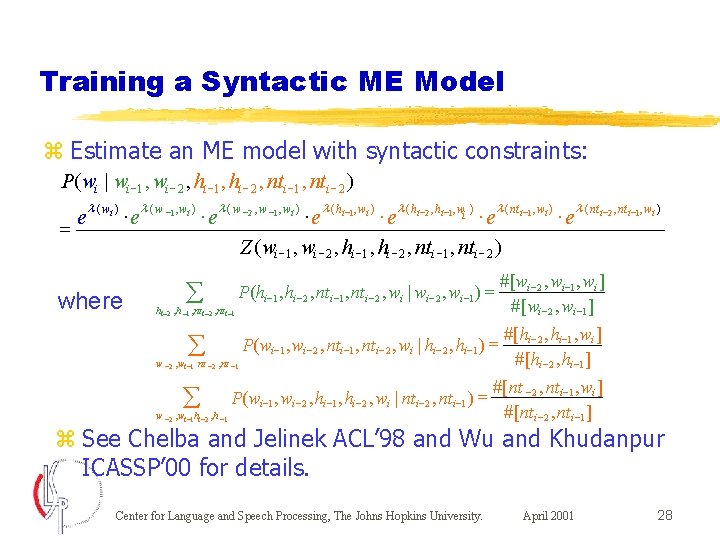

Training a Syntactic ME Model z Estimate an ME model with syntactic constraints: P ( wi | wi -1 , wi - 2 , hi -1 , hi - 2 , nti -1 , nti - 2 ) = e l ( wi ) × e l ( w -1 , wi ) × e l ( w -2 , w -1 , wi ) × e l ( hi-2 , hi-1 , wi ) × e l ( nt i-2 , nti-1 , wi ) Z ( wi -1 , wi - 2 , hi -1 , hi - 2 , nti -1 , nti - 2 ) where å P(hi -1 , hi - 2 , nti -1 , nti - 2 , wi | wi - 2 , wi -1 ) = #[ wi - 2 , wi -1 , wi ] # [wi - 2 , wi -1 ] å P(wi -1 , wi - 2 , nti -1 , nti - 2 , wi | hi - 2 , hi -1 ) = #[hi - 2 , hi -1 , wi ] # [hi - 2 , hi -1 ] hi - 2 , h -1 , nt i -2 , nt i -1 w - 2 , wi -1 nt -2 , nt -1 å P(wi -1 , wi - 2 , hi -1 , hi - 2 , wi | nti - 2 , nti -1 ) = w - 2 , wi -1 hi - 2 , h -1 # [nt - 2 , nti -1 , wi ] # [nti - 2 , nti -1 ] z See Chelba and Jelinek ACL’ 98 and Wu and Khudanpur ICASSP’ 00 for details. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 28

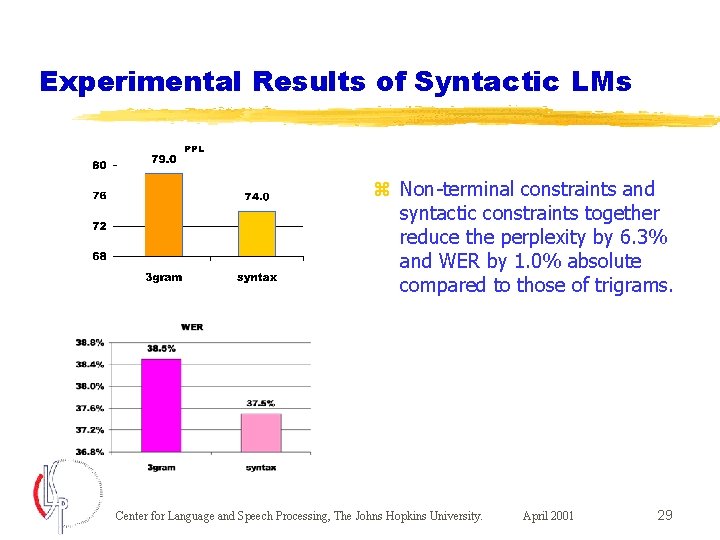

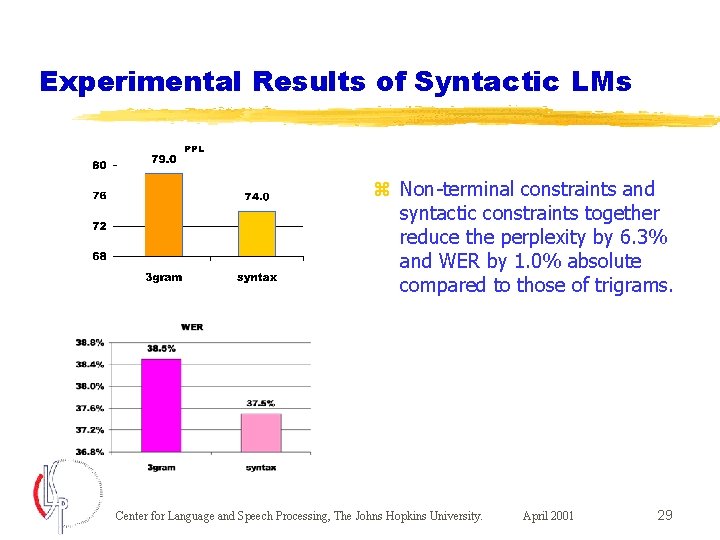

Experimental Results of Syntactic LMs z Non-terminal constraints and syntactic constraints together reduce the perplexity by 6. 3% and WER by 1. 0% absolute compared to those of trigrams. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 29

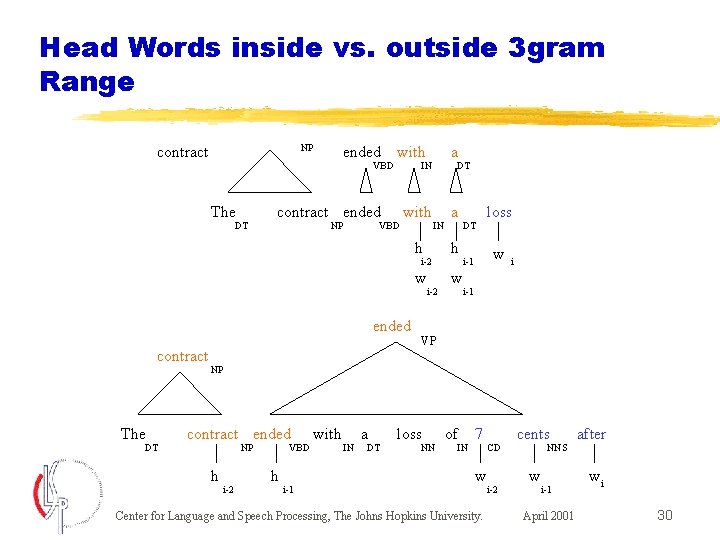

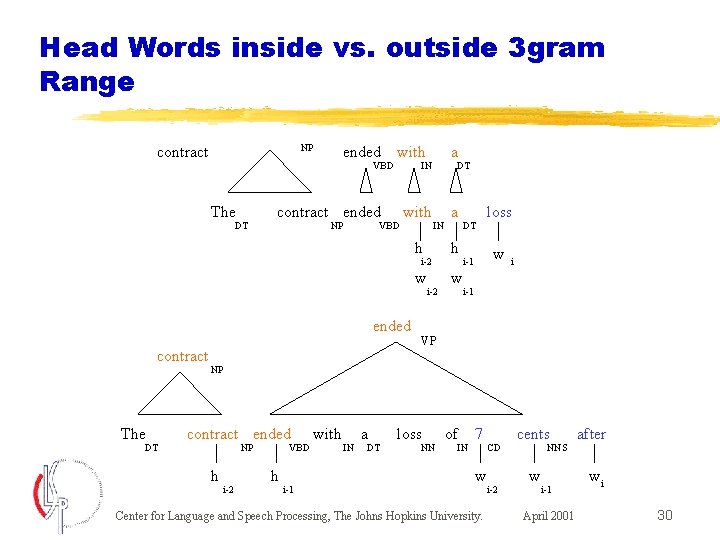

Head Words inside vs. outside 3 gram Range NP contract ended with VBD The DT contract ended NP a IN VBD with DT IN h i-2 w ended contract The DT i-2 a h w DT loss w i-1 i i-1 VP NP contract ended NP h i-2 VBD h i-1 with IN a DT loss NN of IN 7 w Center for Language and Speech Processing, The Johns Hopkins University. CD i-2 cents after w wi NNS i-1 April 2001 30

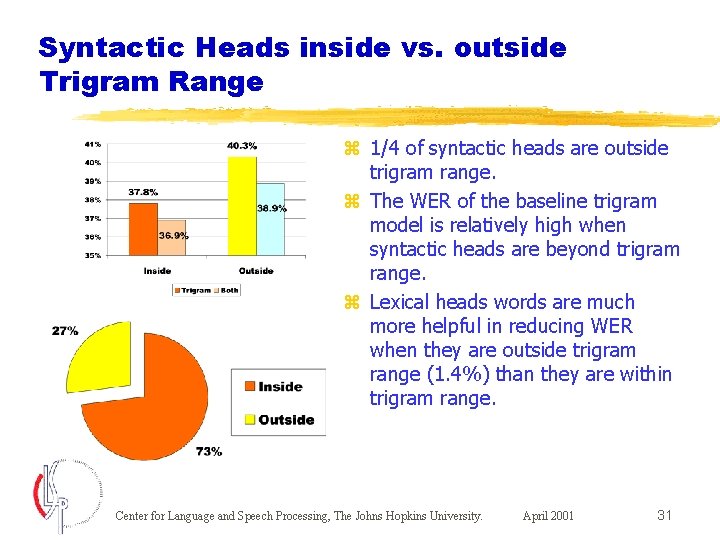

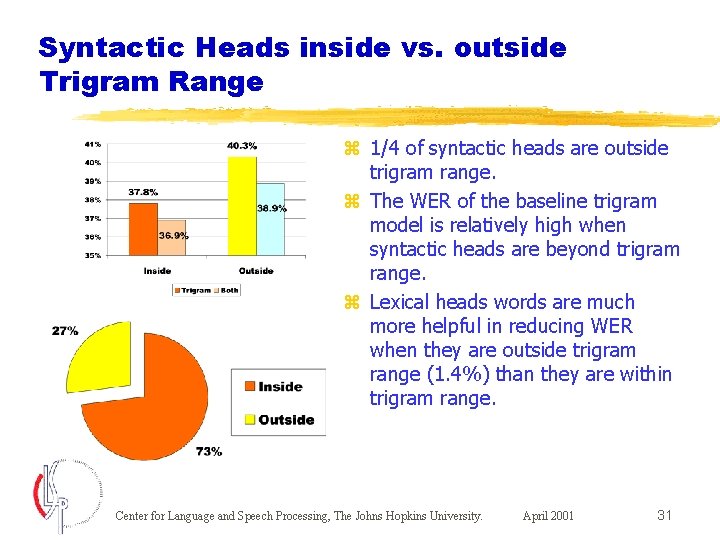

Syntactic Heads inside vs. outside Trigram Range z 1/4 of syntactic heads are outside trigram range. z The WER of the baseline trigram model is relatively high when syntactic heads are beyond trigram range. z Lexical heads words are much more helpful in reducing WER when they are outside trigram range (1. 4%) than they are within trigram range. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 31

Combining Topic, Syntactic and N-gram Dependencies in an ME Framework z Probabilities are assigned as: - P ( wi | W 1 i 1 ) = å P( wi | wi-2 , wi-1, hi-2 , hi-1, nti-2 , nti-1, topic ) × r (Ti | Wii 1 ) - Ti ÎSi z The ME composite model is trained: P(wi | wi-2 , wi-1, hi-2 , hi-1, nti-2 , nti-1, topic) = l ( wi ) e × el(wi-1, wi ) × el(wi-2 , wi-1, wi ) × el(hi-2 , hi-1, wi ) × el(nti-2 , hi-1, wi ) × el(topic, wi ) Z (wi-2 , wi-1, hi-2 , hi-1, nti-2 , nti-1, topic) z Only marginal constraints are necessary. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 32

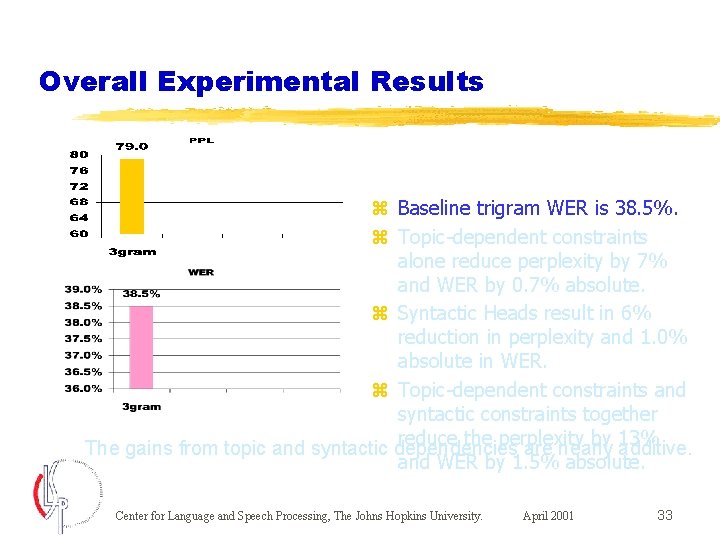

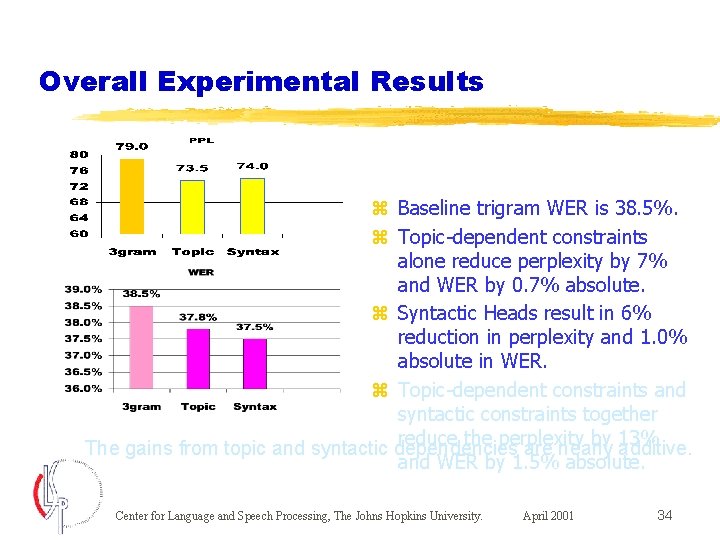

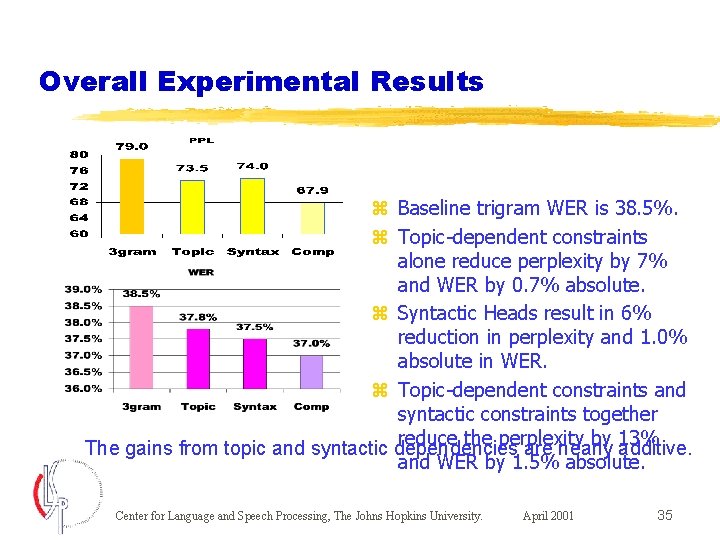

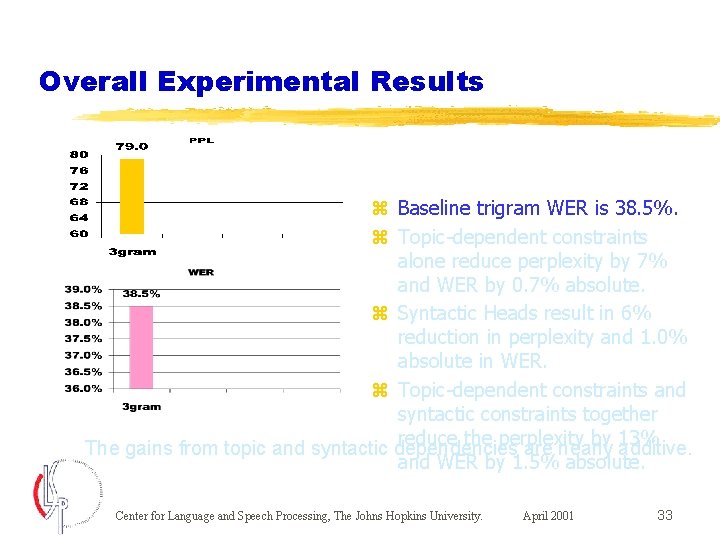

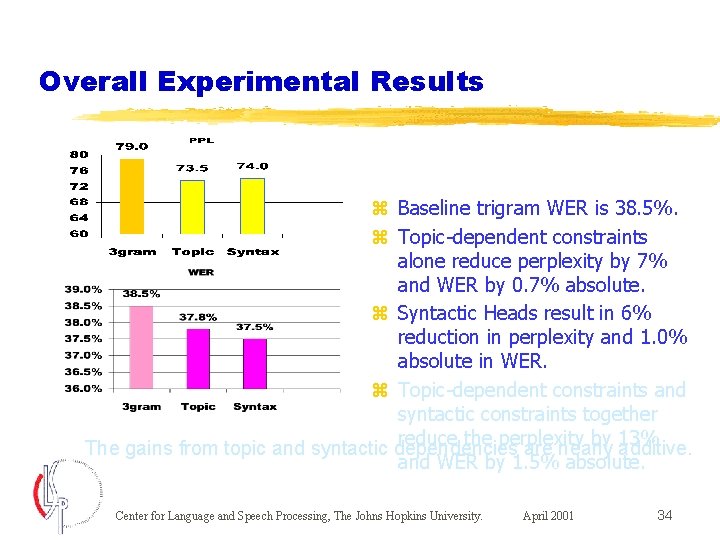

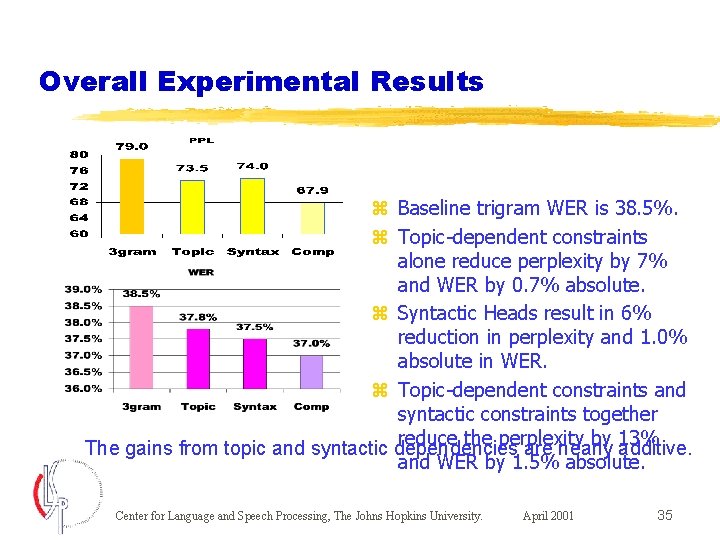

Overall Experimental Results z Baseline trigram WER is 38. 5%. z Topic-dependent constraints alone reduce perplexity by 7% and WER by 0. 7% absolute. z Syntactic Heads result in 6% reduction in perplexity and 1. 0% absolute in WER. z Topic-dependent constraints and syntactic constraints together reduce the perplexity by 13% The gains from topic and syntactic dependencies are nearly additive. and WER by 1. 5% absolute. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 33

Overall Experimental Results z Baseline trigram WER is 38. 5%. z Topic-dependent constraints alone reduce perplexity by 7% and WER by 0. 7% absolute. z Syntactic Heads result in 6% reduction in perplexity and 1. 0% absolute in WER. z Topic-dependent constraints and syntactic constraints together reduce the perplexity by 13% The gains from topic and syntactic dependencies are nearly additive. and WER by 1. 5% absolute. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 34

Overall Experimental Results z Baseline trigram WER is 38. 5%. z Topic-dependent constraints alone reduce perplexity by 7% and WER by 0. 7% absolute. z Syntactic Heads result in 6% reduction in perplexity and 1. 0% absolute in WER. z Topic-dependent constraints and syntactic constraints together reduce the perplexity by 13% The gains from topic and syntactic dependencies are nearly additive. and WER by 1. 5% absolute. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 35

Content Words vs. Stop words z The topic sensitive model reduces WER by 1. 4% on content words, which is twice as much as the overall improvement (0. 7%). z The syntactic model improves WER on both content words and stop words evenly. z The composite model has the advantage of both models and reduces WER on content words more significantly (2. 1%). Center for Language and Speech Processing, The Johns Hopkins University. April 2001 36

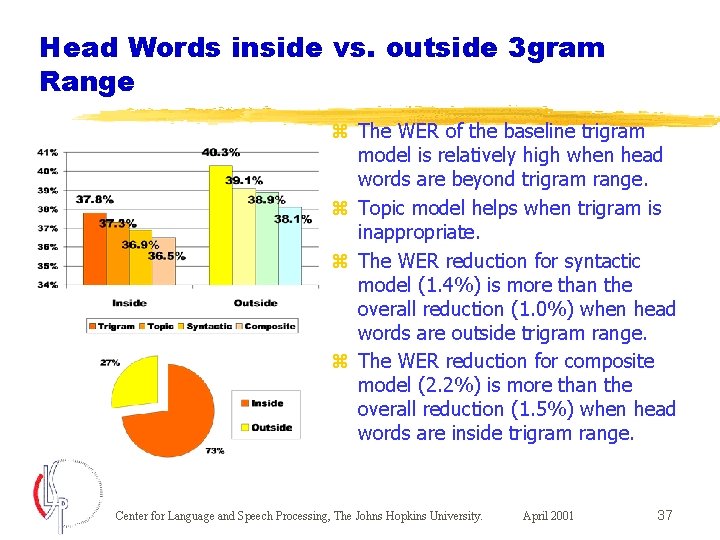

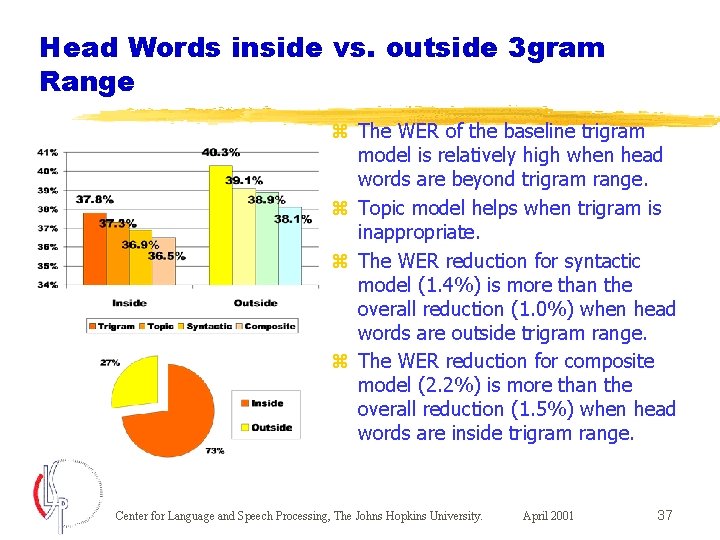

Head Words inside vs. outside 3 gram Range z The WER of the baseline trigram model is relatively high when head words are beyond trigram range. z Topic model helps when trigram is inappropriate. z The WER reduction for syntactic model (1. 4%) is more than the overall reduction (1. 0%) when head words are outside trigram range. z The WER reduction for composite model (2. 2%) is more than the overall reduction (1. 5%) when head words are inside trigram range. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 37

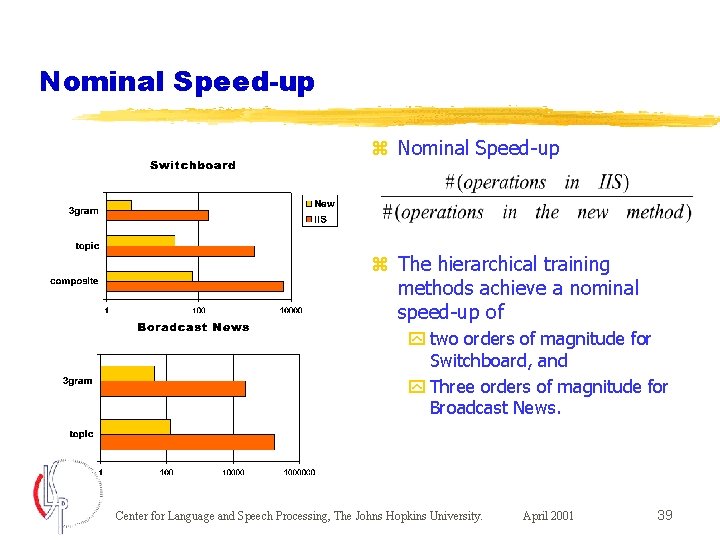

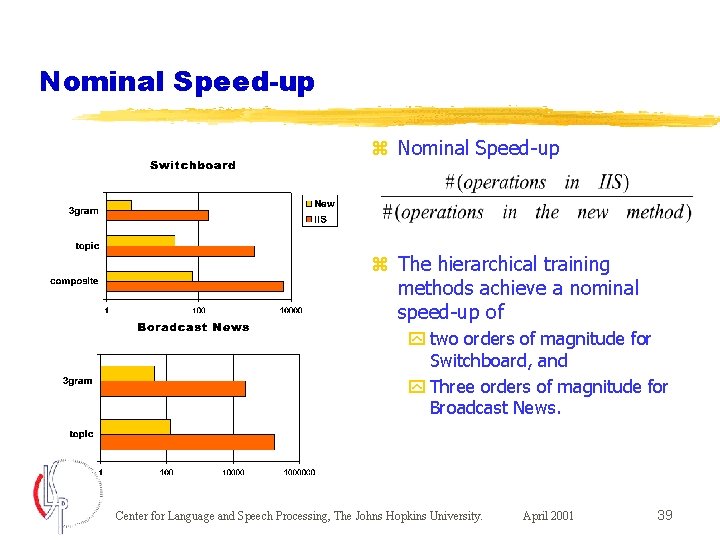

Nominal Speed-up z The hierarchical training methods achieve a nominal speed-up of y two orders of magnitude for Switchboard, and y Three orders of magnitude for Broadcast News. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 39

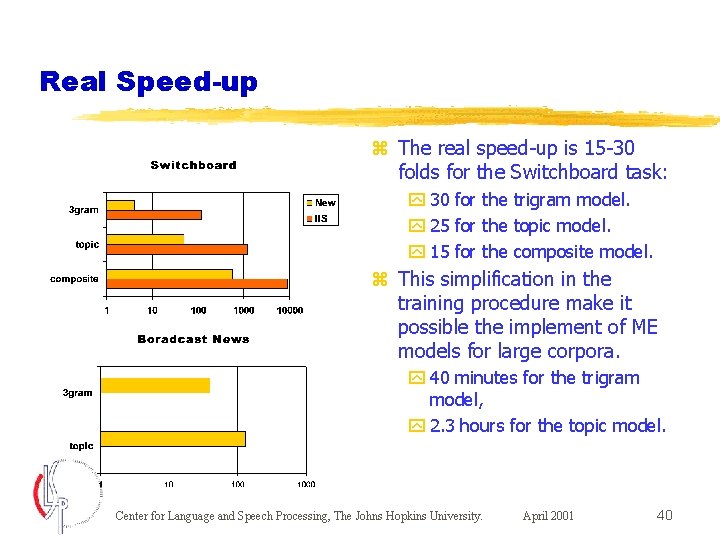

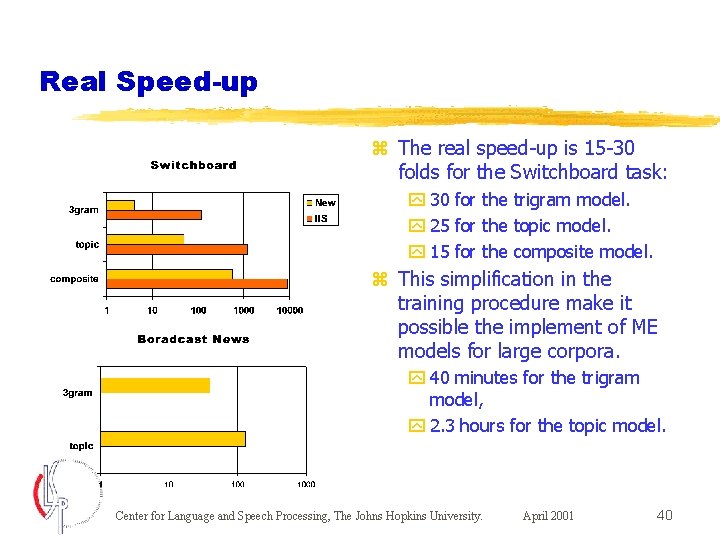

Real Speed-up z The real speed-up is 15 -30 folds for the Switchboard task: y 30 for the trigram model. y 25 for the topic model. y 15 for the composite model. z This simplification in the training procedure make it possible the implement of ME models for large corpora. y 40 minutes for the trigram model, y 2. 3 hours for the topic model. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 40

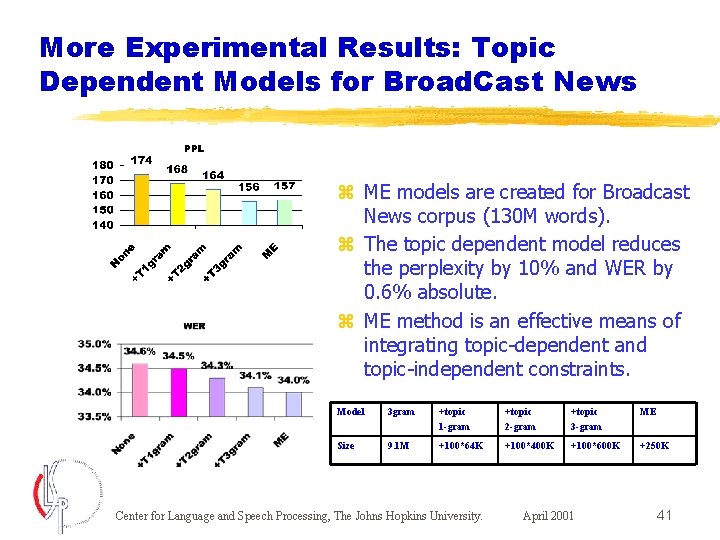

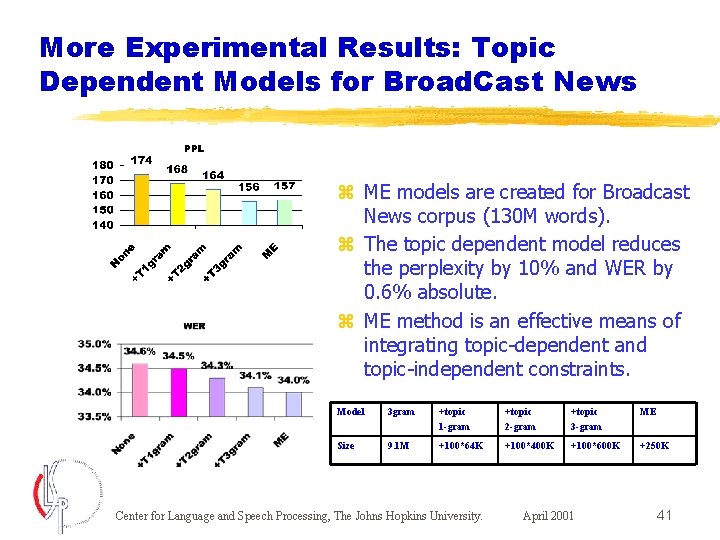

More Experimental Results: Topic Dependent Models for Broad. Cast News z ME models are created for Broadcast News corpus (130 M words). z The topic dependent model reduces the perplexity by 10% and WER by 0. 6% absolute. z ME method is an effective means of integrating topic-dependent and topic-independent constraints. Model 3 gram +topic 1 -gram +topic 2 -gram +topic 3 -gram ME Size 9. 1 M +100*64 K +100*400 K +100*600 K +250 K Center for Language and Speech Processing, The Johns Hopkins University. April 2001 41

Concluding Remarks z Non-local and syntactic dependencies have been successfully integrated with N-grams. Their benefit have been demonstrated in the speech recognition application. y Switchboard: 13% reduction in PPL, 1. 5% (absolute) in WER. (Eurospeech 99 best student paper award. ) y Broadcast News: 10% reduction in PPL, 0. 6% in WER. (Topic constraints only; syntactic constraints in progress. ) z The computational requirements for the estimation and use of maximum entropy techniques have been vastly simplified for a large class of ME models. y Nominal speedup: 100 -1000 fold. y “Real” speedup: 15+ fold. z A General purpose toolkit for ME models is being developed for public release. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 42

Concluding Remarks z Non-local and syntactic dependencies have been successfully integrated with N-grams. Their benefit have been demonstrated in the speech recognition application. y Switchboard: 13% reduction in PPL, 1. 5% (absolute) in WER. (Eurospeech 99 best student paper award. ) y Broadcast News: 10% reduction in PPL, 0. 6% in WER. (Topic constraints only; syntactic constraints in progress. ) z The computational requirements for the estimation and use of maximum entropy techniques have been vastly simplified for a large class of ME models. y Nominal speedup: 100 -1000 fold. y “Real” speedup: 15+ fold. z A General purpose toolkit for ME models is being developed for public release. Center for Language and Speech Processing, The Johns Hopkins University. April 2001 43

Acknowledgement z I thank my advisor Sanjeev Khudanpur who leads me to this field and always gives me wisdom advice and help when necessary and David Yarowsky who gives generous help during my Ph. D. program. z I thank Radu Florian and David Yarowsky for their help on topic detection and data clustering, Ciprian Chelba and Frederick Jelinek for providing the syntactic model (parser) for the SWBD experimental results reported here, and Shankar Kumar and Vlasios Doumpiotis for their help on generating N-best lists for the BN experiments. z I thank all people in the NLP lab and CLSP for their assistance in my thesis work. z This work is supported by National Science Foundation, a STIMULATE grant (IRI-9618874). Center for Language and Speech Processing, The Johns Hopkins University. April 2001 44