Maximum Entropy Language Modeling with Syntactic Semantic and

- Slides: 55

Maximum Entropy Language Modeling with Syntactic, Semantic and Collocational Dependencies Jun Wu and Sanjeev Khudanpur Center for Language and Speech Processing Johns Hopkins University Baltimore, MD 21218 August, 2000 NSF STIMULATE Grant No. IRI-9618874 Center for Language and Speech Processing, The Johns Hopkins University. August 2000 1

Stimulate Team in CLSP z Faculties: y Frederick Jelinek: syntactic language modeling y Eric Brill: consensus lattice rescoring y Sanjeev Khudanpur: maximum entropy language modeling y David Yarowsky: topic/genre dependent language modeling z Students: y Ciprian Chelba: syntactic language modeling y Radu Florian: topic/genre dependent language modeling y Lidia Mangu: consensus lattice rescoring y Jun Wu: maximum entropy language modeling y Peng Xu: syntactic language modeling Center for Language and Speech Processing, The Johns Hopkins University. August 2000 2

Outline z The Maximum entropy principle z Semantic (Topic) dependencies z Syntactic dependencies z ME models with topic and syntactic dependencies z Conclusion and Future Work Center for Language and Speech Processing, The Johns Hopkins University. August 2000 3

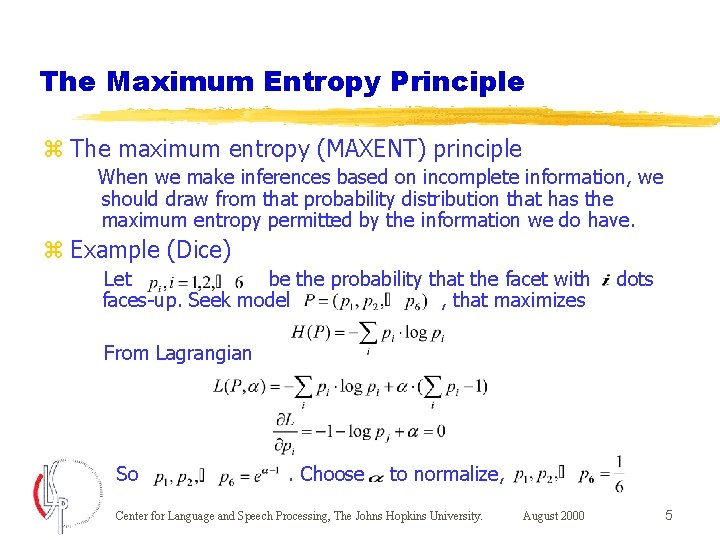

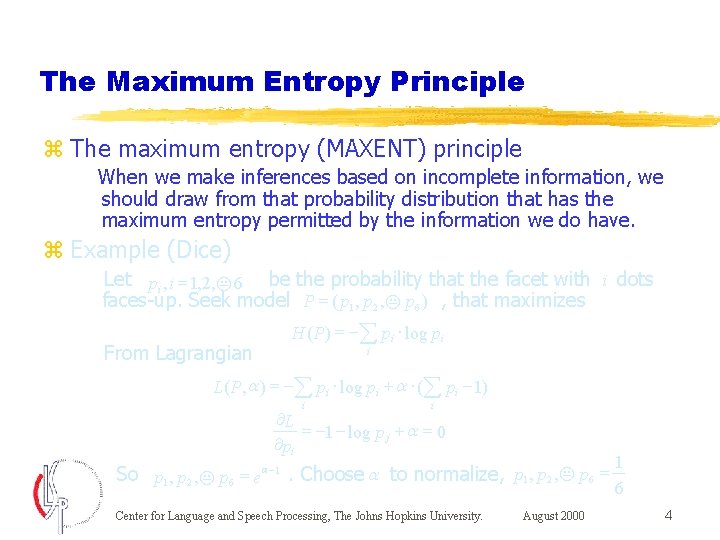

The Maximum Entropy Principle z The maximum entropy (MAXENT) principle When we make inferences based on incomplete information, we should draw from that probability distribution that has the maximum entropy permitted by the information we do have. z Example (Dice) Let pi , i = 1, 2, K 6 be the probability that the facet with i dots faces-up. Seek model P = ( p 1 , p 2 , K p 6 ) , that maximizes From Lagrangian H ( P) = -å pi × log pi i L( P, a ) = -å pi × log pi + a × (å pi - 1) i i ¶L = -1 - log p j + a = 0 ¶pi 1 So p 1 , p 2 , K p 6 = ea -1. Choose a to normalize, p 1 , p 2 , K p 6 = 6 Center for Language and Speech Processing, The Johns Hopkins University. August 2000 4

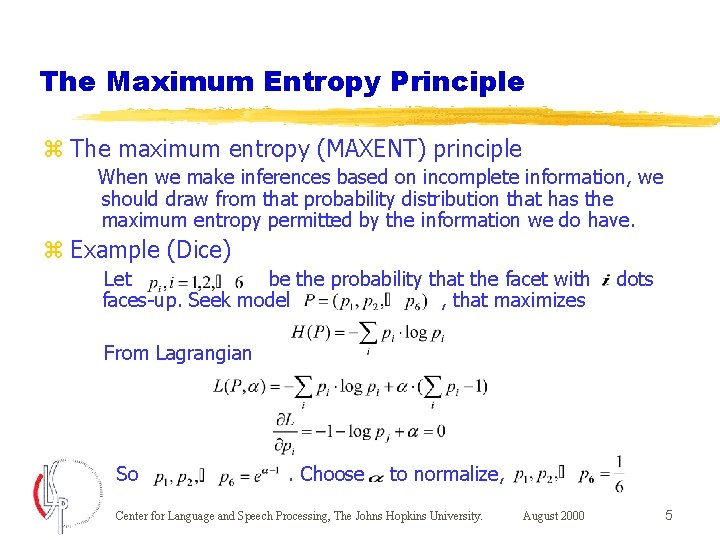

The Maximum Entropy Principle z The maximum entropy (MAXENT) principle When we make inferences based on incomplete information, we should draw from that probability distribution that has the maximum entropy permitted by the information we do have. z Example (Dice) Let be the probability that the facet with faces-up. Seek model , that maximizes dots From Lagrangian So . Choose to normalize, Center for Language and Speech Processing, The Johns Hopkins University. August 2000 5

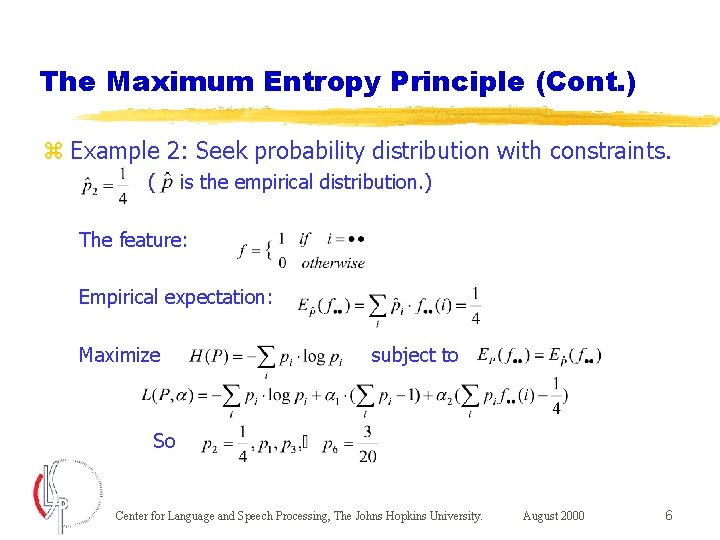

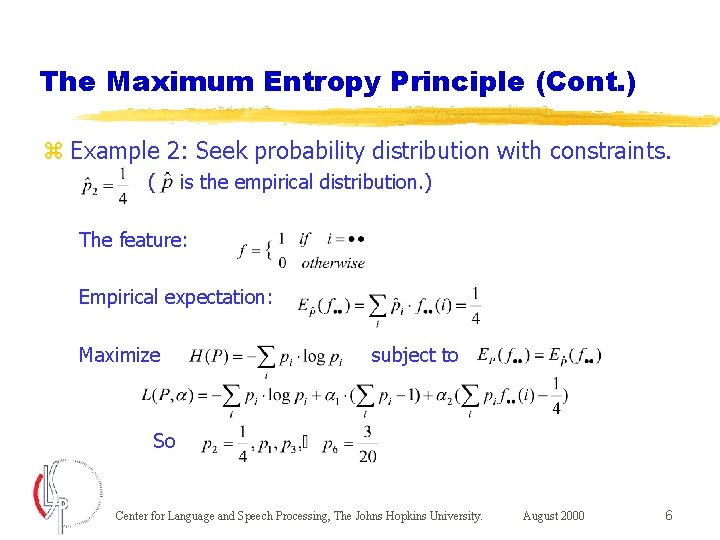

The Maximum Entropy Principle (Cont. ) z Example 2: Seek probability distribution with constraints. ( is the empirical distribution. ) The feature: Empirical expectation: Maximize subject to So Center for Language and Speech Processing, The Johns Hopkins University. August 2000 6

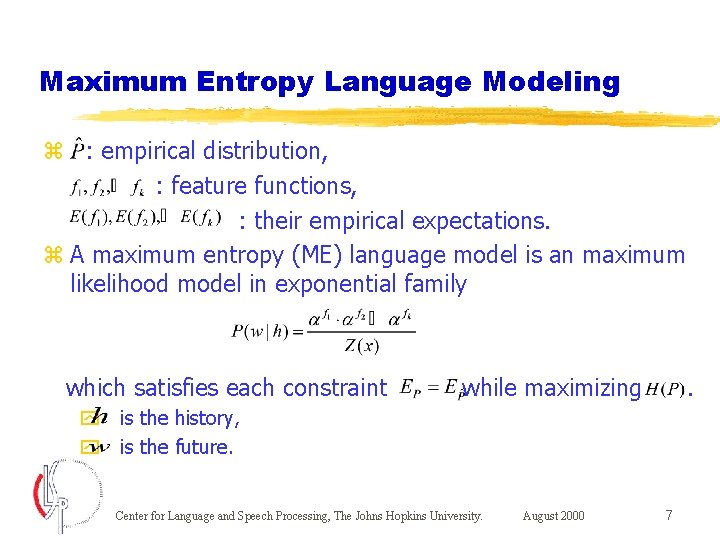

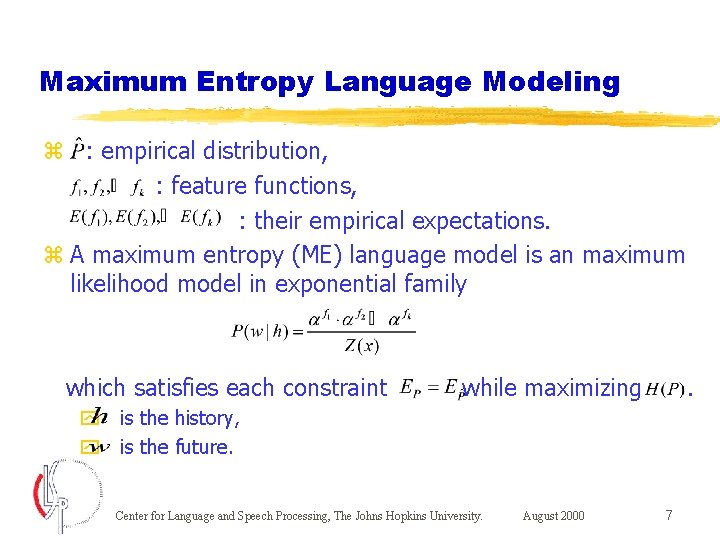

Maximum Entropy Language Modeling z : empirical distribution, : feature functions, : their empirical expectations. z A maximum entropy (ME) language model is an maximum likelihood model in exponential family which satisfies each constraint while maximizing . y is the history, y is the future. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 7

Advantages and Disadvantage of Maximum Entropy Language Modeling z Advantages: y Creating a “smooth” model that satisfies all empirical constraints. y Incorporating various sources of information in a unified language model. z Disadvantage: y Time and space consuming. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 8

Advantages and Disadvantage of Maximum Entropy Language Modeling z Advantages: y Creating a “smooth” model that satisfies all empirical constraints. y Incorporating various sources of information in a unified language model. z Disadvantage: y Time and space consuming. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 9

Motivation for Exploiting Semantic and Syntactic Dependencies Analysts and financial officials in the former British colony consider the contract essential to the revival of the Hong Kong futures exchange. z N-gram models only take local correlation between words into account. z Several dependencies in natural language with longer and sentence-structure dependent spans may compensate for this deficiency. z Need a model that exploits topic and syntax. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 10

Motivation for Exploiting Semantic and Syntactic Dependencies Analysts and financial officials in the former British colony consider the contract essential to the revival of the Hong Kong futures exchange. z N-gram models only take local correlation between words into account. z Several dependencies in natural language with longer and sentence-structure dependent spans may compensate for this deficiency. z Need a model that exploits topic and syntax. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 11

Motivation for Exploiting Semantic and Syntactic Dependencies Analysts and financial officials in the former British colony consider the contract essential to the revival of the Hong Kong futures exchange. z N-gram models only take local correlation between words into account. z Several dependencies in natural language with longer and sentence-structure dependent spans may compensate for this deficiency. z Need a model that exploits topic and syntax. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 12

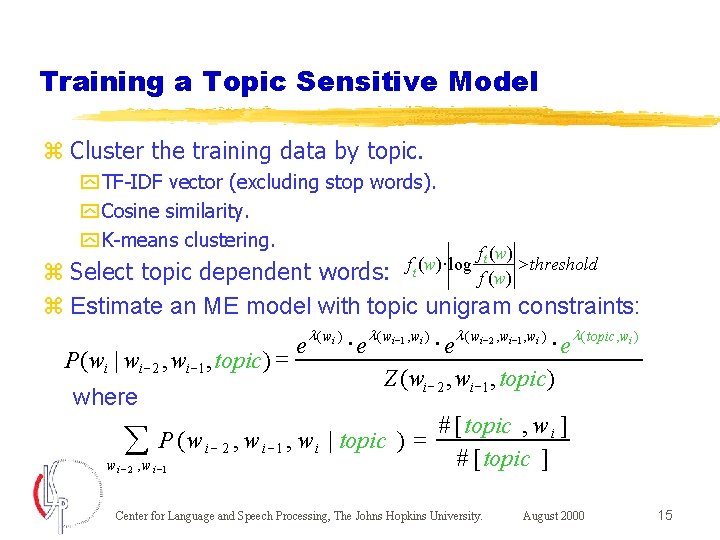

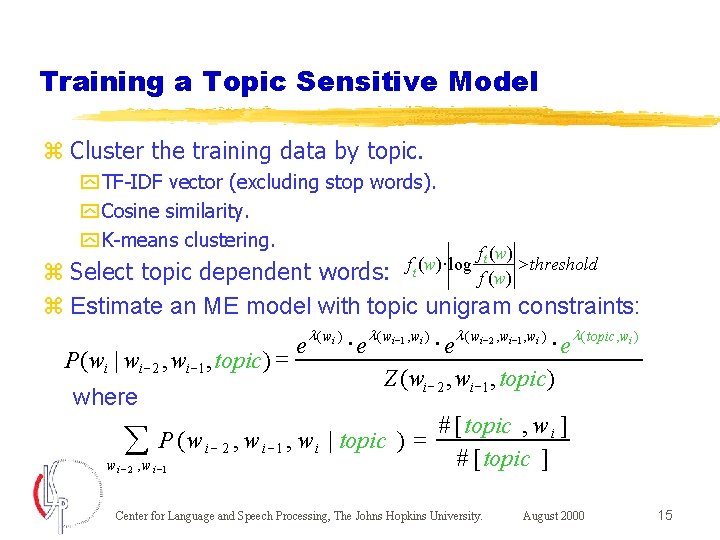

Training a Topic Sensitive Model z Cluster the training data by topic. y TF-IDF vector (excluding stop words). y Cosine similarity. y K-means clustering. f t (w) × log ft (w) > threshold f (w) z Select topic dependent words: z Estimate an ME model with topic unigram constraints: l ( wi ) l ( wi -1 , wi ) l ( wi -2 , wi -1 , wi ) l ( topic , wi ) × × × e e P( wi | wi - 2 , wi -1 , topic) = Z ( wi - 2 , wi -1 , topic) Where # [ topic , w i ] = P ( w , w | topic ) å i-2 i -1 i # [ topic ] w i - 2 , w i -1 Center for Language and Speech Processing, The Johns Hopkins University. August 2000 13

Training a Topic Sensitive Model z Cluster the training data by topic. y TF-IDF vector (excluding stop words). y Cosine similarity. y K-means clustering. f t (w) × log ft (w) > threshold f (w) z Select topic dependent words: z Estimate an ME model with topic unigram constraints: l ( wi ) l ( wi -1 , wi ) l ( wi -2 , wi -1 , wi ) l ( topic , wi ) × × × e e P( wi | wi - 2 , wi -1 , topic) = Z ( wi - 2 , wi -1 , topic) Where # [ topic , w i ] = P ( w , w | topic ) å i-2 i -1 i # [ topic ] w i - 2 , w i -1 Center for Language and Speech Processing, The Johns Hopkins University. August 2000 14

Training a Topic Sensitive Model z Cluster the training data by topic. y TF-IDF vector (excluding stop words). y Cosine similarity. y K-means clustering. f t (w) × log ft (w) > threshold f (w) z Select topic dependent words: z Estimate an ME model with topic unigram constraints: l ( wi ) l ( wi -1 , wi ) l ( wi -2 , wi -1 , wi ) l ( topic , wi ) × × × e e P( wi | wi - 2 , wi -1 , topic) = Z ( wi - 2 , wi -1 , topic) where # [ topic , w i ] = P ( w , w | topic ) å i-2 i -1 i # [ topic ] w i - 2 , w i -1 Center for Language and Speech Processing, The Johns Hopkins University. August 2000 15

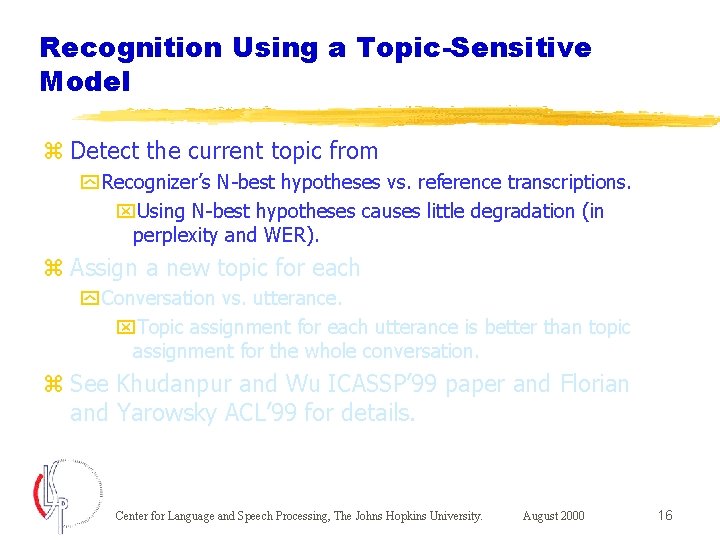

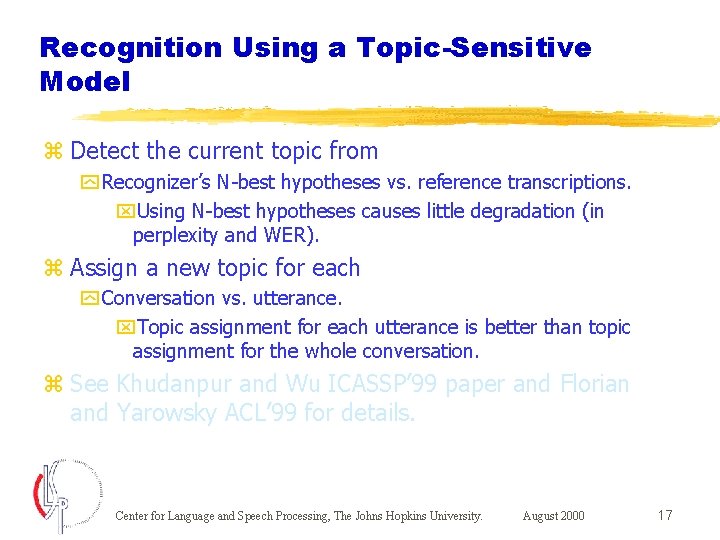

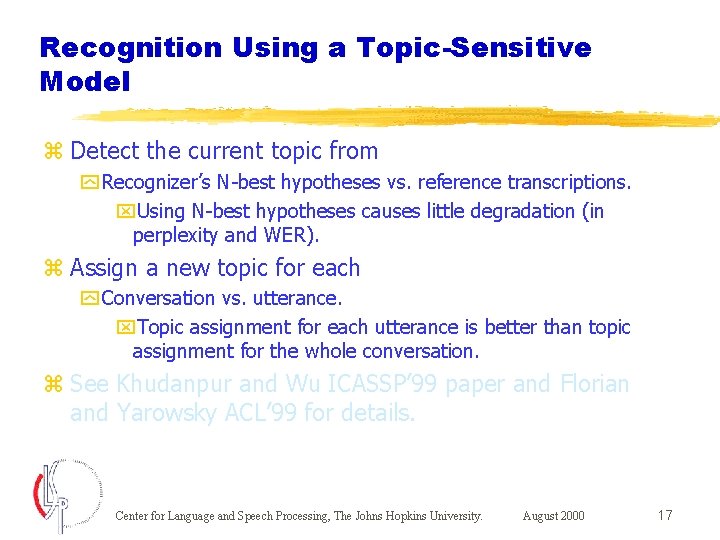

Recognition Using a Topic-Sensitive Model z Detect the current topic from y Recognizer’s N-best hypotheses vs. reference transcriptions. x. Using N-best hypotheses causes little degradation (in perplexity and WER). z Assign a new topic for each y Conversation vs. utterance. x. Topic assignment for each utterance is better than topic assignment for the whole conversation. z See Khudanpur and Wu ICASSP’ 99 paper and Florian and Yarowsky ACL’ 99 for details. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 16

Recognition Using a Topic-Sensitive Model z Detect the current topic from y Recognizer’s N-best hypotheses vs. reference transcriptions. x. Using N-best hypotheses causes little degradation (in perplexity and WER). z Assign a new topic for each y Conversation vs. utterance. x. Topic assignment for each utterance is better than topic assignment for the whole conversation. z See Khudanpur and Wu ICASSP’ 99 paper and Florian and Yarowsky ACL’ 99 for details. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 17

Recognition Using a Topic-Sensitive Model z Detect the current topic from y Recognizer’s N-best hypotheses vs. reference transcriptions. x. Using N-best hypotheses causes little degradation (in perplexity and WER). z Assign a new topic for each y Conversation vs. utterance. x. Topic assignment for each utterance is better than topic assignment for the whole conversation. z See Khudanpur and Wu ICASSP’ 99 paper and Florian and Yarowsky ACL’ 99 for details. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 18

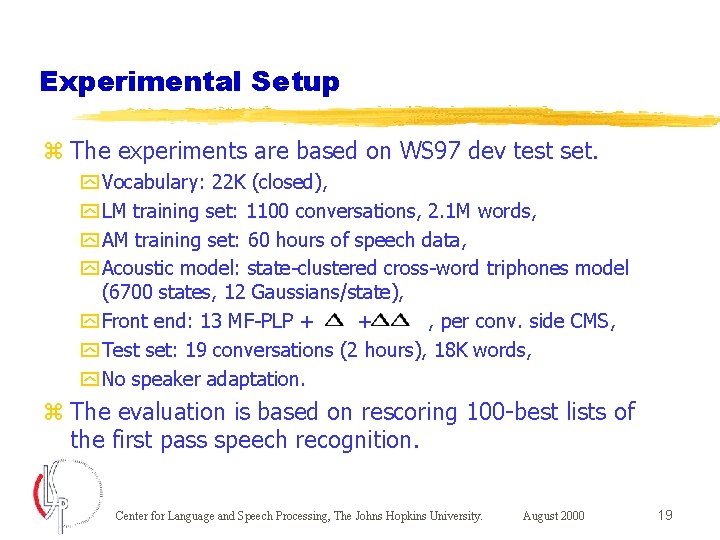

Experimental Setup z The experiments are based on WS 97 dev test set. y Vocabulary: 22 K (closed), y LM training set: 1100 conversations, 2. 1 M words, y AM training set: 60 hours of speech data, y Acoustic model: state-clustered cross-word triphones model (6700 states, 12 Gaussians/state), y Front end: 13 MF-PLP + + , per conv. side CMS, y Test set: 19 conversations (2 hours), 18 K words, y No speaker adaptation. z The evaluation is based on rescoring 100 -best lists of the first pass speech recognition. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 19

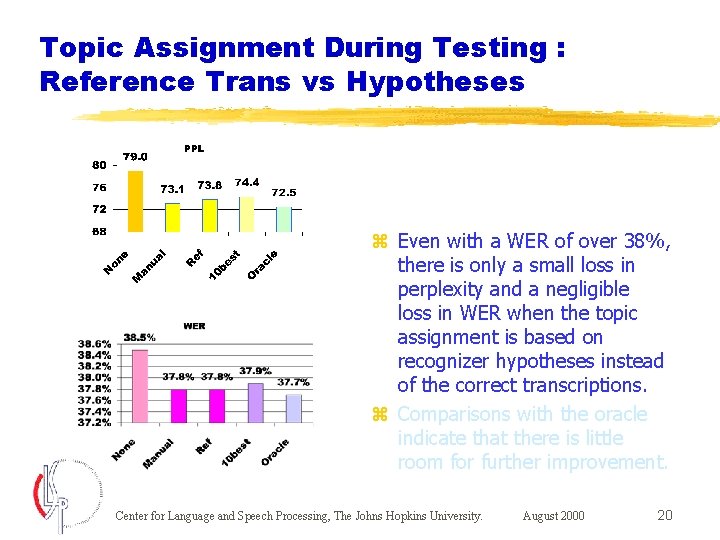

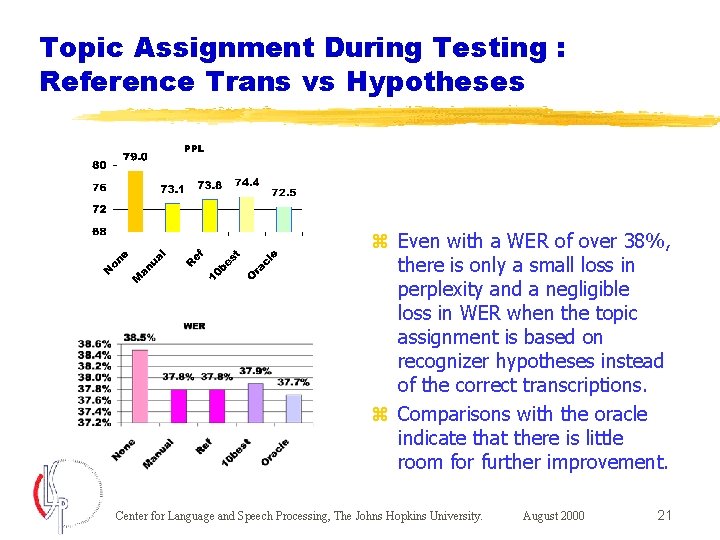

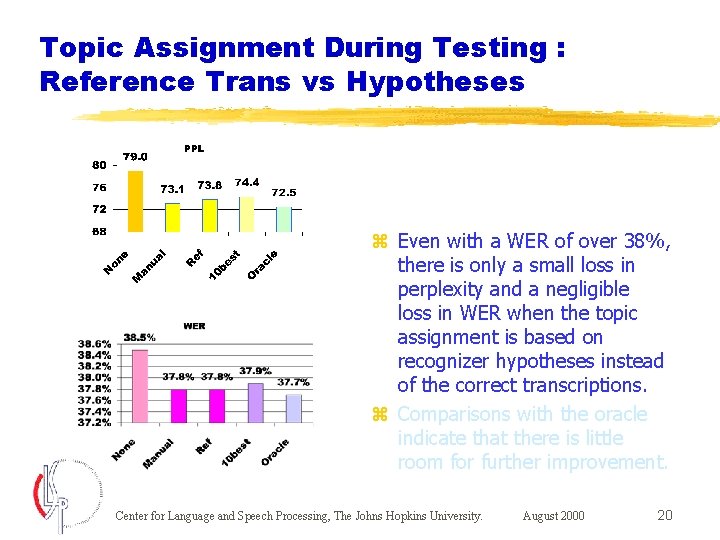

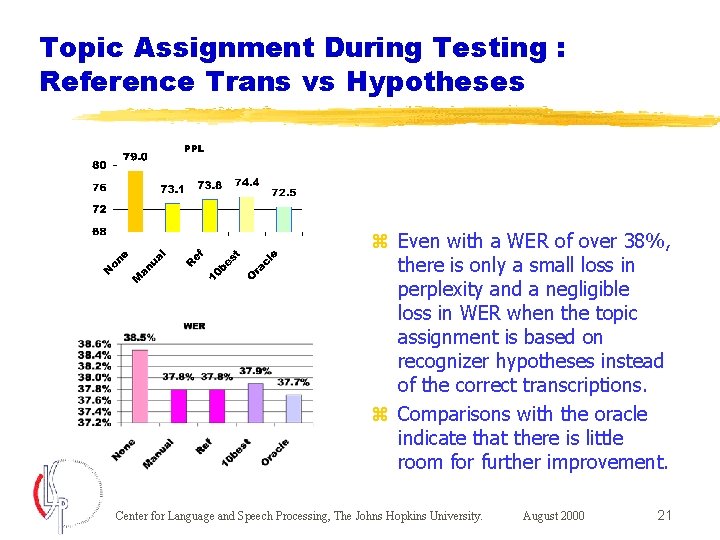

Topic Assignment During Testing : Reference Trans vs Hypotheses z Even with a WER of over 38%, there is only a small loss in perplexity and a negligible loss in WER when the topic assignment is based on recognizer hypotheses instead of the correct transcriptions. z Comparisons with the oracle indicate that there is little room for further improvement. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 20

Topic Assignment During Testing : Reference Trans vs Hypotheses z Even with a WER of over 38%, there is only a small loss in perplexity and a negligible loss in WER when the topic assignment is based on recognizer hypotheses instead of the correct transcriptions. z Comparisons with the oracle indicate that there is little room for further improvement. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 21

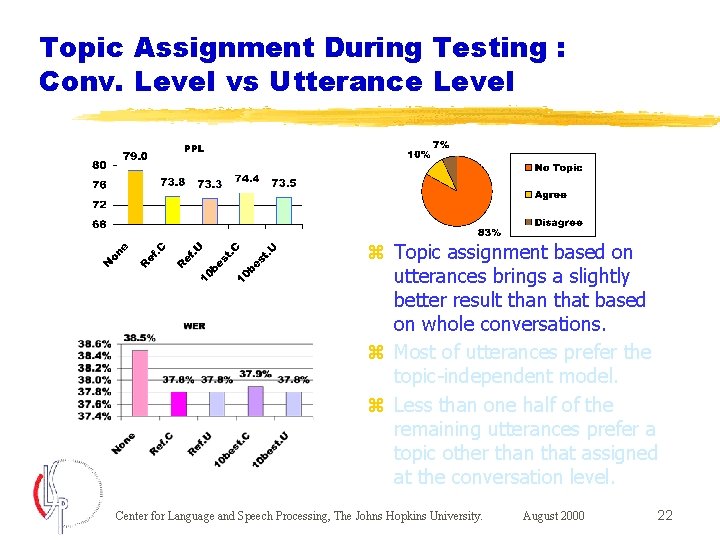

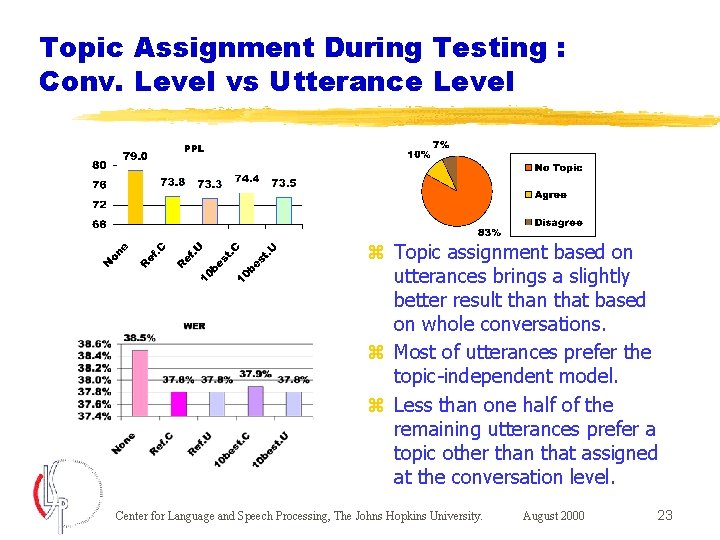

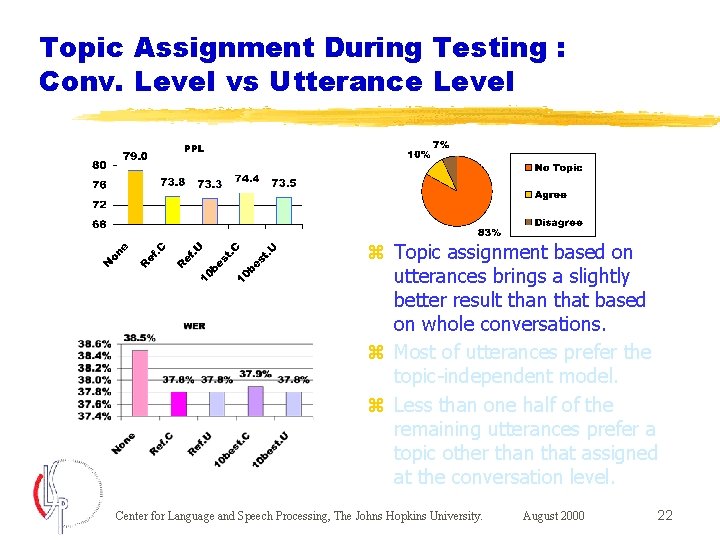

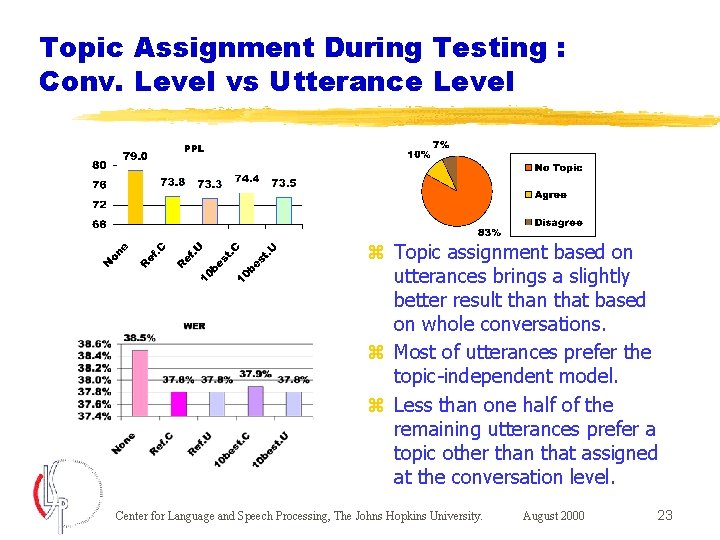

Topic Assignment During Testing : Conv. Level vs Utterance Level z Topic assignment based on utterances brings a slightly better result than that based on whole conversations. z Most of utterances prefer the topic-independent model. z Less than one half of the remaining utterances prefer a topic other than that assigned at the conversation level. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 22

Topic Assignment During Testing : Conv. Level vs Utterance Level z Topic assignment based on utterances brings a slightly better result than that based on whole conversations. z Most of utterances prefer the topic-independent model. z Less than one half of the remaining utterances prefer a topic other than that assigned at the conversation level. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 23

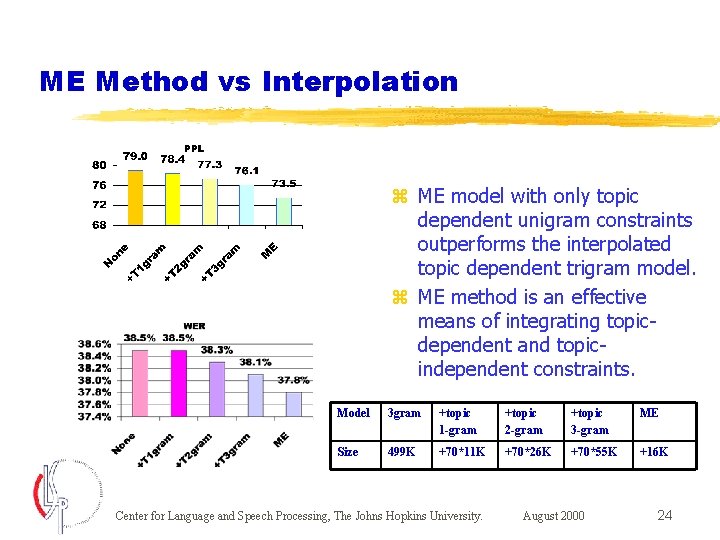

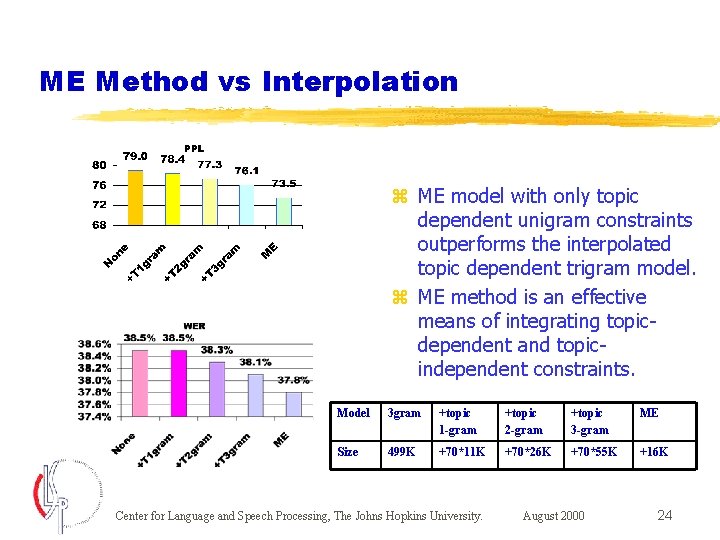

ME Method vs Interpolation z ME model with only topic dependent unigram constraints outperforms the interpolated topic dependent trigram model. z ME method is an effective means of integrating topicdependent and topicindependent constraints. Model 3 gram +topic 1 -gram +topic 2 -gram +topic 3 -gram ME Size 499 K +70*11 K +70*26 K +70*55 K +16 K Center for Language and Speech Processing, The Johns Hopkins University. August 2000 24

ME vs Cache-Based Models z Cache-based model reduces the perplexity, but increase the WER. z Cache-based model brings (0. 6%) more repeated errors than the trigram model does. z Cache model may not be practical when the baseline WER is high. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 25

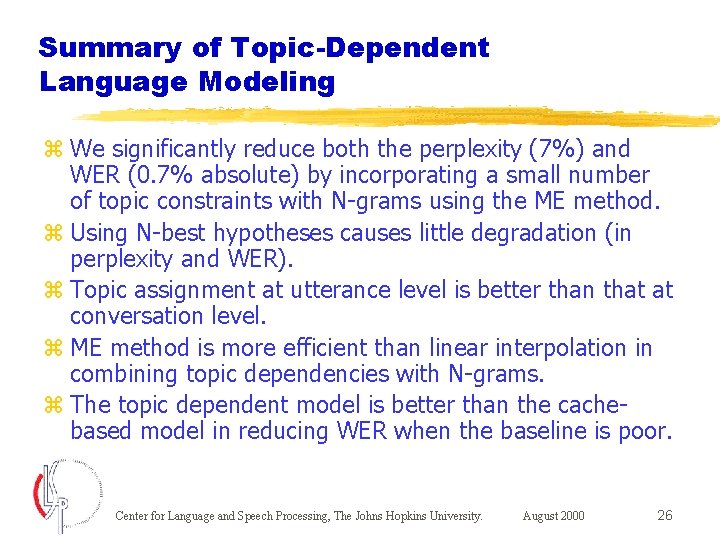

Summary of Topic-Dependent Language Modeling z We significantly reduce both the perplexity (7%) and WER (0. 7% absolute) by incorporating a small number of topic constraints with N-grams using the ME method. z Using N-best hypotheses causes little degradation (in perplexity and WER). z Topic assignment at utterance level is better than that at conversation level. z ME method is more efficient than linear interpolation in combining topic dependencies with N-grams. z The topic dependent model is better than the cachebased model in reducing WER when the baseline is poor. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 26

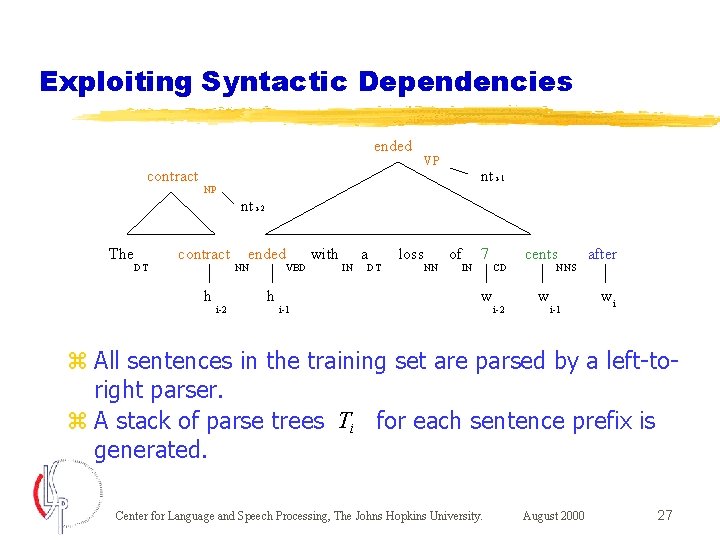

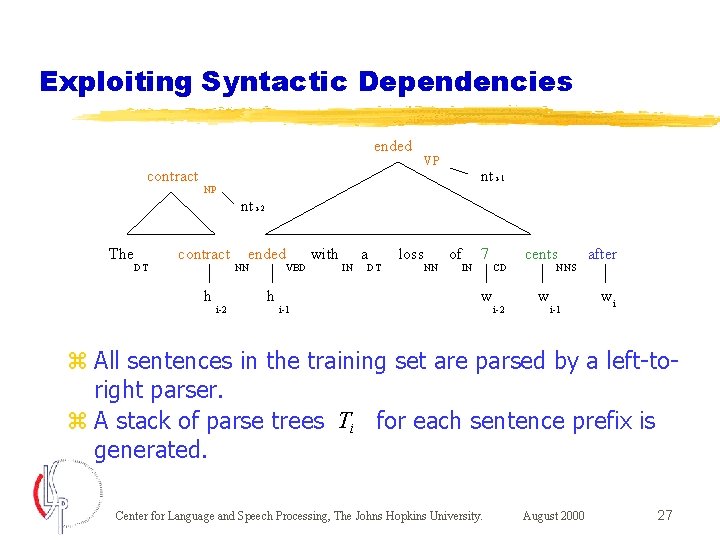

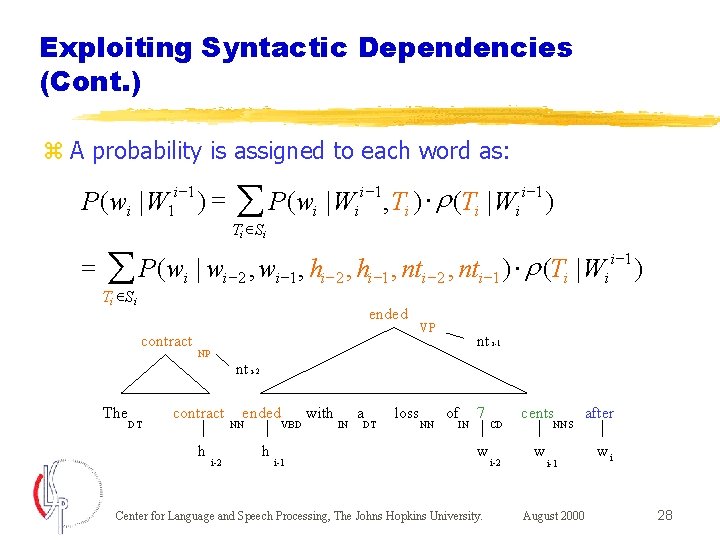

Exploiting Syntactic Dependencies ended contract VP nt i-1 NP nt i-2 The DT contract h i-2 ended NN VBD h i-1 with IN a DT loss NN of IN 7 w CD i-2 cents NNS w i-1 after wi z All sentences in the training set are parsed by a left-toright parser. z A stack of parse trees Ti for each sentence prefix is generated. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 27

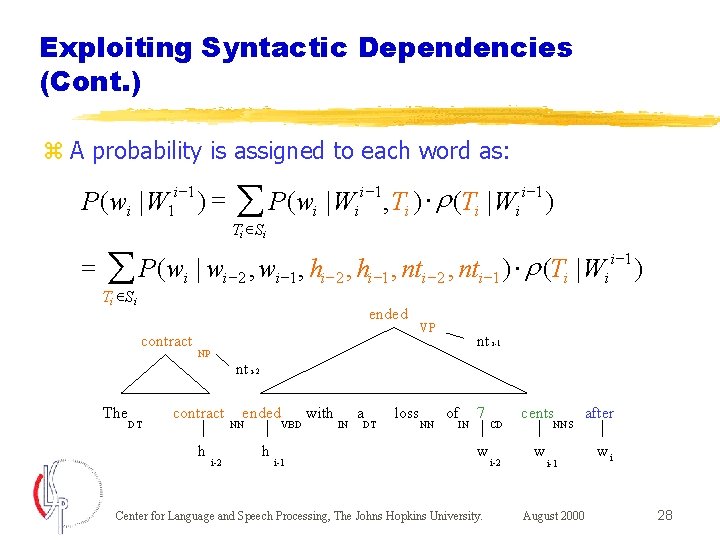

Exploiting Syntactic Dependencies (Cont. ) z A probability is assigned to each word as: i -1 1 P( wi | W = )= å P(w | W i Ti ÎSi i -1 × r , Ti ) (Ti | Wi ) i -1 × r å P(wi | wi-2 , wi-1 , hi-2 , hi-1 , nti-2 , nti-1 ) (Ti | Wi ) Ti ÎSi ended contract VP nt i-1 NP nt i-2 The DT contract h i-2 ended NN VBD h i-1 with IN a DT loss NN of IN 7 w Center for Language and Speech Processing, The Johns Hopkins University. CD i-2 cents NNS w after i-1 August 2000 wi 28

Exploiting Syntactic Dependencies (Cont. ) z A probability is assigned to each word as: i -1 1 P( wi | W = )= å P(w | W Ti ÎSi i -1 × r , Ti ) (Ti | Wi ) i -1 × r å P(wi | wi-2 , wi-1 , hi-2 , hi-1 , nti-2 , nti-1 ) (Ti | Wi ) Ti ÎSi z It is assumed that most of the useful information is embedded in the 2 preceding words and 2 preceding heads. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 29

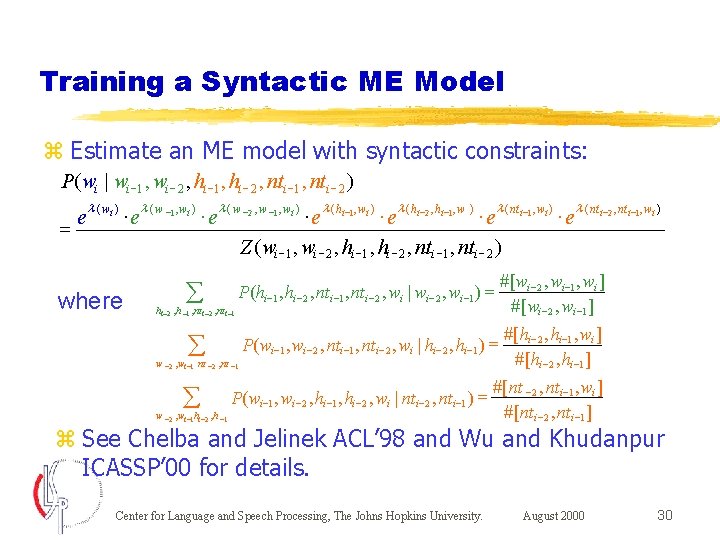

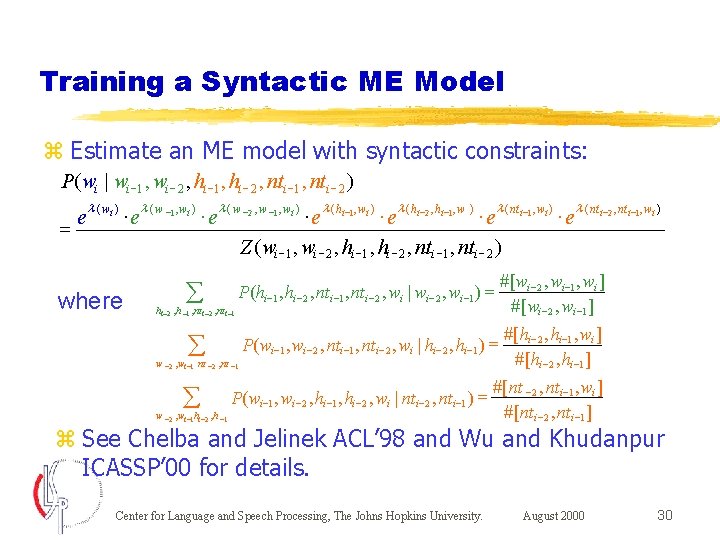

Training a Syntactic ME Model z Estimate an ME model with syntactic constraints: P ( wi | wi -1 , wi - 2 , hi -1 , hi - 2 , nti -1 , nti - 2 ) = e l ( wi ) × e l ( w -1 , wi ) × e l ( w -2 , w -1 , wi ) × e l ( hi-2 , hi-1 , w ) × e l ( nt i-1 , wi ) × e l ( nt i-2 , nti-1 , wi ) Z ( wi -1 , wi - 2 , hi -1 , hi - 2 , nti -1 , nti - 2 ) where å P(hi -1 , hi - 2 , nti -1 , nti - 2 , wi | wi - 2 , wi -1 ) = #[ wi - 2 , wi -1 , wi ] # [wi - 2 , wi -1 ] å P(wi -1 , wi - 2 , nti -1 , nti - 2 , wi | hi - 2 , hi -1 ) = #[hi - 2 , hi -1 , wi ] # [hi - 2 , hi -1 ] hi - 2 , h -1 , nt i -2 , nt i -1 w - 2 , wi -1 nt -2 , nt -1 å P(wi -1 , wi - 2 , hi -1 , hi - 2 , wi | nti - 2 , nti -1 ) = w - 2 , wi -1 hi - 2 , h -1 # [nt - 2 , nti -1 , wi ] # [nti - 2 , nti -1 ] z See Chelba and Jelinek ACL’ 98 and Wu and Khudanpur ICASSP’ 00 for details. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 30

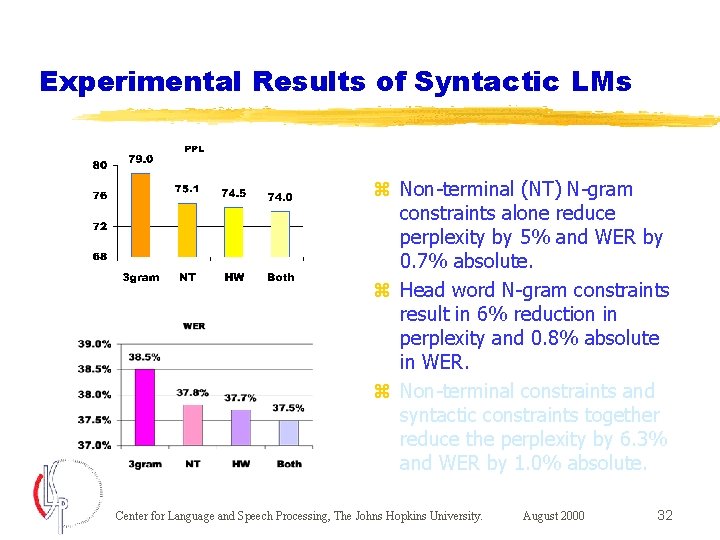

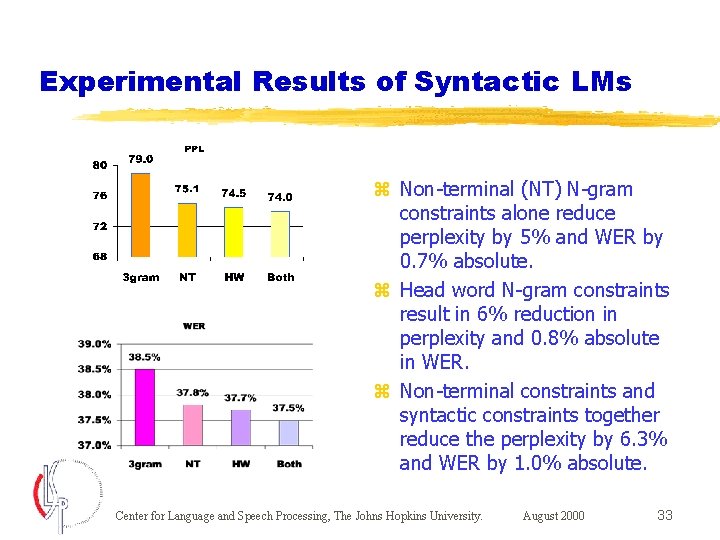

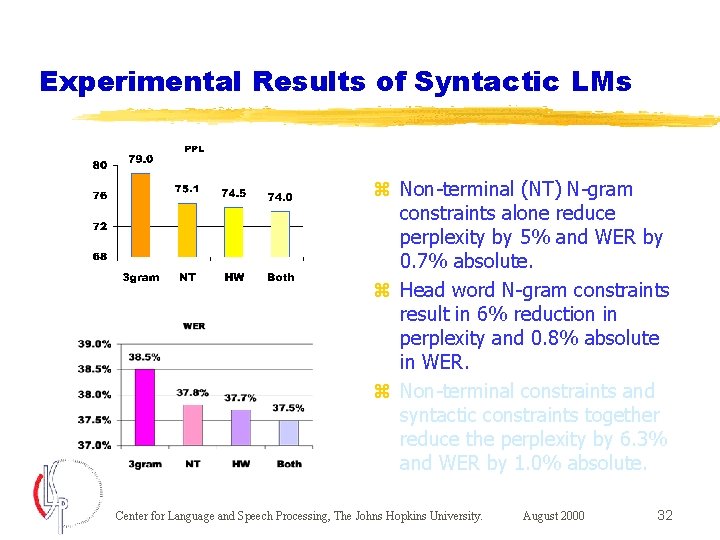

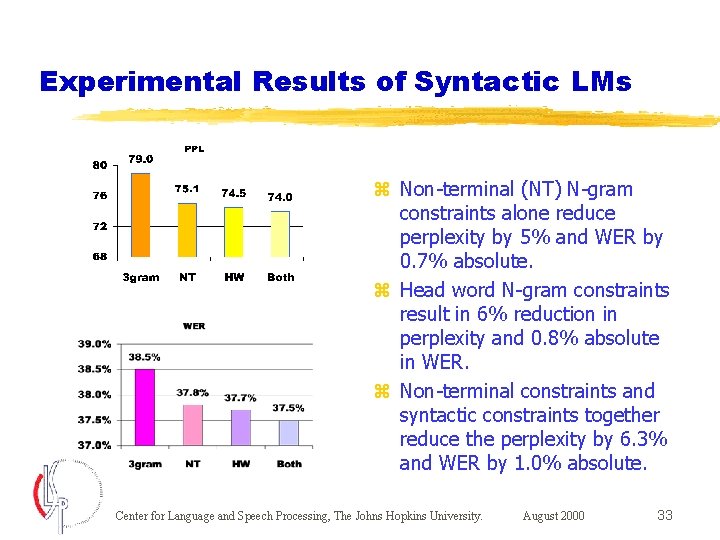

Experimental Results of Syntactic LMs z Non-terminal (NT) N-gram constraints alone reduce perplexity by 5% and WER by 0. 7% absolute. z Head word N-gram constraints result in 6% reduction in perplexity and 0. 8% absolute in WER. z Non-terminal constraints and syntactic constraints together reduce the perplexity by 6. 3% and WER by 1. 0% absolute. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 31

Experimental Results of Syntactic LMs z Non-terminal (NT) N-gram constraints alone reduce perplexity by 5% and WER by 0. 7% absolute. z Head word N-gram constraints result in 6% reduction in perplexity and 0. 8% absolute in WER. z Non-terminal constraints and syntactic constraints together reduce the perplexity by 6. 3% and WER by 1. 0% absolute. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 32

Experimental Results of Syntactic LMs z Non-terminal (NT) N-gram constraints alone reduce perplexity by 5% and WER by 0. 7% absolute. z Head word N-gram constraints result in 6% reduction in perplexity and 0. 8% absolute in WER. z Non-terminal constraints and syntactic constraints together reduce the perplexity by 6. 3% and WER by 1. 0% absolute. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 33

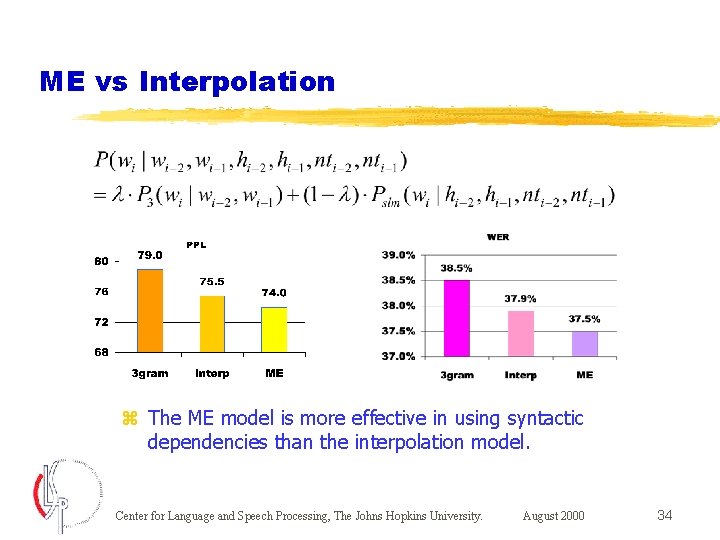

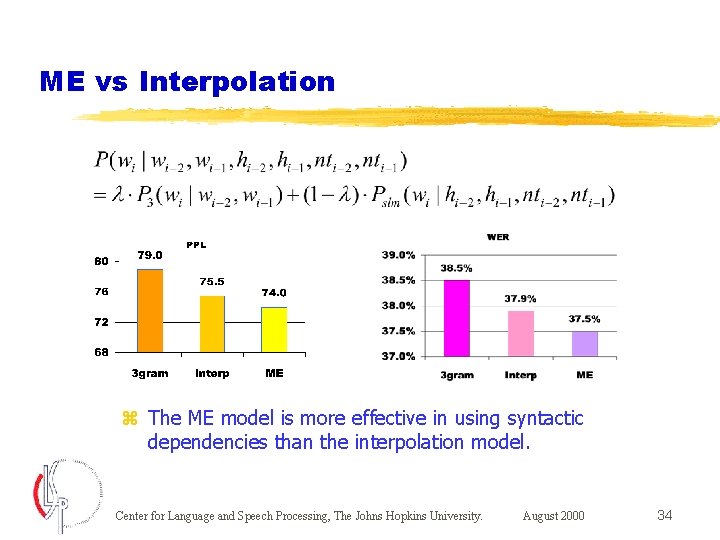

ME vs Interpolation z The ME model is more effective in using syntactic dependencies than the interpolation model. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 34

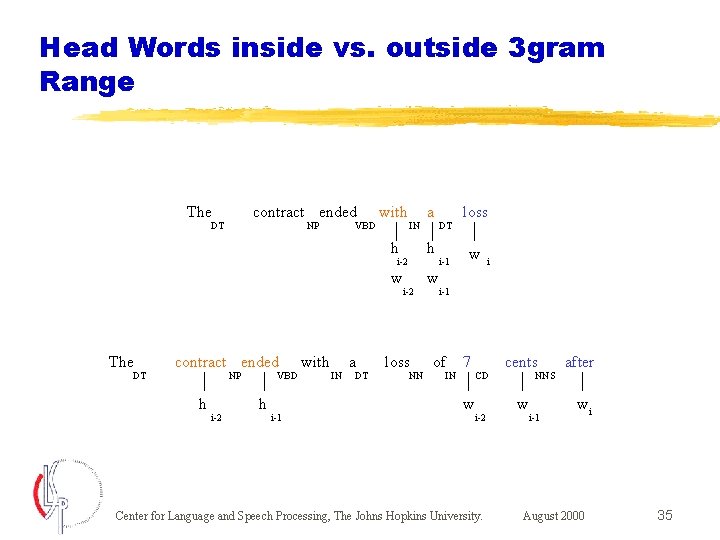

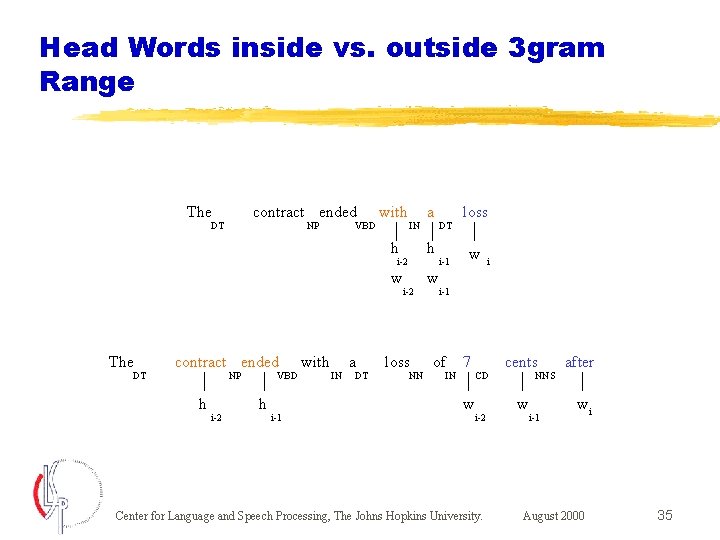

Head Words inside vs. outside 3 gram Range The contract ended DT NP VBD with IN h h i-2 w The DT contract ended NP h i-2 VBD h i-1 with IN a DT a i-2 loss NN w DT i-1 loss w i i-1 of IN 7 w CD i-2 Center for Language and Speech Processing, The Johns Hopkins University. cents after w wi NNS i-1 August 2000 35

Syntactic Heads inside vs. outside Trigram Range z The WER of the baseline trigram model is relatively high when syntactic heads are beyond trigram range. z Lexical head words are much more helpful in reducing WER when they are outside trigram range (1. 5%) than they are within trigram range. z However, non-terminal N-gram constraints help almost evenly in both cases. y Can this gain be obtained from POS class model too? z The WER reduction for the model with both head word and non-terminal constraints (1. 4%) is more than the overall reduction (1. 0%) when head words are beyond trigram range. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 37

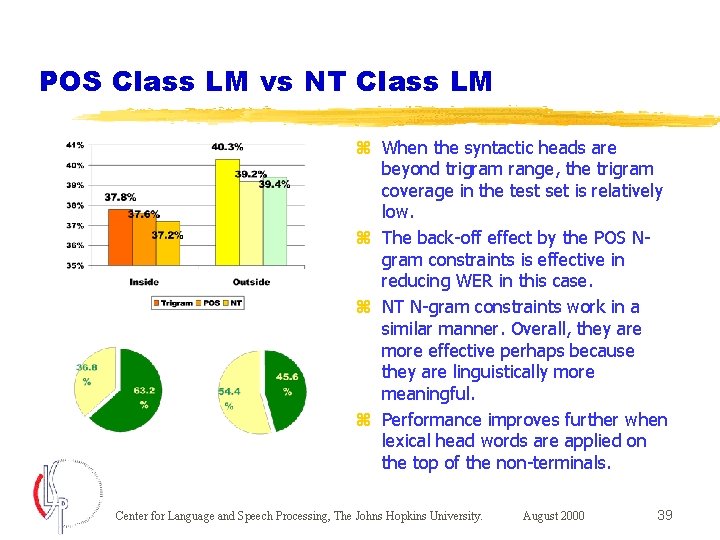

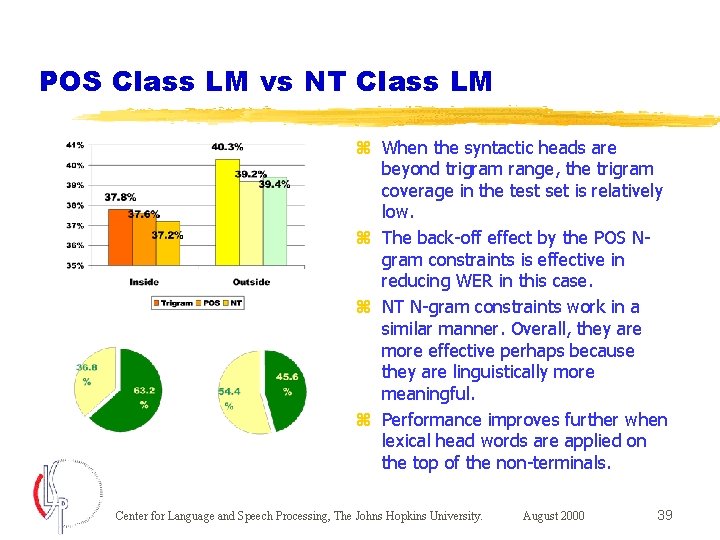

Contrasting the Smoothing Effect of NT Class LM vs POS Class LM z An ME model with part-of-speech (POS) N-gram constraints is built as: P( wi | wi -1 , wi - 2 , posi -1 , posi - 2 ) = e l(w ) × e l ( wi-1 , wi ) × e l ( wi-2 , wi-1 , wi ) × e l ( pos -2 , posi-1 , wi ) Z ( wi -1 , wi - 2 , posi -1 , posi - 2 ) z POS model reduces PPL by 4% and WER by 0. 5%. z The overall gains from POS N-gram constraints are smaller than those from NT N-gram constraints. z Syntactic analysis seems to perform better than just using the two previous word positions. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 38

POS Class LM vs NT Class LM z When the syntactic heads are beyond trigram range, the trigram coverage in the test set is relatively low. z The back-off effect by the POS Ngram constraints is effective in reducing WER in this case. z NT N-gram constraints work in a similar manner. Overall, they are more effective perhaps because they are linguistically more meaningful. z Performance improves further when lexical head words are applied on the top of the non-terminals. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 39

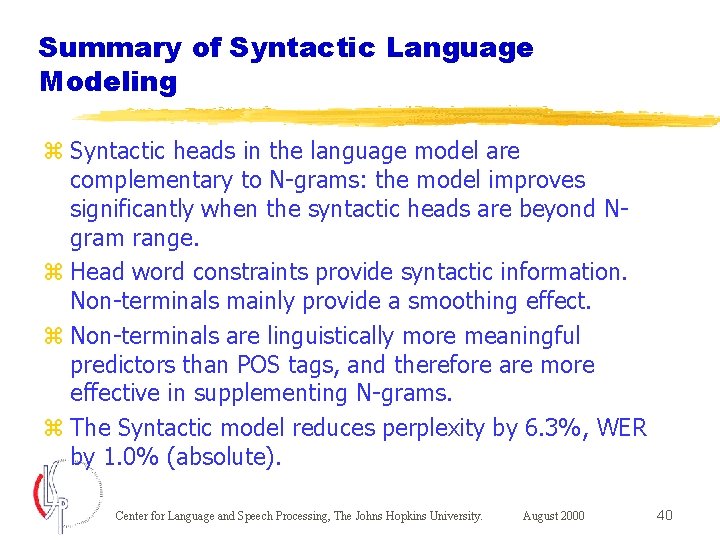

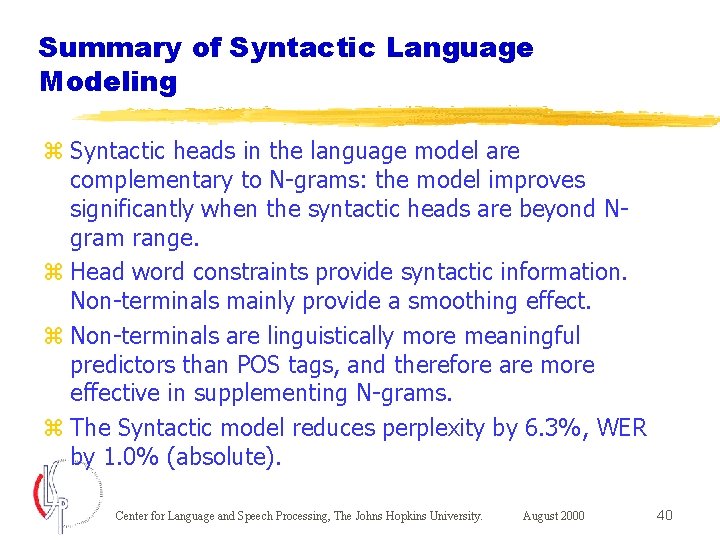

Summary of Syntactic Language Modeling z Syntactic heads in the language model are complementary to N-grams: the model improves significantly when the syntactic heads are beyond Ngram range. z Head word constraints provide syntactic information. Non-terminals mainly provide a smoothing effect. z Non-terminals are linguistically more meaningful predictors than POS tags, and therefore are more effective in supplementing N-grams. z The Syntactic model reduces perplexity by 6. 3%, WER by 1. 0% (absolute). Center for Language and Speech Processing, The Johns Hopkins University. August 2000 40

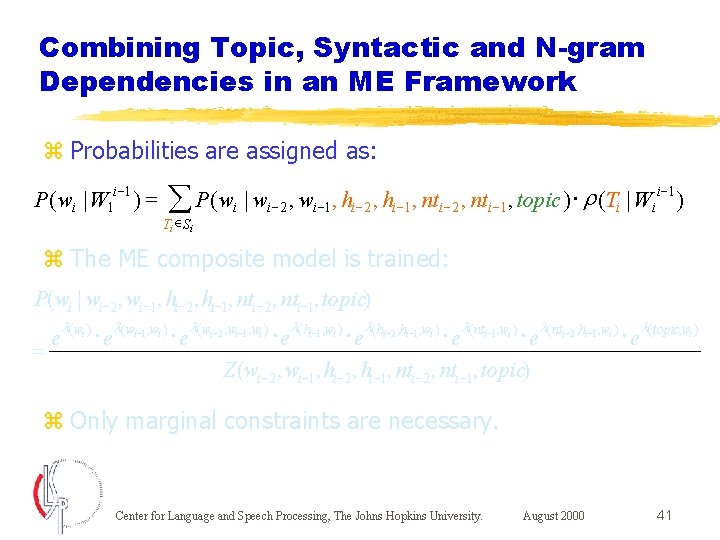

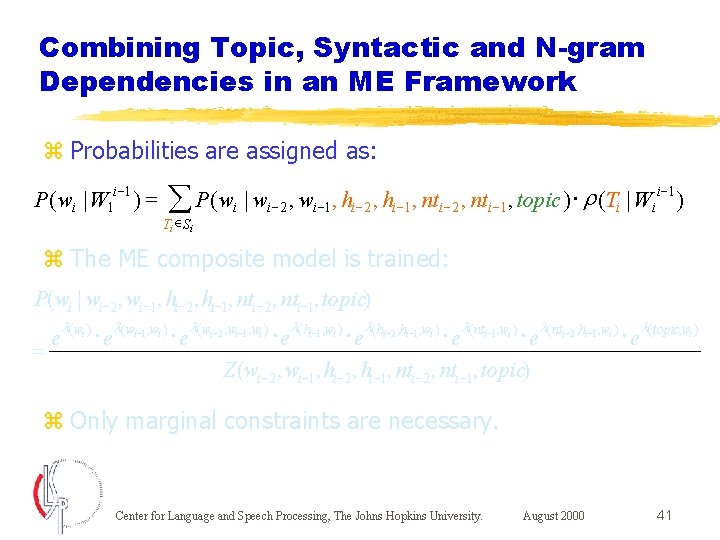

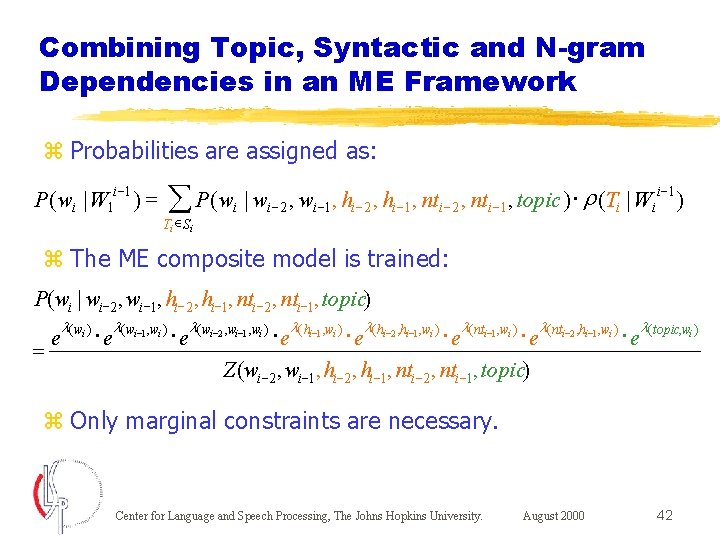

Combining Topic, Syntactic and N-gram Dependencies in an ME Framework z Probabilities are assigned as: - P ( wi | W 1 i 1 ) = å P( wi | wi-2 , wi-1, hi-2 , hi-1, nti-2 , nti-1, topic ) × r (Ti | Wii 1 ) - Ti ÎSi z The ME composite model is trained: P(wi | wi-2 , wi-1, hi-2 , hi-1, nti-2 , nti-1, topic) = l ( wi ) e × el(wi-1, wi ) × el(wi-2 , wi-1, wi ) × el(hi-2 , hi-1, wi ) × el(nti-2 , hi-1, wi ) × el(topic, wi ) Z (wi-2 , wi-1, hi-2 , hi-1, nti-2 , nti-1, topic) z Only marginal constraints are necessary. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 41

Combining Topic, Syntactic and N-gram Dependencies in an ME Framework z Probabilities are assigned as: - P ( wi | W 1 i 1 ) = å P( wi | wi-2 , wi-1, hi-2 , hi-1, nti-2 , nti-1, topic ) × r (Ti | Wii 1 ) - Ti ÎSi z The ME composite model is trained: P(wi | wi-2 , wi-1, hi-2 , hi-1, nti-2 , nti-1, topic) = l ( wi ) e × el(wi-1, wi ) × el(wi-2 , wi-1, wi ) × el(hi-2 , hi-1, wi ) × el(nti-2 , hi-1, wi ) × el(topic, wi ) Z (wi-2 , wi-1, hi-2 , hi-1, nti-2 , nti-1, topic) z Only marginal constraints are necessary. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 42

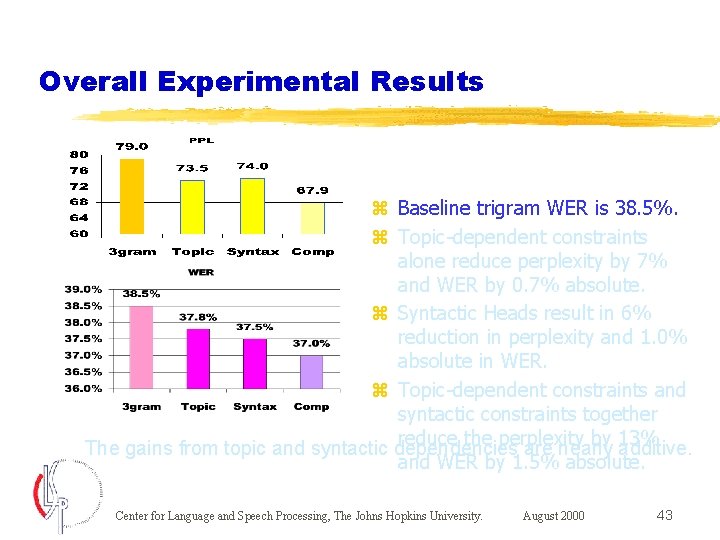

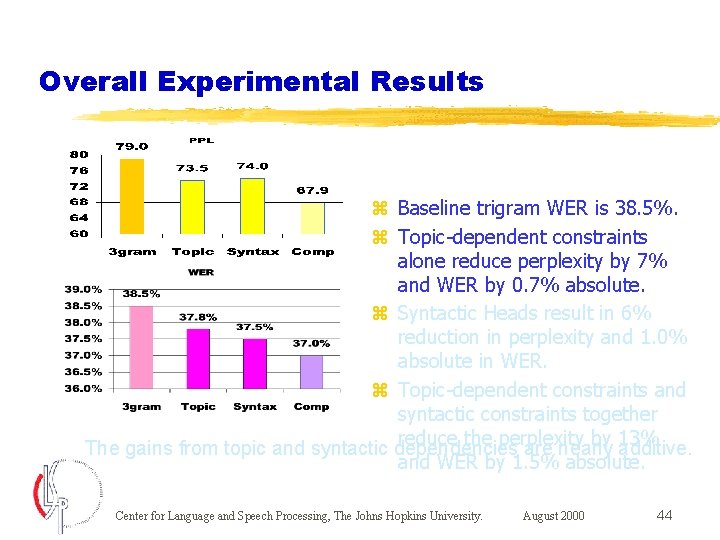

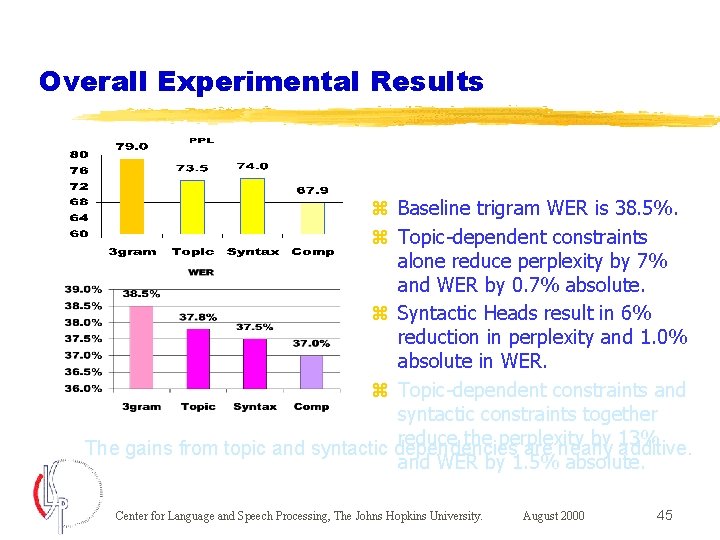

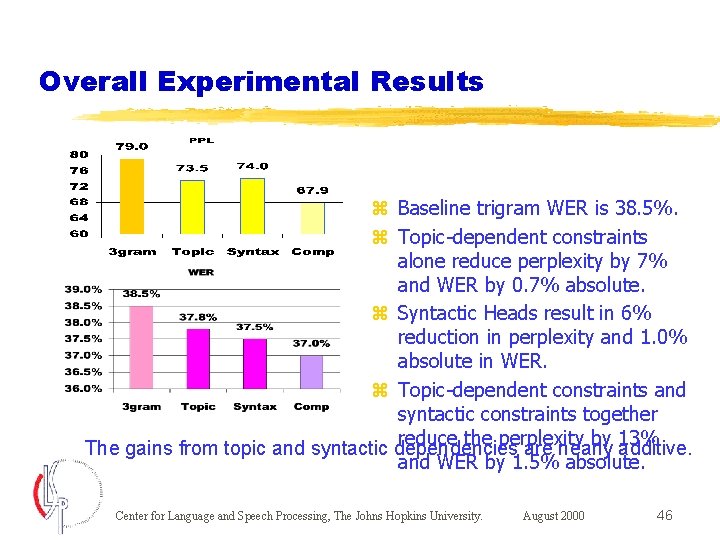

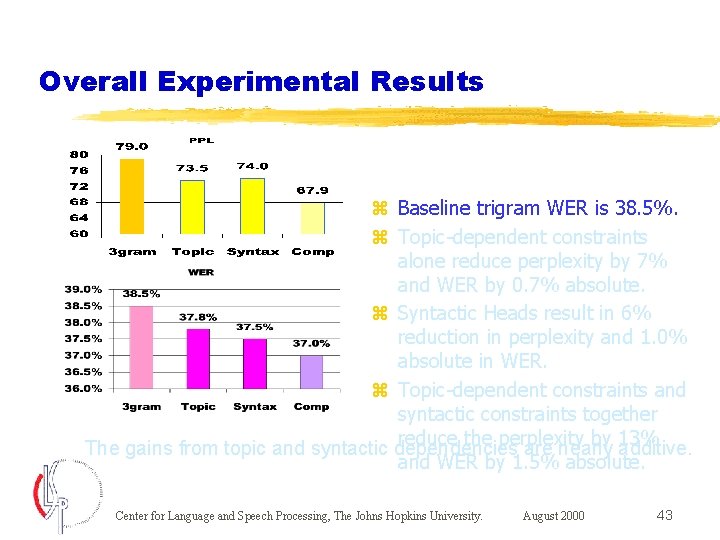

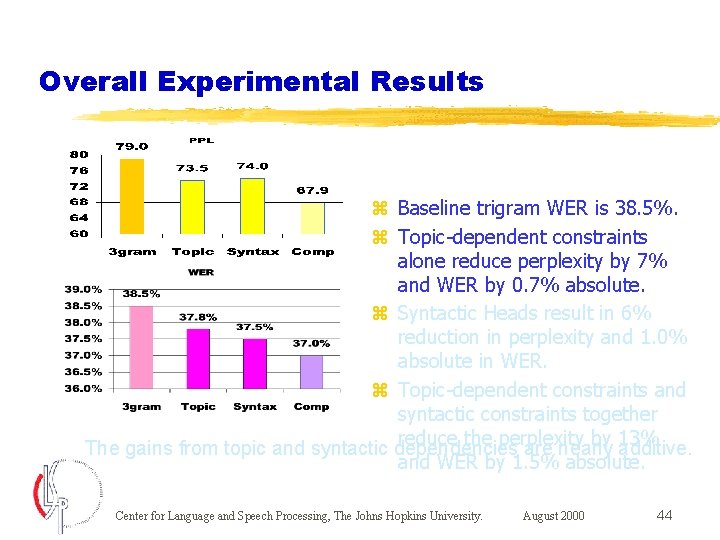

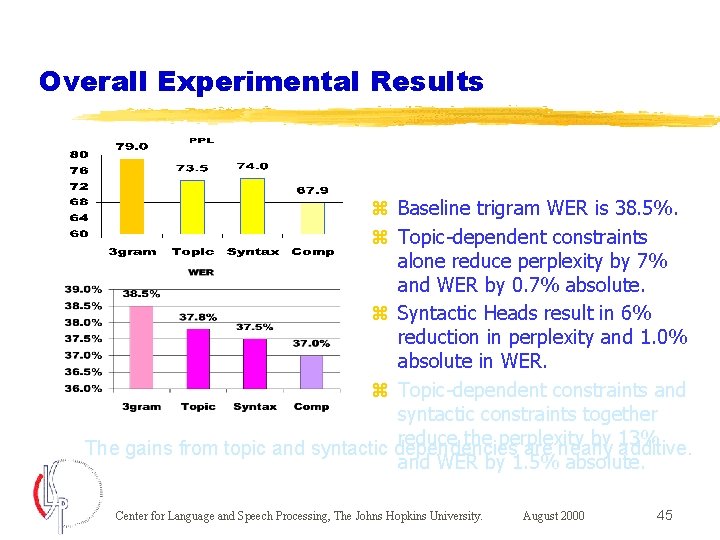

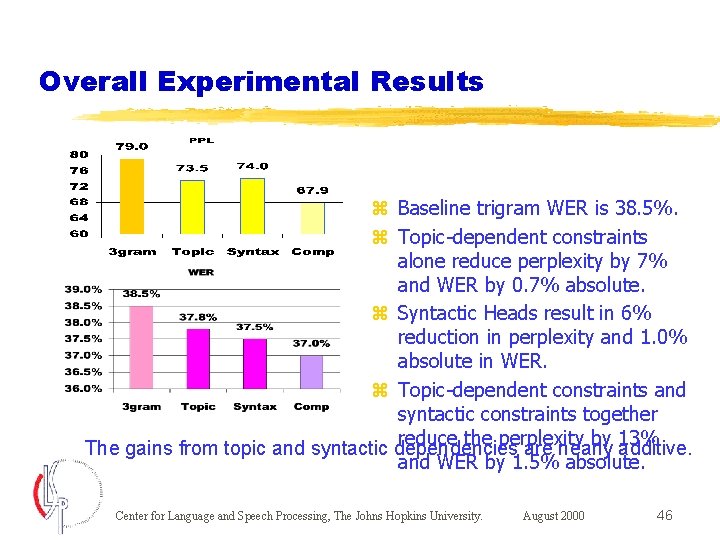

Overall Experimental Results z Baseline trigram WER is 38. 5%. z Topic-dependent constraints alone reduce perplexity by 7% and WER by 0. 7% absolute. z Syntactic Heads result in 6% reduction in perplexity and 1. 0% absolute in WER. z Topic-dependent constraints and syntactic constraints together reduce the perplexity by 13% The gains from topic and syntactic dependencies are nearly additive. and WER by 1. 5% absolute. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 43

Overall Experimental Results z Baseline trigram WER is 38. 5%. z Topic-dependent constraints alone reduce perplexity by 7% and WER by 0. 7% absolute. z Syntactic Heads result in 6% reduction in perplexity and 1. 0% absolute in WER. z Topic-dependent constraints and syntactic constraints together reduce the perplexity by 13% The gains from topic and syntactic dependencies are nearly additive. and WER by 1. 5% absolute. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 44

Overall Experimental Results z Baseline trigram WER is 38. 5%. z Topic-dependent constraints alone reduce perplexity by 7% and WER by 0. 7% absolute. z Syntactic Heads result in 6% reduction in perplexity and 1. 0% absolute in WER. z Topic-dependent constraints and syntactic constraints together reduce the perplexity by 13% The gains from topic and syntactic dependencies are nearly additive. and WER by 1. 5% absolute. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 45

Overall Experimental Results z Baseline trigram WER is 38. 5%. z Topic-dependent constraints alone reduce perplexity by 7% and WER by 0. 7% absolute. z Syntactic Heads result in 6% reduction in perplexity and 1. 0% absolute in WER. z Topic-dependent constraints and syntactic constraints together reduce the perplexity by 13% The gains from topic and syntactic dependencies are nearly additive. and WER by 1. 5% absolute. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 46

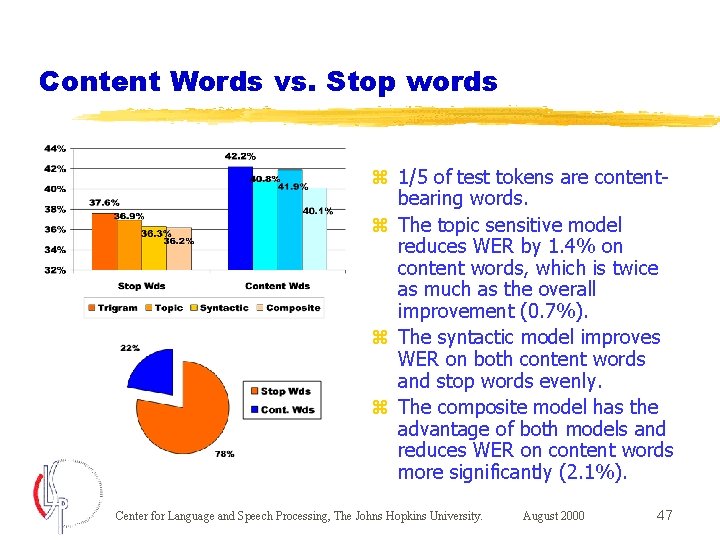

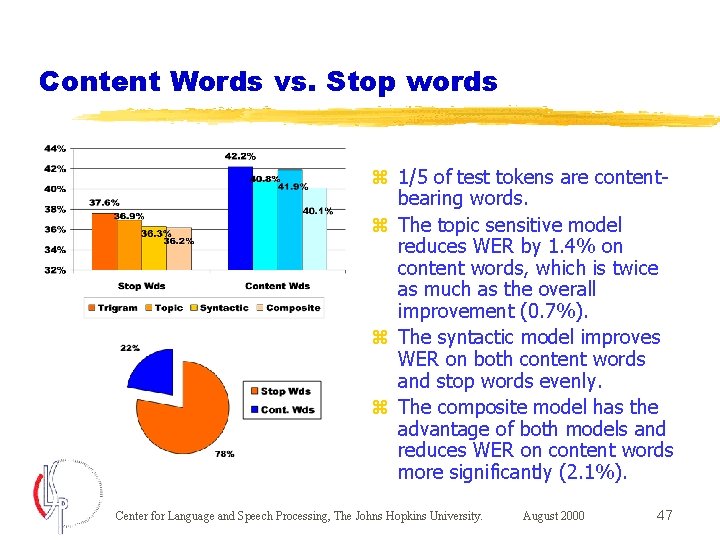

Content Words vs. Stop words z 1/5 of test tokens are contentbearing words. z The topic sensitive model reduces WER by 1. 4% on content words, which is twice as much as the overall improvement (0. 7%). z The syntactic model improves WER on both content words and stop words evenly. z The composite model has the advantage of both models and reduces WER on content words more significantly (2. 1%). Center for Language and Speech Processing, The Johns Hopkins University. August 2000 47

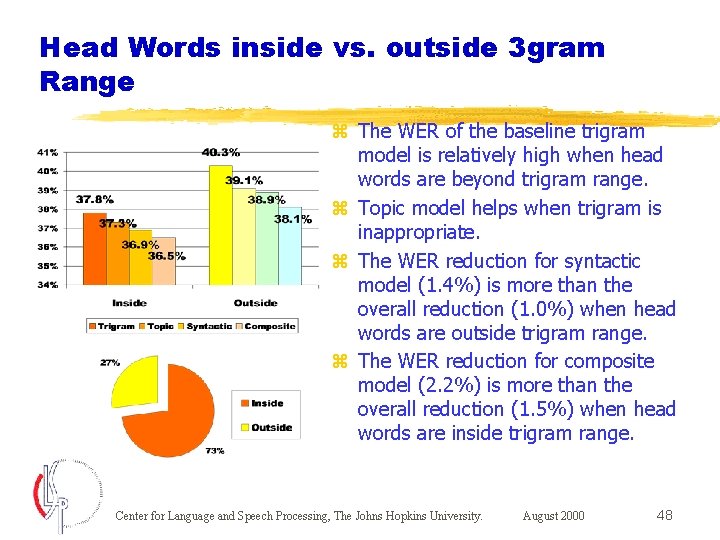

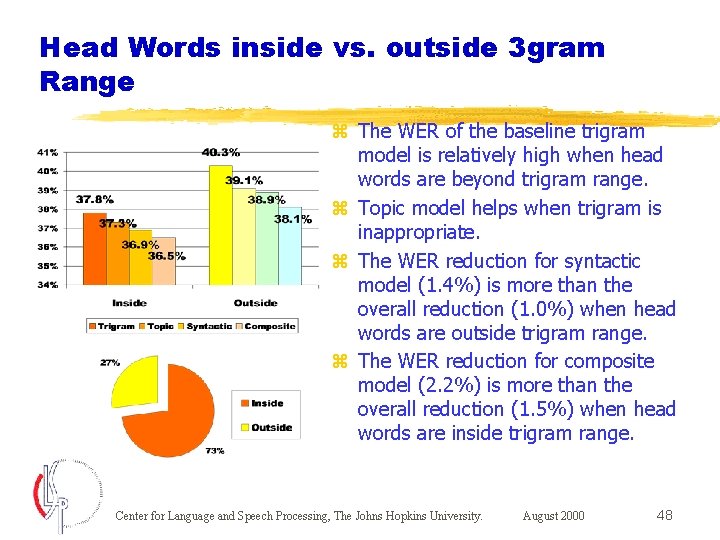

Head Words inside vs. outside 3 gram Range z The WER of the baseline trigram model is relatively high when head words are beyond trigram range. z Topic model helps when trigram is inappropriate. z The WER reduction for syntactic model (1. 4%) is more than the overall reduction (1. 0%) when head words are outside trigram range. z The WER reduction for composite model (2. 2%) is more than the overall reduction (1. 5%) when head words are inside trigram range. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 48

Further Insight Into the Performance z The composite model reduces the WER of content words by 2. 6% absolute when the syntactic predicting information is beyond trigram range. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 49

Concluding Remarks z A language model incorporating two diverse sources of long-range dependence with N-grams has been built. z The WER on content words reduces by 2. 1%, most of it due to topic dependence. z The WER on head words beyond trigram range reduces by 2. 2%, most of it due to syntactic dependence. z These two sources of non-local dependencies are complementary and their gains are almost additive. z Overall perplexity reduction of 13% and WER reduction of 1. 5% (absolute) are achieved on Switchboard. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 50

Concluding Remarks z A language model incorporating two diverse sources of long-range dependence with N-grams has been built. z The WER on content words reduces by 2. 1%, most of it due to topic dependence. z The WER on head words beyond trigram range reduces by 2. 2%, most of it due to syntactic dependence. z These two sources of non-local dependencies are complementary and their gains are almost additive. z Overall perplexity reduction of 13% and WER reduction of 1. 5% (absolute) are achieved on Switchboard. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 51

Concluding Remarks z A language model incorporating two diverse sources of long-range dependence with N-grams has been built. z The WER on content words reduces by 2. 1%, most of it due to topic dependence. z The WER on head words beyond trigram range reduces by 2. 2%, most of it due to syntactic dependence. z These two sources of non-local dependencies are complementary and their gains are almost additive. z Overall perplexity reduction of 13% and WER reduction of 1. 5% (absolute) are achieved on Switchboard. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 52

Concluding Remarks z A language model incorporating two diverse sources of long-range dependence with N-grams has been built. z The WER on content words reduces by 2. 1%, most of it due to topic dependence. z The WER on head words beyond trigram range reduces by 2. 2%, most of it due to syntactic dependence. z These two sources of non-local dependencies are complementary and their gains are almost additive. z Overall perplexity reduction of 13% and WER reduction of 1. 5% (absolute) are achieved on Switchboard. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 53

Concluding Remarks z A language model incorporating two diverse sources of long-range dependence with N-grams has been built. z The WER on content words reduces by 2. 1%, most of it due to topic dependence. z The WER on head words beyond trigram range reduces by 2. 2%, most of it due to syntactic dependence. z These two sources of non-local dependencies are complementary and their gains are almost additive. z Overall perplexity reduction of 13% and WER reduction of 1. 5% (absolute) are achieved on Switchboard. Center for Language and Speech Processing, The Johns Hopkins University. August 2000 54

Ongoing and Future Work z Improve the training algorithm. z Apply this method to other tasks (Broadcast News). Center for Language and Speech Processing, The Johns Hopkins University. August 2000 55

Acknowledgement z We thank Radu Florian and David Yarowsky for their help on topic detection and data clustering and Ciprian Chelba and Frederick Jelinek for providing the syntactic model (parser) for the experimental results reported here. z This work is supported by National Science Foundation, a STIMULATE grant (IRI-9618874). Center for Language and Speech Processing, The Johns Hopkins University. August 2000 56