http www eng fsu edumpf EEL 4930 6

- Slides: 53

http: //www. eng. fsu. edu/~mpf EEL 4930 § 6 / 5930 § 5, Spring ‘ 06 Physical Limits of Computing Slides for a course taught by Michael P. Frank in the Department of Electrical & Computer Engineering M. Frank, "Physical Limits of Computing"

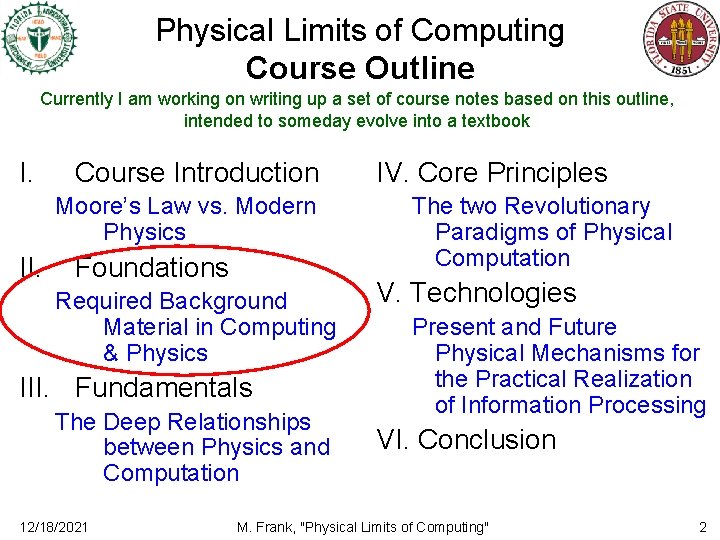

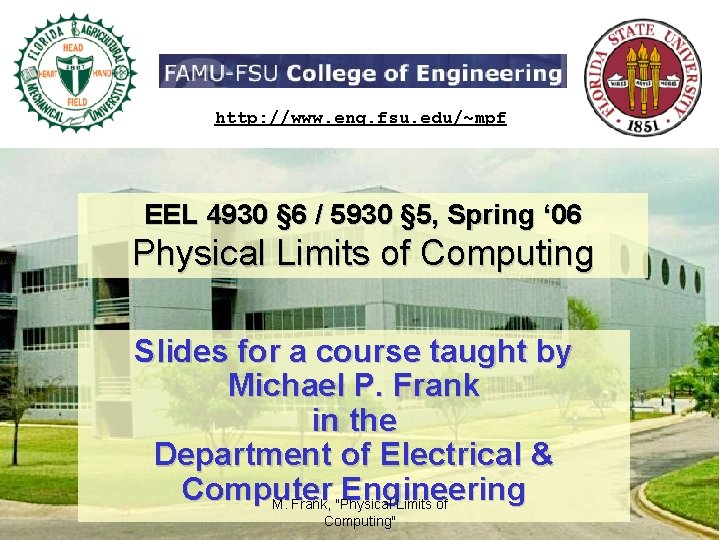

Physical Limits of Computing Course Outline Currently I am working on writing up a set of course notes based on this outline, intended to someday evolve into a textbook I. Course Introduction Moore’s Law vs. Modern Physics II. Foundations Required Background Material in Computing & Physics III. Fundamentals The Deep Relationships between Physics and Computation 12/18/2021 IV. Core Principles The two Revolutionary Paradigms of Physical Computation V. Technologies Present and Future Physical Mechanisms for the Practical Realization of Information Processing VI. Conclusion M. Frank, "Physical Limits of Computing" 2

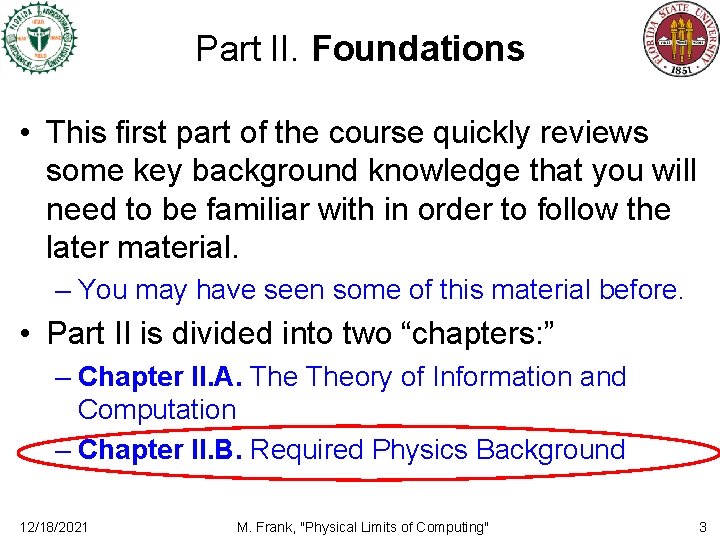

Part II. Foundations • This first part of the course quickly reviews some key background knowledge that you will need to be familiar with in order to follow the later material. – You may have seen some of this material before. • Part II is divided into two “chapters: ” – Chapter II. A. Theory of Information and Computation – Chapter II. B. Required Physics Background 12/18/2021 M. Frank, "Physical Limits of Computing" 3

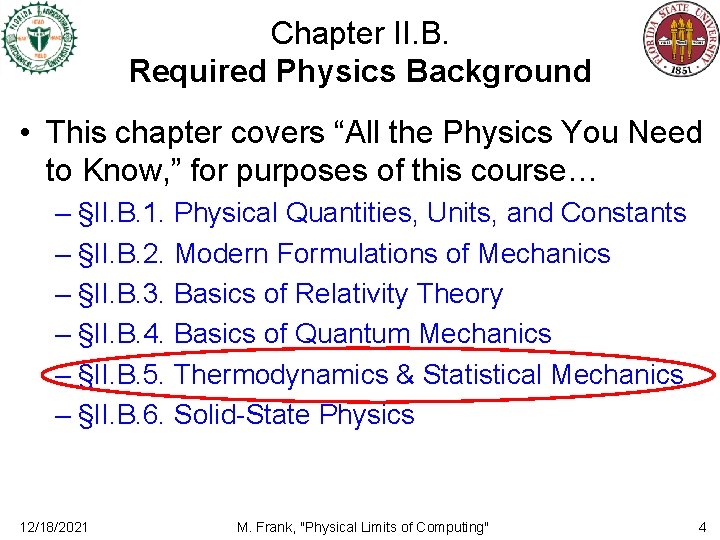

Chapter II. B. Required Physics Background • This chapter covers “All the Physics You Need to Know, ” for purposes of this course… – §II. B. 1. Physical Quantities, Units, and Constants – §II. B. 2. Modern Formulations of Mechanics – §II. B. 3. Basics of Relativity Theory – §II. B. 4. Basics of Quantum Mechanics – §II. B. 5. Thermodynamics & Statistical Mechanics – §II. B. 6. Solid-State Physics 12/18/2021 M. Frank, "Physical Limits of Computing" 4

Section II. B. 5: Thermodynamics and Statistical Mechanics • This section covers what you need to know, from a modern perspective – As informed by fields like quantum statistical mechanics, information theory, and quantum information theory • We break this down into subsections as follows: – – – – (a) What is Energy? (b) Entropy in Thermodynamics (c) Entropy Increase and the 2 nd Law of Thermo. (d) Equilibrium States and the Boltzmann Distribution (e) The Concept of Temperature (f) The Nature of Heat (g) Reversible Heat Engines and the Carnot Cycle (h) Helmholtz and Gibbs Free Energy 12/18/2021 M. Frank, "Physical Limits of Computing" 5

Subsection II. B. 5. a: What is Energy? M. Frank, "Physical Limits of Computing"

What is energy, anyway? • Related to the constancy of physical law. • Nöther’s theorem (1905) relates conservation laws to “Noether” rhymes with “mother” physical symmetries. – Using this theorem, the conservation of energy (1 st law of thermo. ) can be shown to be a direct consequence of the time-symmetry of the laws of physics. • We saw that energy eigenstates are those state vectors that remain constant (except for a phase rotation) over time. (The eigenvectors of the Uδt matrix. ) – Equilibrium states are particular statistical mixtures of these – The state’s eigenvalue gives the energy of the eigenstate – This is the rate of phase-angle accumulation of that state! • Later, we will see that energy can also be viewed as the rate of (quantum) computing that is occurring within a physical system. – Or more precisely, the rate at which quantum “computational effort” is being exerted within that system. 12/18/2021 M. Frank, "Physical Limits of Computing" 7

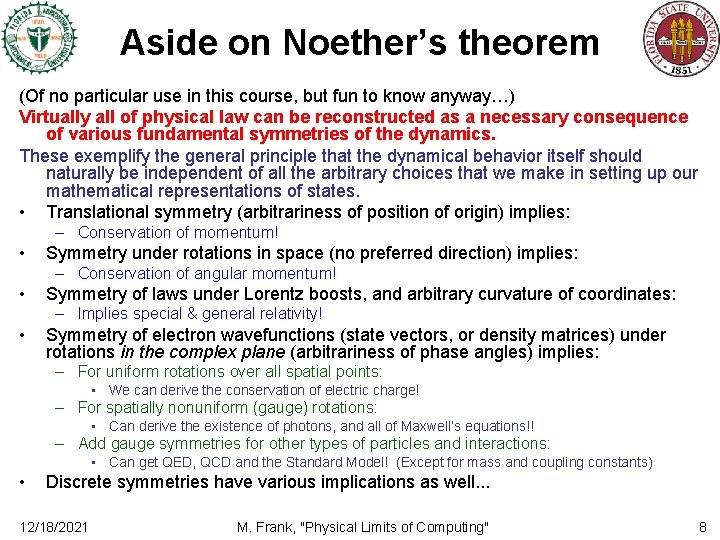

Aside on Noether’s theorem (Of no particular use in this course, but fun to know anyway…) Virtually all of physical law can be reconstructed as a necessary consequence of various fundamental symmetries of the dynamics. These exemplify the general principle that the dynamical behavior itself should naturally be independent of all the arbitrary choices that we make in setting up our mathematical representations of states. • Translational symmetry (arbitrariness of position of origin) implies: – Conservation of momentum! • Symmetry under rotations in space (no preferred direction) implies: – Conservation of angular momentum! • Symmetry of laws under Lorentz boosts, and arbitrary curvature of coordinates: – Implies special & general relativity! • Symmetry of electron wavefunctions (state vectors, or density matrices) under rotations in the complex plane (arbitrariness of phase angles) implies: – For uniform rotations over all spatial points: • We can derive the conservation of electric charge! – For spatially nonuniform (gauge) rotations: • Can derive the existence of photons, and all of Maxwell’s equations!! – Add gauge symmetries for other types of particles and interactions: • Can get QED, QCD and the Standard Model! (Except for mass and coupling constants) • Discrete symmetries have various implications as well. . . 12/18/2021 M. Frank, "Physical Limits of Computing" 8

Types of Energy • Over the course of this module, we will see how to break down total Hamiltonian energy in various ways, and identify portions of the total energy that are of different types: – Rest mass-energy vs. Kinetic energy vs. Potential energy (next slide) – Heat content vs. “chill” content (subsection e) – Free energy vs. spent energy (subsection g) 12/18/2021 M. Frank, "Physical Limits of Computing" 9

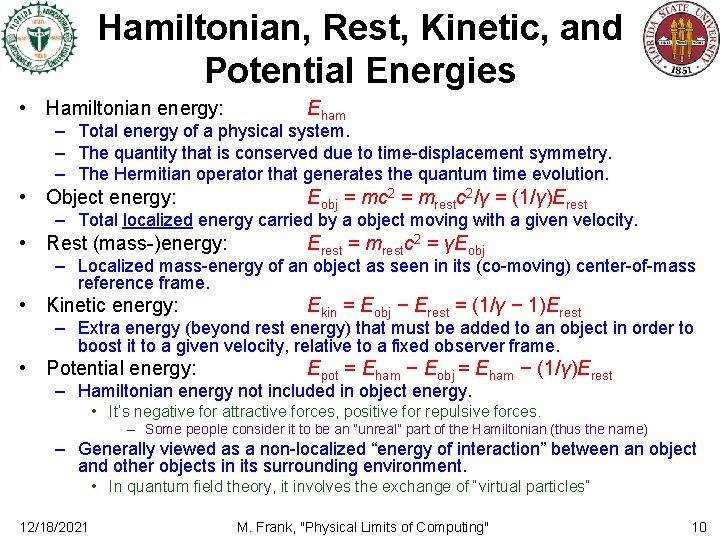

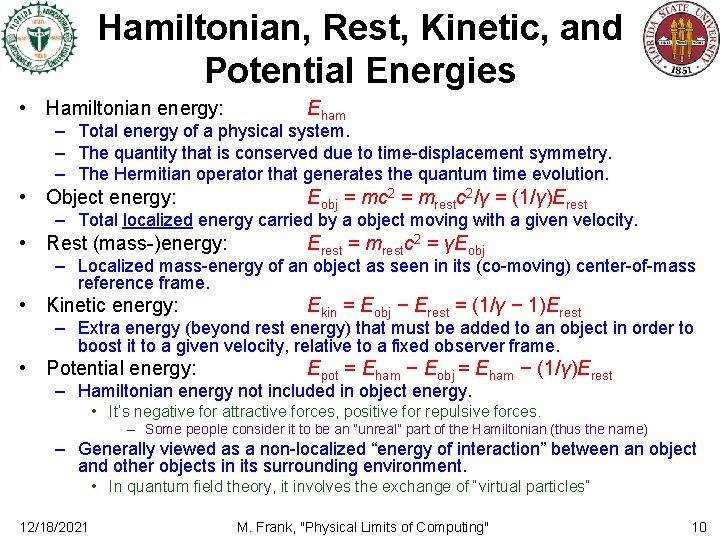

Hamiltonian, Rest, Kinetic, and Potential Energies • Hamiltonian energy: Eham – Total energy of a physical system. – The quantity that is conserved due to time-displacement symmetry. – The Hermitian operator that generates the quantum time evolution. • Object energy: Eobj = mc 2 = mrestc 2/γ = (1/γ)Erest – Total localized energy carried by a object moving with a given velocity. • Rest (mass-)energy: Erest = mrestc 2 = γEobj – Localized mass-energy of an object as seen in its (co-moving) center-of-mass reference frame. • Kinetic energy: Ekin = Eobj − Erest = (1/γ − 1)Erest – Extra energy (beyond rest energy) that must be added to an object in order to boost it to a given velocity, relative to a fixed observer frame. • Potential energy: Epot = Eham − Eobj = Eham − (1/γ)Erest – Hamiltonian energy not included in object energy. • It’s negative for attractive forces, positive for repulsive forces. – Some people consider it to be an “unreal” part of the Hamiltonian (thus the name) – Generally viewed as a non-localized “energy of interaction” between an object and other objects in its surrounding environment. • In quantum field theory, it involves the exchange of “virtual particles” 12/18/2021 M. Frank, "Physical Limits of Computing" 10

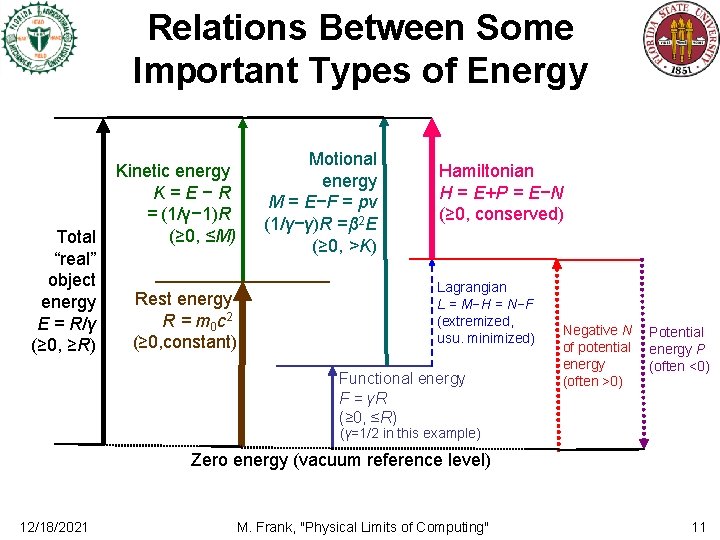

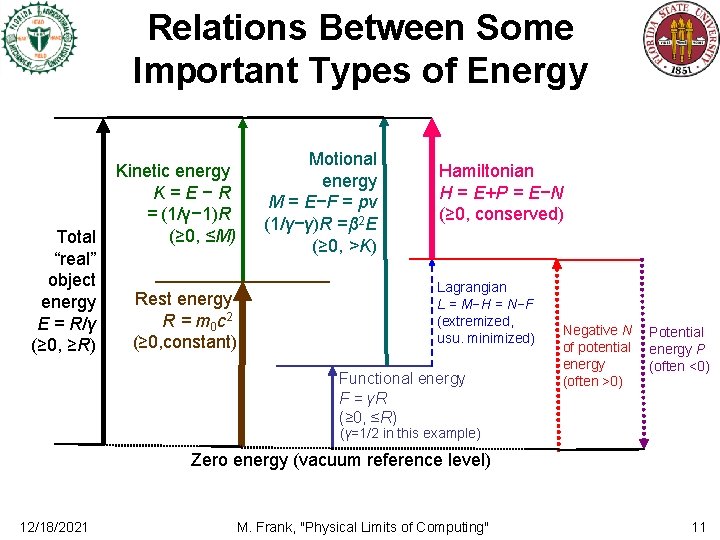

Relations Between Some Important Types of Energy Total “real” object energy E = R/γ (≥ 0, ≥R) Motional energy M = E−F = pv (1/γ−γ)R =β 2 E (≥ 0, >K) Kinetic energy K=E−R = (1/γ− 1)R (≥ 0, ≤M) Rest energy R = m 0 c 2 (≥ 0, constant) Hamiltonian H = E+P = E−N (≥ 0, conserved) Lagrangian L = M−H = N−F (extremized, usu. minimized) Functional energy F = γR (≥ 0, ≤R) Negative N of potential energy (often >0) Potential energy P (often <0) (γ=1/2 in this example) Zero energy (vacuum reference level) 12/18/2021 M. Frank, "Physical Limits of Computing" 11

Subsection II. B. 5. b: Entropy in Thermodynamics M. Frank, "Physical Limits of Computing"

What is entropy? • First was characterized by Rudolph Clausius in 1850. – Originally was just defined via (marginal) heat ÷ temperature, δS = δQ/T – Noted to never decrease in thermodynamic processes. – Significance and physical meaning were mysterious. • In ~1880’s, Ludwig Boltzmann proposed that entropy S is the logarithm of a system’s number N of states, S = k ln N – What we would now call the information capacity of a system – Holds for systems at equilibrium, in a maximum-entropy state • The modern understanding that emerged from 20 th-century physics is that entropy is indeed the amount of unknown or incompressible information in a physical system. – Important contributions to this understanding were made by von Neumann, Shannon, Jaynes, and Zurek. • Let’s explain this a little more fully… 12/18/2021 M. Frank, "Physical Limits of Computing" 13

Standard States • A certain state (or state subset) of a system may be declared, by convention, to be “standard” within some context. – E. g. gas at standard temperature & pressure in physics experiments. • Another example: Newly allocated regions of computer memory are often standardly initialized to all 0’s. • Information that a system is just in the/a standard state can be considered null information. – It is not very informative… • There are more nonstandard states than standard ones – Except in the case of isolated 2 -state systems! – However, pieces of information that are in standard states can still be useful as “clean slates” on which newly measured or computed information can be recorded. 12/18/2021 M. Frank, "Physical Limits of Computing" 14

Computing Information • Computing, in the most general sense, is just the time-evolution of any physical system. – Interactions between subsystems may cause correlations to exist that didn’t exist previously. • E. g. bits a=0 and b interact, assigning a=b • Bit a changes from a known, standard value (null information with zero entropy) to a value that correlates with b – When systems A, B interact in such a way that the state of A is changed in a way that depends on the state of B, • we can say that the information in A is “being computed” from the old information that was in A and B previously 12/18/2021 M. Frank, "Physical Limits of Computing" 15

Decomputing Information • When some piece of information has been computed using a series of known interactions, – it will often be possible to perform another series of interactions that will: • undo the effects of some or all of the earlier interactions, • and decompute the pattern of information – restoring it to a standard state, if desired • E. g. , if the original interactions that took place were thermodynamically reversible (did not increase entropy) then – performing the original series of interactions, inverted, is one way to restore the original state. • There will generally be other ways also. 12/18/2021 M. Frank, "Physical Limits of Computing" 16

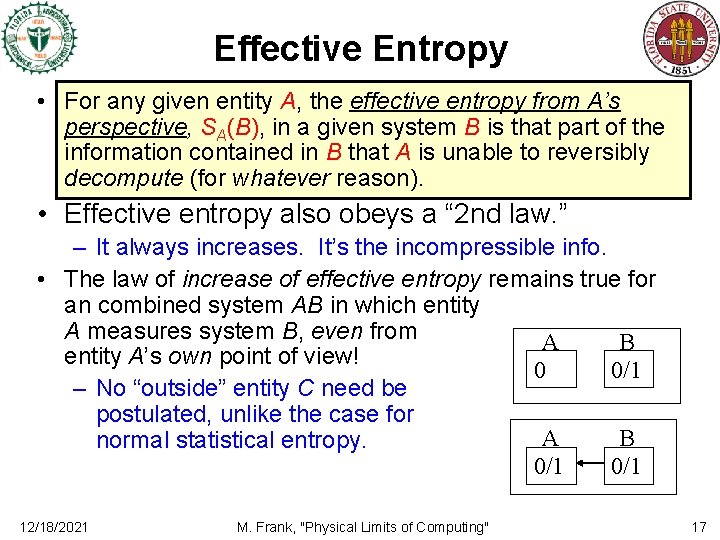

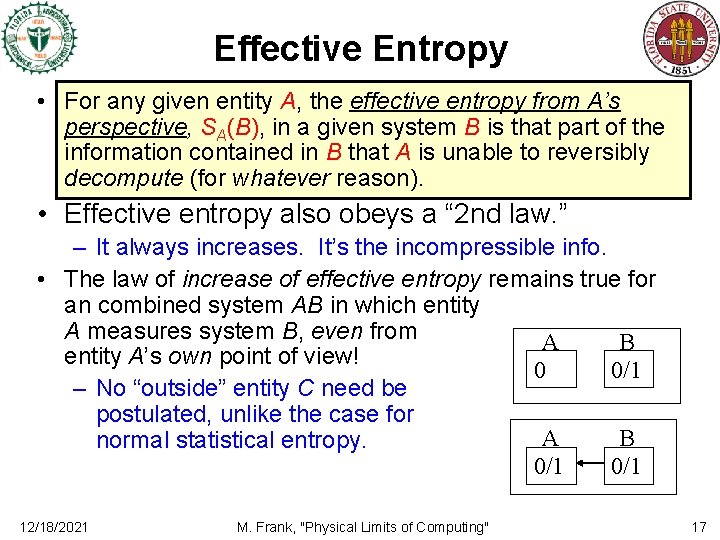

Effective Entropy • For any given entity A, the effective entropy from A’s perspective, SA(B), in a given system B is that part of the information contained in B that A is unable to reversibly decompute (for whatever reason). • Effective entropy also obeys a “ 2 nd law. ” – It always increases. It’s the incompressible info. • The law of increase of effective entropy remains true for an combined system AB in which entity A measures system B, even from A B entity A’s own point of view! 0 0/1 – No “outside” entity C need be postulated, unlike the case for A B normal statistical entropy. 0/1 12/18/2021 M. Frank, "Physical Limits of Computing" 17

Advantages of Effective Entropy • (Effective) entropy, defined as non-reversiblydecomputable information, subsumes the following: – Unknown information (statistical entropy): Can’t be reversibly decomputed, because we don’t even know what its pattern is. • We don’t have any other info that is correlated with it. – Even if we measured it, it would just become known but incompressible. – Known but incompressible information: It can’t be reversibly decomputed because it’s incompressible! • To reversibly decompute it would be to compress it! – Inaccessible information: Also can’t be decomputed, because we can’t get to it! • E. g. , a signal of known information, sent out into space at c. • This simple yet powerful definition is, I submit, the “right” way to understand entropy. 12/18/2021 M. Frank, "Physical Limits of Computing" 18

Subsection II. B. 5. c: Entropy Increase and the 2 nd Law of Thermodynamics The 2 nd Law of Thermodynamics, Proving the 2 nd Law, Maxwell’s Demon, Entropy and Measurement, The Arrow of Time, Boltzmann’s H-theorem M. Frank, "Physical Limits of Computing"

Supremacy of the 2 nd Law of Thermodynamics “The law that entropy increases—the Second Law of Thermodynamics—holds, I think, the supreme position among the laws of Nature. If someone points out to you that your pet theory of the Universe is in disagreement with Maxwell's equations—then so much the worse for Maxwell’s equations. If it is found to be contradicted by observa-tion —well, these experimentalists do bungle things sometimes. But if your theory is found to be against the Second Law of Thermodynamics I can give you no hope; there is nothing for it but to collapse in deepest humiliation. ” – Sir Arthur Eddington, The Nature of the Physical World. New York: Mac. Millian; 1930. • We will see that Eddington was basically right, – because there’s a certain sense in which the 2 nd Law can be viewed as a irrefutable mathematical fact, namely a theorem of combinatorics, • not even a statement about physics at all! 12/18/2021 M. Frank, "Physical Limits of Computing" 20

Brief History of the 2 nd Law of Themodynamics • Early versions of the law were based on centuries of hard-won empirical experience, and had a strong phenomenological flavor, e. g. , – “Perpetual motion machines are impossible. ” – “Heat always spontaneously flows from a hot body to a colder one, never vice-versa. ” – “No process can have as its sole effect the transfer of heat from a cold body to a hotter one. ” • After Clausius introduced the entropy concept, the 2 nd law could be made more quantitative and more general: – “The entropy of any closed system cannot decrease. ” • But, the underlying “reason” for the law remained a mystery. – Today, thanks to more than a century of progress in physics based on the pioneering work of Maxwell, Boltzmann, and others, • we now well understand the underlying mechanical and statistical reasons why the 2 nd law must be true. 12/18/2021 M. Frank, "Physical Limits of Computing" 21

The 2 nd Law of Thermodynamics Follows from Quantum Mechanics • Closed systems evolve via unitary transforms Ut 1 t 2. – Unitary transforms just change the basis, so they do not change the system’s true (von Neumann) entropy. • Because, remember, it only depends on what the Shannon entropy is in the diagonalized basis. • Theorem: Entropy is constant in all closed systems undergoing an exactly-known unitary evolution. – However, if Ut 1 t 2 is ever at all uncertain, or if we ever neglect or disregard some of our information about the state, • Then we will get a mixture of possible resulting states, with provably ≥ effective entropy. • Theorem (2 nd law of thermodynamics): Entropy may increase but never decreases in closed systems – It can increase only if the system undergoes interactions whose details are not completely known, or if the observer discards some of his knowledge. 12/18/2021 M. Frank, "Physical Limits of Computing" 22

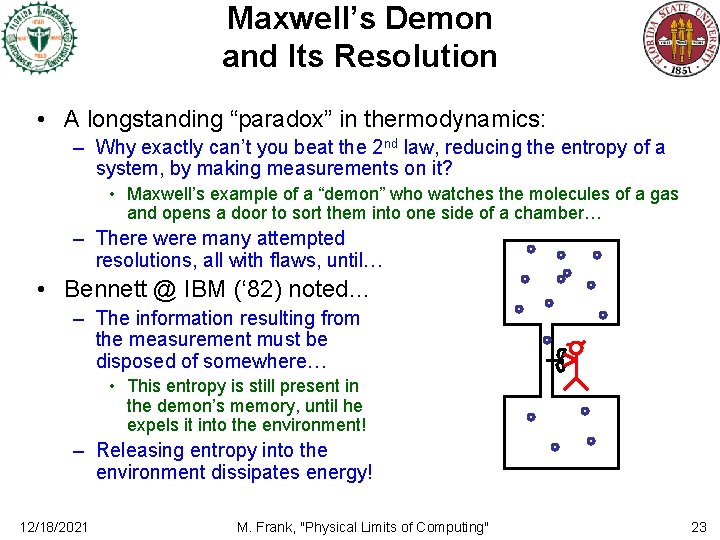

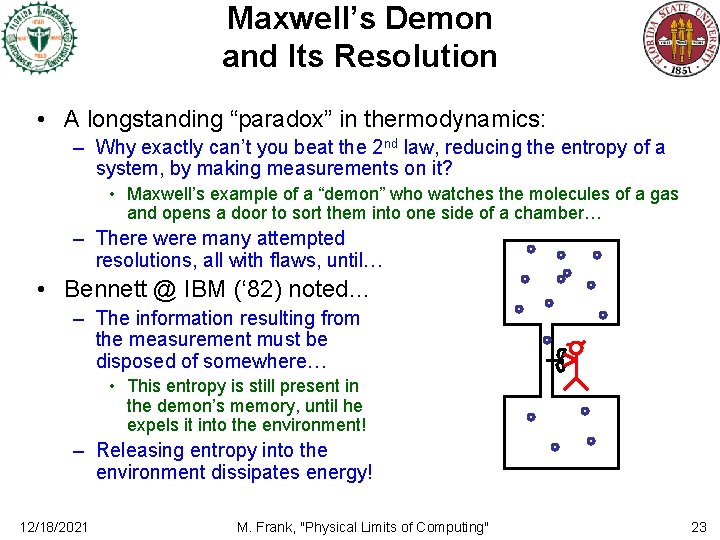

Maxwell’s Demon and Its Resolution • A longstanding “paradox” in thermodynamics: – Why exactly can’t you beat the 2 nd law, reducing the entropy of a system, by making measurements on it? • Maxwell’s example of a “demon” who watches the molecules of a gas and opens a door to sort them into one side of a chamber… – There were many attempted resolutions, all with flaws, until… • Bennett @ IBM (‘ 82) noted… – The information resulting from the measurement must be disposed of somewhere… • This entropy is still present in the demon’s memory, until he expels it into the environment! – Releasing entropy into the environment dissipates energy! 12/18/2021 M. Frank, "Physical Limits of Computing" 23

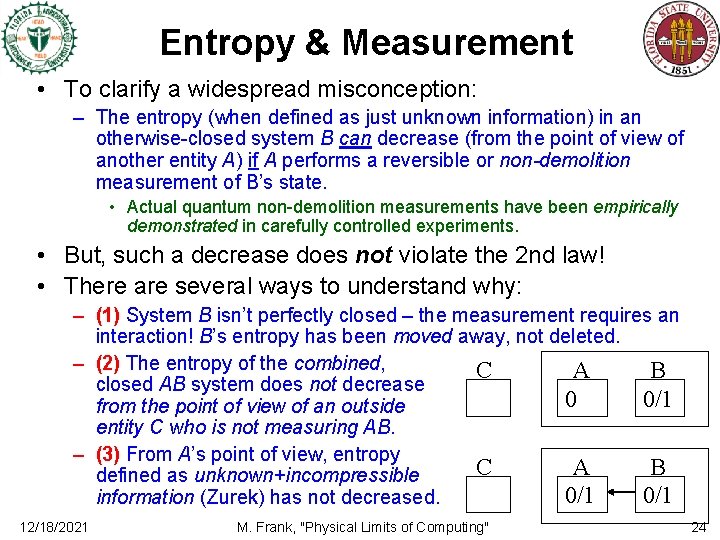

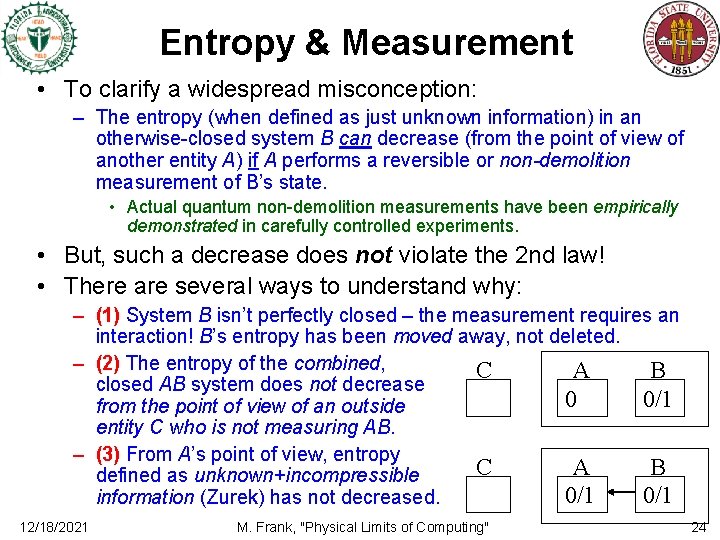

Entropy & Measurement • To clarify a widespread misconception: – The entropy (when defined as just unknown information) in an otherwise-closed system B can decrease (from the point of view of another entity A) if A performs a reversible or non-demolition measurement of B’s state. • Actual quantum non-demolition measurements have been empirically demonstrated in carefully controlled experiments. • But, such a decrease does not violate the 2 nd law! • There are several ways to understand why: – (1) System B isn’t perfectly closed – the measurement requires an interaction! B’s entropy has been moved away, not deleted. – (2) The entropy of the combined, C A B closed AB system does not decrease 0 0/1 from the point of view of an outside entity C who is not measuring AB. – (3) From A’s point of view, entropy C A B defined as unknown+incompressible 0/1 information (Zurek) has not decreased. 12/18/2021 M. Frank, "Physical Limits of Computing" 24

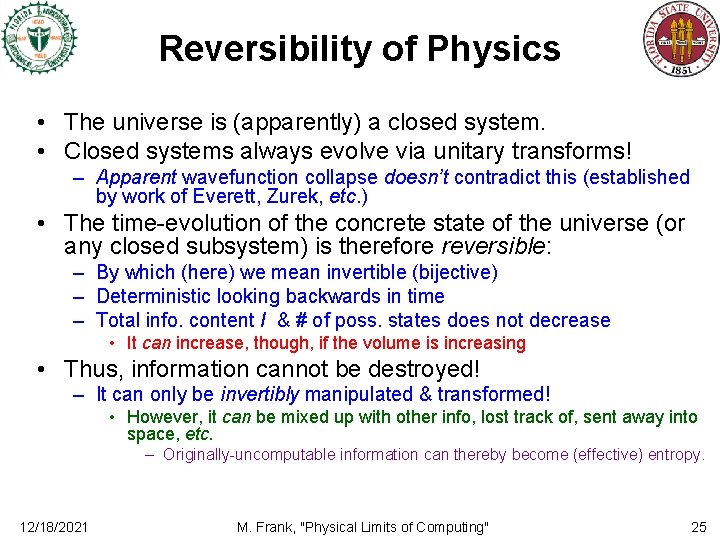

Reversibility of Physics • The universe is (apparently) a closed system. • Closed systems always evolve via unitary transforms! – Apparent wavefunction collapse doesn’t contradict this (established by work of Everett, Zurek, etc. ) • The time-evolution of the concrete state of the universe (or any closed subsystem) is therefore reversible: – By which (here) we mean invertible (bijective) – Deterministic looking backwards in time – Total info. content I & # of poss. states does not decrease • It can increase, though, if the volume is increasing • Thus, information cannot be destroyed! – It can only be invertibly manipulated & transformed! • However, it can be mixed up with other info, lost track of, sent away into space, etc. – Originally-uncomputable information can thereby become (effective) entropy. 12/18/2021 M. Frank, "Physical Limits of Computing" 25

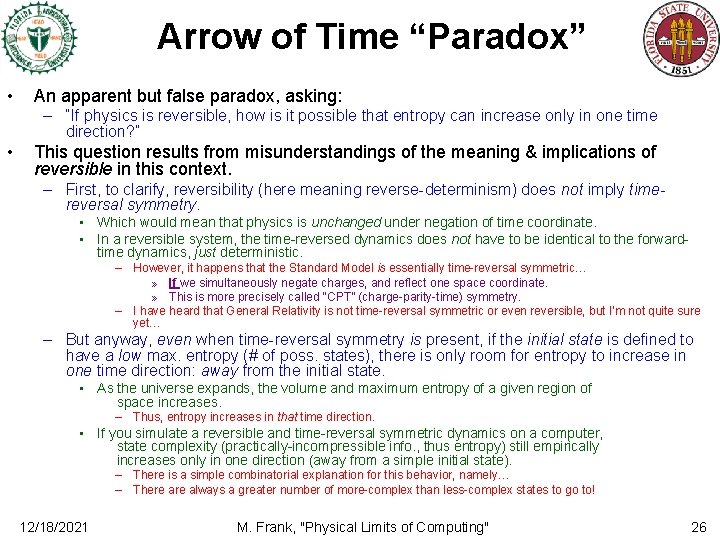

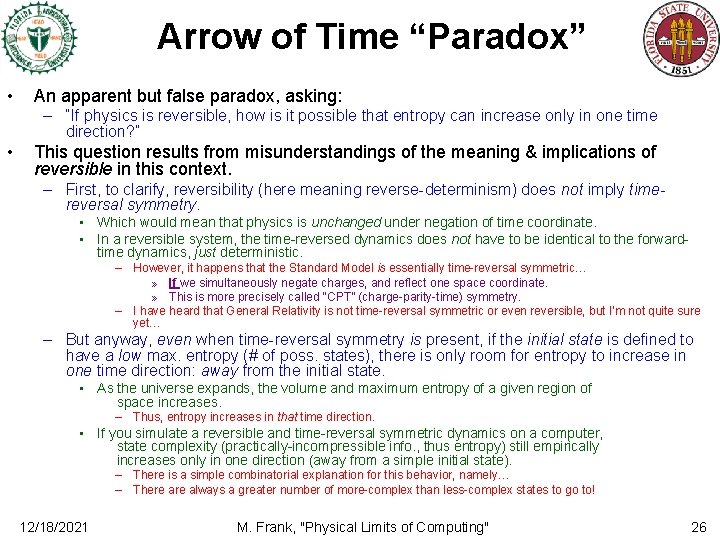

Arrow of Time “Paradox” • An apparent but false paradox, asking: – “If physics is reversible, how is it possible that entropy can increase only in one time direction? ” • This question results from misunderstandings of the meaning & implications of reversible in this context. – First, to clarify, reversibility (here meaning reverse-determinism) does not imply timereversal symmetry. • Which would mean that physics is unchanged under negation of time coordinate. • In a reversible system, the time-reversed dynamics does not have to be identical to the forwardtime dynamics, just deterministic. – However, it happens that the Standard Model is essentially time-reversal symmetric… » If we simultaneously negate charges, and reflect one space coordinate. » This is more precisely called “CPT” (charge-parity-time) symmetry. – I have heard that General Relativity is not time-reversal symmetric or even reversible, but I’m not quite sure yet… – But anyway, even when time-reversal symmetry is present, if the initial state is defined to have a low max. entropy (# of poss. states), there is only room for entropy to increase in one time direction: away from the initial state. • As the universe expands, the volume and maximum entropy of a given region of space increases. – Thus, entropy increases in that time direction. • If you simulate a reversible and time-reversal symmetric dynamics on a computer, state complexity (practically-incompressible info. , thus entropy) still empirically increases only in one direction (away from a simple initial state). – There is a simple combinatorial explanation for this behavior, namely… – There always a greater number of more-complex than less-complex states to go to! 12/18/2021 M. Frank, "Physical Limits of Computing" 26

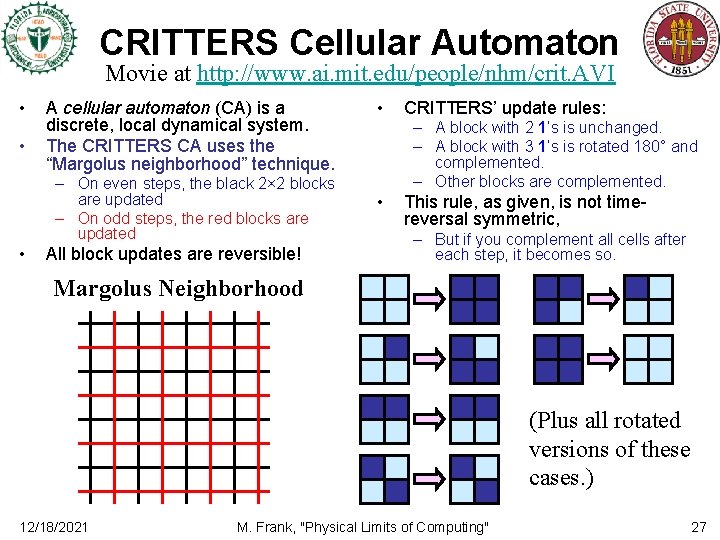

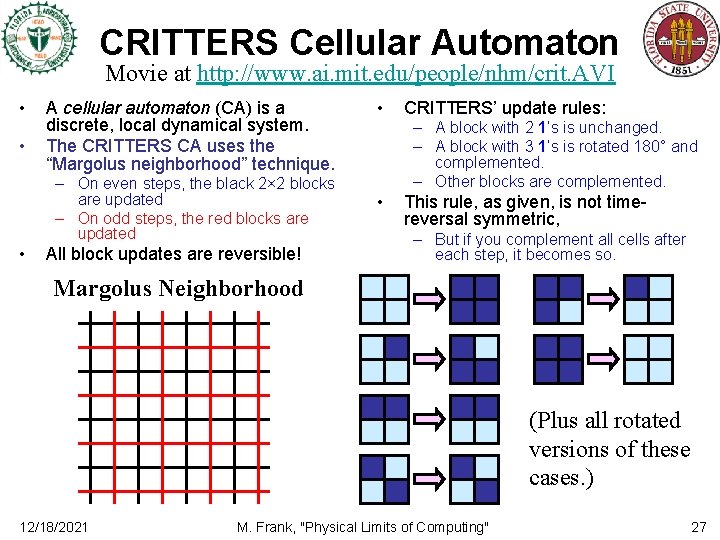

CRITTERS Cellular Automaton Movie at http: //www. ai. mit. edu/people/nhm/crit. AVI • • A cellular automaton (CA) is a discrete, local dynamical system. The CRITTERS CA uses the “Margolus neighborhood” technique. – On even steps, the black 2× 2 blocks are updated – On odd steps, the red blocks are updated • All block updates are reversible! • CRITTERS’ update rules: – A block with 2 1’s is unchanged. – A block with 3 1’s is rotated 180° and complemented. – Other blocks are complemented. • This rule, as given, is not timereversal symmetric, – But if you complement all cells after each step, it becomes so. Margolus Neighborhood (Plus all rotated versions of these cases. ) 12/18/2021 M. Frank, "Physical Limits of Computing" 27

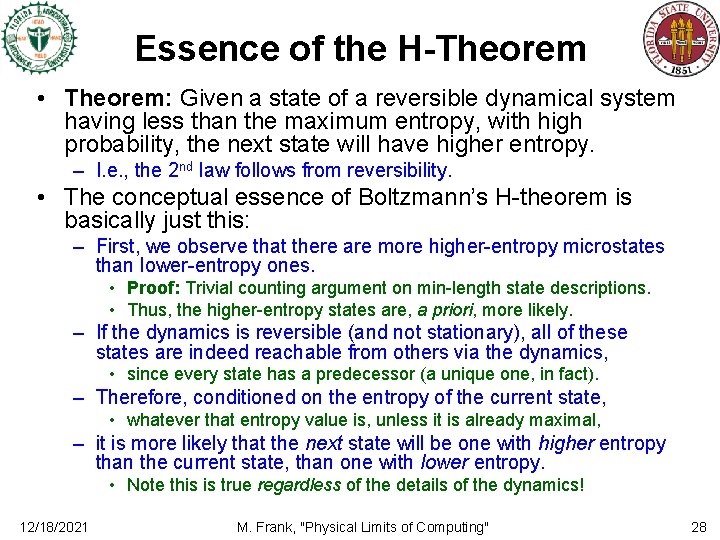

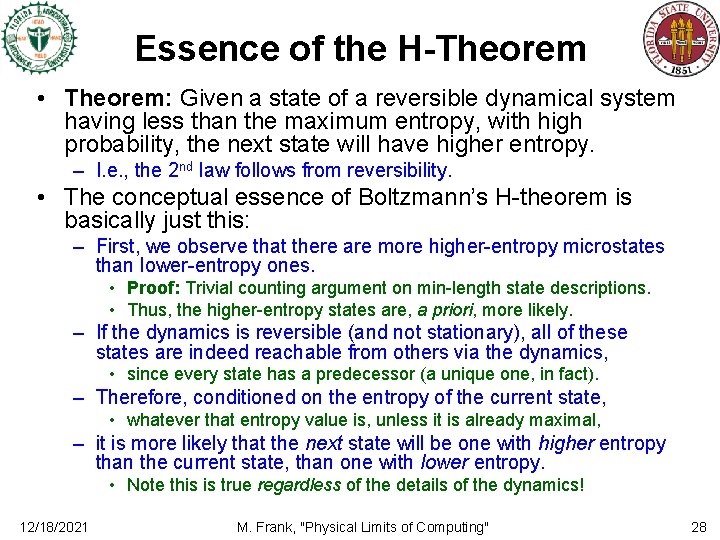

Essence of the H-Theorem • Theorem: Given a state of a reversible dynamical system having less than the maximum entropy, with high probability, the next state will have higher entropy. – I. e. , the 2 nd law follows from reversibility. • The conceptual essence of Boltzmann’s H-theorem is basically just this: – First, we observe that there are more higher-entropy microstates than lower-entropy ones. • Proof: Trivial counting argument on min-length state descriptions. • Thus, the higher-entropy states are, a priori, more likely. – If the dynamics is reversible (and not stationary), all of these states are indeed reachable from others via the dynamics, • since every state has a predecessor (a unique one, in fact). – Therefore, conditioned on the entropy of the current state, • whatever that entropy value is, unless it is already maximal, – it is more likely that the next state will be one with higher entropy than the current state, than one with lower entropy. • Note this is true regardless of the details of the dynamics! 12/18/2021 M. Frank, "Physical Limits of Computing" 28

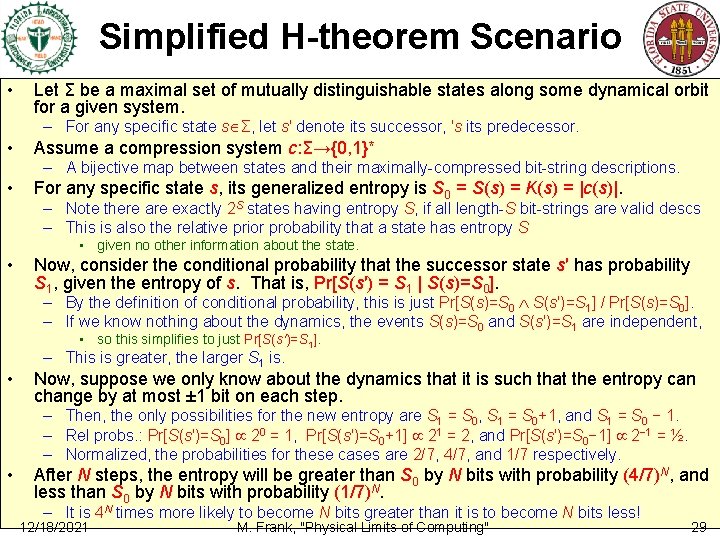

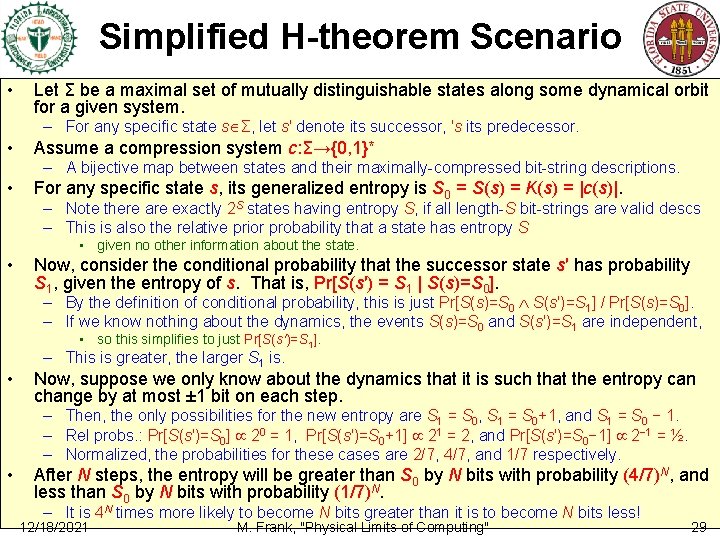

Simplified H-theorem Scenario • Let Σ be a maximal set of mutually distinguishable states along some dynamical orbit for a given system. – For any specific state s Σ, let s′ denote its successor, ′s its predecessor. • Assume a compression system c: Σ→{0, 1}* – A bijective map between states and their maximally-compressed bit-string descriptions. • For any specific state s, its generalized entropy is S 0 = S(s) = K(s) = |c(s)|. – Note there are exactly 2 S states having entropy S, if all length-S bit-strings are valid descs – This is also the relative prior probability that a state has entropy S • given no other information about the state. • Now, consider the conditional probability that the successor state s′ has probability S 1, given the entropy of s. That is, Pr[S(s′) = S 1 | S(s)=S 0]. – By the definition of conditional probability, this is just Pr[S(s)=S 0 S(s′)=S 1] / Pr[S(s)=S 0]. – If we know nothing about the dynamics, the events S(s)=S 0 and S(s′)=S 1 are independent, • so this simplifies to just Pr[S(s′)=S 1]. • – This is greater, the larger S 1 is. Now, suppose we only know about the dynamics that it is such that the entropy can change by at most ± 1 bit on each step. – Then, the only possibilities for the new entropy are S 1 = S 0, S 1 = S 0+1, and S 1 = S 0 − 1. – Rel probs. : Pr[S(s′)=S 0] 20 = 1, Pr[S(s′)=S 0+1] 21 = 2, and Pr[S(s′)=S 0− 1] 2− 1 = ½. – Normalized, the probabilities for these cases are 2/7, 4/7, and 1/7 respectively. • After N steps, the entropy will be greater than S 0 by N bits with probability (4/7)N, and less than S 0 by N bits with probability (1/7)N. – It is 4 N times more likely to become N bits greater than it is to become N bits less! 12/18/2021 M. Frank, "Physical Limits of Computing" 29

Evolution of Entropy Distribution From a spreadsheet simulation based on the results from the previous slide. 12/18/2021 M. Frank, "Physical Limits of Computing" 30

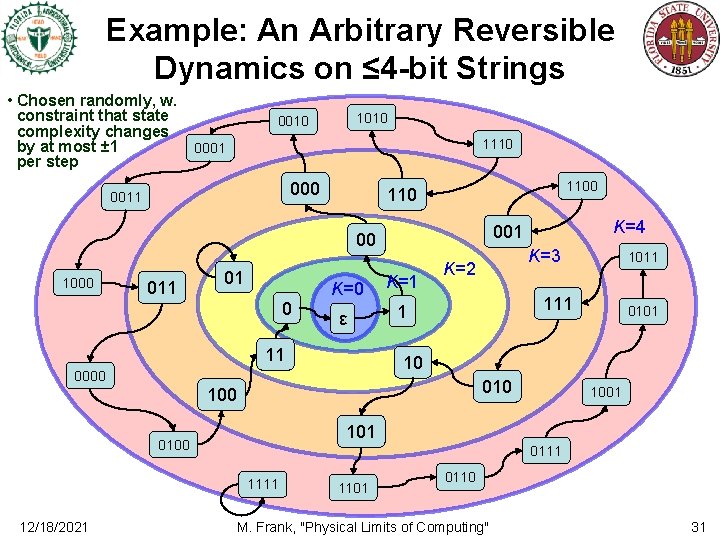

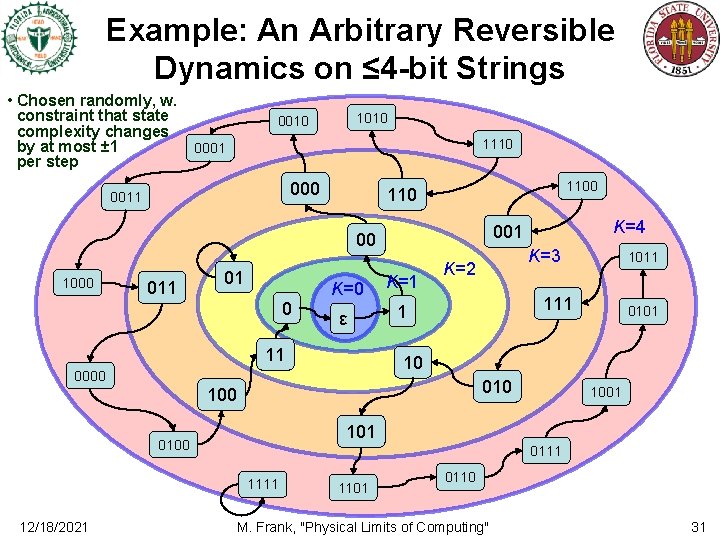

Example: An Arbitrary Reversible Dynamics on ≤ 4 -bit Strings • Chosen randomly, w. constraint that state complexity changes by at most ± 1 per step 1010 0010 1110 0001 000 0011 1100 110 1000 011 01 0 K=1 ε 1 11 K=3 K=2 1011 111 0101 10 0000 010 1001 101 0100 0111 12/18/2021 K=4 001 00 1101 0110 M. Frank, "Physical Limits of Computing" 31

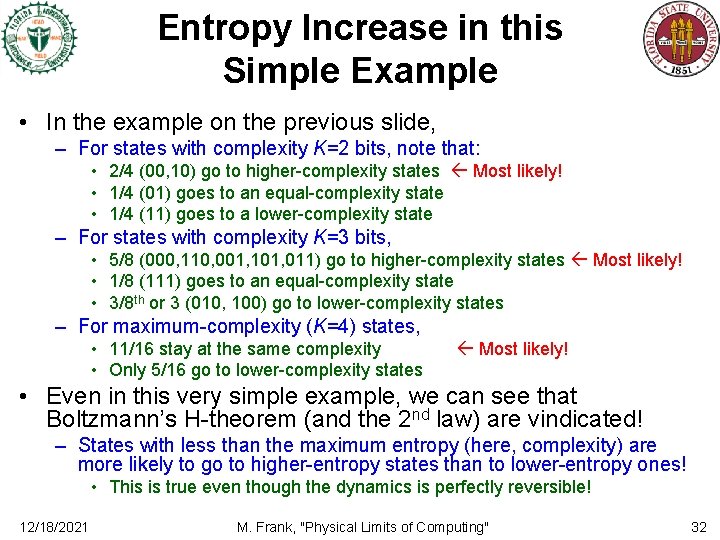

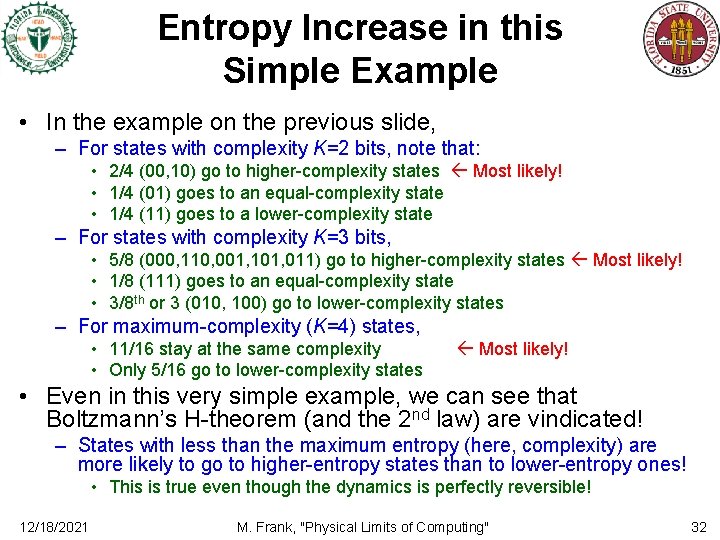

Entropy Increase in this Simple Example • In the example on the previous slide, – For states with complexity K=2 bits, note that: • 2/4 (00, 10) go to higher-complexity states Most likely! • 1/4 (01) goes to an equal-complexity state • 1/4 (11) goes to a lower-complexity state – For states with complexity K=3 bits, • 5/8 (000, 110, 001, 101, 011) go to higher-complexity states Most likely! • 1/8 (111) goes to an equal-complexity state • 3/8 th or 3 (010, 100) go to lower-complexity states – For maximum-complexity (K=4) states, • 11/16 stay at the same complexity • Only 5/16 go to lower-complexity states Most likely! • Even in this very simple example, we can see that Boltzmann’s H-theorem (and the 2 nd law) are vindicated! – States with less than the maximum entropy (here, complexity) are more likely to go to higher-entropy states than to lower-entropy ones! • This is true even though the dynamics is perfectly reversible! 12/18/2021 M. Frank, "Physical Limits of Computing" 32

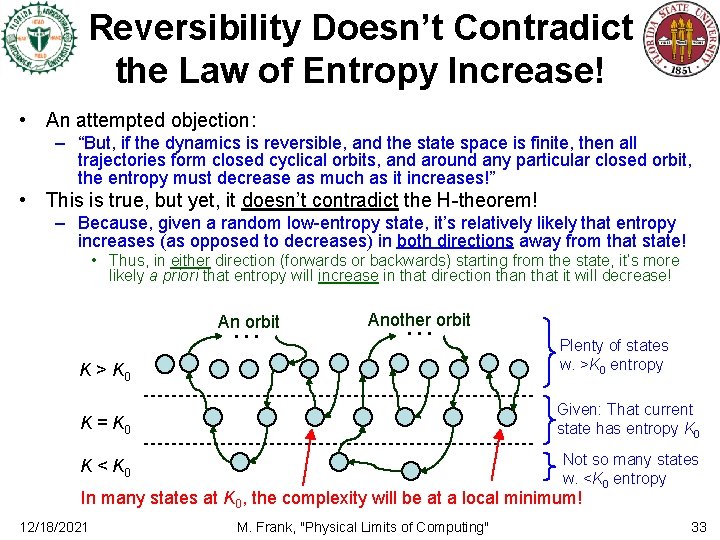

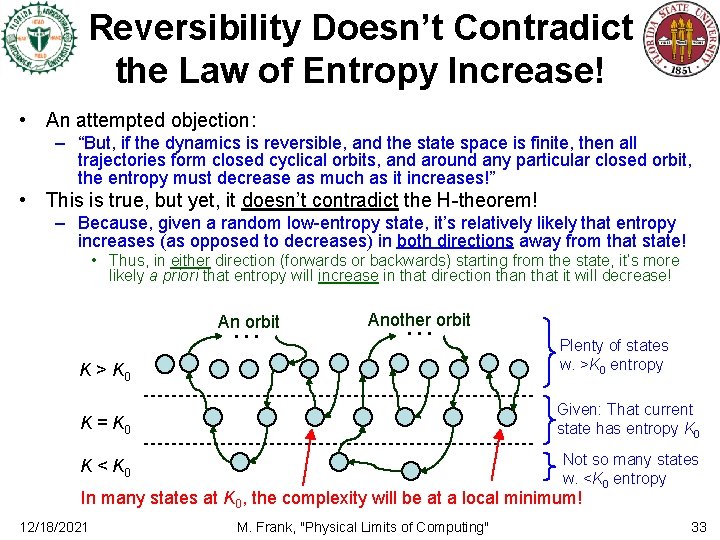

Reversibility Doesn’t Contradict the Law of Entropy Increase! • An attempted objection: – “But, if the dynamics is reversible, and the state space is finite, then all trajectories form closed cyclical orbits, and around any particular closed orbit, the entropy must decrease as much as it increases!” • This is true, but yet, it doesn’t contradict the H-theorem! – Because, given a random low-entropy state, it’s relatively likely that entropy increases (as opposed to decreases) in both directions away from that state! • Thus, in either direction (forwards or backwards) starting from the state, it’s more likely a priori that entropy will increase in that direction that it will decrease! … An orbit … Another orbit K > K 0 Plenty of states w. >K 0 entropy K = K 0 Given: That current state has entropy K 0 K < K 0 Not so many states w. <K 0 entropy In many states at K 0, the complexity will be at a local minimum! 12/18/2021 M. Frank, "Physical Limits of Computing" 33

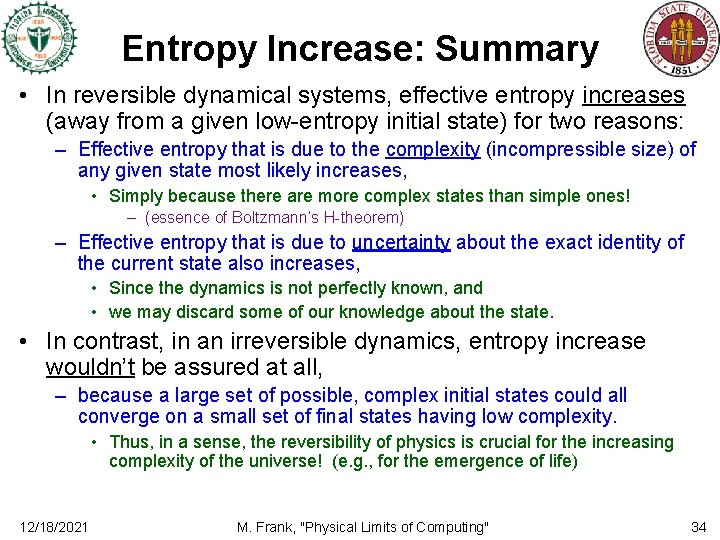

Entropy Increase: Summary • In reversible dynamical systems, effective entropy increases (away from a given low-entropy initial state) for two reasons: – Effective entropy that is due to the complexity (incompressible size) of any given state most likely increases, • Simply because there are more complex states than simple ones! – (essence of Boltzmann’s H-theorem) – Effective entropy that is due to uncertainty about the exact identity of the current state also increases, • Since the dynamics is not perfectly known, and • we may discard some of our knowledge about the state. • In contrast, in an irreversible dynamics, entropy increase wouldn’t be assured at all, – because a large set of possible, complex initial states could all converge on a small set of final states having low complexity. • Thus, in a sense, the reversibility of physics is crucial for the increasing complexity of the universe! (e. g. , for the emergence of life) 12/18/2021 M. Frank, "Physical Limits of Computing" 34

Subsection II. B. 5. d: Equilibrium States and the Boltzmann Distribution M. Frank, "Physical Limits of Computing"

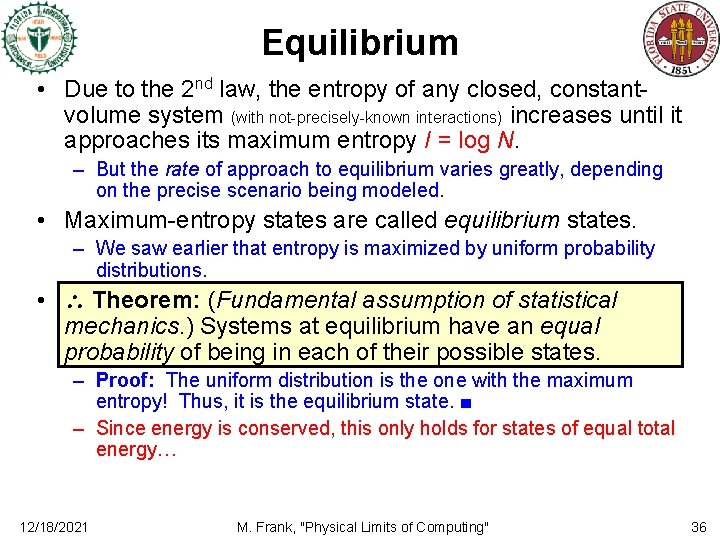

Equilibrium • Due to the 2 nd law, the entropy of any closed, constantvolume system (with not-precisely-known interactions) increases until it approaches its maximum entropy I = log N. – But the rate of approach to equilibrium varies greatly, depending on the precise scenario being modeled. • Maximum-entropy states are called equilibrium states. – We saw earlier that entropy is maximized by uniform probability distributions. • Theorem: (Fundamental assumption of statistical mechanics. ) Systems at equilibrium have an equal probability of being in each of their possible states. – Proof: The uniform distribution is the one with the maximum entropy! Thus, it is the equilibrium state. ■ – Since energy is conserved, this only holds for states of equal total energy… 12/18/2021 M. Frank, "Physical Limits of Computing" 36

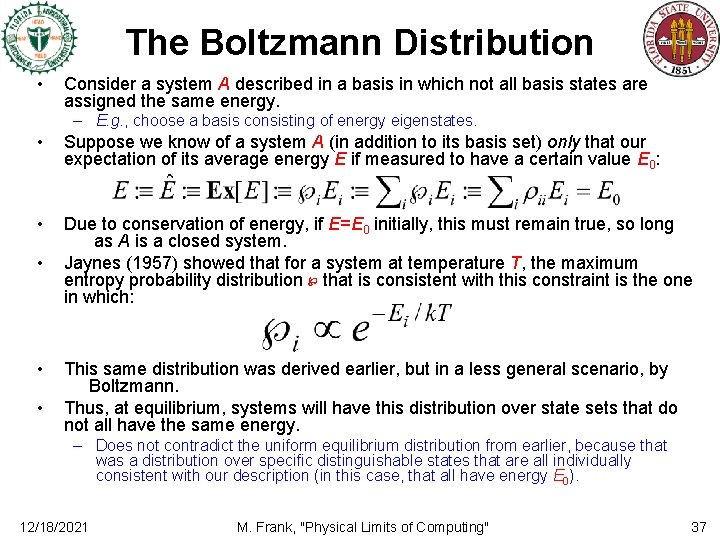

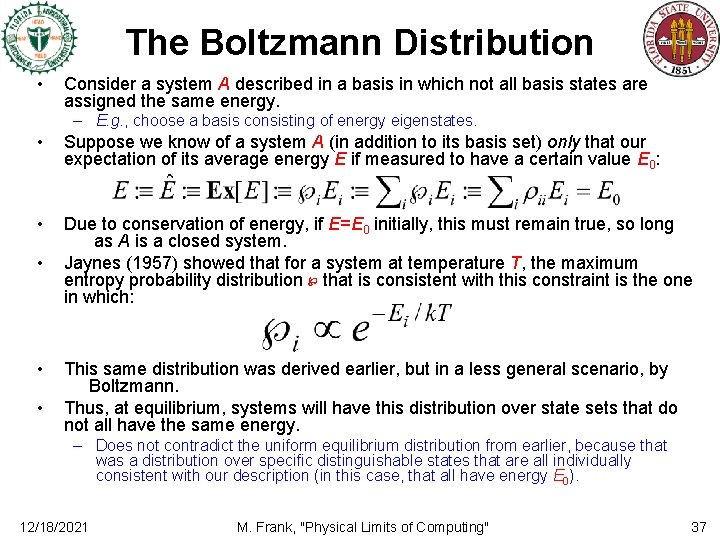

The Boltzmann Distribution • Consider a system A described in a basis in which not all basis states are assigned the same energy. – E. g. , choose a basis consisting of energy eigenstates. • Suppose we know of a system A (in addition to its basis set) only that our expectation of its average energy E if measured to have a certain value E 0: • Due to conservation of energy, if E=E 0 initially, this must remain true, so long as A is a closed system. Jaynes (1957) showed that for a system at temperature T, the maximum entropy probability distribution ℘ that is consistent with this constraint is the one in which: • • • This same distribution was derived earlier, but in a less general scenario, by Boltzmann. Thus, at equilibrium, systems will have this distribution over state sets that do not all have the same energy. – Does not contradict the uniform equilibrium distribution from earlier, because that was a distribution over specific distinguishable states that are all individually consistent with our description (in this case, that all have energy E 0). 12/18/2021 M. Frank, "Physical Limits of Computing" 37

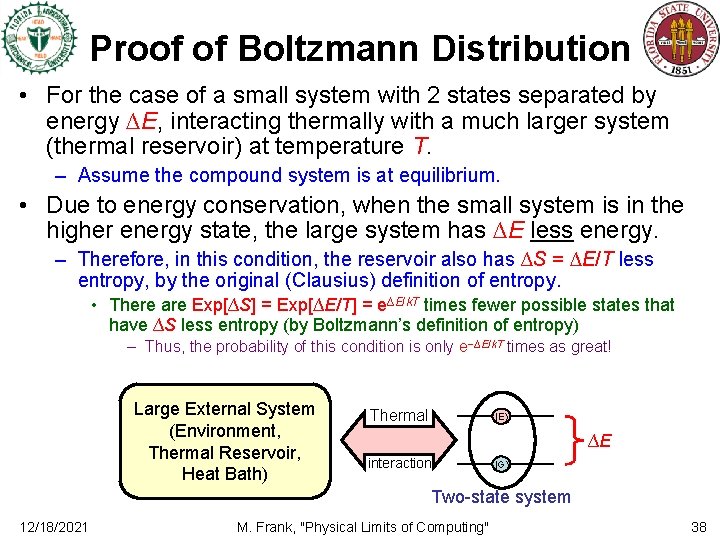

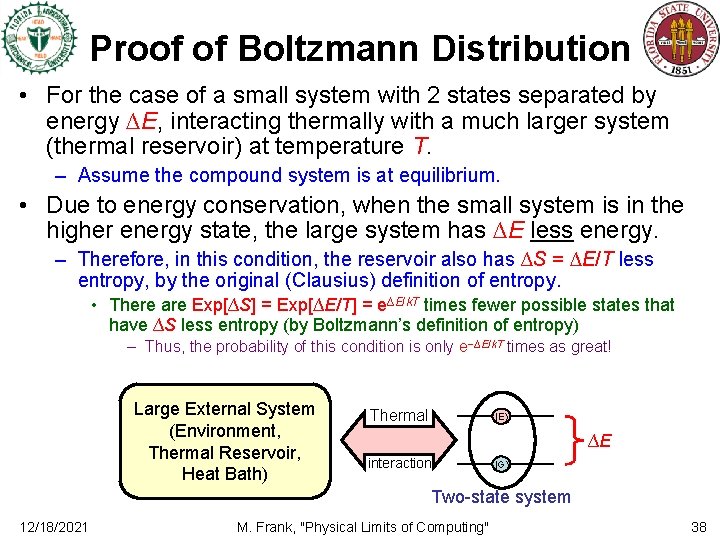

Proof of Boltzmann Distribution • For the case of a small system with 2 states separated by energy ∆E, interacting thermally with a much larger system (thermal reservoir) at temperature T. – Assume the compound system is at equilibrium. • Due to energy conservation, when the small system is in the higher energy state, the large system has ∆E less energy. – Therefore, in this condition, the reservoir also has ∆S = ∆E/T less entropy, by the original (Clausius) definition of entropy. • There are Exp[∆S] = Exp[∆E/T] = e∆E/k. T times fewer possible states that have ∆S less entropy (by Boltzmann’s definition of entropy) – Thus, the probability of this condition is only e−∆E/k. T times as great! Large External System (Environment, Thermal Reservoir, Heat Bath) Thermal |E ∆E interaction |G Two-state system 12/18/2021 M. Frank, "Physical Limits of Computing" 38

Subsection II. B. 5. d: The Concept of Temperature M. Frank, "Physical Limits of Computing"

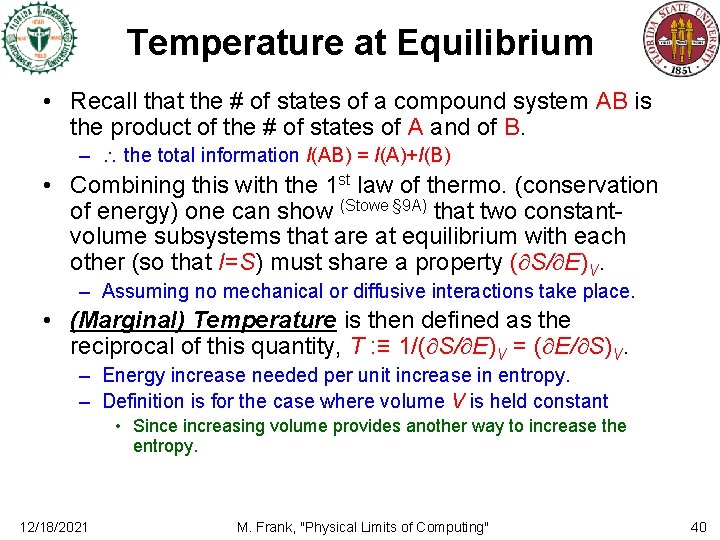

Temperature at Equilibrium • Recall that the # of states of a compound system AB is the product of the # of states of A and of B. – the total information I(AB) = I(A)+I(B) • Combining this with the 1 st law of thermo. (conservation of energy) one can show (Stowe § 9 A) that two constantvolume subsystems that are at equilibrium with each other (so that I=S) must share a property ( S/ E)V. – Assuming no mechanical or diffusive interactions take place. • (Marginal) Temperature is then defined as the reciprocal of this quantity, T : ≡ 1/( S/ E)V = ( E/ S)V. – Energy increase needed per unit increase in entropy. – Definition is for the case where volume V is held constant • Since increasing volume provides another way to increase the entropy. 12/18/2021 M. Frank, "Physical Limits of Computing" 40

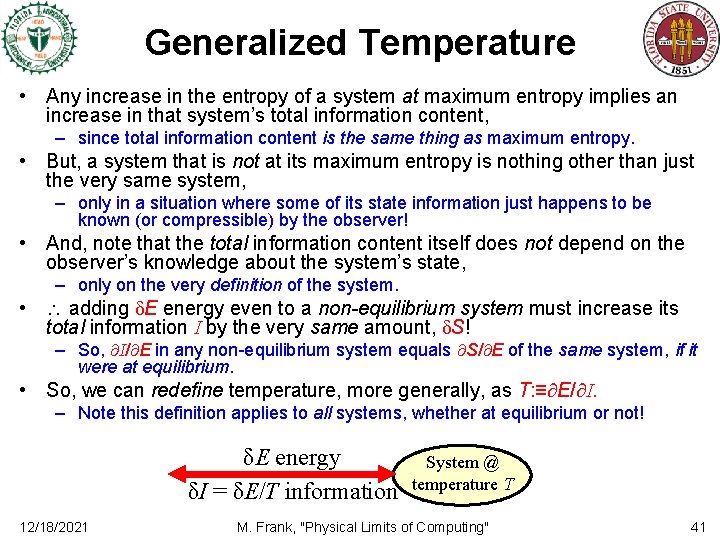

Generalized Temperature • Any increase in the entropy of a system at maximum entropy implies an increase in that system’s total information content, – since total information content is the same thing as maximum entropy. • But, a system that is not at its maximum entropy is nothing other than just the very same system, – only in a situation where some of its state information just happens to be known (or compressible) by the observer! • And, note that the total information content itself does not depend on the observer’s knowledge about the system’s state, – only on the very definition of the system. • adding δE energy even to a non-equilibrium system must increase its total information I by the very same amount, δS! – So, I/ E in any non-equilibrium system equals S/ E of the same system, if it were at equilibrium. • So, we can redefine temperature, more generally, as T: ≡ E/ I. – Note this definition applies to all systems, whether at equilibrium or not! δE energy δI = δE/T information 12/18/2021 System @ temperature T M. Frank, "Physical Limits of Computing" 41

Subsection II. B. 5. e: The Nature of Heat Energy, Heat, Chill, and Work M. Frank, "Physical Limits of Computing"

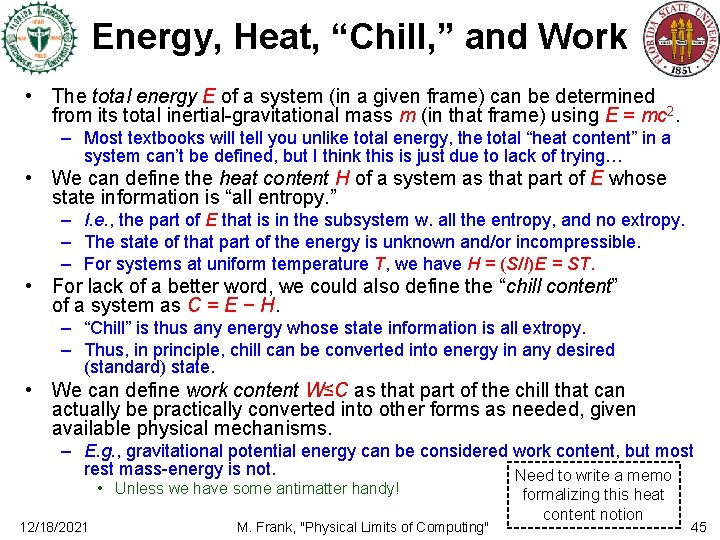

Energy, Heat, “Chill, ” and Work • The total energy E of a system (in a given frame) can be determined from its total inertial-gravitational mass m (in that frame) using E = mc 2. – Most textbooks will tell you unlike total energy, the total “heat content” in a system can’t be defined, but I think this is just due to lack of trying… • We can define the heat content H of a system as that part of E whose state information is “all entropy. ” – I. e. , the part of E that is in the subsystem w. all the entropy, and no extropy. – The state of that part of the energy is unknown and/or incompressible. – For systems at uniform temperature T, we have H = (S/I)E = ST. • For lack of a better word, we could also define the “chill content” of a system as C = E − H. – “Chill” is thus any energy whose state information is all extropy. – Thus, in principle, chill can be converted into energy in any desired (standard) state. • We can define work content W≤C as that part of the chill that can actually be practically converted into other forms as needed, given available physical mechanisms. – E. g. , gravitational potential energy can be considered work content, but most rest mass-energy is not. Need to write a memo • Unless we have some antimatter handy! 12/18/2021 M. Frank, "Physical Limits of Computing" formalizing this heat content notion 45

Subsection II. B. 5. f: Reversible Heat Engines and the Carnot Cycle Ideal Extraction of Work from Heat, Carnot Cycle M. Frank, "Physical Limits of Computing"

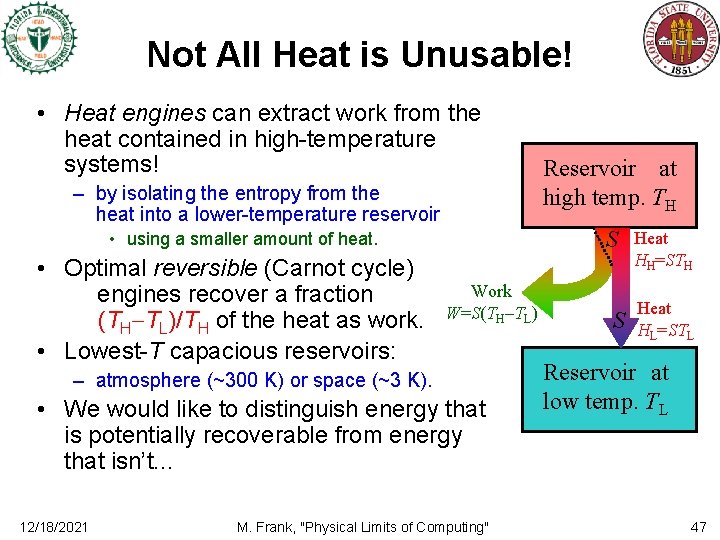

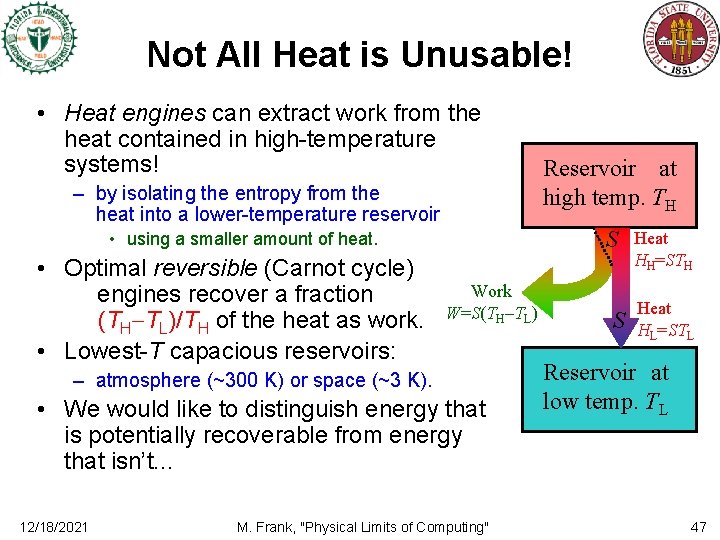

Not All Heat is Unusable! • Heat engines can extract work from the heat contained in high-temperature systems! – by isolating the entropy from the heat into a lower-temperature reservoir S • using a smaller amount of heat. • Optimal reversible (Carnot cycle) engines recover a fraction (TH TL)/TH of the heat as work. • Lowest-T capacious reservoirs: Work W=S(TH TL) – atmosphere (~300 K) or space (~3 K). • We would like to distinguish energy that is potentially recoverable from energy that isn’t. . . 12/18/2021 Reservoir at high temp. TH M. Frank, "Physical Limits of Computing" S Heat HH=STH Heat HL=STL Reservoir at low temp. TL 47

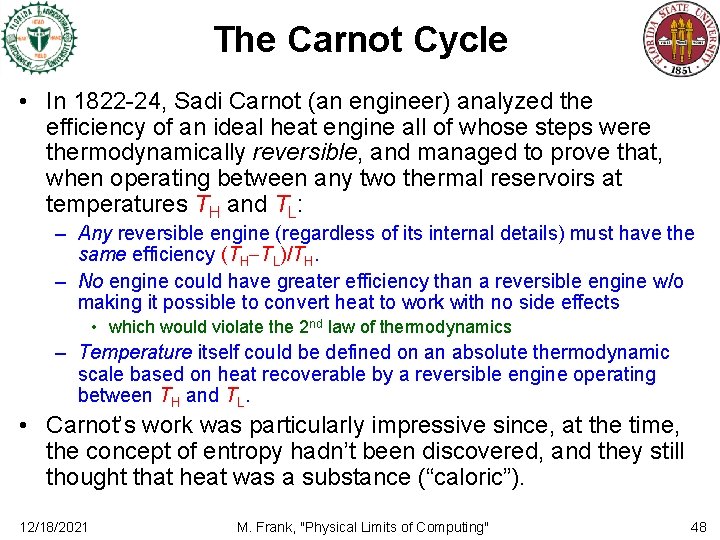

The Carnot Cycle • In 1822 -24, Sadi Carnot (an engineer) analyzed the efficiency of an ideal heat engine all of whose steps were thermodynamically reversible, and managed to prove that, when operating between any two thermal reservoirs at temperatures TH and TL: – Any reversible engine (regardless of its internal details) must have the same efficiency (TH TL)/TH. – No engine could have greater efficiency than a reversible engine w/o making it possible to convert heat to work with no side effects • which would violate the 2 nd law of thermodynamics – Temperature itself could be defined on an absolute thermodynamic scale based on heat recoverable by a reversible engine operating between TH and TL. • Carnot’s work was particularly impressive since, at the time, the concept of entropy hadn’t been discovered, and they still thought that heat was a substance (“caloric”). 12/18/2021 M. Frank, "Physical Limits of Computing" 48

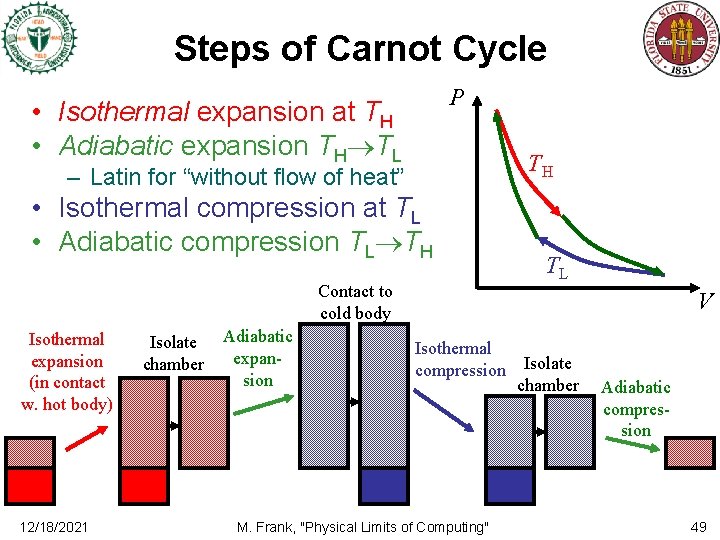

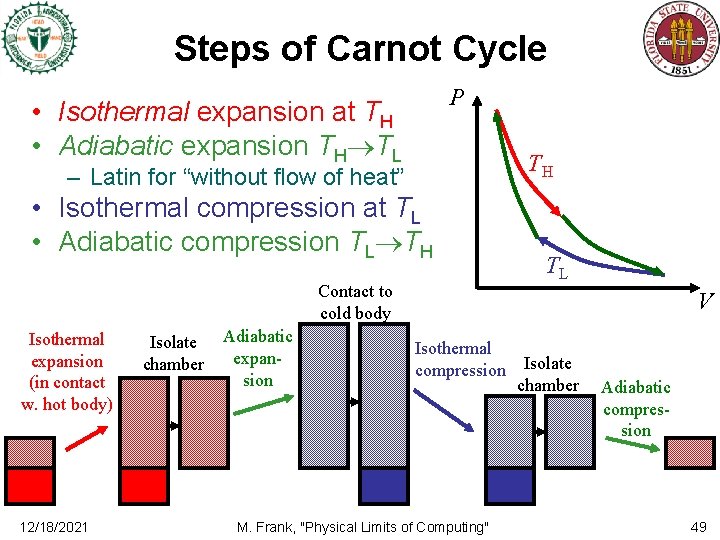

Steps of Carnot Cycle P • Isothermal expansion at TH • Adiabatic expansion TH TL TH – Latin for “without flow of heat” • Isothermal compression at TL • Adiabatic compression TL TH Contact to cold body Isothermal expansion (in contact w. hot body) 12/18/2021 Isolate chamber Adiabatic expansion TL V Isothermal compression M. Frank, "Physical Limits of Computing" Isolate chamber Adiabatic compression 49

Subsection II. B. 5. g: Free Energy Spent energy, Unspent energy, Internal Energy, Free Energy, Helmholtz Free Energy, Gibbs Free Energy M. Frank, "Physical Limits of Computing"

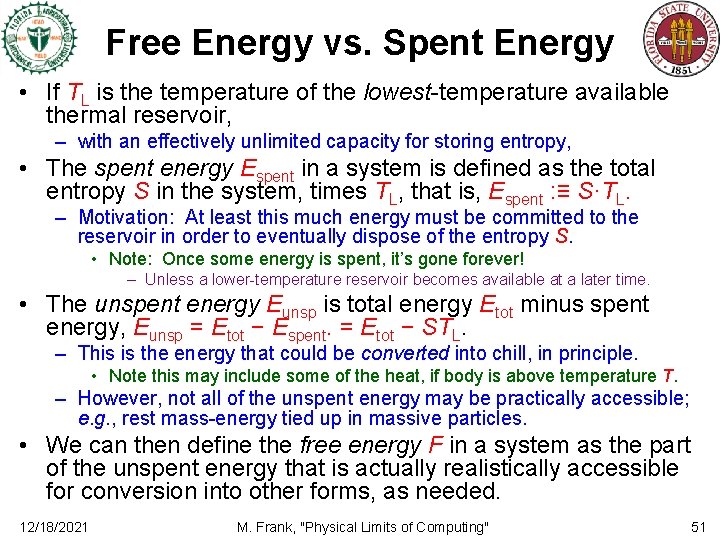

Free Energy vs. Spent Energy • If TL is the temperature of the lowest-temperature available thermal reservoir, – with an effectively unlimited capacity for storing entropy, • The spent energy Espent in a system is defined as the total entropy S in the system, times TL, that is, Espent : ≡ S·TL. – Motivation: At least this much energy must be committed to the reservoir in order to eventually dispose of the entropy S. • Note: Once some energy is spent, it’s gone forever! – Unless a lower-temperature reservoir becomes available at a later time. • The unspent energy Eunsp is total energy Etot minus spent energy, Eunsp = Etot − Espent. = Etot − STL. – This is the energy that could be converted into chill, in principle. • Note this may include some of the heat, if body is above temperature T. – However, not all of the unspent energy may be practically accessible; e. g. , rest mass-energy tied up in massive particles. • We can then define the free energy F in a system as the part of the unspent energy that is actually realistically accessible for conversion into other forms, as needed. 12/18/2021 M. Frank, "Physical Limits of Computing" 51

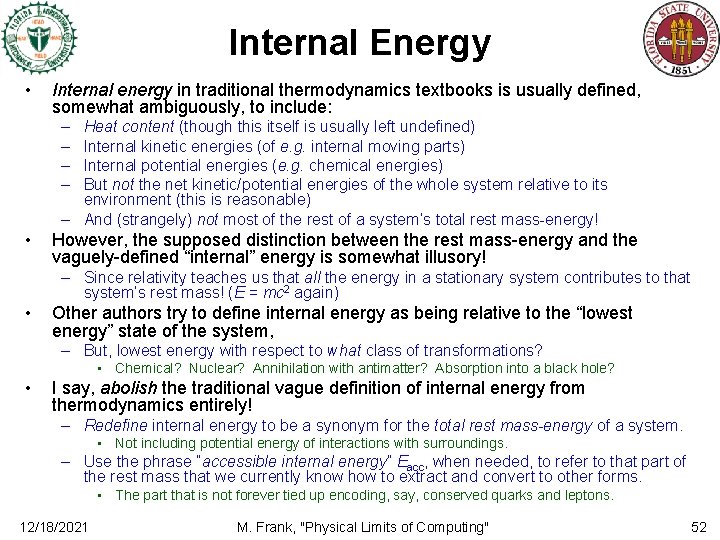

Internal Energy • Internal energy in traditional thermodynamics textbooks is usually defined, somewhat ambiguously, to include: – – Heat content (though this itself is usually left undefined) Internal kinetic energies (of e. g. internal moving parts) Internal potential energies (e. g. chemical energies) But not the net kinetic/potential energies of the whole system relative to its environment (this is reasonable) – And (strangely) not most of the rest of a system’s total rest mass-energy! • However, the supposed distinction between the rest mass-energy and the vaguely-defined “internal” energy is somewhat illusory! – Since relativity teaches us that all the energy in a stationary system contributes to that system’s rest mass! (E = mc 2 again) • Other authors try to define internal energy as being relative to the “lowest energy” state of the system, – But, lowest energy with respect to what class of transformations? • Chemical? Nuclear? Annihilation with antimatter? Absorption into a black hole? • I say, abolish the traditional vague definition of internal energy from thermodynamics entirely! – Redefine internal energy to be a synonym for the total rest mass-energy of a system. • Not including potential energy of interactions with surroundings. – Use the phrase “accessible internal energy” Eacc, when needed, to refer to that part of the rest mass that we currently know how to extract and convert to other forms. • The part that is not forever tied up encoding, say, conserved quarks and leptons. 12/18/2021 M. Frank, "Physical Limits of Computing" 52

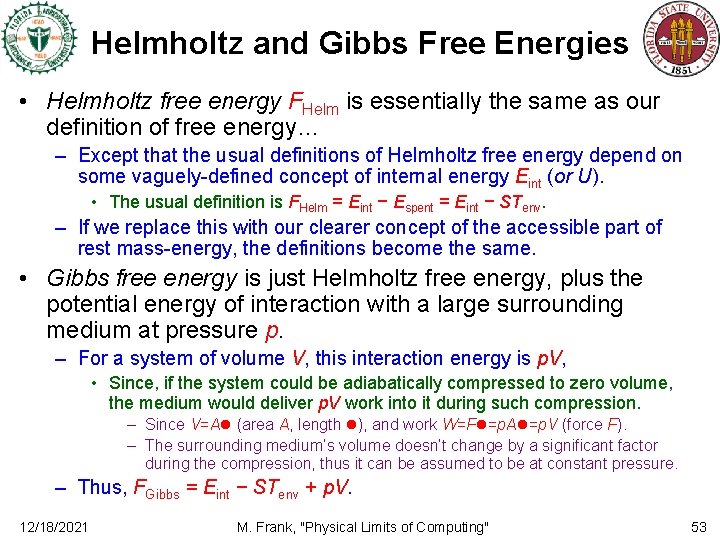

Helmholtz and Gibbs Free Energies • Helmholtz free energy FHelm is essentially the same as our definition of free energy… – Except that the usual definitions of Helmholtz free energy depend on some vaguely-defined concept of internal energy Eint (or U). • The usual definition is FHelm = Eint − Espent = Eint − STenv. – If we replace this with our clearer concept of the accessible part of rest mass-energy, the definitions become the same. • Gibbs free energy is just Helmholtz free energy, plus the potential energy of interaction with a large surrounding medium at pressure p. – For a system of volume V, this interaction energy is p. V, • Since, if the system could be adiabatically compressed to zero volume, the medium would deliver p. V work into it during such compression. – Since V=A (area A, length ), and work W=F =p. A =p. V (force F). – The surrounding medium’s volume doesn’t change by a significant factor during the compression, thus it can be assumed to be at constant pressure. – Thus, FGibbs = Eint − STenv + p. V. 12/18/2021 M. Frank, "Physical Limits of Computing" 53

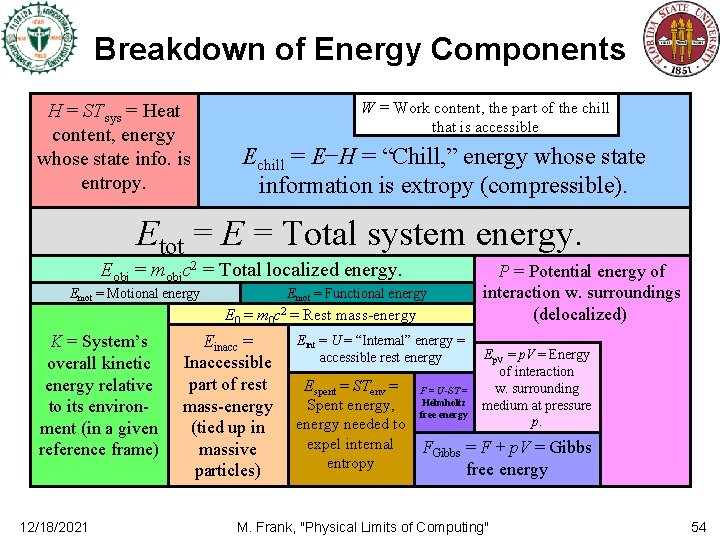

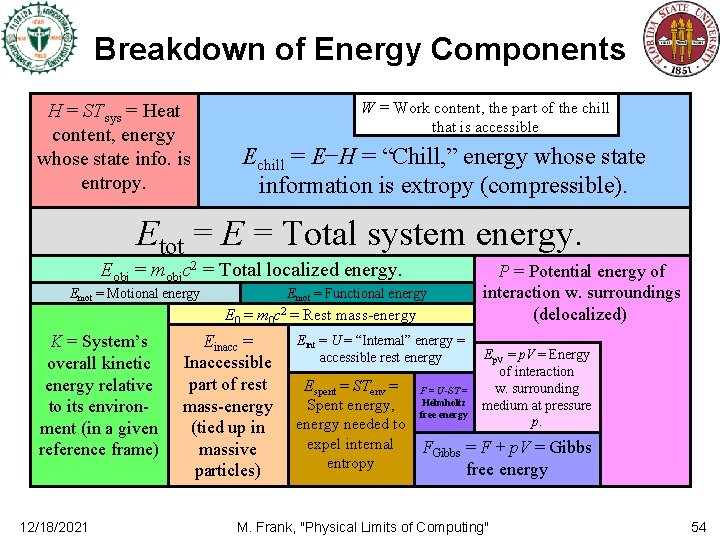

Breakdown of Energy Components H = STsys = Heat content, energy whose state info. is entropy. W = Work content, the part of the chill that is accessible Echill = E−H = “Chill, ” energy whose state information is extropy (compressible). Etot = E = Total system energy. Eobj = mobjc 2 = Total localized energy. Emot = Motional energy Emot = Functional energy E 0 = m 0 c 2 = Rest mass-energy K = System’s overall kinetic energy relative to its environment (in a given reference frame) 12/18/2021 Einacc = Inaccessible part of rest mass-energy (tied up in massive particles) Eint = U = “Internal” energy = accessible rest energy P = Potential energy of interaction w. surroundings (delocalized) Ep. V = Energy of interaction w. surrounding medium at pressure p. Espent = STenv = F = U-ST = Helmholtz Spent energy, free energy needed to expel internal FGibbs = F + p. V = Gibbs entropy free energy M. Frank, "Physical Limits of Computing" 54

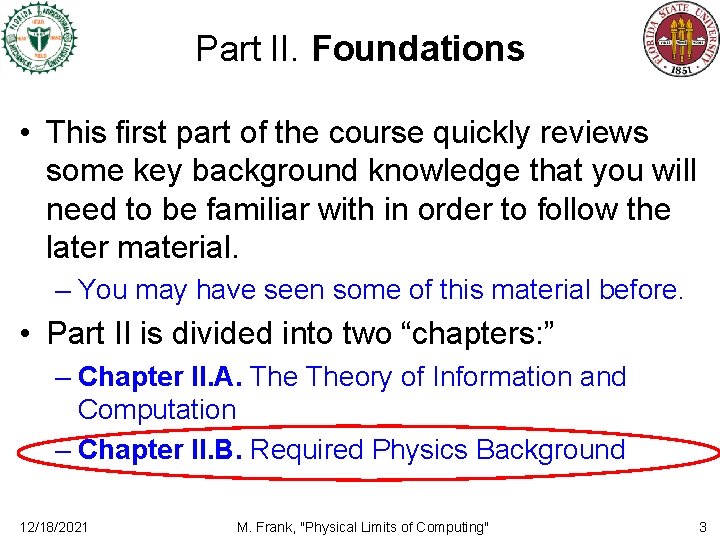

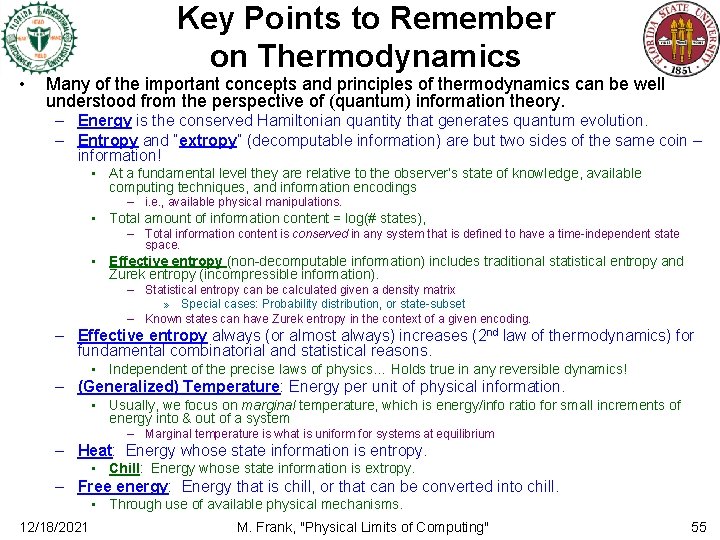

• Key Points to Remember on Thermodynamics Many of the important concepts and principles of thermodynamics can be well understood from the perspective of (quantum) information theory. – Energy is the conserved Hamiltonian quantity that generates quantum evolution. – Entropy and “extropy” (decomputable information) are but two sides of the same coin – information! • At a fundamental level they are relative to the observer’s state of knowledge, available computing techniques, and information encodings – i. e. , available physical manipulations. • Total amount of information content = log(# states), – Total information content is conserved in any system that is defined to have a time-independent state space. • Effective entropy (non-decomputable information) includes traditional statistical entropy and Zurek entropy (incompressible information). – Statistical entropy can be calculated given a density matrix » Special cases: Probability distribution, or state-subset – Known states can have Zurek entropy in the context of a given encoding. – Effective entropy always (or almost always) increases (2 nd law of thermodynamics) for fundamental combinatorial and statistical reasons. • Independent of the precise laws of physics… Holds true in any reversible dynamics! – (Generalized) Temperature: Energy per unit of physical information. • Usually, we focus on marginal temperature, which is energy/info ratio for small increments of energy into & out of a system – Marginal temperature is what is uniform for systems at equilibrium – Heat: Energy whose state information is entropy. • Chill: Energy whose state information is extropy. – Free energy: Energy that is chill, or that can be converted into chill. • Through use of available physical mechanisms. 12/18/2021 M. Frank, "Physical Limits of Computing" 55