GPU evolution Will Graphics Morph Into Compute Norm

- Slides: 48

GPU evolution Will Graphics Morph Into Compute? Norm Rubin Fellow GPG graphics products group AMD PACT 2008

Performance is King Cinematic world: On average studios typically use 100, 000 min of compute per min of image Blinn's Law (the flip side of Moore's Law): – Time per frame is ~constant – Audience expectation of quality per frame is higher every year! Cars. Courtesy of Pixar Animation Studios – Expectation of quality increases with compute increases GPUs are real time – 1 min of compute per min of image so users want 100, 000 times faster machines 2 Pact 2008 | Oct, 2008

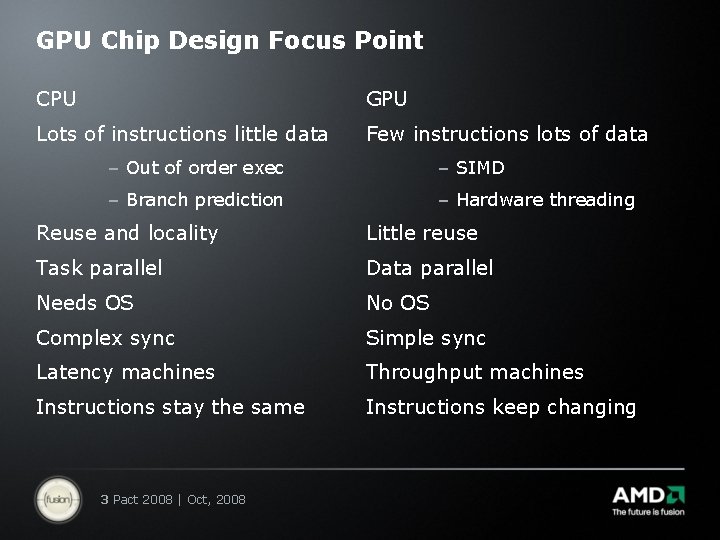

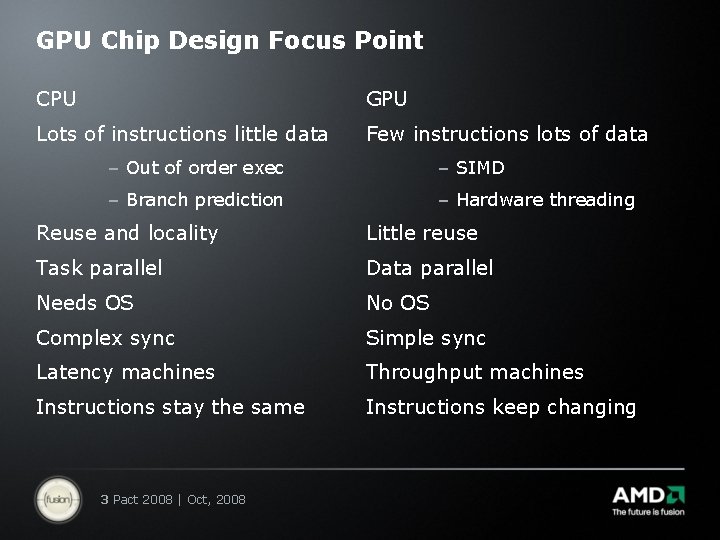

GPU Chip Design Focus Point CPU GPU Lots of instructions little data Few instructions lots of data – Out of order exec – SIMD – Branch prediction – Hardware threading Reuse and locality Little reuse Task parallel Data parallel Needs OS No OS Complex sync Simple sync Latency machines Throughput machines Instructions stay the same Instructions keep changing 3 Pact 2008 | Oct, 2008

Effects of Hardware Changes Call of Juarez 4 Pact 2008 | Oct, 2008

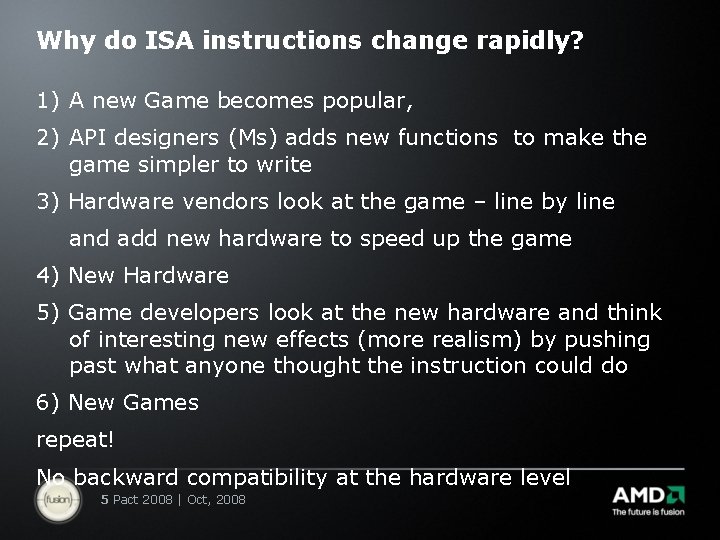

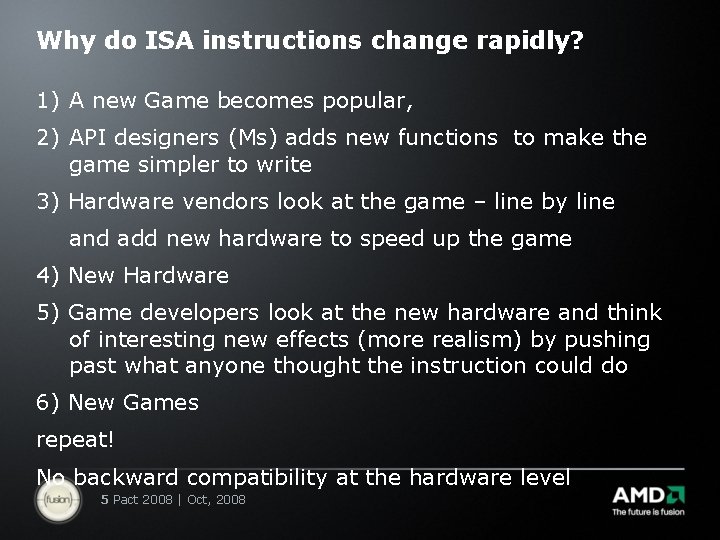

Why do ISA instructions change rapidly? 1) A new Game becomes popular, 2) API designers (Ms) adds new functions to make the game simpler to write 3) Hardware vendors look at the game – line by line and add new hardware to speed up the game 4) New Hardware 5) Game developers look at the new hardware and think of interesting new effects (more realism) by pushing past what anyone thought the instruction could do 6) New Games repeat! No backward compatibility at the hardware level 5 Pact 2008 | Oct, 2008

Co-evolution in action 6 Pact 2008 | Oct, 2008 Photograph courtesy of Peter Chew (www. brisbane. Insects. com)

Is the GPU just a lot of CPU devices? GPU / CPU have been co-evolving Not a process of radical change Current GPUs have rendering-specific hardware Transitional features Ex: fixed function systems Memory systems with filtering (==texture) Depth buffer Rasterizer … These are crucial for graphics performance! 7 Pact 2008 | Oct, 2008

Latency or throughput CPU and GPU are fundamentally different CPU strength is single thread performance (latency machine) GPU strength is massive thread performance (throughput machine) What classes of problems can be solved by massive parallel processing? What exactly does latency or throughput mean? 8 Pact 2008 | Oct, 2008

GPU vs. CPU performance thread: // load r 1 = load (index) // series of adds r 1 = r 1 + r 1 … Run lots of threads Can you get peak performance/multi-core/cluster? Peak performance = do alu ops every cycle 9 Pact 2008 | Oct, 2008

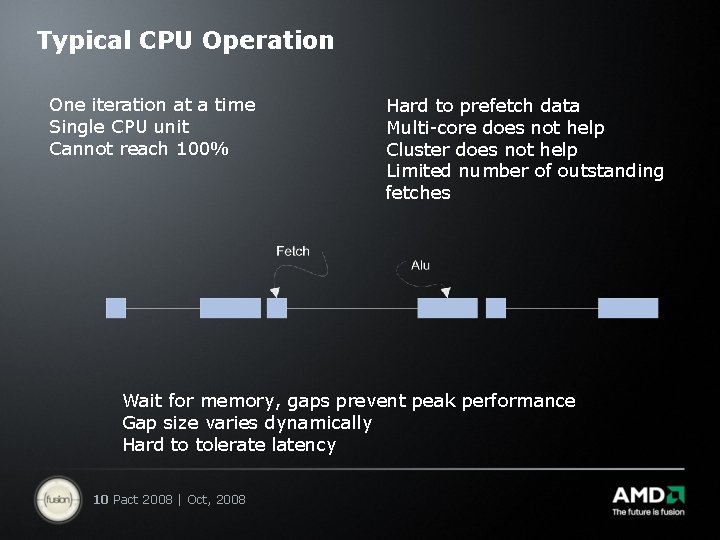

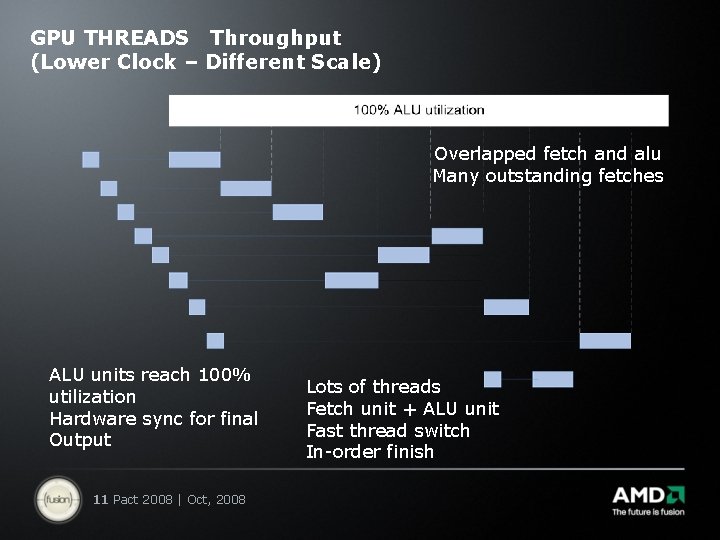

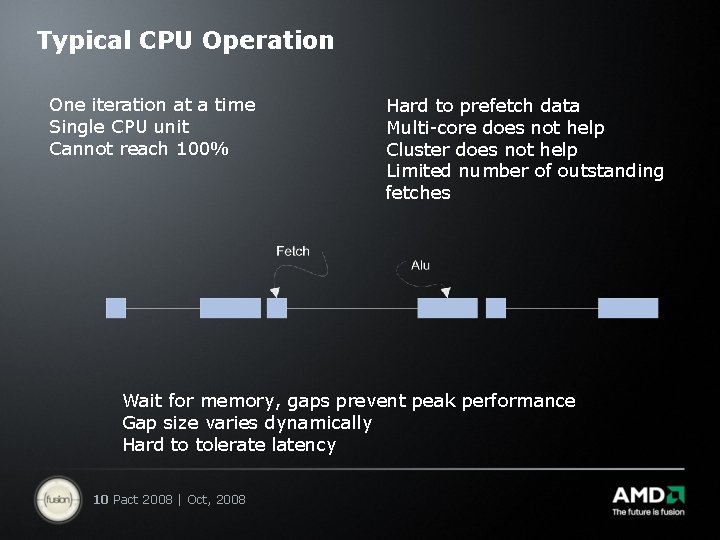

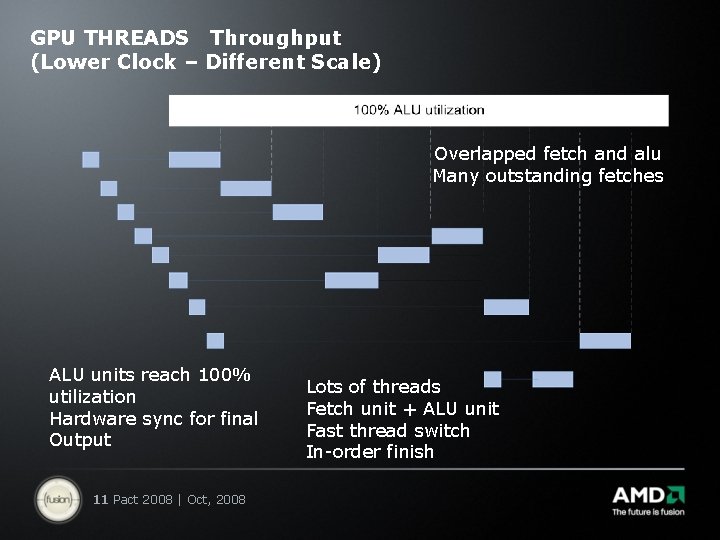

Typical CPU Operation One iteration at a time Single CPU unit Cannot reach 100% Hard to prefetch data Multi-core does not help Cluster does not help Limited number of outstanding fetches Wait for memory, gaps prevent peak performance Gap size varies dynamically Hard to tolerate latency 10 Pact 2008 | Oct, 2008

GPU THREADS Throughput (Lower Clock – Different Scale) Overlapped fetch and alu Many outstanding fetches ALU units reach 100% utilization Hardware sync for final Output 11 Pact 2008 | Oct, 2008 Lots of threads Fetch unit + ALU unit Fast thread switch In-order finish

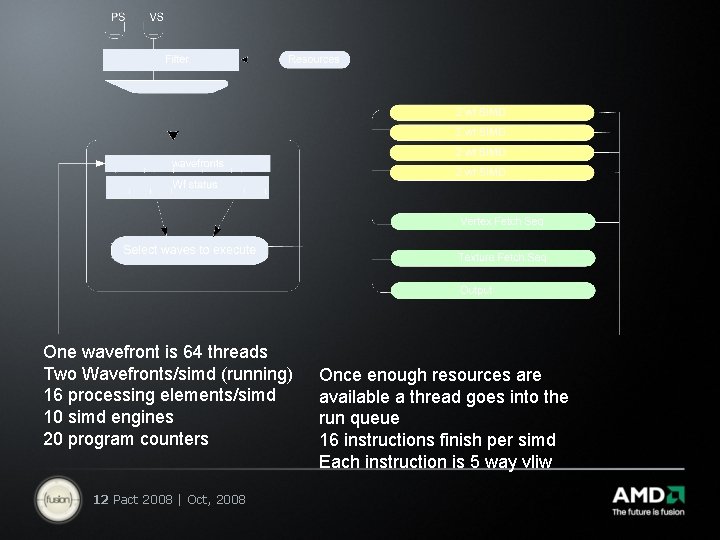

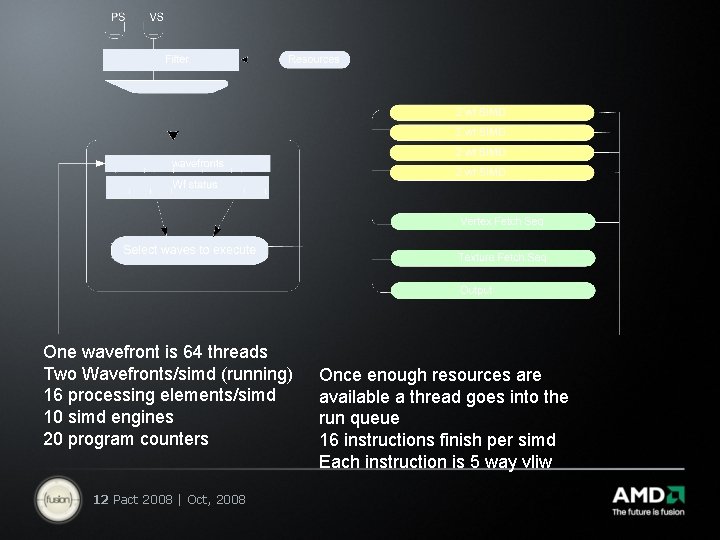

One wavefront is 64 threads Two Wavefronts/simd (running) 16 processing elements/simd 10 simd engines 20 program counters 12 Pact 2008 | Oct, 2008 Once enough resources are available a thread goes into the run queue 16 instructions finish per simd Each instruction is 5 way vliw

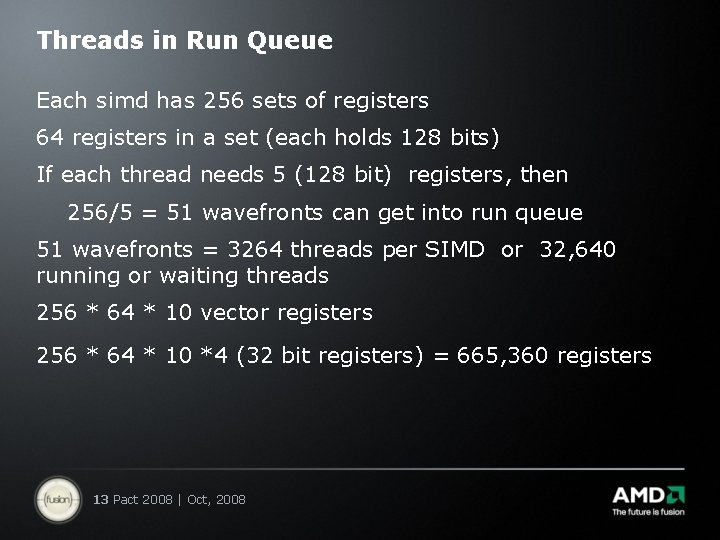

Threads in Run Queue Each simd has 256 sets of registers 64 registers in a set (each holds 128 bits) If each thread needs 5 (128 bit) registers, then 256/5 = 51 wavefronts can get into run queue 51 wavefronts = 3264 threads per SIMD or 32, 640 running or waiting threads 256 * 64 * 10 vector registers 256 * 64 * 10 *4 (32 bit registers) = 665, 360 registers 13 Pact 2008 | Oct, 2008

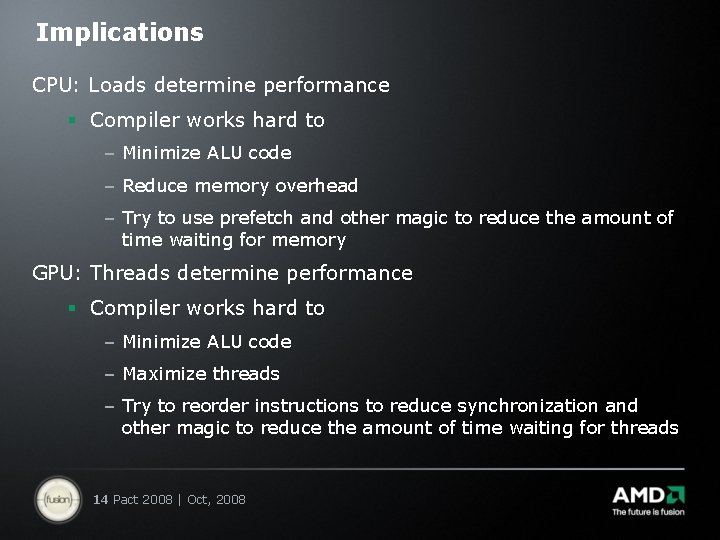

Implications CPU: Loads determine performance § Compiler works hard to – Minimize ALU code – Reduce memory overhead – Try to use prefetch and other magic to reduce the amount of time waiting for memory GPU: Threads determine performance § Compiler works hard to – Minimize ALU code – Maximize threads – Try to reorder instructions to reduce synchronization and other magic to reduce the amount of time waiting for threads 14 Pact 2008 | Oct, 2008

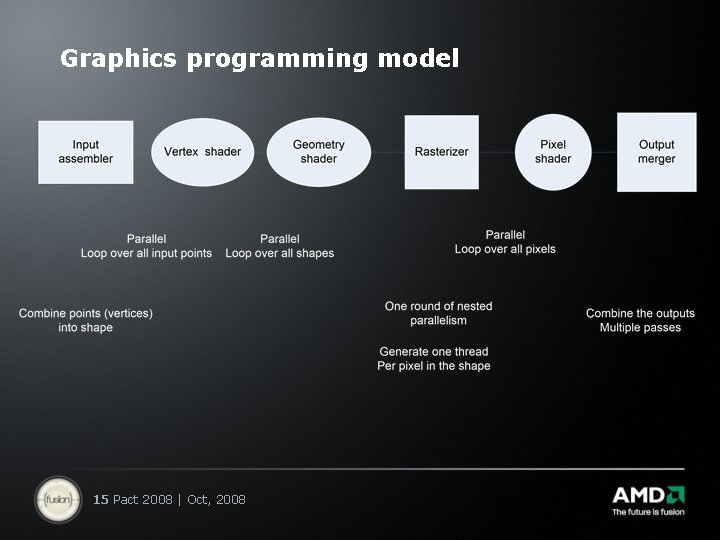

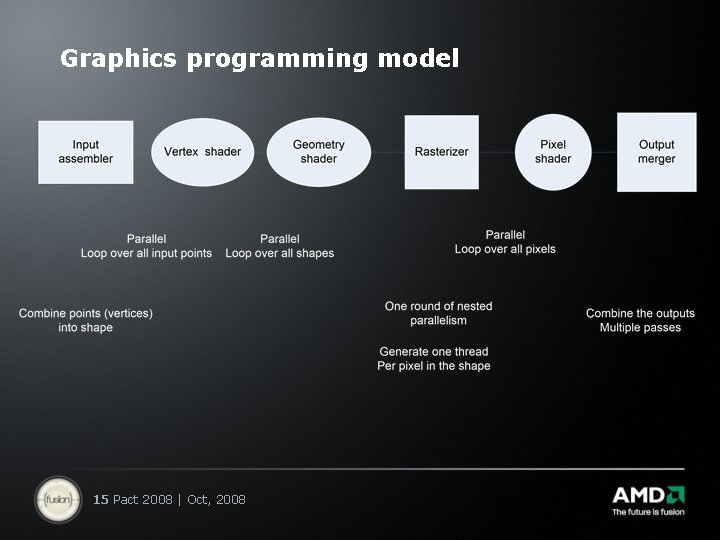

Graphics programming model 15 Pact 2008 | Oct, 2008

Parallelism Model All parallel operations are hidden via domain specific API calls Developers write sequential code + kernels Kernel operate on one vertex or pixel Developers never deal with parallelism directly No need for auto parallel compilers 16 Pact 2008 | Oct, 2008

Observations Developers only write a small part of the program, rest of the code comes from libraries 200 -300 small kernels each < 100 lines No race conditions are possible No error reporting (just keep going) Can view the program as serial (per vertex/shape/pixel) No developer knows the number of processors Not like pthreads Result: Lots of success, simple enough to program 17 Pact 2008 | Oct, 2008

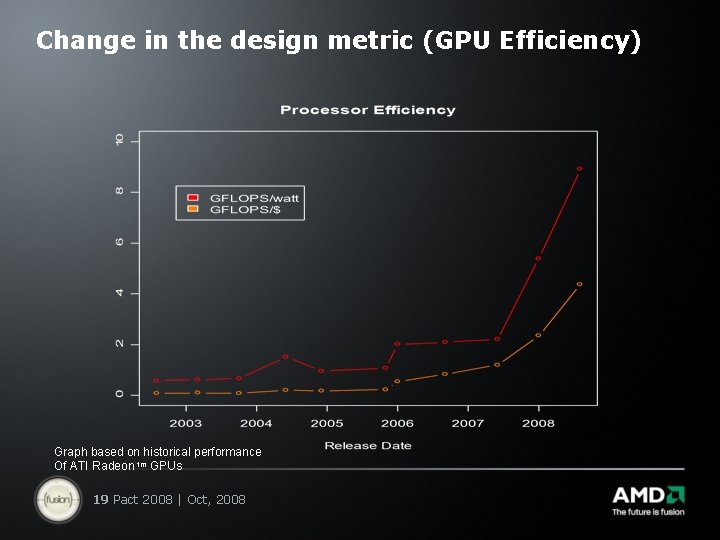

Power and cost have appeared Vendors always release a family of cards (change the number of simd engines per chip) -Programs need to scale -Avoid huge monolithic cores Goal: Best performance comes from two gpu chips on a card Mid range performance from one gpu, Low range – just remove simd engines 18 Pact 2008 | Oct, 2008

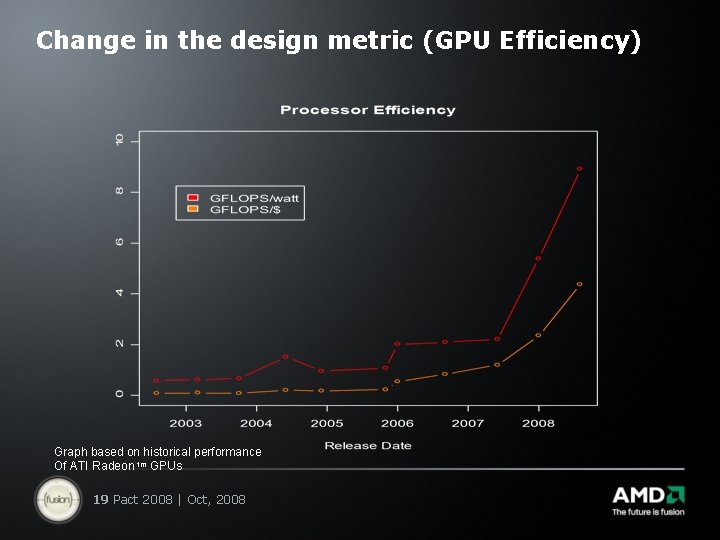

Change in the design metric (GPU Efficiency) Graph based on historical performance Of ATI Radeon tm GPUs 19 Pact 2008 | Oct, 2008

Changes in the last generation ATI Radeon™ HD 38 XX vs ATI Radeon™ HD 48 XX 20 Pact 2008 | Oct, 2008

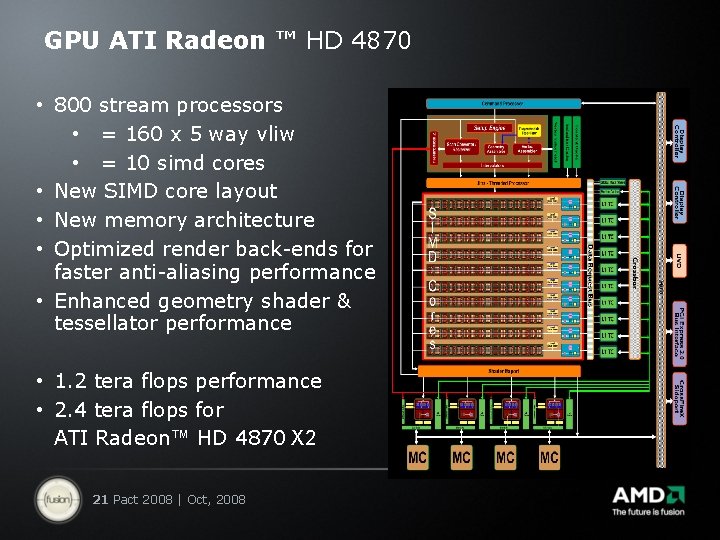

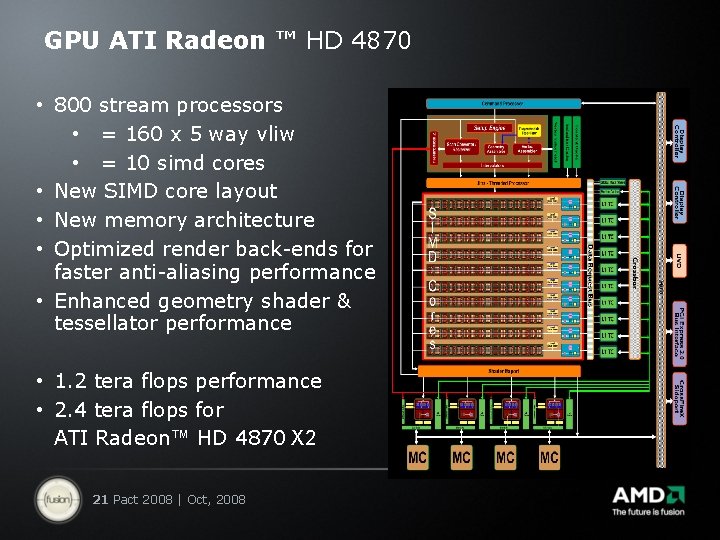

GPU ATI Radeon ™ HD 4870 • 800 stream processors • = 160 x 5 way vliw • = 10 simd cores • New SIMD core layout • New memory architecture • Optimized render back-ends for faster anti-aliasing performance • Enhanced geometry shader & tessellator performance • 1. 2 tera flops performance • 2. 4 tera flops for ATI Radeon™ HD 4870 X 2 21 Pact 2008 | Oct, 2008

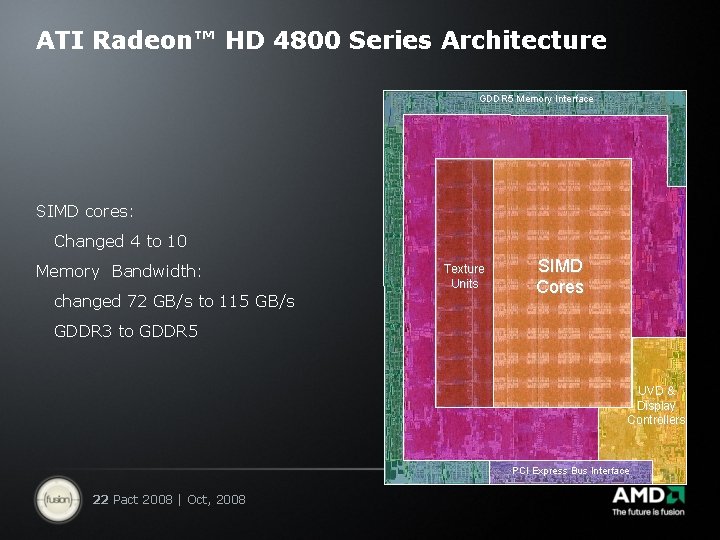

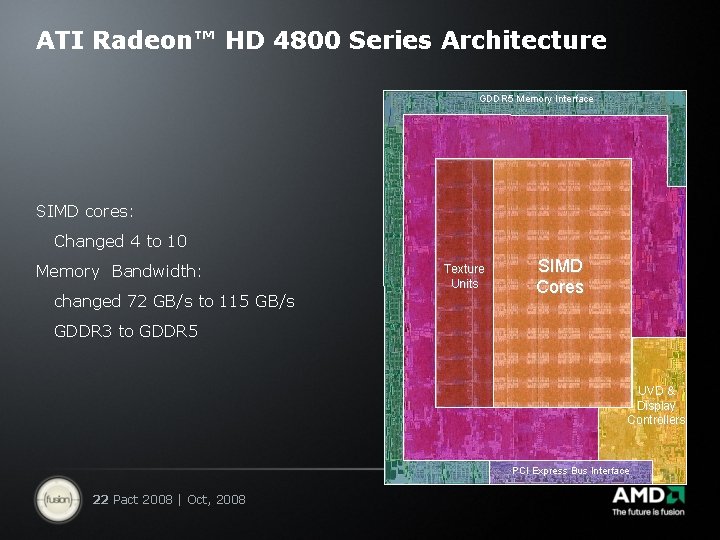

ATI Radeon™ HD 4800 Series Architecture GDDR 5 Memory Interface SIMD cores: Changed 4 to 10 Memory Bandwidth: changed 72 GB/s to 115 GB/s Texture Units SIMD Cores GDDR 3 to GDDR 5 UVD & Display Controllers PCI Express Bus Interface 22 Pact 2008 | Oct, 2008

What is new for gpgpu? Double precision – 5 way vliw allows pairs to do double add 4 to do double multiply 5 way vliw 4 normal functional units + 1 fat unit Local memory on simd (communication) Global memory on chip (more communication) Better thread scheduling General scatter/gather operations 23 Pact 2008 | Oct, 2008 SIMD Cores

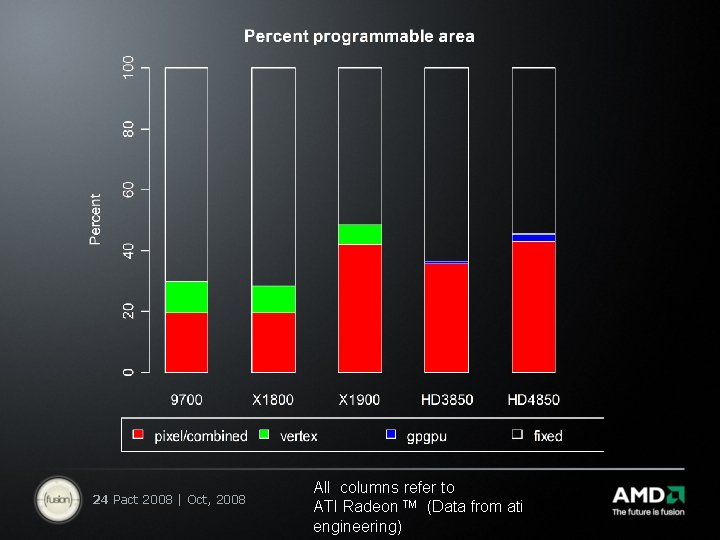

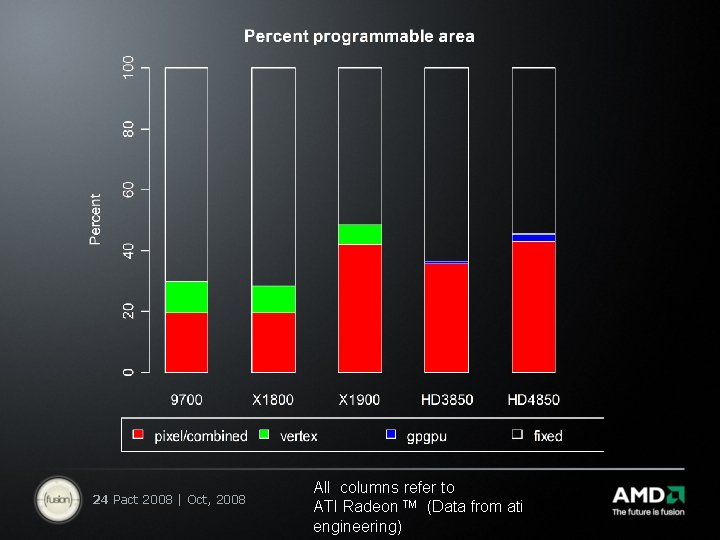

24 Pact 2008 | Oct, 2008 All columns refer to ATI Radeon TM (Data from ati engineering)

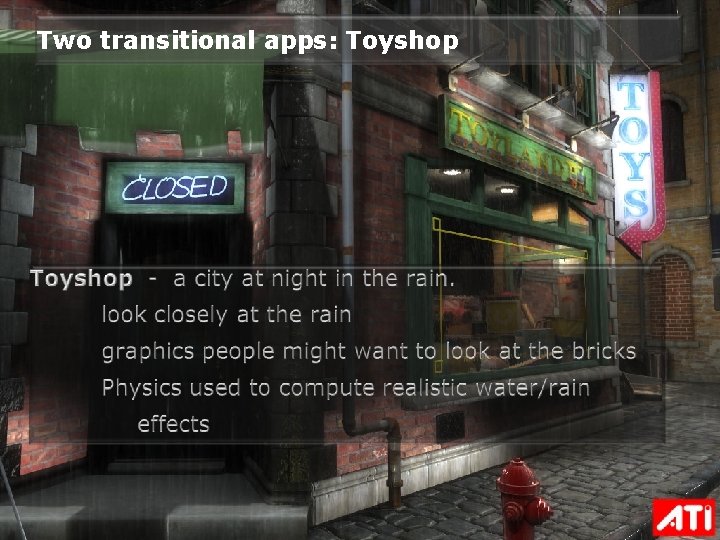

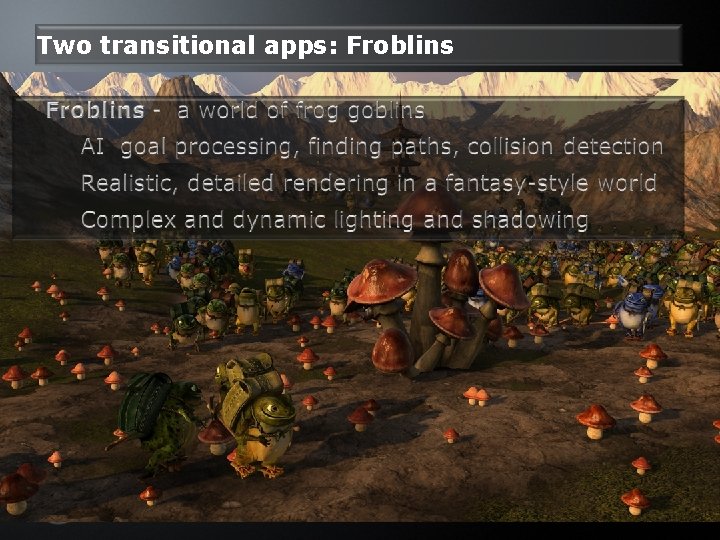

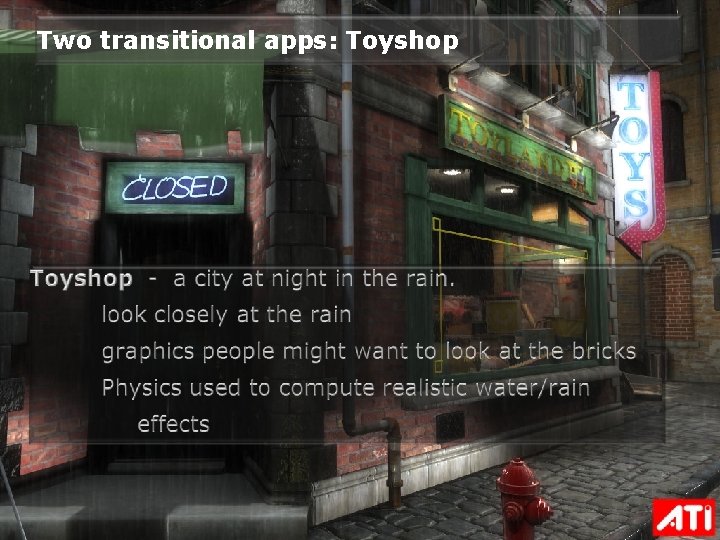

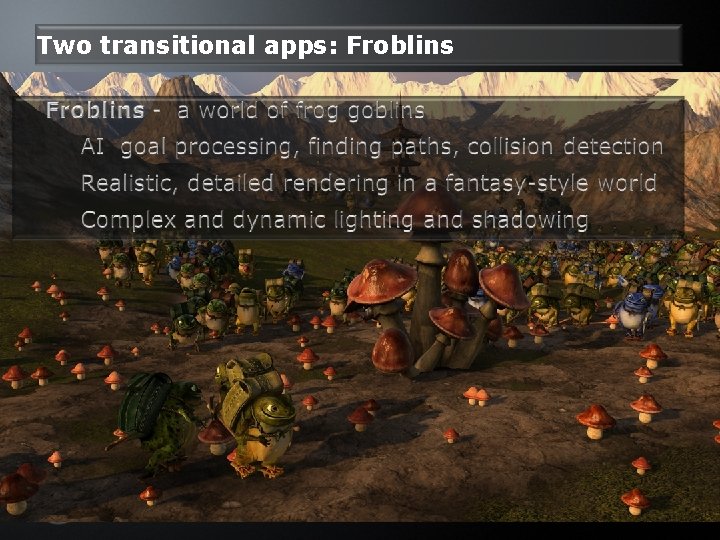

Transitional applications Written by graphics programmers Real connection with graphics is that the result is rendered and looks cute Really programming physics and AI Evaluating physics, simulations, and artificial intelligence on a GPU is becoming an element of future game programs. Massively parallel algorithm formulations Combined with responsive gameplay and rendering 25 Pact 2008 | Oct, 2008

How is software evolving? Graphics API’s have added compute shaders Dx 11 compute shaders Another stage in pipeline Some programming languages which are “sort of C “ Open. CL CUDA CT Streaming languages Brook+ 26 Pact 2008 | Oct, 2008

Two transitional apps: Toyshop 27 Pact 2008 | Oct, 2008

Two transitional apps: Froblins 28 Pact 2008 | Oct, 2008

Toyshop demo Toy. Shop Demo 29 Pact 2008 | Oct, 2008

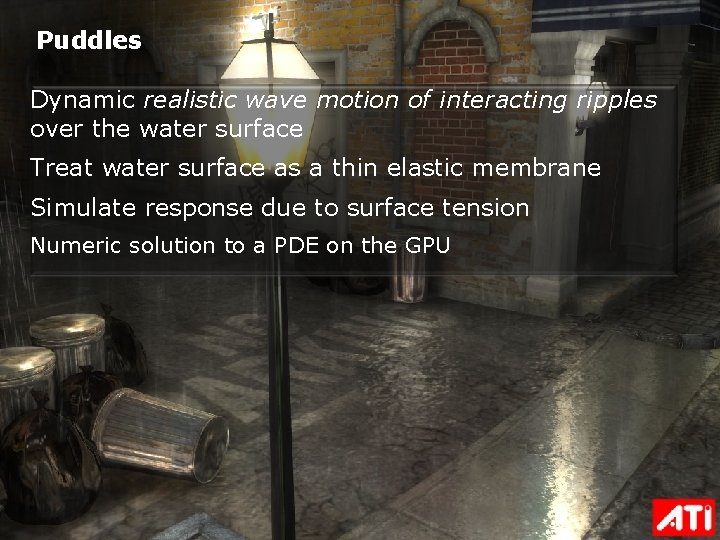

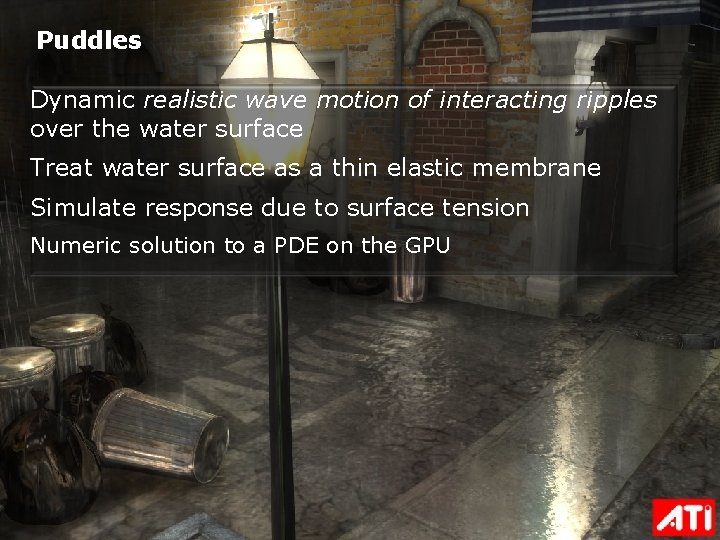

Puddles Dynamic realistic wave motion of interacting ripples over the water surface Treat water surface as a thin elastic membrane Simulate response due to surface tension Numeric solution to a PDE on the GPU 30 Pact 2008 | Oct, 2008

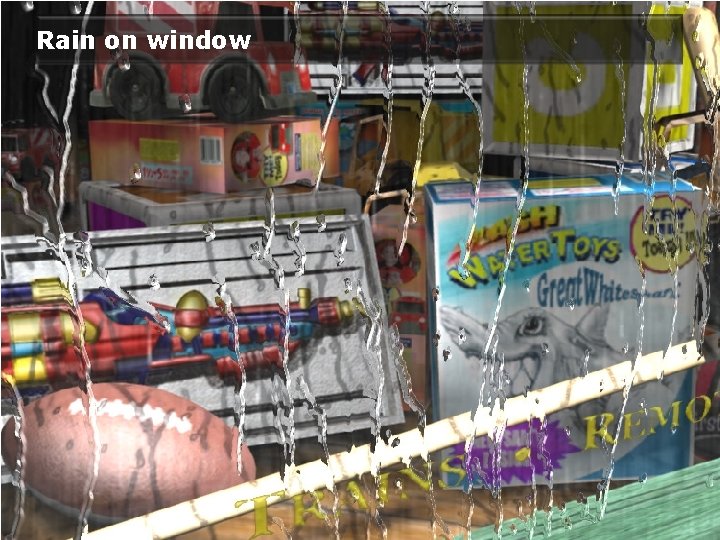

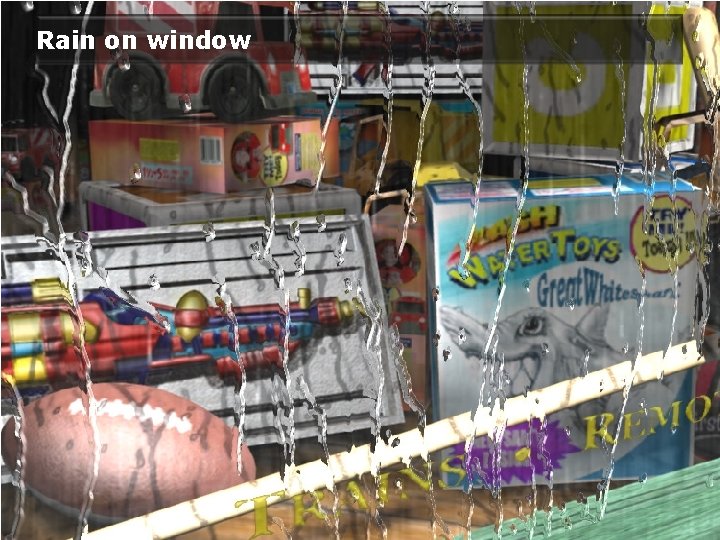

Rain on window 31 Pact 2008 | Oct, 2008

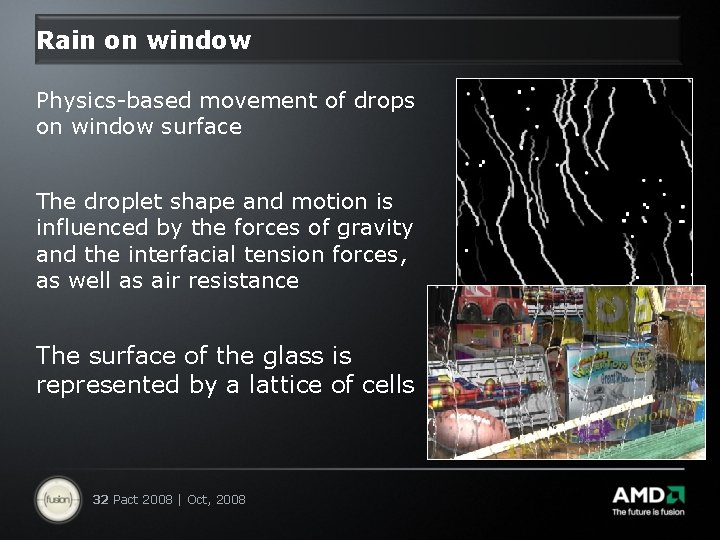

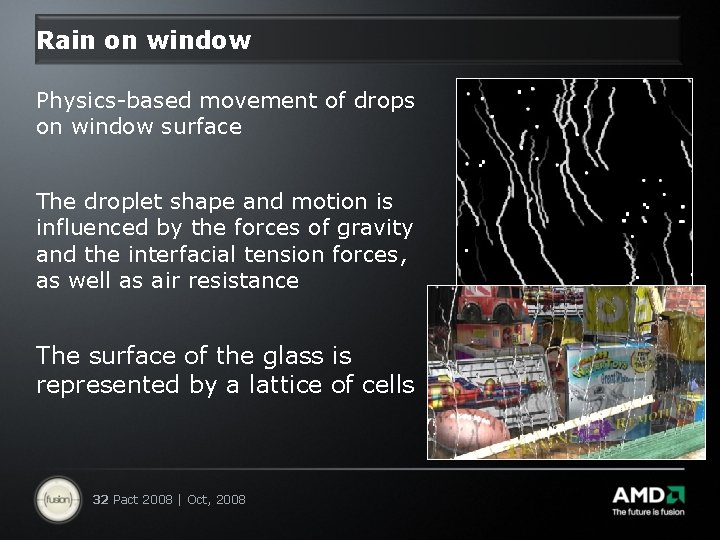

Rain on window Physics-based movement of drops on window surface The droplet shape and motion is influenced by the forces of gravity and the interfacial tension forces, as well as air resistance The surface of the glass is represented by a lattice of cells 32 Pact 2008 | Oct, 2008

Rain Looks great, but do not try to predict rain using this random number technique. Random number generator: done by a load from a small table based on screen xy coordinate + an offset that changes each frame. GPU generation allowed only 16 persistent outputs per thread – Could not save seed Reference: Tatarchuk, N. , Isidoro, J. R. 2006. Artist. Directable Real-Time Rain Rendering in City Environments. In Proceedings of Eurographics Workshop on Natural Phenomena, Vienna, Austria. 33 Pact 2008 | Oct, 2008

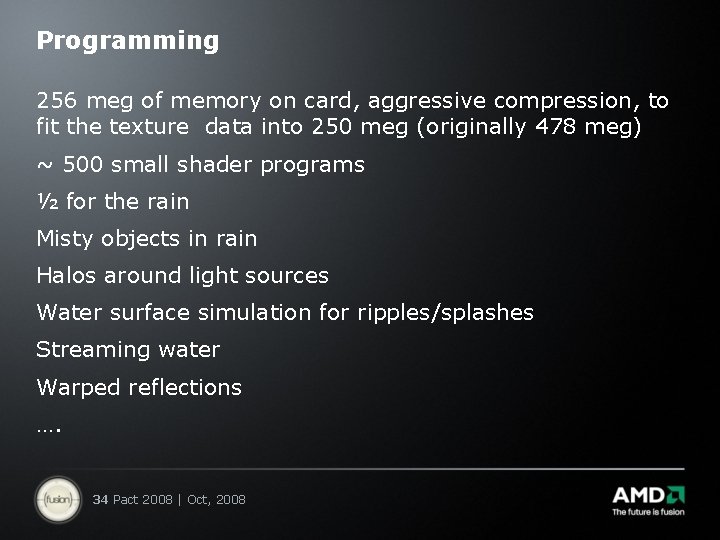

Programming 256 meg of memory on card, aggressive compression, to fit the texture data into 250 meg (originally 478 meg) ~ 500 small shader programs ½ for the rain Misty objects in rain Halos around light sources Water surface simulation for ripples/splashes Streaming water Warped reflections …. 34 Pact 2008 | Oct, 2008

The Toy. Shop Team Lead Artist Lead Programmer Dan Roeger Natalya Tatarchuk David Gosselin Artists Daniel Szecket, Eli Turner, and Abe Wiley Engine / Shader Programming John Isidoro, Dan Ginsburg, Thorsten Scheuermann and Chris Oat Producer Lisa Close 35 Pact 2008 | Oct, 2008 Manager Callan Mc. Inally

Froblin demo Simulation and Rendering Massive Crowds of Intelligent and Detailed Creatures on GPU 36 Pact 2008 | Oct, 2008

A Smörgåsbord of Features Dynamic pathfinding AI computations on GPU Massive crowd rendering with LOD management Tessellation for high quality close-ups and stable performance HDR lighting and post-processing effects with gamma-correct rendering Terrain system Cascade shadows for large-range environments Advanced global illumination system Actual. 9 Tera. Flops performance 37 Pact 2008 | Oct, 2008

Run froblin demo Tessilation allowed by hardware support for limited nested parallelism 38 Pact 2008 | Oct, 2008

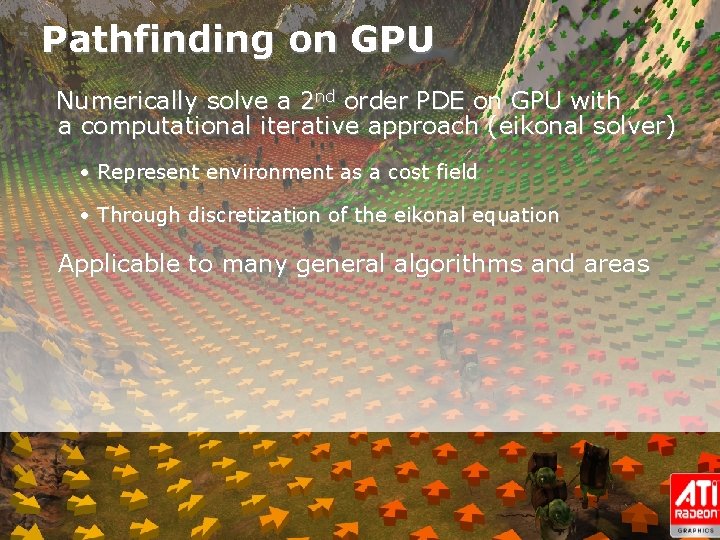

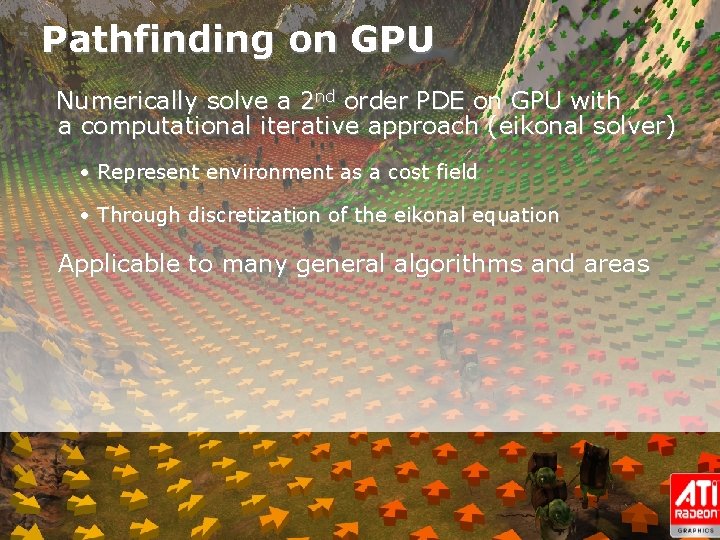

Pathfinding on GPU Numerically solve a 2 nd order PDE on GPU with a computational iterative approach (eikonal solver) • Represent environment as a cost field • Through discretization of the eikonal equation Applicable to many general algorithms and areas 39 Pact 2008 | Oct, 2008

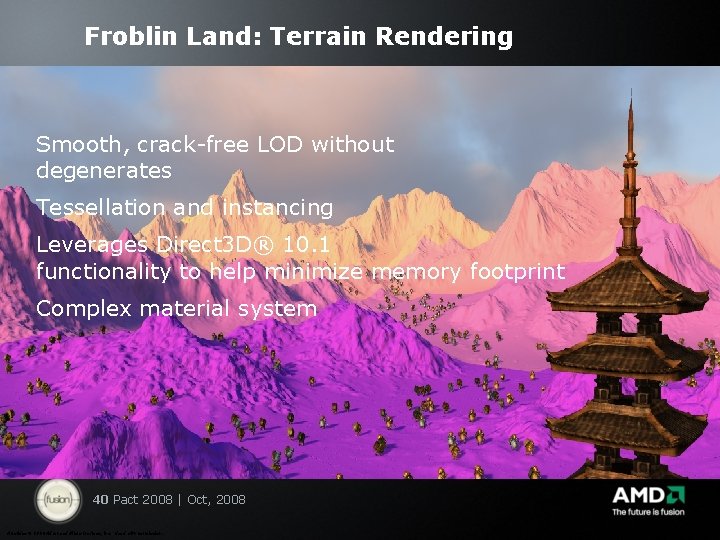

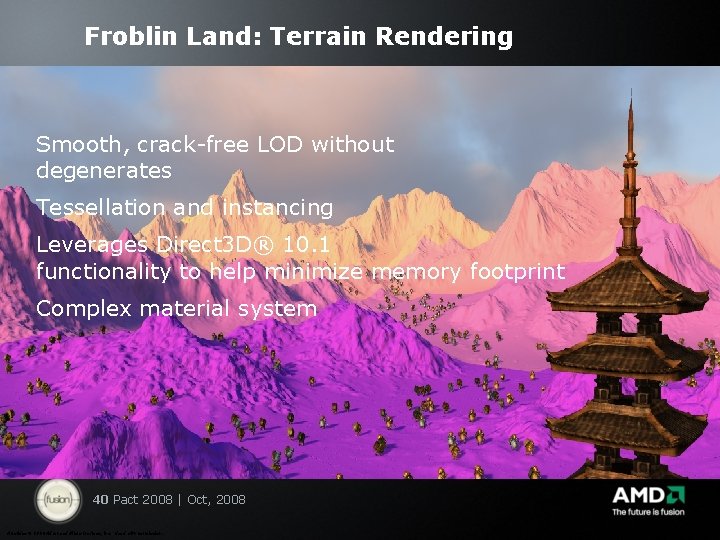

Froblin Land: Terrain Rendering Smooth, crack-free LOD without degenerates Tessellation and instancing Leverages Direct 3 D® 10. 1 functionality to help minimize memory footprint Complex material system 40 Pact 2008 | Oct, 2008 All slides © 2008 Advanced Micro Devices, Inc. Used with permission.

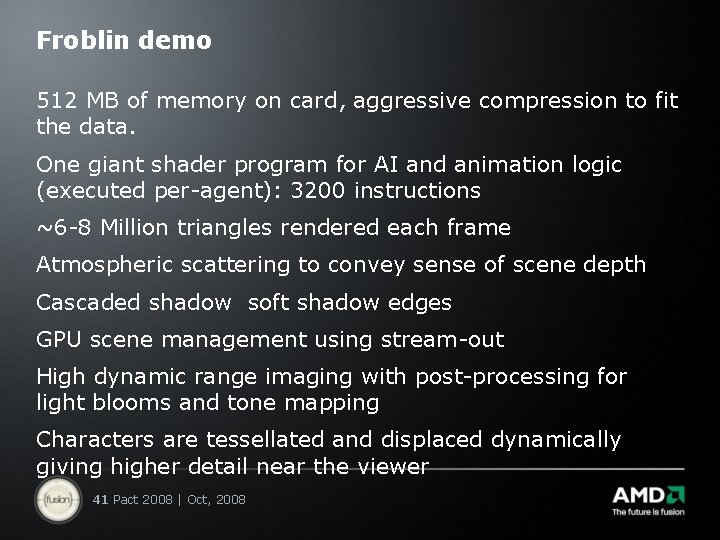

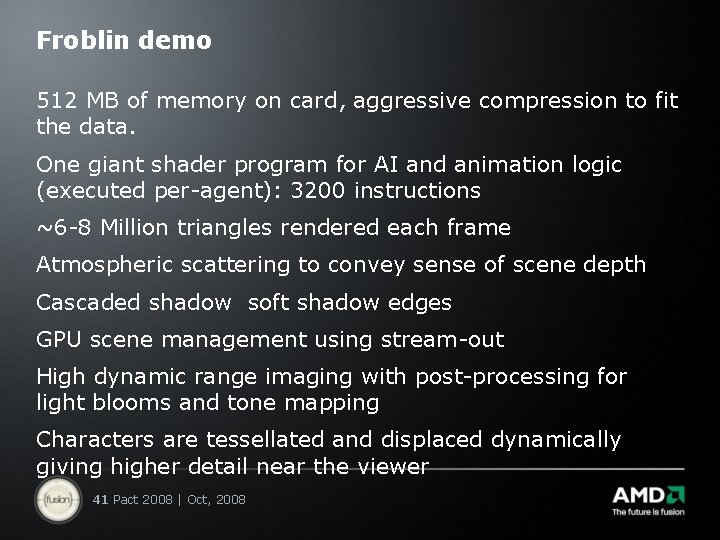

Froblin demo 512 MB of memory on card, aggressive compression to fit the data. One giant shader program for AI and animation logic (executed per-agent): 3200 instructions ~6 -8 Million triangles rendered each frame Atmospheric scattering to convey sense of scene depth Cascaded shadow soft shadow edges GPU scene management using stream-out High dynamic range imaging with post-processing for light blooms and tone mapping Characters are tessellated and displaced dynamically giving higher detail near the viewer 41 Pact 2008 | Oct, 2008

More information about the Froblins demo Reference: Shopf, J. , Barczak, J. , Oat, C. , and Tatarchuk, N. 2008. March of the Froblins: simulation and rendering massive crowds of intelligent and detailed creatures on GPU. In ACM SIGGRAPH 2008 Classes (Los Angeles, California, August 11 - 15, 2008). SIGGRAPH '08. 42 Pact 2008 | Oct, 2008

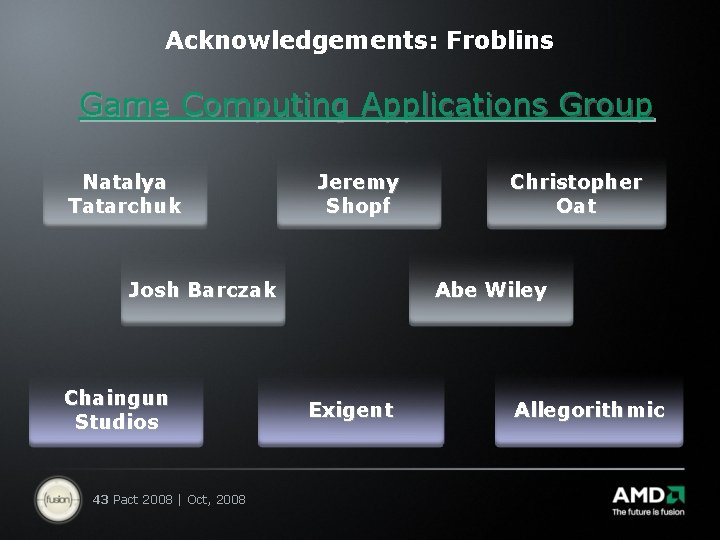

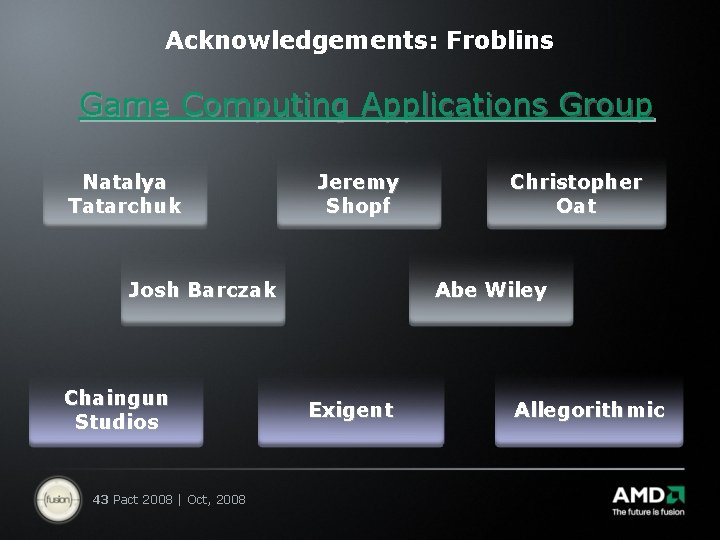

Acknowledgements: Froblins Game Computing Applications Group Natalya Tatarchuk Jeremy Shopf Josh Barczak Chaingun Studios 43 Pact 2008 | Oct, 2008 Christopher Oat Abe Wiley Exigent Allegorithmic

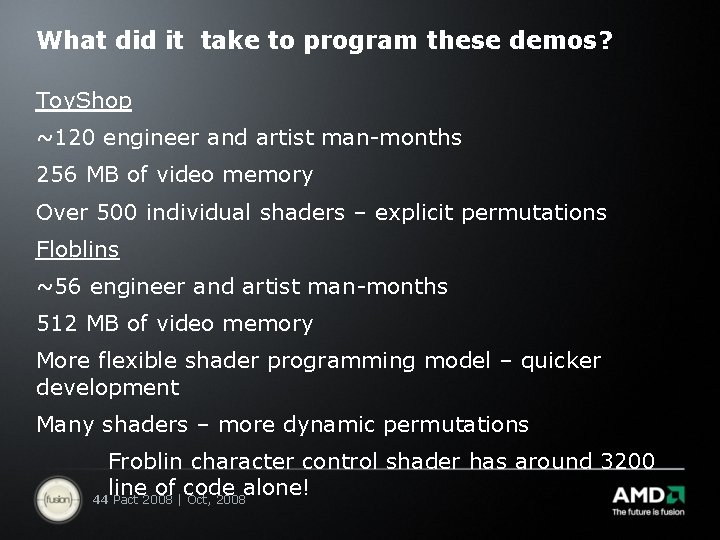

What did it take to program these demos? Toy. Shop ~120 engineer and artist man-months 256 MB of video memory Over 500 individual shaders – explicit permutations Floblins ~56 engineer and artist man-months 512 MB of video memory More flexible shader programming model – quicker development Many shaders – more dynamic permutations Froblin character control shader has around 3200 line of code alone! 44 Pact 2008 | Oct, 2008

Architecture/software issue: Improve the Video Interface To lower power the GPU has a dedicated processor that does video (decode), this offloads work from the cpu Programmable cores are used for de-interlace, scale, color space convert, composition Today this is hand coded; challenge is design a programming model for heterogeneous compute 45 Pact 2008 | Oct, 2008

Challenge Most of the successful parallel applications seem to have dedicated languages (Direct. X® 11/map-reduce/sawzsall) for limited domains Small programs can build interesting applications, Programmers are not super experts in the hardware Programs survive machine generations Can you replicate this success in other domains? 46 Pact 2008 | Oct, 2008

Disclaimer and Attribution DISCLAIMER The information presented in this document is for informational purposes only and may contain technical inaccuracies, omissions and typographical errors. AMD MAKES NO REPRESENTATIONS OR WARRANTIES WITH RESPECT TO THE CONTENTS HEREOF AND ASSUMES NO RESPONSIBILITY FOR ANY INACCURACIES, ERRORS OR OMISSIONS THAT MAY APPEAR IN THIS INFORMATION. AMD SPECIFICALLY DISCLAIMS ANY IMPLIED WARRANTIES OF MERCHANTABILITY OR FITNESS FOR ANY PARTICULAR PURPOSE. IN NO EVENT WILL AMD BE LIABLE TO ANY PERSON FOR ANY DIRECT, INDIRECT, SPECIAL OR OTHER CONSEQUENTIAL DAMAGES ARISING FROM THE USE OF ANY INFORMATION CONTAINED HEREIN, EVEN IF AMD IS EXPRESSLY ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. ATTRIBUTION © 2008 Advanced Micro Devices, Inc. All rights reserved. AMD, the AMD Arrow logo, ATI, the ATI logo, Cross. Fire. X, Power. Play and Radeon and combinations thereof are trademarks of Advanced Micro Devices, Inc. Other names are for informational purposes only and may be trademarks of their respective owners. 47 Pact 2008 | Oct, 2008

Questions? 48 Pact 2008 | Oct, 2008