Enhancing GPU for Scientific Computing Some thoughts Outline

![Related work l l l Using non-programmable GPUs [Erik’ 01] prog. vertex engine for Related work l l l Using non-programmable GPUs [Erik’ 01] prog. vertex engine for](https://slidetodoc.com/presentation_image/176f3b62a680e4cc05e8d17afa7646f9/image-5.jpg)

![GL_QUAD [Vector 4]m Execution graph v. Add CPU Vector Add Operation 1. In this GL_QUAD [Vector 4]m Execution graph v. Add CPU Vector Add Operation 1. In this](https://slidetodoc.com/presentation_image/176f3b62a680e4cc05e8d17afa7646f9/image-9.jpg)

![GL_QUAD [Vectex 4]m Execution graph v. Add CPU 2 Vector Add Operations 1. In GL_QUAD [Vectex 4]m Execution graph v. Add CPU 2 Vector Add Operations 1. In](https://slidetodoc.com/presentation_image/176f3b62a680e4cc05e8d17afa7646f9/image-10.jpg)

- Slides: 26

Enhancing GPU for Scientific Computing Some thoughts

Outline l l l Motivation Related work BLAS Library Execution Model Benchmarks Recommendations

Motivation l GPU Computing • • • l l Vector and Fragment Processor streaming (super)-computers enormous performance! ATI 9700, NV 30 They have become programmable Emerging application areas • • Numerical Sim. [Schroder’ 03], Sorting, Genomics, etc. Goal: Scientific Computing

Motivation l Most software built from small-efficient parts l Scientific apps built on top of s/w library routines l Harnessing GPU resources l • • Arithmetic Intensive Data parallel BLAS Library

![Related work l l l Using nonprogrammable GPUs Erik 01 prog vertex engine for Related work l l l Using non-programmable GPUs [Erik’ 01] prog. vertex engine for](https://slidetodoc.com/presentation_image/176f3b62a680e4cc05e8d17afa7646f9/image-5.jpg)

Related work l l l Using non-programmable GPUs [Erik’ 01] prog. vertex engine for lighting/morphing [Oskin’ 02] vector processing using VP [Ian’ 03] stream processing using FP Problems : • • Monolithic Big Programs One of VP or FP CPU – Passive Mode No Cascading Loop-backs (Parallelism, Setup Times)

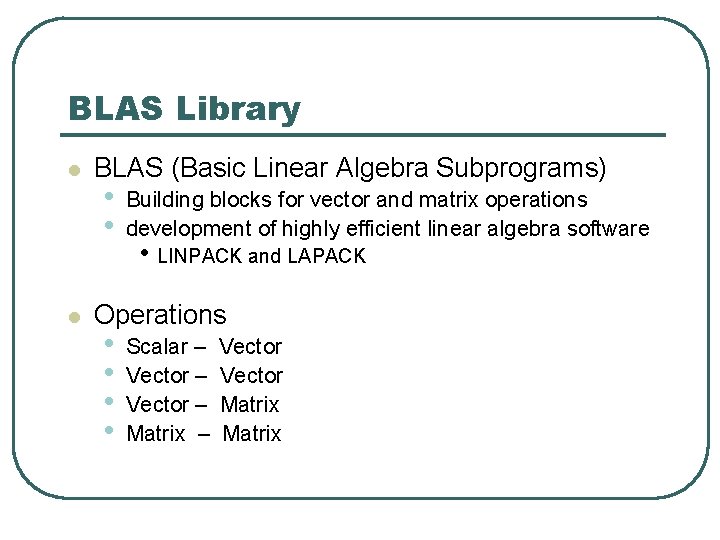

BLAS Library l l BLAS (Basic Linear Algebra Subprograms) • • Building blocks for vector and matrix operations development of highly efficient linear algebra software • LINPACK and LAPACK Operations • • Scalar – Vector – Matrix – Vector Matrix

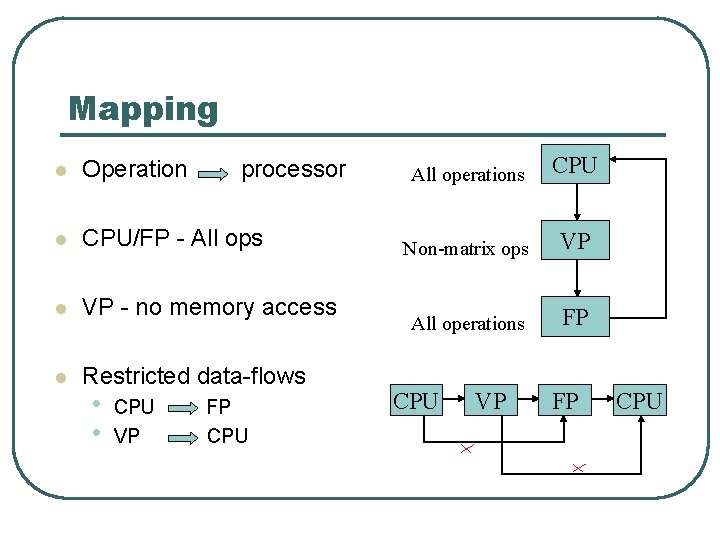

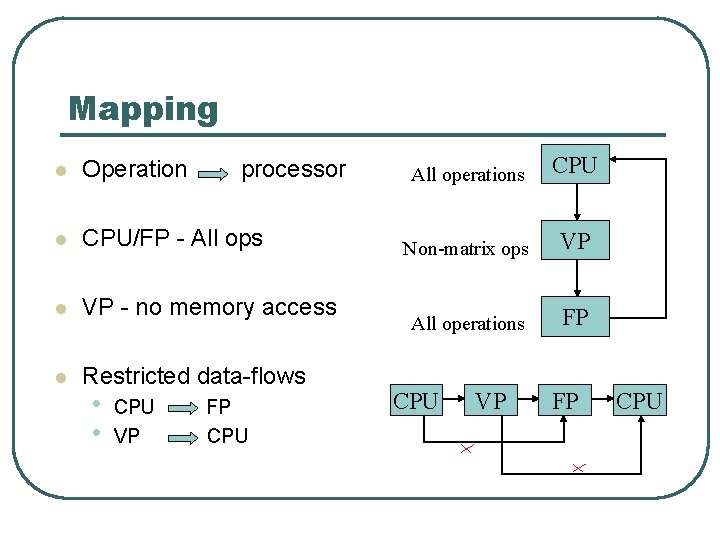

Mapping l Operation l CPU/FP - All ops l VP - no memory access l Restricted data-flows • • CPU VP processor FP CPU All operations CPU Non-matrix ops VP All operations FP CPU VP FP CPU

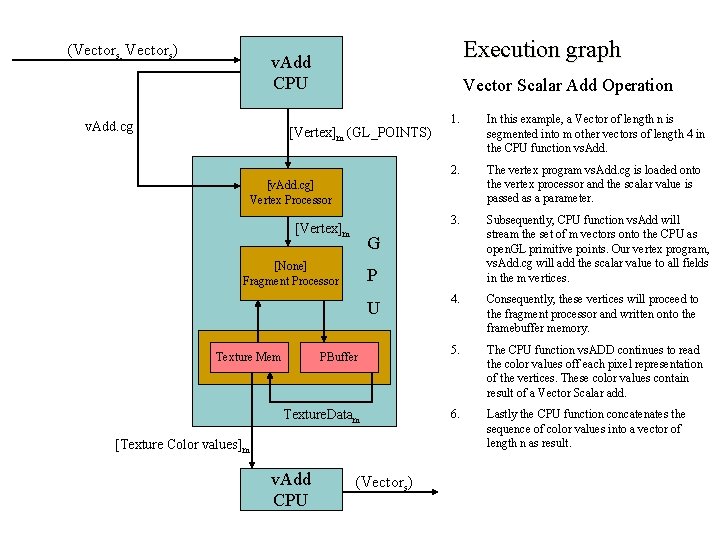

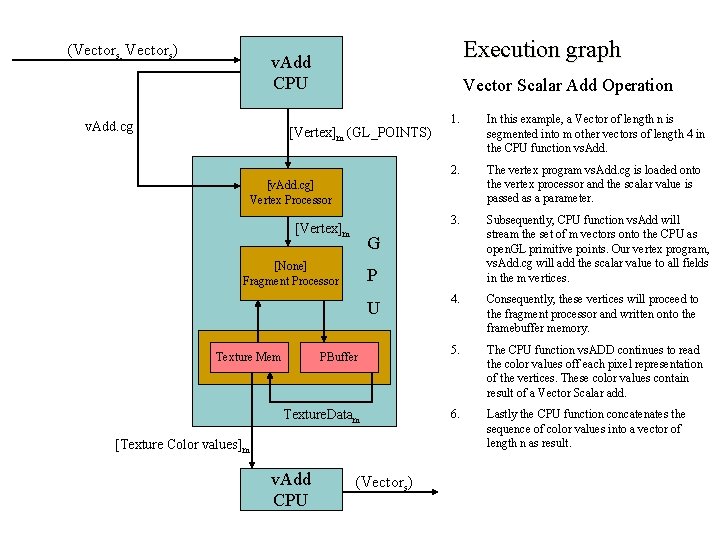

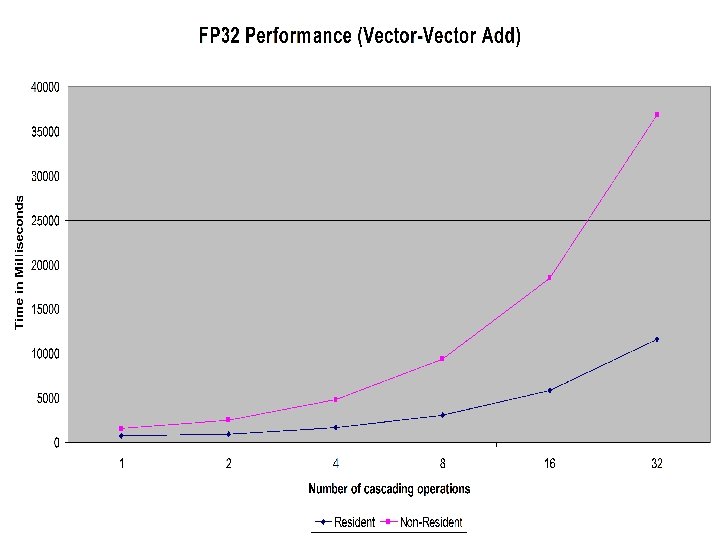

(Vectors, Vectors) Execution graph v. Add CPU v. Add. cg Vector Scalar Add Operation [Vertex]m (GL_POINTS) 1. In this example, a Vector of length n is segmented into m other vectors of length 4 in the CPU function vs. Add. 2. The vertex program vs. Add. cg is loaded onto the vertex processor and the scalar value is passed as a parameter. 3. Subsequently, CPU function vs. Add will stream the set of m vectors onto the CPU as open. GL primitive points. Our vertex program, vs. Add. cg will add the scalar value to all fields in the m vertices. 4. Consequently, these vertices will proceed to the fragment processor and written onto the framebuffer memory. 5. The CPU function vs. ADD continues to read the color values off each pixel representation of the vertices. These color values contain result of a Vector Scalar add. 6. Lastly the CPU function concatenates the sequence of color values into a vector of length n as result. [v. Add. cg] Vertex Processor [Vertex]m G [None] Fragment Processor P U Texture Mem PBuffer Texture. Datam [Texture Color values]m v. Add CPU (Vectors)

![GLQUAD Vector 4m Execution graph v Add CPU Vector Add Operation 1 In this GL_QUAD [Vector 4]m Execution graph v. Add CPU Vector Add Operation 1. In this](https://slidetodoc.com/presentation_image/176f3b62a680e4cc05e8d17afa7646f9/image-9.jpg)

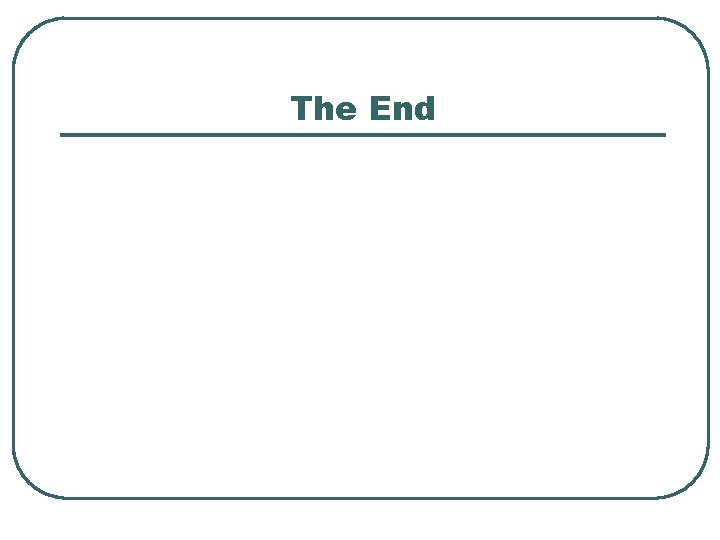

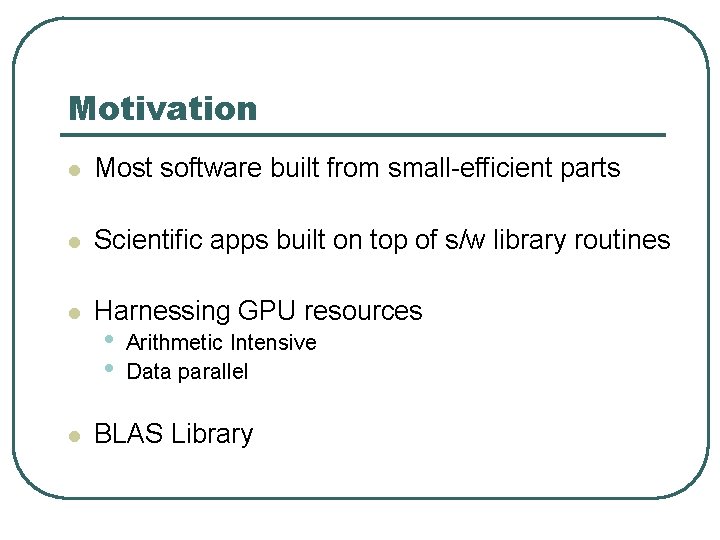

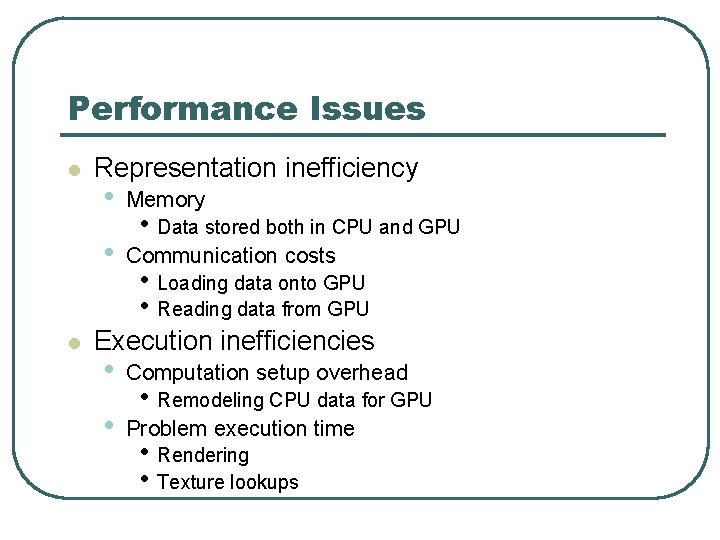

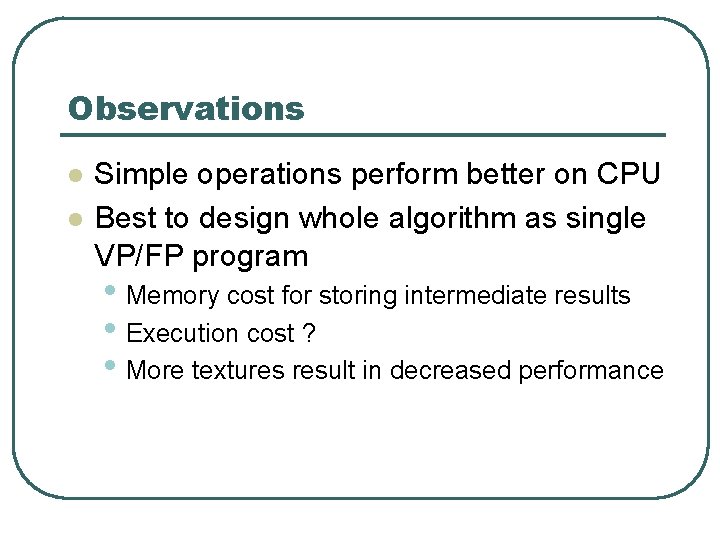

GL_QUAD [Vector 4]m Execution graph v. Add CPU Vector Add Operation 1. In this example, 2 vectors of length s are transformed into texture data in the CPU function v. Add. 2. The vertex program v. Add. cg, and texture data are loaded onto the fragment processor GPU memory respectively. 3. Subsequently, CPU function v. Add will draw a quadrilateral primitive having s pixels. 4. The vertex processor does nothing and passes on the vertices to the rasterizer to process into pixel representation. 5. The rasterizer creates the s pixels for fragment processing. 6. For each pixel, our fragment processor will lookup the values from both textures and determine the color value of each pixel. These pixels are written onto the Pbuffer memory. 7. The CPU function v. ADD continues to read the color values off each pixel representation of the vertices. These color values contain result of a Vector add. 8. The output in Pbuffer is then converted into a texture entry. 9. Lastly the CPU function reads the texture entry and concatenates the sequence of color values into a vector of length s as result. [Vertex 4]m GL_QUAD v. Add. cg [None] Vertex Processor [Vertex 4]m G [v. Add. cg] Fragment Processor Texture. Data 1 m Texture. Data 2 m P U Texture Mem PBuffer Texture. Data 3 m [Texture Color values]m v. Add CPU (Vectors)

![GLQUAD Vectex 4m Execution graph v Add CPU 2 Vector Add Operations 1 In GL_QUAD [Vectex 4]m Execution graph v. Add CPU 2 Vector Add Operations 1. In](https://slidetodoc.com/presentation_image/176f3b62a680e4cc05e8d17afa7646f9/image-10.jpg)

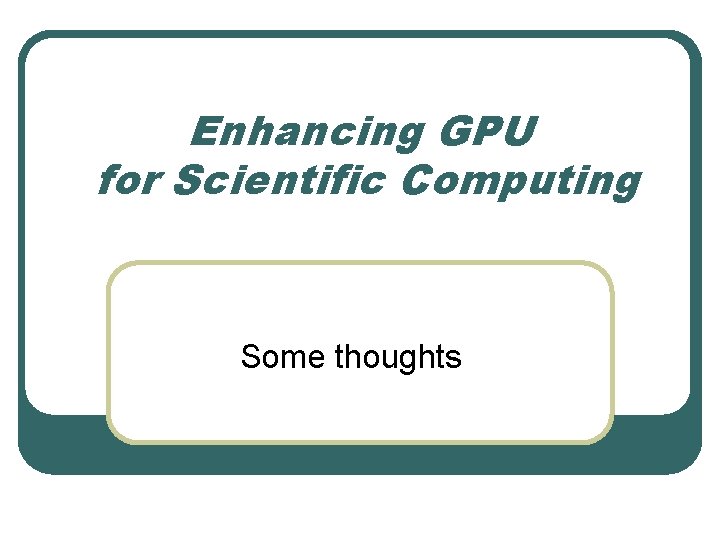

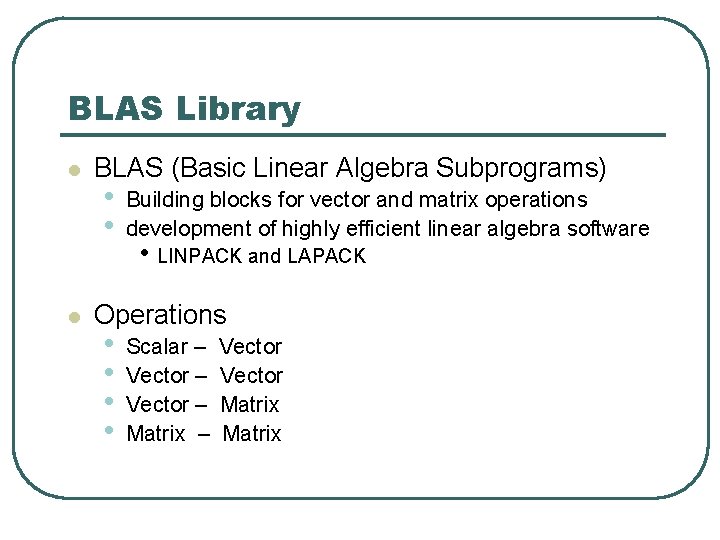

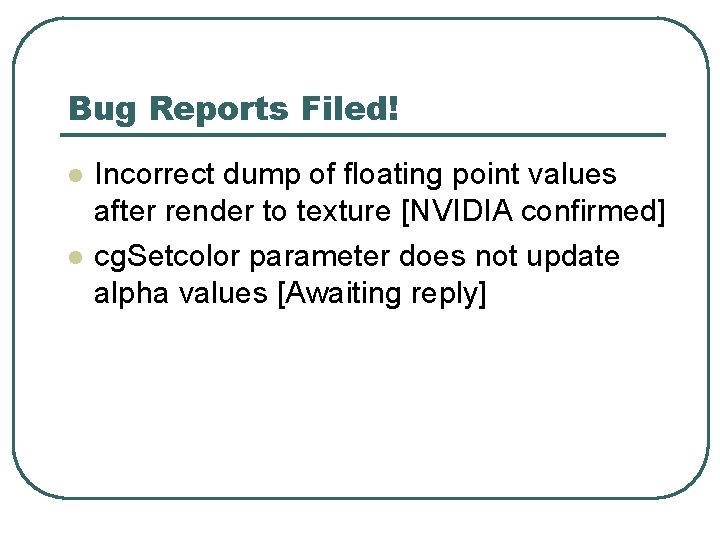

GL_QUAD [Vectex 4]m Execution graph v. Add CPU 2 Vector Add Operations 1. In this example, we perform 2 separate vector add operations. 2. The 1 st operation proceeds as described earlier in our vector add operation. 3. The output of the 1 st operation is used as input for the 2 nd operation. 4. Since it’s the same operation, we do not load a new Vertex or Fragment program. However we proceed to load a new texture data. P 5. The 2 nd operation proceeds as normal. U 6. Lastly the CPU function concatenates the sequence of color values into a vector of length s as result. [Vertex 4]m [None] Vertex Processor Texture. Data 4 m [Vertex 4]m G [v. Add. cg] Fragment Processor Texture Mem PBuffer Texture. Data 3 m [Texture Color values]m v. Add CPU (Vectors)

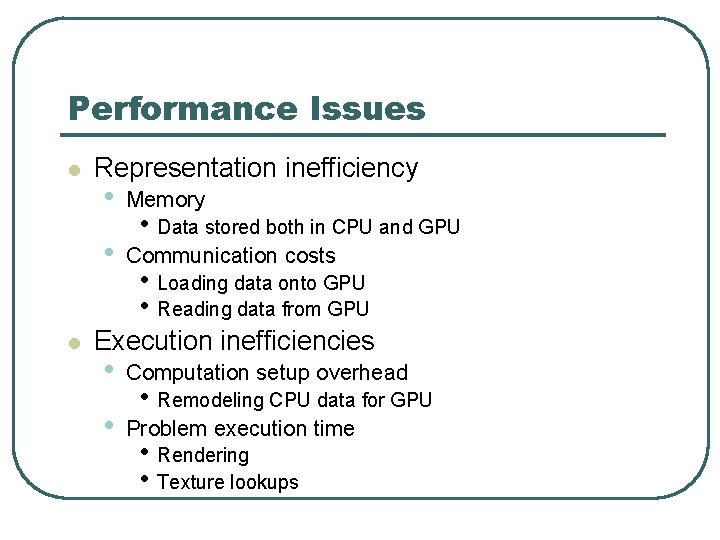

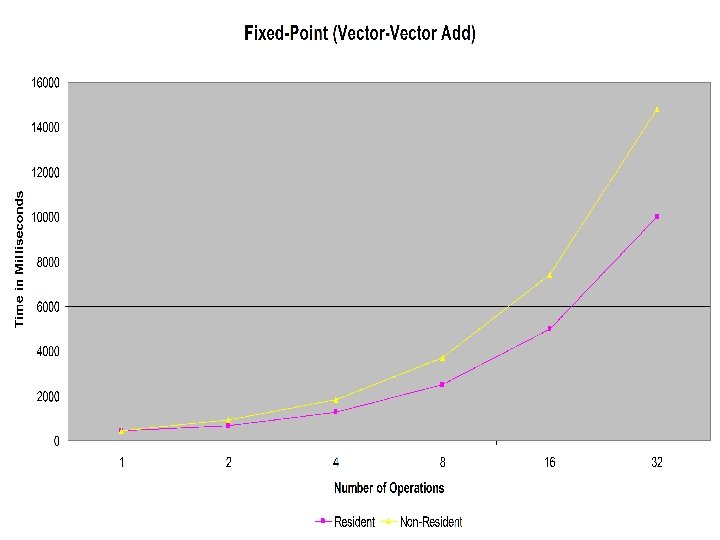

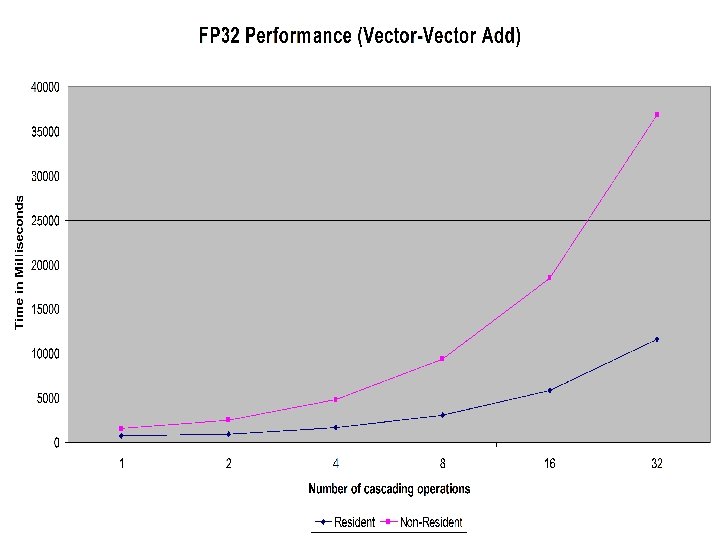

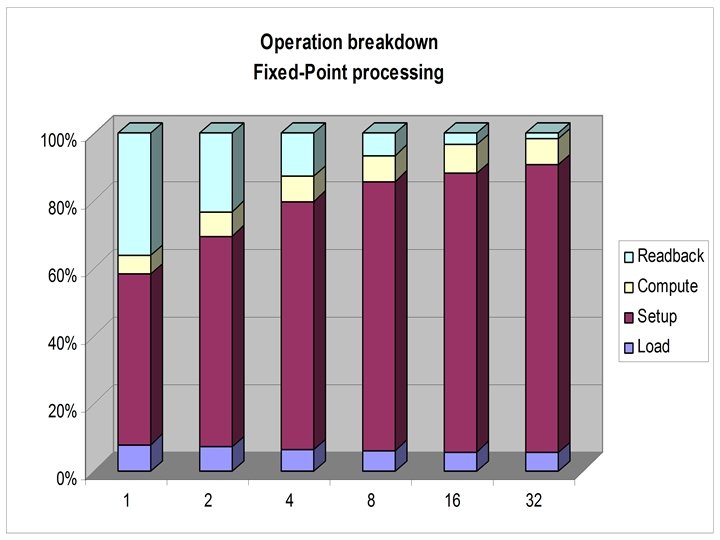

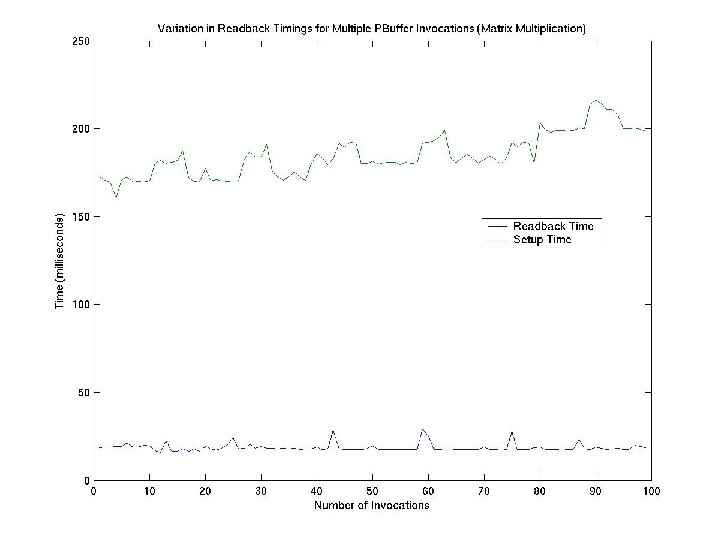

Performance Issues l l Representation inefficiency • Memory • Communication costs • Data stored both in CPU and GPU • Loading data onto GPU • Reading data from GPU Execution inefficiencies • Computation setup overhead • Problem execution time • Remodeling CPU data for GPU • Rendering • Texture lookups

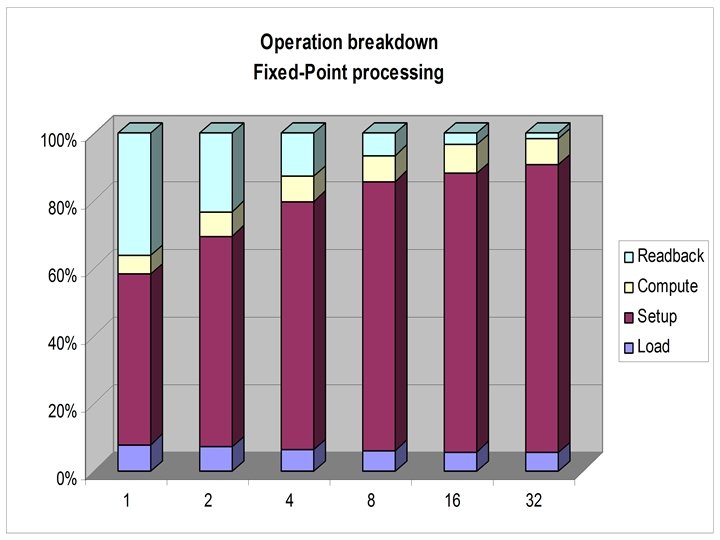

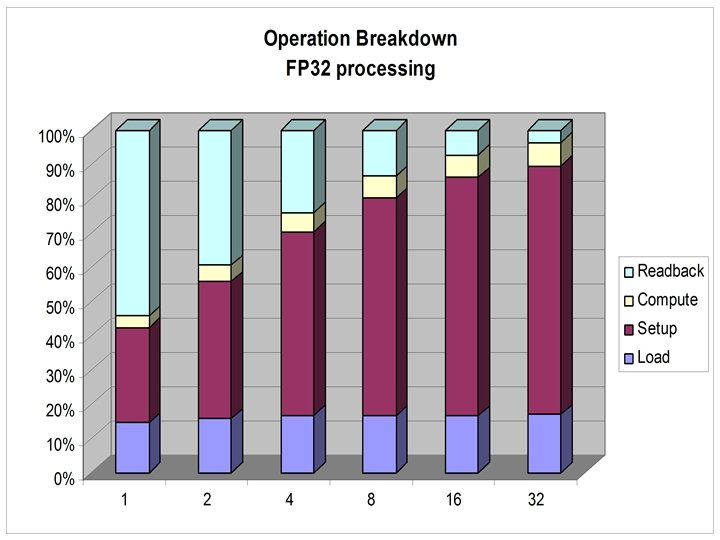

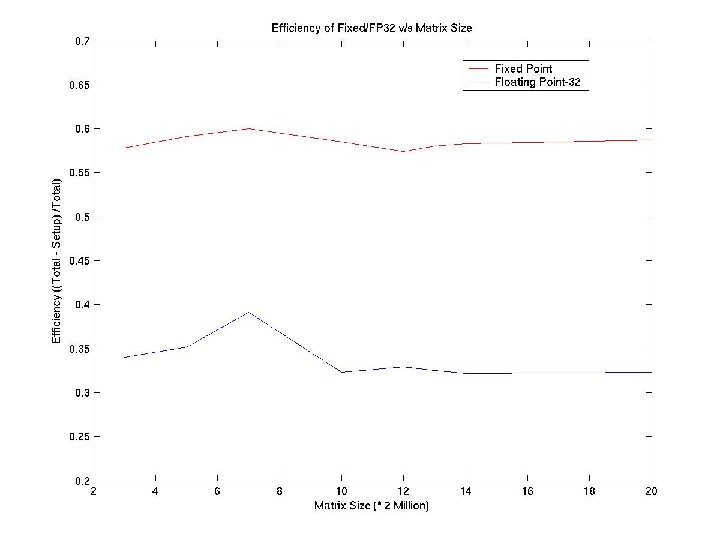

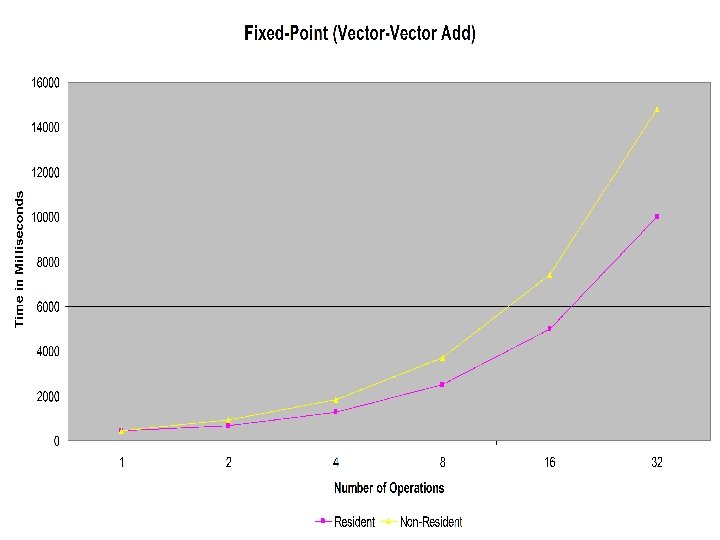

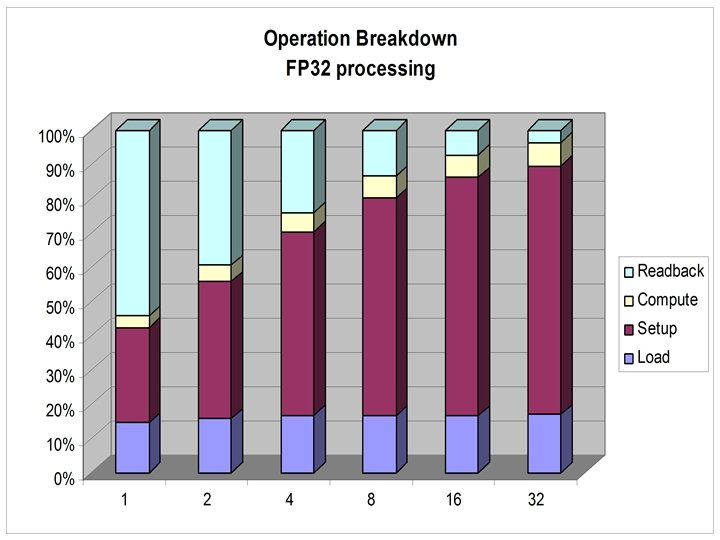

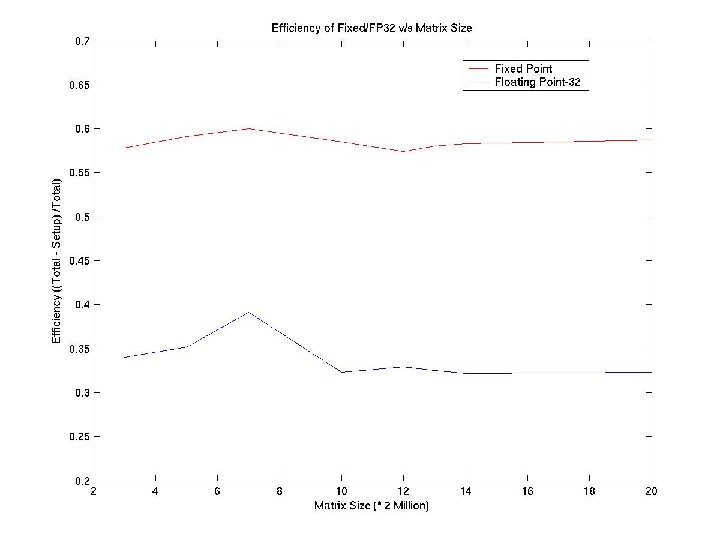

Observations l l l Fixed-point operations are much faster than FP 16/FP 32 operations have similar performance VP is slower than FP • Operation mappings involving both VP and FP result in inefficient pipeline

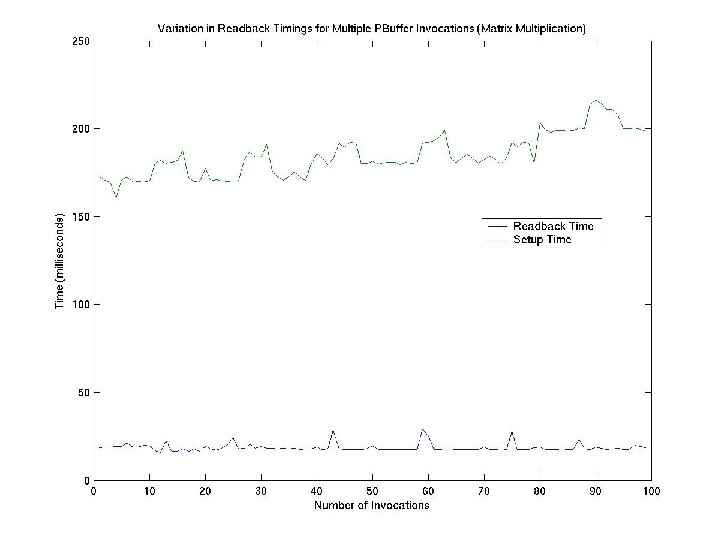

Observations l l Simple operations perform better on CPU Best to design whole algorithm as single VP/FP program • Memory cost for storing intermediate results • Execution cost ? • More textures result in decreased performance

Bug Reports Filed! l l Incorrect dump of floating point values after render to texture [NVIDIA confirmed] cg. Setcolor parameter does not update alpha values [Awaiting reply]

Recommendations (3 D Graphics Hackers) l Load important data into Video memory l Maximum use of Fixed-point Pipeline l Code optimization important (Instr. , Memory) l Upgrade your video card drivers (must!) • Hacking graphics hardware is a *real* pain!

Recommendations (Cg) l Pointer meaningful for numerical computing l Texture fetch instructions (add. Offsets) l Accumulation registers (sum) l Preserving State across multiple calls l Introduce stack mechanisms l Introduce bit wise operators

Recommendations (Hardware) l l l Allow GPU to read/write from CPU memory VP and FP as 1 st class processors on GPU • • Similar cores and instruction sets Allow full parallelism Allow CPU to read/write all registers in GPU processors Introduce a stack Introduce bit wise operators

Deliverables! l A draft subset of the BLAS library l Architecture Insights (issues/constraints) l NV 30 Improvements (Bug reports) l Technical Write-up

The End