TESTING AND EXPOSING WEAK GPU MEMORY MODELS MS

![This is known as sequential consistency (or SC) [1] Assertion cannot be satisfied by This is known as sequential consistency (or SC) [1] Assertion cannot be satisfied by](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-21.jpg)

![Weak Memory Models • Executing this test with the Litmus tool [2] on an Weak Memory Models • Executing this test with the Litmus tool [2] on an](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-24.jpg)

![Co. RR Test • Coherence is SC per memory location [11, p. 14] • Co. RR Test • Coherence is SC per memory location [11, p. 14] •](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-75.jpg)

![GPU Spin Locks • Inter-CTA lock presented in the book CUDA By Example [13] GPU Spin Locks • Inter-CTA lock presented in the book CUDA By Example [13]](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-86.jpg)

![GPU Spin Locks • Inter-CTA lock presented in the book CUDA By Example [13] GPU Spin Locks • Inter-CTA lock presented in the book CUDA By Example [13]](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-87.jpg)

![GPU Spin Locks • Inter-CTA lock presented in the book CUDA By Example [13] GPU Spin Locks • Inter-CTA lock presented in the book CUDA By Example [13]](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-88.jpg)

![GPU Spin Locks • Inter-CTA lock presented in the book CUDA By Example [13] GPU Spin Locks • Inter-CTA lock presented in the book CUDA By Example [13]](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-89.jpg)

![Future Work • Axiomatic memory model in Herd [3] • New scoped relations: Internal–CTA: Future Work • Axiomatic memory model in Herd [3] • New scoped relations: Internal–CTA:](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-109.jpg)

![References [1] L. Lamport, "How to make a multiprocessor computer that correctly executes multi-process References [1] L. Lamport, "How to make a multiprocessor computer that correctly executes multi-process](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-111.jpg)

![References [8] D. R. Hower, B. M. Beckmann, B. R. Gaster, B. A. Hechtman, References [8] D. R. Hower, B. M. Beckmann, B. R. Gaster, B. A. Hechtman,](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-112.jpg)

![References [14] J. A. Stuart and J. D. Owens, "Efficient synchronization primitives for GPUs, References [14] J. A. Stuart and J. D. Owens, "Efficient synchronization primitives for GPUs,](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-113.jpg)

![Bulk Testing • Invalidated GPU memory model from [? ] • Model disallows behaviors Bulk Testing • Invalidated GPU memory model from [? ] • Model disallows behaviors](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-127.jpg)

![Bulk Testing • Invalidated GPU memory model from [? ] • Model disallows behaviors Bulk Testing • Invalidated GPU memory model from [? ] • Model disallows behaviors](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-128.jpg)

- Slides: 137

TESTING AND EXPOSING WEAK GPU MEMORY MODELS MS Thesis Defense by Tyler Sorensen Advisor : Ganesh Gopalakrishnan May 30, 2014 1

• Joint Work with: Jade Alglave (University College London), Daniel Poetzl (University of Oxford), Luc Maranget (Inria), Alastair Donaldson, John Wickerson, (Imperial College London), Mark Batty (University of Cambridge) 2

Roadmap • Background and Approach • Prior Work • Testing Framework • Results • CUDA Spin Locks • Bulk Testing • Future Work and Conclusion 3

Roadmap • Background and Approach • Prior Work • Testing Framework • Results • CUDA Spin Locks • Bulk Testing • Future Work and Conclusion 4

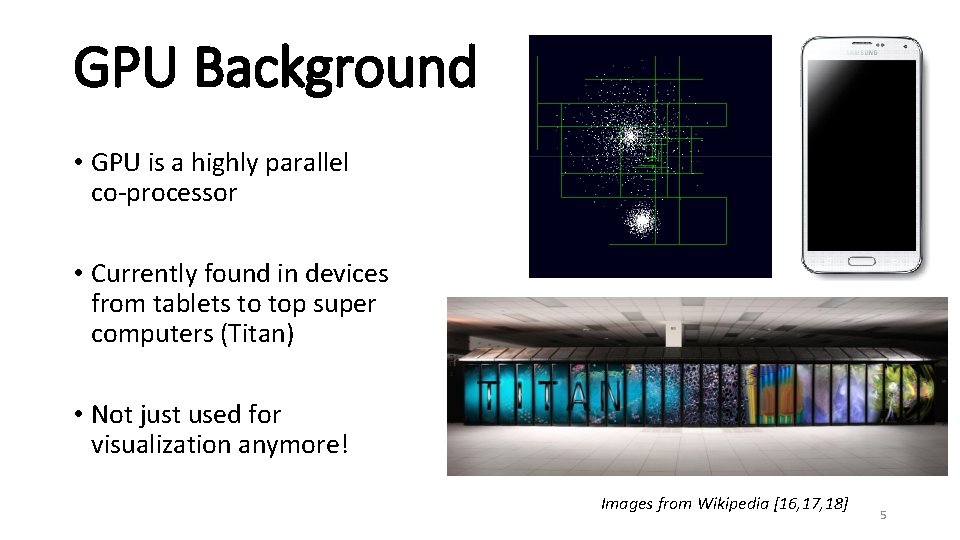

GPU Background • GPU is a highly parallel co-processor • Currently found in devices from tablets to top super computers (Titan) • Not just used for visualization anymore! Images from Wikipedia [16, 17, 18] 5

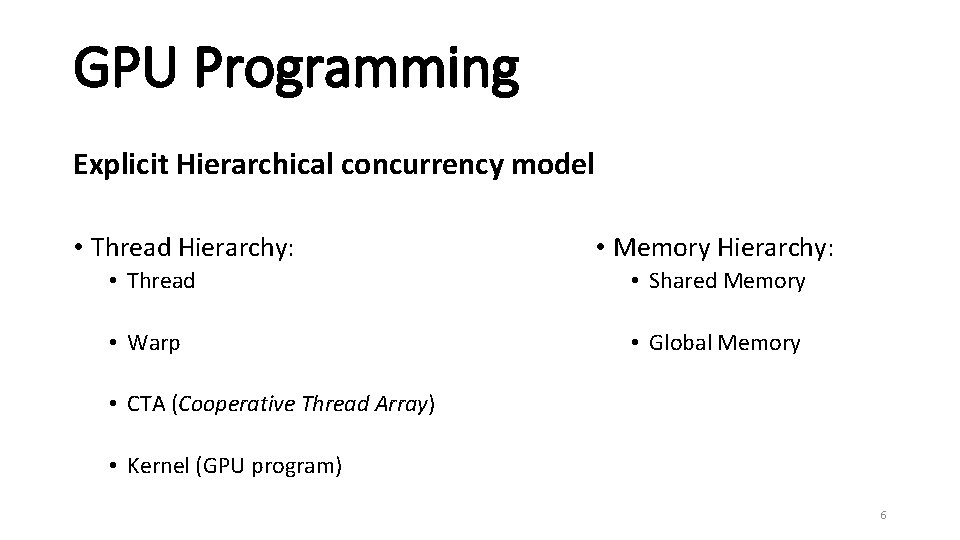

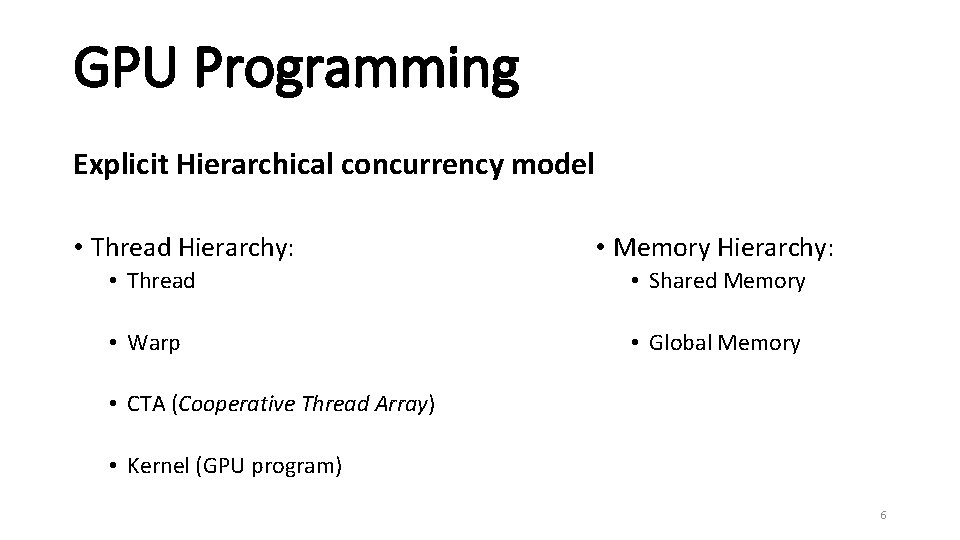

GPU Programming Explicit Hierarchical concurrency model • Thread Hierarchy: • Memory Hierarchy: • Thread • Shared Memory • Warp • Global Memory • CTA (Cooperative Thread Array) • Kernel (GPU program) 6

GPU Programming 7

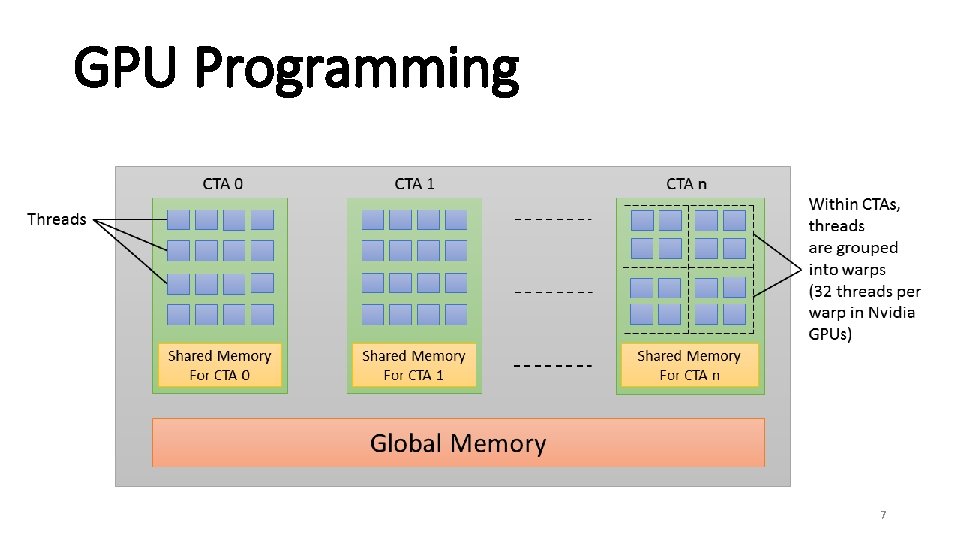

GPU Programming • GPUs are SIMT (Single Instruction, Multiple Thread) • NVIDIA GPUs may be programmed using CUDA or Open. CL 8

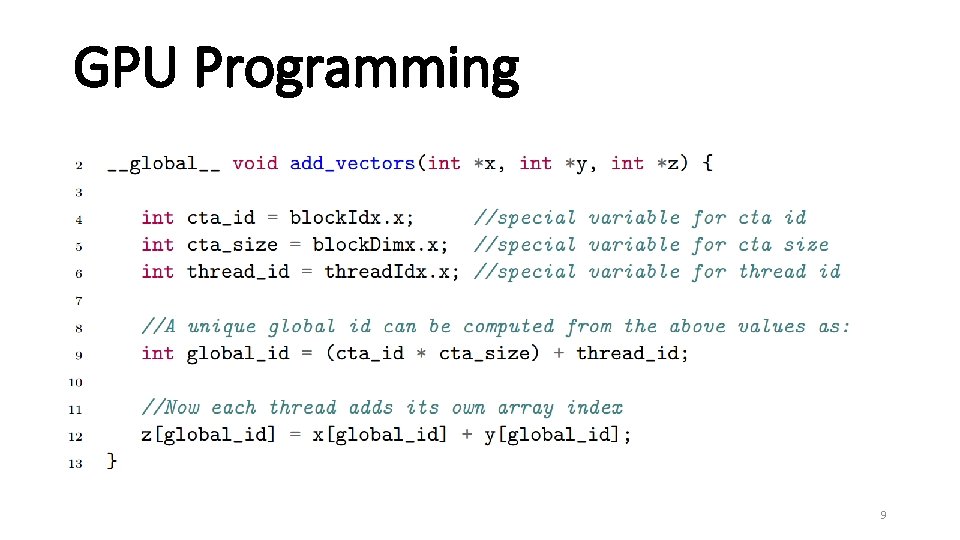

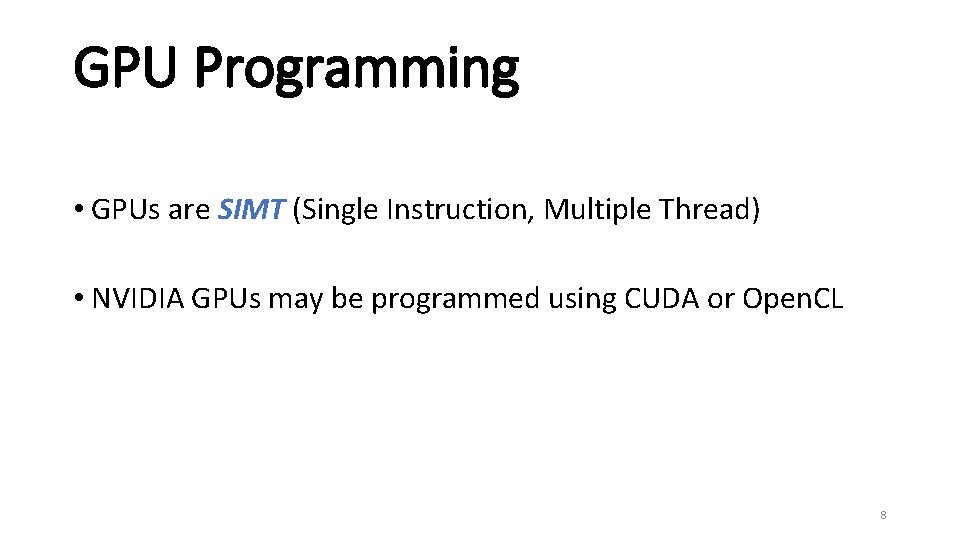

GPU Programming 9

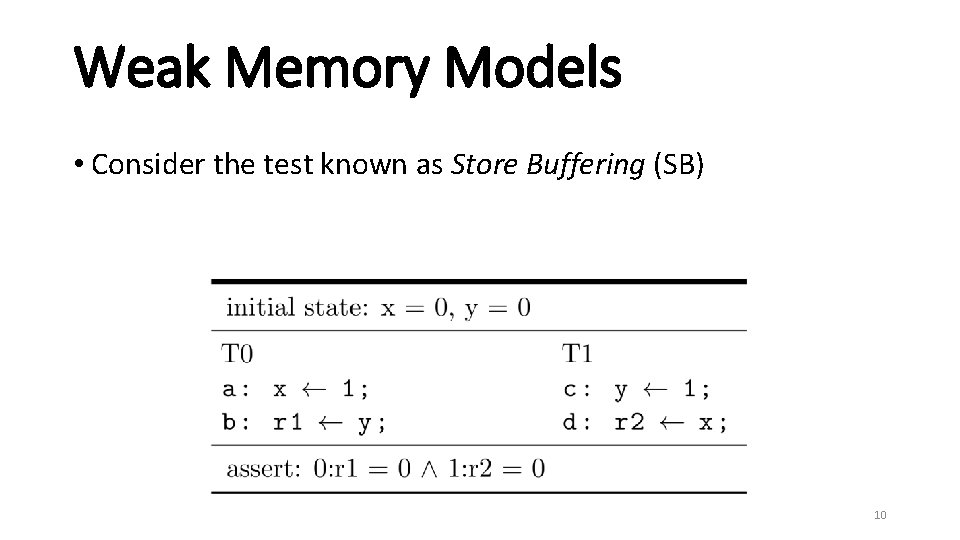

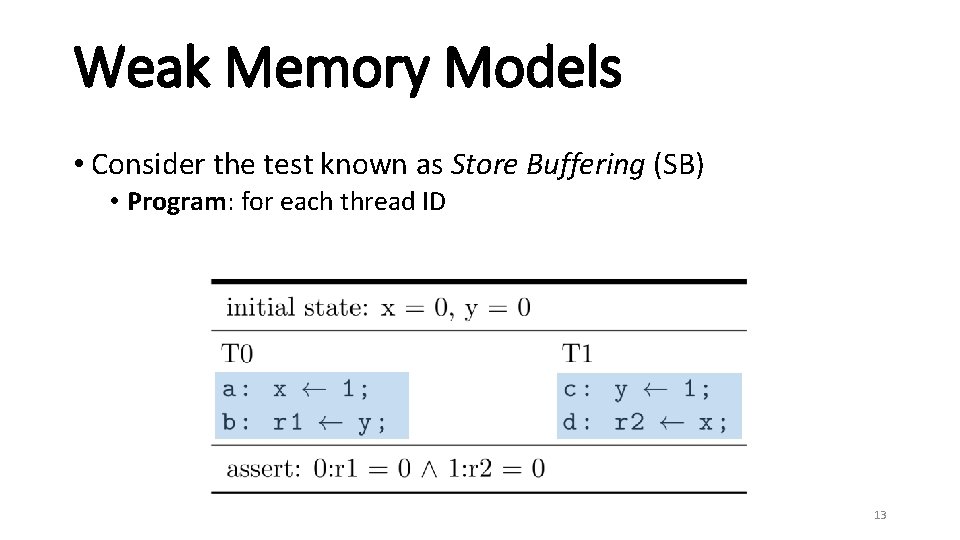

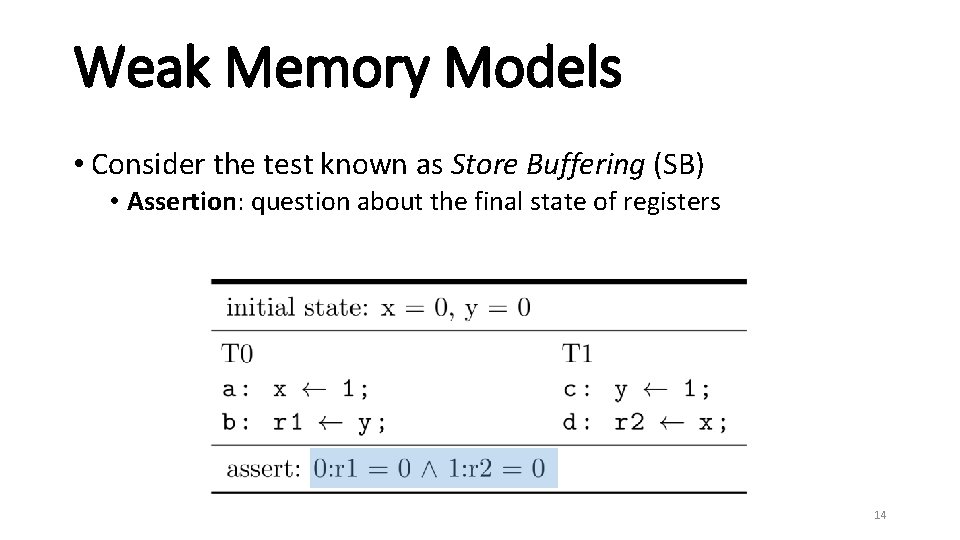

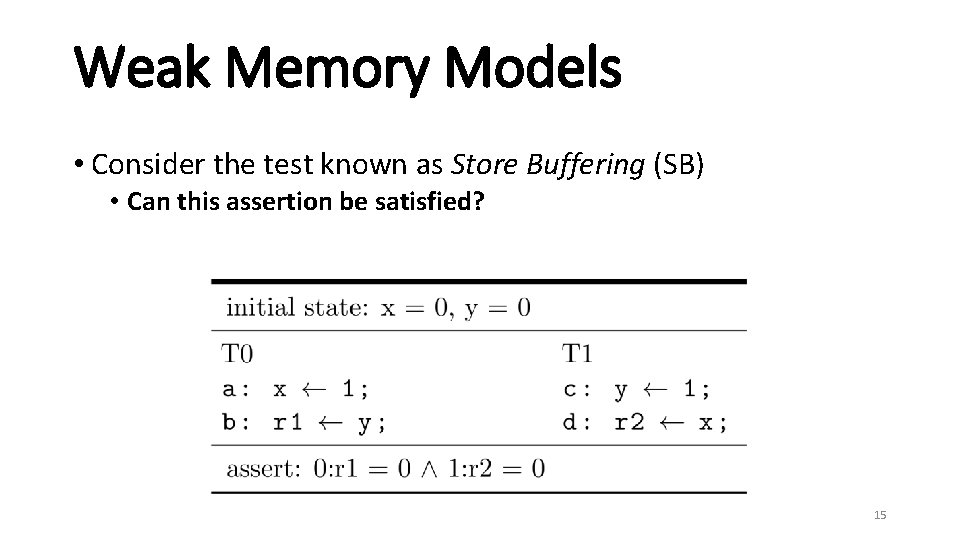

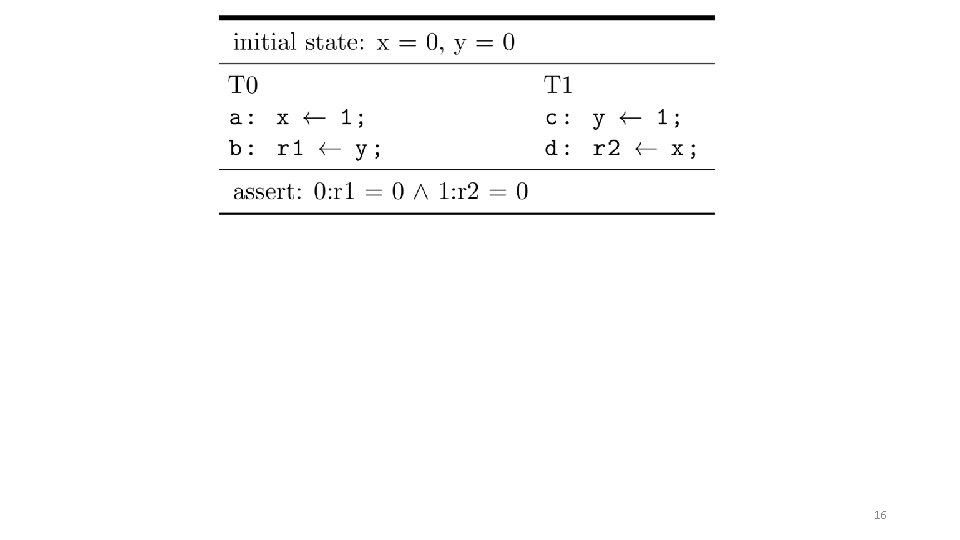

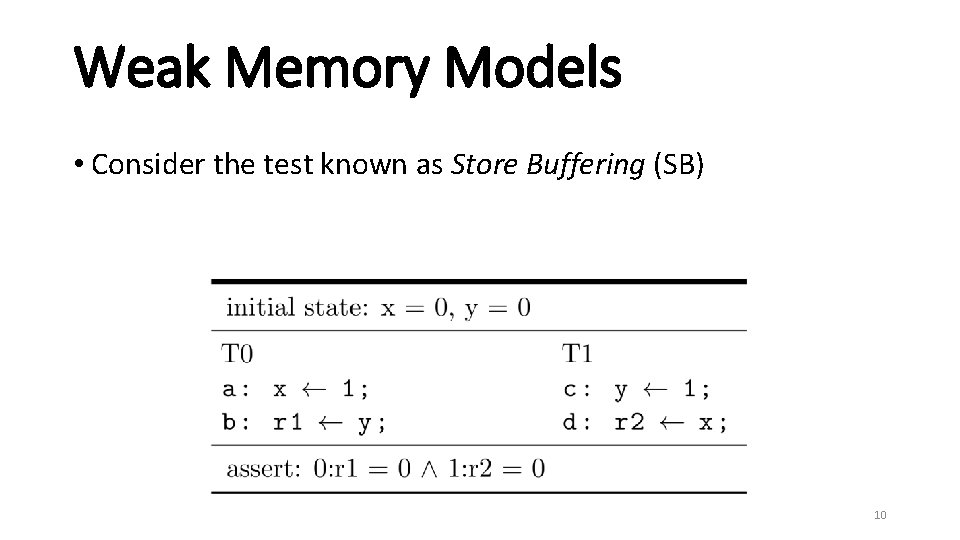

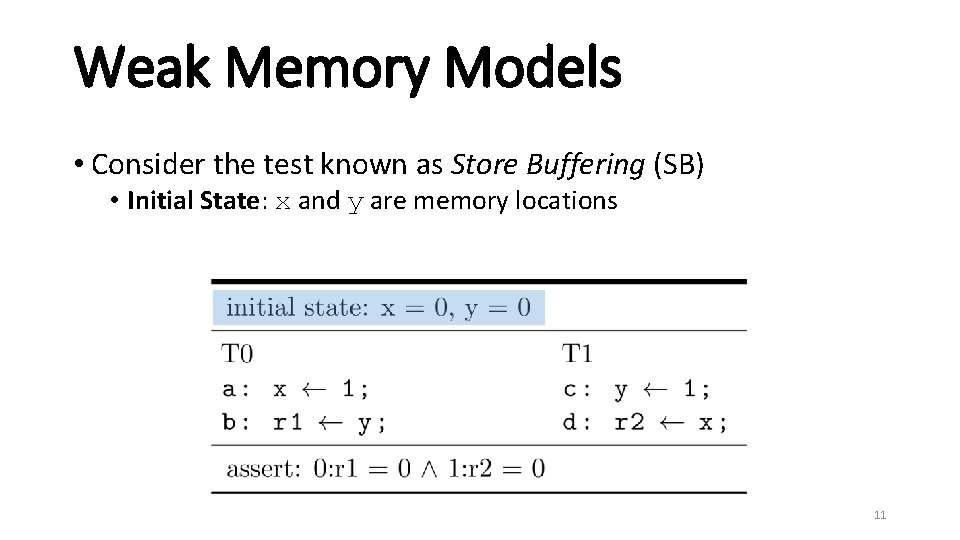

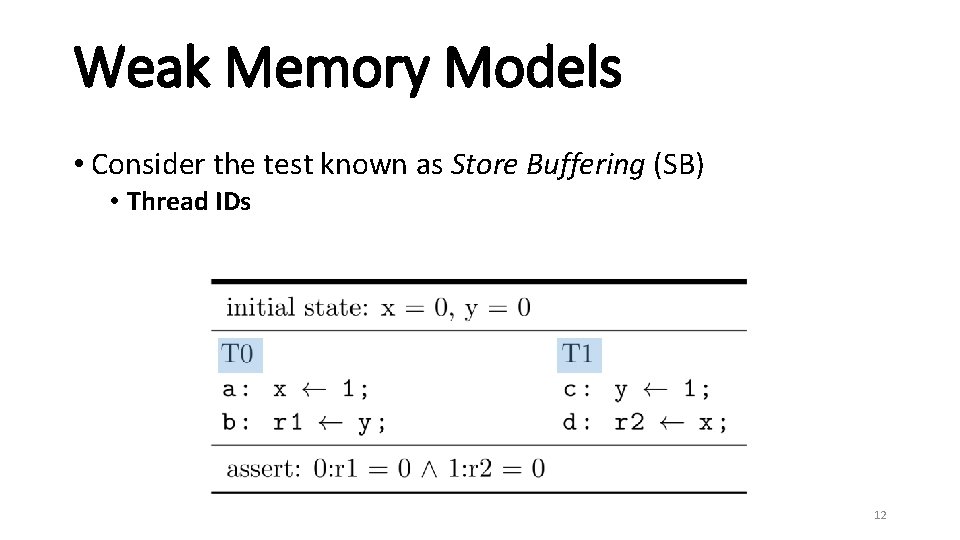

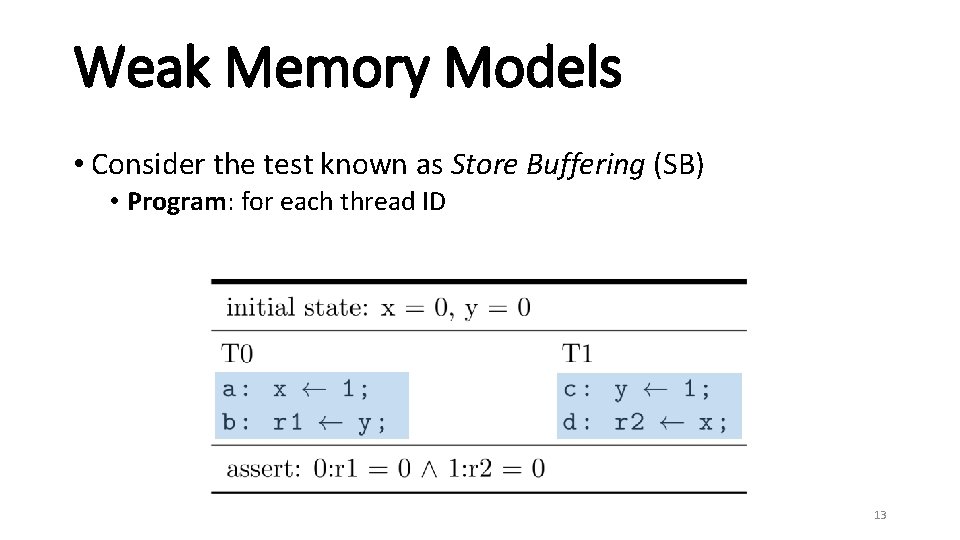

Weak Memory Models • Consider the test known as Store Buffering (SB) 10

Weak Memory Models • Consider the test known as Store Buffering (SB) • Initial State: x and y are memory locations 11

Weak Memory Models • Consider the test known as Store Buffering (SB) • Thread IDs 12

Weak Memory Models • Consider the test known as Store Buffering (SB) • Program: for each thread ID 13

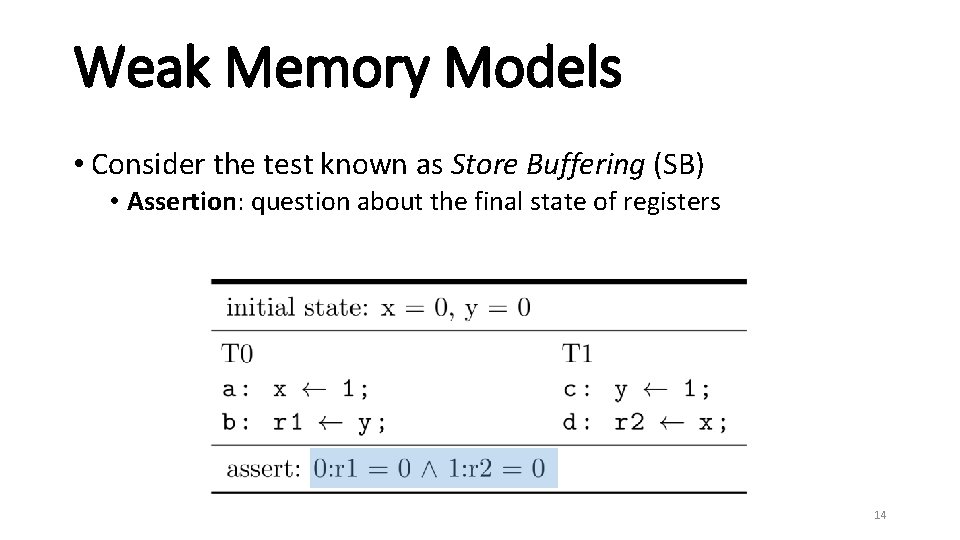

Weak Memory Models • Consider the test known as Store Buffering (SB) • Assertion: question about the final state of registers 14

Weak Memory Models • Consider the test known as Store Buffering (SB) • Can this assertion be satisfied? 15

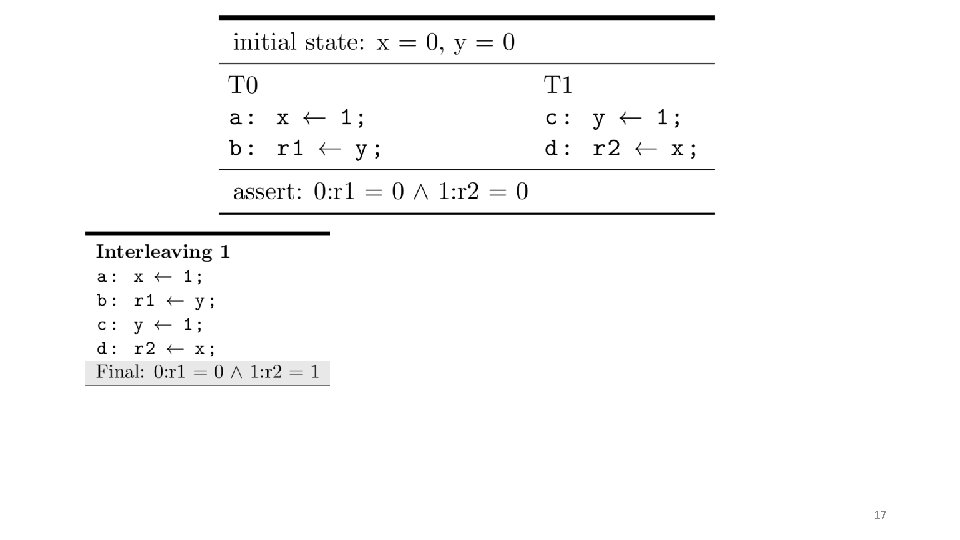

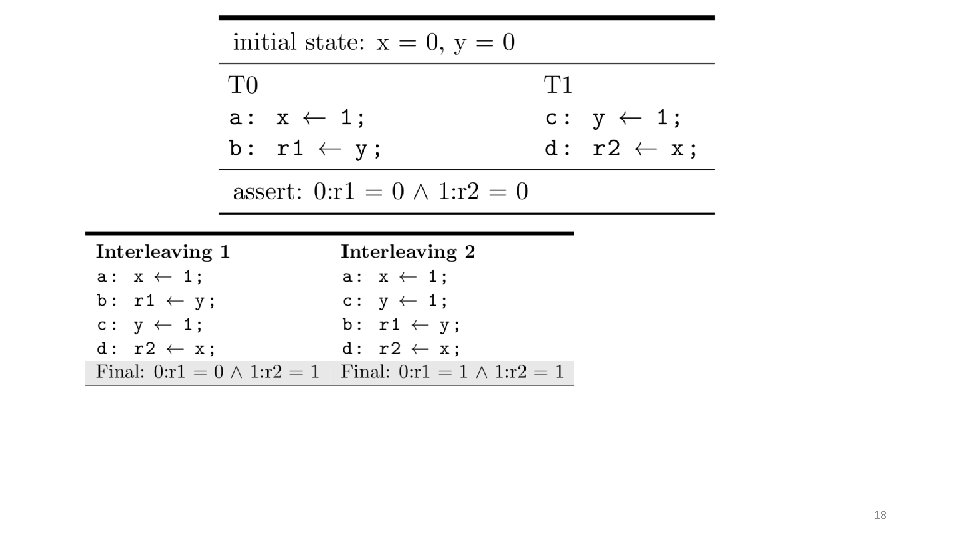

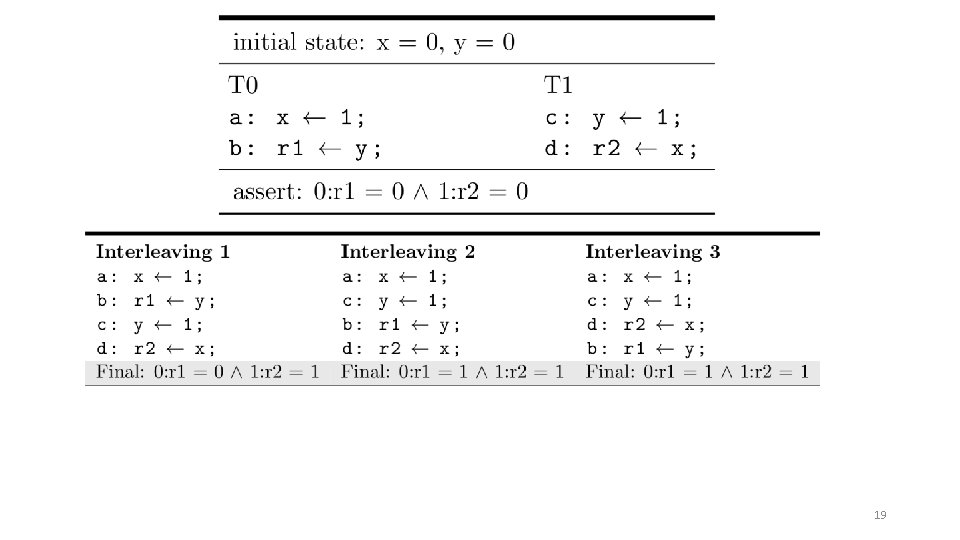

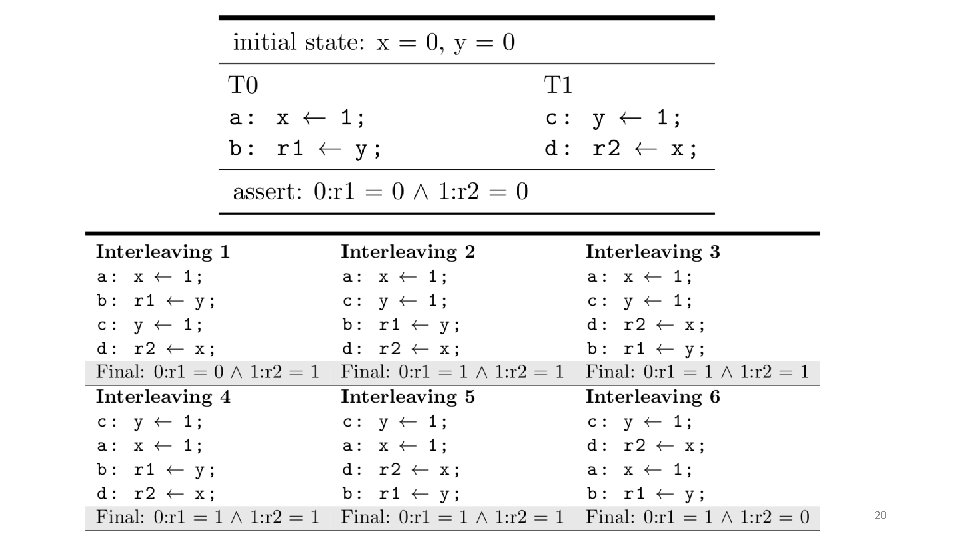

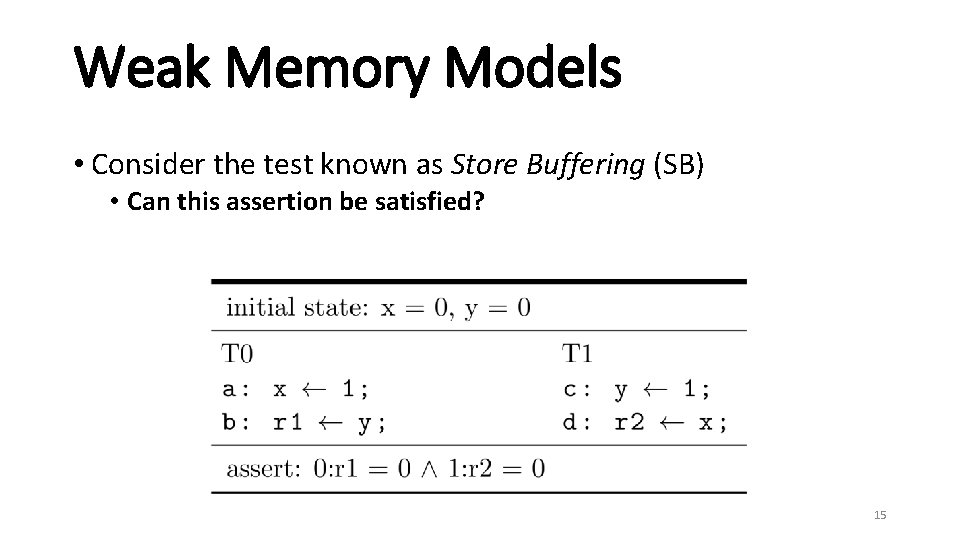

16

17

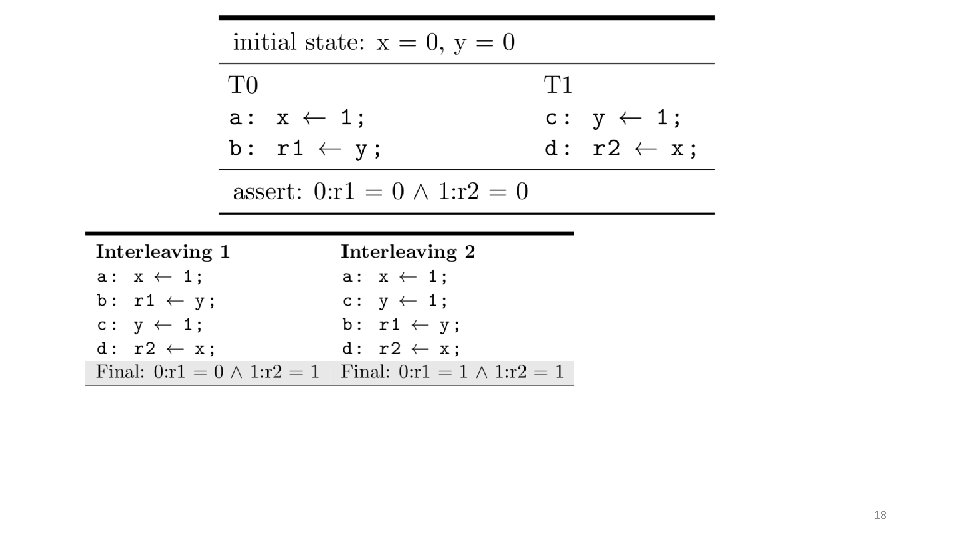

18

19

20

![This is known as sequential consistency or SC 1 Assertion cannot be satisfied by This is known as sequential consistency (or SC) [1] Assertion cannot be satisfied by](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-21.jpg)

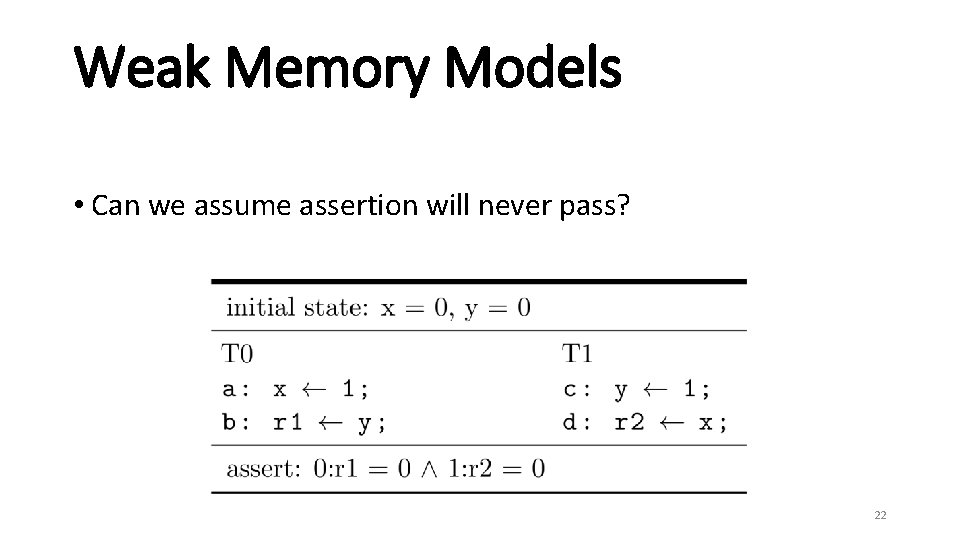

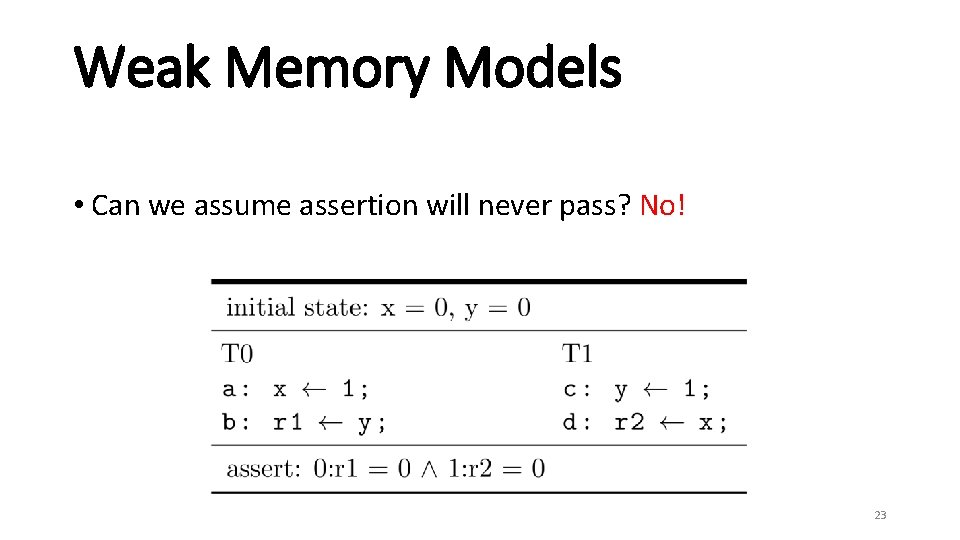

This is known as sequential consistency (or SC) [1] Assertion cannot be satisfied by interleavings 21

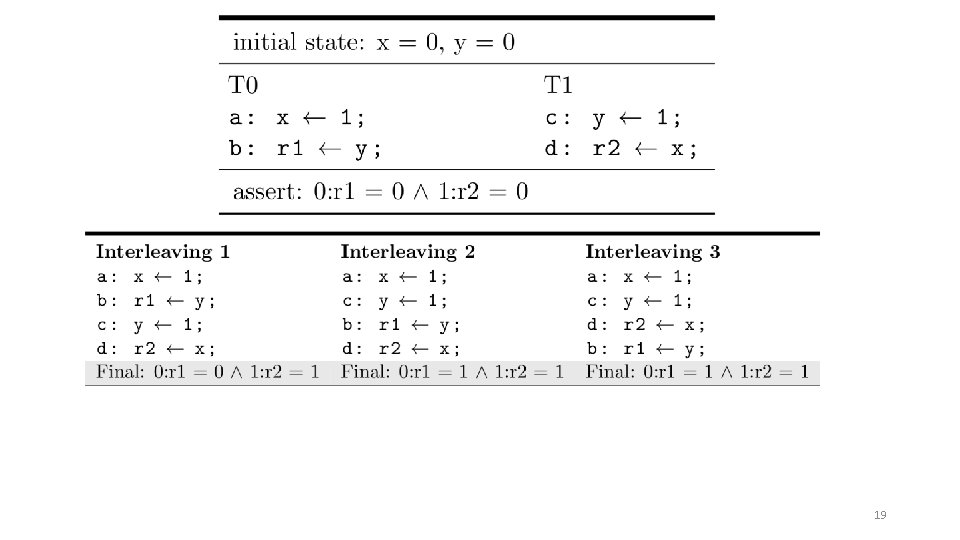

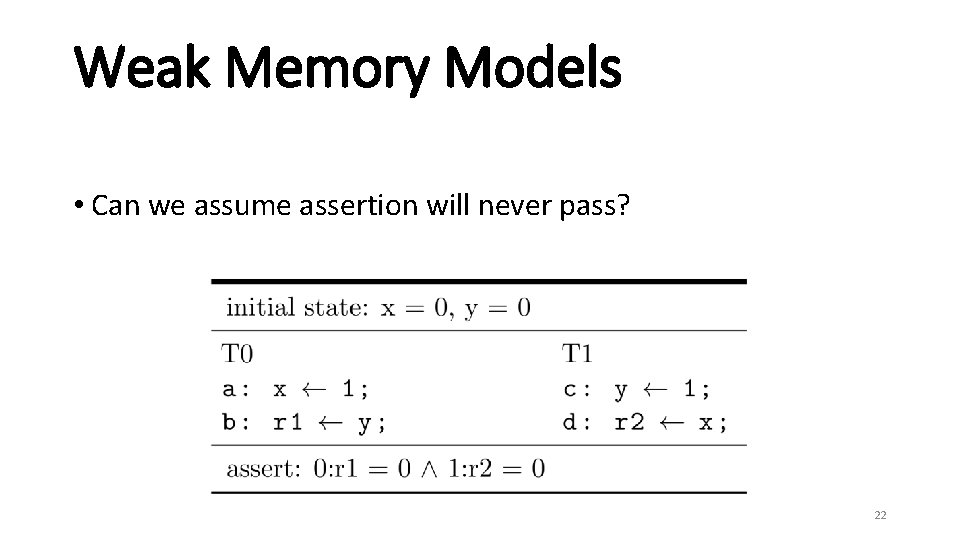

Weak Memory Models • Can we assume assertion will never pass? 22

Weak Memory Models • Can we assume assertion will never pass? No! 23

![Weak Memory Models Executing this test with the Litmus tool 2 on an Weak Memory Models • Executing this test with the Litmus tool [2] on an](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-24.jpg)

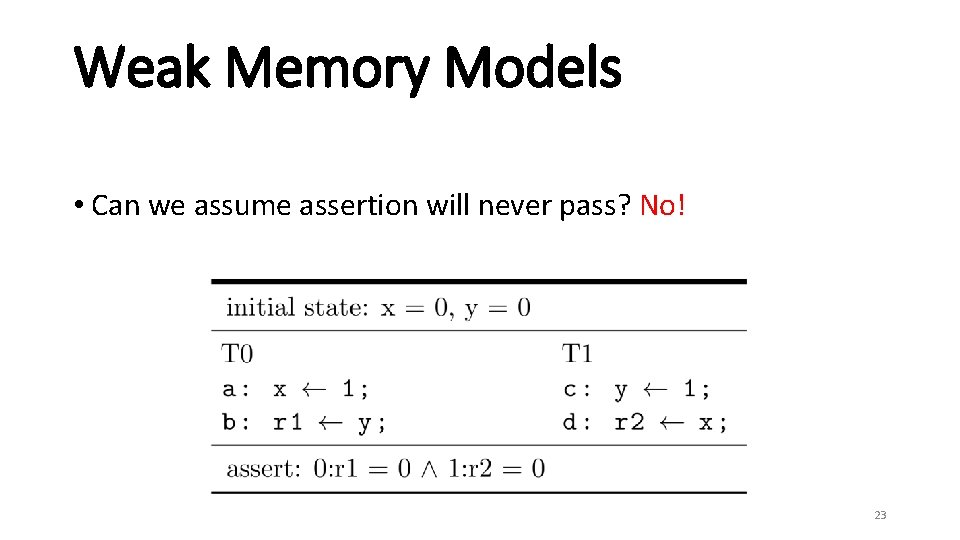

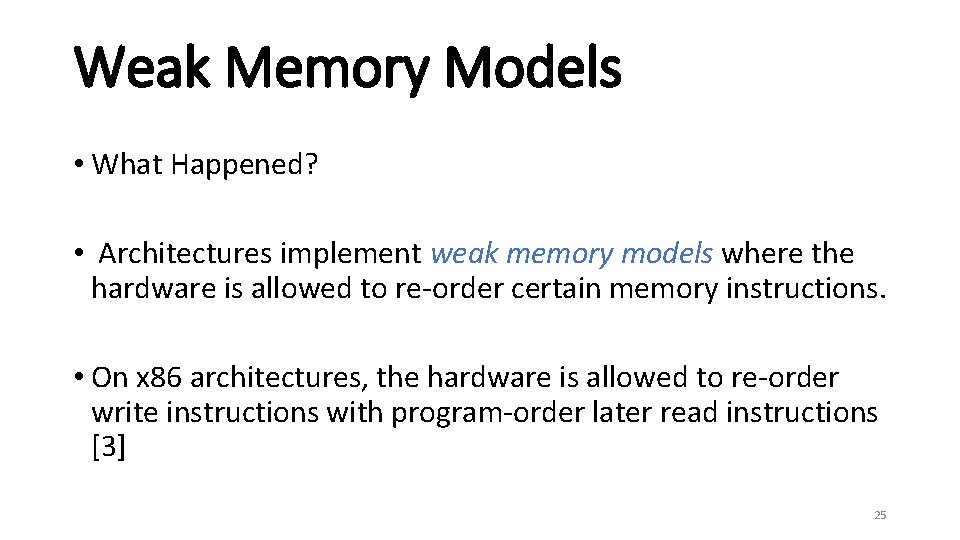

Weak Memory Models • Executing this test with the Litmus tool [2] on an Intel i 7 x 86 processor for 1000000 iterations, we get the following histogram of results: 24

Weak Memory Models • What Happened? • Architectures implement weak memory models where the hardware is allowed to re-order certain memory instructions. • On x 86 architectures, the hardware is allowed to re-order write instructions with program-order later read instructions [3] 25

GPU Memory Models • What type of memory model do current GPUs implement? • Documentation is sparse • CUDA has 1 page + 1 example [4] • PTX has 1 page + 0 examples [5] • No specifics about which instructions are allowed to be re-ordered • We need to know if we are to write correct GPU programs! 26

Our Approach • Empirically explore the memory model implemented on deployed NVIDIA GPUs • Achieved by developing a memory model testing tool for NVIDIA GPUs with specialized heuristics • We analyze classic memory model properties and CUDA applications in this framework with unexpected results • We test large families of tests on GPUs as a basis for modeling and bug hunting 27

Our Approach • Disclaimer: Testing is not guaranteed to reveal all behaviors 28

Roadmap • Background and Approach • Prior Work • Testing Framework • Results • CUDA Spin Locks • Bulk Testing • Future Work and Conclusion 29

Prior Work • Testing Memory Models: • Pioneered by Bill Collier in ARCHTEST in 1992 [6] • TSOTool in 2004 [7] • Litmus in 2011 [2] • We extend this tool 30

Prior Work (GPU Memory Models) • June 2013: • Hower et al. proposed a SC for race-free memory model for GPUs [8] • Sorensen et al. proposed an operational weak GPU memory model based on available documentation [9] • 2014: • Hower et al. proposed two SC for race-free memory model for GPUs, HRFdirect and HRF-indirect [10] It remains unclear what memory model deployed GPUs implement 31

Roadmap • Background and Approach • Prior Work • Testing Framework • Results • CUDA Spin Locks • Bulk Testing • Future Work and Conclusion 32

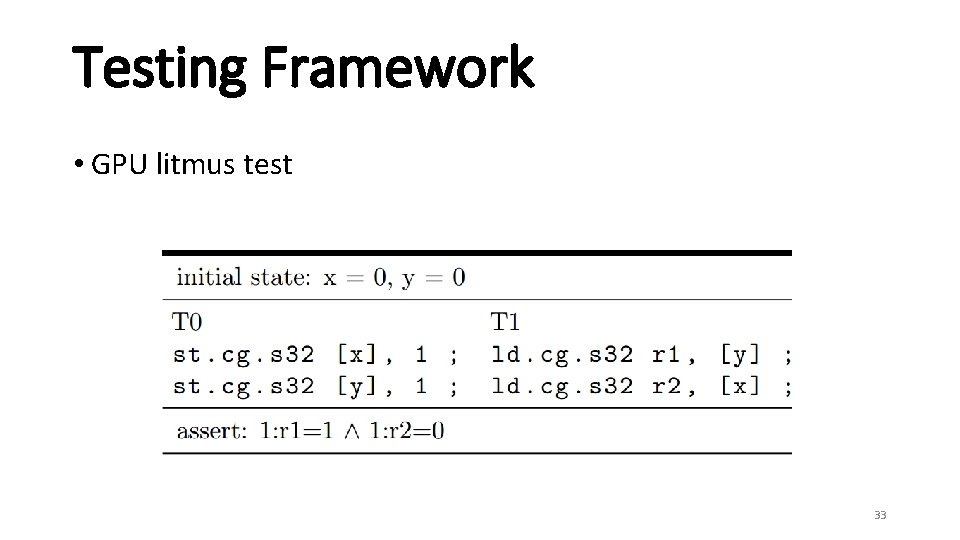

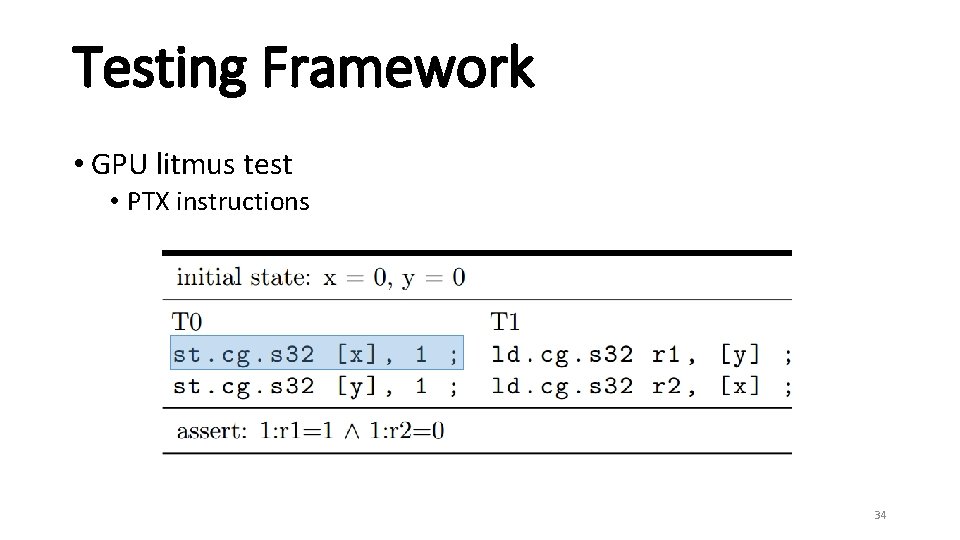

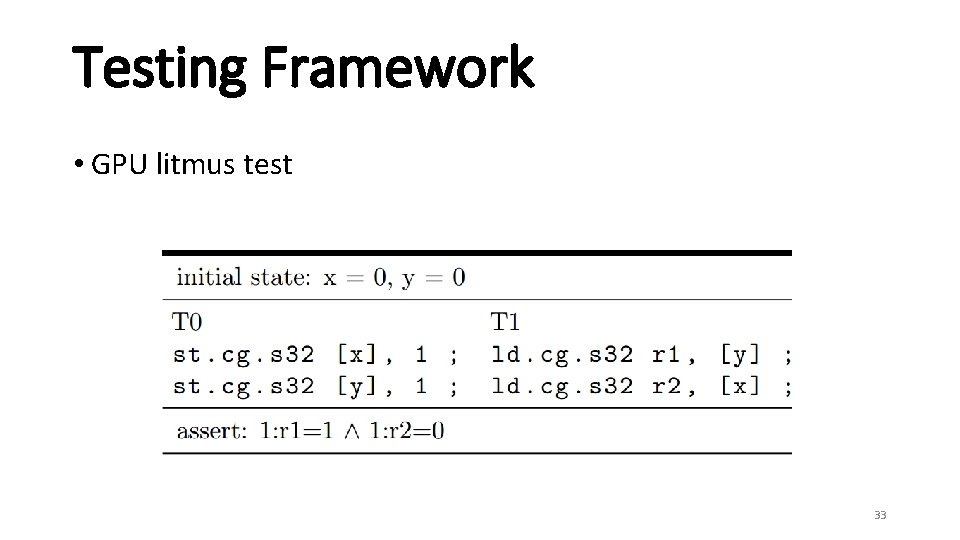

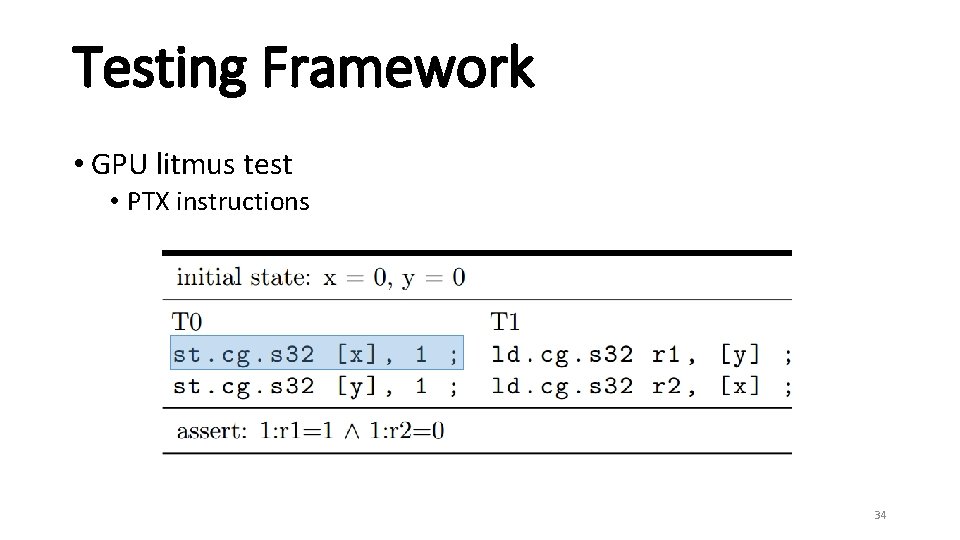

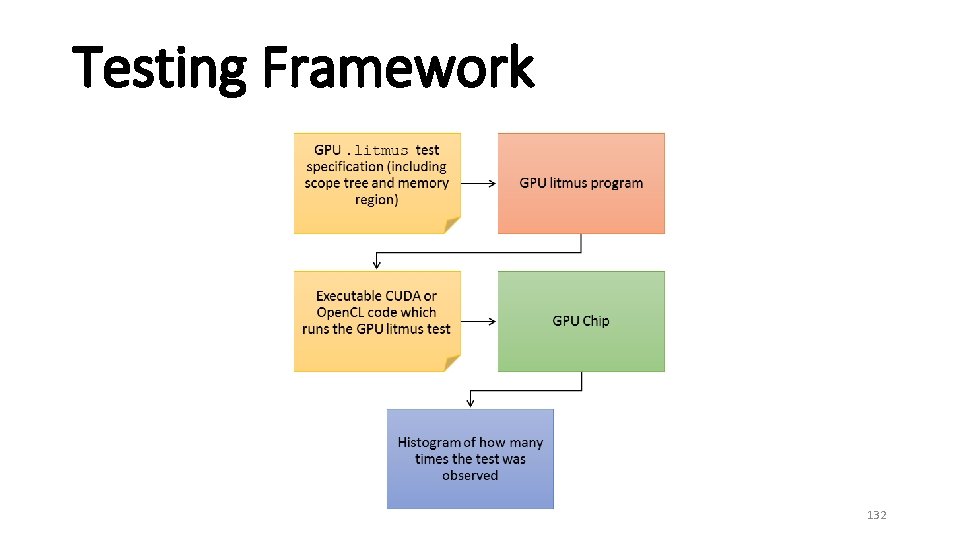

Testing Framework • GPU litmus test 33

Testing Framework • GPU litmus test • PTX instructions 34

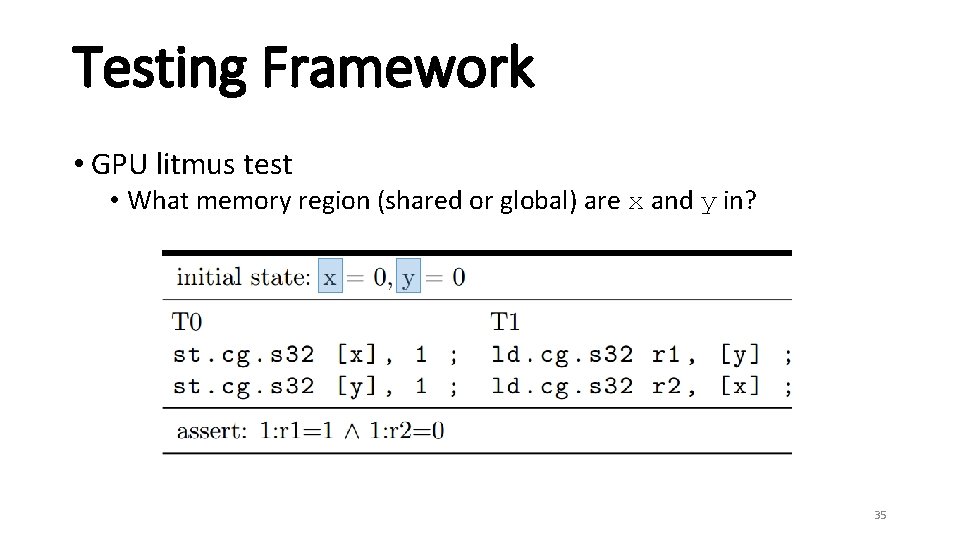

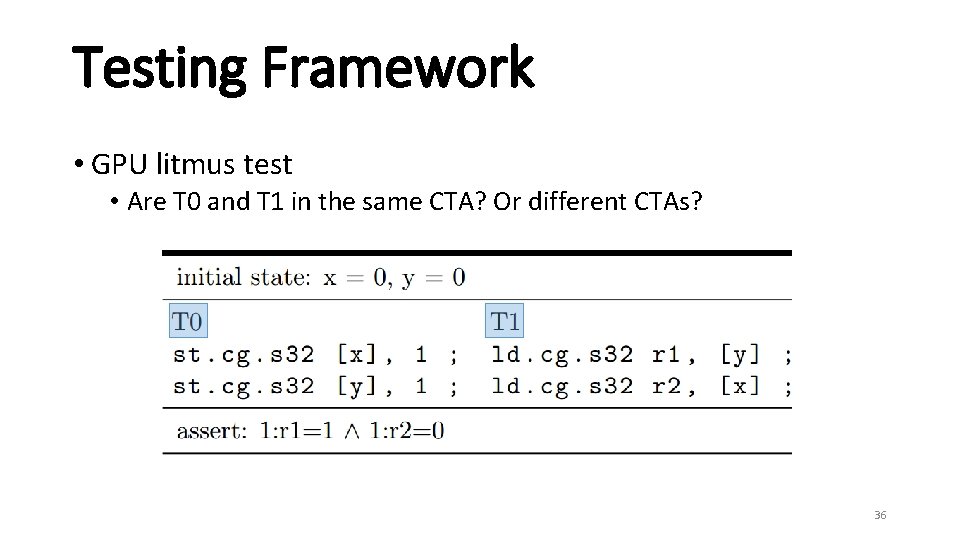

Testing Framework • GPU litmus test • What memory region (shared or global) are x and y in? 35

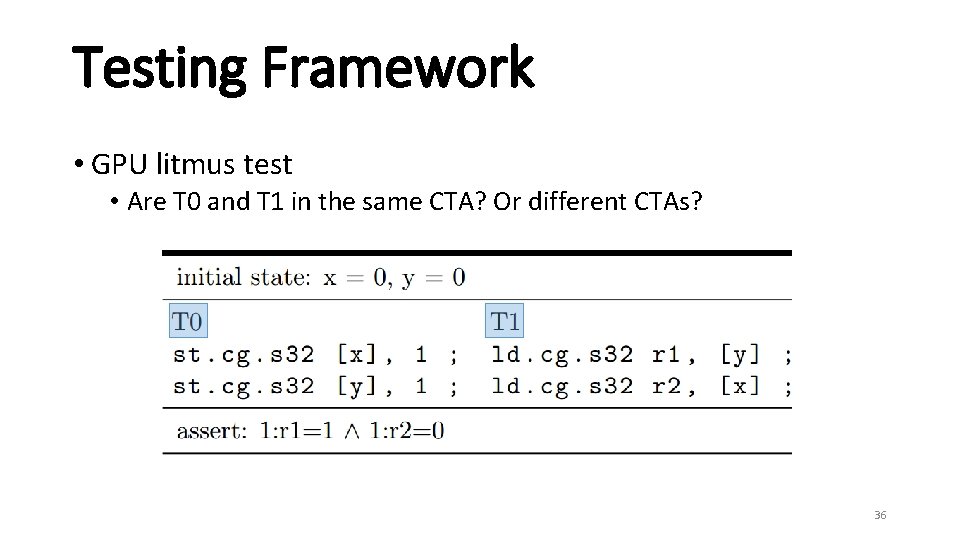

Testing Framework • GPU litmus test • Are T 0 and T 1 in the same CTA? Or different CTAs? 36

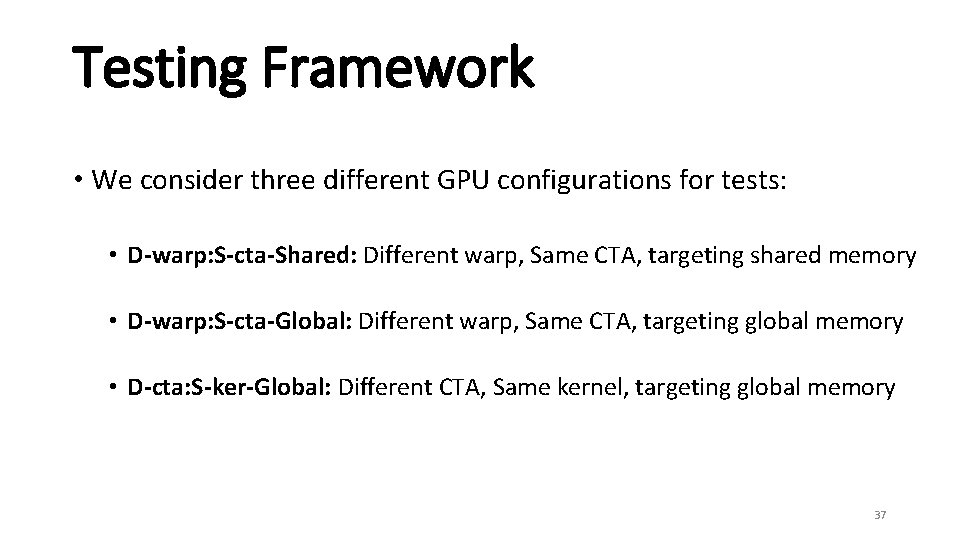

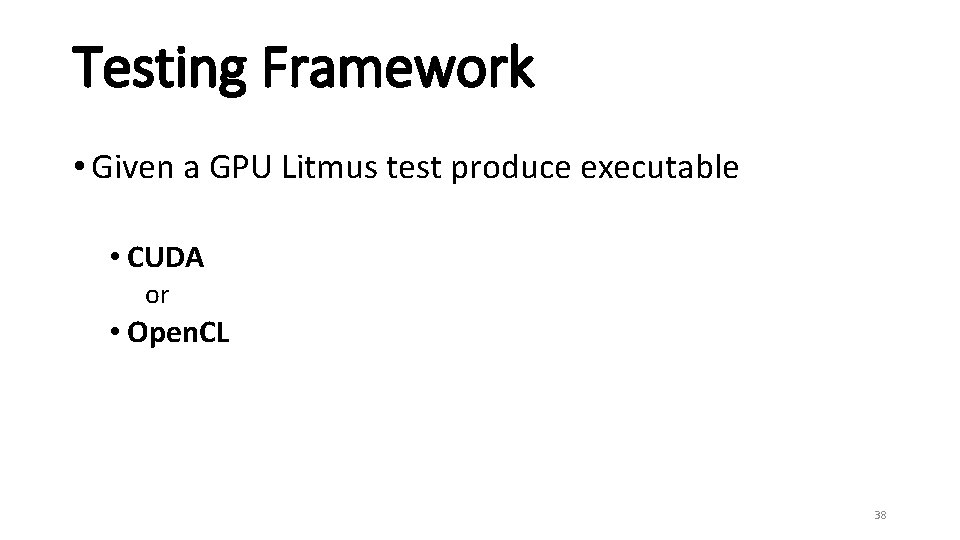

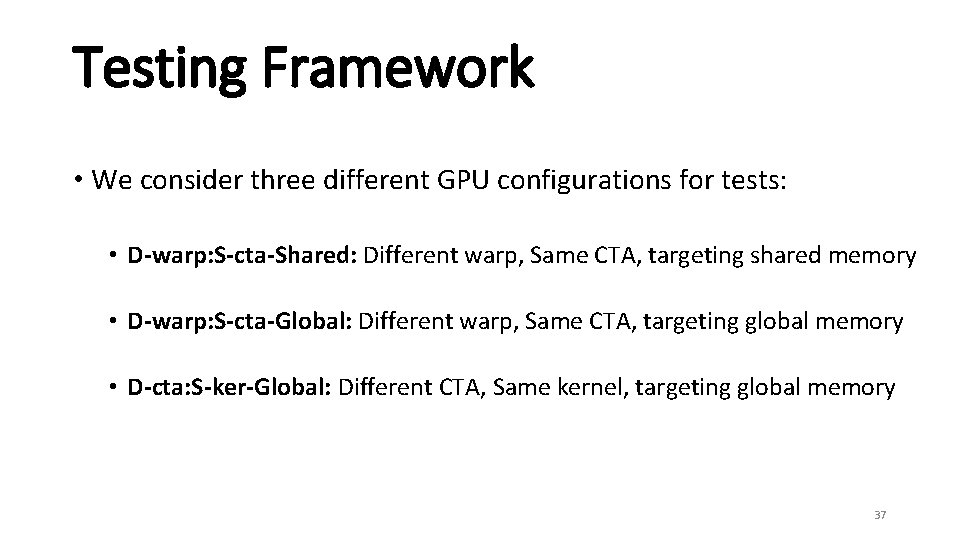

Testing Framework • We consider three different GPU configurations for tests: • D-warp: S-cta-Shared: Different warp, Same CTA, targeting shared memory • D-warp: S-cta-Global: Different warp, Same CTA, targeting global memory • D-cta: S-ker-Global: Different CTA, Same kernel, targeting global memory 37

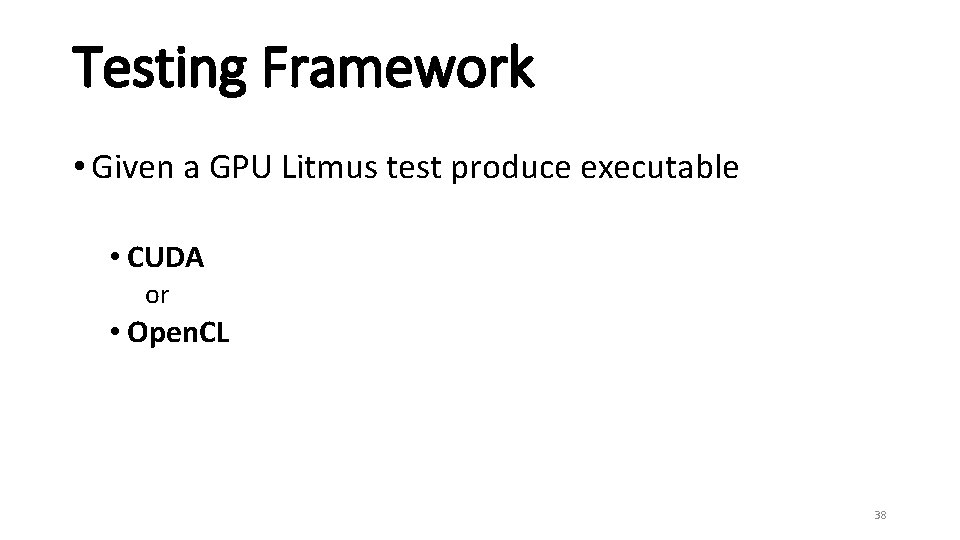

Testing Framework • Given a GPU Litmus test produce executable • CUDA or • Open. CL 38

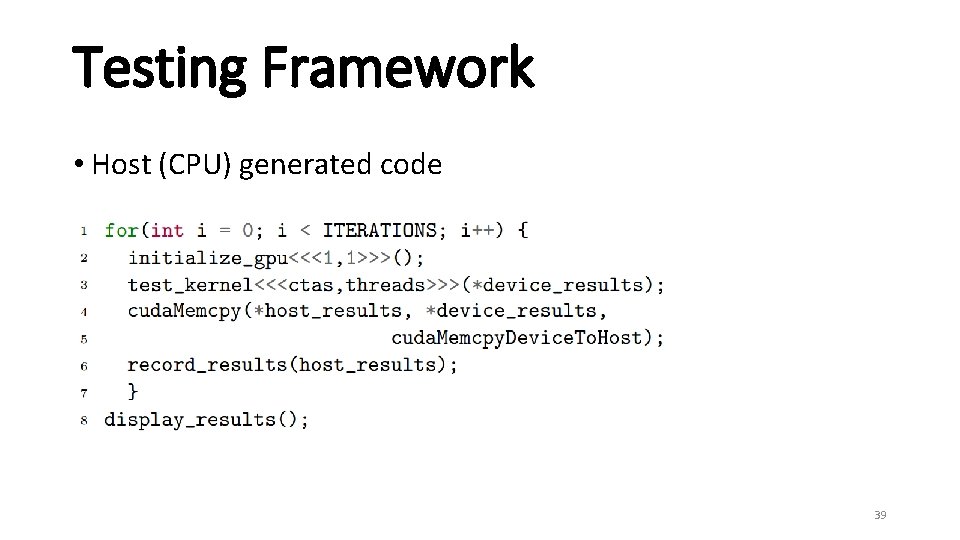

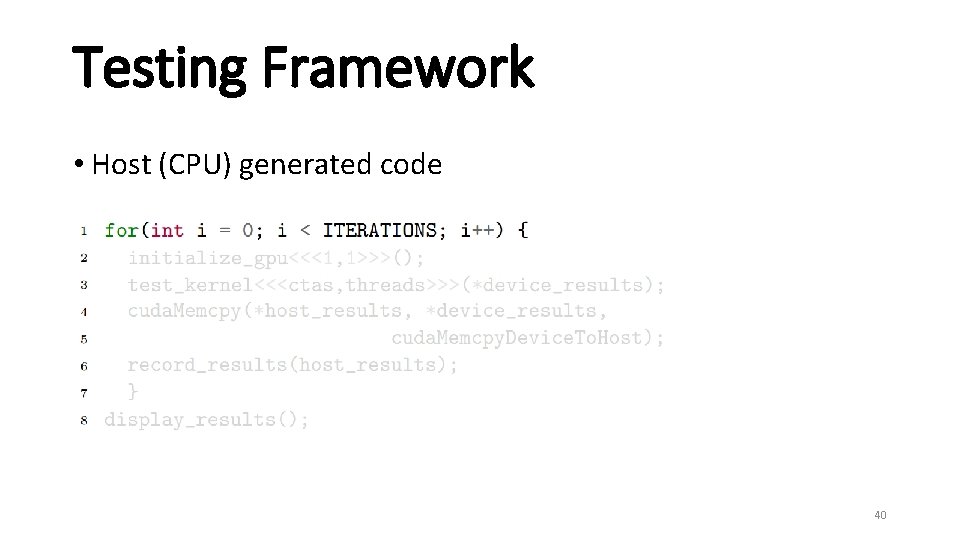

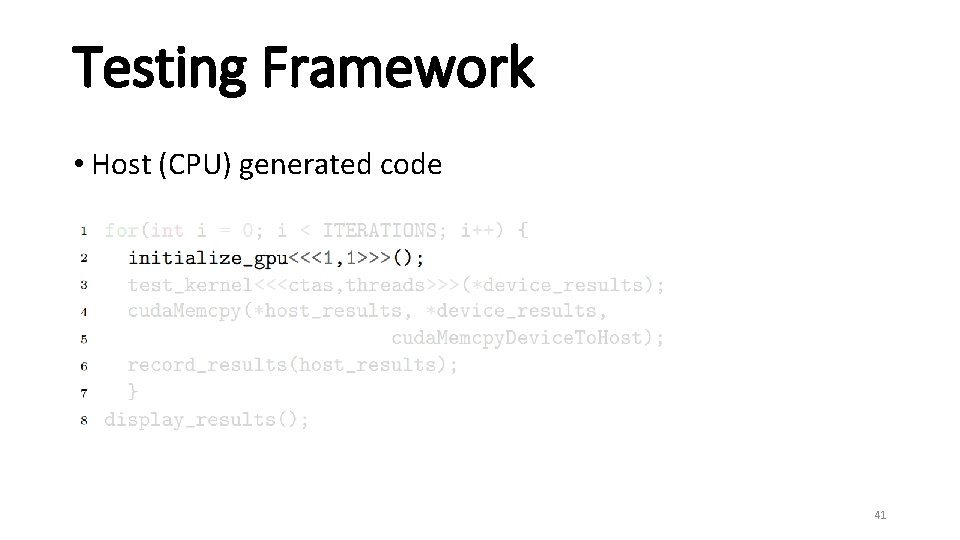

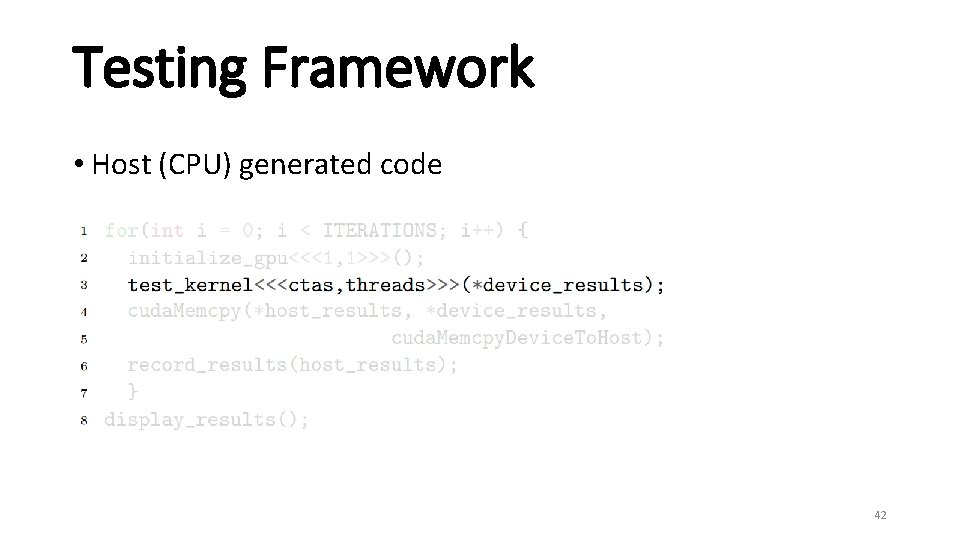

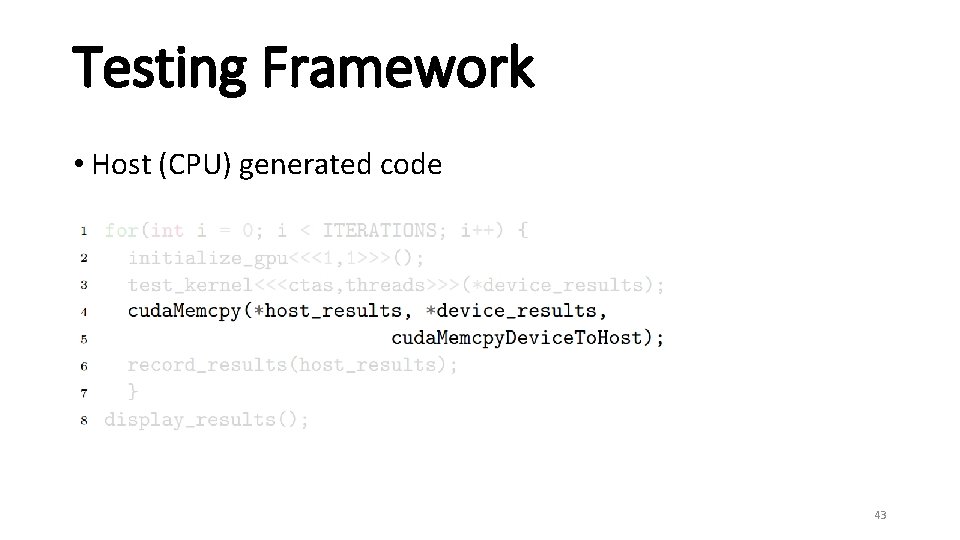

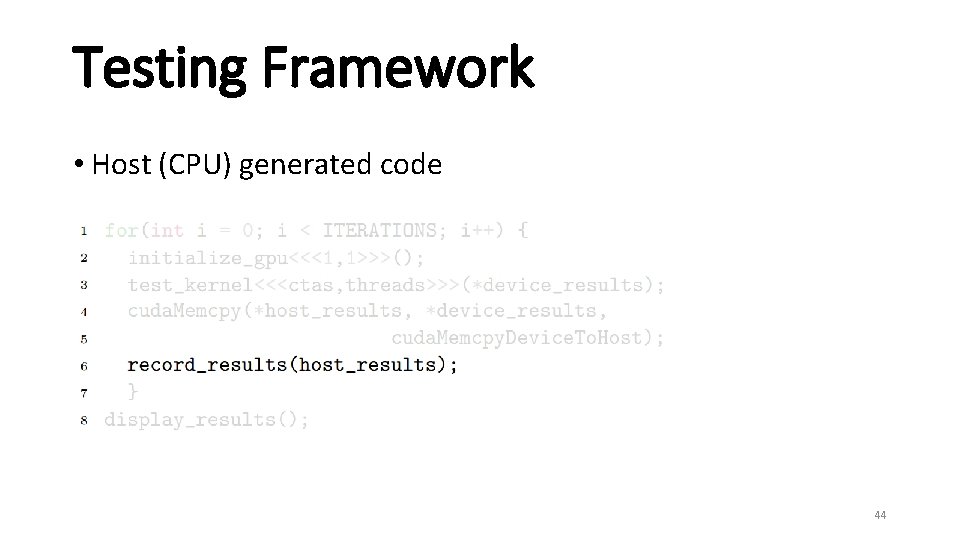

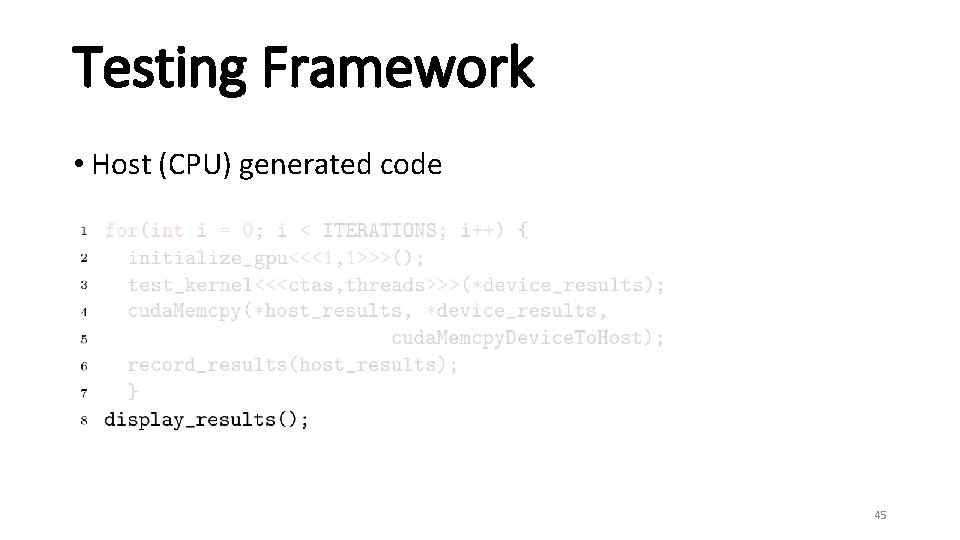

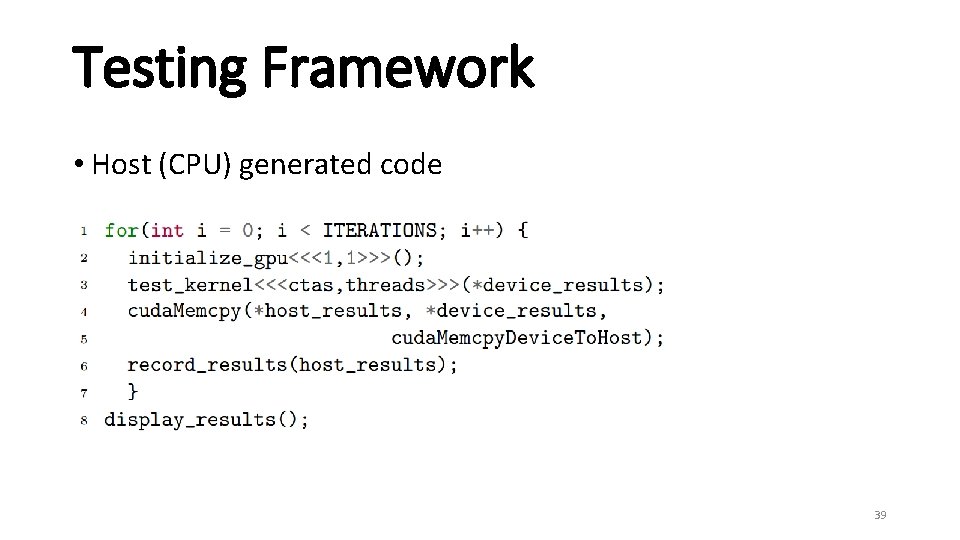

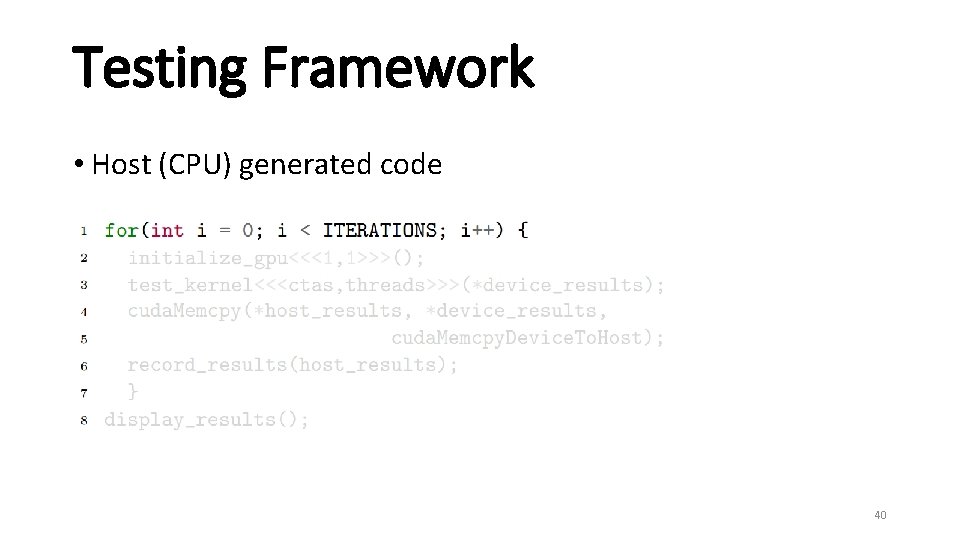

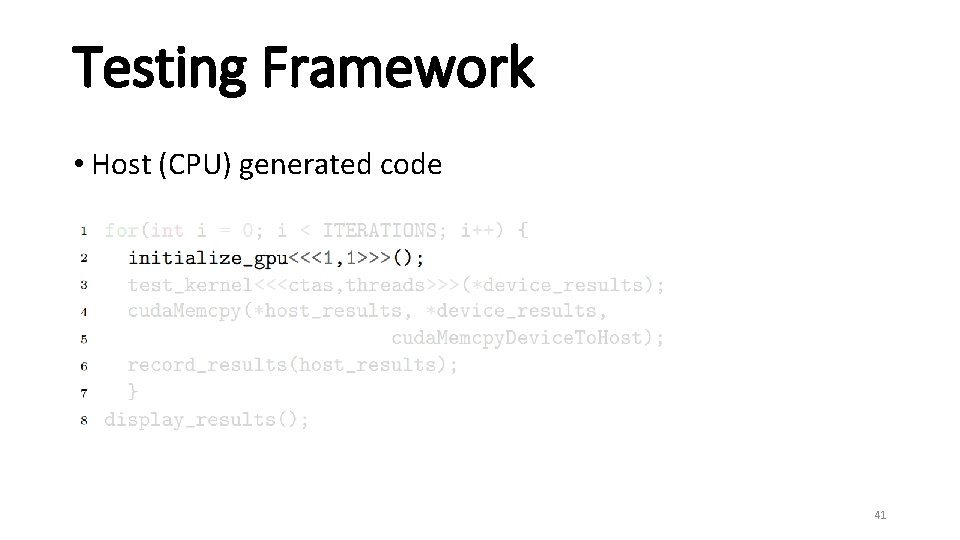

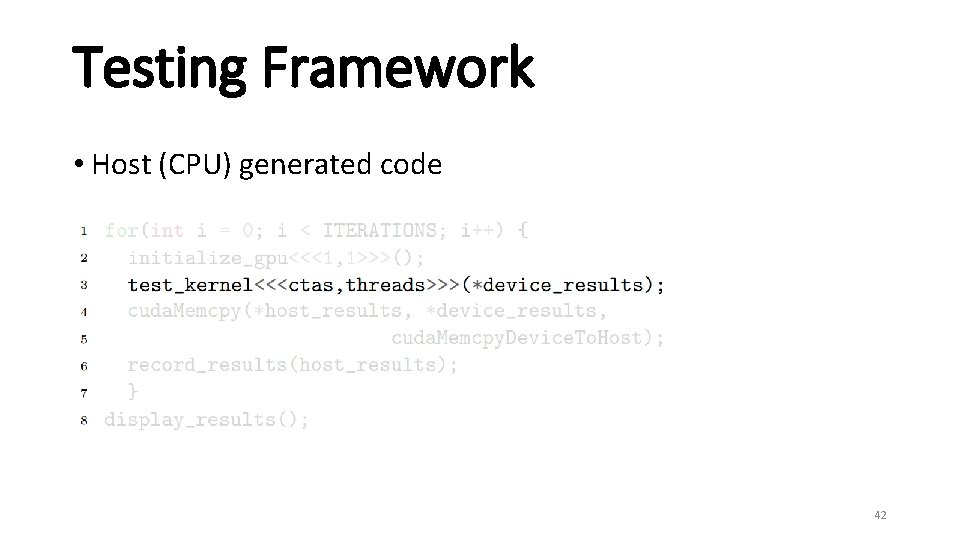

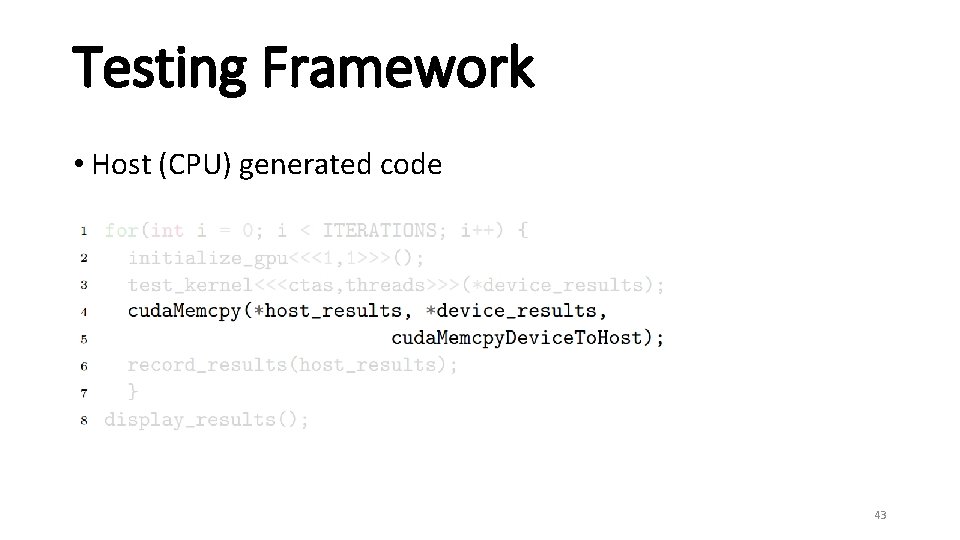

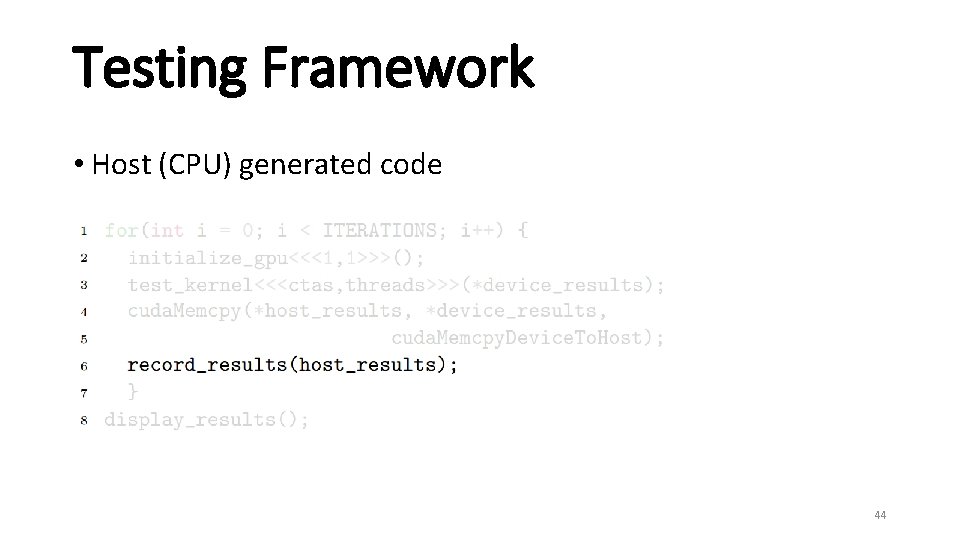

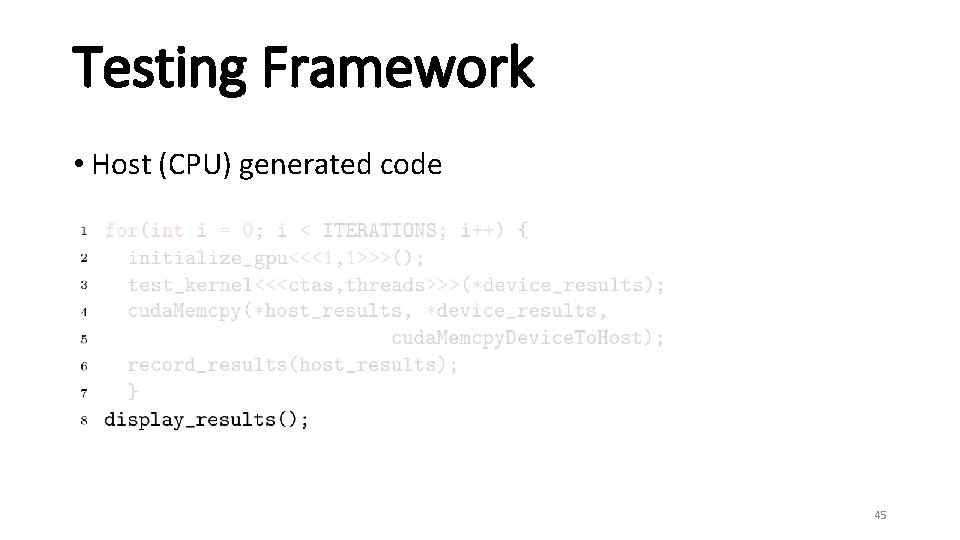

Testing Framework • Host (CPU) generated code 39

Testing Framework • Host (CPU) generated code 40

Testing Framework • Host (CPU) generated code 41

Testing Framework • Host (CPU) generated code 42

Testing Framework • Host (CPU) generated code 43

Testing Framework • Host (CPU) generated code 44

Testing Framework • Host (CPU) generated code 45

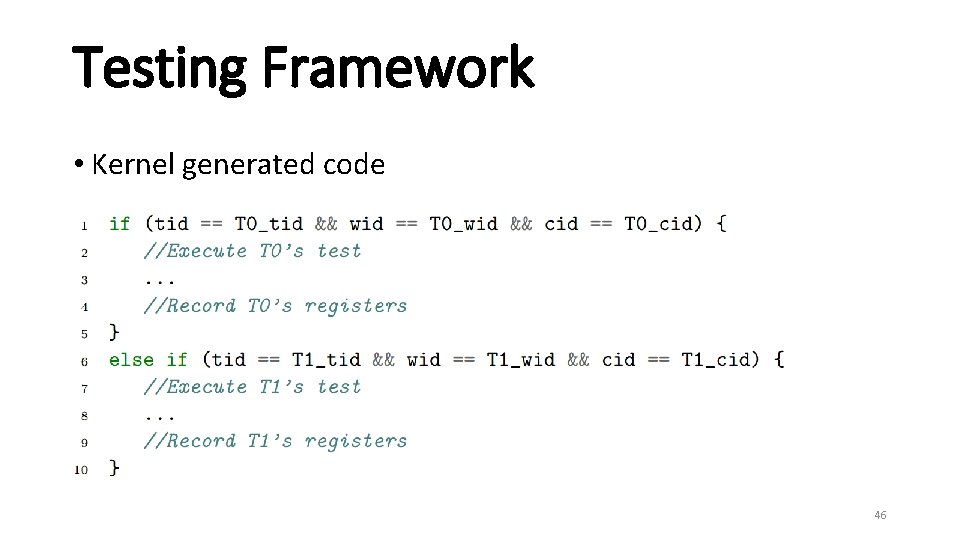

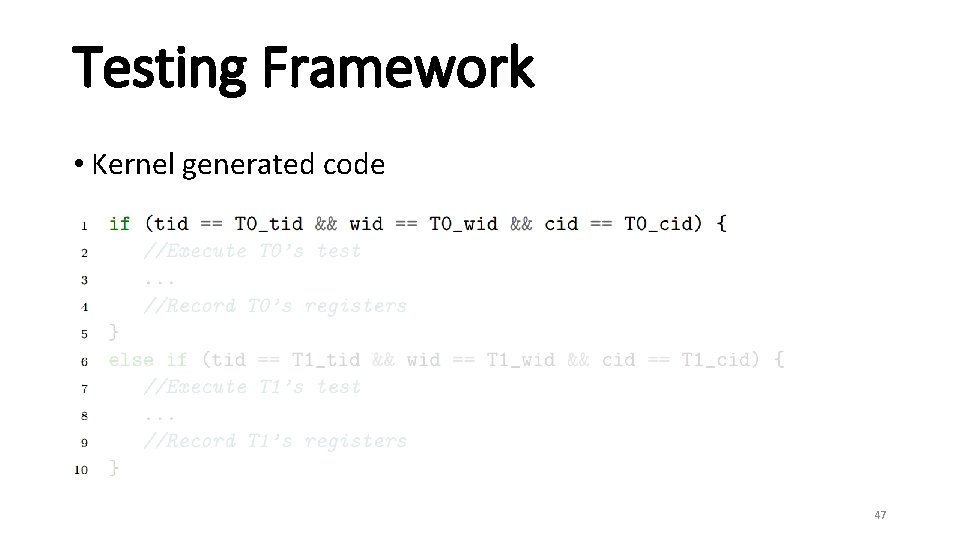

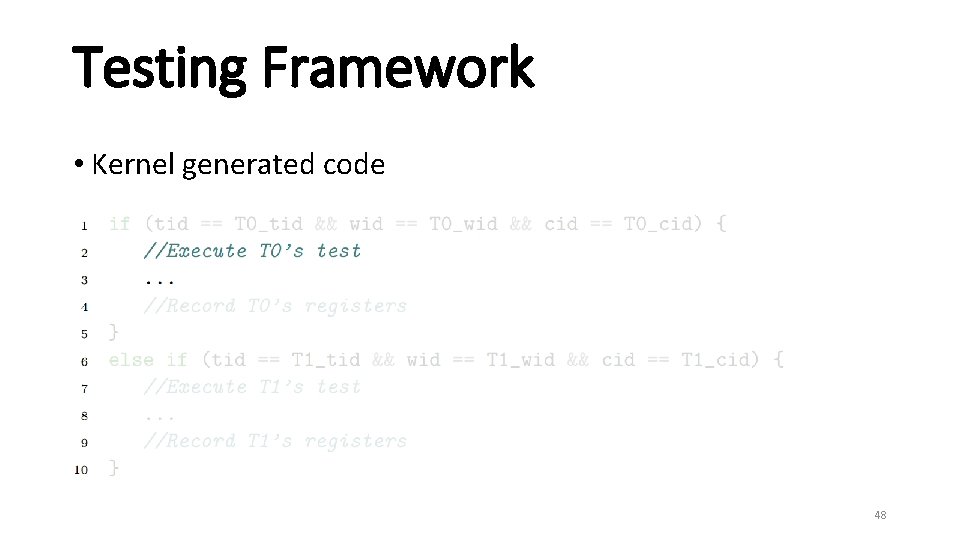

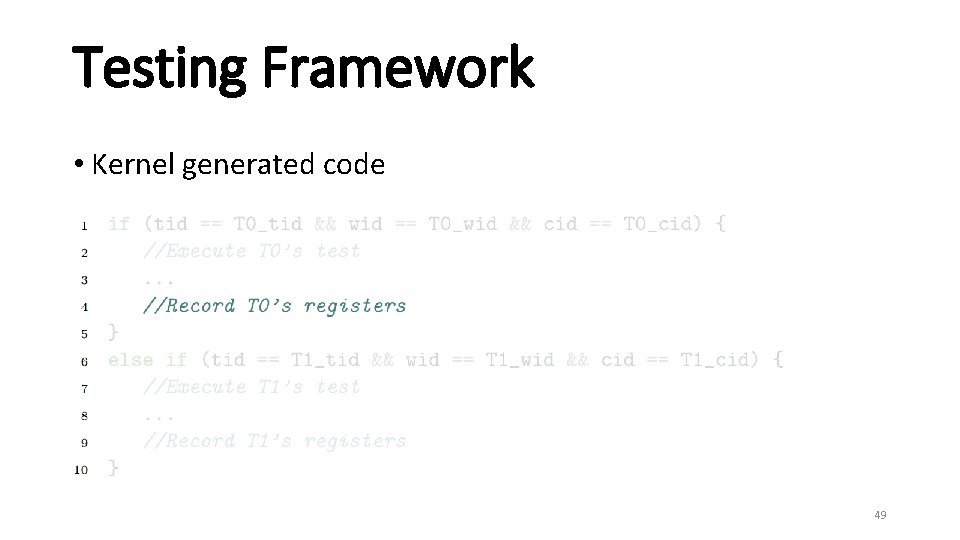

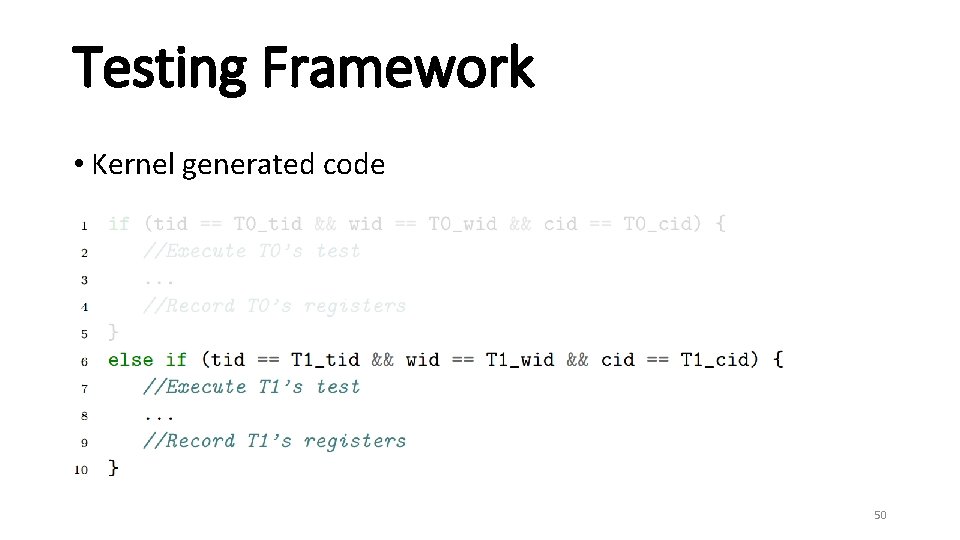

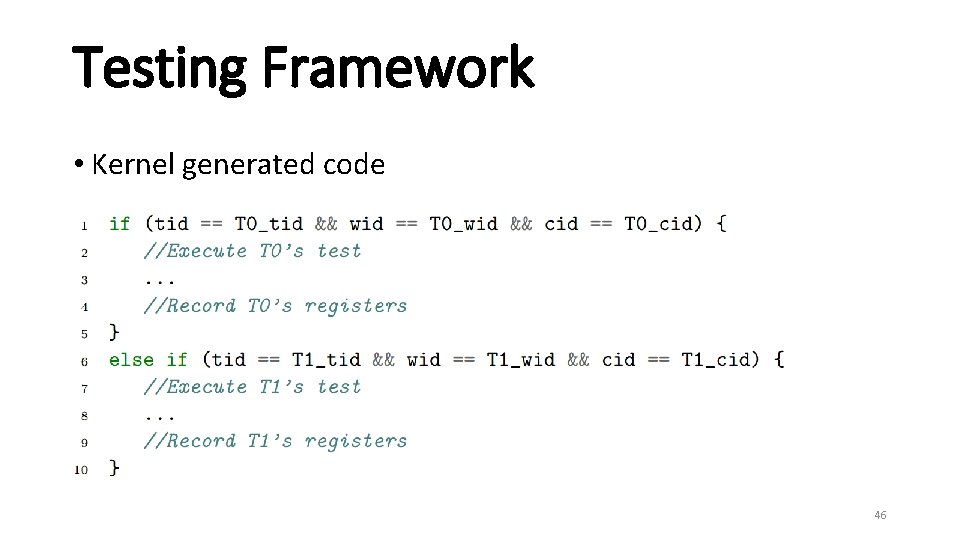

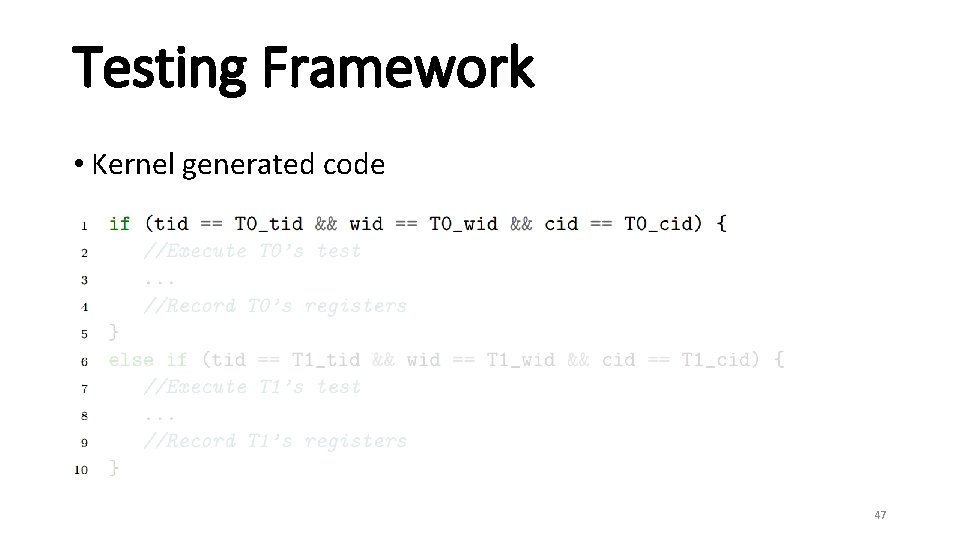

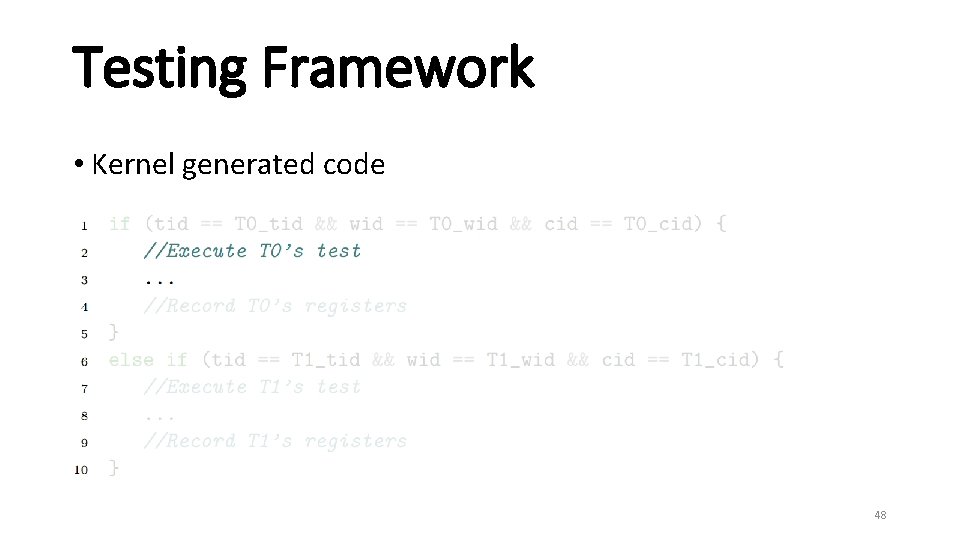

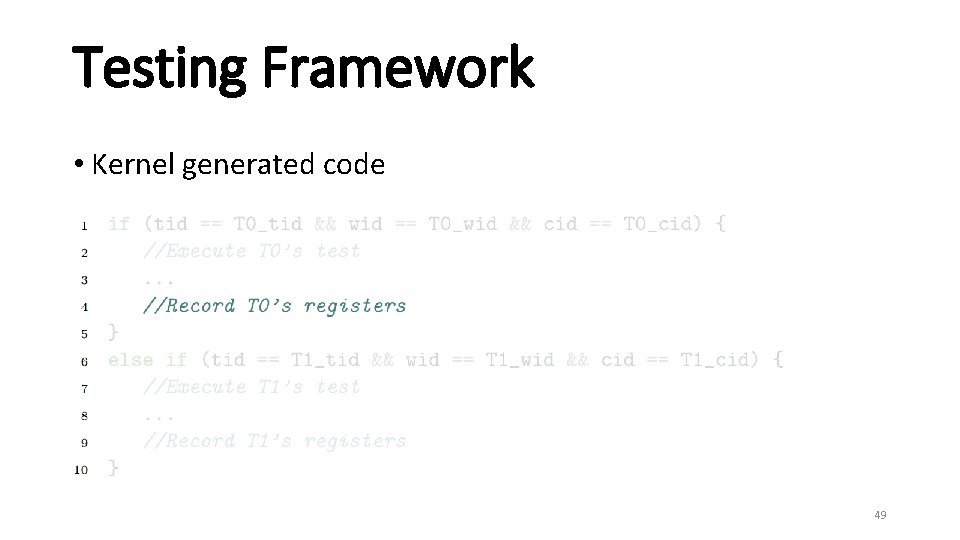

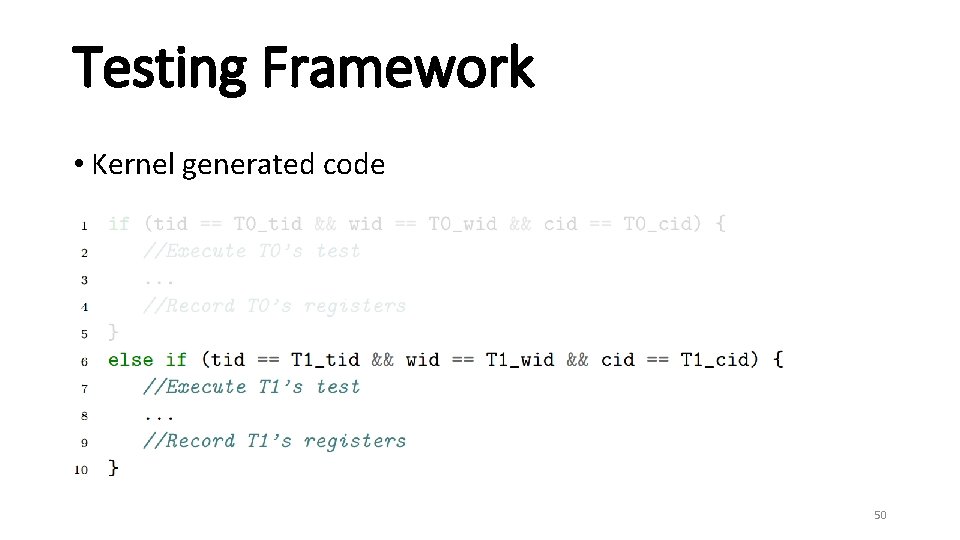

Testing Framework • Kernel generated code 46

Testing Framework • Kernel generated code 47

Testing Framework • Kernel generated code 48

Testing Framework • Kernel generated code 49

Testing Framework • Kernel generated code 50

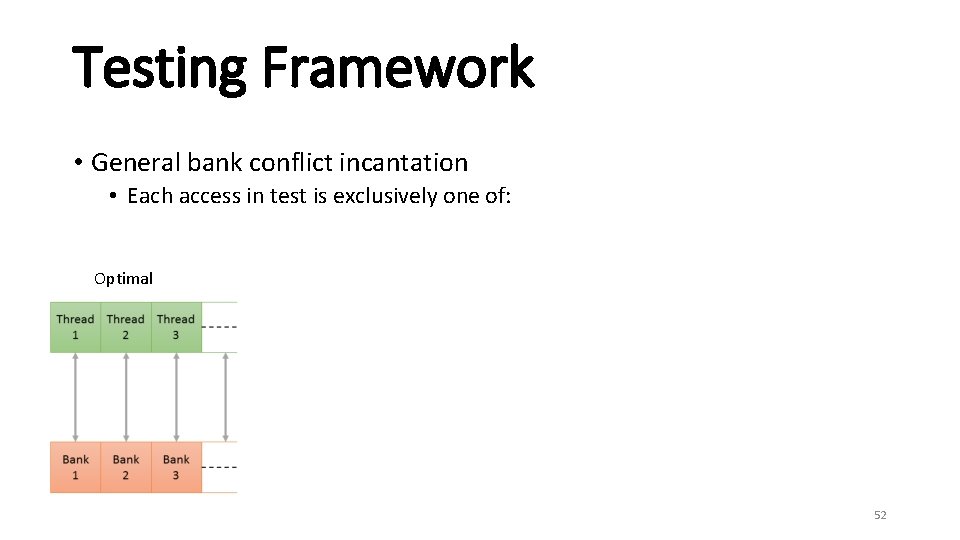

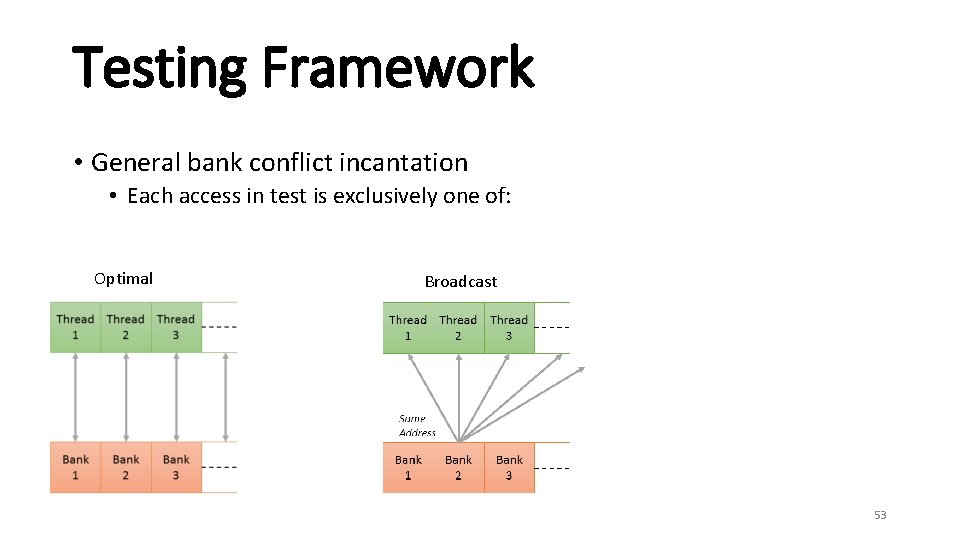

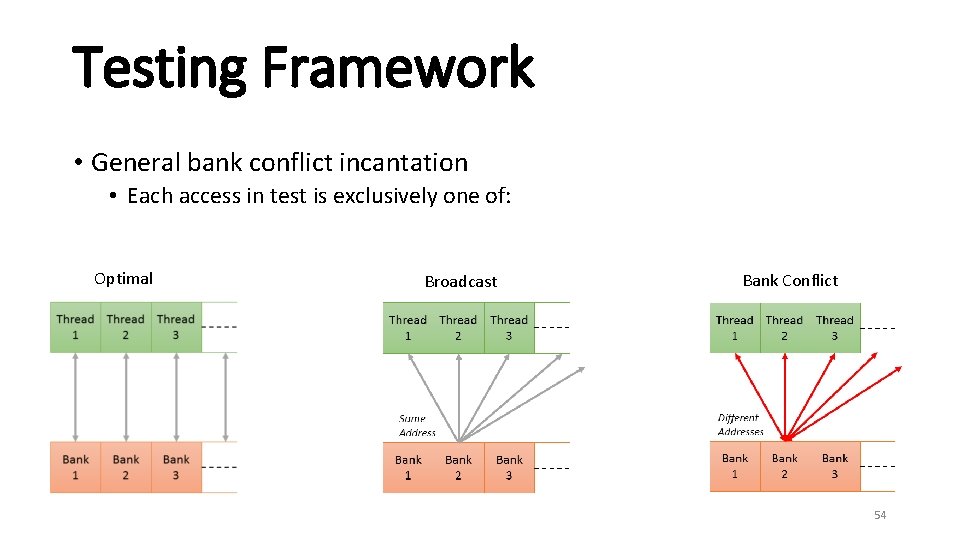

Testing Framework • Basic Framework shows NO weak behaviors • We develop heuristics (we dub incantations) to encourage weak behaviors to appear 51

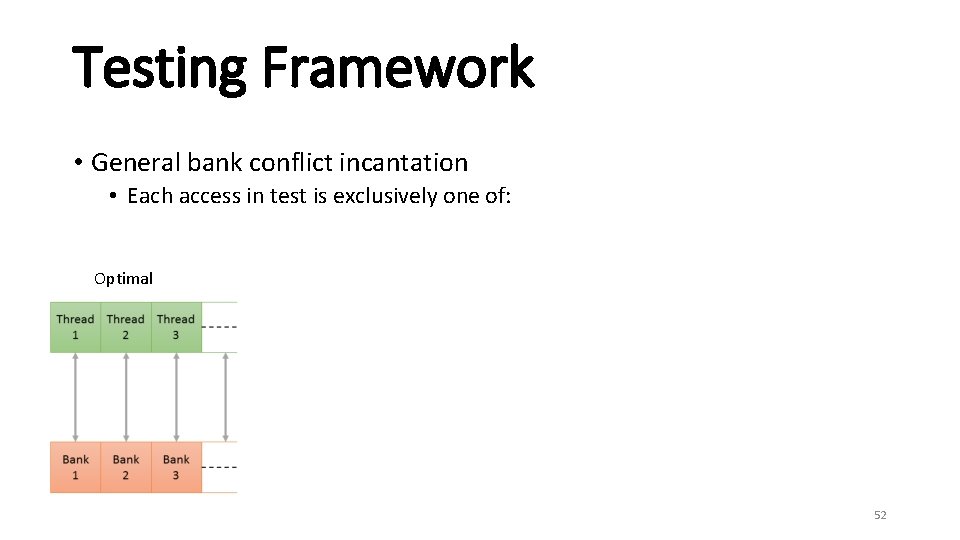

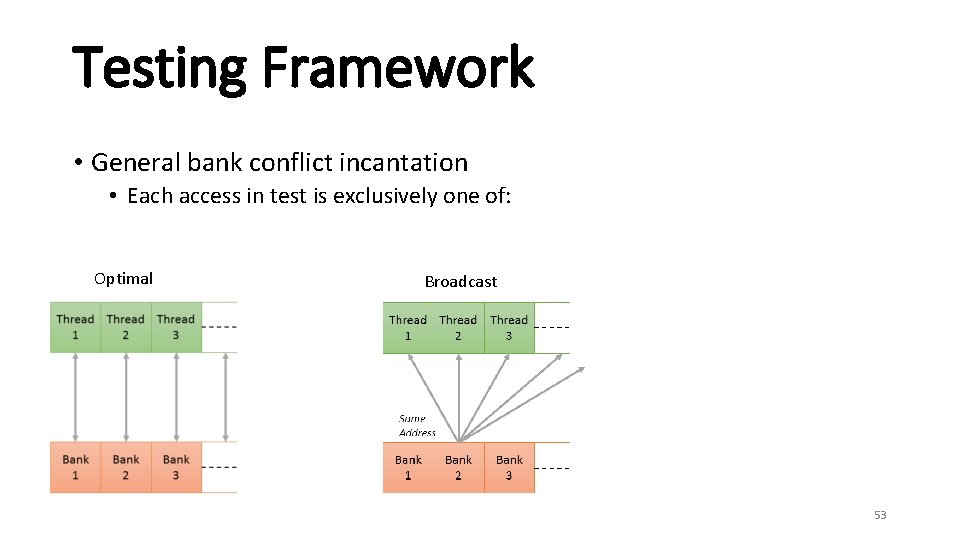

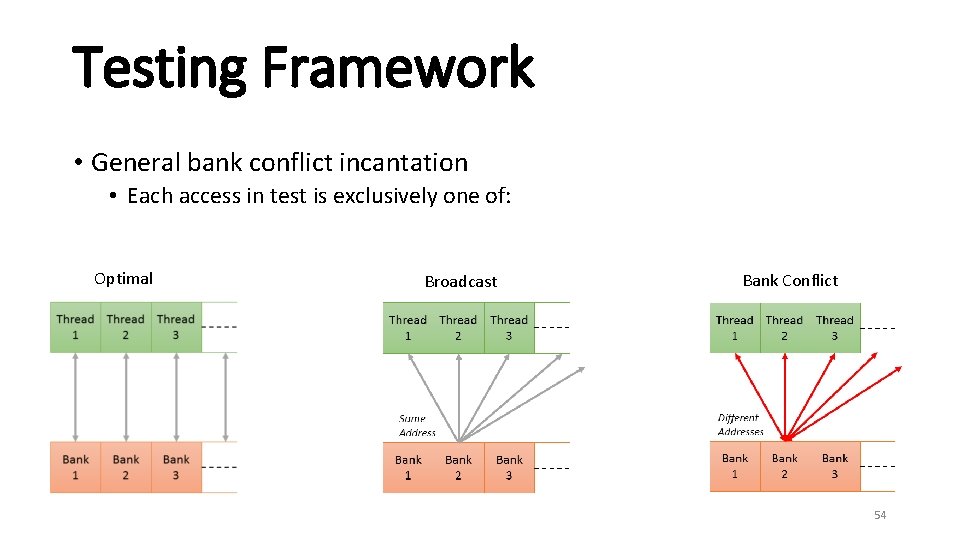

Testing Framework • General bank conflict incantation • Each access in test is exclusively one of: Optimal 52

Testing Framework • General bank conflict incantation • Each access in test is exclusively one of: Optimal Broadcast 53

Testing Framework • General bank conflict incantation • Each access in test is exclusively one of: Optimal Broadcast Bank Conflict 54

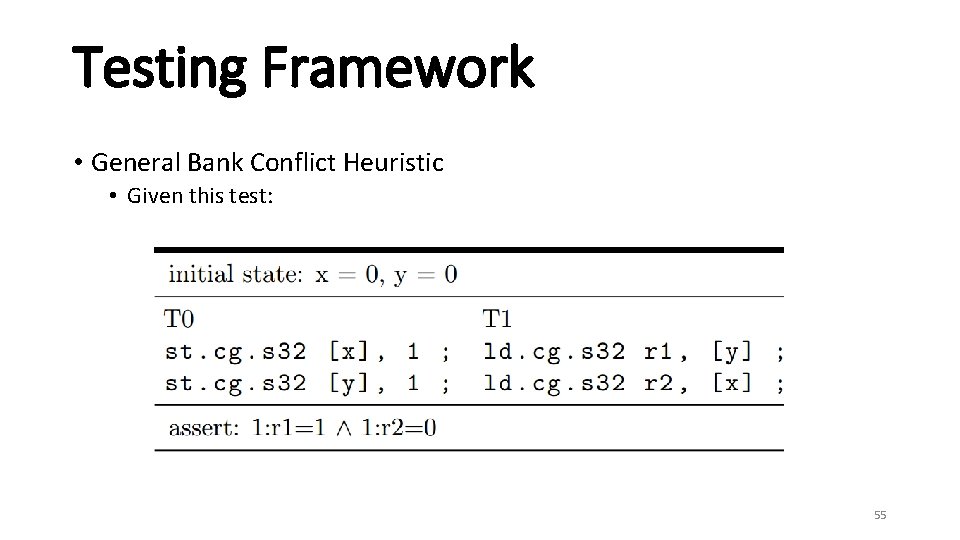

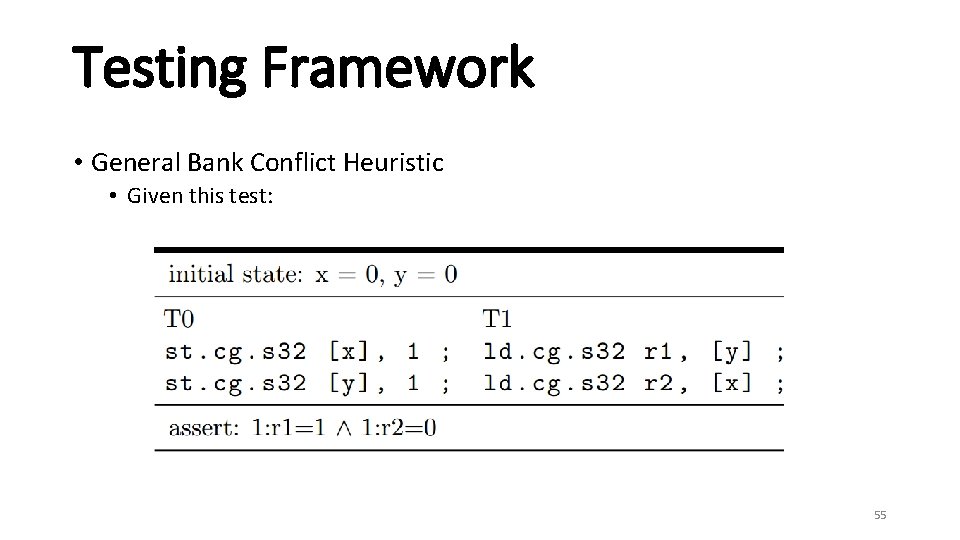

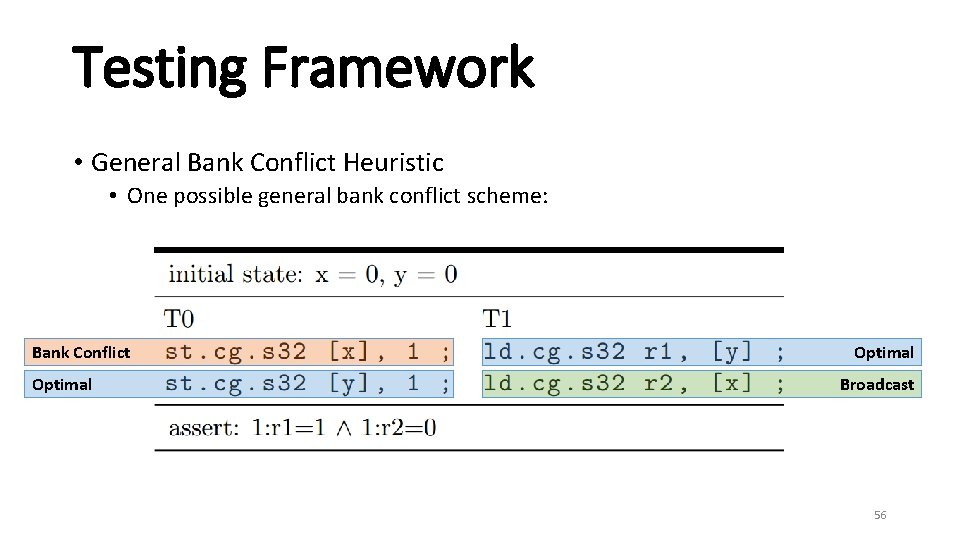

Testing Framework • General Bank Conflict Heuristic • Given this test: 55

Testing Framework • General Bank Conflict Heuristic • One possible general bank conflict scheme: Bank Conflict Optimal Broadcast 56

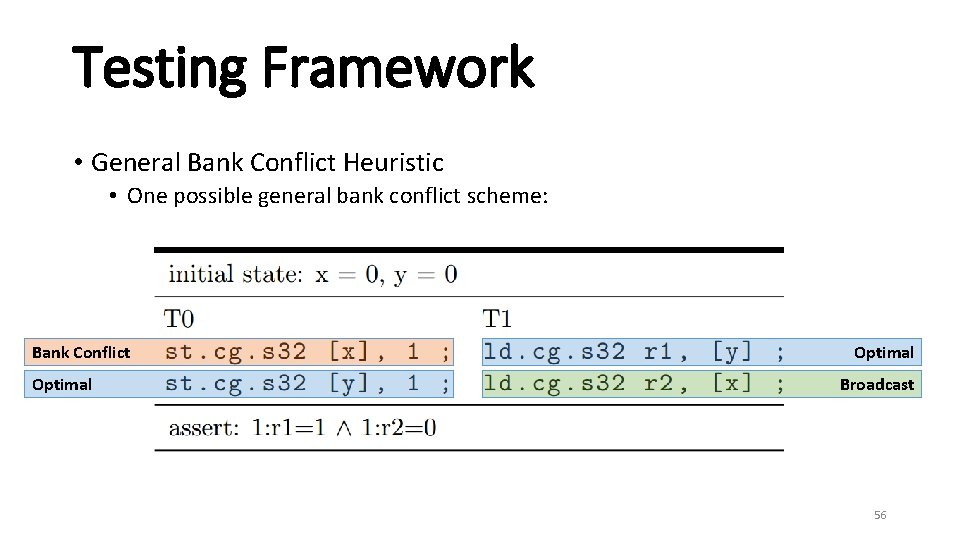

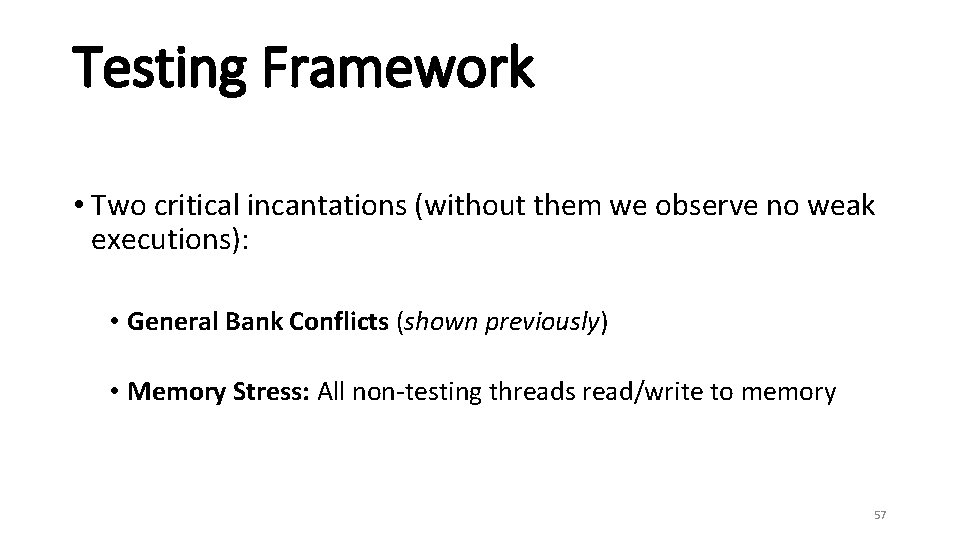

Testing Framework • Two critical incantations (without them we observe no weak executions): • General Bank Conflicts (shown previously) • Memory Stress: All non-testing threads read/write to memory 57

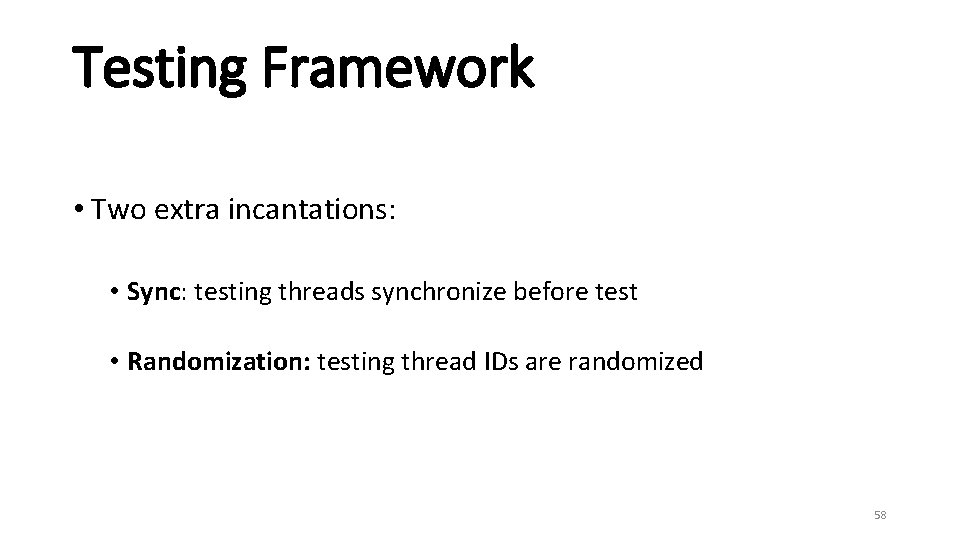

Testing Framework • Two extra incantations: • Sync: testing threads synchronize before test • Randomization: testing thread IDs are randomized 58

Roadmap • Background and Approach • Prior Work • Testing Framework • Results • CUDA Spin Locks • Bulk Testing • Future Work and Conclusion 59

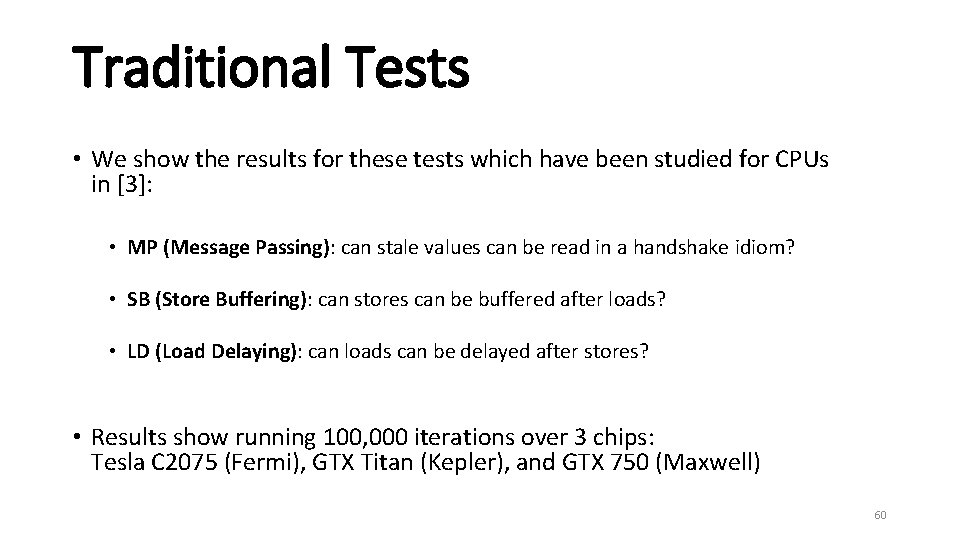

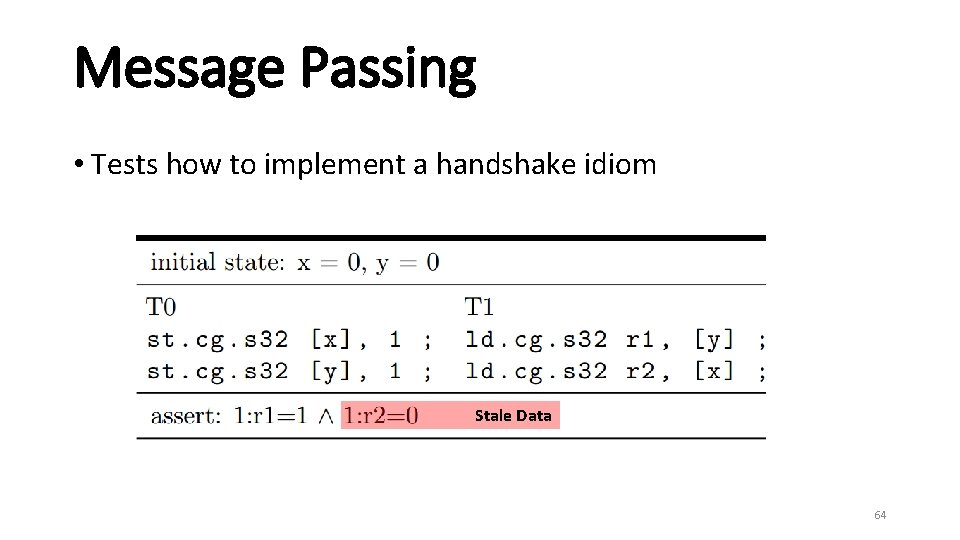

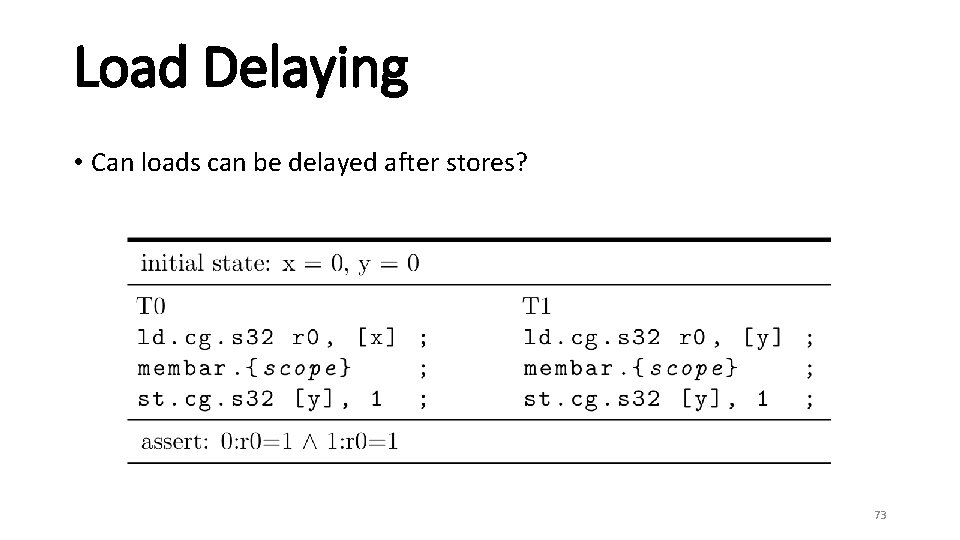

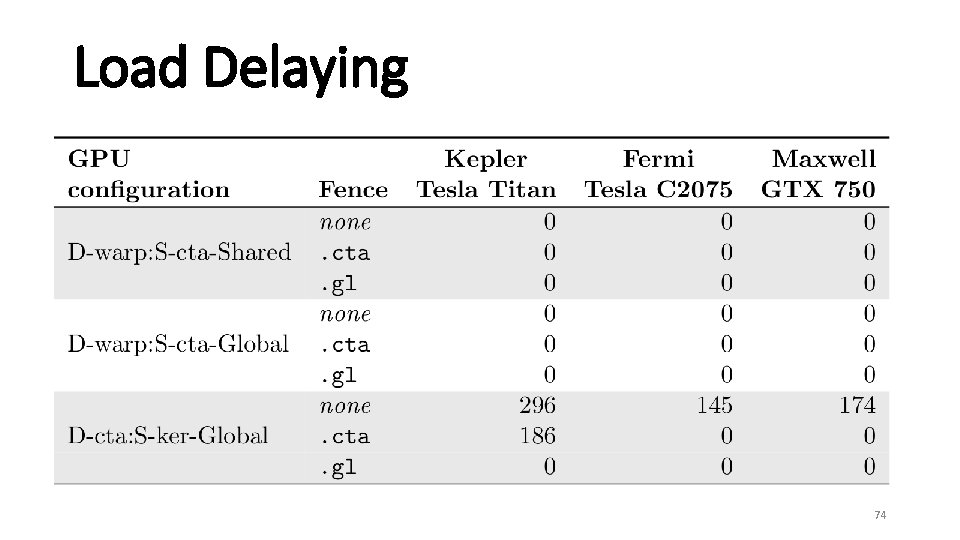

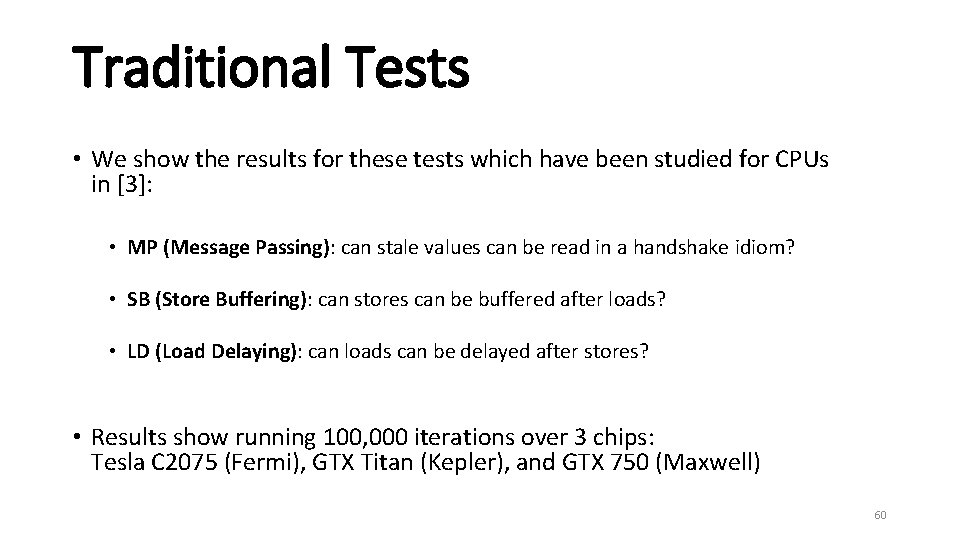

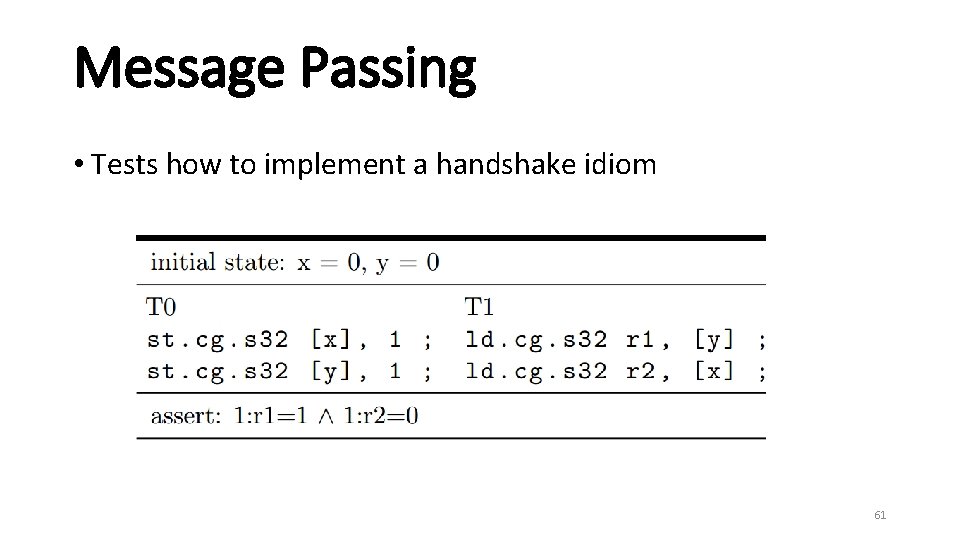

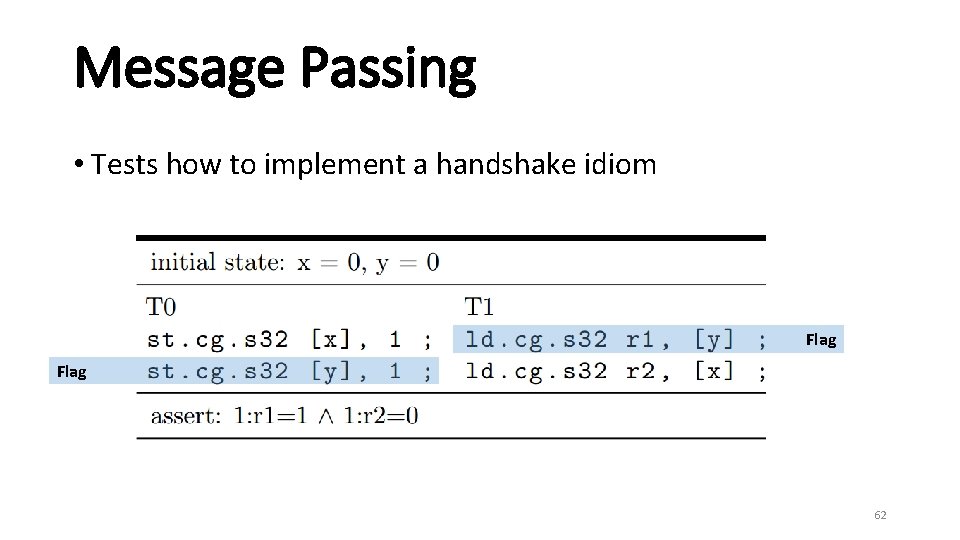

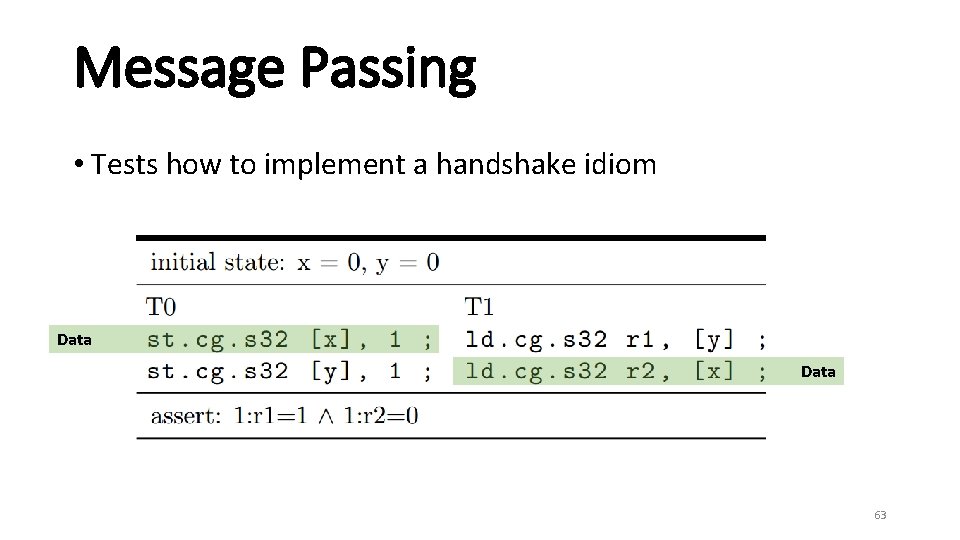

Traditional Tests • We show the results for these tests which have been studied for CPUs in [3]: • MP (Message Passing): can stale values can be read in a handshake idiom? • SB (Store Buffering): can stores can be buffered after loads? • LD (Load Delaying): can loads can be delayed after stores? • Results show running 100, 000 iterations over 3 chips: Tesla C 2075 (Fermi), GTX Titan (Kepler), and GTX 750 (Maxwell) 60

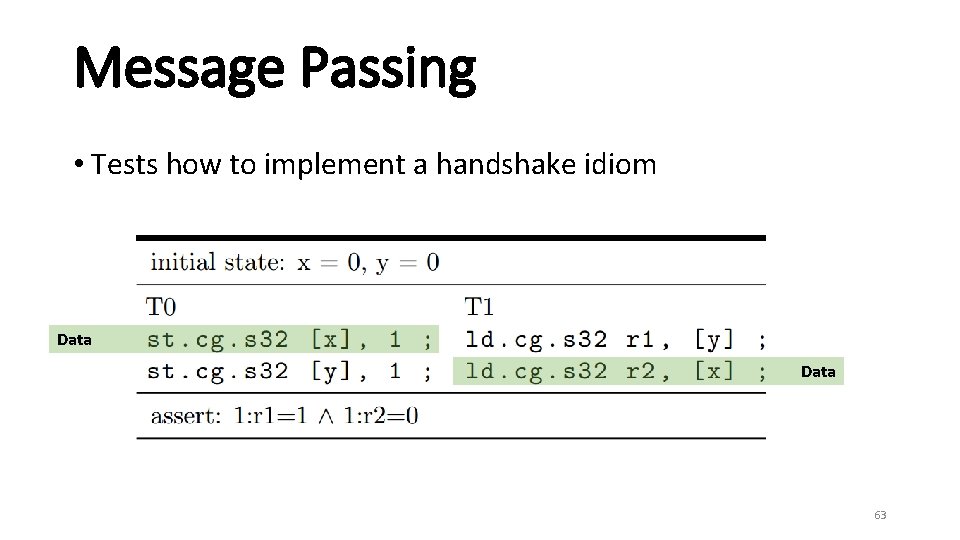

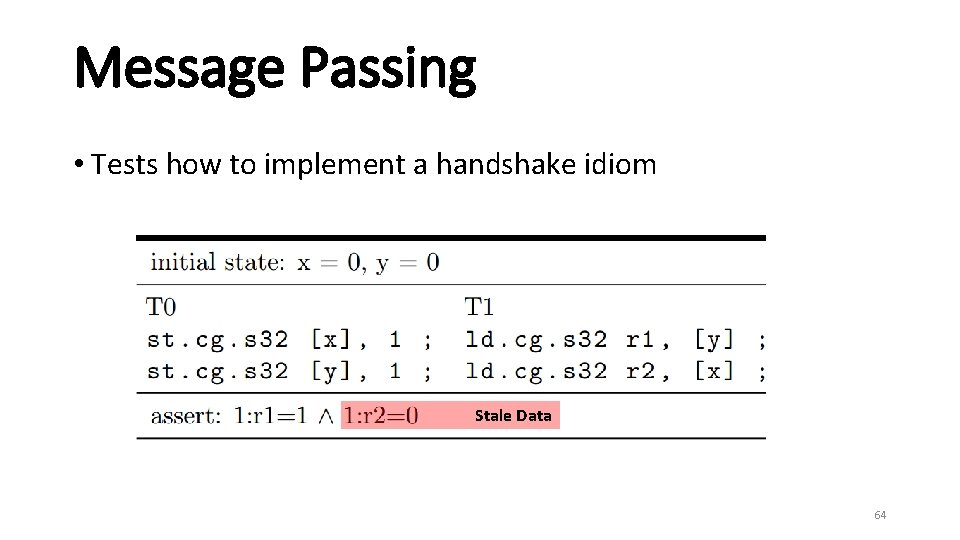

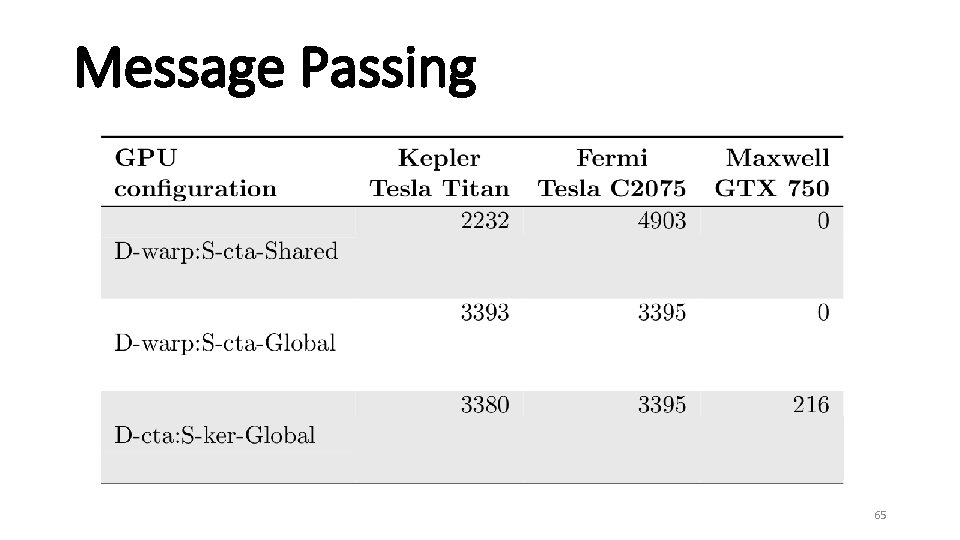

Message Passing • Tests how to implement a handshake idiom 61

Message Passing • Tests how to implement a handshake idiom Flag 62

Message Passing • Tests how to implement a handshake idiom Data 63

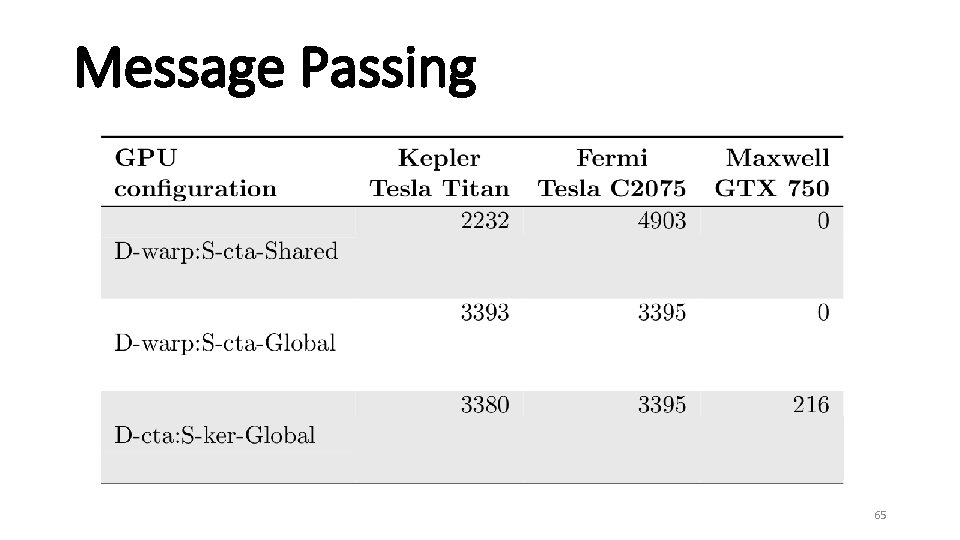

Message Passing • Tests how to implement a handshake idiom Stale Data 64

Message Passing 65

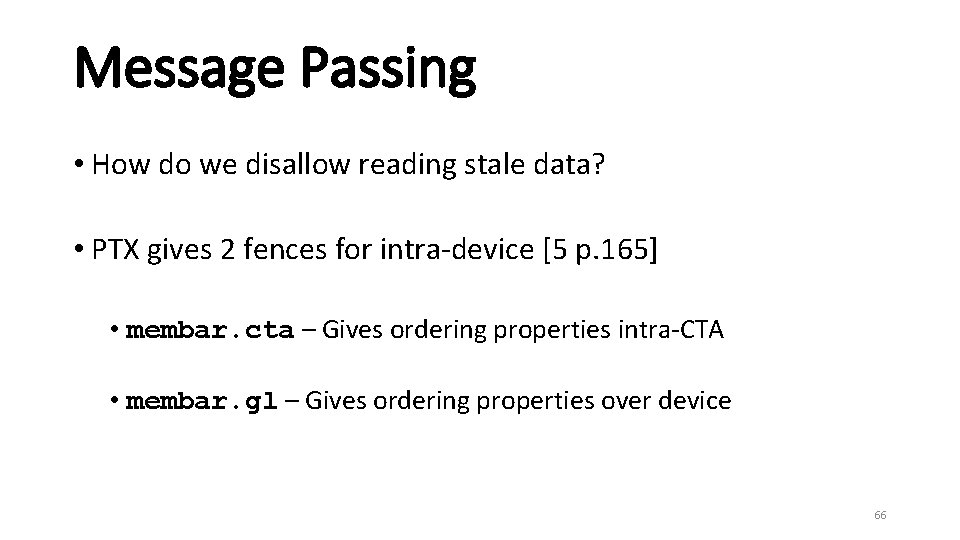

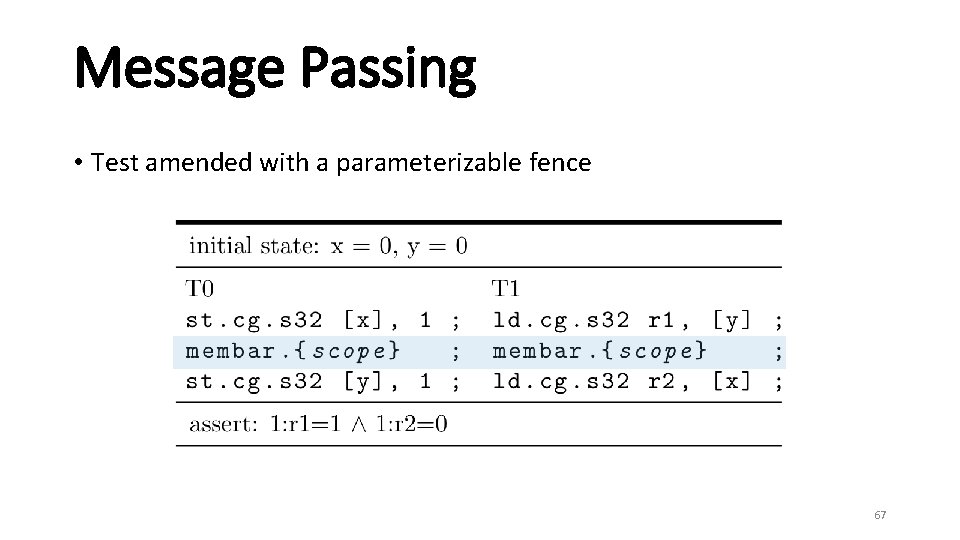

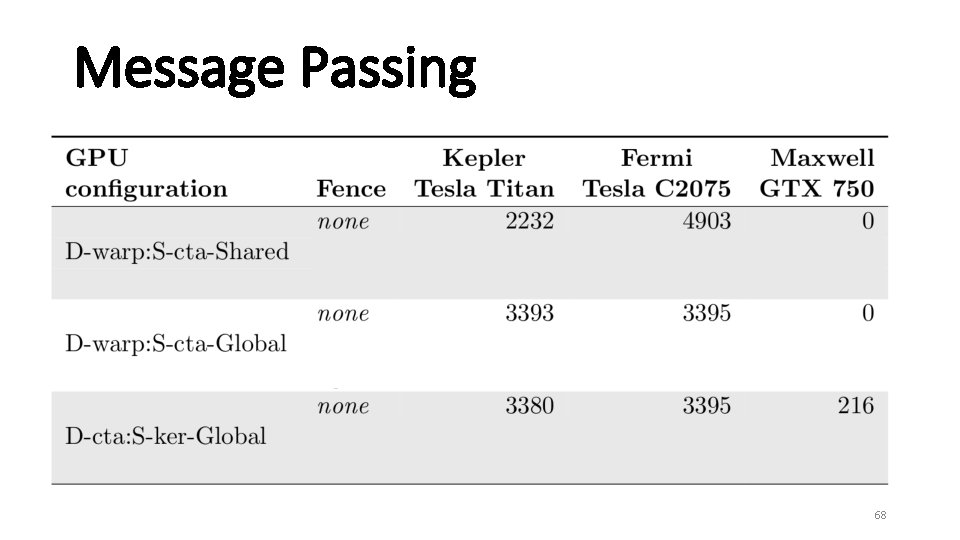

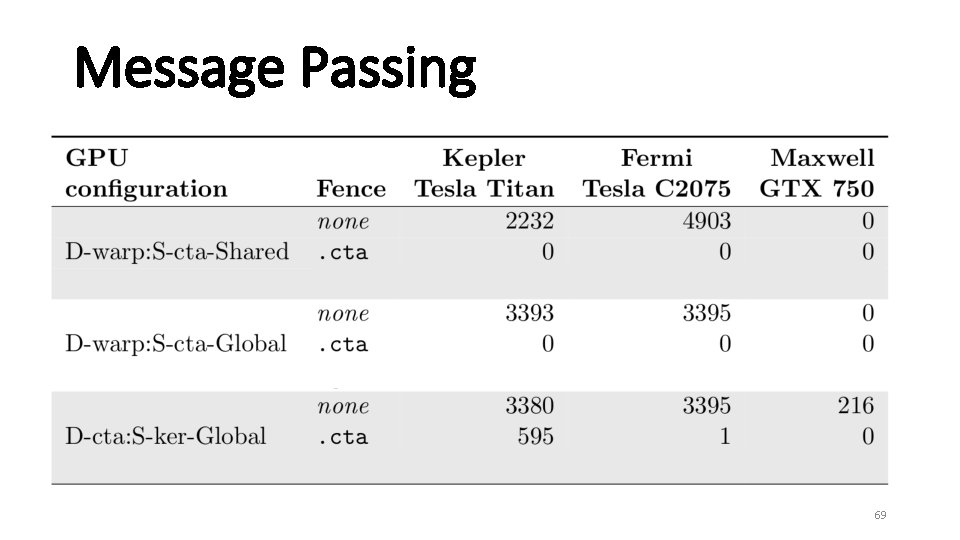

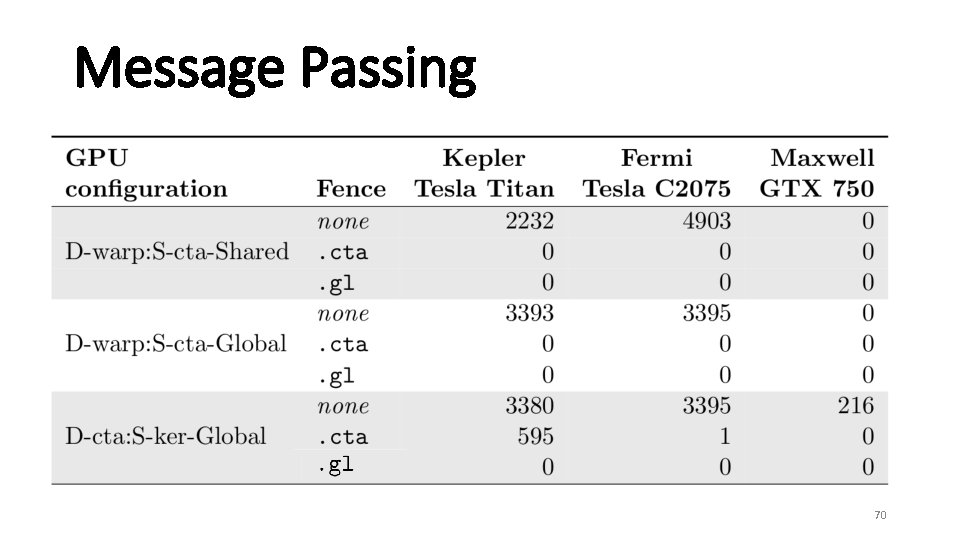

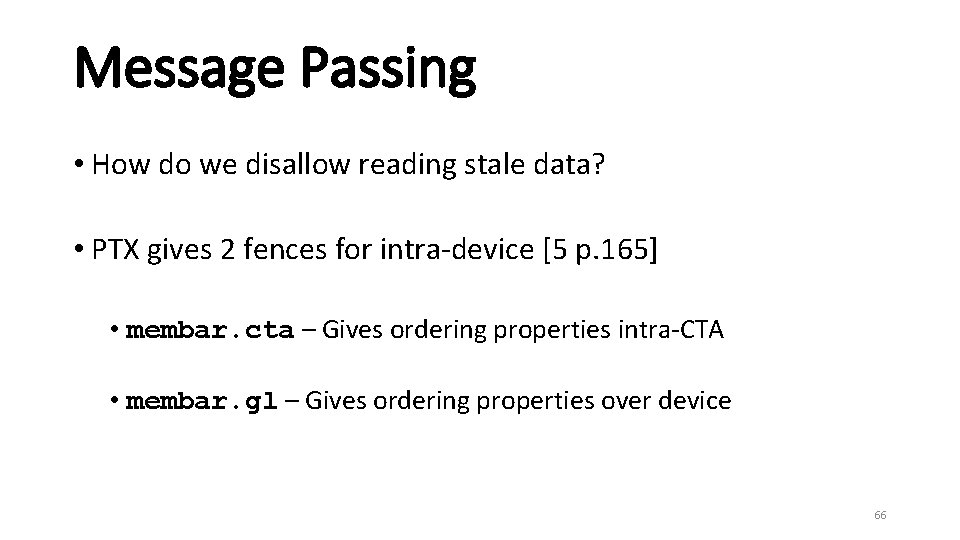

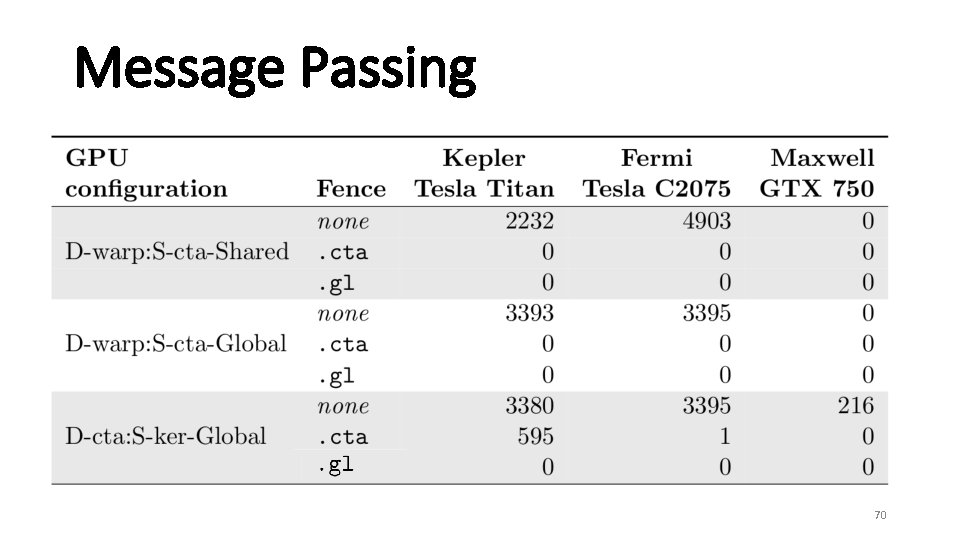

Message Passing • How do we disallow reading stale data? • PTX gives 2 fences for intra-device [5 p. 165] • membar. cta – Gives ordering properties intra-CTA • membar. gl – Gives ordering properties over device 66

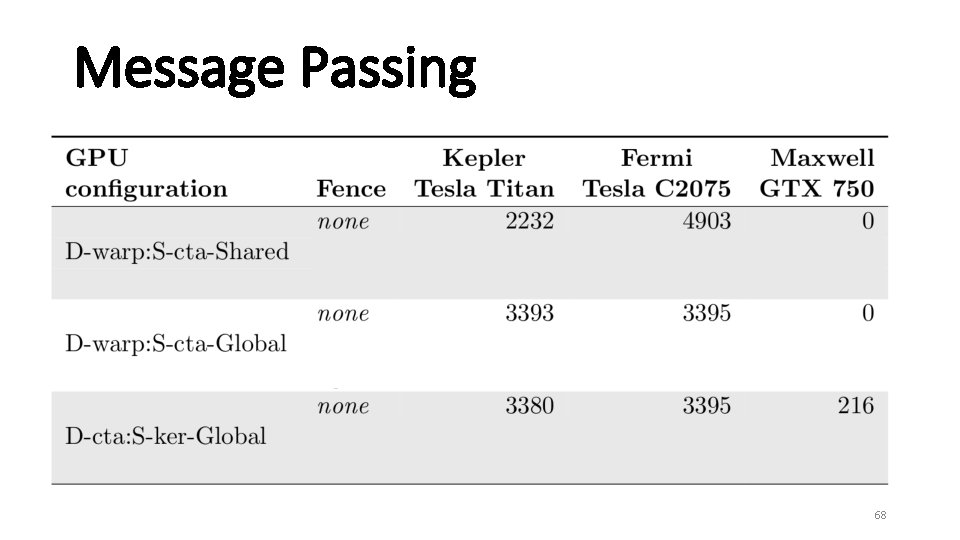

Message Passing • Test amended with a parameterizable fence 67

Message Passing 68

Message Passing 69

Message Passing 70

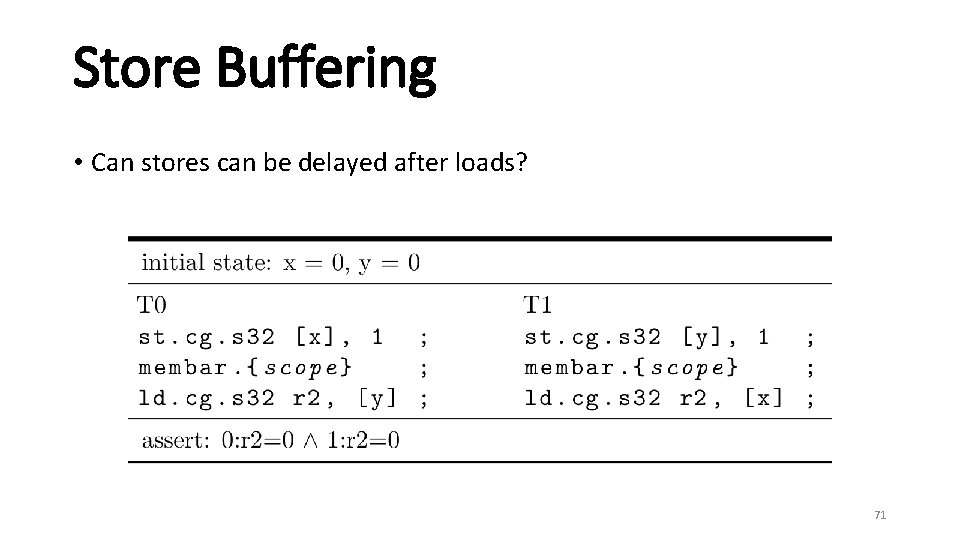

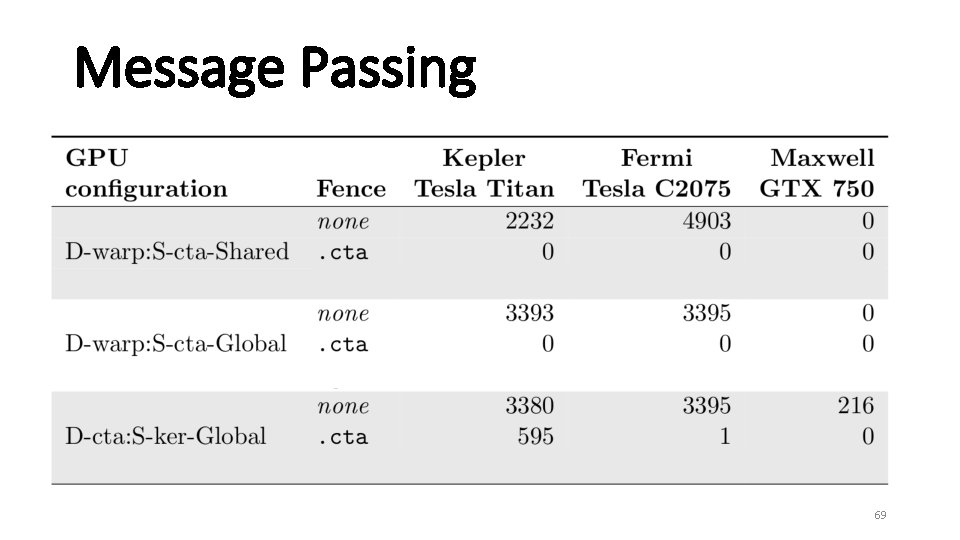

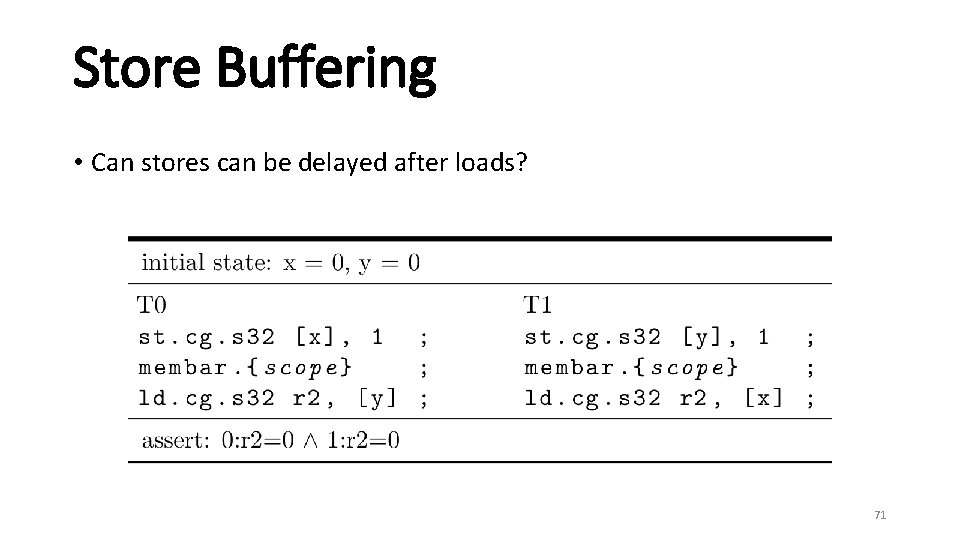

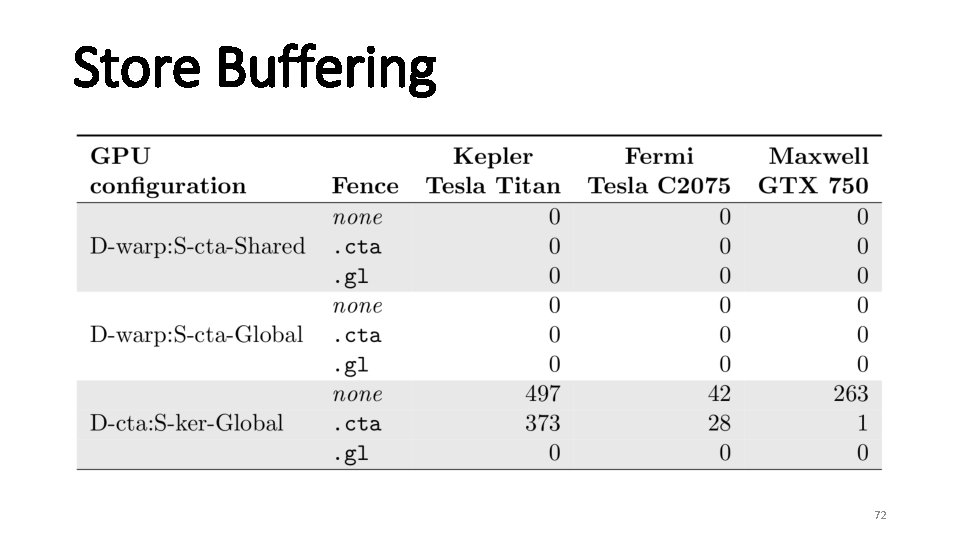

Store Buffering • Can stores can be delayed after loads? 71

Store Buffering 72

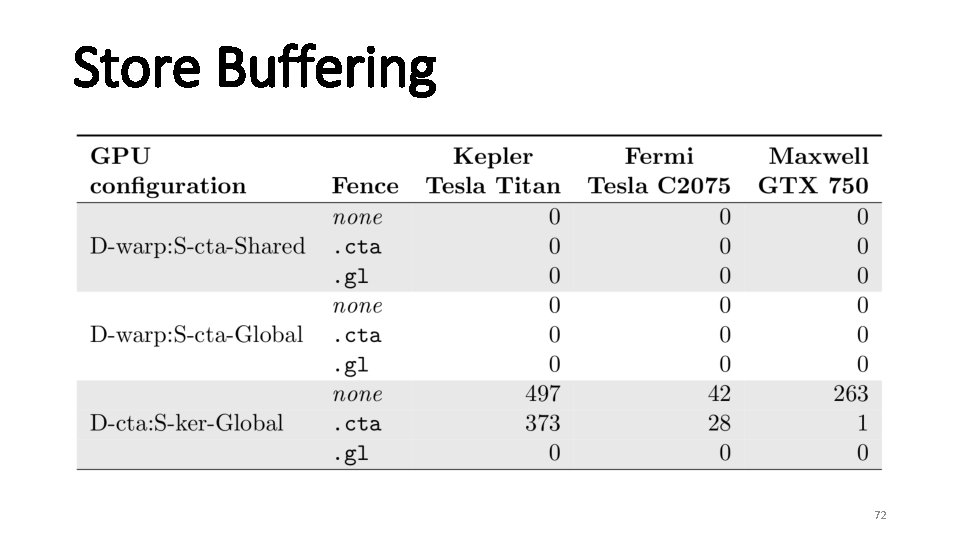

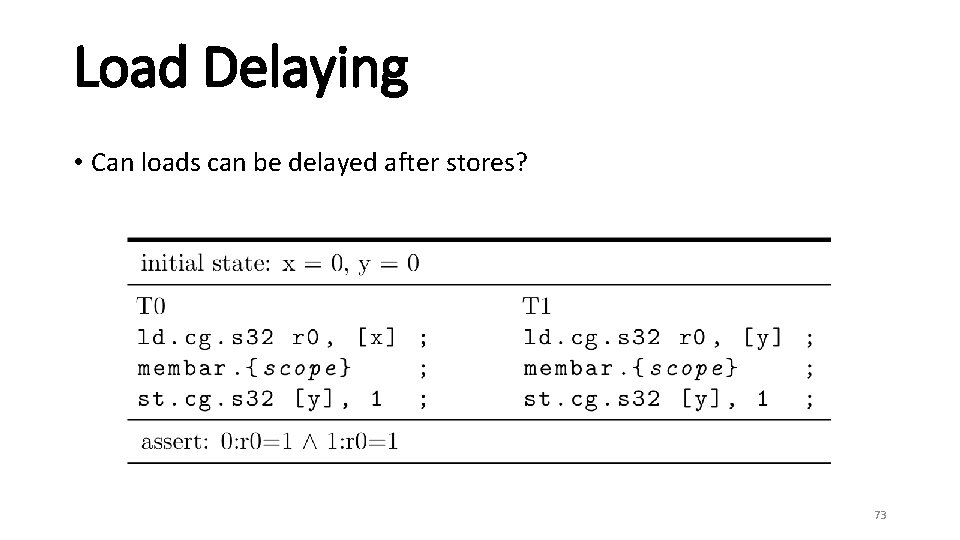

Load Delaying • Can loads can be delayed after stores? 73

Load Delaying 74

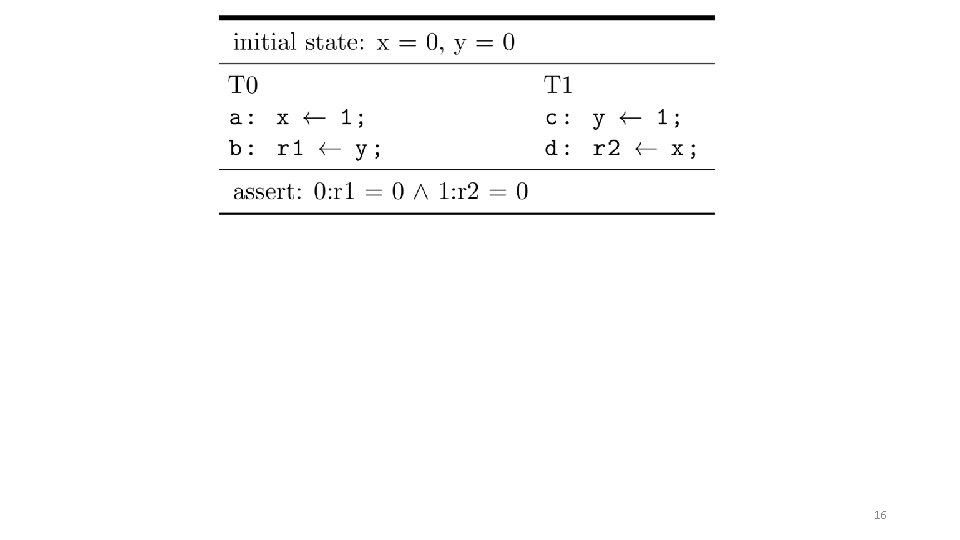

![Co RR Test Coherence is SC per memory location 11 p 14 Co. RR Test • Coherence is SC per memory location [11, p. 14] •](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-75.jpg)

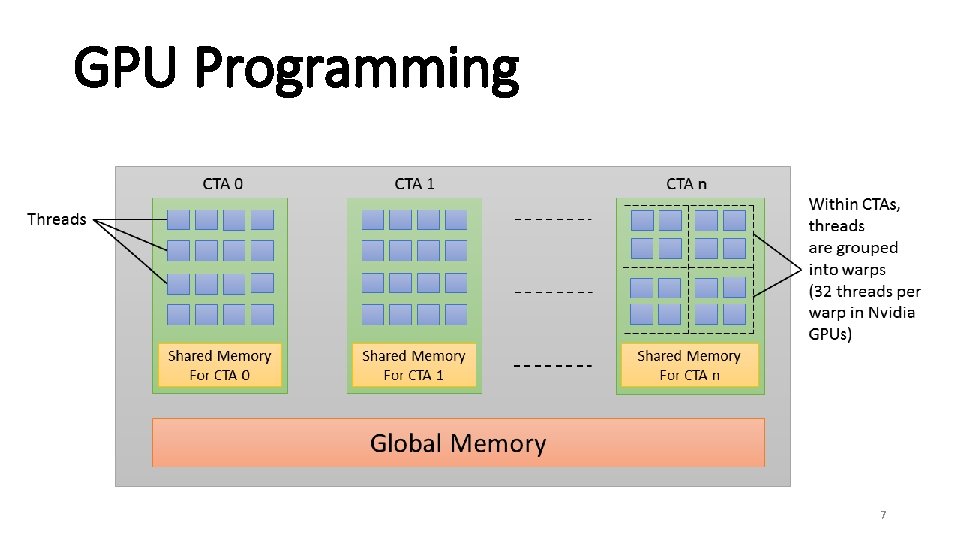

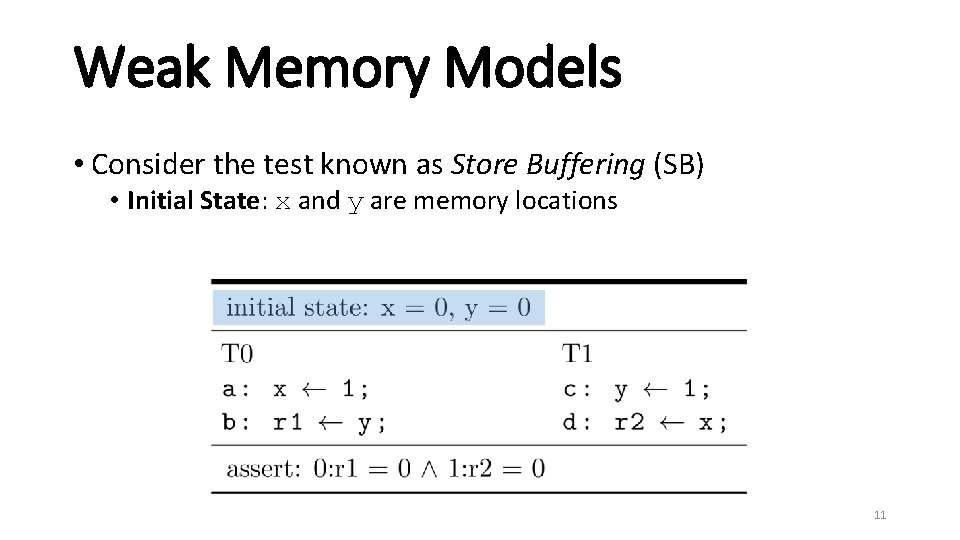

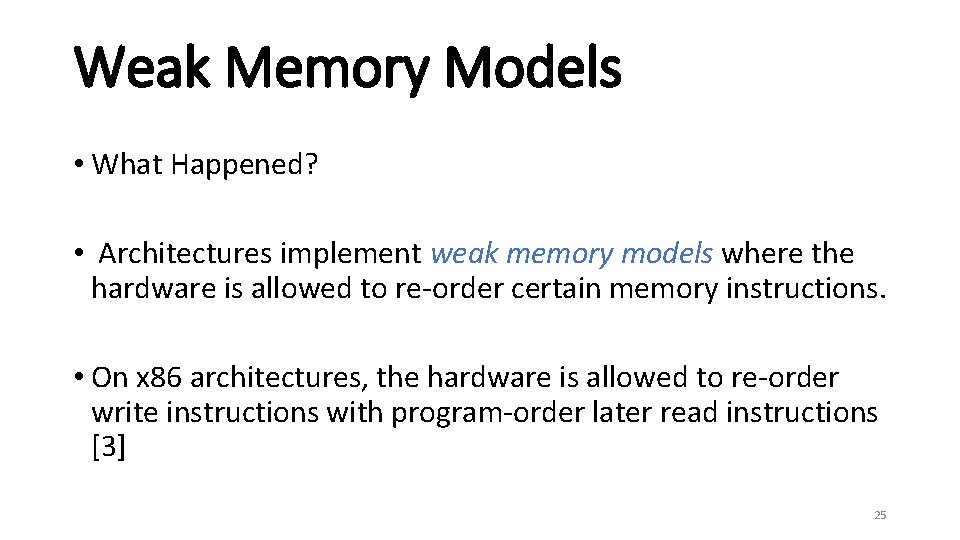

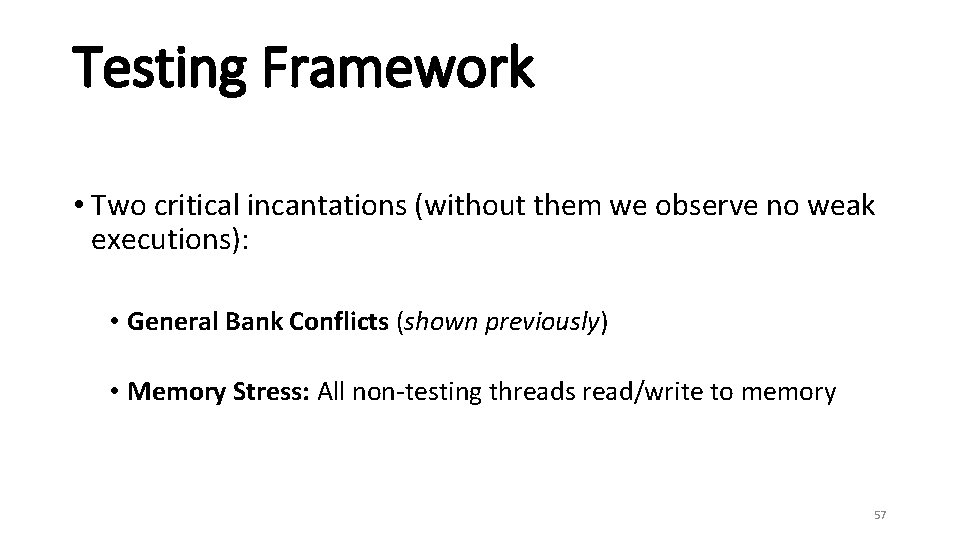

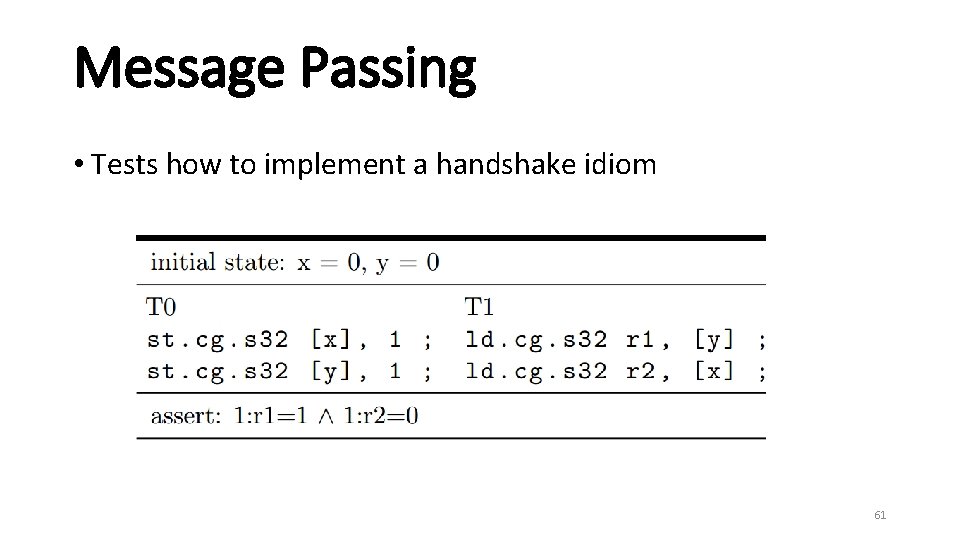

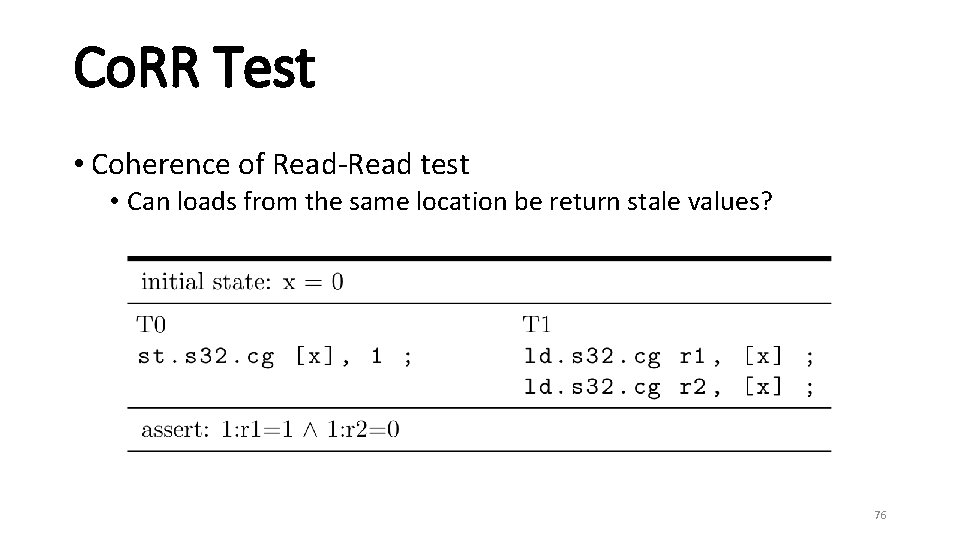

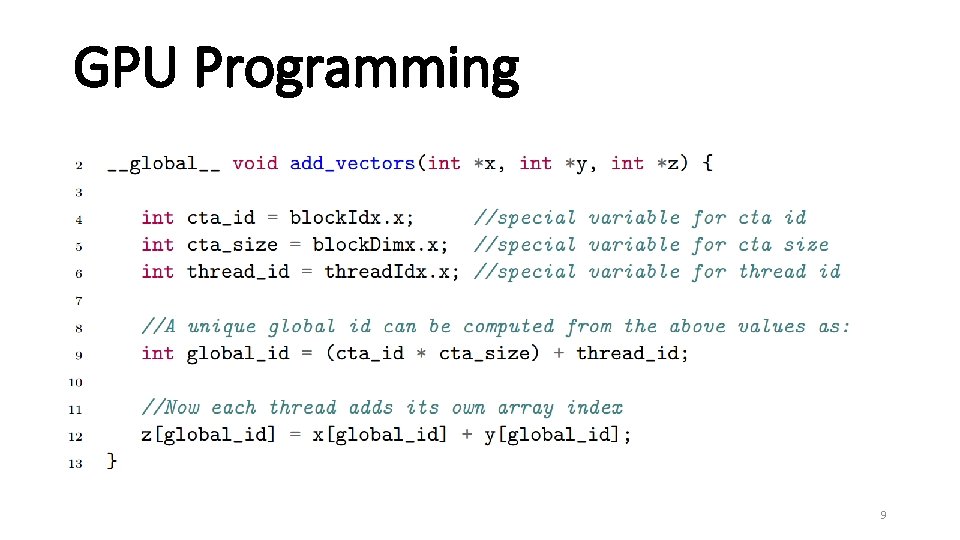

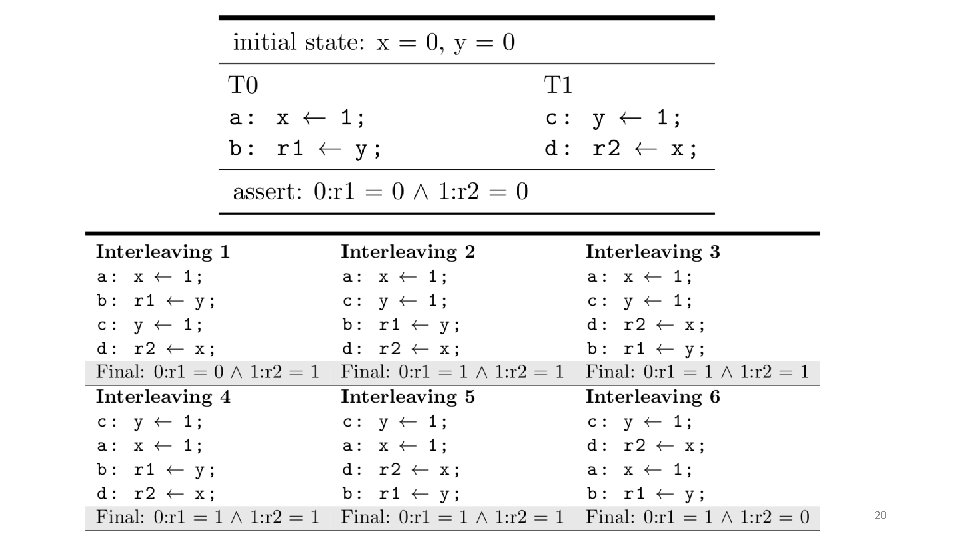

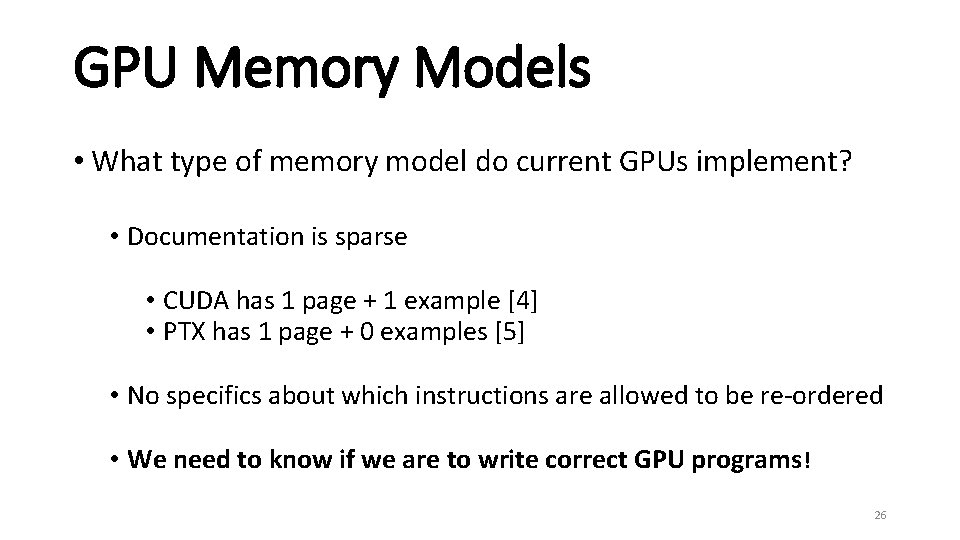

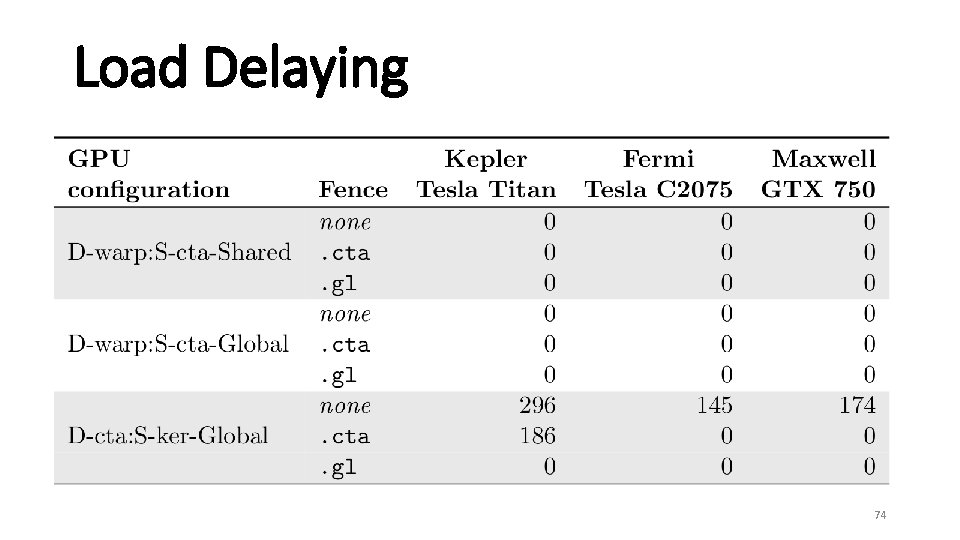

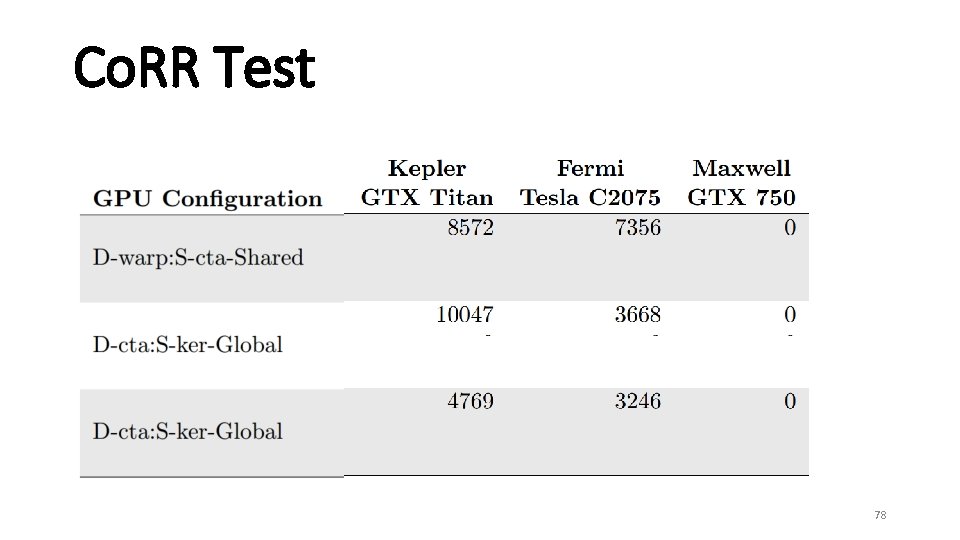

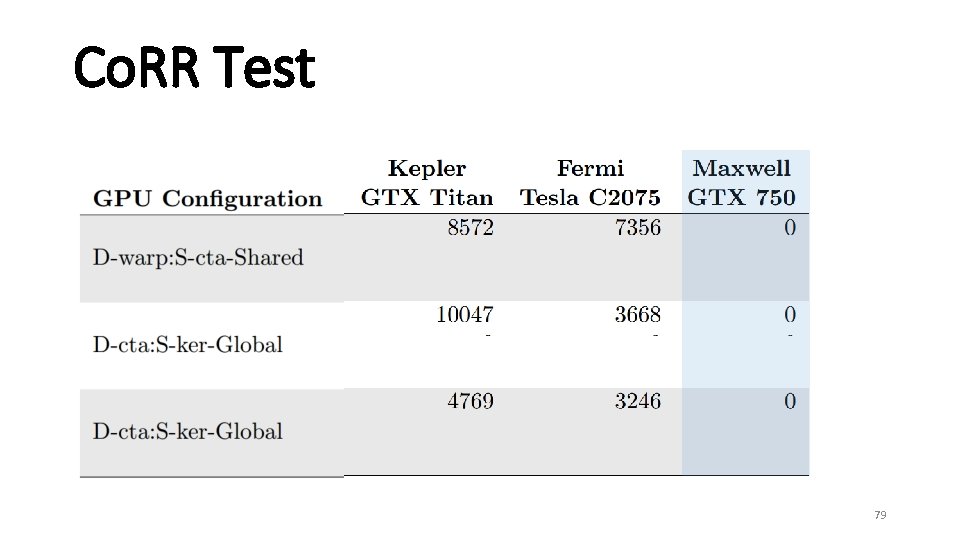

Co. RR Test • Coherence is SC per memory location [11, p. 14] • Modern processors (ARM, POWER, x 86) implement coherence • All language models require coherence (C++11, Open. CL 2. 0) • Has been observed and confirmed buggy in ARM chips [3, 12] 75

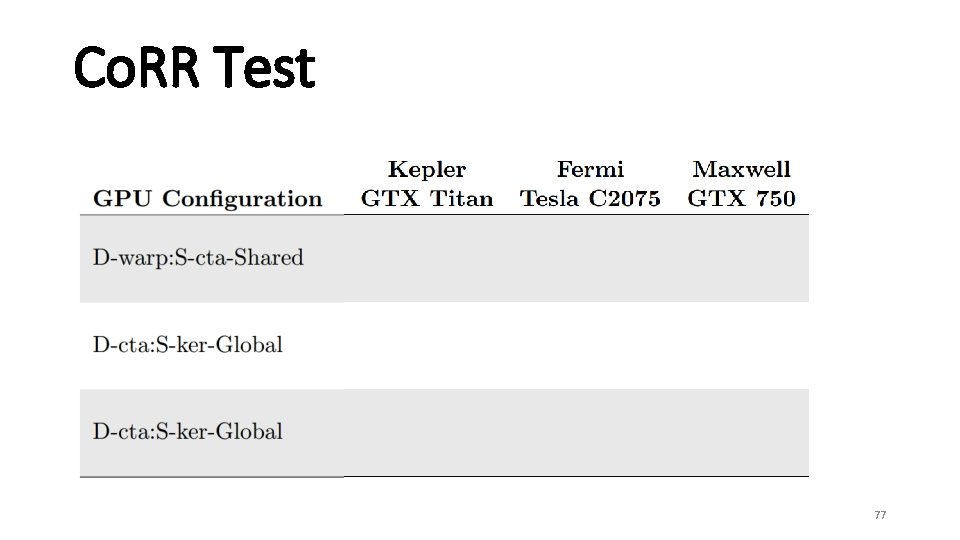

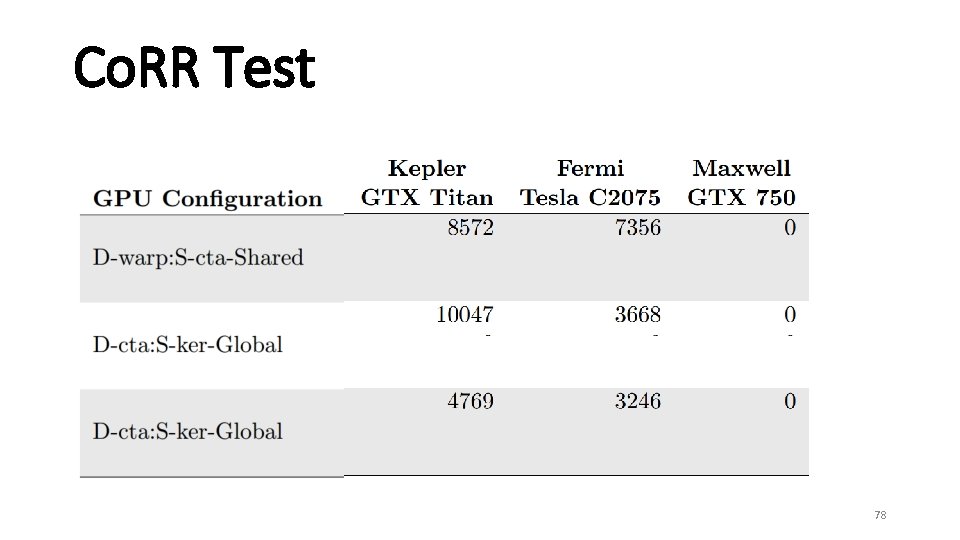

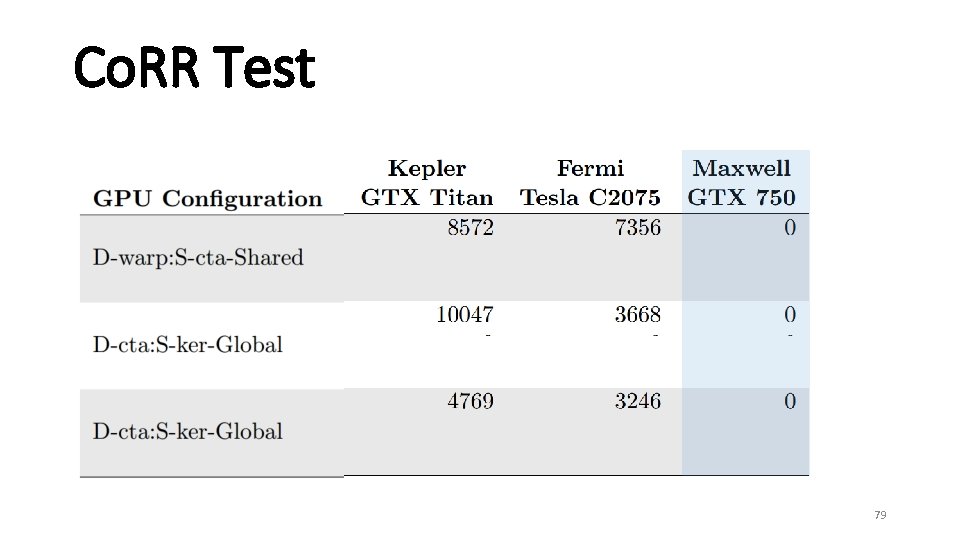

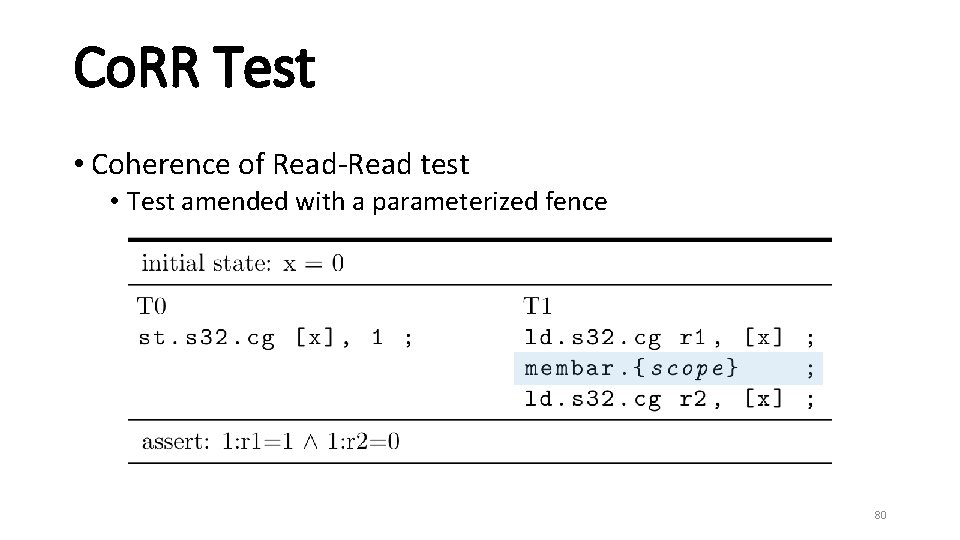

Co. RR Test • Coherence of Read-Read test • Can loads from the same location be return stale values? 76

Co. RR Test 77

Co. RR Test 78

Co. RR Test 79

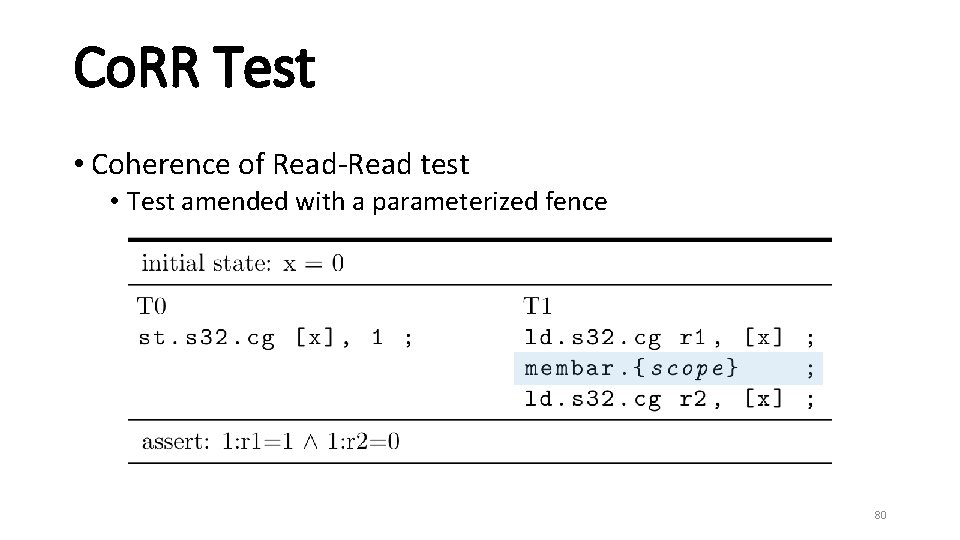

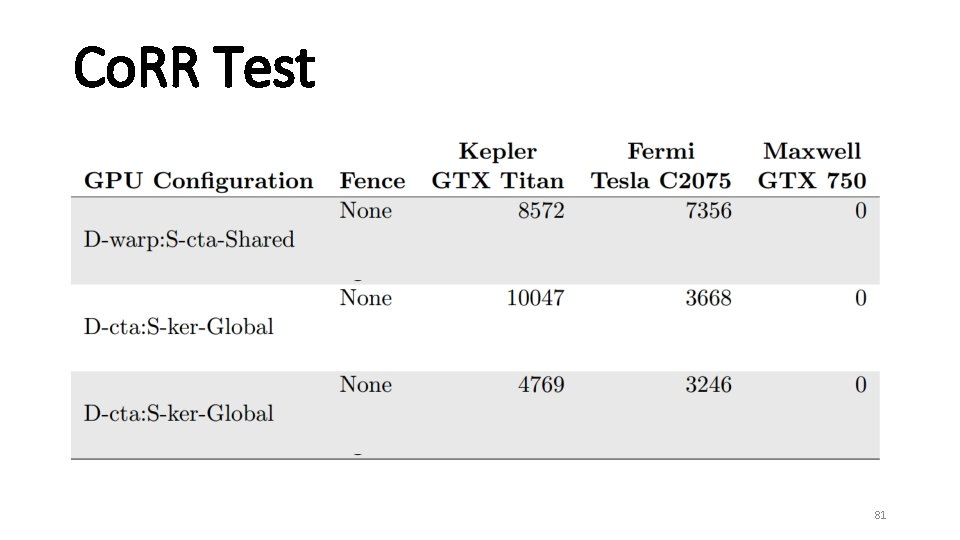

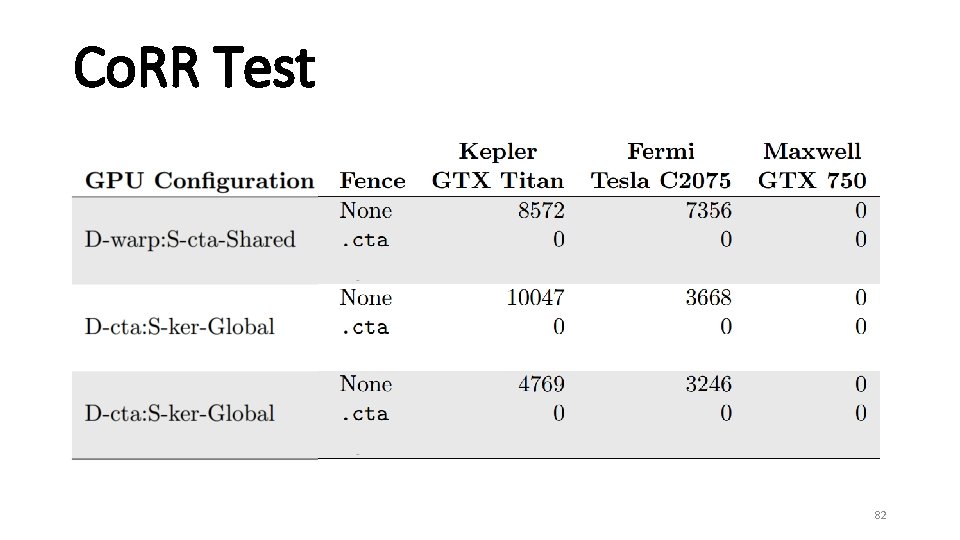

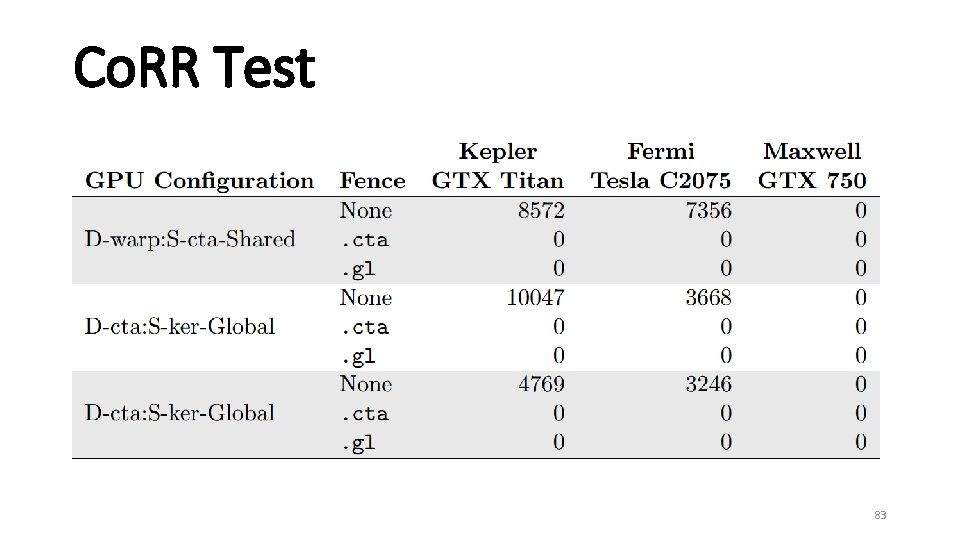

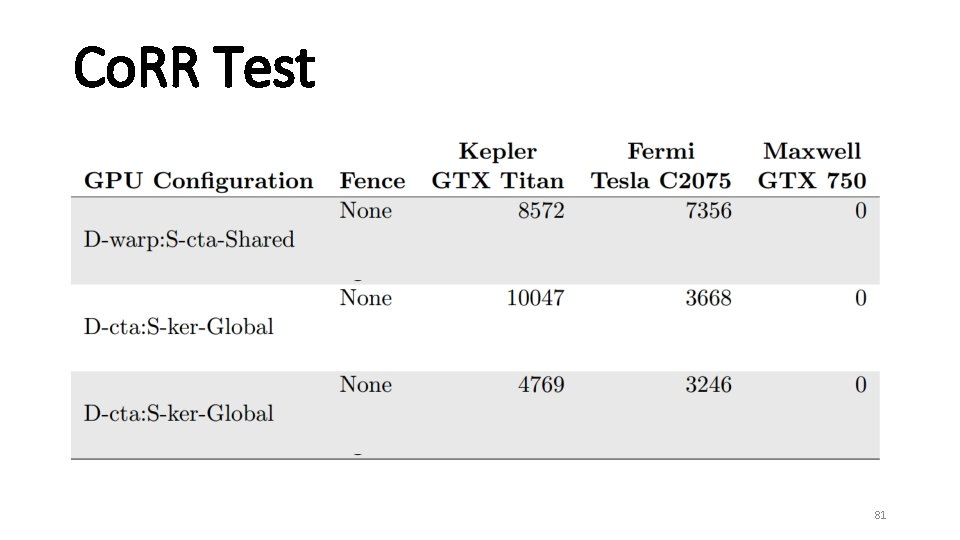

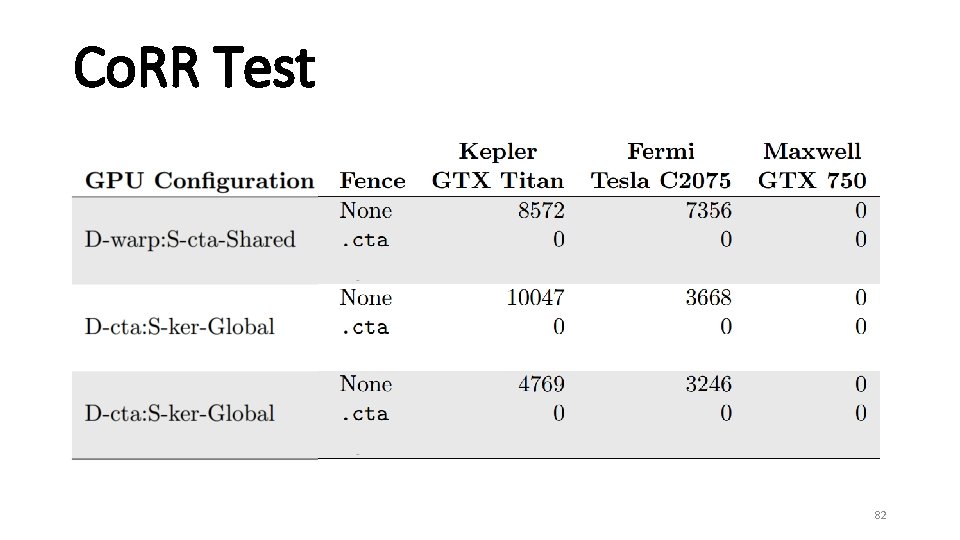

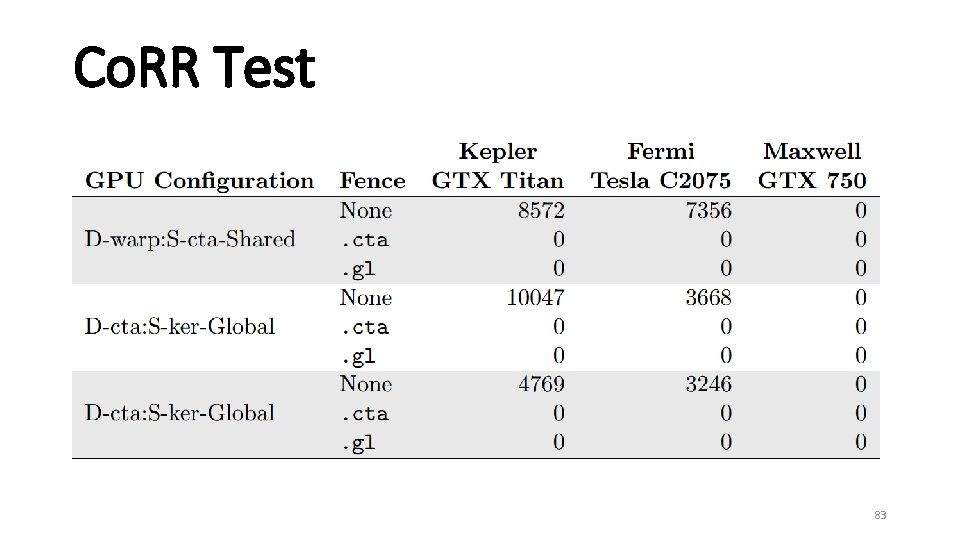

Co. RR Test • Coherence of Read-Read test • Test amended with a parameterized fence 80

Co. RR Test 81

Co. RR Test 82

Co. RR Test 83

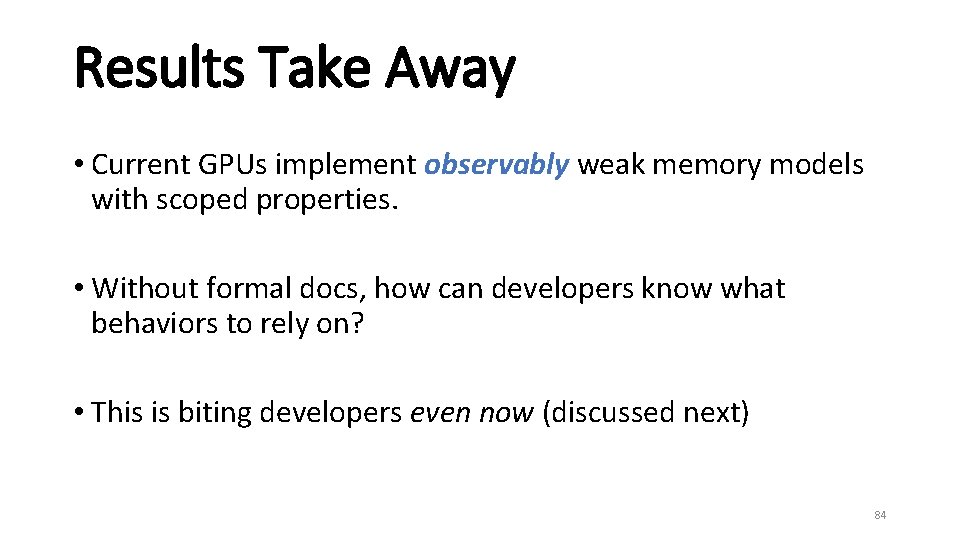

Results Take Away • Current GPUs implement observably weak memory models with scoped properties. • Without formal docs, how can developers know what behaviors to rely on? • This is biting developers even now (discussed next) 84

Roadmap • Background and Approach • Prior Work • Testing Framework • Results • CUDA Spin Locks • Bulk Testing • Future Work and Conclusion 85

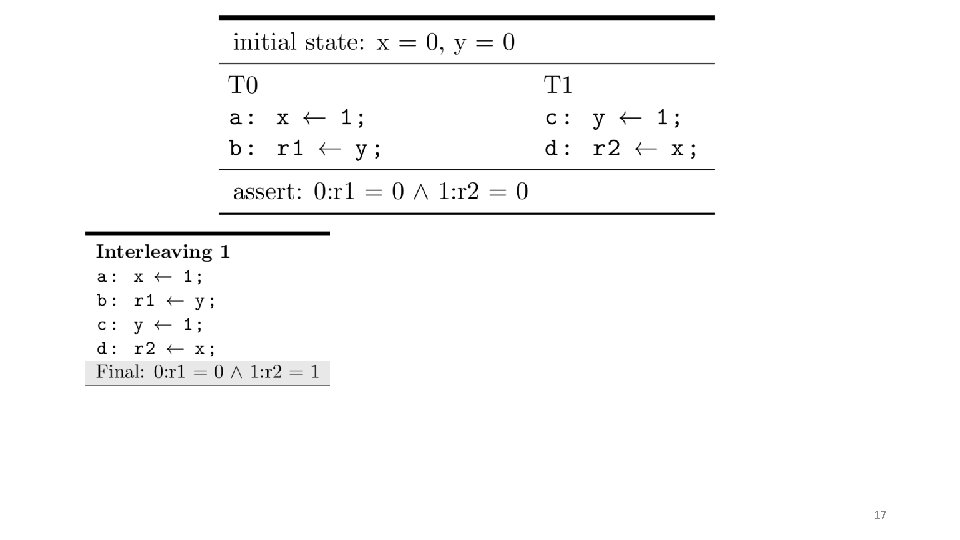

![GPU Spin Locks InterCTA lock presented in the book CUDA By Example 13 GPU Spin Locks • Inter-CTA lock presented in the book CUDA By Example [13]](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-86.jpg)

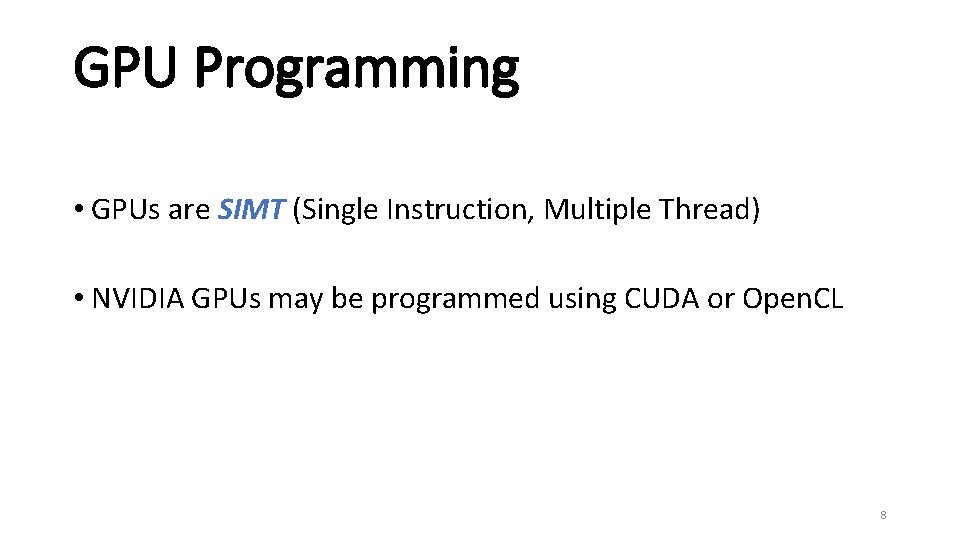

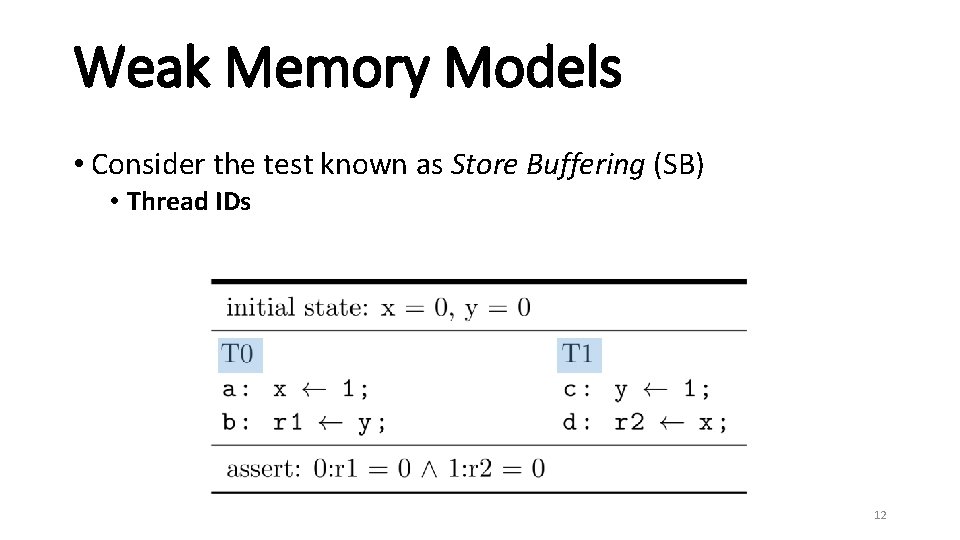

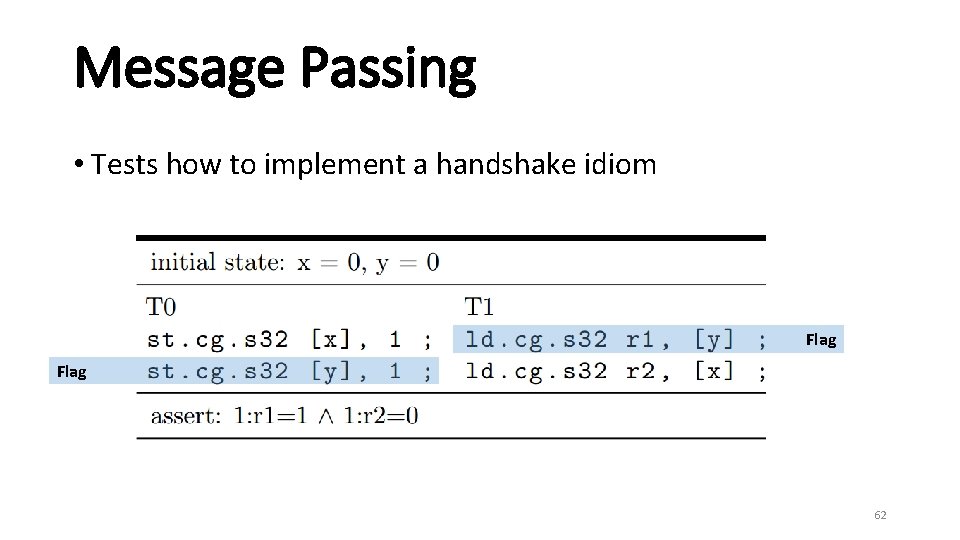

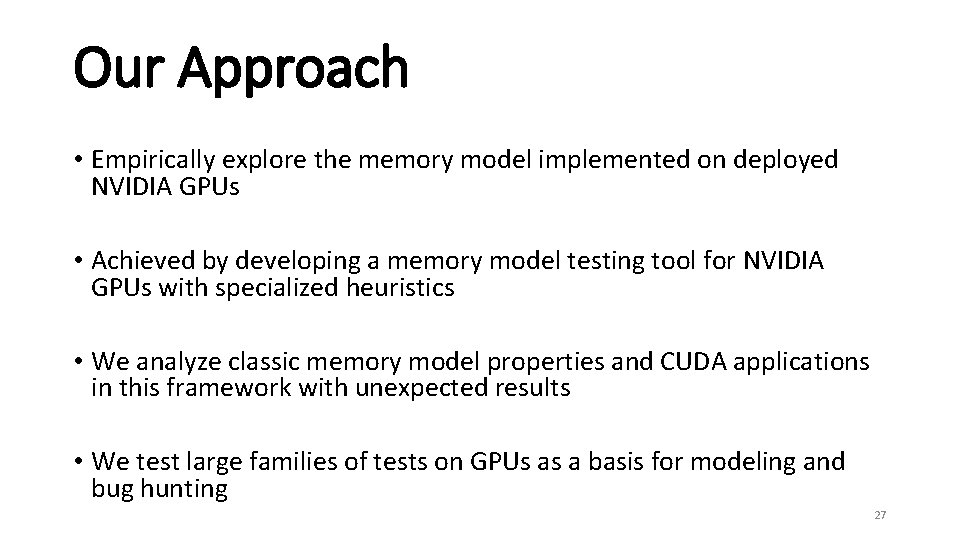

GPU Spin Locks • Inter-CTA lock presented in the book CUDA By Example [13] 86

![GPU Spin Locks InterCTA lock presented in the book CUDA By Example 13 GPU Spin Locks • Inter-CTA lock presented in the book CUDA By Example [13]](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-87.jpg)

GPU Spin Locks • Inter-CTA lock presented in the book CUDA By Example [13] 87

![GPU Spin Locks InterCTA lock presented in the book CUDA By Example 13 GPU Spin Locks • Inter-CTA lock presented in the book CUDA By Example [13]](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-88.jpg)

GPU Spin Locks • Inter-CTA lock presented in the book CUDA By Example [13] 88

![GPU Spin Locks InterCTA lock presented in the book CUDA By Example 13 GPU Spin Locks • Inter-CTA lock presented in the book CUDA By Example [13]](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-89.jpg)

GPU Spin Locks • Inter-CTA lock presented in the book CUDA By Example [13] 89

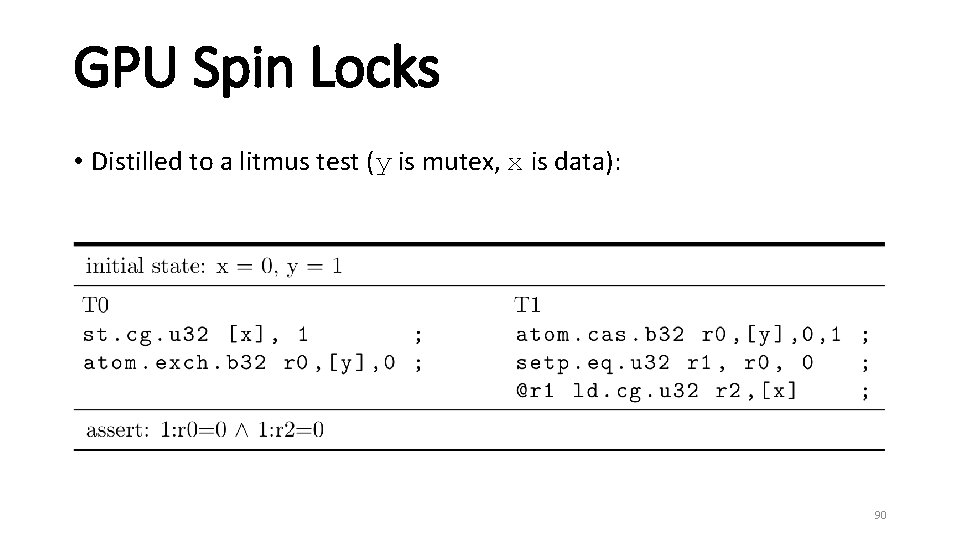

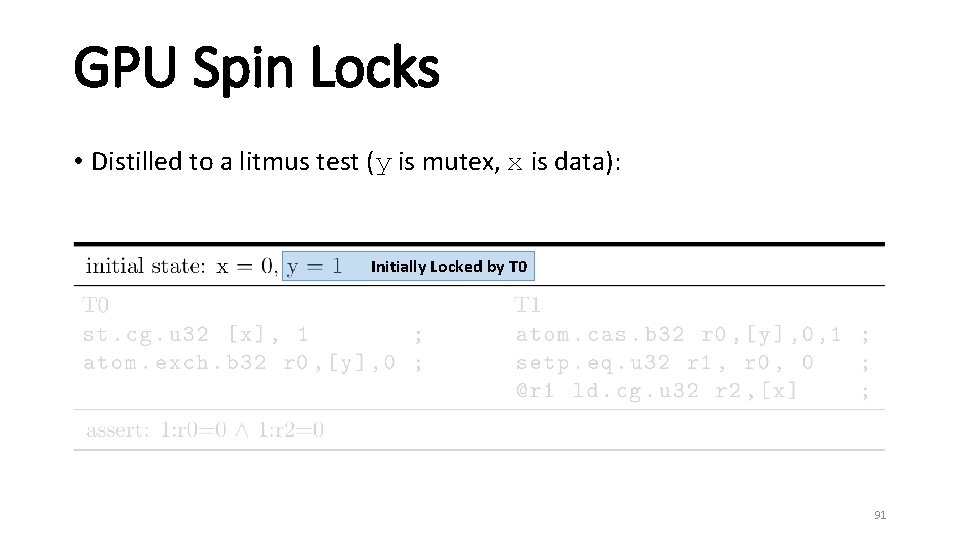

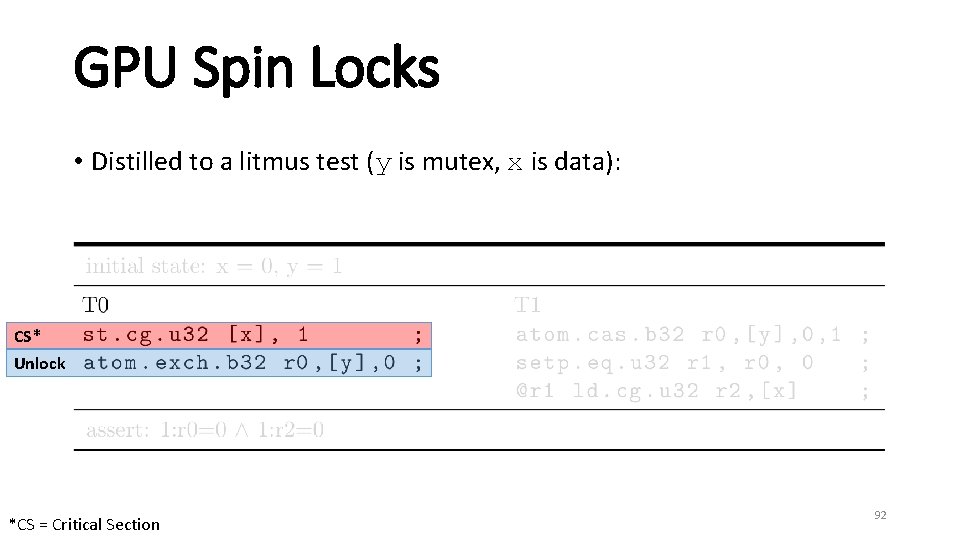

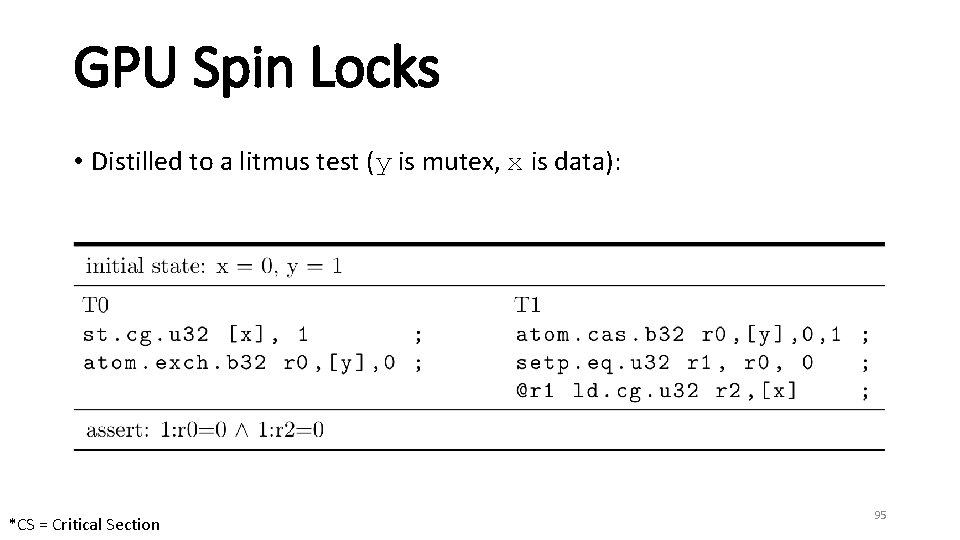

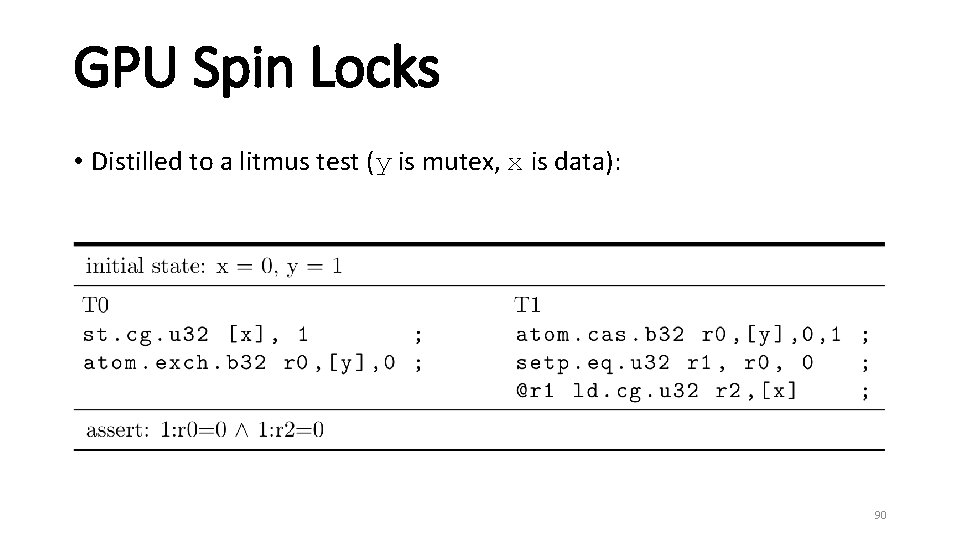

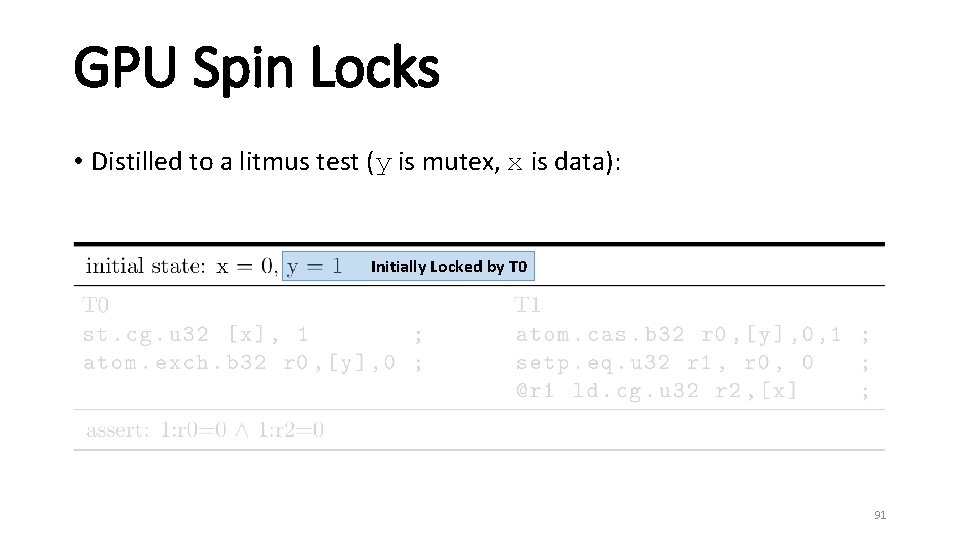

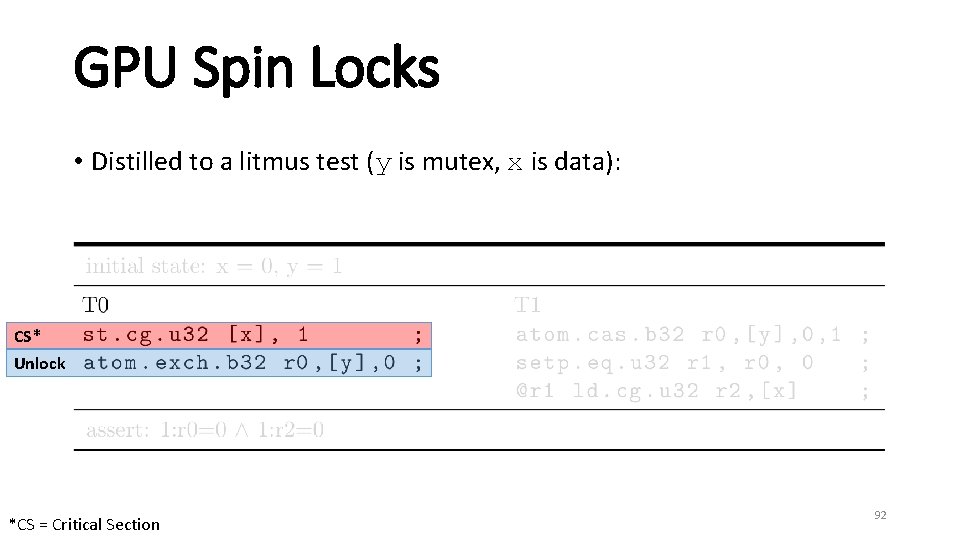

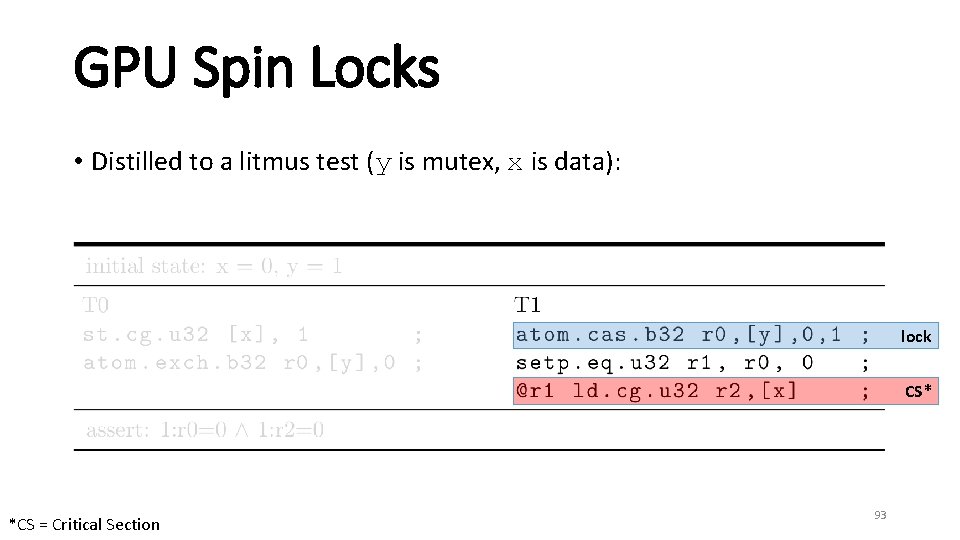

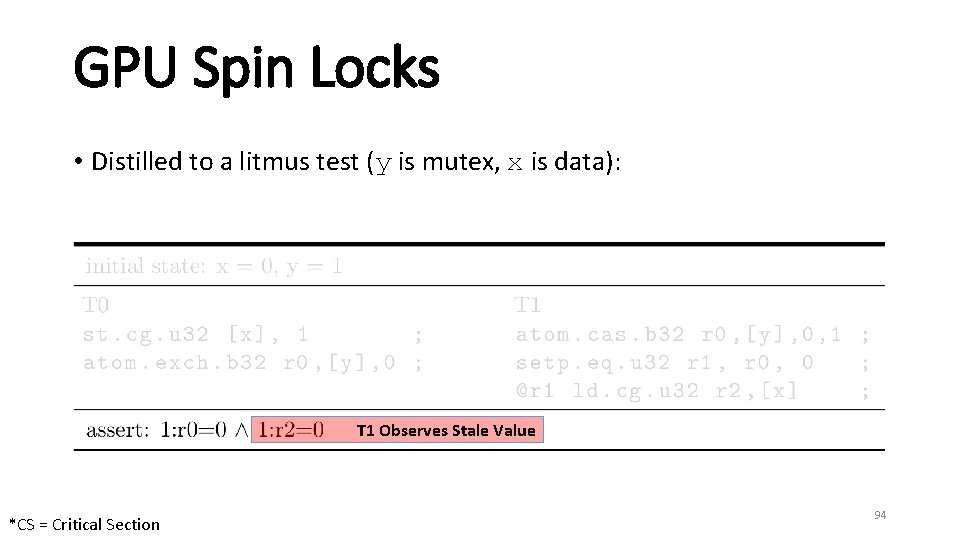

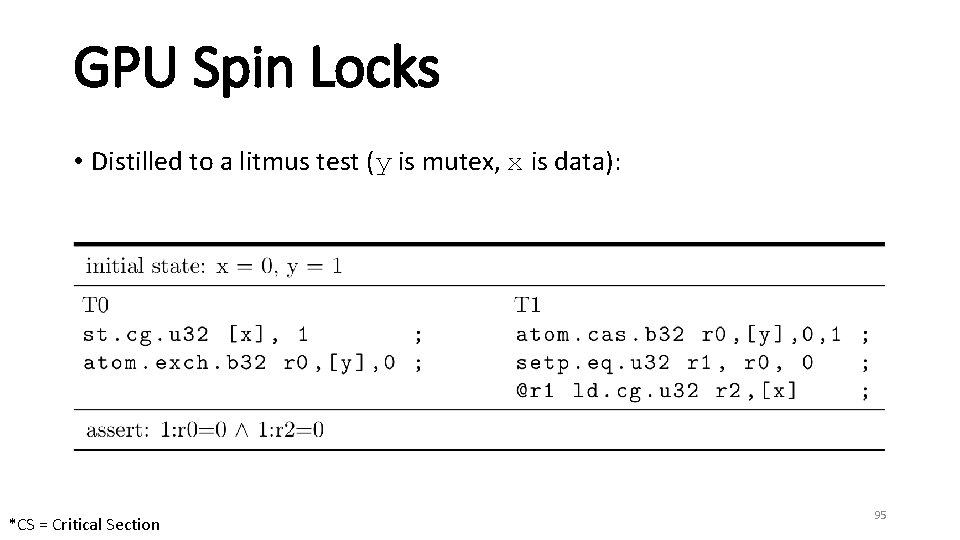

GPU Spin Locks • Distilled to a litmus test (y is mutex, x is data): 90

GPU Spin Locks • Distilled to a litmus test (y is mutex, x is data): Initially Locked by T 0 91

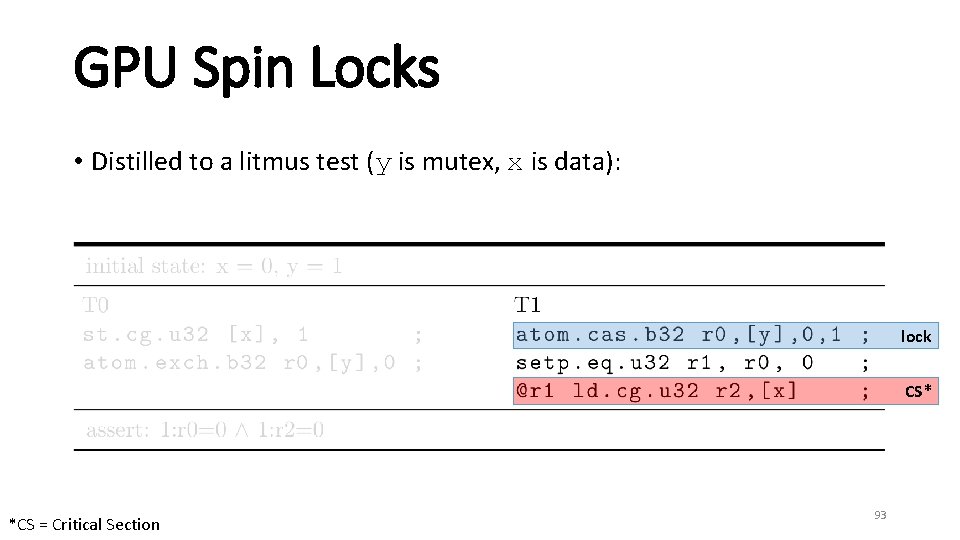

GPU Spin Locks • Distilled to a litmus test (y is mutex, x is data): CS* Unlock *CS = Critical Section 92

GPU Spin Locks • Distilled to a litmus test (y is mutex, x is data): lock CS* *CS = Critical Section 93

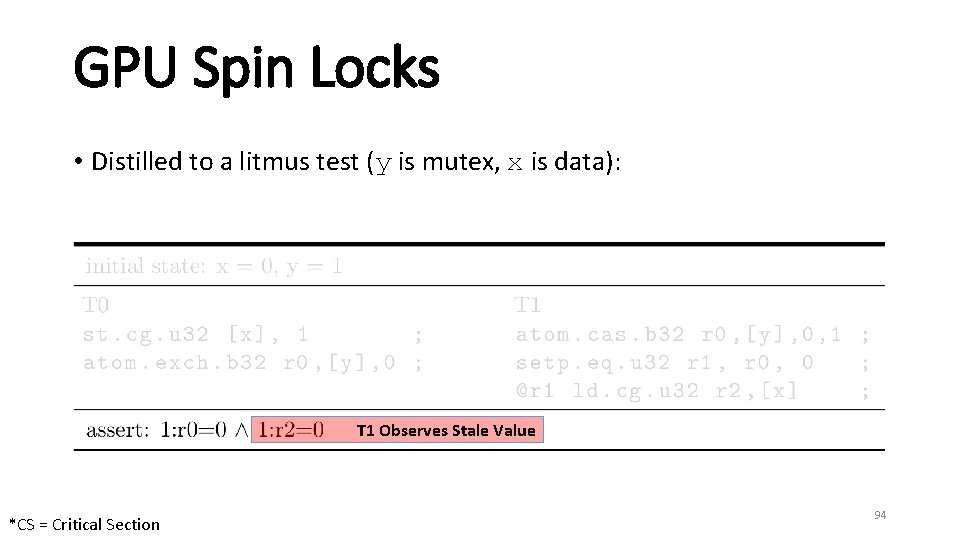

GPU Spin Locks • Distilled to a litmus test (y is mutex, x is data): T 1 Observes Stale Value *CS = Critical Section 94

GPU Spin Locks • Distilled to a litmus test (y is mutex, x is data): *CS = Critical Section 95

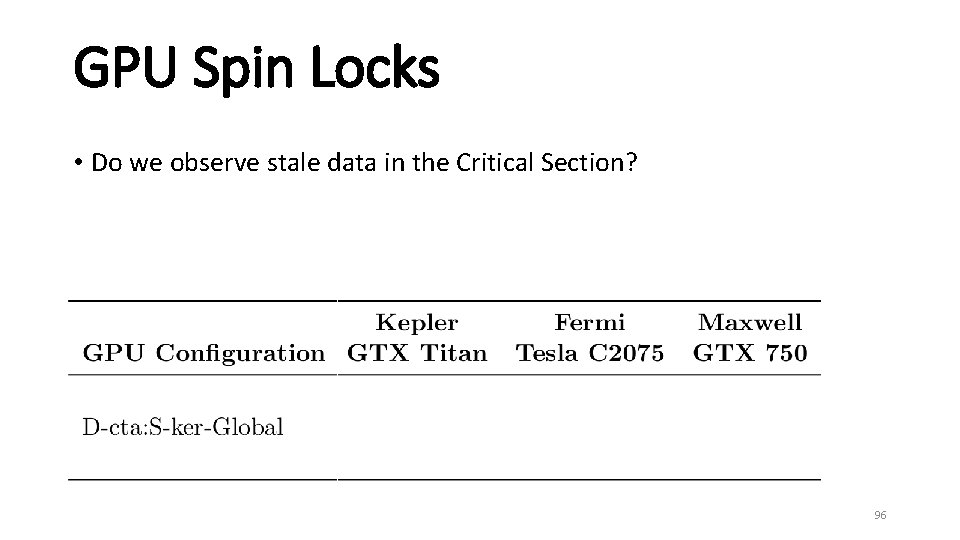

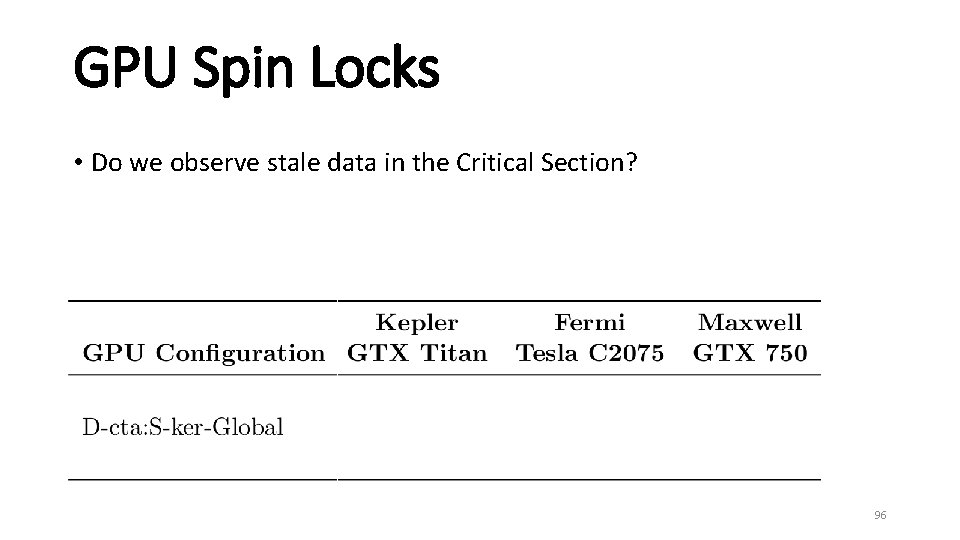

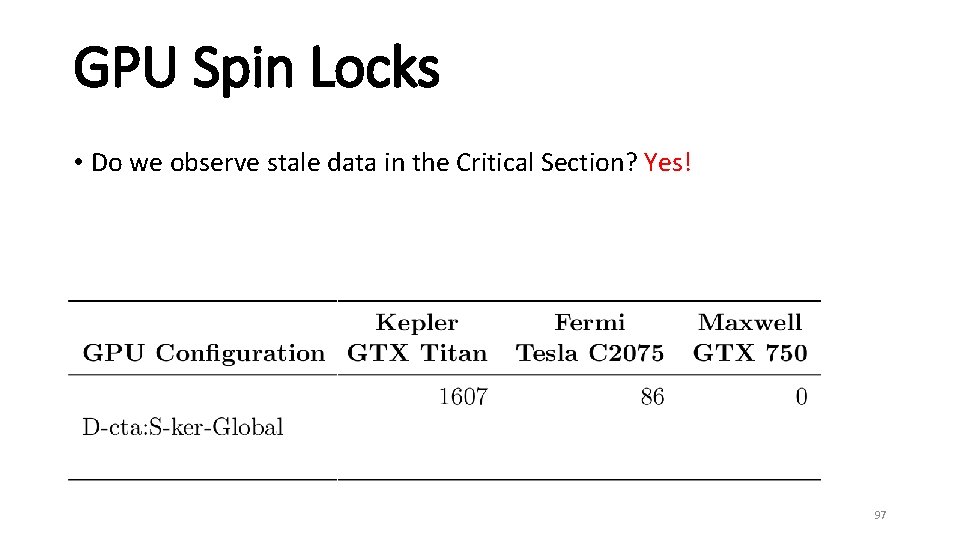

GPU Spin Locks • Do we observe stale data in the Critical Section? 96

GPU Spin Locks • Do we observe stale data in the Critical Section? Yes! 97

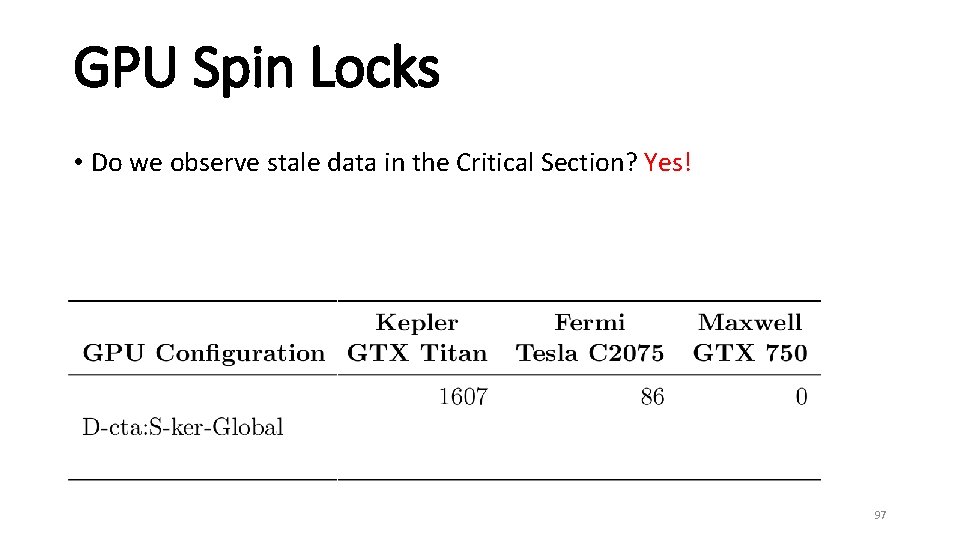

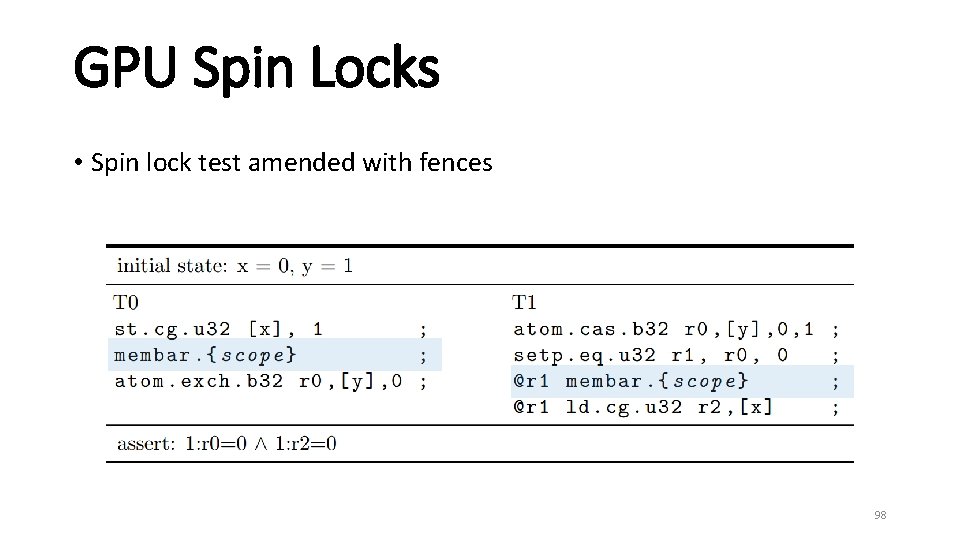

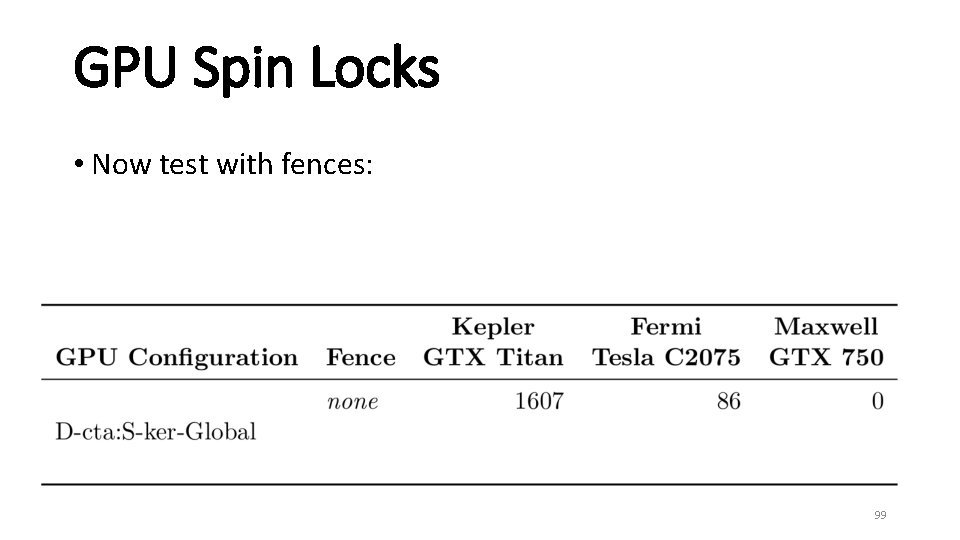

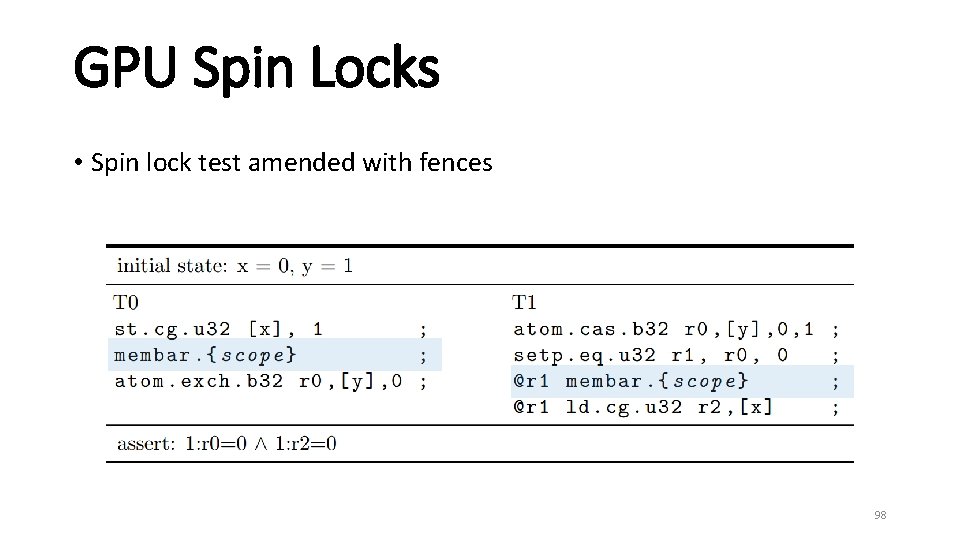

GPU Spin Locks • Spin lock test amended with fences 98

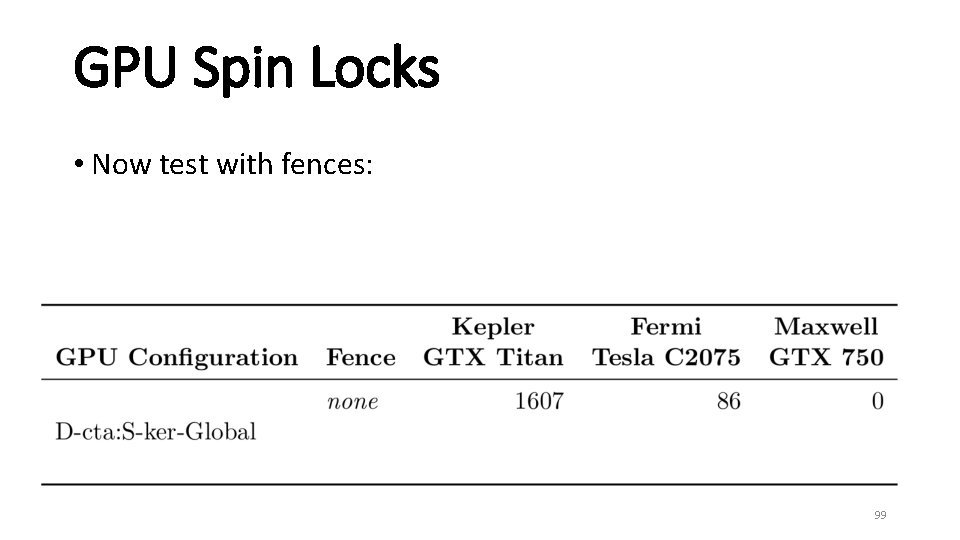

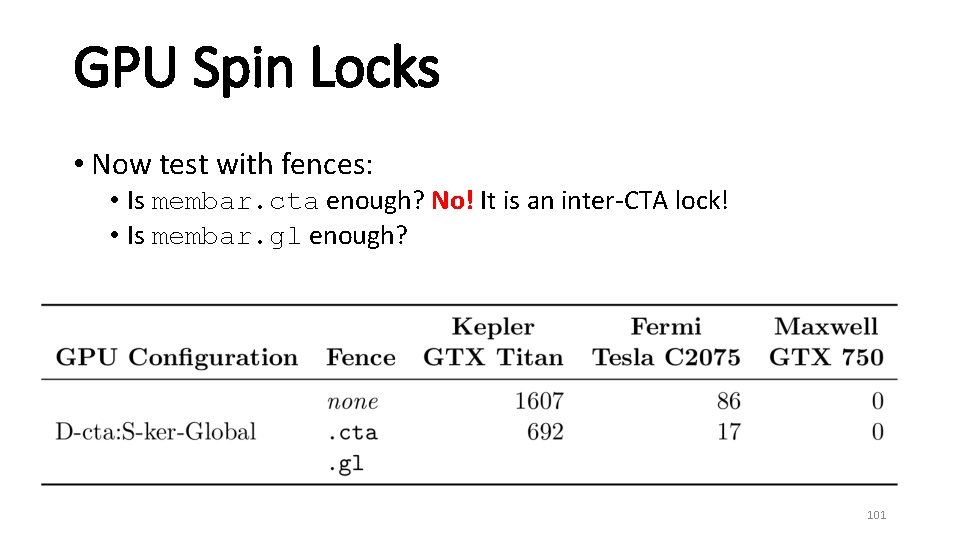

GPU Spin Locks • Now test with fences: 99

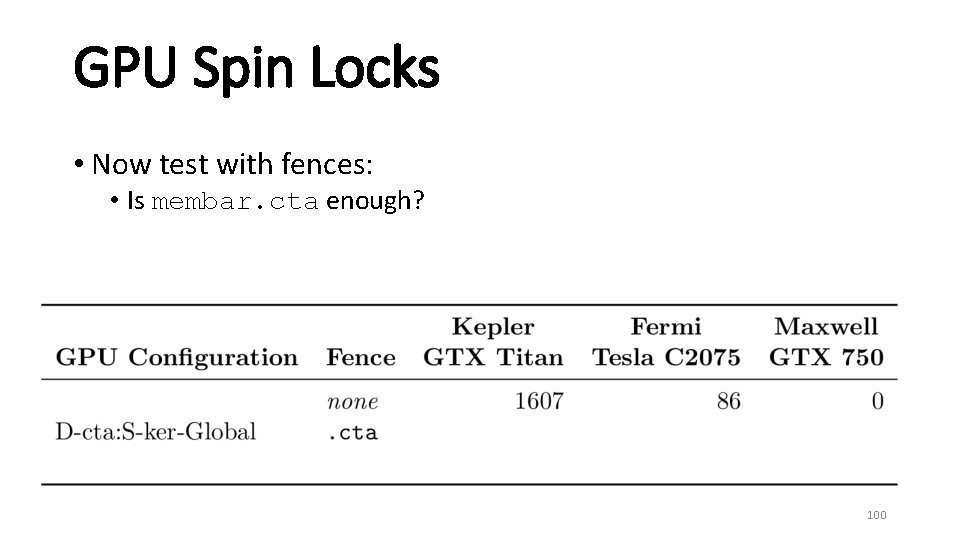

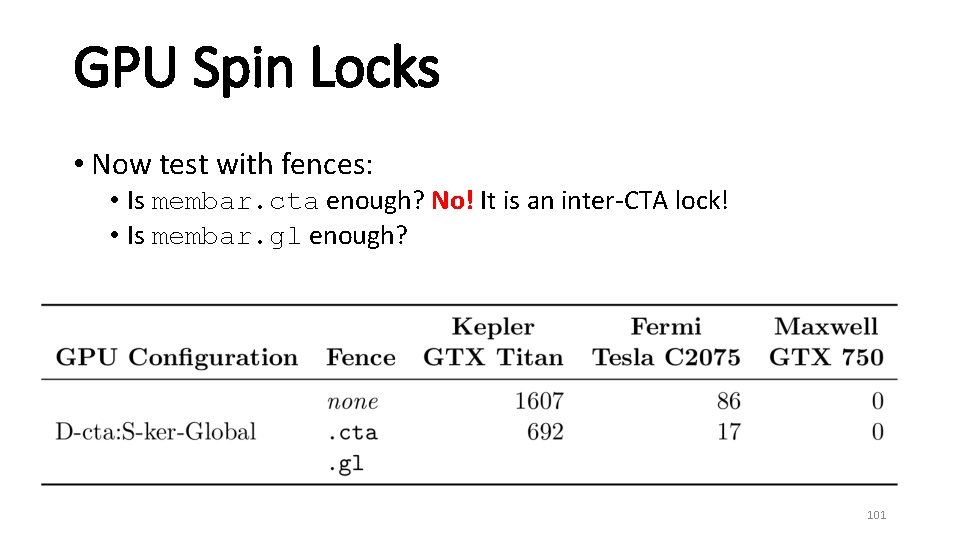

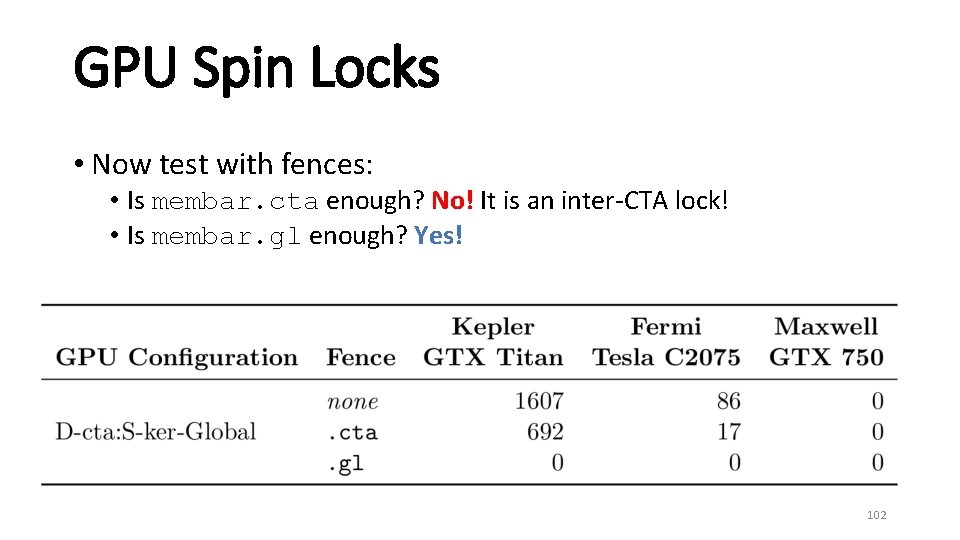

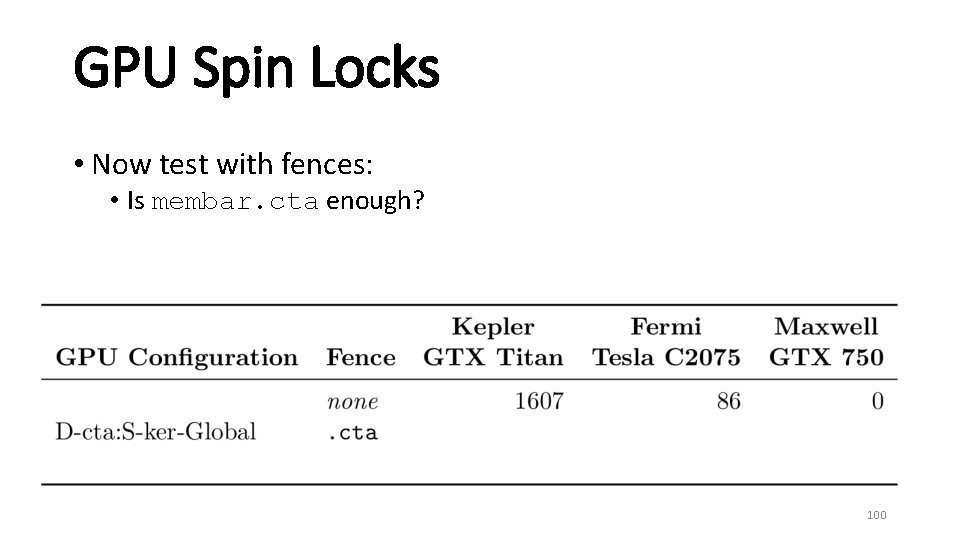

GPU Spin Locks • Now test with fences: • Is membar. cta enough? 100

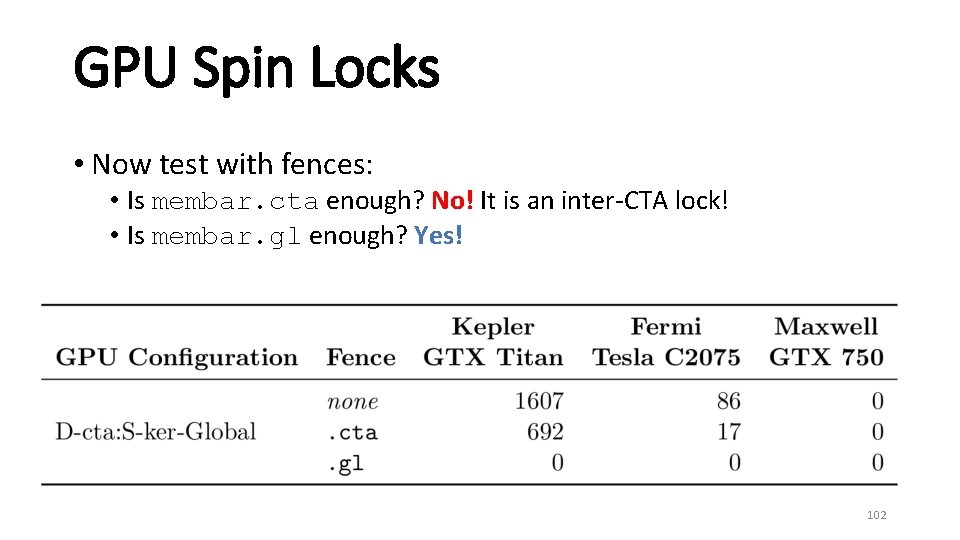

GPU Spin Locks • Now test with fences: • Is membar. cta enough? No! It is an inter-CTA lock! • Is membar. gl enough? Is membar. cta enough? 101

GPU Spin Locks • Now test with fences: • Is membar. cta enough? No! It is an inter-CTA lock! • Is membar. gl enough? Yes! 102

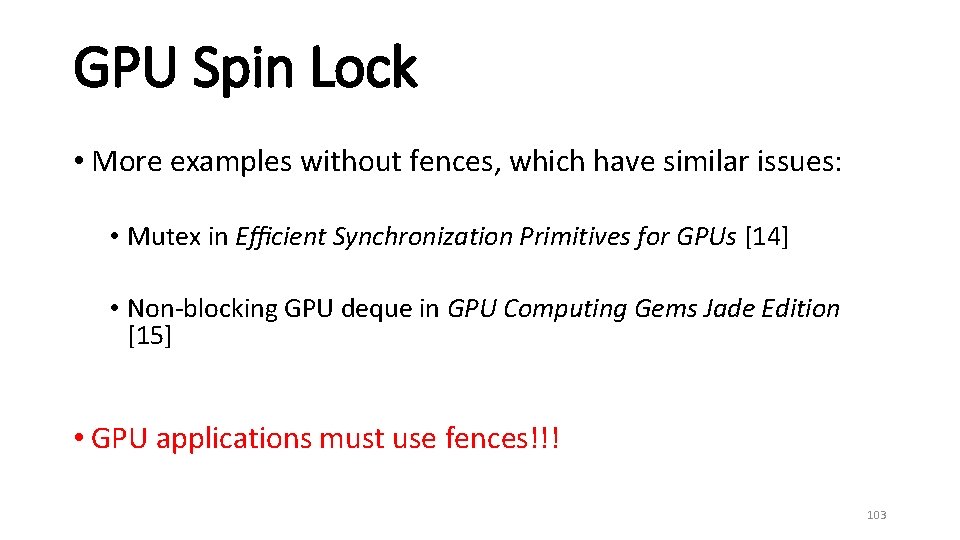

GPU Spin Lock • More examples without fences, which have similar issues: • Mutex in Efficient Synchronization Primitives for GPUs [14] • Non-blocking GPU deque in GPU Computing Gems Jade Edition [15] • GPU applications must use fences!!! 103

Roadmap • Background and Approach • Prior Work • Testing Framework • Results • CUDA Spin Locks • Bulk Testing • Future Work and Conclusion 104

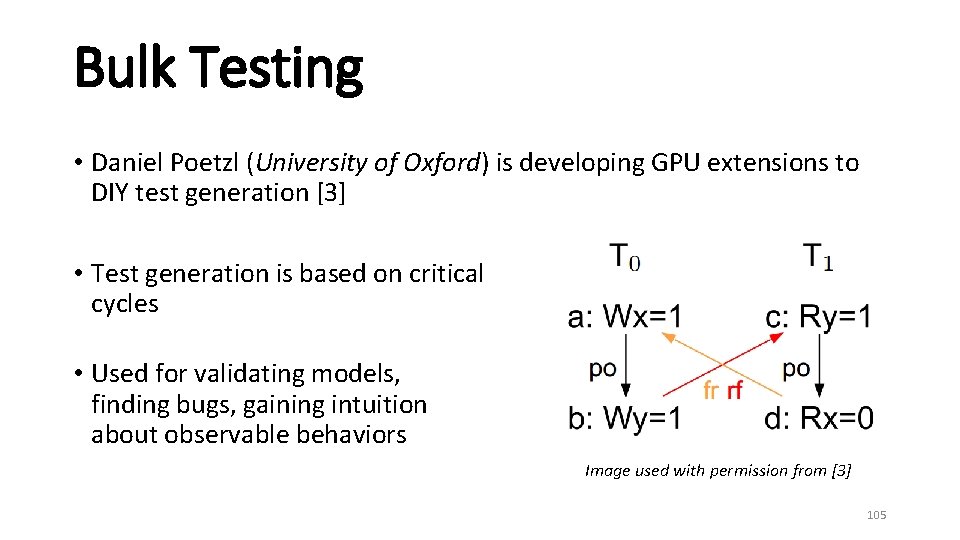

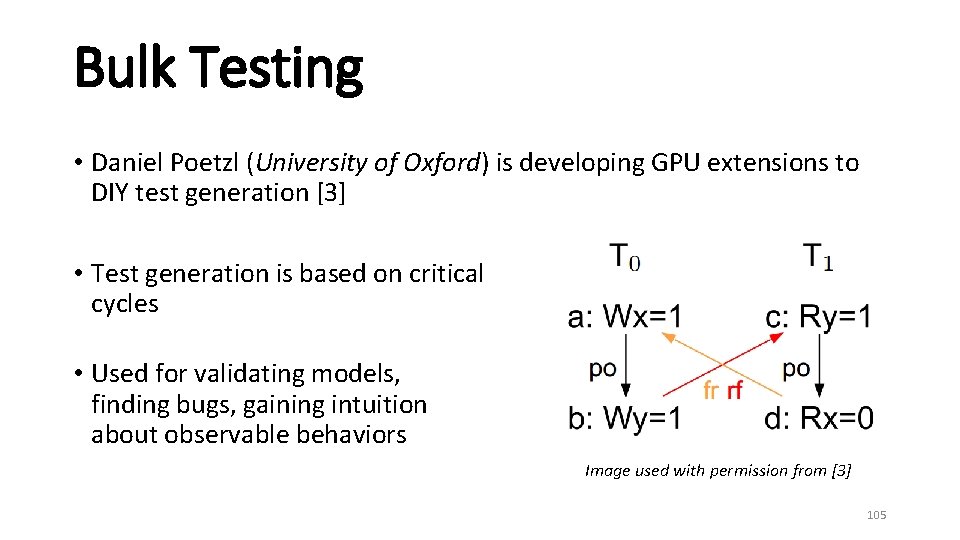

Bulk Testing • Daniel Poetzl (University of Oxford) is developing GPU extensions to DIY test generation [3] • Test generation is based on critical cycles • Used for validating models, finding bugs, gaining intuition about observable behaviors Image used with permission from [3] 105

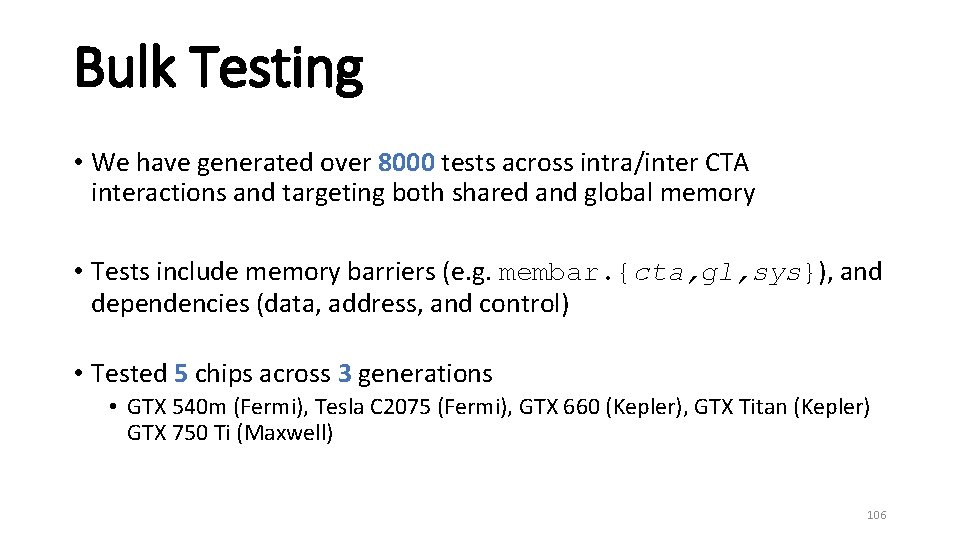

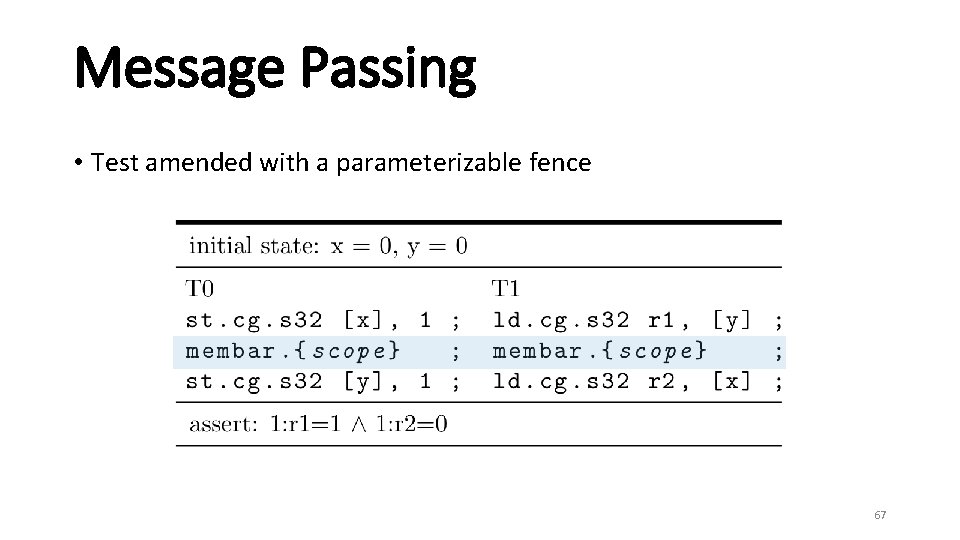

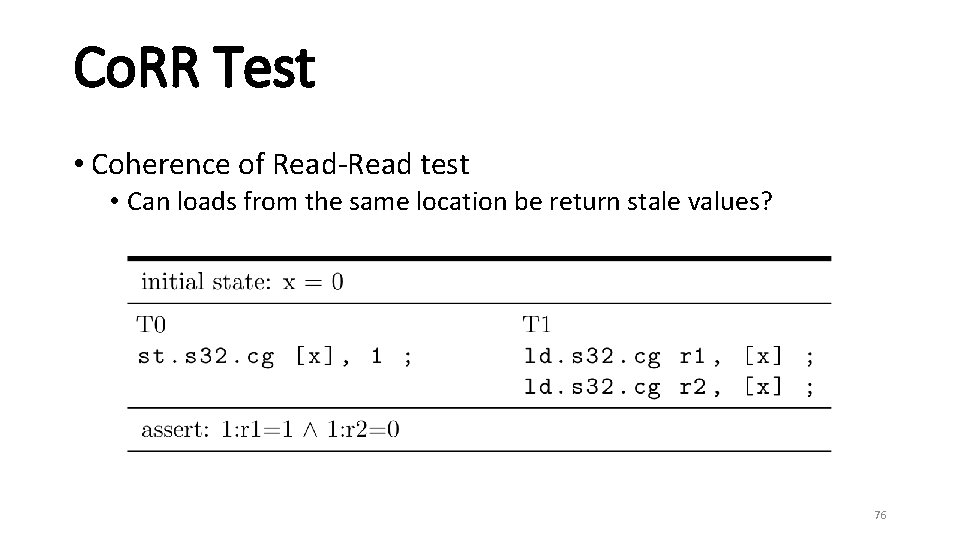

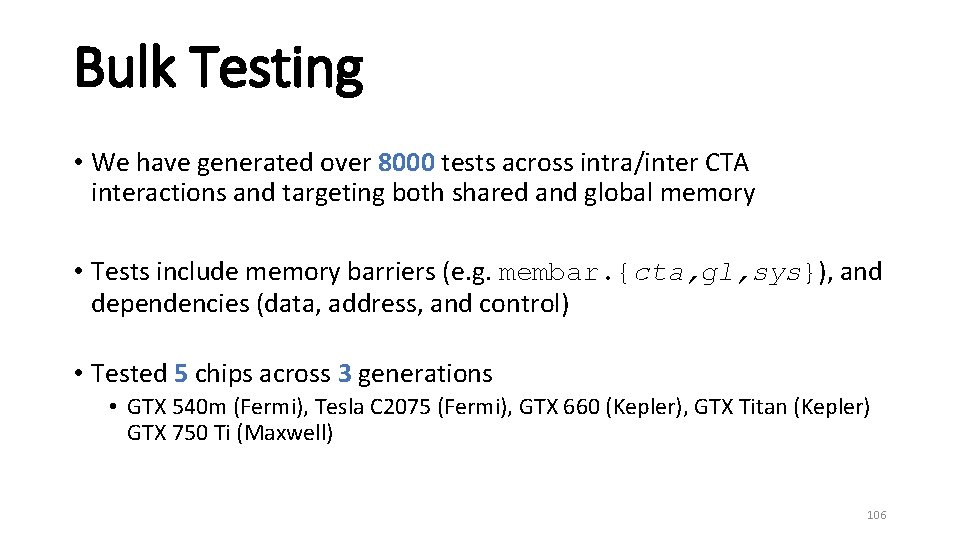

Bulk Testing • We have generated over 8000 tests across intra/inter CTA interactions and targeting both shared and global memory • Tests include memory barriers (e. g. membar. {cta, gl, sys}), and dependencies (data, address, and control) • Tested 5 chips across 3 generations • GTX 540 m (Fermi), Tesla C 2075 (Fermi), GTX 660 (Kepler), GTX Titan (Kepler) GTX 750 Ti (Maxwell) 106

Roadmap • Background and Approach • Prior Work • Testing Framework • Results • CUDA Spin Locks • Bulk Testing • Future Work and Conclusion 107

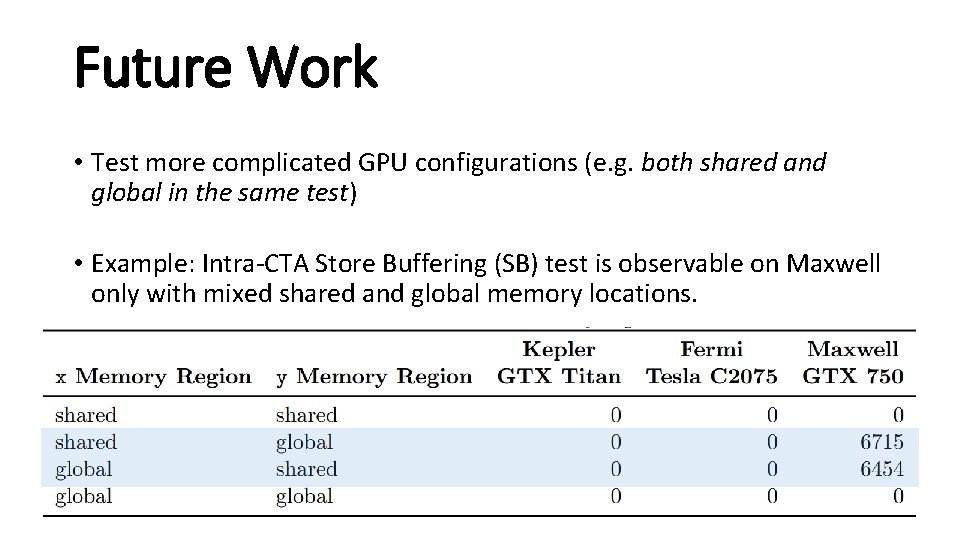

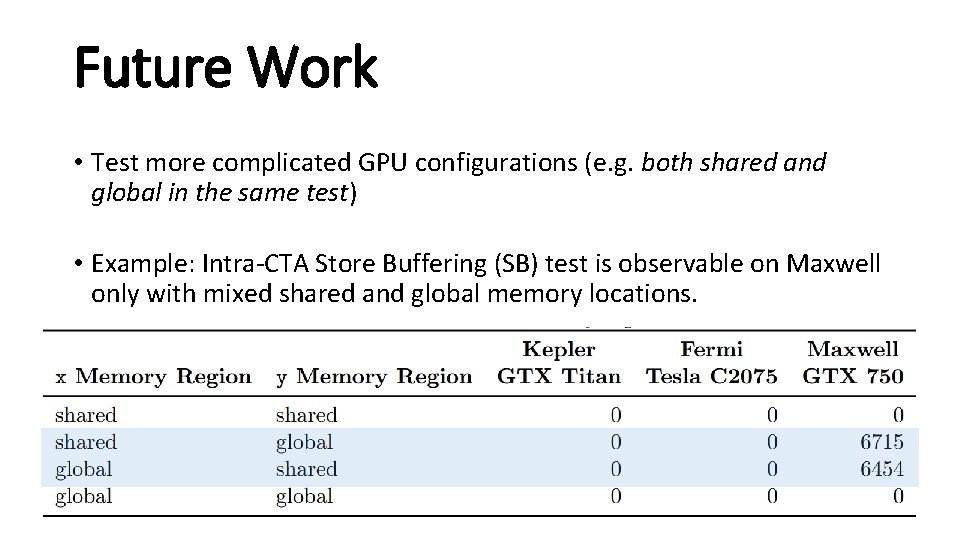

Future Work • Test more complicated GPU configurations (e. g. both shared and global in the same test) • Example: Intra-CTA Store Buffering (SB) test is observable on Maxwell only with mixed shared and global memory locations.

![Future Work Axiomatic memory model in Herd 3 New scoped relations InternalCTA Future Work • Axiomatic memory model in Herd [3] • New scoped relations: Internal–CTA:](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-109.jpg)

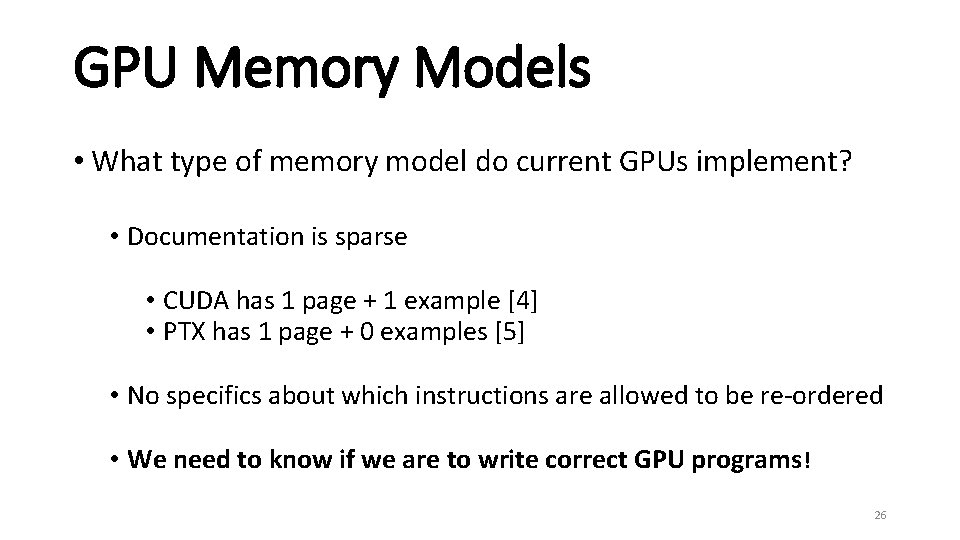

Future Work • Axiomatic memory model in Herd [3] • New scoped relations: Internal–CTA: Contains pairs of instructions that are in the same CTA • Can easily compare model to observations • Based on acyclic relations Image used with permission from [3] 109

Conclusion • Current GPUs have observably weak memory models which are largely undocumented • GPU programming in proceeding without adequate guidelines which results in buggy code (development of reliable GPU code impossible without specs) • Rigorous documentation, testing, and verification of GPU programs based on formal tools is the way forward in terms of developing reliable GPU applications 110

![References 1 L Lamport How to make a multiprocessor computer that correctly executes multiprocess References [1] L. Lamport, "How to make a multiprocessor computer that correctly executes multi-process](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-111.jpg)

References [1] L. Lamport, "How to make a multiprocessor computer that correctly executes multi-process programs, " IEEE Trans. Comput. , pp. 690691, Sep. 1979. [2] J. Alglave, L. Maranget, S. Sarkar, and P. Sewell, "Litmus: Running tests against hardware, " ser. TACAS'11. Springer-Verlag, pp. 41 -44. [3] J. Alglave, L. Maranget, and M. Tautschnig, "Herding cats: modelling, simulation, testing, and data-mining for weak memory, " 2014, to appear in TOPLAS. [4] NVIDIA, "CUDA C programming guide, version 6, " http: //docs. nvidia. com/cuda/pdf/CUDA C Programming Guide. pdf, July 2014. [5] NVIDIA, "Parallel Thread Execution ISA: Version 4. 0 (Feb. 2014), " http: //docs. nvidia. com/cuda/parallel-thread-execution. [6] W. W. Collier, Reasoning About Parallel Architectures. Prentice-Hall, Inc. , 1992. [7] S. Hangal, D. Vahia, C. Manovit, and J. -Y. J. Lu, "TSOtool: A program for verifying memory systems using the memory consistency model, " ser. ISCA '04. IEEE Computer Society, 2004, pp. 114. 111

![References 8 D R Hower B M Beckmann B R Gaster B A Hechtman References [8] D. R. Hower, B. M. Beckmann, B. R. Gaster, B. A. Hechtman,](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-112.jpg)

References [8] D. R. Hower, B. M. Beckmann, B. R. Gaster, B. A. Hechtman, M. D. Hill, S. K. Reinhardt, and D. A. Wood, "Sequential consistency for heterogeneous-race-free, " ser. MSPC'13. ACM, 2013. [9] T. Sorensen, G. Gopalakrishnan, and V. Grover, "Towards shared memory consistency models for GPUs, " ser. ICS'13. ACM, 2013, pp. 489490. [10] D. R. Hower, B. A. Hechtman, B. M. Beckmann, B. R. Gaster, M. D. Hill, S. K. Reinhardt, and D. A. Wood, "Heterogeneous-race-free memory models, " ser. ASPLOS'14. ACM, 2014, pp. 427 -440. [11] D. J. Sorin, M. D. Hill, and D. A. Wood, A Primer on Memory Consistency and Cache Coherence, ser. Synthesis Lectures on Computer Architecture. Morgan & Claypool Publishers, 2011. [12] ARM, "Cortex-A 9 MPCore, programmer advice notice, read-after-read hazards, " ARM Reference 761319. http: //infocenter. arm. com/help/topic/com. arm. doc. uan 0004 a/UAN 0004 A a 9 read. pdf, accessed: May 2014. [13] J. Sanders and E. Kandrot, CUDA by Example: An Introduction to General-Purpose GPU Programming. Addison-Wesley Professional, 2010. 112

![References 14 J A Stuart and J D Owens Efficient synchronization primitives for GPUs References [14] J. A. Stuart and J. D. Owens, "Efficient synchronization primitives for GPUs,](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-113.jpg)

References [14] J. A. Stuart and J. D. Owens, "Efficient synchronization primitives for GPUs, " Co. RR, 2011, http: //arxiv. org/pdf/1110. 4623. pdf. [15] W. -m. W. Hwu, GPU Computing Gems Jade Edition. Morgan Kaufmann Publishers Inc. , 2011. [16] http: //en. wikipedia. org/wiki/Samsung_Galaxy_S 5 [17] http: //en. wikipedia. org/wiki/Titan_(supercomputer) [18] http: //en. wikipedia. org/wiki/Barnes_Hut_simulation 113

Acknowledgements • Advisor: Ganesh Gopalakrishnan • Committee: Zvonimir Rakamaric, Mary Hall • UK Group: Jade Alglave (University College London), Daniel Poetzl (University of Oxford), Luc Maranget (Inria), John Wickerson, Alastair Donaldson (Imperial College London), Mark Batty (University of Cambridge) • Mohammed for feedback on practice runs 114

Thank You 115

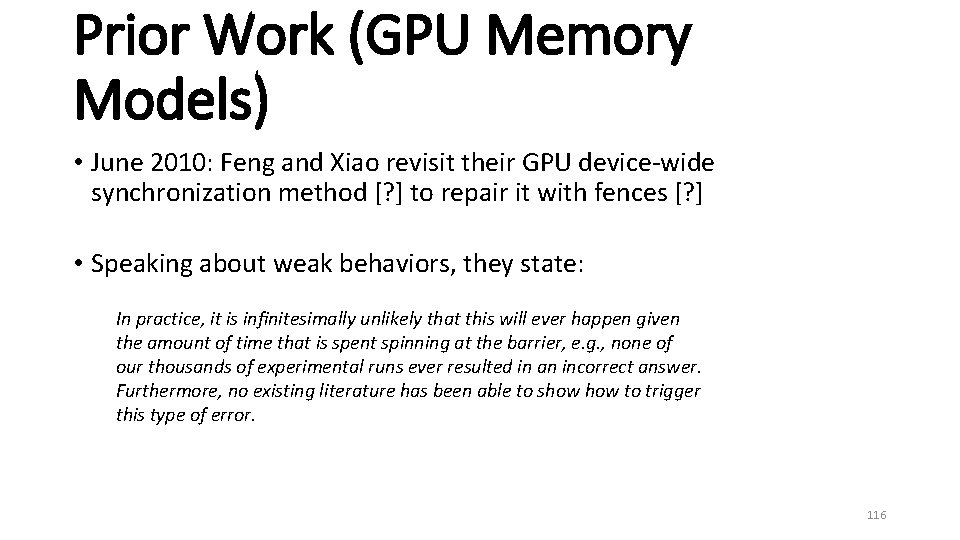

Prior Work (GPU Memory Models) • June 2010: Feng and Xiao revisit their GPU device-wide synchronization method [? ] to repair it with fences [? ] • Speaking about weak behaviors, they state: In practice, it is infinitesimally unlikely that this will ever happen given the amount of time that is spent spinning at the barrier, e. g. , none of our thousands of experimental runs ever resulted in an incorrect answer. Furthermore, no existing literature has been able to show to trigger this type of error. 116

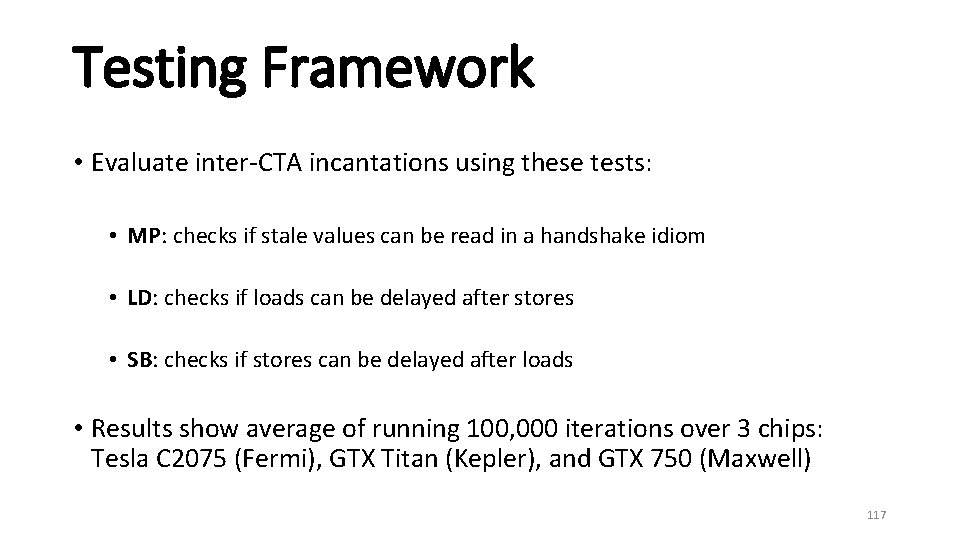

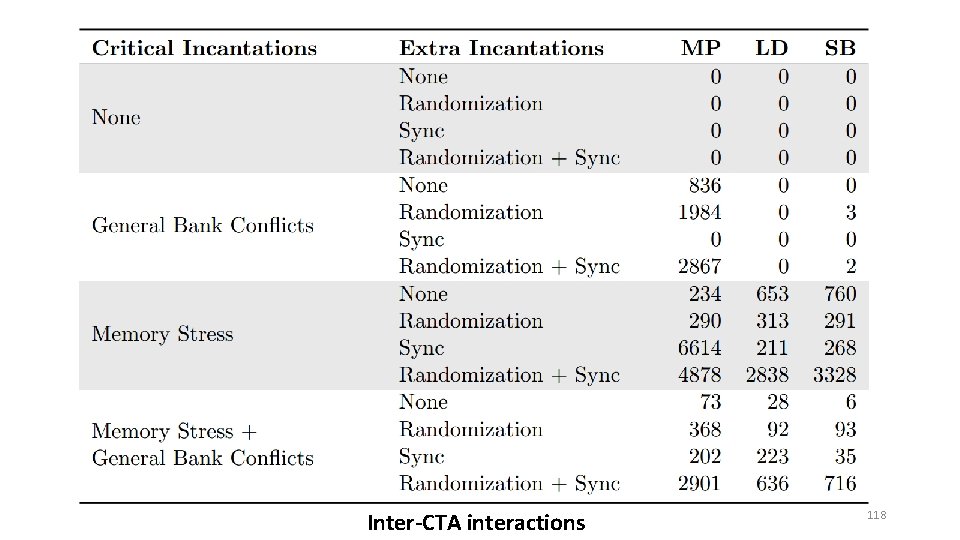

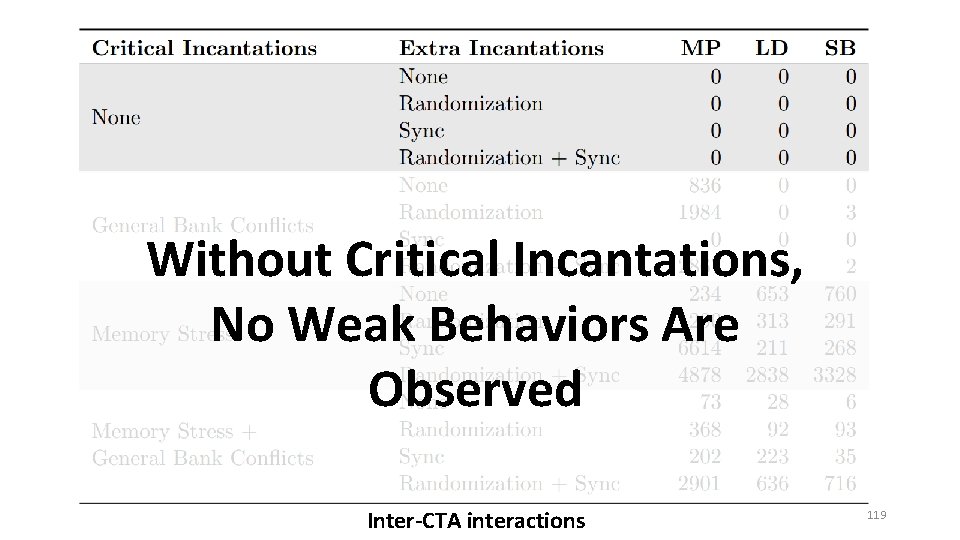

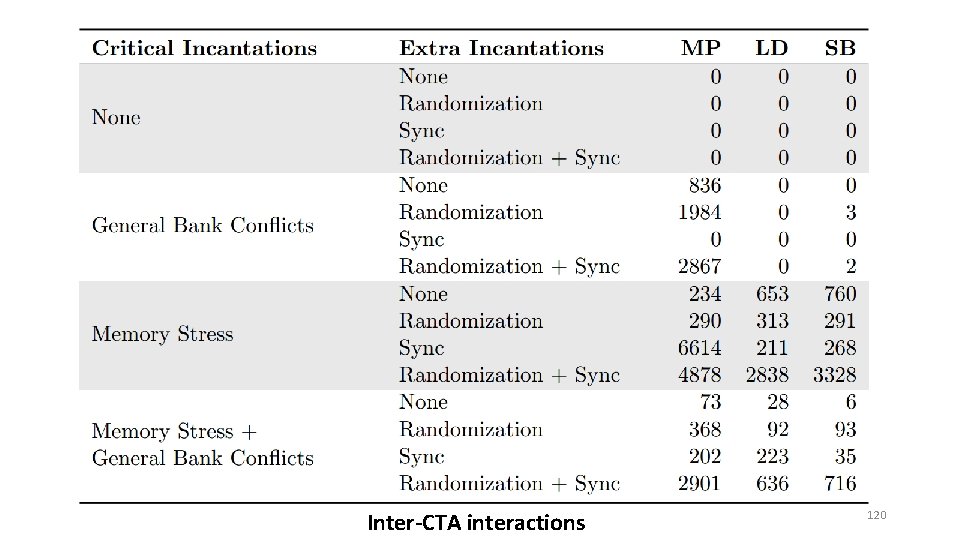

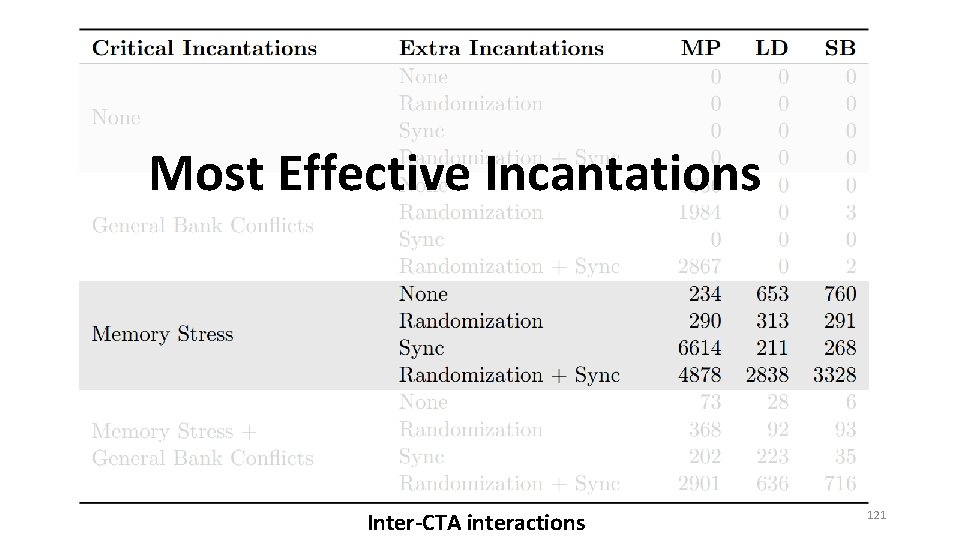

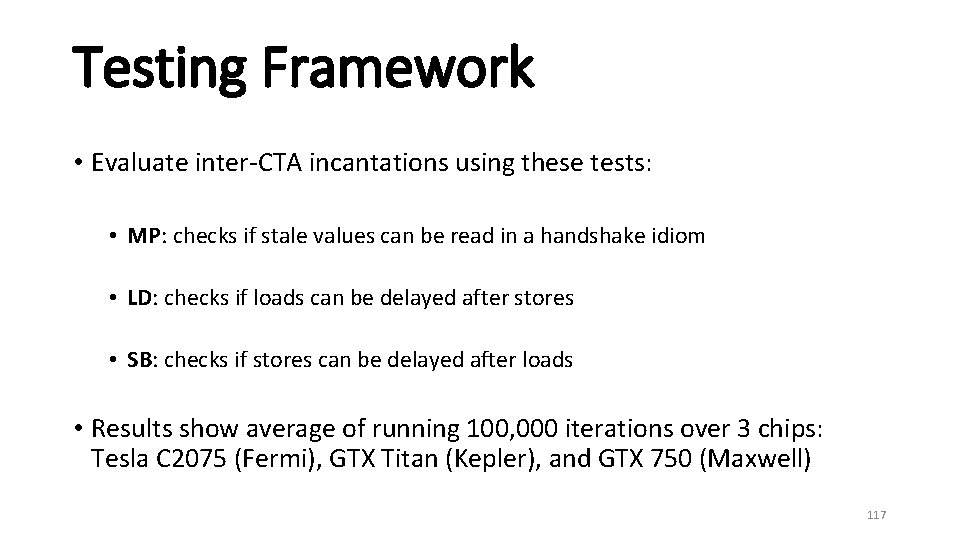

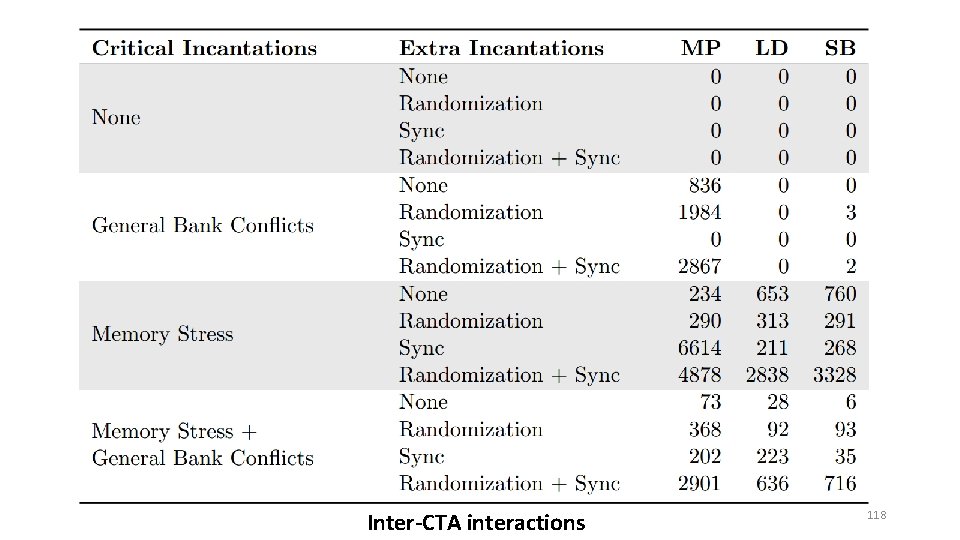

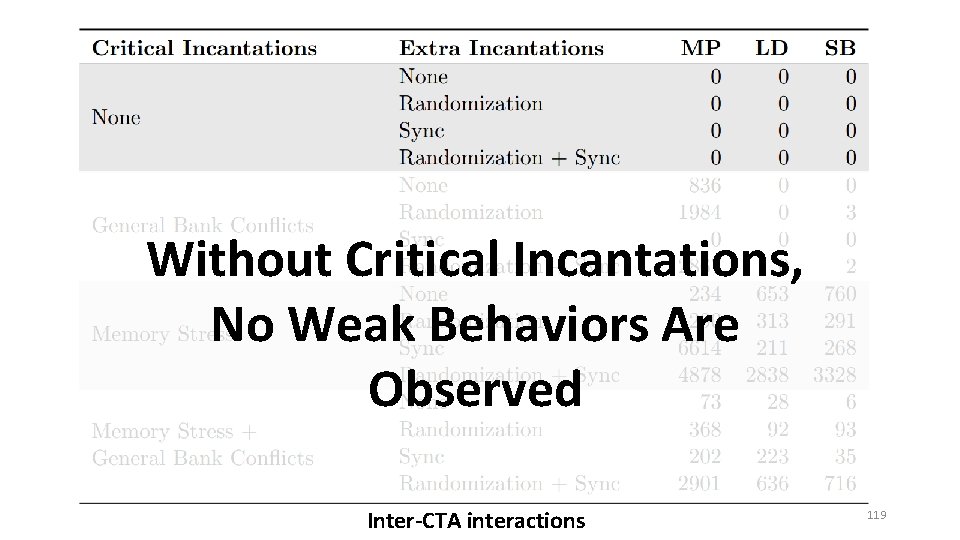

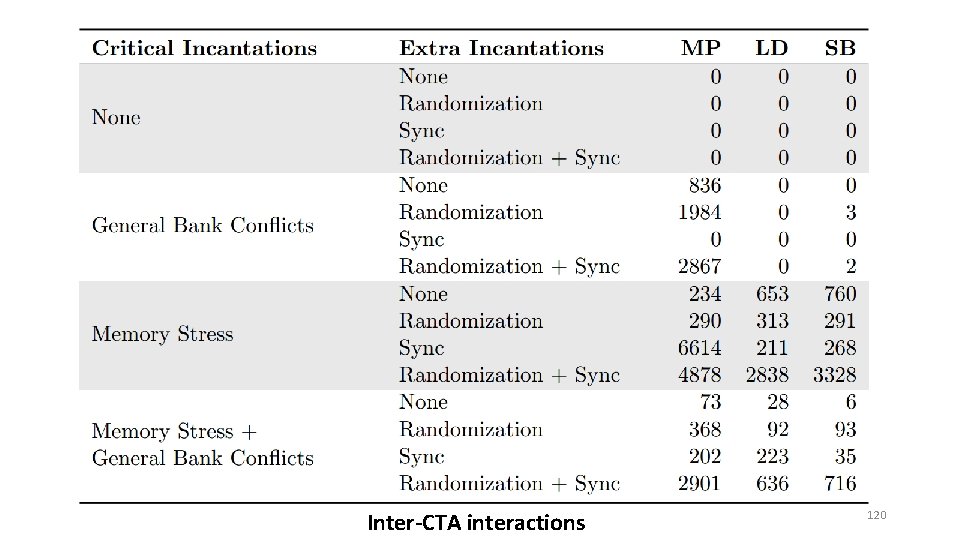

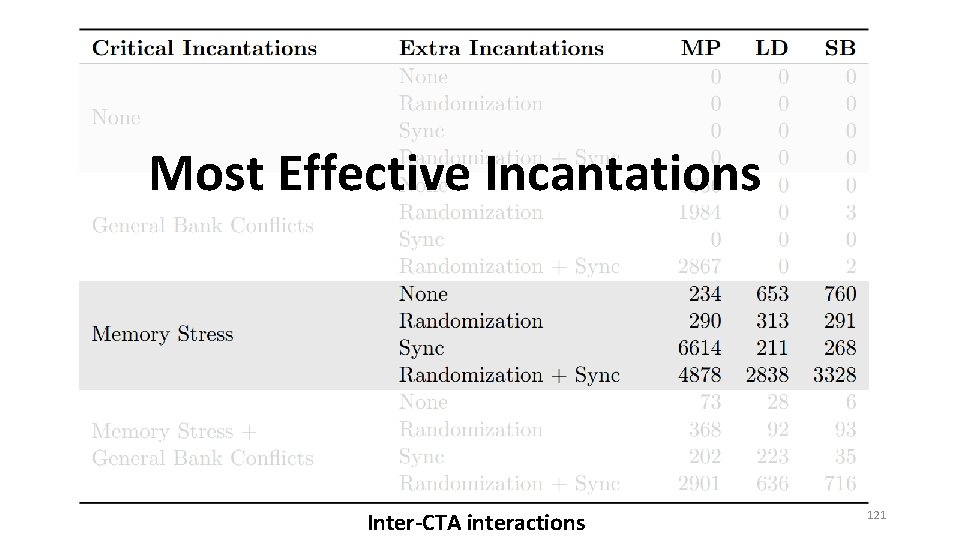

Testing Framework • Evaluate inter-CTA incantations using these tests: • MP: checks if stale values can be read in a handshake idiom • LD: checks if loads can be delayed after stores • SB: checks if stores can be delayed after loads • Results show average of running 100, 000 iterations over 3 chips: Tesla C 2075 (Fermi), GTX Titan (Kepler), and GTX 750 (Maxwell) 117

Inter-CTA interactions 118

Without Critical Incantations, No Weak Behaviors Are Observed Inter-CTA interactions 119

Inter-CTA interactions 120

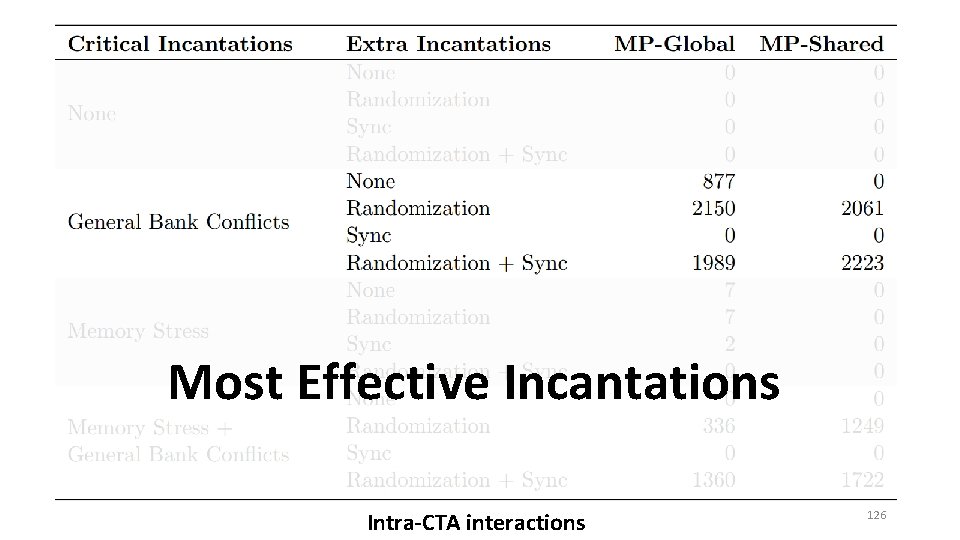

Most Effective Incantations Inter-CTA interactions 121

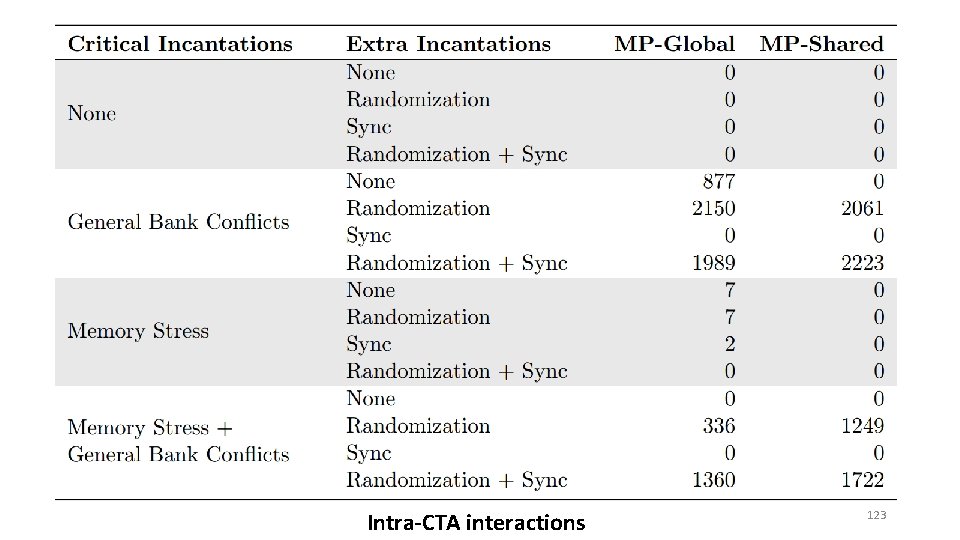

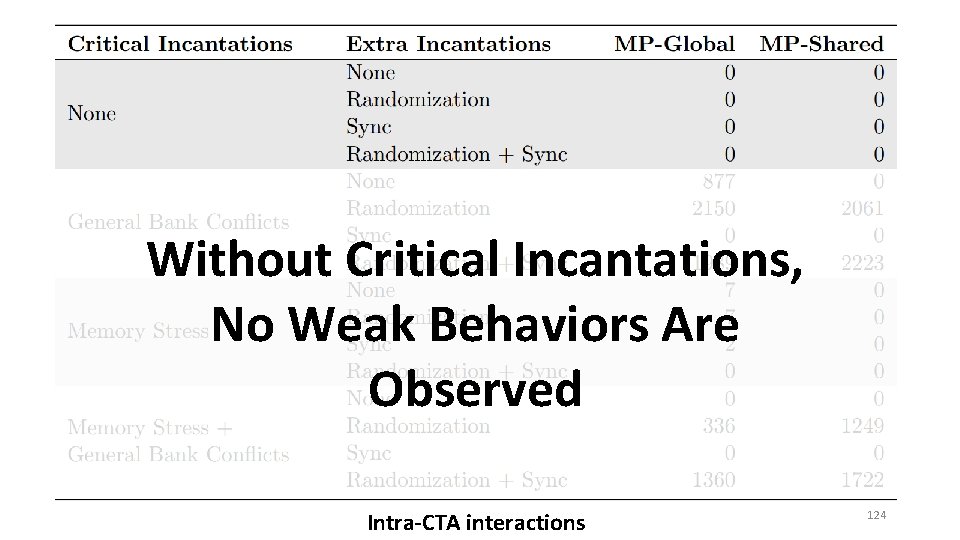

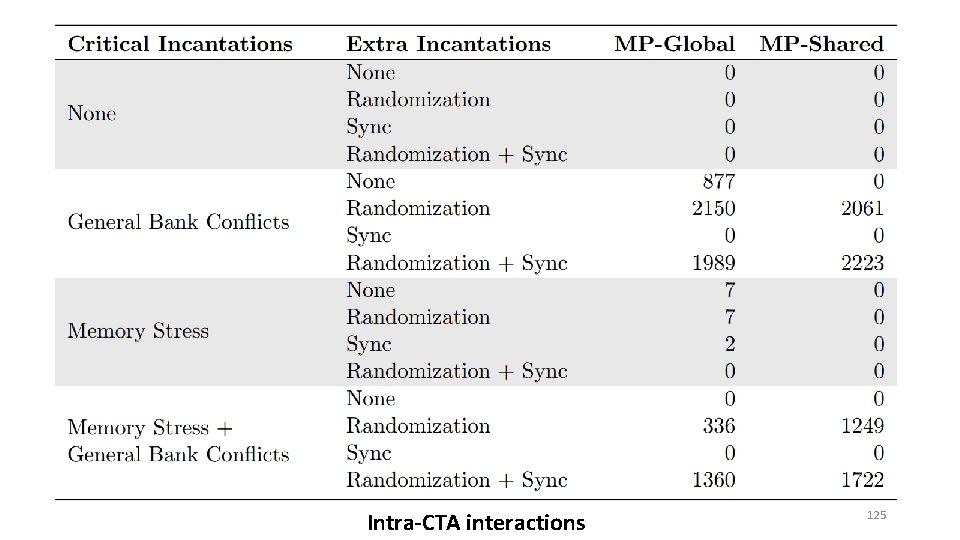

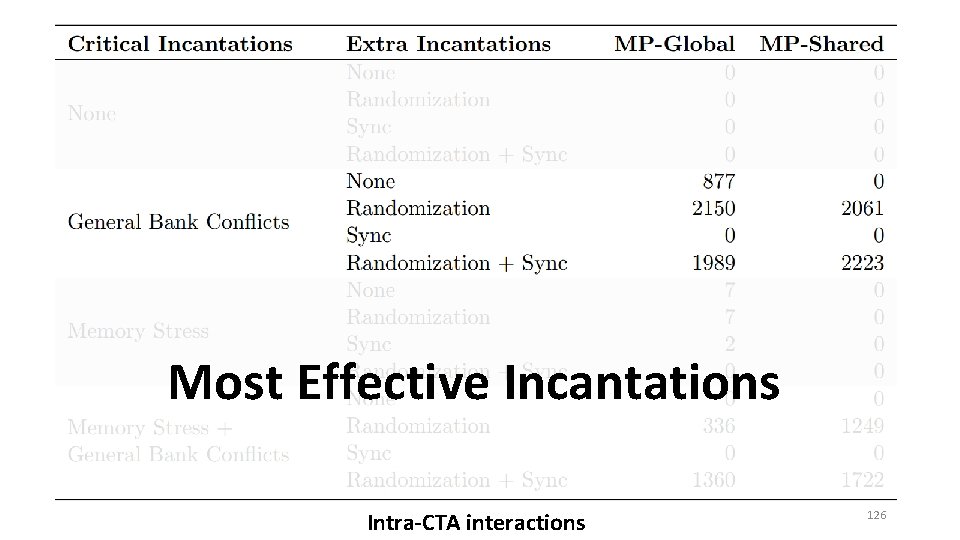

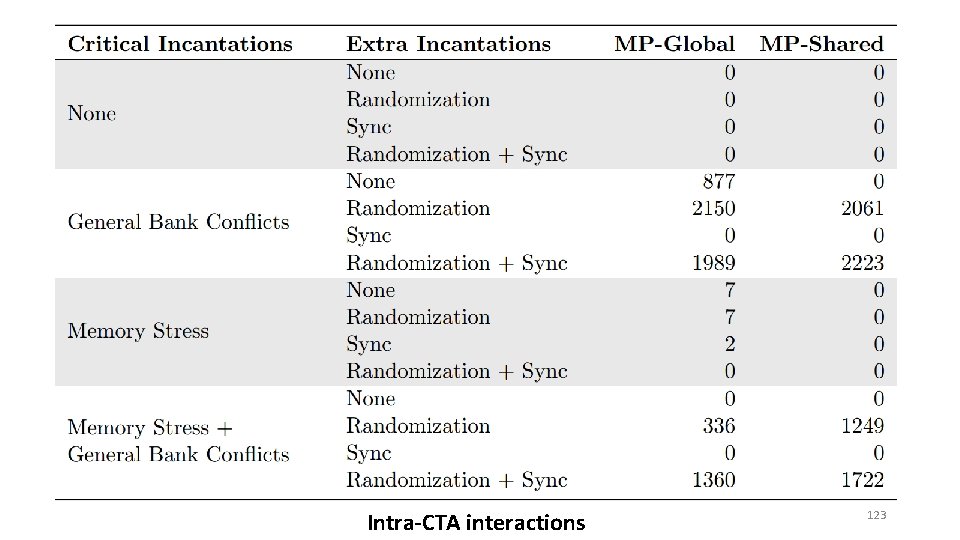

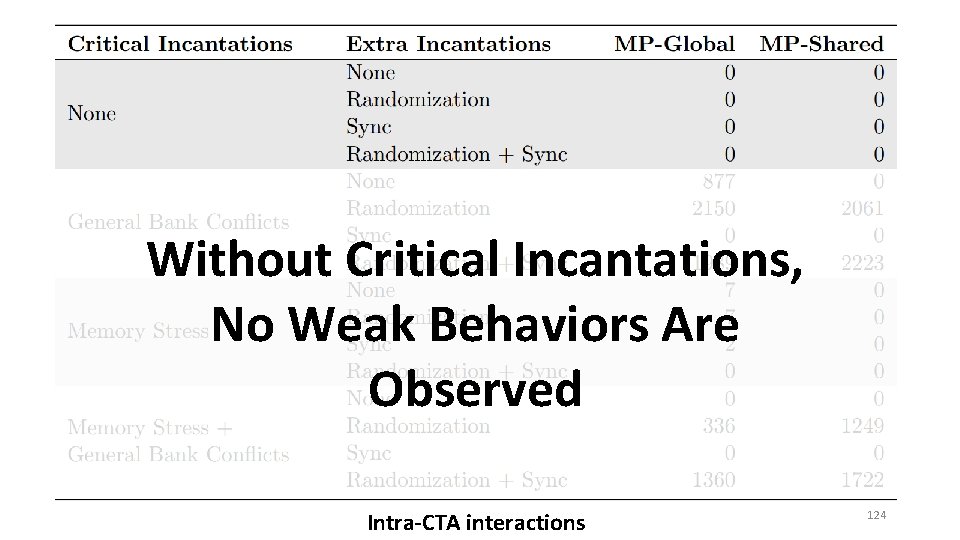

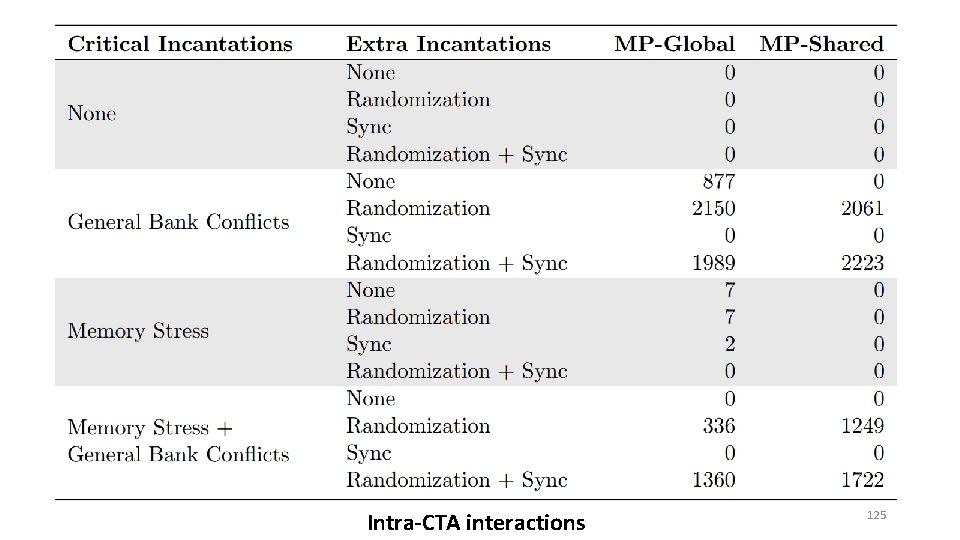

Testing Framework • Evaluate intra-CTA incantations using these tests*: • MP-Global: Message Passing tests targeting global memory region • MP-Shared: Message Passing tests targeting global memory region * The previous tests (LD, SB) are not observable intra-CTA 122

Intra-CTA interactions 123

Without Critical Incantations, No Weak Behaviors Are Observed Intra-CTA interactions 124

Intra-CTA interactions 125

Most Effective Incantations Intra-CTA interactions 126

![Bulk Testing Invalidated GPU memory model from Model disallows behaviors Bulk Testing • Invalidated GPU memory model from [? ] • Model disallows behaviors](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-127.jpg)

Bulk Testing • Invalidated GPU memory model from [? ] • Model disallows behaviors observed on hardware • Gives too strong of orderings to load operations inter-CTA 127

![Bulk Testing Invalidated GPU memory model from Model disallows behaviors Bulk Testing • Invalidated GPU memory model from [? ] • Model disallows behaviors](https://slidetodoc.com/presentation_image/279313662b46d2cffcee5b5a7a5a1f4a/image-128.jpg)

Bulk Testing • Invalidated GPU memory model from [? ] • Model disallows behaviors observed on hardware • Gives too strong of orderings to load operations inter-CTA 128

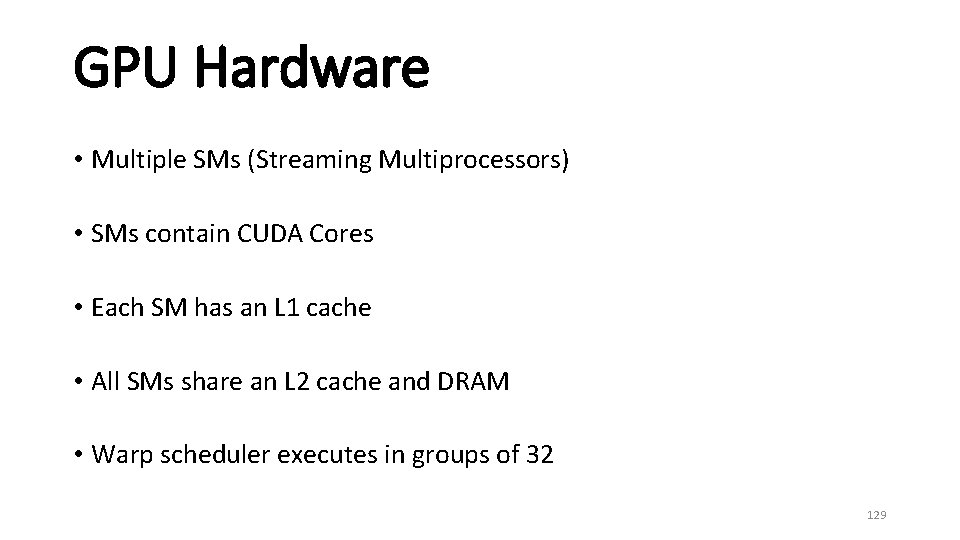

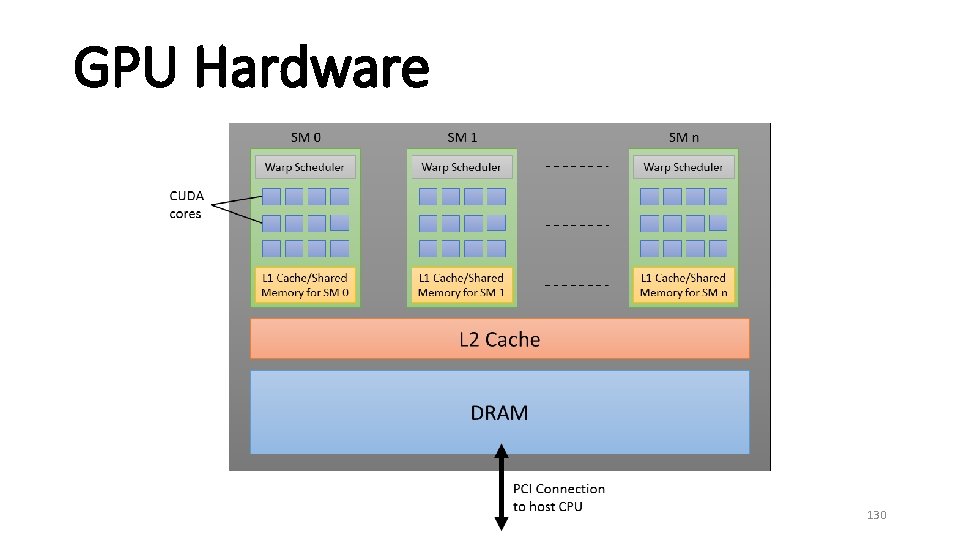

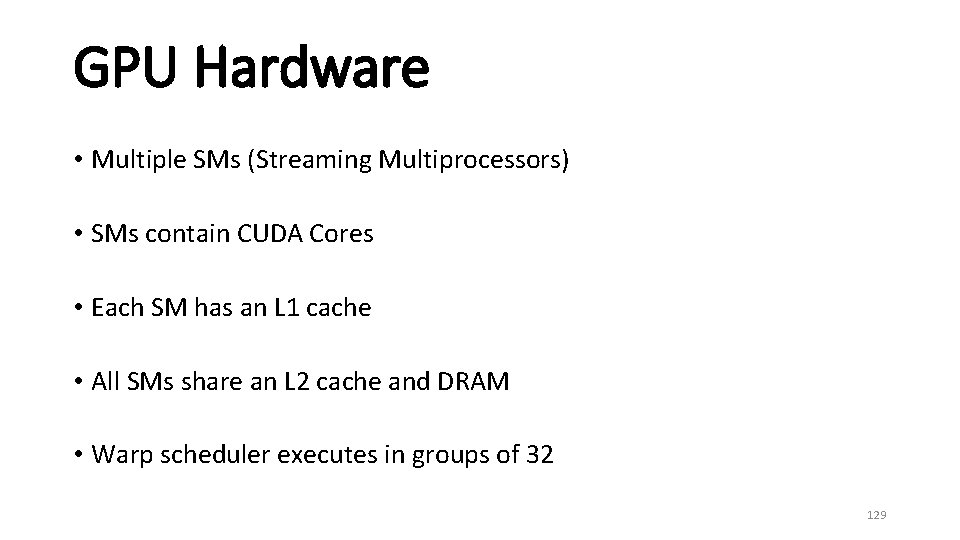

GPU Hardware • Multiple SMs (Streaming Multiprocessors) • SMs contain CUDA Cores • Each SM has an L 1 cache • All SMs share an L 2 cache and DRAM • Warp scheduler executes in groups of 32 129

GPU Hardware 130

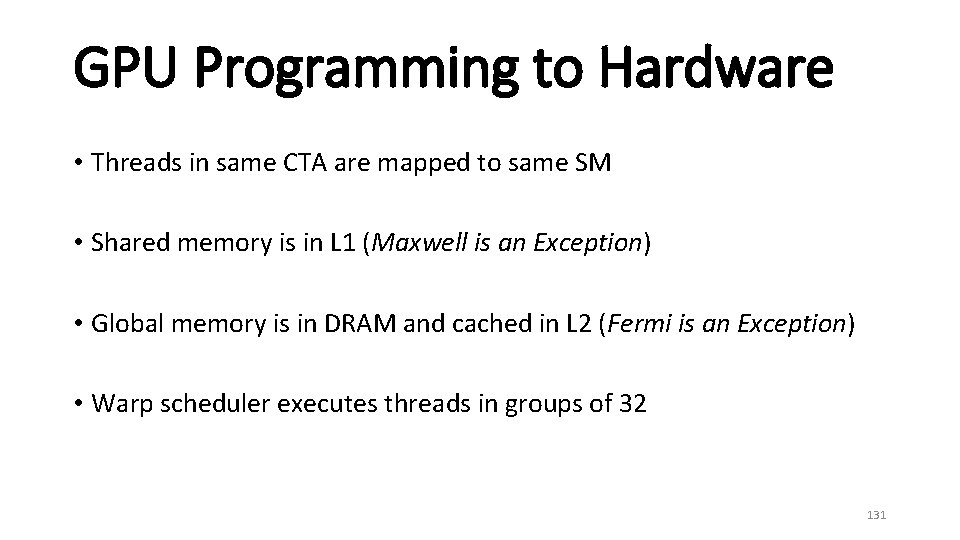

GPU Programming to Hardware • Threads in same CTA are mapped to same SM • Shared memory is in L 1 (Maxwell is an Exception) • Global memory is in DRAM and cached in L 2 (Fermi is an Exception) • Warp scheduler executes threads in groups of 32 131

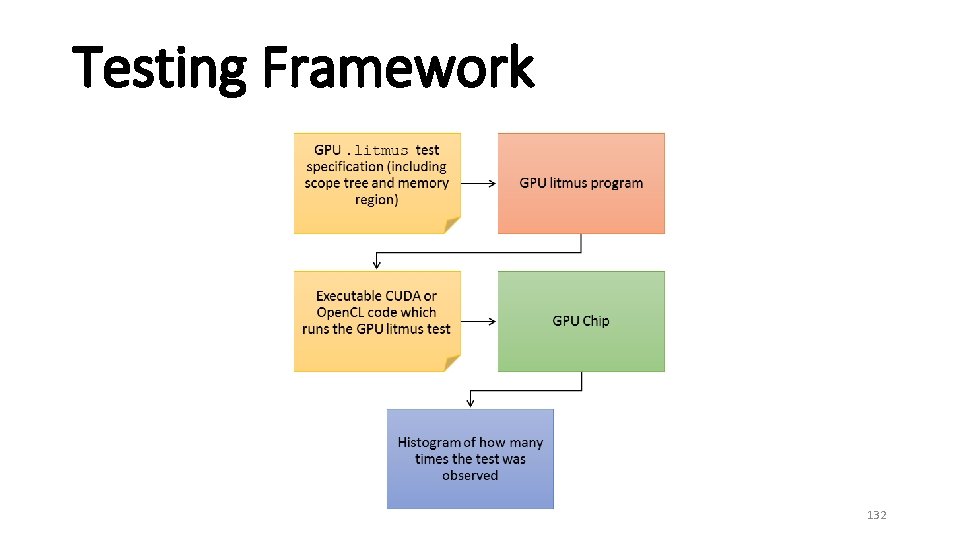

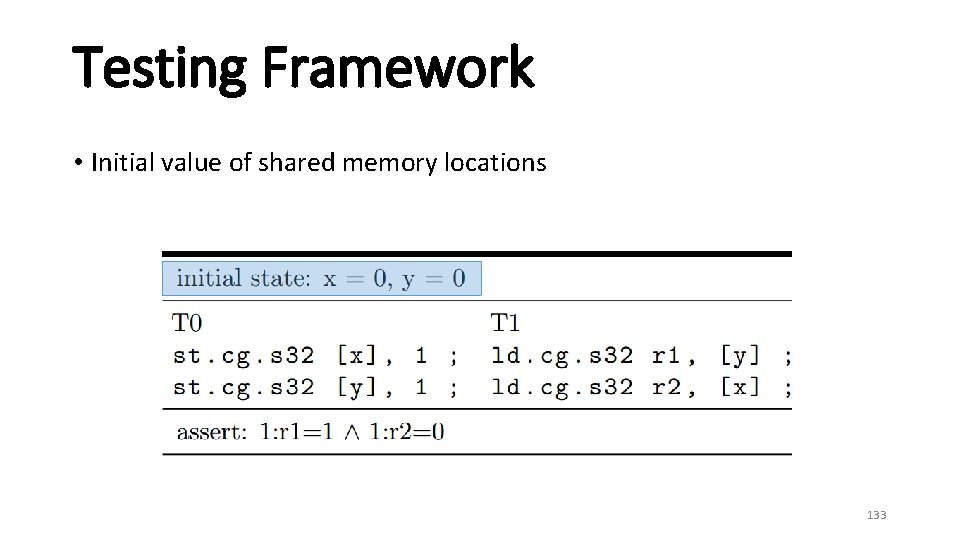

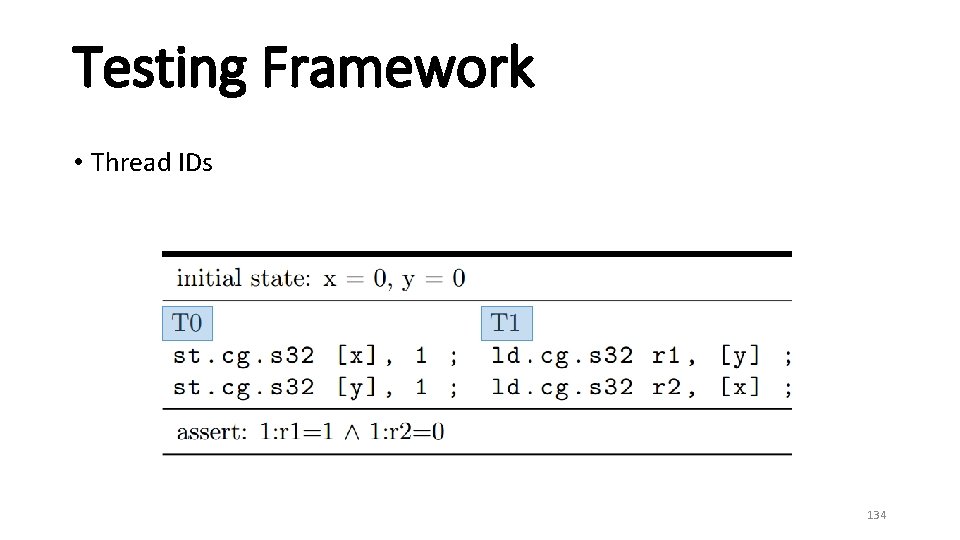

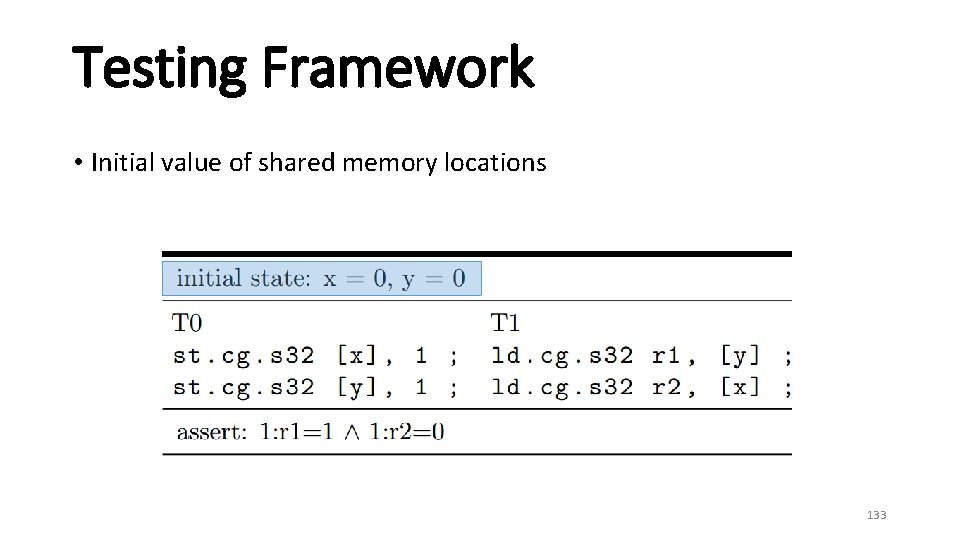

Testing Framework 132

Testing Framework • Initial value of shared memory locations 133

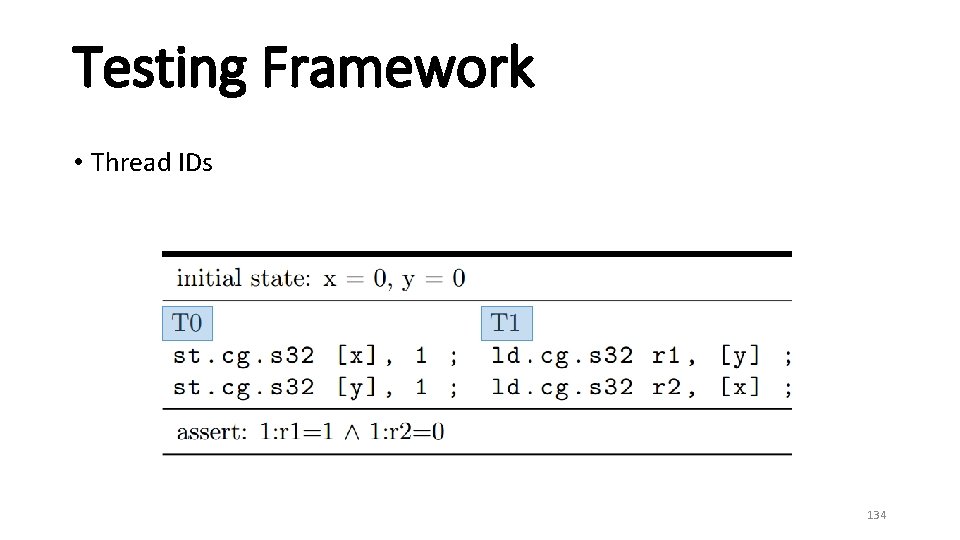

Testing Framework • Thread IDs 134

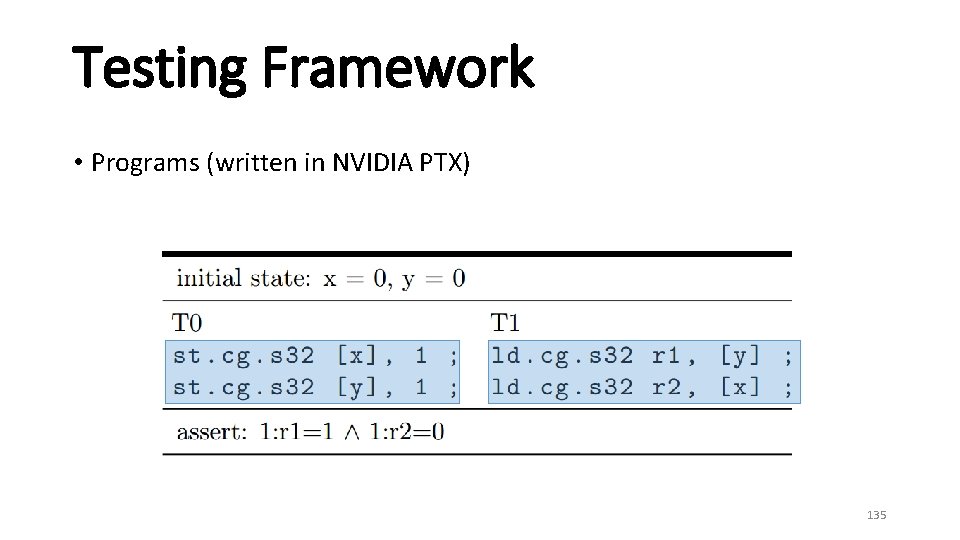

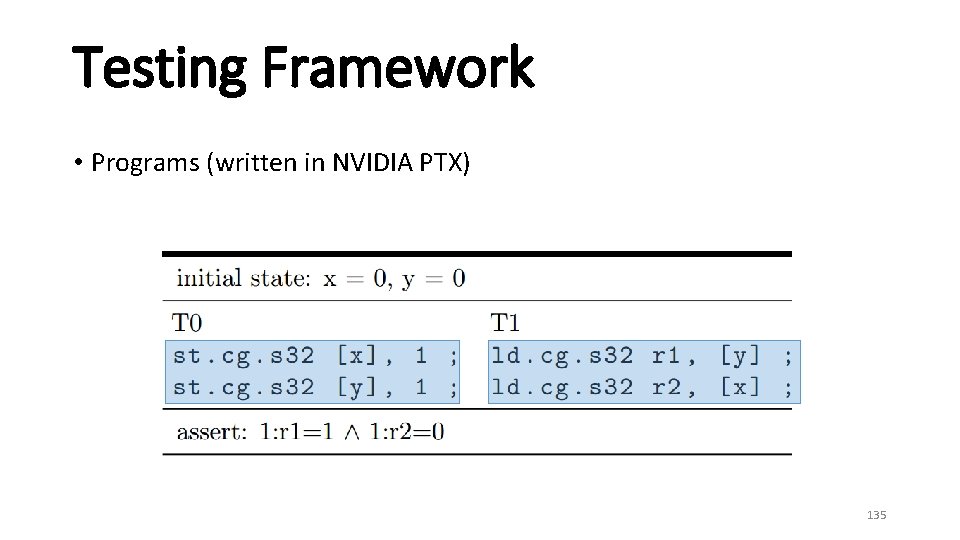

Testing Framework • Programs (written in NVIDIA PTX) 135

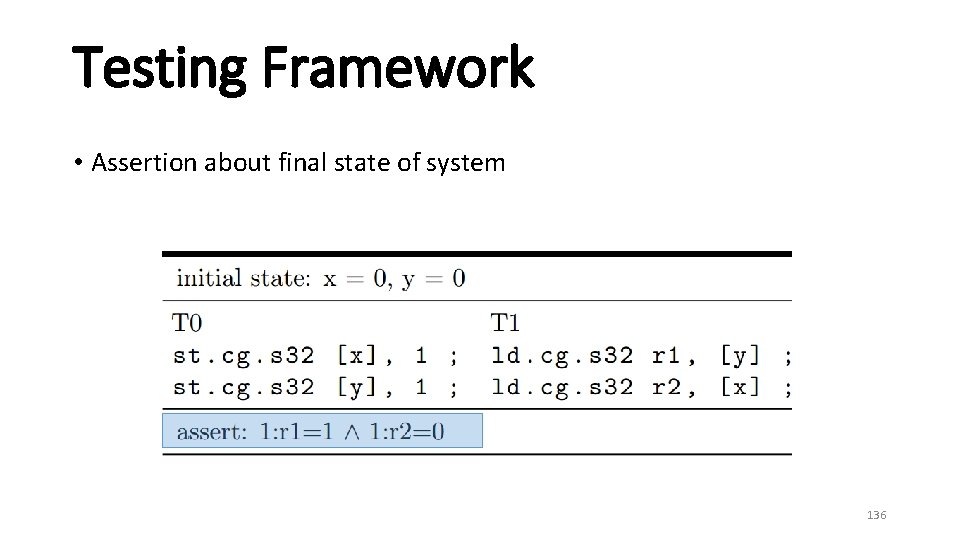

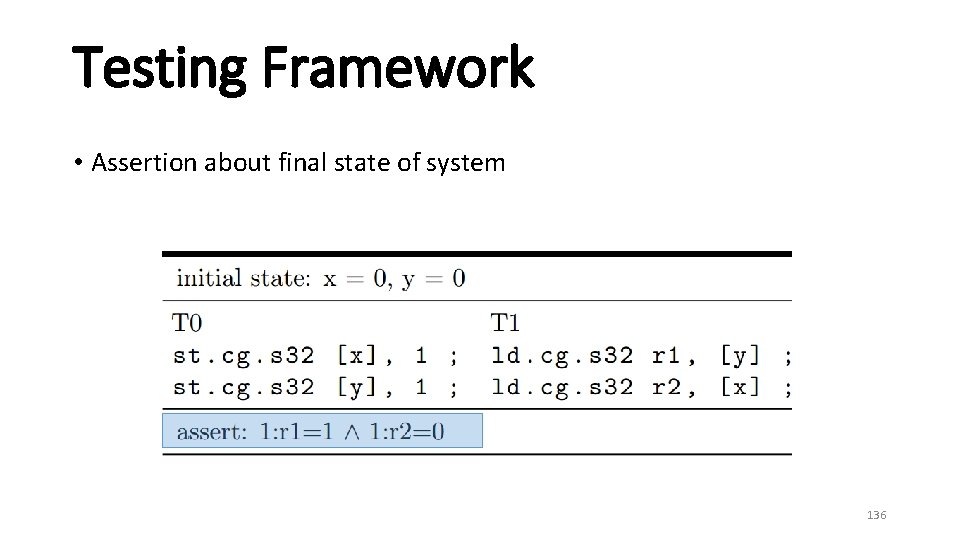

Testing Framework • Assertion about final state of system 136

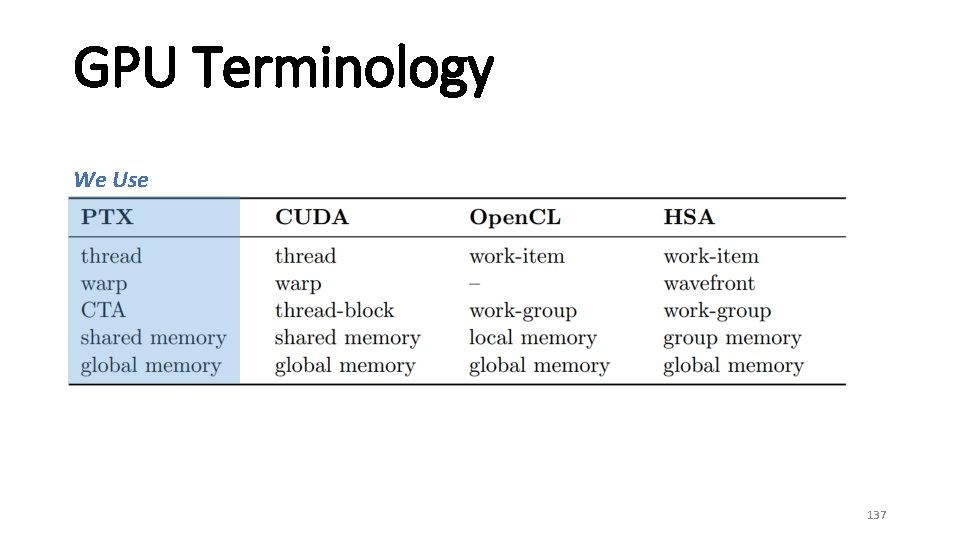

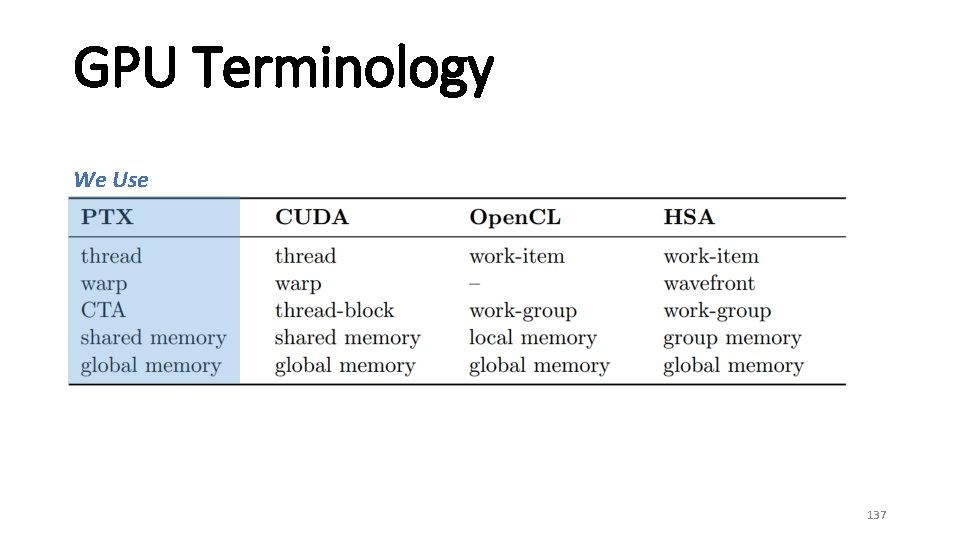

GPU Terminology We Use 137