GRAPHICS AND COMPUTING GPUS JehanFranois Pris jfparisuh edu

- Slides: 34

GRAPHICS AND COMPUTING GPUS Jehan-François Pâris jfparis@uh. edu

Chapter Organization • • • Why bother? Evolution GPU System Architecture Programming GPUs …

Why bother? (I) • Yesterday's fastest computer was the Sequoia supercomputer – Can crunch 16. 32 quadrillion calculations per second (16. 32 Petaflops/s). – 98, 304 compute nodes • Each compute nodes is a 16 -core Power. PC A 2 processor

Why bother? (II) • Today's fastest computer is the Cray XK 7 – Hits 17. 59 Petaflops/s on the LINPAC benchmark. – Features 560, 640 processors, including 261, 632 Nvidia K 20 x accelerating cores. • Supercomputing version of consumer-oriented GK 104 CPU

Why bother (III) • Most techniques developed for highspeed computing end trickling down to mass markets

EVOLUTION

History (I) • Up to late 90's – No GPUs – Much simpler VGA controller • Consisted of –A memory controller –Display generator + DRAM • DRAM was either shared with CPU or private

History (I) • By 1997 – More complex VGA controllers • Incorporated 3 D accelerating functions in hardware –Triangle set up and rasterization –Texture mapping and shading

Rasterization • Converting – An image described in a vector graphics format as a combination of shapes • Lines, polygons, letters, … into – A raster image consisting of individual pixels

History (II) • By 2000 – Single chip graphics processor incorporated nearly all functions of graphics pipeline of high-end workstations • Beginning of the end of high-end workstation market – VGA controller was renamed Graphic Processing Units

Current trends (I) • Graphics processing standards – Well defined APIs – Open GL: Open standard for 3 D graphics programming – Direct. X: Set of MS multimedia programming interfaces (Direct 3 D for 3 D graphics) • Xbox was named after it!

Current trends (II) • Frequent doubling of GPU speeds – Every 12 to 18 months • New paradigm: – Visual computing stands at the intersection graphic processing and parallel computing • Can implement novel graphics algorithms • Use GPUs for non-conventional

Two results • Triumph of heterogeneous architectures – Combining powers of CPU and GPU • GPUs become scalable parallel processors – Moving from hardware-defined pipelining architectures to more flexible programmable architectures

From GPGU to CUDA • GPGU – General-Purpose computing on GPU – Uses traditional graphics API and graphics pipeline

From GPGU to CUDA • CUDA – Compute Unified Device Architecture – Parallel computing platform and programming model • C/C++ • Invented by NVIDIA – Single Program Multiple Data approach

GPU SYSTEM ARCHITECTURE

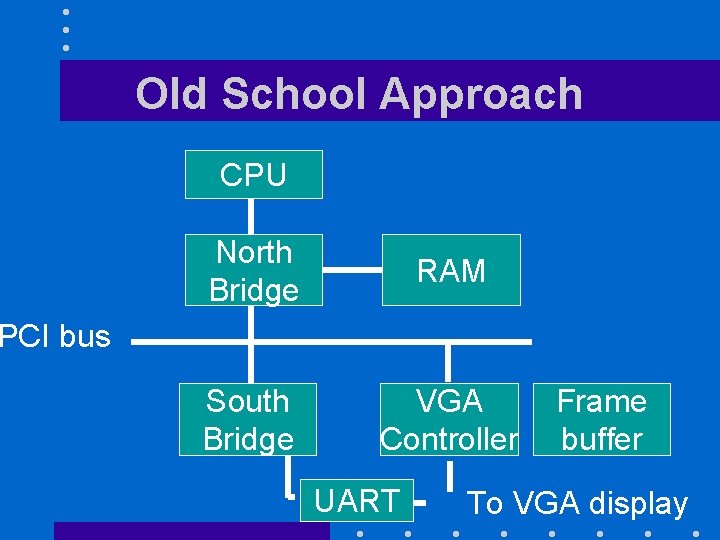

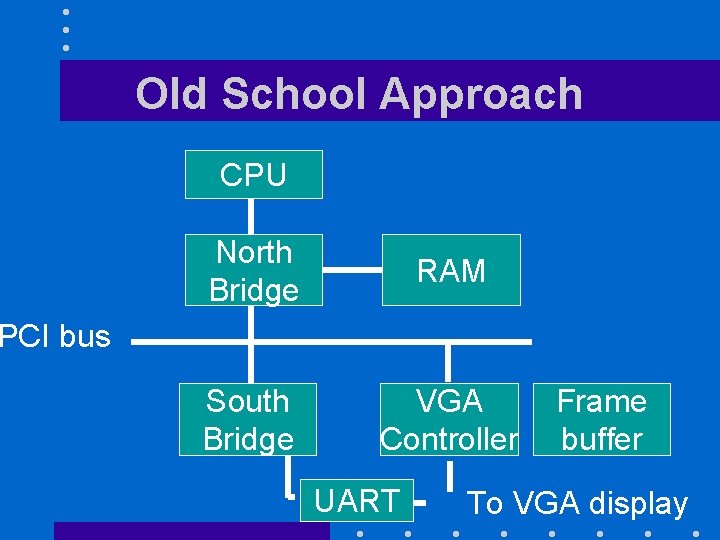

Old School Approach CPU North Bridge RAM South Bridge VGA Controller PCI bus UART Frame buffer To VGA display

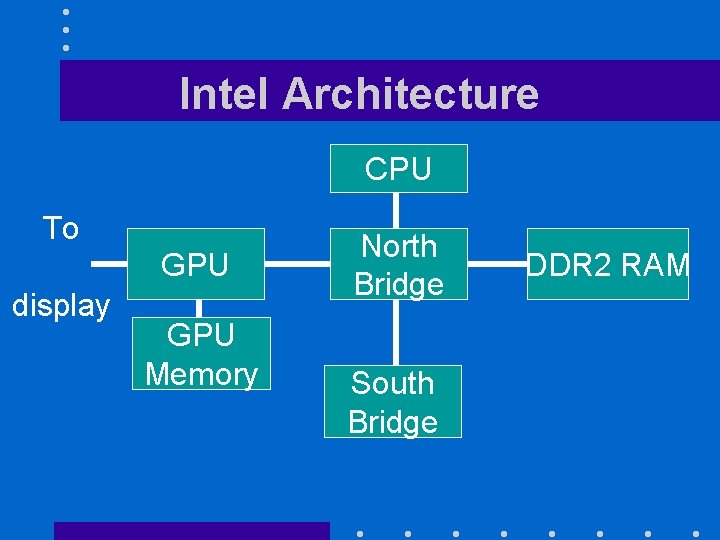

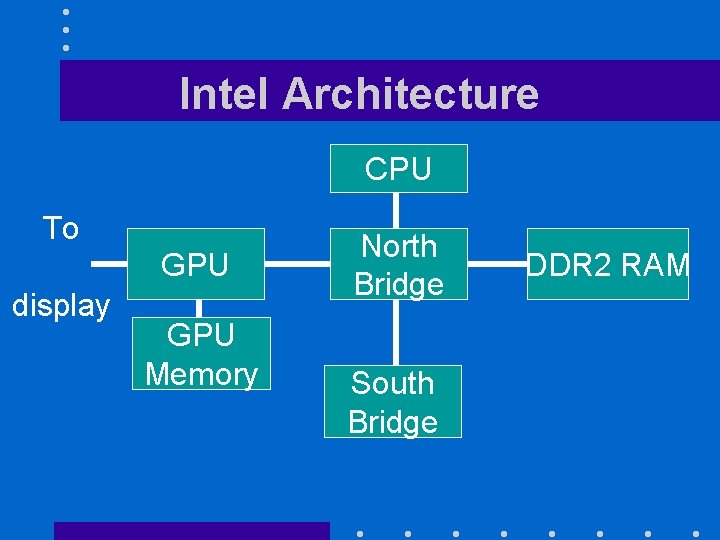

Intel Architecture CPU To GPU display GPU Memory North Bridge South Bridge DDR 2 RAM

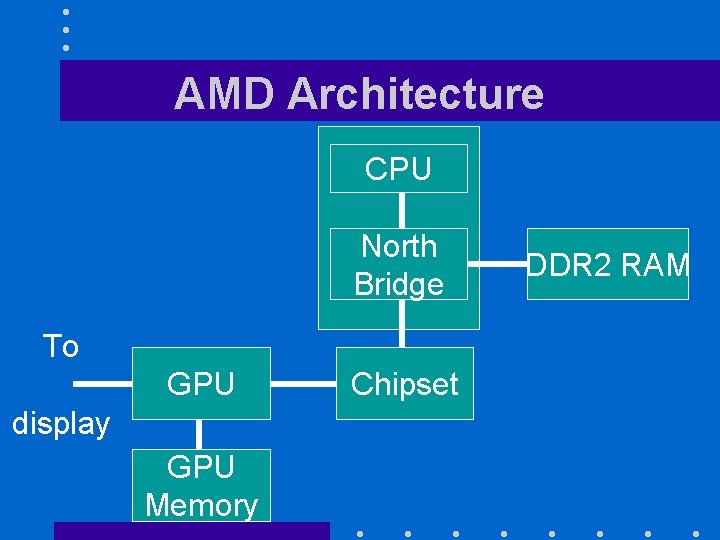

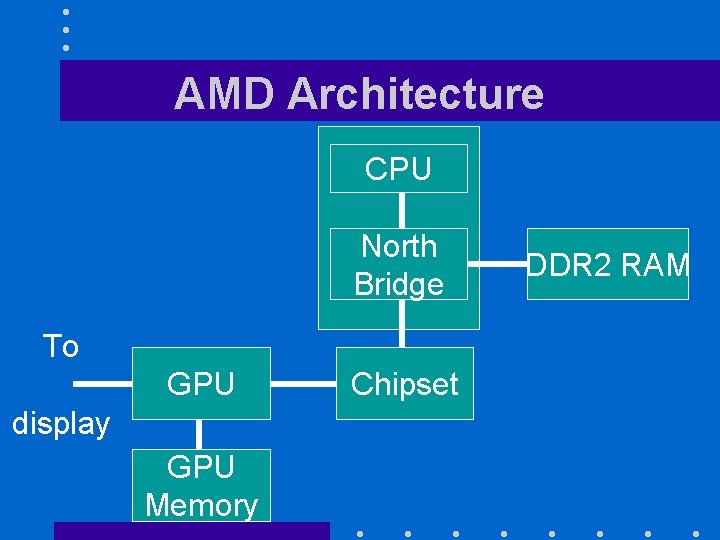

AMD Architecture CPU North Bridge To GPU display GPU Memory Chipset DDR 2 RAM

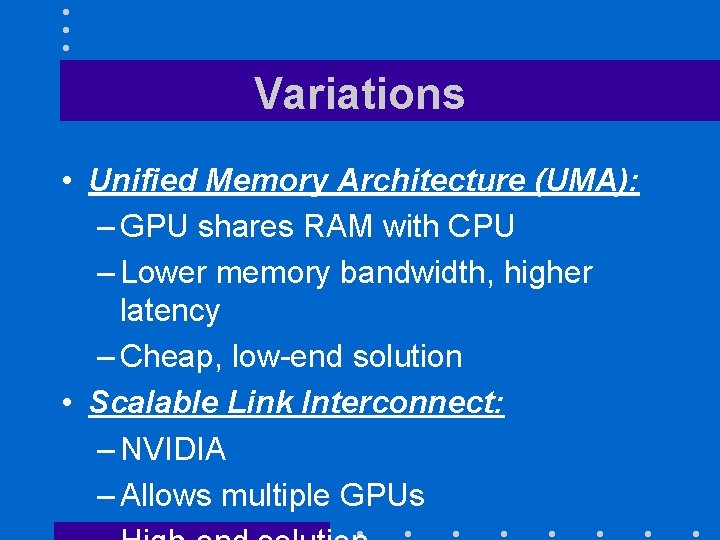

Variations • Unified Memory Architecture (UMA): – GPU shares RAM with CPU – Lower memory bandwidth, higher latency – Cheap, low-end solution • Scalable Link Interconnect: – NVIDIA – Allows multiple GPUs

Integrated solutions • Integrate CPU and Northbridge • Integrate GPU and chipset

Game console • Similar architectures • Architectures evolve over time • Objective is to reduce costs while maintaining performance

GPU interfaces and drivers • GPU attached to CPU via PCI-Express – Replaces older AGP • Interfaces such as Open. GL and Direct 3 D use the GPU as a coprocessor – Send commands, programs and data to GPU through a specific GPU device driver They are often buggy!

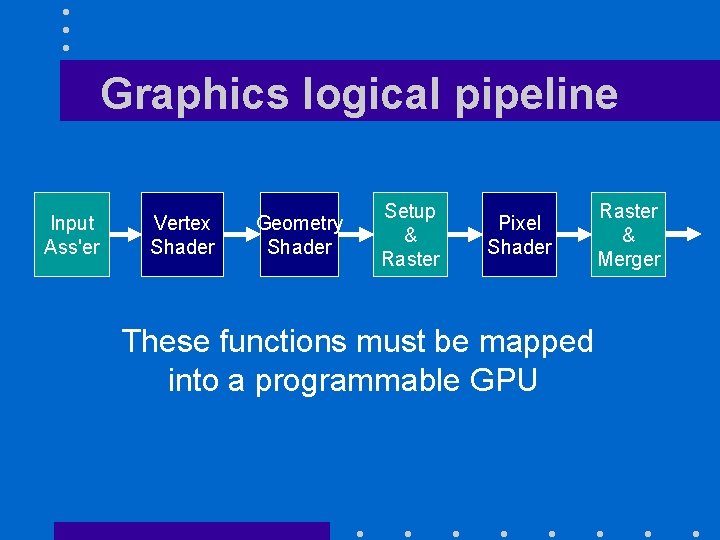

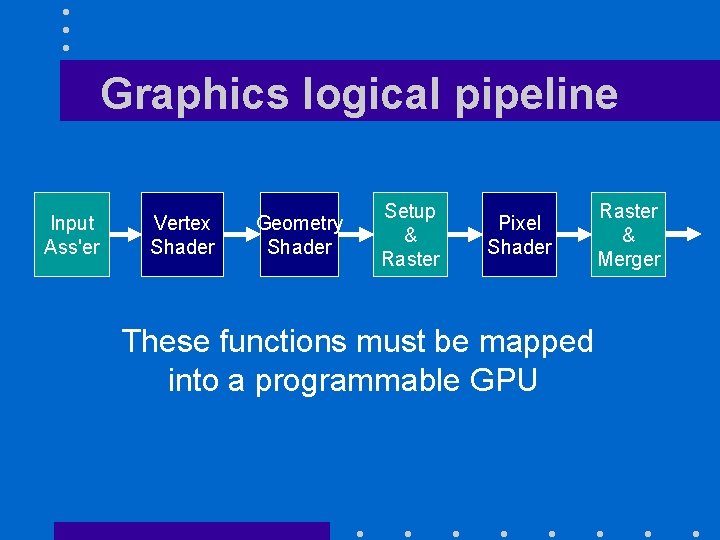

Graphics logical pipeline Input Ass'er Vertex Shader Geometry Shader Setup & Raster Pixel Shader These functions must be mapped into a programmable GPU Raster & Merger

Basic Unified GPU Architecture • Programmable processor array – Tightly integrated with fixed-function processors for texture filtering, rasterization, raster operations – Emphasis in on very high level of parallelism

Example architecture • Tesla architecture (NVIDIA Geoforce 8800) • 116 streaming processors (SP) cores – Organized as 14 multithreaded streaming multiprocessors (SM) • Each SP core – Manages 96 concurrent threads • Thread state are maintained by hardware

Example architecture • Each SM has – 8 SP cores – 2 special function units – Separate caches for instructions and constants – A multithreaded instruction unit – Shared memory (NUMA? )

PROGRAMMING GPUS Will focus on parallel computing applications

Key idea • Must decompose problem into set of parallel computations – Ideally two-level to match GPU organization

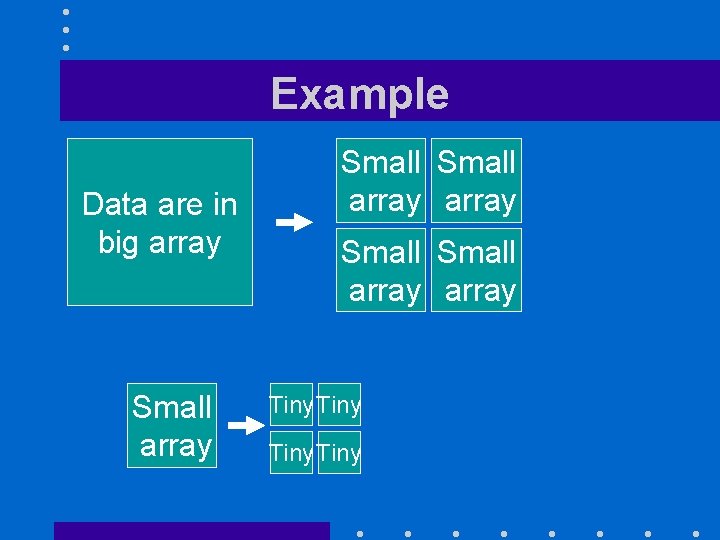

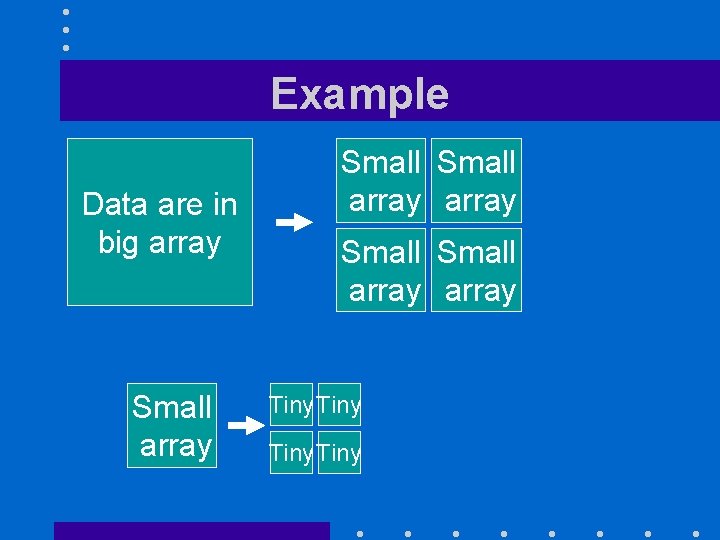

Example Data are in big array Small array Small array Tiny

CUDA • CUDA programs are written in C • Provides three abstractions – Hierarchy of thread groups – Shared memory – Barrier synchronization

Barrier synchronization • Barriers let threads – Wait for completion of a computation step by other cores so they can • Exchange results • Start next step

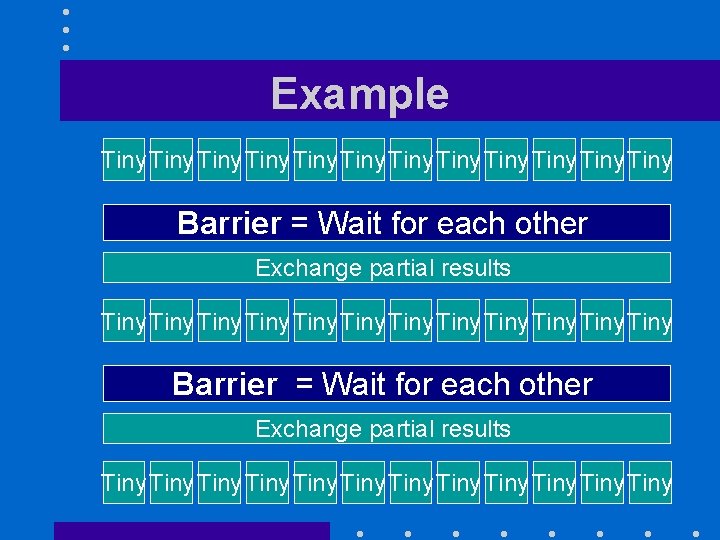

Example Tiny Tiny Tiny Tiny Tiny Tiny Barrier = Wait for each other Exchange partial results Tiny Tiny Tiny

Big fallacies • GPUs – Not good for general computation – Cannot run double precision arithmetic – Do not do floating point correctly • Cannot speedup O(n) algorithms