A GPU Accelerated Storage System Abdullah Gharaibeh with

A GPU Accelerated Storage System Abdullah Gharaibeh with: Samer Al-Kiswany Sathish Gopalakrishnan Matei Ripeanu Net. Sys. Lab The University of British Columbia

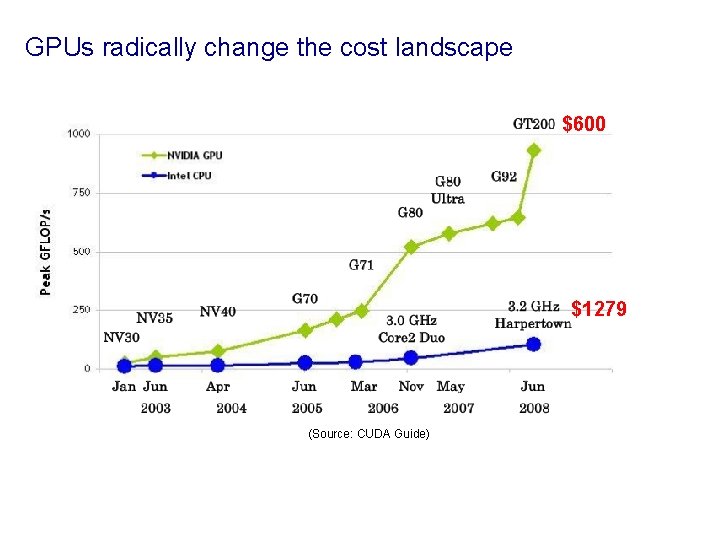

GPUs radically change the cost landscape $600 $1279 (Source: CUDA Guide)

Harnessing GPU Power is Challenging Ø more complex programming model Ø limited memory space Ø accelerator / co-processor model

Motivating Question: Does the 10 x reduction in computation costs GPUs offer change the way we design/implement distributed systems? Context: Distributed Storage Systems

Distributed Systems Computationally Intensive Operations Hashing Techniques Similarity detection Erasure coding Content addressability Encryption/decryption Security Membership testing (Bloom-filter) Integrity checks Compression Redundancy Load balancing Summary cache Storage efficiency Computationally intensive Limit performance

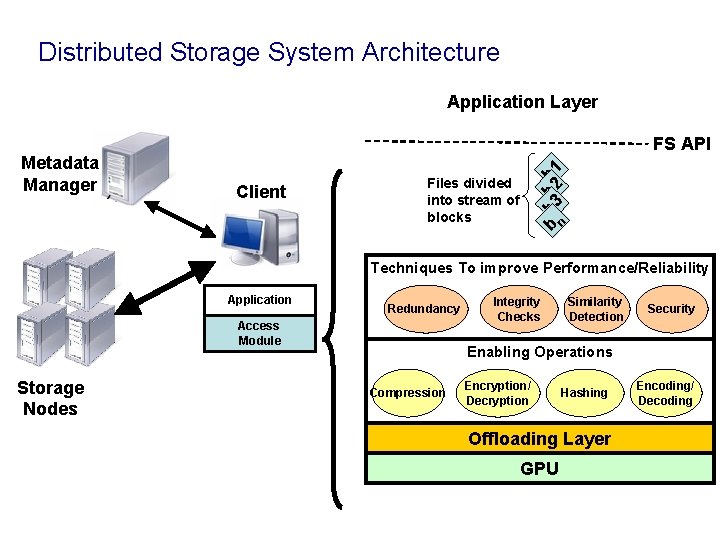

Distributed Storage System Architecture Application Layer Client Files divided into stream of blocks b b 3 b 2 b 1 n Metadata Manager FS API Techniques To improve Performance/Reliability Application Redundancy Access Module Storage Nodes Integrity Checks Similarity Detection Security Enabling Operations Compression Encryption/ Decryption Hashing Offloading CPU Layer GPU Encoding/ Decoding

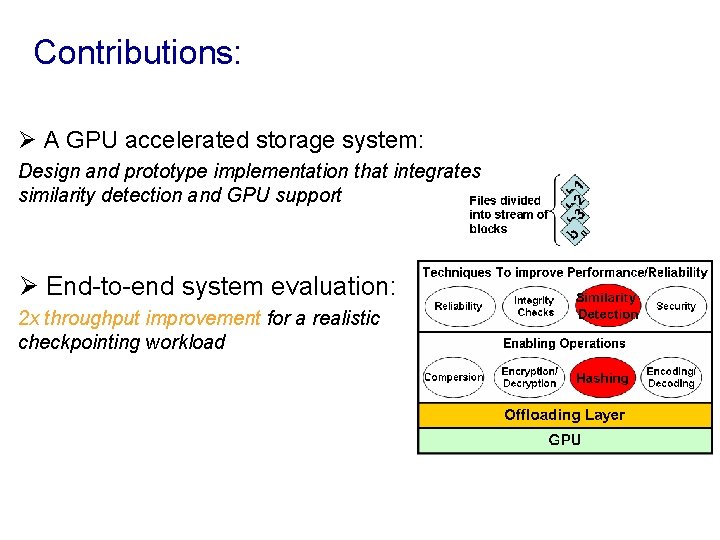

Contributions: Ø A GPU accelerated storage system: Design and prototype implementation that integrates similarity detection and GPU support Ø End-to-end system evaluation: 2 x throughput improvement for a realistic checkpointing workload

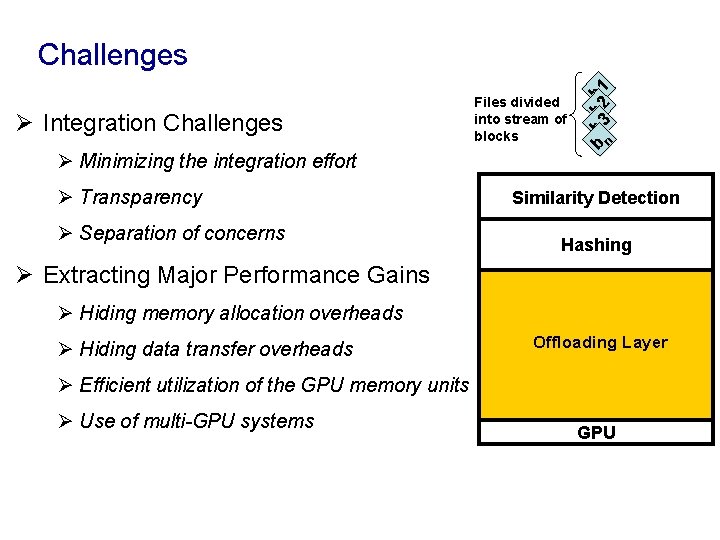

Ø Integration Challenges Ø Minimizing the integration effort Ø Transparency Ø Separation of concerns Files divided into stream of blocks b b 3 b 2 b 1 n Challenges Similarity Detection Hashing Ø Extracting Major Performance Gains Ø Hiding memory allocation overheads Ø Hiding data transfer overheads Offloading Layer Ø Efficient utilization of the GPU memory units Ø Use of multi-GPU systems GPU

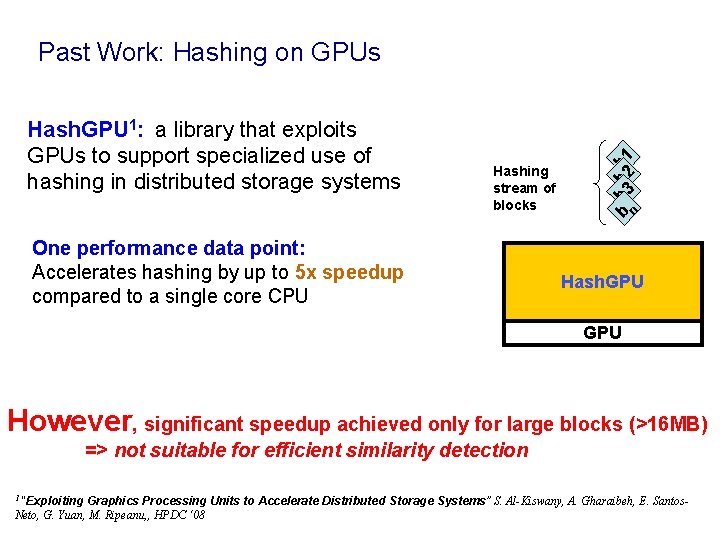

Hash. GPU 1: a library that exploits GPUs to support specialized use of hashing in distributed storage systems Hashing stream of blocks One performance data point: Accelerates hashing by up to 5 x speedup compared to a single core CPU b b 3 b 2 b 1 n Past Work: Hashing on GPUs Hash. GPU However, significant speedup achieved only for large blocks (>16 MB) => not suitable for efficient similarity detection “Exploiting Graphics Processing Units to Accelerate Distributed Storage Systems” S. Al-Kiswany, A. Gharaibeh, E. Santos. Neto, G. Yuan, M. Ripeanu, , HPDC ‘ 08 1

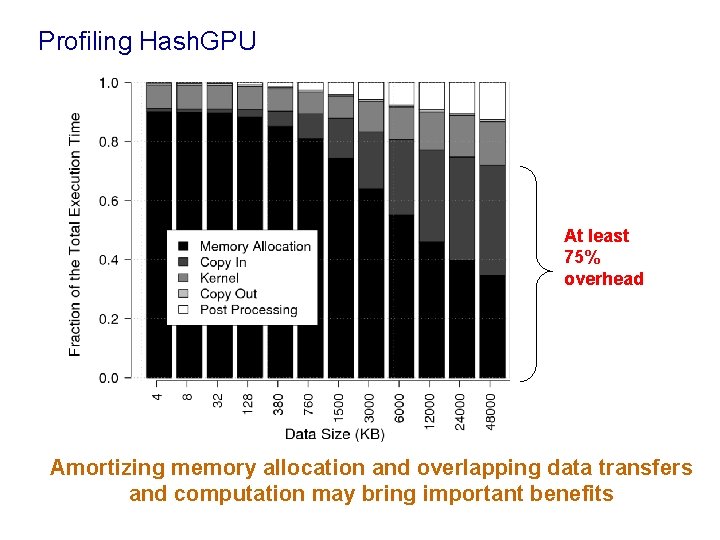

Profiling Hash. GPU At least 75% overhead Amortizing memory allocation and overlapping data transfers and computation may bring important benefits

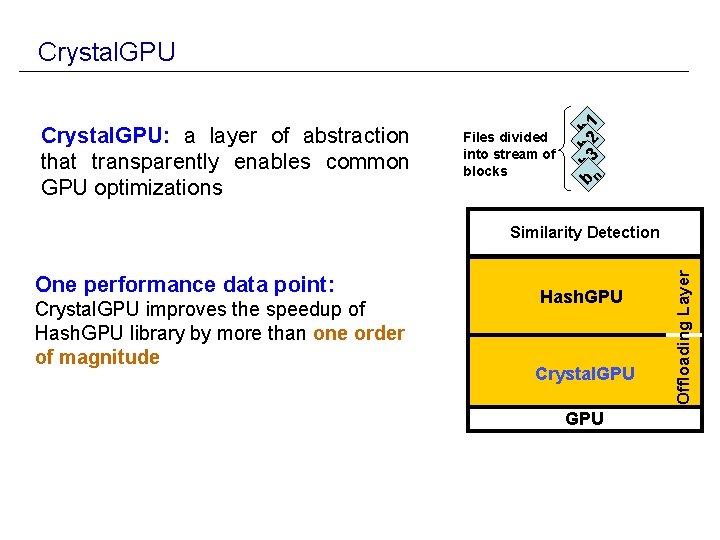

Crystal. GPU: a layer of abstraction that transparently enables common GPU optimizations Files divided into stream of blocks b b 3 b 2 b 1 n Crystal. GPU One performance data point: Crystal. GPU improves the speedup of Hash. GPU library by more than one order of magnitude Hash. GPU Crystal. GPU Offloading Layer Similarity Detection

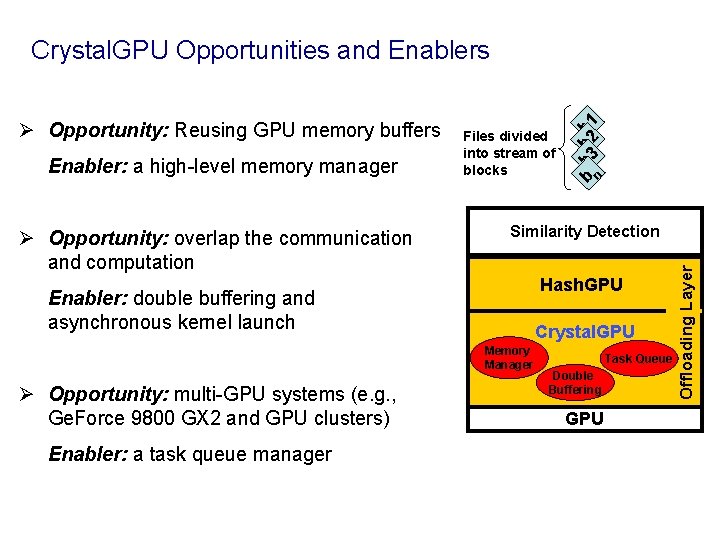

Enabler: a high-level memory manager Ø Opportunity: overlap the communication and computation Files divided into stream of blocks Similarity Detection Hash. GPU Enabler: double buffering and asynchronous kernel launch Crystal. GPU Memory Manager Ø Opportunity: multi-GPU systems (e. g. , Ge. Force 9800 GX 2 and GPU clusters) Enabler: a task queue manager Task Queue Double Buffering GPU Offloading Layer Ø Opportunity: Reusing GPU memory buffers b b 3 b 2 b 1 n Crystal. GPU Opportunities and Enablers

Experimental Evaluation: § Crystal. GPU evaluation § End-to-end system evaluation

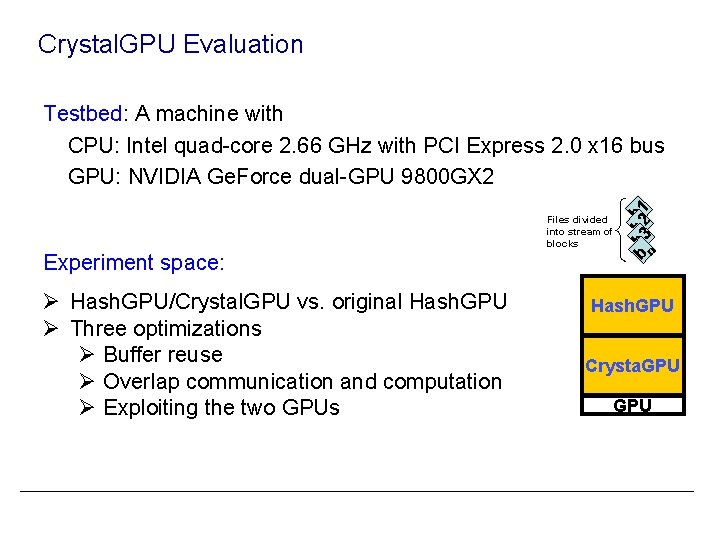

Crystal. GPU Evaluation Experiment space: Ø Hash. GPU/Crystal. GPU vs. original Hash. GPU Ø Three optimizations Ø Buffer reuse Ø Overlap communication and computation Ø Exploiting the two GPUs n Files divided into stream of blocks b b 3 b 2 b 1 Testbed: A machine with CPU: Intel quad-core 2. 66 GHz with PCI Express 2. 0 x 16 bus GPU: NVIDIA Ge. Force dual-GPU 9800 GX 2 Hash. GPU Crysta. GPU

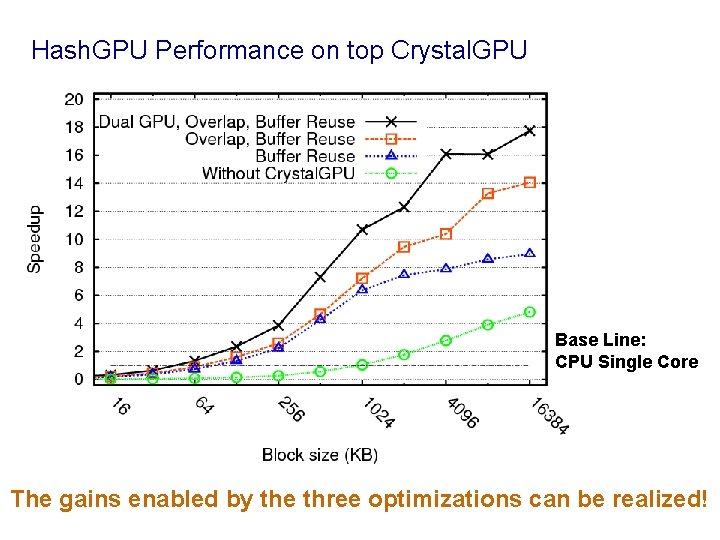

Hash. GPU Performance on top Crystal. GPU Base Line: CPU Single Core The gains enabled by the three optimizations can be realized!

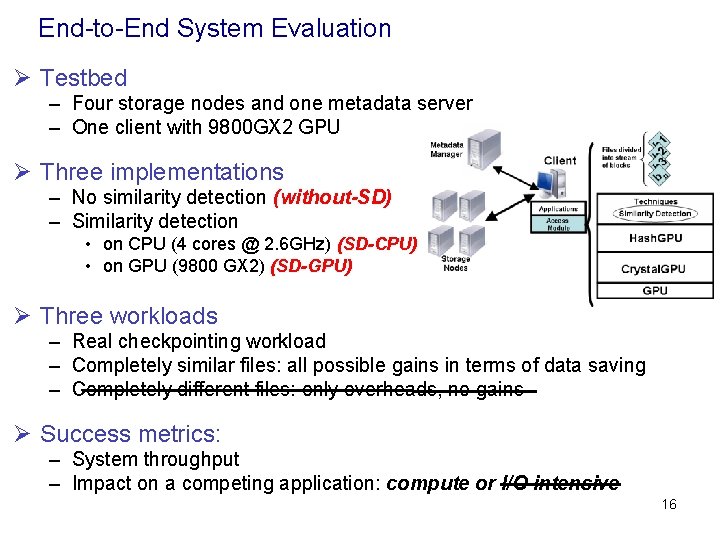

End-to-End System Evaluation Ø Testbed – Four storage nodes and one metadata server – One client with 9800 GX 2 GPU Ø Three implementations – No similarity detection (without-SD) – Similarity detection • on CPU (4 cores @ 2. 6 GHz) (SD-CPU) • on GPU (9800 GX 2) (SD-GPU) Ø Three workloads – Real checkpointing workload – Completely similar files: all possible gains in terms of data saving – Completely different files: only overheads, no gains Ø Success metrics: – System throughput – Impact on a competing application: compute or I/O intensive 16

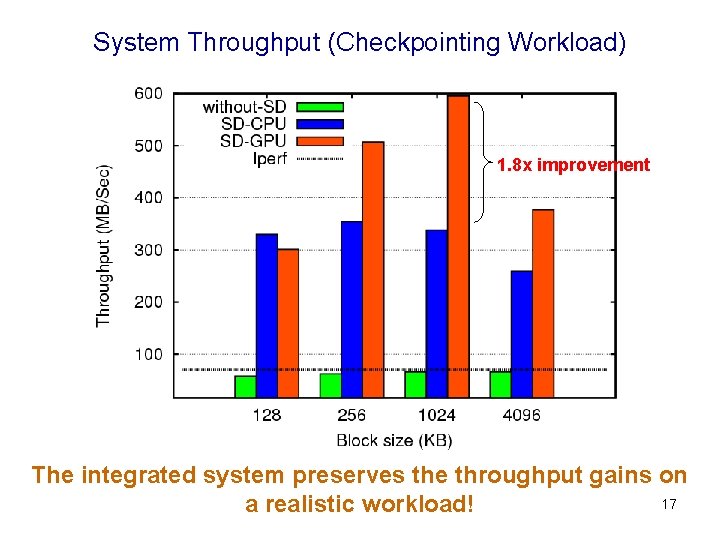

System Throughput (Checkpointing Workload) 1. 8 x improvement The integrated system preserves the throughput gains on 17 a realistic workload!

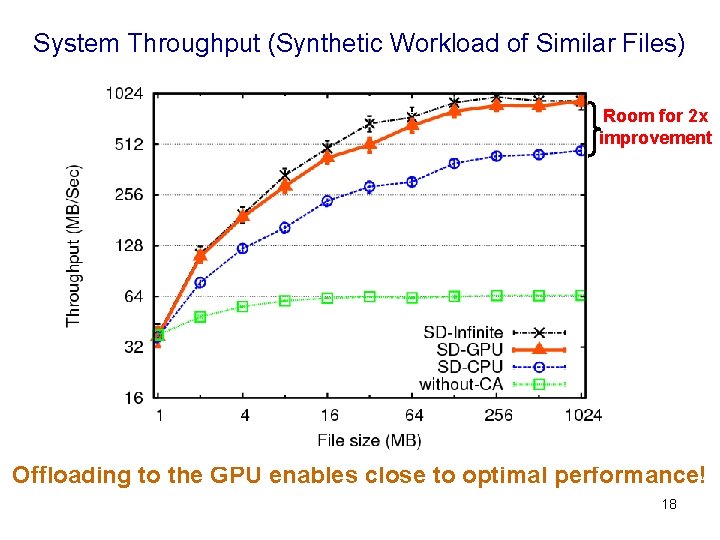

System Throughput (Synthetic Workload of Similar Files) Room for 2 x improvement Offloading to the GPU enables close to optimal performance! 18

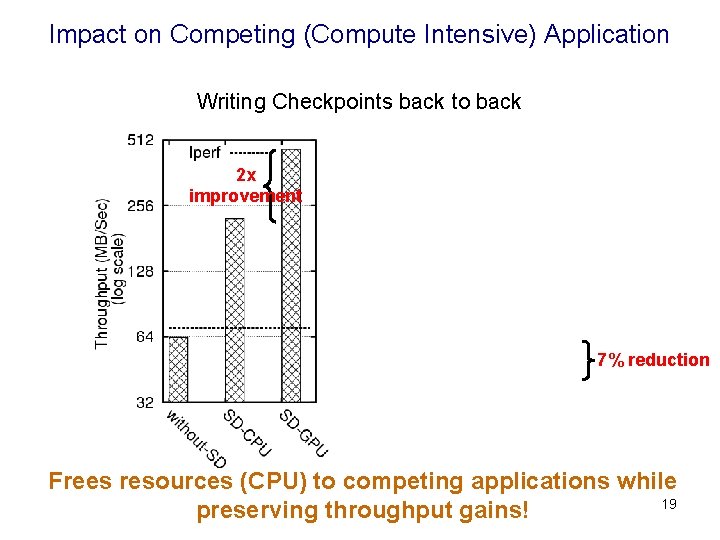

Impact on Competing (Compute Intensive) Application Writing Checkpoints back to back 2 x improvement 7% reduction Frees resources (CPU) to competing applications while 19 preserving throughput gains!

Summary Ø We present the design and implementation of a distributed storage system that integrates GPU power Ø We present Crystal. GPU: a management layer that transparently enable common GPU optimizations across GPGPU applications Ø We empirically demonstrate that employing the GPU enable close to optimal system performance Ø We shed light on the impact of GPU offloading on competing applications running on the same node

netsyslab. ece. ubc. ca

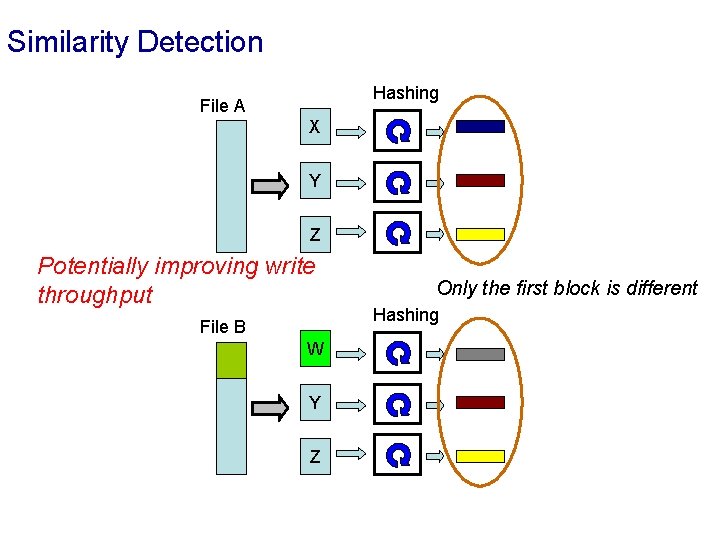

Similarity Detection Hashing File A X Y Z Potentially improving write throughput File B W Y Z Only the first block is different Hashing

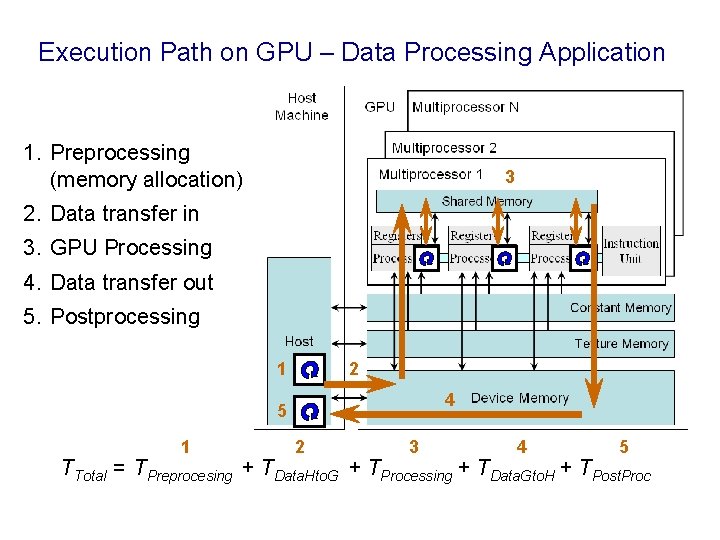

Execution Path on GPU – Data Processing Application 1. Preprocessing (memory allocation) 3 2. Data transfer in 3. GPU Processing 4. Data transfer out 5. Postprocessing 1 2 4 5 1 2 3 4 5 TTotal = TPreprocesing + TData. Hto. G + TProcessing + TData. Gto. H + TPost. Proc

- Slides: 23