FAX Dress Rehearsal Status Report Ilija Vukotic on

- Slides: 47

FAX Dress Rehearsal Status Report Ilija Vukotic on behalf of the atlas-adc-federated-xrootd working group Computation and Enrico Fermi Institutes University of Chicago Software & Computing Workshop March 13, 2013 efi. uchicago. edu ci. uchicago. edu

All the slides are made by Rob Gardner, I just updated them. He can’t be here due to the OSG All Hands meeting. 2 efi. uchicago. edu ci. uchicago. edu

Data federation goals Create a common ATLAS namespace across all storage sites, accessible from anywhere • Make easy to use, homogeneous access to data • Identified initial use cases • Failover from stage-in problems with local storage – Gain access to more CPUs using WAN direct read access – – o Allow brokering to Tier 2 s with partial datasets o Opportunistic resources without local ATLAS storage Use as caching mechanism at sites to reduce local data management tasks o • 3 Eliminate cataloging, consistency checking, deletion services WAN data access group formed in ATLAS to determine use cases & requirements on infrastructure efi. uchicago. edu ci. uchicago. edu

Implications for Production & Analysis • Behind the scenes in the Panda + Pilot systems: Recover from stage-in to local disk failures – This is in production at a few sites – • Development coming to allow advanced brokering which includes network performance Would mean jobs no longer require dataset to be complete at a site – Allows “diskless” compute sites – • Ability to use non-WLCG resources “Off-grid” analysis clusters – Opportunistic resources – Cloud resources – 4 efi. uchicago. edu ci. uchicago. edu

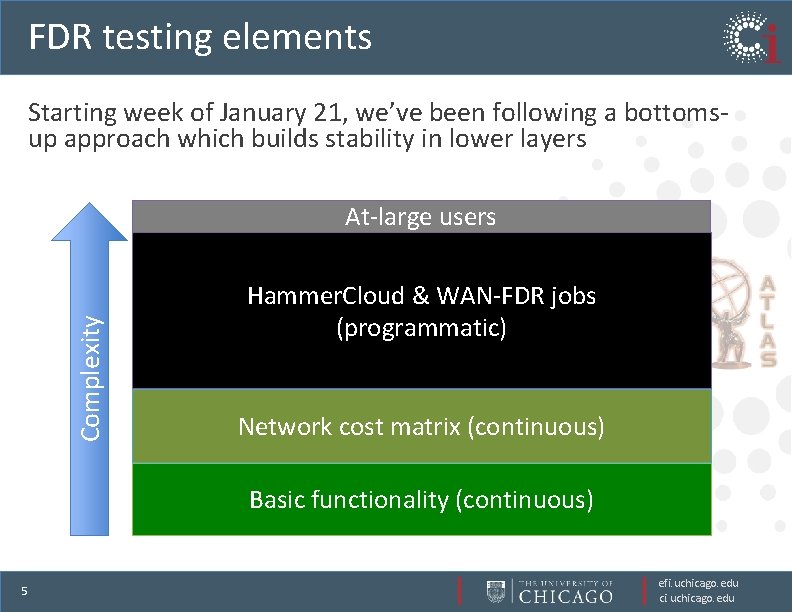

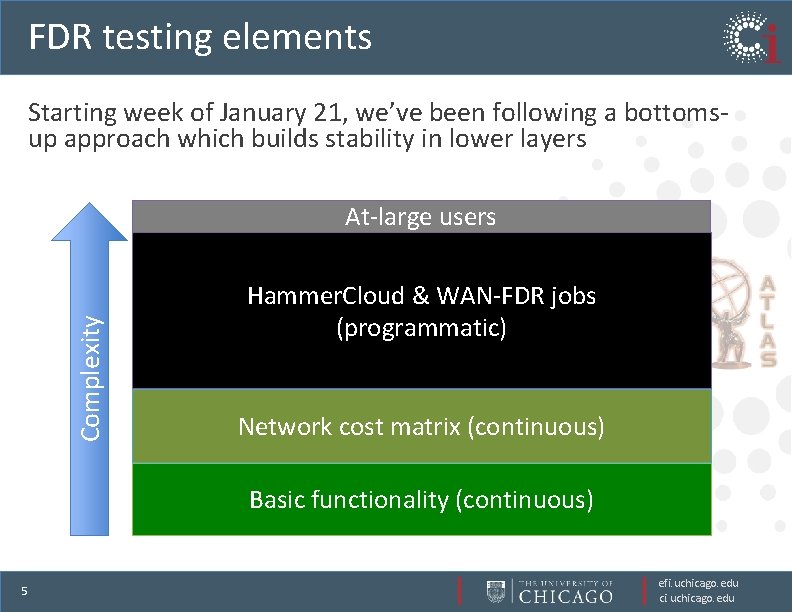

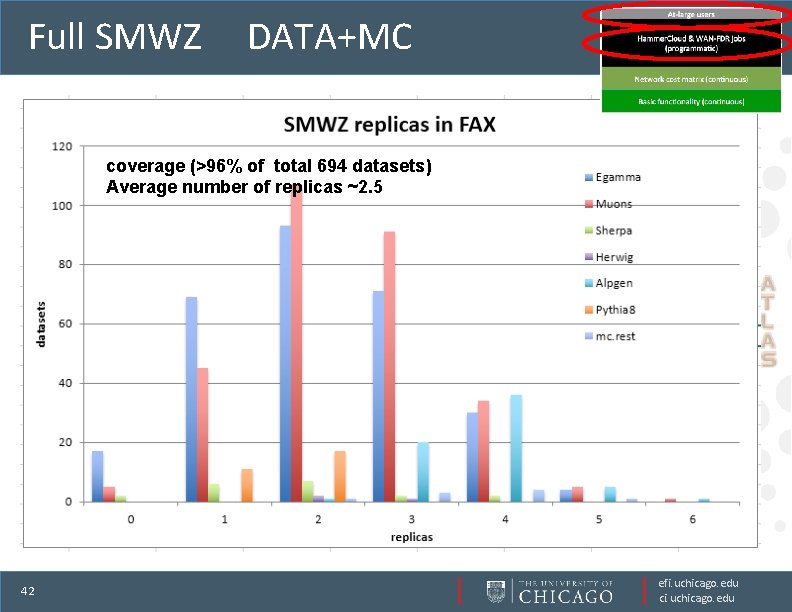

FDR testing elements Starting week of January 21, we’ve been following a bottomsup approach which builds stability in lower layers Complexity At-large users Hammer. Cloud & WAN-FDR jobs (programmatic) Network cost matrix (continuous) Basic functionality (continuous) 5 efi. uchicago. edu ci. uchicago. edu

Site Metrics “Connectivity” – copy and read test matrices • HC runs with modest job numbers • Stage-in & direct read – Local, nearby, far-away – • Load tests For well functioning sites only – Graduated tests 50, 100, 200 jobs vs. various # files – Will notify the site and/or list when these are launched – • Results Simple job efficiency – Wallclock, # files, CPU %, event rate, – 6 efi. uchicago. edu ci. uchicago. edu

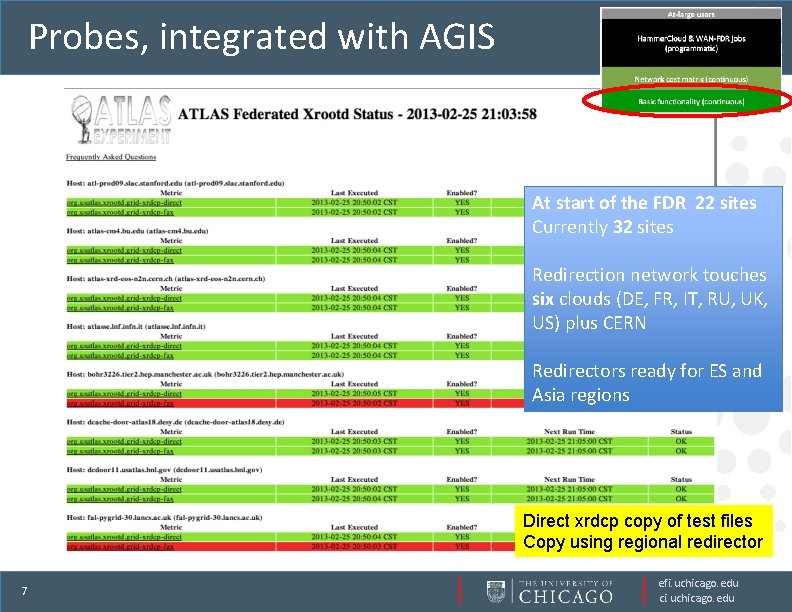

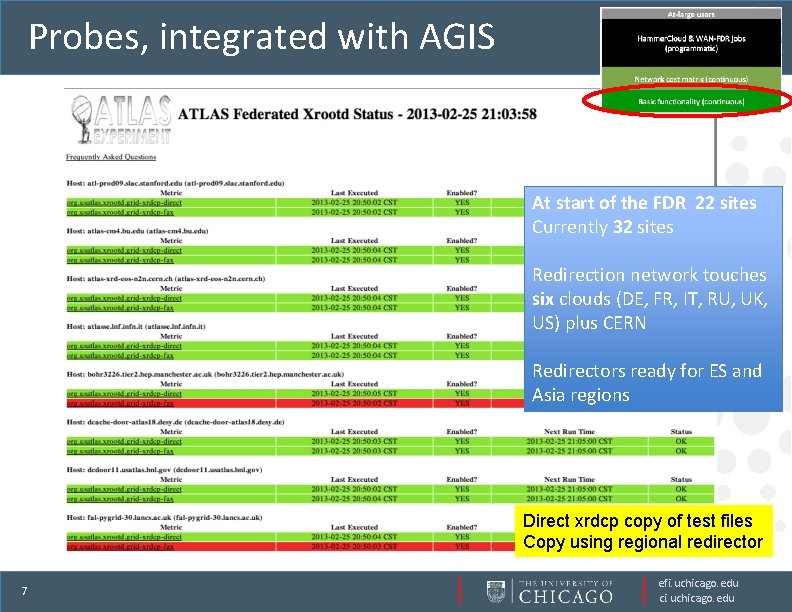

Probes, integrated with AGIS At start of the FDR 22 sites Currently 32 sites Redirection network touches six clouds (DE, FR, IT, RU, UK, US) plus CERN Redirectors ready for ES and Asia regions Direct xrdcp copy of test files Copy using regional redirector 7 efi. uchicago. edu ci. uchicago. edu

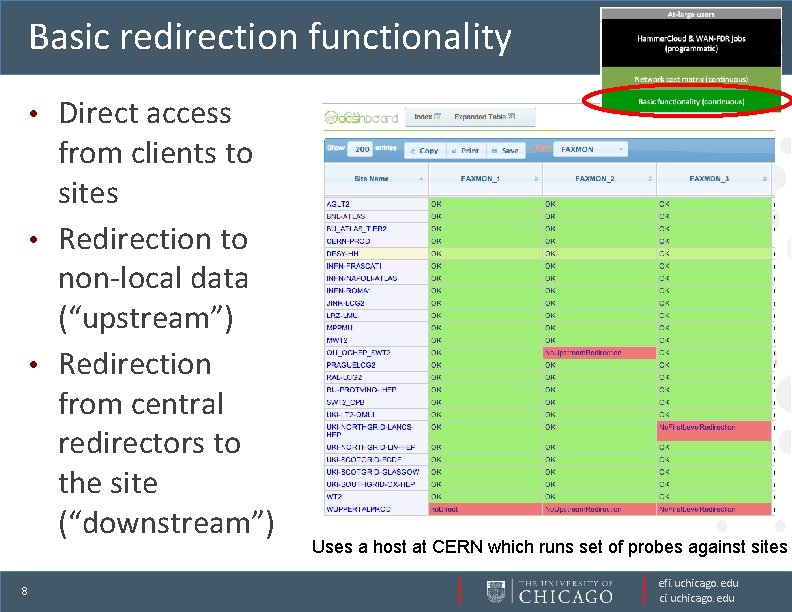

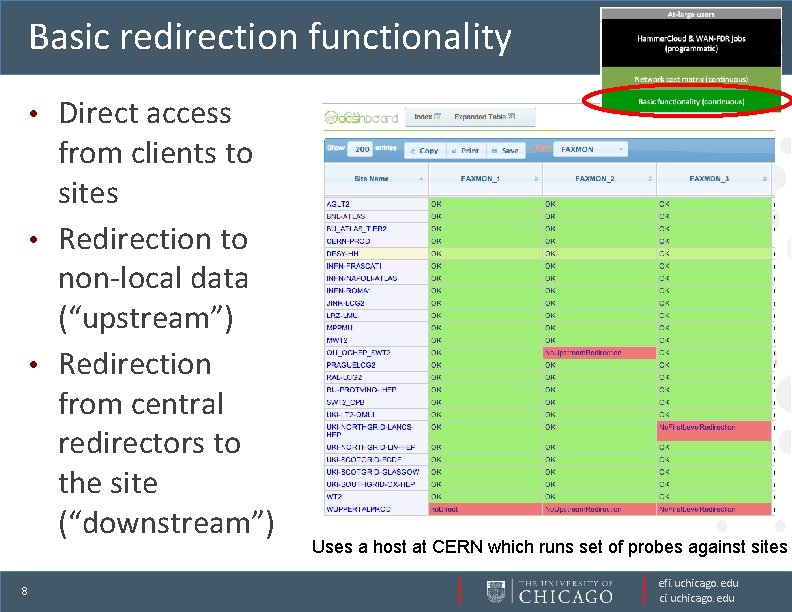

Basic redirection functionality Direct access from clients to sites • Redirection to non-local data (“upstream”) • Redirection from central redirectors to the site (“downstream”) • 8 Uses a host at CERN which runs set of probes against sites efi. uchicago. edu ci. uchicago. edu

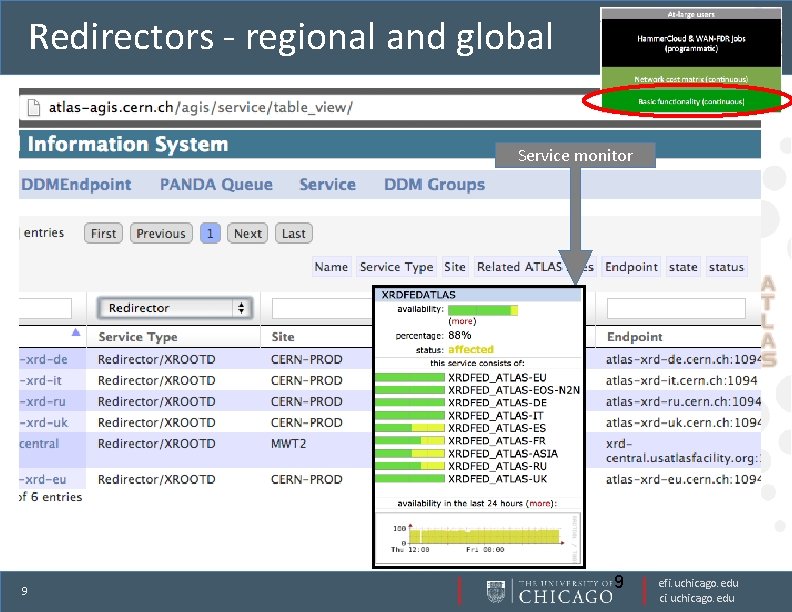

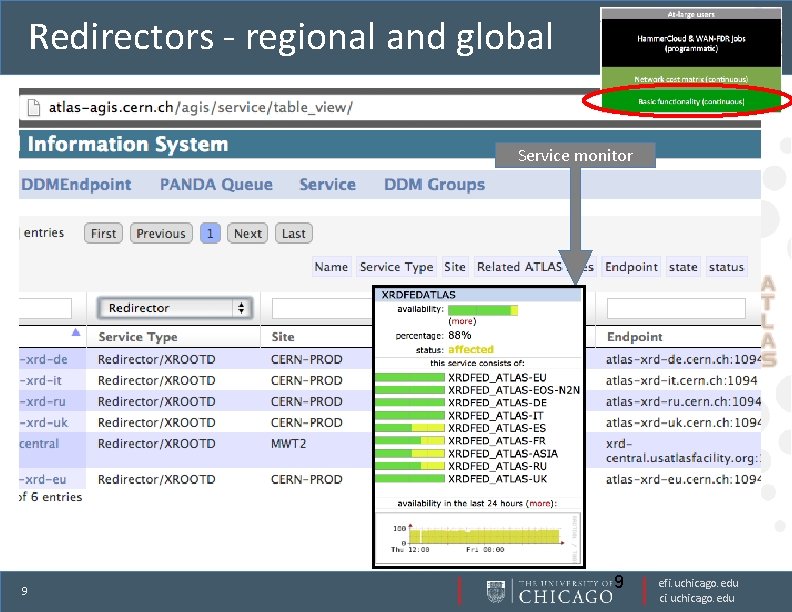

Redirectors - regional and global Service monitor 9 9 efi. uchicago. edu ci. uchicago. edu

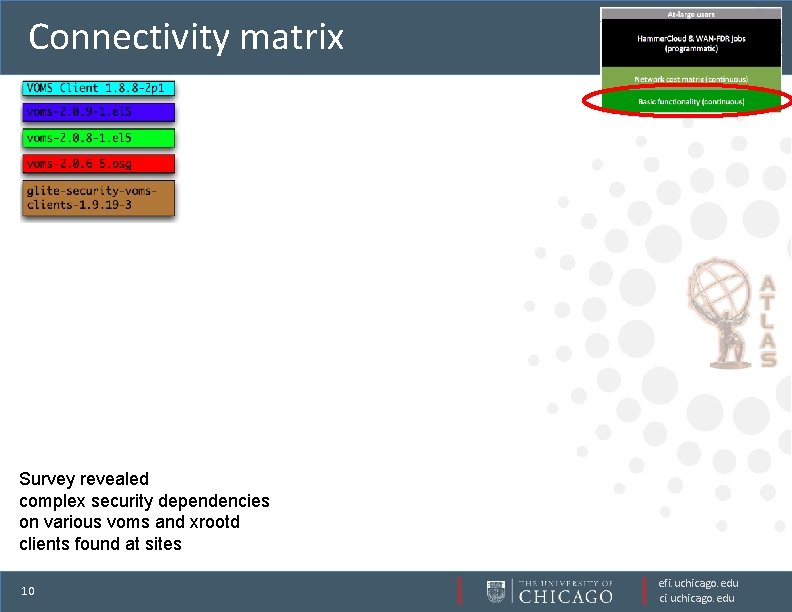

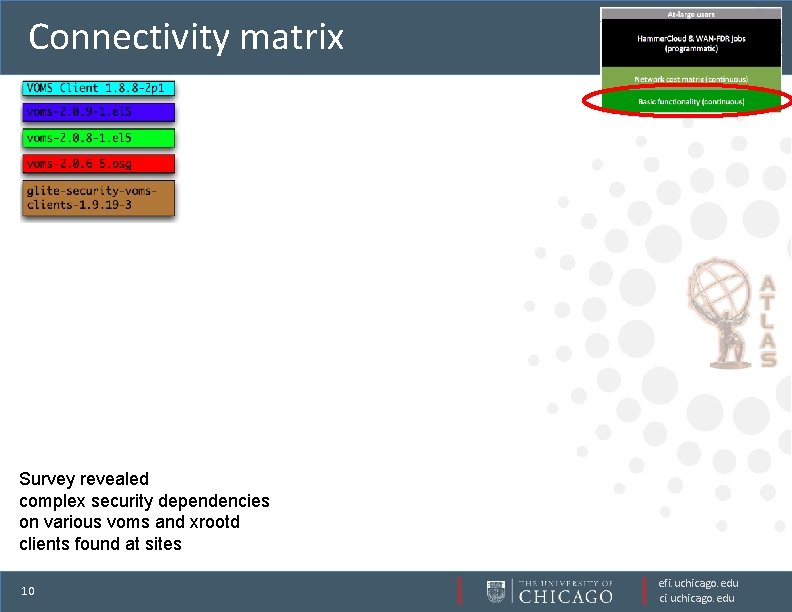

Connectivity matrix Survey revealed complex security dependencies on various voms and xrootd clients found at sites 10 efi. uchicago. edu ci. uchicago. edu

Cost matrix measurements Cost-of-access: (pairwise network links, storage load, etc. ) 11 efi. uchicago. edu ci. uchicago. edu

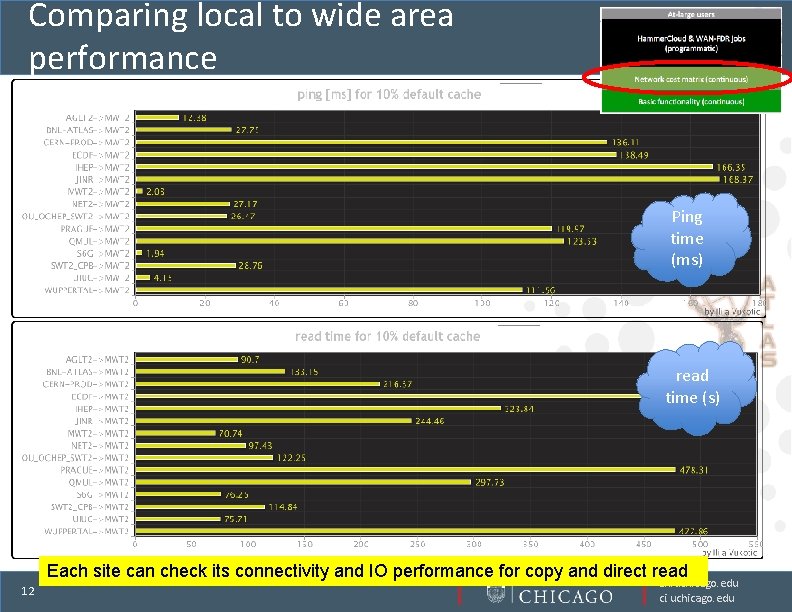

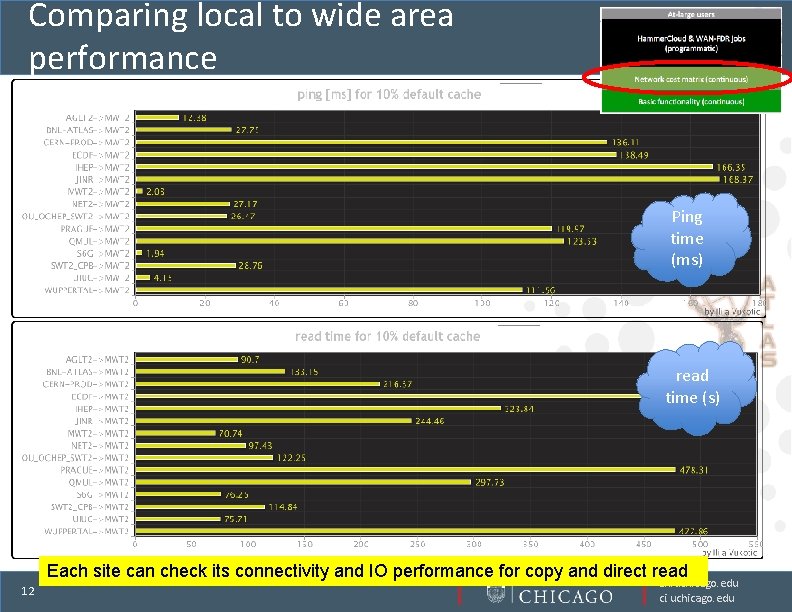

Comparing local to wide area performance local Ping time (ms) read time (s) local Each site can check its connectivity and IO performance for copy and direct read 12 efi. uchicago. edu ci. uchicago. edu

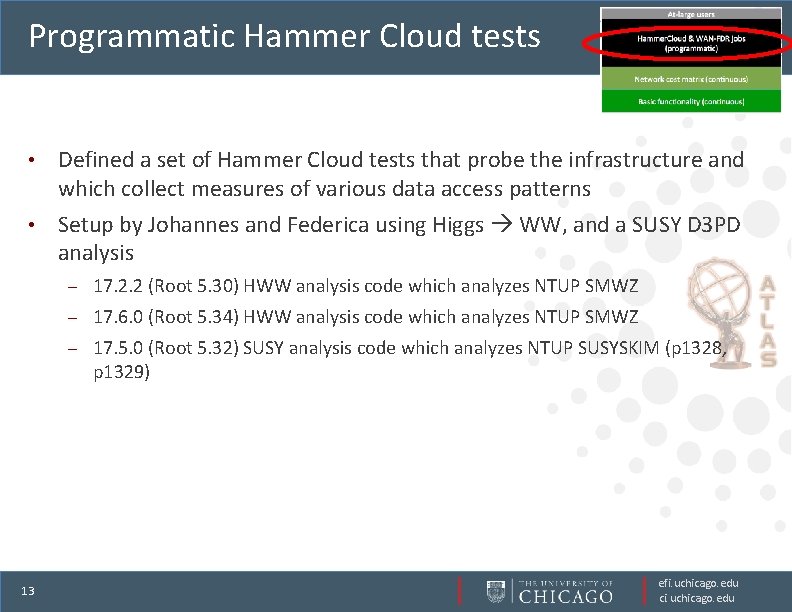

Programmatic Hammer Cloud tests Defined a set of Hammer Cloud tests that probe the infrastructure and which collect measures of various data access patterns • Setup by Johannes and Federica using Higgs WW, and a SUSY D 3 PD analysis • 13 – 17. 2. 2 (Root 5. 30) HWW analysis code which analyzes NTUP SMWZ – 17. 6. 0 (Root 5. 34) HWW analysis code which analyzes NTUP SMWZ – 17. 5. 0 (Root 5. 32) SUSY analysis code which analyzes NTUP SUSYSKIM (p 1328, p 1329) efi. uchicago. edu ci. uchicago. edu

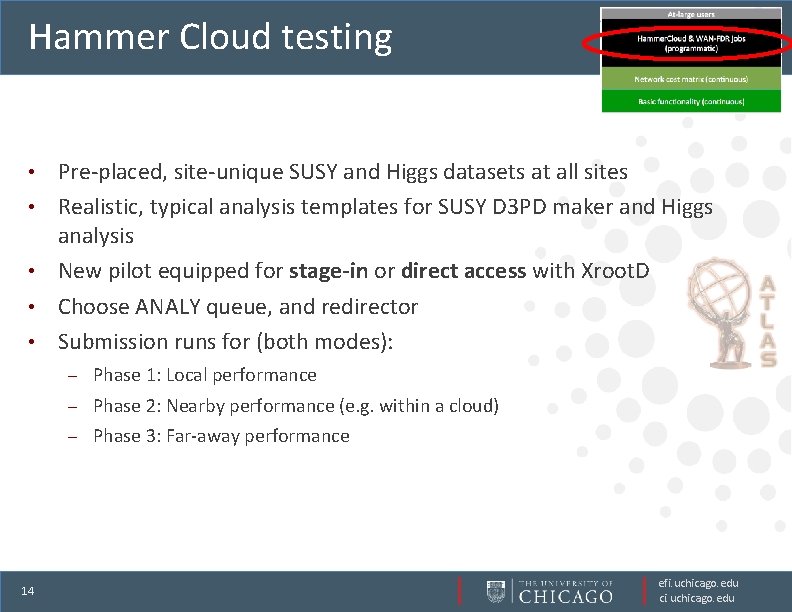

Hammer Cloud testing • • • 14 Pre-placed, site-unique SUSY and Higgs datasets at all sites Realistic, typical analysis templates for SUSY D 3 PD maker and Higgs analysis New pilot equipped for stage-in or direct access with Xroot. D Choose ANALY queue, and redirector Submission runs for (both modes): – Phase 1: Local performance – Phase 2: Nearby performance (e. g. within a cloud) – Phase 3: Far-away performance efi. uchicago. edu ci. uchicago. edu

Test datasets Each of these datasets gets copied to a version with site-specific names in order to so as to automatically test redirection access and to provide a benchmark comparison SUSY data 12_8 Te. V. 00203195. physics_Jet. Tau. Etmiss. merge. NTUP_SUSYSKIM. r 4065_p 1278_p 1329_tid 01163314_00 data 12_8 Te. V. 00203934. physics_Jet. Tau. Etmiss. merge. NTUP_SUSYSKIM. r 4065_p 1278_p 1329_tid 01163289_00 data 12_8 Te. V. 00209074. physics_Jet. Tau. Etmiss. merge. NTUP_SUSYSKIM. r 4065_p 1278_p 1329_tid 01106330_00 data 12_8 Te. V. 00209084. physics_Jet. Tau. Etmiss. merge. NTUP_SUSYSKIM. r 4065_p 1278_p 1329_tid 01106329_00 data 12_8 Te. V. 00209109. physics_Jet. Tau. Etmiss. merge. NTUP_SUSYSKIM. r 4065_p 1278_p 1329_tid 01106328_00 data 12_8 Te. V. 00209161. physics_Jet. Tau. Etmiss. merge. NTUP_SUSYSKIM. r 4065_p 1278_p 1329_tid 01106327_00 data 12_8 Te. V. 00209183. physics_Jet. Tau. Etmiss. merge. NTUP_SUSYSKIM. r 4065_p 1278_p 1329_tid 01106326_00 data 12_8 Te. V. 00209265. physics_Jet. Tau. Etmiss. merge. NTUP_SUSYSKIM. r 4065_p 1278_p 1329_tid 01106323_00 data 12_8 Te. V. 00209269. physics_Jet. Tau. Etmiss. merge. NTUP_SUSYSKIM. r 4065_p 1278_p 1329_tid 01106322_00 data 12_8 Te. V. 00209550. physics_Jet. Tau. Etmiss. merge. NTUP_SUSYSKIM. r 4065_p 1278_p 1329_tid 01106319_00 data 12_8 Te. V. 00209628. physics_Jet. Tau. Etmiss. merge. NTUP_SUSYSKIM. r 4065_p 1278_p 1329_tid 01106316_00 data 12_8 Te. V. 00209629. physics_Jet. Tau. Etmiss. merge. NTUP_SUSYSKIM. r 4065_p 1278_p 1329_tid 01106315_00 SMWZ data 12_8 Te. V. 00211697. physics_Muons. merge. NTUP_SMWZ. f 479_m 1228_p 1067_p 1141_tid 00987986_00 data 12_8 Te. V. 00211620. physics_Muons. merge. NTUP_SMWZ. f 479_m 1228_p 1067_p 1141_tid 00986521_00 data 12_8 Te. V. 00211522. physics_Muons. merge. NTUP_SMWZ. f 479_m 1228_p 1067_p 1141_tid 00986520_00 data 12_8 Te. V. 00212172. physics_Muons. merge. NTUP_SMWZ. f 479_m 1228_p 1067_p 1141_tid 01007411_00 data 12_8 Te. V. 00212144. physics_Muons. merge. NTUP_SMWZ. f 479_m 1228_p 1067_p 1141_tid 00999023_00 data 12_8 Te. V. 00211937. physics_Muons. merge. NTUP_SMWZ. f 479_m 1228_p 1067_p 1141_tid 00994157_00 data 12_8 Te. V. 00212000. physics_Muons. merge. NTUP_SMWZ. f 479_m 1228_p 1067_p 1141_tid 00994158_00 data 12_8 Te. V. 00212199. physics_Muons. merge. NTUP_SMWZ. f 479_m 1228_p 1067_p 1141_tid 01007410_00 data 12_8 Te. V. 00211772. physics_Muons. merge. NTUP_SMWZ. f 479_m 1228_p 1067_p 1141_tid 00990030_00 data 12_8 Te. V. 00211787. physics_Muons. merge. NTUP_SMWZ. f 479_m 1228_p 1067_p 1141_tid 00990029_00 15 efi. uchicago. edu ci. uchicago. edu

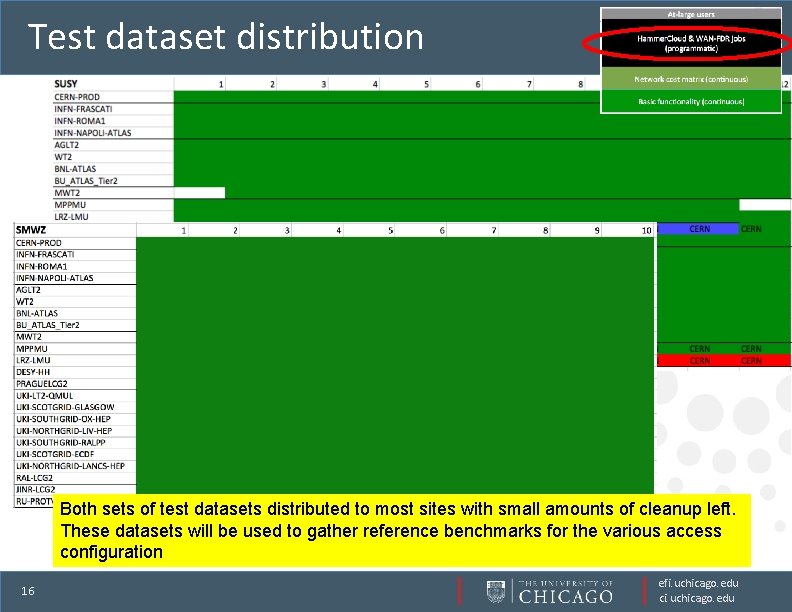

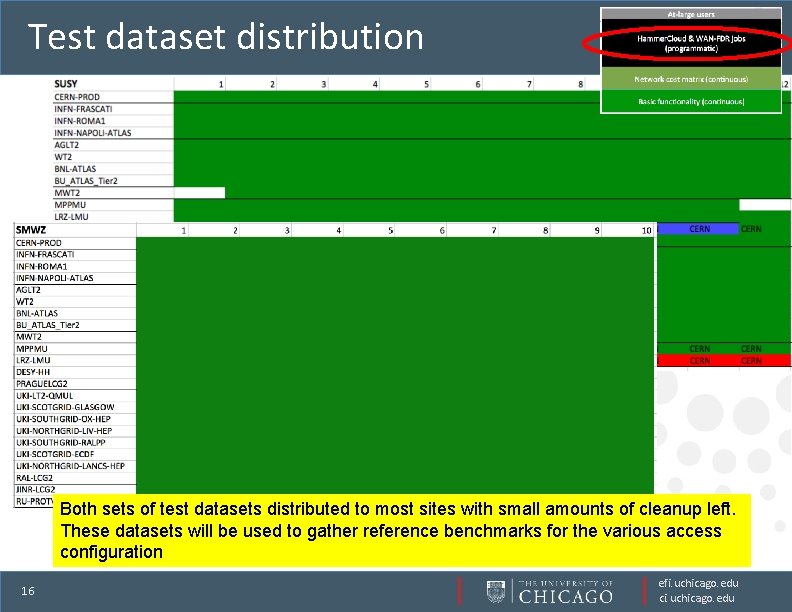

Test dataset distribution Both sets of test datasets distributed to most sites with small amounts of cleanup left. These datasets will be used to gather reference benchmarks for the various access configuration 16 efi. uchicago. edu ci. uchicago. edu

Queue configurations This turns out to be the hardest part • Providing federated XRoot. D access exposes the full extent of heterogeneity of sites, in terms of schedconfig queue parameters • Each site’s “copysetup” parameters seems to differ, and specific parameter settings need to be tried in the Hammer Cloud job submission scripts using –overwrite. Queuedata • Amazingly, in spite of this there a good fraction of FAXfunctional sites • 17 efi. uchicago. edu ci. uchicago. edu

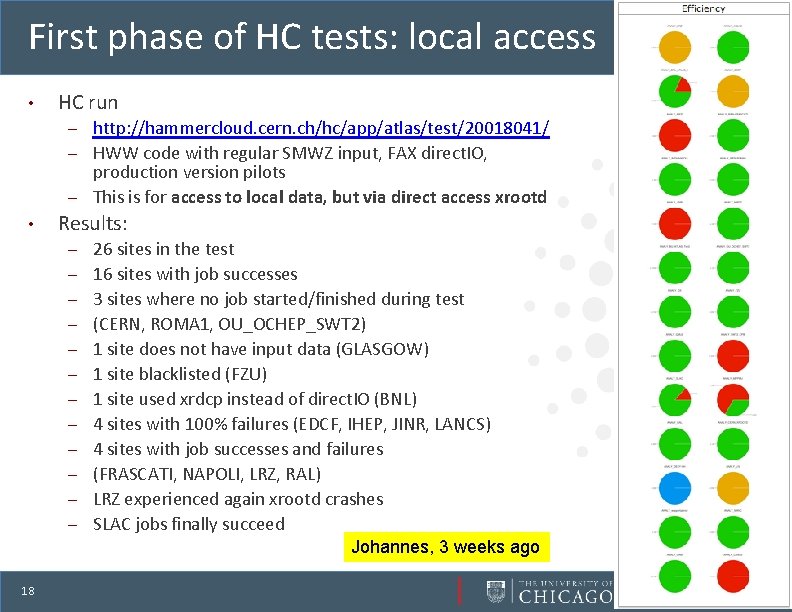

First phase of HC tests: local access • HC run http: //hammercloud. cern. ch/hc/app/atlas/test/20018041/ – HWW code with regular SMWZ input, FAX direct. IO, production version pilots – This is for access to local data, but via direct access xrootd – • Results: – – – 26 sites in the test 16 sites with job successes 3 sites where no job started/finished during test (CERN, ROMA 1, OU_OCHEP_SWT 2) 1 site does not have input data (GLASGOW) 1 site blacklisted (FZU) 1 site used xrdcp instead of direct. IO (BNL) 4 sites with 100% failures (EDCF, IHEP, JINR, LANCS) 4 sites with job successes and failures (FRASCATI, NAPOLI, LRZ, RAL) LRZ experienced again xrootd crashes SLAC jobs finally succeed Johannes, 3 weeks ago 18 efi. uchicago. edu ci. uchicago. edu

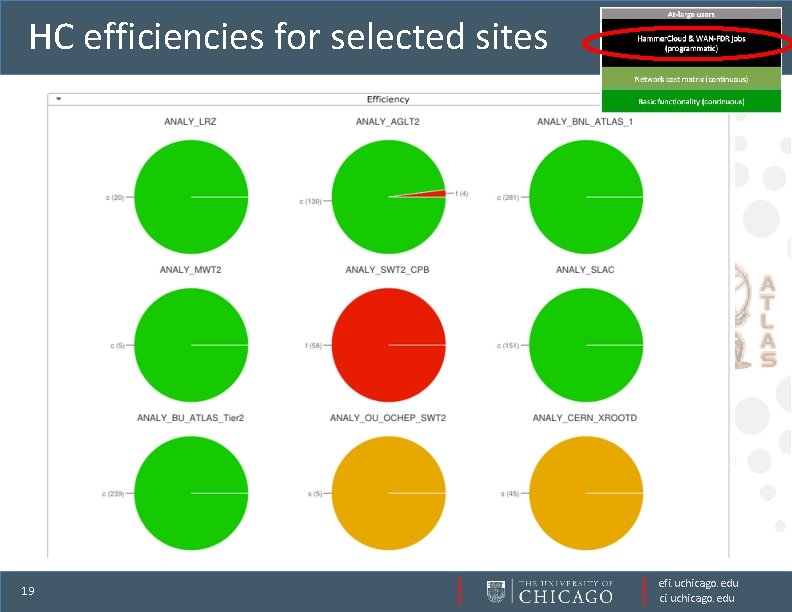

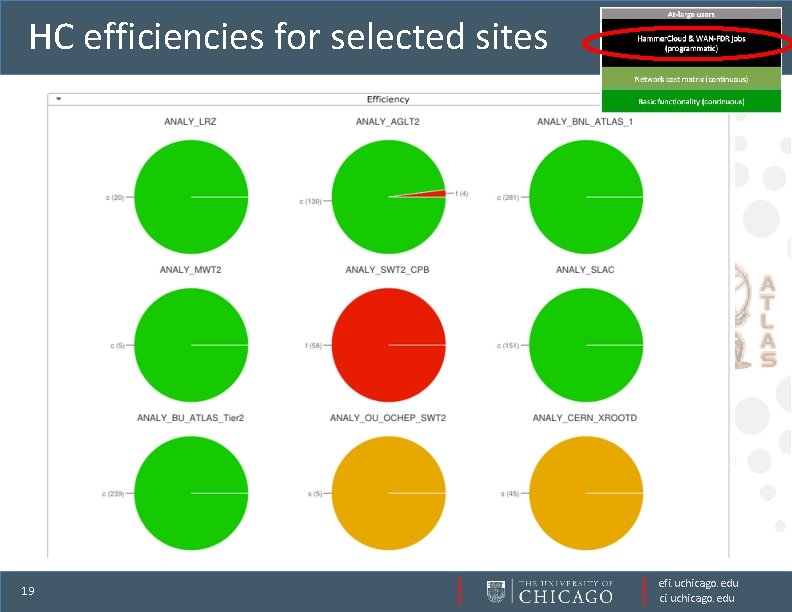

HC efficiencies for selected sites 19 efi. uchicago. edu ci. uchicago. edu

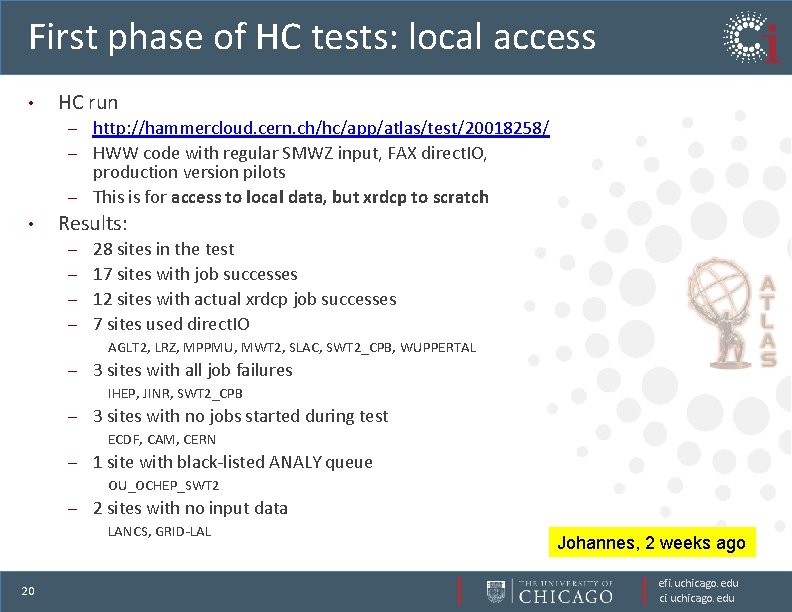

First phase of HC tests: local access • HC run http: //hammercloud. cern. ch/hc/app/atlas/test/20018258/ – HWW code with regular SMWZ input, FAX direct. IO, production version pilots – This is for access to local data, but xrdcp to scratch – • Results: 28 sites in the test – 17 sites with job successes – 12 sites with actual xrdcp job successes – 7 sites used direct. IO – AGLT 2, LRZ, MPPMU, MWT 2, SLAC, SWT 2_CPB, WUPPERTAL – 3 sites with all job failures IHEP, JINR, SWT 2_CPB – 3 sites with no jobs started during test ECDF, CAM, CERN – 1 site with black-listed ANALY queue OU_OCHEP_SWT 2 – 2 sites with no input data LANCS, GRID-LAL 20 Johannes, 2 weeks ago efi. uchicago. edu ci. uchicago. edu

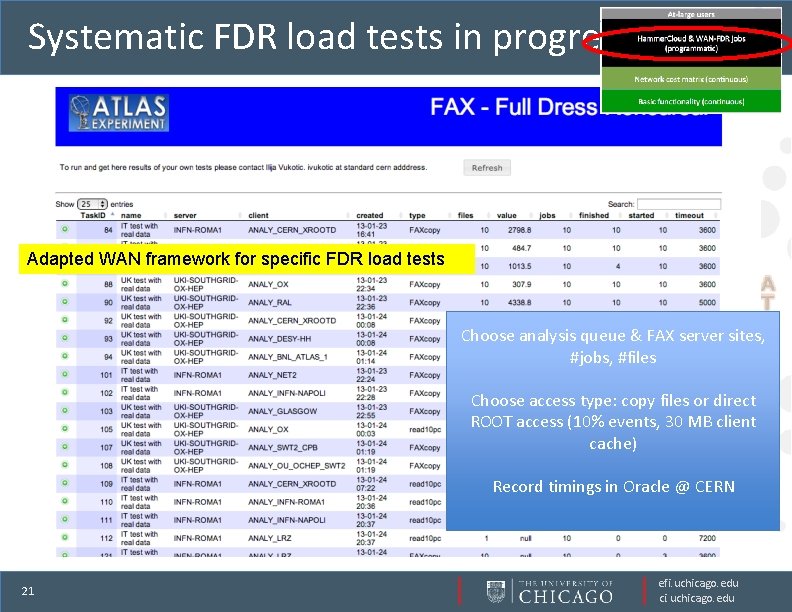

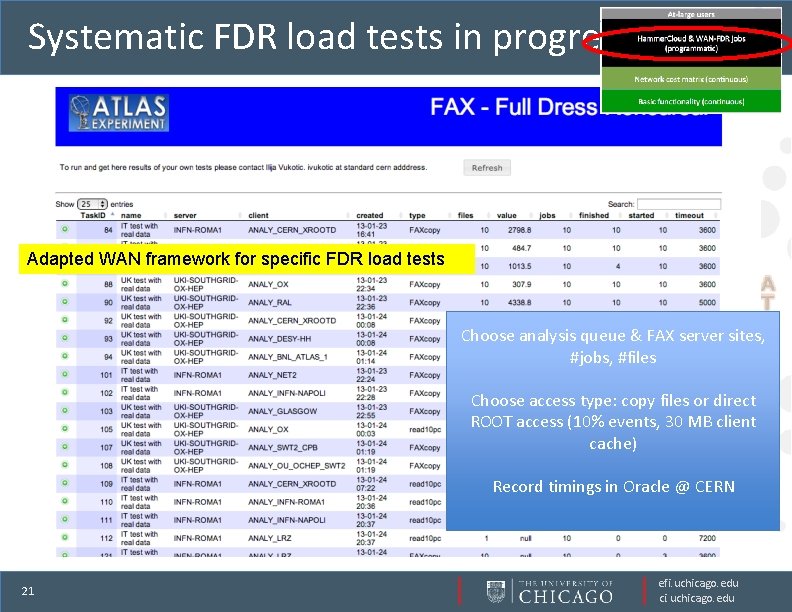

Systematic FDR load tests in progress Adapted WAN framework for specific FDR load tests Choose analysis queue & FAX server sites, #jobs, #files Choose access type: copy files or direct ROOT access (10% events, 30 MB client cache) Record timings in Oracle @ CERN 21 efi. uchicago. edu ci. uchicago. edu

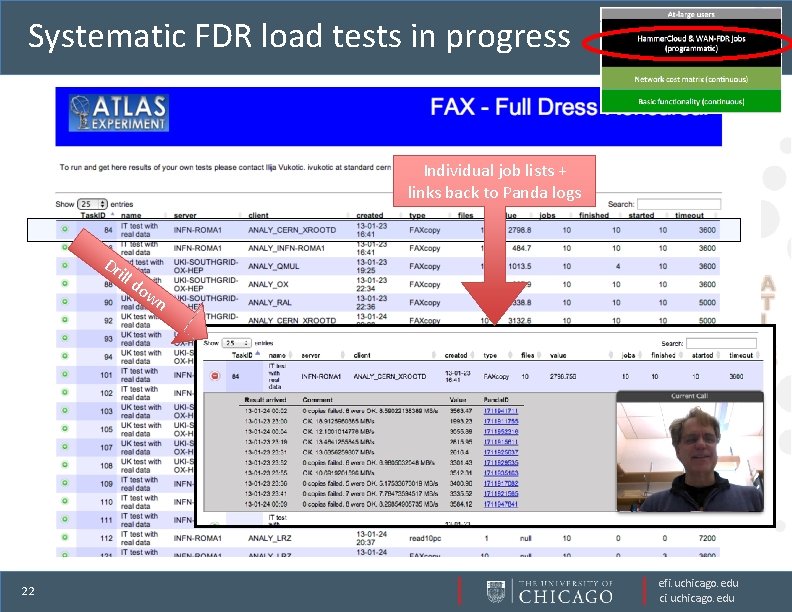

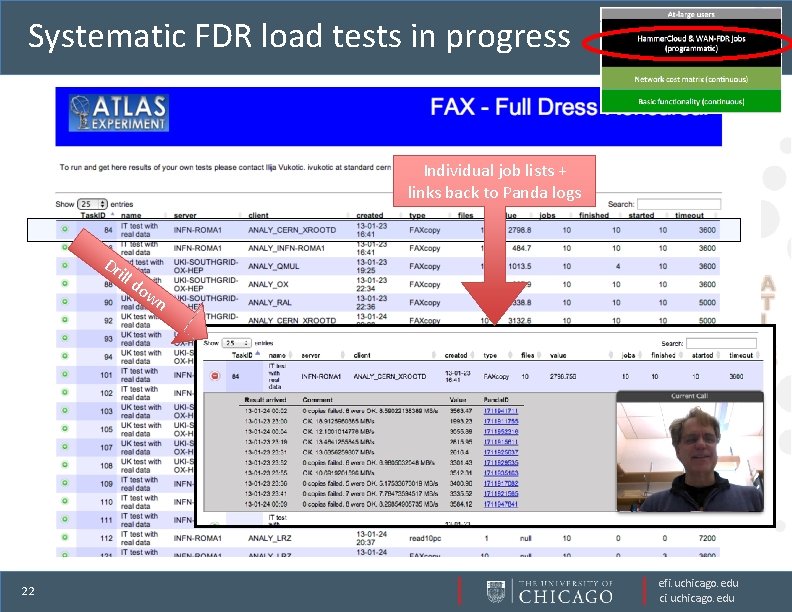

Systematic FDR load tests in progress Individual job lists + links back to Panda logs Dr ill 22 do w n efi. uchicago. edu ci. uchicago. edu

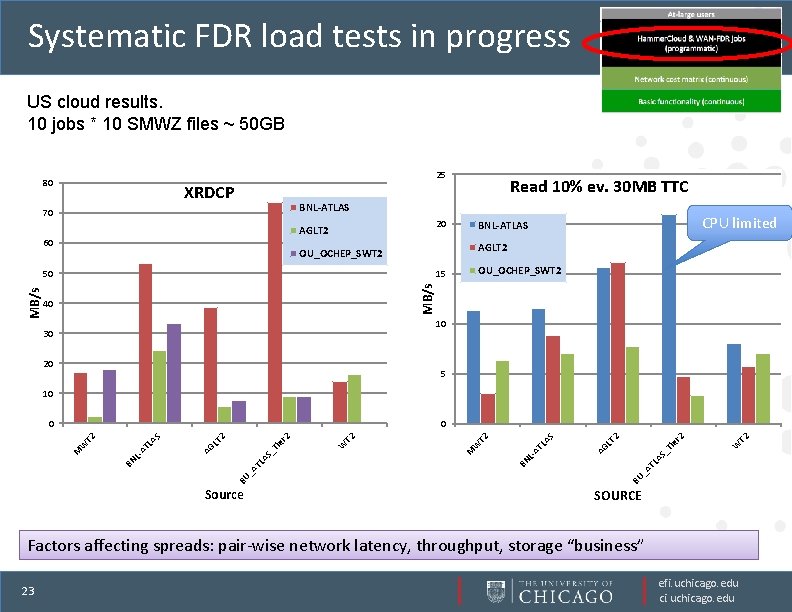

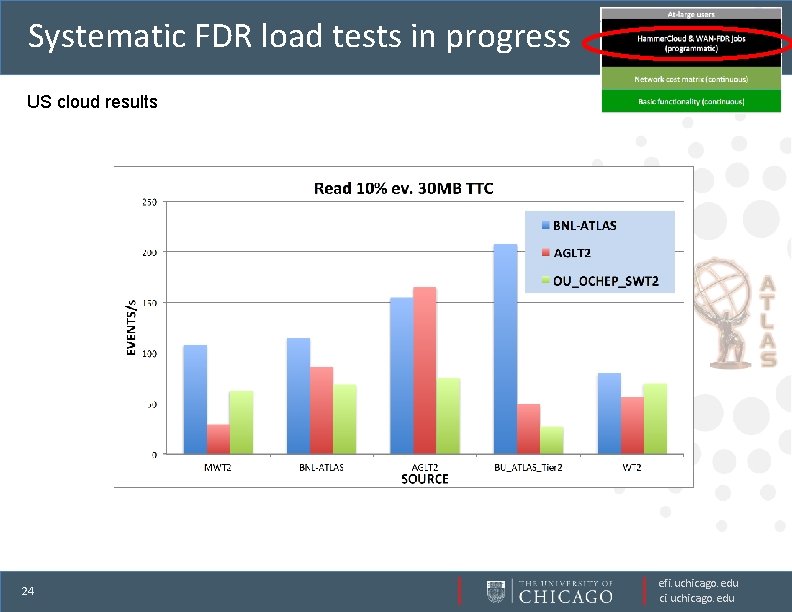

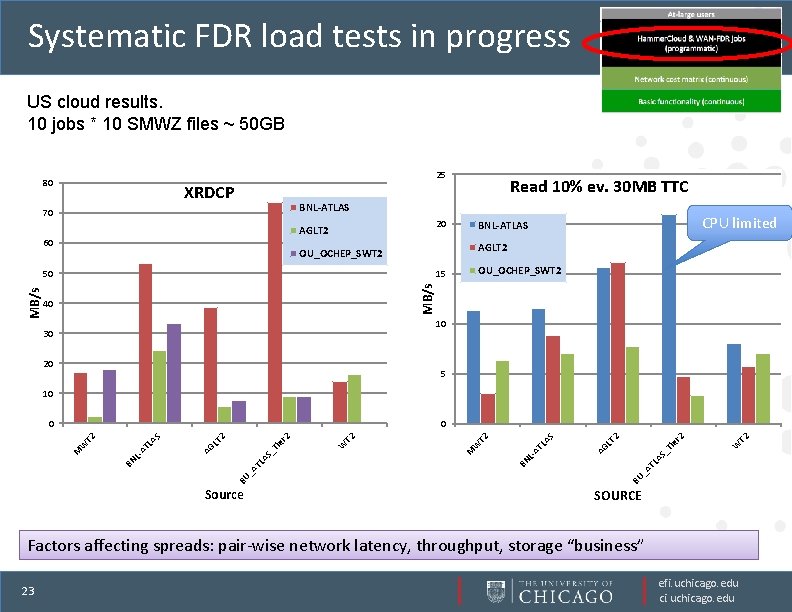

Systematic FDR load tests in progress US cloud results. 10 jobs * 10 SMWZ files ~ 50 GB 25 80 XRDCP BNL-ATLAS 70 20 AGLT 2 60 CPU limited BNL-ATLAS AGLT 2 OU_OCHEP_SWT 2 50 OU_OCHEP_SWT 2 15 MB/s Read 10% ev. 30 MB TTC 40 10 30 20 5 10 0 T 2 TL A S_ W Ti er 2 LT 2 AG LA S LAT BU _A BN W T 2 M S_ TL A BU _A Source W Ti er 2 LT 2 AG LA S LAT BN M W T 2 0 SOURCE Factors affecting spreads: pair-wise network latency, throughput, storage “business” 23 efi. uchicago. edu ci. uchicago. edu

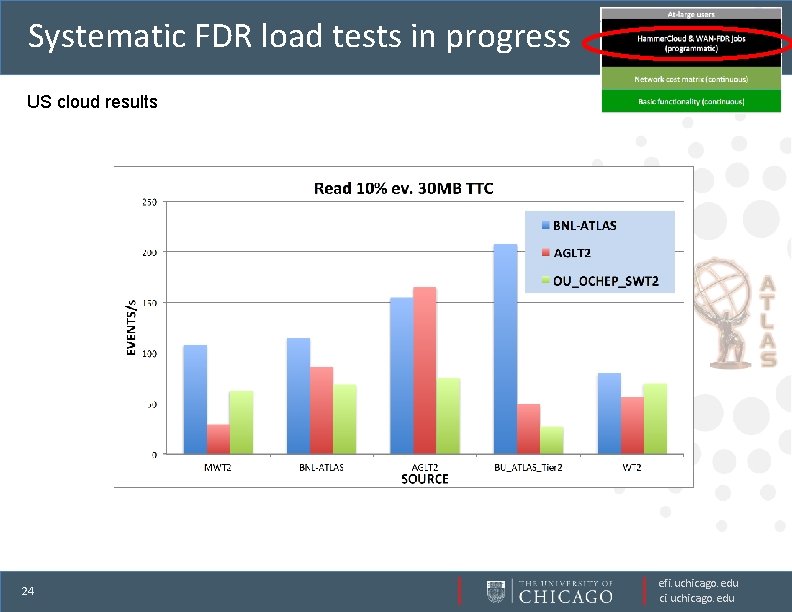

Systematic FDR load tests in progress US cloud results 24 efi. uchicago. edu ci. uchicago. edu

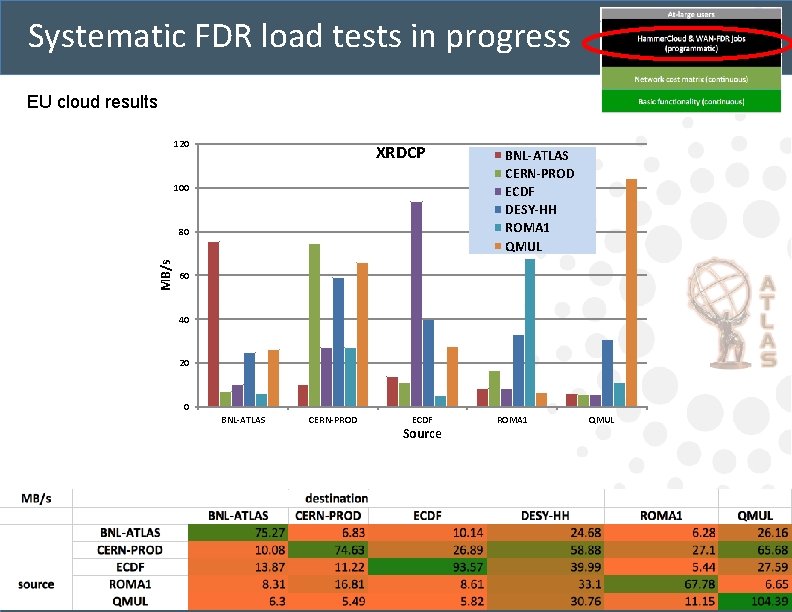

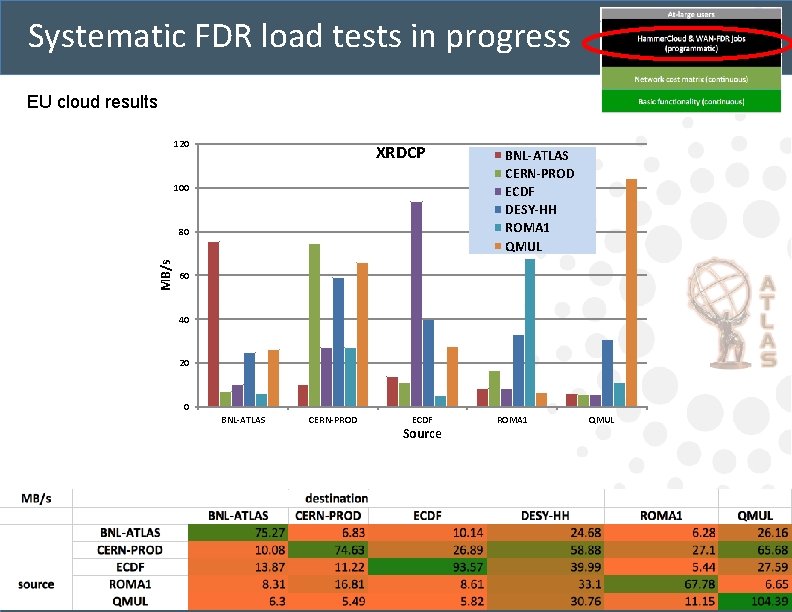

Systematic FDR load tests in progress EU cloud results 120 XRDCP 100 MB/s 80 BNL-ATLAS CERN-PROD ECDF DESY-HH ROMA 1 QMUL 60 40 20 0 BNL-ATLAS 25 CERN-PROD ECDF Source ROMA 1 QMUL efi. uchicago. edu ci. uchicago. edu

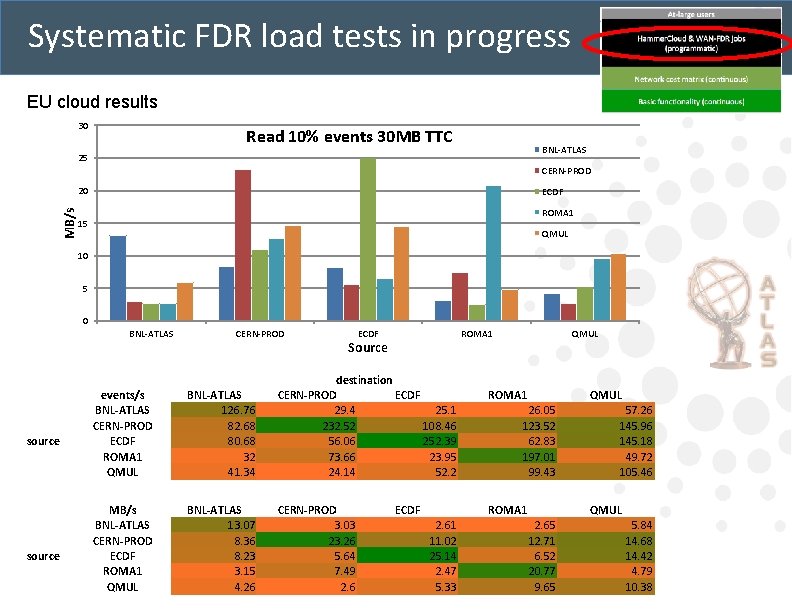

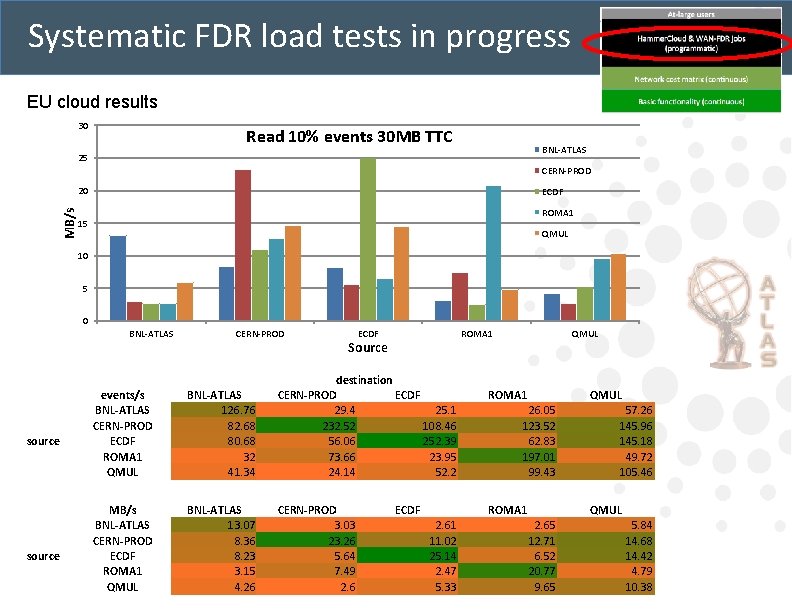

Systematic FDR load tests in progress EU cloud results 30 Read 10% events 30 MB TTC BNL-ATLAS 25 CERN-PROD MB/s 20 ECDF ROMA 1 15 QMUL 10 5 0 BNL-ATLAS source 26 CERN-PROD ECDF ROMA 1 Source events/s BNL-ATLAS CERN-PROD ECDF ROMA 1 QMUL BNL-ATLAS 126. 76 82. 68 80. 68 32 41. 34 destination CERN-PROD ECDF 29. 4 25. 1 232. 52 108. 46 56. 06 252. 39 73. 66 23. 95 24. 14 52. 2 MB/s BNL-ATLAS CERN-PROD ECDF ROMA 1 QMUL BNL-ATLAS 13. 07 8. 36 8. 23 3. 15 4. 26 CERN-PROD 3. 03 23. 26 5. 64 7. 49 2. 6 ECDF QMUL ROMA 1 QMUL 26. 05 123. 52 62. 83 197. 01 99. 43 ROMA 1 2. 61 11. 02 25. 14 2. 47 5. 33 57. 26 145. 96 145. 18 49. 72 105. 46 QMUL 2. 65 12. 71 6. 52 20. 77 9. 65 5. 84 14. 68 14. 42 4. 79 10. 38 efi. uchicago. edu ci. uchicago. edu

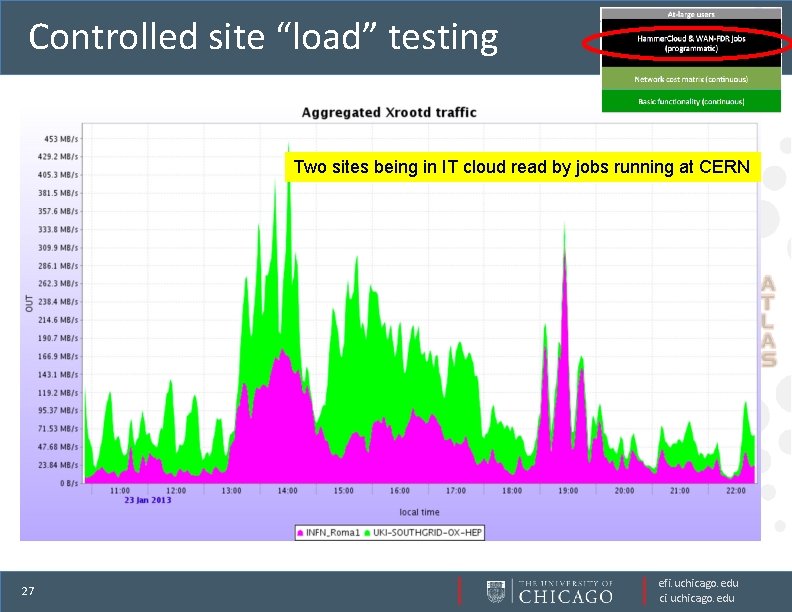

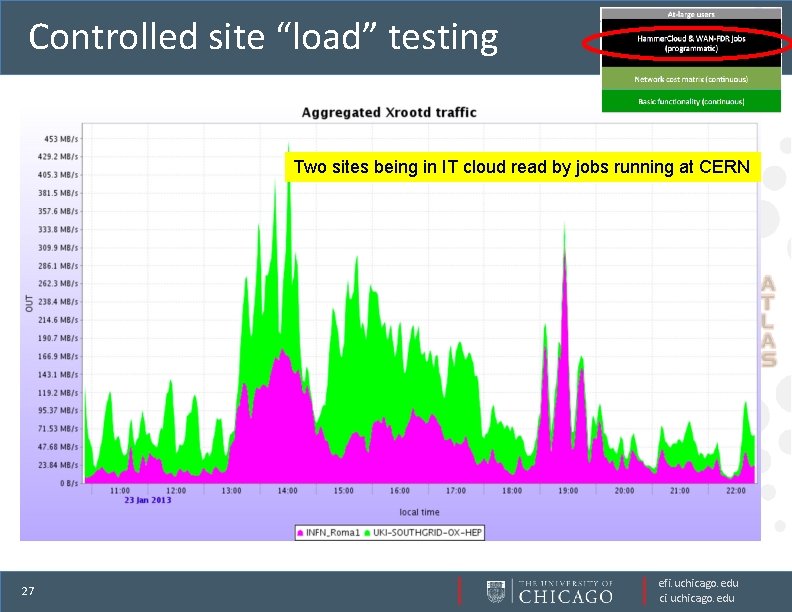

Controlled site “load” testing Two sites being in IT cloud read by jobs running at CERN 27 efi. uchicago. edu ci. uchicago. edu

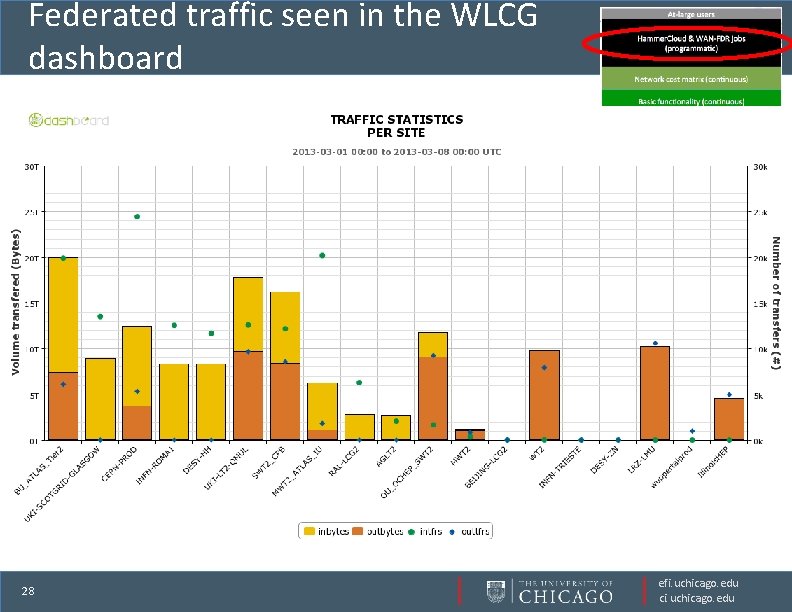

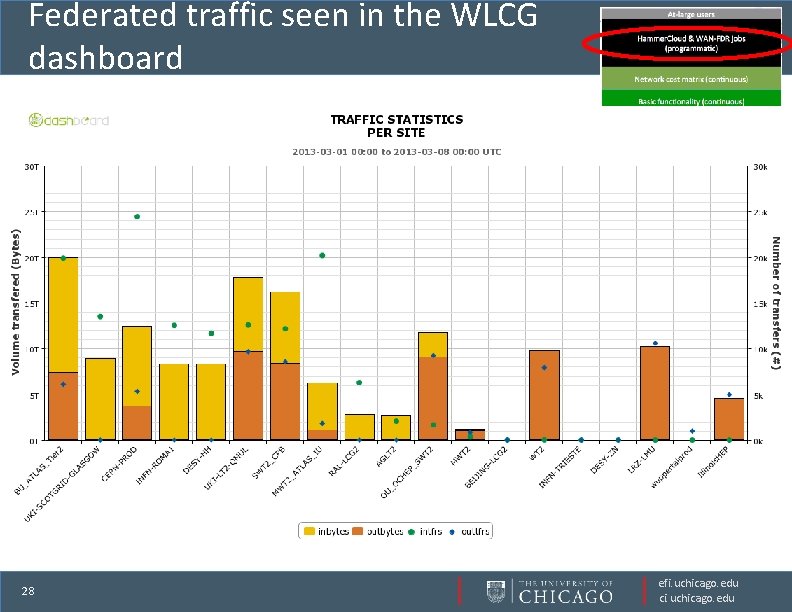

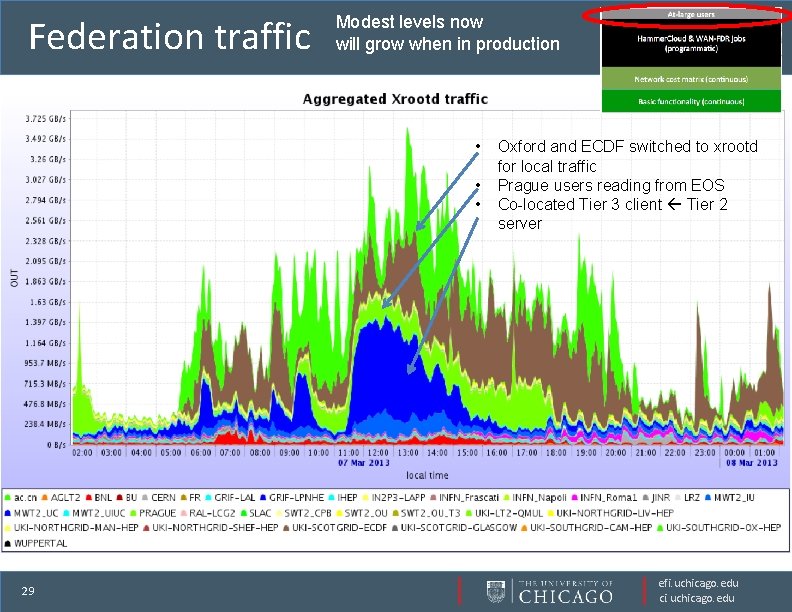

Federated traffic seen in the WLCG dashboard 28 efi. uchicago. edu ci. uchicago. edu

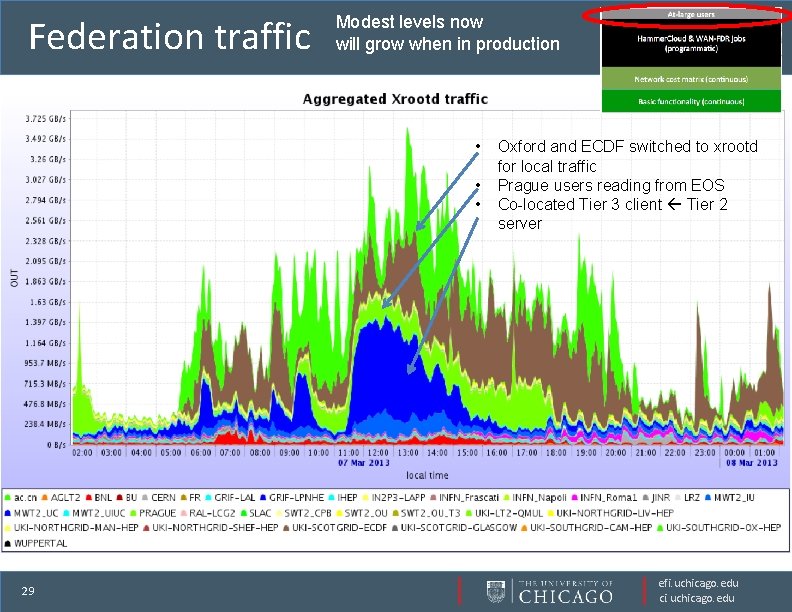

Federation traffic Modest levels now will grow when in production • • • 29 Oxford and ECDF switched to xrootd for local traffic Prague users reading from EOS Co-located Tier 3 client Tier 2 server efi. uchicago. edu ci. uchicago. edu

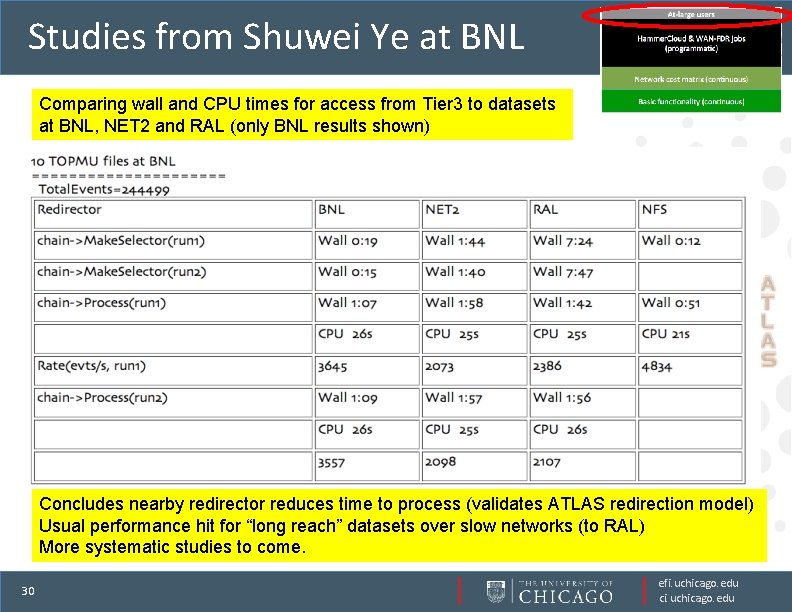

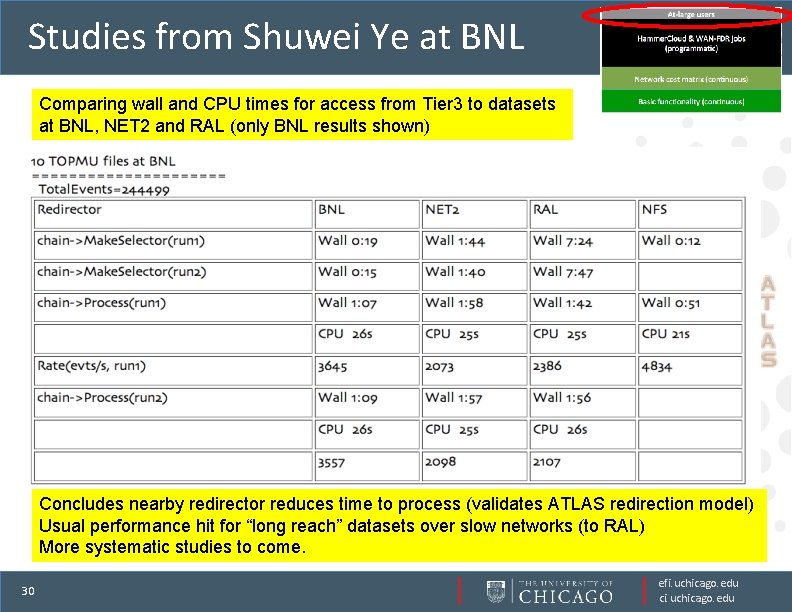

Studies from Shuwei Ye at BNL Comparing wall and CPU times for access from Tier 3 to datasets at BNL, NET 2 and RAL (only BNL results shown) Concludes nearby redirector reduces time to process (validates ATLAS redirection model) Usual performance hit for “long reach” datasets over slow networks (to RAL) More systematic studies to come. 30 efi. uchicago. edu ci. uchicago. edu

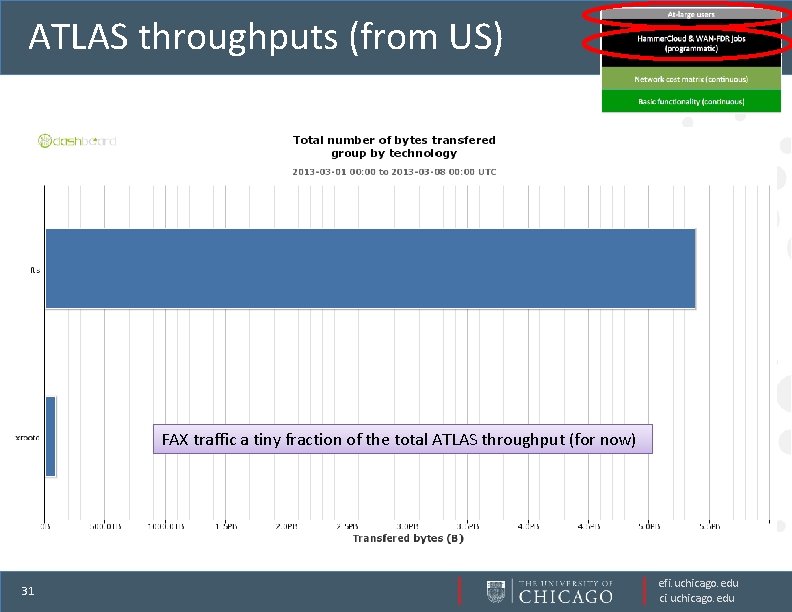

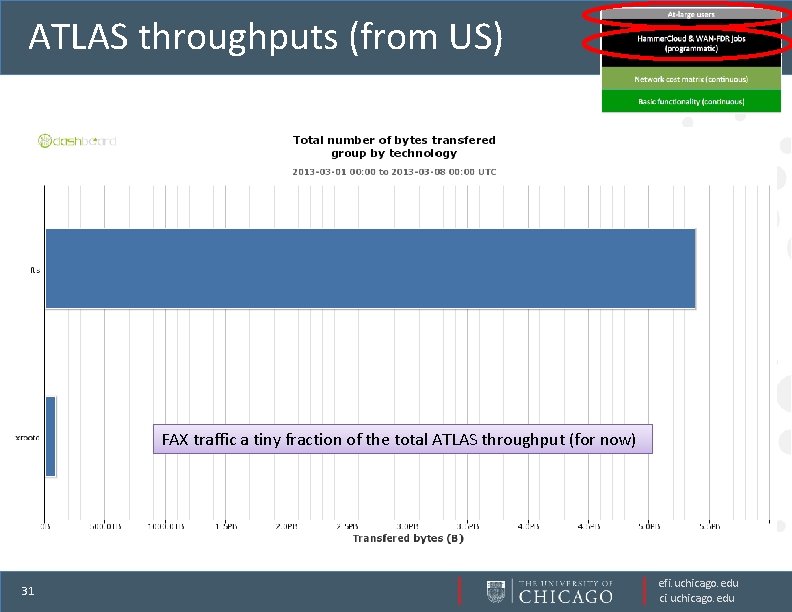

ATLAS throughputs (from US) FAX traffic a tiny fraction of the total ATLAS throughput (for now) 31 efi. uchicago. edu ci. uchicago. edu

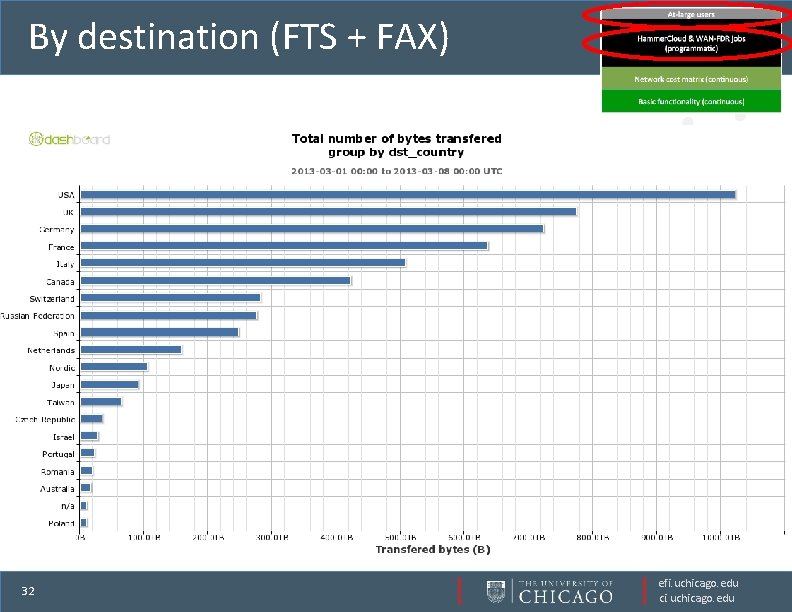

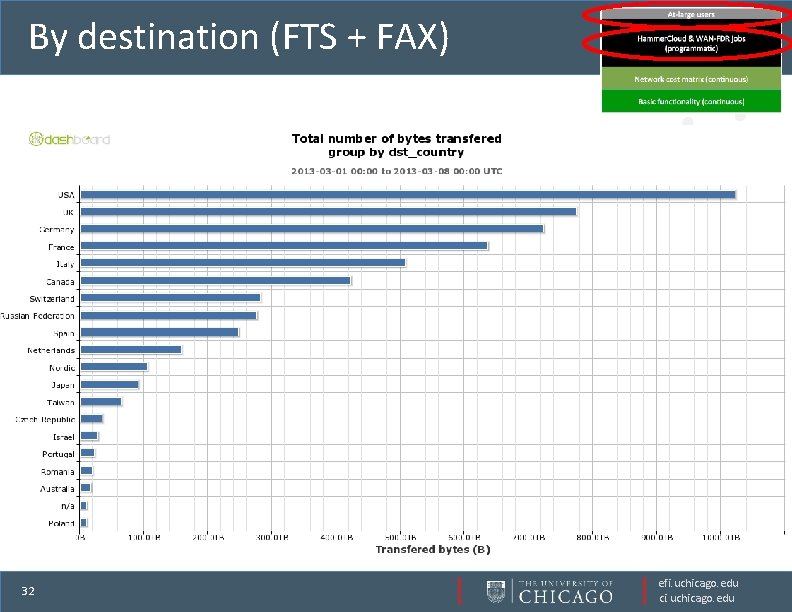

By destination (FTS + FAX) 32 efi. uchicago. edu ci. uchicago. edu

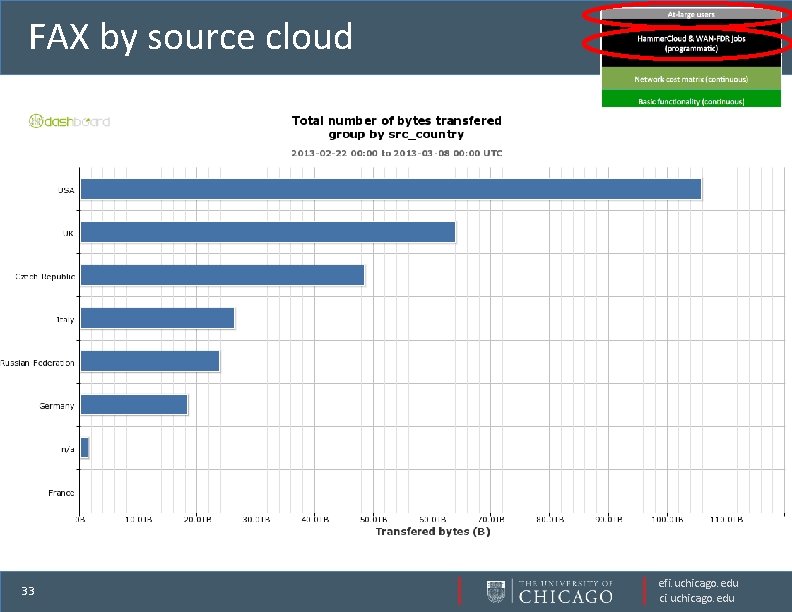

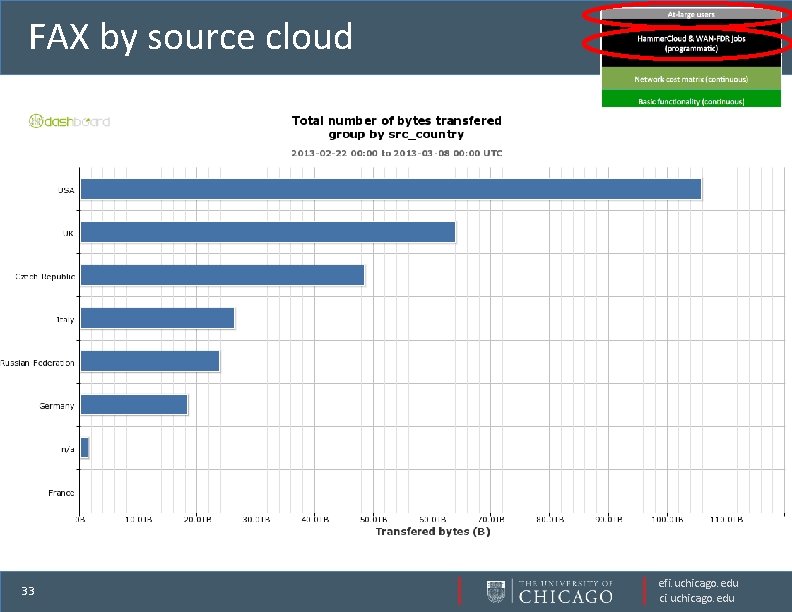

FAX by source cloud 33 efi. uchicago. edu ci. uchicago. edu

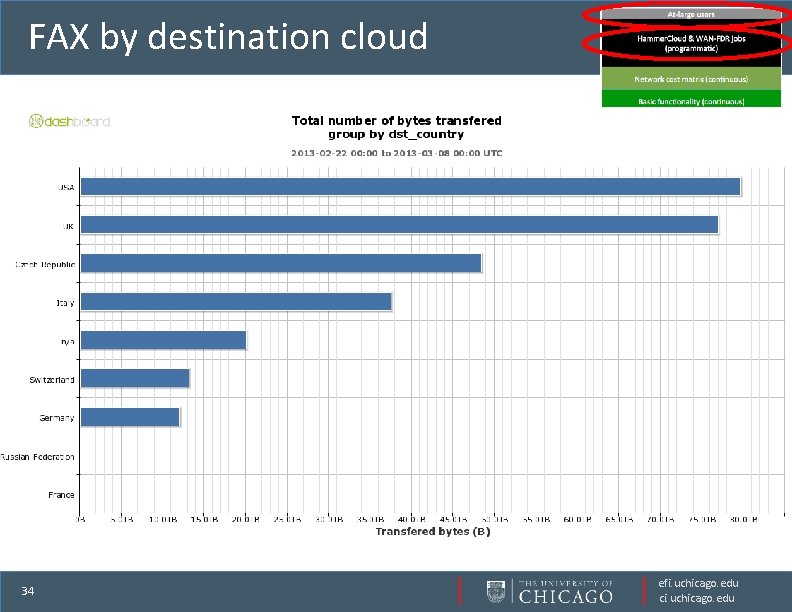

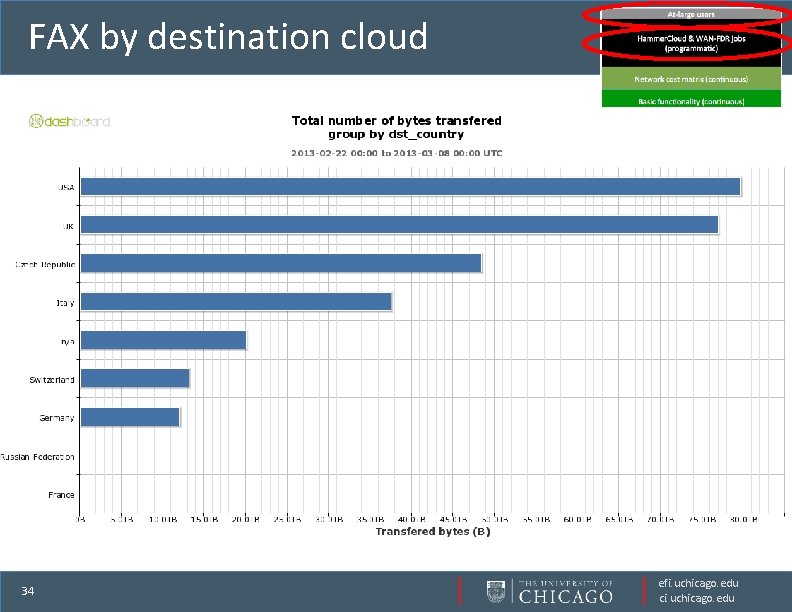

FAX by destination cloud 34 efi. uchicago. edu ci. uchicago. edu

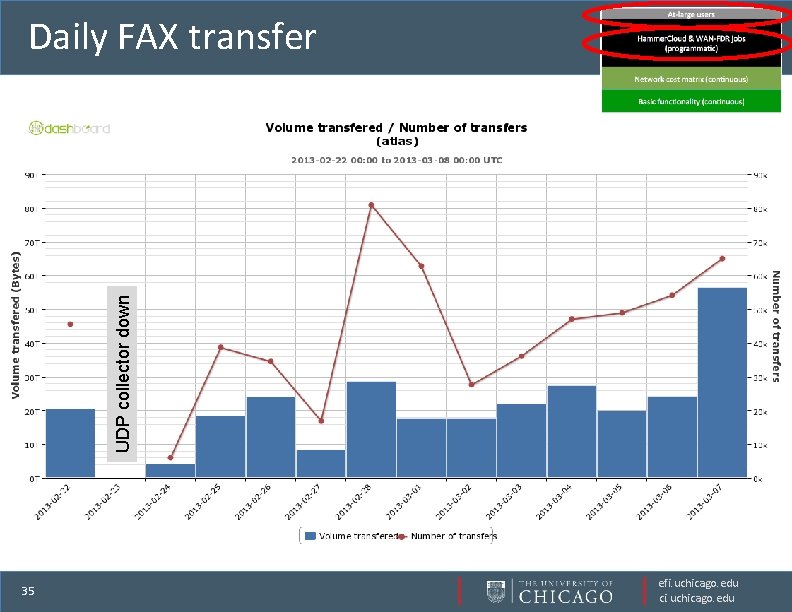

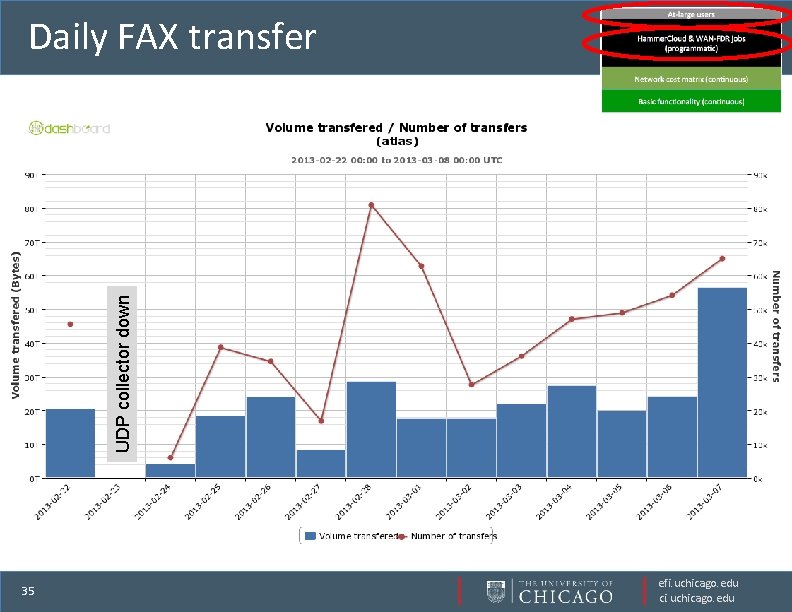

UDP collector down Daily FAX transfer 35 efi. uchicago. edu ci. uchicago. edu

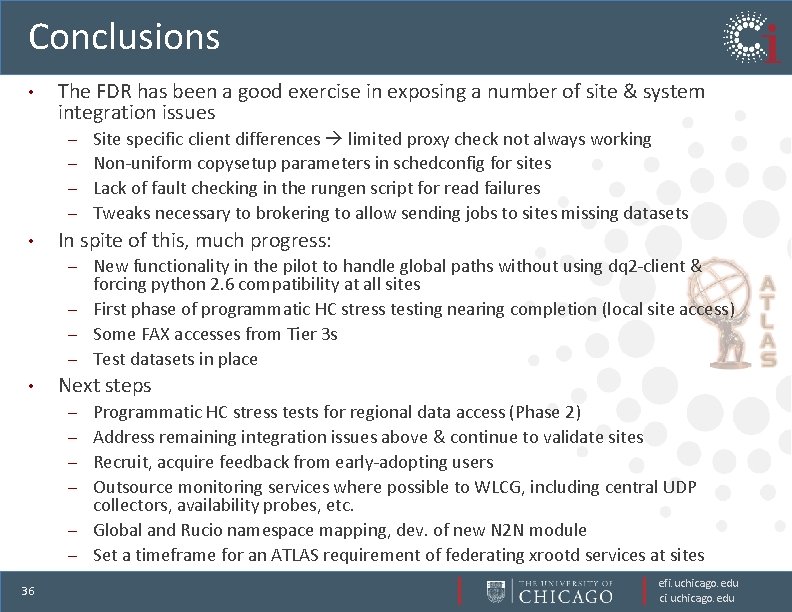

Conclusions • The FDR has been a good exercise in exposing a number of site & system integration issues Site specific client differences limited proxy check not always working – Non-uniform copysetup parameters in schedconfig for sites – Lack of fault checking in the rungen script for read failures – Tweaks necessary to brokering to allow sending jobs to sites missing datasets – • In spite of this, much progress: New functionality in the pilot to handle global paths without using dq 2 -client & forcing python 2. 6 compatibility at all sites – First phase of programmatic HC stress testing nearing completion (local site access) – Some FAX accesses from Tier 3 s – Test datasets in place – • Next steps – – – 36 Programmatic HC stress tests for regional data access (Phase 2) Address remaining integration issues above & continue to validate sites Recruit, acquire feedback from early-adopting users Outsource monitoring services where possible to WLCG, including central UDP collectors, availability probes, etc. Global and Rucio namespace mapping, dev. of new N 2 N module Set a timeframe for an ATLAS requirement of federating xrootd services at sites efi. uchicago. edu ci. uchicago. edu

Thanks • • • 37 A hearty thanks goes out to all the members of the atlas-adc-federated -xrootd group, especially site admins and providers of redirection & monitoring infrastructure Special thanks to Johannes and Federica for preparing HC FAX analysis stress test templates and detailed reporting on test results Simone & Hiro for test dataset distribution & Simone for getting involved in HC testing Paul, John, Jose for pilot and wrapper changes Rob for testing and pushing us all Wei for doggedly tracking down xrootd security issues & other site problems & Andy for getting ATLAS’ required features into xrootd releases efi. uchicago. edu ci. uchicago. edu

EXTRA SLIDES 38 efi. uchicago. edu ci. uchicago. edu

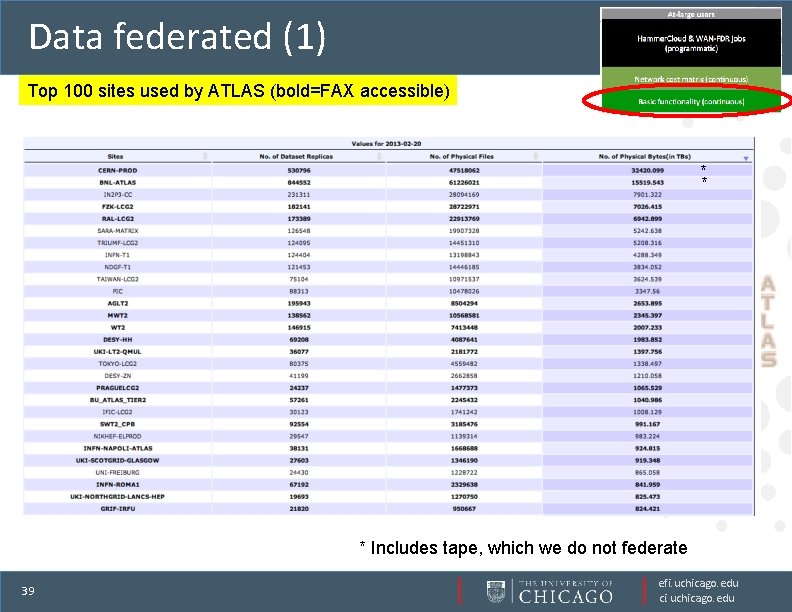

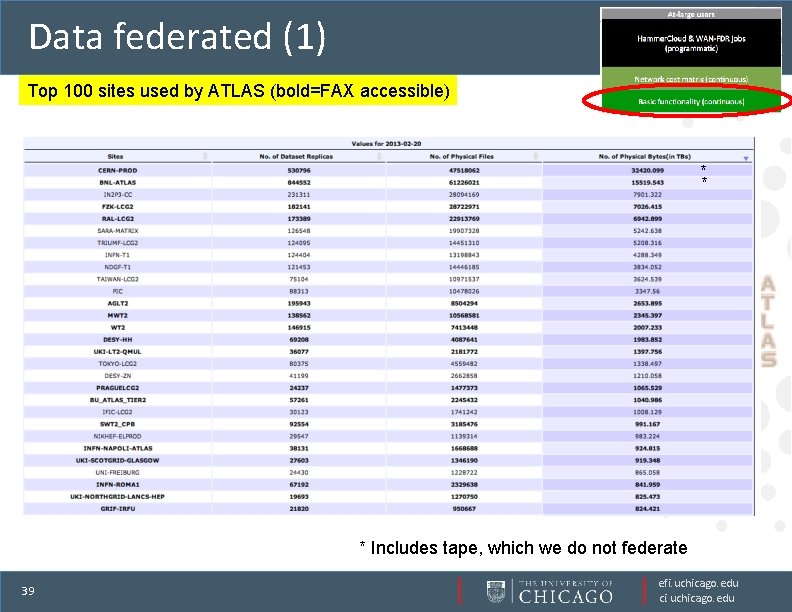

Data federated (1) Top 100 sites used by ATLAS (bold=FAX accessible) * * * Includes tape, which we do not federate 39 efi. uchicago. edu ci. uchicago. edu

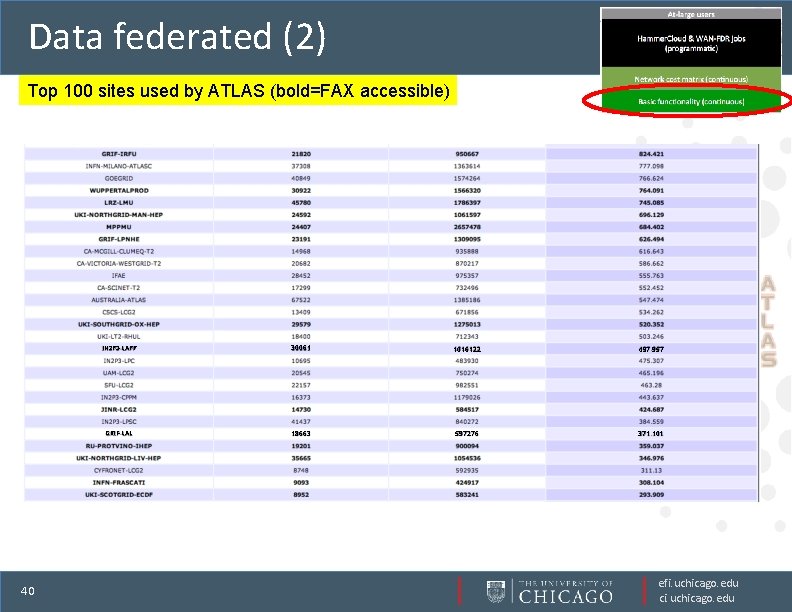

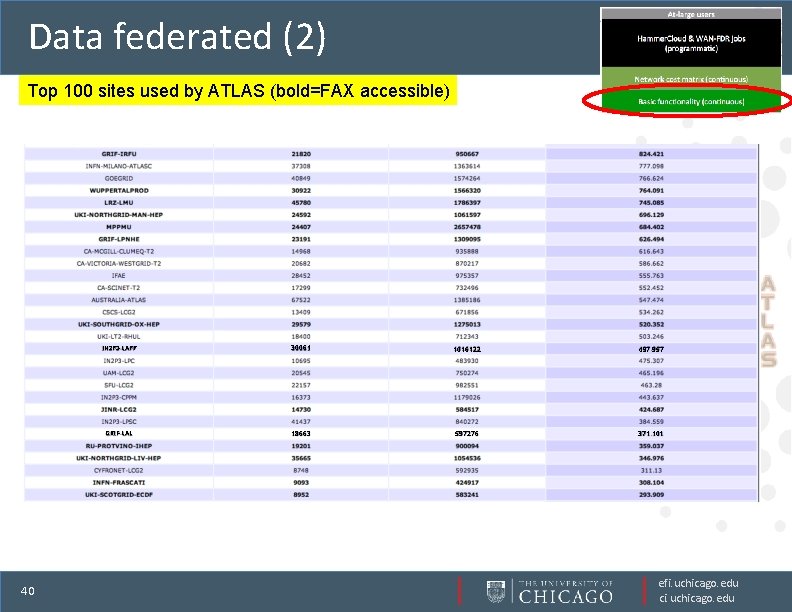

Data federated (2) Top 100 sites used by ATLAS (bold=FAX accessible) 40 IN 2 P 3 -LAPP 30061 1016122 497. 957 GRIF-LAL 18663 597276 371. 101 efi. uchicago. edu ci. uchicago. edu

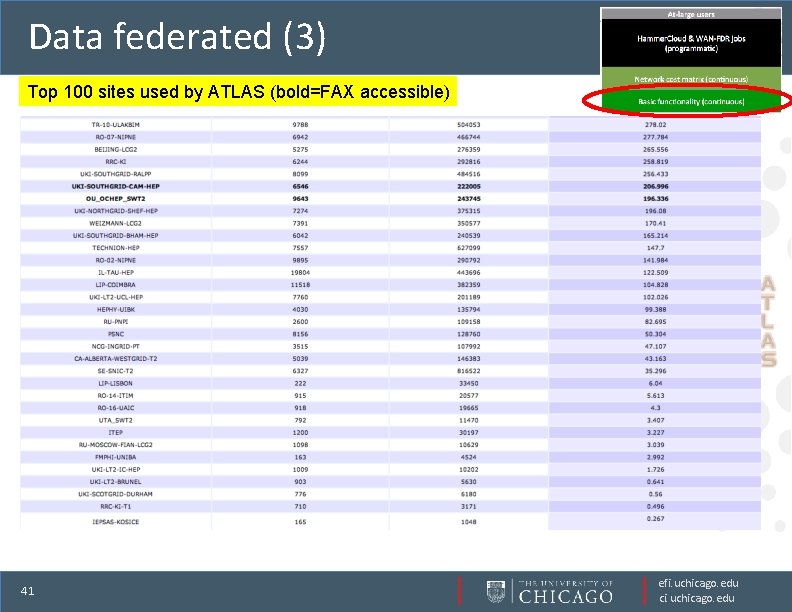

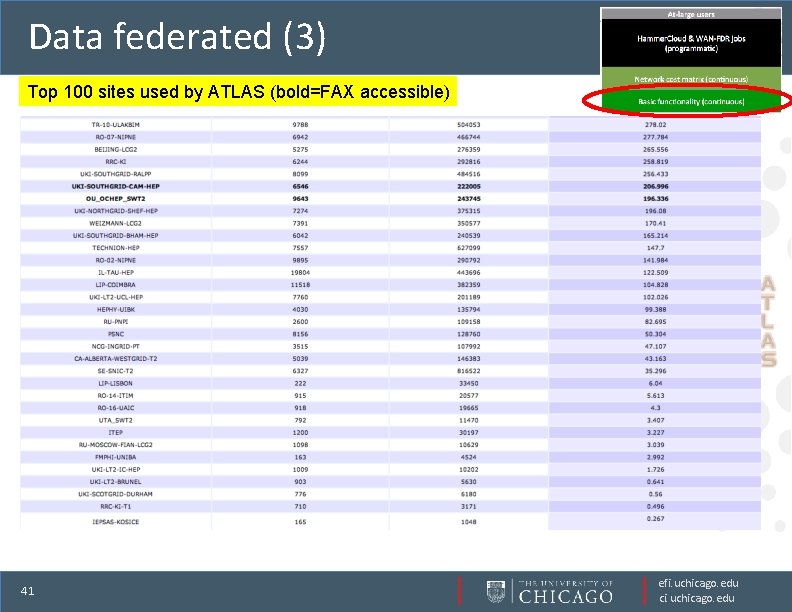

Data federated (3) Top 100 sites used by ATLAS (bold=FAX accessible) 41 efi. uchicago. edu ci. uchicago. edu

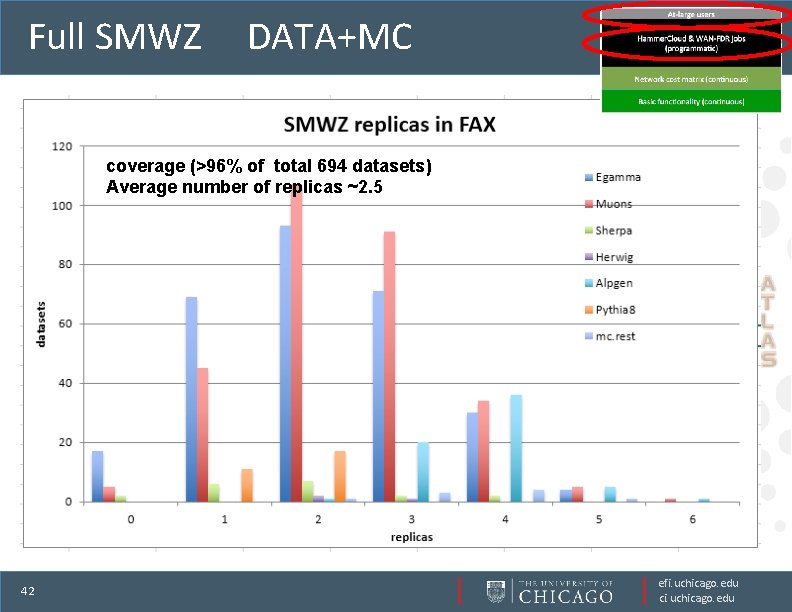

Full SMWZ DATA+MC coverage (>96% of total 694 datasets) Average number of replicas ~2. 5 42 efi. uchicago. edu ci. uchicago. edu

Skim. Slim. Service • • 43 FAX killer app. Free physicists from dealing with big data Free IT professionals from dealing with physicists, let them deal with what they do the best - big data. Efficiently use available resources (over the pledge, OSG, ANALY queues, EC 2) efi. uchicago. edu ci. uchicago. edu

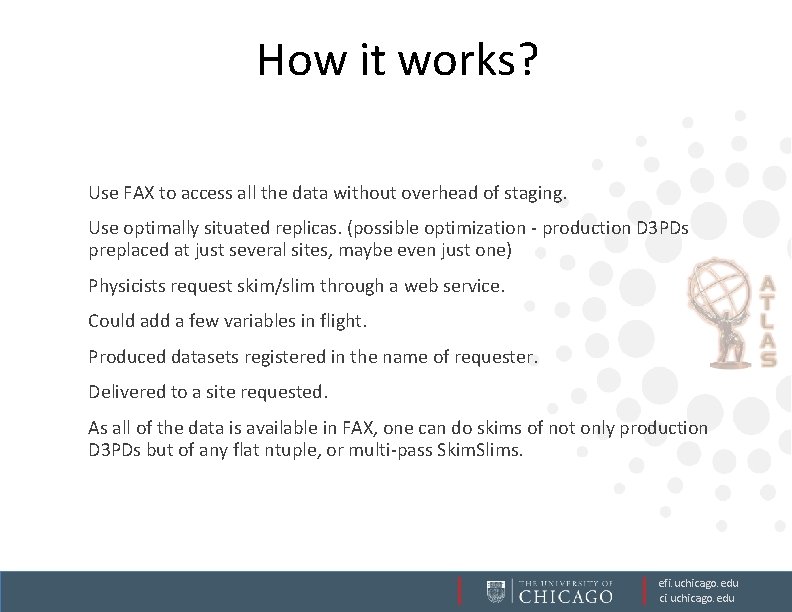

How it works? Use FAX to access all the data without overhead of staging. Use optimally situated replicas. (possible optimization - production D 3 PDs preplaced at just several sites, maybe even just one) Physicists request skim/slim through a web service. Could add a few variables in flight. Produced datasets registered in the name of requester. Delivered to a site requested. As all of the data is available in FAX, one can do skims of not only production D 3 PDs but of any flat ntuple, or multi-pass Skim. Slims. efi. uchicago. edu ci. uchicago. edu

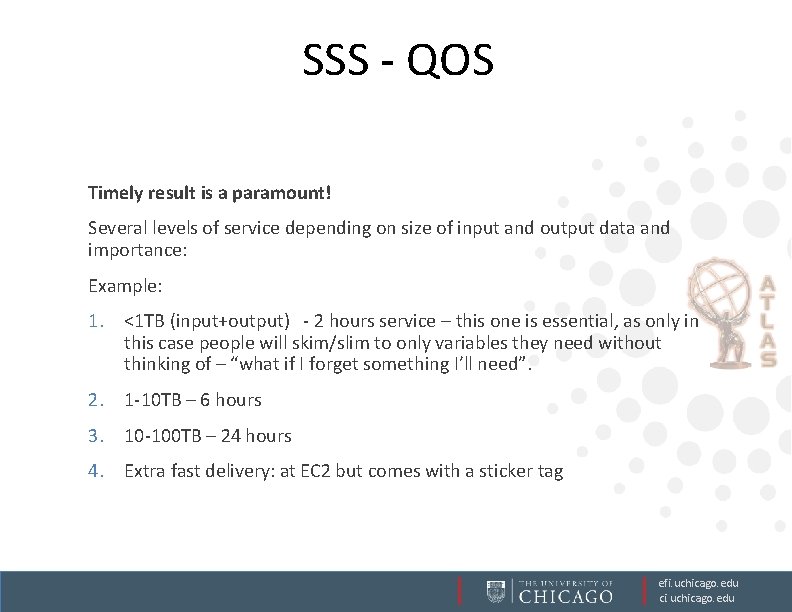

SSS - QOS Timely result is a paramount! Several levels of service depending on size of input and output data and importance: Example: 1. <1 TB (input+output) - 2 hours service – this one is essential, as only in this case people will skim/slim to only variables they need without thinking of – “what if I forget something I’ll need”. 2. 1 -10 TB – 6 hours 3. 10 -100 TB – 24 hours 4. Extra fast delivery: at EC 2 but comes with a sticker tag efi. uchicago. edu ci. uchicago. edu

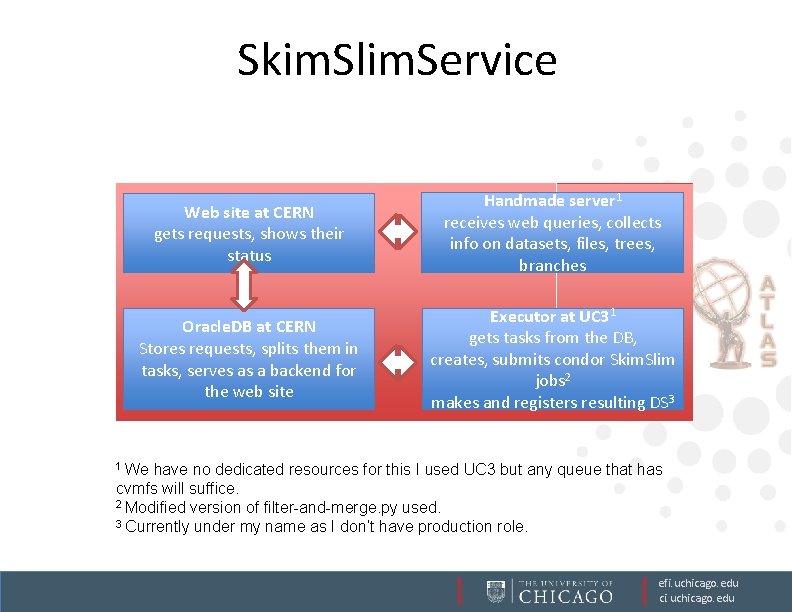

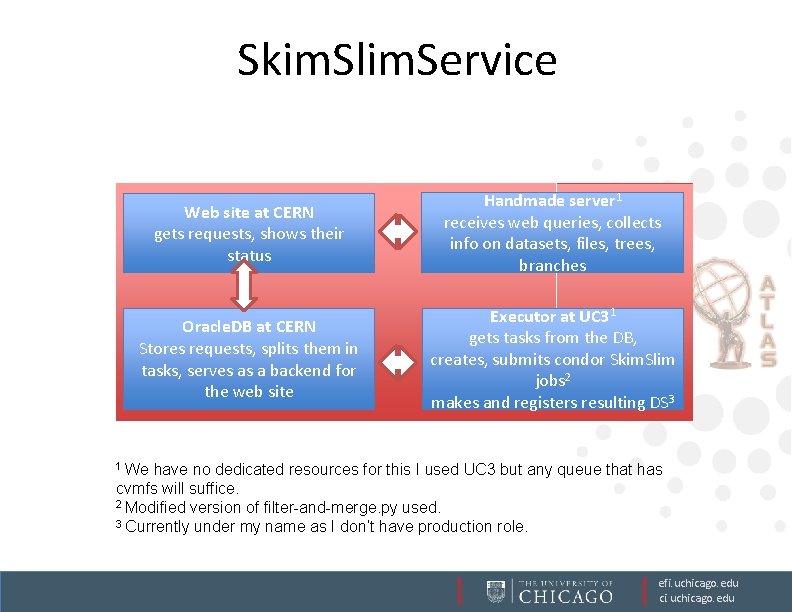

Skim. Slim. Service Web site at CERN gets requests, shows their status Handmade server 1 receives web queries, collects info on datasets, files, trees, branches Oracle. DB at CERN Stores requests, splits them in tasks, serves as a backend for the web site Executor at UC 31 gets tasks from the DB, creates, submits condor Skim. Slim jobs 2 makes and registers resulting DS 3 1 We have no dedicated resources for this I used UC 3 but any queue that has cvmfs will suffice. 2 Modified version of filter-and-merge. py used. 3 Currently under my name as I don’t have production role. efi. uchicago. edu ci. uchicago. edu

http: //ivukotic. web. cern. ch/ivukotic/SSS/index. asp efi. uchicago. edu ci. uchicago. edu

Ilija prorok

Ilija prorok Ilija braovac

Ilija braovac Udg.edu me

Udg.edu me Writing a status report

Writing a status report Rehearsal strategies

Rehearsal strategies Mental rehearsal techniques

Mental rehearsal techniques P value pearson correlation

P value pearson correlation Narrative rehearsal hypothesis

Narrative rehearsal hypothesis Oral rehearsal for writing

Oral rehearsal for writing Blocking rehearsals

Blocking rehearsals Tel. fax

Tel. fax Tel. fax

Tel. fax What is electronic correspondence

What is electronic correspondence Slidetodoc.com

Slidetodoc.com Is fax machine an output device

Is fax machine an output device Is fax machine an output device

Is fax machine an output device Rcn admin portal

Rcn admin portal Fax machine is input or output device

Fax machine is input or output device Crexendo status

Crexendo status Is fax machine an output device

Is fax machine an output device Fax-359

Fax-359 Madap formulary

Madap formulary Fax-359

Fax-359 Fax word formation

Fax word formation Fax-353

Fax-353 Transitory defect marks

Transitory defect marks Switchvox fax

Switchvox fax Extended hardware

Extended hardware Serveur fax

Serveur fax Serveur fax gratuit

Serveur fax gratuit Fax-353

Fax-353 Status report executivo

Status report executivo Customs broker triennial status report 2021

Customs broker triennial status report 2021 Survey status report

Survey status report Enter vendor name

Enter vendor name Training status report

Training status report Academic status report asu

Academic status report asu Hydroelectricty

Hydroelectricty Convercent report status

Convercent report status Definition of rag status

Definition of rag status Work status report from doctor

Work status report from doctor Status report

Status report Seo status report

Seo status report Annual status of education report

Annual status of education report Mdo kaiser

Mdo kaiser What is partial report technique

What is partial report technique Gnit dress code

Gnit dress code What elements of design are stiff, rough, shiny and smooth?

What elements of design are stiff, rough, shiny and smooth?