Evaluating Learning Introduction to Evaluating Evaluation is a

- Slides: 27

Evaluating Learning

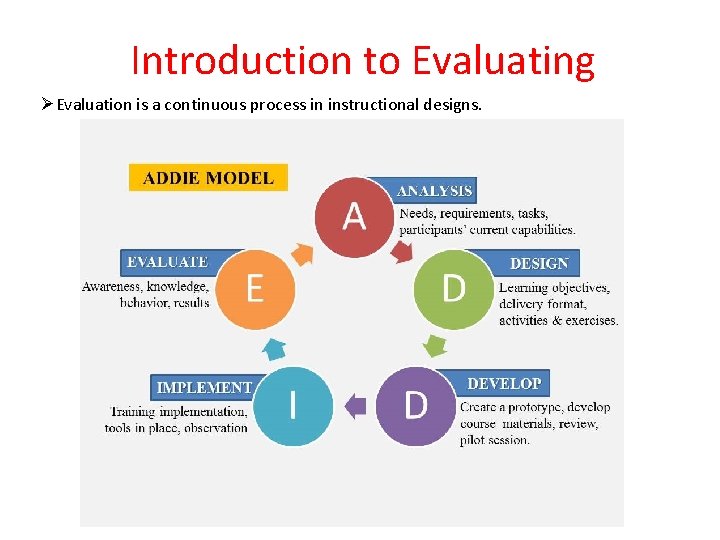

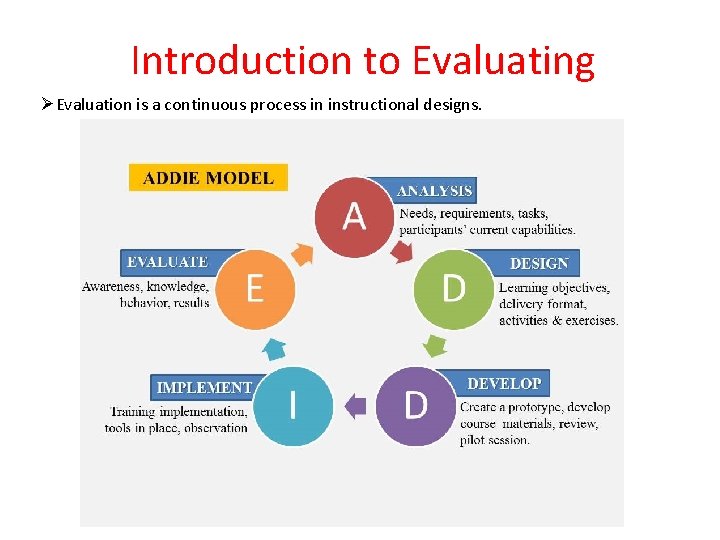

Introduction to Evaluating ØEvaluation is a continuous process in instructional designs. Fig. Design Phase in the ADDIE Model

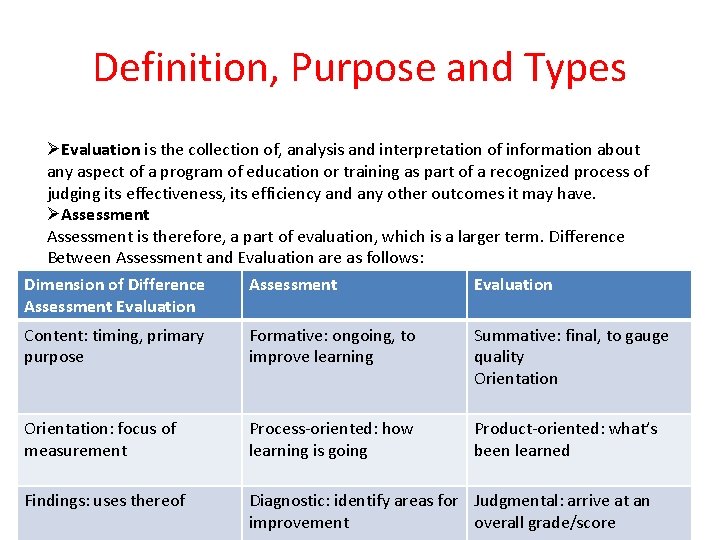

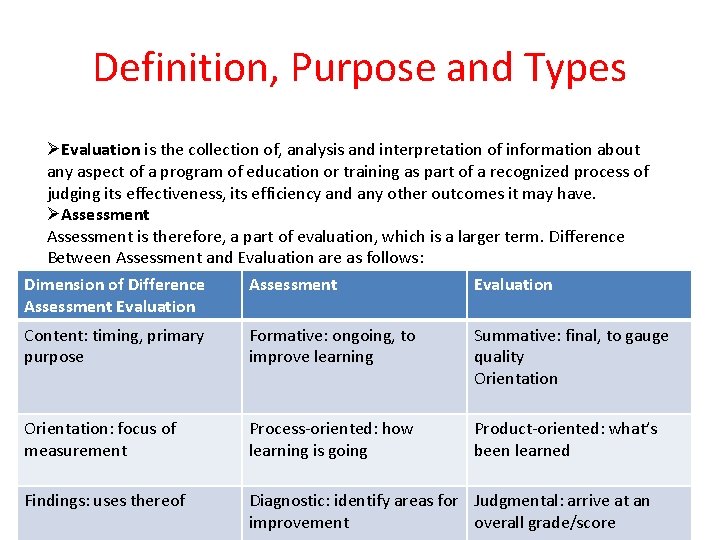

Definition, Purpose and Types ØEvaluation is the collection of, analysis and interpretation of information about any aspect of a program of education or training as part of a recognized process of judging its effectiveness, its efficiency and any other outcomes it may have. ØAssessment is therefore, a part of evaluation, which is a larger term. Difference Between Assessment and Evaluation are as follows: Dimension of Difference Assessment Evaluation Content: timing, primary purpose Formative: ongoing, to improve learning Summative: final, to gauge quality Orientation: focus of measurement Process-oriented: how learning is going Product-oriented: what’s been learned Findings: uses thereof Diagnostic: identify areas for Judgmental: arrive at an improvement overall grade/score

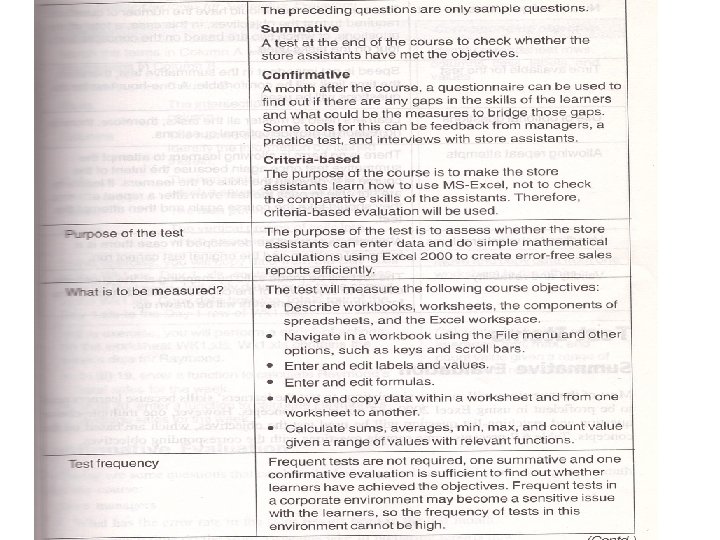

Types of Evaluation Based on the stage of the course at which it is conducted: 1. Formative 2. Summative 3. Confirmative Based on the purpose of evaluation: 1. Criteria-based 2. Norm-Based

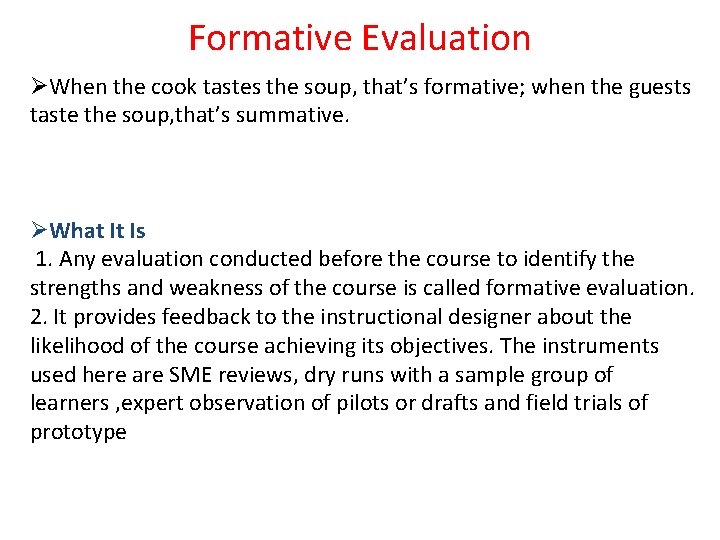

Formative Evaluation ØWhen the cook tastes the soup, that’s formative; when the guests taste the soup, that’s summative. ØWhat It Is 1. Any evaluation conducted before the course to identify the strengths and weakness of the course is called formative evaluation. 2. It provides feedback to the instructional designer about the likelihood of the course achieving its objectives. The instruments used here are SME reviews, dry runs with a sample group of learners , expert observation of pilots or drafts and field trials of prototype

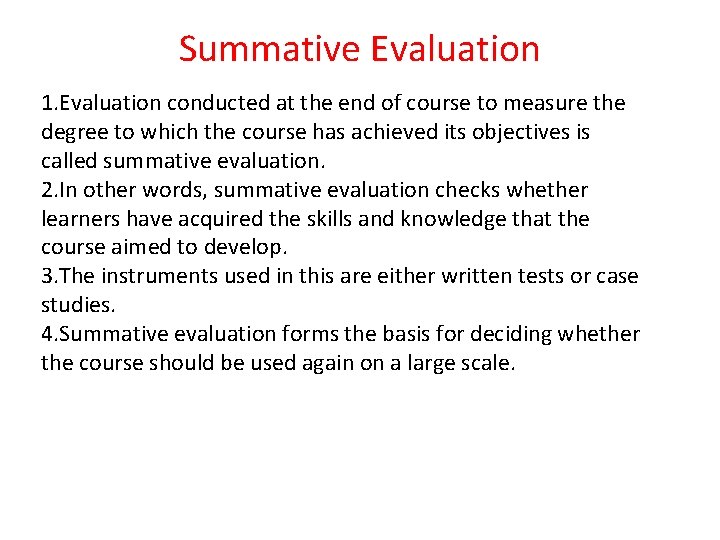

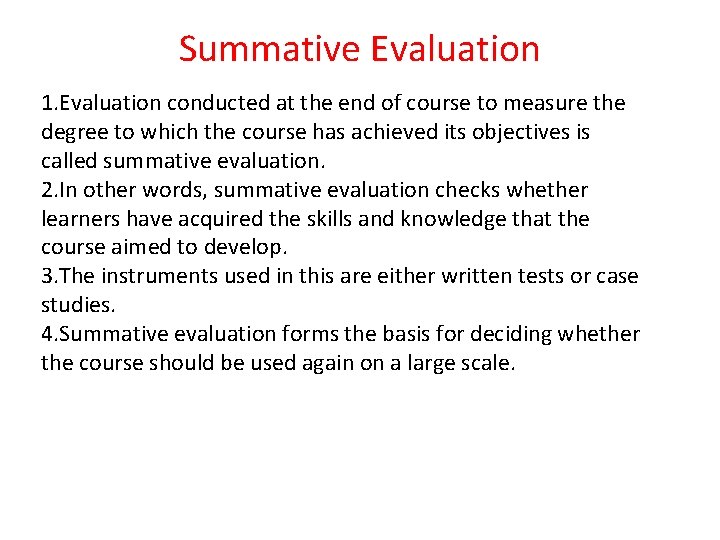

Summative Evaluation 1. Evaluation conducted at the end of course to measure the degree to which the course has achieved its objectives is called summative evaluation. 2. In other words, summative evaluation checks whether learners have acquired the skills and knowledge that the course aimed to develop. 3. The instruments used in this are either written tests or case studies. 4. Summative evaluation forms the basis for deciding whether the course should be used again on a large scale.

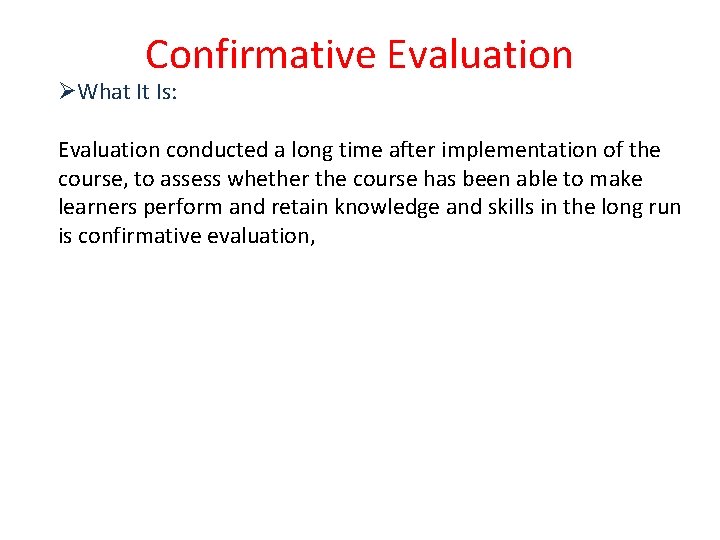

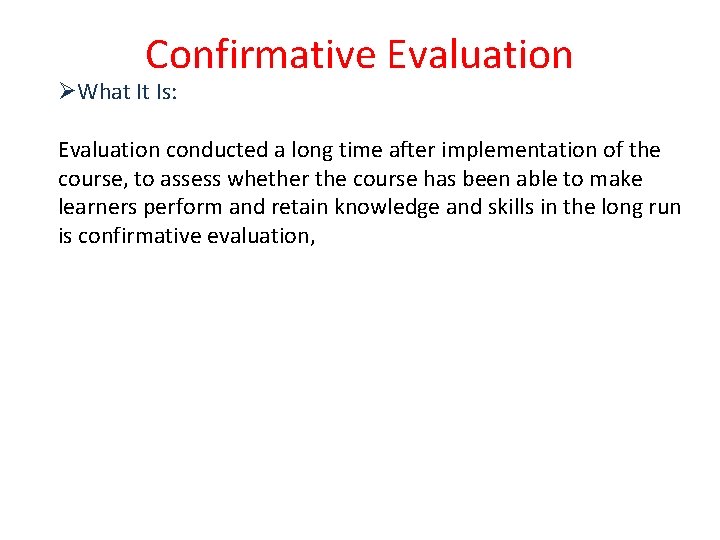

Confirmative Evaluation ØWhat It Is: Evaluation conducted a long time after implementation of the course, to assess whether the course has been able to make learners perform and retain knowledge and skills in the long run is confirmative evaluation,

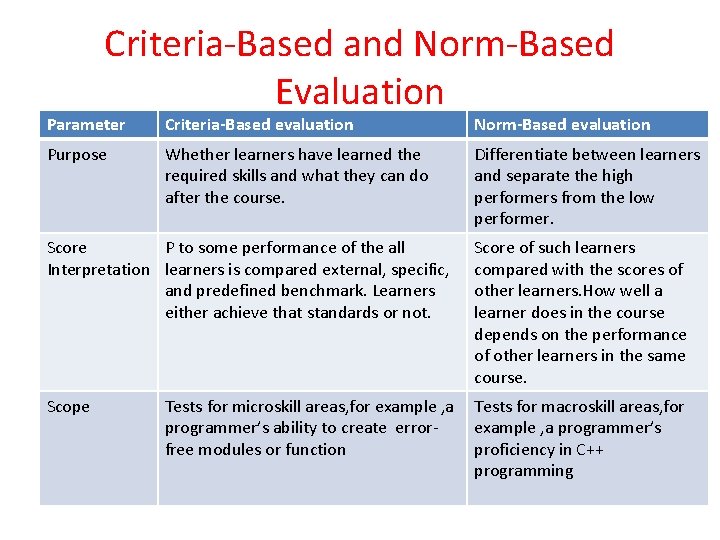

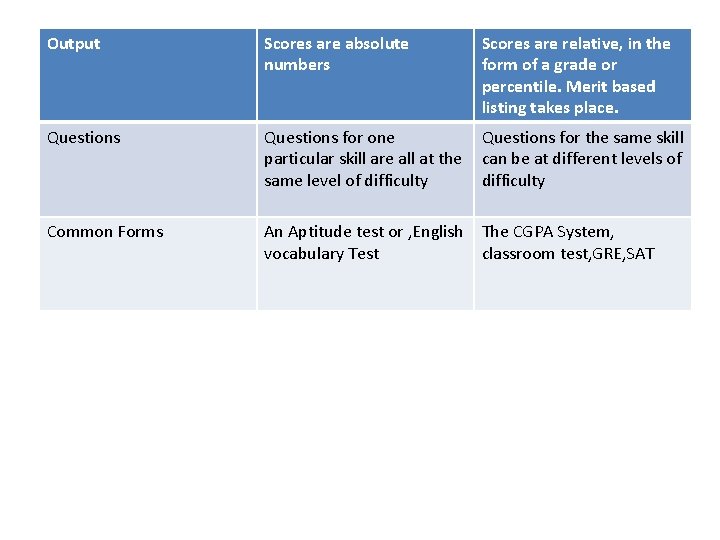

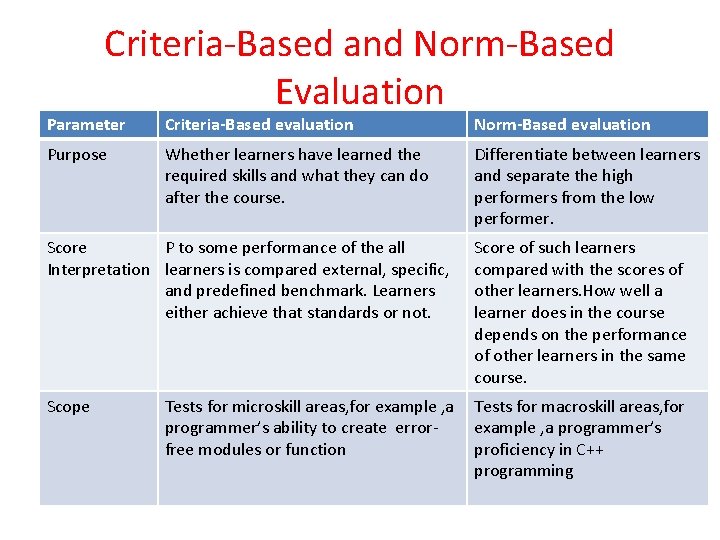

Criteria-Based and Norm-Based Evaluation Parameter Criteria-Based evaluation Norm-Based evaluation Purpose Whether learners have learned the required skills and what they can do after the course. Differentiate between learners and separate the high performers from the low performer. Score P to some performance of the all Interpretation learners is compared external, specific, and predefined benchmark. Learners either achieve that standards or not. Score of such learners compared with the scores of other learners. How well a learner does in the course depends on the performance of other learners in the same course. Scope Tests for macroskill areas, for example , a programmer’s proficiency in C++ programming Tests for microskill areas, for example , a programmer’s ability to create errorfree modules or function

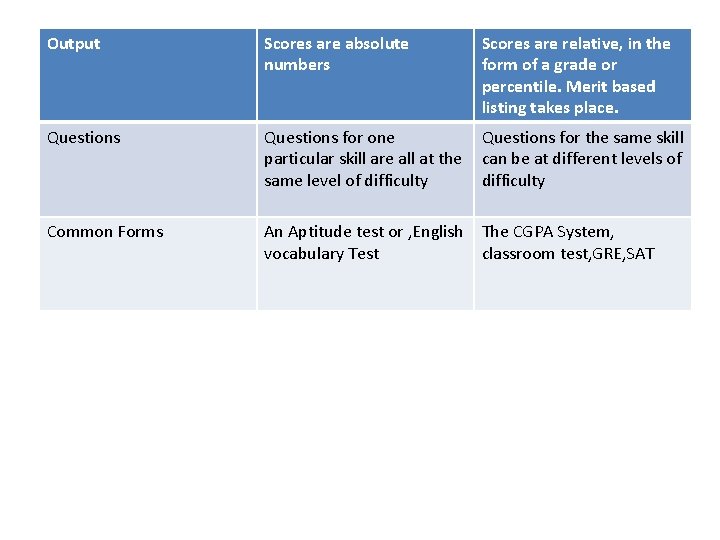

Output Scores are absolute numbers Scores are relative, in the form of a grade or percentile. Merit based listing takes place. Questions for one particular skill are all at the same level of difficulty Questions for the same skill can be at different levels of difficulty Common Forms An Aptitude test or , English The CGPA System, vocabulary Test classroom test, GRE, SAT

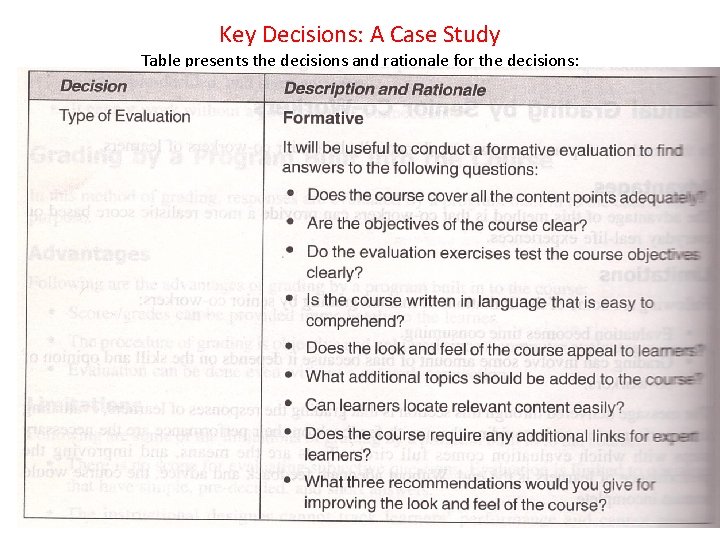

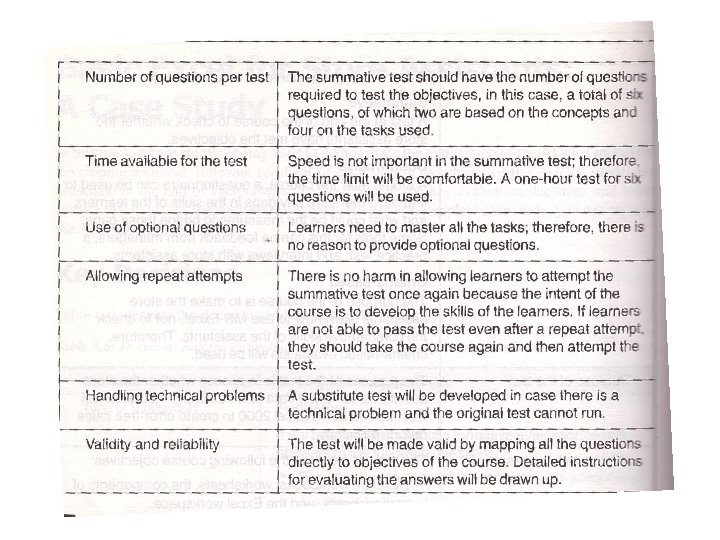

Key Decisions for Designing Tests Each type of evaluation requires the instructional designer make the following decisions before the actual test can be designed: 1. Purpose of the test 2. What the test should measure 3. Test Frequency 4. Time available to learners for taking the tests 5. Use of optional question 6. Allowing the repeat attempts 7. Hamdling technical problems 8. Validity and reliability of Tests

Types of Tests Items Some common Types of Tests are: 1. Multiple-choice 2. True/False 3. Short-answer or Text-Input 4. Matching-list 5. Simulation 6. Fill-in-the-blank 7. Eassay-type 8. Sequencing

Parts of a Multiple –Choice Question 1. Question Item ØA question item is the problem statement or the body of the question that poses the problem to the learner. The stem may be a complete sentence or a sentence completed by the question options. For Example: Which of the following is the largest continent in the world? i)Asia ii)Africa iii)Australia iv)Europe 2. Keys ØThe test item can have one or more correct answers; these are called the keys. They may be numbers , full sentences, incomplete sentences, phrases or, terms. As Given above example key or the correct answer is: Asia 3. Distractors ØThe incorrect option for the question are the distractors, As given above example distractors or the incorrect options are: Australia, Africa, Europe ØThe keys and distractors are together called the option of a question.

Variation of Forms Multiple-choice questions can be presented in the following forms as well: 1. Common contents as base 2. Common set of options 3. Items in analogy form

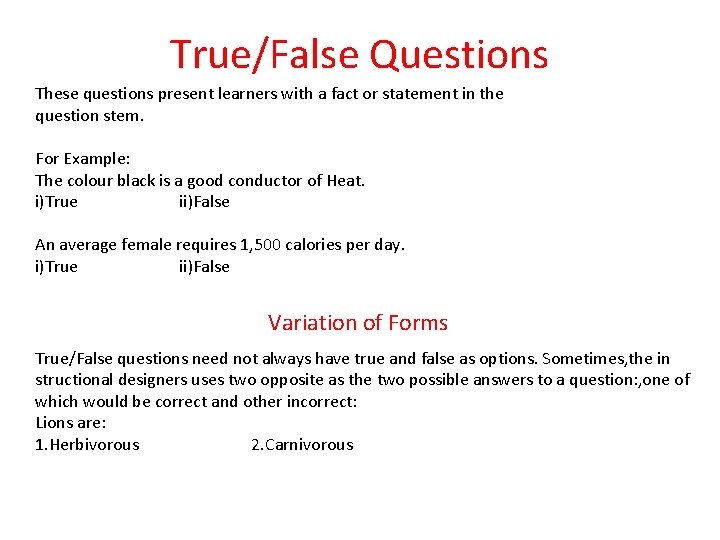

True/False Questions These questions present learners with a fact or statement in the question stem. For Example: The colour black is a good conductor of Heat. i)True ii)False An average female requires 1, 500 calories per day. i)True ii)False Variation of Forms True/False questions need not always have true and false as options. Sometimes, the in structional designers uses two opposite as the two possible answers to a question: , one of which would be correct and other incorrect: Lions are: 1. Herbivorous 2. Carnivorous

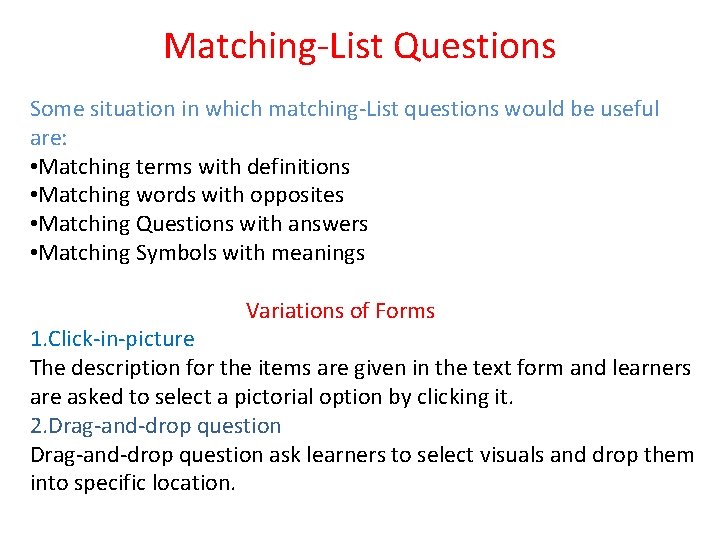

Matching-List Questions Some situation in which matching-List questions would be useful are: • Matching terms with definitions • Matching words with opposites • Matching Questions with answers • Matching Symbols with meanings Variations of Forms 1. Click-in-picture The description for the items are given in the text form and learners are asked to select a pictorial option by clicking it. 2. Drag-and-drop question ask learners to select visuals and drop them into specific location.

Fill-in-the-Blank Questions These questions require learners to complete a statement or a table by supplying a missing word or a item. Example: The_____ option of word 2000 aloows you to change the case of selected text.

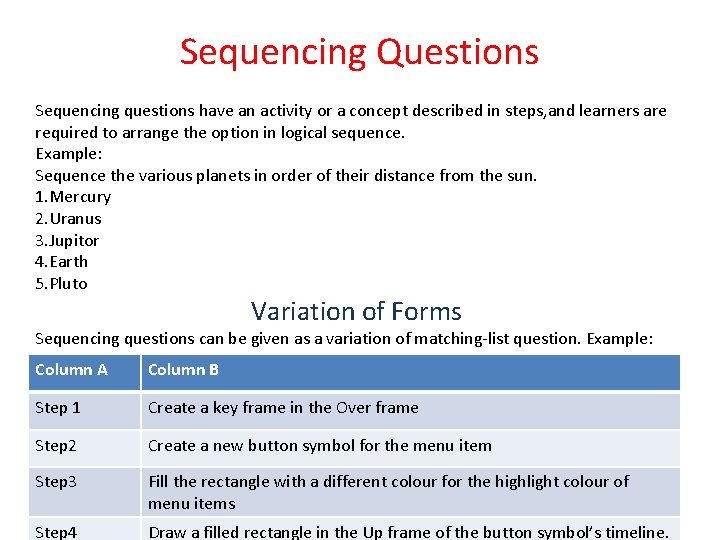

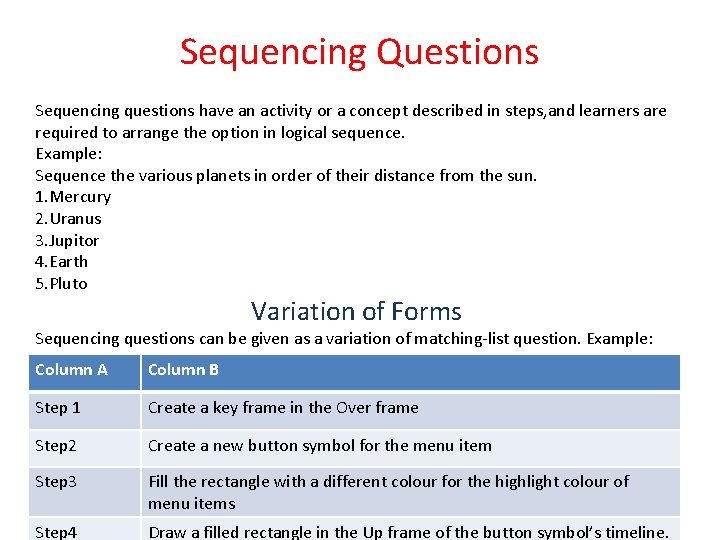

Sequencing Questions Sequencing questions have an activity or a concept described in steps, and learners are required to arrange the option in logical sequence. Example: Sequence the various planets in order of their distance from the sun. 1. Mercury 2. Uranus 3. Jupitor 4. Earth 5. Pluto Variation of Forms Sequencing questions can be given as a variation of matching-list question. Example: Column A Column B Step 1 Create a key frame in the Over frame Step 2 Create a new button symbol for the menu item Step 3 Fill the rectangle with a different colour for the highlight colour of menu items Step 4 Draw a filled rectangle in the Up frame of the button symbol’s timeline.

Essay-Type Questions that are requires learners to apply their knowledge of a particular concept are called essay-type Questions. Example: What were the major causes of the French revolution?

Guideline for Writing Test Items 1. Multiple-choice Question Follow the Guideline for writing the different parts of multiple-choice question: ØWriting stems -Use Standard terminology -Keep the stem concise -Avoid Giveaways -Do not make the stem Negative ØWriting Options -Avoid Giveaway -Keep Options parallel in form -Keep Options Concise -Have an optimum Number of options

2. True/False Questions The guidelines for creating true/false questions are given as follows: 1. Prevent Guessing: Ask more than one true/false question on a subject to check whether learners have understood the key concepts taught in the course. Present a series of True/False items to reduce guesswork by learners and add complexity to the test. 3. Matching List Questions Guidelines: 1. Make the question easy to read and to attempt. 2. Avoid Giveaways 3. Guidelines for other forms of Matching-List Questions 4. Click-in-picture Questions 5. Drag and Drop Question.

4. Simulation Questions Guidelines: ØMake it easy to interpret. ØKeep optimum Number of steps 5. Fill-in-the-Blank Questions Guidelines: Blank should preferably be at the end of the stem, instead of at the beginning or in the middle.

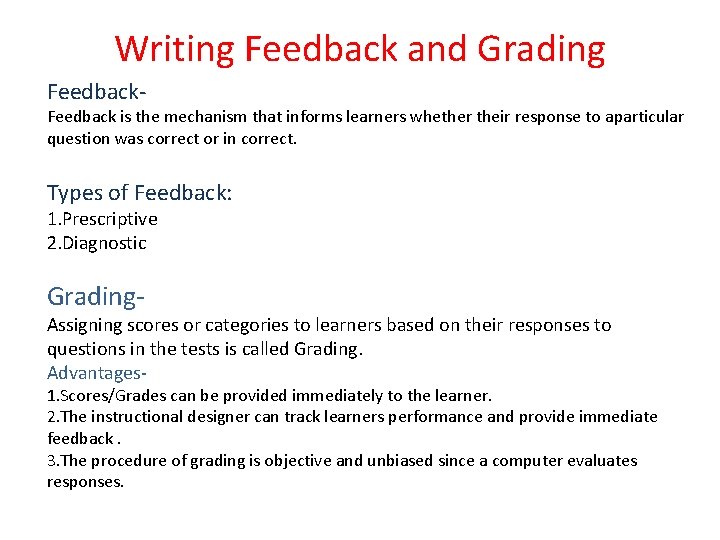

Writing Feedback and Grading Feedback- Feedback is the mechanism that informs learners whether their response to aparticular question was correct or in correct. Types of Feedback: 1. Prescriptive 2. Diagnostic Grading- Assigning scores or categories to learners based on their responses to questions in the tests is called Grading. Advantages- 1. Scores/Grades can be provided immediately to the learner. 2. The instructional designer can track learners performance and provide immediate feedback. 3. The procedure of grading is objective and unbiased since a computer evaluates responses.

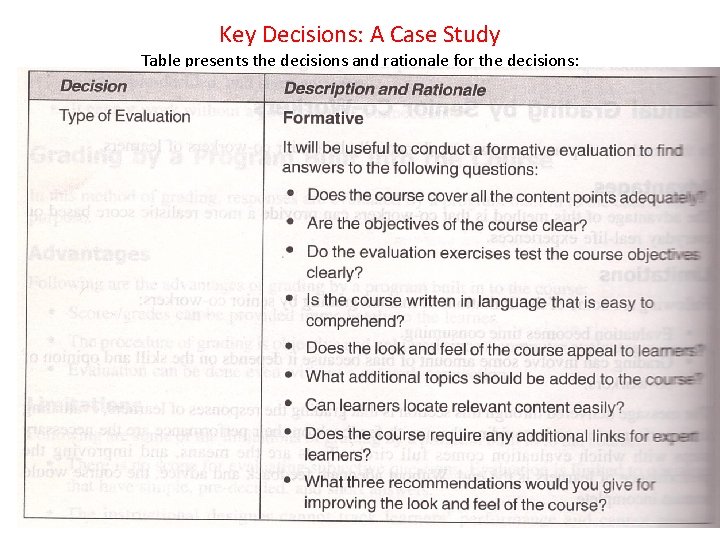

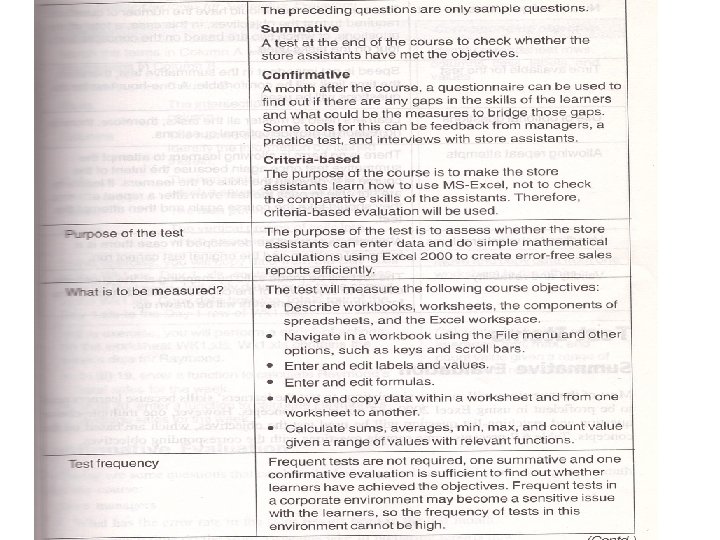

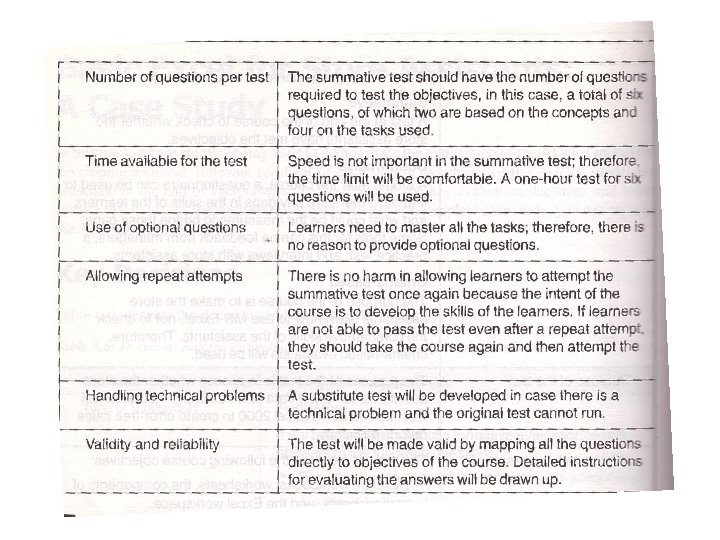

Key Decisions: A Case Study Table presents the decisions and rationale for the decisions:

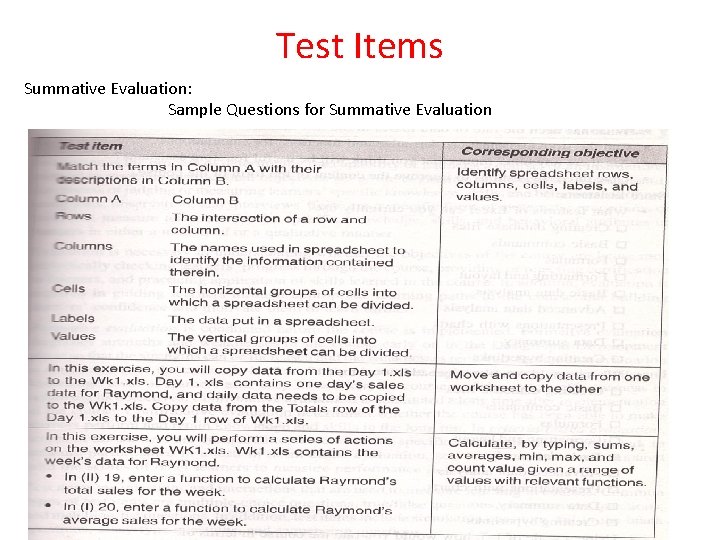

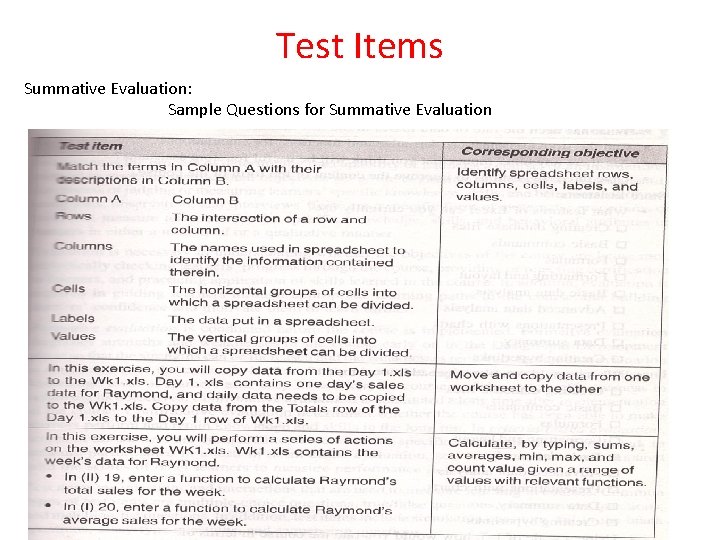

Test Items Summative Evaluation: Sample Questions for Summative Evaluation

Thank You!