Discount Evaluation Evaluating with experts Discount Evaluation Techniques

- Slides: 44

Discount Evaluation Evaluating with experts

Discount Evaluation Techniques l Basis: – – – l Observing users can be time-consuming and expensive Try to predict usability rather than observing it directly Conserve resources (quick & low cost) Approach: – – HCI experts interact with system and try to find potential problems and give prescriptive feedback Best if l l l Haven’t used earlier prototype Familiar with domain or task Understand user perspectives

Heuristic Evaluation l Several expert usability evaluators assess system based on simple and general heuristics (principles or rules of thumb) Mainly qualitative use with experts Predictive l Developed by Jakob Nielsen l l l (Web site: www. useit. com)

Procedure 1. 2. 3. 4. Gather inputs Evaluate system Debriefing and collection Severity rating

1: Gather Inputs l Who are evaluators? – l Need to learn about domain, its practices Get the prototype to be studied – May vary from mock-ups and storyboards to a working system

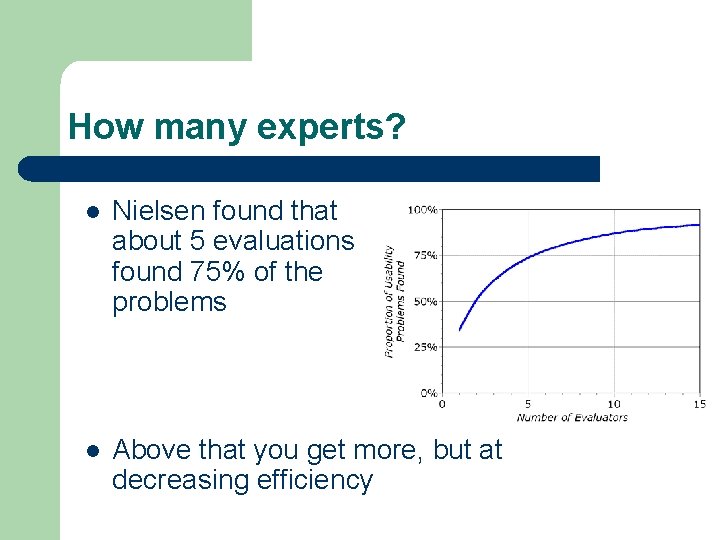

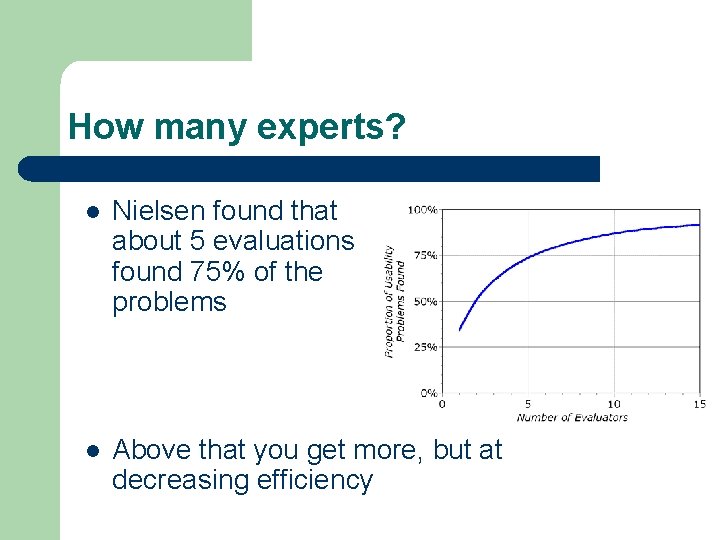

How many experts? l Nielsen found that about 5 evaluations found 75% of the problems l Above that you get more, but at decreasing efficiency

2: Evaluate System l Reviewers evaluate system based on highlevel heuristics. l Where to get heuristics? – http: //www. useit. com/papers/heuristic/ – http: //www. asktog. com/basics/first. Principles. html

Heuristics l l l use simple and natural dialog speak user’s language minimize memory load be consistent provide feedback l l provide clearly marked exits provide shortcuts provide good error messages prevent errors

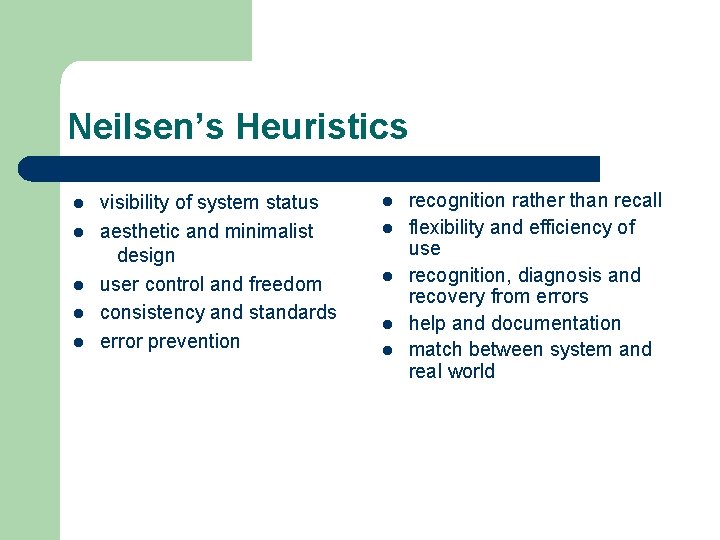

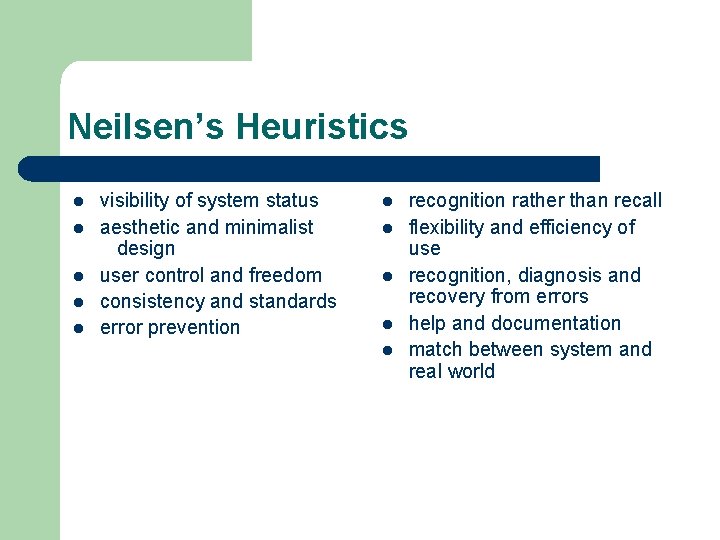

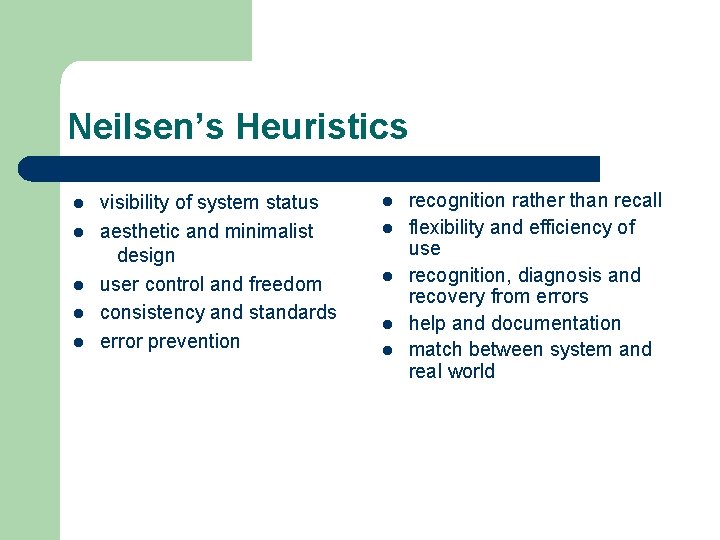

Neilsen’s Heuristics l l l visibility of system status aesthetic and minimalist design user control and freedom consistency and standards error prevention l l l recognition rather than recall flexibility and efficiency of use recognition, diagnosis and recovery from errors help and documentation match between system and real world

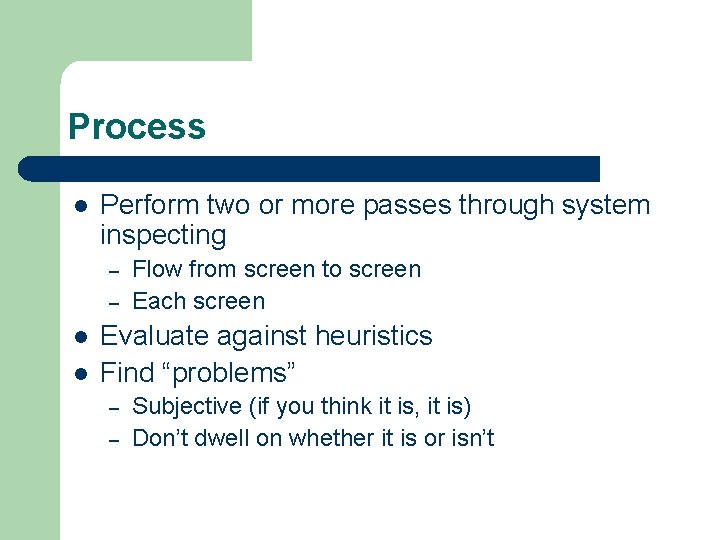

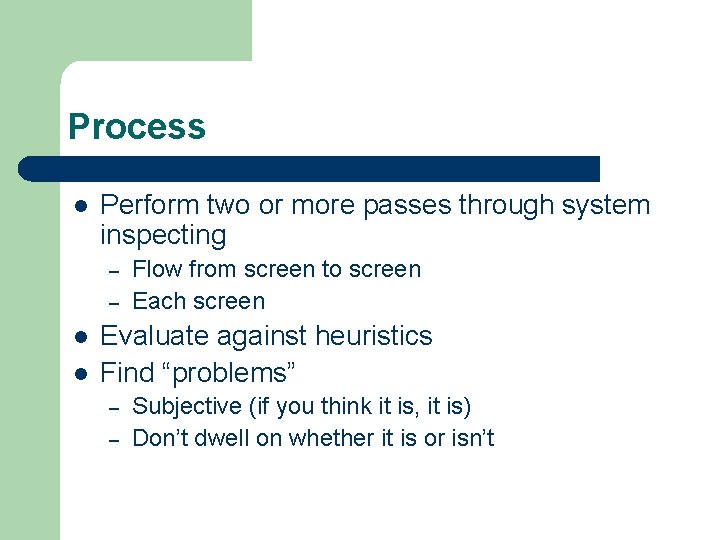

Process l Perform two or more passes through system inspecting – – l l Flow from screen to screen Each screen Evaluate against heuristics Find “problems” – – Subjective (if you think it is, it is) Don’t dwell on whether it is or isn’t

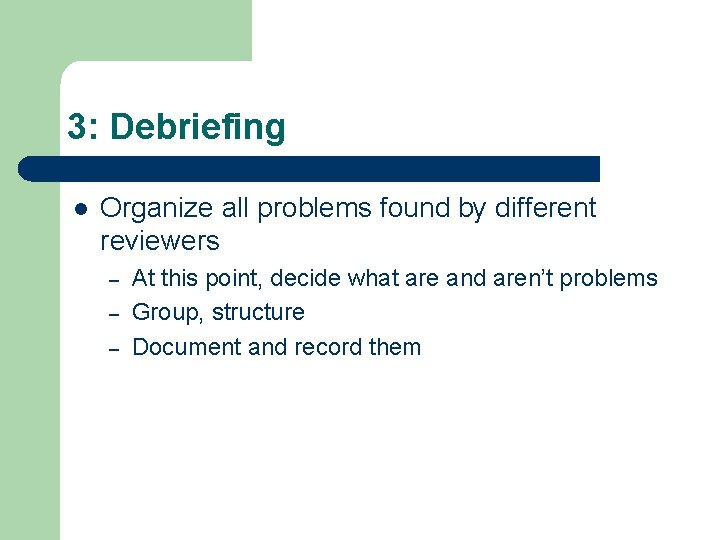

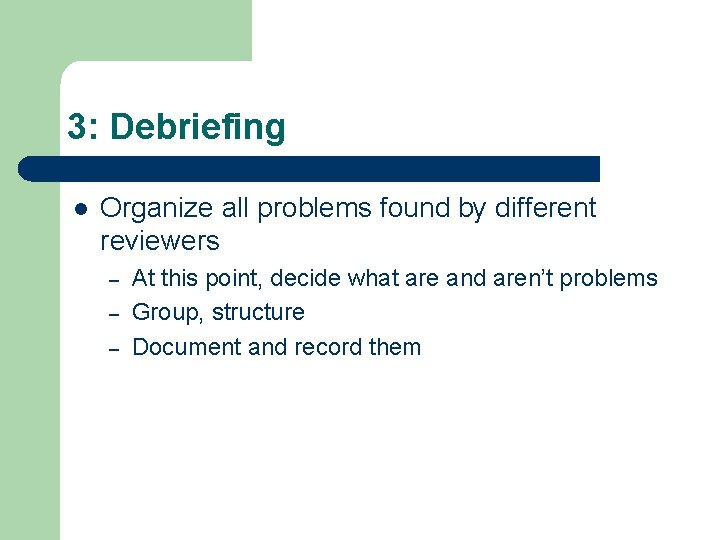

3: Debriefing l Organize all problems found by different reviewers – – – At this point, decide what are and aren’t problems Group, structure Document and record them

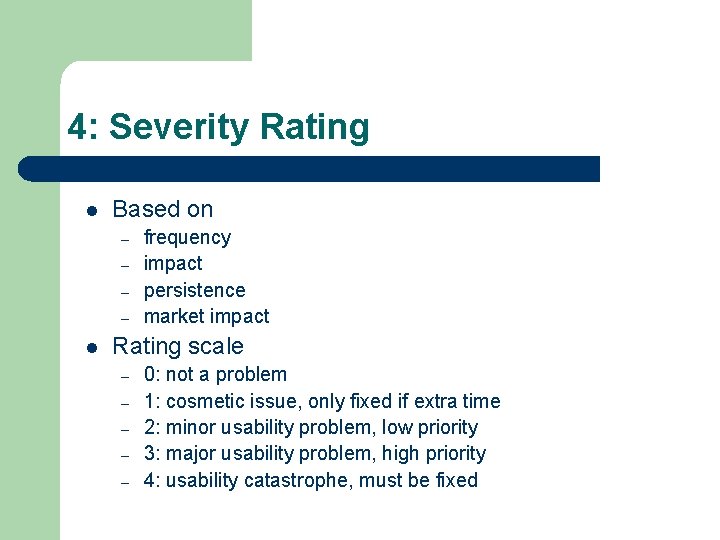

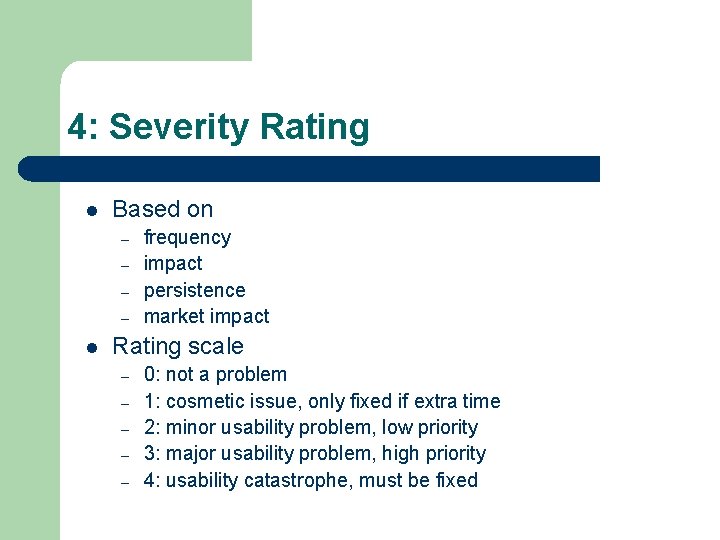

4: Severity Rating l Based on – – l frequency impact persistence market impact Rating scale – – – 0: not a problem 1: cosmetic issue, only fixed if extra time 2: minor usability problem, low priority 3: major usability problem, high priority 4: usability catastrophe, must be fixed

Advantages l l l Few ethical issues to consider Inexpensive, quick Getting someone practiced in method and knowledgeable of domain is valuable

Challenges l Very subjective assessment of problems – l Why are these the right heuristics? – l Depends of expertise of reviewers Others have been suggested How to determine what is a true usability problem – Some recent papers suggest that many identified “problems” really aren’t

Your turn: l Banner – adding a course l Use Nielsen’s heuristics (p 686) List all problems Come up to the board and put up at least one new one We’ll rate as a group l l l

Neilsen’s Heuristics l l l visibility of system status aesthetic and minimalist design user control and freedom consistency and standards error prevention l l l recognition rather than recall flexibility and efficiency of use recognition, diagnosis and recovery from errors help and documentation match between system and real world

Heuristic Evaluation: review l l Design team provides prototype and chooses a set of heuristics Experts systematically step through entire prototype and write down all problems Design team creates master list, assigns severity rating Design team decides how to modify design

Cognitive Walkthrough More evaluating with experts

Cognitive Walkthrough l Assess learnability and usability through simulation of way users explore and become familiar with interactive system – – – Qualitative Predictive With experts l to examine learnability and novice behavior l From Polson, Lewis, et al at UC Boulder

CW: Process l l l Construct carefully designed tasks from system spec or screen mock-up Walk through (cognitive & operational) activities required to go from one screen to another Review actions needed for task, attempt to predict how users would behave and what problems they’ll encounter

CW: Assumptions l l User has rough plan User explores system, looking for actions to contribute to performance of action User selects action seems best for desired goal User interprets response and assesses whether progress has been made toward completing task

CW: Requirements l l Description of users and their backgrounds Description of task user is to perform Complete list of the actions required to complete task Prototype or description of system

CW: Methodology l Experts step through action sequence – – – l Action 1 Response A, B, . . Action 2 Response A. . . For each one, ask four questions and try to construct a believability story

CW: Questions 1. 2. 3. 4. Will users be trying to produce whatever effect action has? Will users be able to notice that the correct action is available? (is it visible) Once found, will they know it’s the right one for desired effect? (is it correct) Will users understand feedback after action?

Let’s practice: My Internet Radio l User characteristics – – Technology savy users Familiar with computers Understand Internet radio concept Just joined and downloaded this radio

Task: add a station to presets l l Click genre Scroll list and choose genre Assuming station is on first page, add station to presets -- right-click on station, choose add to presets from popup menu. Click OK on Presets

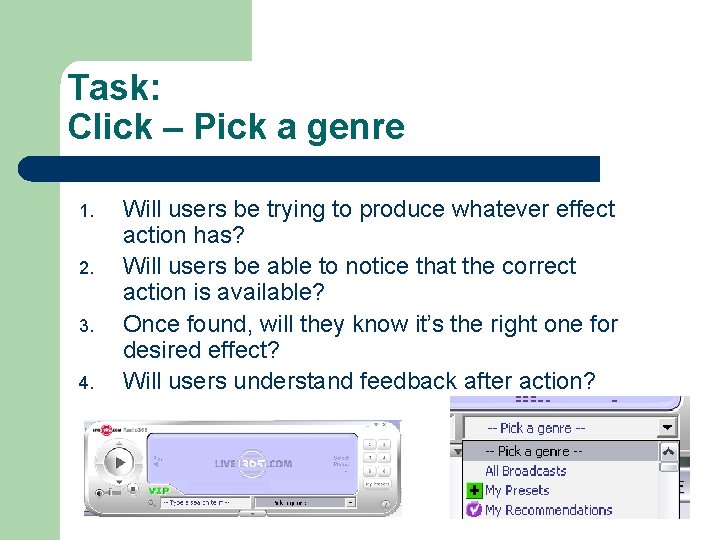

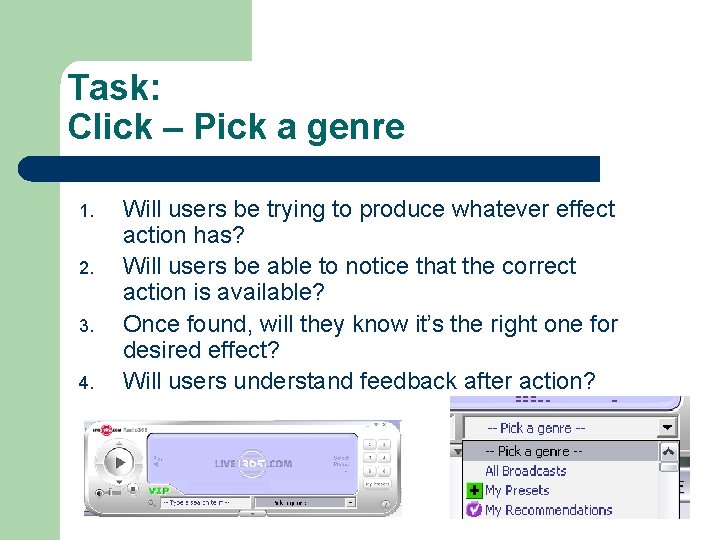

Task: Click – Pick a genre 1. 2. 3. 4. Will users be trying to produce whatever effect action has? Will users be able to notice that the correct action is available? Once found, will they know it’s the right one for desired effect? Will users understand feedback after action?

CW: Answering the Questions l 1. Will user be trying to produce effect? – Typical supporting evidence l l l – It is part of their original task They have experience using the system The system tells them to do it No evidence? l l Construct a failure scenario Explain, back up opinion

CW: Next Question l 2. Will user notice action is available? – Typical supporting evidence l l l Experience Visible device, such as a button Perceivable representation of an action such as a menu item

CW: Next Question l 3. Will user know it’s the right one for the effect? – Typical supporting evidence l l l Experience Interface provides a visual item (such as prompt) to connect action to result effect All other actions look wrong

CW: Next Question l 4. Will user understand the feedback? – Typical supporting evidence l l Experience Recognize a connection between a system response and what user was trying to do

Scroll list and choose genre 1. 2. 3. 4. Will users be trying to produce whatever effect action has? Will users be able to notice that the correct action is available? Once found, will they know it’s the right one for desired effect? Will users understand feedback after action?

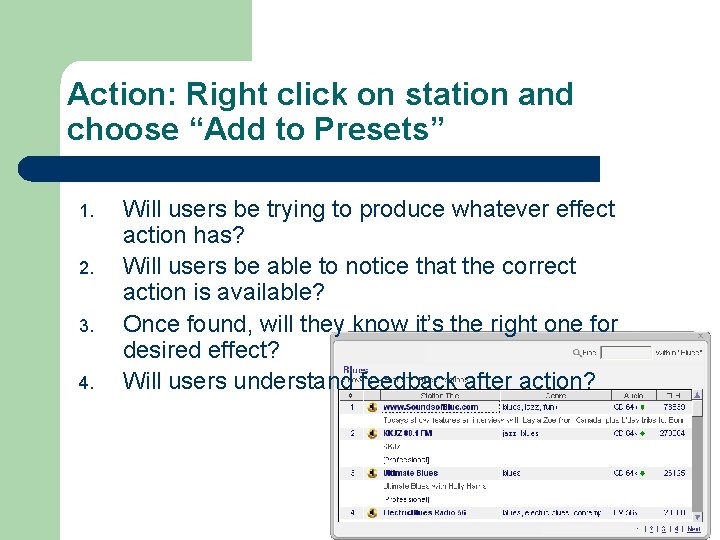

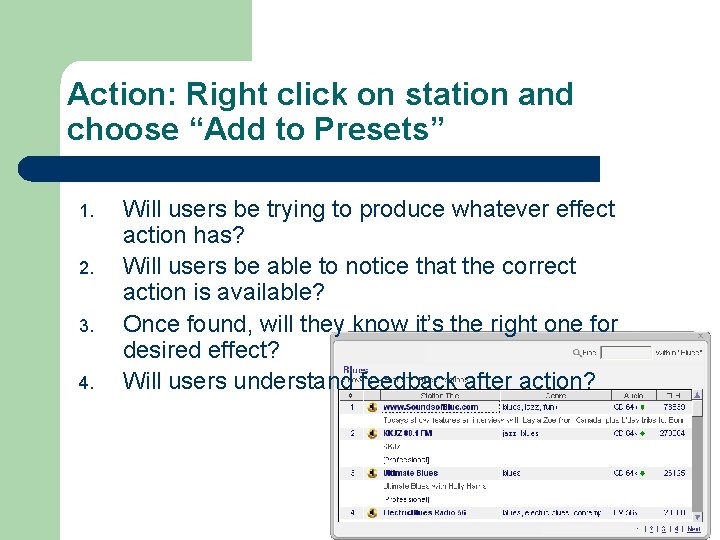

Action: Right click on station and choose “Add to Presets” 1. 2. 3. 4. Will users be trying to produce whatever effect action has? Will users be able to notice that the correct action is available? Once found, will they know it’s the right one for desired effect? Will users understand feedback after action?

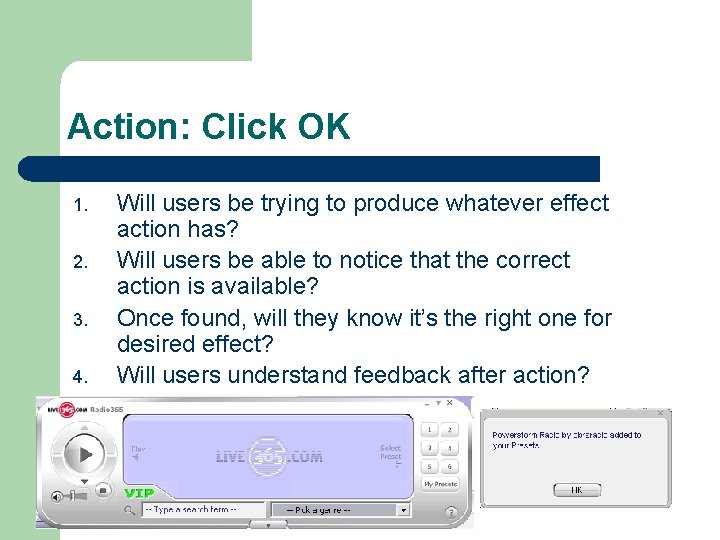

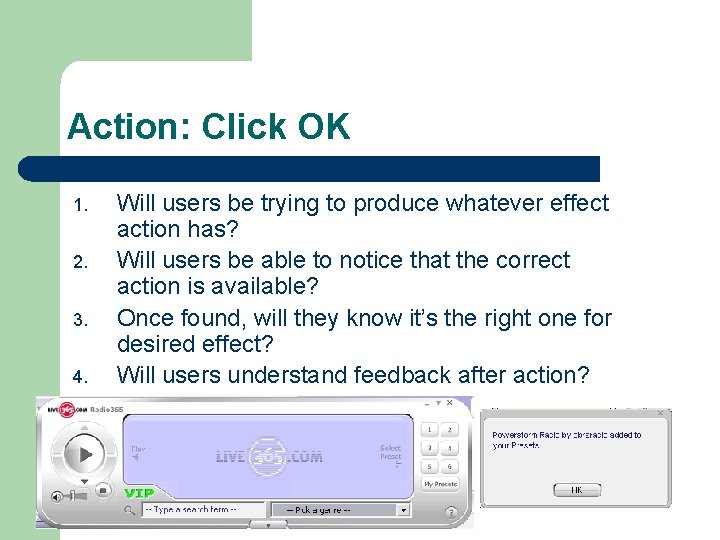

Action: Click OK 1. 2. 3. 4. Will users be trying to produce whatever effect action has? Will users be able to notice that the correct action is available? Once found, will they know it’s the right one for desired effect? Will users understand feedback after action?

Problems l Did I pick the right task? Or list out the right sequence of actions?

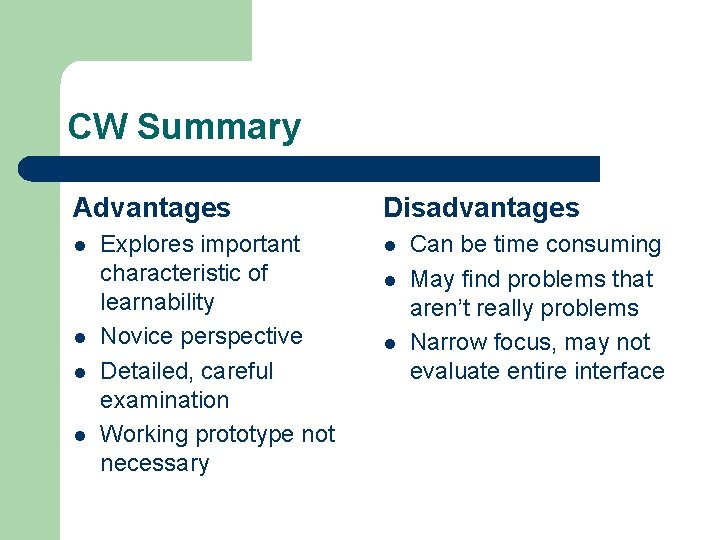

CW Summary Advantages l l Explores important characteristic of learnability Novice perspective Detailed, careful examination Working prototype not necessary Disadvantages l l l Can be time consuming May find problems that aren’t really problems Narrow focus, may not evaluate entire interface

Your turn l Banner – adding a class l What are our tasks? l What are the actions?

CW: Questions 1. 2. 3. 4. Will users be trying to produce whatever effect action has? Will users be able to notice that the correct action is available? (is it visible) Once found, will they know it’s the right one for desired effect? (is it correct) Will users understand feedback after action?

CW: responsibilities l l l Design team creates prototype, user characteristics Design team chooses tasks, lists out every action and response Experts answer 4 questions for every action/response Design team gathers responses and feedback Design team determines how to modify the design

Next Wednesday’s plan l l 3: 30 -4: 05 Cognitive Walkthrough 1 member of design team (D) will facilitate 4 members of the evaluating team D walks through each task, evaluating team asks and answers the 4 questions for every action D makes sure all feedback is written down and takes back to rest of design team

Cognitive Walkthrough pairs l l Rabid Bunnies – User One The Team - Satisfaction Inventive Innovators – No Spoon Awesome - No. Name

Monday’s plan l l 4: 10 -4: 45 Heuristic evaluation 1 member of the Design team (D 2) demos the prototype to the evaluating team D 2 provides the set of heuristics Evaluating team individually writes down all problems they see and gives it to D 2

Heuristic evaluation teams l l Rabid Bunnies – The Team User Won - Satisfaction Inventive Innovators - Awesome No. Spoon – No. Name

Come Prepared! l l l Bring your prototype, have it ready to go at 3: 30 Choose facilitator (D 1 & D 2) for both Bring task & action lists for cognitive walkthrough Bring heuristics for heuristic evaluation As an evaluator – be detailed, thorough, and constructive