CUDA COMPUTE UNIFIED DEVICE ARCHITECTURE PARALLEL PROGRAMMING USING

- Slides: 32

CUDA (COMPUTE UNIFIED DEVICE ARCHITECTURE) PARALLEL PROGRAMMING USING THE GPU By: Matt Sirabella Neil Weber Christian Casseus Jordan Dubique

CUDA (COMPUTE UNIFIED DEVICE ARCHITECTURE) History of Parallel Computing • Computing using the GPU • What is CUDA? • Key Features • Purpose of CUDA • EXAMPLE(S) •

CUDA (COMPUTE UNIFIED DEVICE ARCHITECTURE) History of Parallel Computing

History of GPU Computing Parallel Programming � 1980’s, early 90’s: golden age of data parallel computing, where the same computations are performed on different data elements Super Computers � Powerful, but expensive � Despite its lack of availability, super computers created excitement about parallel computing.

History of GPU Computing Parallel Programming � The complexity of parallel computing is much higher than sequential computing. This is where CUDA comes in!

GPU Computing Why use GPU’s in computing? � GPU’s are massively multithreaded many-core chips. � Many-Core Chips (GPU) vs. Multi-Core Chips (CPU) Many-core chips contain hundreds of processor cores. Multi-core chips contain less cores (Ex. Dual Core, Quad Core, Eight Core). � Increase Application Efficiency

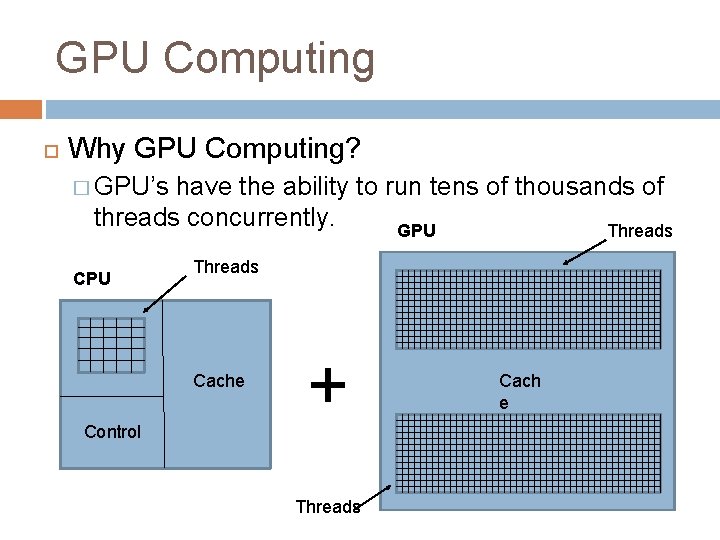

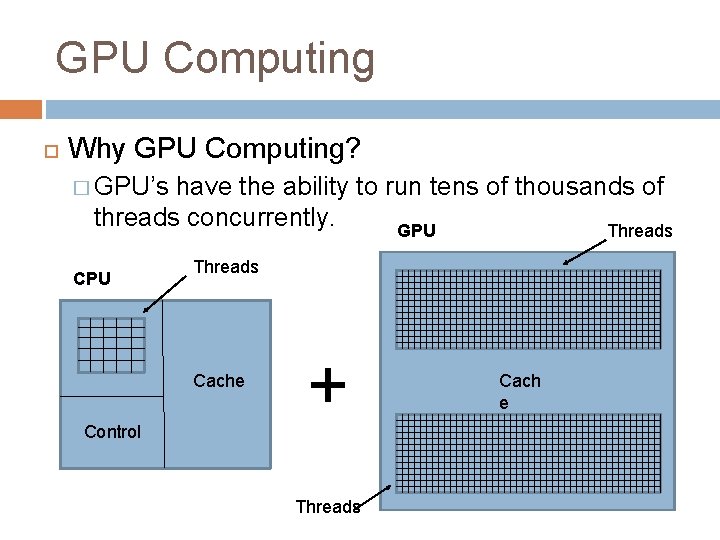

GPU Computing Why GPU Computing? � GPU’s have the ability to run tens of thousands of threads concurrently. GPU Threads Cache Control + Threads Cach e

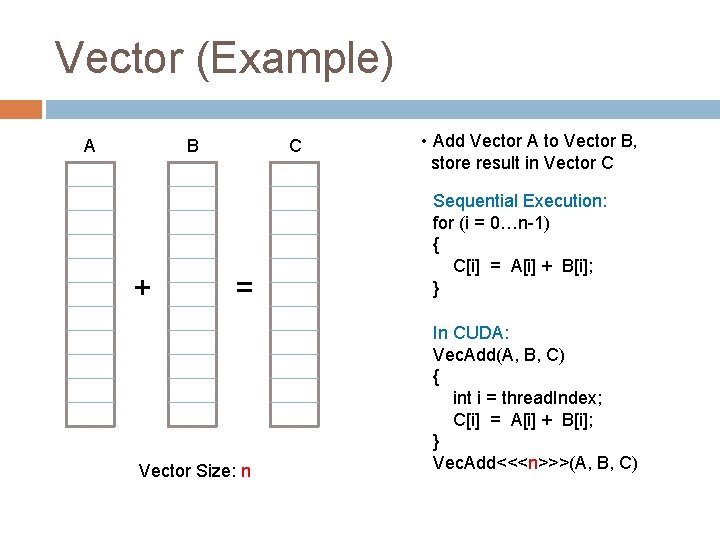

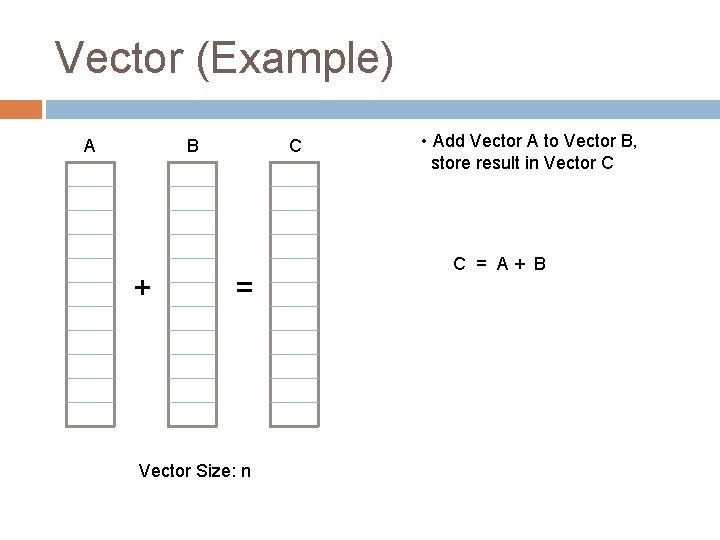

Vector (Example) A B + C = Vector Size: n • Add Vector A to Vector B, store result in Vector C C = A+ B

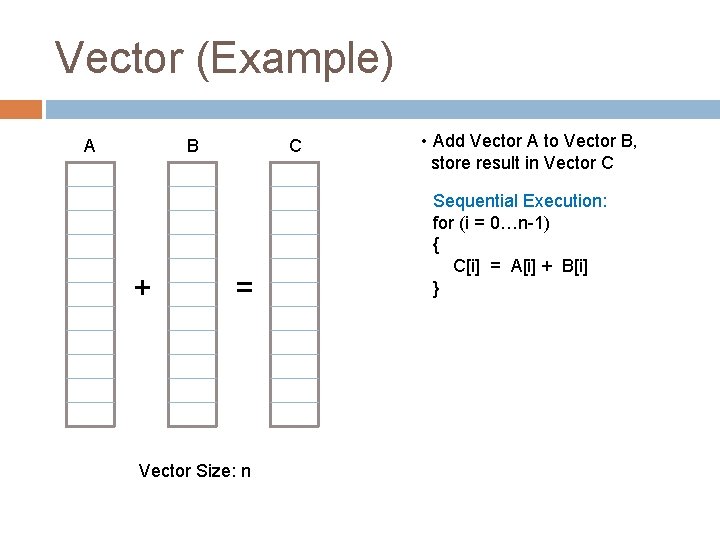

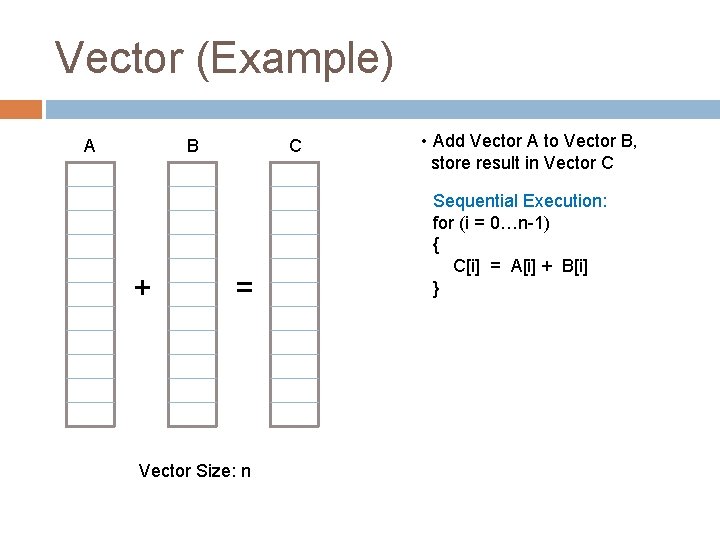

Vector (Example) A B + C = Vector Size: n • Add Vector A to Vector B, store result in Vector C Sequential Execution: for (i = 0…n-1) { C[i] = A[i] + B[i] }

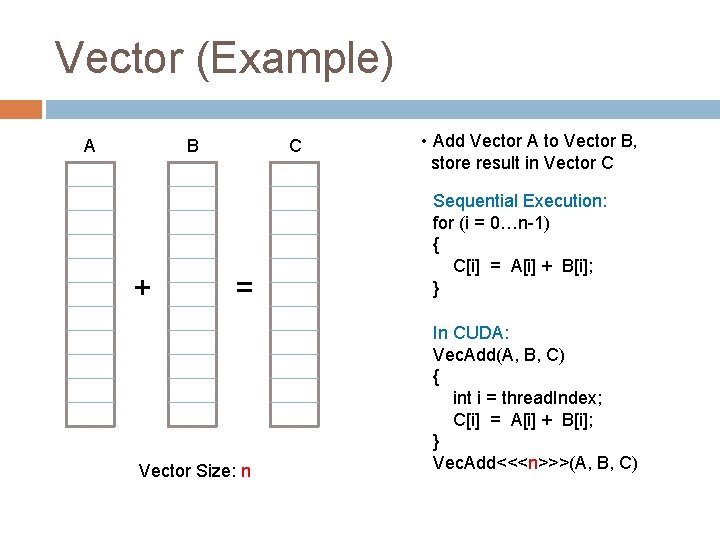

Vector (Example) A B + C = Vector Size: n • Add Vector A to Vector B, store result in Vector C Sequential Execution: for (i = 0…n-1) { C[i] = A[i] + B[i]; } In CUDA: Vec. Add(A, B, C) { int i = thread. Index; C[i] = A[i] + B[i]; } Vec. Add<<<n>>>(A, B, C)

What is CUDA? CUDA is a parallel computing platform and programming model invented by NVIDIA GPUs implement this architecture and programming model. CUDA works with all NVIDIA GPUs from the G 8 x series onwards By downloading the CUDA Toolkit you can code algorithms for execution on the GPU.

History CUDA project was announced in November, 2006 Public beta version of CUDA SDK was released in February, 2007 as the world's first solution for general-computing on GPUs. Later in the year came CUDA 1. 1 beta, which introduced CUDA functions to common NVIDIA drivers. Current Version: CUDA 5. 0 developer. nvidia. com/cuda-downloads

CUDA The developer still programs in the familiar C, C++, Fortran, or another supported language, and incorporates extensions of these languages in the form of a few basic keywords. "GPUs have evolved to the point where many real -world applications are easily implemented on them and run significantly faster than on multicore systems. Future computing architectures will be hybrid systems with parallel-core GPUs working in tandem with multi-core CPUs. “ -- Jack Dongarra Professor, University of Tennessee

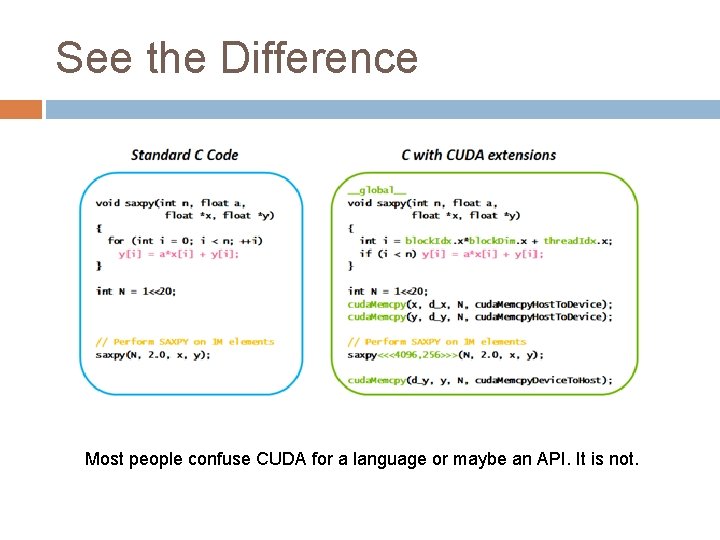

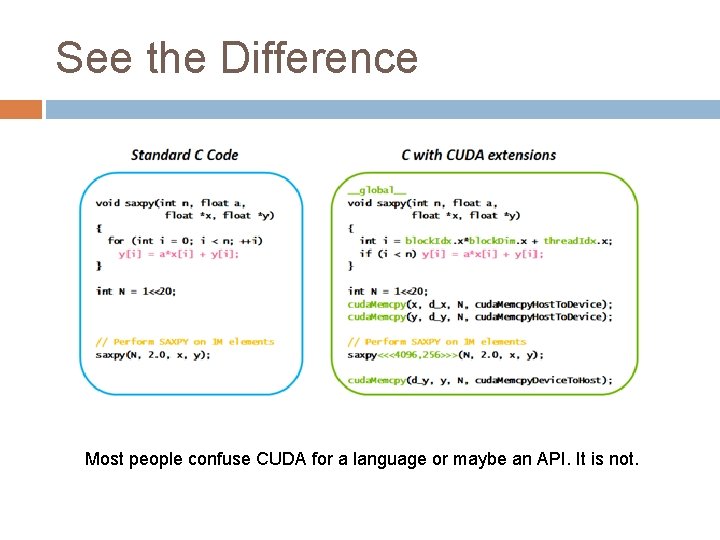

See the Difference Most people confuse CUDA for a language or maybe an API. It is not.

Where to Learn developer. nvidia. com/cuda-education-training NVIDIA hosts regular webinars for developers “The key thing customers said was they didn't want to have to learn a whole new language or API. Some of them were hiring gaming developers because they knew GPUs were fast but didn't know how to get to them. Providing a solution that was easy, that you could learn in one session and see it outperform your CPU code was critical. “ -- Ian Buck General Manager, NVIDIA

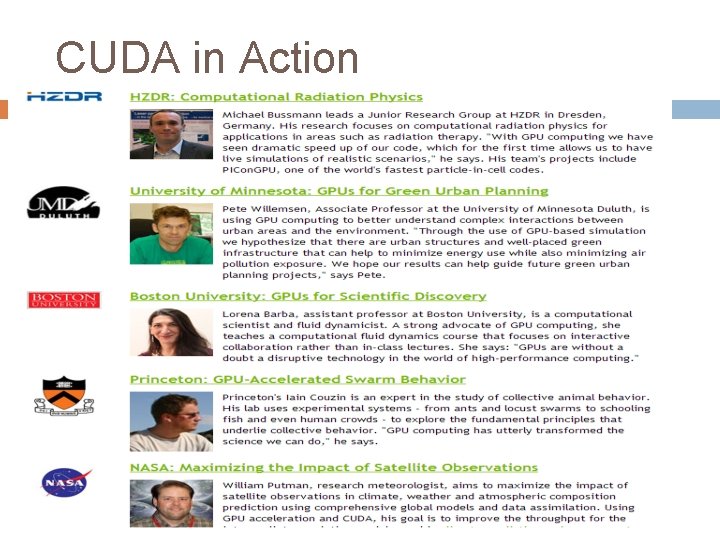

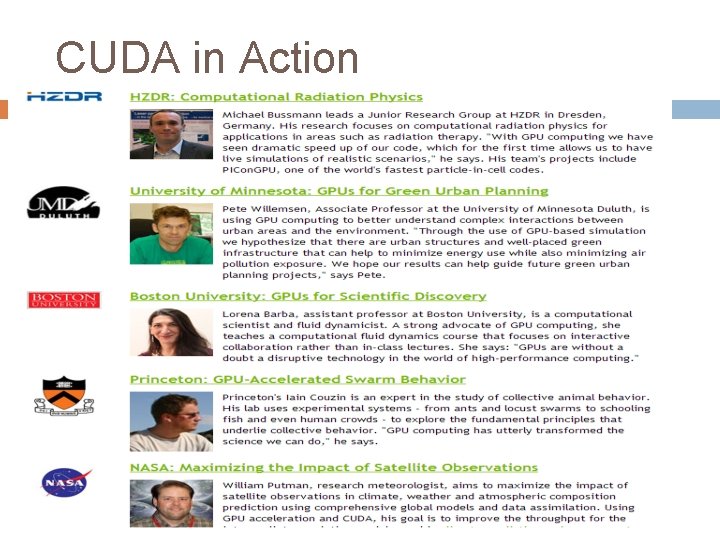

CUDA in Action

CUDA What sets CUDA apart

Accessible in many ways The CUDA platform is accessible to software developers through CUDA-accelerated libraries and compiler directives Provides accessibility through extensions to commonly used programming languages. � C and C++ (CUDA C/C++) and Fortran (CUDA Fortran). CUDA platform supports other computational interfaces. � Khronos Group's Open. CL and Microsoft's Direct. Compute and C++ AMP. Third party wrappers available for other languages. � Python, Perl, Fortran, Java, Ruby, Lua, Haskell, MATLAB and IDL.

Distinct features Parallelism. Data locality. Thread cooperation.

Parallelism Parallel throughput architecture that emphasizes executing many concurrent threads slowly, rather than executing a single thread very quickly. Facilitate heterogeneous computing: CPU + GPU. Parallel portions of an application are executed on the device as kernels.

Data locality CUDA model encourages data locality and reuse for good performance on the GPU. The data tiling and locality expressed in effective CUDA kernels also gains most of the benefits of handoptimization for the CPU architecture. The expression of data locality and computational regularity in the CUDA programming model achieves much of the performance benefits of tuning code for the architecture by hand.

Thread cooperation CUDA threads are extremely lightweight. � Very little creation overhead. � Fast switching. CUDA uses thousands of threads to achieve efficiency. � Multi-core CPUs can only use a few. Thread cooperation is valuable. � Cooperate on memory accesses. � Share results to avoid redundant computation.

CUDA SYNTAX

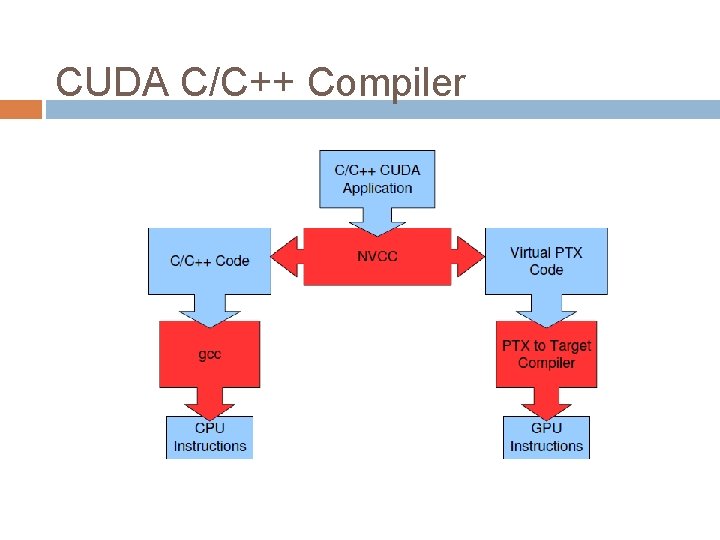

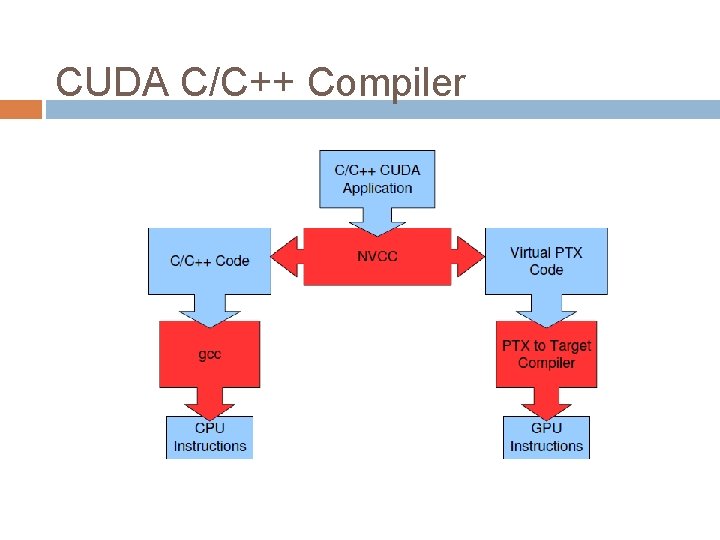

CUDA C/C++ Compiler

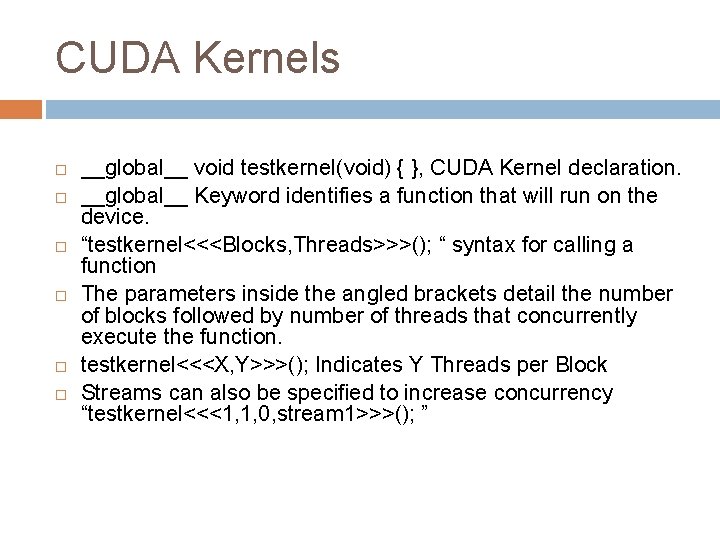

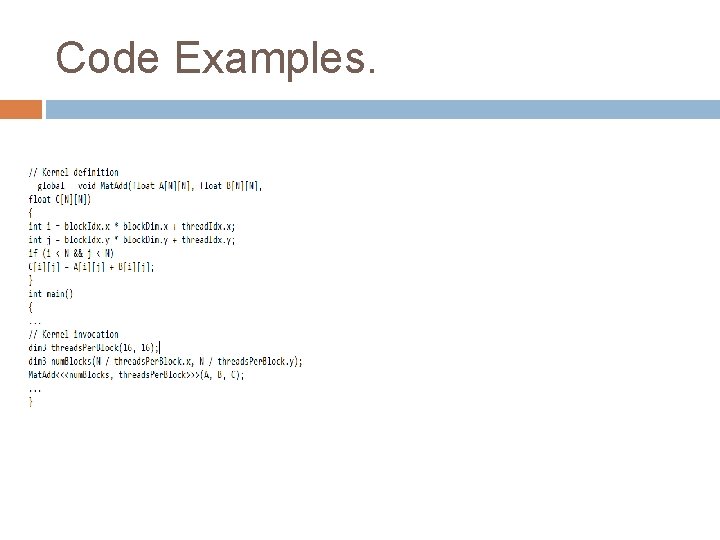

CUDA Kernels __global__ void testkernel(void) { }, CUDA Kernel declaration. __global__ Keyword identifies a function that will run on the device. “testkernel<<<Blocks, Threads>>>(); “ syntax for calling a function The parameters inside the angled brackets detail the number of blocks followed by number of threads that concurrently execute the function. testkernel<<<X, Y>>>(); Indicates Y Threads per Block Streams can also be specified to increase concurrency “testkernel<<<1, 1, 0, stream 1>>>(); ”

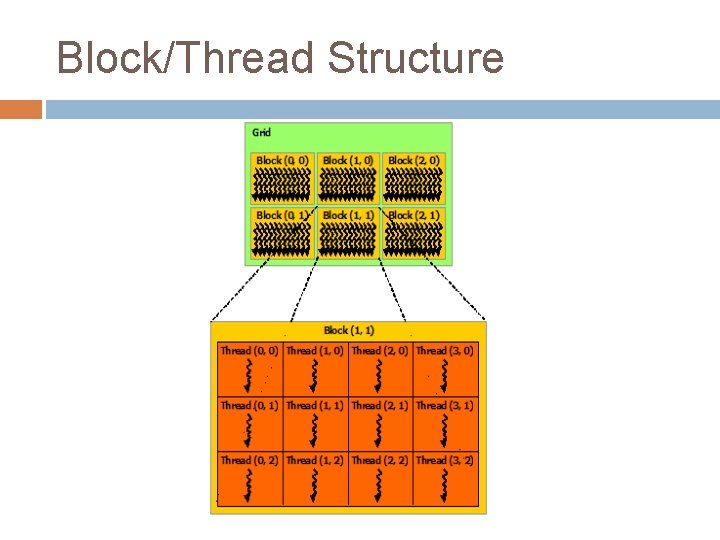

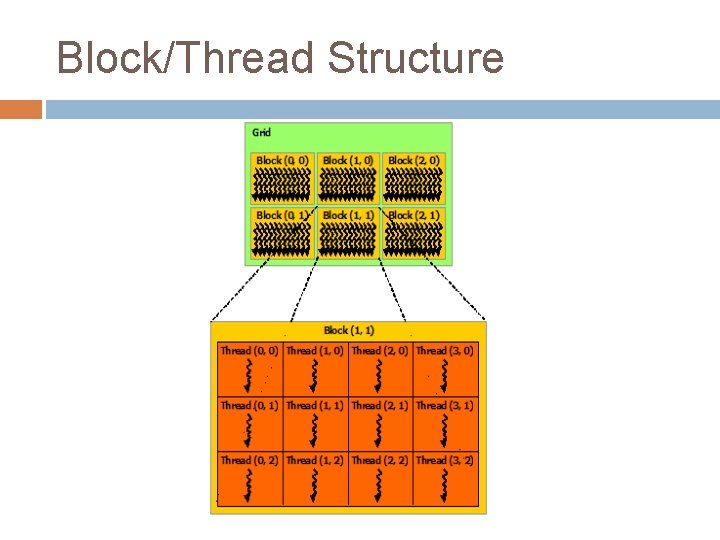

Block/Thread Structure

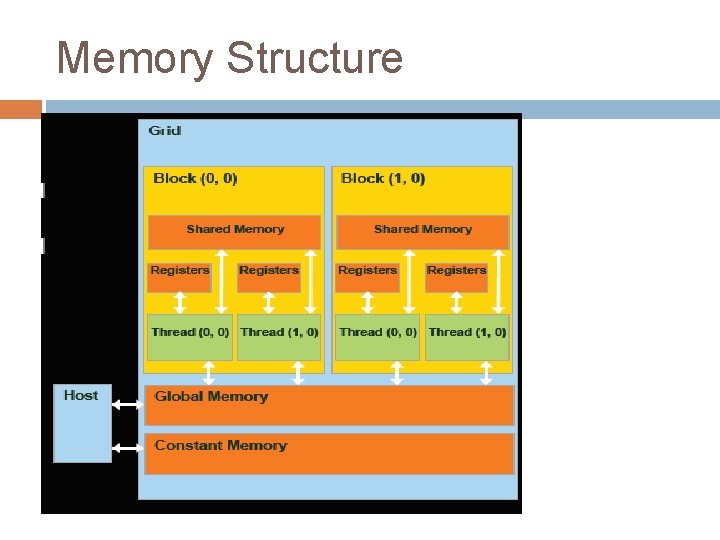

Memory Core memory Functions in CUDA are cuda. Malloc(), cuda. Free(), cuda. Memcpy() *cuda. Memcpy. Async() increases concurrency Threads within a Block can share memory using the keyword “__shared__ “ void __syncthreads(); Ensures that all threads within a block have access to the same data.

Other Keywords and Variables grid. Dim and block. Dim: Contain dimensions for grids and blocks block. Idx: contains block index within a grid. For example, block. Idx. x thread. Idx: contains thread index within a block. __device__: Declares a variable that resides in the devices global memory __constant__: Declares a variable that resides in devices constant memory

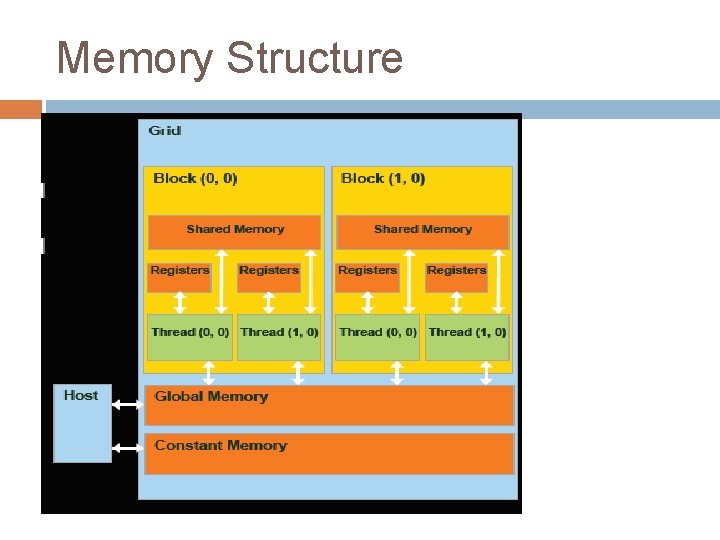

Memory Structure

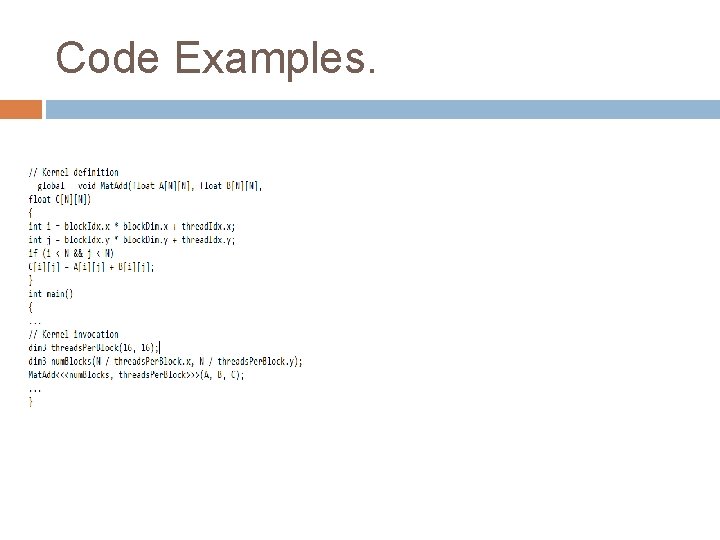

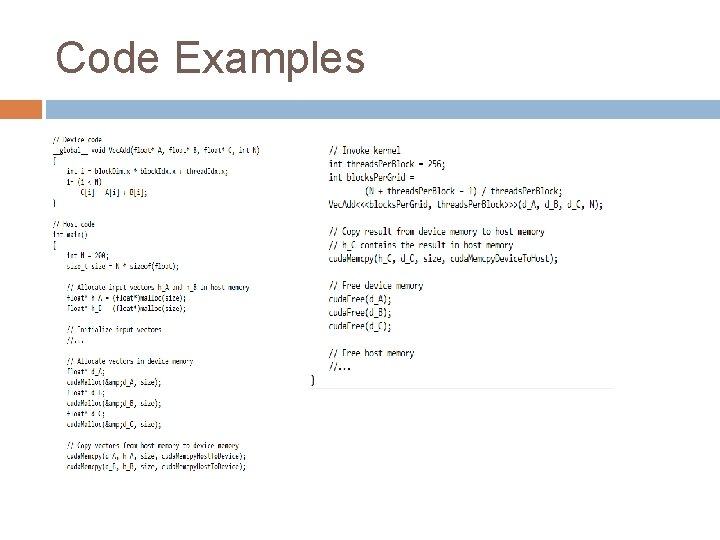

Code Examples.

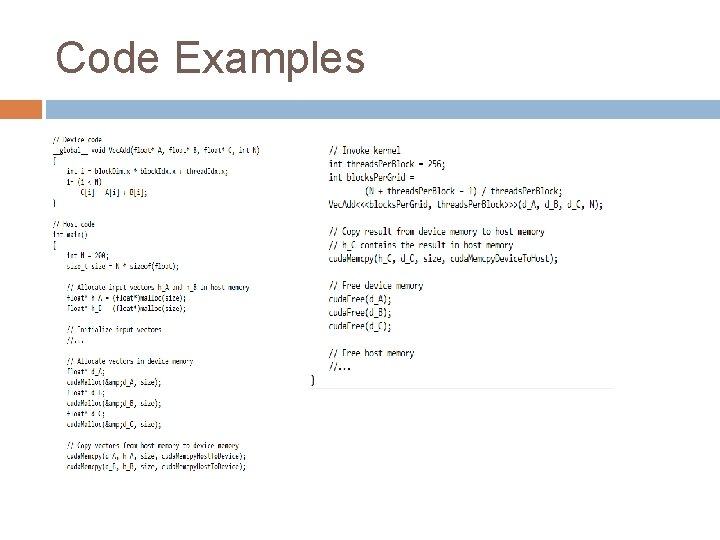

Code Examples

Larger Applications Sequence Analysis and Alignment Database Searching and Indexing Next-generation Sequencing and its Applications Phylogeny Reconstruction Computational Genomics and Proteomics Gene expression, Microarrays and Gene Regulatory Networks Protein Structure Prediction Production-level GPU Parallelization of widely used algorithms and tools. Bioinformatics Research GPUGRID. com