CS 148 Introduction to Computer Graphics and Imaging

- Slides: 42

CS 148: Introduction to Computer Graphics and Imaging 1/42 OPTICS I

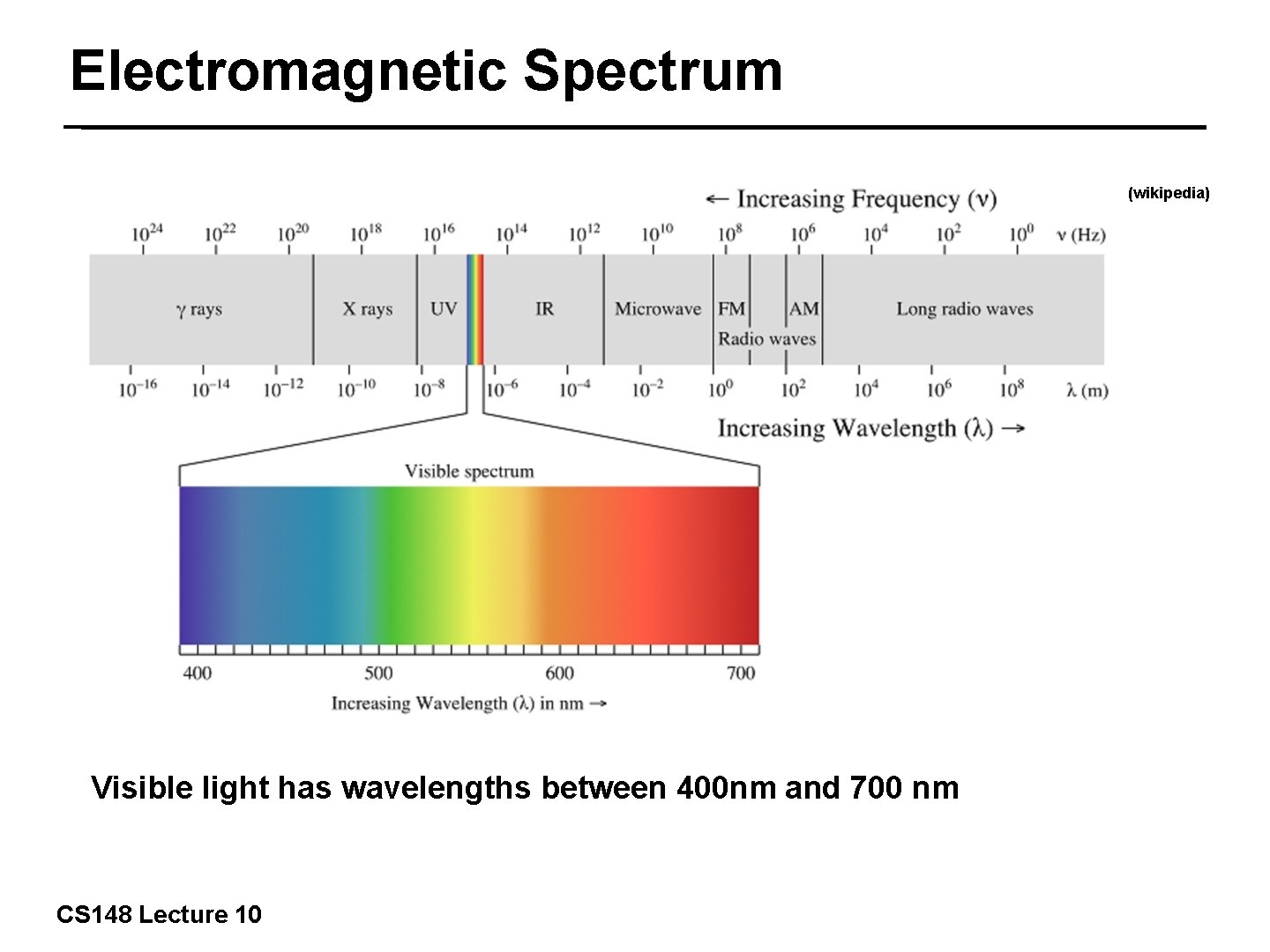

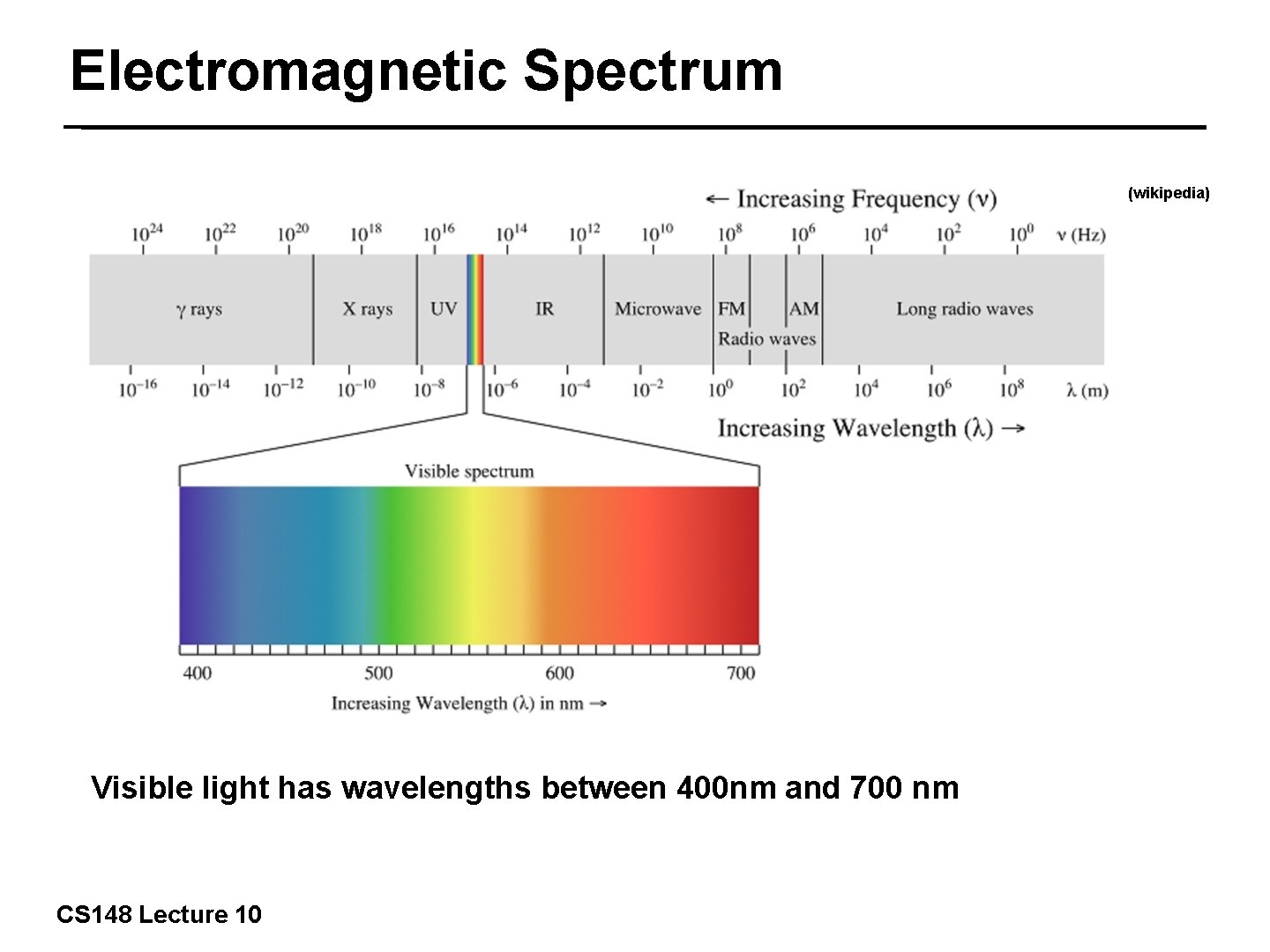

Electromagnetic Spectrum (wikipedia) 2/42 Visible light has wavelengths between 400 nm and 700 nm CS 148 Lecture 10

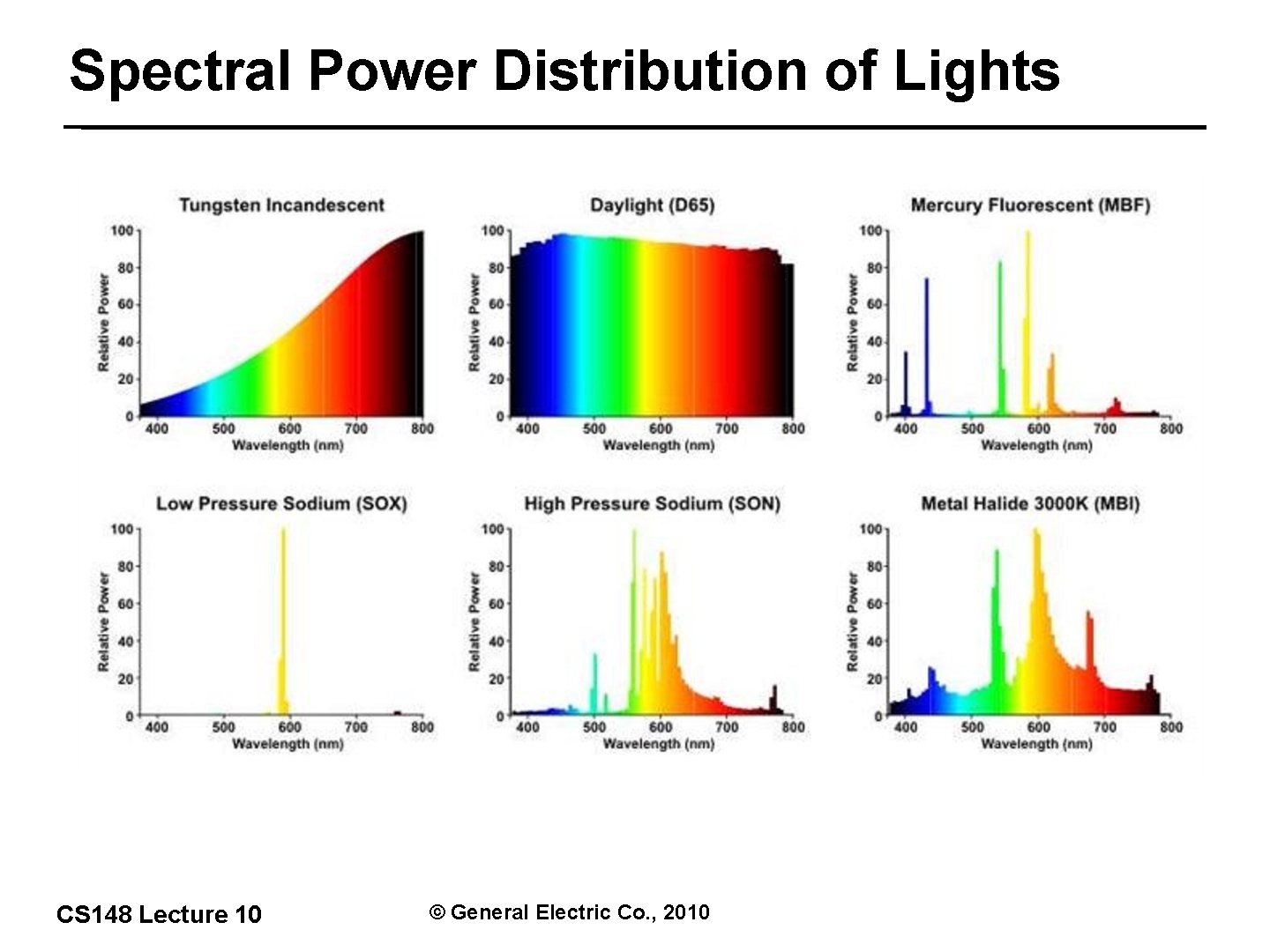

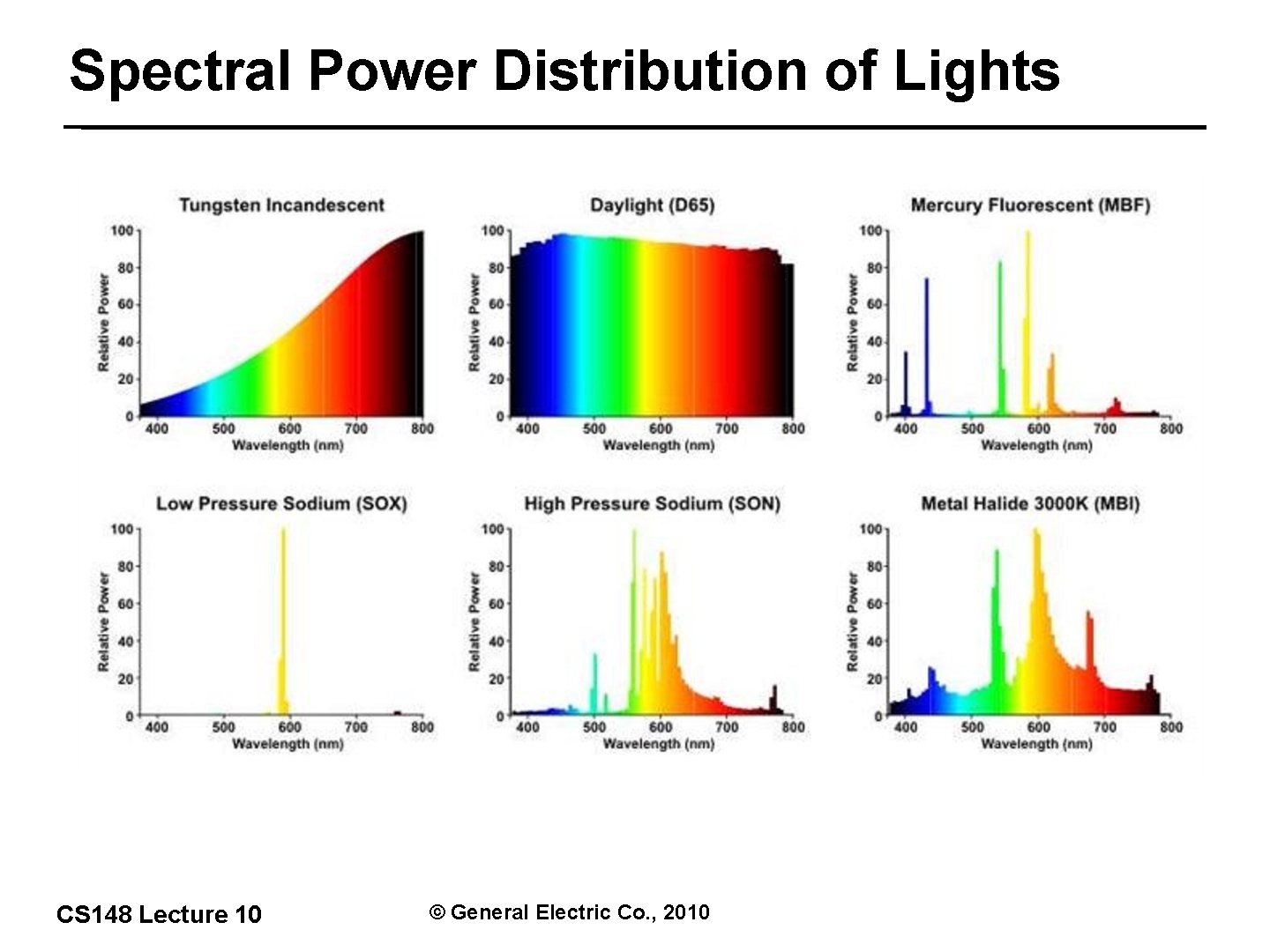

Spectral Power Distribution of Lights 3/42 CS 148 Lecture 10 © General Electric Co. , 2010

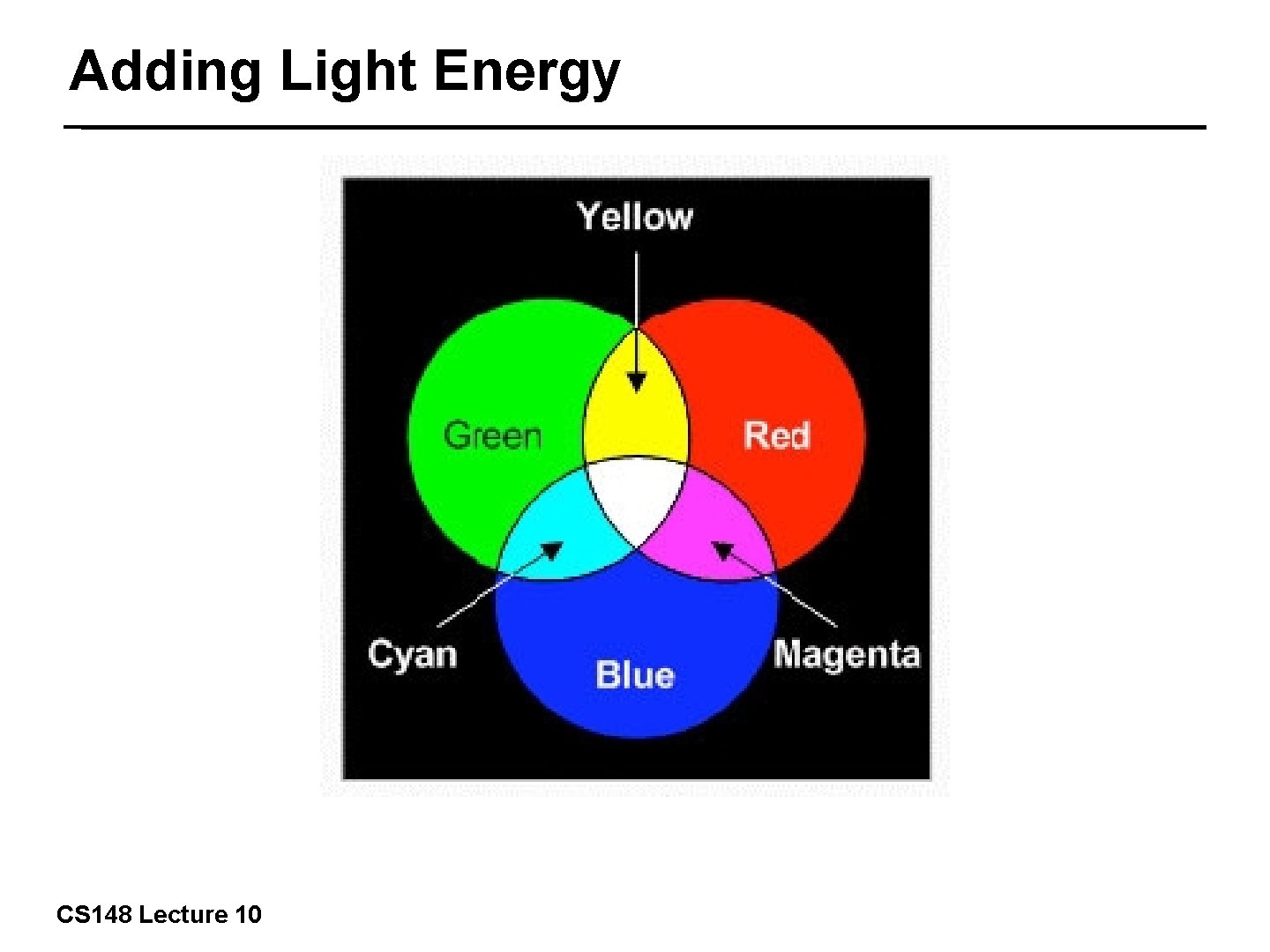

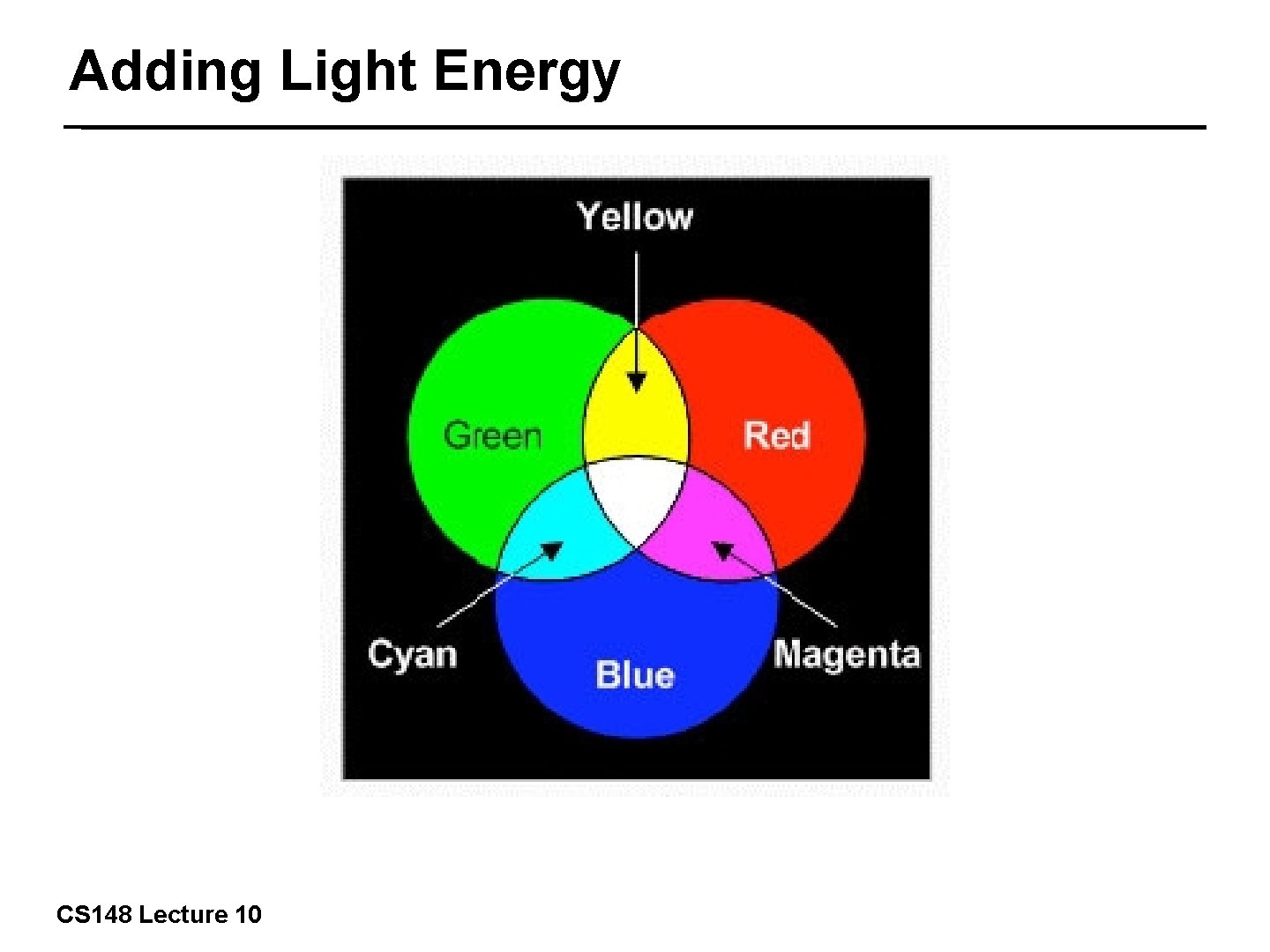

Adding Light Energy 4/42 CS 148 Lecture 10

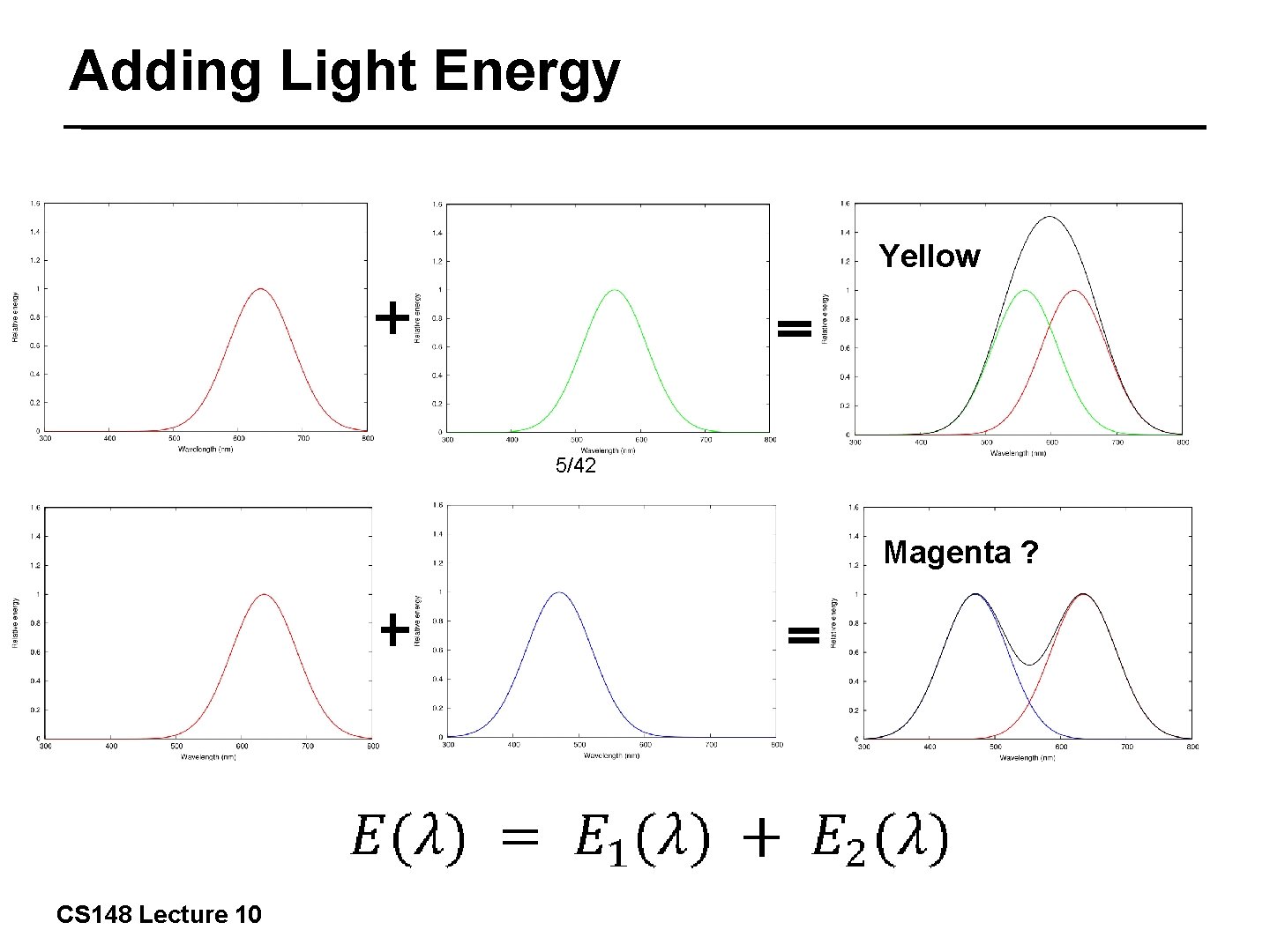

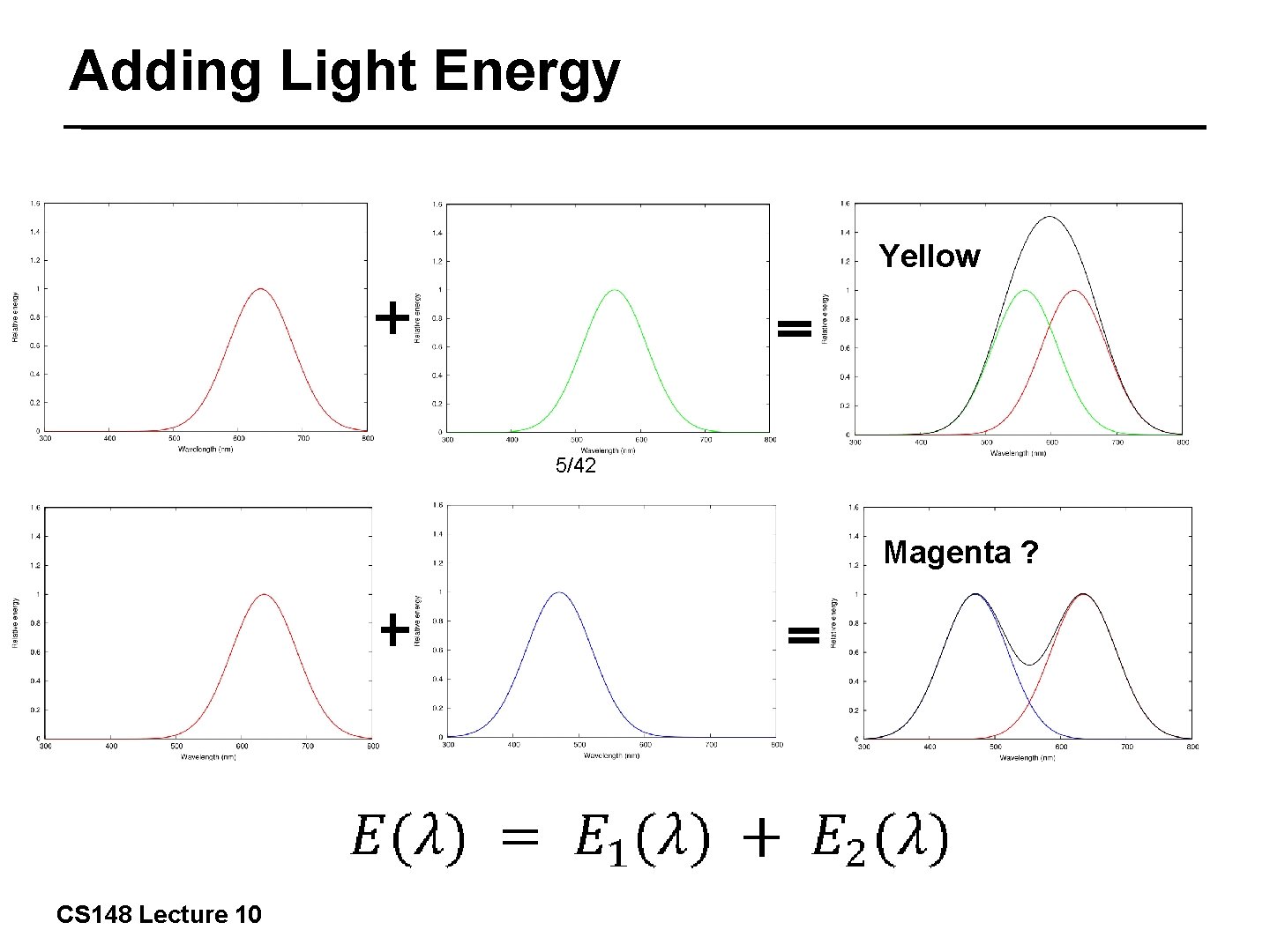

Adding Light Energy Yellow + = 5/42 Magenta ? + CS 148 Lecture 10 =

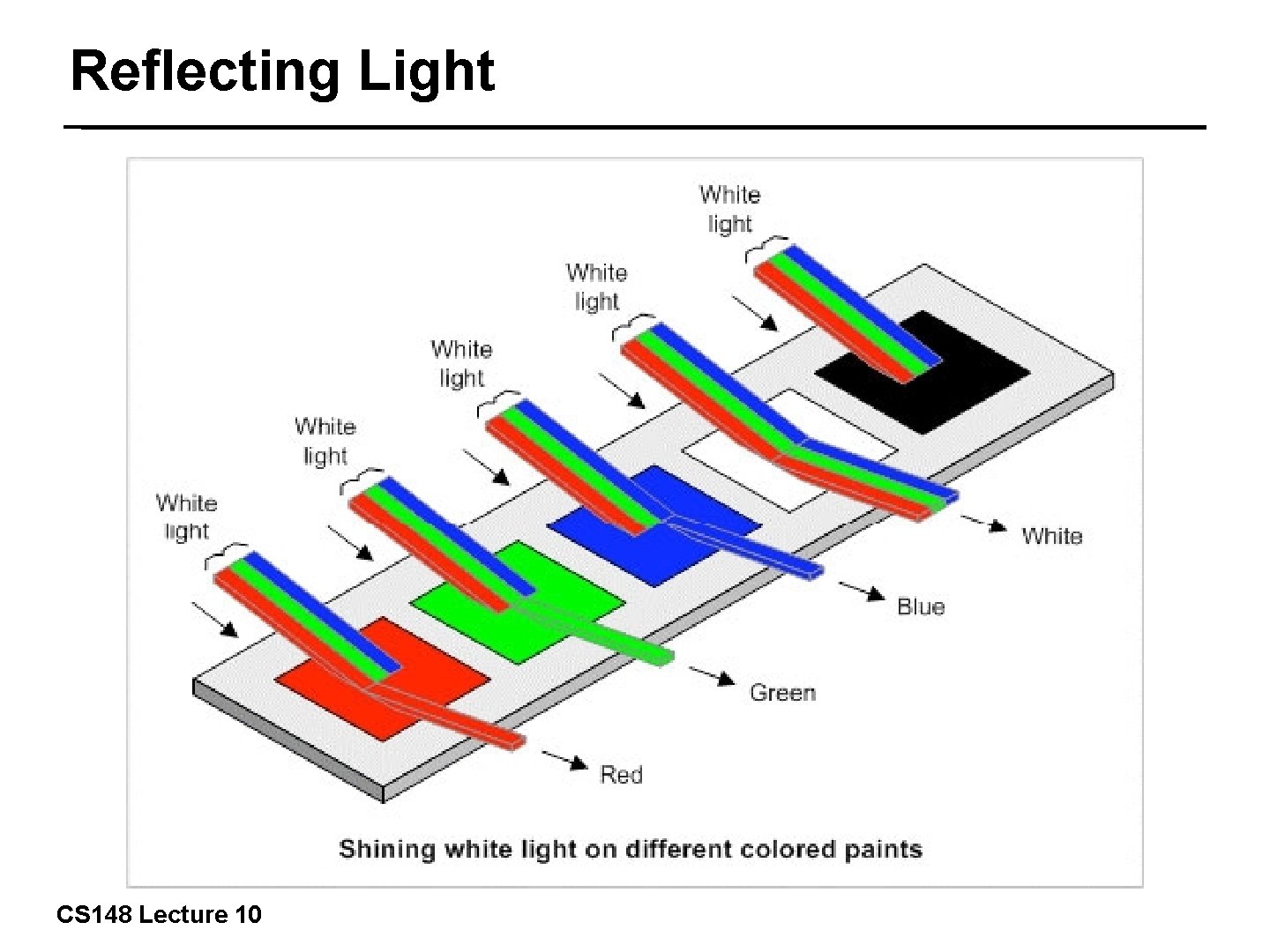

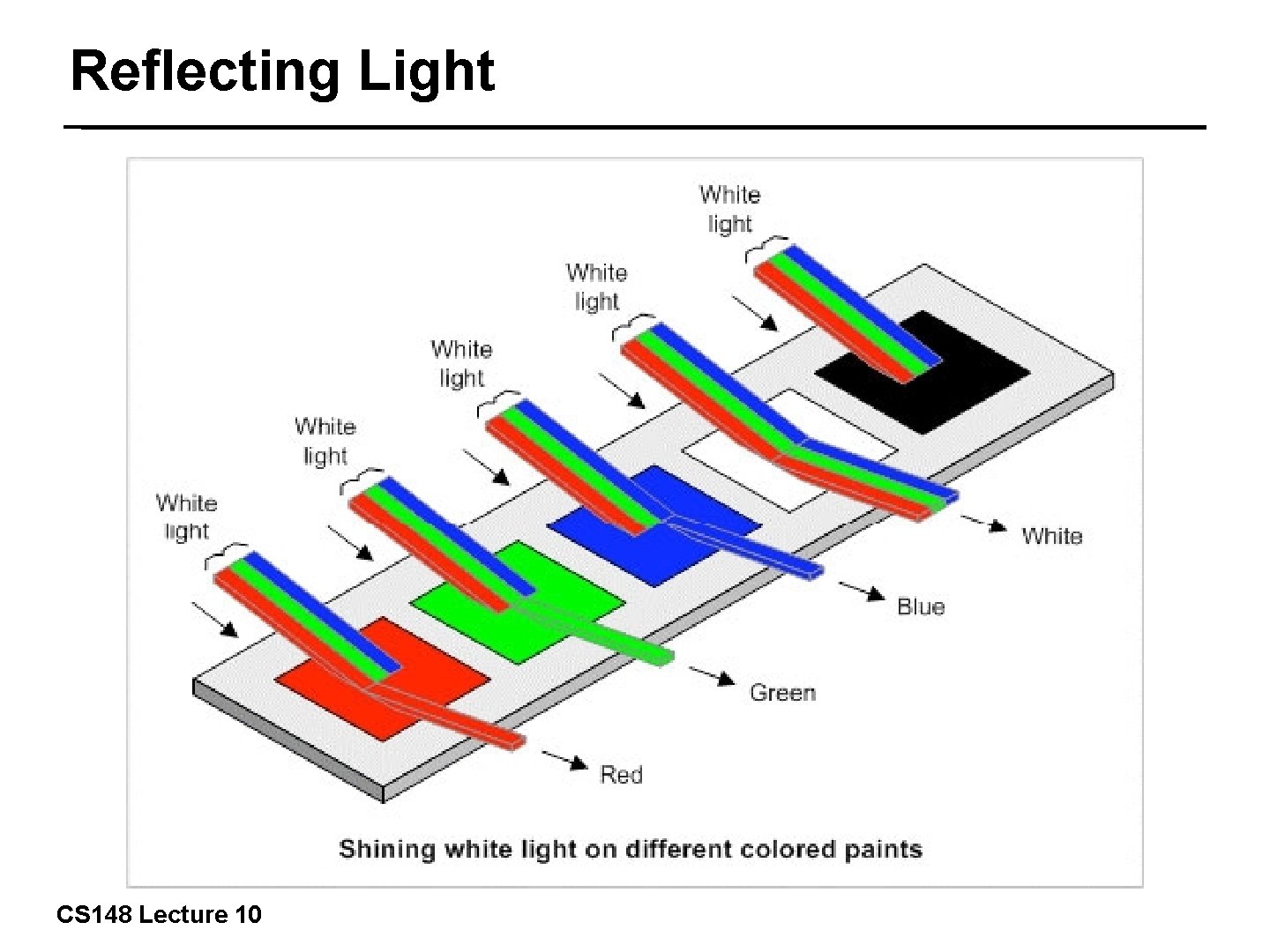

Reflecting Light 6/42 CS 148 Lecture 10

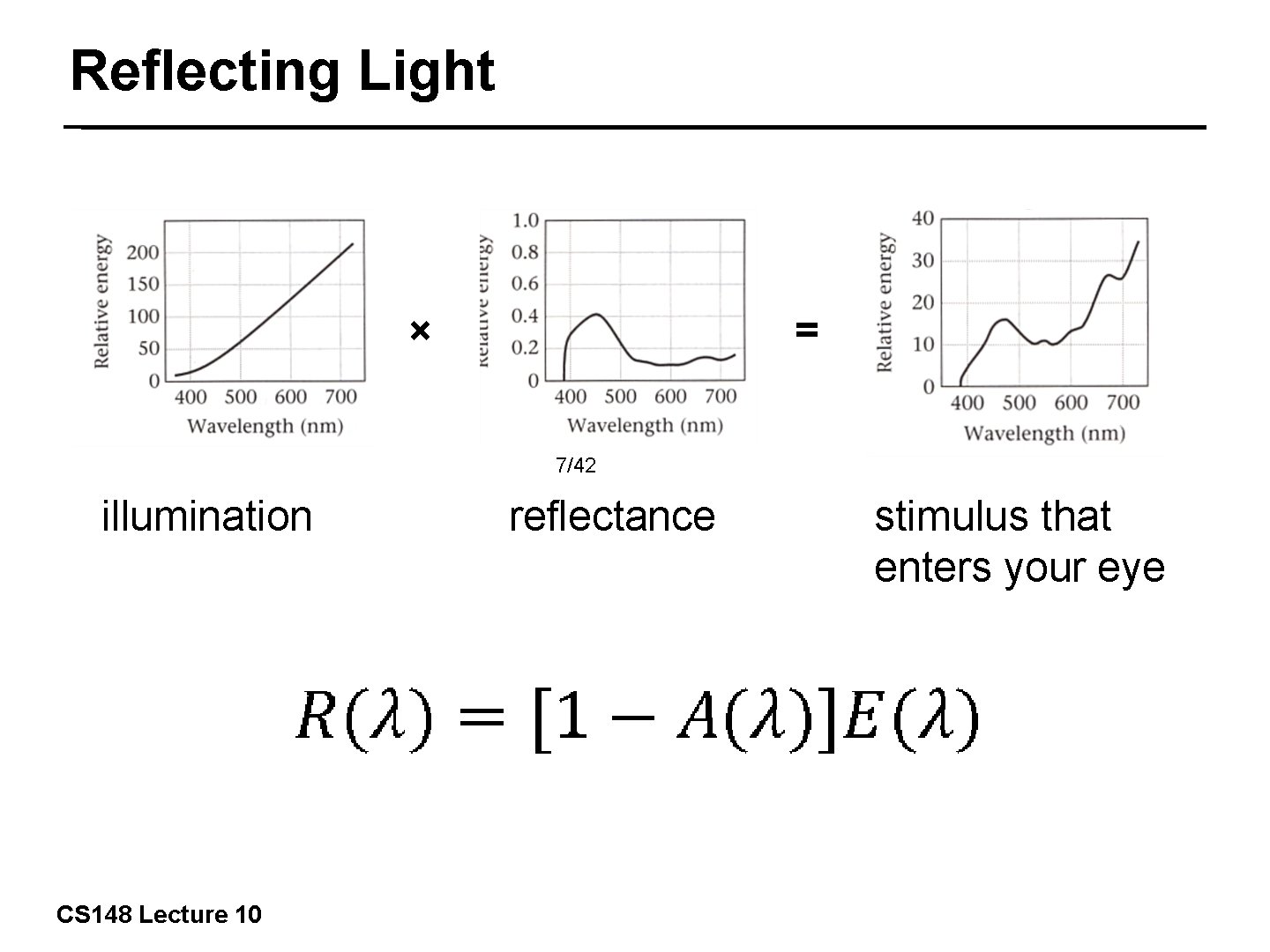

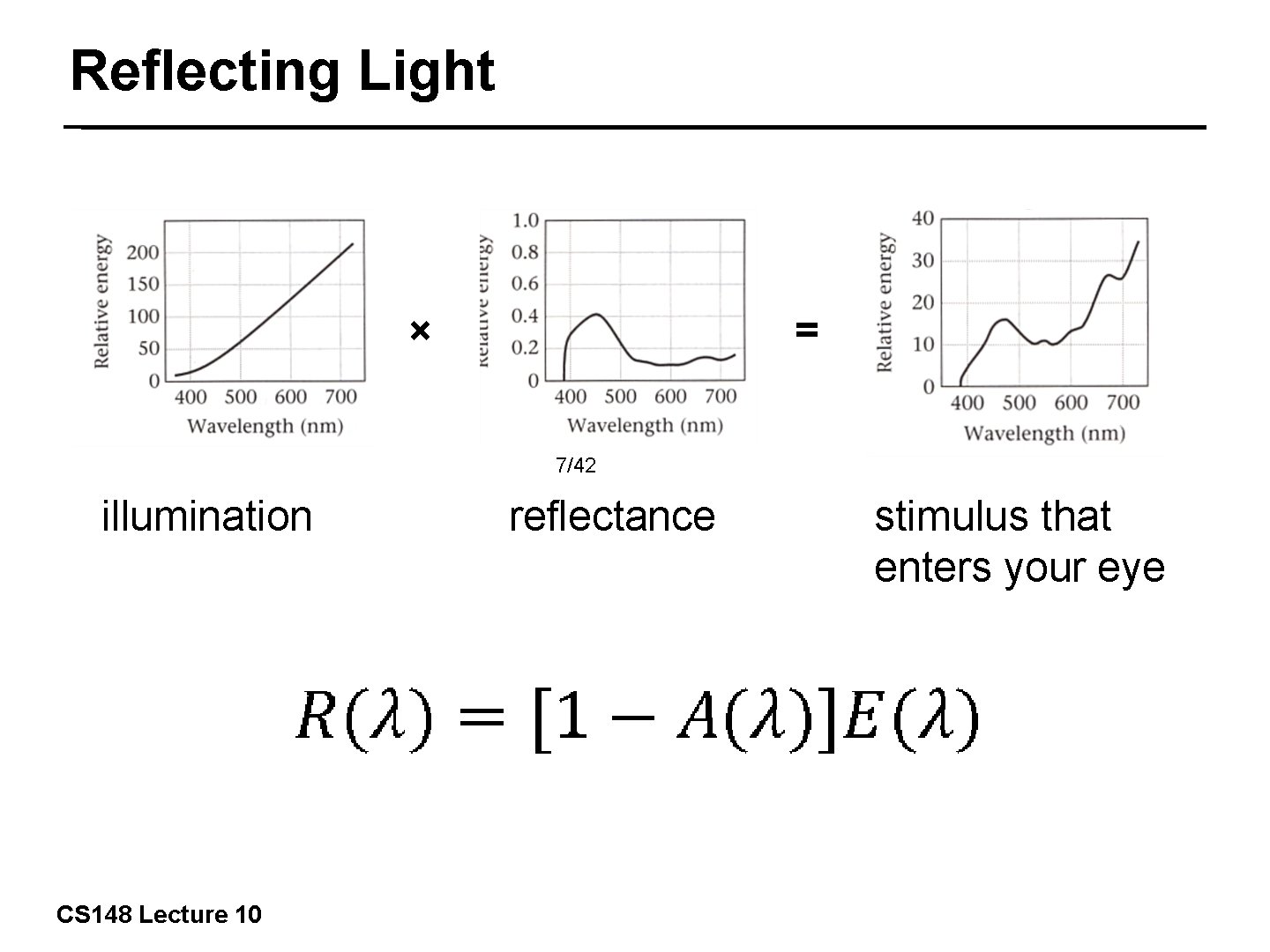

Reflecting Light × = 7/42 illumination CS 148 Lecture 10 reflectance stimulus that enters your eye

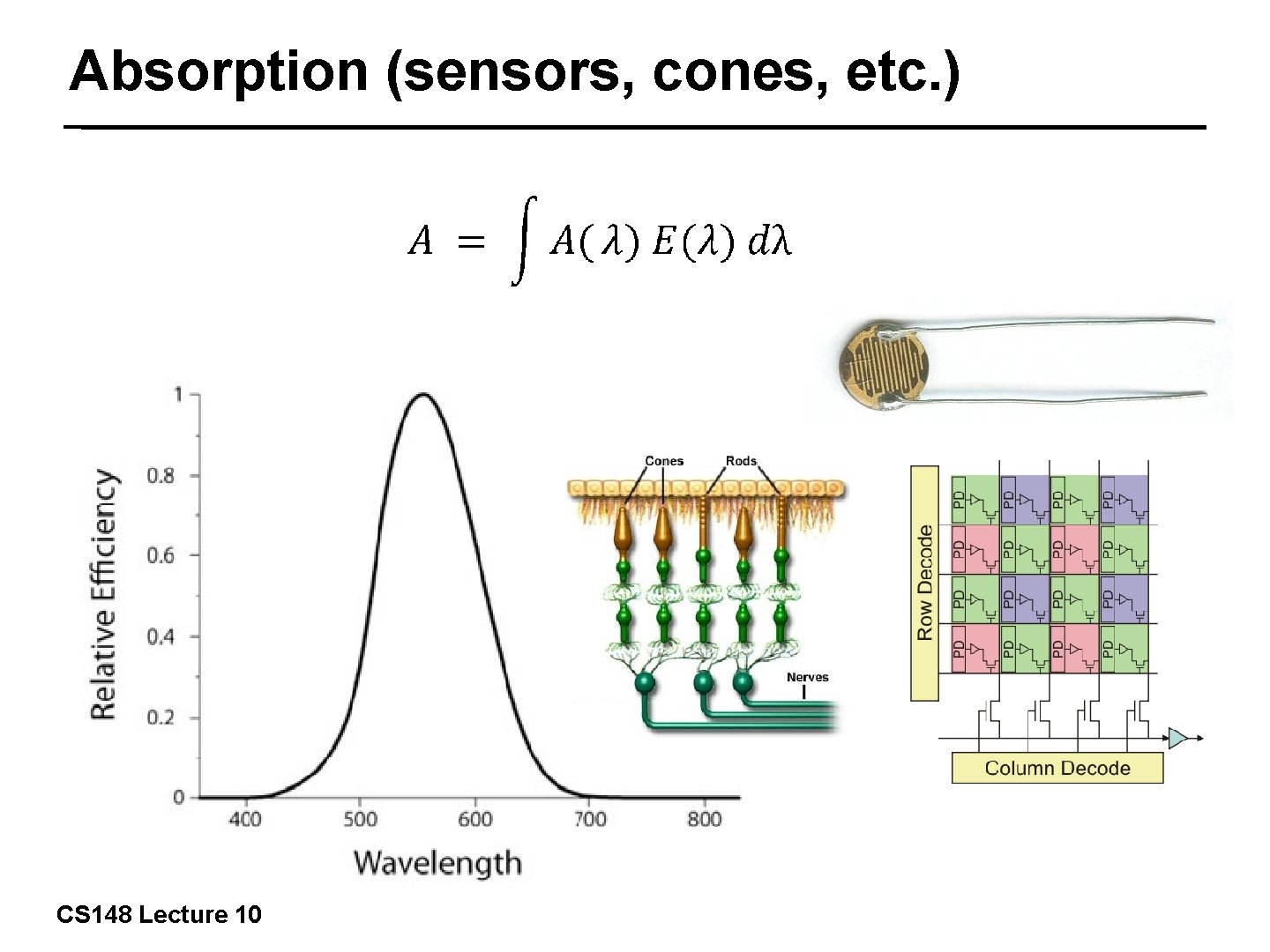

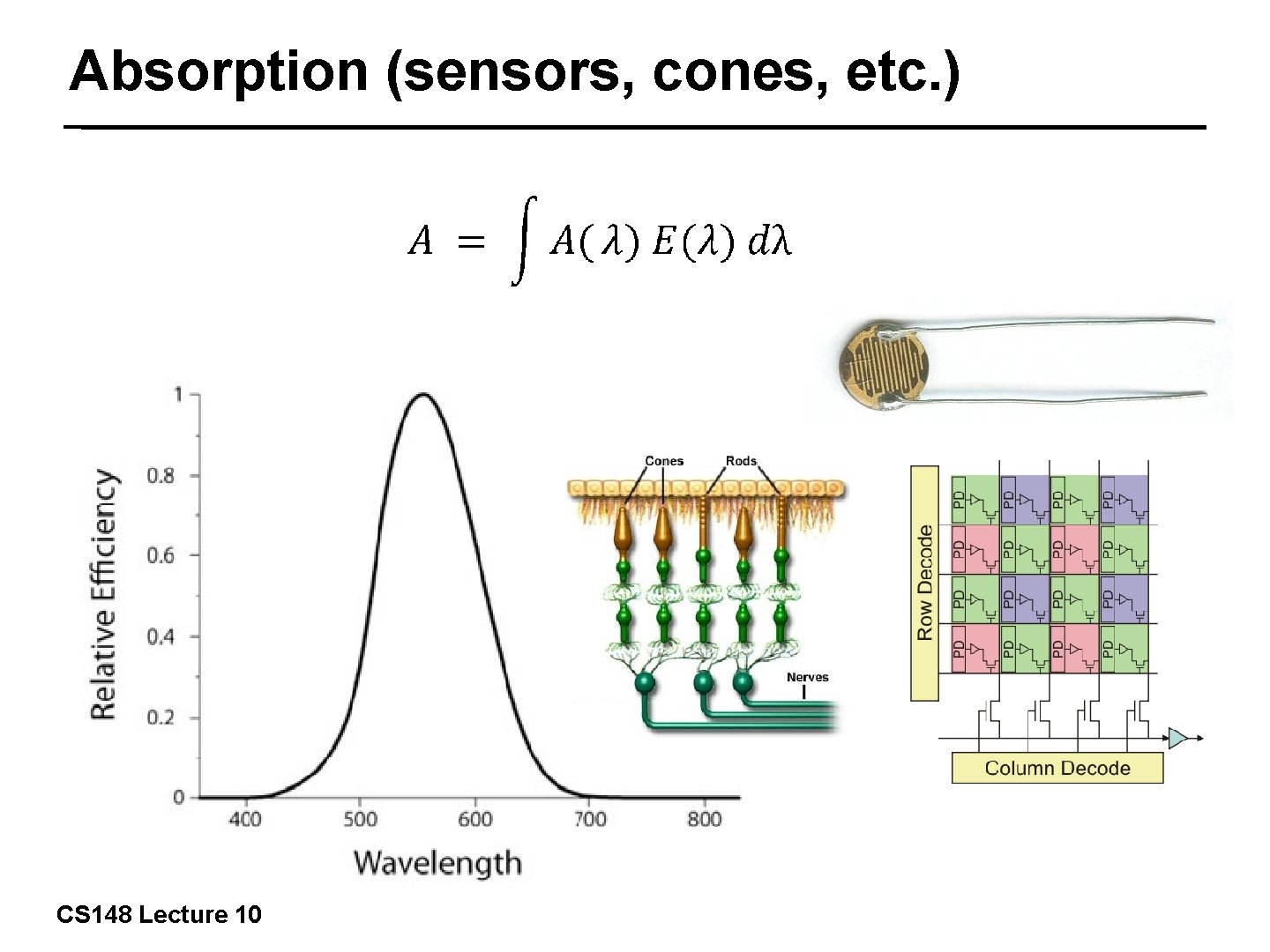

Absorption (sensors, cones, etc. ) 8/42 CS 148 Lecture 10

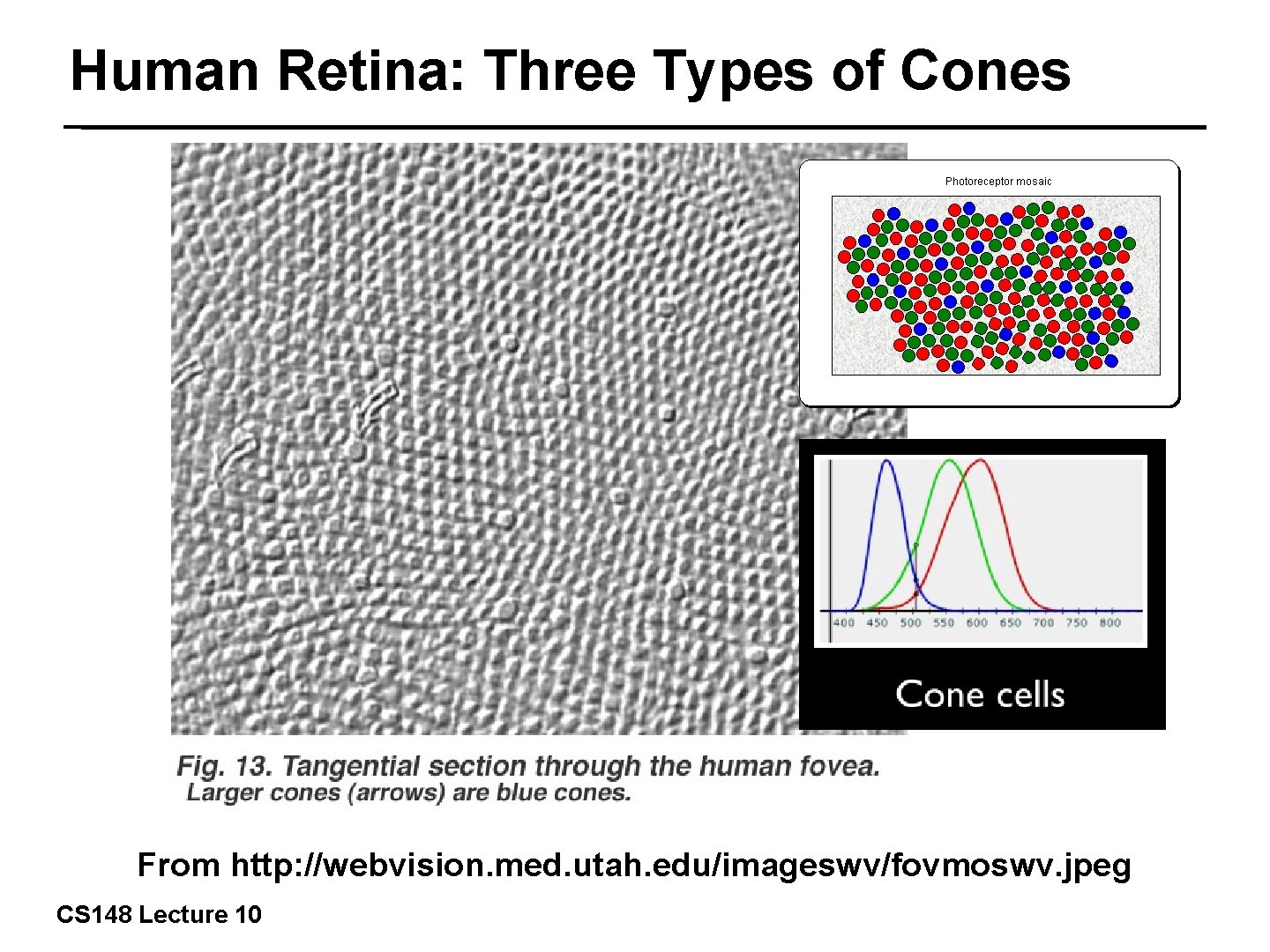

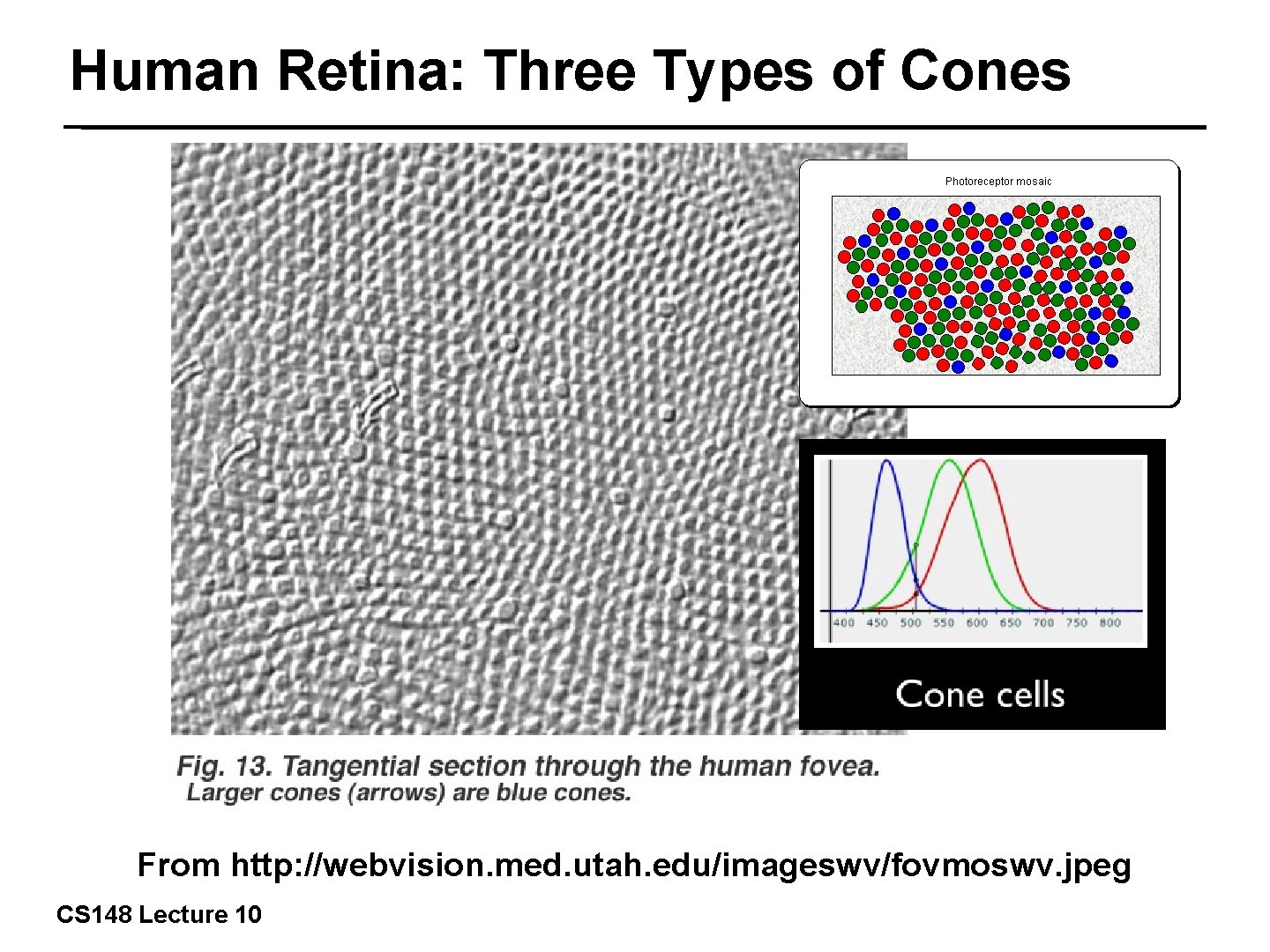

Human Retina: Three Types of Cones 9/42 From http: //webvision. med. utah. edu/imageswv/fovmoswv. jpeg CS 148 Lecture 10

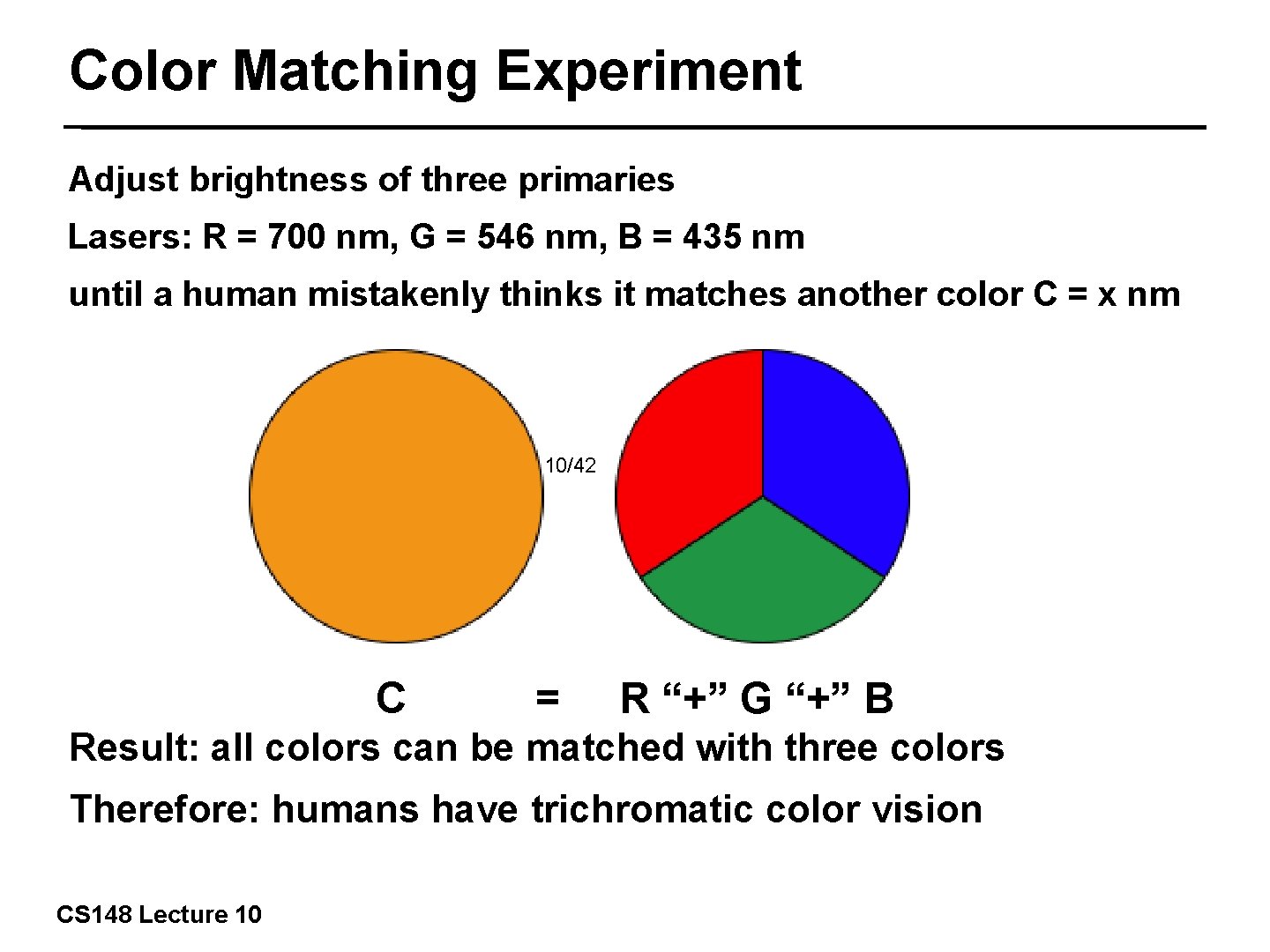

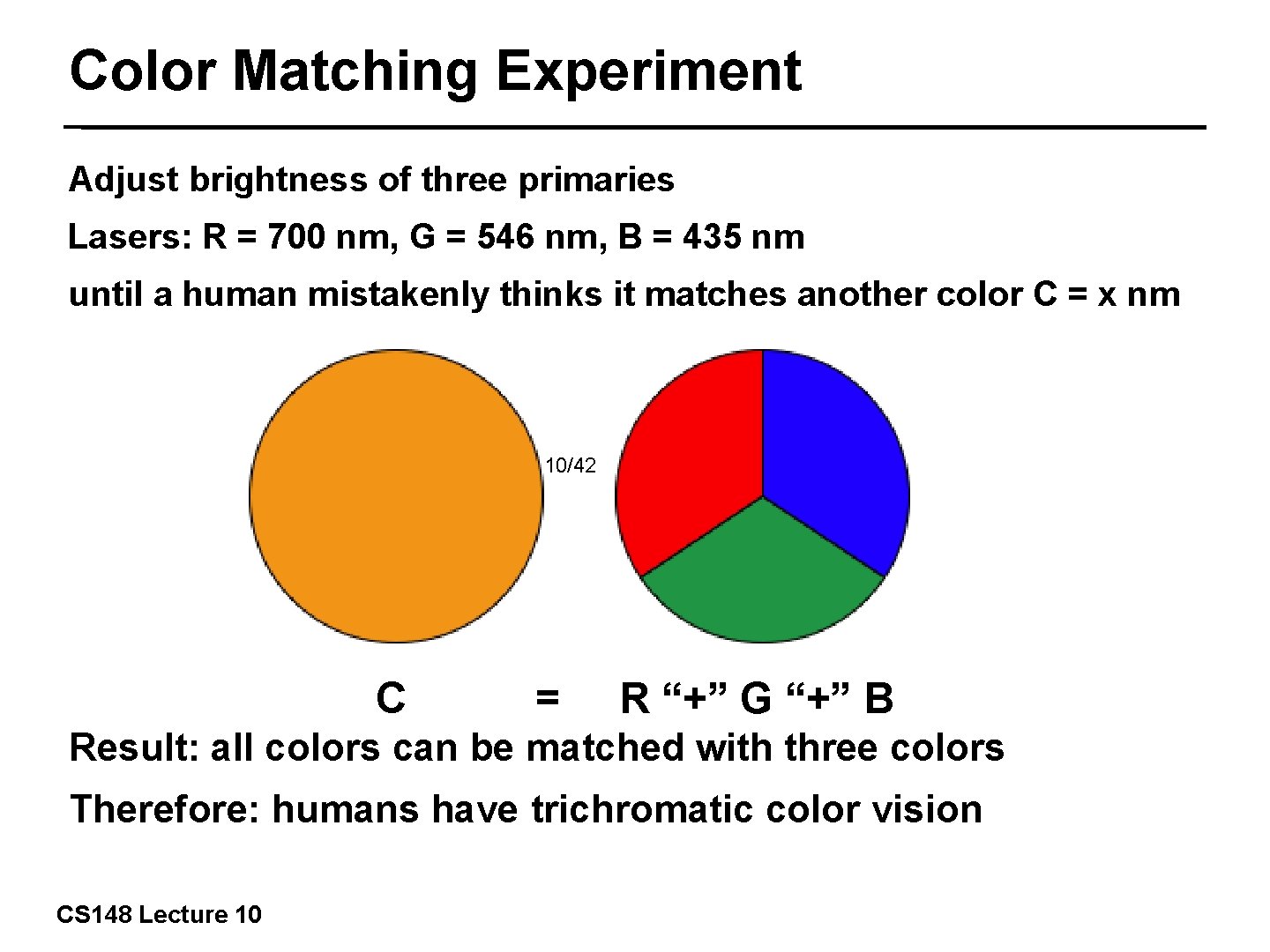

Color Matching Experiment Adjust brightness of three primaries Lasers: R = 700 nm, G = 546 nm, B = 435 nm until a human mistakenly thinks it matches another color C = x nm 10/42 C = R “+” G “+” B Result: all colors can be matched with three colors Therefore: humans have trichromatic color vision CS 148 Lecture 10

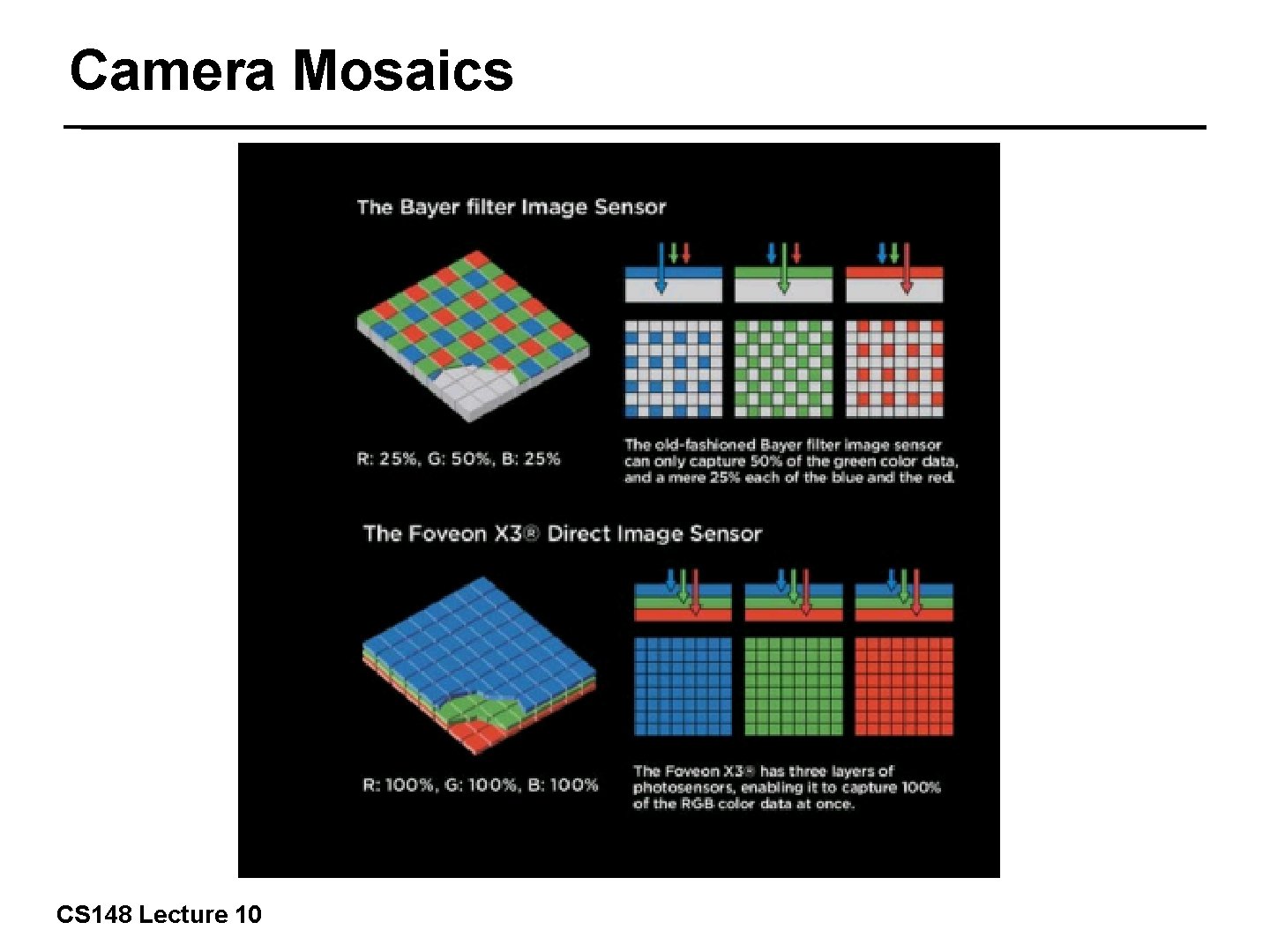

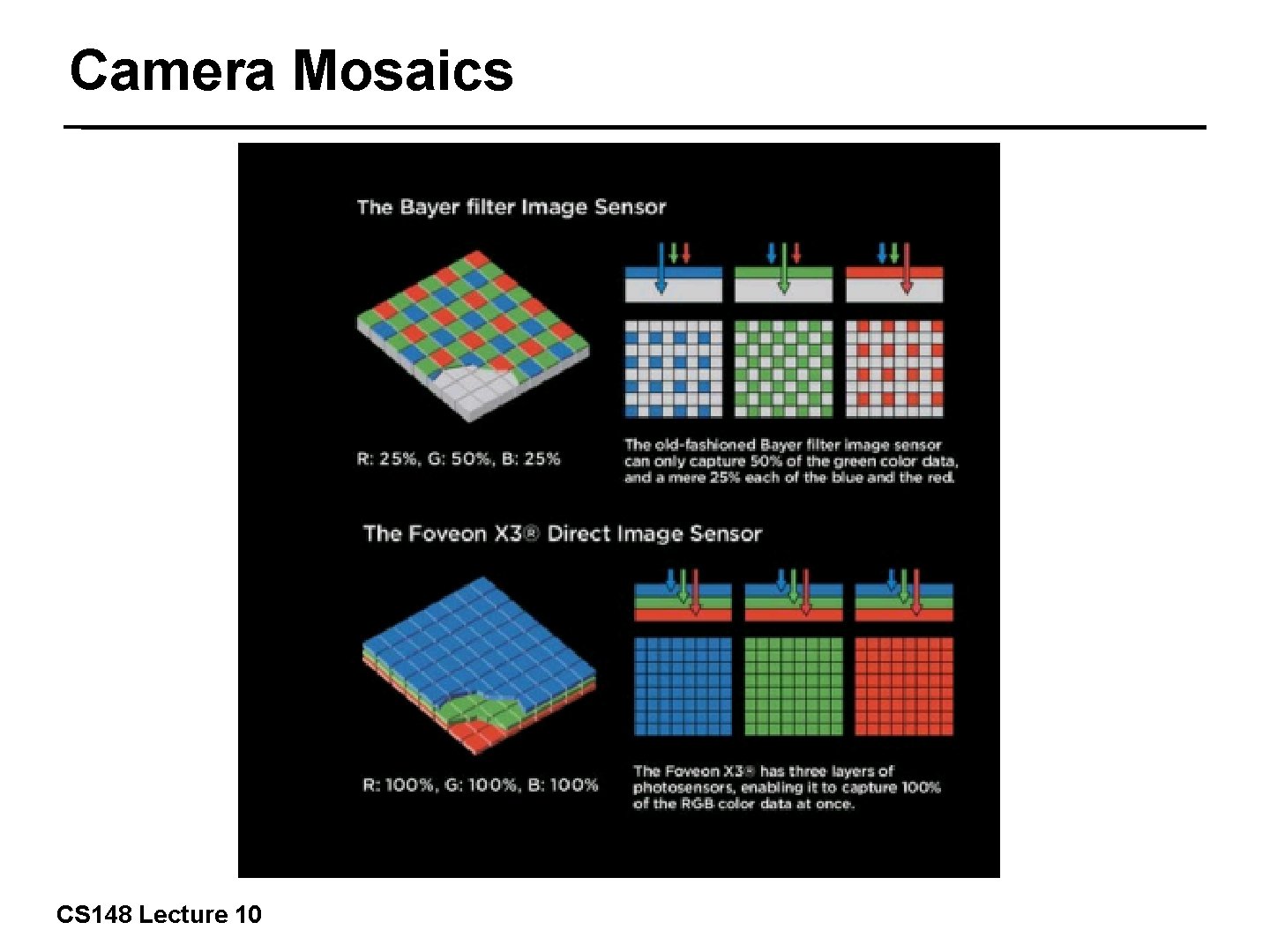

Camera Mosaics 11/42 CS 148 Lecture 10

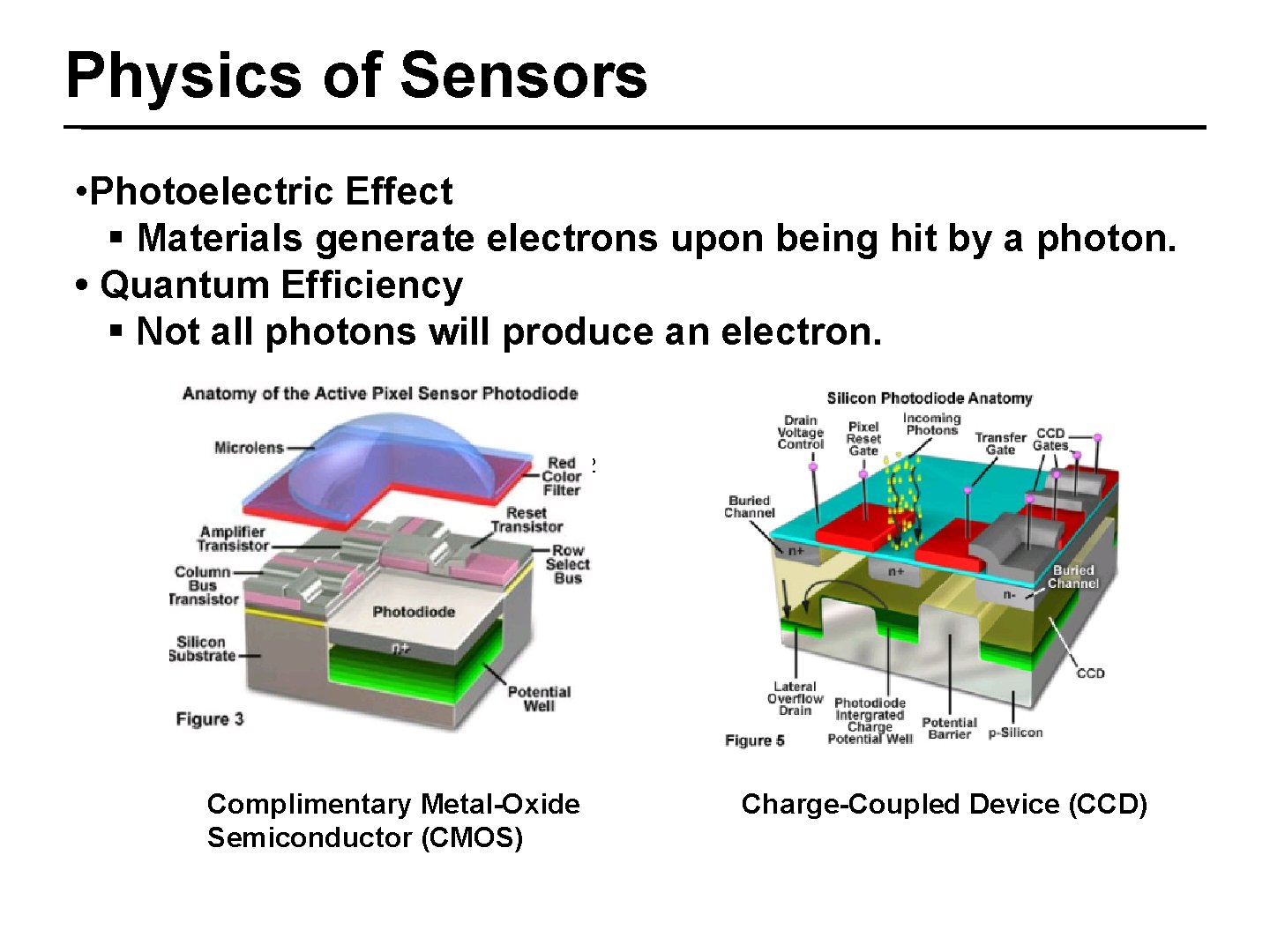

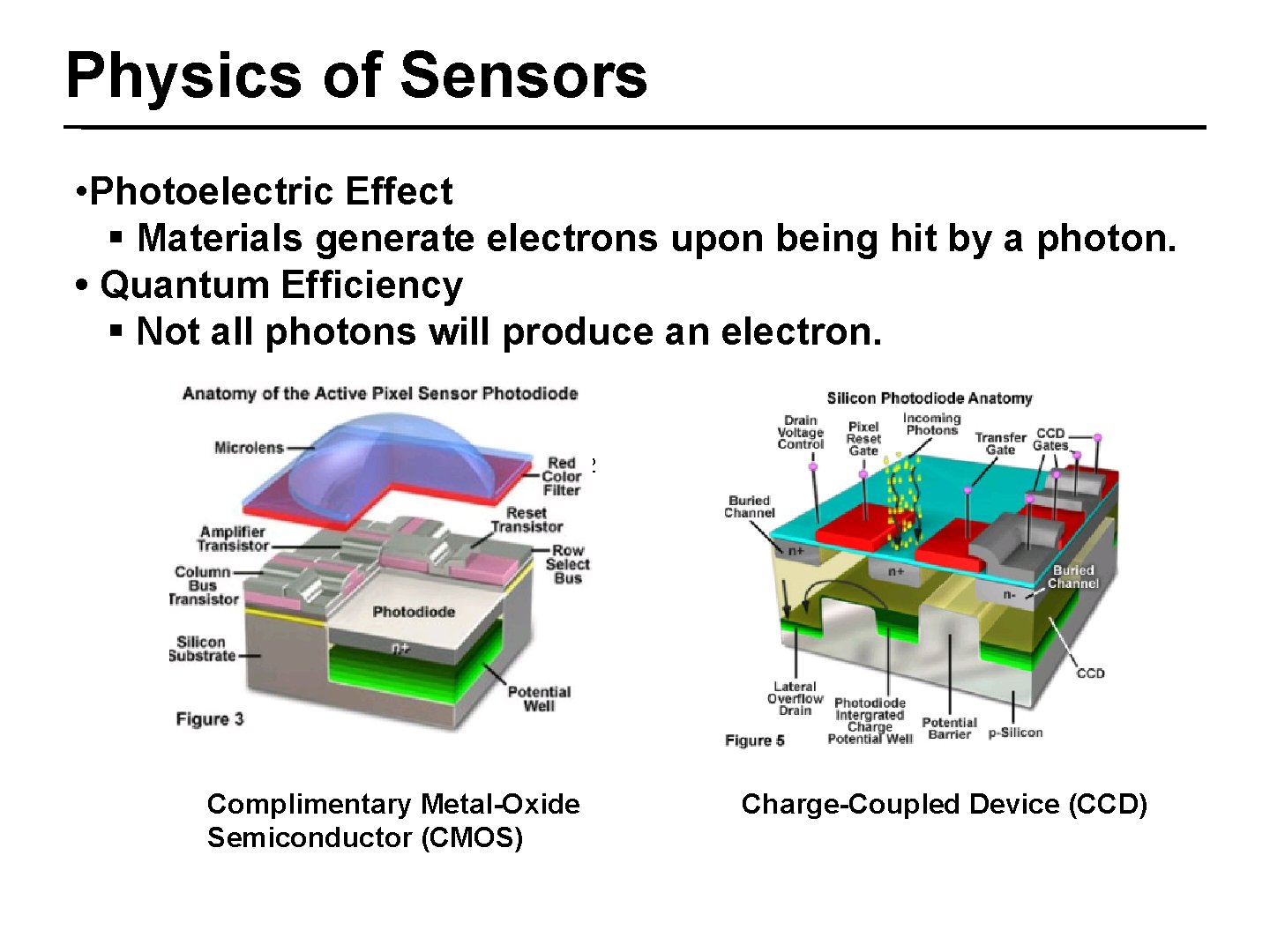

Physics of Sensors • Photoelectric Effect § Materials generate electrons upon being hit by a photon. • Quantum Efficiency § Not all photons will produce an electron. 12/42 Complimentary Metal-Oxide Semiconductor (CMOS) Charge-Coupled Device (CCD)

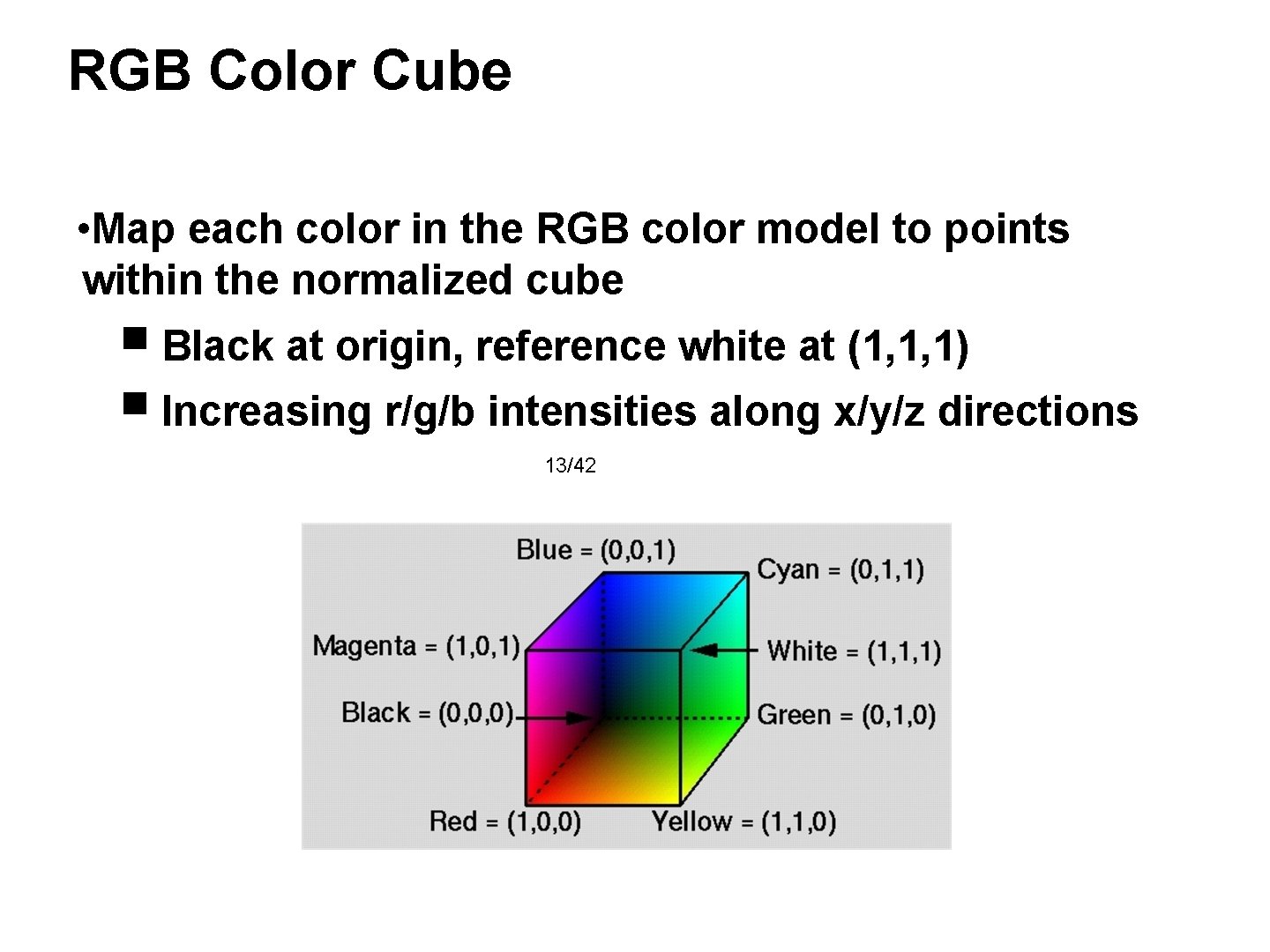

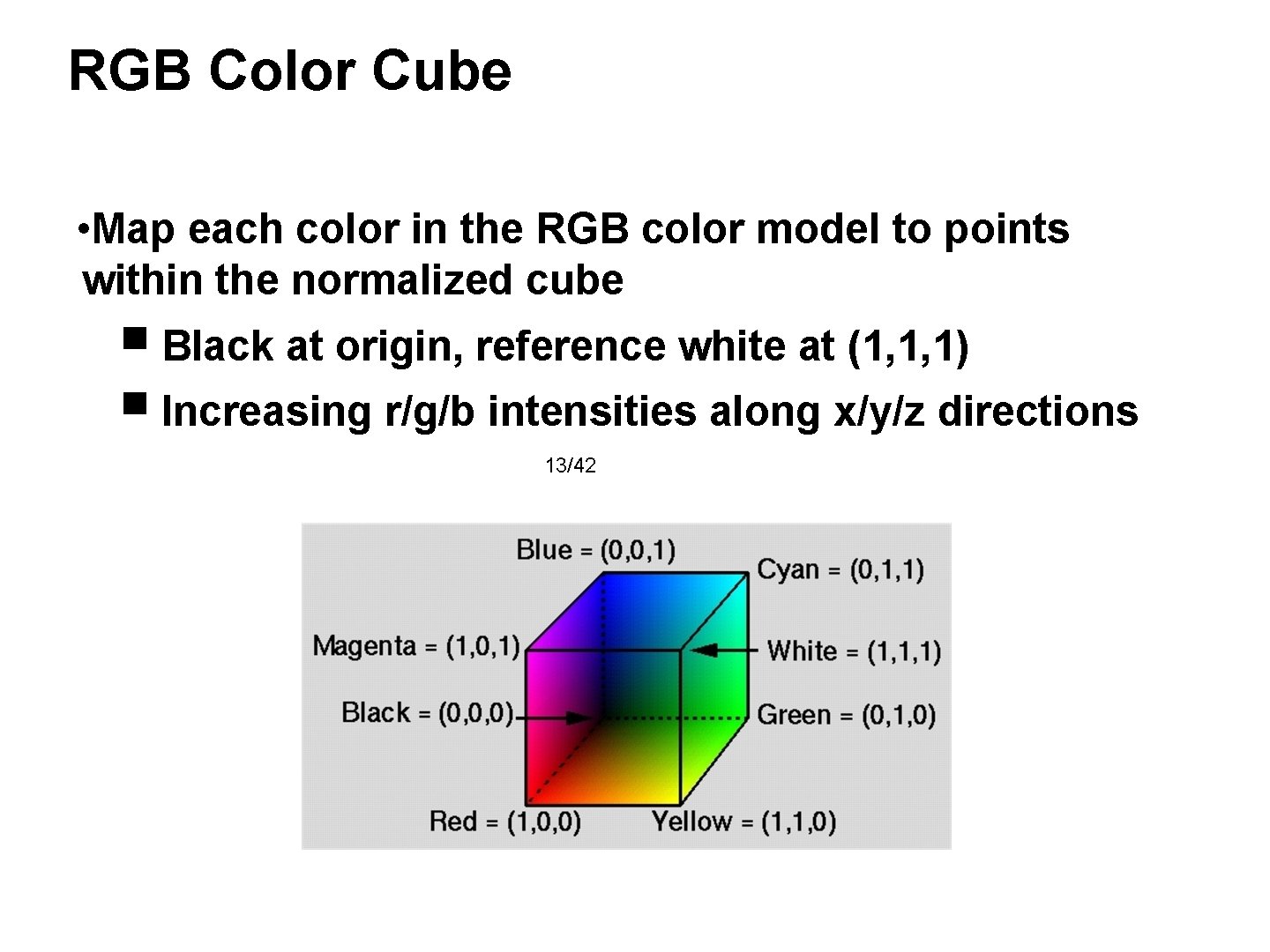

RGB Color Cube • Map each color in the RGB color model to points within the normalized cube ■ Black at origin, reference white at (1, 1, 1) ■ Increasing r/g/b intensities along x/y/z directions 13/42

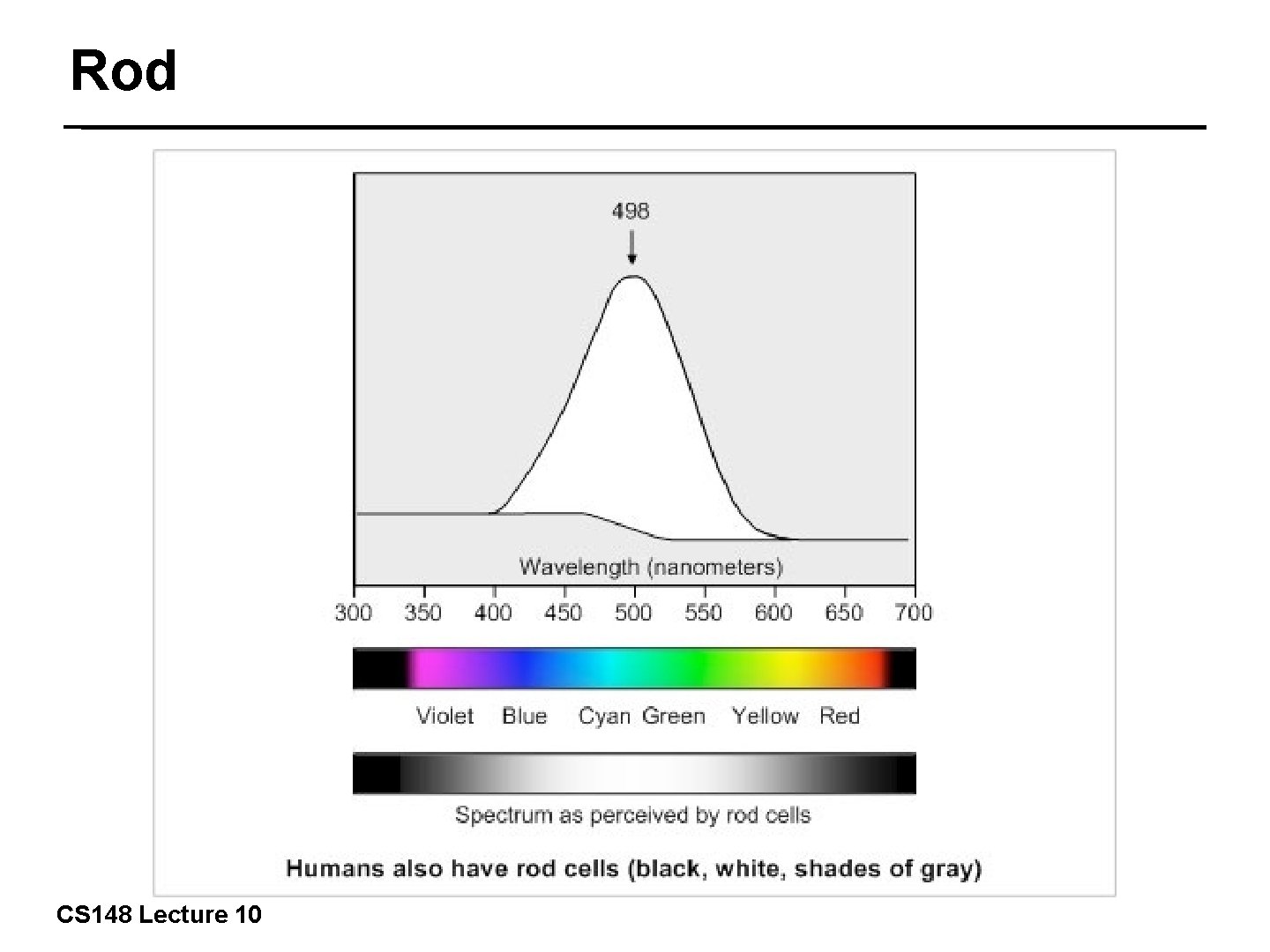

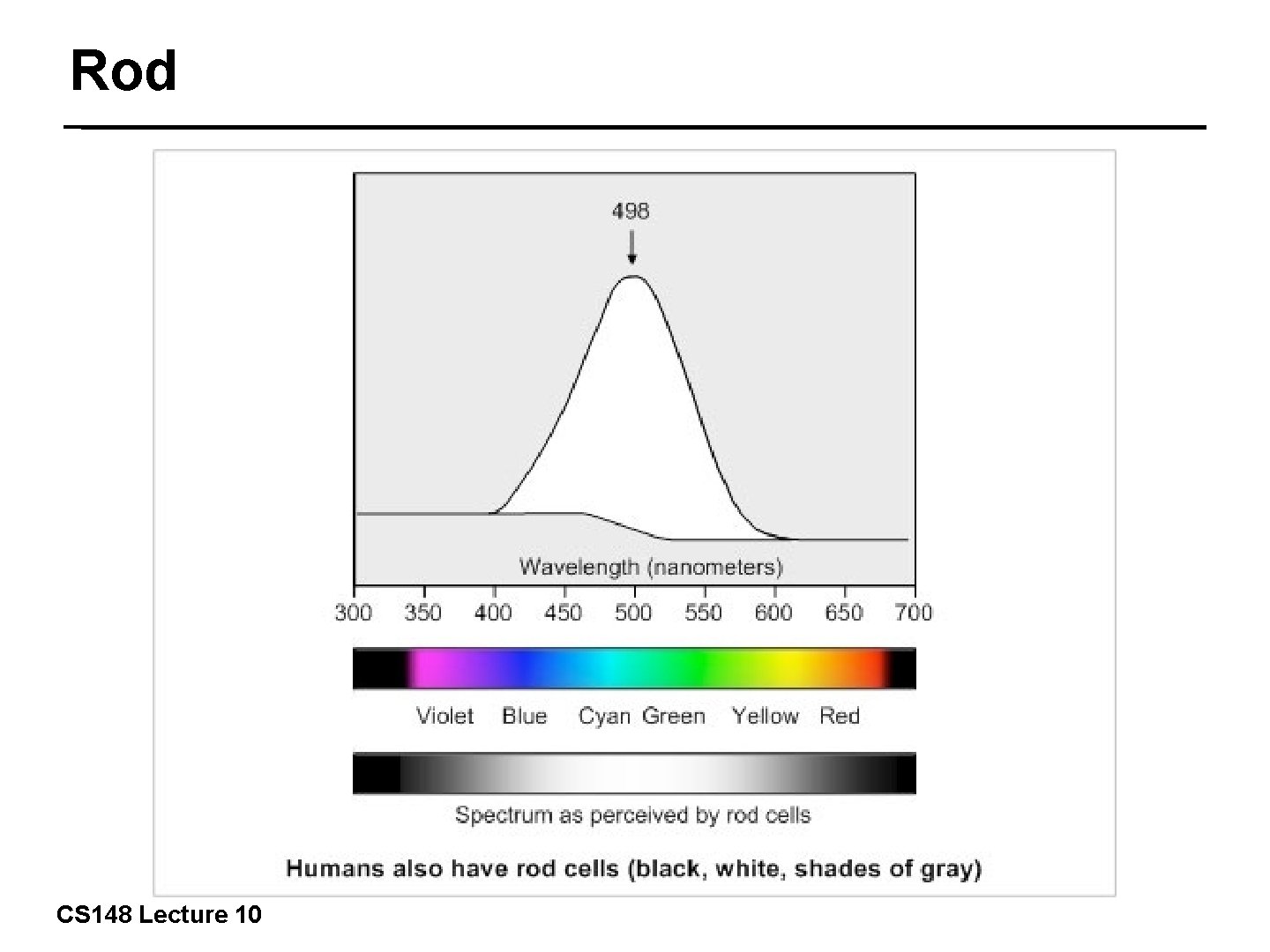

Rod 14/42 CS 148 Lecture 10

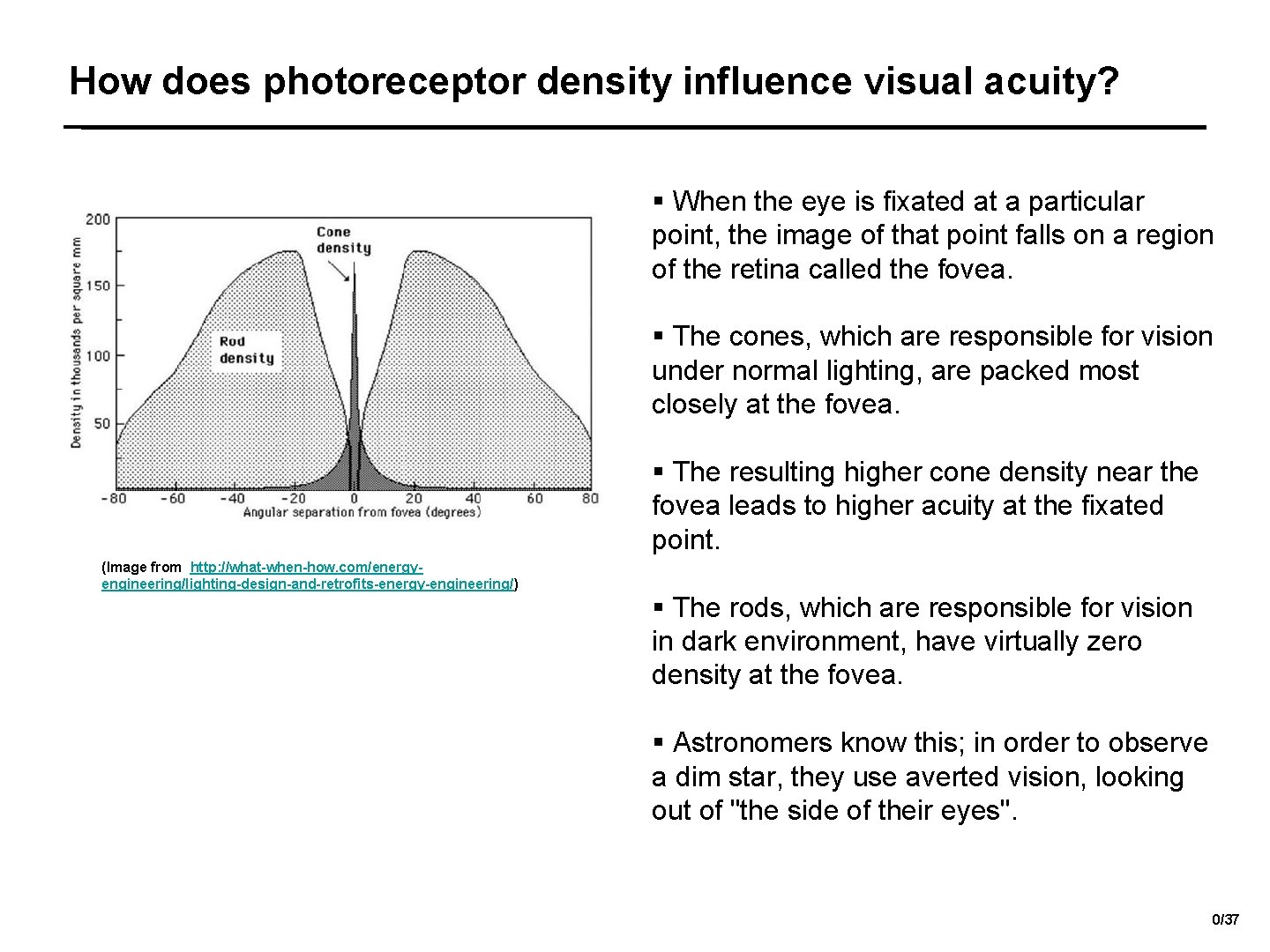

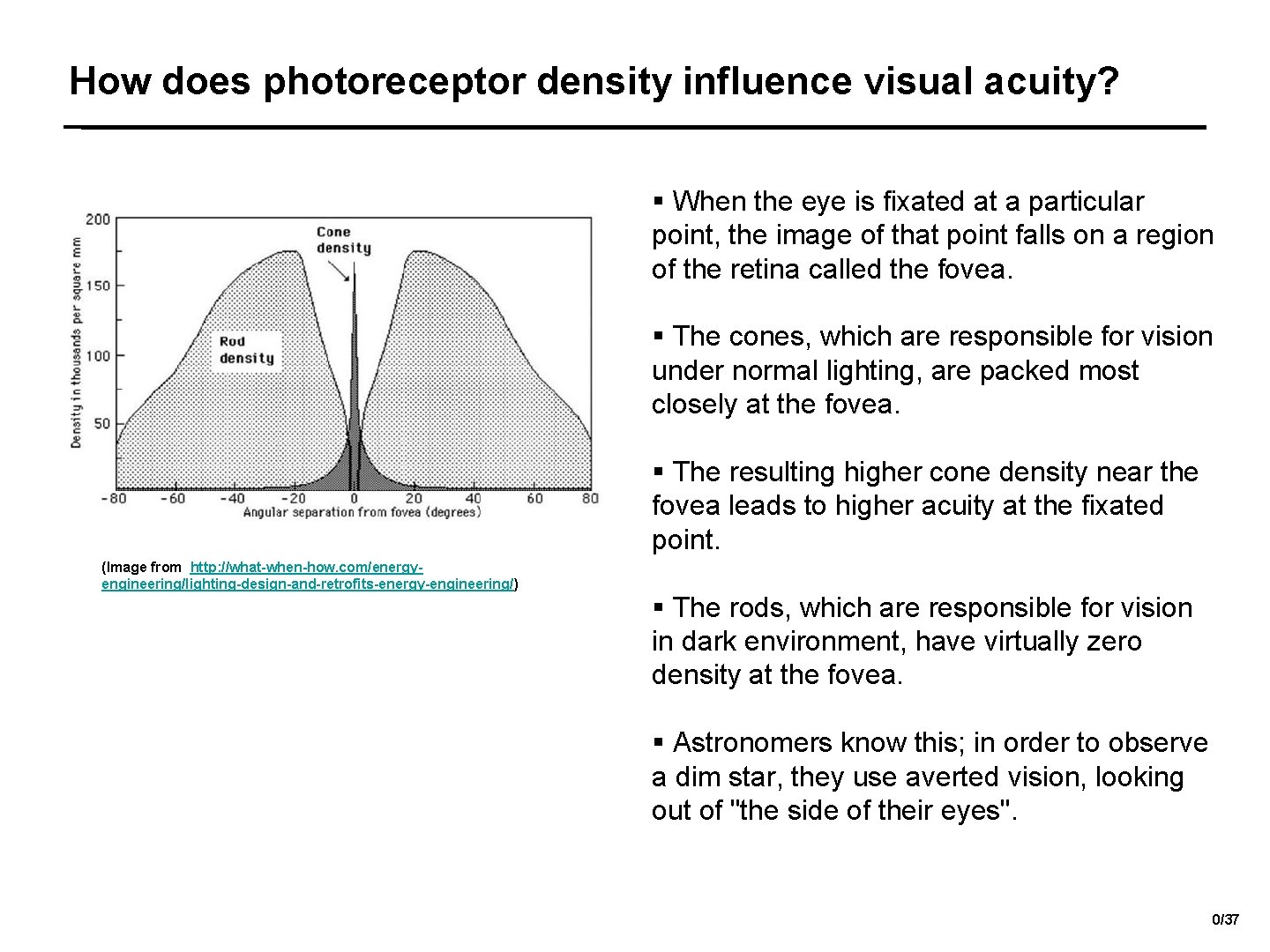

How does photoreceptor density influence visual acuity? § When the eye is fixated at a particular point, the image of that point falls on a region of the retina called the fovea. § The cones, which are responsible for vision under normal lighting, are packed most closely at the fovea. 15/42 (Image from http: //what-when-how. com/energyengineering/lighting-design-and-retrofits-energy-engineering/) § The resulting higher cone density near the fovea leads to higher acuity at the fixated point. § The rods, which are responsible for vision in dark environment, have virtually zero density at the fovea. § Astronomers know this; in order to observe a dim star, they use averted vision, looking out of "the side of their eyes". 0/37

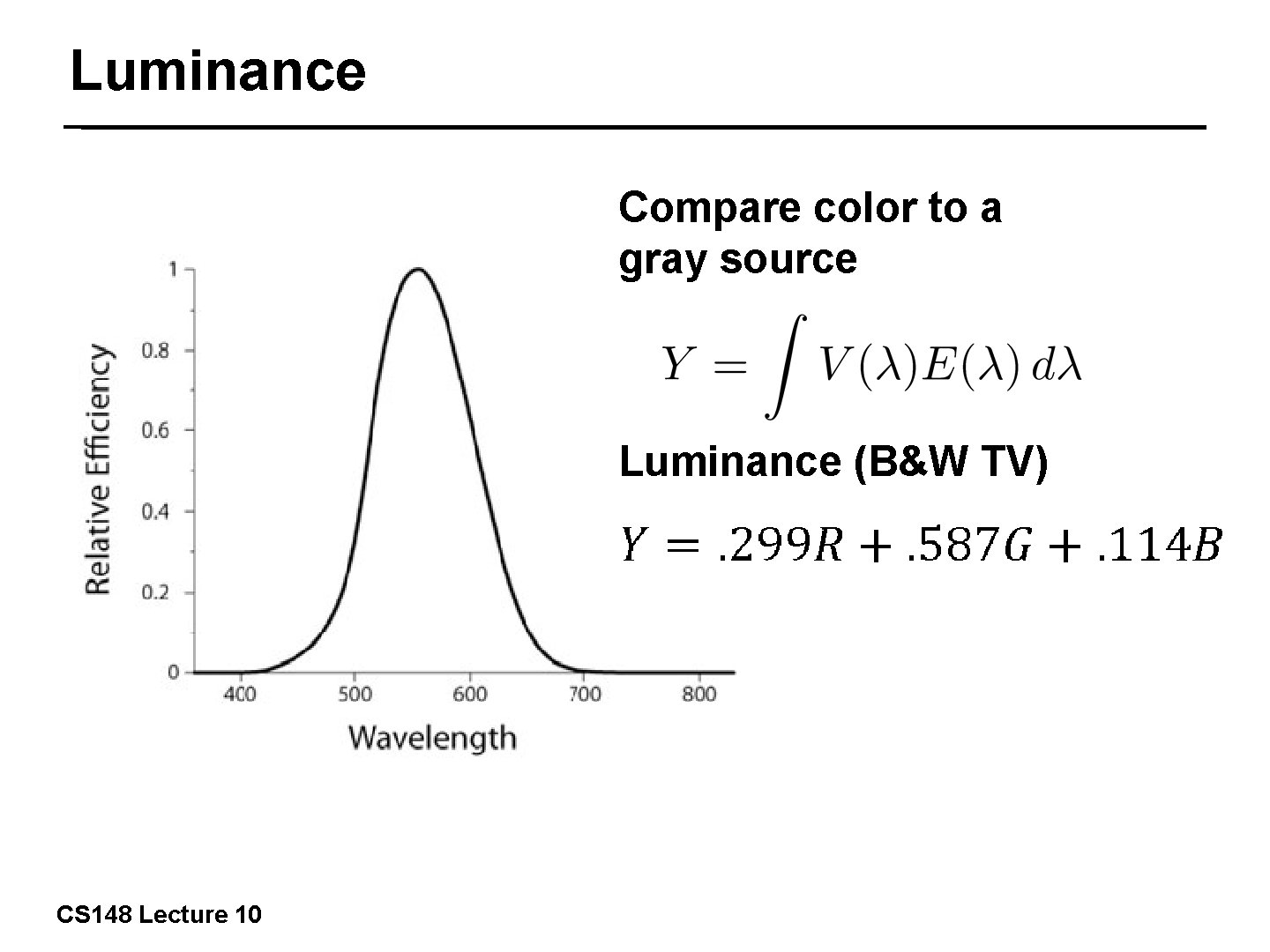

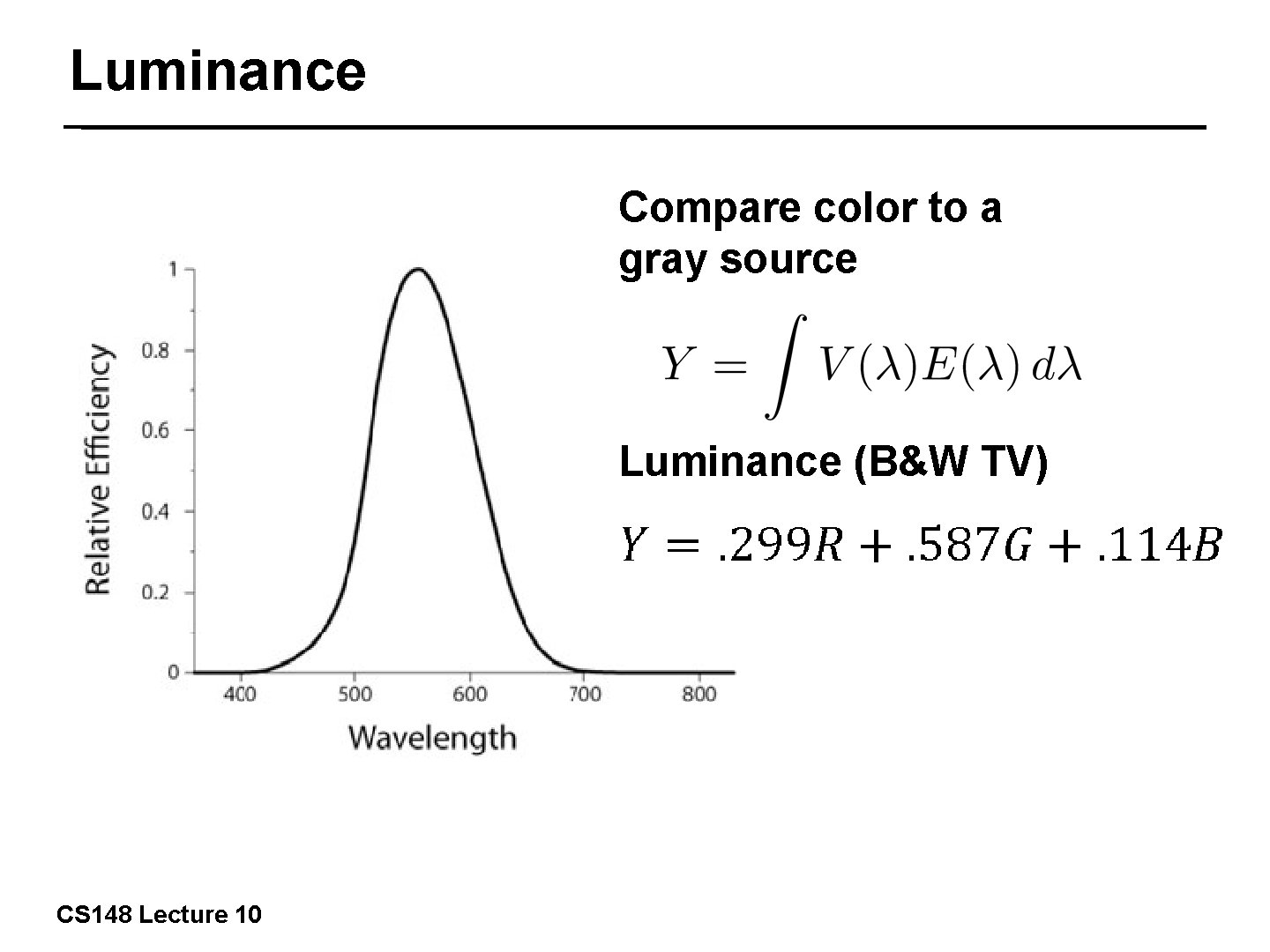

Luminance Compare color to a gray source 16/42 CS 148 Lecture 10 Luminance (B&W TV)

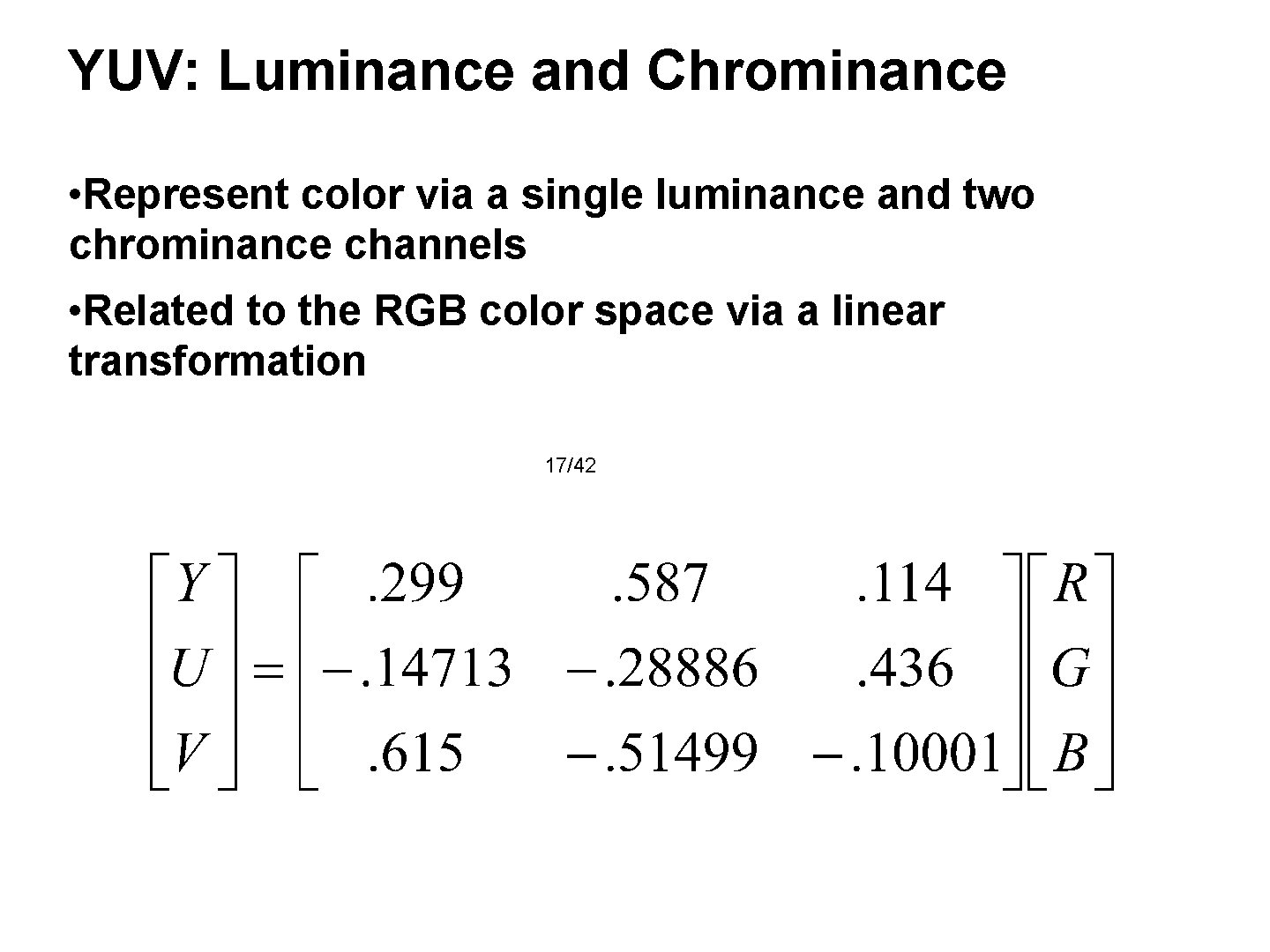

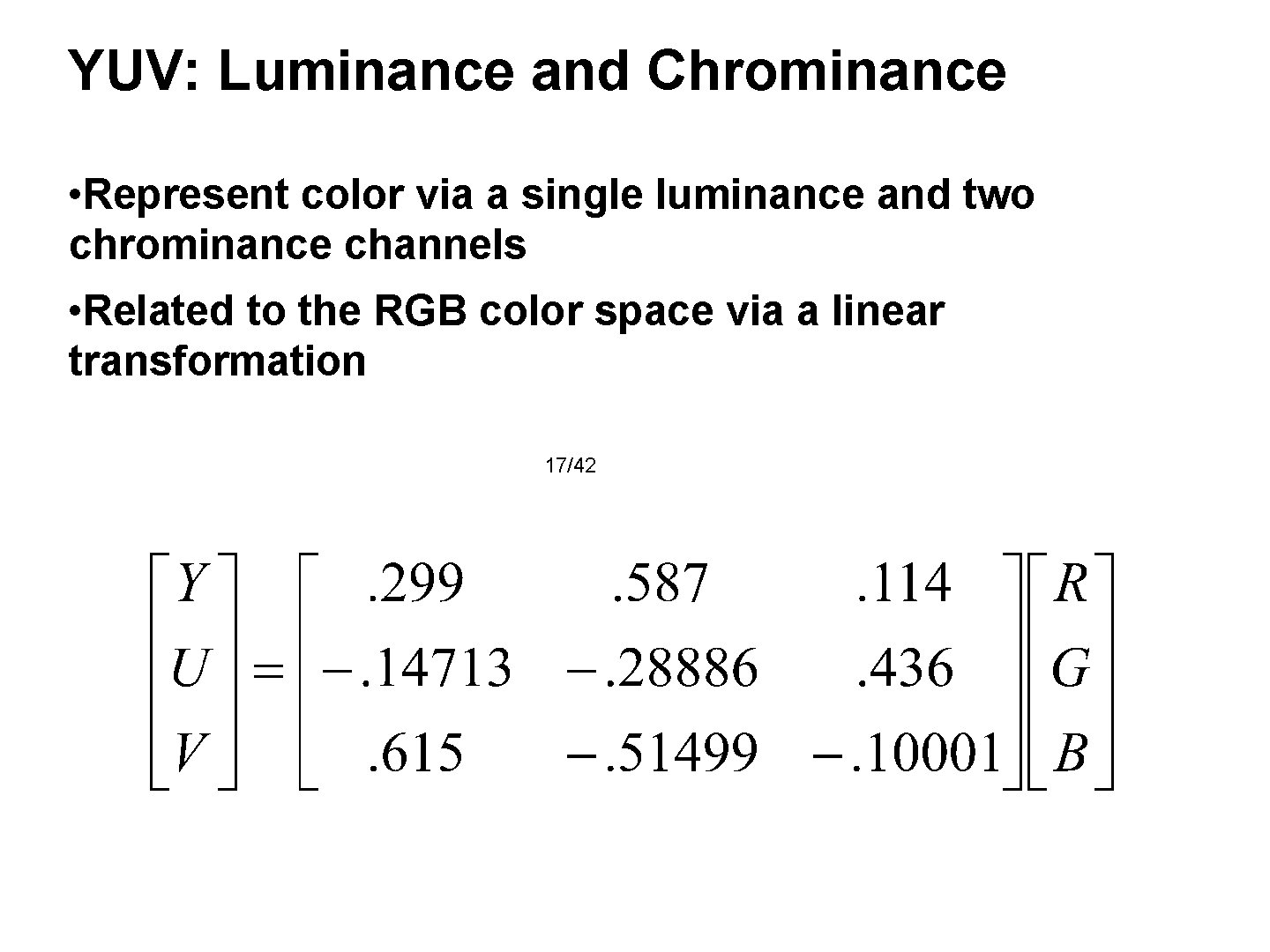

YUV: Luminance and Chrominance • Represent color via a single luminance and two chrominance channels • Related to the RGB color space via a linear transformation 17/42

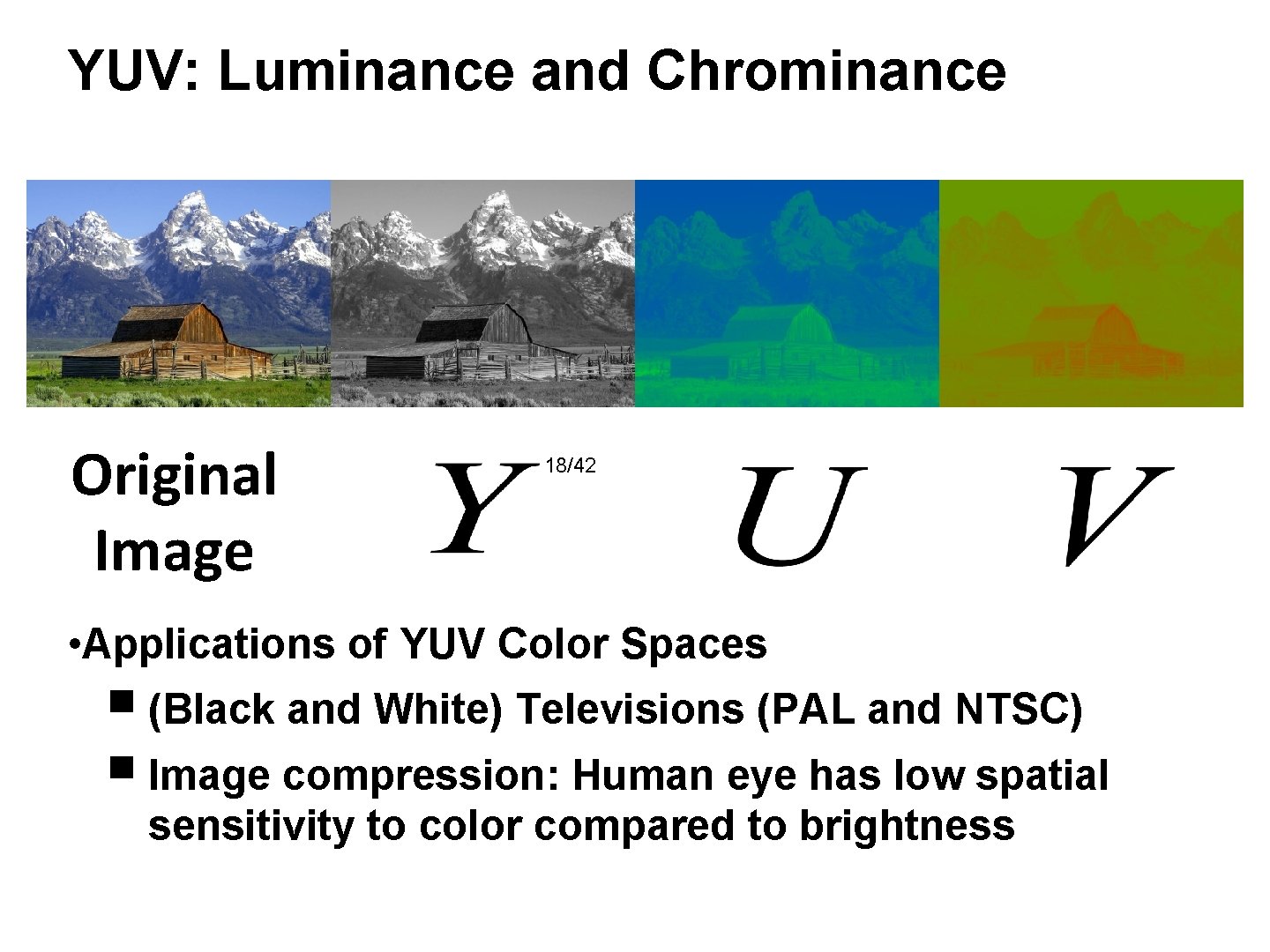

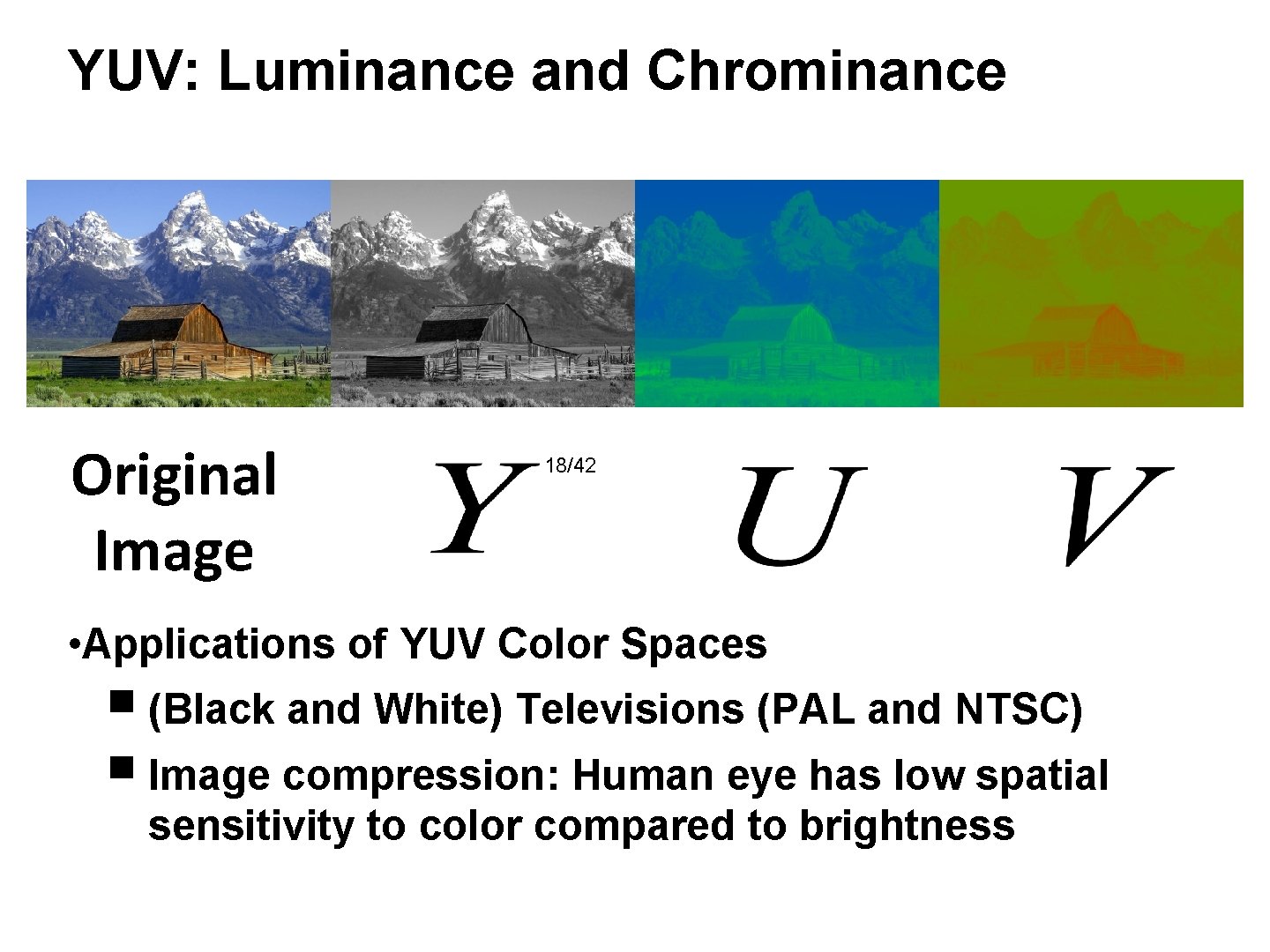

YUV: Luminance and Chrominance Original Image 18/42 • Applications of YUV Color Spaces ■ (Black and White) Televisions (PAL and NTSC) ■ Image compression: Human eye has low spatial sensitivity to color compared to brightness

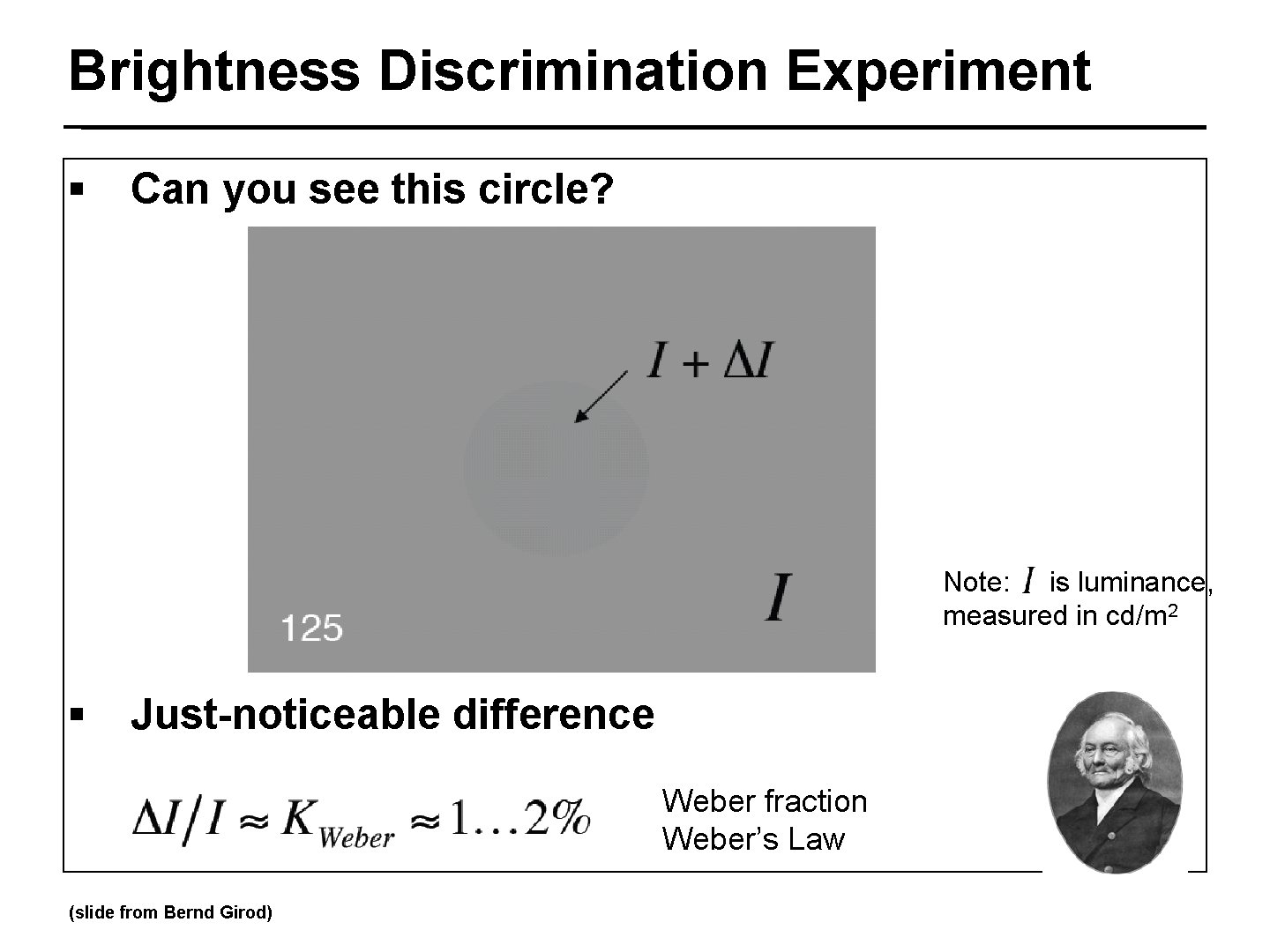

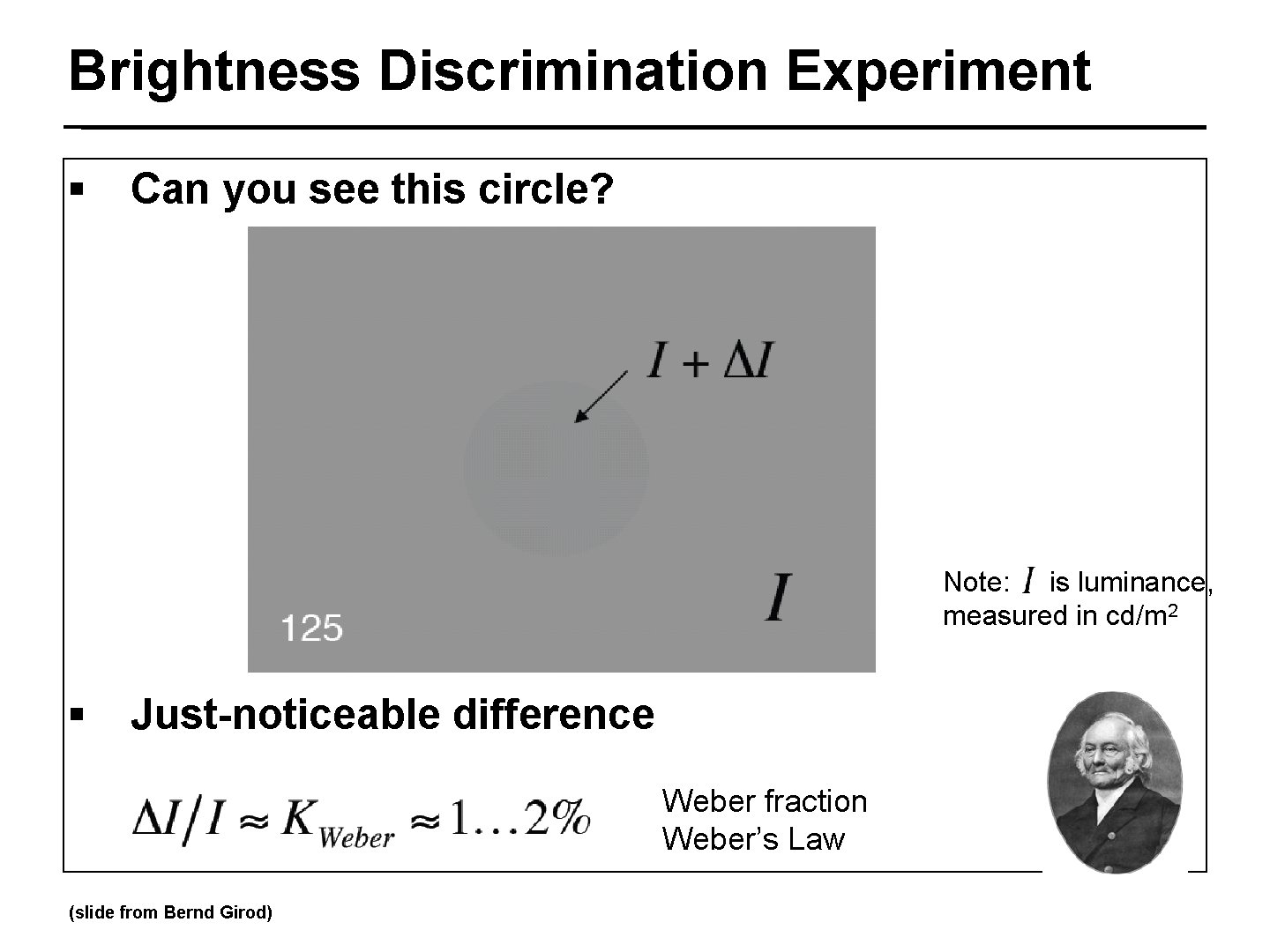

Brightness Discrimination Experiment § Can you see this circle? 19/42 Note: is luminance, measured in cd/m 2 § Just-noticeable difference Weber fraction Weber’s Law (slide from Bernd Girod)

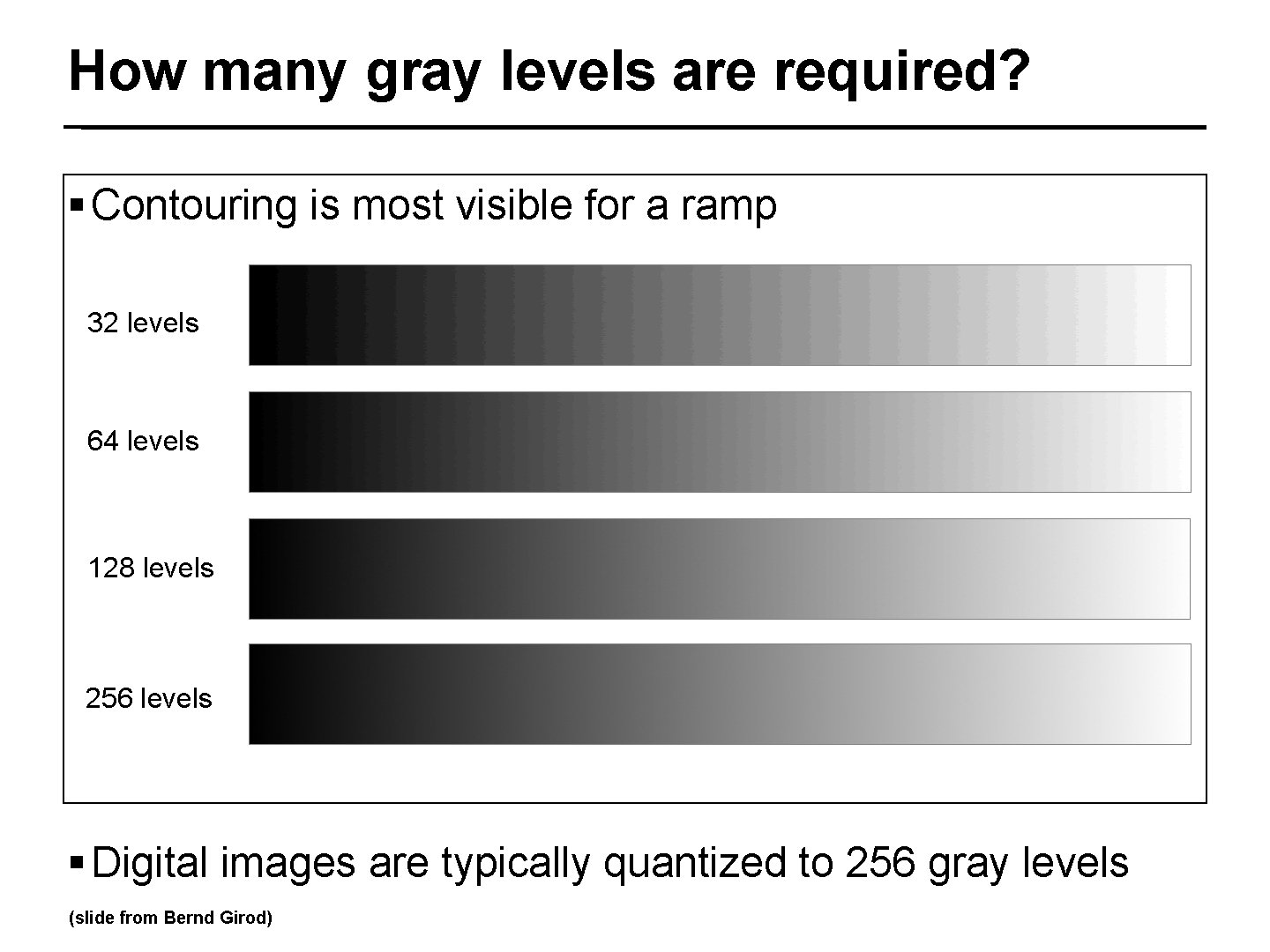

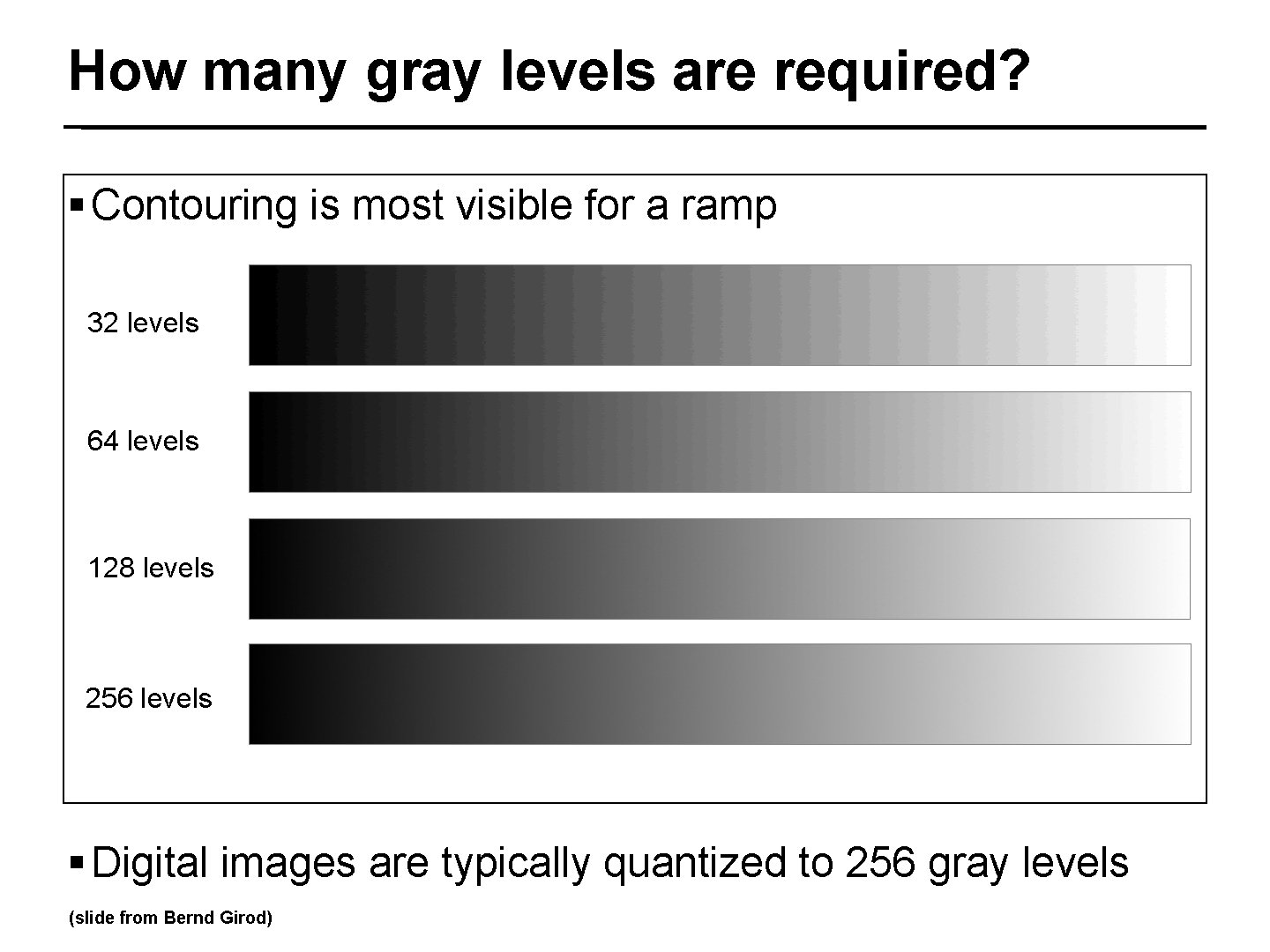

How many gray levels are required? § Contouring is most visible for a ramp 32 levels 64 levels 20/42 128 levels 256 levels § Digital images are typically quantized to 256 gray levels (slide from Bernd Girod)

Limited Dynamic Range (Max/Min) ■ World: ■ ■ ■ Possible: 100, 000, 000: 1 Typical: 100, 000: 1 Human: ■ ■ Static: 100: 1 Dynamic: 1, 000: 1 ■ ■ As soon as the eye moves, it adaptively adjusts its exposure by changing the size of the 21/42 pupil. Media: ■ ■ Newsprint: 10: 1 Glossy print: 60: 1 Samsung F 2370 H LCD monitor: static 3000: 1, dynamic 150, 000: 1 ■ Static contrast ratio is the luminance ratio between brightest white and darkest black within a single image ■ Dynamic contrast ratio is the luminance ratio between an image with the brightest white level and an image with the darkest black level The contrast ratio in a TV monitor specification is measured in dark room. In normal office lighting conditions, the effective contrast ratio drops from 3000: 1 to less than 200: 1.

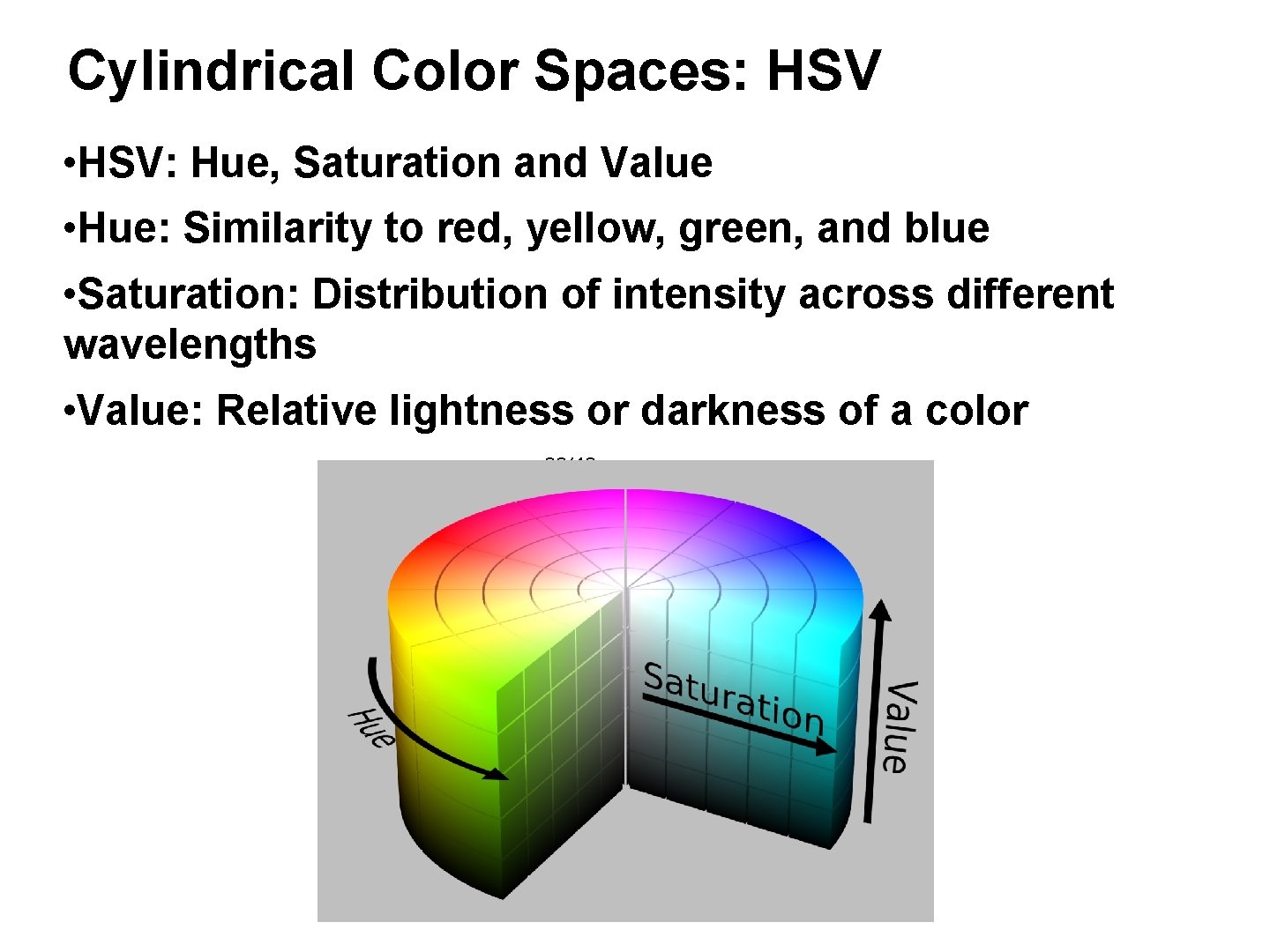

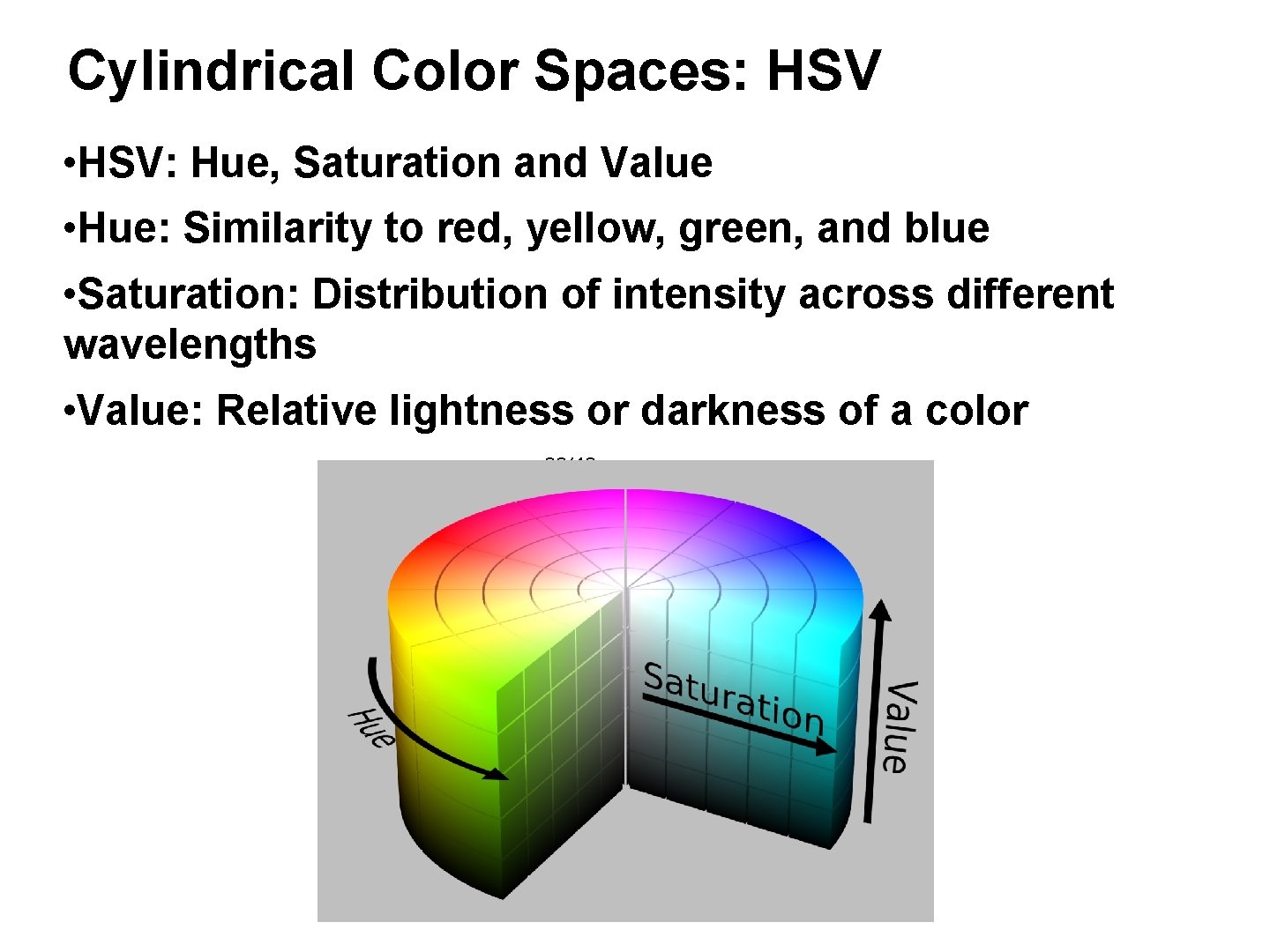

Cylindrical Color Spaces: HSV • HSV: Hue, Saturation and Value • Hue: Similarity to red, yellow, green, and blue • Saturation: Distribution of intensity across different wavelengths • Value: Relative lightness or darkness of a color 22/42

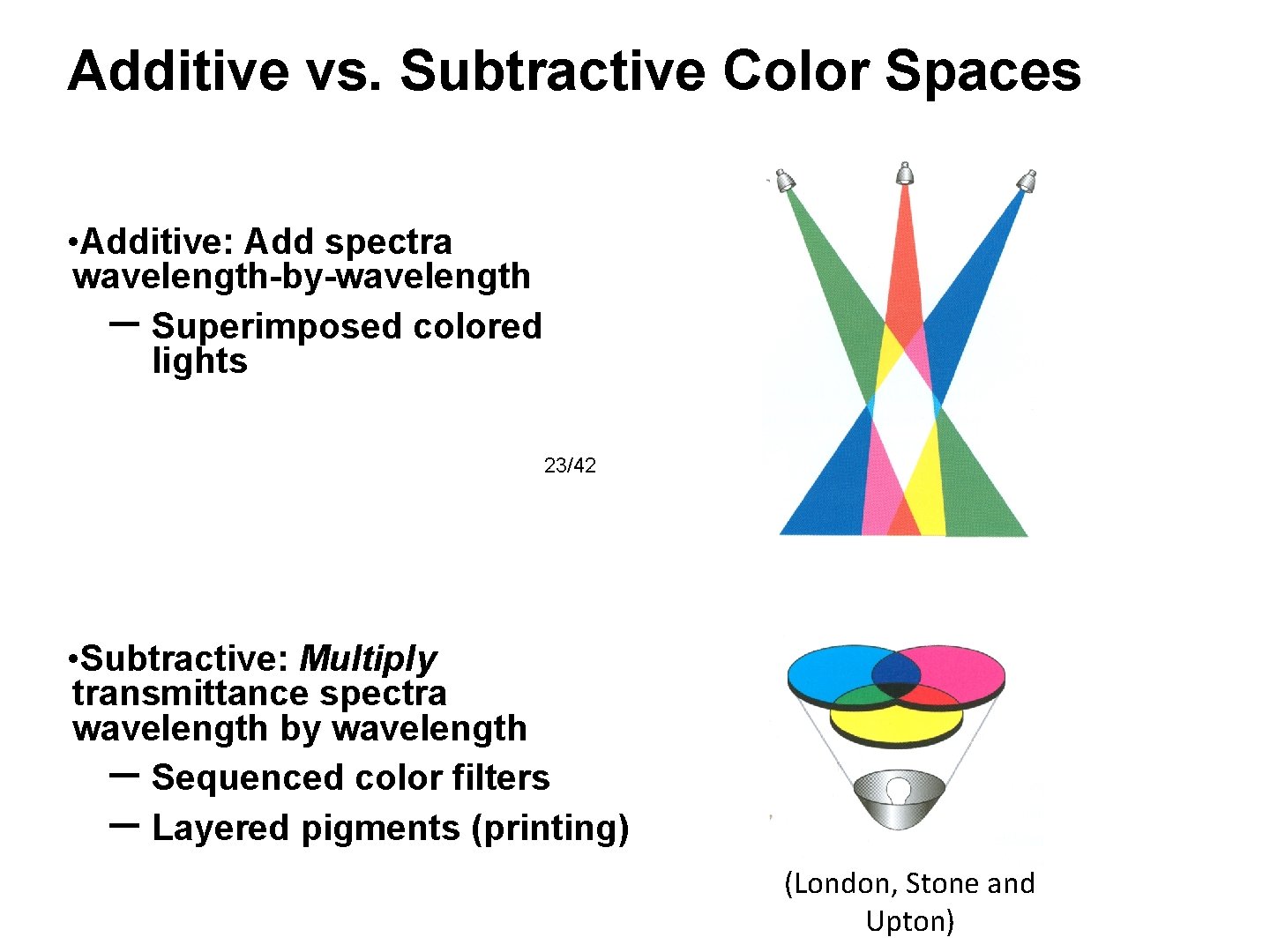

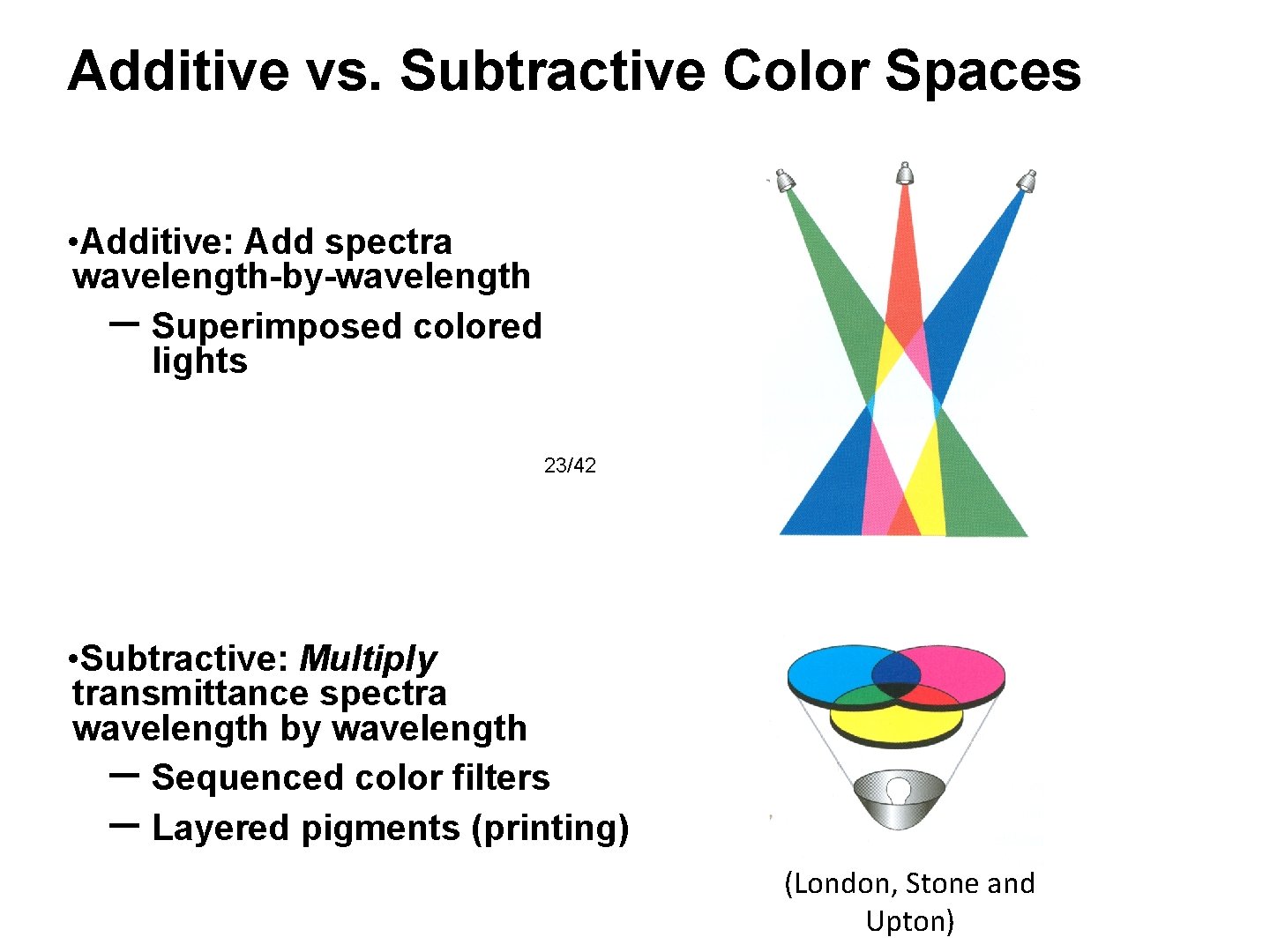

Additive vs. Subtractive Color Spaces • Additive: Add spectra wavelength-by-wavelength – Superimposed colored lights 23/42 • Subtractive: Multiply transmittance spectra wavelength by wavelength – Sequenced color filters – Layered pigments (printing) (London, Stone and Upton)

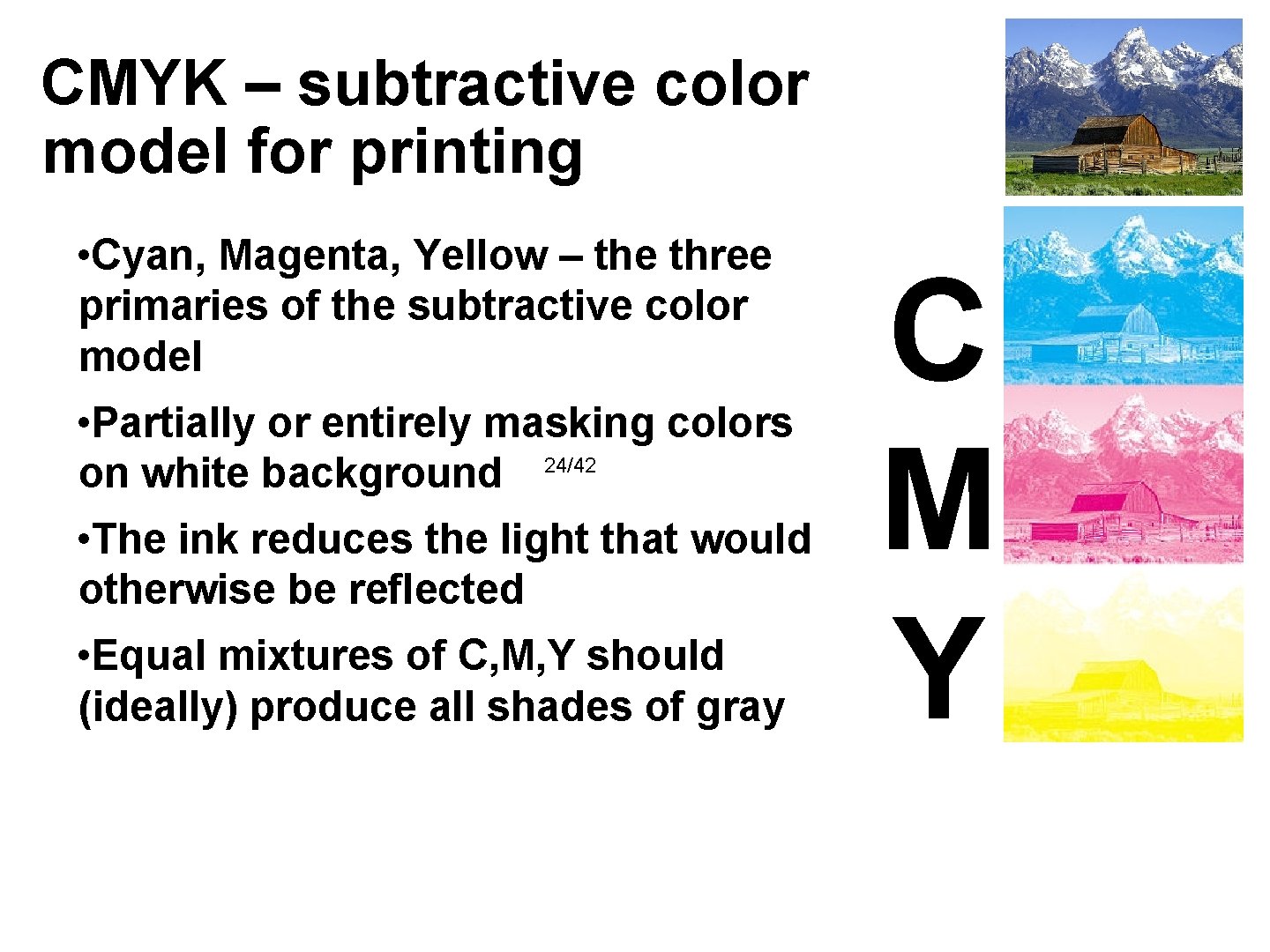

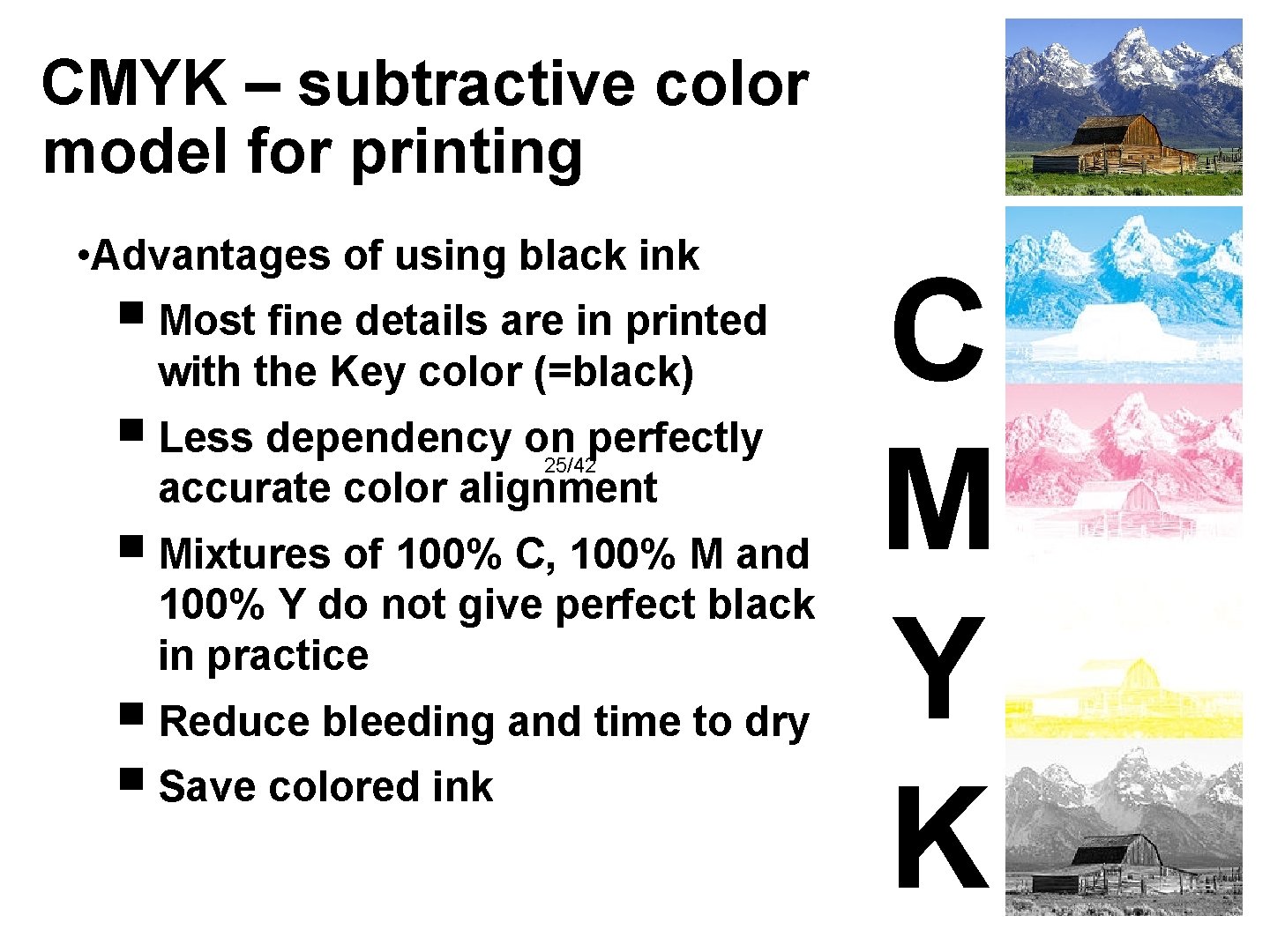

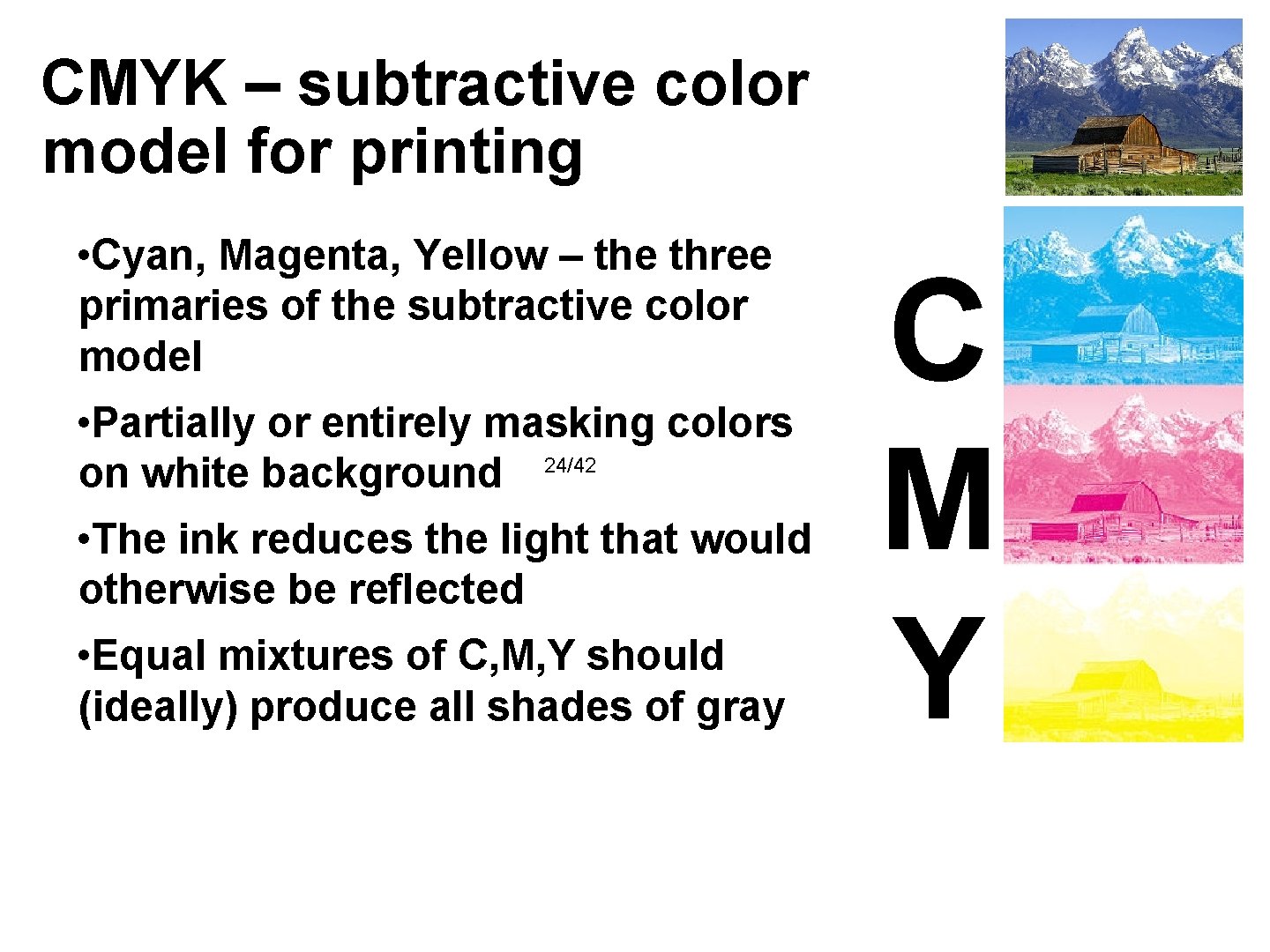

CMYK – subtractive color model for printing • Cyan, Magenta, Yellow – the three primaries of the subtractive color model • Partially or entirely masking colors 24/42 on white background • The ink reduces the light that would otherwise be reflected • Equal mixtures of C, M, Y should (ideally) produce all shades of gray C M Y

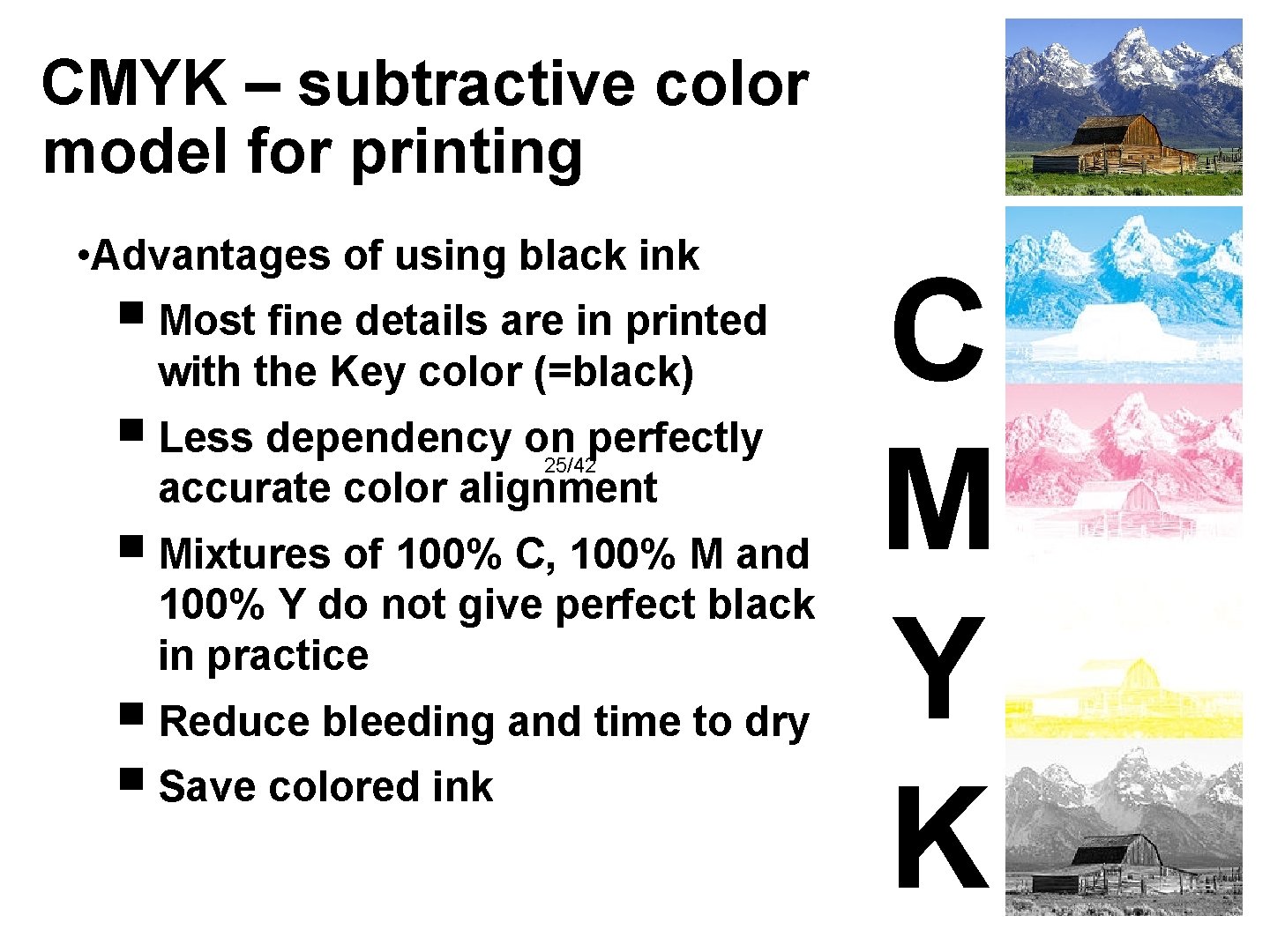

CMYK – subtractive color model for printing • Advantages of using black ink ■ Most fine details are in printed with the Key color (=black) ■ Less dependency on perfectly 25/42 accurate color alignment ■ Mixtures of 100% C, 100% M and 100% Y do not give perfect black in practice ■ Reduce bleeding and time to dry ■ Save colored ink C M Y K

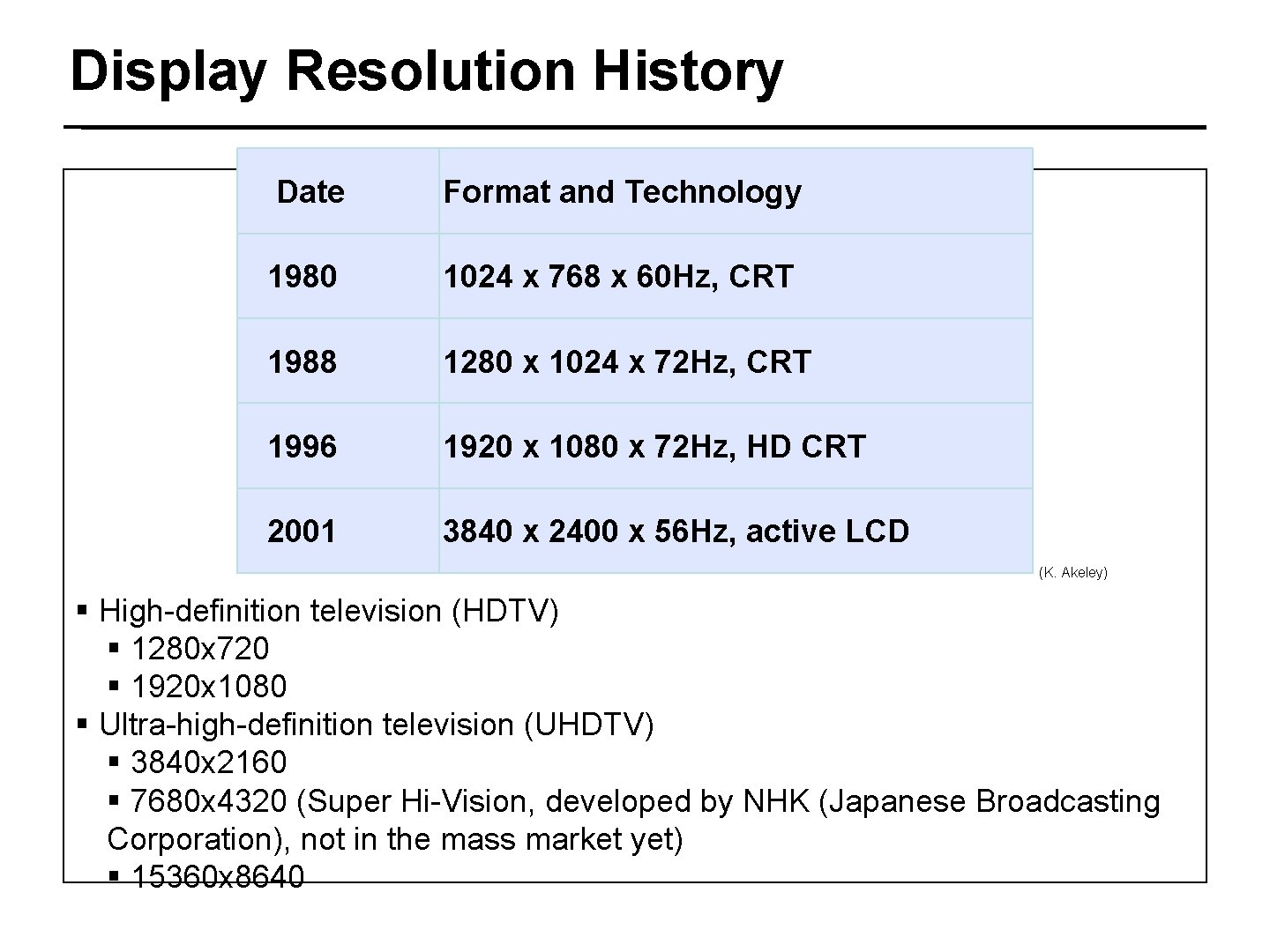

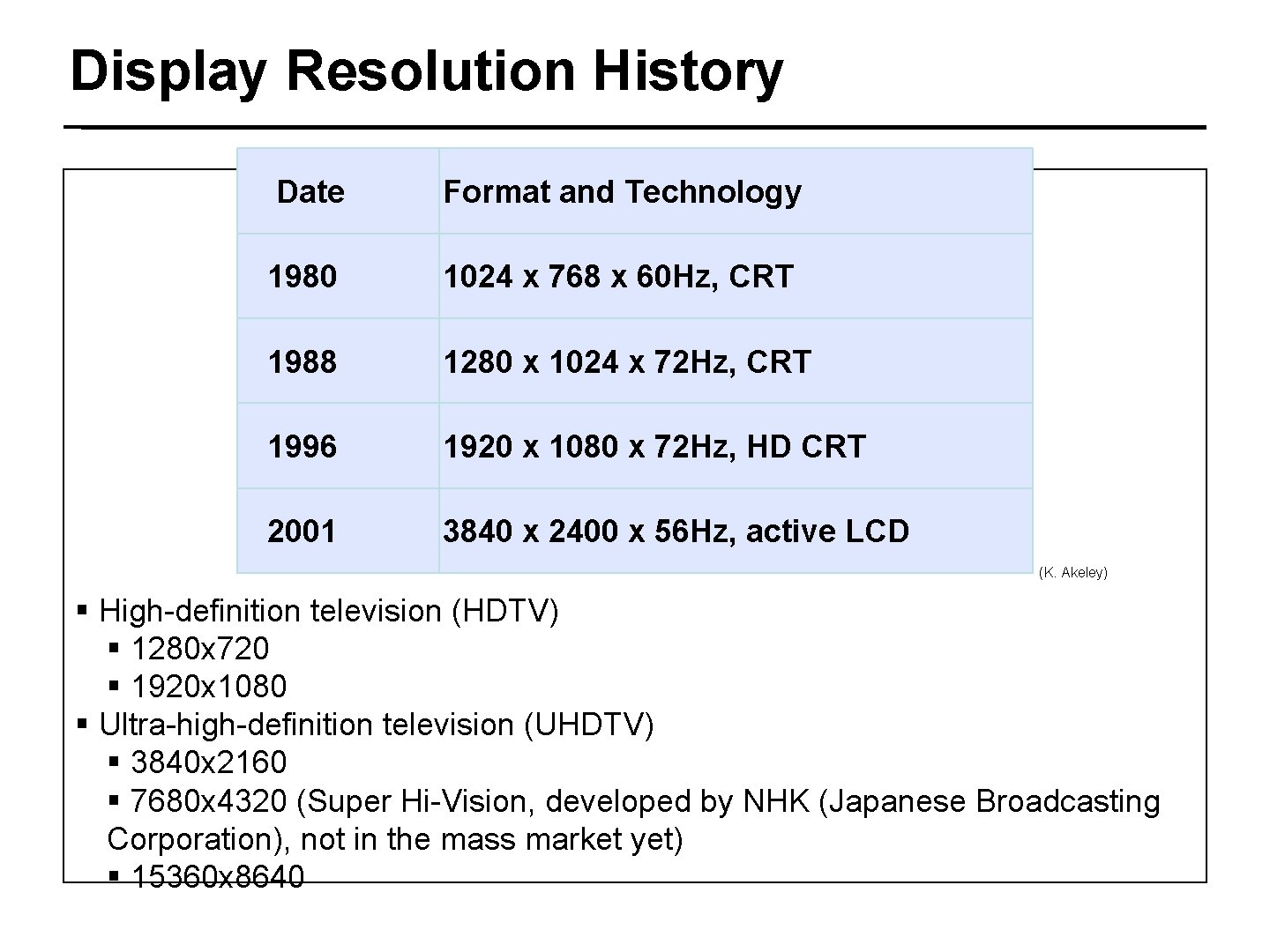

Display Resolution History Date Format and Technology 1980 1024 x 768 x 60 Hz, CRT 1988 1280 x 1024 x 72 Hz, CRT 1996 1920 x 26/42 1080 x 72 Hz, HD CRT 2001 3840 x 2400 x 56 Hz, active LCD (K. Akeley) § High-definition television (HDTV) § 1280 x 720 § 1920 x 1080 § Ultra-high-definition television (UHDTV) § 3840 x 2160 § 7680 x 4320 (Super Hi-Vision, developed by NHK (Japanese Broadcasting Corporation), not in the mass market yet) § 15360 x 8640

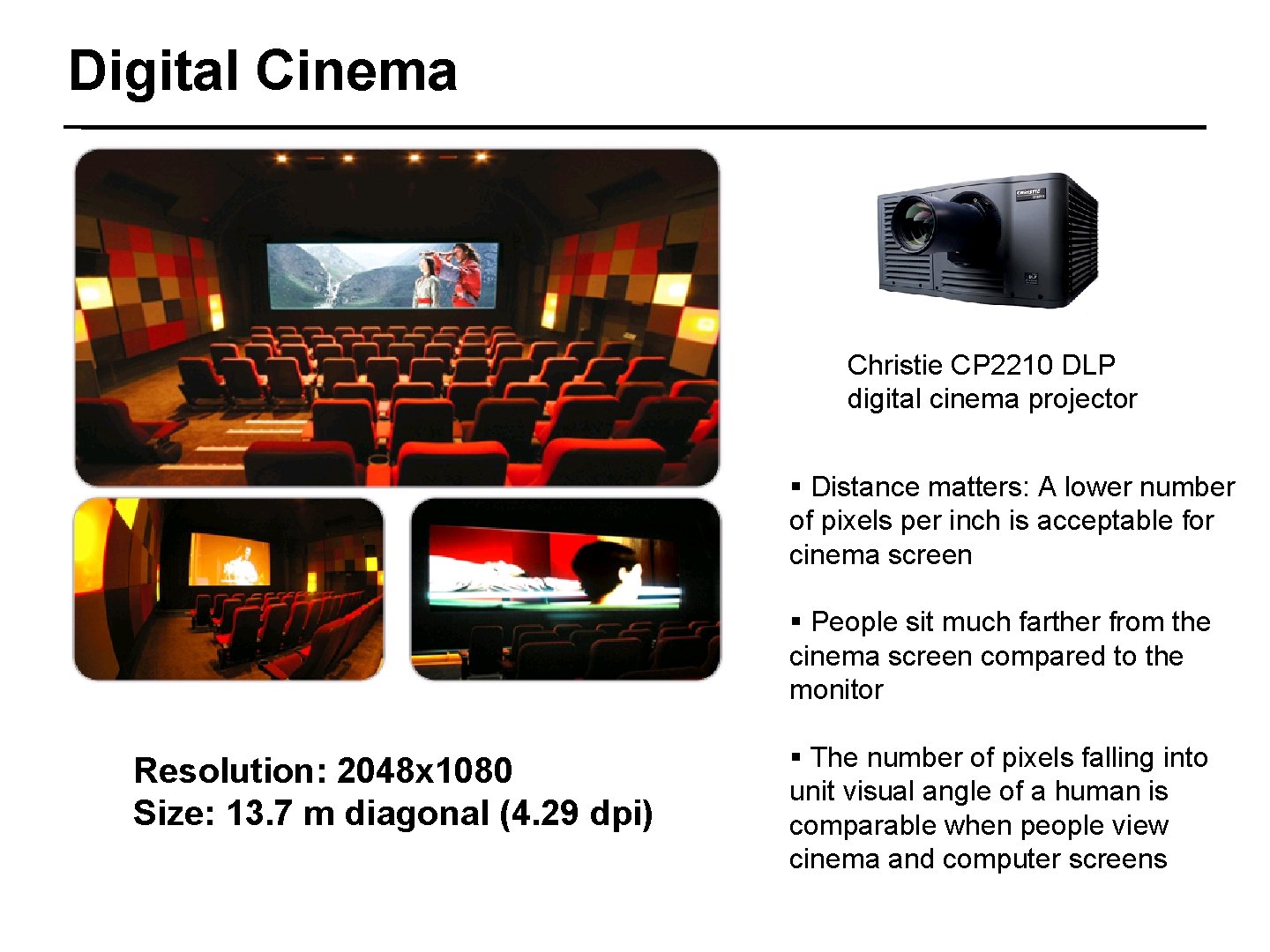

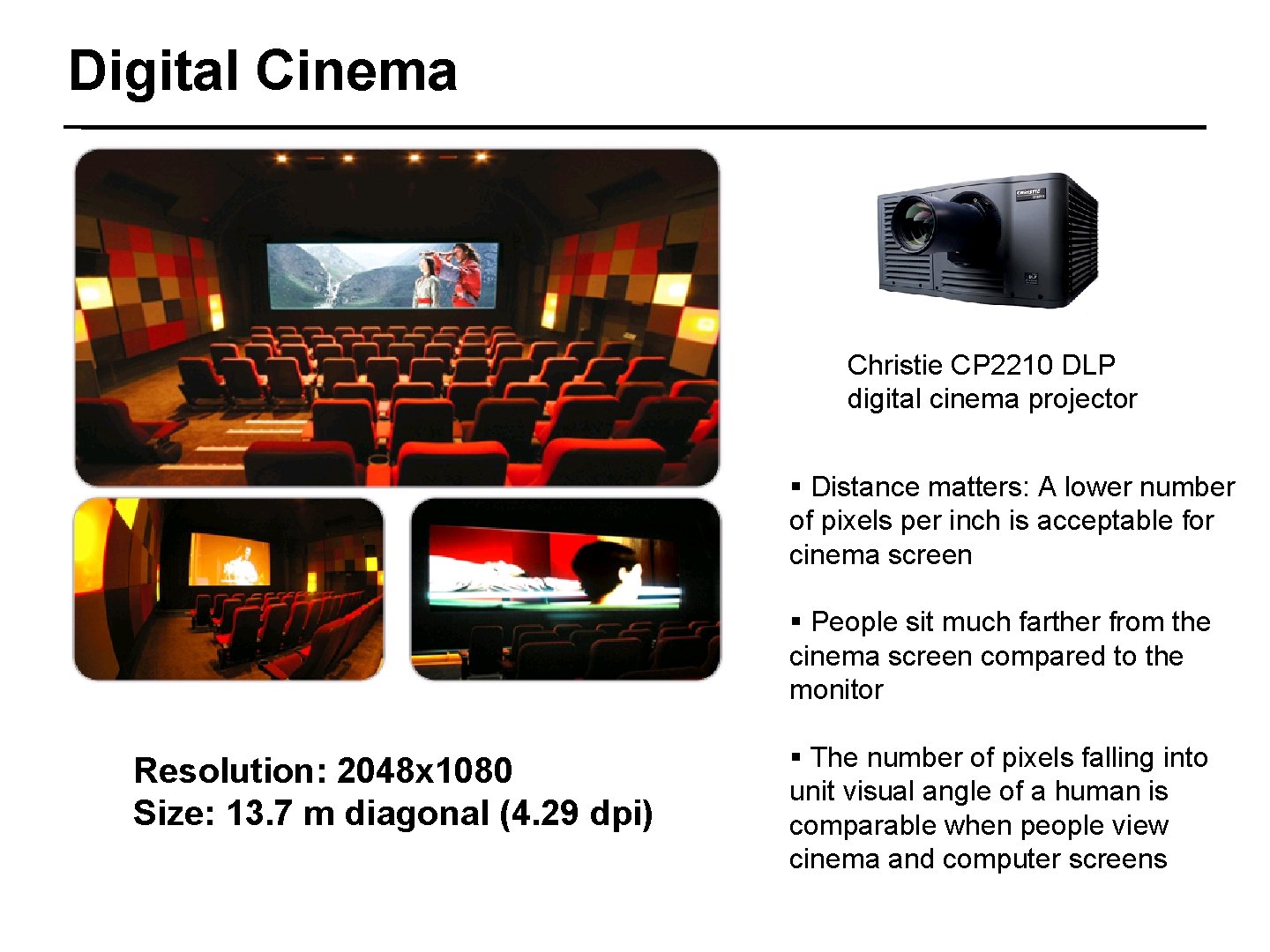

Digital Cinema Christie CP 2210 DLP digital cinema projector 27/42 § Distance matters: A lower number of pixels per inch is acceptable for cinema screen § People sit much farther from the cinema screen compared to the monitor Resolution: 2048 x 1080 Size: 13. 7 m diagonal (4. 29 dpi) § The number of pixels falling into unit visual angle of a human is comparable when people view cinema and computer screens

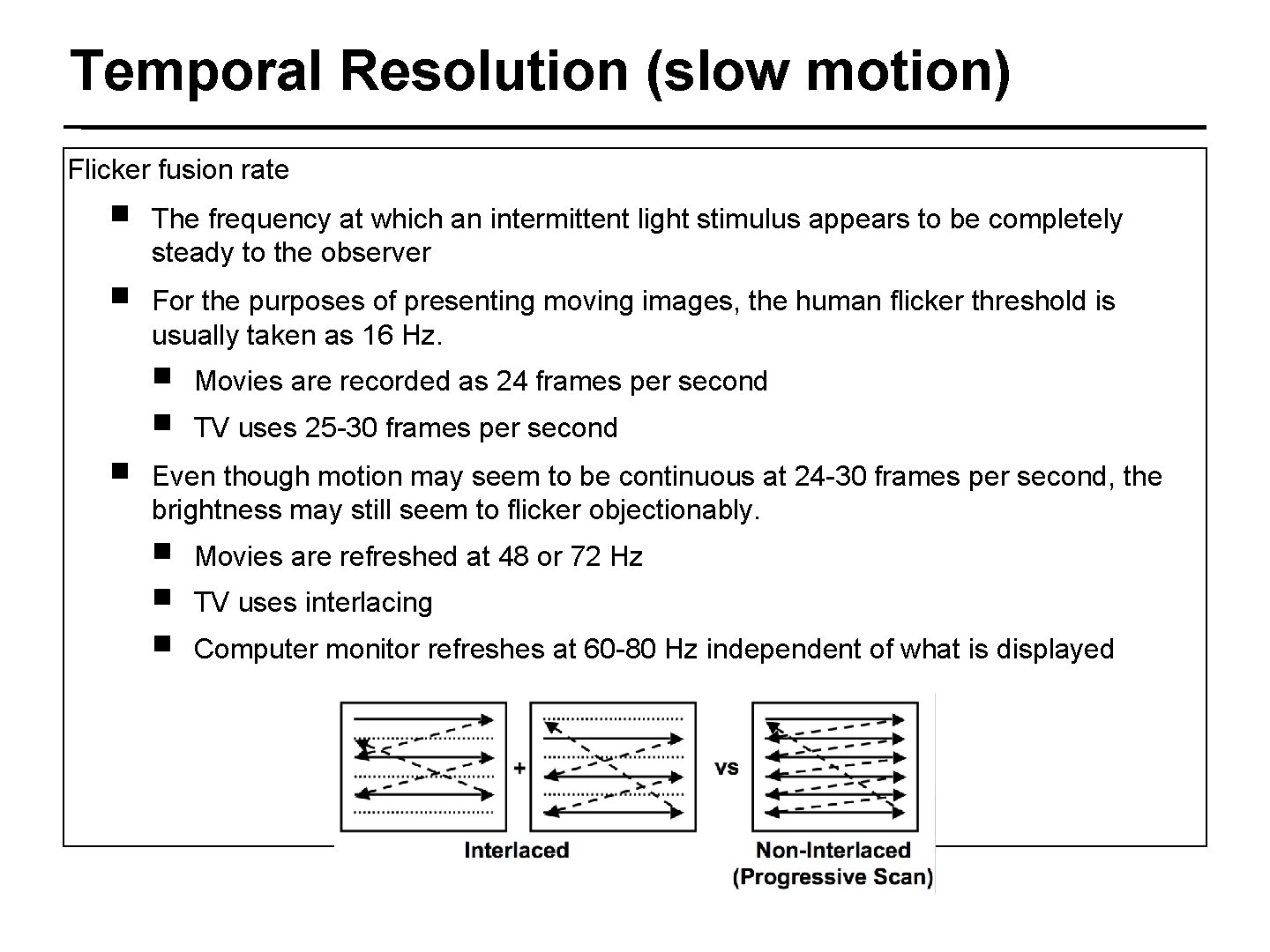

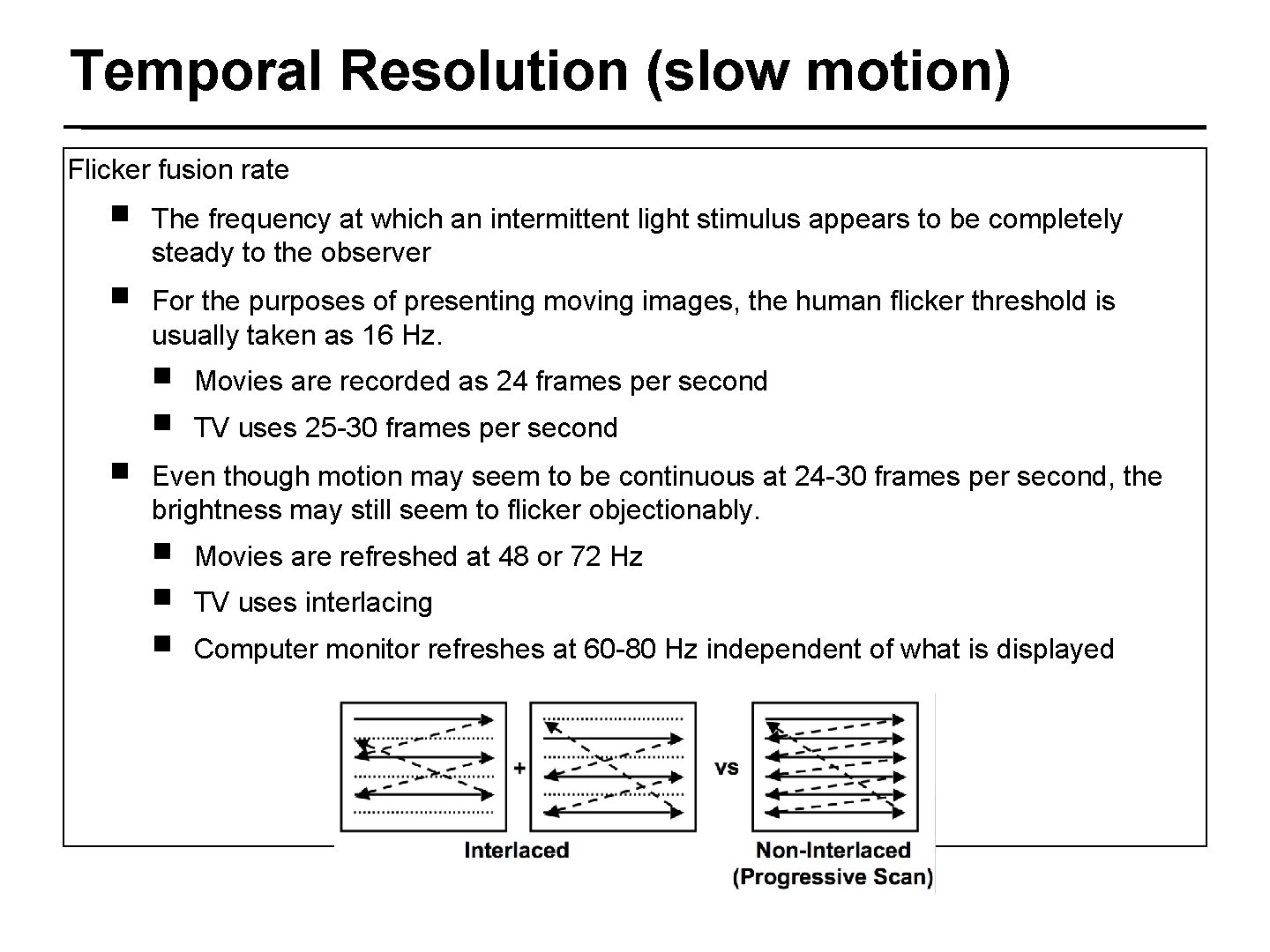

Temporal Resolution (slow motion) Flicker fusion rate ■ The frequency at which an intermittent light stimulus appears to be completely steady to the observer ■ For the purposes of presenting moving images, the human flicker threshold is usually taken as 16 Hz. ■ ■ ■ Movies are recorded as 24 frames per second TV uses 25 -30 frames per second 28/42 Even though motion may seem to be continuous at 24 -30 frames per second, the brightness may still seem to flicker objectionably. ■ ■ ■ Movies are refreshed at 48 or 72 Hz TV uses interlacing Computer monitor refreshes at 60 -80 Hz independent of what is displayed

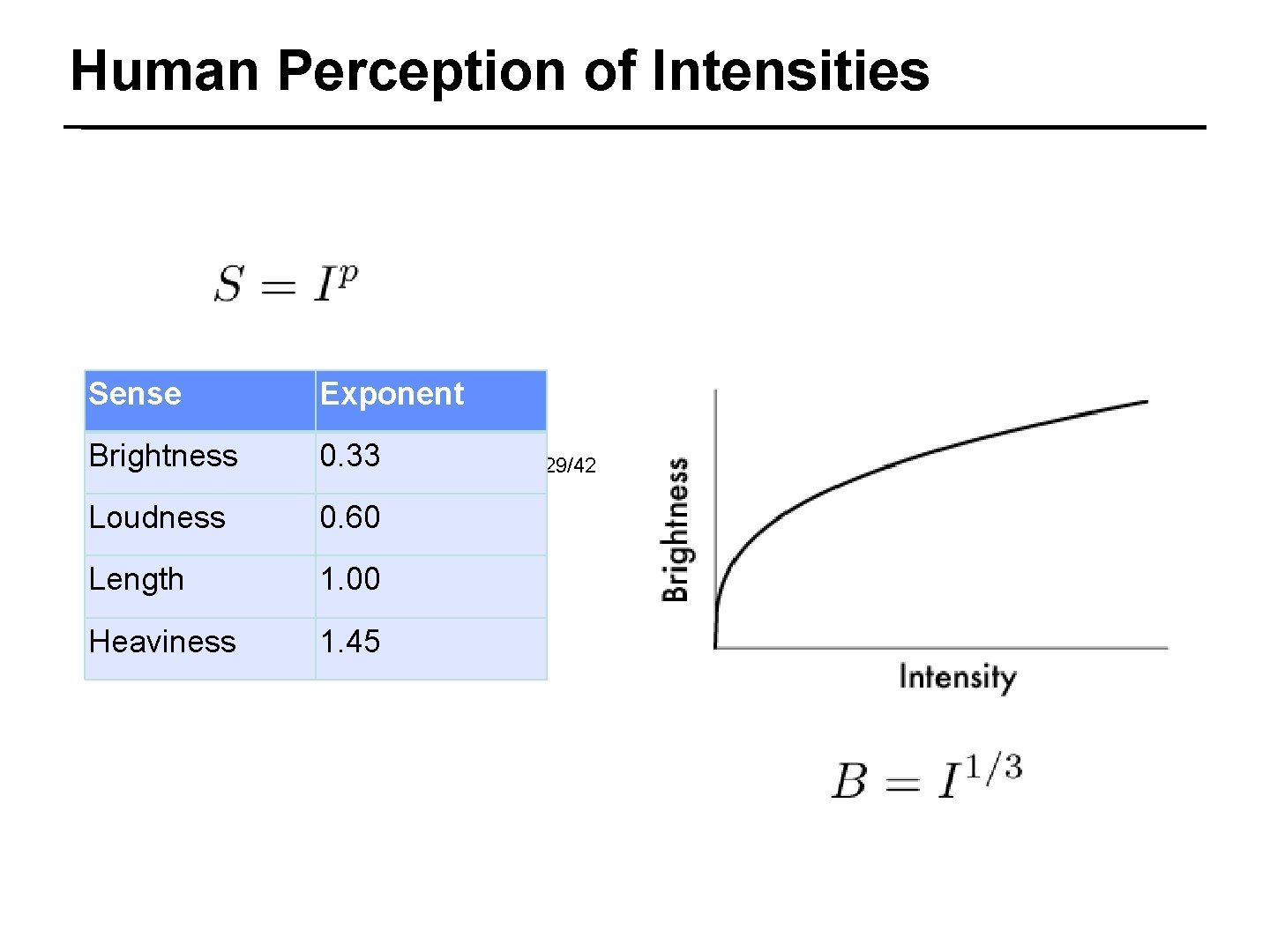

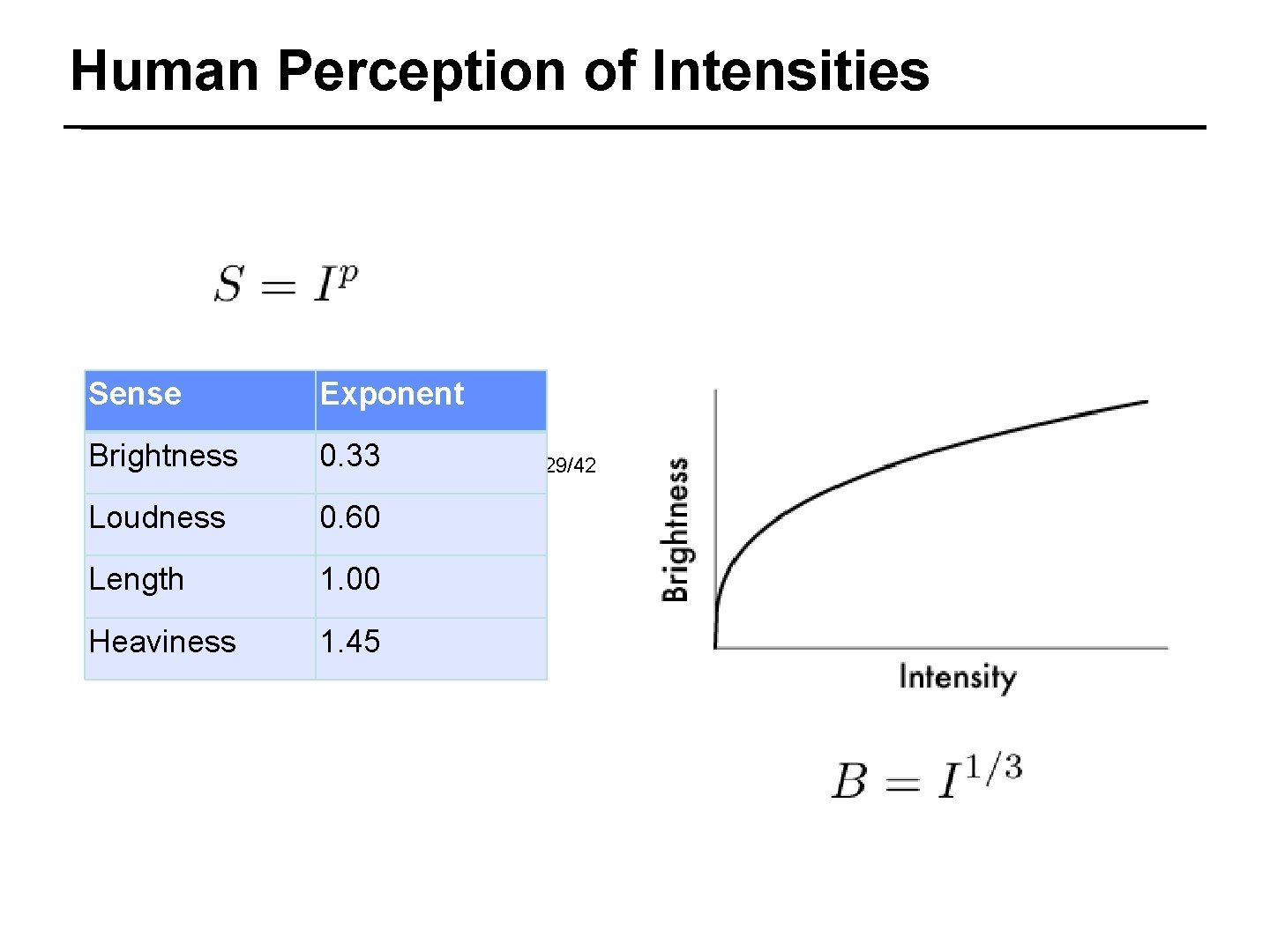

Human Perception of Intensities Sense Exponent Brightness 0. 33 Loudness 0. 60 Length 1. 00 Heaviness 1. 45 29/42

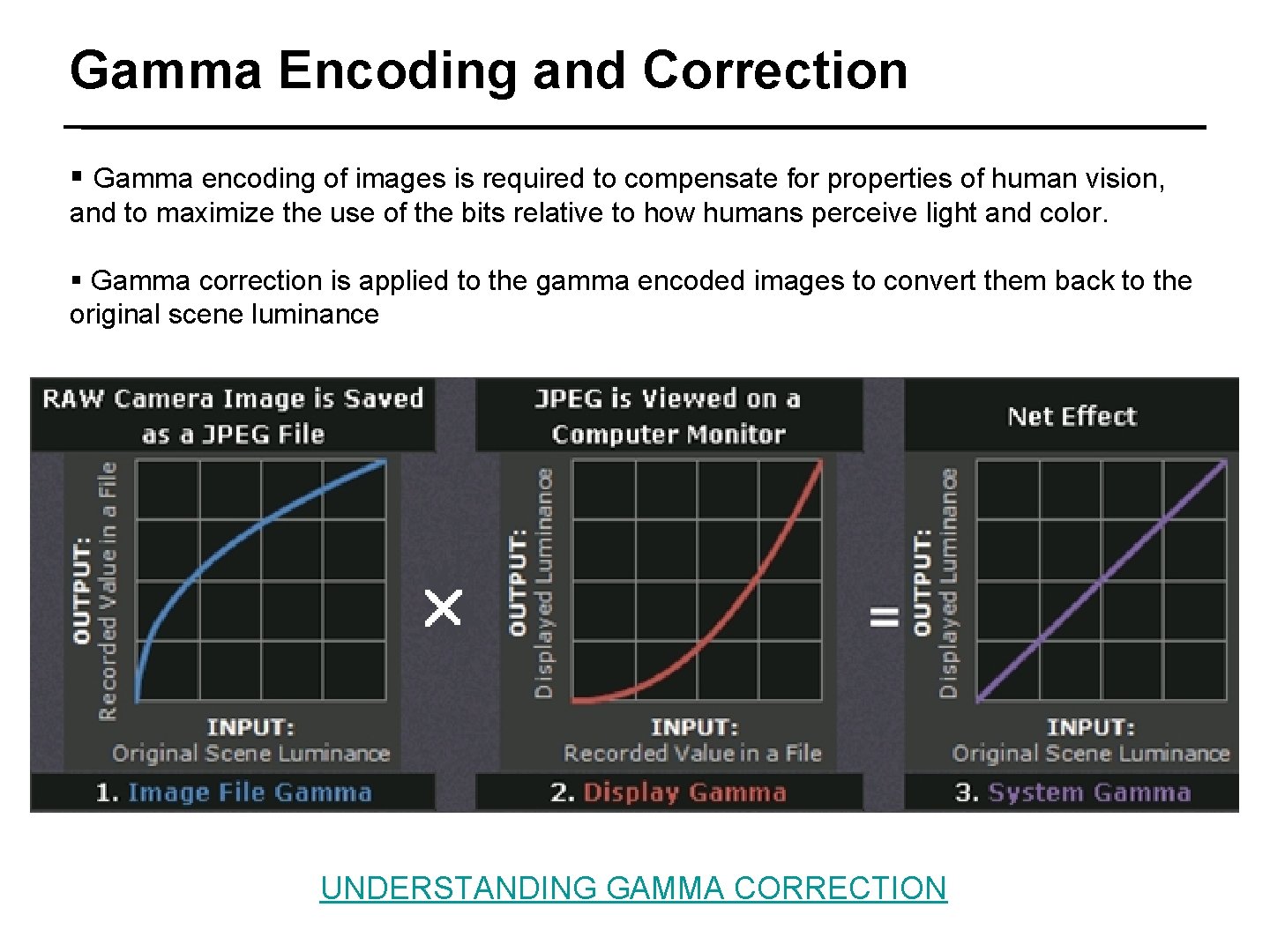

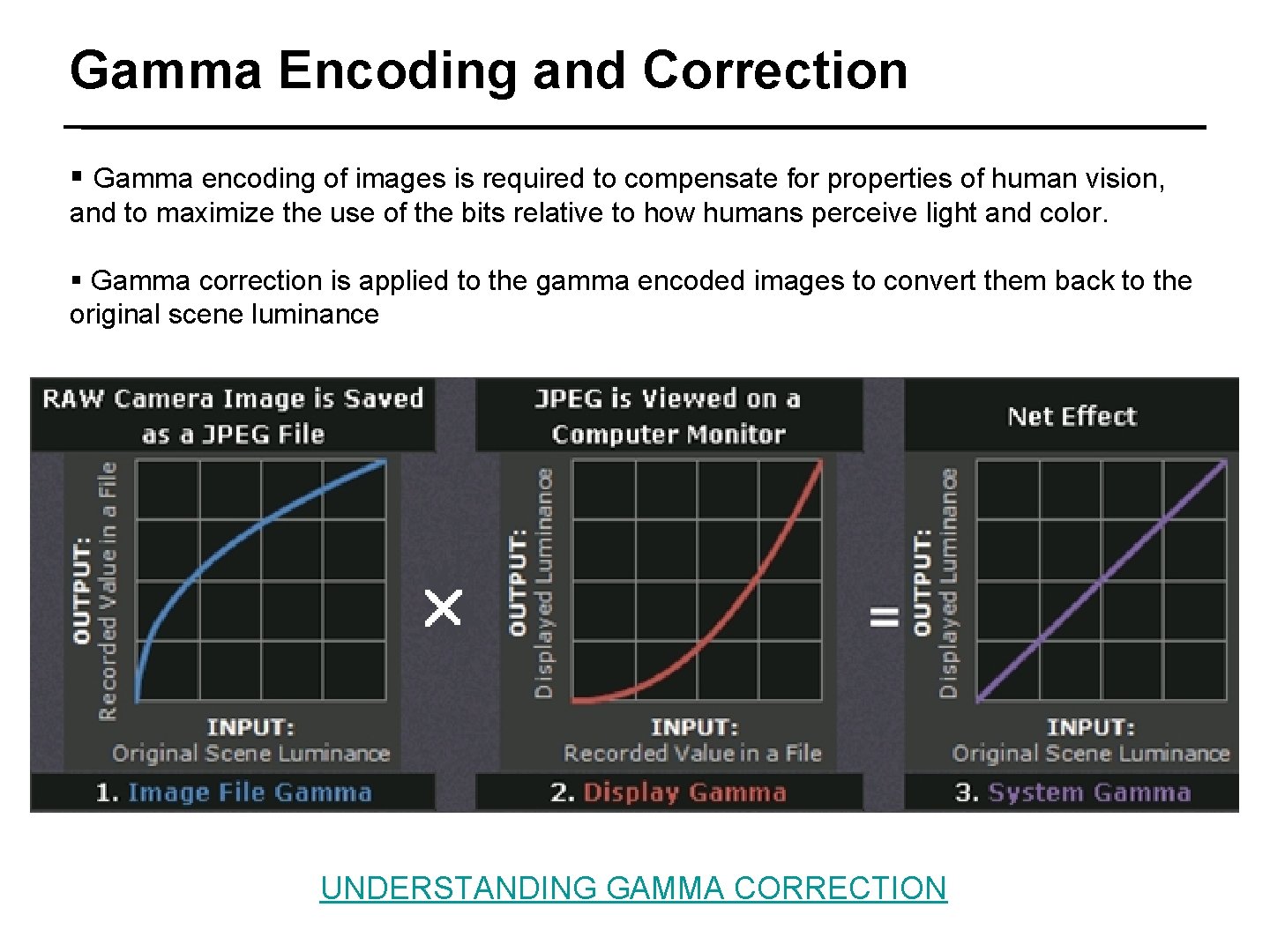

Gamma Encoding and Correction § Gamma encoding of images is required to compensate for properties of human vision, and to maximize the use of the bits relative to how humans perceive light and color. § Gamma correction is applied to the gamma encoded images to convert them back to the original scene luminance 30/42 UNDERSTANDING GAMMA CORRECTION

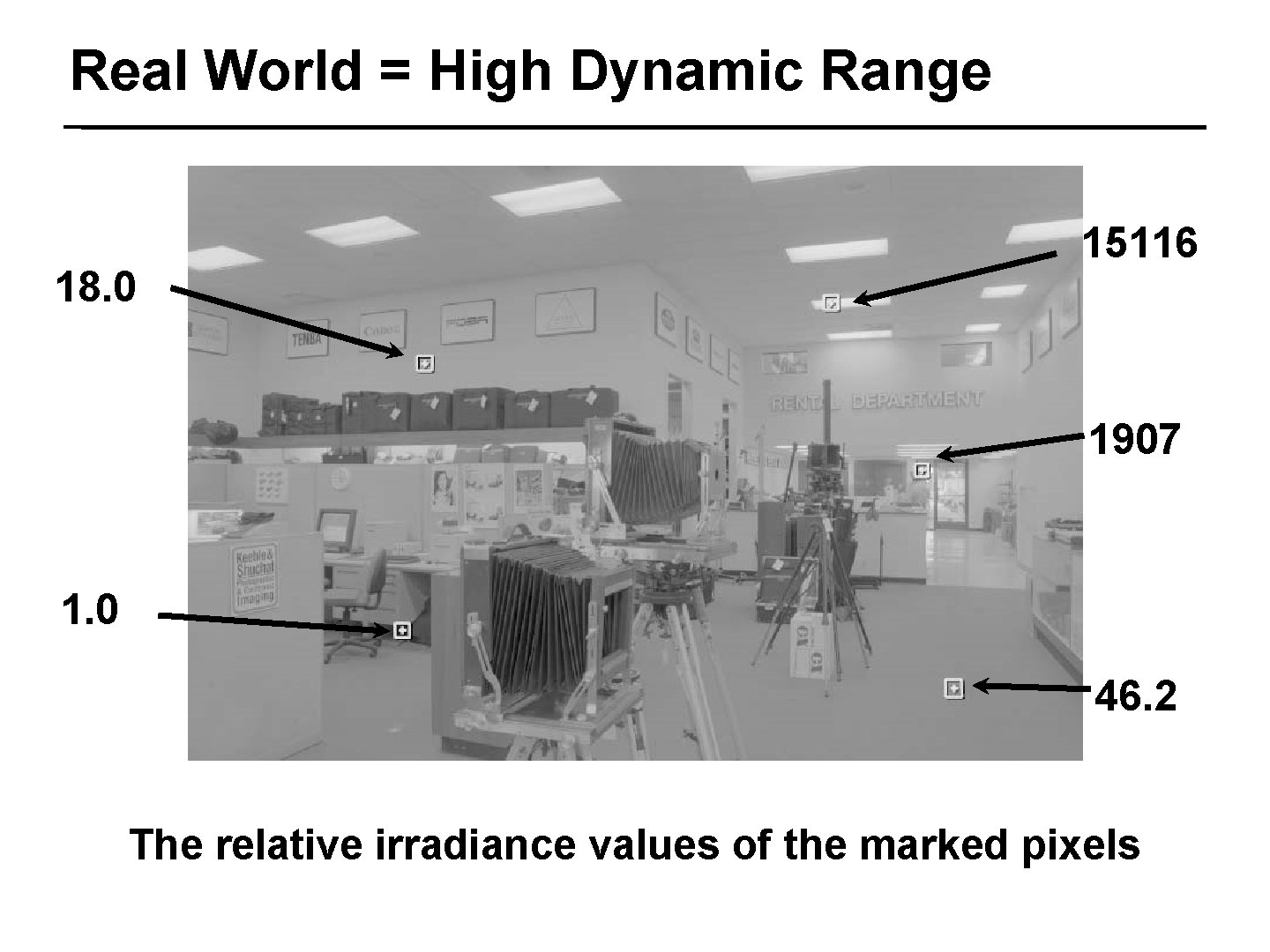

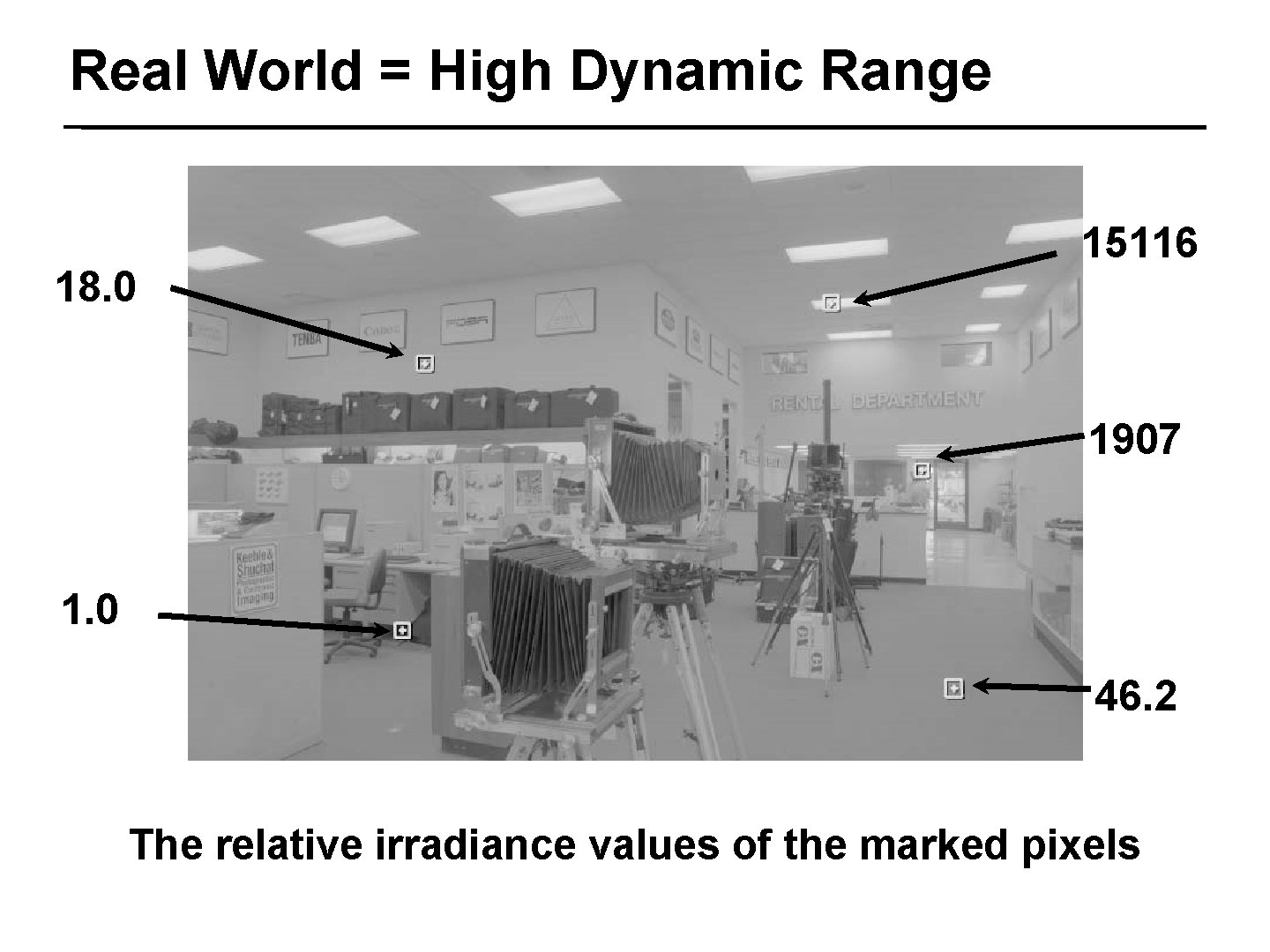

Real World = High Dynamic Range 15116 18. 0 31/42 1907 1. 0 46. 2 The relative irradiance values of the marked pixels

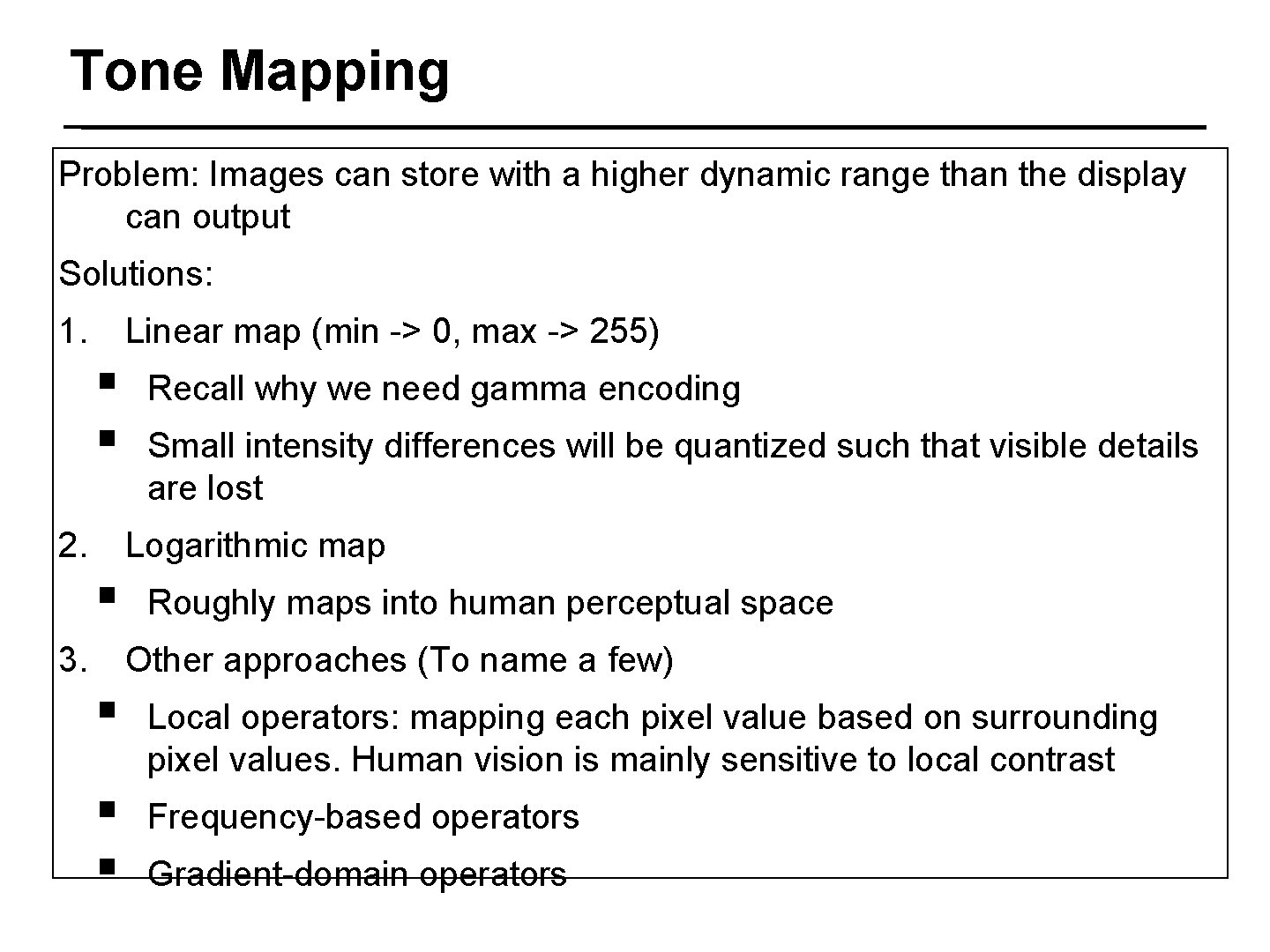

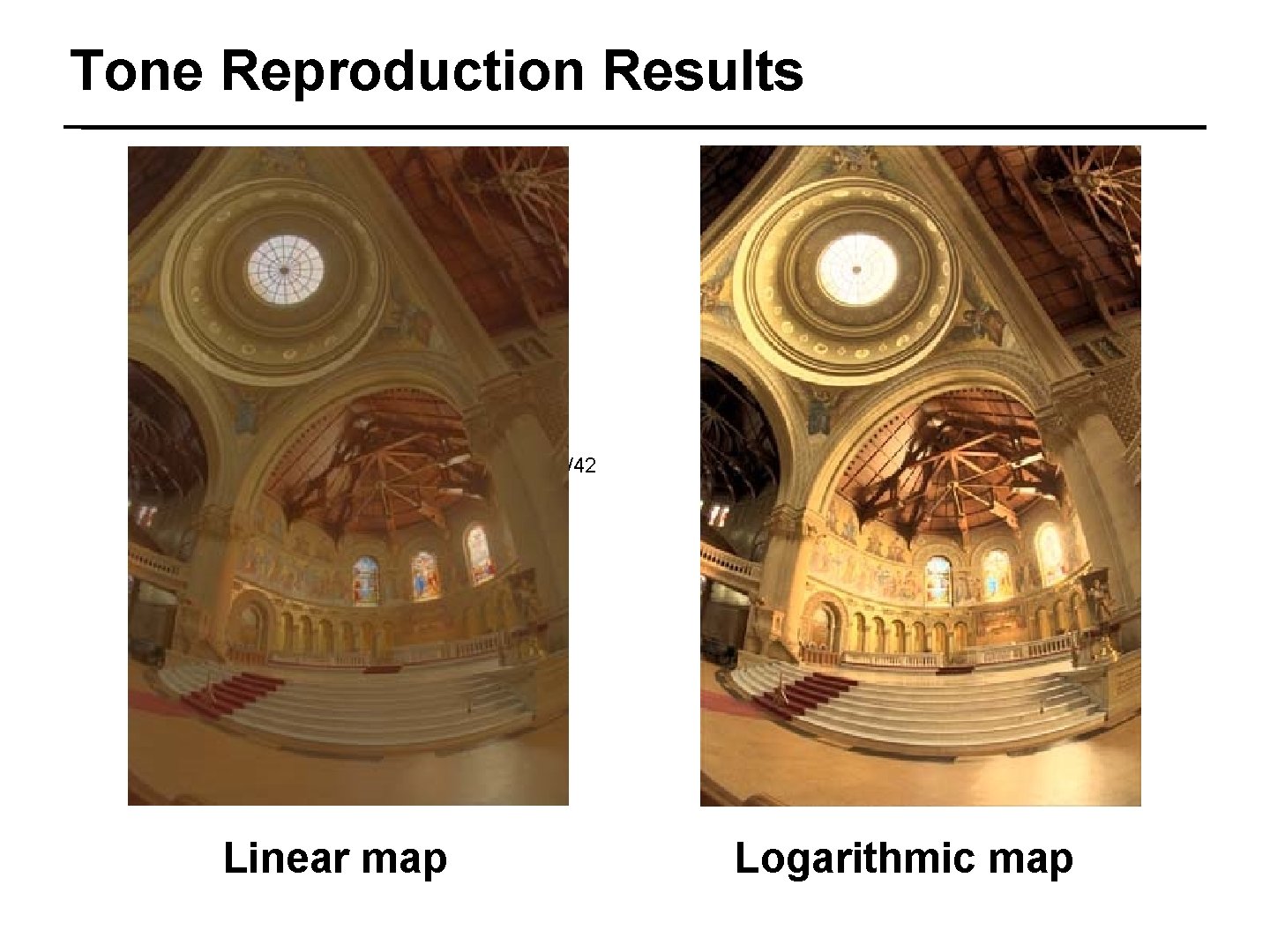

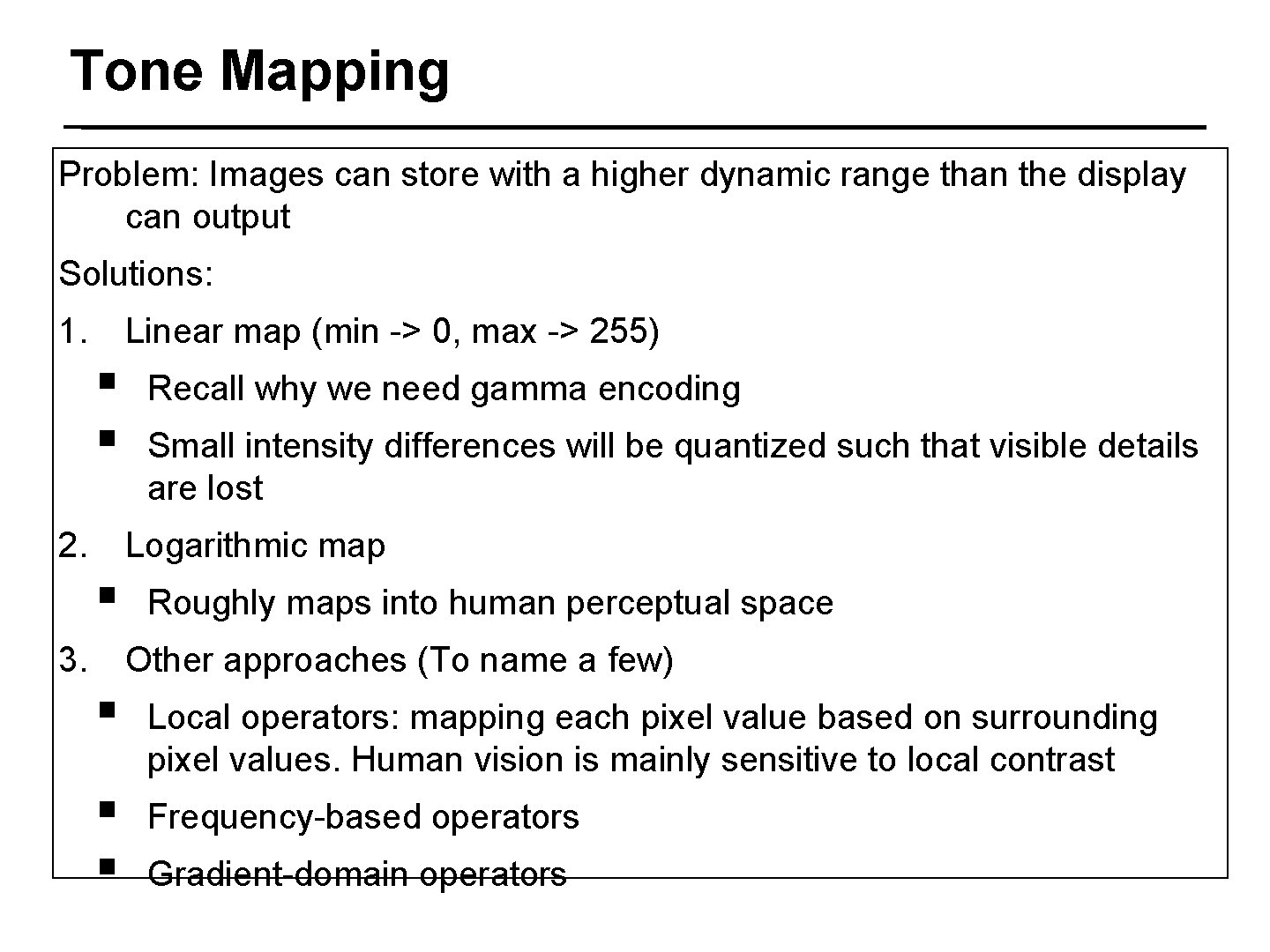

Tone Mapping Problem: Images can store with a higher dynamic range than the display can output Solutions: 1. Linear map (min -> 0, max -> 255) § § 2. Recall why we need gamma encoding Small intensity differences 32/42 will be quantized such that visible details are lost Logarithmic map § 3. Roughly maps into human perceptual space Other approaches (To name a few) § Local operators: mapping each pixel value based on surrounding pixel values. Human vision is mainly sensitive to local contrast § § Frequency-based operators Gradient-domain operators

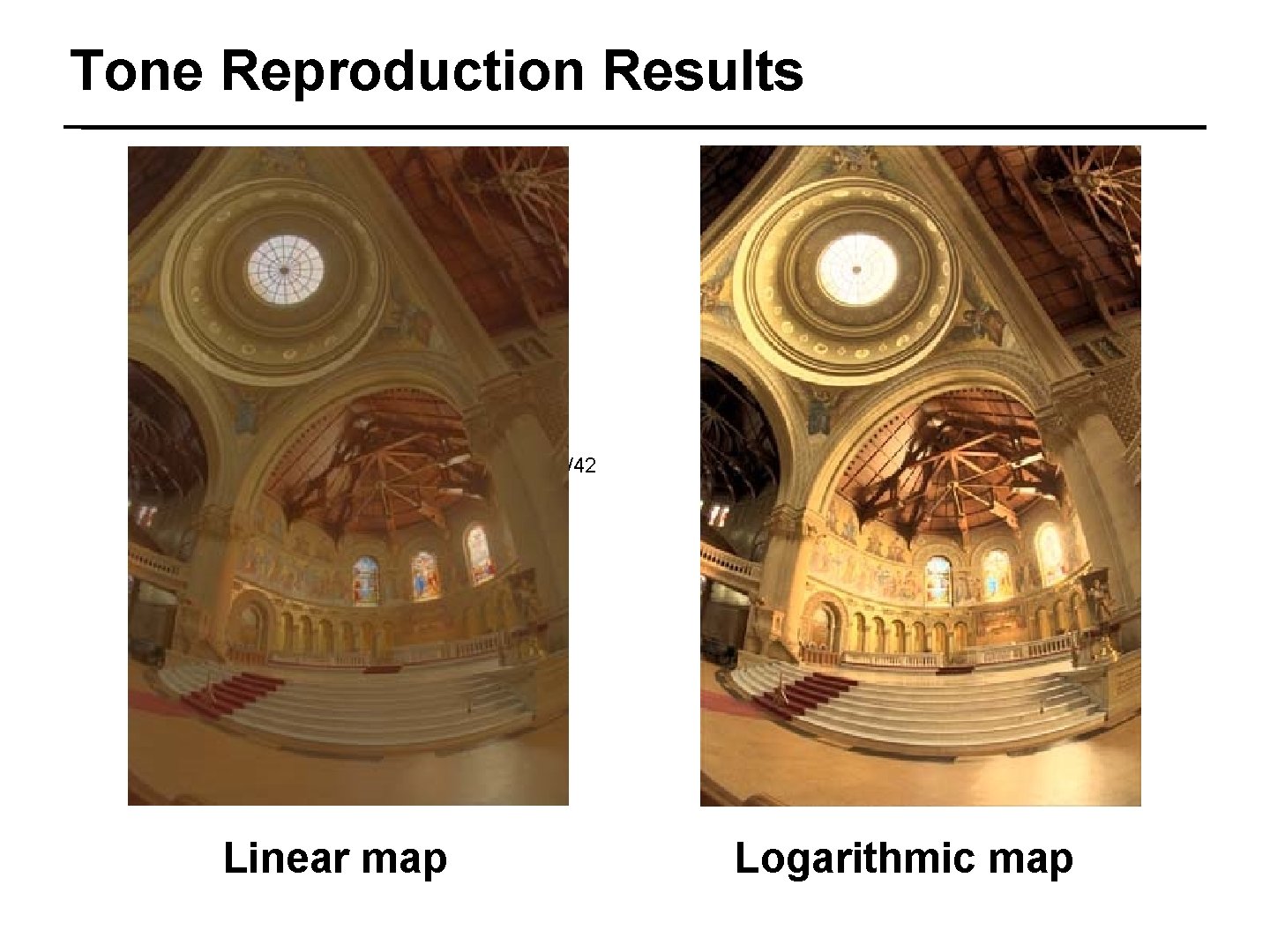

Tone Reproduction Results 33/42 Linear map Logarithmic map

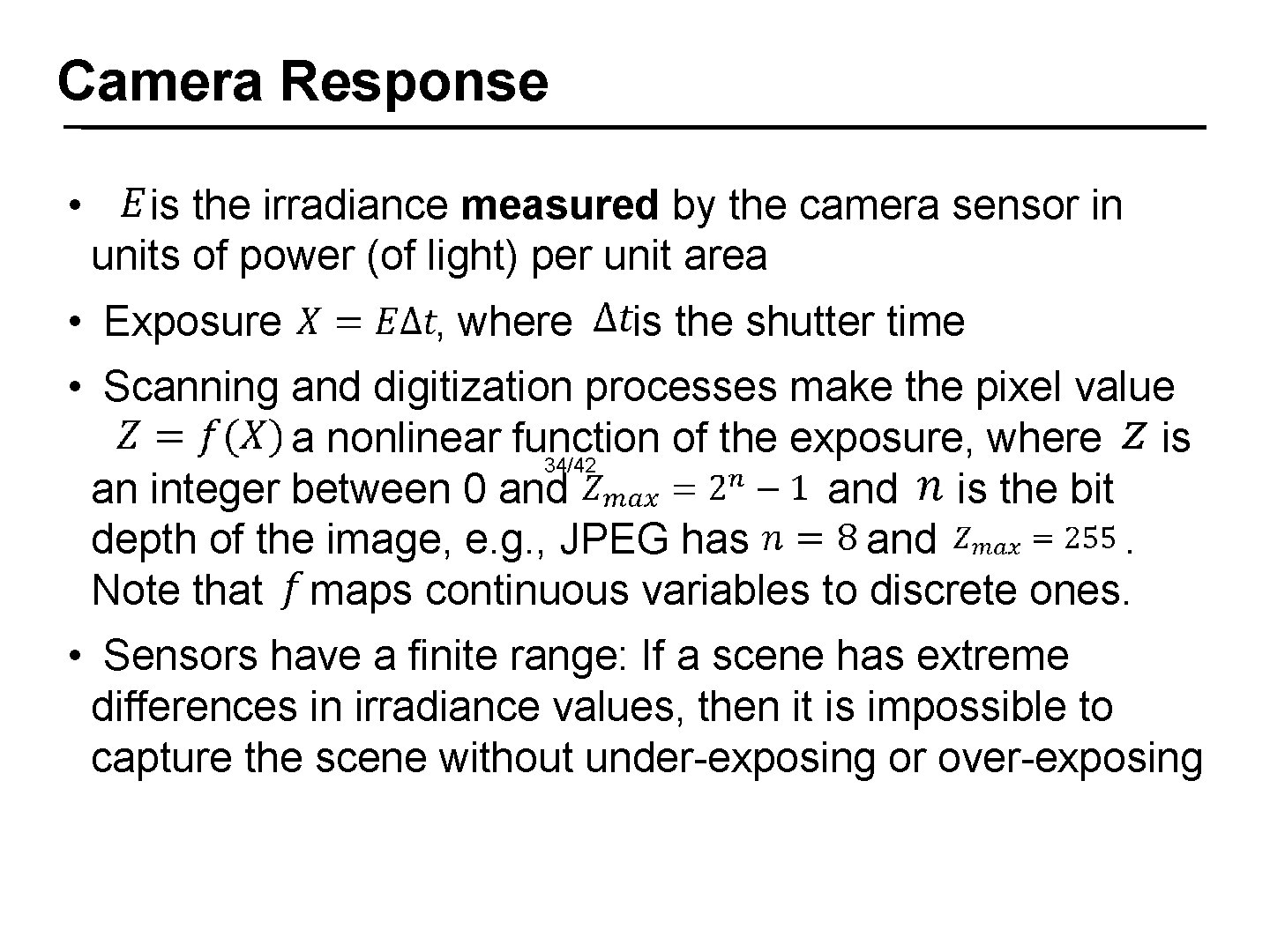

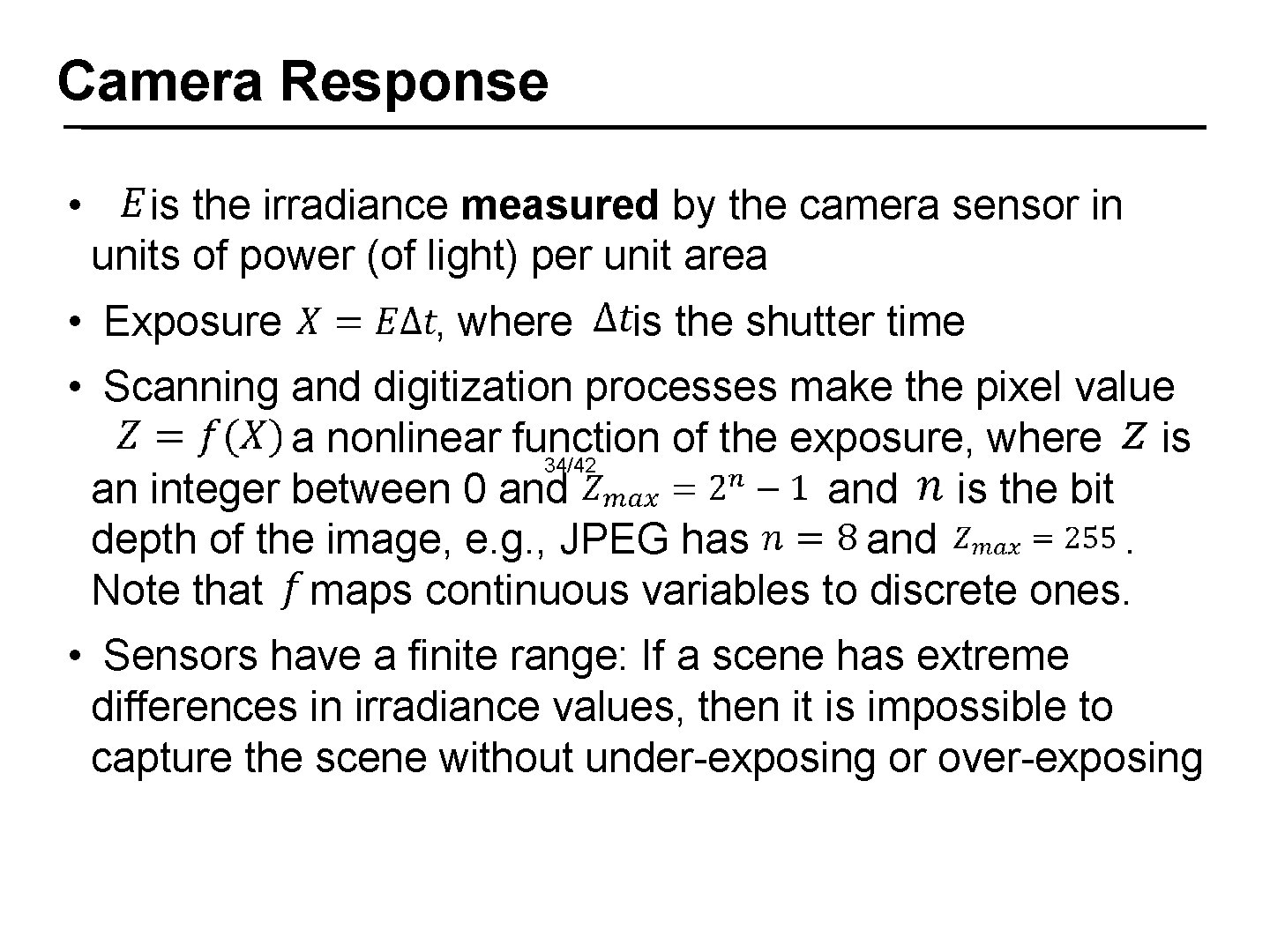

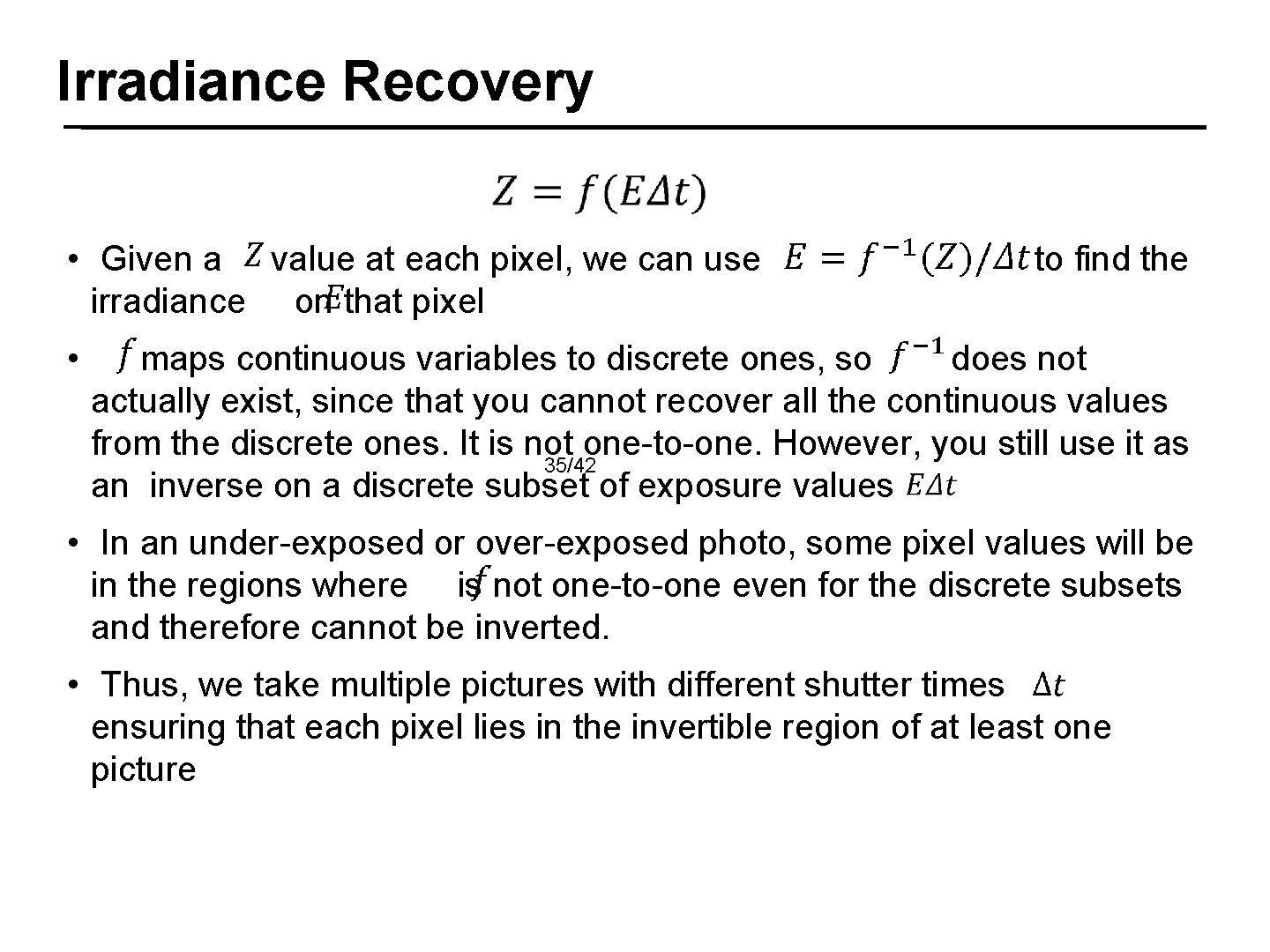

Camera Response • is the irradiance measured by the camera sensor in units of power (of light) per unit area • Exposure , where is the shutter time • Scanning and digitization processes make the pixel value ____ a nonlinear function of the exposure, where is 34/42 an integer between 0 and is the bit depth of the image, e. g. , JPEG has and. Note that maps continuous variables to discrete ones. • Sensors have a finite range: If a scene has extreme differences in irradiance values, then it is impossible to capture the scene without under-exposing or over-exposing

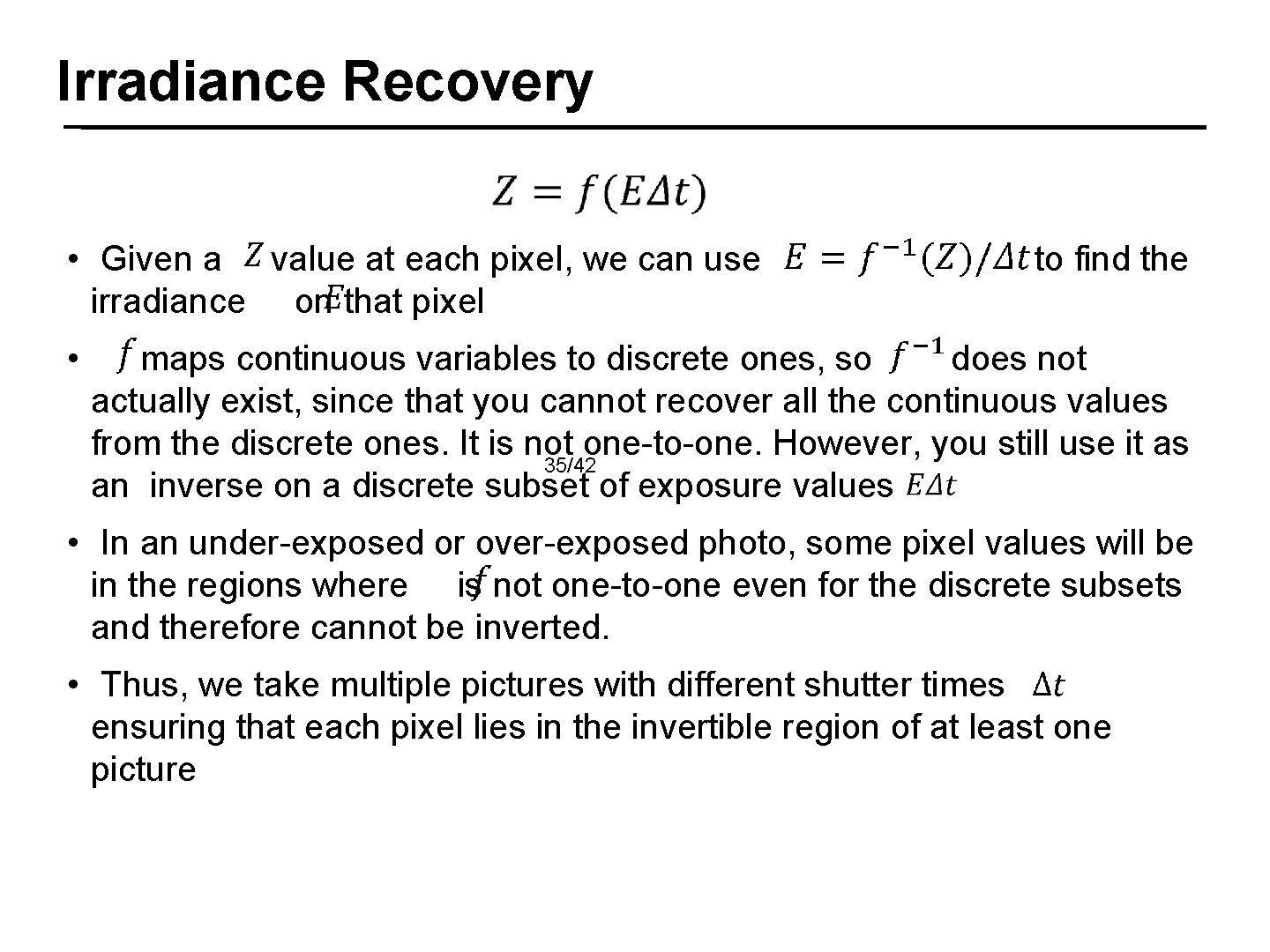

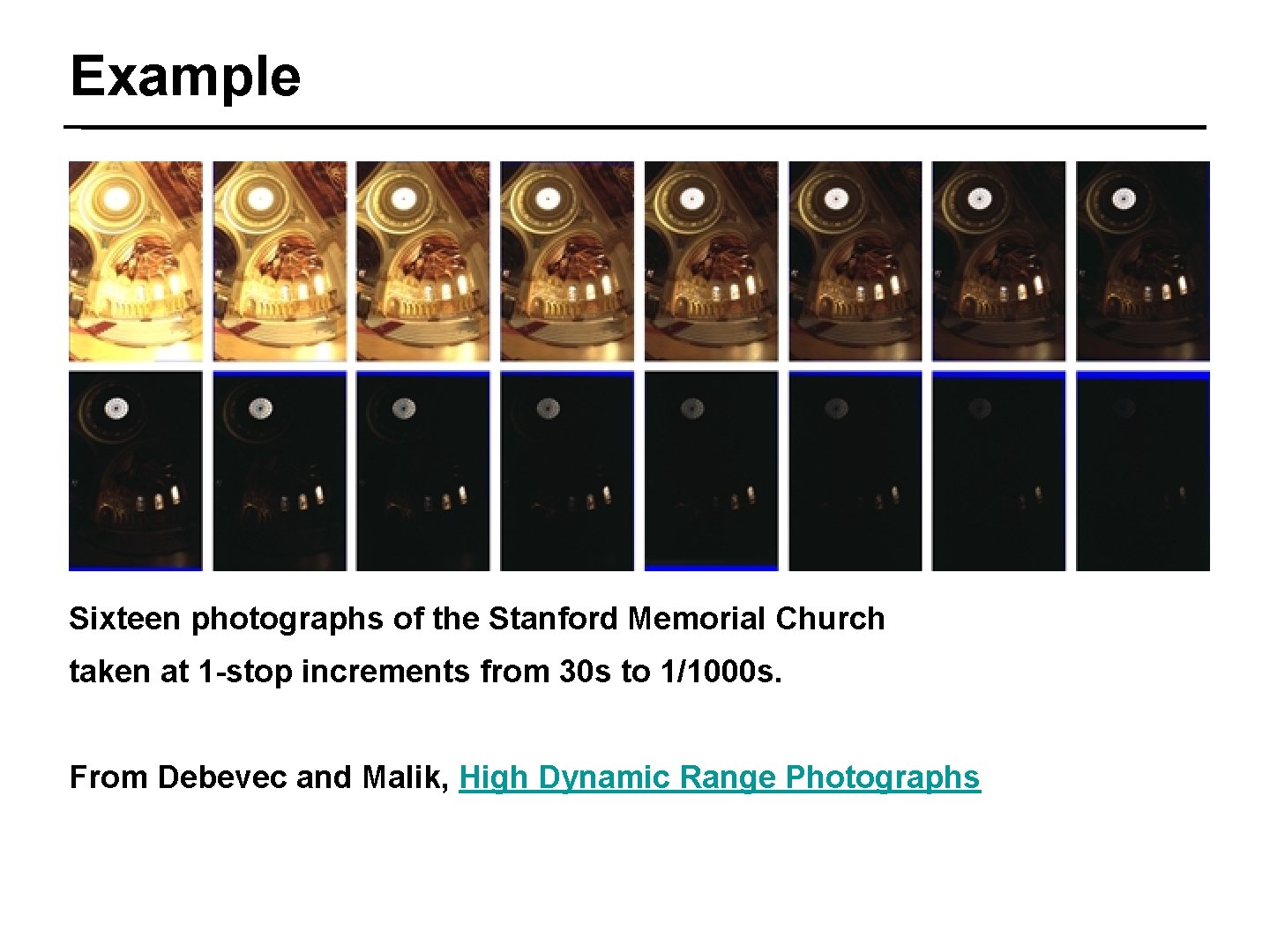

Irradiance Recovery • Given a value at each pixel, we can use irradiance on that pixel • to find the maps continuous variables to discrete ones, so does not actually exist, since that you cannot recover all the continuous values from the discrete ones. It is not one-to-one. However, you still use it as 35/42 an inverse on a discrete subset of exposure values • In an under-exposed or over-exposed photo, some pixel values will be in the regions where is not one-to-one even for the discrete subsets and therefore cannot be inverted. • Thus, we take multiple pictures with different shutter times ensuring that each pixel lies in the invertible region of at least one picture

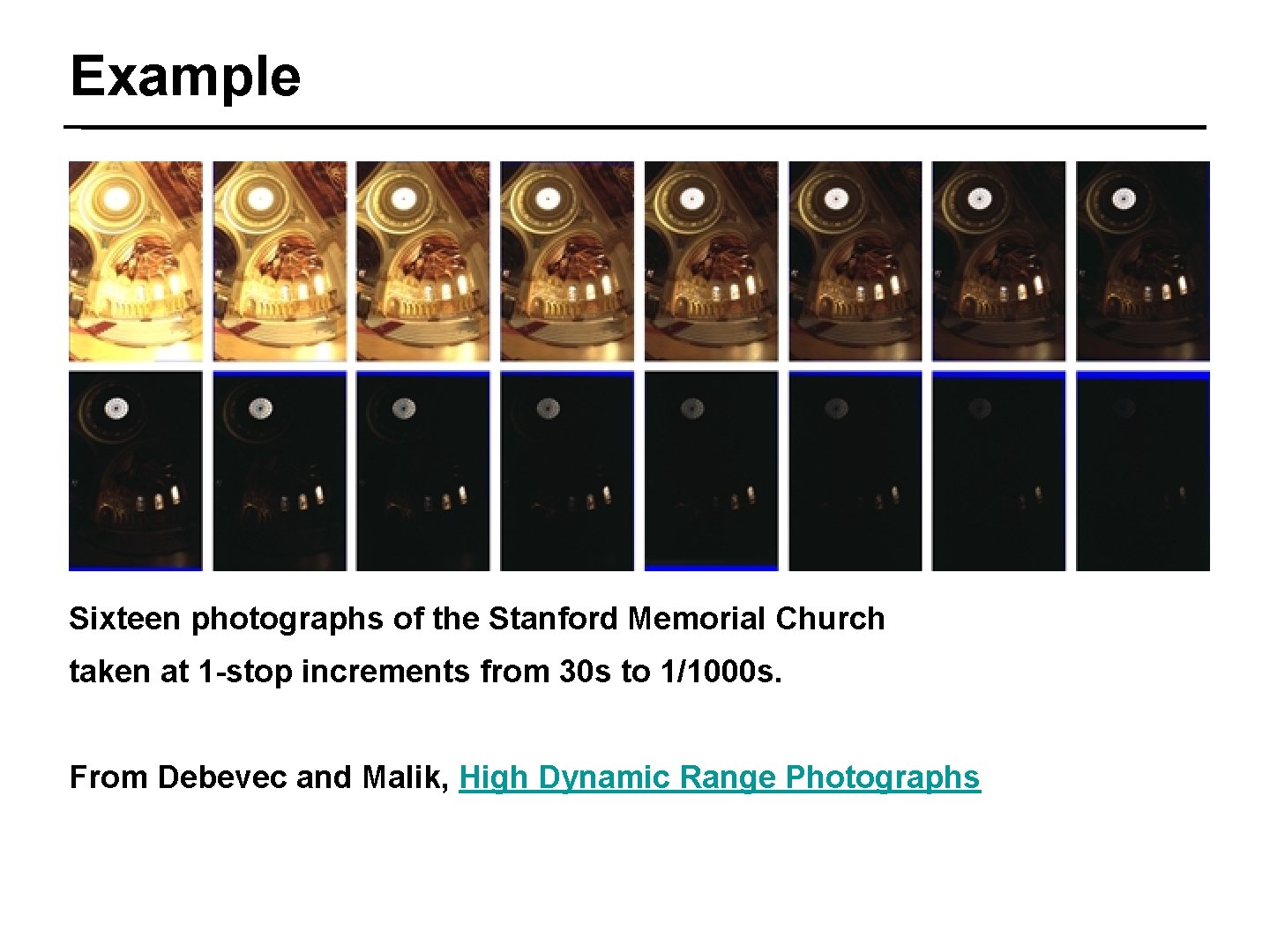

Example 36/42 Sixteen photographs of the Stanford Memorial Church taken at 1 -stop increments from 30 s to 1/1000 s. From Debevec and Malik, High Dynamic Range Photographs

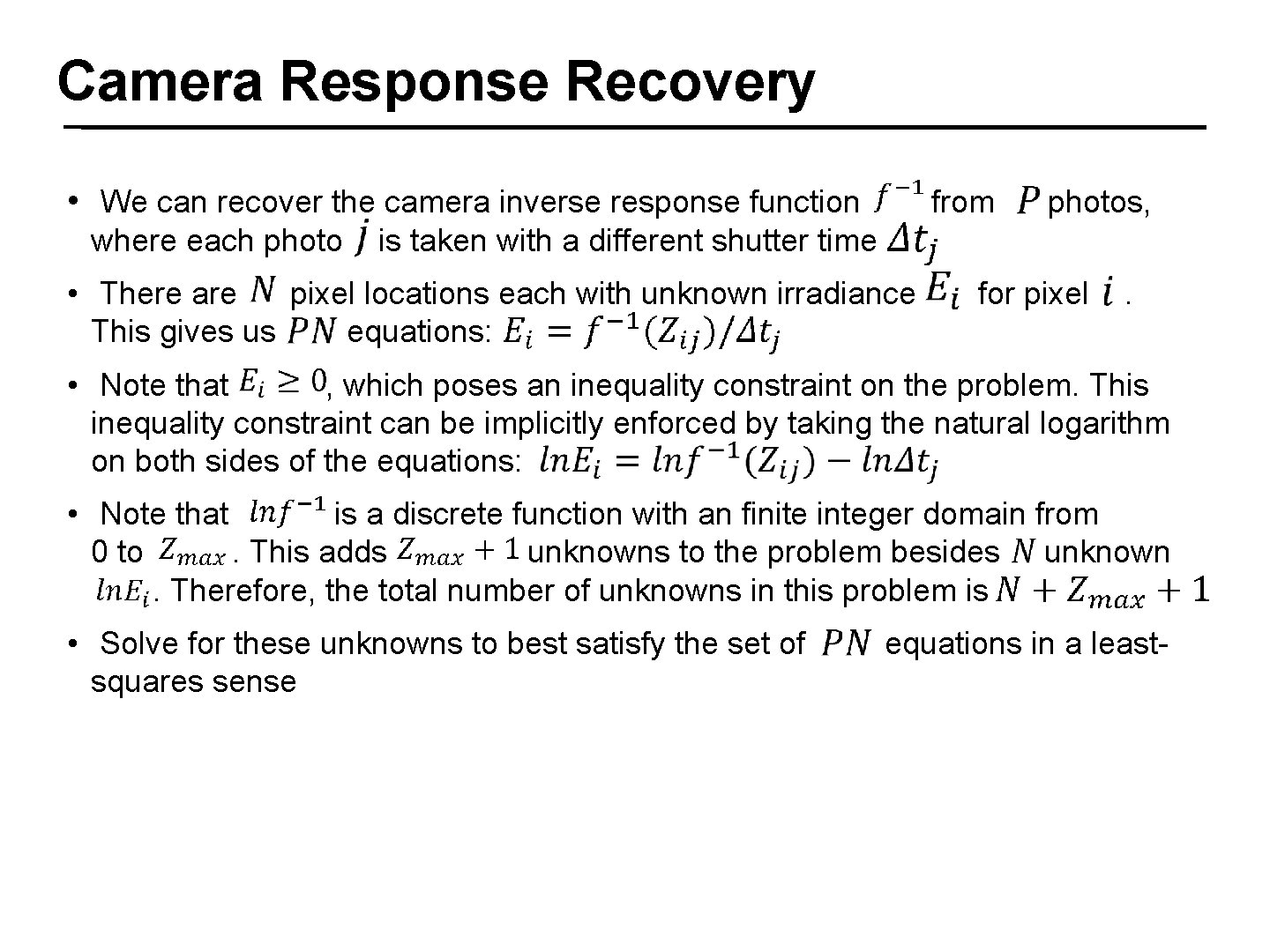

Camera Response Recovery • We can recover the camera inverse response function where each photo from photos, is taken with a different shutter time • There are pixel locations each with unknown irradiance This gives us equations: for pixel . • Note that , which poses an inequality constraint on the problem. This inequality constraint can be implicitly enforced by taking the natural logarithm on both sides of the equations: 37/42 • Note that is a discrete function with an finite integer domain from 0 to. This adds unknowns to the problem besides unknown ___. Therefore, the total number of unknowns in this problem is • Solve for these unknowns to best satisfy the set of squares sense equations in a least-

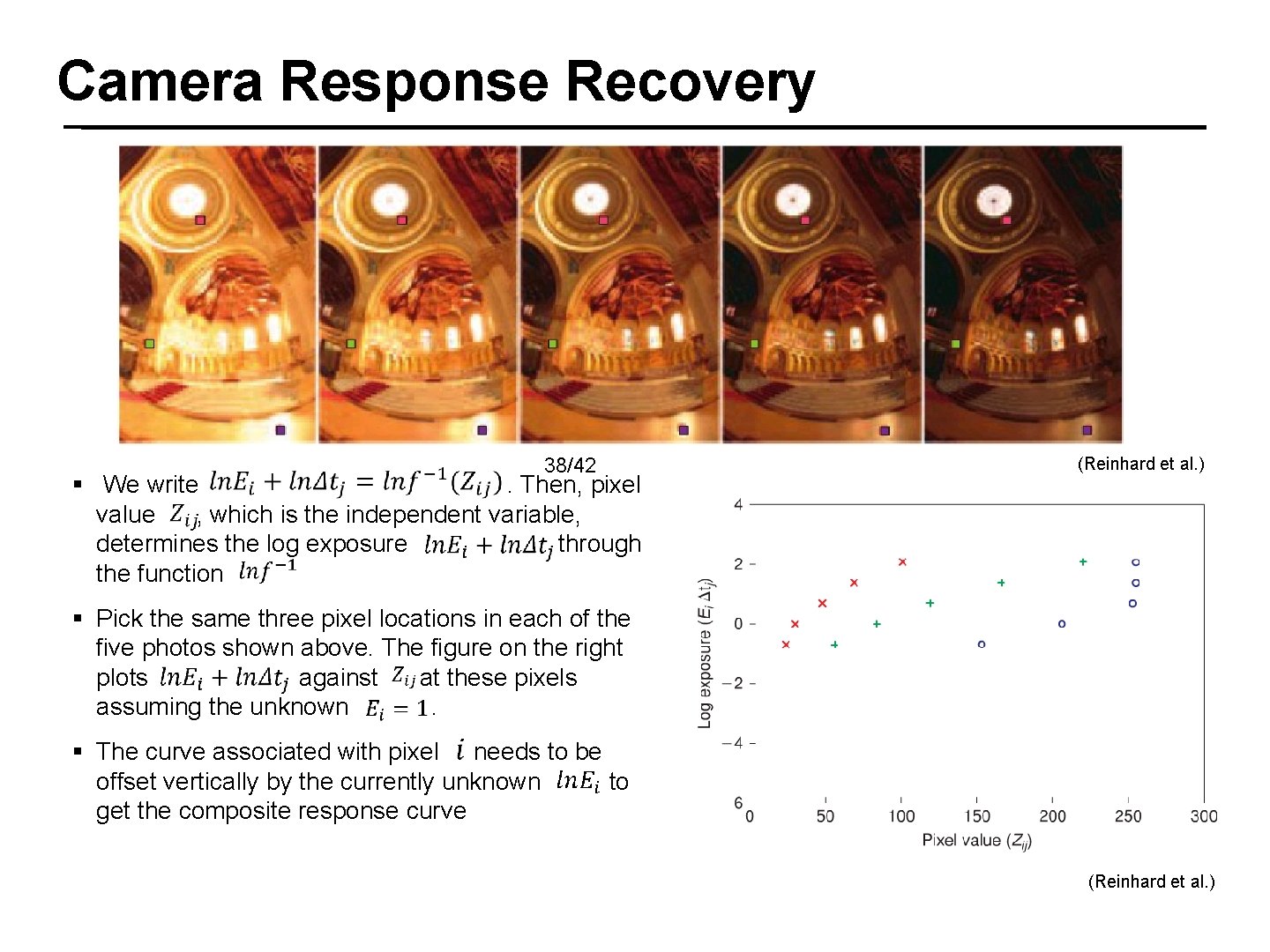

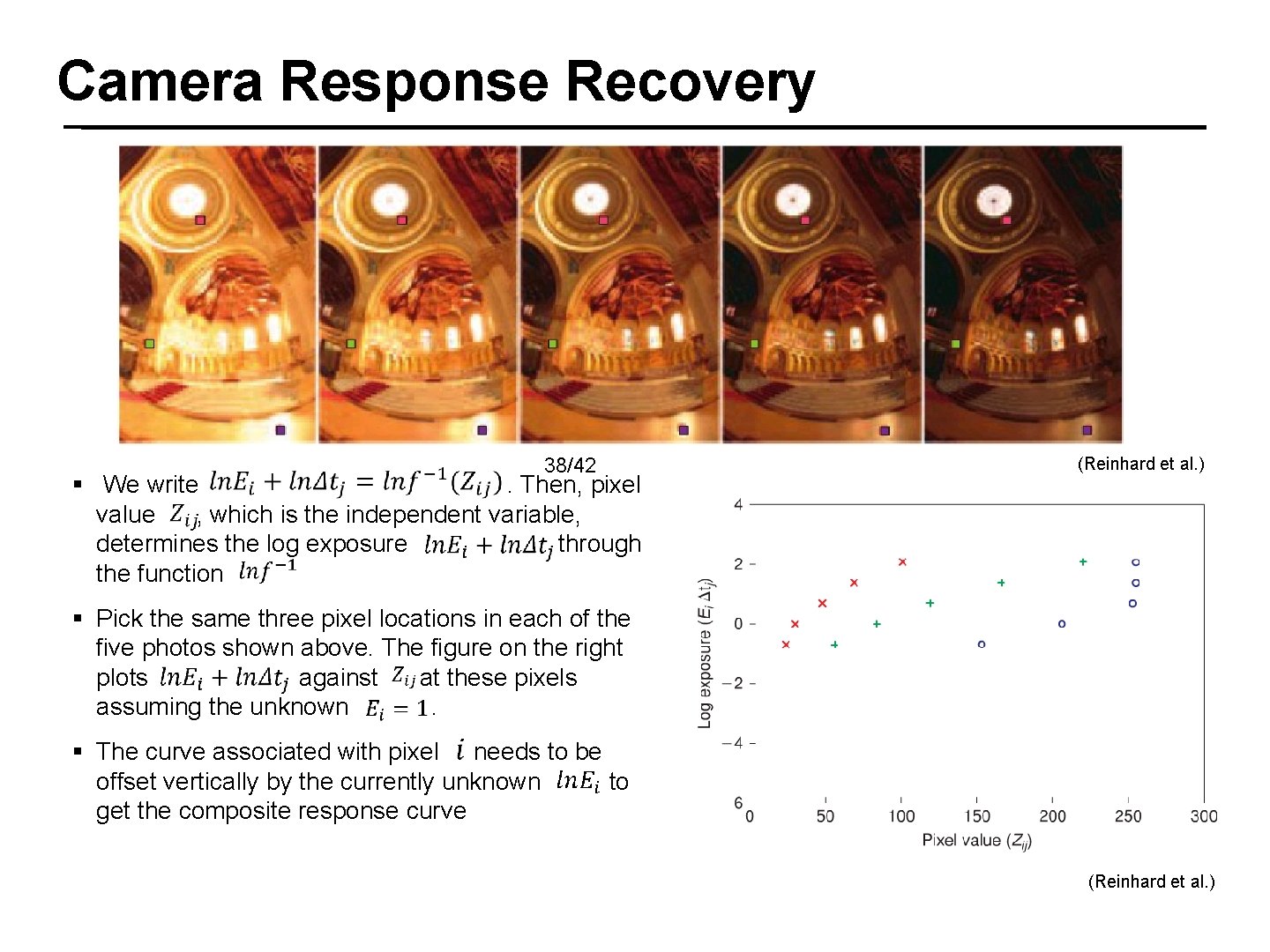

Camera Response Recovery 38/42 § We write. Then, pixel value , which is the independent variable, determines the log exposure through the function (Reinhard et al. ) § Pick the same three pixel locations in each of the five photos shown above. The figure on the right plots against at these pixels assuming the unknown. § The curve associated with pixel needs to be offset vertically by the currently unknown to get the composite response curve (Reinhard et al. )

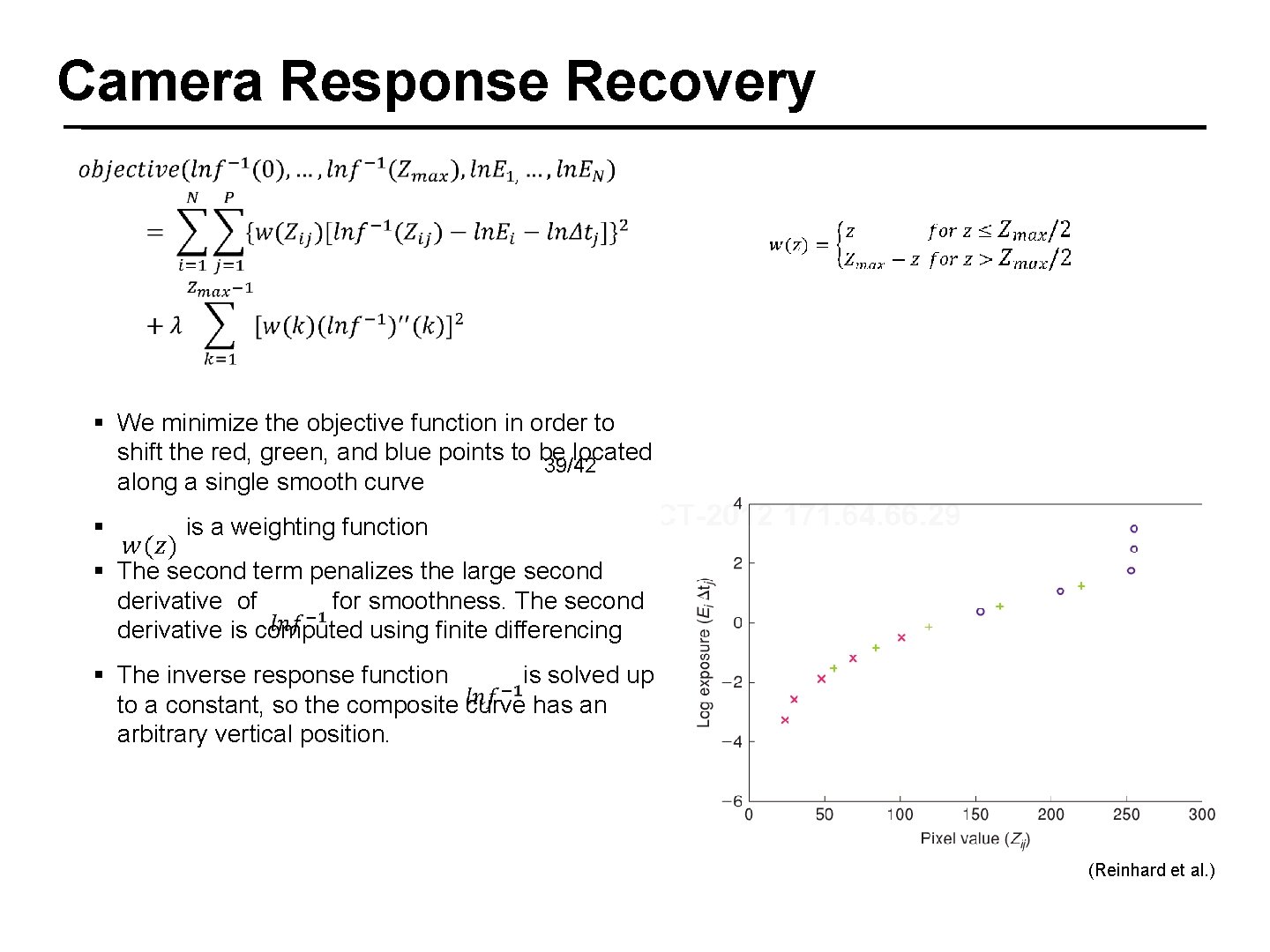

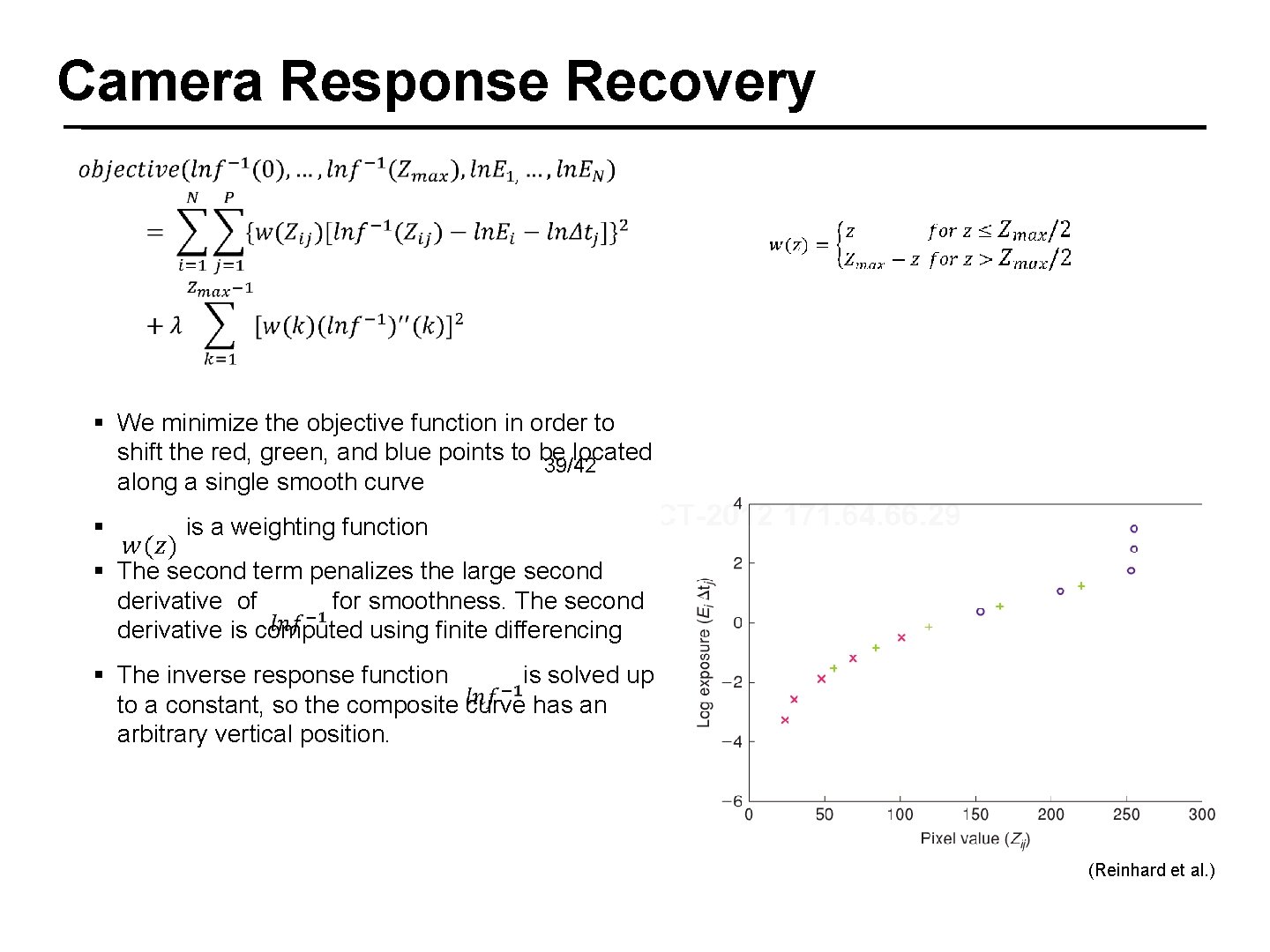

Camera Response Recovery § We minimize the objective function in order to shift the red, green, and blue points to be located 39/42 along a single smooth curve § is a weighting function § The second term penalizes the large second derivative of for smoothness. The second derivative is computed using finite differencing § The inverse response function is solved up to a constant, so the composite curve has an arbitrary vertical position. (Reinhard et al. )

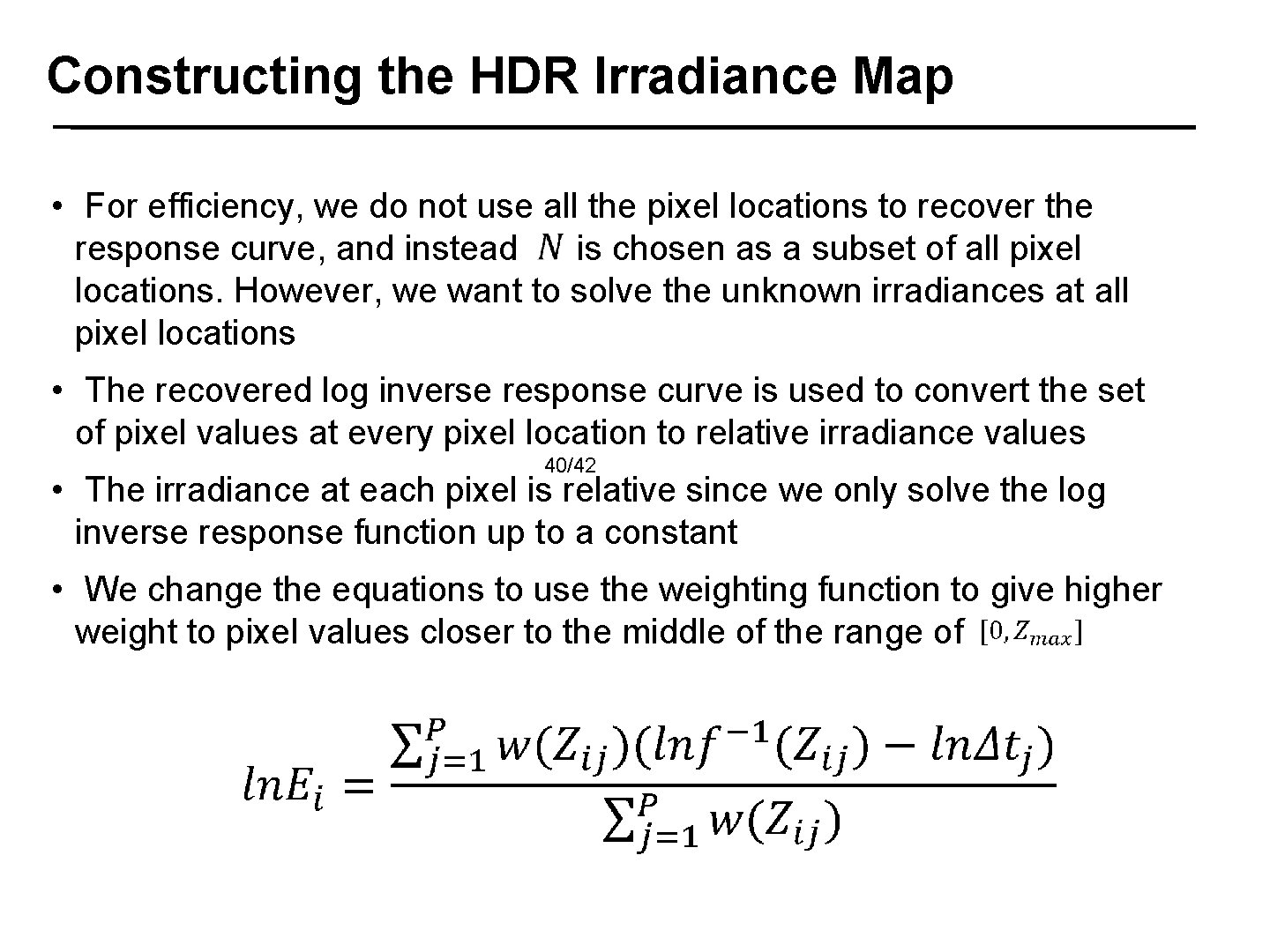

Constructing the HDR Irradiance Map • For efficiency, we do not use all the pixel locations to recover the response curve, and instead is chosen as a subset of all pixel locations. However, we want to solve the unknown irradiances at all pixel locations • The recovered log inverse response curve is used to convert the set of pixel values at every pixel location to relative irradiance values 40/42 • The irradiance at each pixel is relative since we only solve the log inverse response function up to a constant • We change the equations to use the weighting function to give higher weight to pixel values closer to the middle of the range of

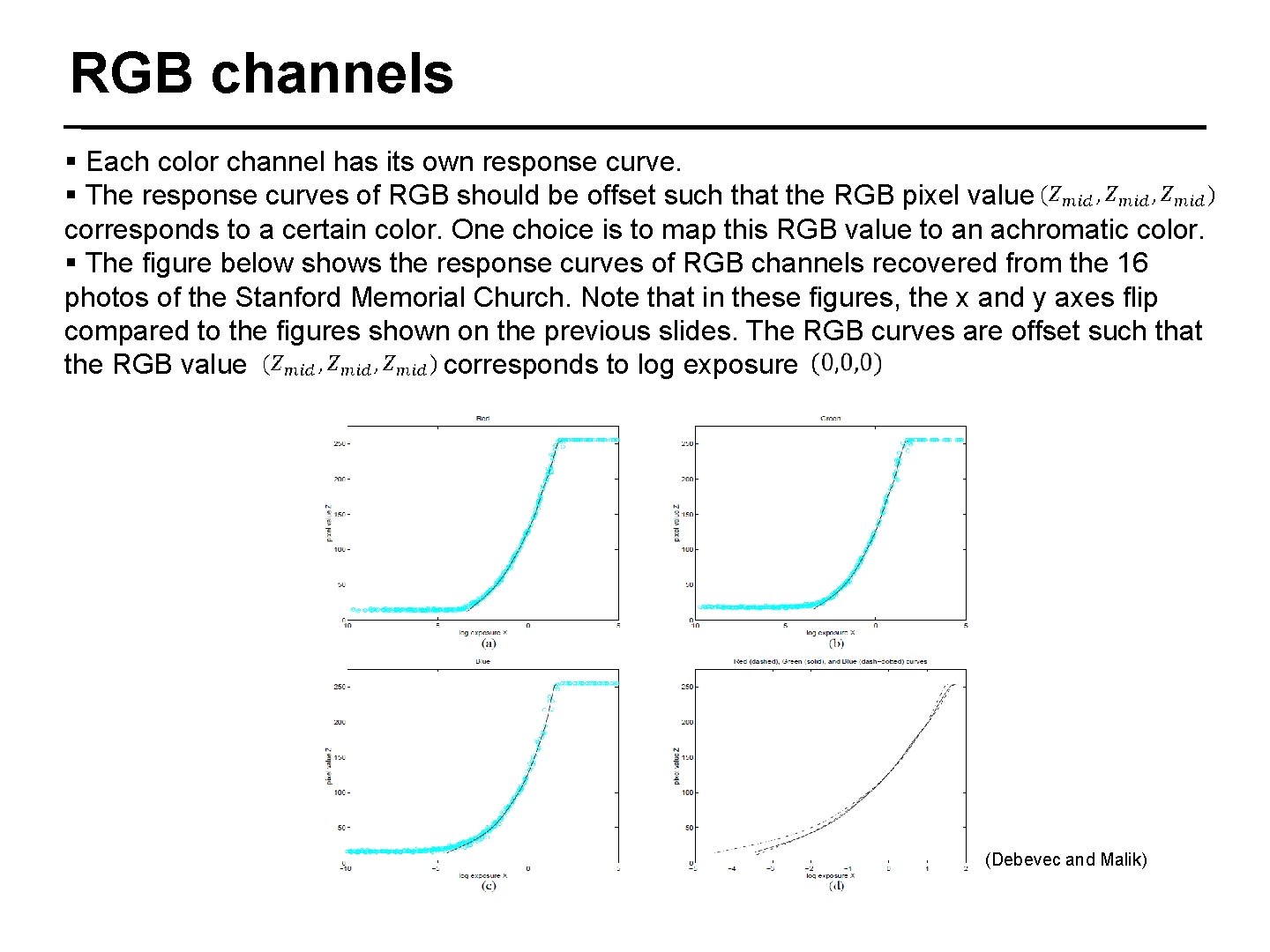

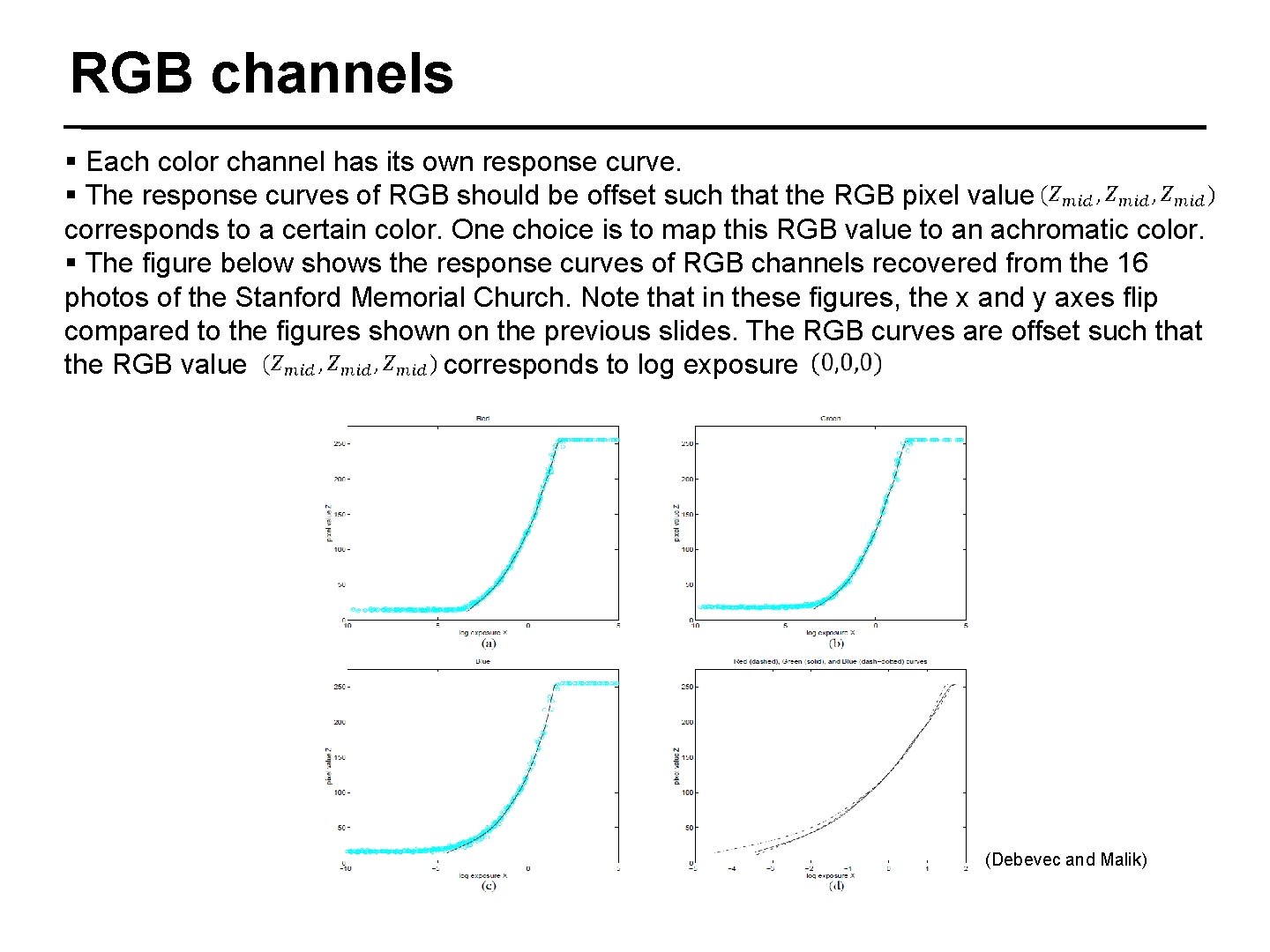

RGB channels § Each color channel has its own response curve. § The response curves of RGB should be offset such that the RGB pixel value corresponds to a certain color. One choice is to map this RGB value to an achromatic color. § The figure below shows the response curves of RGB channels recovered from the 16 photos of the Stanford Memorial Church. Note that in these figures, the x and y axes flip compared to the figures shown on the previous slides. The RGB curves are offset such that the RGB value corresponds to log exposure 41/42 (Debevec and Malik)

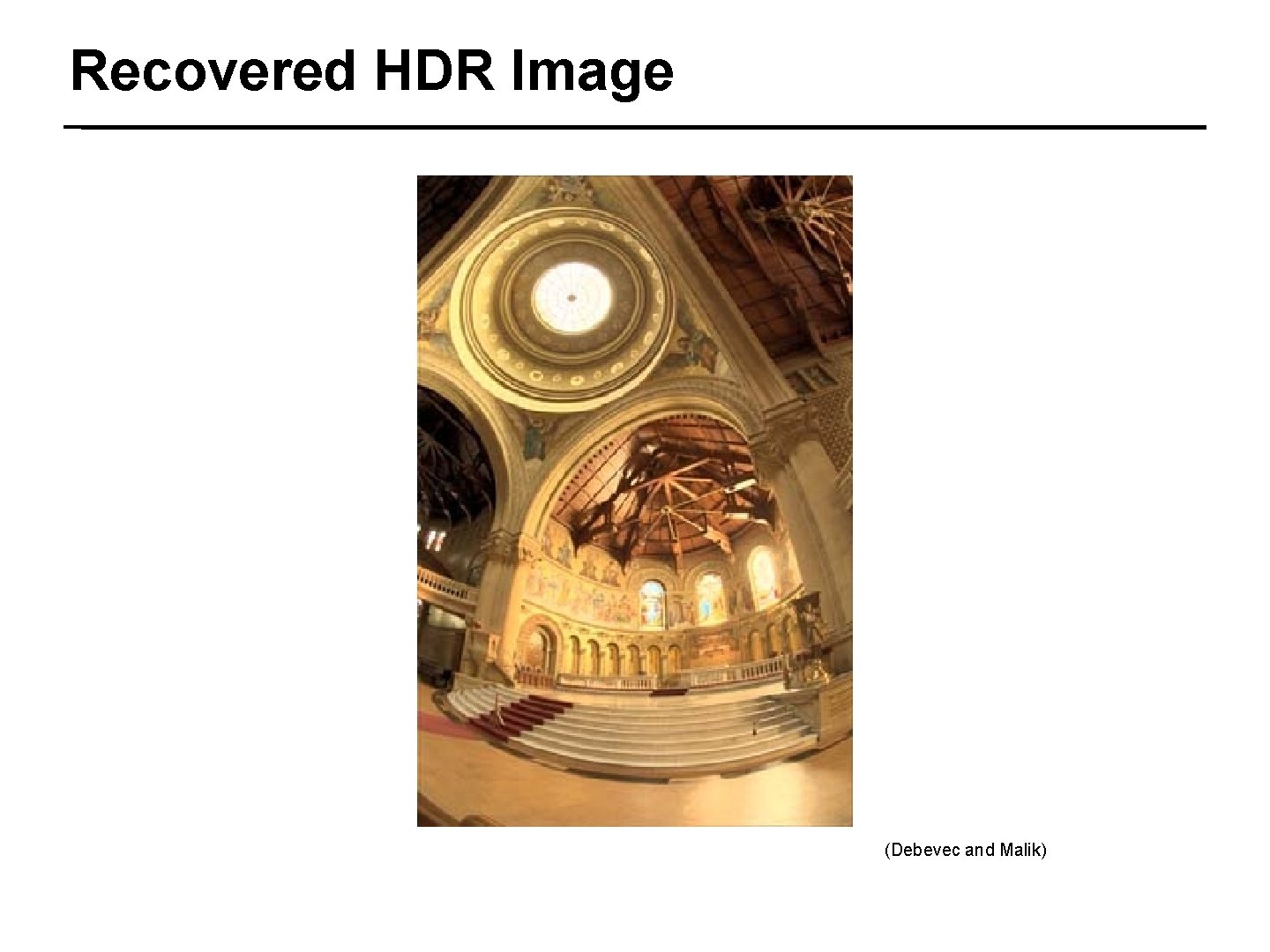

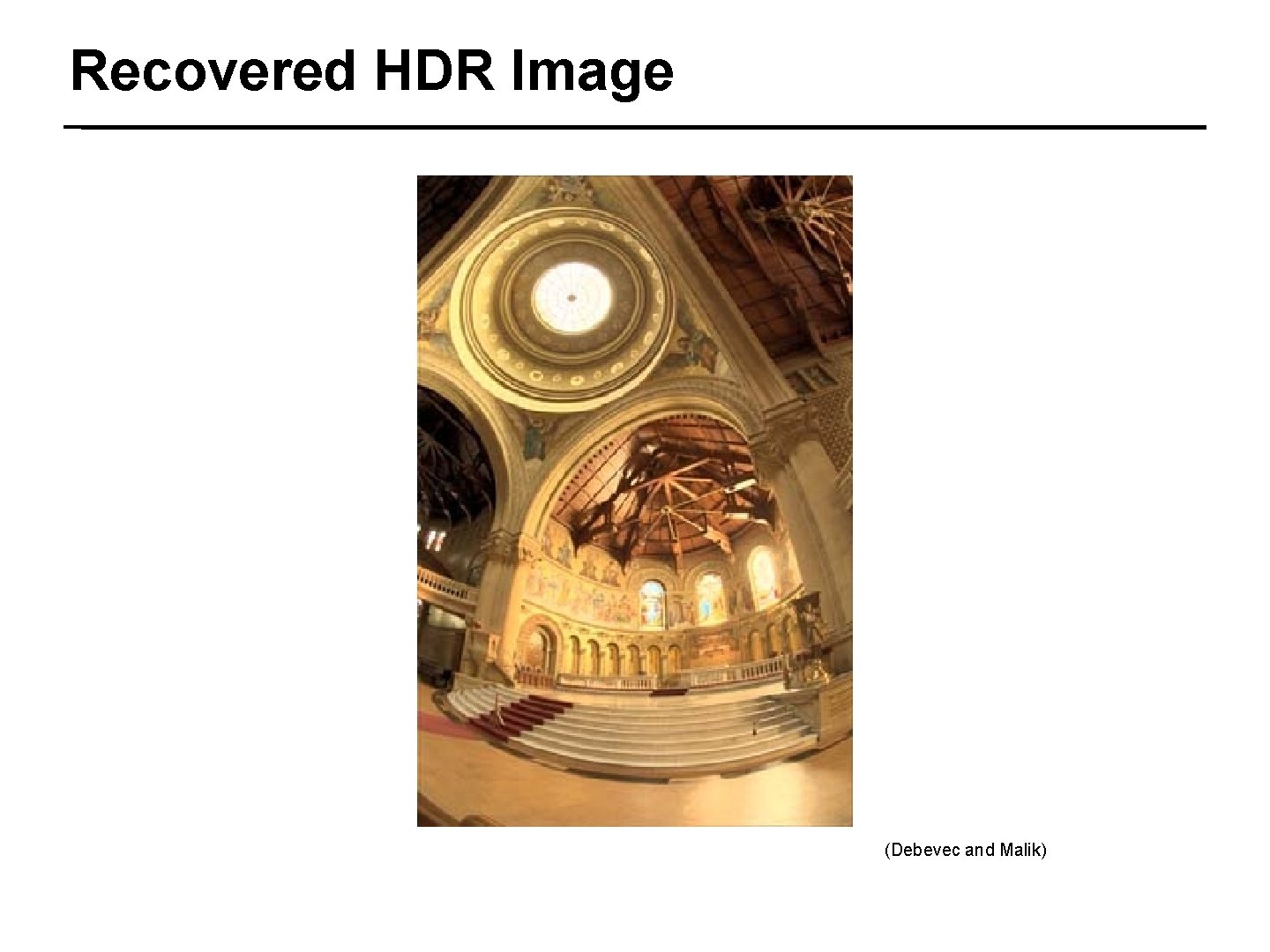

Recovered HDR Image 42/42 (Debevec and Malik)