Computer vision models learning and inference Chapter 2

![Expectation tell us the expected or average value of some function f [x] taking Expectation tell us the expected or average value of some function f [x] taking](https://slidetodoc.com/presentation_image_h2/da4cc1dfa297e2dfd4456d22eee96b13/image-20.jpg)

![Expectation tell us the expected or average value of some function f [x] taking Expectation tell us the expected or average value of some function f [x] taking](https://slidetodoc.com/presentation_image_h2/da4cc1dfa297e2dfd4456d22eee96b13/image-21.jpg)

- Slides: 27

Computer vision: models, learning and inference Chapter 2 Introduction to probability Please send errata to s. prince@cs. ucl. ac. uk

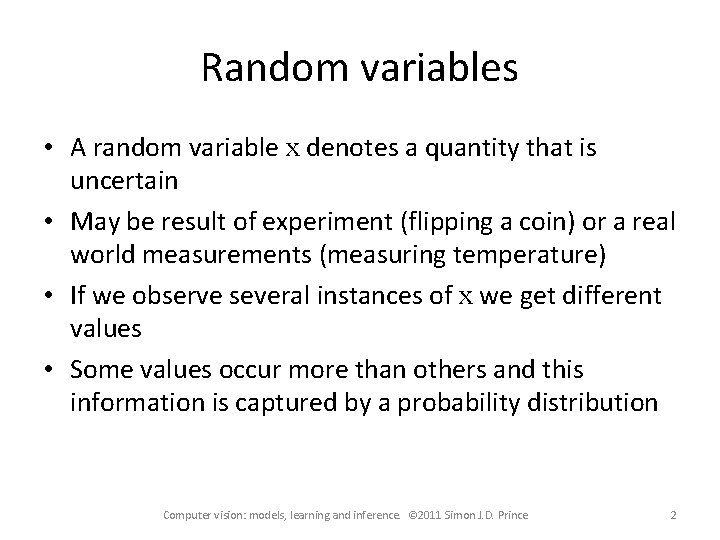

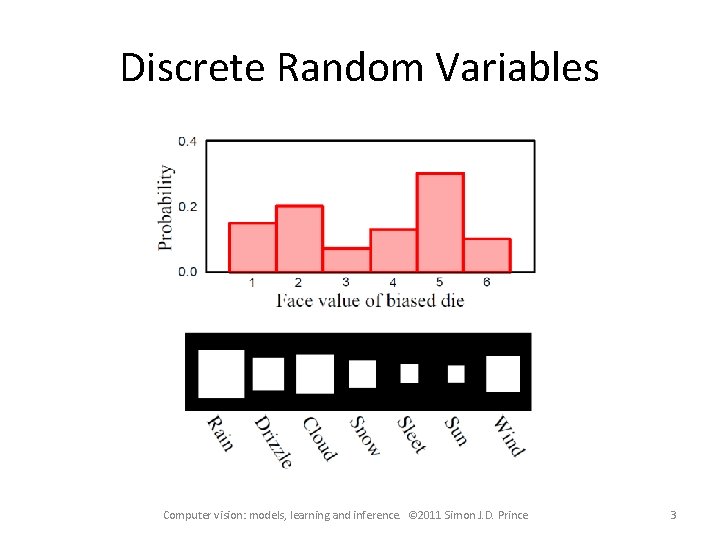

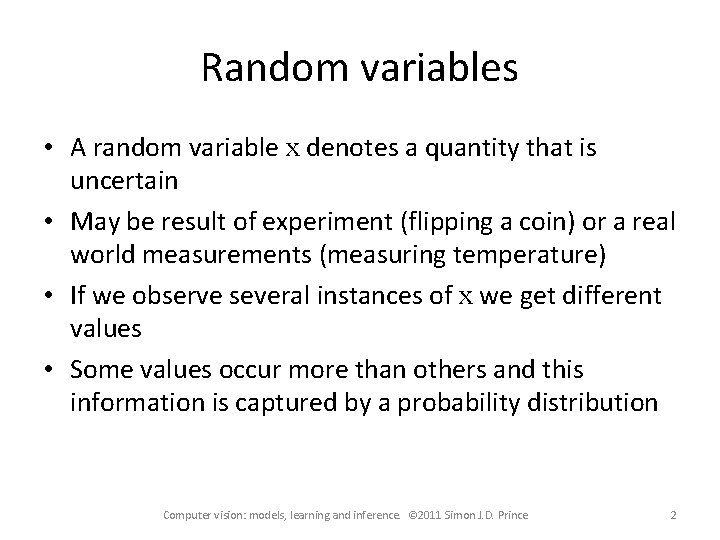

Random variables • A random variable x denotes a quantity that is uncertain • May be result of experiment (flipping a coin) or a real world measurements (measuring temperature) • If we observe several instances of x we get different values • Some values occur more than others and this information is captured by a probability distribution Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 2

Discrete Random Variables Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 3

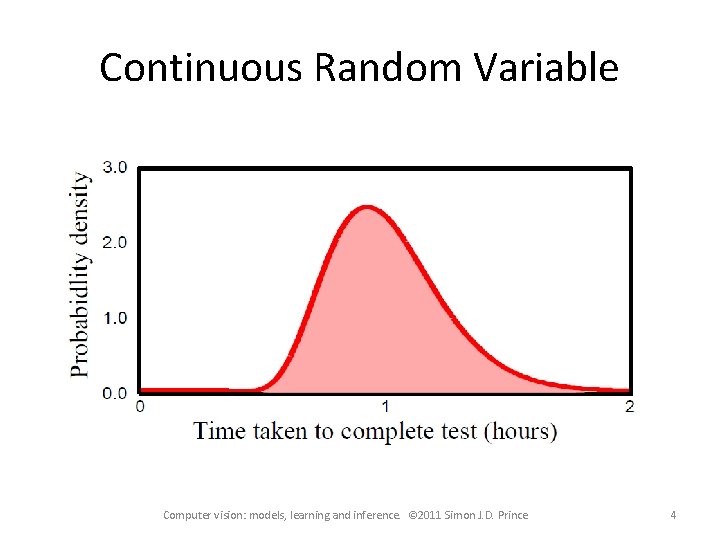

Continuous Random Variable Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 4

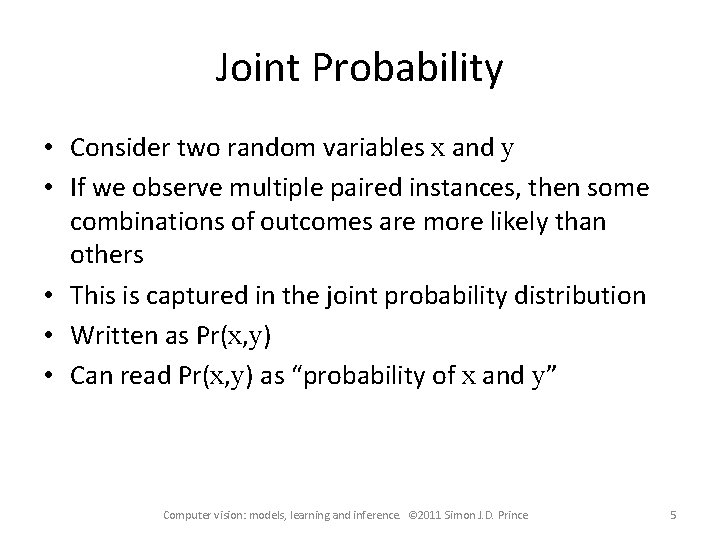

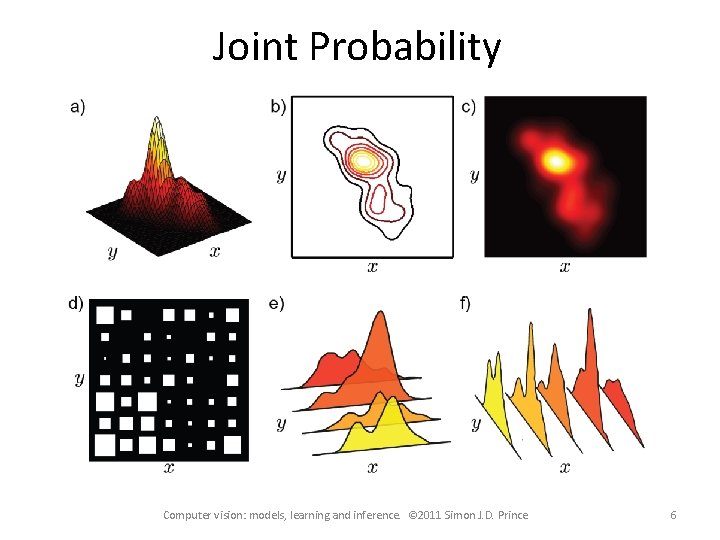

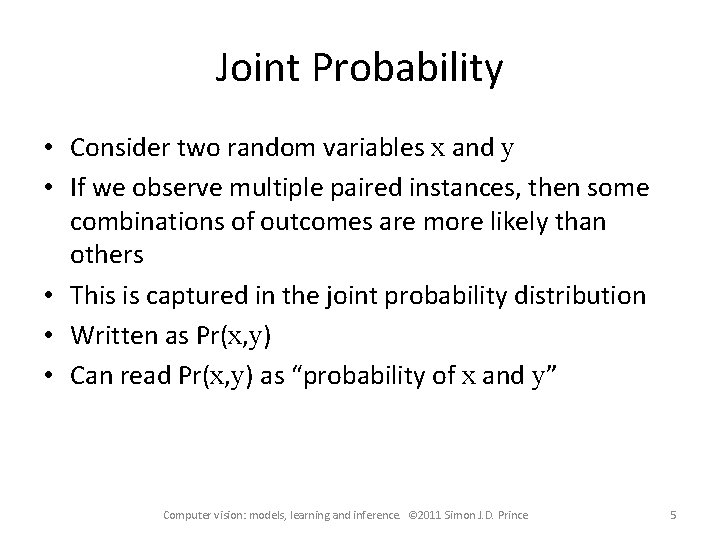

Joint Probability • Consider two random variables x and y • If we observe multiple paired instances, then some combinations of outcomes are more likely than others • This is captured in the joint probability distribution • Written as Pr(x, y) • Can read Pr(x, y) as “probability of x and y” Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 5

Joint Probability Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 6

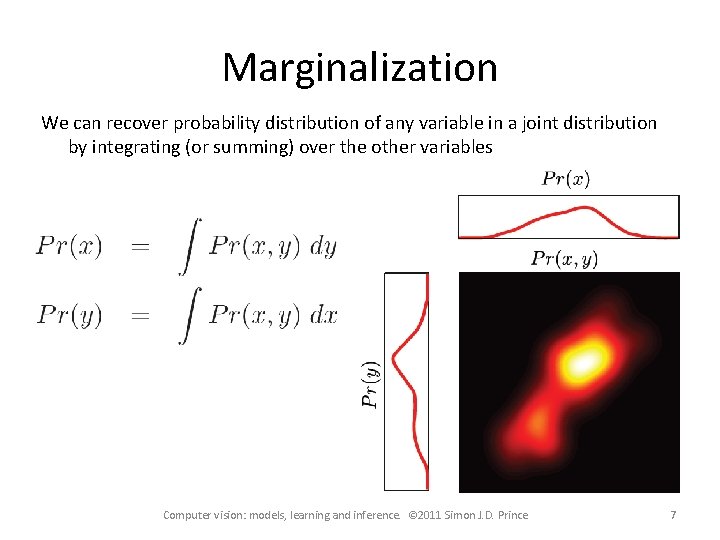

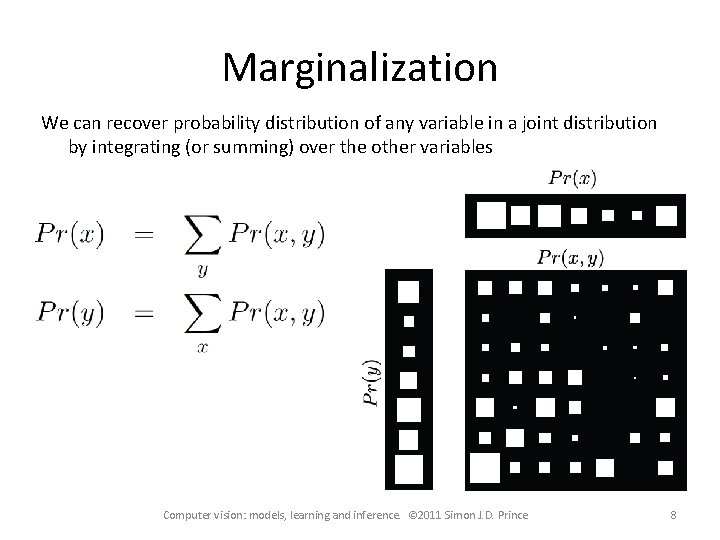

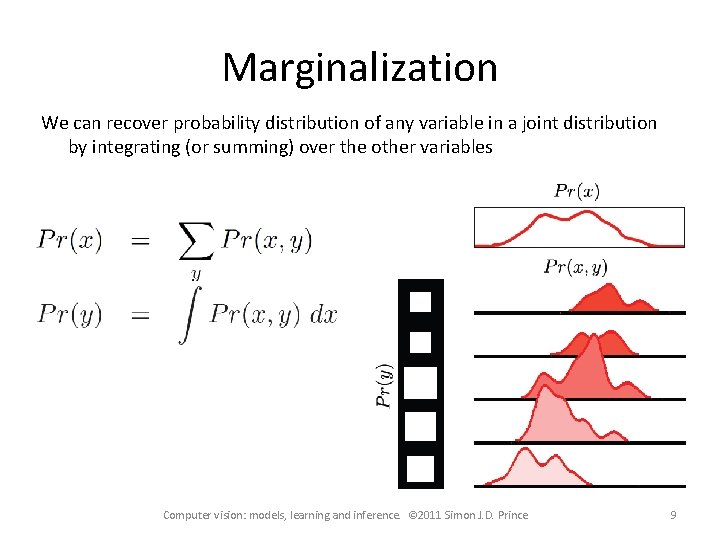

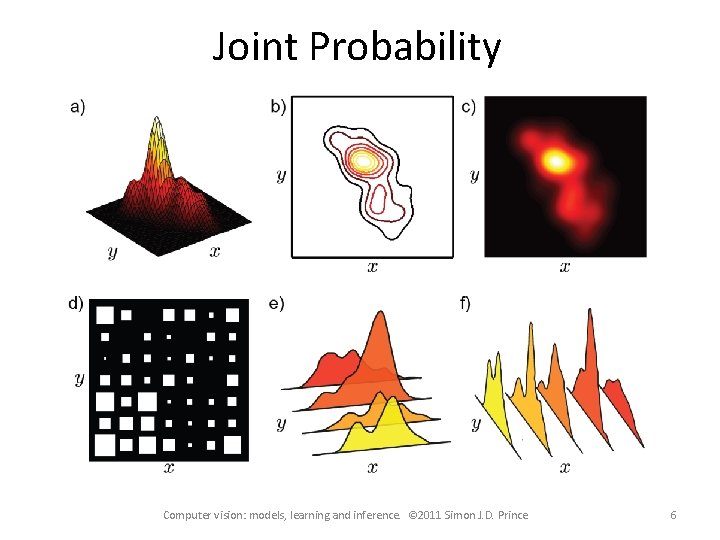

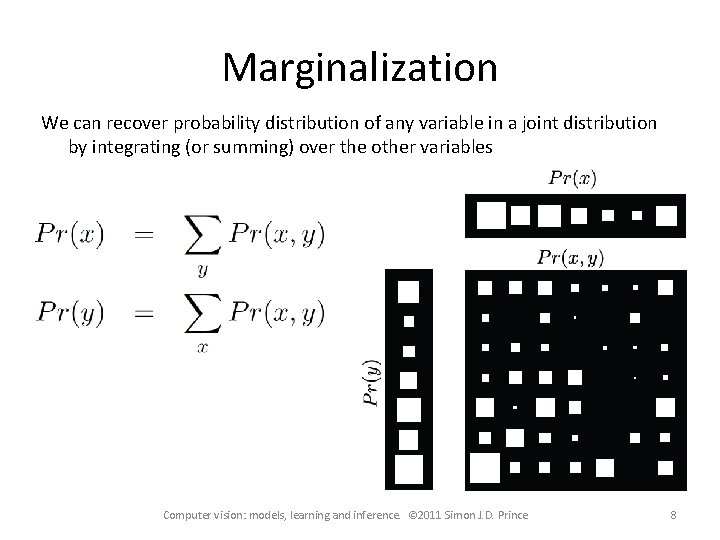

Marginalization We can recover probability distribution of any variable in a joint distribution by integrating (or summing) over the other variables Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 7

Marginalization We can recover probability distribution of any variable in a joint distribution by integrating (or summing) over the other variables Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 8

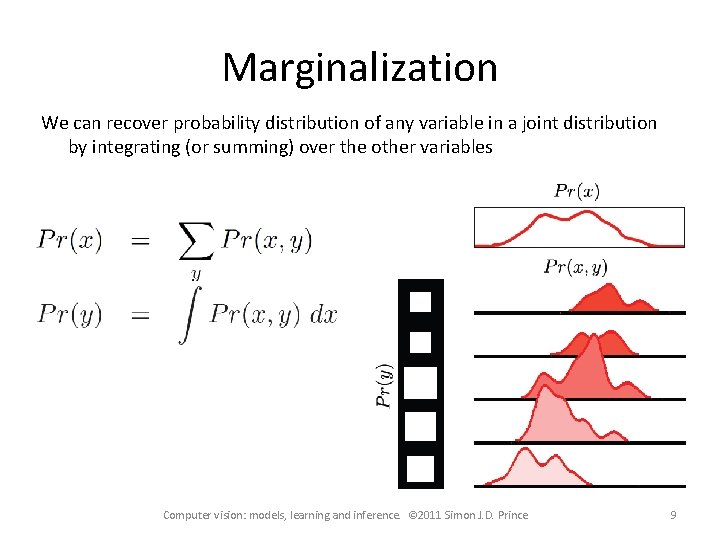

Marginalization We can recover probability distribution of any variable in a joint distribution by integrating (or summing) over the other variables Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 9

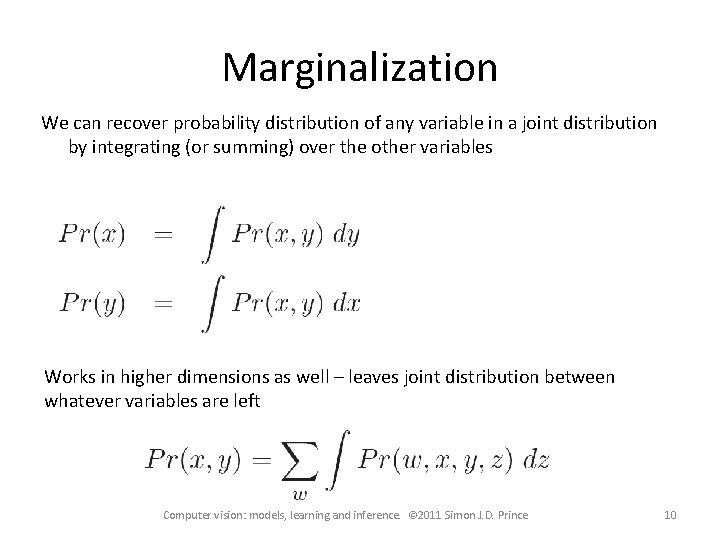

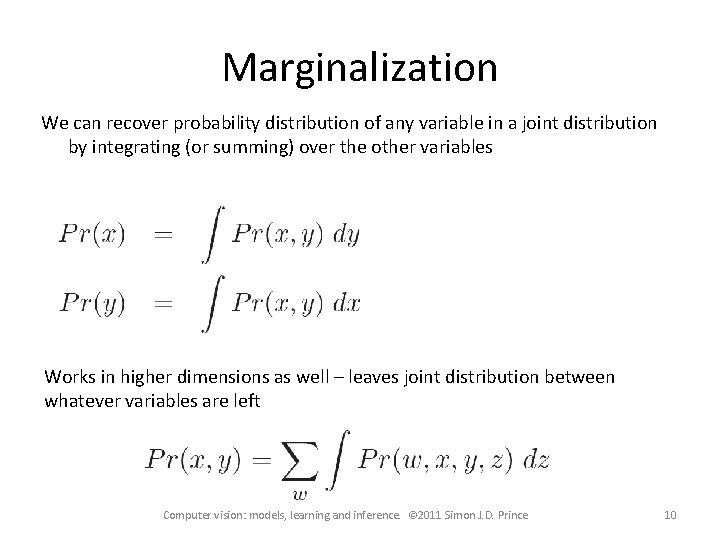

Marginalization We can recover probability distribution of any variable in a joint distribution by integrating (or summing) over the other variables Works in higher dimensions as well – leaves joint distribution between whatever variables are left Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 10

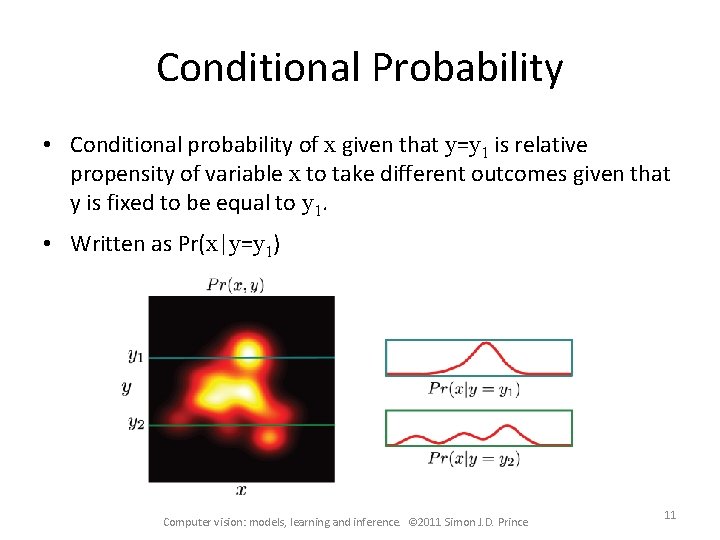

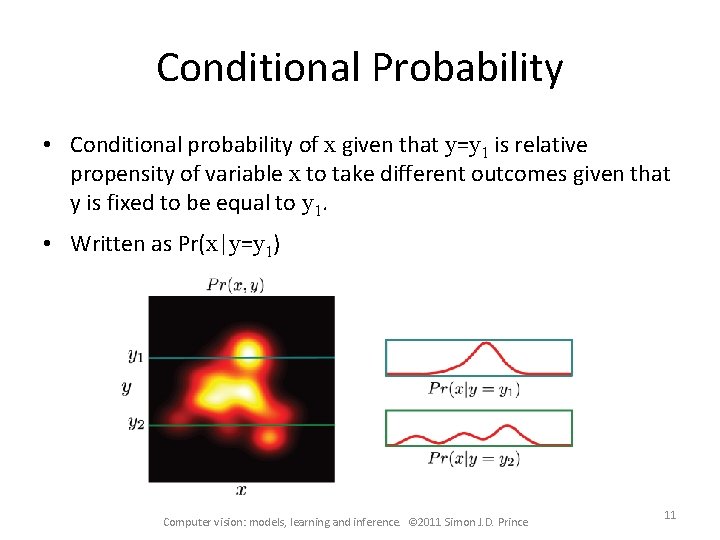

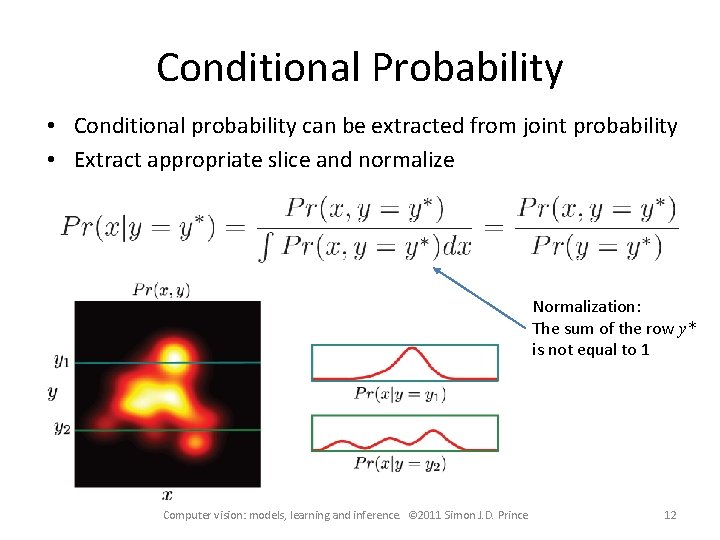

Conditional Probability • Conditional probability of x given that y=y 1 is relative propensity of variable x to take different outcomes given that y is fixed to be equal to y 1. • Written as Pr(x|y=y 1) Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 11

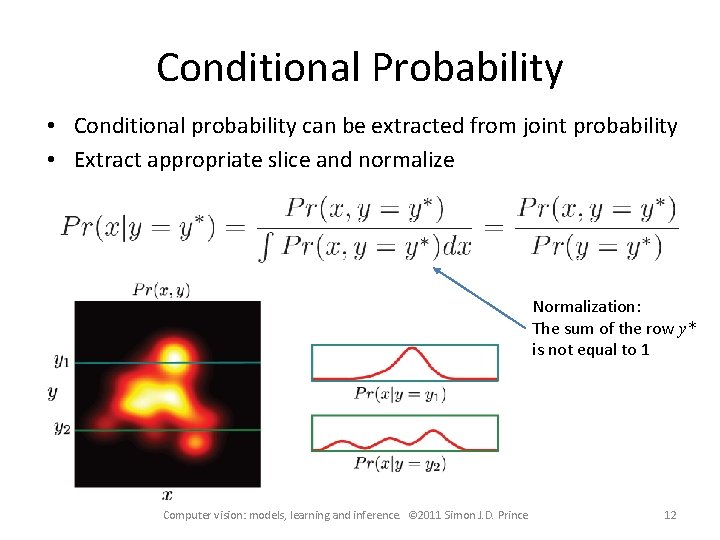

Conditional Probability • Conditional probability can be extracted from joint probability • Extract appropriate slice and normalize Normalization: The sum of the row y* is not equal to 1 Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 12

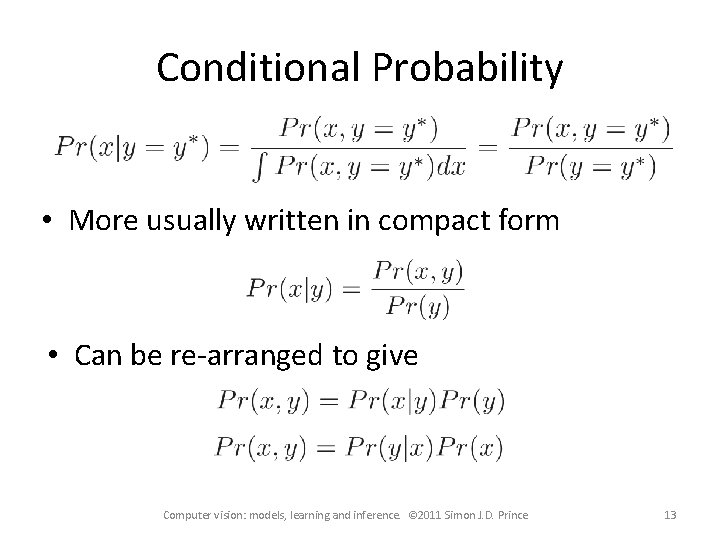

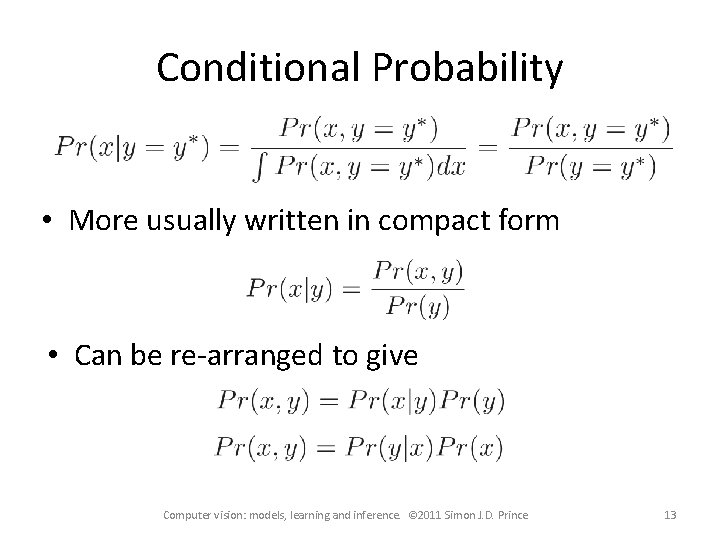

Conditional Probability • More usually written in compact form • Can be re-arranged to give Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 13

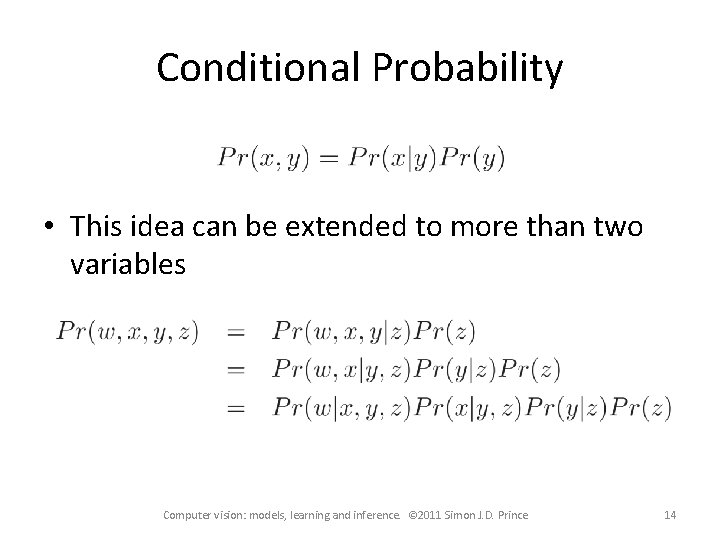

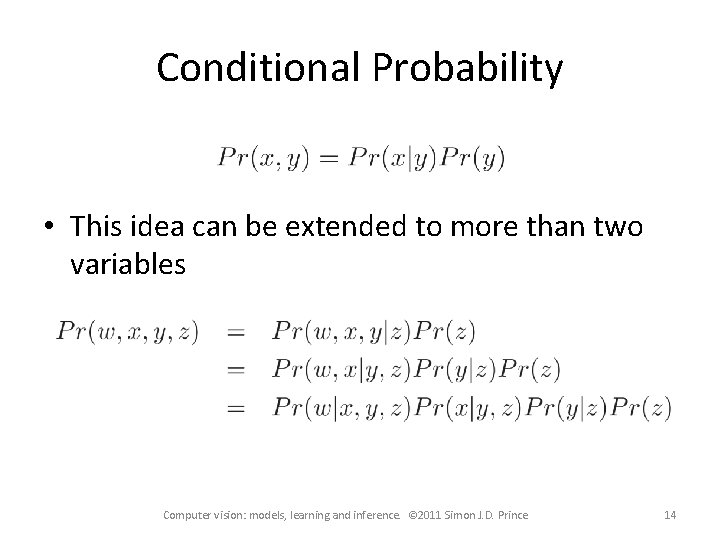

Conditional Probability • This idea can be extended to more than two variables Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 14

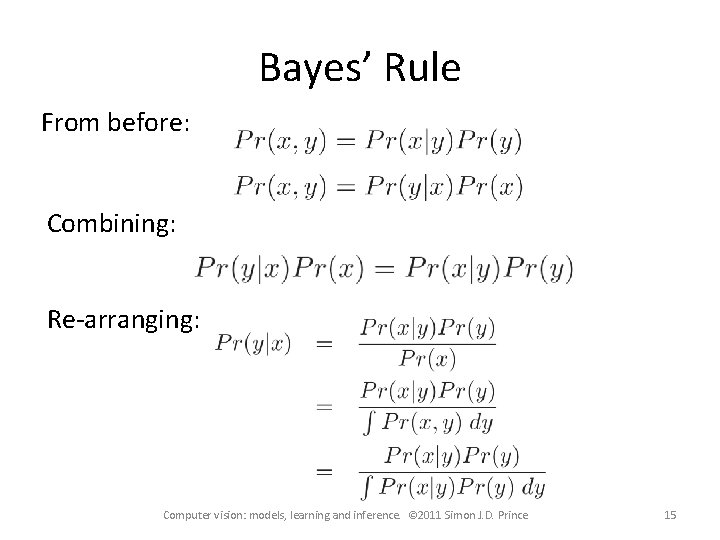

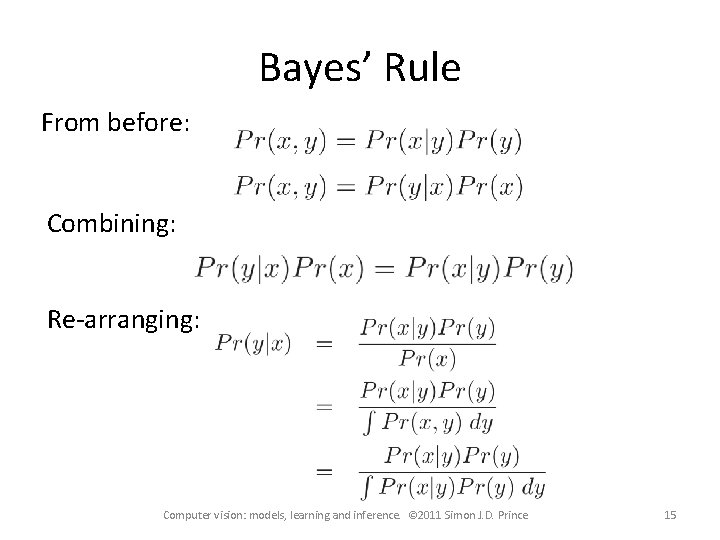

Bayes’ Rule From before: Combining: Re-arranging: Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 15

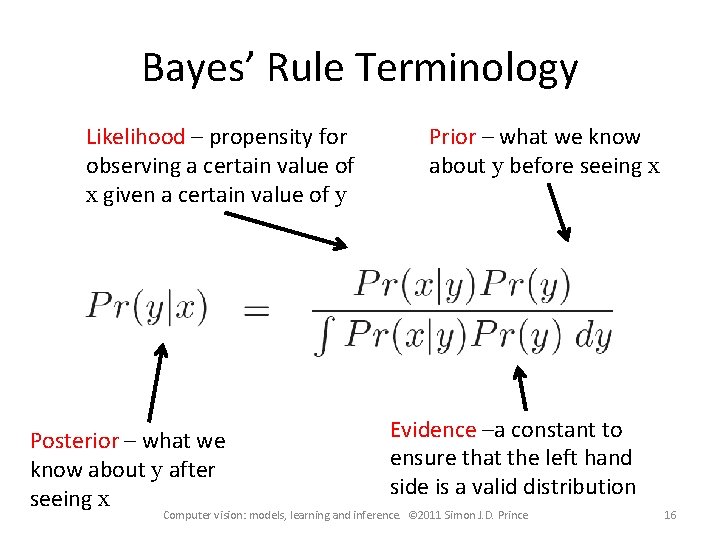

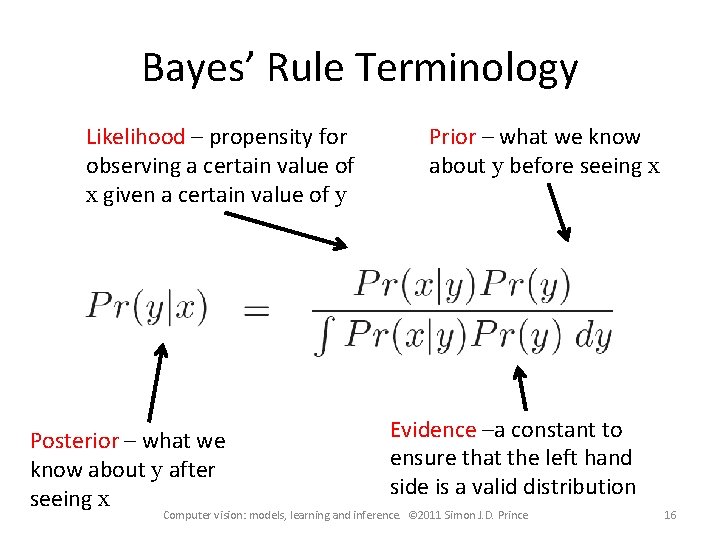

Bayes’ Rule Terminology Likelihood – propensity for observing a certain value of x given a certain value of y Posterior – what we know about y after seeing x Prior – what we know about y before seeing x Evidence –a constant to ensure that the left hand side is a valid distribution Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 16

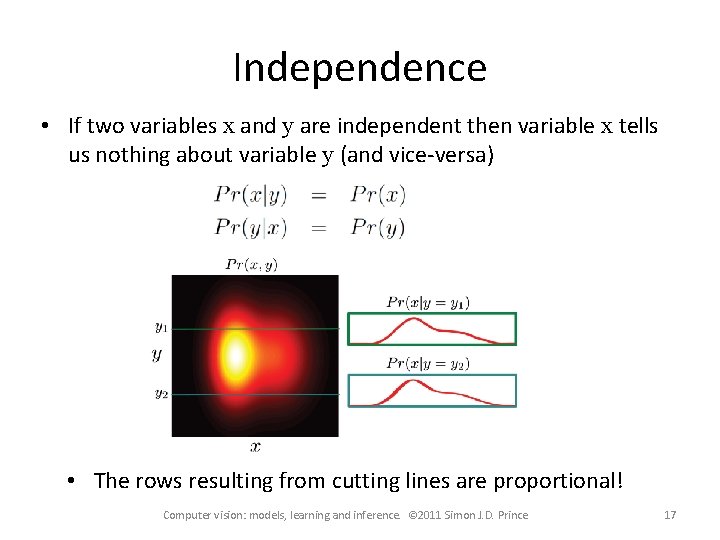

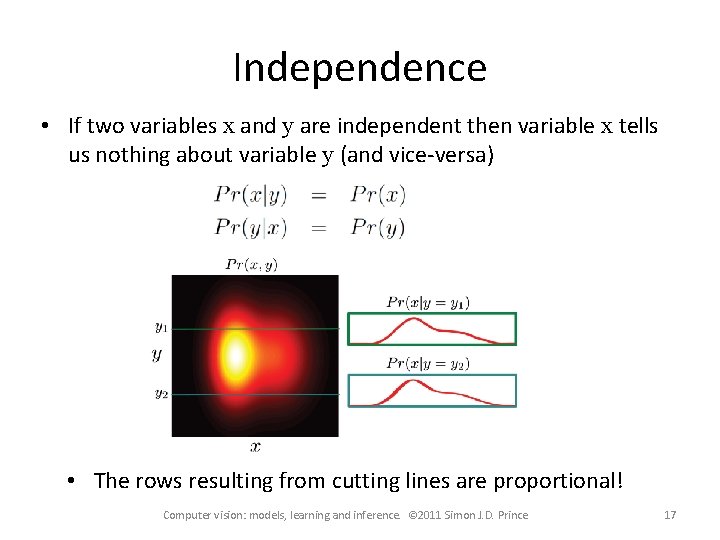

Independence • If two variables x and y are independent then variable x tells us nothing about variable y (and vice-versa) • The rows resulting from cutting lines are proportional! Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 17

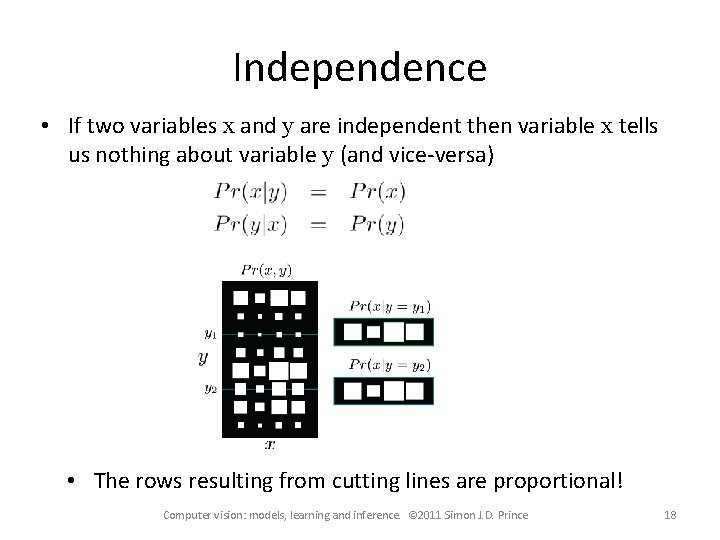

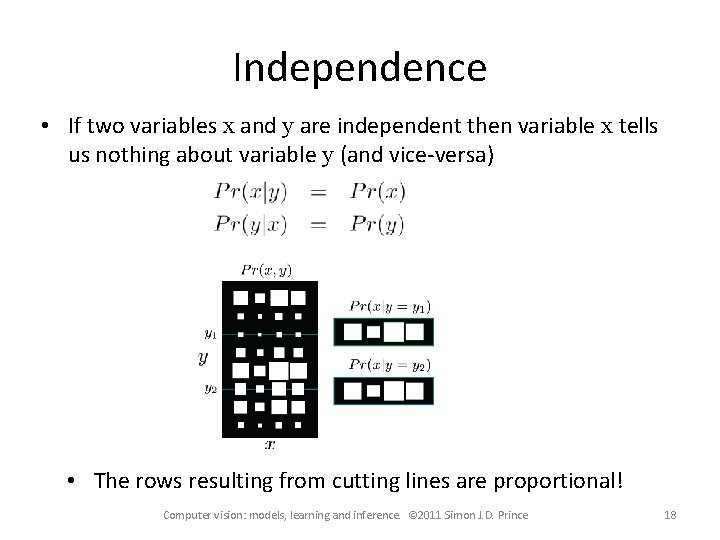

Independence • If two variables x and y are independent then variable x tells us nothing about variable y (and vice-versa) • The rows resulting from cutting lines are proportional! Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 18

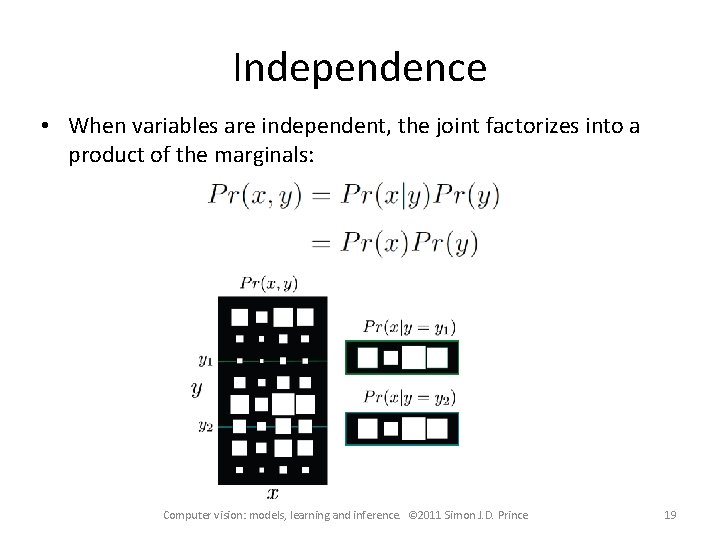

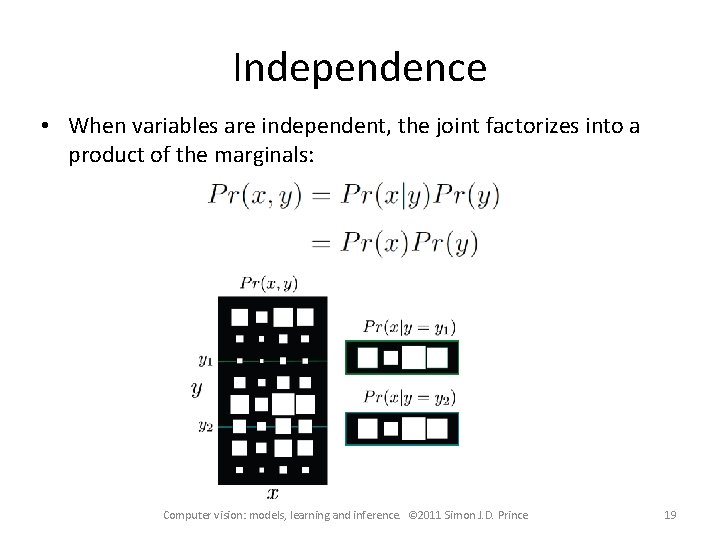

Independence • When variables are independent, the joint factorizes into a product of the marginals: Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 19

![Expectation tell us the expected or average value of some function f x taking Expectation tell us the expected or average value of some function f [x] taking](https://slidetodoc.com/presentation_image_h2/da4cc1dfa297e2dfd4456d22eee96b13/image-20.jpg)

Expectation tell us the expected or average value of some function f [x] taking into account the distribution of x Definition: Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 20

![Expectation tell us the expected or average value of some function f x taking Expectation tell us the expected or average value of some function f [x] taking](https://slidetodoc.com/presentation_image_h2/da4cc1dfa297e2dfd4456d22eee96b13/image-21.jpg)

Expectation tell us the expected or average value of some function f [x] taking into account the distribution of x Definition in two dimensions: Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 21

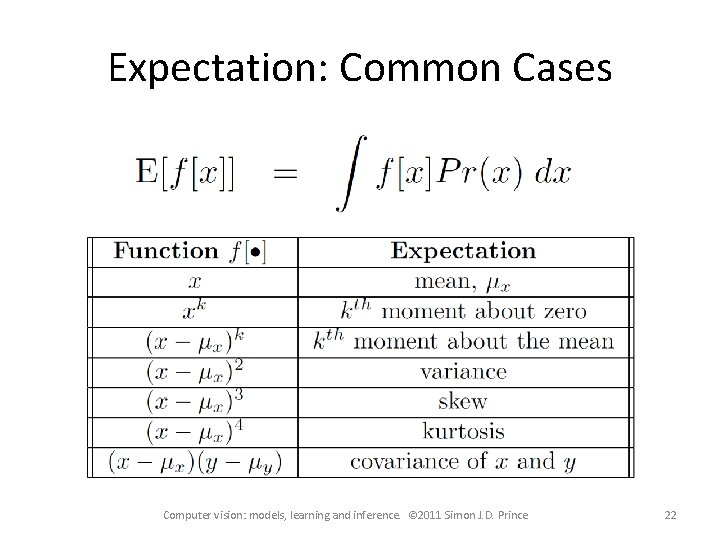

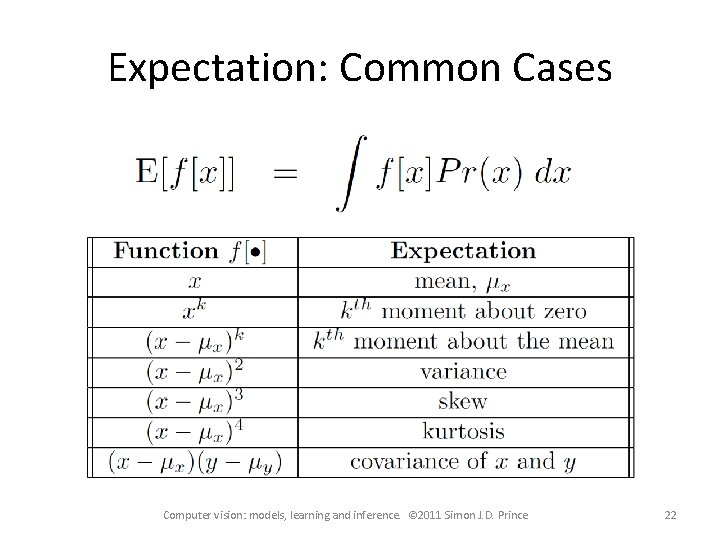

Expectation: Common Cases Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 22

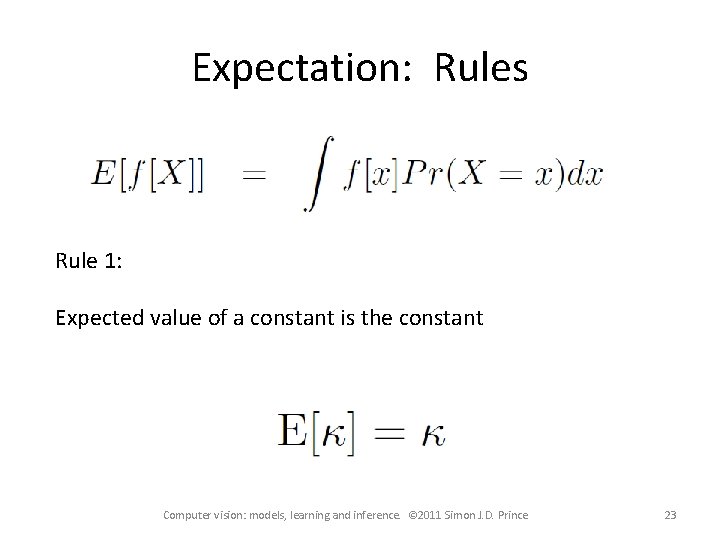

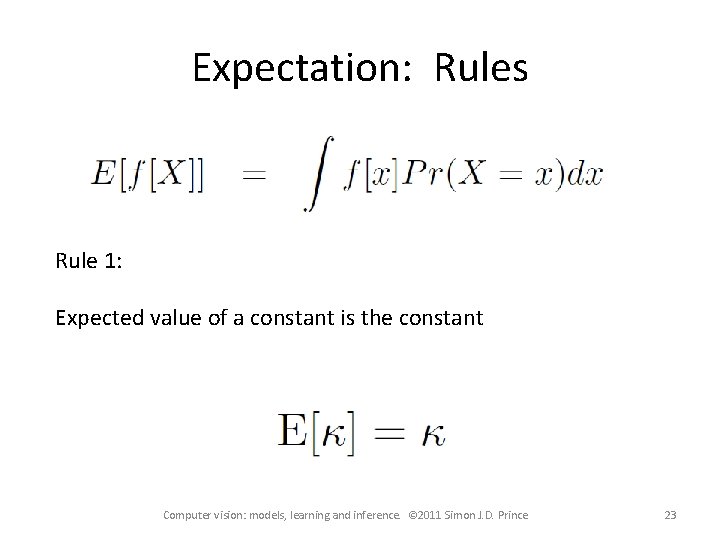

Expectation: Rules Rule 1: Expected value of a constant is the constant Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 23

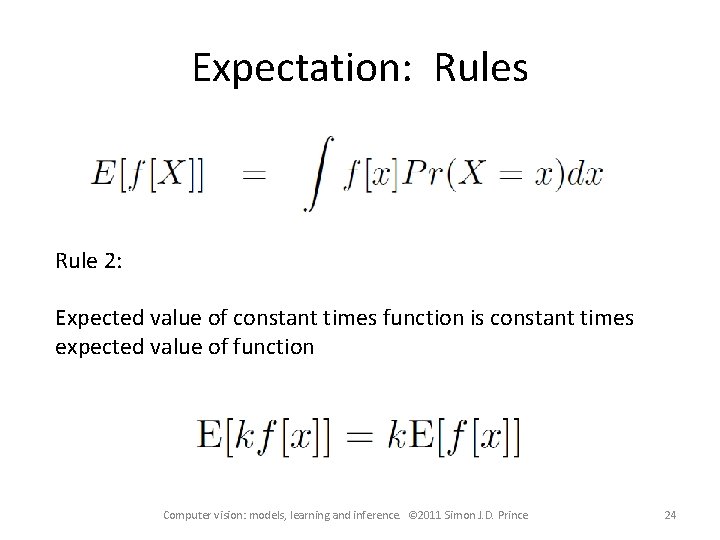

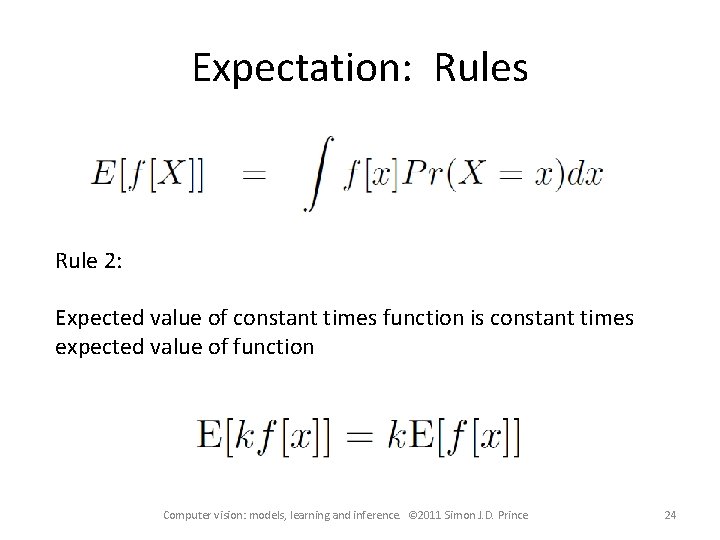

Expectation: Rules Rule 2: Expected value of constant times function is constant times expected value of function Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 24

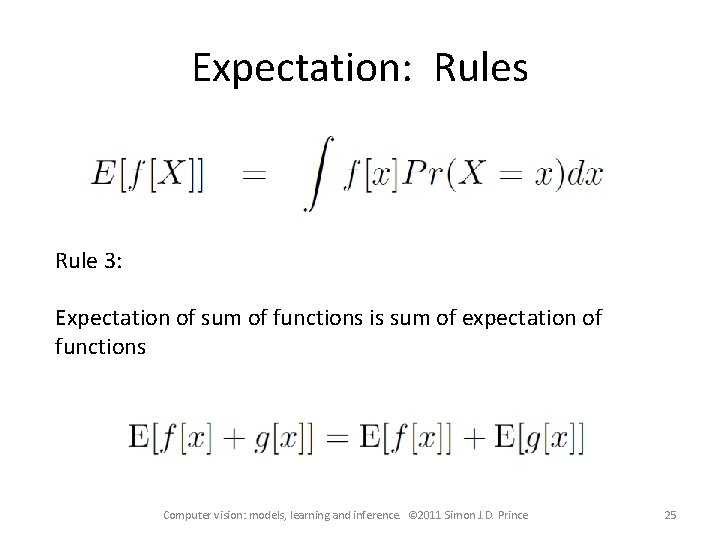

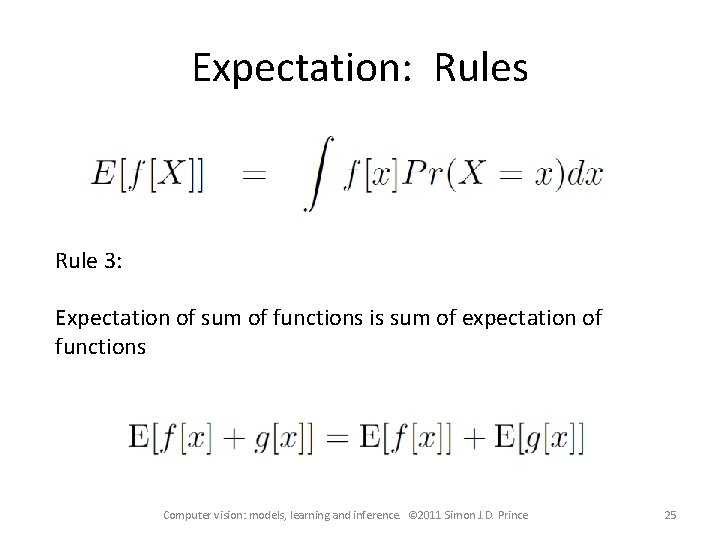

Expectation: Rules Rule 3: Expectation of sum of functions is sum of expectation of functions Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 25

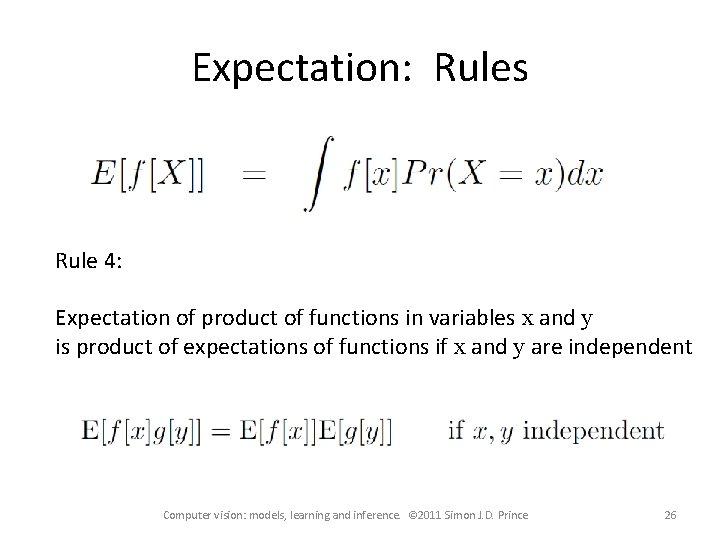

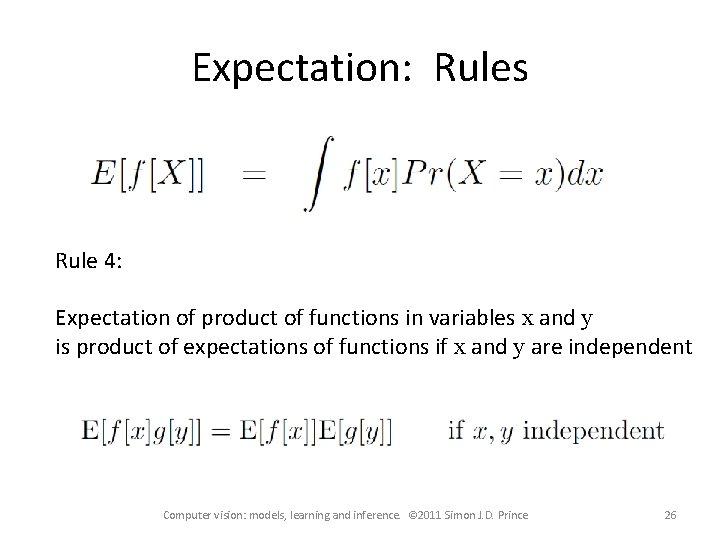

Expectation: Rules Rule 4: Expectation of product of functions in variables x and y is product of expectations of functions if x and y are independent Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 26

Conclusions • Rules of probability are compact and simple • Concepts of marginalization, joint and conditional probability, Bayes rule and expectation underpin all of the models in this book • One remaining concept – conditional expectation – discussed later Computer vision: models, learning and inference. © 2011 Simon J. D. Prince 27