COMP 740 Computer Architecture and Implementation Montek Singh

![Merging Arrays Example /* Before */ int val[SIZE]; int key[SIZE]; /* After */ struct Merging Arrays Example /* Before */ int val[SIZE]; int key[SIZE]; /* After */ struct](https://slidetodoc.com/presentation_image/134e0c5b3621f95d773a548f6bbb87b4/image-25.jpg)

- Slides: 41

COMP 740: Computer Architecture and Implementation Montek Singh Sep 14, 2016 Topic: Optimization of Cache Performance 1

Outline ã Cache Performance l Means of improving performance Read textbook Appendix B. 3 and Ch. 2. 2 2

How to Improve Cache Performance ã Latency l Reduce miss rate l Reduce miss penalty l Reduce hit time ã Bandwidth l Increase hit bandwidth l Increase miss bandwidth 3

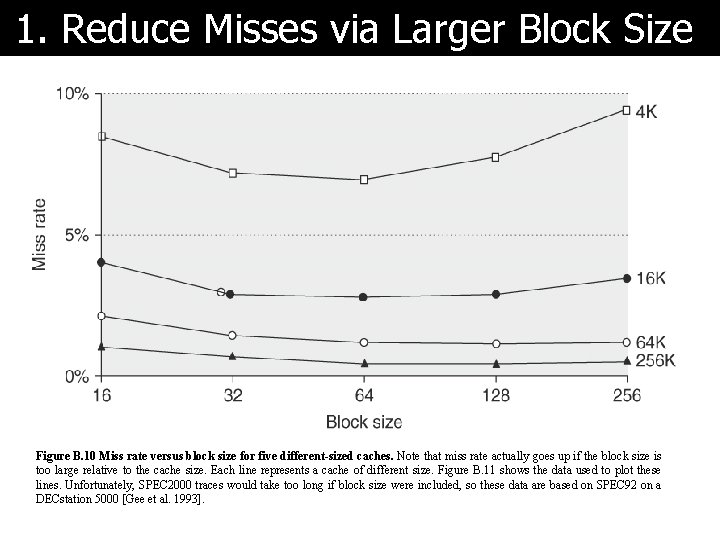

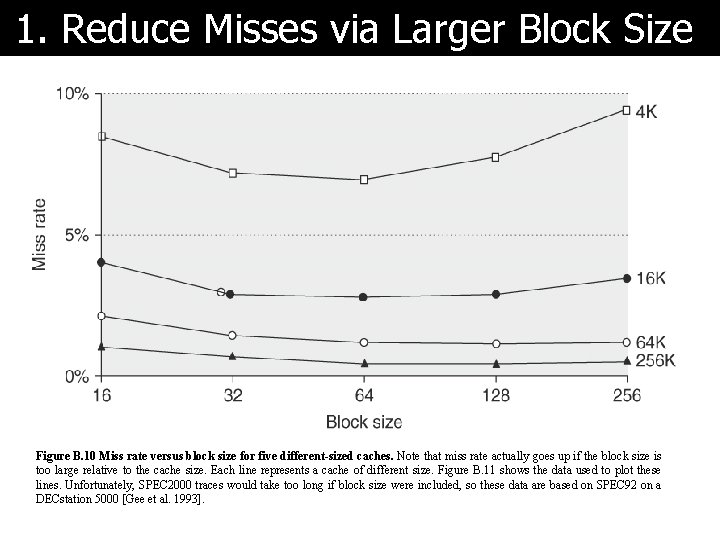

1. Reduce Misses via Larger Block Size Figure B. 10 Miss rate versus block size for five different-sized caches. Note that miss rate actually goes up if the block size is too large relative to the cache size. Each line represents a cache of different size. Figure B. 11 shows the data used to plot these lines. Unfortunately, SPEC 2000 traces would take too long if block size were included, so these data are based on SPEC 92 on a DECstation 5000 [Gee et al. 1993].

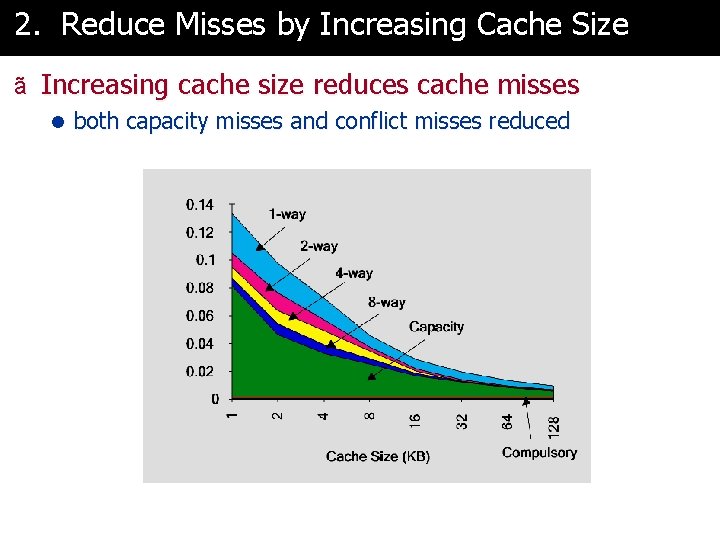

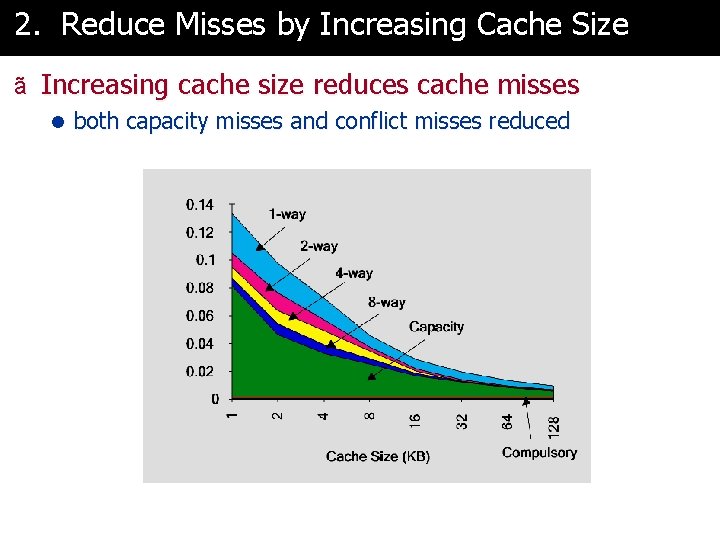

2. Reduce Misses by Increasing Cache Size ã Increasing cache size reduces cache misses l both capacity misses and conflict misses reduced

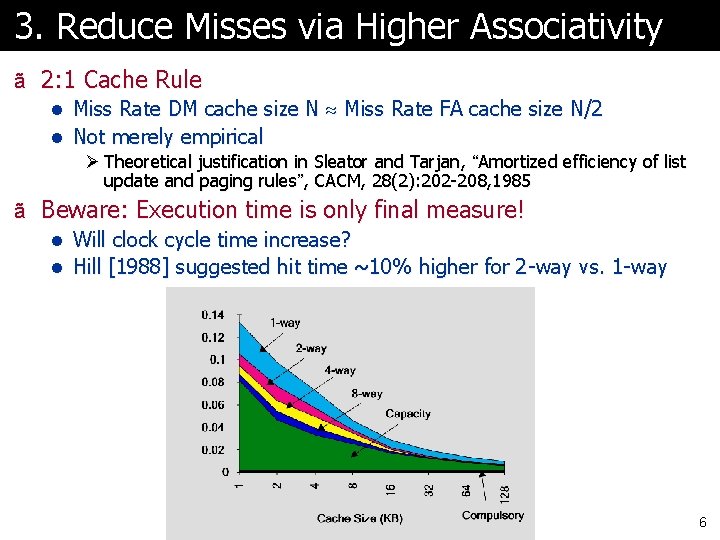

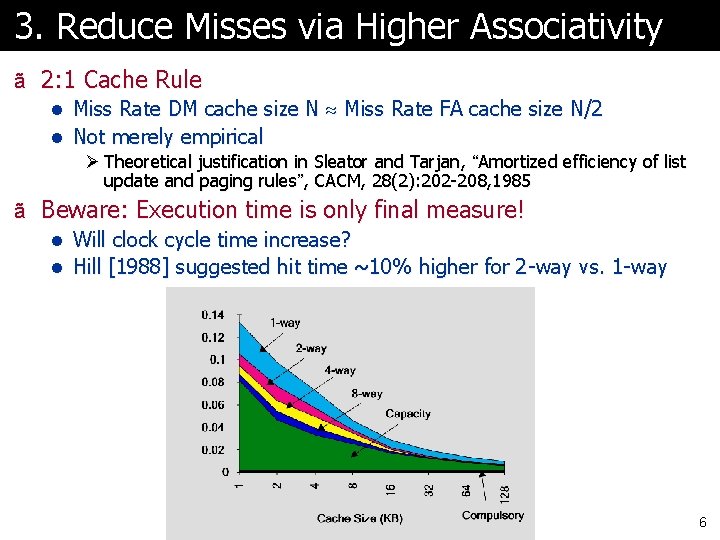

3. Reduce Misses via Higher Associativity ã 2: 1 Cache Rule l Miss Rate DM cache size N Miss Rate FA cache size N/2 l Not merely empirical Ø Theoretical justification in Sleator and Tarjan, “Amortized efficiency of list update and paging rules”, CACM, 28(2): 202 -208, 1985 ã Beware: Execution time is only final measure! l Will clock cycle time increase? l Hill [1988] suggested hit time ~10% higher for 2 -way vs. 1 -way 6

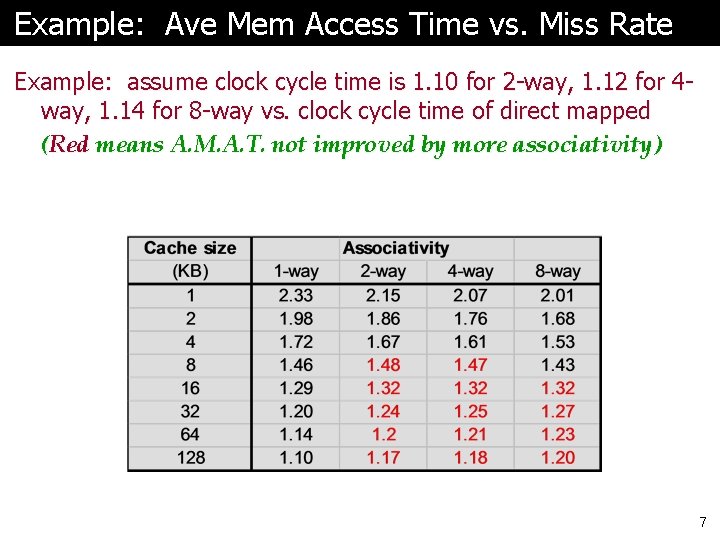

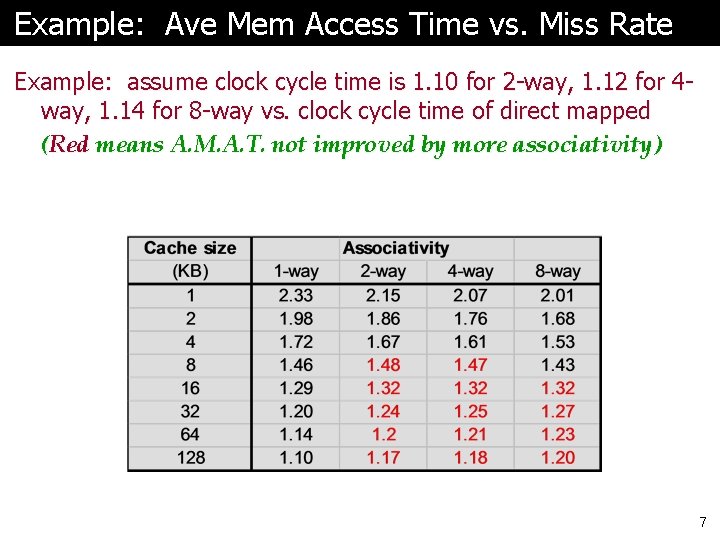

Example: Ave Mem Access Time vs. Miss Rate Example: assume clock cycle time is 1. 10 for 2 -way, 1. 12 for 4 way, 1. 14 for 8 -way vs. clock cycle time of direct mapped (Red means A. M. A. T. not improved by more associativity) 7

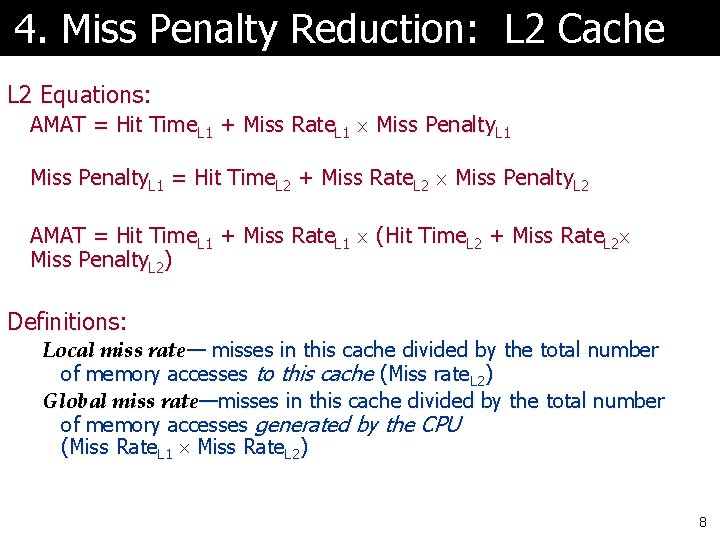

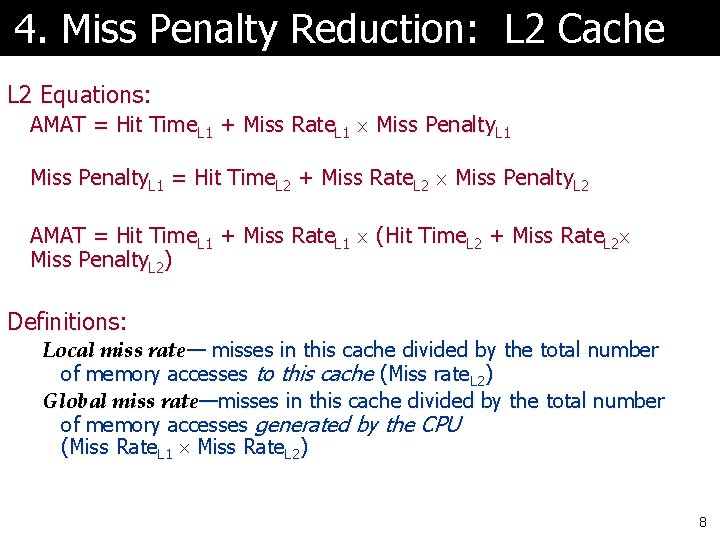

4. Miss Penalty Reduction: L 2 Cache L 2 Equations: AMAT = Hit Time. L 1 + Miss Rate. L 1 Miss Penalty. L 1 = Hit Time. L 2 + Miss Rate. L 2 Miss Penalty. L 2 AMAT = Hit Time. L 1 + Miss Rate. L 1 (Hit Time. L 2 + Miss Rate. L 2 Miss Penalty. L 2) Definitions: Local miss rate— misses in this cache divided by the total number of memory accesses to this cache (Miss rate. L 2) Global miss rate—misses in this cache divided by the total number of memory accesses generated by the CPU (Miss Rate. L 1 Miss Rate. L 2) 8

5. Reducing Miss Penalty Read Priority over Write on Miss: l Goal: allow reads to be served before writes have completed ã Challenges: l Write-through caches: Ø Using write buffers: RAW conflicts with reads on cache misses Ø If simply wait for write buffer to empty might increase read miss penalty by 50% (old MIPS 1000) Ø Check write buffer contents before read; if no conflicts, let the memory access continue l Write-back caches: Ø Read miss replacing dirty block Ø Normal: Write dirty block to memory, and then do the read Ø Instead copy the dirty block to a write buffer, then do the read, and then do the write Ø CPU stall less since restarts as soon as read completes 9

Summary of Basic Optimizations ã Six basic cache optimizations: 1. Larger block size Ø Reduces compulsory misses Ø Increases capacity and conflict misses, increases miss penalty 2. Larger total cache capacity to reduce miss rate Ø Increases hit time, increases power consumption 3. Higher associativity Ø Reduces conflict misses Ø Increases hit time, increases power consumption 4. Higher number of cache levels Ø Reduces overall memory access time 5. Giving priority to read misses over writes Ø Reduces miss penalty 6. Avoiding address translation in cache indexing (later) Ø Reduces hit time

More advanced optimizations 11

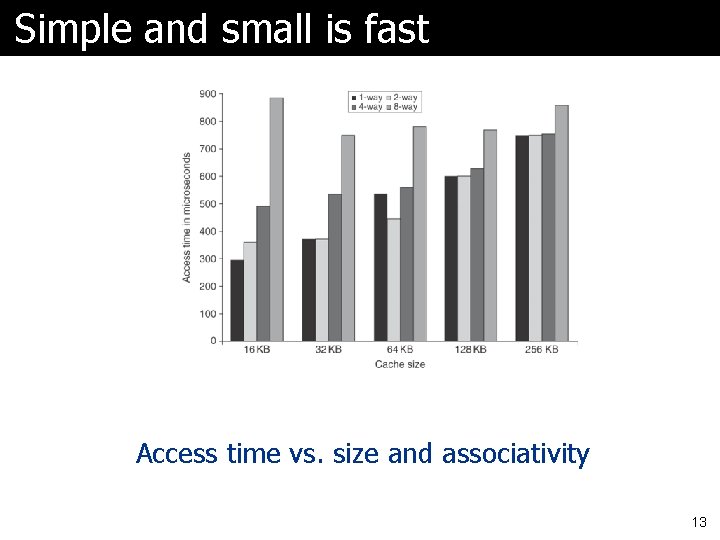

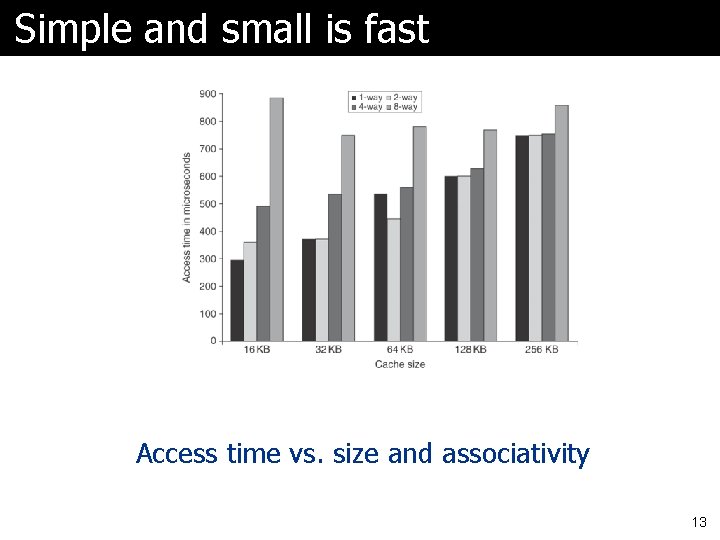

1. Fast Hit Times via Small, Simple Caches ã Simple caches can be faster l cache hit time increasingly a bottleneck to CPU performance Ø set associativity requires complex tag matching �slower Ø direct-mapped are simpler �faster �shorter CPU cycle times – tag check can be overlapped with transmission of data ã Smaller caches can be faster l can fit on the same chip as CPU Ø avoid penalty of going off-chip l for L 2 caches: compromise Ø keep tags on chip, and data off chip – fast tag check, yet greater cache capacity l L 1 data cache reduced from 16 KB in Pentium III to 8 KB in Pentium IV 12

Simple and small is fast Access time vs. size and associativity 13

Simple and small is energy-efficient Energy per read vs. size and associativity 14

2. Way Prediction ã Way prediction to improve hit time l Goal: reduce conflict misses, yet maintain hit speed of a direct-mapped cache l Approach: keep extra bits to predict the “way” within the set Ø the output multiplexor is pre-set to select the desired block Ø if block is correct one, fast hit time of 1 clock cycle Ø if block isn’t correct, check other blocks in 2 nd clock cycle l Mis-prediction gives longer hit time ã Prediction accuracy l > 90% for two-way l > 80% for four-way Ø I-cache has better accuracy than D-cache l First used on MIPS R 10000 in mid-90 s l Used on ARM Cortex-A 8 15

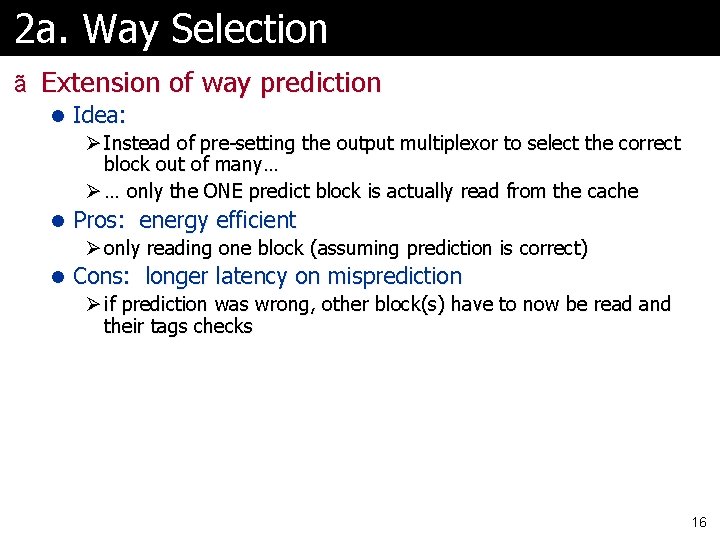

2 a. Way Selection ã Extension of way prediction l Idea: Ø Instead of pre-setting the output multiplexor to select the correct block out of many… Ø … only the ONE predict block is actually read from the cache l Pros: energy efficient Ø only reading one block (assuming prediction is correct) l Cons: longer latency on misprediction Ø if prediction was wrong, other block(s) have to now be read and their tags checks 16

3. Pipelining Cache ã Pipeline cache access to improve bandwidth l For faster clock cycle time: Ø allow L 1 hit time to be multiple clock cycles (instead of 1 cycle) Ø make cache pipelined, so it still has high bandwidth l Examples: Ø Pentium: 1 cycle Ø Pentium Pro – Pentium III: 2 cycles Ø Pentium 4 – Core i 7: 4 cycles ã Cons: l increases number of pipeline stages for an instruction Ø longer branch mis-prediction penalty Ø more clock cycles between “load” and receiving the data ã Pros: l allows faster clock rate for the processor l makes it easier to increase associativity 17

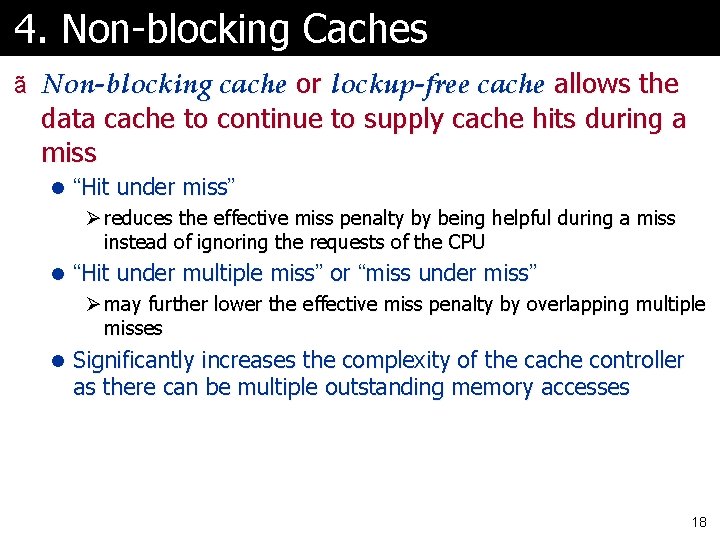

4. Non-blocking Caches ã Non-blocking cache or lockup-free cache allows the data cache to continue to supply cache hits during a miss l “Hit under miss” Ø reduces the effective miss penalty by being helpful during a miss instead of ignoring the requests of the CPU l “Hit under multiple miss” or “miss under miss” Ø may further lower the effective miss penalty by overlapping multiple misses l Significantly increases the complexity of the cache controller as there can be multiple outstanding memory accesses 18

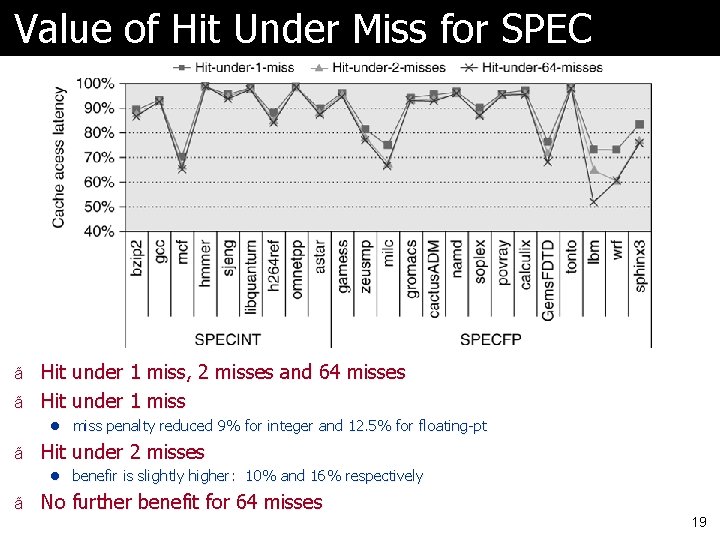

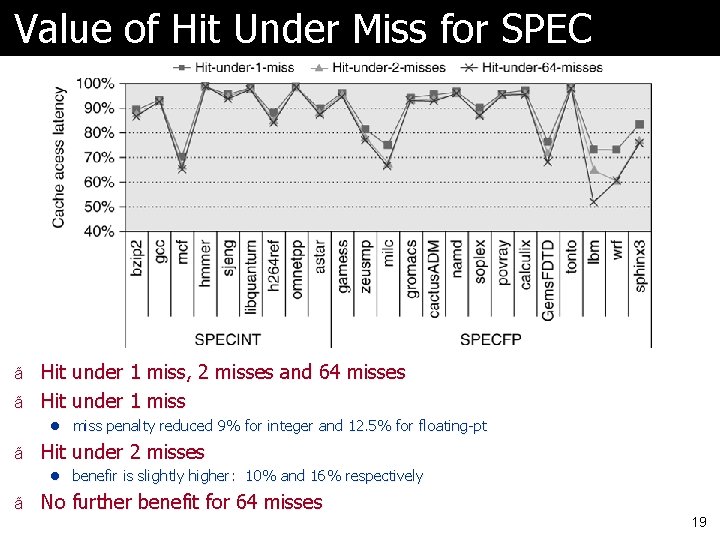

Value of Hit Under Miss for SPEC ã Hit under 1 miss, 2 misses and 64 misses ã Hit under 1 miss l miss penalty reduced 9% for integer and 12. 5% for floating-pt ã Hit under 2 misses l benefir is slightly higher: 10% and 16% respectively ã No further benefit for 64 misses 19

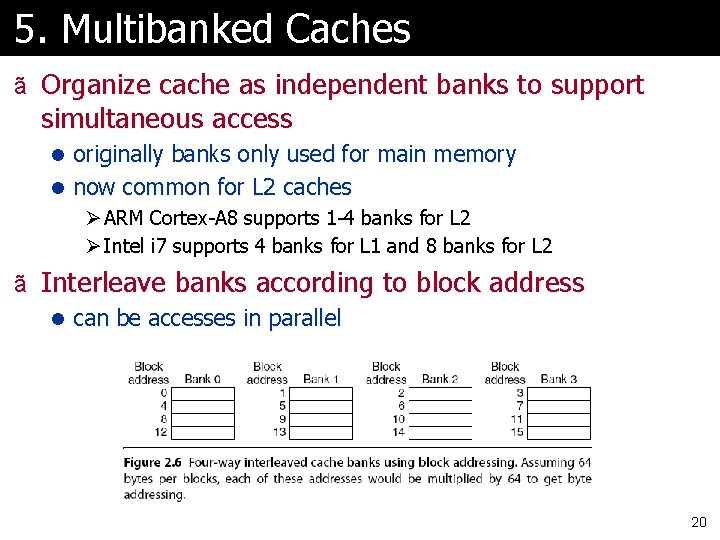

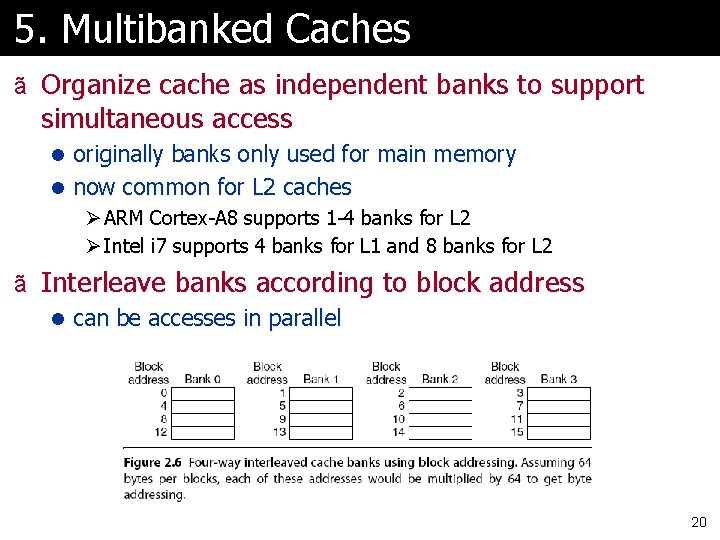

5. Multibanked Caches ã Organize cache as independent banks to support simultaneous access l originally banks only used for main memory l now common for L 2 caches Ø ARM Cortex-A 8 supports 1 -4 banks for L 2 Ø Intel i 7 supports 4 banks for L 1 and 8 banks for L 2 ã Interleave banks according to block address l can be accesses in parallel 20

6. Early Restart and Critical Word First ã Don’t wait for full block to be loaded before restarting CPU l Early Restart—As soon as the requested word of the block arrrives, send it to the CPU and let the CPU continue execution l Critical Word First—Request the missed word first from memory and send it to the CPU as soon as it arrives Ø let the CPU continue while filling the rest of the words in the block. Ø also called “wrapped fetch” and “requested word first” ã Generally useful only in large blocks ã Spatial locality a problem l tend to want next sequential word, so not clear if benefit by early restart 21

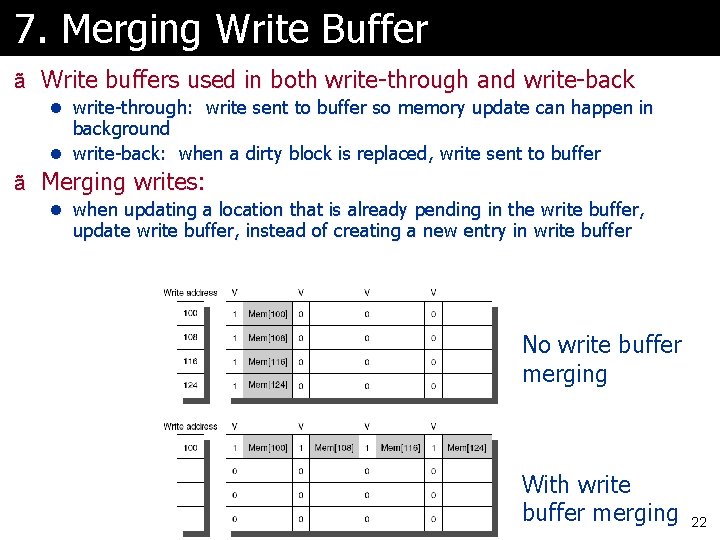

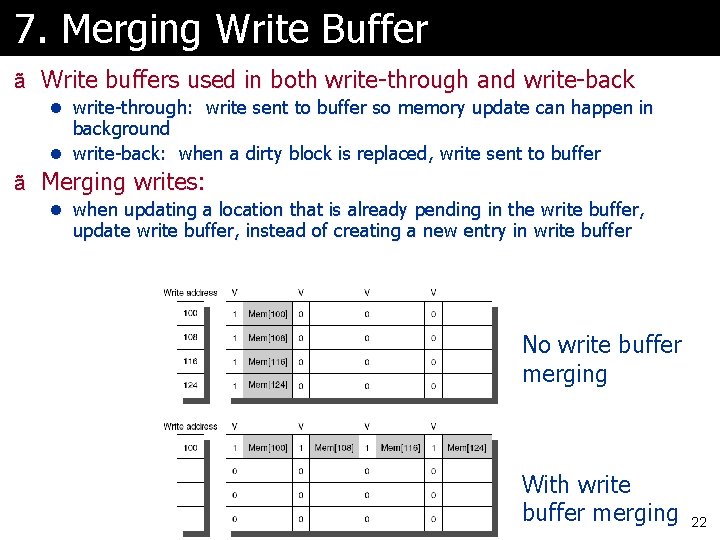

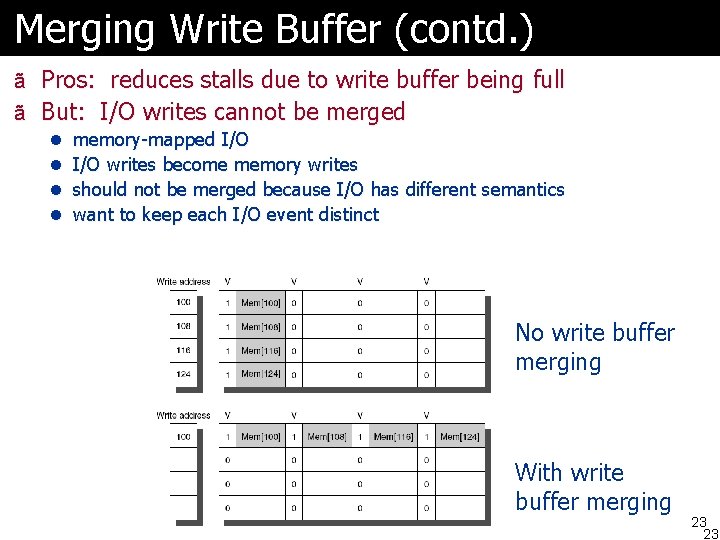

7. Merging Write Buffer ã Write buffers used in both write-through and write-back l write-through: write sent to buffer so memory update can happen in background l write-back: when a dirty block is replaced, write sent to buffer ã Merging writes: l when updating a location that is already pending in the write buffer, update write buffer, instead of creating a new entry in write buffer No write buffer merging With write buffer merging 22

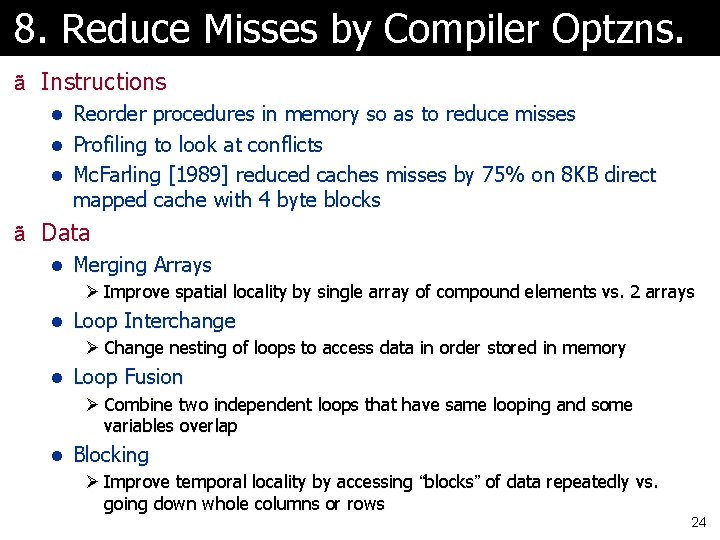

Merging Write Buffer (contd. ) ã Pros: reduces stalls due to write buffer being full ã But: I/O writes cannot be merged l memory-mapped I/O l I/O writes become memory writes l should not be merged because I/O has different semantics l want to keep each I/O event distinct No write buffer merging With write buffer merging 23 23

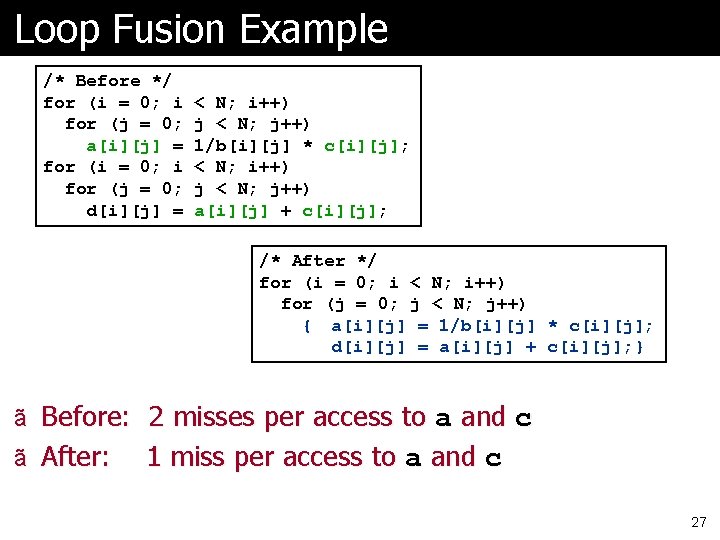

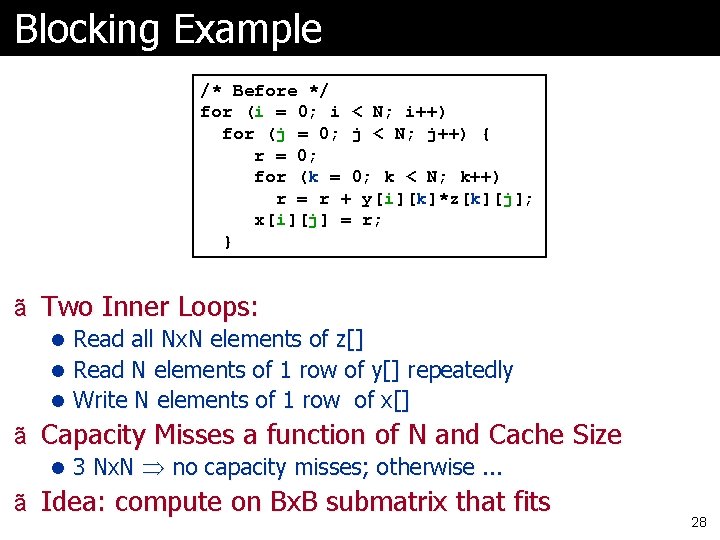

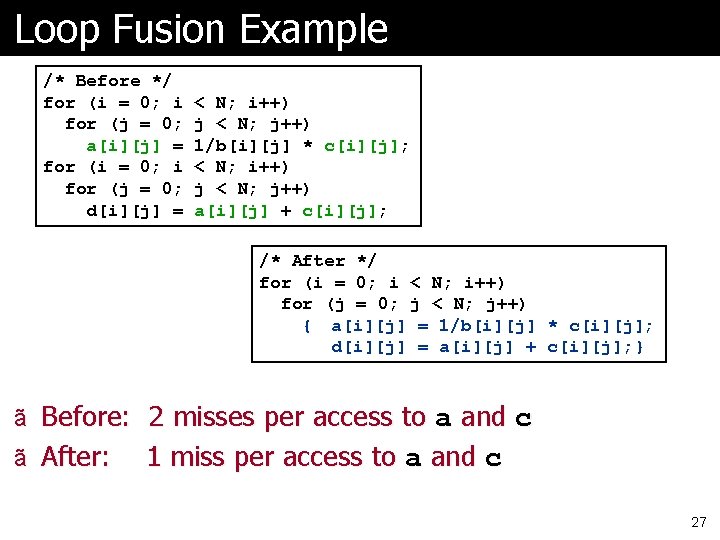

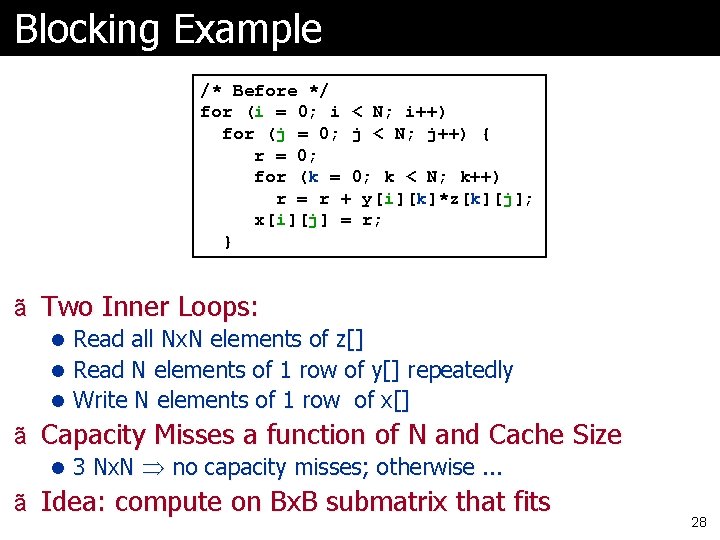

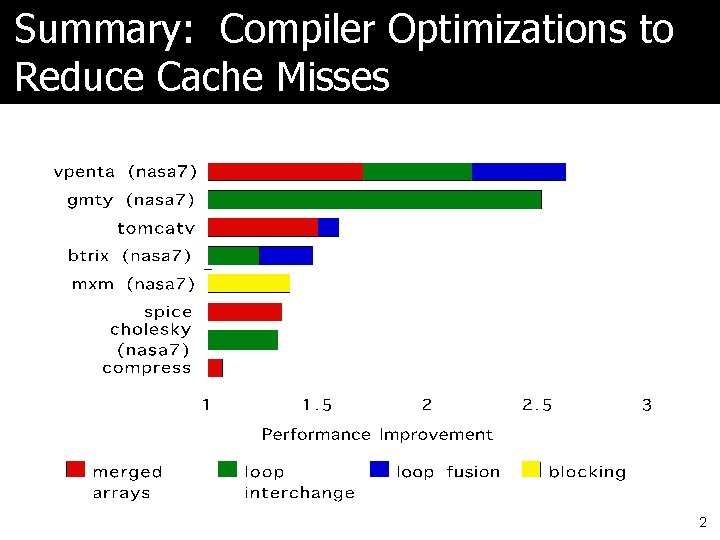

8. Reduce Misses by Compiler Optzns. ã Instructions l Reorder procedures in memory so as to reduce misses l Profiling to look at conflicts l Mc. Farling [1989] reduced caches misses by 75% on 8 KB direct mapped cache with 4 byte blocks ã Data l Merging Arrays Ø Improve spatial locality by single array of compound elements vs. 2 arrays l Loop Interchange Ø Change nesting of loops to access data in order stored in memory l Loop Fusion Ø Combine two independent loops that have same looping and some variables overlap l Blocking Ø Improve temporal locality by accessing “blocks” of data repeatedly vs. going down whole columns or rows 24

![Merging Arrays Example Before int valSIZE int keySIZE After struct Merging Arrays Example /* Before */ int val[SIZE]; int key[SIZE]; /* After */ struct](https://slidetodoc.com/presentation_image/134e0c5b3621f95d773a548f6bbb87b4/image-25.jpg)

Merging Arrays Example /* Before */ int val[SIZE]; int key[SIZE]; /* After */ struct merge { int val; int key; }; struct merged_array[SIZE]; ã Reduces conflicts between val and key ã Addressing expressions are different 25

Loop Interchange Example /* Before */ for (k = 0; k < for (j = 0; j for (i = 0; x[i][j] = 100; k++) < 100; j++) i < 5000; i++) 2 * x[i][j]; /* After */ for (k = 0; k < for (i = 0; i for (j = 0; x[i][j] = 100; k++) < 5000; i++) j < 100; j++) 2 * x[i][j]; ã Sequential accesses instead of striding through memory every 100 words 26

Loop Fusion Example /* Before */ for (i = 0; i for (j = 0; a[i][j] = for (i = 0; i for (j = 0; d[i][j] = < N; i++) j < N; j++) 1/b[i][j] * c[i][j]; < N; i++) j < N; j++) a[i][j] + c[i][j]; /* After */ for (i = 0; i < N; i++) for (j = 0; j < N; j++) { a[i][j] = 1/b[i][j] * c[i][j]; d[i][j] = a[i][j] + c[i][j]; } ã Before: 2 misses per access to a and c ã After: 1 miss per access to a and c 27

Blocking Example /* Before */ for (i = 0; i < N; i++) for (j = 0; j < N; j++) { r = 0; for (k = 0; k < N; k++) r = r + y[i][k]*z[k][j]; x[i][j] = r; } ã Two Inner Loops: l Read all Nx. N elements of z[] l Read N elements of 1 row of y[] repeatedly l Write N elements of 1 row of x[] ã Capacity Misses a function of N and Cache Size l 3 Nx. N no capacity misses; otherwise. . . ã Idea: compute on Bx. B submatrix that fits 28

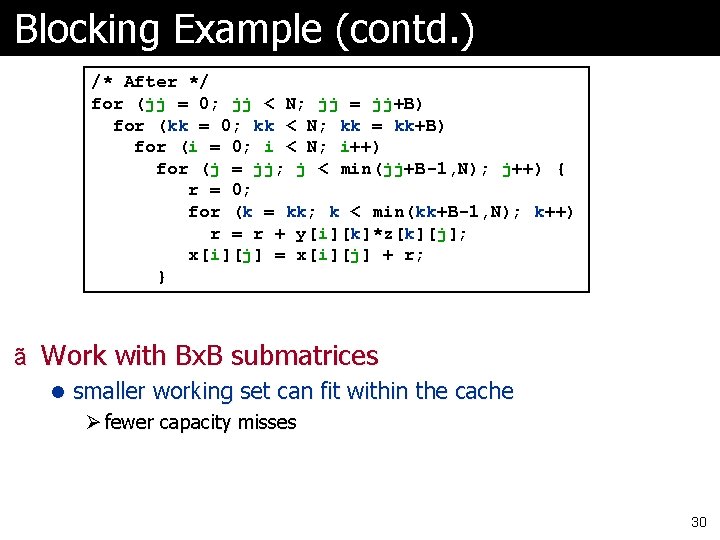

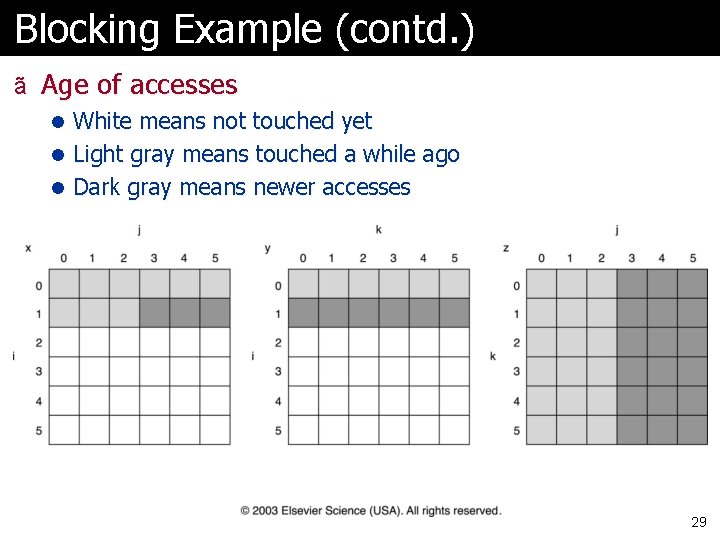

Blocking Example (contd. ) ã Age of accesses l White means not touched yet l Light gray means touched a while ago l Dark gray means newer accesses 29

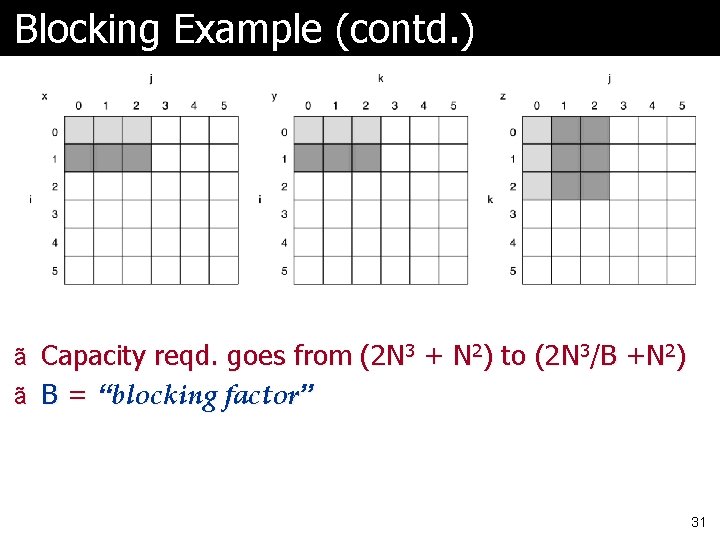

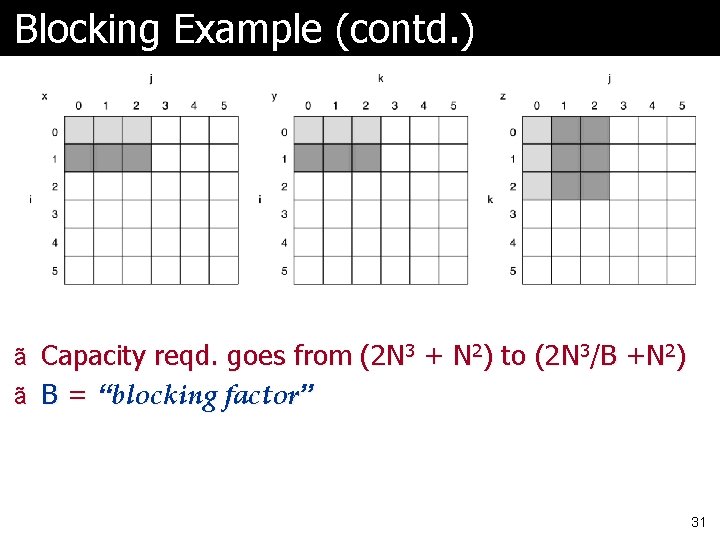

Blocking Example (contd. ) /* After */ for (jj = 0; jj < N; jj = jj+B) for (kk = 0; kk < N; kk = kk+B) for (i = 0; i < N; i++) for (j = jj; j < min(jj+B-1, N); j++) { r = 0; for (k = kk; k < min(kk+B-1, N); k++) r = r + y[i][k]*z[k][j]; x[i][j] = x[i][j] + r; } ã Work with Bx. B submatrices l smaller working set can fit within the cache Ø fewer capacity misses 30

Blocking Example (contd. ) ã Capacity reqd. goes from (2 N 3 + N 2) to (2 N 3/B +N 2) ã B = “blocking factor” 31

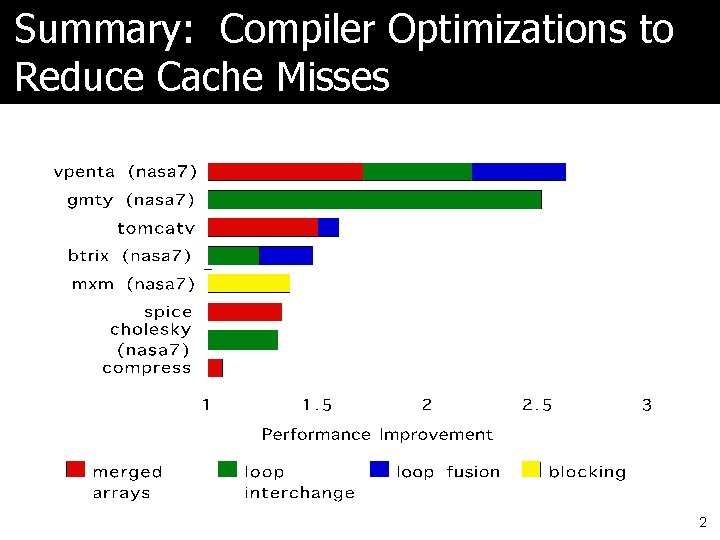

Summary: Compiler Optimizations to Reduce Cache Misses 32

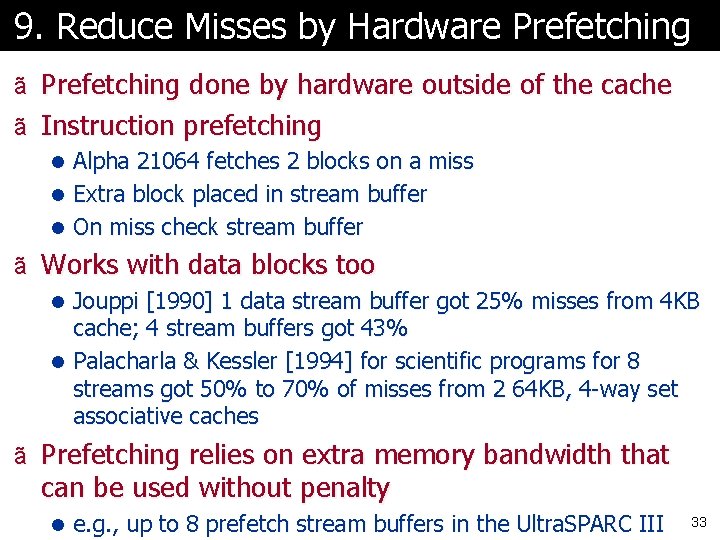

9. Reduce Misses by Hardware Prefetching ã Prefetching done by hardware outside of the cache ã Instruction prefetching l Alpha 21064 fetches 2 blocks on a miss l Extra block placed in stream buffer l On miss check stream buffer ã Works with data blocks too l Jouppi [1990] 1 data stream buffer got 25% misses from 4 KB cache; 4 stream buffers got 43% l Palacharla & Kessler [1994] for scientific programs for 8 streams got 50% to 70% of misses from 2 64 KB, 4 -way set associative caches ã Prefetching relies on extra memory bandwidth that can be used without penalty l e. g. , up to 8 prefetch stream buffers in the Ultra. SPARC III 33

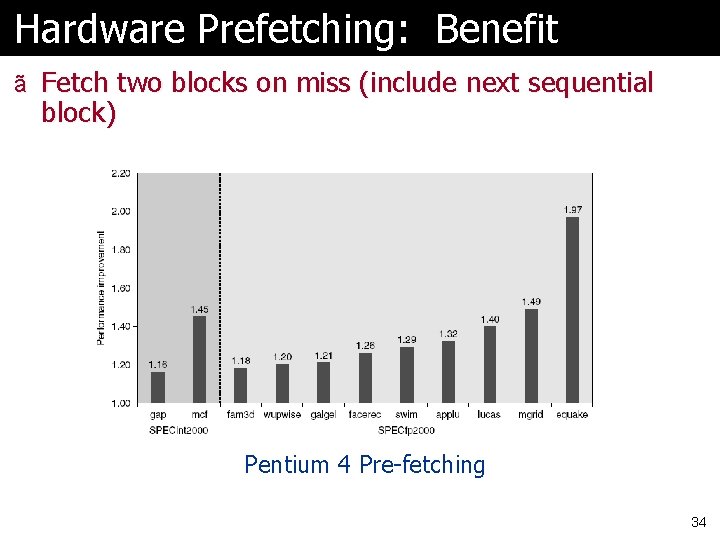

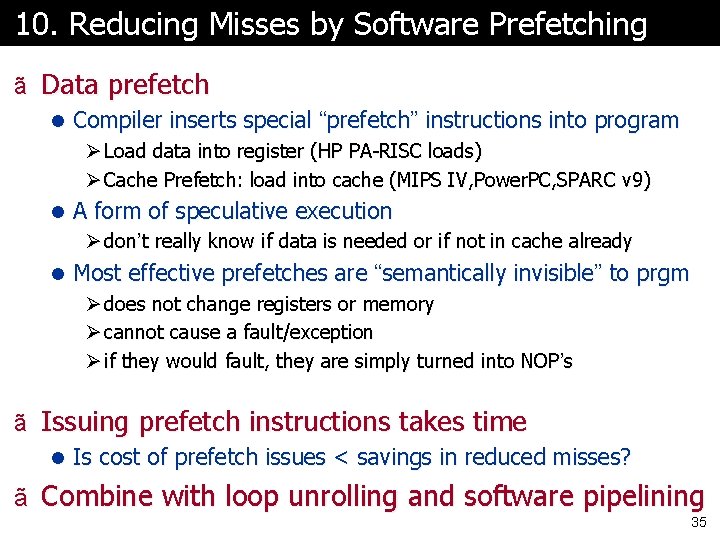

Hardware Prefetching: Benefit ã Fetch two blocks on miss (include next sequential block) Pentium 4 Pre-fetching 34

10. Reducing Misses by Software Prefetching ã Data prefetch l Compiler inserts special “prefetch” instructions into program Ø Load data into register (HP PA-RISC loads) Ø Cache Prefetch: load into cache (MIPS IV, Power. PC, SPARC v 9) l A form of speculative execution Ø don’t really know if data is needed or if not in cache already l Most effective prefetches are “semantically invisible” to prgm Ø does not change registers or memory Ø cannot cause a fault/exception Ø if they would fault, they are simply turned into NOP’s ã Issuing prefetch instructions takes time l Is cost of prefetch issues < savings in reduced misses? ã Combine with loop unrolling and software pipelining 35

A couple other optimizations 36

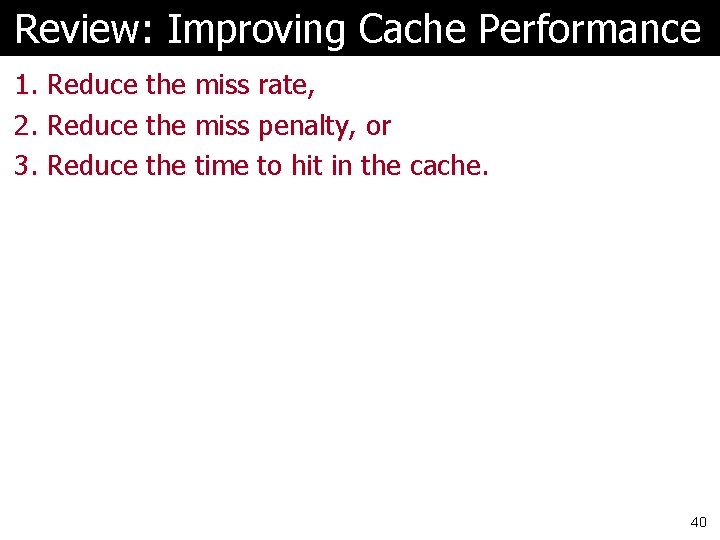

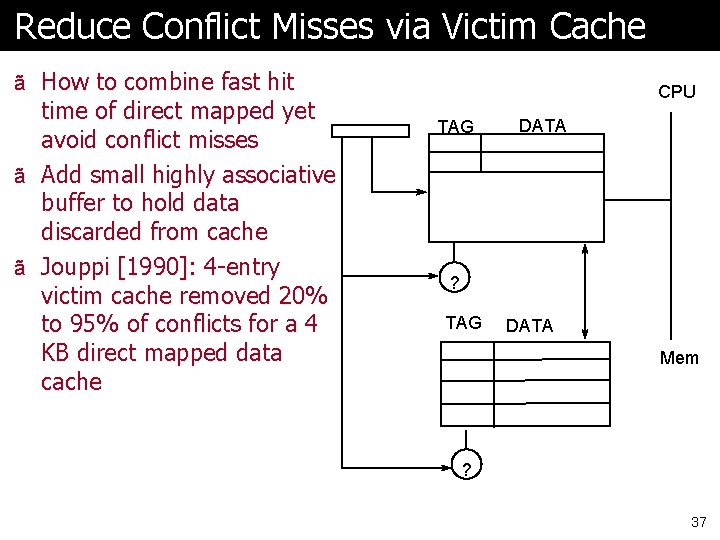

Reduce Conflict Misses via Victim Cache ã How to combine fast hit time of direct mapped yet avoid conflict misses ã Add small highly associative buffer to hold data discarded from cache ã Jouppi [1990]: 4 -entry victim cache removed 20% to 95% of conflicts for a 4 KB direct mapped data cache CPU TAG DATA ? TAG DATA Mem ? 37

Reduce Conflict Misses via Pseudo-Assoc. ã How to combine fast hit time of direct mapped and have the lower conflict misses of 2 -way SA cache ã Divide cache: on a miss, check other half of cache to see if there, if so have a pseudo-hit (slow hit) Hit Time Pseudo Hit Time Miss Penalty Time ã Drawback: CPU pipeline is hard if hit takes 1 or 2 cycles l Better for caches not tied directly to processor 38

Fetching Subblocks to Reduce Miss Penalty ã Don’t have to load full block on a miss ã Have bits per subblock to indicate valid 100 200 300 1 0 0 1 1 1 0 0 0 1 Valid Bits 39

Review: Improving Cache Performance 1. Reduce the miss rate, 2. Reduce the miss penalty, or 3. Reduce the time to hit in the cache. 40

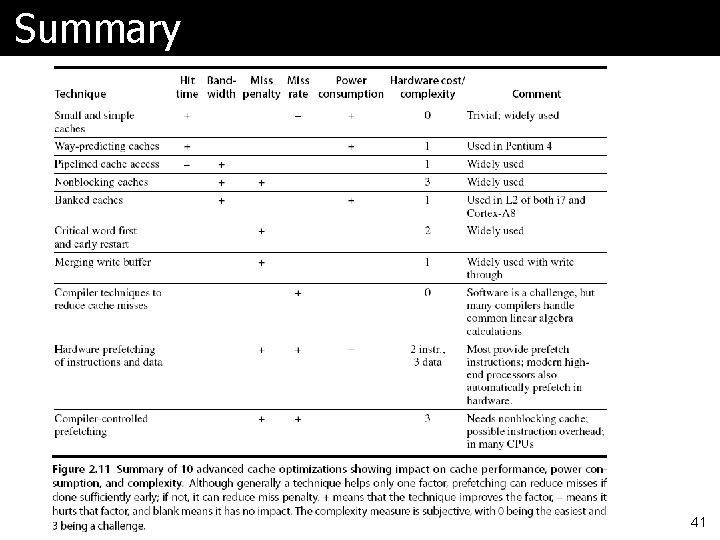

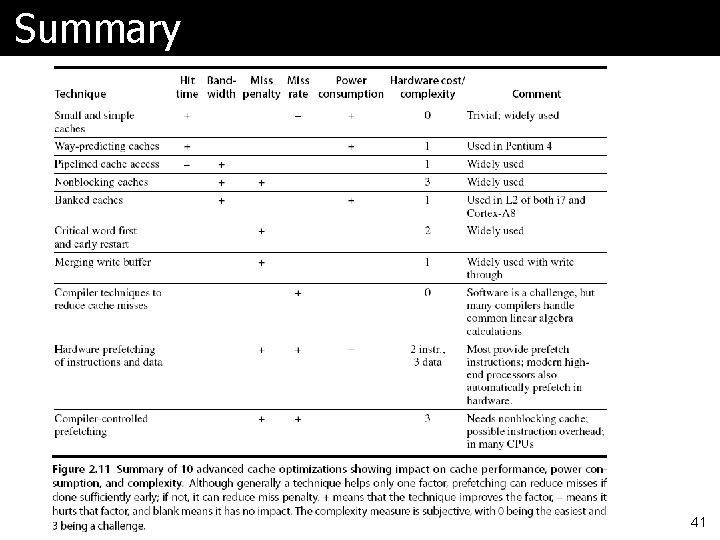

Summary 41