COMP 206 Computer Architecture and Implementation Montek Singh

- Slides: 18

COMP 206: Computer Architecture and Implementation Montek Singh Mon. , Aug 30, 2004 Lecture 2 1

Outline ã Quantitative Principles of Computer Design l Amdahl’s law (make the common case fast) ã Performance Metrics l MIPS, FLOPS, and all that… ã Examples 2

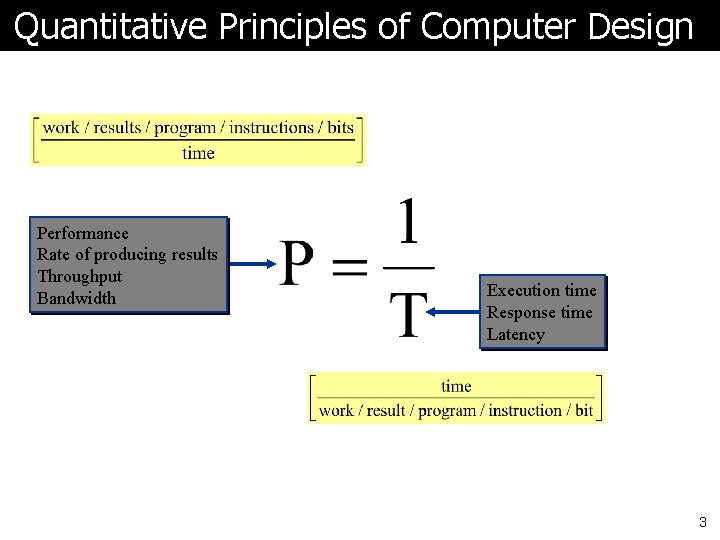

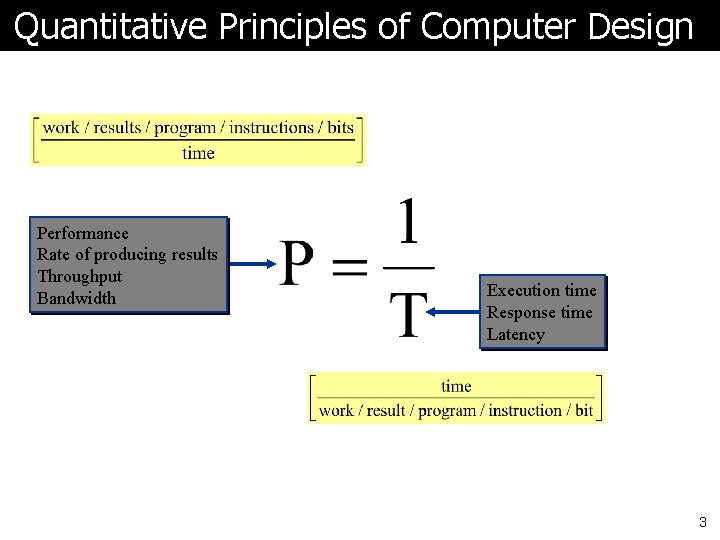

Quantitative Principles of Computer Design Performance Rate of producing results Throughput Bandwidth Execution time Response time Latency 3

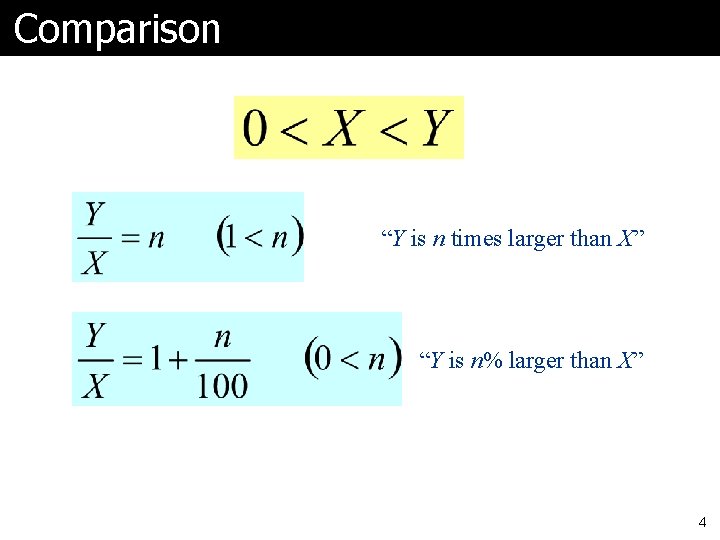

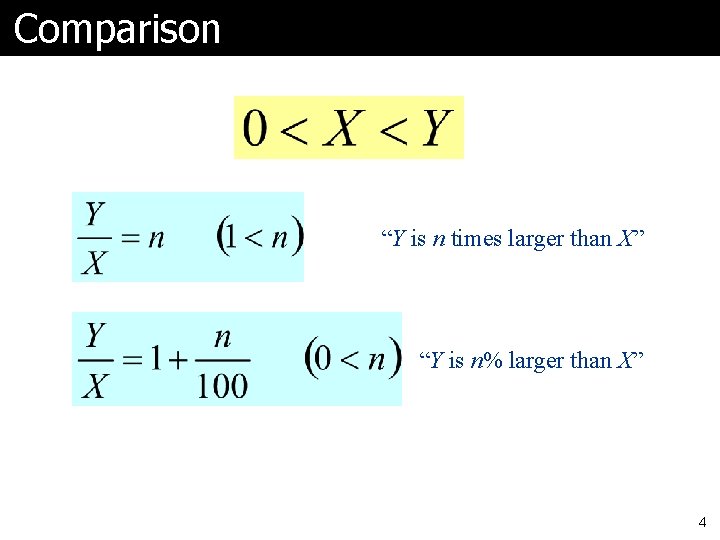

Comparison “Y is n times larger than X” “Y is n% larger than X” 4

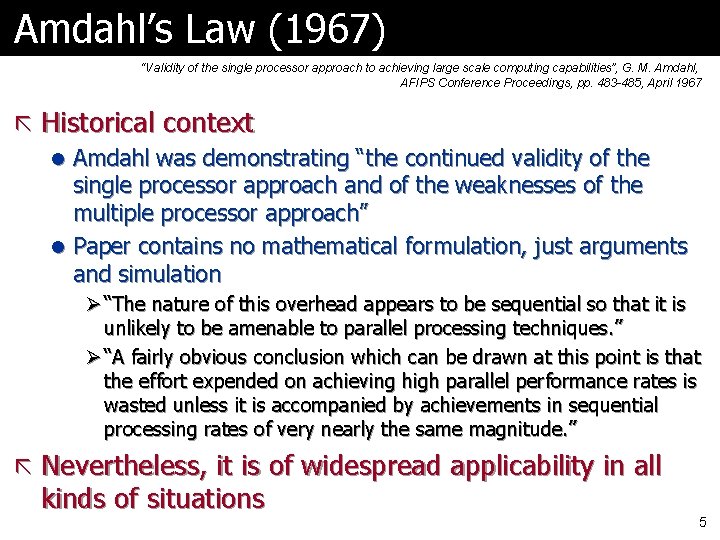

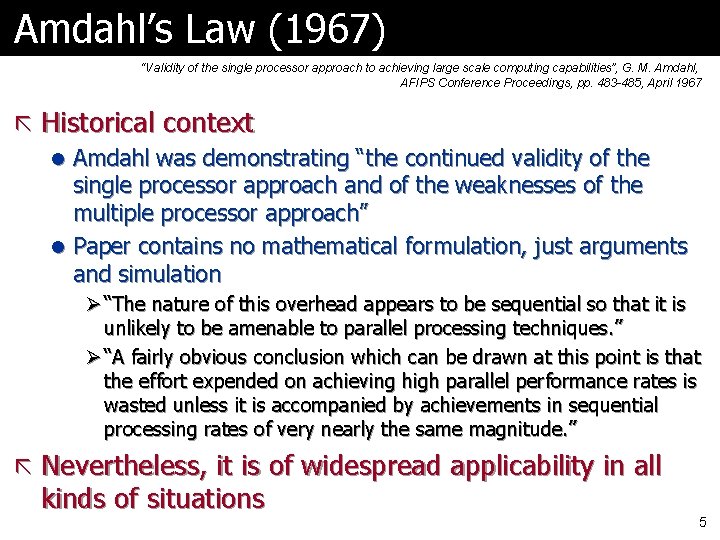

Amdahl’s Law (1967) “Validity of the single processor approach to achieving large scale computing capabilities”, G. M. Amdahl, AFIPS Conference Proceedings, pp. 483 -485, April 1967 ã Historical context l Amdahl was demonstrating “the continued validity of the single processor approach and of the weaknesses of the multiple processor approach” l Paper contains no mathematical formulation, just arguments and simulation Ø “The nature of this overhead appears to be sequential so that it is unlikely to be amenable to parallel processing techniques. ” Ø “A fairly obvious conclusion which can be drawn at this point is that the effort expended on achieving high parallel performance rates is wasted unless it is accompanied by achievements in sequential processing rates of very nearly the same magnitude. ” ã Nevertheless, it is of widespread applicability in all kinds of situations 5

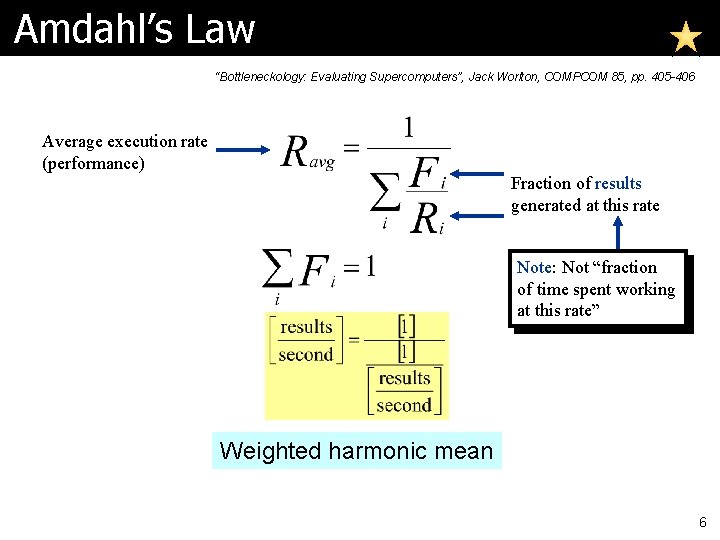

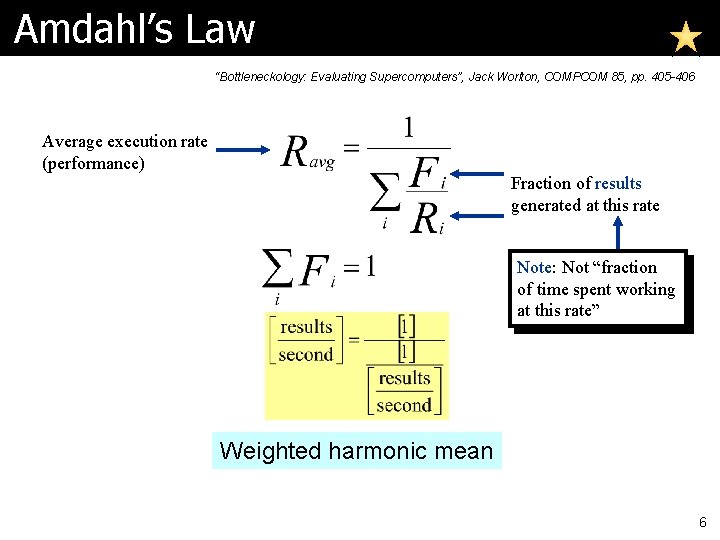

Amdahl’s Law “Bottleneckology: Evaluating Supercomputers”, Jack Worlton, COMPCOM 85, pp. 405 -406 Average execution rate (performance) Fraction of results generated at this rate Note: Not “fraction of time spent working at this rate” Weighted harmonic mean 6

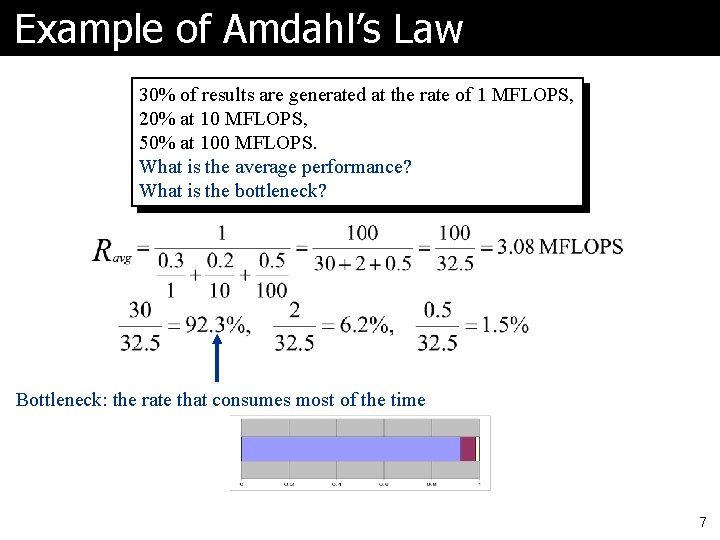

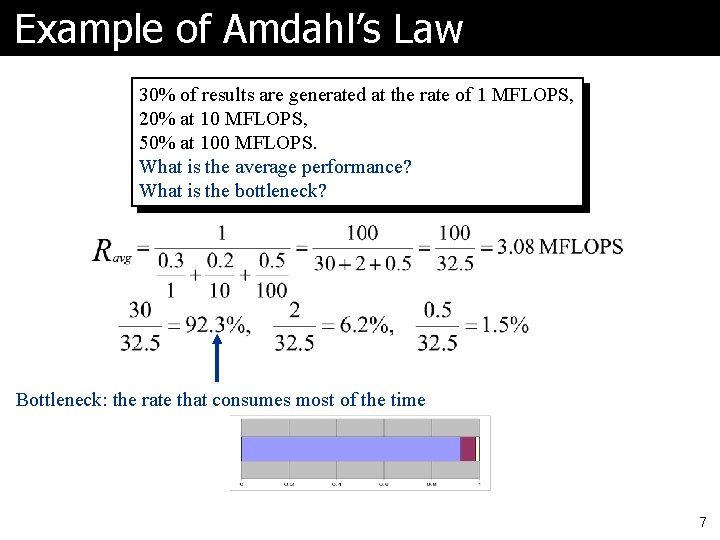

Example of Amdahl’s Law 30% of results are generated at the rate of 1 MFLOPS, 20% at 10 MFLOPS, 50% at 100 MFLOPS. What is the average performance? What is the bottleneck? Bottleneck: the rate that consumes most of the time 7

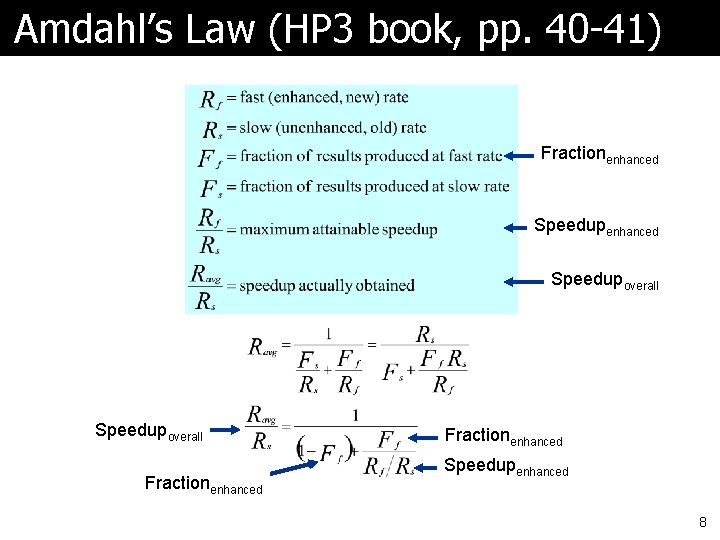

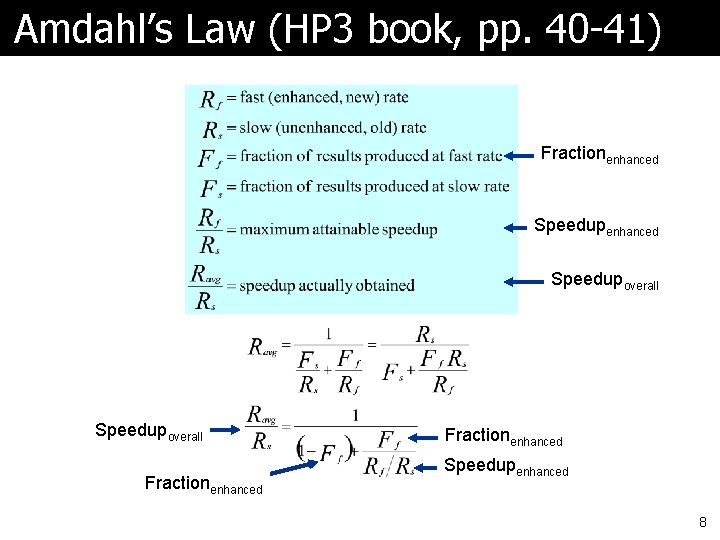

Amdahl’s Law (HP 3 book, pp. 40 -41) Fractionenhanced Speedupoverall Fractionenhanced Speedupenhanced 8

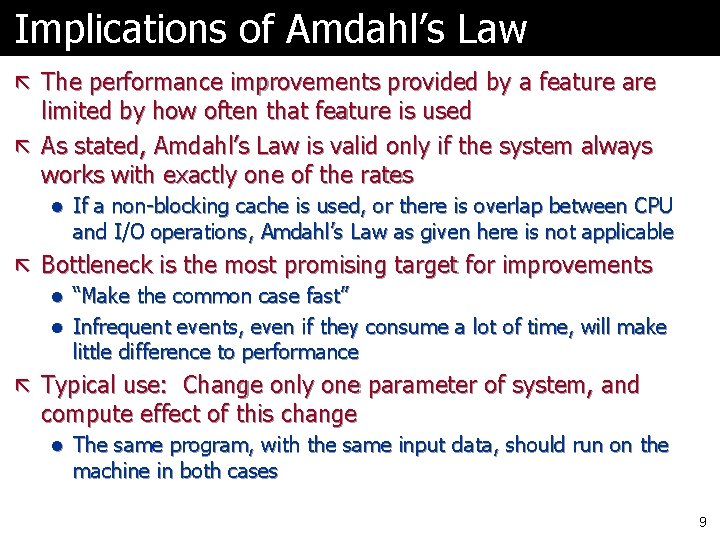

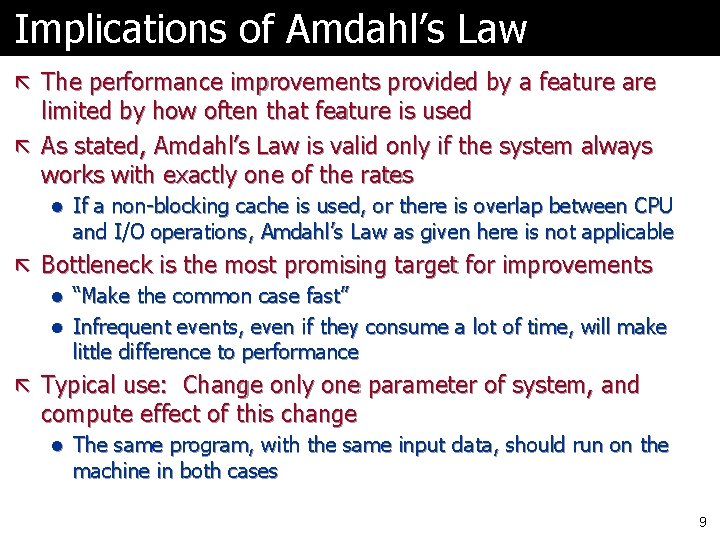

Implications of Amdahl’s Law ã The performance improvements provided by a feature are limited by how often that feature is used ã As stated, Amdahl’s Law is valid only if the system always works with exactly one of the rates l If a non-blocking cache is used, or there is overlap between CPU and I/O operations, Amdahl’s Law as given here is not applicable ã Bottleneck is the most promising target for improvements l “Make the common case fast” l Infrequent events, even if they consume a lot of time, will make little difference to performance ã Typical use: Change only one parameter of system, and compute effect of this change l The same program, with the same input data, should run on the machine in both cases 9

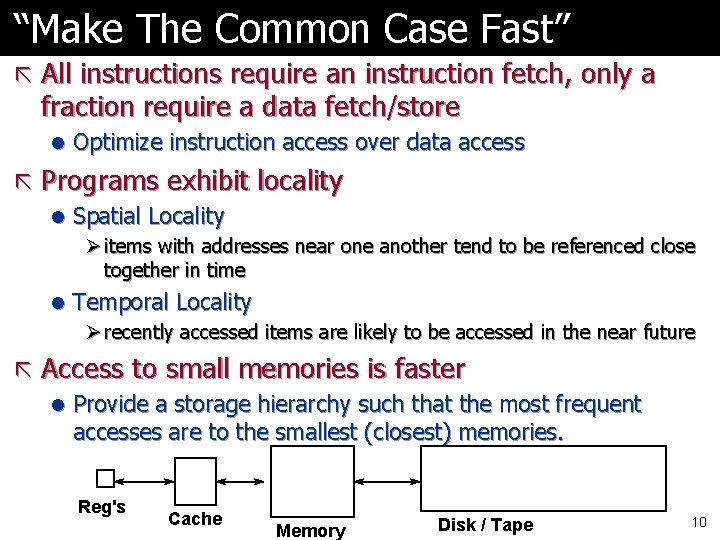

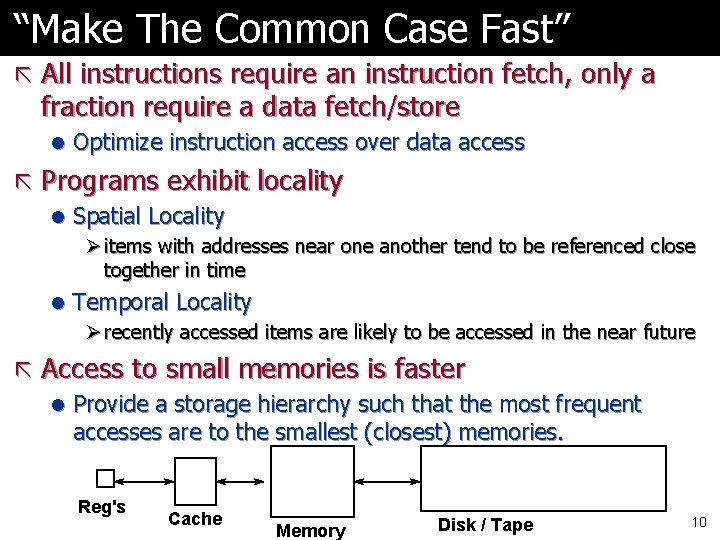

“Make The Common Case Fast” ã All instructions require an instruction fetch, only a fraction require a data fetch/store l Optimize instruction access over data access ã Programs exhibit locality l Spatial Locality Ø items with addresses near one another tend to be referenced close together in time l Temporal Locality Ø recently accessed items are likely to be accessed in the near future ã Access to small memories is faster l Provide a storage hierarchy such that the most frequent accesses are to the smallest (closest) memories. Reg's Cache Memory Disk / Tape 10

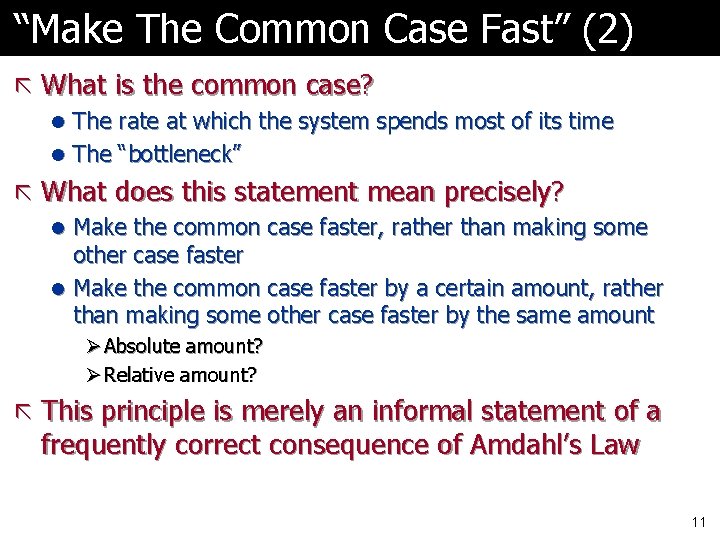

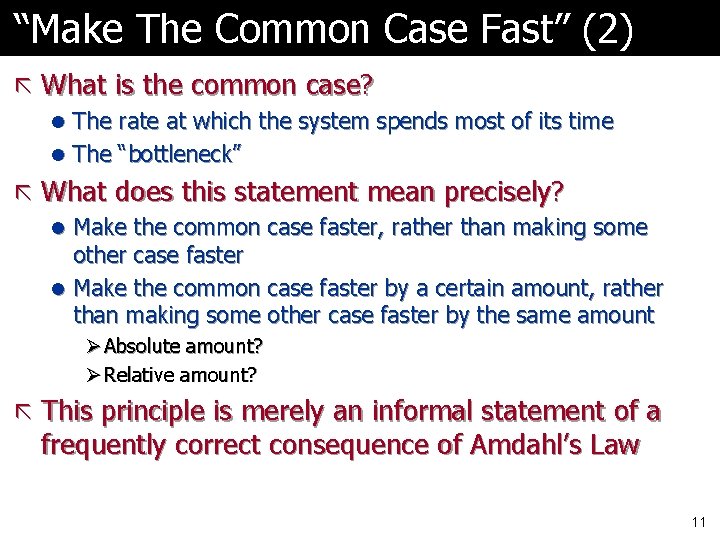

“Make The Common Case Fast” (2) ã What is the common case? l The rate at which the system spends most of its time l The “bottleneck” ã What does this statement mean precisely? l Make the common case faster, rather than making some other case faster l Make the common case faster by a certain amount, rather than making some other case faster by the same amount Ø Absolute amount? Ø Relative amount? ã This principle is merely an informal statement of a frequently correct consequence of Amdahl’s Law 11

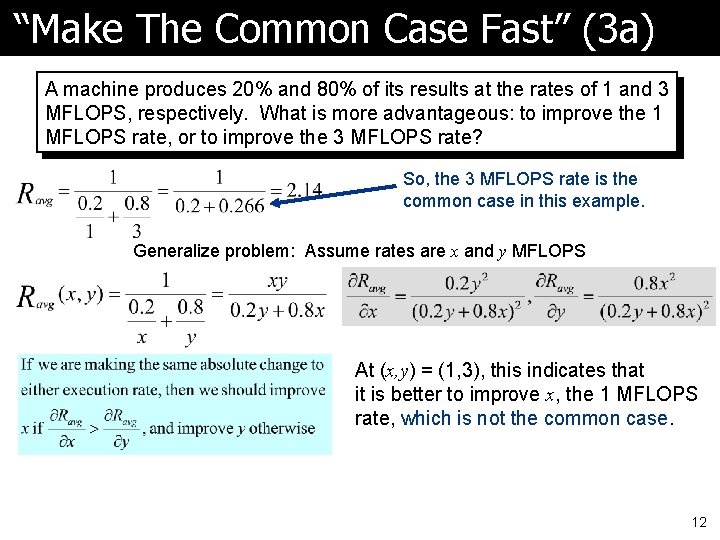

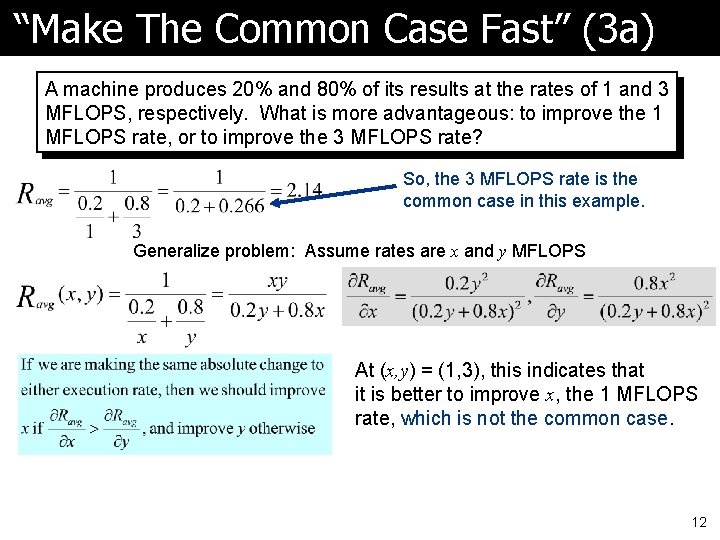

“Make The Common Case Fast” (3 a) A machine produces 20% and 80% of its results at the rates of 1 and 3 MFLOPS, respectively. What is more advantageous: to improve the 1 MFLOPS rate, or to improve the 3 MFLOPS rate? So, the 3 MFLOPS rate is the common case in this example. Generalize problem: Assume rates are x and y MFLOPS At (x, y) = (1, 3), this indicates that it is better to improve x, the 1 MFLOPS rate, which is not the common case. 12

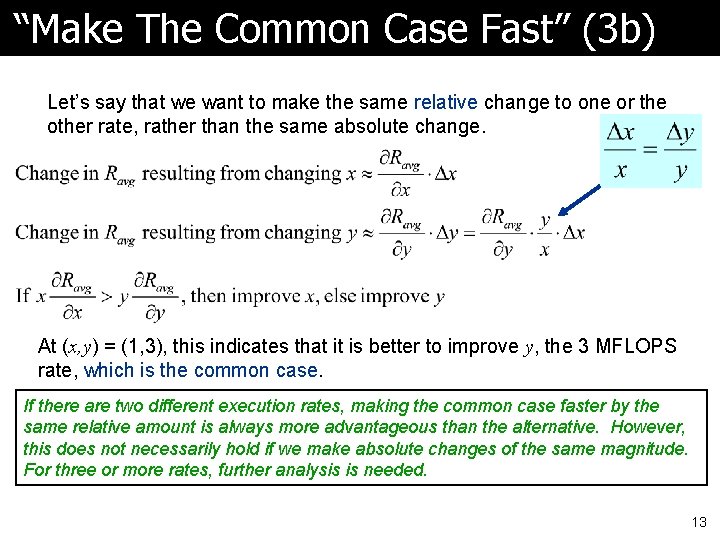

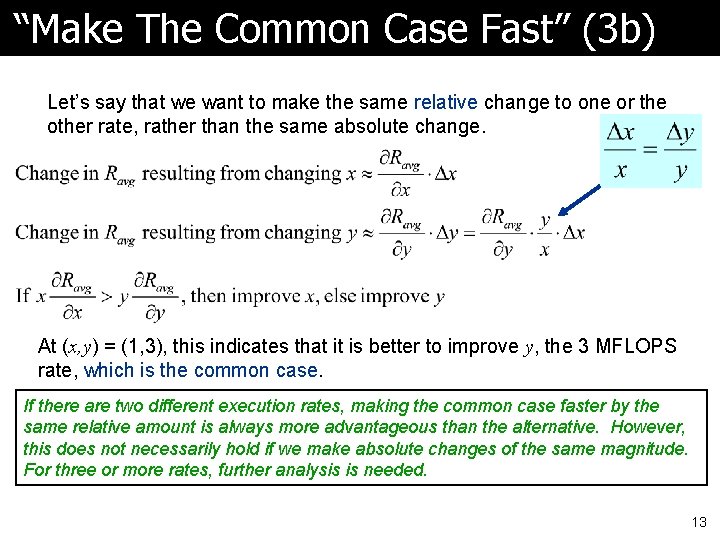

“Make The Common Case Fast” (3 b) Let’s say that we want to make the same relative change to one or the other rate, rather than the same absolute change. At (x, y) = (1, 3), this indicates that it is better to improve y, the 3 MFLOPS rate, which is the common case. If there are two different execution rates, making the common case faster by the same relative amount is always more advantageous than the alternative. However, this does not necessarily hold if we make absolute changes of the same magnitude. For three or more rates, further analysis is needed. 13

Basics of Performance 14

Details of CPI 15

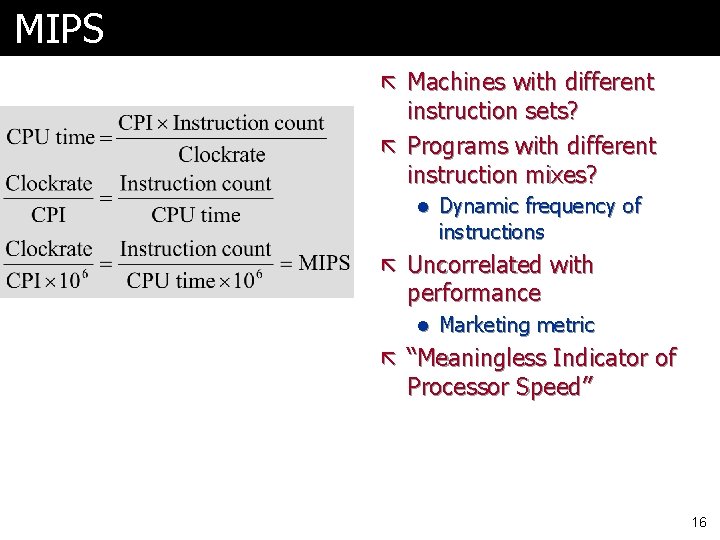

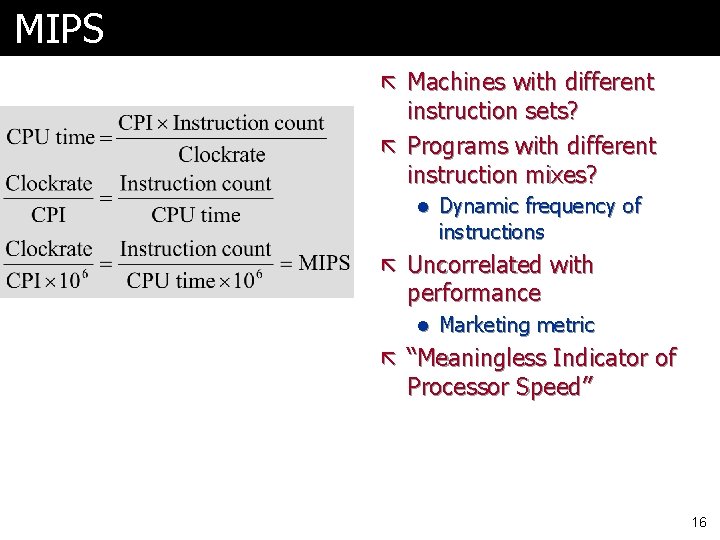

MIPS ã Machines with different instruction sets? ã Programs with different instruction mixes? l Dynamic frequency of instructions ã Uncorrelated with performance l Marketing metric ã “Meaningless Indicator of Processor Speed” 16

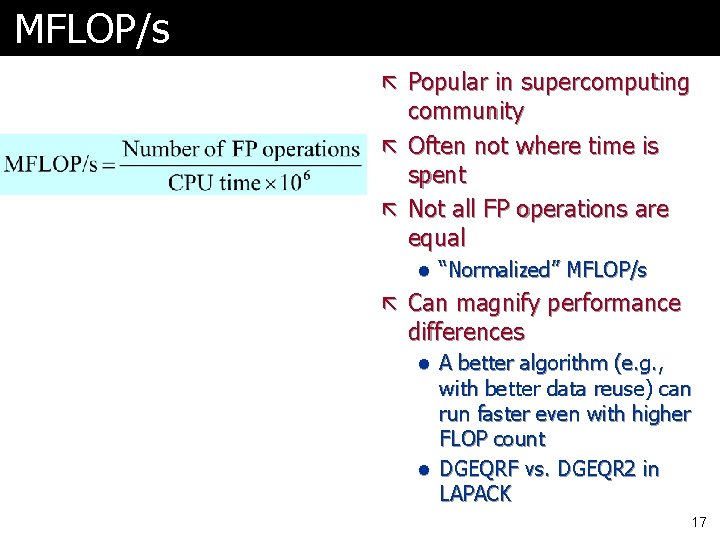

MFLOP/s ã Popular in supercomputing community ã Often not where time is spent ã Not all FP operations are equal l “Normalized” MFLOP/s ã Can magnify performance differences l A better algorithm (e. g. , with better data reuse) can run faster even with higher FLOP count l DGEQRF vs. DGEQR 2 in LAPACK 17

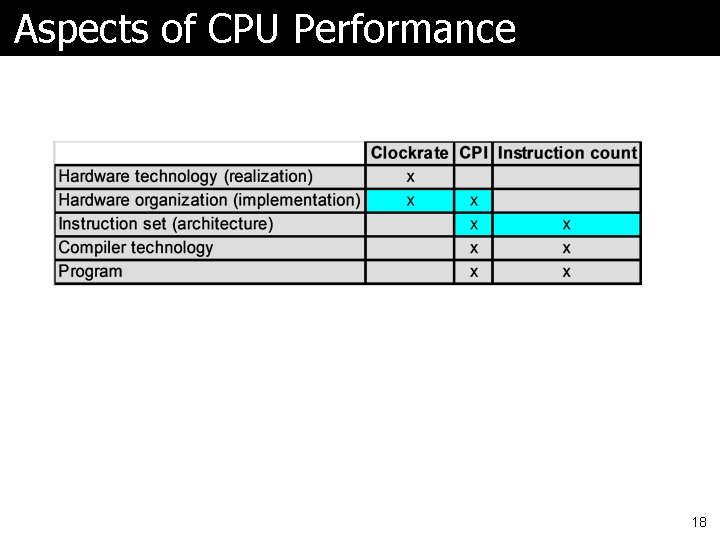

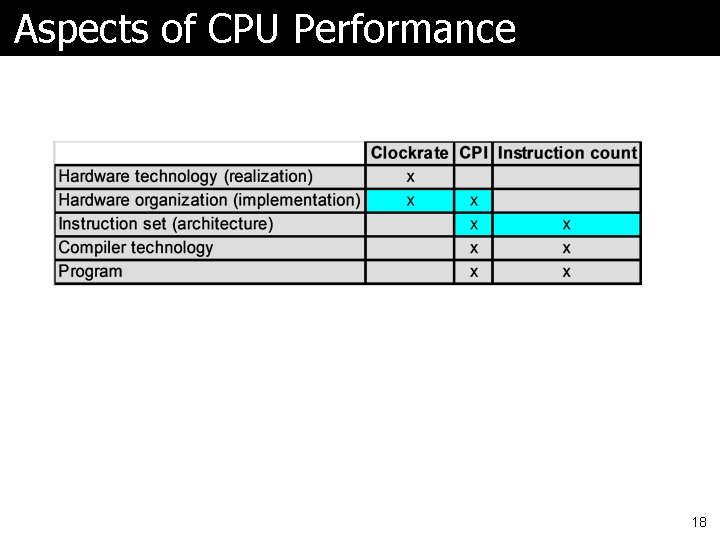

Aspects of CPU Performance 18