CC 5212 1 PROCESAMIENTO MASIVO DE DATOS OTOO

![Map. Reduce/Hadoop 1. Input 2. Map 3. Partition [Sort] 4. Shuffle 5. Merge Sort Map. Reduce/Hadoop 1. Input 2. Map 3. Partition [Sort] 4. Shuffle 5. Merge Sort](https://slidetodoc.com/presentation_image/03f3f46ddb1babf53058d46403816a2c/image-4.jpg)

![Map. Reduce/Hadoop Input Map Partition / [Sort] Combine Shuffle Merge Sort (0, perro sed Map. Reduce/Hadoop Input Map Partition / [Sort] Combine Shuffle Merge Sort (0, perro sed](https://slidetodoc.com/presentation_image/03f3f46ddb1babf53058d46403816a2c/image-5.jpg)

![R Input W R|W R Map Partition / [Sort] Combine Shuffle Merge Sort (0, R Input W R|W R Map Partition / [Sort] Combine Shuffle Merge Sort (0,](https://slidetodoc.com/presentation_image/03f3f46ddb1babf53058d46403816a2c/image-6.jpg)

![R Input W R|W R Map Partition / [Sort] Combine Shuffle W Merge Sort R Input W R|W R Map Partition / [Sort] Combine Shuffle W Merge Sort](https://slidetodoc.com/presentation_image/03f3f46ddb1babf53058d46403816a2c/image-7.jpg)

![R Input W R|W R Map Partition / [Sort] Combine Shuffle W Merge Sort R Input W R|W R Map Partition / [Sort] Combine Shuffle W Merge Sort](https://slidetodoc.com/presentation_image/03f3f46ddb1babf53058d46403816a2c/image-8.jpg)

- Slides: 59

CC 5212 -1 PROCESAMIENTO MASIVO DE DATOS OTOÑO 2019 Lecture 5 Apache Spark (Core) Aidan Hogan aidhog@gmail. com

Spark vs. Hadoop What is the main weakness of Hadoop? Let’s see …

Data Transport Costs (throughput) 30 GB/s 600 MB/s 125 MB/s Main Memory Solid-state Disk Hard-disk 50– 150 ns 10– 100 μs 5– 15 ms 10 MB/s Network (same rack) (across racks) 10– 100 μs 100– 500 μs (access)

![Map ReduceHadoop 1 Input 2 Map 3 Partition Sort 4 Shuffle 5 Merge Sort Map. Reduce/Hadoop 1. Input 2. Map 3. Partition [Sort] 4. Shuffle 5. Merge Sort](https://slidetodoc.com/presentation_image/03f3f46ddb1babf53058d46403816a2c/image-4.jpg)

Map. Reduce/Hadoop 1. Input 2. Map 3. Partition [Sort] 4. Shuffle 5. Merge Sort 6. Reduce 7. Output

![Map ReduceHadoop Input Map Partition Sort Combine Shuffle Merge Sort 0 perro sed Map. Reduce/Hadoop Input Map Partition / [Sort] Combine Shuffle Merge Sort (0, perro sed](https://slidetodoc.com/presentation_image/03f3f46ddb1babf53058d46403816a2c/image-5.jpg)

Map. Reduce/Hadoop Input Map Partition / [Sort] Combine Shuffle Merge Sort (0, perro sed que) (perro, 1) (sed, 1) (que, 1) (sed, 1) (perro, 1) (que, 1) (decir, 1) (sed, 1) (13, que decir que) (que, 1) (decir, 1) (que, 2) (perro, 1) (que, 2) (que, 1) (26, la que sed) (la, 1) (que, 1) (sed, 1) (que, 1) (la, 1) Reduce Output (decir, 1) (sed, 1) (decir, {1}) (sed, {1, 1}) (decir, 1) (sed, 2) (perro, 1) (que, 2) (pero, {1}) (perro, 1) (que, 4) (la, 1) (la, {1}) (que, {1, 1, 2} ) (la, 1)

![R Input W RW R Map Partition Sort Combine Shuffle Merge Sort 0 R Input W R|W R Map Partition / [Sort] Combine Shuffle Merge Sort (0,](https://slidetodoc.com/presentation_image/03f3f46ddb1babf53058d46403816a2c/image-6.jpg)

R Input W R|W R Map Partition / [Sort] Combine Shuffle Merge Sort (0, perro sed que) (perro, 1) (sed, 1) (que, 1) (sed, 1) (perro, 1) (que, 1) (decir, 1) (sed, 1) (13, que decir que) (que, 1) (decir, 1) (que, 2) (perro, 1) (que, 2) (que, 1) (26, la que sed) (la, 1) (que, 1) (sed, 1) (que, 1) (la, 1) W (la, 1) Reduce Output (decir, 1) (sed, 1) (decir, {1}) (sed, {1, 1}) (decir, 1) (sed, 2) (perro, 1) (que, 2) (pero, {1}) (perro, 1) (que, 4) (la, 1) (la, {1}) (que, {1, 1, 2} ) (la, 1)

![R Input W RW R Map Partition Sort Combine Shuffle W Merge Sort R Input W R|W R Map Partition / [Sort] Combine Shuffle W Merge Sort](https://slidetodoc.com/presentation_image/03f3f46ddb1babf53058d46403816a2c/image-7.jpg)

R Input W R|W R Map Partition / [Sort] Combine Shuffle W Merge Sort Reduce Map. Reduce/Hadoop always coordinates (decir, (sed, 1) (decir, {1}) (sed, 1) (perro, 1) (sed, {1, 1}) 1) between phases (Map → Shuffle → Reduce) (perro, (decir, (sed, 1) 1) (sed, 1) (que, 1) and between high-level tasks (Count (sed, 1) → Order) (que, 1) using the hard-disk. (0, perro sed que) (13, que decir que) (que, 1) (decir, 1) (que, 2) (HDFS) (perro, 1) (que, 2) (que, 1) (26, la que sed) (la, 1) (que, 1) (sed, 1) (que, 1) (la, 1) Output (decir, 1) (sed, 2) (perro, (pero, {1}) 1) (que, {1, 1, 2} (que, 1) ) (que, 1) (HDFS) (que, 2) (perro, 1) (que, 4) (la, 1) (la, {1}) …

![R Input W RW R Map Partition Sort Combine Shuffle W Merge Sort R Input W R|W R Map Partition / [Sort] Combine Shuffle W Merge Sort](https://slidetodoc.com/presentation_image/03f3f46ddb1babf53058d46403816a2c/image-8.jpg)

R Input W R|W R Map Partition / [Sort] Combine Shuffle W Merge Sort Reduce Map. Reduce/Hadoop always coordinates (decir, (sed, 1) (decir, {1}) (sed, 1) (perro, 1) (sed, {1, 1}) 1) between phases (Map → Shuffle → Reduce) (perro, (decir, (sed, 1) 1) (sed, 1) (que, 1) and between high-level tasks (Count (sed, 1) → Order) (que, 1) using the hard-disk. (0, perro sed que) We saw this already counting words … • In memory on one machine: seconds (HDFS) • On disk on one machine: minutes (que, 1) • Over Map. Reduce: minutes (13, que decir que) (que, 1) (decir, 1) (que, 1) (26, la que sed) (la, 1) (que, 1) (sed, 1) (decir, 1) (que, 2) (perro, 1) (que, 2) (perro, (pero, {1}) 1) (que, {1, 1, 2} (que, 1) ) (que, 1) (HDFS) (que, 2) (la, 1) (sed, 1) Any alternative to these options? (que, 1) • In memory on multiple machines: (la, 1) ? ? ? (la, {1}) Output (decir, 1) (sed, 2) … (perro, 1) (que, 4) (la, 1)

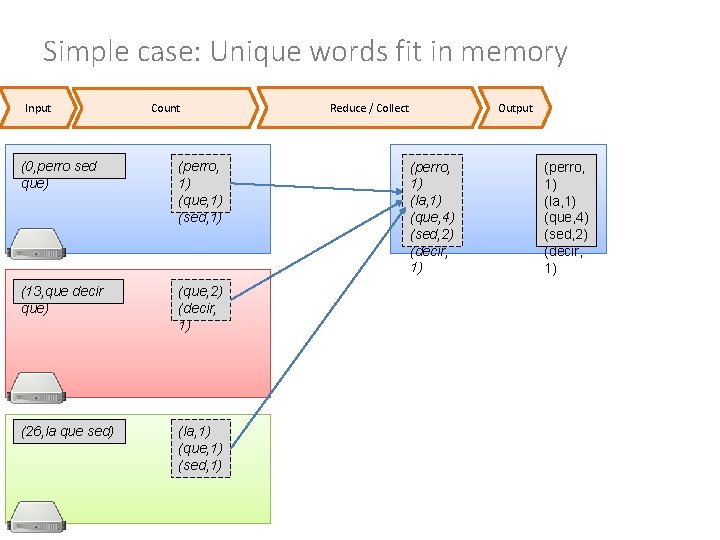

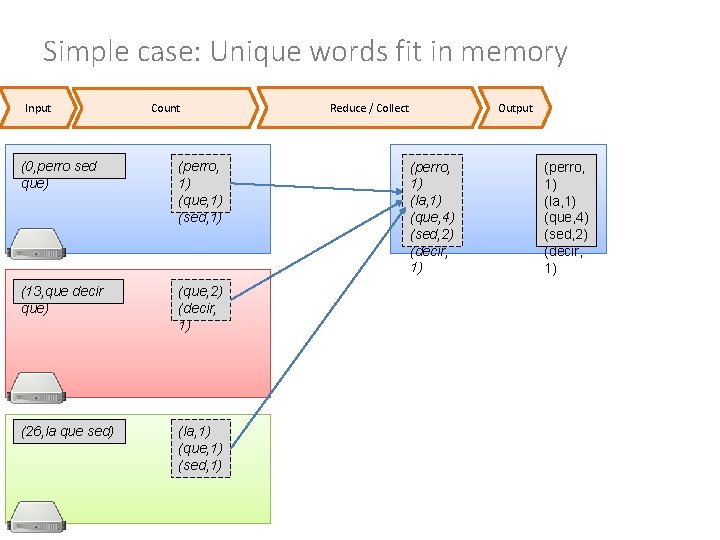

Simple case: Unique words fit in memory Input Count (0, perro sed que) (perro, 1) (que, 1) (sed, 1) (13, que decir que) (que, 2) (decir, 1) (26, la que sed) (la, 1) (que, 1) (sed, 1) Reduce / Collect Output (perro, 1) (la, 1) (que, 4) (sed, 2) (decir, 1)

R Input W Count (0, perro sed que) (perro, 1) (que, 1) (sed, 1) (13, que decir que) (que, 2) (decir, 1) (26, la que sed) (la, 1) (que, 1) (sed, 1) Reduce / Collect Output (perro, 1) (la, 1) (que, 4) (sed, 2) (decir, 1) If unique words don’t fit in memory? …

APACHE SPARK

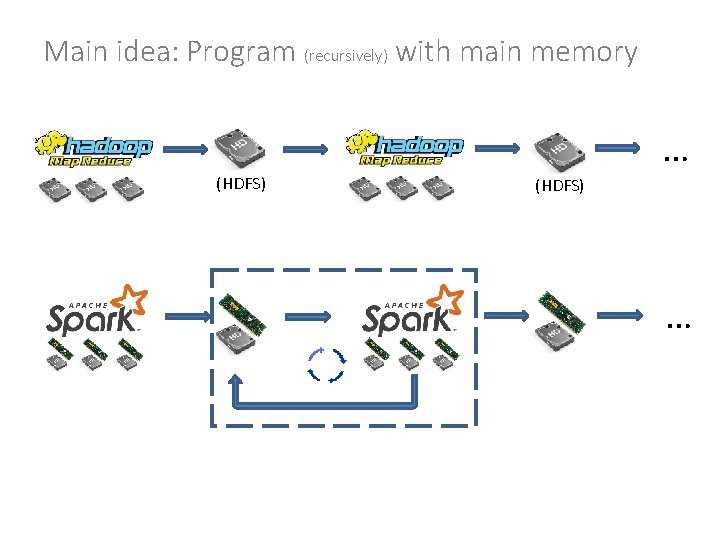

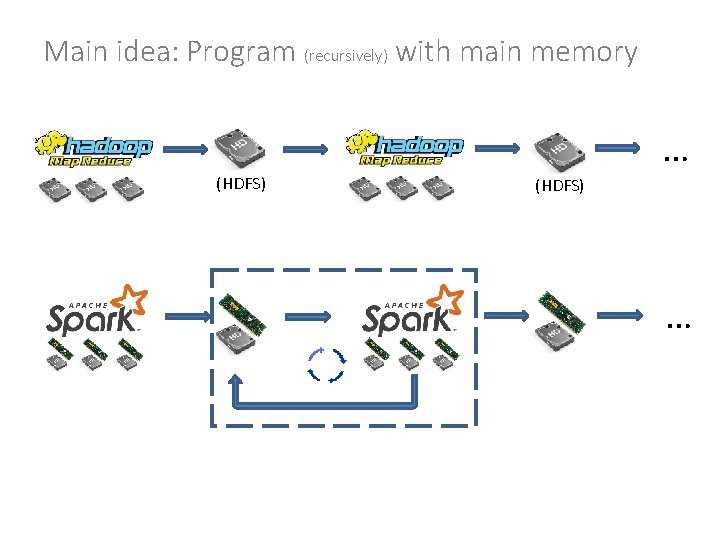

Main idea: Program with main memory (HDFS) …

Main idea: Program (recursively) with main memory (HDFS) …

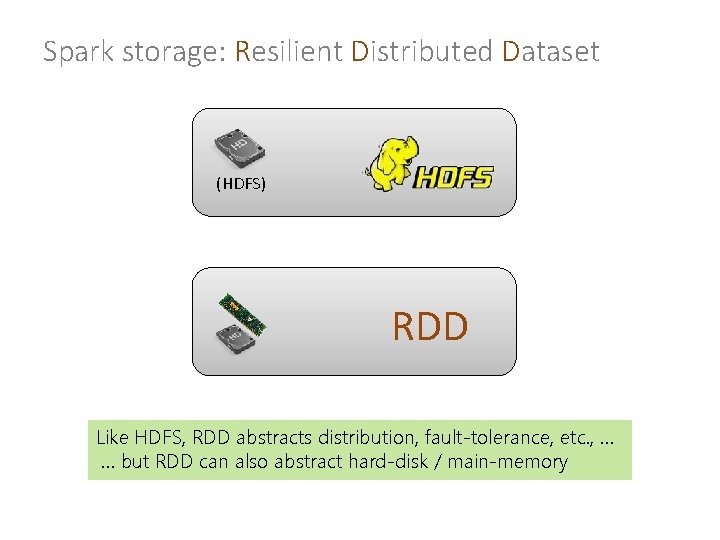

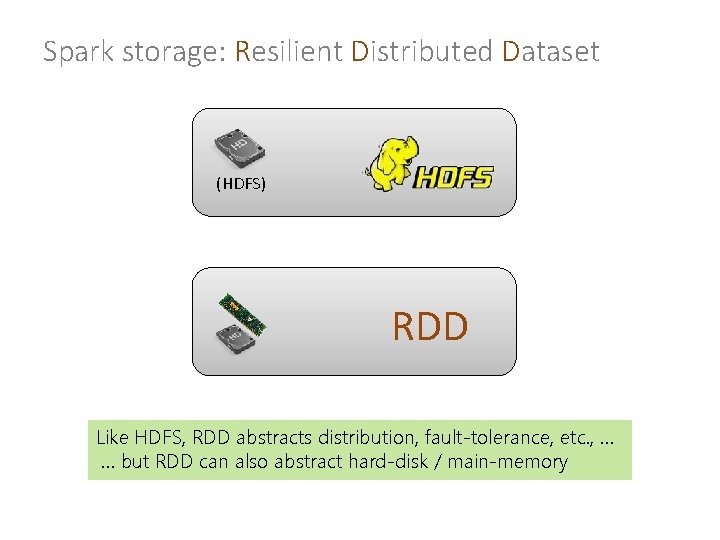

Spark storage: Resilient Distributed Dataset (HDFS) RDD Like HDFS, RDD abstracts distribution, fault-tolerance, etc. , … … but RDD can also abstract hard-disk / main-memory

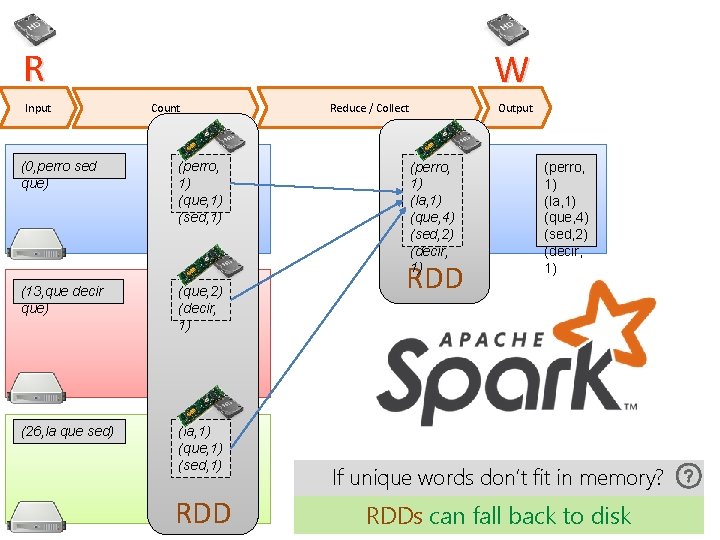

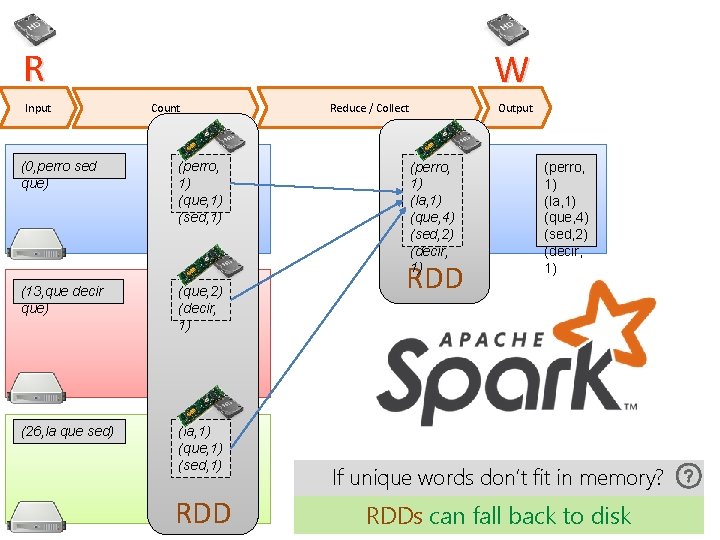

R Input W Count (0, perro sed que) (perro, 1) (que, 1) (sed, 1) (13, que decir que) (que, 2) (decir, 1) (26, la que sed) (la, 1) (que, 1) (sed, 1) RDD Reduce / Collect Output (perro, 1) (la, 1) (que, 4) (sed, 2) (decir, 1) RDD (perro, 1) (la, 1) (que, 4) (sed, 2) (decir, 1) If unique words don’t fit in memory? RDDs can fall back to disk

Spark storage: Resilient Distributed Dataset • Resilient: Fault-tolerant • Distributed: Partitioned • Dataset: Umm, a set of data

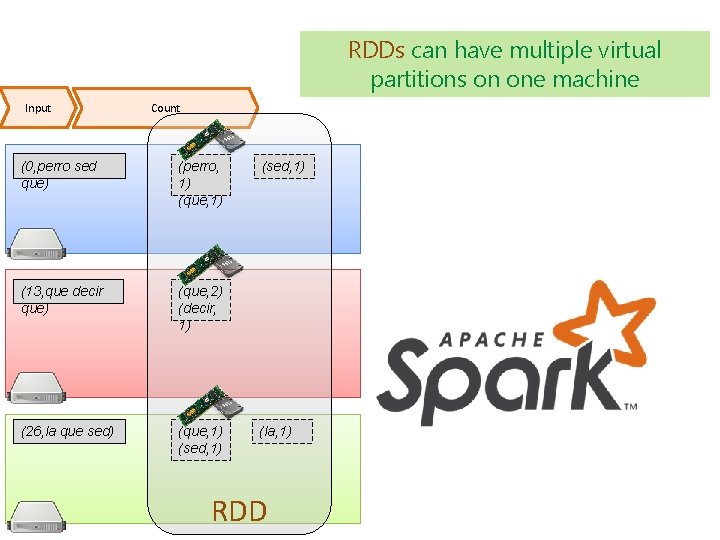

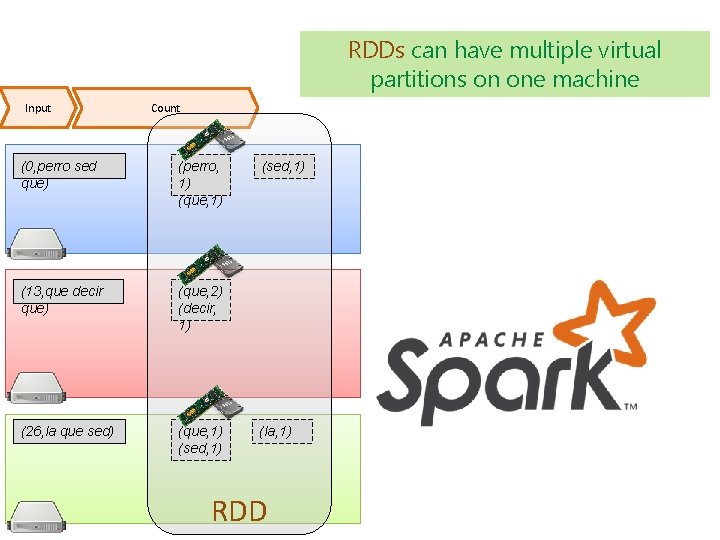

RDDs can have multiple virtual partitions on one machine Input Count (0, perro sed que) (perro, 1) (que, 1) (13, que decir que) (que, 2) (decir, 1) (26, la que sed) (que, 1) (sed, 1) (la, 1) RDD

Types of RDDs in Spark • Hadoop. RDD • Double. RDD • Cassandra. RDD • Filtered. RDD • Jdbc. RDD • Geo. RDD • Mapped. RDD • Json. RDD • Es. Spark • Pair. RDD • Schema. RDD • Shuffled. RDD • Vertex. RDD • Union. RDD • Edge. RDD • Python. RDD Specific types of RDDs permit specific operations Pair. RDD of particular importance for M/R style operators

APACHE SPARK: EXAMPLE

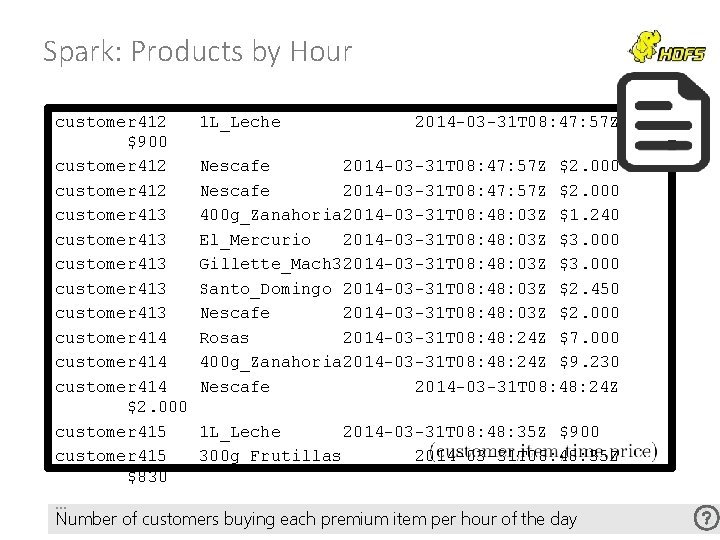

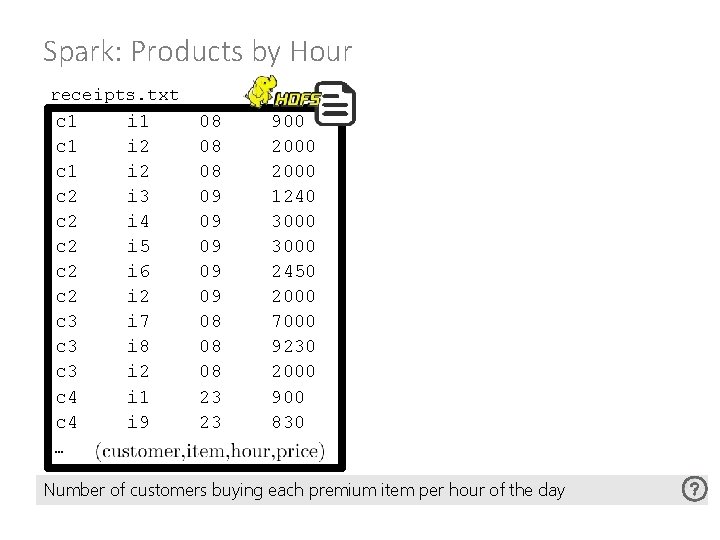

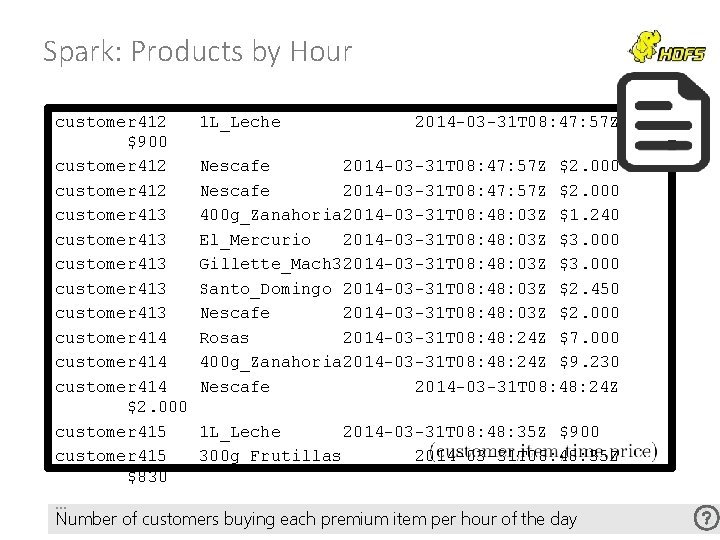

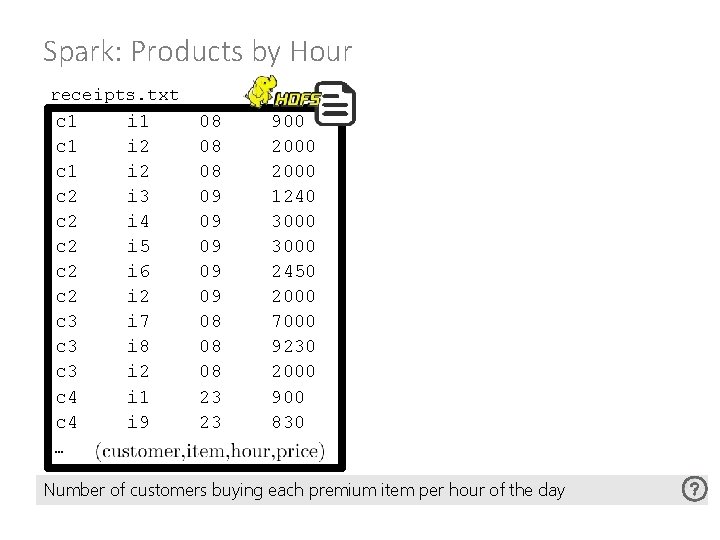

Spark: Products by Hour customer 412 $900 customer 412 customer 413 customer 413 customer 414 $2. 000 customer 415 $830 … 1 L_Leche 2014 -03 -31 T 08: 47: 57 Z Nescafe 2014 -03 -31 T 08: 47: 57 Z $2. 000 400 g_Zanahoria 2014 -03 -31 T 08: 48: 03 Z $1. 240 El_Mercurio 2014 -03 -31 T 08: 48: 03 Z $3. 000 Gillette_Mach 32014 -03 -31 T 08: 48: 03 Z $3. 000 Santo_Domingo 2014 -03 -31 T 08: 48: 03 Z $2. 450 Nescafe 2014 -03 -31 T 08: 48: 03 Z $2. 000 Rosas 2014 -03 -31 T 08: 48: 24 Z $7. 000 400 g_Zanahoria 2014 -03 -31 T 08: 48: 24 Z $9. 230 Nescafe 2014 -03 -31 T 08: 48: 24 Z 1 L_Leche 2014 -03 -31 T 08: 48: 35 Z $900 300 g_Frutillas 2014 -03 -31 T 08: 48: 35 Z Number of customers buying each premium item per hour of the day

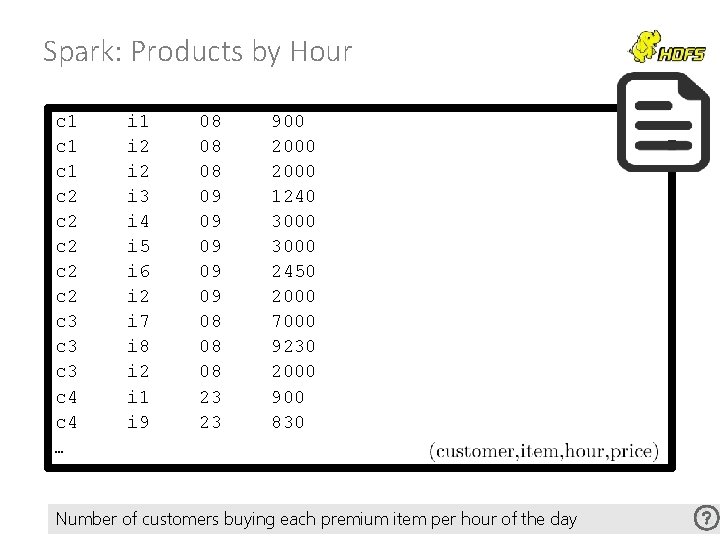

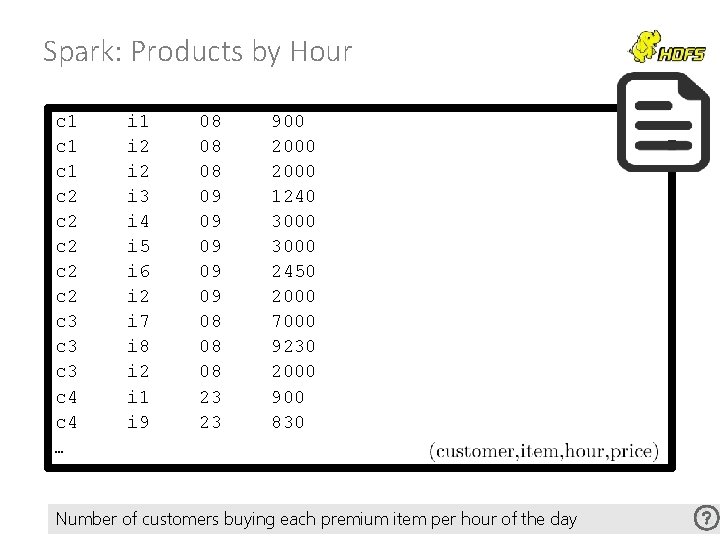

Spark: Products by Hour c 1 c 1 c 2 c 2 c 2 c 3 c 3 c 4 … i 1 i 2 i 3 i 4 i 5 i 6 i 2 i 7 i 8 i 2 i 1 i 9 08 08 08 09 09 09 08 08 08 23 23 900 2000 1240 3000 2450 2000 7000 9230 2000 900 830 Number of customers buying each premium item per hour of the day

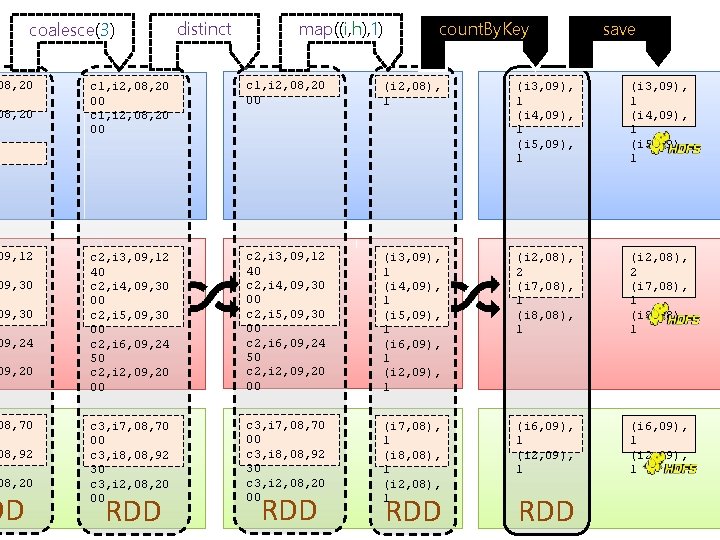

load coalesce(3) filter(p>1000) … c 1, i 1, 08, 90 0 c 1, i 2, 08, 20 00 c 1, i 2, 08, 20 c 4, i 1, 23, 90 00 0 c 4, i 9, 23, 83 0 c 1, i 2, 08, 20 00 c 2, i 3, 09, 12 40 c 2, i 4, 09, 30 00 c 2, i 5, 09, 30 00 c 2, i 6, 09, 24 50 c 2, i 2, 09, 20 00 c 3, i 7, 08, 70 00 c 3, i 8, 08, 92 30 c 3, i 2, 08, 20 00 RDD RDD

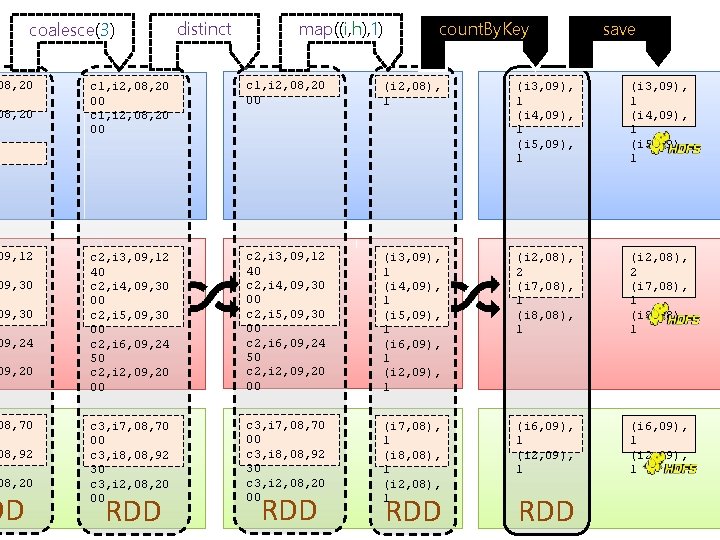

coalesce(3) 08, 20 09, 12 09, 30 09, 24 09, 20 08, 70 08, 92 08, 20 DD distinct map((i, h), 1) count. By. Key save c 1, i 2, 08, 20 00 (i 2, 08), 1 (i 3, 09), 1 (i 4, 09), 1 (i 5, 09), 1 c 2, i 3, 09, 12 40 c 2, i 4, 09, 30 00 c 2, i 5, 09, 30 00 c 2, i 6, 09, 24 50 c 2, i 2, 09, 20 00 (i 3, 09), 1 (i 4, 09), 1 (i 5, 09), 1 (i 6, 09), 1 (i 2, 08), 2 (i 7, 08), 1 (i 8, 08), 1 c 3, i 7, 08, 70 00 c 3, i 8, 08, 92 30 c 3, i 2, 08, 20 00 (i 7, 08), 1 (i 8, 08), 1 (i 2, 08), 1 (i 6, 09), 1 (i 2, 09), 1 RDD RDD

APACHE SPARK: TRANSFORMATIONS &ACTIONS

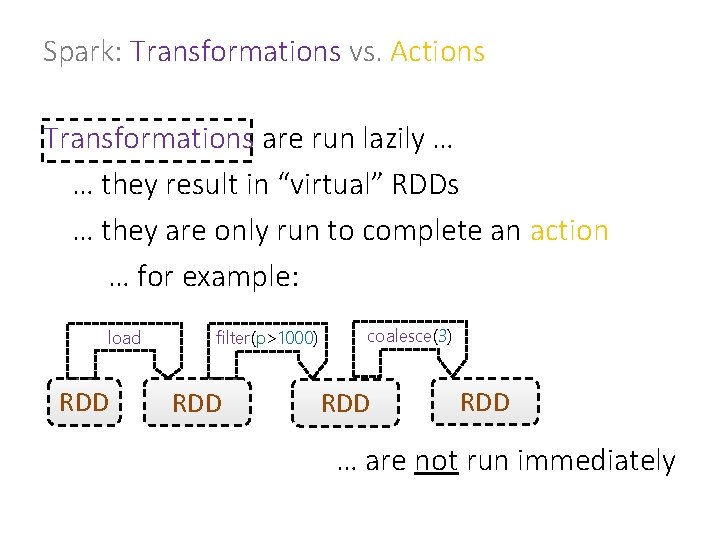

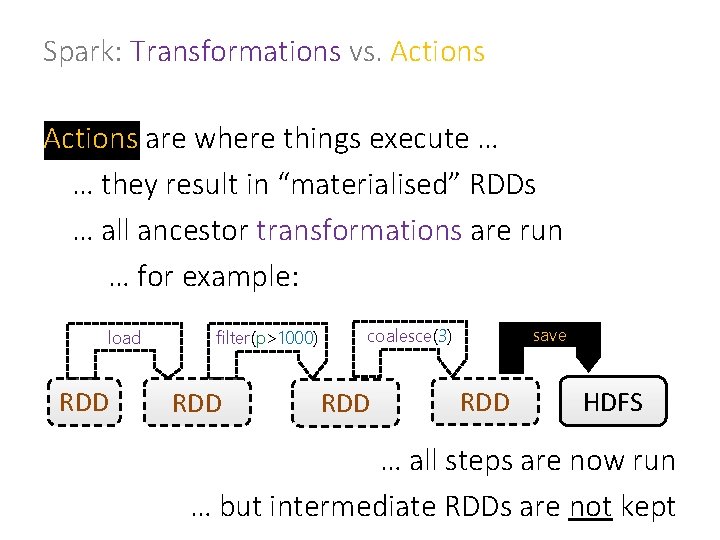

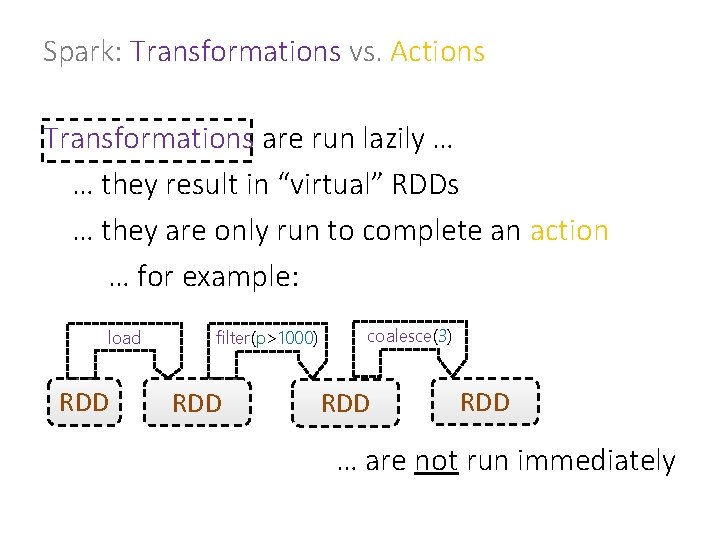

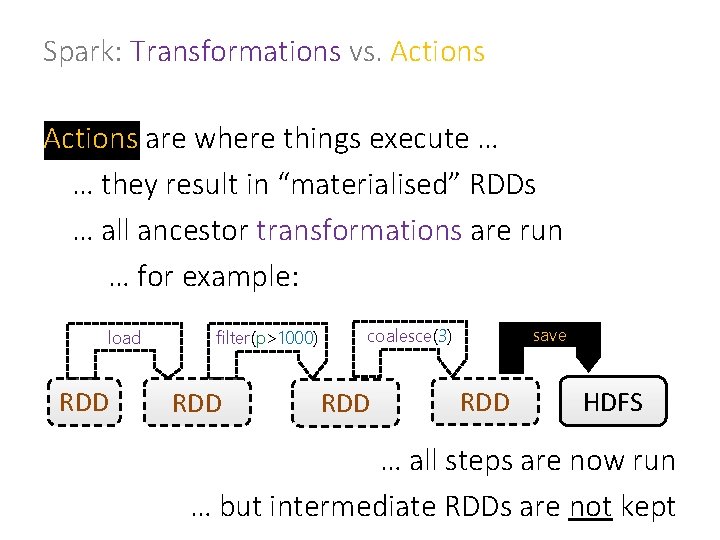

Spark: Transformations vs. Actions Transformations are run lazily … … they result in “virtual” RDDs … they are only run to complete an action … for example: load RDD filter(p>1000) RDD coalesce(3) RDD … are not run immediately

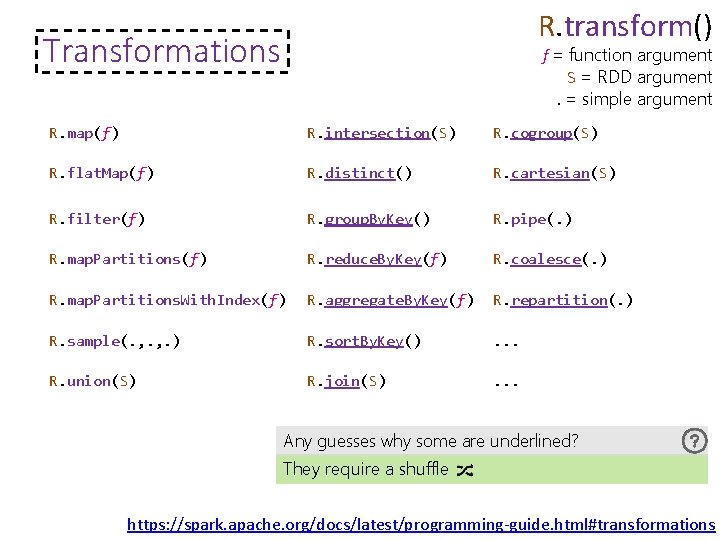

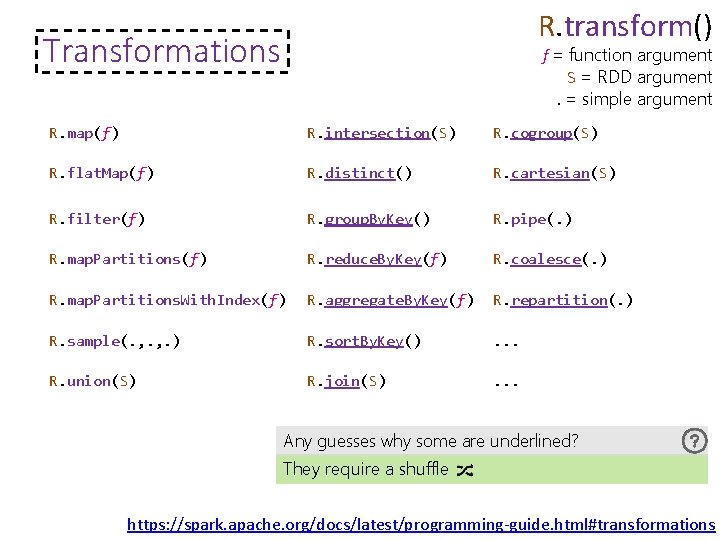

R. transform() Transformations f = function argument S = RDD argument. = simple argument R. map(f) R. intersection(S) R. cogroup(S) R. flat. Map(f) R. distinct() R. cartesian(S) R. filter(f) R. group. By. Key() R. pipe(. ) R. map. Partitions(f) R. reduce. By. Key(f) R. coalesce(. ) R. map. Partitions. With. Index(f) R. aggregate. By. Key(f) R. repartition(. ) R. sample(. , . ) R. sort. By. Key() . . . R. union(S) R. join(S) . . . Any guesses why some are underlined? They require a shuffle https: //spark. apache. org/docs/latest/programming-guide. html#transformations

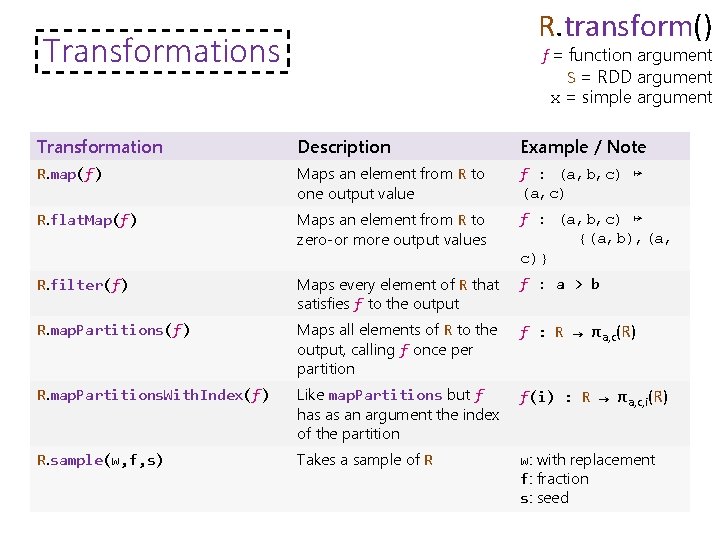

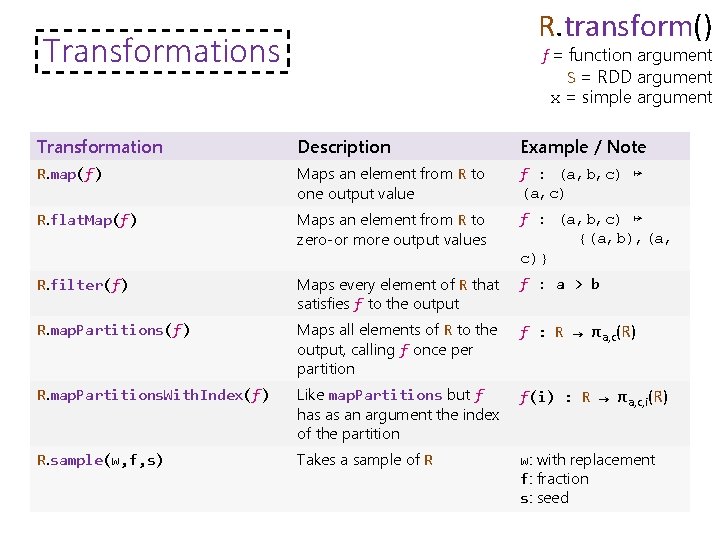

R. transform() Transformations f = function argument S = RDD argument x = simple argument Transformation Description Example / Note R. map(f) Maps an element from R to one output value f : (a, b, c) ↦ (a, c) R. flat. Map(f) Maps an element from R to zero-or more output values f : (a, b, c) ↦ {(a, b), (a, c)} R. filter(f) Maps every element of R that satisfies f to the output f : a > b R. map. Partitions(f) Maps all elements of R to the output, calling f once per partition f : R → πa, c(R) R. map. Partitions. With. Index(f) Like map. Partitions but f has as an argument the index of the partition f(i) : R → πa, c, i(R) R. sample(w, f, s) Takes a sample of R w: with replacement f: fraction s: seed

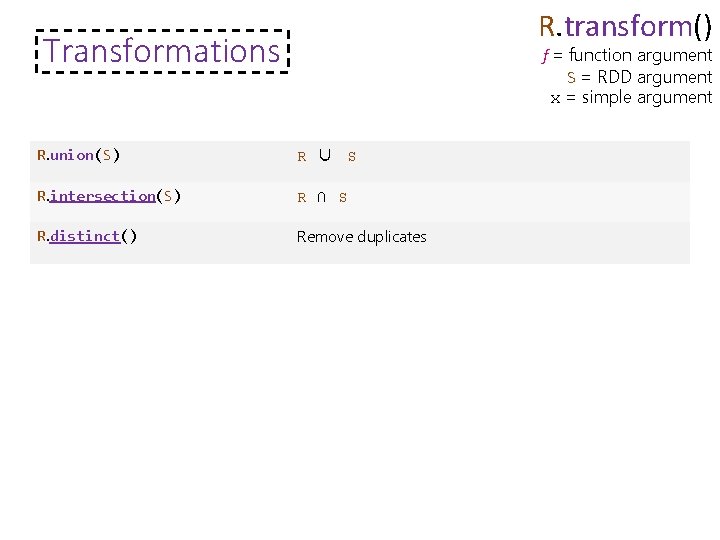

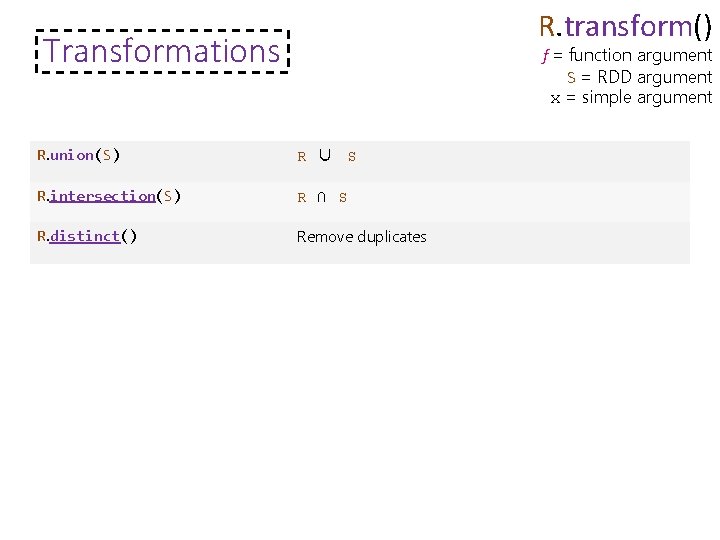

R. transform() Transformations f = function argument S = RDD argument x = simple argument R. union(S) R ∪ S R. intersection(S) R ∩ S R. distinct() Remove duplicates

R. transform() Transformations f = function argument S = RDD argument x = simple argument Requiring a Pair. RDD. . . R. group. By. Key() Groups values by key R. reduce. By. Key(f) Groups values by key and calls f : (a, b) ↦ a + b f to combine and reduce values with the same key R. aggregate. By. Key(c, fr) Groups values by key using c as an initial value, fc as a combiner and fr as a reducer c: initial value fc: combiner fr: reducer R. sort. By. Key([a]) Order by key a: true ascending R. join(S) R �S, join by key Also: left. Outer. Join, right. Outer. Join, and full. Outer. Join R. cogroup(S) Group values by key in R y S together false descending

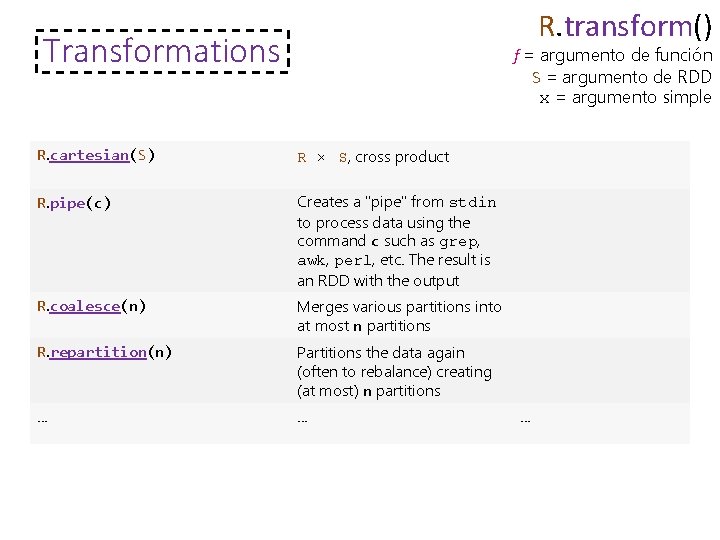

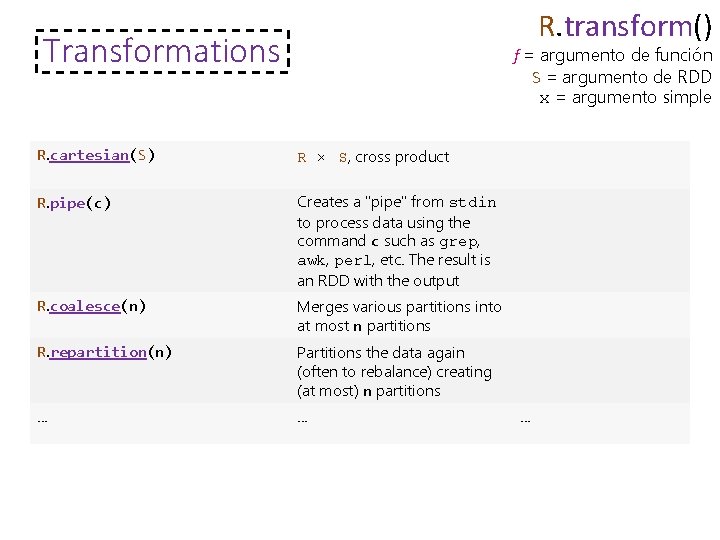

R. transform() Transformations f = argumento de función S = argumento de RDD x = argumento simple R. cartesian(S) R × S, cross product R. pipe(c) Creates a "pipe" from stdin to process data using the command c such as grep, awk, perl, etc. The result is an RDD with the output R. coalesce(n) Merges various partitions into at most n partitions R. repartition(n) Partitions the data again (often to rebalance) creating (at most) n partitions . .

Spark: Transformations vs. Actions are where things execute … … they result in “materialised” RDDs … all ancestor transformations are run … for example: load RDD filter(p>1000) RDD save coalesce(3) RDD HDFS … all steps are now run … but intermediate RDDs are not kept

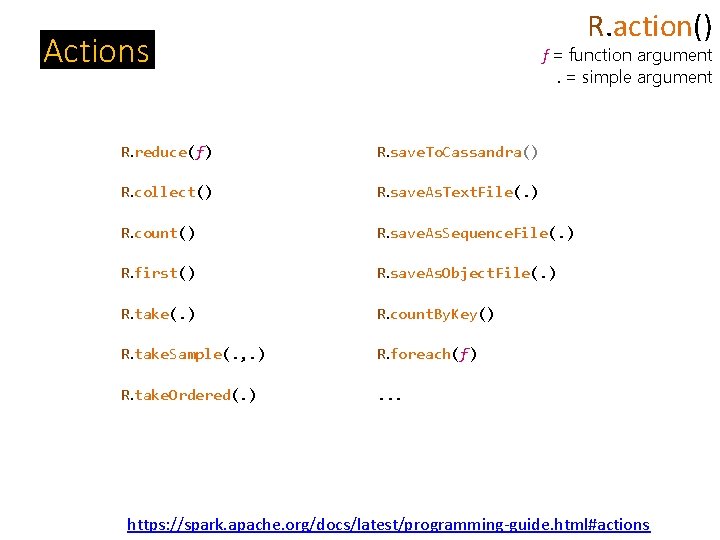

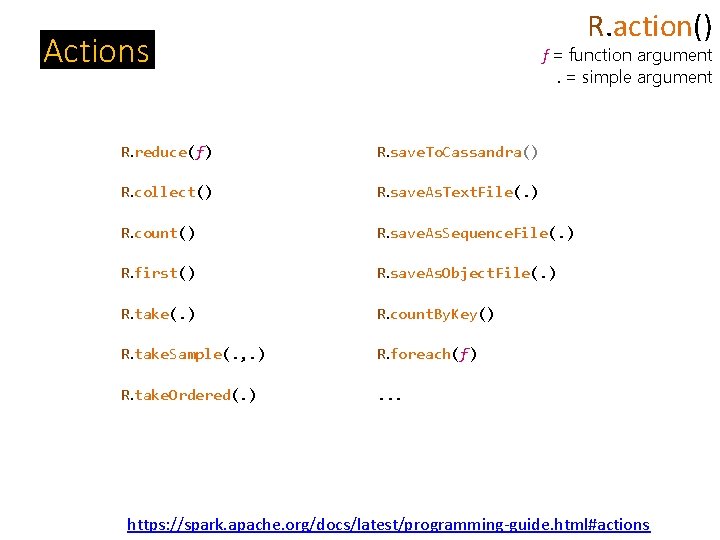

R. action() Actions f = function argument. = simple argument R. reduce(f) R. save. To. Cassandra() R. collect() R. save. As. Text. File(. ) R. count() R. save. As. Sequence. File(. ) R. first() R. save. As. Object. File(. ) R. take(. ) R. count. By. Key() R. take. Sample(. , . ) R. foreach(f) R. take. Ordered(. ) . . . https: //spark. apache. org/docs/latest/programming-guide. html#actions

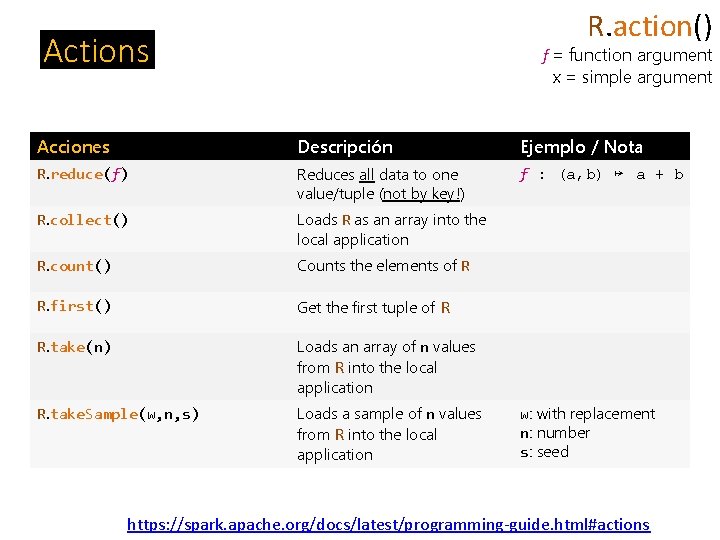

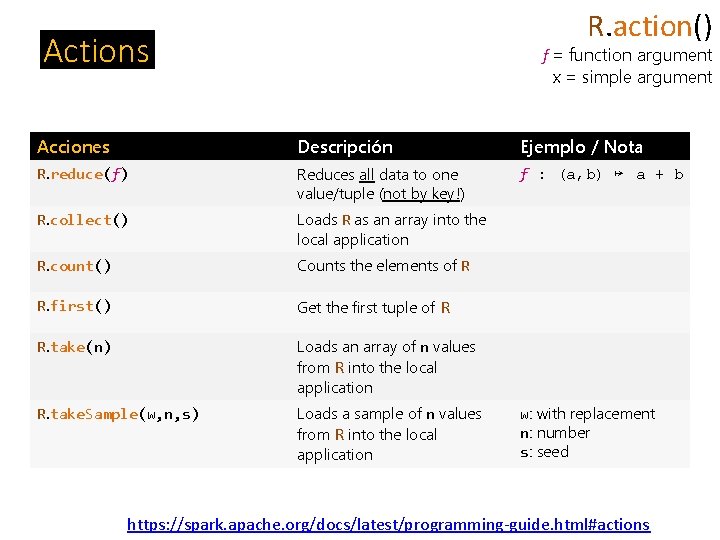

R. action() Actions f = function argument x = simple argument Acciones Descripción Ejemplo / Nota R. reduce(f) Reduces all data to one value/tuple (not by key!) f : (a, b) ↦ a + b R. collect() Loads R as an array into the local application R. count() Counts the elements of R R. first() Get the first tuple of R R. take(n) Loads an array of n values from R into the local application R. take. Sample(w, n, s) Loads a sample of n values from R into the local application w: with replacement n: number s: seed https: //spark. apache. org/docs/latest/programming-guide. html#actions

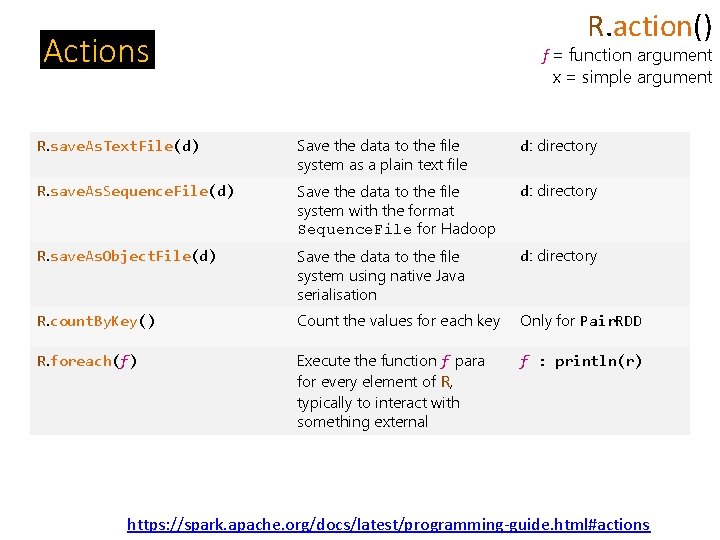

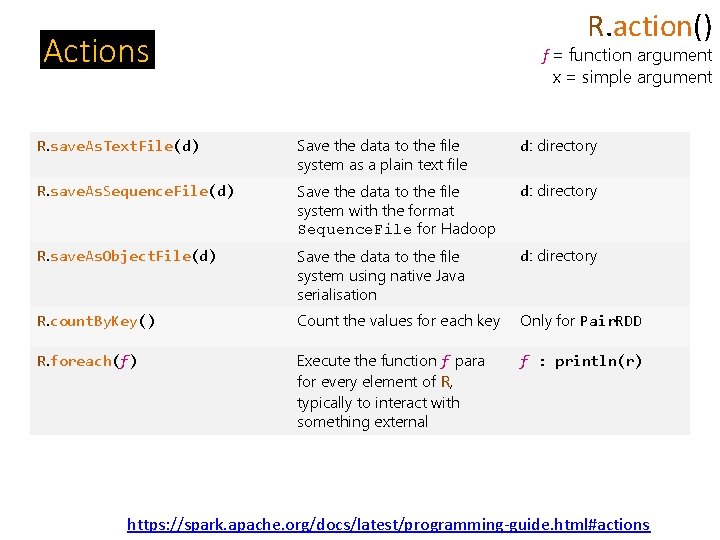

R. action() Actions f = function argument x = simple argument R. save. As. Text. File(d) Save the data to the file system as a plain text file d: directory R. save. As. Sequence. File(d) Save the data to the file system with the format Sequence. File for Hadoop d: directory R. save. As. Object. File(d) Save the data to the file system using native Java serialisation d: directory R. count. By. Key() Count the values for each key Only for Pair. RDD R. foreach(f) Execute the function f para for every element of R, typically to interact with something external f : println(r) https: //spark. apache. org/docs/latest/programming-guide. html#actions

APACHE SPARK: TRANSFORMATIONS FOR Pair. RDD

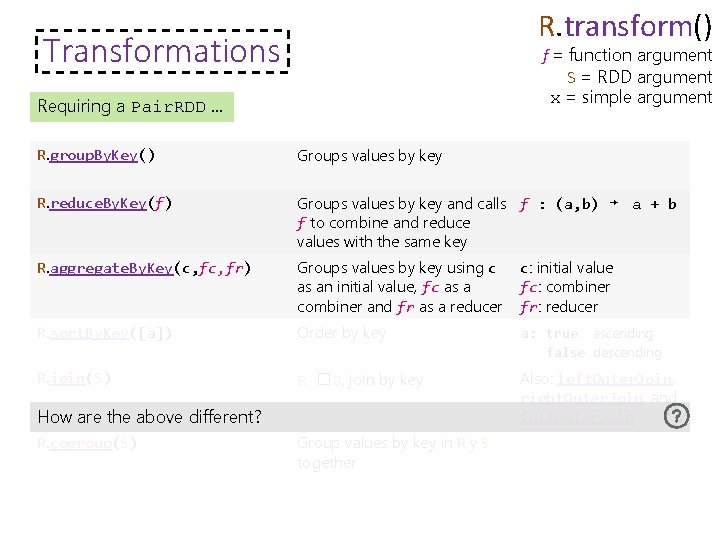

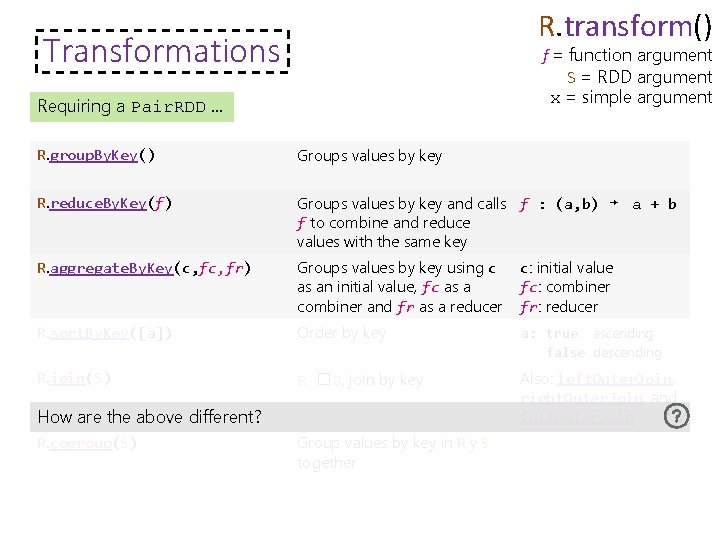

R. transform() Transformations f = function argument S = RDD argument x = simple argument Requiring a Pair. RDD. . . R. group. By. Key() Groups values by key R. reduce. By. Key(f) Groups values by key and calls f : (a, b) ↦ a + b f to combine and reduce values with the same key R. aggregate. By. Key(c, fr) Groups values by key using c as an initial value, fc as a combiner and fr as a reducer c: initial value fc: combiner fr: reducer R. sort. By. Key([a]) Order by key a: true ascending R. join(S) R �S, join by key Also: left. Outer. Join, right. Outer. Join, and full. Outer. Join How are the above different? R. cogroup(S) Group values by key in R y S together false descending

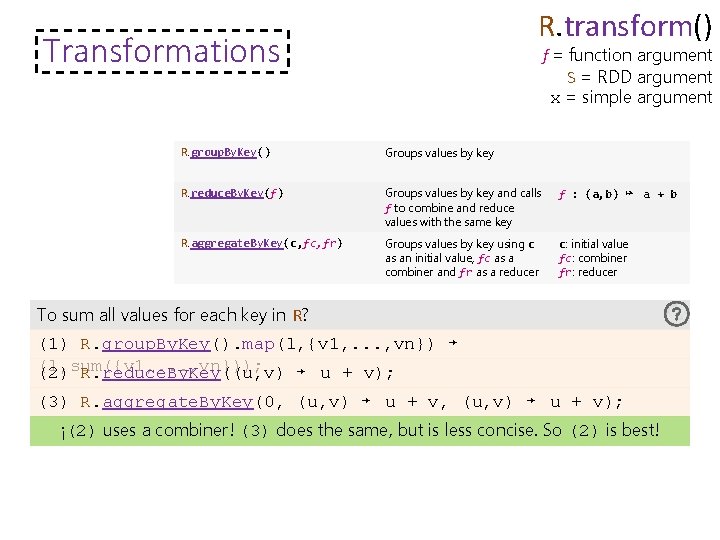

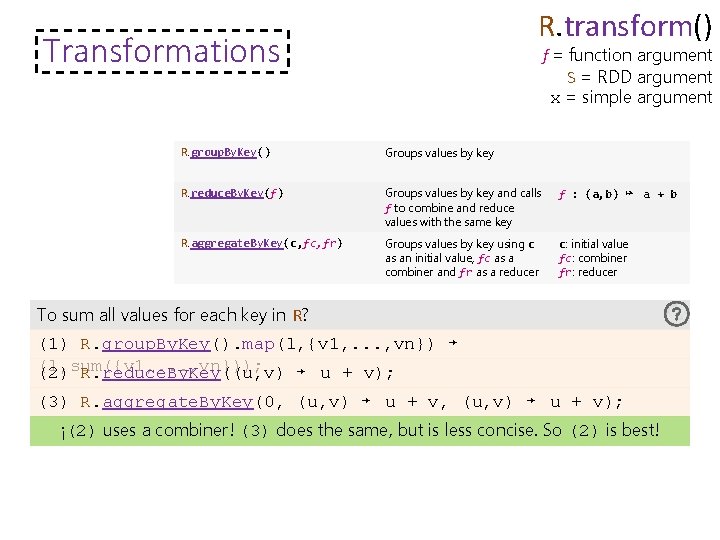

R. transform() Transformations f = function argument S = RDD argument x = simple argument R. group. By. Key() Groups values by key R. reduce. By. Key(f) Groups values by key and calls f to combine and reduce values with the same key f : (a, b) ↦ a + b R. aggregate. By. Key(c, fr) Groups values by key using c as an initial value, fc as a combiner and fr as a reducer c: initial value fc: combiner fr: reducer To sum all values for each key in R? (1) R. group. By. Key(). map(l, {v 1, . . . , vn}) ↦ (l, sum({v 1, . . . , vn})); (2) R. reduce. By. Key((u, v) ↦ u + v); (3) R. aggregate. By. Key(0, (u, v) ↦ u + v); ¡(2) uses a combiner! (3) does the same, but is less concise. So (2) is best!

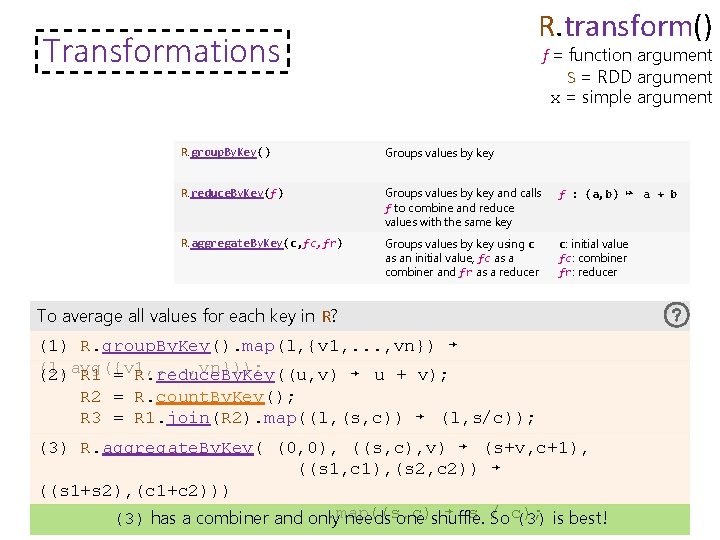

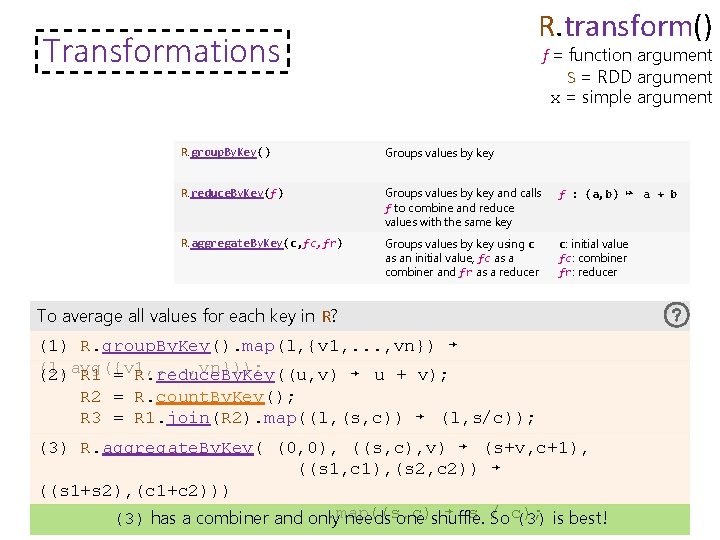

R. transform() Transformations f = function argument S = RDD argument x = simple argument R. group. By. Key() Groups values by key R. reduce. By. Key(f) Groups values by key and calls f to combine and reduce values with the same key f : (a, b) ↦ a + b R. aggregate. By. Key(c, fr) Groups values by key using c as an initial value, fc as a combiner and fr as a reducer c: initial value fc: combiner fr: reducer To average all values for each key in R? (1) R. group. By. Key(). map(l, {v 1, . . . , vn}) ↦ (l, avg({v 1, . . . , vn})); (2) R 1 = R. reduce. By. Key((u, v) ↦ u + v); R 2 = R. count. By. Key(); R 3 = R 1. join(R 2). map((l, (s, c)) ↦ (l, s/c)); (3) R. aggregate. By. Key( (0, 0), ((s, c), v) ↦ (s+v, c+1), ((s 1, c 1), (s 2, c 2)) ↦ ((s 1+s 2), (c 1+c 2))). map((s, c) ↦ s / (3) has a combiner and only needs one shuffle. So c); (3) is best!

APACHE SPARK: "DIRECTED ACYCLIC GRAPH" ("DAG")

Spark: Products by Hour receipts. txt c 1 c 1 c 2 c 2 c 2 c 3 c 3 c 4 … i 1 i 2 i 3 i 4 i 5 i 6 i 2 i 7 i 8 i 2 i 1 i 9 08 08 08 09 09 09 08 08 08 23 23 900 2000 1240 3000 2450 2000 7000 9230 2000 900 830 Number of customers buying each premium item per hour of the day

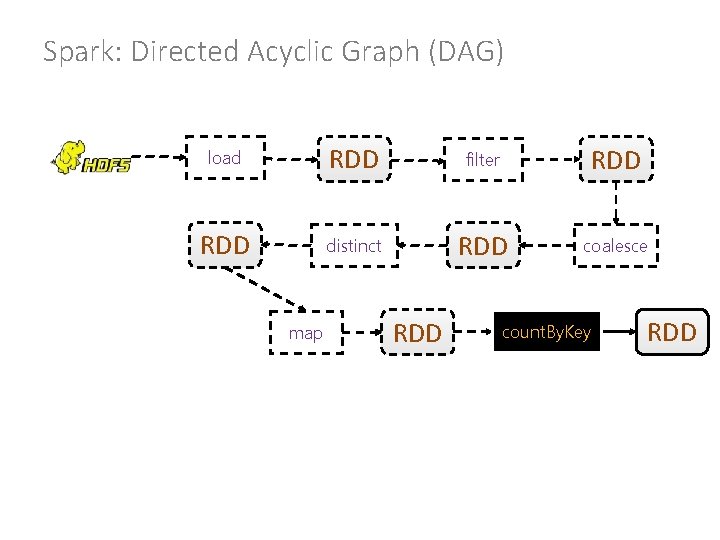

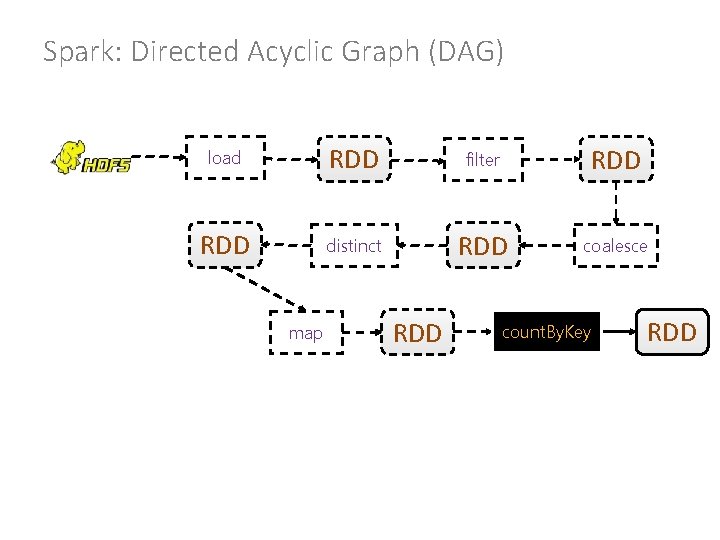

Spark: Directed Acyclic Graph (DAG) load RDD filter RDD distinct RDD coalesce map RDD count. By. Key RDD

Spark: Products by Hour receipts. txt c 1 c 1 c 2 c 2 c 2 c 3 c 3 c 4 … i 1 i 2 i 3 i 4 i 5 i 6 i 2 i 7 i 8 i 2 i 1 i 9 customer. txt 08 08 08 09 09 09 08 08 08 23 23 900 2000 1240 3000 2450 2000 7000 9230 2000 900 830 c 1 c 2 c 3 c 4 … female 24 female 40 73 21 Number of customers buying each premium item per hour of the day Also … № of females older than 30 buying each premium item per hour of the day

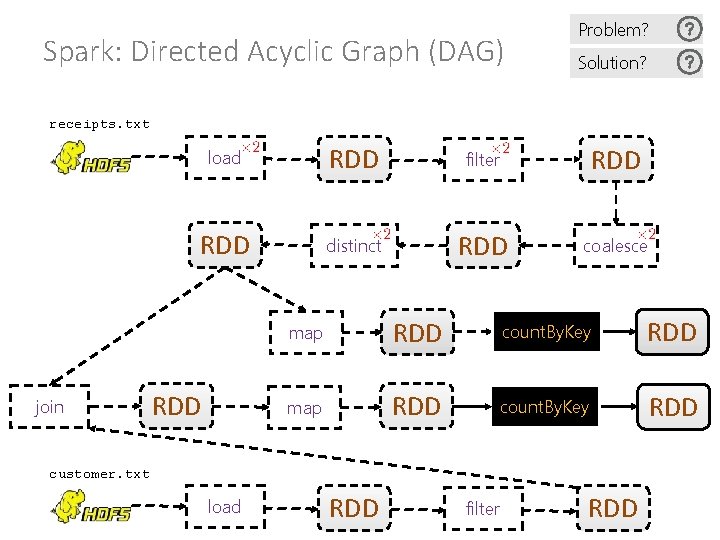

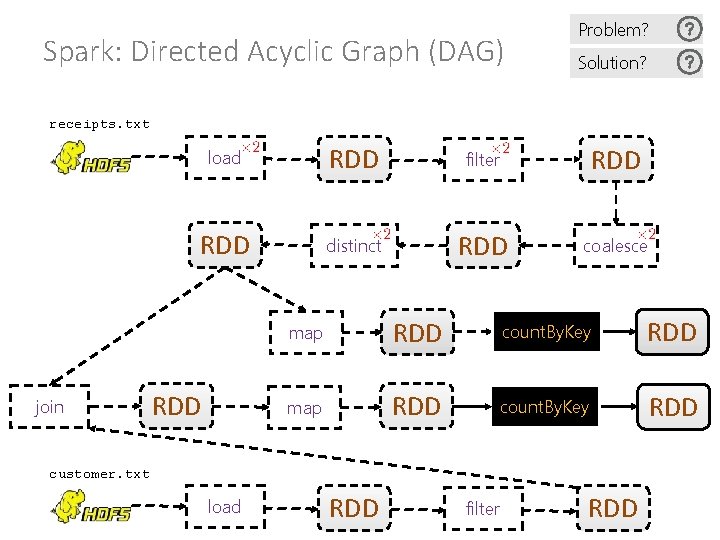

Spark: Directed Acyclic Graph (DAG) Problem? Solution? receipts. txt join load RDD filter RDD distinct RDD coalesce RDD map RDD count. By. Key RDD customer. txt load RDD filter RDD

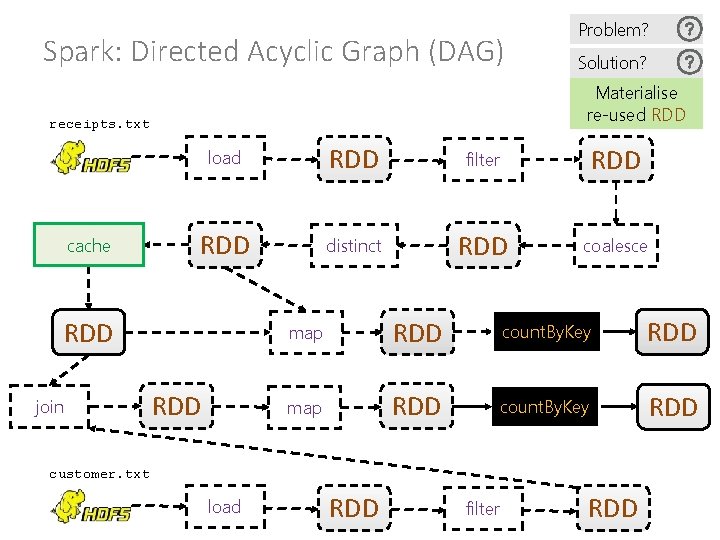

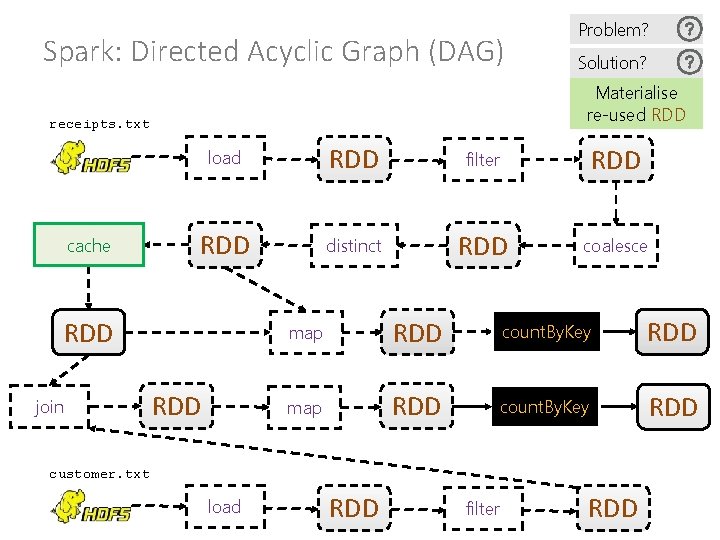

Spark: Directed Acyclic Graph (DAG) load RDD filter RDD distinct RDD coalesce RDD join Solution? Materialise re-used RDD receipts. txt cache Problem? RDD map RDD count. By. Key RDD customer. txt load RDD filter RDD

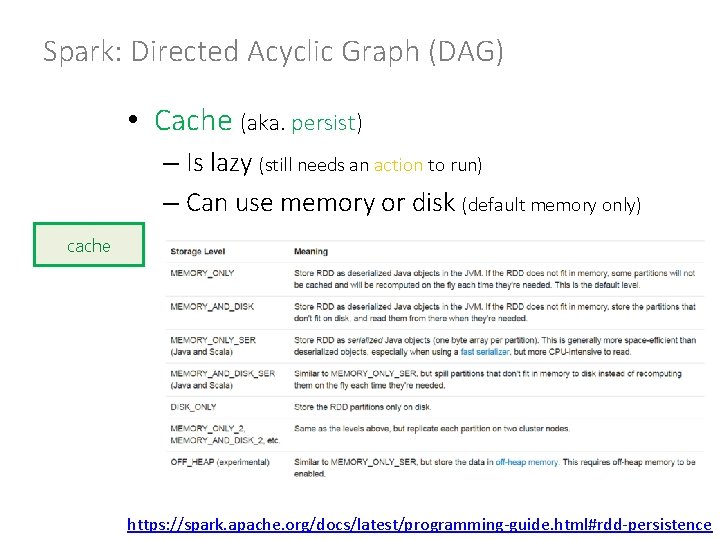

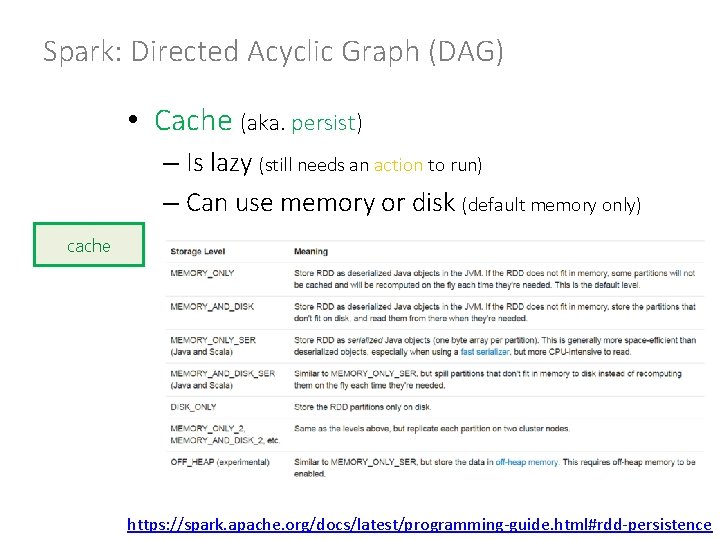

Spark: Directed Acyclic Graph (DAG) • Cache (aka. persist) – Is lazy (still needs an action to run) – Can use memory or disk (default memory only) cache https: //spark. apache. org/docs/latest/programming-guide. html#rdd-persistence

APACHE SPARK: CORE SUMMARY

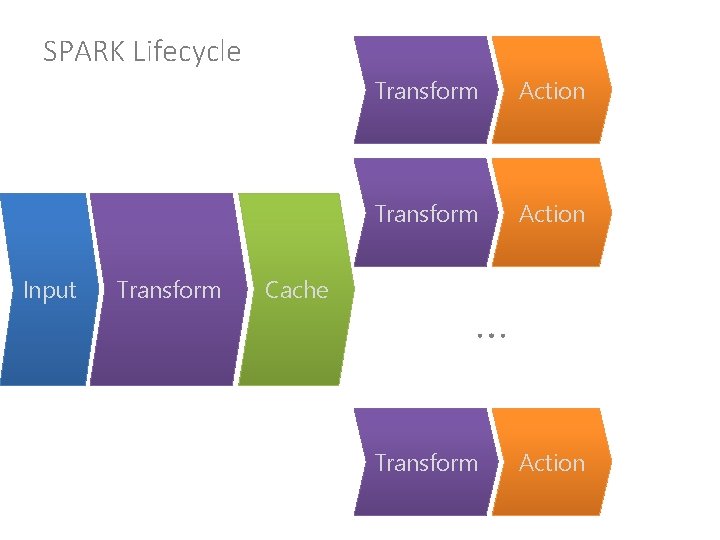

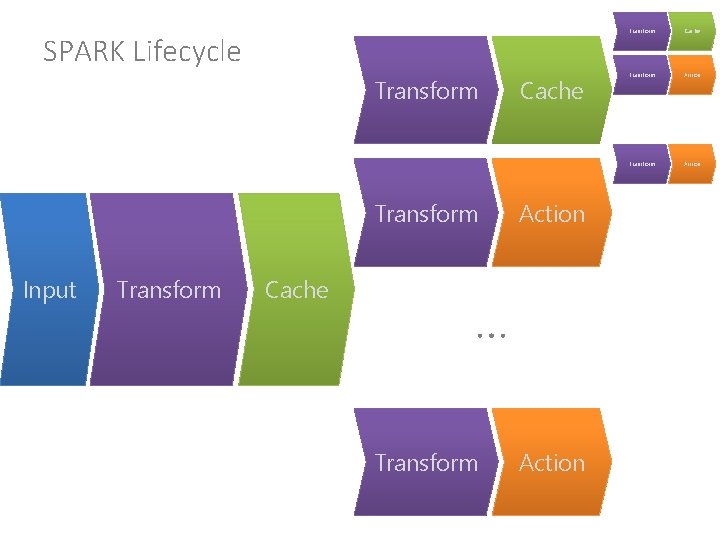

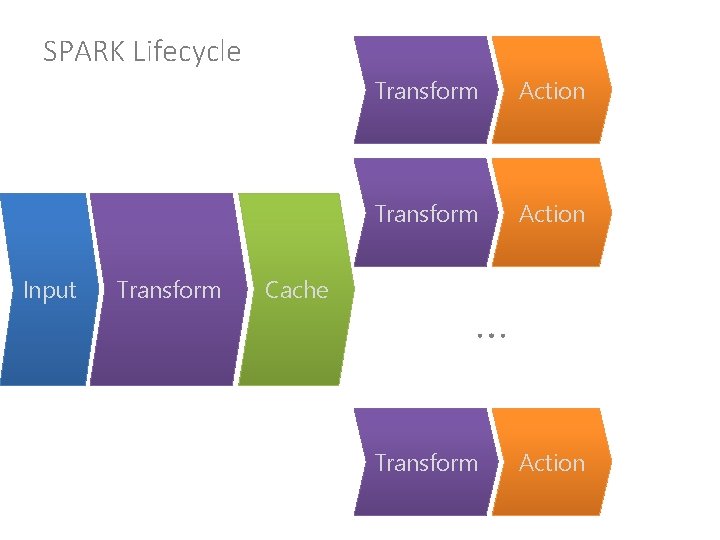

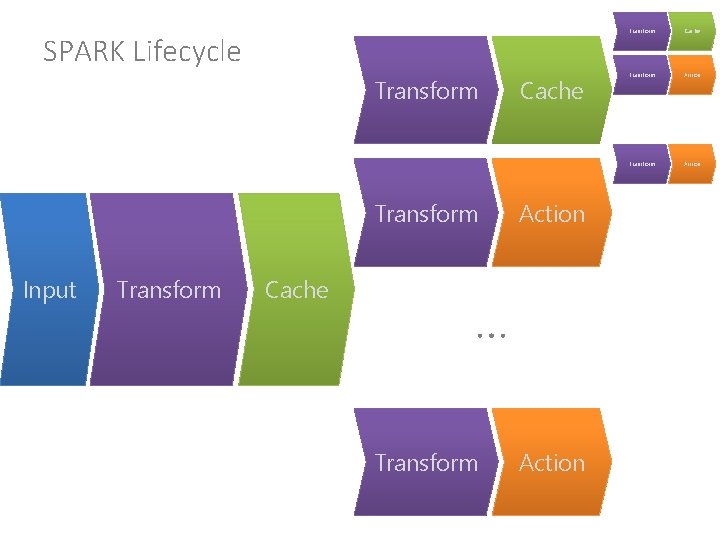

SPARK Lifecycle Input Transform Cache Transform Action … Transform Action

SPARK Lifecycle Transform Action Cache Transform Action … Transform Input Transform Cache Action … Transform Action

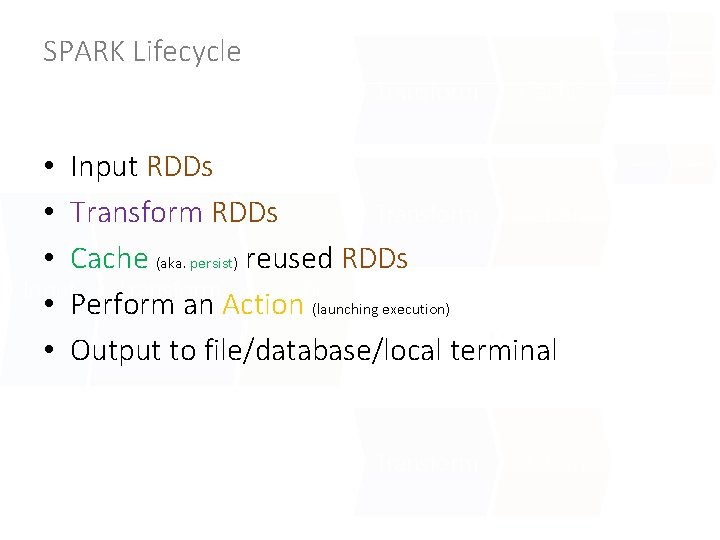

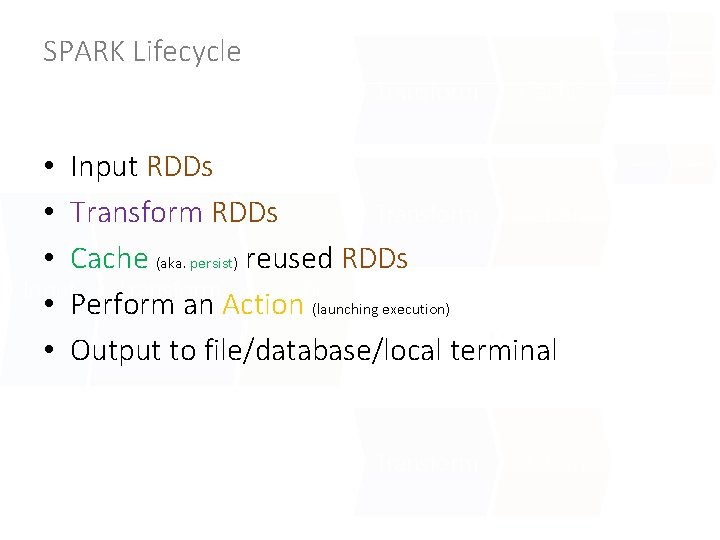

SPARK Lifecycle Transform Cache Transform Action … • Input RDDs Transform Action • Transform RDDs • Cache (aka. persist) reused RDDs Input Transform Cache • Perform an Action (launching execution) … • Output to file/database/local terminal Transform Action

SPARK: BEYOND THE CORE

Hadoop vs. Spark vs YARN Mesos Tachyon

Hadoop vs. Spark: SQL, ML, Streams, … vs YARN Mesos Tachyon SQL MLlib Streaming

SPARK VS. HADOOP

Spark can use the disk https: //databricks. com/blog/2014/10/10/spark-petabyte-sort. html

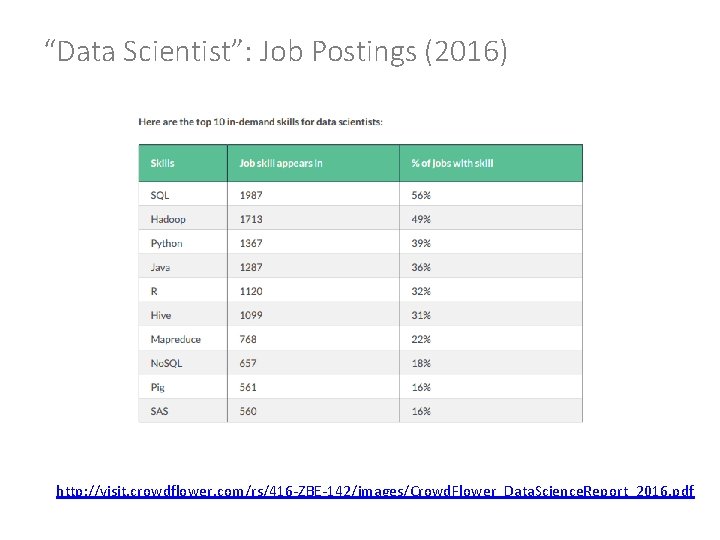

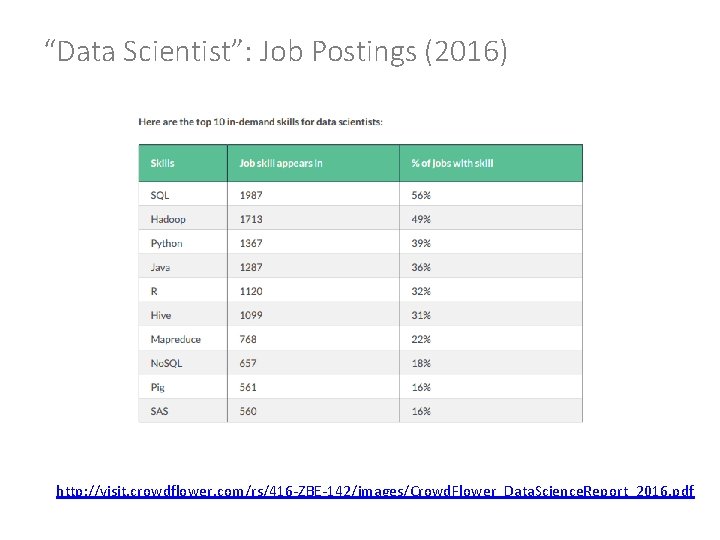

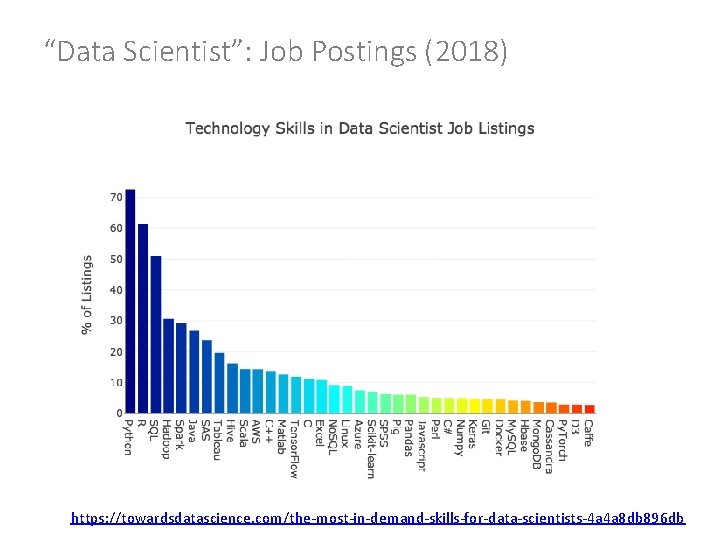

“Data Scientist”: Job Postings (2016) http: //visit. crowdflower. com/rs/416 -ZBE-142/images/Crowd. Flower_Data. Science. Report_2016. pdf

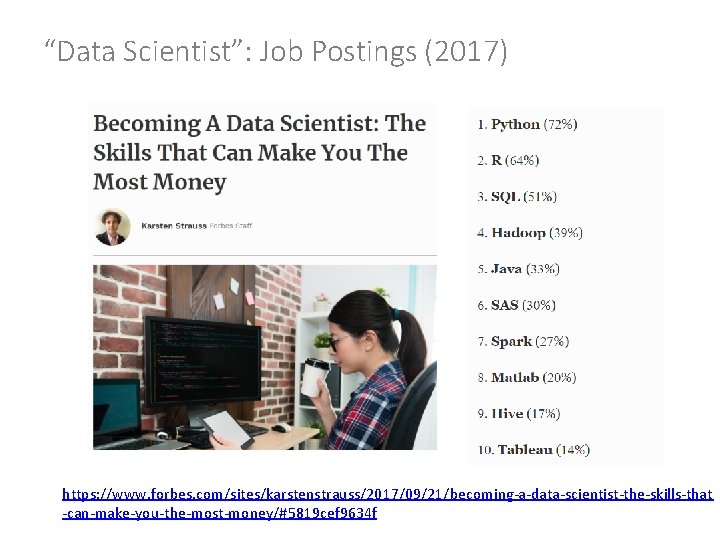

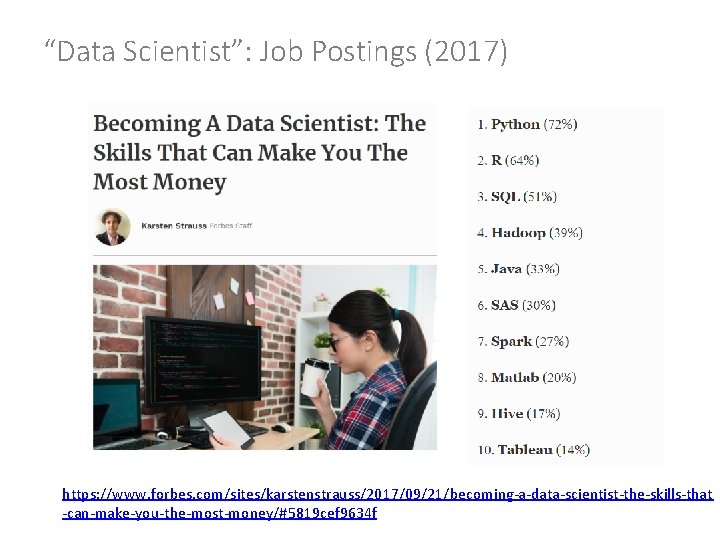

“Data Scientist”: Job Postings (2017) https: //www. forbes. com/sites/karstenstrauss/2017/09/21/becoming-a-data-scientist-the-skills-that -can-make-you-the-most-money/#5819 cef 9634 f

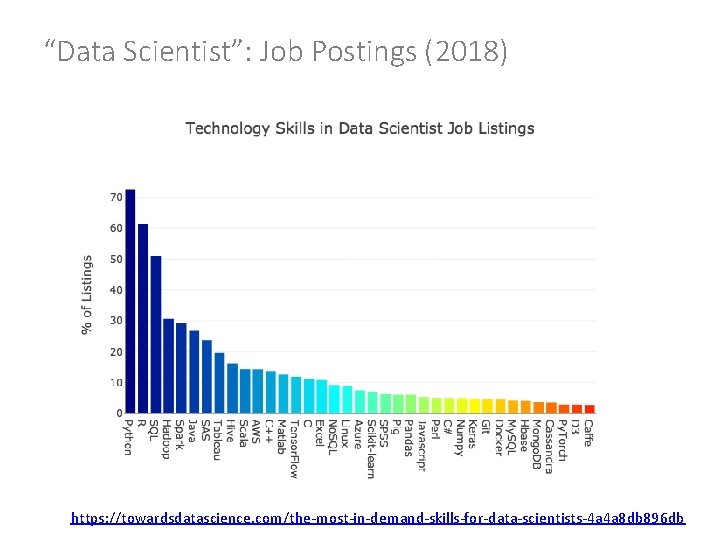

“Data Scientist”: Job Postings (2018) https: //towardsdatascience. com/the-most-in-demand-skills-for-data-scientists-4 a 4 a 8 db 896 db

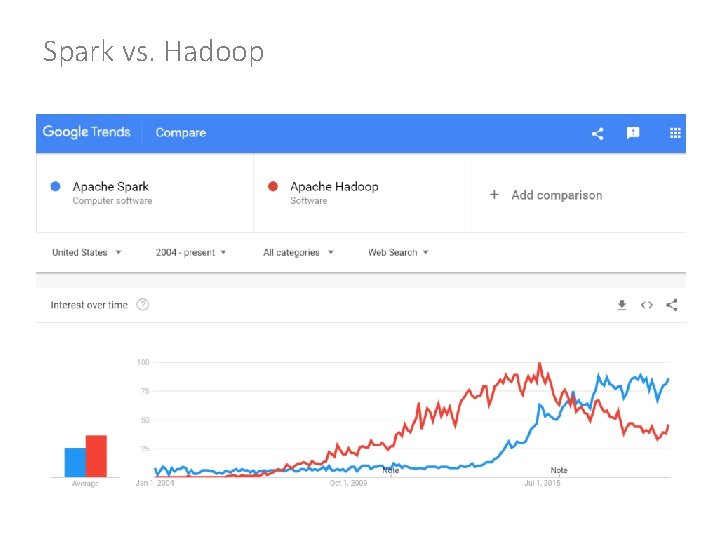

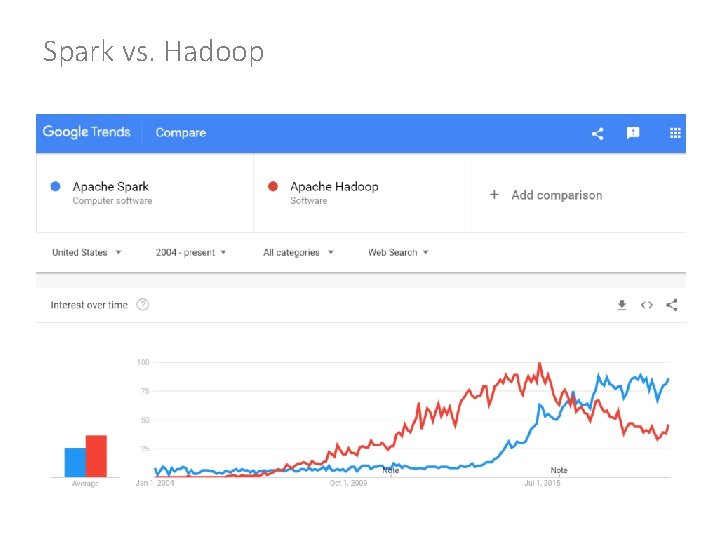

Spark vs. Hadoop

Questions?

Perforacion esofagica

Perforacion esofagica Afiche de medios de comunicacion

Afiche de medios de comunicacion Contuzie dex

Contuzie dex Sro web

Sro web Familia léxica

Familia léxica Modelo de procesamiento de la información

Modelo de procesamiento de la información Procesamiento de informacion por medios digitales

Procesamiento de informacion por medios digitales Procesamiento de consultas distribuidas

Procesamiento de consultas distribuidas Juegos de velocidad de procesamiento

Juegos de velocidad de procesamiento Directivas de procesamiento

Directivas de procesamiento Procesamiento en serie

Procesamiento en serie Procesamiento de consultas distribuidas

Procesamiento de consultas distribuidas Reticulo endoplasmatico rugoso funcion

Reticulo endoplasmatico rugoso funcion Sekondaryang datos

Sekondaryang datos Datos sujetivos

Datos sujetivos Escribe los siguientes datos

Escribe los siguientes datos Sandra crucianelli periodismo de datos

Sandra crucianelli periodismo de datos Datos discretos o continuos

Datos discretos o continuos Pseudocodigo

Pseudocodigo Halimbawa ng semantic mapping tagalog

Halimbawa ng semantic mapping tagalog Sistema continuo y discreto

Sistema continuo y discreto Diagrama de flujo de datos logico

Diagrama de flujo de datos logico Restricciones no estructurales base de datos

Restricciones no estructurales base de datos Beatriz beltrán

Beatriz beltrán Estructuras de datos

Estructuras de datos Www.dian.gov.co

Www.dian.gov.co Que es el modelo relacional en programacion

Que es el modelo relacional en programacion Hallar x

Hallar x Lenguaje dcl

Lenguaje dcl Auth_040

Auth_040 Central de datos

Central de datos Medidas de dispersion para datos agrupados

Medidas de dispersion para datos agrupados Datos primarios en una investigacion de mercados

Datos primarios en una investigacion de mercados Modelo relacional de objetos

Modelo relacional de objetos 2fn base de datos

2fn base de datos Redundancia conceptual

Redundancia conceptual Gestores de bases de datos no relacionales

Gestores de bases de datos no relacionales Tabla de frecuencia para datos no agrupados

Tabla de frecuencia para datos no agrupados Mis datos alsea

Mis datos alsea Procesador de consultas

Procesador de consultas Datos de nomina

Datos de nomina Modelo de datos

Modelo de datos Interpretación de datos estadísticos ejemplos

Interpretación de datos estadísticos ejemplos Pensamiento aleatorio y sistemas de datos

Pensamiento aleatorio y sistemas de datos Datos informativos del docente

Datos informativos del docente Taller de bases de datos

Taller de bases de datos Cardinalidad en base de datos

Cardinalidad en base de datos Instrumentos de recolección de datos

Instrumentos de recolección de datos Datos generales de una obra

Datos generales de una obra Bases de datos

Bases de datos Desviacion media para datos agrupados

Desviacion media para datos agrupados Cableado estructurado

Cableado estructurado Sgbd libres

Sgbd libres Recogida de datos cuantitativos

Recogida de datos cuantitativos Flujo de datos transfronterizos

Flujo de datos transfronterizos Base de datos objeto relacional

Base de datos objeto relacional Kahalagahan ng plagyarismo sa pananaliksik

Kahalagahan ng plagyarismo sa pananaliksik Es todo aquello de lo cual interesa guardar datos

Es todo aquello de lo cual interesa guardar datos Diagrama de flujo de datos ejemplos

Diagrama de flujo de datos ejemplos Bigotes boxplot

Bigotes boxplot