CC 5212 1 PROCESAMIENTO MASIVO DE DATOS OTOO

- Slides: 65

CC 5212 -1 PROCESAMIENTO MASIVO DE DATOS OTOÑO 2018 Lecture 7 Information Retrieval: Ranking Aidan Hogan aidhog@gmail. com

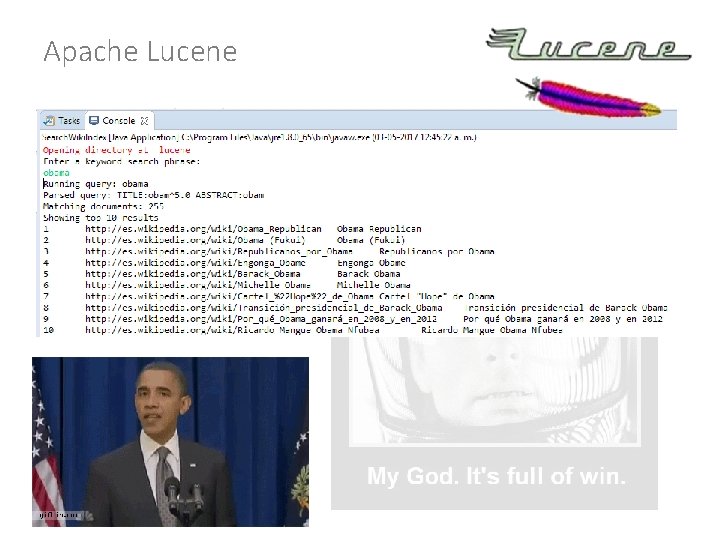

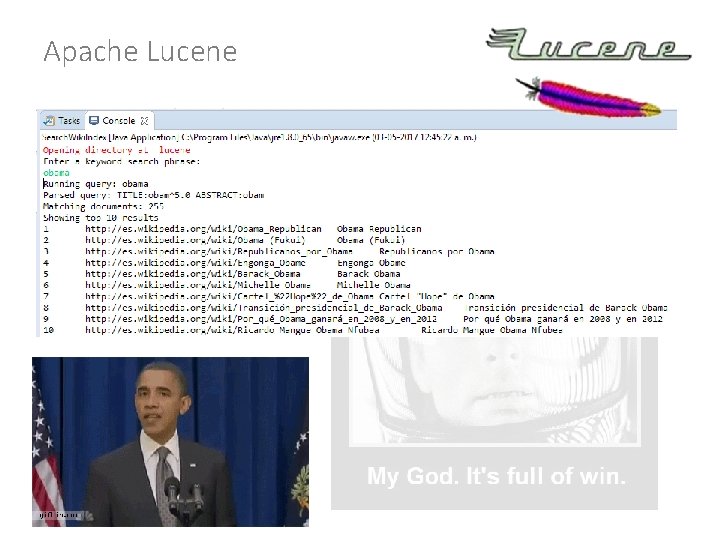

Apache Lucene • Inverted Index – They built one so you don’t have to! – Open Source in Java

Apache Lucene • Inverted Index – They built one so you don’t have to! – Open Source in Java

INFORMATION RETRIEVAL: RANKING

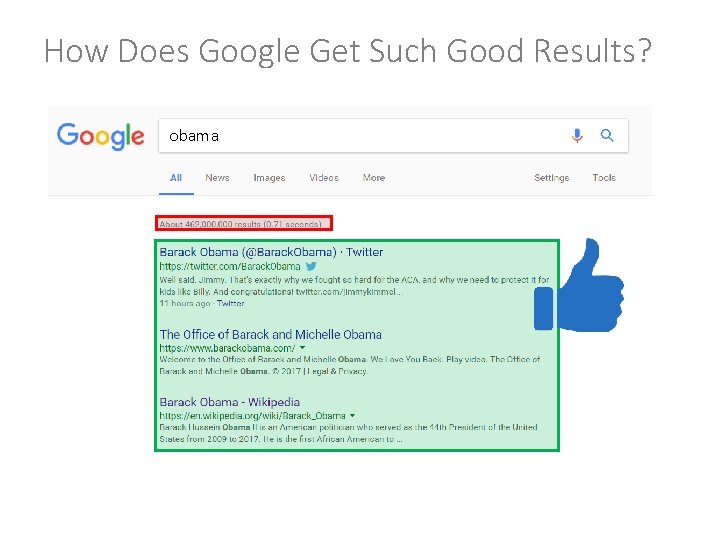

How Does Google Get Such Good Results? obama

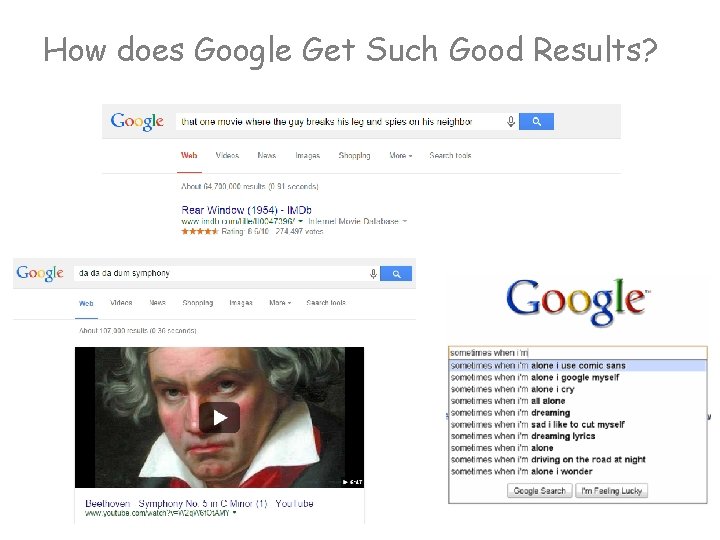

How does Google Get Such Good Results?

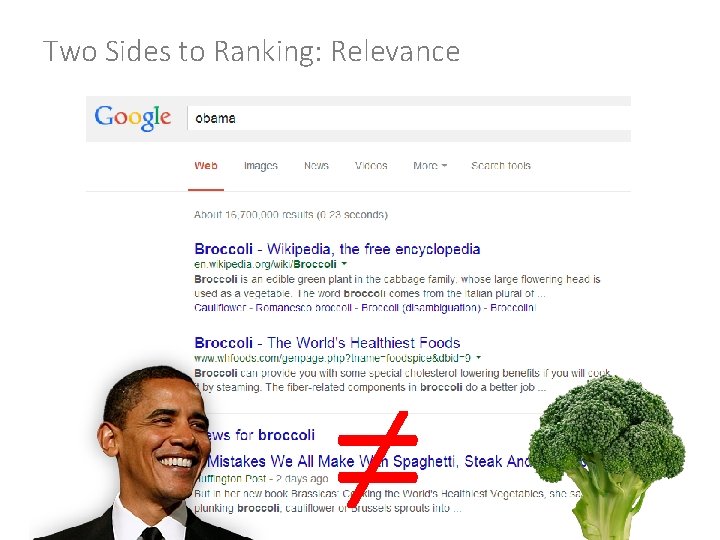

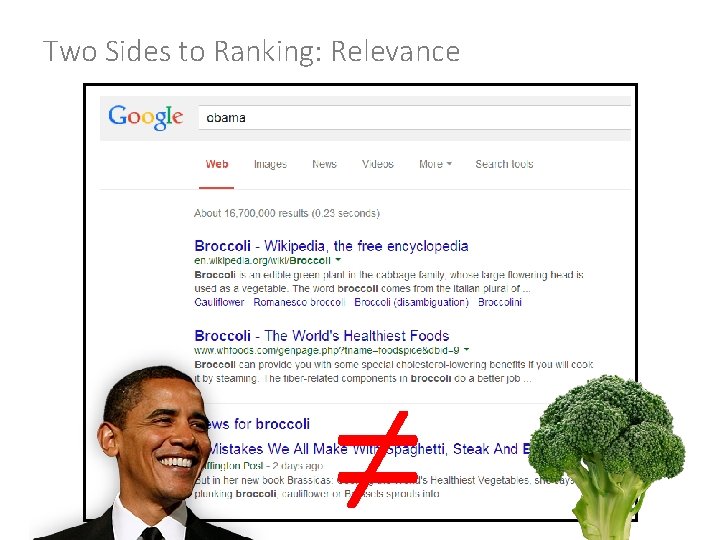

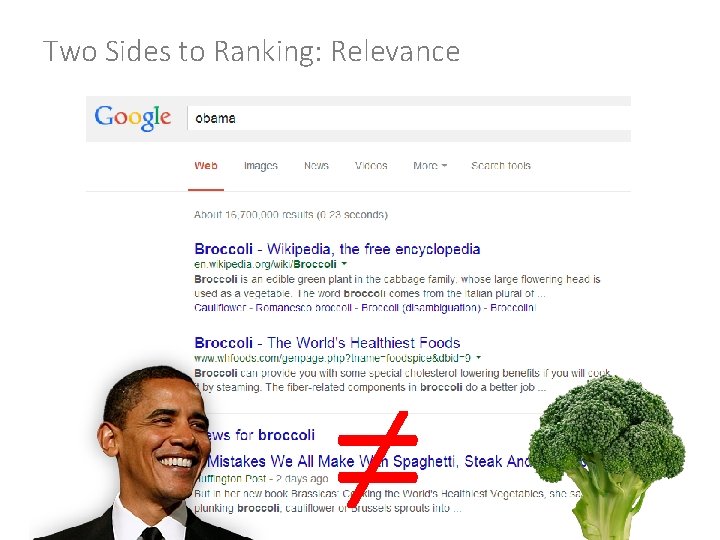

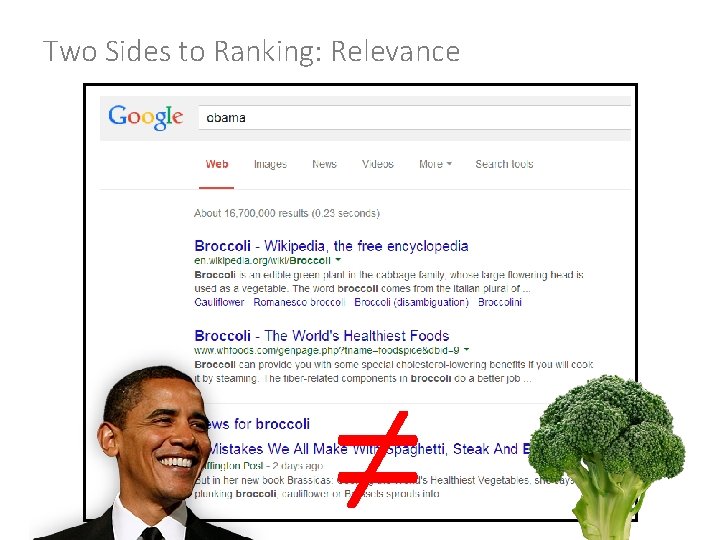

Two Sides to Ranking: Relevance ≠

Two Sides to Ranking: Importance >

RANKING: RELEVANCE

Example Query Which of these three keyword terms is most “important”?

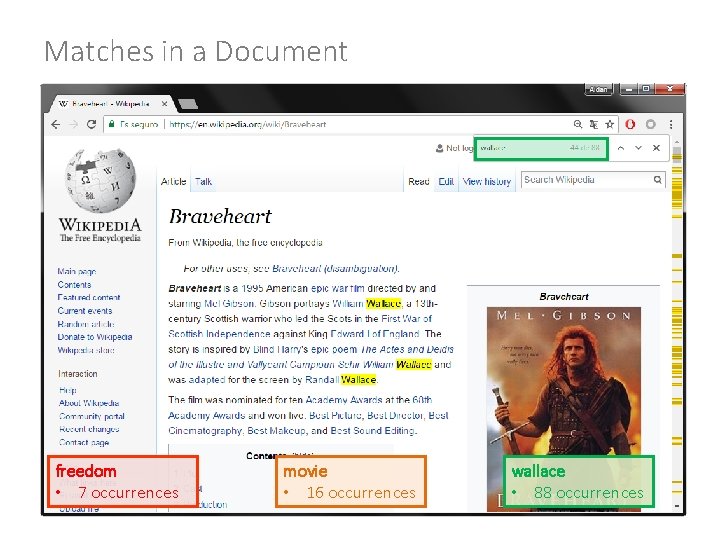

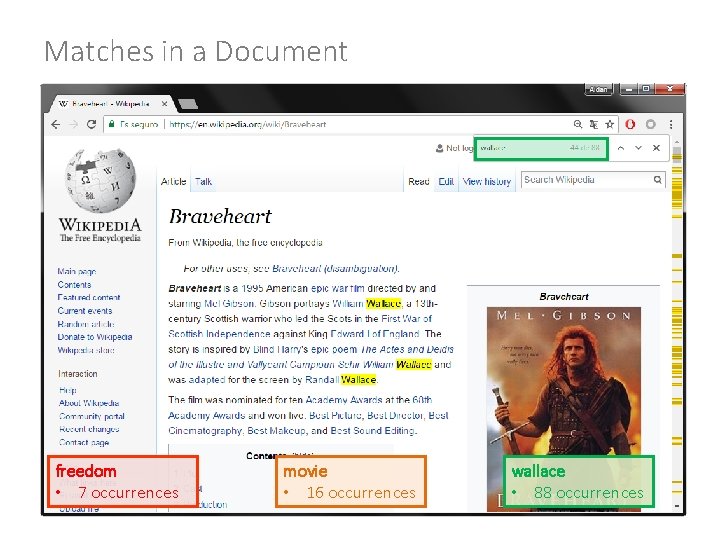

Matches in a Document freedom • 7 occurrences

Matches in a Document freedom • 7 occurrences movie • 16 occurrences

Matches in a Document freedom • 7 occurrences movie • 16 occurrences wallace • 88 occurrences

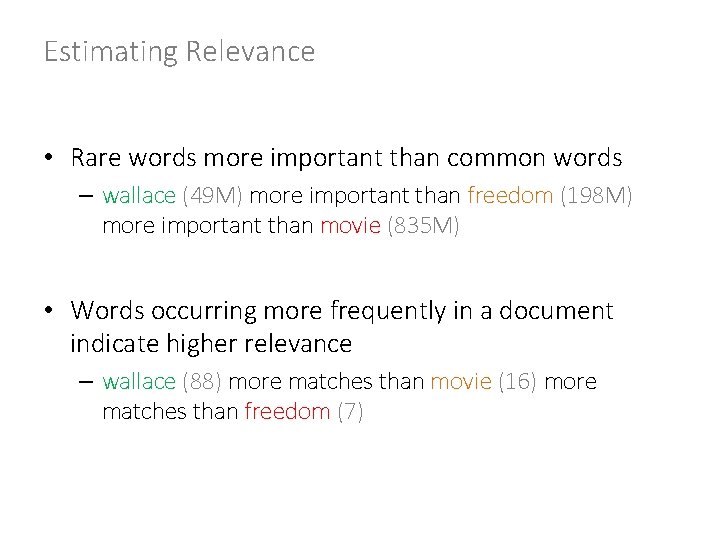

Usefulness of Words movie • occurs very frequently freedom • occurs frequently wallace • occurs occassionally

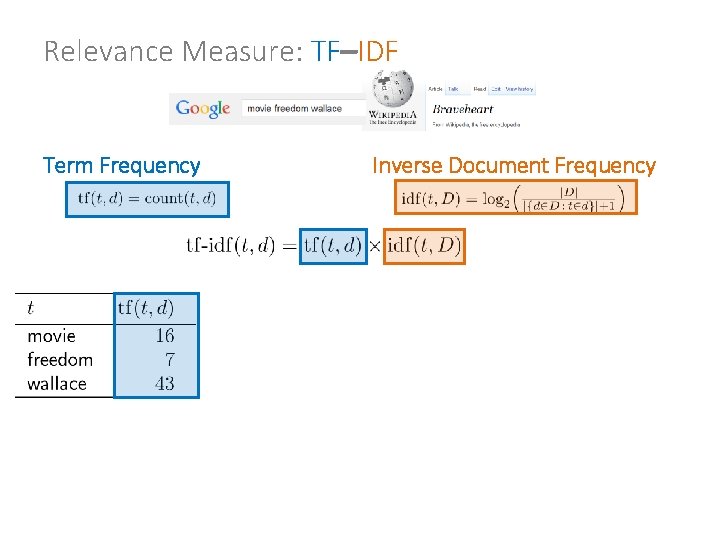

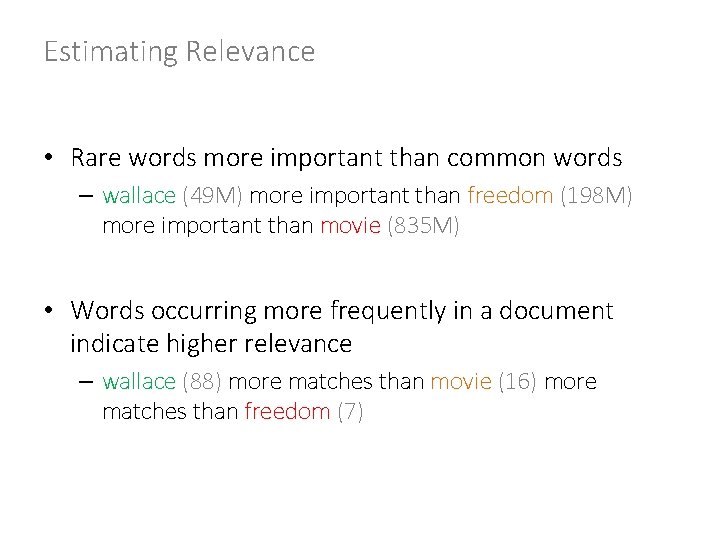

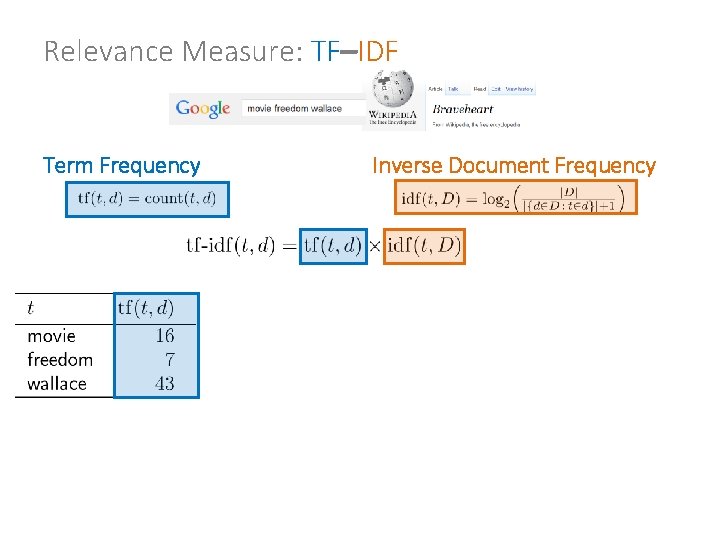

Estimating Relevance • Rare words more important than common words – wallace (49 M) more important than freedom (198 M) more important than movie (835 M) • Words occurring more frequently in a document indicate higher relevance – wallace (88) more matches than movie (16) more matches than freedom (7)

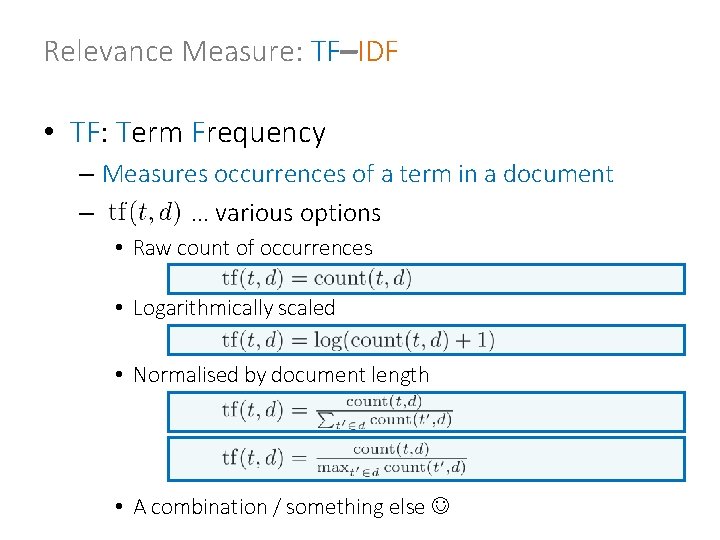

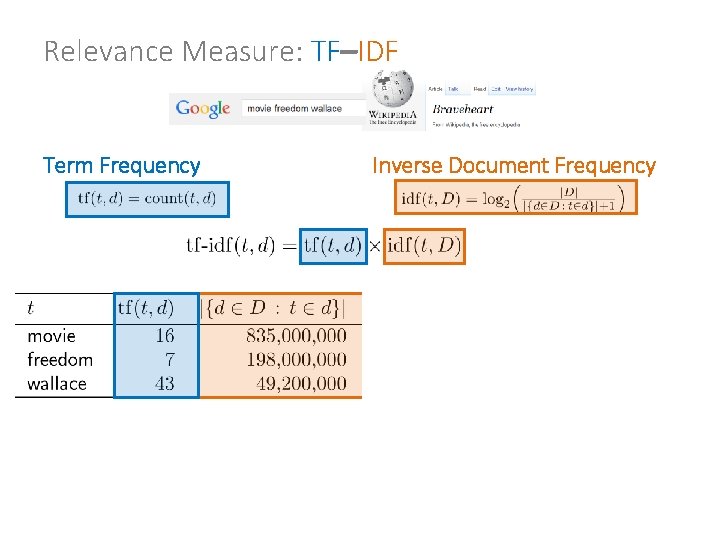

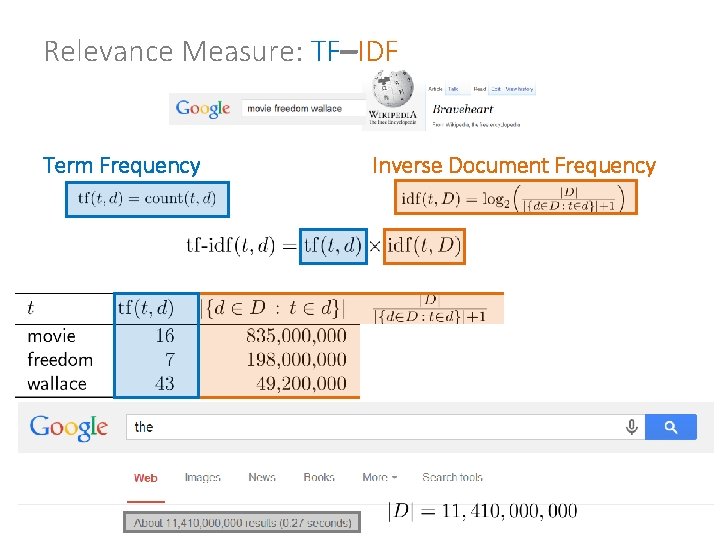

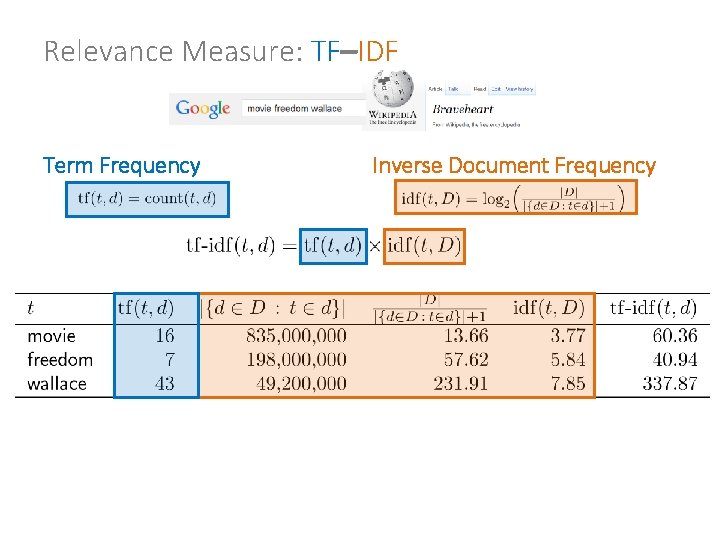

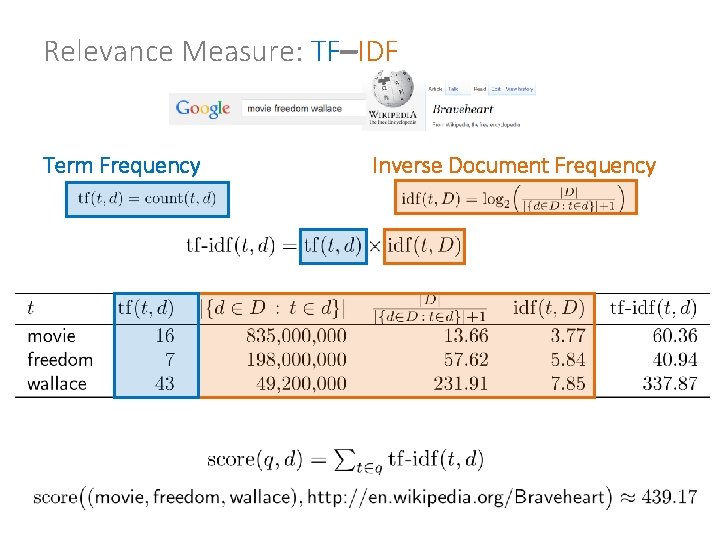

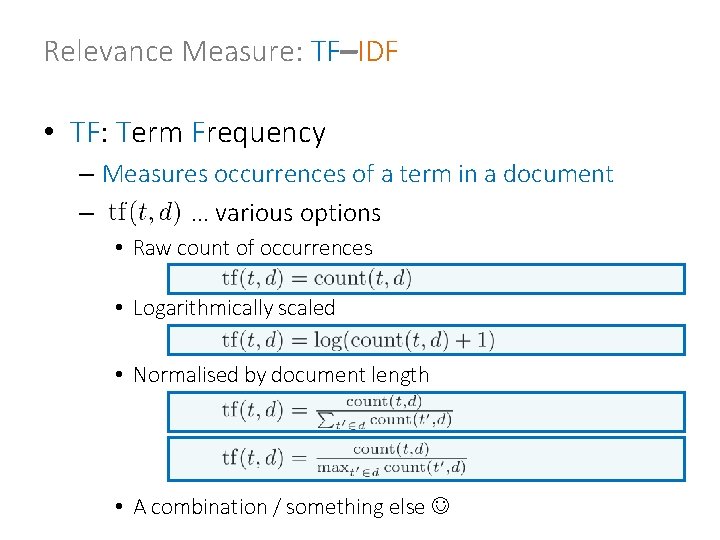

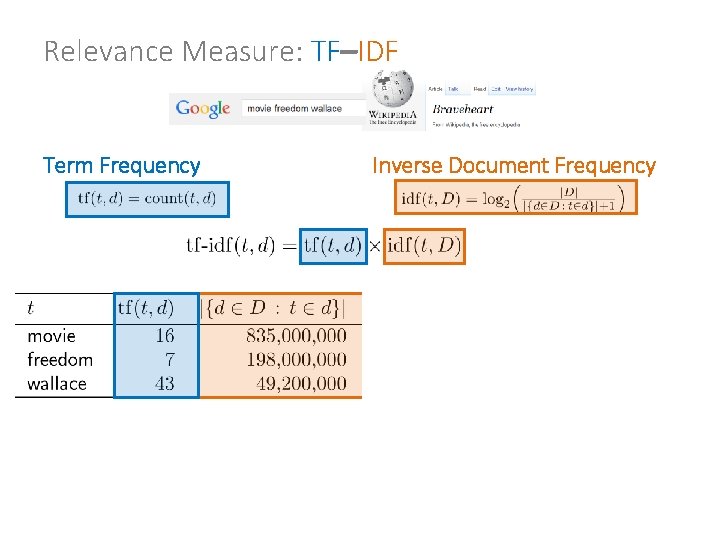

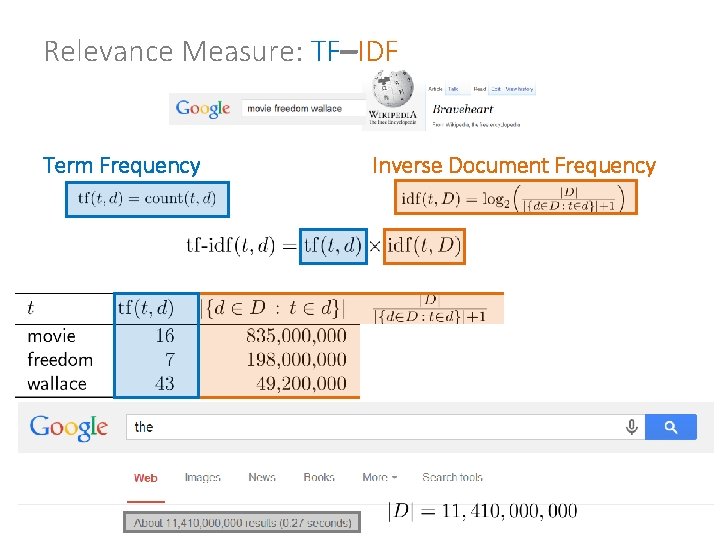

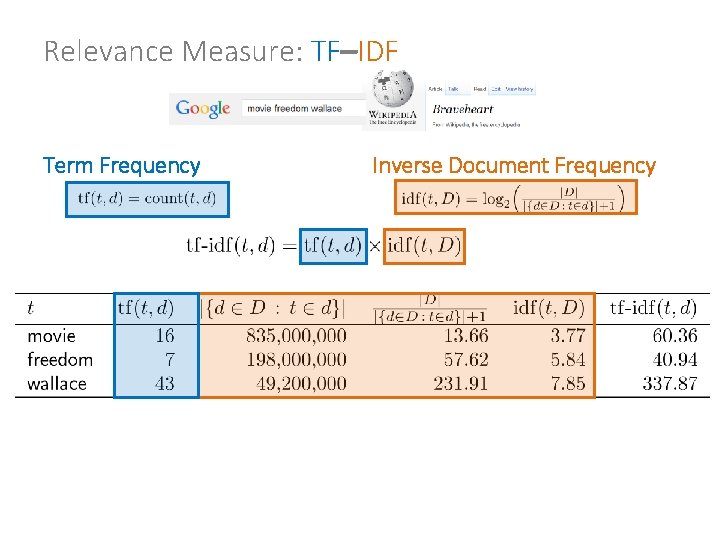

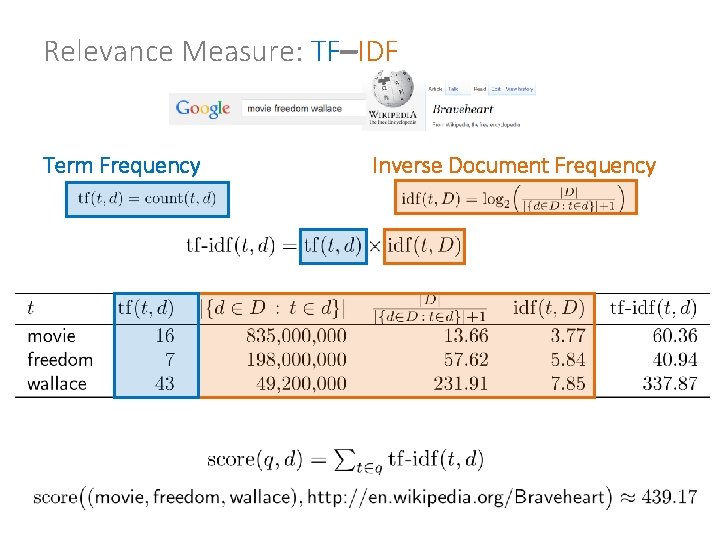

Relevance Measure: TF–IDF • TF: Term Frequency – Measures occurrences of a term in a document – … various options • Raw count of occurrences • Logarithmically scaled • Normalised by document length • A combination / something else

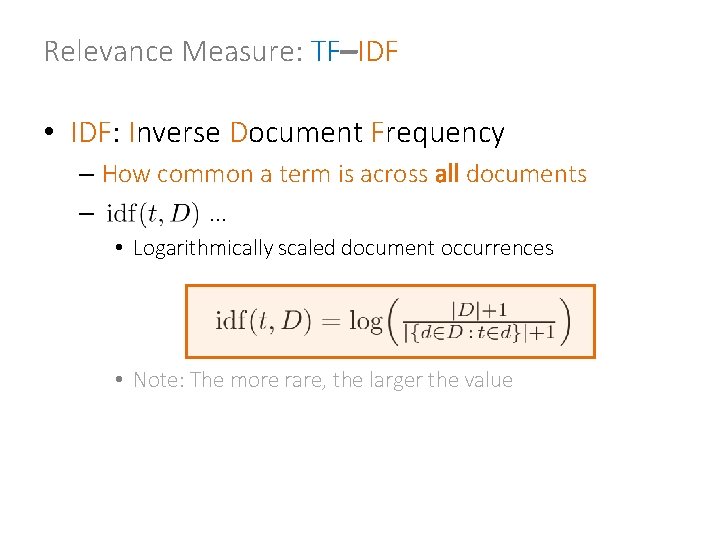

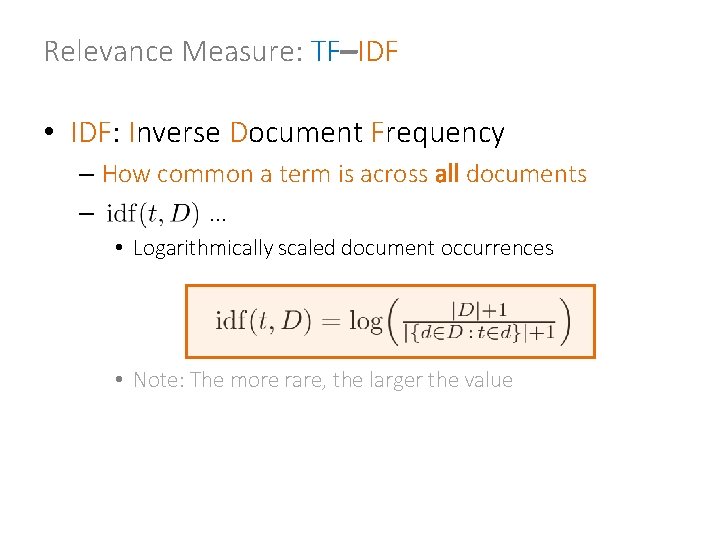

Relevance Measure: TF–IDF • IDF: Inverse Document Frequency – How common a term is across all documents – … • Logarithmically scaled document occurrences • Note: The more rare, the larger the value

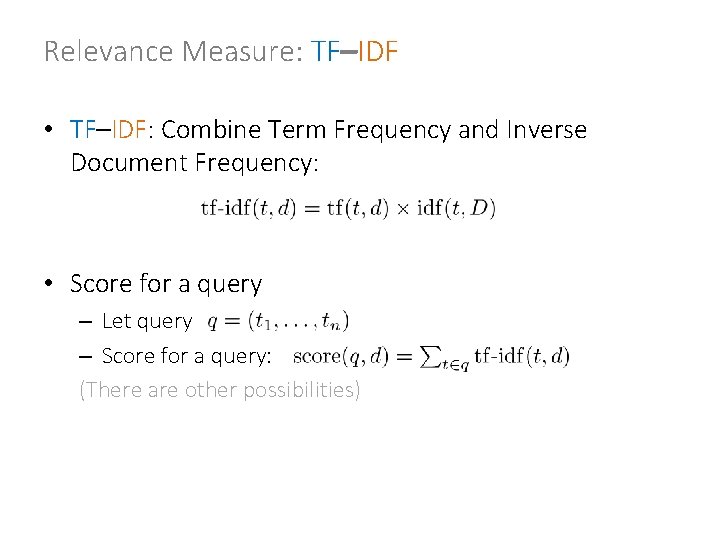

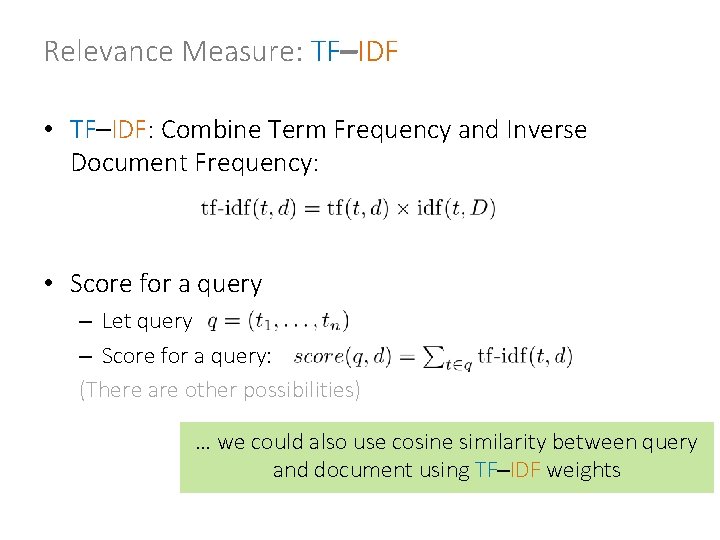

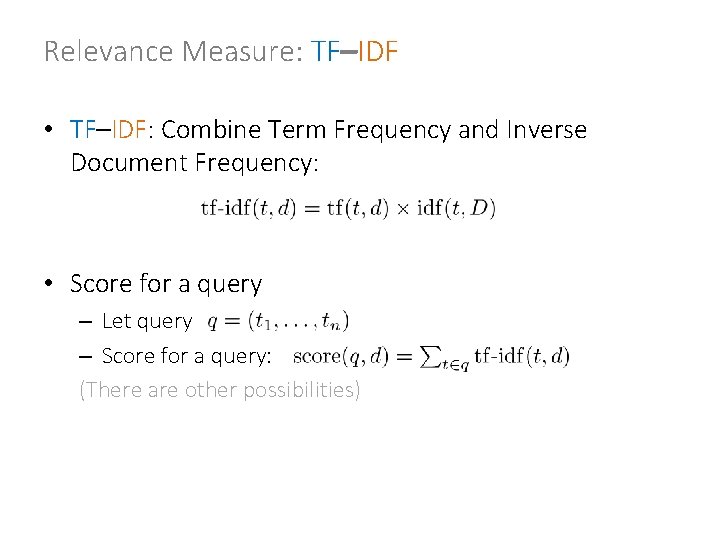

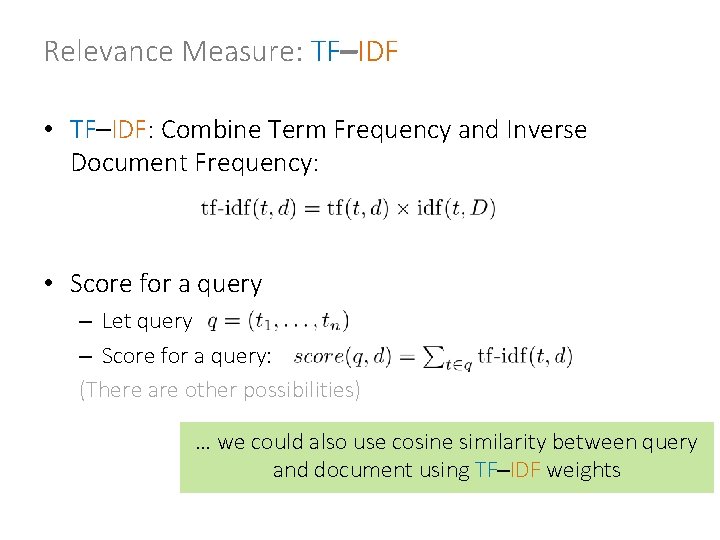

Relevance Measure: TF–IDF • TF–IDF: Combine Term Frequency and Inverse Document Frequency: • Score for a query – Let query – Score for a query: (There are other possibilities)

Relevance Measure: TF–IDF Term Frequency Inverse Document Frequency

Relevance Measure: TF–IDF Term Frequency Inverse Document Frequency

Relevance Measure: TF–IDF Term Frequency Inverse Document Frequency

Relevance Measure: TF–IDF Term Frequency Inverse Document Frequency

Relevance Measure: TF–IDF Term Frequency Inverse Document Frequency

Relevance Measure: TF–IDF Term Frequency Inverse Document Frequency

Relevance Measure: TF–IDF Term Frequency Inverse Document Frequency

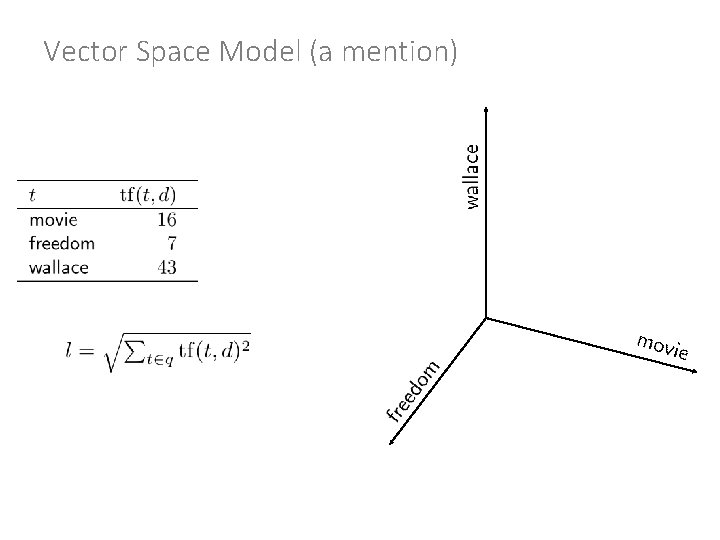

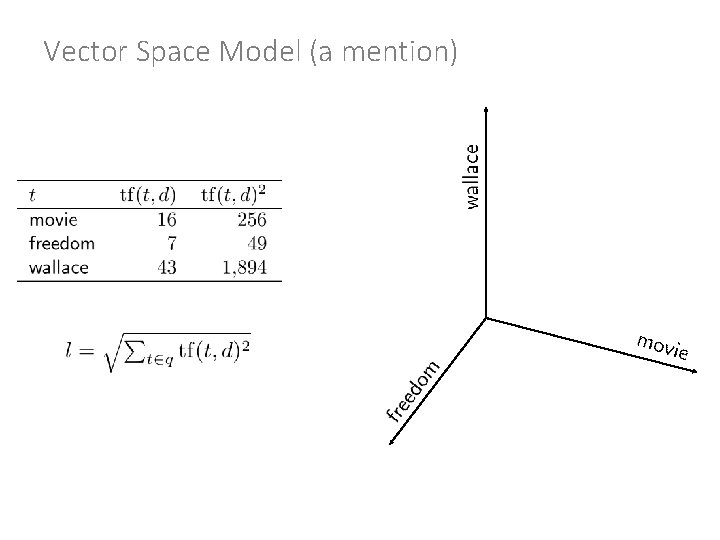

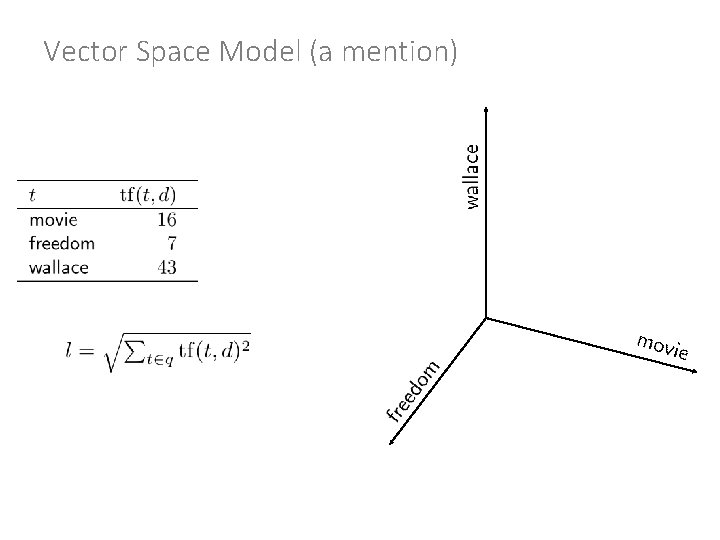

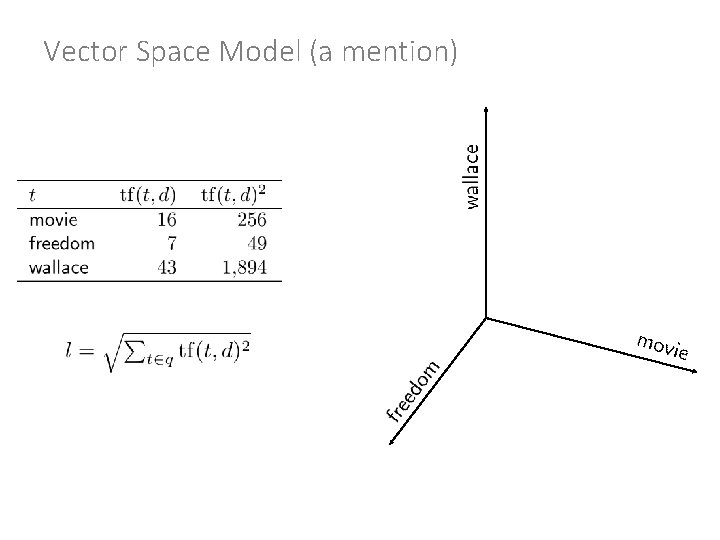

Vector Space Model (a mention)

Vector Space Model (a mention)

Vector Space Model (a mention)

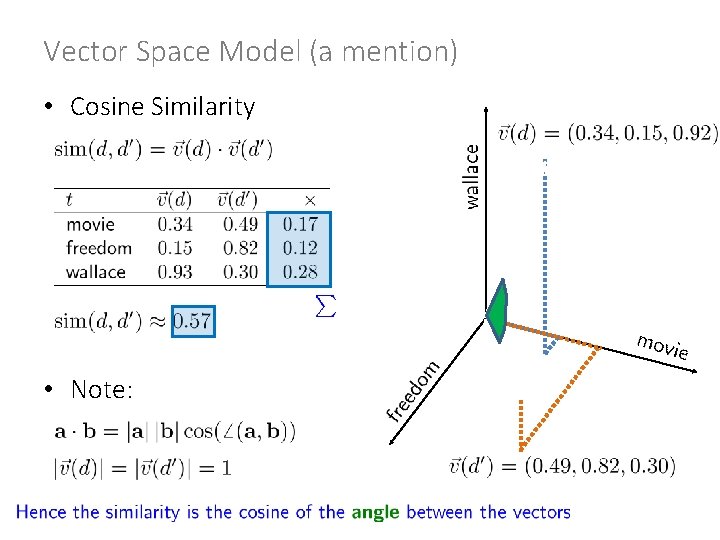

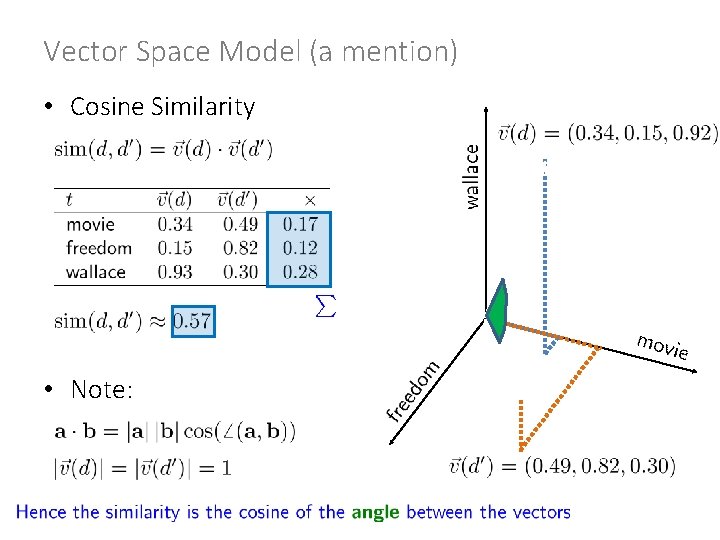

Vector Space Model (a mention) • Cosine Similarity • Note:

Relevance Measure: TF–IDF • TF–IDF: Combine Term Frequency and Inverse Document Frequency: • Score for a query – Let query – Score for a query: (There are other possibilities) … we could also use cosine similarity between query and document using TF–IDF weights

Two Sides to Ranking: Relevance ≠

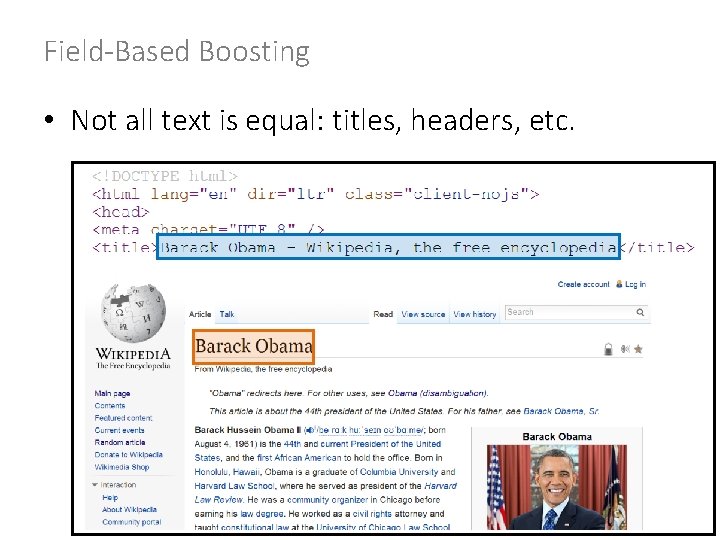

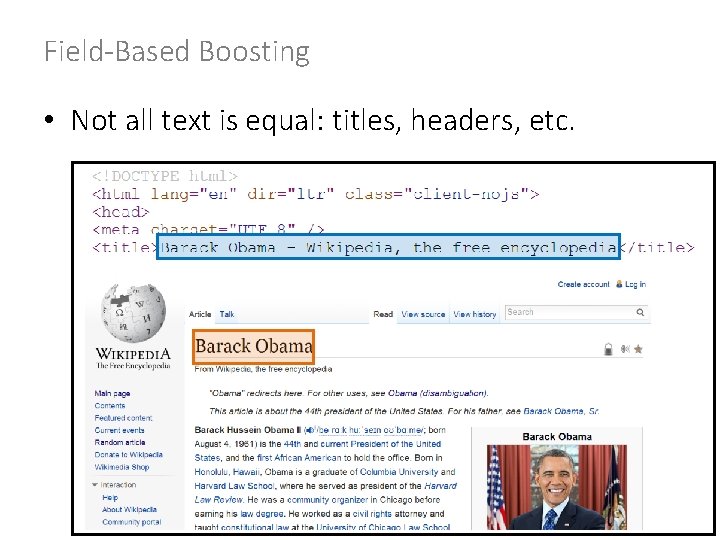

Field-Based Boosting • Not all text is equal: titles, headers, etc.

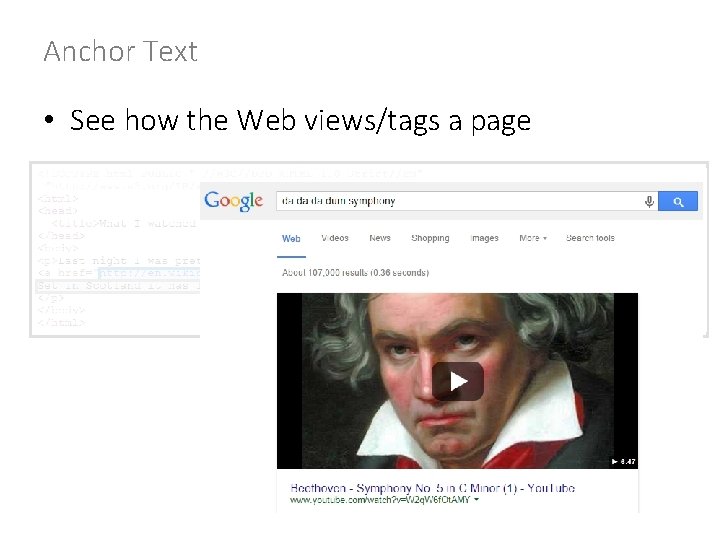

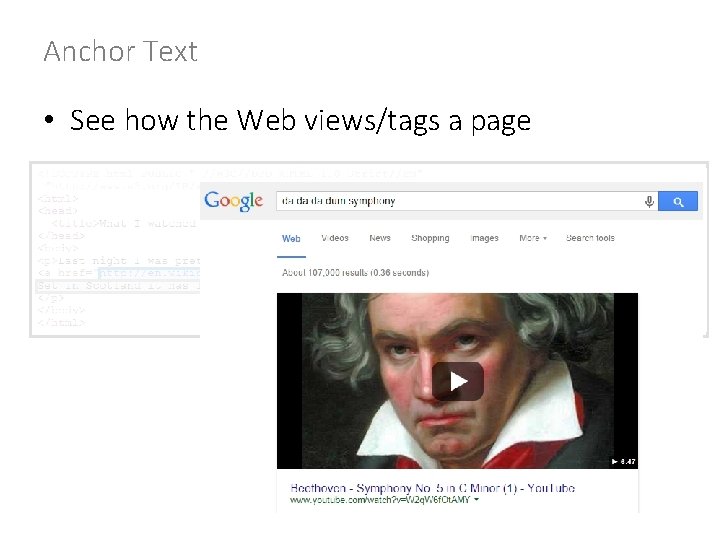

Anchor Text • See how the Web views/tags a page

Anchor Text • See how the Web views/tags a page

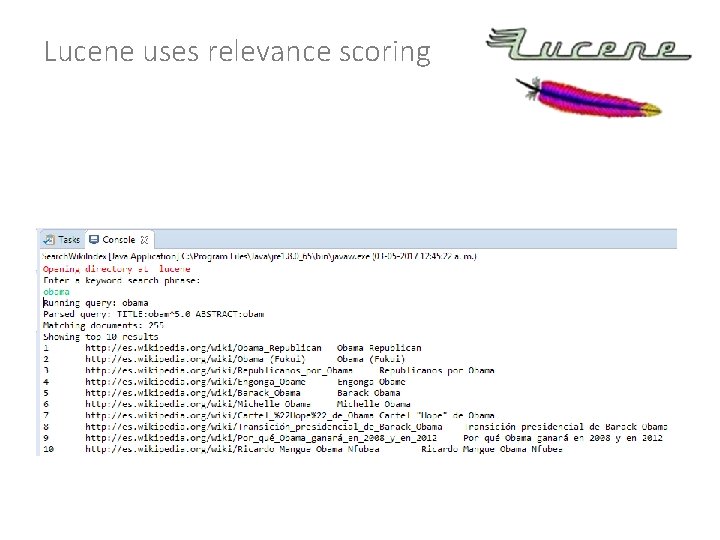

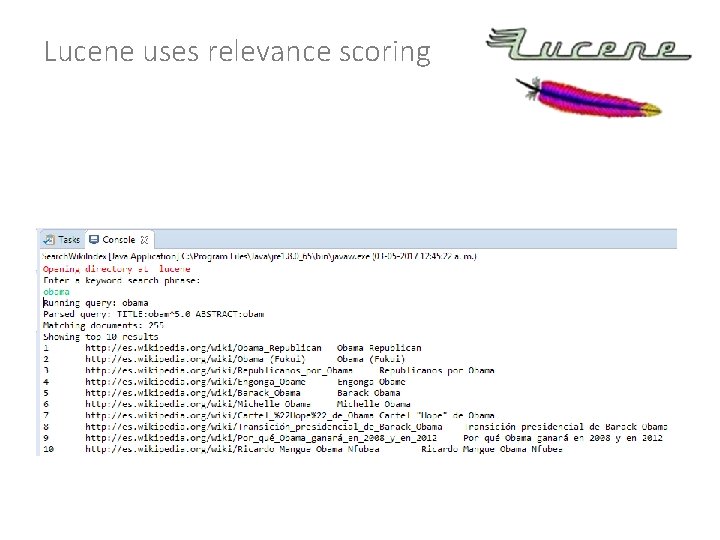

Lucene uses relevance scoring

RANKING: IMPORTANCE

Two Sides to Ranking: Importance How could we determine that Barack Obama is more important than Mount Obama as a search result for "obama" on the Web? >

Link Analysis Which will have more links from other pages? The Wikipedia article for Mount Obama? The Wikipedia article for Barack Obama?

Link Analysis • Consider links as votes of confidence in a page • A hyperlink is the open Web’s version of … (… even if the page is linked in a negative way. )

Link Analysis So if we just count links to a page we can determine its importance and we are done?

Link Spamming

Link Importance So which should count for more? A link from http: //en. wikipedia. org/wiki/Barack_Obama? Or a link from http: //freev 1 agra. com/shop. html?

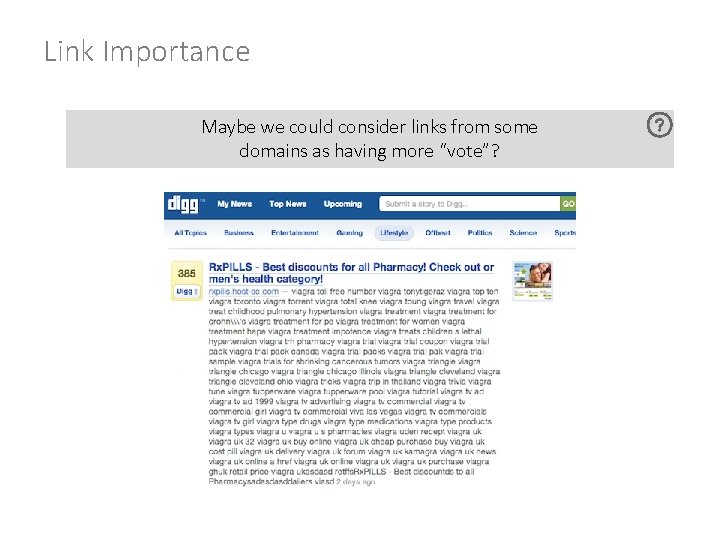

Link Importance Maybe we could consider links from some domains as having more “vote”?

Page. Rank

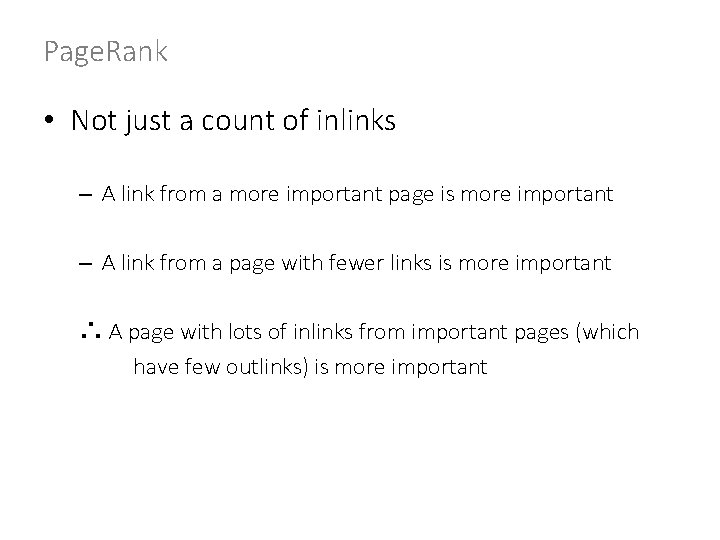

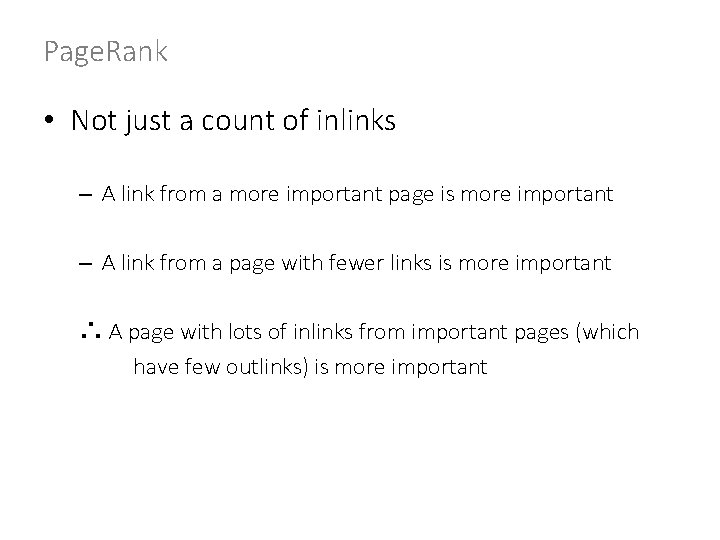

Page. Rank • Not just a count of inlinks – A link from a more important page is more important – A link from a page with fewer links is more important ∴ A page with lots of inlinks from important pages (which have few outlinks) is more important

Page. Rank is Recursive • Not just a count of inlinks – A link from a more important page is more important – A link from a page with fewer links is more important ∴ A page with lots of inlinks from important pages (which have few outlinks) is more important

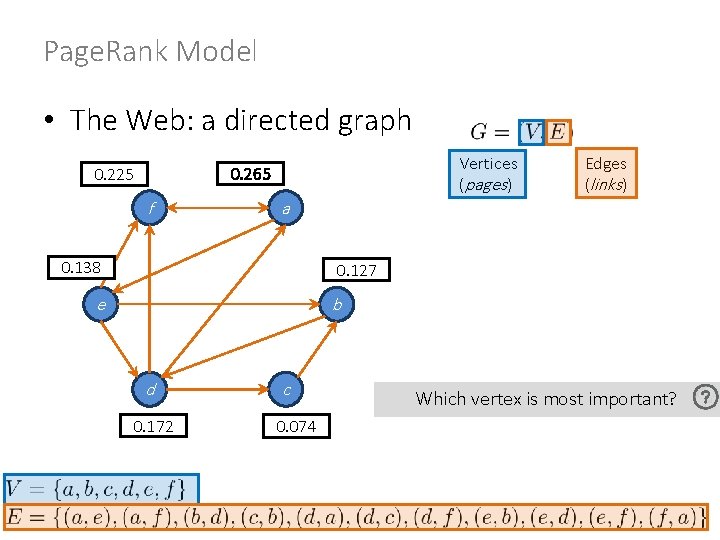

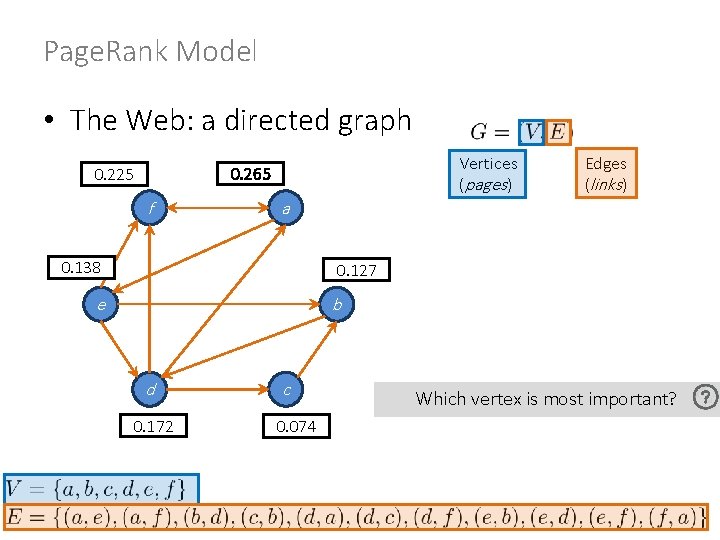

Page. Rank Model • The Web: a directed graph Vertices (pages ) 0. 265 0. 225 f Edges (links) a 0. 138 0. 127 e b d 0. 172 c 0. 074 Which vertex is most important?

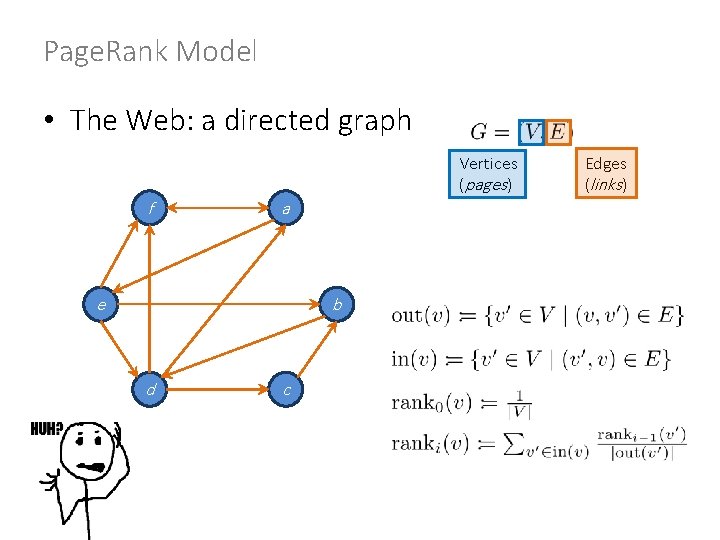

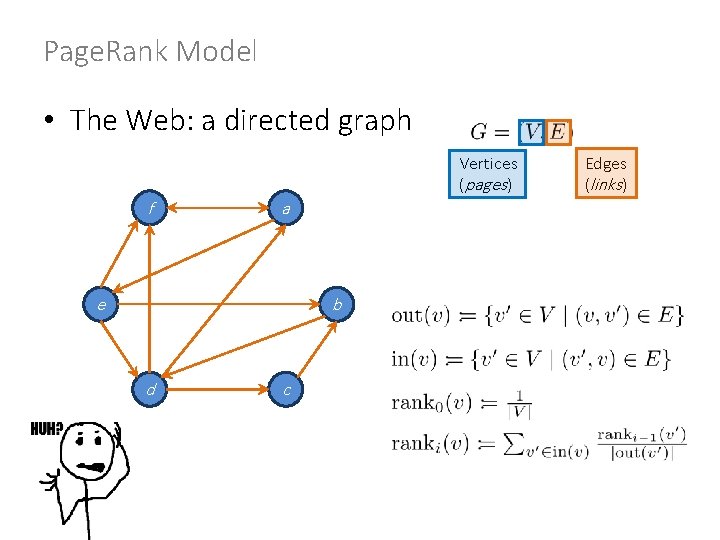

Page. Rank Model • The Web: a directed graph Vertices (pages ) f a e b d c Edges (links)

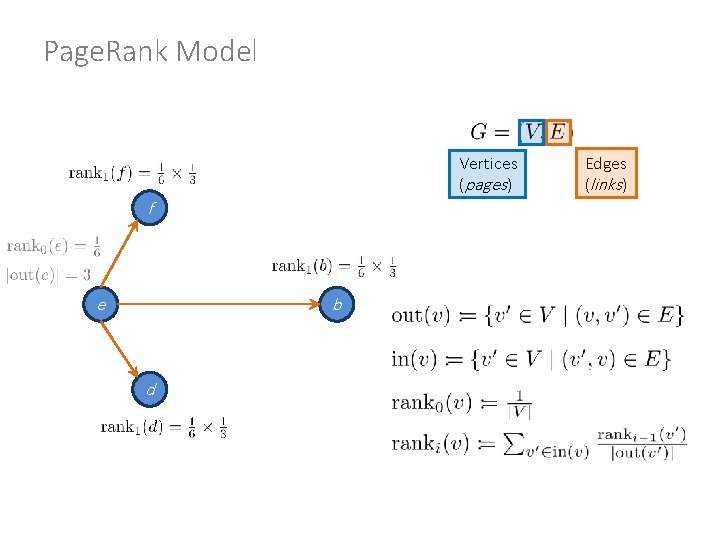

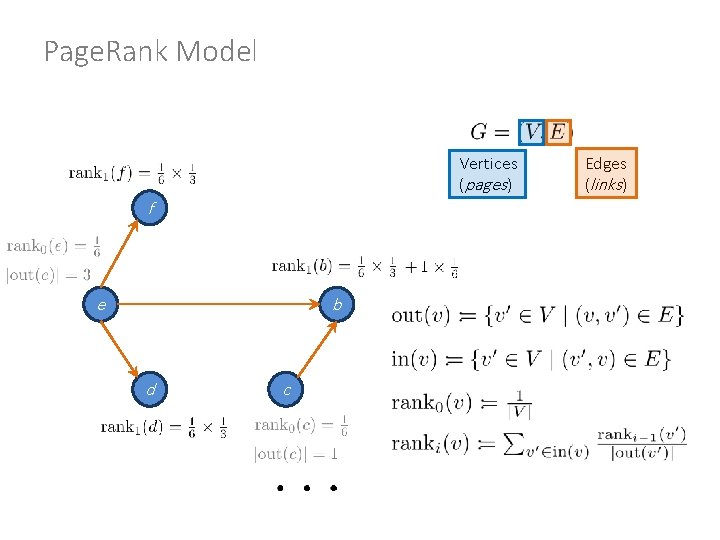

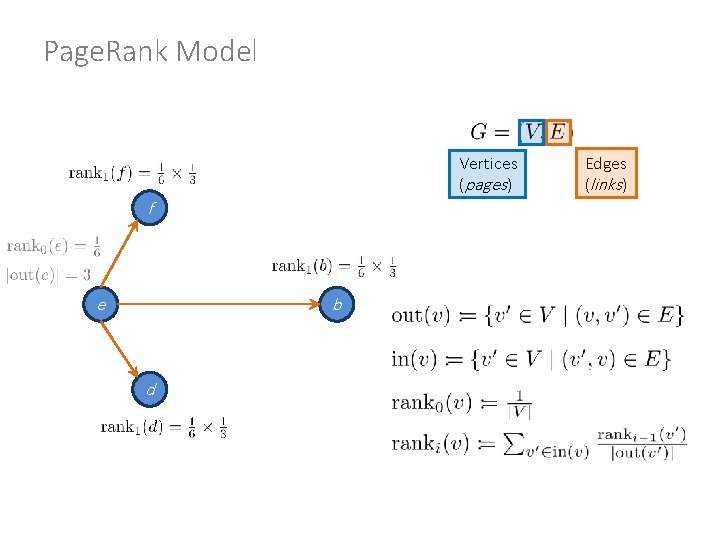

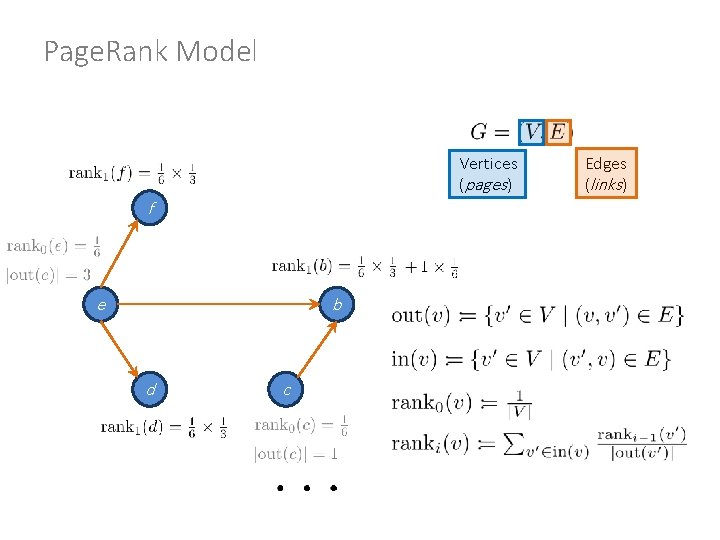

Page. Rank Model Vertices (pages ) f e b d Edges (links)

Page. Rank Model Vertices (pages ) f e b d c Edges (links)

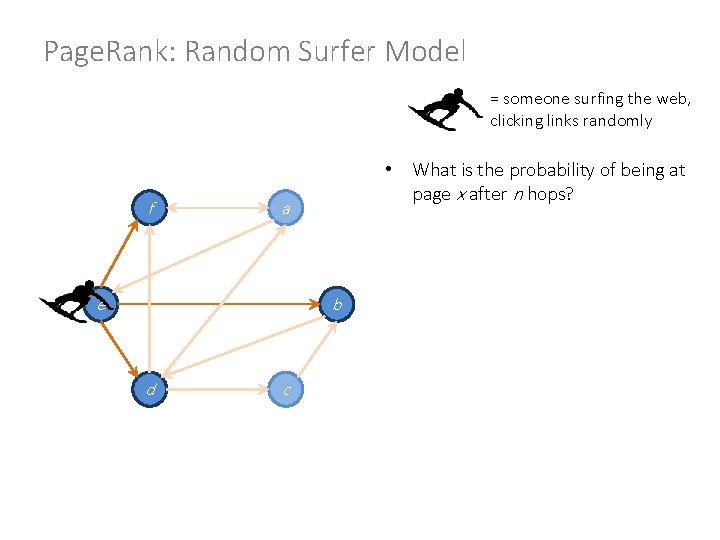

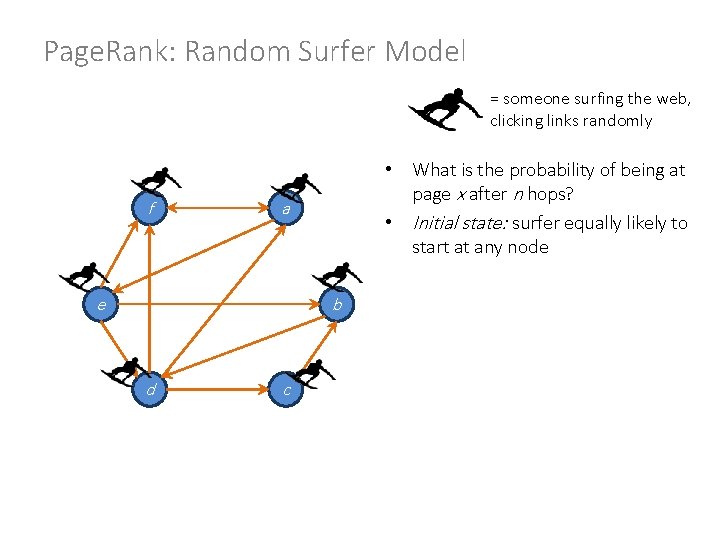

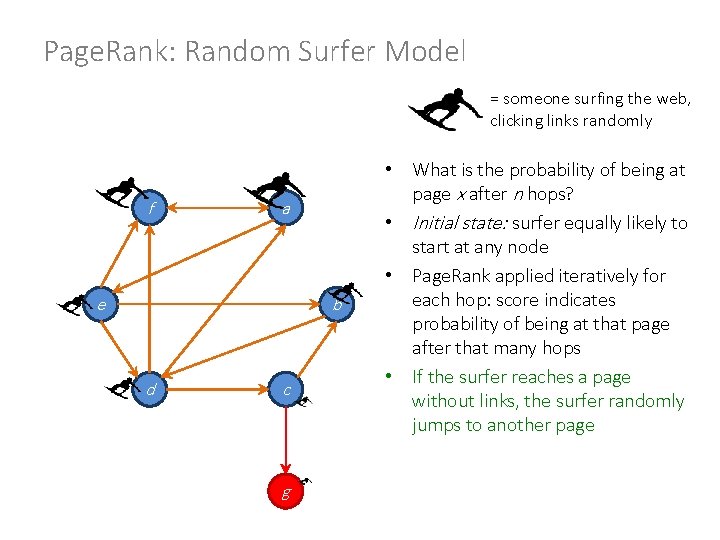

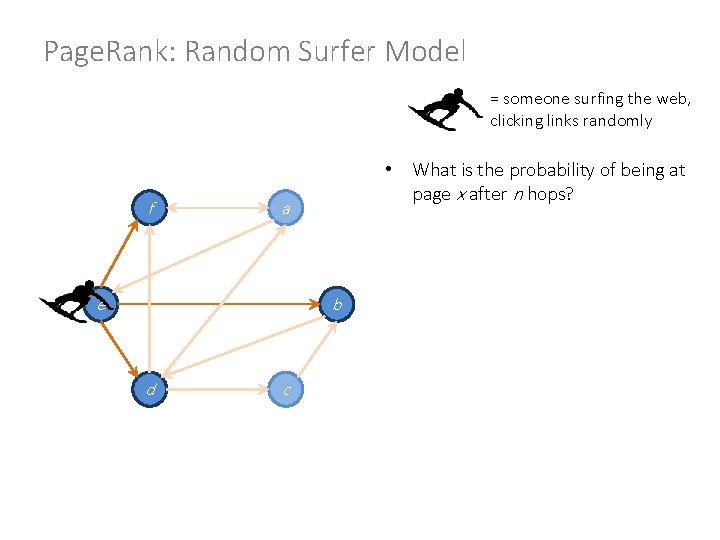

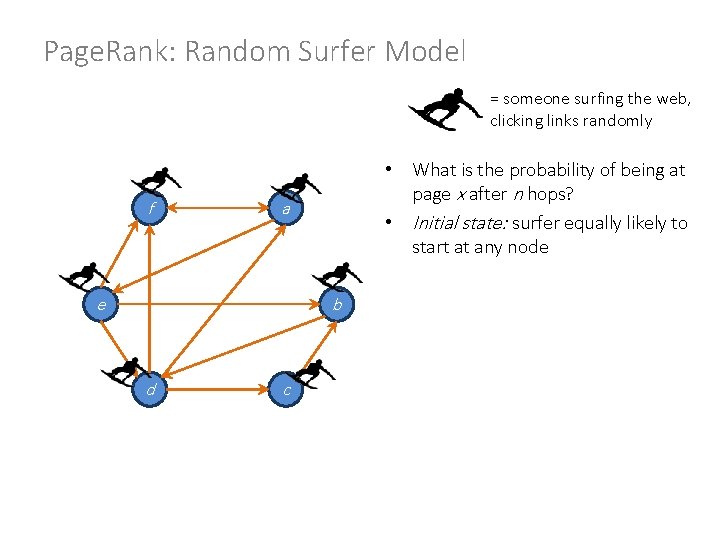

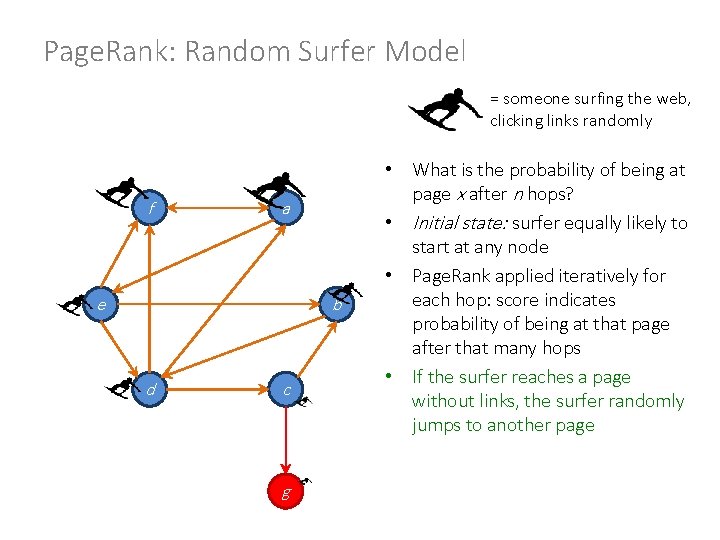

Page. Rank: Random Surfer Model = someone surfing the web, clicking links randomly f • What is the probability of being at page x after n hops? a e b d c

Page. Rank: Random Surfer Model = someone surfing the web, clicking links randomly f • What is the probability of being at page x after n hops? • Initial state: surfer equally likely to start at any node a e b d c

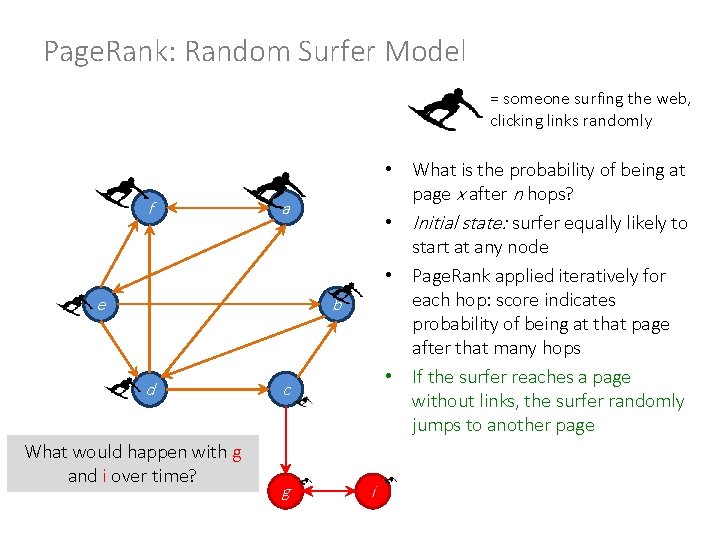

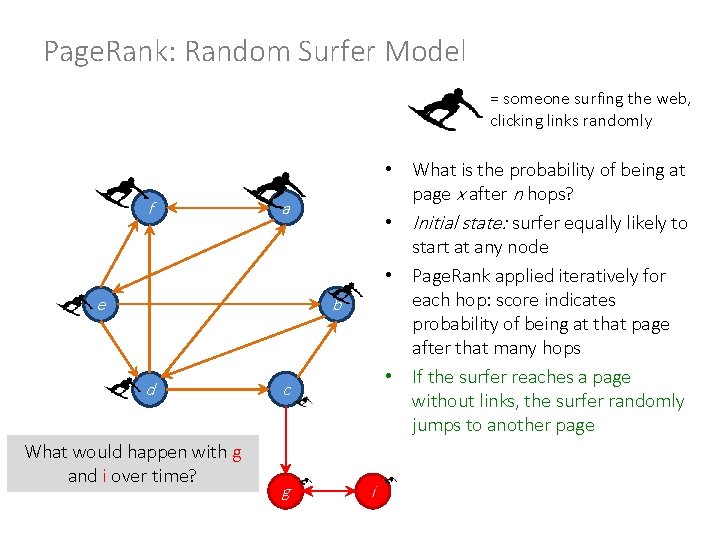

Page. Rank: Random Surfer Model = someone surfing the web, clicking links randomly f a e b d What would happen with g over time? c g • What is the probability of being at page x after n hops? • Initial state: surfer equally likely to start at any node • Page. Rank applied iteratively for each hop: score indicates probability of being at that page after that many hops

Page. Rank: Random Surfer Model = someone surfing the web, clicking links randomly f a e b d c g • What is the probability of being at page x after n hops? • Initial state: surfer equally likely to start at any node • Page. Rank applied iteratively for each hop: score indicates probability of being at that page after that many hops • If the surfer reaches a page without links, the surfer randomly jumps to another page

Page. Rank: Random Surfer Model = someone surfing the web, clicking links randomly f • What is the probability of being at page x after n hops? • Initial state: surfer equally likely to start at any node • Page. Rank applied iteratively for each hop: score indicates probability of being at that page after that many hops • If the surfer reaches a page without links, the surfer randomly jumps to another page a e b d What would happen with g and i over time? c g i

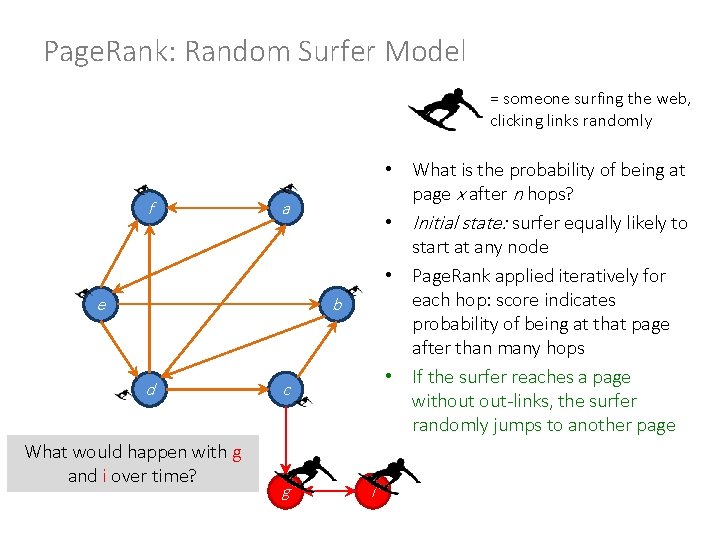

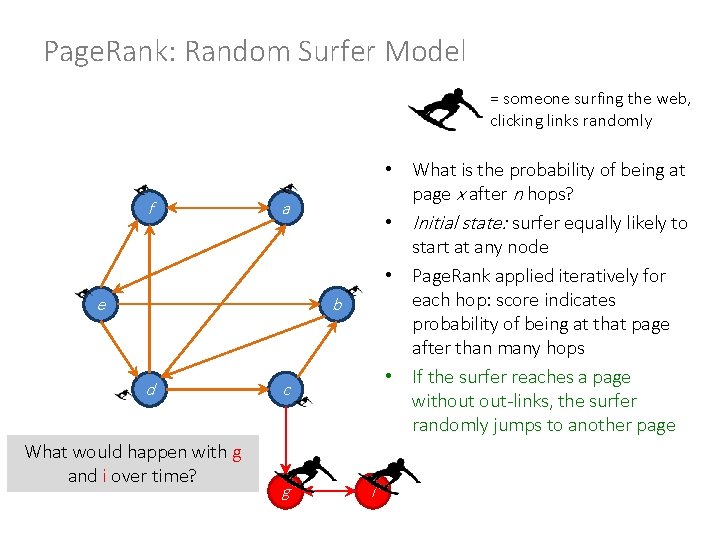

Page. Rank: Random Surfer Model = someone surfing the web, clicking links randomly f • What is the probability of being at page x after n hops? • Initial state: surfer equally likely to start at any node • Page. Rank applied iteratively for each hop: score indicates probability of being at that page after than many hops • If the surfer reaches a page without out-links, the surfer randomly jumps to another page a e b d What would happen with g and i over time? c g i

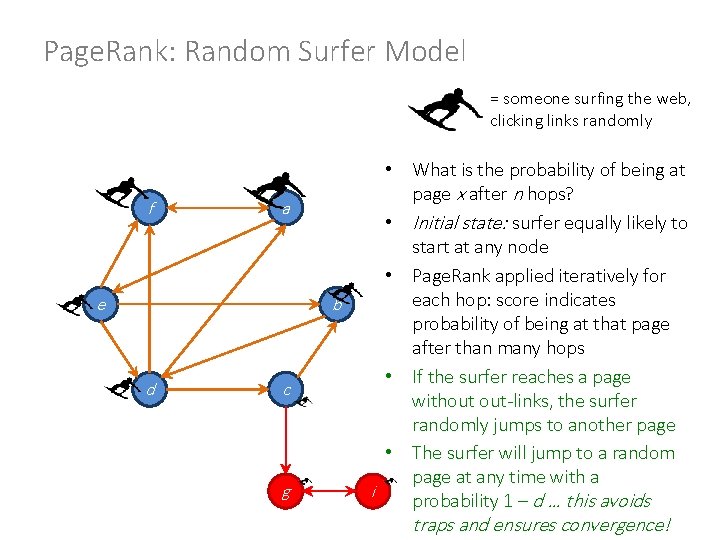

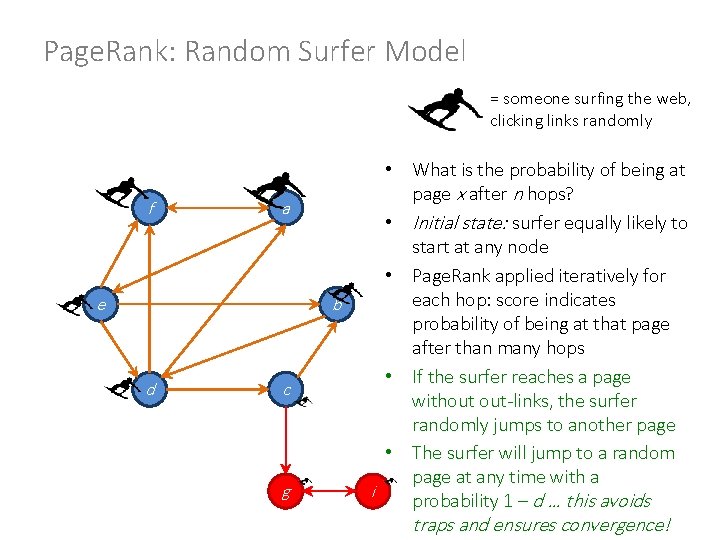

Page. Rank: Random Surfer Model = someone surfing the web, clicking links randomly f a e b d c g • What is the probability of being at page x after n hops? • Initial state: surfer equally likely to start at any node • Page. Rank applied iteratively for each hop: score indicates probability of being at that page after than many hops • If the surfer reaches a page without out-links, the surfer randomly jumps to another page • The surfer will jump to a random page at any time with a i probability 1 – d … this avoids traps and ensures convergence!

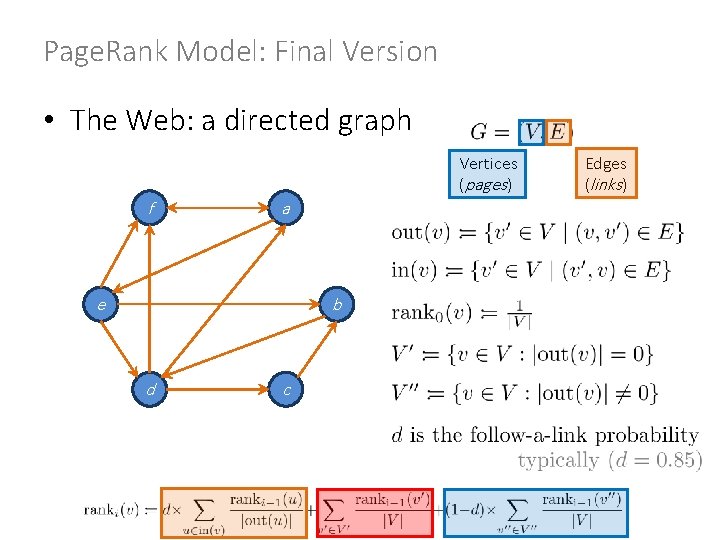

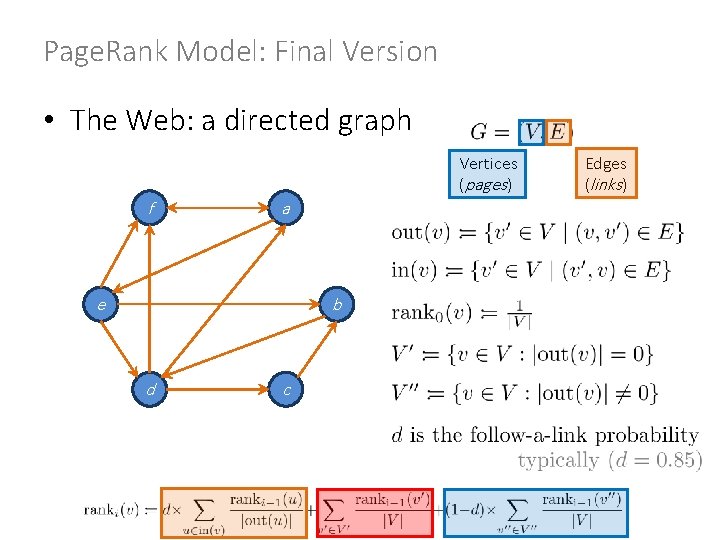

Page. Rank Model: Final Version • The Web: a directed graph Vertices (pages ) f a e b d c Edges (links)

Page. Rank: Benefits ü ü More robust than a simple link count Fewer ties than link counting Scalable to approximate (for sparse graphs) Convergence guaranteed

Two Sides to Ranking: Importance >

HOW DOES GOOGLE REALLY RANK? AN EDUCATED GUESS

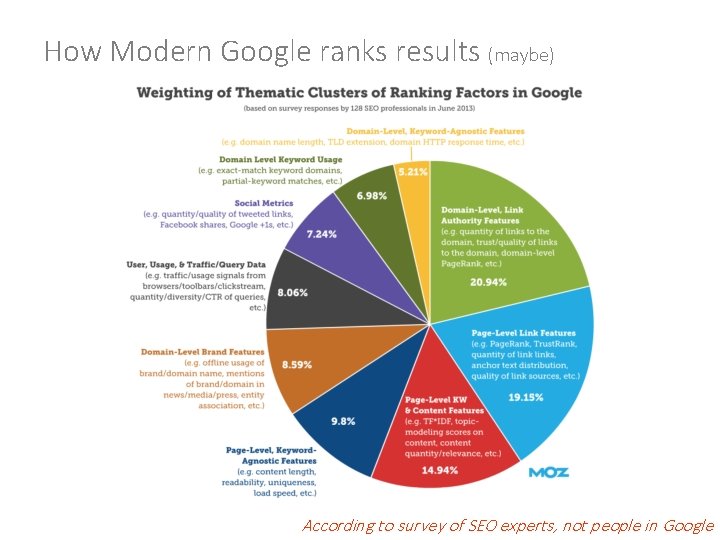

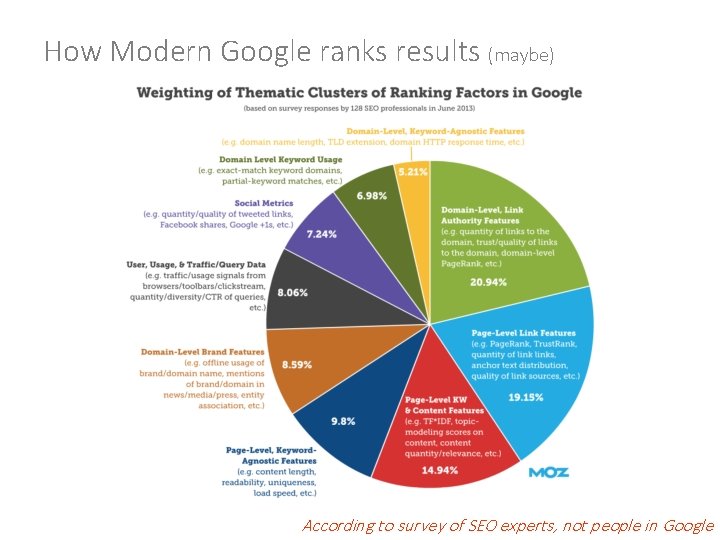

How Modern Google ranks results (maybe) According to survey of SEO experts, not people in Google

How Modern Google ranks results (maybe) Why so secretive? According to survey of SEO experts, not people in Google

Ranking: Science or Art?

Questions?

Afiche de los medios de comunicación

Afiche de los medios de comunicación Tamponada cardiaca dex

Tamponada cardiaca dex Uif sro

Uif sro Perforacion esofagica

Perforacion esofagica Procesamiento de informacion por medios digitales

Procesamiento de informacion por medios digitales Procesamiento de consultas distribuidas

Procesamiento de consultas distribuidas Juegos de velocidad de procesamiento

Juegos de velocidad de procesamiento Directivas de procesamiento

Directivas de procesamiento Procesamiento en serie

Procesamiento en serie Procesamiento de consultas distribuidas

Procesamiento de consultas distribuidas Reticulo endoplasmatico rugoso funcion

Reticulo endoplasmatico rugoso funcion Meronimia

Meronimia Modelo de procesamiento de la información

Modelo de procesamiento de la información Sekondaryong datos

Sekondaryong datos Datos subjetivos y objetivos

Datos subjetivos y objetivos Desviacion media para datos agrupados

Desviacion media para datos agrupados Datos simples

Datos simples Base de datos ies

Base de datos ies Flujo de datos transfronterizos

Flujo de datos transfronterizos Base de datos de una pizzeria

Base de datos de una pizzeria Cuaderno de recogida de datos

Cuaderno de recogida de datos Iba't ibang uri ng plagyarismo

Iba't ibang uri ng plagyarismo Simbología diagrama de flujo

Simbología diagrama de flujo Ejemplos de subtipos

Ejemplos de subtipos Error de propagacion

Error de propagacion Bases de datos deductivas

Bases de datos deductivas Efectos fijos y aleatorios datos de panel

Efectos fijos y aleatorios datos de panel Desviacion estandar

Desviacion estandar Definicion de normalizacion de base de datos

Definicion de normalizacion de base de datos Buses de datos sata

Buses de datos sata Datos pareados y no pareados

Datos pareados y no pareados Ldd que es

Ldd que es Plataforma de intermediación de datos

Plataforma de intermediación de datos Rut1000

Rut1000 Norma técnica de seguridad y calidad de servicio

Norma técnica de seguridad y calidad de servicio Datos de un cheque

Datos de un cheque Ddl y dml ejemplos

Ddl y dml ejemplos Julio verne murió en las profundidades marinas

Julio verne murió en las profundidades marinas Ciencia de datos ibm

Ciencia de datos ibm Niveles de un arbol

Niveles de un arbol Gdbacef

Gdbacef Bases de datos

Bases de datos Gawain 5:concept map

Gawain 5:concept map Upb bases de datos

Upb bases de datos 10 bases de datos más grandes del mundo 2020

10 bases de datos más grandes del mundo 2020 100 cosas que debes saber antes de morir

100 cosas que debes saber antes de morir Bases de datos post-relacionales

Bases de datos post-relacionales Sistemas manejadores de base de datos

Sistemas manejadores de base de datos Diagrama de flujo de datos nivel 0 1 y 2 ejemplos

Diagrama de flujo de datos nivel 0 1 y 2 ejemplos Primera segunda y tercera forma normal

Primera segunda y tercera forma normal Mapa de datos puntuales

Mapa de datos puntuales Tuplas base de datos

Tuplas base de datos Como se llama la tabla

Como se llama la tabla Cita de cita apa 7

Cita de cita apa 7 Pez globo descripcion

Pez globo descripcion Microsoft access es un sistema gestor de base de datos

Microsoft access es un sistema gestor de base de datos Experimentos con un solo factor

Experimentos con un solo factor Datos tutor

Datos tutor Media aritmética para datos agrupados

Media aritmética para datos agrupados Imputacion de datos en r

Imputacion de datos en r Ejemplo de diagrama sagital

Ejemplo de diagrama sagital Base de datos relacional access

Base de datos relacional access Solo en dios confio los demas traigan datos

Solo en dios confio los demas traigan datos Teoria de la elasticidad sophie germain

Teoria de la elasticidad sophie germain Datos agrupados

Datos agrupados Ficha de cita textual con elipsis

Ficha de cita textual con elipsis