Wide Area Networks for HEP in the LHC

- Slides: 31

Wide Area Networks for HEP in the LHC Era Harvey B Newman California Institute of Technology CHEP 2010 conference Taipei, October 19 th, 2010 1

OUTLINE Global View of Networks from ICFA SCIC Perspective Continental and Transoceanic Network Infrastructures Rise of Dark Fiber Networks DYNES: Dynamic Network System 2

CHEP 2001, Beijing Harvey B Newman California Institute of Technology September 6, 2001 The Internet 2009

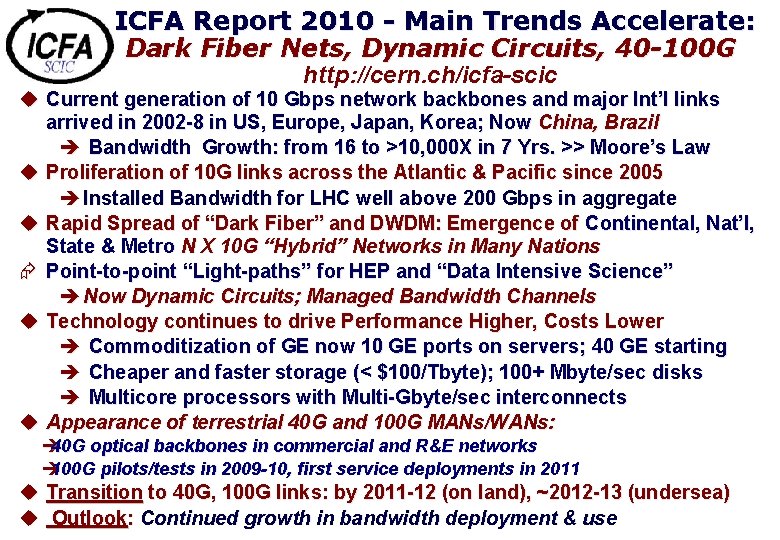

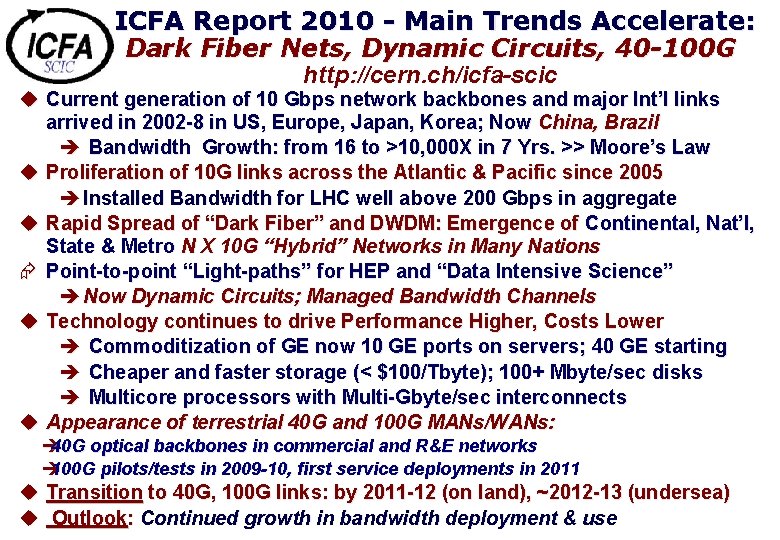

ICFA Report 2010 - Main Trends Accelerate: Dark Fiber Nets, Dynamic Circuits, 40 -100 G http: //cern. ch/icfa-scic u Current generation of 10 Gbps network backbones and major Int’l links arrived in 2002 -8 in US, Europe, Japan, Korea; Now China, Brazil è Bandwidth Growth: from 16 to >10, 000 X in 7 Yrs. >> Moore’s Law u Proliferation of 10 G links across the Atlantic & Pacific since 2005 è Installed Bandwidth for LHC well above 200 Gbps in aggregate u Rapid Spread of “Dark Fiber” and DWDM: Emergence of Continental, Nat’l, State & Metro N X 10 G “Hybrid” Networks in Many Nations Æ Point-to-point “Light-paths” for HEP and “Data Intensive Science” è Now Dynamic Circuits; Managed Bandwidth Channels u Technology continues to drive Performance Higher, Costs Lower è Commoditization of GE now 10 GE ports on servers; 40 GE starting è Cheaper and faster storage (< $100/Tbyte); 100+ Mbyte/sec disks è Multicore processors with Multi-Gbyte/sec interconnects u Appearance of terrestrial 40 G and 100 G MANs/WANs: è 40 G optical backbones in commercial and R&E networks è 100 G pilots/tests in 2009 -10, first service deployments in 2011 u Transition to 40 G, 100 G links: by 2011 -12 (on land), ~2012 -13 (undersea) u Outlook: Continued growth in bandwidth deployment & use

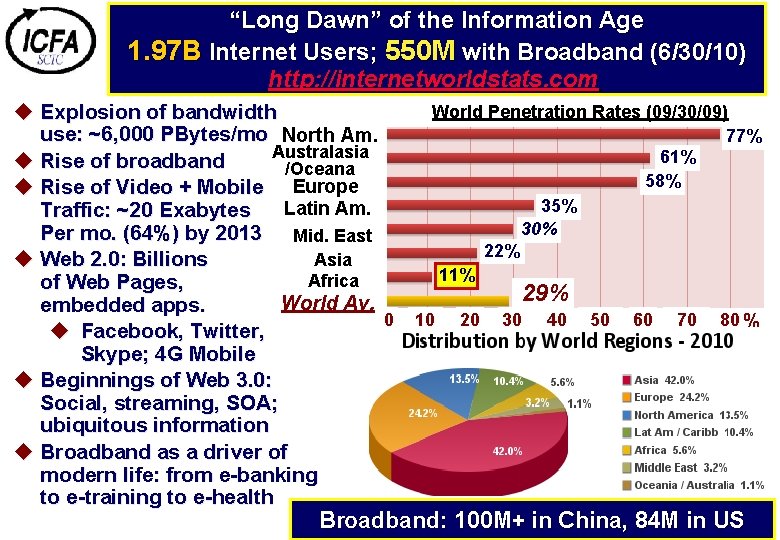

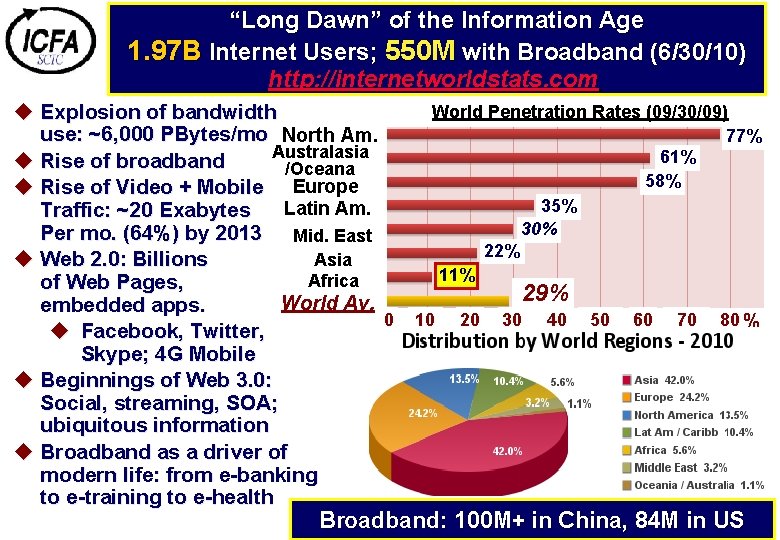

“Long Dawn” of the Information Age Revolutions in with Networking 1. 97 B Internet Users; 550 M Broadband (6/30/10) http: //internetworldstats. com World Penetration Rates (09/30/09) u Explosion of bandwidth use: ~6, 000 PBytes/mo North Am. 77% Australasia 61% u Rise of broadband /Oceana 58% u Rise of Video + Mobile Europe 35% Latin Am. Traffic: ~20 Exabytes 30% Per mo. (64%) by 2013 Mid. East 22% Asia u Web 2. 0: Billions 11% Africa of Web Pages, 29% World Av. embedded apps. 0 10 20 30 40 50 60 70 80 % u Facebook, Twitter, Skype; 4 G Mobile u Beginnings of Web 3. 0: Social, streaming, SOA; ubiquitous information u Broadband as a driver of modern life: from e-banking to e-training to e-health Broadband: 100 M+ in China, 84 M in US

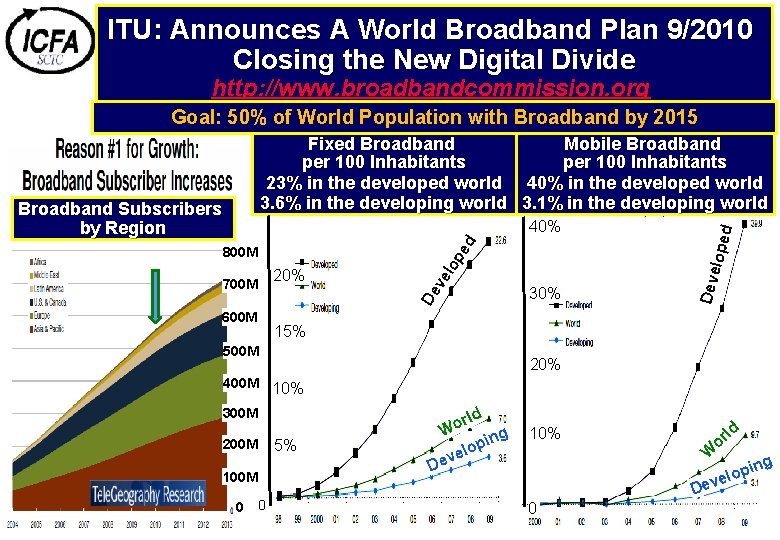

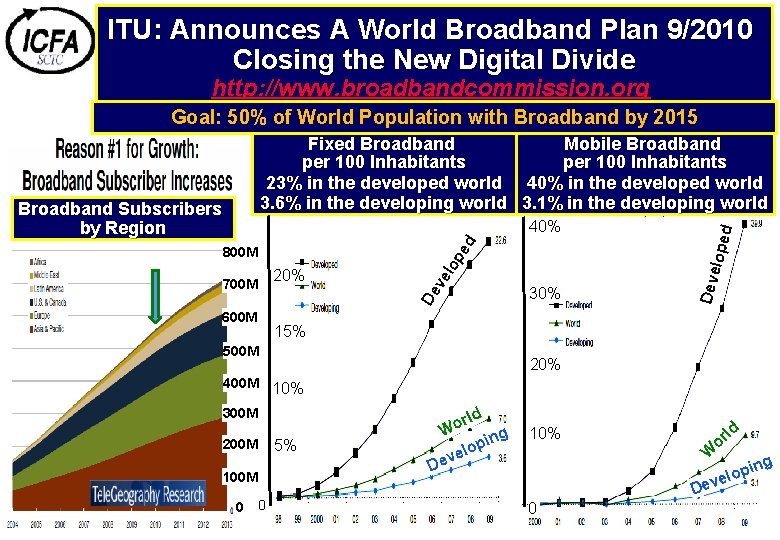

ITU: Announces A World Broadband Plan 9/2010 Revolutions in Networking Closing the New Digital Divide http: //www. broadbandcommission. org Goal: 50% of World Population with Broadband by 2015 ed lo p 20% De 700 M ve 800 M 600 M 30% 15% 500 M 400 M 20% 10% 300 M 5% 200 M 100 M 0 lope 40% Deve Broadband Subscribers by Region d Fixed Broadband Mobile Broadband per 100 Inhabitants 23% in the developed world 40% in the developed world 3. 6% in the developing world 3. 1% in the developing world 0 rld o W g pin o l ve De 10% 0 ld r o W ing p o l e Dev

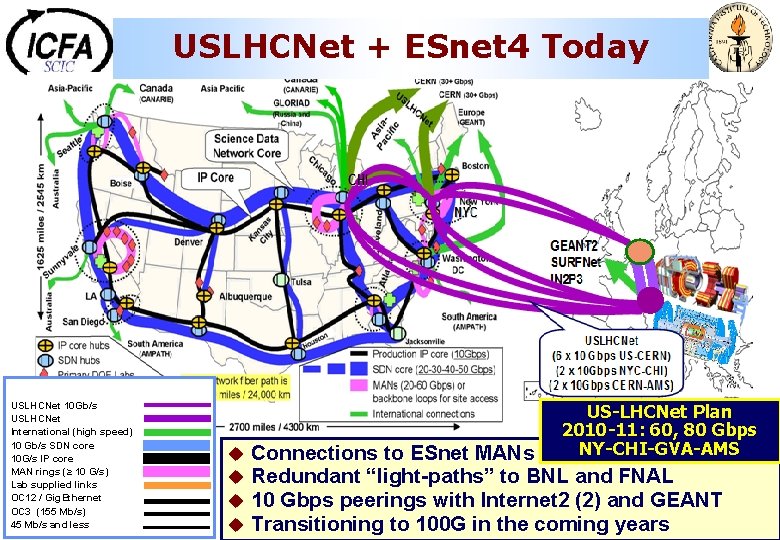

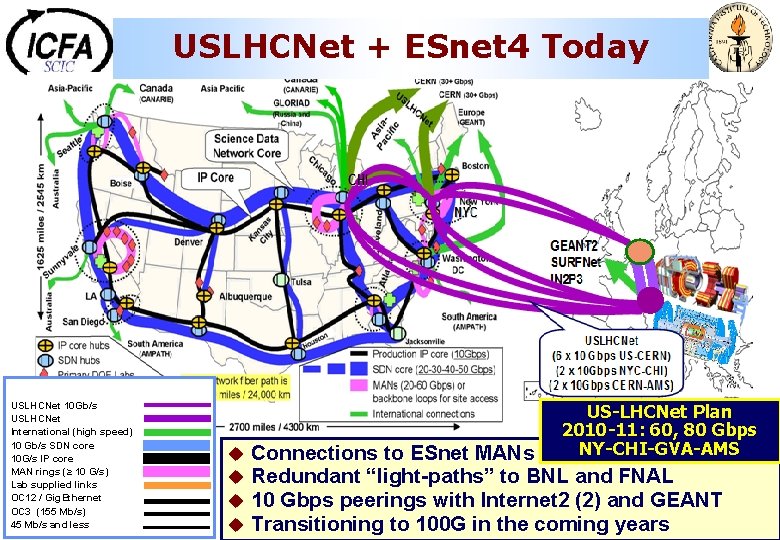

USLHCNet + ESnet 4 Today USLHCNet 10 Gb/s USLHCNet 20 Gb/s International (high speed) 10 Gb/s SDN core 10 G/s IP core MAN rings (≥ 10 G/s) Lab supplied links OC 12 / Gig. Ethernet OC 3 (155 Mb/s) 45 Mb/s and less US-LHCNet Plan 2010 -11: 60, 80 Gbps NY-CHI-GVA-AMS u Connections to ESnet MANs in NYC & Chicago u Redundant “light-paths” to BNL and FNAL u 10 Gbps peerings with Internet 2 (2) and GEANT u Transitioning to 100 G in the coming years

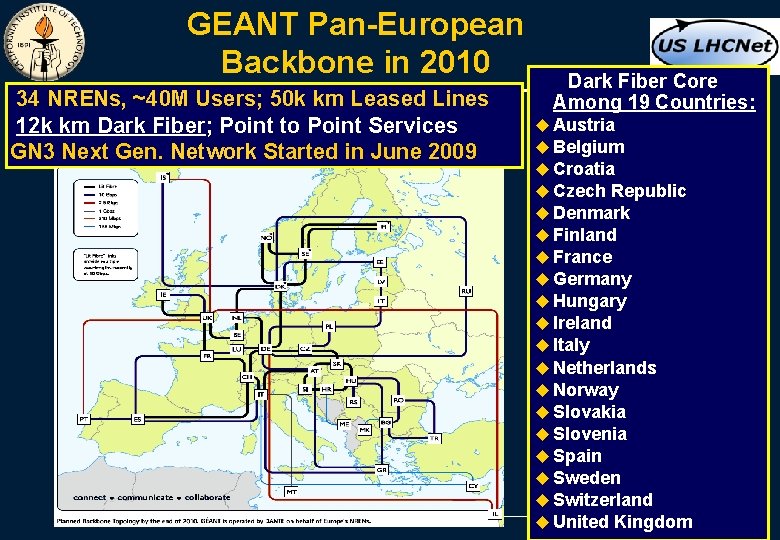

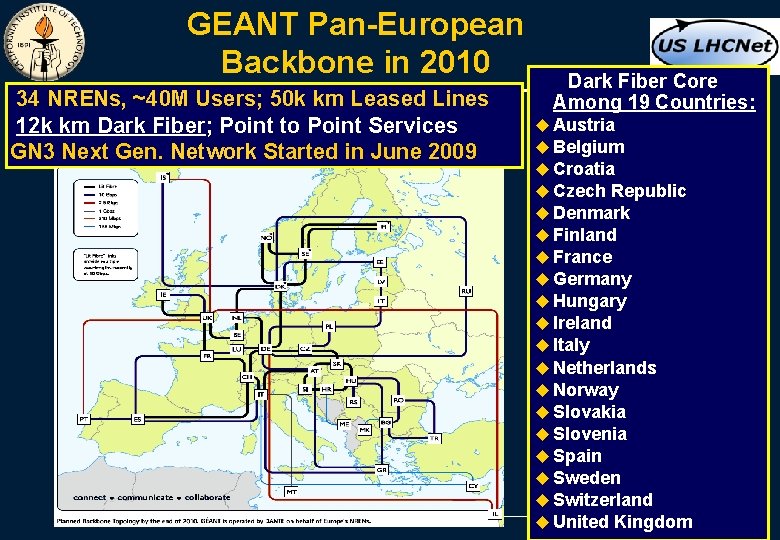

GEANT Pan-European Backbone in 2010 34 NRENs, ~40 M Users; 50 k km Leased Lines 12 k km Dark Fiber; Point to Point Services GN 3 Next Gen. Network Started in June 2009 Dark Fiber Core Among 19 Countries: u Austria u Belgium u Croatia u Czech Republic u Denmark u Finland u France u Germany u Hungary u Ireland u Italy u Netherlands u Norway u Slovakia u Slovenia u Spain u Sweden u Switzerland u United Kingdom 8

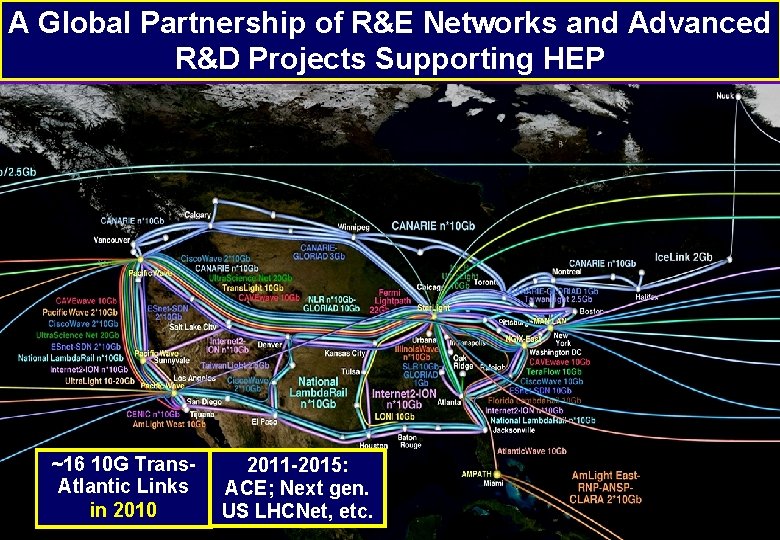

GLIF 2010 Map DRAFT A Global Partnership of R&E Networks and Advanced Network R&D Projects Supporting HEP

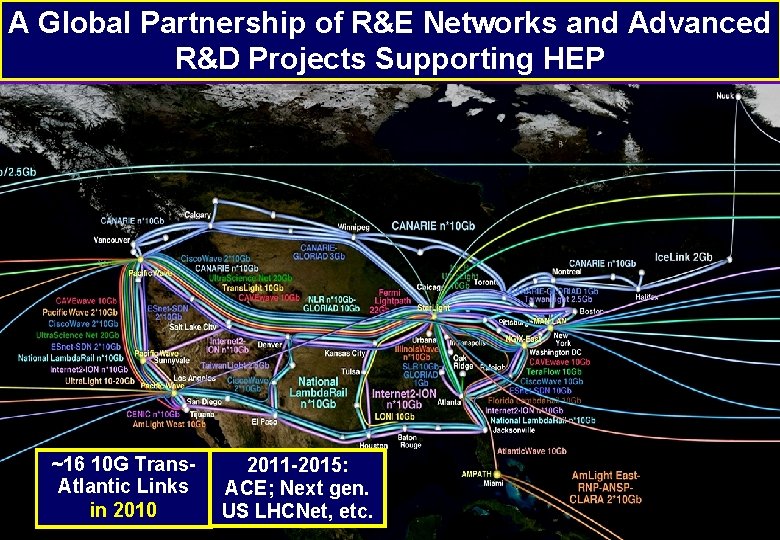

A Global Partnership of R&E Networks and Advanced GLIF 2010 Map DRAFT R&D Projects Supporting HEP ~16 10 G Trans. Atlantic Links in 2010 2011 -2015: ACE; Next gen. US LHCNet, etc.

October 14, 2009 The National Science Foundation (NSF)-funded Taj network has expanded to the Global Ring Network for Advanced Application Development (GLORIAD), wrapping another ring of light around the northern hemisphere for science and education. Taj now connects India, Singapore, Vietnam and Egypt to the GLORIAD global infrastructure and dramatically improves existing U. S. network links with China and the Nordic region. The new Taj expansion to India & Egypt

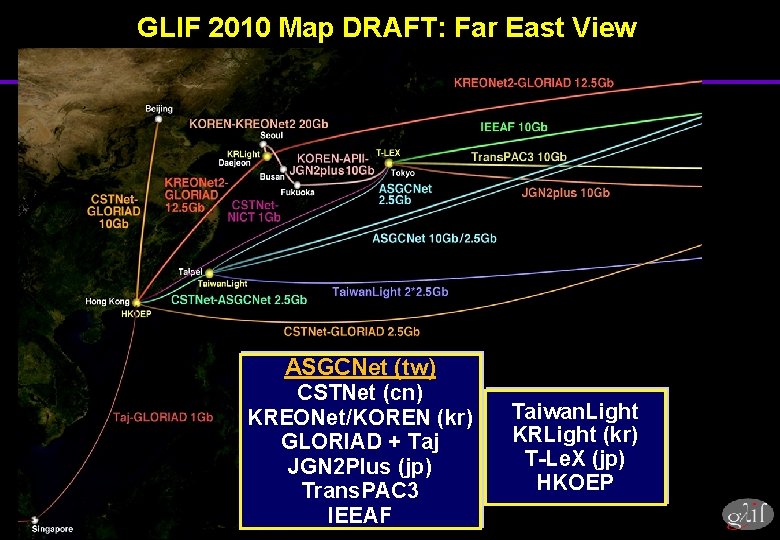

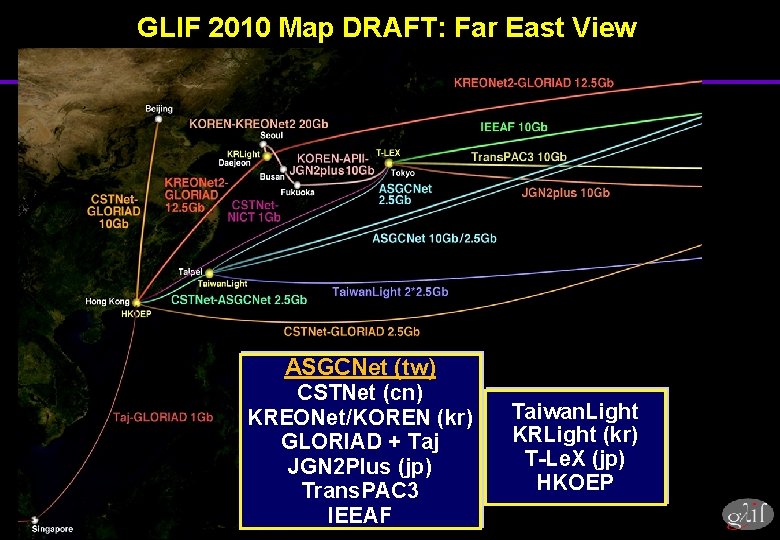

GLIF 2010 Map DRAFT: Far East View ASGCNet (tw) CSTNet (cn) KREONet/KOREN (kr) GLORIAD + Taj JGN 2 Plus (jp) Trans. PAC 3 IEEAF Taiwan. Light KRLight (kr) T-Le. X (jp) HKOEP

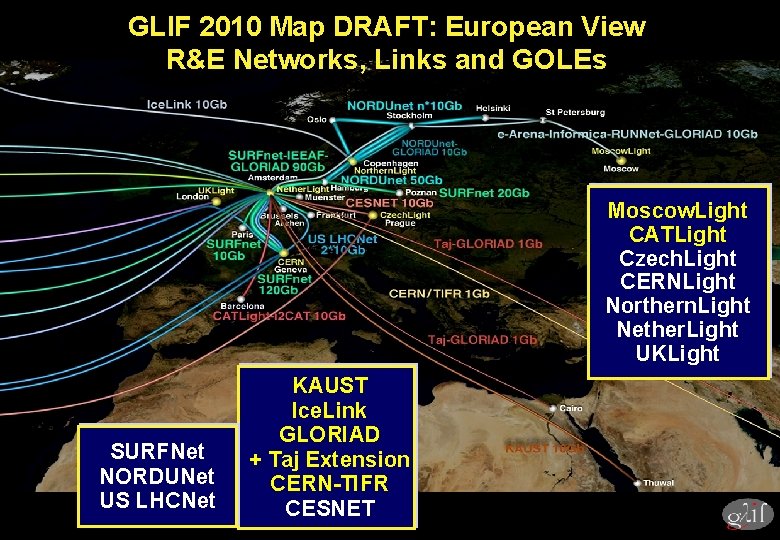

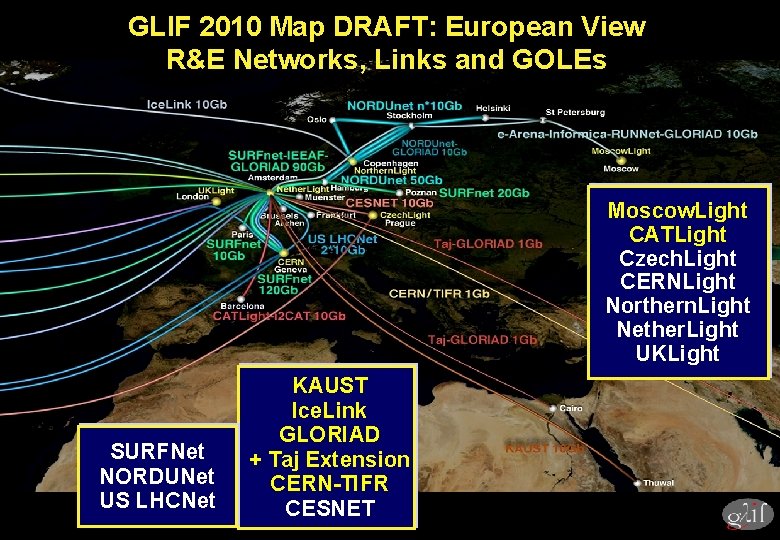

GLIF 2010 Map DRAFT: European View R&E Networks, Links and GOLEs Moscow. Light CATLight Czech. Light CERNLight Northern. Light Nether. Light UKLight SURFNet NORDUNet US LHCNet KAUST Ice. Link GLORIAD + Taj Extension CERN-TIFR CESNET

GLIF 2010 Map DRAFT: Brazil RNP-Ipe RNP Giga Kyatera (Sao Paulo) CLARA/RNP Innova Red (br, ar, cl) REUNA-ESO Am. Light East Am. Light Andes

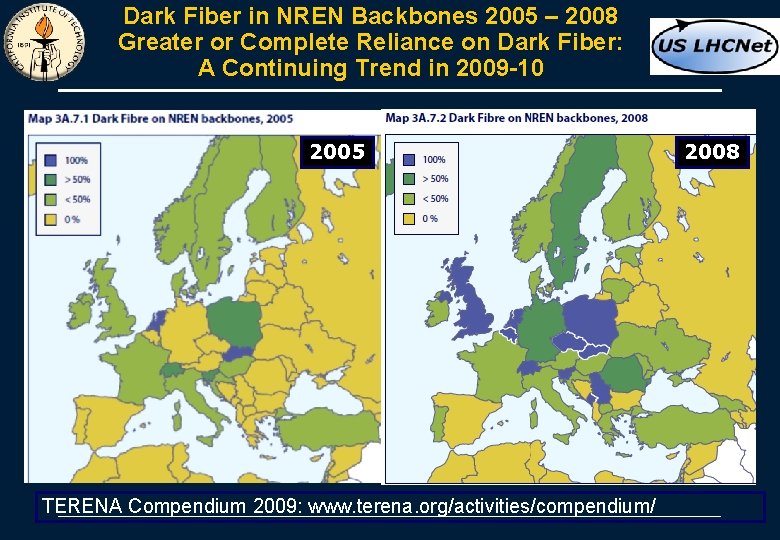

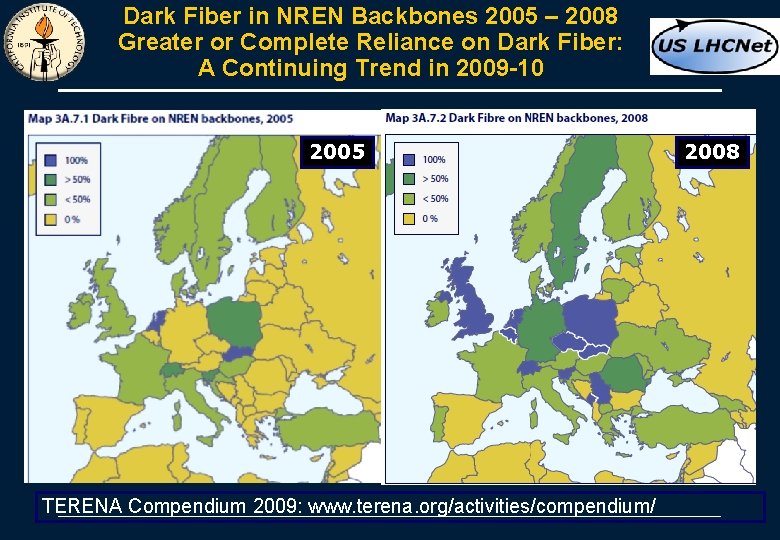

Dark Fiber in NREN Backbones 2005 – 2008 Greater or Complete Reliance on Dark Fiber: A Continuing Trend in 2009 -10 2005 TERENA Compendium 2009: www. terena. org/activities/compendium/ 2008

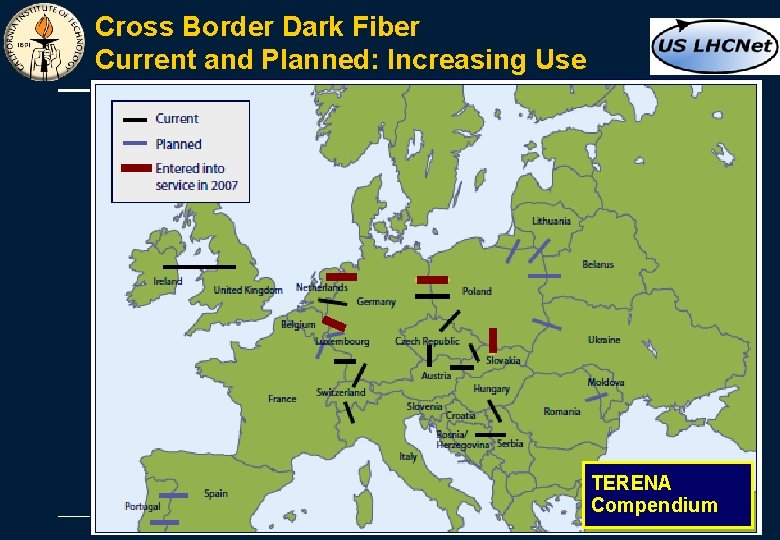

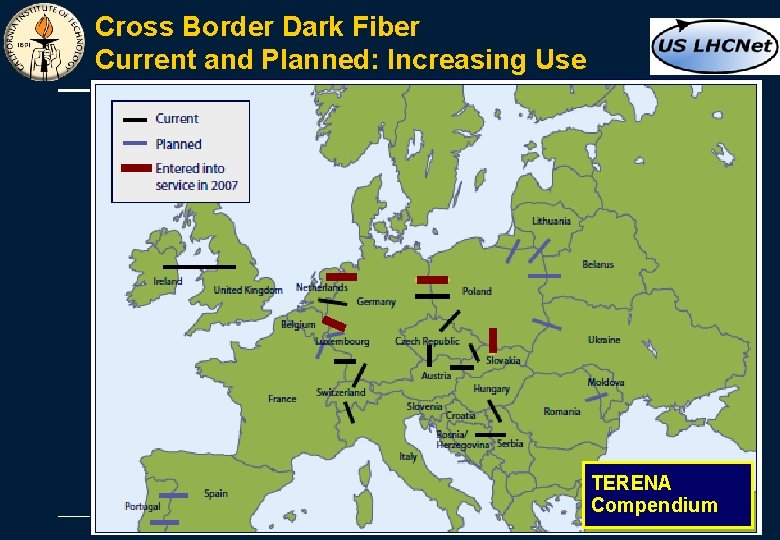

Cross Border Dark Fiber Current and Planned: Increasing Use TERENA Compendium

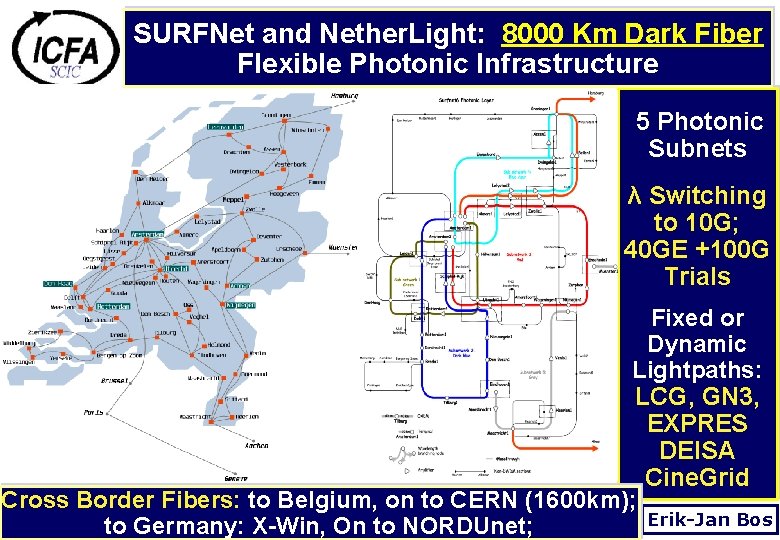

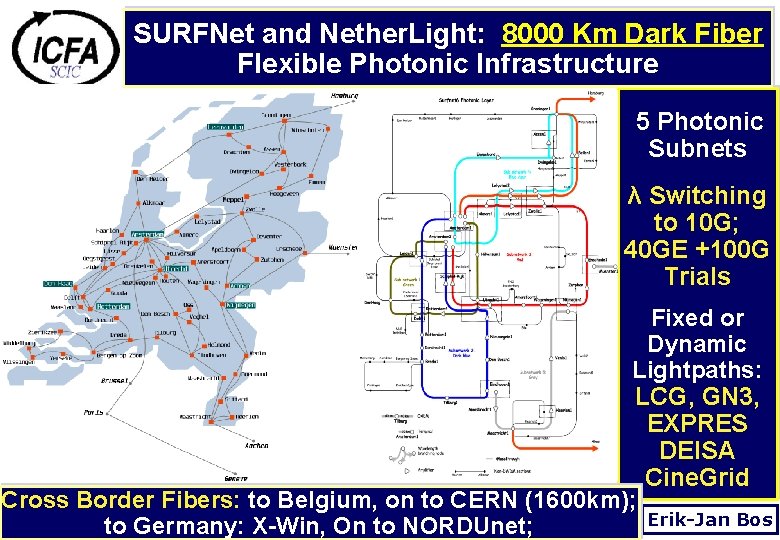

SURFNet and Nether. Light: 8000 Km Dark Fiber Flexible Photonic Infrastructure 5 Photonic Subnets λ Switching to 10 G; 40 GE +100 G Trials Fixed or Dynamic Lightpaths: LCG, GN 3, EXPRES DEISA Cine. Grid Cross Border Fibers: to Belgium, on to CERN (1600 km); Erik-Jan Bos to Germany: X-Win, On to NORDUnet;

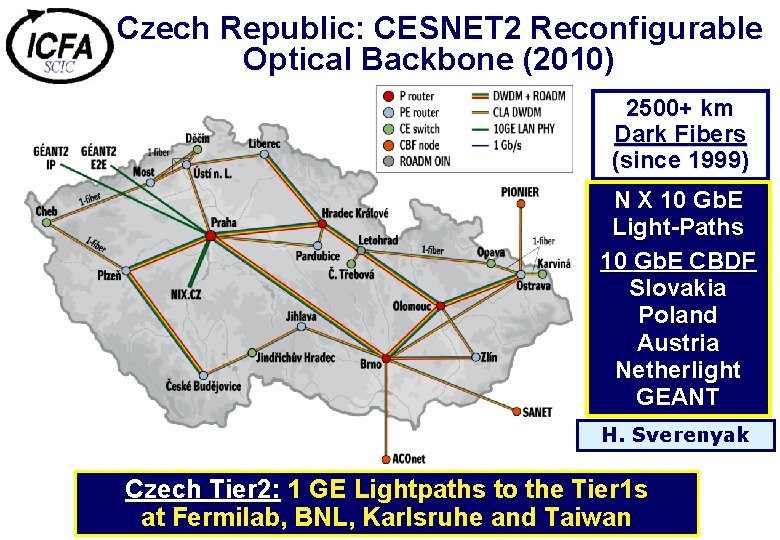

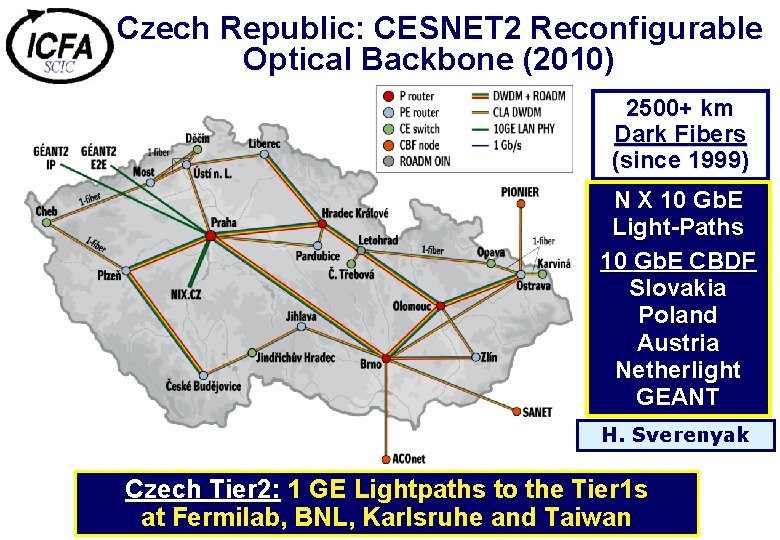

Czech Republic: CESNET 2 Reconfigurable Optical Backbone (2010) 2500+ km Dark Fibers (since 1999) N X 10 Gb. E Light-Paths 10 Gb. E CBDF Slovakia Poland Austria Netherlight GEANT H. Sverenyak Czech Tier 2: 1 GE Lightpaths to the Tier 1 s at Fermilab, BNL, Karlsruhe and Taiwan

POLAND: PIONIER 6000 km Dark Fiber Network in 2010 2 X 10 G Among 20 Major University Centers WLCG POLTIER 2 Distributed Tier 2 (Poznan, Warsaw, Cracow) Connects to Karlsruhe Tier 1 Cross Border Dark Fiber Links to Russia, Ukraine, Lithuania, Belarus, Czech Republic, and Slovakia R. Lichwala

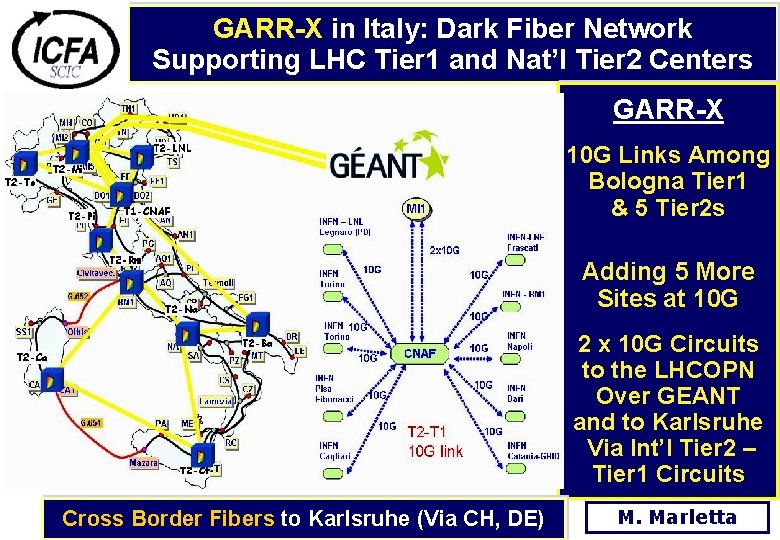

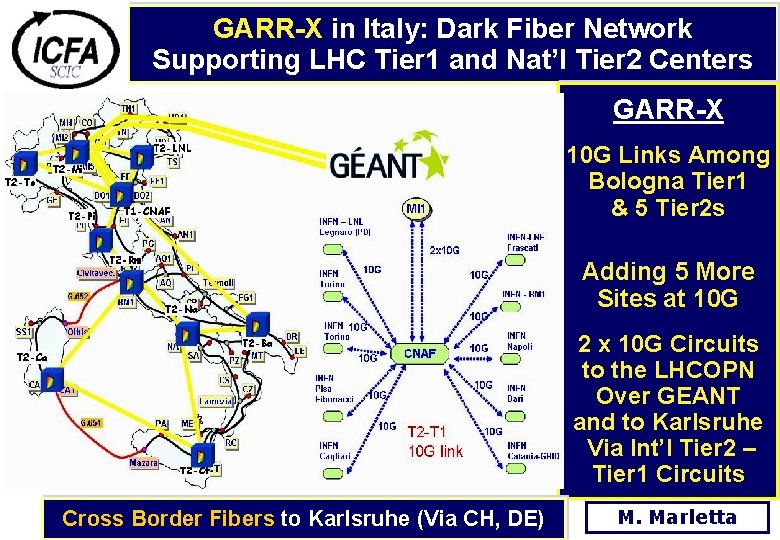

GARR-X in Italy: Dark Fiber Network Supporting LHC Tier 1 and Nat’l Tier 2 Centers GARR-X 10 G Links Among Bologna Tier 1 & 5 Tier 2 s Adding 5 More Sites at 10 G 2 x 10 G Circuits to the LHCOPN Over GEANT and to Karlsruhe Via Int’l Tier 2 – Tier 1 Circuits Cross Border Fibers to Karlsruhe (Via CH, DE) M. Marletta

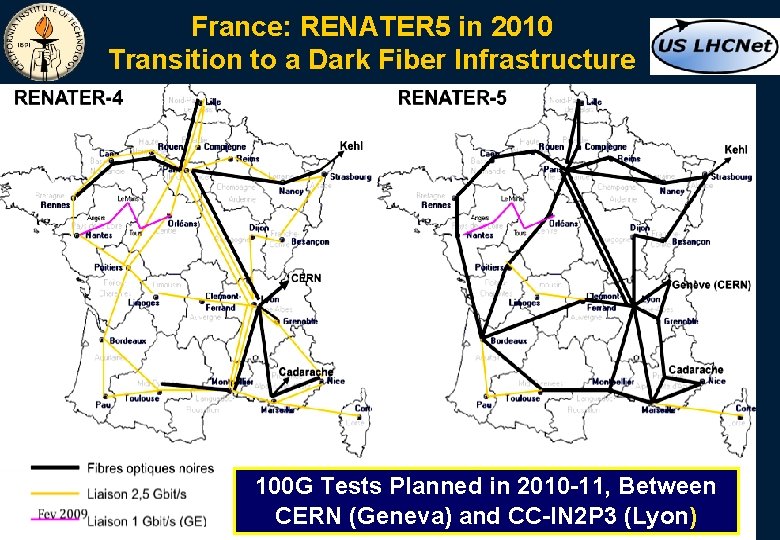

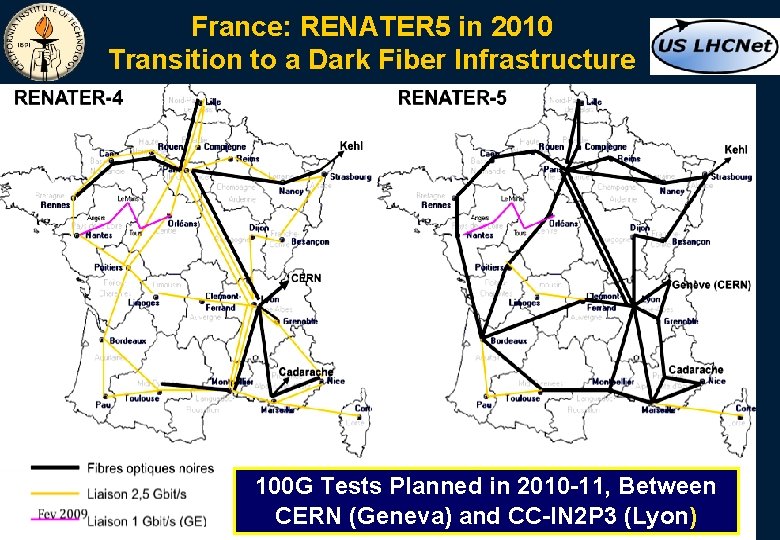

France: RENATER 5 in 2010 Transition to a Dark Fiber Infrastructure 100 G Tests Planned in 2010 -11, Between CERN (Geneva) and CC-IN 2 P 3 (Lyon)

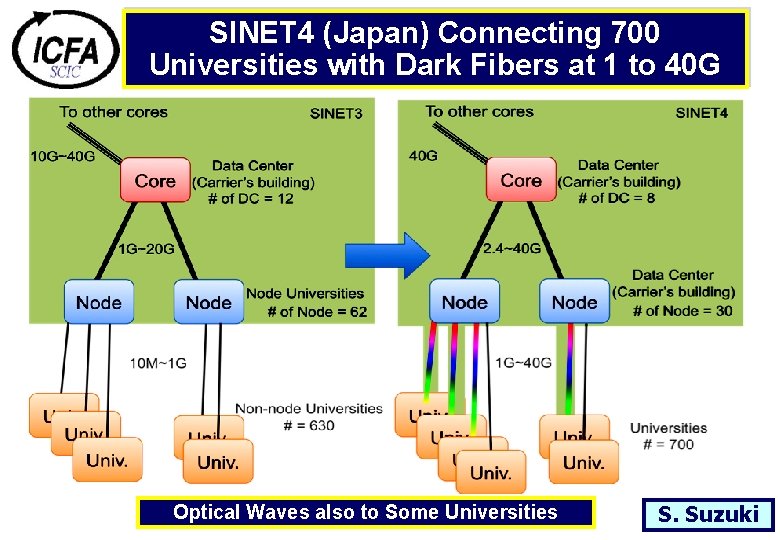

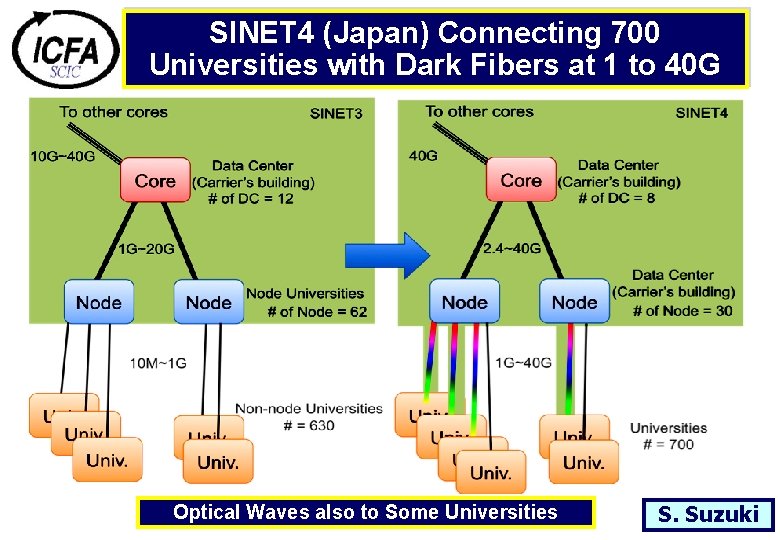

SINET 4 (Japan) Connecting 700 Universities with Dark Fibers at 1 to 40 G Optical Waves also to Some Universities S. Suzuki

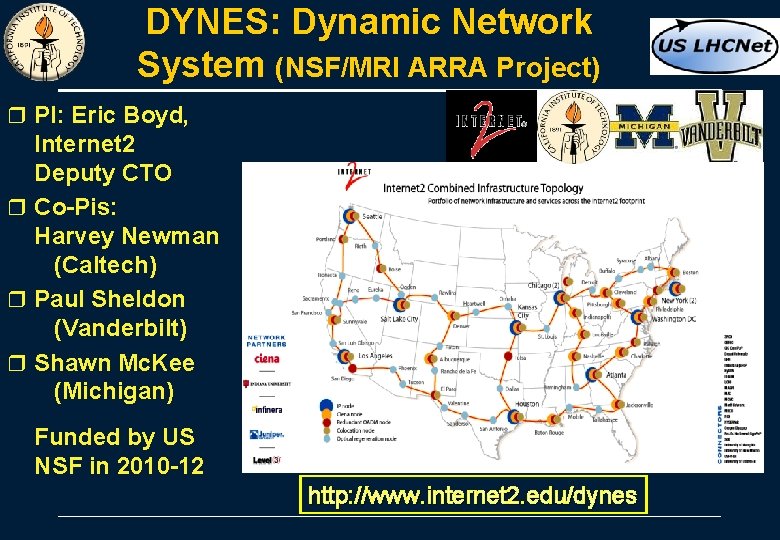

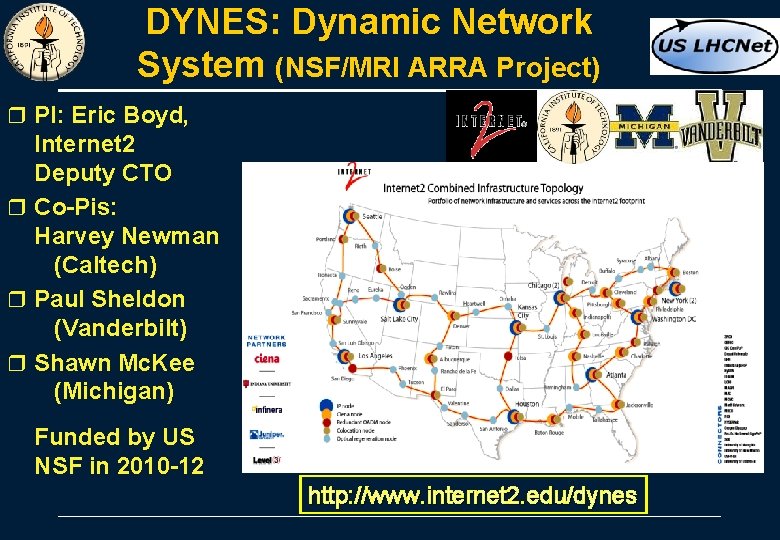

DYNES: Dynamic Network System (NSF/MRI ARRA Project) r PI: Eric Boyd, Internet 2 Deputy CTO r Co-Pis: Harvey Newman (Caltech) r Paul Sheldon (Vanderbilt) r Shawn Mc. Kee (Michigan) Funded by US NSF in 2010 -12 http: //www. internet 2. edu/dynes

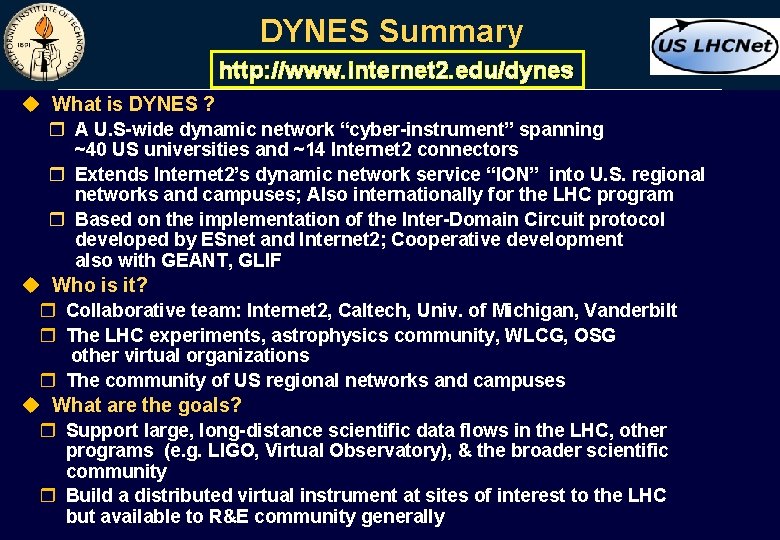

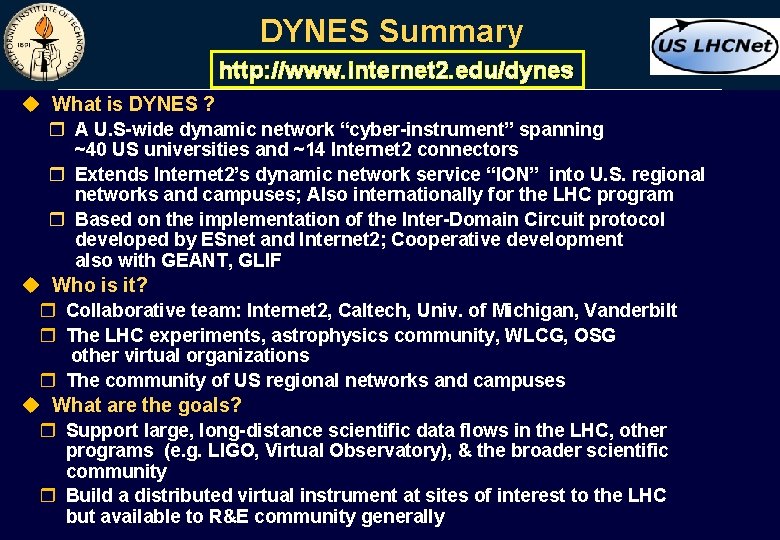

DYNES Summary http: //www. internet 2. edu/dynes u What is DYNES ? r A U. S-wide dynamic network “cyber-instrument” spanning ~40 US universities and ~14 Internet 2 connectors r Extends Internet 2’s dynamic network service “ION” into U. S. regional networks and campuses; Also internationally for the LHC program r Based on the implementation of the Inter-Domain Circuit protocol developed by ESnet and Internet 2; Cooperative development also with GEANT, GLIF u Who is it? r Collaborative team: Internet 2, Caltech, Univ. of Michigan, Vanderbilt r The LHC experiments, astrophysics community, WLCG, OSG other virtual organizations r The community of US regional networks and campuses u What are the goals? r Support large, long-distance scientific data flows in the LHC, other programs (e. g. LIGO, Virtual Observatory), & the broader scientific community r Build a distributed virtual instrument at sites of interest to the LHC but available to R&E community generally

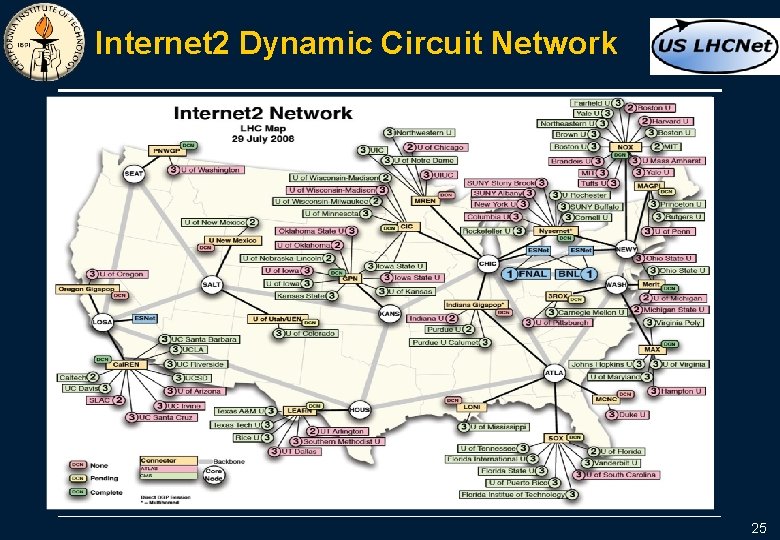

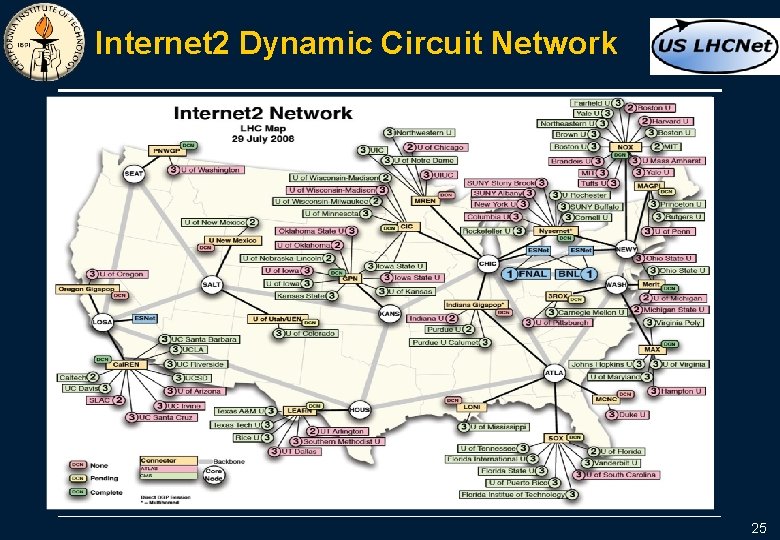

Internet 2 Dynamic Circuit Network 25

DYNES The Problem to be Addressed u Sustained throughputs at 1 -10 Gbps (and some > 10 Gbps) are in production use today by some Tier 2 s as well as Tier 1 s u LHC data volumes and transfer rates are expected to expand by an order of magnitude over the next several years r As higher capacity storage and regional, national and transoceanic 40 G and 100 Gbps network links become available and affordable. u Network usage on this scale can only be accommodated with planning, n appropriate architecture, and national and international community involvement by r The LHC groups at universities and labs r Campuses, regional and state networks connecting to Internet 2 r ESnet, US LHCNet, NSF/IRNC, other major networks in US & Europe u Network resource allocation and data operations need to be consistent r DYNES will help provide standard services and low cost equipment to help meet the needs

DYNES: Why Dynamic Circuits ? u To meet the requirements, Internet 2 and ESnet, along with several US regional networks, US LHCNet, and NRENs and in GEANT in Europe, have developed a strategy (since with a meeting at CERN, March 2004) r Based on a ‘hybrid’ network architecture r Where the traditional IP network backbone is paralleled by a circuit-oriented core network reserved for large-scale science traffic. Æ Major examples are Internet 2’s Dynamic Circuit Network (its “ION Service”) and ESnet’s Science Data Network (SDN), each of which provides: u Increased effective bandwidth capacity, and reliability of network access, by mutually isolating the large long-lasting flows (on ION and/or the ESnet SDN) and the traditional IP mix of many small flows u Guaranteed bandwidth as a service by building a system to automatically schedule and implement virtual circuits traversing the network backbone, and u Improved ability of scientists to access network measurement data for all the network segments end-to-end through the perf. SONAR monitoring infrastructure.

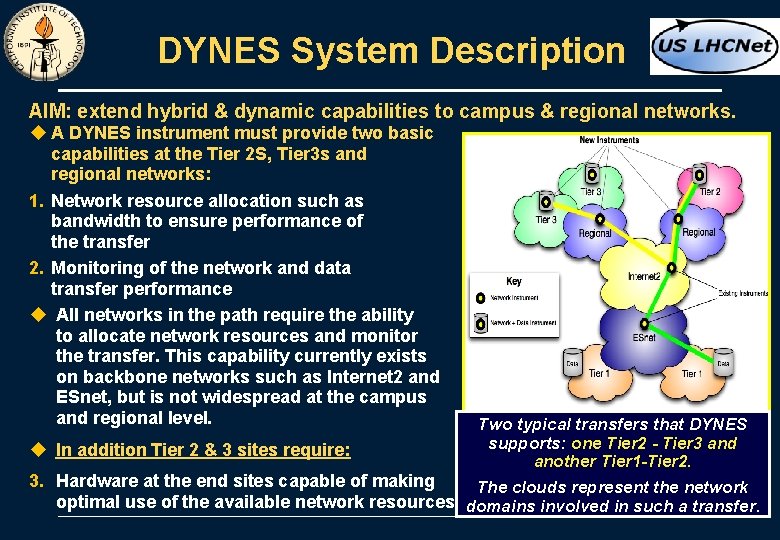

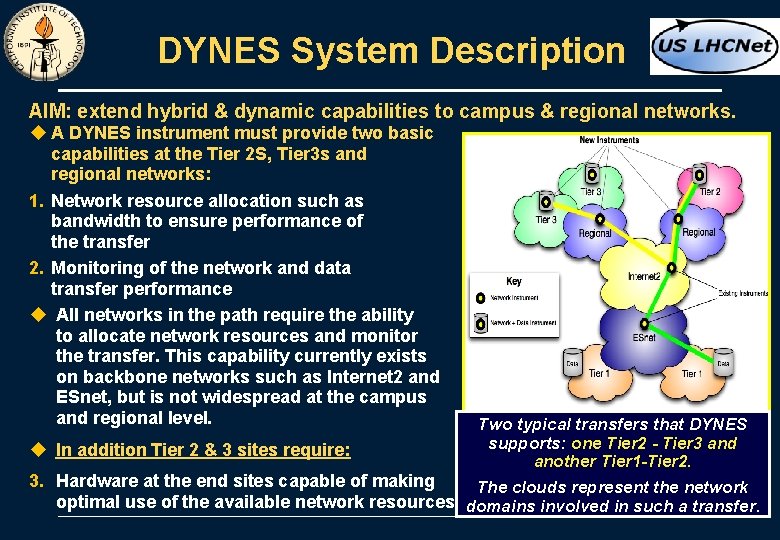

DYNES System Description AIM: extend hybrid & dynamic capabilities to campus & regional networks. u A DYNES instrument must provide two basic capabilities at the Tier 2 S, Tier 3 s and regional networks: 1. Network resource allocation such as bandwidth to ensure performance of the transfer 2. Monitoring of the network and data transfer performance u All networks in the path require the ability to allocate network resources and monitor the transfer. This capability currently exists on backbone networks such as Internet 2 and ESnet, but is not widespread at the campus and regional level. u In addition Tier 2 & 3 sites require: Two typical transfers that DYNES supports: one Tier 2 - Tier 3 and another Tier 1 -Tier 2. 3. Hardware at the end sites capable of making The clouds represent the network optimal use of the available network resources domains involved in such a transfer.

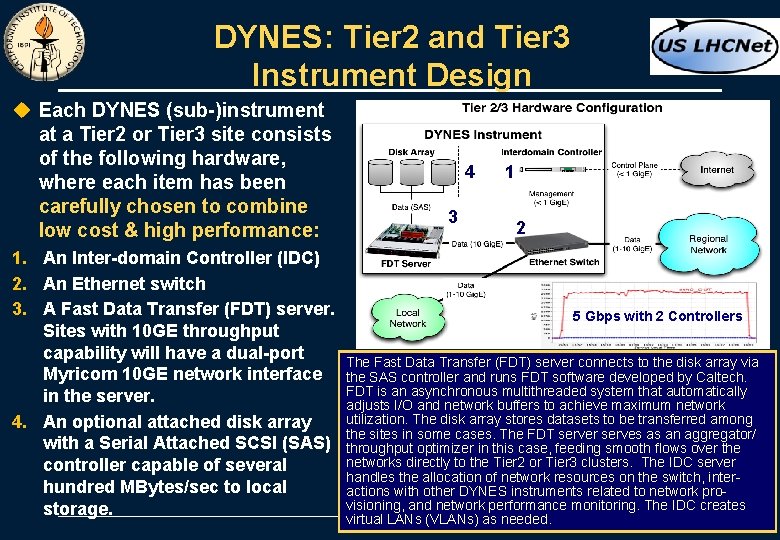

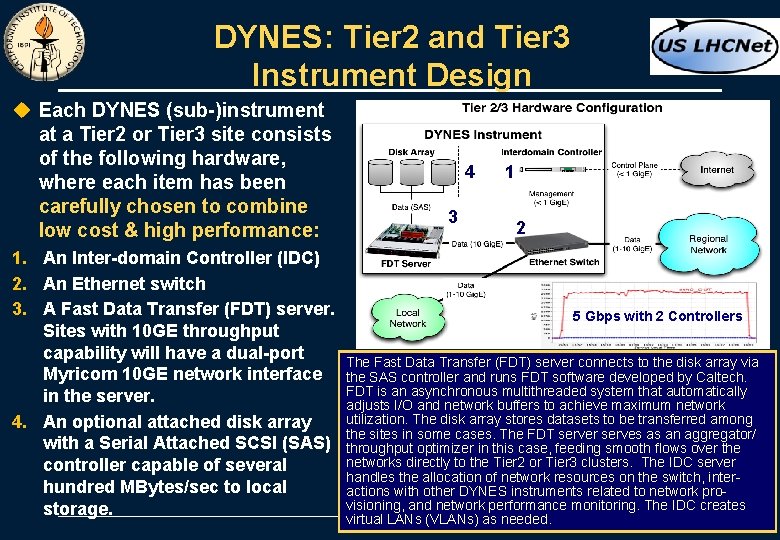

DYNES: Tier 2 and Tier 3 Instrument Design u Each DYNES (sub-)instrument at a Tier 2 or Tier 3 site consists of the following hardware, where each item has been carefully chosen to combine low cost & high performance: 1. An Inter-domain Controller (IDC) 2. An Ethernet switch 3. A Fast Data Transfer (FDT) server. Sites with 10 GE throughput capability will have a dual-port Myricom 10 GE network interface in the server. 4. An optional attached disk array with a Serial Attached SCSI (SAS) controller capable of several hundred MBytes/sec to local storage. 4 3 1 2 5 Gbps with 2 Controllers The Fast Data Transfer (FDT) server connects to the disk array via the SAS controller and runs FDT software developed by Caltech. FDT is an asynchronous multithreaded system that automatically adjusts I/O and network buffers to achieve maximum network utilization. The disk array stores datasets to be transferred among the sites in some cases. The FDT server serves as an aggregator/ throughput optimizer in this case, feeding smooth flows over the networks directly to the Tier 2 or Tier 3 clusters. The IDC server handles the allocation of network resources on the switch, interactions with other DYNES instruments related to network provisioning, and network performance monitoring. The IDC creates virtual LANs (VLANs) as needed.

Wide Area Networks for HEP In the LHC Era: Conclusions u The Internet is undergoing a sustained revolution, as a driver of world economic progress r HEP is at the leading edge and is a driver of R&E networks and inter-regional links in support of our science u A global community of networks has arisen that support the LHC program (as a leading user) and science and education broadly u We are benefitting from the press of global network traffic r The transition to the next generation of networks will occur just in time to meet the LHC experiments’ network needs of future years u We need to build a network-aware Computing Model architecture r Focused on consistent computing, storage and network operations u We are moving from static lightpaths to dynamic circuits crossing continents and oceans, based on standardized services. r DYNES will extend these production capabilities to many US campuses and regional networks; Just the start of a major new trend.

THANK YOU! newman@hep. caltech. edu 31

Introduction to wide area networks

Introduction to wide area networks Wireless wide area networks

Wireless wide area networks Circuit switched wan

Circuit switched wan Extreme angle photography

Extreme angle photography Basestore iptv

Basestore iptv Virtual circuit vs datagram

Virtual circuit vs datagram Uk hep forum

Uk hep forum Hepatitis b transmission

Hepatitis b transmission Chronic hepatitis

Chronic hepatitis Ana hep

Ana hep Hep a vs b vs c

Hep a vs b vs c Termoelektrana sisak

Termoelektrana sisak Hep c results interpretation

Hep c results interpretation Hep b vaccines

Hep b vaccines Hep obnovljivi izvori energije

Hep obnovljivi izvori energije Hep verlag elehrmittel anleitung

Hep verlag elehrmittel anleitung Liverpool hep c

Liverpool hep c Hep c symptoms female

Hep c symptoms female Www.cdc.gov/vaccines/schedules/index.html

Www.cdc.gov/vaccines/schedules/index.html Hep b vaccine schedule for adults

Hep b vaccine schedule for adults Hep international

Hep international Wide area network wan fort myers

Wide area network wan fort myers Wide area network topology

Wide area network topology Wireless wide area network

Wireless wide area network Wide area

Wide area Mobile wide area network

Mobile wide area network Wide area network adalah

Wide area network adalah Cisco wide area application services

Cisco wide area application services Wawf acceptor training

Wawf acceptor training Config t

Config t Wawf

Wawf Wide area kommunikationssystem

Wide area kommunikationssystem