CERN Wide Area NetworkingCERN Wide Area Networking requirements

- Slides: 45

CERN Wide Area Networking@CERN Wide Area Networking requirements and challenges at CERN for the LHC era Presented at the NEC’ 01 conference, 17 th September, VARNA, Bulgaria Olivier H. Martin CERN - IT Division September 2001 Olivier. Martin@cern. ch 3/6/2021 NEC'01 Varna (Bulgaria) Olivier H. Martin (1)

CERN § § § Presentation Outline CERN connectivity Update Academic & Research Networking in Europe § TEN-155 § GEANT project Update Interconnections with Academic & Research networks worldwide § STAR TAP § STAR LIGHT Data. TAG project Internet today § What it is § Growth § Technologies § Trends Challenges ahead: § Qo. S § Gigabit/second file transfer § Security architecture 3/6/2021 NEC'01 Varna (Bulgaria) 2

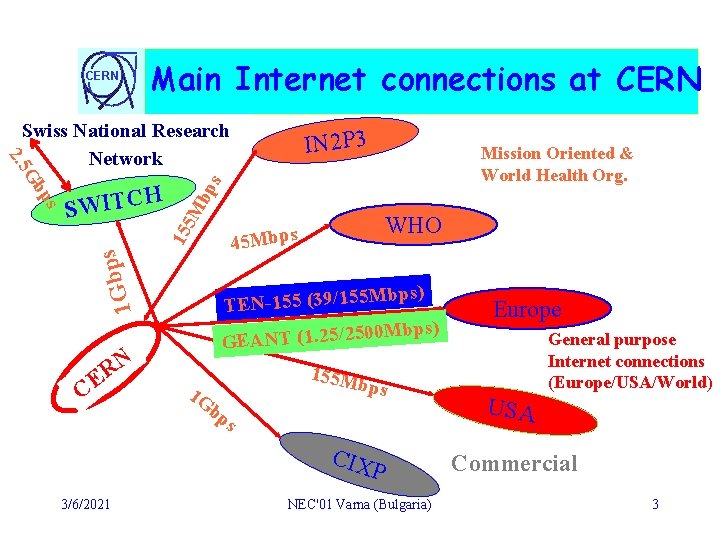

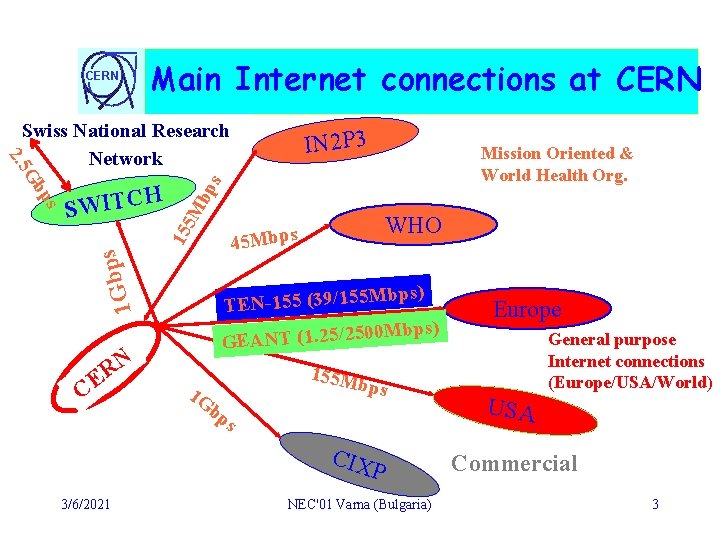

CERN Main Internet connections at CERN C Mission Oriented & World Health Org. WHO 45 Mbps 1 Gbp N R E IN 2 P 3 bp s 15 5 M H C T I W S s ps Gb 2. 5 Swiss National Research Network Mbps) TEN-155 (39/155 0 Mbps) 0 5 /2 5. 2 (1 T N A E G 155 M b ps 1 G bp s CIX 3/6/2021 P NEC'01 Varna (Bulgaria) Europe General purpose Internet connections (Europe/USA/World) USA Commercial 3

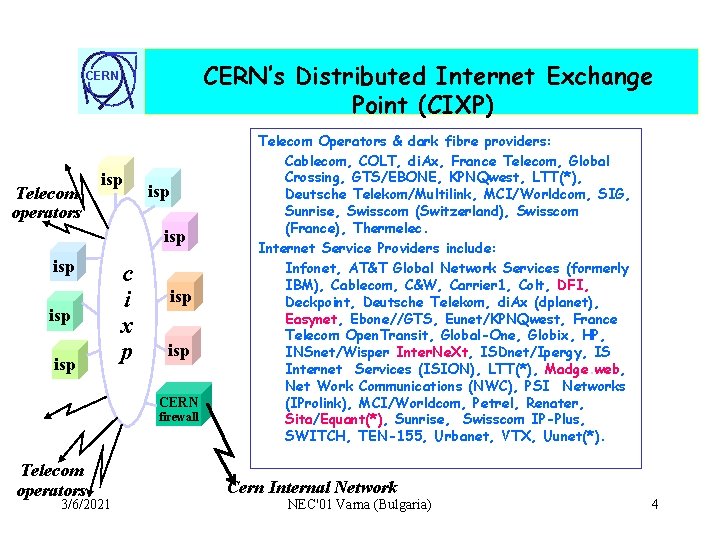

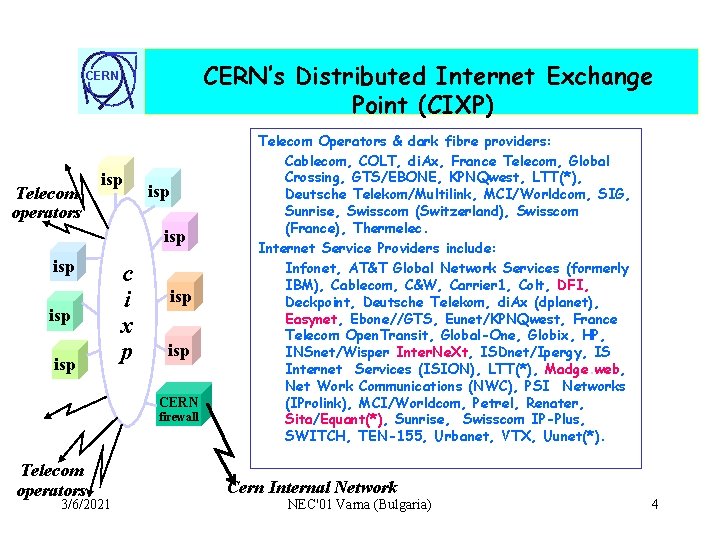

CERN’s Distributed Internet Exchange Point (CIXP) CERN Telecom operators isp isp isp c i x p isp CERN firewall Telecom operators 3/6/2021 Telecom Operators & dark fibre providers: Cablecom, COLT, di. Ax, France Telecom, Global Crossing, GTS/EBONE, KPNQwest, LTT(*), Deutsche Telekom/Multilink, MCI/Worldcom, SIG, Sunrise, Swisscom (Switzerland), Swisscom (France), Thermelec. Internet Service Providers include: Infonet, AT&T Global Network Services (formerly IBM), Cablecom, C&W, Carrier 1, Colt, DFI, Deckpoint, Deutsche Telekom, di. Ax (dplanet), Easynet, Ebone//GTS, Eunet/KPNQwest, France Telecom Open. Transit, Global-One, Globix, HP, INSnet/Wisper Inter. Ne. Xt, ISDnet/Ipergy, IS Internet Services (ISION), LTT(*), Madge. web, Net Work Communications (NWC), PSI Networks (IProlink), MCI/Worldcom, Petrel, Renater, Sita/Equant(*), Sunrise, Swisscom IP-Plus, SWITCH, TEN-155, Urbanet, VTX, Uunet(*). Cern Internal Network NEC'01 Varna (Bulgaria) 4

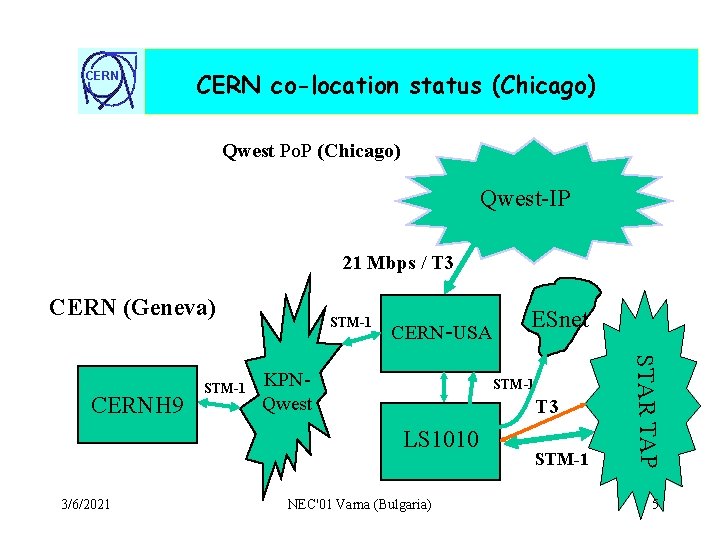

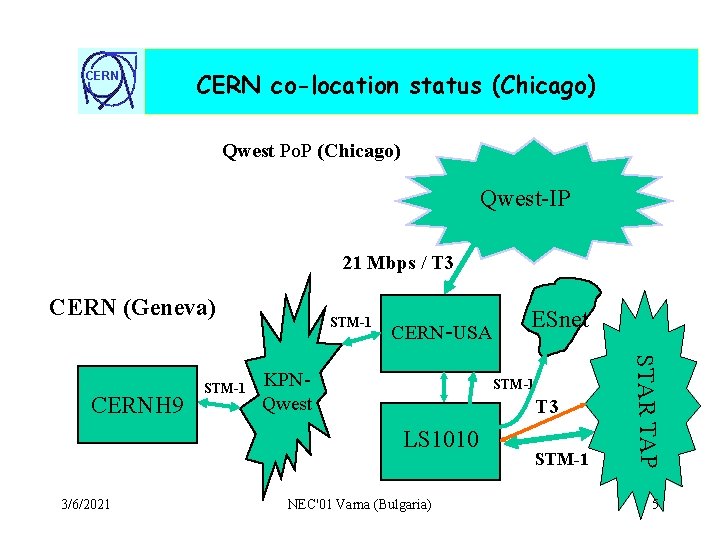

CERN co-location status (Chicago) Qwest Po. P (Chicago) Qwest-IP 21 Mbps / T 3 CERN (Geneva) CERN-USA KPNQwest STM-1 T 3 LS 1010 3/6/2021 ESnet NEC'01 Varna (Bulgaria) STM-1 STAR TAP CERNH 9 STM-1 5

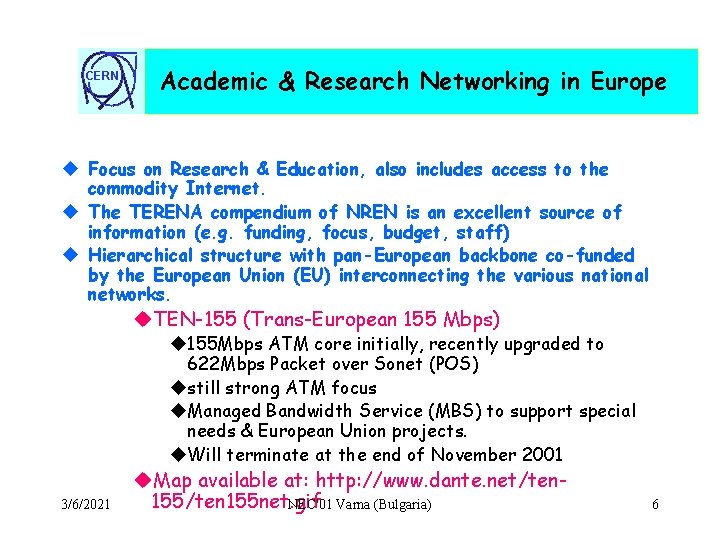

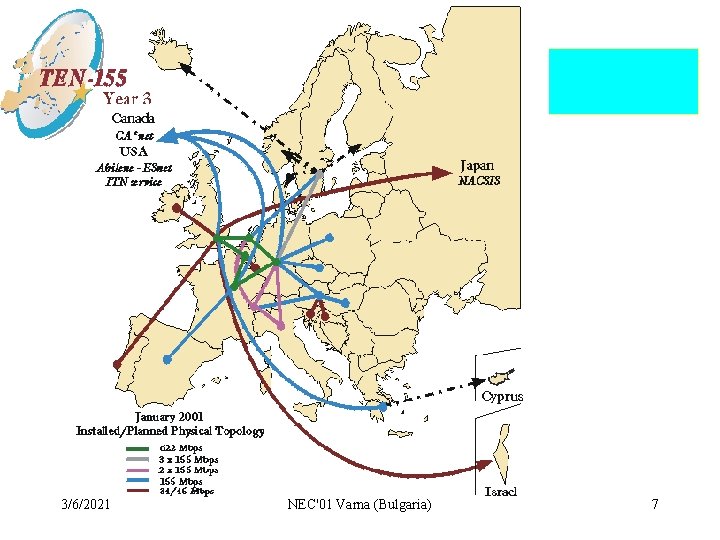

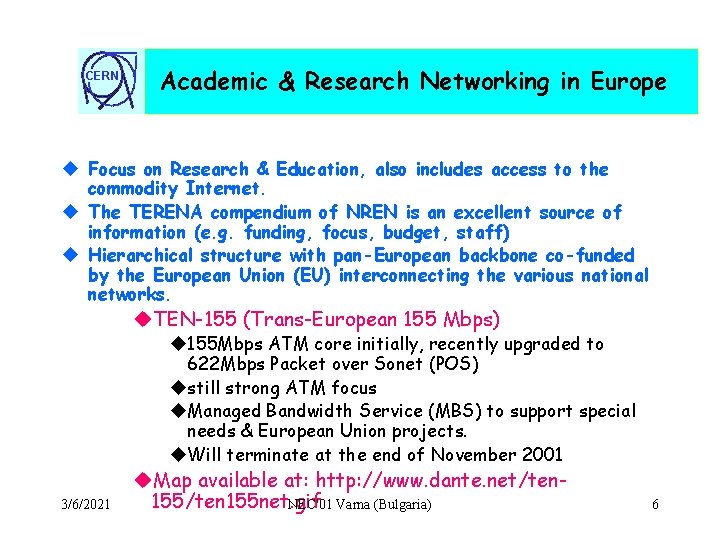

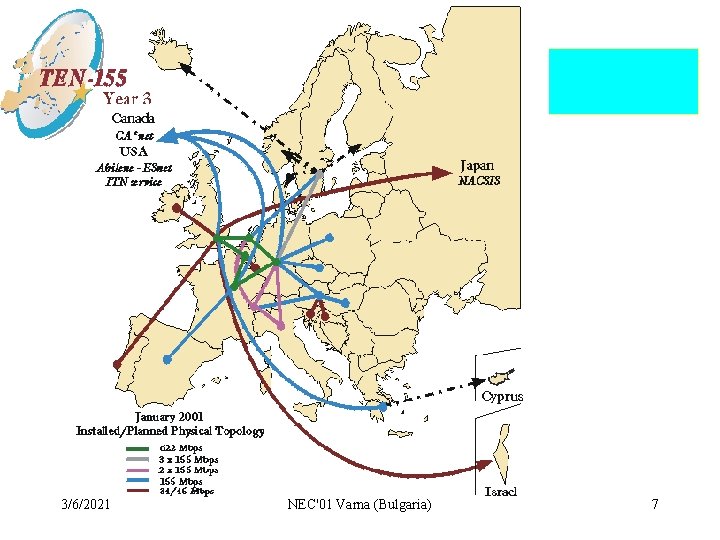

CERN Academic & Research Networking in Europe u Focus on Research & Education, also includes access to the commodity Internet. u The TERENA compendium of NREN is an excellent source of information (e. g. funding, focus, budget, staff) u Hierarchical structure with pan-European backbone co-funded by the European Union (EU) interconnecting the various national networks. u. TEN-155 (Trans-European 155 Mbps) u 155 Mbps ATM core initially, recently upgraded to 622 Mbps Packet over Sonet (POS) ustill strong ATM focus u. Managed Bandwidth Service (MBS) to support special needs & European Union projects. u. Will terminate at the end of November 2001 3/6/2021 u. Map available at: http: //www. dante. net/ten 155 net. gif NEC'01 Varna (Bulgaria) 6

CERN 3/6/2021 NEC'01 Varna (Bulgaria) 7

CERN The GEANT Project u 4 years project started on December 1, 2000 u call for tender issued June 2000, closed September 2000, adjudication made in June 2001 u first parts to be delivered in September 2001. u Provisional cost estimate: 233 Meuro u. EC contribution 87 MEuro (37%), of which 62% will be spent during year 1 in order to keep NRN’s contributions stable (i. e. 36 MEuro/year). u Several 2. 5/10 Gbps rings (mostly over unprotected lambdas, three main Telecom Providers (Colt/DT/Telia): u. West ring (2*half rings) provided by COLT (UK-SE-DEIT-CH-FR) u. East legs and miscellaneous circuits provided by Deutsche Telekom (e. g. CH-AT, CZ-DE) 3/6/2021 NEC'01 Varna (Bulgaria) 8

CERN 3/6/2021 NEC'01 Varna (Bulgaria) 9

CERN The GEANT Project (cont. ) u Access: mostly via 2. 5 Gbps circuits u Routers: Juniper u CERN l CERN & SWITCH will share a 2. 5 Gbps access l CERN should purchase 50% of the total capacity (i. e. 1. 25 Gbps). l It is also expected that the access bandwith to GEANT will double every 12 -18 months. l The Swiss Po. P of GEANT will be located at the Geneva airport (Ldcom@Halle de Fret) 3/6/2021 NEC'01 Varna (Bulgaria) 10

CERN The GEANT Project (cont. ) u Connection to other World Regions u in principle via core nodes only, They will, together, form a European Distributed Access (EDA) “point” conceptually similar to the STAR TAP. § Projected Services & Applications u standard (i. e. best effort) IP service u premium IP service (diffserv’s Expedited Forwarding (EF)) u guaranteed capacity service (GCS), using diffserv’s Assured Forwarding (AF)) u Virtual Private Networking (VPN), layer 2 (? ) & layer 3 u Native Multicast u Various Grid projects (e. g. Data. Grid) expected to be leading applications. u Use of MPLS anticipated for traffic engineering, VPN (e. g. Data. Grid, IPv 6). 3/6/2021 NEC'01 Varna (Bulgaria) 11

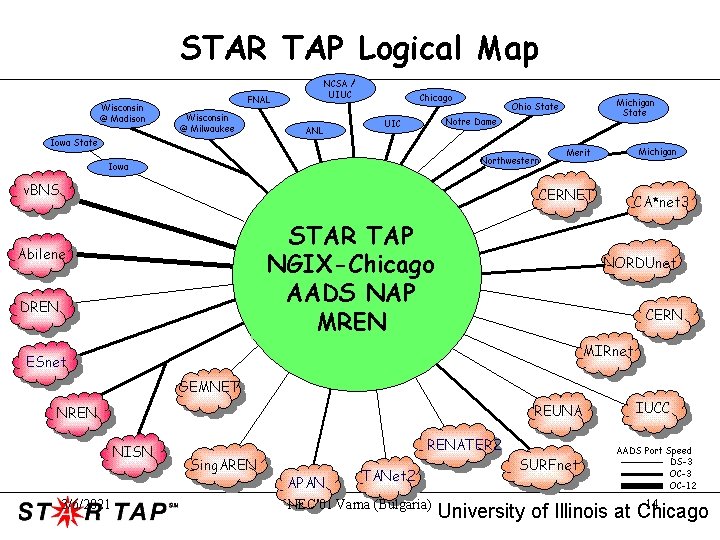

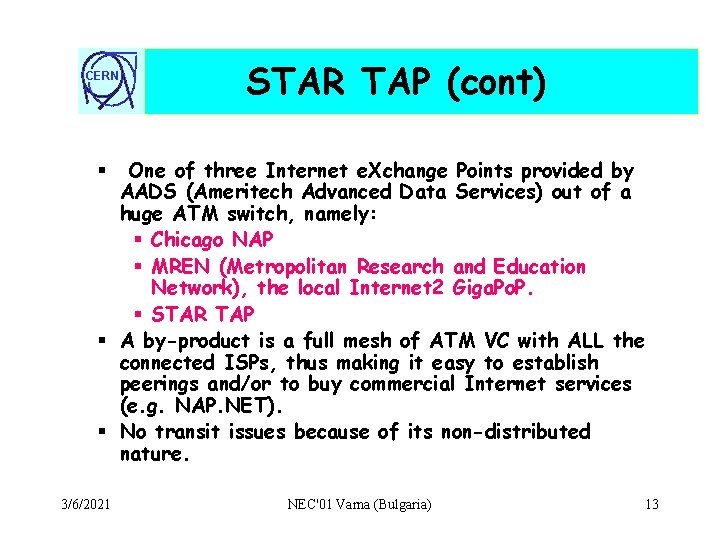

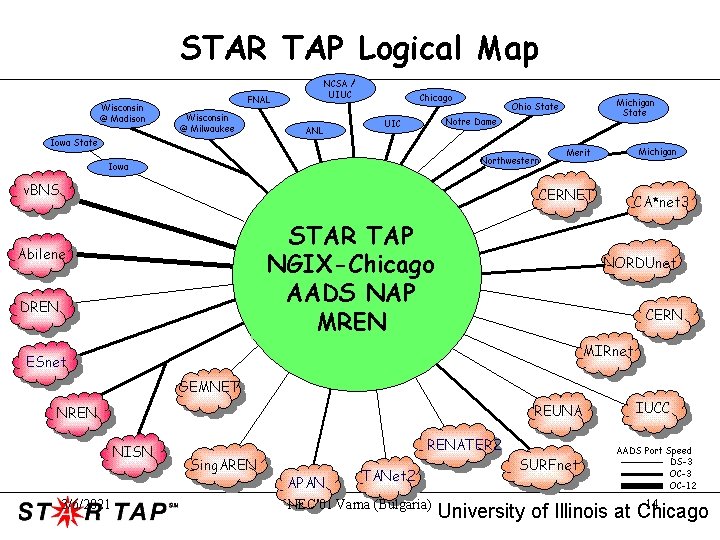

CERN STAR TAP § Science, Technology And Research Transit Access Point § International Connection Point for Research and Education Networks at the Ameritech NAP in Chicago § Project goal: to facilitate the long-term interconnection and interoperability of advanced international networking in support of applications, performance measuring, and technology evaluations. § Hosts the 6 TAP - IPv 6 Meet Point § http: //www. startap. net/ 3/6/2021 NEC'01 Varna (Bulgaria) 12

CERN STAR TAP (cont) One of three Internet e. Xchange Points provided by AADS (Ameritech Advanced Data Services) out of a huge ATM switch, namely: § Chicago NAP § MREN (Metropolitan Research and Education Network), the local Internet 2 Giga. Po. P. § STAR TAP § A by-product is a full mesh of ATM VC with ALL the connected ISPs, thus making it easy to establish peerings and/or to buy commercial Internet services (e. g. NAP. NET). § No transit issues because of its non-distributed nature. § 3/6/2021 NEC'01 Varna (Bulgaria) 13

CERN 3/6/2021 NEC'01 Varna (Bulgaria) 14

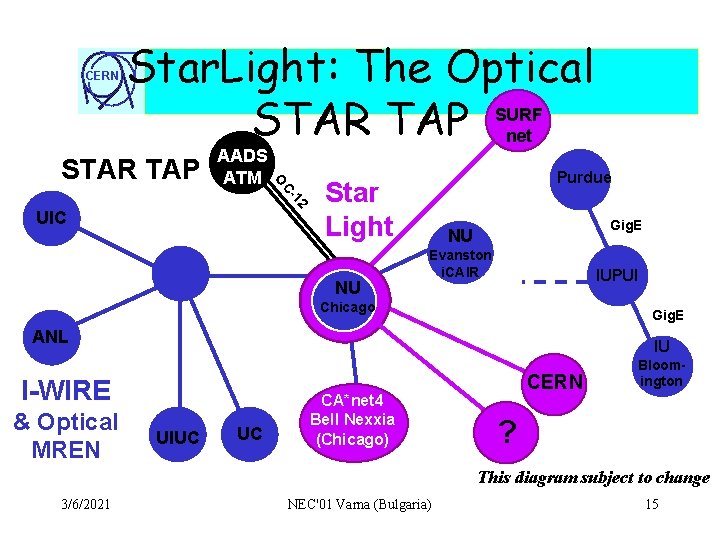

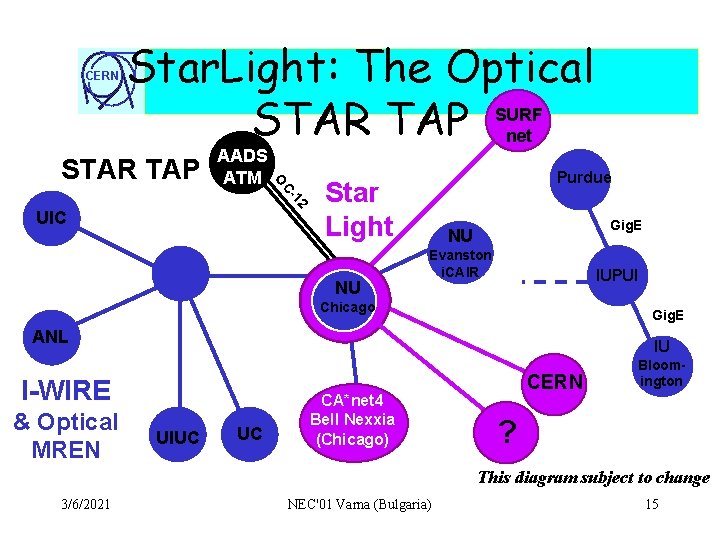

CERN Star. Light: The Optical STAR TAP SURF net BN 12 C- O STAR TAP AADS ATM UIC Purdue Star Light NU Gig. E NU Evanston i. CAIR IUPUI Chicago Gig. E ANL IU I-WIRE & Optical MREN UIUC UC CA*net 4 Bell Nexxia (Chicago) CERN Bloomington ? This diagram subject to change 3/6/2021 NEC'01 Varna (Bulgaria) 15

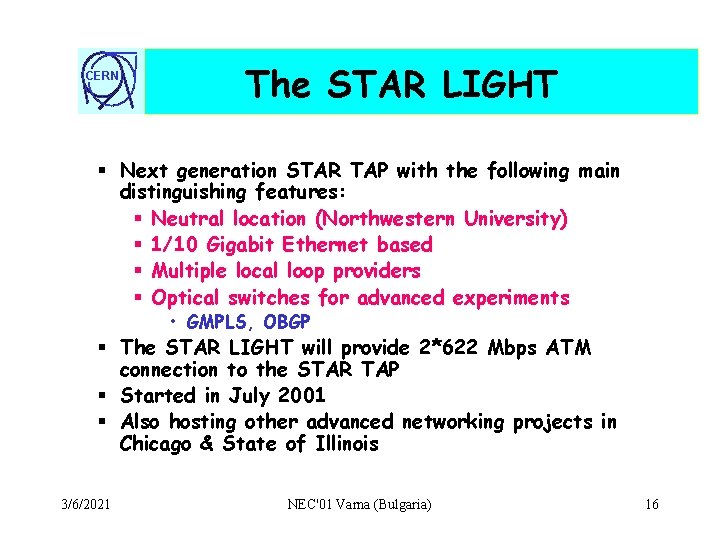

CERN The STAR LIGHT § Next generation STAR TAP with the following main distinguishing features: § Neutral location (Northwestern University) § 1/10 Gigabit Ethernet based § Multiple local loop providers § Optical switches for advanced experiments • GMPLS, OBGP § The STAR LIGHT will provide 2*622 Mbps ATM connection to the STAR TAP § Started in July 2001 § Also hosting other advanced networking projects in Chicago & State of Illinois 3/6/2021 NEC'01 Varna (Bulgaria) 16

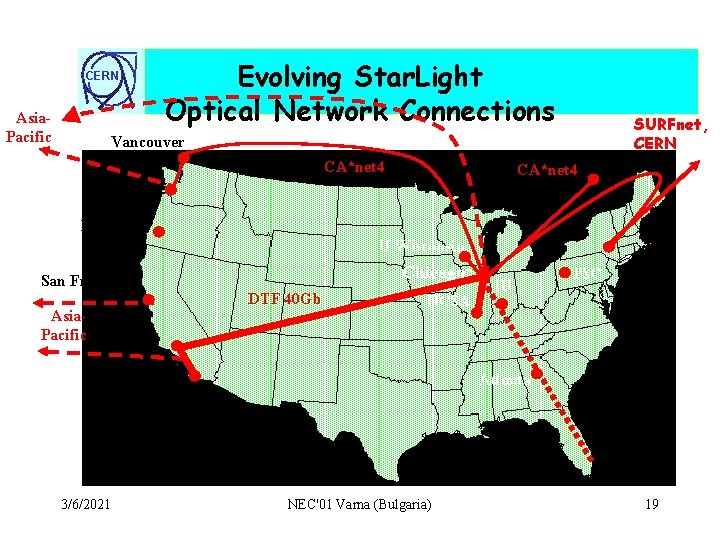

CERN § § § § Star. Light Connections STAR TAP (AADS NAP) is connected via two OC-12 c ATM circuits now operational The Netherlands (SURFnet) is bringing two OC-12 c POS from Amsterdam to Star. Light on September 1, 2001 and a 2. 5 Gbps lambda to Star. Light on September 15, 2001 Abilene will soon connect via Gig. E Canada (CA*net 3/4) is connected via Gig. E, soon 10 Gig. E I-WIRE, a State-of-Illinois-funded dark-fiber multi-10 Gig. E DWDM effort involving Illinois research institutions is being built. 36 strands to the Qwest Chicago Po. P are in. NSF Distributed Terascale Facility (DTF) 4 x 10 Gig. E network being engineered by PACI and Qwest. NORDUnet will be using Star. Light’s OC-12 ATM connection CERN should come in March 2002 with OC-12 from Geneva. A second 2. 5 Gbps research circuit is also expected to come during the second half of 2002 (EU Data. TAG project). 3/6/2021 NEC'01 Varna (Bulgaria) 17

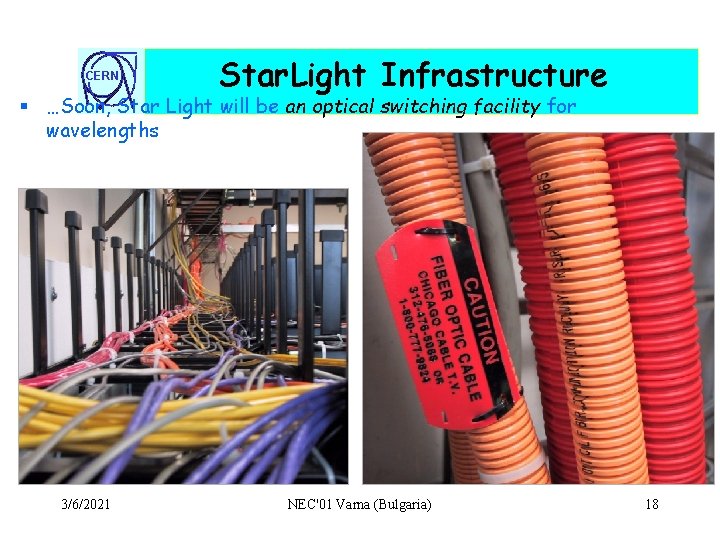

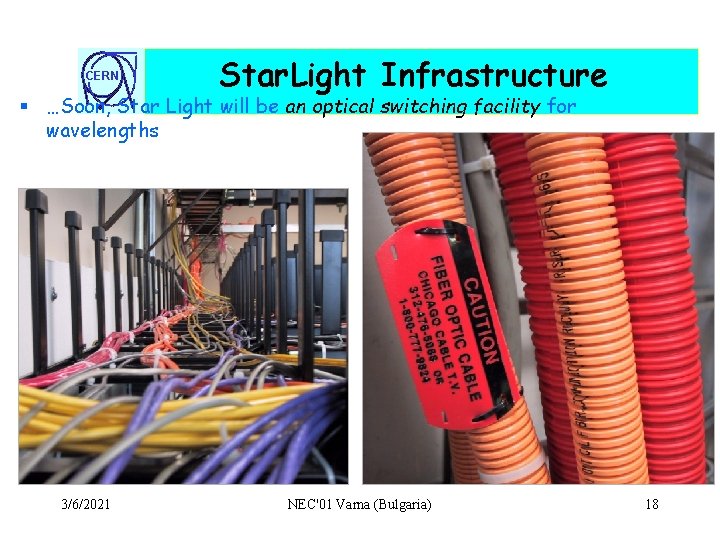

CERN Star. Light Infrastructure § …Soon, Star Light will be an optical switching facility for wavelengths 3/6/2021 NEC'01 Varna (Bulgaria) 18

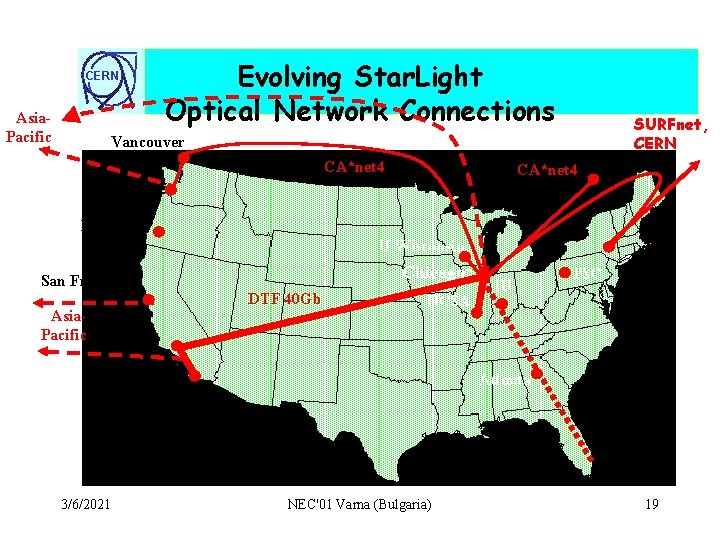

Evolving Star. Light Optical Network Connections CERN Asia. Pacific SURFnet, CERN Vancouver CA*net 4 Seattle Portland U Wisconsin Chicago* San Francisco DTF 40 Gb Asia. Pacific NCSA Caltech IU NYC Atlanta SDSC *ANL, UIC, NU, UC, IIT, MREN 3/6/2021 PSC NEC'01 Varna (Bulgaria) AMPATH 19

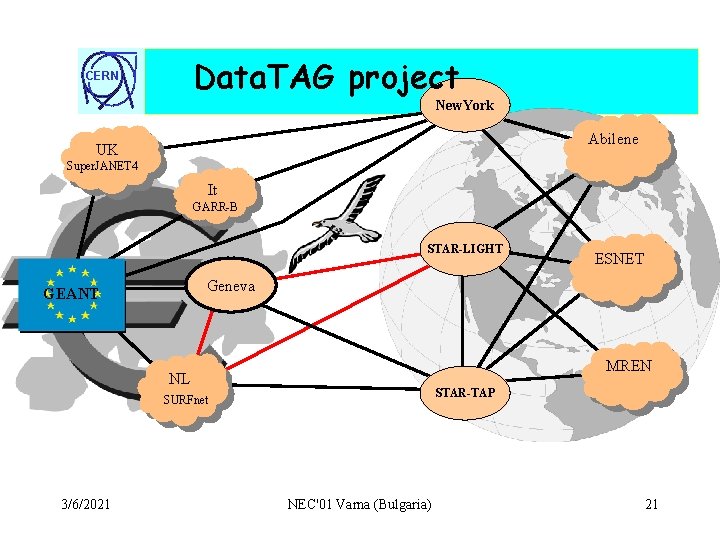

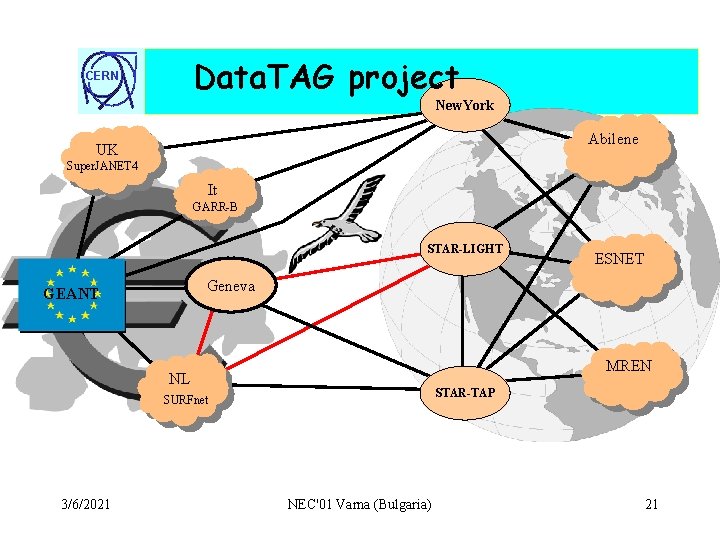

CERN § § § Data. TAG project Main aims: § Ensure maximum interoperability between USA and EU Grid projects § Transatlantic test bed for advanced Grid applied network research • 2. 5 Gbps circuit between CERN and Star. Light (Chicago) Partners: § PPARC (UK) § University of Amsterdam (NL) § INFN (IT) § CERN (Coordinating Partner) Negotiation with EU is well advanced § Expected project start: 1/1/2002 § Duration: 2 years. 3/6/2021 NEC'01 Varna (Bulgaria) 20

Data. TAG project CERN New. York Abilene UK Super. JANET 4 It GARR-B STAR-LIGHT Geneva GEANT MREN NL STAR-TAP SURFnet 3/6/2021 ESNET NEC'01 Varna (Bulgaria) 21

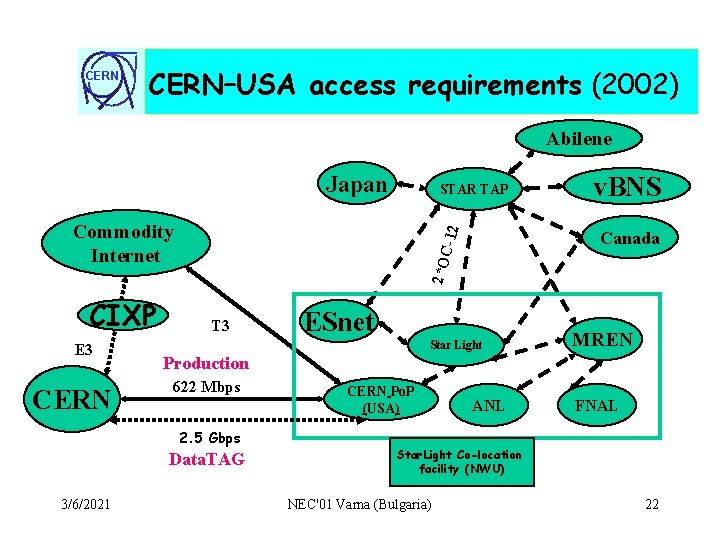

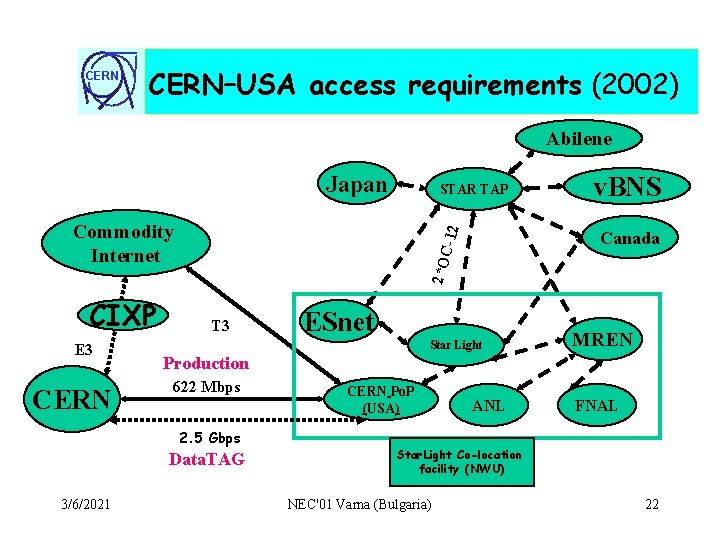

CERN–USA access requirements (2002) Abilene Japan STAR TAP CIXP E 3 CERN -12 Commodity Internet ESnet Star Light MREN Production 622 Mbps 2. 5 Gbps Data. TAG 3/6/2021 Canada 2*OC T 3 v. BNS CERN Po. P (USA) ANL FNAL Star. Light Co-location facility (NWU) NEC'01 Varna (Bulgaria) 22

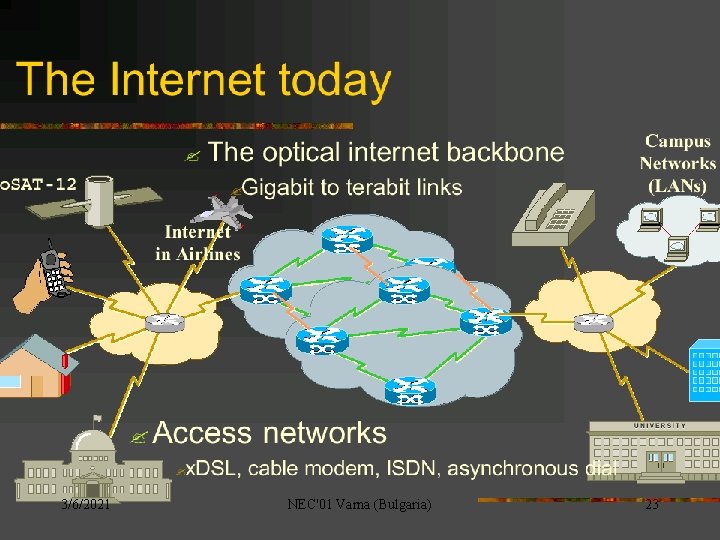

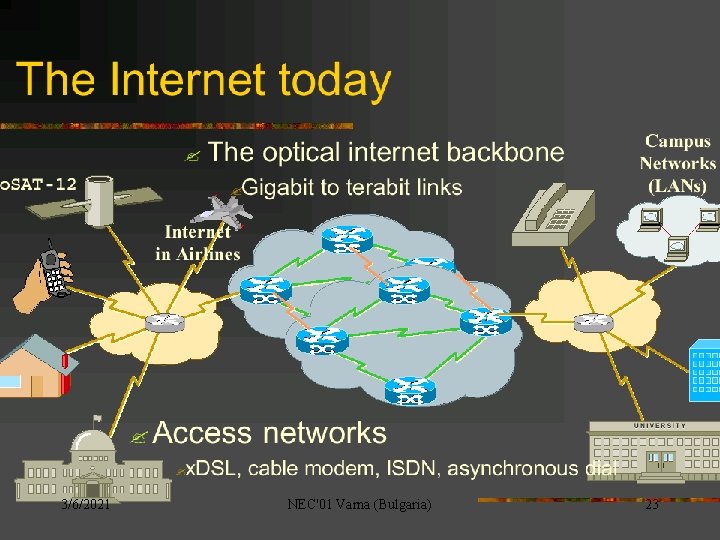

CERN 3/6/2021 NEC'01 Varna (Bulgaria) 23

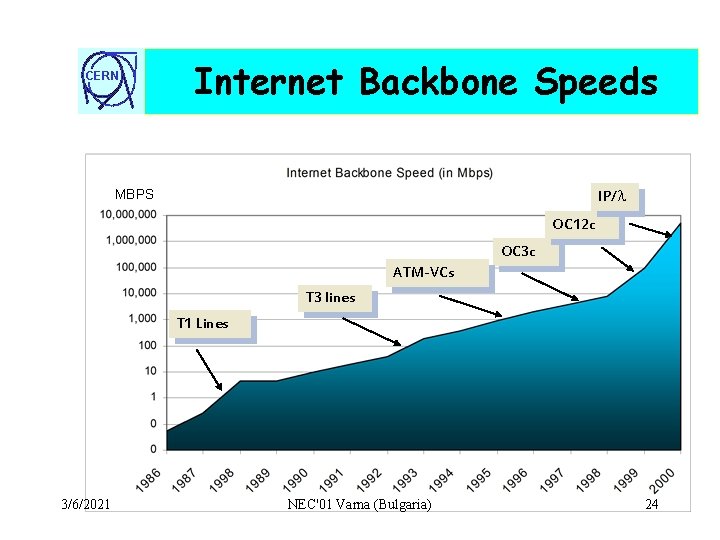

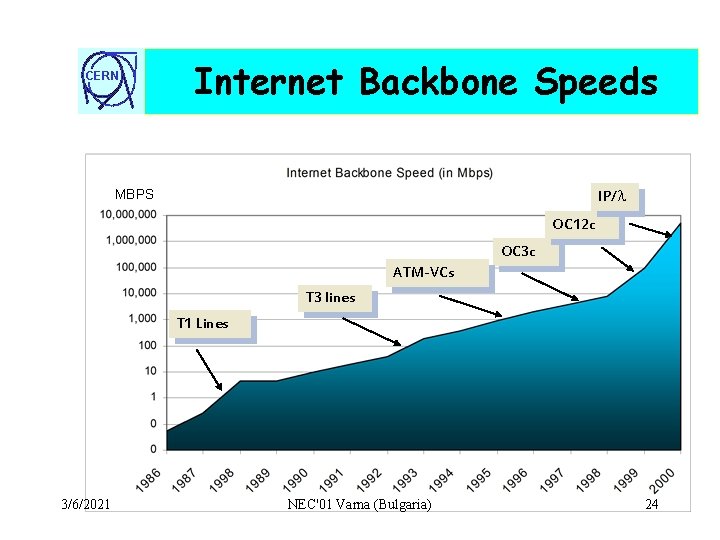

CERN Internet Backbone Speeds MBPS IP/ OC 12 c OC 3 c ATM-VCs T 3 lines T 1 Lines 3/6/2021 NEC'01 Varna (Bulgaria) 24

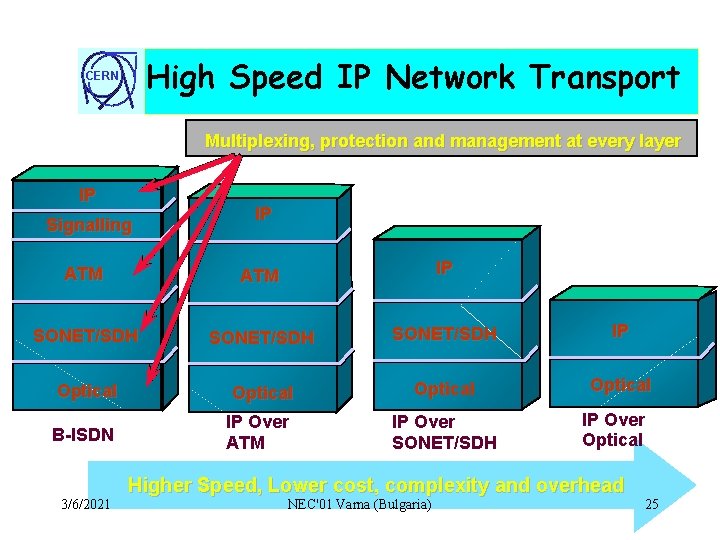

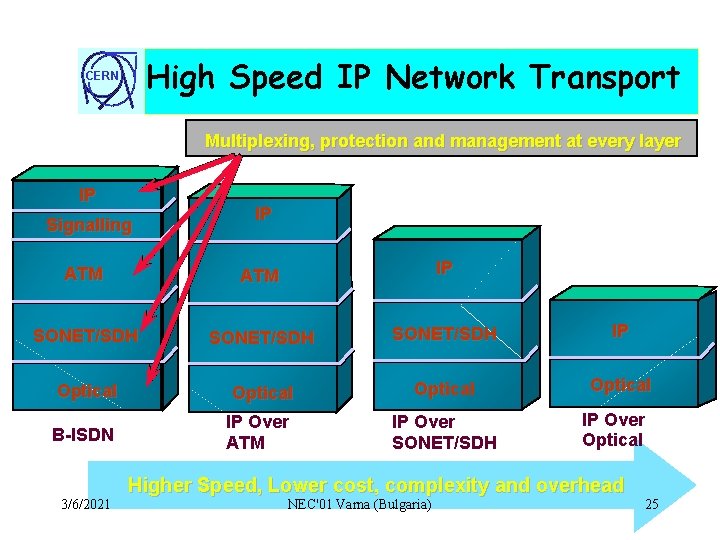

High Speed IP Network Transport CERN Multiplexing, protection and management at every layer IP Signalling IP ATM IP SONET/SDH IP Optical B-ISDN IP Over ATM 3/6/2021 IP Over SONET/SDH IP Over Optical Higher Speed, Lower cost, complexity and overhead NEC'01 Varna (Bulgaria) 25

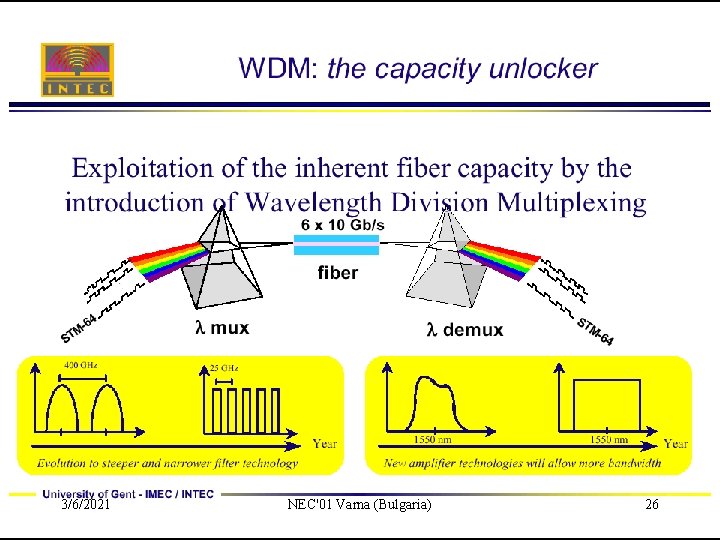

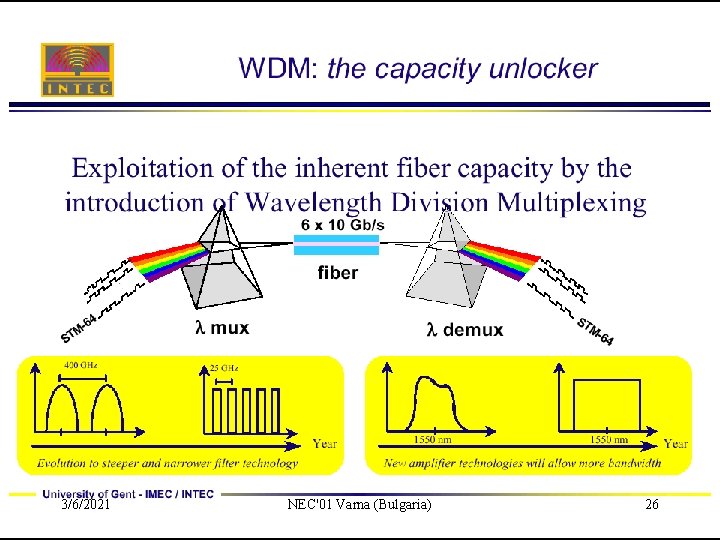

CERN 3/6/2021 NEC'01 Varna (Bulgaria) 26

CERN 3/6/2021 NEC'01 Varna (Bulgaria) 27

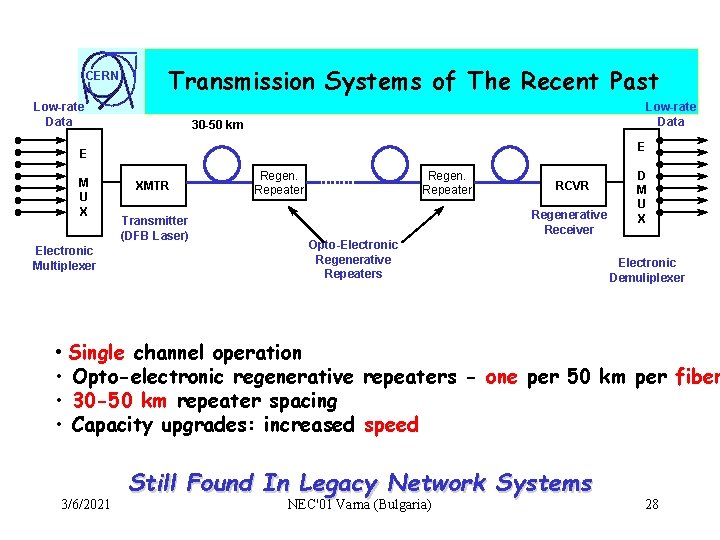

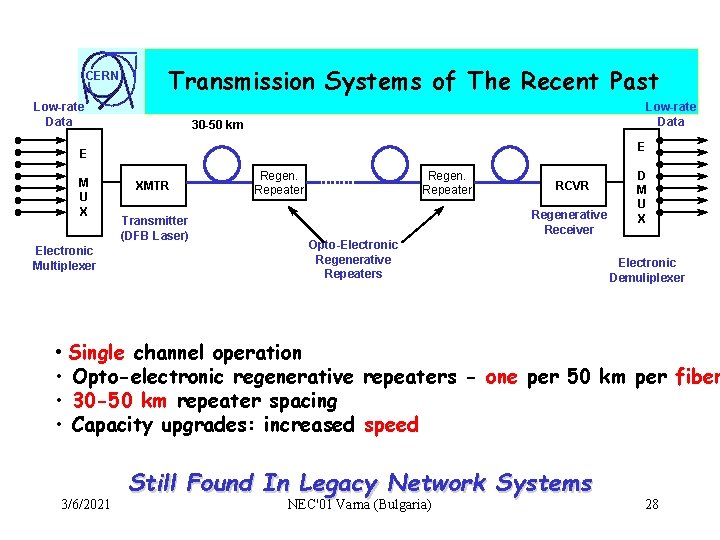

CERN Transmission Systems of The Recent Past Low-rate Data 30 -50 km E E M U X Electronic Multiplexer XMTR Transmitter (DFB Laser) Regen. Repeater RCVR Regenerative Receiver Opto-Electronic Regenerative Repeaters D M U X Electronic Demuliplexer • Single channel operation • Opto-electronic regenerative repeaters - one per 50 km per fiber • 30 -50 km repeater spacing • Capacity upgrades: increased speed 3/6/2021 Still Found In Legacy Network Systems NEC'01 Varna (Bulgaria) 28

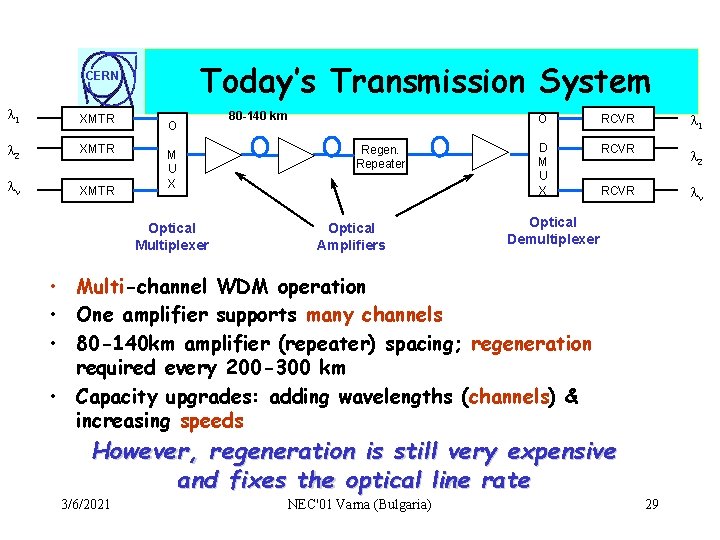

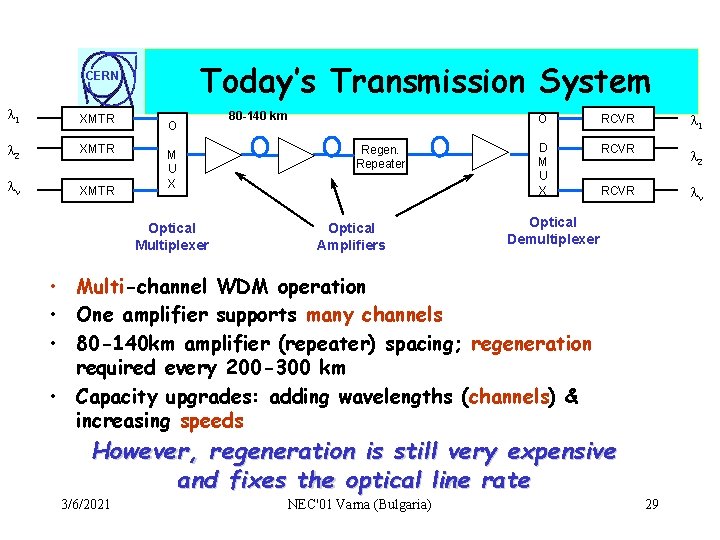

Today’s Transmission System CERN 1 XMTR 2 XMTR n XMTR O M U X Optical Multiplexer 80 -140 km Regen. Repeater Optical Amplifiers O RCVR 1 D M U X RCVR 2 RCVR n Optical Demultiplexer • Multi-channel WDM operation • One amplifier supports many channels • 80 -140 km amplifier (repeater) spacing; regeneration required every 200 -300 km • Capacity upgrades: adding wavelengths (channels) & increasing speeds However, regeneration is still very expensive and fixes the optical line rate 3/6/2021 NEC'01 Varna (Bulgaria) 29

CERN 1 XMTR 2 XMTR n XMTR Next Generation…The Now Generation O 80 -140 km O D M U X 1600 km Optical Multiplexer § § RCVR 1 RCVR 2 RCVR n Optical Demultiplexer Multi-channel WDM operation One amplifier supports many channels 80 -140 km amplifier (repeater) spacing; regeneration required only every 1600 km Capacity upgrades: adding wavelengths (channels) & increasing speeds Over 1000 Km optically transparent research network tested on the Qwest network 3/6/2021 NEC'01 Varna (Bulgaria) 30

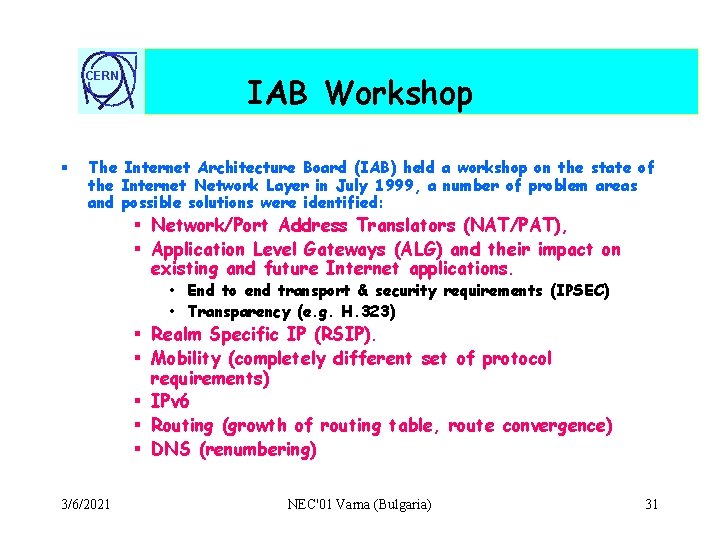

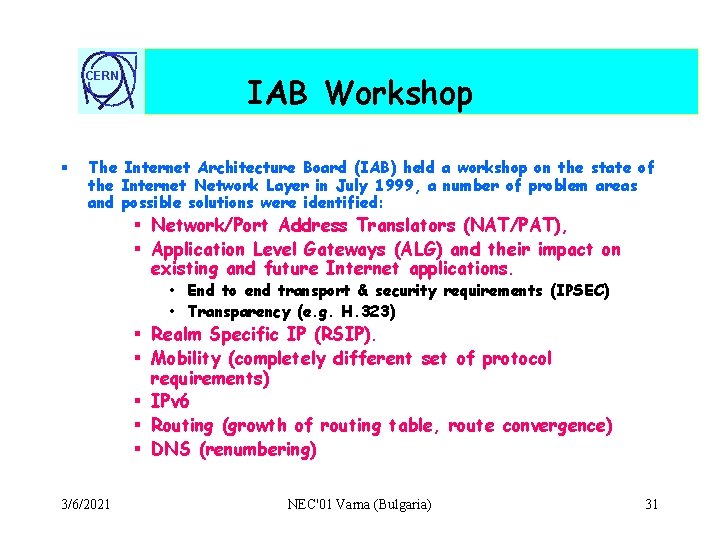

CERN § IAB Workshop The Internet Architecture Board (IAB) held a workshop on the state of the Internet Network Layer in July 1999, a number of problem areas and possible solutions were identified: § Network/Port Address Translators (NAT/PAT), § Application Level Gateways (ALG) and their impact on existing and future Internet applications. • End to end transport & security requirements (IPSEC) • Transparency (e. g. H. 323) § Realm Specific IP (RSIP). § Mobility (completely different set of protocol requirements) § IPv 6 § Routing (growth of routing table, route convergence) § DNS (renumbering) 3/6/2021 NEC'01 Varna (Bulgaria) 31

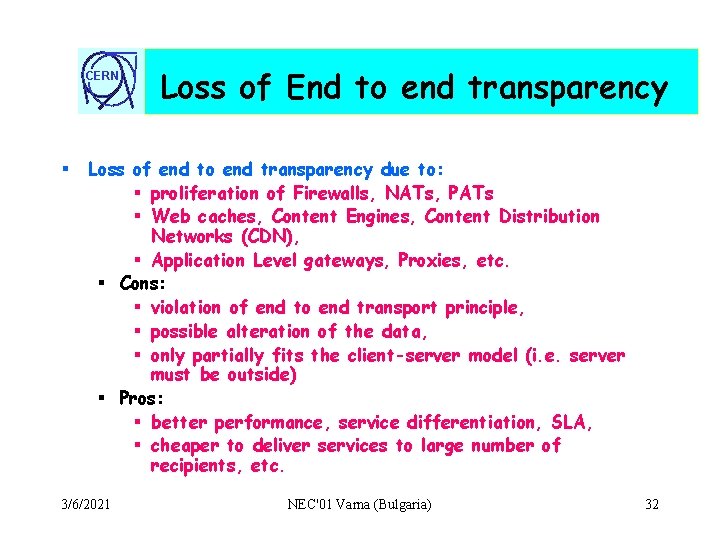

CERN § Loss of End to end transparency Loss of end to end transparency due to: § proliferation of Firewalls, NATs, PATs § Web caches, Content Engines, Content Distribution Networks (CDN), § Application Level gateways, Proxies, etc. § Cons: § violation of end to end transport principle, § possible alteration of the data, § only partially fits the client-server model (i. e. server must be outside) § Pros: § better performance, service differentiation, SLA, § cheaper to deliver services to large number of recipients, etc. 3/6/2021 NEC'01 Varna (Bulgaria) 32

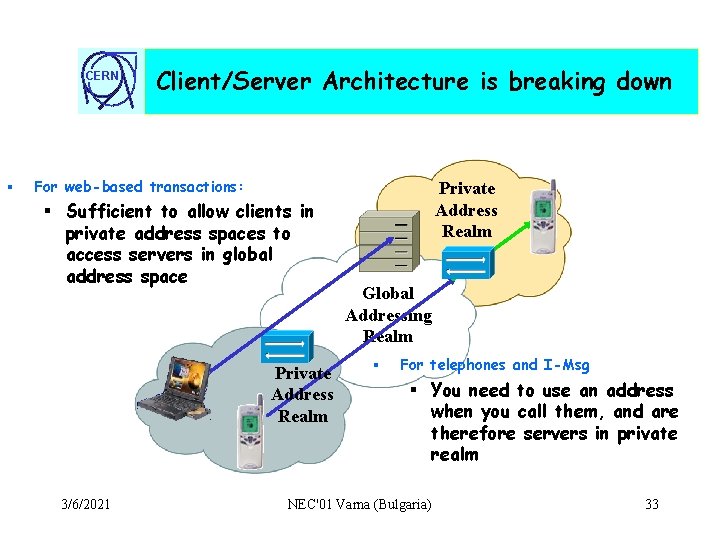

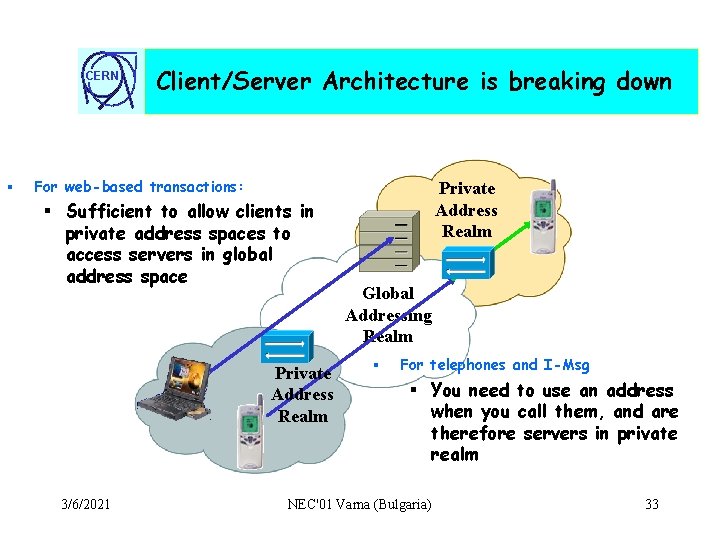

CERN § Client/Server Architecture is breaking down For web-based transactions: § Sufficient to allow clients in private address spaces to access servers in global address space Private Address Realm 3/6/2021 Private Address Realm Global Addressing Realm § For telephones and I-Msg § You need to use an address when you call them, and are therefore servers in private realm NEC'01 Varna (Bulgaria) 33

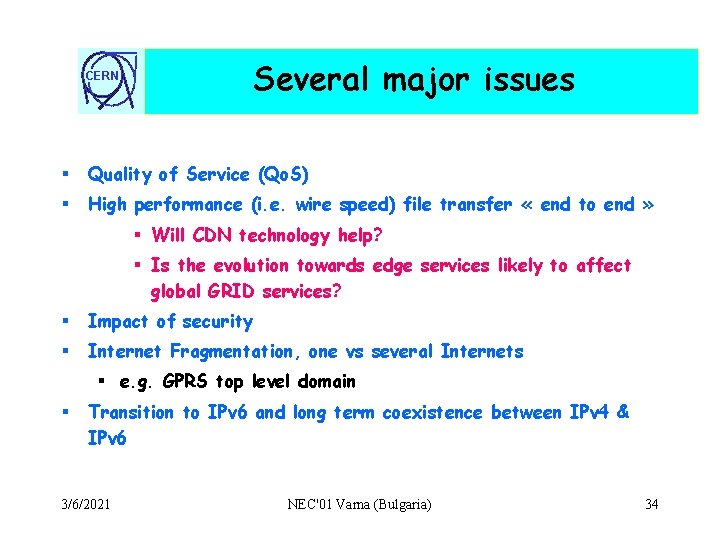

Several major issues CERN § Quality of Service (Qo. S) § High performance (i. e. wire speed) file transfer « end to end » § Will CDN technology help? § Is the evolution towards edge services likely to affect global GRID services? § Impact of security § Internet Fragmentation, one vs several Internets § e. g. GPRS top level domain § Transition to IPv 6 and long term coexistence between IPv 4 & IPv 6 3/6/2021 NEC'01 Varna (Bulgaria) 34

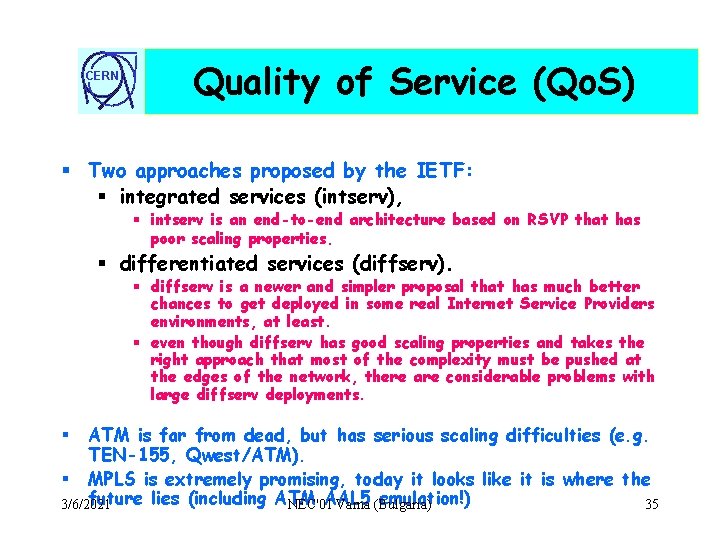

CERN Quality of Service (Qo. S) § Two approaches proposed by the IETF: § integrated services (intserv), § intserv is an end-to-end architecture based on RSVP that has poor scaling properties. § differentiated services (diffserv). § diffserv is a newer and simpler proposal that has much better chances to get deployed in some real Internet Service Providers environments, at least. § even though diffserv has good scaling properties and takes the right approach that most of the complexity must be pushed at the edges of the network, there are considerable problems with large diffserv deployments. ATM is far from dead, but has serious scaling difficulties (e. g. TEN-155, Qwest/ATM). § MPLS is extremely promising, today it looks like it is where the future lies (including ATM AAL 5 emulation!) 3/6/2021 NEC'01 Varna (Bulgaria) 35 §

CERN Quality of Service (Qo. S) § Qo. S is an increasing nightmare as the understanding of the implications are growing: Delivering Qo. S at the edge and only at the edge is not sufficient to guarantee low jitter, delay bound communications, § Therefore complex functionality must also be introduced in Internet core routers, § is it compatible with ASICs, § is it worthwhile? § Is MPLS an adequate and scalable answer? § § Is circuit oriented technology (e. g. dynamic wavelength) appropriate? § If so, for which scenarios? 3/6/2021 NEC'01 Varna (Bulgaria) 36

CERN Internet Backbone Technologies (MPLS/1) l MPLS (Multi-Protocol Label Switching) is an emerging IETF standard that is gaining impressive acceptance, especially with the traditional Telecom Operators and the large Internet Tier 1. l Recursive encapsulation mechanism that can be mapped over any layer 2 technology (e. g. ATM, but also POS). l Departure from destination based routing that has been plaguing the Internet since the beginning. l Fast packet switching performed on source, destination labels, as well as To. S. Like ATM VP/VC, MPLS labels only have local significance. l Better integration of layer 2 and 3 than in an IP over ATM network through the use of RSVP or LDP (Label Distribution Protocol). l Ideal for traffic engineering, Qo. S routing, VPN, IPv 6 even. 3/6/2021 NEC'01 Varna (Bulgaria) 37

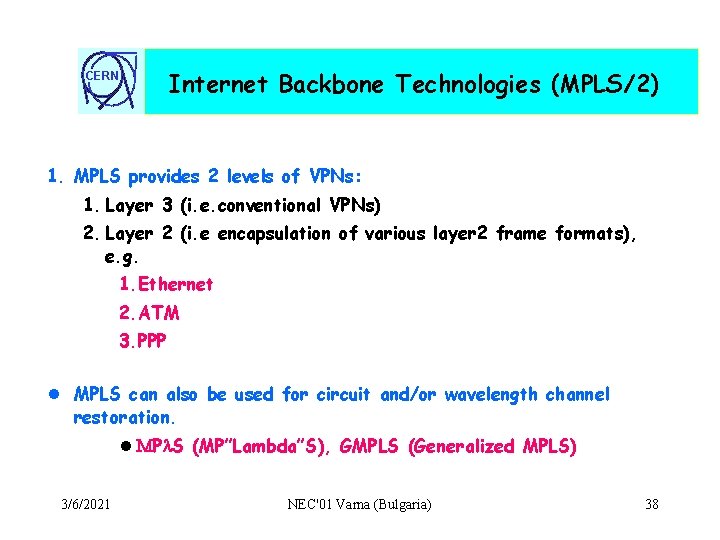

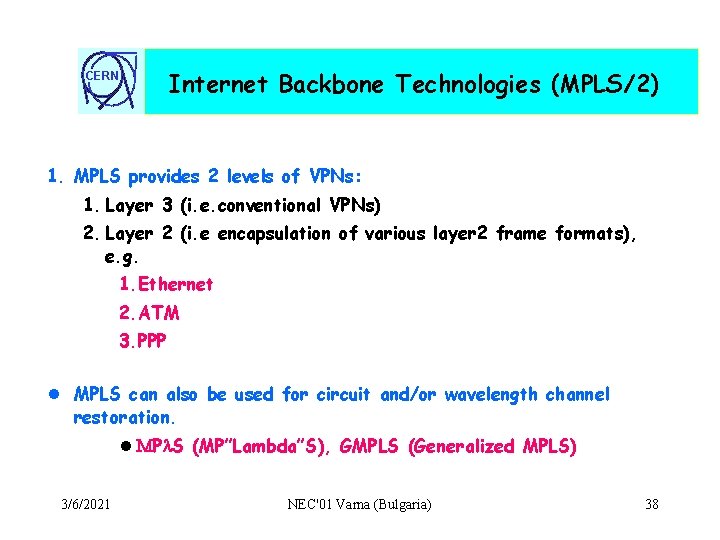

CERN Internet Backbone Technologies (MPLS/2) 1. MPLS provides 2 levels of VPNs: 1. Layer 3 (i. e. conventional VPNs) 2. Layer 2 (i. e encapsulation of various layer 2 frame formats), e. g. 1. Ethernet 2. ATM 3. PPP l MPLS can also be used for circuit and/or wavelength channel restoration. l MPl. S (MP”Lambda”S), GMPLS (Generalized MPLS) 3/6/2021 NEC'01 Varna (Bulgaria) 38

CERN Gigabit/second networking § The start of a new era: § Very rapid progress towards 10 Gbps networking in both the Local (LAN) and Wide area (WAN) networking environments are being made. § 40 Gbps is in sight on WANs, but what after? § The success of the LHC computing Grid critically depends on the availability of Gbps links between CERN and LHC regional centers. § What does it mean? (*) § In theory: § 1 GB file transferred in 11 seconds over a 1 Gbps circuit (*) § 1 TB file transfer would still require 3 hours § and 1 PB file transfer would require 4 months § In practice: § major transmission protocol issues will need to be addressed 3/6/2021 according to the 75% empirical rule NEC'01 Varna (Bulgaria) 39

CERN Very high speed file transfer (1) § High performance switched LAN assumed: § requires time & money. § High performance WAN also assumed: § also requires money but is becoming possible. § very careful engineering mandatory. § Will remain very problematic especially over high bandwidth*delay paths: § Might force the use Jumbo Frames because of interactions between TCP/IP and link error rates. § Could possibly conflict with strong security requirements (i. e. throughput, handling of TCP/IP options (e. g. window scaling)) 3/6/2021 NEC'01 Varna (Bulgaria) 40

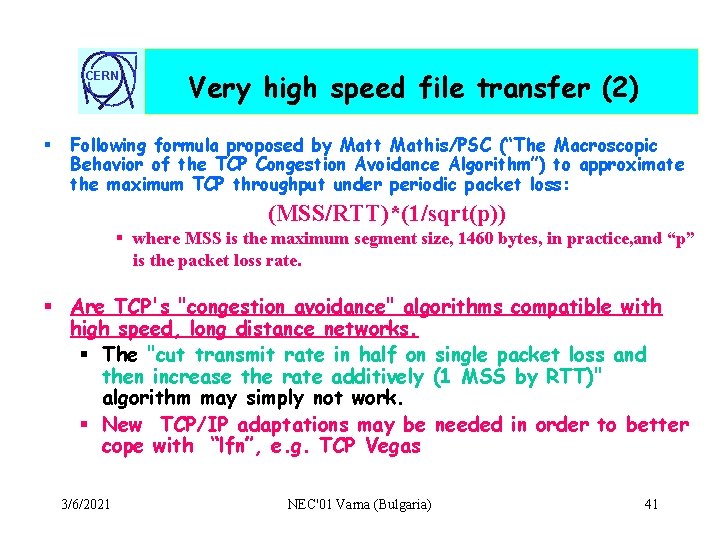

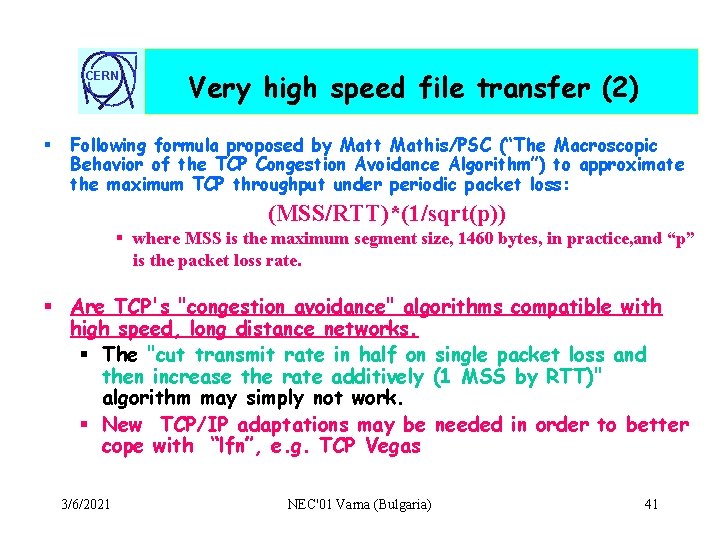

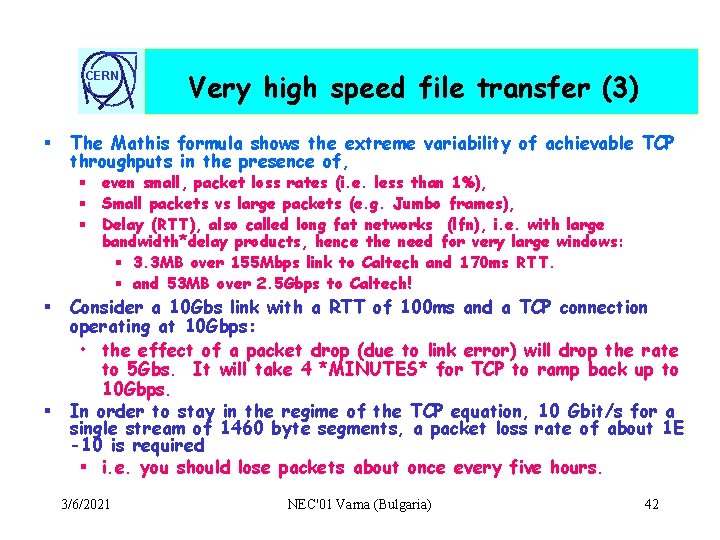

CERN § Very high speed file transfer (2) Following formula proposed by Matt Mathis/PSC (“The Macroscopic Behavior of the TCP Congestion Avoidance Algorithm”) to approximate the maximum TCP throughput under periodic packet loss: (MSS/RTT)*(1/sqrt(p)) § where MSS is the maximum segment size, 1460 bytes, in practice, and “p” is the packet loss rate. § Are TCP's "congestion avoidance" algorithms compatible with high speed, long distance networks. § The "cut transmit rate in half on single packet loss and then increase the rate additively (1 MSS by RTT)" algorithm may simply not work. § New TCP/IP adaptations may be needed in order to better cope with “lfn”, e. g. TCP Vegas 3/6/2021 NEC'01 Varna (Bulgaria) 41

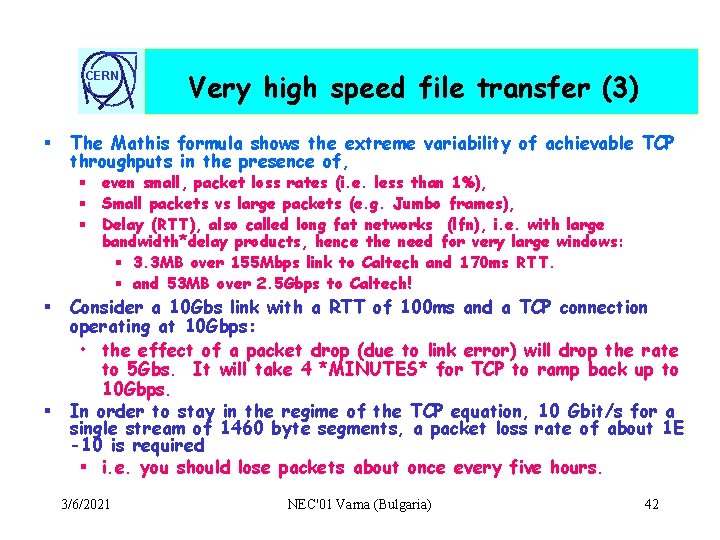

CERN § Very high speed file transfer (3) The Mathis formula shows the extreme variability of achievable TCP throughputs in the presence of, § even small, packet loss rates (i. e. less than 1%), § Small packets vs large packets (e. g. Jumbo frames), § Delay (RTT), also called long fat networks (lfn), i. e. with large bandwidth*delay products, hence the need for very large windows: § 3. 3 MB over 155 Mbps link to Caltech and 170 ms RTT. § and 53 MB over 2. 5 Gbps to Caltech! § § Consider a 10 Gbs link with a RTT of 100 ms and a TCP connection operating at 10 Gbps: • the effect of a packet drop (due to link error) will drop the rate to 5 Gbs. It will take 4 *MINUTES* for TCP to ramp back up to 10 Gbps. In order to stay in the regime of the TCP equation, 10 Gbit/s for a single stream of 1460 byte segments, a packet loss rate of about 1 E -10 is required § i. e. you should lose packets about once every five hours. 3/6/2021 NEC'01 Varna (Bulgaria) 42

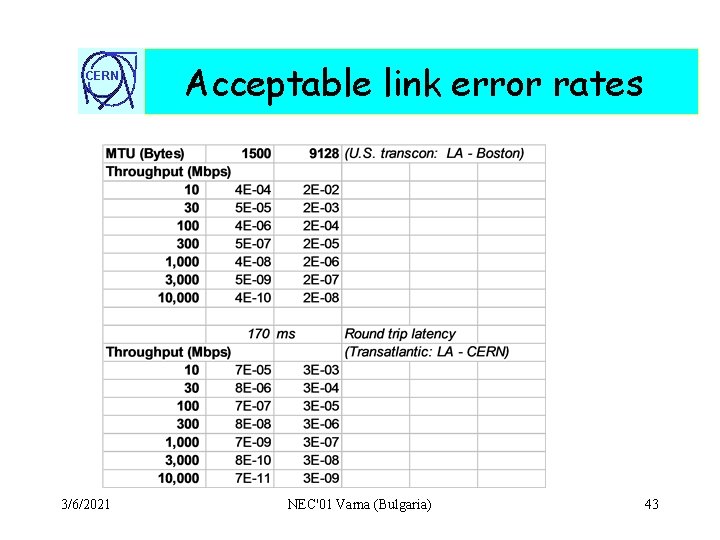

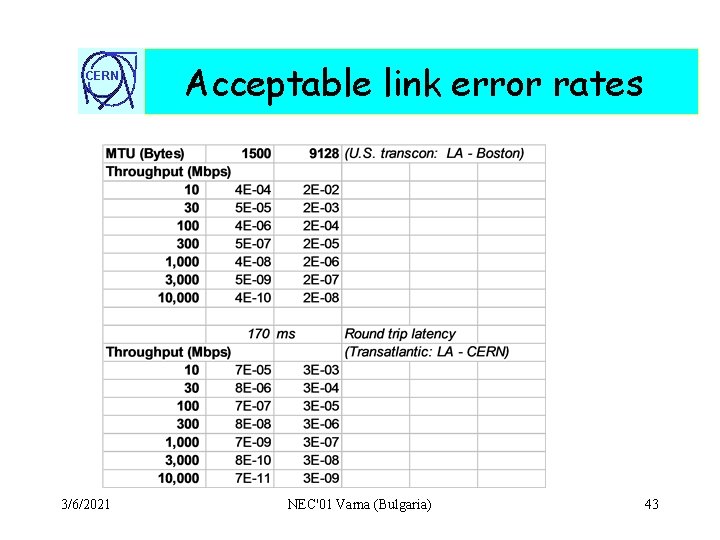

CERN 3/6/2021 Acceptable link error rates NEC'01 Varna (Bulgaria) 43

CERN § § § Very high speed file transfer (tentative conclusions) Tcp/ip fairness only exist between similar flows, i. e. § similar duration, § similar RTTs. Tcp/ip congestion avoidance algorithms need to be revisited (e. g. Vegas rather then Reno/New. Reno). Current ways of circumventing the problem, e. g. § Multi-stream & parallel socket § just bandages or the practical solution to the problem? Web 100, a 3 MUSD NSF project, might help enormously! § better TCP/IP instrumentation (MIB) § self-tuning § tools for measuring performance § improved FTP implementation Non-Tcp/ip based transport solution, use of Forward Error Corrections (FEC), Early Congestion Notifications (ECN) rather than active queue management techniques (RED/WRED)? 3/6/2021 NEC'01 Varna (Bulgaria) 44

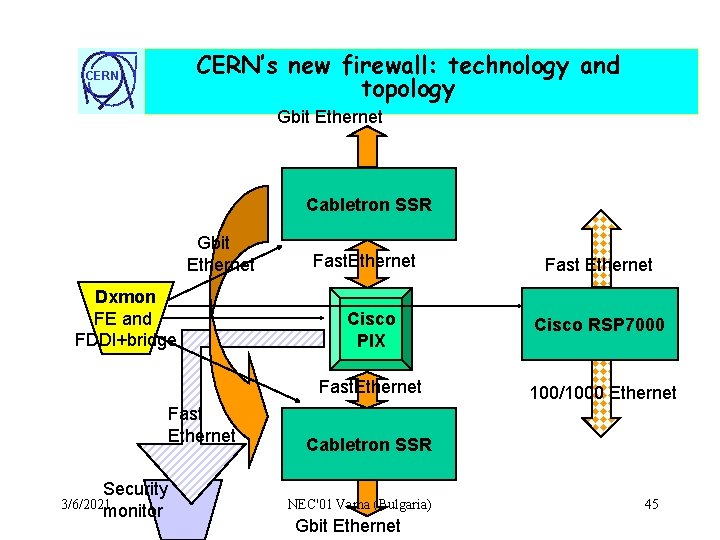

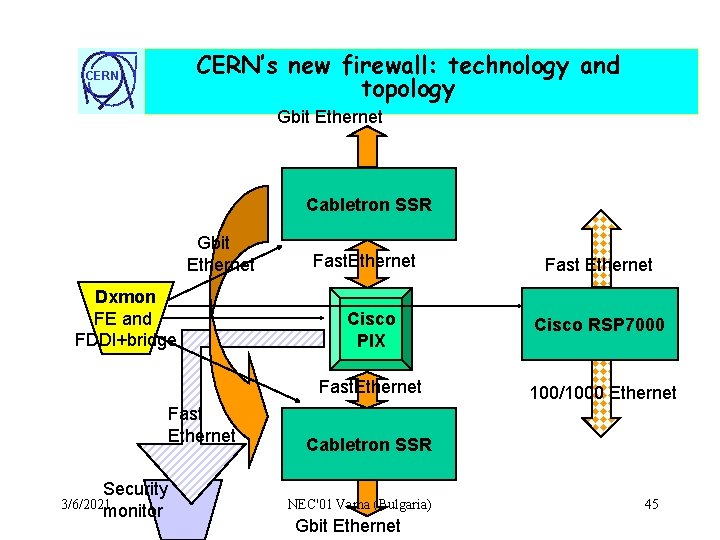

CERN’s new firewall: technology and topology CERN Gbit Ethernet Cabletron SSR Gbit Ethernet Dxmon FE and FDDI+bridge Fast Ethernet Security 3/6/2021 monitor Fast. Ethernet Fast Ethernet Cisco PIX Cisco RSP 7000 Fast. Ethernet 100/1000 Ethernet Cabletron SSR NEC'01 Varna (Bulgaria) Gbit Ethernet 45