LHC OPN and LHC ONE LHC networks Marco

- Slides: 29

LHC OPN and LHC ONE ”LHC networks” Marco Marletta (GARR) – Stefano Zani (INFN CNAF) Workshop INFN CCR - GARR, Napoli, 2012

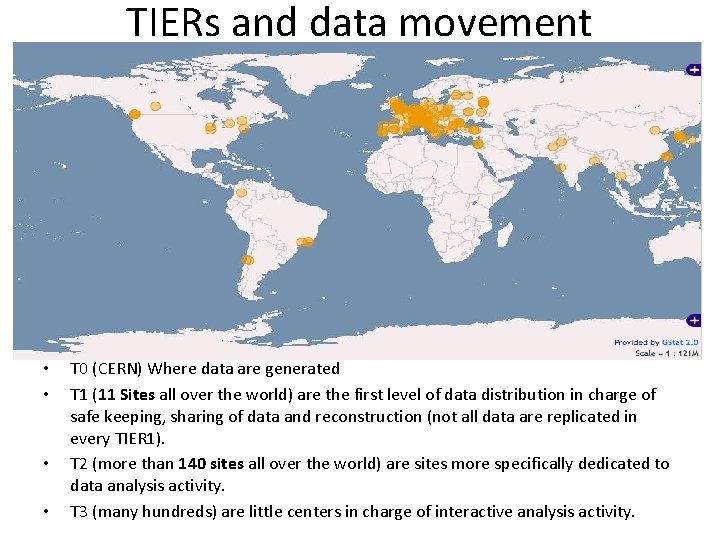

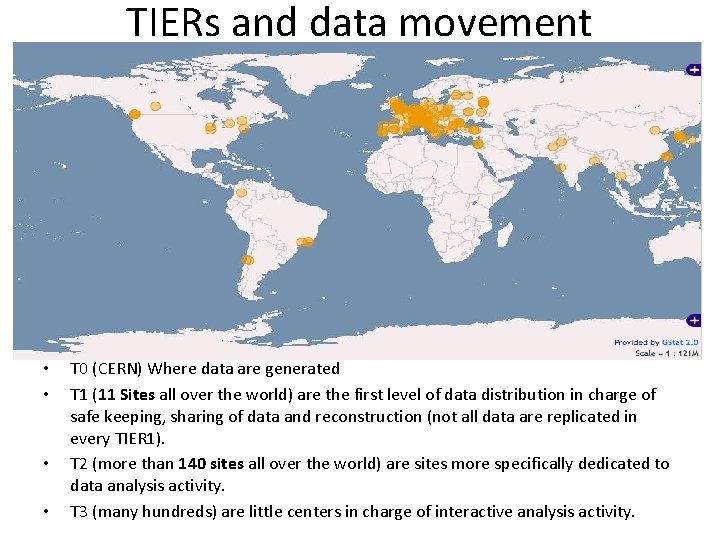

TIERs and data movement • • T 0 (CERN) Where data are generated T 1 (11 Sites all over the world) are the first level of data distribution in charge of safe keeping, sharing of data and reconstruction (not all data are replicated in every TIER 1). T 2 (more than 140 sites all over the world) are sites more specifically dedicated to data analysis activity. T 3 (many hundreds) are little centers in charge of interactive analysis activity.

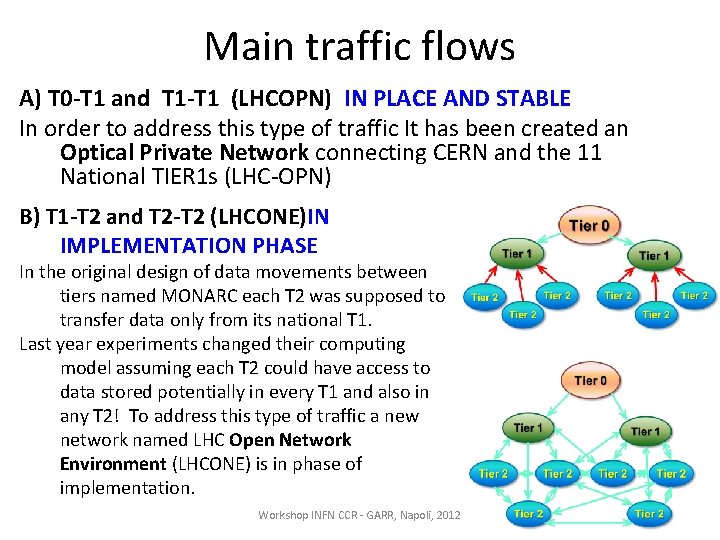

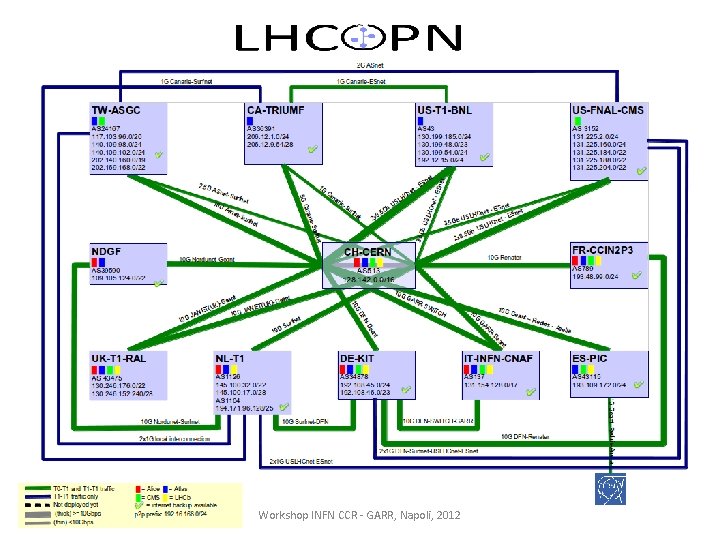

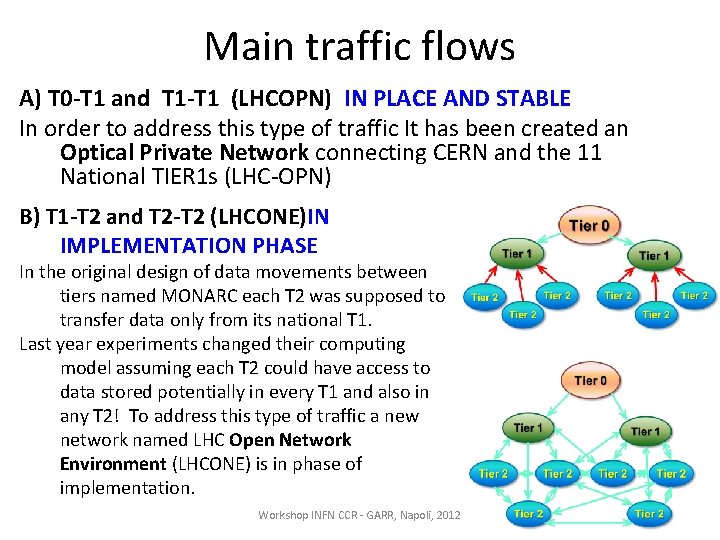

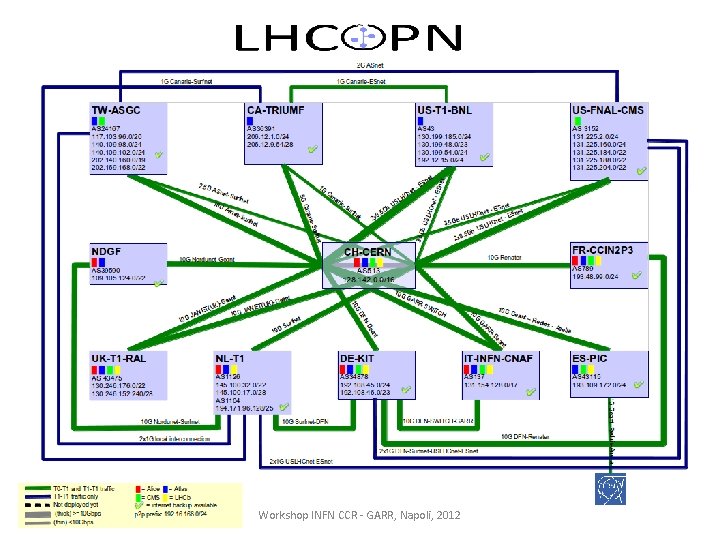

Main traffic flows A) T 0 -T 1 and T 1 -T 1 (LHCOPN) IN PLACE AND STABLE In order to address this type of traffic It has been created an Optical Private Network connecting CERN and the 11 National TIER 1 s (LHC-OPN) B) T 1 -T 2 and T 2 -T 2 (LHCONE)IN IMPLEMENTATION PHASE In the original design of data movements between tiers named MONARC each T 2 was supposed to transfer data only from its national T 1. Last year experiments changed their computing model assuming each T 2 could have access to data stored potentially in every T 1 and also in any T 2! To address this type of traffic a new network named LHC Open Network Environment (LHCONE) is in phase of implementation. Workshop INFN CCR - GARR, Napoli, 2012

Workshop INFN CCR - GARR, Napoli, 2012

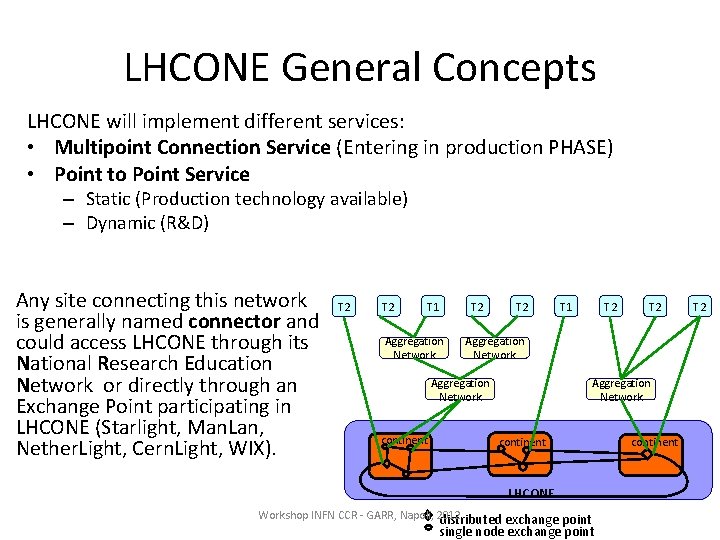

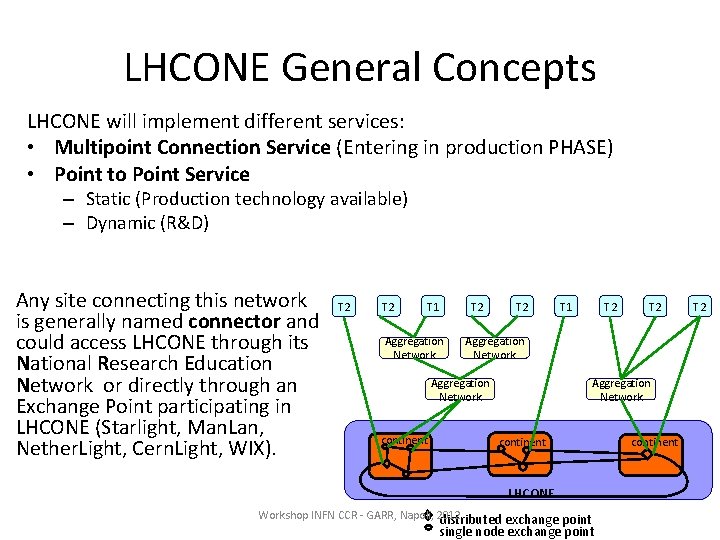

LHCONE General Concepts LHCONE will implement different services: • Multipoint Connection Service (Entering in production PHASE) • Point to Point Service – Static (Production technology available) – Dynamic (R&D) Any site connecting this network is generally named connector and could access LHCONE through its National Research Education Network or directly through an Exchange Point participating in LHCONE (Starlight, Man. Lan, Nether. Light, Cern. Light, WIX). T 2 T 1 Aggregation Network T 2 T 2 Aggregation Network continent T 1 Aggregation Network continent LHCONE Workshop INFN CCR - GARR, Napoli, 2012 distributed exchange point single node exchange point continent T 2

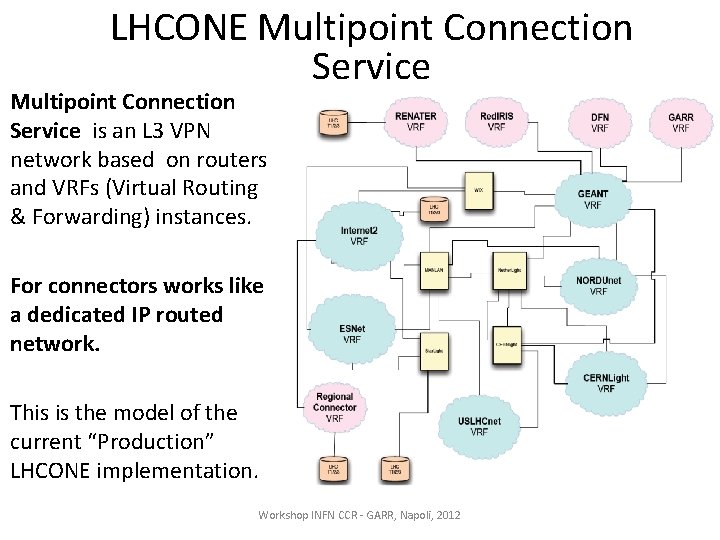

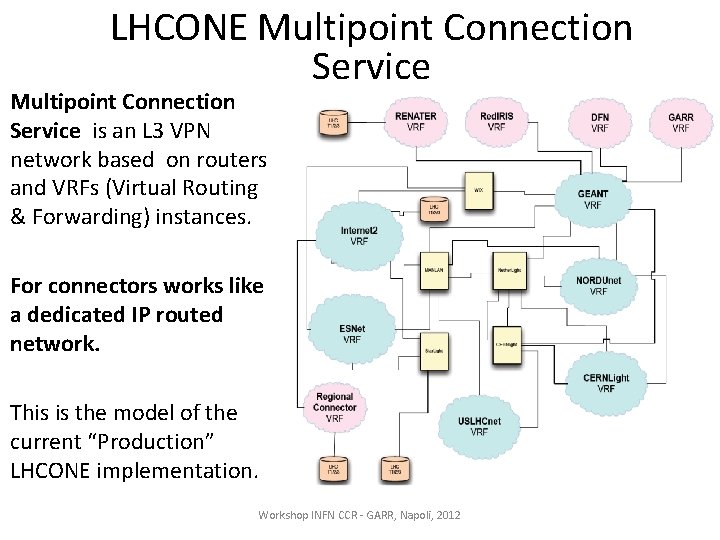

LHCONE Multipoint Connection Service is an L 3 VPN network based on routers and VRFs (Virtual Routing & Forwarding) instances. For connectors works like a dedicated IP routed network. This is the model of the current “Production” LHCONE implementation. Workshop INFN CCR - GARR, Napoli, 2012

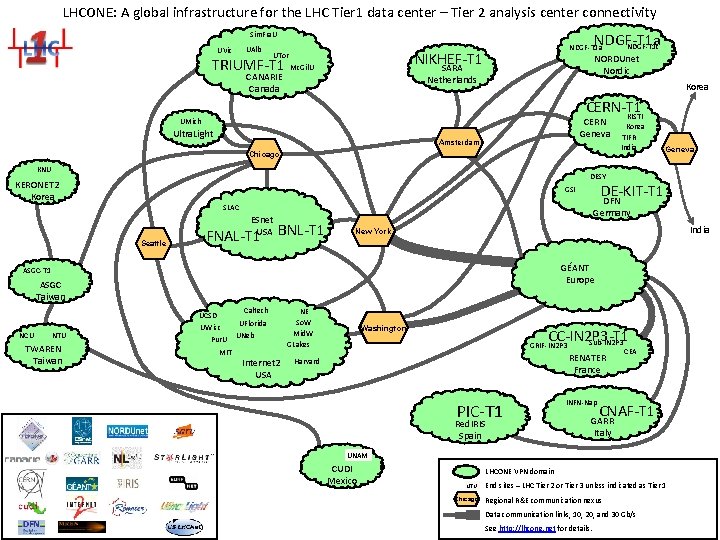

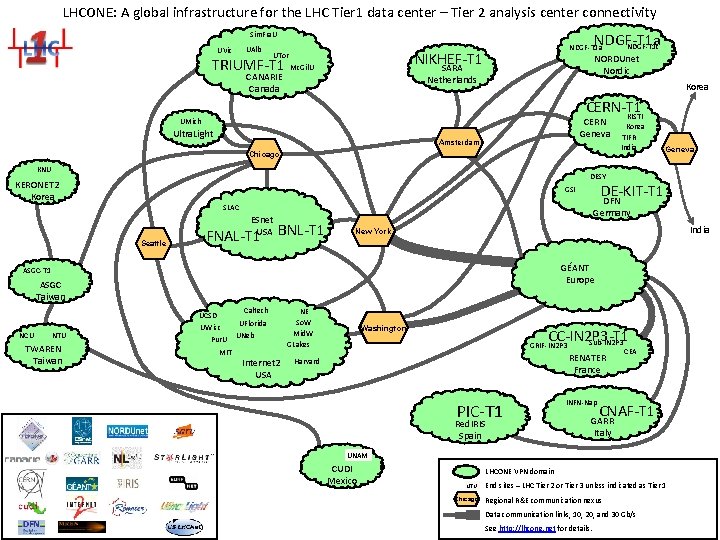

LHCONE: A global infrastructure for the LHC Tier 1 data center – Tier 2 analysis center connectivity NDGF-T 1 a NDGF-T 1 c Sim. Fra. U UAlb UVic TRIUMF-T 1 CANARIE Canada NDGF-T 1 a NIKHEF-T 1 SARA UTor Mc. Gil. U NORDUnet Nordic Netherlands Korea CERN-T 1 KISTI CERN Geneva UMich Ultra. Light Amsterdam Chicago KNU DFN Germany SLAC Seattle FNAL-T 1 BNL-T 1 India New York GÉANT Europe ASGC-T 1 ASGC Taiwan Caltech UCSD TWAREN Taiwan DE-KIT-T 1 GSI ESnet USA NTU Geneva DESY KERONET 2 Korea NCU Korea TIFR India UWisc Pur. U MIT UFlorida UNeb Internet 2 USA NE So. W Mid. W GLakes Washington CC-IN 2 P 3 -T 1 Sub-IN 2 P 3 GRIF-IN 2 P 3 RENATER France Harvard PIC-T 1 Red. IRIS Spain INFN-Nap CEA CNAF-T 1 GARR Italy UNAM CUDI Mexico LHCONE VPN domain NTU Chicago End sites – LHC Tier 2 or Tier 3 unless indicated as Tier 1 Regional R&E communication nexus Data communication links, 10, 20, and 30 Gb/s See http: //lhcone. net for details.

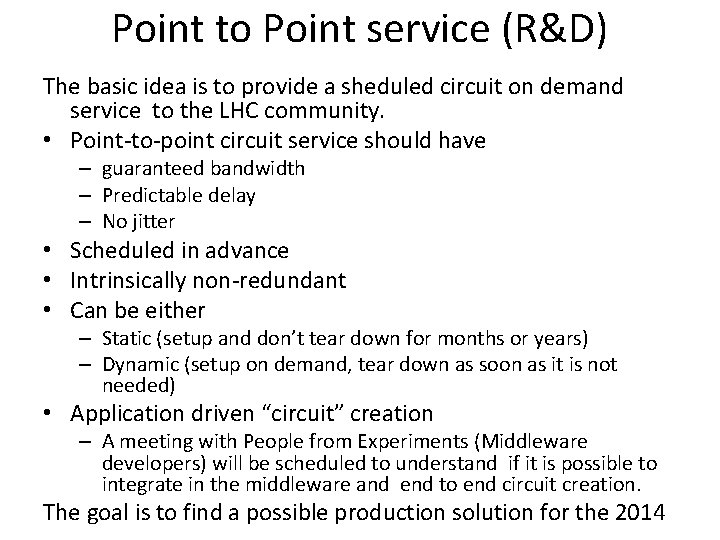

Point to Point service (R&D) The basic idea is to provide a sheduled circuit on demand service to the LHC community. • Point-to-point circuit service should have – guaranteed bandwidth – Predictable delay – No jitter • Scheduled in advance • Intrinsically non-redundant • Can be either – Static (setup and don’t tear down for months or years) – Dynamic (setup on demand, tear down as soon as it is not needed) • Application driven “circuit” creation – A meeting with People from Experiments (Middleware developers) will be scheduled to understand if it is possible to integrate in the middleware and end to end circuit creation. The goal is to find a possible production solution for the 2014

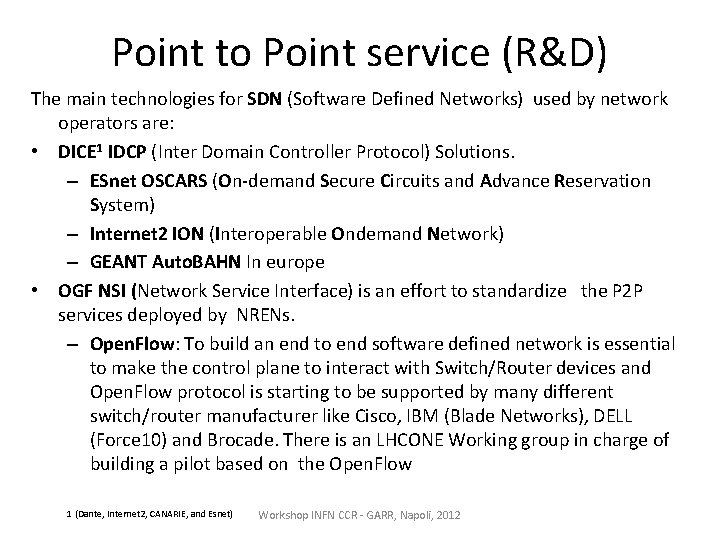

Point to Point service (R&D) The main technologies for SDN (Software Defined Networks) used by network operators are: • DICE 1 IDCP (Inter Domain Controller Protocol) Solutions. – ESnet OSCARS (On-demand Secure Circuits and Advance Reservation System) – Internet 2 ION (Interoperable Ondemand Network) – GEANT Auto. BAHN In europe • OGF NSI (Network Service Interface) is an effort to standardize the P 2 P services deployed by NRENs. – Open. Flow: To build an end to end software defined network is essential to make the control plane to interact with Switch/Router devices and Open. Flow protocol is starting to be supported by many different switch/router manufacturer like Cisco, IBM (Blade Networks), DELL (Force 10) and Brocade. There is an LHCONE Working group in charge of building a pilot based on the Open. Flow 1 (Dante, Internet 2, CANARIE, and Esnet) Workshop INFN CCR - GARR, Napoli, 2012

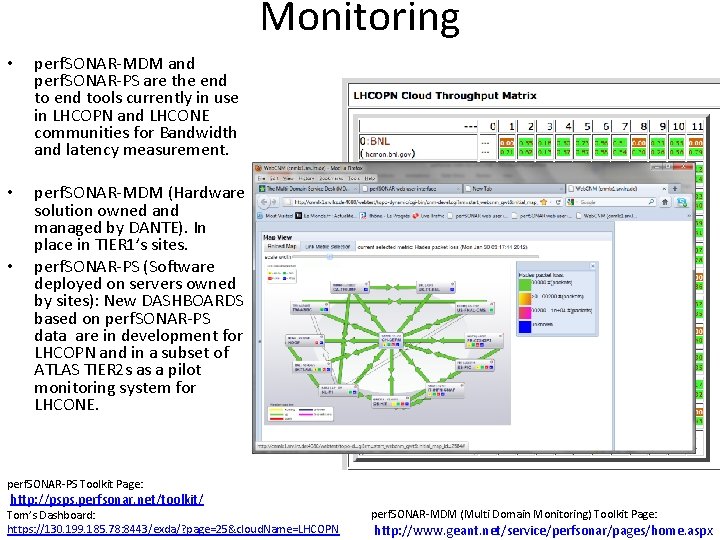

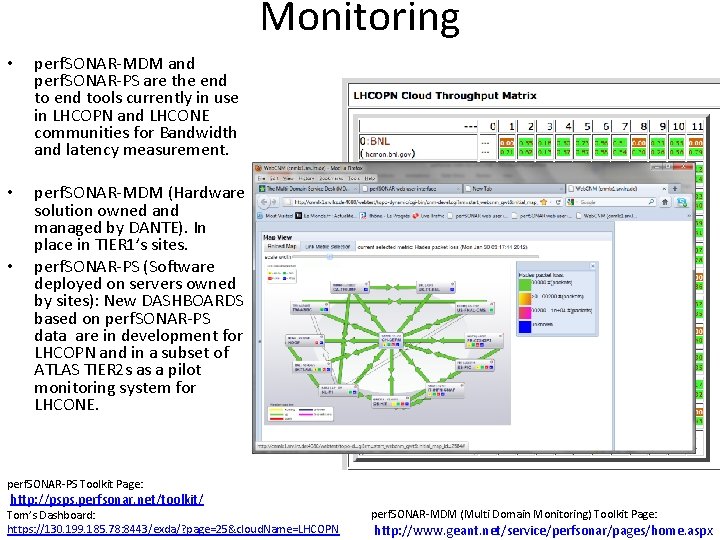

Monitoring • perf. SONAR-MDM and perf. SONAR-PS are the end tools currently in use in LHCOPN and LHCONE communities for Bandwidth and latency measurement. • perf. SONAR-MDM (Hardware solution owned and managed by DANTE). In place in TIER 1’s sites. perf. SONAR-PS (Software deployed on servers owned by sites): New DASHBOARDS based on perf. SONAR-PS data are in development for LHCOPN and in a subset of ATLAS TIER 2 s as a pilot monitoring system for LHCONE. • perf. SONAR-PS Toolkit Page: http: //psps. perfsonar. net/toolkit/ Tom’s Dashboard: https: //130. 199. 185. 78: 8443/exda/? page=25&cloud. Name=LHCOPN perf. SONAR-MDM (Multi Domain Monitoring) Toolkit Page: http: //www. geant. net/service/perfsonar/pages/home. aspx

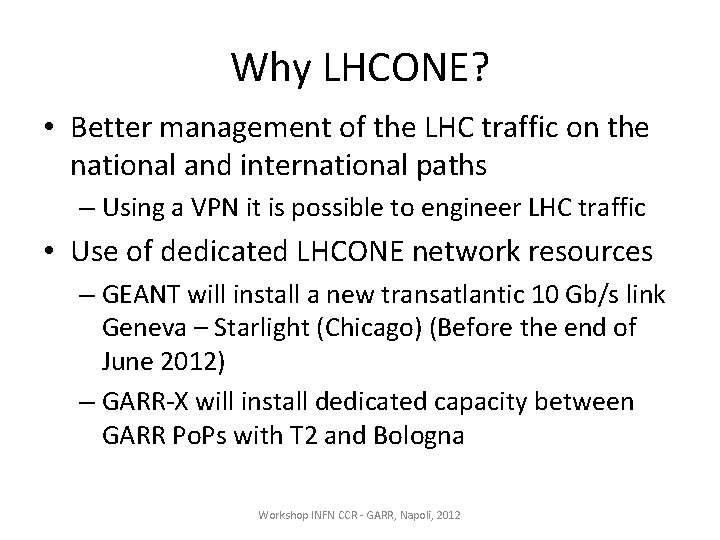

Why LHCONE? • Better management of the LHC traffic on the national and international paths – Using a VPN it is possible to engineer LHC traffic • Use of dedicated LHCONE network resources – GEANT will install a new transatlantic 10 Gb/s link Geneva – Starlight (Chicago) (Before the end of June 2012) – GARR-X will install dedicated capacity between GARR Po. Ps with T 2 and Bologna Workshop INFN CCR - GARR, Napoli, 2012

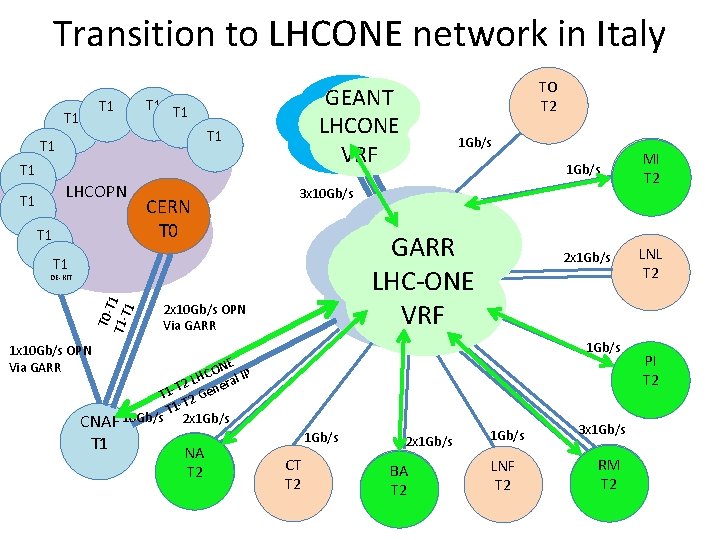

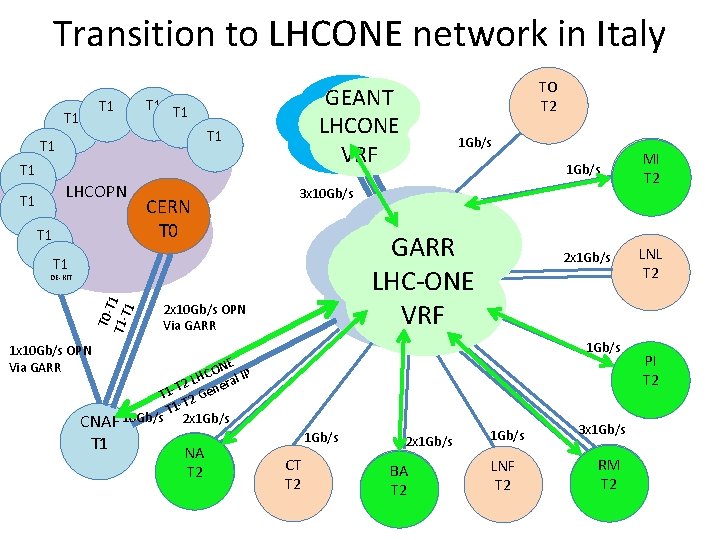

Transition to LHCONE network in Italy T 1 T 1 LHCOPN T 1 … T 1 CERN T 0 GEANT LHCONE VRF 1 Gb/s GARR General IP LHC-ONE Backbone VRF DE-KIT 2 x 10 Gb/s OPN Via GARR NE O C LH eral IP 2 T T 1 - 2 Gen 1 -T T CNAF 10 Gb/s 2 x 1 Gb/s NA T 2 MI T 2 2 x 1 Gb/s LNL T 2 1 Gb/s 1 x 10 Gb/s OPN Via GARR T 1 1 Gb/s 3 x 10 Gb/s T 1 T 0 T T 1 - 1 TO T 2 1 Gb/s CT T 2 2 x 1 Gb/s BA T 2 1 Gb/s LNF T 2 3 x 1 Gb/s RM T 2 PI T 2

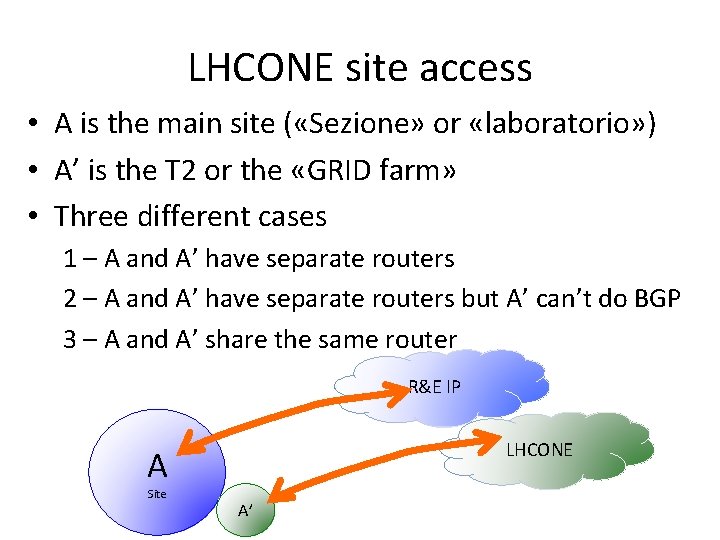

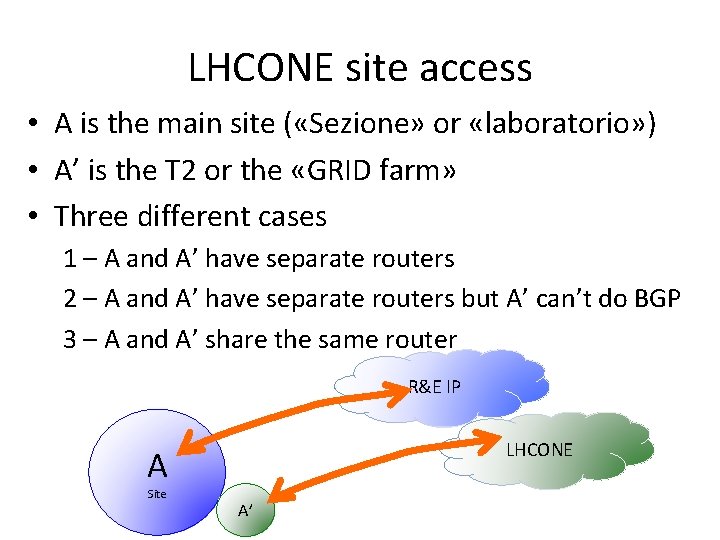

LHCONE site access • A is the main site ( «Sezione» or «laboratorio» ) • A’ is the T 2 or the «GRID farm» • Three different cases 1 – A and A’ have separate routers 2 – A and A’ have separate routers but A’ can’t do BGP 3 – A and A’ share the same router R&E IP LHCONE A Site A’

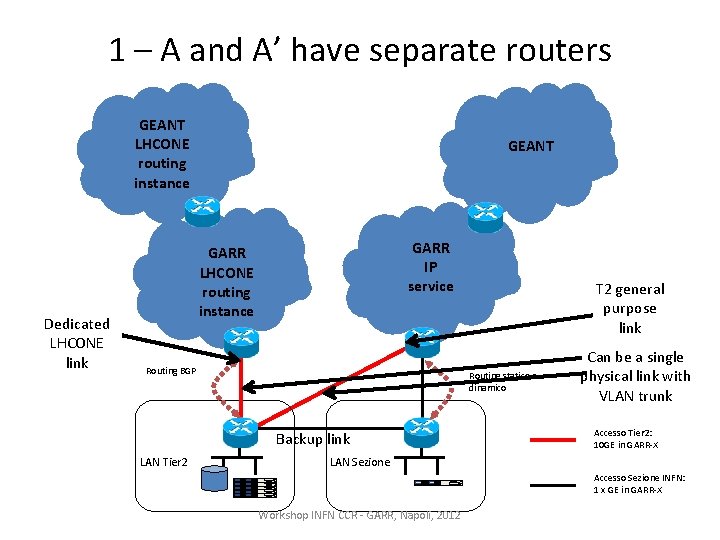

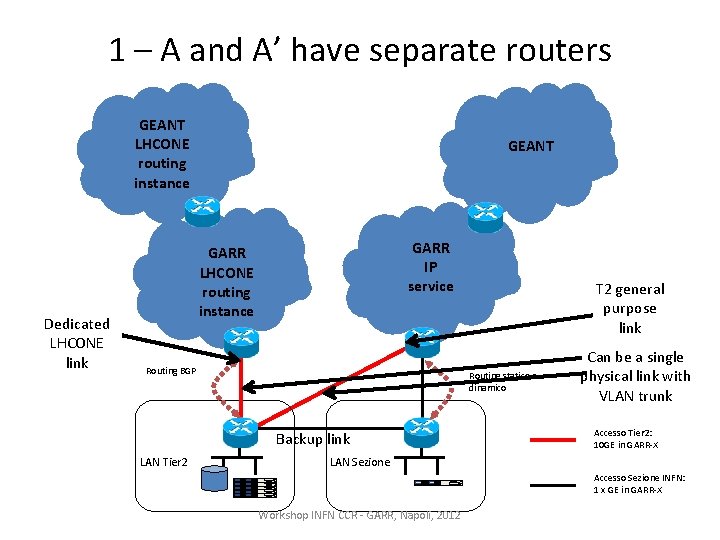

1 – A and A’ have separate routers GEANT LHCONE routing instance Dedicated LHCONE link GEANT GARR IP service GARR LHCONE routing instance Routing BGP Routing statico o dinamico Backup link LAN Tier 2 T 2 general purpose link Can be a single physical link with VLAN trunk Accesso Tier 2: 10 GE in GARR-X LAN Sezione Accesso Sezione INFN: 1 x GE in GARR-X Workshop INFN CCR - GARR, Napoli, 2012

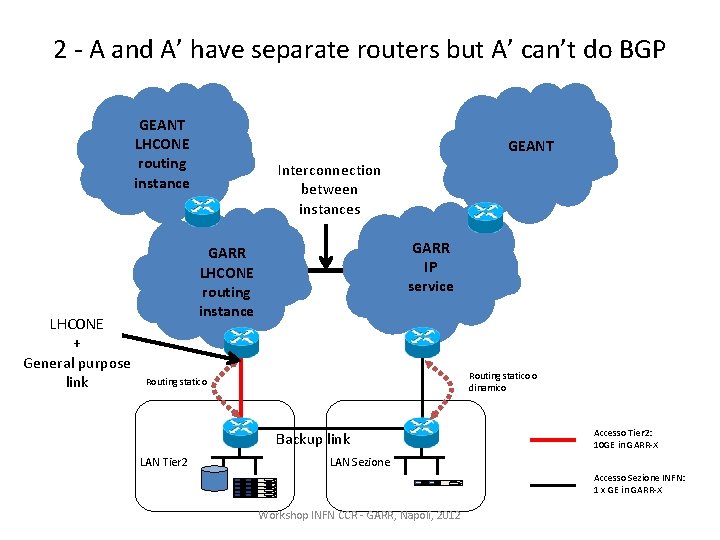

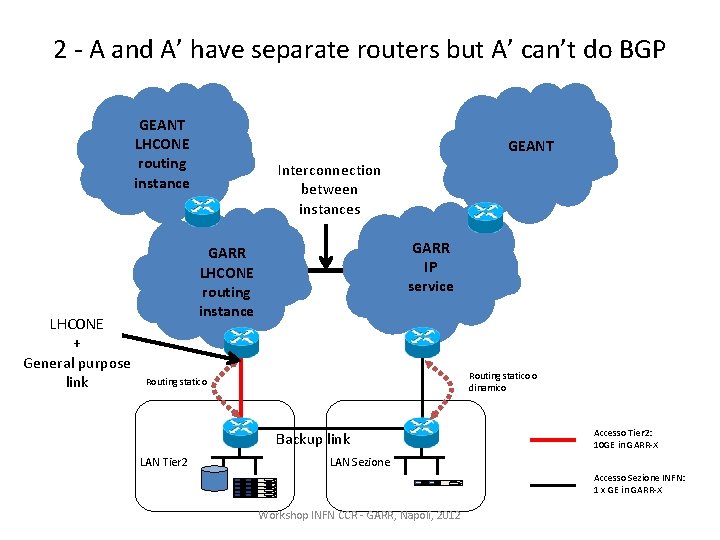

2 - A and A’ have separate routers but A’ can’t do BGP GEANT LHCONE routing instance LHCONE + General purpose link GEANT Interconnection between instances GARR IP service GARR LHCONE routing instance Routing statico o dinamico Routing statico Backup link LAN Tier 2 Accesso Tier 2: 10 GE in GARR-X LAN Sezione Accesso Sezione INFN: 1 x GE in GARR-X Workshop INFN CCR - GARR, Napoli, 2012

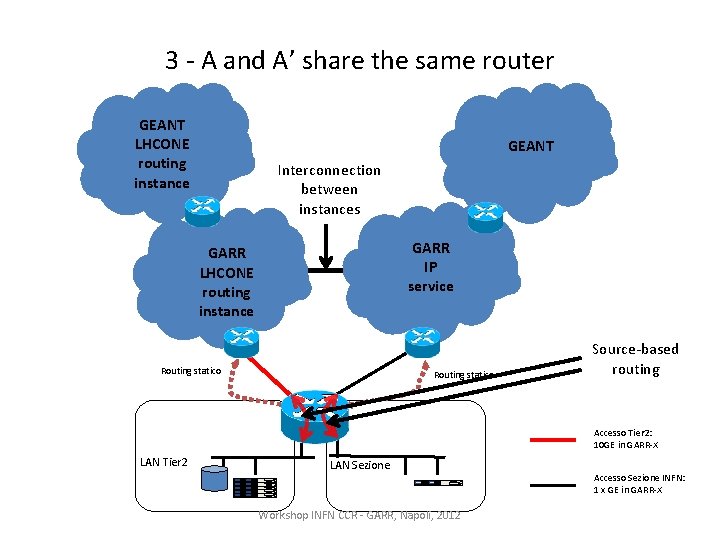

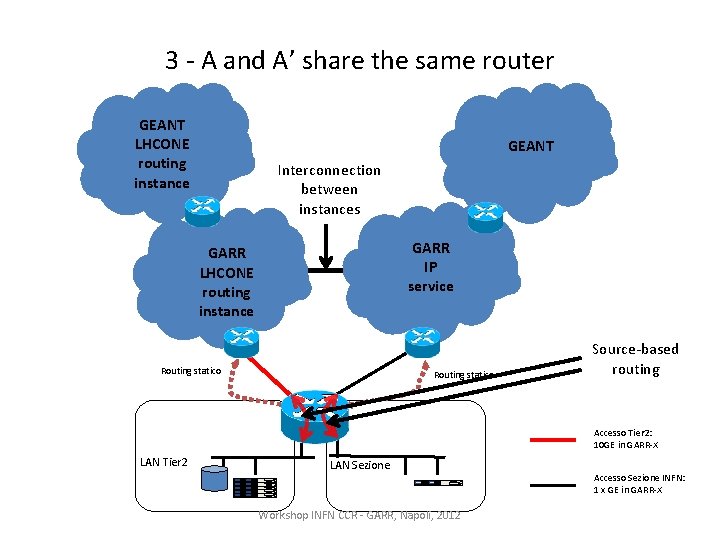

3 - A and A’ share the same router GEANT LHCONE routing instance GEANT Interconnection between instances GARR IP service GARR LHCONE routing instance Routing statico Source-based routing Accesso Tier 2: 10 GE in GARR-X LAN Tier 2 LAN Sezione Workshop INFN CCR - GARR, Napoli, 2012 Accesso Sezione INFN: 1 x GE in GARR-X

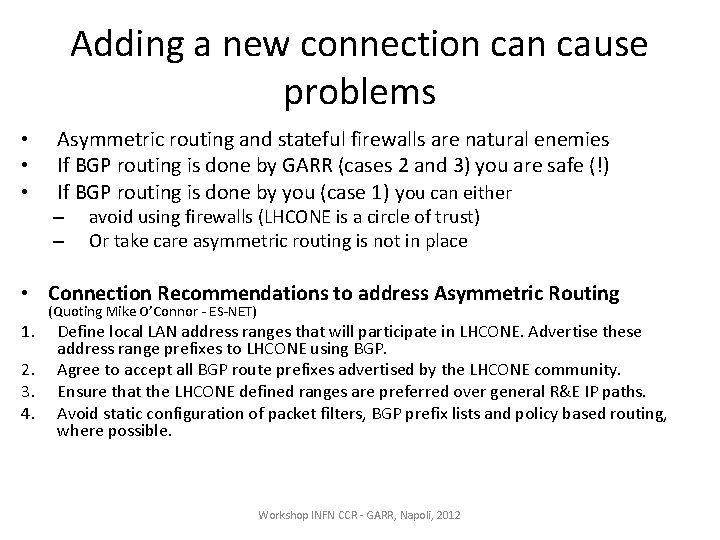

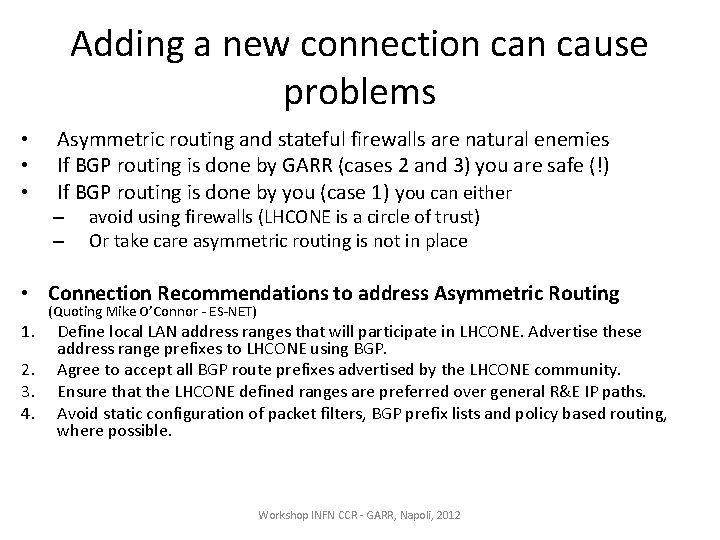

Adding a new connection cause problems • • • Asymmetric routing and stateful firewalls are natural enemies If BGP routing is done by GARR (cases 2 and 3) you are safe (!) If BGP routing is done by you (case 1) you can either – – avoid using firewalls (LHCONE is a circle of trust) Or take care asymmetric routing is not in place • Connection Recommendations to address Asymmetric Routing 1. 2. 3. 4. (Quoting Mike O’Connor - ES-NET) Define local LAN address ranges that will participate in LHCONE. Advertise these address range prefixes to LHCONE using BGP. Agree to accept all BGP route prefixes advertised by the LHCONE community. Ensure that the LHCONE defined ranges are preferred over general R&E IP paths. Avoid static configuration of packet filters, BGP prefix lists and policy based routing, where possible. Workshop INFN CCR - GARR, Napoli, 2012

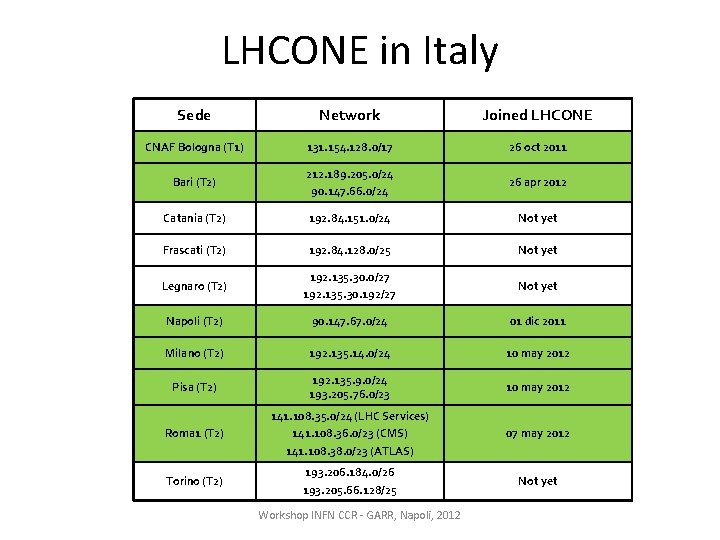

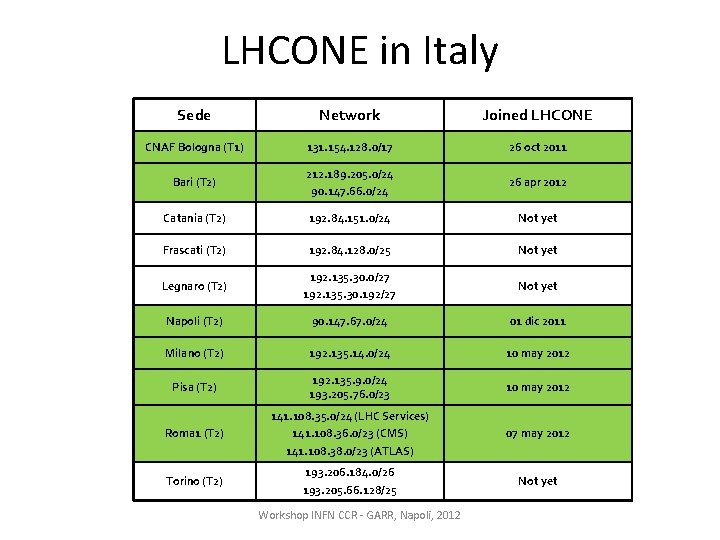

LHCONE in Italy Sede Network Joined LHCONE CNAF Bologna (T 1) 131. 154. 128. 0/17 26 oct 2011 Bari (T 2) 212. 189. 205. 0/24 90. 147. 66. 0/24 26 apr 2012 Catania (T 2) 192. 84. 151. 0/24 Not yet Frascati (T 2) 192. 84. 128. 0/25 Not yet Legnaro (T 2) 192. 135. 30. 0/27 192. 135. 30. 192/27 Not yet Napoli (T 2) 90. 147. 67. 0/24 01 dic 2011 Milano (T 2) 192. 135. 14. 0/24 10 may 2012 Pisa (T 2) 192. 135. 9. 0/24 193. 205. 76. 0/23 10 may 2012 Roma 1 (T 2) 141. 108. 35. 0/24 (LHC Services) 141. 108. 36. 0/23 (CMS) 141. 108. 38. 0/23 (ATLAS) 07 may 2012 Torino (T 2) 193. 206. 184. 0/26 193. 205. 66. 128/25 Not yet Workshop INFN CCR - GARR, Napoli, 2012

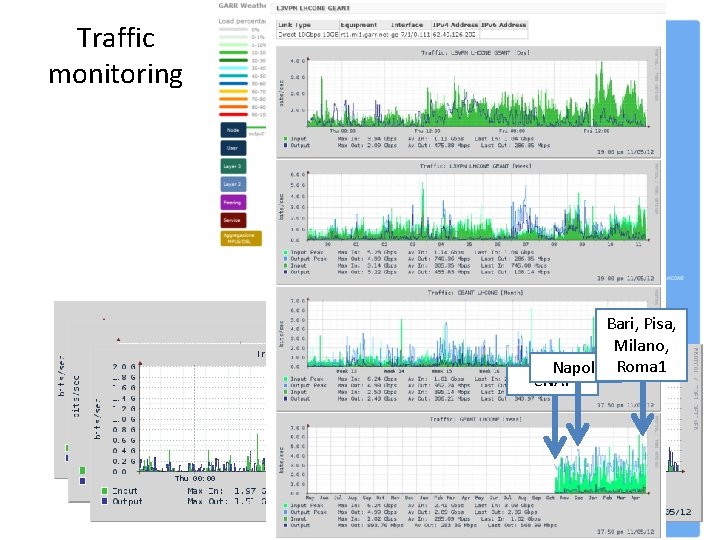

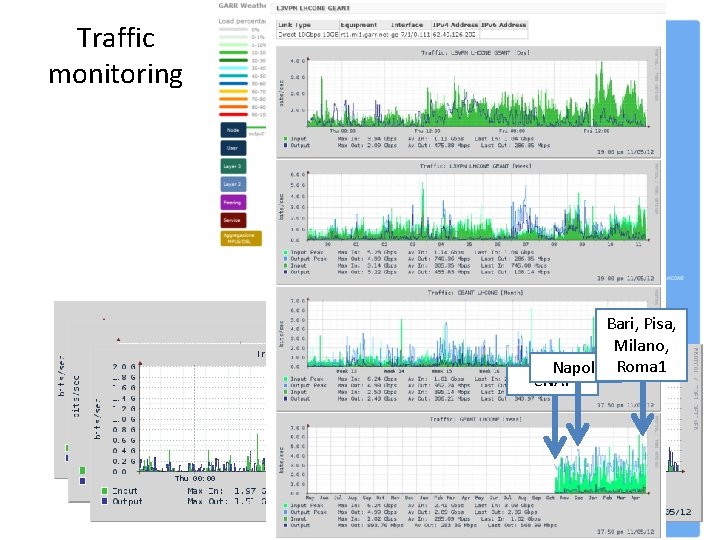

Traffic monitoring Bari, Pisa, Milano, Napoli Roma 1 CNAF

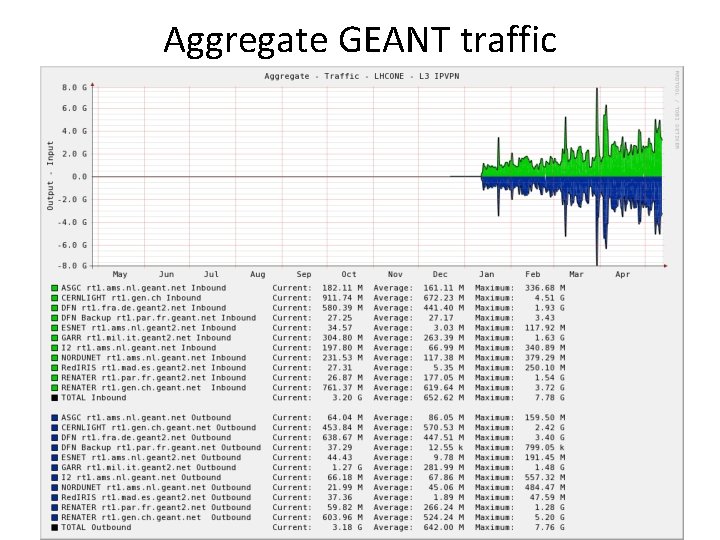

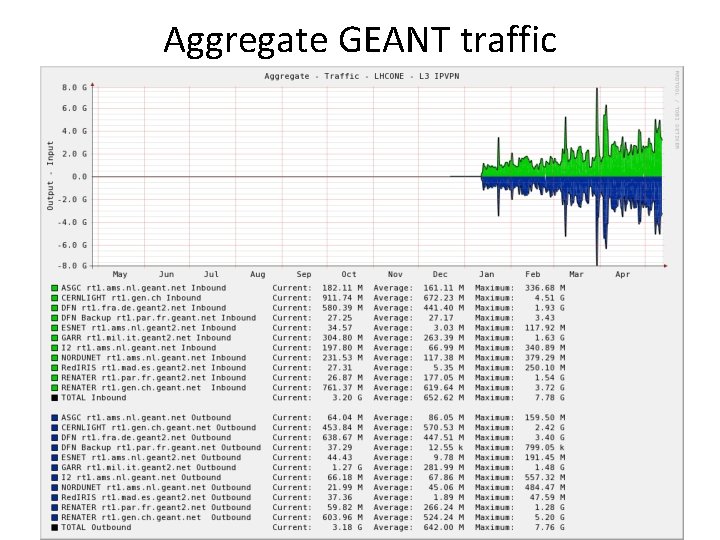

Aggregate GEANT traffic

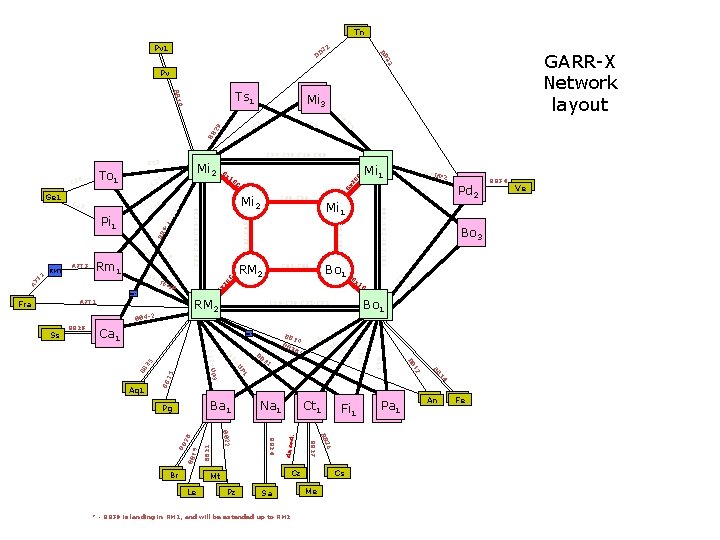

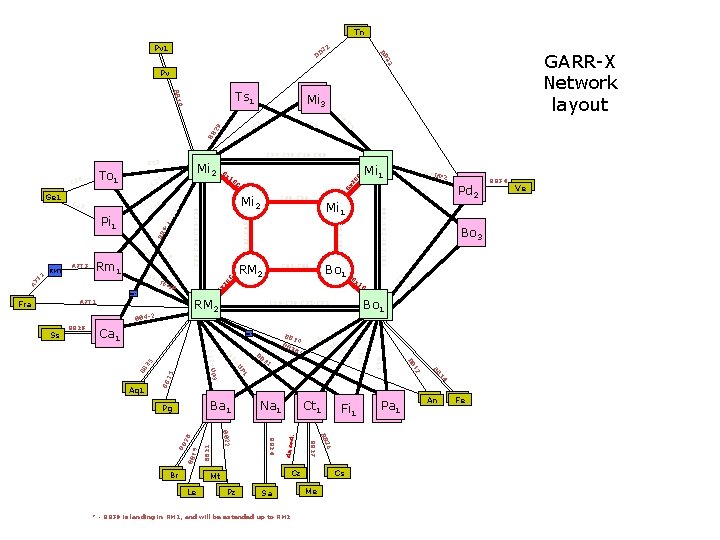

Tn Tn Pv 1 GARR-X Network layout BB 32 BB 33 Pv BB 1 Ts 1 0 Mi Mi 33 29 C BB C G 10 15 BB 9 BB 1 BB 21 20 * - BB 30 is landing in RM 1, and will be extended up to RM 2 C 41 -C 42 -C 43 -C 44 7 8 C 6 26 BB 27 Sa Sa Fi 1 BB BB 24 BB 22 BB 14 B B Ct 11 Ct Cz Cz Pz Pz 17 Na 11 Na Mt Mt Le Le C 13 5 31 8 C 1 1 UP UP 3 BB BB 1+ C 17+ Ba 1 Br Br BB 16 BB 30 * 2 C 1 0 RM 1 Pg Pg Bo Bo 11 C 29 -C 30 -C 31 -C 32 Ca Ca 11 Aq 1 C G C 1 BB 4 -2 BB 28 10 RM 22 RM A 7 T 1 Ss Ss 8 x Pd 2 Bo 3 C 14 P RM 1 Fra Bo 1 UP 2 C 9 Mi 1 C 45 -C 46 RM 2 G EM C 6+ 8 x Mi 2 10 A 7 T /T C 49 -C 50 8 x C 1 9 Mi 1 C 51 -C 52 1+ 4 BB 2 4 2 C 2 Rm 1 G C 47 -C 48 0 C 2 Pi 1 A 7 T 3 10 C 33 -C 34 -C 35 -C 36 C 2 7 RMT 8 x C C 1 5 Ge 1 21 da or d. To 1 C 26 C 5 C 37 -C 38 -C 39 -C 40 Mi Mi 22 C 23 4 - C 1 C 3 2 - Cs Cs Me Me Pa 1 An An Fe Fe BB 34 Ve Ve

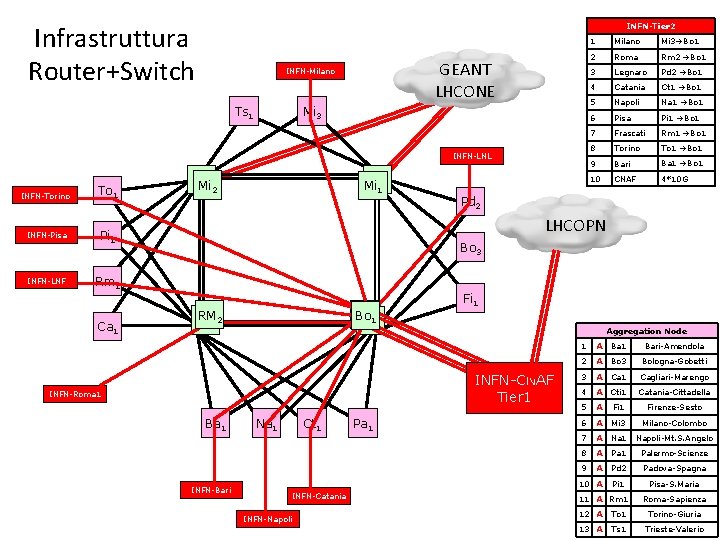

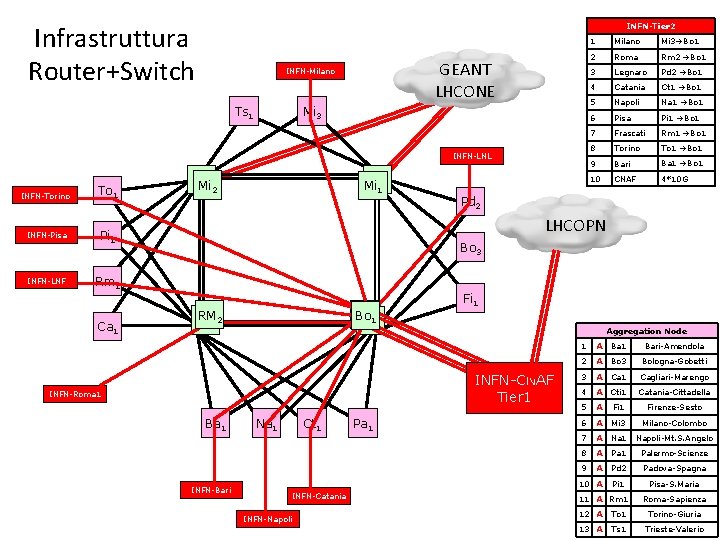

Infrastruttura Router+Switch INFN-Tier 2 GEANT LHCONE INFN-Milano Ts 1 Mi 3 INFN-LNL INFN-Torino To 1 INFN-Pisa Pi 1 INFN-LNF Rm 1 Ca 1 Mi 2 Mi 1 1 Milano Mi 3 Bo 1 2 Roma Rm 2 Bo 1 3 Legnaro Pd 2 Bo 1 4 Catania Ct 1 Bo 1 5 Napoli Na 1 Bo 1 6 Pisa Pi 1 Bo 1 7 Frascati Rm 1 Bo 1 8 Torino To 1 Bo 1 9 Bari Ba 1 Bo 1 10 CNAF 4*10 G Pd 2 LHCOPN Bo 3 Bo Bo 11 Bo 1 RM 2 Fi 1 Aggregation Node INFN-CNAF Tier 1 INFN-Roma 1 Ba 1 INFN-Bari Na 1 Ct 1 INFN-Catania INFN-Napoli Pa 1 1 A Ba 1 2 A Bo 3 Bologna-Gobetti 3 A Ca 1 Cagliari-Marengo 4 A Cti 1 Catania-Cittadella 5 A Fi 1 Firenze-Sesto 6 A Mi 3 Milano-Colombo 7 A Na 1 Napoli-Mt. S. Angelo 8 A Pa 1 Palermo-Scienze 9 A Pd 2 Padova-Spagna 10 A Pi 1 Pisa-S. Maria 11 A Rm 1 Bari-Amendola Roma-Sapienza 12 A To 1 Torino-Giuria 13 A Ts 1 Trieste-Valerio

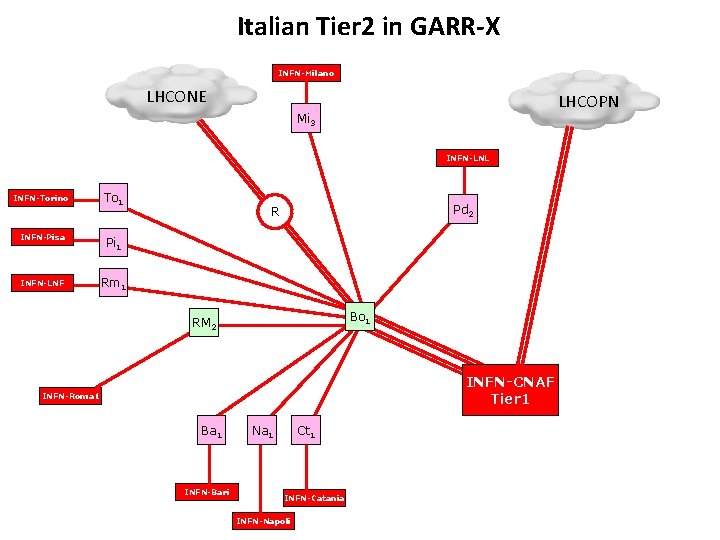

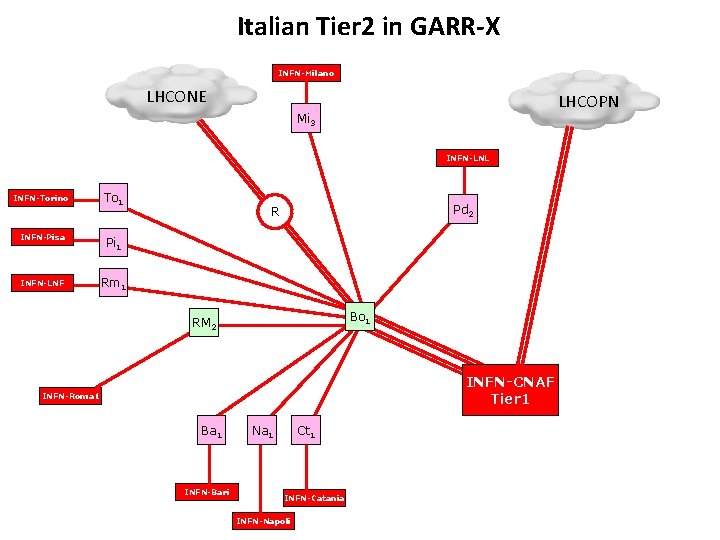

Italian Tier 2 in GARR-X INFN-Milano LHCONE LHCOPN Mi 3 INFN-LNL INFN-Torino To 1 INFN-Pisa Pi 1 INFN-LNF Rm 1 Pd 2 R Bo 1 RM 2 INFN-CNAF Tier 1 INFN-Roma 1 Ba 1 INFN-Bari Na 1 Ct 1 INFN-Catania INFN-Napoli

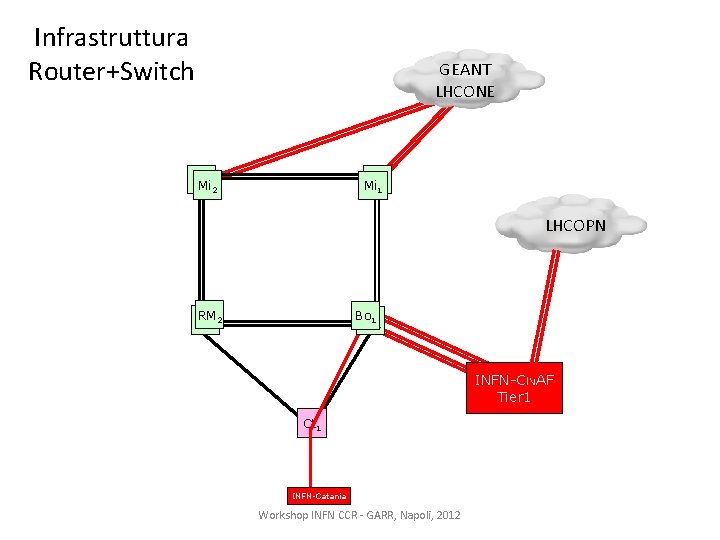

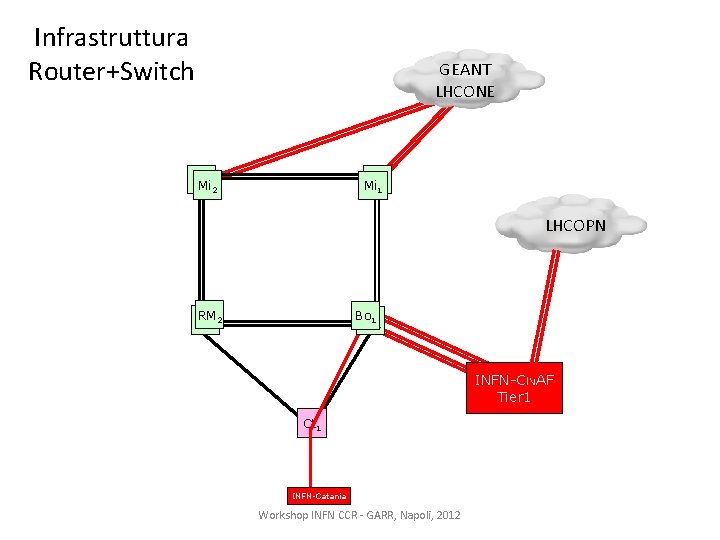

Infrastruttura Router+Switch GEANT LHCONE Mi 2 Mi 1 LHCOPN RM 2 Bo Bo 11 INFN-CNAF Tier 1 Ct 1 INFN-Catania Workshop INFN CCR - GARR, Napoli, 2012

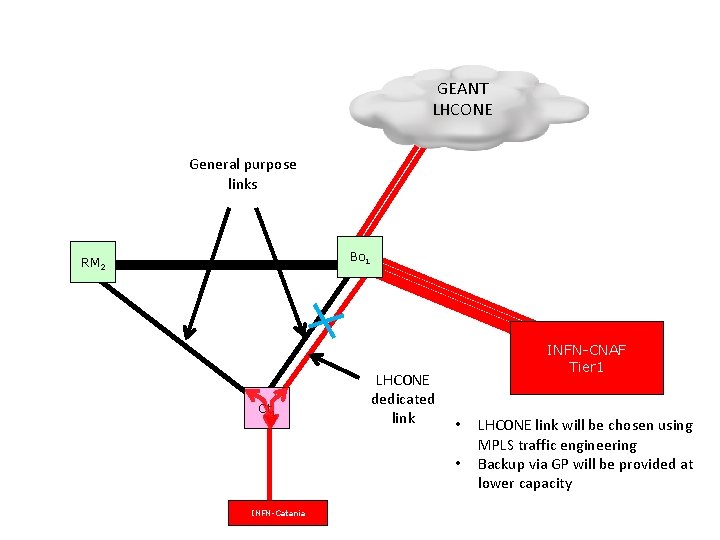

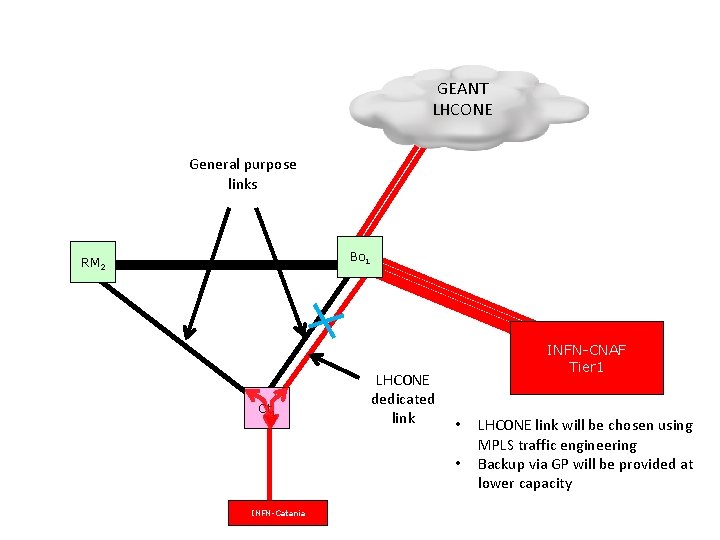

GEANT LHCONE General purpose links Bo 1 RM 2 Ct 1 LHCONE dedicated link INFN-CNAF Tier 1 • • INFN-Catania LHCONE link will be chosen using MPLS traffic engineering Backup via GP will be provided at lower capacity

The End. . Thank You for your attention! Workshop INFN CCR - GARR, Napoli, 2012

Backup Slides Workshop INFN CCR - GARR, Napoli, 2012

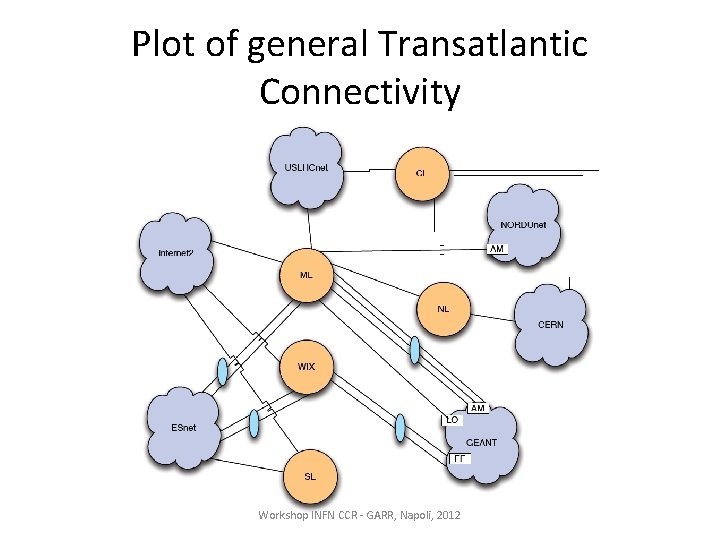

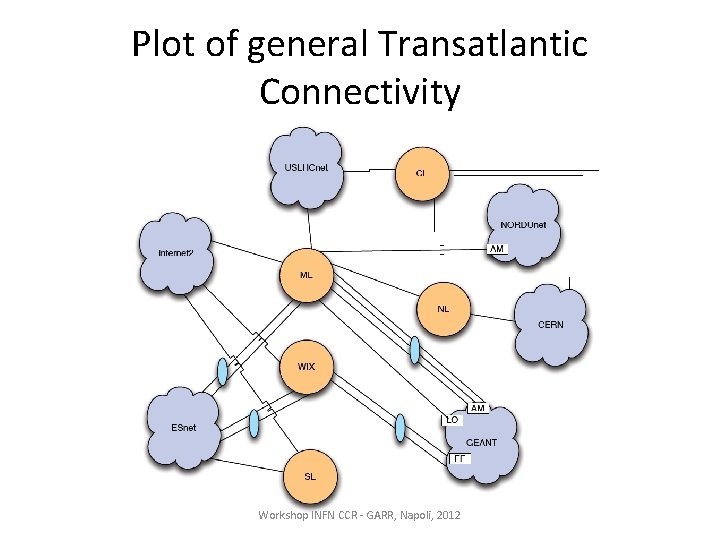

Plot of general Transatlantic Connectivity Workshop INFN CCR - GARR, Napoli, 2012

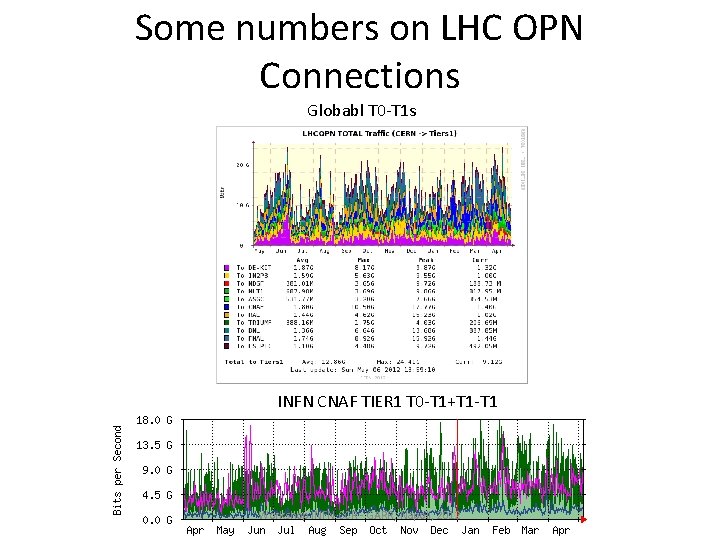

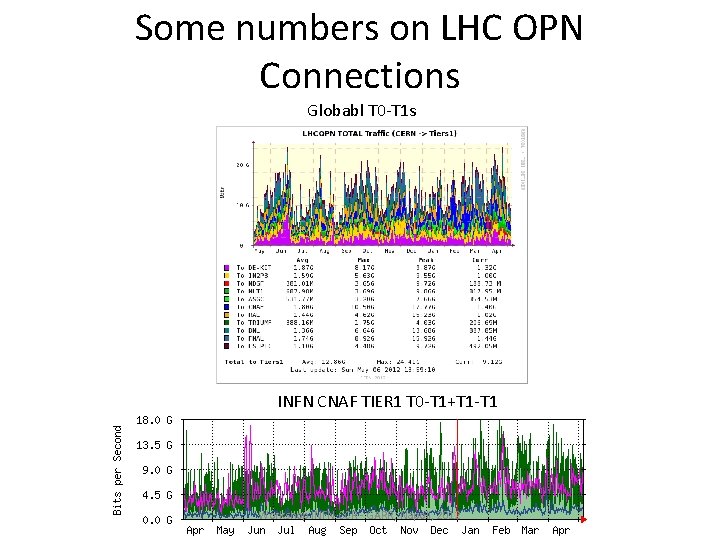

Some numbers on LHC OPN Connections Globabl T 0 -T 1 s INFN CNAF TIER 1 T 0 -T 1+T 1 -T 1 Workshop INFN CCR - GARR, Napoli, 2012