What Do You Want Semantic Understanding Youve Got

![Grand Challenge Semantic Understanding “The Grand Challenge [of semantic understanding] has become mission critical. Grand Challenge Semantic Understanding “The Grand Challenge [of semantic understanding] has become mission critical.](https://slidetodoc.com/presentation_image_h2/815117ea046e2b5b83957369c78243f2/image-7.jpg)

![Extraction Ontology: Example January 2004 Car [-> object]; Car [0: 1] has Year [1: Extraction Ontology: Example January 2004 Car [-> object]; Car [0: 1] has Year [1:](https://slidetodoc.com/presentation_image_h2/815117ea046e2b5b83957369c78243f2/image-47.jpg)

- Slides: 106

What Do You Want— Semantic Understanding? (You’ve Got to be Kidding) David W. Embley Brigham Young University January 2004 Funded in part by the National Science Foundation 1

Presentation Outline § § § § January 2004 Grand Challenge Meaning, Knowledge, Information, Data Fun and Games with Data Information Extraction Ontologies Applications Limitations and Pragmatics Summary and Challenges 2

Grand Challenge Semantic Understanding Can we quantify & specify the nature of this grand challenge? January 2004 3

Grand Challenge Semantic Understanding “If ever there were a technology that could generate trillions of dollars in savings worldwide …, it would be the technology that makes business information systems interoperable. ” (Jeffrey T. Pollock, VP of Technology Strategy, Modulant Solutions) January 2004 4

Grand Challenge Semantic Understanding “The Semantic Web: … content that is meaningful to computers [and that] will unleash a revolution of new possibilities … Properly designed, the Semantic Web can assist the evolution of human knowledge …” (Tim Berners-Lee, …, Weaving the Web) January 2004 5

Grand Challenge Semantic Understanding “ 20 th Century: Data Processing “ 21 st Century: Data Exchange “The issue now is mutual understanding. ” (Stefano Spaccapietra, Editor in Chief, Journal on Data Semantics) January 2004 6

![Grand Challenge Semantic Understanding The Grand Challenge of semantic understanding has become mission critical Grand Challenge Semantic Understanding “The Grand Challenge [of semantic understanding] has become mission critical.](https://slidetodoc.com/presentation_image_h2/815117ea046e2b5b83957369c78243f2/image-7.jpg)

Grand Challenge Semantic Understanding “The Grand Challenge [of semantic understanding] has become mission critical. Current solutions … won’t scale. Businesses need economic growth dependent on the web working and scaling (cost: $1 trillion/year). ” (Michael Brodie, Chief Scientist, Verizon Communications) January 2004 7

Why Semantic Understanding? § Because we’re overwhelmed with data We succeed in managing information • Point and click too slow if we can “[take] data and [analyze] it • “Give and me what I want when want it. ” [simplify] it and [tell]I people thekey information they want, progress § Becauseexactly it’s the to revolutionary rather than all the information they • Automated and knowledge sharing couldinteroperability have. ” - Jim Gray, Microsoft Research • Negotiation in e-business • Large-scale, in-silico experiments in e-science January 2004 8

What is Semantic Understanding? Semantics: “The meaning or the interpretation of a word, sentence, or other language form. ” Understanding: “To grasp or comprehend [what’s] intended or expressed. ’’ - Dictionary. com January 2004 9

Can We Achieve Semantic Understanding? “A computer doesn’t truly ‘understand’ anything. ” … But computers can manipulate terms “in ways that are useful and meaningful to the human user. ” - Tim Berners-Lee Key Point: it only has to be good enough. And that’s our challenge and our opportunity! January 2004 10

Presentation Outline § § § § January 2004 Grand Challenge Meaning, Knowledge, Information, Data Fun and Games with Data Information Extraction Ontologies Applications Limitations and Pragmatics Summary and Challenges 11

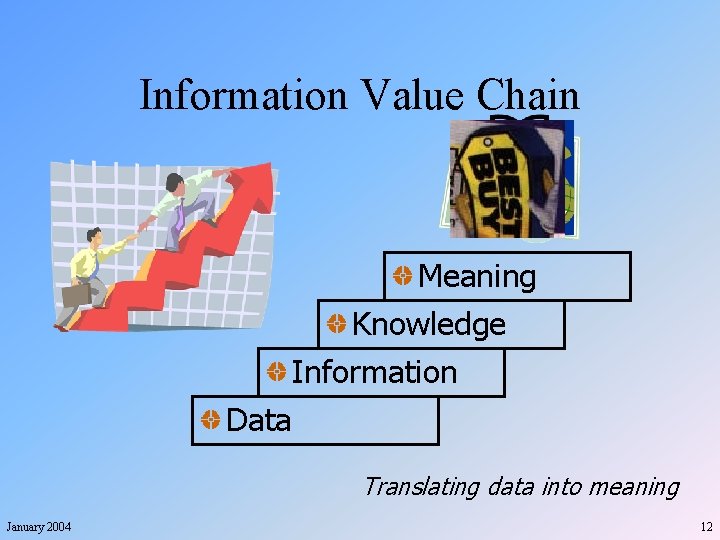

Information Value Chain Meaning Knowledge Information Data Translating data into meaning January 2004 12

Foundational Definitions § Meaning: knowledge that is relevant or activates § Knowledge: information with a degree of certainty or community agreement § Information: data in a conceptual framework § Data: attribute-value pairs - Adapted from [Meadow 92] January 2004 13

Foundational Definitions § Meaning: knowledge that is relevant or activates § Knowledge: information with a degree of certainty or community agreement (ontology) § Information: data in a conceptual framework § Data: attribute-value pairs - Adapted from [Meadow 92] January 2004 14

Foundational Definitions § Meaning: knowledge that is relevant or activates § Knowledge: information with a degree of certainty or community agreement (ontology) § Information: data in a conceptual framework § Data: attribute-value pairs - Adapted from [Meadow 92] January 2004 15

Foundational Definitions § Meaning: knowledge that is relevant or activates § Knowledge: information with a degree of certainty or community agreement (ontology) § Information: data in a conceptual framework § Data: attribute-value pairs - Adapted from [Meadow 92] January 2004 16

Data § Attribute-Value Pairs • Fundamental for information • Thus, fundamental for knowledge & meaning January 2004 17

Data § Attribute-Value Pairs • Fundamental for information • Thus, fundamental for knowledge & meaning § Data Frame • Extensive knowledge about a data item Everyday data: currency, dates, time, weights & measures Textual appearance, units, context, operators, I/O conversion • Abstract data type with an extended framework January 2004 18

Presentation Outline § § § § January 2004 Grand Challenge Meaning, Knowledge, Information, Data Fun and Games with Data Information Extraction Ontologies Applications Limitations and Pragmatics Summary and Challenges 19

? Olympus C-750 Ultra Zoom Sensor Resolution: Optical Zoom: Digital Zoom: Installed Memory: Lens Aperture: Focal Length min: Focal Length max: January 2004 4. 2 megapixels 10 x 4 x 16 MB F/8 -2. 8/3. 7 6. 3 mm 63. 0 mm 20

? Olympus C-750 Ultra Zoom Sensor Resolution: Optical Zoom: Digital Zoom: Installed Memory: Lens Aperture: Focal Length min: Focal Length max: January 2004 4. 2 megapixels 10 x 4 x 16 MB F/8 -2. 8/3. 7 6. 3 mm 63. 0 mm 21

? Olympus C-750 Ultra Zoom Sensor Resolution: Optical Zoom: Digital Zoom: Installed Memory: Lens Aperture: Focal Length min: Focal Length max: January 2004 4. 2 megapixels 10 x 4 x 16 MB F/8 -2. 8/3. 7 6. 3 mm 63. 0 mm 22

? Olympus C-750 Ultra Zoom Sensor Resolution Optical Zoom Digital Zoom Installed Memory Lens Aperture Focal Length min Focal Length max January 2004 4. 2 megapixels 10 x 4 x 16 MB F/8 -2. 8/3. 7 6. 3 mm 63. 0 mm 23

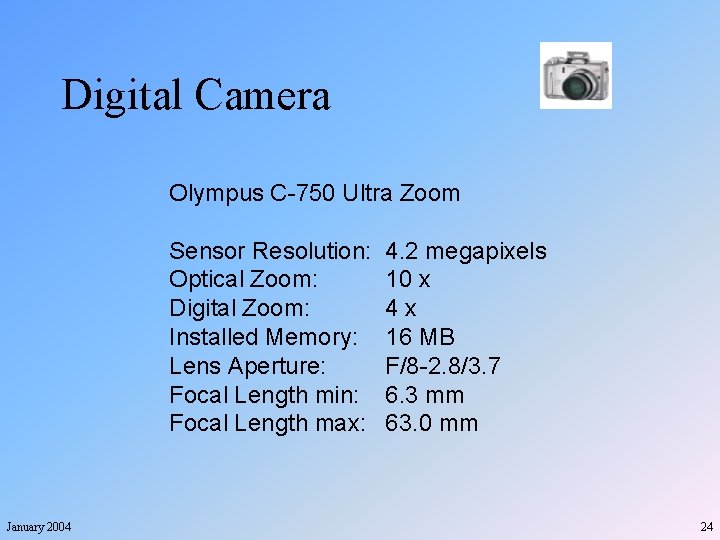

Digital Camera Olympus C-750 Ultra Zoom Sensor Resolution: Optical Zoom: Digital Zoom: Installed Memory: Lens Aperture: Focal Length min: Focal Length max: January 2004 4. 2 megapixels 10 x 4 x 16 MB F/8 -2. 8/3. 7 6. 3 mm 63. 0 mm 24

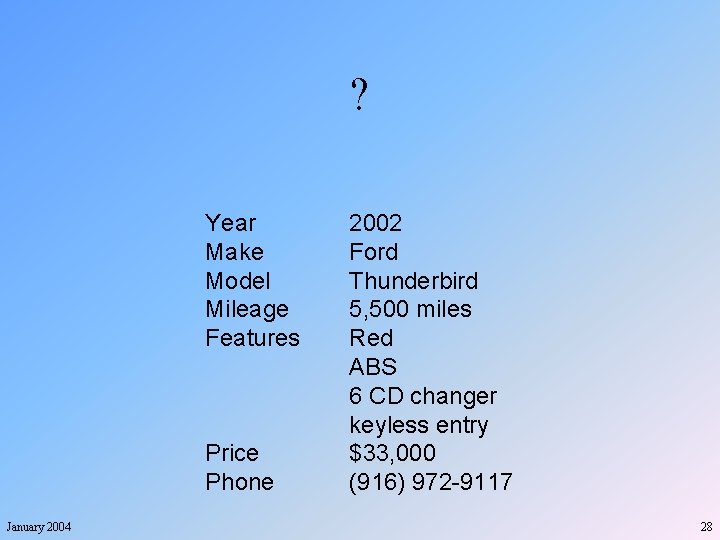

? Year Make Model Mileage Features Price Phone January 2004 2002 Ford Thunderbird 5, 500 miles Red ABS 6 CD changer keyless entry $33, 000 (916) 972 -9117 25

? Year Make Model Mileage Features Price Phone January 2004 2002 Ford Thunderbird 5, 500 miles Red ABS 6 CD changer keyless entry $33, 000 (916) 972 -9117 26

? Year Make Model Mileage Features Price Phone January 2004 2002 Ford Thunderbird 5, 500 miles Red ABS 6 CD changer keyless entry $33, 000 (916) 972 -9117 27

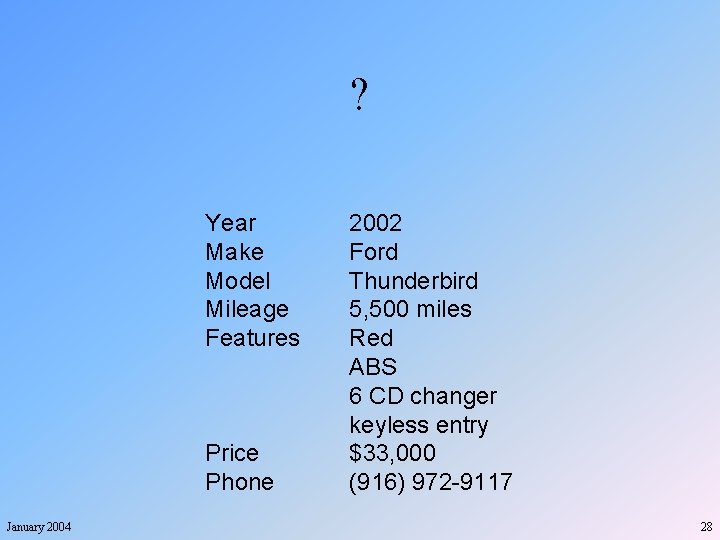

? Year Make Model Mileage Features Price Phone January 2004 2002 Ford Thunderbird 5, 500 miles Red ABS 6 CD changer keyless entry $33, 000 (916) 972 -9117 28

Car Advertisement Year Make Model Mileage Features Price Phone January 2004 2002 Ford Thunderbird 5, 500 miles Red ABS 6 CD changer keyless entry $33, 000 (916) 972 -9117 29

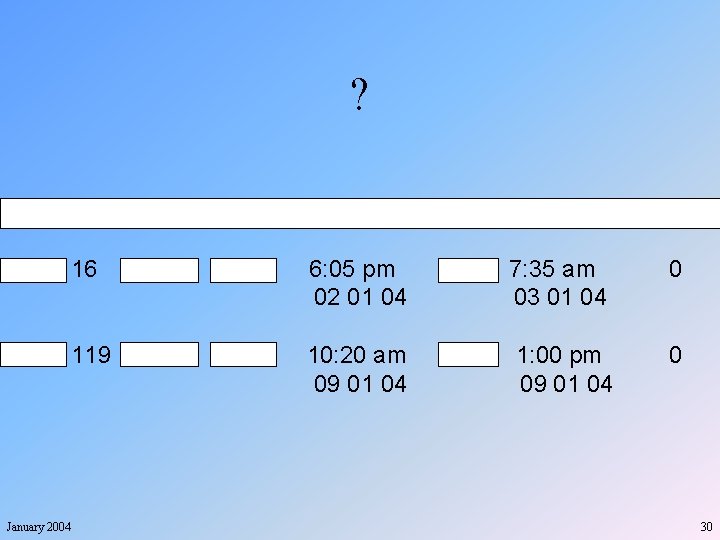

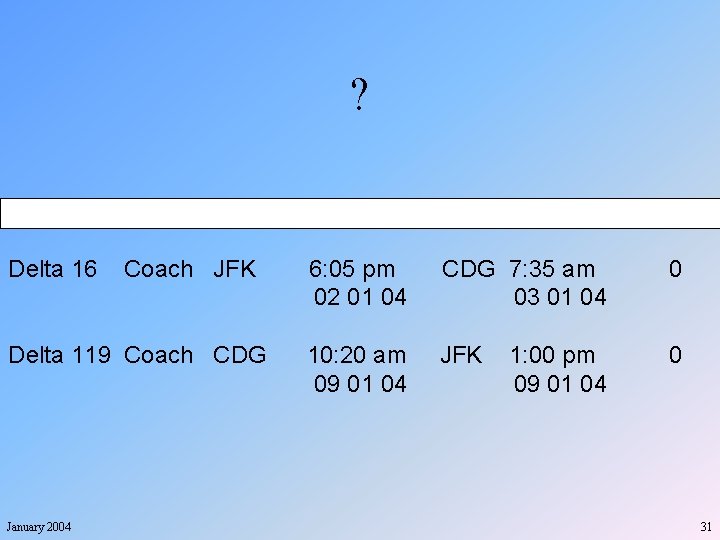

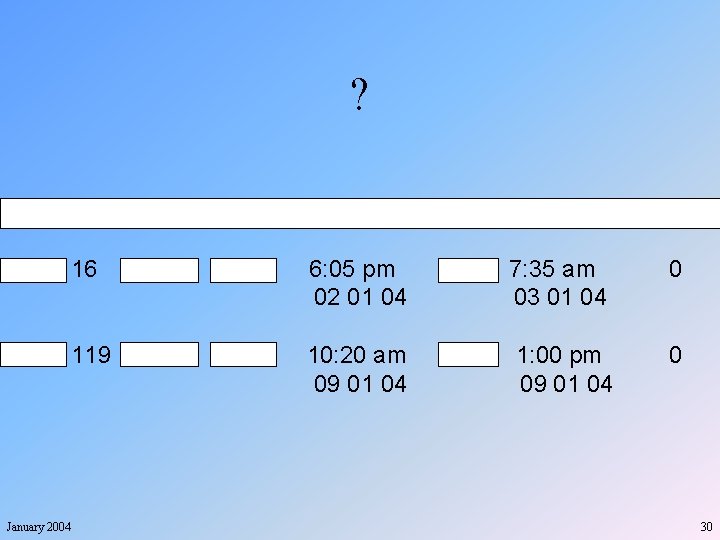

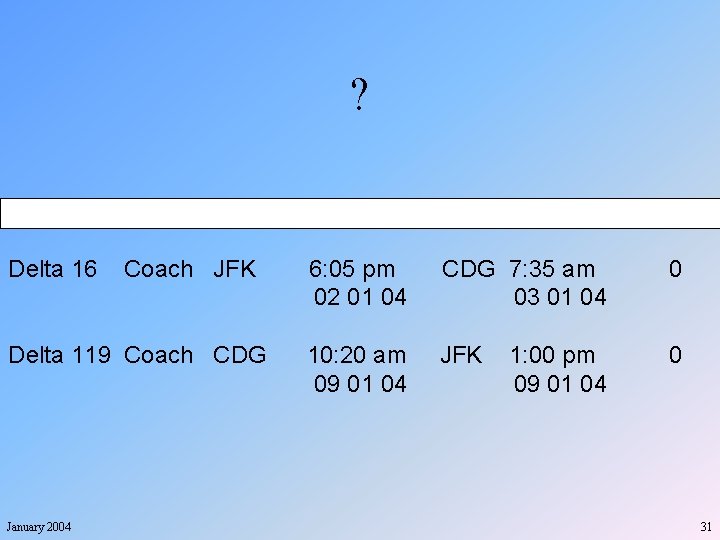

? Flight # Class From Delta 16 Coach JFK Delta 119 Coach CDG January 2004 Time/Date To Time/Date 6: 05 pm 02 01 04 CDG 7: 35 am 03 01 04 0 10: 20 am 09 01 04 JFK 0 1: 00 pm 09 01 04 Stops 30

? Flight # Class From Delta 16 Coach JFK Delta 119 Coach CDG January 2004 Time/Date To Time/Date 6: 05 pm 02 01 04 CDG 7: 35 am 03 01 04 0 10: 20 am 09 01 04 JFK 0 1: 00 pm 09 01 04 Stops 31

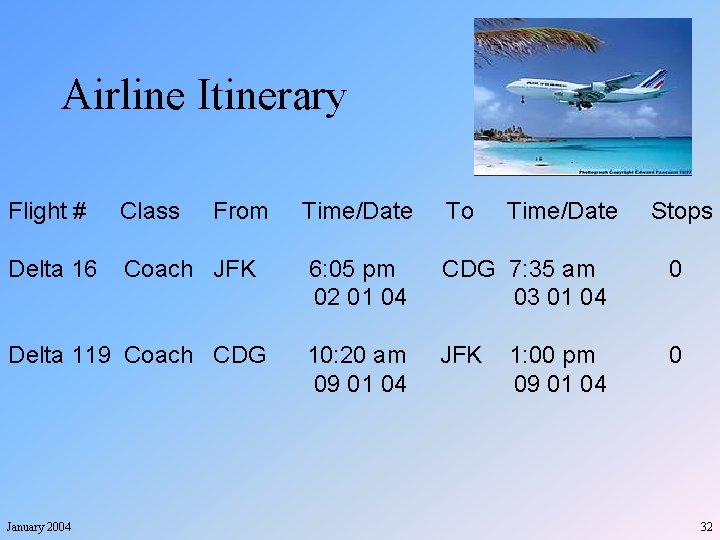

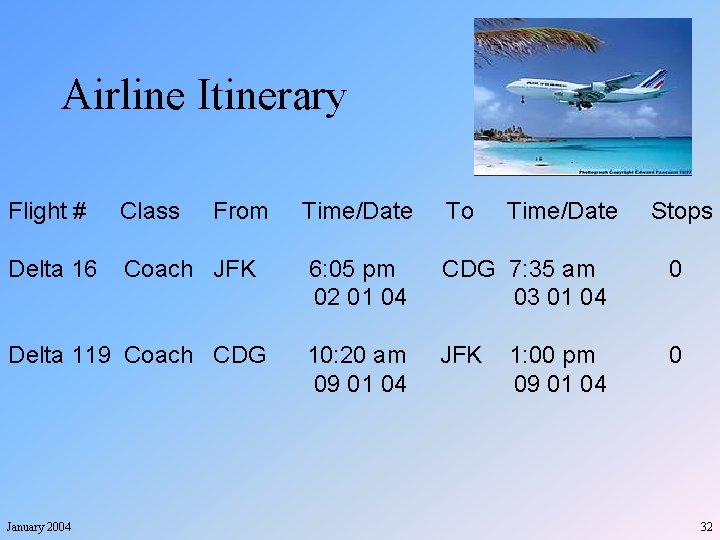

Airline Itinerary Flight # Class From Delta 16 Coach JFK Delta 119 Coach CDG January 2004 Time/Date To Time/Date 6: 05 pm 02 01 04 CDG 7: 35 am 03 01 04 0 10: 20 am 09 01 04 JFK 0 1: 00 pm 09 01 04 Stops 32

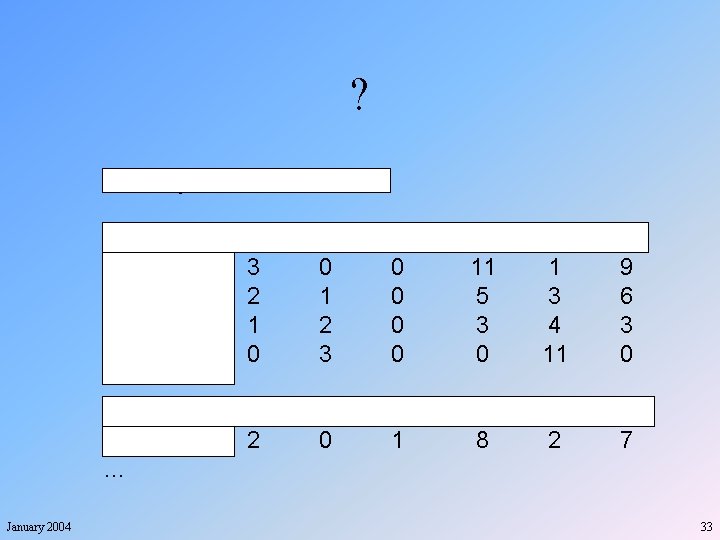

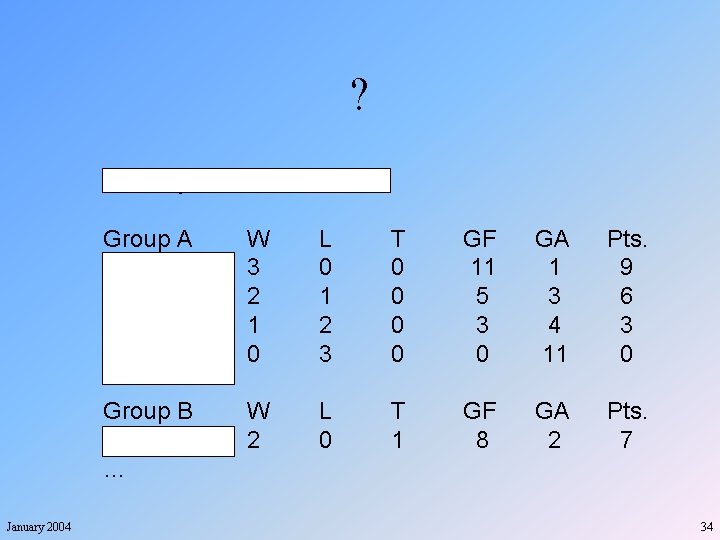

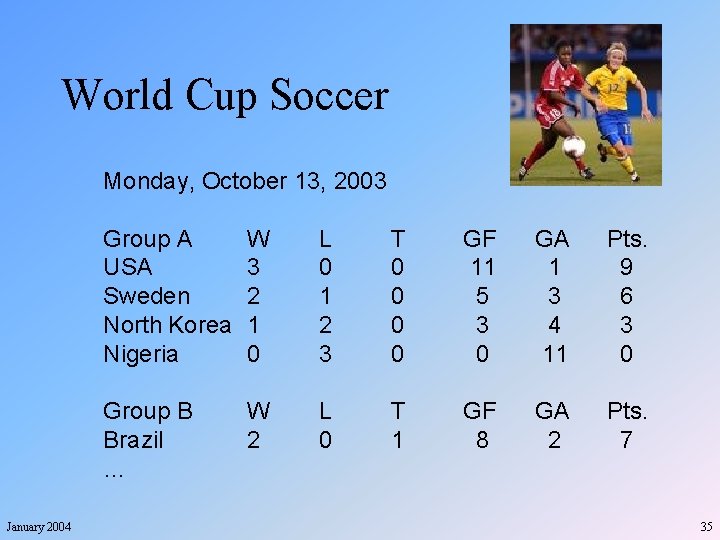

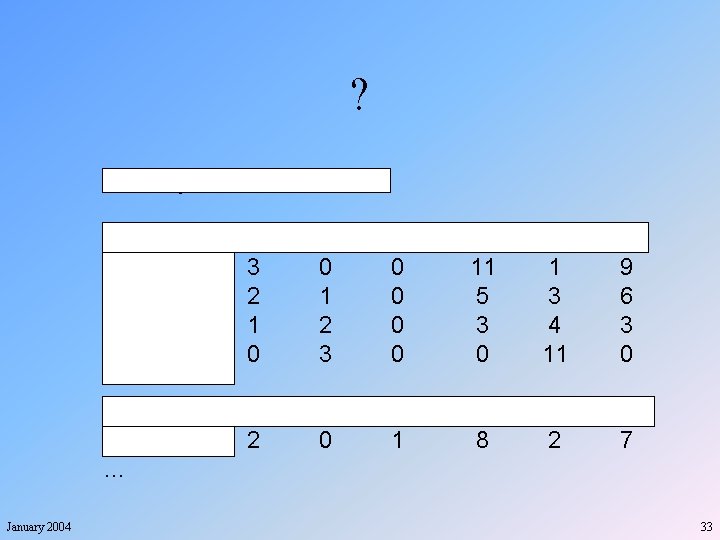

? Monday, October 13, 2003 January 2004 Group A USA Sweden North Korea Nigeria W 3 2 1 0 L 0 1 2 3 T 0 0 GF 11 5 3 0 GA 1 3 4 11 Pts. 9 6 3 0 Group B Brazil … W 2 L 0 T 1 GF 8 GA 2 Pts. 7 33

? Monday, October 13, 2003 January 2004 Group A USA Sweden North Korea Nigeria W 3 2 1 0 L 0 1 2 3 T 0 0 GF 11 5 3 0 GA 1 3 4 11 Pts. 9 6 3 0 Group B Brazil … W 2 L 0 T 1 GF 8 GA 2 Pts. 7 34

World Cup Soccer Monday, October 13, 2003 January 2004 Group A USA Sweden North Korea Nigeria W 3 2 1 0 L 0 1 2 3 T 0 0 GF 11 5 3 0 GA 1 3 4 11 Pts. 9 6 3 0 Group B Brazil … W 2 L 0 T 1 GF 8 GA 2 Pts. 7 35

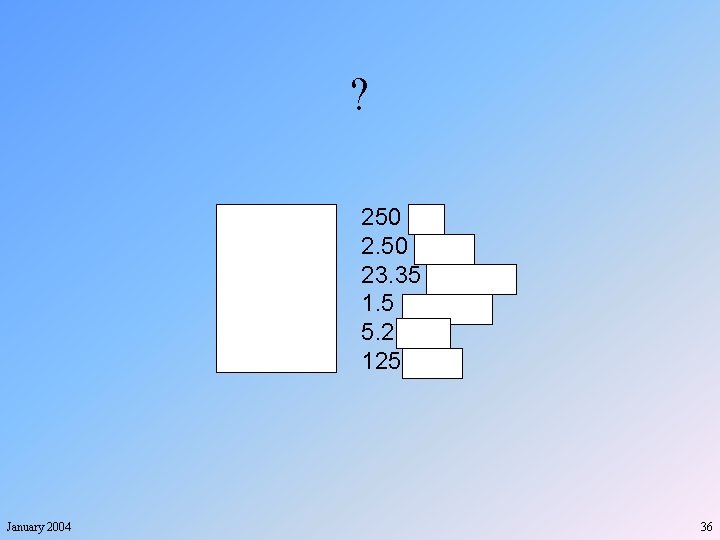

? Calories Distance Time Incline Speed Heart Rate January 2004 250 cal 2. 50 miles 23. 35 minutes 1. 5 degrees 5. 2 mph 125 bpm 36

? Calories Distance Time Incline Speed Heart Rate January 2004 250 cal 2. 50 miles 23. 35 minutes 1. 5 degrees 5. 2 mph 125 bpm 37

? Calories Distance Time Incline Speed Heart Rate January 2004 250 cal 2. 50 miles 23. 35 minutes 1. 5 degrees 5. 2 mph 125 bpm 38

Treadmill Workout Calories Distance Time Incline Speed Heart Rate January 2004 250 cal 2. 50 miles 23. 35 minutes 1. 5 degrees 5. 2 mph 125 bpm 39

? Place County State Type Elevation USGS Quad Latitude Longitude January 2004 Bonnie Lake Duchesne Utah Lake 10, 000 feet Mirror Lake 40. 711ºN 110. 876ºW 40

? Place County State Type Elevation USGS Quad Latitude Longitude January 2004 Bonnie Lake Duchesne Utah Lake 10, 000 feet Mirror Lake 40. 711ºN 110. 876ºW 41

? Place County State Type Elevation USGS Quad Latitude Longitude January 2004 Bonnie Lake Duchesne Utah Lake 10, 000 feet Mirror Lake 40. 711ºN 110. 876ºW 42

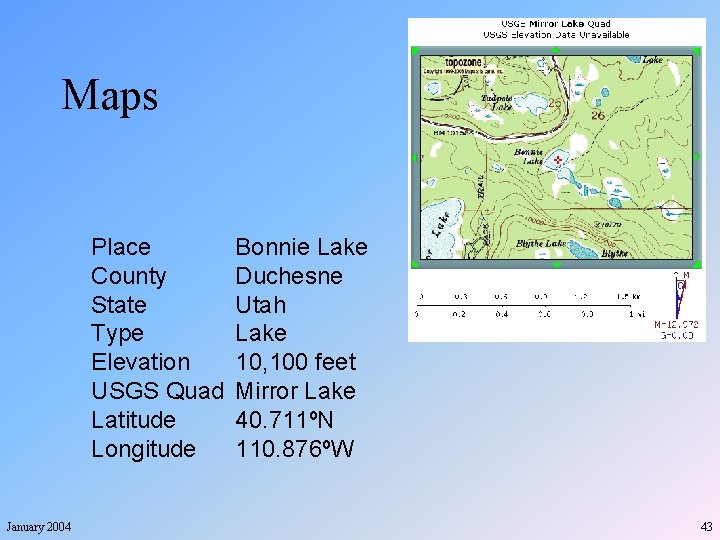

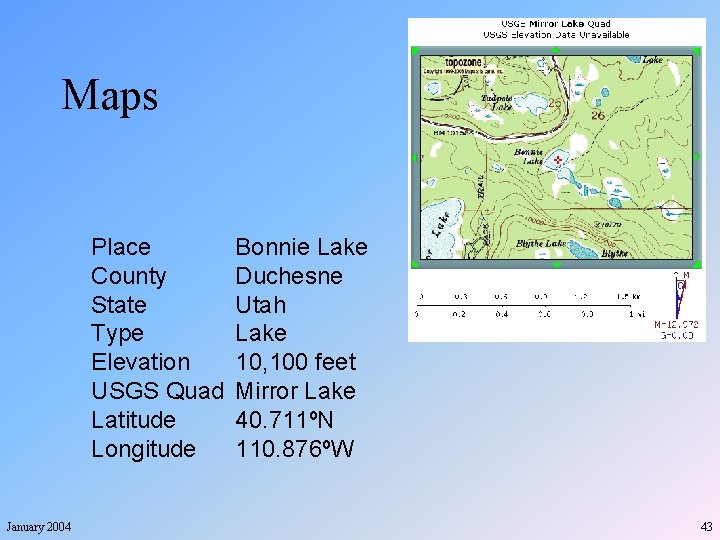

Maps Place County State Type Elevation USGS Quad Latitude Longitude January 2004 Bonnie Lake Duchesne Utah Lake 10, 100 feet Mirror Lake 40. 711ºN 110. 876ºW 43

Presentation Outline § § § § January 2004 Grand Challenge Meaning, Knowledge, Information, Data Fun and Games with Data Information Extraction Ontologies Applications Limitations and Pragmatics Summary and Challenges 44

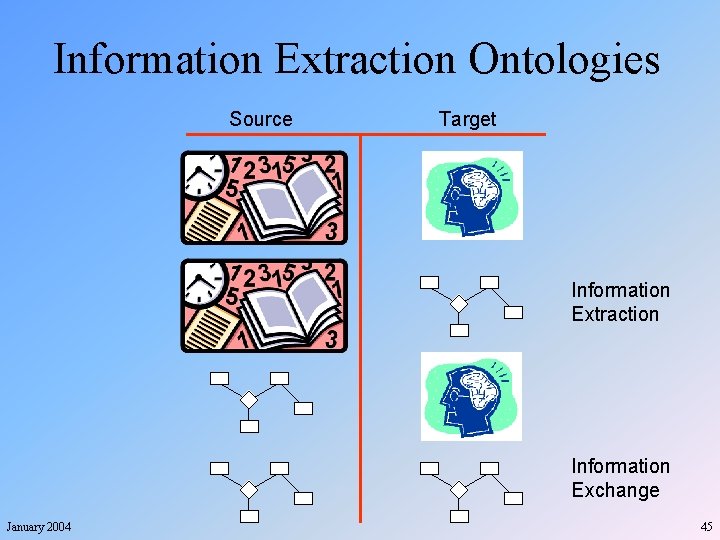

Information Extraction Ontologies Source Target Information Extraction Information Exchange January 2004 45

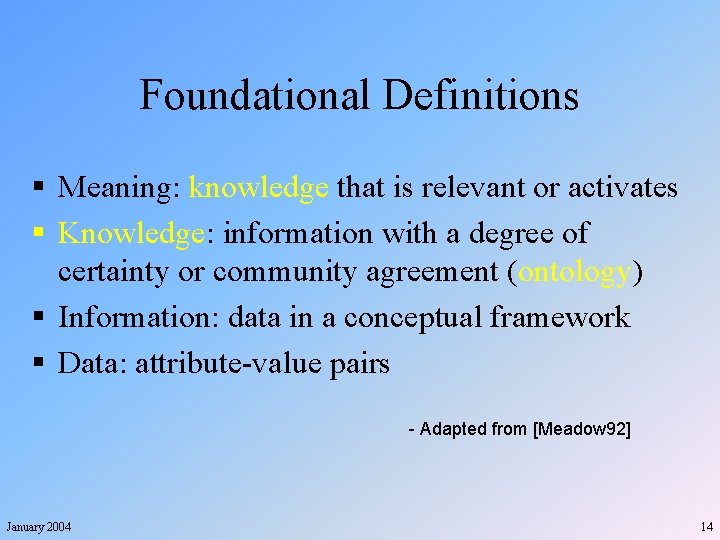

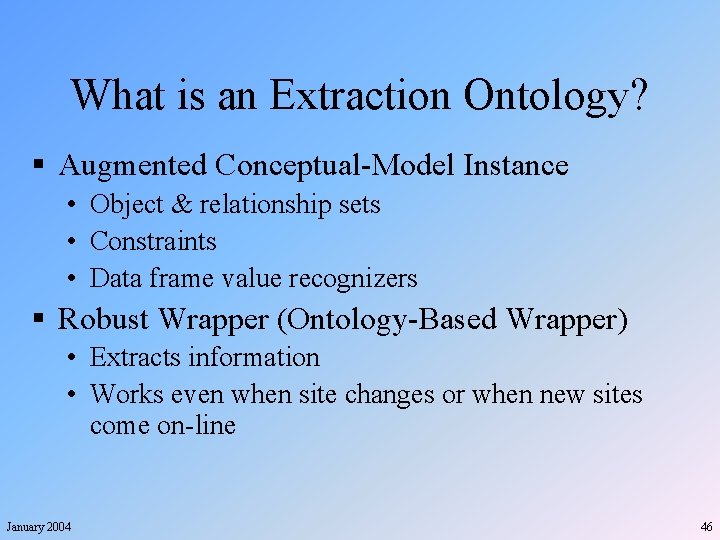

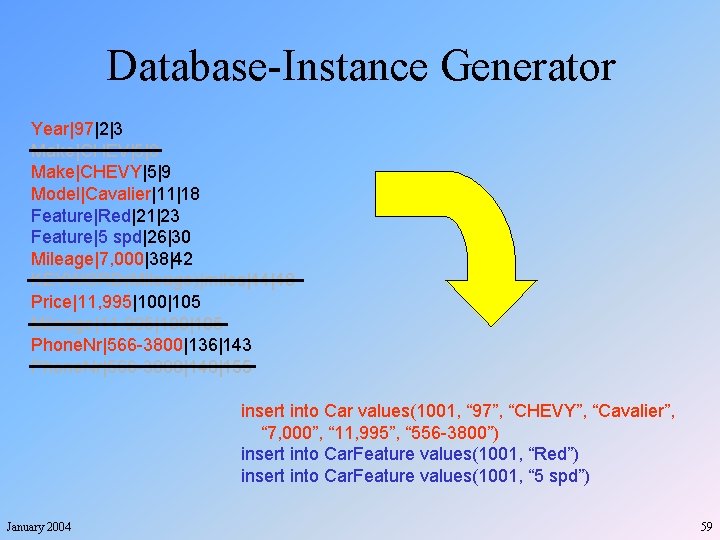

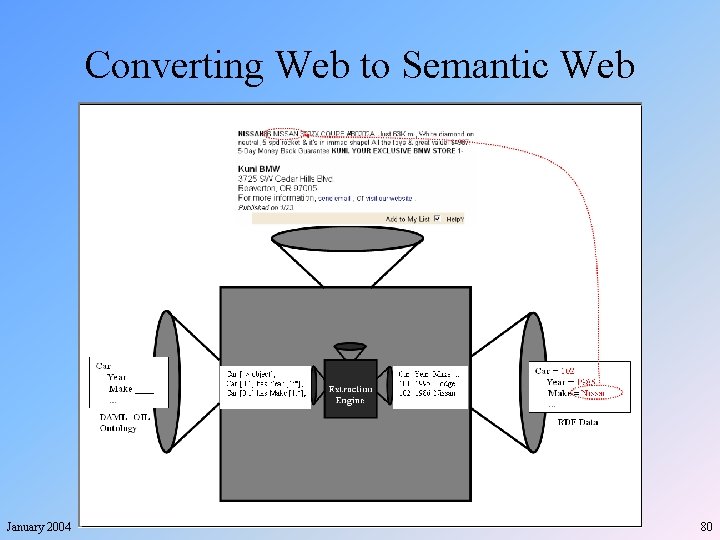

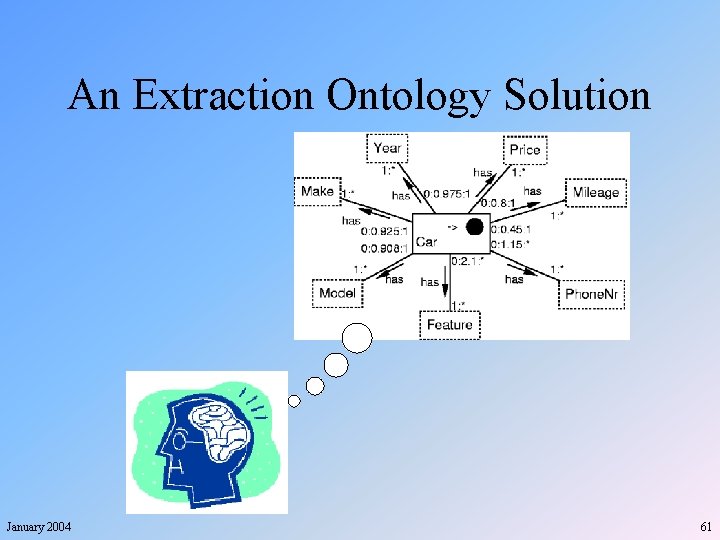

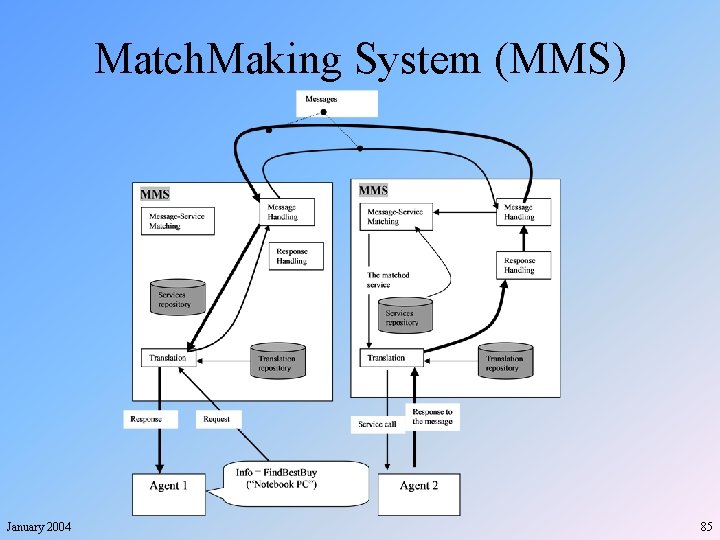

What is an Extraction Ontology? § Augmented Conceptual-Model Instance • Object & relationship sets • Constraints • Data frame value recognizers § Robust Wrapper (Ontology-Based Wrapper) • Extracts information • Works even when site changes or when new sites come on-line January 2004 46

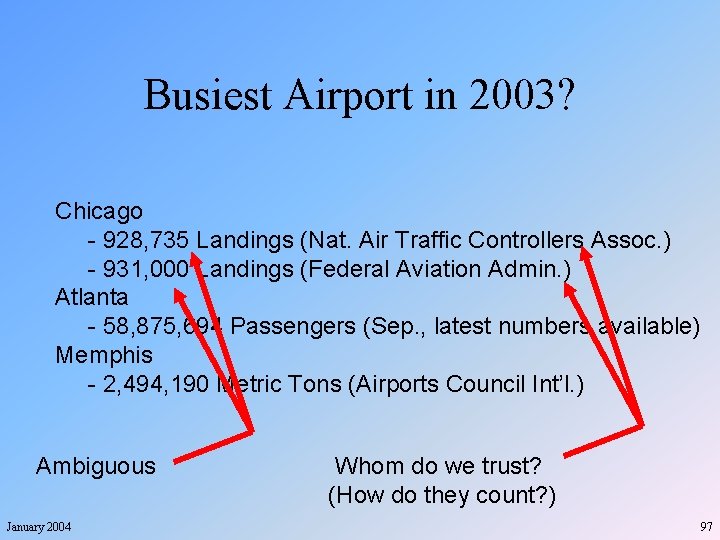

![Extraction Ontology Example January 2004 Car object Car 0 1 has Year 1 Extraction Ontology: Example January 2004 Car [-> object]; Car [0: 1] has Year [1:](https://slidetodoc.com/presentation_image_h2/815117ea046e2b5b83957369c78243f2/image-47.jpg)

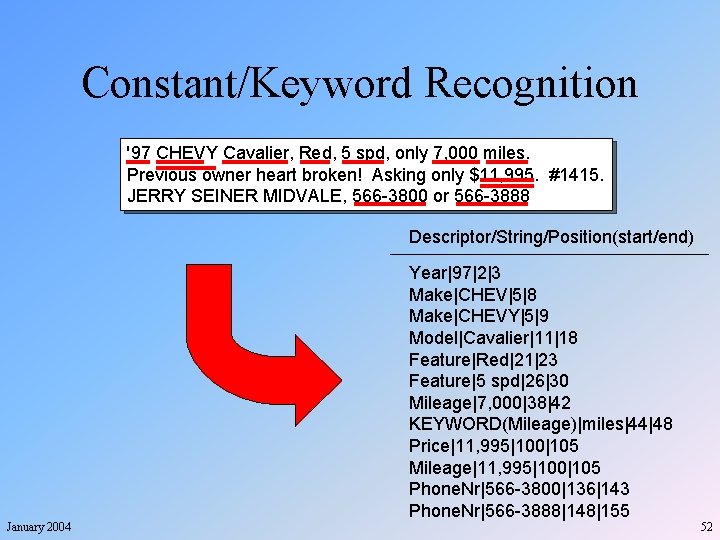

Extraction Ontology: Example January 2004 Car [-> object]; Car [0: 1] has Year [1: *]; Car [0: 1] has Make [1: *]; … Car [0: *] has Feature [1: *]; Phone. Nr [1: *] is for Car [0: 1]; Year matches [4] constant {extract “d{2}”; context “b’[4 -9]db”; …} … Mileage matches [8] keyword {bmilesb”, “bmib. ”, …} … … 47

Extraction Ontologies: An Example of Semantic Understanding § “Intelligent” Symbol Manipulation § Gives the “Illusion of Understanding” § Obtains Meaningful and Useful Results January 2004 48

Presentation Outline § § § § January 2004 Grand Challenge Meaning, Knowledge, Information, Data Fun and Games with Data Information Extraction Ontologies Applications Limitations and Pragmatics Summary and Challenges 49

A Variety of Applications § § § January 2004 Information Extraction High-Precision Classification Schema Mapping Semantic Web Creation Agent Communication Ontology Generation 50

Application #1 Information Extraction January 2004 51

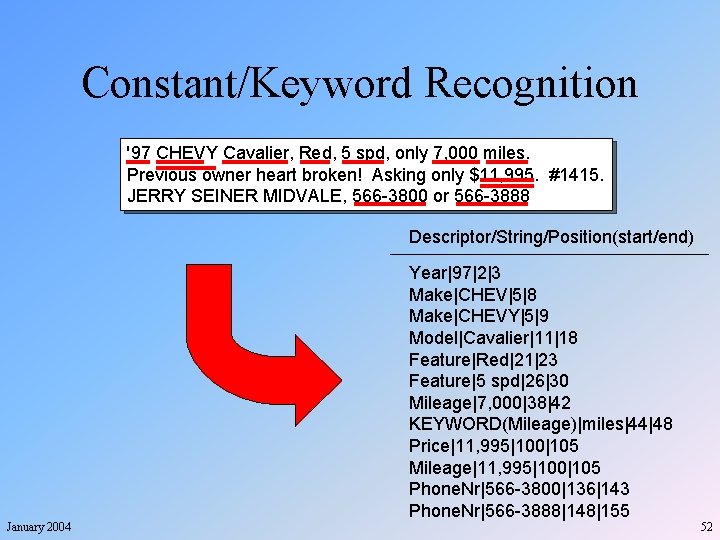

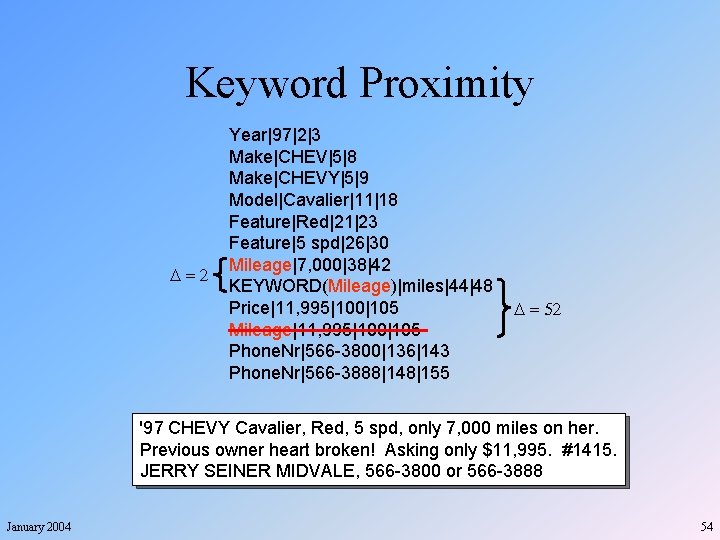

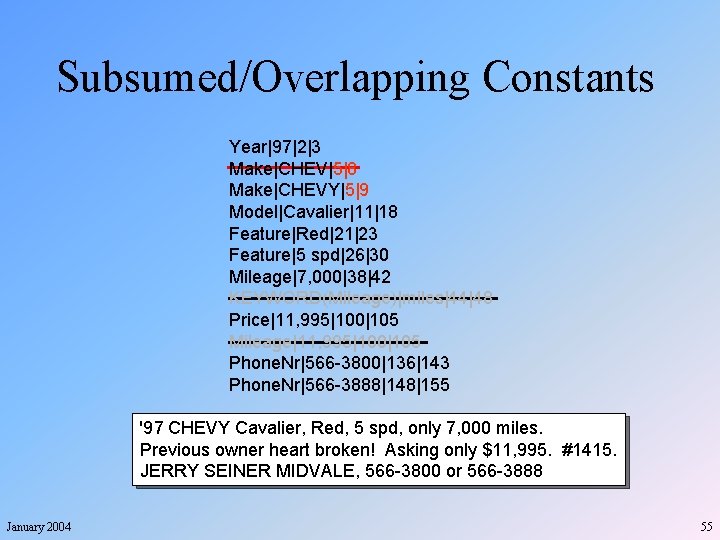

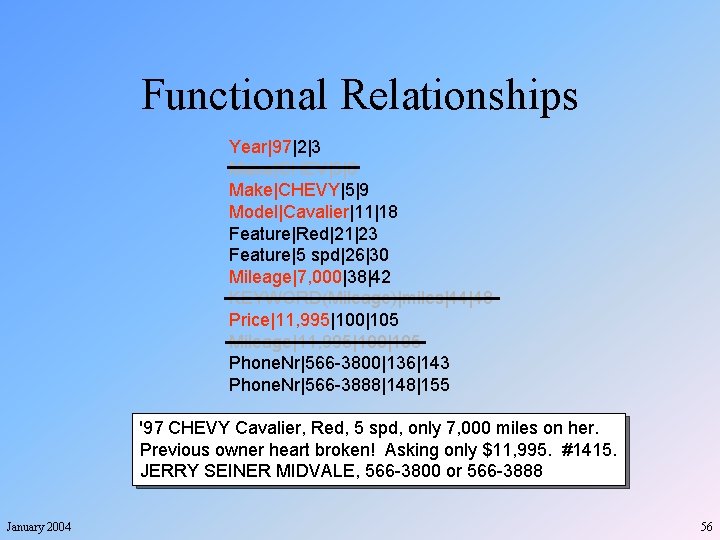

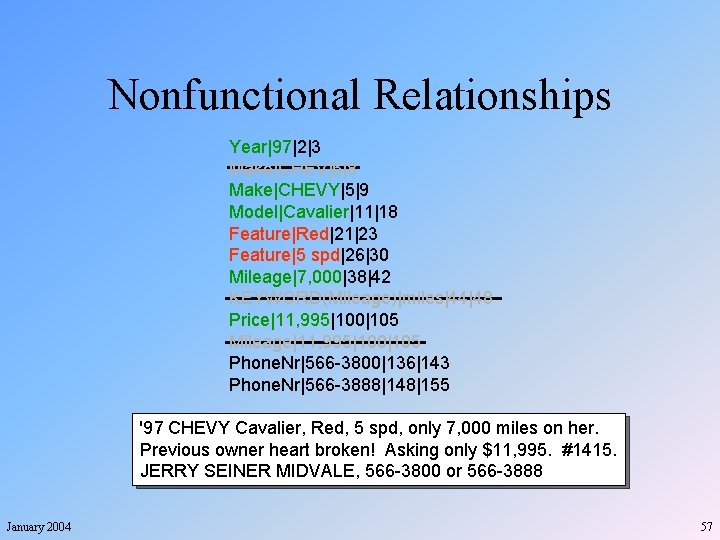

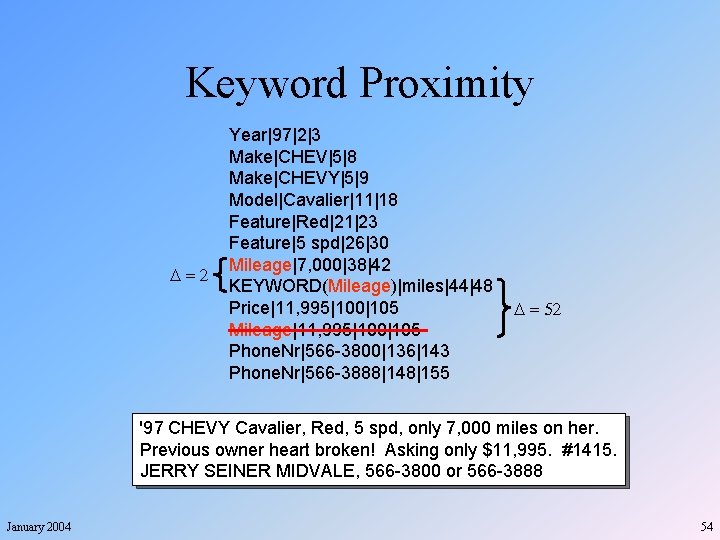

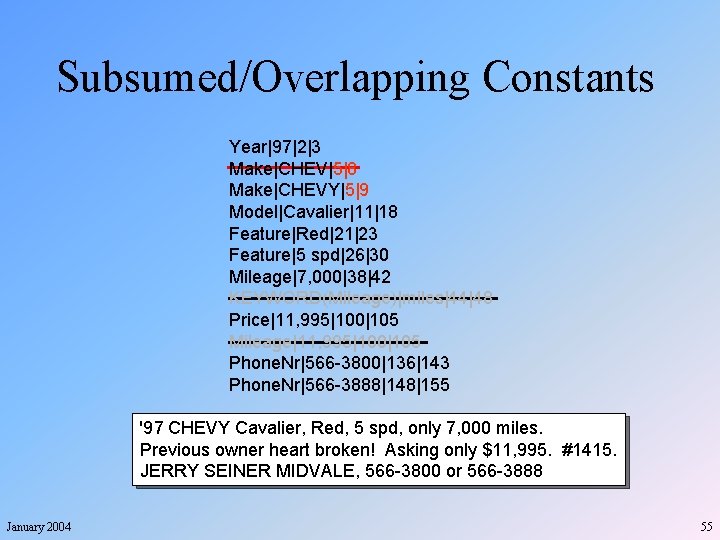

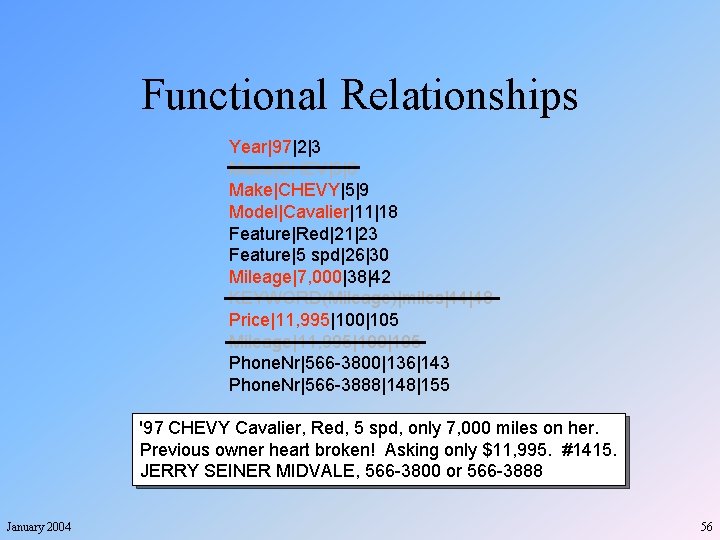

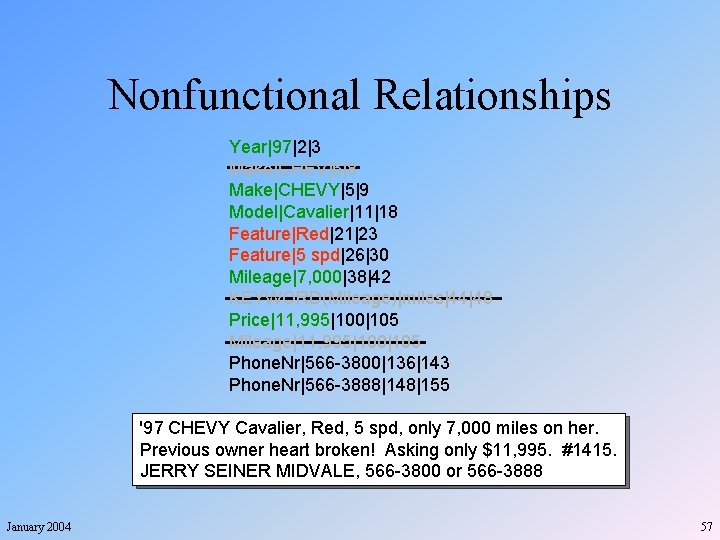

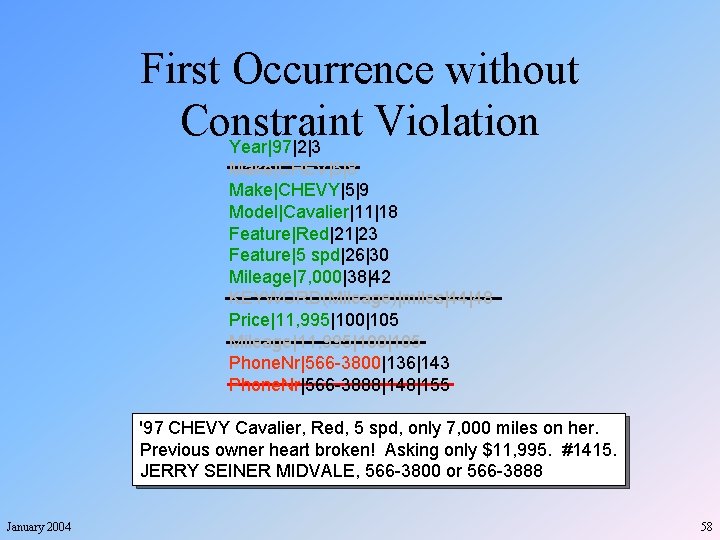

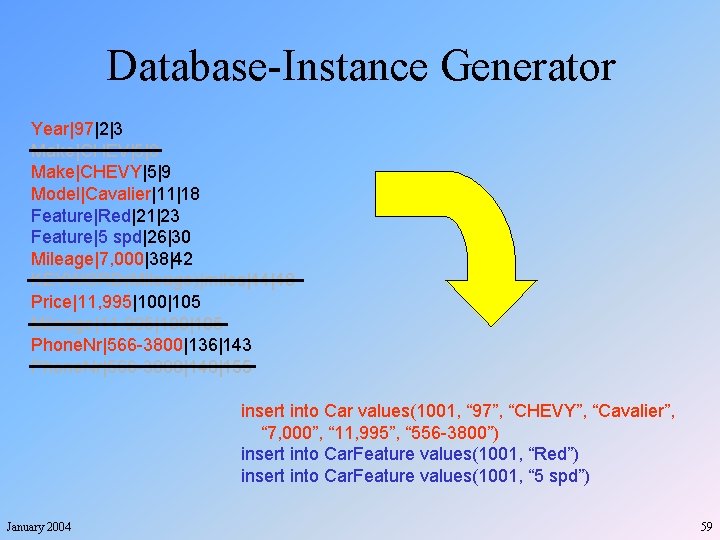

Constant/Keyword Recognition '97 CHEVY Cavalier, Red, 5 spd, only 7, 000 miles. Previous owner heart broken! Asking only $11, 995. #1415. JERRY SEINER MIDVALE, 566 -3800 or 566 -3888 Descriptor/String/Position(start/end) January 2004 Year|97|2|3 Make|CHEV|5|8 Make|CHEVY|5|9 Model|Cavalier|11|18 Feature|Red|21|23 Feature|5 spd|26|30 Mileage|7, 000|38|42 KEYWORD(Mileage)|miles|44|48 Price|11, 995|100|105 Mileage|11, 995|100|105 Phone. Nr|566 -3800|136|143 Phone. Nr|566 -3888|148|155 52

Heuristics § § § January 2004 Keyword proximity Subsumed and overlapping constants Functional relationships Nonfunctional relationships First occurrence without constraint violation 53

Keyword Proximity D=2 Year|97|2|3 Make|CHEV|5|8 Make|CHEVY|5|9 Model|Cavalier|11|18 Feature|Red|21|23 Feature|5 spd|26|30 Mileage|7, 000|38|42 KEYWORD(Mileage)|miles|44|48 Price|11, 995|100|105 Mileage|11, 995|100|105 Phone. Nr|566 -3800|136|143 Phone. Nr|566 -3888|148|155 D = 52 '97 CHEVY Cavalier, Red, 5 spd, only 7, 000 miles on her. Previous owner heart broken! Asking only $11, 995. #1415. JERRY SEINER MIDVALE, 566 -3800 or 566 -3888 January 2004 54

Subsumed/Overlapping Constants Year|97|2|3 Make|CHEV|5|8 Make|CHEVY|5|9 Model|Cavalier|11|18 Feature|Red|21|23 Feature|5 spd|26|30 Mileage|7, 000|38|42 KEYWORD(Mileage)|miles|44|48 Price|11, 995|100|105 Mileage|11, 995|100|105 Phone. Nr|566 -3800|136|143 Phone. Nr|566 -3888|148|155 '97 CHEVY Cavalier, Red, 5 spd, only 7, 000 miles. Previous owner heart broken! Asking only $11, 995. #1415. JERRY SEINER MIDVALE, 566 -3800 or 566 -3888 January 2004 55

Functional Relationships Year|97|2|3 Make|CHEV|5|8 Make|CHEVY|5|9 Model|Cavalier|11|18 Feature|Red|21|23 Feature|5 spd|26|30 Mileage|7, 000|38|42 KEYWORD(Mileage)|miles|44|48 Price|11, 995|100|105 Mileage|11, 995|100|105 Phone. Nr|566 -3800|136|143 Phone. Nr|566 -3888|148|155 '97 CHEVY Cavalier, Red, 5 spd, only 7, 000 miles on her. Previous owner heart broken! Asking only $11, 995. #1415. JERRY SEINER MIDVALE, 566 -3800 or 566 -3888 January 2004 56

Nonfunctional Relationships Year|97|2|3 Make|CHEV|5|8 Make|CHEVY|5|9 Model|Cavalier|11|18 Feature|Red|21|23 Feature|5 spd|26|30 Mileage|7, 000|38|42 KEYWORD(Mileage)|miles|44|48 Price|11, 995|100|105 Mileage|11, 995|100|105 Phone. Nr|566 -3800|136|143 Phone. Nr|566 -3888|148|155 '97 CHEVY Cavalier, Red, 5 spd, only 7, 000 miles on her. Previous owner heart broken! Asking only $11, 995. #1415. JERRY SEINER MIDVALE, 566 -3800 or 566 -3888 January 2004 57

First Occurrence without Constraint Violation Year|97|2|3 Make|CHEV|5|8 Make|CHEVY|5|9 Model|Cavalier|11|18 Feature|Red|21|23 Feature|5 spd|26|30 Mileage|7, 000|38|42 KEYWORD(Mileage)|miles|44|48 Price|11, 995|100|105 Mileage|11, 995|100|105 Phone. Nr|566 -3800|136|143 Phone. Nr|566 -3888|148|155 '97 CHEVY Cavalier, Red, 5 spd, only 7, 000 miles on her. Previous owner heart broken! Asking only $11, 995. #1415. JERRY SEINER MIDVALE, 566 -3800 or 566 -3888 January 2004 58

Database-Instance Generator Year|97|2|3 Make|CHEV|5|8 Make|CHEVY|5|9 Model|Cavalier|11|18 Feature|Red|21|23 Feature|5 spd|26|30 Mileage|7, 000|38|42 KEYWORD(Mileage)|miles|44|48 Price|11, 995|100|105 Mileage|11, 995|100|105 Phone. Nr|566 -3800|136|143 Phone. Nr|566 -3888|148|155 insert into Car values(1001, “ 97”, “CHEVY”, “Cavalier”, “ 7, 000”, “ 11, 995”, “ 556 -3800”) insert into Car. Feature values(1001, “Red”) insert into Car. Feature values(1001, “ 5 spd”) January 2004 59

Application #2 High-Precision Classification January 2004 60

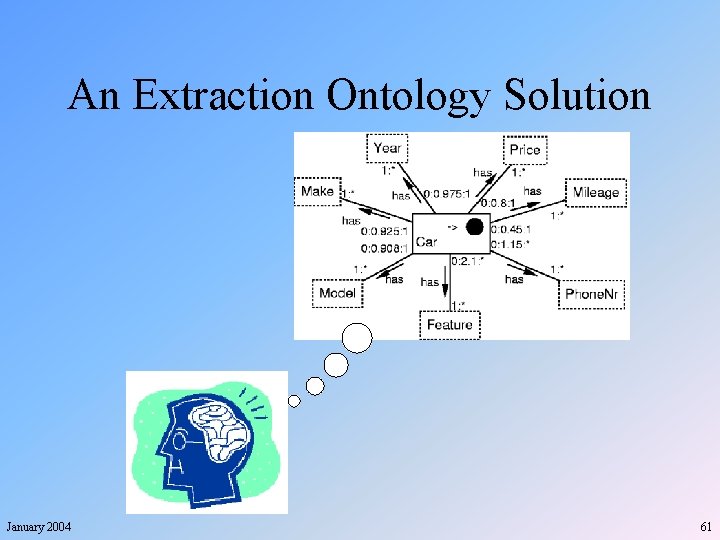

An Extraction Ontology Solution January 2004 61

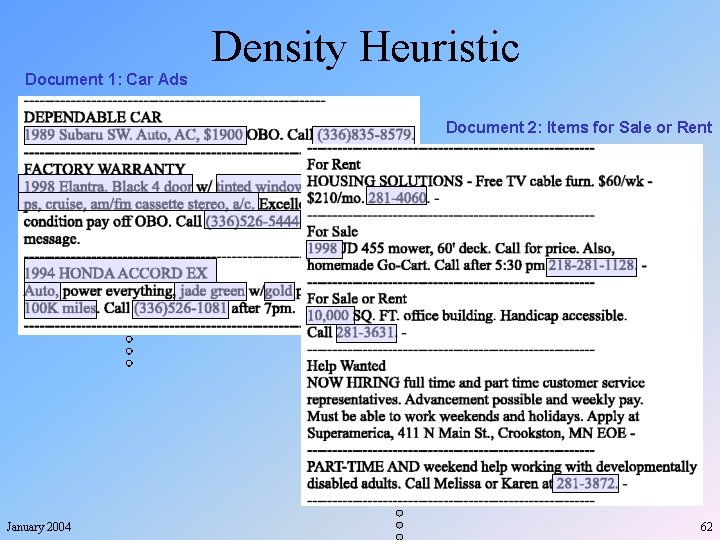

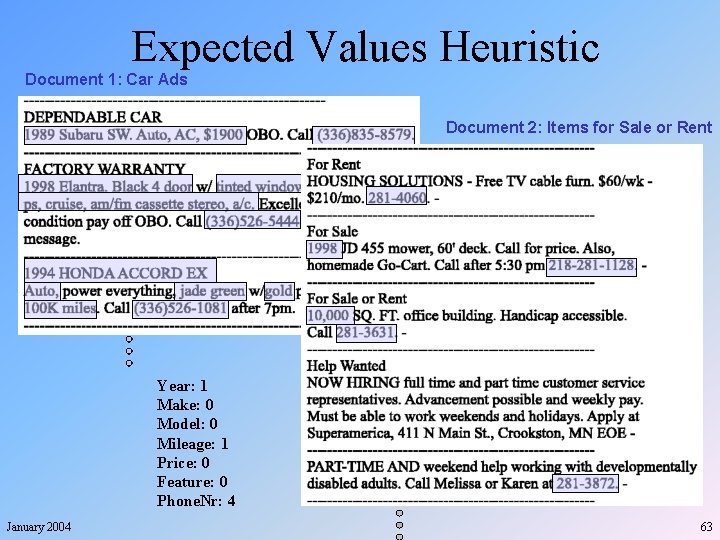

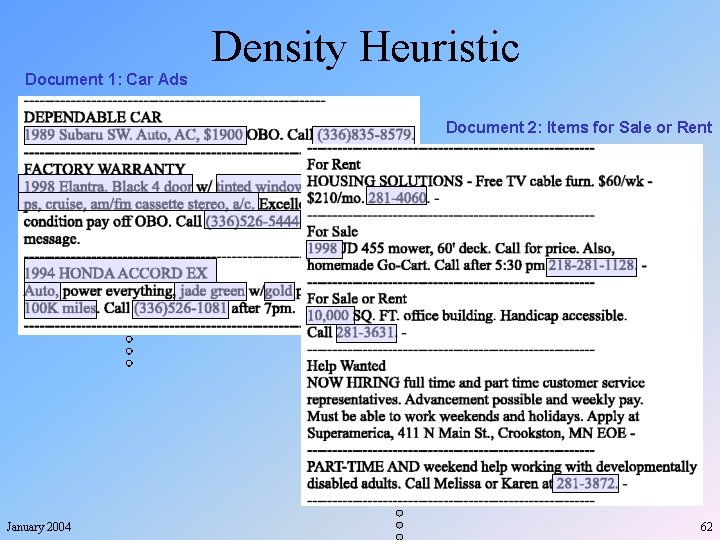

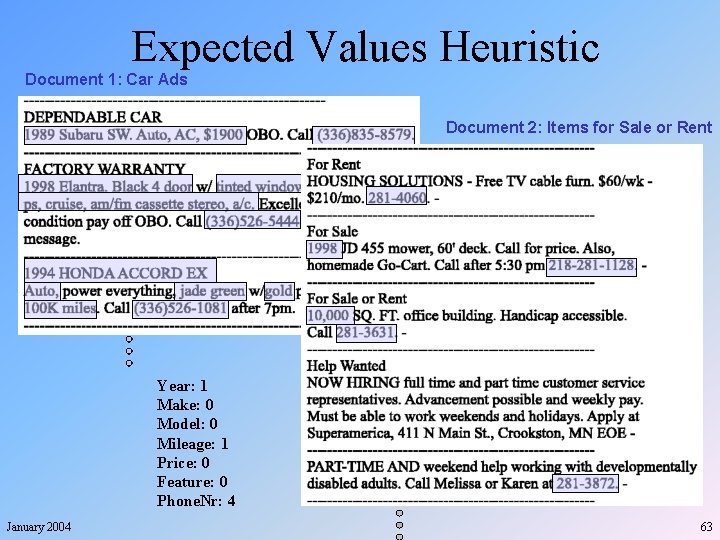

Density Heuristic Document 1: Car Ads Document 2: Items for Sale or Rent January 2004 62

Expected Values Heuristic Document 1: Car Ads Document 2: Items for Sale or Rent Year: 3 Make: 2 Model: 3 Mileage: 1 Price: 1 Feature: 15 Phone. Nr: 3 Year: 1 Make: 0 Model: 0 Mileage: 1 Price: 0 Feature: 0 Phone. Nr: 4 January 2004 63

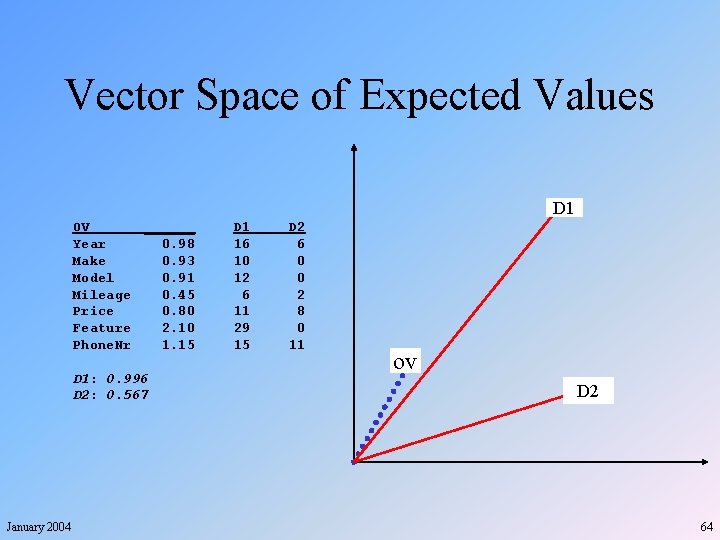

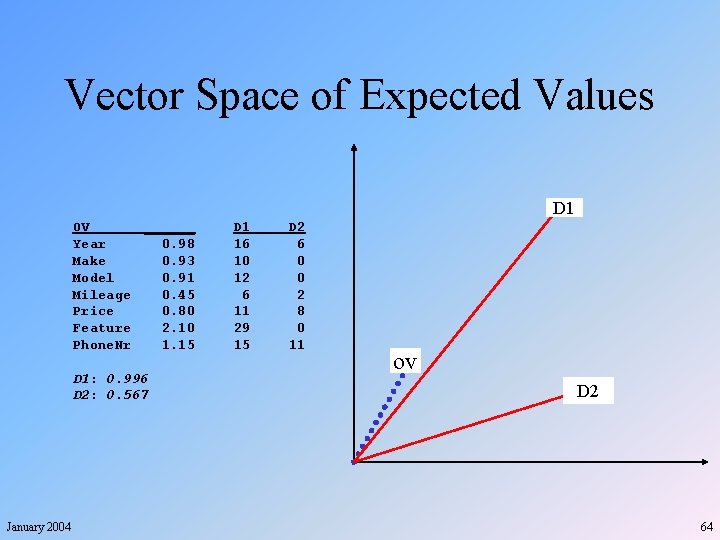

Vector Space of Expected Values D 1 OV ______ Year 0. 98 Make 0. 93 Model 0. 91 Mileage 0. 45 Price 0. 80 Feature 2. 10 Phone. Nr 1. 15 D 1: 0. 996 D 2: 0. 567 January 2004 D 1 16 10 12 6 11 29 15 D 2 6 0 0 2 8 0 11 ov D 2 64

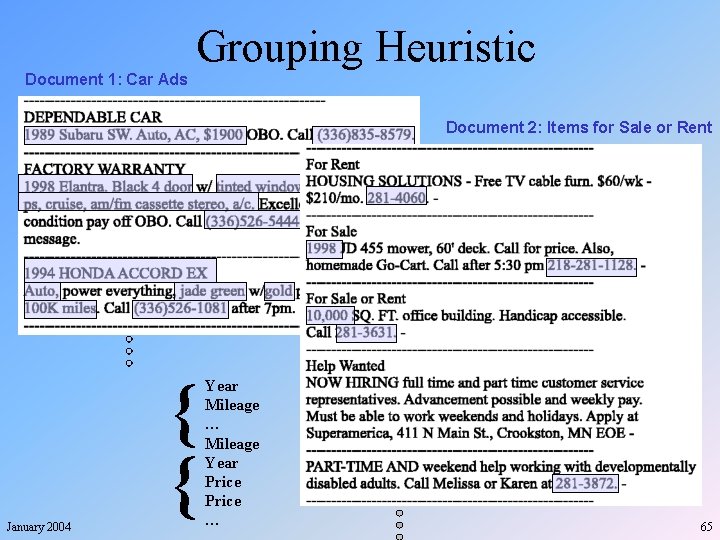

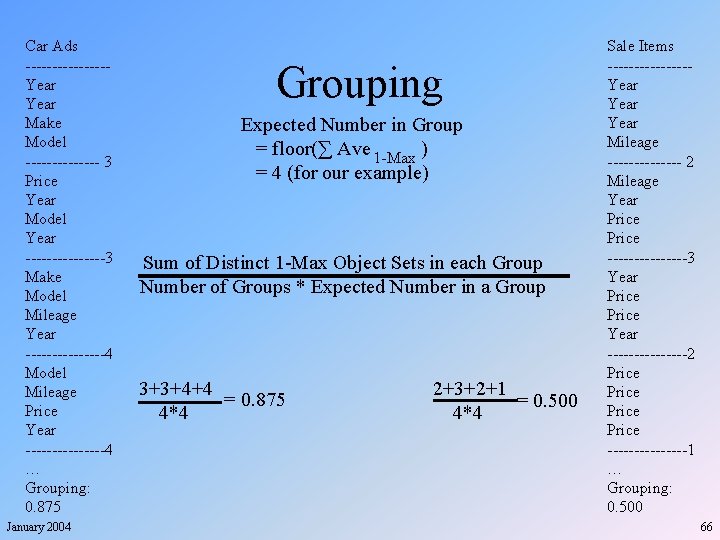

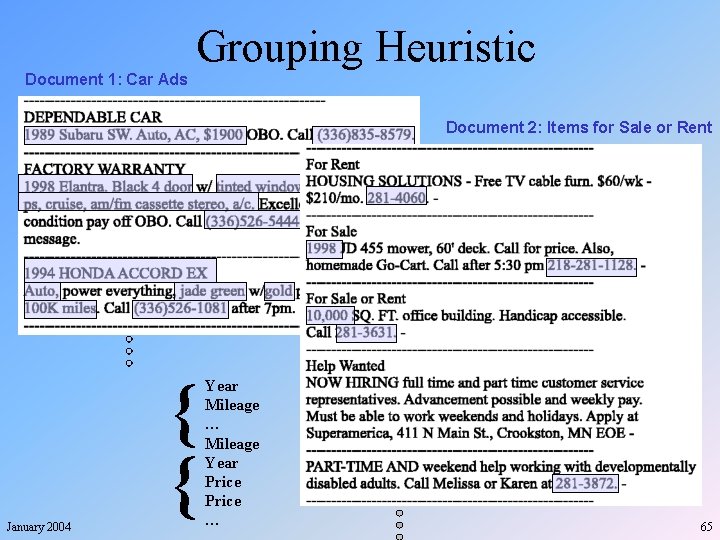

Grouping Heuristic Document 1: Car Ads Document 2: Items for Sale or Rent January 2004 { { Year Mileage … Mileage Year Price … { { { Year Make Model Price Year Model Year Make Model Mileage … 65

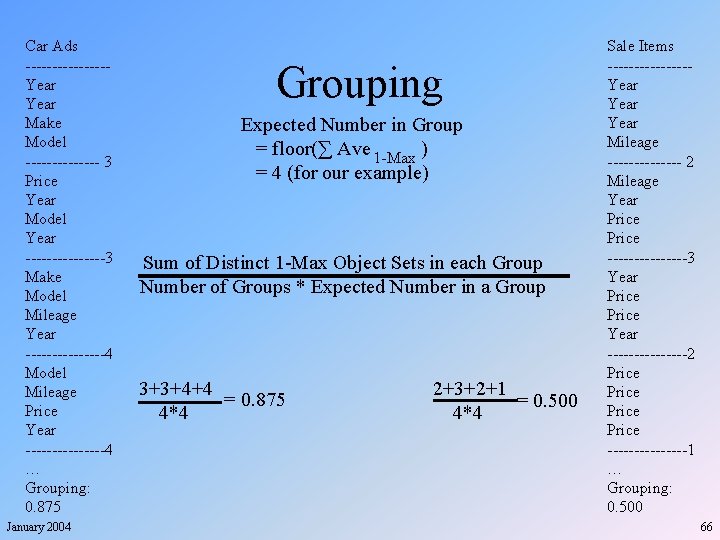

Car Ads --------Year Make Model ------- 3 Price Year Model Year --------3 Make Model Mileage Year --------4 Model Mileage Price Year --------4 … Grouping: 0. 875 January 2004 Grouping Expected Number in Group = floor(∑ Ave 1 -Max ) = 4 (for our example) Sum of Distinct 1 -Max Object Sets in each Group Number of Groups * Expected Number in a Group 3+3+4+4 = 0. 875 4*4 2+3+2+1 = 0. 500 4*4 Sale Items --------Year Mileage ------- 2 Mileage Year Price --------3 Year Price Year --------2 Price --------1 … Grouping: 0. 500 66

Application #3 Schema Mapping January 2004 67

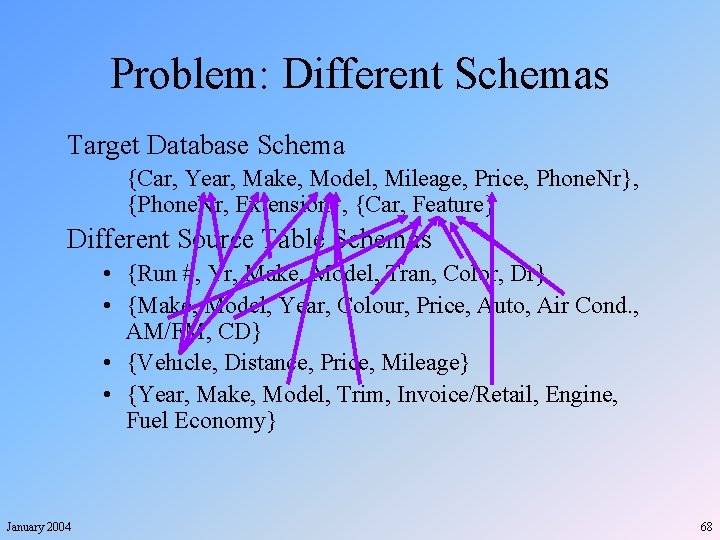

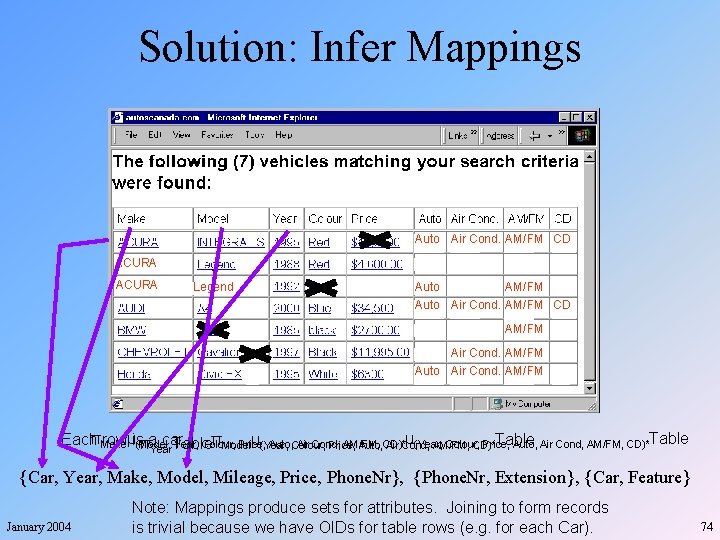

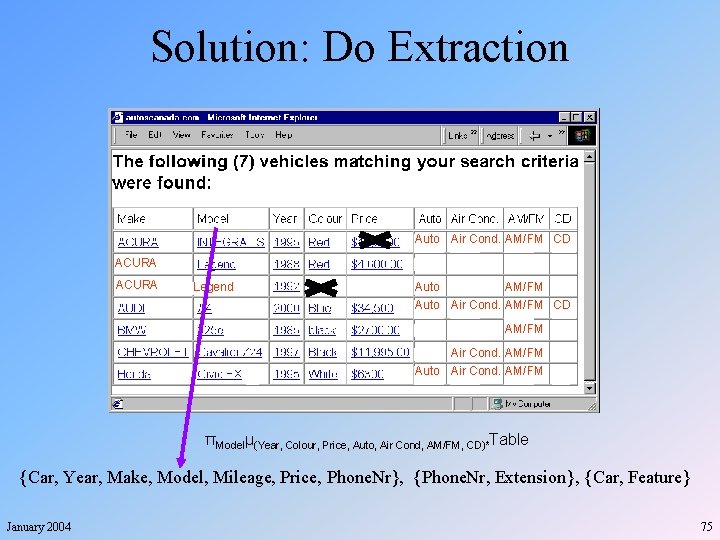

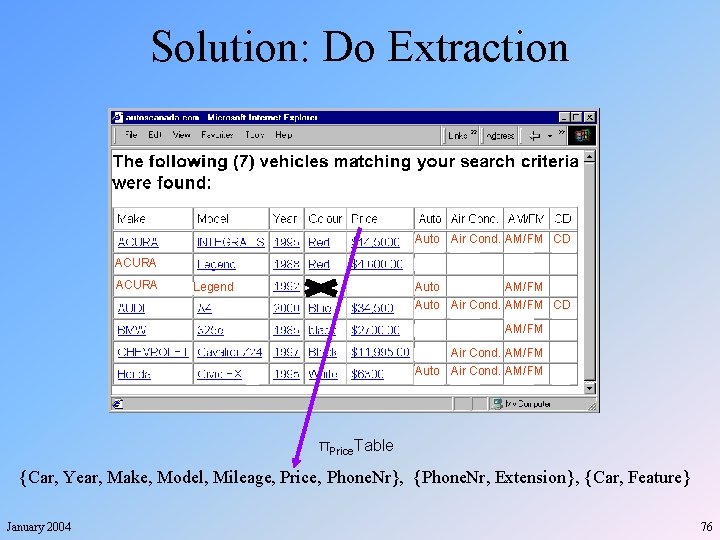

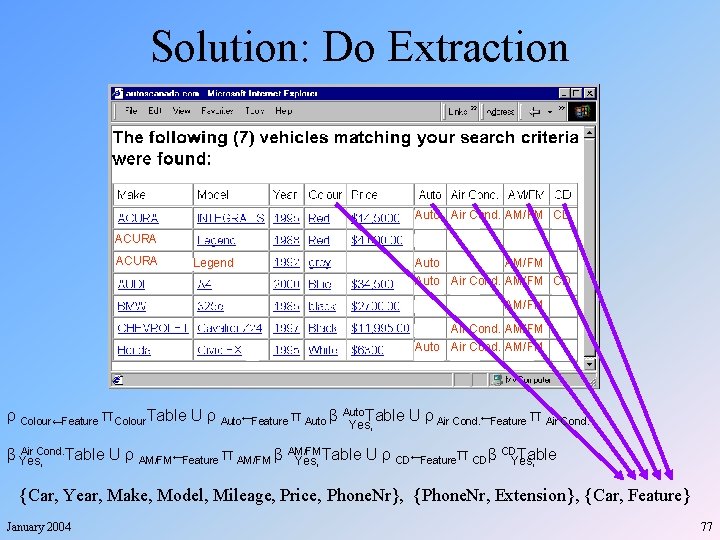

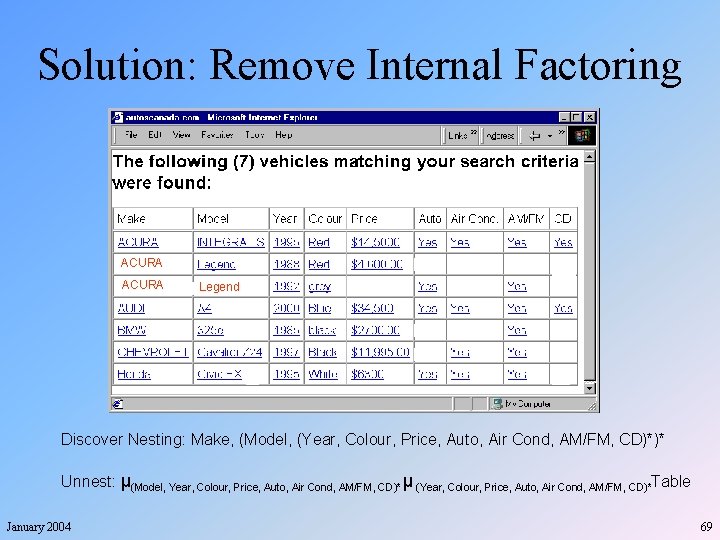

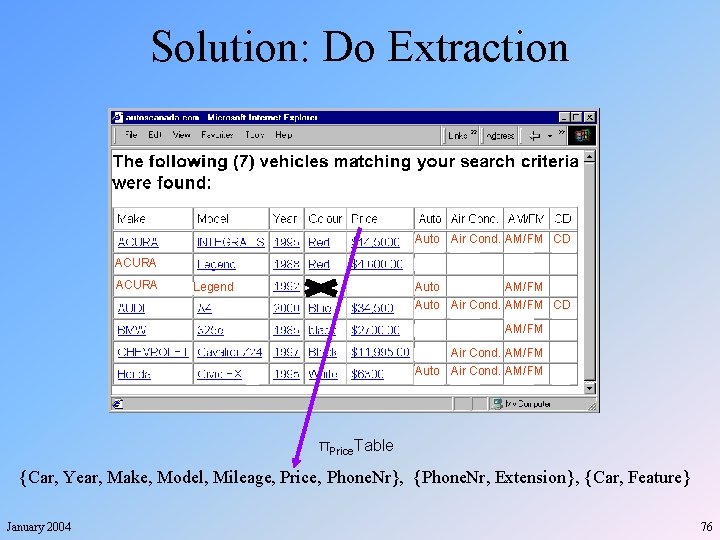

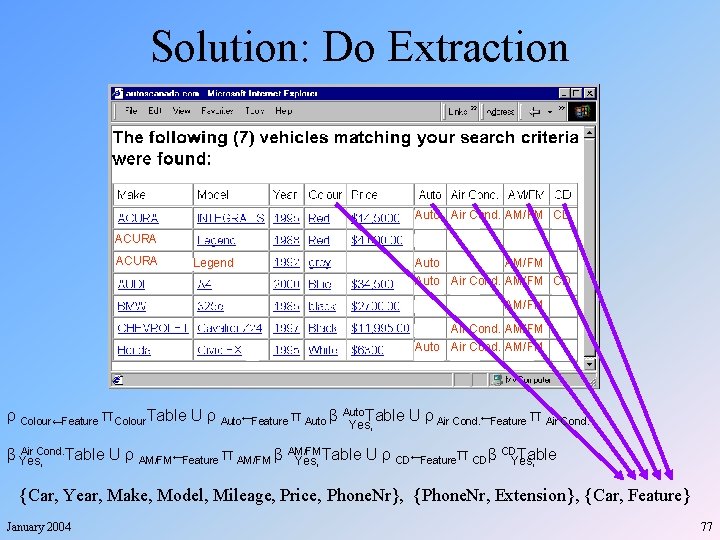

Problem: Different Schemas Target Database Schema {Car, Year, Make, Model, Mileage, Price, Phone. Nr}, {Phone. Nr, Extension}, {Car, Feature} Different Source Table Schemas • {Run #, Yr, Make, Model, Tran, Color, Dr} • {Make, Model, Year, Colour, Price, Auto, Air Cond. , AM/FM, CD} • {Vehicle, Distance, Price, Mileage} • {Year, Make, Model, Trim, Invoice/Retail, Engine, Fuel Economy} January 2004 68

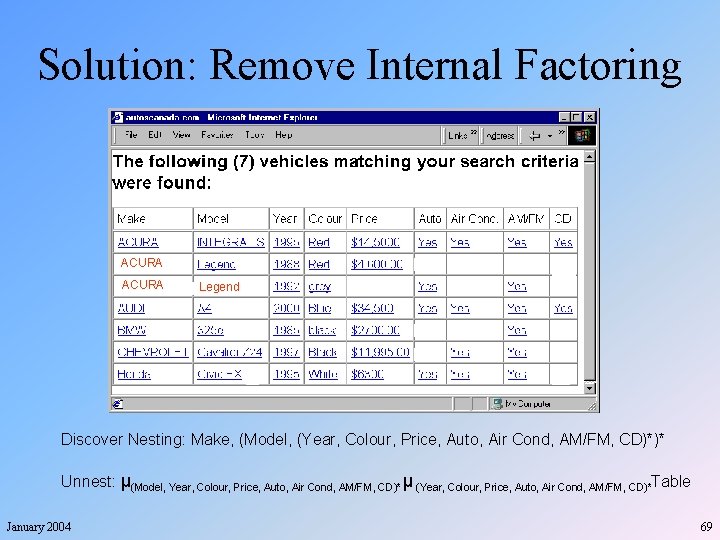

Solution: Remove Internal Factoring ACURA Legend Discover Nesting: Make, (Model, (Year, Colour, Price, Auto, Air Cond, AM/FM, CD)*)* Unnest: μ(Model, Year, Colour, Price, Auto, Air Cond, AM/FM, CD)* μ (Year, Colour, Price, Auto, Air Cond, AM/FM, CD)*Table January 2004 69

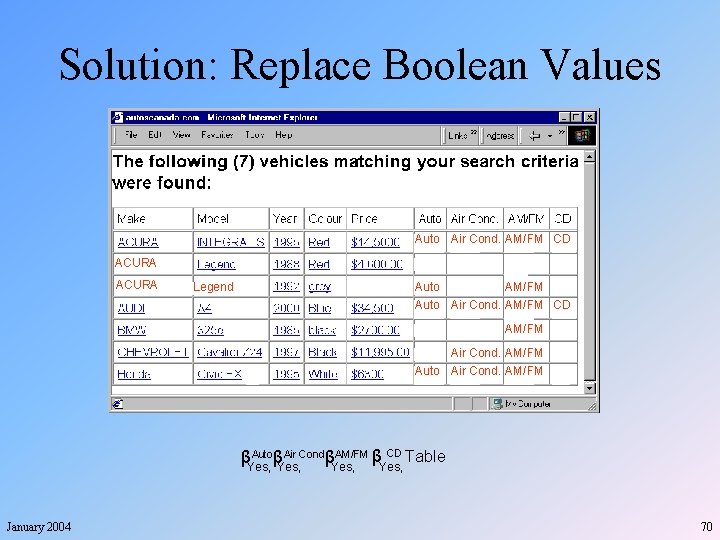

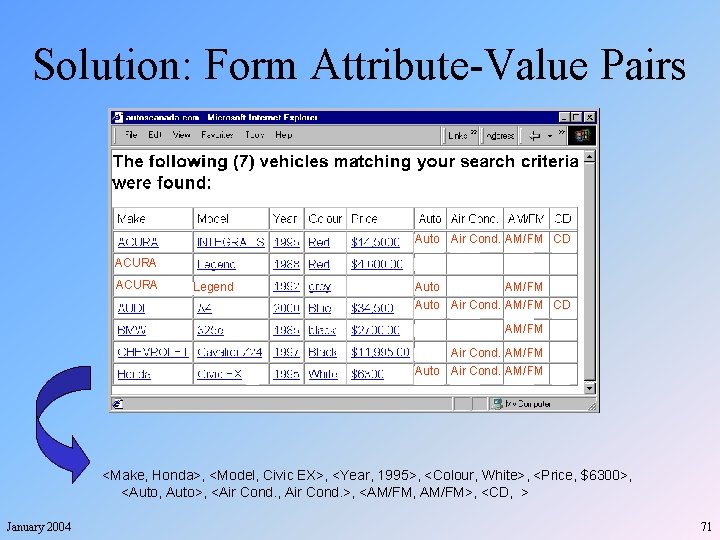

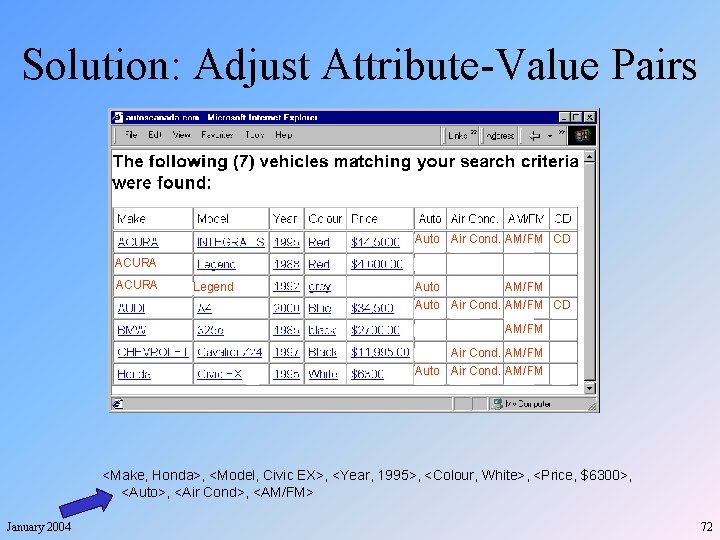

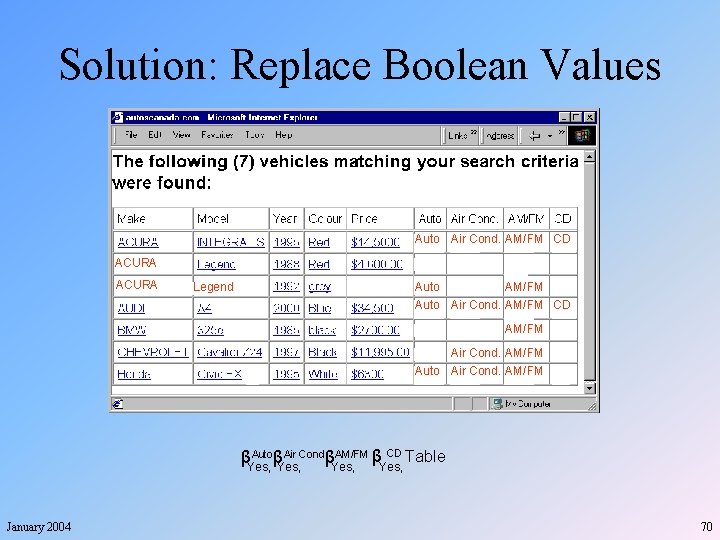

Solution: Replace Boolean Values Auto Air Cond. AM/FM CD ACURA Legend Auto AM/FM Auto Air Cond. AM/FM CD AM/FM Air Cond. AM/FM AutoβAir CondβAM/FM β CD Table βYes, January 2004 70

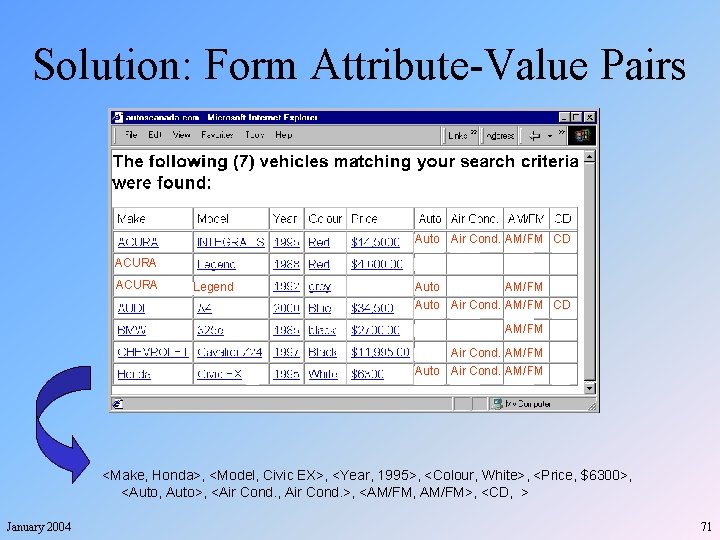

Solution: Form Attribute-Value Pairs Auto Air Cond. AM/FM CD ACURA Legend Auto AM/FM Auto Air Cond. AM/FM CD AM/FM Air Cond. AM/FM Auto Air Cond. AM/FM <Make, Honda>, <Model, Civic EX>, <Year, 1995>, <Colour, White>, <Price, $6300>, <Auto, Auto>, <Air Cond. , Air Cond. >, <AM/FM, AM/FM>, <CD, > January 2004 71

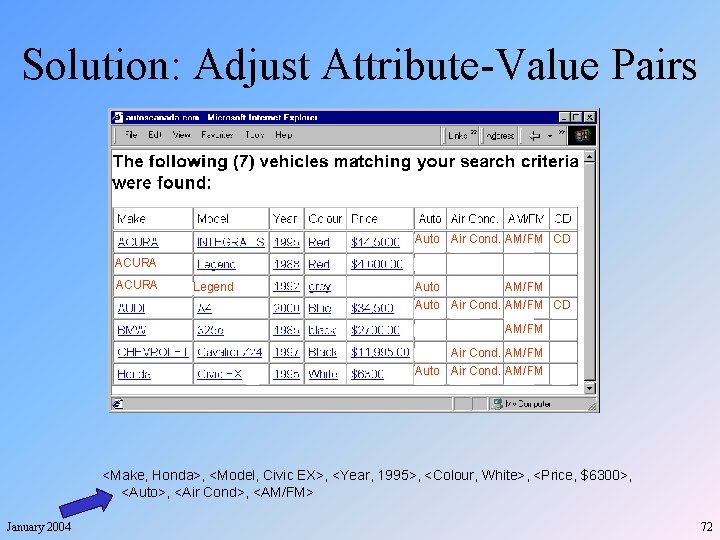

Solution: Adjust Attribute-Value Pairs Auto Air Cond. AM/FM CD ACURA Legend Auto AM/FM Auto Air Cond. AM/FM CD AM/FM Air Cond. AM/FM Auto Air Cond. AM/FM <Make, Honda>, <Model, Civic EX>, <Year, 1995>, <Colour, White>, <Price, $6300>, <Auto>, <Air Cond>, <AM/FM> January 2004 72

Solution: Do Extraction Auto Air Cond. AM/FM CD ACURA Legend Auto AM/FM Auto Air Cond. AM/FM CD AM/FM Air Cond. AM/FM Auto Air Cond. AM/FM January 2004 73

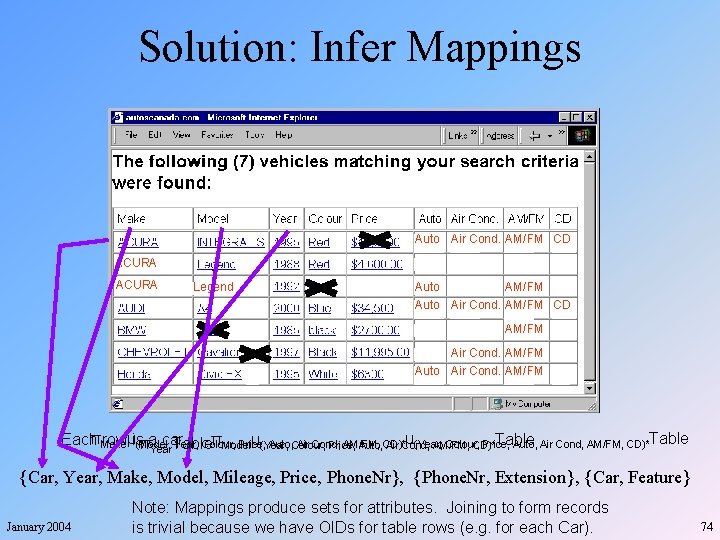

Solution: Infer Mappings Auto Air Cond. AM/FM CD ACURA Legend Auto AM/FM Auto Air Cond. AM/FM CD AM/FM Air Cond. AM/FM Auto Air Cond. AM/FM μ(Year, EachπMake rowμis(Model, car. πModel μ(Year, Table Year, Colour, Price, Auto, Colour, Air Cond, AM/FM, Colour, Price, Auto, Air Cond, AM/FM, CD)*Table πa. Year Table Price, Auto, CD)* Air Cond, AM/FM, CD)* {Car, Year, Make, Model, Mileage, Price, Phone. Nr}, {Phone. Nr, Extension}, {Car, Feature} January 2004 Note: Mappings produce sets for attributes. Joining to form records is trivial because we have OIDs for table rows (e. g. for each Car). 74

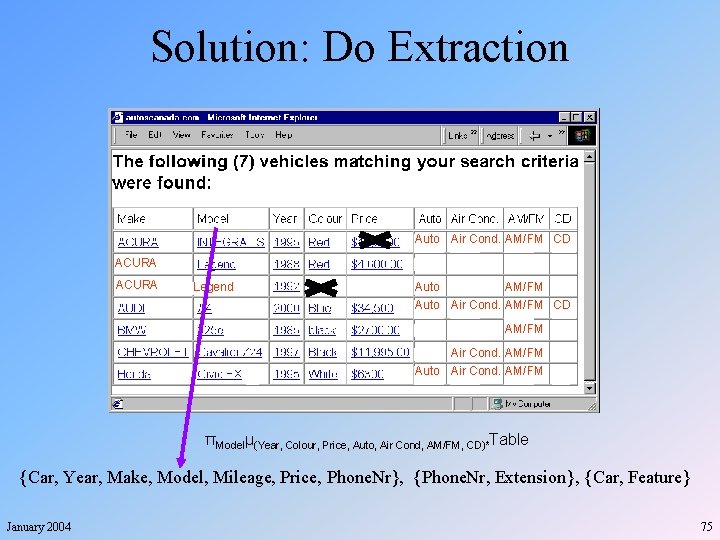

Solution: Do Extraction Auto Air Cond. AM/FM CD ACURA Legend Auto AM/FM Auto Air Cond. AM/FM CD AM/FM Air Cond. AM/FM Auto Air Cond. AM/FM πModelμ(Year, Colour, Price, Auto, Air Cond, AM/FM, CD)*Table {Car, Year, Make, Model, Mileage, Price, Phone. Nr}, {Phone. Nr, Extension}, {Car, Feature} January 2004 75

Solution: Do Extraction Auto Air Cond. AM/FM CD ACURA Legend Auto AM/FM Auto Air Cond. AM/FM CD AM/FM Air Cond. AM/FM Auto Air Cond. AM/FM πPrice. Table {Car, Year, Make, Model, Mileage, Price, Phone. Nr}, {Phone. Nr, Extension}, {Car, Feature} January 2004 76

Solution: Do Extraction Auto Air Cond. AM/FM CD ACURA Legend Auto AM/FM Auto Air Cond. AM/FM CD AM/FM Air Cond. AM/FM Auto Air Cond. AM/FM ρ Colour←Feature π Colour. Table U ρ Auto←Feature π Auto β Auto. Table U ρ Air Cond. ←Feature π Air Cond. Yes, Air Cond. Table U ρ AM/FM CDTable β Yes, AM/FM←Feature π AM/FM β Yes, Table U ρ CD←Featureπ CDβ Yes, {Car, Year, Make, Model, Mileage, Price, Phone. Nr}, {Phone. Nr, Extension}, {Car, Feature} January 2004 77

Application #4 Semantic Web Creation January 2004 78

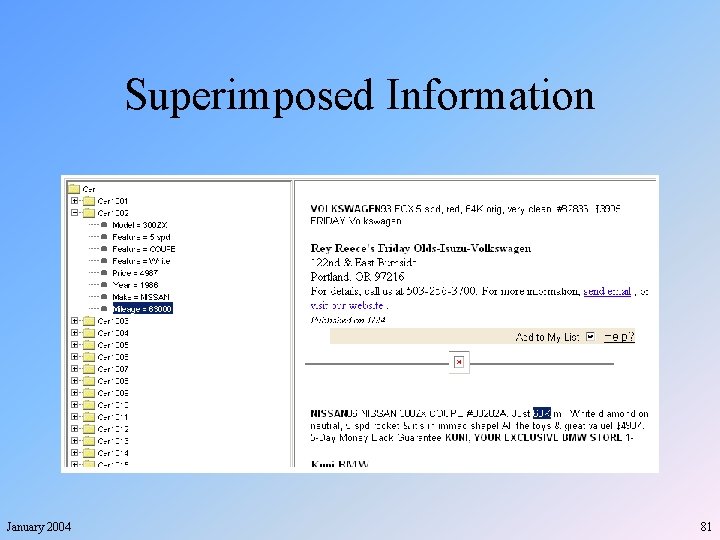

The Semantic Web § Make web content accessible to machines § What prevents this from working? • Lack of content • Lack of tools to create useful content • Difficulty of converting the web to the Semantic Web January 2004 79

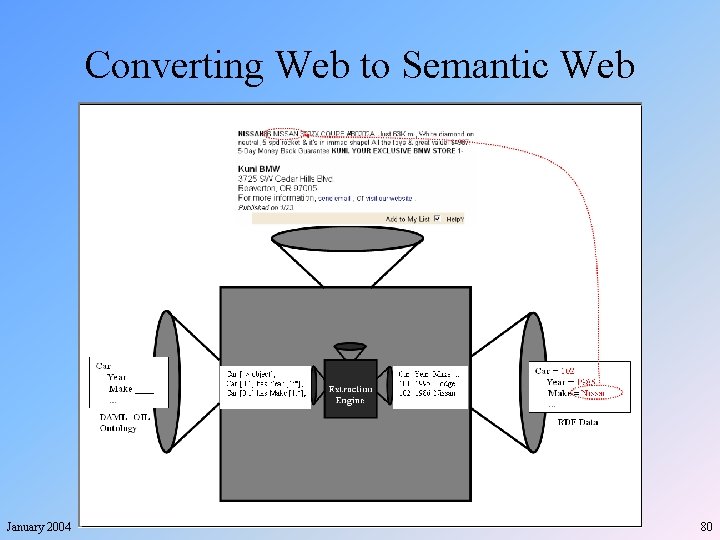

Converting Web to Semantic Web January 2004 80

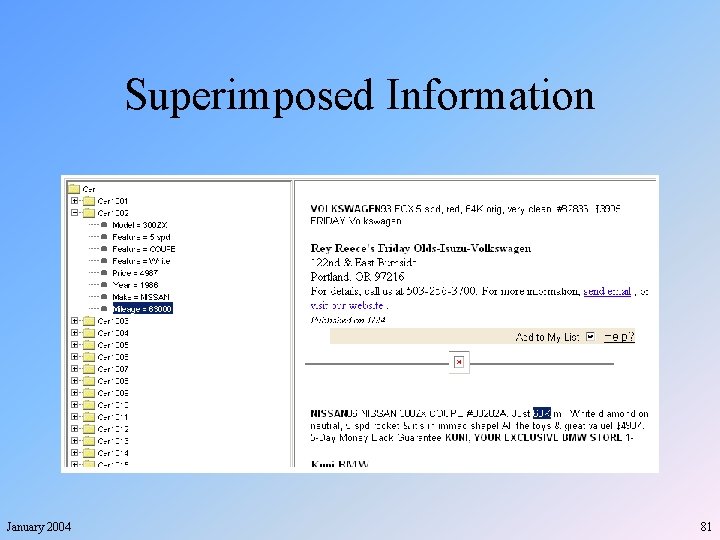

Superimposed Information January 2004 81

Application #5 Agent Communication January 2004 82

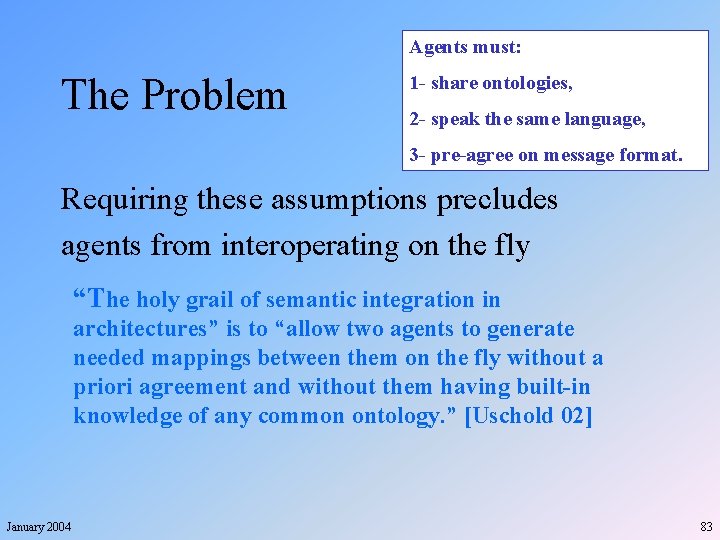

Agents must: The Problem 1 - share ontologies, 2 - speak the same language, 3 - pre-agree on message format. Requiring these assumptions precludes agents from interoperating on the fly “The holy grail of semantic integration in architectures” is to “allow two agents to generate needed mappings between them on the fly without a priori agreement and without them having built-in knowledge of any common ontology. ” [Uschold 02] January 2004 83

Solution • Eliminate all assumptions Agents must: 1 - share ontologies, 2 - speak the same language, 3 - pre-agree on message format. • This requires: - Translating (developing mutual understanding) - Dynamically capturing a message’s semantics - Matching a message with a service January 2004 84

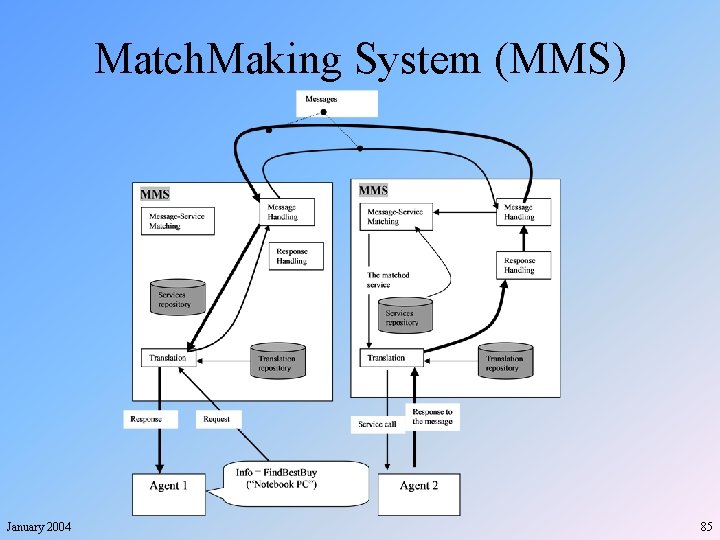

Match. Making System (MMS) January 2004 85

Application #6 Ontology Generation January 2004 86

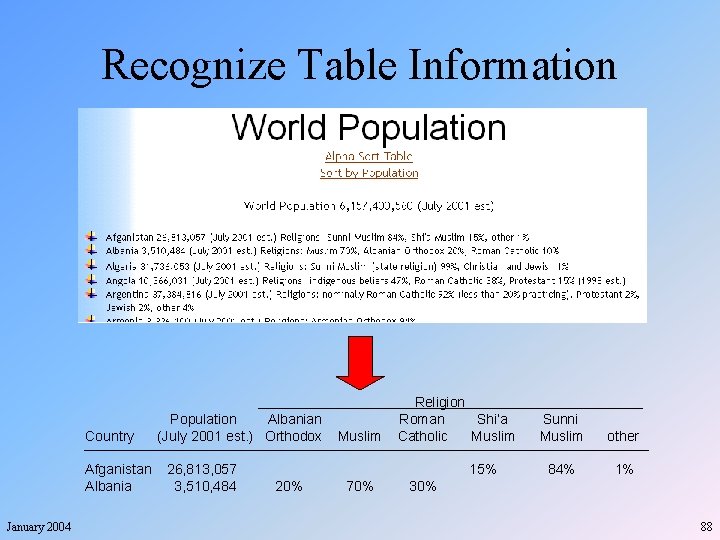

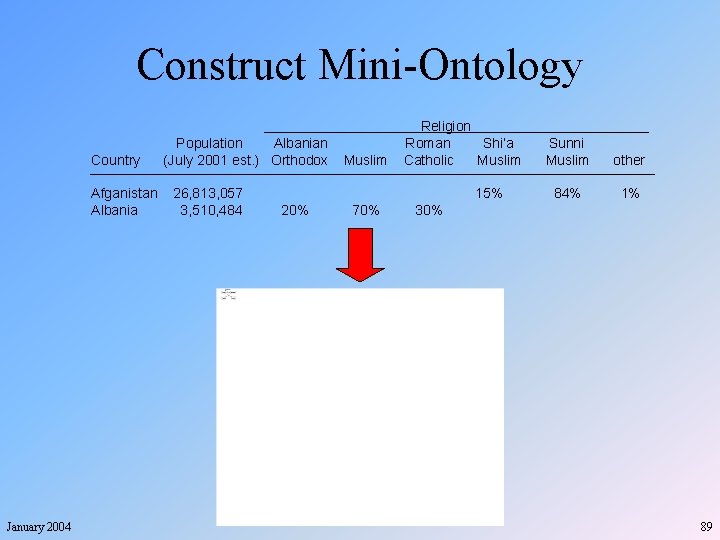

TANGO: Table Analysis for Generating Ontologies § § Recognize and normalize table information Construct mini-ontologies from tables Discover inter-ontology mappings Merge mini-ontologies into a growing ontology January 2004 87

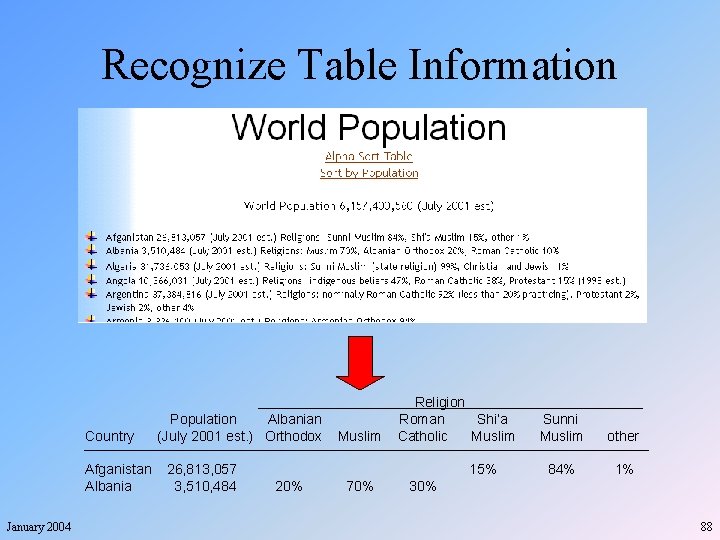

Recognize Table Information Country Afganistan Albania January 2004 Population Albanian (July 2001 est. ) Orthodox 26, 813, 057 3, 510, 484 Muslim Religion Roman Shi’a Catholic Muslim 15% 20% 70% Sunni Muslim other 84% 1% 30% 88

Construct Mini-Ontology Country Afganistan Albania January 2004 Population Albanian (July 2001 est. ) Orthodox 26, 813, 057 3, 510, 484 Muslim Religion Roman Shi’a Catholic Muslim 15% 20% 70% Sunni Muslim other 84% 1% 30% 89

Discover Mappings January 2004 90

Merge January 2004 91

Presentation Outline § § § § January 2004 Grand Challenge Meaning, Knowledge, Information, Data Fun and Games with Data Information Extraction Ontologies Applications Limitations and Pragmatics Summary and Challenges 92

Limitations and Pragmatics § § § January 2004 Data-Rich, Narrow Domain Ambiguities ~ Context Assumptions Incompleteness ~ Implicit Information Common Sense Requirements Knowledge Prerequisites … 93

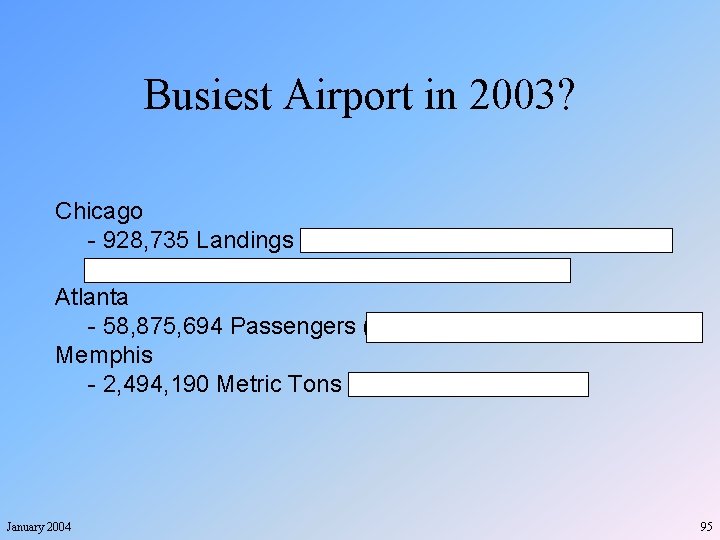

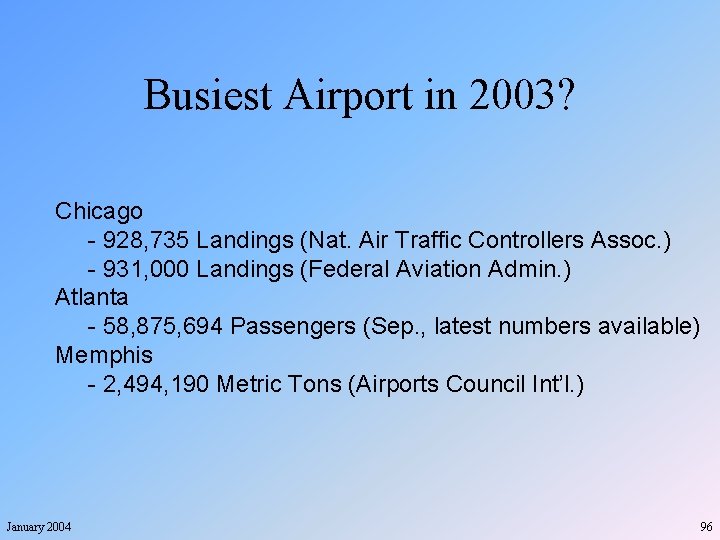

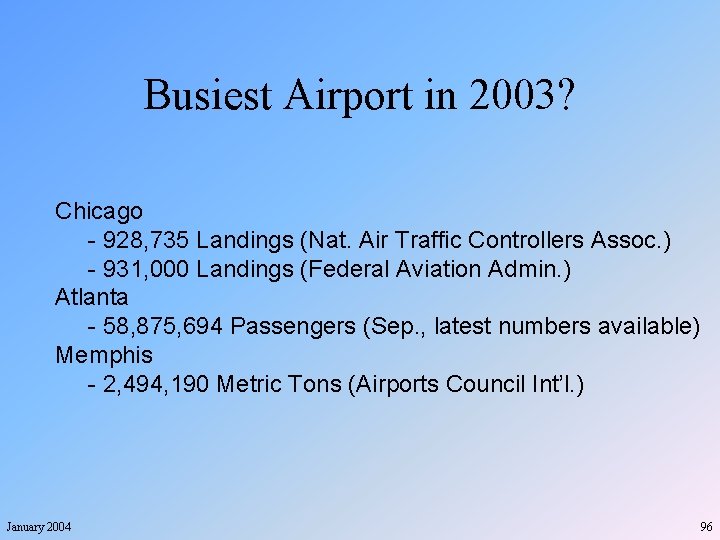

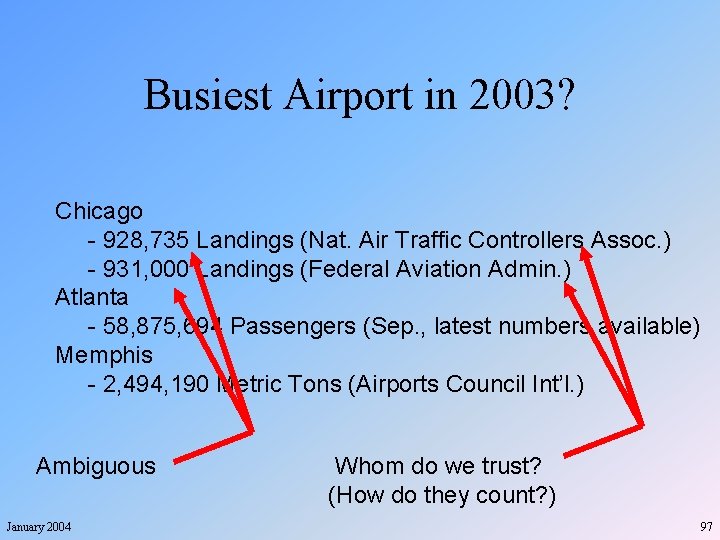

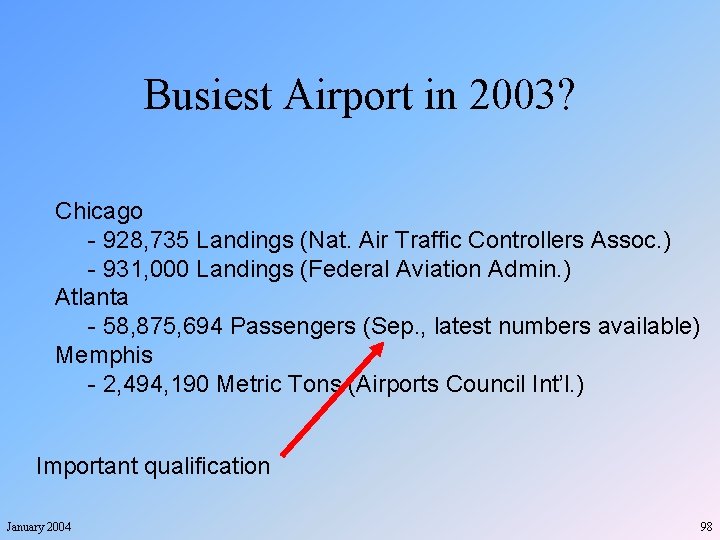

Busiest Airport in 2003? Chicago - 928, 735 Landings (Nat. Air Traffic Controllers Assoc. ) - 931, 000 Landings (Federal Aviation Admin. ) Atlanta - 58, 875, 694 Passengers (Sep. , latest numbers available) Memphis - 2, 494, 190 Metric Tons (Airports Council Int’l. ) January 2004 94

Busiest Airport in 2003? Chicago - 928, 735 Landings (Nat. Air Traffic Controllers Assoc. ) - 931, 000 Landings (Federal Aviation Admin. ) Atlanta - 58, 875, 694 Passengers (Sep. , latest numbers available) Memphis - 2, 494, 190 Metric Tons (Airports Council Int’l. ) January 2004 95

Busiest Airport in 2003? Chicago - 928, 735 Landings (Nat. Air Traffic Controllers Assoc. ) - 931, 000 Landings (Federal Aviation Admin. ) Atlanta - 58, 875, 694 Passengers (Sep. , latest numbers available) Memphis - 2, 494, 190 Metric Tons (Airports Council Int’l. ) January 2004 96

Busiest Airport in 2003? Chicago - 928, 735 Landings (Nat. Air Traffic Controllers Assoc. ) - 931, 000 Landings (Federal Aviation Admin. ) Atlanta - 58, 875, 694 Passengers (Sep. , latest numbers available) Memphis - 2, 494, 190 Metric Tons (Airports Council Int’l. ) Ambiguous January 2004 Whom do we trust? (How do they count? ) 97

Busiest Airport in 2003? Chicago - 928, 735 Landings (Nat. Air Traffic Controllers Assoc. ) - 931, 000 Landings (Federal Aviation Admin. ) Atlanta - 58, 875, 694 Passengers (Sep. , latest numbers available) Memphis - 2, 494, 190 Metric Tons (Airports Council Int’l. ) Important qualification January 2004 98

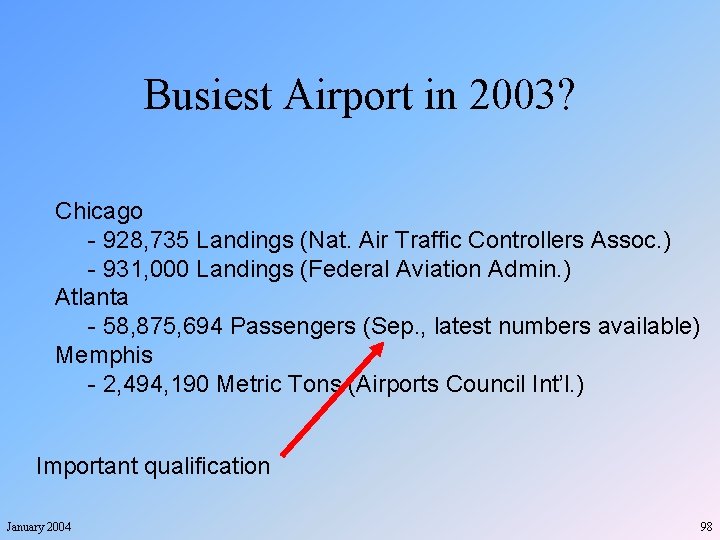

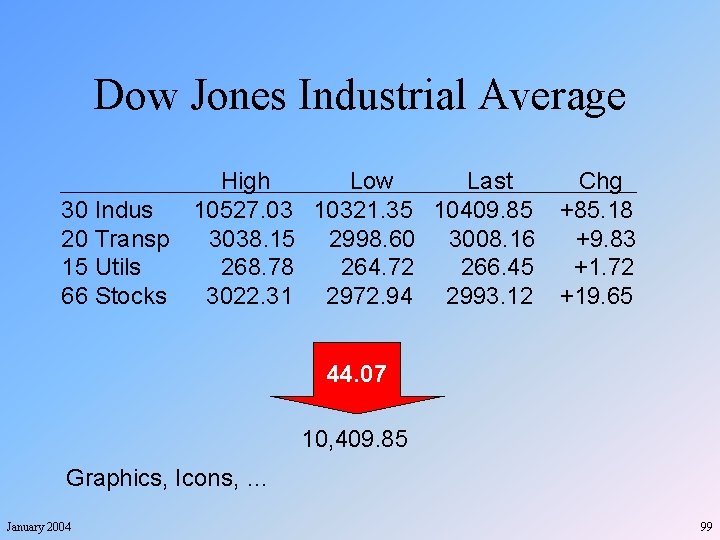

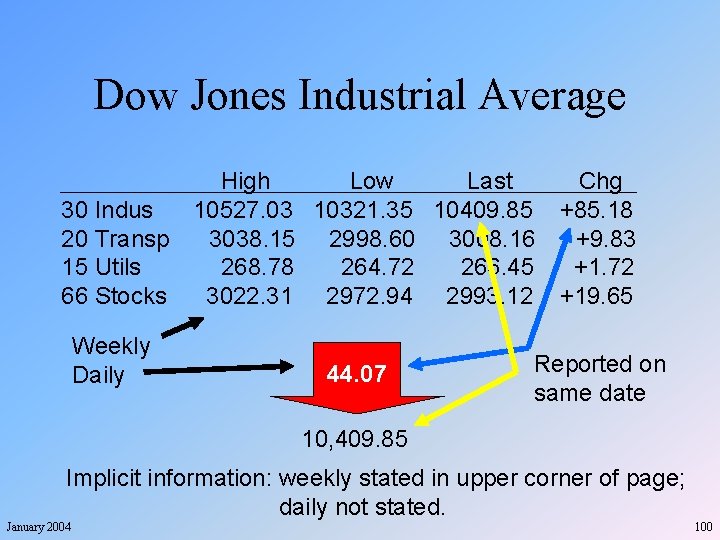

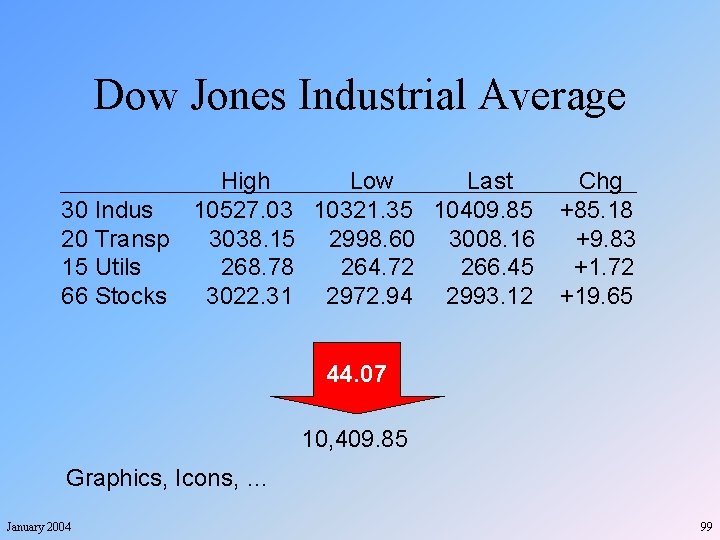

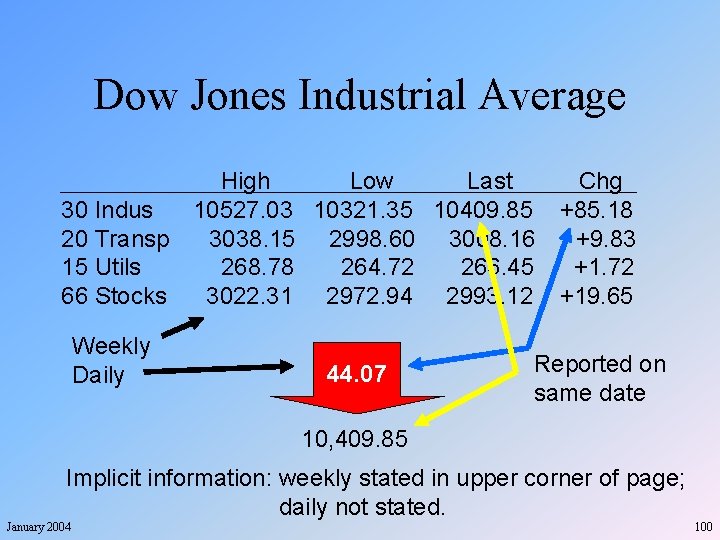

Dow Jones Industrial Average High Low Last Chg 30 Indus 10527. 03 10321. 35 10409. 85 +85. 18 20 Transp 3038. 15 2998. 60 3008. 16 +9. 83 15 Utils 268. 78 264. 72 266. 45 +1. 72 66 Stocks 3022. 31 2972. 94 2993. 12 +19. 65 44. 07 10, 409. 85 Graphics, Icons, … January 2004 99

Dow Jones Industrial Average High Low Last Chg 30 Indus 10527. 03 10321. 35 10409. 85 +85. 18 20 Transp 3038. 15 2998. 60 3008. 16 +9. 83 15 Utils 268. 78 264. 72 266. 45 +1. 72 66 Stocks 3022. 31 2972. 94 2993. 12 +19. 65 Weekly Daily 44. 07 Reported on same date 10, 409. 85 Implicit information: weekly stated in upper corner of page; daily not stated. January 2004 100

“Mad Cow” hurts Utah jobs “Utah stands to lose 1, 200 jobs from Asian countries’ import bans on beef products, . . . ” Common sense: a cow can’t hurt jobs. January 2004 101

“Mad Cow” hurts Utah jobs “Utah stands to lose 1, 200 jobs from Asian countries’ import bans on beef products, . . . ” Knowledge required for understanding: Mad Cow disease discovered in Washington. Humans can get the disease by eating contaminated beef. People in Asian countries don’t want to get sick. Washington state (not DC), which is in the western US. Utah is in the western US. Beef cattle are regionally linked (somehow? ) January 2004 102

Presentation Outline § § § § January 2004 Grand Challenge Meaning, Knowledge, Information, Data Fun and Games with Data Information Extraction Ontologies Applications Limitations and Pragmatics Summary and Challenges 103

Some Key Ideas § Data, Information, and Knowledge § Data Frames • Knowledge about everyday data items • Recognizers for data in context § Ontologies • Resilient Extraction Ontologies • Shared Conceptualizations § Limitations and Pragmatics January 2004 104

Some Research Issues § Building a library of open source data recognizers § Creating a corpora of test data for extraction, integration, table understanding, … § Precisely finding and gathering relevant information • Subparts of larger data • Scattered data (linked, factored, implied) • Data behind forms in the hidden web § Improving concept matching • Indirect matching • Calculations and unit conversions § … January 2004 105

Some Research Challenges § § § Automating ontology construction Converting web data to Semantic Web data Accommodating different views Developing effective personal software agents … www. deg. byu. edu January 2004 106