Understanding Performance of Concurrent Data Structures on Graphics

![SPSC FIFO Queues Lamport [1] enqueue (data) { if (NEXT(head) == tail) return FALSE; SPSC FIFO Queues Lamport [1] enqueue (data) { if (NEXT(head) == tail) return FALSE;](https://slidetodoc.com/presentation_image/a79a9607e8d33d39c0b685329915dca3/image-14.jpg)

![SPSC FIFO Queues Fast. Forward [2] • head and tail private to producer and SPSC FIFO Queues Fast. Forward [2] • head and tail private to producer and](https://slidetodoc.com/presentation_image/a79a9607e8d33d39c0b685329915dca3/image-15.jpg)

![SPSC FIFO Queues MCRing. Buffer [4] 1. Similar to Batch. Queue but handles many SPSC FIFO Queues MCRing. Buffer [4] 1. Similar to Batch. Queue but handles many](https://slidetodoc.com/presentation_image/a79a9607e8d33d39c0b685329915dca3/image-16.jpg)

![MPMC FIFO Queues MS-queue (Blocking) [5] 1. Linked-List based. 2. Mutual exclusion locks to MPMC FIFO Queues MS-queue (Blocking) [5] 1. Linked-List based. 2. Mutual exclusion locks to](https://slidetodoc.com/presentation_image/a79a9607e8d33d39c0b685329915dca3/image-17.jpg)

![MPMC FIFO Queues MS-queue (Non-blocking) [5] 1. Lock-free. 2. Uses CAS to add nodes MPMC FIFO Queues MS-queue (Non-blocking) [5] 1. Lock-free. 2. Uses CAS to add nodes](https://slidetodoc.com/presentation_image/a79a9607e8d33d39c0b685329915dca3/image-18.jpg)

![MPMC FIFO Queues TZ-Queue (Non-Blocking) [6] 1. Lock-free, Array-based. 2. Uses CAS to insert MPMC FIFO Queues TZ-Queue (Non-Blocking) [6] 1. Lock-free, Array-based. 2. Uses CAS to insert](https://slidetodoc.com/presentation_image/a79a9607e8d33d39c0b685329915dca3/image-19.jpg)

- Slides: 32

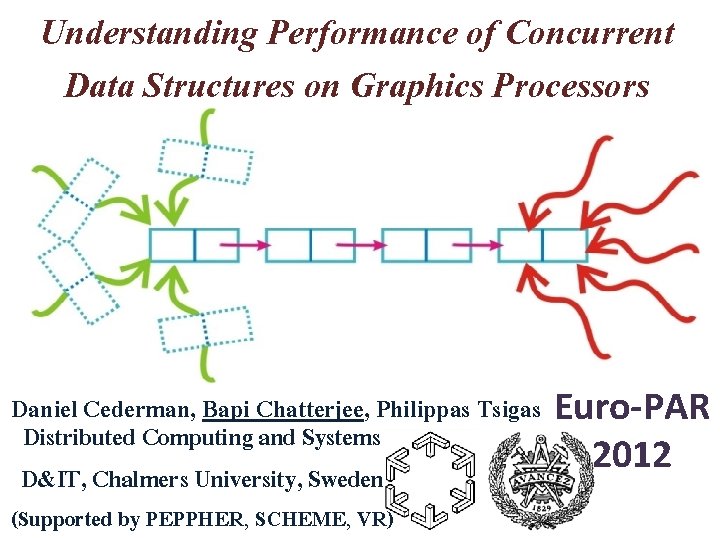

Understanding Performance of Concurrent Data Structures on Graphics Processors Daniel Cederman, Bapi Chatterjee, Philippas Tsigas Euro-PAR Distributed Computing and Systems 2012 D&IT, Chalmers University, Sweden (Supported by PEPPHER, SCHEME, VR)

Main processor • Uniprocessors – No more • Multi-core, Many-core Co-processor • Graphics Processors • SIMD, N x speedup Parallelization on GPU (GPGPU) • CUDA, Open. Cl • Independent of CPU • Ubiquitous 2/31

Data structures + Multi-core/Manycore = Concurrent data structure • Rich literature and growing • Applications CDS on GPU • Synchronization aware applications on GPU • Challenging but required Concurrent Programming Parallel Slowdown 3/31

Concurrent Data Structures on GPU • Implementation Issues • Performance Portability 4/31

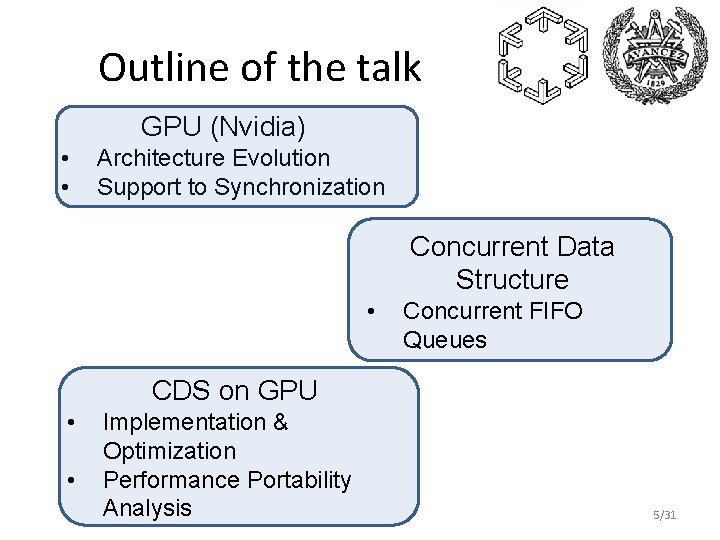

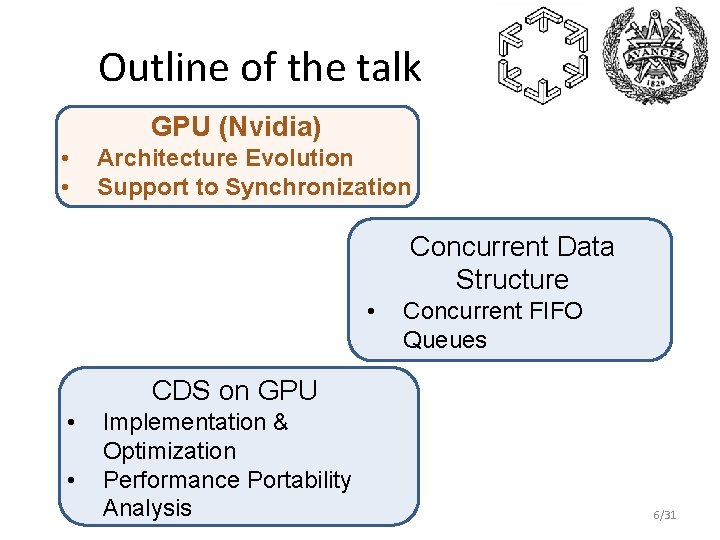

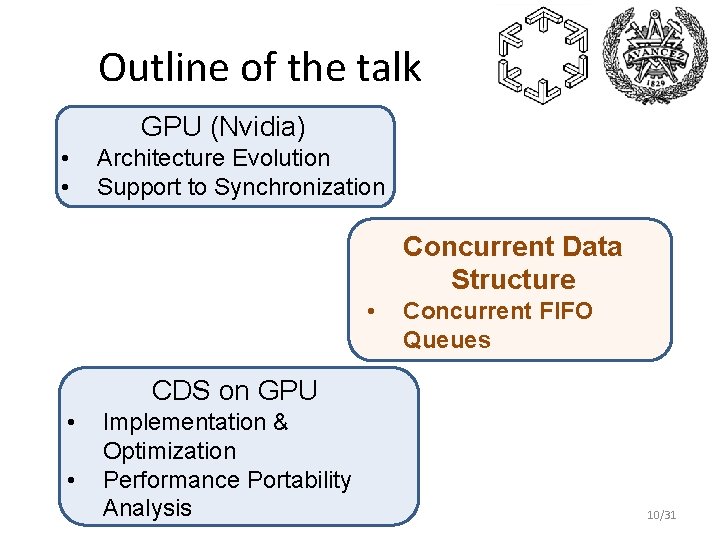

Outline of the talk GPU (Nvidia) • • Architecture Evolution Support to Synchronization Concurrent Data Structure • Concurrent FIFO Queues CDS on GPU • • Implementation & Optimization Performance Portability Analysis 5/31

Outline of the talk GPU (Nvidia) • • Architecture Evolution Support to Synchronization Concurrent Data Structure • Concurrent FIFO Queues CDS on GPU • • Implementation & Optimization Performance Portability Analysis 6/31

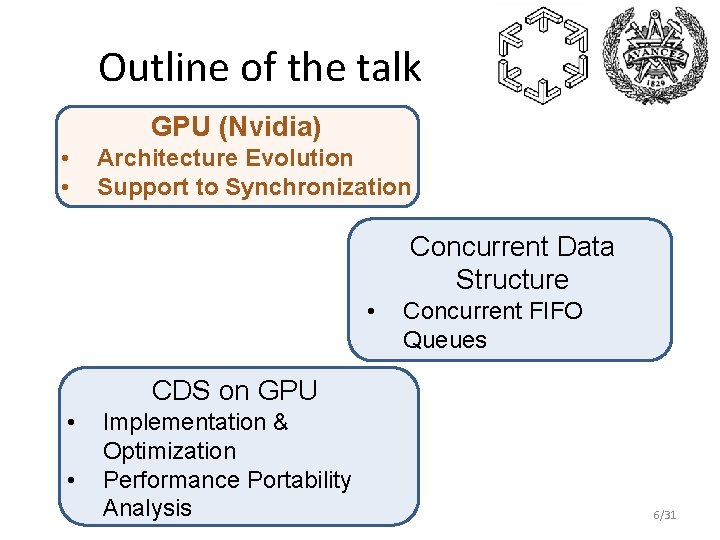

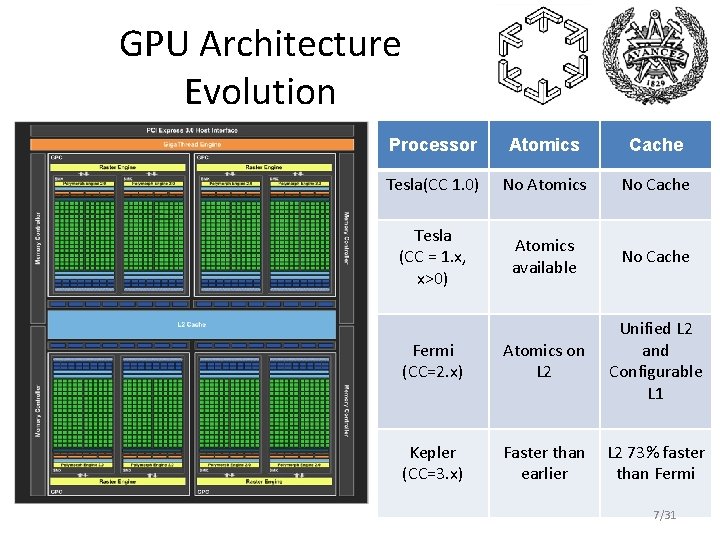

GPU Architecture Evolution Processor Atomics Cache Tesla(CC 1. 0) No Atomics No Cache Tesla (CC = 1. x, x>0) Atomics available No Cache Fermi (CC=2. x) Atomics on L 2 Unified L 2 and Configurable L 1 Kepler (CC=3. x) Faster than earlier L 2 73% faster than Fermi 7/31

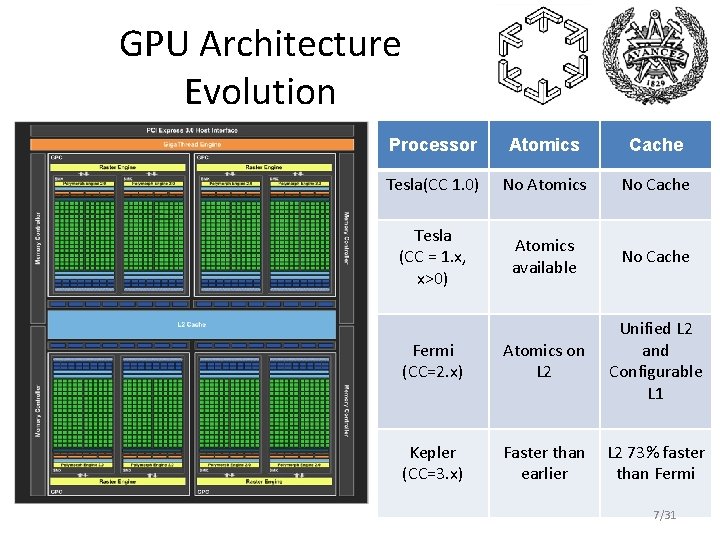

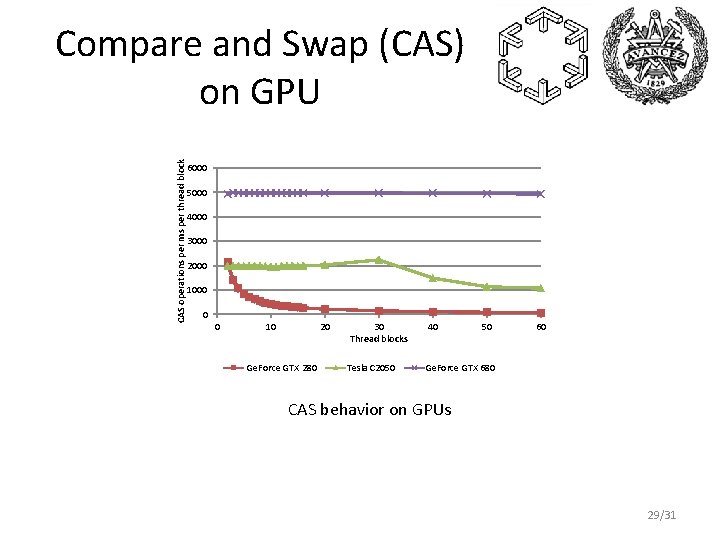

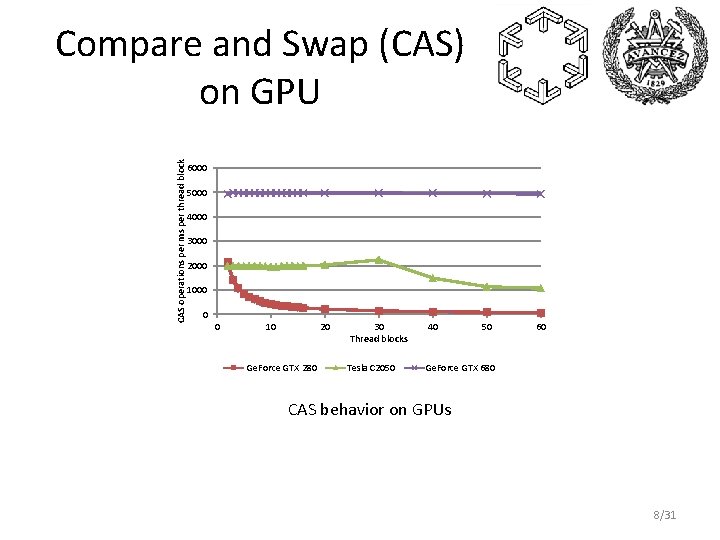

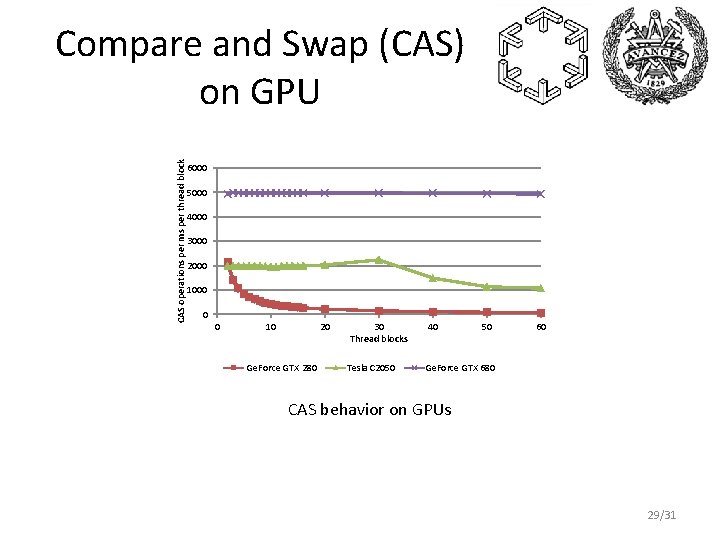

CAS operations per ms per thread block Compare and Swap (CAS) on GPU 6000 5000 4000 3000 2000 1000 0 0 10 20 Ge. Force GTX 280 30 Thread blocks Tesla C 2050 40 50 60 Ge. Force GTX 680 CAS behavior on GPUs 8/31

CDS on GPU – Motivation & Challenges • Transition from a pure co-processor to a more independent compute unit. • CUDA and Open. CL. • Synchronization primitives getting cheaper with availability of multilevel cache. • Synchronization aware programs vs. inherent SIMD. 9/31

Outline of the talk GPU (Nvidia) • • Architecture Evolution Support to Synchronization Concurrent Data Structure • Concurrent FIFO Queues CDS on GPU • • Implementation & Optimization Performance Portability Analysis 10/31

Concurrent Data Structure 1. Synchronization Progress guarantee. 2. Blocking. 3. Non-blocking. 1. Lock – free 2. Wait - free 11/31

Concurrent FIFO Queues 12/31

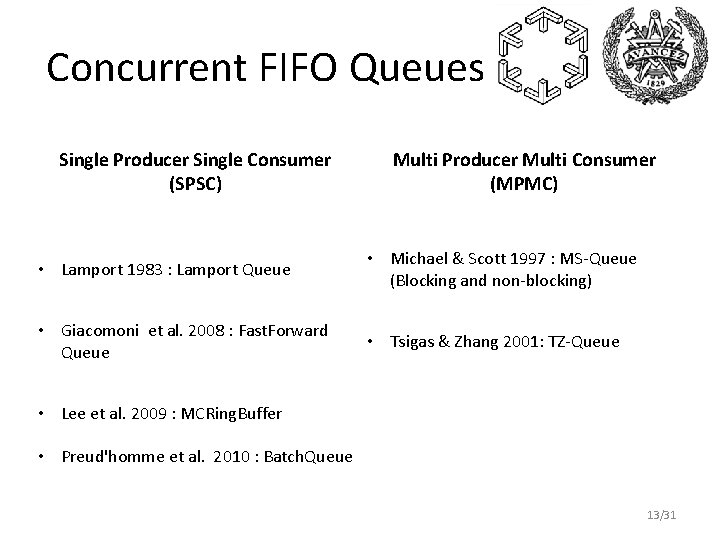

Concurrent FIFO Queues Single Producer Single Consumer (SPSC) Multi Producer Multi Consumer (MPMC) • Lamport 1983 : Lamport Queue • Michael & Scott 1997 : MS-Queue (Blocking and non-blocking) • Giacomoni et al. 2008 : Fast. Forward Queue • Tsigas & Zhang 2001: TZ-Queue • Lee et al. 2009 : MCRing. Buffer • Preud'homme et al. 2010 : Batch. Queue 13/31

![SPSC FIFO Queues Lamport 1 enqueue data if NEXThead tail return FALSE SPSC FIFO Queues Lamport [1] enqueue (data) { if (NEXT(head) == tail) return FALSE;](https://slidetodoc.com/presentation_image/a79a9607e8d33d39c0b685329915dca3/image-14.jpg)

SPSC FIFO Queues Lamport [1] enqueue (data) { if (NEXT(head) == tail) return FALSE; buffer[head] = data; head = NEXT(head); return TRUE; } dequeue (data) { if (head == tail) { return FALSE; data = buffer[tail]; tail = NEXT(tail); return TRUE; } 1. Lock-free, Array-based. 2. Synchronization through atomic read and write on shared head and tail Causes cache thrashing. 14/31

![SPSC FIFO Queues Fast Forward 2 head and tail private to producer and SPSC FIFO Queues Fast. Forward [2] • head and tail private to producer and](https://slidetodoc.com/presentation_image/a79a9607e8d33d39c0b685329915dca3/image-15.jpg)

SPSC FIFO Queues Fast. Forward [2] • head and tail private to producer and consumer lowering cache thrashing. Batch. Queue [3] • The queue is divided into two batches – producer writes to one of them while the consumer reads from the other one. 15/31

![SPSC FIFO Queues MCRing Buffer 4 1 Similar to Batch Queue but handles many SPSC FIFO Queues MCRing. Buffer [4] 1. Similar to Batch. Queue but handles many](https://slidetodoc.com/presentation_image/a79a9607e8d33d39c0b685329915dca3/image-16.jpg)

SPSC FIFO Queues MCRing. Buffer [4] 1. Similar to Batch. Queue but handles many batches. 2. Many batches may cause less latency if producer is not fast enough. 16/31

![MPMC FIFO Queues MSqueue Blocking 5 1 LinkedList based 2 Mutual exclusion locks to MPMC FIFO Queues MS-queue (Blocking) [5] 1. Linked-List based. 2. Mutual exclusion locks to](https://slidetodoc.com/presentation_image/a79a9607e8d33d39c0b685329915dca3/image-17.jpg)

MPMC FIFO Queues MS-queue (Blocking) [5] 1. Linked-List based. 2. Mutual exclusion locks to synchronize. 3. CAS-based spin lock and Bakery Lock – fine Grained and Coarse grained. 17/31

![MPMC FIFO Queues MSqueue Nonblocking 5 1 Lockfree 2 Uses CAS to add nodes MPMC FIFO Queues MS-queue (Non-blocking) [5] 1. Lock-free. 2. Uses CAS to add nodes](https://slidetodoc.com/presentation_image/a79a9607e8d33d39c0b685329915dca3/image-18.jpg)

MPMC FIFO Queues MS-queue (Non-blocking) [5] 1. Lock-free. 2. Uses CAS to add nodes at tail and remove nodes from head. 3. Helping mechanism between threads leads to true lock-freedom. 18/31

![MPMC FIFO Queues TZQueue NonBlocking 6 1 Lockfree Arraybased 2 Uses CAS to insert MPMC FIFO Queues TZ-Queue (Non-Blocking) [6] 1. Lock-free, Array-based. 2. Uses CAS to insert](https://slidetodoc.com/presentation_image/a79a9607e8d33d39c0b685329915dca3/image-19.jpg)

MPMC FIFO Queues TZ-Queue (Non-Blocking) [6] 1. Lock-free, Array-based. 2. Uses CAS to insert elements and move head and tail. 3. head and tail pointers are moved after every x: th operation. 19/31

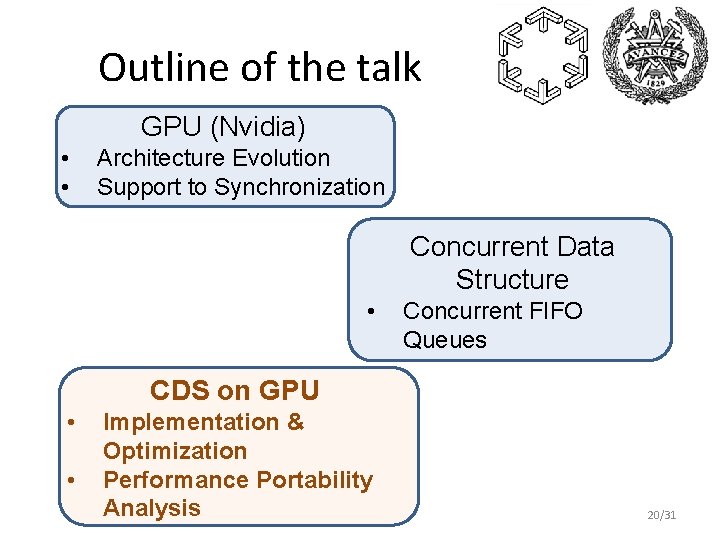

Outline of the talk GPU (Nvidia) • • Architecture Evolution Support to Synchronization Concurrent Data Structure • Concurrent FIFO Queues CDS on GPU • • Implementation & Optimization Performance Portability Analysis 20/31

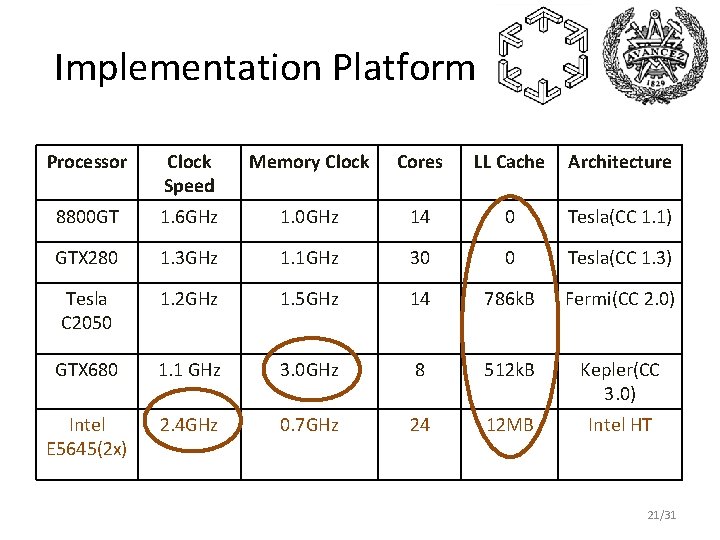

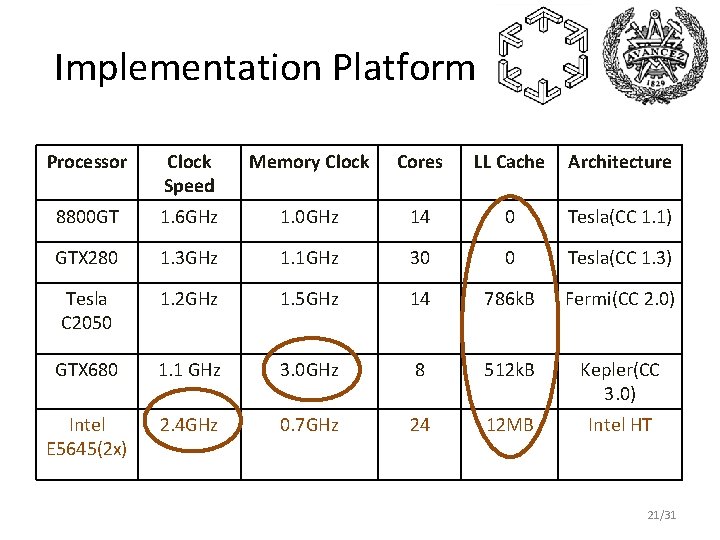

Implementation Platform Processor Clock Speed Memory Clock Cores LL Cache Architecture 8800 GT 1. 6 GHz 1. 0 GHz 14 0 Tesla(CC 1. 1) GTX 280 1. 3 GHz 1. 1 GHz 30 0 Tesla(CC 1. 3) Tesla C 2050 1. 2 GHz 1. 5 GHz 14 786 k. B Fermi(CC 2. 0) GTX 680 1. 1 GHz 3. 0 GHz 8 512 k. B Kepler(CC 3. 0) Intel E 5645(2 x) 2. 4 GHz 0. 7 GHz 24 12 MB Intel HT 21/31

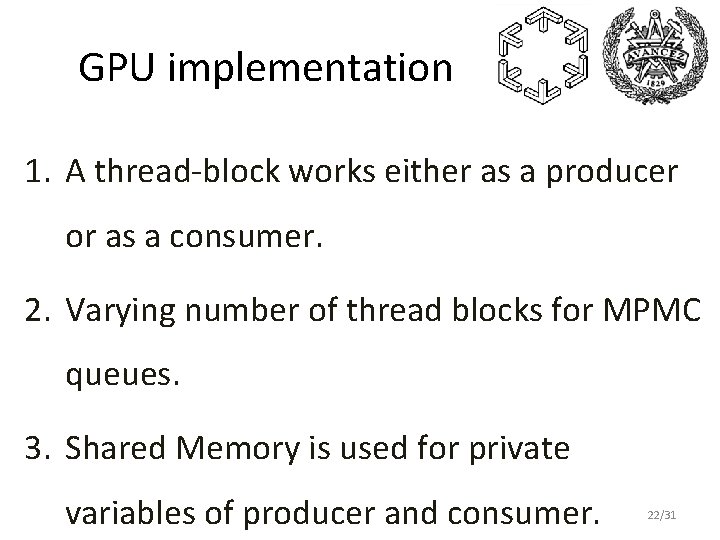

GPU implementation 1. A thread-block works either as a producer or as a consumer. 2. Varying number of thread blocks for MPMC queues. 3. Shared Memory is used for private variables of producer and consumer. 22/31

GPU optimization 1. Batch. Queue and MCRing. Buffer - advantage of shared memory to make them Buffered. 2. Coalescing in memory transfer in buffered queues. 3. Empirical optimization in TZ-Queue – move the pointers after every second operation. 23/31

Experimental Setup 1. Throughput = # {successful enque or deque} / ms. 2. MPMC experiments : 25% enque and 75% deque. 3. Contention – high and low. 4. In CPU, producers and consumers were put on different sockets. 24/31

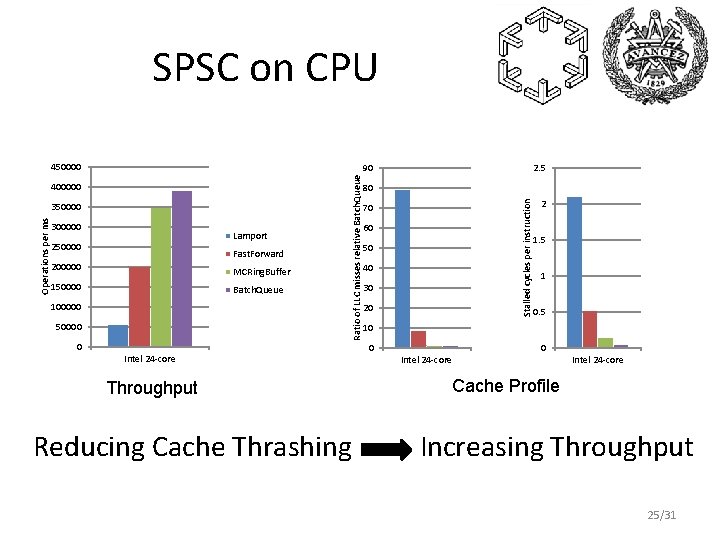

SPSC on CPU 450000 Operations per ms 350000 300000 Lamport 250000 Fast. Forward 200000 MCRing. Buffer 150000 Batch. Queue 100000 50000 0 Intel 24 -core Throughput Reducing Cache Thrashing 2. 5 80 Stalled cycles per instruction 400000 Ratio of LLC misses relative Batch. Queue 90 70 60 50 40 30 20 2 1. 5 1 0. 5 10 0 0 Intel 24 -core Cache Profile Increasing Throughput 25/31

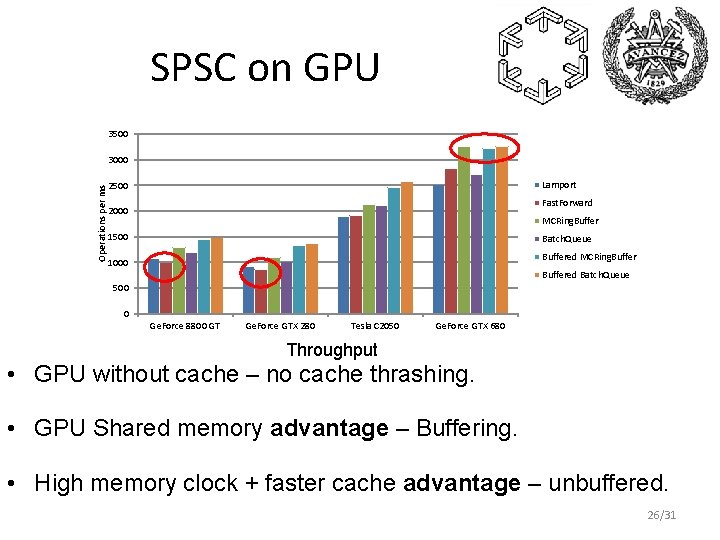

SPSC on GPU 3500 Operations per ms 3000 Lamport 2500 Fast. Forward 2000 MCRing. Buffer 1500 Batch. Queue Buffered MCRing. Buffer 1000 Buffered Batch. Queue 500 0 Ge. Force 8800 GT Ge. Force GTX 280 Tesla C 2050 Ge. Force GTX 680 Throughput • GPU without cache – no cache thrashing. • GPU Shared memory advantage – Buffering. • High memory clock + faster cache advantage – unbuffered. 26/31

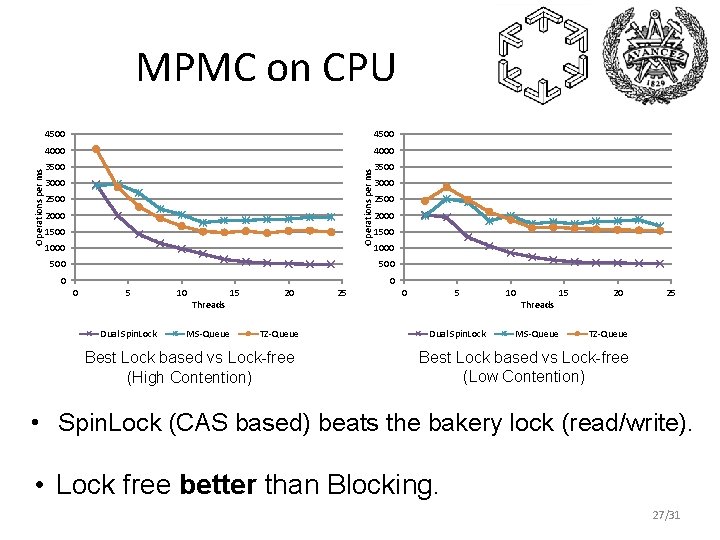

4500 4000 3500 Operations per ms MPMC on CPU 3000 2500 2000 1500 1000 500 0 0 0 5 Dual Spin. Lock 10 Threads MS-Queue 15 20 TZ-Queue Best Lock based vs Lock-free (High Contention) 25 0 5 Dual Spin. Lock 10 Threads MS-Queue 15 20 25 TZ-Queue Best Lock based vs Lock-free (Low Contention) • Spin. Lock (CAS based) beats the bakery lock (read/write). • Lock free better than Blocking. 27/31

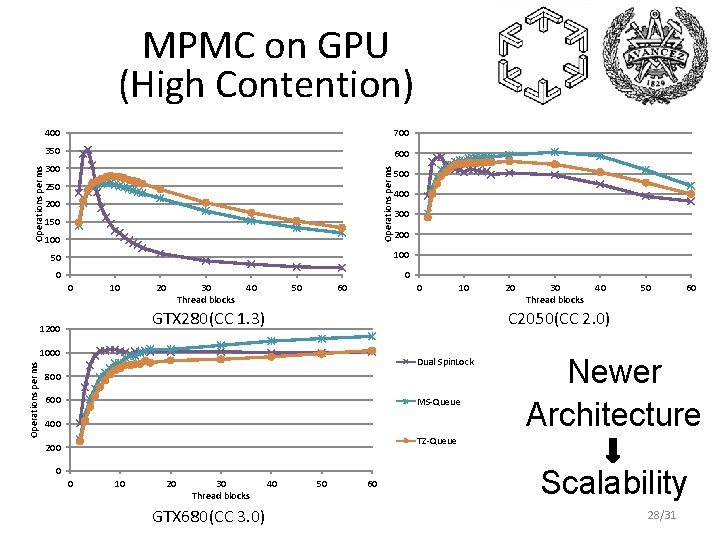

400 700 350 600 300 Operations per ms MPMC on GPU (High Contention) 250 200 150 100 400 300 200 100 50 0 10 20 30 Thread blocks 40 50 60 C 2050(CC 2. 0) GTX 280(CC 1. 3) 1200 1000 Operations per ms 500 Dual Spin. Lock 800 600 MS-Queue 400 Newer Architecture TZ-Queue 200 0 0 10 20 30 Thread blocks GTX 680(CC 3. 0) 40 50 60 Scalability 28/31

CAS operations per ms per thread block Compare and Swap (CAS) on GPU 6000 5000 4000 3000 2000 1000 0 0 10 20 Ge. Force GTX 280 30 Thread blocks Tesla C 2050 40 50 60 Ge. Force GTX 680 CAS behavior on GPUs 29/31

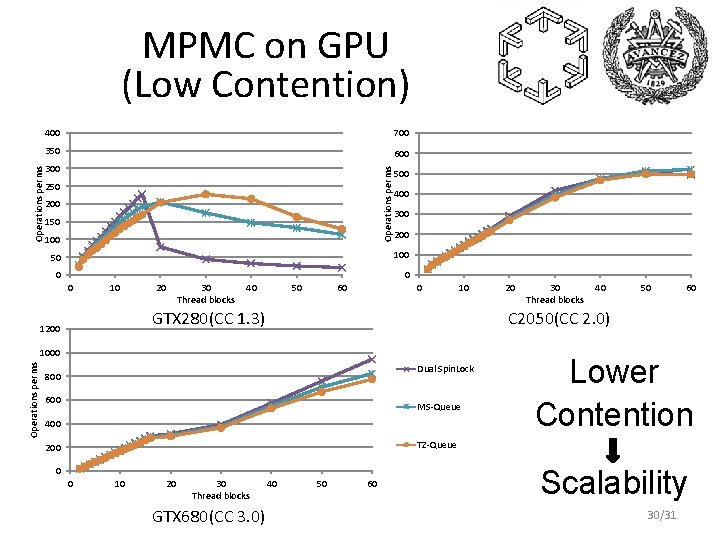

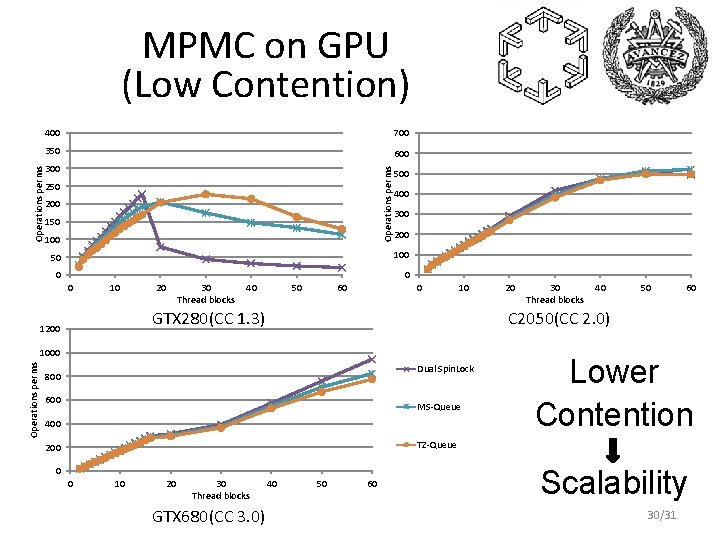

400 700 350 600 300 Operations per ms MPMC on GPU (Low Contention) 250 200 150 100 500 400 300 200 100 50 0 10 20 30 Thread blocks 40 50 60 0 10 1000 Operations per ms 30 Thread blocks 40 50 60 C 2050(CC 2. 0) GTX 280(CC 1. 3) 1200 20 Dual Spin. Lock 800 600 MS-Queue 400 Lower Contention TZ-Queue 200 0 0 10 20 30 Thread blocks GTX 680(CC 3. 0) 40 50 60 Scalability 30/31

Summary 1. Concurrent queues are in general performance portable from CPU to GPU. 2. The configurable cache are still NOT enough to remove the benefit of redesigning algorithms from GPU shared memory viewpoint. 3. Significantly improved atomics in Fermi and further in Kepler is a big motivation for algorithmic designs of CDS for GPU. 31/31

References 1. Lamport L. : Specifying Concurrent program modules. ACM Transactions on Programming Languages and Systems 5, (1983), 190 -222 2. Giacomoni, J. , Moseley, T. , Vachharajani, M. : Fast. Forward for efficient pipeline parallelism: a cache-optimized concurrent lock-free queue. In: Proceedings of the 13 th ACM SIGPLAN Symposium on Principles and practice of parallel programming, ACM (2008) 43 -52 3. Preud'homme, T. , Sopena, J. , Thomas, G. , Folliot, B. : Batch. Queue: Fast and Memory. Thrifty Core to Core Communication. In: 22 nd International Symposium on Computer Architecture and High Performance Computing (SBAC-PAD). (2010) 215 -222 4. Lee, P. P. C. , Bu, T. , Chandranmenon, G. : A lock-free, cache-efficient shared ring Buffer for multi-core architectures. In: Proceedings of the 5 th ACM/IEEE Symposium on architectures for Networking and Communications Systems. ANCS '09, New York, NY, USA, ACM (2009) 78 -79 5. Michael, M. , Scott, M. : Simple, fast, and practical non-blocking and blocking concurrent queue algorithms. In: Proceedings of the 15 th annual ACM symposium on Principles of distributed computing, ACM (1996) 267 -275 6. Tsigas, P. , Zhang, Y. : A simple, fast and scalable non-blocking concurrent fifo queue for shared memory multiprocessor systems. In: Proceedings of the 13 th annual ACM symposium on Parallel algorithms and architectures, ACM (2001) 134 -143