Tutorial for Map Reduce Hadoop Large Scale Processing

- Slides: 36

Tutorial for Map. Reduce (Hadoop) & Large Scale Processing Le Zhao (LTI, SCS, CMU) Database Seminar & Large Scale Seminar 2010 -Feb-15 Some slides adapted from IR course lectures by Jamie Callan © 2010, Le Zhao

Outline • • Why Map. Reduce (Hadoop) Map. Reduce basics The Map. Reduce way of thinking Manipulating large data 2 © 2010, Le Zhao

Outline • Why Map. Reduce (Hadoop) – Why go large scale – Compared to other parallel computing models – Hadoop related tools • Map. Reduce basics • The Map. Reduce way of thinking • Manipulating large data 3 © 2010, Le Zhao

Why NOT to do parallel computing • Concerns: a parallel system needs to provide: – – Data distribution Computation distribution Fault tolerance Job scheduling 4 © 2010, Le Zhao

Why Map. Reduce (Hadoop) • Previous parallel computation models – 1) scp + ssh » Manual everything – 2) network cross-mounted disks + condor/torque » No data distr, disk access is bottleneck » Can only partition totally distributed computation » No fault tolerance » Prioritized job scheduling 5 © 2010, Le Zhao

Hadoop • Parallel batch computation – Data distribution » Hadoop Distributed File System (HDFS) » Like Linux FS, but with automatic data repetition – Computation distribution » Automatic, user only need to specify #input_splits » Can distribute aggregation computations as well – Fault tolerance » Automatic recovery from failure » Speculative execution (a backup task) – Job scheduling » Ok, but still relies on the politeness of users 6 © 2010, Le Zhao

How you can use Hadoop • Hadoop Streaming – Quick hacking – much like shell scripting » Uses STDIN & STDOUT carry data » cat file | mapper | sort | reducer > output – Easier to use legacy code, all programming languages • Hadoop Java API – Build large systems » More data types » More control over Hadoop’s behavior » Easier debugging with Java’s error stacktrace display – Net. Beans plugin for Hadoop provides easy programming » http: //hadoopstudio. org/docs. html 7 © 2010, Le Zhao

Outline • • Why Map. Reduce (Hadoop) Map. Reduce basics The Map. Reduce way of thinking Manipulating large data 8 © 2010, Le Zhao

Map and Reduce Map. Reduce is a new use of an old idea in Computer Science • Map: Apply a function to every object in a list – Each object is independent » Order is unimportant » Maps can be done in parallel – The function produces a result • Reduce: Combine the results to produce a final result You may have seen this in a Lisp or functional programming course 9 © 2009, Jamie Callan

Map. Reduce • Input reader – Divide input into splits, assign each split to a Map processor • Map – Apply the Map function to each record in the split – Each Map function returns a list of (key, value) pairs • Shuffle/Partition and Sort – Shuffle distributes sorting & aggregation to many reducers – All records for key k are directed to the same reduce processor – Sort groups the same keys together, and prepares for aggregation • Reduce – Apply the Reduce function to each key – The result of the Reduce function is a list of (key, value) pairs 10 © 2010, Jamie Callan

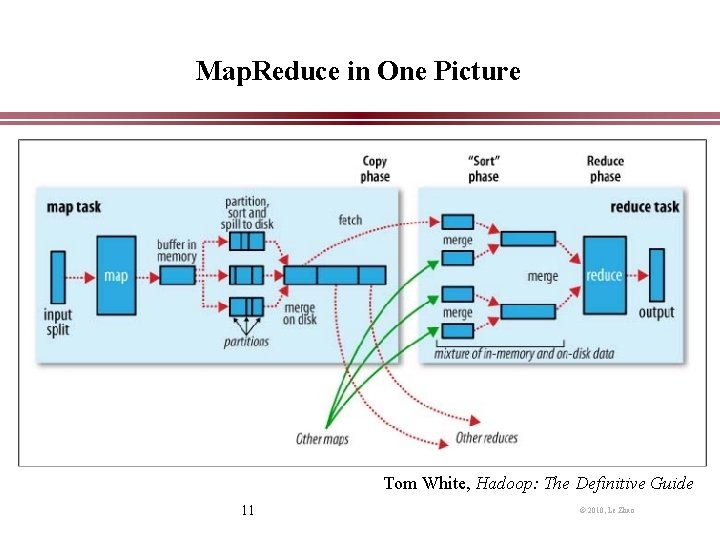

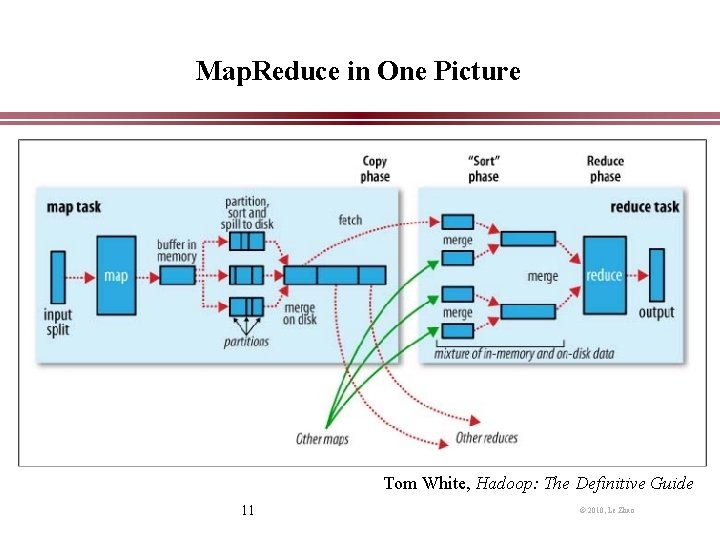

Map. Reduce in One Picture Tom White, Hadoop: The Definitive Guide 11 © 2010, Le Zhao

Outline • Why Map. Reduce (Hadoop) • Map. Reduce basics • The Map. Reduce way of thinking – Two simple use cases – Two more advanced & useful Map. Reduce tricks – Two Map. Reduce applications • Manipulating large data 12 © 2010, Le Zhao

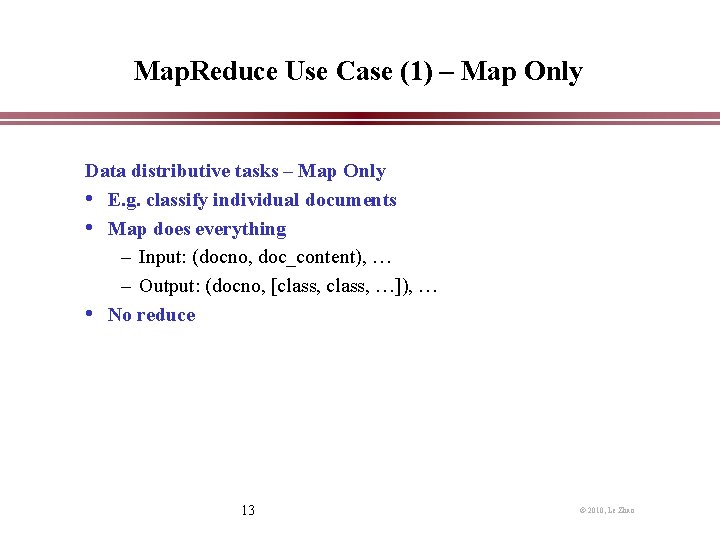

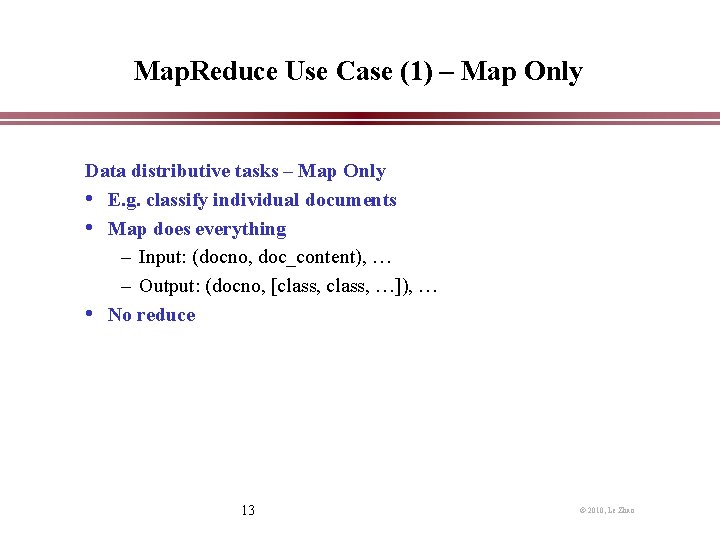

Map. Reduce Use Case (1) – Map Only Data distributive tasks – Map Only • E. g. classify individual documents • Map does everything – Input: (docno, doc_content), … – Output: (docno, [class, …]), … • No reduce 13 © 2010, Le Zhao

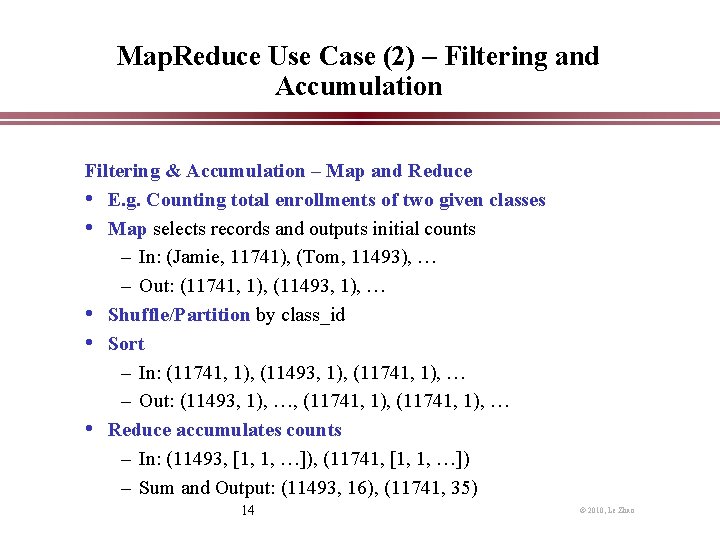

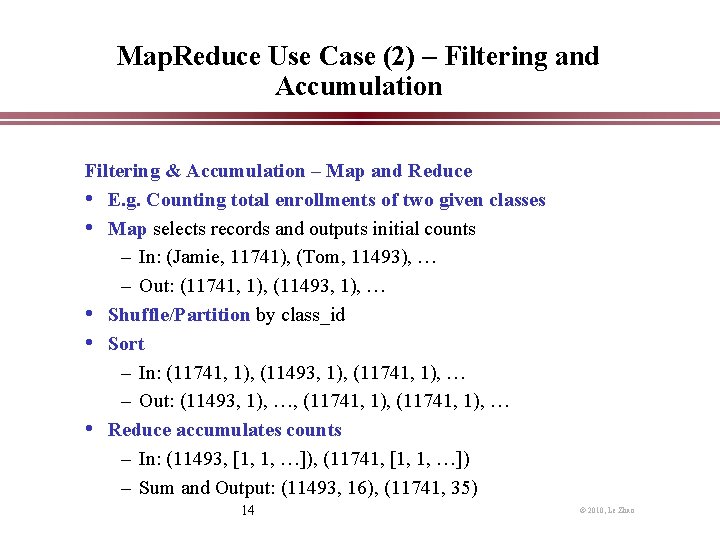

Map. Reduce Use Case (2) – Filtering and Accumulation Filtering & Accumulation – Map and Reduce • E. g. Counting total enrollments of two given classes • Map selects records and outputs initial counts – In: (Jamie, 11741), (Tom, 11493), … – Out: (11741, 1), (11493, 1), … • Shuffle/Partition by class_id • Sort – In: (11741, 1), (11493, 1), (11741, 1), … – Out: (11493, 1), …, (11741, 1), … • Reduce accumulates counts – In: (11493, [1, 1, …]), (11741, [1, 1, …]) – Sum and Output: (11493, 16), (11741, 35) 14 © 2010, Le Zhao

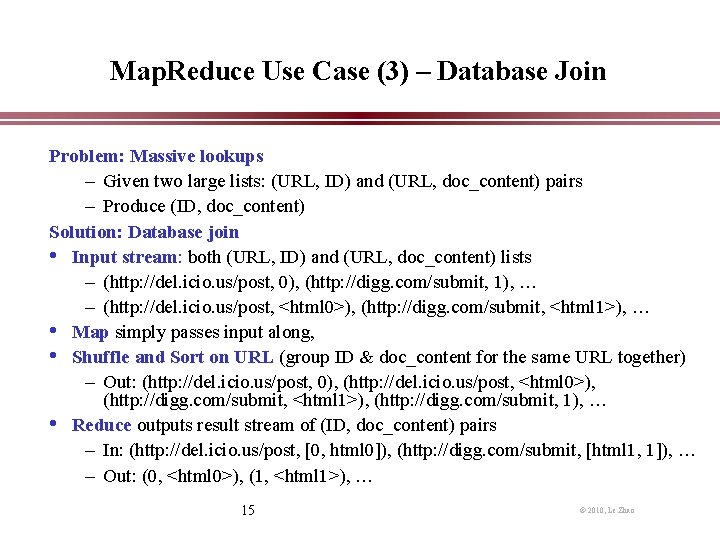

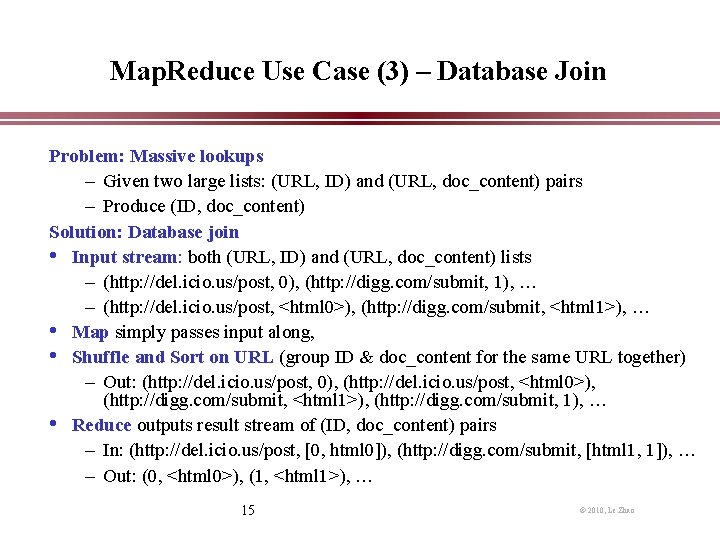

Map. Reduce Use Case (3) – Database Join Problem: Massive lookups – Given two large lists: (URL, ID) and (URL, doc_content) pairs – Produce (ID, doc_content) Solution: Database join • Input stream: both (URL, ID) and (URL, doc_content) lists – (http: //del. icio. us/post, 0), (http: //digg. com/submit, 1), … – (http: //del. icio. us/post, <html 0>), (http: //digg. com/submit, <html 1>), … • Map simply passes input along, • Shuffle and Sort on URL (group ID & doc_content for the same URL together) – Out: (http: //del. icio. us/post, 0), (http: //del. icio. us/post, <html 0>), (http: //digg. com/submit, <html 1>), (http: //digg. com/submit, 1), … • Reduce outputs result stream of (ID, doc_content) pairs – In: (http: //del. icio. us/post, [0, html 0]), (http: //digg. com/submit, [html 1, 1]), … – Out: (0, <html 0>), (1, <html 1>), … 15 © 2010, Le Zhao

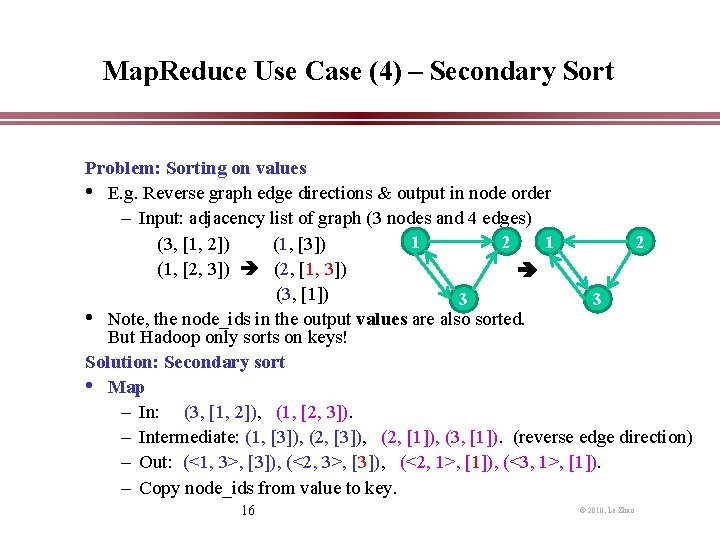

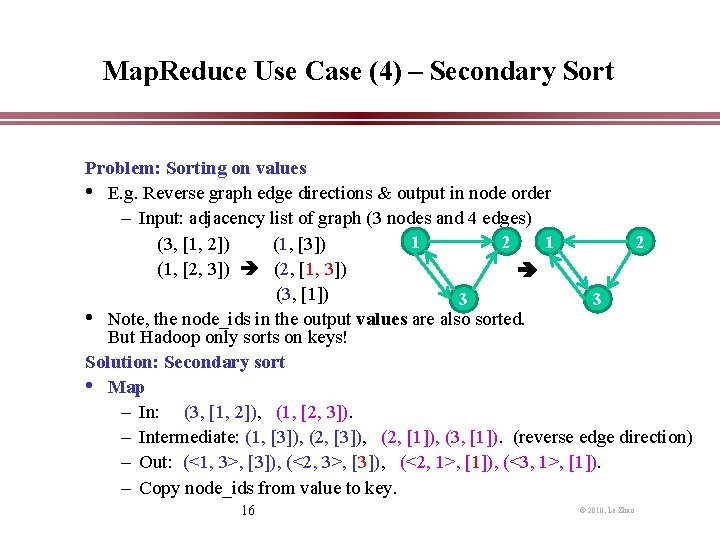

Map. Reduce Use Case (4) – Secondary Sort Problem: Sorting on values • E. g. Reverse graph edge directions & output in node order – Input: adjacency list of graph (3 nodes and 4 edges) 1 2 (3, [1, 2]) (1, [3]) (1, [2, 3]) (2, [1, 3]) (3, [1]) 3 3 • Note, the node_ids in the output values are also sorted. But Hadoop only sorts on keys! Solution: Secondary sort • Map – In: (3, [1, 2]), (1, [2, 3]). – Intermediate: (1, [3]), (2, [1]), (3, [1]). (reverse edge direction) – Out: (<1, 3>, [3]), (<2, 1>, [1]), (<3, 1>, [1]). – Copy node_ids from value to key. 16 © 2010, Le Zhao

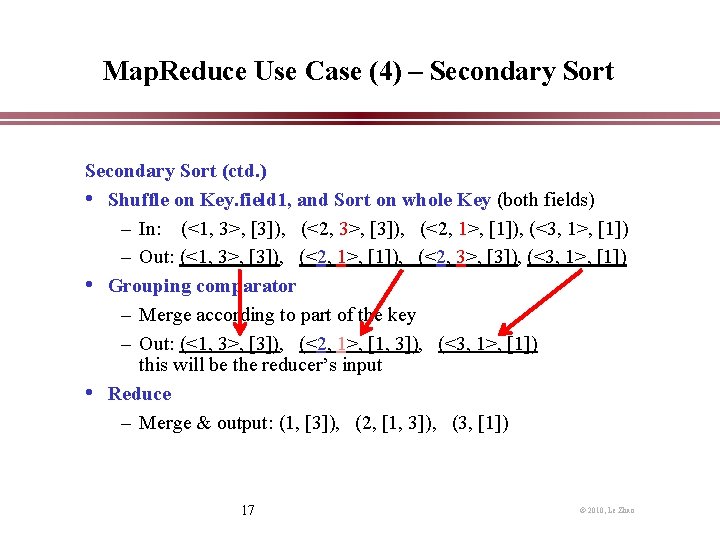

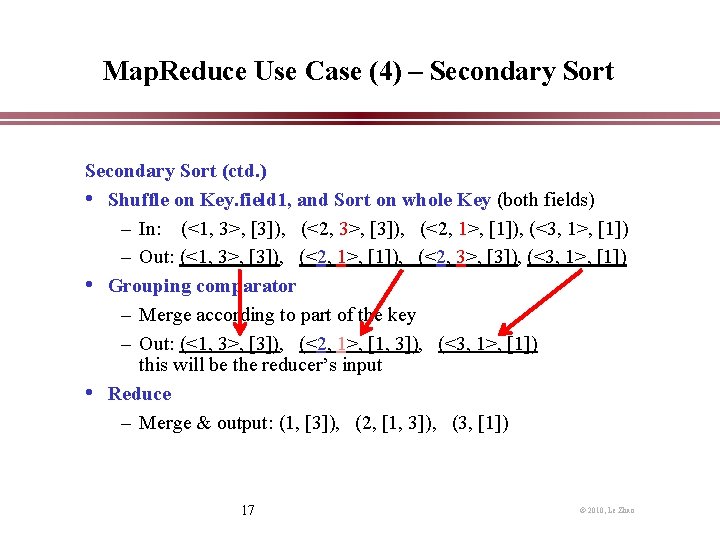

Map. Reduce Use Case (4) – Secondary Sort (ctd. ) • Shuffle on Key. field 1, and Sort on whole Key (both fields) – In: (<1, 3>, [3]), (<2, 1>, [1]), (<3, 1>, [1]) – Out: (<1, 3>, [3]), (<2, 1>, [1]), (<2, 3>, [3]), (<3, 1>, [1]) • Grouping comparator – Merge according to part of the key – Out: (<1, 3>, [3]), (<2, 1>, [1, 3]), (<3, 1>, [1]) this will be the reducer’s input • Reduce – Merge & output: (1, [3]), (2, [1, 3]), (3, [1]) 17 © 2010, Le Zhao

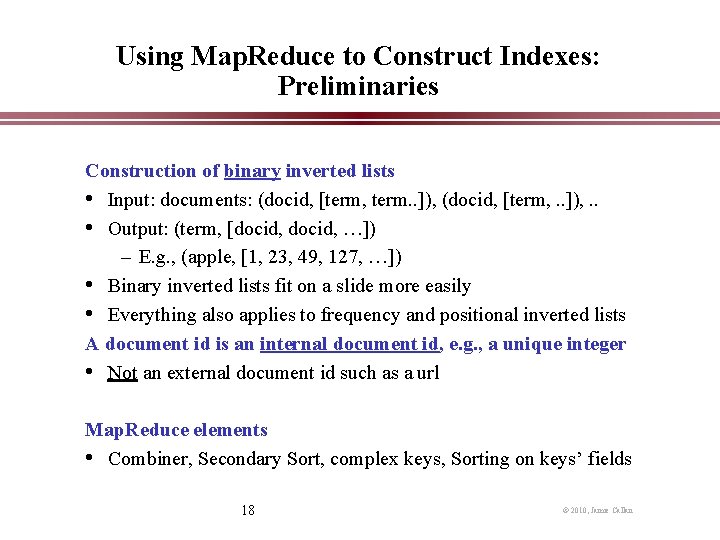

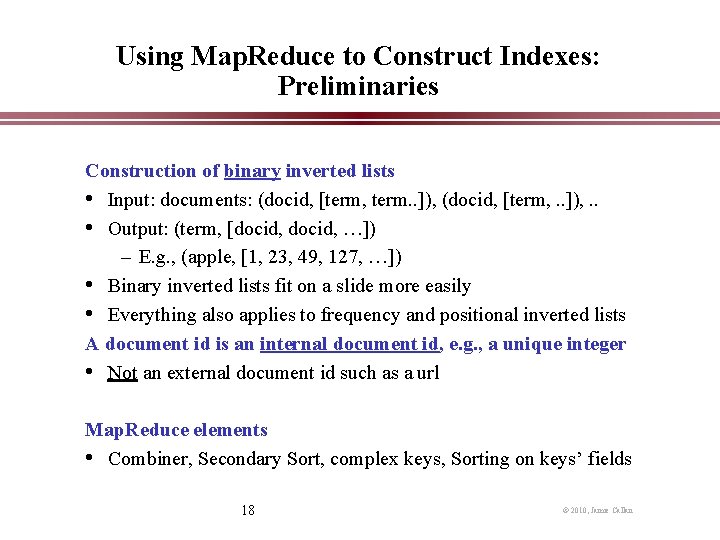

Using Map. Reduce to Construct Indexes: Preliminaries Construction of binary inverted lists • Input: documents: (docid, [term, term. . ]), (docid, [term, . . ]), . . • Output: (term, [docid, …]) – E. g. , (apple, [1, 23, 49, 127, …]) • Binary inverted lists fit on a slide more easily • Everything also applies to frequency and positional inverted lists A document id is an internal document id, e. g. , a unique integer • Not an external document id such as a url Map. Reduce elements • Combiner, Secondary Sort, complex keys, Sorting on keys’ fields 18 © 2010, Jamie Callan

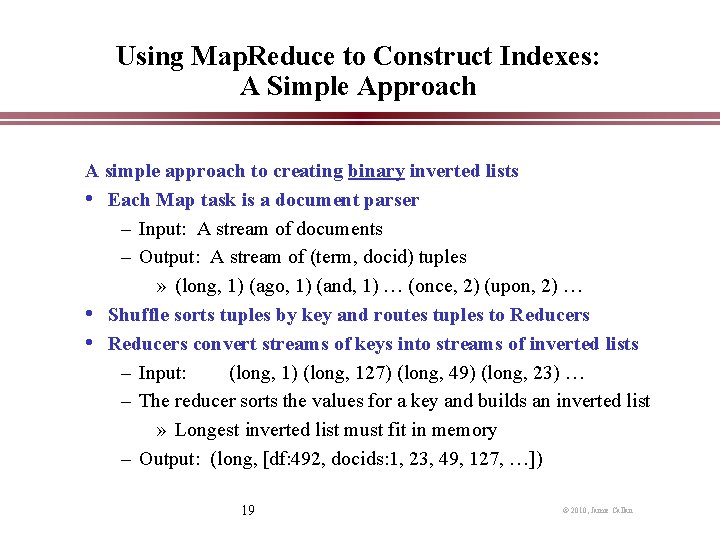

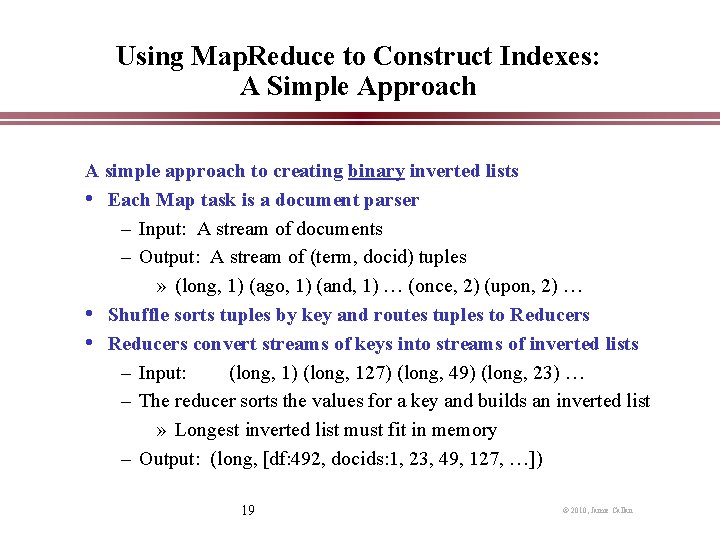

Using Map. Reduce to Construct Indexes: A Simple Approach A simple approach to creating binary inverted lists • Each Map task is a document parser – Input: A stream of documents – Output: A stream of (term, docid) tuples » (long, 1) (ago, 1) (and, 1) … (once, 2) (upon, 2) … • Shuffle sorts tuples by key and routes tuples to Reducers • Reducers convert streams of keys into streams of inverted lists – Input: (long, 1) (long, 127) (long, 49) (long, 23) … – The reducer sorts the values for a key and builds an inverted list » Longest inverted list must fit in memory – Output: (long, [df: 492, docids: 1, 23, 49, 127, …]) 19 © 2010, Jamie Callan

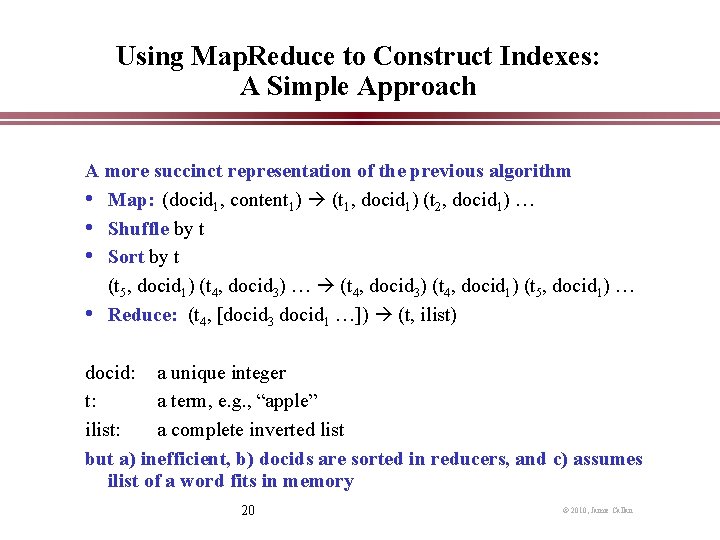

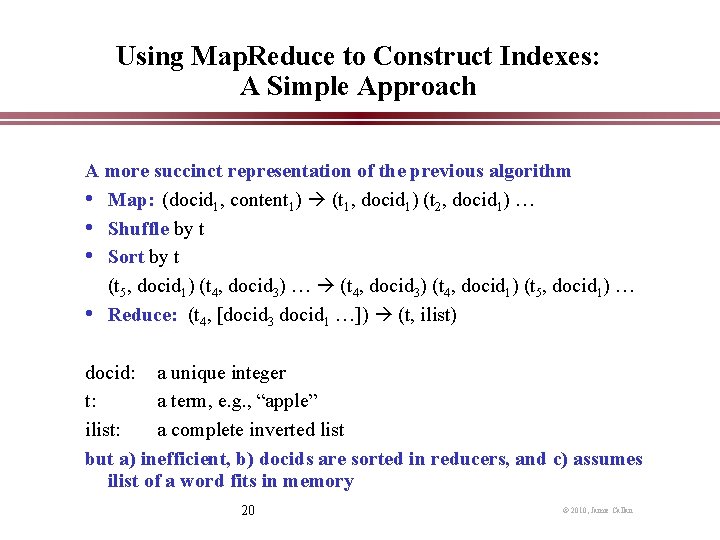

Using Map. Reduce to Construct Indexes: A Simple Approach A more succinct representation of the previous algorithm • Map: (docid 1, content 1) (t 1, docid 1) (t 2, docid 1) … • Shuffle by t • Sort by t (t 5, docid 1) (t 4, docid 3) … (t 4, docid 3) (t 4, docid 1) (t 5, docid 1) … • Reduce: (t 4, [docid 3 docid 1 …]) (t, ilist) docid: a unique integer t: a term, e. g. , “apple” ilist: a complete inverted list but a) inefficient, b) docids are sorted in reducers, and c) assumes ilist of a word fits in memory 20 © 2010, Jamie Callan

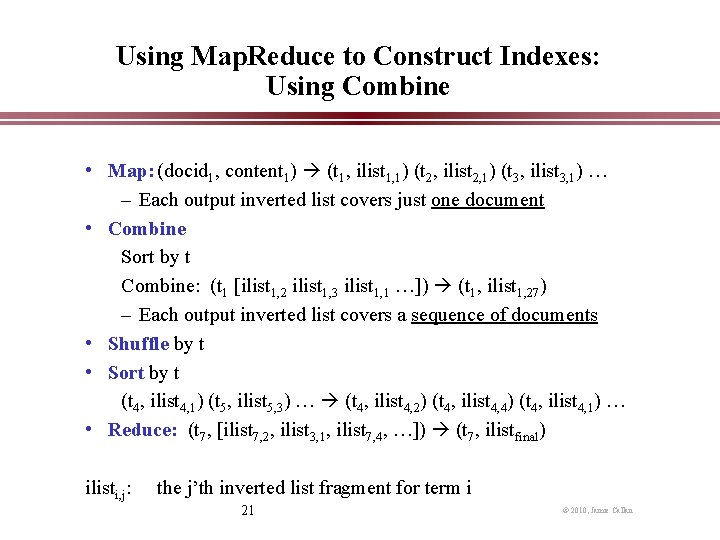

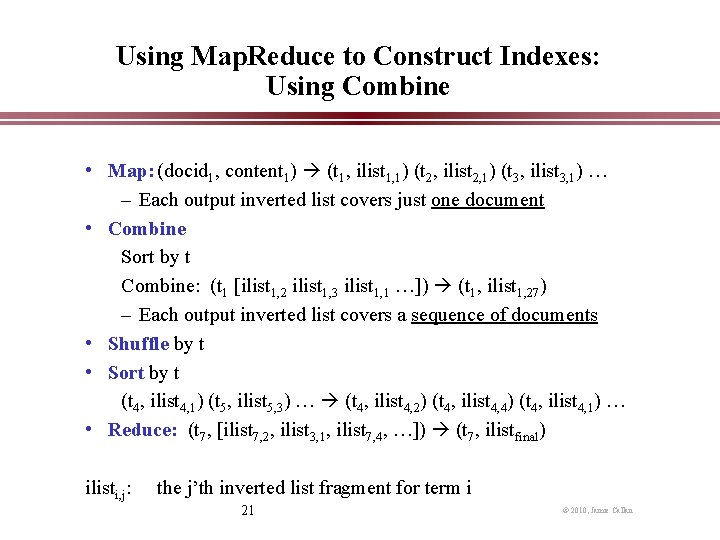

Using Map. Reduce to Construct Indexes: Using Combine • Map: (docid 1, content 1) (t 1, ilist 1, 1) (t 2, ilist 2, 1) (t 3, ilist 3, 1) … – Each output inverted list covers just one document • Combine Sort by t Combine: (t 1 [ilist 1, 2 ilist 1, 3 ilist 1, 1 …]) (t 1, ilist 1, 27) – Each output inverted list covers a sequence of documents • Shuffle by t • Sort by t (t 4, ilist 4, 1) (t 5, ilist 5, 3) … (t 4, ilist 4, 2) (t 4, ilist 4, 4) (t 4, ilist 4, 1) … • Reduce: (t 7, [ilist 7, 2, ilist 3, 1, ilist 7, 4, …]) (t 7, ilistfinal) ilisti, j: the j’th inverted list fragment for term i 21 © 2010, Jamie Callan

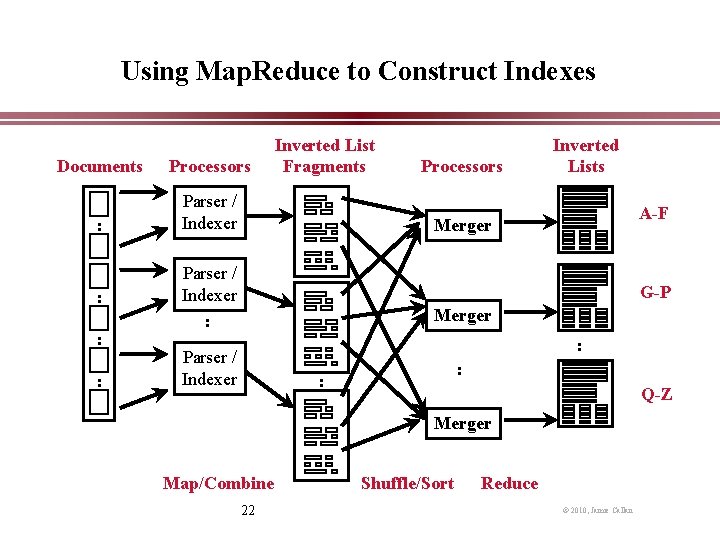

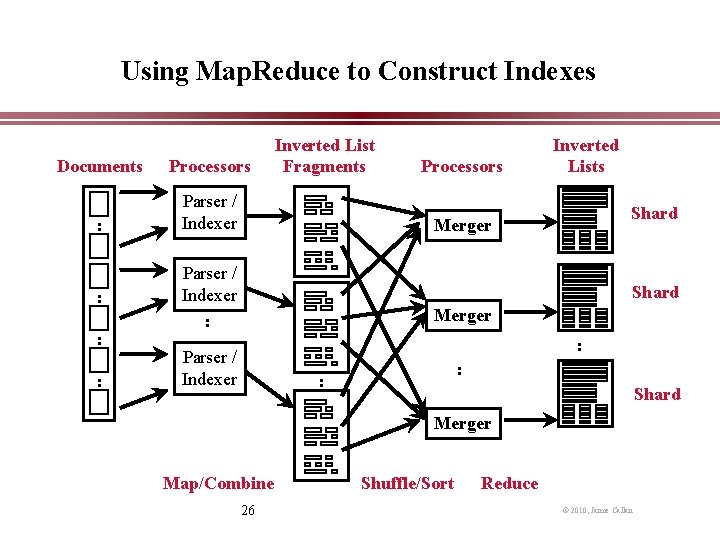

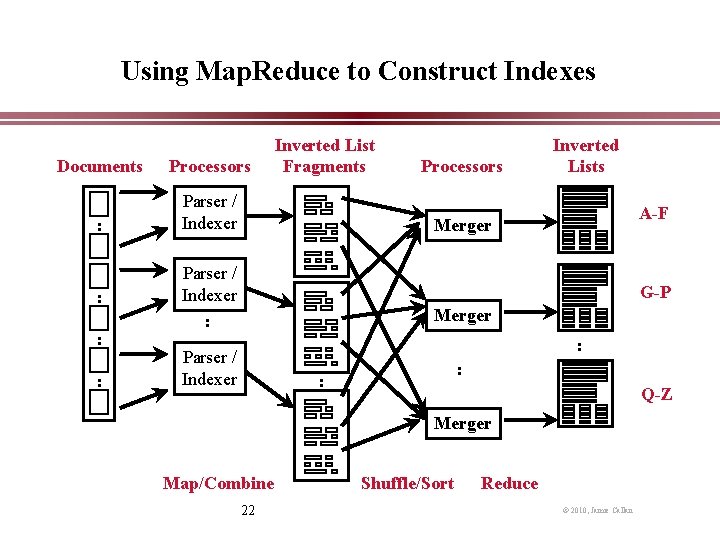

Using Map. Reduce to Construct Indexes Documents Processors : Parser / Indexer : : : Inverted List Fragments Processors Inverted Lists A-F Merger Parser / Indexer : G-P Merger : Parser / Indexer : : Q-Z Merger Map/Combine 22 Shuffle/Sort Reduce © 2010, Jamie Callan

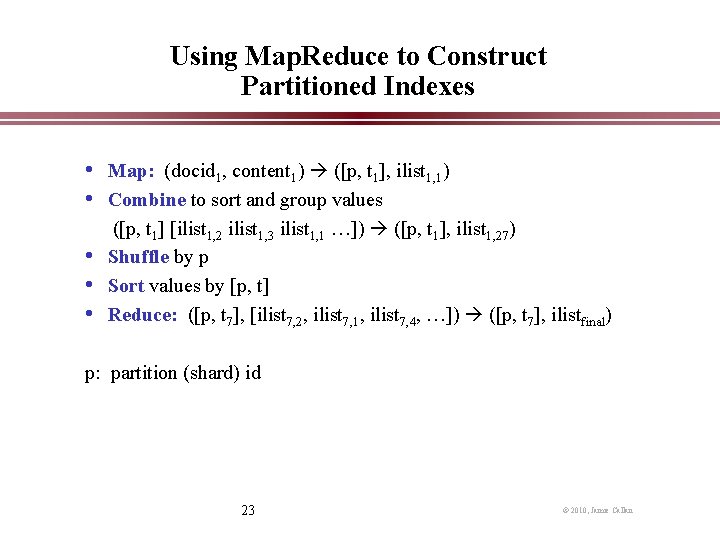

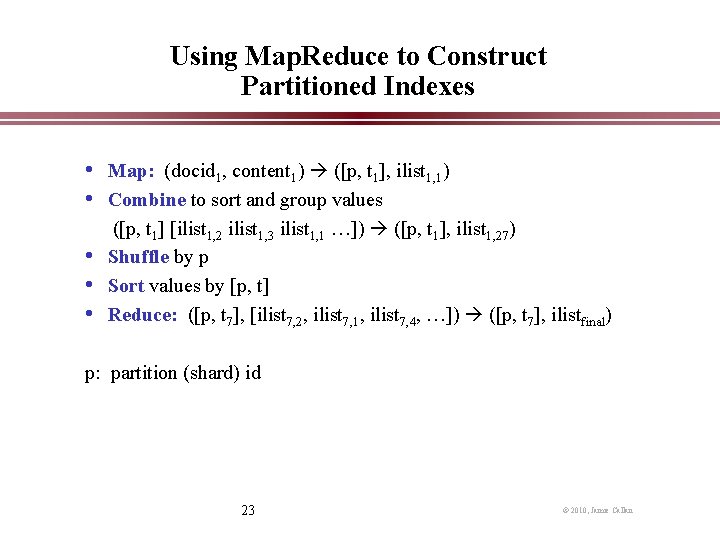

Using Map. Reduce to Construct Partitioned Indexes • Map: (docid 1, content 1) ([p, t 1], ilist 1, 1) • Combine to sort and group values • • • ([p, t 1] [ilist 1, 2 ilist 1, 3 ilist 1, 1 …]) ([p, t 1], ilist 1, 27) Shuffle by p Sort values by [p, t] Reduce: ([p, t 7], [ilist 7, 2, ilist 7, 1, ilist 7, 4, …]) ([p, t 7], ilistfinal) p: partition (shard) id 23 © 2010, Jamie Callan

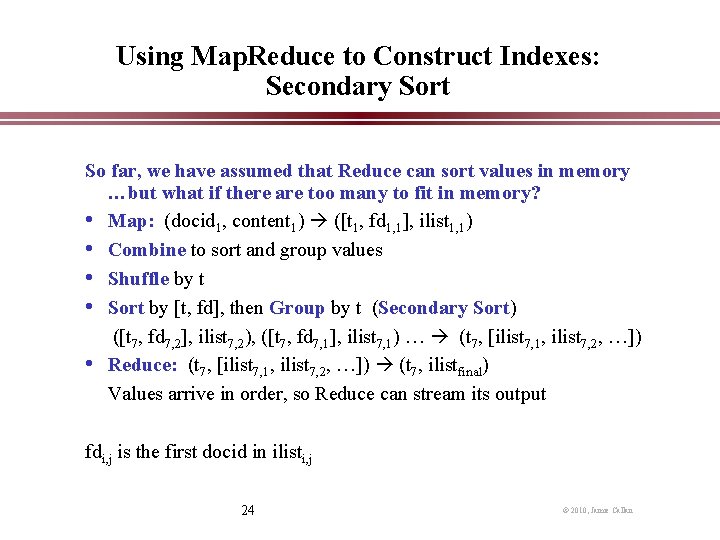

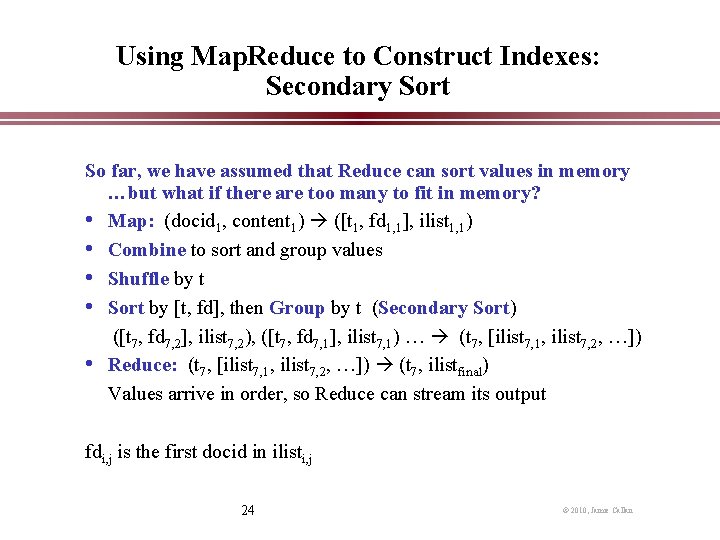

Using Map. Reduce to Construct Indexes: Secondary Sort So far, we have assumed that Reduce can sort values in memory …but what if there are too many to fit in memory? • Map: (docid 1, content 1) ([t 1, fd 1, 1], ilist 1, 1) • Combine to sort and group values • Shuffle by t • Sort by [t, fd], then Group by t (Secondary Sort) ([t 7, fd 7, 2], ilist 7, 2), ([t 7, fd 7, 1], ilist 7, 1) … (t 7, [ilist 7, 1, ilist 7, 2, …]) • Reduce: (t 7, [ilist 7, 1, ilist 7, 2, …]) (t 7, ilistfinal) Values arrive in order, so Reduce can stream its output fdi, j is the first docid in ilisti, j 24 © 2010, Jamie Callan

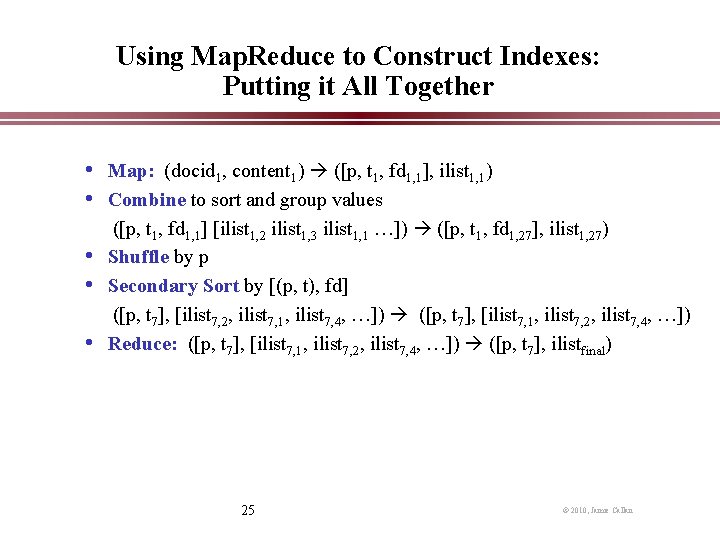

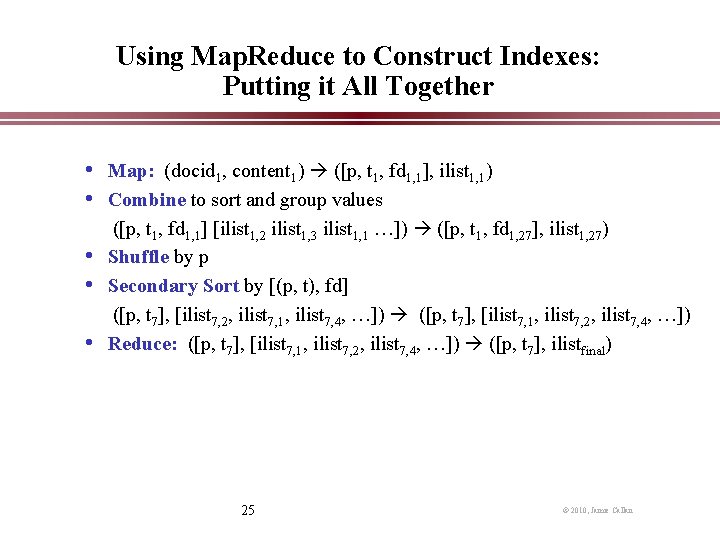

Using Map. Reduce to Construct Indexes: Putting it All Together • Map: (docid 1, content 1) ([p, t 1, fd 1, 1], ilist 1, 1) • Combine to sort and group values • • • ([p, t 1, fd 1, 1] [ilist 1, 2 ilist 1, 3 ilist 1, 1 …]) ([p, t 1, fd 1, 27], ilist 1, 27) Shuffle by p Secondary Sort by [(p, t), fd] ([p, t 7], [ilist 7, 2, ilist 7, 1, ilist 7, 4, …]) ([p, t 7], [ilist 7, 1, ilist 7, 2, ilist 7, 4, …]) Reduce: ([p, t 7], [ilist 7, 1, ilist 7, 2, ilist 7, 4, …]) ([p, t 7], ilistfinal) 25 © 2010, Jamie Callan

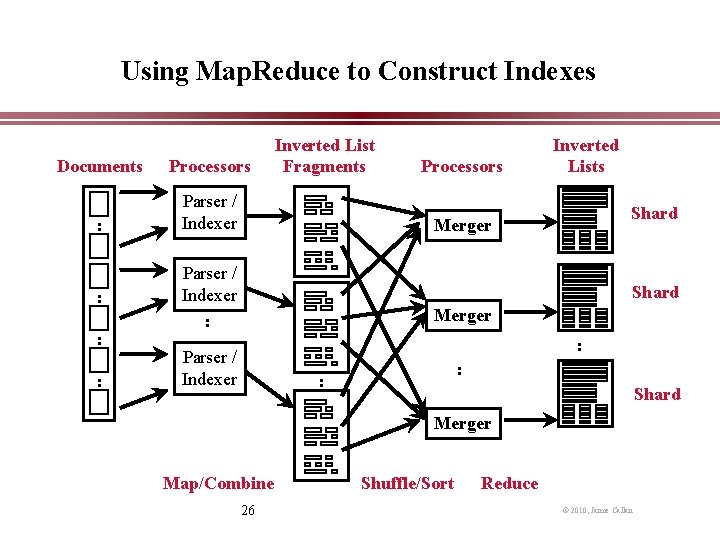

Using Map. Reduce to Construct Indexes Documents Processors : Parser / Indexer : : : Inverted List Fragments Processors Inverted Lists Shard Merger Parser / Indexer : Shard Merger : Parser / Indexer : : Shard Merger Map/Combine 26 Shuffle/Sort Reduce © 2010, Jamie Callan

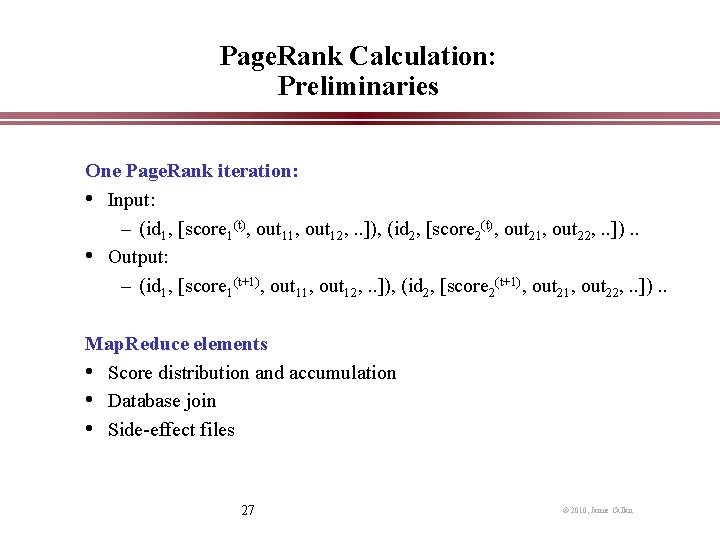

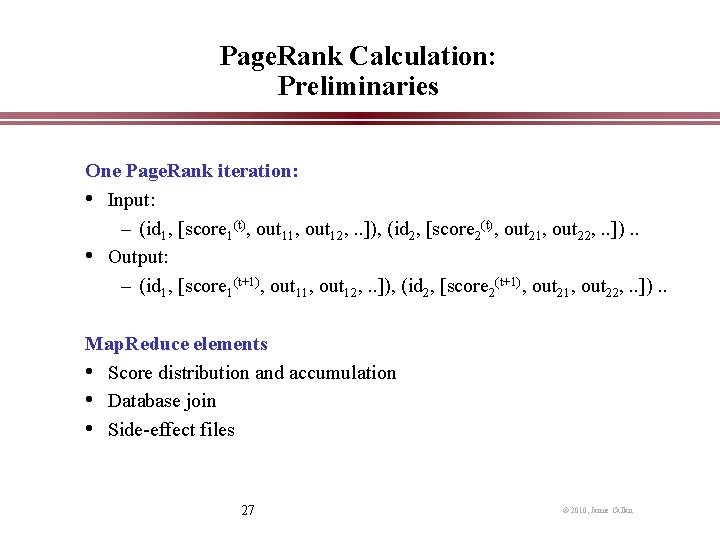

Page. Rank Calculation: Preliminaries One Page. Rank iteration: • Input: – (id 1, [score 1(t), out 11, out 12, . . ]), (id 2, [score 2(t), out 21, out 22, . . ]). . • Output: – (id 1, [score 1(t+1), out 11, out 12, . . ]), (id 2, [score 2(t+1), out 21, out 22, . . ]). . Map. Reduce elements • Score distribution and accumulation • Database join • Side-effect files 27 © 2010, Jamie Callan

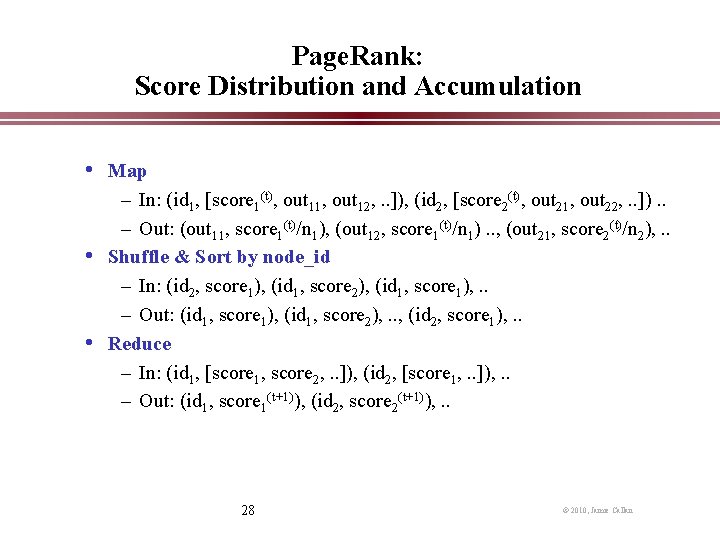

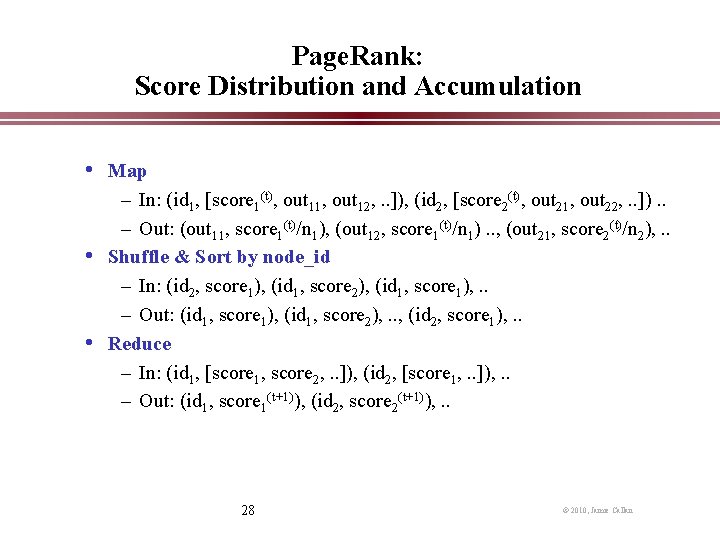

Page. Rank: Score Distribution and Accumulation • Map – In: (id 1, [score 1(t), out 11, out 12, . . ]), (id 2, [score 2(t), out 21, out 22, . . ]). . – Out: (out 11, score 1(t)/n 1), (out 12, score 1(t)/n 1). . , (out 21, score 2(t)/n 2), . . • Shuffle & Sort by node_id – In: (id 2, score 1), (id 1, score 2), (id 1, score 1), . . – Out: (id 1, score 1), (id 1, score 2), . . , (id 2, score 1), . . • Reduce – In: (id 1, [score 1, score 2, . . ]), (id 2, [score 1, . . ]), . . – Out: (id 1, score 1(t+1)), (id 2, score 2(t+1)), . . 28 © 2010, Jamie Callan

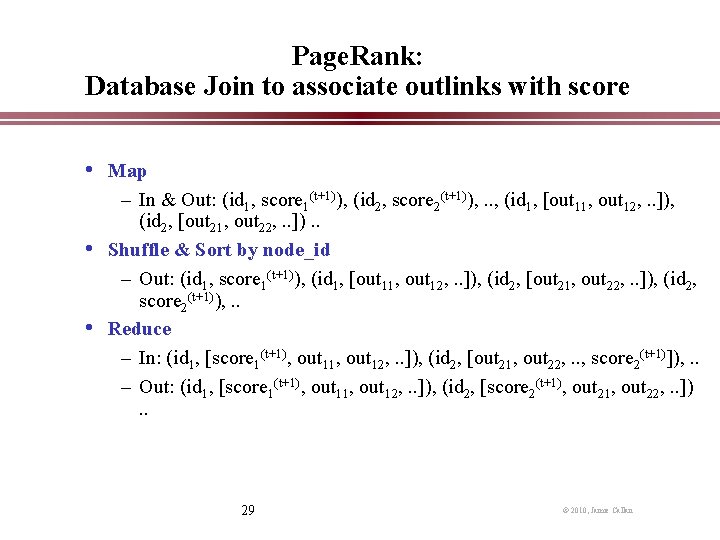

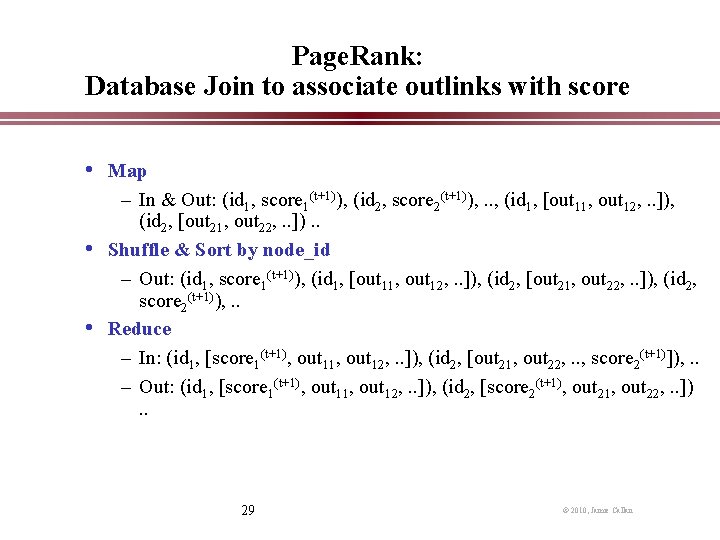

Page. Rank: Database Join to associate outlinks with score • Map – In & Out: (id 1, score 1(t+1)), (id 2, score 2(t+1)), . . , (id 1, [out 11, out 12, . . ]), (id 2, [out 21, out 22, . . ]). . • Shuffle & Sort by node_id – Out: (id 1, score 1(t+1)), (id 1, [out 11, out 12, . . ]), (id 2, [out 21, out 22, . . ]), (id 2, score 2(t+1)), . . • Reduce – In: (id 1, [score 1(t+1), out 11, out 12, . . ]), (id 2, [out 21, out 22, . . , score 2(t+1)]), . . – Out: (id 1, [score 1(t+1), out 11, out 12, . . ]), (id 2, [score 2(t+1), out 21, out 22, . . ]). . 29 © 2010, Jamie Callan

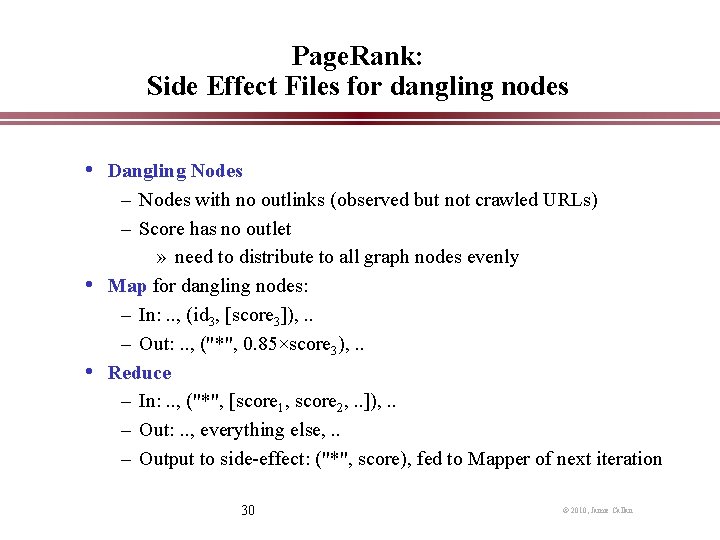

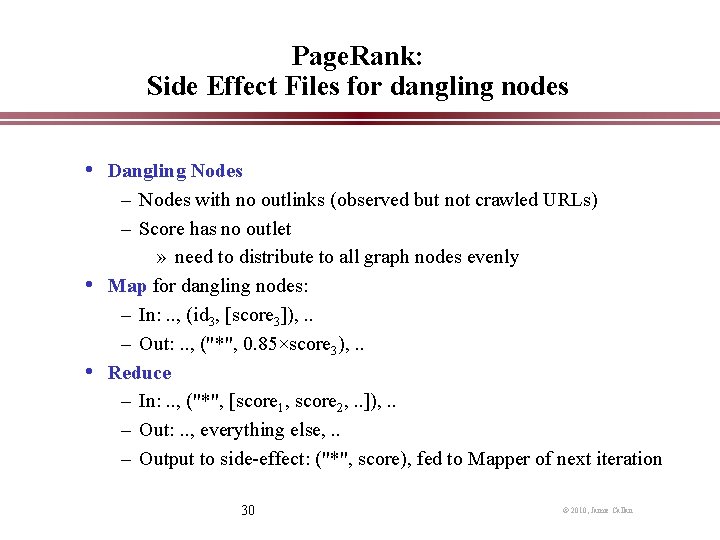

Page. Rank: Side Effect Files for dangling nodes • Dangling Nodes – Nodes with no outlinks (observed but not crawled URLs) – Score has no outlet » need to distribute to all graph nodes evenly • Map for dangling nodes: – In: . . , (id 3, [score 3]), . . – Out: . . , ("*", 0. 85×score 3), . . • Reduce – In: . . , ("*", [score 1, score 2, . . ]), . . – Out: . . , everything else, . . – Output to side-effect: ("*", score), fed to Mapper of next iteration 30 © 2010, Jamie Callan

Outline • • Why Map. Reduce (Hadoop) Map. Reduce basics The Map. Reduce way of thinking Manipulating large data 31 © 2010, Le Zhao

Manipulating Large Data • Do everything in Hadoop (and HDFS) – Make sure every step is parallelized! – Any serial step breaks your design • E. g. storing the URL list for a Web graph – Each node in Web graph has an id – [URL 1, URL 2, …], use line number as id – bottle neck – [(id 1, URL 1), (id 2, URL 2), …], explicit id 32 © 2010, Le Zhao

Hadoop based Tools • For Developing in Java, Net. Beans plugin • • • – http: //www. hadoopstudio. org/docs. html Pig Latin, a SQL-like high level data processing script language Hive, Data warehouse, SQL Cascading, Data processing Mahout, Machine Learning algorithms on Hadoop HBase, Distributed data store as a large table More – http: //hadoop. apache. org/ – http: //en. wikipedia. org/wiki/Hadoop – Many other toolkits, Nutch, Cloud 9, Ivory 33 © 2010, Le Zhao

Get Your Hands Dirty • Hadoop Virtual Machine – http: //www. cloudera. com/developers/downloads/virtualmachine/ » This runs Hadoop 0. 20 – An earlier Hadoop 0. 18. 0 version is here http: //code. google. com/edu/parallel/tools/hadoopvm/index. ht ml • Amazon EC 2 • Various other Hadoop clusters around • The Net. Beans plugin simulates Hadoop – The workflow view works on Windows – Local running & debugging works on Mac. OS and Linux – http: //www. hadoopstudio. org/docs. html 34 © 2010, Le Zhao

Conclusions • • • Why large scale Map. Reduce advantages Hadoop uses Use cases – Map only: for totally distributive computation – Map+Reduce: for filtering & aggregation – Database join: for massive dictionary lookups – Secondary sort: for sorting on values – Inverted indexing: combiner, complex keys – Page. Rank: side effect files Large data 35 © 2010, Jamie Callan

For More Information • L. A. Barroso, J. Dean, and U. Hölzle. “Web search for a planet: The Google cluster • • architecture. ” IEEE Micro, 2003. J. Dean and S. Ghemawat. “Map. Reduce: Simplified Data Processing on Large Clusters. ” Proceedings of the 6 th Symposium on Operating System Design and Implementation (OSDI 2004), pages 137 -150. 2004. S. Ghemawat, H. Gobioff, and S. -T. Leung. “The Google File System. ” Proceedings of the 19 th ACM Symposium on Operating Systems Principles (SOSP-03), pages 29 -43. 2003. I. H. Witten, A. Moffat, and T. C. Bell. Managing Gigabytes. Morgan Kaufmann. 1999. J. Zobel and A. Moffat. “Inverted files for text search engines. ” ACM Computing Surveys, 38 (2). 2006. http: //hadoop. apache. org/common/docs/current/mapred_tutorial. html. “Map/Reduce Tutorial”. Fetched January 21, 2010. Tom White. Hadoop: The Definitive Guide. O'Reilly Media. June 5, 2009 J. Lin and C. Dyer. Data-Intensive Text Processing with Map. Reduce, Book Draft. February 7, 2010. 36 © 2010, Jamie Callan