2015 1 Paper Review Map Reduce Simplified Data

![Map. Reduce : Simplified Data Processing on Large Clusters Reference documents [1] Jeffrey Dean Map. Reduce : Simplified Data Processing on Large Clusters Reference documents [1] Jeffrey Dean](https://slidetodoc.com/presentation_image_h2/4a4c50a59020a6211e716b5bfbac262c/image-43.jpg)

- Slides: 44

2015 -1학기 운영체제특론 Paper Review Map. Reduce : Simplified Data Processing on Large Clusters Jeffrey Dean and Sanjay Ghemawat (Google, Inc. ) 72150272 홍민하

INDEX CONTENT 01. Introduction 02. Programming Model 03. Implementation 04. Refinements 05. Performance 06. Conclusions

01. Introduction Why need the data process system? Design

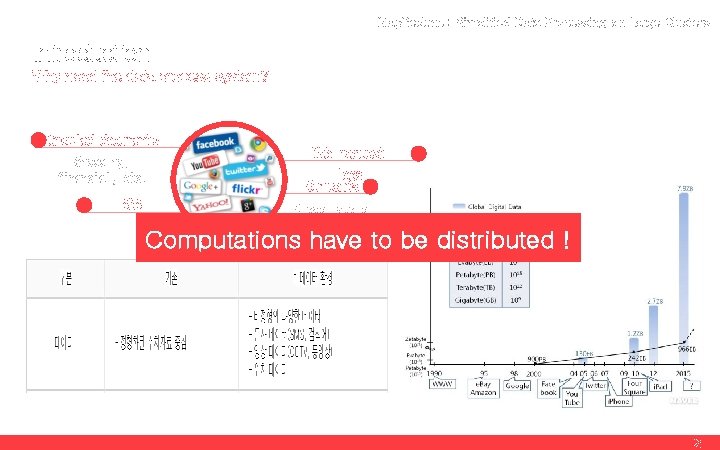

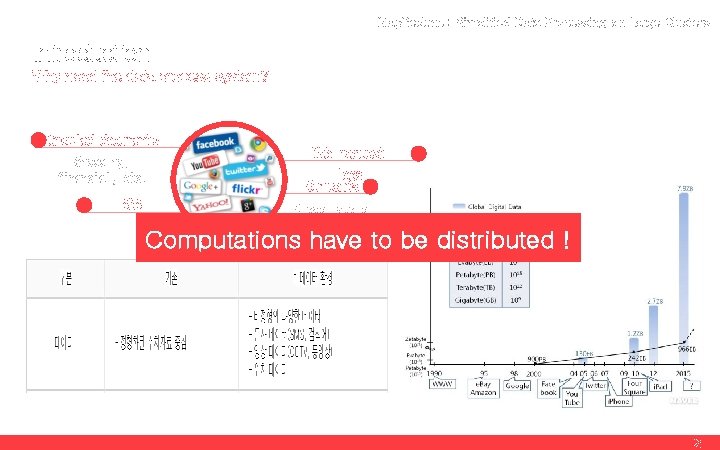

Map. Reduce : Simplified Data Processing on Large Clusters Introduction Why need the data process system? Crawled documents Shopping, financial, etc. SNS Web request logs Contents Video, audio, picture, etc. Computations have to be distributed ! 2

Map. Reduce : Simplified Data Processing on Large Clusters Introduction Design Map. Reduce is a programming model and an associated implementation for processing and generating large data sets. Express the simple computations Hides the messy details in a library Use the map and reduce (parallelization, fault-tolerance, data distribution, load balancing) primitives present in Lisp and many other functional languages 3

02. Programming Model Map, Reduce Concept Example – counting the number of occurrences of each word More Examples

Map. Reduce : Simplified Data Processing on Large Clusters Programming Model Map, Reduce Concept Map Each logical “record” in our input Produces a set of intermediate key/value pairs Reduce Accepts an intermediate key I and a set of values for that key Merges together these values that shared the same key 5

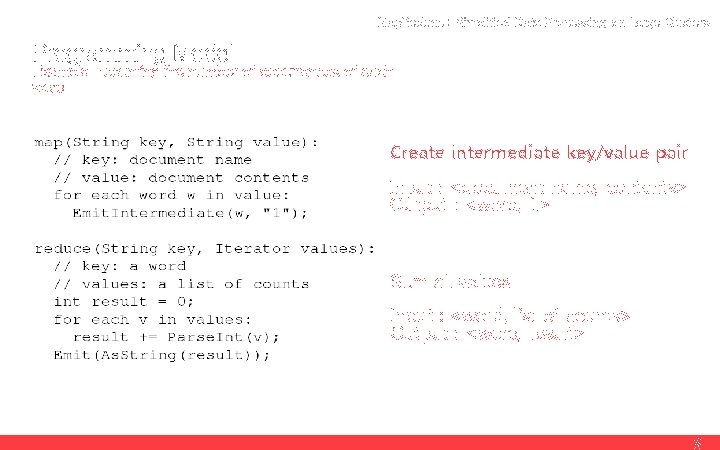

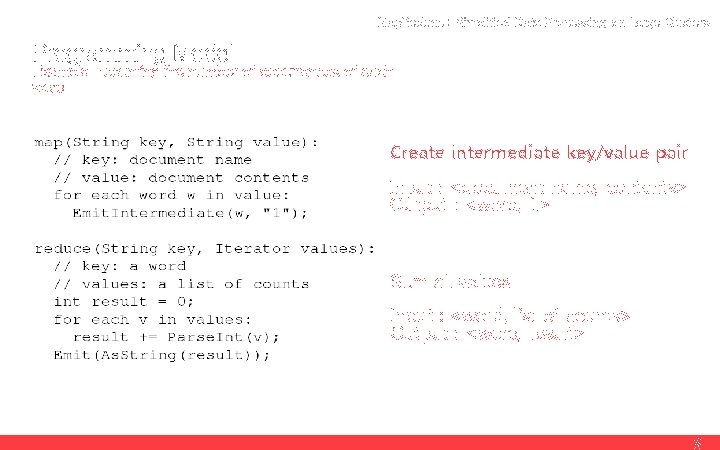

Map. Reduce : Simplified Data Processing on Large Clusters Programming Model Example – counting the number of occurrences of each word Create intermediate key/value pair Input : <document name, contents> Output : <word, 1> Sum all values Input : <word, list of counts> Output : <word, result> 6

Map. Reduce : Simplified Data Processing on Large Clusters Programming Model Example – counting the number of occurrences of each word 7

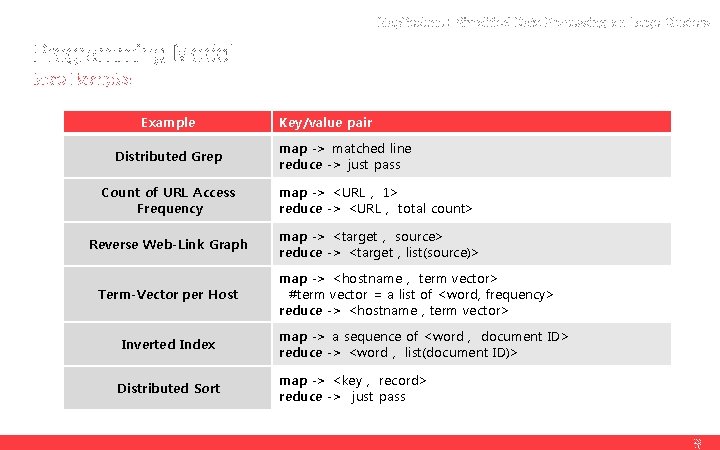

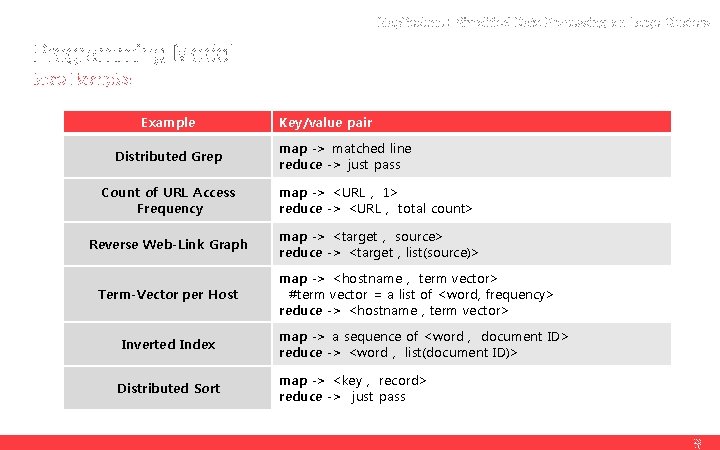

Map. Reduce : Simplified Data Processing on Large Clusters Programming Model More Examples Example Distributed Grep Key/value pair map -> matched line reduce -> just pass Count of URL Access Frequency map -> <URL , 1> reduce -> <URL , total count> Reverse Web-Link Graph map -> <target , source> reduce -> <target , list(source)> Term-Vector per Host Inverted Index Distributed Sort map -> <hostname , term vector> #term vector = a list of <word, frequency> reduce -> <hostname , term vector> map -> a sequence of <word , document ID> reduce -> <word , list(document ID)> map -> <key , record> reduce -> just pass 8

03. Implementation Environment Execution Overview Fault Tolerance Locality

Map. Reduce : Simplified Data Processing on Large Clusters Implementation Environment Computing environment in wide use at Google x 86 processors running Linux, 1. dual-processor with 2 -4 GB of memory per machine. 2. 100 MB/sec or 1 GB/sec at the machine bandwidth. cluster consists of 3. Ahundreds or thousands of machines (Machine failures are common) 4. Storage is provided by inexpensive IDE disks 10

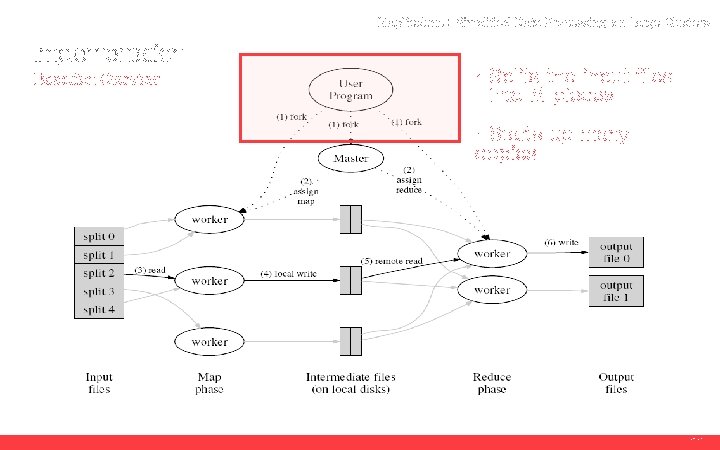

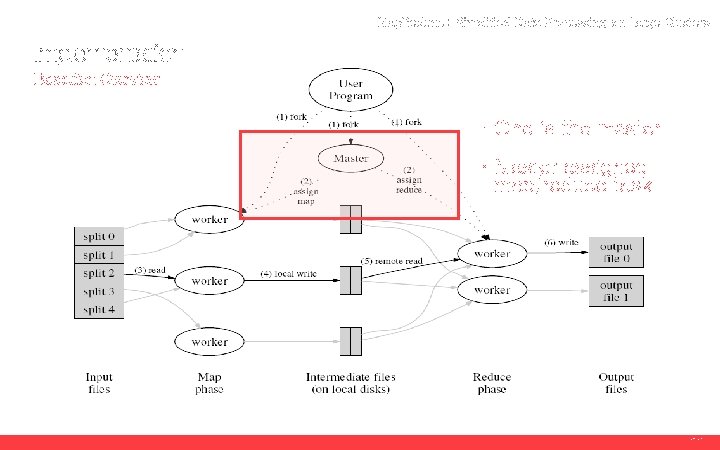

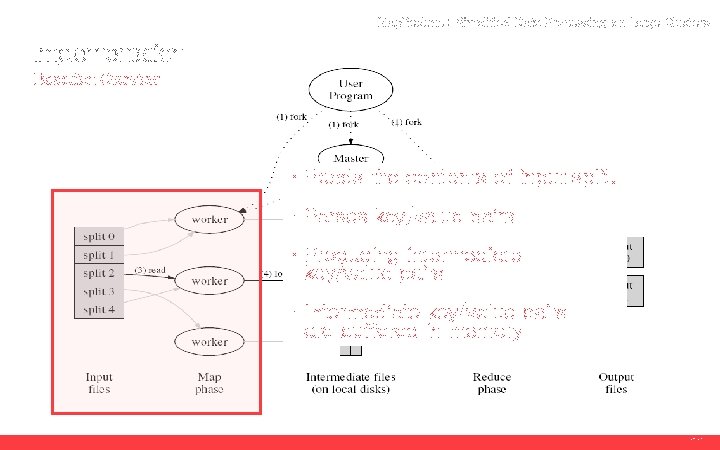

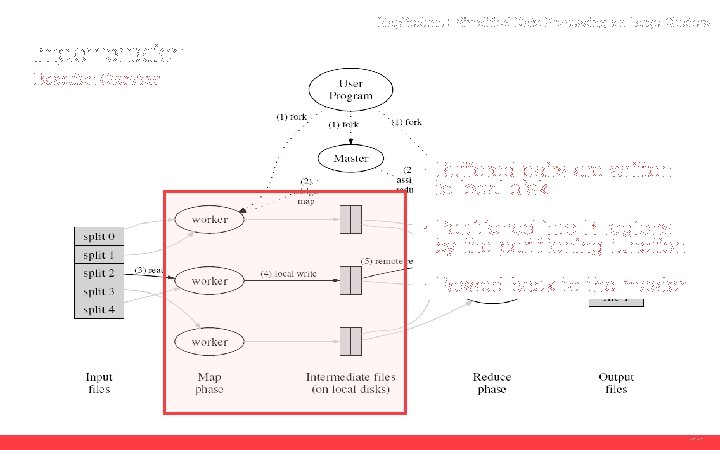

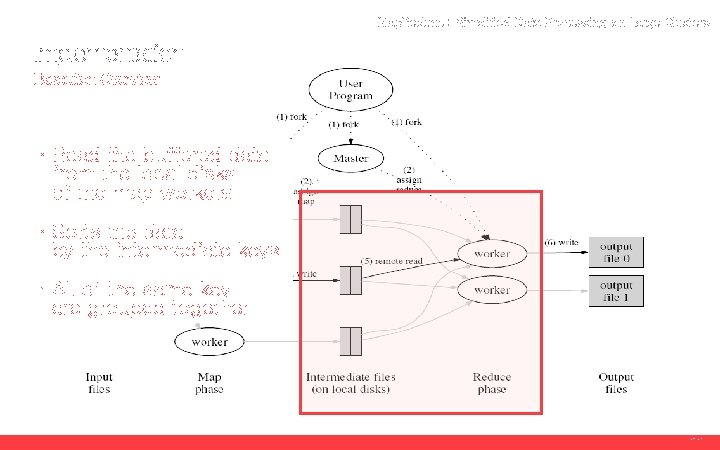

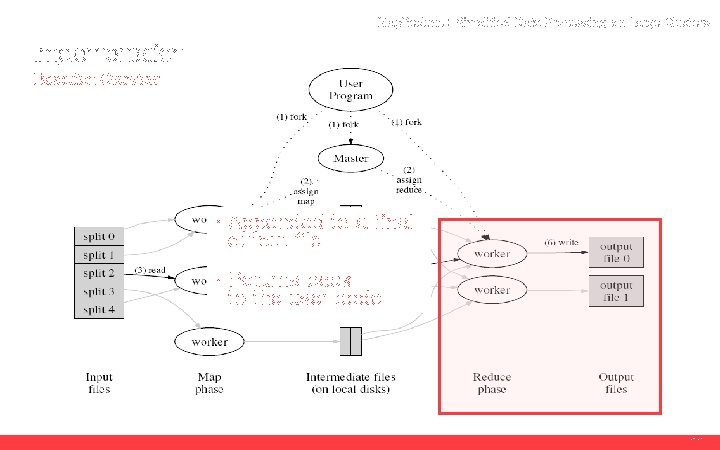

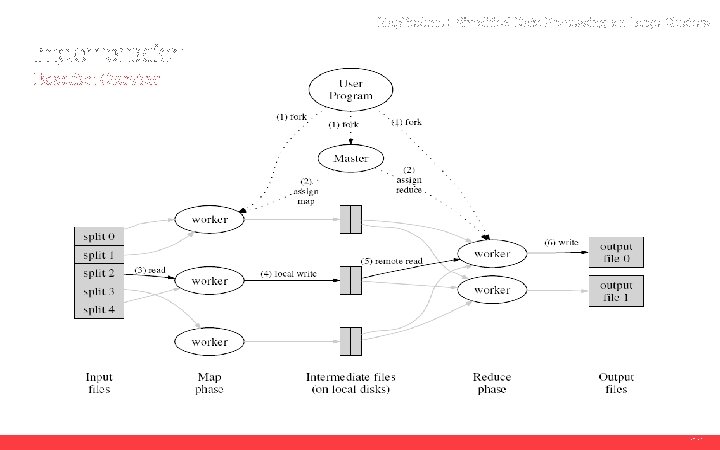

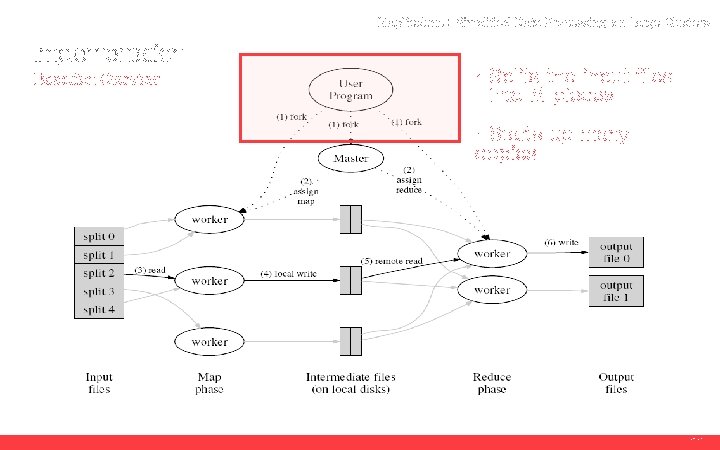

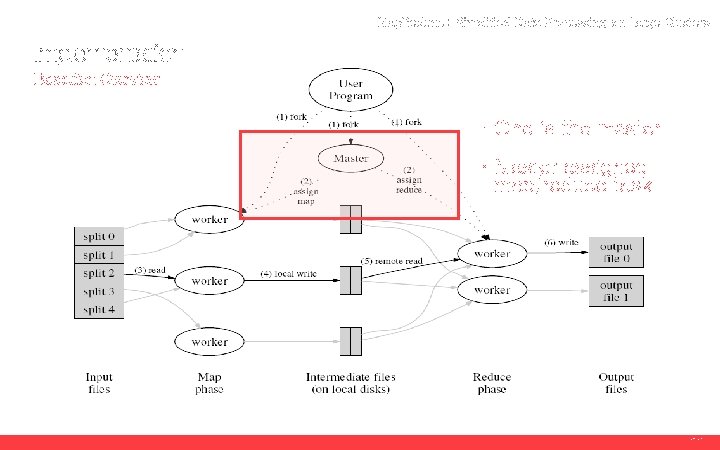

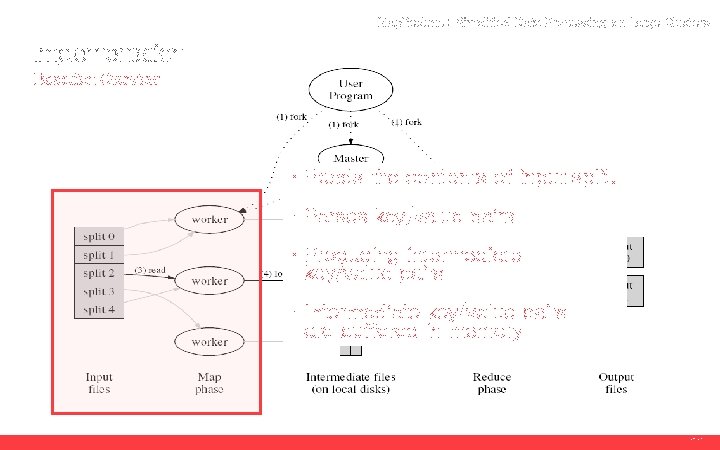

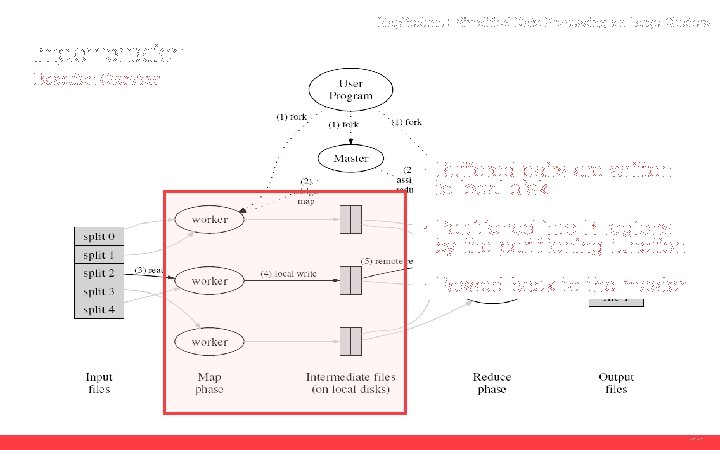

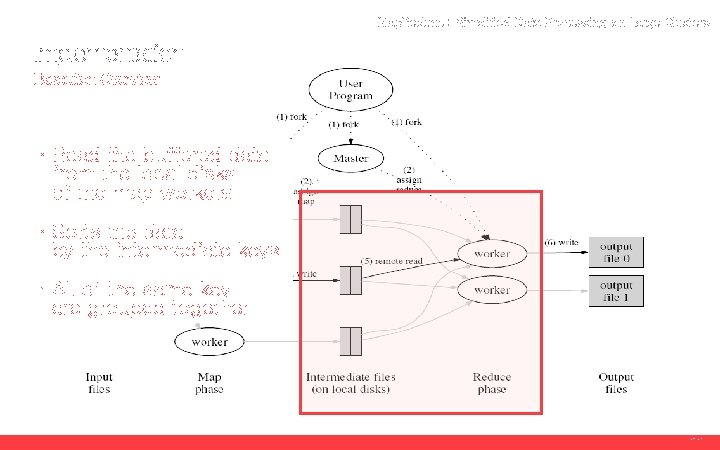

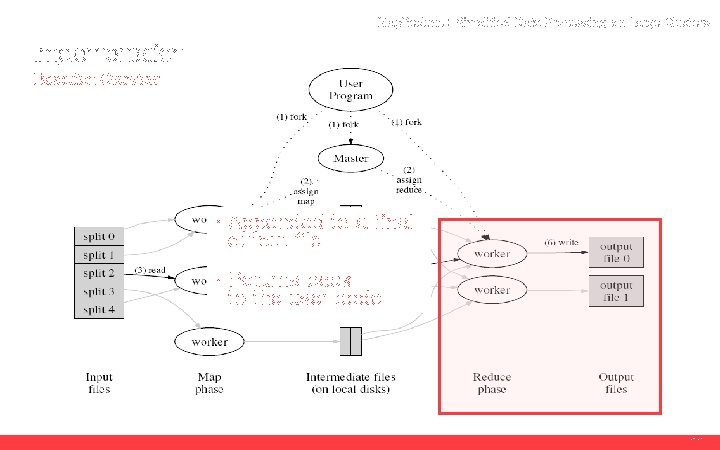

Map. Reduce : Simplified Data Processing on Large Clusters Implementation Execution Overview 11

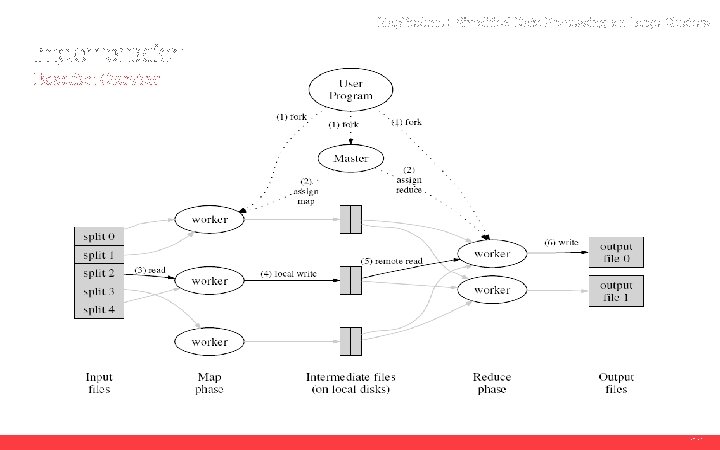

Map. Reduce : Simplified Data Processing on Large Clusters Implementation Execution Overview · Splits the input files into M pieces · Starts up many copies 11

Map. Reduce : Simplified Data Processing on Large Clusters Implementation Execution Overview · One is the master · Master assigned map/reduce task 11

Map. Reduce : Simplified Data Processing on Large Clusters Implementation Execution Overview · Reads the contents of input split. · Parses key/value pairs · Producing intermediate key/value pairs · Intermediate key/value pairs are buffered in memory 11

Map. Reduce : Simplified Data Processing on Large Clusters Implementation Execution Overview · Buffered pairs are written to local disk · Partitioned into R regions by the partitioning function · Passed back to the master 11

Map. Reduce : Simplified Data Processing on Large Clusters Implementation Execution Overview · Read the buffered data from the local disks of the map workers · Sorts the data by the intermediate keys · All of the same key are grouped together 11

Map. Reduce : Simplified Data Processing on Large Clusters Implementation Execution Overview · Appended to a final output file · Returns back to the user code 11

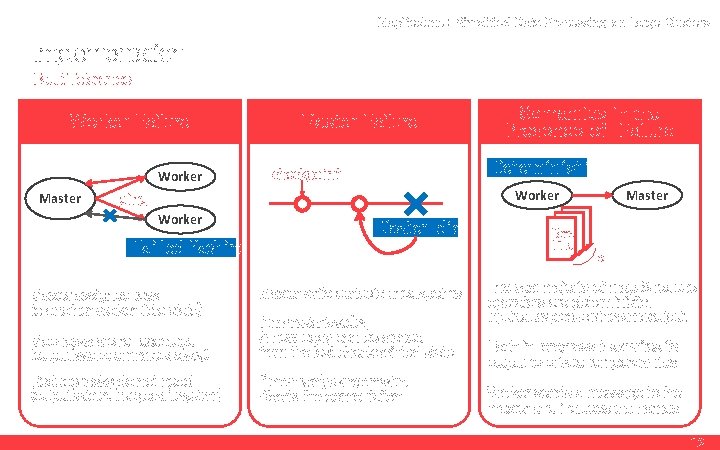

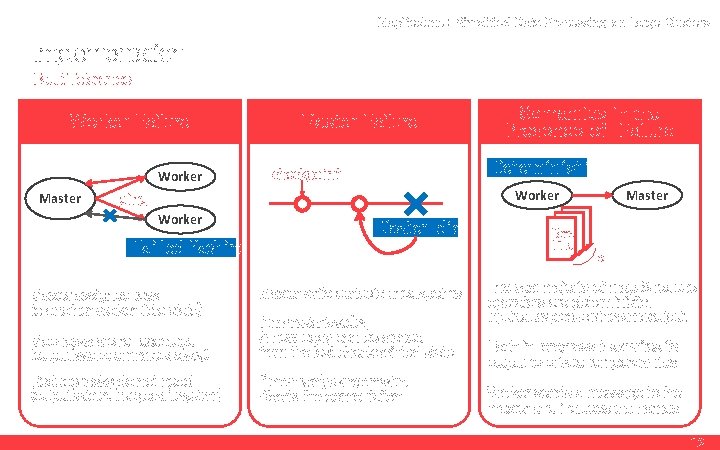

Map. Reduce : Simplified Data Processing on Large Clusters Implementation Fault Tolerance Worker Failure Worker Master Failure Semantics in the Presence of Failure Deterministic checkpoint Worker ping Worker Master die Failed Machine Temp File R Master assigned task to another worker (idle state) Master write periodic checkpoints Map tasks are re-executed (output is stored on the local disk(s)) A new copy can be started from the last checkpointed state Reduce tasks do not need If there is only a single master, (output is stored in a global file system) Master If the master task dies, Aborts the computation The vast majority of map & reduce operators are deterministic. Input values produces the same output. Each in-progress task writes its output to private temporary files Worker sends a message to the master and includes the names 12

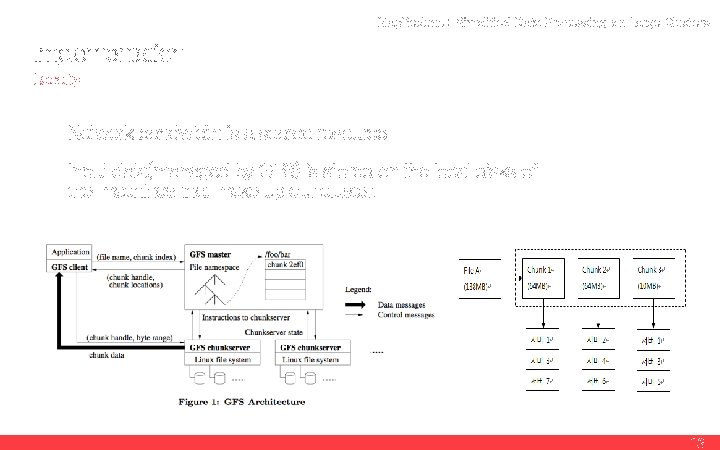

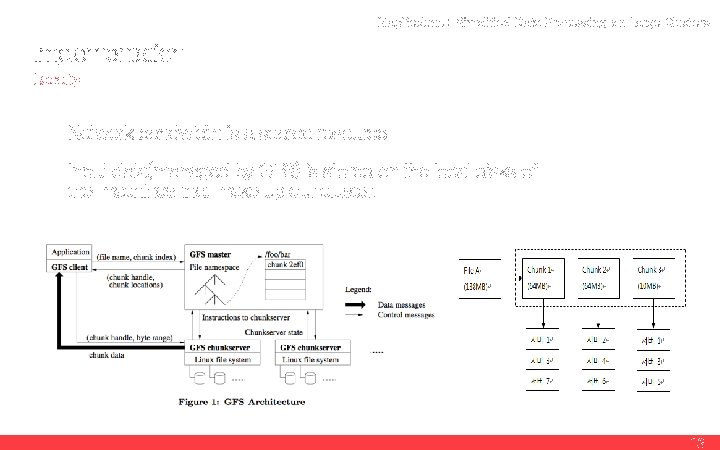

Map. Reduce : Simplified Data Processing on Large Clusters Implementation Locality Network bandwidth is a scarce resource Input data(managed by GFS) is stored on the local disks of the machines that make up our cluster. 13

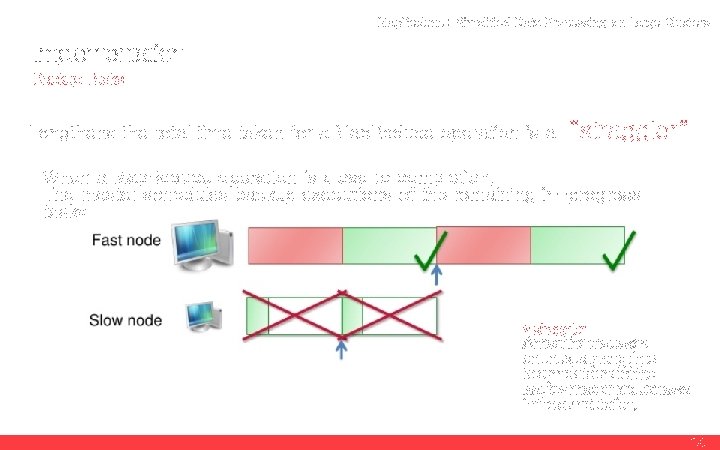

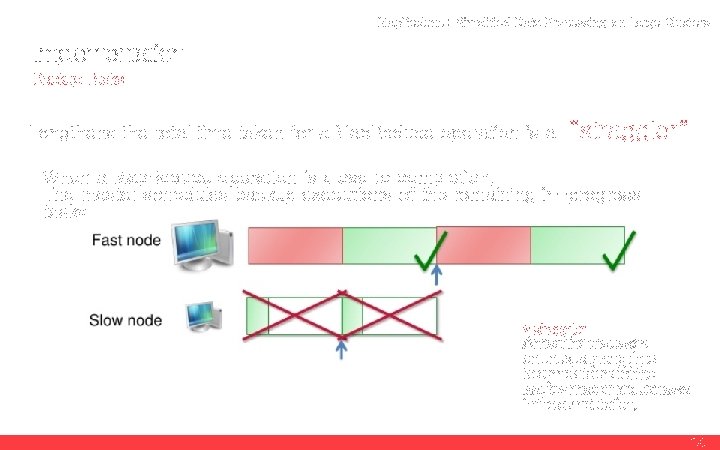

Map. Reduce : Simplified Data Processing on Large Clusters Implementation Backup Tasks Lengthens the total time taken for a Map. Reduce operation is a “straggler” When a Map. Reduce operation is close to completion, The master schedules backup executions of the remaining in-progress tasks. * straggler A machine that takes an unusually long time to complete one of the last few map or reduce tasks in the computation. 14

04. Refinements Combiner Function Skipping Bad Records Status Information Another

Map. Reduce : Simplified Data Processing on Large Clusters Refinements Combiner Function In some cases, there is significant repetition in the intermediate keys produced by each map task and the user specified reduce function is commutative and associative. Combiner function that does partial merging of this data before it is sent over the network. Similarity Reduce Combiner Difference Same code is used to implement both the combiner and the reduce functions. Output is written to the final output file. Output is written to an intermediate file that will be sent to a reduce task. Mapper Combiner Reducer 16

Map. Reduce : Simplified Data Processing on Large Clusters Refinements Skipping Bad Records Problem : Such bugs prevent a Map. Reduce operation from completing. Solution : If the user code generates a signal Master Worker Signal handler Sequence Number N Local variable Sequence number N UPD packet Record N Skip the record 17

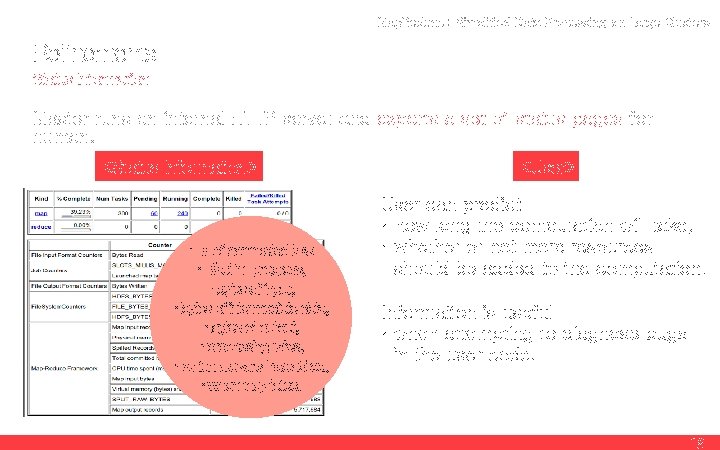

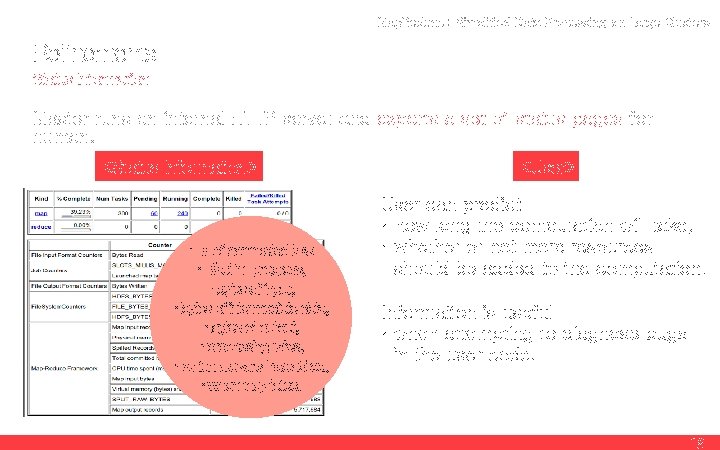

Map. Reduce : Simplified Data Processing on Large Clusters Refinements Status Information Master runs an internal HTTP server and exports a set of status pages for human. <Status Information> · # of completed task · # of in-progress, · bytes of input, · bytes of intermediate data, · bytes of output, · processing rates, · which workers have failed, · when they failed. <User> User can predict · how long the computation will take, · whether or not more resources should be added to the computation. Information is useful · when attempting to diagnose bugs in the user code. 18

Map. Reduce : Simplified Data Processing on Large Clusters Refinements Another Partitioning Function basic : Hash(key) mod R Upgrade : Hash(Hostname(URLkey)) mod R Ordering Guarantees Intermediate key/value pairs are processed in increasing key order. Counters Map. Reduce library provides a counter facility to count occurrences of various events. Input and Output Types Each input type implementation knows how to split itself into meaningful ranges. 19

05. Performance Grep Sort – Normal execution Effect of Backup Tasks Machine Failures

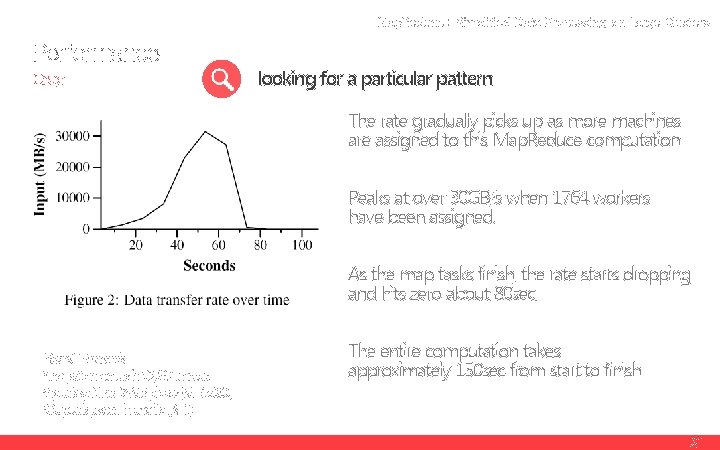

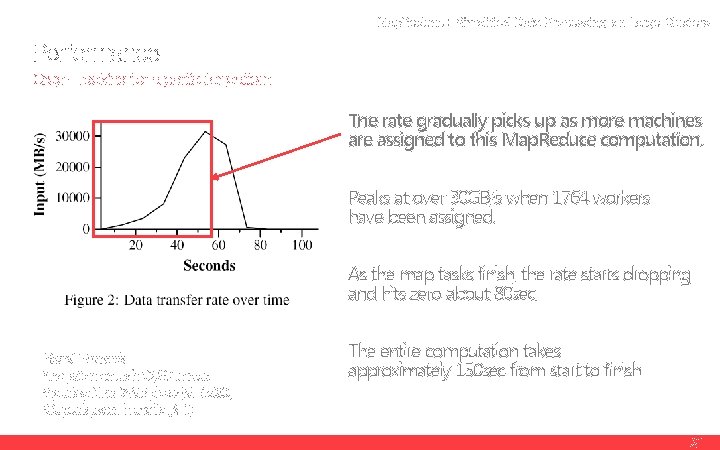

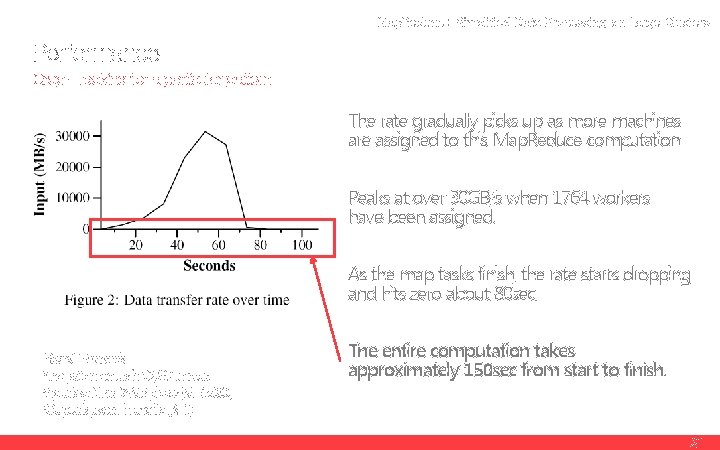

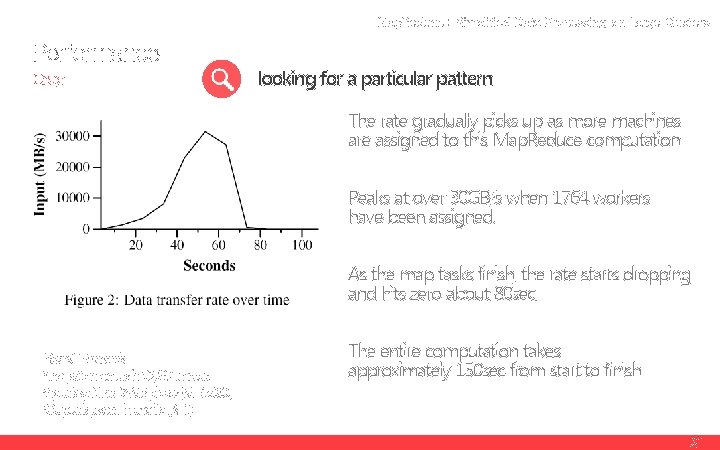

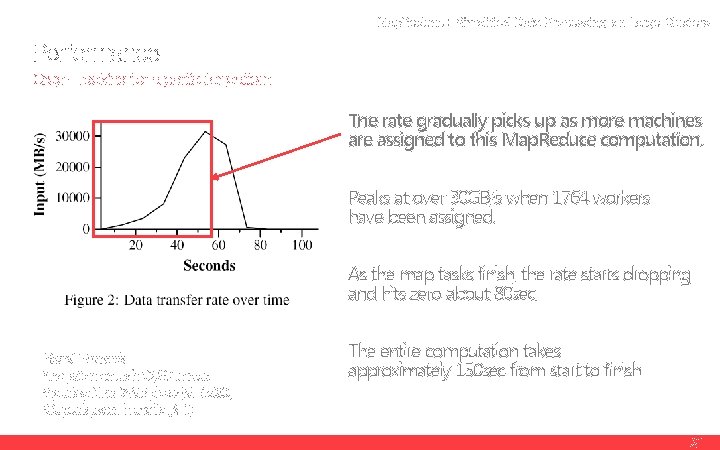

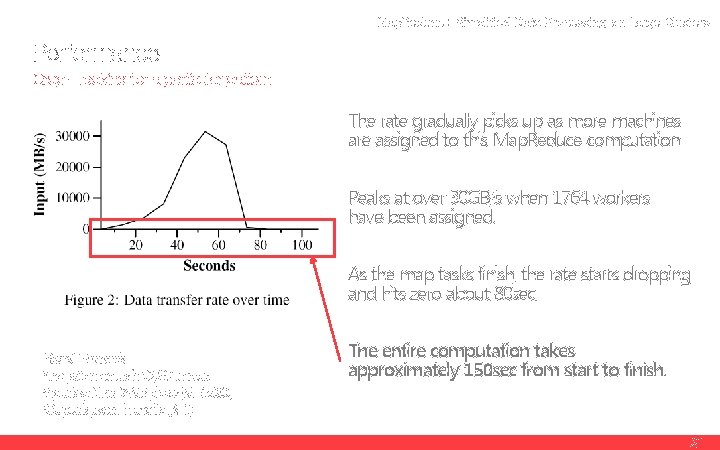

Map. Reduce : Simplified Data Processing on Large Clusters Performance Grep looking for a particular pattern The rate gradually picks up as more machines are assigned to this Map. Reduce computation. Peaks at over 30 GB/s when 1764 workers have been assigned. As the map tasks finish, the rate starts dropping and hits zero about 80 sec. *Scans 1 TB records *The pattern occurs in 92, 337 records. *Input is split into 64 MB pieces (M=15000) *Output is placed in one file (R=1) The entire computation takes approximately 150 sec from start to finish. 21

Map. Reduce : Simplified Data Processing on Large Clusters Performance Grep – looking for a particular pattern The rate gradually picks up as more machines are assigned to this Map. Reduce computation. Peaks at over 30 GB/s when 1764 workers have been assigned. As the map tasks finish, the rate starts dropping and hits zero about 80 sec. *Scans 1 TB records *The pattern occurs in 92, 337 records. *Input is split into 64 MB pieces (M=15000) *Output is placed in one file (R=1) The entire computation takes approximately 150 sec from start to finish. 21

Map. Reduce : Simplified Data Processing on Large Clusters Performance Grep – looking for a particular pattern The rate gradually picks up as more machines are assigned to this Map. Reduce computation. Peaks at over 30 GB/s when 1764 workers have been assigned. As the map tasks finish, the rate starts dropping and hits zero about 80 sec. *Scans 1 TB records *The pattern occurs in 92, 337 records. *Input is split into 64 MB pieces (M=15000) *Output is placed in one file (R=1) The entire computation takes approximately 150 sec from start to finish. 21

Map. Reduce : Simplified Data Processing on Large Clusters Performance Grep – looking for a particular pattern The rate gradually picks up as more machines are assigned to this Map. Reduce computation. Peaks at over 30 GB/s when 1764 workers have been assigned. As the map tasks finish, the rate starts dropping and hits zero about 80 sec. *Scans 1 TB records *The pattern occurs in 92, 337 records. *Input is split into 64 MB pieces (M=15000) *Output is placed in one file (R=1) The entire computation takes approximately 150 sec from start to finish. 21

Map. Reduce : Simplified Data Processing on Large Clusters Performance Grep – looking for a particular pattern The rate gradually picks up as more machines are assigned to this Map. Reduce computation. Peaks at over 30 GB/s when 1764 workers have been assigned. As the map tasks finish, the rate starts dropping and hits zero about 80 sec. *Scans 1 TB records *The pattern occurs in 92, 337 records. *Input is split into 64 MB pieces (M=15000) *Output is placed in one file (R=1) The entire computation takes approximately 150 sec from start to finish. 21

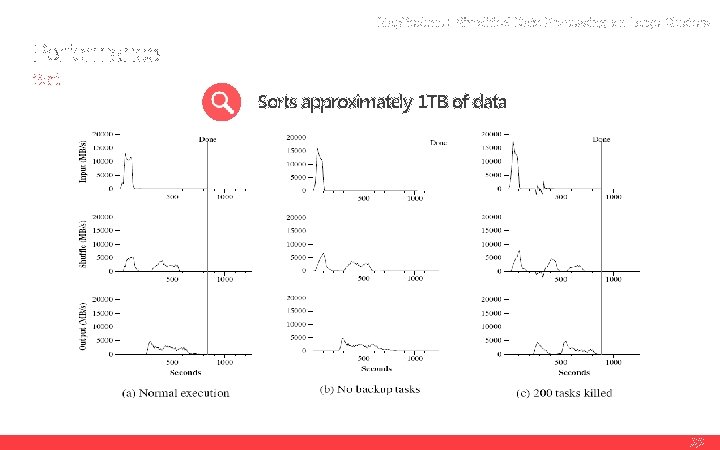

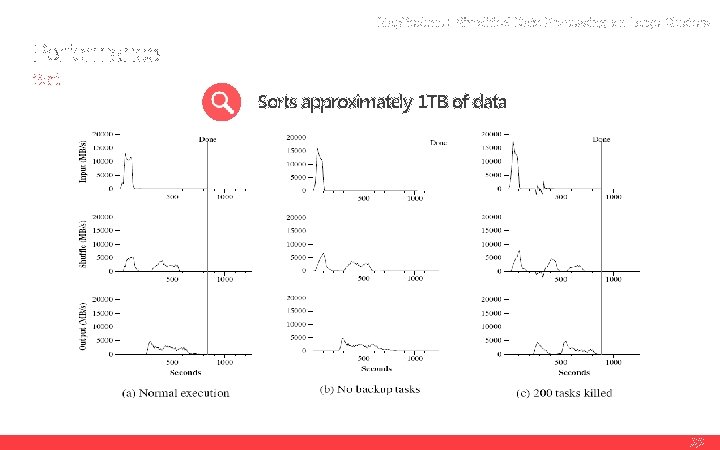

Map. Reduce : Simplified Data Processing on Large Clusters Performance Sorts approximately 1 TB of data 22

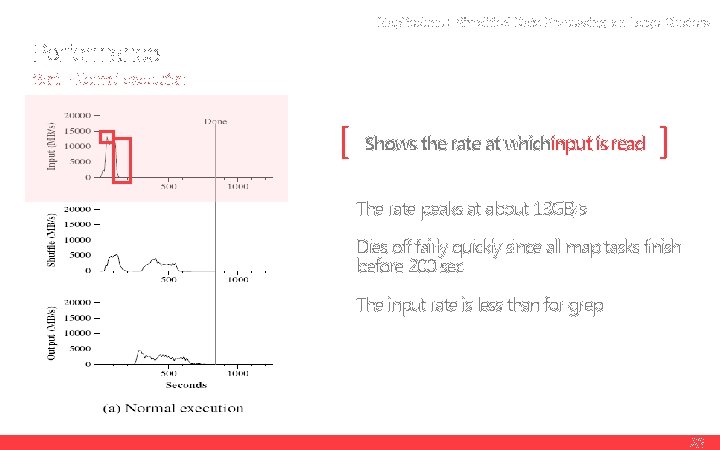

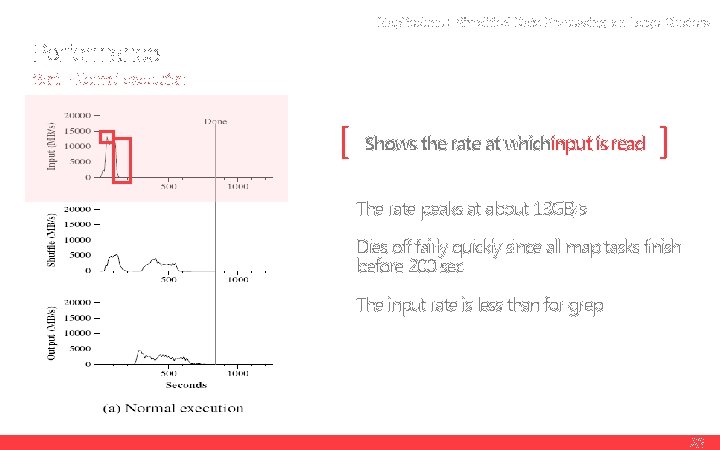

Map. Reduce : Simplified Data Processing on Large Clusters Performance Sort – Normal execution [ Shows the rate at whichinput is read ] The rate peaks at about 13 GB/s Dies off fairly quickly since all map tasks finish before 200 sec The input rate is less than for grep 23

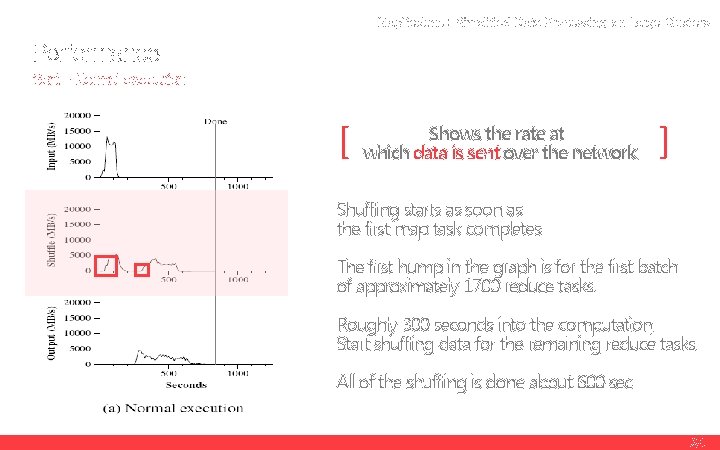

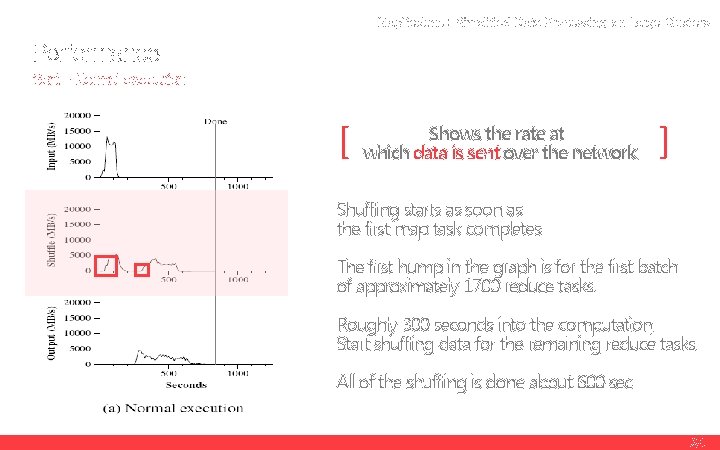

Map. Reduce : Simplified Data Processing on Large Clusters Performance Sort – Normal execution [ Shows the rate at which data is sent over the network ] Shuffling starts as soon as the first map task completes. The first hump in the graph is for the first batch of approximately 1700 reduce tasks. Roughly 300 seconds into the computation, Start shuffling data for the remaining reduce tasks. All of the shuffling is done about 600 sec 24

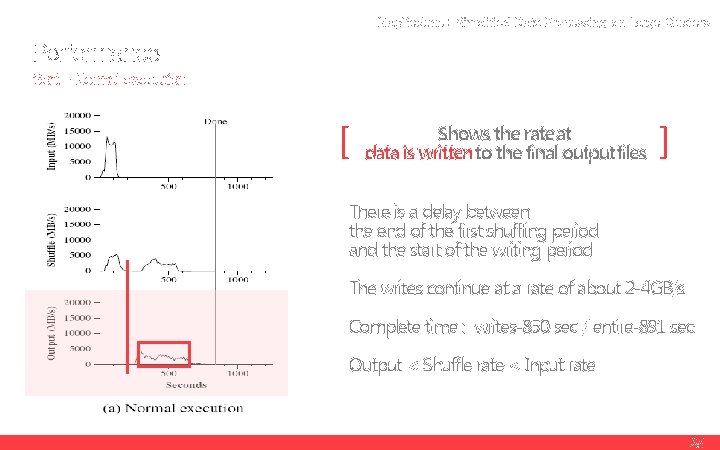

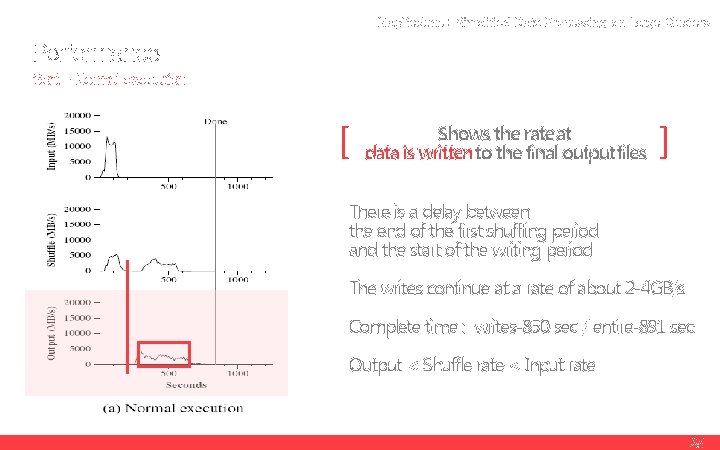

Map. Reduce : Simplified Data Processing on Large Clusters Performance Sort – Normal execution [ Shows the rate at data is writtento the final outputfiles ] There is a delay between the end of the first shuffling period and the start of the writing period The writes continue at a rate of about 2 -4 GB/s Complete time : writes-850 sec / entire-891 sec Output < Shuffle rate < Input rate 25

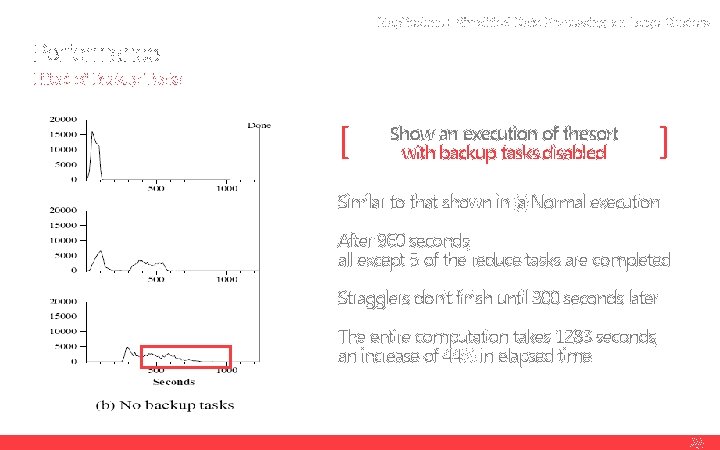

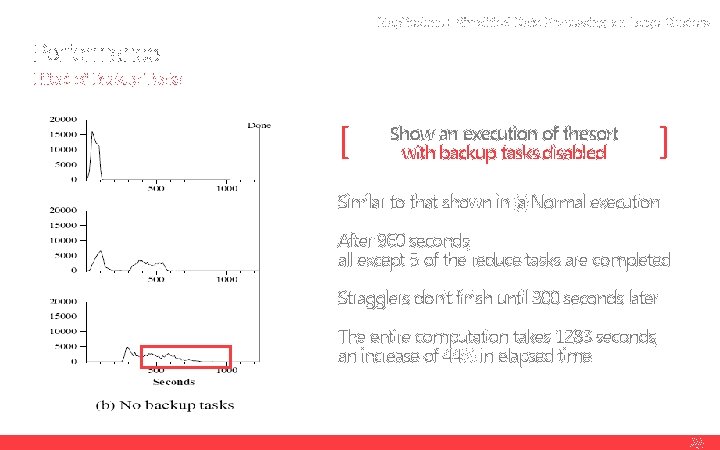

Map. Reduce : Simplified Data Processing on Large Clusters Performance Effect of Backup Tasks [ Show an execution of thesort with backup tasks disabled ] Similar to that shown in (a)Normal execution After 960 seconds, all except 5 of the reduce tasks are completed Stragglers don’t finish until 300 seconds later The entire computation takes 1283 seconds, an increase of 44% in elapsed time. 26

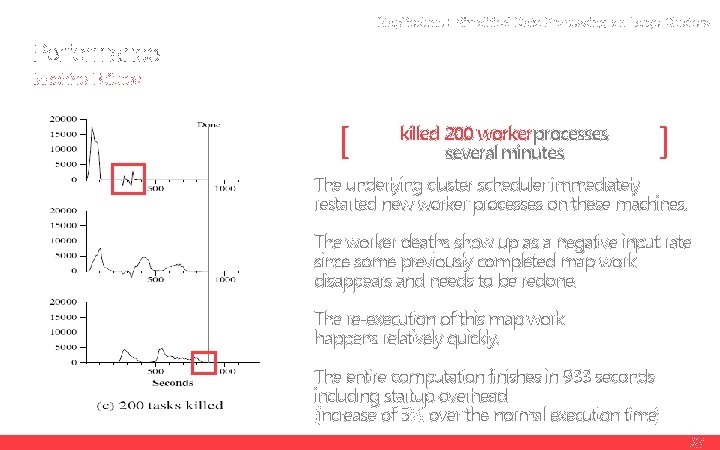

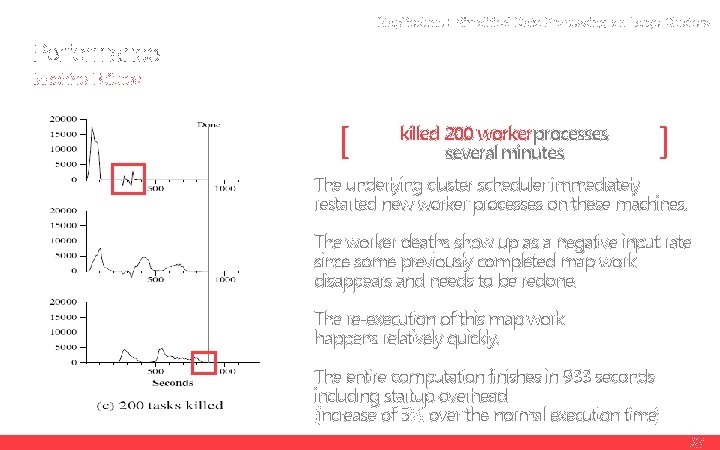

Map. Reduce : Simplified Data Processing on Large Clusters Performance Machine Failures [ killed 200 workerprocesses several minutes ] The underlying cluster scheduler immediately restarted new worker processes on these machines. The worker deaths show up as a negative input rate since some previously completed map work disappears and needs to be redone. The re-execution of this map work happens relatively quickly. The entire computation finishes in 933 seconds including startup overhead (increase of 5% over the normal execution time) 27

06. Conclusions Large-Scale Indexing Success to several reasons Reference

Map. Reduce : Simplified Data Processing on Large Clusters Experience Large-Scale Indexing Rewrite of the production indexing systemthat produces the data structures used for the Google web search service. A large set of documents Stored as a set of GFS files Runs indexing process Retrieved by crawling system Documents are more than 20 TB Runs as a sequence of 5 to 10 Map. Reduce Provided several benefits The indexing code is simpler, smaller, and easier to understand. It can keep conceptually unrelated computations separate. This makes it easy to change the indexing process. The indexing process has become much easier to operate, because most of the problems are dealt with automatically the Map. Reduce library 29

Map. Reduce : Simplified Data Processing on Large Clusters Conclusions Success to several reasons The model is easy to use It hides the details of parallelization, fault-tolerance, locality optimization, and load balancing. Large Variety of problems are easily expressible It is used for the generation of data for Google’s production web search service, for sorting, for data mining, for machine learning, and many other systems. Developed that scales to large clusters of machines The implementation makes efficient use of these machine resources and therefore is suitable for use on many of the large computational problems encountered at Google. 30

![Map Reduce Simplified Data Processing on Large Clusters Reference documents 1 Jeffrey Dean Map. Reduce : Simplified Data Processing on Large Clusters Reference documents [1] Jeffrey Dean](https://slidetodoc.com/presentation_image_h2/4a4c50a59020a6211e716b5bfbac262c/image-43.jpg)

Map. Reduce : Simplified Data Processing on Large Clusters Reference documents [1] Jeffrey Dean and Sanjay Chemawat, Map. Reduce: Simplified Data Processing on Large Clusters, 2004 [2] Sanjay Chemawat, Howard Gobioff, and Shun-Tak Leung, The Google File System, 2003 [3] Matei Zaharia, Andy Konwinski, Anthouny D. Joseph, Randy Kats, Ion Stoica, Improving Map. Reduce Performance in Heterogeneous Environments [4] terms. naver. com [5] www. wikipedia. org [6] etc.

Thank You