Map Reduce What Is Hadoop The Apache Hadoop

![Main run method – the engine public int run(String[] args) { Job job = Main run method – the engine public int run(String[] args) { Job job =](https://slidetodoc.com/presentation_image/5a748971b9f06931b6249d012bdf8d43/image-14.jpg)

- Slides: 23

Map. Reduce

What Is Hadoop? • The Apache Hadoop project develops open- source software for reliable, scalable, distributed computing. • In a nutshell ü Hadoop provides: a reliable shared storage and analysis system. ü The storage is provided by HDFS ü The analysis by Map. Reduce.

What is Map. Reduce? • Map. Reduce is a patented software framework introduced by Google to support distributed computing on large data sets on clusters of computers. • It is a sub-project of the Apache Hadoop Project. • Map. Reduce is a Programming Model and an associated implementation for processing and generating big data sets with a parallel, distributed algorithm on a cluster.

Map. Reduce Serves Two Essential Functions: q It filters and parcels out work to various nodes within the cluster or map q A function sometimes referred to as the mapper q It organizes and reduces the results from each node into a cohesive answer to a query q Referred to as the reducer.

How Map. Reduce works q The original version of Map. Reduce involved several component daemons, including: ü Job. Tracker -- the master node that manages all the jobs and resources in a cluster ü Task. Trackers -- agents deployed to each machine in the cluster to run the map and reduce tasks ü Job. History Server -- a component that tracks completed jobs and is typically deployed as a separate function or with Job Tracker.

Map. Reduce Framework: q Per cluster node: v Single Job. Tracker per master ü Responsible for scheduling the jobs’ component tasks on the slaves ü Monitors slave progress ü Re-executing failed tasks v Single Task Tracker per slave ü Execute the tasks as directed by the master

Map. Reduce core functionality: q Code usually written in Java- though it can be written in other languages with the Hadoop Streaming API q Two fundamental pieces: v Map step ü Master node takes large problem input and slices it into smaller sub problems; distributes these to worker nodes. ü Worker node may do this again; leads to a multi-level tree structure ü Worker processes smaller problem and hands back to master v Reduce step ü Master node takes the answers to the sub problems and combines them in a predefined way to get the output/answer to original problem

Map. Reduce core functionality (II) q Data flow beyond the two key pieces (map and reduce): ü Input reader – divides input into appropriate size splits which get assigned to a Map function ü Map function – maps file data to smaller, intermediate <key, value> pairs ü Partition function – finds the correct reducer: given the key and number of reducers, returns the desired Reduce node ü Compare function – input for Reduce is pulled from the Map intermediate output and sorted according to this compare function ü Reduce function – takes intermediate values and reduces to a smaller solution handed back to the framework ü Output writer – writes file output

Map. Reduce core functionality (III) q A Map. Reduce Job controls the execution ü Splits the input dataset into independent chunks ü Processed by the map tasks in parallel q The framework sorts the outputs of the maps q A Map. Reduce Task is sent the output of the framework to reduce and combine q Both the input and output of the job are stored in a file system q Framework handles scheduling q Monitors and re-executes failed tasks

Map. Reduce input and output q Map. Reduce operates exclusively on pairs q Job Input: <key, value> pairs q Job Output: <key, value> pairs ü Conceivably of different types q Key and value classes have to be serializable by the framework. ü Default serialization requires keys and values to implement Writable ü Key classes must facilitate sorting by the framework

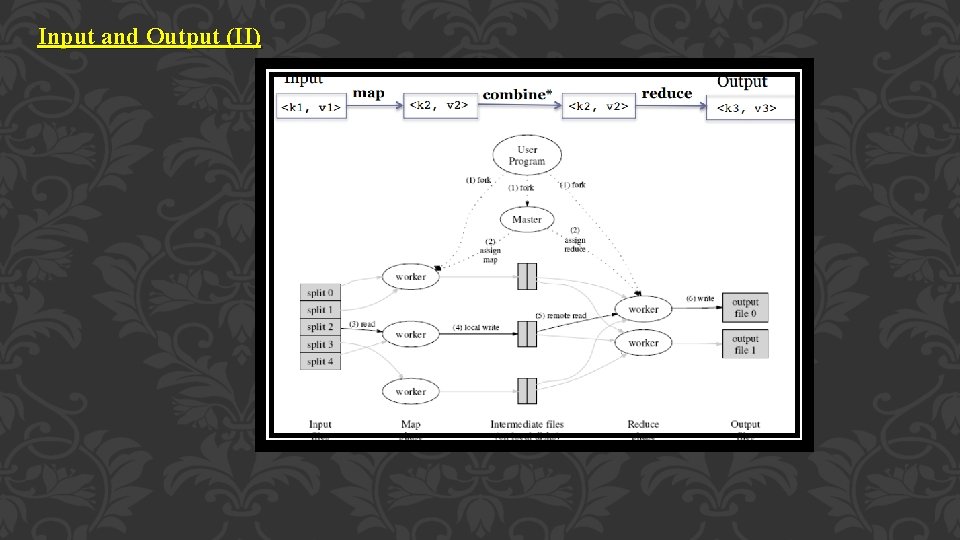

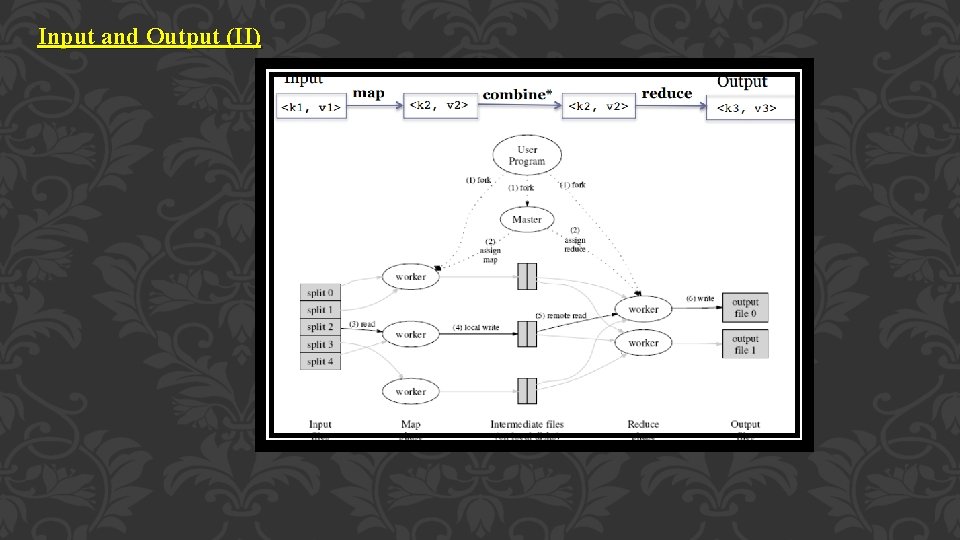

Input and Output (II)

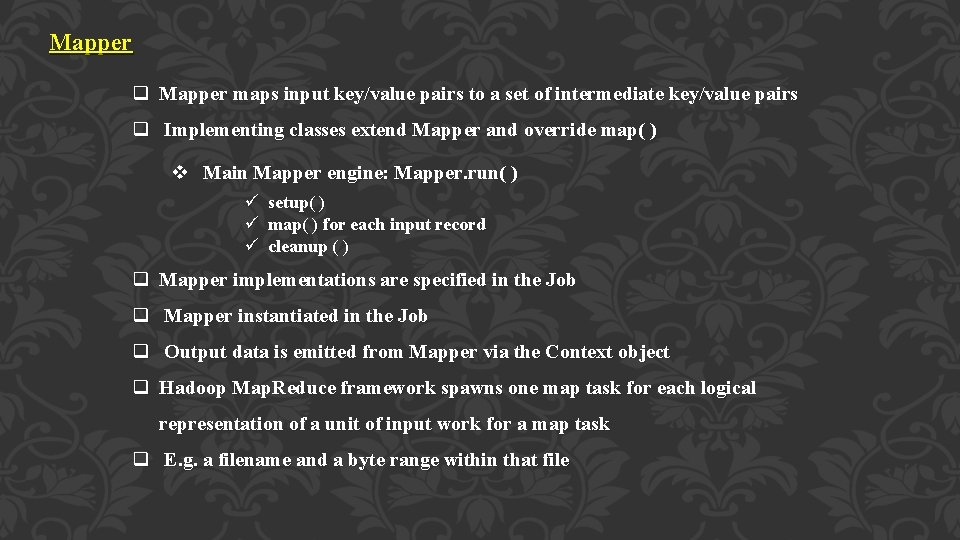

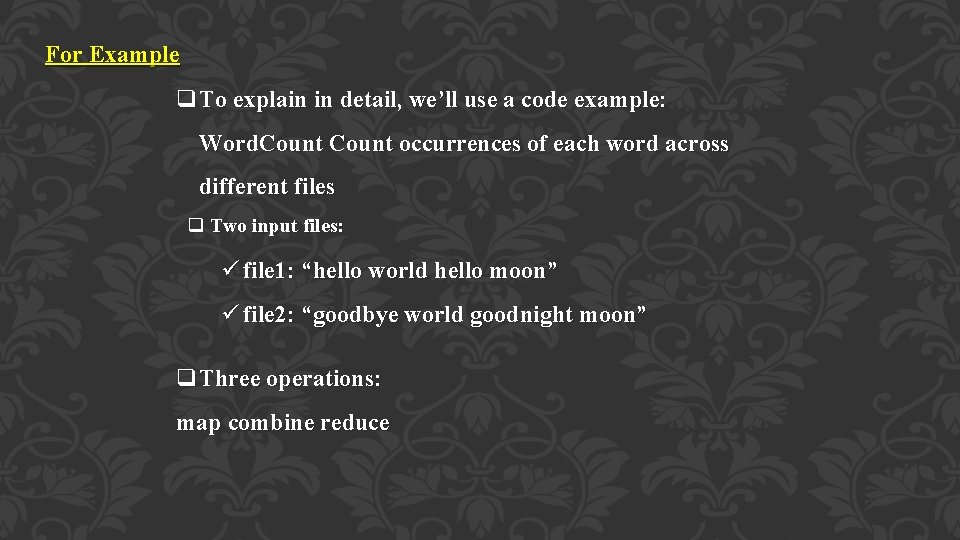

For Example q. To explain in detail, we’ll use a code example: Word. Count occurrences of each word across different files q Two input files: ü file 1: “hello world hello moon” ü file 2: “goodbye world goodnight moon” q. Three operations: map combine reduce

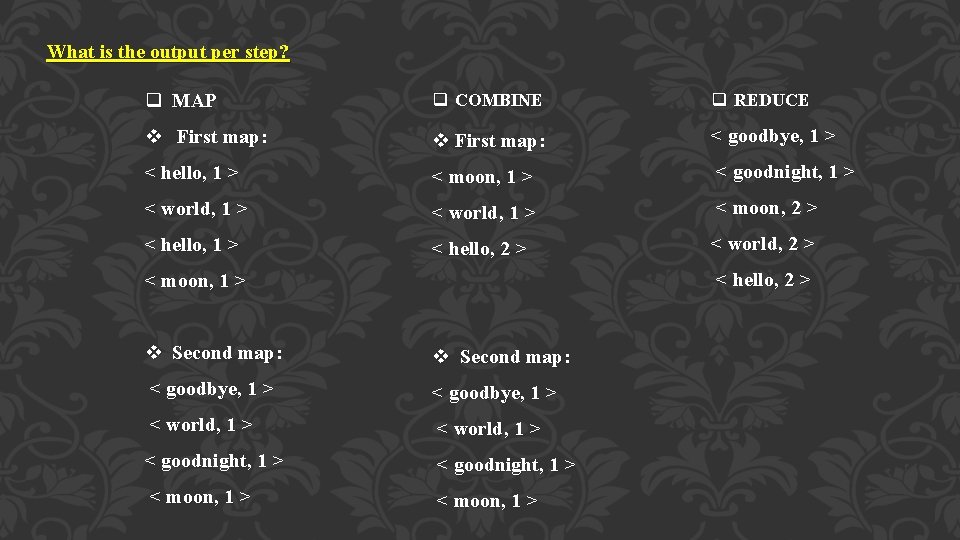

What is the output per step? q MAP q COMBINE q REDUCE v First map: < goodbye, 1 > < hello, 1 > < moon, 1 > < goodnight, 1 > < world, 1 > < moon, 2 > < hello, 1 > < hello, 2 > < world, 2 > < hello, 2 > < moon, 1 > v Second map: < goodbye, 1 > < world, 1 > < goodnight, 1 > < moon, 1 >

![Main run method the engine public int runString args Job job Main run method – the engine public int run(String[] args) { Job job =](https://slidetodoc.com/presentation_image/5a748971b9f06931b6249d012bdf8d43/image-14.jpg)

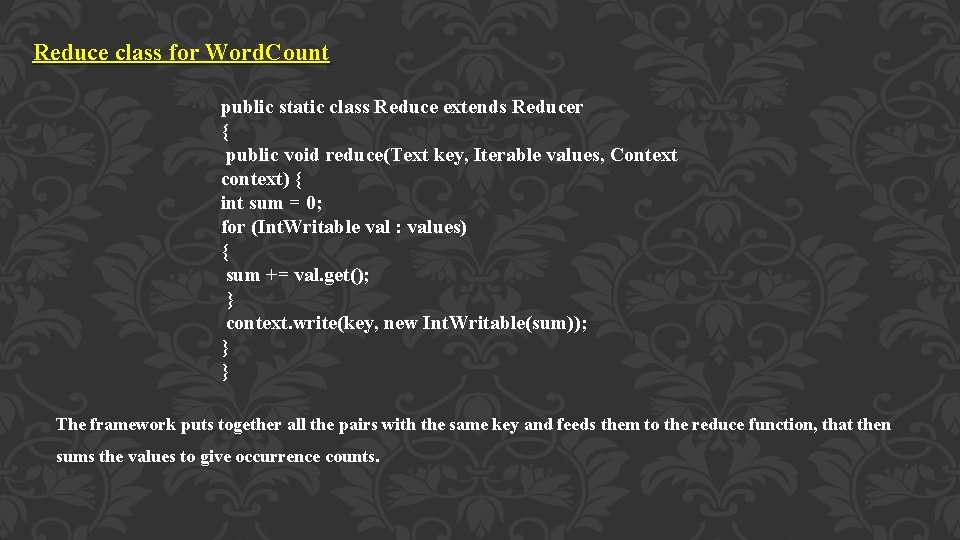

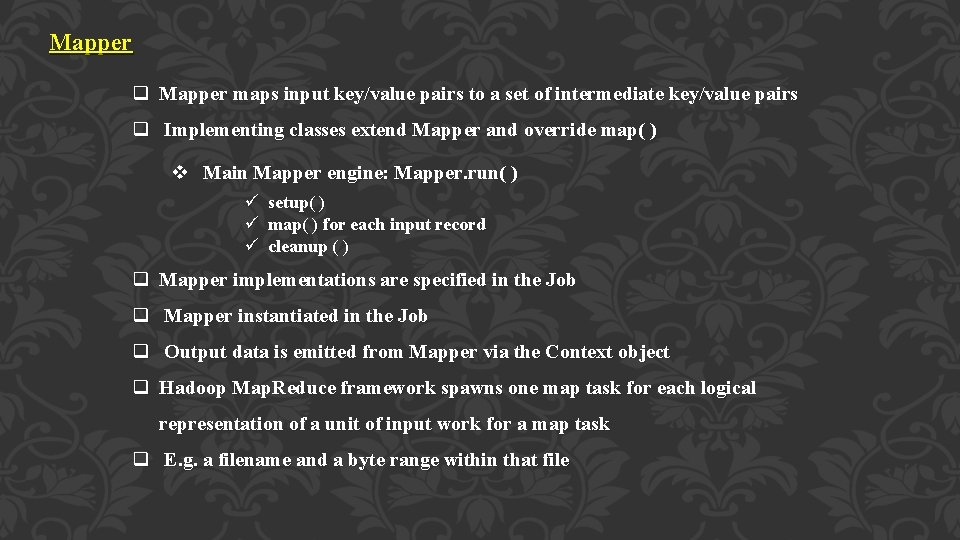

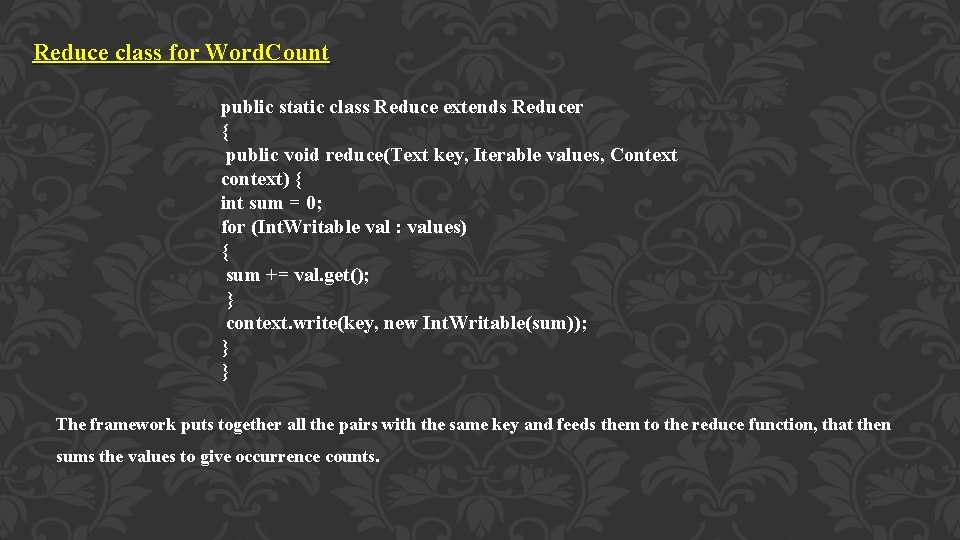

Main run method – the engine public int run(String[] args) { Job job = new Job(get. Conf()); job. set. Jar. By. Class(Word. Count. class); job. set. Job. Name(“wordcount”); job. set. Output. Key. Class(Text. class); job. set. Output. Value. Class(Int. Writable. class); job. set. Mapper. Class(Map. class); job. set. Combiner. Class(Reduce. class); job. set. Reducer. Class(Reduce. class); job. set. Input. Format. Class(Text. Input. Format. class); job. set. Output. Format. Class(Text. Output. Format. class); File. Input. Format. set. Input. Paths(job, new Path(args[0])); File. Output. Format. set. Output. Paths(job, new Path(args[1])); boolean success = job. wait. For. Completion(true); return success ? 0 : 1; }

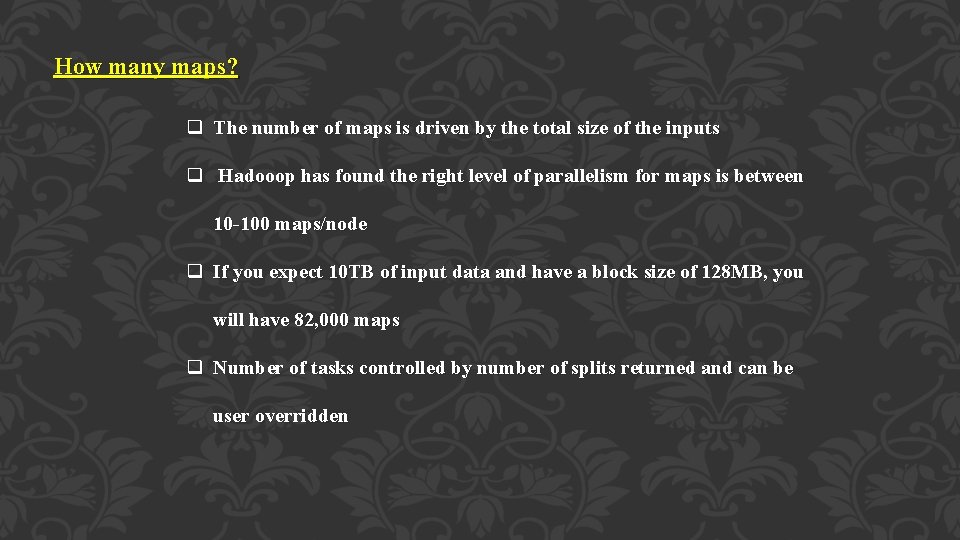

Mapper q Mapper maps input key/value pairs to a set of intermediate key/value pairs q Implementing classes extend Mapper and override map( ) v Main Mapper engine: Mapper. run( ) ü ü ü setup( ) map( ) for each input record cleanup ( ) q Mapper implementations are specified in the Job q Mapper instantiated in the Job q Output data is emitted from Mapper via the Context object q Hadoop Map. Reduce framework spawns one map task for each logical representation of a unit of input work for a map task q E. g. a filename and a byte range within that file

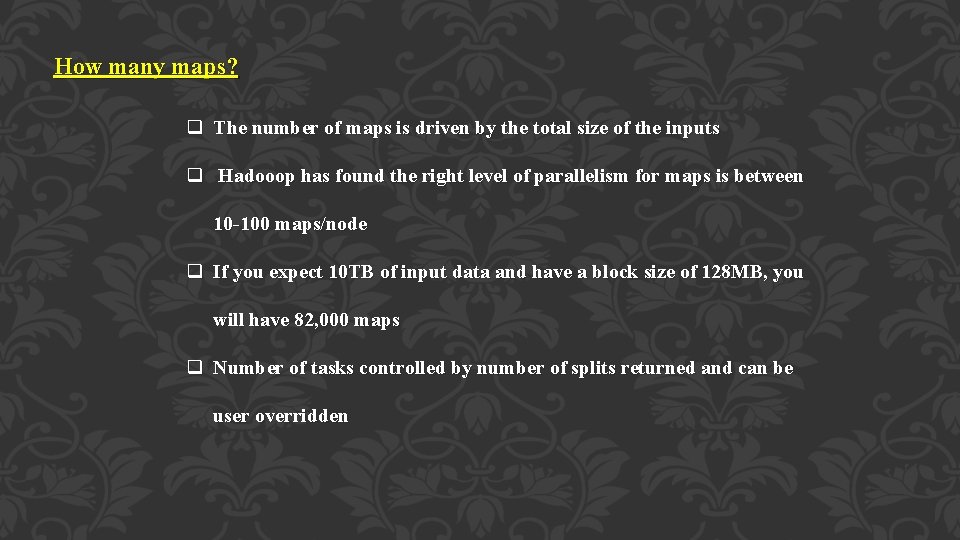

How many maps? q The number of maps is driven by the total size of the inputs q Hadooop has found the right level of parallelism for maps is between 10 -100 maps/node q If you expect 10 TB of input data and have a block size of 128 MB, you will have 82, 000 maps q Number of tasks controlled by number of splits returned and can be user overridden

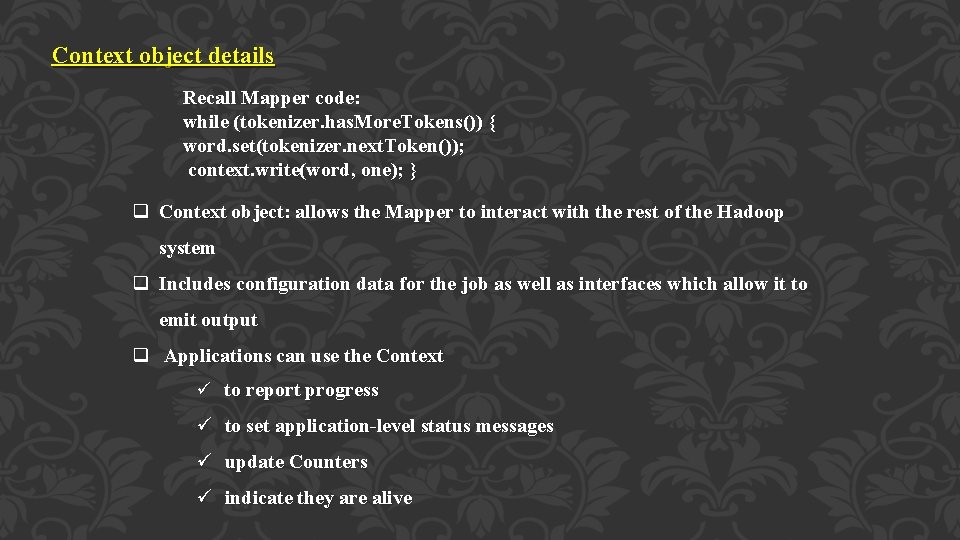

Context object details Recall Mapper code: while (tokenizer. has. More. Tokens()) { word. set(tokenizer. next. Token()); context. write(word, one); } q Context object: allows the Mapper to interact with the rest of the Hadoop system q Includes configuration data for the job as well as interfaces which allow it to emit output q Applications can use the Context ü to report progress ü to set application-level status messages ü update Counters ü indicate they are alive

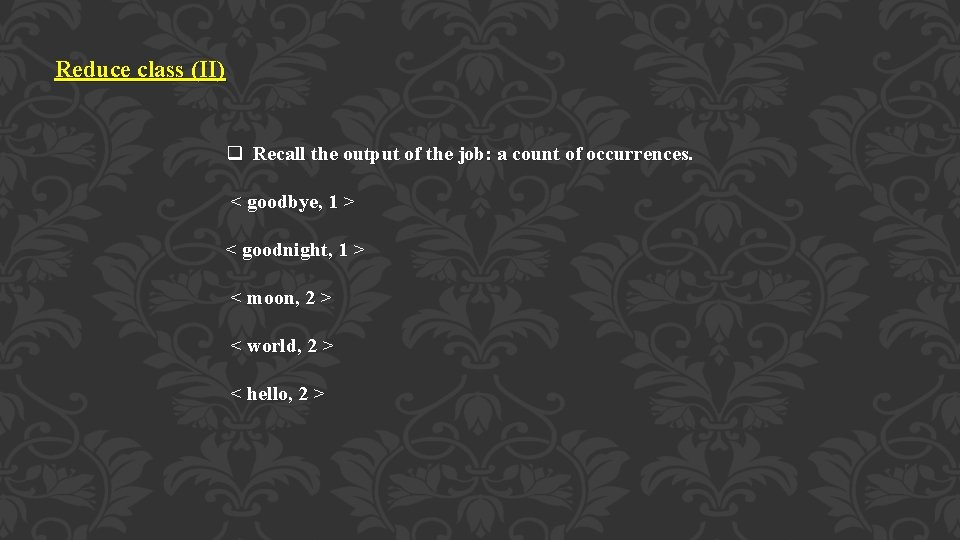

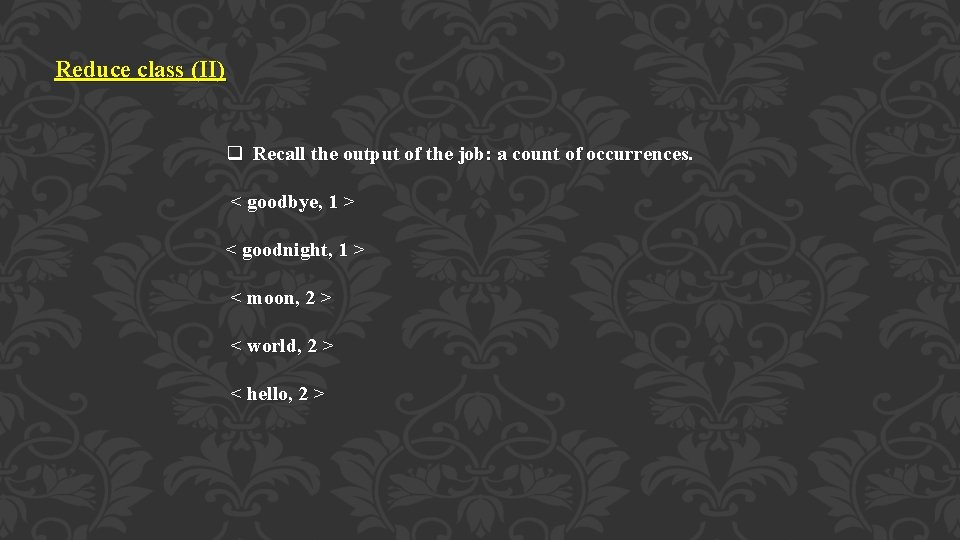

Reduce class for Word. Count public static class Reduce extends Reducer { public void reduce(Text key, Iterable values, Context context) { int sum = 0; for (Int. Writable val : values) { sum += val. get(); } context. write(key, new Int. Writable(sum)); } } The framework puts together all the pairs with the same key and feeds them to the reduce function, that then sums the values to give occurrence counts.

Reduce class (II) q Recall the output of the job: a count of occurrences. < goodbye, 1 > < goodnight, 1 > < moon, 2 > < world, 2 > < hello, 2 >

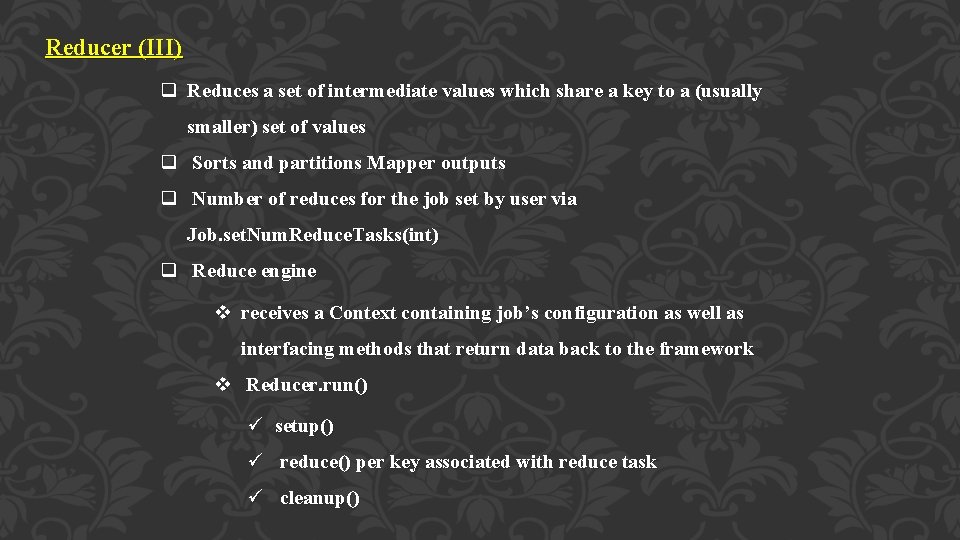

Reducer (III) q Reduces a set of intermediate values which share a key to a (usually smaller) set of values q Sorts and partitions Mapper outputs q Number of reduces for the job set by user via Job. set. Num. Reduce. Tasks(int) q Reduce engine v receives a Context containing job’s configuration as well as interfacing methods that return data back to the framework v Reducer. run() ü setup() ü reduce() per key associated with reduce task ü cleanup()

How many reduces? q 0. 95 or 1. 75 multiplied by (number. Of. Nodes * mapreduce. tasktracker. reduce. tasks. maximum q 0. 95 : all of the reduces can launch immediately and start transferring map outputs as the maps finish q 1. 75: the faster nodes will finish their first round of reduces and launch a second wave of reduces, doing a better job of load balancing q Increasing number of reduces increases framework overhead; and increases load balancing and lowers cost of failures

Summary q Hadoop Map. Reduce is a large scale, open source software framework dedicated to scalable, distributed, data-intensive computing q The framework breaks up large data into smaller parallelizable chunks and handles scheduling ü Maps each piece to an intermediate value ü Reduces intermediate values to a solution ü User-specified partition and combiner options q Fault tolerant, reliable, and supports thousands of nodes and petabytes of data q If you can rewrite algorithms into Maps and Reduces, and your problem can be broken up into small pieces solvable in parallel, then Hadoop’s Map. Reduce is the way to go for a distributed problem solving approach to large datasets q Tried and tested in production q Many implementation options

Thank you By, V. Priyanka-M. Sc. , CS Bon secours college for women