Map Reduce Algorithm Design Chapter 3 the reduce

- Slides: 74

Map. Reduce Algorithm Design Chapter 3

the reduce task in hadoop contains three steps: shuffle, sort and reduce where the sort (and after that the reduce) can only start once all the mappers are done. In the Map. Reduce model, you need to wait for all mappers to finish, since the keys need to be grouped and sorted; plus, you may have some speculative mappers running and you do not know yet which of the duplicate mappers will finish first. However, as the "Breaking the Map. Reduce Stage Barrier" paper indicates, for some applications, it may make sense not to wait for all of the output of the mappers. If you would want to implement this sort of behavior (most likely for research purposes), then you should take a look at theorg. apache. hadoop. mapred. Reduce. Task. Reduce. Copier class, which implements Shuffle. Consumer. Plugin. Reduce. Copier. fetch. Outputs() method is the one that holds the reduce task from running until all map outputs are copied (through the while loop in line 2026 of Hadoop release 1. 0. 4).

Why this Chapter? • Everything you didn’t think could be done with Map. Reduce

Programmer • Only implements mapper, reducer • Optional: combiner, partitioner • Must express in a small number of rigidly defined components • Trickiest aspect: – Synchronization • Shuffle/short, otherwise everything runs in isolation in MR

But, user can • Construct complex data structures as keys, values • Execute user-specified initialization/ termination code • Control sort order of intermediate keys • Control partitioning of keys, ie. Set of keys encountered by particular reducer • May need more than a single job, sequence of jobs – Iteration – Convergence – may need drivers (counters) • Preserve state in mappers/reducers – really? ?

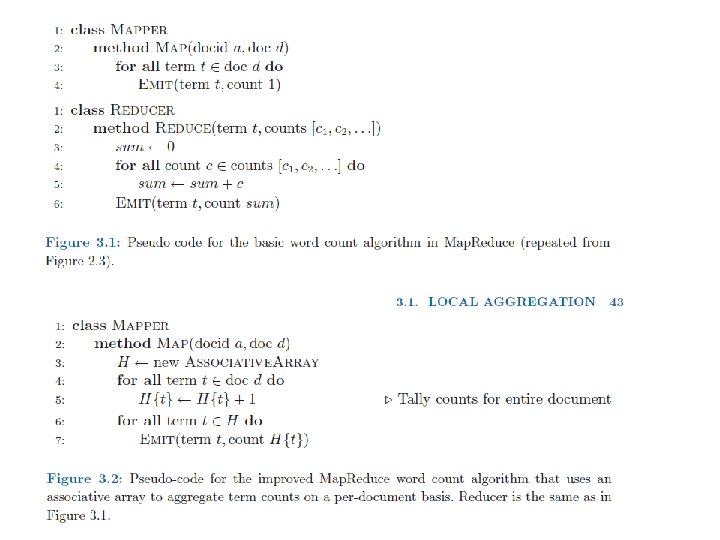

Local Aggregation • Hadoop writes intermediate results to local disk, then sends over network • Can have lots of data transmitted to reducers • How to avoid this? – Instead use Combiners and in-Mapper combining – Reduce amount to shuffle between M and R • Without combiners, as many intermediate values as input (k, v)

Combiners • Combiners – mini-Reducers (implemented in Map) – Input to combiner is output of Mapper – no sending over network – Combiners run on same machine as Mapper – Output of combiner is much smaller than input

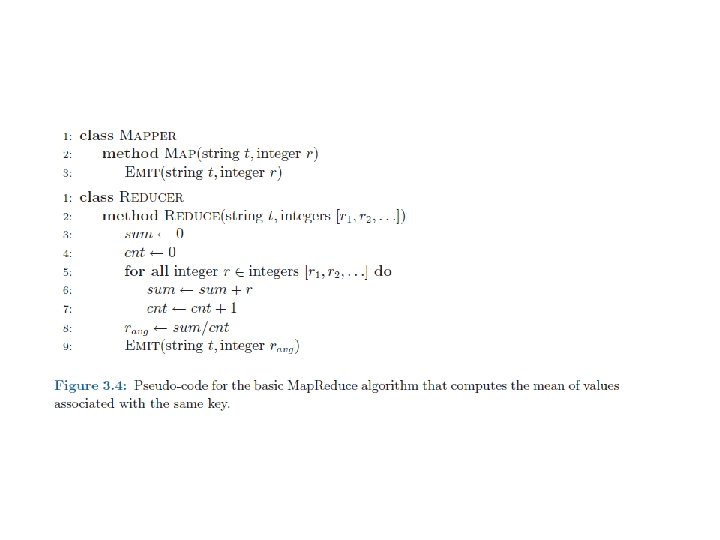

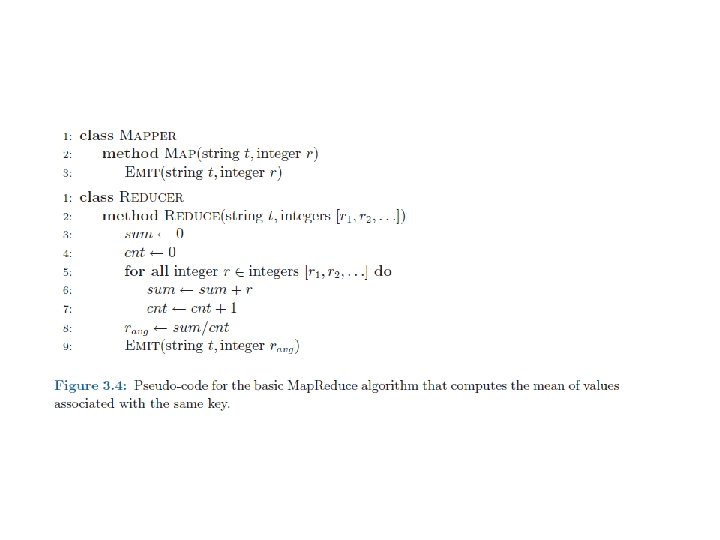

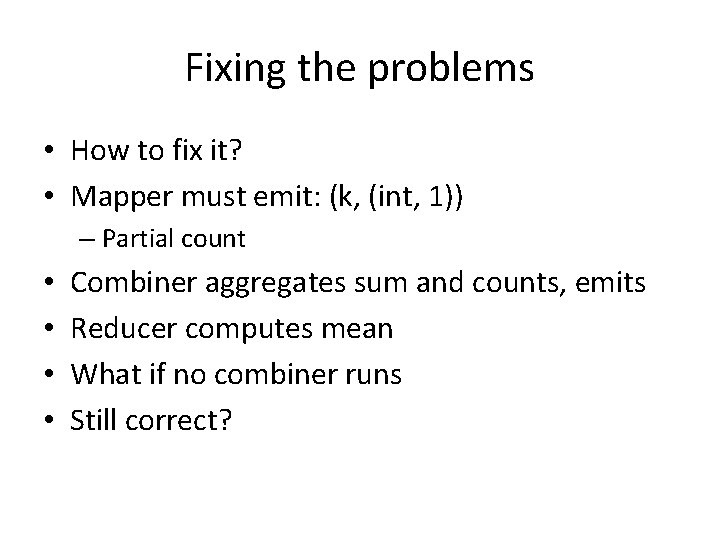

Correctness • Combiners can reduce running time, must take care to be correct • Example: – Compute mean of all integers associated with a key • E. g. key – user ids, value - elapsed time for session • Compute mean on per-user basis • Requires lots of shuffling

Use combiner? – Requires lots of shuffling – Solution? – Can use mini-REDUCER as combiner: • But, Mean(1, 2, 3, 4, 5) != Mean(1, 2), Mean(3, 4, 5))

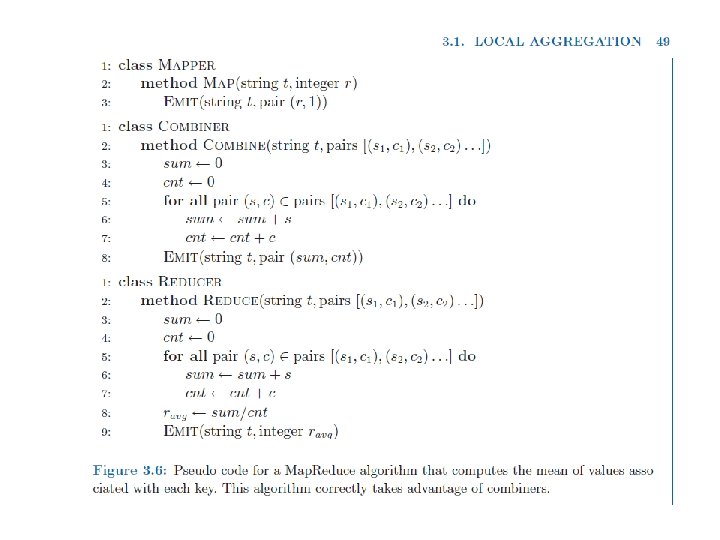

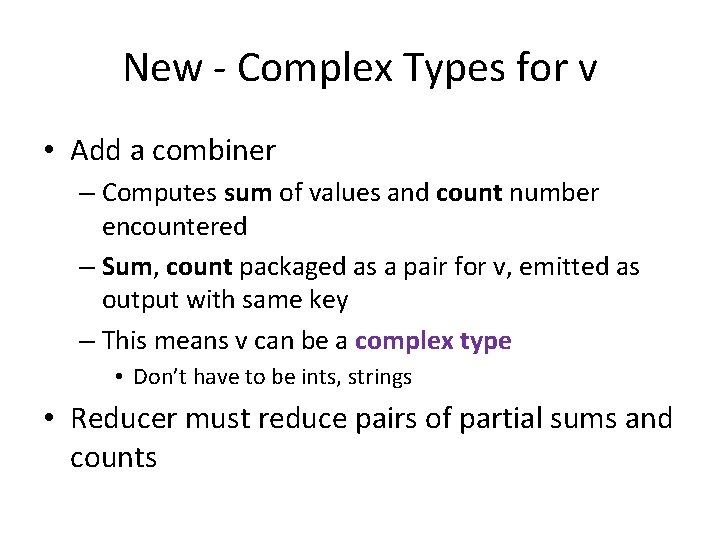

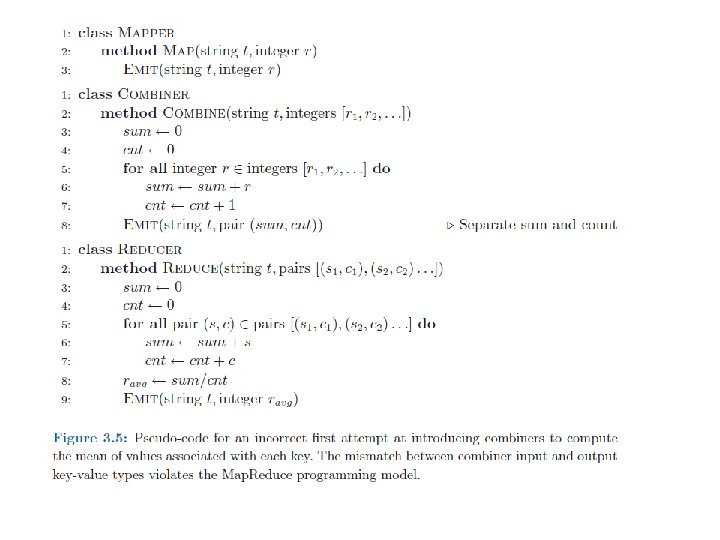

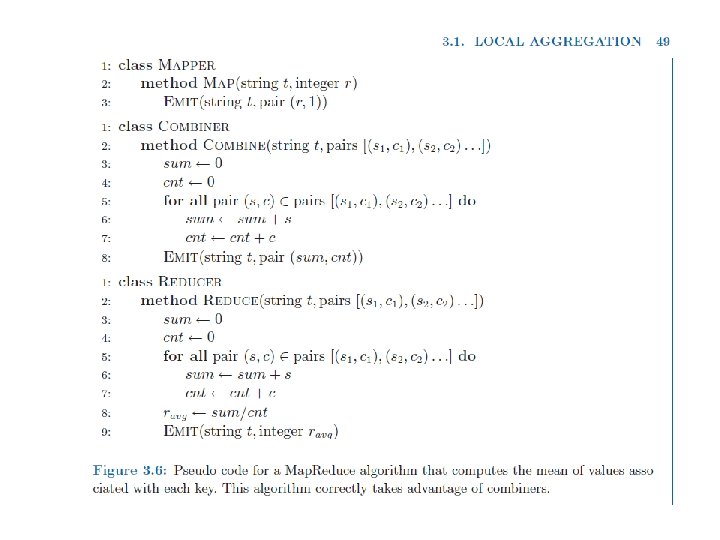

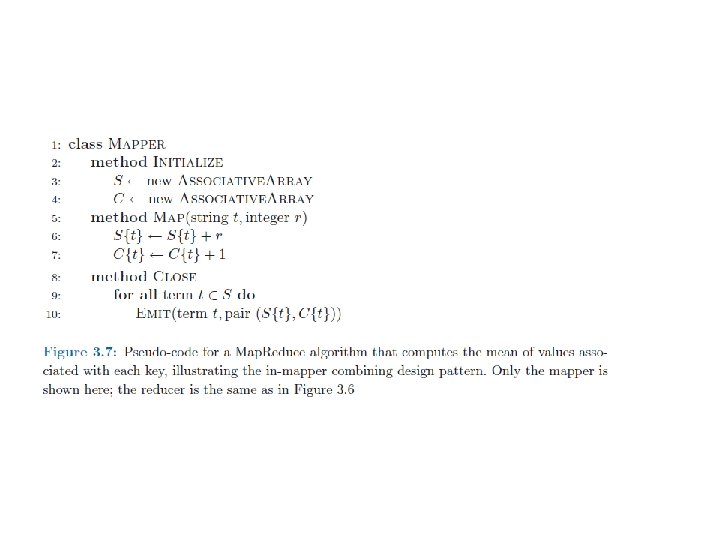

New - Complex Types for v • Add a combiner – Computes sum of values and count number encountered – Sum, count packaged as a pair for v, emitted as output with same key – This means v can be a complex type • Don’t have to be ints, strings • Reducer must reduce pairs of partial sums and counts

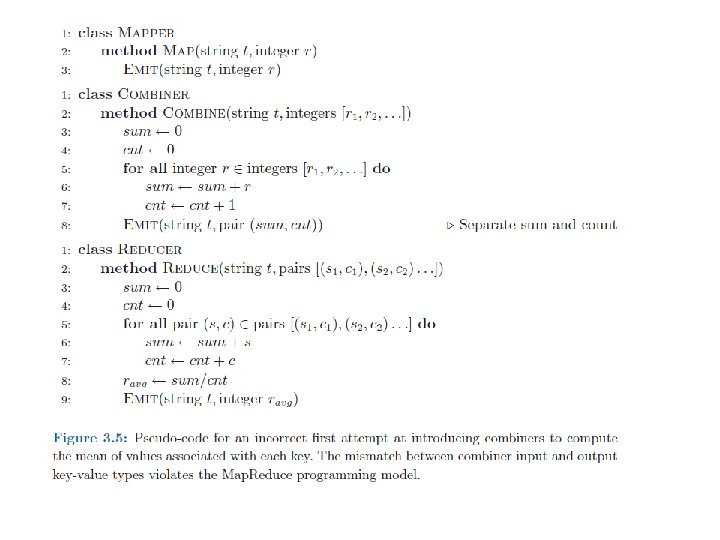

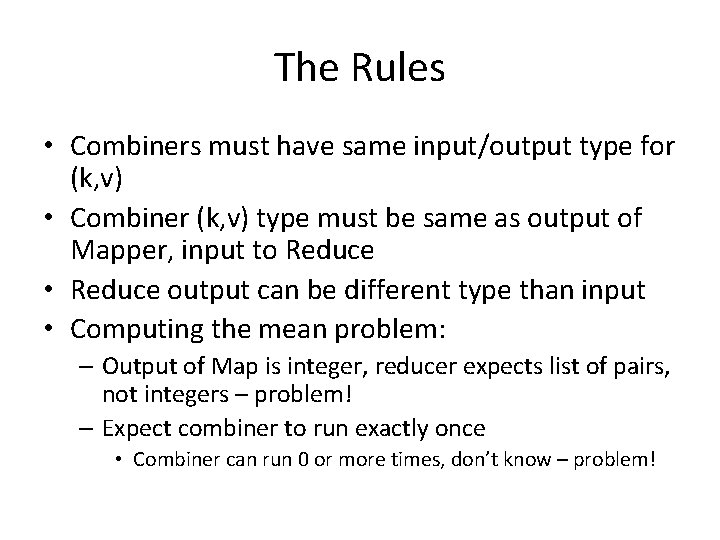

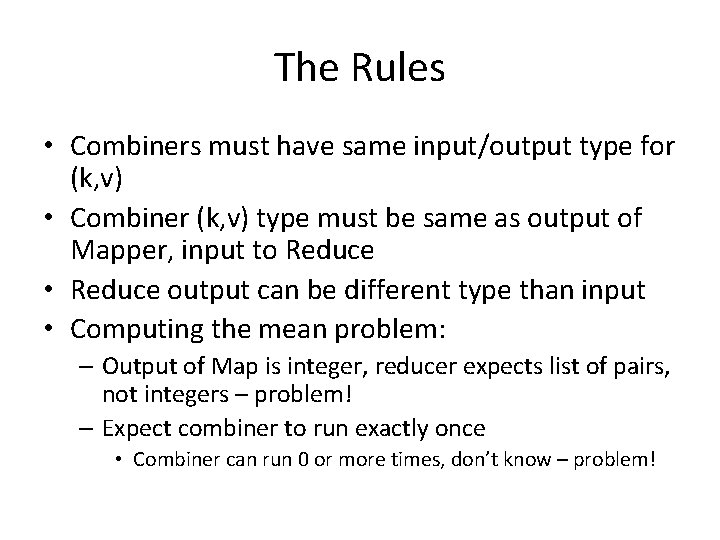

The Rules • Combiners must have same input/output type for (k, v) • Combiner (k, v) type must be same as output of Mapper, input to Reduce • Reduce output can be different type than input • Computing the mean problem: – Output of Map is integer, reducer expects list of pairs, not integers – problem! – Expect combiner to run exactly once • Combiner can run 0 or more times, don’t know – problem!

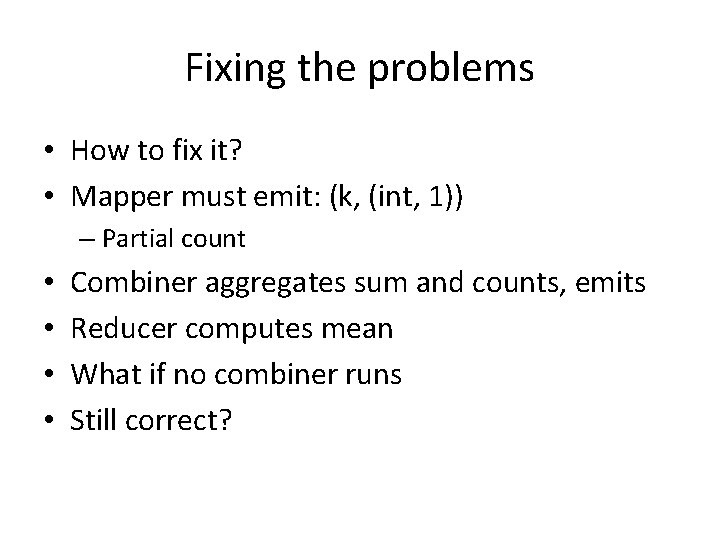

Fixing the problems • How to fix it? • Mapper must emit: (k, (int, 1)) – Partial count • • Combiner aggregates sum and counts, emits Reducer computes mean What if no combiner runs Still correct?

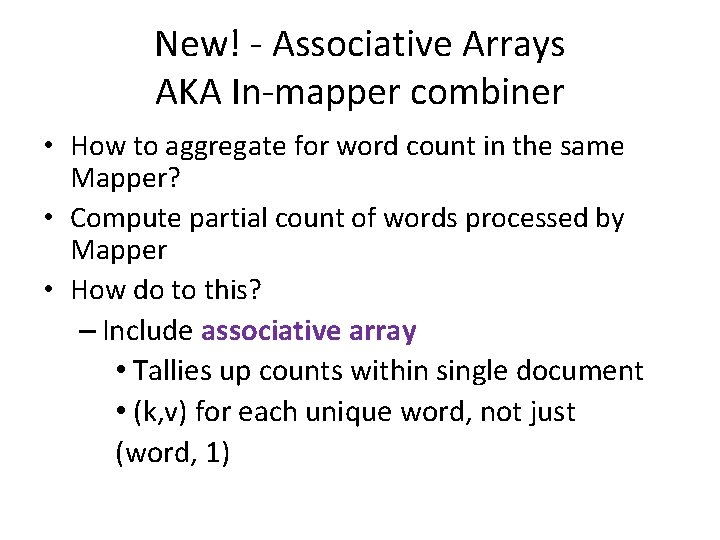

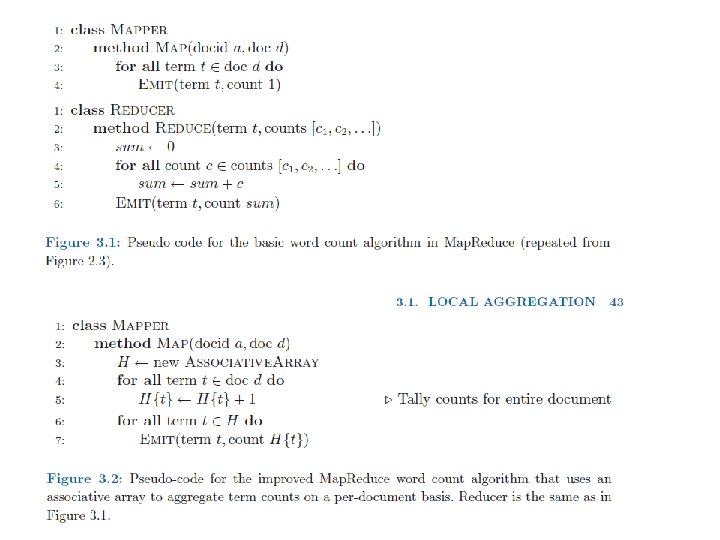

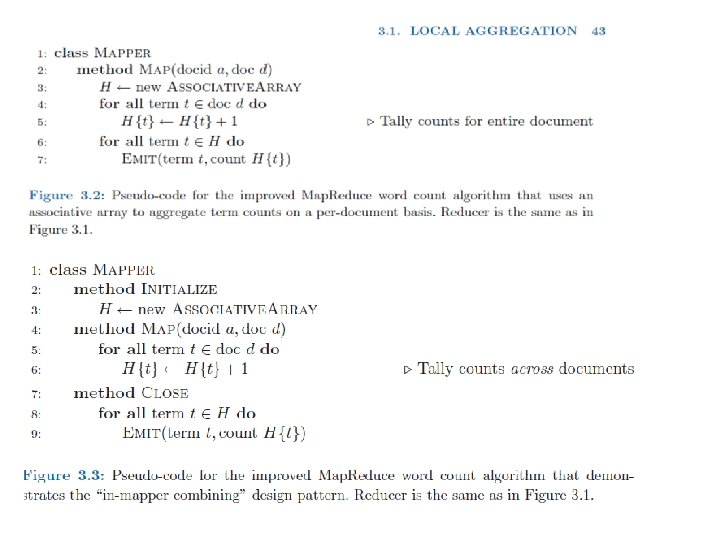

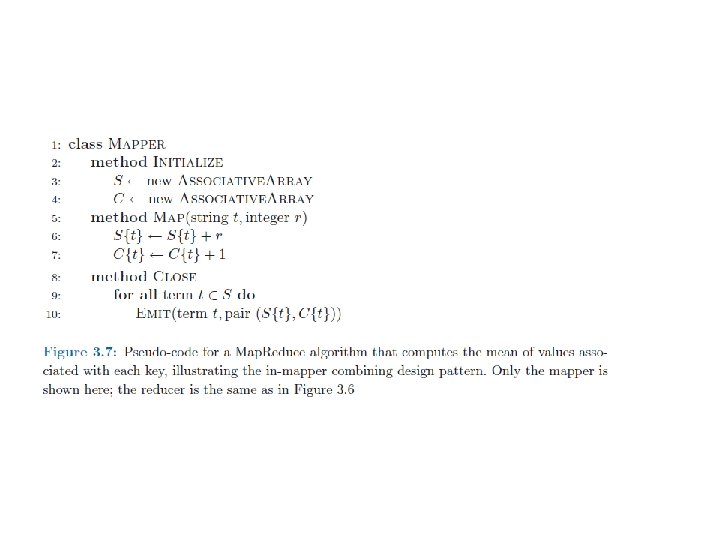

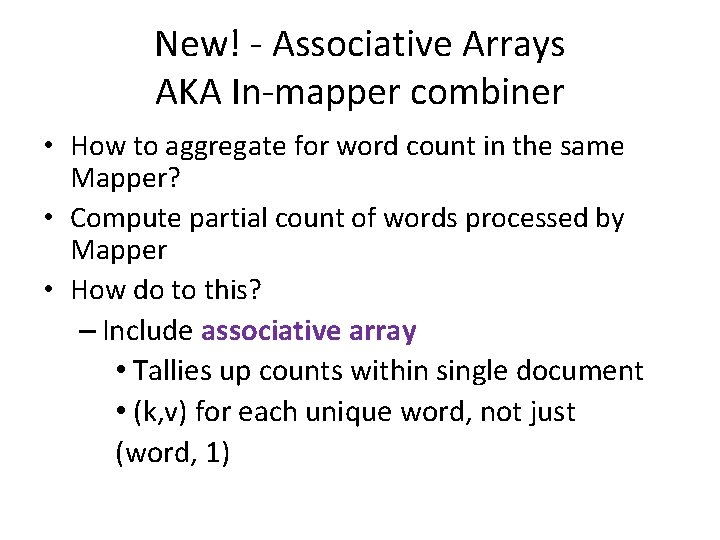

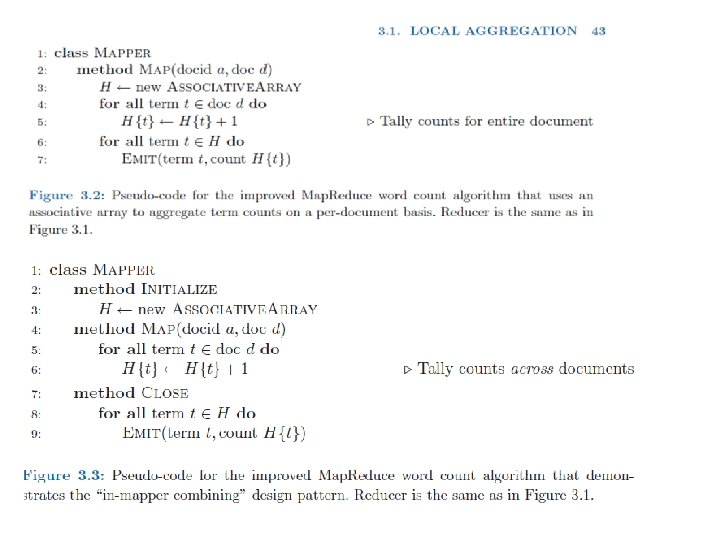

New! - Associative Arrays AKA In-mapper combiner • How to aggregate for word count in the same Mapper? • Compute partial count of words processed by Mapper • How do to this? – Include associative array • Tallies up counts within single document • (k, v) for each unique word, not just (word, 1)

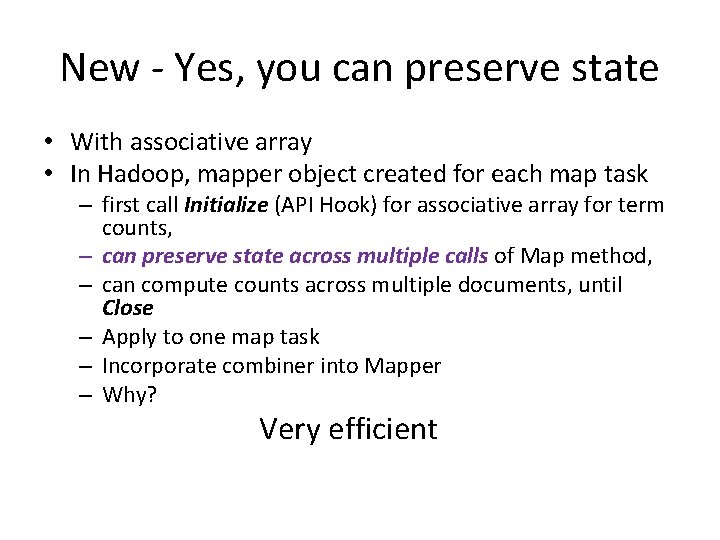

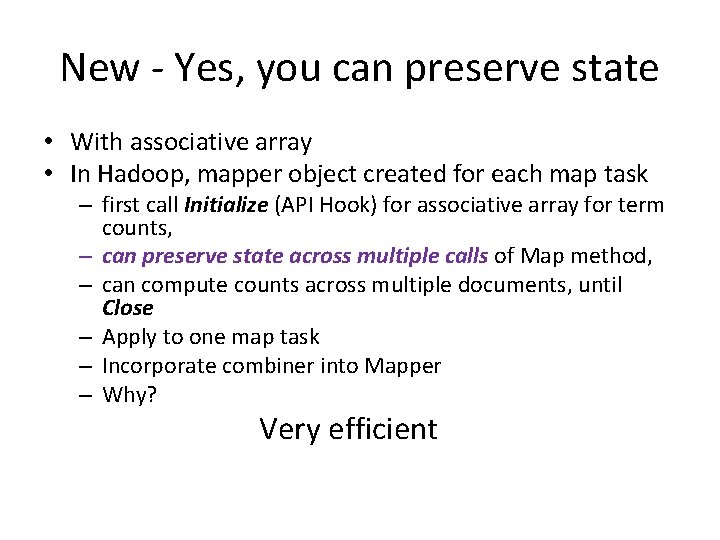

New - Yes, you can preserve state • With associative array • In Hadoop, mapper object created for each map task – first call Initialize (API Hook) for associative array for term counts, – can preserve state across multiple calls of Map method, – can compute counts across multiple documents, until Close – Apply to one map task – Incorporate combiner into Mapper – Why? Very efficient

Computing Mean - associative arrays • How to use associate arrays for this? – Must user combiner if no associate array

• Interesting link that talks about associative arrays (Map in Java) • https: //vangjee. wordpress. com/2012/03/07/t he-in-mapper-combining-design-pattern-formapreduce-programming/

Preserving State • But – violates functional programming paradigm of MR • Algorithm behavior may depend on order of (k, v) input • Scalability problems – depend on memory to store intermediate results – Solution – can emit intermediate results after every n (k, v) or after certain memory usage • Can reduce straggler problem

Information Retrieval with Map. Reduce Chapter 4 Some slides from Jimmy Lin

Topics • Introduction to IR • Inverted Indexing • Retrieval

Web search problems • Web search problems decompose into three components 1. Gather web content (crawling) 2. Construct inverted index based on web content gathered (indexing) 3. Ranking documents given a query using inverted index (retrieval)

Web search problems Crawling and creating indexes share similar characteristics and requirements – Both are offline problems, no need for real-time – Tolerable for a few minutes delay before content searchable – OK to run smaller-scale index updates frequently • Querying online problem – Demands sub-second response time – Low latency high throughput – Loads can very greatly •

Web Crawler • To acquire the document collection over which indexes are built • Acquiring web content requires crawling – Traverse web by repeatedly following hyperlinks and storing downloaded pages – Start by populating a queue with seed pages

Web Crawler Issues • Shouldn’t overload web servers with crawling • Prioritize order in which unvisited pages downloaded • Avoid downloading page multiple times – coordination and load balancing • • Robust when failures Learn update patterns so content current Identify near duplicates and select best for index Identify dominant language on page

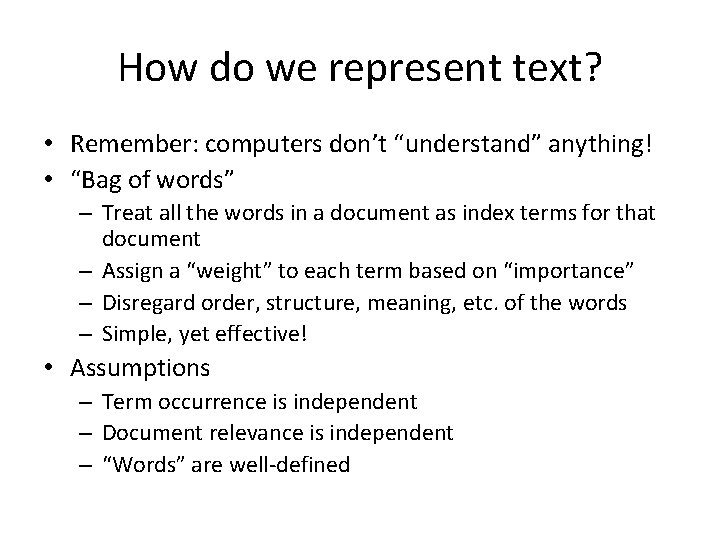

How do we represent text? • Remember: computers don’t “understand” anything! • “Bag of words” – Treat all the words in a document as index terms for that document – Assign a “weight” to each term based on “importance” – Disregard order, structure, meaning, etc. of the words – Simple, yet effective! • Assumptions – Term occurrence is independent – Document relevance is independent – “Words” are well-defined

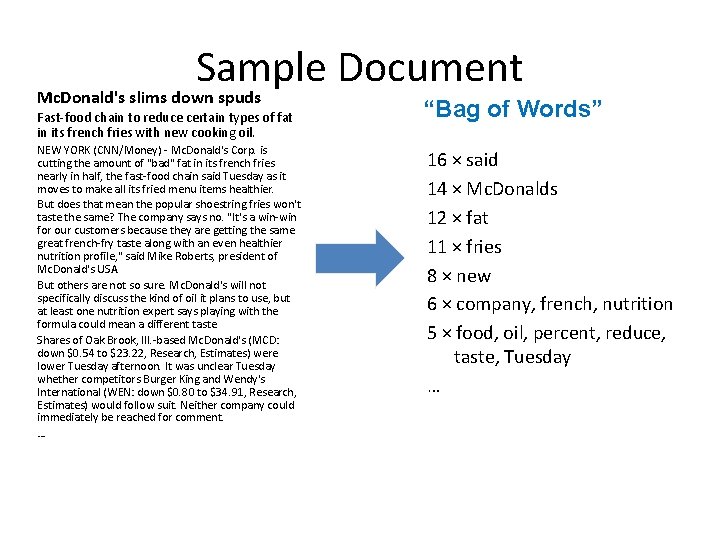

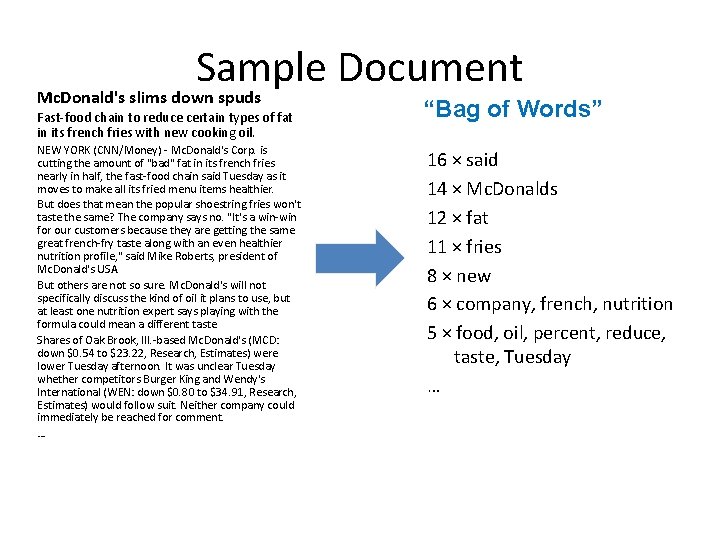

Sample Document Mc. Donald's slims down spuds Fast-food chain to reduce certain types of fat in its french fries with new cooking oil. NEW YORK (CNN/Money) - Mc. Donald's Corp. is cutting the amount of "bad" fat in its french fries nearly in half, the fast-food chain said Tuesday as it moves to make all its fried menu items healthier. But does that mean the popular shoestring fries won't taste the same? The company says no. "It's a win-win for our customers because they are getting the same great french-fry taste along with an even healthier nutrition profile, " said Mike Roberts, president of Mc. Donald's USA. But others are not so sure. Mc. Donald's will not specifically discuss the kind of oil it plans to use, but at least one nutrition expert says playing with the formula could mean a different taste. Shares of Oak Brook, Ill. -based Mc. Donald's (MCD: down $0. 54 to $23. 22, Research, Estimates) were lower Tuesday afternoon. It was unclear Tuesday whether competitors Burger King and Wendy's International (WEN: down $0. 80 to $34. 91, Research, Estimates) would follow suit. Neither company could immediately be reached for comment. … “Bag of Words” 16 × said 14 × Mc. Donalds 12 × fat 11 × fries 8 × new 6 × company, french, nutrition 5 × food, oil, percent, reduce, taste, Tuesday …

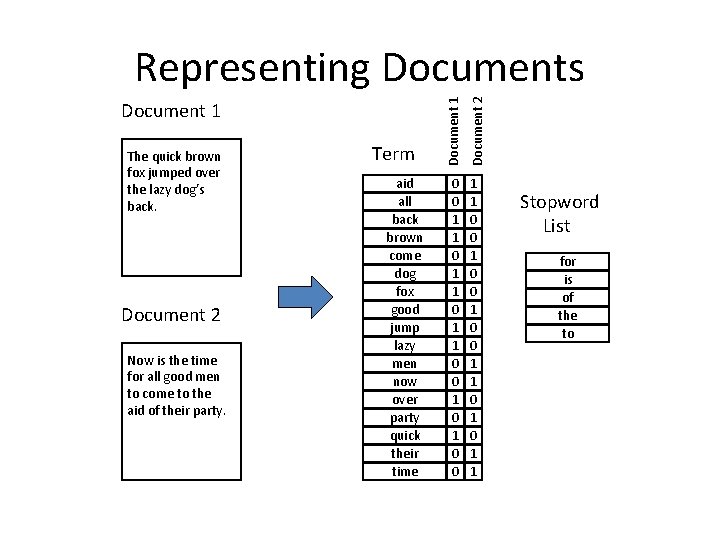

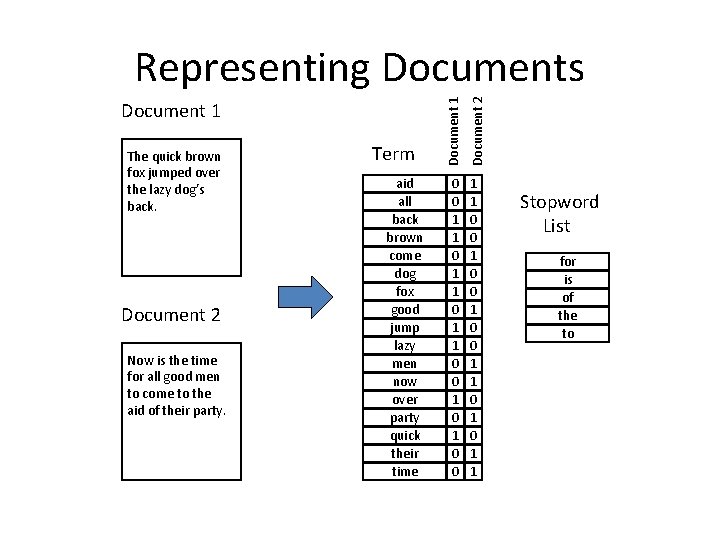

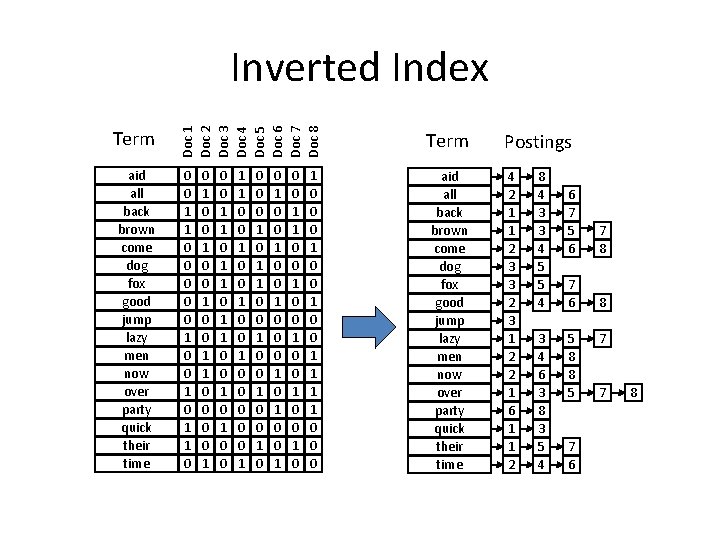

The quick brown fox jumped over the lazy dog’s back. Document 2 Now is the time for all good men to come to the aid of their party. Term aid all back brown come dog fox good jump lazy men now over party quick their time Document 2 Document 1 Representing Documents 0 0 1 1 0 0 1 0 0 1 1 0 1 1 Stopword List for is of the to

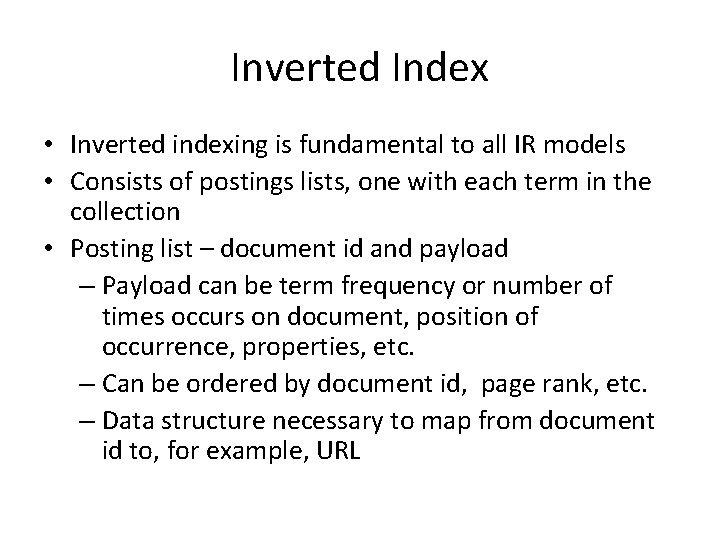

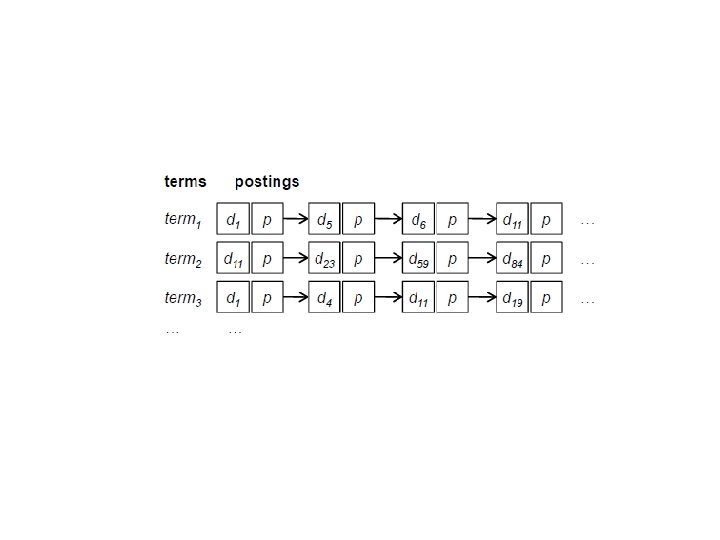

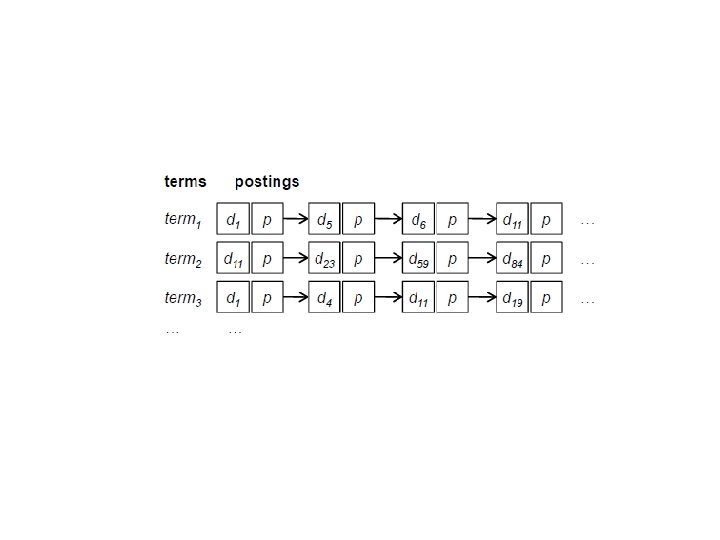

Inverted Index • Inverted indexing is fundamental to all IR models • Consists of postings lists, one with each term in the collection • Posting list – document id and payload – Payload can be term frequency or number of times occurs on document, position of occurrence, properties, etc. – Can be ordered by document id, page rank, etc. – Data structure necessary to map from document id to, for example, URL

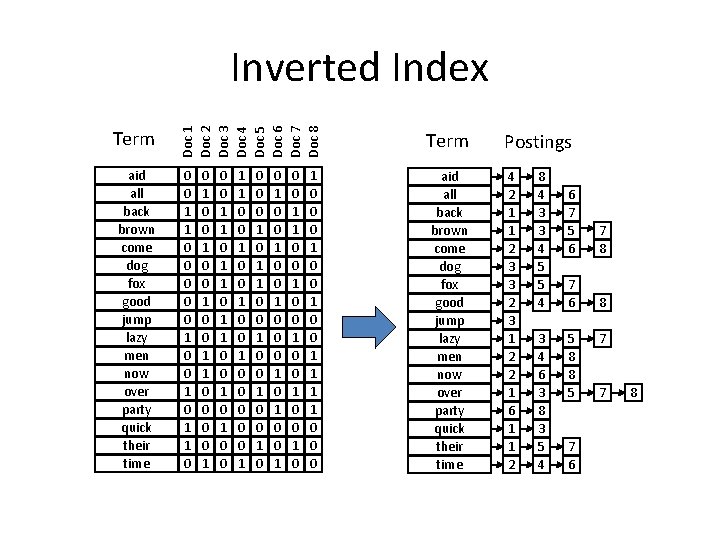

Term aid all back brown come dog fox good jump lazy men now over party quick their time Doc 1 Doc 2 Doc 3 Doc 4 Doc 5 Doc 6 Doc 7 Doc 8 Inverted Index 0 0 1 1 0 0 0 1 0 1 1 0 0 1 0 0 1 1 0 0 1 1 0 0 0 0 0 1 0 1 1 0 0 1 0 0 0 1 0 0 1 0 0 1 0 0 1 1 0 0 0 Term aid all back brown come dog fox good jump lazy men now over party quick their time Postings 4 2 1 1 2 3 3 2 3 1 2 2 1 6 1 1 2 8 4 3 3 4 5 5 4 3 4 6 3 8 3 5 4 6 7 5 6 7 8 7 6 8 5 8 8 5 7 6 7 7 8

• New due date for HW#4 – Wed. 3/29

Process query - retrieval • Given a query, fetch posting lists of index associated with query, traverse postings to compute result set • Query document scores must be computed • Top k documents extracted • Optimization strategies to reduce # postings must examine

Index • Size of index depends on payload • Well-optimized inverted index can be 1/10 of size of original document collection • If store position info, could be several times larger • Usually can hold entire vocabulary in memory (using front-coding) • Postings lists usually too large to store in memory • Query evaluation involves random disk access and decoding postings – Try to minimize random seeks

Indexing: Performance Analysis • Creating indexes problem – Must be relatively fast, but need not be real time – For Web, incremental updates are important • How large is the inverted index? – Size of vocabulary – Size of postings • Fundamentally, a large sorting problem – Do terms and/or postings fit in memory? – Terms usually fit in memory – Postings usually don’t

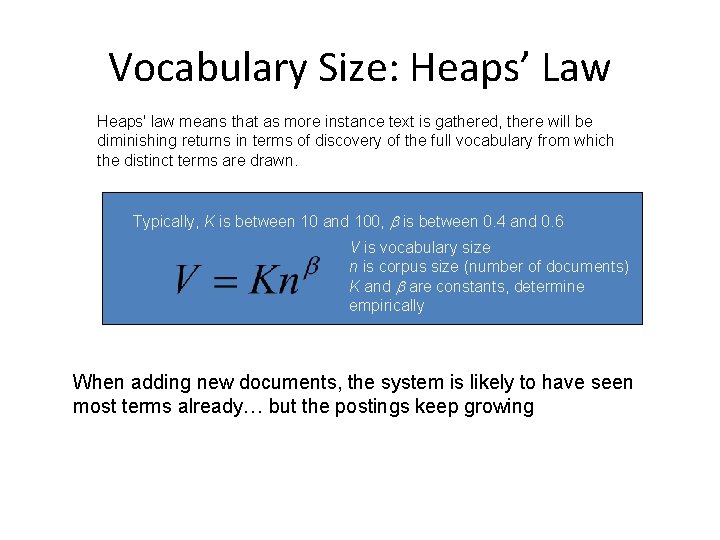

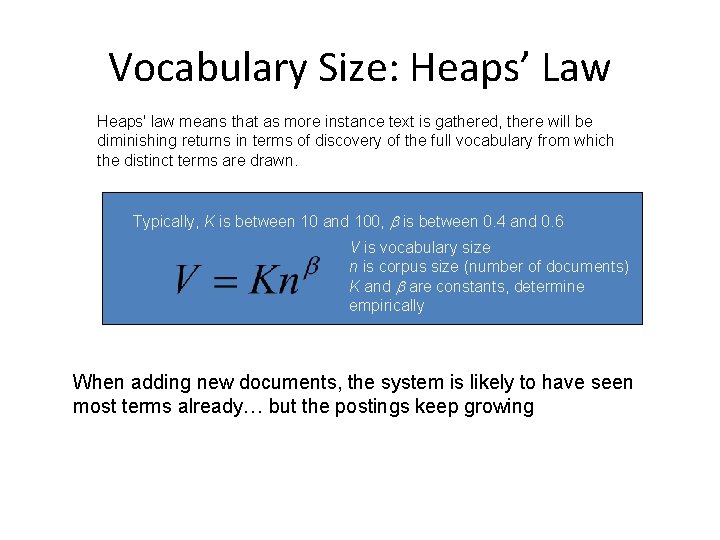

Vocabulary Size: Heaps’ Law Heaps' law means that as more instance text is gathered, there will be diminishing returns in terms of discovery of the full vocabulary from which the distinct terms are drawn. Typically, K is between 10 and 100, is between 0. 4 and 0. 6 V is vocabulary size n is corpus size (number of documents) K and are constants, determine empirically When adding new documents, the system is likely to have seen most terms already… but the postings keep growing

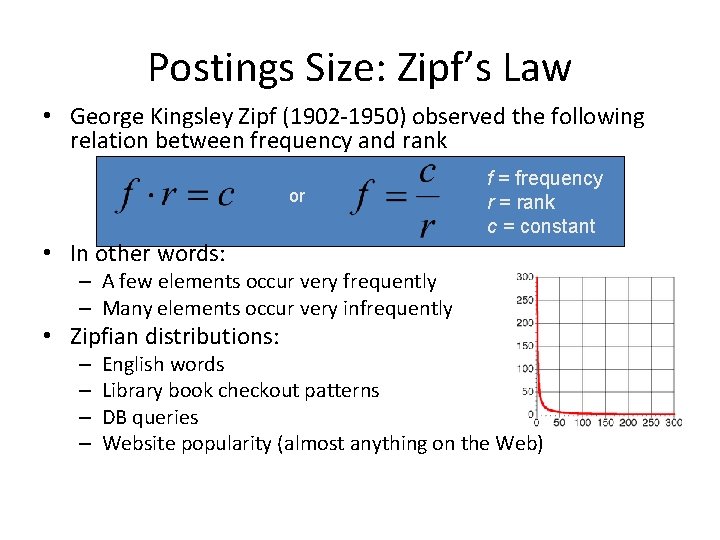

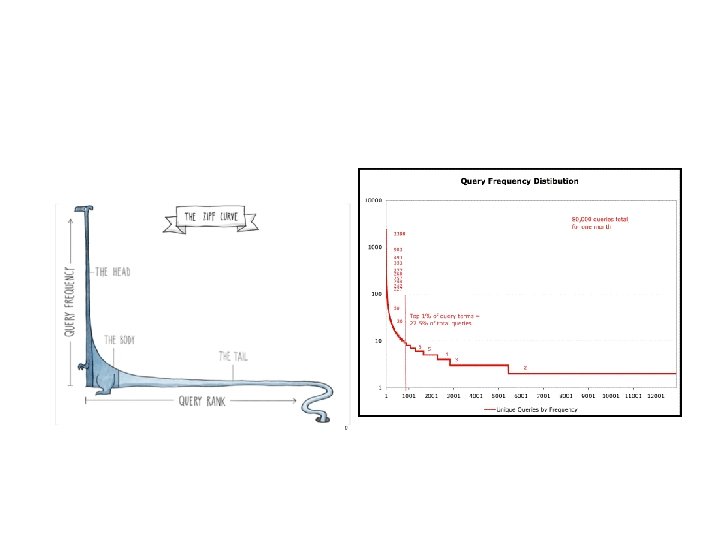

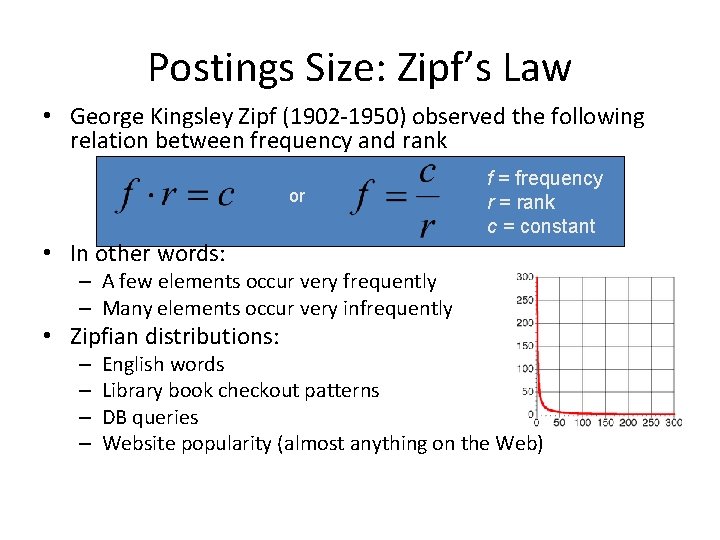

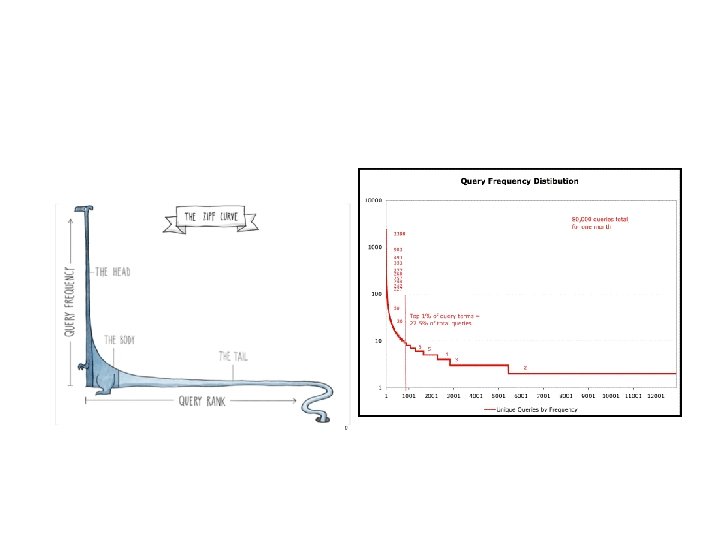

Postings Size: Zipf’s Law • George Kingsley Zipf (1902 -1950) observed the following relation between frequency and rank or f = frequency r = rank c = constant • In other words: – A few elements occur very frequently – Many elements occur very infrequently • Zipfian distributions: – – English words Library book checkout patterns DB queries Website popularity (almost anything on the Web)

Word Frequency in English Frequency of 50 most common words in English (sample of 19 million words)

Question to consider • How to create an inverted index using map reduce?

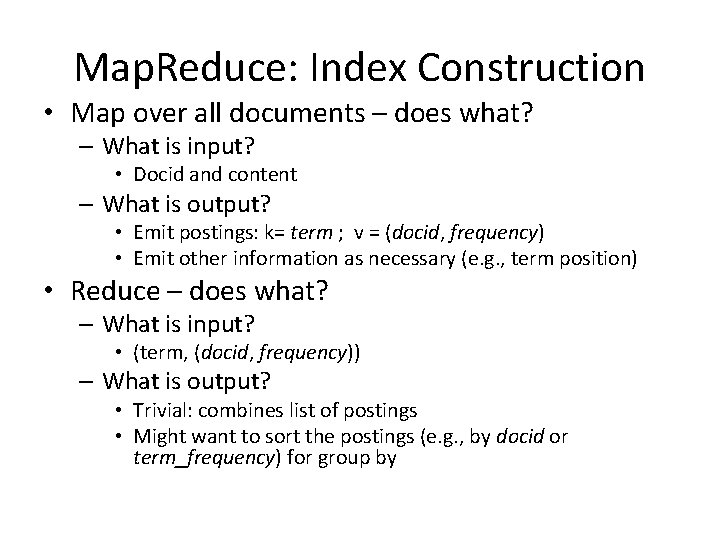

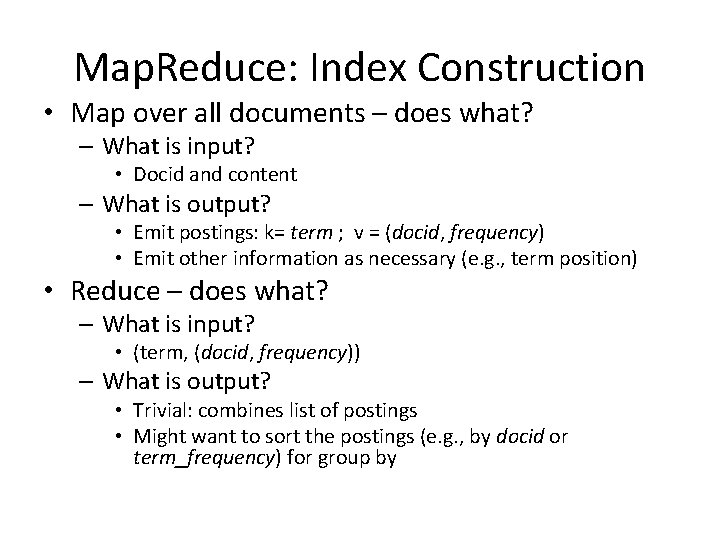

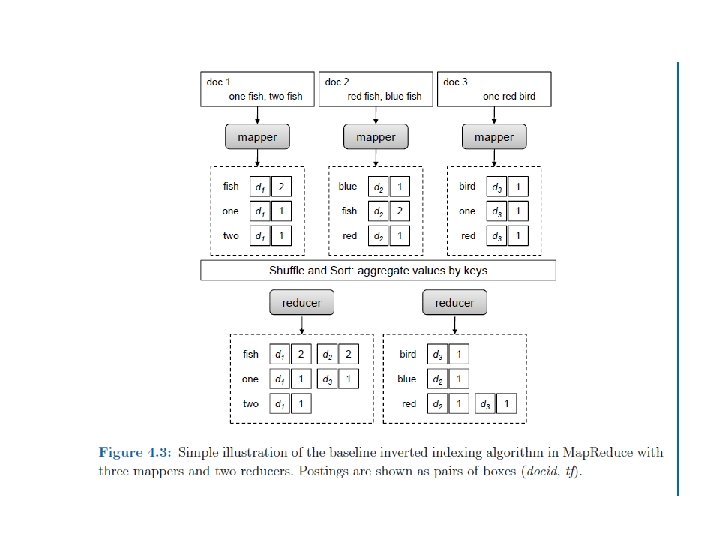

Map. Reduce: Index Construction • Map over all documents – does what? – What is input? • Docid and content – What is output? • Emit postings: k= term ; v = (docid, frequency) • Emit other information as necessary (e. g. , term position) • Reduce – does what? – What is input? • (term, (docid, frequency)) – What is output? • Trivial: combines list of postings • Might want to sort the postings (e. g. , by docid or term_frequency) for group by

Map. Reduce • Map emits term postings • Reduce performs large distributed group by of postings by term

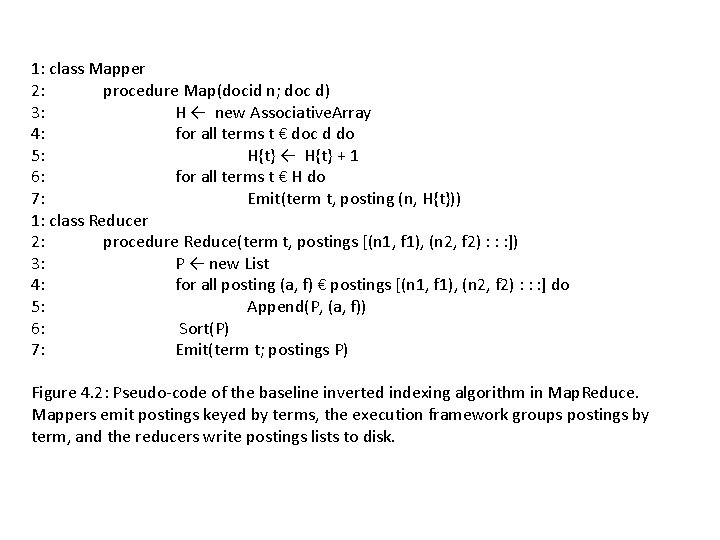

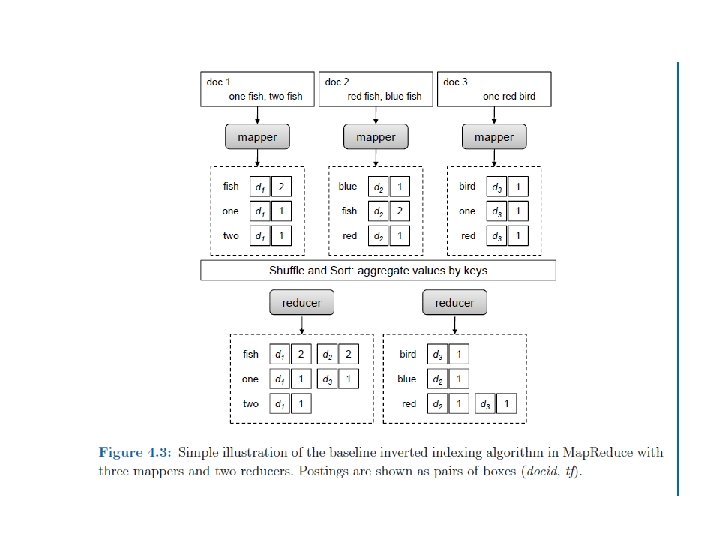

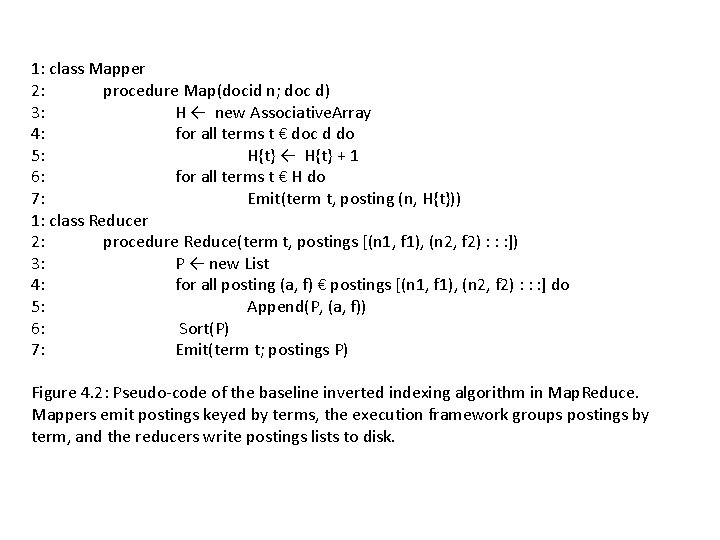

1: class Mapper 2: procedure Map(docid n; doc d) 3: H ← new Associative. Array 4: for all terms t € doc d do 5: H{t} ← H{t} + 1 6: for all terms t € H do 7: Emit(term t, posting (n, H{t})) 1: class Reducer 2: procedure Reduce(term t, postings [(n 1, f 1), (n 2, f 2) : : : ]) 3: P ← new List 4: for all posting (a, f) € postings [(n 1, f 1), (n 2, f 2) : : : ] do 5: Append(P, (a, f)) 6: Sort(P) 7: Emit(term t; postings P) Figure 4. 2: Pseudo-code of the baseline inverted indexing algorithm in Map. Reduce. Mappers emit postings keyed by terms, the execution framework groups postings by term, and the reducers write postings lists to disk.

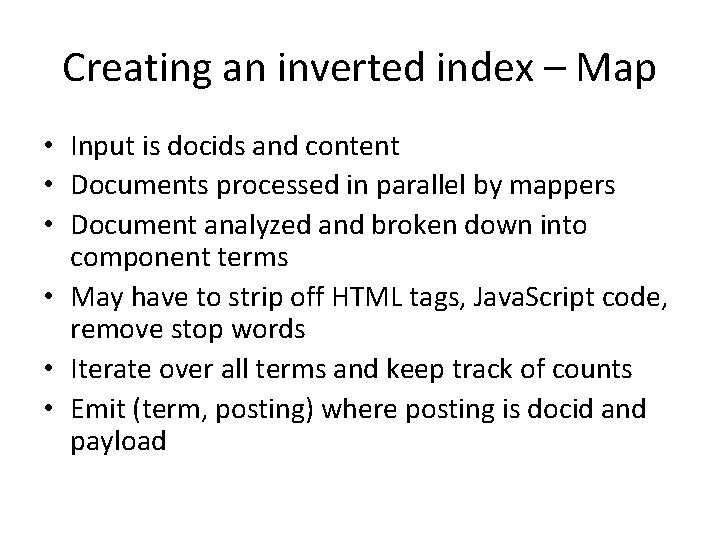

Creating an inverted index – Map • Input is docids and content • Documents processed in parallel by mappers • Document analyzed and broken down into component terms • May have to strip off HTML tags, Java. Script code, remove stop words • Iterate over all terms and keep track of counts • Emit (term, posting) where posting is docid and payload

Creating Inverted Index - Reduce • After shuffle sort, Reduce is easy – Just combines individual postings by appending to initially empty list and writes to disk – Usually compresses list

• How would this be done without Map. Reduce?

Retrieval • Have just shown how to create an index, but what about retrieving info for a query? • What is involved: – Identify query terms – Look up posting lists corresponding to query terms – Traverse posting lists to compute query-document scores – Return top k results

Retrieval • • • Must have sub-second response Optimized for low-latency True of Map. Reduce? True of underlying file system Issues? – Look up postings – Postings too large to fit in memory • (random disk seeks) – Round-trip network exchanges – Must find location of data block from master, etc.

Issues • Issues? – Look up postings to find terms in documents – Postings too large to fit in memory • (random disk seeks) – Round-trip network exchanges

Solution? • Distributed Retrieval

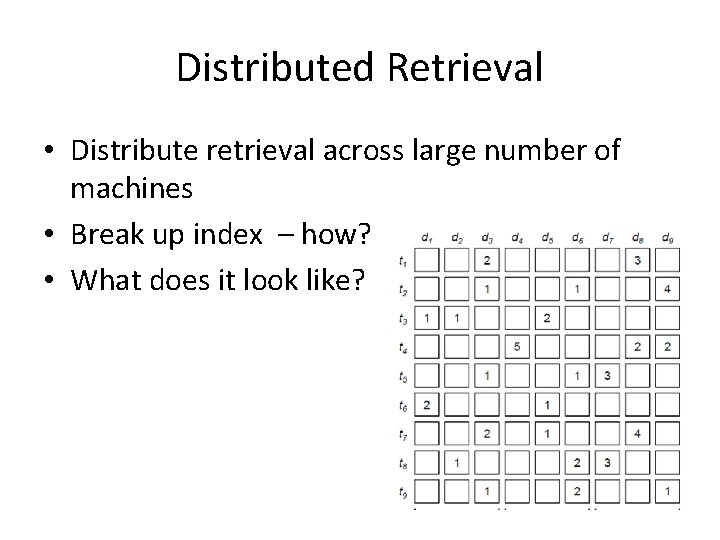

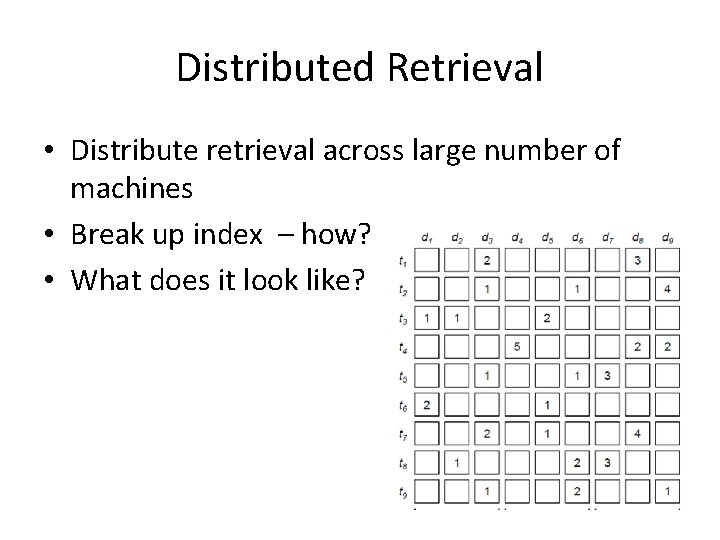

Distributed Retrieval • Distribute retrieval across large number of machines • Break up index – how? • What does it look like?

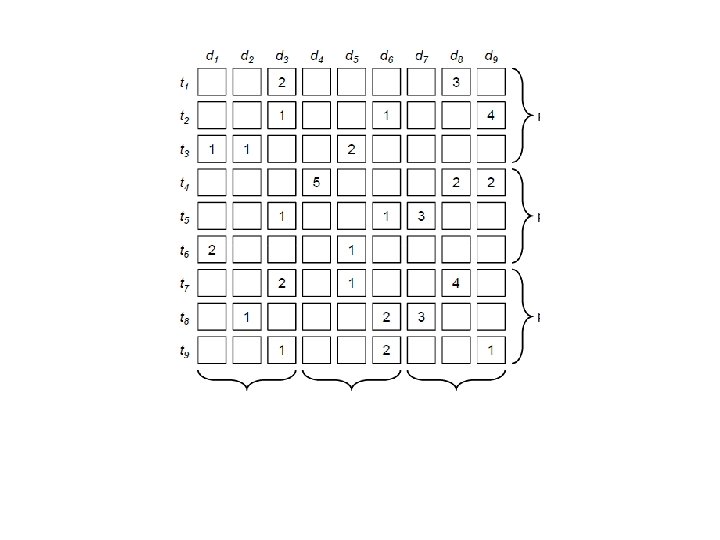

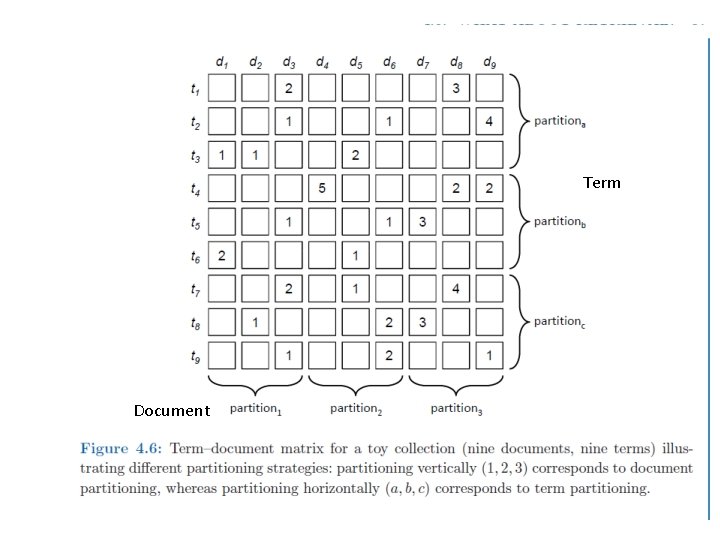

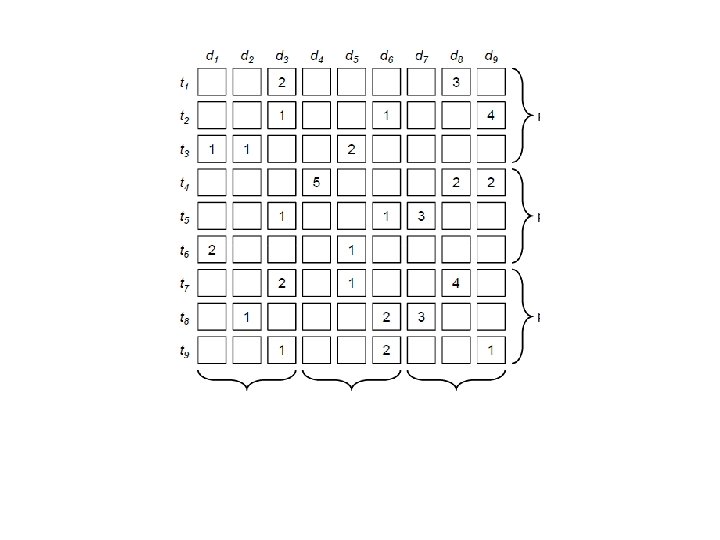

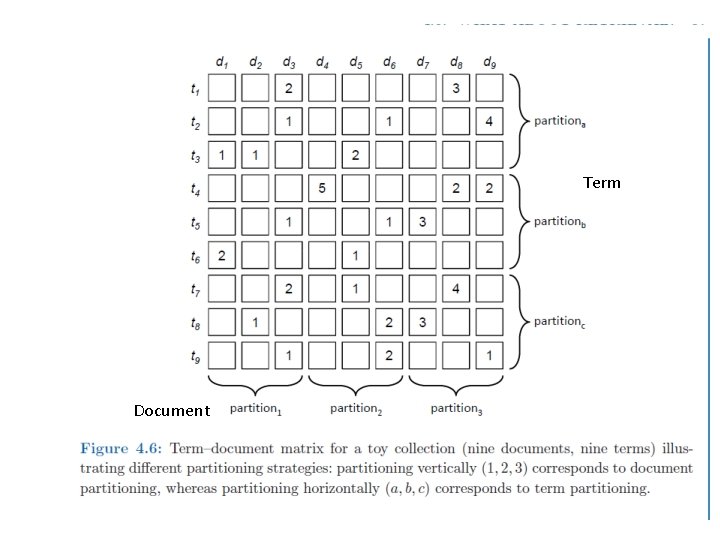

• • Document -chunks (cols) and terms (rows) Document partitioning Term partitioning Need Query Broker and Partition Servers

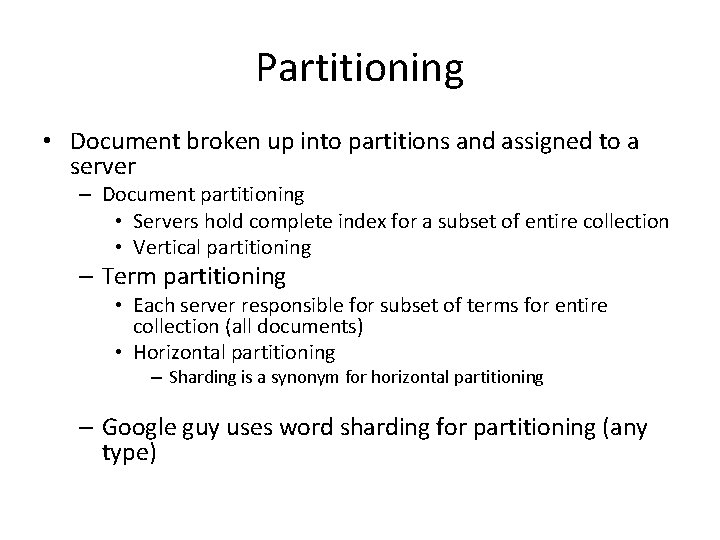

Partitioning • Document broken up into partitions and assigned to a server – Document partitioning • Servers hold complete index for a subset of entire collection • Vertical partitioning – Term partitioning • Each server responsible for subset of terms for entire collection (all documents) • Horizontal partitioning – Sharding is a synonym for horizontal partitioning – Google guy uses word sharding for partitioning (any type)

Term Document

Document Partitioning • Suppose query contains 3 terms, t 1, t 2 and t 3 • Document Partitioning – Query broker forwards query to all partition servers – Each server must search for t 1, t 2, t 3 in its subset of documents – Each server sends partial results to query broker – Query broker merges partial results from each – Query broker returns final result to user

Term Partitioning • Suppose query contains 3 terms, t 1, t 2 and t 3 • Term (word) Partitioning – Typical strategy Uses pipelined strategy – accumulators and route: • Broker forwards query to server that holds postings for t 1 (least frequent term) • Server traverses postings list, computes partial query scores • Partial scores sent to server with t 2 – only has to examine documents listed in postings list that have t 1 • Partial scores sent to server with t 3 – examines only documents in which t 1 and t 2 occur – Then final result passed to query broker

Document Partitioning • Pros/Cons? – Query must be processed by every server – Each partition operates independently, traverse postings in parallel • shorter query latencies • Assuming K words, N partitions (shards): • Number of disk accesses - Big O? – O(K*N)

Term Partitioning • Pros/Cons – – – Increases throughput - smaller total # of seeks per query Better if memory limited system Load balancing tricky - may create hotspots on servers Requires higher bandwidth – info spread across machines Need data in one place about each document – no obvious home • Number of disk accesses - Big O? – O(K) K words, at most K partitions (shards) – why? • only have to search for one term and in documents in which previous terms occurred, maximum documents

Term Partitioning • What if did in parallel? – Each term would be processed at a different server – Each server would have to traverse all documents and not just those in which previous terms exist – All would have to be combined at the end • O(K*D) where D is the number of documents? ?

Which is best? • Google adopts which partitioning? – Document partitioning – less bandwidth – Keeps indexes in memory (not commonly done in search engines) – Quality degrades with machine failure – Servers offline won’t deliver results • User doesn’t know • Users less discriminating as to which relevant documents appear in results • Even is no failure may cycle through different partitions

Other aspects of Partitioning • Can partition documents along a dimension, e. g. by quality • Search highest quality first • Search lower quality if needed • Include another dimension, e. g. content • Only send queries to partitions likely to contain relevant documents instead of to all partitions

Replication and Partitioning • Replication? – Within same partition as well as across geographically distributed data centers – Query routing problems • Serve clients from the closest data centers • Must also route to appropriate locations • If single data center, must balance load across replicas

Displaying to User • Postings only store docid • Get a list of ranked docids • Responsibility of document servers (not partition servers) to generate meaningful output – Title, URL, snippet of result entry • Caching useful if index not in memory and can even cache results of entire queries – Why cache results? – Zipfian!!

Summary 1. Documents gathered by web crawling 2. Inverted indexes built from documents 3. Use indexes to respond to queries • More info about Google’s approach 2009 • Jeff Dean 8: 06 http: //videolectures. net/wsdm 09_dean_cblirs/