Map Reduce Simplified Data Processing on Large Cluster

![[input (key, value)] [Intermediate (key, value)] [Unique key, output value list] Programming Model Map [input (key, value)] [Intermediate (key, value)] [Unique key, output value list] Programming Model Map](https://slidetodoc.com/presentation_image_h/da9071a3d4e8d0c583c4887f2dd6669a/image-7.jpg)

- Slides: 28

Map. Reduce: Simplified Data Processing on Large Cluster Authors: Jeffrey Dean and Sanjay Ghemawat Presented by: Yang Liu, University of Michigan EECS 582 – W 16 1

About the Authors • Jeff Dean • Sanjay Ghemawat EECS 582 – W 16 2

Motivation • Challenge at google • Input data too large -> distributed computing • Most computations are straightforward(log processing, inverted index) -> boring work • Complexity of distributed computing • Machine failure • Scheduling EECS 582 – W 16 3

Solution: Map. Reduce • Map. Reduce as the distributed programing infrastructure • Simple Programming interface: Map + Reduce • Distributed implementation that hides all the messy details • Fault tolerance • I/O scheduling • parallelization EECS 582 – W 16 4

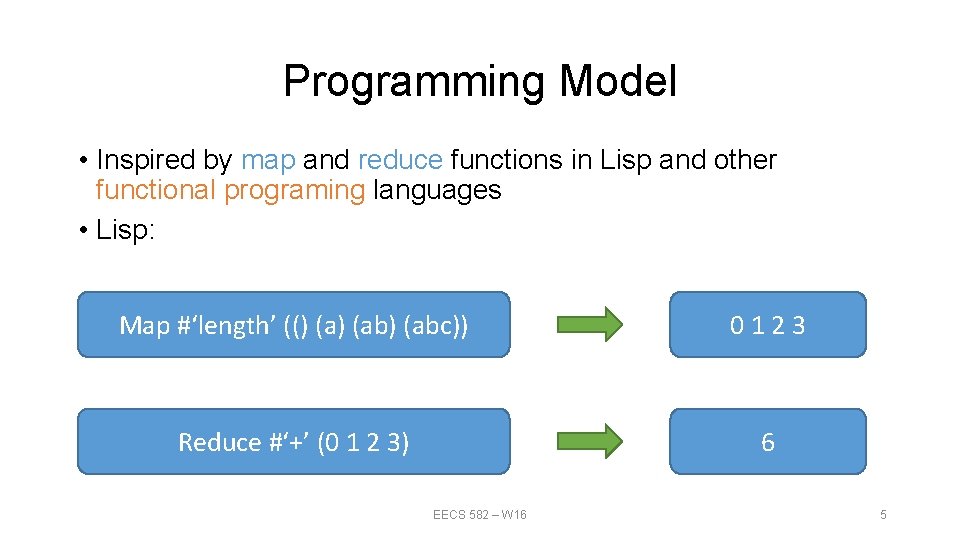

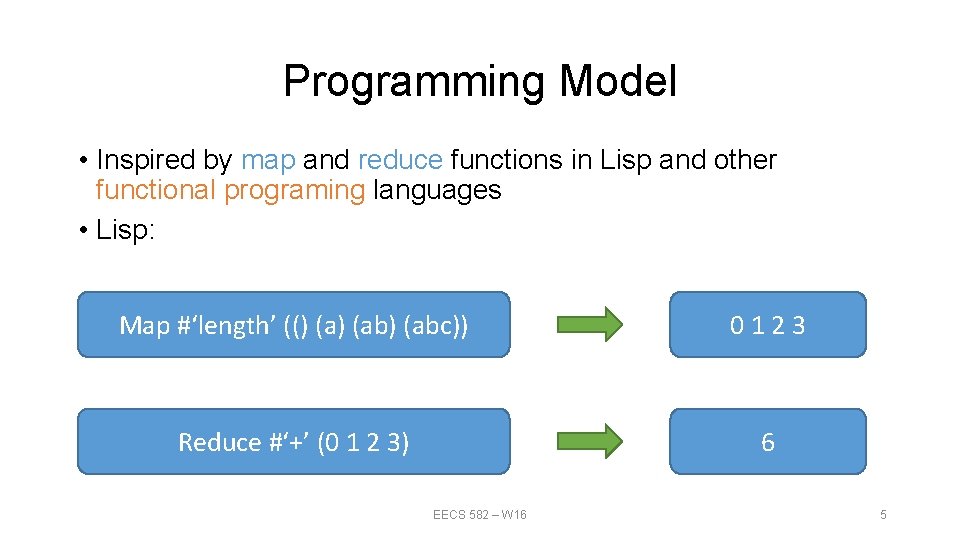

Programming Model • Inspired by map and reduce functions in Lisp and other functional programing languages • Lisp: Map #‘length’ (() (ab) (abc)) 0123 Reduce #‘+’ (0 1 2 3) 6 EECS 582 – W 16 5

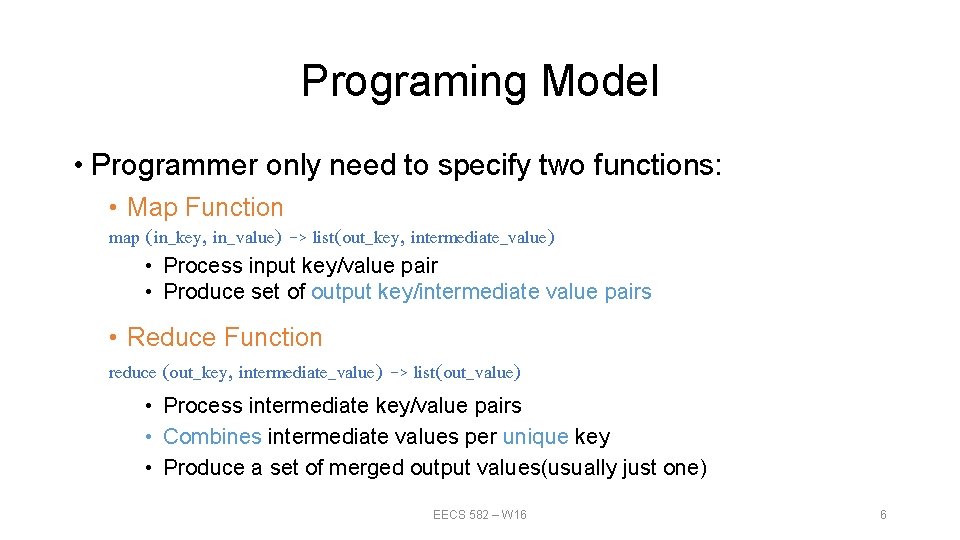

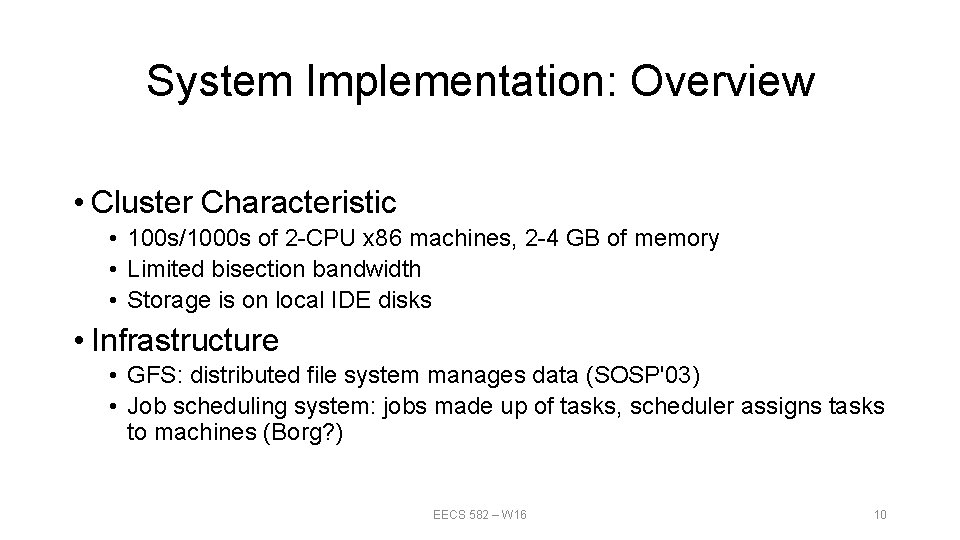

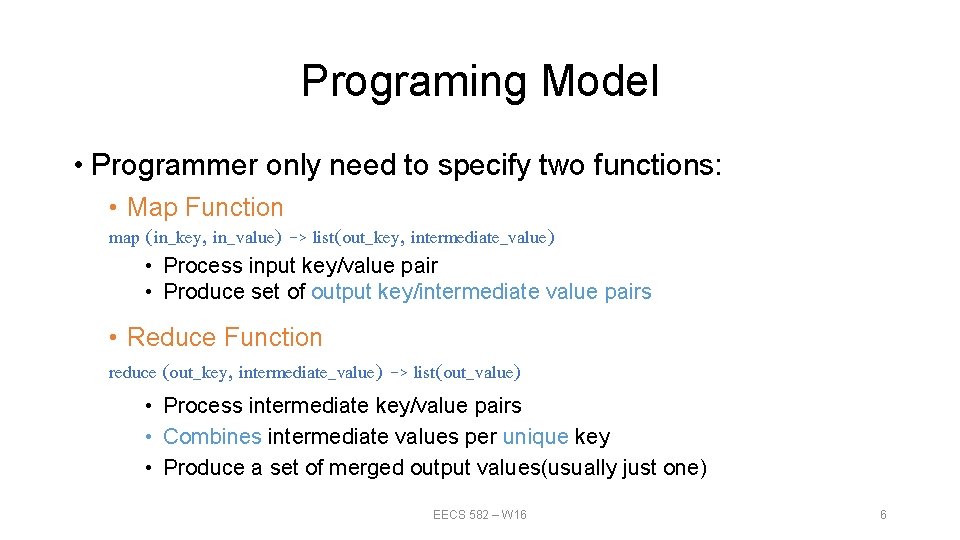

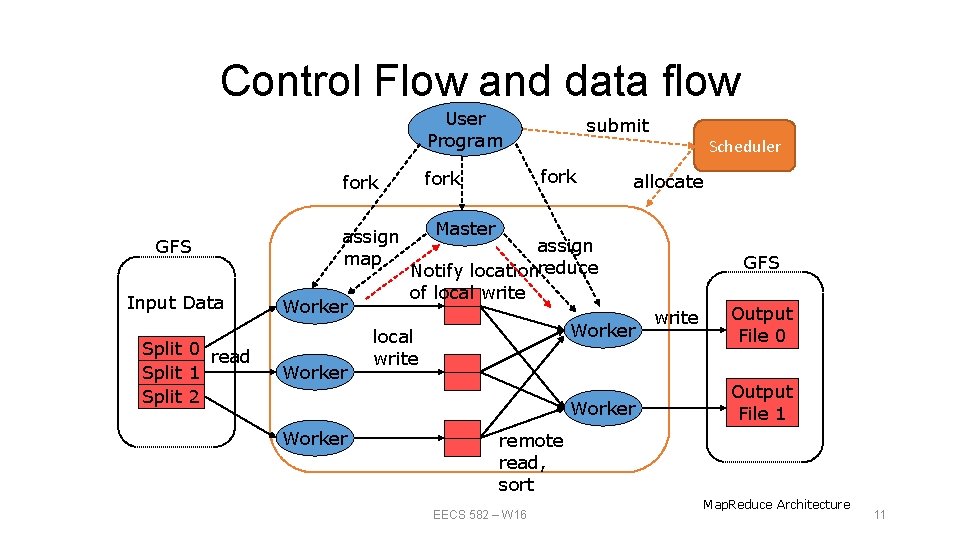

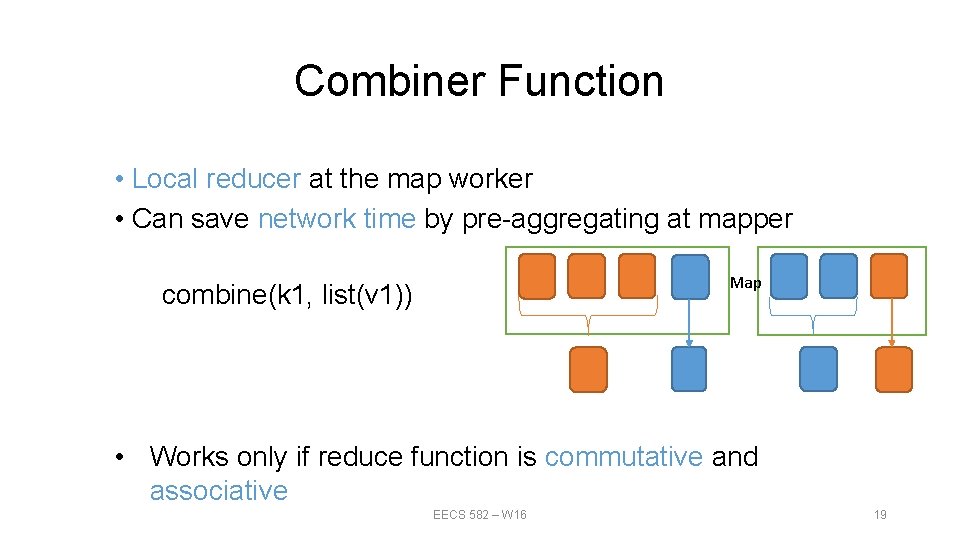

Programing Model • Programmer only need to specify two functions: • Map Function map (in_key, in_value) -> list(out_key, intermediate_value) • Process input key/value pair • Produce set of output key/intermediate value pairs • Reduce Function reduce (out_key, intermediate_value) -> list(out_value) • Process intermediate key/value pairs • Combines intermediate values per unique key • Produce a set of merged output values(usually just one) EECS 582 – W 16 6

![input key value Intermediate key value Unique key output value list Programming Model Map [input (key, value)] [Intermediate (key, value)] [Unique key, output value list] Programming Model Map](https://slidetodoc.com/presentation_image_h/da9071a3d4e8d0c583c4887f2dd6669a/image-7.jpg)

[input (key, value)] [Intermediate (key, value)] [Unique key, output value list] Programming Model Map Function Shuffle (merge sort by key) EECS 582 – W 16 Reduce function 7

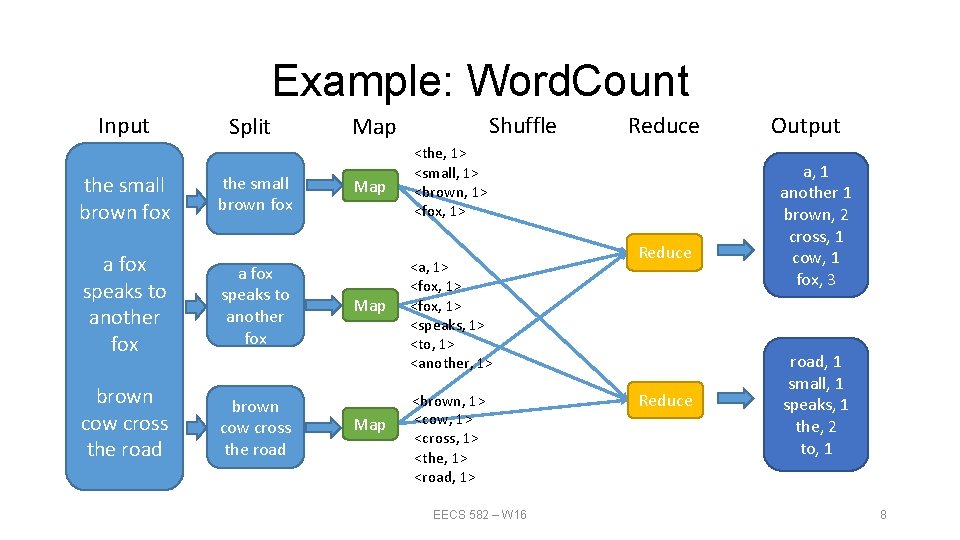

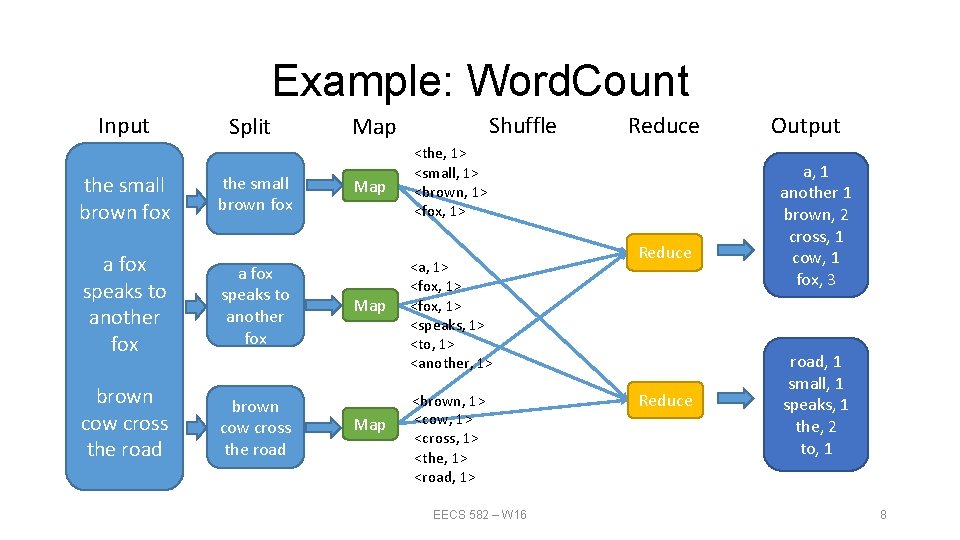

Example: Word. Count Input the small brown fox Split the small brown fox a fox speaks to another fox brown cow cross the road Shuffle Map Map Reduce <the, 1> <small, 1> <brown, 1> <fox, 1> <a, 1> <fox, 1> <speaks, 1> <to, 1> <another, 1> <brown, 1> <cow, 1> <cross, 1> <the, 1> <road, 1> EECS 582 – W 16 Reduce Output a, 1 another 1 brown, 2 cross, 1 cow, 1 fox, 3 road, 1 small, 1 speaks, 1 the, 2 to, 1 8

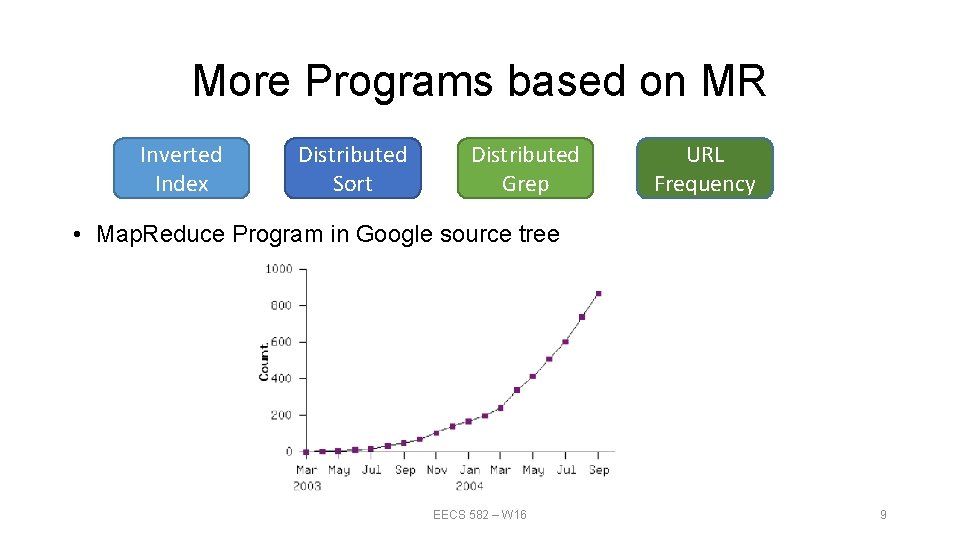

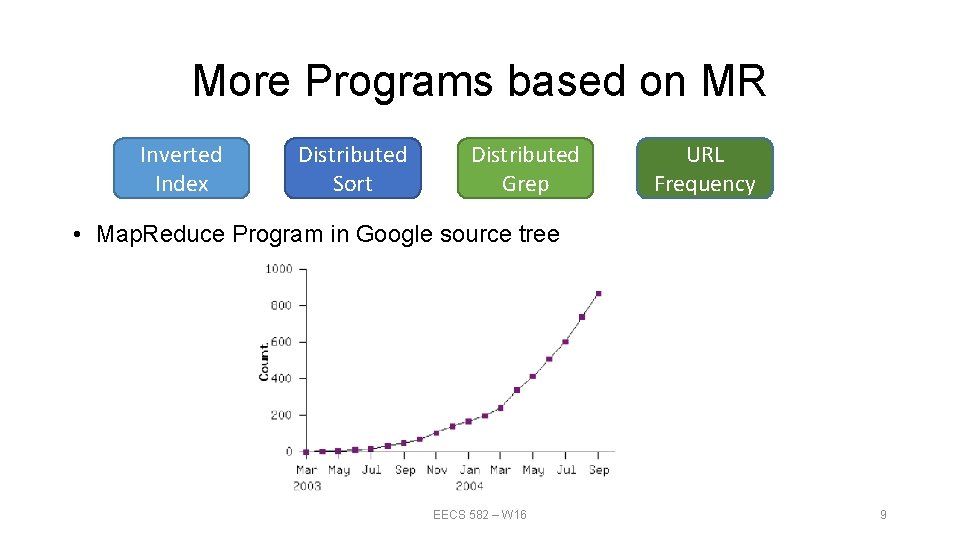

More Programs based on MR Inverted Index Distributed Sort Distributed Grep URL Frequency • Map. Reduce Program in Google source tree EECS 582 – W 16 9

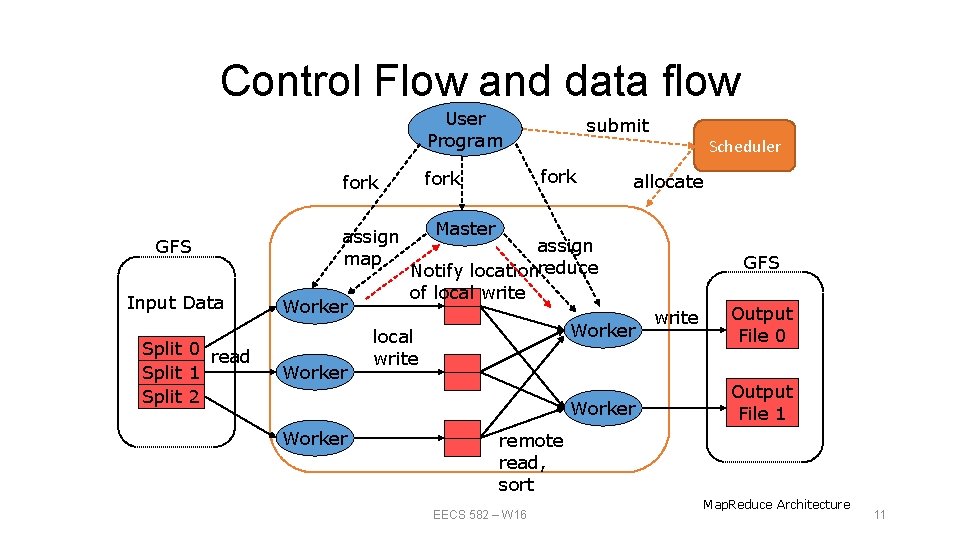

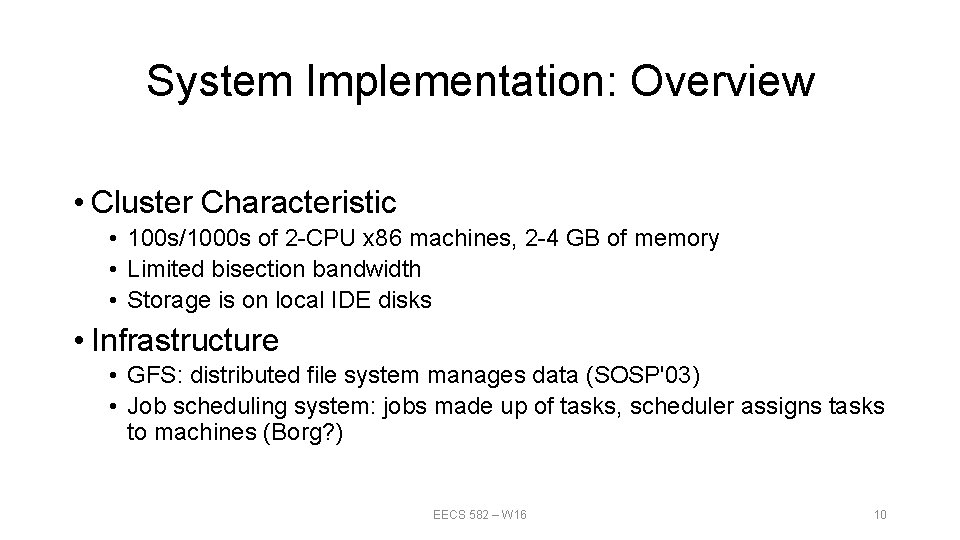

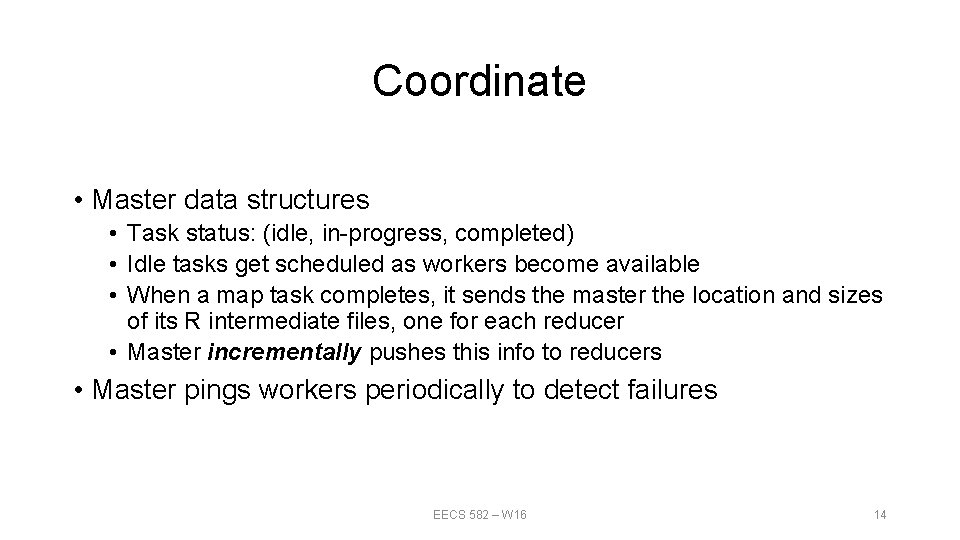

System Implementation: Overview • Cluster Characteristic • 100 s/1000 s of 2 -CPU x 86 machines, 2 -4 GB of memory • Limited bisection bandwidth • Storage is on local IDE disks • Infrastructure • GFS: distributed file system manages data (SOSP'03) • Job scheduling system: jobs made up of tasks, scheduler assigns tasks to machines (Borg? ) EECS 582 – W 16 10

Control Flow and data flow User Program GFS Input Data Split 0 read Split 1 Split 2 assign map Worker fork submit allocate Master assign Notify locationreduce of local write Worker Scheduler GFS write Output File 0 Output File 1 remote read, sort EECS 582 – W 16 Map. Reduce Architecture 11

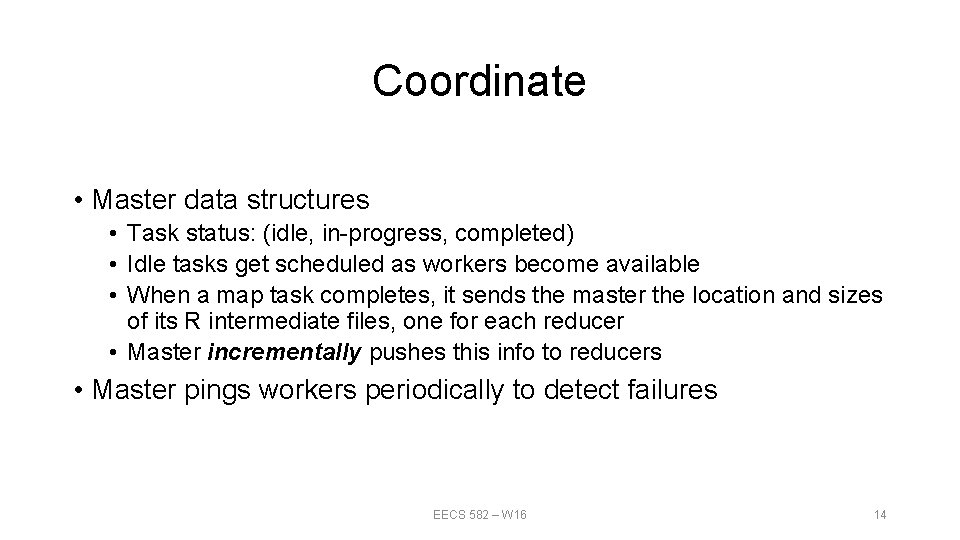

Coordinate • Master data structures • Task status: (idle, in-progress, completed) • Idle tasks get scheduled as workers become available • When a map task completes, it sends the master the location and sizes of its R intermediate files, one for each reducer • Master incrementally pushes this info to reducers • Master pings workers periodically to detect failures EECS 582 – W 16 14

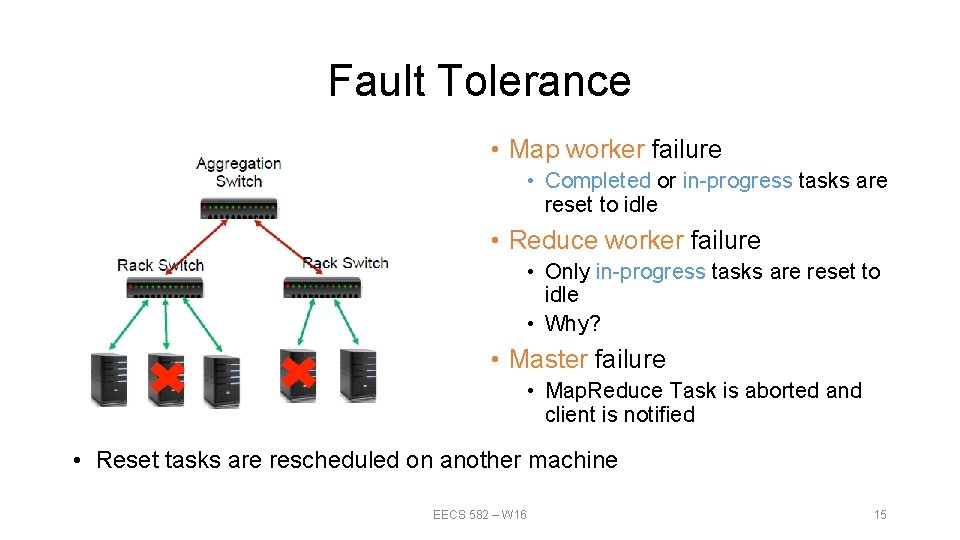

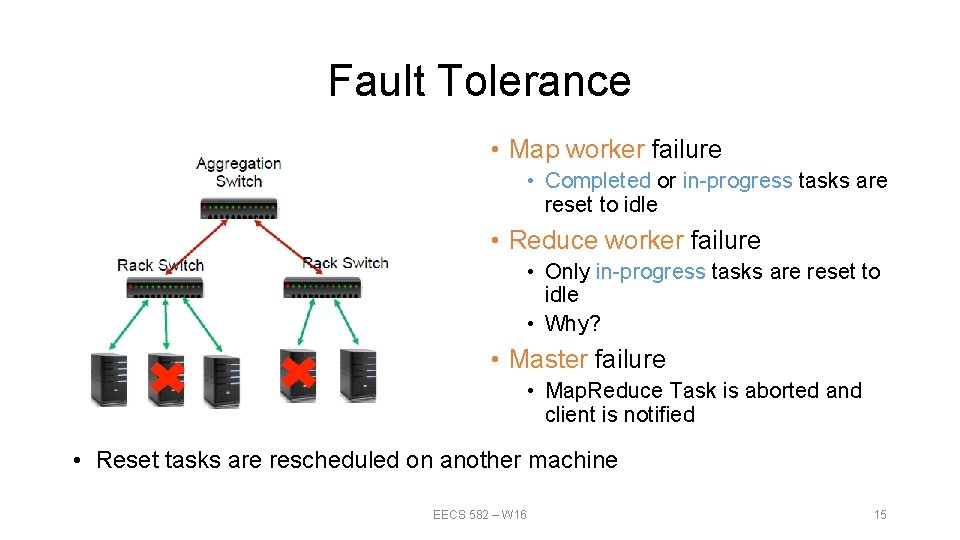

Fault Tolerance • Map worker failure • Completed or in-progress tasks are reset to idle • Reduce worker failure • Only in-progress tasks are reset to idle • Why? • Master failure • Map. Reduce Task is aborted and client is notified • Reset tasks are rescheduled on another machine EECS 582 – W 16 15

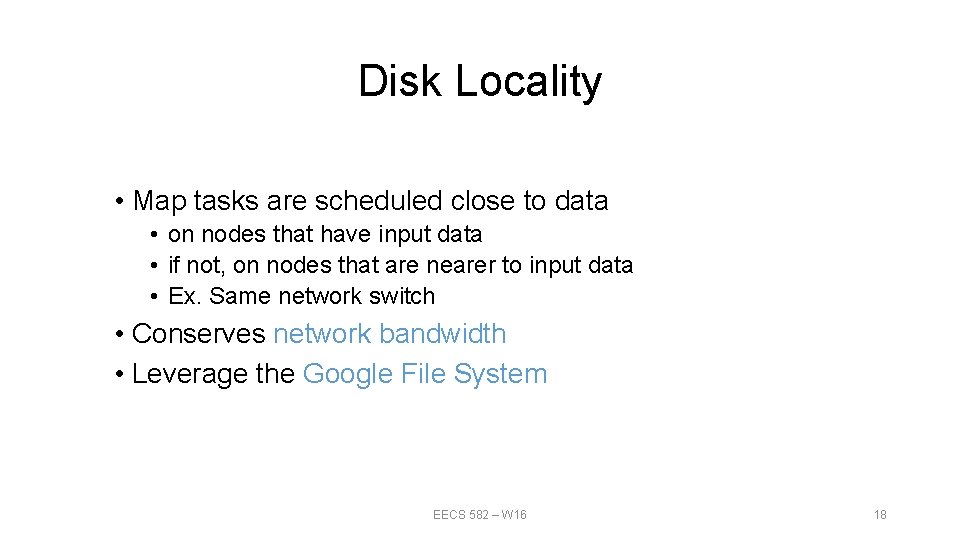

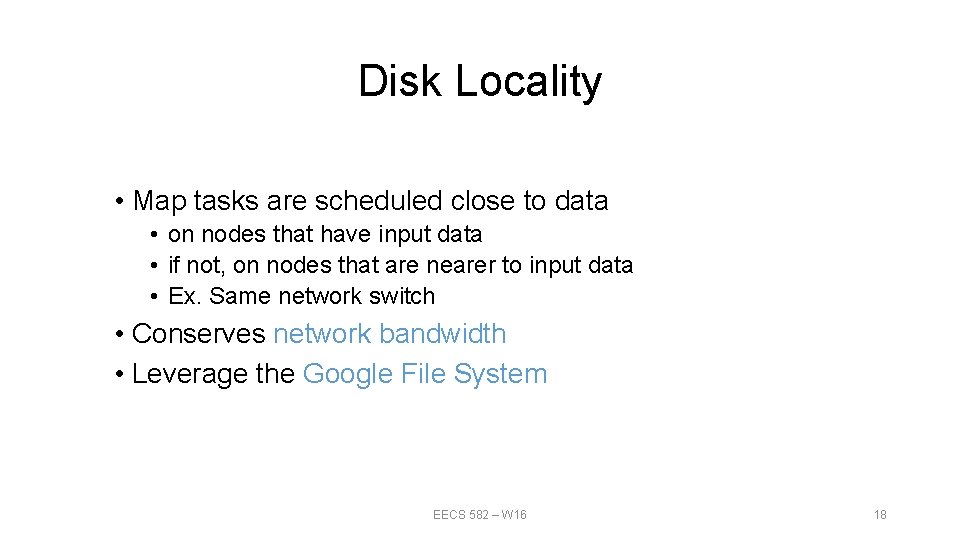

Disk Locality • Map tasks are scheduled close to data • on nodes that have input data • if not, on nodes that are nearer to input data • Ex. Same network switch • Conserves network bandwidth • Leverage the Google File System EECS 582 – W 16 18

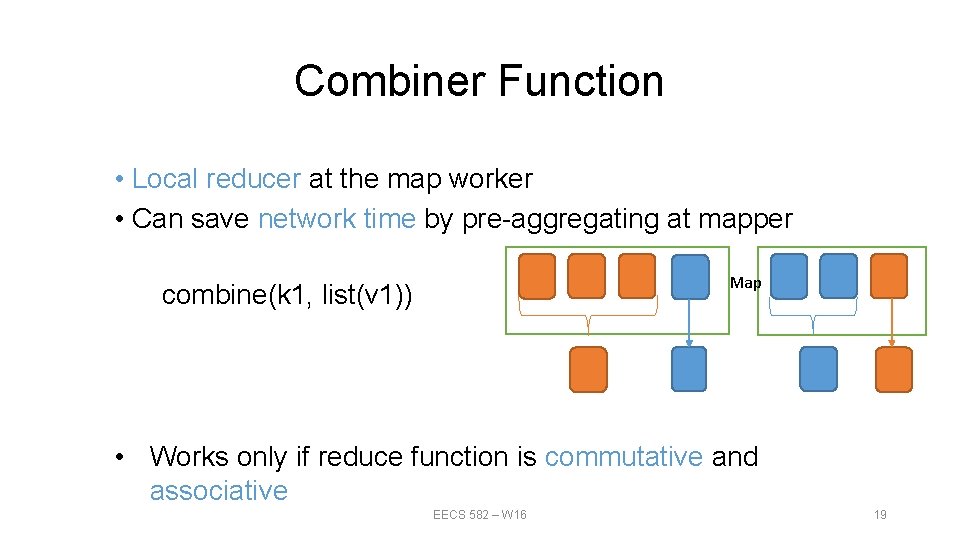

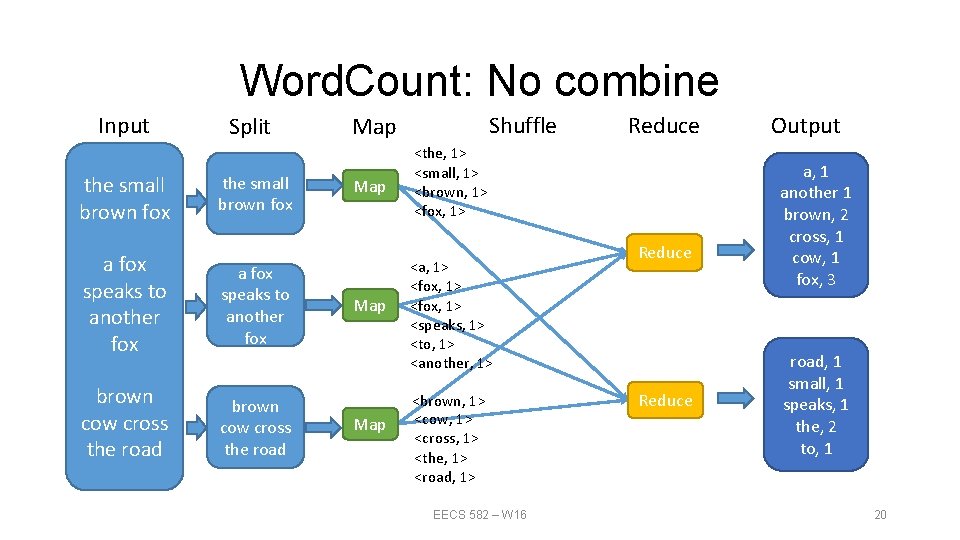

Combiner Function • Local reducer at the map worker • Can save network time by pre-aggregating at mapper Map combine(k 1, list(v 1)) • Works only if reduce function is commutative and associative EECS 582 – W 16 19

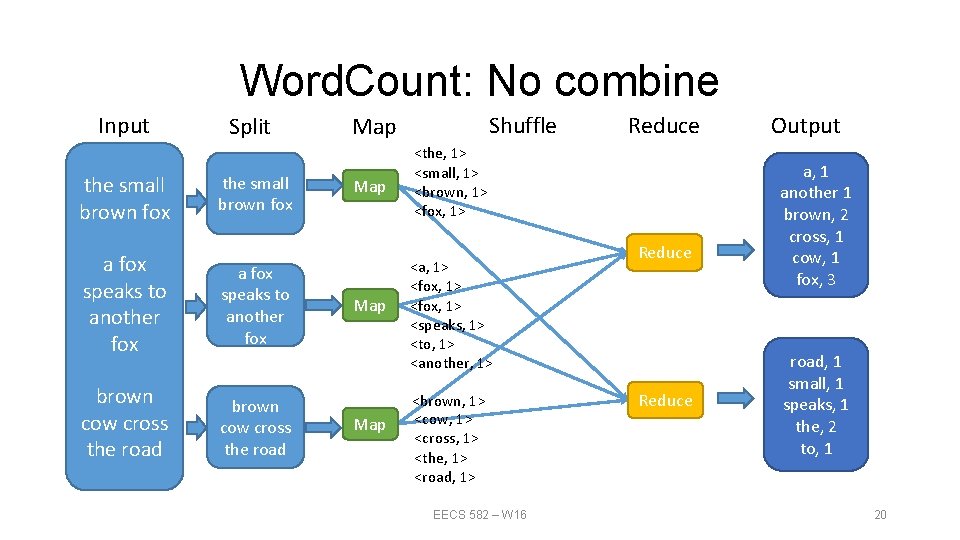

Word. Count: No combine Input the small brown fox Split the small brown fox a fox speaks to another fox brown cow cross the road Shuffle Map Map Reduce <the, 1> <small, 1> <brown, 1> <fox, 1> <a, 1> <fox, 1> <speaks, 1> <to, 1> <another, 1> <brown, 1> <cow, 1> <cross, 1> <the, 1> <road, 1> EECS 582 – W 16 Reduce Output a, 1 another 1 brown, 2 cross, 1 cow, 1 fox, 3 road, 1 small, 1 speaks, 1 the, 2 to, 1 20

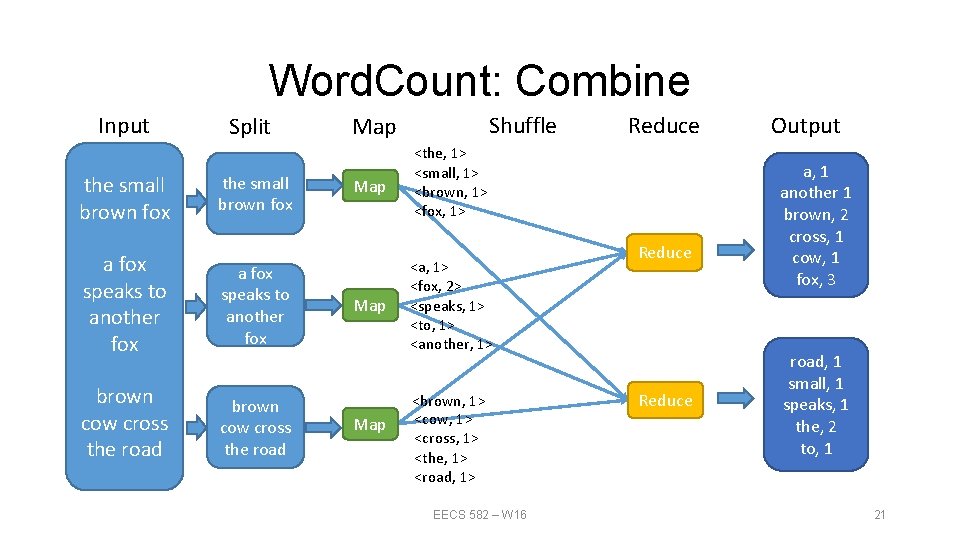

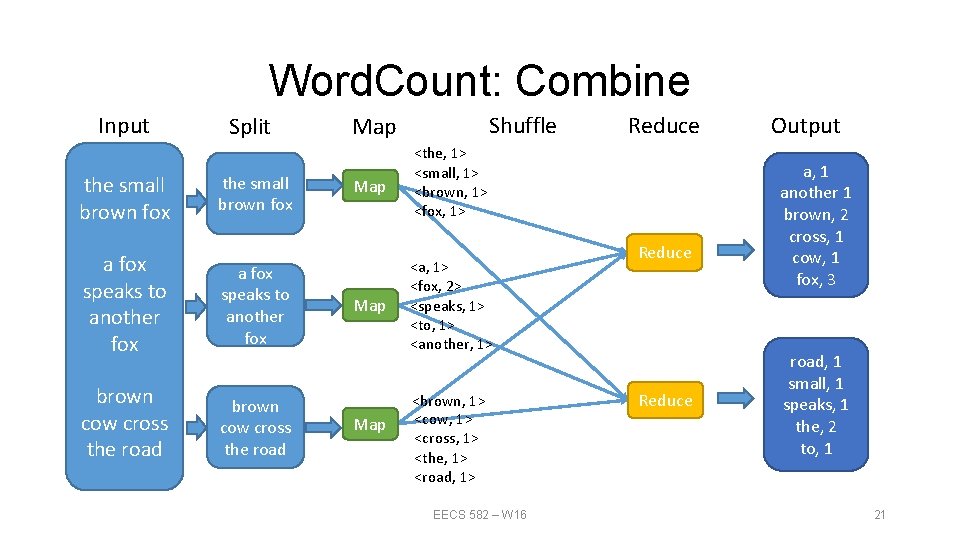

Word. Count: Combine Input the small brown fox Split the small brown fox a fox speaks to another fox brown cow cross the road Shuffle Map Map Reduce <the, 1> <small, 1> <brown, 1> <fox, 1> <a, 1> <fox, 2> <speaks, 1> <to, 1> <another, 1> <brown, 1> <cow, 1> <cross, 1> <the, 1> <road, 1> EECS 582 – W 16 Reduce Output a, 1 another 1 brown, 2 cross, 1 cow, 1 fox, 3 road, 1 small, 1 speaks, 1 the, 2 to, 1 21

More Features • Skipping Bad Records • Input and Output Types • Local Execution • Status Information • Counters • etc. . . EECS 582 – W 16 23

Conclusion • Inexpensive commodity machines can be the basis of a large scale reliable system • Map. Reduce hides all the messy details of distributed computing • Map. Reduce provides a simple parallel programming interface EECS 582 – W 16 27

Lessons learn • General Design • General Abstraction -> Solve many problems -> Success • Simple Interface -> Fast Adaption -> Success • Distributed System design • Network is a scarce resource • Locality matters • Pre-aggregate whenever possible • Master-Worker architecture is simple yet powerful EECS 582 – W 16 28

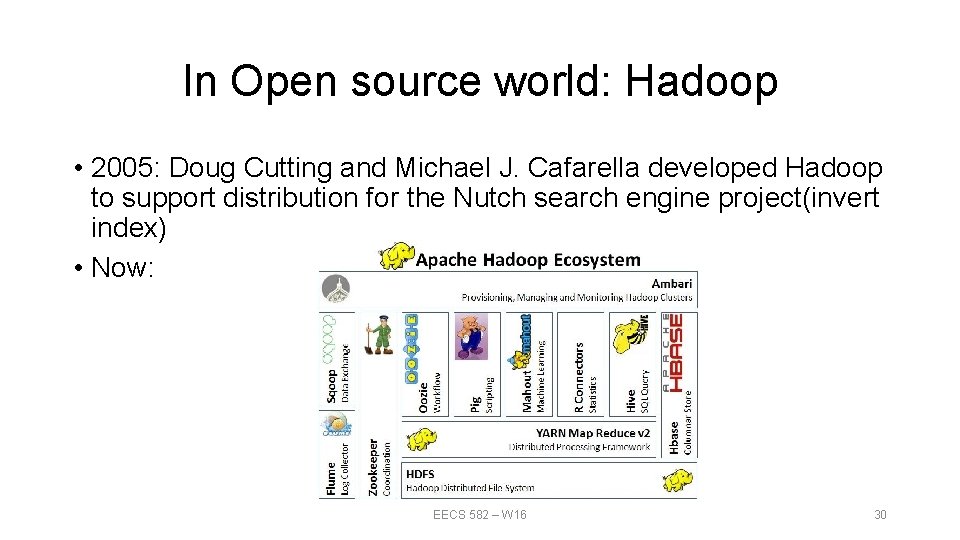

Influence • Map. Reduce is one of the MOST cited system paper : 16648 as for 03/08/2016 • Together with Google File System, Bigtable, it inspires the Big Data Era • What happen after Map. Reduce? EECS 582 – W 16 29

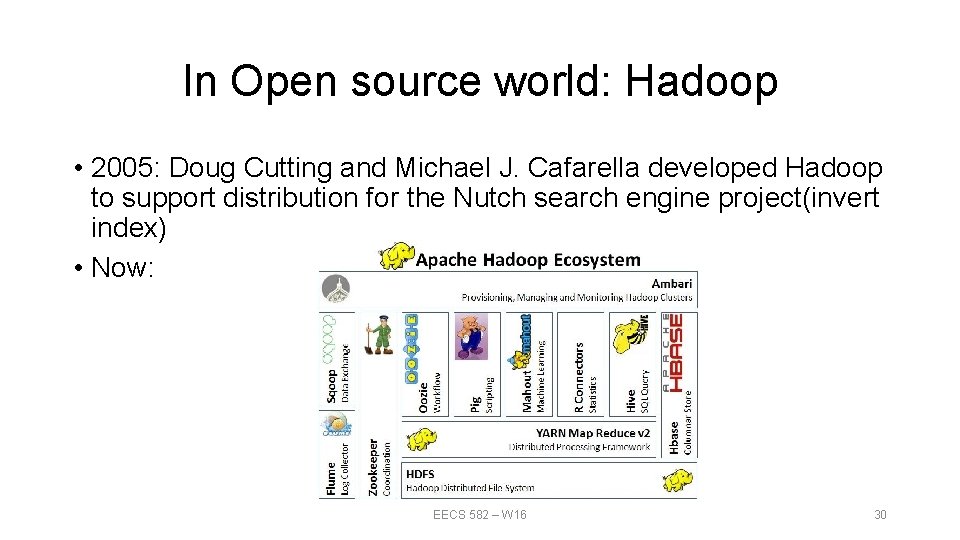

In Open source world: Hadoop • 2005: Doug Cutting and Michael J. Cafarella developed Hadoop to support distribution for the Nutch search engine project(invert index) • Now: EECS 582 – W 16 30

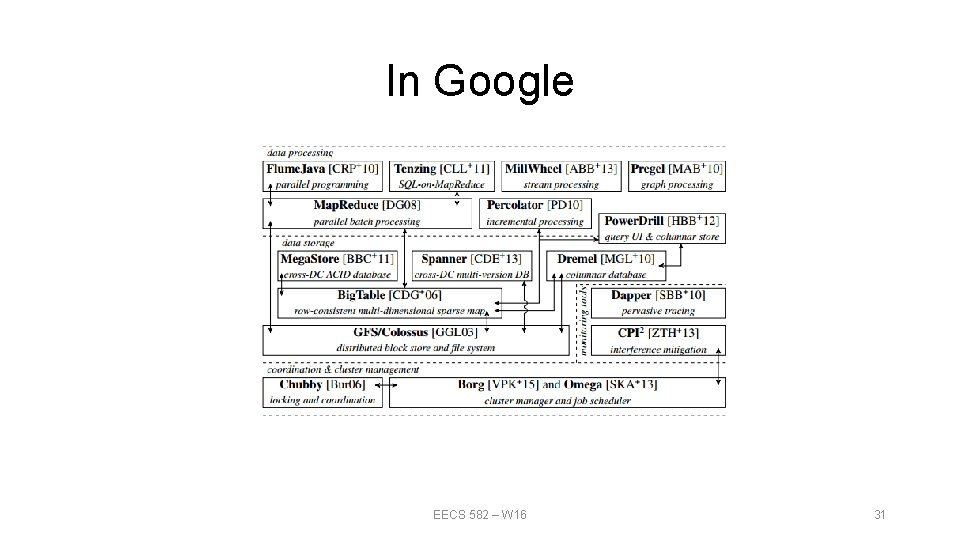

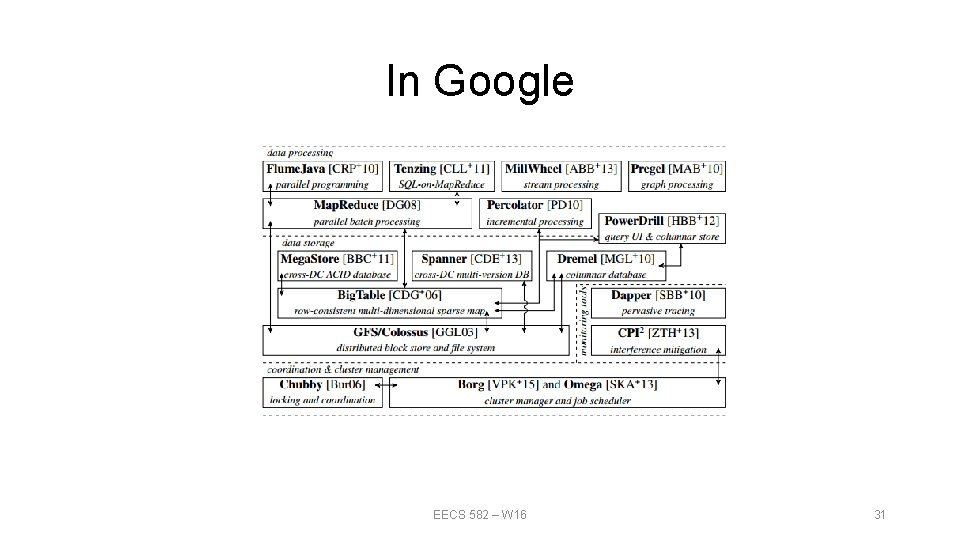

In Google EECS 582 – W 16 31

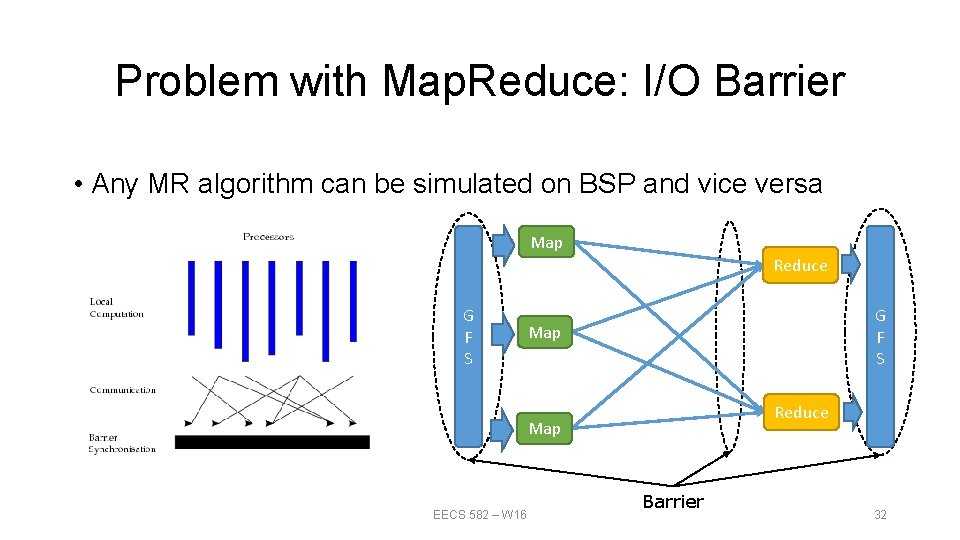

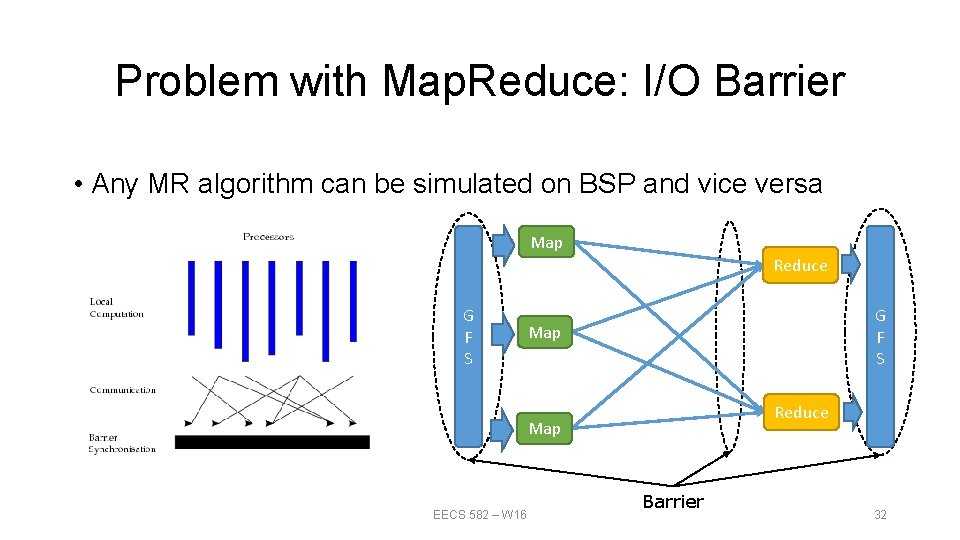

Problem with Map. Reduce: I/O Barrier • Any MR algorithm can be simulated on BSP and vice versa Map Reduce G F S Map Reduce Map EECS 582 – W 16 Barrier 32

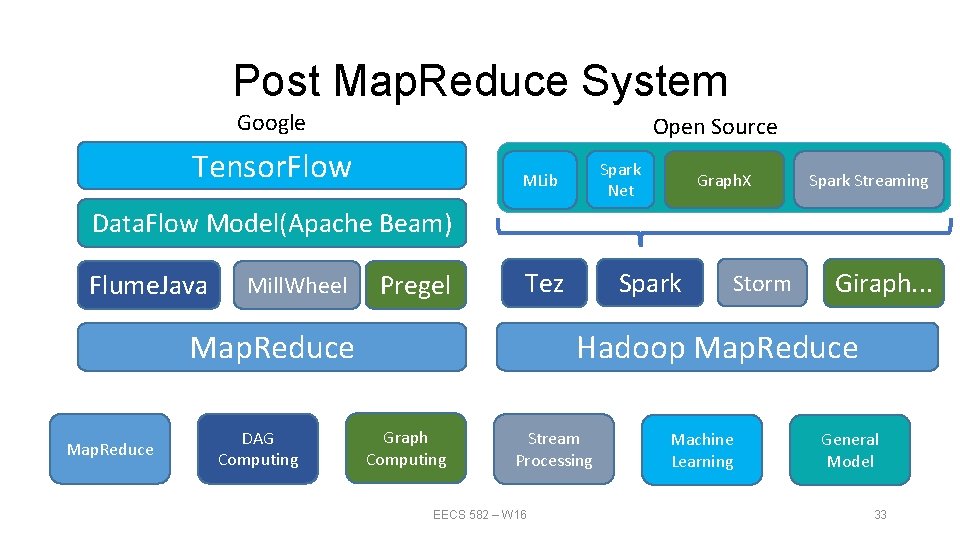

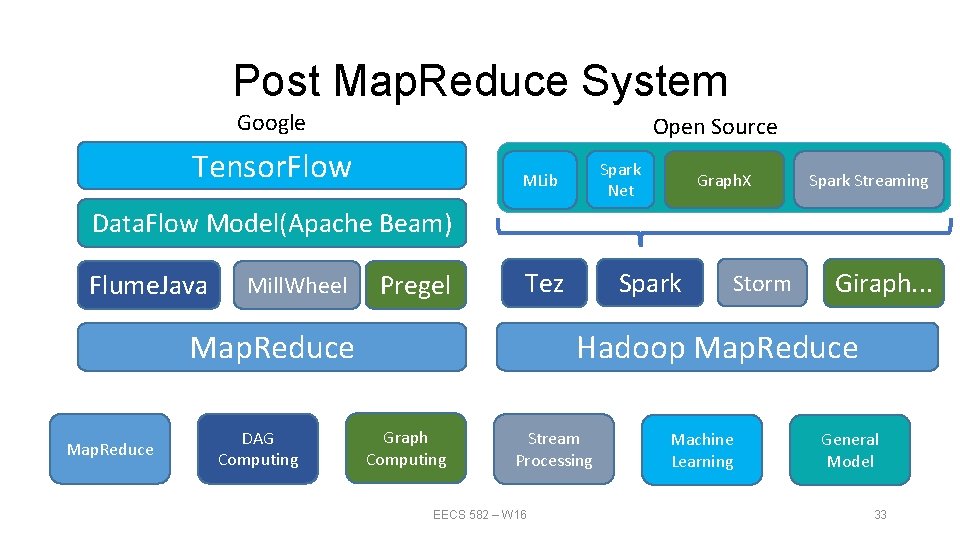

Post Map. Reduce System Google Open Source Tensor. Flow Spark Net MLib Graph. X Spark Streaming Data. Flow Model(Apache Beam) Flume. Java Mill. Wheel Pregel DAG Computing Storm Giraph. . . Hadoop Map. Reduce Spark Tez Graph Computing Stream Processing EECS 582 – W 16 Machine Learning General Model 33

Questions? EECS 582 – W 16 34

References • Map. Reduce Architecture: http: //cecs. wright. edu/~tkprasad/courses/cs 707/L 06 Map. Reduce. ppt/ • Map. Reduce Presentation: http: //research. google. com/archive/mapreduce-osdi 04 -slides/ • Map. Reduce Presentation: http: //web. eecs. umich. edu/~mozafari/fall 2015/eecs 584/presentations/lecture 15 -a. pdf/ • Operating system support for warehouse-scale computing: https: //www. cl. cam. ac. uk/~ms 705/pub/thesis-submitted. pdf/ • Apache Ecosystem Pic: http: //blog. agroknow. com/? cat=1 • Map. Reduce: http: //static. googleusercontent. com/media/research. google. com/en//archive/mapreduceosdi 04. pdf/ • Flume. Java: http: //pages. cs. wisc. edu/~akella/CS 838/F 12/838 Cloud. Papers/Flume. Java. pdf/ • Mill. Wheel: http: //www. vldb. org/pvldb/vol 6/p 1033 -akidau. pdf/ • Pregel: http: //web. stanford. edu/class/cs 347/reading/pregel. pdf/ EECS 582 – W 16 35

References • Giraph: http: //giraph. apache. org/ • Spark: http: //spark. apache. org/ • Tez: https: //tez. apache. org/ • Data. Flow: http: //www. vldb. org/pvldb/vol 8/p 1792 -Akidau. pdf/ • Tensorflow: https: //www. tensorflow. org/ • Apache Beam: http: //incubator. apache. org/projects/beam. html • Spark. Net: https: //github. com/amplab/Spark. Net • Caffe on Spark: http: //yahoohadoop. tumblr. com/post/129872361846/large-scaledistributed-deep-learning-on-hadoop EECS 582 – W 16 36