Traffic flow prediction and minimization of traffic congestion

- Slides: 47

Traffic flow prediction and minimization of traffic congestion using Adaboost Algorithm with Random Forest as a weak Learner ניבוי מצבי גודש ומזעור לחצי התנועה באמצעות מערכת לומדת ( כלומד חלש Random Forest עם Adaboost )אלגוריתי וזיהוי של בעיות תעבורה באזור אורבאני , המשמשת לניבוי by Guy Leshem Joint work with Prof. Ya’acov Ritov Department of Statistics, The Hebrew University of Jerusalem, Israel 9/15/2020 1

Outline o o o Introduction Motivation Methods Results Conclusion Future work 9/15/2020 2

Part I Introduction 9/15/2020 3

Traffic in urban road network o o o Road transportation is a critical link between all the other modes of transportation and their proper functioning. Signalized intersections, is a critical element of an urban road transportation system. Traffic congestion in urban areas is causing vehicular delays (which increases the total travel time through an urban road network), thus resulting in a reduction in speed, reliability, air and sound pollution and costeffectiveness of the transportation system. 9/15/2020 4

Part II Motivation 9/15/2020 5

Our Motivation o o The first motivation for this research is to check if from traffic related data, we can predict heavy traffic with high accurate at the urban area. The main goal of this research is to plan and build a learning system for “traffic flow manage control” of urban area for prediction of traffic flow problem, and to use with this prediction to minimized traffic congestion. 9/15/2020 6

Part III Methods 9/15/2020 7

Definition of Machine learning o o Machine learning is concerned with the development of algorithms and techniques that allow computers to "learn". Inductive machine learning methods create computer programs by extracting rules and patterns out of massive data sets. Every discrete sample come with a label, and the goal of the machine learning is to predict labels of a new sample that the algorithm doesn’t meet during the learning process. The learning algorithm will receive partial feedback on his performance during the learning process, and “he” needs to conclude which decision bring to him success and which bring failure. 9/15/2020 8

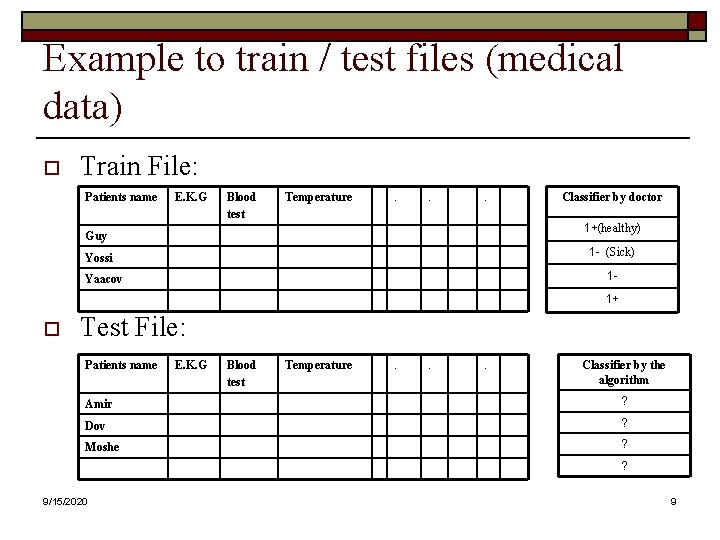

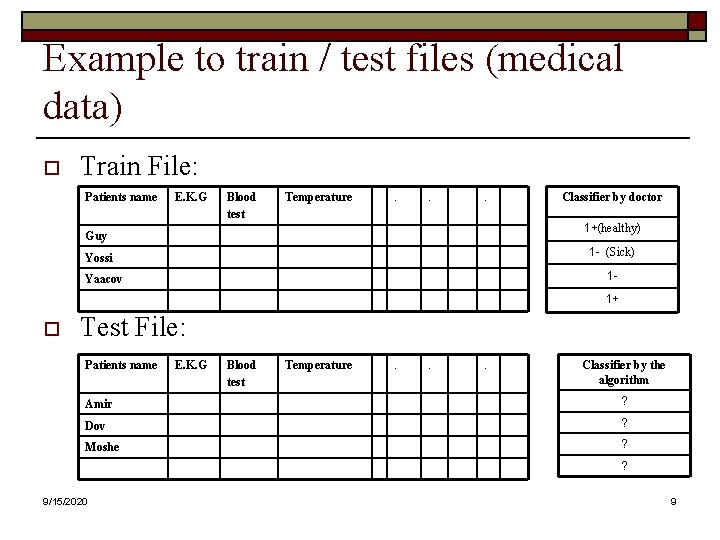

Example to train / test files (medical data) o Train File: Patients name E. K. G Blood test Temperature . . . Classifier by doctor 1+(healthy) Guy 1 - (Sick) Yossi 1 - Yaacov 1+ o Test File: Patients name E. K. G Blood test Temperature . . . Classifier by the algorithm Amir ? Dov ? Moshe ? ? 9/15/2020 9

Part III - A System Part 9/15/2020 10

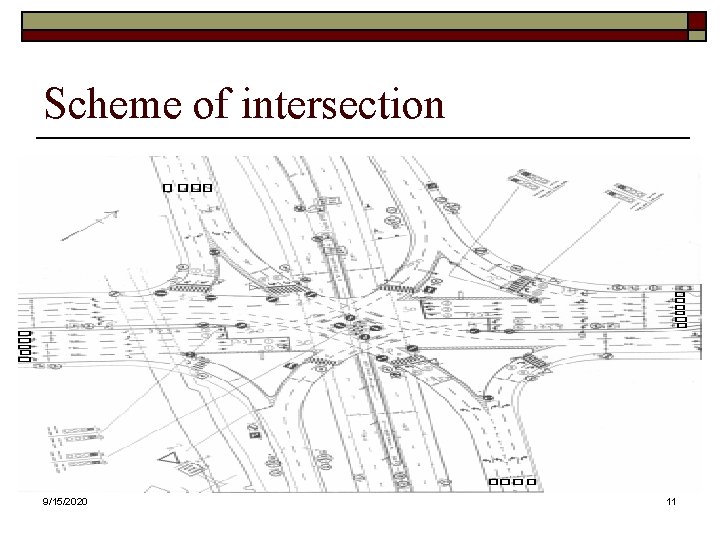

Scheme of intersection 9/15/2020 11

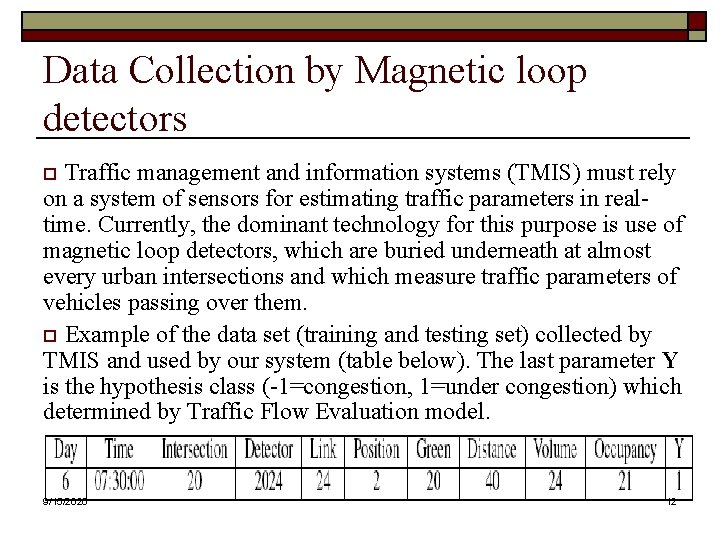

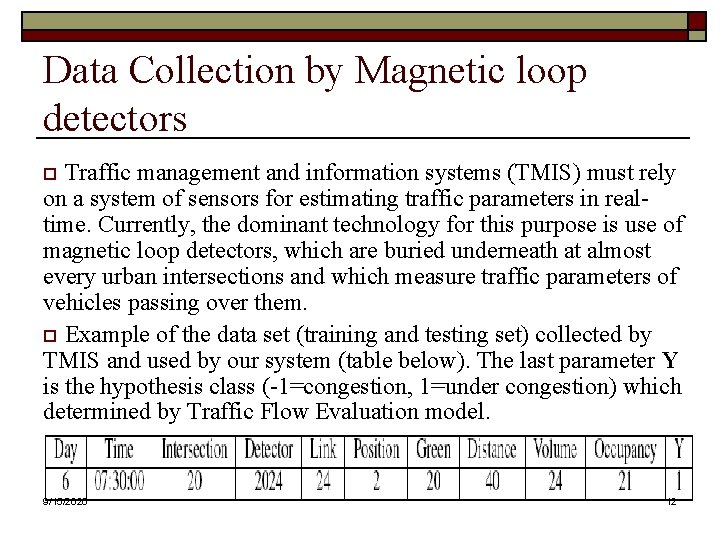

Data Collection by Magnetic loop detectors Traffic management and information systems (TMIS) must rely on a system of sensors for estimating traffic parameters in realtime. Currently, the dominant technology for this purpose is use of magnetic loop detectors, which are buried underneath at almost every urban intersections and which measure traffic parameters of vehicles passing over them. o Example of the data set (training and testing set) collected by TMIS and used by our system (table below). The last parameter Y is the hypothesis class (-1=congestion, 1=under congestion) which determined by Traffic Flow Evaluation model. o 9/15/2020 12

Data Collection by Real-time computer vision system for measuring traffic parameters The technology of video monitoring systems is alternative and / or addition to the magnetic loop detectors. 9/15/2020 13

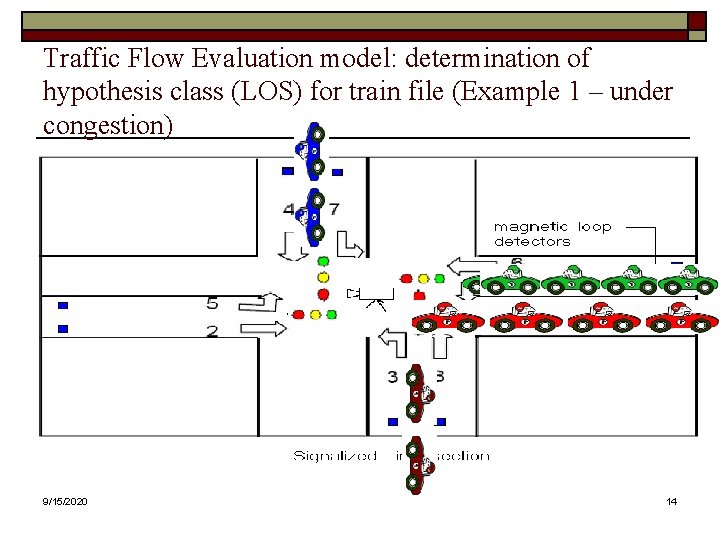

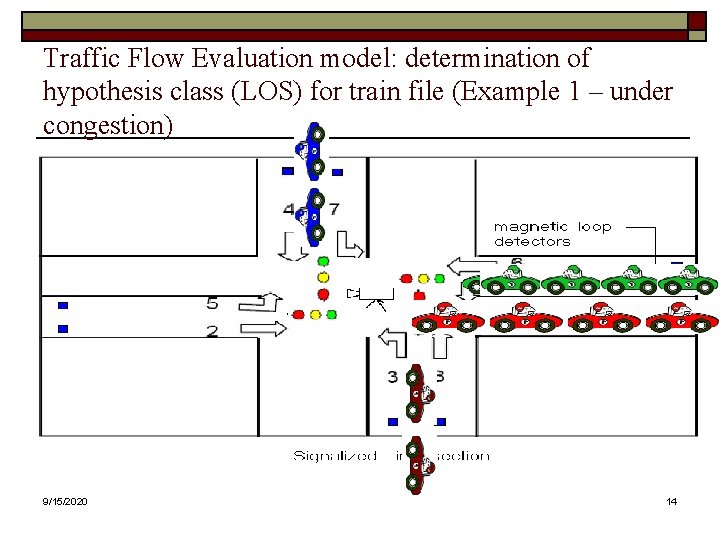

Traffic Flow Evaluation model: determination of hypothesis class (LOS) for train file (Example 1 – under congestion) 9/15/2020 14

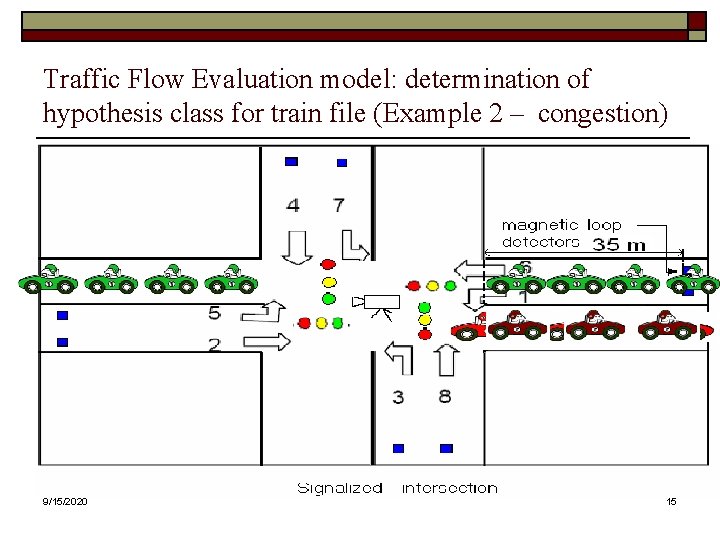

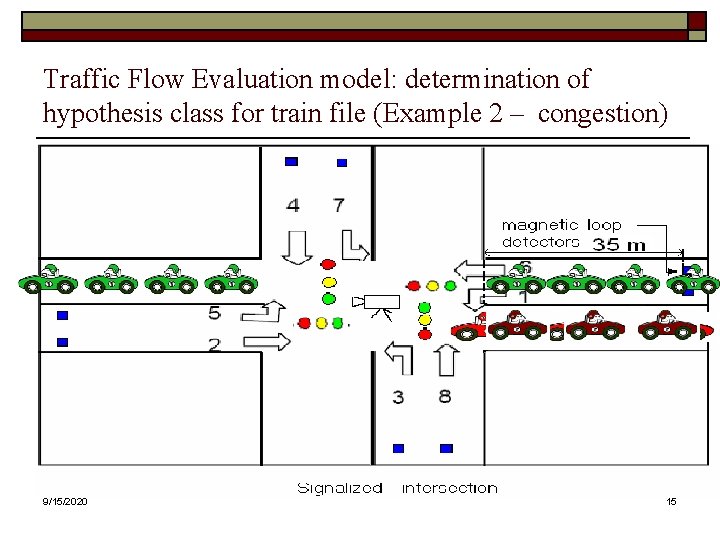

Traffic Flow Evaluation model: determination of hypothesis class for train file (Example 2 – congestion) 9/15/2020 15

Part III - B Algorithmic part 9/15/2020 17

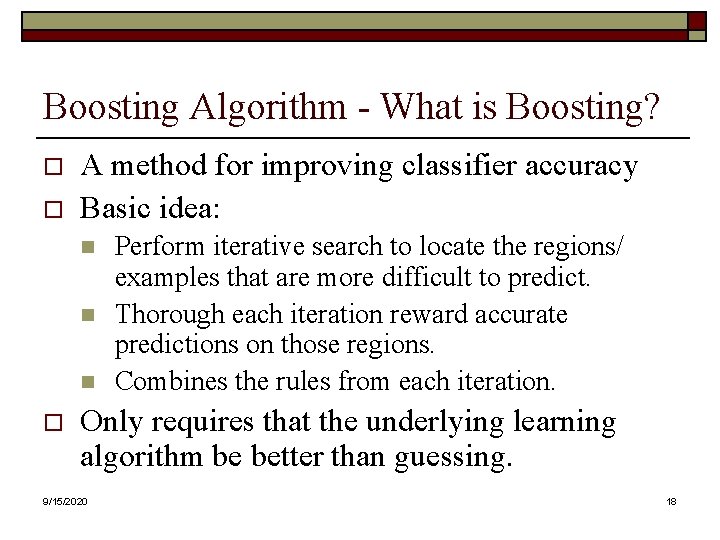

Boosting Algorithm - What is Boosting? o o A method for improving classifier accuracy Basic idea: n n n o Perform iterative search to locate the regions/ examples that are more difficult to predict. Thorough each iteration reward accurate predictions on those regions. Combines the rules from each iteration. Only requires that the underlying learning algorithm be better than guessing. 9/15/2020 18

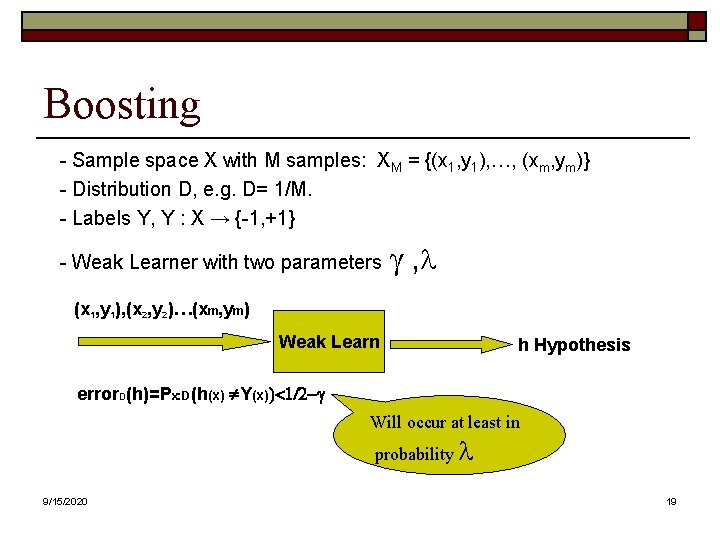

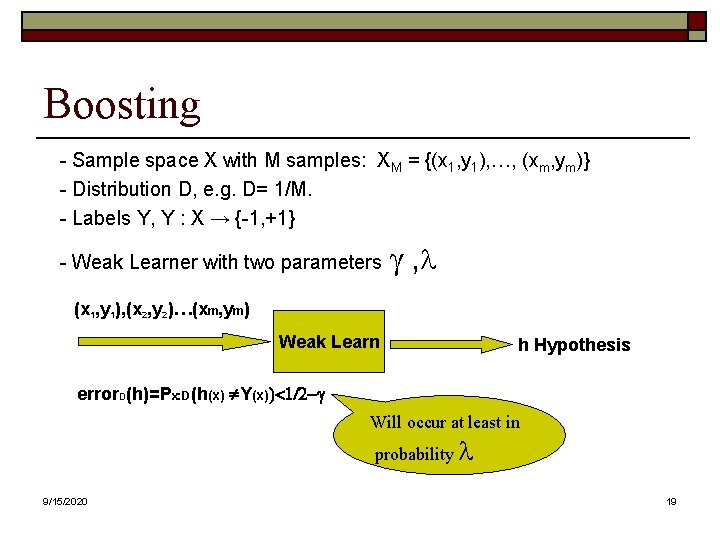

Boosting - Sample space X with M samples: XM = {(x 1, y 1), …, (xm, ym)} - Distribution D, e. g. D= 1/M. - Labels Y, Y : X → {-1, +1} - Weak Learner with two parameters g , l (x 1, y 1), (x 2, y 2)…(xm, ym) Weak Learn h Hypothesis error. D(h)=Px: D(h(x) ¹Y(x))<1/2 -g Will occur at least in probability l 9/15/2020 19

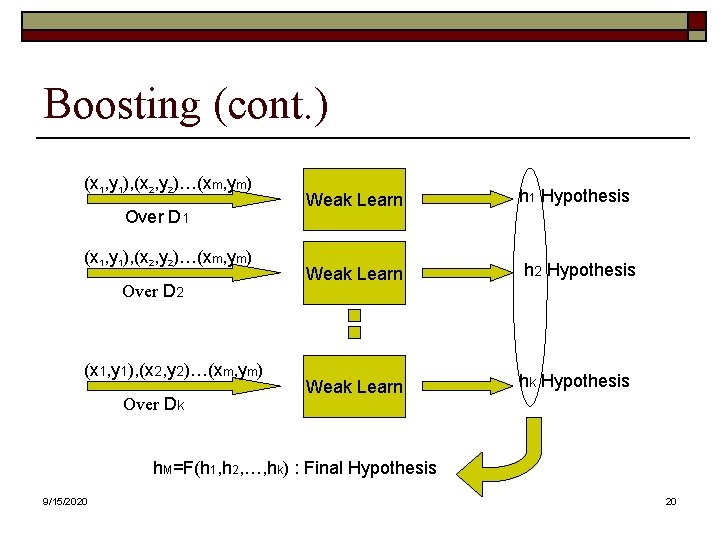

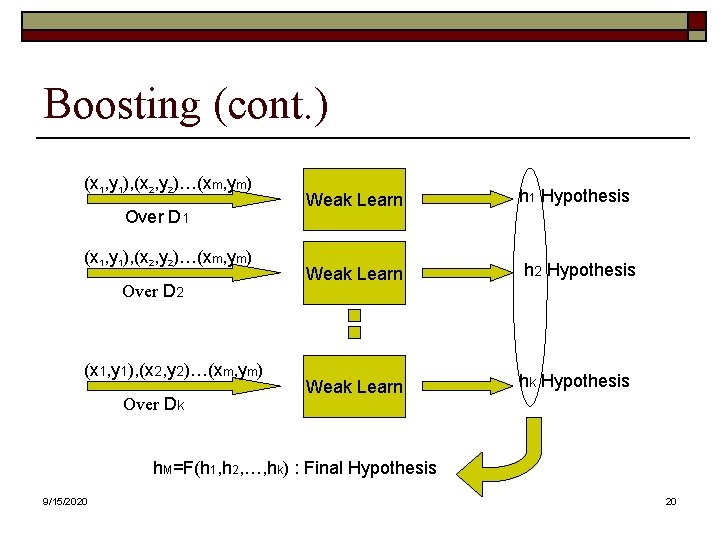

Boosting (cont. ) (x 1, y 1), (x 2, y 2)…(xm, ym) Over D 1 (x 1, y 1), (x 2, y 2)…(xm, ym) Over D 2 (x 1, y 1), (x 2, y 2)…(xm, ym) Over Dk Weak Learn h 1 Hypothesis Weak Learn h 2 Hypothesis Weak Learn hk Hypothesis h. M=F(h 1, h 2, …, hk) : Final Hypothesis 9/15/2020 20

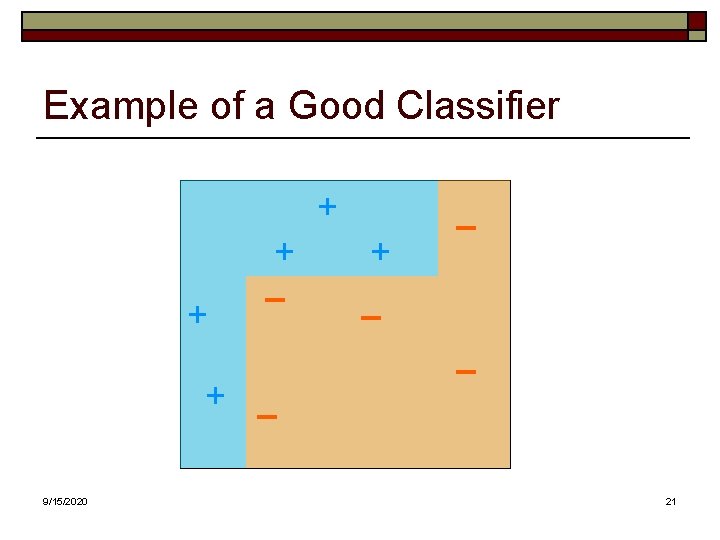

Example of a Good Classifier + + + 9/15/2020 + - - 21

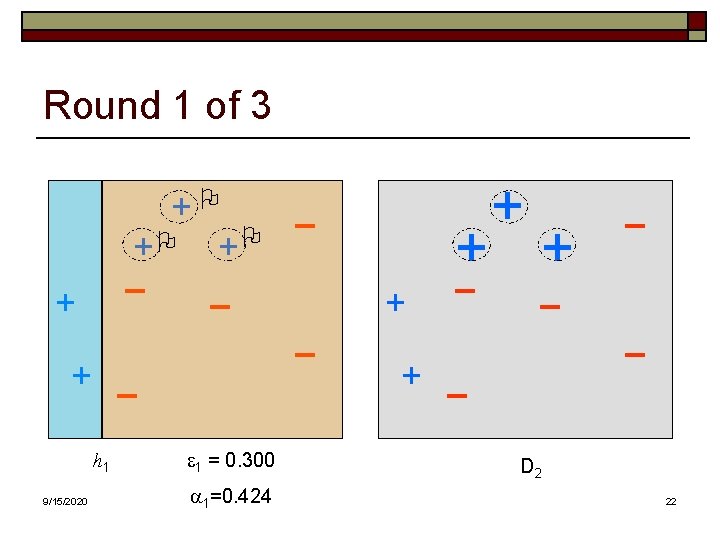

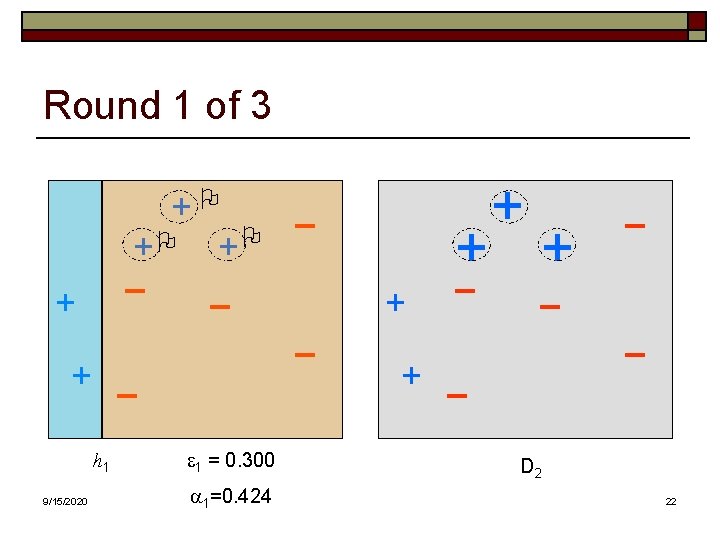

Round 1 of 3 +O O +O + - + h 1 9/15/2020 e 1 = 0. 300 a 1=0. 424 + + - - + D 2 22

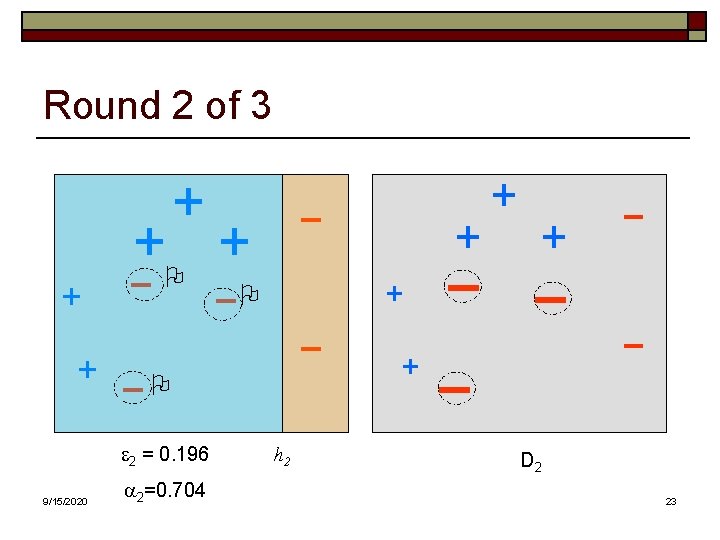

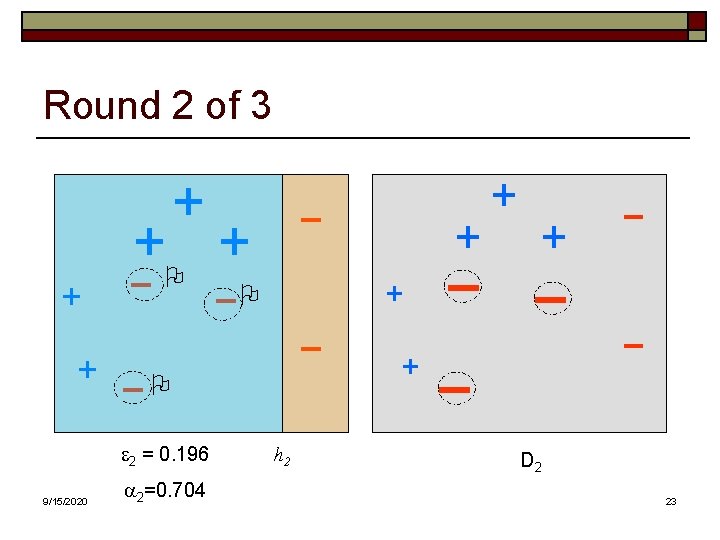

Round 2 of 3 + + - +O + - -O + -O e 2 = 0. 196 9/15/2020 a 2=0. 704 + h 2 + - + + - - D 2 23

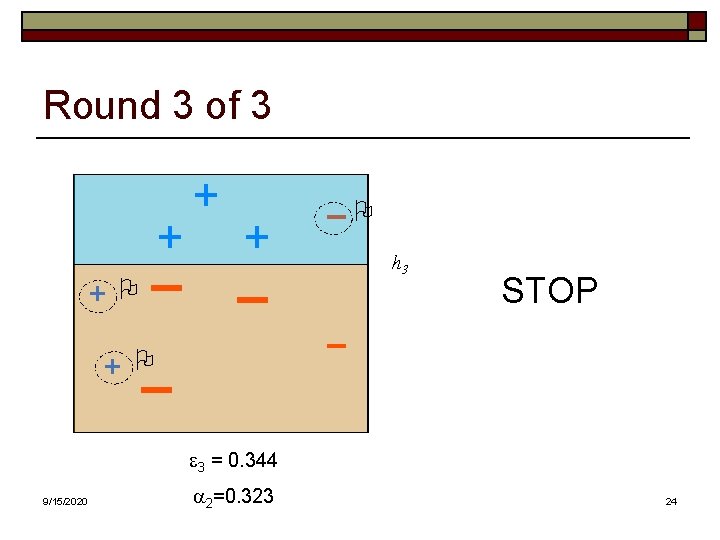

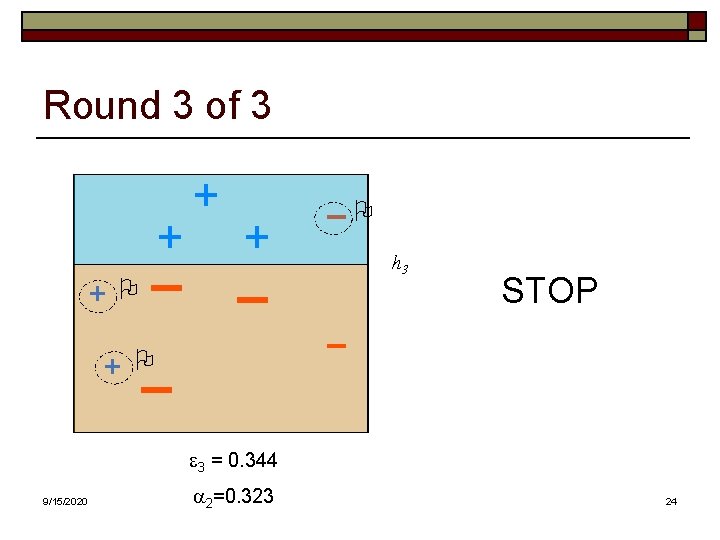

Round 3 of 3 + + O + - + + - O - -O h 3 STOP - e 3 = 0. 344 9/15/2020 a 2=0. 323 24

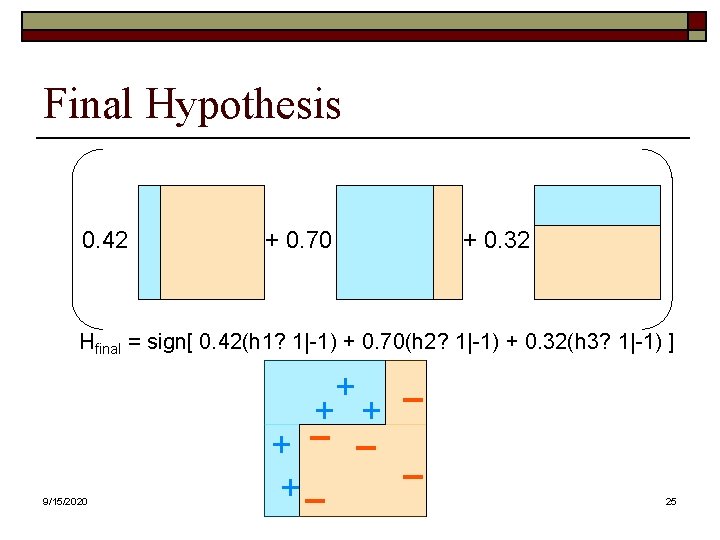

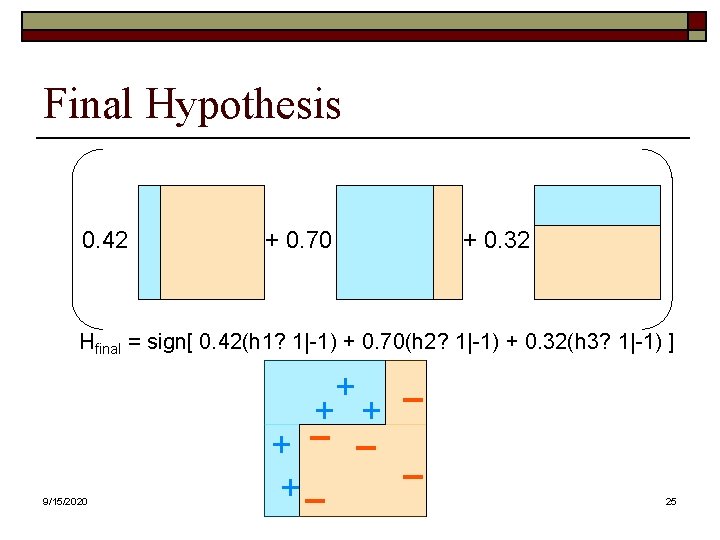

Final Hypothesis 0. 42 + 0. 70 + 0. 32 Hfinal = sign[ 0. 42(h 1? 1|-1) + 0. 70(h 2? 1|-1) + 0. 32(h 3? 1|-1) ] + 9/15/2020 + + + - +- 25

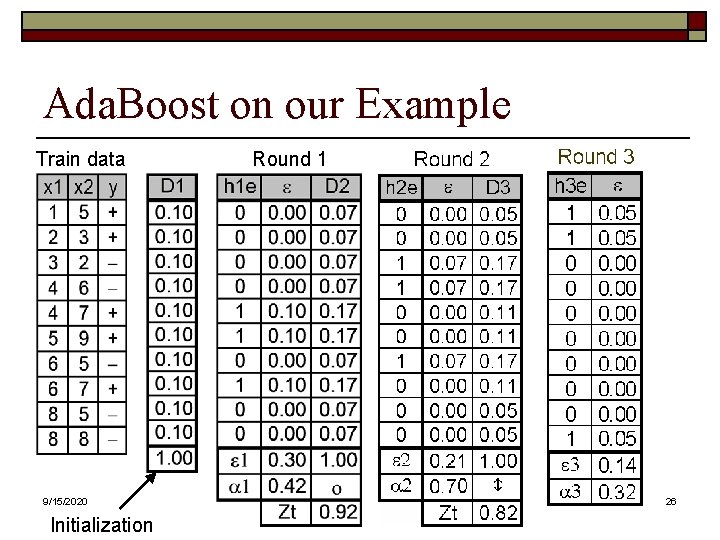

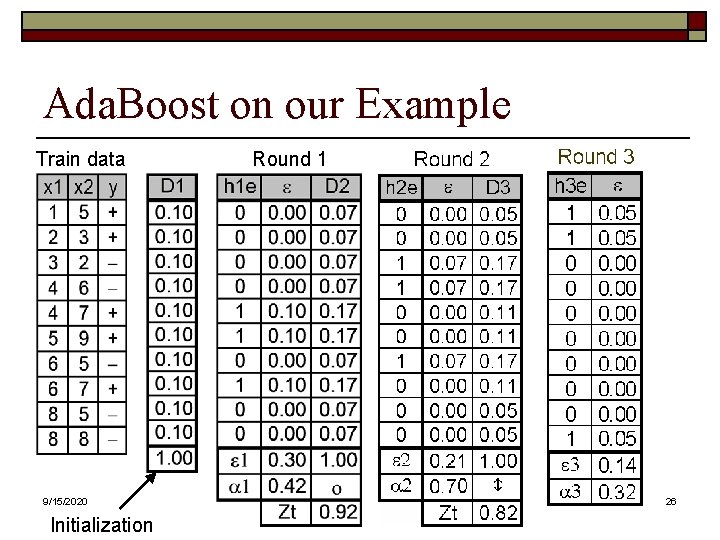

Ada. Boost on our Example Train data 9/15/2020 Initialization Round 1 26

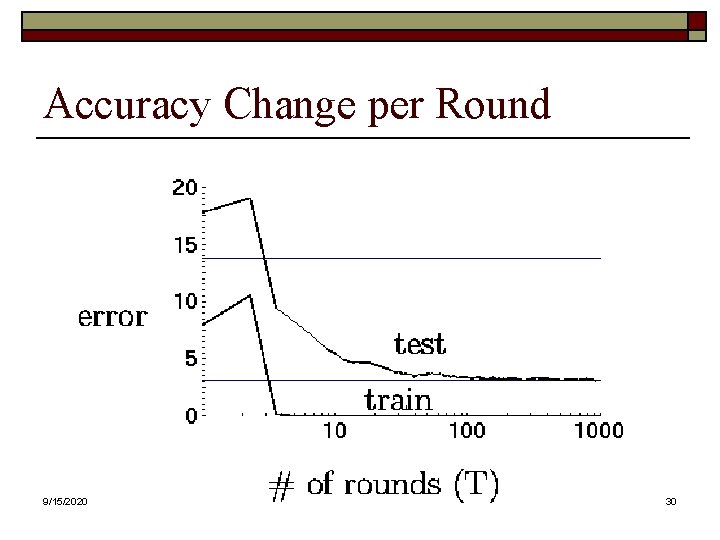

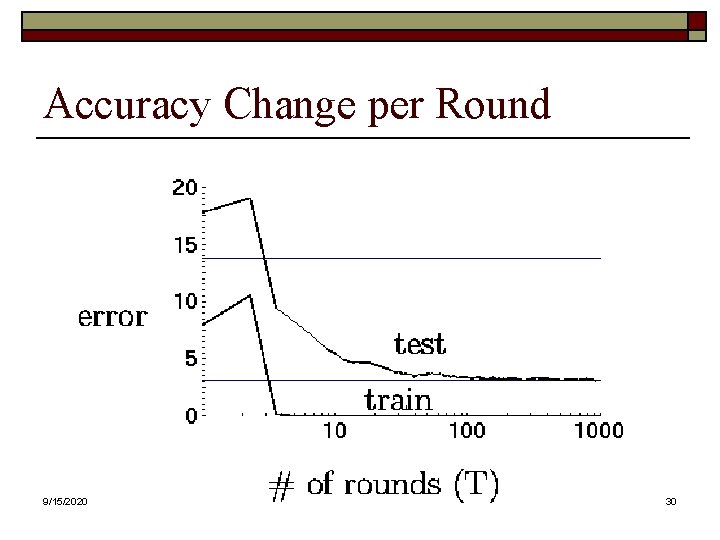

Accuracy Change per Round 9/15/2020 30

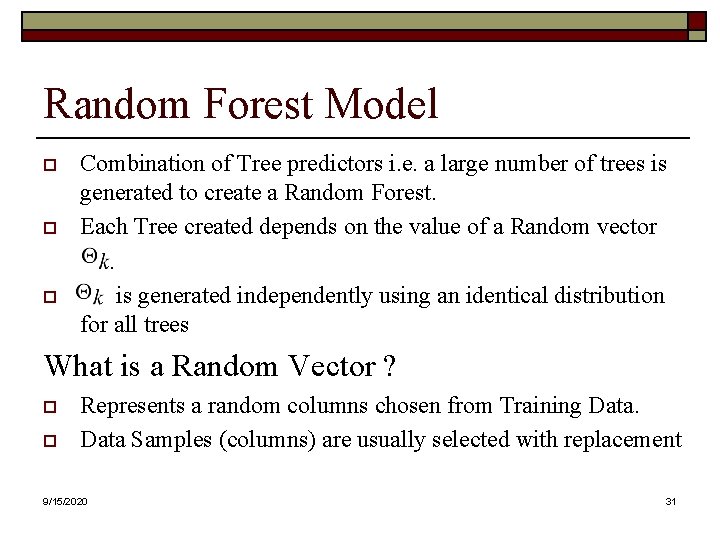

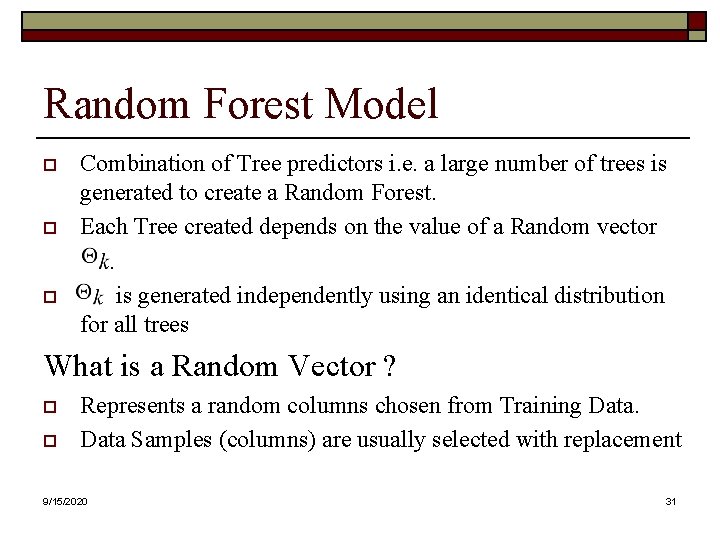

Random Forest Model o o o Combination of Tree predictors i. e. a large number of trees is generated to create a Random Forest. Each Tree created depends on the value of a Random vector. is generated independently using an identical distribution for all trees What is a Random Vector ? o o Represents a random columns chosen from Training Data Samples (columns) are usually selected with replacement 9/15/2020 31

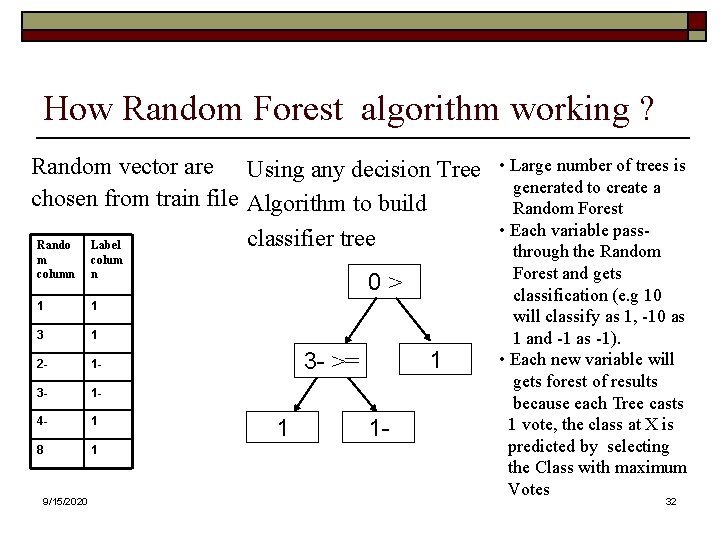

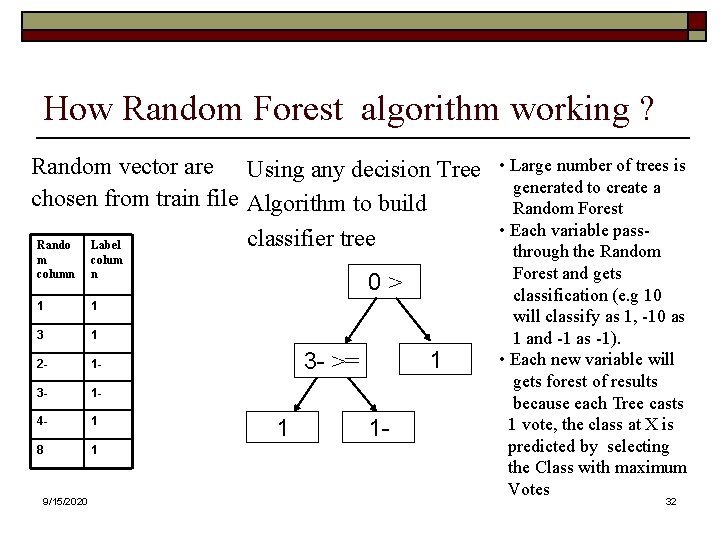

How Random Forest algorithm working ? Random vector are Using any decision Tree chosen from train file Algorithm to build classifier tree Rando Label m column colum n 1 1 3 1 2 - 1 - 3 - 1 - 4 - 1 8 1 9/15/2020 0> 1 3 - >= 1 1 - • Large number of trees is generated to create a Random Forest • Each variable passthrough the Random Forest and gets classification (e. g 10 will classify as 1, -10 as 1 and -1 as -1). • Each new variable will gets forest of results because each Tree casts 1 vote, the class at X is predicted by selecting the Class with maximum Votes 32

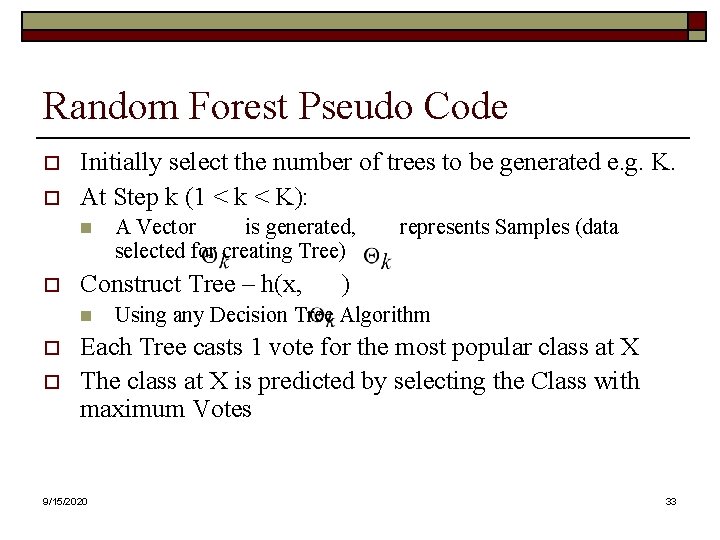

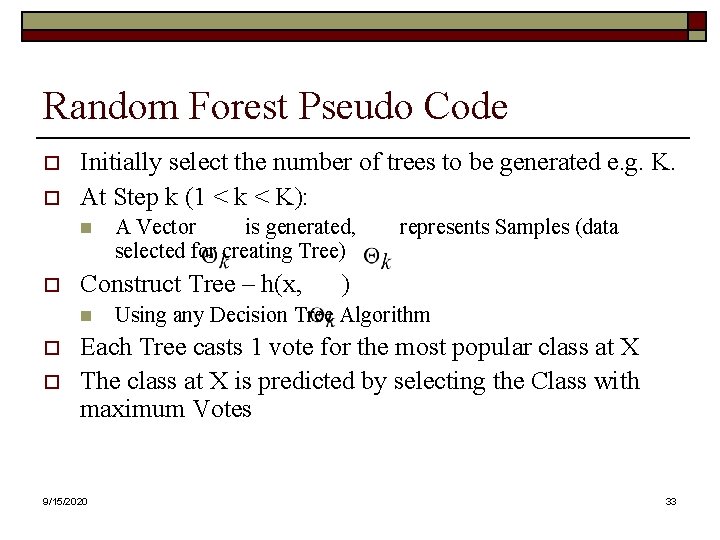

Random Forest Pseudo Code o o Initially select the number of trees to be generated e. g. K. At Step k (1 < k < K): n o Construct Tree – h(x, n o o A Vector is generated, selected for creating Tree) represents Samples (data ) Using any Decision Tree Algorithm Each Tree casts 1 vote for the most popular class at X The class at X is predicted by selecting the Class with maximum Votes 9/15/2020 33

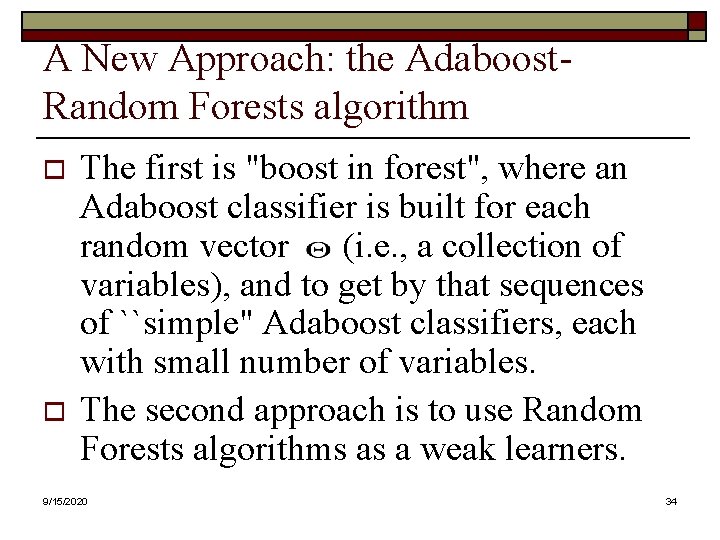

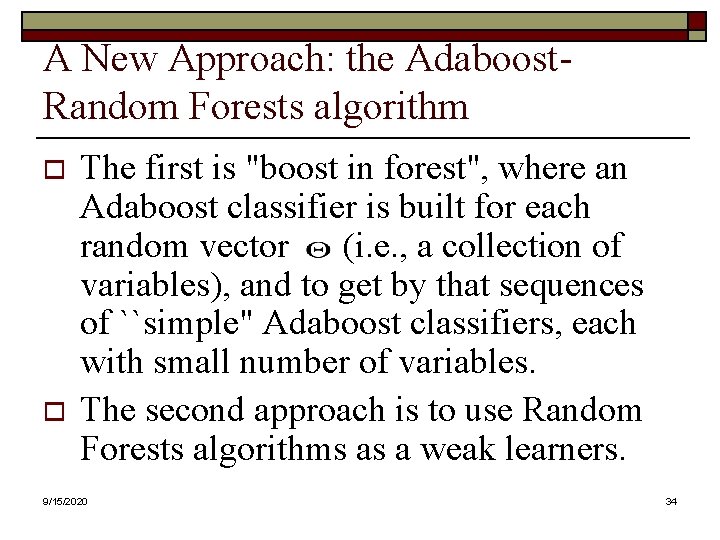

A New Approach: the Adaboost. Random Forests algorithm o o The first is "boost in forest", where an Adaboost classifier is built for each random vector (i. e. , a collection of variables), and to get by that sequences of ``simple" Adaboost classifiers, each with small number of variables. The second approach is to use Random Forests algorithms as a weak learners. 9/15/2020 34

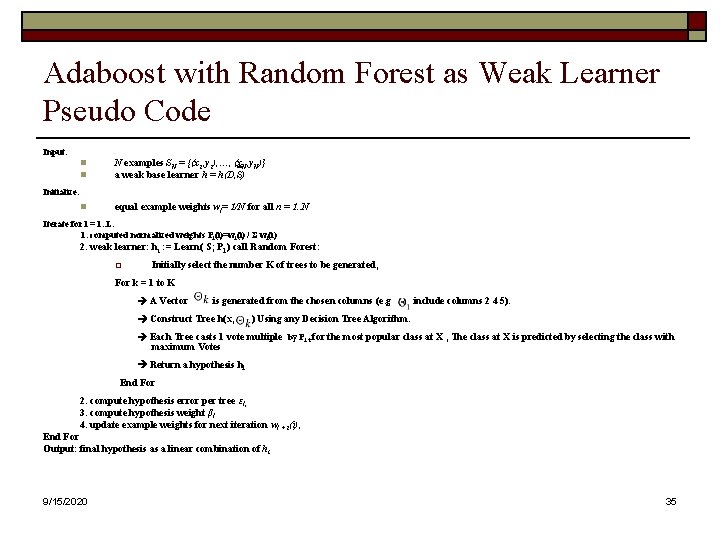

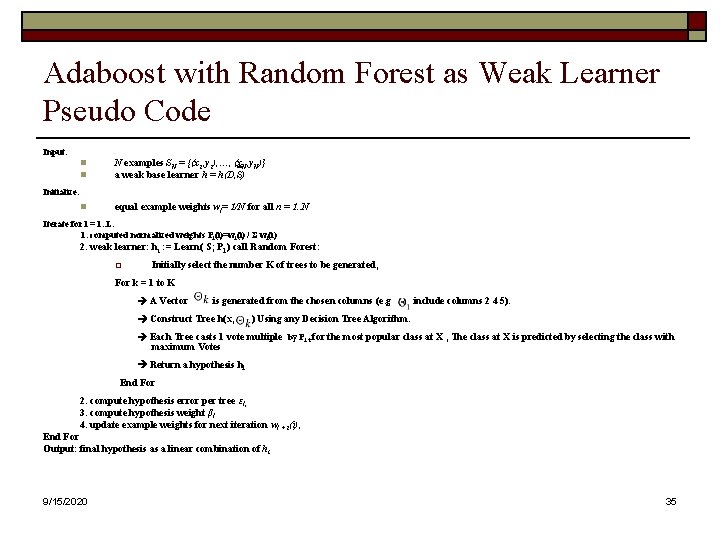

Adaboost with Random Forest as Weak Learner Pseudo Code Input: n n N examples SN = {(x 1, y 1), …, (x. N, y. N)} a weak base learner h = h(D, S) n equal example weights wl= 1/N for all n = 1. . N Initialize: Iterate for l = 1. . L: 1. computed normalized weights Pl(i)=wl(i) / Σ wl(i) 2. weak learner: ht : = Learn( S; Pl ) call Random Forest: o Initially select the number K of trees to be generated, For k = 1 to K A Vector is generated from the chosen columns (e. g Construct Tree h(x, include columns 2 4 5). ) Using any Decision Tree Algorithm. Each Tree casts 1 vote multiple by Pl t for the most popular class at X , The class at X is predicted by selecting the class with maximum Votes Return a hypothesis hl End For 2. compute hypothesis error per tree εl, 3. compute hypothesis weight βl 4. update example weights for next iteration wl + 1(i), End For Output: final hypothesis as a linear combination of ht 9/15/2020 35

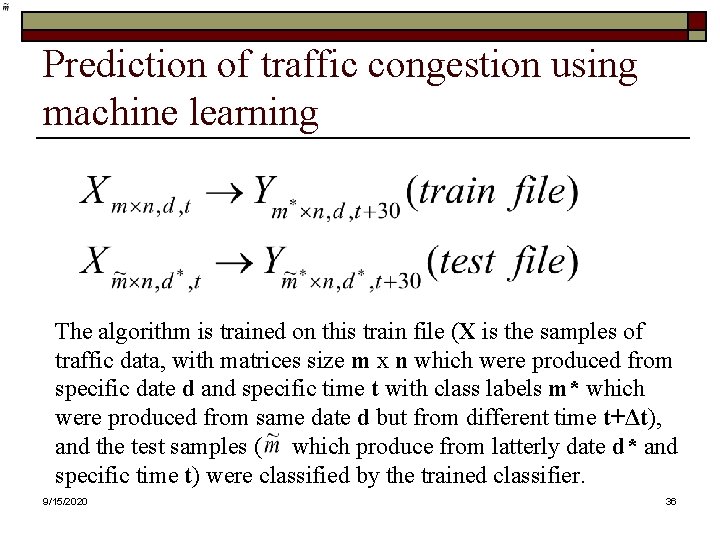

Prediction of traffic congestion using machine learning The algorithm is trained on this train file (X is the samples of traffic data, with matrices size m x n which were produced from specific date d and specific time t with class labels m* which were produced from same date d but from different time t+Δt), and the test samples ( which produce from latterly date d* and specific time t) were classified by the trained classifier. 9/15/2020 36

Traffic Signal Timing Optimization o o o There is number of models / Software for Traffic Signal Timing Optimization (e. g. PASSER ). Base on the existing data, and with the software for “Traffic Signal Timing Optimization”, we can to plan many programs of stop light for every possible congestion scenario. The suitable stop light program to the given prediction can be found, and the current stop light program will change with this optimize program before the congestion situation will occurred. 9/15/2020 37

Microscopic traffic simulator o o In order to check the quality of the traffic prediction and the quality of “Traffic Signal Timing Optimization”, we also used the microscopic traffic simulator. We found an error rate of approximately 7%. In comparison, the naive predictor (consisting in the estimation of the future class labels by its current value) gives an error rate of 16% 9/15/2020 38

Part IV Results 9/15/2020 39

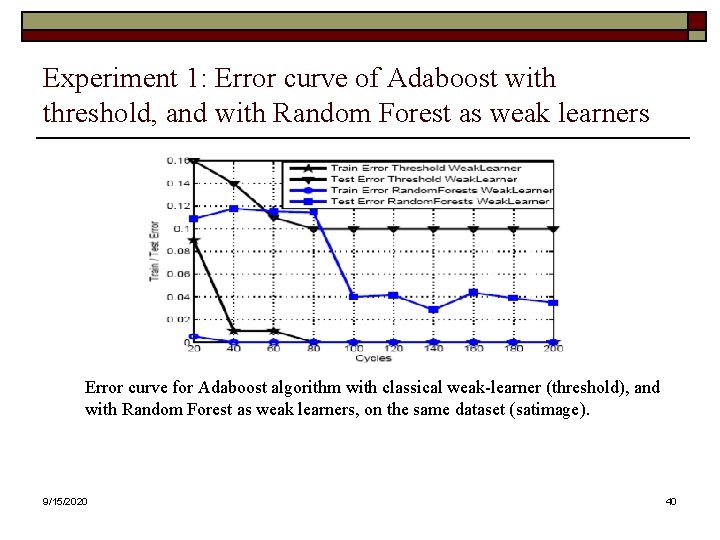

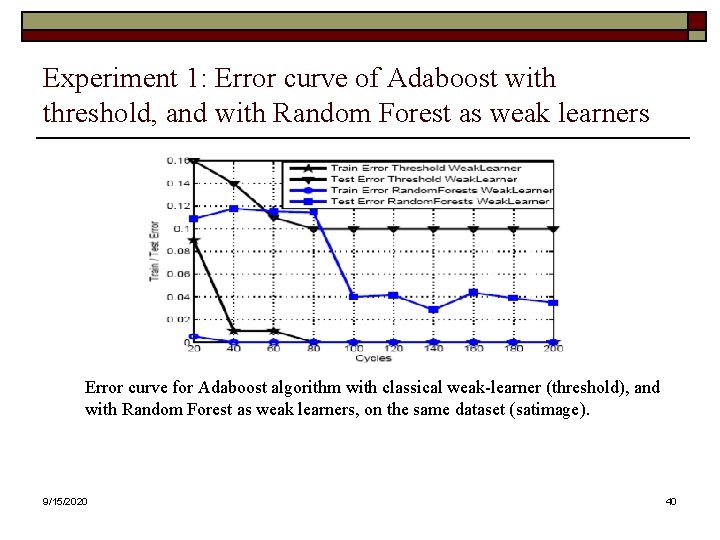

Experiment 1: Error curve of Adaboost with threshold, and with Random Forest as weak learners Error curve for Adaboost algorithm with classical weak-learner (threshold), and with Random Forest as weak learners, on the same dataset (satimage). 9/15/2020 40

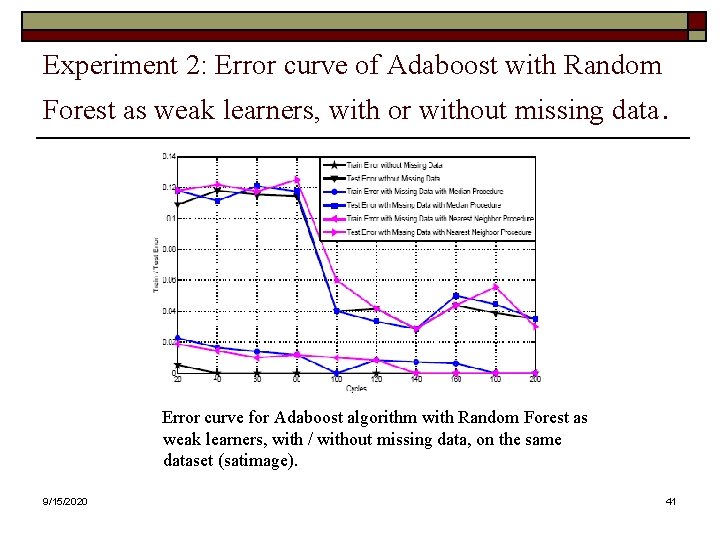

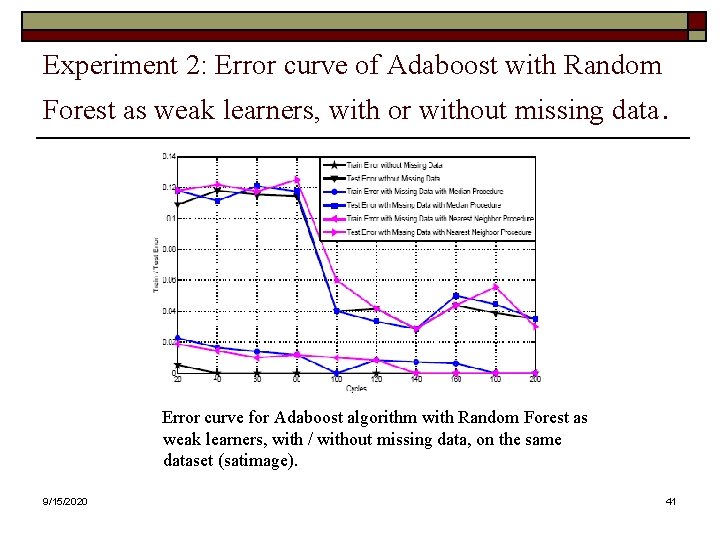

Experiment 2: Error curve of Adaboost with Random Forest as weak learners, with or without missing data. Error curve for Adaboost algorithm with Random Forest as weak learners, with / without missing data, on the same dataset (satimage). 9/15/2020 41

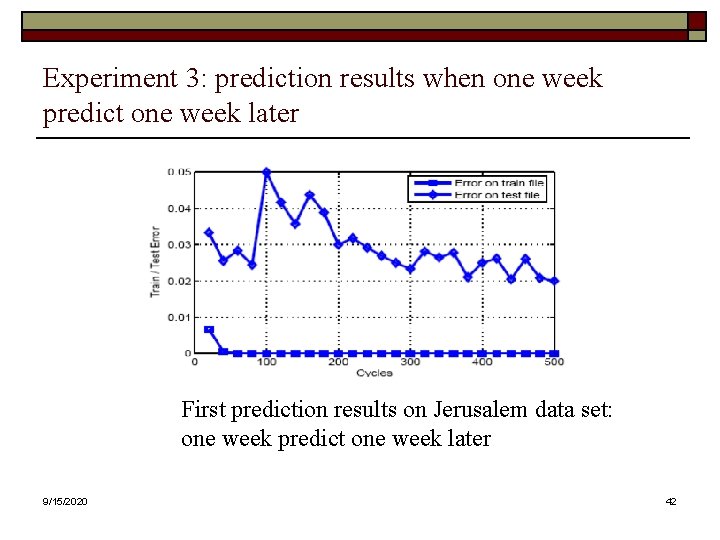

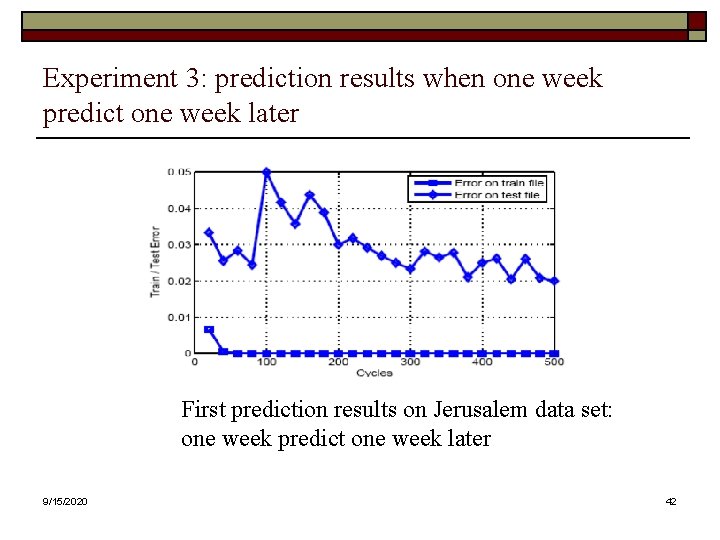

Experiment 3: prediction results when one week predict one week later First prediction results on Jerusalem data set: one week predict one week later 9/15/2020 42

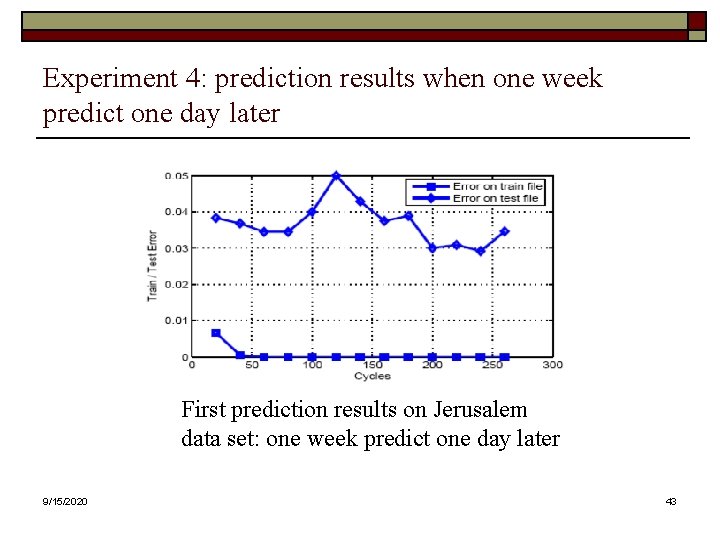

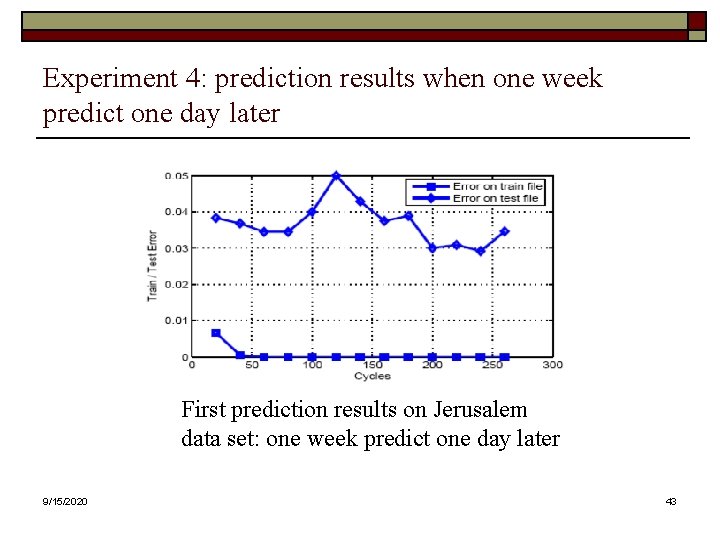

Experiment 4: prediction results when one week predict one day later First prediction results on Jerusalem data set: one week predict one day later 9/15/2020 43

Experiment 5: Using with Traffic Signal Timing Optimization (Preliminary test) - Number of lanes with congestion situation lengthwise Yermiyahu road – 4. - Number of lanes with congestion situation lengthwise Yermiyahu road with Optimization program – 1. 9/15/2020 44

Part V Conclusions 9/15/2020 45

Advantage of the new approach o o The final classifier of this approach (Adaboost algorithm with random forest as weak learning) is very accurate with minor misclassification and strong predication ability (it is excelled in accuracy among current algorithms). It has an effective method for dealing with missing data and maintains accuracy when a large proportion of the data are missing. It has an effective method for predication of multi-classification problems. It has an effective method for unbalanced data (for instance: one label (e. g “ 1”- congestion) in the train file is very poor (e. g 10%) and in the test file is a much larger (e. g 35%)). 9/15/2020 46

Conclusions from the predication results o o This preliminary test showed very good results and the methodology used to predict traffic congestion for t + t=07: 30 gave an error rate of approximately 2% in the first experiment ("one week predicts the following week"), and approximately 3% in the second experiment ("one week predicts a following day"). The possibility of prediction of traffic congestion half an hour before its happening enable to talk about future traffic flow policies. 9/15/2020 47

Part VI Future Work 9/15/2020 48

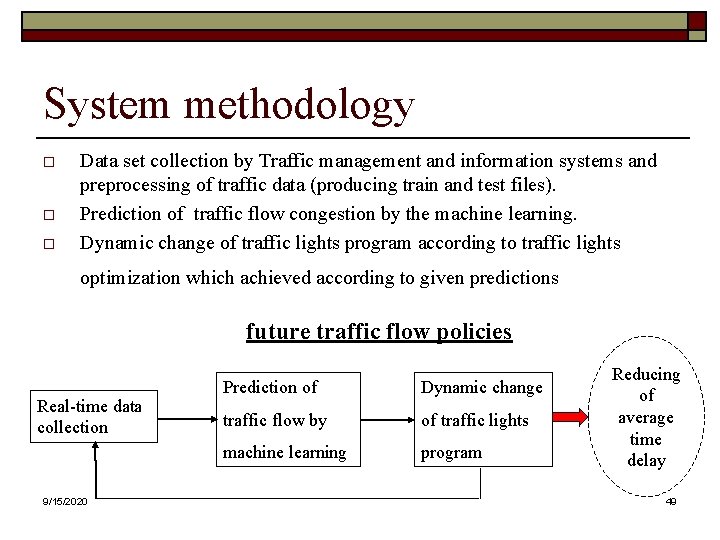

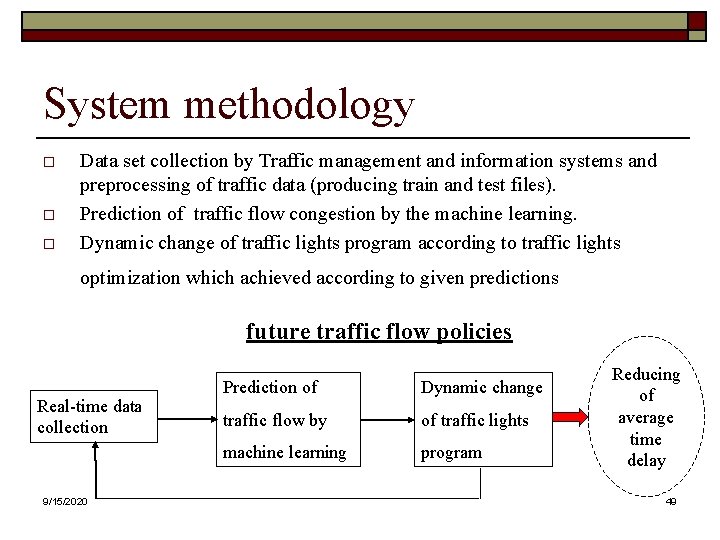

System methodology o o o Data set collection by Traffic management and information systems and preprocessing of traffic data (producing train and test files). Prediction of traffic flow congestion by the machine learning. Dynamic change of traffic lights program according to traffic lights optimization which achieved according to given predictions future traffic flow policies Real-time data collection 9/15/2020 Prediction of Dynamic change traffic flow by of traffic lights machine learning program Reducing of average time delay 49

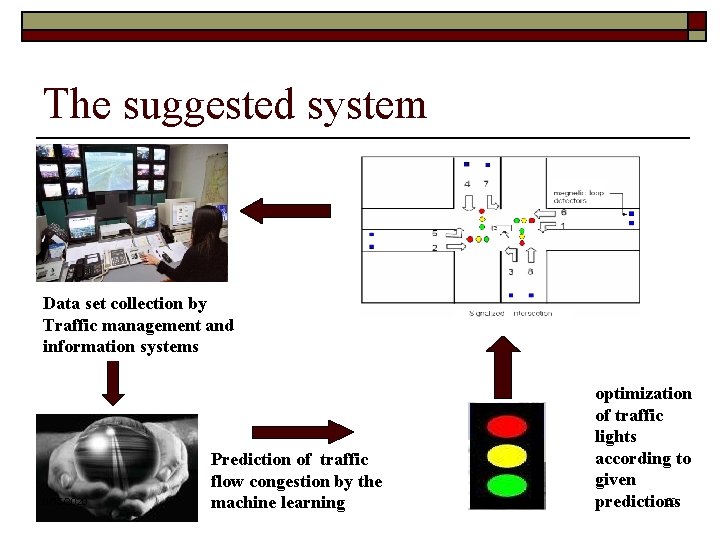

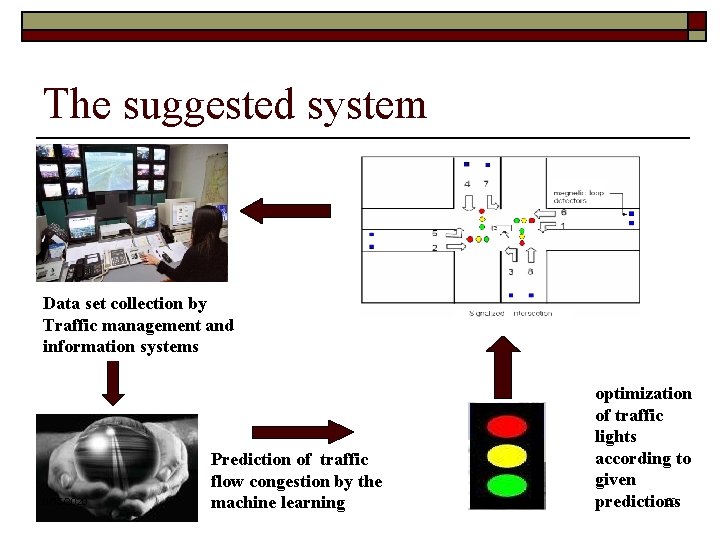

The suggested system Data set collection by Traffic management and information systems 9/15/2020 Prediction of traffic flow congestion by the machine learning optimization of traffic lights according to given 50 predictions

That is all, folks… Thank you for your patience! y Gu shem Le 9/15/2020 51

Pincuegula

Pincuegula Traffic cause and effect

Traffic cause and effect Traffic congestion conclusion

Traffic congestion conclusion Inbound traffic vs outbound traffic

Inbound traffic vs outbound traffic All traffic solutions

All traffic solutions Hyperemia and congestion

Hyperemia and congestion Difference between hyperemia and congestion

Difference between hyperemia and congestion Capacity allocation and congestion management

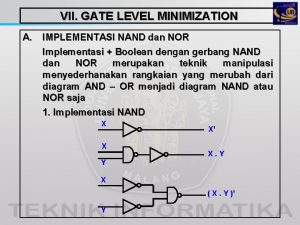

Capacity allocation and congestion management Karnaugh map definition

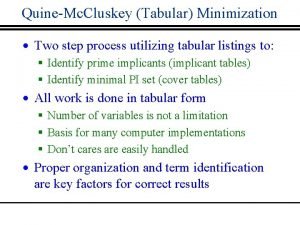

Karnaugh map definition Implication table

Implication table Simplex minimization problem

Simplex minimization problem Regularized risk minimization

Regularized risk minimization Cost minimizing rule

Cost minimizing rule Dual simplex method example

Dual simplex method example Finite state machine minimization

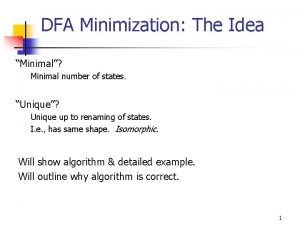

Finite state machine minimization Minimization techniques in digital electronics

Minimization techniques in digital electronics Optimal binary search tree

Optimal binary search tree Cost minimization

Cost minimization Minimization of dfa

Minimization of dfa Big m method minimization example

Big m method minimization example Subset construction nfa to dfa calculator

Subset construction nfa to dfa calculator Big m method minimization

Big m method minimization Interval halving method optimization example

Interval halving method optimization example Convert nfa to dfa

Convert nfa to dfa Tabular method of minimization

Tabular method of minimization Long run cost minimization

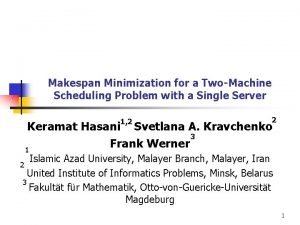

Long run cost minimization Makespan nedir

Makespan nedir Context minimization error

Context minimization error Dfa minimization examples

Dfa minimization examples Dfa minimization examples

Dfa minimization examples Nand xx

Nand xx Cost minimization analysis

Cost minimization analysis Empirical risk minimization python

Empirical risk minimization python Bddgaf

Bddgaf Risk minimization plan

Risk minimization plan Cost minimization perfect complements

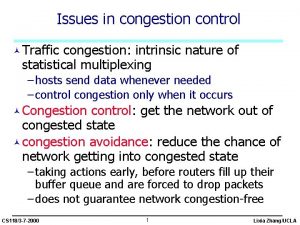

Cost minimization perfect complements Network congestion causes

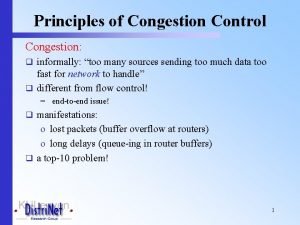

Network congestion causes Principles of congestion control

Principles of congestion control In2140

In2140 Tcp congestion control

Tcp congestion control Network provisioning in congestion control

Network provisioning in congestion control Principles of congestion control

Principles of congestion control Principles of congestion control in computer networks

Principles of congestion control in computer networks Eneritis

Eneritis Load shedding in congestion control

Load shedding in congestion control Udp congestion control

Udp congestion control Septae

Septae Carmen bove

Carmen bove