Congestion Control 1 Principles of Congestion Control Congestion

![TCP & AIMD: congestion r Dynamic window size [Van Jacobson] m Initialization: Slow start TCP & AIMD: congestion r Dynamic window size [Van Jacobson] m Initialization: Slow start](https://slidetodoc.com/presentation_image_h/1dfffe790fe3cdf185ca4570b7630deb/image-28.jpg)

- Slides: 48

Congestion Control 1

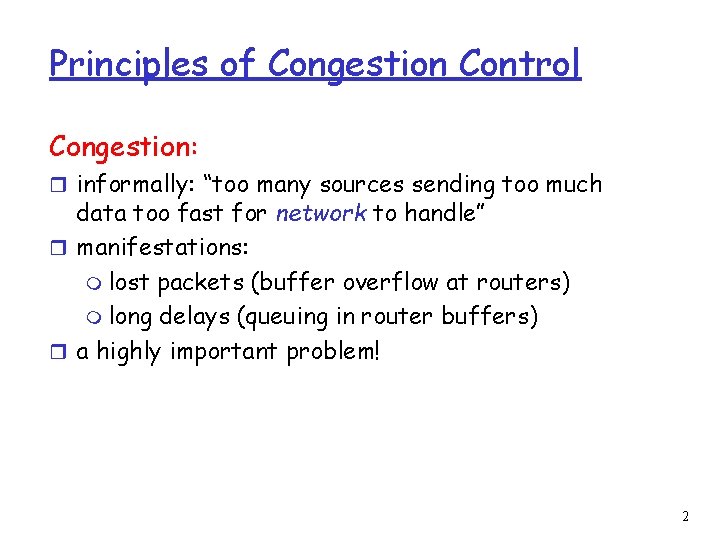

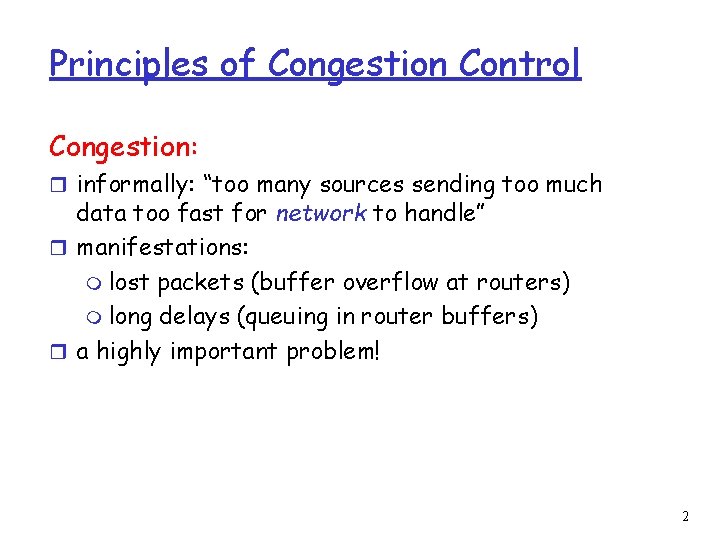

Principles of Congestion Control Congestion: r informally: “too many sources sending too much data too fast for network to handle” r manifestations: m lost packets (buffer overflow at routers) m long delays (queuing in router buffers) r a highly important problem! 2

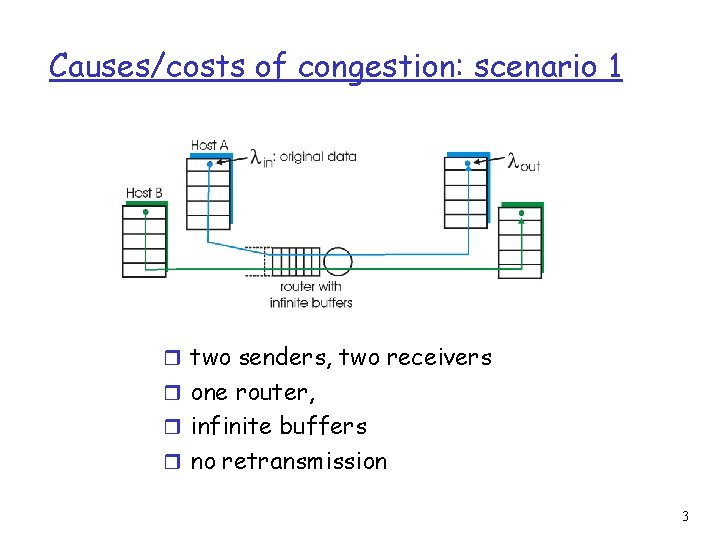

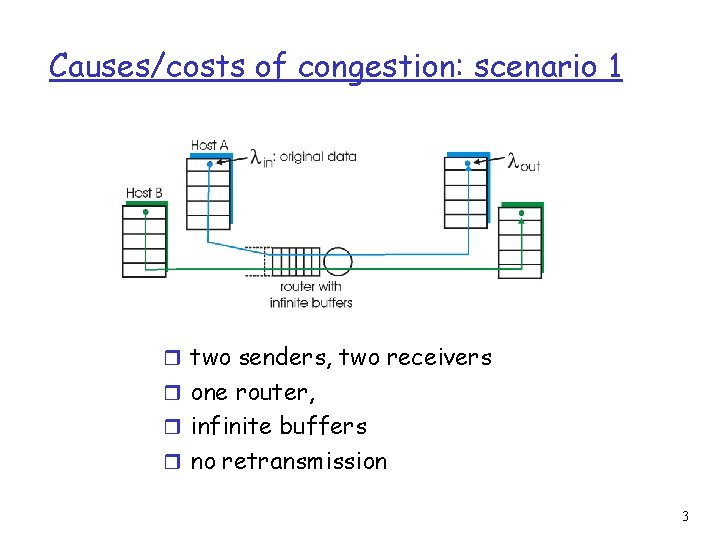

Causes/costs of congestion: scenario 1 r two senders, two receivers r one router, r infinite buffers r no retransmission 3

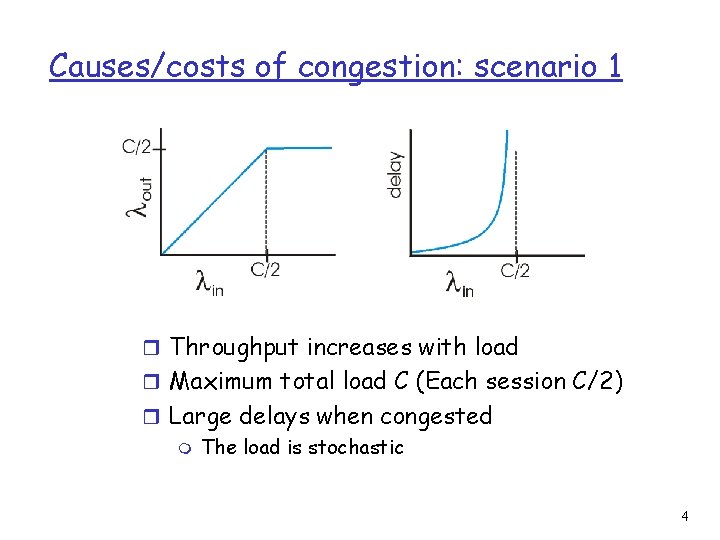

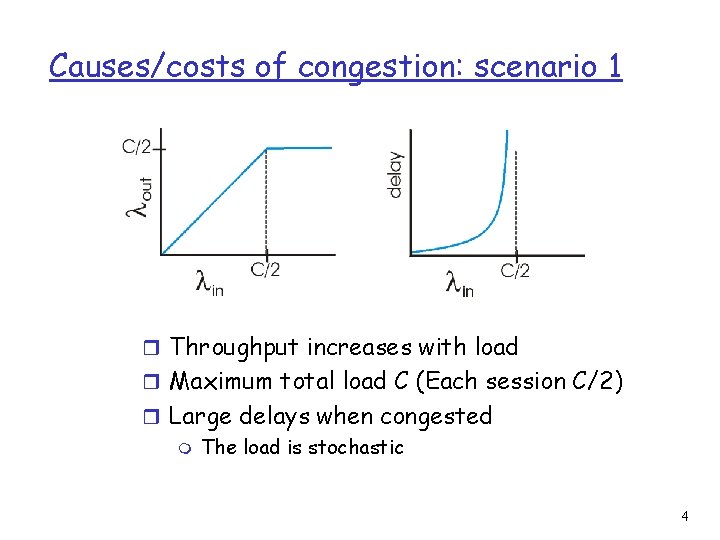

Causes/costs of congestion: scenario 1 r Throughput increases with load r Maximum total load C (Each session C/2) r Large delays when congested m The load is stochastic 4

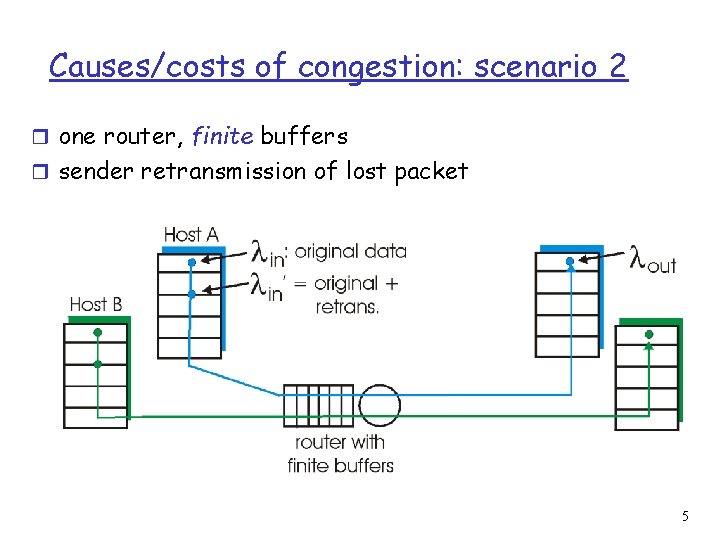

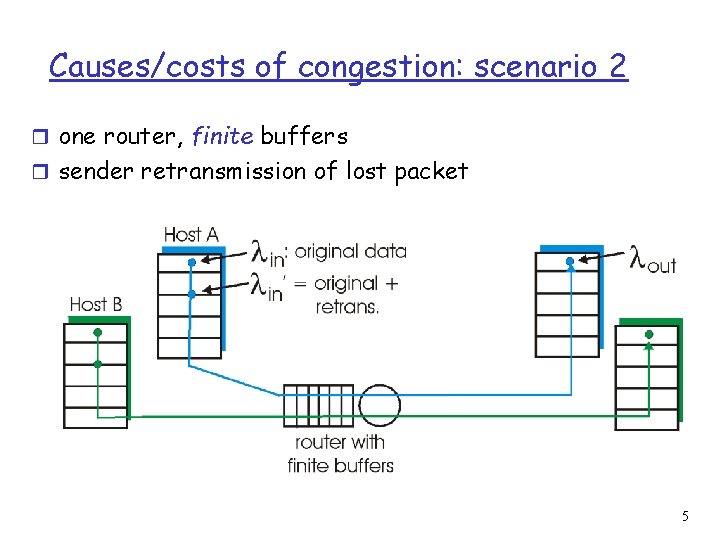

Causes/costs of congestion: scenario 2 r one router, finite buffers r sender retransmission of lost packet 5

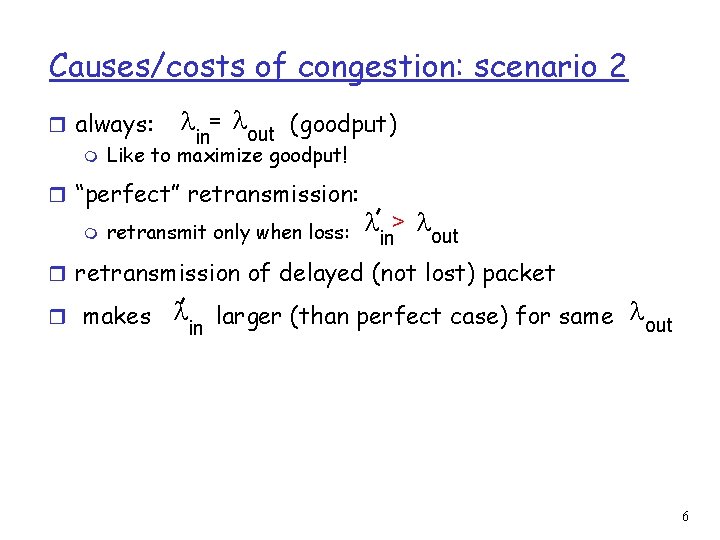

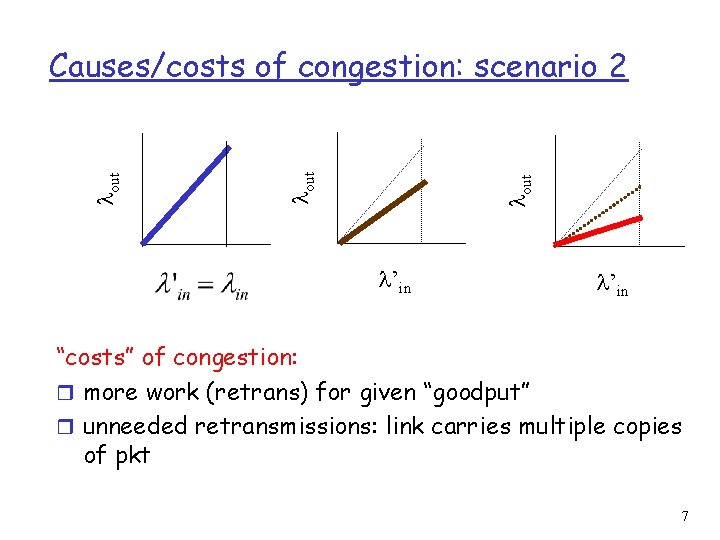

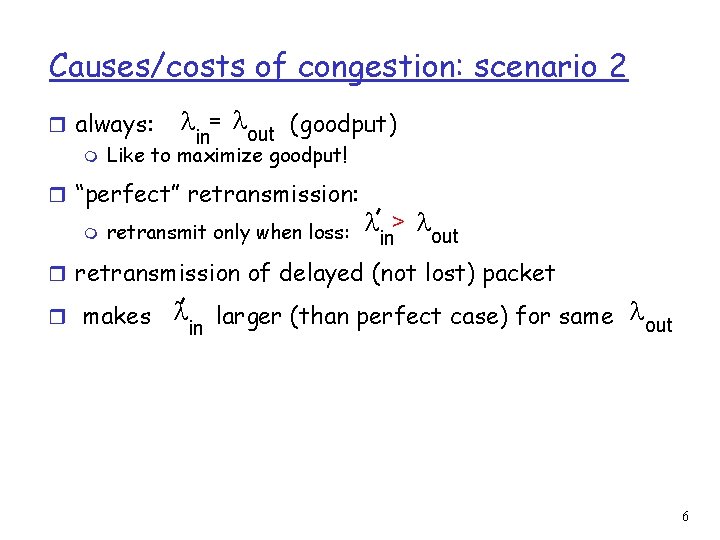

Causes/costs of congestion: scenario 2 r always: = out (goodput) in m Like to maximize goodput! r “perfect” retransmission: m retransmit only when loss: > out in r retransmission of delayed (not lost) packet r makes larger (than perfect case) for same out in 6

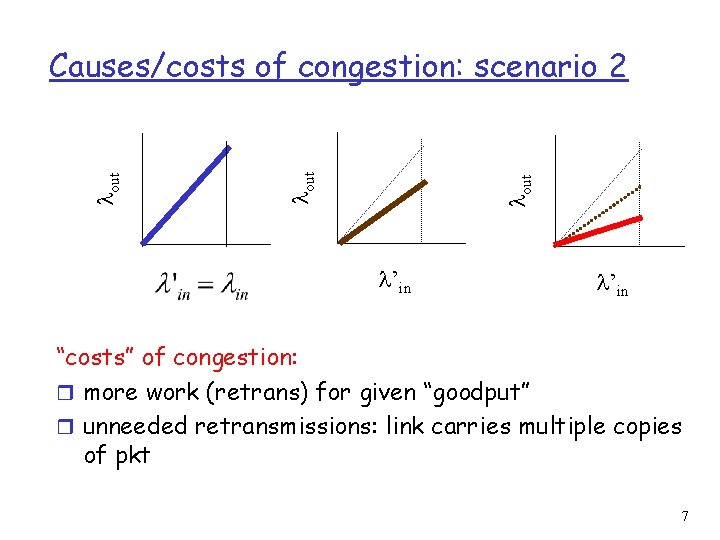

out Causes/costs of congestion: scenario 2 ’in “costs” of congestion: r more work (retrans) for given “goodput” r unneeded retransmissions: link carries multiple copies of pkt 7

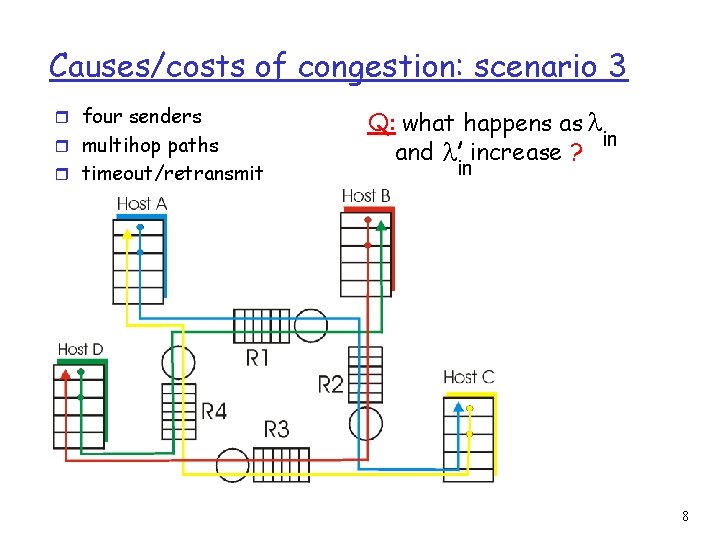

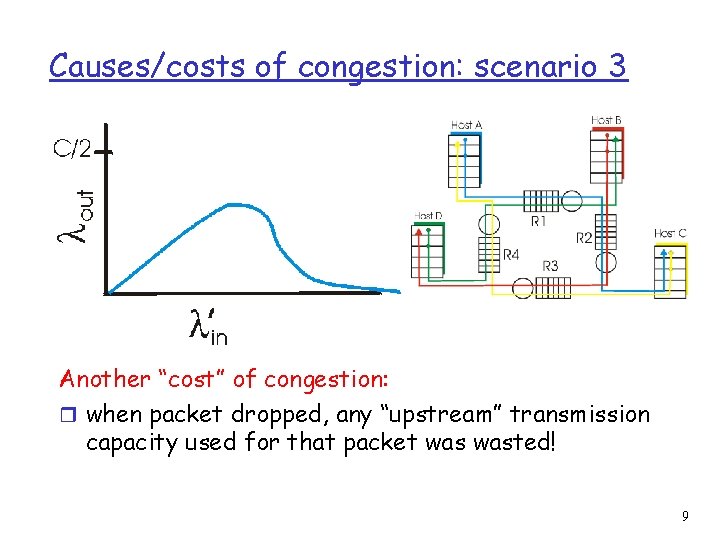

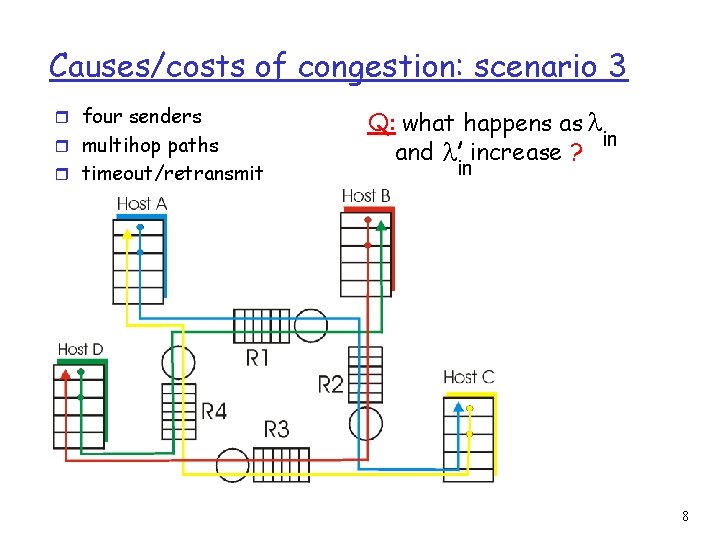

Causes/costs of congestion: scenario 3 r four senders r multihop paths r timeout/retransmit Q: what happens as in and increase ? in 8

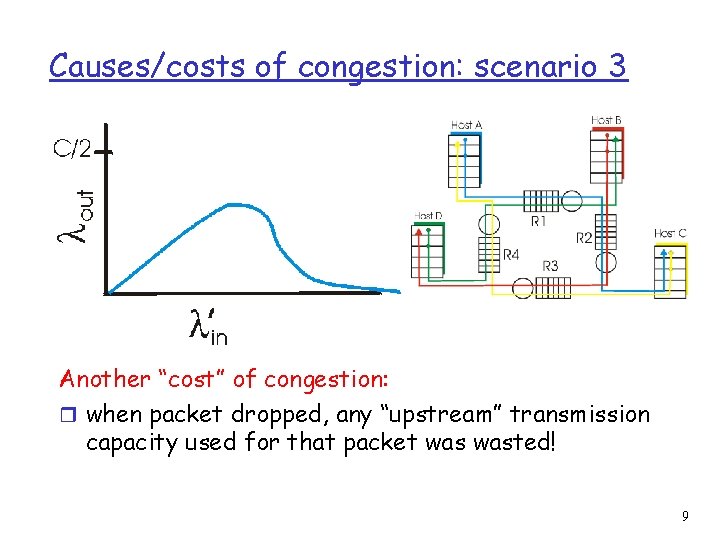

Causes/costs of congestion: scenario 3 Another “cost” of congestion: r when packet dropped, any “upstream” transmission capacity used for that packet wasted! 9

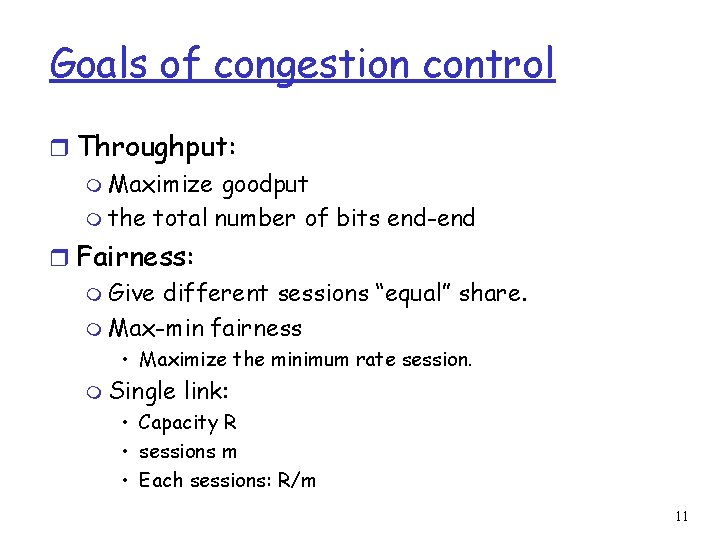

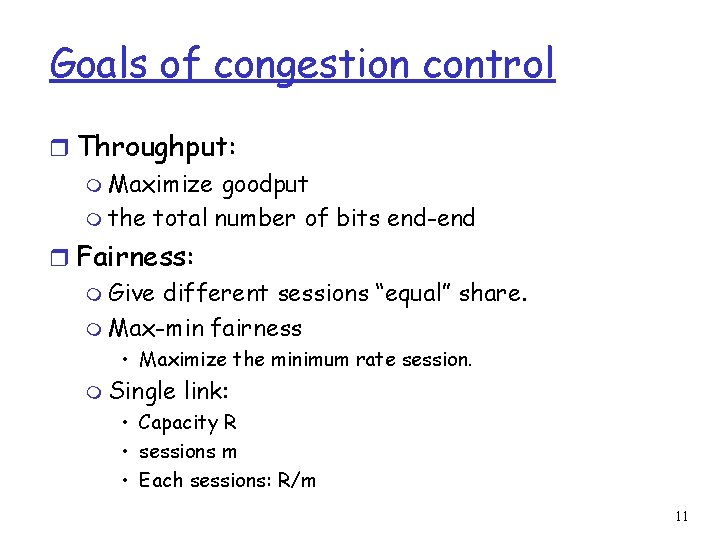

Approaches towards congestion control Two broad approaches towards congestion control: End-end congestion control: r no explicit feedback from network r congestion inferred from end-system observed loss, delay r approach taken by TCP Network-assisted congestion control: r routers provide feedback to end systems m single bit indicating congestion (SNA, DECbit, TCP/IP ECN, ATM) m explicit rate sender should send at 10

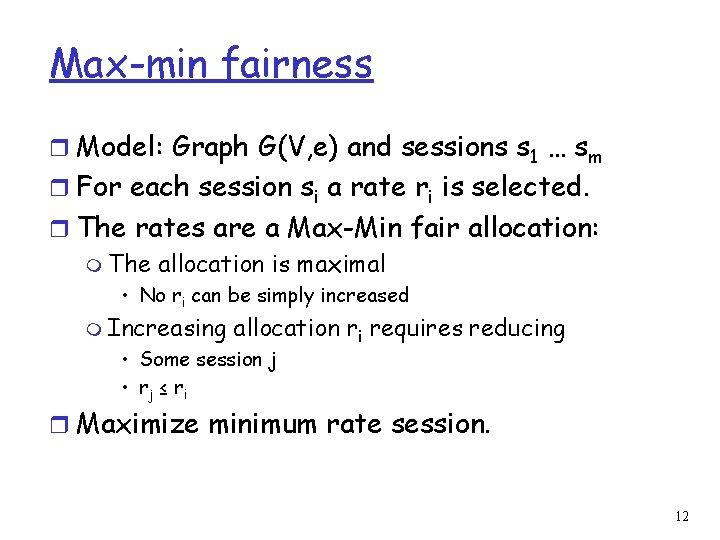

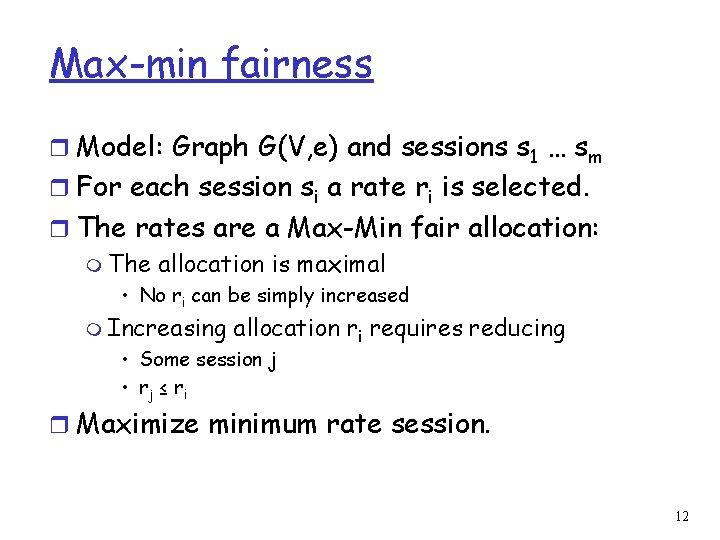

Goals of congestion control r Throughput: m Maximize goodput m the total number of bits end-end r Fairness: m Give different sessions “equal” share. m Max-min fairness • Maximize the minimum rate session. m Single link: • Capacity R • sessions m • Each sessions: R/m 11

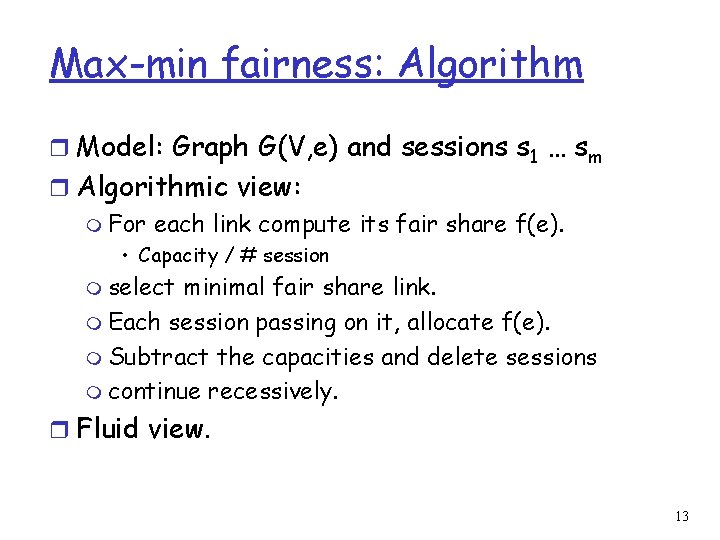

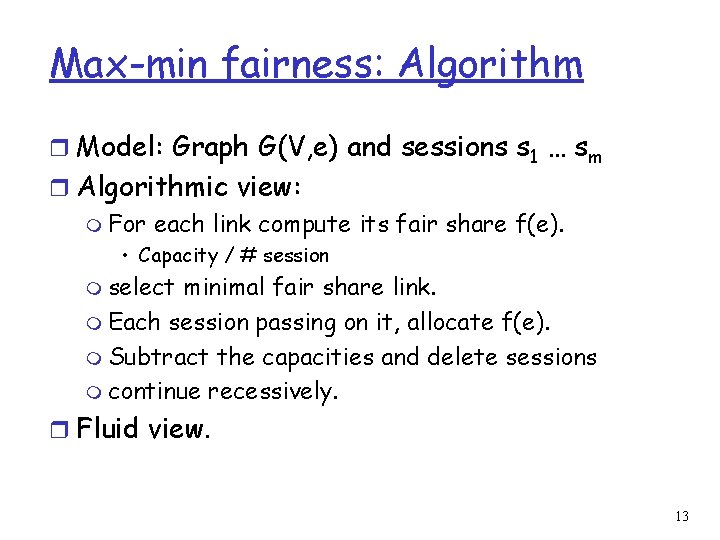

Max-min fairness r Model: Graph G(V, e) and sessions s 1 … sm r For each session si a rate ri is selected. r The rates are a Max-Min fair allocation: m The allocation is maximal • No ri can be simply increased m Increasing allocation ri requires reducing • Some session j • rj ≤ ri r Maximize minimum rate session. 12

Max-min fairness: Algorithm r Model: Graph G(V, e) and sessions s 1 … sm r Algorithmic view: m For each link compute its fair share f(e). • Capacity / # session m select minimal fair share link. m Each session passing on it, allocate f(e). m Subtract the capacities and delete sessions m continue recessively. r Fluid view. 13

Max-min fairness r Example r Throughput versus fairness. 14

Case study: ATM ABR congestion control ABR: available bit rate: r “elastic service” r if sender’s path “underloaded”: m sender can use available bandwidth r if sender’s path congested: m sender lowers rate m a minimum guaranteed rate r Aim: m coordinate increase/decrease rate m avoid loss! 15

Case study: ATM ABR congestion control RM (resource management) cells: r sent by sender, in between data cells m one out of every 32 cells. r RM cells returned to sender by receiver r Each router modifies the RM cell r Info in RM cell set by switches m “network-assisted” r 2 bit info. m NI bit: no increase in rate (mild congestion) m CI bit: congestion indication (lower rate) 16

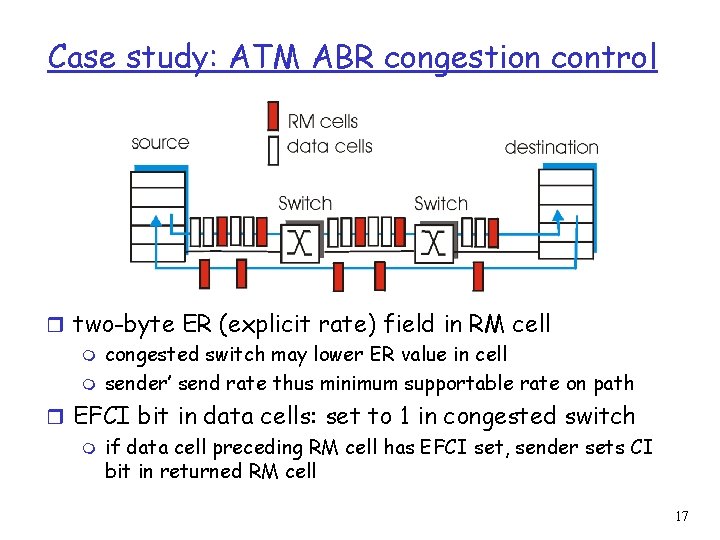

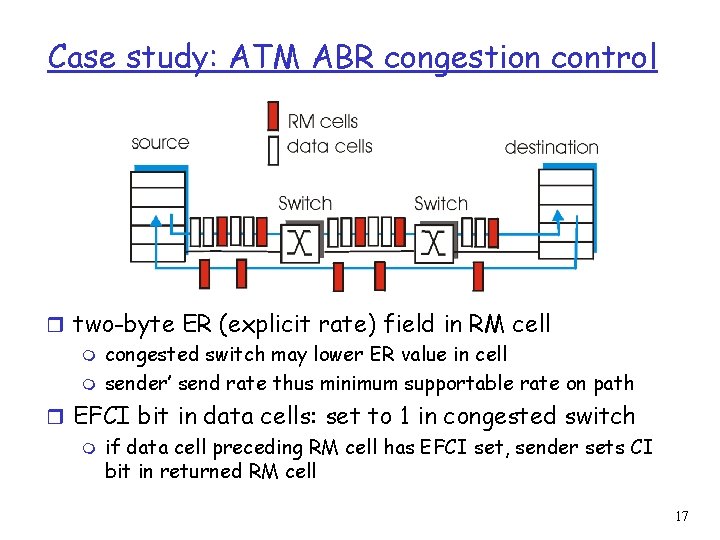

Case study: ATM ABR congestion control r two-byte ER (explicit rate) field in RM cell m congested switch may lower ER value in cell m sender’ send rate thus minimum supportable rate on path r EFCI bit in data cells: set to 1 in congested switch m if data cell preceding RM cell has EFCI set, sender sets CI bit in returned RM cell 17

Case study: ATM ABR congestion control r How does the router selects its action: m selects a rate m Set congestion bits m Vendor dependent functionality r Advantages: m fast response m accurate response r Disadvantages: m network level design m Increase router tasks (load). m Interoperability issues. 18

End to end control 19

End to end feedback r Abstraction: m Alarm flag. m observable at the end stations 20

Simple Abstraction 21

Simple Abstraction 22

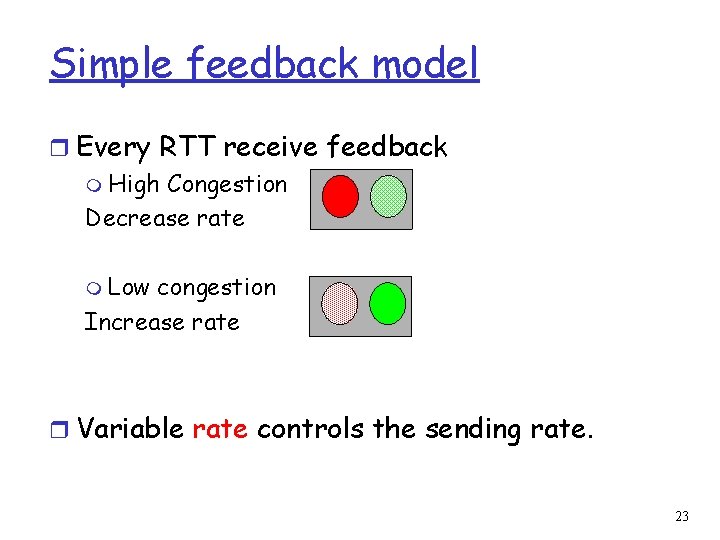

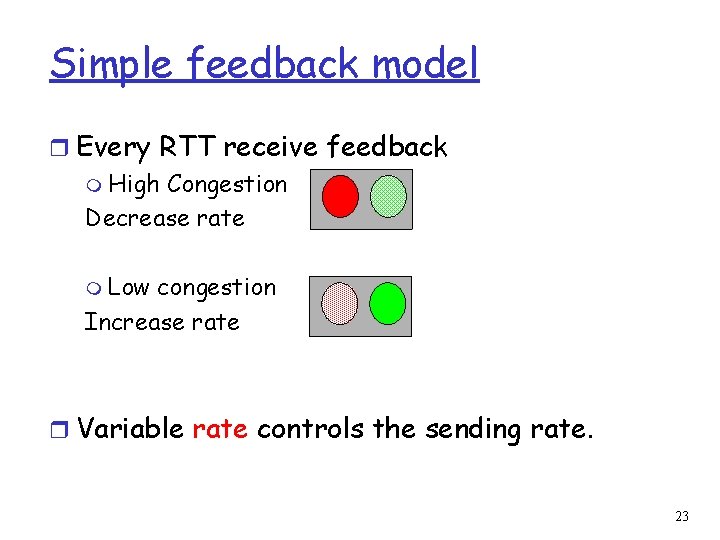

Simple feedback model r Every RTT receive feedback m High Congestion Decrease rate m Low congestion Increase rate r Variable rate controls the sending rate. 23

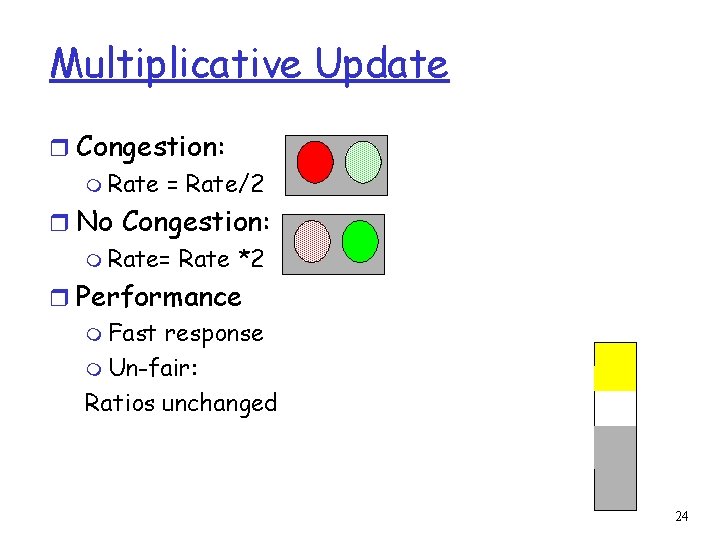

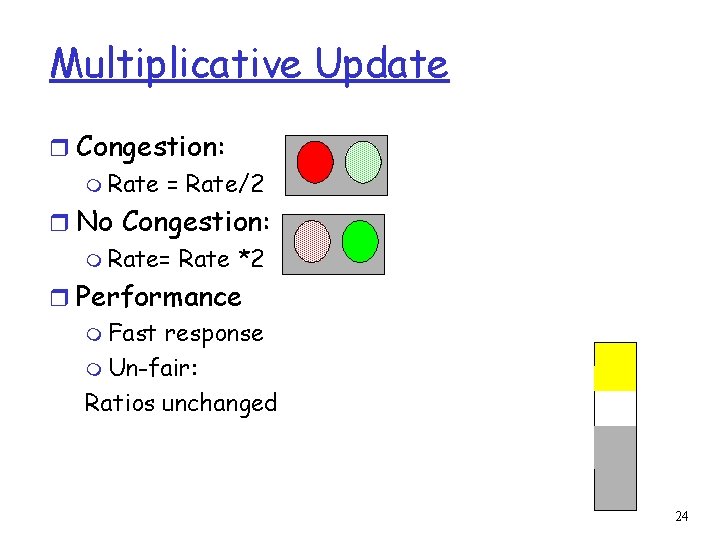

Multiplicative Update r Congestion: m Rate = Rate/2 r No Congestion: m Rate= Rate *2 r Performance m Fast response m Un-fair: Ratios unchanged 24

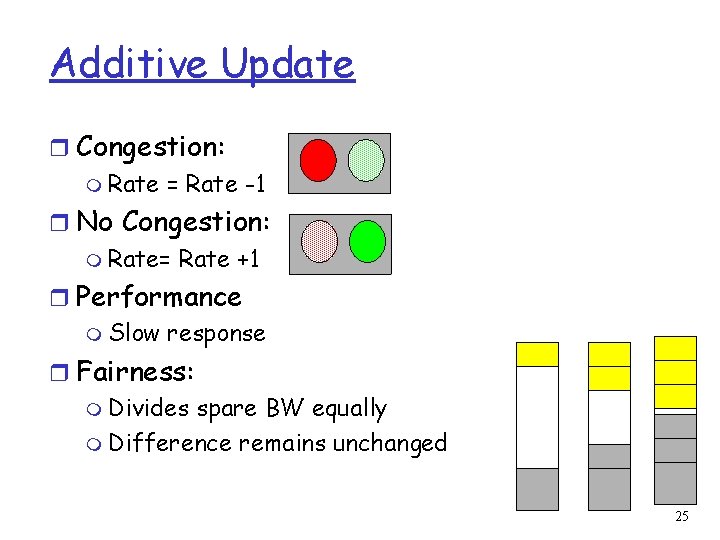

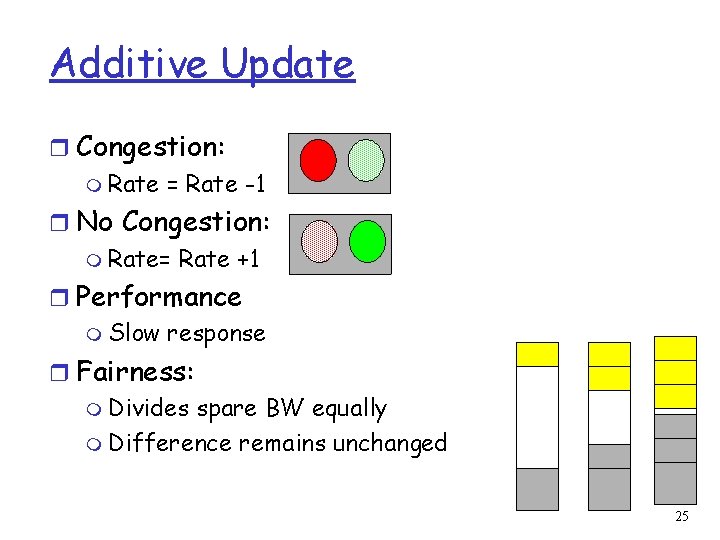

Additive Update r Congestion: m Rate = Rate -1 r No Congestion: m Rate= Rate +1 r Performance m Slow response r Fairness: m Divides spare BW equally m Difference remains unchanged 25

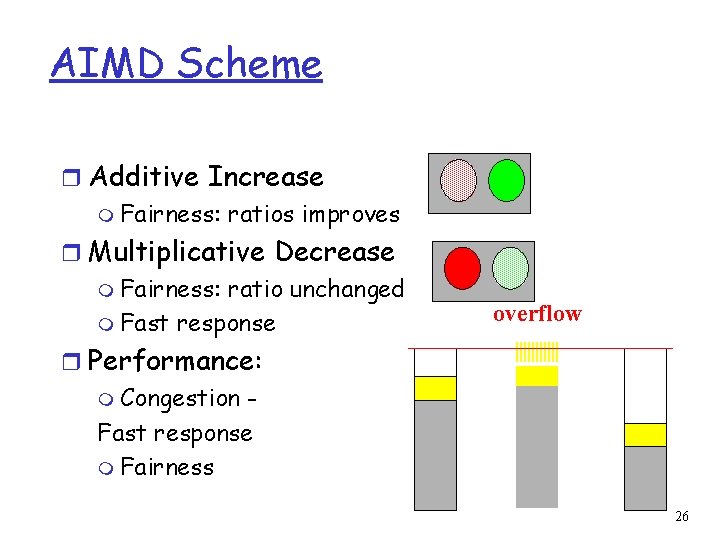

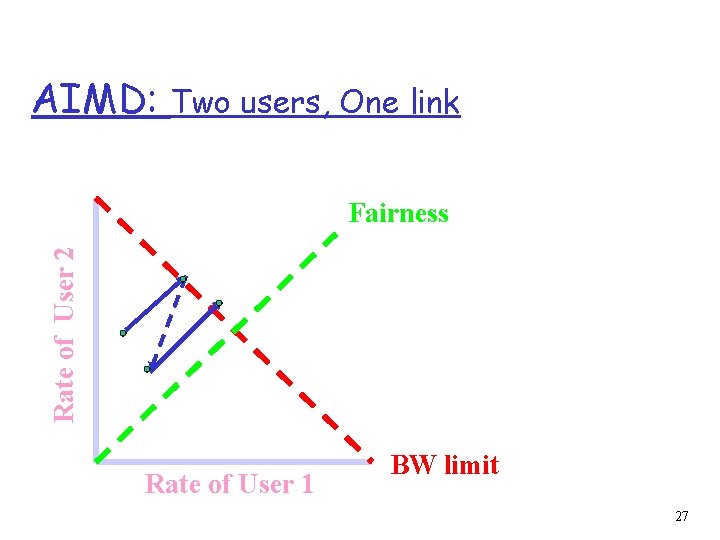

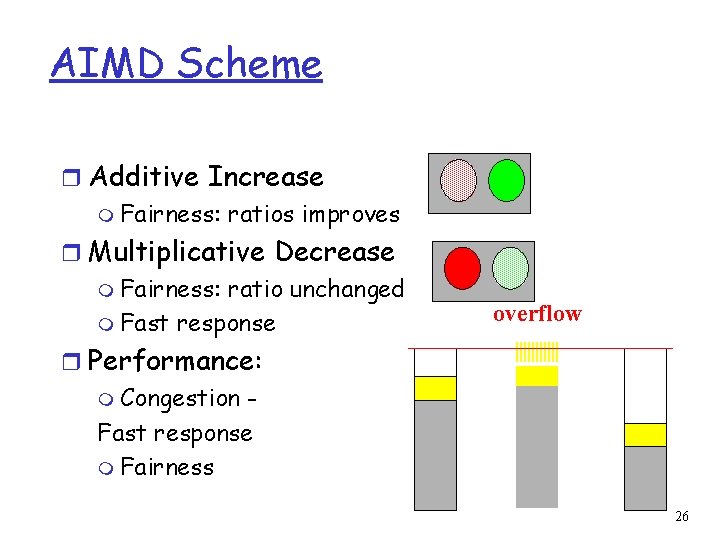

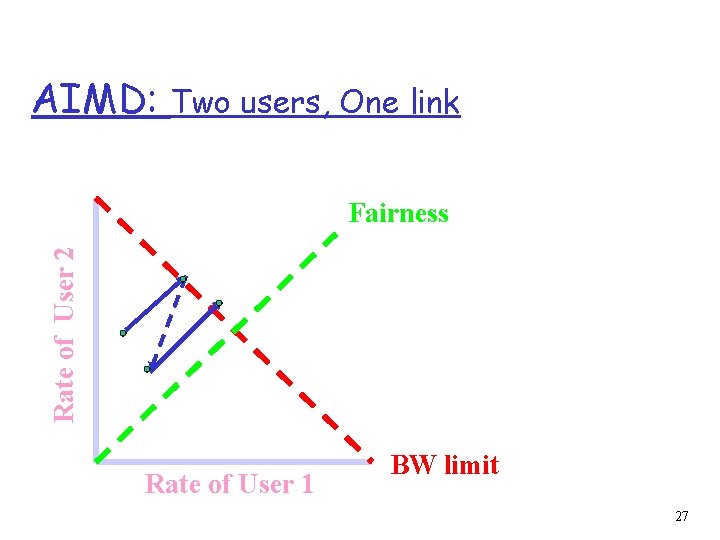

AIMD Scheme r Additive Increase m Fairness: ratios improves r Multiplicative Decrease m Fairness: ratio unchanged m Fast response overflow r Performance: m Congestion Fast response m Fairness 26

AIMD: Two users, One link Rate of User 2 Fairness Rate of User 1 BW limit 27

![TCP AIMD congestion r Dynamic window size Van Jacobson m Initialization Slow start TCP & AIMD: congestion r Dynamic window size [Van Jacobson] m Initialization: Slow start](https://slidetodoc.com/presentation_image_h/1dfffe790fe3cdf185ca4570b7630deb/image-28.jpg)

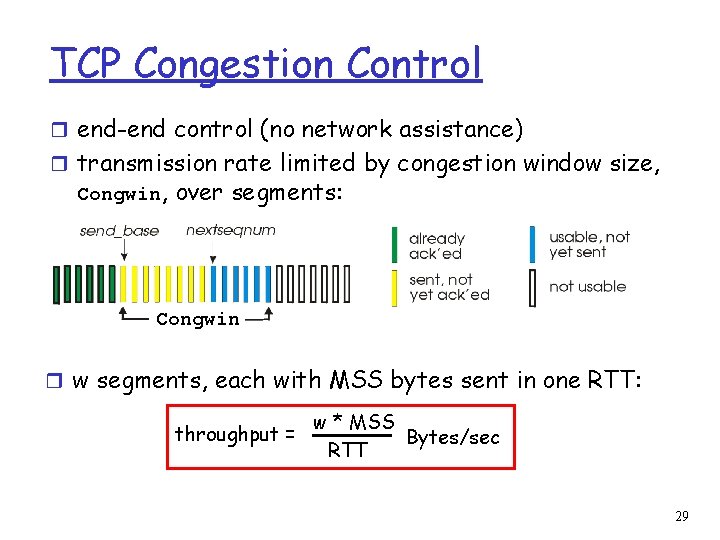

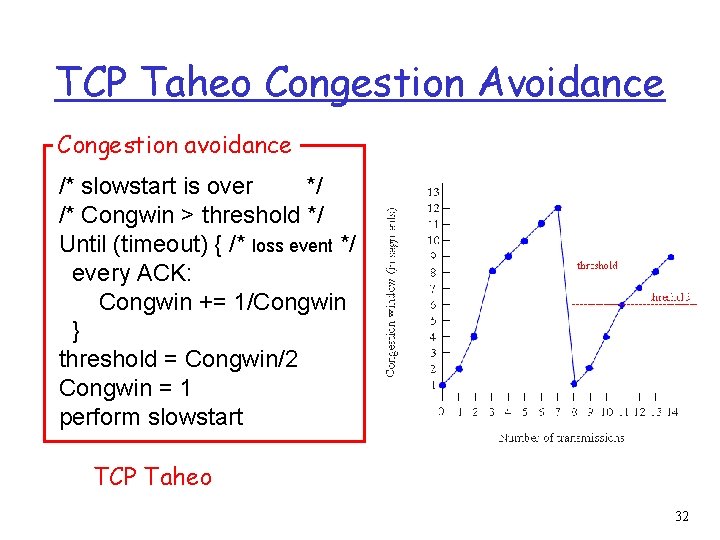

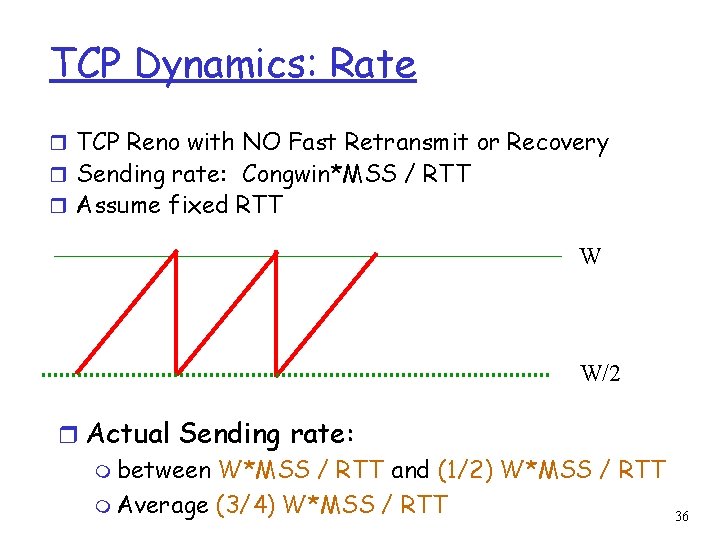

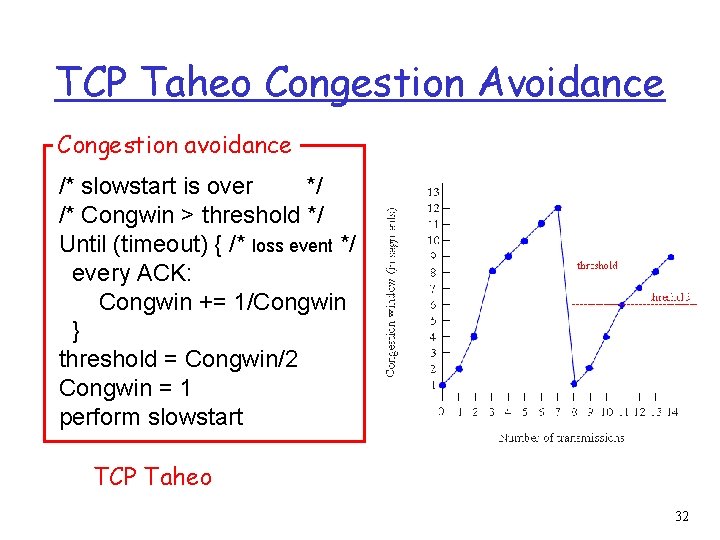

TCP & AIMD: congestion r Dynamic window size [Van Jacobson] m Initialization: Slow start m Steady state: AIMD r Congestion = timeout m TCP Taheo r Congestion = timeout || 3 duplicate ACK m TCP Reno & TCP new Reno r Congestion = higher latency m TCP Vegas 28

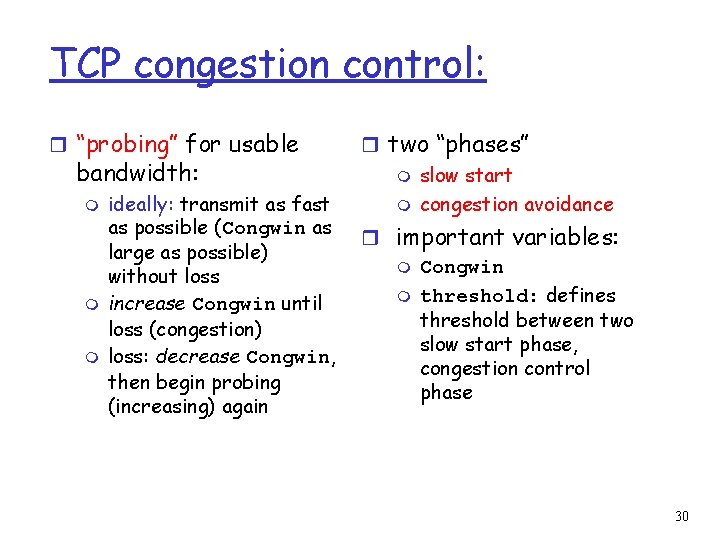

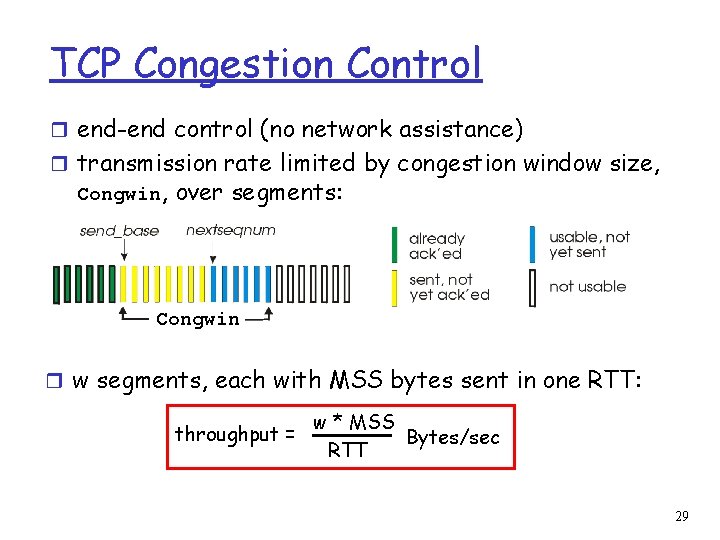

TCP Congestion Control r end-end control (no network assistance) r transmission rate limited by congestion window size, Congwin, over segments: Congwin r w segments, each with MSS bytes sent in one RTT: throughput = w * MSS Bytes/sec RTT 29

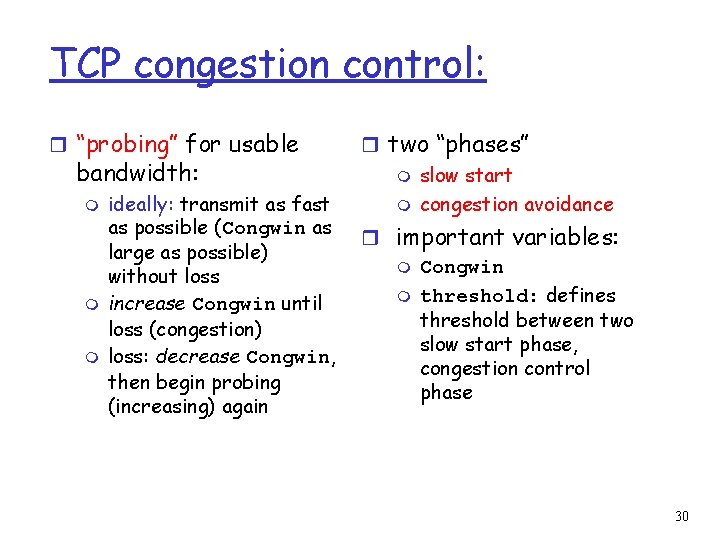

TCP congestion control: r “probing” for usable bandwidth: m m m ideally: transmit as fast as possible (Congwin as large as possible) without loss increase Congwin until loss (congestion) loss: decrease Congwin, then begin probing (increasing) again r two “phases” m slow start m congestion avoidance r important variables: m Congwin m threshold: defines threshold between two slow start phase, congestion control phase 30

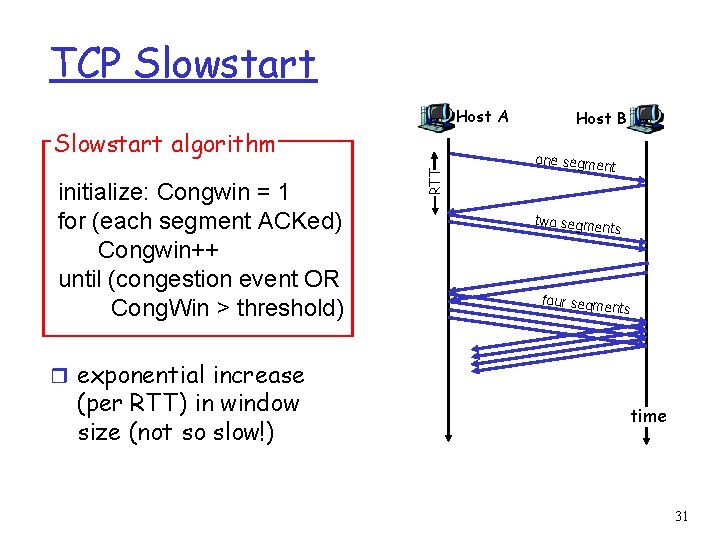

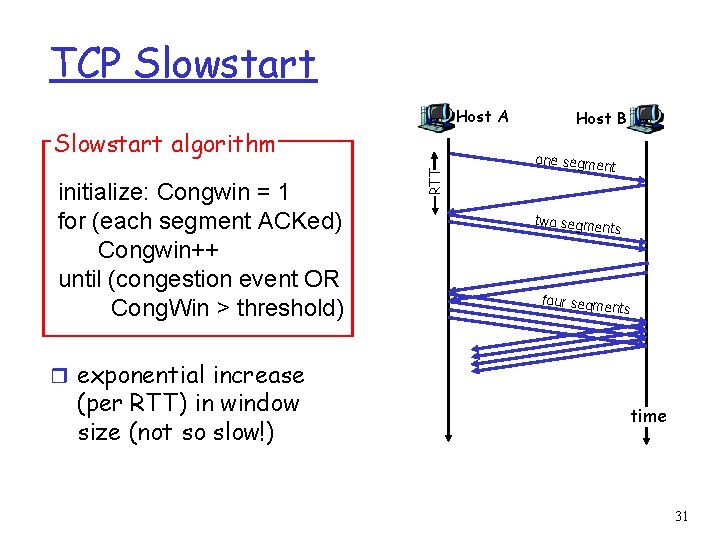

TCP Slowstart Host A initialize: Congwin = 1 for (each segment ACKed) Congwin++ until (congestion event OR Cong. Win > threshold) RTT Slowstart algorithm Host B one segme nt two segme nts four segme nts r exponential increase (per RTT) in window size (not so slow!) time 31

TCP Taheo Congestion Avoidance Congestion avoidance /* slowstart is over */ /* Congwin > threshold */ Until (timeout) { /* loss event */ every ACK: Congwin += 1/Congwin } threshold = Congwin/2 Congwin = 1 perform slowstart TCP Taheo 32

TCP Reno r Fast retransmit: m After receiving 3 duplicate ACK m Resend first packet in window. • Try to avoid waiting for timeout r Fast recovery: m After retransmission do not enter slowstart. m Threshold = Congwin/2 m Congwin = 3 + Congwin/2 m Each duplicate ACK received Congwin++ m After new ACK • Congwin = Threshold • return to congestion avoidance r Single packet drop: great! 33

TCP Vegas: r Idea: track the RTT m Try to avoid packet loss m latency increases: lower rate m latency very low: increase rate r Implementation: m sample_RTT: current RTT m Base_RTT: min. over sample_RTT m Expected = Congwin / Base_RTT m Actual = number of packets sent / sample_RTT m =Expected - Actual 34

TCP Vegas r = Expected - Actual r Congestion Avoidance: m two parameters: and , < m If ( < ) Congwin = Congwin +1 m If ( > ) Congwin = Congwin -1 m Otherwise no change m Note: Once per RTT r Slowstart m parameter m If ( > ) then move to congestion avoidance r Timeout: same as TCP Taheo 35

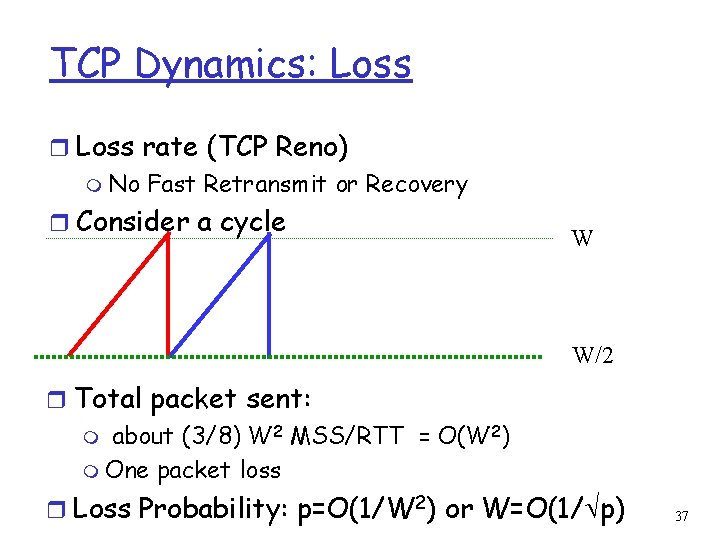

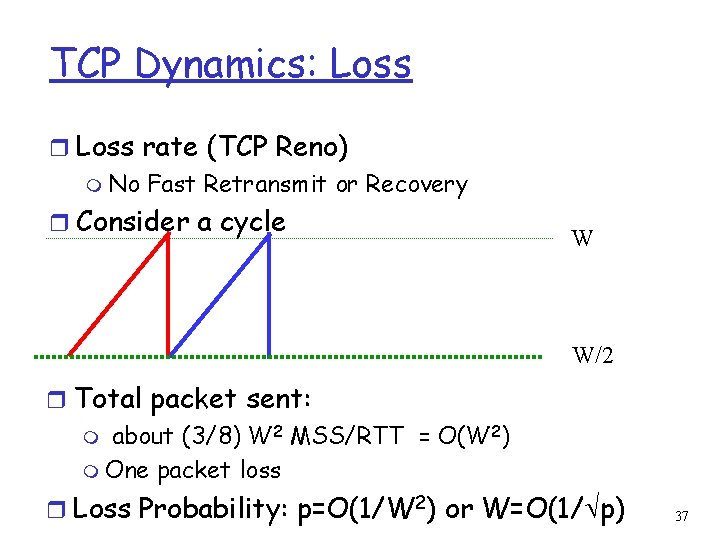

TCP Dynamics: Rate r TCP Reno with NO Fast Retransmit or Recovery r Sending rate: Congwin*MSS / RTT r Assume fixed RTT W W/2 r Actual Sending rate: m between W*MSS / RTT and (1/2) W*MSS / RTT m Average (3/4) W*MSS / RTT 36

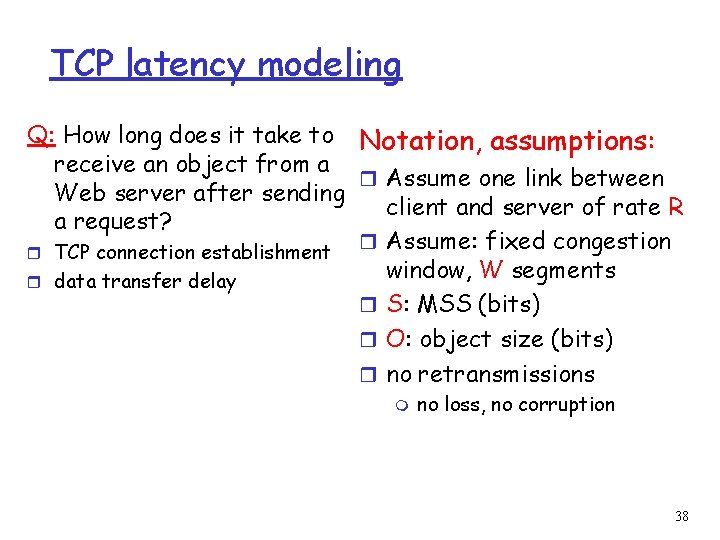

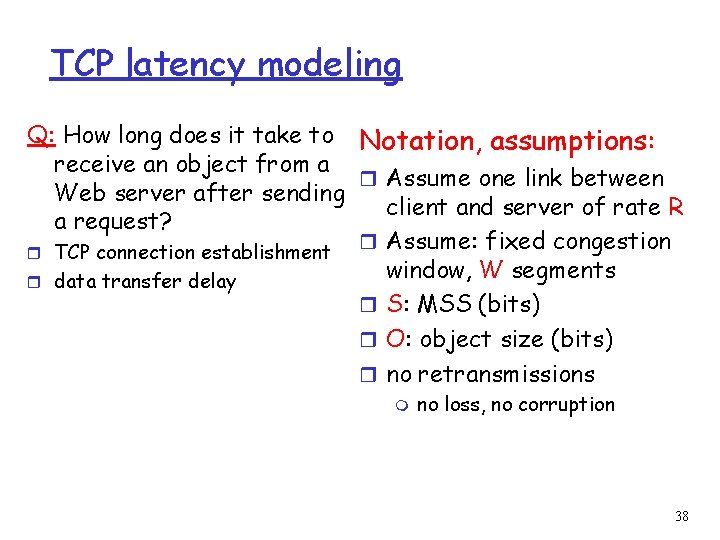

TCP Dynamics: Loss rate (TCP Reno) m No Fast Retransmit or Recovery r Consider a cycle W W/2 r Total packet sent: m about (3/8) W 2 MSS/RTT = O(W 2) m One packet loss r Loss Probability: p=O(1/W 2) or W=O(1/ p) 37

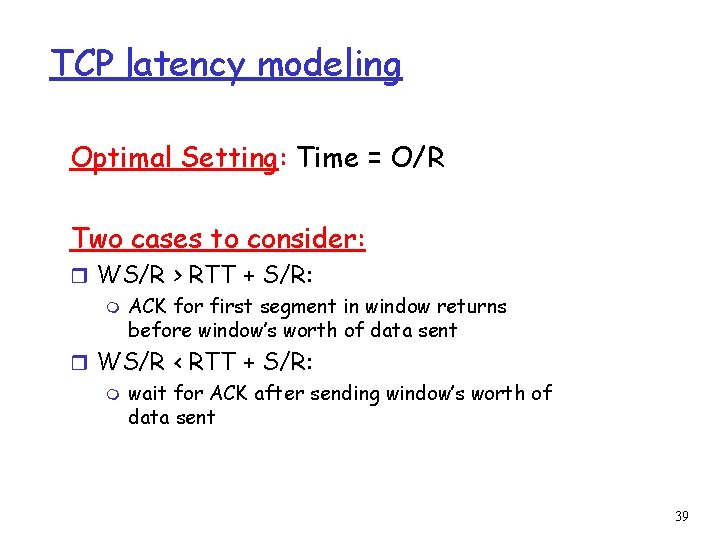

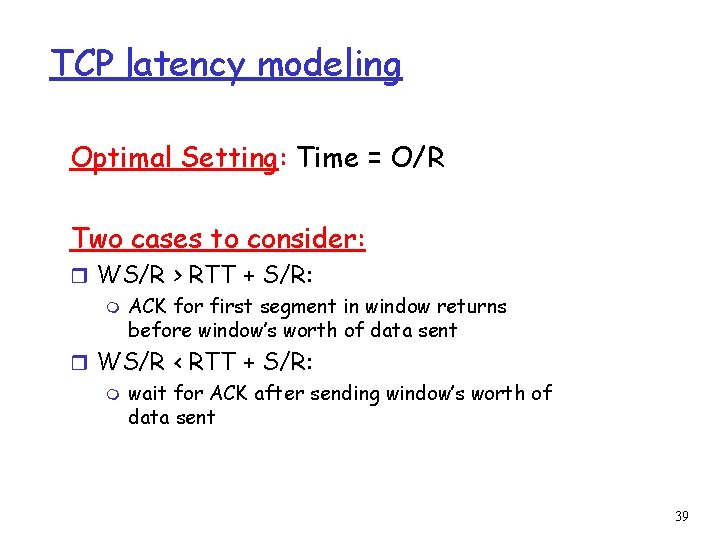

TCP latency modeling Q: How long does it take to Notation, assumptions: receive an object from a r Assume one link between Web server after sending client and server of rate R a request? r Assume: fixed congestion r TCP connection establishment window, W segments r data transfer delay r S: MSS (bits) r O: object size (bits) r no retransmissions m no loss, no corruption 38

TCP latency modeling Optimal Setting: Time = O/R Two cases to consider: r WS/R > RTT + S/R: m ACK for first segment in window returns before window’s worth of data sent r WS/R < RTT + S/R: m wait for ACK after sending window’s worth of data sent 39

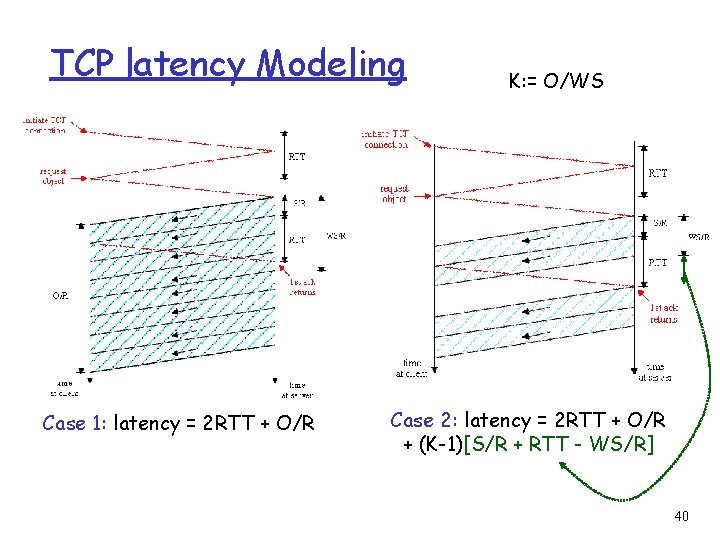

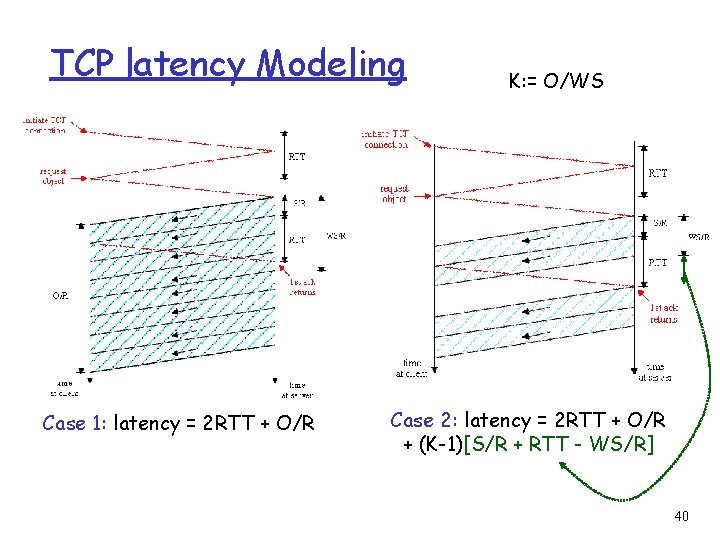

TCP latency Modeling Case 1: latency = 2 RTT + O/R K: = O/WS Case 2: latency = 2 RTT + O/R + (K-1)[S/R + RTT - WS/R] 40

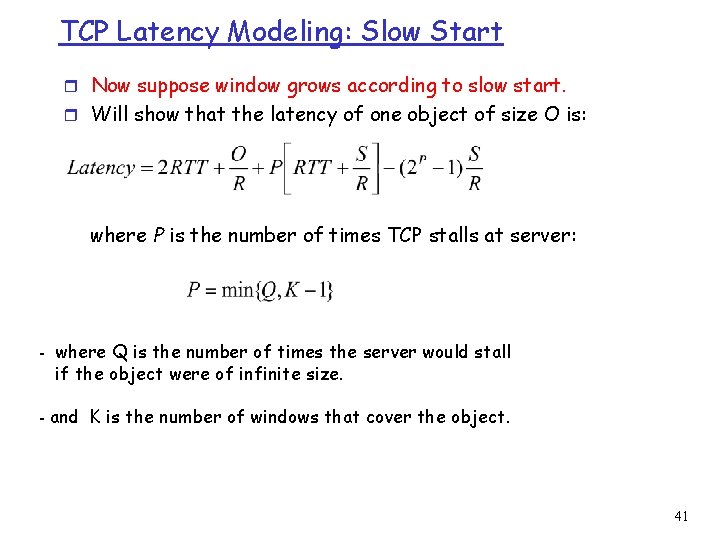

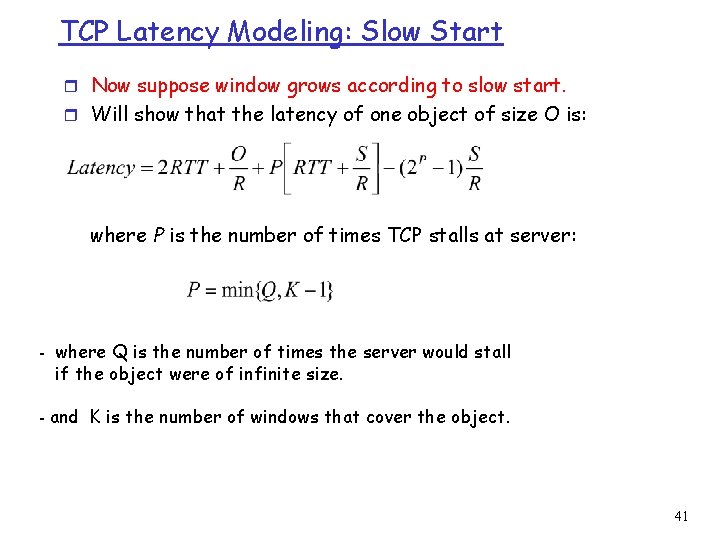

TCP Latency Modeling: Slow Start r Now suppose window grows according to slow start. r Will show that the latency of one object of size O is: where P is the number of times TCP stalls at server: - where Q is the number of times the server would stall if the object were of infinite size. - and K is the number of windows that cover the object. 41

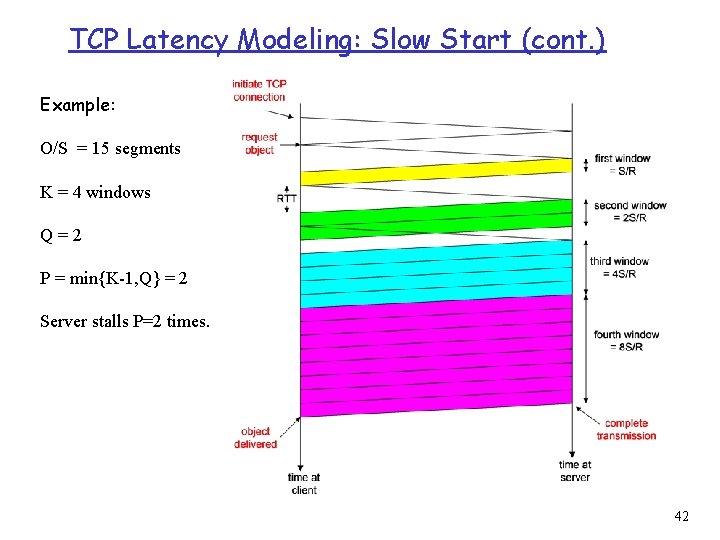

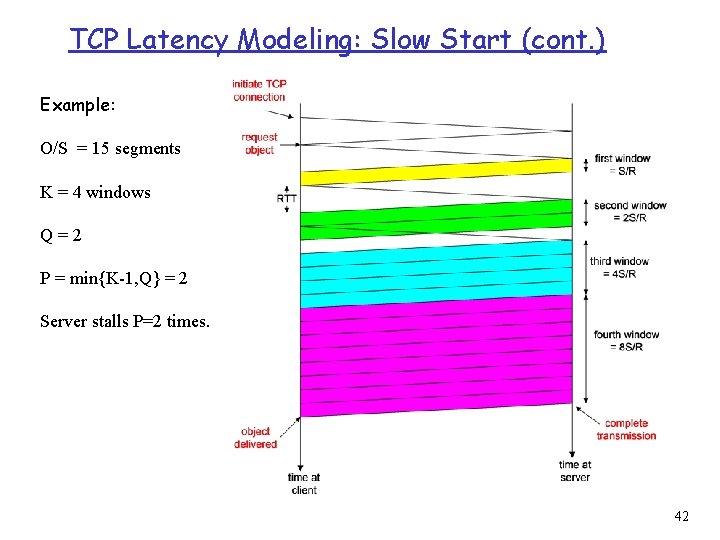

TCP Latency Modeling: Slow Start (cont. ) Example: O/S = 15 segments K = 4 windows Q=2 P = min{K-1, Q} = 2 Server stalls P=2 times. 42

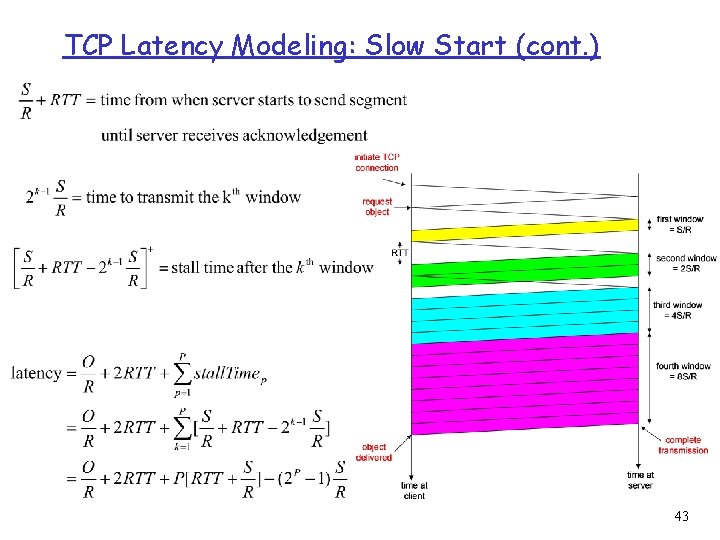

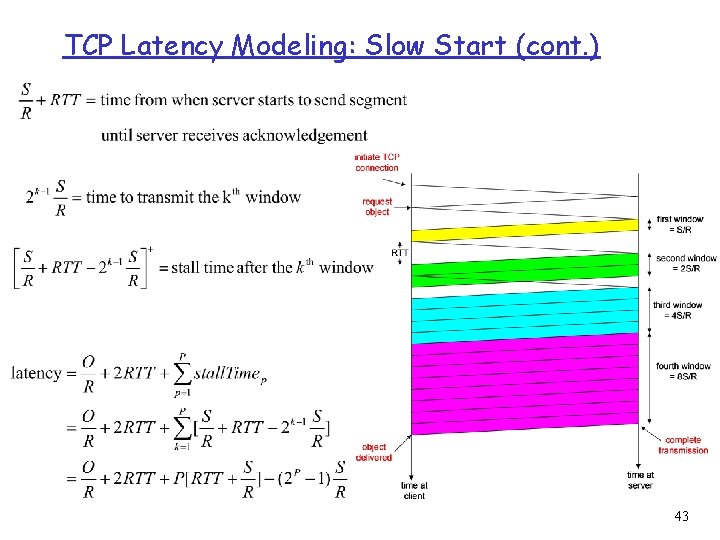

TCP Latency Modeling: Slow Start (cont. ) 43

Flow Control 44

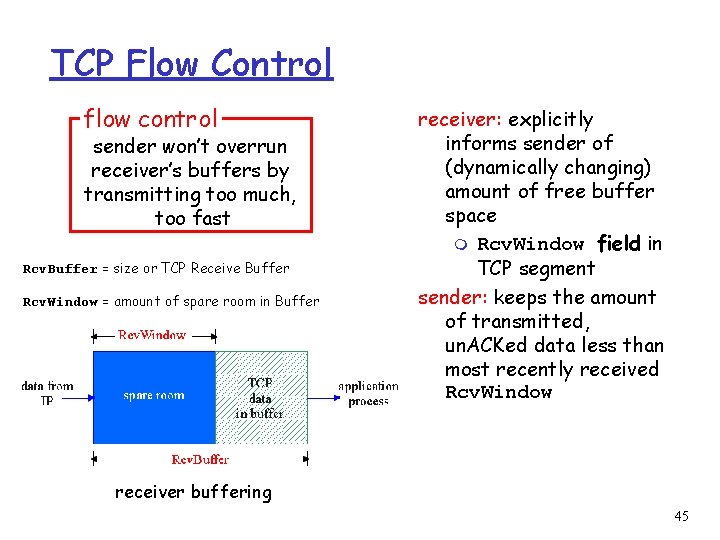

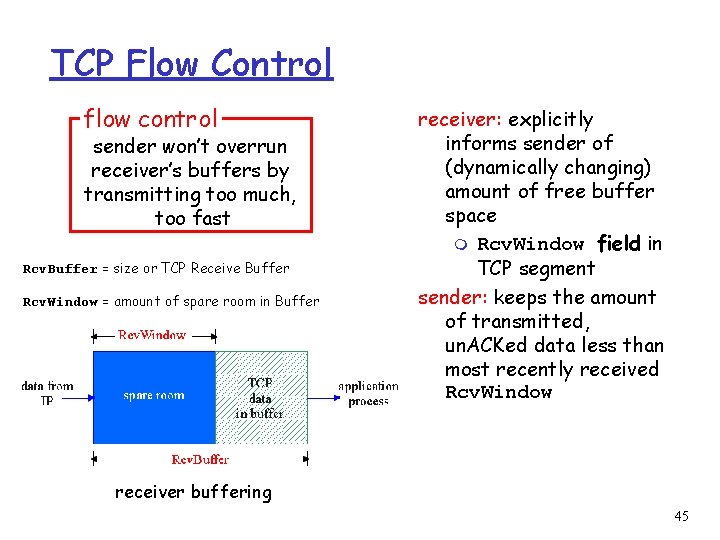

TCP Flow Control flow control sender won’t overrun receiver’s buffers by transmitting too much, too fast Rcv. Buffer = size or TCP Receive Buffer Rcv. Window = amount of spare room in Buffer receiver: explicitly informs sender of (dynamically changing) amount of free buffer space m Rcv. Window field in TCP segment sender: keeps the amount of transmitted, un. ACKed data less than most recently received Rcv. Window receiver buffering 45

TCP: setting timeouts 46

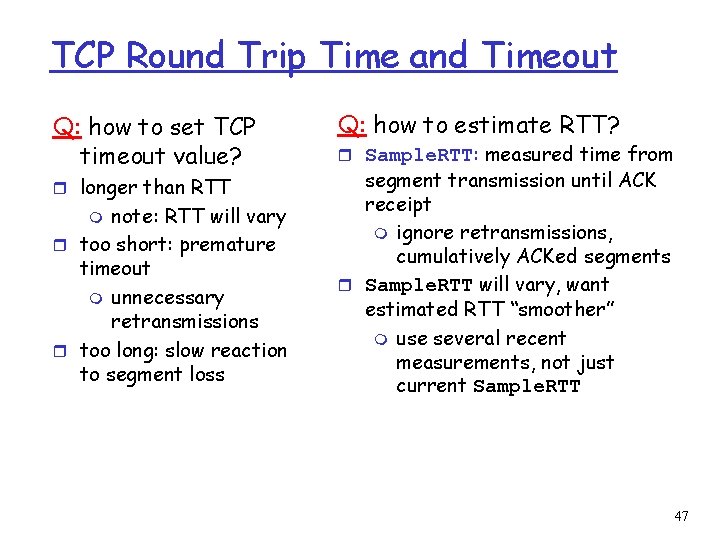

TCP Round Trip Time and Timeout Q: how to set TCP timeout value? r longer than RTT note: RTT will vary r too short: premature timeout m unnecessary retransmissions r too long: slow reaction to segment loss m Q: how to estimate RTT? r Sample. RTT: measured time from segment transmission until ACK receipt m ignore retransmissions, cumulatively ACKed segments r Sample. RTT will vary, want estimated RTT “smoother” m use several recent measurements, not just current Sample. RTT 47

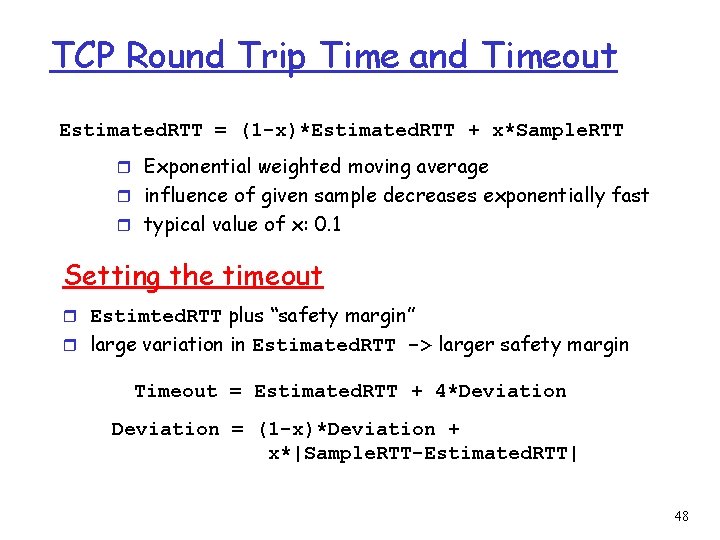

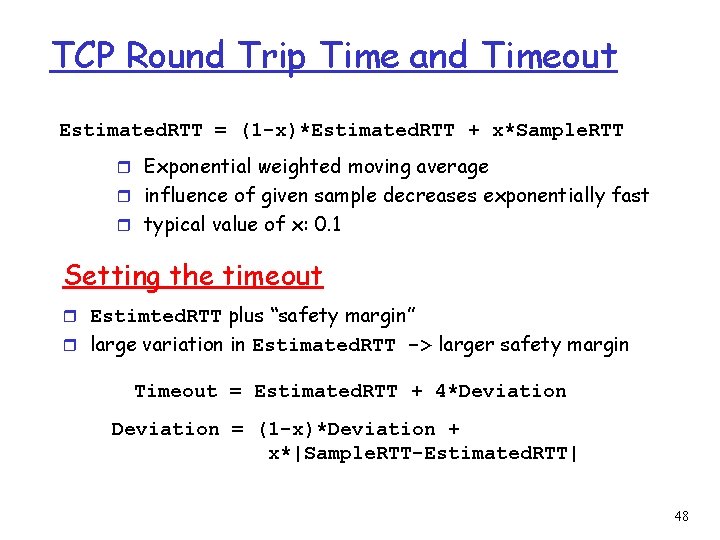

TCP Round Trip Time and Timeout Estimated. RTT = (1 -x)*Estimated. RTT + x*Sample. RTT r Exponential weighted moving average r influence of given sample decreases exponentially fast r typical value of x: 0. 1 Setting the timeout r Estimted. RTT plus “safety margin” r large variation in Estimated. RTT -> larger safety margin Timeout = Estimated. RTT + 4*Deviation = (1 -x)*Deviation + x*|Sample. RTT-Estimated. RTT| 48