Issues in congestion control Traffic congestion intrinsic nature

- Slides: 17

Issues in congestion control ©Traffic congestion: intrinsic nature of statistical multiplexing – hosts send data whenever needed – control congestion only when it occurs ©Congestion control: get the network out of congested state ©congestion avoidance: reduce the chance of network getting into congested state – taking actions early, before routers fill up their buffer queue and are forced to drop packets – does not guarantee network congestion-free CS 118/3 -7 -2000 1 Lixia Zhang/UCLA

Congestion Notification signal hosts to adjust their transmission rates ©extremely difficult, if not impossible, to tell individual hosts exactly how fast to send – traffic load changes randomly – communication with end users takes time » the delay is different for different users – very large number of users sharing the same net ©existing schemes – default: TCP takes pkt losses as congestion signal – DECBIT – RED: Random Early Detection of congestion CS 118/3 -7 -2000 2 Lixia Zhang/UCLA

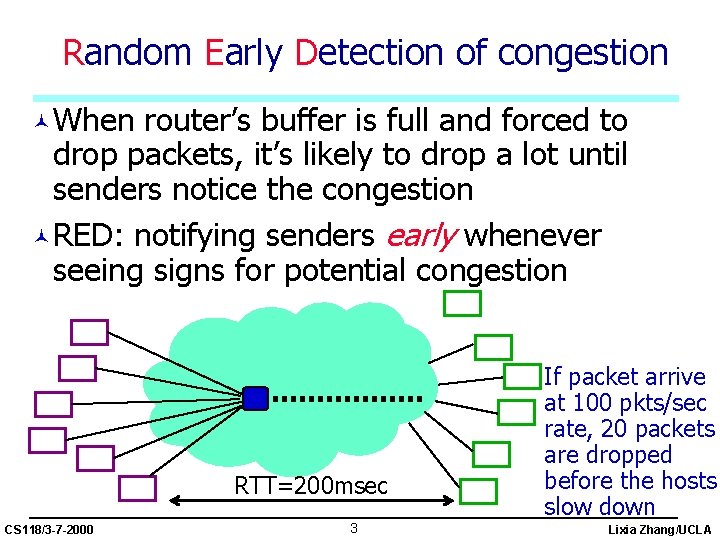

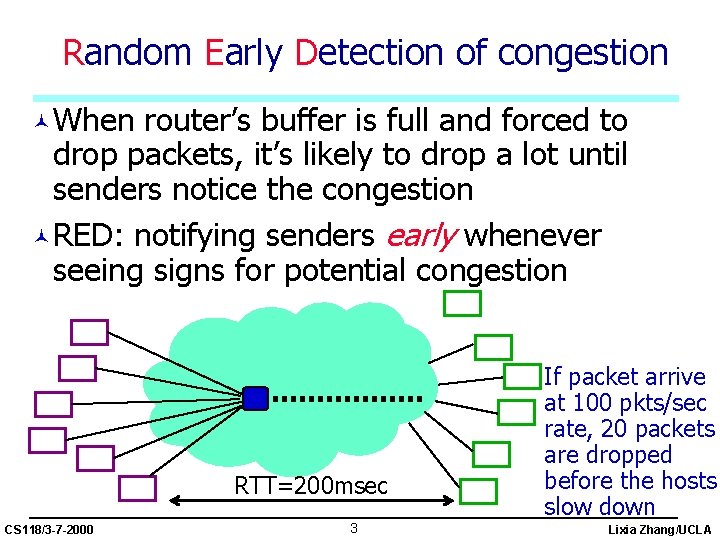

Random Early Detection of congestion ©When router’s buffer is full and forced to drop packets, it’s likely to drop a lot until senders notice the congestion ©RED: notifying senders early whenever seeing signs for potential congestion RTT=200 msec CS 118/3 -7 -2000 3 If packet arrive at 100 pkts/sec rate, 20 packets are dropped before the hosts slow down Lixia Zhang/UCLA

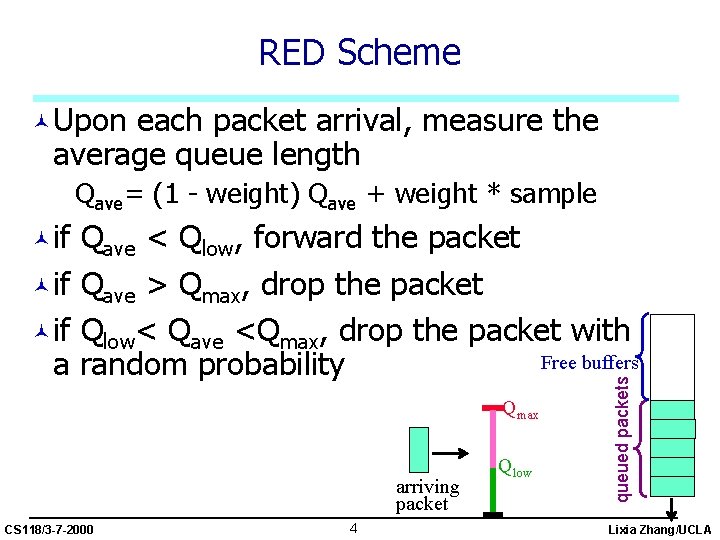

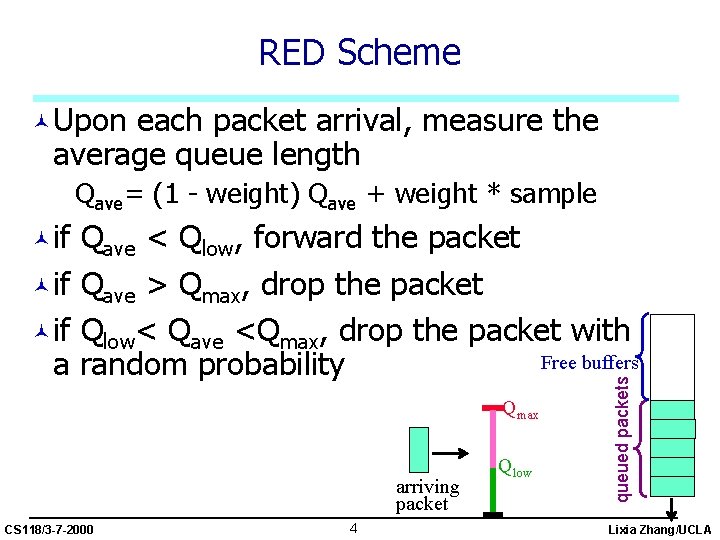

RED Scheme ©Upon each packet arrival, measure the average queue length Qave= (1 - weight) Qave + weight * sample Qave < Qlow, forward the packet ©if Qave > Qmax, drop the packet ©if Qlow< Qave <Qmax, drop the packet with Free buffers a random probability Qmax arriving packet CS 118/3 -7 -2000 4 Qlow queued packets ©if Lixia Zhang/UCLA

RED (cont) ©Why randomly drop – stateless control: keep no record for individual data flows – whoever sending faster than the average receive more congestion signal proportionally ©Congestion feedback is implicit – just drop the packet, TCP will slow down ©can make it explicit by marking, instead of dropping, the packet – new effort: Explicit Congestion Notification (ECN) for TCP CS 118/3 -7 -2000 5 Lixia Zhang/UCLA

Controlling data delivery quality ©Congestion control – control total traffic to be under resource limit – under the constraints, give everyone equal share » open question: how to define "everyone"? » no quantitative statement on throughput or delay ©quantitative performance control – allocate bandwidth to specific class of traffic » either static allocation, or dynamic signaling – assure that only qualified packets can use reserved bandwidth » packet classification » packet scheduling CS 118/3 -7 -2000 6 Lixia Zhang/UCLA

What is a "flow"? ©a number of definitions exist – from one application process to another – from one source host to one destination host – all packets from one organization to another ©why we care: need to identify packets that should be treated as one entity and receive the same service ©how to identify a flow – explicit: a virtual circuit – implicit: routers exam packet headers to find out » the same source-destination pair? or » carrying the same service class flag? CS 118/3 -7 -2000 7 Lixia Zhang/UCLA

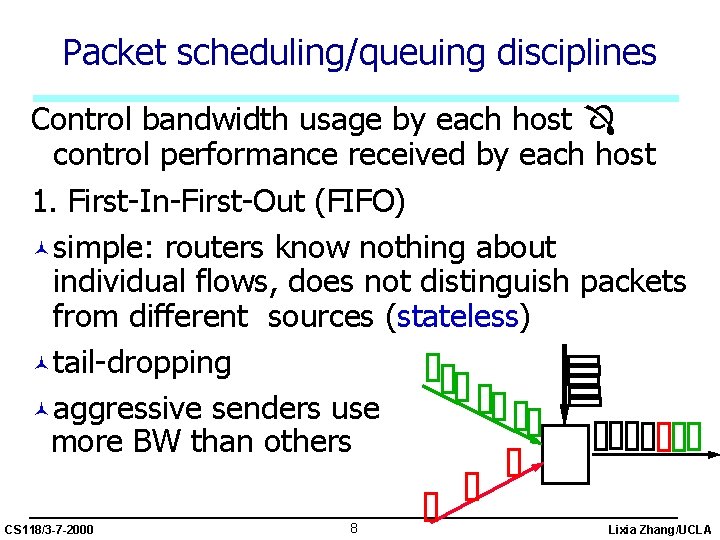

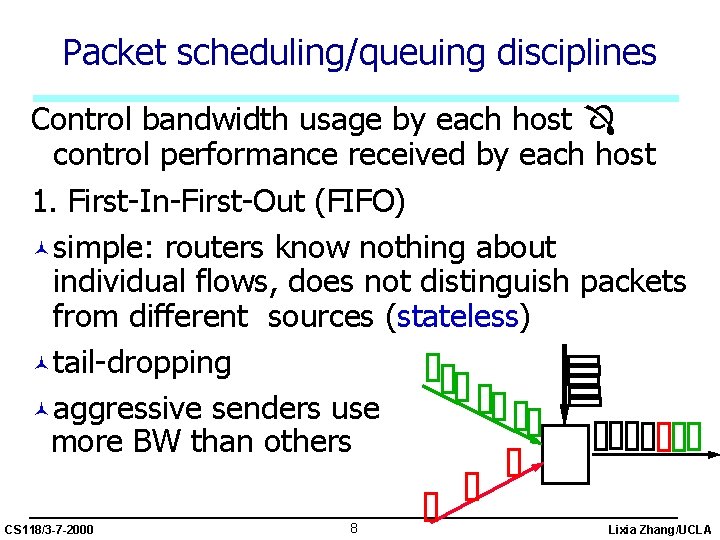

Packet scheduling/queuing disciplines Control bandwidth usage by each host control performance received by each host 1. First-In-First-Out (FIFO) ©simple: routers know nothing about individual flows, does not distinguish packets from different sources (stateless) ©tail-dropping ©aggressive senders use more BW than others CS 118/3 -7 -2000 8 Lixia Zhang/UCLA

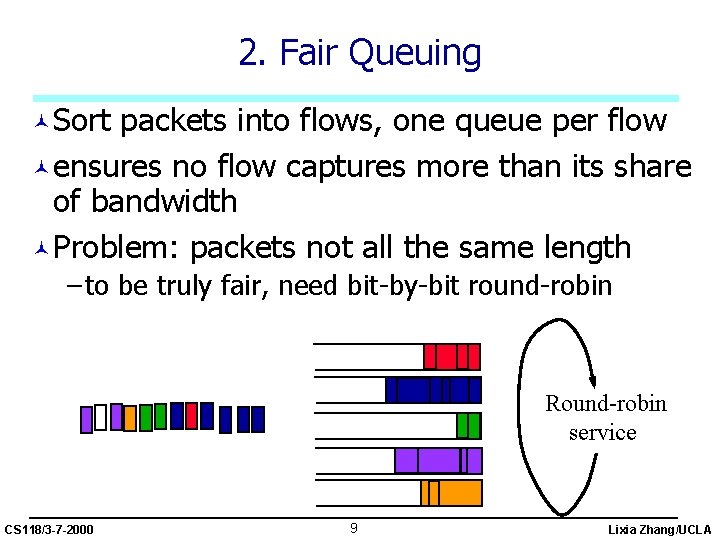

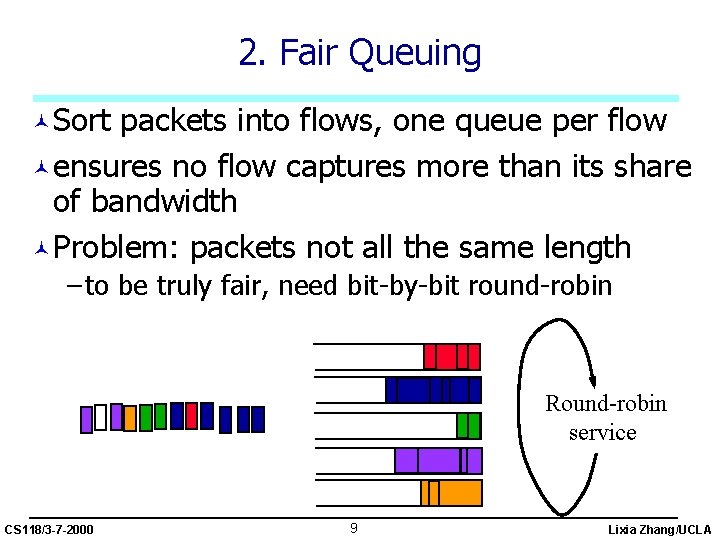

2. Fair Queuing ©Sort packets into flows, one queue per flow ©ensures no flow captures more than its share of bandwidth ©Problem: packets not all the same length – to be truly fair, need bit-by-bit round-robin Round-robin service CS 118/3 -7 -2000 9 Lixia Zhang/UCLA

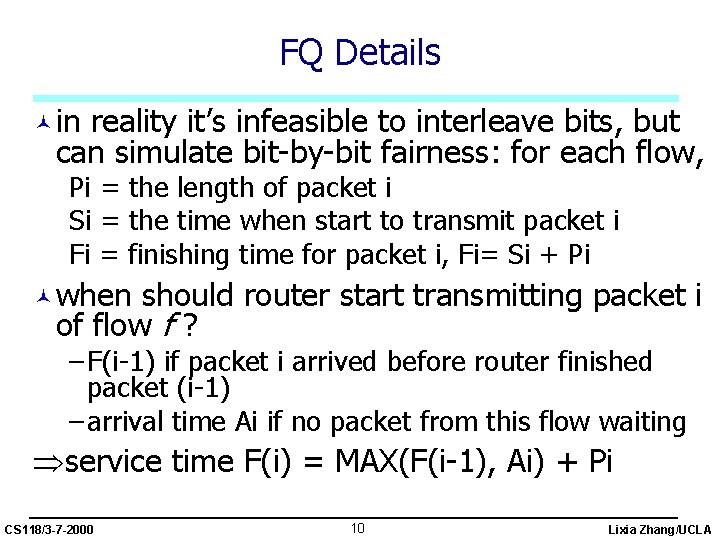

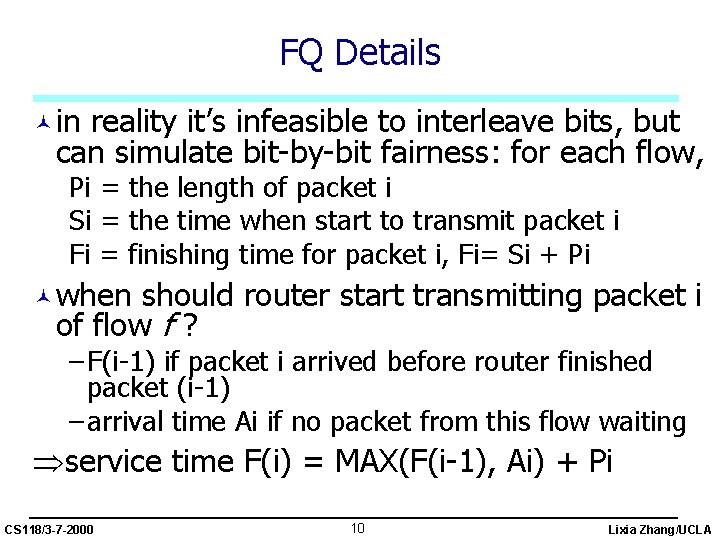

FQ Details ©in reality it’s infeasible to interleave bits, but can simulate bit-by-bit fairness: for each flow, Pi = the length of packet i Si = the time when start to transmit packet i Fi = finishing time for packet i, Fi= Si + Pi ©when should router start transmitting packet i of flow f ? – F(i-1) if packet i arrived before router finished packet (i-1) – arrival time Ai if no packet from this flow waiting service time F(i) = MAX(F(i-1), Ai) + Pi CS 118/3 -7 -2000 10 Lixia Zhang/UCLA

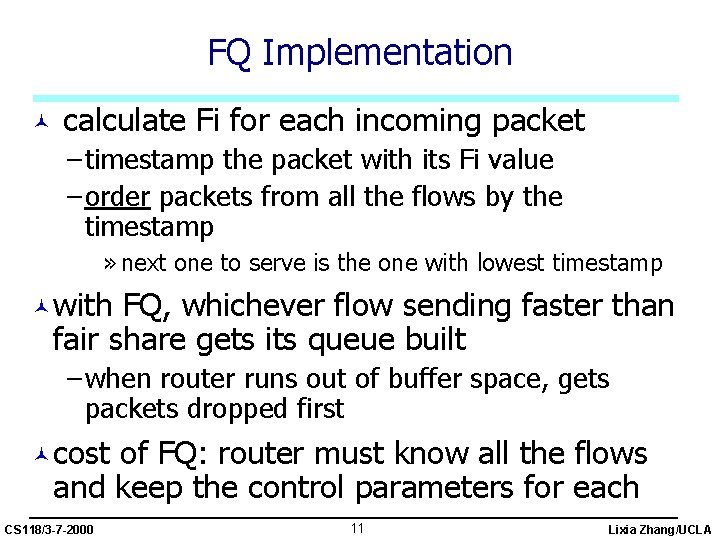

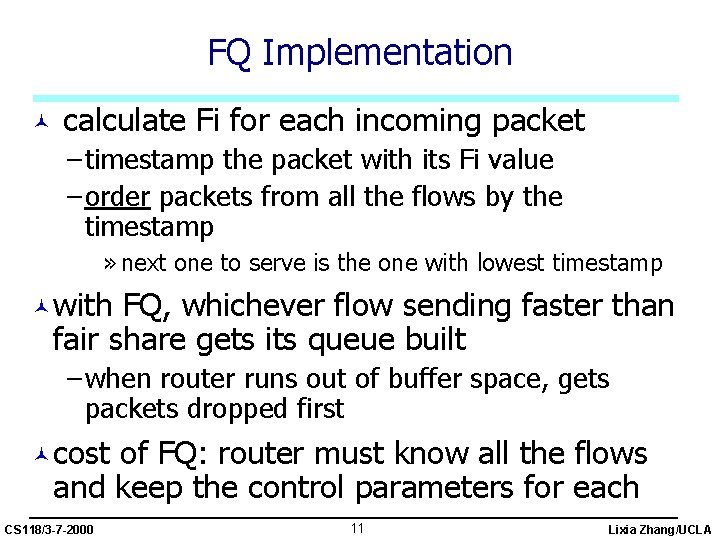

FQ Implementation © calculate Fi for each incoming packet – timestamp the packet with its Fi value – order packets from all the flows by the timestamp » next one to serve is the one with lowest timestamp ©with FQ, whichever flow sending faster than fair share gets its queue built – when router runs out of buffer space, gets packets dropped first ©cost of FQ: router must know all the flows and keep the control parameters for each CS 118/3 -7 -2000 11 Lixia Zhang/UCLA

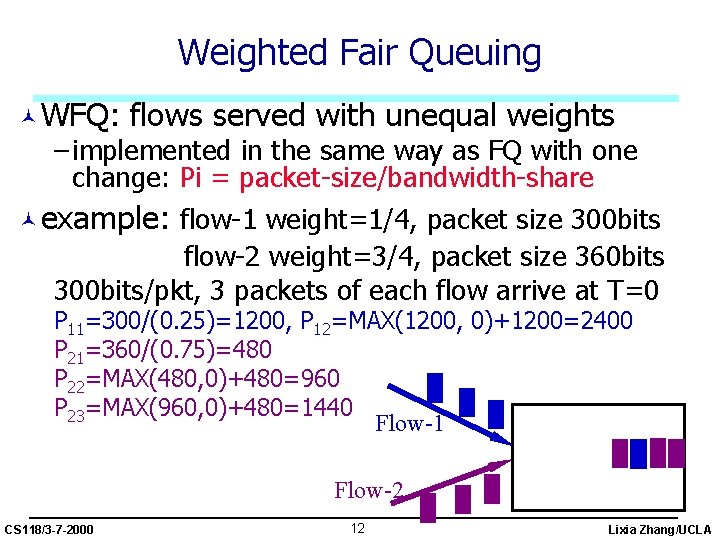

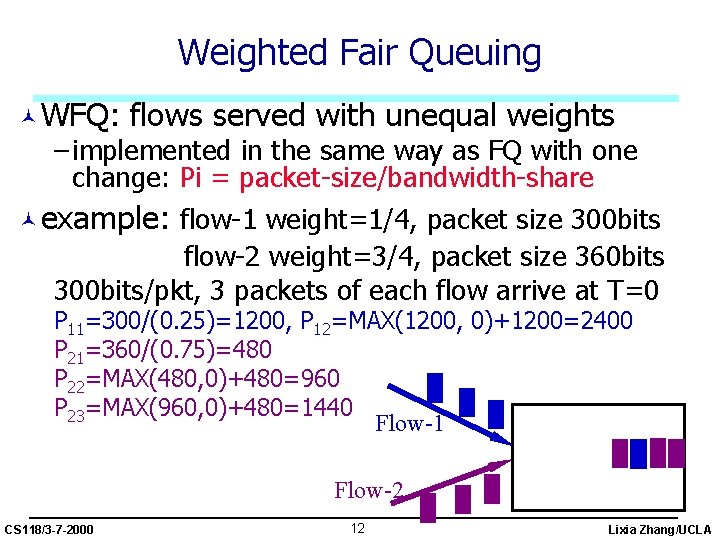

Weighted Fair Queuing ©WFQ: flows served with unequal weights – implemented in the same way as FQ with one change: Pi = packet-size/bandwidth-share ©example: flow-1 weight=1/4, packet size 300 bits flow-2 weight=3/4, packet size 360 bits 300 bits/pkt, 3 packets of each flow arrive at T=0 P 11=300/(0. 25)=1200, P 12=MAX(1200, 0)+1200=2400 P 21=360/(0. 75)=480 P 22=MAX(480, 0)+480=960 P 23=MAX(960, 0)+480=1440 Flow-1 Flow-2 CS 118/3 -7 -2000 12 Lixia Zhang/UCLA

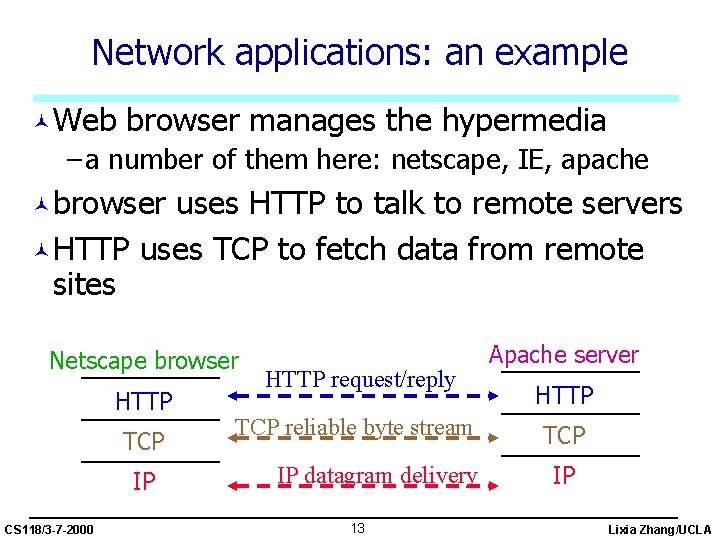

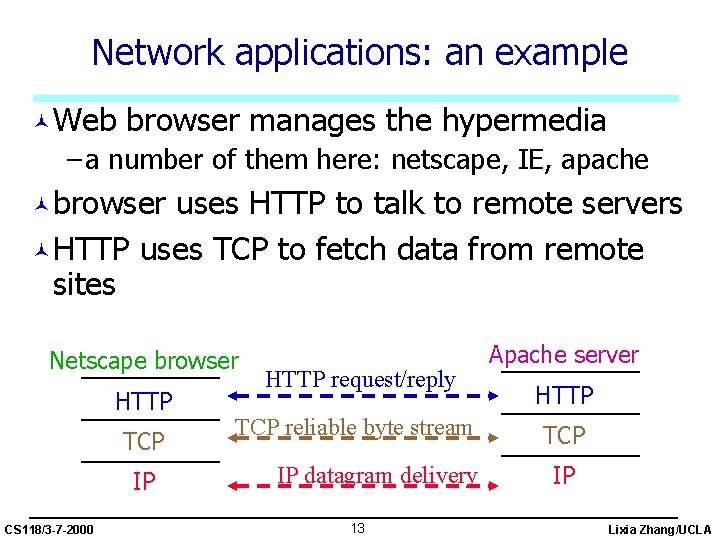

Network applications: an example ©Web browser manages the hypermedia – a number of them here: netscape, IE, apache ©browser uses HTTP to talk to remote servers ©HTTP uses TCP to fetch data from remote sites Netscape browser HTTP TCP IP CS 118/3 -7 -2000 HTTP request/reply TCP reliable byte stream IP datagram delivery 13 Apache server HTTP TCP IP Lixia Zhang/UCLA

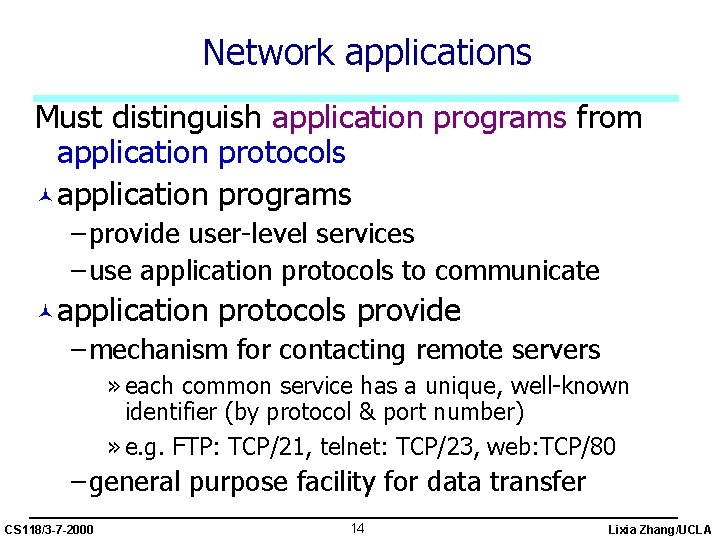

Network applications Must distinguish application programs from application protocols ©application programs – provide user-level services – use application protocols to communicate ©application protocols provide – mechanism for contacting remote servers » each common service has a unique, well-known identifier (by protocol & port number) » e. g. FTP: TCP/21, telnet: TCP/23, web: TCP/80 – general purpose facility for data transfer CS 118/3 -7 -2000 14 Lixia Zhang/UCLA

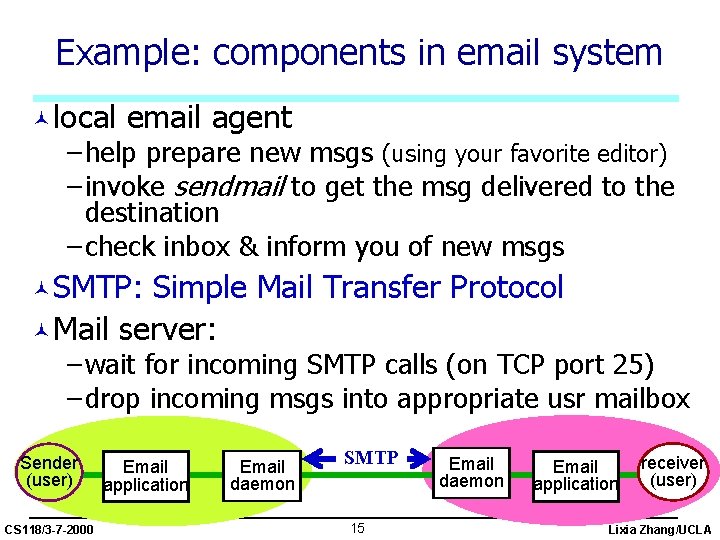

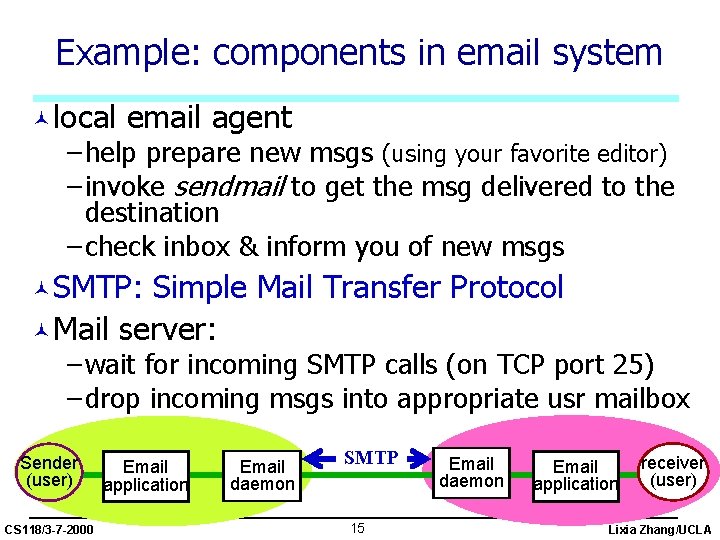

Example: components in email system ©local email agent – help prepare new msgs (using your favorite editor) – invoke sendmail to get the msg delivered to the destination – check inbox & inform you of new msgs ©SMTP: Simple Mail Transfer Protocol ©Mail server: – wait for incoming SMTP calls (on TCP port 25) – drop incoming msgs into appropriate usr mailbox Sender (user) CS 118/3 -7 -2000 Email application Email daemon SMTP 15 Email daemon Email application receiver (user) Lixia Zhang/UCLA

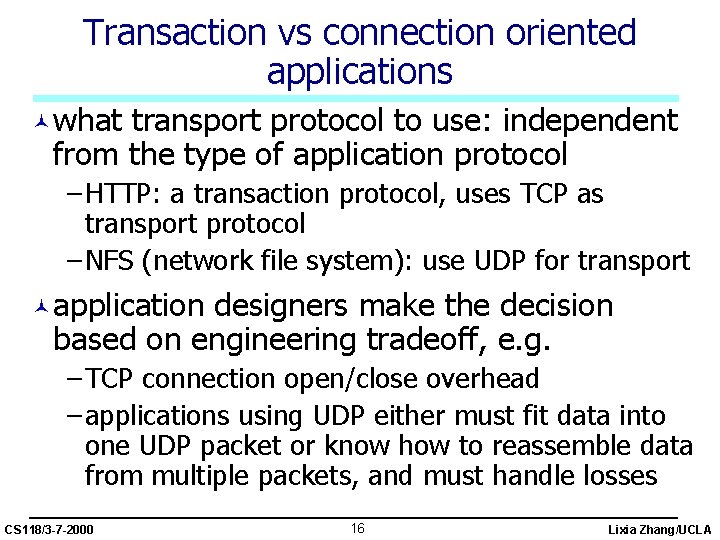

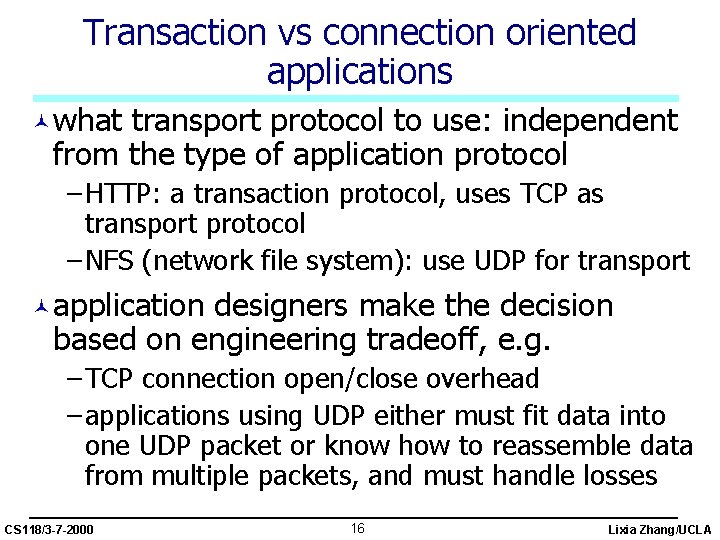

Transaction vs connection oriented applications ©what transport protocol to use: independent from the type of application protocol – HTTP: a transaction protocol, uses TCP as transport protocol – NFS (network file system): use UDP for transport ©application designers make the decision based on engineering tradeoff, e. g. – TCP connection open/close overhead – applications using UDP either must fit data into one UDP packet or know how to reassemble data from multiple packets, and must handle losses CS 118/3 -7 -2000 16 Lixia Zhang/UCLA

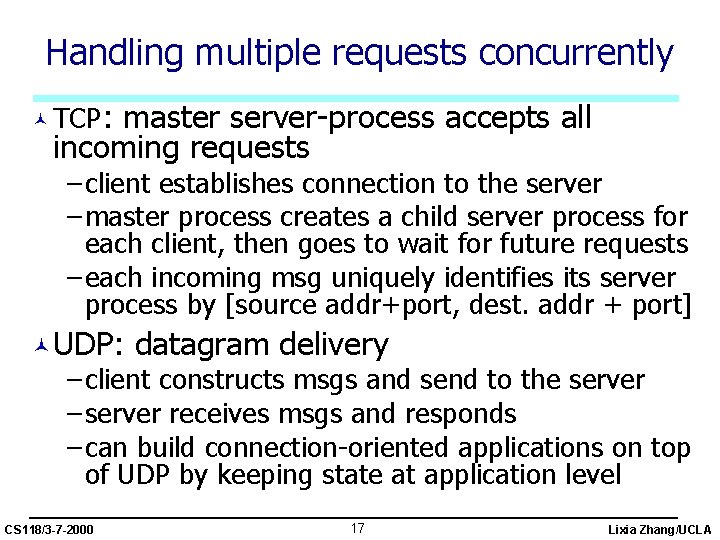

Handling multiple requests concurrently © TCP: master server-process accepts all incoming requests – client establishes connection to the server – master process creates a child server process for each client, then goes to wait for future requests – each incoming msg uniquely identifies its server process by [source addr+port, dest. addr + port] ©UDP: datagram delivery – client constructs msgs and send to the server – server receives msgs and responds – can build connection-oriented applications on top of UDP by keeping state at application level CS 118/3 -7 -2000 17 Lixia Zhang/UCLA

Circumciliary congestion and conjunctival congestion

Circumciliary congestion and conjunctival congestion Effect of traffic

Effect of traffic Conclusion of traffic congestion

Conclusion of traffic congestion Incomina

Incomina All traffic solutions

All traffic solutions Principles of congestion control

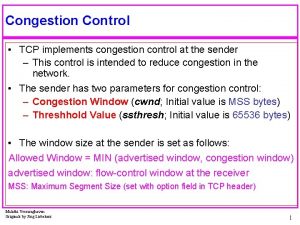

Principles of congestion control Tcp congestion control

Tcp congestion control Tcp congestion control

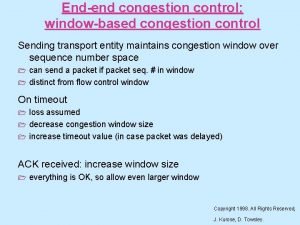

Tcp congestion control Traffic throttling and load shedding

Traffic throttling and load shedding Congestion control in virtual circuit

Congestion control in virtual circuit Congestion control principles

Congestion control principles Load shedding in congestion control

Load shedding in congestion control Udp congestion control

Udp congestion control Principles of congestion control

Principles of congestion control Principles of congestion control

Principles of congestion control Congestion control in network layer

Congestion control in network layer Choke packet in congestion control

Choke packet in congestion control Tcp segment header size

Tcp segment header size