The DomainSpecific Track at CLEF 2008 Vivien Petras

- Slides: 24

The Domain-Specific Track at CLEF 2008 Vivien Petras & Stefan Baerisch GESIS Social Science Information Centre, Bonn, Germany Aarhus, Denmark, September 17, 2008 1

Outline • The Domain-Specific Task • Collections & Controlled Vocabularies • Participants, Runs & Relevance Assessments • Themes • Outlook 2

The Domain-Specific Task CLIR on structured scientific document collections: • social science domain • bibliographic metadata • controlled vocabularies for subject description Leverage for: • search • query expansion • translation 3

The Domain-Specific Tasks: • Monolingual: against German, English or Russian • Bilingual: against German, English or Russian • Multilingual: against combined collection Topics: • 25 topics in standard TREC format (title, desc, narr): • suggestions from 28 subject specialties • translated from German English, Russian 4

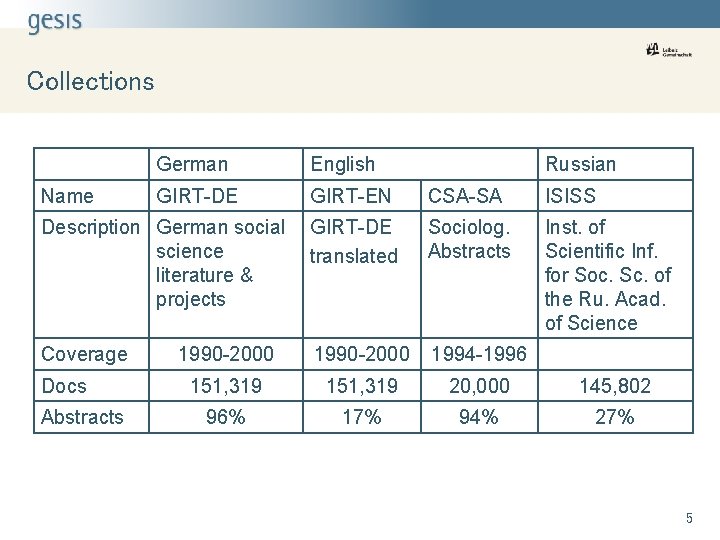

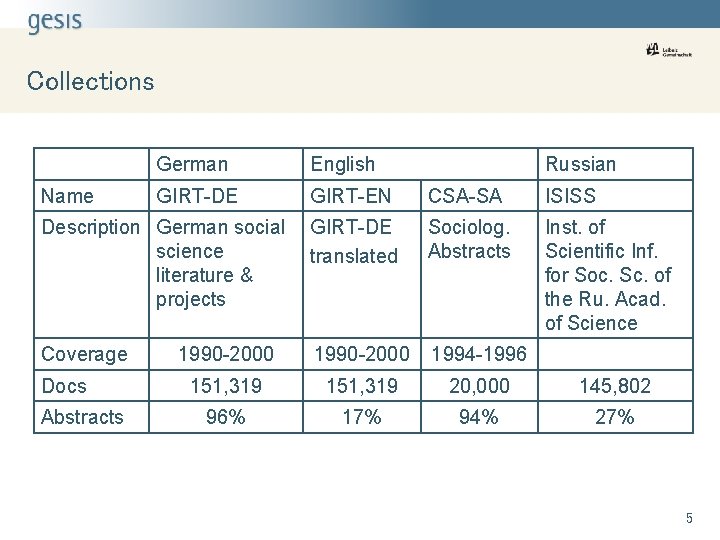

Collections German English GIRT-DE GIRT-EN CSA-SA ISISS Description German social science literature & projects GIRT-DE translated Sociolog. Abstracts Inst. of Scientific Inf. for Soc. Sc. of the Ru. Acad. of Science Coverage 1990 -2000 1994 -1996 151, 319 20, 000 145, 802 96% 17% 94% 27% Name Docs Abstracts Russian 5

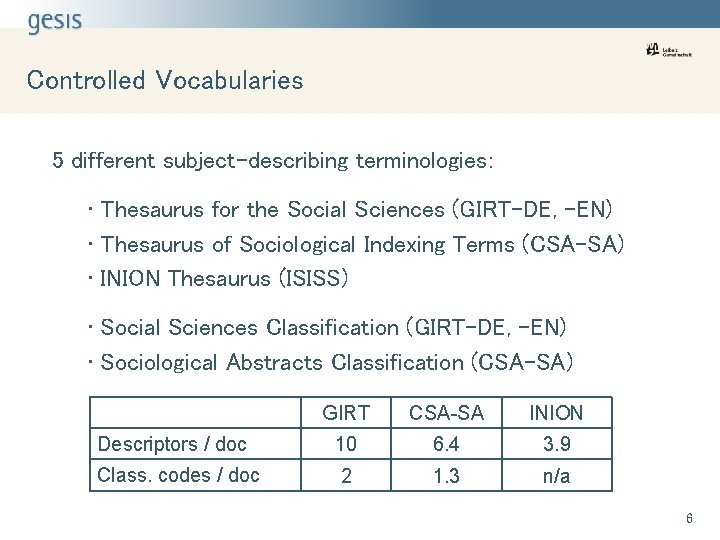

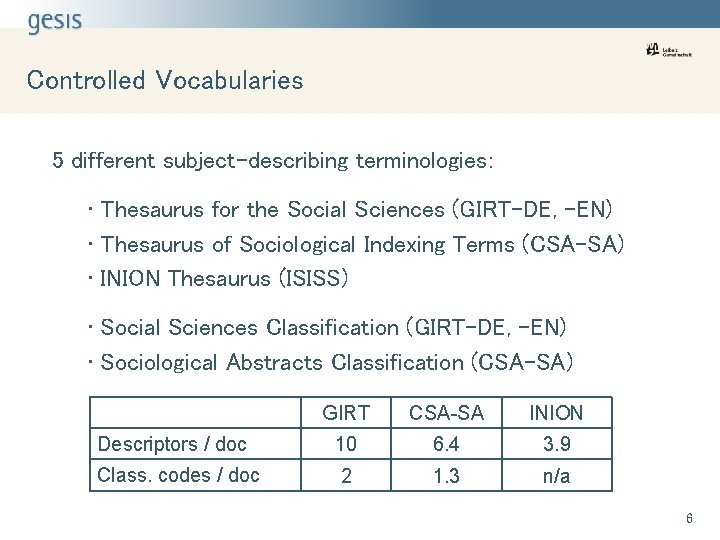

Controlled Vocabularies 5 different subject-describing terminologies: • Thesaurus for the Social Sciences (GIRT-DE, -EN) • Thesaurus of Sociological Indexing Terms (CSA-SA) • INION Thesaurus (ISISS) • Social Sciences Classification (GIRT-DE, -EN) • Sociological Abstracts Classification (CSA-SA) GIRT CSA-SA INION Descriptors / doc 10 6. 4 3. 9 Class. codes / doc 2 1. 3 n/a 6

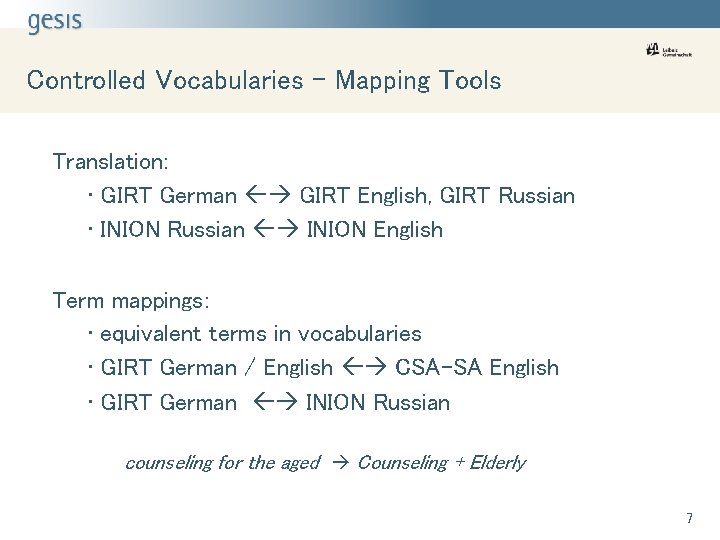

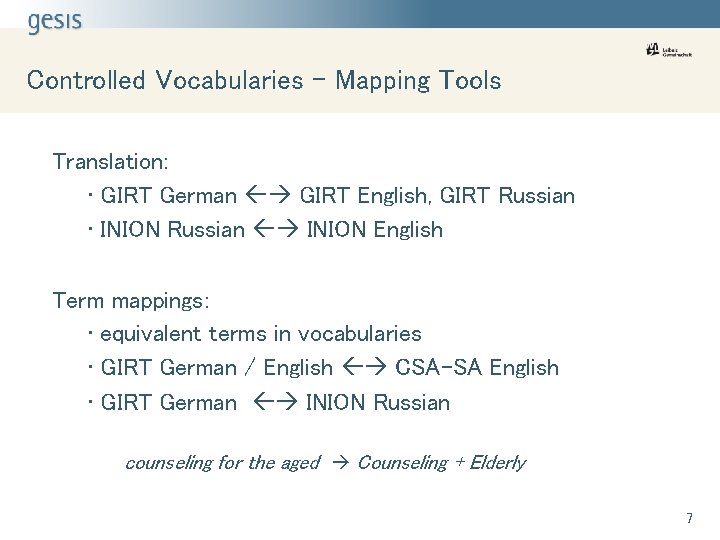

Controlled Vocabularies – Mapping Tools Translation: • GIRT German GIRT English, GIRT Russian • INION Russian INION English Term mappings: • equivalent terms in vocabularies • GIRT German / English CSA-SA English • GIRT German INION Russian counseling for the aged Counseling + Elderly 7

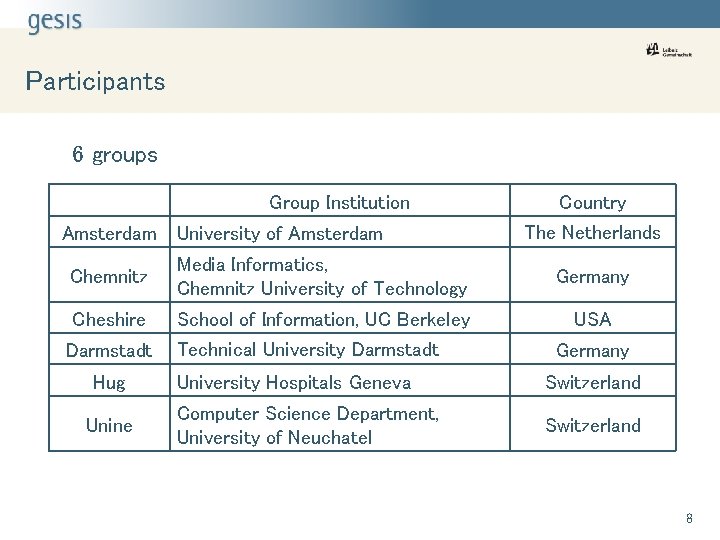

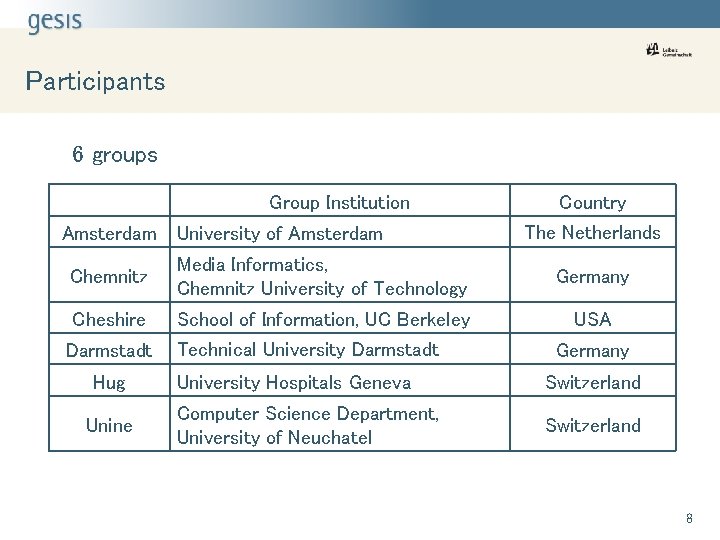

Participants 6 groups Group Institution Amsterdam University of Amsterdam Country The Netherlands Chemnitz Media Informatics, Chemnitz University of Technology Germany Cheshire School of Information, UC Berkeley USA Darmstadt Hug Unine Technical University Darmstadt Germany University Hospitals Geneva Switzerland Computer Science Department, University of Neuchatel Switzerland 8

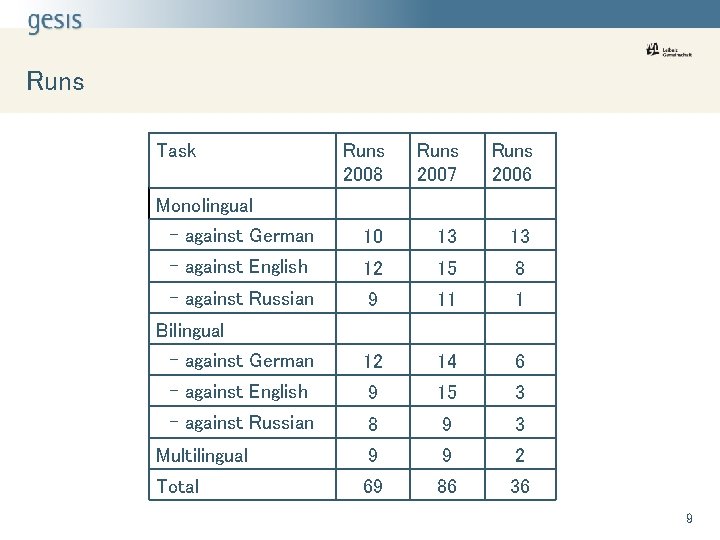

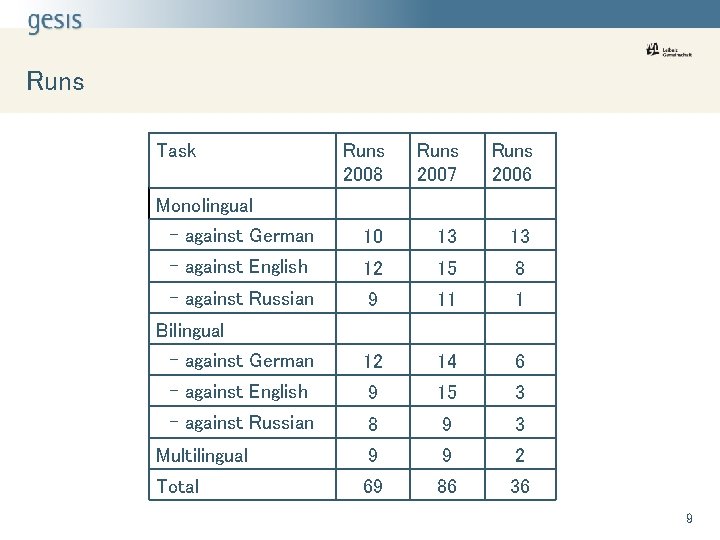

Runs Task Runs 2008 Runs 2007 Runs 2006 - against German 10 13 13 - against English 12 15 8 - against Russian 9 11 1 - against German 12 14 6 - against English 9 15 3 - against Russian 8 9 3 Multilingual 9 9 2 Total 69 86 36 Monolingual Bilingual 9

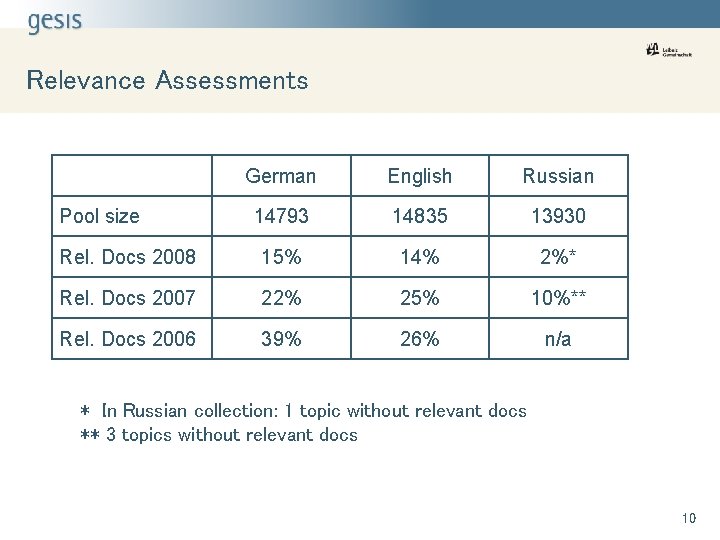

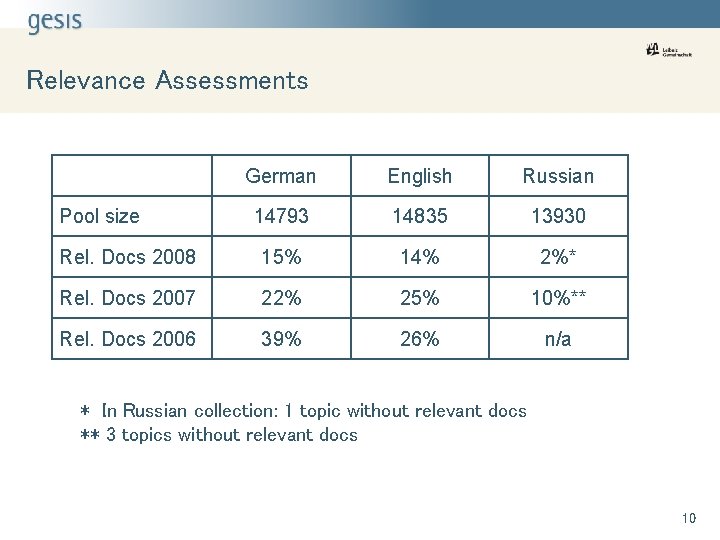

Relevance Assessments German English Russian 14793 14835 13930 Rel. Docs 2008 15% 14% 2%* Rel. Docs 2007 22% 25% 10%** Rel. Docs 2006 39% 26% n/a Pool size * In Russian collection: 1 topic without relevant docs ** 3 topics without relevant docs 10

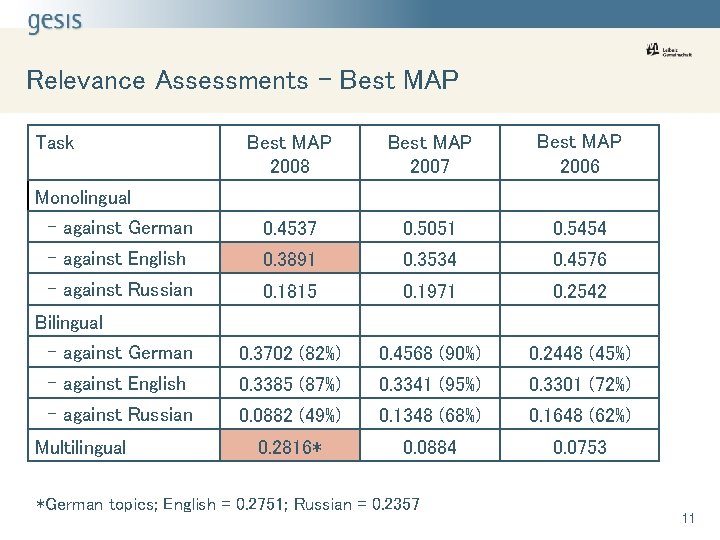

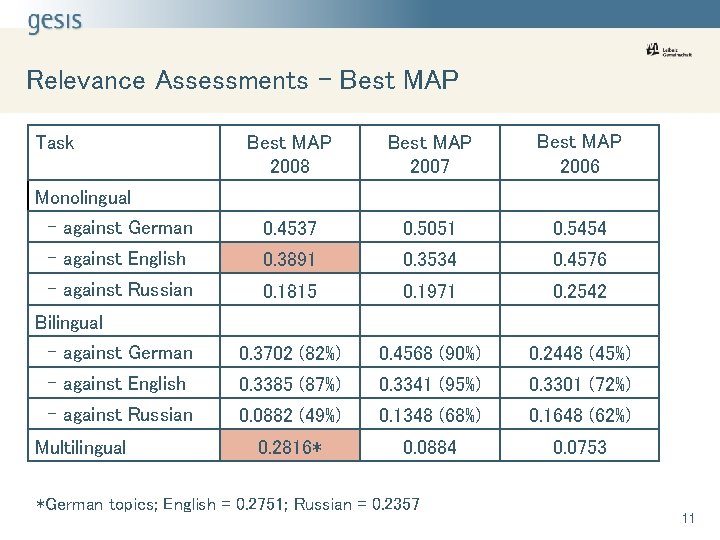

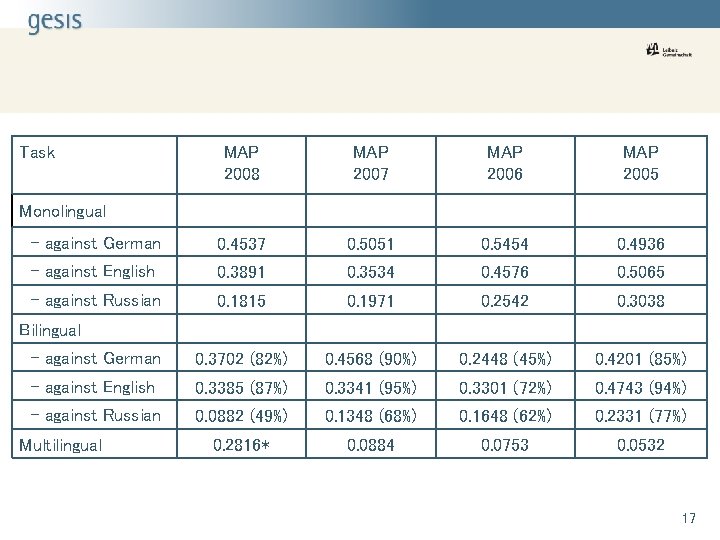

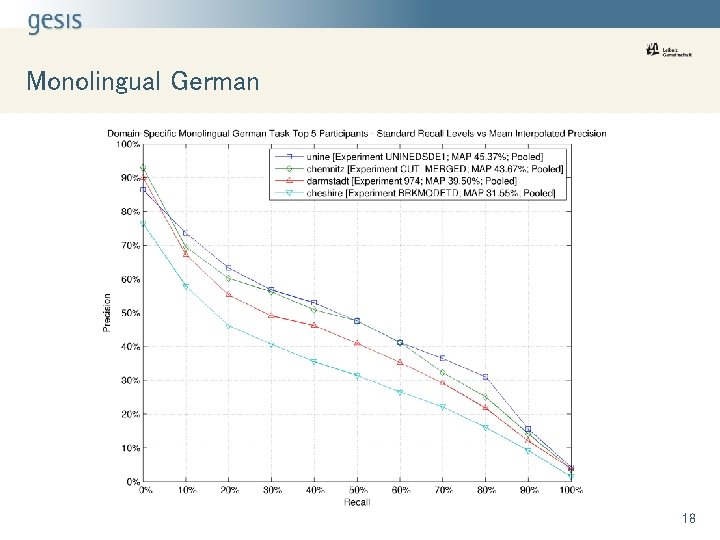

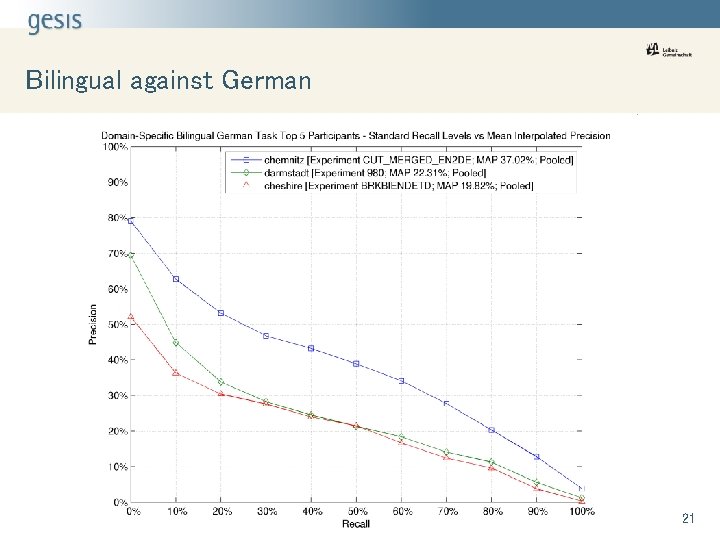

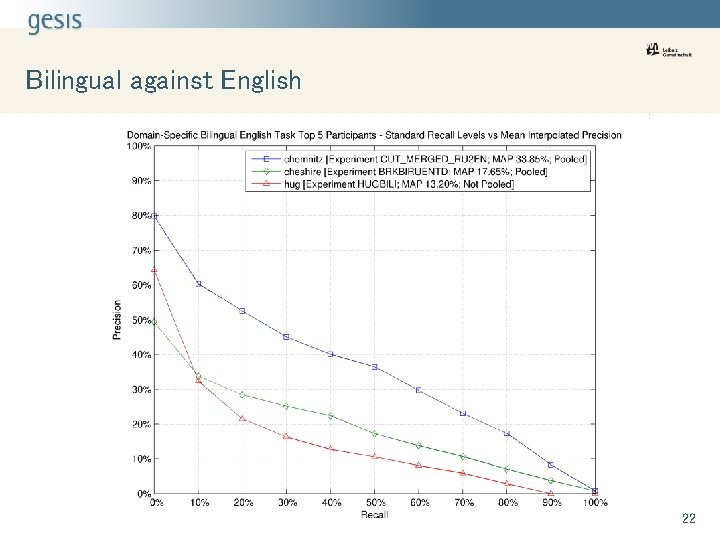

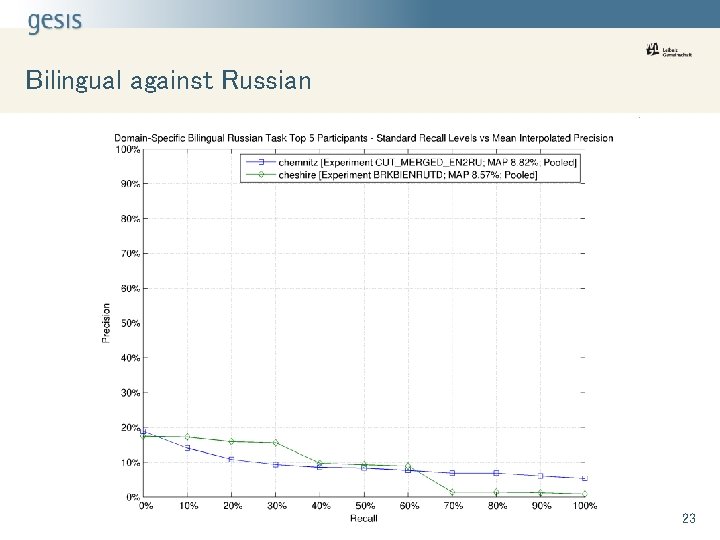

Relevance Assessments – Best MAP 2008 Best MAP 2007 Best MAP 2006 - against German 0. 4537 0. 5051 0. 5454 - against English 0. 3891 0. 3534 0. 4576 - against Russian 0. 1815 0. 1971 0. 2542 - against German 0. 3702 (82%) 0. 4568 (90%) 0. 2448 (45%) - against English 0. 3385 (87%) 0. 3341 (95%) 0. 3301 (72%) - against Russian 0. 0882 (49%) 0. 1348 (68%) 0. 1648 (62%) 0. 2816* 0. 0884 0. 0753 Task Monolingual Bilingual Multilingual *German topics; English = 0. 2751; Russian = 0. 2357 11

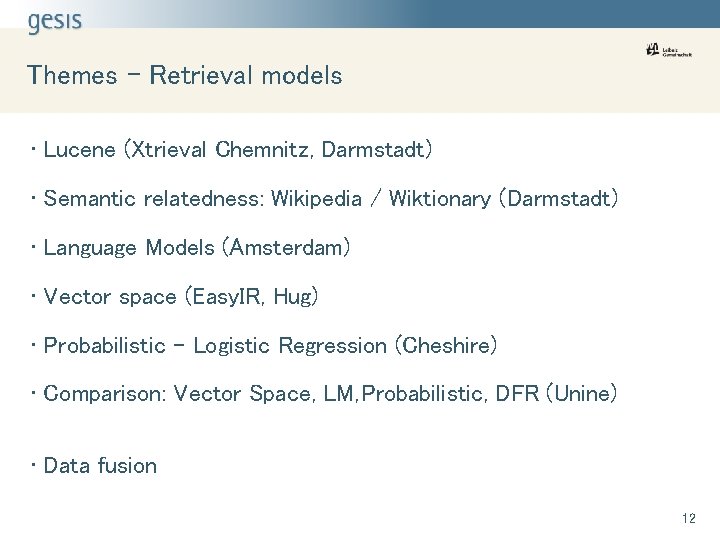

Themes - Retrieval models • Lucene (Xtrieval Chemnitz, Darmstadt) • Semantic relatedness: Wikipedia / Wiktionary (Darmstadt) • Language Models (Amsterdam) • Vector space (Easy. IR, Hug) • Probabilistic – Logistic Regression (Cheshire) • Comparison: Vector Space, LM, Probabilistic, DFR (Unine) • Data fusion 12

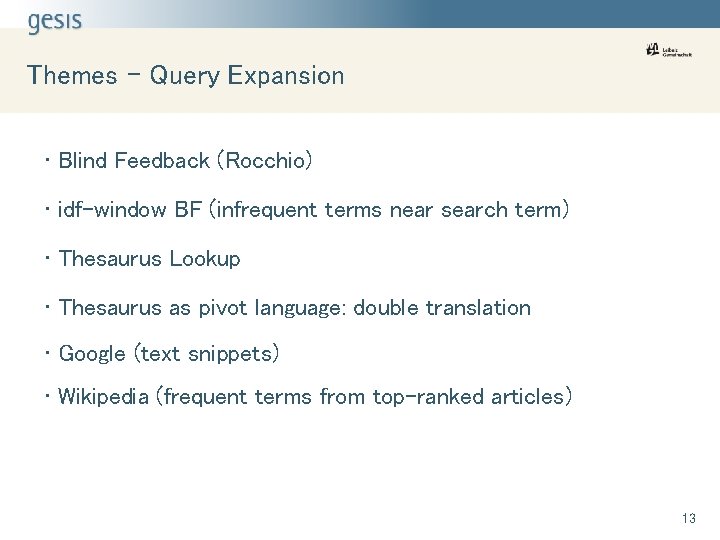

Themes – Query Expansion • Blind Feedback (Rocchio) • idf-window BF (infrequent terms near search term) • Thesaurus Lookup • Thesaurus as pivot language: double translation • Google (text snippets) • Wikipedia (frequent terms from top-ranked articles) 13

Themes – Translation • Google AJAX language API • Commercial Software (Systran, LEC) • Bilingual thesaurus look-up • ML retrieval thesaurus look-up • Wikipedia (Cross-language links) 14

Summary & Outlook • Enough interest for 2009? • Different corpora • Different tasks • full topic run (125 topics) • result: controlled vocabulary terms (not documents) • robust task • Full-text retrieval with open access literature 15

Domain-Specific Track: http: //www. gesis. org/en/research/ information_technology/clef_ds. htm Vocabulary Mappings: http: //www. gesis. org/en/research/ information_technology/komohe. htm Email: vivien. petras@gesis. org 16

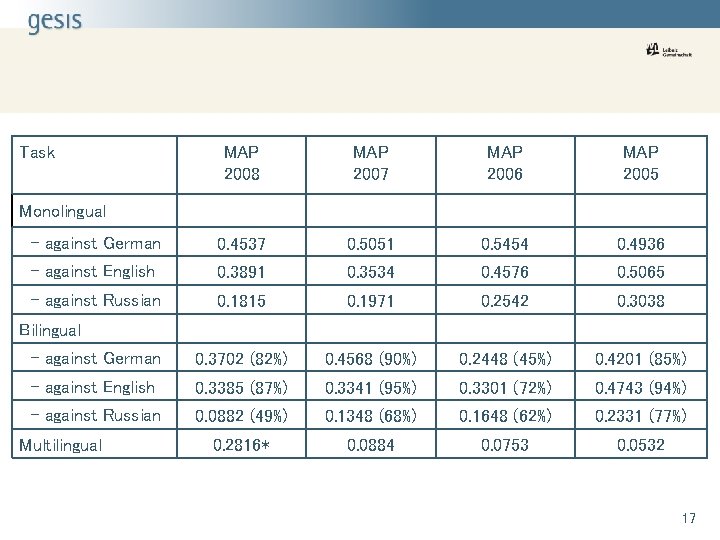

Task MAP 2008 MAP 2007 MAP 2006 MAP 2005 - against German 0. 4537 0. 5051 0. 5454 0. 4936 - against English 0. 3891 0. 3534 0. 4576 0. 5065 - against Russian 0. 1815 0. 1971 0. 2542 0. 3038 - against German 0. 3702 (82%) 0. 4568 (90%) 0. 2448 (45%) 0. 4201 (85%) - against English 0. 3385 (87%) 0. 3341 (95%) 0. 3301 (72%) 0. 4743 (94%) - against Russian 0. 0882 (49%) 0. 1348 (68%) 0. 1648 (62%) 0. 2331 (77%) 0. 2816* 0. 0884 0. 0753 0. 0532 Monolingual Bilingual Multilingual 17

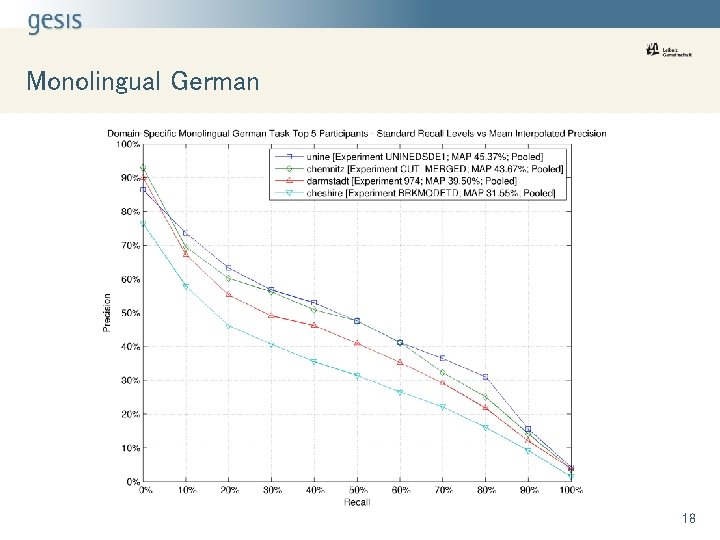

Monolingual German 18

Monolingual English 19

Monolingual Russian 20

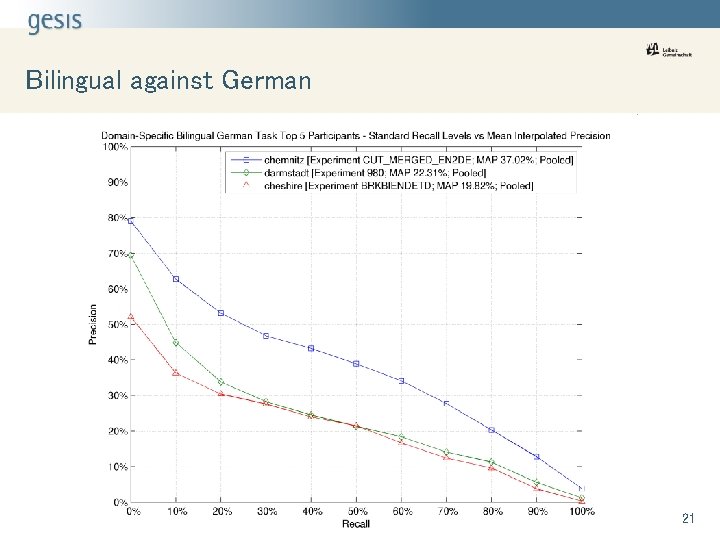

Bilingual against German 21

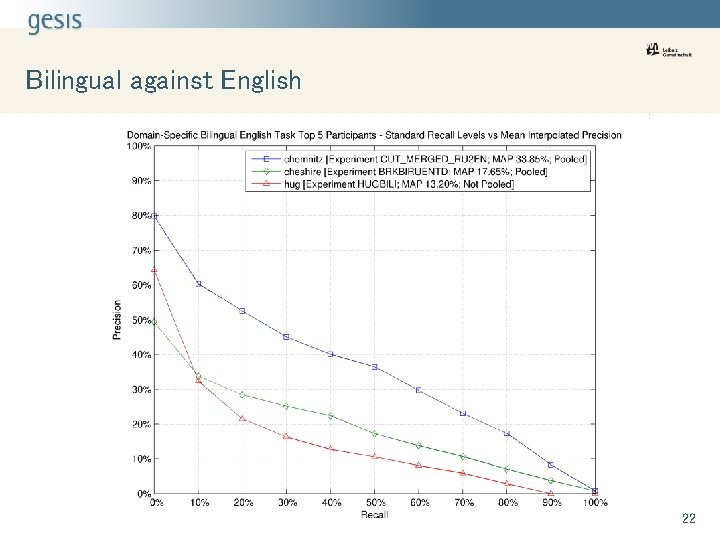

Bilingual against English 22

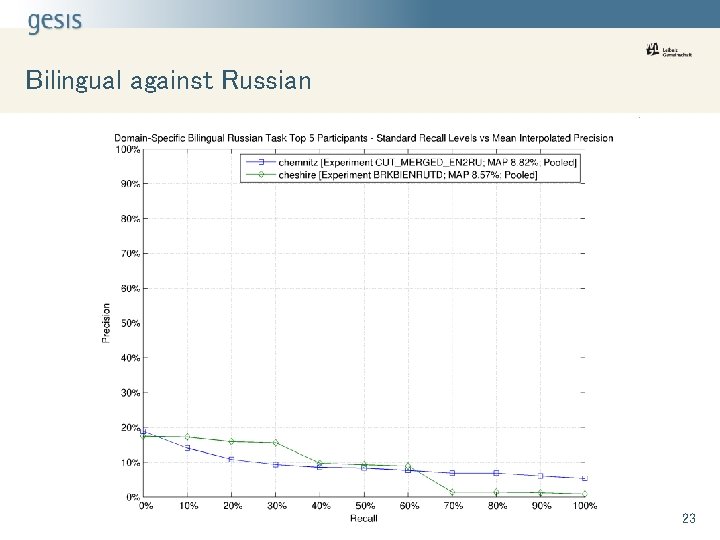

Bilingual against Russian 23

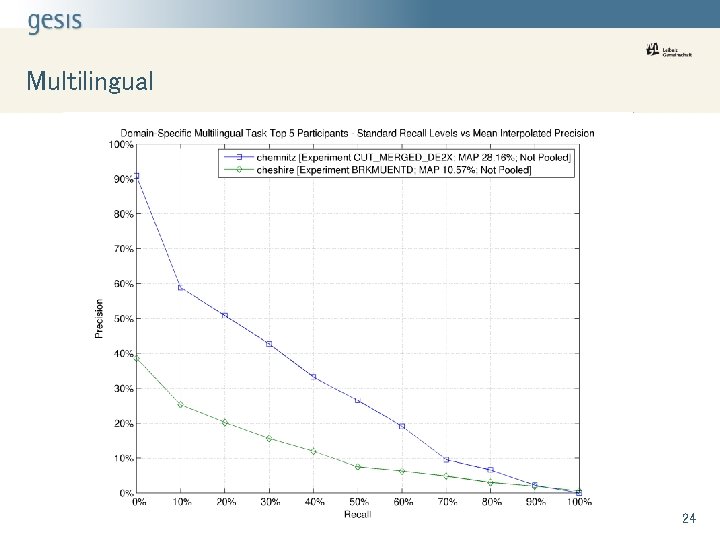

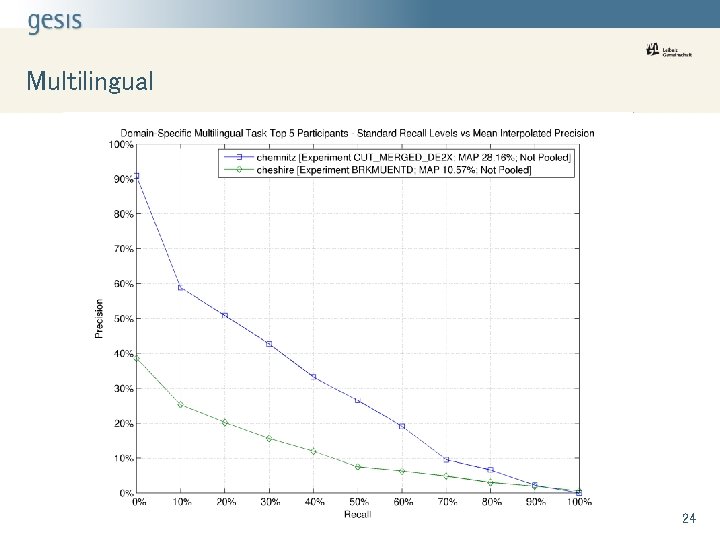

Multilingual 24

Vivien petras

Vivien petras 2008 2008

2008 2008 Petras banys

Petras banys Petras tehachapi

Petras tehachapi Petras cvirka biografija

Petras cvirka biografija Kas

Kas Videlčius

Videlčius Jeremy petras

Jeremy petras Anthony petras

Anthony petras Anthony petras

Anthony petras Dr petras kisielius

Dr petras kisielius Monika doveikiene

Monika doveikiene Vivien blossier

Vivien blossier Vivien le nestour

Vivien le nestour Vivien luo

Vivien luo On vivien els bandolers

On vivien els bandolers Dr easson

Dr easson Vivien blossier

Vivien blossier Dr fazekas melinda

Dr fazekas melinda Vivien blossier

Vivien blossier Vivien le nestour

Vivien le nestour Ldsu

Ldsu Vivien leung psychologist

Vivien leung psychologist Grek vivien

Grek vivien Clef otp

Clef otp