CLEF Interactive Track Overview i CLEF Douglas W

- Slides: 16

CLEF Interactive Track Overview i. CLEF Douglas W. Oard, UMD, USA Julio Gonzalo, UNED, Spain 21 August 2003 CLEF 2003

Outline • Goals • Track design • Participating teams • Results

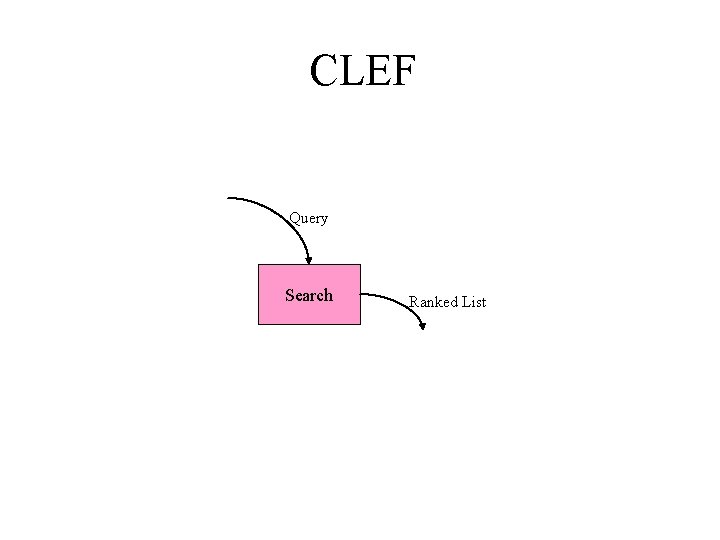

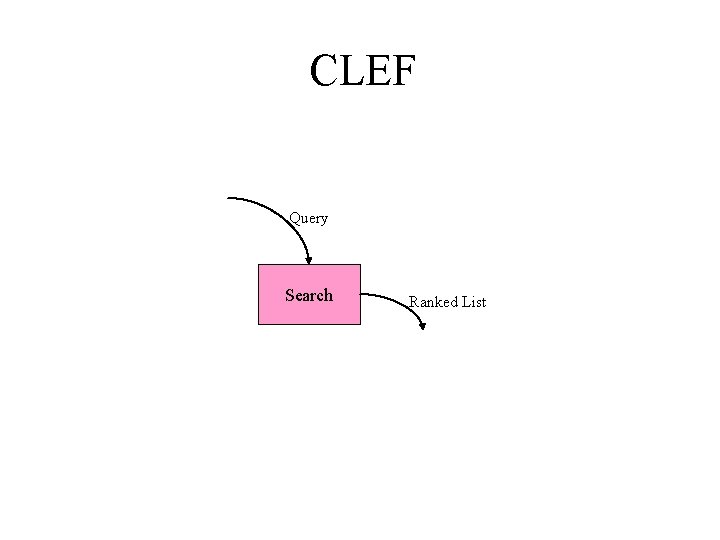

CLEF Query Search Ranked List

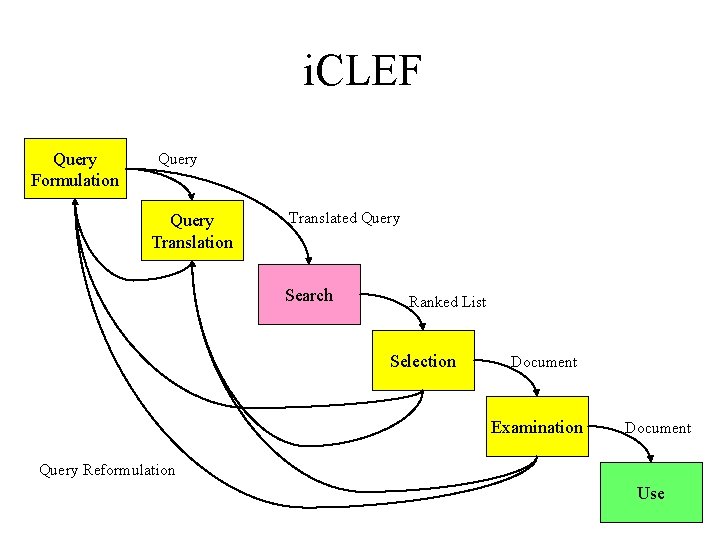

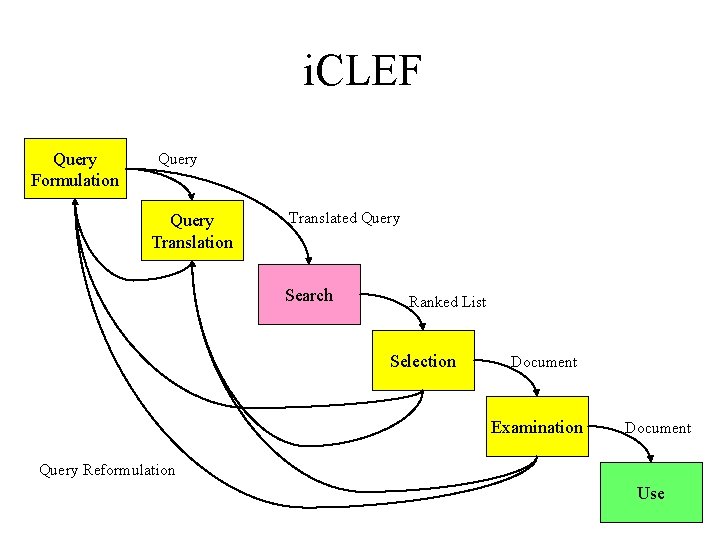

i. CLEF Query Formulation Query Translation Translated Query Search Ranked List Selection Document Examination Document Query Reformulation Use

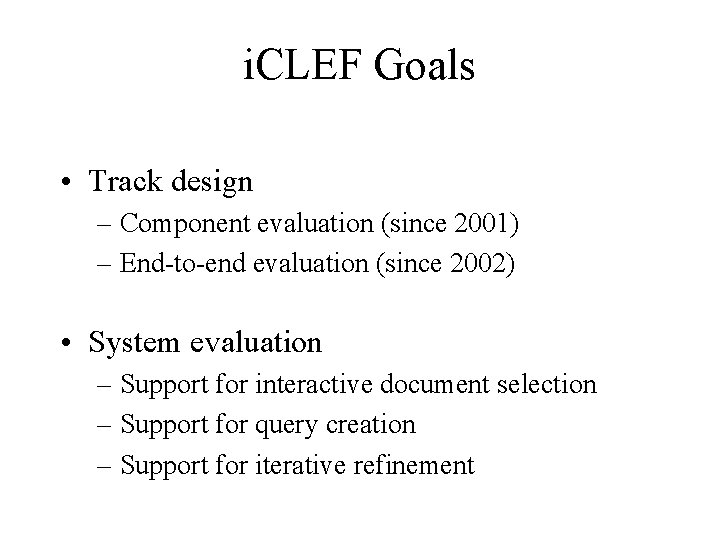

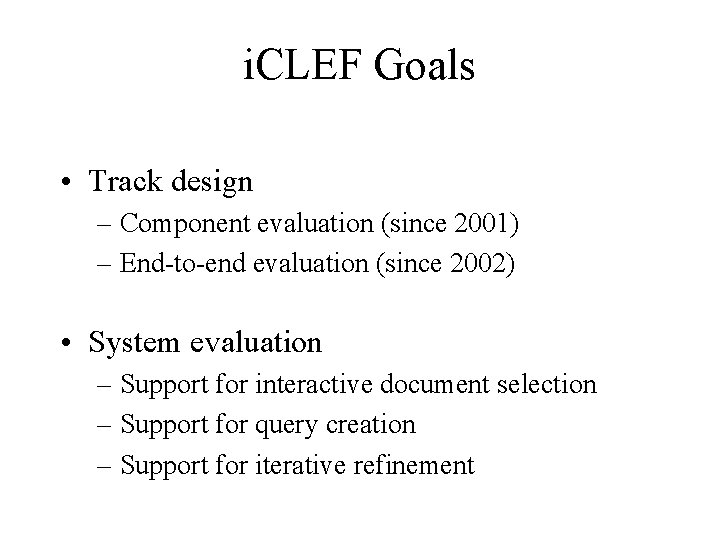

i. CLEF Goals • Track design – Component evaluation (since 2001) – End-to-end evaluation (since 2002) • System evaluation – Support for interactive document selection – Support for query creation – Support for iterative refinement

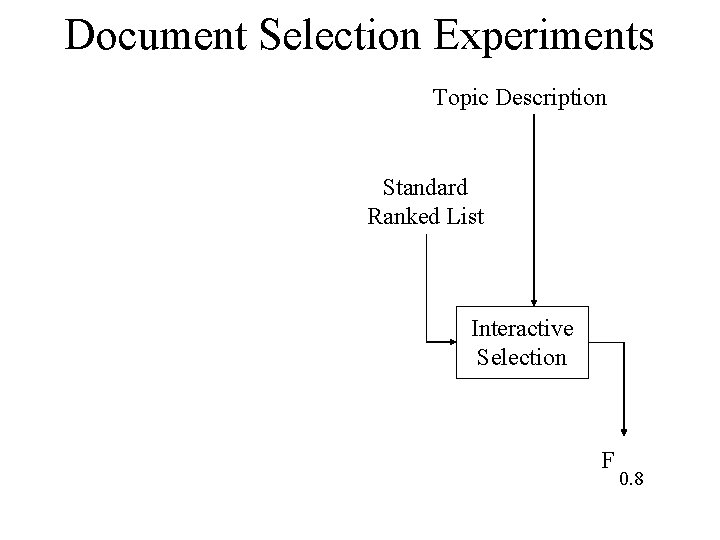

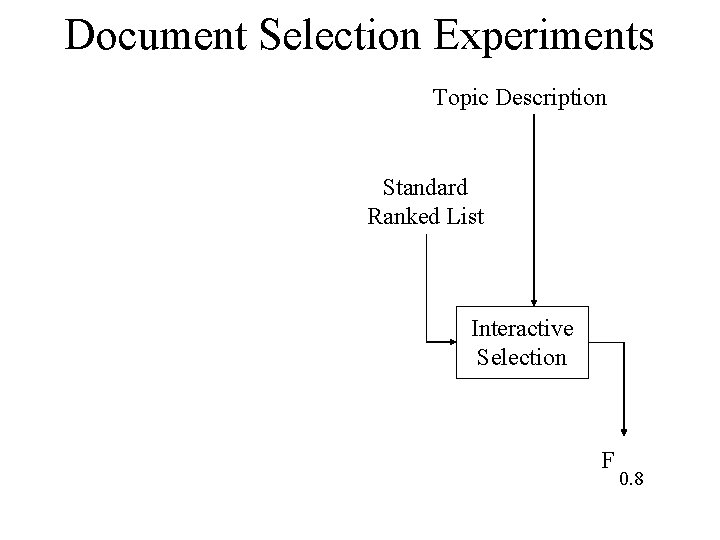

Document Selection Experiments Topic Description Standard Ranked List Interactive Selection F 0. 8

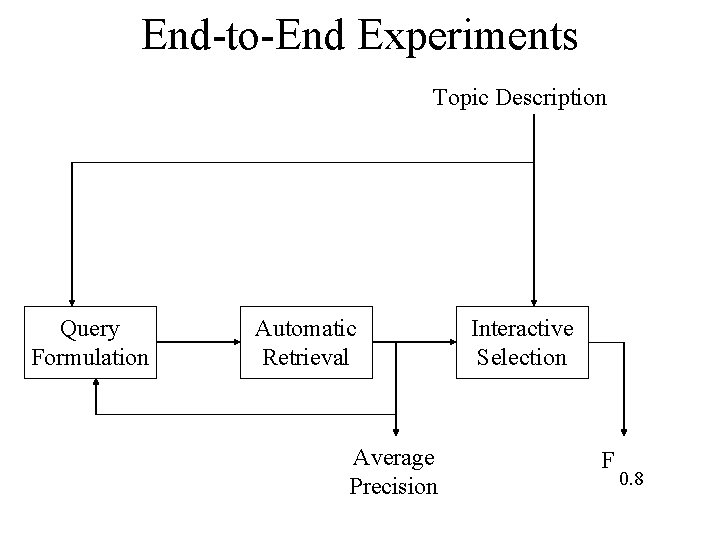

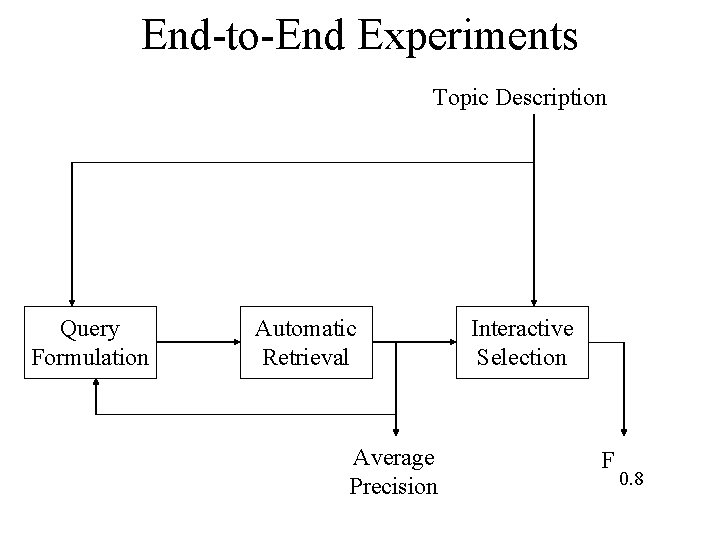

End-to-End Experiments Topic Description Query Formulation Automatic Retrieval Average Precision Interactive Selection F 0. 8

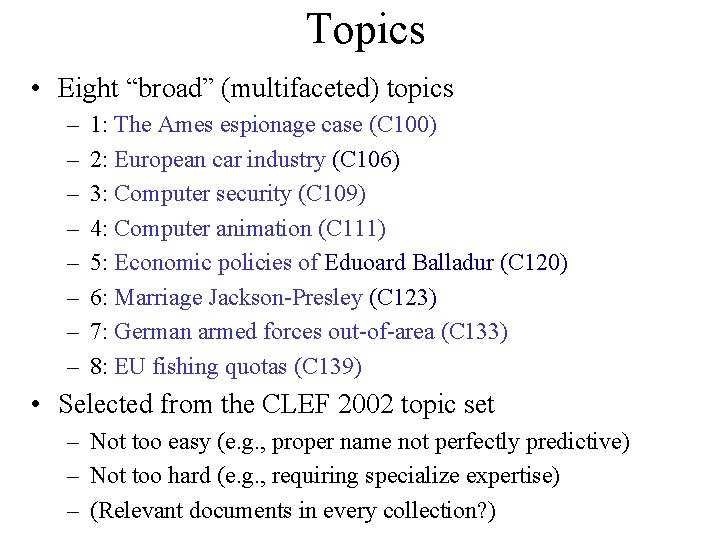

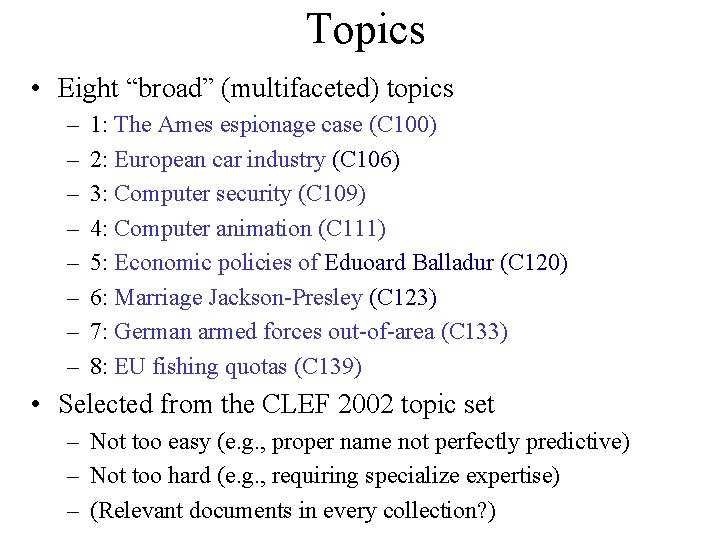

Topics • Eight “broad” (multifaceted) topics – – – – 1: The Ames espionage case (C 100) 2: European car industry (C 106) 3: Computer security (C 109) 4: Computer animation (C 111) 5: Economic policies of Eduoard Balladur (C 120) 6: Marriage Jackson-Presley (C 123) 7: German armed forces out-of-area (C 133) 8: EU fishing quotas (C 139) • Selected from the CLEF 2002 topic set – Not too easy (e. g. , proper name not perfectly predictive) – Not too hard (e. g. , requiring specialize expertise) – (Relevant documents in every collection? )

Test Collection • Any CLEF-2002 language collection – Systran baseline translations • Spanish to English, English to Spanish • Augmented relevance judgments – Start with CLEF-2002 judgments – Enrich pools with: • Top 20 documents from every iteration • Every document judged by a user – Judge all additions to the pools

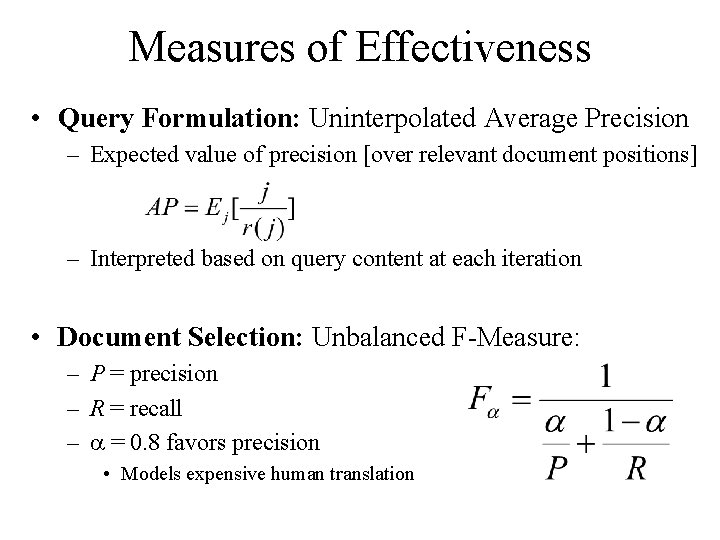

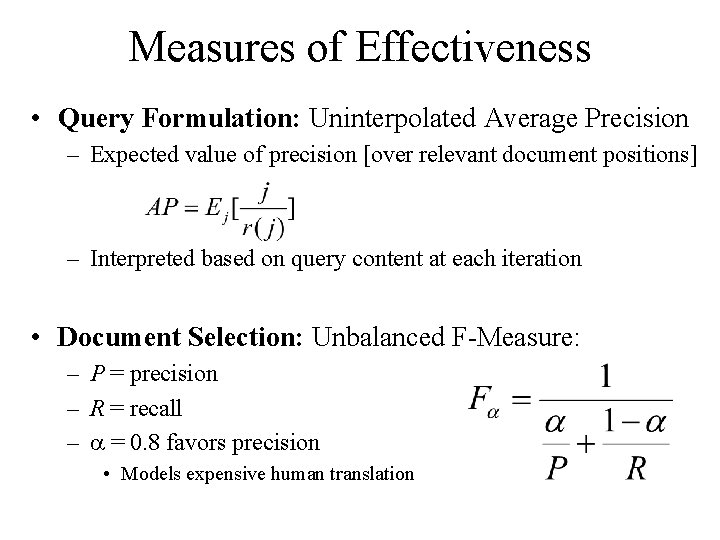

Measures of Effectiveness • Query Formulation: Uninterpolated Average Precision – Expected value of precision [over relevant document positions] – Interpreted based on query content at each iteration • Document Selection: Unbalanced F-Measure: – P = precision – R = recall – = 0. 8 favors precision • Models expensive human translation

Variation in Automatic Measures • System – What we seek to measure • Topic – Sample topic space, compute expected value • Topic+System – Pair by topic and compute statistical significance • Collection – Repeat the experiment using several collections

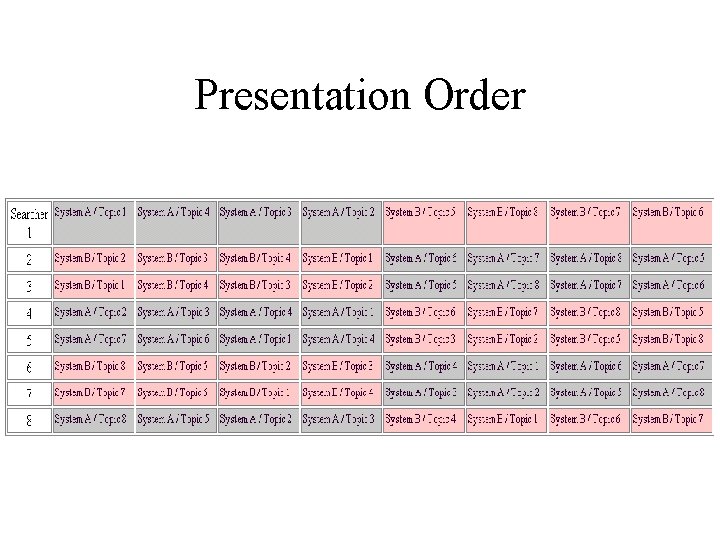

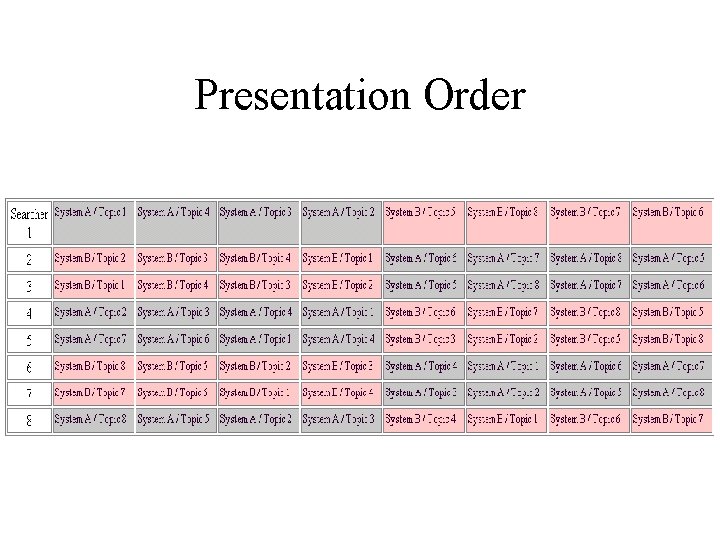

Additional Effects in i. CLEF • Learning – Vary topic presentation order • Fatigue – Vary system presentation order • Topic+User (Expertise) – Ask about prior knowledge of each topic

Presentation Order

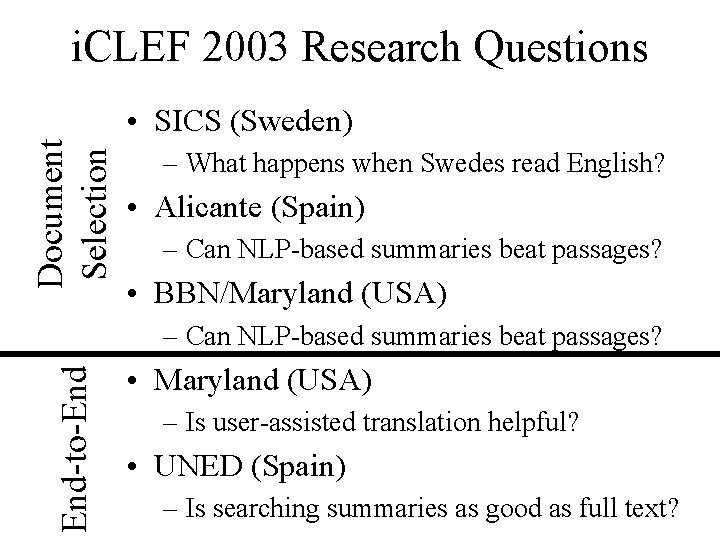

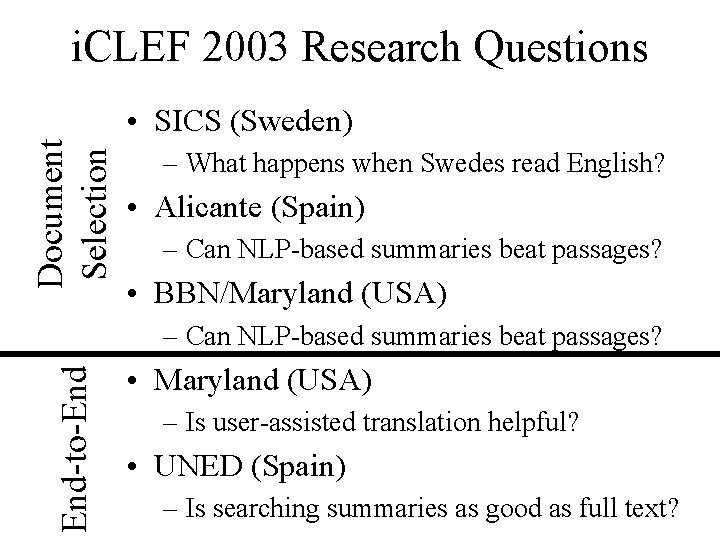

i. CLEF 2003 Research Questions Document Selection • SICS (Sweden) – What happens when Swedes read English? • Alicante (Spain) – Can NLP-based summaries beat passages? • BBN/Maryland (USA) End-to-End – Can NLP-based summaries beat passages? • Maryland (USA) – Is user-assisted translation helpful? • UNED (Spain) – Is searching summaries as good as full text?

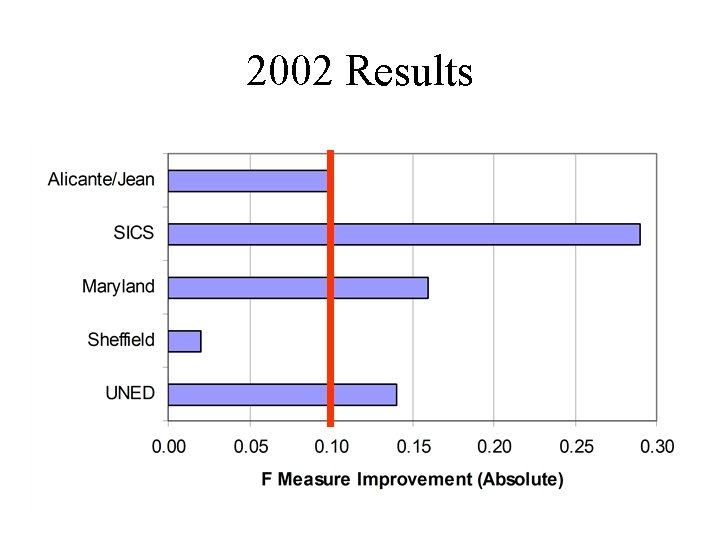

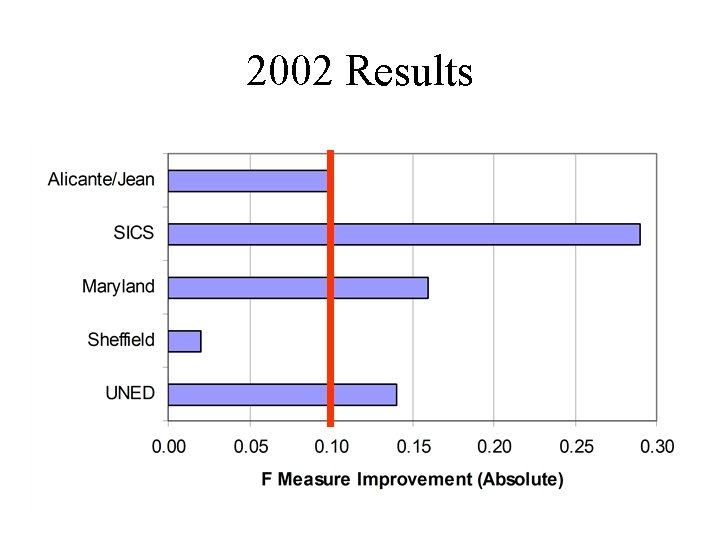

2002 Results

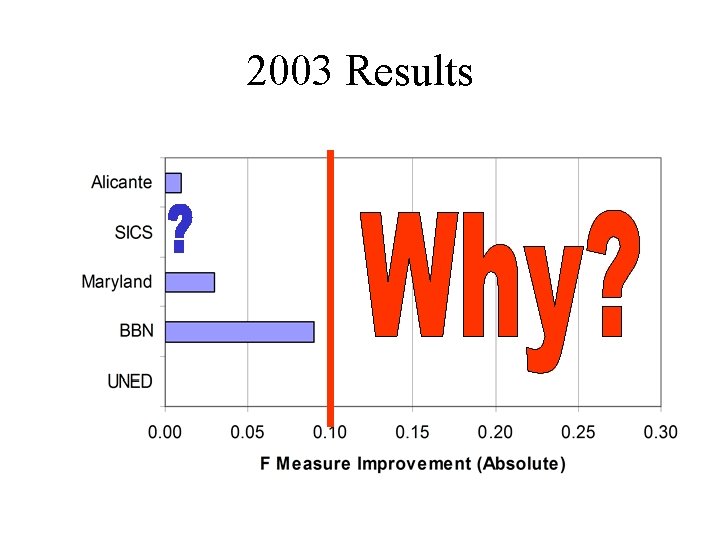

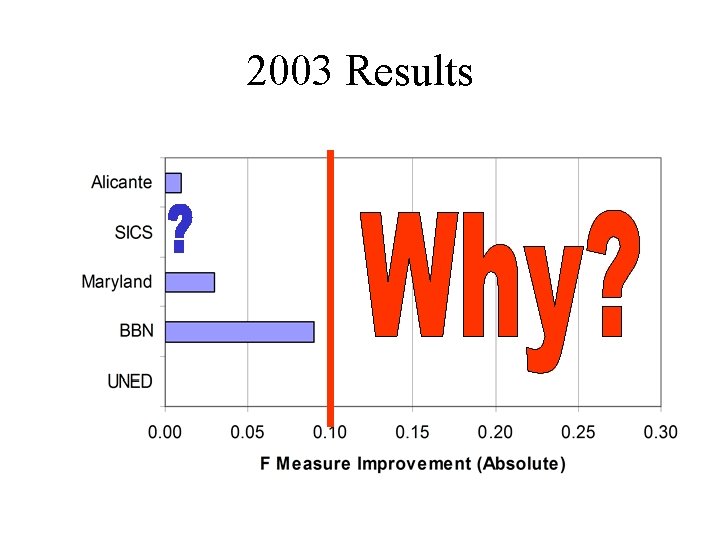

2003 Results