CLEF 2009 Corfu Question Answering Track Overview A

- Slides: 31

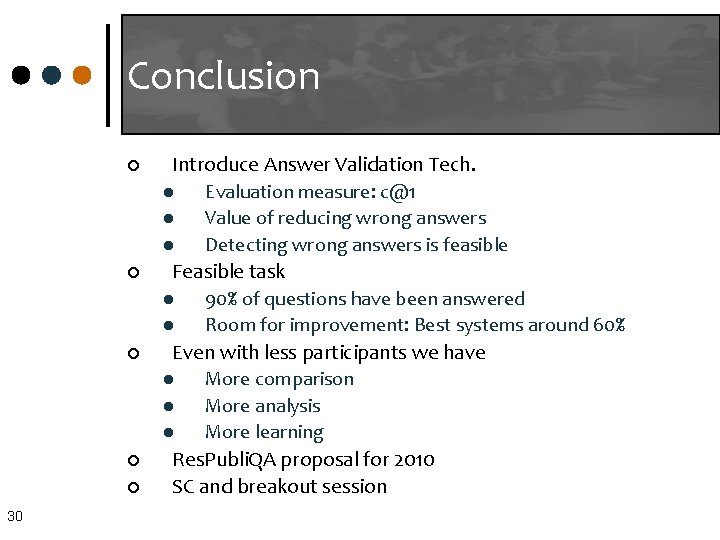

CLEF 2009, Corfu Question Answering Track Overview A. Peñas P. Forner R. Sutcliffe Á. Rodrigo C. Forascu I. Alegria D. Giampiccolo N. Moreau P. Osenova 1 J. Turmo P. R. Comas S. Rosset O. Galibert N. Moreau D. Mostefa P. Rosso D. Buscaldi D. Santos L. M. Cabral

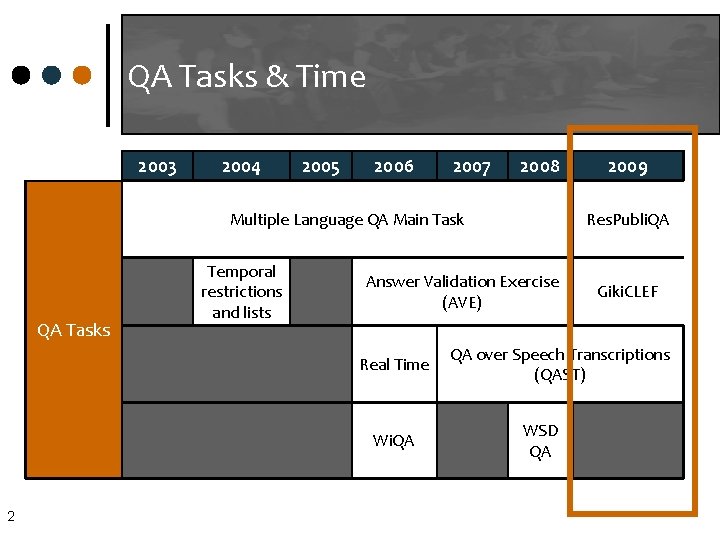

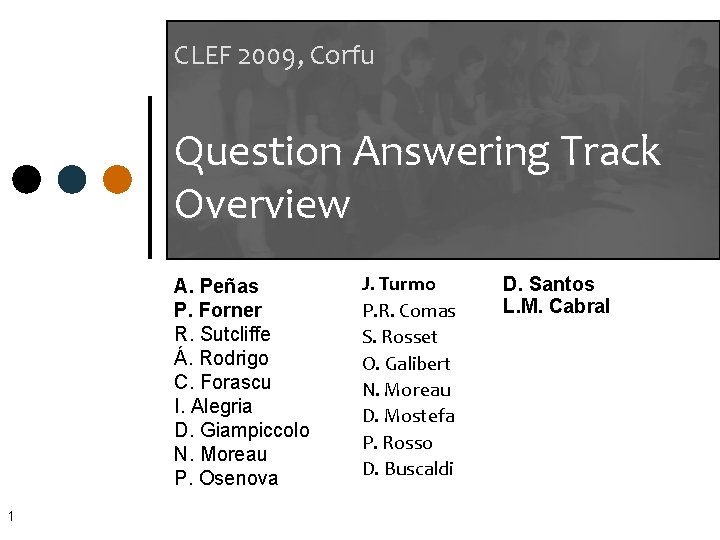

QA Tasks & Time 2003 2004 2005 2006 2007 2008 Res. Publi. QA Multiple Language QA Main Task QA Tasks Temporal restrictions and lists Answer Validation Exercise (AVE) Real Time Wi. QA 2 2009 Giki. CLEF QA over Speech Transcriptions (QAST) WSD QA

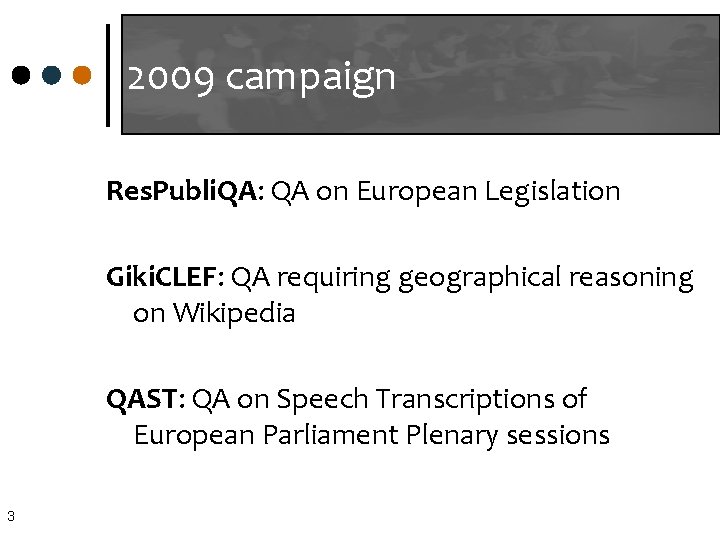

2009 campaign Res. Publi. QA: QA on European Legislation Giki. CLEF: QA requiring geographical reasoning on Wikipedia QAST: QA on Speech Transcriptions of European Parliament Plenary sessions 3

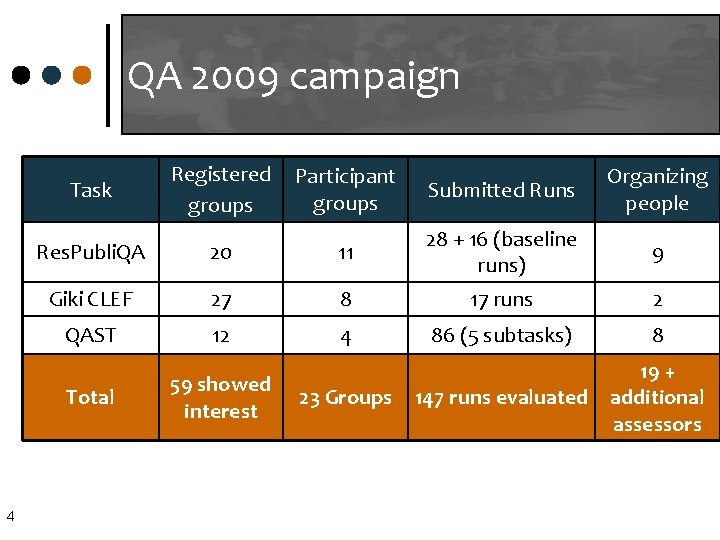

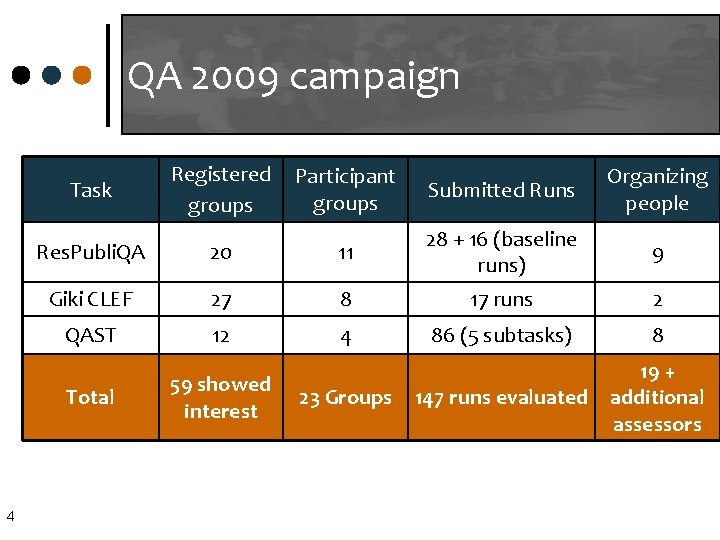

QA 2009 campaign 4 Task Registered groups Participant groups Submitted Runs Organizing people Res. Publi. QA 20 11 28 + 16 (baseline runs) 9 Giki CLEF 27 8 17 runs 2 QAST 12 4 86 (5 subtasks) 8 Total 59 showed interest 147 runs evaluated 19 + additional assessors 23 Groups

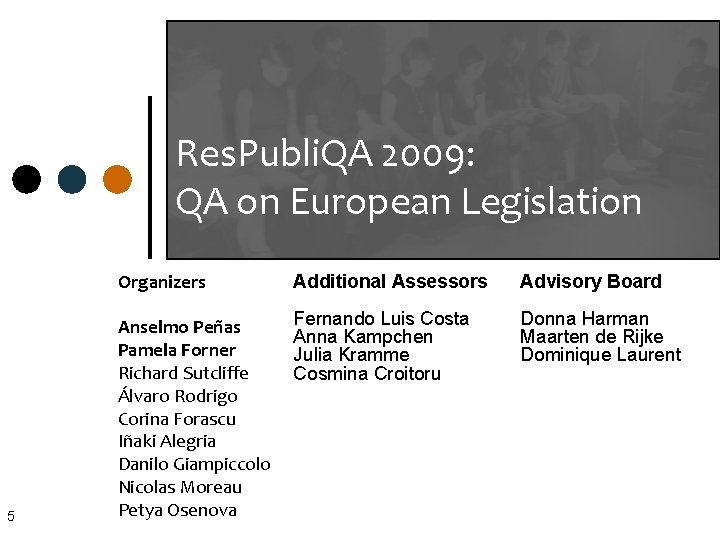

Res. Publi. QA 2009: QA on European Legislation 5 Organizers Additional Assessors Advisory Board Anselmo Peñas Pamela Forner Richard Sutcliffe Álvaro Rodrigo Corina Forascu Iñaki Alegria Danilo Giampiccolo Nicolas Moreau Petya Osenova Fernando Luis Costa Anna Kampchen Julia Kramme Cosmina Croitoru Donna Harman Maarten de Rijke Dominique Laurent

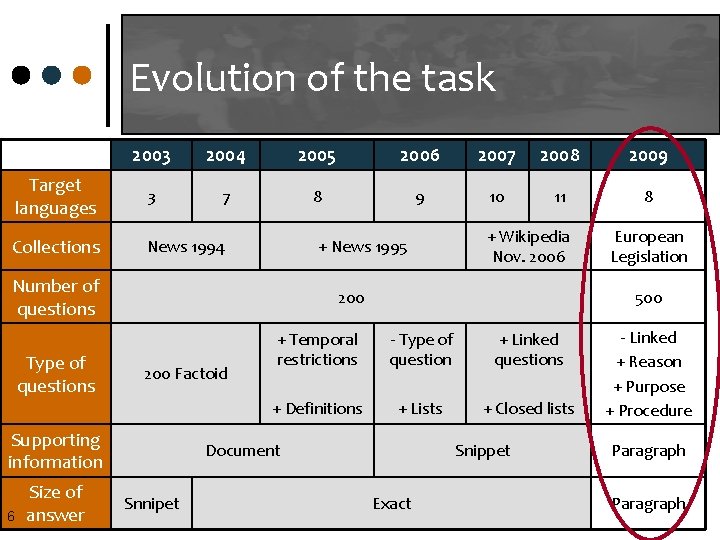

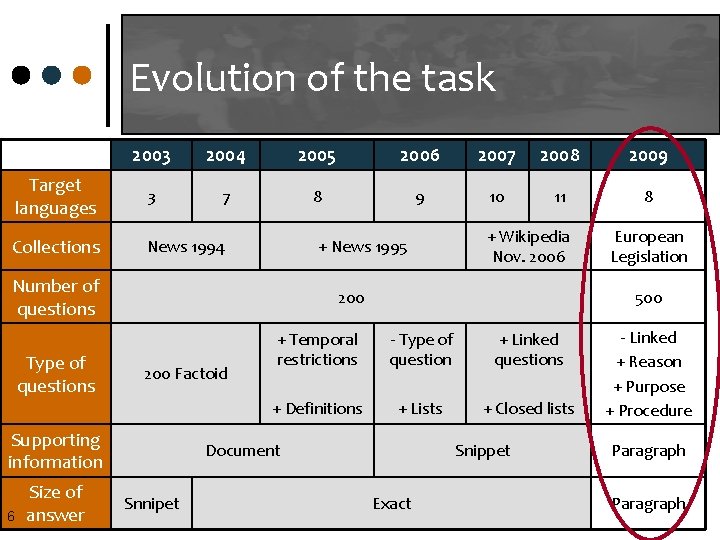

Evolution of the task 2003 2004 2005 2006 2007 2008 2009 Target languages 3 7 8 9 10 11 8 Collections News 1994 + News 1995 Number of questions Type of questions 200 Factoid Supporting information 6 Size of answer + Wikipedia Nov. 2006 500 + Temporal restrictions - Type of question + Linked questions + Definitions + Lists + Closed lists Document Snnipet European Legislation Snippet Exact - Linked + Reason + Purpose + Procedure Paragraph

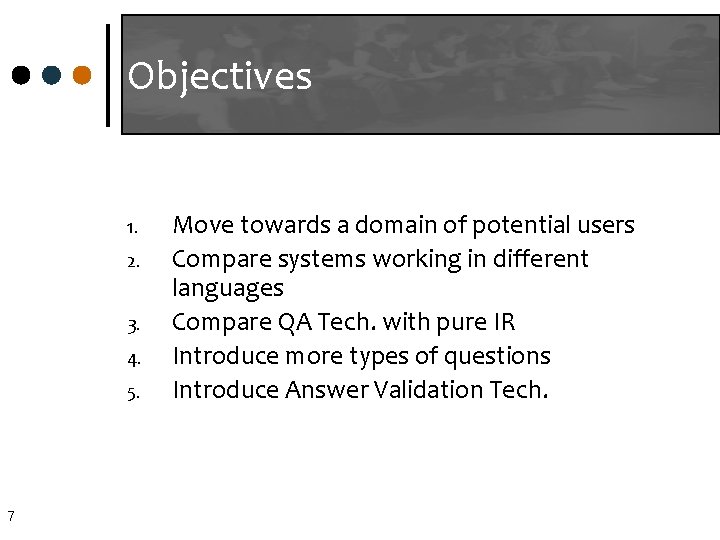

Objectives 1. 2. 3. 4. 5. 7 Move towards a domain of potential users Compare systems working in different languages Compare QA Tech. with pure IR Introduce more types of questions Introduce Answer Validation Tech.

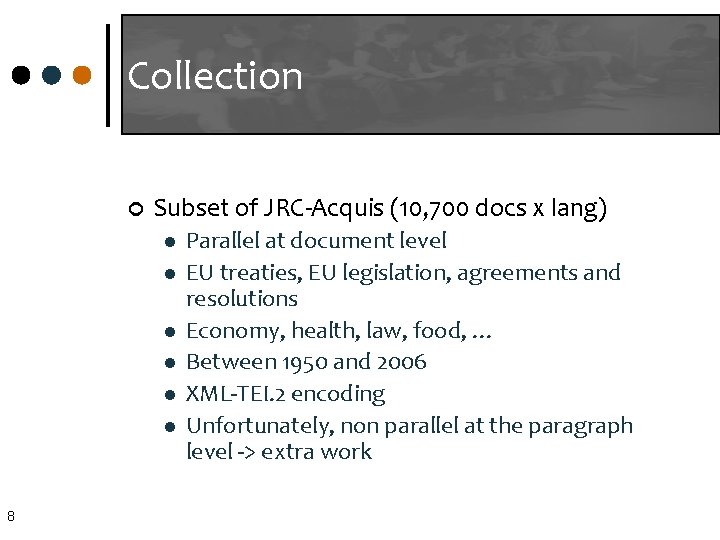

Collection ¢ Subset of JRC-Acquis (10, 700 docs x lang) l l l 8 Parallel at document level EU treaties, EU legislation, agreements and resolutions Economy, health, law, food, … Between 1950 and 2006 XML-TEI. 2 encoding Unfortunately, non parallel at the paragraph level -> extra work

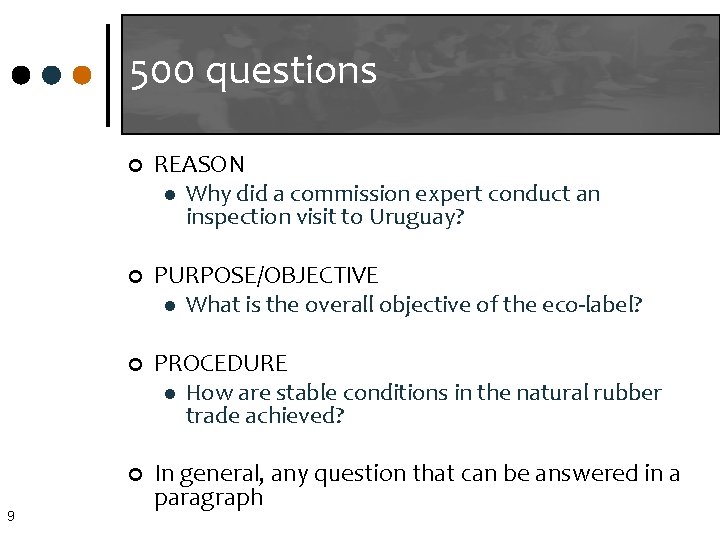

500 questions 9 ¢ REASON l Why did a commission expert conduct an inspection visit to Uruguay? ¢ PURPOSE/OBJECTIVE l What is the overall objective of the eco-label? ¢ PROCEDURE l How are stable conditions in the natural rubber trade achieved? ¢ In general, any question that can be answered in a paragraph

500 questions ¢ Also l FACTOID • In how many languages is the Official Journal of the Community published? l DEFINITION • What is meant by “whole milk”? ¢ 10 No NIL questions

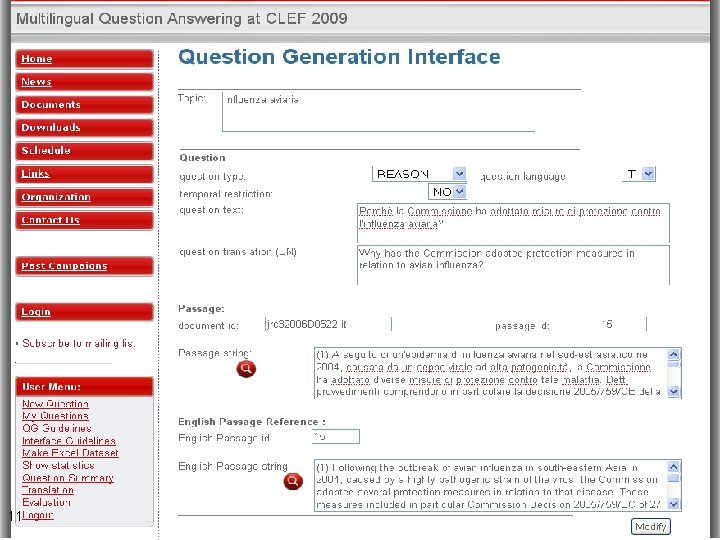

11

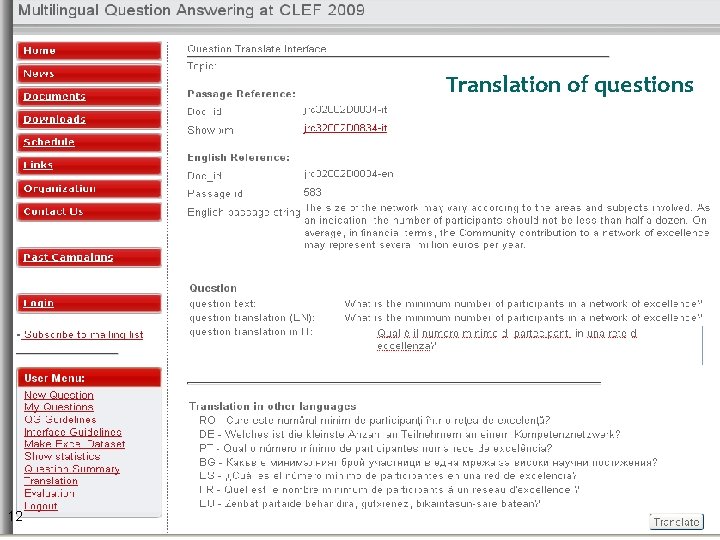

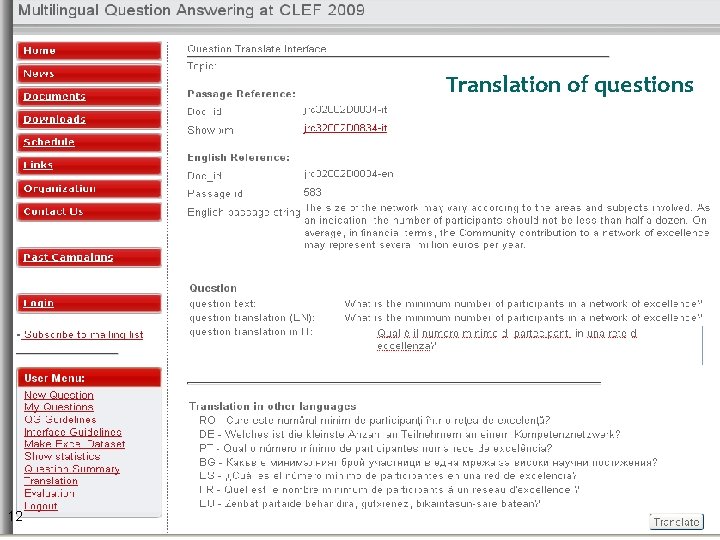

Translation of questions 12

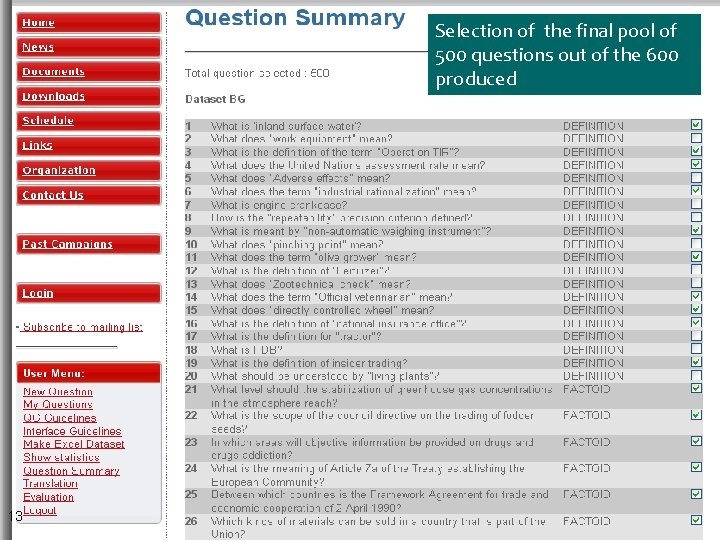

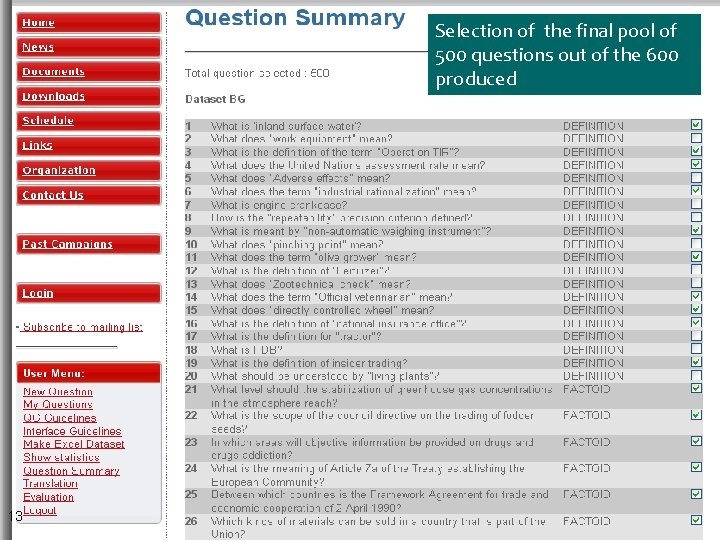

Selection of the final pool of 500 questions out of the 600 produced 13

14

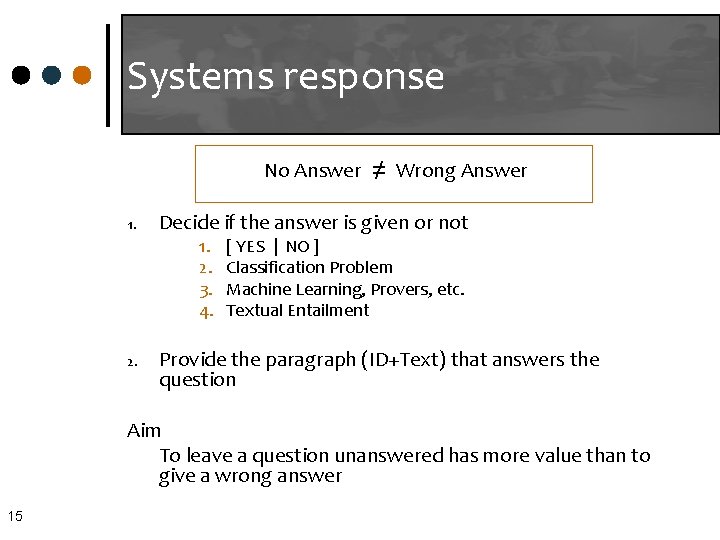

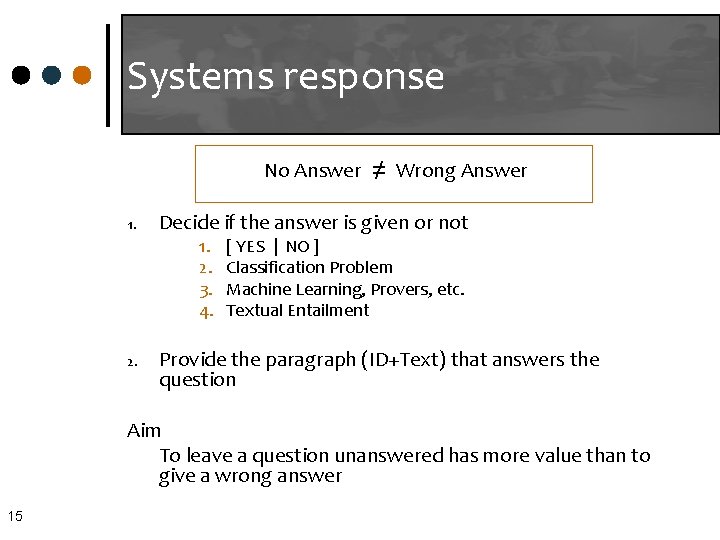

Systems response No Answer 1. Decide if the answer is given or not 1. 2. 3. 4. 2. ≠ Wrong Answer [ YES | NO ] Classification Problem Machine Learning, Provers, etc. Textual Entailment Provide the paragraph (ID+Text) that answers the question Aim To leave a question unanswered has more value than to give a wrong answer 15

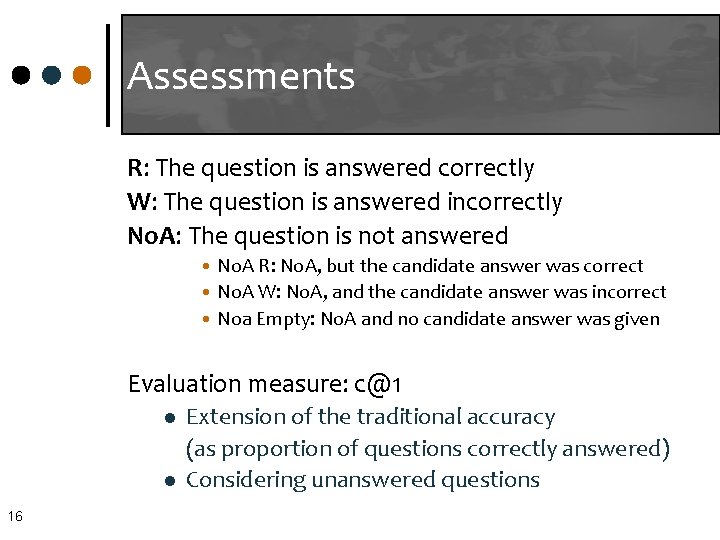

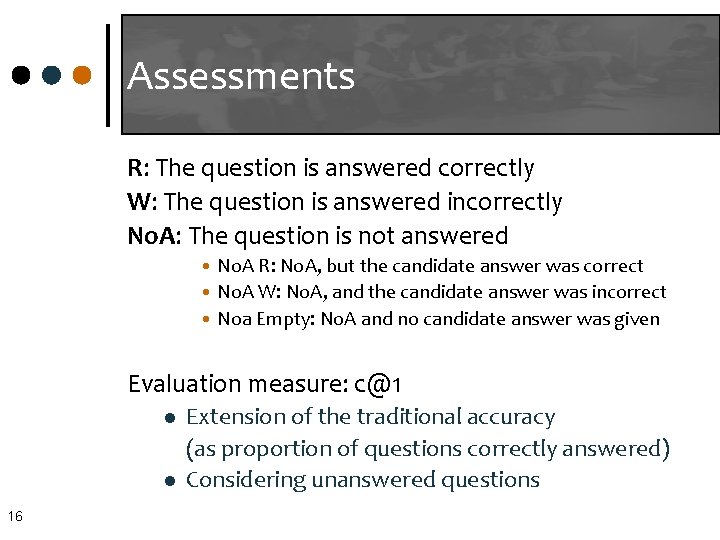

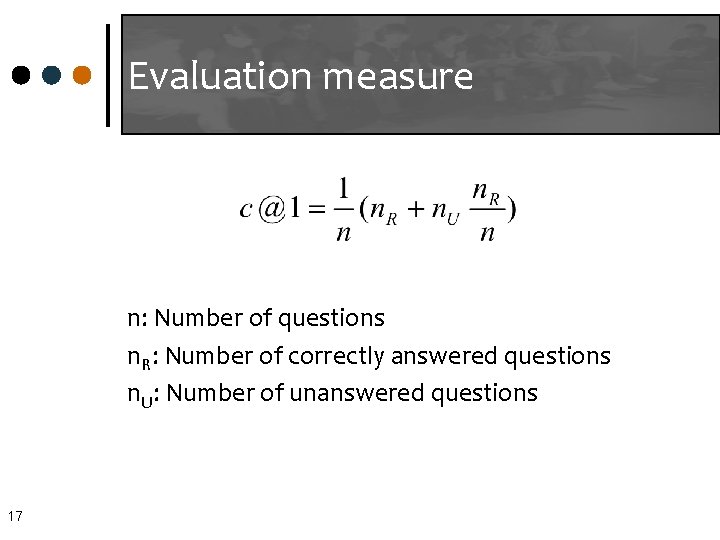

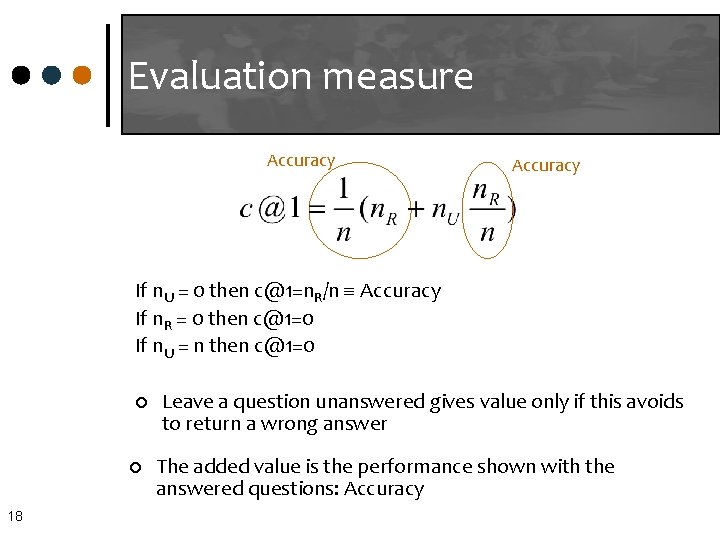

Assessments R: The question is answered correctly W: The question is answered incorrectly No. A: The question is not answered • No. A R: No. A, but the candidate answer was correct • No. A W: No. A, and the candidate answer was incorrect • Noa Empty: No. A and no candidate answer was given Evaluation measure: c@1 l l 16 Extension of the traditional accuracy (as proportion of questions correctly answered) Considering unanswered questions

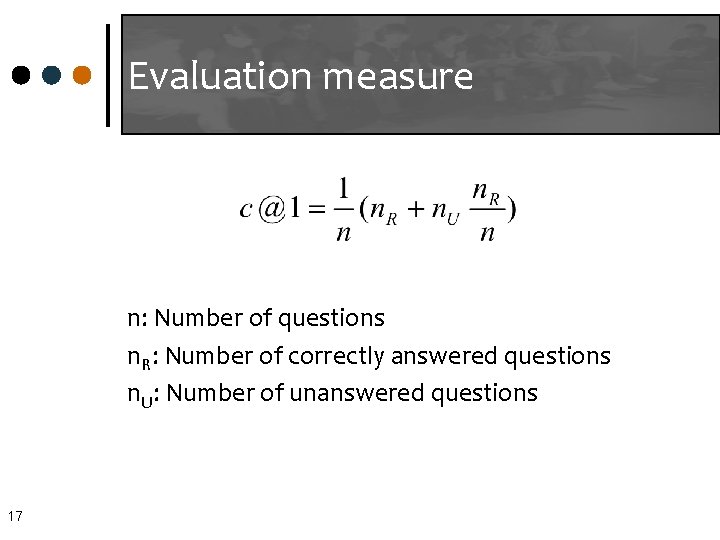

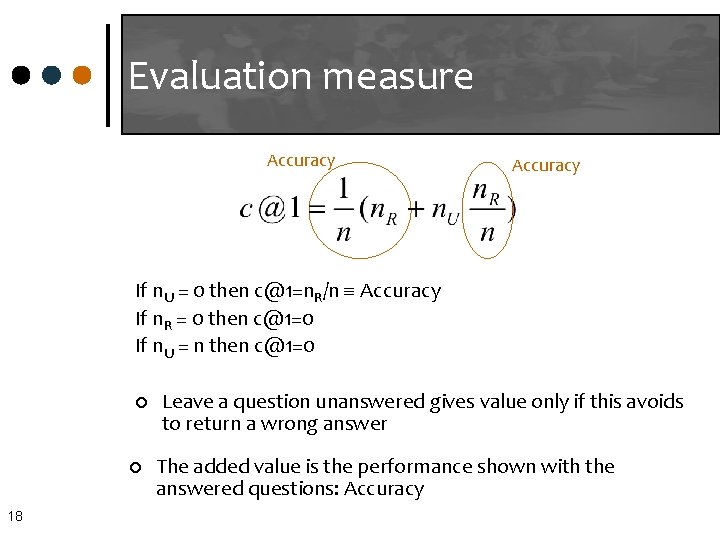

Evaluation measure n: Number of questions n. R: Number of correctly answered questions n. U: Number of unanswered questions 17

Evaluation measure Accuracy If n. U = 0 then c@1=n. R/n Accuracy If n. R = 0 then c@1=0 If n. U = n then c@1=0 ¢ ¢ 18 Leave a question unanswered gives value only if this avoids to return a wrong answer The added value is the performance shown with the answered questions: Accuracy

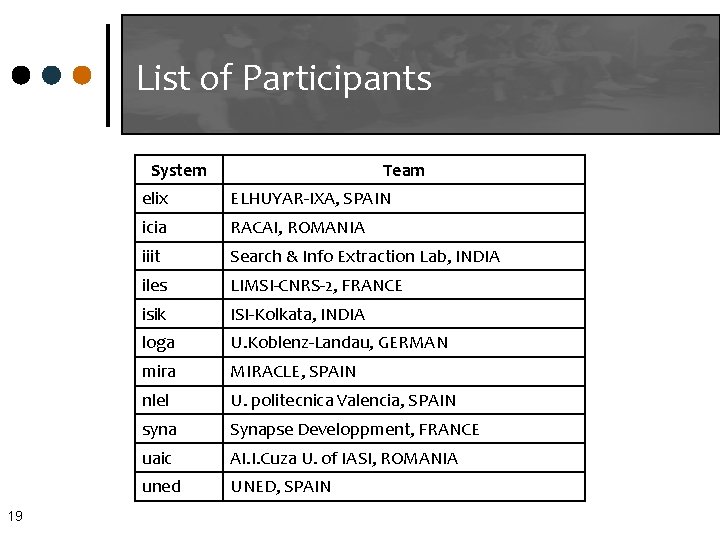

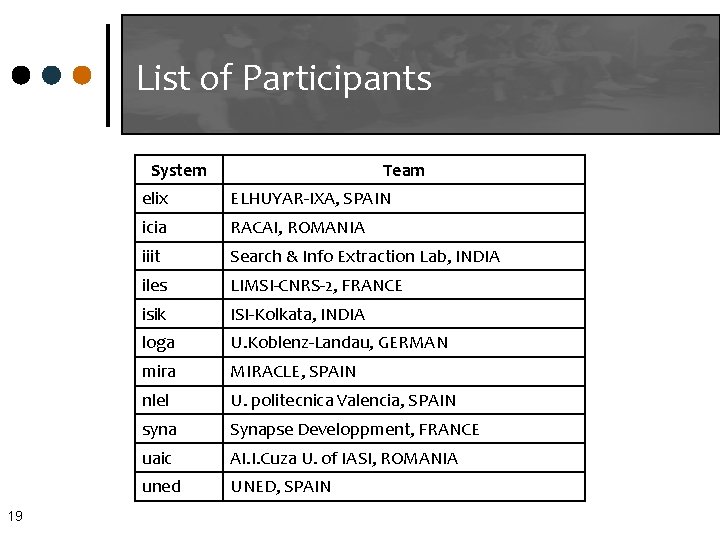

List of Participants System 19 Team elix ELHUYAR-IXA, SPAIN icia RACAI, ROMANIA iiit Search & Info Extraction Lab, INDIA iles LIMSI-CNRS-2, FRANCE isik ISI-Kolkata, INDIA loga U. Koblenz-Landau, GERMAN mira MIRACLE, SPAIN nlel U. politecnica Valencia, SPAIN syna Synapse Developpment, FRANCE uaic AI. I. Cuza U. of IASI, ROMANIA uned UNED, SPAIN

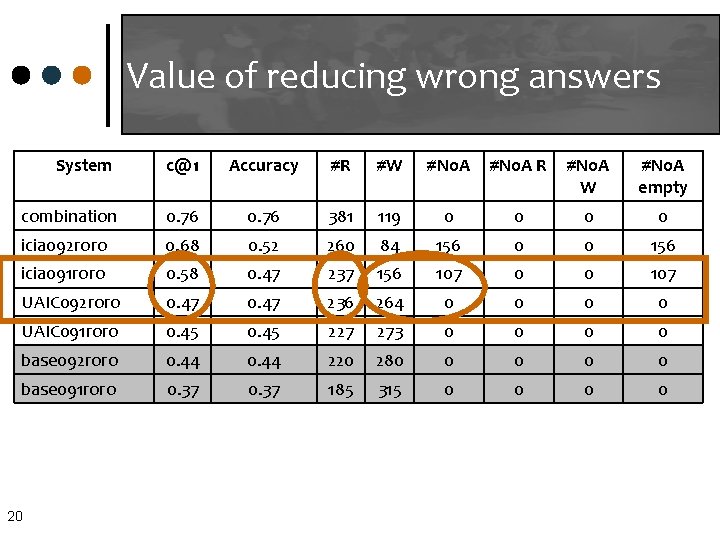

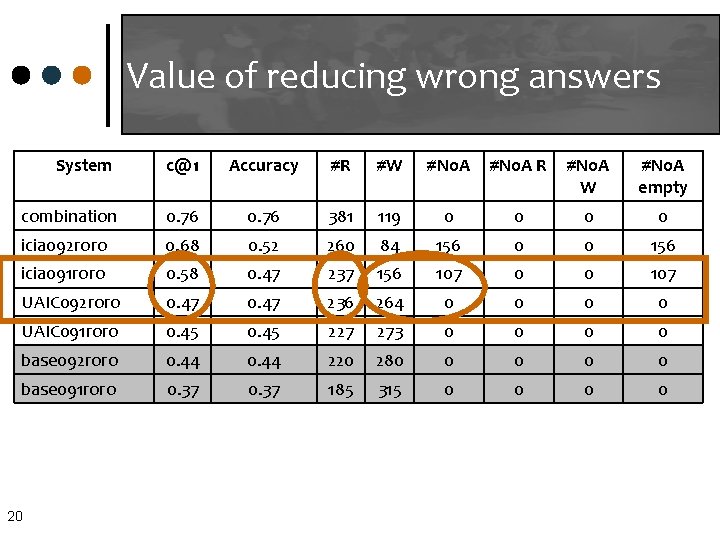

Value of reducing wrong answers System c@1 Accuracy #R #W #No. A R #No. A W #No. A empty combination 0. 76 381 119 0 0 icia 092 roro 0. 68 0. 52 260 84 156 0 0 156 icia 091 roro 0. 58 0. 47 237 156 107 0 0 107 UAIC 092 roro 0. 47 236 264 0 0 UAIC 091 roro 0. 45 227 273 0 0 base 092 roro 0. 44 220 280 0 0 base 091 roro 0. 37 185 315 0 0 20

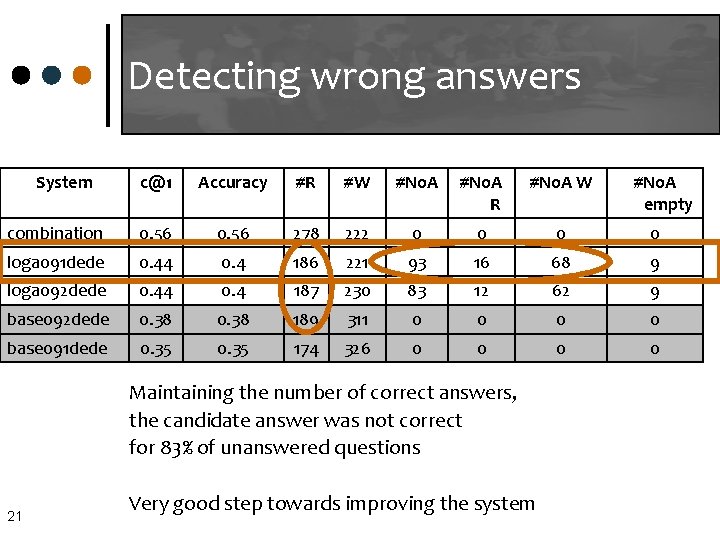

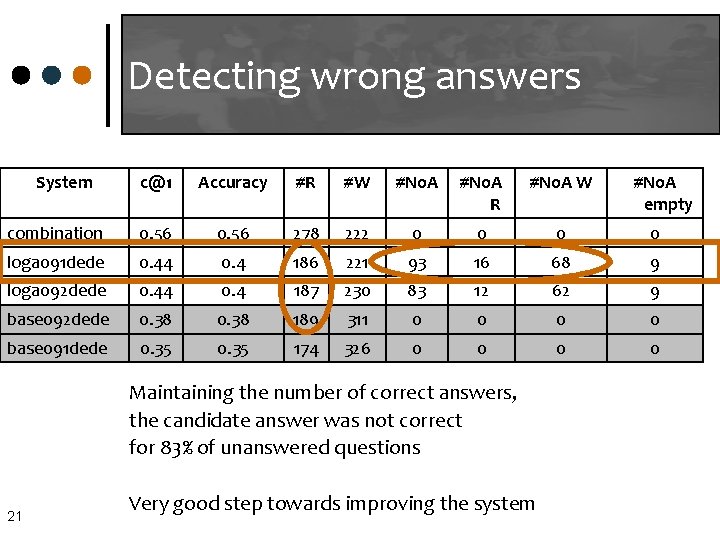

Detecting wrong answers System c@1 Accuracy #R #W #No. A R #No. A W combination 0. 56 278 222 0 0 loga 091 dede 0. 44 0. 4 186 221 93 16 68 9 loga 092 dede 0. 44 0. 4 187 230 83 12 62 9 base 092 dede 0. 38 189 311 0 0 base 091 dede 0. 35 174 326 0 0 Maintaining the number of correct answers, the candidate answer was not correct for 83% of unanswered questions 21 Very good step towards improving the system #No. A empty

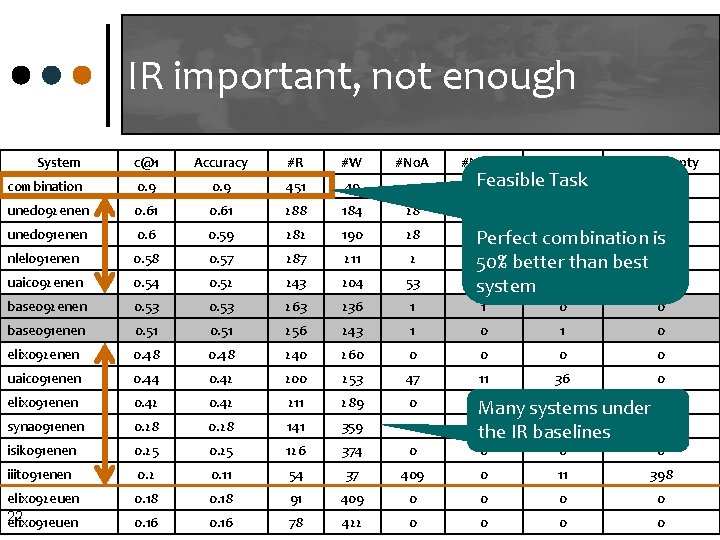

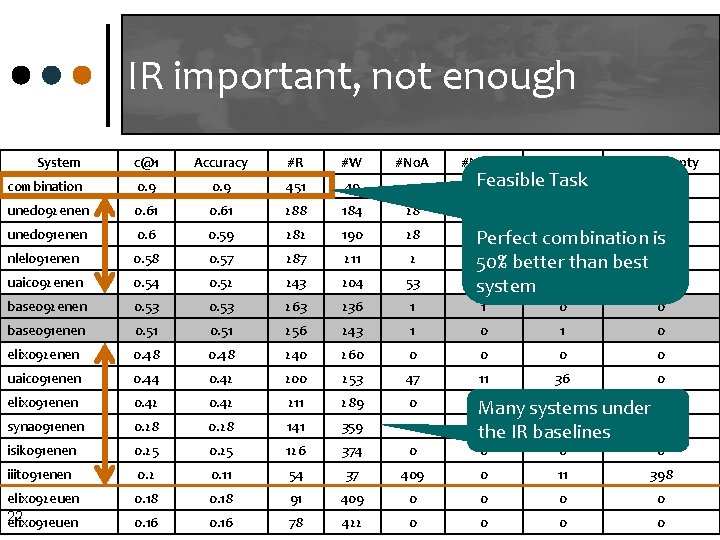

IR important, not enough System c@1 Accuracy #R #W #No. A R #No. A W combination 0. 9 451 49 0 Feasible Task 0 0 uned 092 enen 0. 61 288 184 28 15 uned 091 enen 0. 6 0. 59 282 190 28 nlel 091 enen 0. 58 0. 57 287 211 2 uaic 092 enen 0. 54 0. 52 243 204 53 15 13 Perfect combination is 0 0 50% better 0 than best 2 18 35 0 system base 092 enen 0. 53 263 236 1 1 0 0 base 091 enen 0. 51 256 243 1 0 elix 092 enen 0. 48 240 260 0 0 uaic 091 enen 0. 44 0. 42 200 253 47 11 36 0 elix 091 enen 0. 42 211 289 0 syna 091 enen 0. 28 141 359 0 isik 091 enen 0. 25 126 374 0 0 0 Many systems under 0 0 the IR baselines iiit 091 enen 0. 2 0. 11 54 37 elix 092 euen 22 elix 091 euen 0. 18 91 0. 16 78 12 #No. A empty 0 1 0 0 0 409 0 11 398 409 0 0 422 0 0

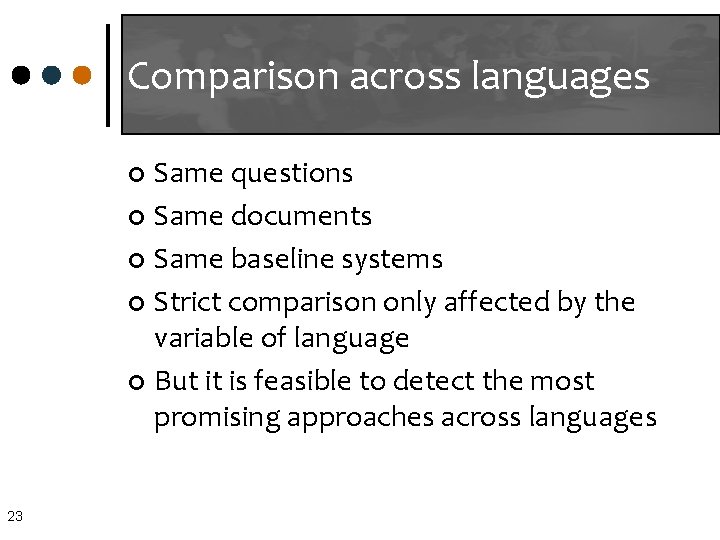

Comparison across languages Same questions ¢ Same documents ¢ Same baseline systems ¢ Strict comparison only affected by the variable of language ¢ But it is feasible to detect the most promising approaches across languages ¢ 23

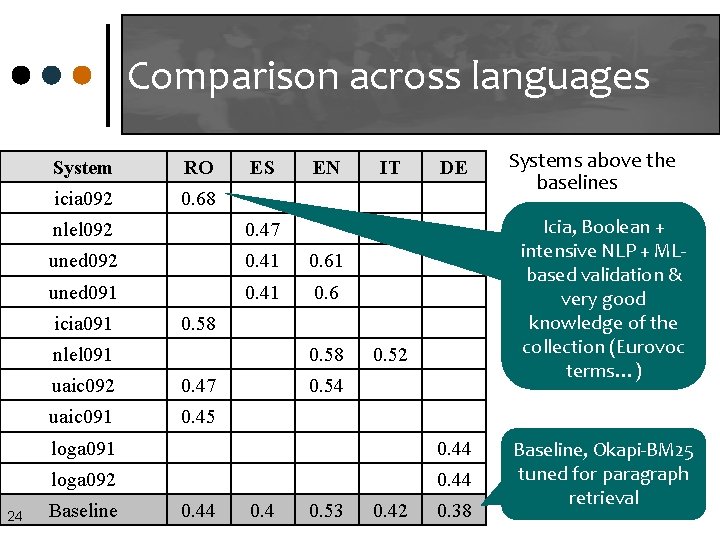

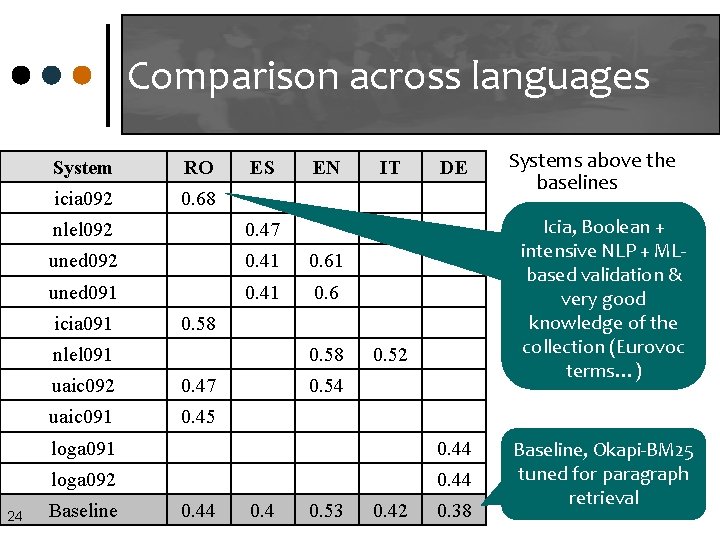

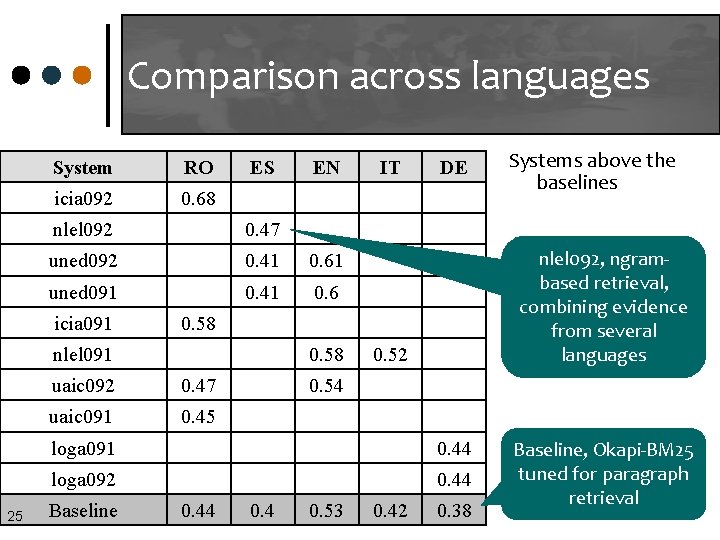

Comparison across languages System RO icia 092 0. 68 ES nlel 092 0. 47 uned 092 0. 41 0. 61 uned 091 0. 41 0. 6 icia 091 IT DE 0. 58 uaic 092 0. 47 uaic 091 0. 45 0. 52 0. 54 loga 091 0. 44 loga 092 0. 44 Baseline 0. 44 0. 53 0. 42 Systems above the baselines Icia, Boolean + intensive NLP + MLbased validation & very good knowledge of the collection (Eurovoc terms…) 0. 58 nlel 091 24 EN 0. 38 Baseline, Okapi-BM 25 tuned for paragraph retrieval

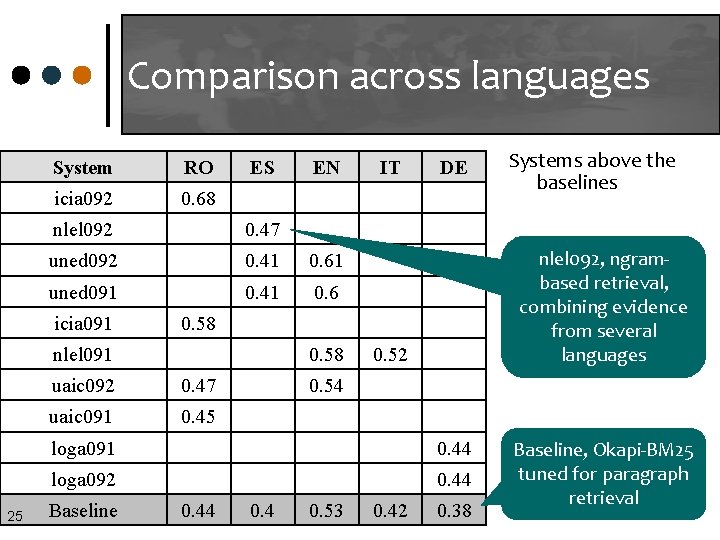

Comparison across languages System RO icia 092 0. 68 ES nlel 092 0. 47 uned 092 0. 41 0. 61 uned 091 0. 41 0. 6 icia 091 IT DE 0. 58 uaic 092 0. 47 uaic 091 0. 45 0. 52 0. 54 loga 091 0. 44 loga 092 0. 44 Baseline 0. 44 Systems above the baselines nlel 092, ngrambased retrieval, combining evidence from several languages 0. 58 nlel 091 25 EN 0. 4 0. 53 0. 42 0. 38 Baseline, Okapi-BM 25 tuned for paragraph retrieval

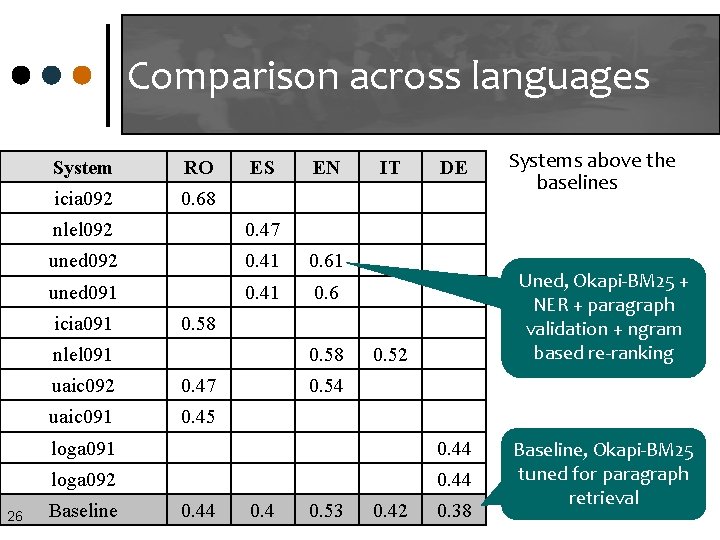

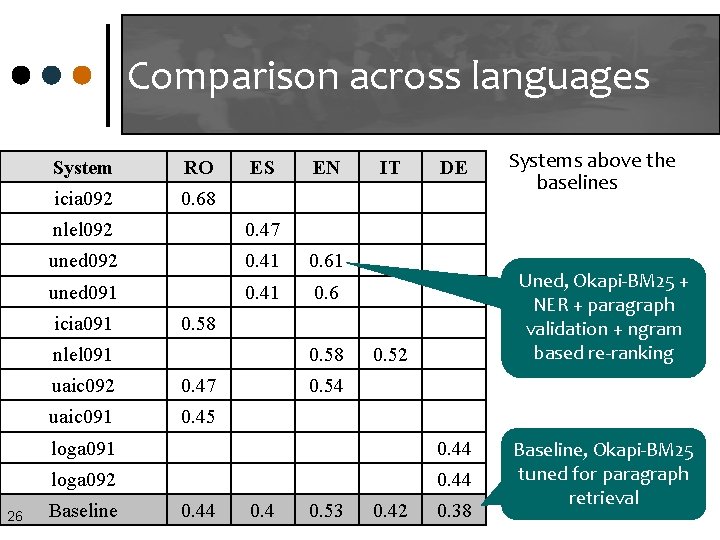

Comparison across languages System RO icia 092 0. 68 ES nlel 092 0. 47 uned 092 0. 41 0. 61 uned 091 0. 41 0. 6 icia 091 IT DE 0. 58 uaic 092 0. 47 uaic 091 0. 45 0. 52 0. 54 loga 091 0. 44 loga 092 0. 44 Baseline 0. 44 Systems above the baselines Uned, Okapi-BM 25 + NER + paragraph validation + ngram based re-ranking 0. 58 nlel 091 26 EN 0. 4 0. 53 0. 42 0. 38 Baseline, Okapi-BM 25 tuned for paragraph retrieval

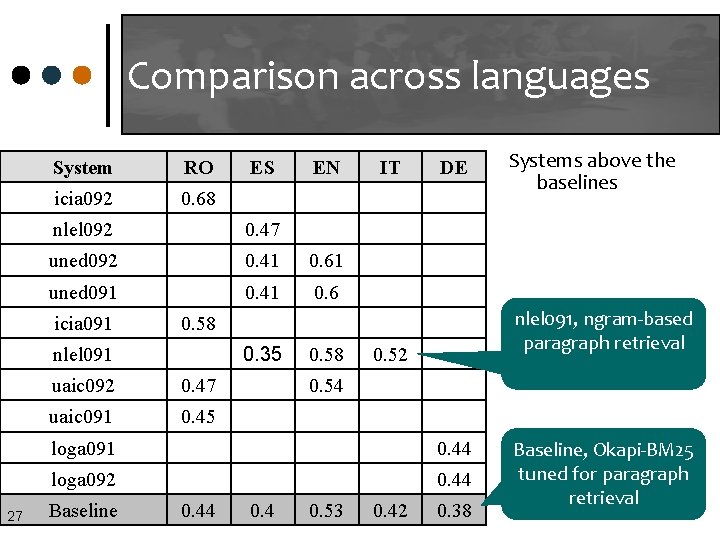

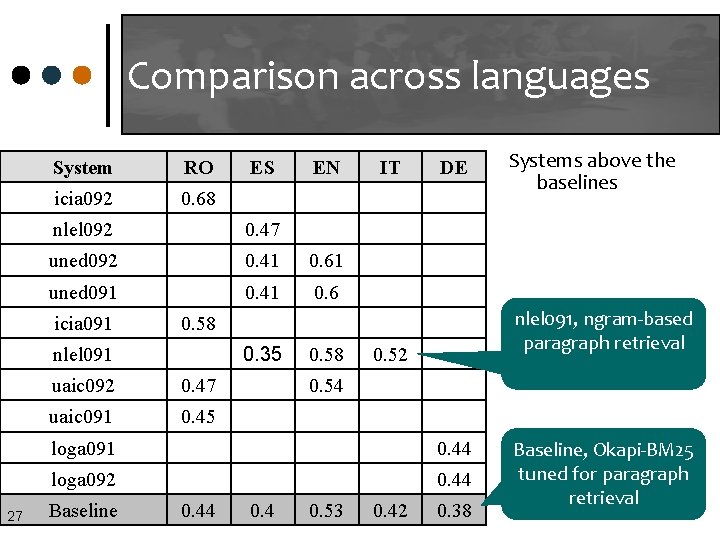

Comparison across languages System RO icia 092 0. 68 EN nlel 092 0. 47 uned 092 0. 41 0. 61 uned 091 0. 41 0. 6 icia 091 IT DE 0. 35 uaic 092 0. 47 uaic 091 0. 45 0. 58 0. 52 0. 54 loga 091 0. 44 loga 092 0. 44 Baseline 0. 44 Systems above the baselines nlel 091, ngram-based paragraph retrieval 0. 58 nlel 091 27 ES 0. 4 0. 53 0. 42 0. 38 Baseline, Okapi-BM 25 tuned for paragraph retrieval

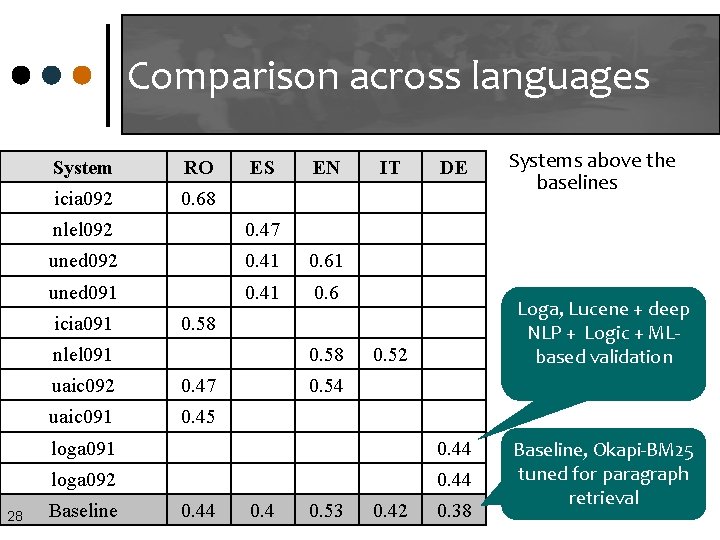

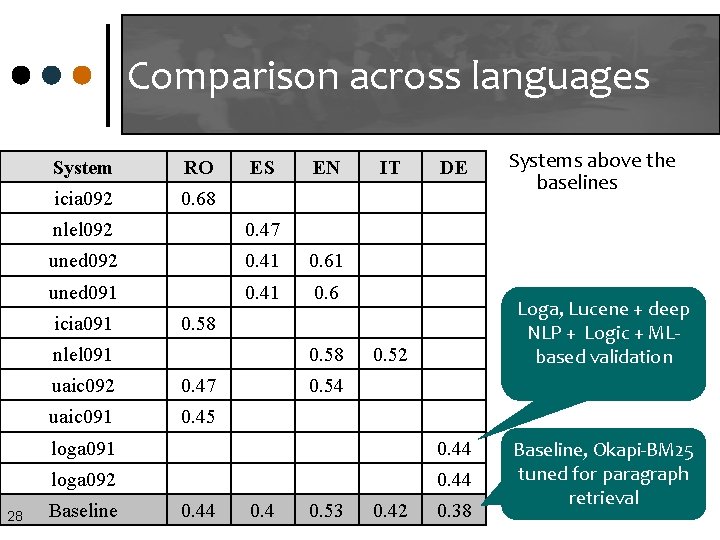

Comparison across languages System RO icia 092 0. 68 ES nlel 092 0. 47 uned 092 0. 41 0. 61 uned 091 0. 41 0. 6 icia 091 IT DE 0. 58 uaic 092 0. 47 uaic 091 0. 45 0. 52 0. 54 loga 091 0. 44 loga 092 0. 44 Baseline 0. 44 Systems above the baselines Loga, Lucene + deep NLP + Logic + MLbased validation 0. 58 nlel 091 28 EN 0. 4 0. 53 0. 42 0. 38 Baseline, Okapi-BM 25 tuned for paragraph retrieval

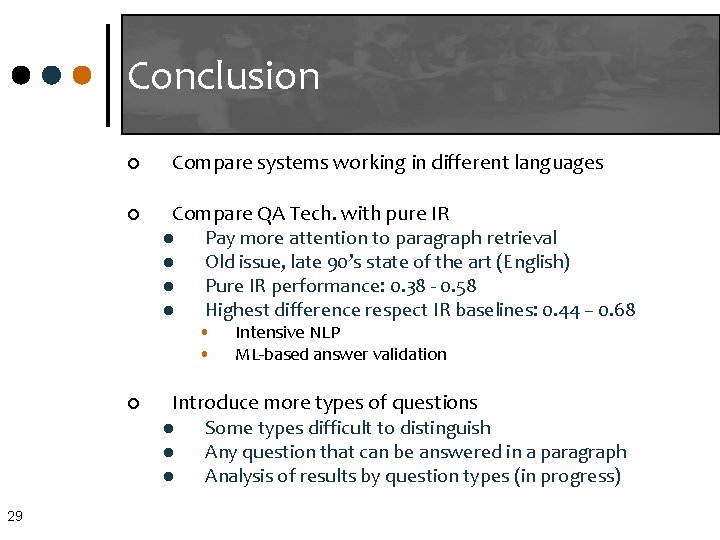

Conclusion ¢ ¢ Compare systems working in different languages Compare QA Tech. with pure IR l Pay more attention to paragraph retrieval l Old issue, late 90’s state of the art (English) l Pure IR performance: 0. 38 - 0. 58 l Highest difference respect IR baselines: 0. 44 – 0. 68 • • ¢ 29 Intensive NLP ML-based answer validation Introduce more types of questions l Some types difficult to distinguish l Any question that can be answered in a paragraph l Analysis of results by question types (in progress)

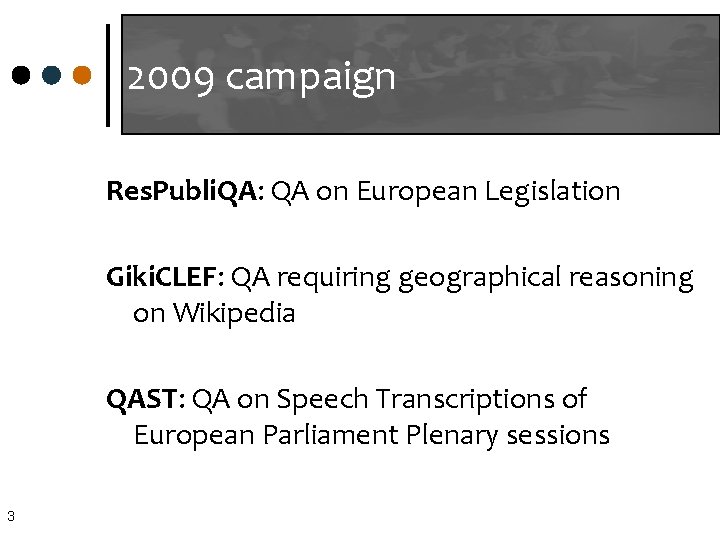

Conclusion ¢ ¢ ¢ 30 Introduce Answer Validation Tech. l Evaluation measure: c@1 l Value of reducing wrong answers l Detecting wrong answers is feasible Feasible task l 90% of questions have been answered l Room for improvement: Best systems around 60% Even with less participants we have l More comparison l More analysis l More learning Res. Publi. QA proposal for 2010 SC and breakout session

Interest on Res. Publi. QA 2010 GROUP 31 1 Uni. "Al. I. Cuza" Iasi (Dan Cristea, Diana Trandabat) 2 Linguateca (Nuno Cardoso) 3 RACAI (Dan Tufis, Radu Ion) 4 Jesus Vilares 5 Univ. Koblenz-Landlau (Bjorn Pelzer) 6 Thomson Reuters (Isabelle Moulinier) 7 Gracinda Carvalho 8 UNED (Alvaro Rodrigo) 9 Uni. Politecnica Valencia (Paolo Rosso & Davide Buscaldi) 10 Uni. Hagen (Ingo Glockner) 11 Linguit (Jochen L. Leidner) 12 Uni. Saarland (Dietrich Klakow) 13 ELHUYAR-IXA (Arantxa Otegi) 14 MIRACLE TEAM (Paloma Martínez Fernández) But we need more You have already a Gold Standard of 500 questions & answers to play with…