Memory Networks for Question Answering Question Answering Return

- Slides: 41

Memory Networks for Question Answering

Question Answering Return the correct answer to a question expressed as a natural language question l Different from IR (search), which returns the most relevant documents, to a query expressed with keywords. l In principle, most AI/NLP tasks can be reduced to QA l

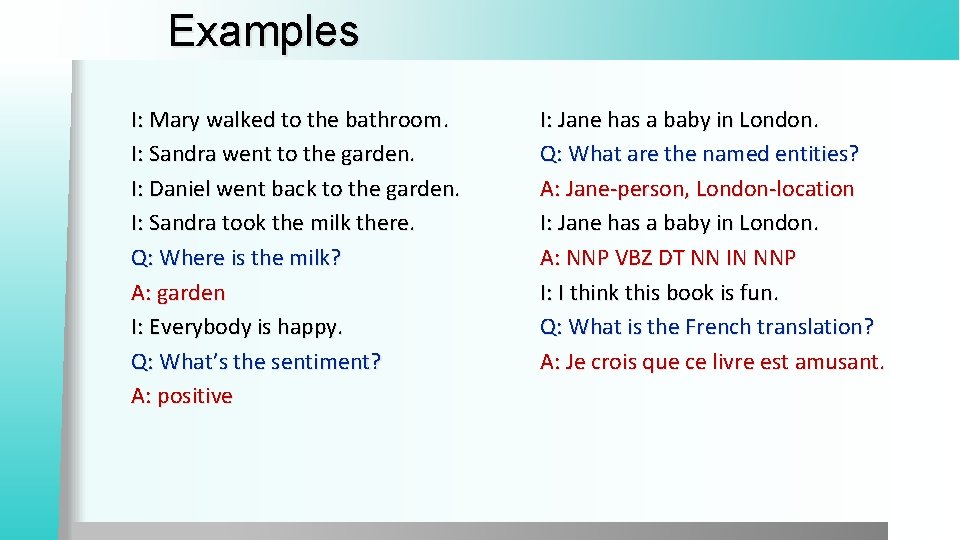

Examples I: Mary walked to the bathroom. I: Sandra went to the garden. I: Daniel went back to the garden. I: Sandra took the milk there. Q: Where is the milk? A: garden I: Everybody is happy. Q: What’s the sentiment? A: positive I: Jane has a baby in London. Q: What are the named entities? A: Jane-person, London-location I: Jane has a baby in London. A: NNP VBZ DT NN IN NNP I: I think this book is fun. Q: What is the French translation? A: Je crois que ce livre est amusant.

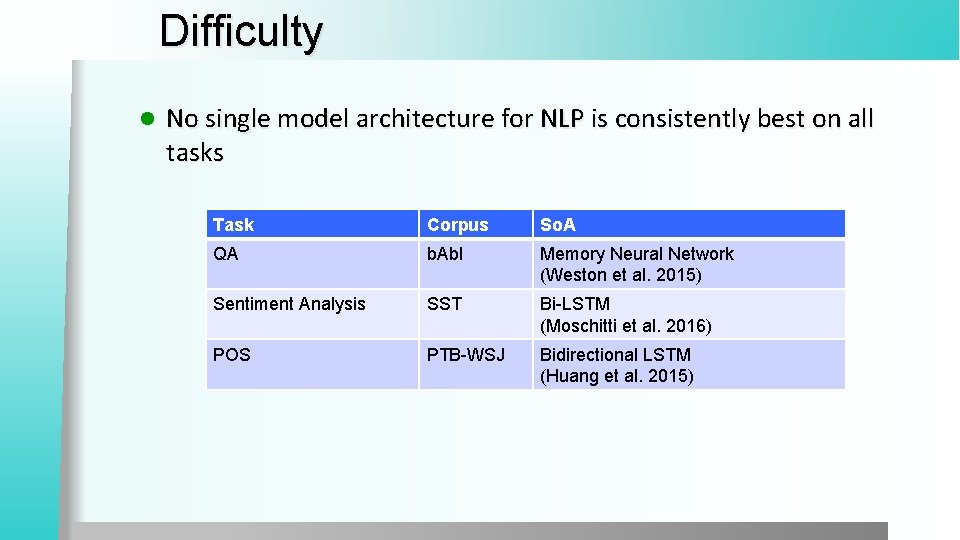

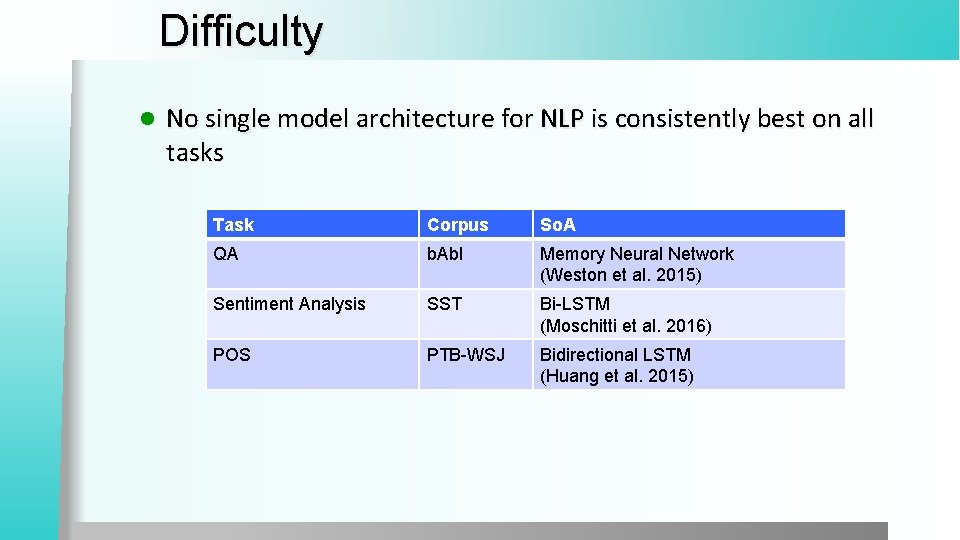

Difficulty l No single model architecture for NLP is consistently best on all tasks Task Corpus So. A QA b. Ab. I Memory Neural Network (Weston et al. 2015) Sentiment Analysis SST Bi-LSTM (Moschitti et al. 2016) POS PTB-WSJ Bidirectional LSTM (Huang et al. 2015)

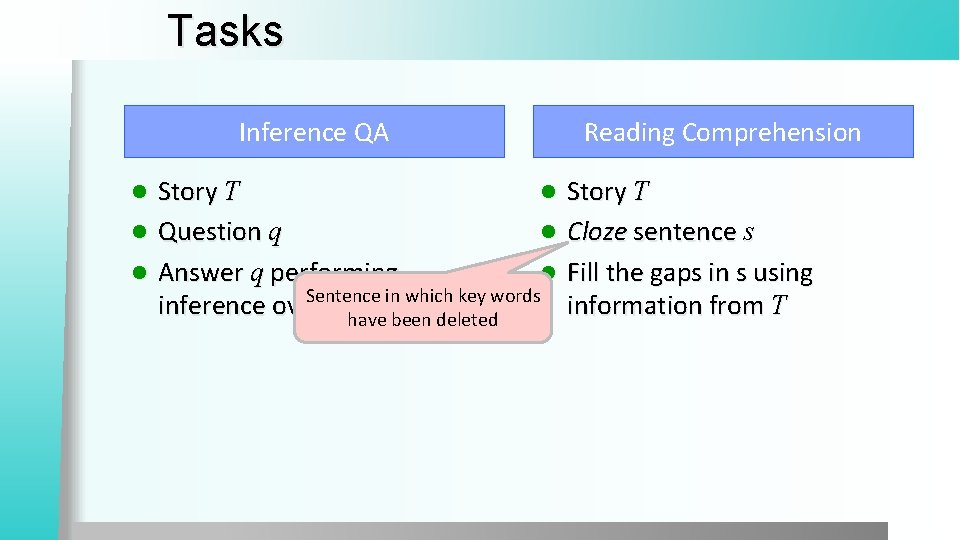

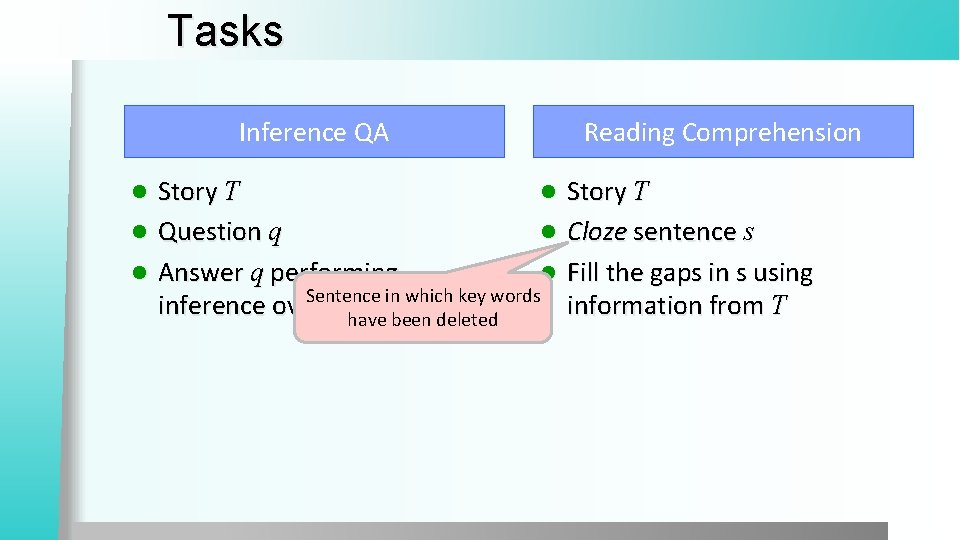

Tasks Inference QA Story T l l Question q l l Answer q performing l Sentence in which key words inference over T have been deleted l Reading Comprehension Story T Cloze sentence s Fill the gaps in s using information from T

b. Ab. I Dataset

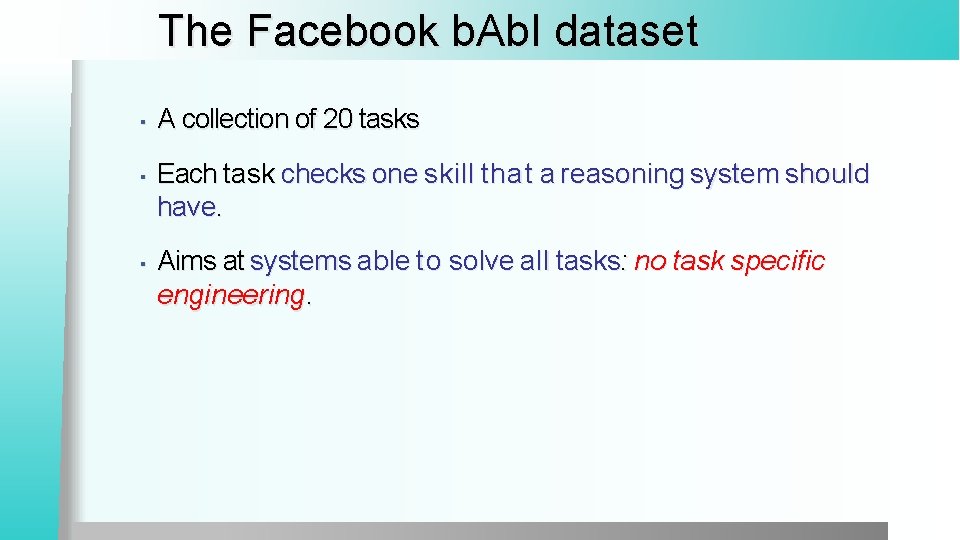

The Facebook b. Ab. I dataset ▪ A collection of 20 tasks ▪ Each task checks one skill that a reasoning system should have. ▪ Aims at systems able to solve all tasks: no task specific engineering.

(T 1) Single supporting fact ▪ ▪ Questions where a single supporting fact, previously given, provides the answer. Simplest case of this: asking for the location of a person. John is in the playground. Bob is in the office. Where is John? A: playground (T 2) Two supporting facts ▪ Harder task: questions where two supporting statements have to be chained to answer the question. John is in the playground. Bob is in the office. John picked up the football. Bob went to the kitchen. Where is the football? A: playground Where was Bob before the kitchen? A: office

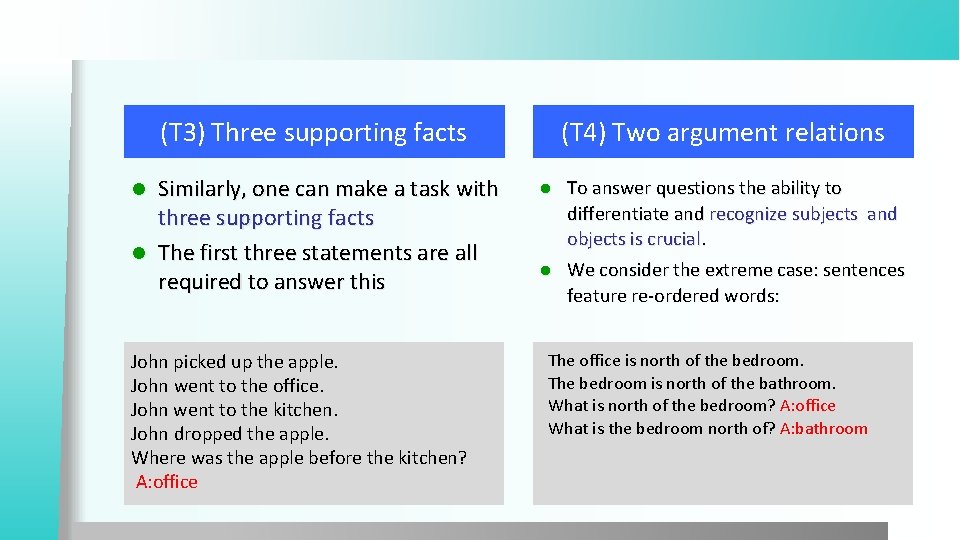

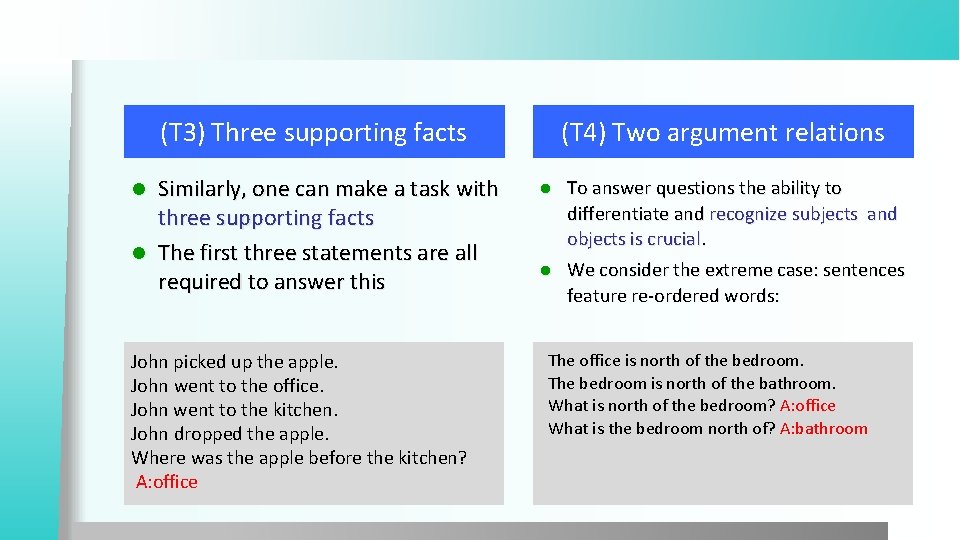

(T 3) Three supporting facts Similarly, one can make a task with three supporting facts l The first three statements are all required to answer this l John picked up the apple. John went to the office. John went to the kitchen. John dropped the apple. Where was the apple before the kitchen? A: office (T 4) Two argument relations To answer questions the ability to differentiate and recognize subjects and objects is crucial. l We consider the extreme case: sentences feature re-ordered words: l The office is north of the bedroom. The bedroom is north of the bathroom. What is north of the bedroom? A: office What is the bedroom north of? A: bathroom

(T 6) Yes/No questions l This task tests, in the simplest case possible (with a single supporting fact) the ability of a model to answer true/false type questions: John is in the playground. Daniel picks up the milk. Is John in the classroom? A: no Does Daniel have the milk? A: yes (T 7) Counting l This task tests the ability of the QA system to perform simple counting operations, by asking about the number of objects with a certain property Daniel picked up the football. Daniel dropped the football. Daniel got the milk. Daniel took the apple. How many objects is Daniel holding? A: two

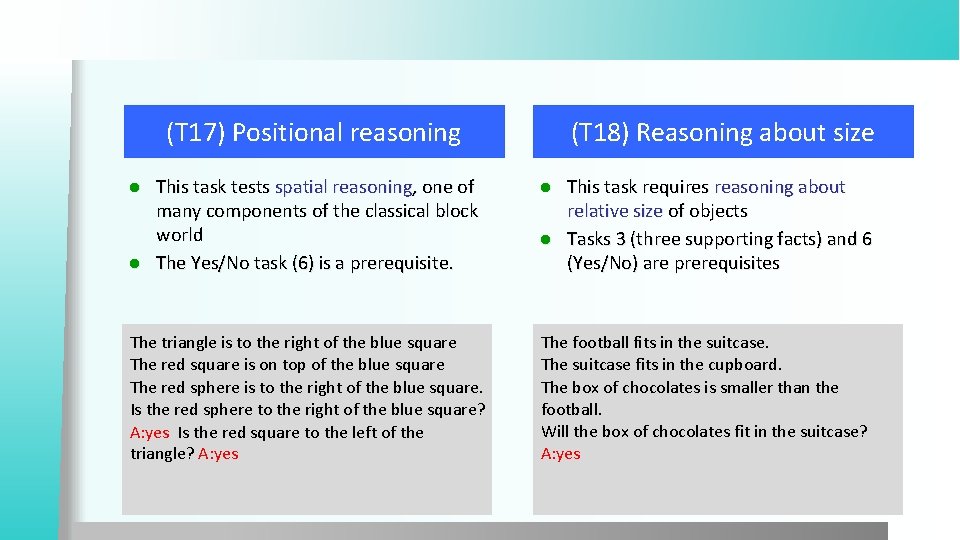

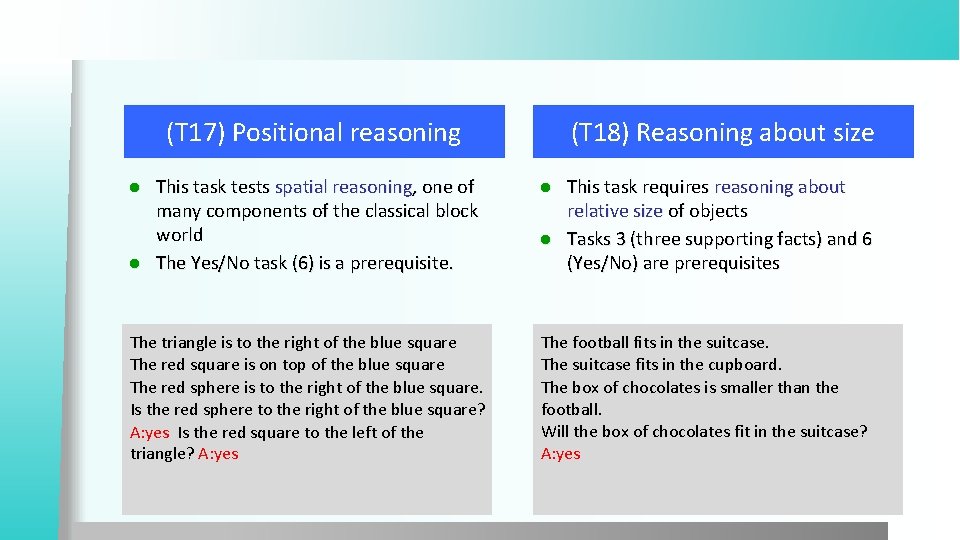

(T 17) Positional reasoning (T 18) Reasoning about size l This task tests spatial reasoning, one of many components of the classical block world l The Yes/No task (6) is a prerequisite. l This task requires reasoning about relative size of objects l Tasks 3 (three supporting facts) and 6 (Yes/No) are prerequisites The triangle is to the right of the blue square The red square is on top of the blue square The red sphere is to the right of the blue square. Is the red sphere to the right of the blue square? A: yes Is the red square to the left of the triangle? A: yes The football fits in the suitcase. The suitcase fits in the cupboard. The box of chocolates is smaller than the football. Will the box of chocolates fit in the suitcase? A: yes

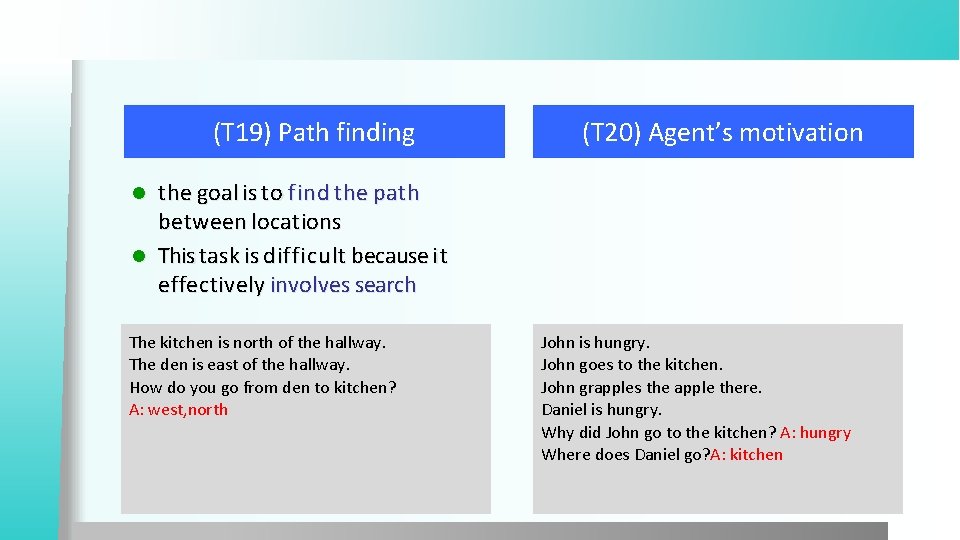

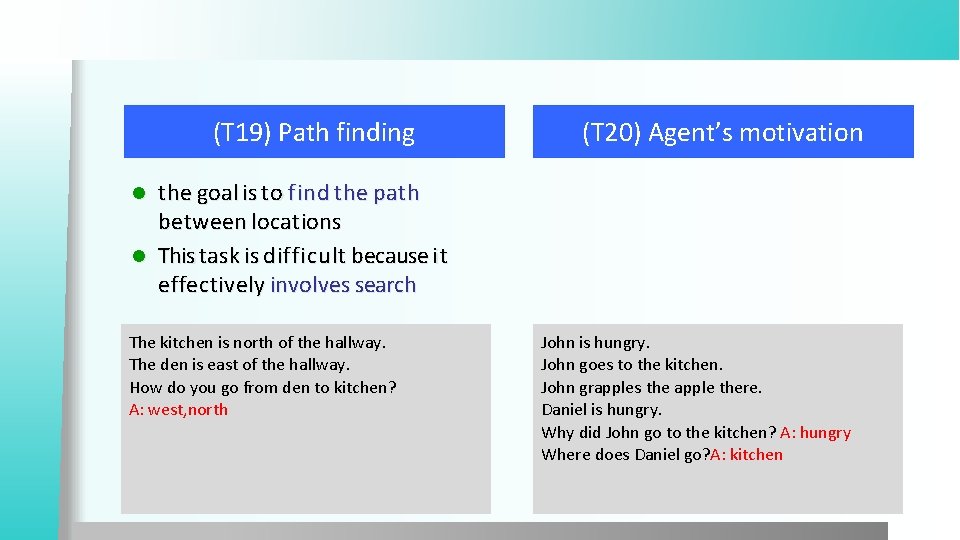

(T 19) Path finding (T 20) Agent’s motivation the goal is to find the path between locations l This task is difficult because it effectively involves search l The kitchen is north of the hallway. The den is east of the hallway. How do you go from den to kitchen? A: west, north John is hungry. John goes to the kitchen. John grapples the apple there. Daniel is hungry. Why did John go to the kitchen? A: hungry Where does Daniel go? A: kitchen

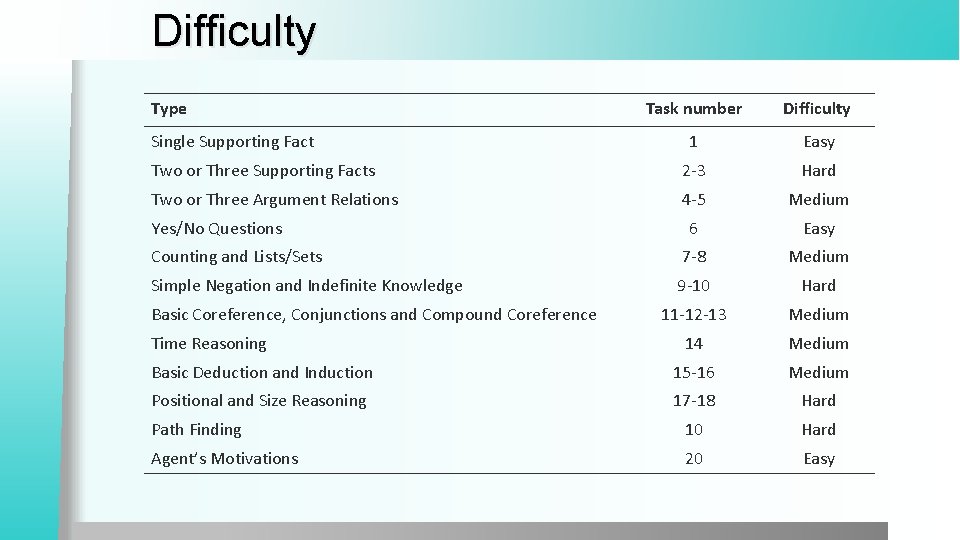

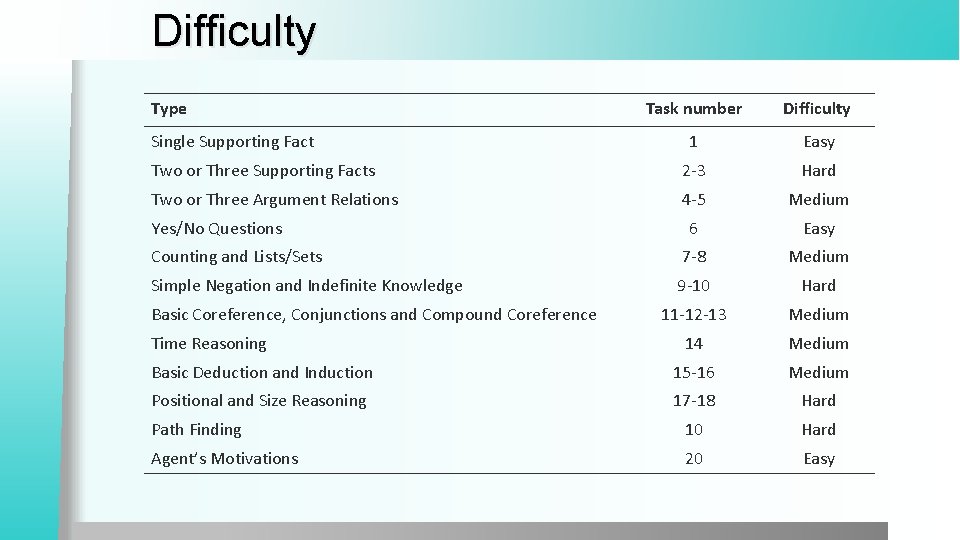

Difficulty Type Task number Difficulty 1 Easy Two or Three Supporting Facts 2 -3 Hard Two or Three Argument Relations 4 -5 Medium 6 Easy Counting and Lists/Sets 7 -8 Medium Simple Negation and Indefinite Knowledge 9 -10 Hard 11 -12 -13 Medium 14 Medium Basic Deduction and Induction 15 -16 Medium Positional and Size Reasoning 17 -18 Hard Path Finding 10 Hard Agent’s Motivations 20 Easy Single Supporting Fact Yes/No Questions Basic Coreference, Conjunctions and Compound Coreference Time Reasoning

Dynamic Memory Networks

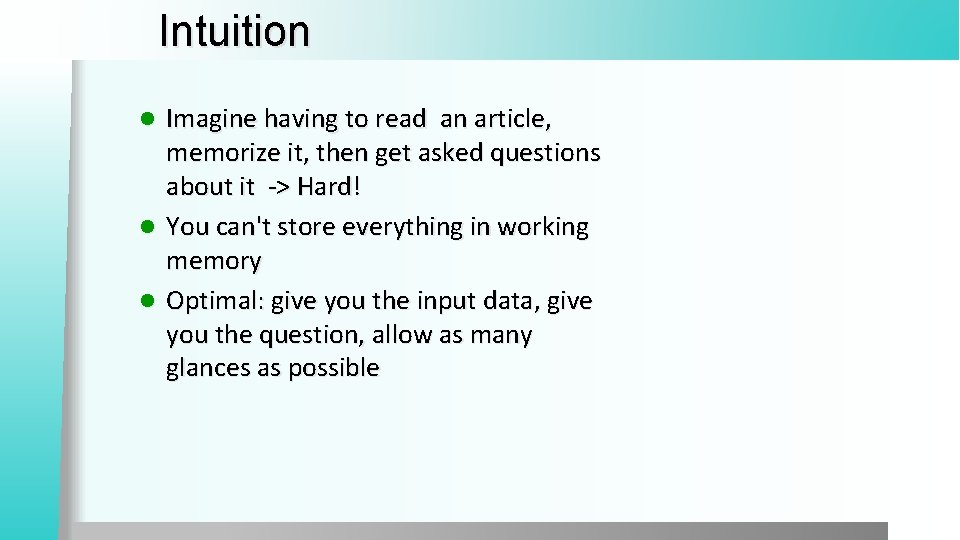

Intuition Imagine having to read an article, memorize it, then get asked questions about it -> Hard! l You can't store everything in working memory l Optimal: give you the input data, give you the question, allow as many glances as possible l

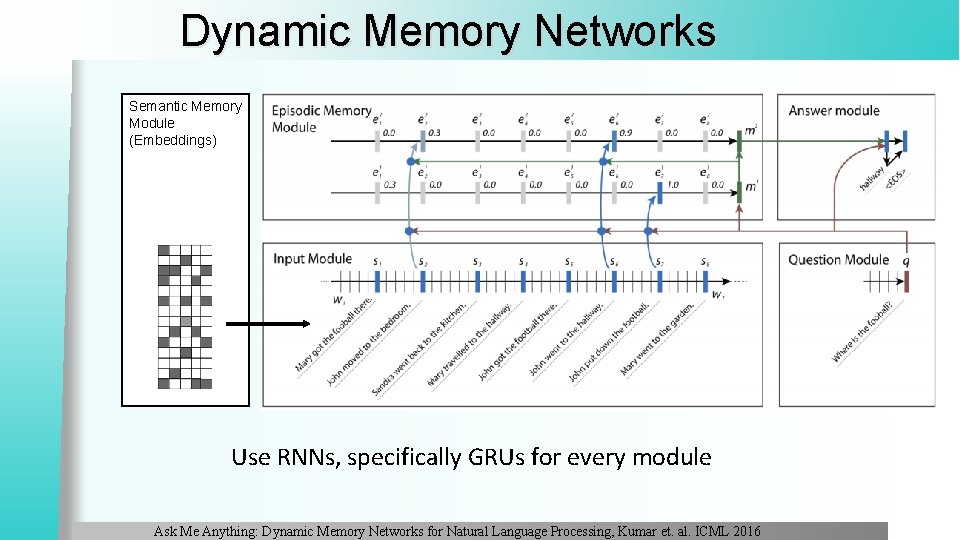

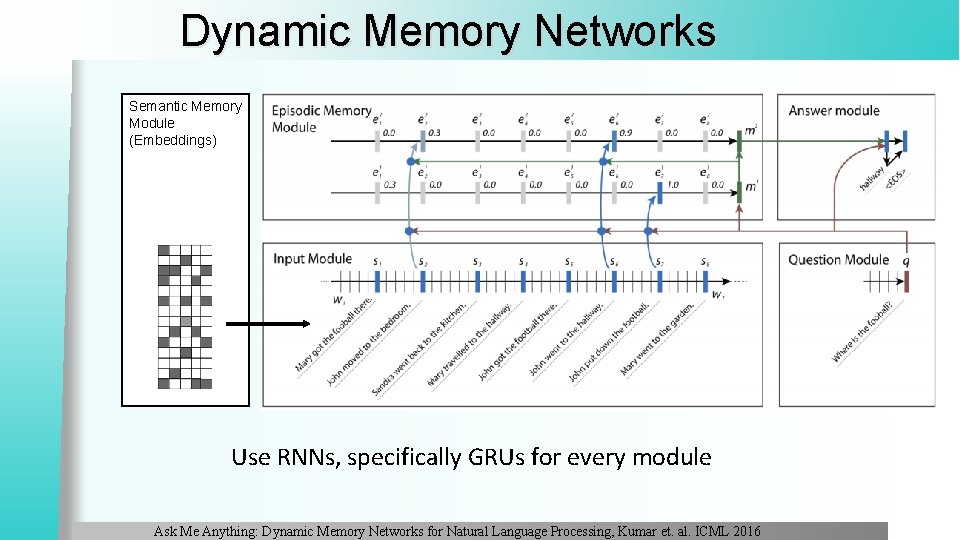

Dynamic Memory Networks Semantic Memory Module (Embeddings) Use RNNs, specifically GRUs for every module Ask Me Anything: Dynamic Memory Networks for Natural Language Processing, Kumar et. al. ICML 2016

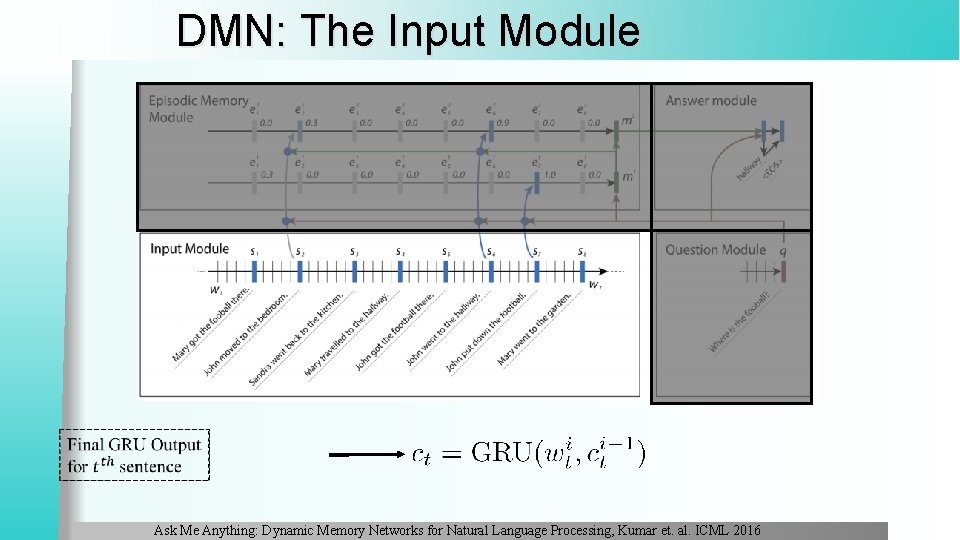

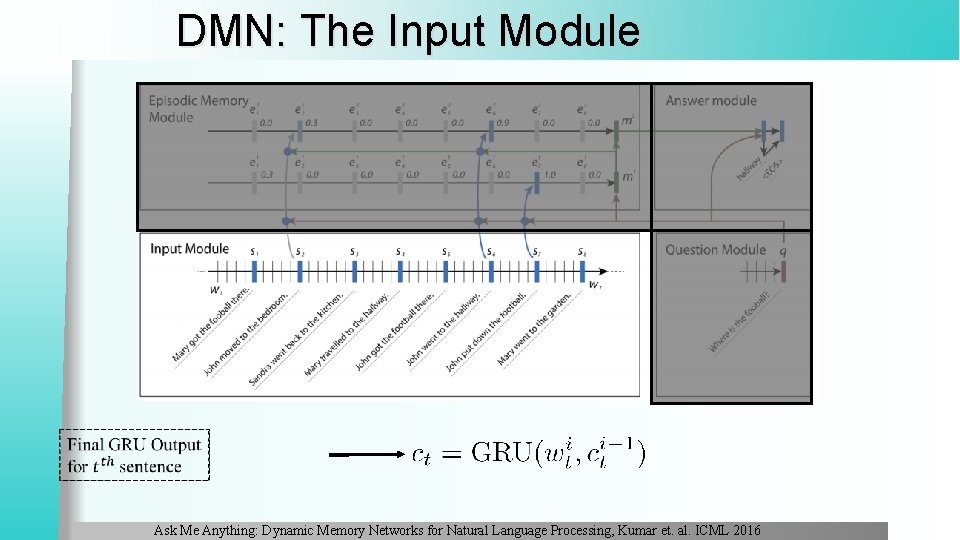

DMN: The Input Module Ask Me Anything: Dynamic Memory Networks for Natural Language Processing, Kumar et. al. ICML 2016

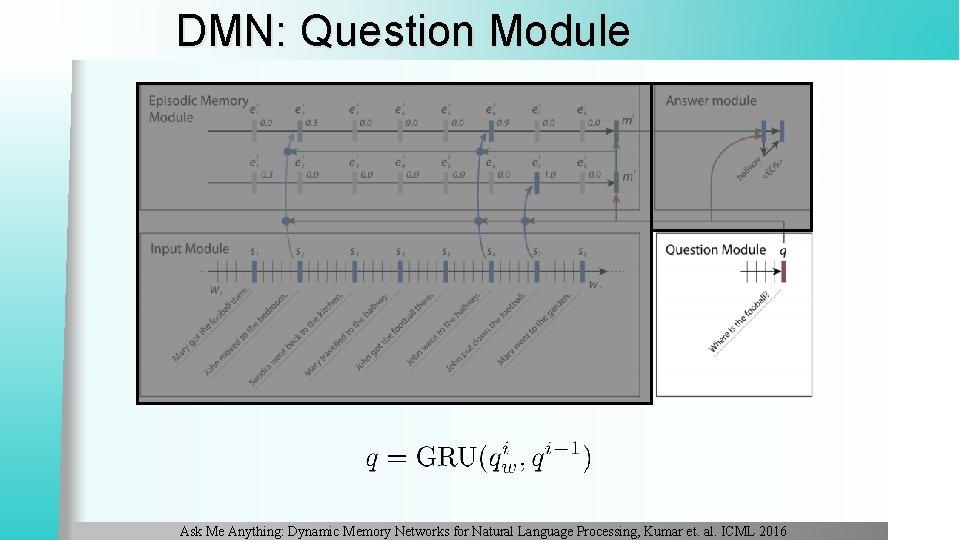

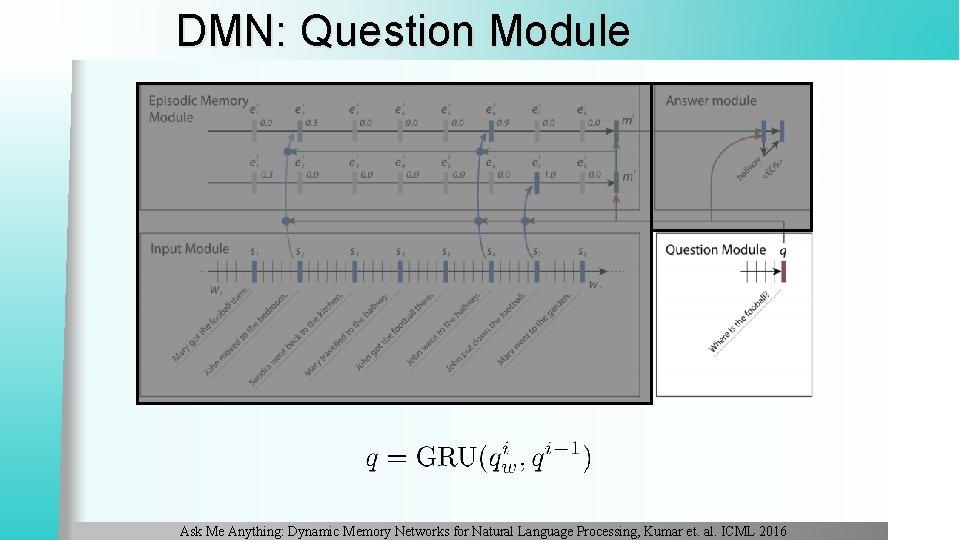

DMN: Question Module Ask Me Anything: Dynamic Memory Networks for Natural Language Processing, Kumar et. al. ICML 2016

DMN: Episodic Memory, 1 st hop Ask Me Anything: Dynamic Memory Networks for Natural Language Processing, Kumar et. al. ICML 2016

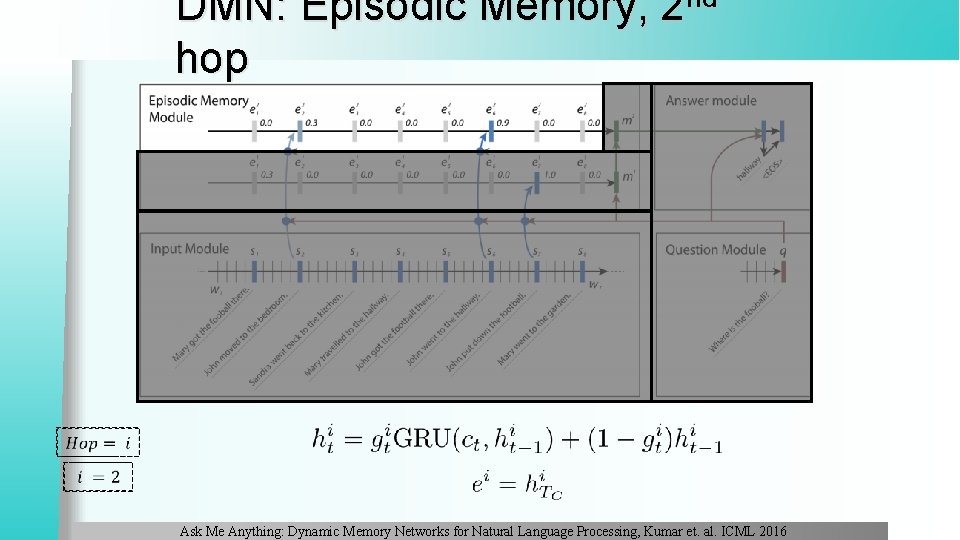

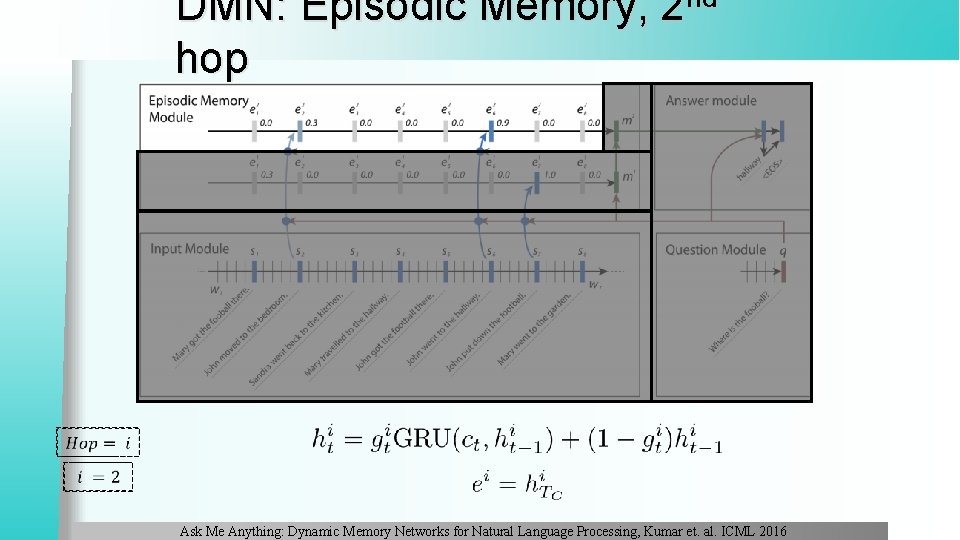

DMN: Episodic Memory, 2 nd hop Ask Me Anything: Dynamic Memory Networks for Natural Language Processing, Kumar et. al. ICML 2016

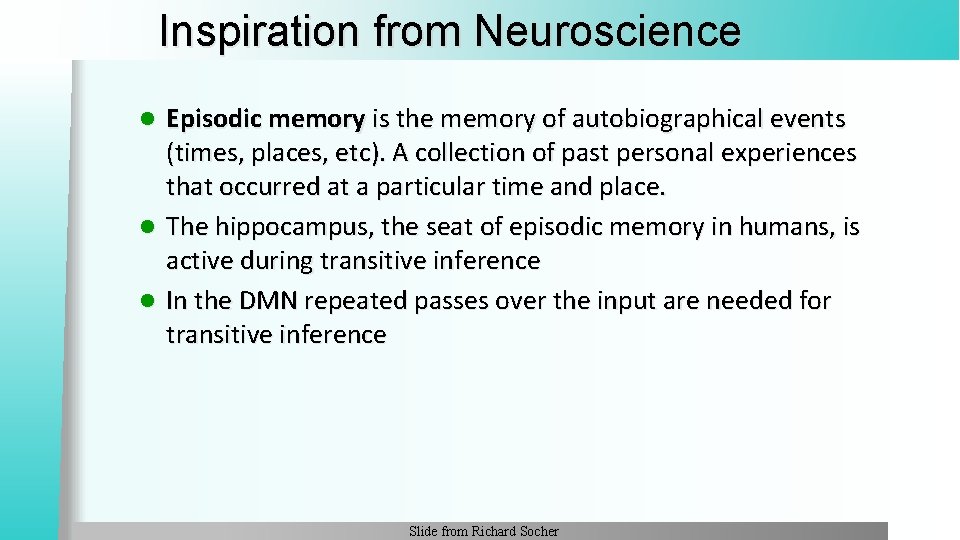

Inspiration from Neuroscience Episodic memory is the memory of autobiographical events (times, places, etc). A collection of past personal experiences that occurred at a particular time and place. l The hippocampus, the seat of episodic memory in humans, is active during transitive inference l In the DMN repeated passes over the input are needed for transitive inference l Slide from Richard Socher

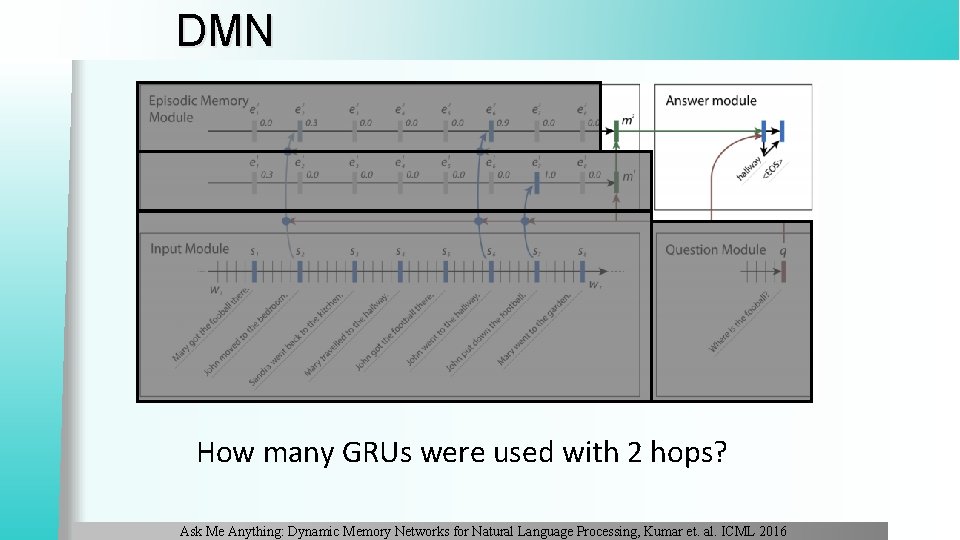

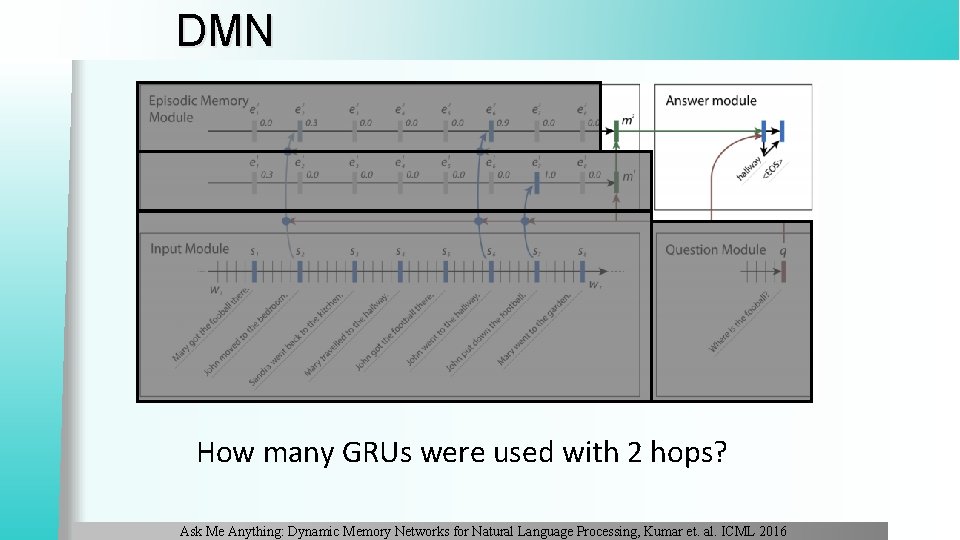

DMN • When the end of the input is reached, the relevant facts are summarized in another GRU or simple NNet Ask Me Anything: Dynamic Memory Networks for Natural Language Processing, Kumar et. al. ICML 2016

DMN: Answer Module Ask Me Anything: Dynamic Memory Networks for Natural Language Processing, Kumar et. al. ICML 2016

DMN How many GRUs were used with 2 hops? Ask Me Anything: Dynamic Memory Networks for Natural Language Processing, Kumar et. al. ICML 2016

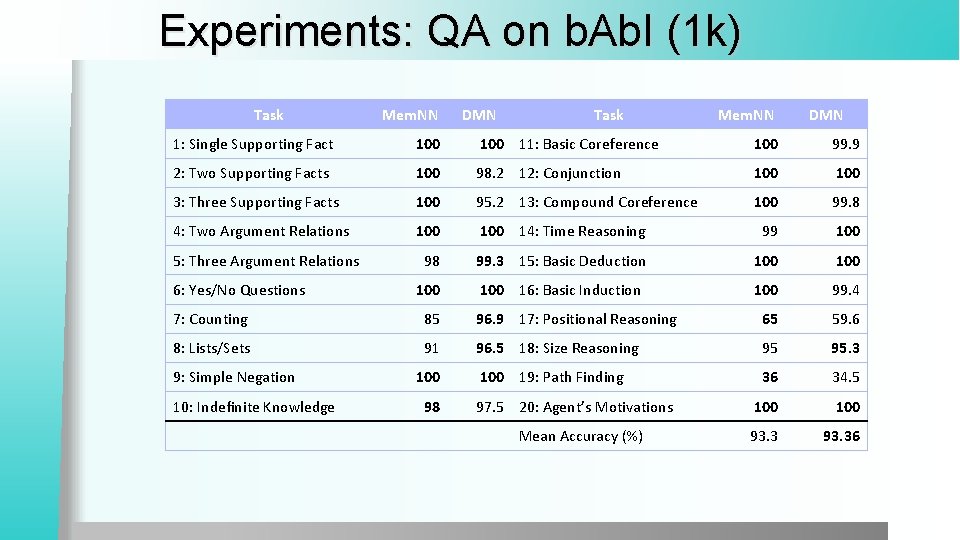

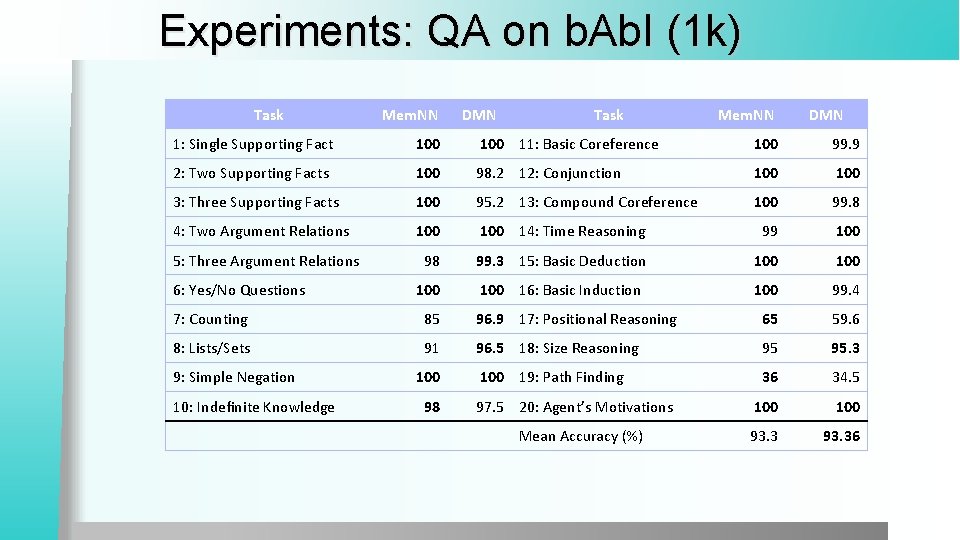

Experiments: QA on b. Ab. I (1 k) Task Mem. NN DMN 1: Single Supporting Fact 100 11: Basic Coreference 100 99. 9 2: Two Supporting Facts 100 98. 2 12: Conjunction 100 3: Three Supporting Facts 100 95. 2 13: Compound Coreference 100 99. 8 4: Two Argument Relations 100 14: Time Reasoning 99 100 98 99. 3 15: Basic Deduction 100 100 16: Basic Induction 100 99. 4 5: Three Argument Relations 6: Yes/No Questions 7: Counting 85 96. 9 17: Positional Reasoning 65 59. 6 8: Lists/Sets 91 96. 5 18: Size Reasoning 95 95. 3 100 19: Path Finding 36 34. 5 100 93. 36 9: Simple Negation 10: Indefinite Knowledge 100 98 97. 5 20: Agent’s Motivations Mean Accuracy (%)

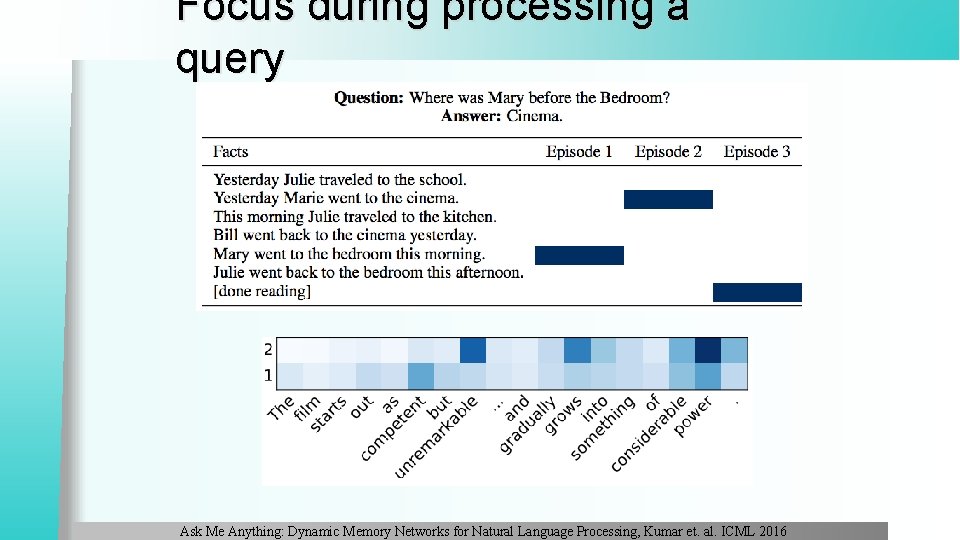

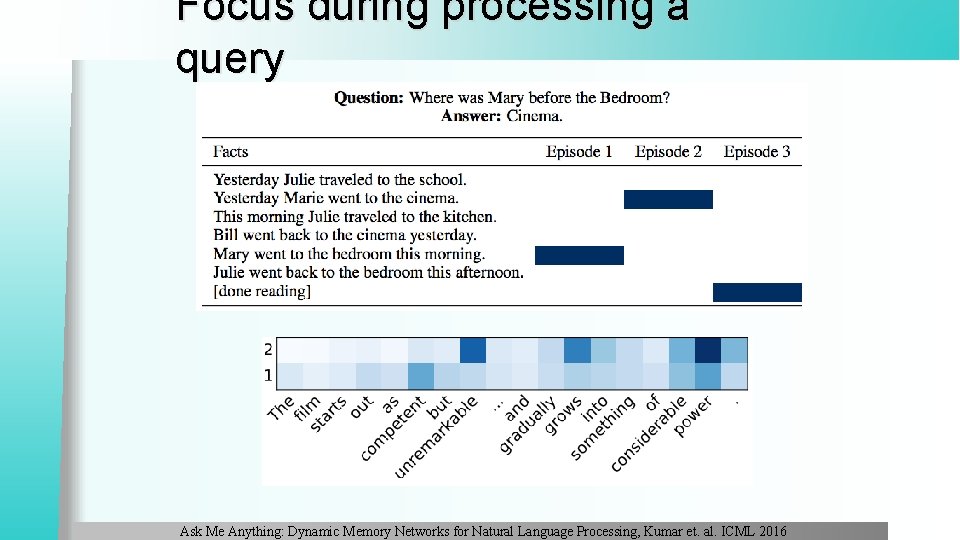

Focus during processing a query Ask Me Anything: Dynamic Memory Networks for Natural Language Processing, Kumar et. al. ICML 2016

Comparison: Mem. Nets vs DMN Similarities l Mem. Nets and DMNs have input, scoring, attention and response mechanisms Differences l l For input representations Mem. Nets use bag of word, nonlinear or linear embeddings that explicitly encode position Mem. Nets iteratively run functions for attention and response DMNs shows that neural sequence models can be used for input representation, attention and response mechanisms naturally captures position and temporality Enables broader range of applications

Question Dependent Recurrent Entity Network for Question Answering Andrea Madotto Giuseppe Attardi

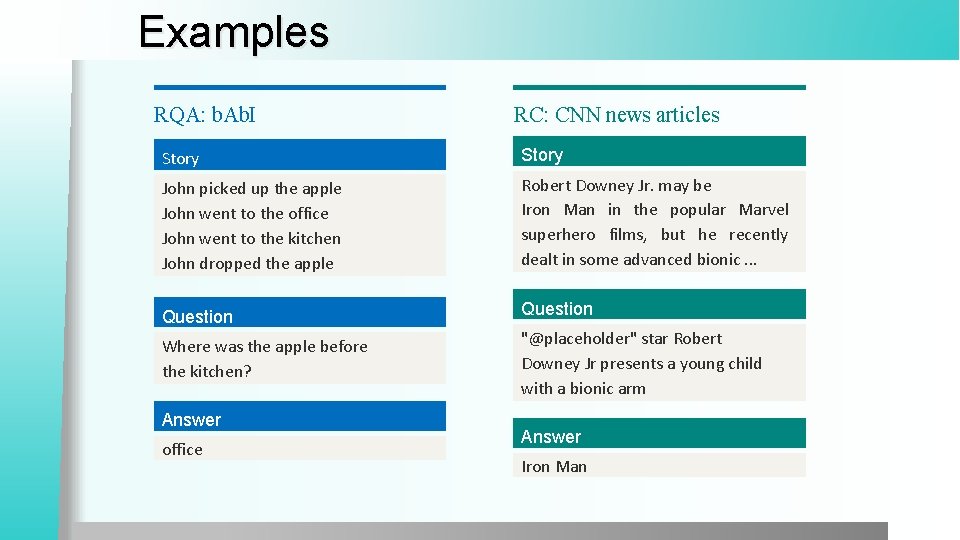

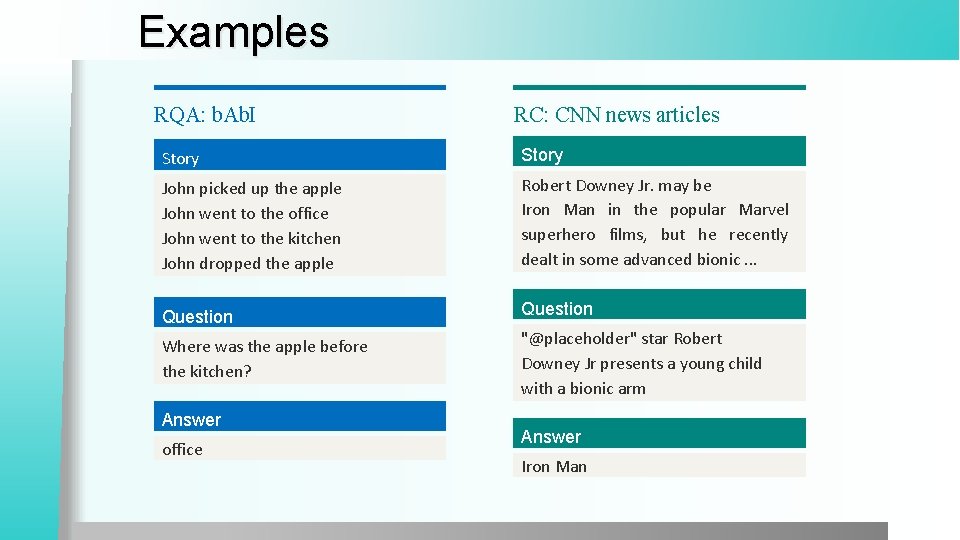

Examples RQA: b. Ab. I RC: CNN news articles Story John picked up the apple John went to the office John went to the kitchen John dropped the apple Robert Downey Jr. may be Iron Man in the popular Marvel superhero films, but he recently dealt in some advanced bionic. . . Question Where was the apple before the kitchen? "@placeholder" star Robert Downey Jr presents a young child with a bionic arm Answer office Answer Iron Man

Related Work Many models have been proposed to solve the RQA and RC. Pointer Networks Memory Networks • Attentive Sum Reader • Memory Network • Attention Over Attention • Recurrent Entity Network • Epi. Reader • Dynamic Memory Network • Stanford Attentive Reader • Dynamic Entity Representation • Neural Touring Machine • End To End Memory Network (Mem. N 2 N)

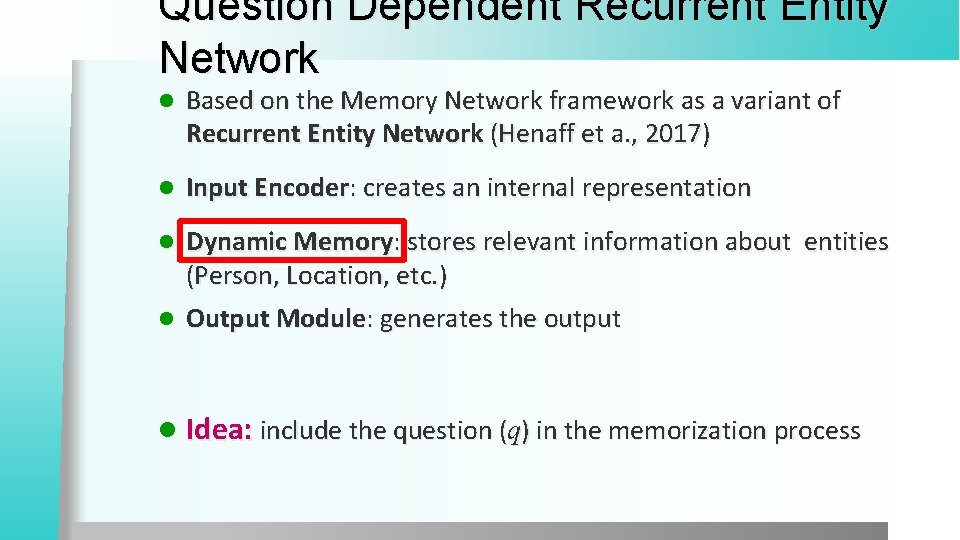

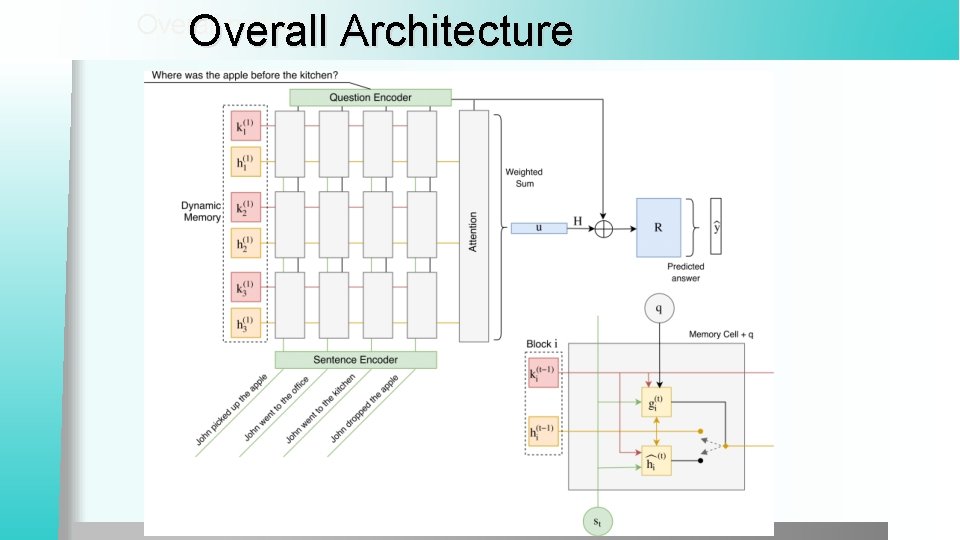

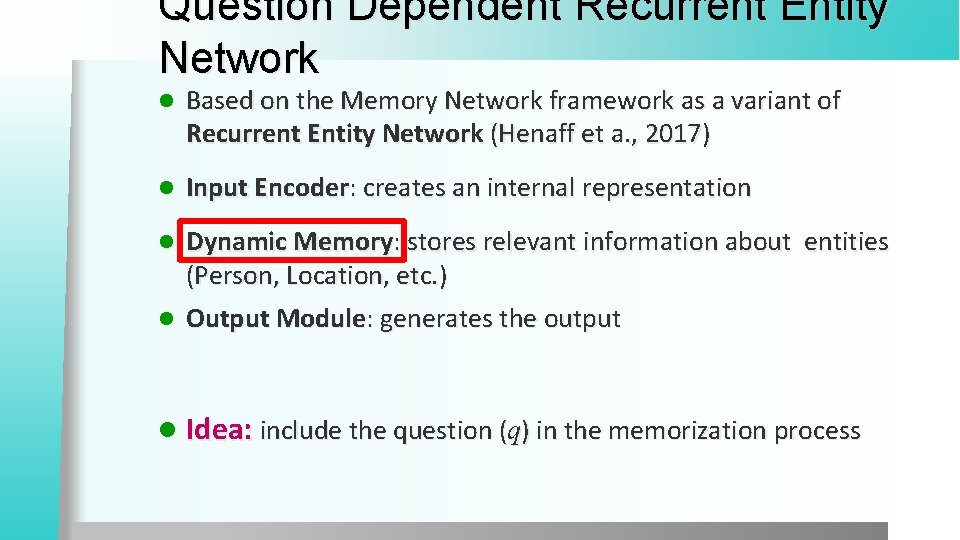

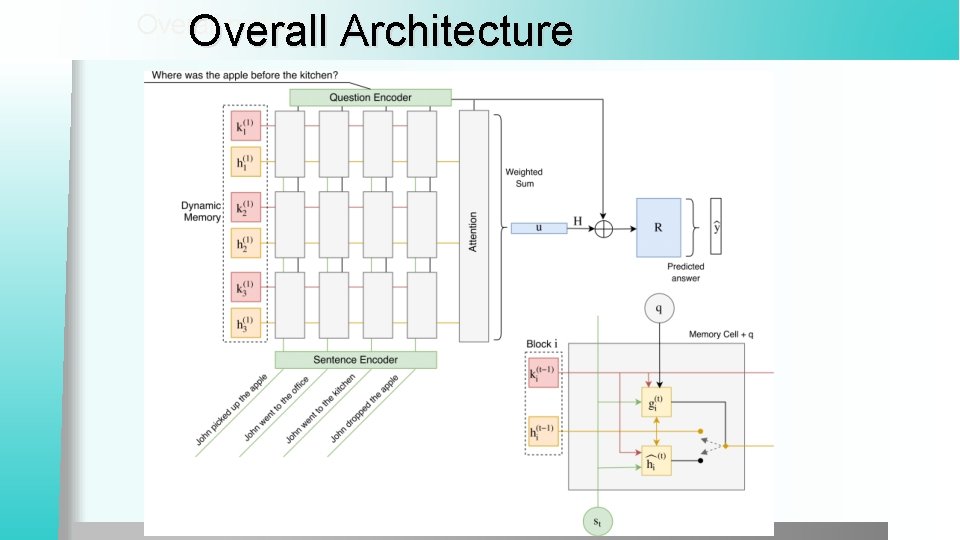

Question Dependent Recurrent Entity Network l Based on the Memory Network framework as a variant of Recurrent Entity Network (Henaff et a. , 2017) l Input Encoder: creates an internal representation Dynamic Memory: stores relevant information about entities (Person, Location, etc. ) l Output Module: generates the output l l Idea: include the question (q) in the memorization process

Input Encoder Set of sentences {s 1, … , st } and a question q. • E R|V |×d embedding matrix → E(w) = e Rd • {e 1(s), … , es)(m} vectors for the words in st • {e 1 q( ), … , q)e(}} k vectors for the words in q • f (s/q) = {f 1, … , fm } multiplicative masks with fi Rd

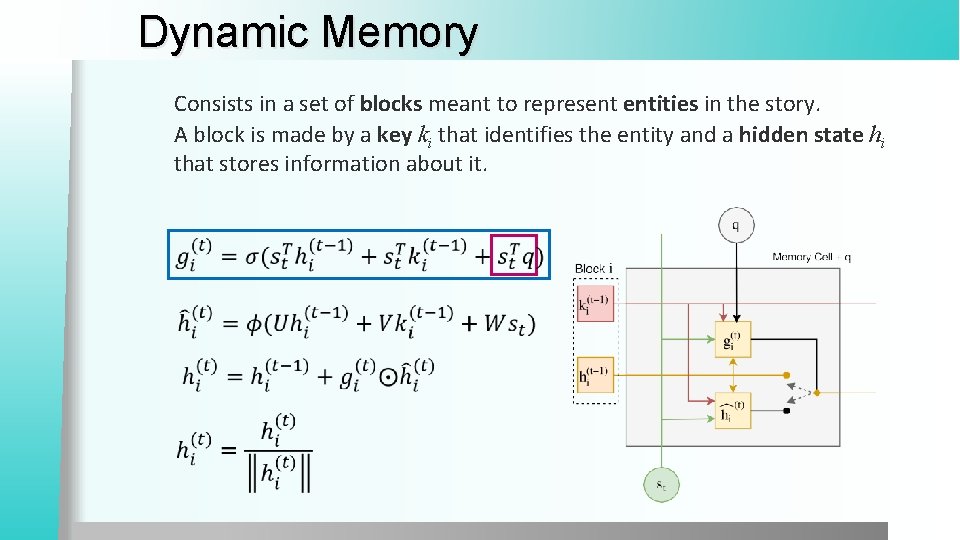

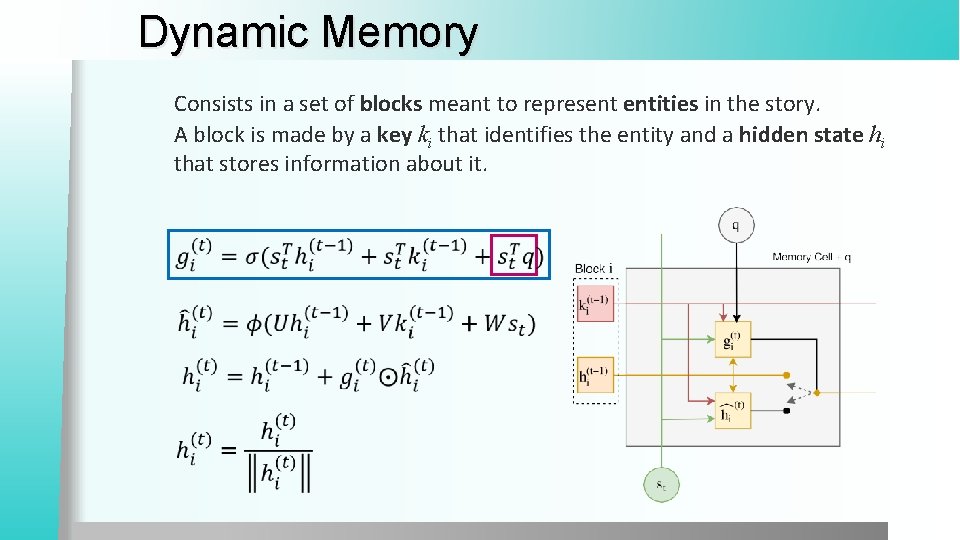

Dynamic Memory Consists in a set of blocks meant to represent entities in the story. A block is made by a key ki that identifies the entity and a hidden state hi that stores information about it.

Output Module • Scores memories hi using q • embedding matrix E R|V|×d to generate the output y where y R|V| represents the model answer Training n Given {(xi, yi)} =1 i, we used cross entropy loss function H(y, y ). End-To-End training with standard Backpropagation Through Time (BPTT) algorithm.

Overall Architecture Overall picture

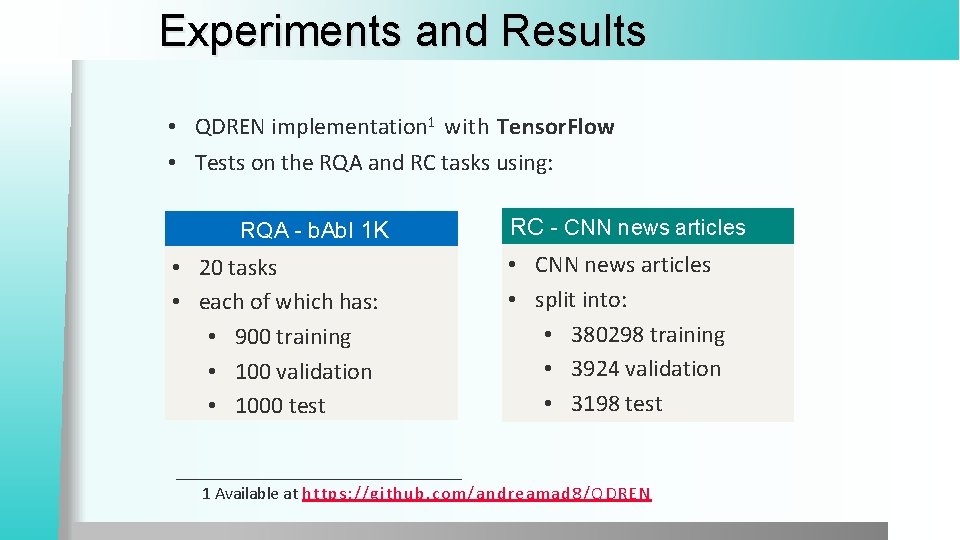

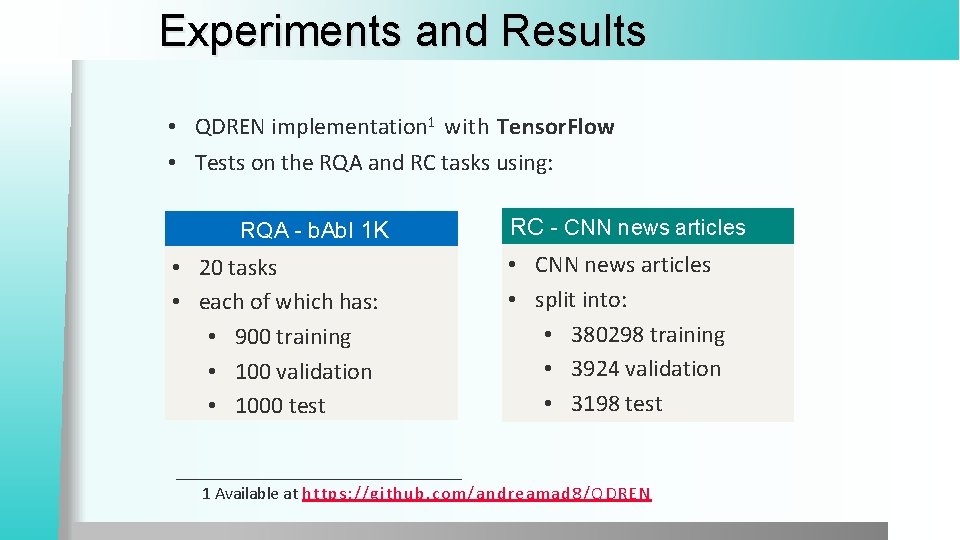

Experiments and Results • QDREN implementation 1 with Tensor. Flow • Tests on the RQA and RC tasks using: RQA - b. Ab. I 1 K • 20 tasks • each of which has: • 900 training • 100 validation • 1000 test RC - CNN news articles • CNN news articles • split into: • 380298 training • 3924 validation • 3198 test 1 Available at https: //github. com/andreamad 8/QDREN

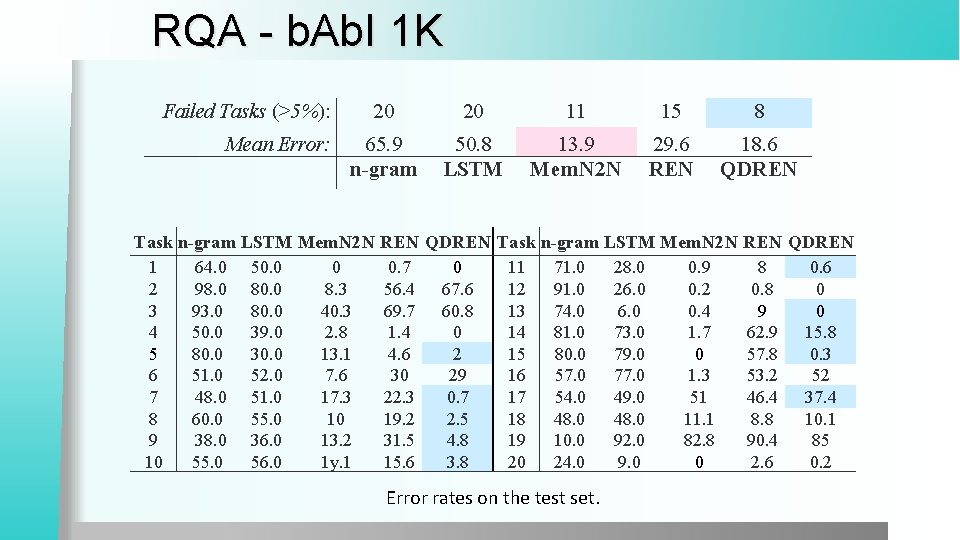

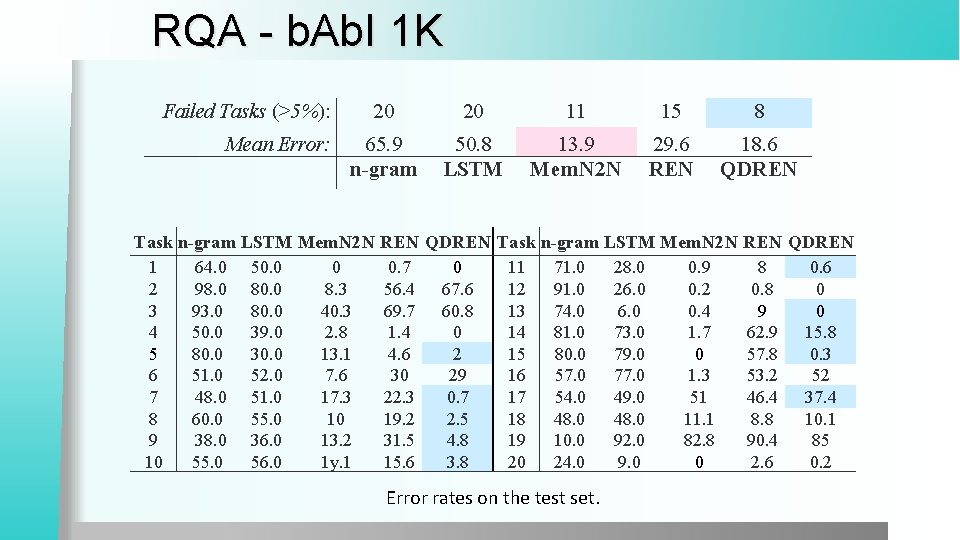

RQA - b. Ab. I 1 K Failed Tasks (>5%): Mean Error: 20 20 11 15 8 65. 9 n-gram 50. 8 LSTM 13. 9 Mem. N 2 N 29. 6 REN 18. 6 QDREN Task n-gram LSTM Mem. N 2 N REN QDREN 1 64. 0 50. 0 0 0. 7 0 11 71. 0 28. 0 0. 9 8 0. 6 2 98. 0 80. 0 8. 3 56. 4 67. 6 12 91. 0 26. 0 0. 2 0. 8 0 3 93. 0 80. 0 40. 3 69. 7 60. 8 13 74. 0 6. 0 0. 4 9 0 4 50. 0 39. 0 2. 8 1. 4 0 14 81. 0 73. 0 1. 7 62. 9 15. 8 5 80. 0 30. 0 13. 1 4. 6 2 15 80. 0 79. 0 0 57. 8 0. 3 6 51. 0 52. 0 7. 6 30 29 16 57. 0 77. 0 1. 3 53. 2 52 7 48. 0 51. 0 17. 3 22. 3 0. 7 17 54. 0 49. 0 51 46. 4 37. 4 8 60. 0 55. 0 10 19. 2 2. 5 18 48. 0 11. 1 8. 8 10. 1 9 38. 0 36. 0 13. 2 31. 5 4. 8 19 10. 0 92. 0 82. 8 90. 4 85 10 55. 0 56. 0 1 y. 1 15. 6 3. 8 20 24. 0 9. 0 0 2. 6 0. 2 Error rates on the test set.

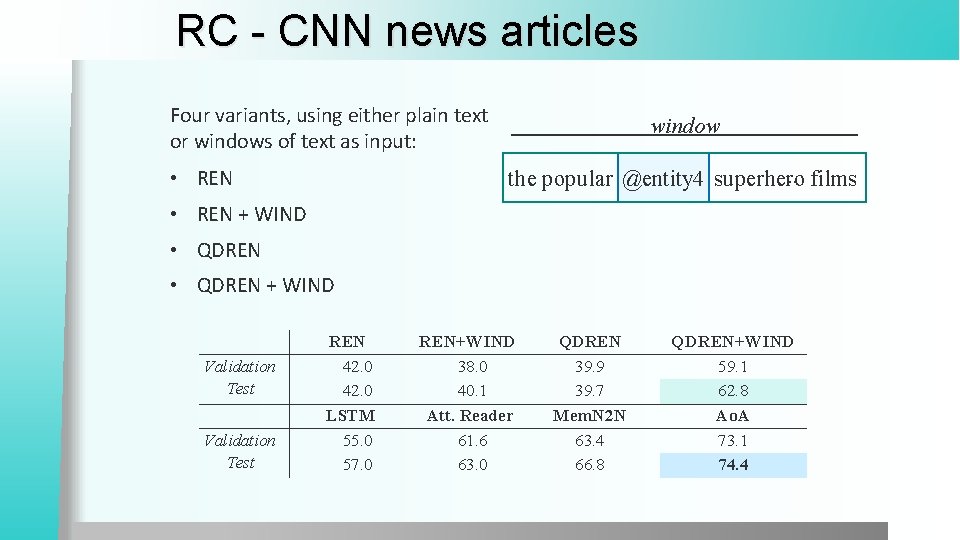

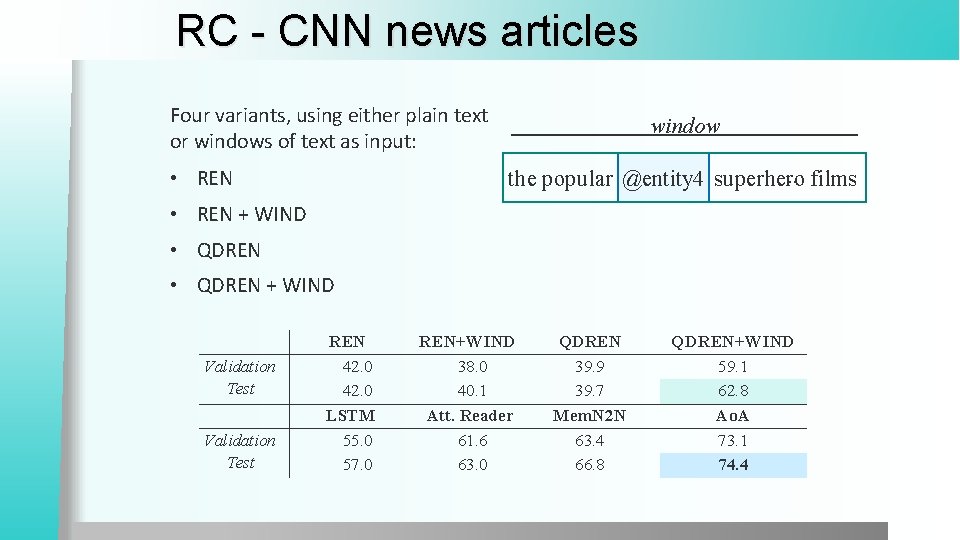

RC - CNN news articles Four variants, using either plain text or windows of text as input: window. . films the popular @entity 4 superhero • REN + WIND • QDREN + WIND Validation Test REN 42. 0 LSTM 55. 0 57. 0 REN+WIND 38. 0 40. 1 Att. Reader 61. 6 63. 0 QDREN 39. 9 39. 7 Mem. N 2 N 63. 4 66. 8 QDREN+WIND 59. 1 62. 8 Ao. A 73. 1 74. 4

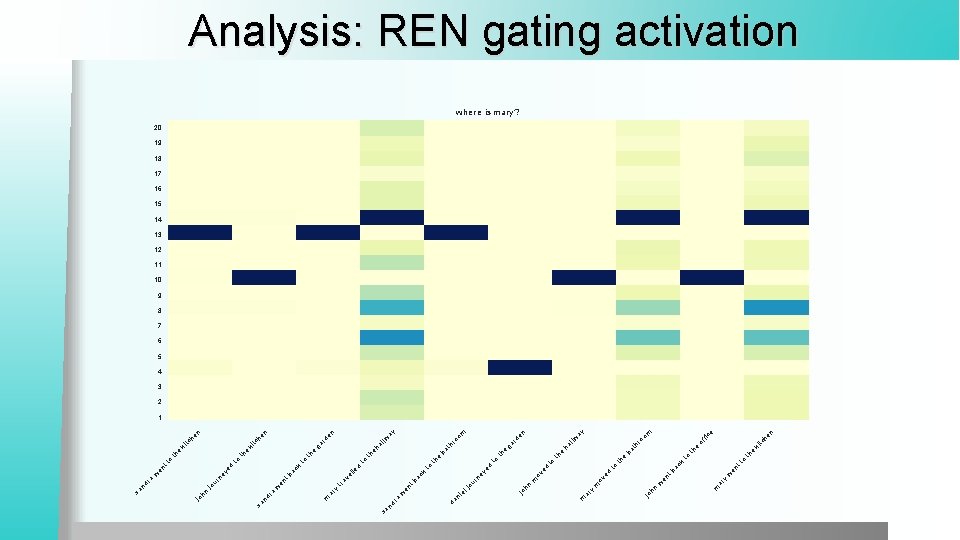

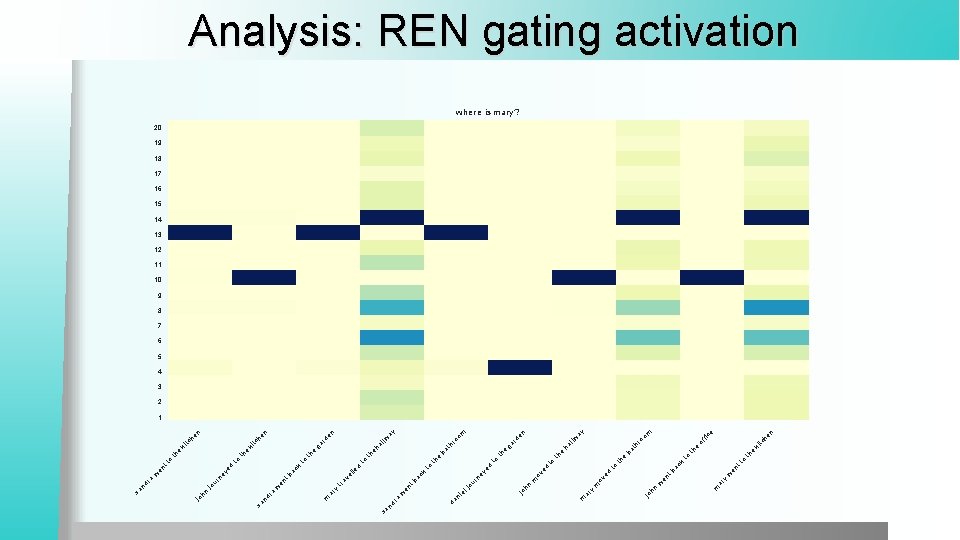

sa y m ar y w o k th tt ac en tb to e th ba ki e tc he n e om ay en fic ro of th rd llw ga ay om lw ro ha e e th to e th th th al n n en he he rd tc tc ga ki ki eh e ba th th e e th th e to th to to to d to ed ed en ov ov k d ye k ne ac m w m hn hn ar jo m jo ur tb jo en le ac el tb av en o to tt d en ye w ne ra ur tr w jo nd y el w ar ni ra m ra hn nd da nd sa jo sa Analysis: REN gating activation where is mary? 20 19 18 17 16 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1

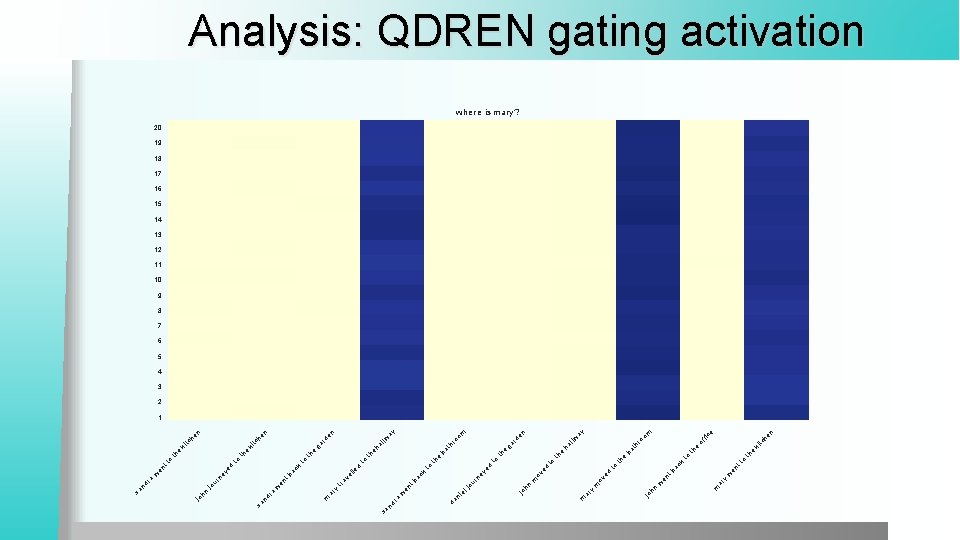

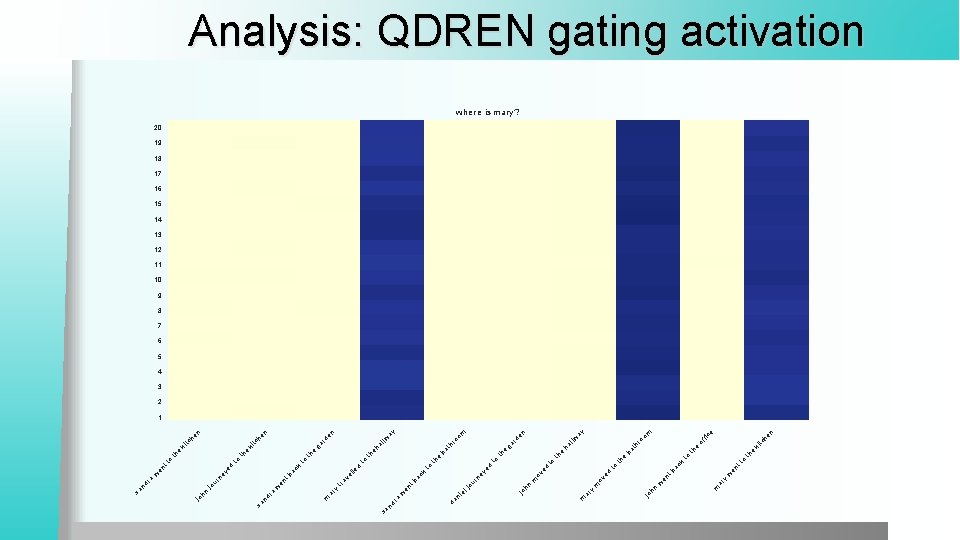

sa y m ar y w o k th tt ac en tb to e th ba ki e tc he n e om ay en fic ro of th rd llw ga ay om lw ro ha e e th to e th th th al n n en he he rd tc tc ga ki ki eh e ba th th e e th th e to th to to to d to ed ed en ov ov k d ye k ne ac m w m hn hn ar jo m jo ur tb jo en le ac el tb av en o to tt d en ye w ne ra ur tr w jo nd y el w ar ni ra m ra hn nd da nd sa jo sa Analysis: QDREN gating activation where is mary? 20 19 18 17 16 15 14 13 12 11 10 9 8 7 6 5 4 3 2 1

References l l l l Ask Me Anything: Dynamic Memory Networks for Natural Language Processing (Kumar et al. , 2015) Dynamic Memory Networks for Visual and Textual Question Answering (Xiong et al. , 2016) Sequence to Sequence (Sutskever et al. , 2014) Neural Turing Machines (Graves et al. , 2014) Teaching Machines to Read and Comprehend (Hermann et al. , 2015) Learning to Transduce with Unbounded Memory (Grefenstette 2015) Structured Memory for Neural Turing Machines (Wei Zhang 2015) l l l End to end memory networks (Sukhbaataret et al. , 2015) A. Moschitti, A. Severyn. 2015. UNITN: Training Deep Convolutional Network for Twitter Sentiment Classification. Sem. Eeval 2015. M. Henaff, J. Weston, A. Szlam, A. Bordes, Y. Le. Cun. Tracking the World State with Recurrent Entity Network. ar. Xiv preprint ar. Xiv: 1612. 03969, 2017. J. Weston, S. Chopra, A. Bordes. Memory Networks. ar. Xiv preprint ar. Xiv: 1410. 3916, 2014. A. Madotto, G. Attardi. Question Dependent Recurrent Entity Network for Question Answering. 2017.