Text Classification ActiveInteractive learning Text Categorization Categorization of

- Slides: 26

Text Classification, Active/Interactive learning

Text Categorization • Categorization of documents, based on topics • When the topics are known – categorization (supervised) • When the topics are unknown – classification (a. k. a clustering, unsupervised)

Supervised learning • Training data – documents with their assigned (true) categories • Documents are represented by feature vectors x=(x 1, x 2, …, xn)

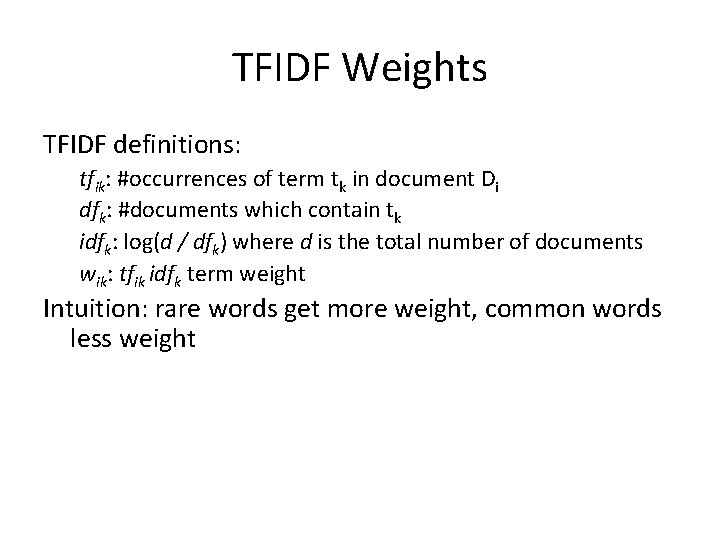

Document features • • • Word frequencies Stems/lemmas Phrases POS tags Semantic features (concepts, named entities) tdidf : tf = term frequency idf = inverse document frequency

TFIDF Weights TFIDF definitions: tfik: #occurrences of term tk in document Di dfk: #documents which contain tk idfk: log(d / dfk) where d is the total number of documents wik: tfik idfk term weight Intuition: rare words get more weight, common words less weight

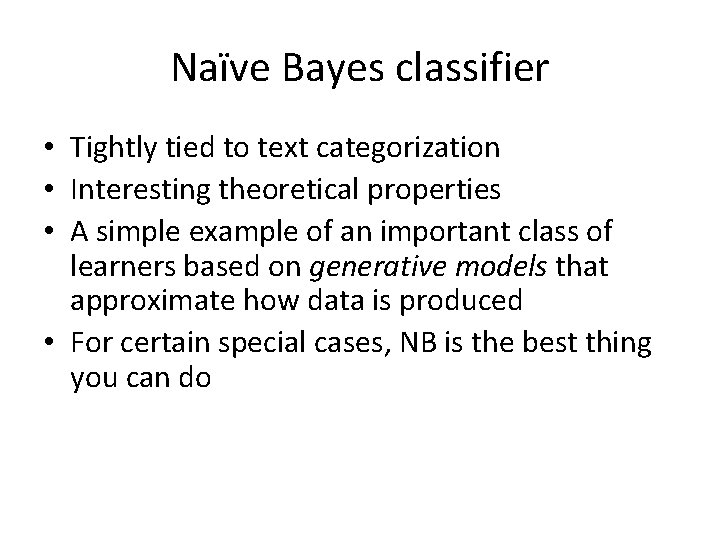

Naïve Bayes classifier • Tightly tied to text categorization • Interesting theoretical properties • A simple example of an important class of learners based on generative models that approximate how data is produced • For certain special cases, NB is the best thing you can do

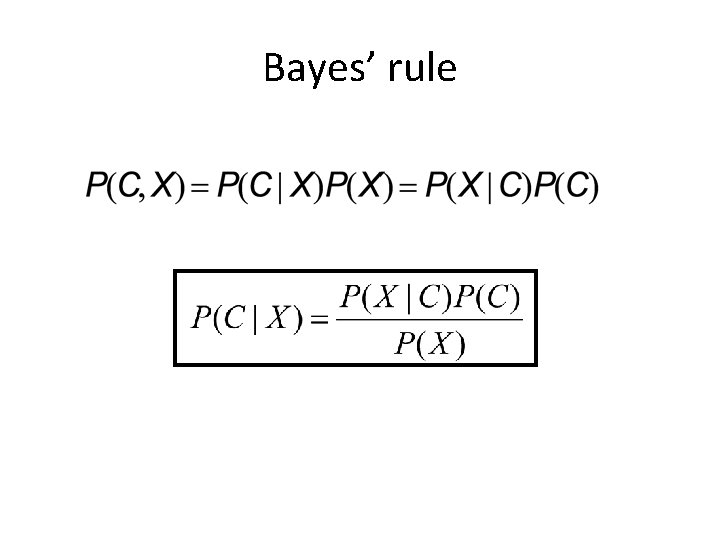

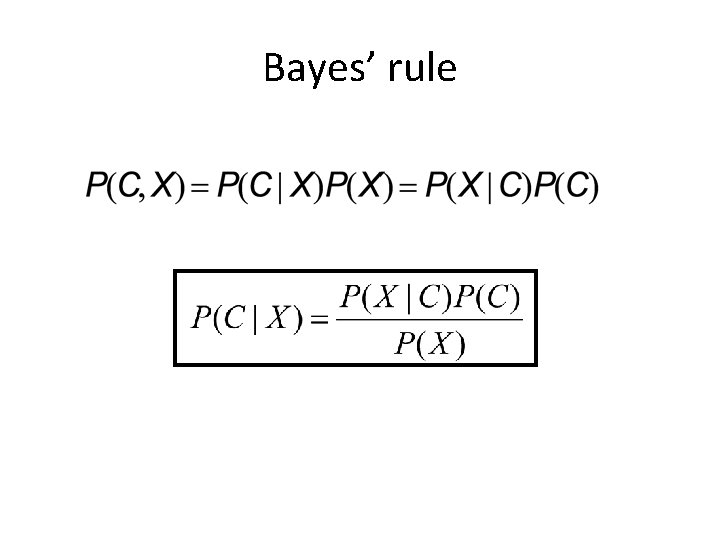

Bayes’ rule

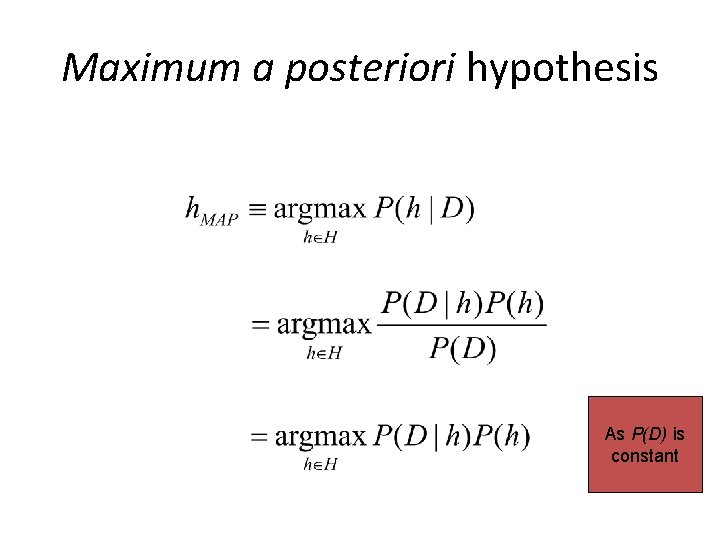

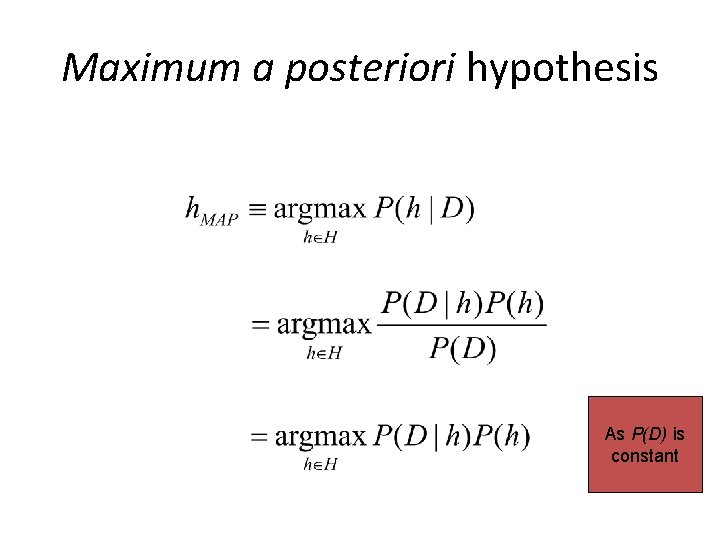

Maximum a posteriori hypothesis As P(D) is constant

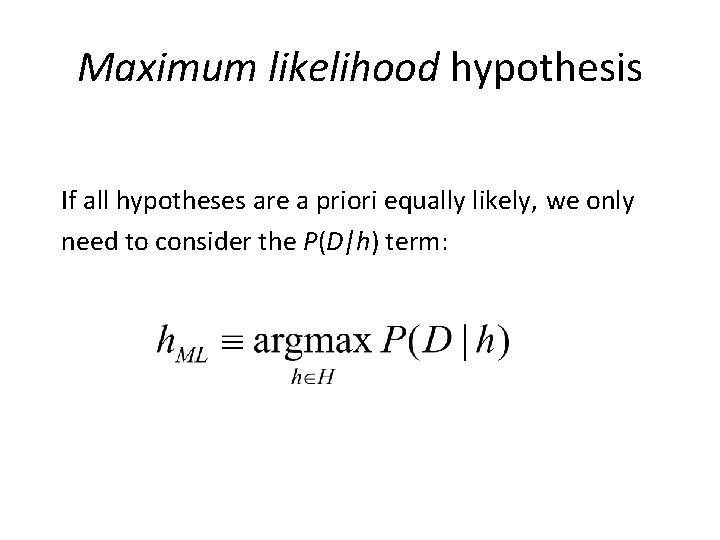

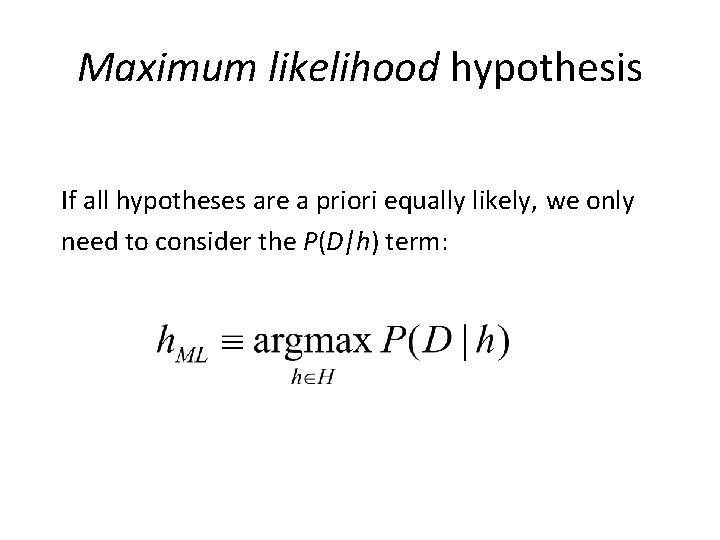

Maximum likelihood hypothesis If all hypotheses are a priori equally likely, we only need to consider the P(D|h) term:

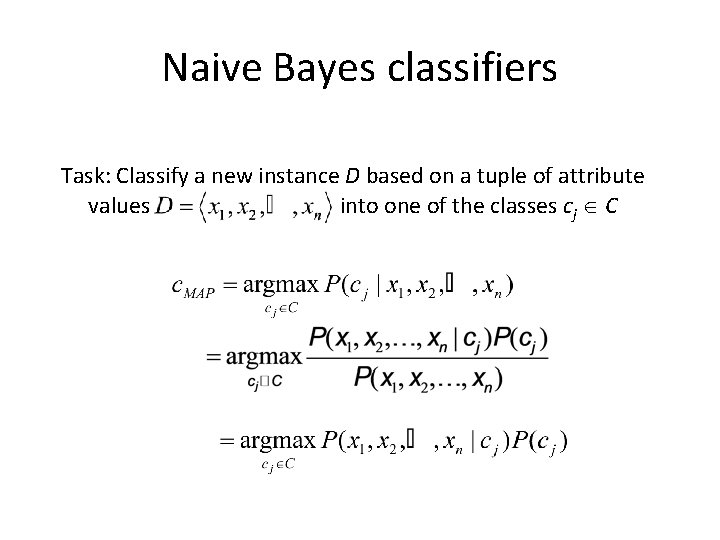

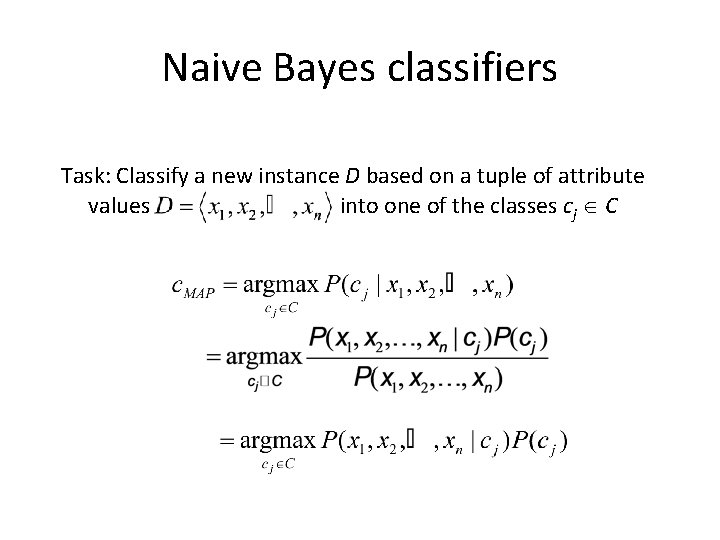

Naive Bayes classifiers Task: Classify a new instance D based on a tuple of attribute values into one of the classes cj C

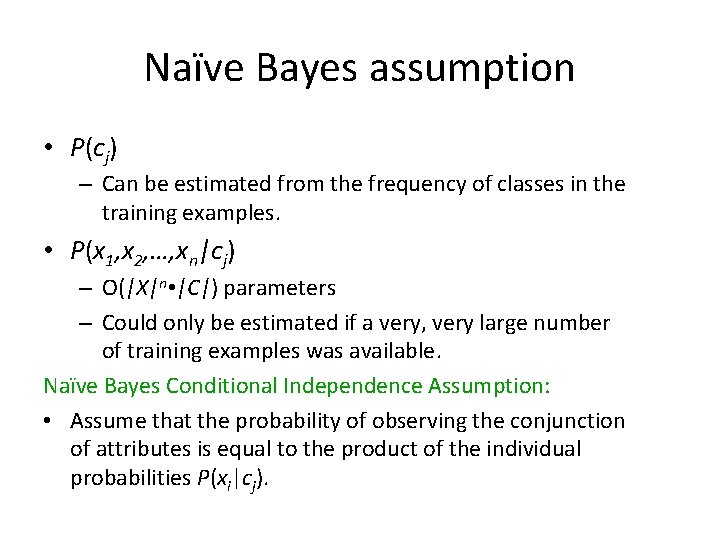

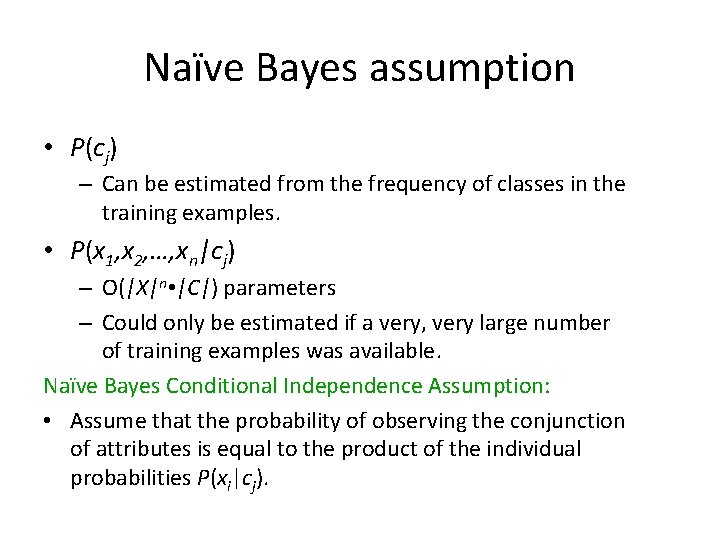

Naïve Bayes assumption • P(cj) – Can be estimated from the frequency of classes in the training examples. • P(x 1, x 2, …, xn|cj) – O(|X|n • |C|) parameters – Could only be estimated if a very, very large number of training examples was available. Naïve Bayes Conditional Independence Assumption: • Assume that the probability of observing the conjunction of attributes is equal to the product of the individual probabilities P(xi|cj).

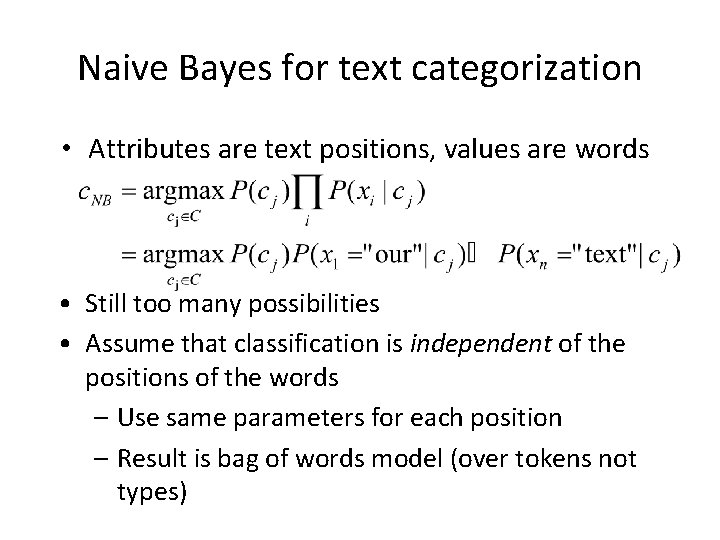

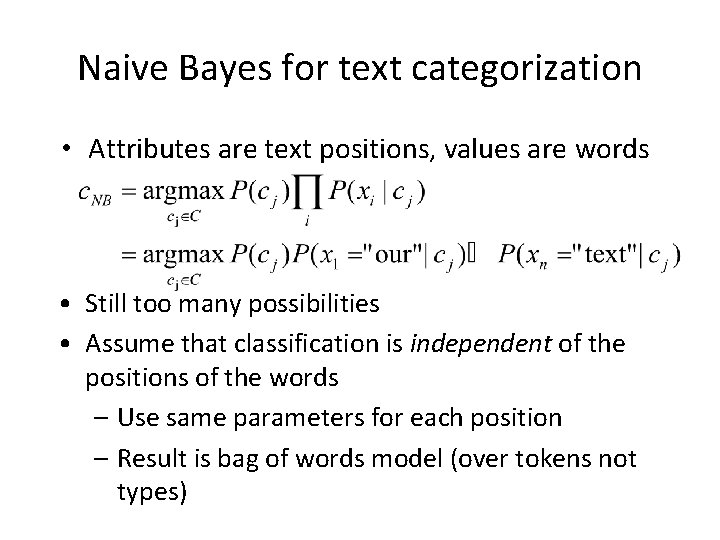

Naive Bayes for text categorization • Attributes are text positions, values are words • Still too many possibilities • Assume that classification is independent of the positions of the words – Use same parameters for each position – Result is bag of words model (over tokens not types)

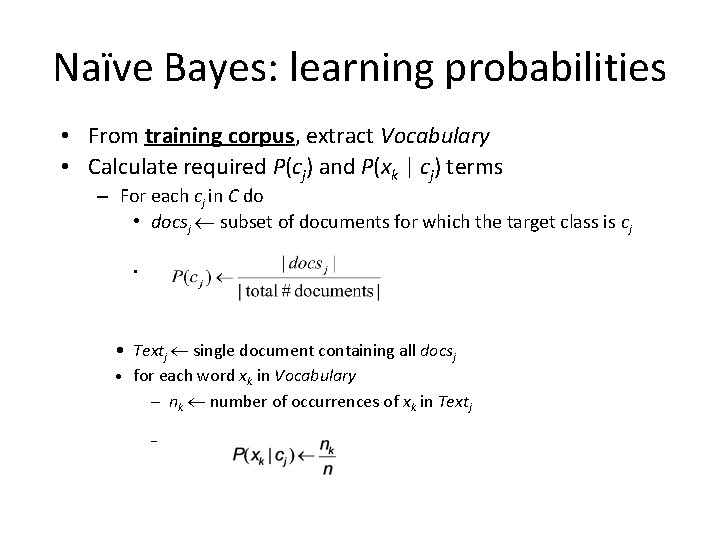

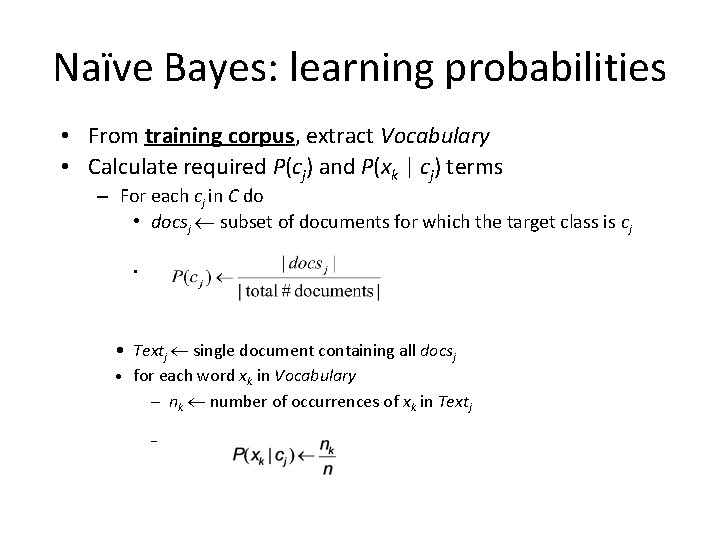

Naïve Bayes: learning probabilities • From training corpus, extract Vocabulary • Calculate required P(cj) and P(xk | cj) terms – For each cj in C do • docsj subset of documents for which the target class is cj • • Textj single document containing all docsj • for each word xk in Vocabulary – nk number of occurrences of xk in Textj –

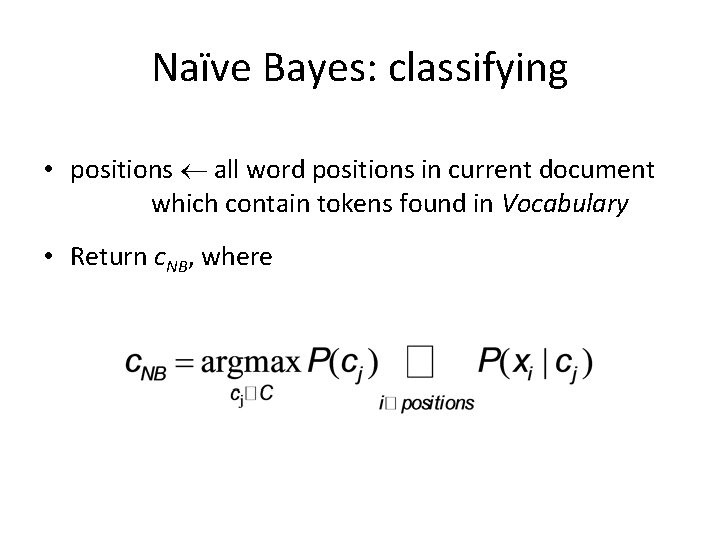

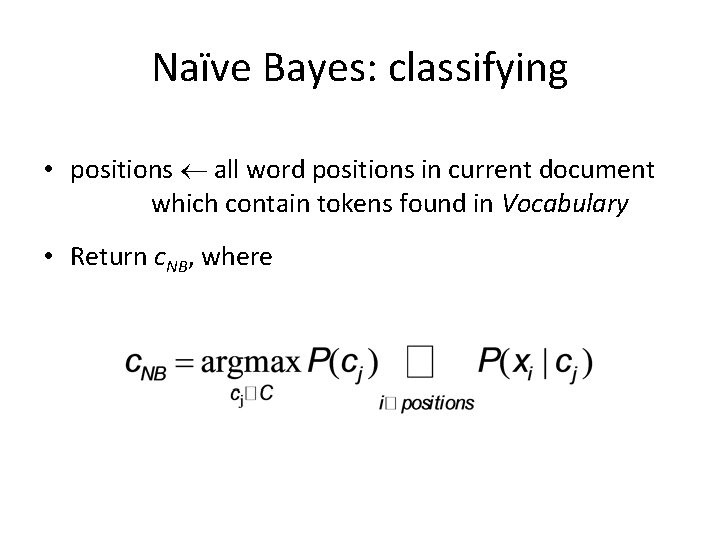

Naïve Bayes: classifying • positions all word positions in current document which contain tokens found in Vocabulary • Return c. NB, where

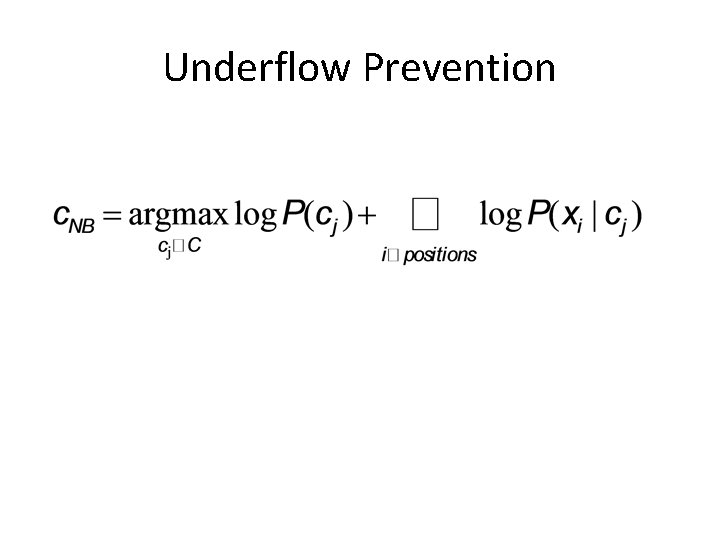

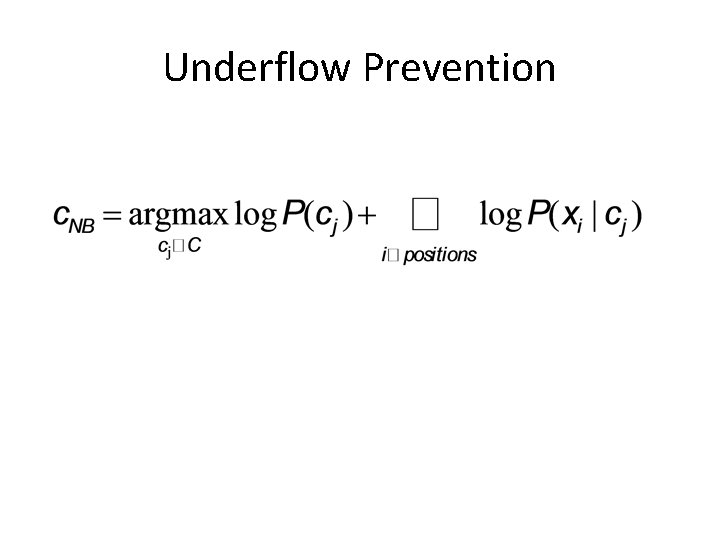

Underflow Prevention

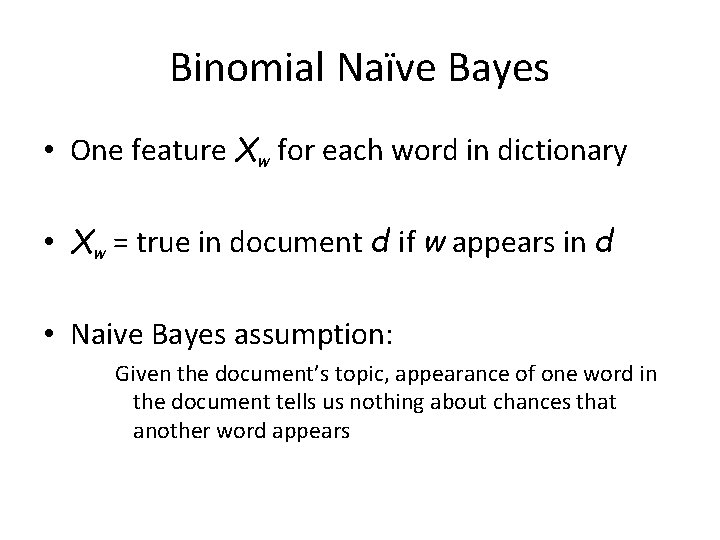

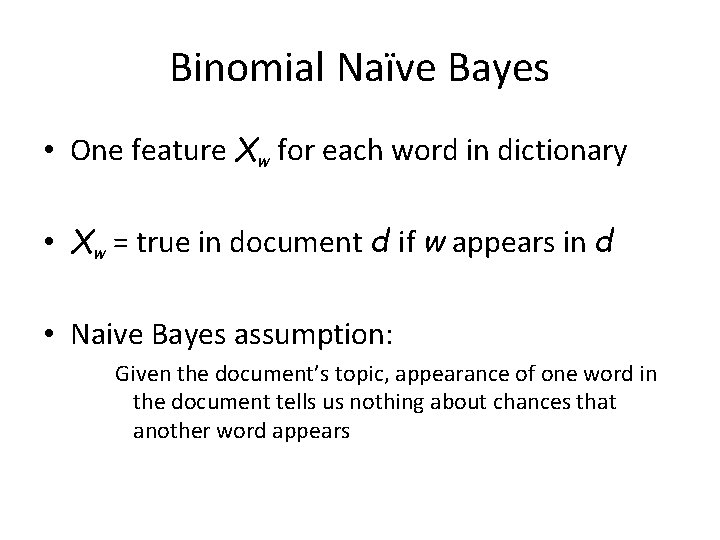

Binomial Naïve Bayes • One feature Xw for each word in dictionary • Xw = true in document d if w appears in d • Naive Bayes assumption: Given the document’s topic, appearance of one word in the document tells us nothing about chances that another word appears

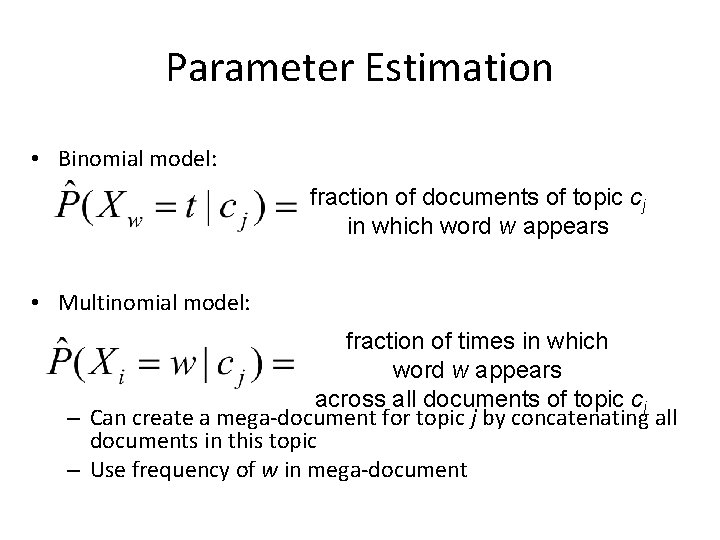

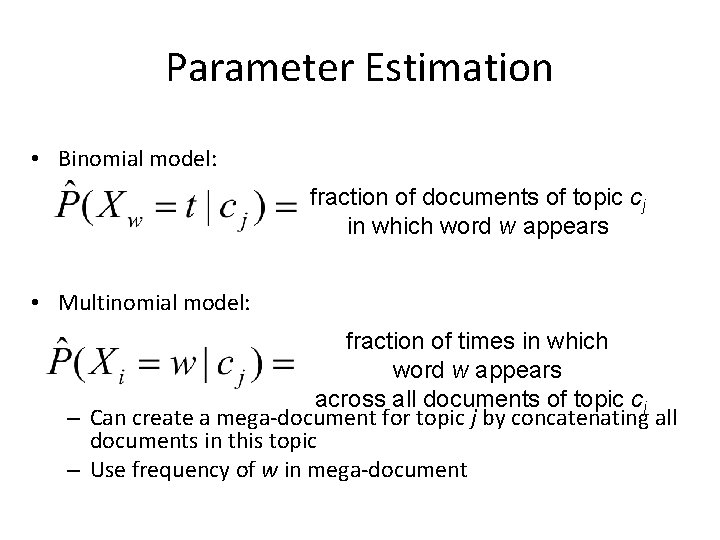

Parameter Estimation • Binomial model: fraction of documents of topic cj in which word w appears • Multinomial model: fraction of times in which word w appears across all documents of topic cj – Can create a mega-document for topic j by concatenating all documents in this topic – Use frequency of w in mega-document

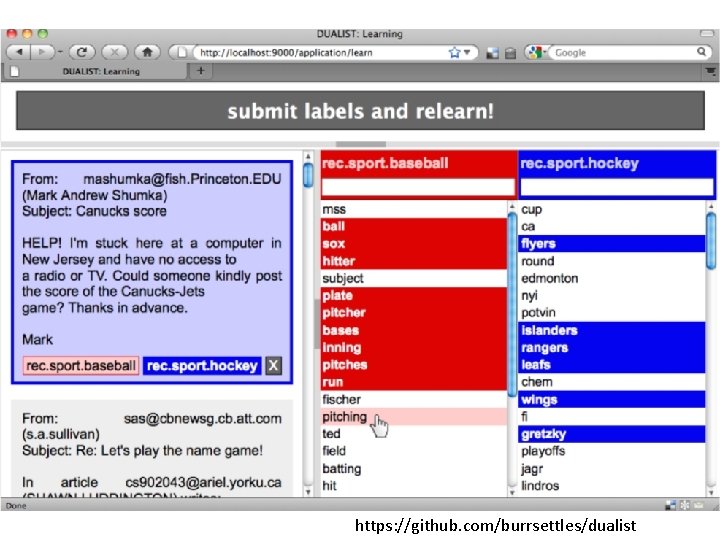

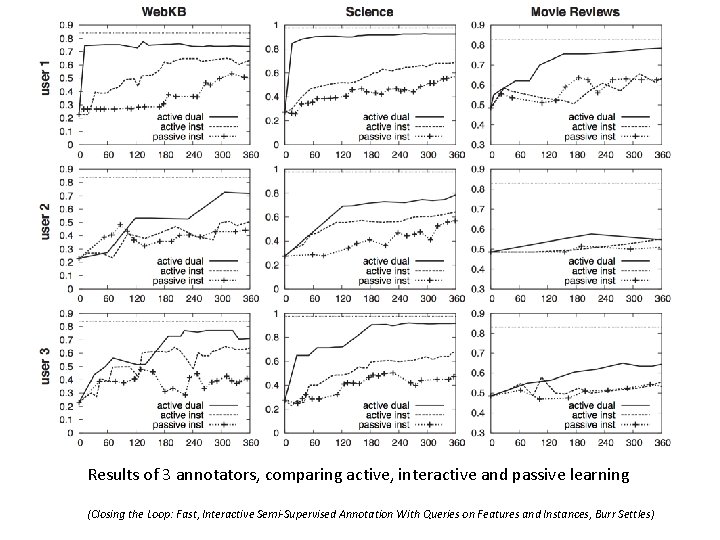

Learning probabilities • Passive learning – learning from the annotated corpus • Active learning – learning only from the most informative instances (real time annotation) • Interactive learning – the annotator can choose to suggest specific features (e. g. , playoffs to indicate sports) and not just complete instances

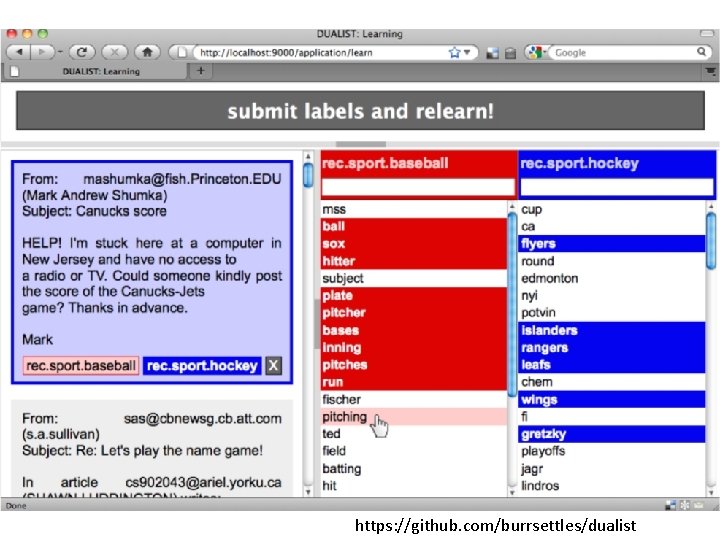

https: //github. com/burrsettles/dualist

{Inter}active learning • Available actions: – Annotate an instance with a class – Annotate a feature with a class – Suggest new feature and annotate it with a class

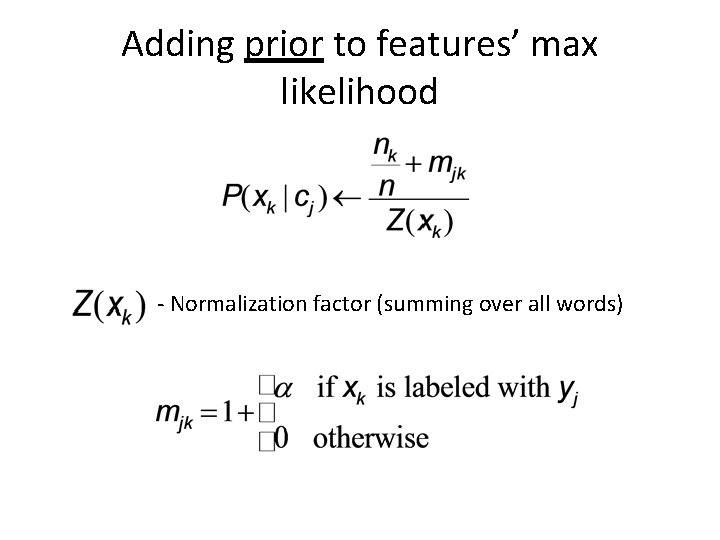

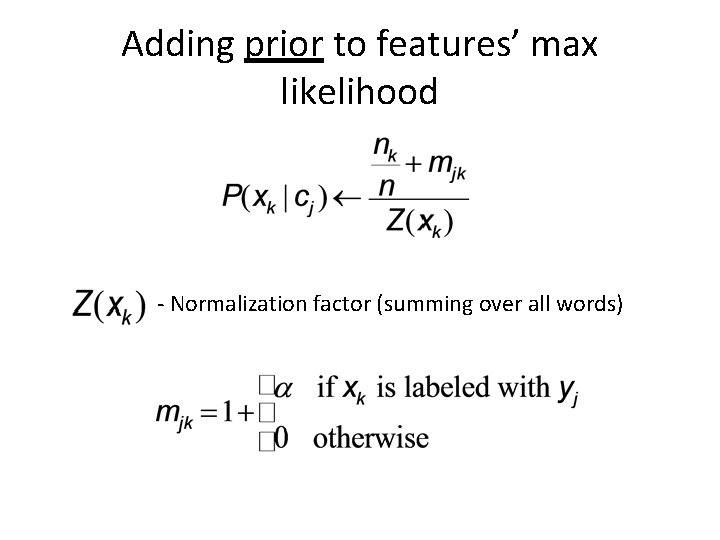

Adding prior to features’ max likelihood - Normalization factor (summing over all words)

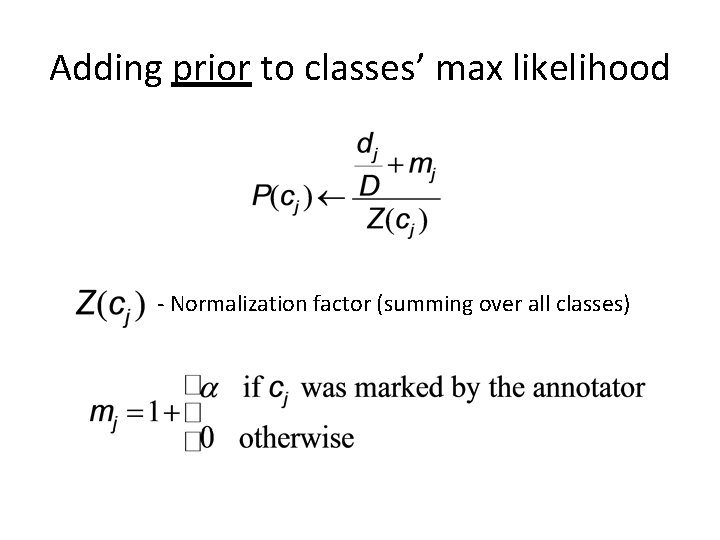

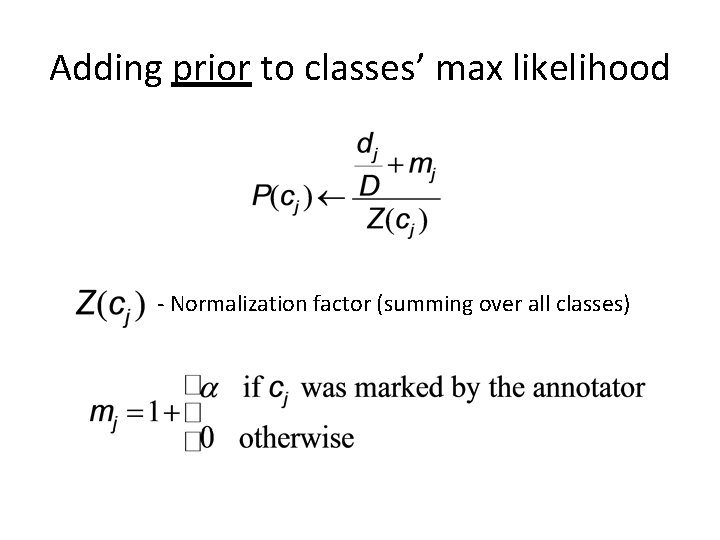

Adding prior to classes’ max likelihood - Normalization factor (summing over all classes)

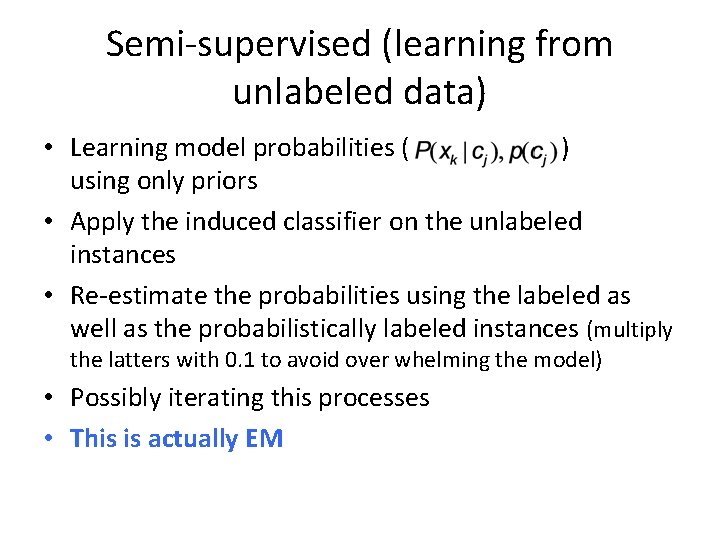

Semi-supervised (learning from unlabeled data) • Learning model probabilities ( ) using only priors • Apply the induced classifier on the unlabeled instances • Re-estimate the probabilities using the labeled as well as the probabilistically labeled instances (multiply the latters with 0. 1 to avoid over whelming the model) • Possibly iterating this processes • This is actually EM

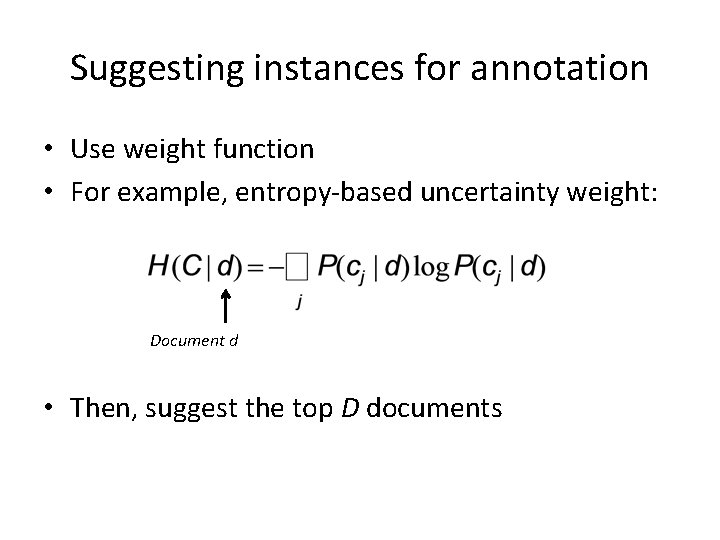

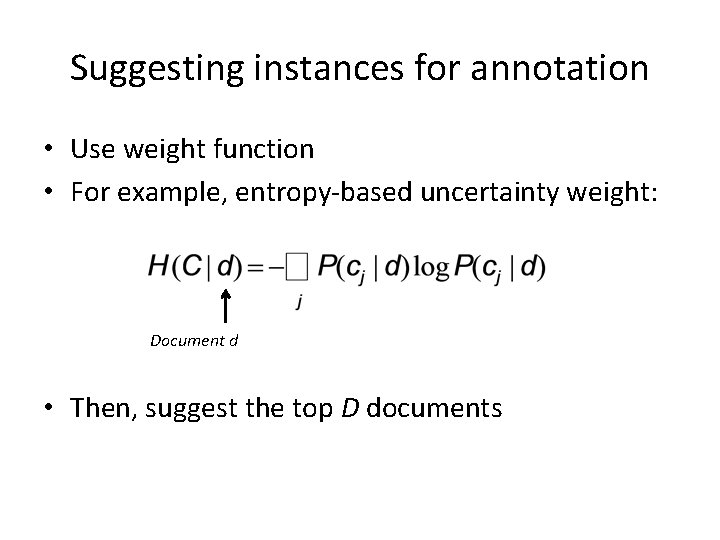

Suggesting instances for annotation • Use weight function • For example, entropy-based uncertainty weight: Document d • Then, suggest the top D documents

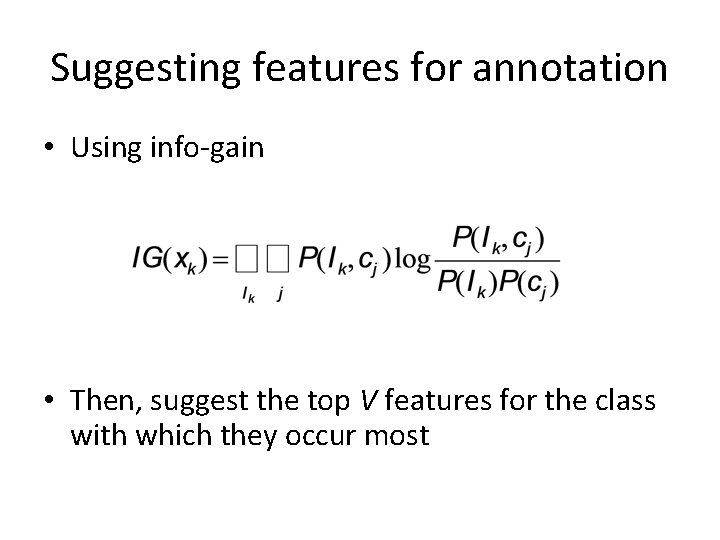

Suggesting features for annotation • Using info-gain • Then, suggest the top V features for the class with which they occur most

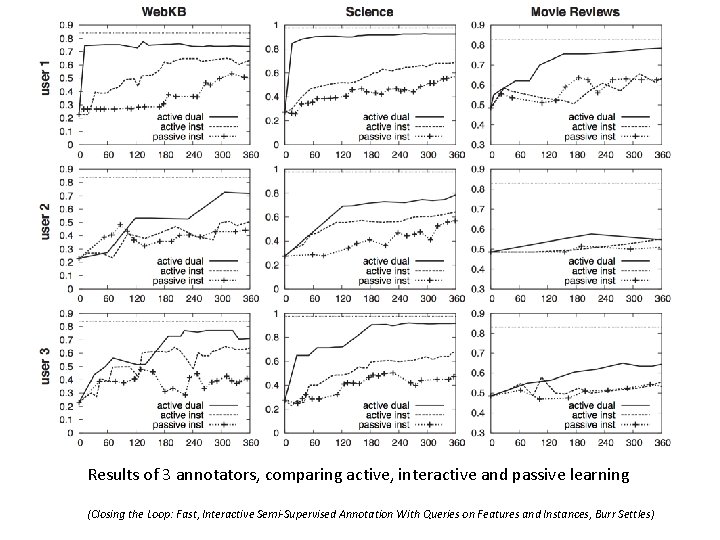

Results of 3 annotators, comparing active, interactive and passive learning (Closing the Loop: Fast, Interactive Semi-Supervised Annotation With Queries on Features and Instances, Burr Settles)