Supercomputer End Users the Opt IPuter Killer Application

- Slides: 45

Supercomputer End Users: the Opt. IPuter Killer Application Keynote DREN Networking and Security Conference San Diego, CA August 13, 2008 Dr. Larry Smarr Director, California Institute for Telecommunications and Information Technology Harry E. Gruber Professor, Dept. of Computer Science and Engineering Jacobs School of Engineering, UCSD

Abstract During the last few years, a radical restructuring of optical networks supporting e-Science projects has occurred around the world. U. S. universities are beginning to acquire access to high bandwidth lightwaves (termed "lambdas") on fiber optics through the National Lambda. Rail, Internet 2's Circuit Services, and the Global Lambda Integrated Facility. The NSF-funded Opt. IPuter project explores how user controlled 1 - or 10 - Gbps lambdas can provide direct access to global data repositories, scientific instruments, and computational resources from the researcher's Linux clusters in their campus laboratories. These end user clusters are reconfigured as "Opt. IPortals, " providing the end user with local scalable visualization, computing, and storage. Integration of high definition video with Opt. IPortals creates a high performance collaboration workspace of global reach. An emerging major new user community are end users of NSF’s Tera. Grid and DODs HPCMP, allowing them to directly optically connect to the remote Tera or Peta-scale resources from their local laboratories and to bring disciplinary experts from multiple sites into the local data and visualization analysis process.

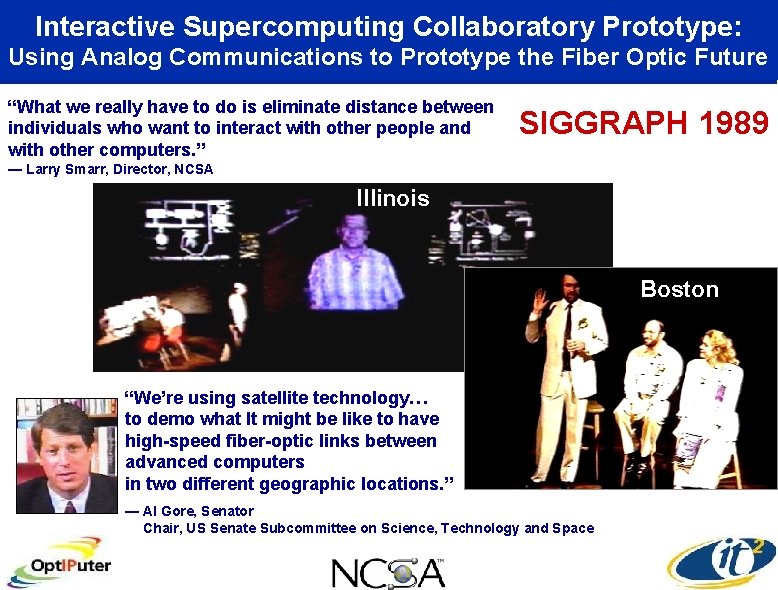

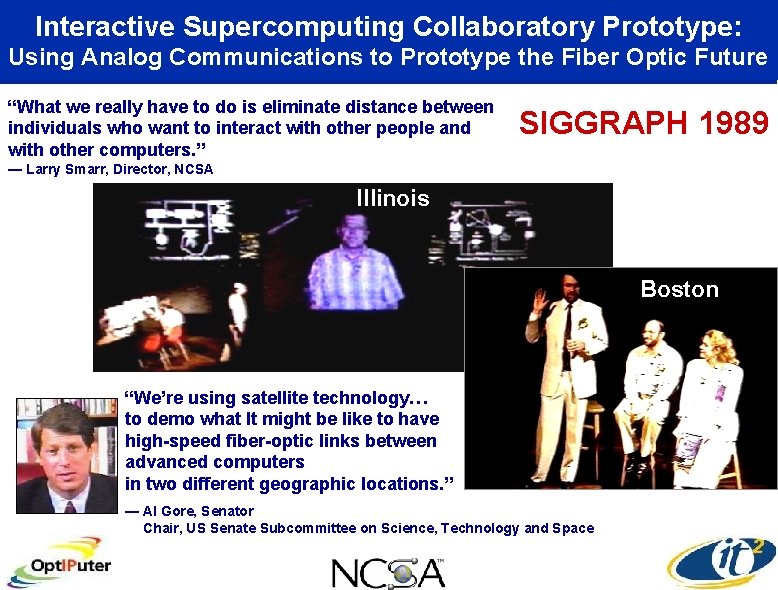

Interactive Supercomputing Collaboratory Prototype: Using Analog Communications to Prototype the Fiber Optic Future “What we really have to do is eliminate distance between individuals who want to interact with other people and with other computers. ” SIGGRAPH 1989 ― Larry Smarr, Director, NCSA Illinois Boston “We’re using satellite technology… to demo what It might be like to have high-speed fiber-optic links between advanced computers in two different geographic locations. ” ― Al Gore, Senator Chair, US Senate Subcommittee on Science, Technology and Space

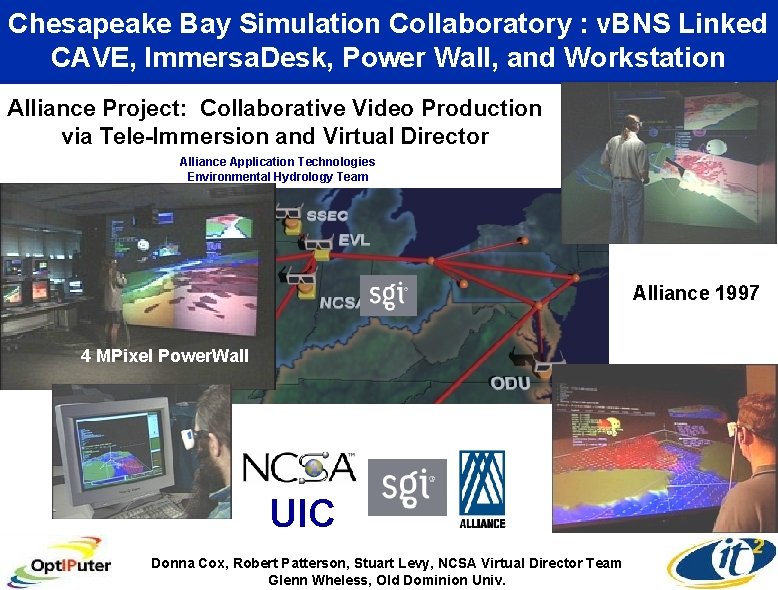

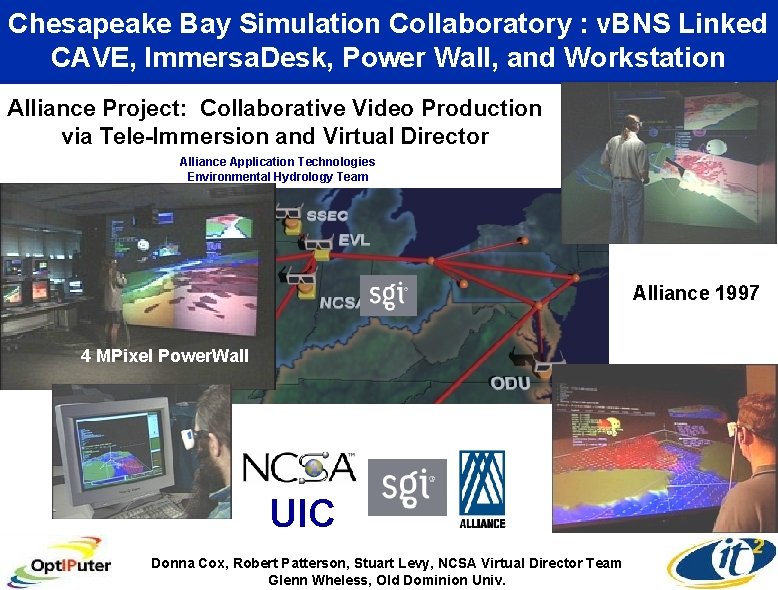

Chesapeake Bay Simulation Collaboratory : v. BNS Linked CAVE, Immersa. Desk, Power Wall, and Workstation Alliance Project: Collaborative Video Production via Tele-Immersion and Virtual Director Alliance Application Technologies Environmental Hydrology Team Alliance 1997 4 MPixel Power. Wall UIC Donna Cox, Robert Patterson, Stuart Levy, NCSA Virtual Director Team Glenn Wheless, Old Dominion Univ.

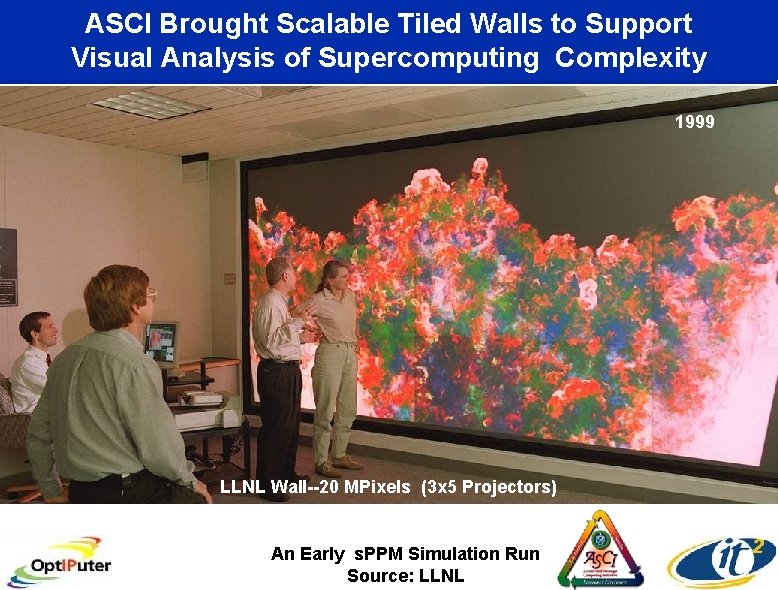

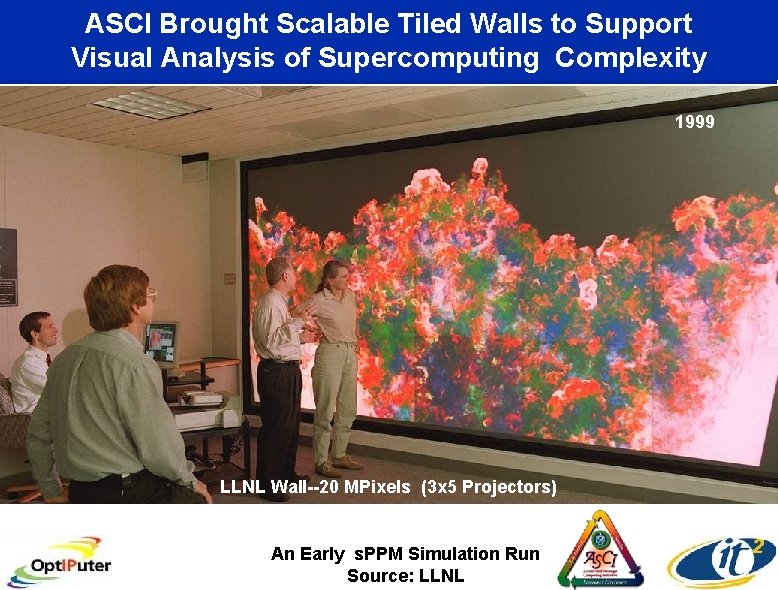

ASCI Brought Scalable Tiled Walls to Support Visual Analysis of Supercomputing Complexity 1999 LLNL Wall--20 MPixels (3 x 5 Projectors) An Early s. PPM Simulation Run Source: LLNL

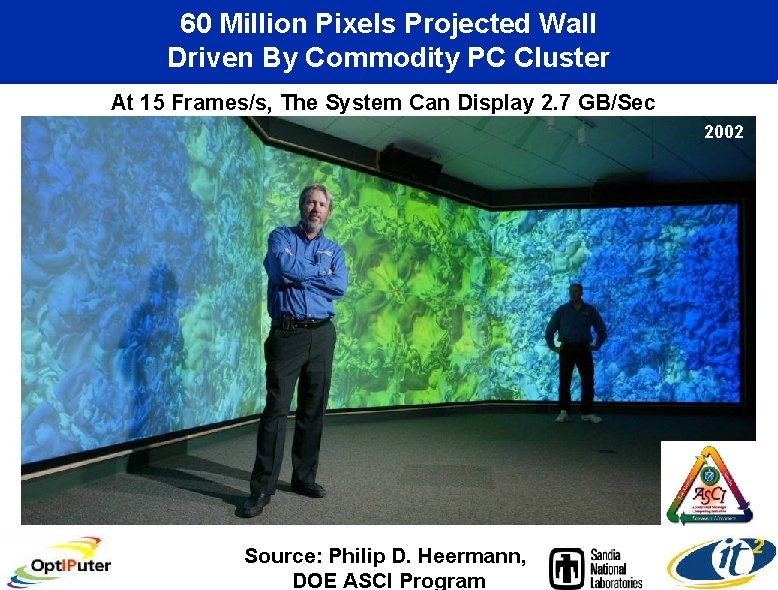

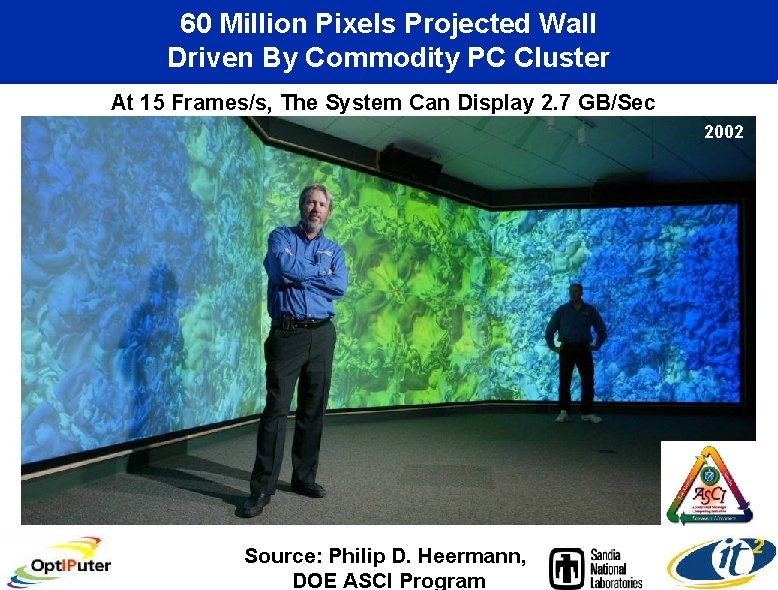

60 Million Pixels Projected Wall Driven By Commodity PC Cluster At 15 Frames/s, The System Can Display 2. 7 GB/Sec 2002 Source: Philip D. Heermann, DOE ASCI Program

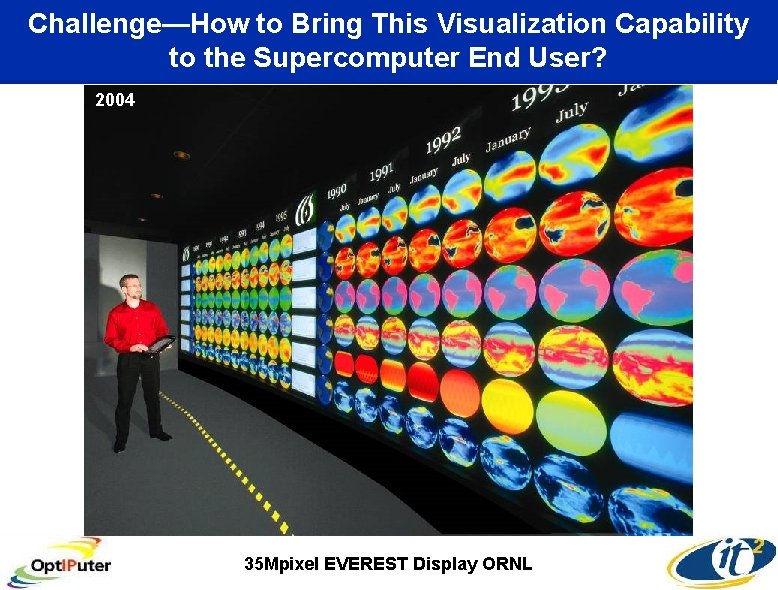

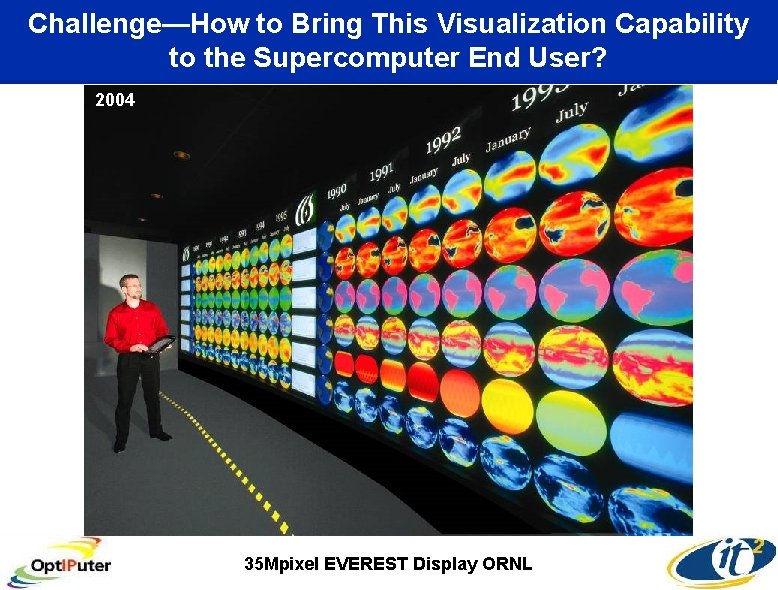

Challenge—How to Bring This Visualization Capability to the Supercomputer End User? 2004 35 Mpixel EVEREST Display ORNL

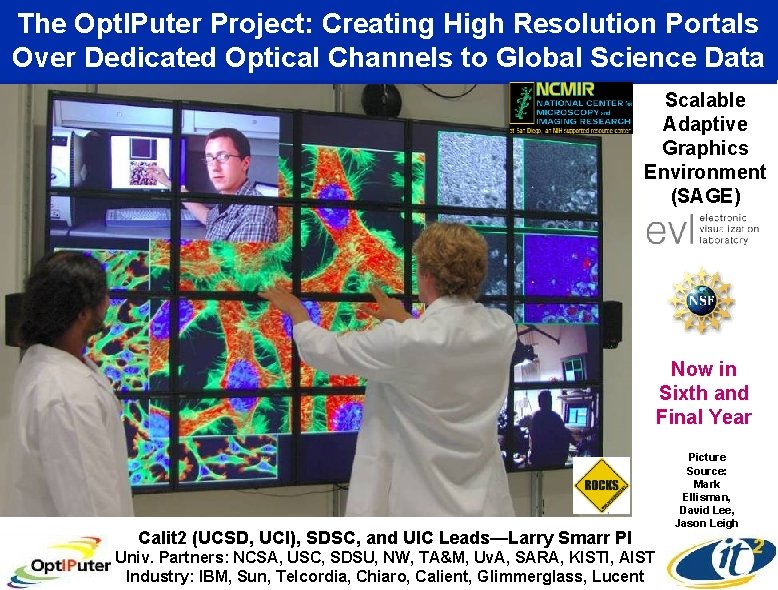

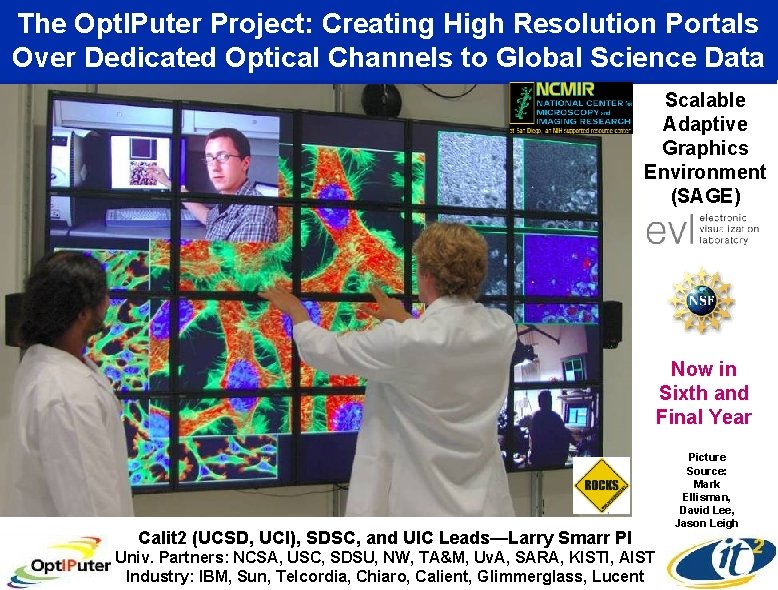

The Opt. IPuter Project: Creating High Resolution Portals Over Dedicated Optical Channels to Global Science Data Scalable Adaptive Graphics Environment (SAGE) Now in Sixth and Final Year Calit 2 (UCSD, UCI), SDSC, and UIC Leads—Larry Smarr PI Univ. Partners: NCSA, USC, SDSU, NW, TA&M, Uv. A, SARA, KISTI, AIST Industry: IBM, Sun, Telcordia, Chiaro, Calient, Glimmerglass, Lucent Picture Source: Mark Ellisman, David Lee, Jason Leigh

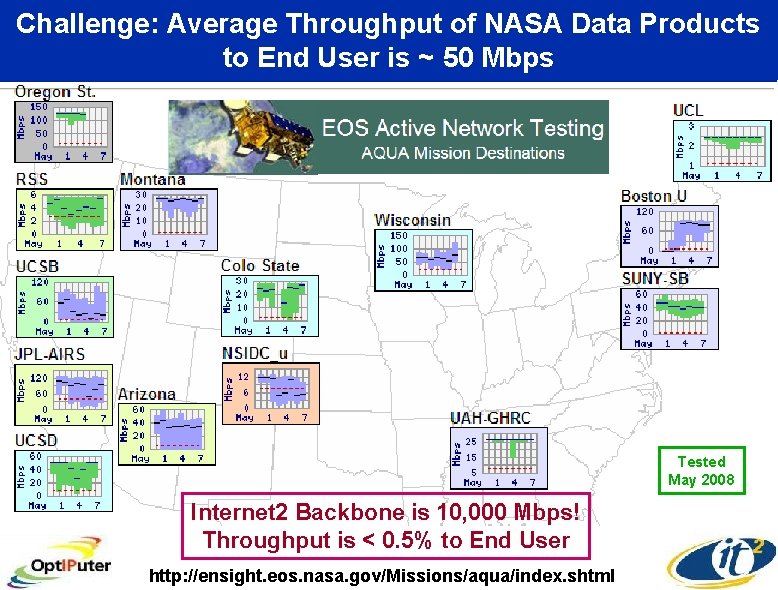

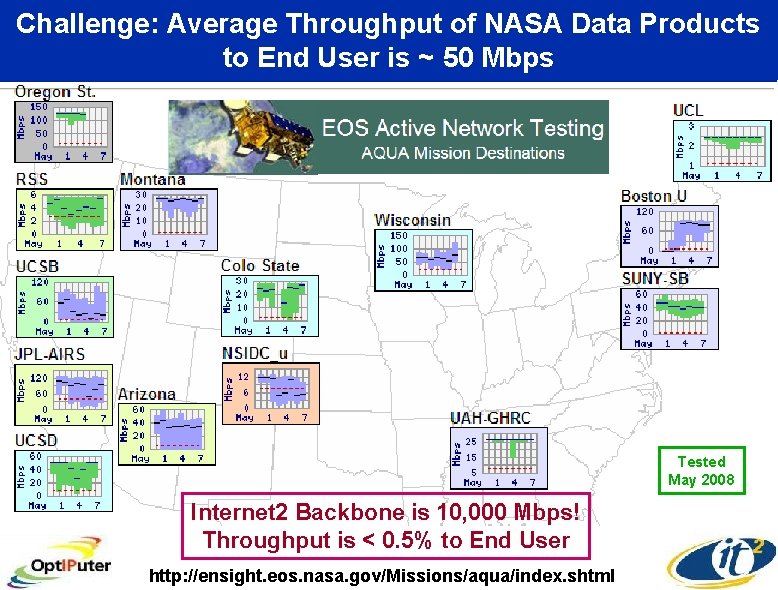

Challenge: Average Throughput of NASA Data Products to End User is ~ 50 Mbps Tested May 2008 Internet 2 Backbone is 10, 000 Mbps! Throughput is < 0. 5% to End User http: //ensight. eos. nasa. gov/Missions/aqua/index. shtml

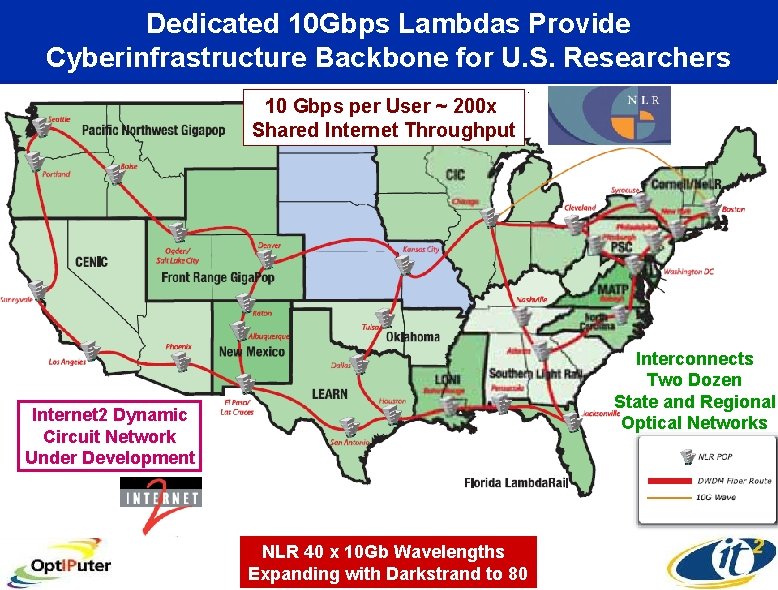

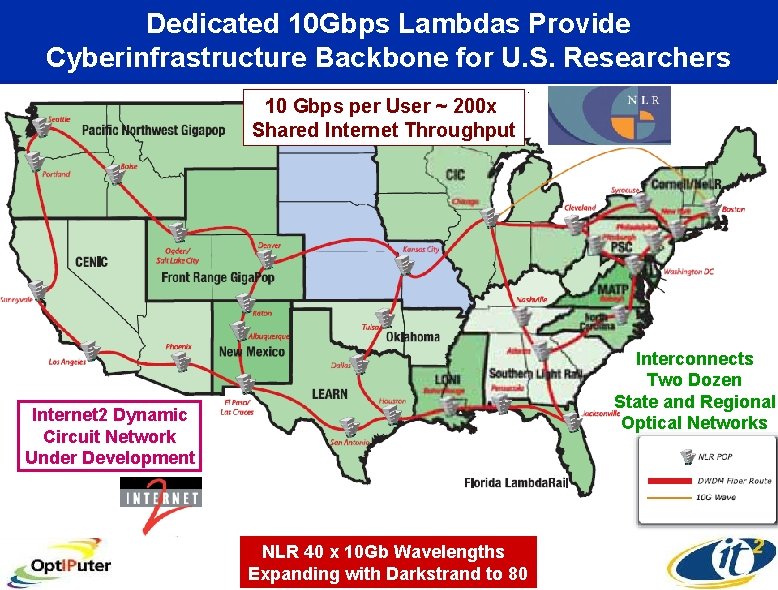

Dedicated 10 Gbps Lambdas Provide Cyberinfrastructure Backbone for U. S. Researchers 10 Gbps per User ~ 200 x Shared Internet Throughput Interconnects Two Dozen State and Regional Optical Networks Internet 2 Dynamic Circuit Network Under Development NLR 40 x 10 Gb Wavelengths Expanding with Darkstrand to 80

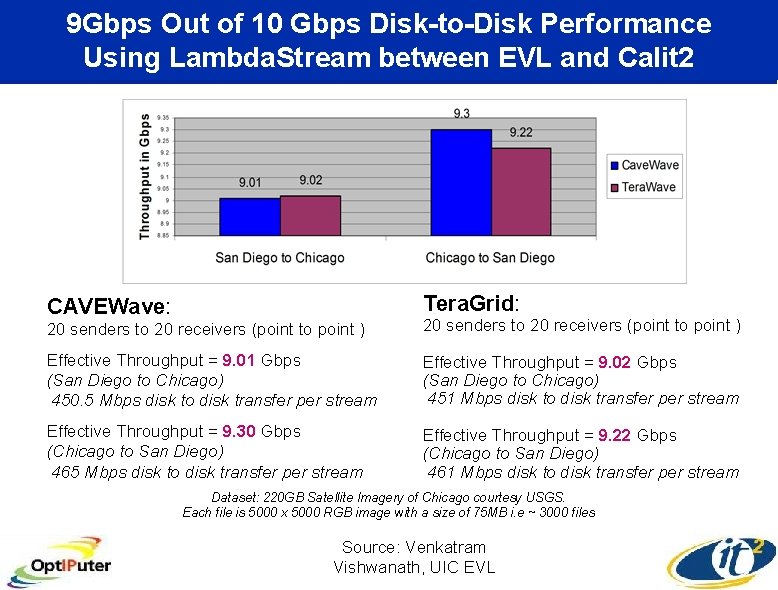

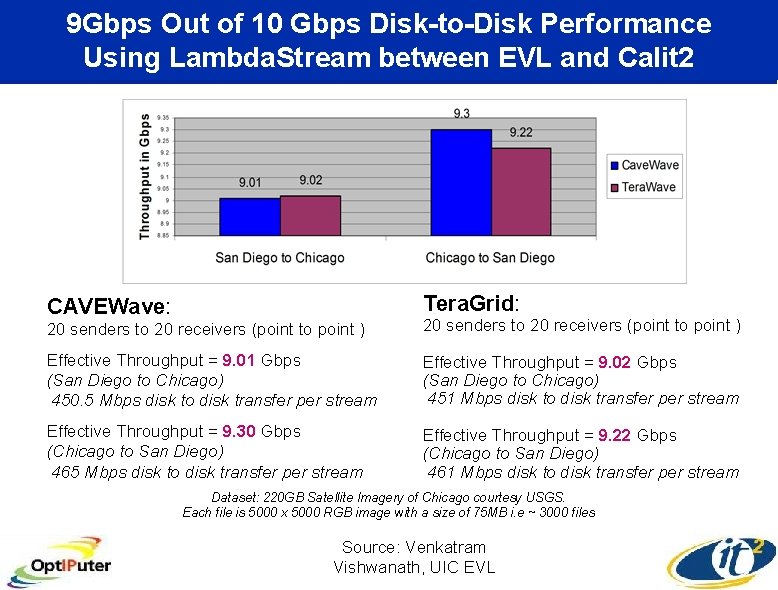

9 Gbps Out of 10 Gbps Disk-to-Disk Performance Using Lambda. Stream between EVL and Calit 2 Tera. Grid: CAVEWave: 20 senders to 20 receivers (point to point ) Effective Throughput = 9. 01 Gbps (San Diego to Chicago) 450. 5 Mbps disk to disk transfer per stream Effective Throughput = 9. 02 Gbps (San Diego to Chicago) 451 Mbps disk to disk transfer per stream Effective Throughput = 9. 30 Gbps (Chicago to San Diego) 465 Mbps disk to disk transfer per stream Effective Throughput = 9. 22 Gbps (Chicago to San Diego) 461 Mbps disk to disk transfer per stream Dataset: 220 GB Satellite Imagery of Chicago courtesy USGS. Each file is 5000 x 5000 RGB image with a size of 75 MB i. e ~ 3000 files Source: Venkatram Vishwanath, UIC EVL

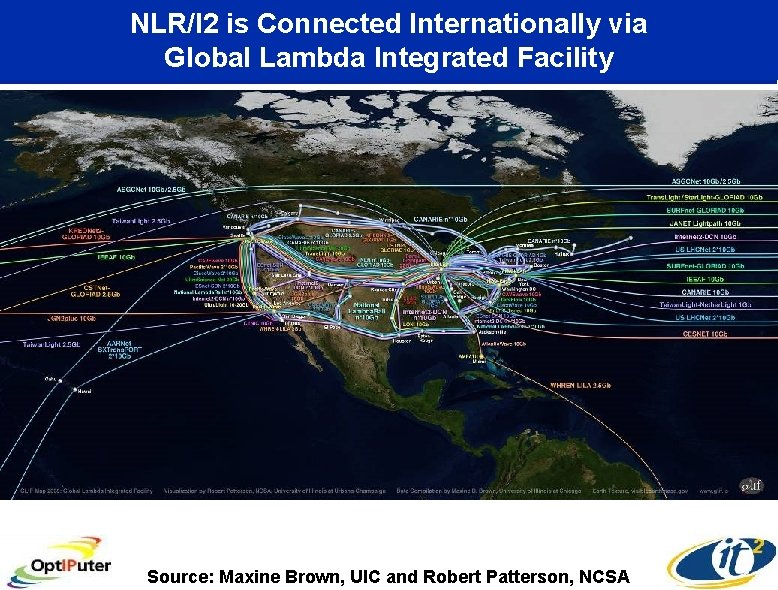

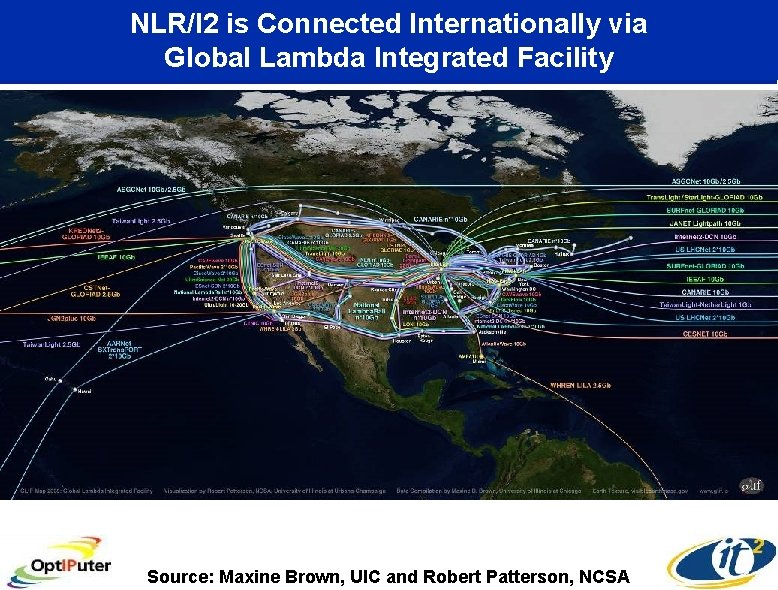

NLR/I 2 is Connected Internationally via Global Lambda Integrated Facility Source: Maxine Brown, UIC and Robert Patterson, NCSA

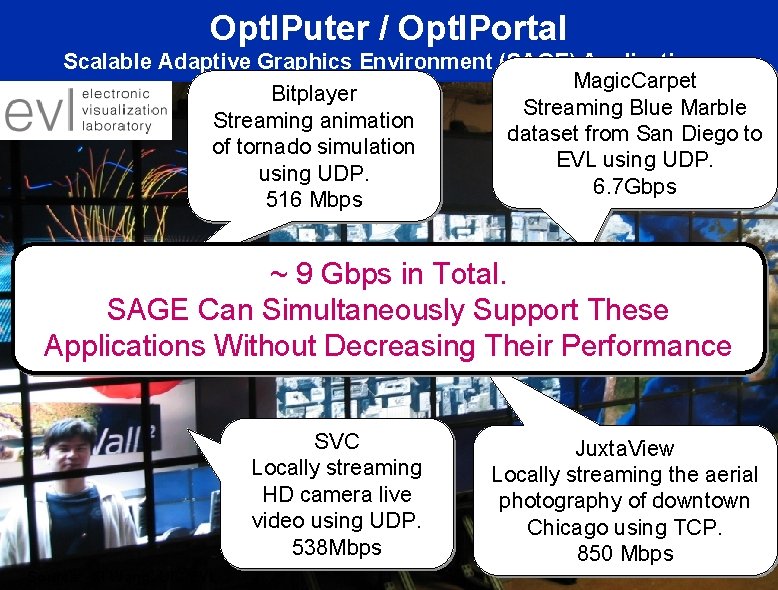

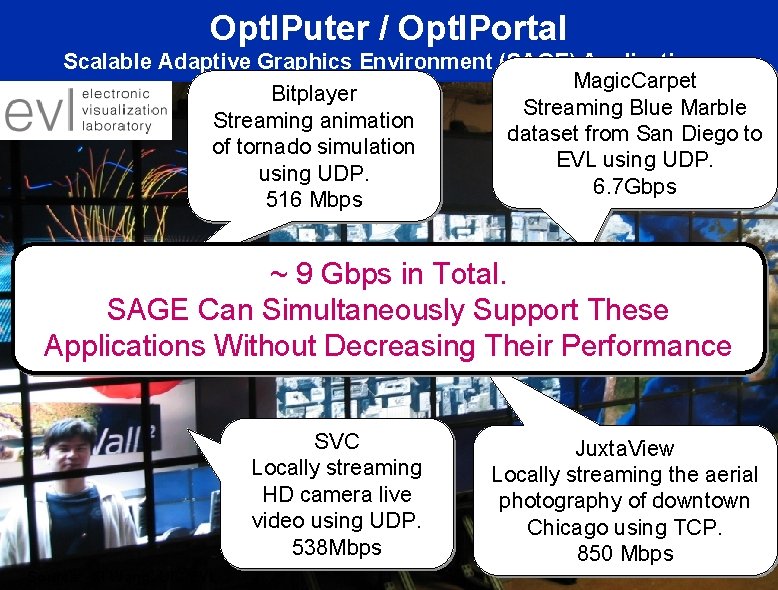

Opt. IPuter / Opt. IPortal Scalable Adaptive Graphics Environment (SAGE) Applications Magic. Carpet Bitplayer Streaming Blue Marble Streaming animation dataset from San Diego to of tornado simulation EVL using UDP. 6. 7 Gbps 516 Mbps ~ 9 Gbps in Total. SAGE Can Simultaneously Support These Applications Without Decreasing Their Performance SVC Locally streaming HD camera live video using UDP. 538 Mbps Source: Xi Wang, UIC/EVL Juxta. View Locally streaming the aerial photography of downtown Chicago using TCP. 850 Mbps

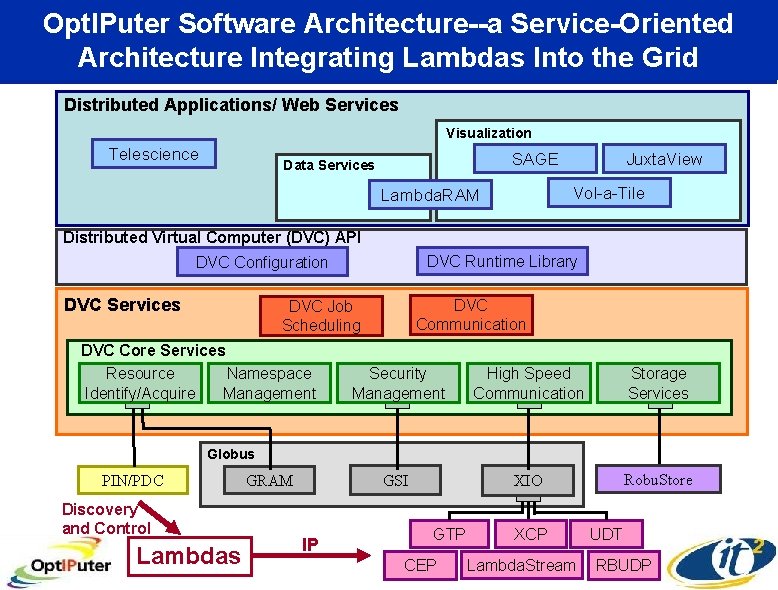

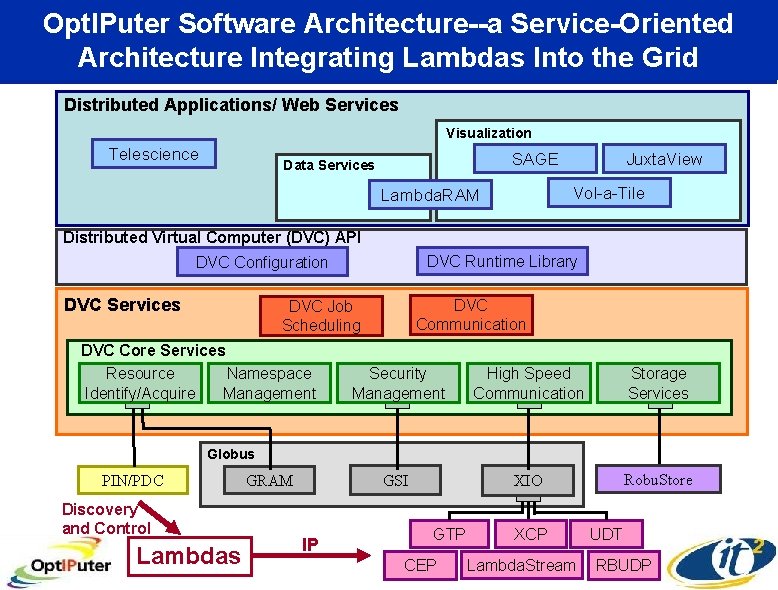

Opt. IPuter Software Architecture--a Service-Oriented Architecture Integrating Lambdas Into the Grid Distributed Applications/ Web Services Visualization Telescience SAGE Data Services Juxta. View Vol-a-Tile Lambda. RAM Distributed Virtual Computer (DVC) API DVC Runtime Library DVC Configuration DVC Services DVC Communication DVC Job Scheduling DVC Core Services Resource Namespace Identify/Acquire Management Security Management High Speed Communication Storage Services GSI XIO Robu. Store Globus PIN/PDC Discovery and Control Lambdas GRAM IP GTP CEP XCP Lambda. Stream UDT RBUDP

Two New Calit 2 Buildings Provide New Laboratories for “Living in the Future” • “Convergence” Laboratory Facilities – Nanotech, Bio. MEMS, Chips, Radio, Photonics – Virtual Reality, Digital Cinema, HDTV, Gaming • Over 1000 Researchers in Two Buildings – Linked via Dedicated Optical Networks UC Irvine www. calit 2. net Preparing for a World in Which Distance is Eliminated…

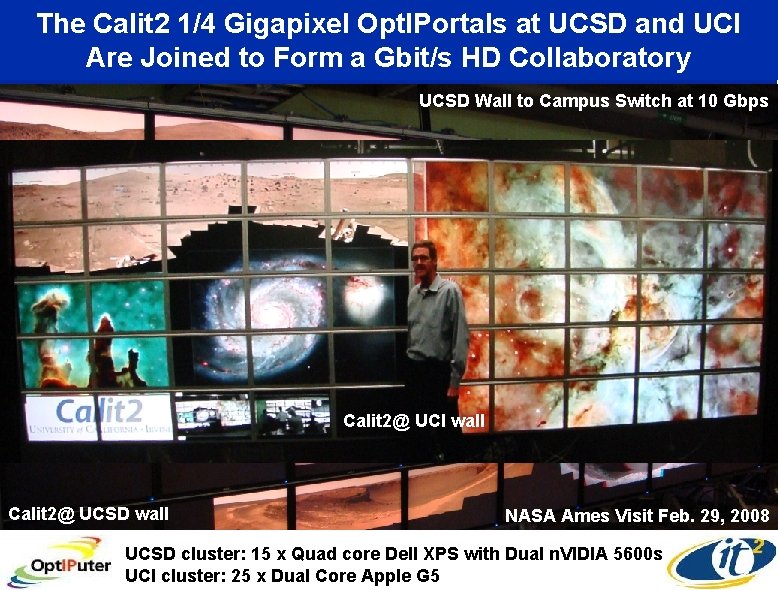

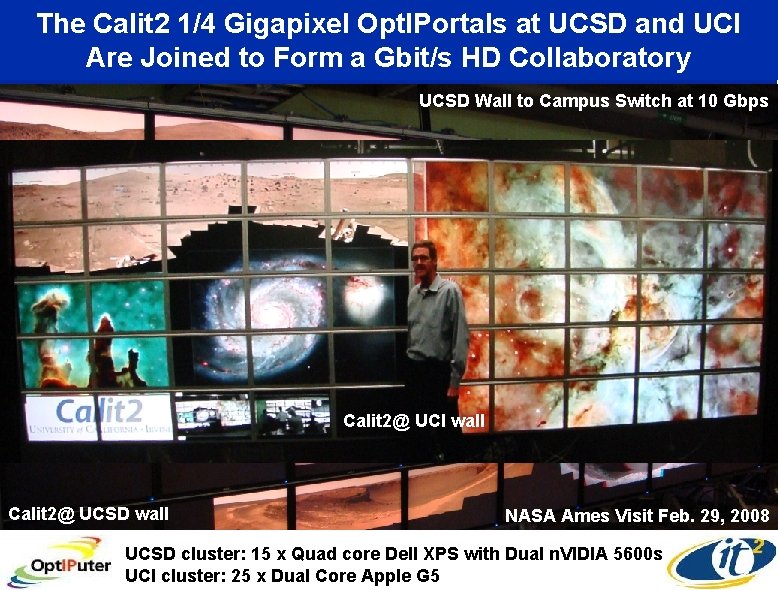

The Calit 2 1/4 Gigapixel Opt. IPortals at UCSD and UCI Are Joined to Form a Gbit/s HD Collaboratory UCSD Wall to Campus Switch at 10 Gbps Calit 2@ UCI wall Calit 2@ UCSD wall NASA Ames Visit Feb. 29, 2008 UCSD cluster: 15 x Quad core Dell XPS with Dual n. VIDIA 5600 s UCI cluster: 25 x Dual Core Apple G 5

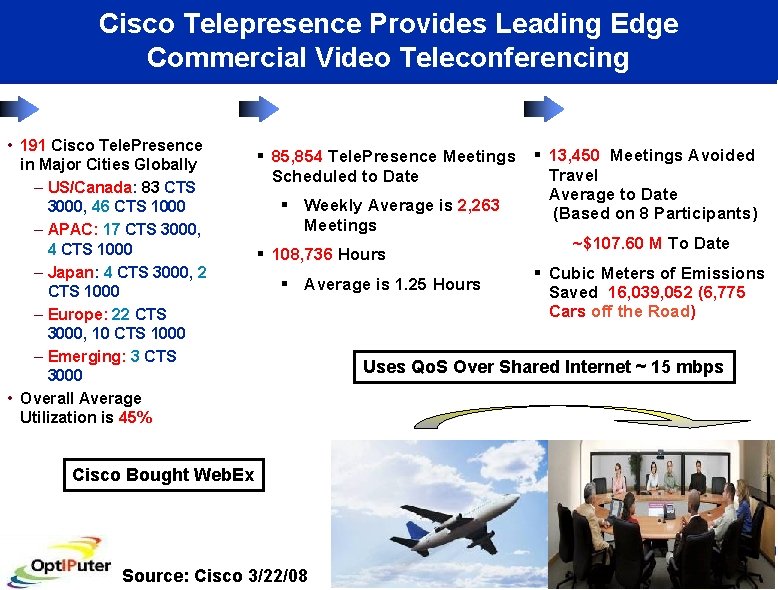

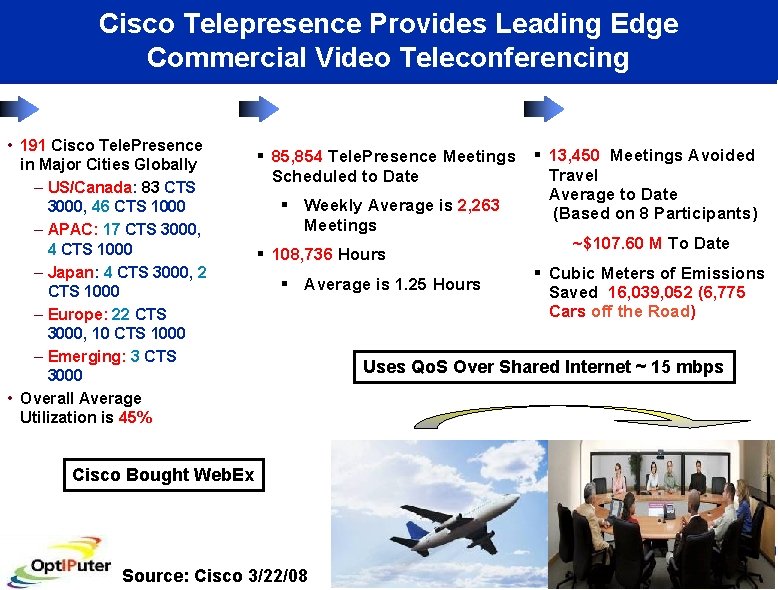

Cisco Telepresence Provides Leading Edge Commercial Video Teleconferencing • 191 Cisco Tele. Presence in Major Cities Globally – US/Canada: 83 CTS 3000, 46 CTS 1000 – APAC: 17 CTS 3000, 4 CTS 1000 – Japan: 4 CTS 3000, 2 CTS 1000 – Europe: 22 CTS 3000, 10 CTS 1000 – Emerging: 3 CTS 3000 • Overall Average Utilization is 45% § 85, 854 Tele. Presence Meetings Scheduled to Date § Weekly Average is 2, 263 Meetings § 108, 736 Hours § Average is 1. 25 Hours Cisco Bought Web. Ex Source: Cisco 3/22/08 § 13, 450 Meetings Avoided Travel Average to Date (Based on 8 Participants) ~$107. 60 M To Date § Cubic Meters of Emissions Saved 16, 039, 052 (6, 775 Cars off the Road) Uses Qo. S Over Shared Internet ~ 15 mbps

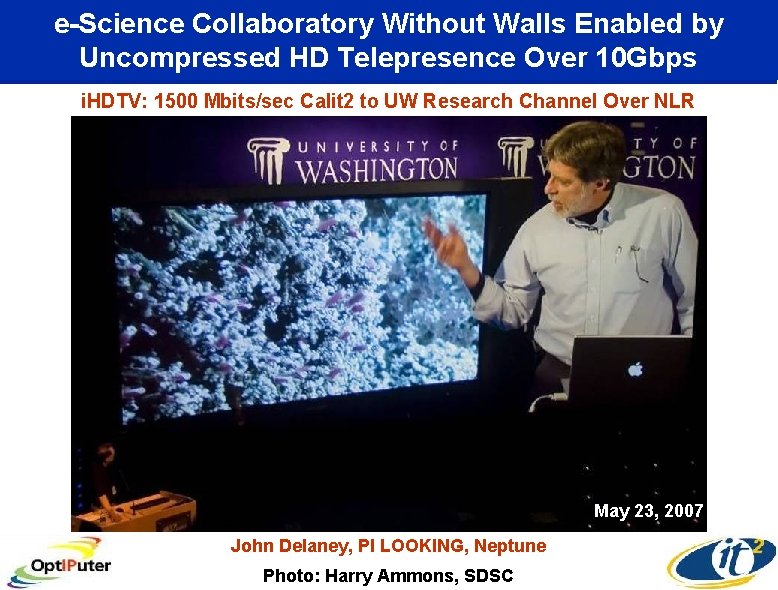

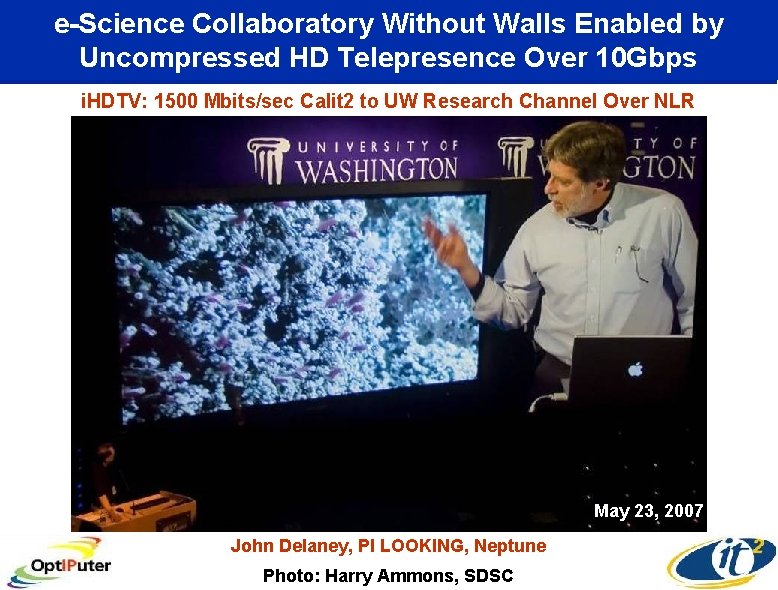

e-Science Collaboratory Without Walls Enabled by Uncompressed HD Telepresence Over 10 Gbps i. HDTV: 1500 Mbits/sec Calit 2 to UW Research Channel Over NLR May 23, 2007 John Delaney, PI LOOKING, Neptune Photo: Harry Ammons, SDSC

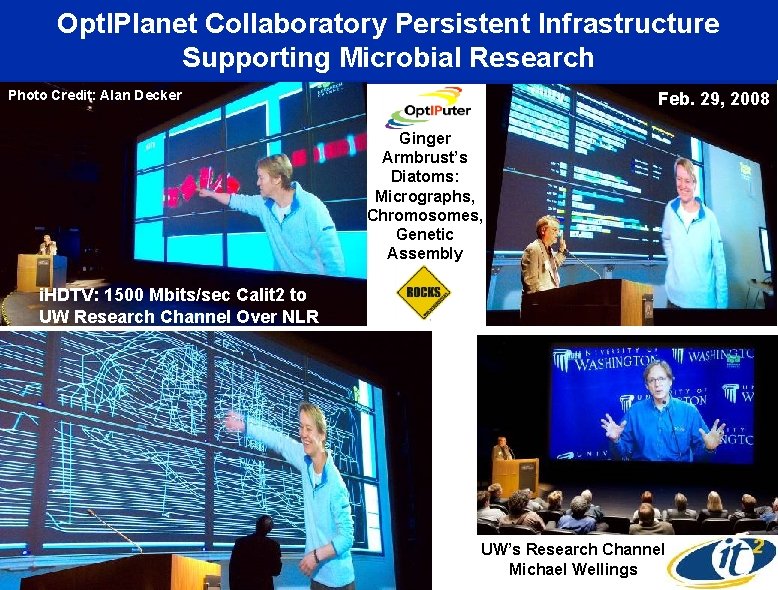

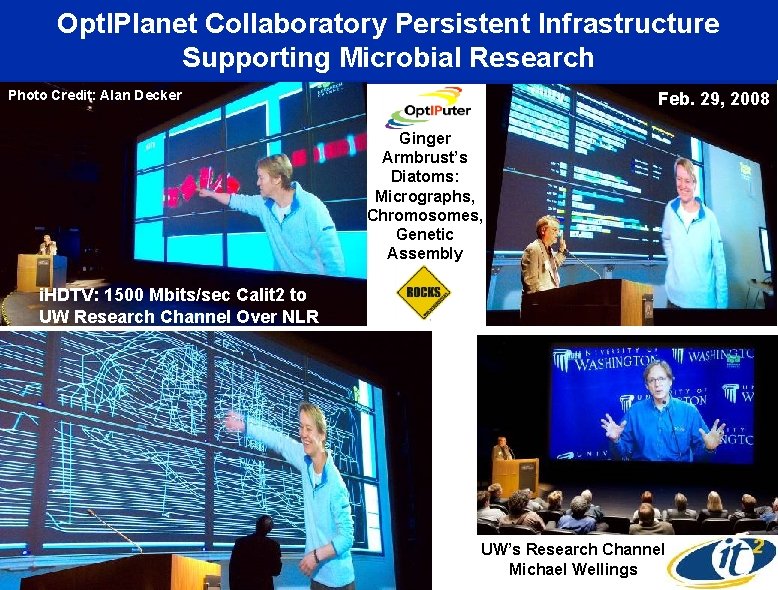

Opt. IPlanet Collaboratory Persistent Infrastructure Supporting Microbial Research Photo Credit: Alan Decker Feb. 29, 2008 Ginger Armbrust’s Diatoms: Micrographs, Chromosomes, Genetic Assembly i. HDTV: 1500 Mbits/sec Calit 2 to UW Research Channel Over NLR UW’s Research Channel Michael Wellings

Opt. IPortals Are Being Adopted Globally AIST-Japan Osaka U-Japan NCHC-Taiwan KISTI-Korea CNIC-China UZurich SARA- Netherlands Brno-Czech Republic EVL@UIC Calit 2@UCSD Calit 2@UCI U. Melbourne, Australia

Green Initiative: Can Optical Fiber Replace Airline Travel for Continuing Collaborations ? Source: Maxine Brown, Opt. IPuter Project Manager

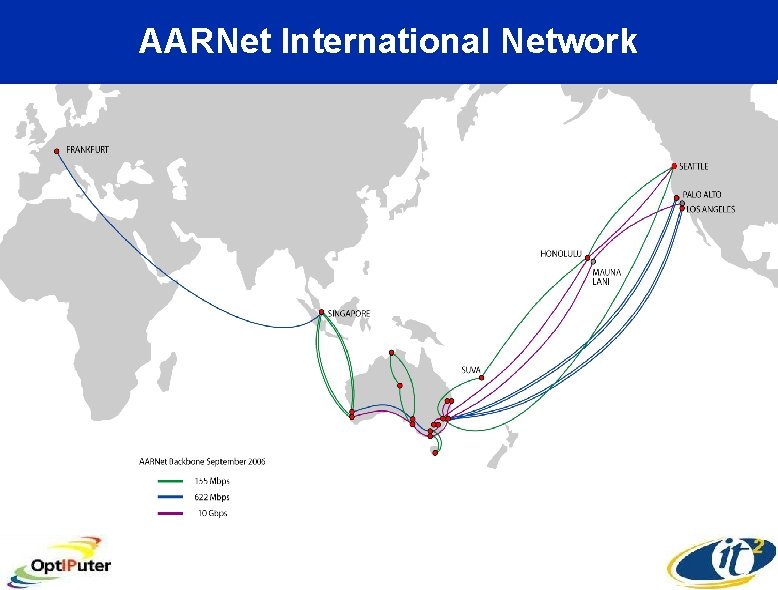

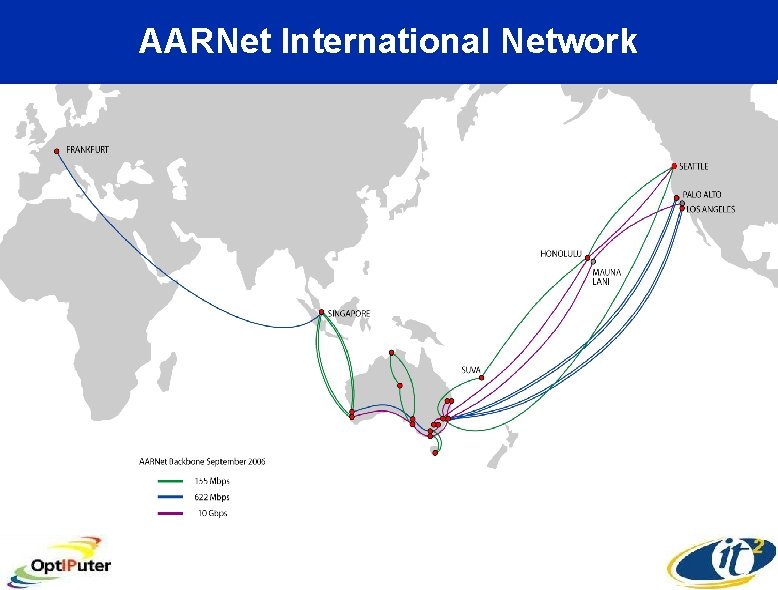

AARNet International Network

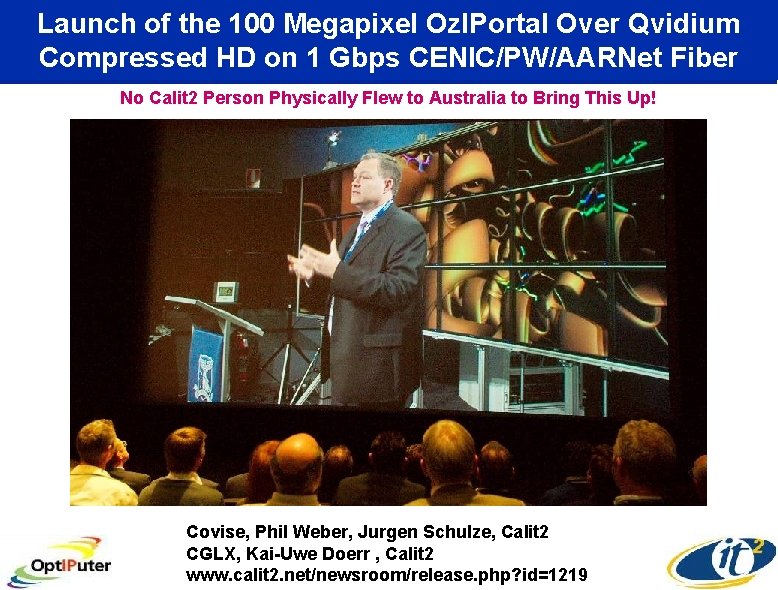

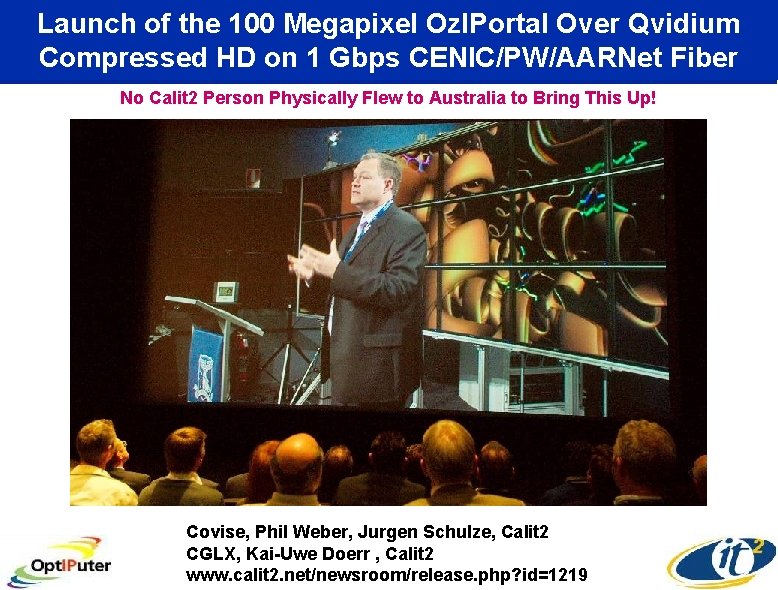

Launch of the 100 Megapixel Oz. IPortal Over Qvidium Compressed HD on 1 Gbps CENIC/PW/AARNet Fiber No Calit 2 Person Physically Flew to Australia to Bring This Up! January 15, 2008 Covise, Phil Weber, Jurgen Schulze, Calit 2 CGLX, Kai-Uwe Doerr , Calit 2 www. calit 2. net/newsroom/release. php? id=1219

Victoria Premier and Australian Deputy Prime Minister Asking Questions

University of Melbourne Vice Chancellor Glyn Davis in Calit 2 Replies to Question from Australia

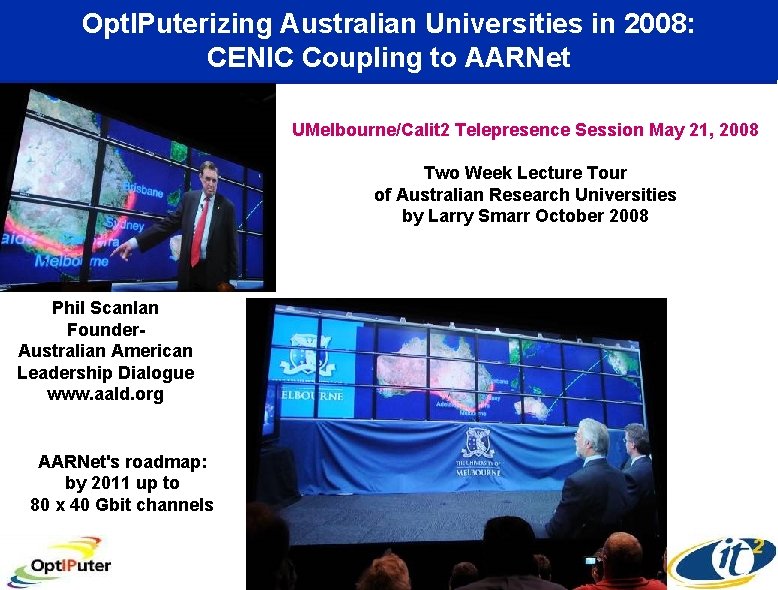

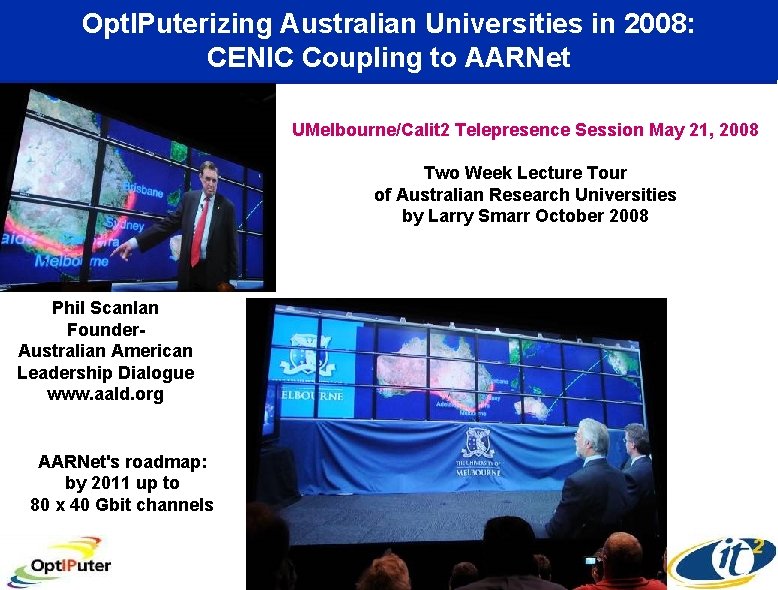

Opt. IPuterizing Australian Universities in 2008: CENIC Coupling to AARNet UMelbourne/Calit 2 Telepresence Session May 21, 2008 Two Week Lecture Tour of Australian Research Universities by Larry Smarr October 2008 Phil Scanlan Founder. Australian American Leadership Dialogue www. aald. org AARNet's roadmap: by 2011 up to 80 x 40 Gbit channels

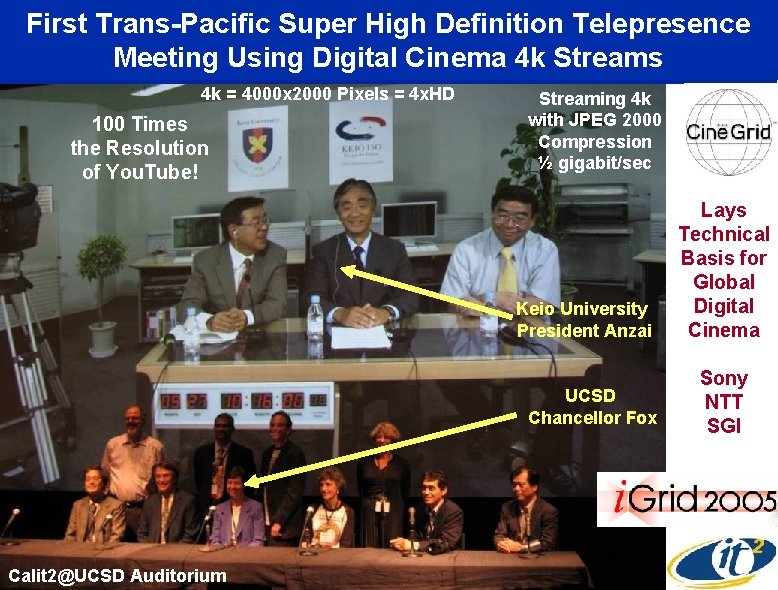

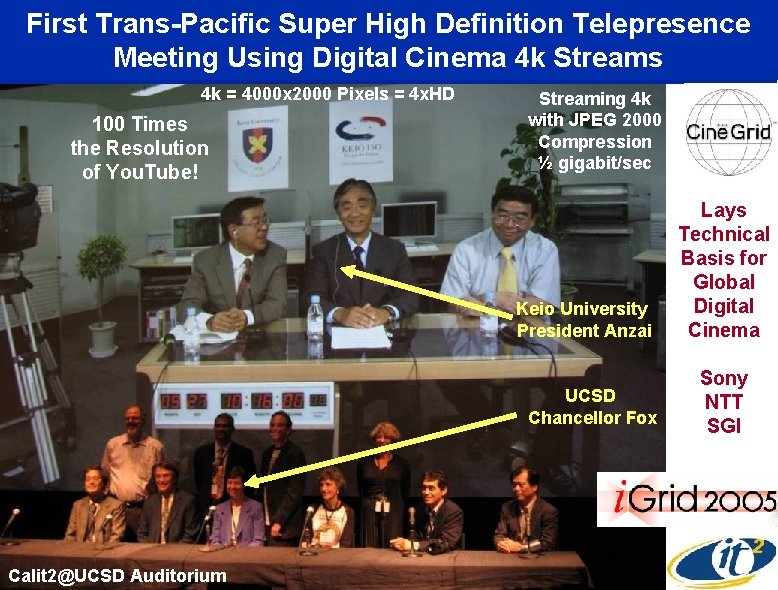

First Trans-Pacific Super High Definition Telepresence Meeting Using Digital Cinema 4 k Streams 4 k = 4000 x 2000 Pixels = 4 x. HD 100 Times the Resolution of You. Tube! Streaming 4 k with JPEG 2000 Compression ½ gigabit/sec Keio University President Anzai UCSD Chancellor Fox Calit 2@UCSD Auditorium Lays Technical Basis for Global Digital Cinema Sony NTT SGI

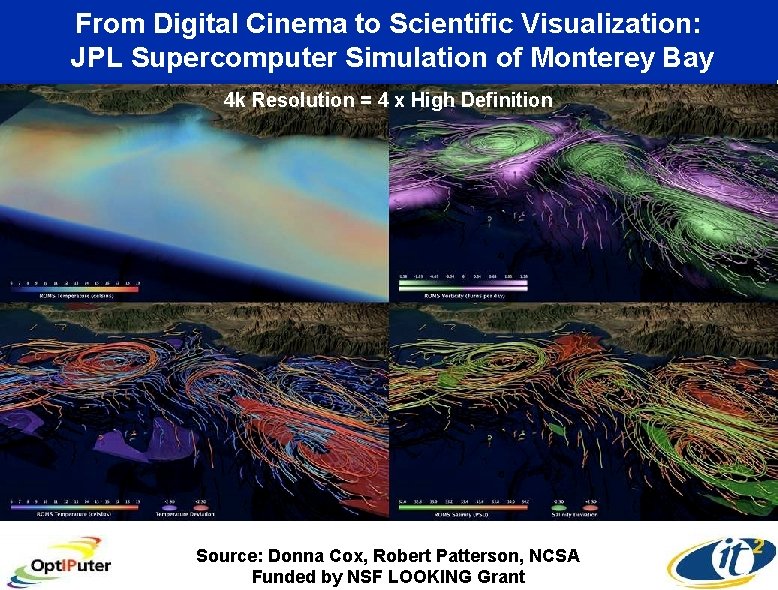

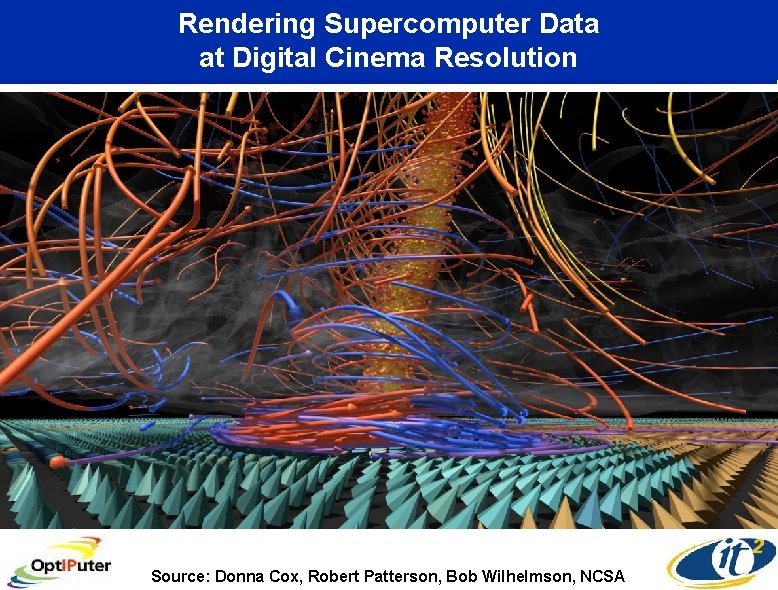

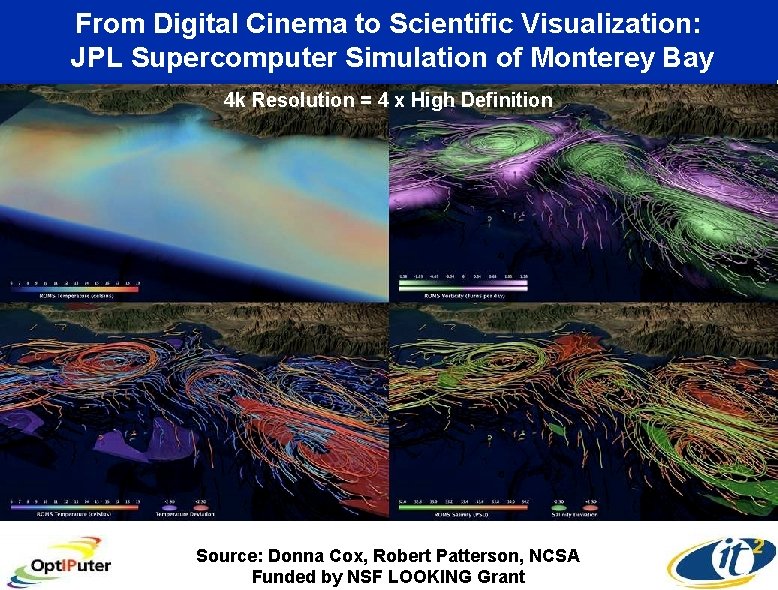

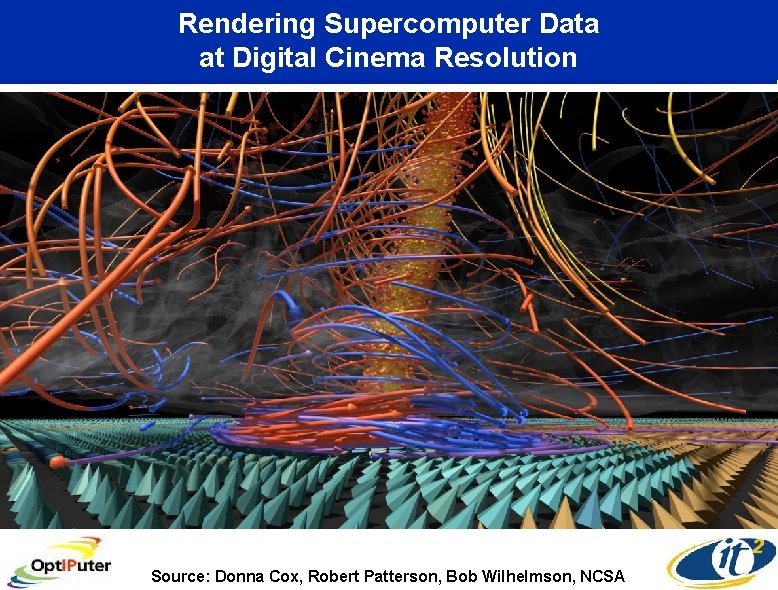

From Digital Cinema to Scientific Visualization: JPL Supercomputer Simulation of Monterey Bay 4 k Resolution = 4 x High Definition Source: Donna Cox, Robert Patterson, NCSA Funded by NSF LOOKING Grant

Rendering Supercomputer Data at Digital Cinema Resolution Source: Donna Cox, Robert Patterson, Bob Wilhelmson, NCSA

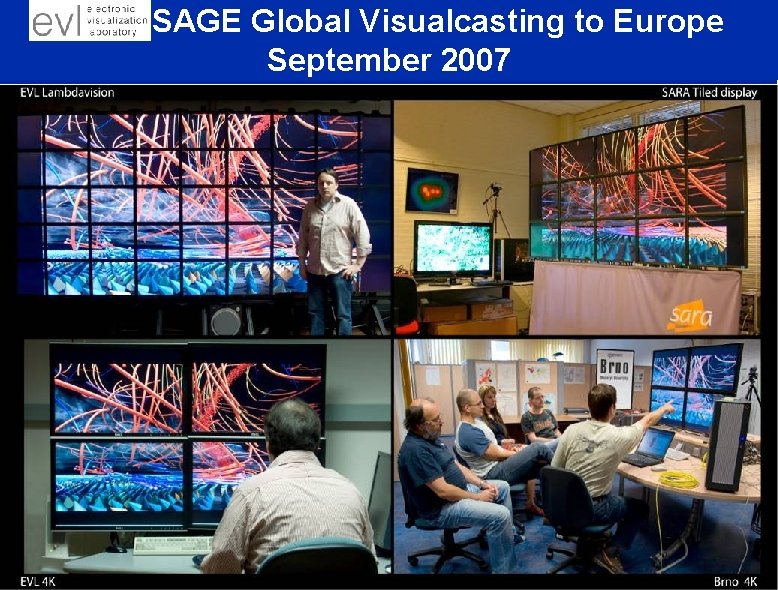

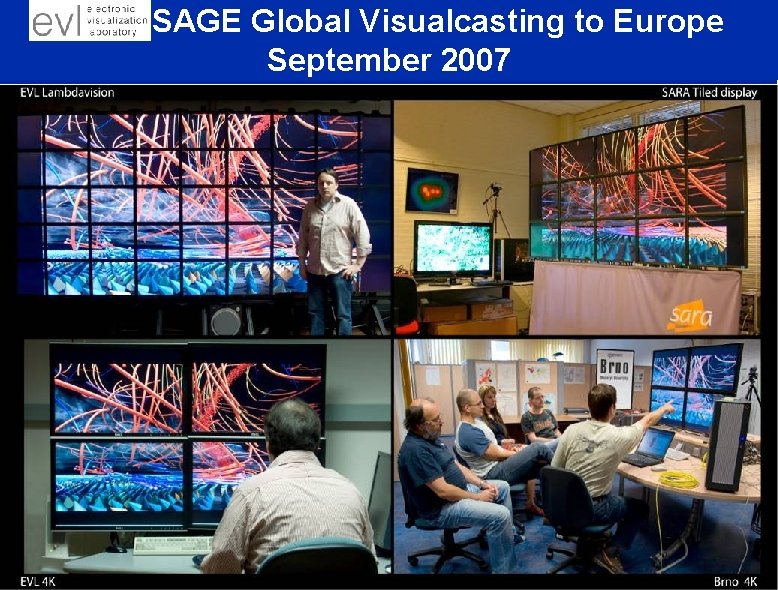

EVL’s SAGE Global Visualcasting to Europe September 2007 Gigabit Streams Image Source Image Replication Opt. IPuter servers at CALIT 2 San Diego Opt. IPuter SAGEBridge at Star. Light Chicago Image Viewing Opt. IPortals at EVL Chicago Image Viewing Opt. IPortal at SARA Amsterdam Opt. IPortal at Masaryk University Brno Source: Luc Renambot, EVL Image Viewing Opt. IPortal at Russian Academy of Sciences Moscow Oct 1

Creating a California Cyberinfrastructure of Opt. IPuter “On-Ramps” to NLR & Tera. Grid Resources UC Davis UC San Francisco UC Berkeley UC Merced UC Santa Cruz UC Los Angeles UC Santa Barbara UC Riverside UC Irvine UC San Diego Creating a Critical Mass of Opt. IPuter End Users on a Secure Lambda. Grid CENIC Workshop at Calit 2 Sept 15 -16, 2008 Source: Fran Berman, SDSC , Larry Smarr, Calit 2

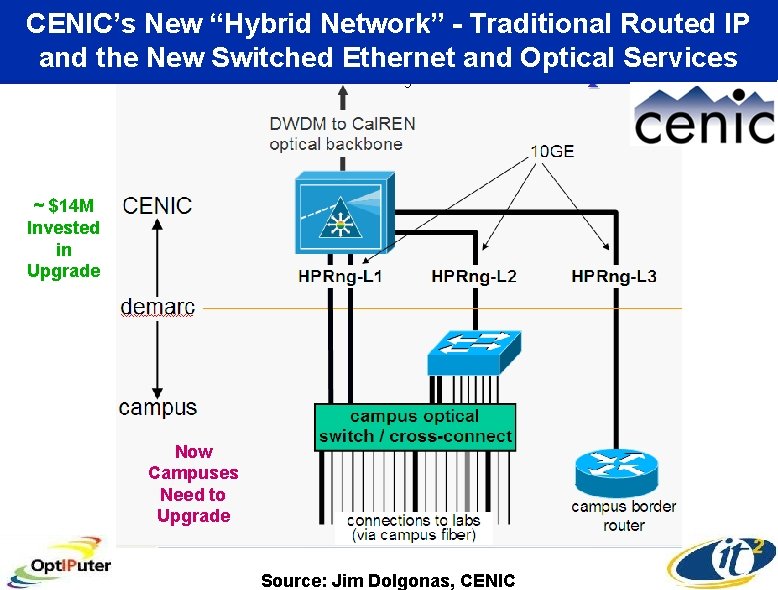

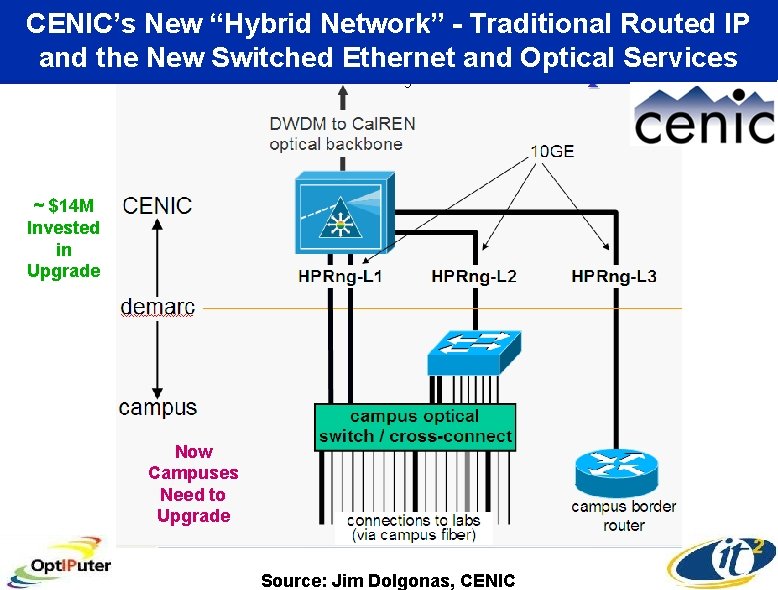

CENIC’s New “Hybrid Network” - Traditional Routed IP and the New Switched Ethernet and Optical Services ~ $14 M Invested in Upgrade Now Campuses Need to Upgrade Source: Jim Dolgonas, CENIC

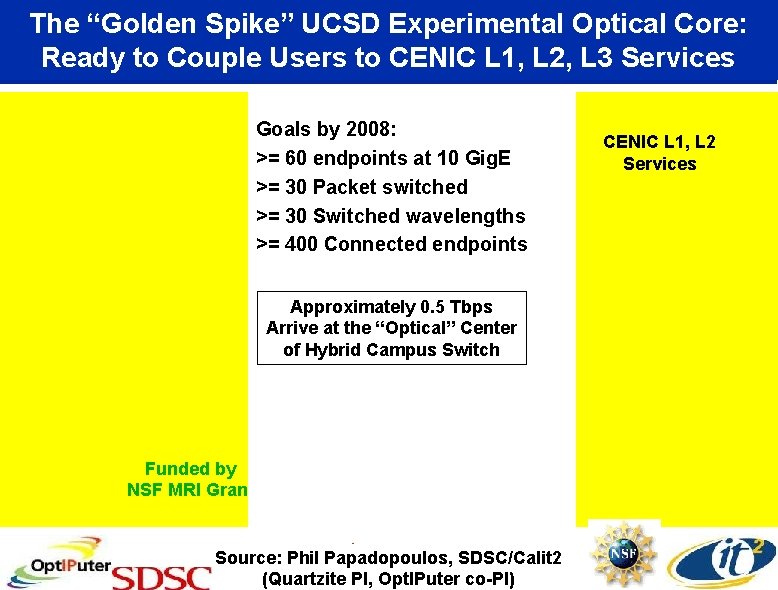

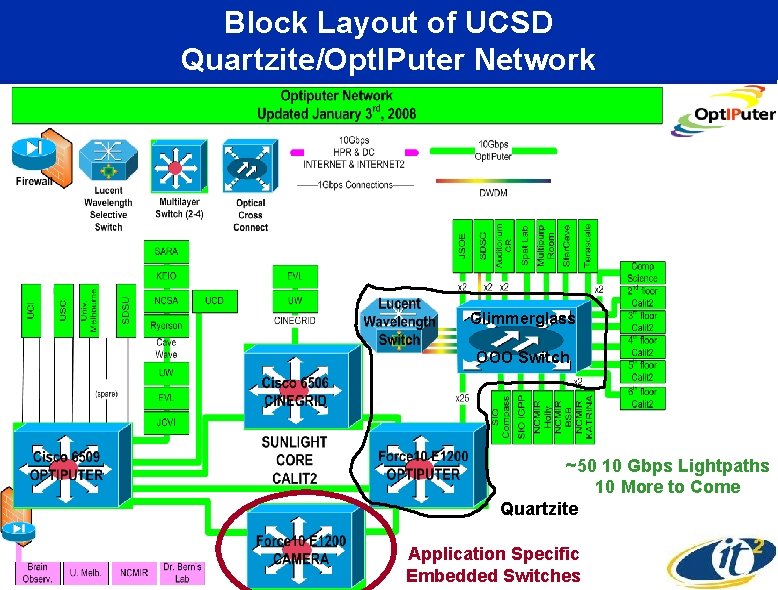

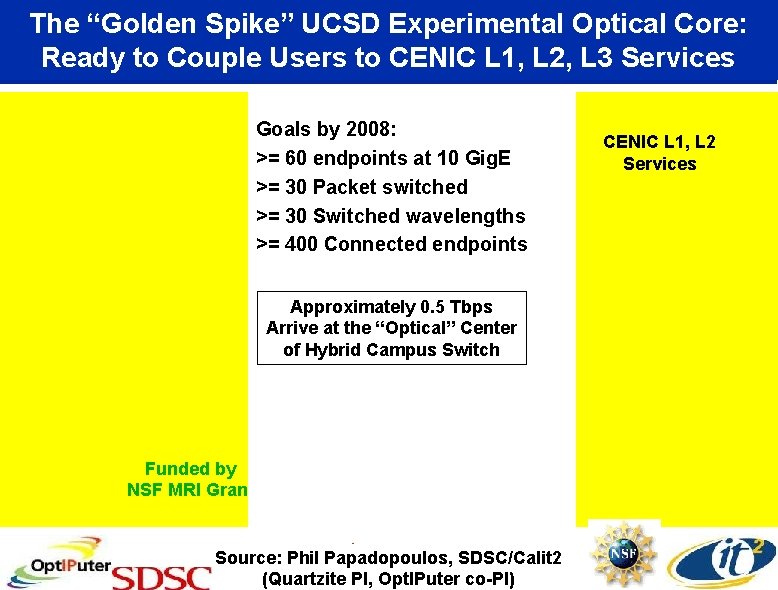

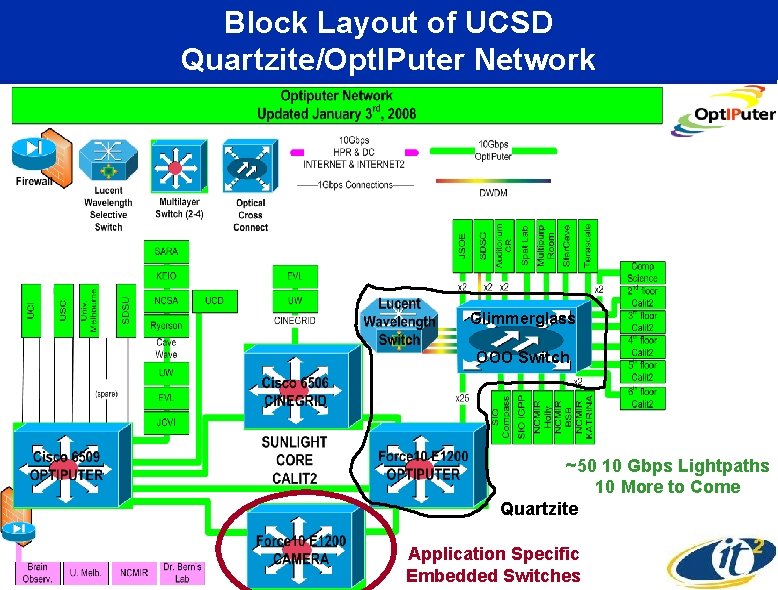

The “Golden Spike” UCSD Experimental Optical Core: Ready to Couple Users to CENIC L 1, L 2, L 3 Services Goals by 2008: >= 60 endpoints at 10 Gig. E >= 30 Packet switched Lucent >= 30 Switched wavelengths >= 400 Connected endpoints Glimmerglass Approximately 0. 5 Tbps Arrive at the “Optical” Center of Hybrid Campus Switch Force 10 Funded by NSF MRI Grant Cisco 6509 Opt. IPuter Border Router Source: Phil Papadopoulos, SDSC/Calit 2 (Quartzite PI, Opt. IPuter co-PI) CENIC L 1, L 2 Services

Calit 2 Sunlight Optical Exchange Contains Quartzite 10: 45 am Feb. 21, 2008

Block Layout of UCSD Quartzite/Opt. IPuter Network Glimmerglass OOO Switch ~50 10 Gbps Lightpaths 10 More to Come Quartzite Application Specific Embedded Switches

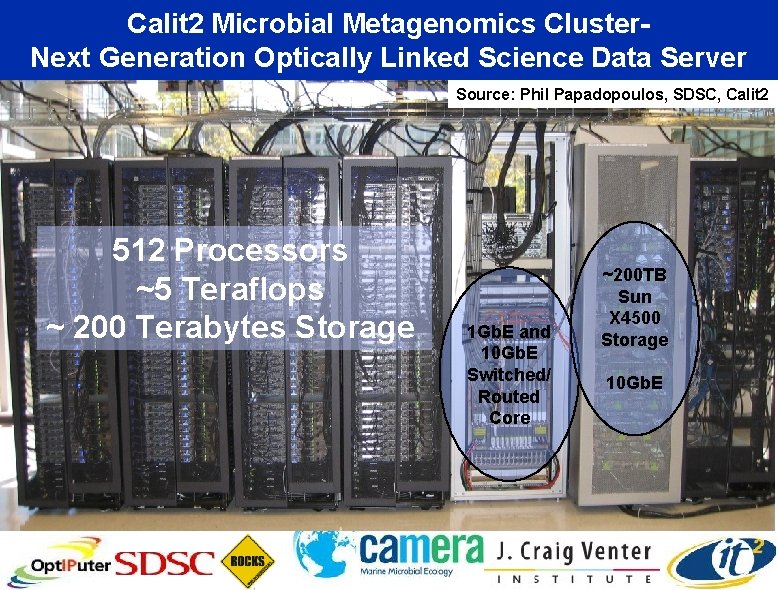

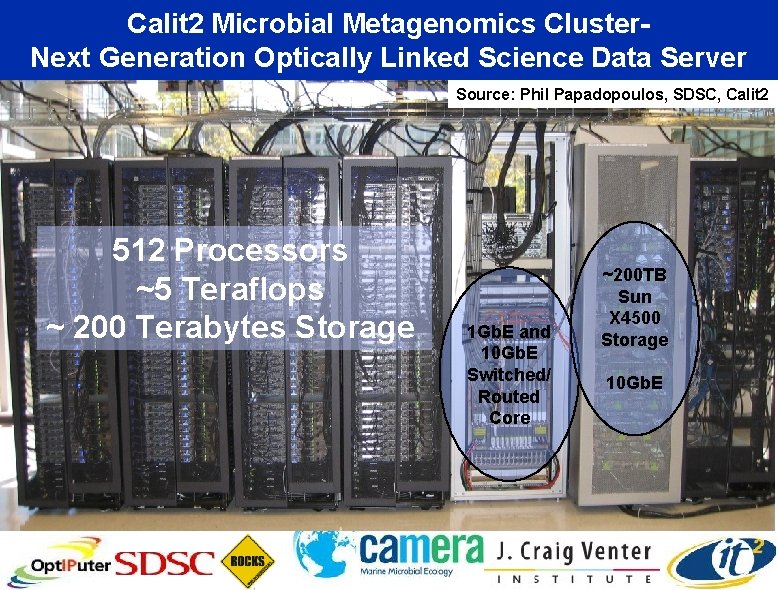

Calit 2 Microbial Metagenomics Cluster. Next Generation Optically Linked Science Data Server Source: Phil Papadopoulos, SDSC, Calit 2 512 Processors ~5 Teraflops ~ 200 Terabytes Storage 1 Gb. E and 10 Gb. E Switched/ Routed Core ~200 TB Sun X 4500 Storage 10 Gb. E

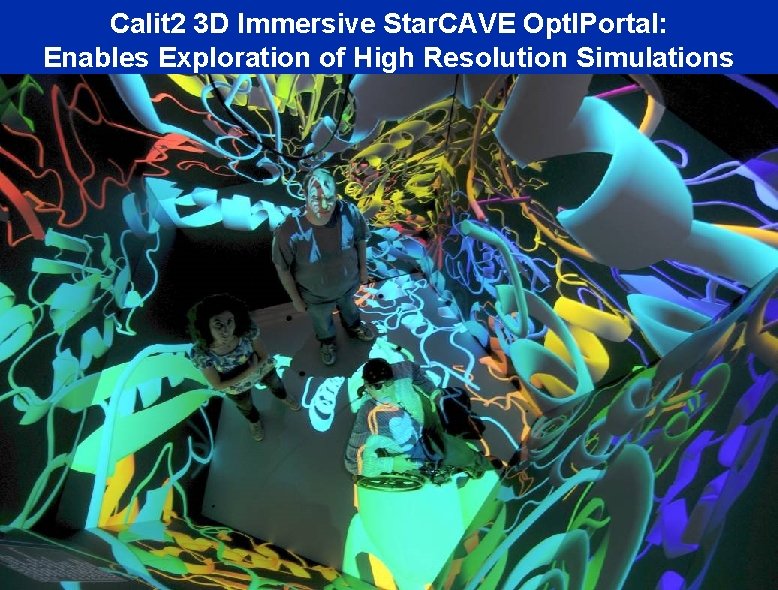

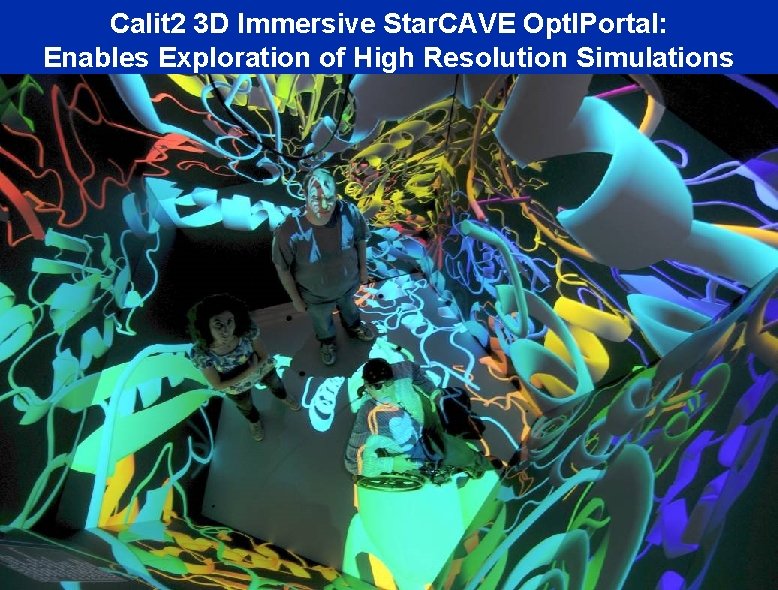

Calit 2 3 D Immersive Star. CAVE Opt. IPortal: Enables Exploration of High Resolution Simulations Connected at 50 Gb/s to Quartzite 30 HD Projectors! Passive Polarization-Optimized the Polarization Separation and Minimized Attenuation 15 Meyer Sound Speakers + Subwoofer Source: Tom De. Fanti, Greg Dawe, Calit 2 Cluster with 30 Nvidia 5600 cards-60 GB Texture Memory

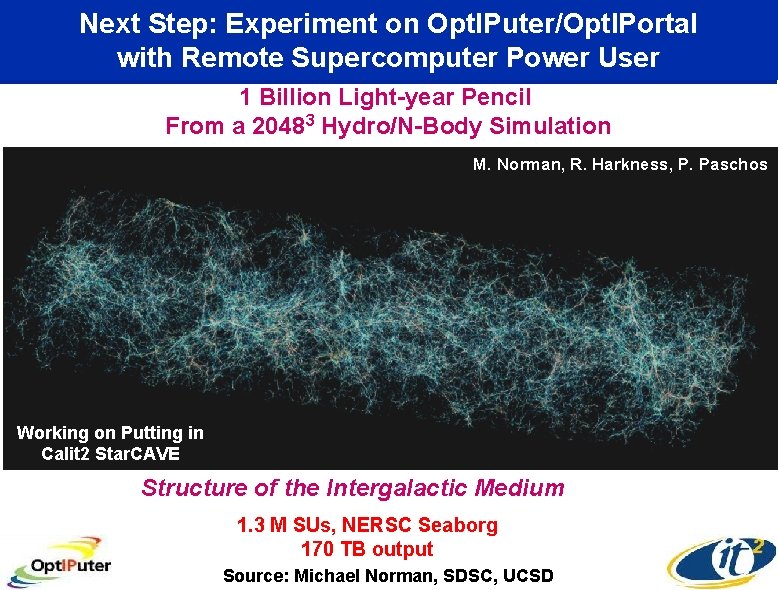

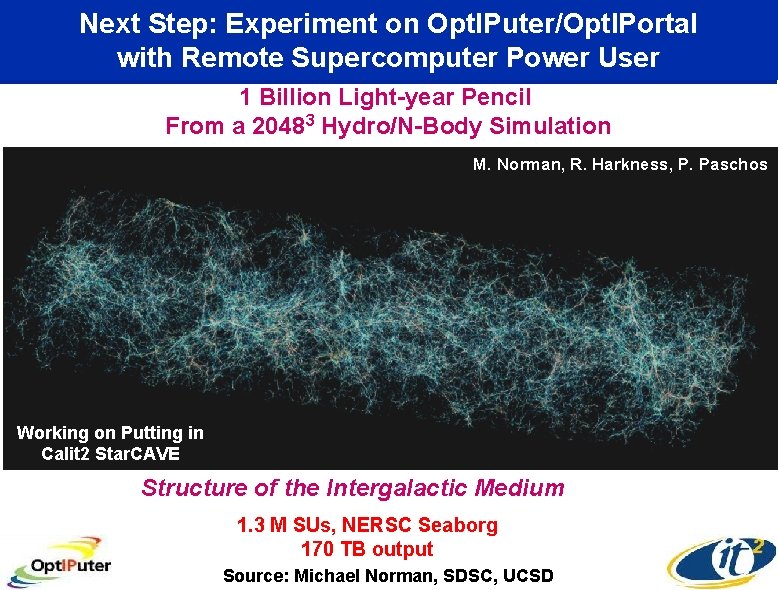

Next Step: Experiment on Opt. IPuter/Opt. IPortal with Remote Supercomputer Power User 1 Billion Light-year Pencil From a 20483 Hydro/N-Body Simulation M. Norman, R. Harkness, P. Paschos Working on Putting in Calit 2 Star. CAVE Structure of the Intergalactic Medium 1. 3 M SUs, NERSC Seaborg 170 TB output Source: Michael Norman, SDSC, UCSD

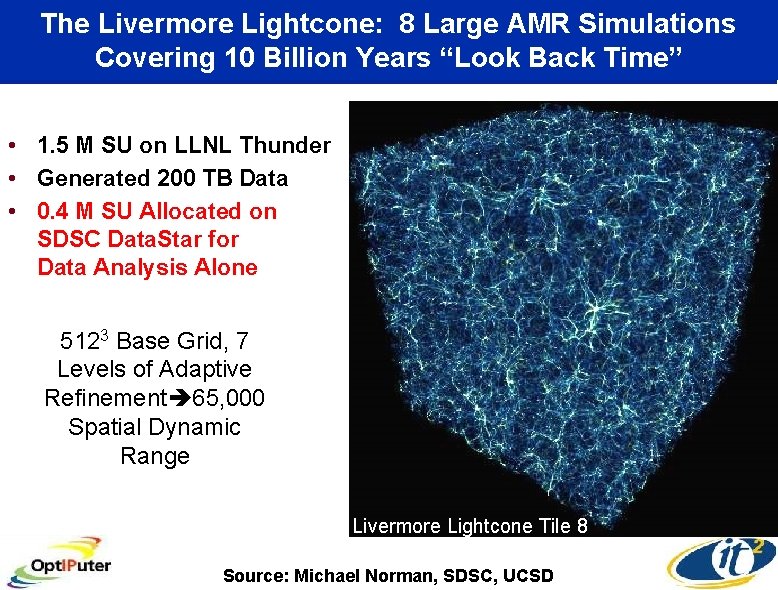

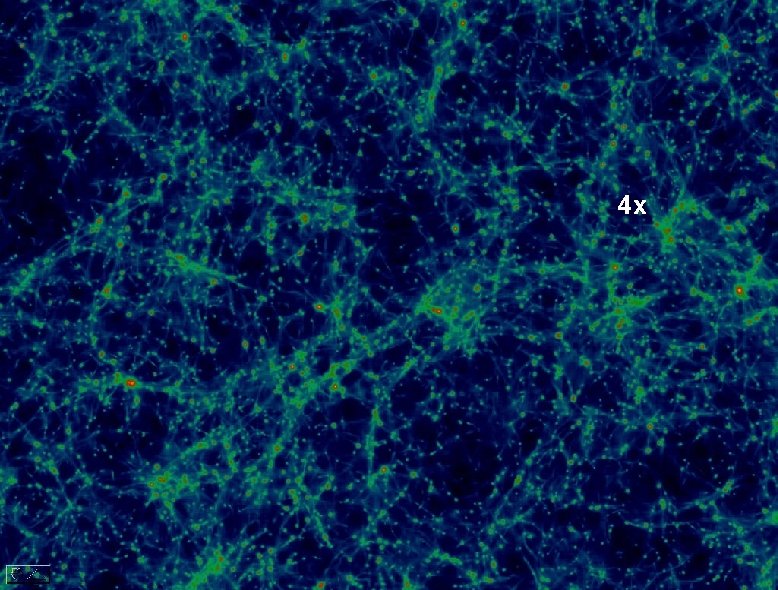

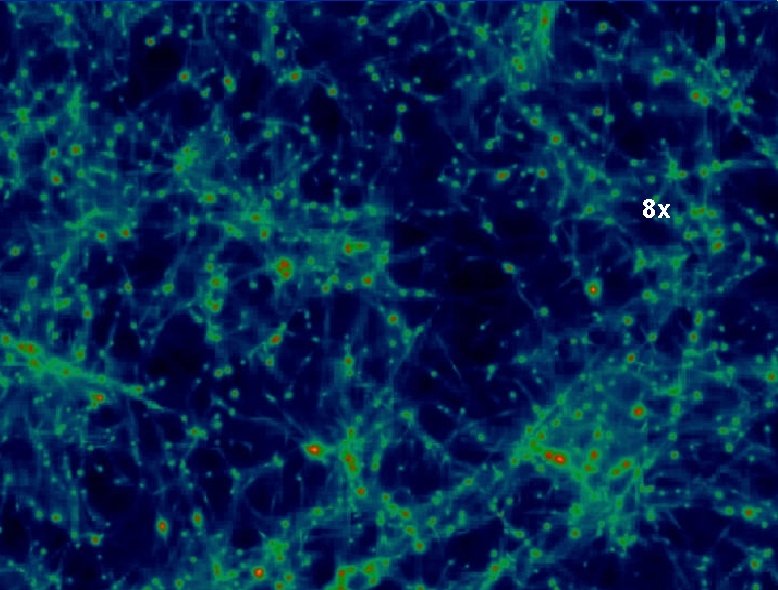

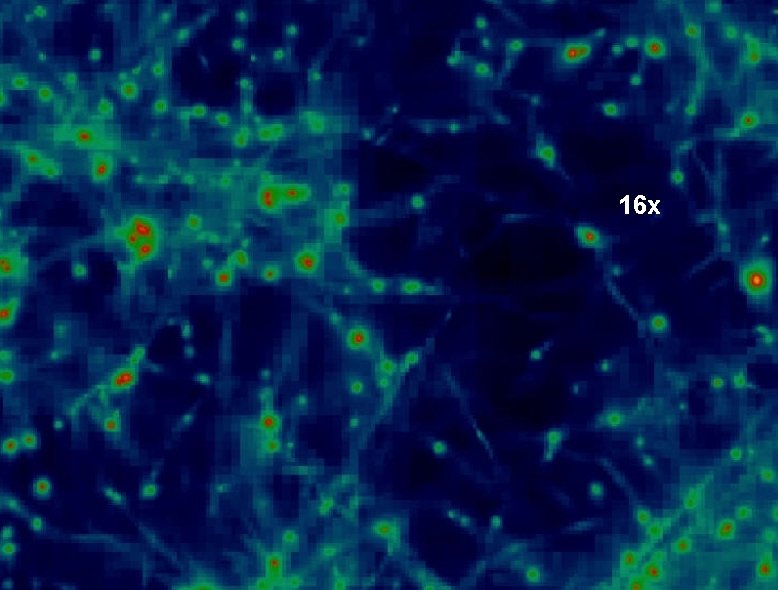

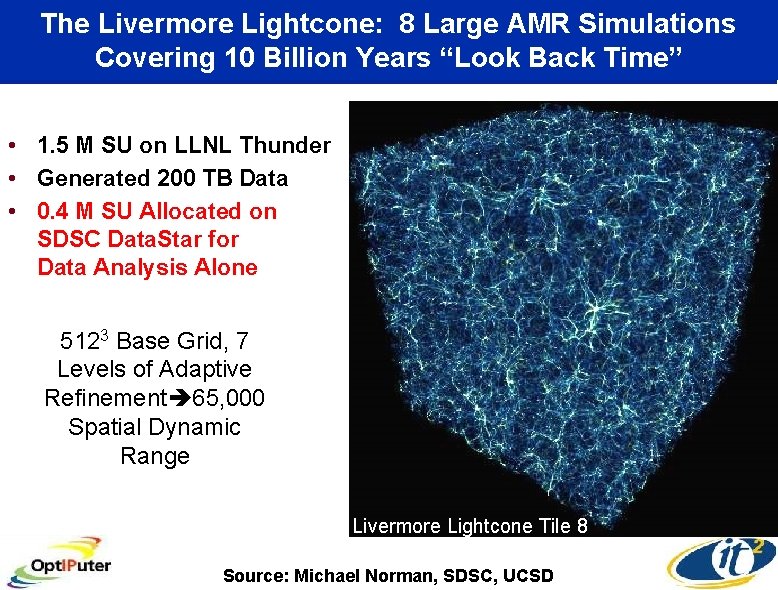

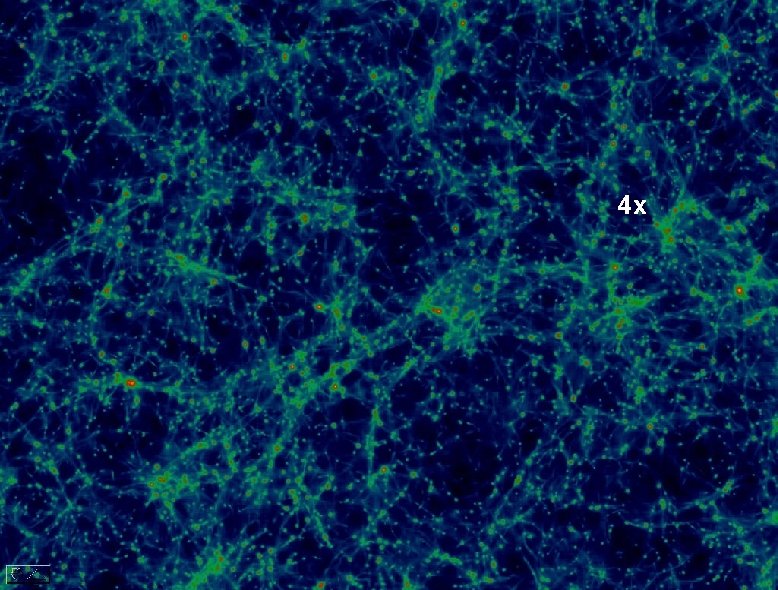

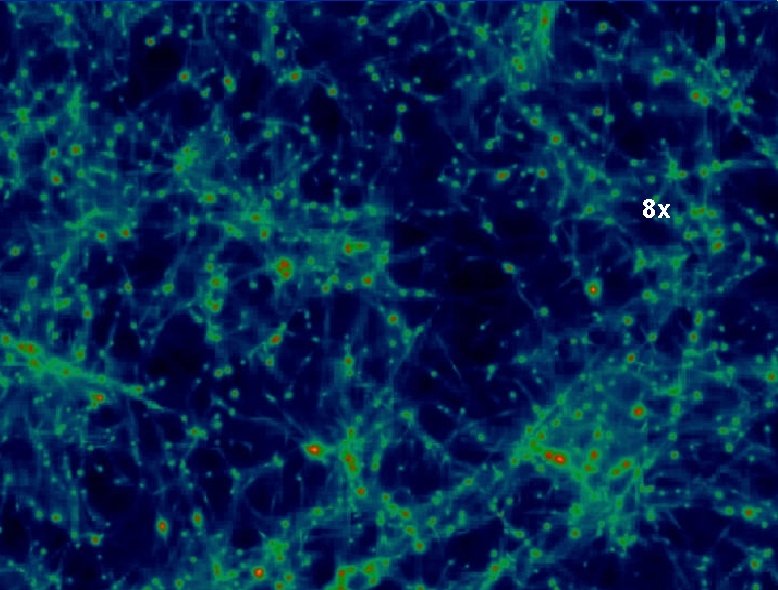

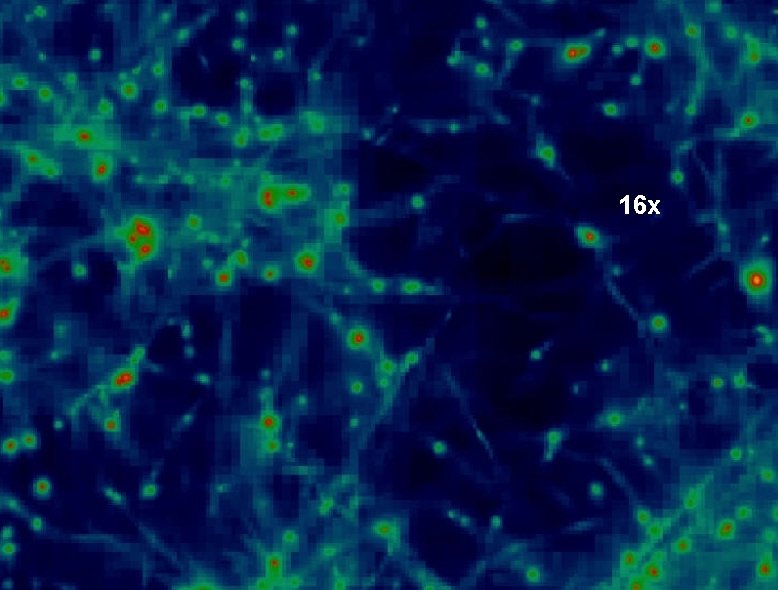

The Livermore Lightcone: 8 Large AMR Simulations Covering 10 Billion Years “Look Back Time” • 1. 5 M SU on LLNL Thunder • Generated 200 TB Data • 0. 4 M SU Allocated on SDSC Data. Star for Data Analysis Alone 5123 Base Grid, 7 Levels of Adaptive Refinement 65, 000 Spatial Dynamic Range Livermore Lightcone Tile 8 Source: Michael Norman, SDSC, UCSD

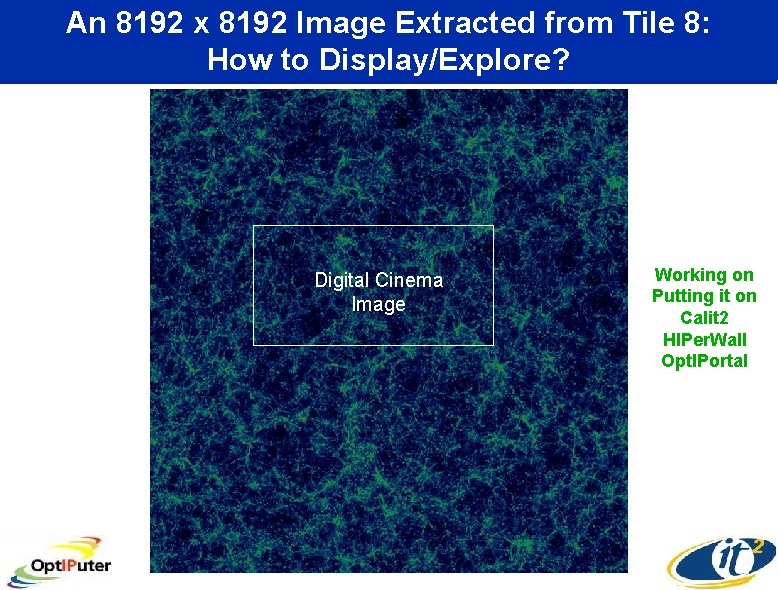

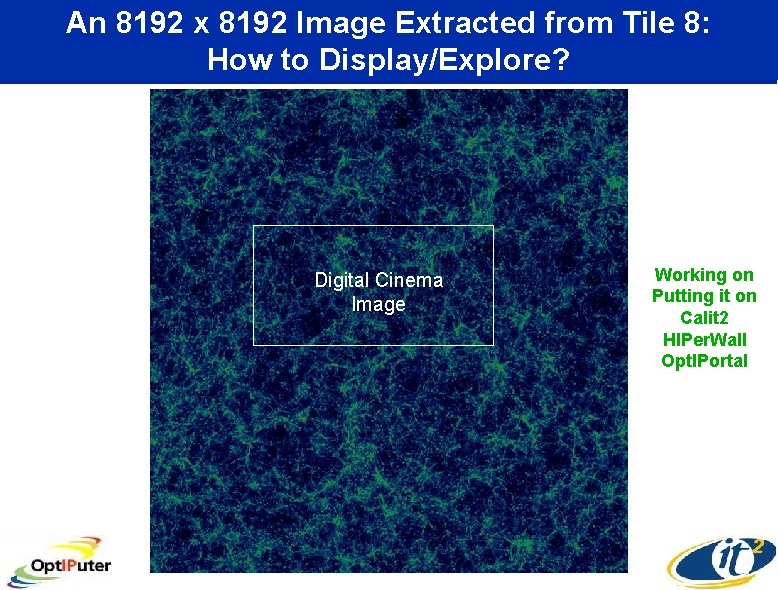

An 8192 x 8192 Image Extracted from Tile 8: How to Display/Explore? Digital Cinema Image Working on Putting it on Calit 2 HIPer. Wall Opt. IPortal

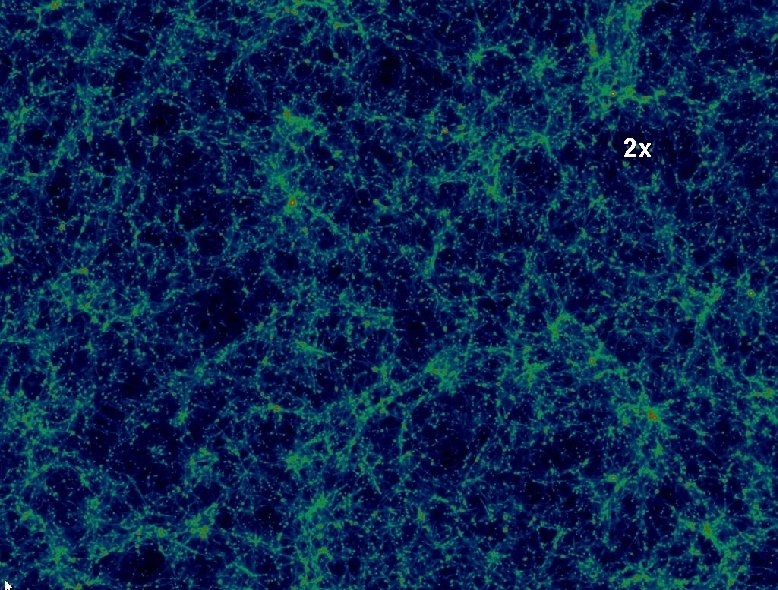

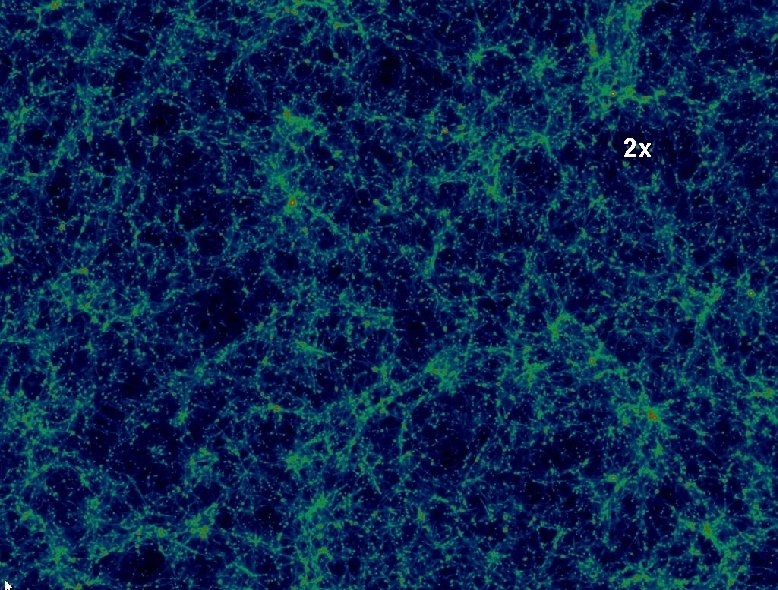

2 x

4 x

8 x

16 x

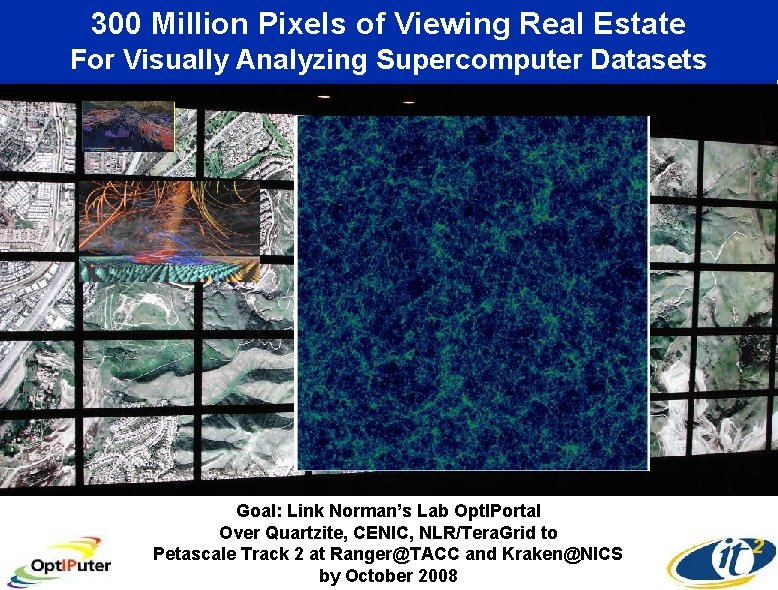

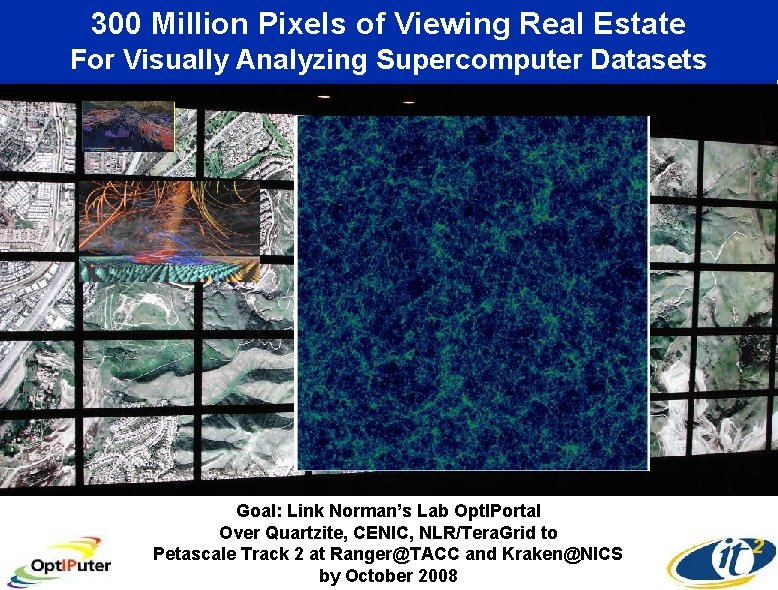

300 Million Pixels of Viewing Real Estate For Visually Analyzing Supercomputer Datasets HDTV Digital Cameras Digital Cinema Goal: Link Norman’s Lab Opt. IPortal Over Quartzite, CENIC, NLR/Tera. Grid to Petascale Track 2 at Ranger@TACC and Kraken@NICS by October 2008