Supercomputing the Next Century Talk to the MaxPlanckInstitut

- Slides: 40

Supercomputing the Next Century • Talk to the Max-Planck-Institut fuer Gravitationsphysik Albert-Einstein-Institut, Potsdam, Germany • June 15, 1998

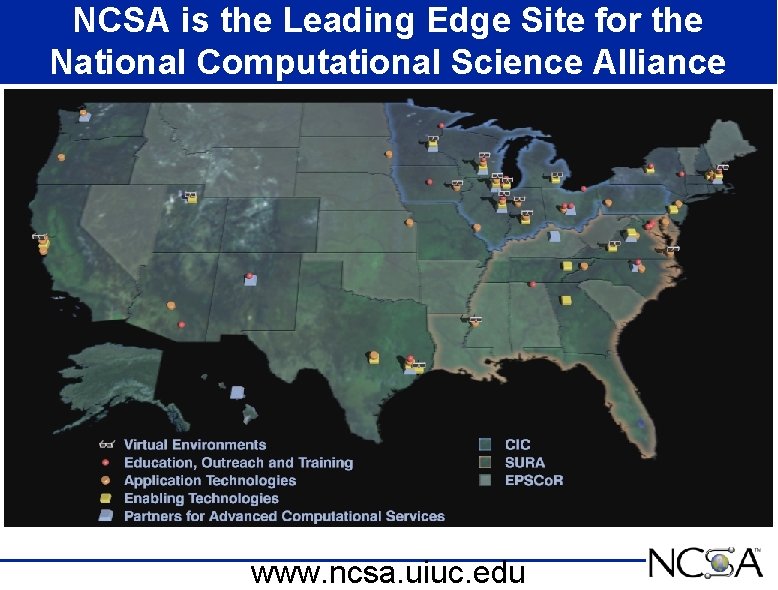

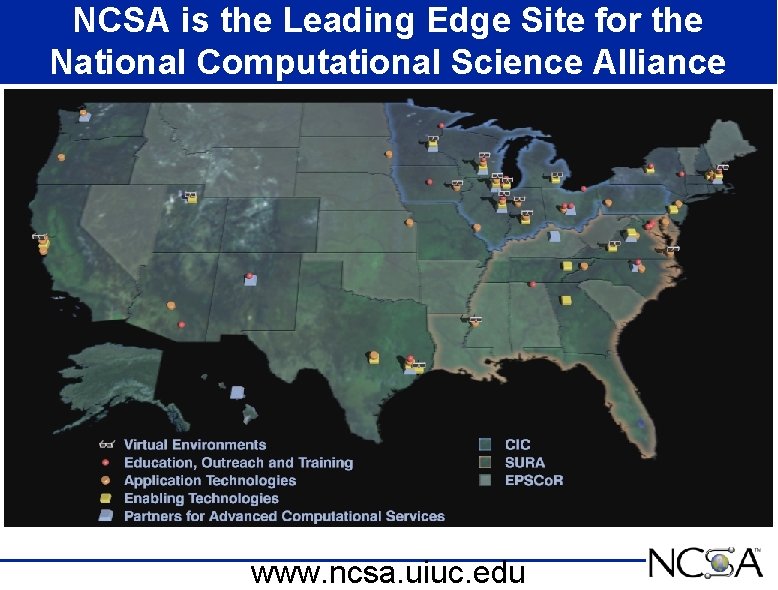

NCSA is the Leading Edge Site for the National Computational Science Alliance www. ncsa. uiuc. edu

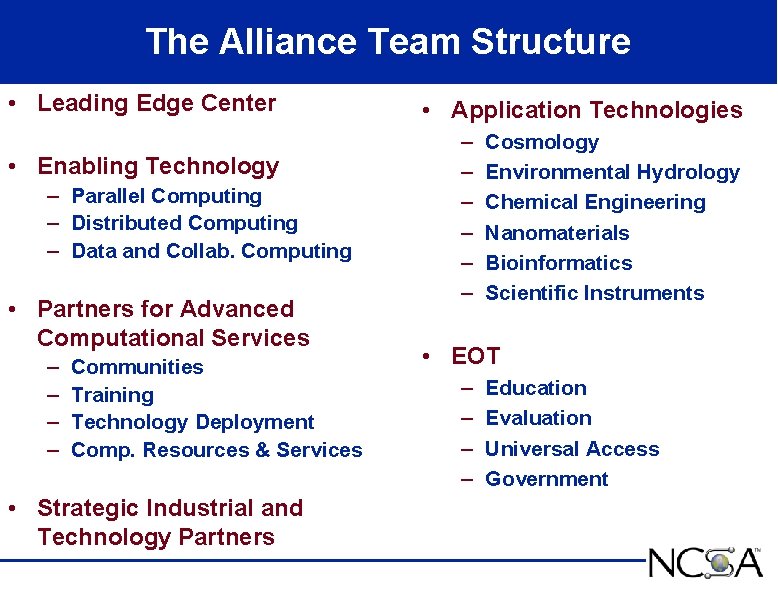

The Alliance Team Structure • Leading Edge Center • Enabling Technology – Parallel Computing – Distributed Computing – Data and Collab. Computing • Partners for Advanced Computational Services – – Communities Training Technology Deployment Comp. Resources & Services • Strategic Industrial and Technology Partners • Application Technologies – – – Cosmology Environmental Hydrology Chemical Engineering Nanomaterials Bioinformatics Scientific Instruments • EOT – – Education Evaluation Universal Access Government

Alliance‘ 98 Hosts 1000 Attendees With Hundreds On-Line!

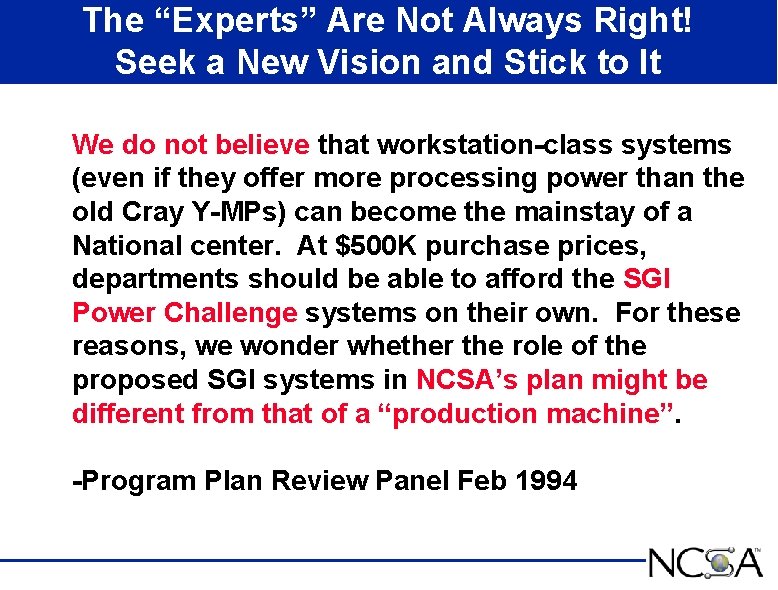

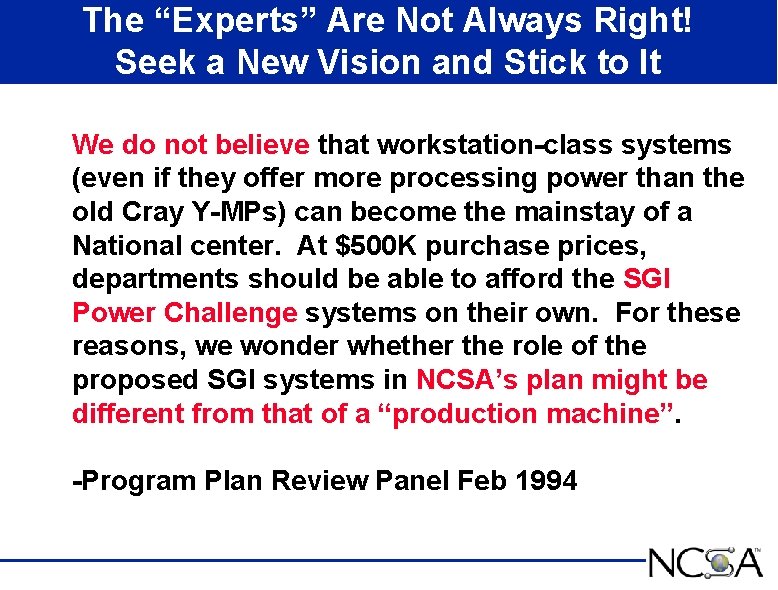

The “Experts” Are Not Always Right! Seek a New Vision and Stick to It We do not believe that workstation-class systems (even if they offer more processing power than the old Cray Y-MPs) can become the mainstay of a National center. At $500 K purchase prices, departments should be able to afford the SGI Power Challenge systems on their own. For these reasons, we wonder whether the role of the proposed SGI systems in NCSA’s plan might be different from that of a “production machine”. -Program Plan Review Panel Feb 1994

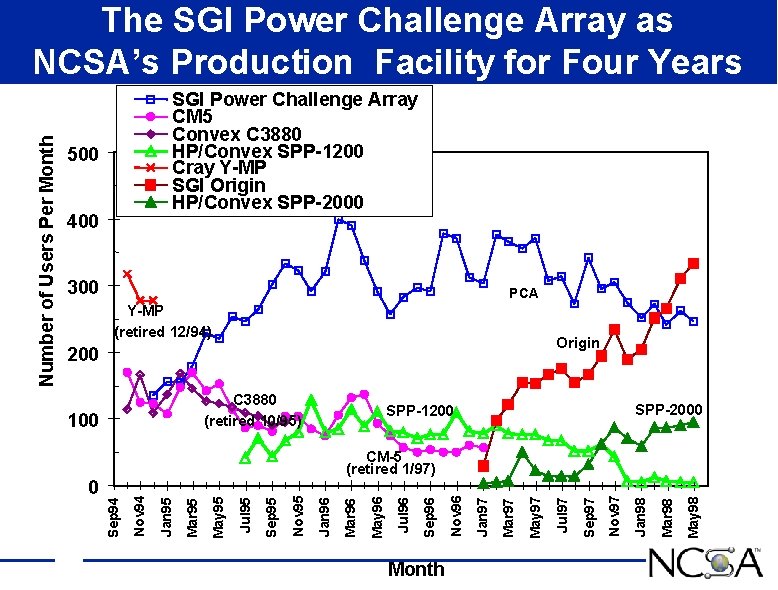

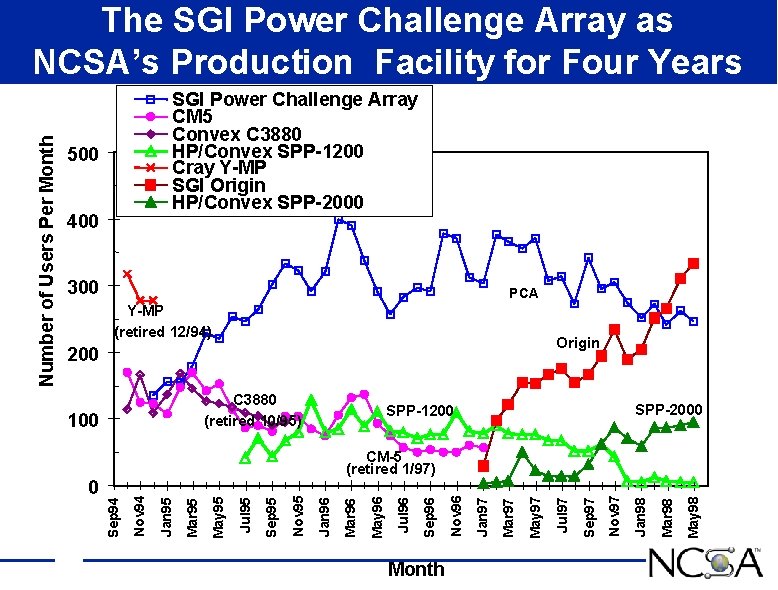

SGI Power Challenge Array CM 5 Convex C 3880 HP/Convex SPP-1200 Cray Y-MP SGI Origin HP/Convex SPP-2000 500 400 300 PCA Y-MP (retired 12/94) Origin 200 C 3880 (retired 10/95) 100 Month May 98 Mar 98 Jan 98 Nov 97 Sep 97 Jul 97 May 97 Mar 97 Jan 97 Nov 96 Sep 96 Jul 96 May 96 Mar 96 Jan 96 Nov 95 Sep 95 Jul 95 May 95 Mar 95 Jan 95 Nov 94 0 SPP-2000 SPP-1200 CM-5 (retired 1/97) Sep 94 Number of Users Per Month The SGI Power Challenge Array as NCSA’s Production Facility for Four Years

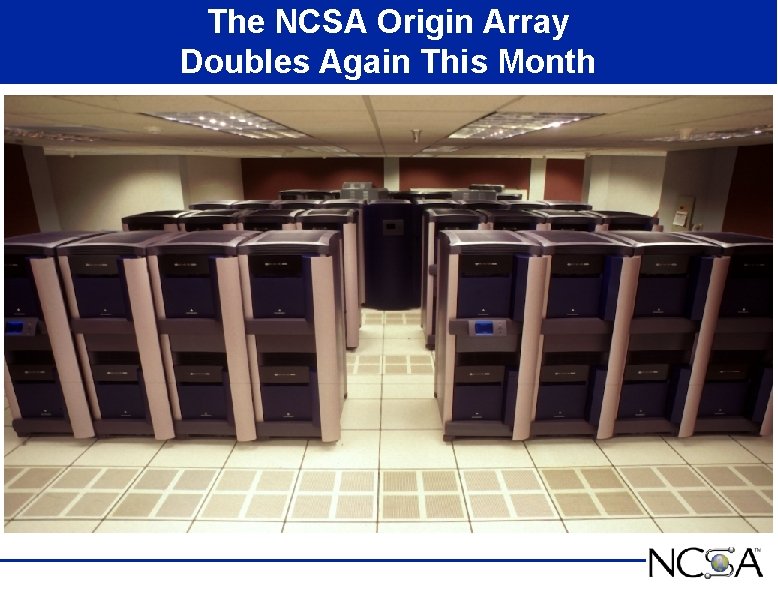

The NCSA Origin Array Doubles Again This Month

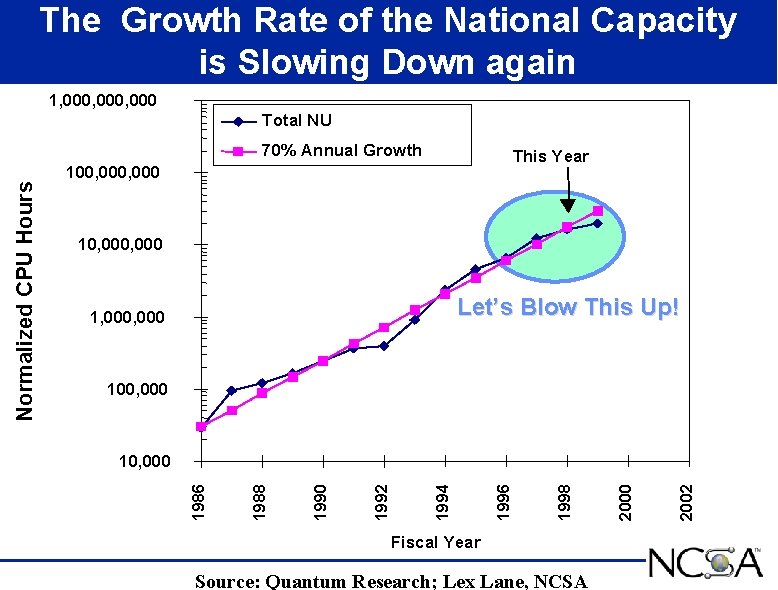

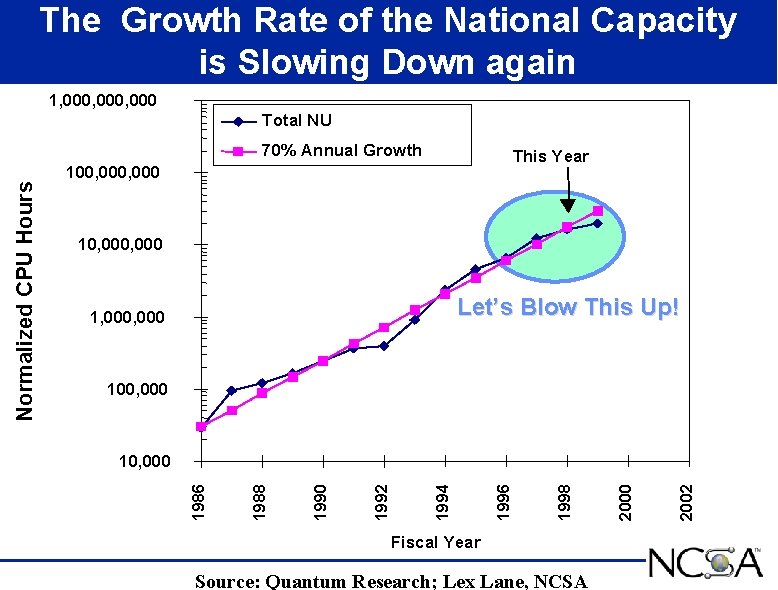

The Growth Rate of the National Capacity is Slowing Down again 1, 000, 000 Total NU This Year 100, 000 10, 000 Let’s Blow This Up! 1, 000 100, 000 Fiscal Year Source: Quantum Research; Lex Lane, NCSA 2002 2000 1998 1996 1994 1992 1990 1988 10, 000 1986 Normalized CPU Hours 70% Annual Growth

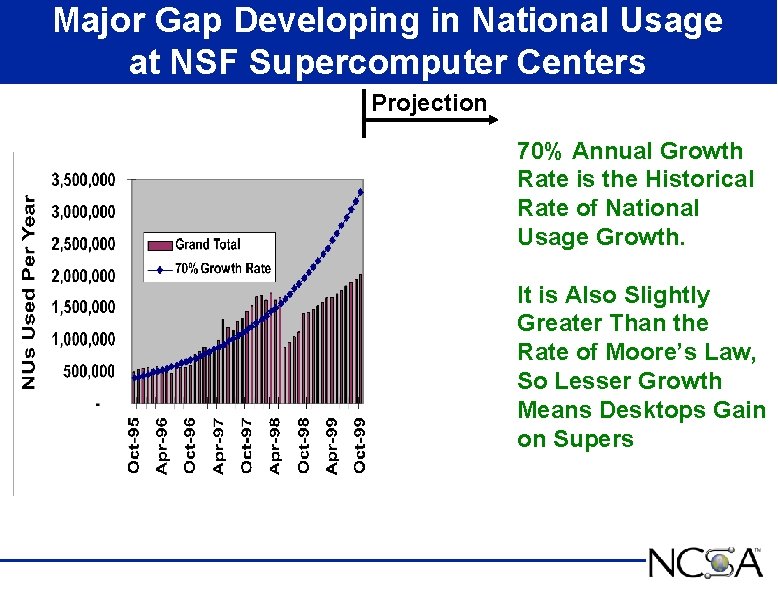

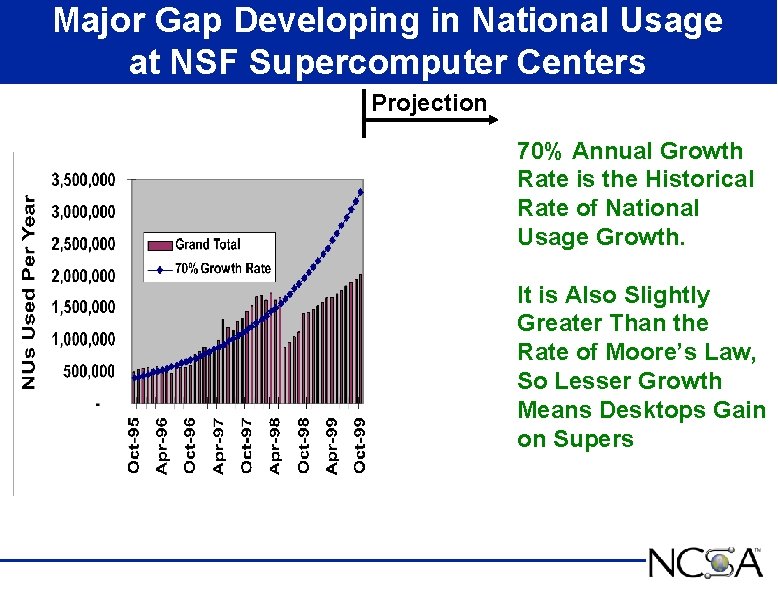

Major Gap Developing in National Usage at NSF Supercomputer Centers Projection 70% Annual Growth Rate is the Historical Rate of National Usage Growth. It is Also Slightly Greater Than the Rate of Moore’s Law, So Lesser Growth Means Desktops Gain on Supers

Monthly National Usage at NSF Supercomputer Centers FY 96 FY 97 FY 98 Transition Period FY 99 Projection Capacity Level NSF Proposed at 3/97 NSB Meeting

Accelerated Strategic Computing Initiative is Coupling DOE DP Labs to Universities • Access to ASCI Leading Edge Supercomputers • Academic Strategic Alliances Program • Data and Visualization Corridors http: //www. llnl. gov/asci-alliances/centers. html

Comparison of the Do. E ASCI and the NSF PACI Origin Array Scale Through FY 99 Los Alamos Origin System FY 99 5 -6000 processors www. lanl. gov/projects/asci/bluemtn /Hardware/schedule. html NCSA Proposed System FY 99 6 x 128 and 4 x 64=1024 processors

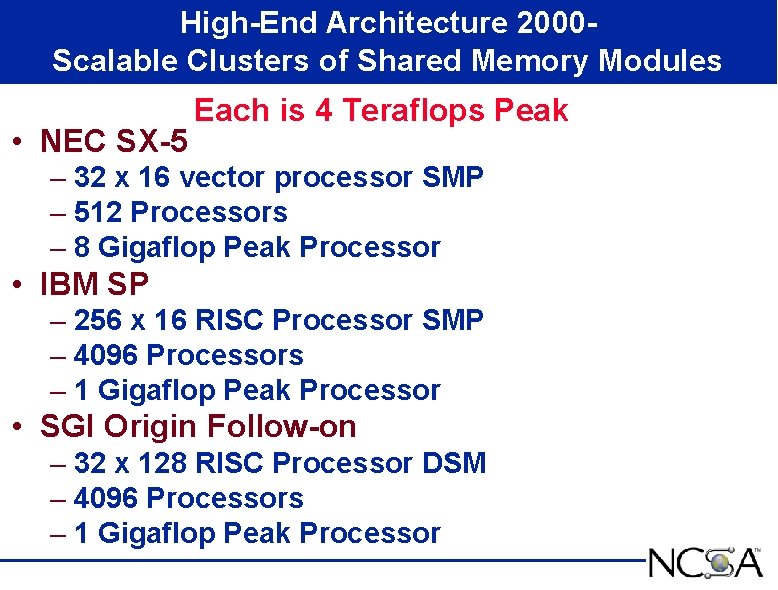

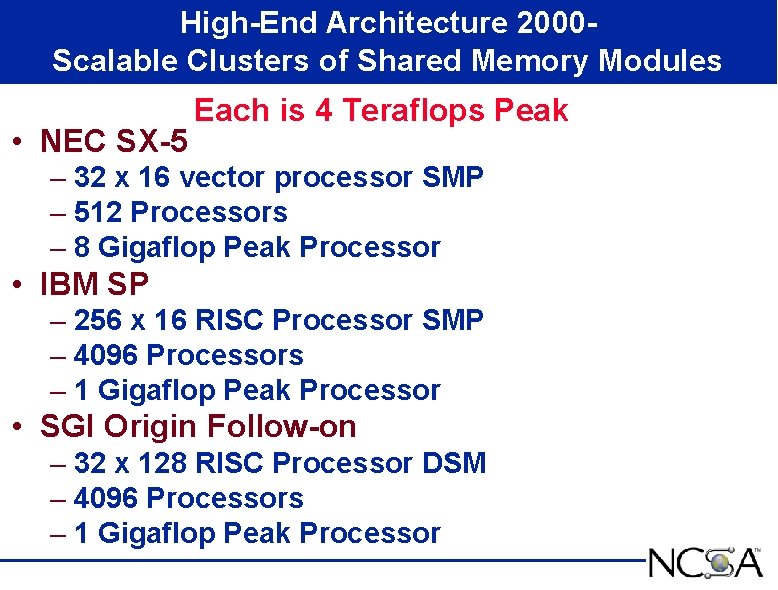

High-End Architecture 2000 Scalable Clusters of Shared Memory Modules • NEC SX-5 Each is 4 Teraflops Peak – 32 x 16 vector processor SMP – 512 Processors – 8 Gigaflop Peak Processor • IBM SP – 256 x 16 RISC Processor SMP – 4096 Processors – 1 Gigaflop Peak Processor • SGI Origin Follow-on – 32 x 128 RISC Processor DSM – 4096 Processors – 1 Gigaflop Peak Processor

Emerging Portable Computing Standards • • HPF MPI Open. MP Hybrids of MPI and Open. MP

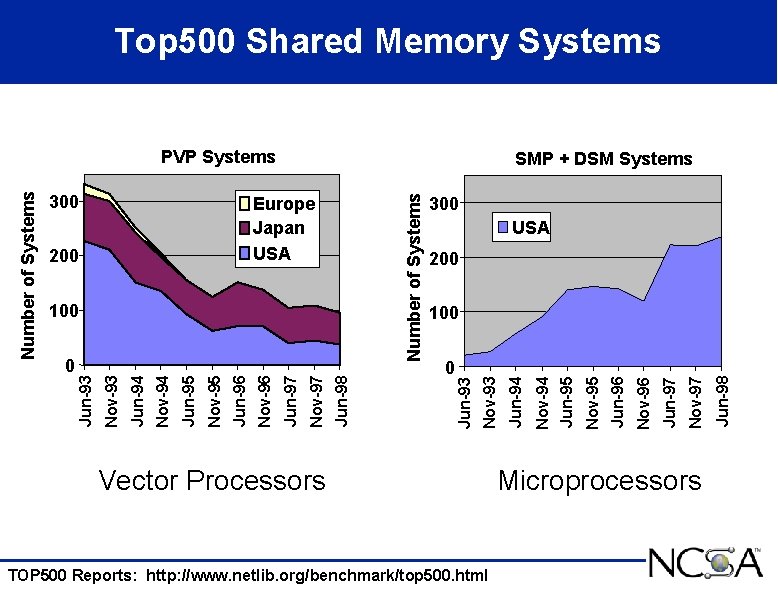

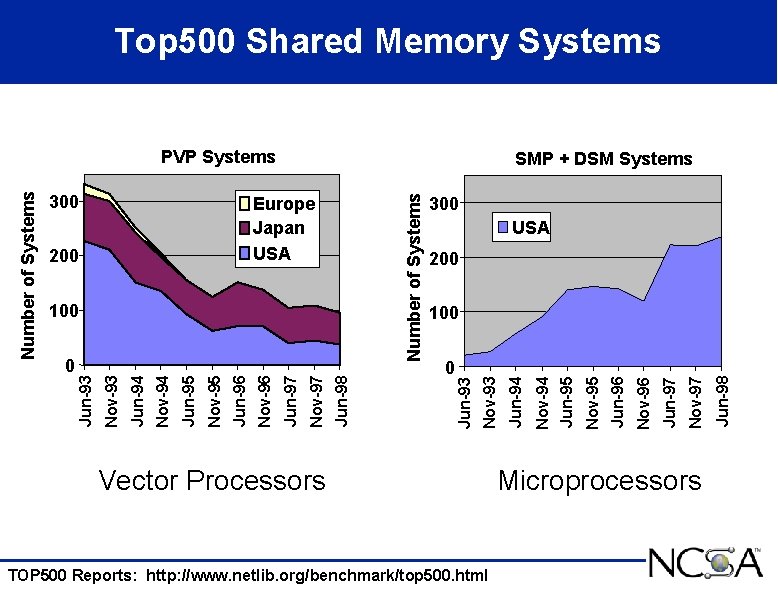

Top 500 Shared Memory Systems SMP + DSM Systems 200 Vector Processors TOP 500 Reports: http: //www. netlib. org/benchmark/top 500. html Microprocessors Jun-98 Nov-97 Jun-97 Nov-96 Jun-96 Nov-95 0 Jun-95 100 Nov-94 Jun-98 Nov-97 Jun-97 Nov-96 Jun-96 Nov-95 Jun-95 Nov-94 Jun-94 0 Nov-93 100 USA Jun-94 200 300 Nov-93 Europe Japan USA Jun-93 Number of Systems 300 Jun-93 Number of Systems PVP Systems

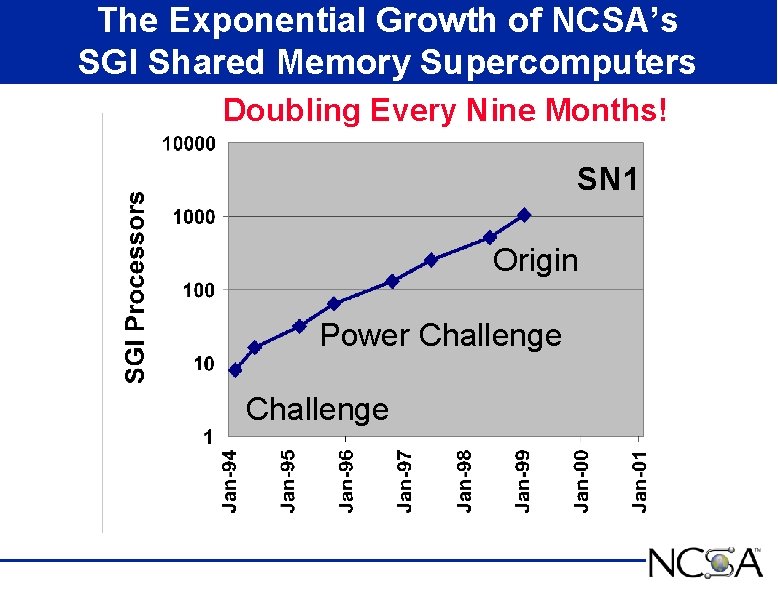

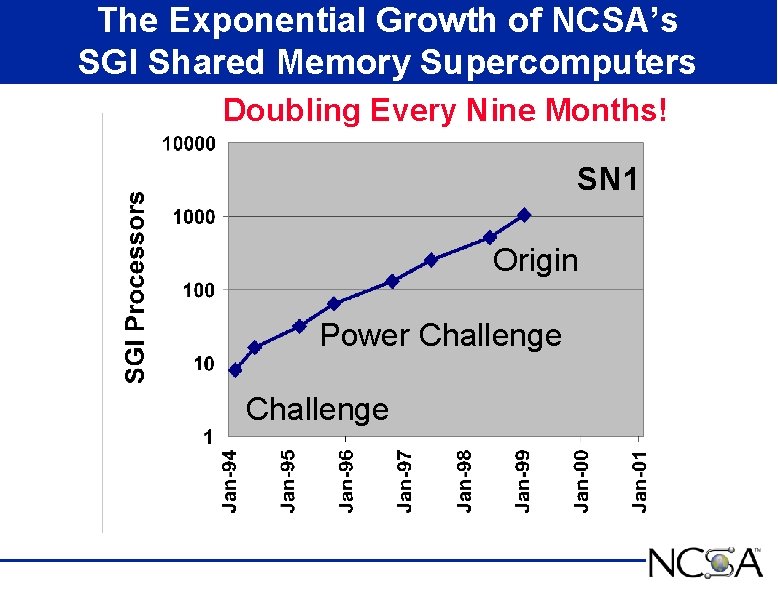

The Exponential Growth of NCSA’s SGI Shared Memory Supercomputers Doubling Every Nine Months! SN 1 Origin Power Challenge

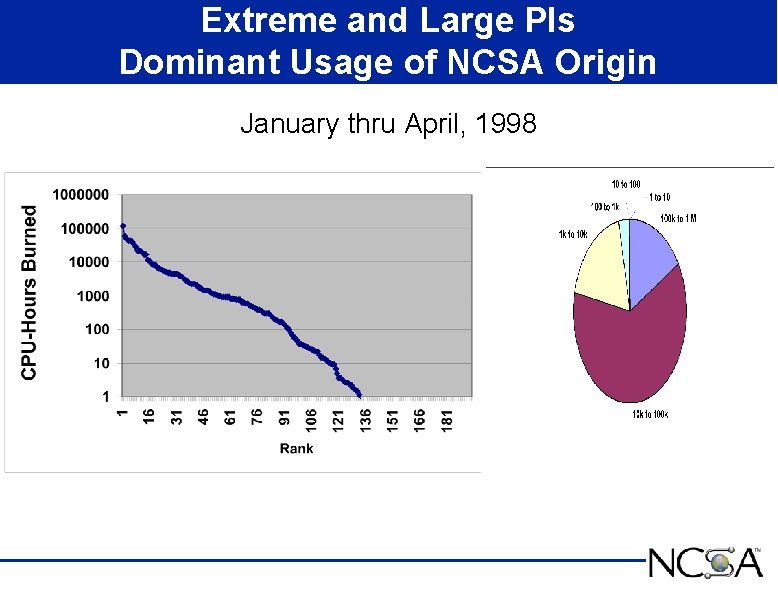

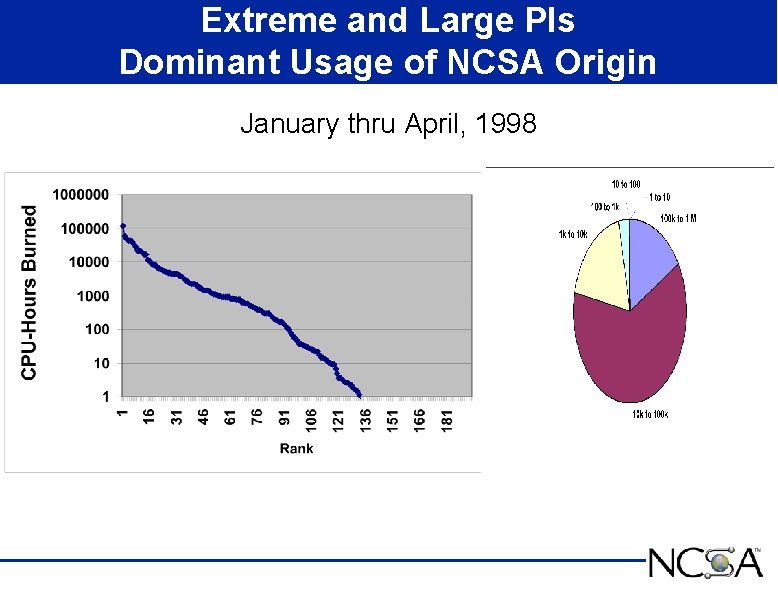

Extreme and Large PIs Dominant Usage of NCSA Origin January thru April, 1998

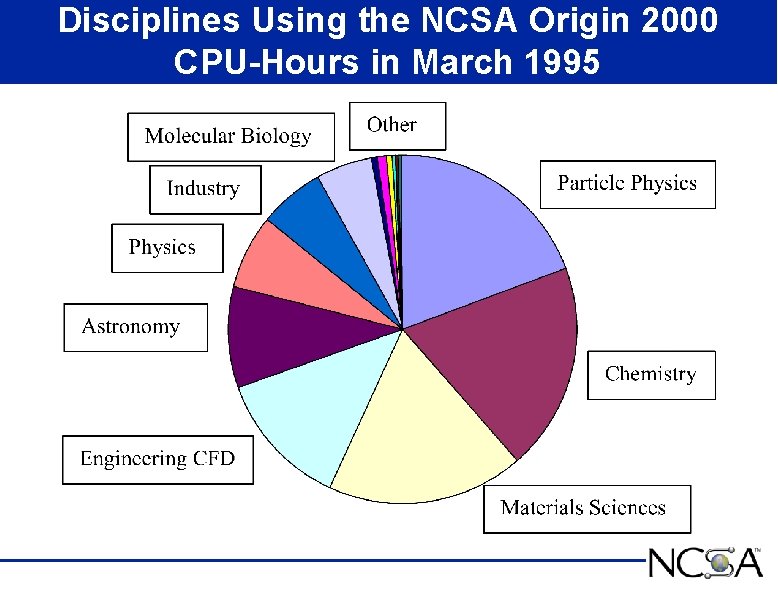

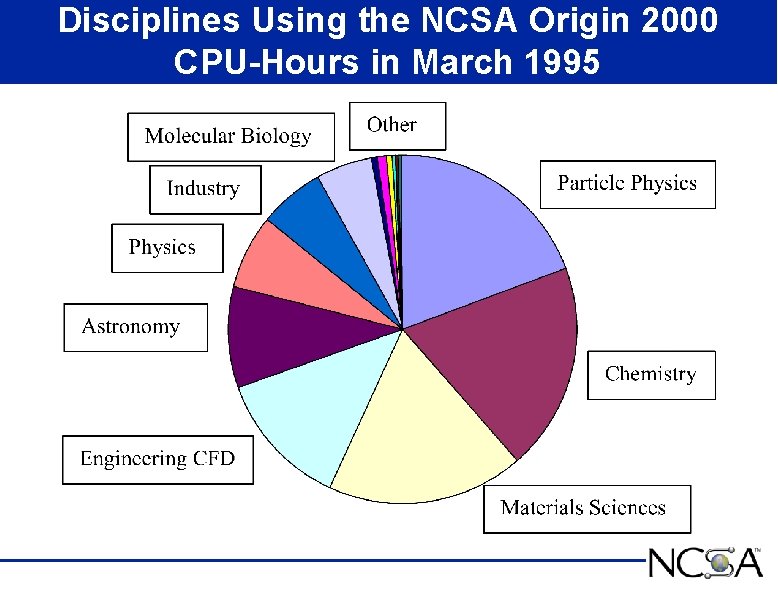

Disciplines Using the NCSA Origin 2000 CPU-Hours in March 1995

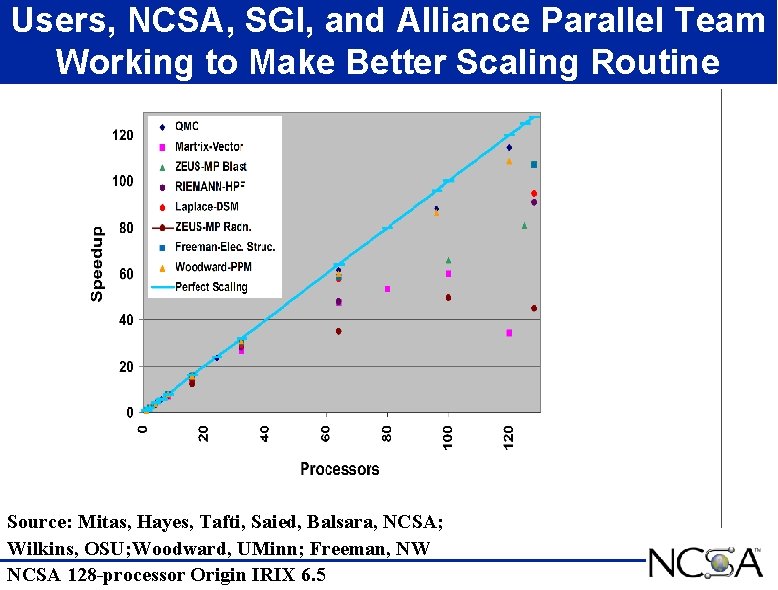

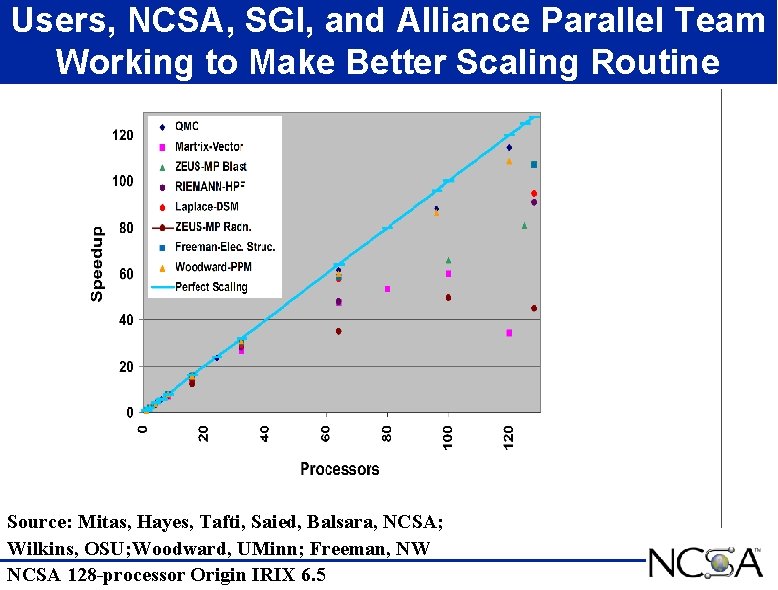

Users, NCSA, SGI, and Alliance Parallel Team Working to Make Better Scaling Routine Source: Mitas, Hayes, Tafti, Saied, Balsara, NCSA; Wilkins, OSU; Woodward, UMinn; Freeman, NW NCSA 128 -processor Origin IRIX 6. 5

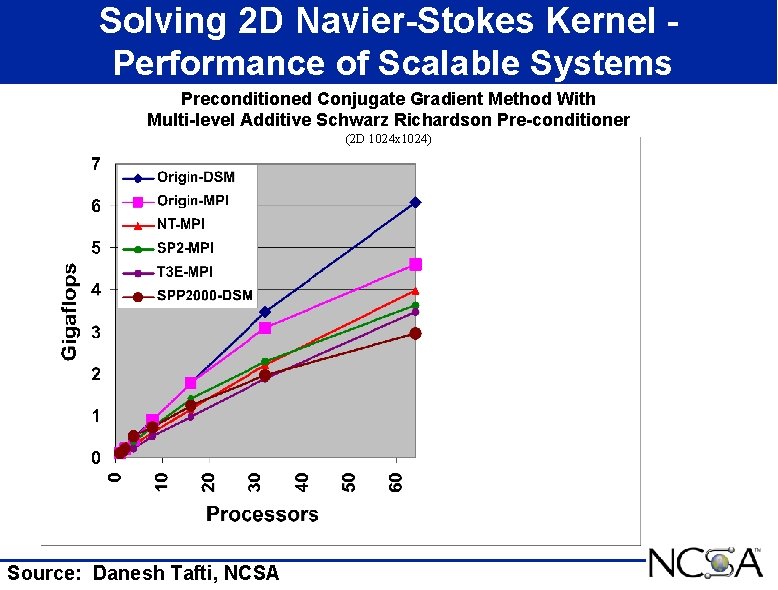

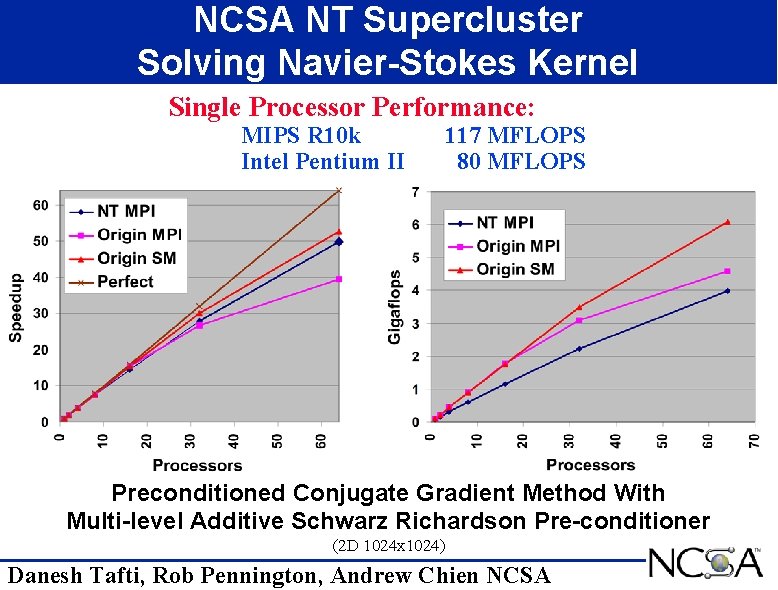

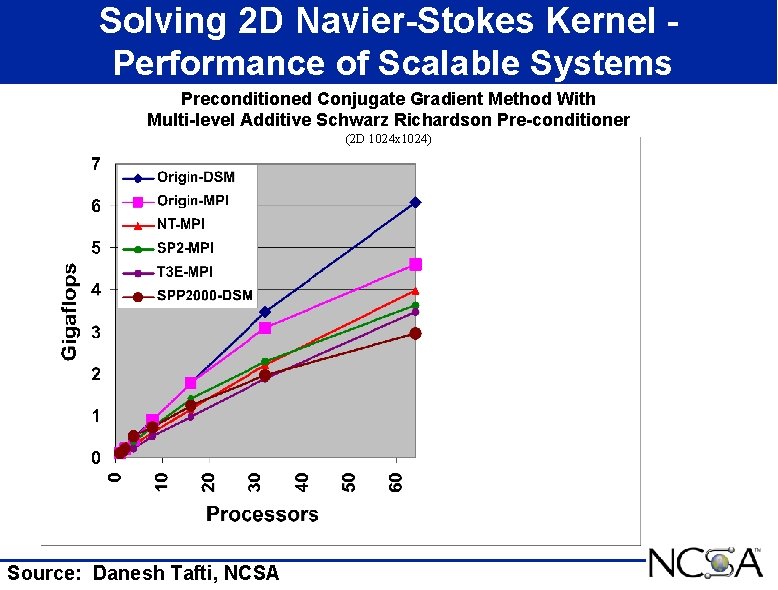

Solving 2 D Navier-Stokes Kernel Performance of Scalable Systems Preconditioned Conjugate Gradient Method With Multi-level Additive Schwarz Richardson Pre-conditioner (2 D 1024 x 1024) Source: Danesh Tafti, NCSA

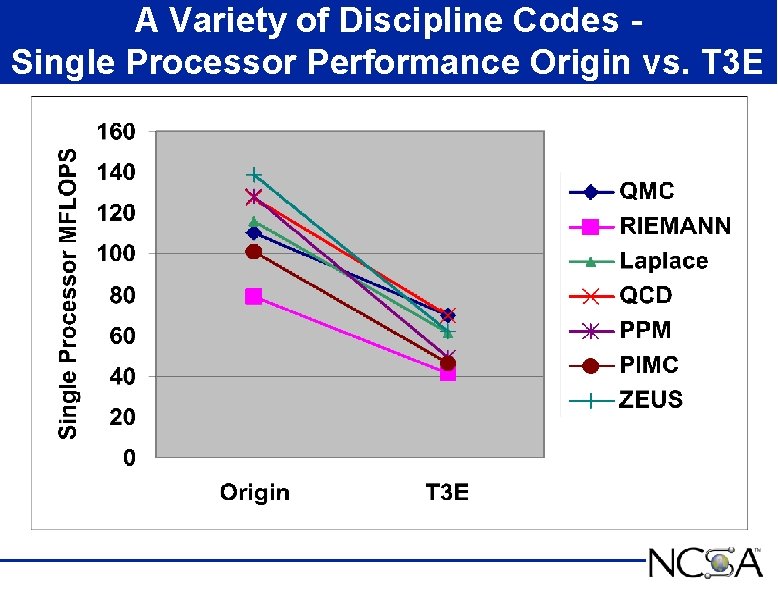

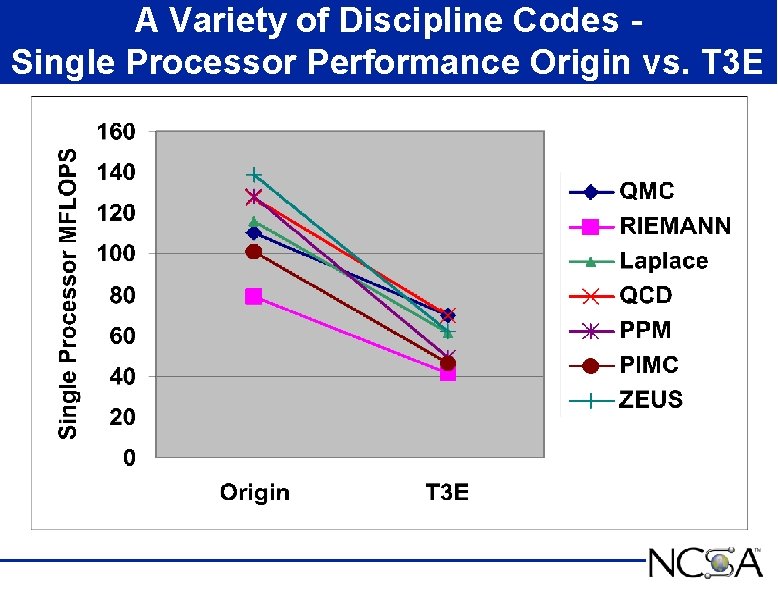

A Variety of Discipline Codes Single Processor Performance Origin vs. T 3 E

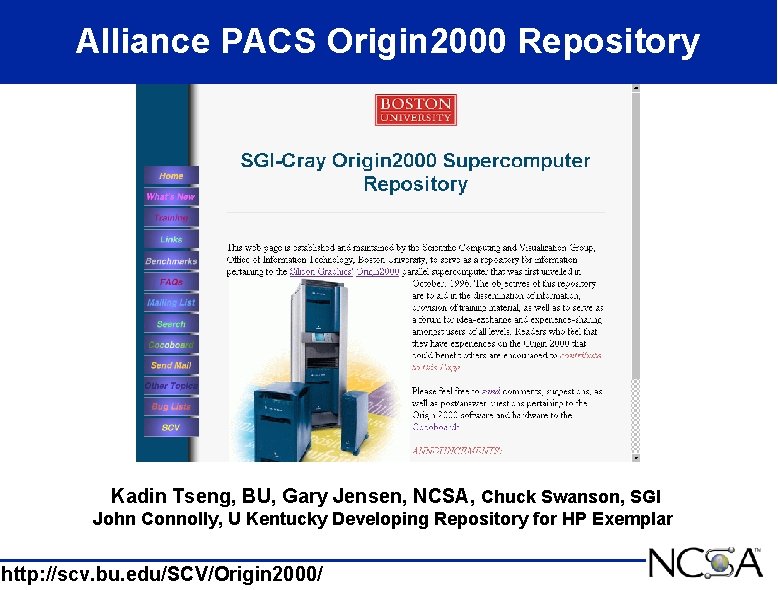

Alliance PACS Origin 2000 Repository Kadin Tseng, BU, Gary Jensen, NCSA, Chuck Swanson, SGI John Connolly, U Kentucky Developing Repository for HP Exemplar http: //scv. bu. edu/SCV/Origin 2000/

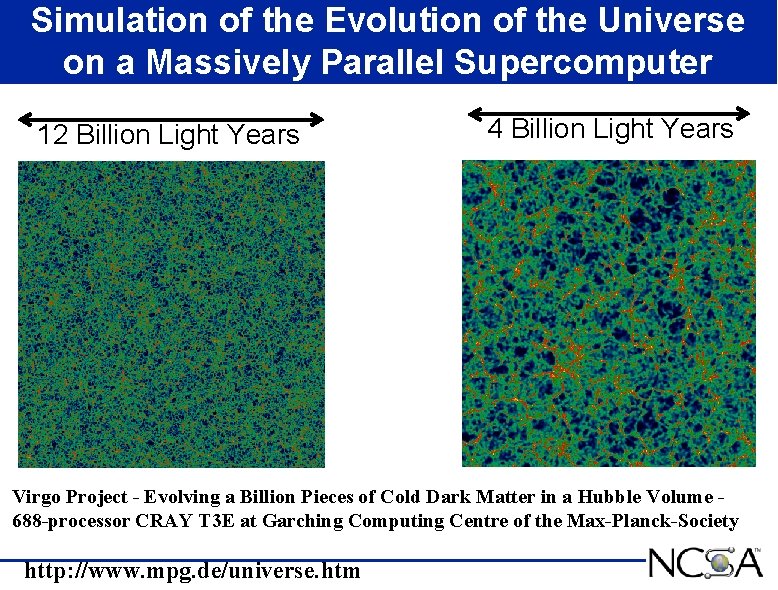

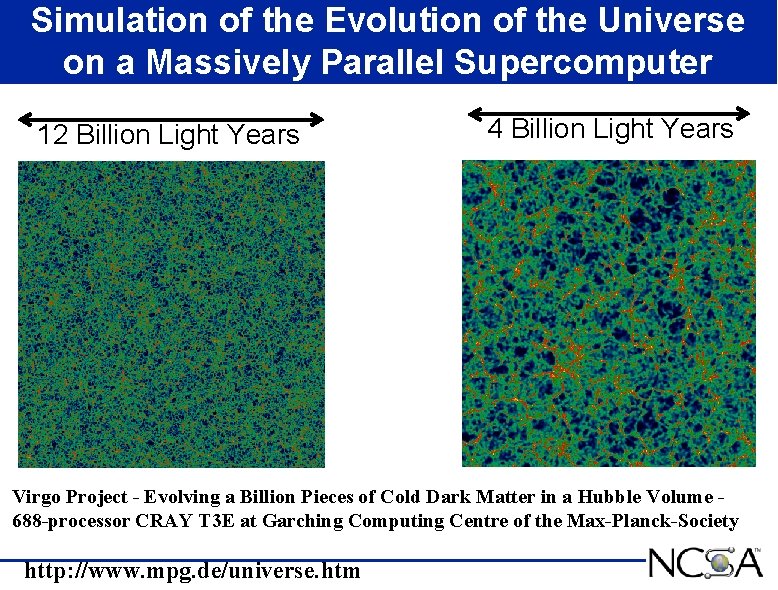

Simulation of the Evolution of the Universe on a Massively Parallel Supercomputer 12 Billion Light Years 4 Billion Light Years Virgo Project - Evolving a Billion Pieces of Cold Dark Matter in a Hubble Volume 688 -processor CRAY T 3 E at Garching Computing Centre of the Max-Planck-Society http: //www. mpg. de/universe. htm

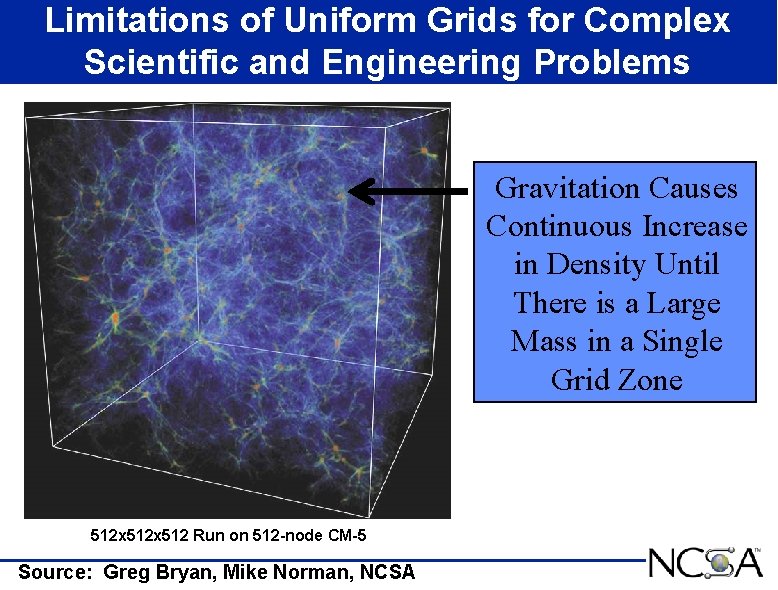

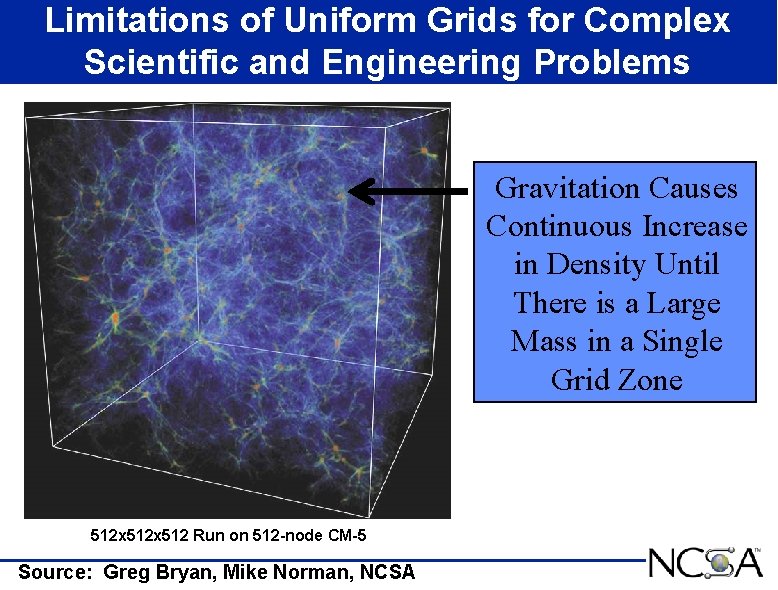

Limitations of Uniform Grids for Complex Scientific and Engineering Problems Gravitation Causes Continuous Increase in Density Until There is a Large Mass in a Single Grid Zone 512 x 512 Run on 512 -node CM-5 Source: Greg Bryan, Mike Norman, NCSA

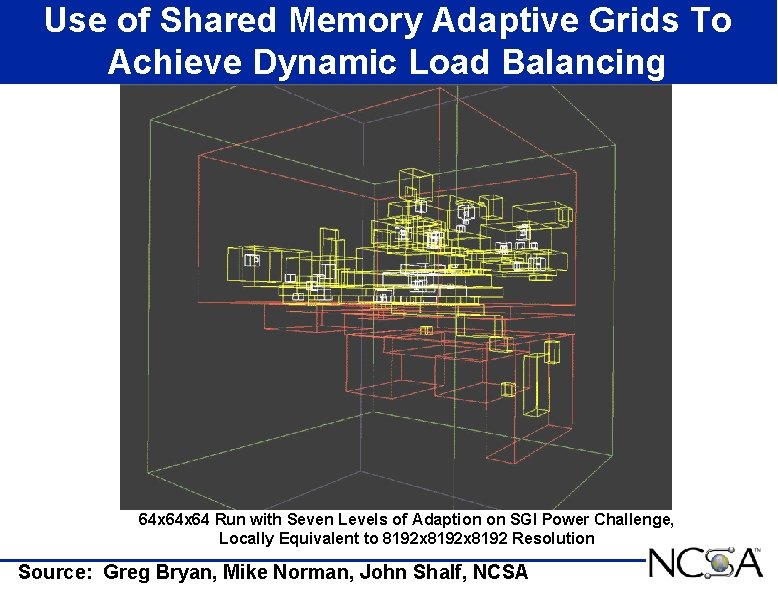

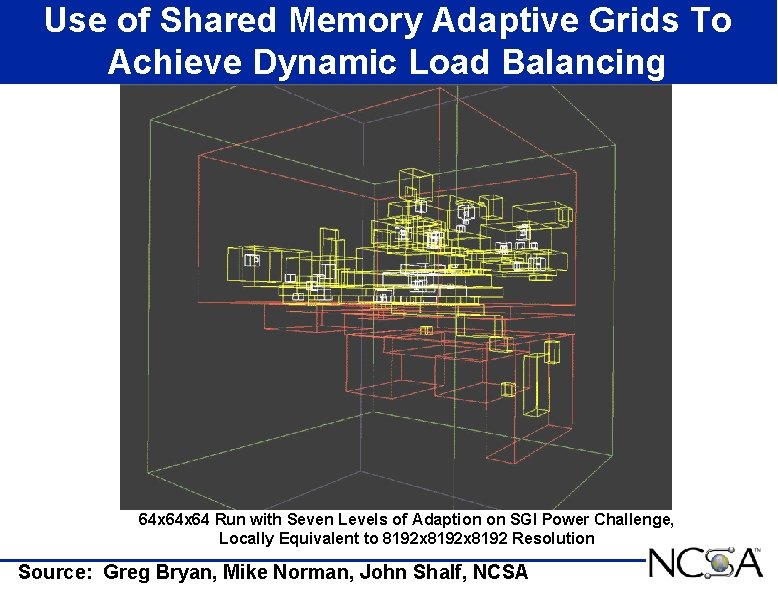

Use of Shared Memory Adaptive Grids To Achieve Dynamic Load Balancing 64 x 64 Run with Seven Levels of Adaption on SGI Power Challenge, Locally Equivalent to 8192 x 8192 Resolution Source: Greg Bryan, Mike Norman, John Shalf, NCSA

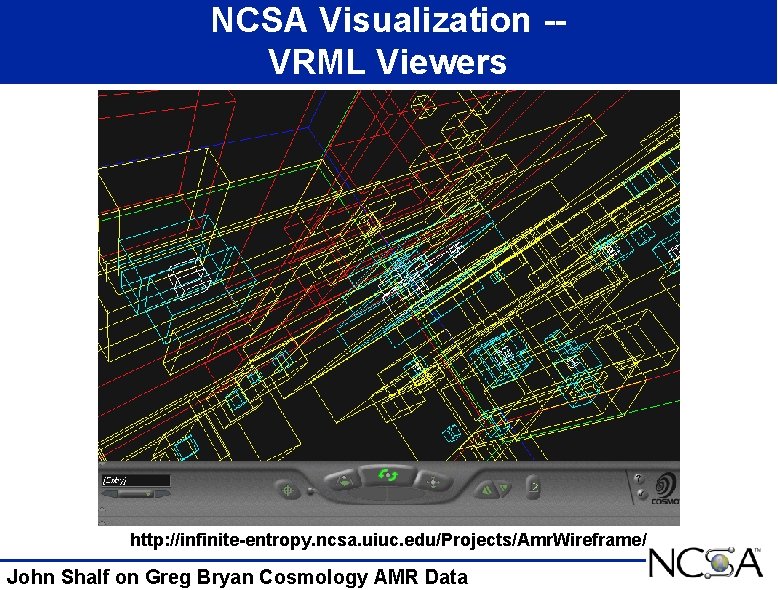

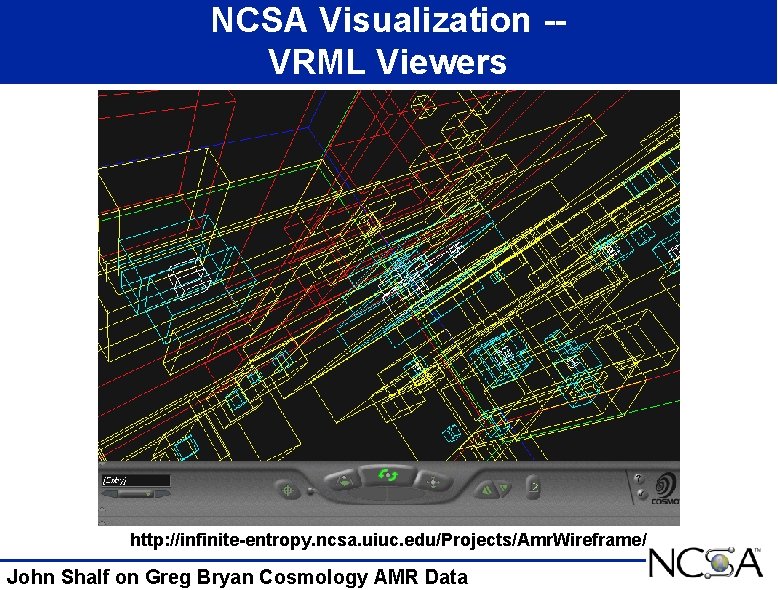

NCSA Visualization -VRML Viewers http: //infinite-entropy. ncsa. uiuc. edu/Projects/Amr. Wireframe/ John Shalf on Greg Bryan Cosmology AMR Data

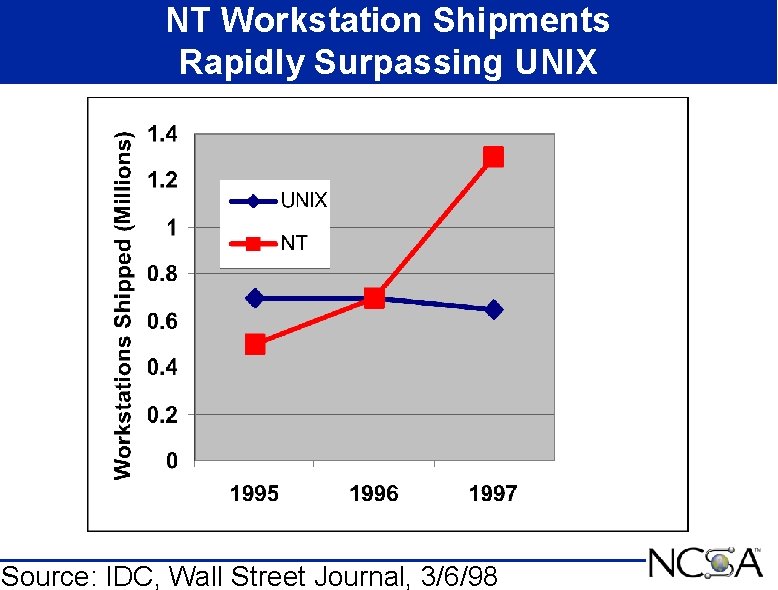

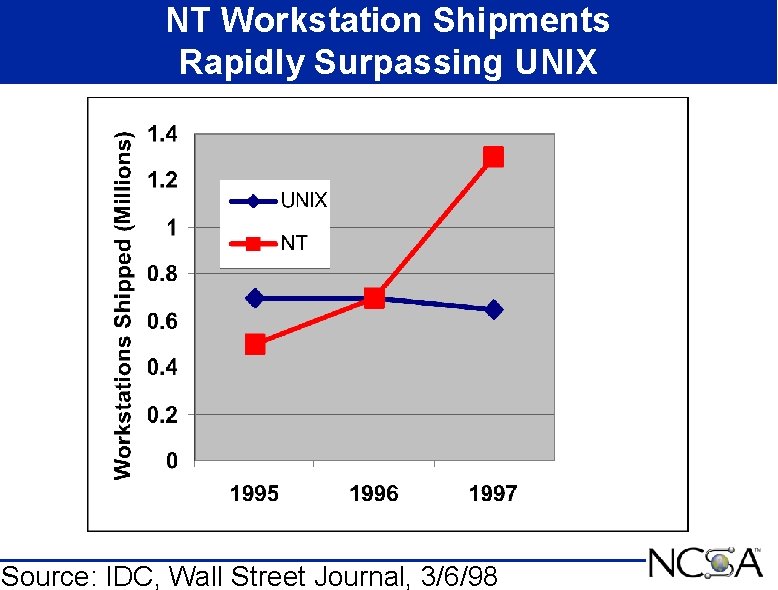

NT Workstation Shipments Rapidly Surpassing UNIX Source: IDC, Wall Street Journal, 3/6/98

Current Alliance LES NT Cluster Testbed Compaq Computer and Hewlett-Packard • Schedule of NT Supercluster Goals – 1998 Deploy First Production Clusters – Scientific and Engineering Tuned Cluster – Andrew Chien, Alliance Parallel Computing Team – Rob Pennington, NCSA C&C – Currently 256 -processors of HP and Compaq Pentium II SMPs – Data Intensive Tuned Cluster – 1999 Enlarge to 512 -Processors in Cluster – 2000 Move to Merced – 2002 -2005 Achieve Teraflop Performance • UNIX/RISC & NT/Intel will Co-exist for 5 Years – 1998 -2000 Move Applications to NT/Intel – 2000 -2005 Convergence toward NT/Merced

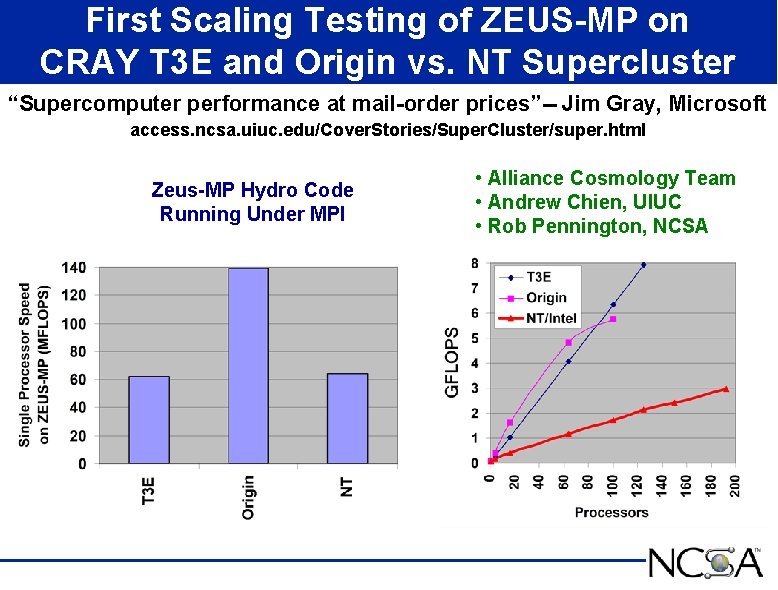

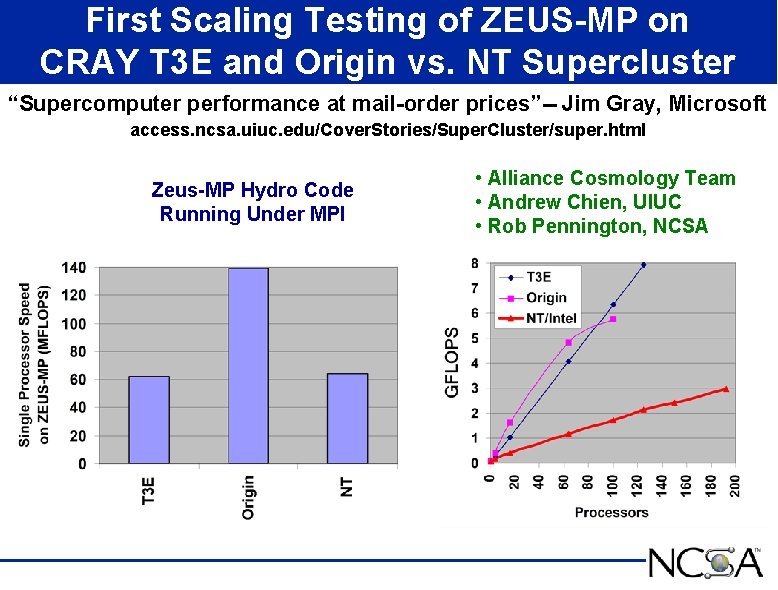

First Scaling Testing of ZEUS-MP on CRAY T 3 E and Origin vs. NT Supercluster “Supercomputer performance at mail-order prices”-- Jim Gray, Microsoft access. ncsa. uiuc. edu/Cover. Stories/Super. Cluster/super. html Zeus-MP Hydro Code Running Under MPI • Alliance Cosmology Team • Andrew Chien, UIUC • Rob Pennington, NCSA

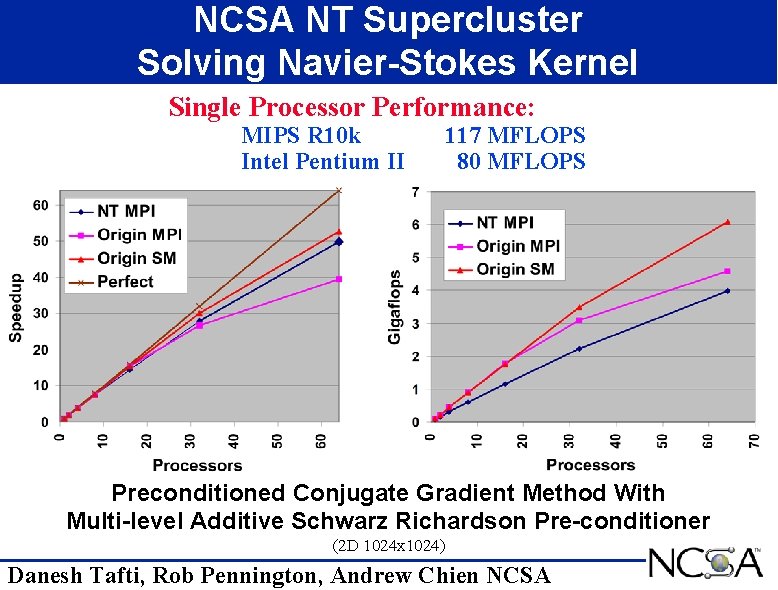

NCSA NT Supercluster Solving Navier-Stokes Kernel Single Processor Performance: MIPS R 10 k Intel Pentium II 117 MFLOPS 80 MFLOPS Preconditioned Conjugate Gradient Method With Multi-level Additive Schwarz Richardson Pre-conditioner (2 D 1024 x 1024) Danesh Tafti, Rob Pennington, Andrew Chien NCSA

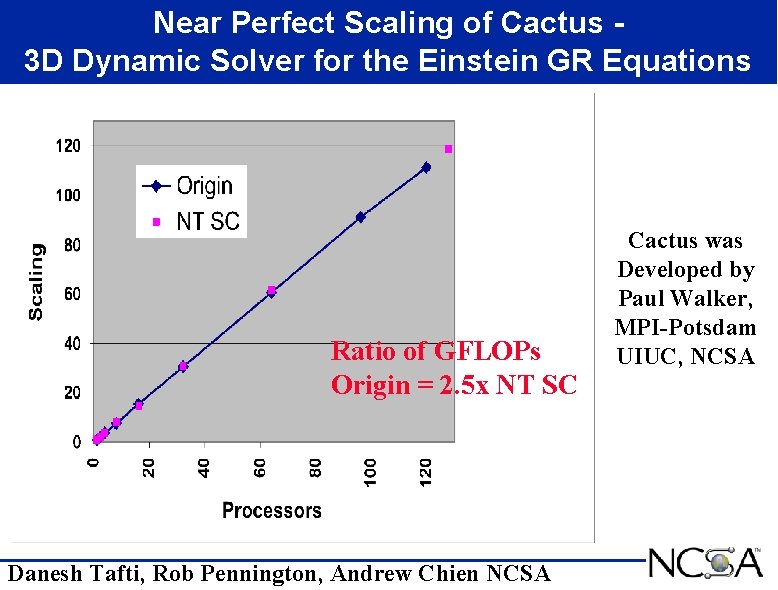

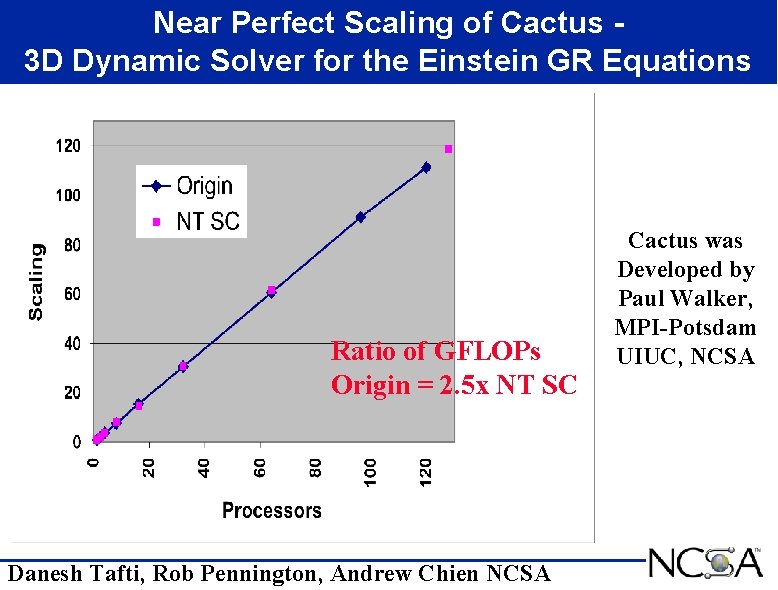

Near Perfect Scaling of Cactus 3 D Dynamic Solver for the Einstein GR Equations Ratio of GFLOPs Origin = 2. 5 x NT SC Danesh Tafti, Rob Pennington, Andrew Chien NCSA Cactus was Developed by Paul Walker, MPI-Potsdam UIUC, NCSA

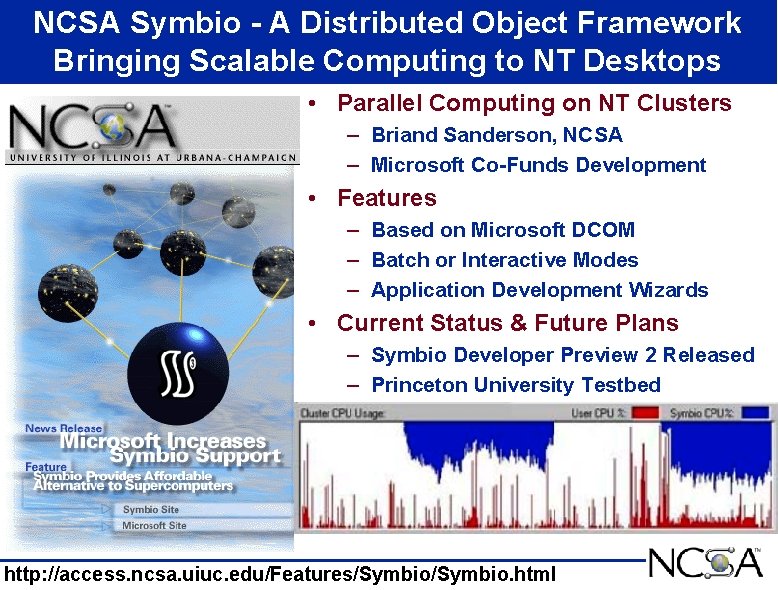

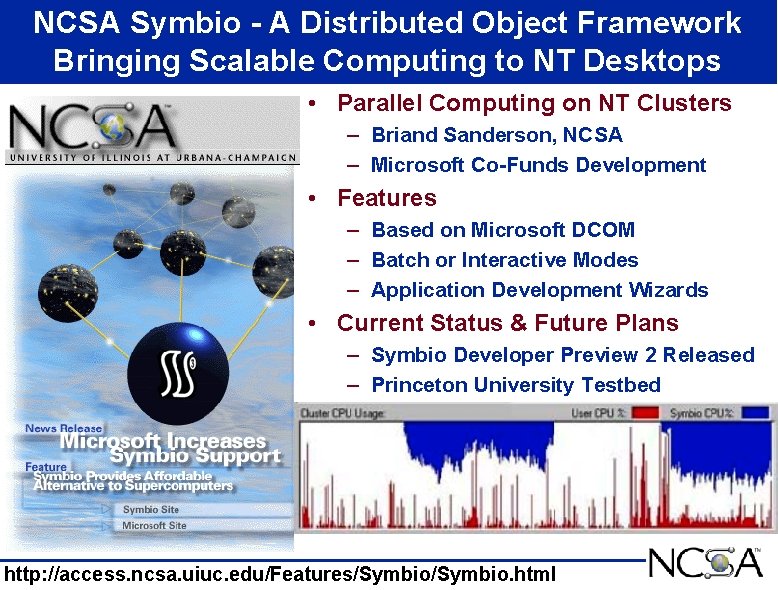

NCSA Symbio - A Distributed Object Framework Bringing Scalable Computing to NT Desktops • Parallel Computing on NT Clusters – Briand Sanderson, NCSA – Microsoft Co-Funds Development • Features – Based on Microsoft DCOM – Batch or Interactive Modes – Application Development Wizards • Current Status & Future Plans – Symbio Developer Preview 2 Released – Princeton University Testbed http: //access. ncsa. uiuc. edu/Features/Symbio. html

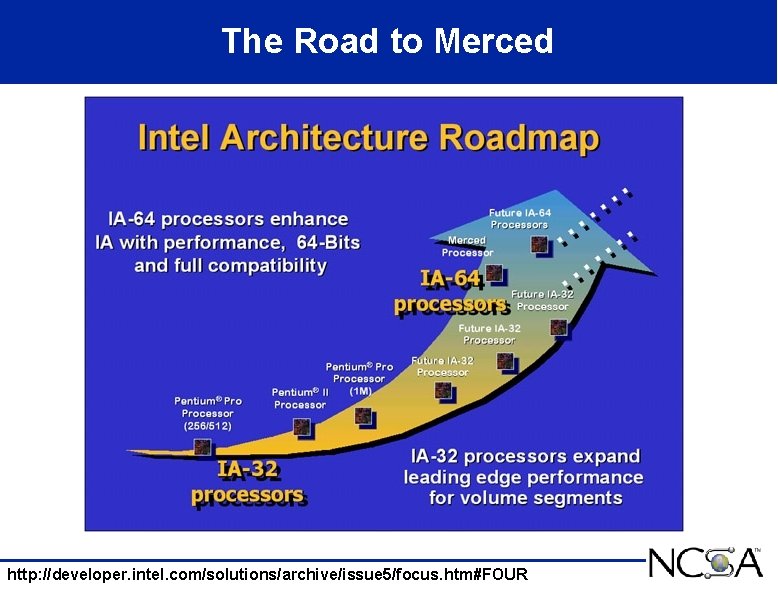

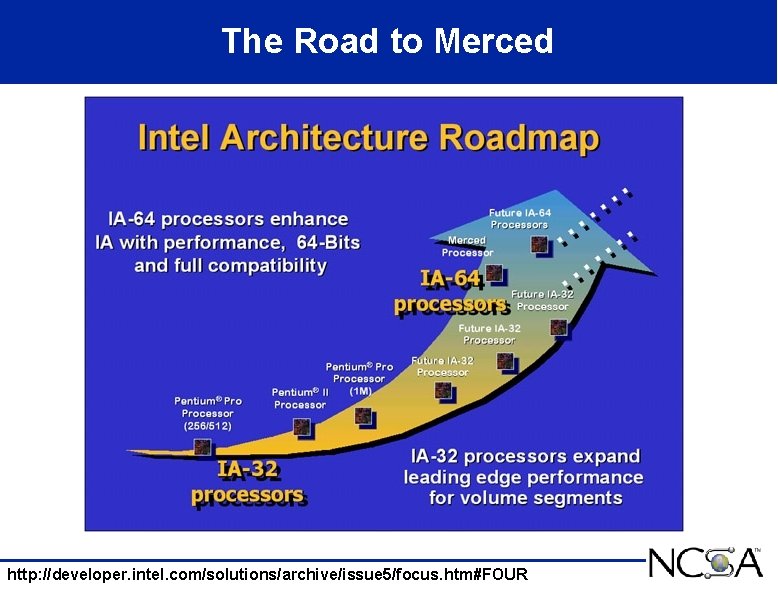

The Road to Merced http: //developer. intel. com/solutions/archive/issue 5/focus. htm#FOUR

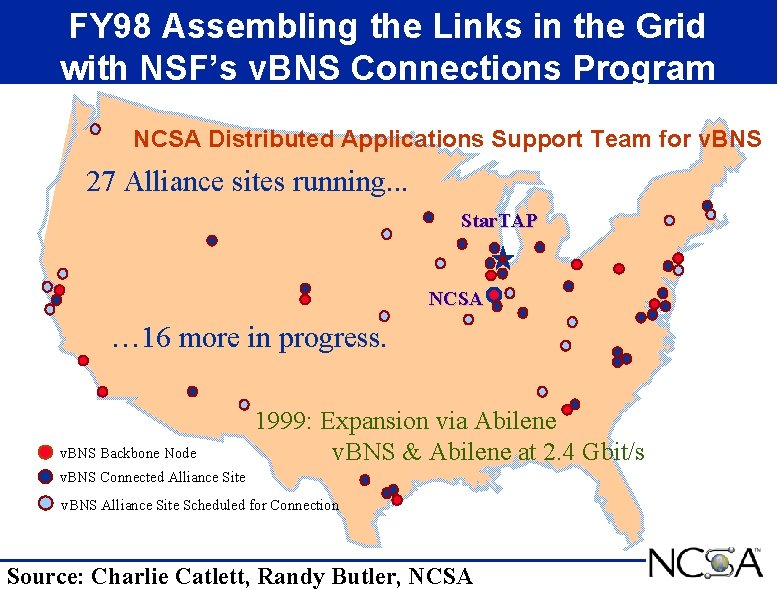

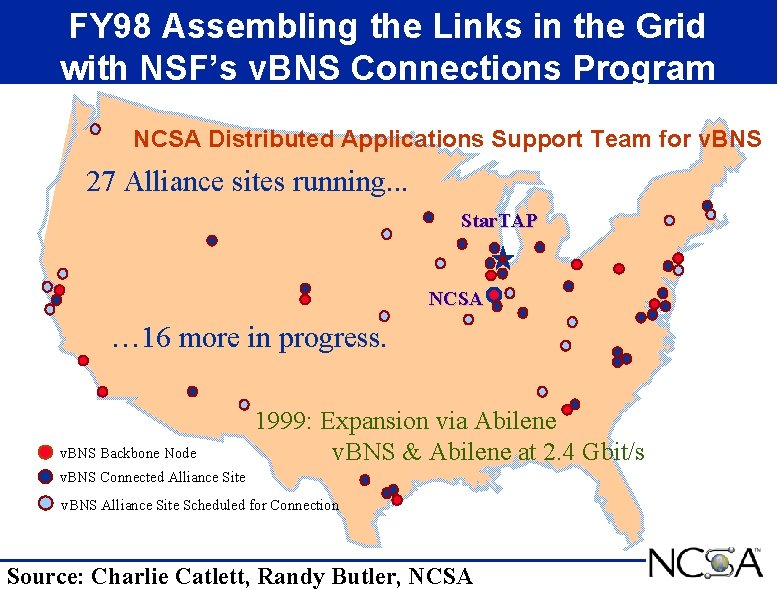

FY 98 Assembling the Links in the Grid with NSF’s v. BNS Connections Program NCSA Distributed Applications Support Team for v. BNS 27 Alliance sites running. . . Star. TAP NCSA … 16 more in progress. v. BNS Backbone Node 1999: Expansion via Abilene v. BNS & Abilene at 2. 4 Gbit/s v. BNS Connected Alliance Site v. BNS Alliance Site Scheduled for Connection Source: Charlie Catlett, Randy Butler, NCSA

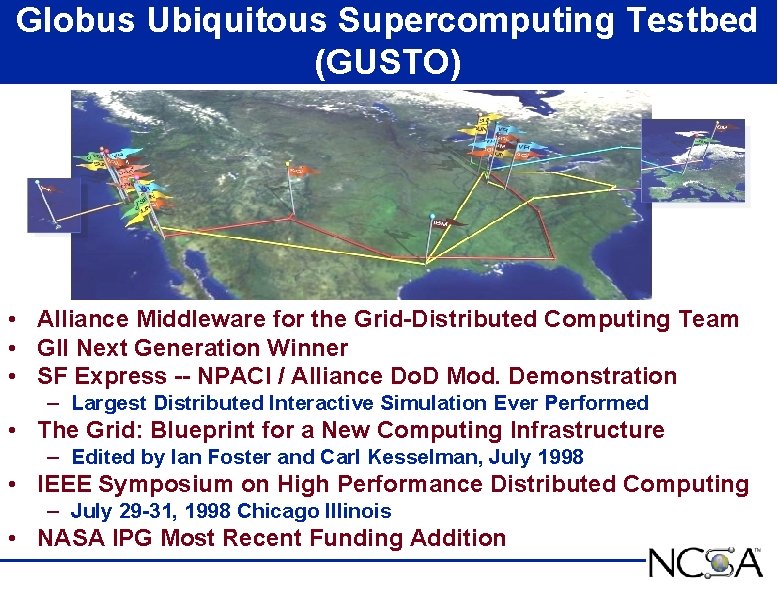

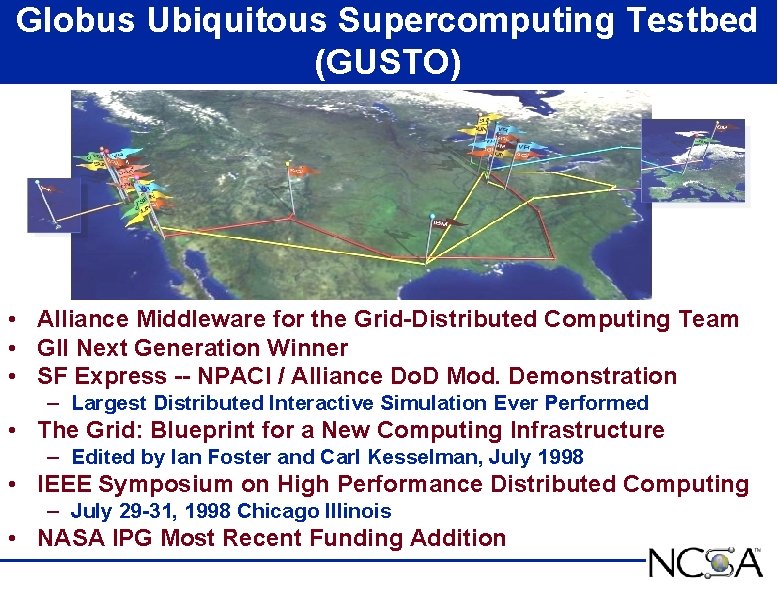

Globus Ubiquitous Supercomputing Testbed (GUSTO) • Alliance Middleware for the Grid-Distributed Computing Team • GII Next Generation Winner • SF Express -- NPACI / Alliance Do. D Mod. Demonstration – Largest Distributed Interactive Simulation Ever Performed • The Grid: Blueprint for a New Computing Infrastructure – Edited by Ian Foster and Carl Kesselman, July 1998 • IEEE Symposium on High Performance Distributed Computing – July 29 -31, 1998 Chicago Illinois • NASA IPG Most Recent Funding Addition

Alliance National Technology Grid Workshop and Training Facilities Powered by Silicon Graphics Linked by the NSF v. BNS Jason Leigh and Tom De. Fanti, EVL; Rick Stevens, ANL

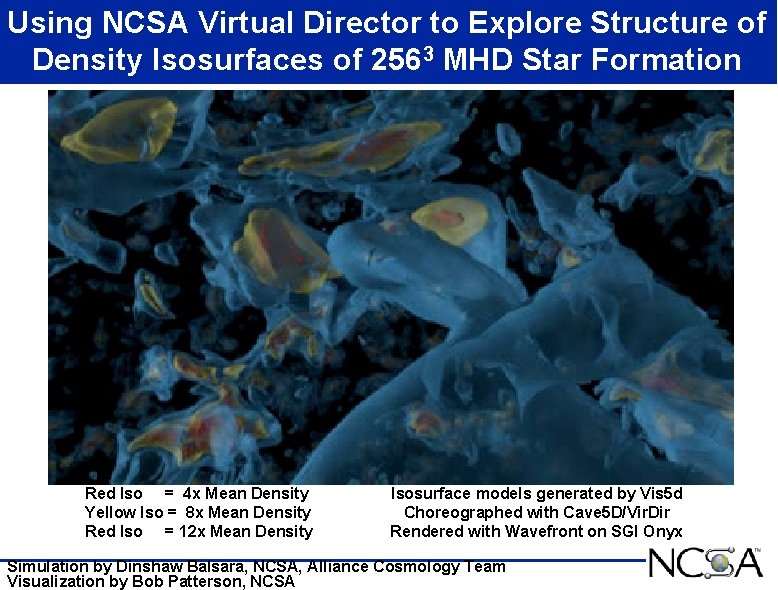

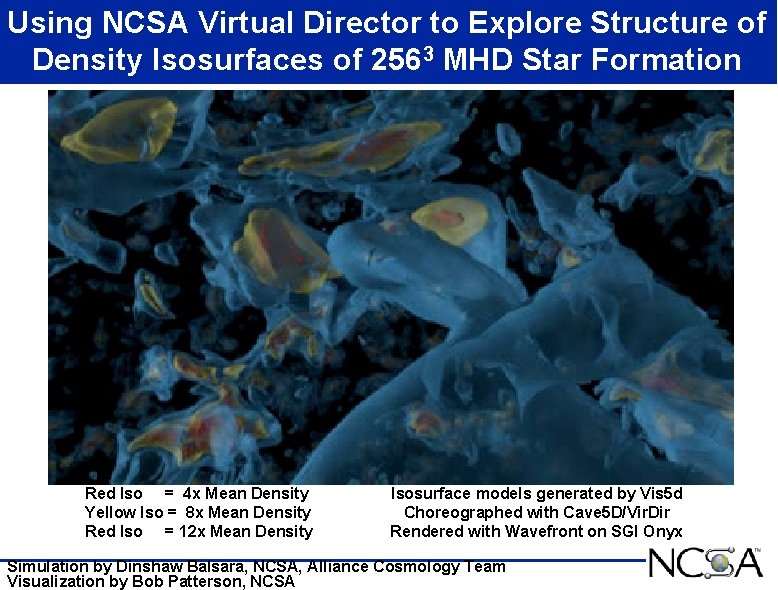

Using NCSA Virtual Director to Explore Structure of Density Isosurfaces of 2563 MHD Star Formation Red Iso = 4 x Mean Density Yellow Iso = 8 x Mean Density Red Iso = 12 x Mean Density Isosurface models generated by Vis 5 d Choreographed with Cave 5 D/Vir. Dir Rendered with Wavefront on SGI Onyx Simulation by Dinshaw Balsara, NCSA, Alliance Cosmology Team Visualization by Bob Patterson, NCSA

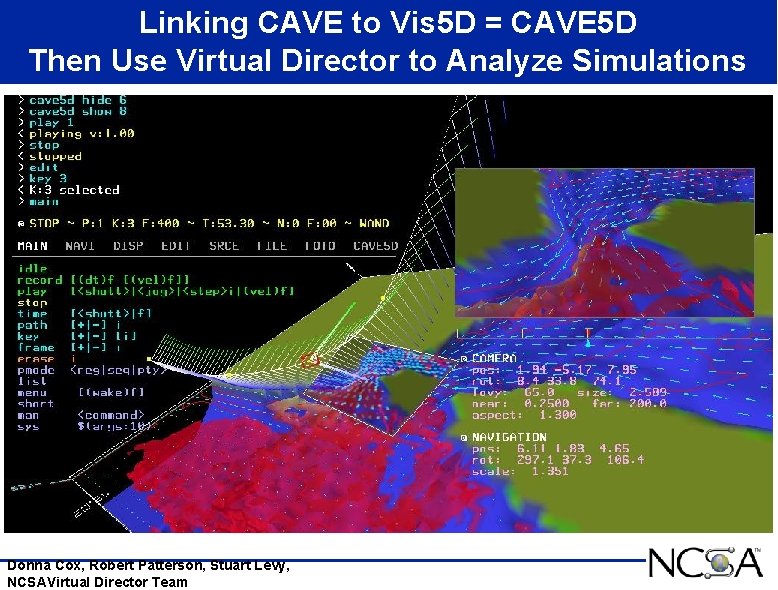

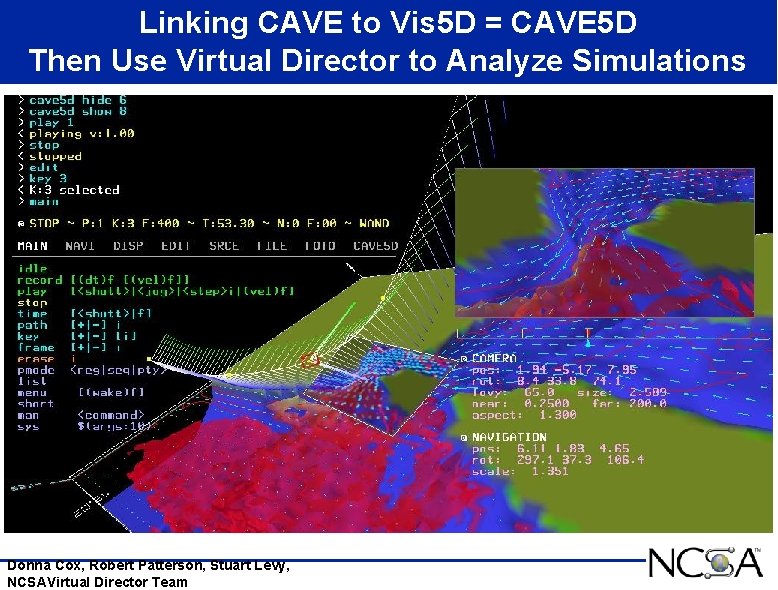

Linking CAVE to Vis 5 D = CAVE 5 D Then Use Virtual Director to Analyze Simulations Donna Cox, Robert Patterson, Stuart Levy, NCSAVirtual Director Team

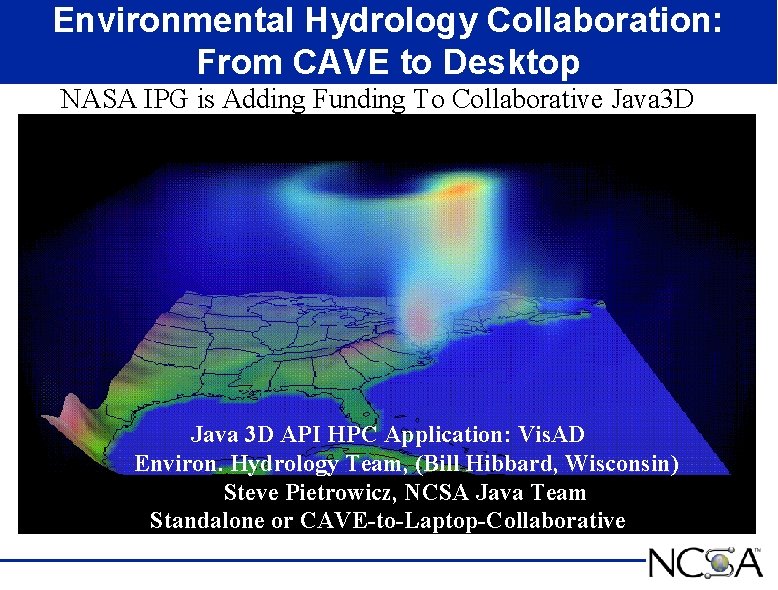

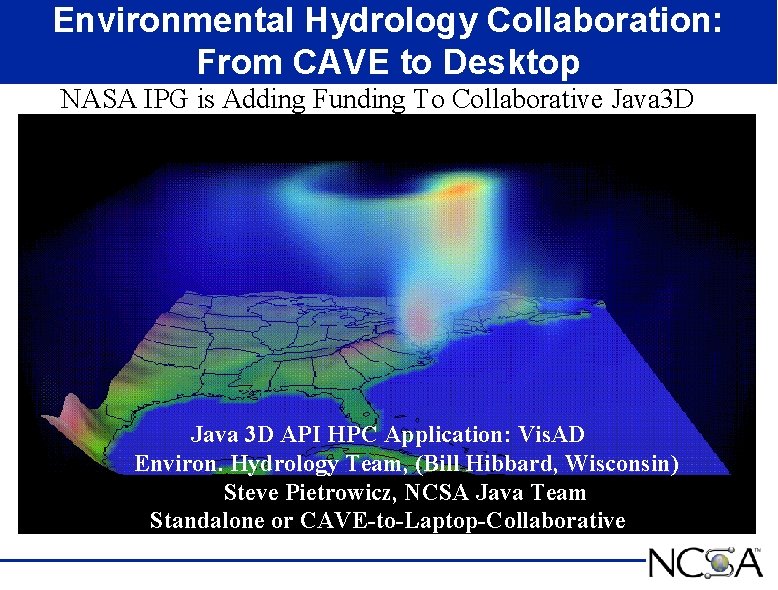

Environmental Hydrology Collaboration: From CAVE to Desktop NASA IPG is Adding Funding To Collaborative Java 3 D API HPC Application: Vis. AD Environ. Hydrology Team, (Bill Hibbard, Wisconsin) Steve Pietrowicz, NCSA Java Team Standalone or CAVE-to-Laptop-Collaborative

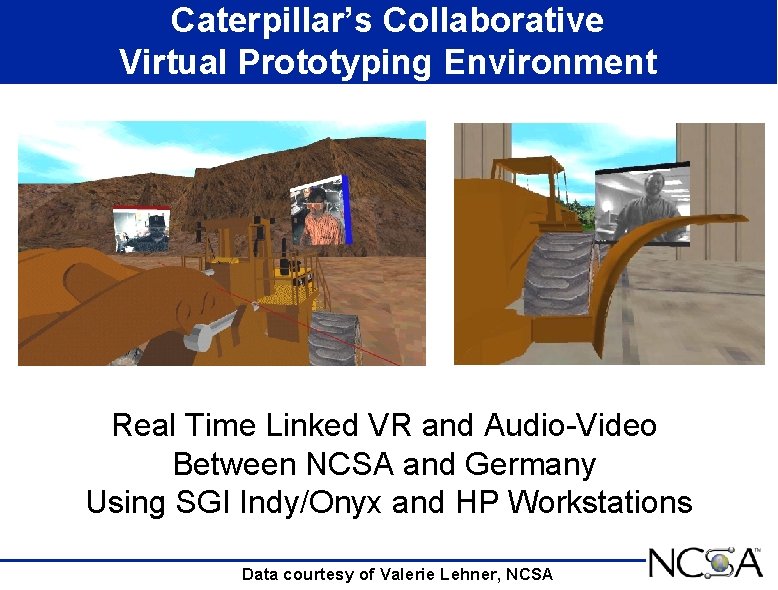

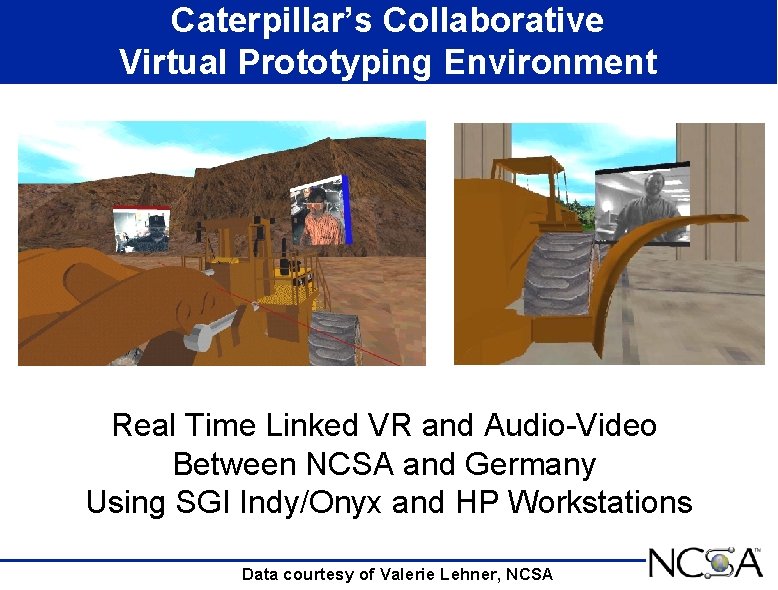

Caterpillar’s Collaborative Virtual Prototyping Environment Real Time Linked VR and Audio-Video Between NCSA and Germany Using SGI Indy/Onyx and HP Workstations Data courtesy of Valerie Lehner, NCSA